CSE 190 Neural Networks Ethical Issues in Artificial

- Slides: 18

CSE 190 Neural Networks: Ethical Issues in Artificial Intelligence Gary Cottrell Week 10 Lecture 3 9/26/2020 CSE 190 1

Introduction n Some of the issues: Will robots have rights? n What if robots become much smarter than us? (the singularity) n What if robots kill people or worse, make humans extinct? n n As researchers, we need to think about these issues now. CSE 190 9/26/2020 2

Introduction n Some of the issues: n n Will robots have rights? Movie time! CSE 190 9/26/2020 3

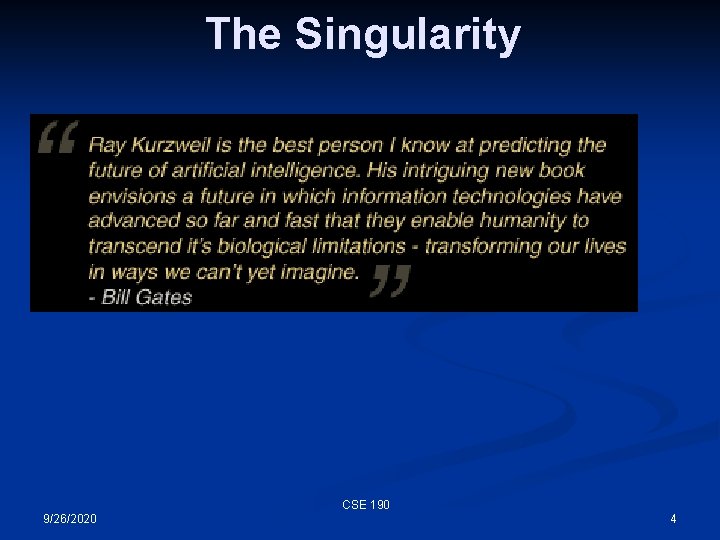

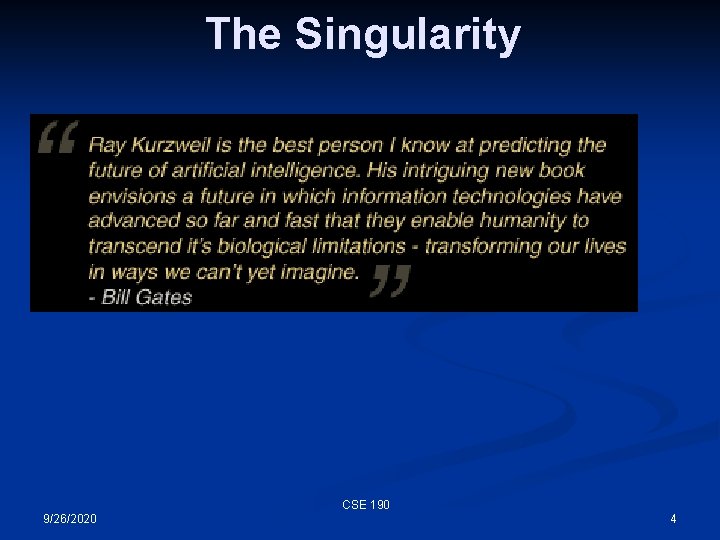

The Singularity CSE 190 9/26/2020 4

The Singularity “Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended. ” n — "The Coming Technological Singularity" (1993) by Vernor Vinge (SDSU Professor and Sci. Fi author) CSE 190 9/26/2020 5

The Three Laws of Robotics (Isaac Asimov) 1. A robot may not injure a human being or, through inaction, allow a human being to come to harm. 2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. CSE 190 9/26/2020 6

CSE 190 9/26/2020 7

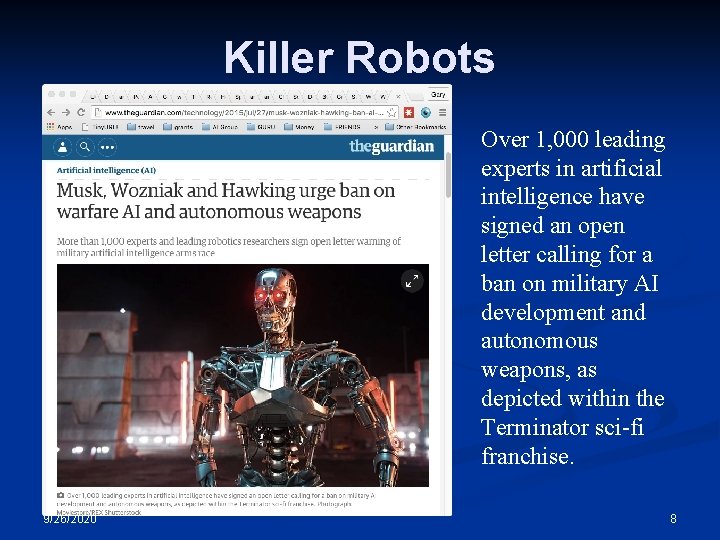

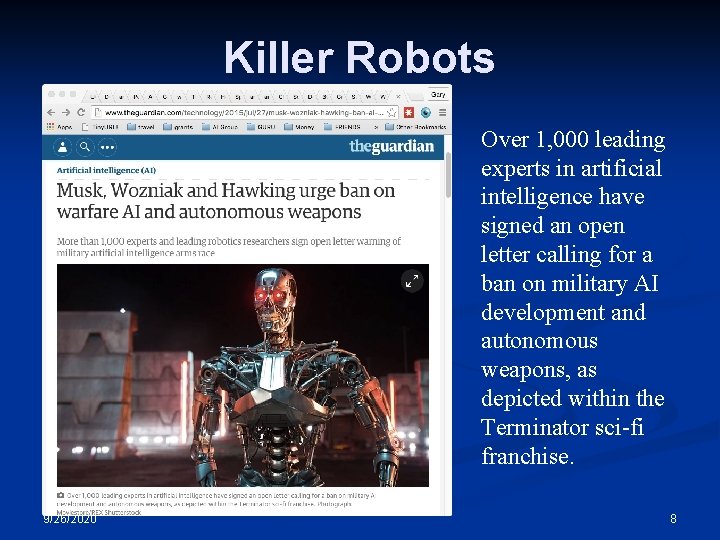

Killer Robots Over 1, 000 leading experts in artificial intelligence have signed an open letter calling for a ban on military AI development and autonomous weapons, as depicted within the Terminator sci-fi franchise. CSE 190 9/26/2020 8

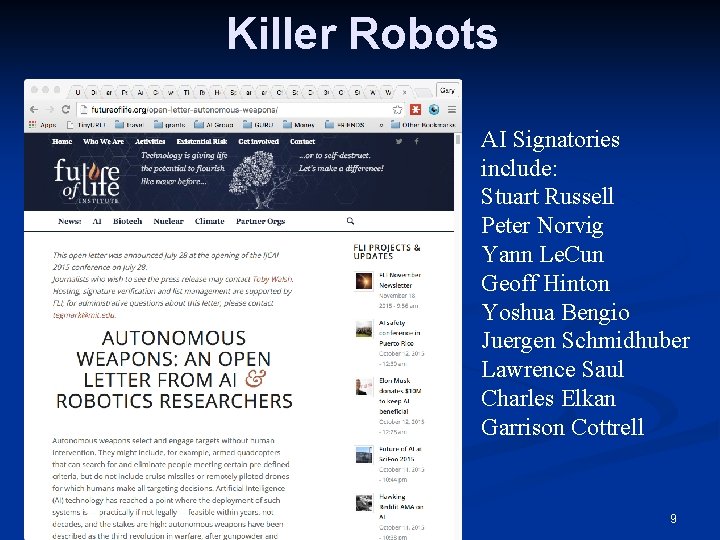

Killer Robots AI Signatories include: Stuart Russell Peter Norvig Yann Le. Cun Geoff Hinton Yoshua Bengio Juergen Schmidhuber Lawrence Saul Charles Elkan Garrison Cottrell CSE 190 9/26/2020 9

Killer Robots Other Signatories include: Stephen Hawking Elon Musk Steve Wozniak Dan Dennett Noam Chomsky But not: Barak Obama Vladmir Putin ISIS CSE 190 9/26/2020 10

Killer Robots What are some arguments for autonomous weapons? Evan Ackerman’s arguments CSE 190 9/26/2020 11

Killer Robots What are some arguments for autonomous weapons? The problem with this [pronouncement by AI researchers] is that no letter, UN declaration, or even a formal ban ratified by multiple nations is going to prevent people from being able to build autonomous, weaponized robots. Generally speaking, technology itself is not inherently good or bad: it’s what we choose to do with it that’s good or bad, and you can’t just cover your eyes and start screaming “STOP!!!” if you see something sinister on the horizon when there’s so much simultaneous potential for positive progress. What we really need, then, is a way of making autonomous armed robots ethical, because we’re not going to be able to prevent them from existing. Evan Ackerman’s arguments CSE 190 9/26/2020 12

Killer Robots What are some arguments for autonomous weapons? The problem with this [treaty by major countries] is that no treaty, UN declaration, or even a formal ban ratified by multiple nations is going to prevent people from being able to build nuclear bombs. Generally speaking, technology itself is not inherently good or bad: it’s what we choose to do with it that’s good or bad, and you can’t just cover your eyes and start screaming “STOP!!!” if you see something sinister on the horizon when there’s so much simultaneous potential for positive progress. What we really need, then, is a way of making nuclear bombs ethical, because we’re not going to be able to prevent them from existing. Evan Ackerman’s arguments CSE 190 9/26/2020 13

Killer Robots What are some arguments for autonomous weapons? What we really need, then, is a way of making autonomous armed robots ethical, because we’re not going to be able to prevent them from existing. Could autonomous armed robots perform better than armed humans in combat, resulting in fewer casualties (combatant or non-combatant) on both sides? I think that it will be possible for robots to be as good (or better) at identifying hostile enemy combatants as humans, since there are rules that can be followed (called Rules of Engagement) to determine whether or not using force is justified. For example, does your target have a weapon? Is that weapon pointed at you? Has the weapon been fired? Have you been hit? These are all things that a robot can determine using any number of sensors that currently exist. Evan Ackerman’s arguments CSE 190 9/26/2020 14

Killer Robots What are some arguments for autonomous weapons? Why technology can lead to a reduction in casualties on the battlefield • The ability to act conservatively: i. e. , they do not need to protect themselves in cases of low certainty of target identification. Autonomous armed robotic vehicles do not need to have self-preservation as a foremost drive, if at all. They can be used in a self sacrificing manner if needed and appropriate without reservation by a commanding officer. There is no need for a ‘shoot first, ask-questions later’ approach, but rather a ‘first-do-no-harm’ strategy can be utilized instead. They can truly assume risk on behalf of the noncombatant, something that soldiers are schooled in, but which some have difficulty achieving in practice. Ronald C. Arkin’s arguments CSE 190 9/26/2020 15

Killer Robots What are some arguments for autonomous weapons? Why technology can lead to a reduction in casualties on the battlefield • Unmanned robotic systems can be designed without emotions that cloud their judgment or result in anger and frustration with ongoing battlefield events. Ronald C. Arkin’s arguments CSE 190 9/26/2020 16

Killer Robots What are some arguments for autonomous weapons? Why technology can lead to a reduction in casualties on the battlefield • Intelligent electronic systems can integrate more information from more sources far faster before responding with lethal force than a human possibly could in real-time. • When working in a team of combined human soldiers and autonomous systems as an organic asset, they have the potential capability of independently and objectively monitoring ethical behavior in the battlefield by all parties, providing evidence and reporting infractions that might be observed. This presence alone might possibly lead to a reduction in human ethical infractions. Ronald C. Arkin’s arguments CSE 190 9/26/2020 17

Ethical Considerations n n n Some of the issues: n Will robots have rights? n What if robots become much smarter than us? (the singularity) n What if robots kill people or worse, make humans extinct? As researchers, we need to think about these issues now. I’m not saying to decide one way or another – just that you need to think about it! CSE 190 9/26/2020 18