CSE 190 Honors Seminar in High Performance Computing

- Slides: 68

CSE 190 Honors Seminar in High Performance Computing, Spring 2000 Prof. Sid Karin skarin@ucsd. edu x 45075 CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

• • Definitions History SDSC/NPACI Applications CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Definitions of Supercomputers • The most powerful machines available. • Machines that cost about 25 M$ in year 2000 $. • Machines sufficiently powerful to model physical processes including accurate laws of nature and realistic geometry, and including large quantities of observational/experimental data. CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Supercomputer Performance Metrics • Benchmarks • Applications • Kernels • Selected Algorithms • Theoretical Peak Speed • (Guaranteed not to exceed speed) • TOP 500 List CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Misleading Performance Specifications in the Supercomputer Field David H. Bailey RNR Technical Report RNR-92 -005 December 1, 1992 http: //www. nasa. gov/Pubs/Tech. Reports/RNRreports/dbailey/RN R-92 -005/RNR-92 -005. html CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

• • Definitions History SDSC/NPACI Applications CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Applications • • • Cryptography Nuclear Weapons Design Weather / Climate Scientific Simulation Petroleum Exploration Aerospace Design Automotive Design Pharmaceutical Design Data Mining Data Assimilation CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Applications cont’d. • Processes too complex to instrument • Automotive crash testing • Air flow • Processes too fast to observe • Molecular interactions • Processes too small to observe • Molecular interactions • Processes too slow to observe • Astrophysics CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Applications cont’d. • Performance • Price • Performance / Price CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

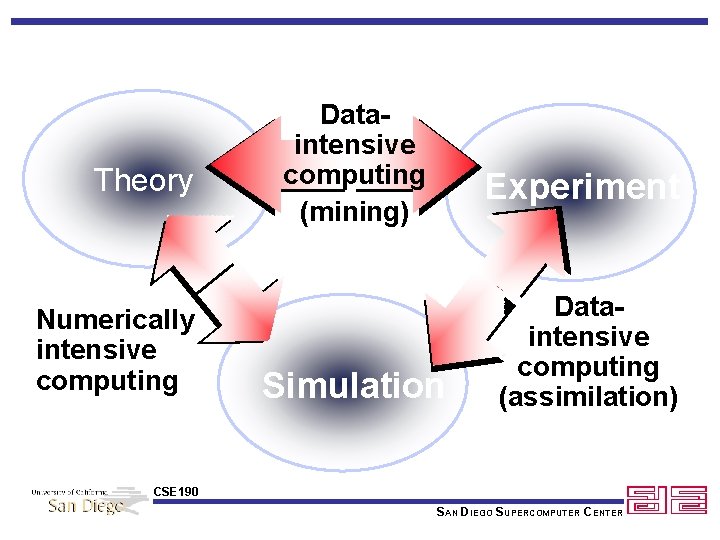

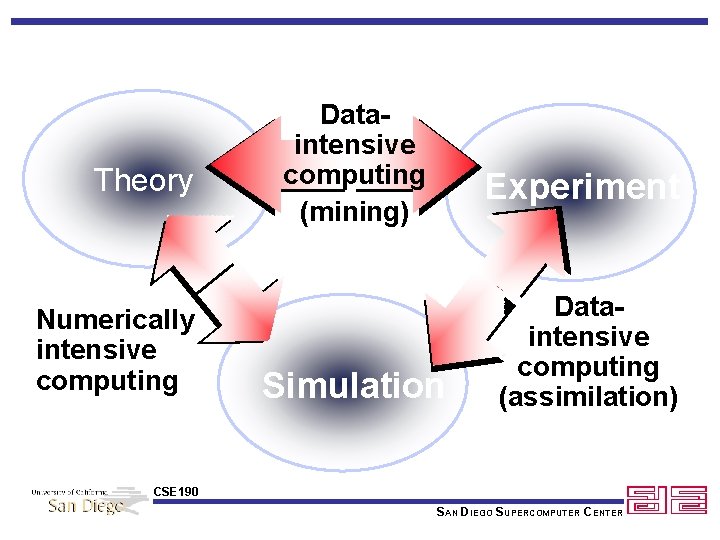

Theory Numerically intensive computing Dataintensive computing (mining) Experiment Simulation Dataintensive computing (assimilation) CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

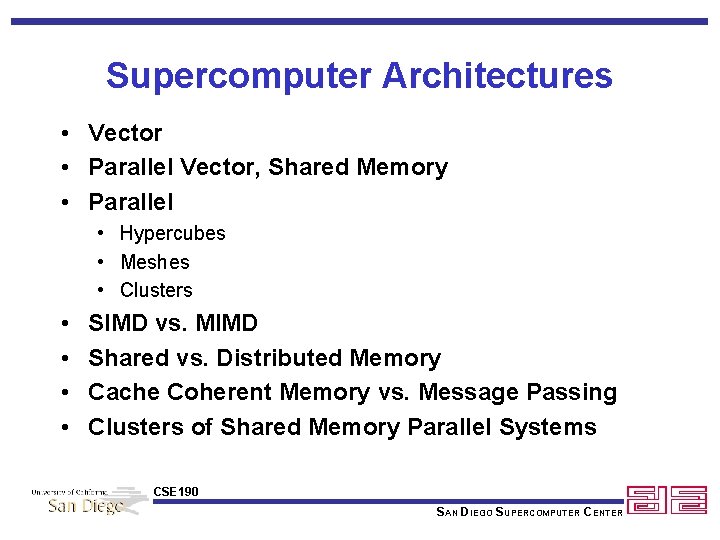

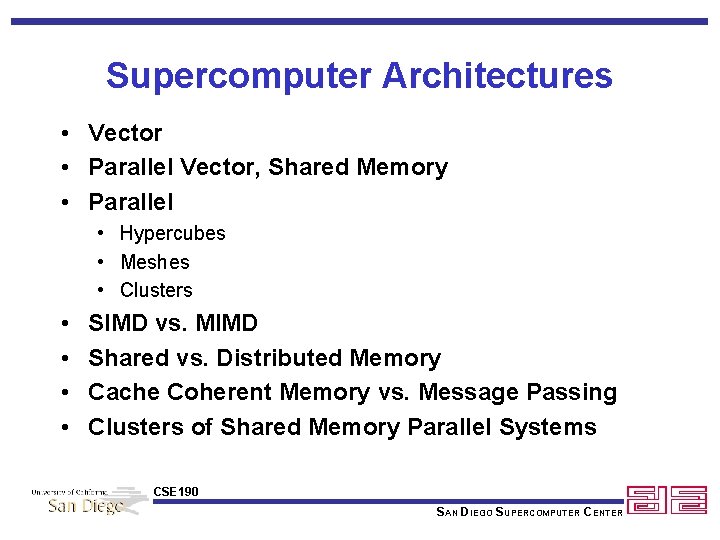

Supercomputer Architectures • Vector • Parallel Vector, Shared Memory • Parallel • Hypercubes • Meshes • Clusters • • SIMD vs. MIMD Shared vs. Distributed Memory Cache Coherent Memory vs. Message Passing Clusters of Shared Memory Parallel Systems CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

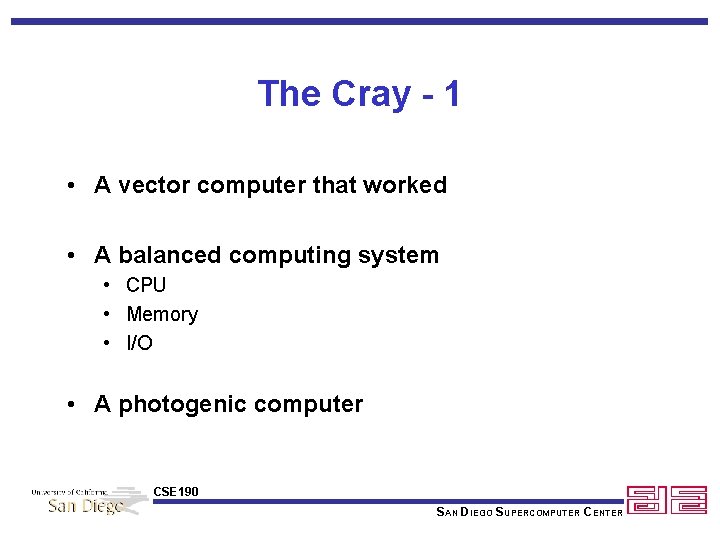

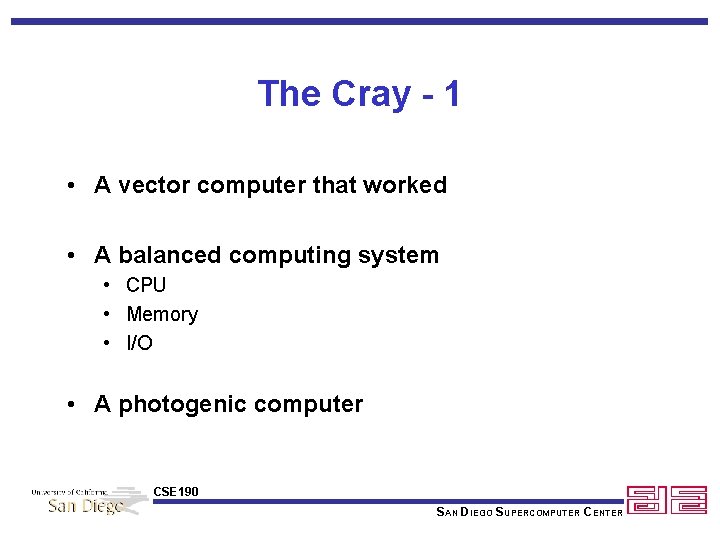

The Cray - 1 • A vector computer that worked • A balanced computing system • CPU • Memory • I/O • A photogenic computer CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

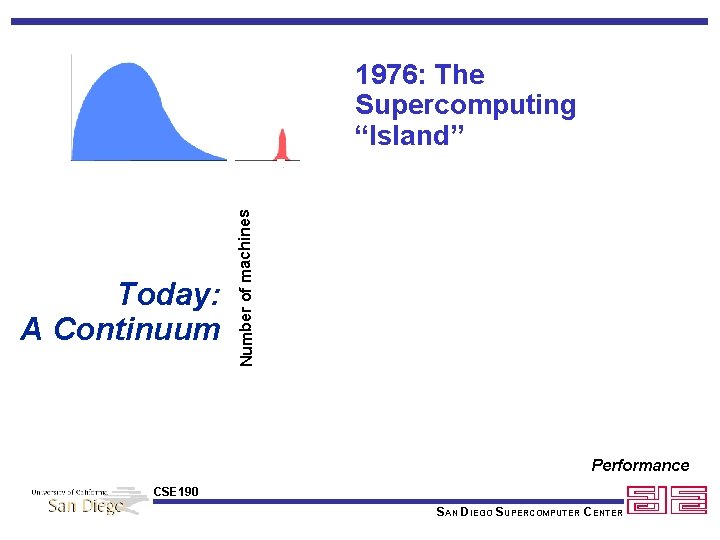

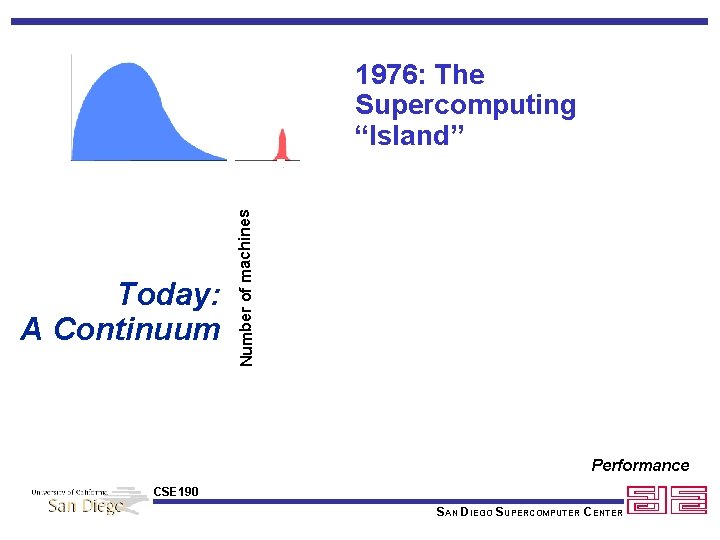

Today: A Continuum Number of machines 1976: The Supercomputing “Island” Performance CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

The Cray X-MP • Shared Memory • Parallel Vector • Followed by Cray Y-MP, C-90, J-90, T 90…. . CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

The Cray -2 • Parallel Vector Shared Memory • Very Large Memory (256 MW) • Actually 256 K MW = 262 MW • One word = 8 Bytes • Liquid Immersion cooling CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Cray Companies • Control Data • Cray Research Inc. • Cray Computer Company Inc. • SRC Inc. CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Thinking Machines • SIMD vs. MIMD • Evolution from CM-1 to CM-2 • ARPA Involvement CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

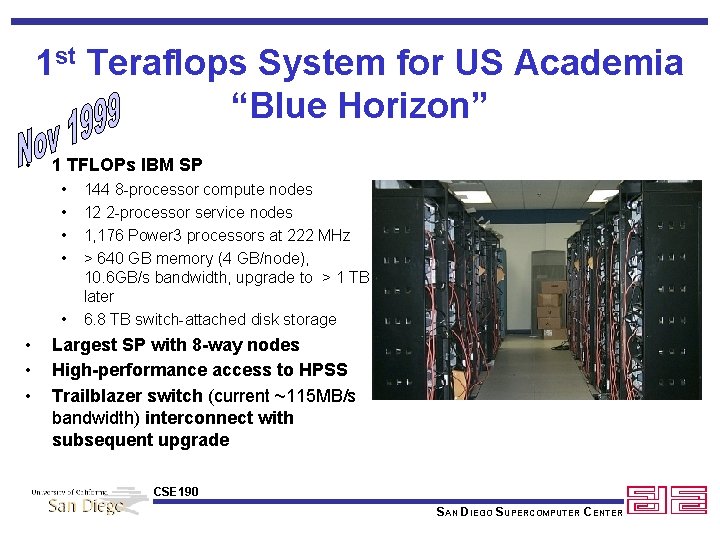

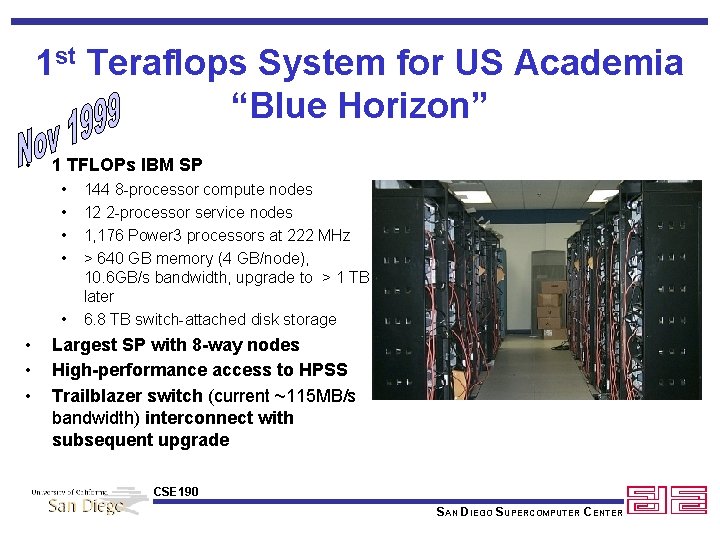

1 st Teraflops System for US Academia “Blue Horizon” • 1 TFLOPs IBM SP • • 144 8 -processor compute nodes 12 2 -processor service nodes 1, 176 Power 3 processors at 222 MHz > 640 GB memory (4 GB/node), 10. 6 GB/s bandwidth, upgrade to > 1 TB later 6. 8 TB switch-attached disk storage Largest SP with 8 -way nodes High-performance access to HPSS Trailblazer switch (current ~115 MB/s bandwidth) interconnect with subsequent upgrade CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

UCSD Currently #10 on Dongarra’s Top 500 List • Actual Linpack benchmark sustained 558 Gflops on 120 nodes • Projected Linpack benchmark is 650 Gflops on 144 nodes • Theoretical peak 1. 023 Tflops CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

First Tera MTA is at SDSC CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Tera MTA • Architectural Characteristics • • Multithreaded architecture Randomized, flat, shared memory 8 CPUs, 8 GB RAM now going to 16 (later this year) High bandwidth to memory (word per cycle per CPU) • Benefits • Reduced programming effort: single parallel model for one or many processors • Good scalability CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

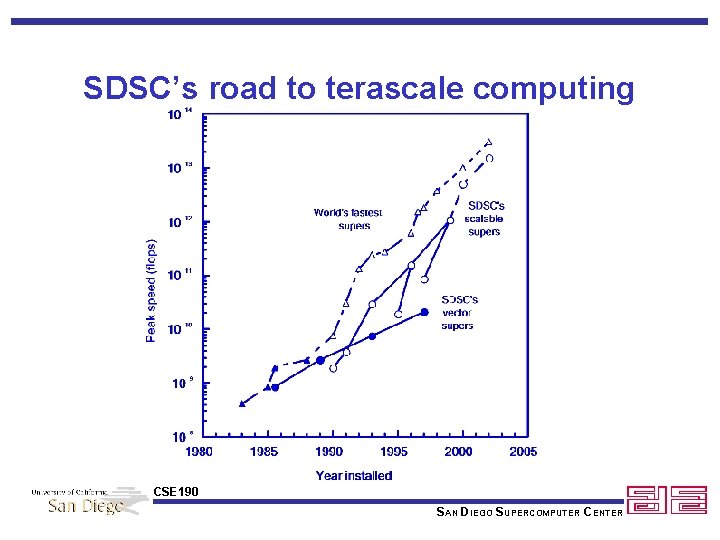

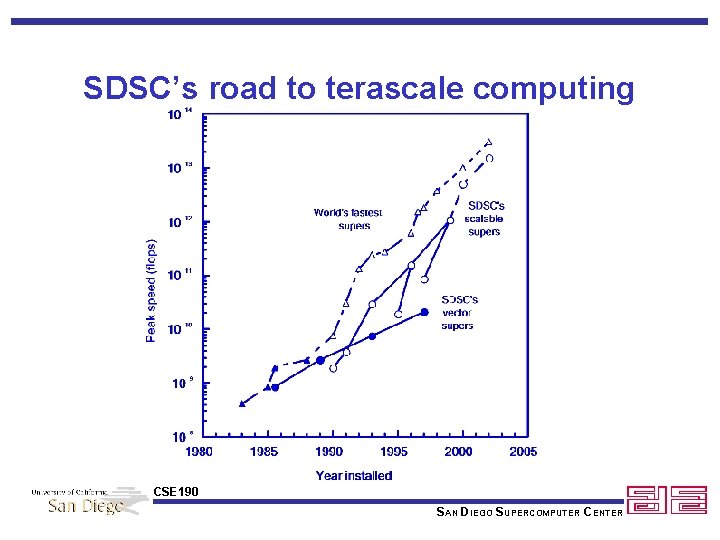

SDSC’s road to terascale computing CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

ASCI Blue Mountain Site Prep 12, 000 sq ft 120 ft CSE 190 100 ft SAN DIEGO SUPERCOMPUTER CENTER

ASCI Blue Mountain Site Prep 12, 000 sq ft 120 ft CSE 190 100 ft SAN DIEGO SUPERCOMPUTER CENTER

ASCI Blue Mountain Facilities Accomplishments • • 12, 000 sq. ft. of floor space 1. 6 MWatts of power 530 tons of cooling capability 384 cabinets to house the 6144 CPU’s 48 cabinets for metarouters 96 cabinets for disks 9 cabinets for 36 HIPPI switches about 348 miles of fiber cable CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

ASCI Blue Mountain SST System Final Configuration Cray Origin 2000 - 3. 072 Tera. FLOPS peak 48 X 128 CPU Origin 2000 (250 MHz R 10 K) 6144 CPUs: 48 X 128 CPU SMPs 1536 GB memory total: • 32 GB memory per 128 CPU SMP 76 TB Fibre Channel RAID disks 36 x HIPPI-800 switch Cluster Interconnect To be deployed later this year: 9 x HIPPI-6400 32 -way switch Cluster Interconnect CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

ASCI Blue Mountain Accomplishments • On-site integration of 48 X 128 system completed (including upgrades) • Hi. PPI-800 Interconnect completed • 18 GB Fiber Channel Disk completed • Integrated Visualization (16 IR Pipes) • Most Site Prep completed • System integrated into LANL secure computing environment • Web based tool for tracking status CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

ASCI Blue Mountain Accomplishments-cont • Linpack - achieved 1. 608 Tera. FLOPs • • accelerated schedule-2 weeks after install system validation run on 40 x 126 configuration f 90/MPI version run of over 6 hours • s. PPM - turbulence modeling code • validated full system integration • • used all 12 Hi. PPI boards/SMP and 36 switches used special “MPI” Hi. PPI bypass library • ASCI codes scaling CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

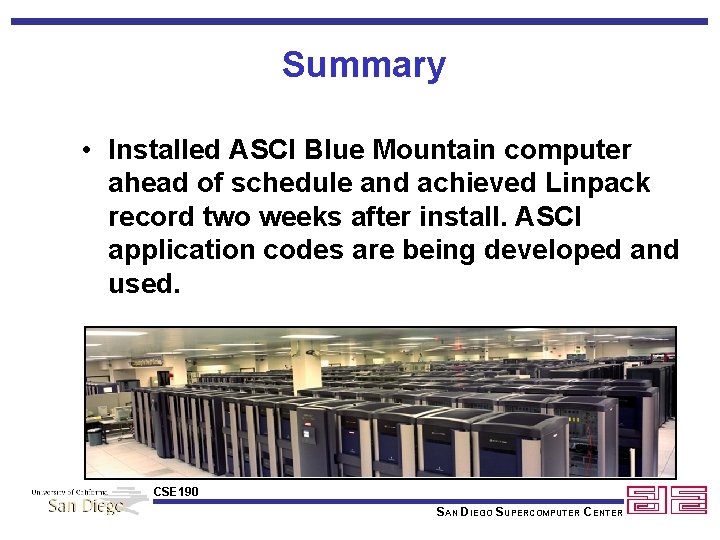

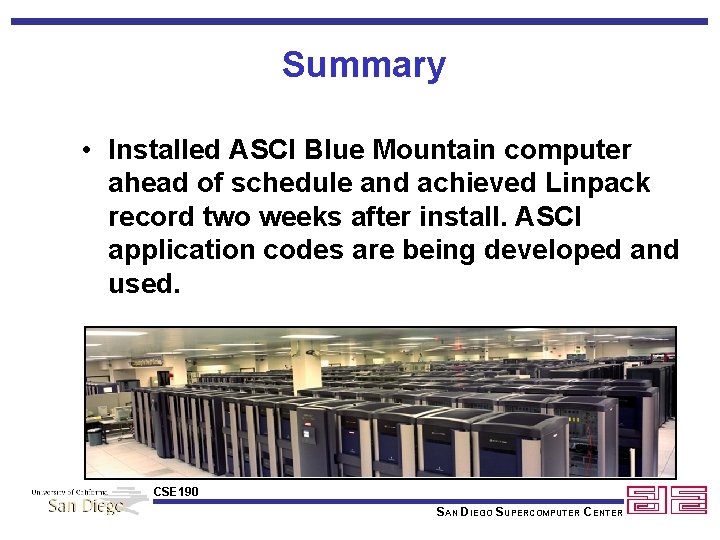

Summary • Installed ASCI Blue Mountain computer ahead of schedule and achieved Linpack record two weeks after install. ASCI application codes are being developed and used. CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

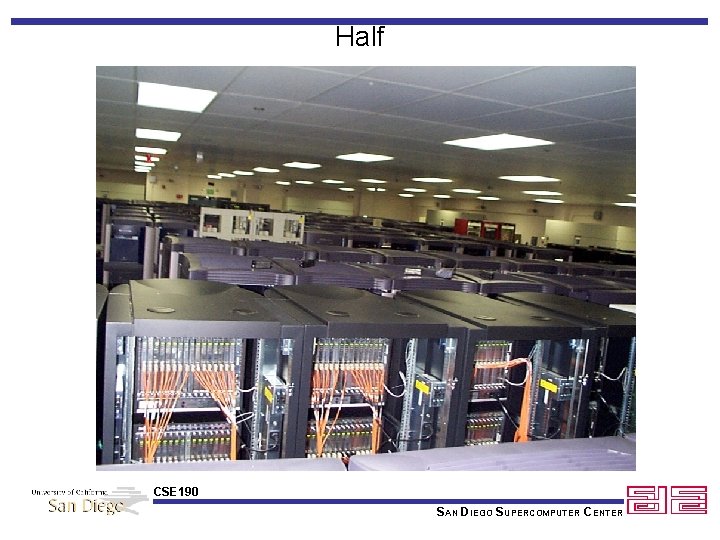

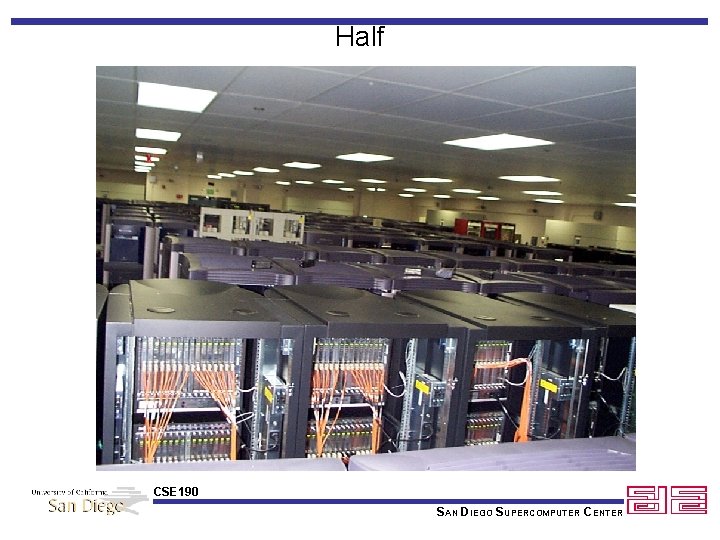

Half CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

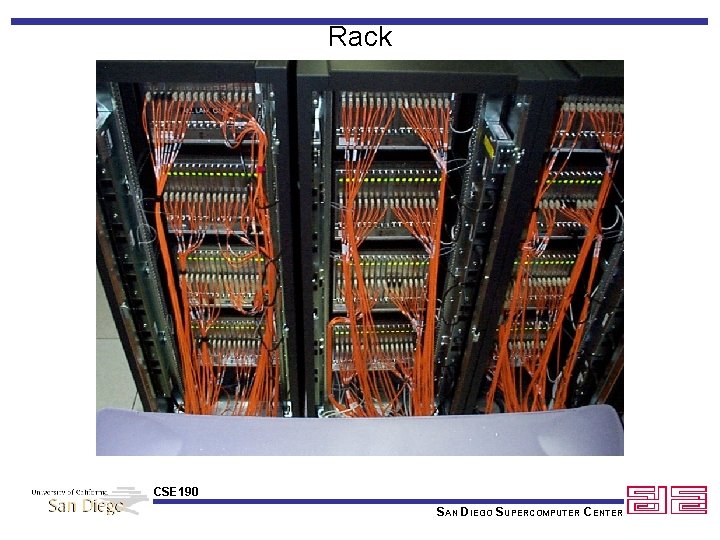

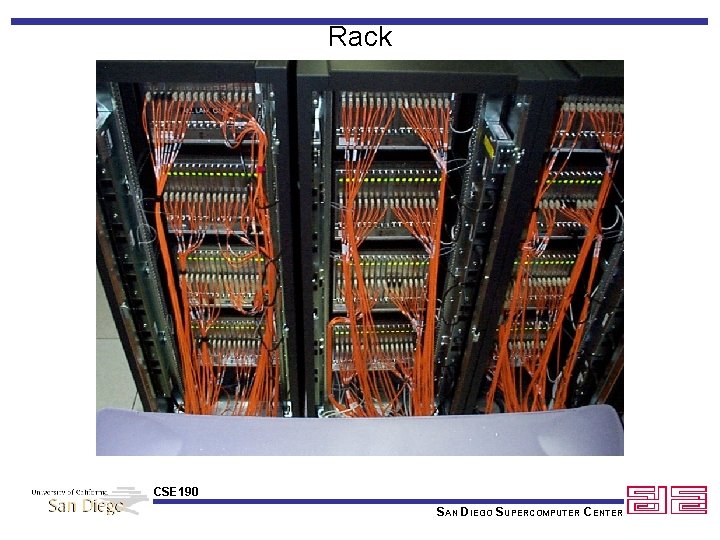

Rack CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Trench CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

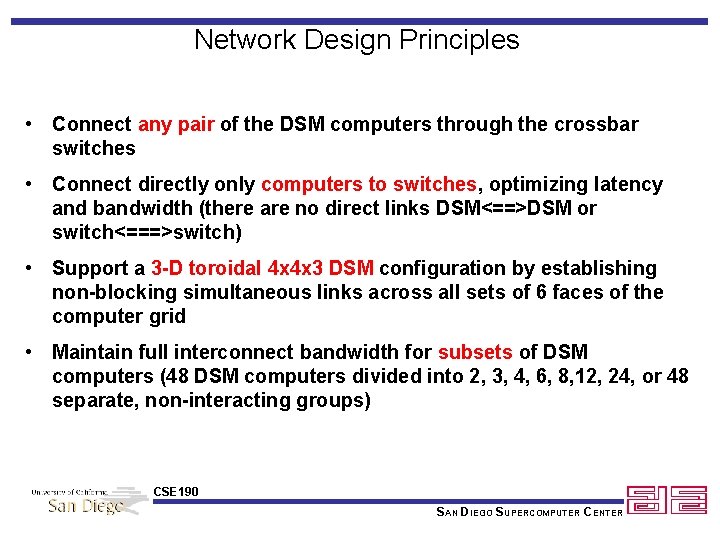

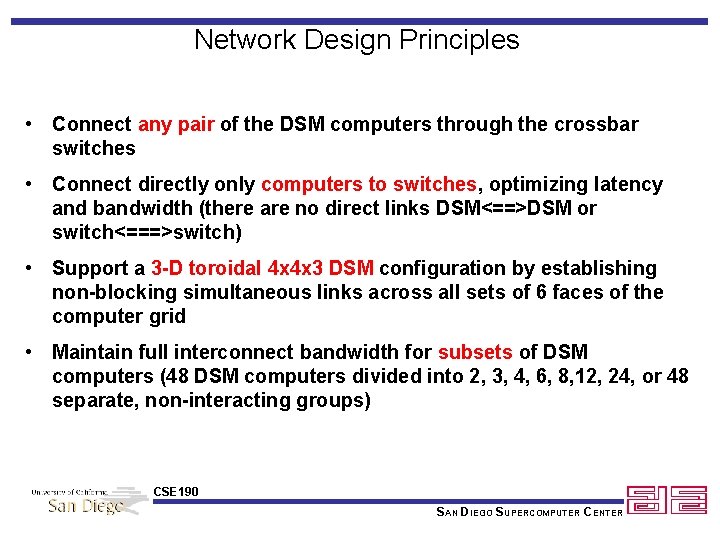

Network Design Principles • Connect any pair of the DSM computers through the crossbar switches • Connect directly only computers to switches, optimizing latency and bandwidth (there are no direct links DSM<==>DSM or switch<===>switch) • Support a 3 -D toroidal 4 x 4 x 3 DSM configuration by establishing non-blocking simultaneous links across all sets of 6 faces of the computer grid • Maintain full interconnect bandwidth for subsets of DSM computers (48 DSM computers divided into 2, 3, 4, 6, 8, 12, 24, or 48 separate, non-interacting groups) CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

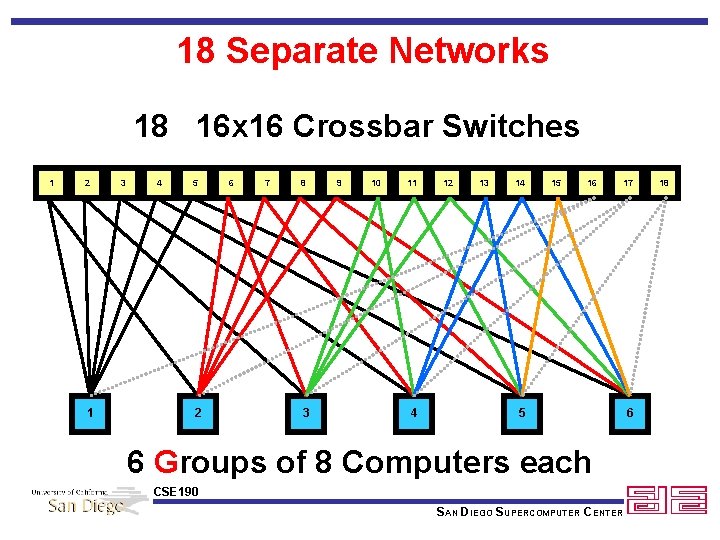

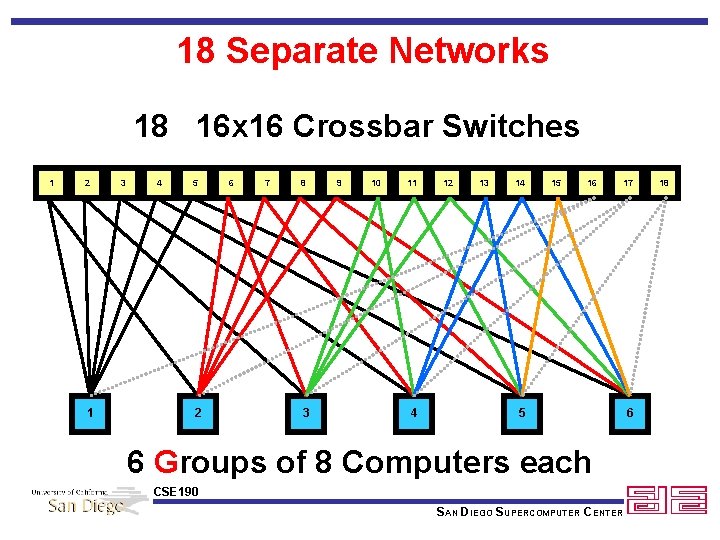

18 Separate Networks 18 16 x 16 Crossbar Switches 1 2 1 3 4 5 2 6 7 8 3 9 10 11 4 12 13 14 15 16 17 5 6 Groups of 8 Computers each CSE 190 SAN DIEGO SUPERCOMPUTER CENTER 6 18

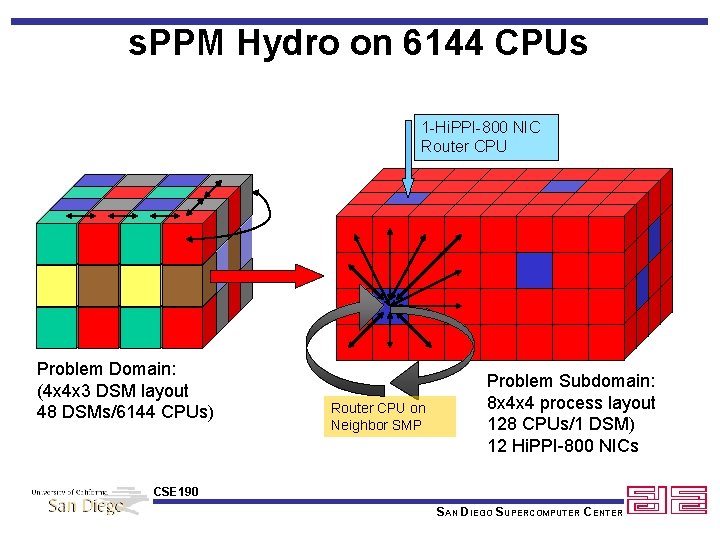

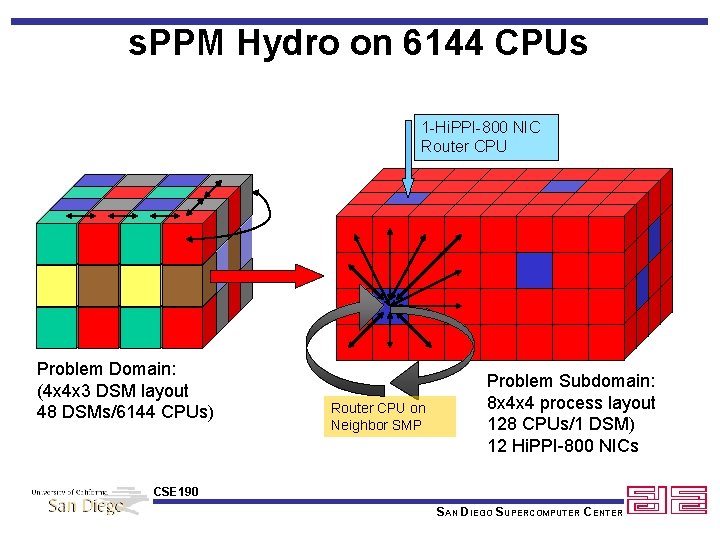

s. PPM Hydro on 6144 CPUs 1 -Hi. PPI-800 NIC Router CPU Problem Domain: (4 x 4 x 3 DSM layout 48 DSMs/6144 CPUs) Router CPU on Neighbor SMP Problem Subdomain: 8 x 4 x 4 process layout 128 CPUs/1 DSM) 12 Hi. PPI-800 NICs CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

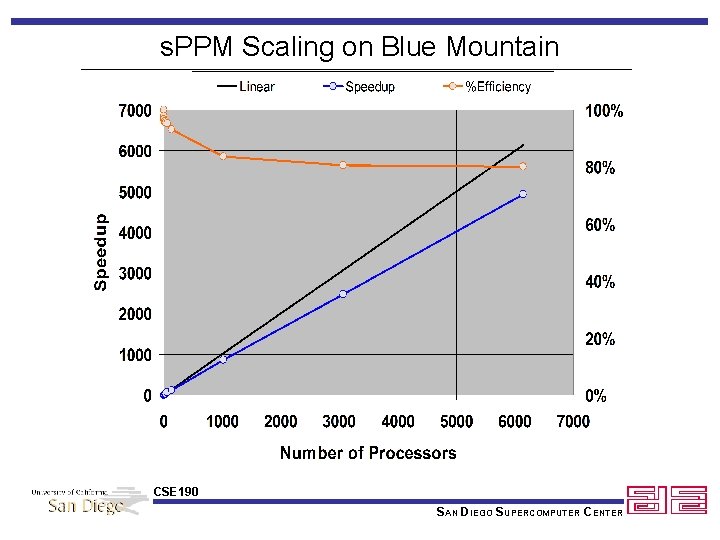

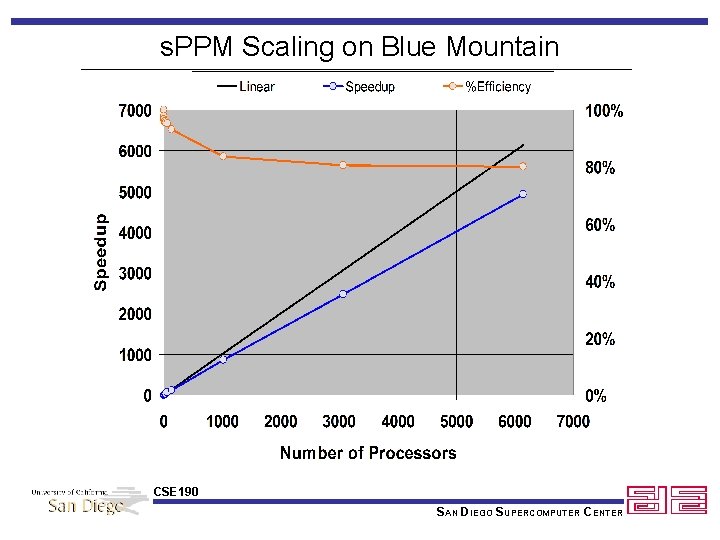

s. PPM Scaling on Blue Mountain CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

• • Definitions History SDSC/NPACI Applications CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

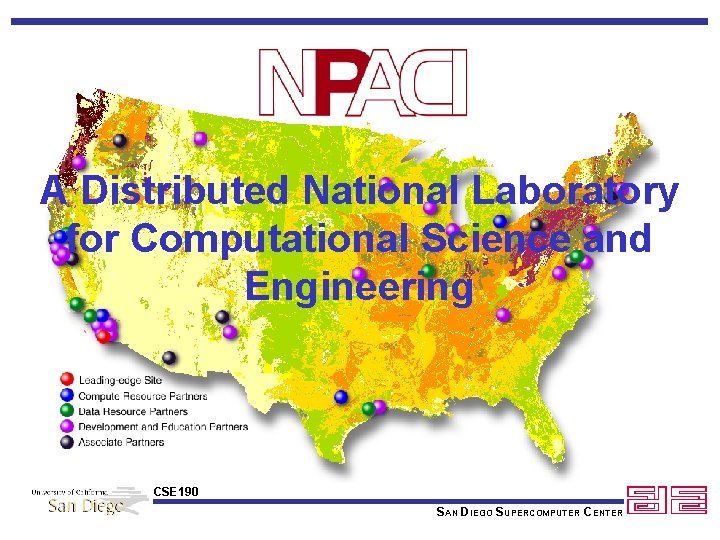

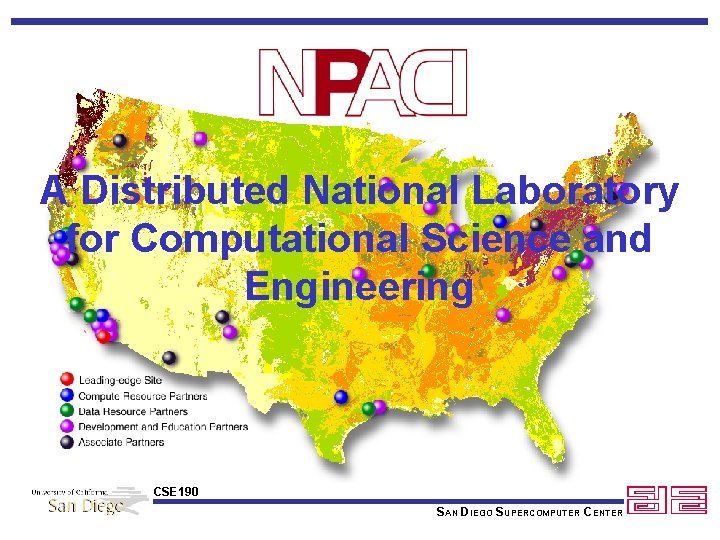

SDSC A National Laboratory for Computational Science and Engineering CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

A Distributed National Laboratory for Computational Science and Engineering CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

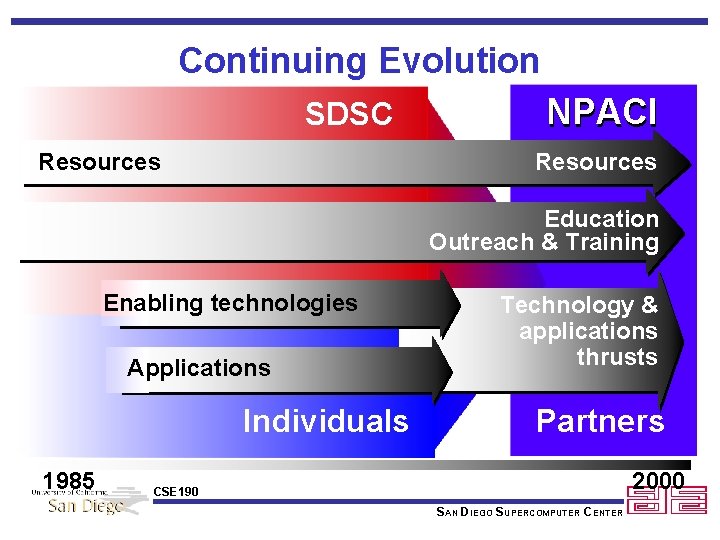

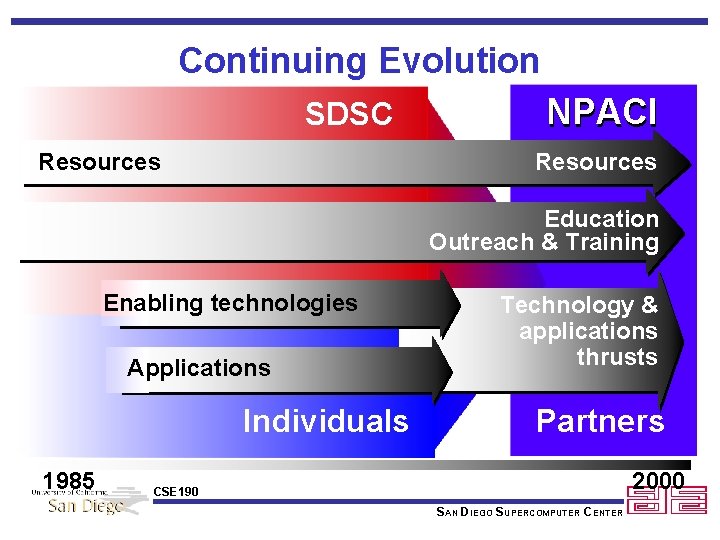

Continuing Evolution SDSC Resources NPACI Resources Education Outreach & Training Enabling technologies Applications Individuals 1985 Technology & applications thrusts Partners 2000 CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

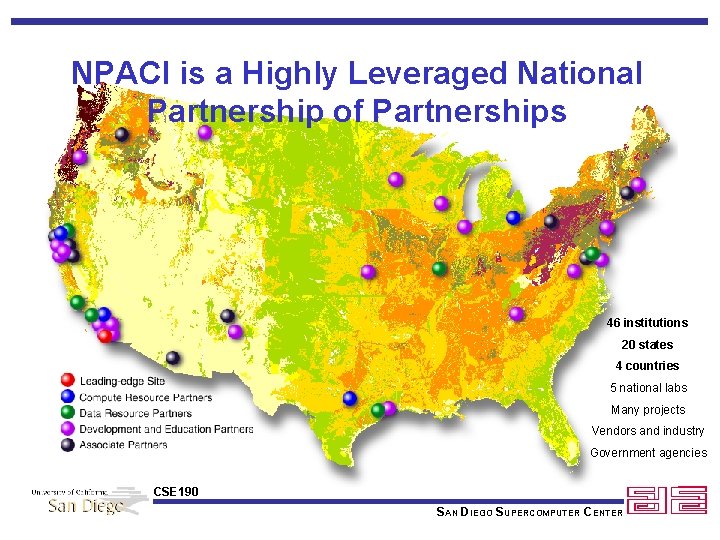

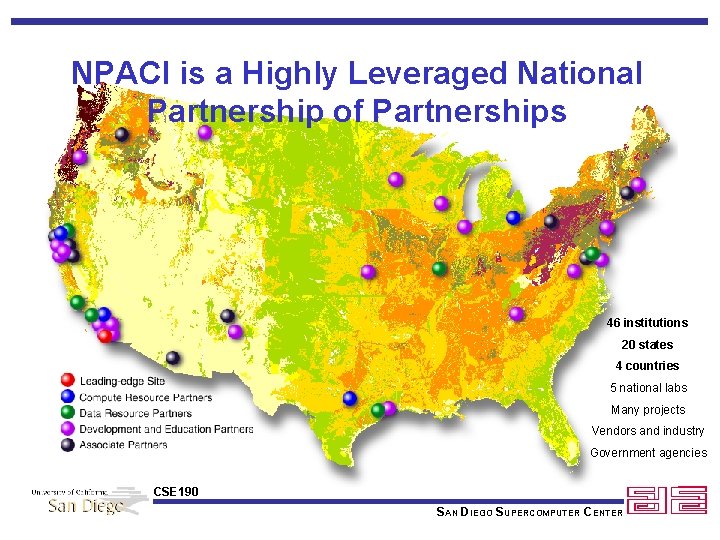

NPACI is a Highly Leveraged National Partnership of Partnerships 46 institutions 20 states 4 countries 5 national labs Many projects Vendors and industry Government agencies CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Mission Accelerate Scientific Discovery Through the development and implementation of computational and computer science techniques CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Vision Changing How Science is Done • Collect data from digital libraries, laboratories, and observation • Analyze the data with models run on the grid • Visualize and share data over the Web • Publish results in a digital library CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Goals: Fulfilling the Mission Embracing the Scientific Community • Capability Computing • Provide compute and information resources of exceptional capability • Discovery Environments • Develop and deploy novel, integrated, easy-to-use computational environments • Computational Literacy • Extend the excitement, benefits, and opportunities of computational science CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

NPACI Objectives • Deploy teraflops-scale computers • Create a national metacomputing infrastructure • Enable data-intensive computing • Create persistent intellectual infrastructure • Conduct outreach to new communities • Advance computing technology in support of science CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

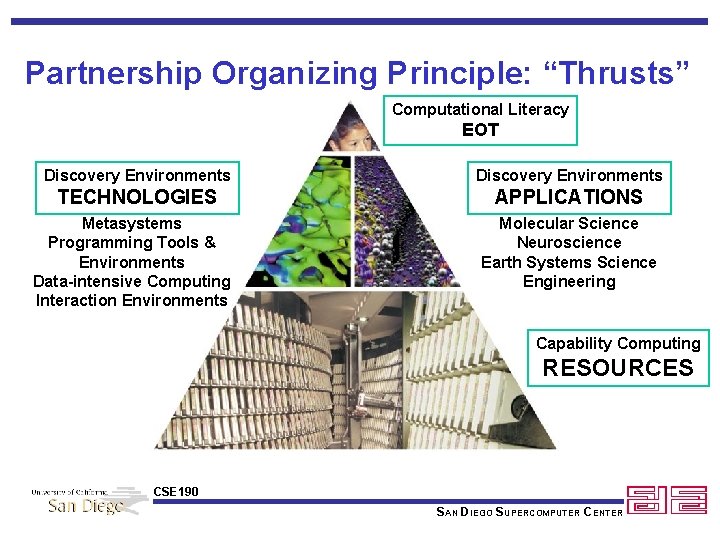

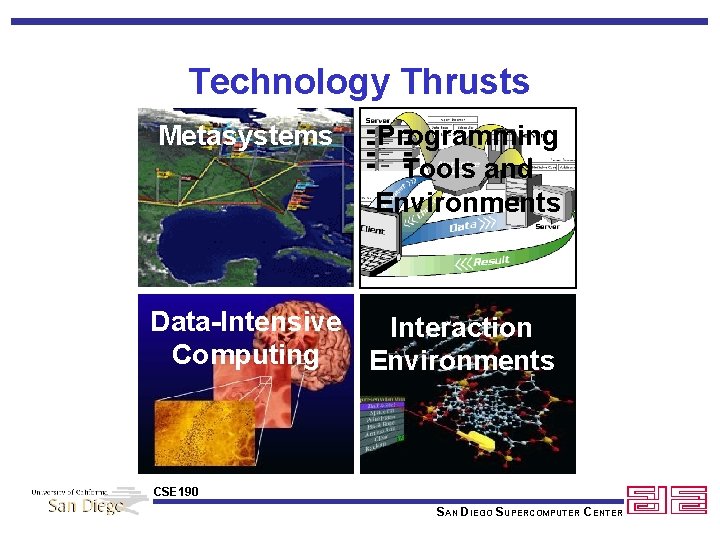

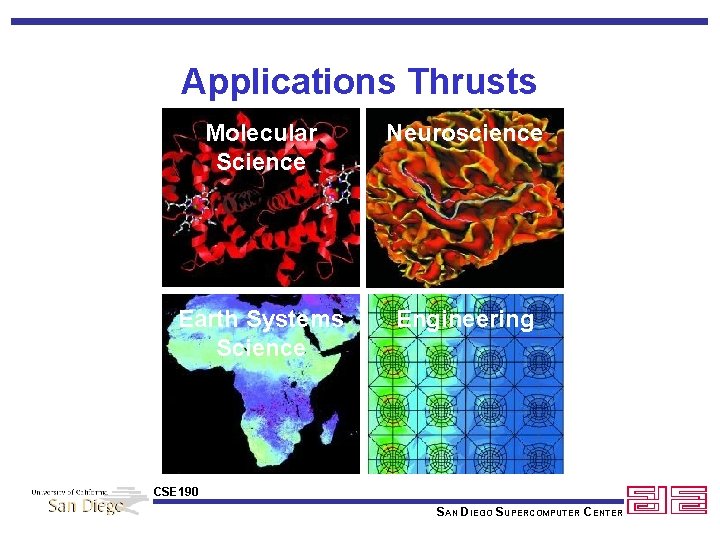

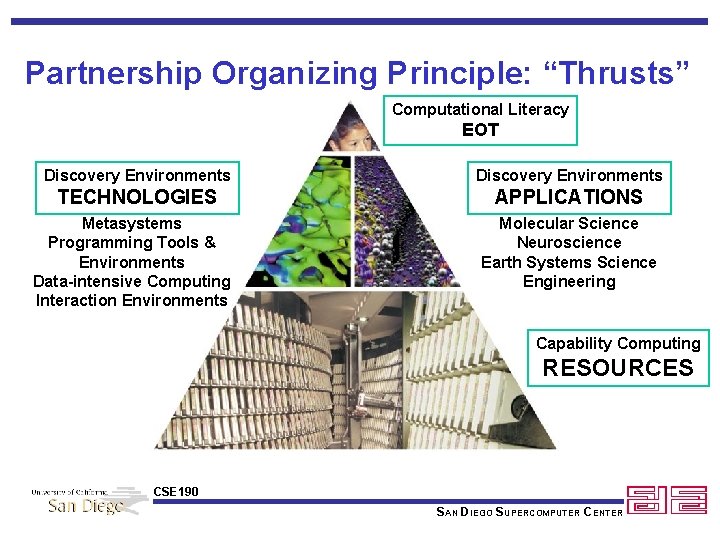

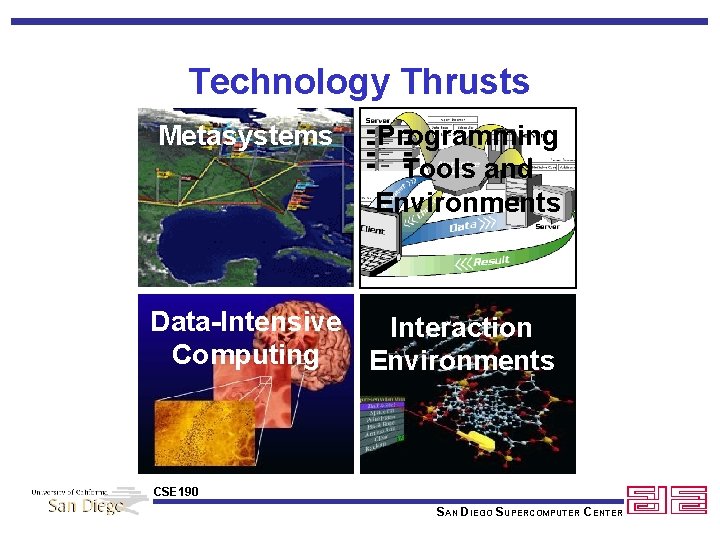

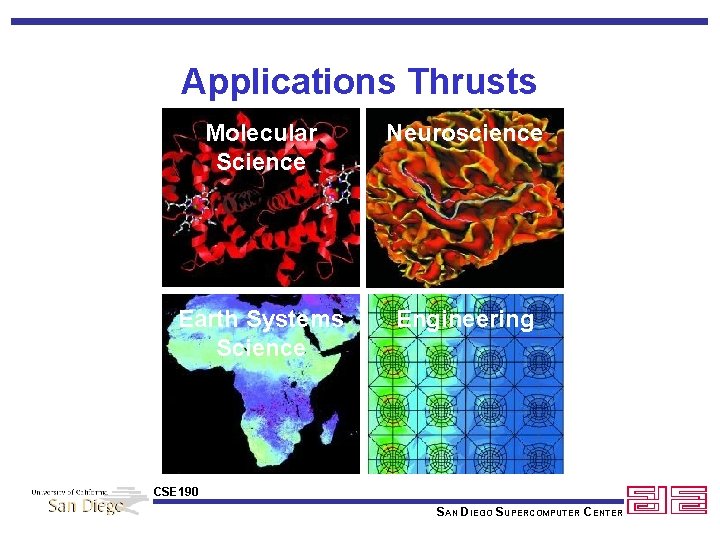

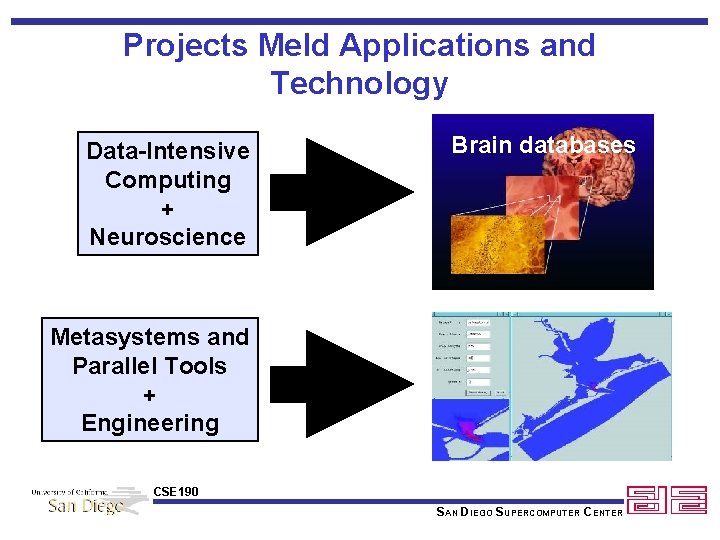

Partnership Organizing Principle: “Thrusts” Computational Literacy EOT Discovery Environments TECHNOLOGIES APPLICATIONS Metasystems Programming Tools & Environments Data-intensive Computing Interaction Environments Molecular Science Neuroscience Earth Systems Science Engineering Capability Computing RESOURCES CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Technology Thrusts Metasystems Programming Tools and Environments Data-Intensive Interaction Computing Environments CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Applications Thrusts Molecular Science Neuroscience Earth Systems Science Engineering CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

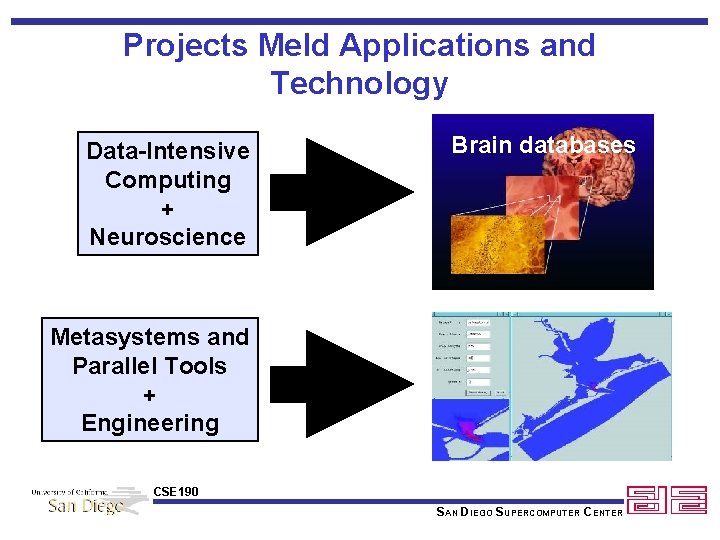

Projects Meld Applications and Technology Data-Intensive Computing + Neuroscience Brain databases Metasystems and Parallel Tools + Engineering CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

• • Definitions History SDSC/NPACI Applications CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

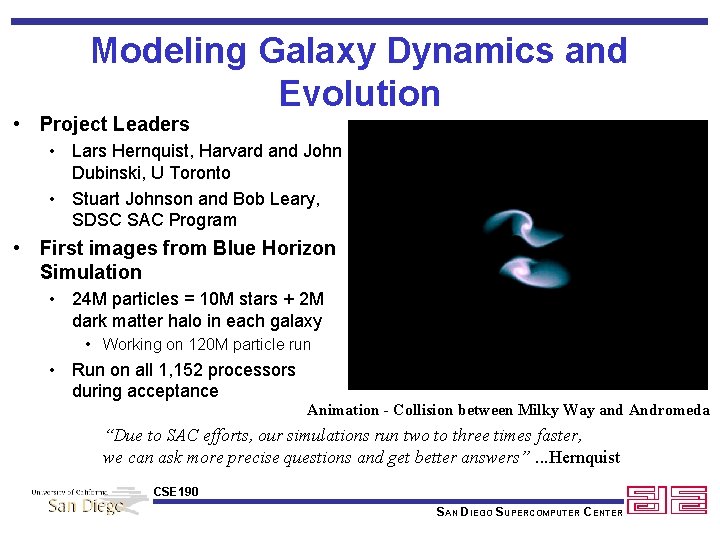

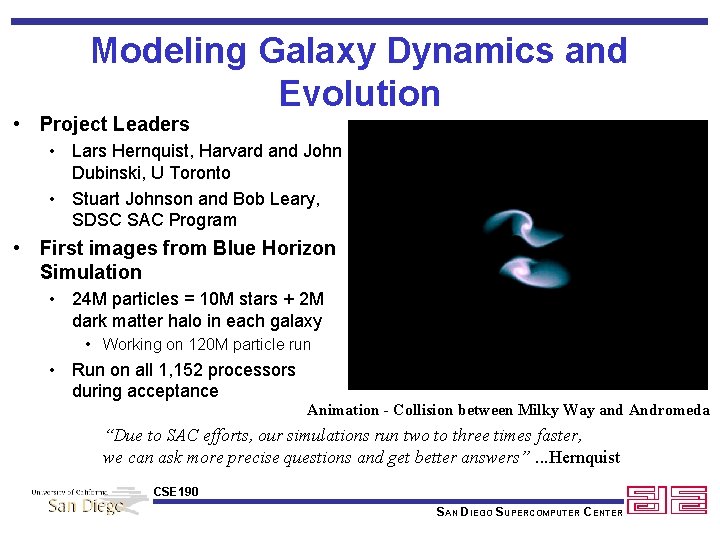

Modeling Galaxy Dynamics and Evolution • Project Leaders • Lars Hernquist, Harvard and John Dubinski, U Toronto • Stuart Johnson and Bob Leary, SDSC SAC Program • First images from Blue Horizon Simulation • 24 M particles = 10 M stars + 2 M dark matter halo in each galaxy • Working on 120 M particle run • Run on all 1, 152 processors during acceptance Animation - Collision between Milky Way and Andromeda “Due to SAC efforts, our simulations run two to three times faster, we can ask more precise questions and get better answers”. . . Hernquist CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

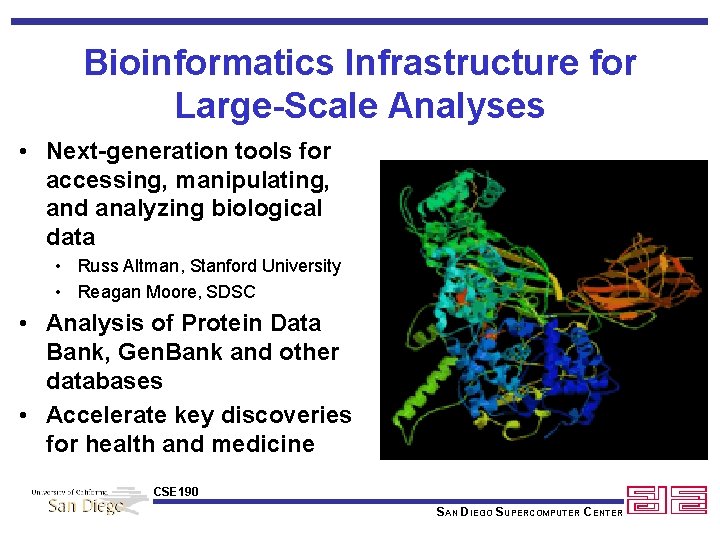

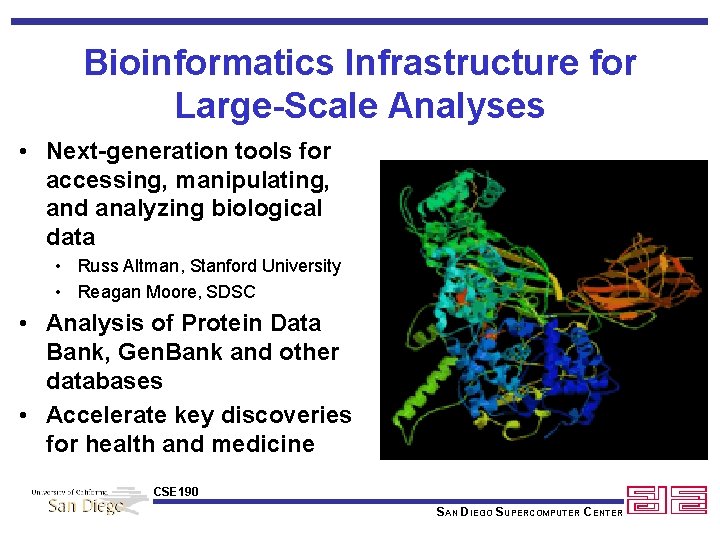

Bioinformatics Infrastructure for Large-Scale Analyses • Next-generation tools for accessing, manipulating, and analyzing biological data • Russ Altman, Stanford University • Reagan Moore, SDSC • Analysis of Protein Data Bank, Gen. Bank and other databases • Accelerate key discoveries for health and medicine CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Protein Folding in a Distributed Computing Environment • Simulating protein movement governing reactions within cells • • Andrew Grimshaw, U Virginia Charles Brooks, The Scripps Research Institute • Bernard Pailthorpe, UCSD/SDSC • Computationally intensive • Distributed computing power from Legion CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

Telescience for Advanced Tomography Applications • Integrates remote instrumentation, distributed computing, federated databases, image archives, and visualization tools. • Mark Ellisman, UCSD • Fran Berman, UCSD • Carl Kesselman, USC • 3 -D tomographic reconstruction of biological specimens CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

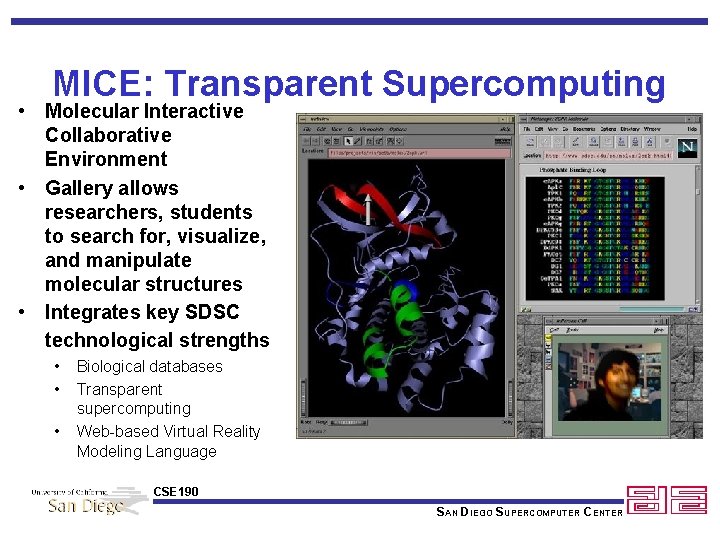

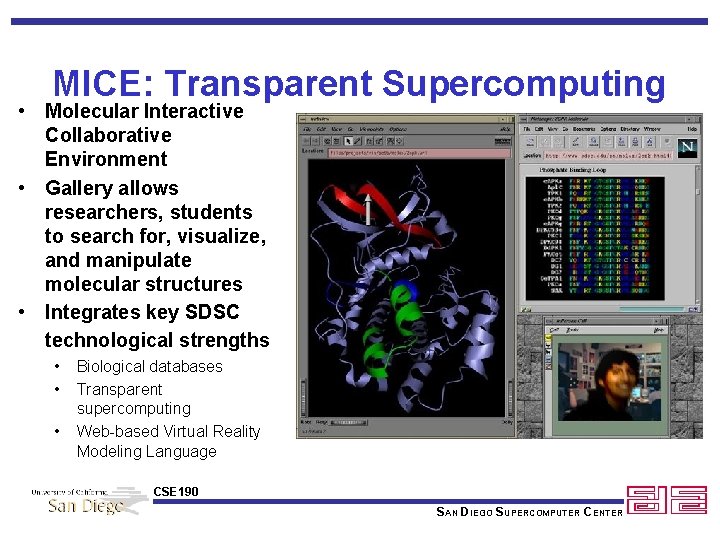

MICE: Transparent Supercomputing • Molecular Interactive Collaborative Environment • Gallery allows researchers, students to search for, visualize, and manipulate molecular structures • Integrates key SDSC technological strengths • • • Biological databases Transparent supercomputing Web-based Virtual Reality Modeling Language CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

The Protein Data Bank • World’s single scientific resource for depositing and searching protein structures • Protein structure data growing exponentially • 10, 500 structures in PDB today • 20, 000 by the year 2001 1 CD 3: The PDB’s 10, 000 th structure. • Vital to the advancement of biological sciences • Working towards a digital continuum from primary data to final scientific publication • Capture of primary data from highenergy synchrotrons (e. g. Stanford Linear Accelerator Center) requires 50 Mbps network bandwidth CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

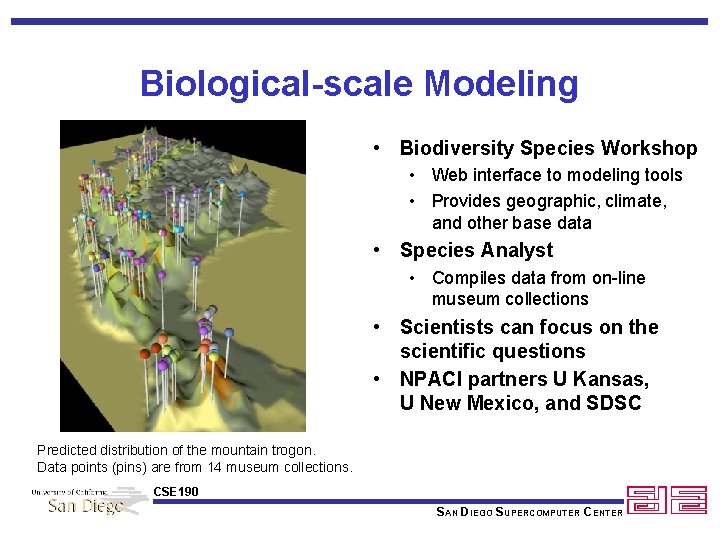

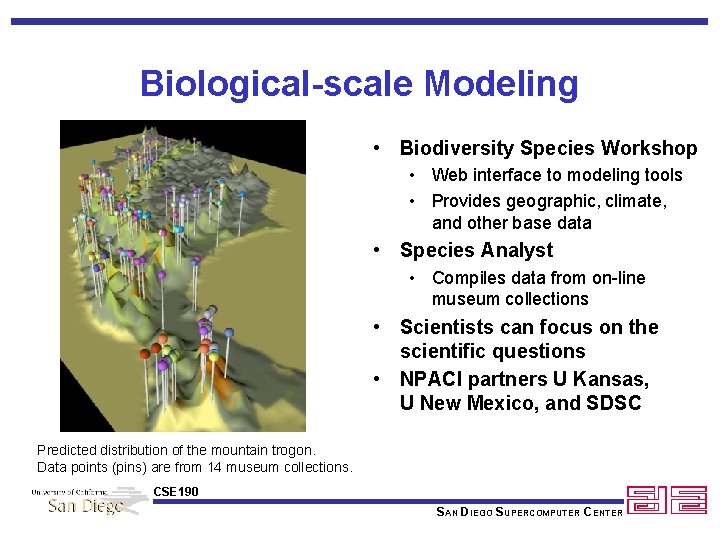

Biological-scale Modeling • Biodiversity Species Workshop • Web interface to modeling tools • Provides geographic, climate, and other base data • Species Analyst • Compiles data from on-line museum collections • Scientists can focus on the scientific questions • NPACI partners U Kansas, U New Mexico, and SDSC Predicted distribution of the mountain trogon. Data points (pins) are from 14 museum collections. CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

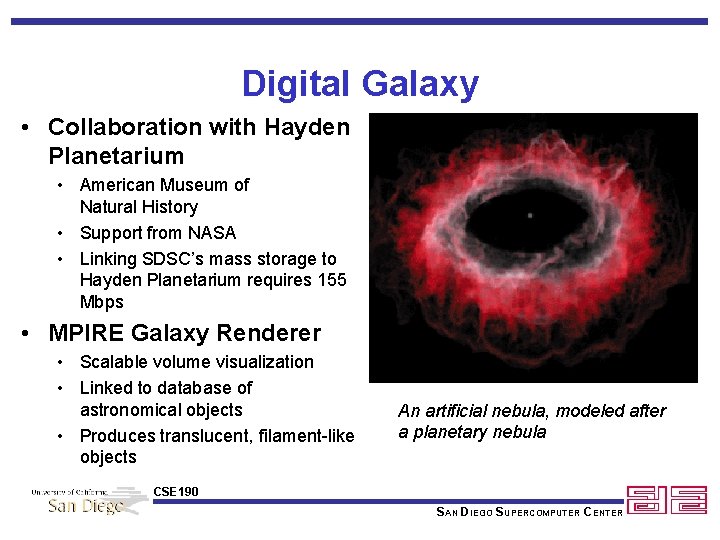

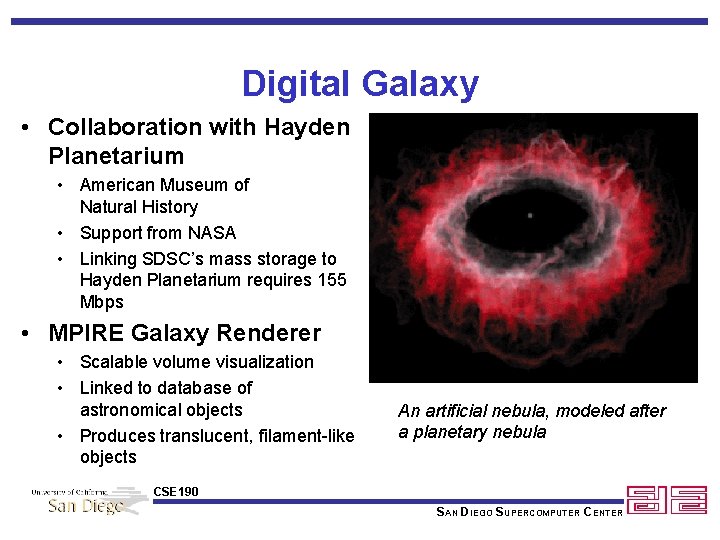

Digital Galaxy • Collaboration with Hayden Planetarium • American Museum of Natural History • Support from NASA • Linking SDSC’s mass storage to Hayden Planetarium requires 155 Mbps • MPIRE Galaxy Renderer • Scalable volume visualization • Linked to database of astronomical objects • Produces translucent, filament-like objects An artificial nebula, modeled after a planetary nebula CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

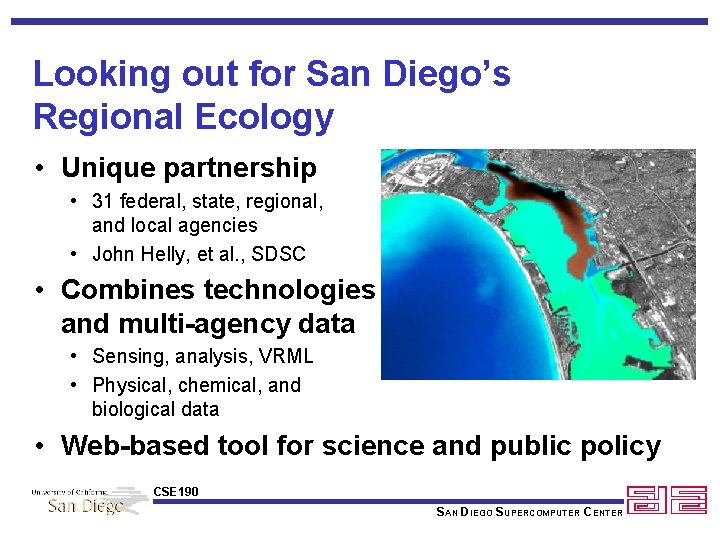

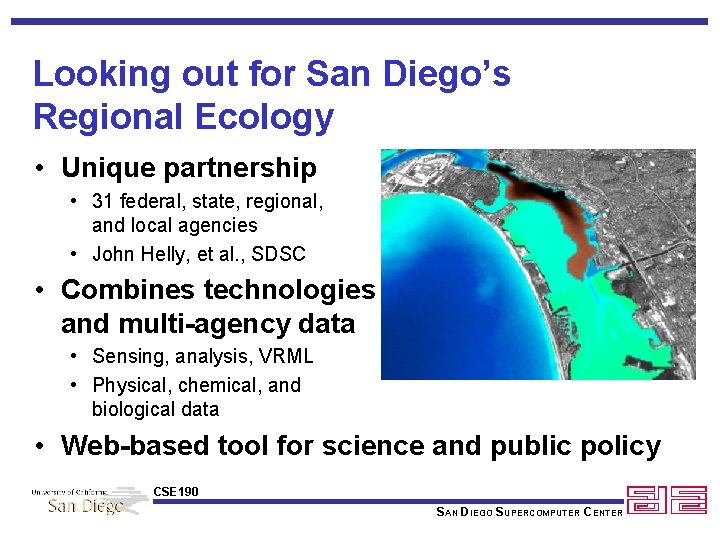

Looking out for San Diego’s Regional Ecology • Unique partnership • 31 federal, state, regional, and local agencies • John Helly, et al. , SDSC • Combines technologies and multi-agency data • Sensing, analysis, VRML • Physical, chemical, and biological data • Web-based tool for science and public policy CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

AMICO: The Art of Managing Art • Art Museum Image Consortium (AMICO) • 28 art museums working toward educational use of digital multimedia • Launch of the AMICO Library includes more than 50, 000 works of art • • AMICO, CDL, SDSC XML information mediation SDSC SRB data management Links between images, scholarly research, educational material CSE 190 SAN DIEGO SUPERCOMPUTER CENTER

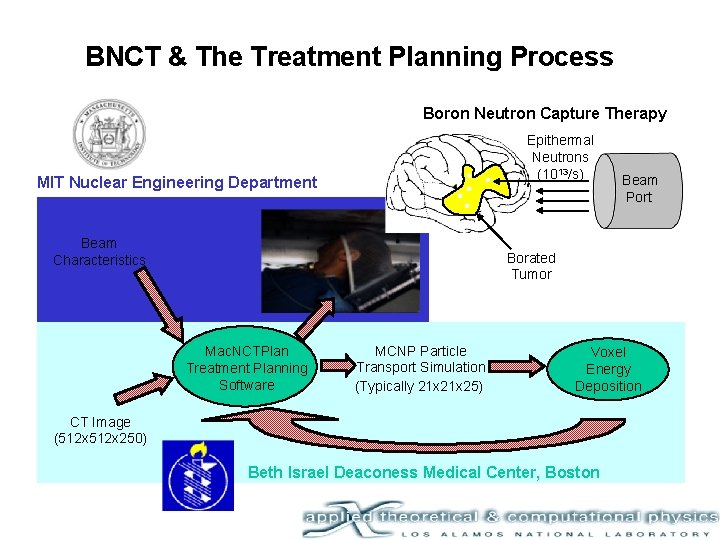

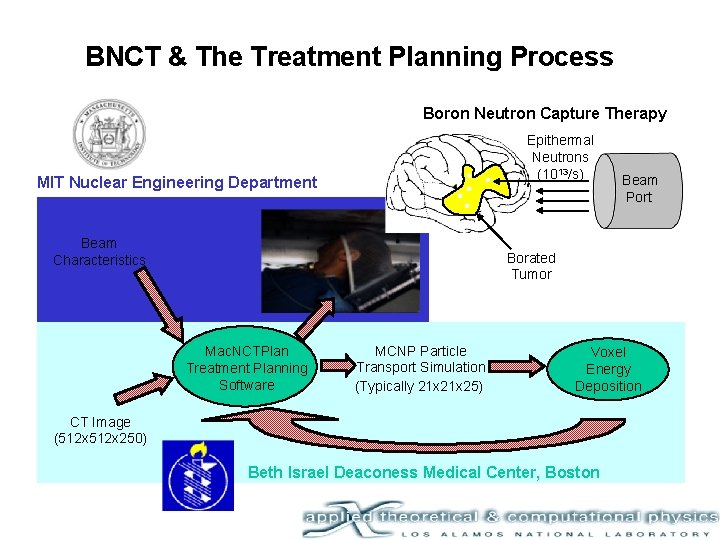

BNCT & The Treatment Planning Process Boron Neutron Capture Therapy MIT Nuclear Engineering Department Epithermal Neutrons (1013/s) Beam Characteristics Beam Port Borated Tumor Mac. NCTPlan Treatment Planning Software MCNP Particle Transport Simulation (Typically 21 x 25) Voxel Energy Deposition CT Image (512 x 250) Beth Israel Deaconess Medical Center, Boston

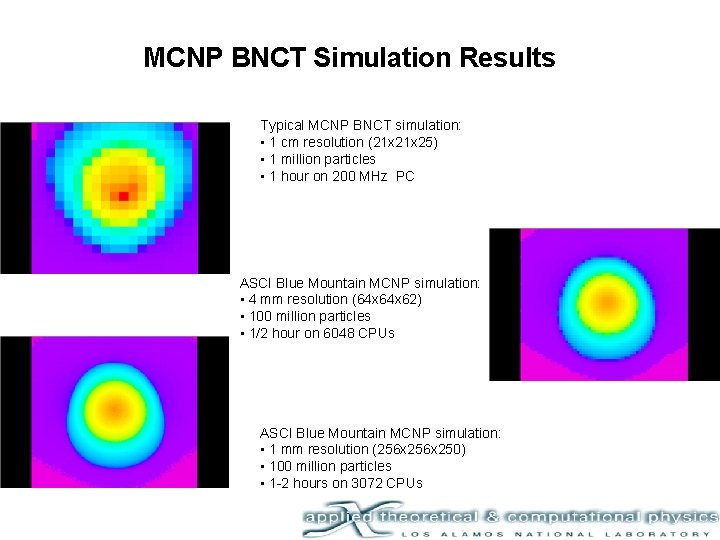

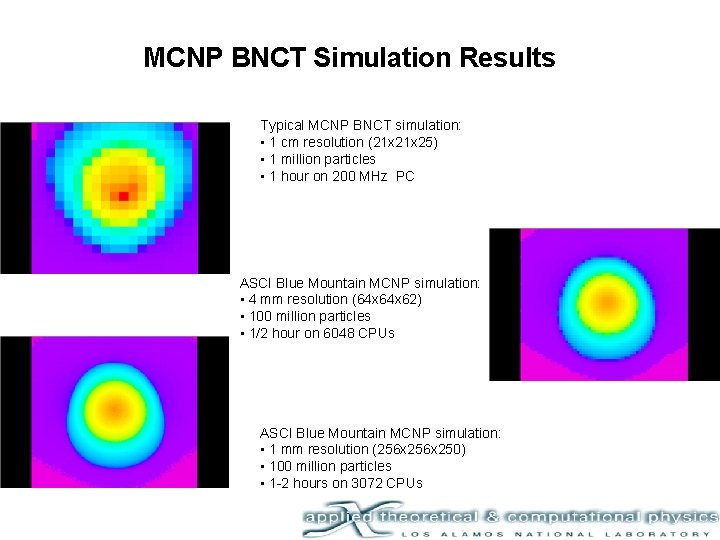

MCNP BNCT Simulation Results Typical MCNP BNCT simulation: • 1 cm resolution (21 x 25) • 1 million particles • 1 hour on 200 MHz PC ASCI Blue Mountain MCNP simulation: • 4 mm resolution (64 x 62) • 100 million particles • 1/2 hour on 6048 CPUs ASCI Blue Mountain MCNP simulation: • 1 mm resolution (256 x 250) • 100 million particles • 1 -2 hours on 3072 CPUs