CSCI 6307 Foundation of Systems Review Final Exam

- Slides: 34

CSCI 6307 Foundation of Systems Review: Final Exam Xiang Lian The University of Texas – Pan American Edinburg, TX 78539 lianx@utpa. edu

Review • Chapters 2 and 5 in the architecture textbook • Chapters 1~3, and 6 in the operating system textbook • Lecture slides (A 5, R 1 ~ R 6, O 1 ~ O 6) • In-class exercises (3) ~ (5) • Assignments 3 ~ 5 2

Review • 5 Questions (100 points) • 1 Bonus Question (20 extra points) 3

Chapter 2 Instructions • Binary representation – 2's complement – Positive/negative integers binary numbers – Addition, negation 4

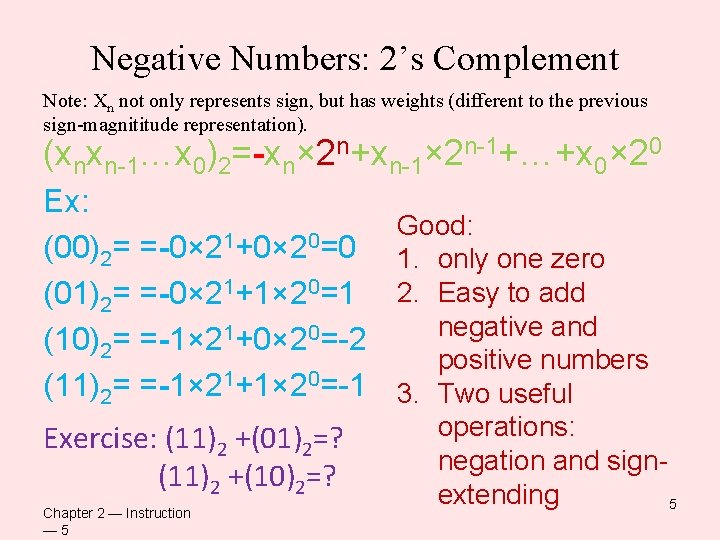

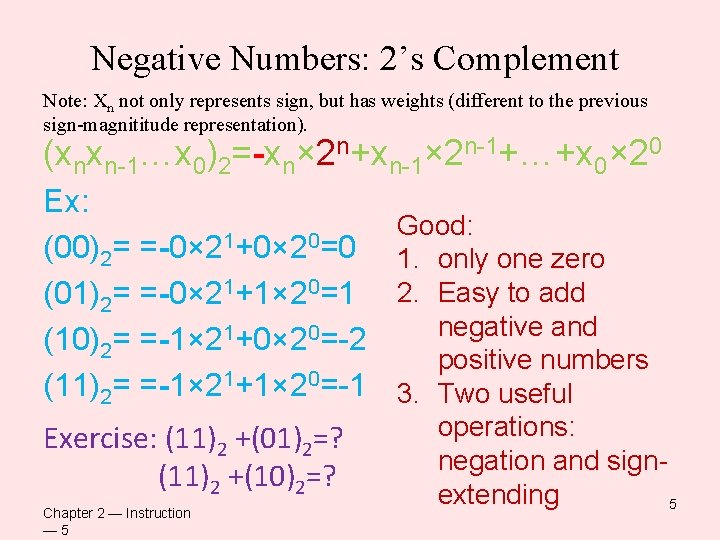

Negative Numbers: 2’s Complement Note: Xn not only represents sign, but has weights (different to the previous sign-magnititude representation). (xnxn-1…x 0)2=-xn× 2 n+xn-1× 2 n-1+…+x 0× 20 Ex: (00)2= =-0× 21+0× 20=0 (01)2= =-0× 21+1× 20=1 (10)2= =-1× 21+0× 20=-2 (11)2= =-1× 21+1× 20=-1 Exercise: (11)2 +(01)2=? (11)2 +(10)2=? Chapter 2 — Instruction — 5 Good: 1. only one zero 2. Easy to add negative and positive numbers 3. Two useful operations: negation and signextending 5

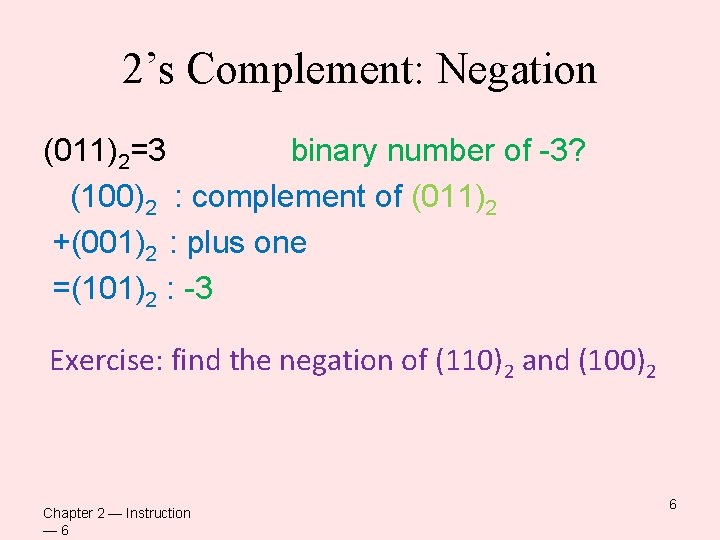

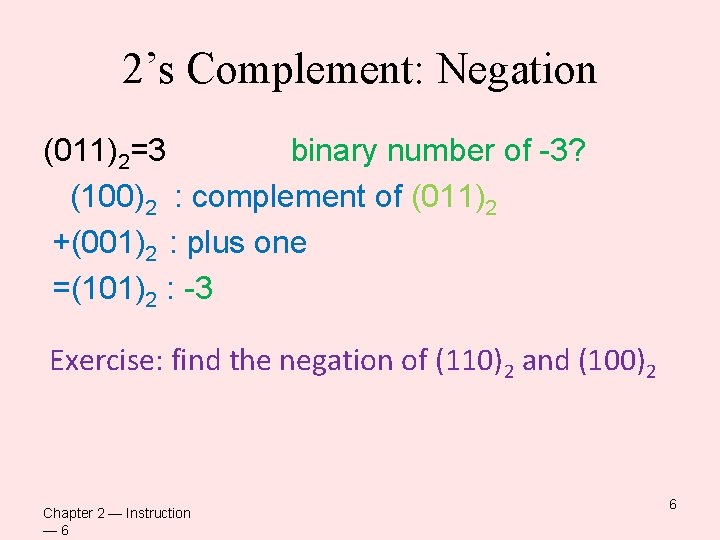

2’s Complement: Negation (011)2=3 binary number of -3? (100)2 : complement of (011)2 +(001)2 : plus one =(101)2 : -3 Exercise: find the negation of (110)2 and (100)2 Chapter 2 — Instruction — 6 6

Chapter 5 Large and Fast: Exploiting Memory Hierarchy • Memory hierarchy • Direct mapped cache – Address interpretation • Tag number • Index number 7

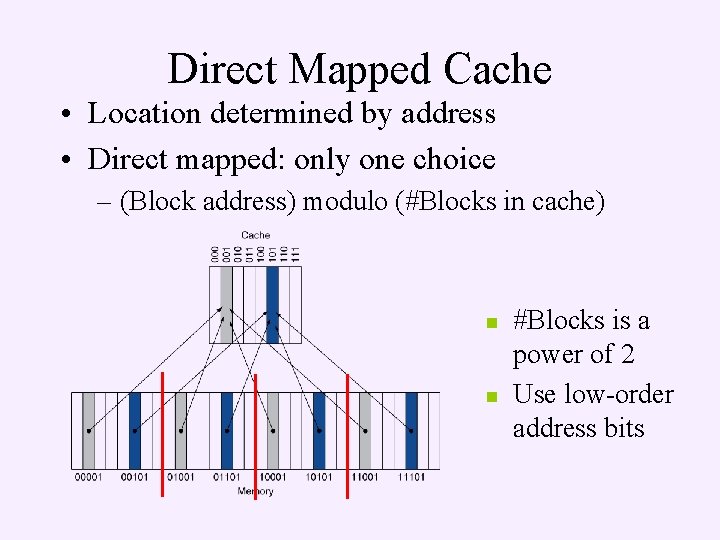

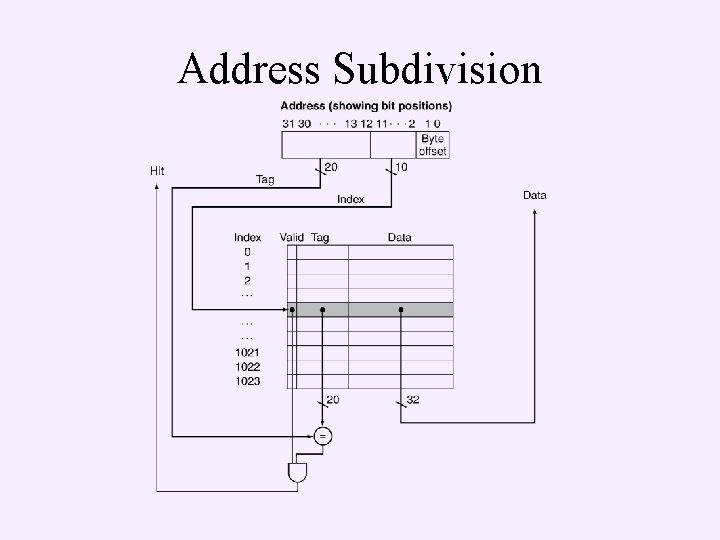

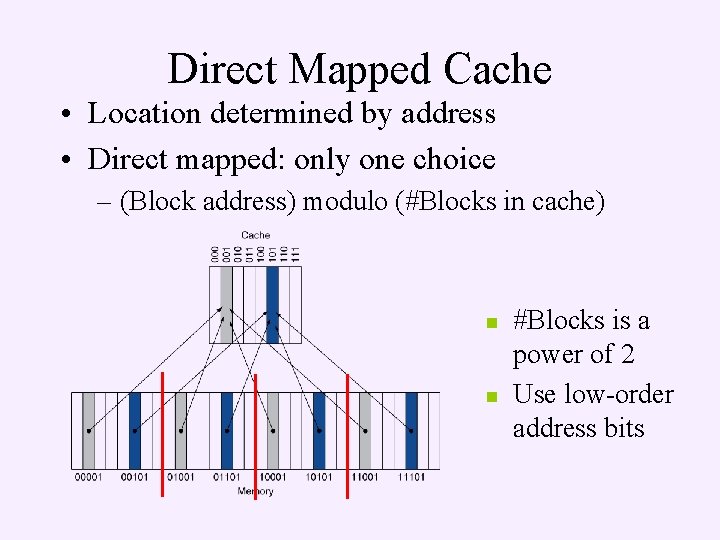

Direct Mapped Cache • Location determined by address • Direct mapped: only one choice – (Block address) modulo (#Blocks in cache) n n #Blocks is a power of 2 Use low-order address bits

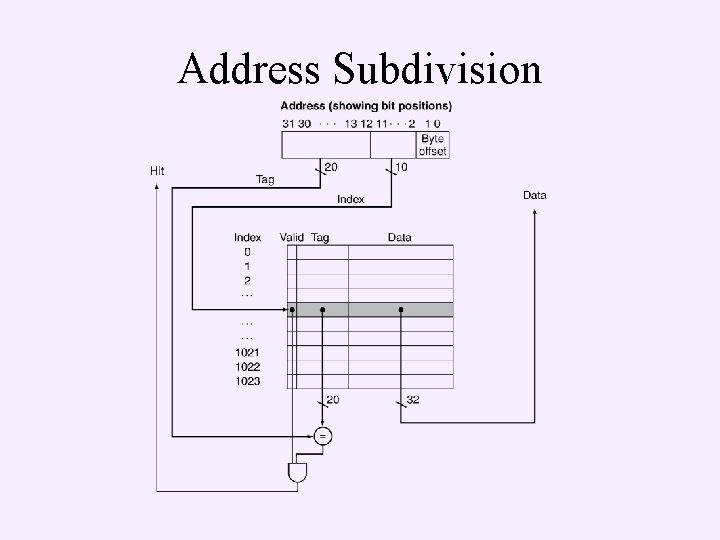

Address Subdivision

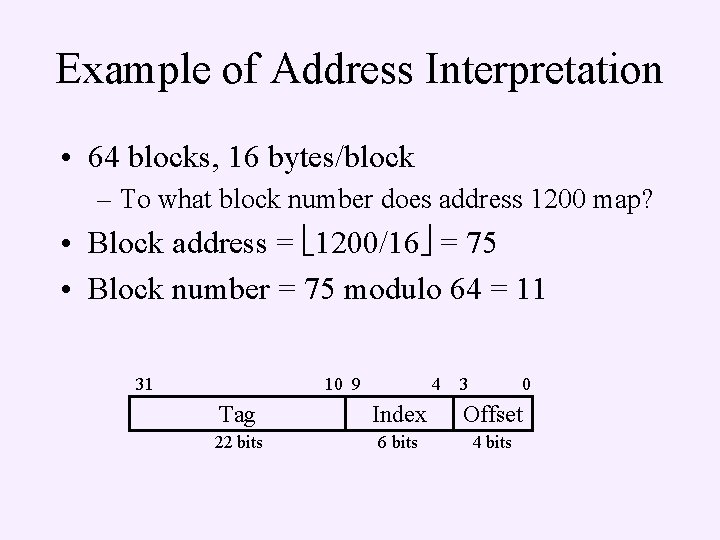

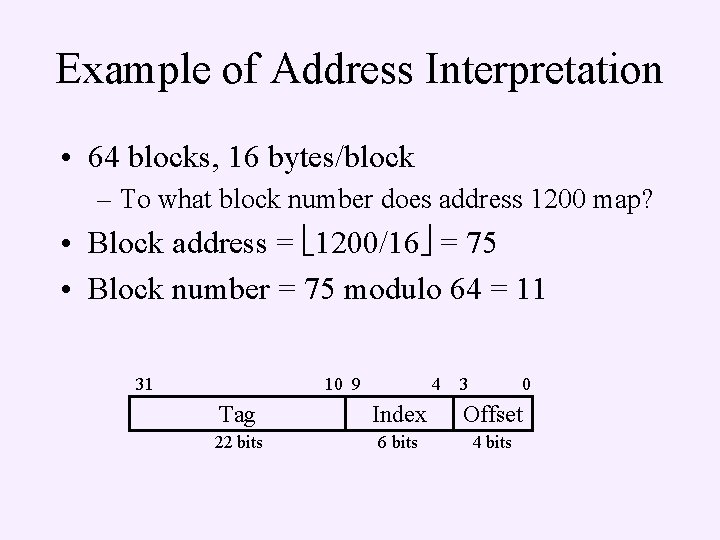

Example of Address Interpretation • 64 blocks, 16 bytes/block – To what block number does address 1200 map? • Block address = 1200/16 = 75 • Block number = 75 modulo 64 = 11 31 10 9 4 3 0 Tag Index Offset 22 bits 6 bits 4 bits

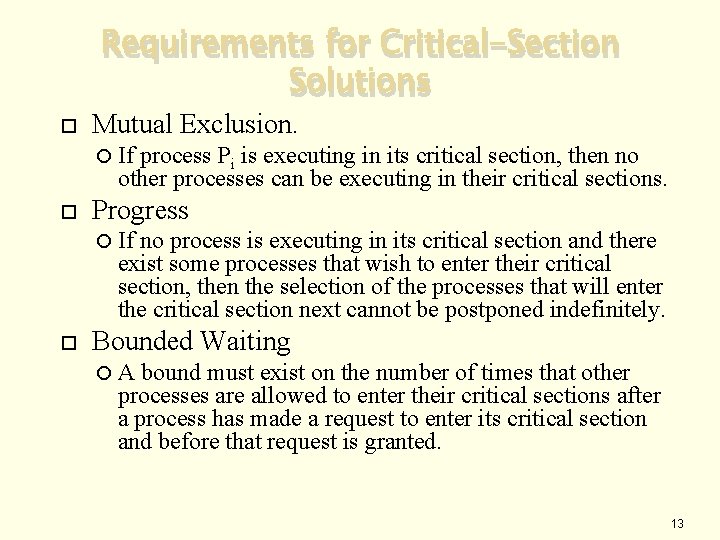

R 1 – R 3: Process and Threads • Critical sections – ensure that when one process is executing in its critical section, no other process is allowed to execute in its critical section, called mutual exclusion • Requirements for Critical-Section Solutions – Mutual Exclusion – Progress • Semaphore and its P() and V() functions – Definition – Usage • Job scheduling methods – FCFS, SJF (or SJN), High priority job first, job with the closest deadline first – Waiting time and turnaround time 11

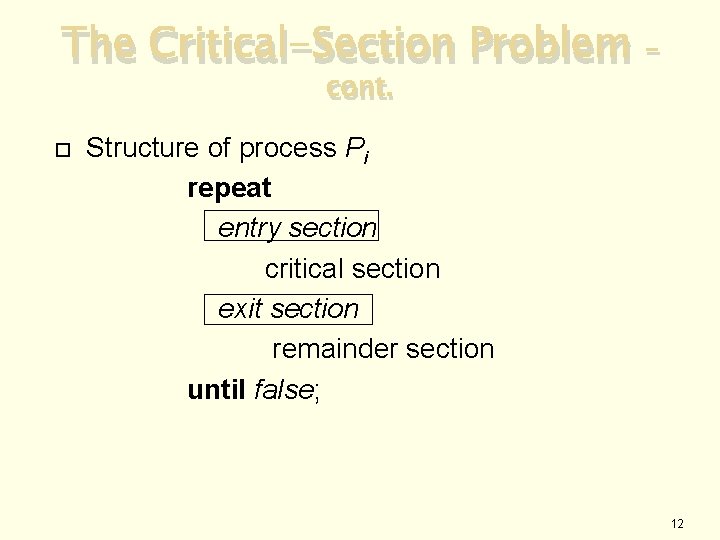

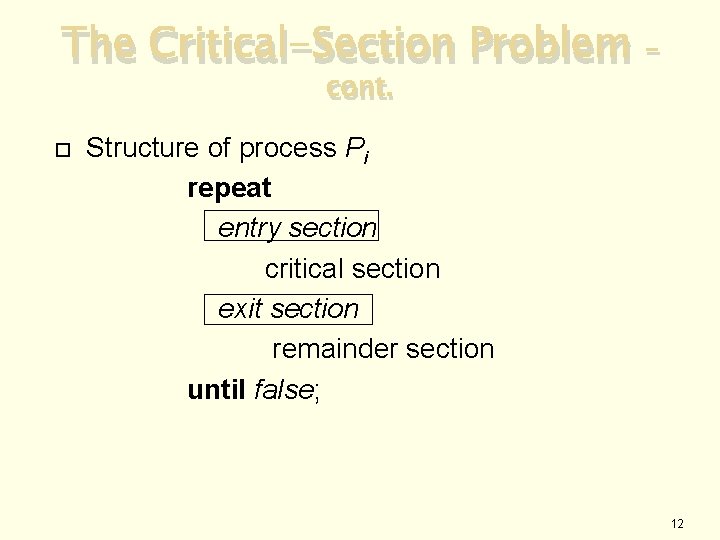

The Critical-Section Problem cont. – Structure of process Pi repeat entry section critical section exit section remainder section until false; 12

Requirements for Critical-Section Solutions Mutual Exclusion. If process Pi is executing in its critical section, then no other processes can be executing in their critical sections. Progress If no process is executing in its critical section and there exist some processes that wish to enter their critical section, then the selection of the processes that will enter the critical section next cannot be postponed indefinitely. Bounded Waiting A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted. 13

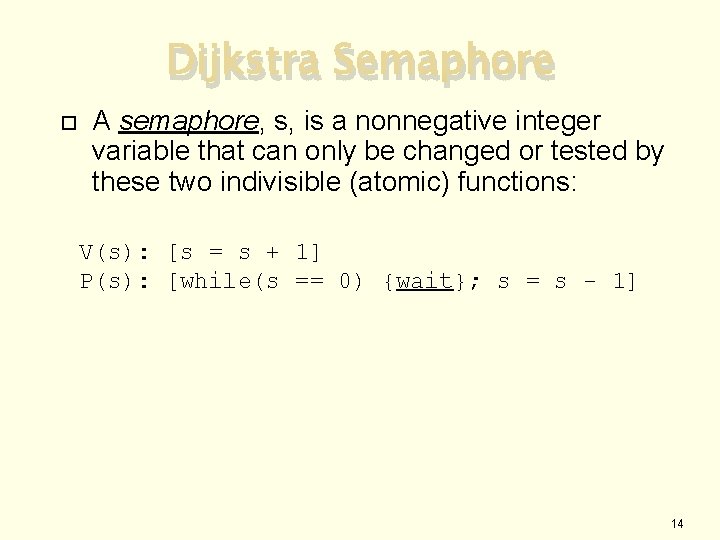

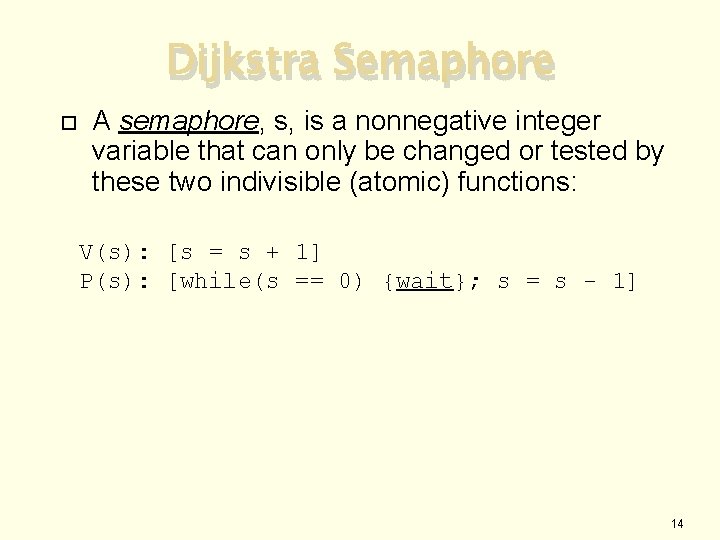

Dijkstra Semaphore A semaphore, s, is a nonnegative integer variable that can only be changed or tested by these two indivisible (atomic) functions: V(s): [s = s + 1] P(s): [while(s == 0) {wait}; s = s - 1] 14

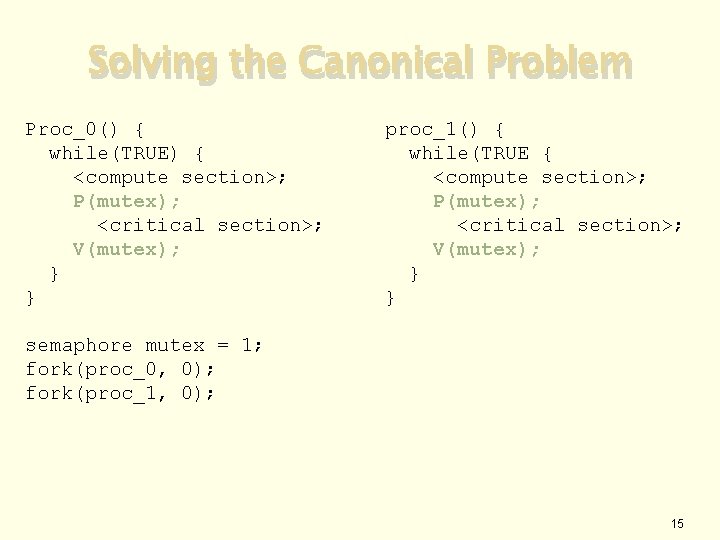

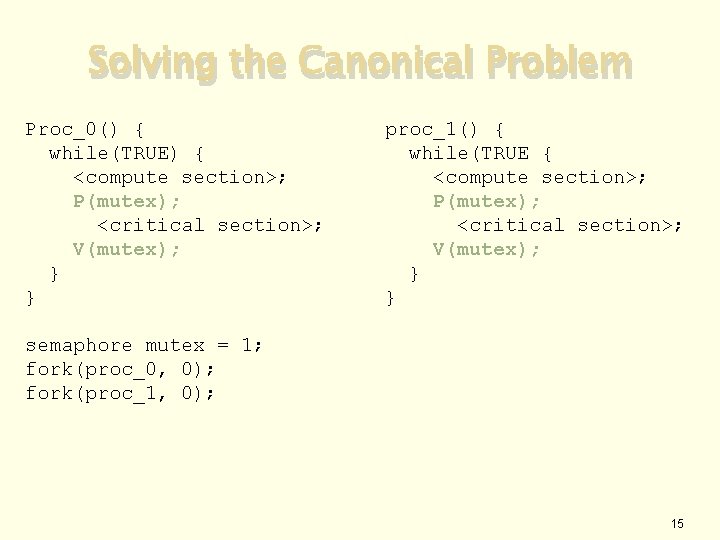

Solving the Canonical Problem Proc_0() { while(TRUE) { <compute section>; P(mutex); <critical section>; V(mutex); } } proc_1() { while(TRUE { <compute section>; P(mutex); <critical section>; V(mutex); } } semaphore mutex = 1; fork(proc_0, 0); fork(proc_1, 0); 15

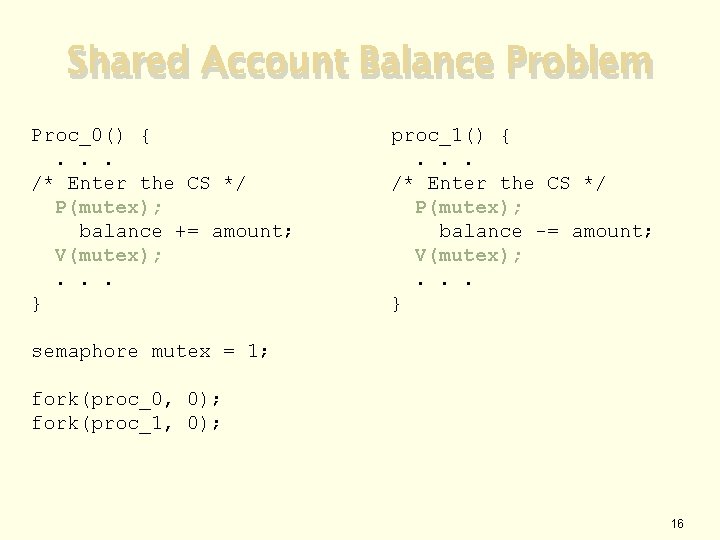

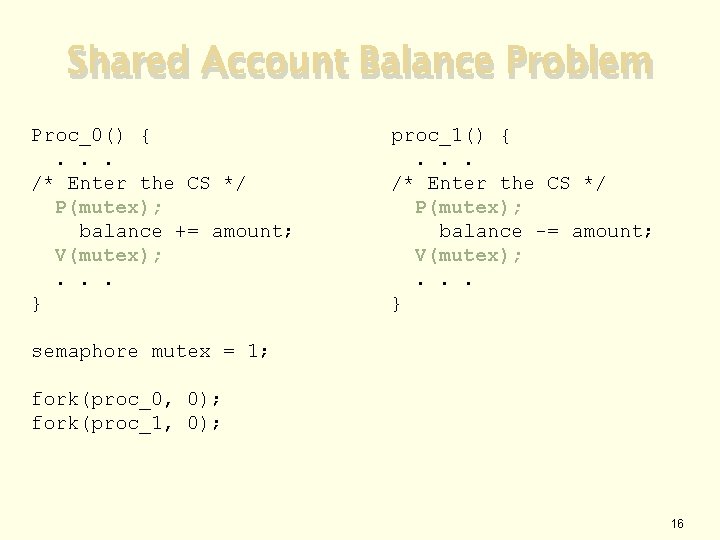

Shared Account Balance Problem Proc_0() {. . . /* Enter the CS */ P(mutex); balance += amount; V(mutex); . . . } proc_1() {. . . /* Enter the CS */ P(mutex); balance -= amount; V(mutex); . . . } semaphore mutex = 1; fork(proc_0, 0); fork(proc_1, 0); 16

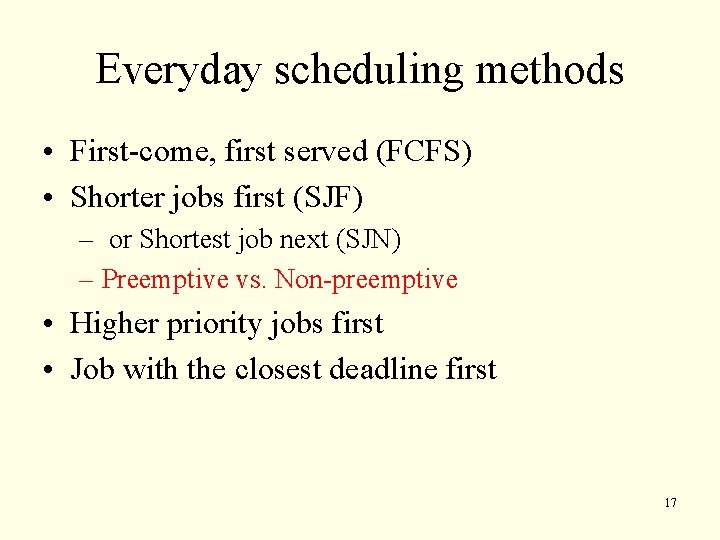

Everyday scheduling methods • First-come, first served (FCFS) • Shorter jobs first (SJF) – or Shortest job next (SJN) – Preemptive vs. Non-preemptive • Higher priority jobs first • Job with the closest deadline first 17

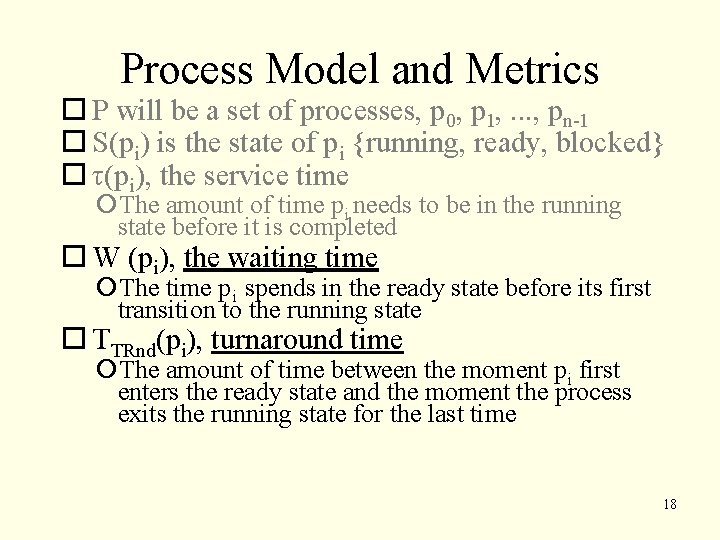

Process Model and Metrics P will be a set of processes, p 0, p 1, . . . , pn-1 S(pi) is the state of pi {running, ready, blocked} τ(pi), the service time The amount of time pi needs to be in the running state before it is completed W (pi), the waiting time The time pi spends in the ready state before its first transition to the running state TTRnd(pi), turnaround time The amount of time between the moment pi first enters the ready state and the moment the process exits the running state for the last time 18

R 4 – R 5: Memory Management • Functions of memory manager • • Abstraction Allocation Address translation Virtual memory • Memory allocation (see lecture slides R 4 and exercises) • Fixed partition method • • First-fit Next-fit Best-fit Worst-fit 19

R 4 – R 5: Memory Management (cont'd) • Page reference stream: – Given a sequence of addresses, translate it into a page reference stream • Fetch policy • when a page should be loaded • On demand paging • Replacement policy (see lecture slides and exercises) • which page is unloaded • Policies • • • Random Balady’s optimal algorithm* LRU* LFU FIFO • Placement policy • where page should be loaded 20

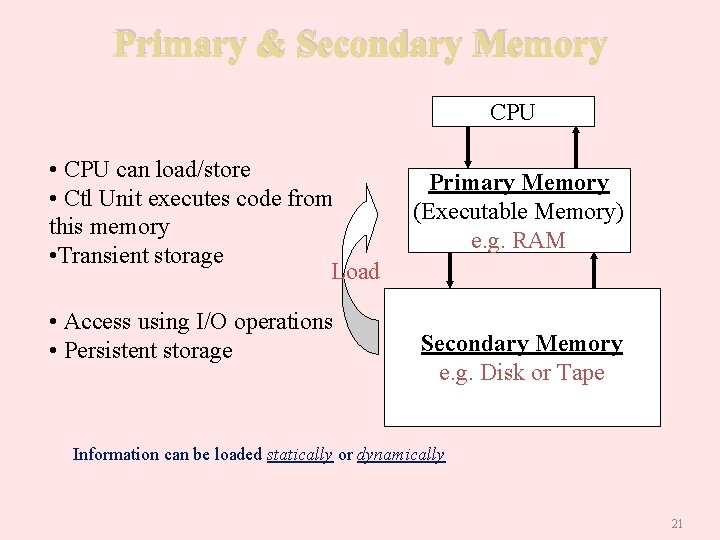

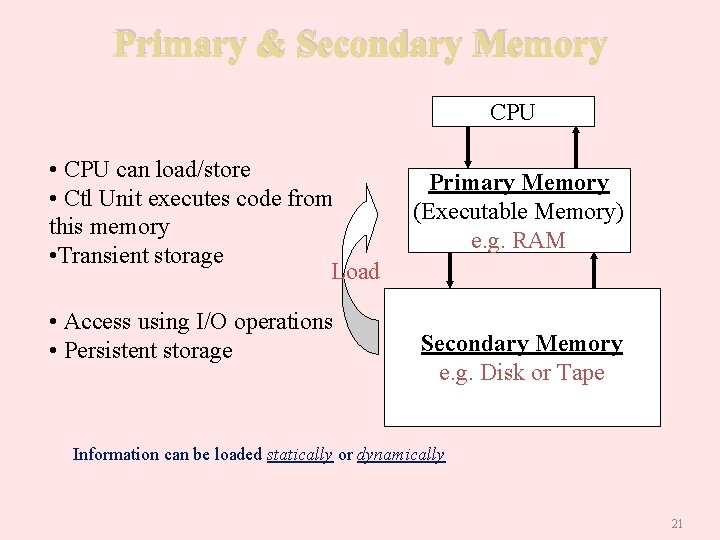

Primary & Secondary Memory CPU • CPU can load/store • Ctl Unit executes code from this memory • Transient storage Load • Access using I/O operations • Persistent storage Primary Memory (Executable Memory) e. g. RAM Secondary Memory e. g. Disk or Tape Information can be loaded statically or dynamically 21

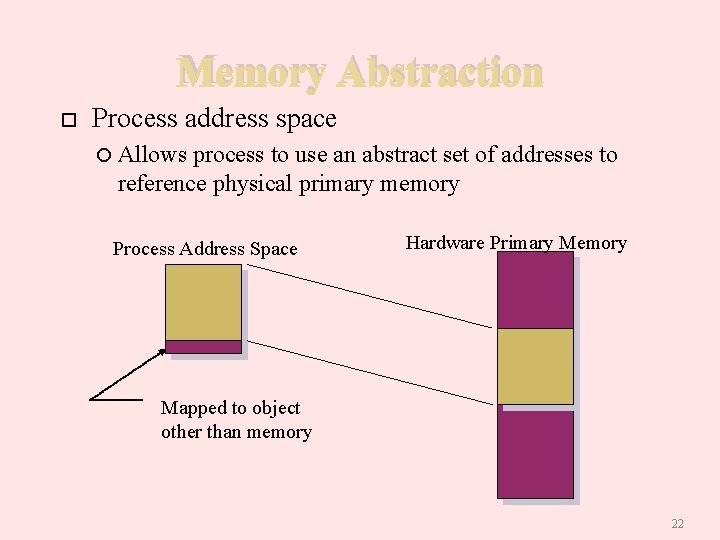

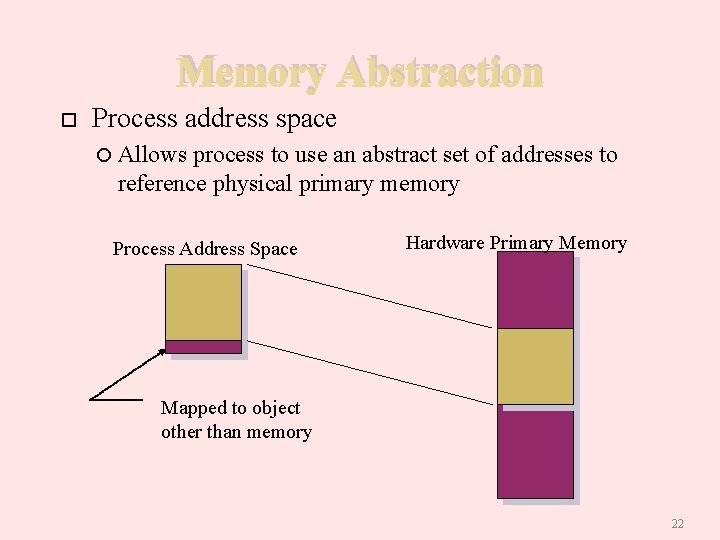

Memory Abstraction Process address space Allows process to use an abstract set of addresses to reference physical primary memory Process Address Space Hardware Primary Memory Mapped to object other than memory 22

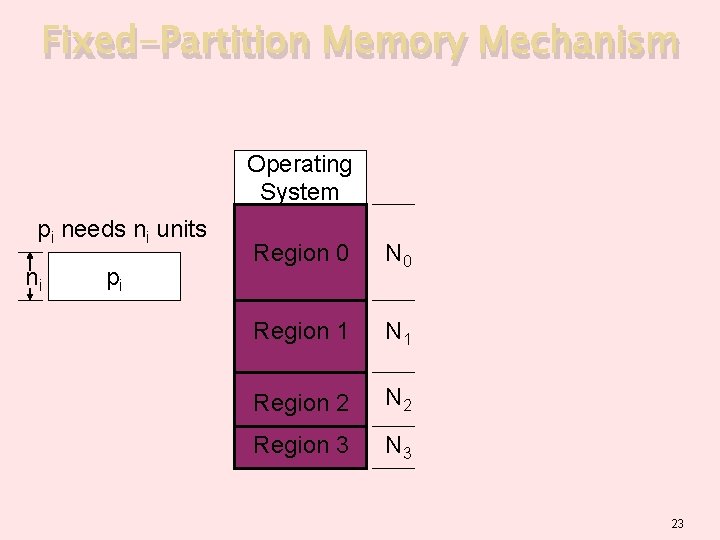

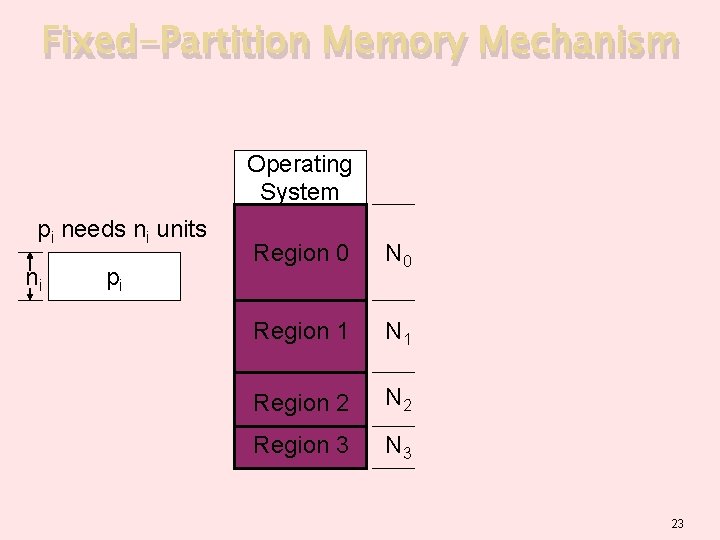

Fixed-Partition Memory Mechanism Operating System pi needs ni units ni pi Region 0 N 0 Region 1 N 1 Region 2 N 2 Region 3 N 3 23

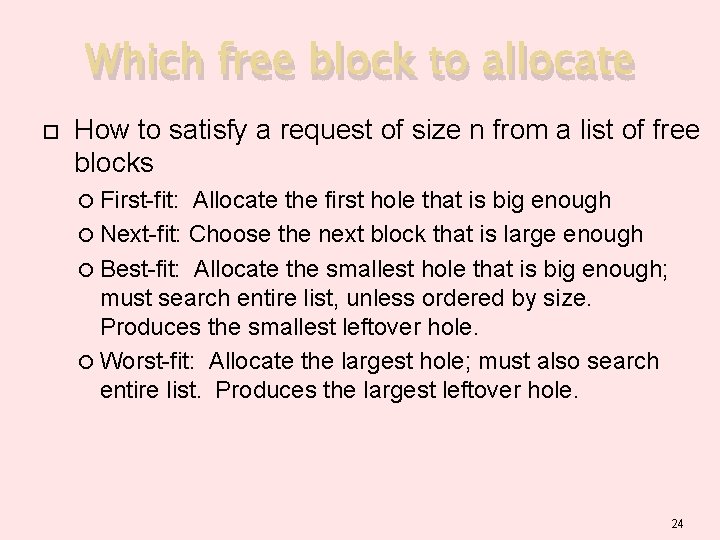

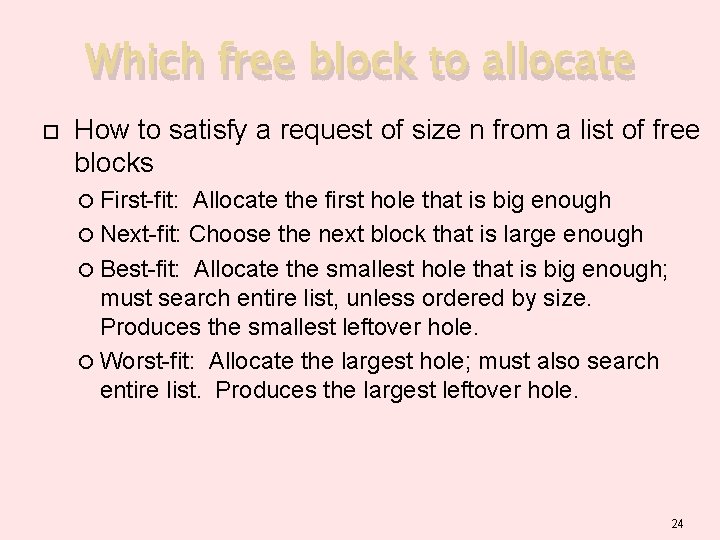

Which free block to allocate How to satisfy a request of size n from a list of free blocks First-fit: Allocate the first hole that is big enough Next-fit: Choose the next block that is large enough Best-fit: Allocate the smallest hole that is big enough; must search entire list, unless ordered by size. Produces the smallest leftover hole. Worst-fit: Allocate the largest hole; must also search entire list. Produces the largest leftover hole. 24

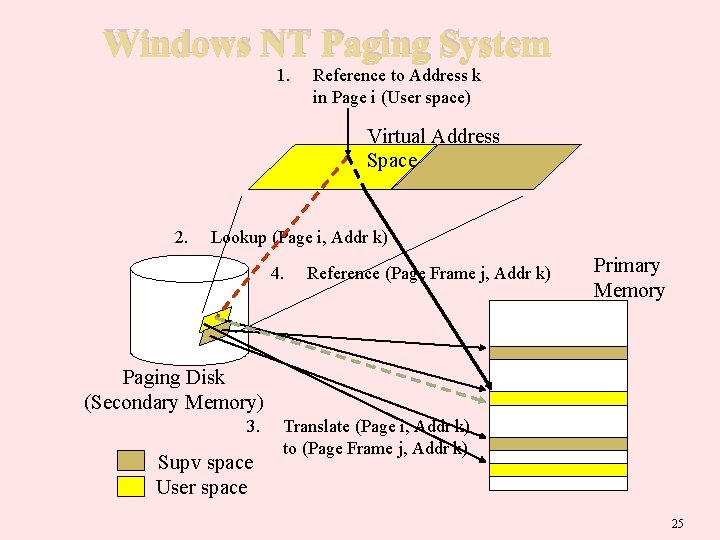

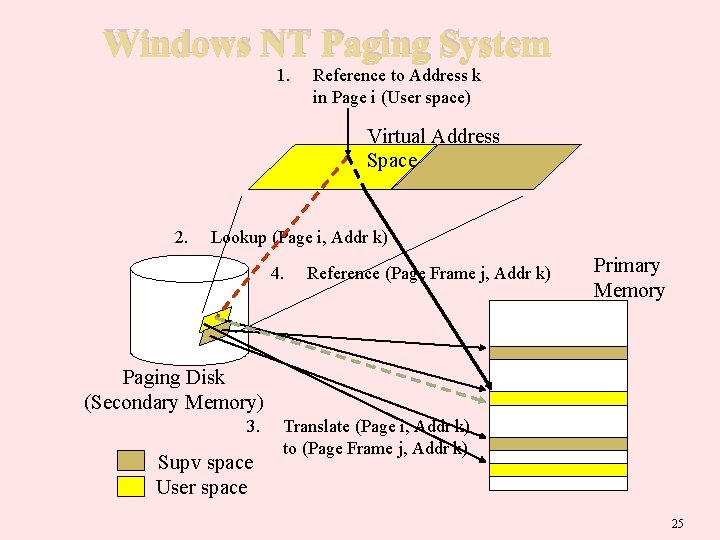

Windows NT Paging System 1. Reference to Address k in Page i (User space) Virtual Address Space 2. Lookup (Page i, Addr k) 4. Reference (Page Frame j, Addr k) Primary Memory Paging Disk (Secondary Memory) 3. Supv space User space Translate (Page i, Addr k) to (Page Frame j, Addr k) 25

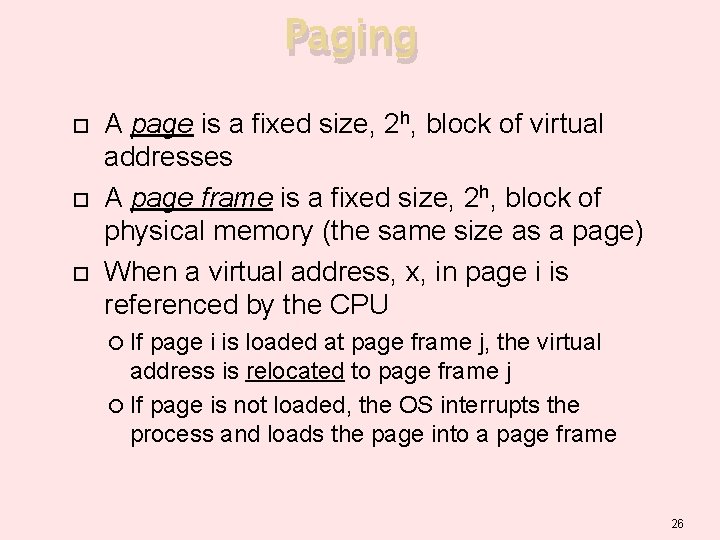

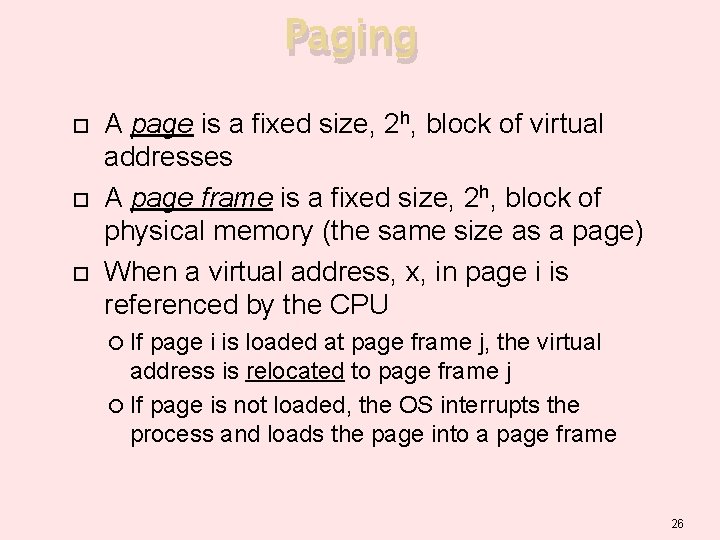

Paging A page is a fixed size, 2 h, block of virtual addresses A page frame is a fixed size, 2 h, block of physical memory (the same size as a page) When a virtual address, x, in page i is referenced by the CPU If page i is loaded at page frame j, the virtual address is relocated to page frame j If page is not loaded, the OS interrupts the process and loads the page into a page frame 26

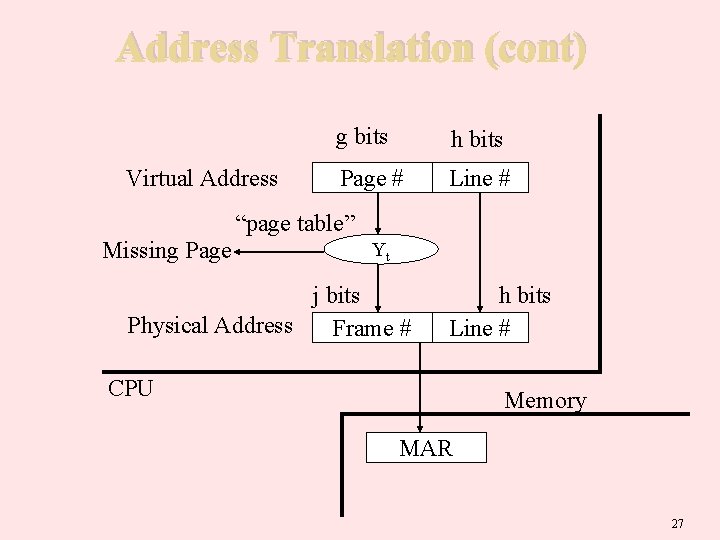

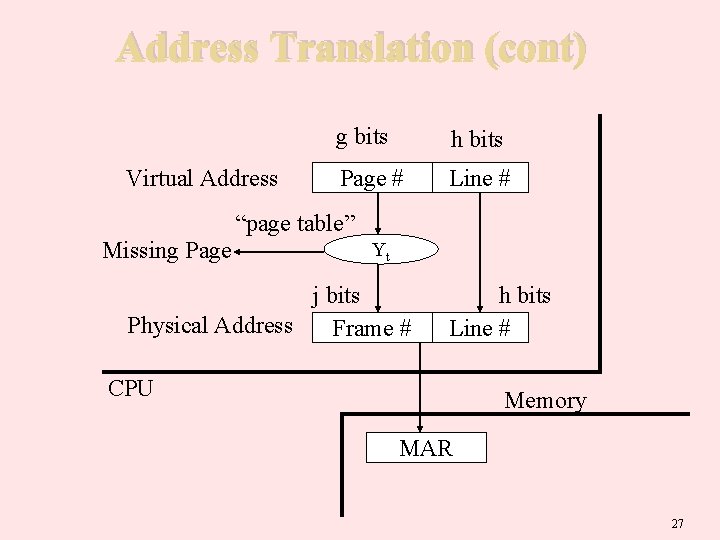

Address Translation (cont) Virtual Address g bits h bits Page # Line # “page table” Missing Page Yt j bits Physical Address Frame # h bits Line # CPU Memory MAR 27

Paging Algorithms Two basic types of paging algorithms Static allocation Dynamic allocation Three basic policies in defining any paging algorithm Fetch policy – when a page should be loaded Replacement policy –which page is unloaded Placement policy – where page should be loaded 28

Page references Processes continually reference memory and so generate a stream of page references The page reference stream tells us everything about how a process uses memory For a given size, we only need to consider the page number If we have a reference to a page, then immediately following references to the page will never generate a page fault 0100, 0432, 0101, 0612, 0103, 0104, 0101, 0611, 0102, 0103 0104, 0101, 0610, 0103, 0104, 0101, 0609, 0102, 0105 Suppose the page size is 100 bytes, what is the page reference stream? We use page reference streams to evaluate paging algorithms 29

Fetch Policy Determines when a page should be brought into primary memory Usually don’t have prior knowledge about what pages will be needed Majority of paging mechanisms use a demand fetch policy Page is loaded only when process references it 30

Replacement Policy When there is no empty page frame in memory, we need to find one to replace Write it out to the swap area if it has been changed since it was read in from the swap area Dirty bit or modified bit pages that have been changed are referred to as “dirty” these pages must be written out to disk because the disk version is out of date this is called “cleaning” the page Which page to remove from memory to make room for a new page We need a page replacement algorithm 31

R 6: Deadlock • 4 necessary conditions of deadlock – Mutual exclusion – Hold and wait – No preemption – Circular wait • How to deal with deadlock in OS – Prevention – Avoidance – Recovery • Please see examples in lecture slides and exercises in Assignment 5 32

Dealing with Deadlocks Three ways Prevention place restrictions on resource requests to make deadlock impossible Avoidance plan ahead to avoid deadlock. Recovery Check for deadlock (periodically or sporadically) and recover from it Please see examples in lecture slides and exercises in Assignment 5 33

Good Luck! Q/A