CSCE 771 Natural Language Processing Lecture 12 Classifiers

![Code_consecutive_pos_tagger. py revisited to trace history development def pos_features(sentence, i, history): # [_consec-pos-tag-features] if Code_consecutive_pos_tagger. py revisited to trace history development def pos_features(sentence, i, history): # [_consec-pos-tag-features] if](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-8.jpg)

![Trace continued pos_features ******************* sentence= ['Rookie', …'. '] i= 1 history= ['NN'] pos_features= {'suffix(3)': Trace continued pos_features ******************* sentence= ['Rookie', …'. '] i= 1 history= ['NN'] pos_features= {'suffix(3)':](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-10.jpg)

![rtepair = nltk. corpus. rte. pairs(['rte 3_dev. xml'])[33] extractor = nltk. RTEFeature. Extractor(rtepair) print rtepair = nltk. corpus. rte. pairs(['rte 3_dev. xml'])[33] extractor = nltk. RTEFeature. Extractor(rtepair) print](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-15.jpg)

![def tag_list(tagged_sents): return [tag for sent in tagged_sents for (word, tag) in sent] def def tag_list(tagged_sents): return [tag for sent in tagged_sents for (word, tag) in sent] def](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-19.jpg)

![print entropy(['male', 'male']) -0. 0 print entropy(['male', 'female', 'male']) 0. 811278124459 print entropy(['female', 'male']) print entropy(['male', 'male']) -0. 0 print entropy(['male', 'female', 'male']) 0. 811278124459 print entropy(['female', 'male'])](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-22.jpg)

![chunkex-draw grammar = "NP: {<DT>? <JJ>*<NN>}" # [_chunkex-grammar] cp = nltk. Regexp. Parser(grammar) # chunkex-draw grammar = "NP: {<DT>? <JJ>*<NN>}" # [_chunkex-grammar] cp = nltk. Regexp. Parser(grammar) #](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-36.jpg)

![Chunk two consecutive nouns = [("money", "NN"), ("market", "NN"), ("fund", "NN")] grammar = "NP: Chunk two consecutive nouns = [("money", "NN"), ("market", "NN"), ("fund", "NN")] grammar = "NP:](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-37.jpg)

![from nltk. corpus import conll 2000 test_sents = conll 2000. chunked_sents('test. txt', chunk_types=['NP']) print from nltk. corpus import conll 2000 test_sents = conll 2000. chunked_sents('test. txt', chunk_types=['NP']) print](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-41.jpg)

![A Real Attempt grammar = r"NP: {<[CDJNP]. *>+}" cp = nltk. Regexp. Parser(grammar) print A Real Attempt grammar = r"NP: {<[CDJNP]. *>+}" cp = nltk. Regexp. Parser(grammar) print](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-44.jpg)

- Slides: 47

CSCE 771 Natural Language Processing Lecture 12 Classifiers Part 2 Topics n n Classifiers Maxent Classifiers Maximum Entropy Markov Models Information Extraction and chunking intro Readings: Chapter 6, 7. 1 February 25, 2013

Overview Last Time n Confusion Matrix Brill Demo n NLTK Ch 6 - Text Classification n Today n n n Confusion Matrix Brill Demo NLTK Ch 6 - Text Classification Readings n – 2– NLTK Ch 6 CSCE 771 Spring 2013

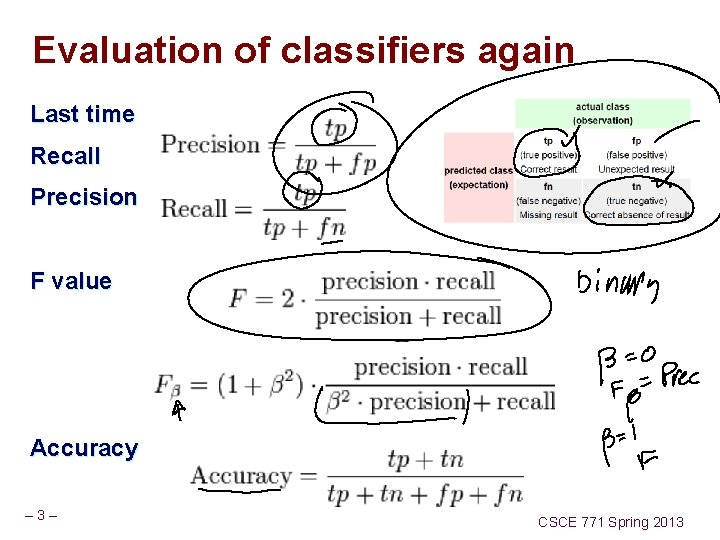

Evaluation of classifiers again Last time Recall Precision F value Accuracy – 3– CSCE 771 Spring 2013

Reuters Data set 21578 documents 118 categories document can be in multiple classes 118 binary classifiers – 4– CSCE 771 Spring 2013

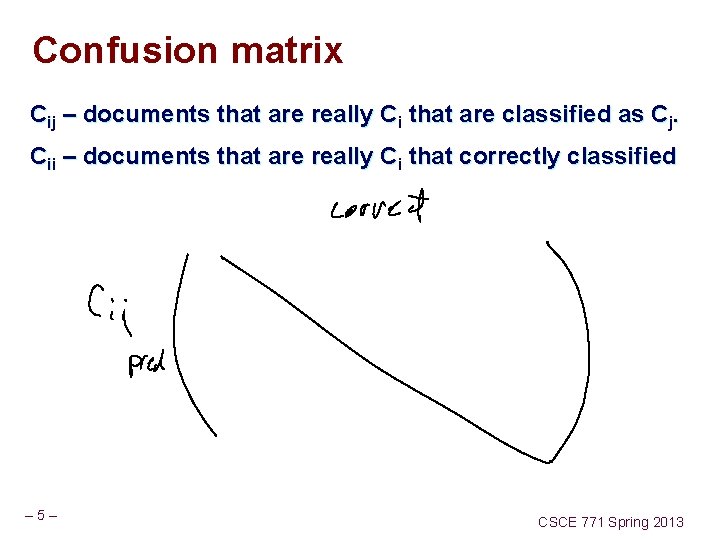

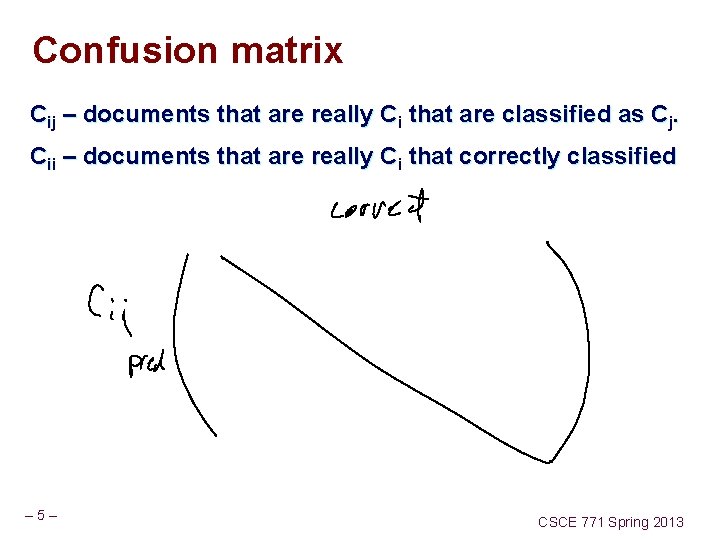

Confusion matrix Cij – documents that are really Ci that are classified as Cj. Cii – documents that are really Ci that correctly classified – 5– CSCE 771 Spring 2013

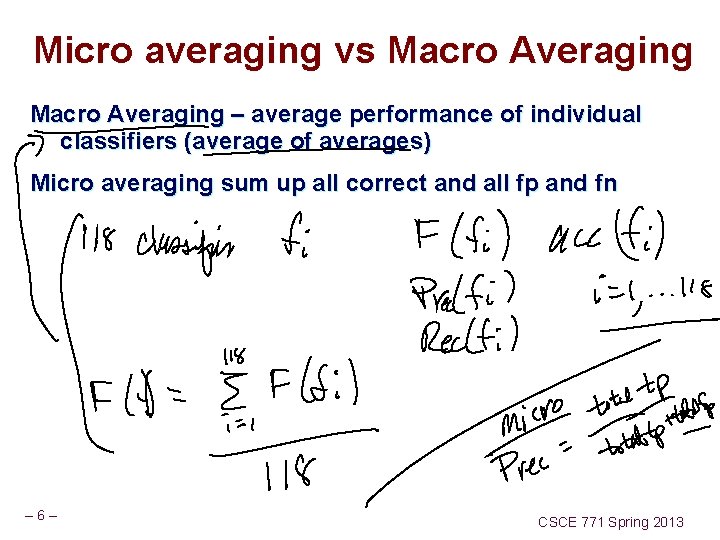

Micro averaging vs Macro Averaging – average performance of individual classifiers (average of averages) Micro averaging sum up all correct and all fp and fn – 6– CSCE 771 Spring 2013

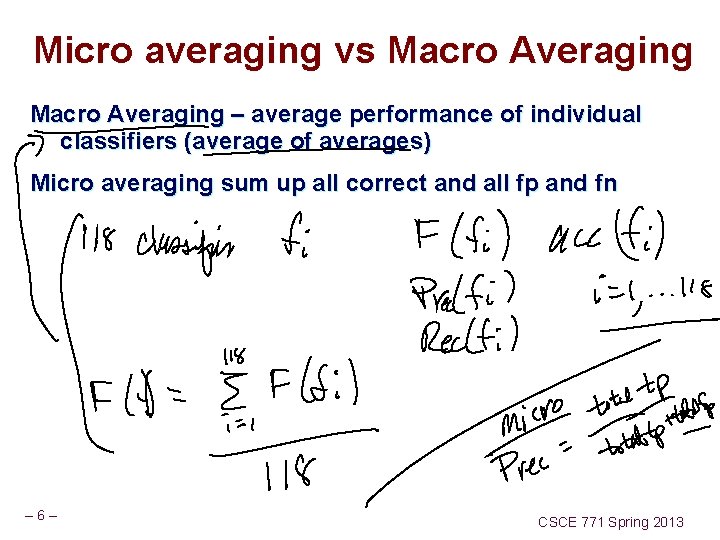

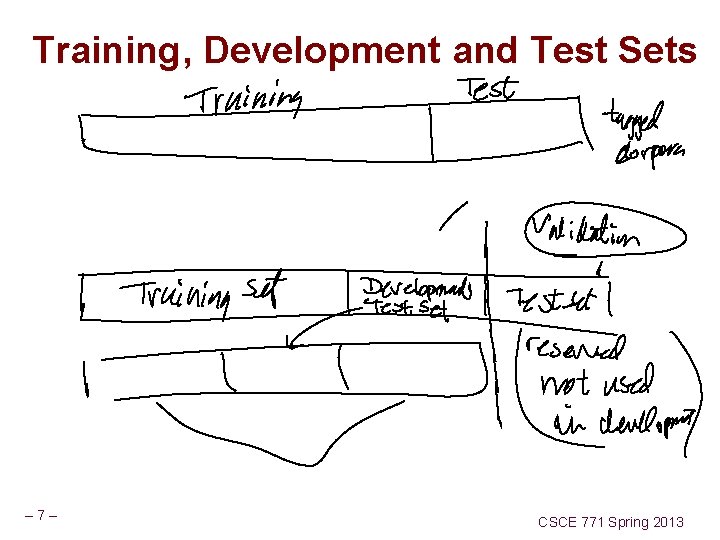

Training, Development and Test Sets – 7– CSCE 771 Spring 2013

![Codeconsecutivepostagger py revisited to trace history development def posfeaturessentence i history consecpostagfeatures if Code_consecutive_pos_tagger. py revisited to trace history development def pos_features(sentence, i, history): # [_consec-pos-tag-features] if](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-8.jpg)

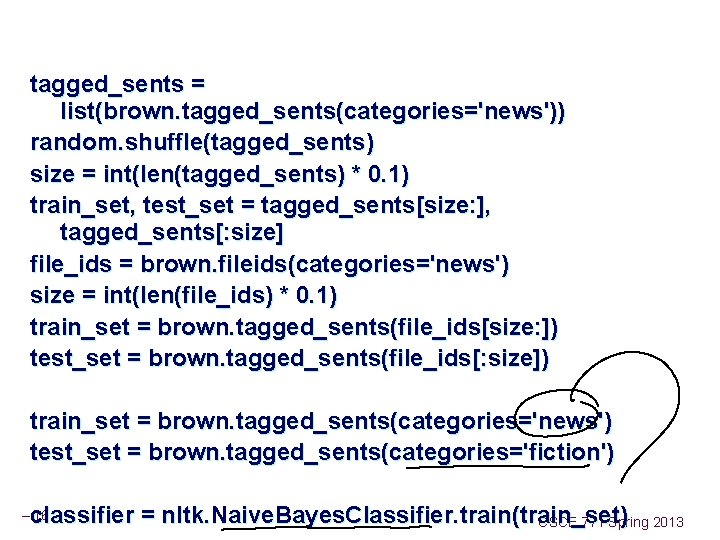

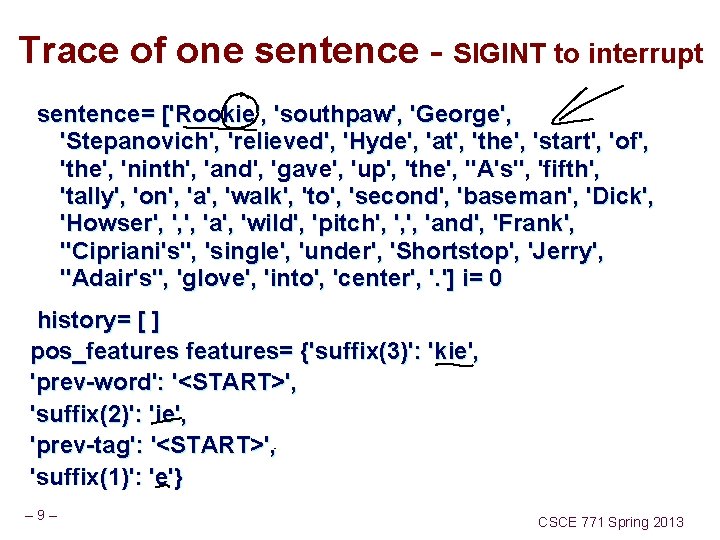

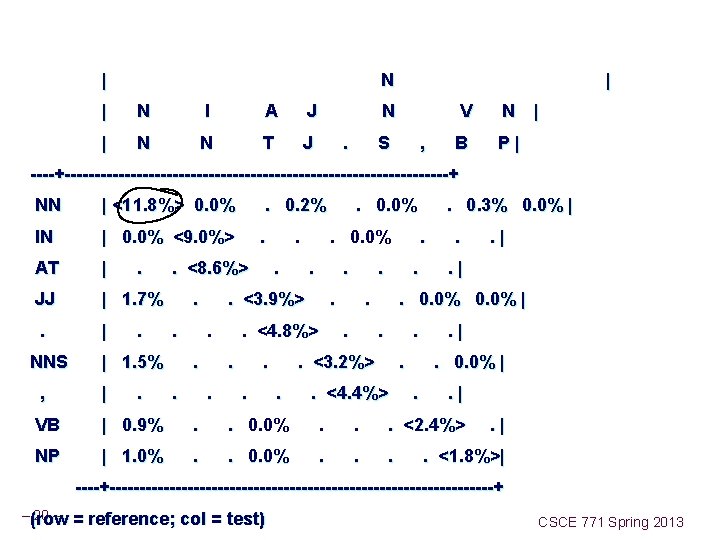

Code_consecutive_pos_tagger. py revisited to trace history development def pos_features(sentence, i, history): # [_consec-pos-tag-features] if debug == 1 : print "pos_features *****************" if debug == 1 : print " sentence=", sentence if debug == 1 : print " i=", i if debug == 1 : print " history=", history features = {"suffix(1)": sentence[i][-1: ], "suffix(2)": sentence[i][-2: ], "suffix(3)": sentence[i][-3: ]} if i == 0: features["prev-word"] = "<START>" features["prev-tag"] = "<START>" else: features["prev-word"] = sentence[i-1] features["prev-tag"] = history[i-1] if debug == 1 : print "pos_features=", features – 8 – return features CSCE 771 Spring 2013

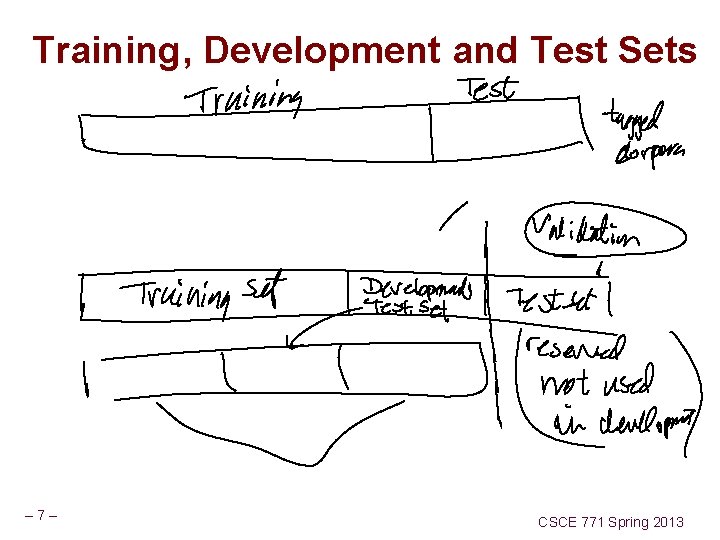

Trace of one sentence - SIGINT to interrupt sentence= ['Rookie', 'southpaw', 'George', 'Stepanovich', 'relieved', 'Hyde', 'at', 'the', 'start', 'of', 'the', 'ninth', 'and', 'gave', 'up', 'the', "A's", 'fifth', 'tally', 'on', 'a', 'walk', 'to', 'second', 'baseman', 'Dick', 'Howser', ', ', 'a', 'wild', 'pitch', ', ', 'and', 'Frank', "Cipriani's", 'single', 'under', 'Shortstop', 'Jerry', "Adair's", 'glove', 'into', 'center', '. '] i= 0 history= [ ] pos_features= {'suffix(3)': 'kie', 'prev-word': '<START>', 'suffix(2)': 'ie', 'prev-tag': '<START>', 'suffix(1)': 'e'} – 9– CSCE 771 Spring 2013

![Trace continued posfeatures sentence Rookie i 1 history NN posfeatures suffix3 Trace continued pos_features ******************* sentence= ['Rookie', …'. '] i= 1 history= ['NN'] pos_features= {'suffix(3)':](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-10.jpg)

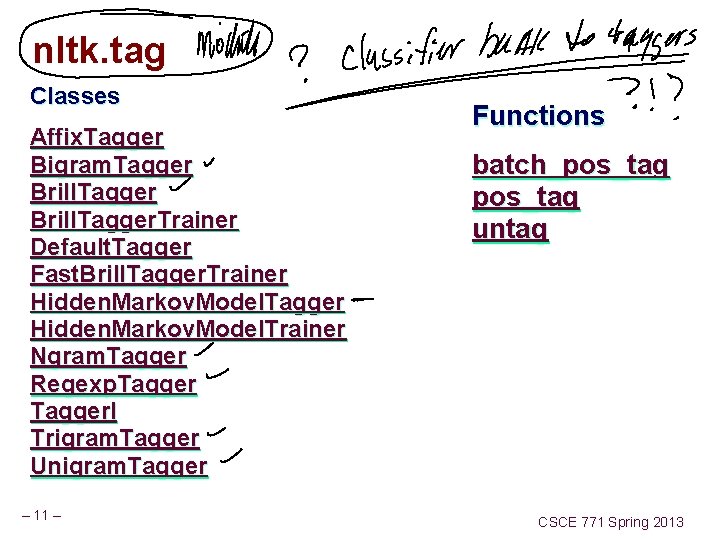

Trace continued pos_features ******************* sentence= ['Rookie', …'. '] i= 1 history= ['NN'] pos_features= {'suffix(3)': 'paw', 'prev-word': 'Rookie', 'suffix(2)': 'aw', 'prev-tag': 'NN', 'suffix(1)': 'w'} pos_features ******************* sentence= ['Rookie', 'southpaw', … '. '] i= 2 history= ['NN', 'NN'] pos_features= {'suffix(3)': 'rge', 'prev-word': 'southpaw', 'suffix(2)': 'ge', 'prev-tag': 'NN', 'suffix(1)': 'e'} – 10 – CSCE 771 Spring 2013

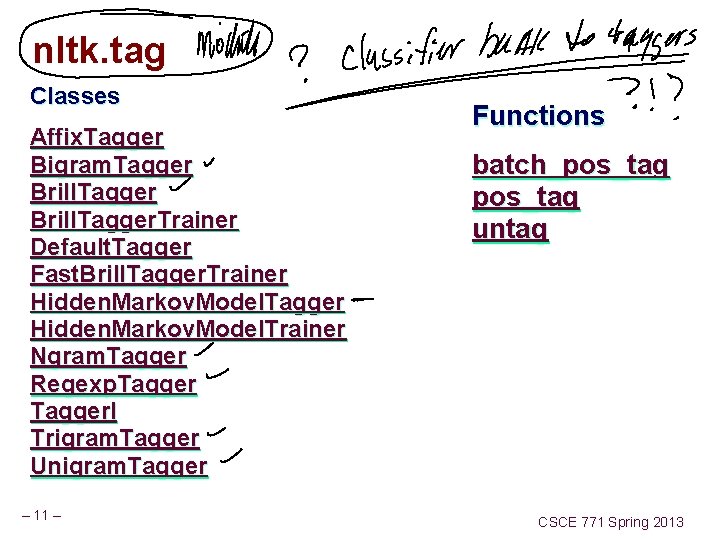

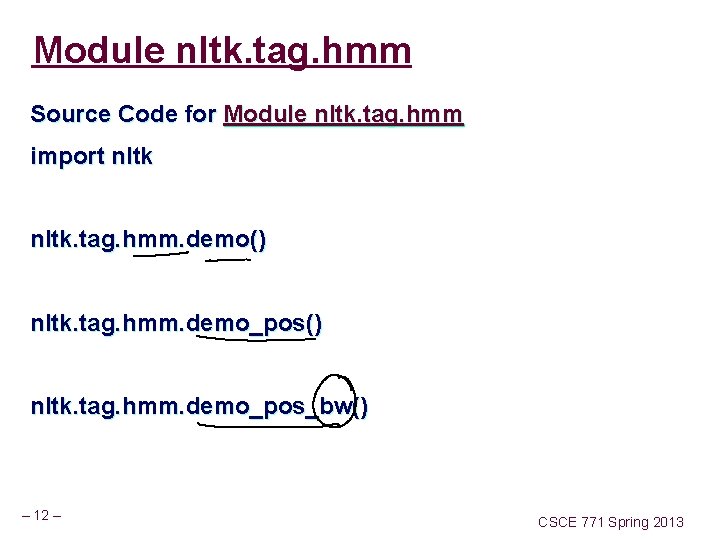

nltk. tag Classes Affix. Tagger Bigram. Tagger Brill. Tagger. Trainer Default. Tagger Fast. Brill. Tagger. Trainer Hidden. Markov. Model. Tagger Hidden. Markov. Model. Trainer Ngram. Tagger Regexp. Tagger. I Trigram. Tagger Unigram. Tagger – 11 – Functions batch_pos_tag untag CSCE 771 Spring 2013

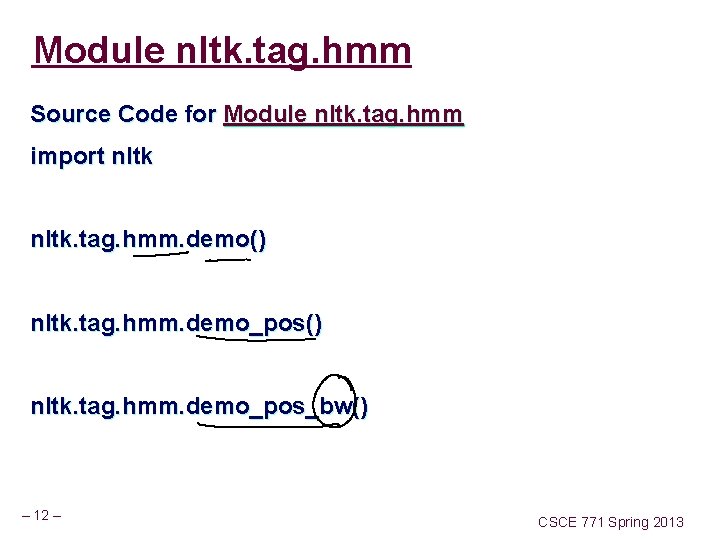

Module nltk. tag. hmm Source Code for Module nltk. tag. hmm import nltk. tag. hmm. demo() nltk. tag. hmm. demo_pos_bw() – 12 – CSCE 771 Spring 2013

HMM demo import nltk. tag. hmm. demo() nltk. tag. hmm. demo_pos_bw() – 13 – CSCE 771 Spring 2013

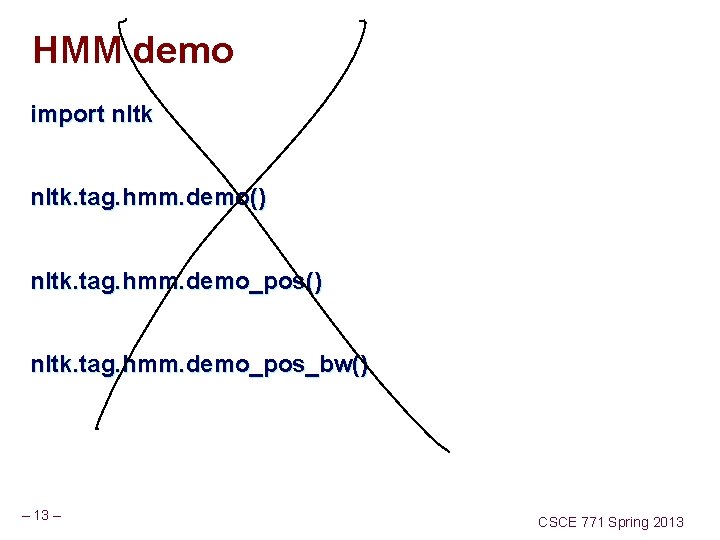

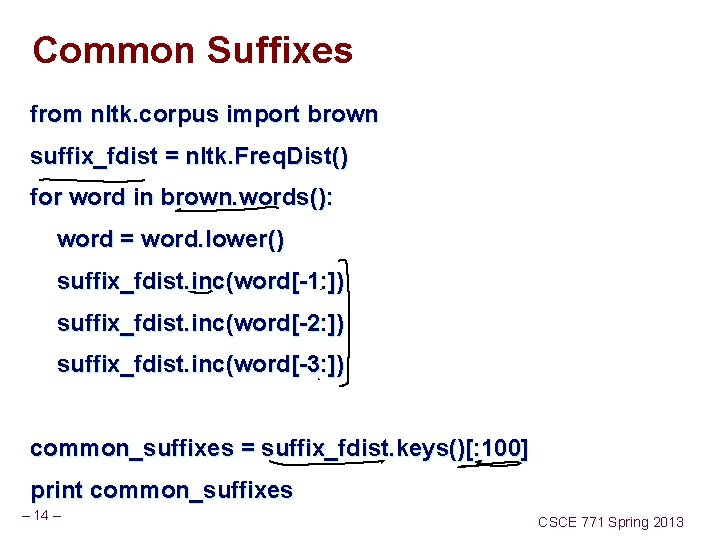

Common Suffixes from nltk. corpus import brown suffix_fdist = nltk. Freq. Dist() for word in brown. words(): word = word. lower() suffix_fdist. inc(word[-1: ]) suffix_fdist. inc(word[-2: ]) suffix_fdist. inc(word[-3: ]) common_suffixes = suffix_fdist. keys()[: 100] print common_suffixes – 14 – CSCE 771 Spring 2013

![rtepair nltk corpus rte pairsrte 3dev xml33 extractor nltk RTEFeature Extractorrtepair print rtepair = nltk. corpus. rte. pairs(['rte 3_dev. xml'])[33] extractor = nltk. RTEFeature. Extractor(rtepair) print](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-15.jpg)

rtepair = nltk. corpus. rte. pairs(['rte 3_dev. xml'])[33] extractor = nltk. RTEFeature. Extractor(rtepair) print extractor. text_words set(['Russia', 'Organisation', 'Shanghai', … print extractor. hyp_words set(['member', 'SCO', 'China']) print extractor. overlap('word') set([ ]) print extractor. overlap('ne') set(['SCO', 'China']) print extractor. hyp_extra('word') set(['member']) – 15 – CSCE 771 Spring 2013

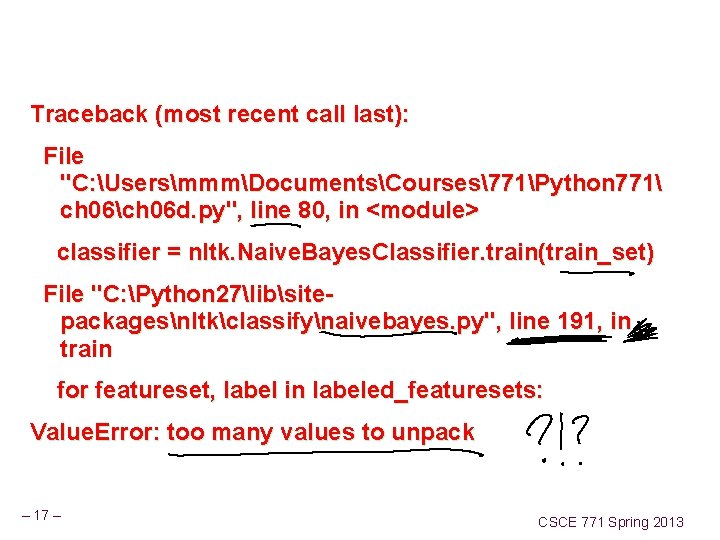

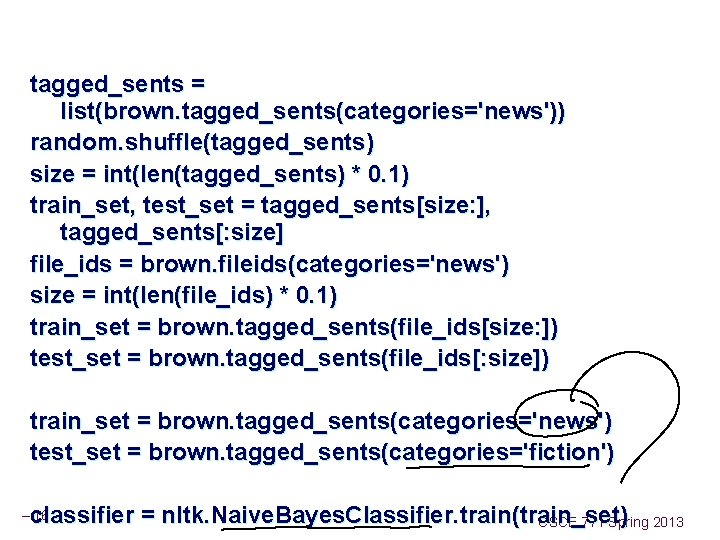

tagged_sents = list(brown. tagged_sents(categories='news')) random. shuffle(tagged_sents) size = int(len(tagged_sents) * 0. 1) train_set, test_set = tagged_sents[size: ], tagged_sents[: size] file_ids = brown. fileids(categories='news') size = int(len(file_ids) * 0. 1) train_set = brown. tagged_sents(file_ids[size: ]) test_set = brown. tagged_sents(file_ids[: size]) train_set = brown. tagged_sents(categories='news') test_set = brown. tagged_sents(categories='fiction') classifier = nltk. Naive. Bayes. Classifier. train(train_set) CSCE 771 Spring 2013 – 16 –

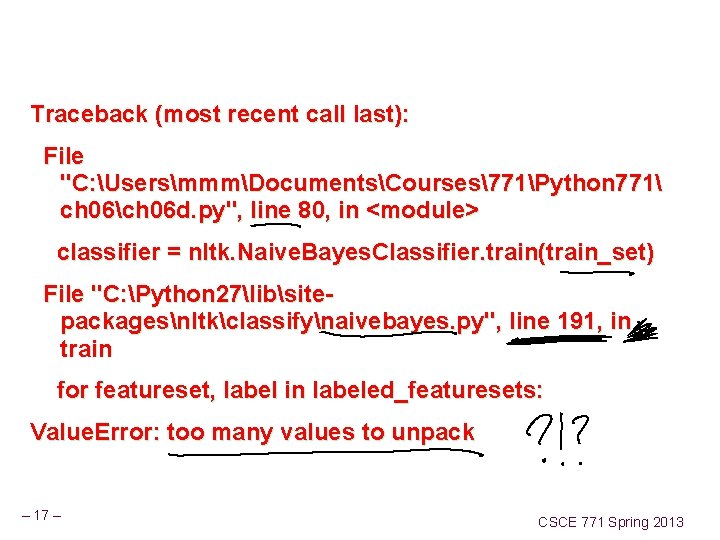

Traceback (most recent call last): File "C: UsersmmmDocumentsCourses771Python 771 ch 06ch 06 d. py", line 80, in <module> classifier = nltk. Naive. Bayes. Classifier. train(train_set) File "C: Python 27libsitepackagesnltkclassifynaivebayes. py", line 191, in train for featureset, label in labeled_featuresets: Value. Error: too many values to unpack – 17 – CSCE 771 Spring 2013

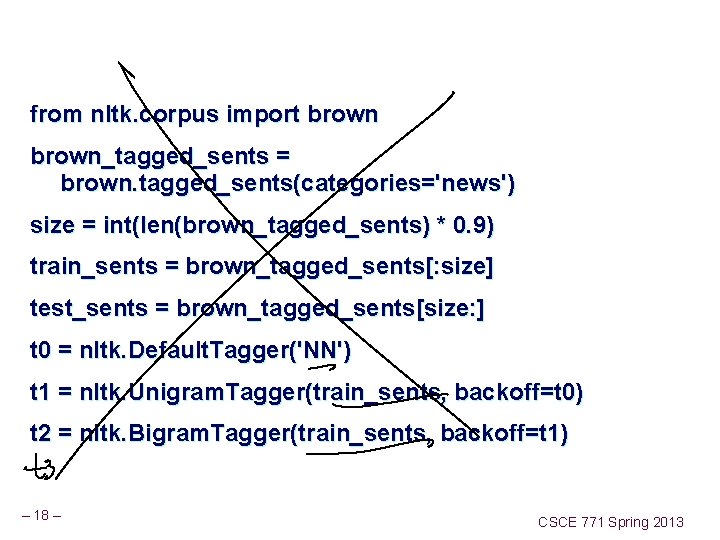

from nltk. corpus import brown_tagged_sents = brown. tagged_sents(categories='news') size = int(len(brown_tagged_sents) * 0. 9) train_sents = brown_tagged_sents[: size] test_sents = brown_tagged_sents[size: ] t 0 = nltk. Default. Tagger('NN') t 1 = nltk. Unigram. Tagger(train_sents, backoff=t 0) t 2 = nltk. Bigram. Tagger(train_sents, backoff=t 1) – 18 – CSCE 771 Spring 2013

![def taglisttaggedsents return tag for sent in taggedsents for word tag in sent def def tag_list(tagged_sents): return [tag for sent in tagged_sents for (word, tag) in sent] def](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-19.jpg)

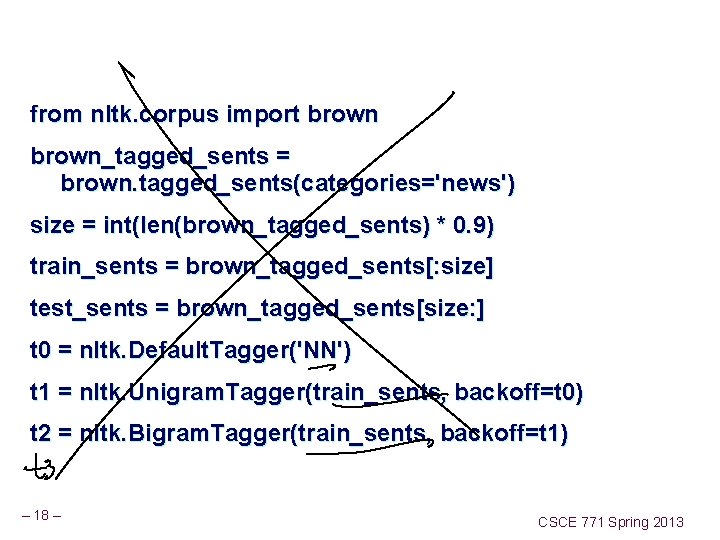

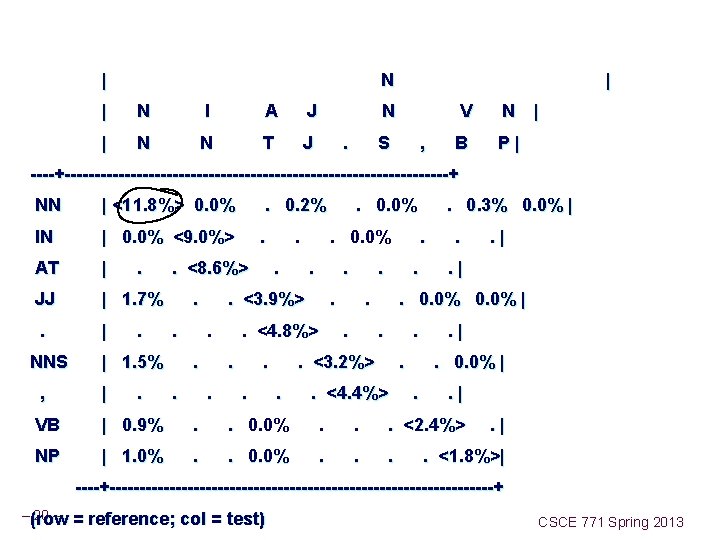

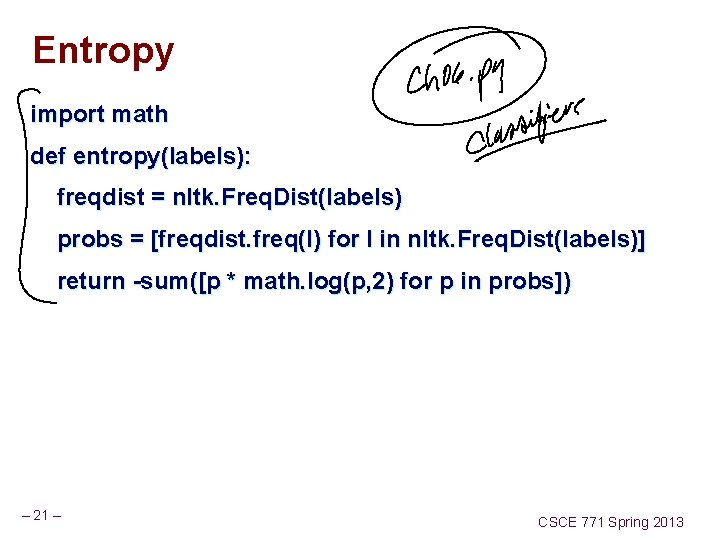

def tag_list(tagged_sents): return [tag for sent in tagged_sents for (word, tag) in sent] def apply_tagger(tagger, corpus): return [tagger. tag(nltk. tag. untag(sent)) for sent in corpus] gold = tag_list(brown. tagged_sents(categories='editorial')) test = tag_list(apply_tagger(t 2, brown. tagged_sents(categories='editorial'))) cm = nltk. Confusion. Matrix(gold, test) print cm. pp(sort_by_count=True, show_percents=True, truncate=9) – 19 – CSCE 771 Spring 2013

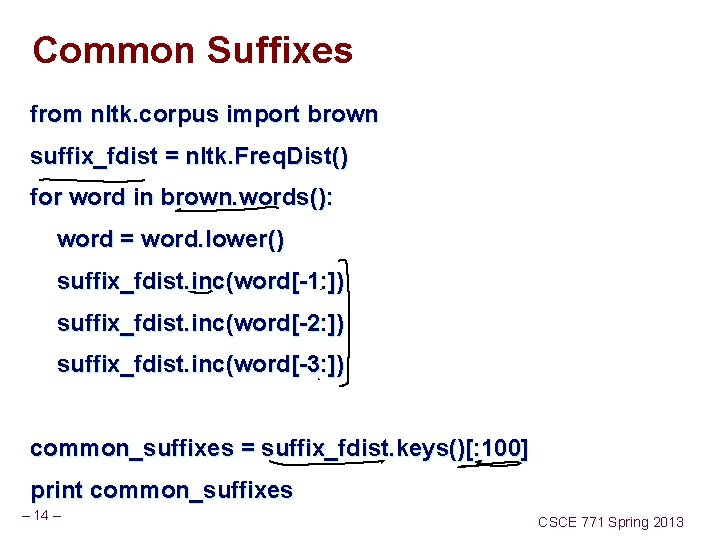

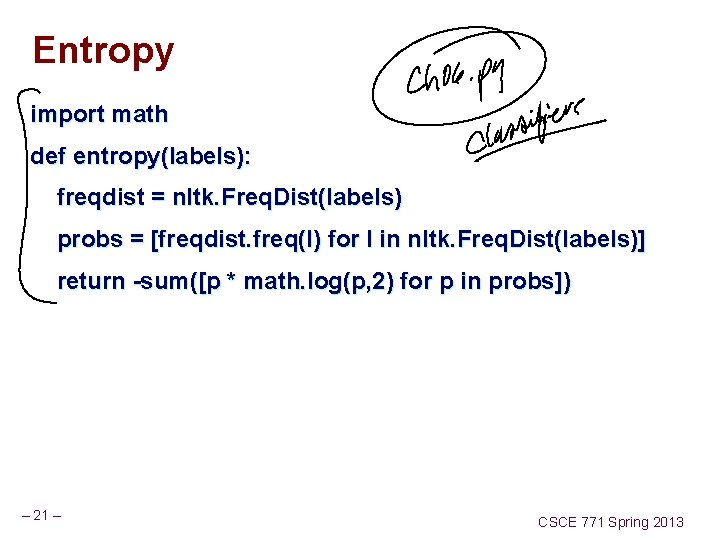

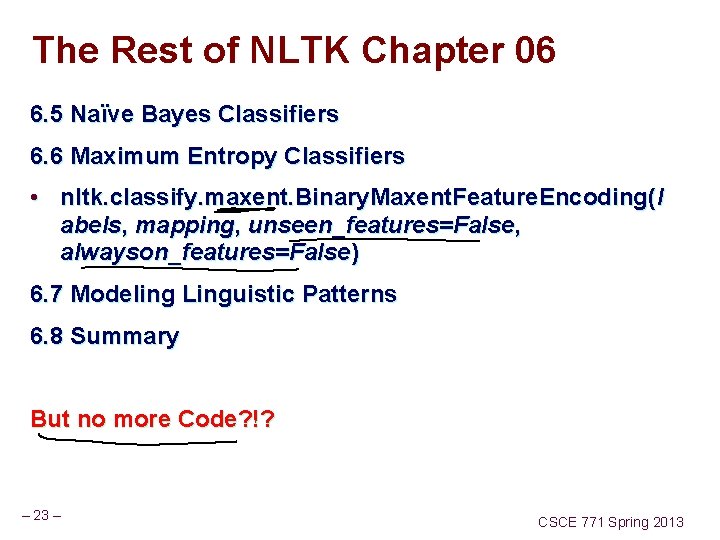

Entropy import math def entropy(labels): freqdist = nltk. Freq. Dist(labels) probs = [freqdist. freq(l) for l in nltk. Freq. Dist(labels)] return -sum([p * math. log(p, 2) for p in probs]) – 21 – CSCE 771 Spring 2013

![print entropymale male 0 0 print entropymale female male 0 811278124459 print entropyfemale male print entropy(['male', 'male']) -0. 0 print entropy(['male', 'female', 'male']) 0. 811278124459 print entropy(['female', 'male'])](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-22.jpg)

print entropy(['male', 'male']) -0. 0 print entropy(['male', 'female', 'male']) 0. 811278124459 print entropy(['female', 'male']) 1. 0 print entropy(['female', 'male', 'female']) 0. 811278124459 print entropy(['female', 'female']) -0. 0 – 22 – CSCE 771 Spring 2013

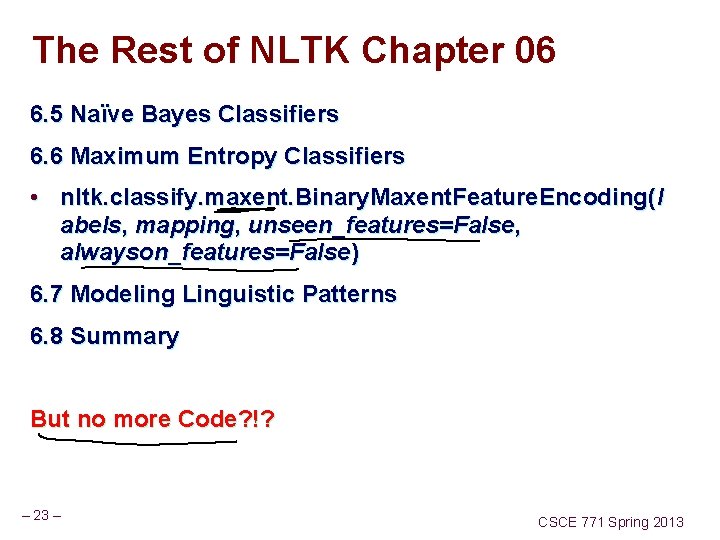

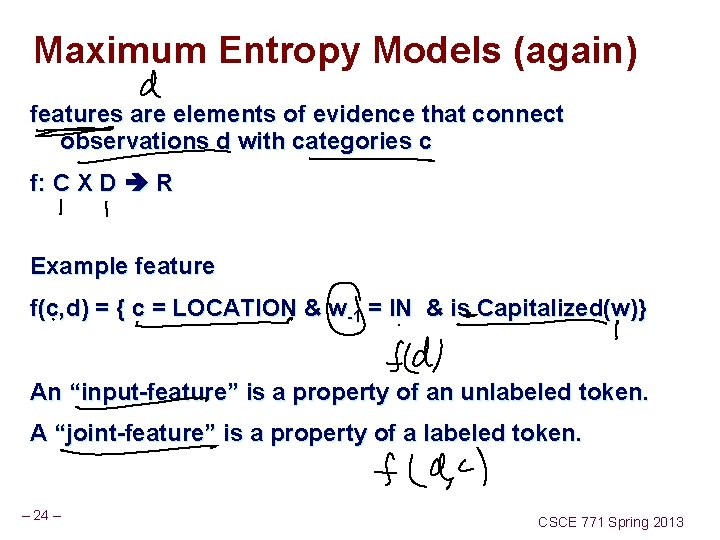

The Rest of NLTK Chapter 06 6. 5 Naïve Bayes Classifiers 6. 6 Maximum Entropy Classifiers • nltk. classify. maxent. Binary. Maxent. Feature. Encoding(l abels, mapping, unseen_features=False, alwayson_features=False) 6. 7 Modeling Linguistic Patterns 6. 8 Summary But no more Code? !? – 23 – CSCE 771 Spring 2013

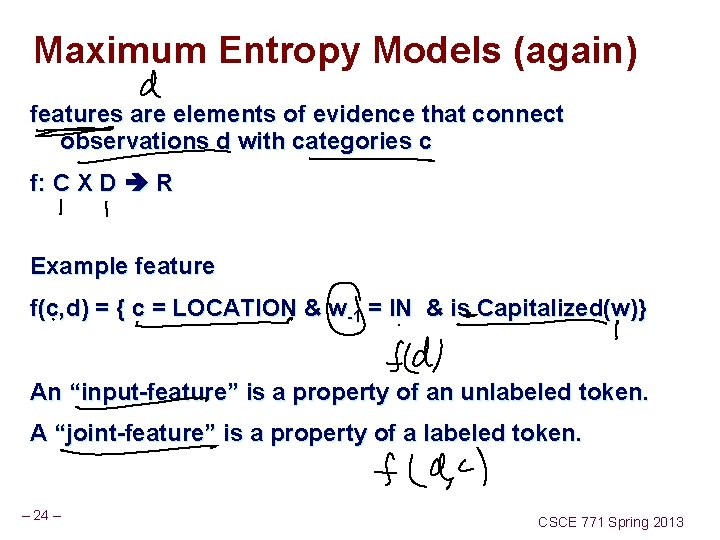

Maximum Entropy Models (again) features are elements of evidence that connect observations d with categories c f: C X D R Example feature f(c, d) = { c = LOCATION & w-1 = IN & is Capitalized(w)} An “input-feature” is a property of an unlabeled token. A “joint-feature” is a property of a labeled token. – 24 – CSCE 771 Spring 2013

Feature-Based Liner Classifiers p(c |d, lambda)= – 25 – CSCE 771 Spring 2013

Maxent Model revisited – 26 – CSCE 771 Spring 2013

Maximum Entropy Markov Models (MEMM) repeatedly use Maxent classifier to iteratively apply to a sequence – 27 – CSCE 771 Spring 2013

– 28 – CSCE 771 Spring 2013

Named Entity Recognition (NER) enities – 1. a : being, existence; especially : independent, separate, or self-contained existence b : the existence of a thing as contrasted with its attributes 2. : something that has separate and distinct existence and objective or conceptual reality 3. : an organization (as a business or governmental unit) that has an identity separate from those of its members one of those with a name • http: //nlp. stanford. edu/software/CRF-NER. shtml – 29 – CSCE 771 Spring 2013

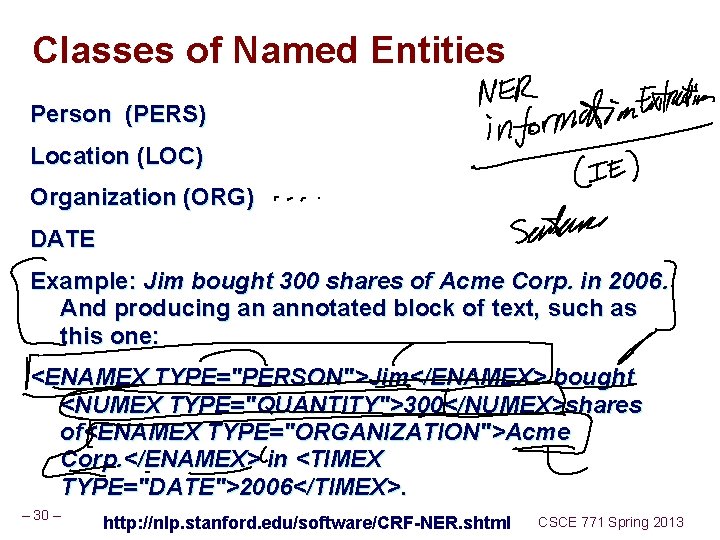

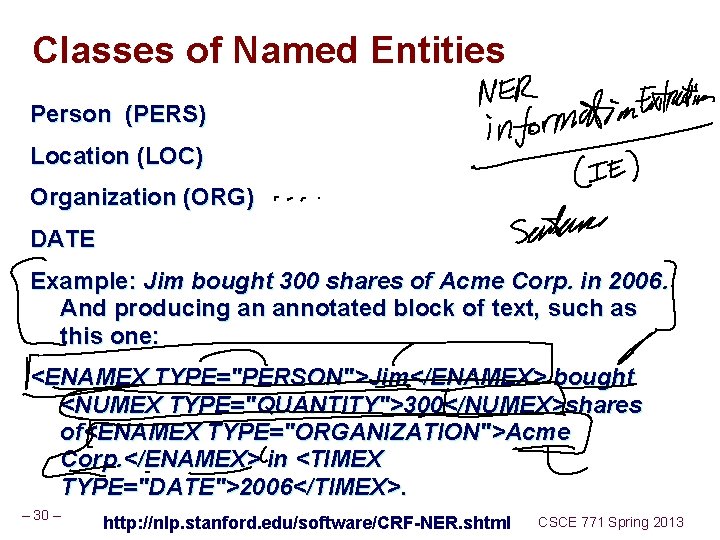

Classes of Named Entities Person (PERS) Location (LOC) Organization (ORG) DATE Example: Jim bought 300 shares of Acme Corp. in 2006. And producing an annotated block of text, such as this one: <ENAMEX TYPE="PERSON">Jim</ENAMEX> bought <NUMEX TYPE="QUANTITY">300</NUMEX>shares of<ENAMEX TYPE="ORGANIZATION">Acme Corp. </ENAMEX> in <TIMEX TYPE="DATE">2006</TIMEX>. – 30 – http: //nlp. stanford. edu/software/CRF-NER. shtml CSCE 771 Spring 2013

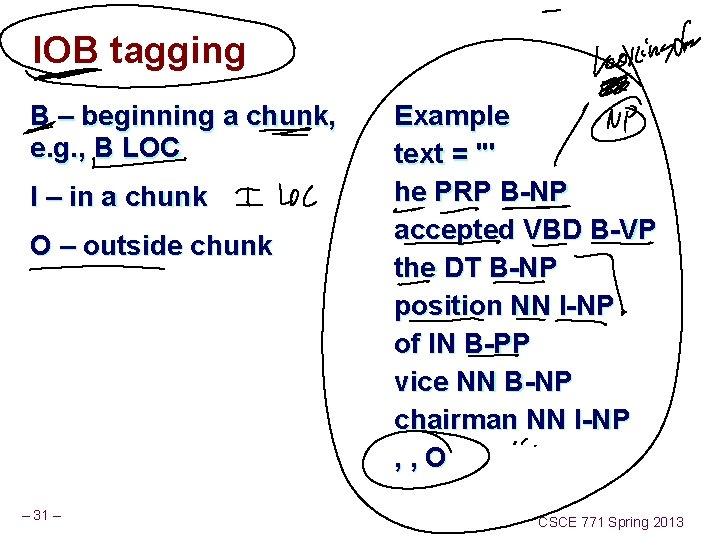

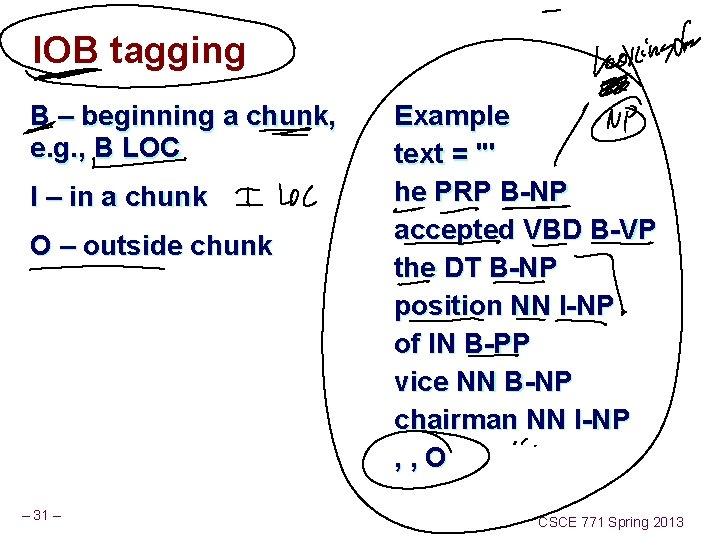

IOB tagging B – beginning a chunk, e. g. , B LOC I – in a chunk O – outside chunk – 31 – Example text = ''' he PRP B-NP accepted VBD B-VP the DT B-NP position NN I-NP of IN B-PP vice NN B-NP chairman NN I-NP , , O CSCE 771 Spring 2013

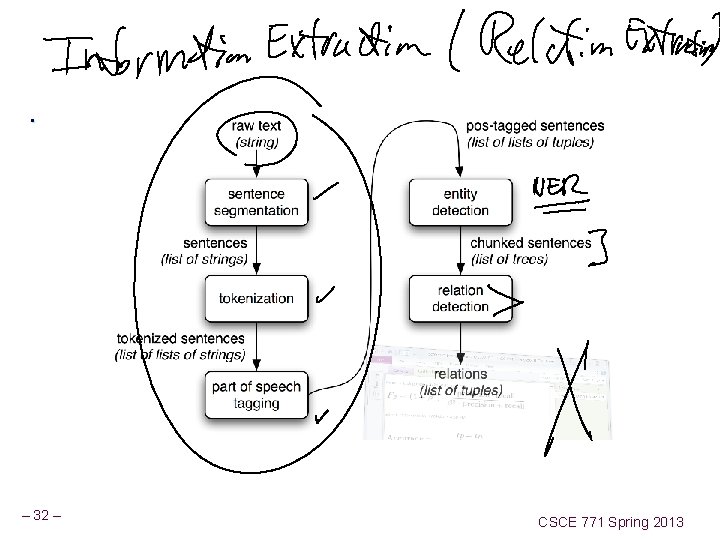

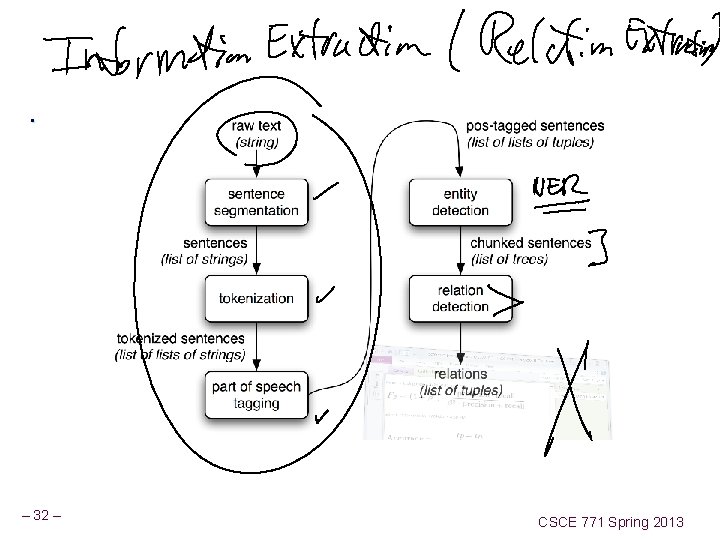

. – 32 – CSCE 771 Spring 2013

Chunking - partial parsing – 33 – CSCE 771 Spring 2013

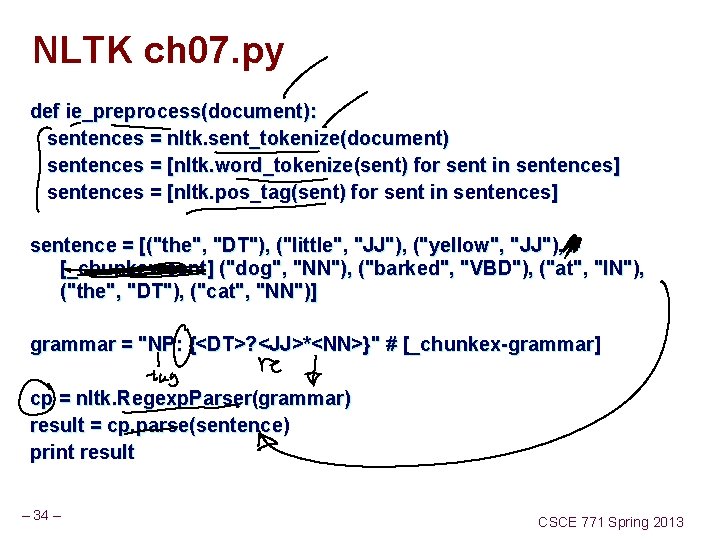

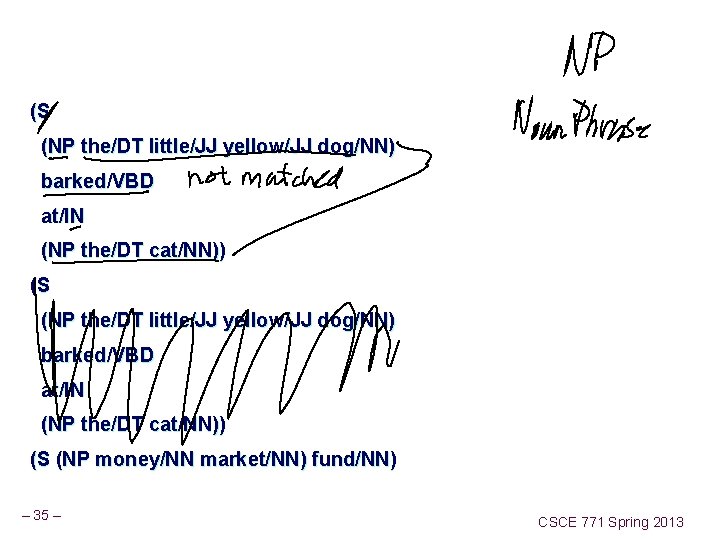

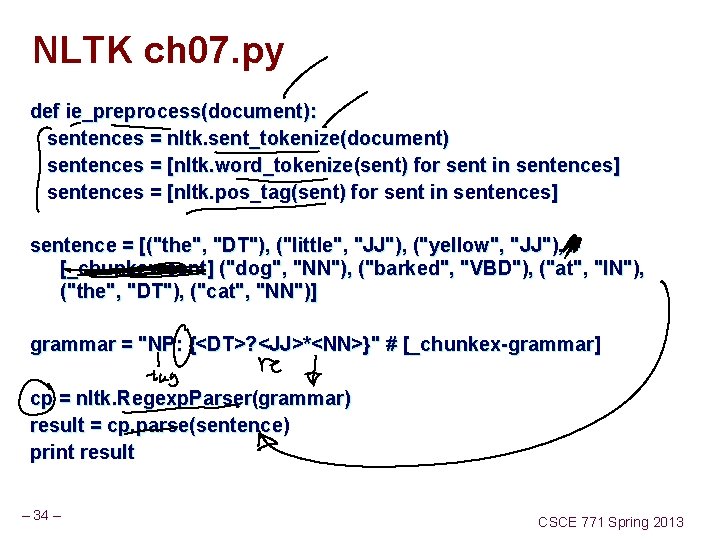

NLTK ch 07. py def ie_preprocess(document): sentences = nltk. sent_tokenize(document) sentences = [nltk. word_tokenize(sent) for sent in sentences] sentences = [nltk. pos_tag(sent) for sent in sentences] sentence = [("the", "DT"), ("little", "JJ"), ("yellow", "JJ"), # [_chunkex-sent] ("dog", "NN"), ("barked", "VBD"), ("at", "IN"), ("the", "DT"), ("cat", "NN")] grammar = "NP: {<DT>? <JJ>*<NN>}" # [_chunkex-grammar] cp = nltk. Regexp. Parser(grammar) result = cp. parse(sentence) print result – 34 – CSCE 771 Spring 2013

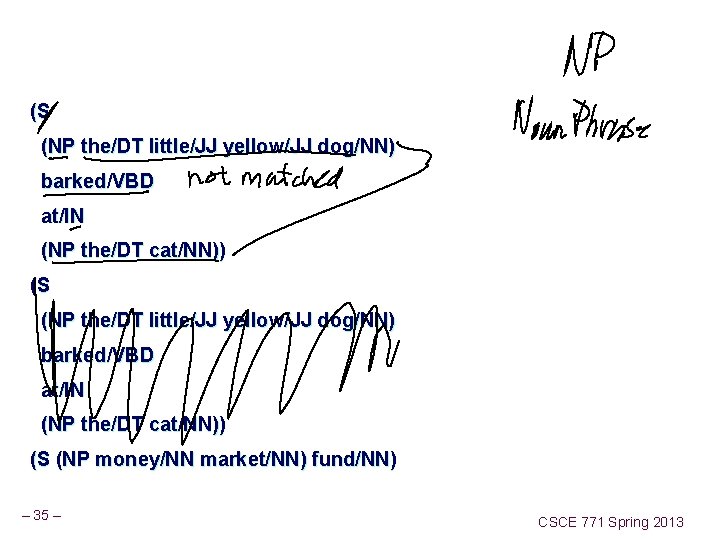

(S (NP the/DT little/JJ yellow/JJ dog/NN) barked/VBD at/IN (NP the/DT cat/NN)) (S (NP money/NN market/NN) fund/NN) – 35 – CSCE 771 Spring 2013

![chunkexdraw grammar NP DT JJNN chunkexgrammar cp nltk Regexp Parsergrammar chunkex-draw grammar = "NP: {<DT>? <JJ>*<NN>}" # [_chunkex-grammar] cp = nltk. Regexp. Parser(grammar) #](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-36.jpg)

chunkex-draw grammar = "NP: {<DT>? <JJ>*<NN>}" # [_chunkex-grammar] cp = nltk. Regexp. Parser(grammar) # [_chunkex-cp] result = cp. parse(sentence) # [_chunkex-test] print result # [_chunkex-print] result. draw() – 36 – CSCE 771 Spring 2013

![Chunk two consecutive nouns money NN market NN fund NN grammar NP Chunk two consecutive nouns = [("money", "NN"), ("market", "NN"), ("fund", "NN")] grammar = "NP:](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-37.jpg)

Chunk two consecutive nouns = [("money", "NN"), ("market", "NN"), ("fund", "NN")] grammar = "NP: {<NN>} # Chunk two consecutive nouns" cp = nltk. Regexp. Parser(grammar) print cp. parse(nouns) (S (NP money/NN market/NN) fund/NN) – 37 – CSCE 771 Spring 2013

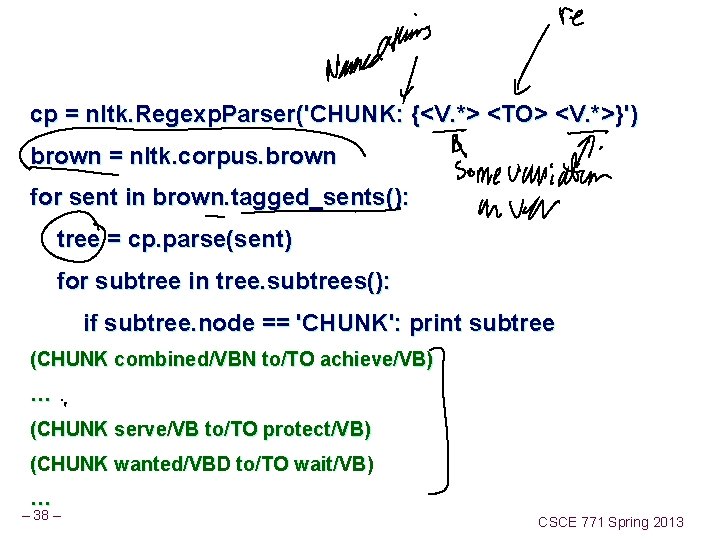

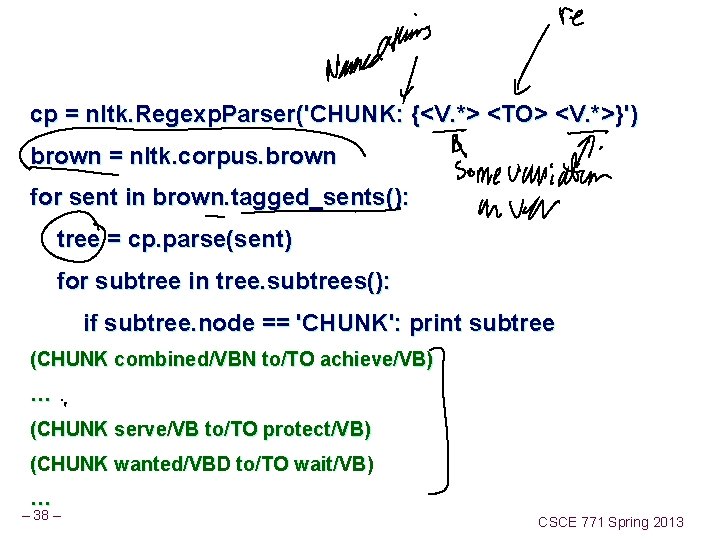

cp = nltk. Regexp. Parser('CHUNK: {<V. *> <TO> <V. *>}') brown = nltk. corpus. brown for sent in brown. tagged_sents(): tree = cp. parse(sent) for subtree in tree. subtrees(): if subtree. node == 'CHUNK': print subtree (CHUNK combined/VBN to/TO achieve/VB) … (CHUNK serve/VB to/TO protect/VB) (CHUNK wanted/VBD to/TO wait/VB) … – 38 – CSCE 771 Spring 2013

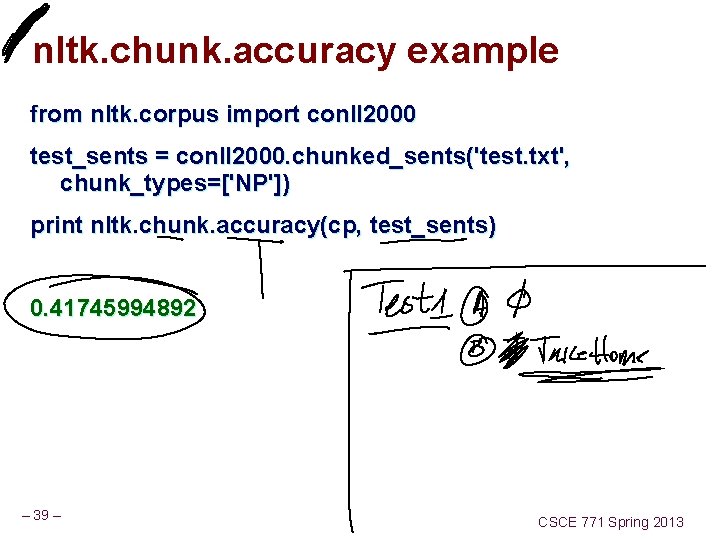

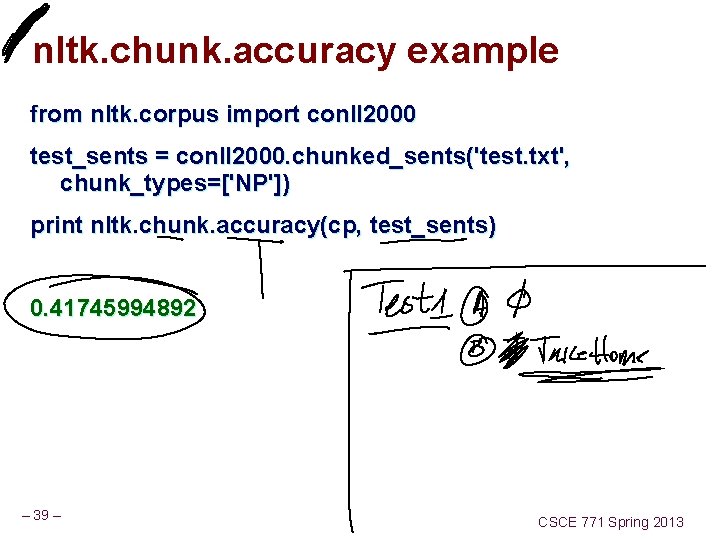

nltk. chunk. accuracy example from nltk. corpus import conll 2000 test_sents = conll 2000. chunked_sents('test. txt', chunk_types=['NP']) print nltk. chunk. accuracy(cp, test_sents) 0. 41745994892 – 39 – CSCE 771 Spring 2013

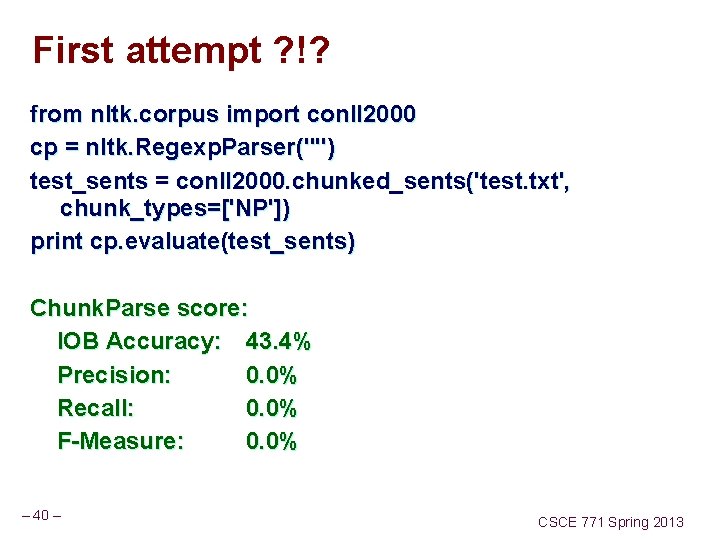

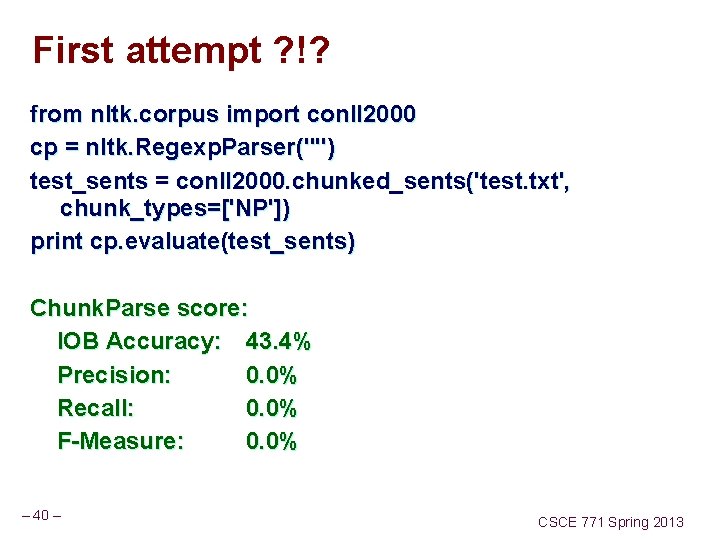

First attempt ? !? from nltk. corpus import conll 2000 cp = nltk. Regexp. Parser("") test_sents = conll 2000. chunked_sents('test. txt', chunk_types=['NP']) print cp. evaluate(test_sents) Chunk. Parse score: IOB Accuracy: 43. 4% Precision: 0. 0% Recall: 0. 0% F-Measure: 0. 0% – 40 – CSCE 771 Spring 2013

![from nltk corpus import conll 2000 testsents conll 2000 chunkedsentstest txt chunktypesNP print from nltk. corpus import conll 2000 test_sents = conll 2000. chunked_sents('test. txt', chunk_types=['NP']) print](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-41.jpg)

from nltk. corpus import conll 2000 test_sents = conll 2000. chunked_sents('test. txt', chunk_types=['NP']) print nltk. chunk. accuracy(cp, test_sents) 0. 41745994892 Carlyle NNP B-NP Group NNP I-NP , , O – 41 – CSCE 771 Spring 2013

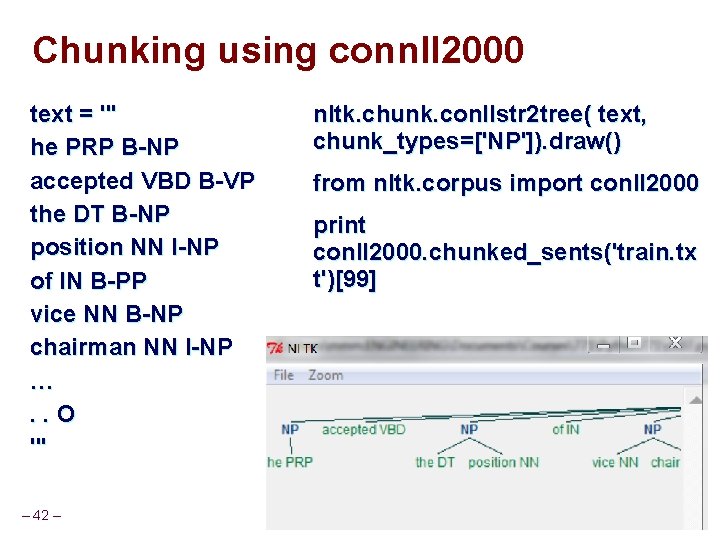

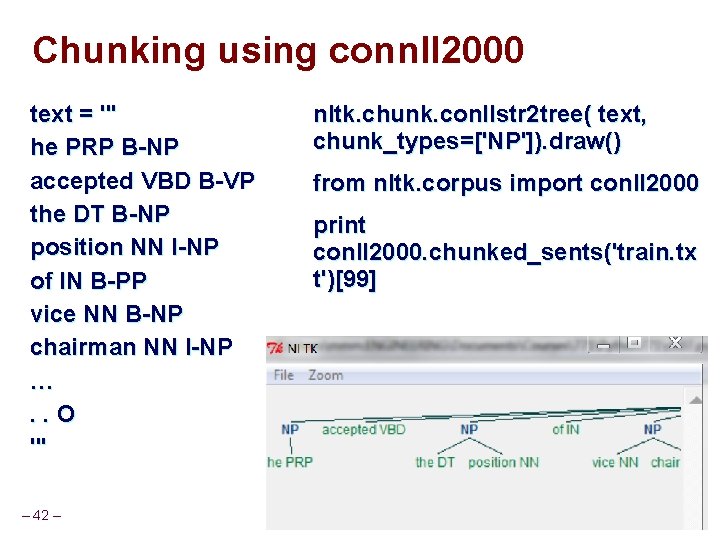

Chunking using connll 2000 text = ''' he PRP B-NP accepted VBD B-VP the DT B-NP position NN I-NP of IN B-PP vice NN B-NP chairman NN I-NP …. . O ''' – 42 – nltk. chunk. conllstr 2 tree( text, chunk_types=['NP']). draw() from nltk. corpus import conll 2000 print conll 2000. chunked_sents('train. tx t')[99] CSCE 771 Spring 2013

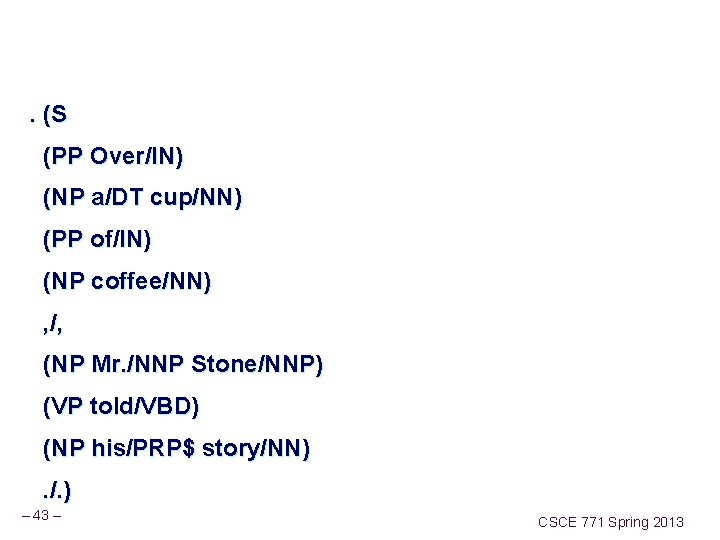

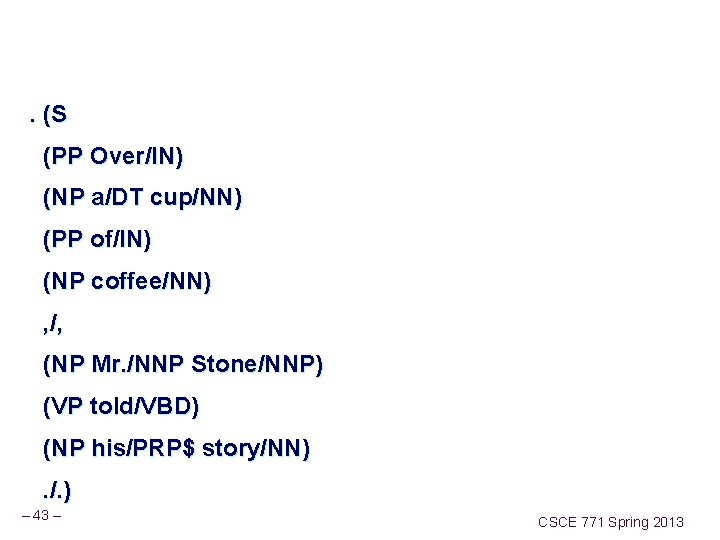

. (S (PP Over/IN) (NP a/DT cup/NN) (PP of/IN) (NP coffee/NN) , /, (NP Mr. /NNP Stone/NNP) (VP told/VBD) (NP his/PRP$ story/NN). /. ) – 43 – CSCE 771 Spring 2013

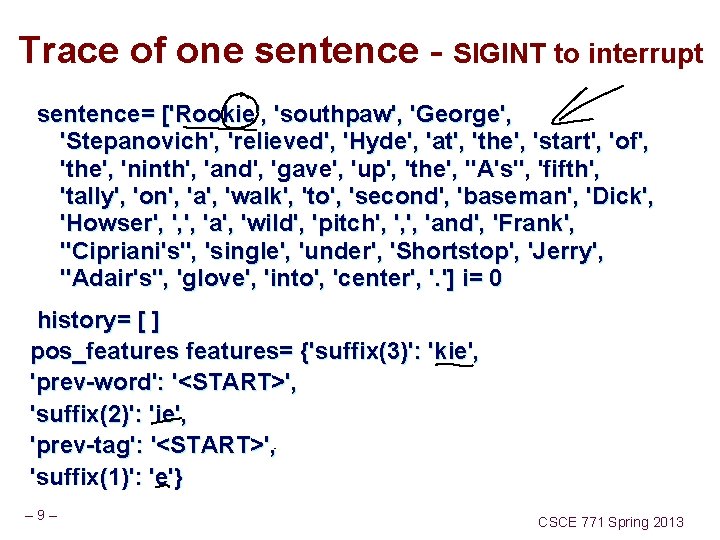

![A Real Attempt grammar rNP CDJNP cp nltk Regexp Parsergrammar print A Real Attempt grammar = r"NP: {<[CDJNP]. *>+}" cp = nltk. Regexp. Parser(grammar) print](https://slidetodoc.com/presentation_image_h2/9236bbe298e3d1eb6e84a7a0054c1ef8/image-44.jpg)

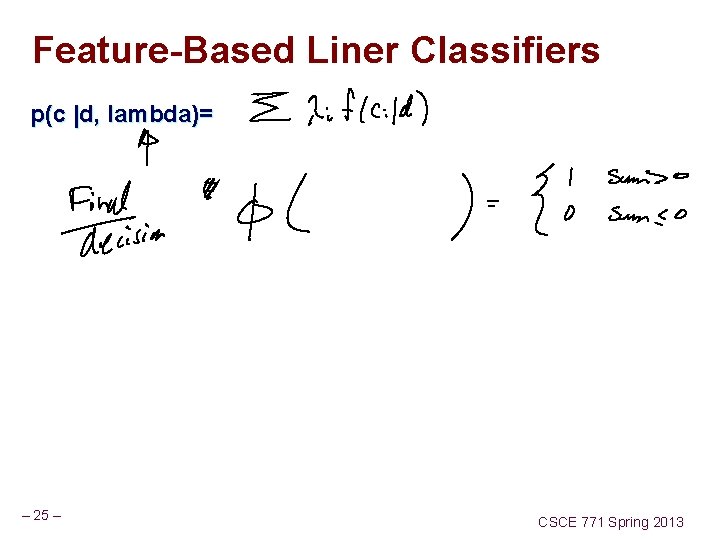

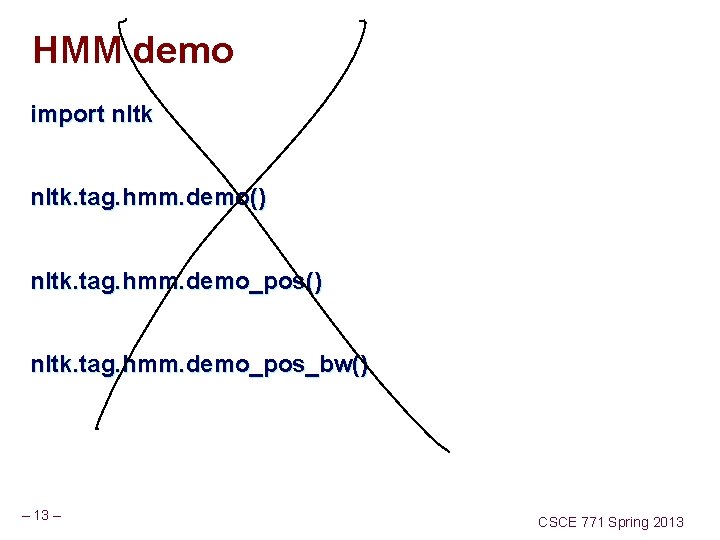

A Real Attempt grammar = r"NP: {<[CDJNP]. *>+}" cp = nltk. Regexp. Parser(grammar) print cp. evaluate(test_sents) Chunk. Parse score: IOB Accuracy: 87. 7% Precision: 70. 6% Recall: 67. 8% F-Measure: 69. 2% – 44 – CSCE 771 Spring 2013

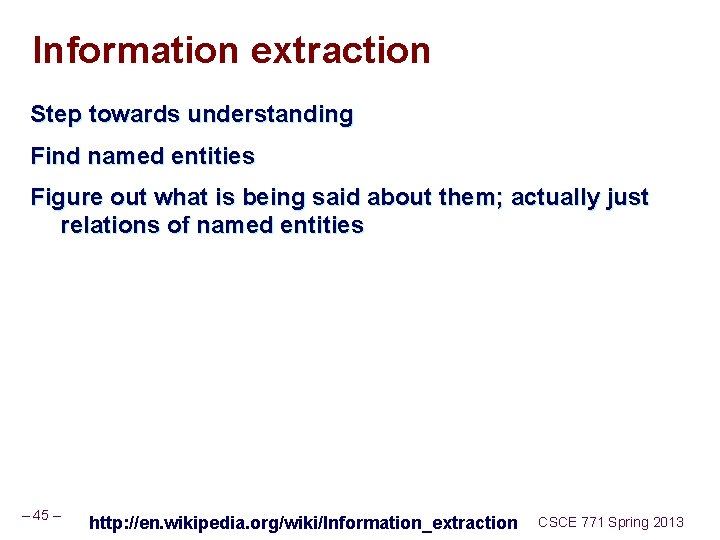

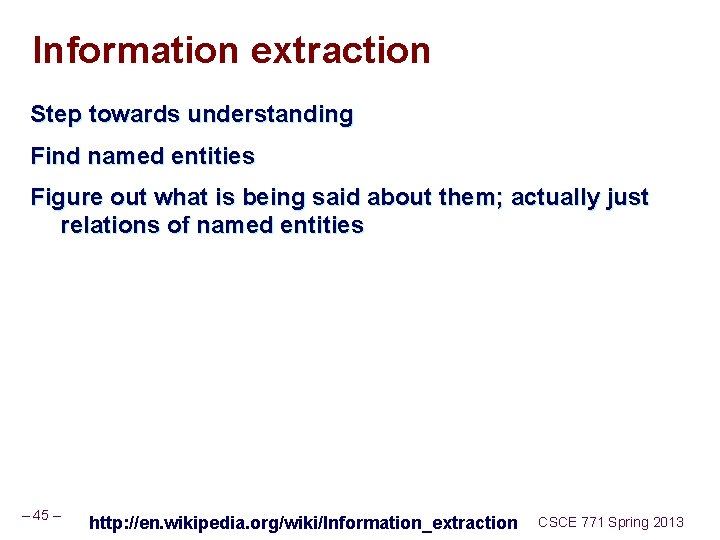

Information extraction Step towards understanding Find named entities Figure out what is being said about them; actually just relations of named entities – 45 – http: //en. wikipedia. org/wiki/Information_extraction CSCE 771 Spring 2013

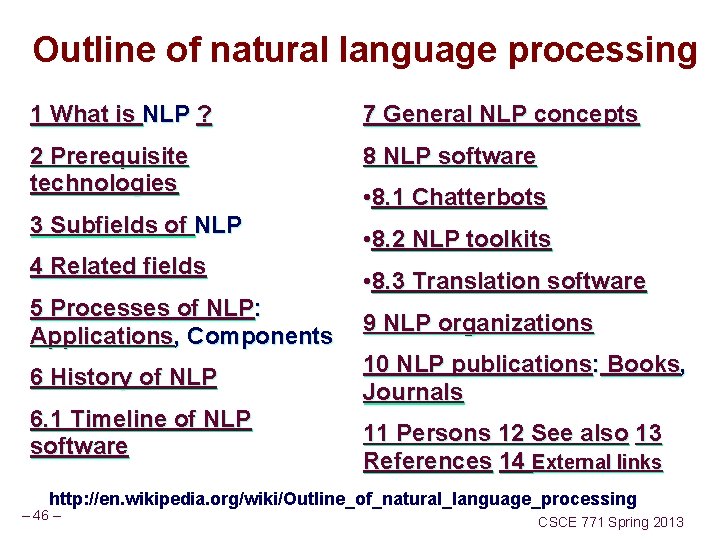

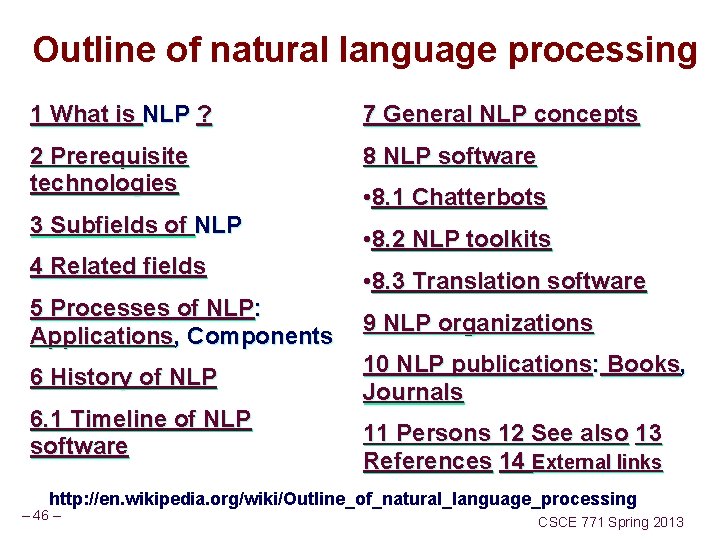

Outline of natural language processing 1 What is NLP ? 7 General NLP concepts 2 Prerequisite technologies 8 NLP software 3 Subfields of NLP 4 Related fields 5 Processes of NLP: Applications, Components 6 History of NLP 6. 1 Timeline of NLP software • 8. 1 Chatterbots • 8. 2 NLP toolkits • 8. 3 Translation software 9 NLP organizations 10 NLP publications: Books, Journals 11 Persons 12 See also 13 References 14 External links http: //en. wikipedia. org/wiki/Outline_of_natural_language_processing – 46 – CSCE 771 Spring 2013

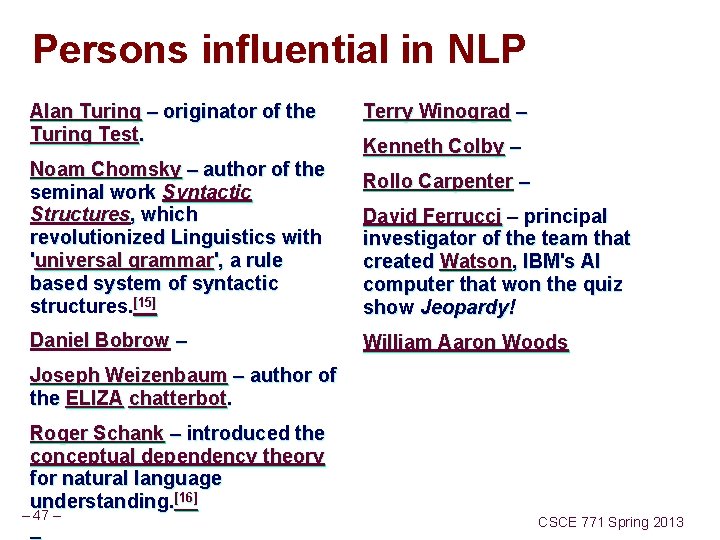

Persons influential in NLP Alan Turing – originator of the Turing Test. Noam Chomsky – author of the seminal work Syntactic Structures, which revolutionized Linguistics with 'universal grammar', a rule based system of syntactic structures. [15] Daniel Bobrow – Terry Winograd – Kenneth Colby – Rollo Carpenter – David Ferrucci – principal investigator of the team that created Watson, IBM's AI computer that won the quiz show Jeopardy! William Aaron Woods Joseph Weizenbaum – author of the ELIZA chatterbot. Roger Schank – introduced the conceptual dependency theory for natural language understanding. [16] – 47 – CSCE 771 Spring 2013