CSCE 771 Natural Language Processing Lecture 10 NLTK

![brown_lrnd_tagged = brown. tagged_words(categories='learned', simplify_tags=True) tags = [b[1] for (a, b) in nltk. ibigrams(brown_lrnd_tagged) brown_lrnd_tagged = brown. tagged_words(categories='learned', simplify_tags=True) tags = [b[1] for (a, b) in nltk. ibigrams(brown_lrnd_tagged)](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-3.jpg)

![Default. tagger NN tags = [tag for (word, tag) in brown. tagged_words(categories='news')] print nltk. Default. tagger NN tags = [tag for (word, tag) in brown. tagged_words(categories='news')] print nltk.](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-8.jpg)

![Evaluate regexp_tagger = nltk. Regexp. Tagger(patterns) print regexp_tagger. tag(brown_sents[3]) [('``', 'NN'), ('Only', 'NN'), ('a', Evaluate regexp_tagger = nltk. Regexp. Tagger(patterns) print regexp_tagger. tag(brown_sents[3]) [('``', 'NN'), ('Only', 'NN'), ('a',](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-10.jpg)

![Likely_tags; Backoff to NN sent = brown. sents(categories='news')[3] baseline_tagger. tag(sent) ('Only', 'NN'), ('a', 'NN'), Likely_tags; Backoff to NN sent = brown. sents(categories='news')[3] baseline_tagger. tag(sent) ('Only', 'NN'), ('a', 'NN'),](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-12.jpg)

![def performance(cfd, wordlist): lt = dict((word, cfd[word]. max()) for word in wordlist) baseline_tagger = def performance(cfd, wordlist): lt = dict((word, cfd[word]. max()) for word in wordlist) baseline_tagger =](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-14.jpg)

- Slides: 31

CSCE 771 Natural Language Processing Lecture 10 NLTK POS Tagging Part 3 Topics n n n Taggers Rule Based Taggers Probabilistic Taggers Transformation Based Taggers - Brill Supervised learning Readings: Chapter 5. 4 -? February 18, 2013

Overview Last Time n Overview of POS Tags Today n n n Part of Speech Tagging Parts of Speech Rule Based taggers Stochastic taggers Transformational taggers Readings n – 2– Chapter 5. 4 -5. ? CSCE 771 Spring 2011

![brownlrndtagged brown taggedwordscategorieslearned simplifytagsTrue tags b1 for a b in nltk ibigramsbrownlrndtagged brown_lrnd_tagged = brown. tagged_words(categories='learned', simplify_tags=True) tags = [b[1] for (a, b) in nltk. ibigrams(brown_lrnd_tagged)](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-3.jpg)

brown_lrnd_tagged = brown. tagged_words(categories='learned', simplify_tags=True) tags = [b[1] for (a, b) in nltk. ibigrams(brown_lrnd_tagged) if a[0] == 'often'] fd = nltk. Freq. Dist(tags) print fd. tabulate() VN V VD ADJ DET ADV P , CNJ . TO VBZ VG WH 15 12 8 5 5 4 3 3 1 1 1 – 3– CSCE 771 Spring 2011

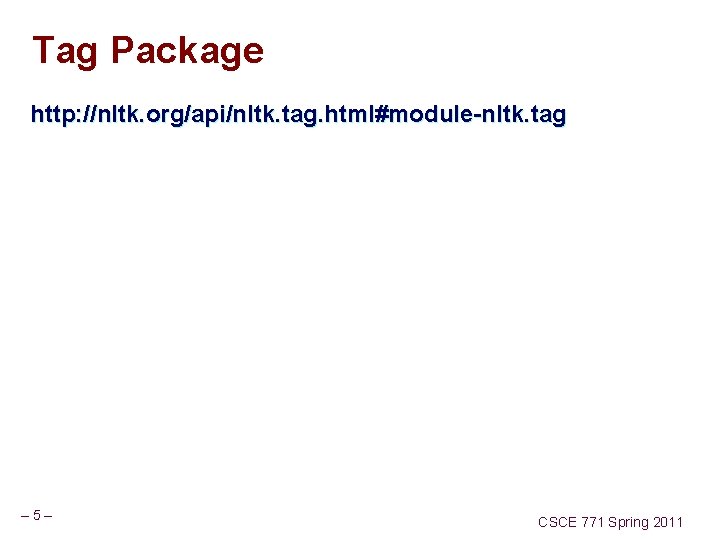

highly ambiguous words >>> brown_news_tagged = brown. tagged_words(categories='news', simplify_tags=True) >>> data = nltk. Conditional. Freq. Dist((word. lower(), tag) . . . for (word, tag) in brown_news_tagged) >>> for word in data. conditions(): . . . if len(data[word]) > 3: . . . tags = data[word]. keys() . . . print word, ' '. join(tags) . . . best ADJ ADV NP V better ADJ ADV V DET – 4– …. CSCE 771 Spring 2011

Tag Package http: //nltk. org/api/nltk. tag. html#module-nltk. tag – 5– CSCE 771 Spring 2011

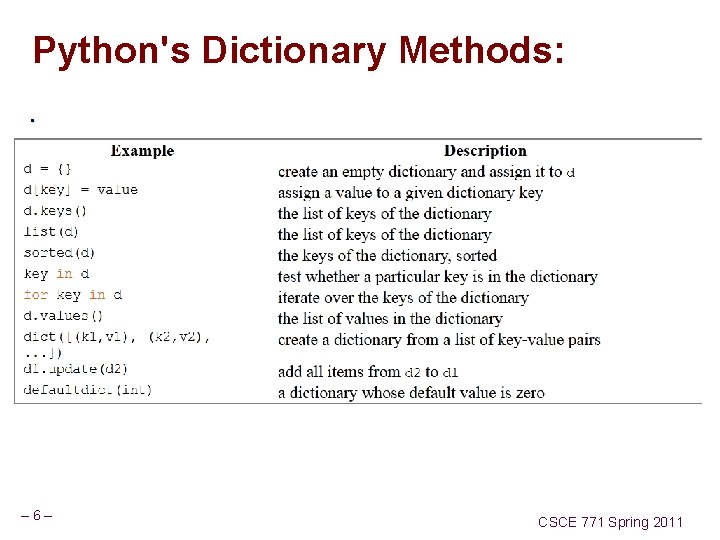

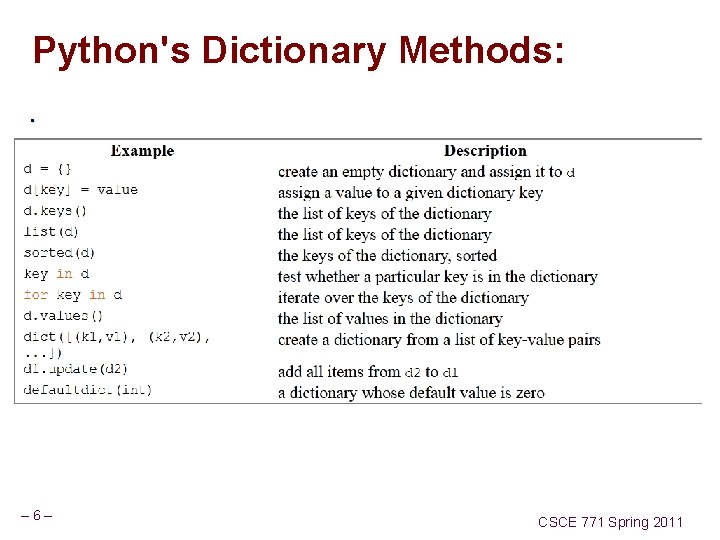

Python's Dictionary Methods: . – 6– CSCE 771 Spring 2011

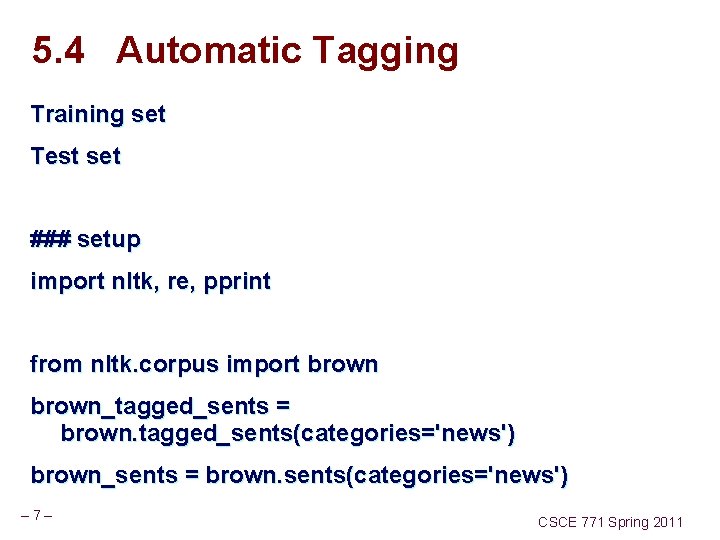

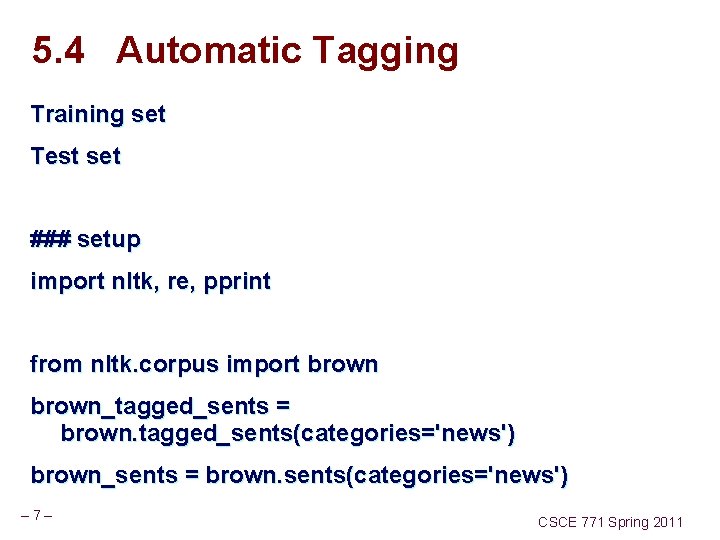

5. 4 Automatic Tagging Training set Test set ### setup import nltk, re, pprint from nltk. corpus import brown_tagged_sents = brown. tagged_sents(categories='news') brown_sents = brown. sents(categories='news') – 7– CSCE 771 Spring 2011

![Default tagger NN tags tag for word tag in brown taggedwordscategoriesnews print nltk Default. tagger NN tags = [tag for (word, tag) in brown. tagged_words(categories='news')] print nltk.](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-8.jpg)

Default. tagger NN tags = [tag for (word, tag) in brown. tagged_words(categories='news')] print nltk. Freq. Dist(tags). max() raw = 'I do not like green eggs and ham, I …Sam I am!' tokens = nltk. word_tokenize(raw) default_tagger = nltk. Default. Tagger('NN') print default_tagger. tag(tokens) [('I', 'NN'), ('do', 'NN'), ('not', 'NN'), ('like', 'NN'), … print default_tagger. evaluate(brown_tagged_sents) 0. 130894842572 – 8– CSCE 771 Spring 2011

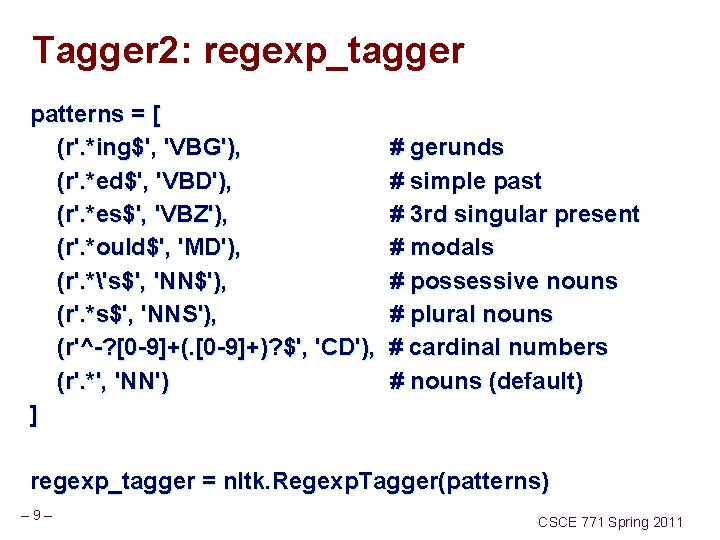

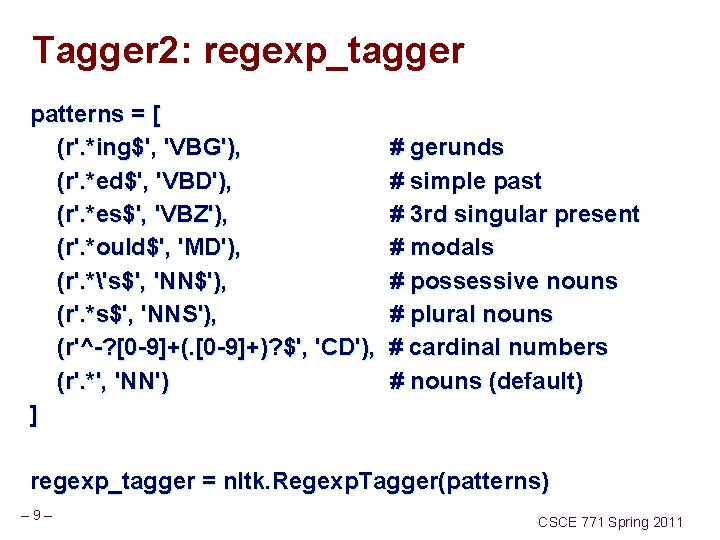

Tagger 2: regexp_tagger patterns = [ (r'. *ing$', 'VBG'), # gerunds (r'. *ed$', 'VBD'), # simple past (r'. *es$', 'VBZ'), # 3 rd singular present (r'. *ould$', 'MD'), # modals (r'. *'s$', 'NN$'), # possessive nouns (r'. *s$', 'NNS'), # plural nouns (r'^-? [0 -9]+(. [0 -9]+)? $', 'CD'), # cardinal numbers (r'. *', 'NN') # nouns (default) ] regexp_tagger = nltk. Regexp. Tagger(patterns) – 9– CSCE 771 Spring 2011

![Evaluate regexptagger nltk Regexp Taggerpatterns print regexptagger tagbrownsents3 NN Only NN a Evaluate regexp_tagger = nltk. Regexp. Tagger(patterns) print regexp_tagger. tag(brown_sents[3]) [('``', 'NN'), ('Only', 'NN'), ('a',](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-10.jpg)

Evaluate regexp_tagger = nltk. Regexp. Tagger(patterns) print regexp_tagger. tag(brown_sents[3]) [('``', 'NN'), ('Only', 'NN'), ('a', 'NN'), ('relative', 'NN'), … print regexp_tagger. evaluate(brown_tagged_sents) 0. 203263917895 – 10 – CSCE 771 Spring 2011

Unigram Tagger: 100 Most Freq tag fd = nltk. Freq. Dist(brown. words(categories='news')) cfd = nltk. Conditional. Freq. Dist(brown. tagged_words(categories='news')) most_freq_words = fd. keys()[: 100] likely_tags = dict((word, cfd[word]. max()) for word in most_freq_words) baseline_tagger = nltk. Unigram. Tagger(model=likely_tags) print baseline_tagger. evaluate(brown_tagged_sents) 0. 455784951369 – 11 – CSCE 771 Spring 2011

![Likelytags Backoff to NN sent brown sentscategoriesnews3 baselinetagger tagsent Only NN a NN Likely_tags; Backoff to NN sent = brown. sents(categories='news')[3] baseline_tagger. tag(sent) ('Only', 'NN'), ('a', 'NN'),](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-12.jpg)

Likely_tags; Backoff to NN sent = brown. sents(categories='news')[3] baseline_tagger. tag(sent) ('Only', 'NN'), ('a', 'NN'), ('relative', 'NN'), ('handful', 'NN'), ('of', 'NN'), baseline_tagger = nltk. Unigram. Tagger(model=likely_tags, backoff=nltk. Default. Tagger('NN')) print baseline_tagger. tag(sent) 'Only', 'NN'), ('a', 'AT'), ('relative', 'NN'), ('handful', 'NN'), ('of', 'IN'), print baseline_tagger. evaluate(brown_tagged_sents) 0. 581776955666 – 12 – CSCE 771 Spring 2011

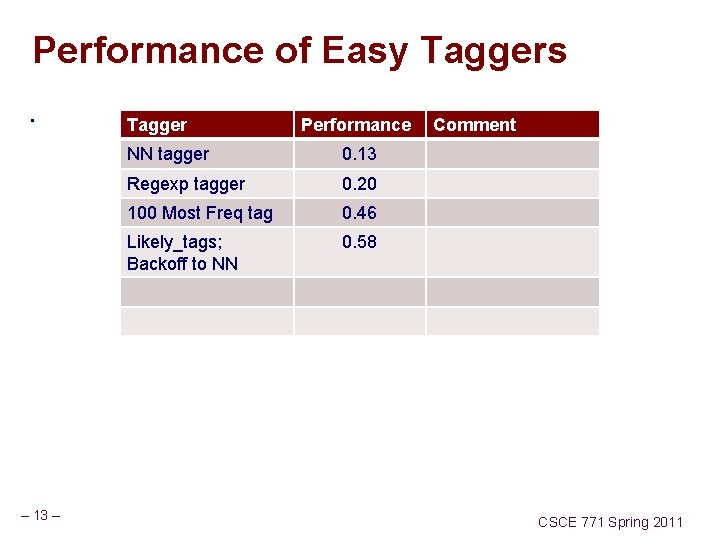

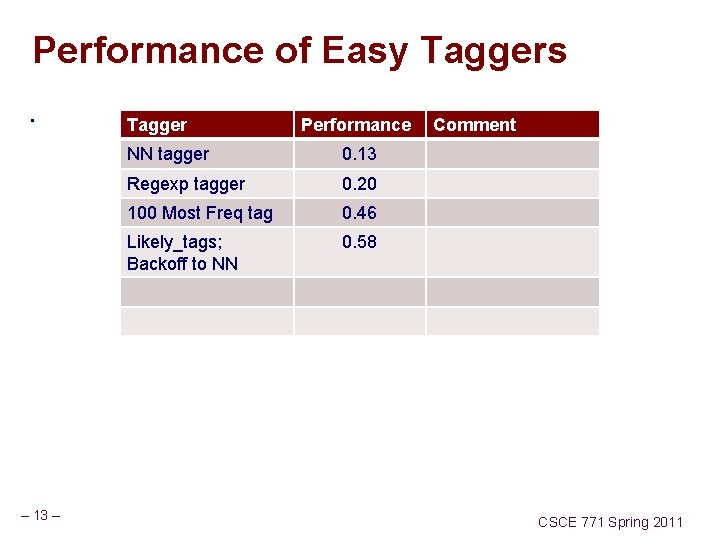

Performance of Easy Taggers. – 13 – Tagger Performance NN tagger 0. 13 Regexp tagger 0. 20 100 Most Freq tag 0. 46 Likely_tags; Backoff to NN 0. 58 Comment CSCE 771 Spring 2011

![def performancecfd wordlist lt dictword cfdword max for word in wordlist baselinetagger def performance(cfd, wordlist): lt = dict((word, cfd[word]. max()) for word in wordlist) baseline_tagger =](https://slidetodoc.com/presentation_image_h/23a34e2b72a7fc5412e13ff945755ff4/image-14.jpg)

def performance(cfd, wordlist): lt = dict((word, cfd[word]. max()) for word in wordlist) baseline_tagger = nltk. Unigram. Tagger(model=lt, backoff=nltk. Default. Tagger('NN')) return baseline_tagger. evaluate(brown. tagged_sents(categ ories='news')) – 14 – CSCE 771 Spring 2011

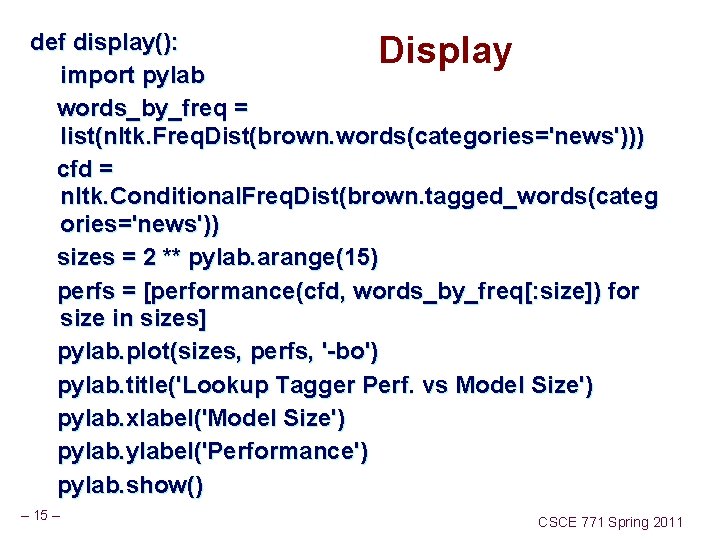

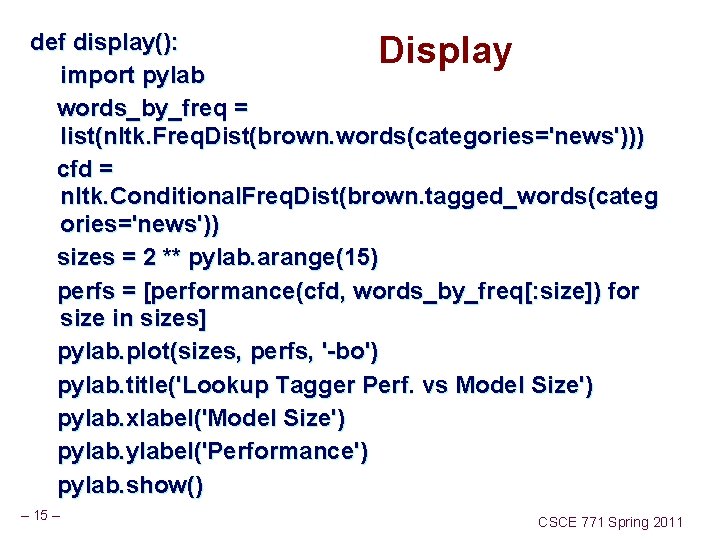

def display(): Display import pylab words_by_freq = list(nltk. Freq. Dist(brown. words(categories='news'))) cfd = nltk. Conditional. Freq. Dist(brown. tagged_words(categ ories='news')) sizes = 2 ** pylab. arange(15) perfs = [performance(cfd, words_by_freq[: size]) for size in sizes] pylab. plot(sizes, perfs, '-bo') pylab. title('Lookup Tagger Perf. vs Model Size') pylab. xlabel('Model Size') pylabel('Performance') pylab. show() – 15 – CSCE 771 Spring 2011

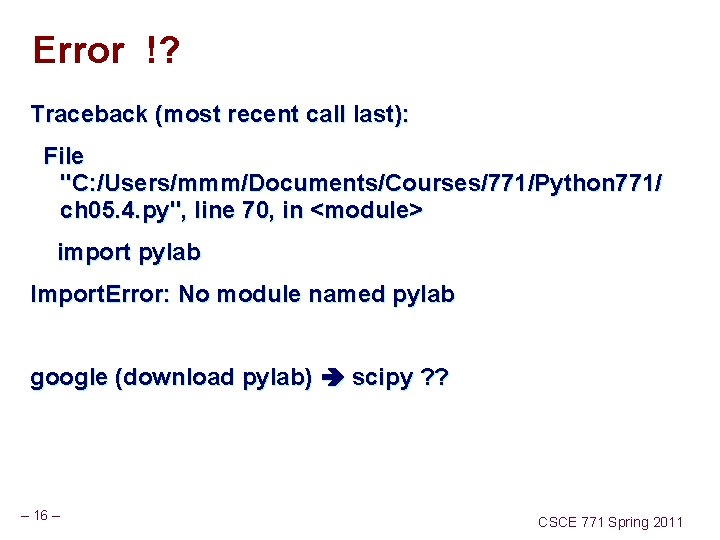

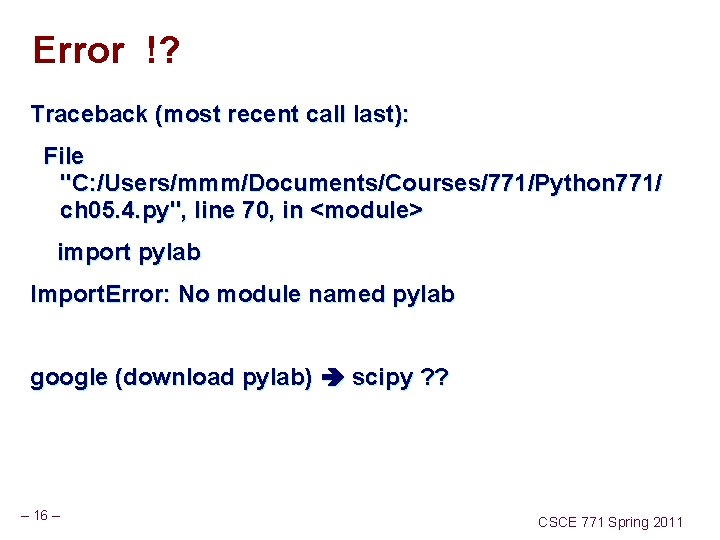

Error !? Traceback (most recent call last): File "C: /Users/mmm/Documents/Courses/771/Python 771/ ch 05. 4. py", line 70, in <module> import pylab Import. Error: No module named pylab google (download pylab) scipy ? ? – 16 – CSCE 771 Spring 2011

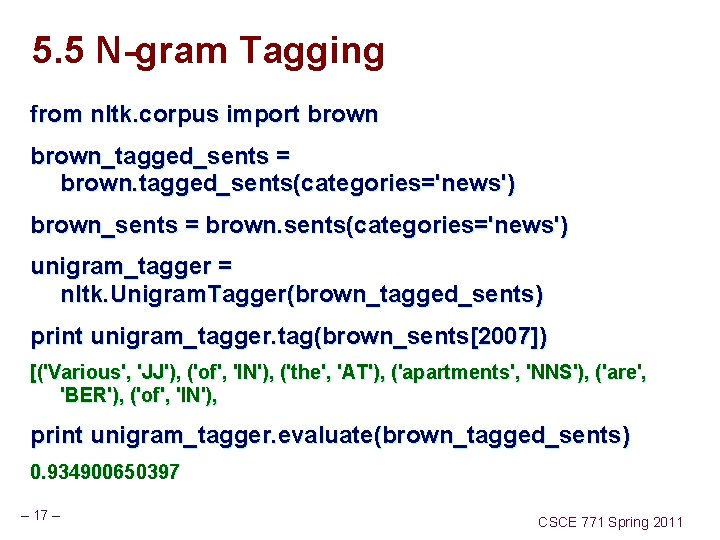

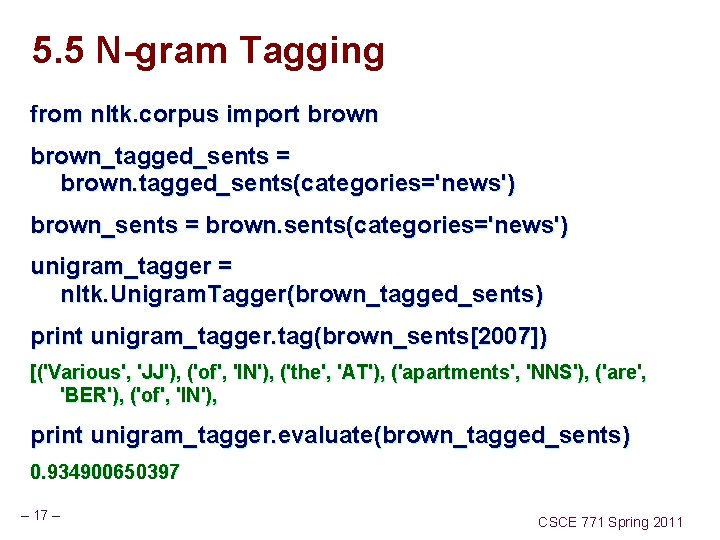

5. 5 N-gram Tagging from nltk. corpus import brown_tagged_sents = brown. tagged_sents(categories='news') brown_sents = brown. sents(categories='news') unigram_tagger = nltk. Unigram. Tagger(brown_tagged_sents) print unigram_tagger. tag(brown_sents[2007]) [('Various', 'JJ'), ('of', 'IN'), ('the', 'AT'), ('apartments', 'NNS'), ('are', 'BER'), ('of', 'IN'), print unigram_tagger. evaluate(brown_tagged_sents) 0. 934900650397 – 17 – CSCE 771 Spring 2011

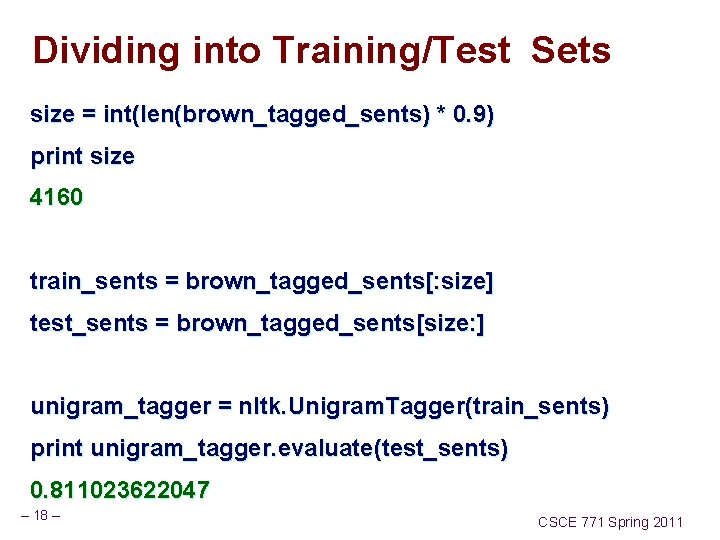

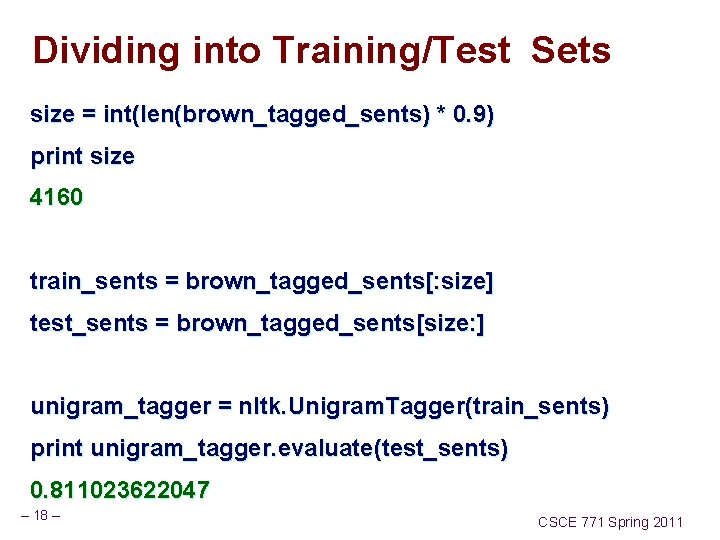

Dividing into Training/Test Sets size = int(len(brown_tagged_sents) * 0. 9) print size 4160 train_sents = brown_tagged_sents[: size] test_sents = brown_tagged_sents[size: ] unigram_tagger = nltk. Unigram. Tagger(train_sents) print unigram_tagger. evaluate(test_sents) 0. 811023622047 – 18 – CSCE 771 Spring 2011

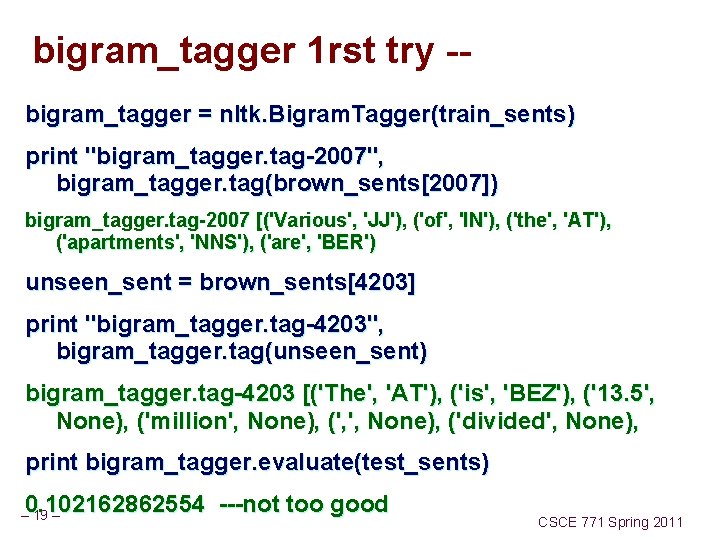

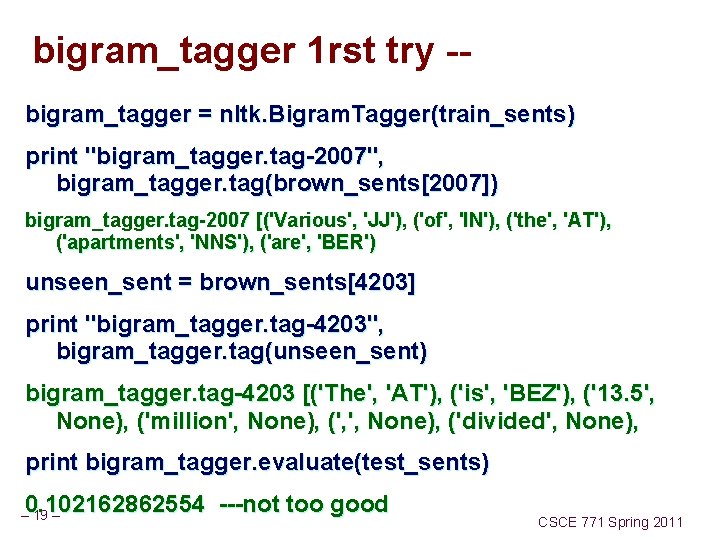

bigram_tagger 1 rst try -- bigram_tagger = nltk. Bigram. Tagger(train_sents) print "bigram_tagger. tag-2007", bigram_tagger. tag(brown_sents[2007]) bigram_tagger. tag-2007 [('Various', 'JJ'), ('of', 'IN'), ('the', 'AT'), ('apartments', 'NNS'), ('are', 'BER') unseen_sent = brown_sents[4203] print "bigram_tagger. tag-4203", bigram_tagger. tag(unseen_sent) bigram_tagger. tag-4203 [('The', 'AT'), ('is', 'BEZ'), ('13. 5', None), ('million', None), (', ', None), ('divided', None), print bigram_tagger. evaluate(test_sents) 0. 102162862554 ---not too good – 19 – CSCE 771 Spring 2011

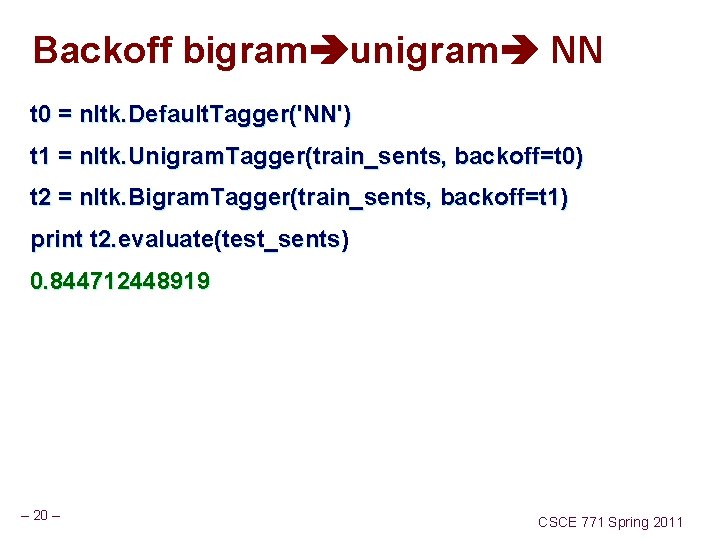

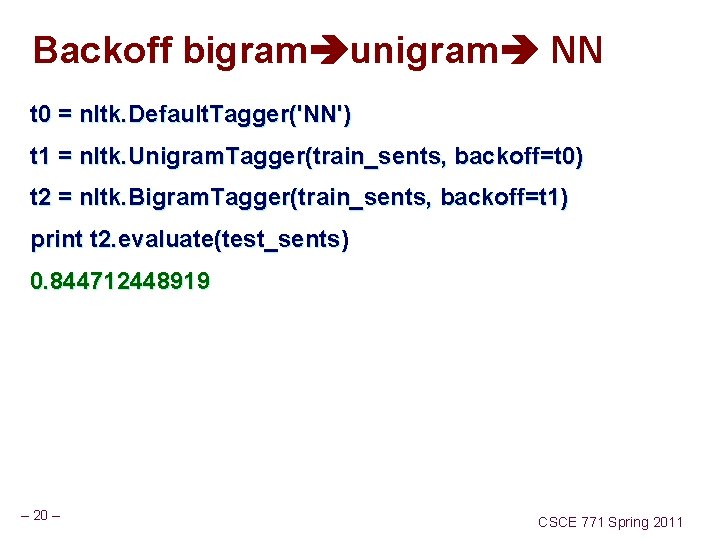

Backoff bigram unigram NN t 0 = nltk. Default. Tagger('NN') t 1 = nltk. Unigram. Tagger(train_sents, backoff=t 0) t 2 = nltk. Bigram. Tagger(train_sents, backoff=t 1) print t 2. evaluate(test_sents) 0. 844712448919 – 20 – CSCE 771 Spring 2011

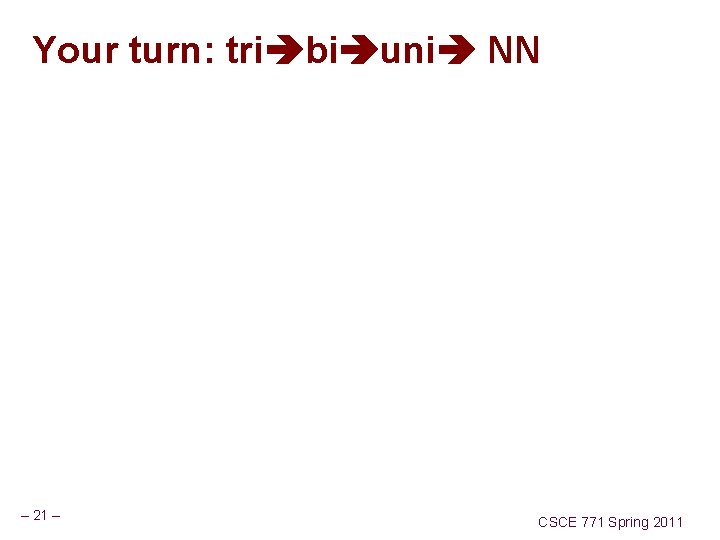

Your turn: tri bi uni NN – 21 – CSCE 771 Spring 2011

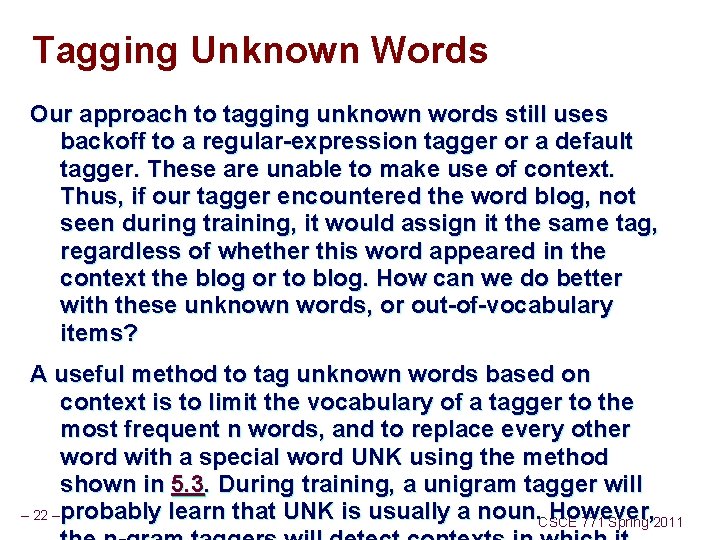

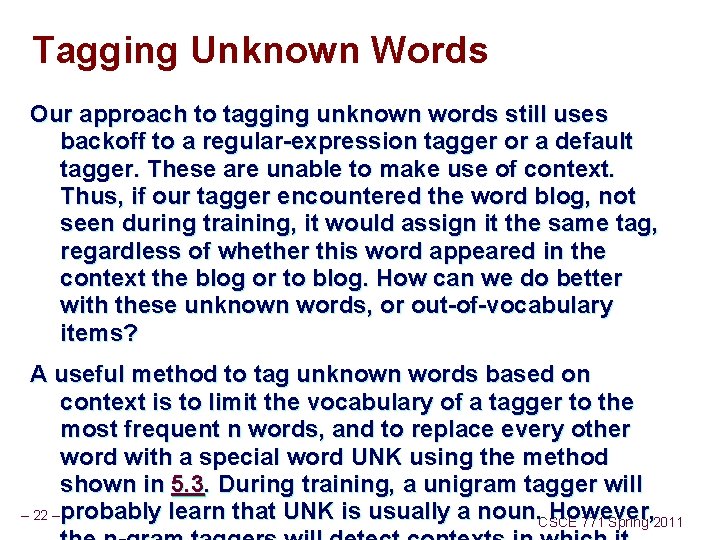

Tagging Unknown Words Our approach to tagging unknown words still uses backoff to a regular-expression tagger or a default tagger. These are unable to make use of context. Thus, if our tagger encountered the word blog, not seen during training, it would assign it the same tag, regardless of whether this word appeared in the context the blog or to blog. How can we do better with these unknown words, or out-of-vocabulary items? A useful method to tag unknown words based on context is to limit the vocabulary of a tagger to the most frequent n words, and to replace every other word with a special word UNK using the method shown in 5. 3. During training, a unigram tagger will – 22 – probably learn that UNK is usually a noun. However, CSCE 771 Spring 2011

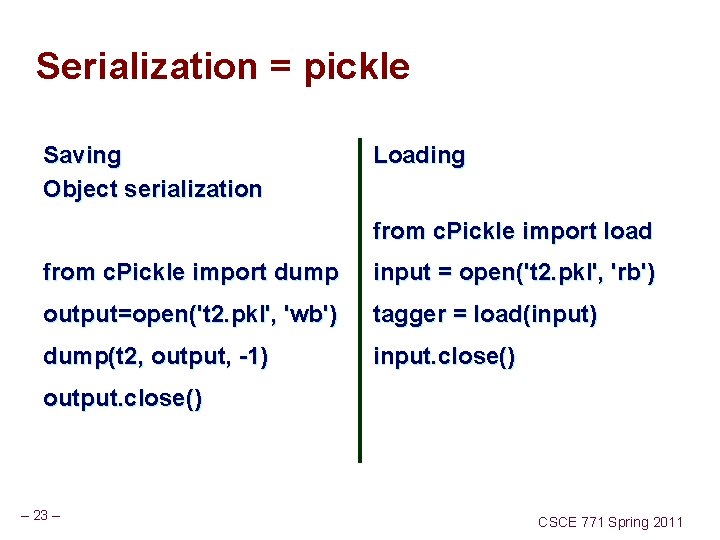

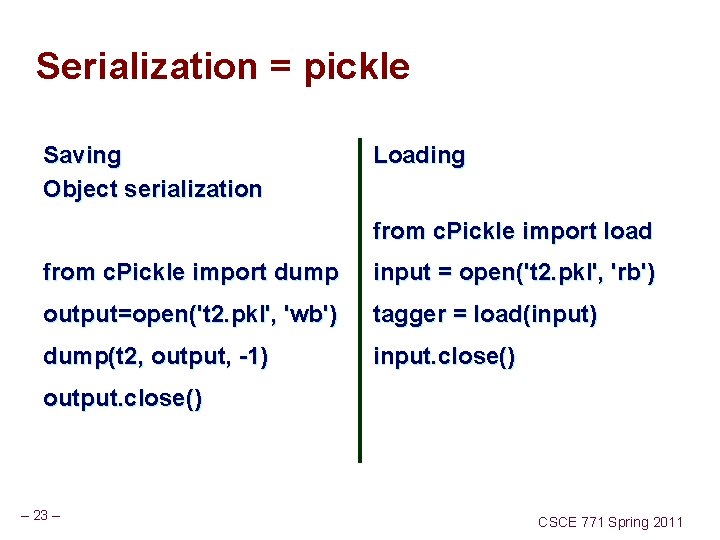

Serialization = pickle Saving Object serialization Loading from c. Pickle import load from c. Pickle import dump input = open('t 2. pkl', 'rb') output=open('t 2. pkl', 'wb') tagger = load(input) dump(t 2, output, -1) input. close() output. close() – 23 – CSCE 771 Spring 2011

Performance Limitations – 24 – CSCE 771 Spring 2011

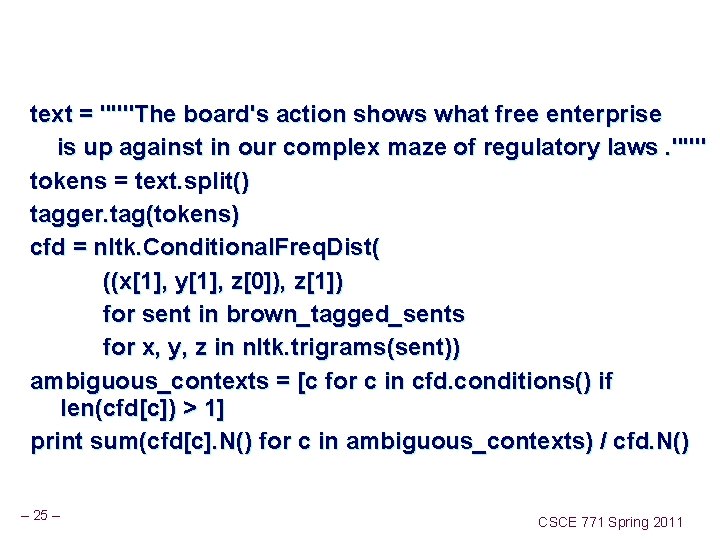

text = """The board's action shows what free enterprise is up against in our complex maze of regulatory laws. """ tokens = text. split() tagger. tag(tokens) cfd = nltk. Conditional. Freq. Dist( ((x[1], y[1], z[0]), z[1]) for sent in brown_tagged_sents for x, y, z in nltk. trigrams(sent)) ambiguous_contexts = [c for c in cfd. conditions() if len(cfd[c]) > 1] print sum(cfd[c]. N() for c in ambiguous_contexts) / cfd. N() – 25 – CSCE 771 Spring 2011

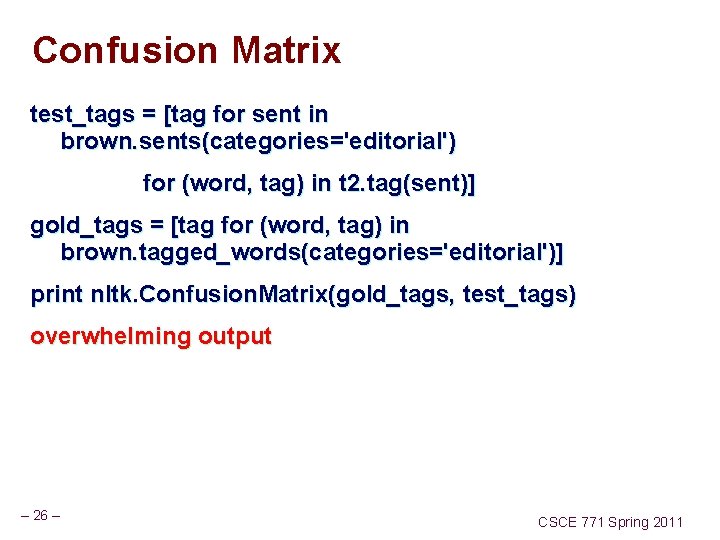

Confusion Matrix test_tags = [tag for sent in brown. sents(categories='editorial') for (word, tag) in t 2. tag(sent)] gold_tags = [tag for (word, tag) in brown. tagged_words(categories='editorial')] print nltk. Confusion. Matrix(gold_tags, test_tags) overwhelming output – 26 – CSCE 771 Spring 2011

– 27 – CSCE 771 Spring 2011

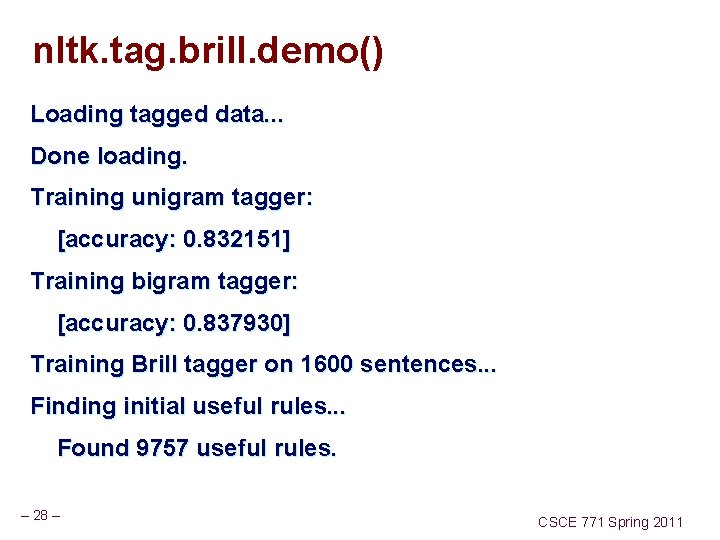

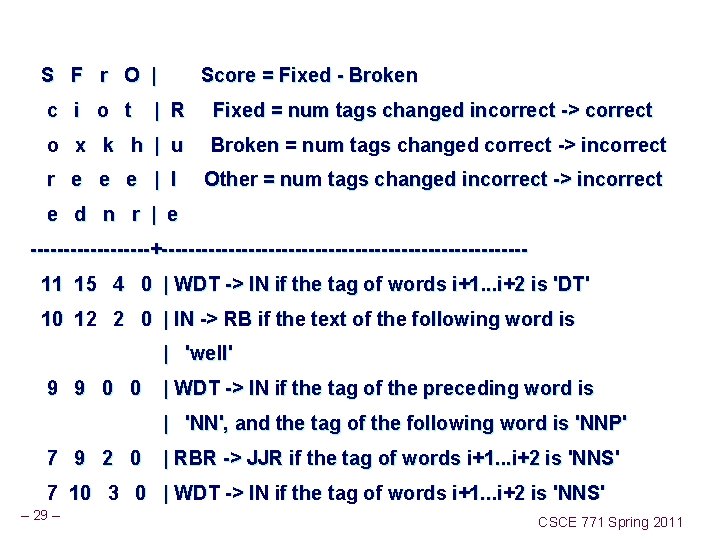

nltk. tag. brill. demo() Loading tagged data. . . Done loading. Training unigram tagger: [accuracy: 0. 832151] Training bigram tagger: [accuracy: 0. 837930] Training Brill tagger on 1600 sentences. . . Finding initial useful rules. . . Found 9757 useful rules. – 28 – CSCE 771 Spring 2011

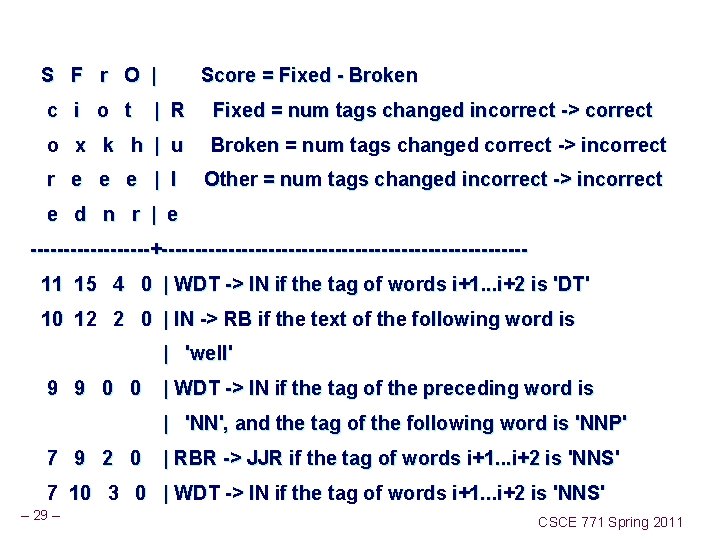

S F r O | Score = Fixed - Broken c i o t | R Fixed = num tags changed incorrect -> correct o x k h | u Broken = num tags changed correct -> incorrect r e e e | l Other = num tags changed incorrect -> incorrect e d n r | e ---------+--------------------------- 11 15 4 0 | WDT -> IN if the tag of words i+1. . . i+2 is 'DT' 10 12 2 0 | IN -> RB if the text of the following word is | 'well' 9 9 0 0 | WDT -> IN if the tag of the preceding word is | 'NN', and the tag of the following word is 'NNP' 7 9 2 0 | RBR -> JJR if the tag of words i+1. . . i+2 is 'NNS' 7 10 3 0 | WDT -> IN if the tag of words i+1. . . i+2 is 'NNS' – 29 – CSCE 771 Spring 2011

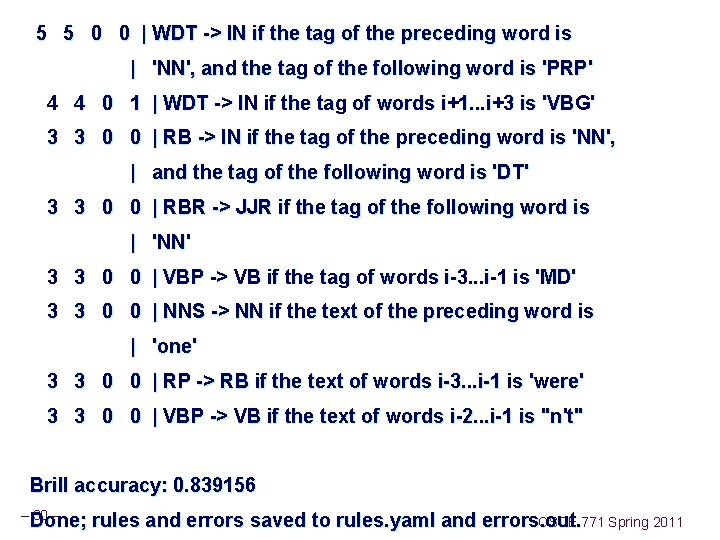

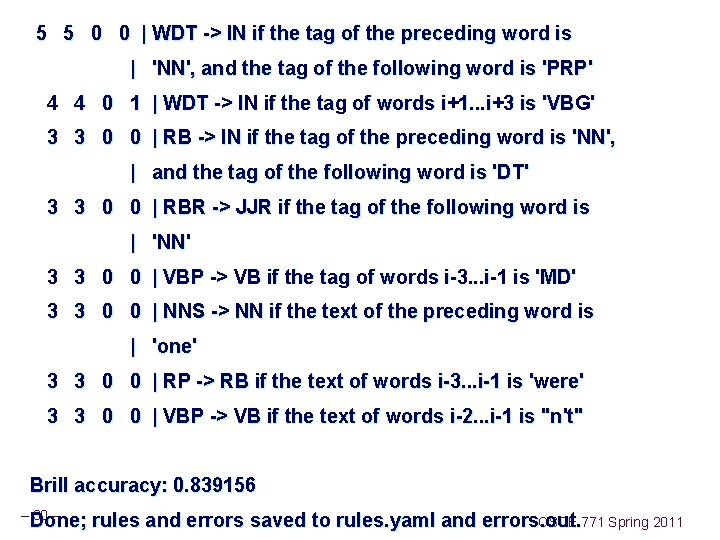

5 5 0 0 | WDT -> IN if the tag of the preceding word is | 'NN', and the tag of the following word is 'PRP' 4 4 0 1 | WDT -> IN if the tag of words i+1. . . i+3 is 'VBG' 3 3 0 0 | RB -> IN if the tag of the preceding word is 'NN', | and the tag of the following word is 'DT' 3 3 0 0 | RBR -> JJR if the tag of the following word is | 'NN' 3 3 0 0 | VBP -> VB if the tag of words i-3. . . i-1 is 'MD' 3 3 0 0 | NNS -> NN if the text of the preceding word is | 'one' 3 3 0 0 | RP -> RB if the text of words i-3. . . i-1 is 'were' 3 3 0 0 | VBP -> VB if the text of words i-2. . . i-1 is "n't" Brill accuracy: 0. 839156 – Done; rules and errors saved to rules. yaml and errors. out. 30 – CSCE 771 Spring 2011 Done; rules and errors saved to rules. yaml and errors. out.

– 31 – CSCE 771 Spring 2011