CSCE 531 Compiler Construction Ch 6 RunTime Organization

![Data Representation: Disjoint Unions type T = record size[T] = size[T ] tag case Data Representation: Disjoint Unions type T = record size[T] = size[T ] tag case](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-34.jpg)

![Static Arrays type T = array n of TE; var a : T; a[0] Static Arrays type T = array n of TE; var a : T; a[0]](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-38.jpg)

![Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; A possible representation Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; A possible representation](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-40.jpg)

![Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; Another possible representation Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; Another possible representation](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-41.jpg)

- Slides: 185

CSCE 531 Compiler Construction Ch. 6: Run-Time Organization Spring 2008 Marco Valtorta mgv@cse. sc. edu UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Acknowledgment • The slides are based on the textbook and other sources, including slides from Bent Thomsen’s course at the University of Aalborg in Denmark and several other fine textbooks • The three main other compiler textbooks I considered are: – Aho, Alfred V. , Monica S. Lam, Ravi Sethi, and Jeffrey D. Ullman. Compilers: Principles, Techniques, & Tools, 2 nd ed. Addison-Welsey, 2007. (The “dragon book”) – Appel, Andrew W. Modern Compiler Implementation in Java, 2 nd ed. Cambridge, 2002. (Editions in ML and C also available; the “tiger books”) – Grune, Dick, Henri E. Bal, Ceriel J. H. Jacobs, and UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering Koen G. Langendoen. Modern Compiler Design.

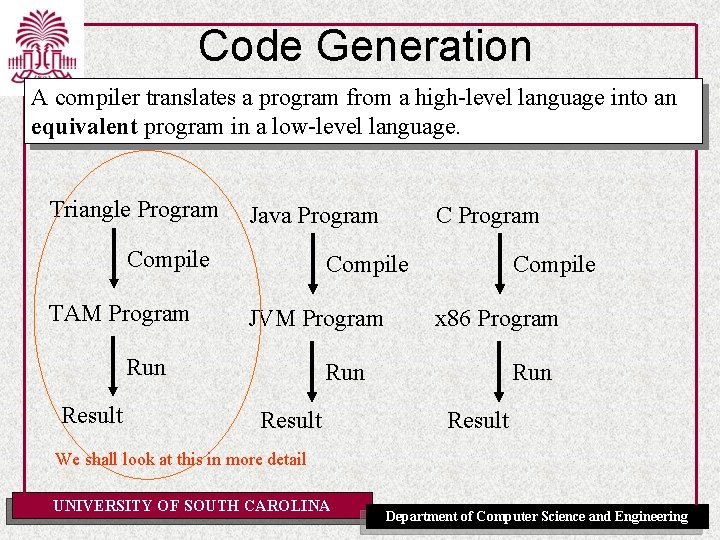

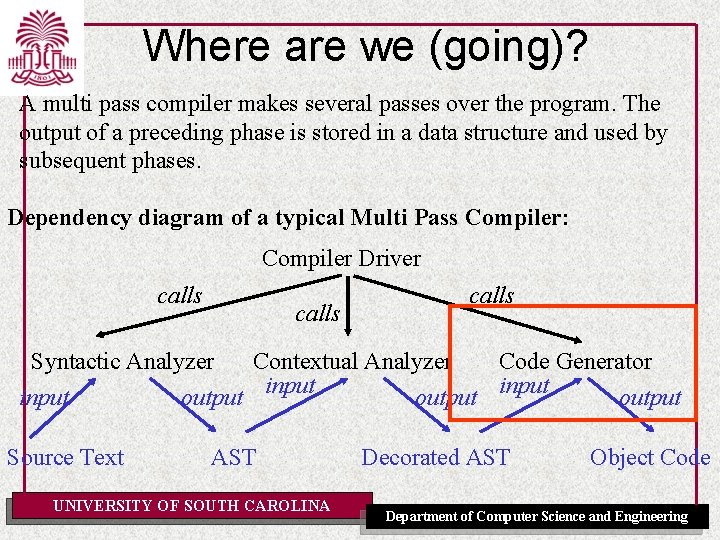

Where are we (going)? A multi pass compiler makes several passes over the program. The output of a preceding phase is stored in a data structure and used by subsequent phases. Dependency diagram of a typical Multi Pass Compiler: Compiler Driver calls Syntactic Analyzer Contextual Analyzer Code Generator input output Source Text AST UNIVERSITY OF SOUTH CAROLINA Decorated AST Object Code Department of Computer Science and Engineering

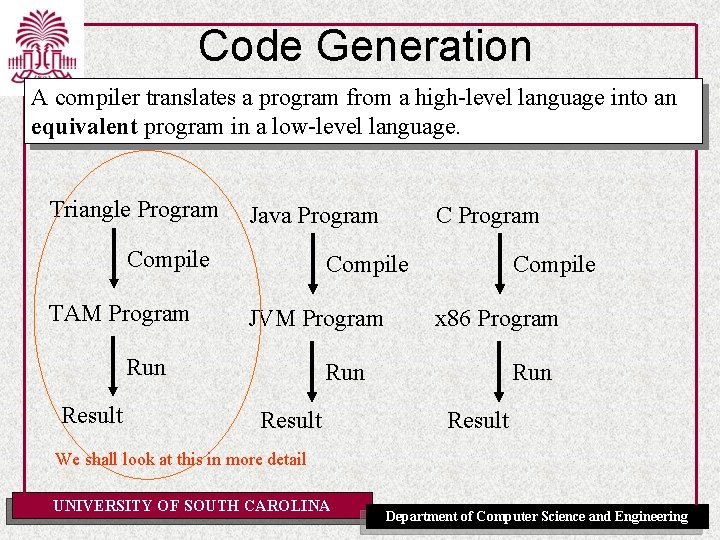

Code Generation A compiler translates a program from a high-level language into an equivalent program in a low-level language. Triangle Program Java Program Compile TAM Program Compile JVM Program Run Result C Program Compile x 86 Program Run Result We shall look at this in more detail UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

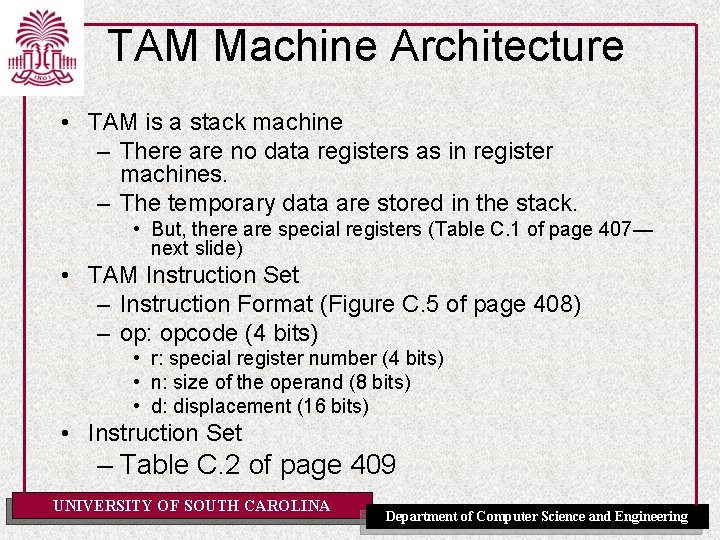

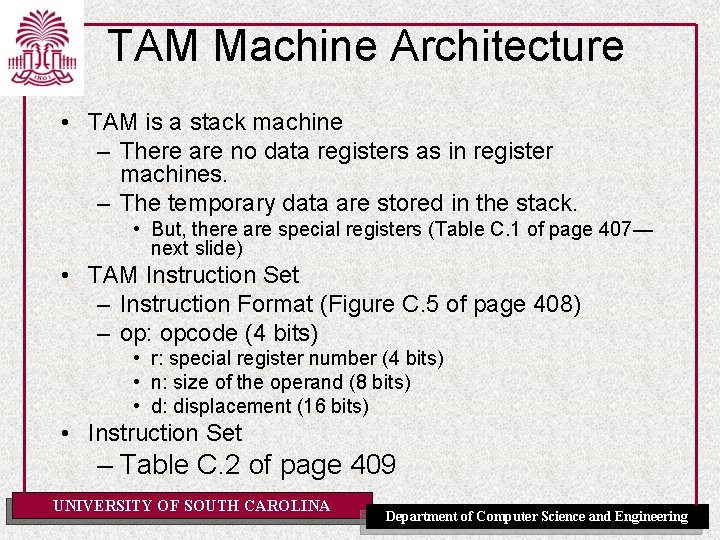

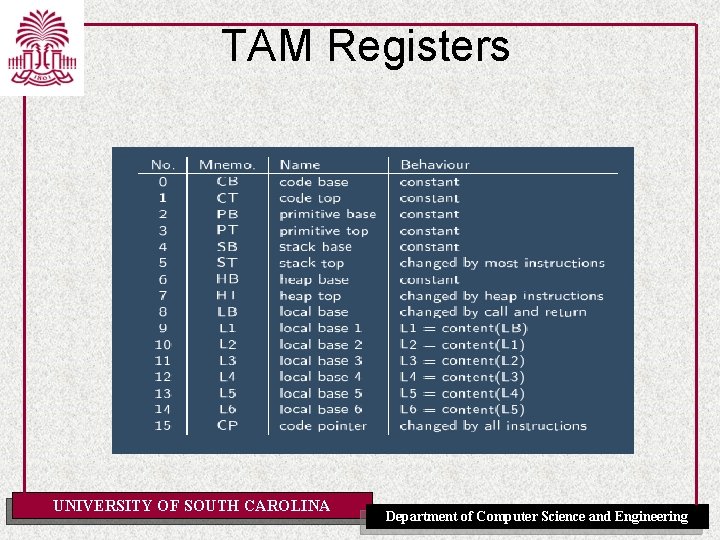

TAM Machine Architecture • TAM is a stack machine – There are no data registers as in register machines. – The temporary data are stored in the stack. • But, there are special registers (Table C. 1 of page 407— next slide) • TAM Instruction Set – Instruction Format (Figure C. 5 of page 408) – op: opcode (4 bits) • r: special register number (4 bits) • n: size of the operand (8 bits) • d: displacement (16 bits) • Instruction Set – Table C. 2 of page 409 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

TAM Registers UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

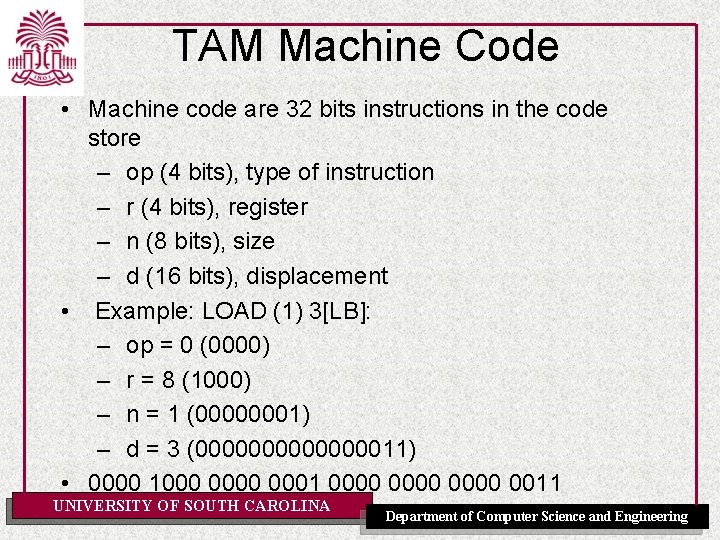

TAM Machine Code • Machine code are 32 bits instructions in the code store – op (4 bits), type of instruction – r (4 bits), register – n (8 bits), size – d (16 bits), displacement • Example: LOAD (1) 3[LB]: – op = 0 (0000) – r = 8 (1000) – n = 1 (00000001) – d = 3 (000000011) • 0000 1000 0001 0000 0011 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

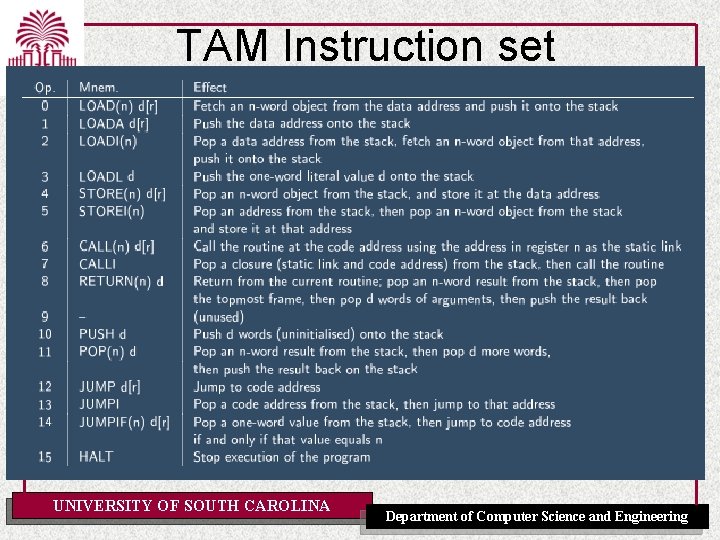

TAM Instruction set UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

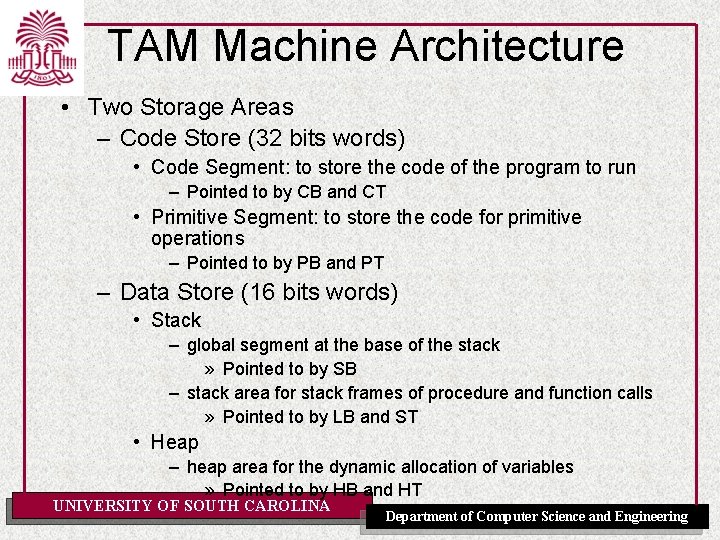

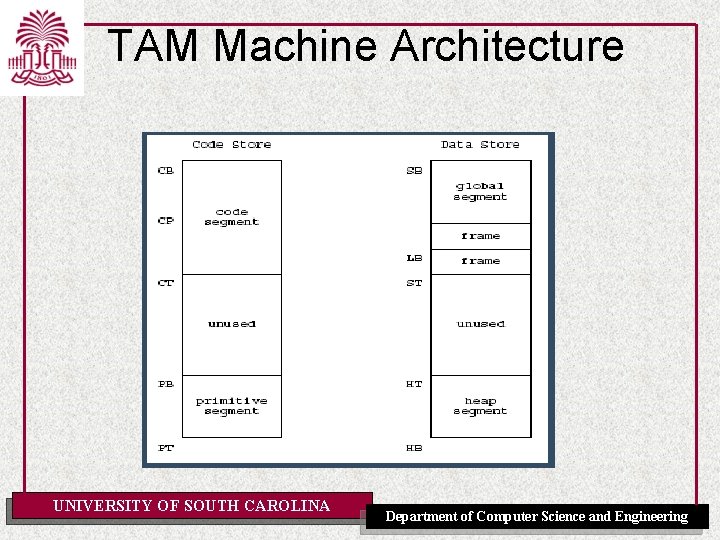

TAM Machine Architecture • Two Storage Areas – Code Store (32 bits words) • Code Segment: to store the code of the program to run – Pointed to by CB and CT • Primitive Segment: to store the code for primitive operations – Pointed to by PB and PT – Data Store (16 bits words) • Stack – global segment at the base of the stack » Pointed to by SB – stack area for stack frames of procedure and function calls » Pointed to by LB and ST • Heap – heap area for the dynamic allocation of variables » Pointed to by HB and HT UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

TAM Machine Architecture UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

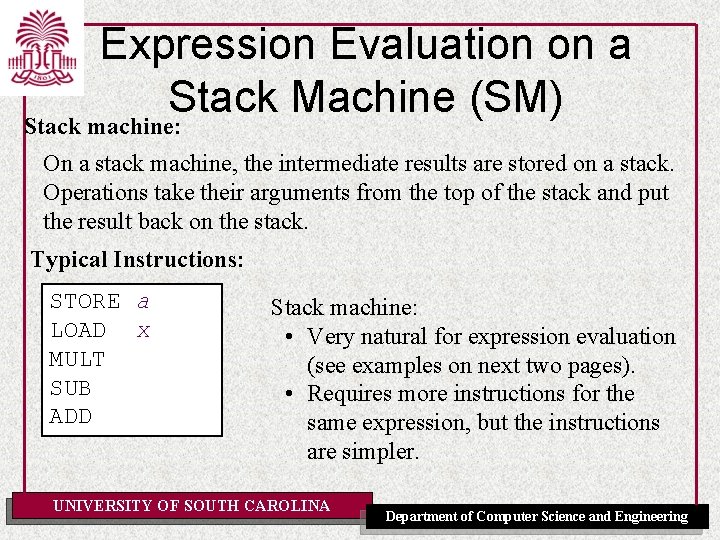

Expression Evaluation on a Stack Machine (SM) Stack machine: On a stack machine, the intermediate results are stored on a stack. Operations take their arguments from the top of the stack and put the result back on the stack. Typical Instructions: STORE a LOAD x MULT SUB ADD Stack machine: • Very natural for expression evaluation (see examples on next two pages). • Requires more instructions for the same expression, but the instructions are simpler. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Expression Evaluation on a Stack Machine Example 1: Computing (a * b) + (1 - (c * 2)) on a stack machine. LOAD MULT LOAD MULT SUB ADD a b #1 c #2 //stack: //stack: //stack: a a b (a*b) 1 c 2 (a*b) 1 (c*2) (a*b) (1 -(c*2)) (a*b)+(1 -(c*2)) Note the correspondence between the instructions and the expression written in postfix notation: a b * 1 c 2 * - + UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

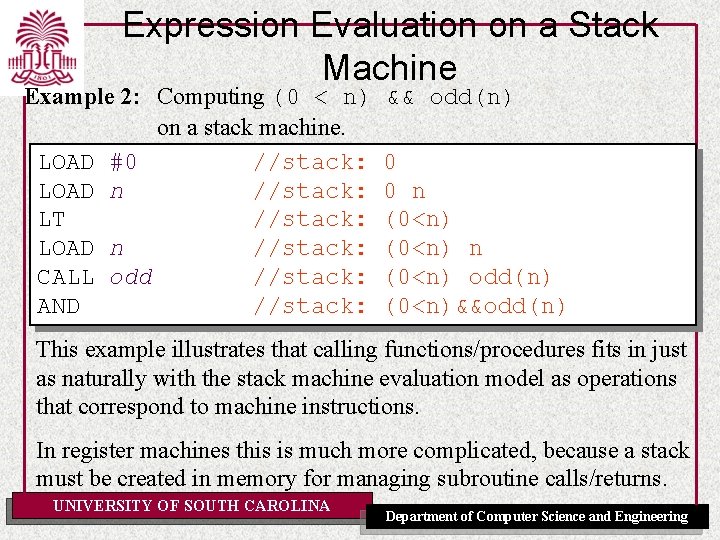

Expression Evaluation on a Stack Machine Example 2: Computing (0 < n) on a stack machine. LOAD #0 //stack: LOAD n //stack: LT //stack: LOAD n //stack: CALL odd //stack: AND //stack: && odd(n) 0 0 n (0<n) odd(n) (0<n)&&odd(n) This example illustrates that calling functions/procedures fits in just as naturally with the stack machine evaluation model as operations that correspond to machine instructions. In register machines this is much more complicated, because a stack must be created in memory for managing subroutine calls/returns. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

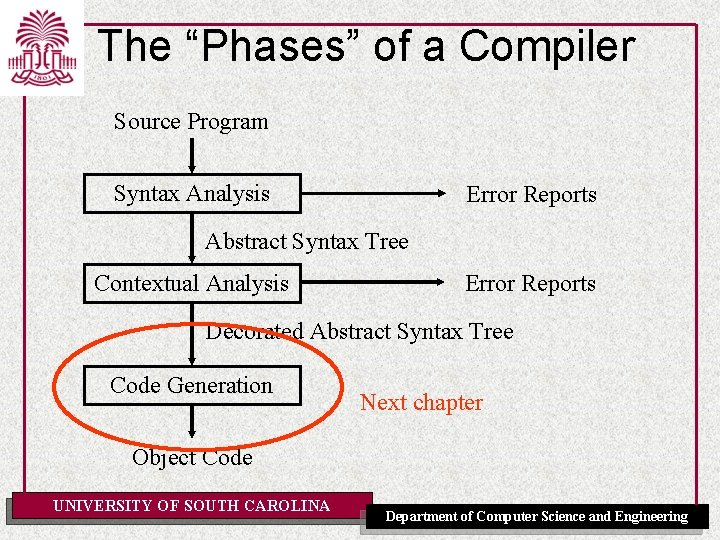

The “Phases” of a Compiler Source Program Syntax Analysis Error Reports Abstract Syntax Tree Contextual Analysis Error Reports Decorated Abstract Syntax Tree Code Generation Next chapter Object Code UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

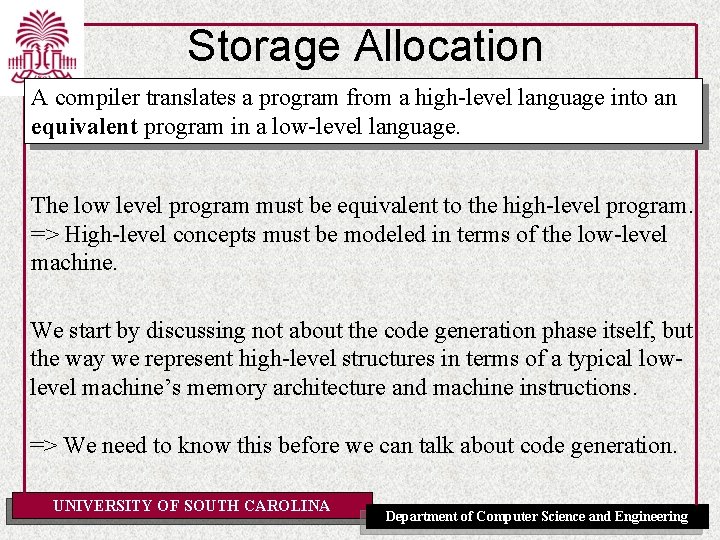

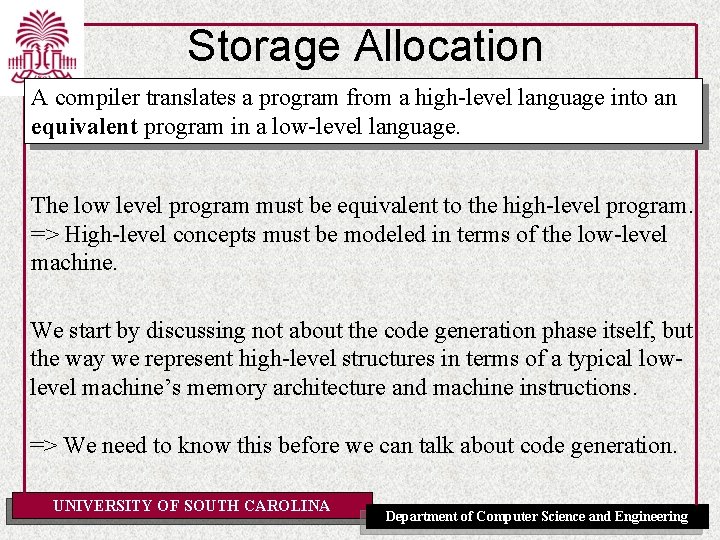

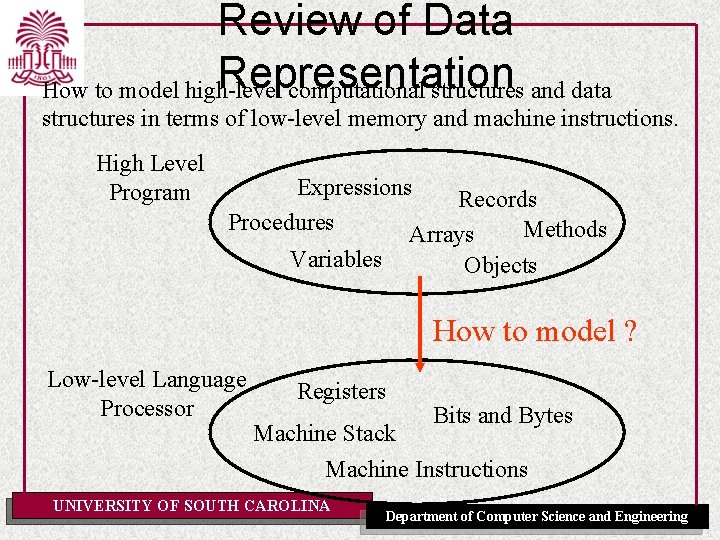

Storage Allocation A compiler translates a program from a high-level language into an equivalent program in a low-level language. The low level program must be equivalent to the high-level program. => High-level concepts must be modeled in terms of the low-level machine. We start by discussing not about the code generation phase itself, but the way we represent high-level structures in terms of a typical lowlevel machine’s memory architecture and machine instructions. => We need to know this before we can talk about code generation. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

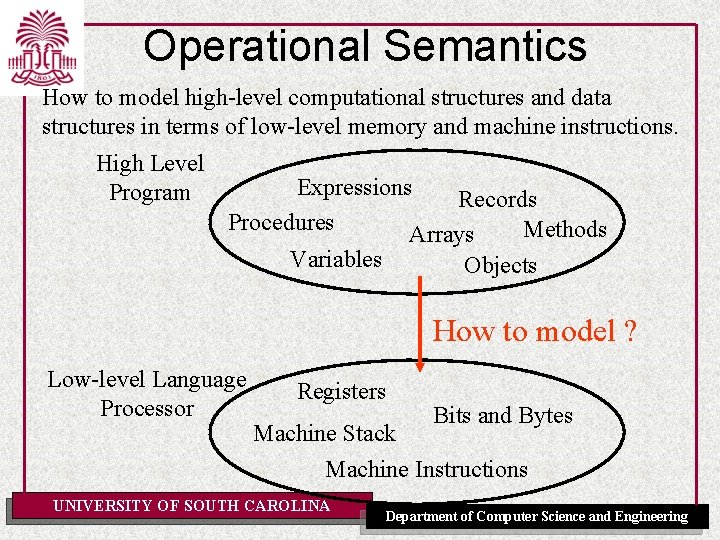

Operational Semantics How to model high-level computational structures and data structures in terms of low-level memory and machine instructions. High Level Program Expressions Records Procedures Methods Arrays Variables Objects How to model ? Low-level Language Processor Registers Bits and Bytes Machine Stack Machine Instructions UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

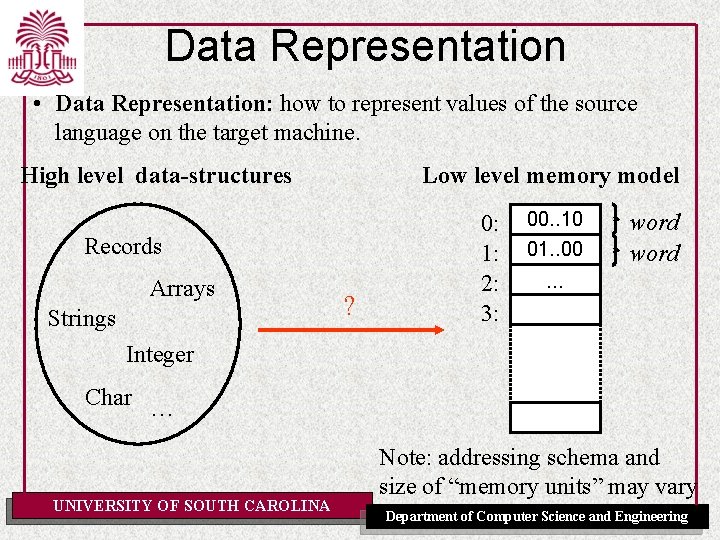

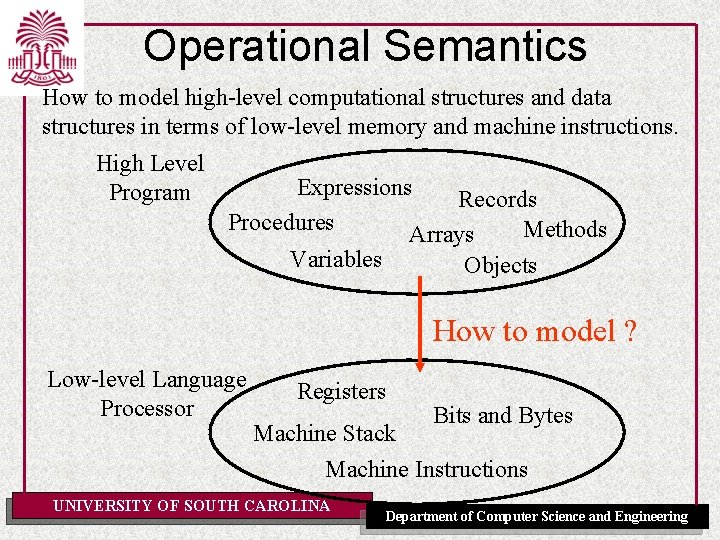

Data Representation • Data Representation: how to represent values of the source language on the target machine. High level data-structures Low level memory model Records Arrays Strings ? 0: 1: 2: 3: 00. . 10 01. . 00 word . . . Integer Char … UNIVERSITY OF SOUTH CAROLINA Note: addressing schema and size of “memory units” may vary Department of Computer Science and Engineering

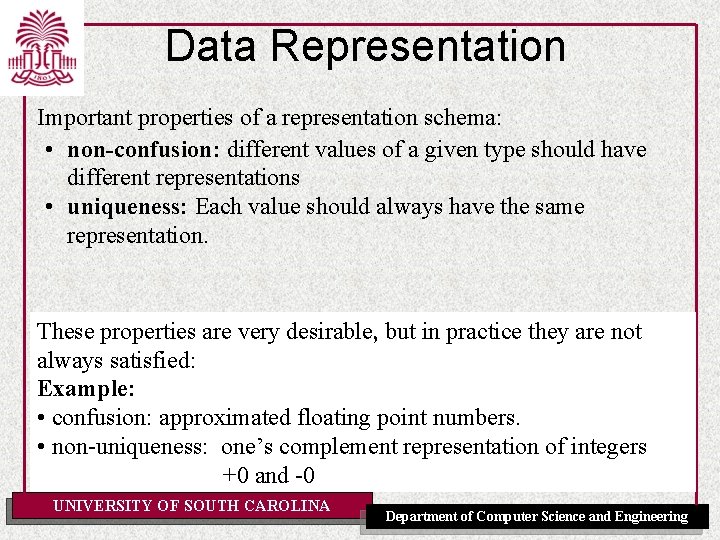

Data Representation Important properties of a representation schema: • non-confusion: different values of a given type should have different representations • uniqueness: Each value should always have the same representation. These properties are very desirable, but in practice they are not always satisfied: Example: • confusion: approximated floating point numbers. • non-uniqueness: one’s complement representation of integers +0 and -0 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

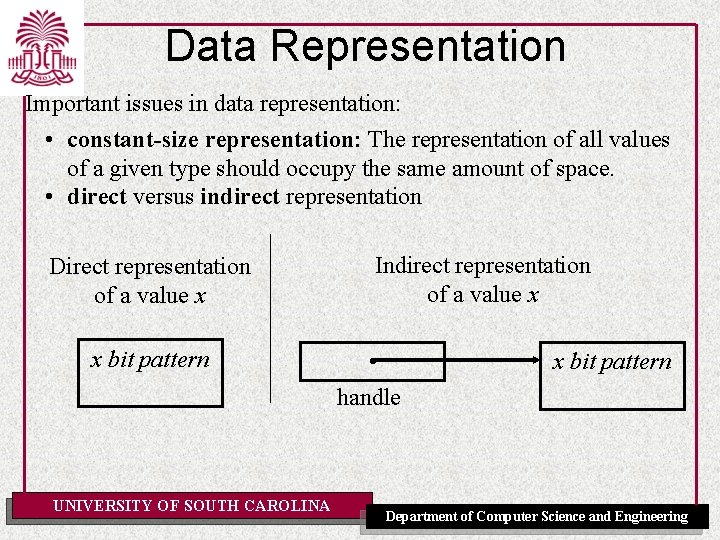

Data Representation Important issues in data representation: • constant-size representation: The representation of all values of a given type should occupy the same amount of space. • direct versus indirect representation Direct representation of a value x x bit pattern UNIVERSITY OF SOUTH CAROLINA Indirect representation of a value x • handle x bit pattern Department of Computer Science and Engineering

Indirect Representation Q: What reasons could there be for choosing indirect representations? To make the representation “constant size” even if representation requires different amounts of memory for different values. Both are represented by pointers =>Same size • small x bit pattern • big x bit pattern UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

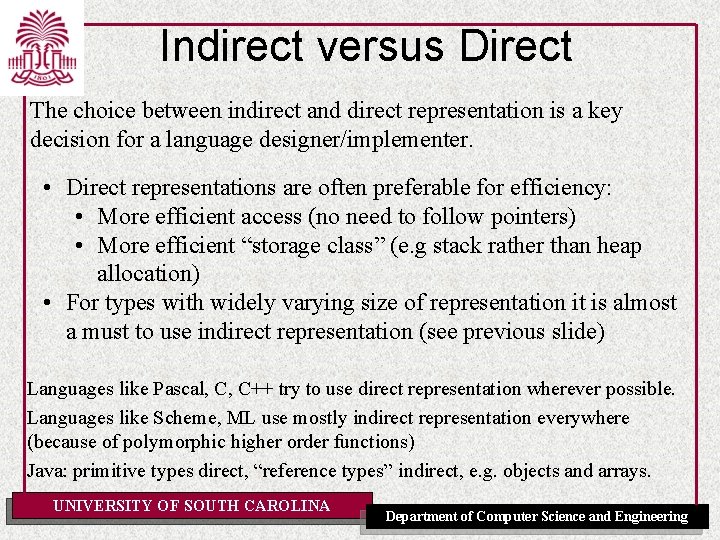

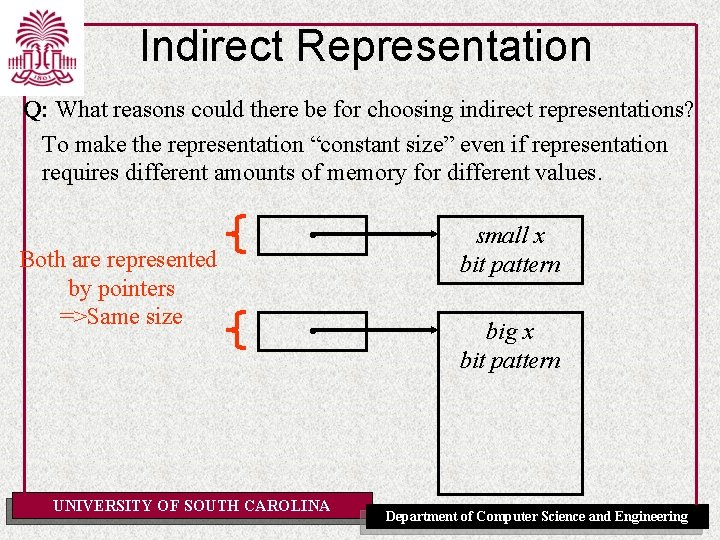

Indirect versus Direct The choice between indirect and direct representation is a key decision for a language designer/implementer. • Direct representations are often preferable for efficiency: • More efficient access (no need to follow pointers) • More efficient “storage class” (e. g stack rather than heap allocation) • For types with widely varying size of representation it is almost a must to use indirect representation (see previous slide) Languages like Pascal, C, C++ try to use direct representation wherever possible. Languages like Scheme, ML use mostly indirect representation everywhere (because of polymorphic higher order functions) Java: primitive types direct, “reference types” indirect, e. g. objects and arrays. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

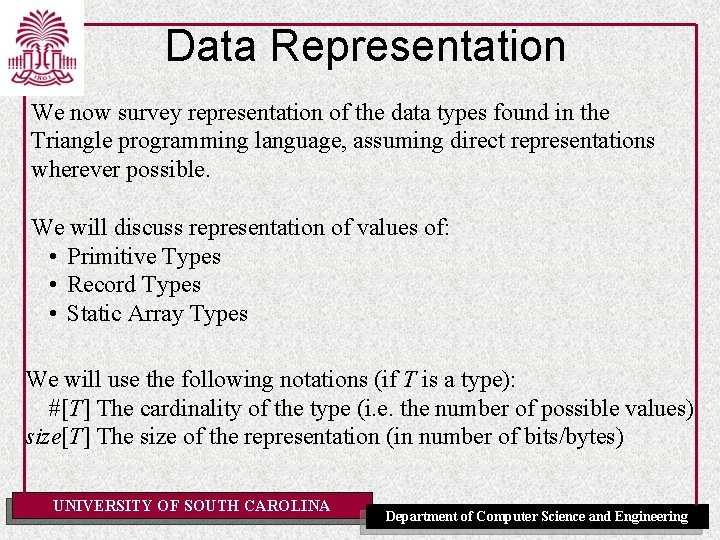

Data Representation We now survey representation of the data types found in the Triangle programming language, assuming direct representations wherever possible. We will discuss representation of values of: • Primitive Types • Record Types • Static Array Types We will use the following notations (if T is a type): #[T] The cardinality of the type (i. e. the number of possible values) size[T] The size of the representation (in number of bits/bytes) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

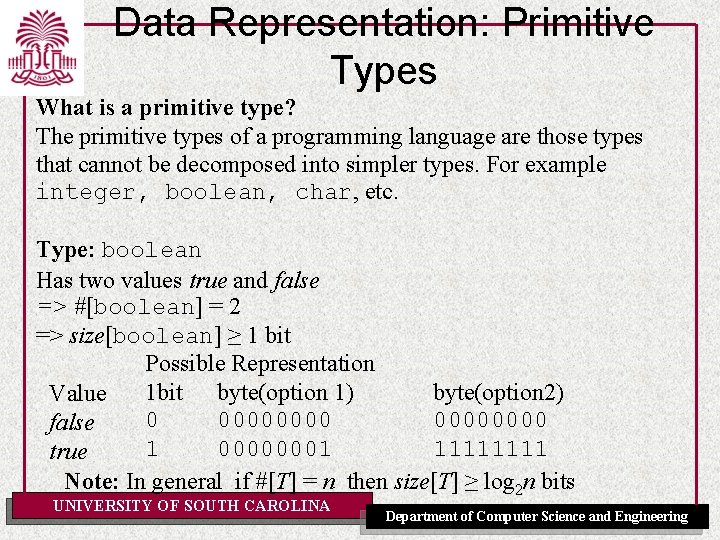

Data Representation: Primitive Types What is a primitive type? The primitive types of a programming language are those types that cannot be decomposed into simpler types. For example integer, boolean, char, etc. Type: boolean Has two values true and false => #[boolean] = 2 => size[boolean] ≥ 1 bit Possible Representation 1 bit byte(option 1) byte(option 2) Value 0 00000000 false 1 00000001 1111 true Note: In general if #[T] = n then size[T] ≥ log 2 n bits UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

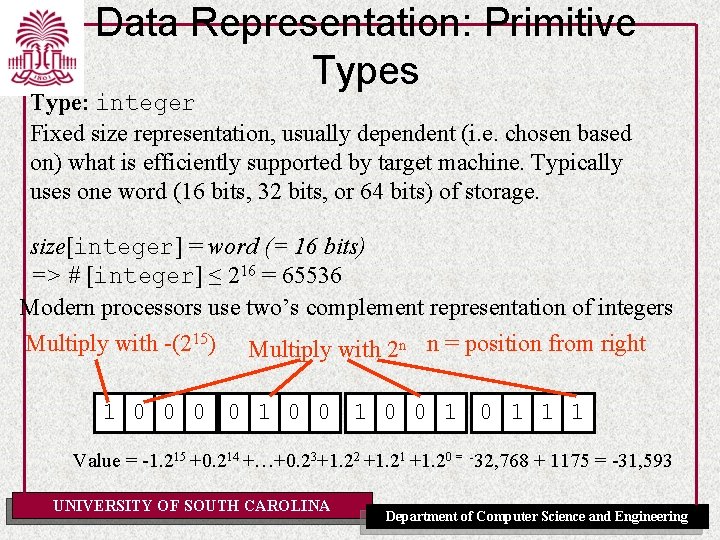

Data Representation: Primitive Types Type: integer Fixed size representation, usually dependent (i. e. chosen based on) what is efficiently supported by target machine. Typically uses one word (16 bits, 32 bits, or 64 bits) of storage. size[integer] = word (= 16 bits) => # [integer] ≤ 216 = 65536 Modern processors use two’s complement representation of integers Multiply with -(215) Multiply with 2 n n = position from right 1 0 0 1 0 1 1 1 Value = -1. 215 +0. 214 +…+0. 23+1. 22 +1. 21 +1. 20 = -32, 768 + 1175 = -31, 593 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

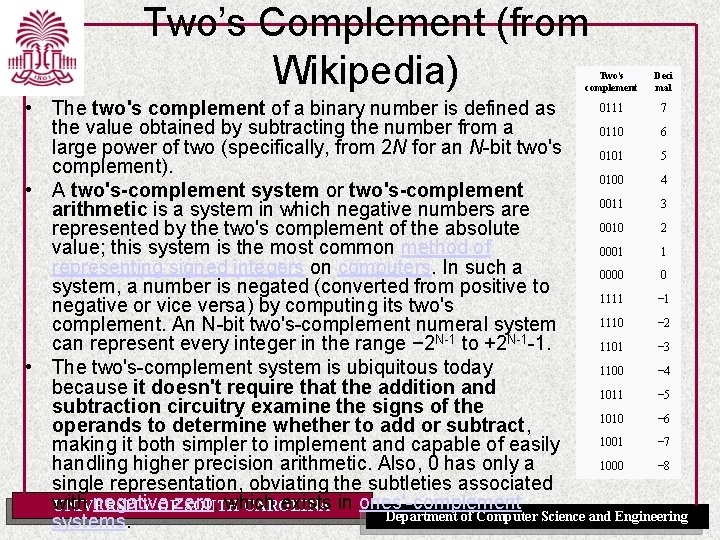

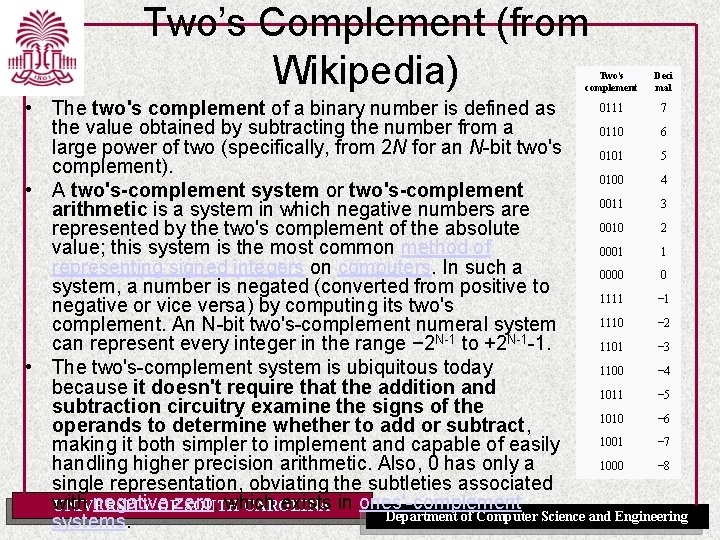

Two’s Complement (from Wikipedia) Two's complement Deci mal 0111 7 • The two's complement of a binary number is defined as the value obtained by subtracting the number from a 0110 6 large power of two (specifically, from 2 N for an N-bit two's 0101 5 complement). 0100 4 • A two's-complement system or two's-complement 0011 3 arithmetic is a system in which negative numbers are 0010 2 represented by the two's complement of the absolute value; this system is the most common method of 0001 1 representing signed integers on computers. In such a 0000 0 system, a number is negated (converted from positive to 1111 − 1 negative or vice versa) by computing its two's 1110 − 2 complement. An N-bit two's-complement numeral system can represent every integer in the range − 2 N-1 to +2 N-1 -1. 1101 − 3 • The two's-complement system is ubiquitous today 1100 − 4 because it doesn't require that the addition and 1011 − 5 subtraction circuitry examine the signs of the 1010 − 6 operands to determine whether to add or subtract, 1001 − 7 making it both simpler to implement and capable of easily 1000 − 8 handling higher precision arithmetic. Also, 0 has only a single representation, obviating the subtleties associated with negative which exists in ones'-complement UNIVERSITY OFzero, SOUTH CAROLINA Department of Computer Science and Engineering systems.

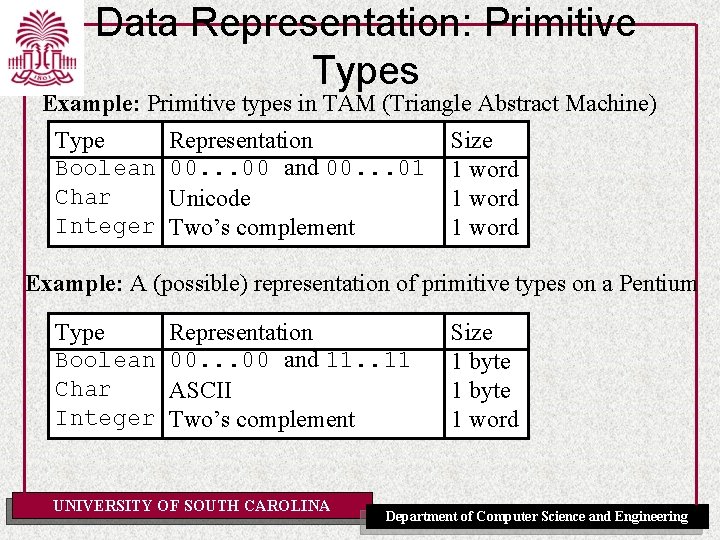

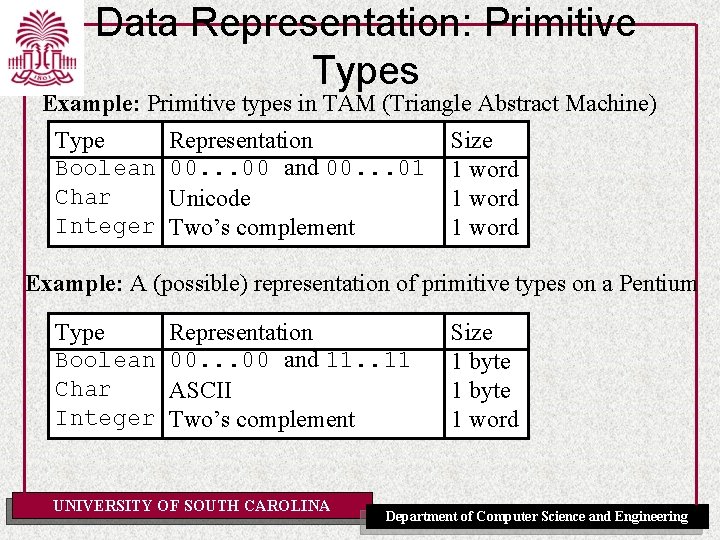

Data Representation: Primitive Types Example: Primitive types in TAM (Triangle Abstract Machine) Type Boolean Char Integer Representation 00. . . 00 and 00. . . 01 Unicode Two’s complement Size 1 word Example: A (possible) representation of primitive types on a Pentium Type Boolean Char Integer Representation 00. . . 00 and 11. . 11 ASCII Two’s complement UNIVERSITY OF SOUTH CAROLINA Size 1 byte 1 word Department of Computer Science and Engineering

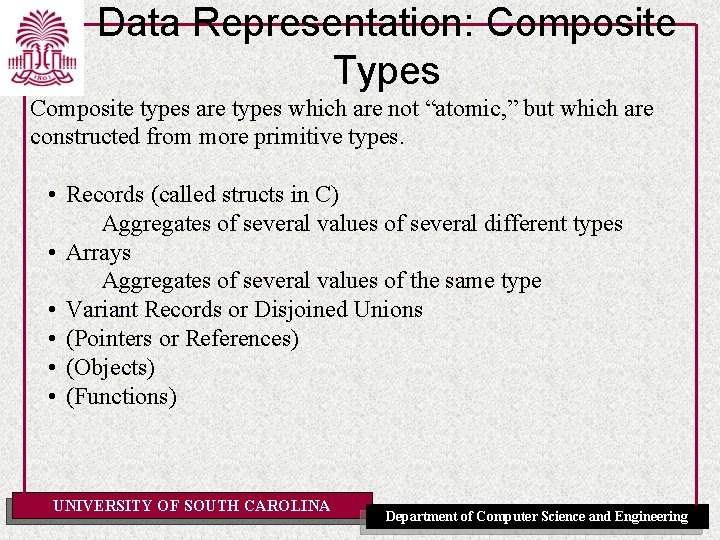

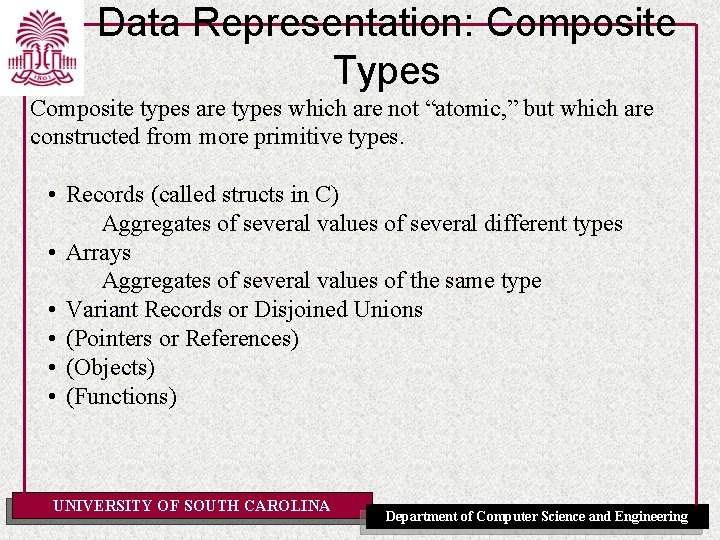

Data Representation: Composite Types Composite types are types which are not “atomic, ” but which are constructed from more primitive types. • Records (called structs in C) Aggregates of several values of several different types • Arrays Aggregates of several values of the same type • Variant Records or Disjoined Unions • (Pointers or References) • (Objects) • (Functions) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

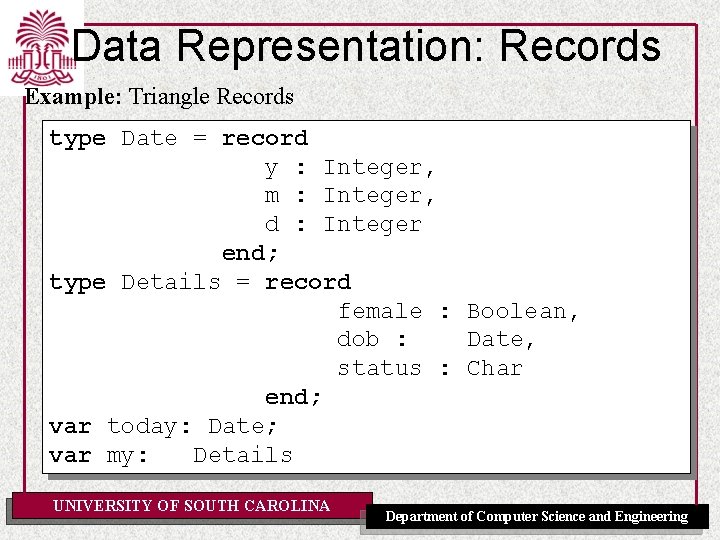

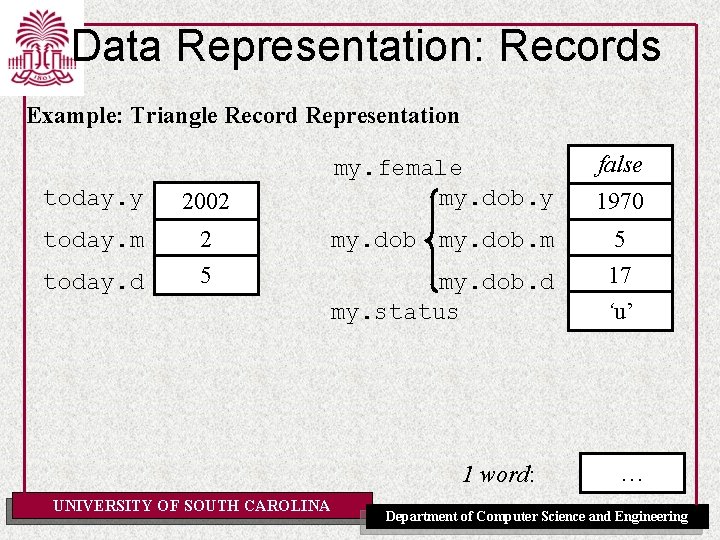

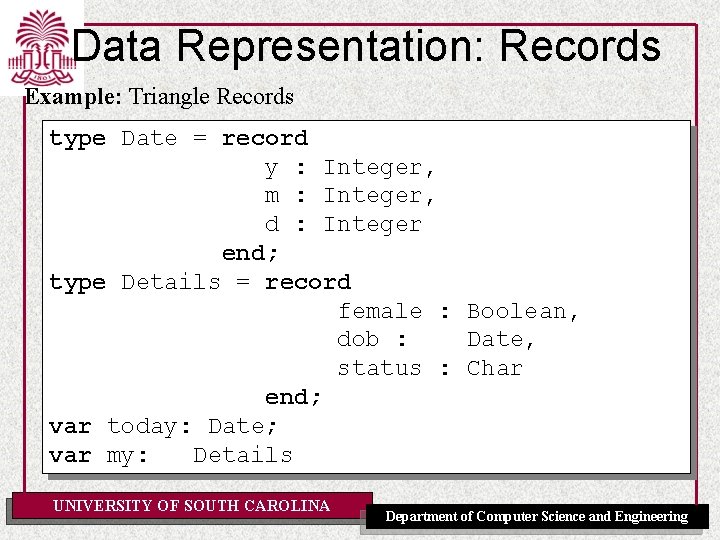

Data Representation: Records Example: Triangle Records type Date = record y : Integer, m : Integer, d : Integer end; type Details = record female : Boolean, dob : Date, status : Char end; var today: Date; var my: Details UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Data Representation: Records Example: Triangle Record Representation today. y 2002 today. m 2 5 today. d my. female my. dob. y my. dob. m my. dob. d my. status 1 word: UNIVERSITY OF SOUTH CAROLINA false 1970 5 17 ‘u’ … Department of Computer Science and Engineering

Data Representation: Records occur in some form or other in most programming languages: Ada, Pascal, Triangle (here they are actually called records) C, C++ (here they are called structs). The usual representation of a record type is just the concatenation of individual representations of each of its component types. r. I 1 value of type T 1 r. I 2 value of type T 2 r. In value of type Tn UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

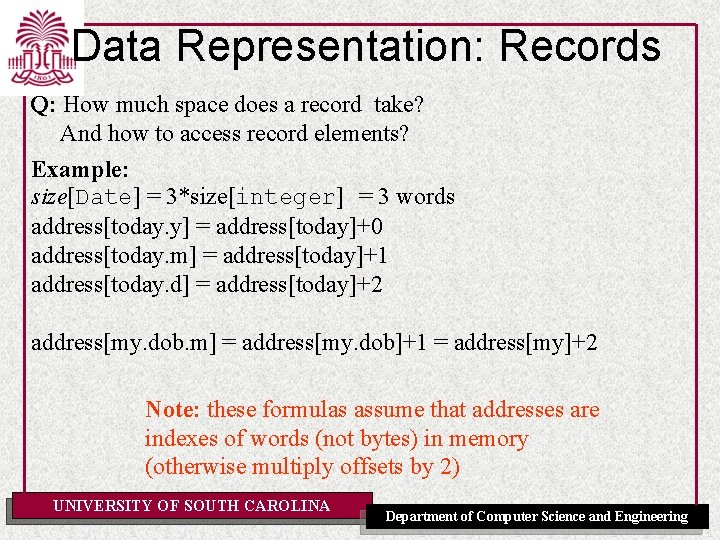

Data Representation: Records Q: How much space does a record take? And how to access record elements? Example: size[Date] = 3*size[integer] = 3 words address[today. y] = address[today]+0 address[today. m] = address[today]+1 address[today. d] = address[today]+2 address[my. dob. m] = address[my. dob]+1 = address[my]+2 Note: these formulas assume that addresses are indexes of words (not bytes) in memory (otherwise multiply offsets by 2) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

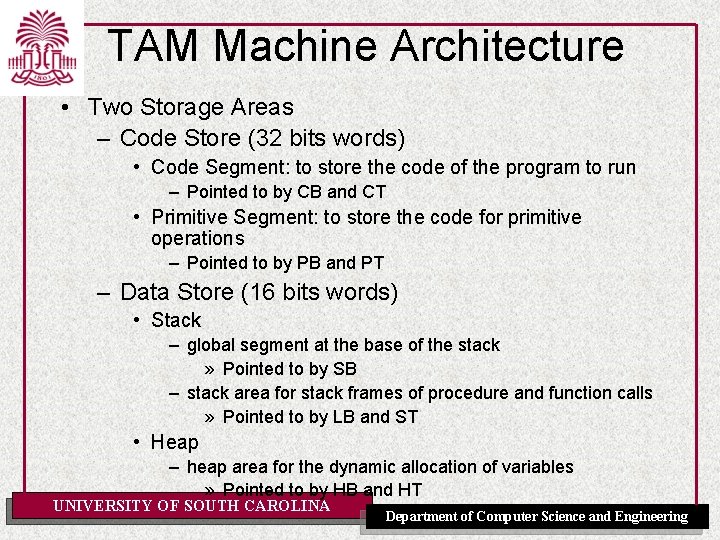

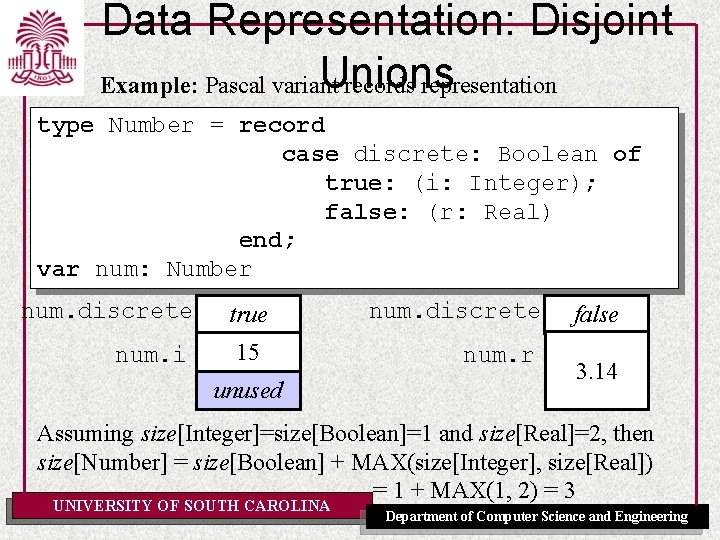

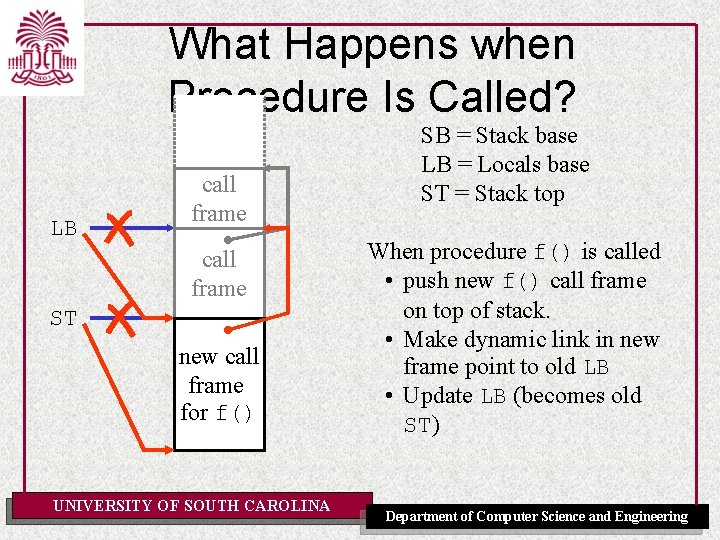

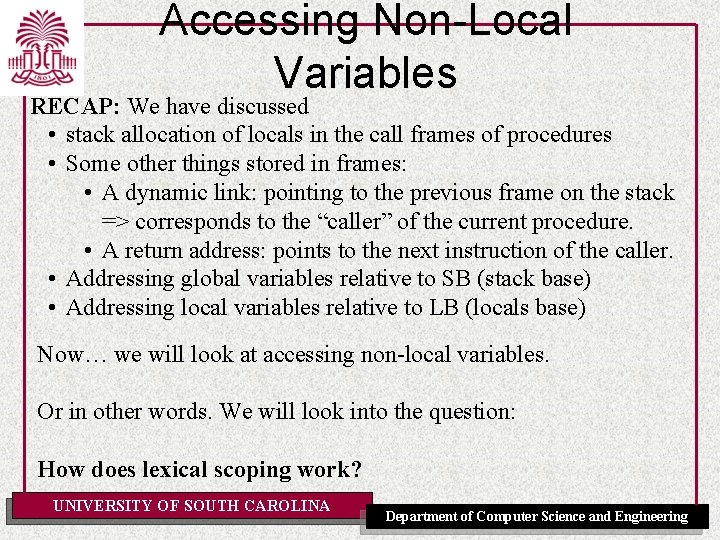

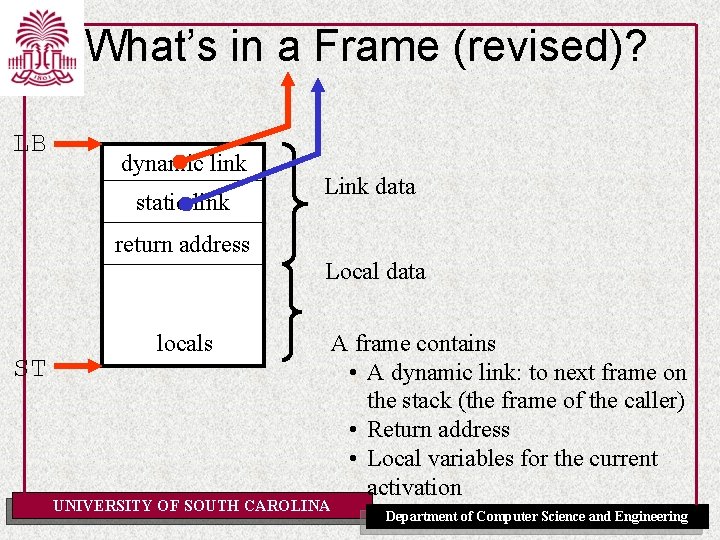

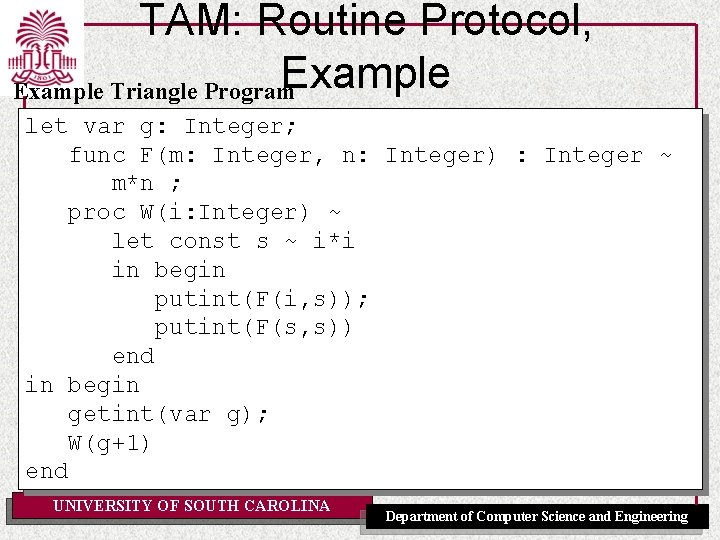

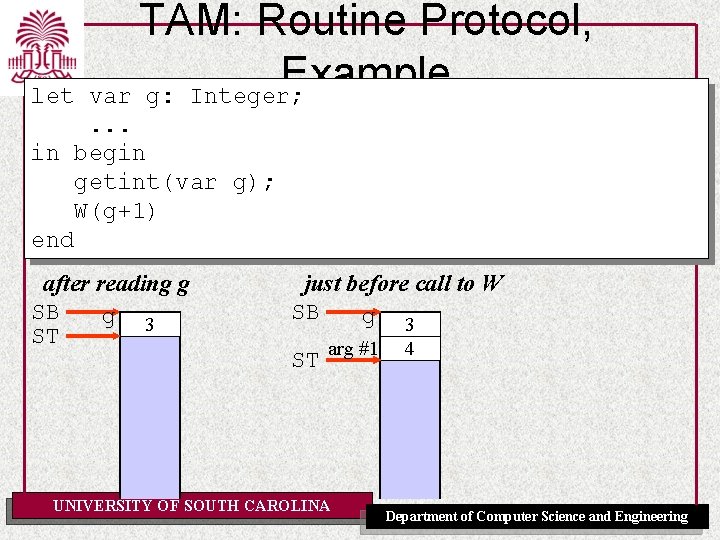

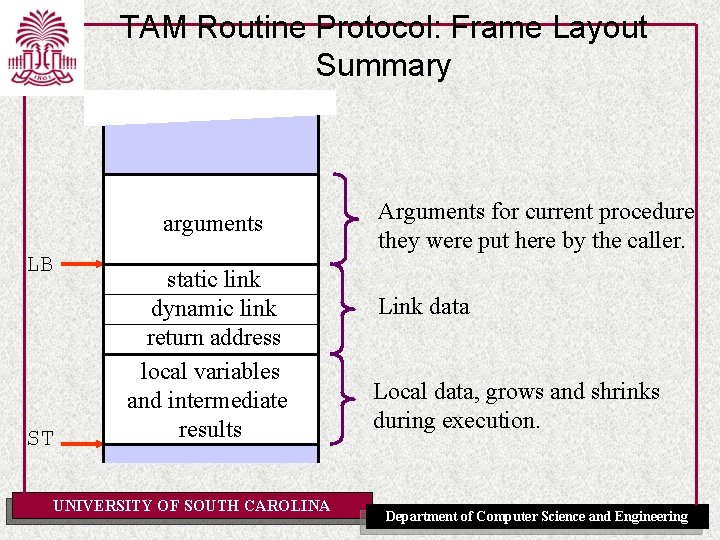

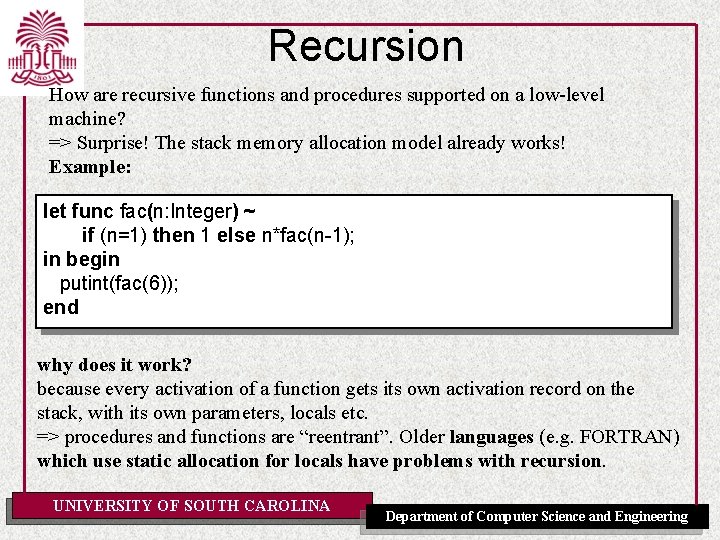

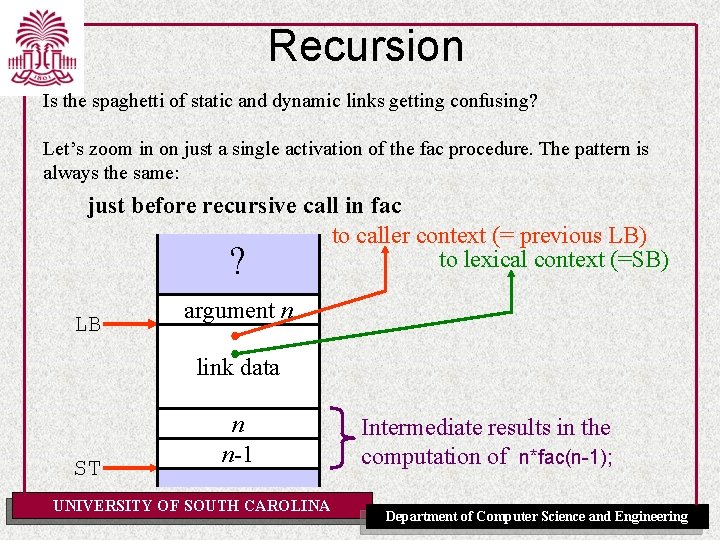

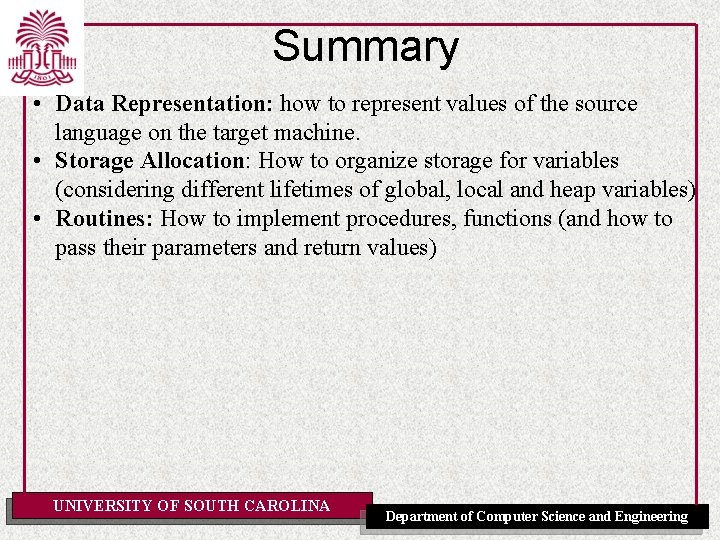

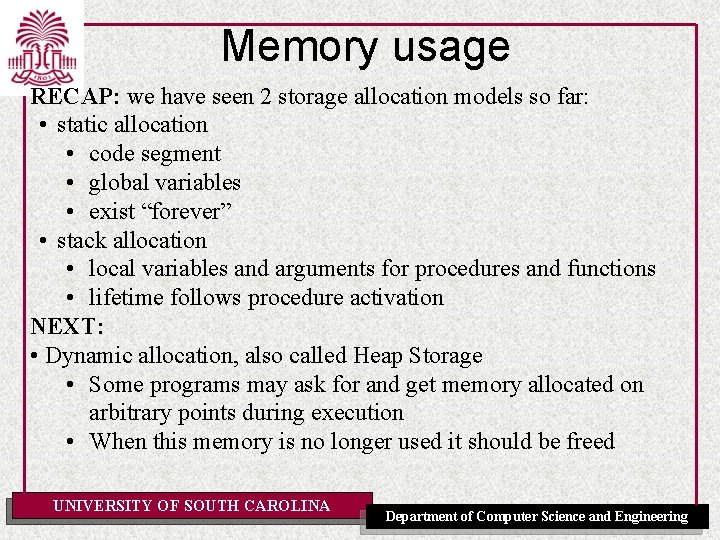

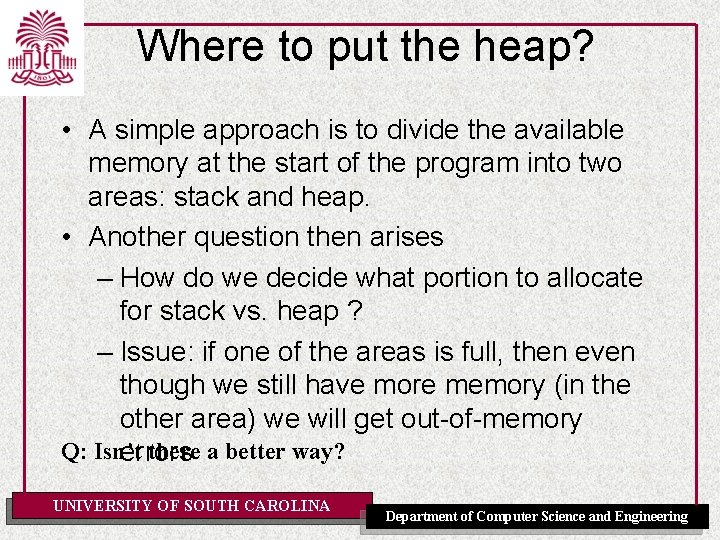

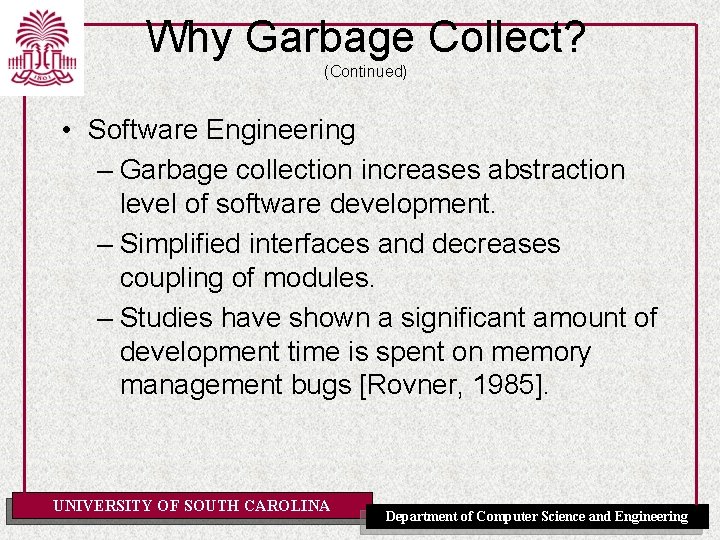

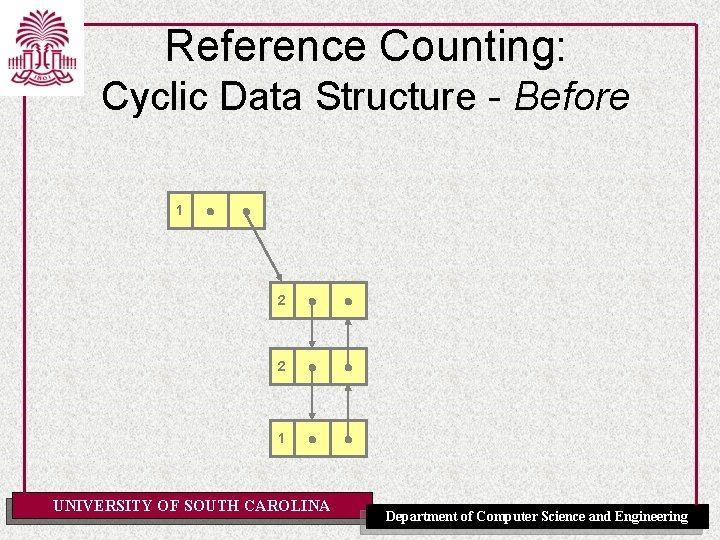

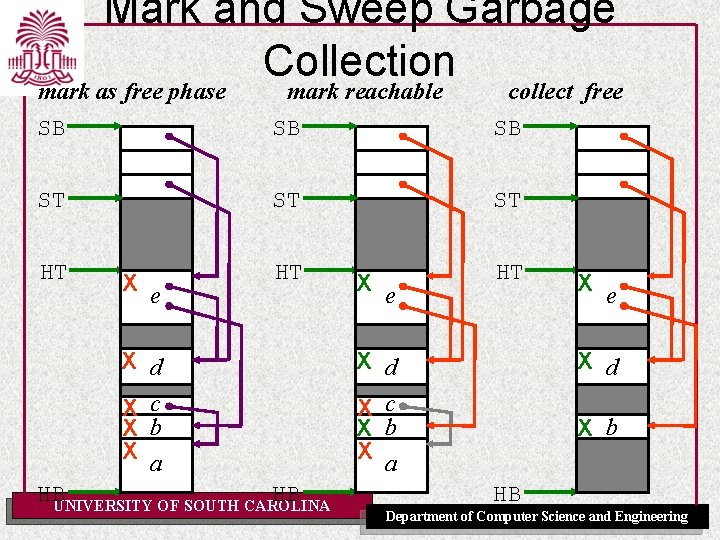

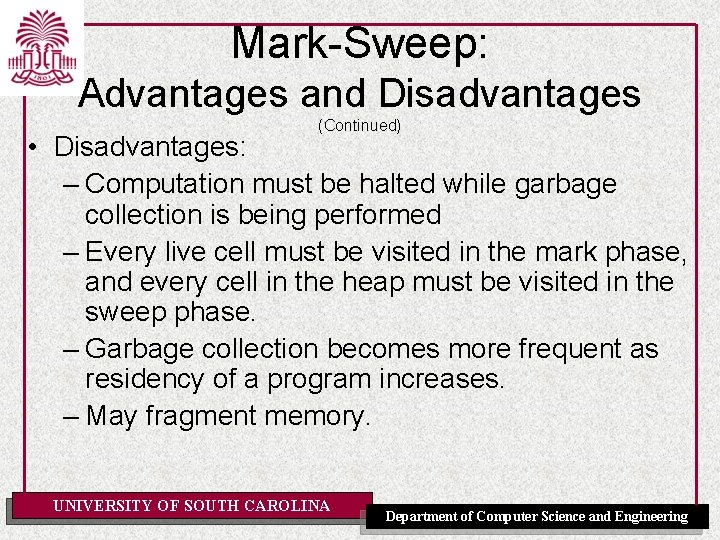

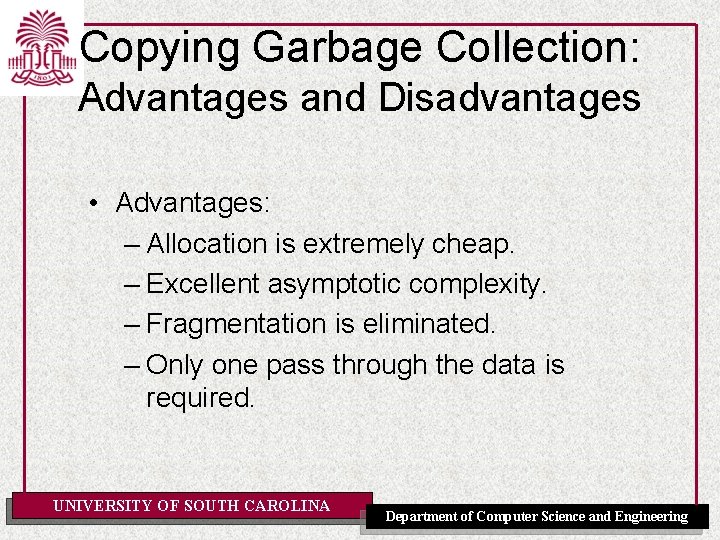

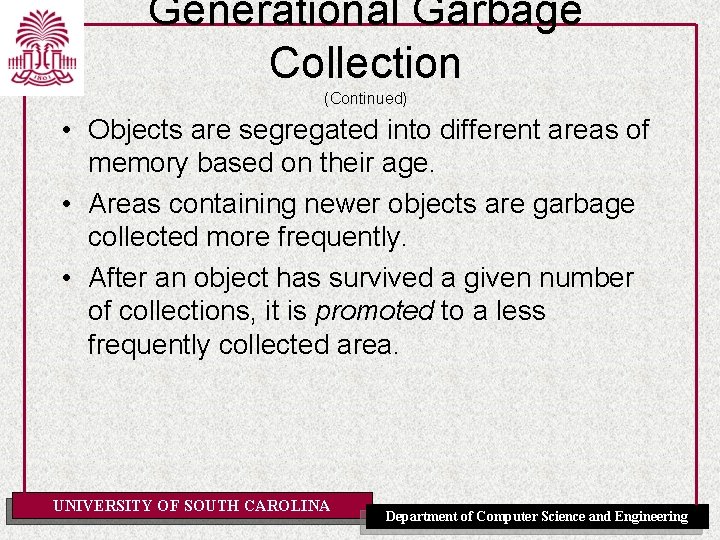

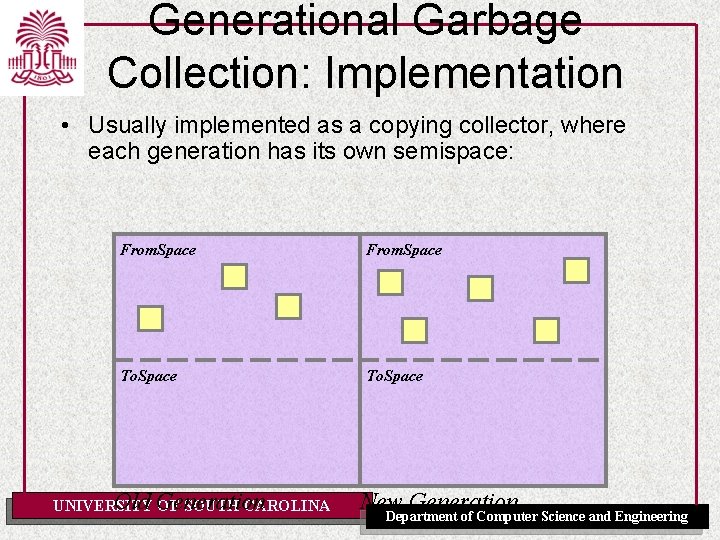

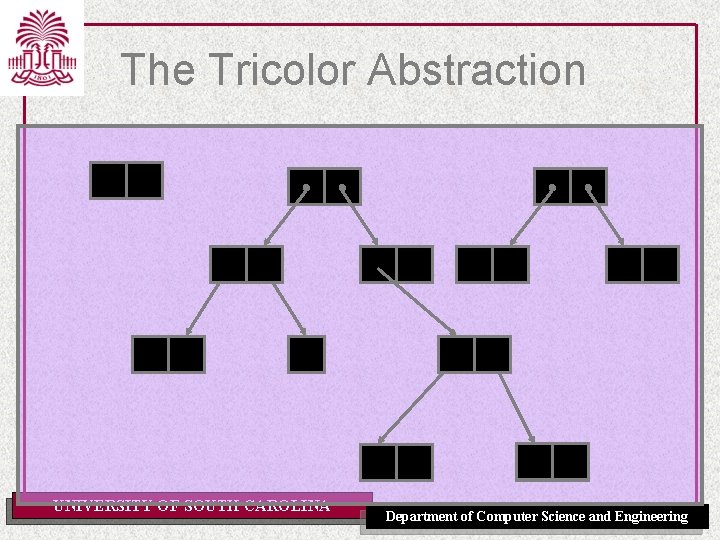

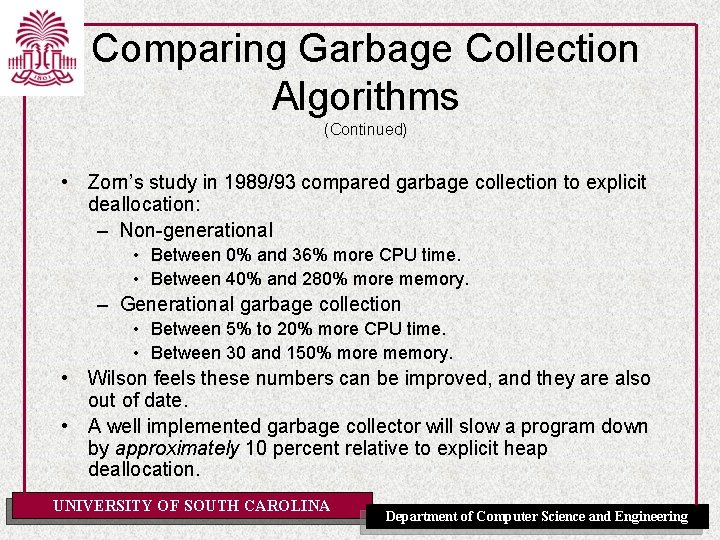

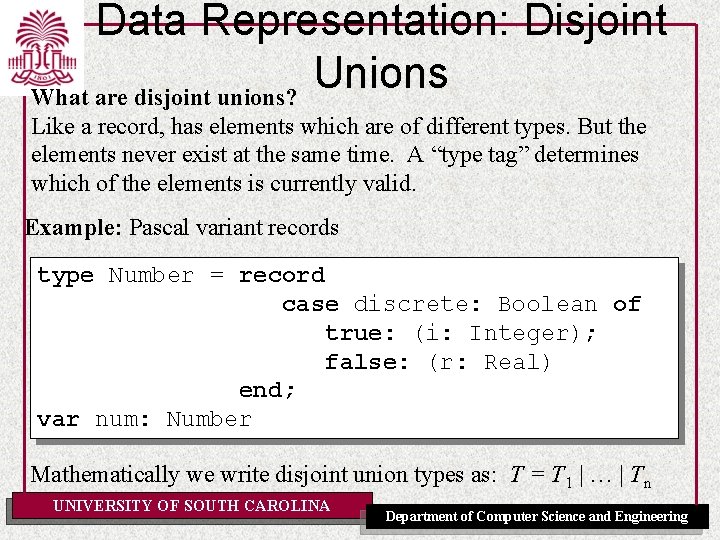

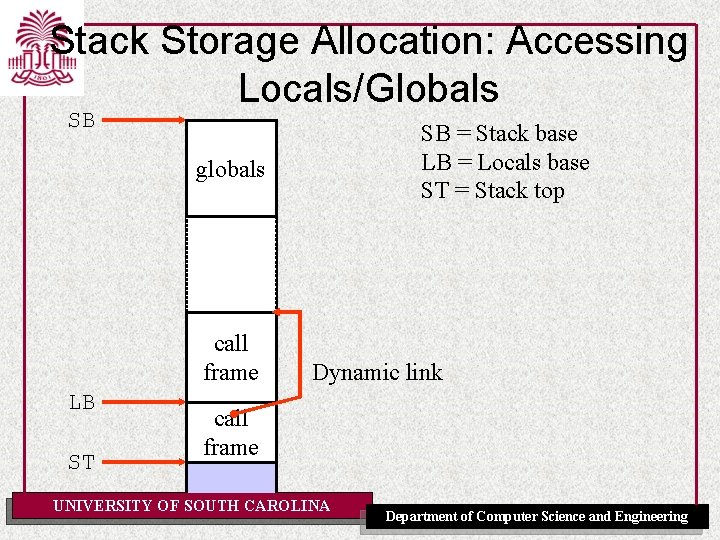

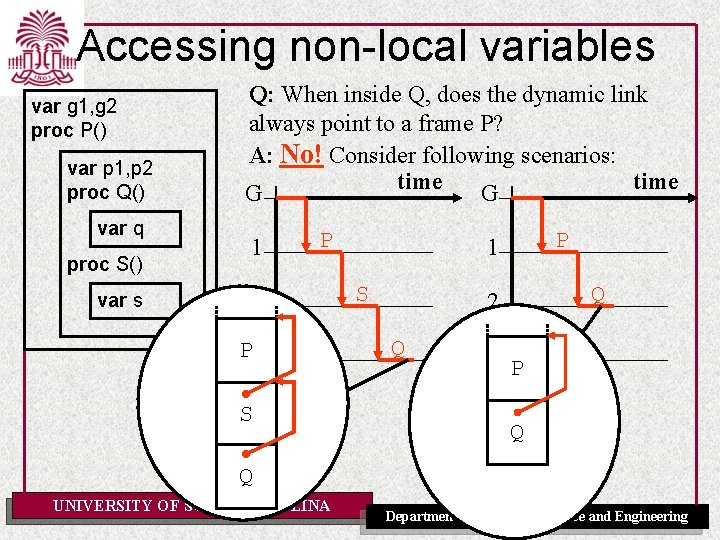

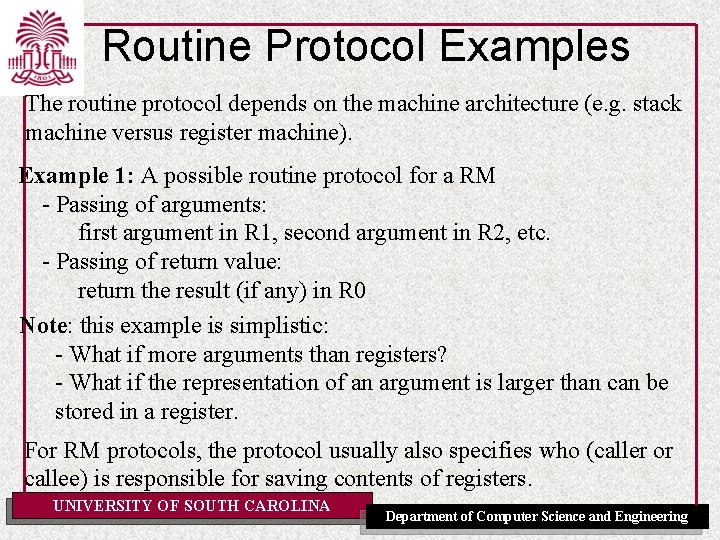

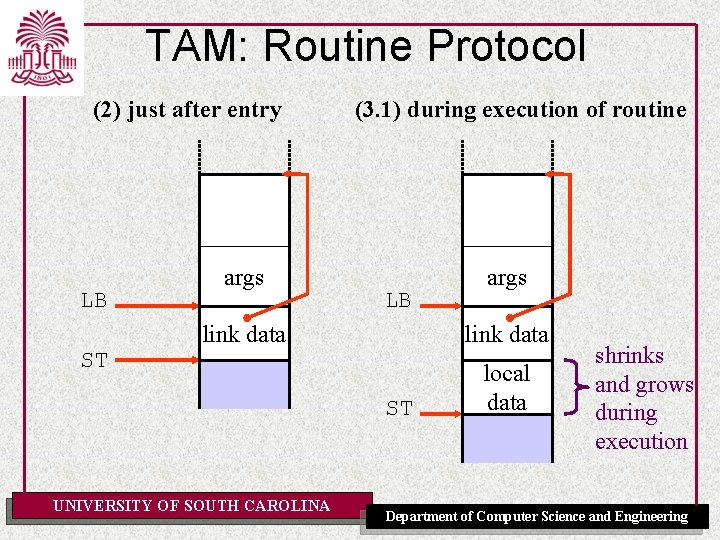

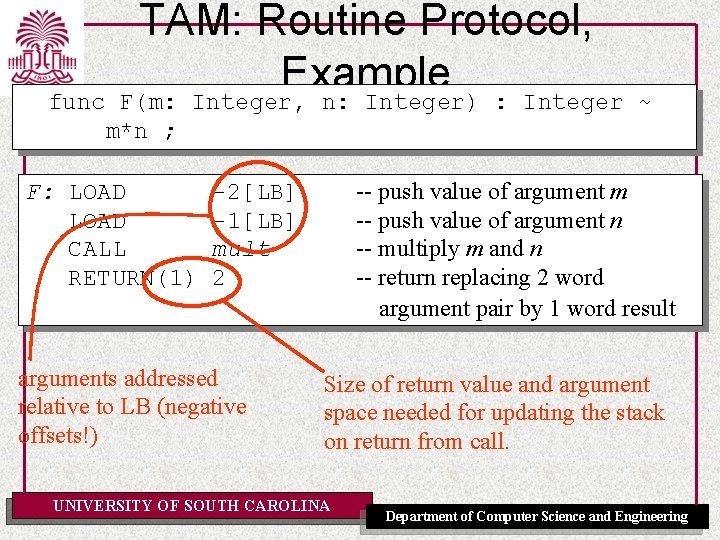

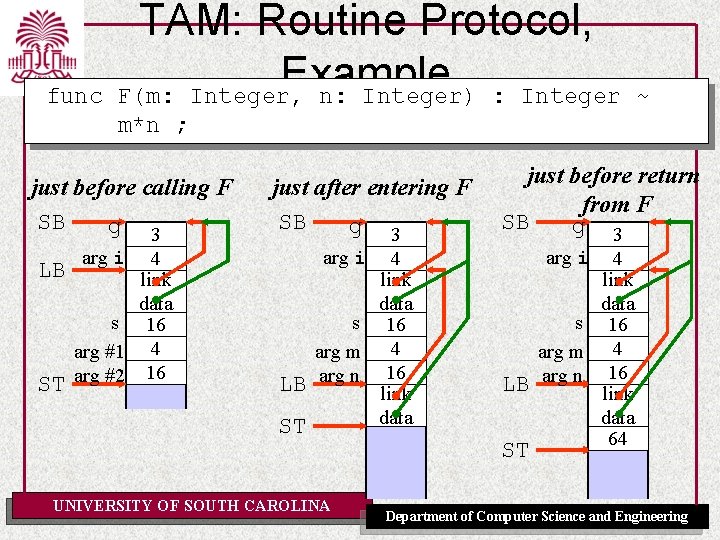

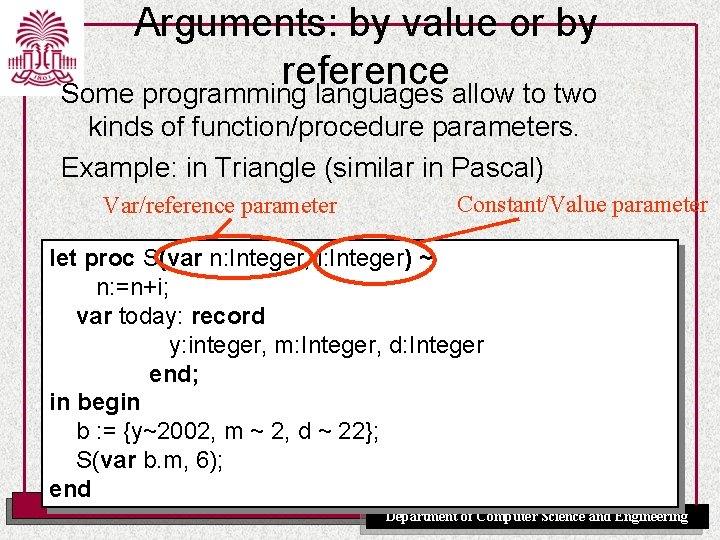

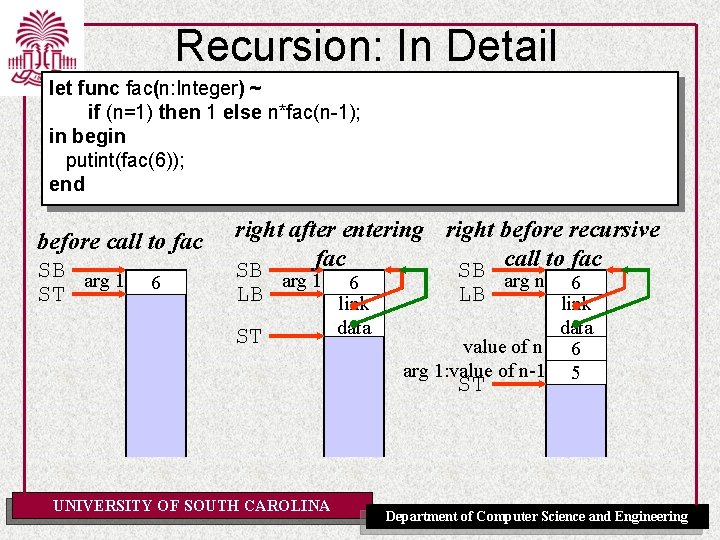

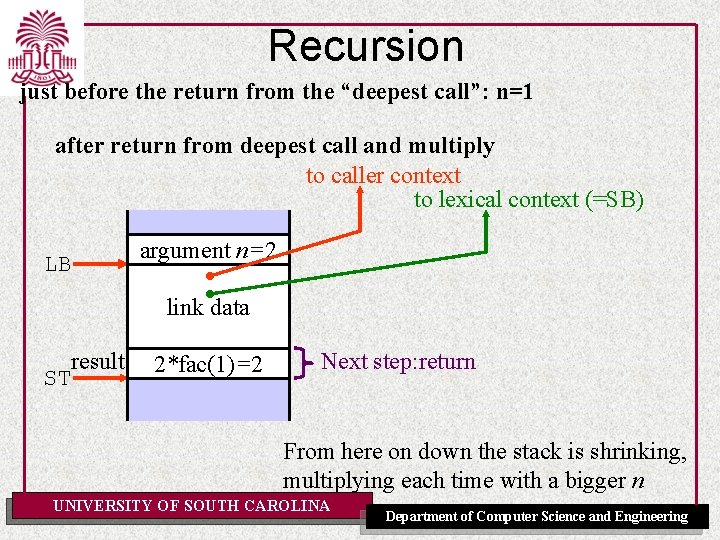

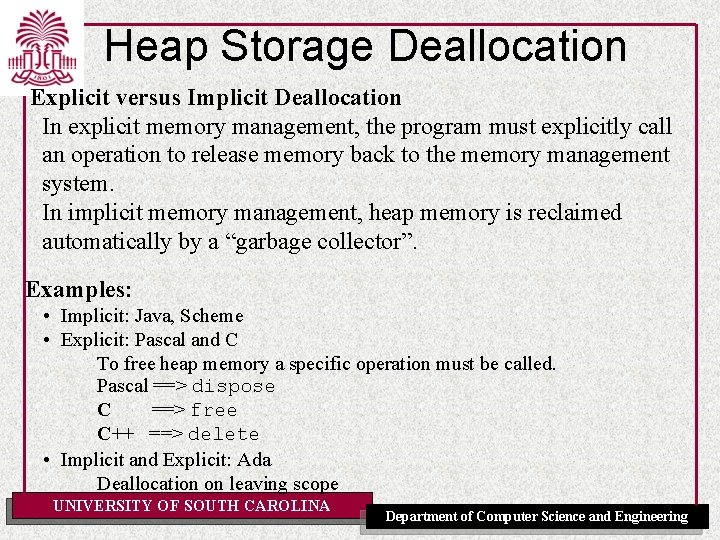

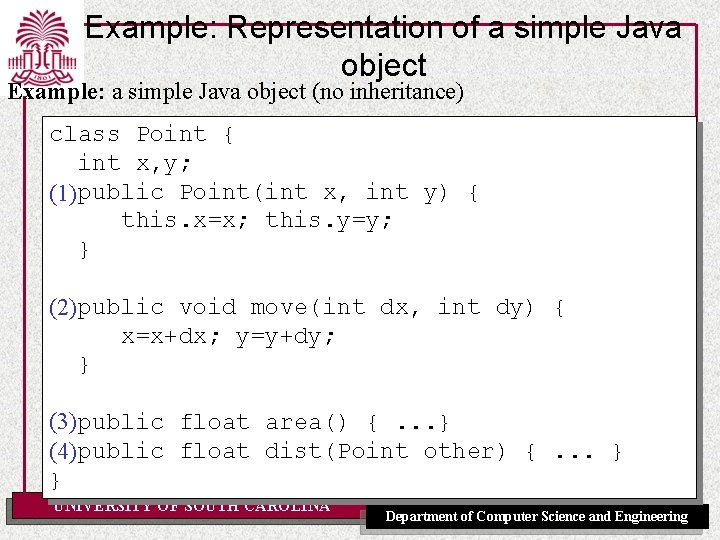

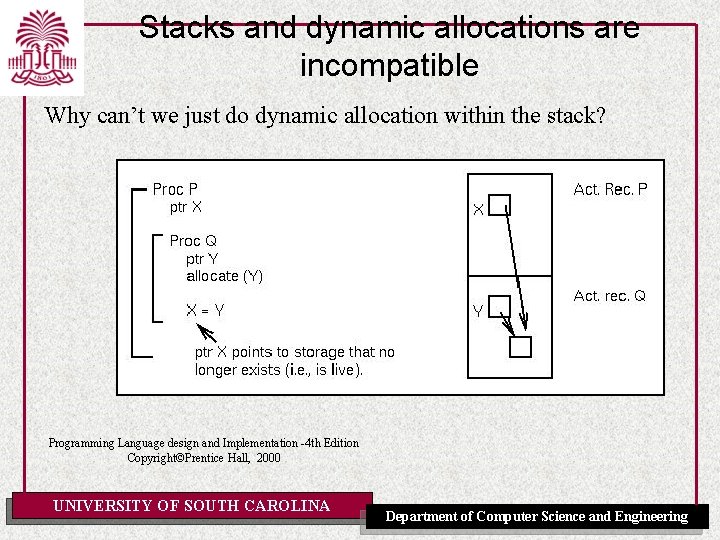

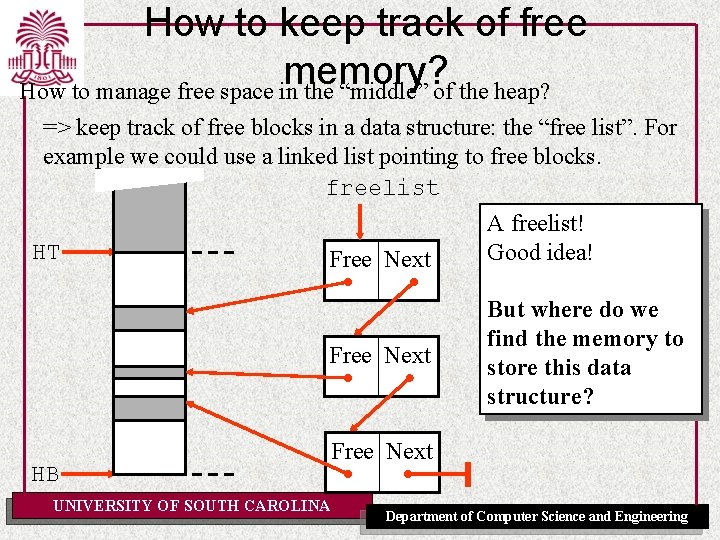

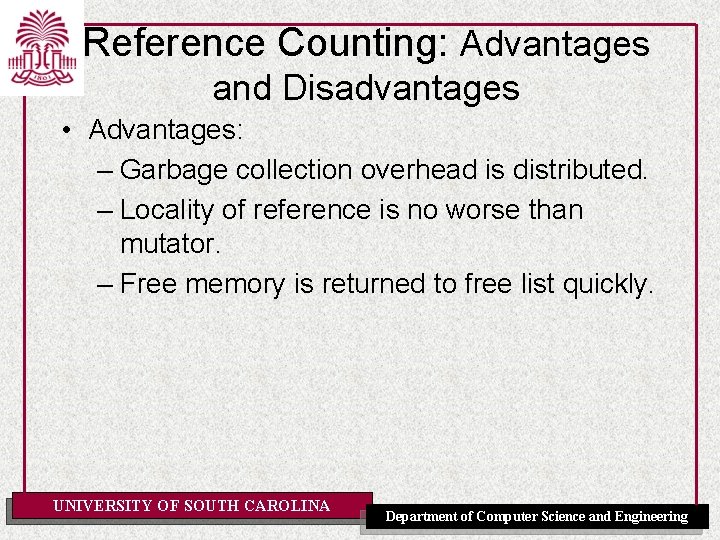

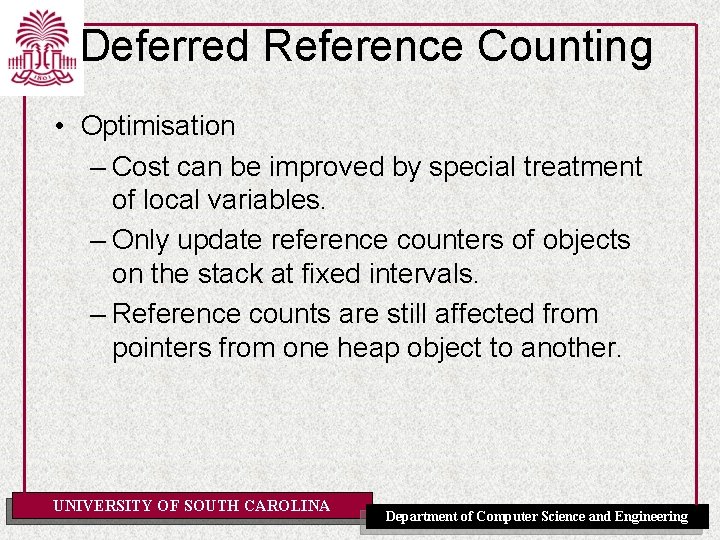

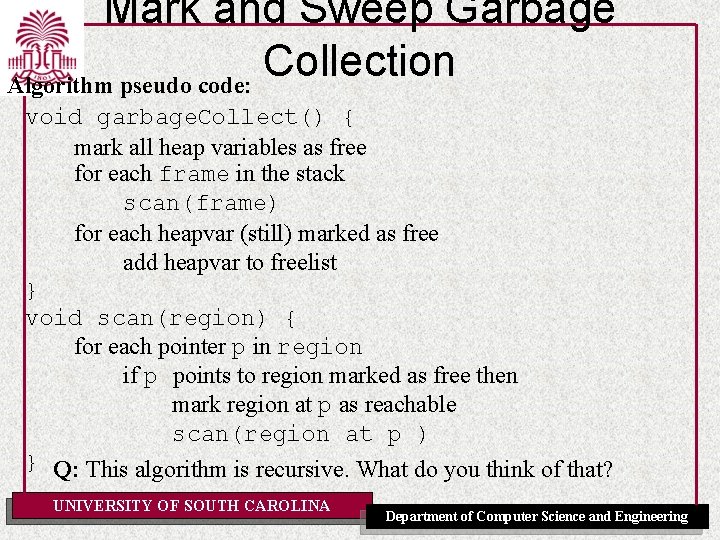

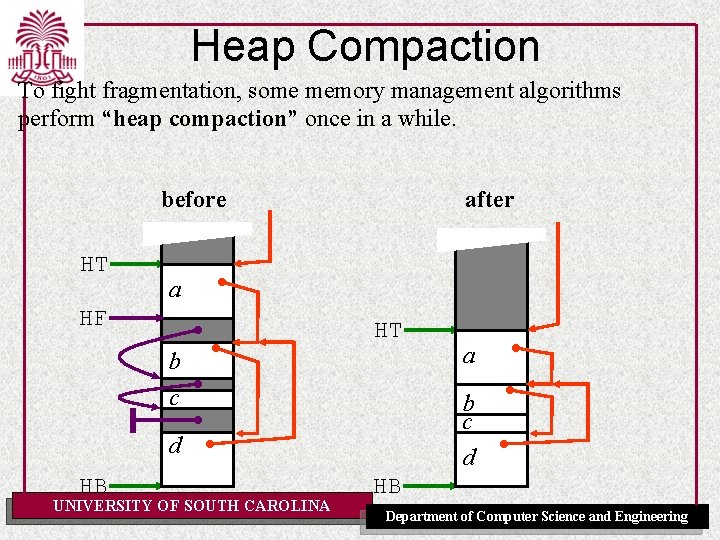

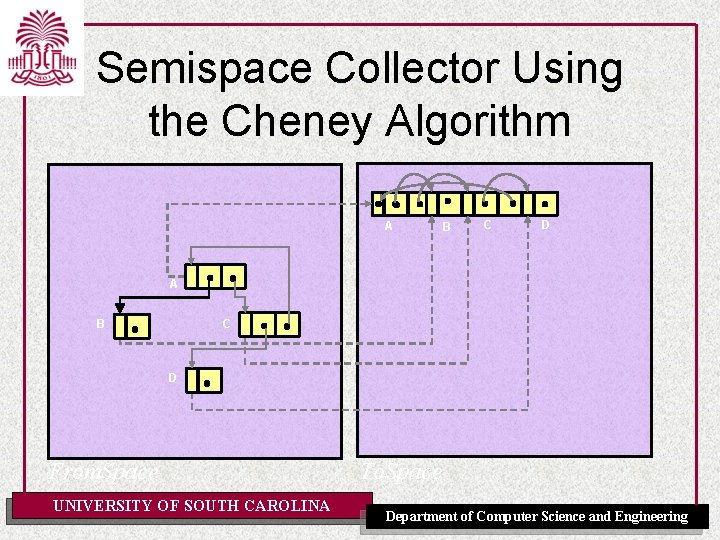

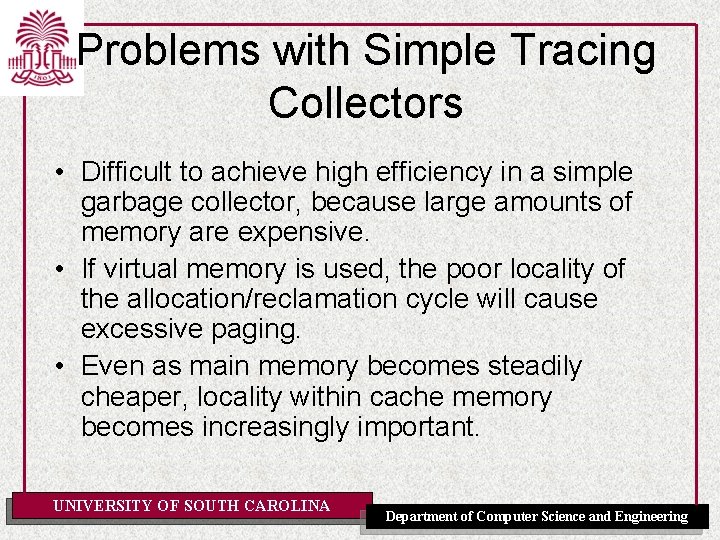

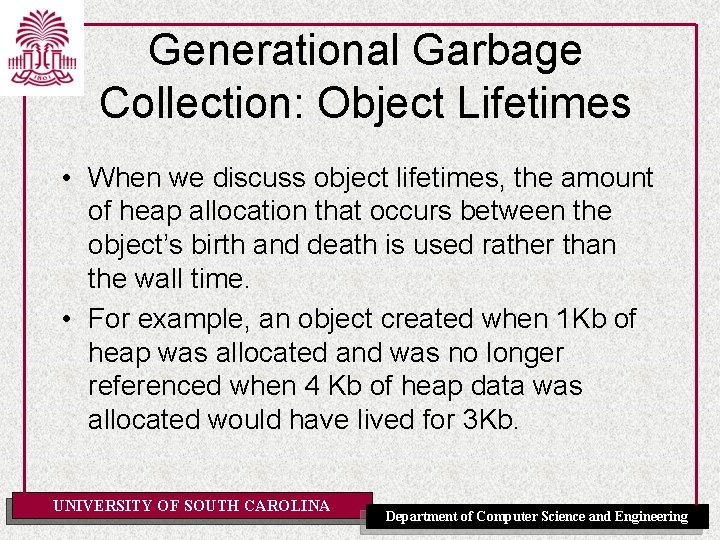

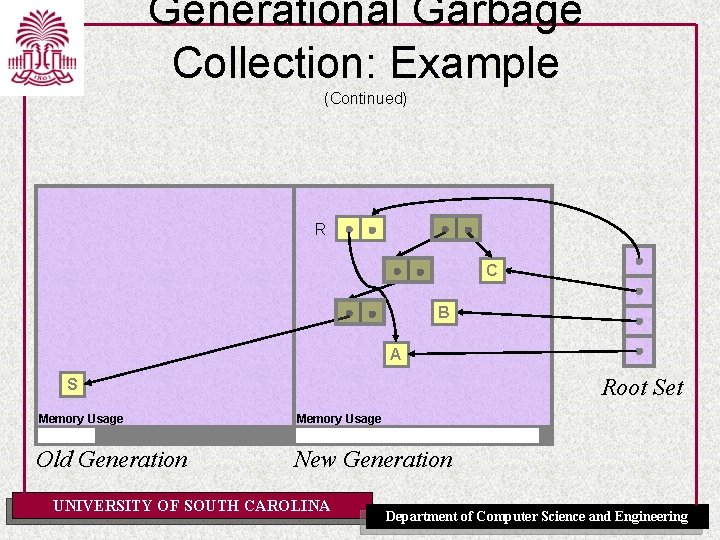

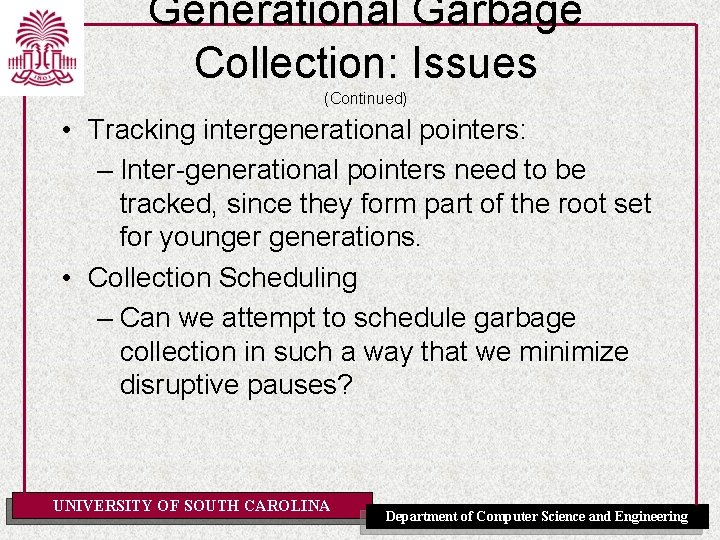

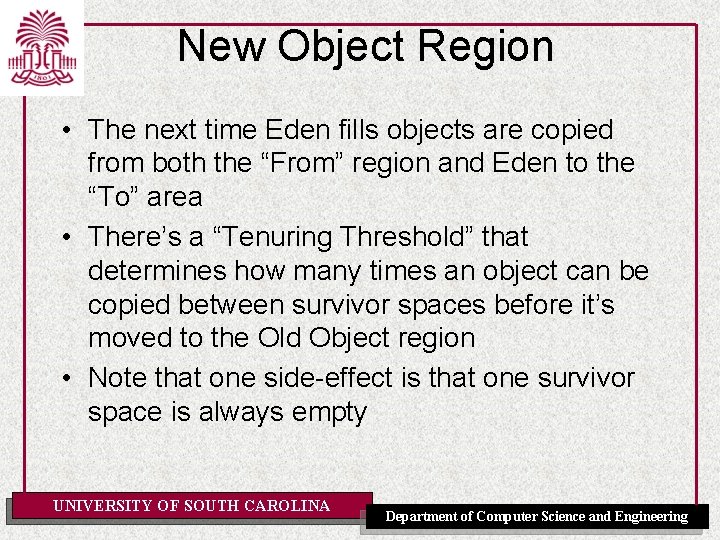

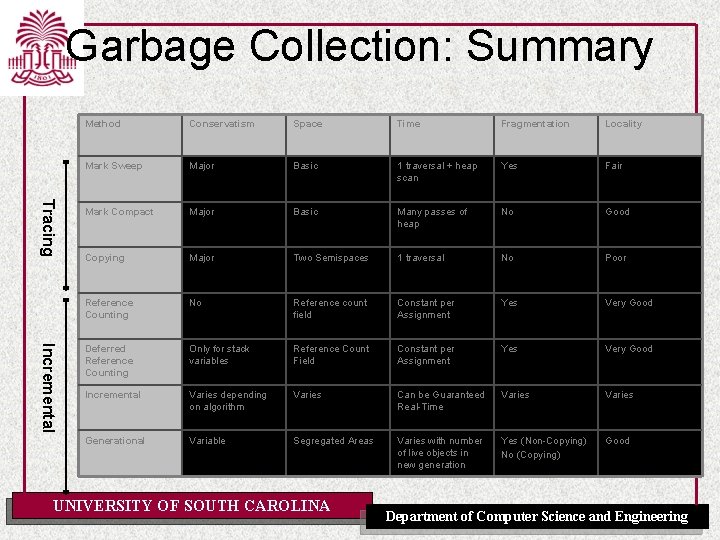

Data Representation: Disjoint Unions What are disjoint unions? Like a record, has elements which are of different types. But the elements never exist at the same time. A “type tag” determines which of the elements is currently valid. Example: Pascal variant records type Number = record case discrete: Boolean of true: (i: Integer); false: (r: Real) end; var num: Number Mathematically we write disjoint union types as: T = T 1 | … | Tn UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Data Representation: Disjoint Unions Example: Pascal variant records representation type Number = record case discrete: Boolean of true: (i: Integer); false: (r: Real) end; var num: Number num. discrete num. i true 15 unused num. discrete num. r false 3. 14 Assuming size[Integer]=size[Boolean]=1 and size[Real]=2, then size[Number] = size[Boolean] + MAX(size[Integer], size[Real]) = 1 + MAX(1, 2) = 3 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

![Data Representation Disjoint Unions type T record sizeT sizeT tag case Data Representation: Disjoint Unions type T = record size[T] = size[T ] tag case](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-34.jpg)

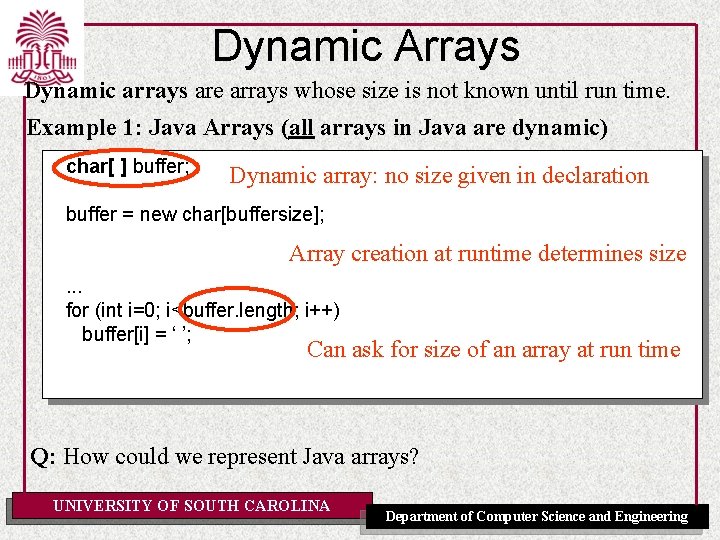

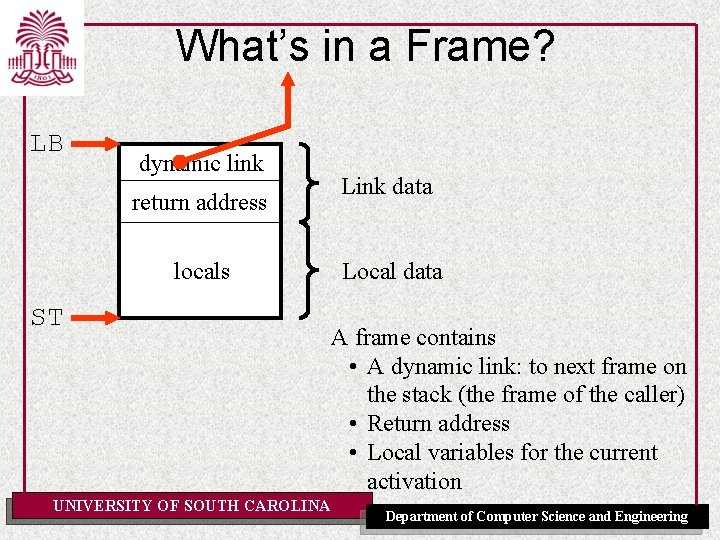

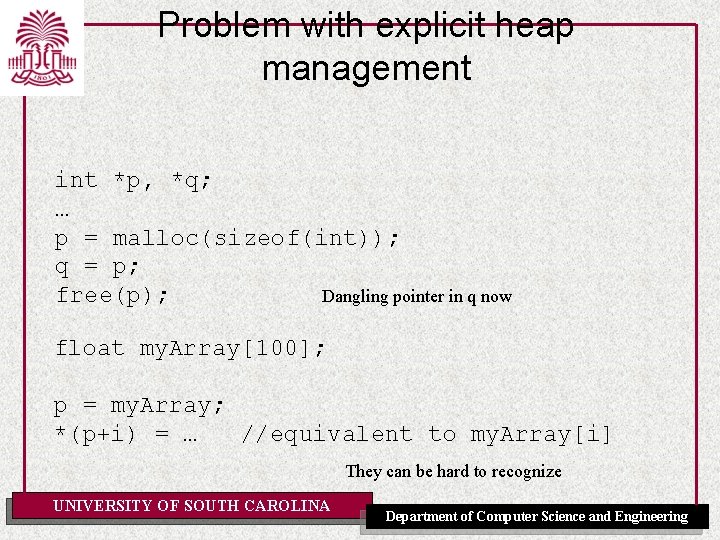

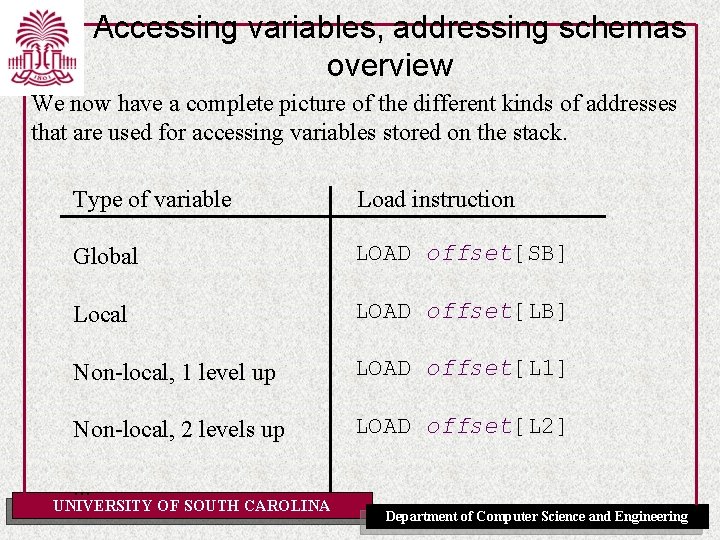

Data Representation: Disjoint Unions type T = record size[T] = size[T ] tag case Itag: Ttag of v 1: (I 1: T 1); v 2: (I 2: T 2); . . . vn: (In: Tn); end; var u: T u. Itag u. I 1 + MAX(size[T 1], . . . , size[Tn]) address[u. Itag ] = address[u] address[u. I 1] = address[u]+size[Ttag]. . . address[u. In] = address[u]+size[Ttag] u. Itag v 1 type T 1 or u. Itag v 2 u. I 2 type T 2 UNIVERSITY OF SOUTH CAROLINA or … or vn u. In type Tn Department of Computer Science and Engineering

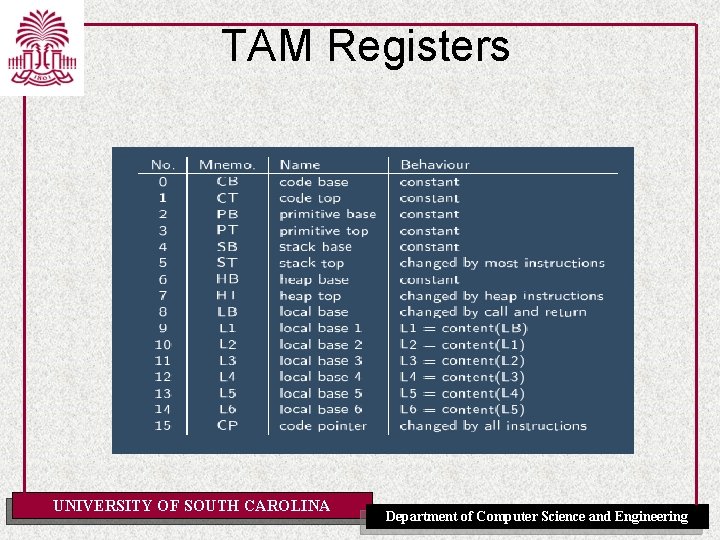

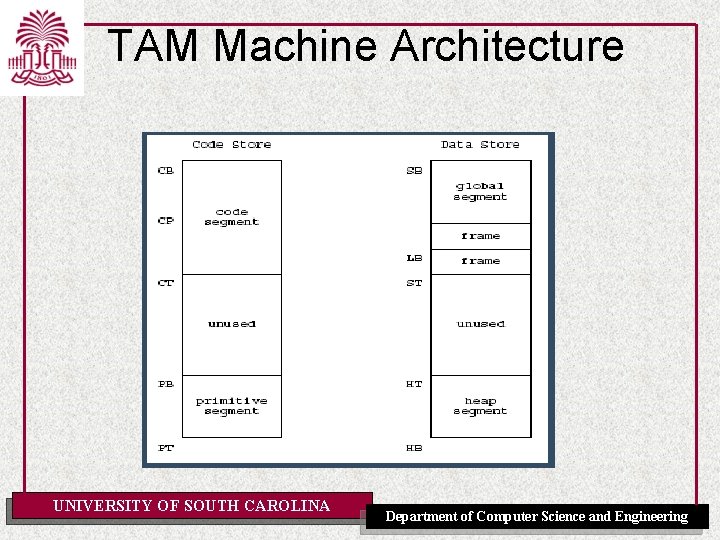

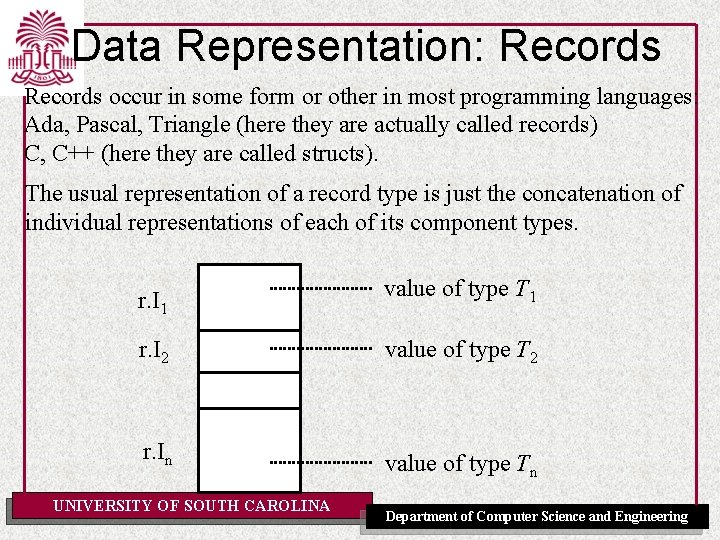

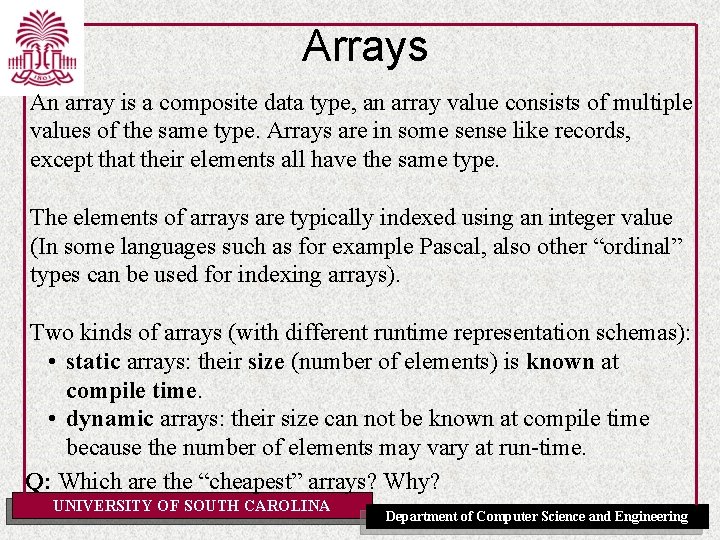

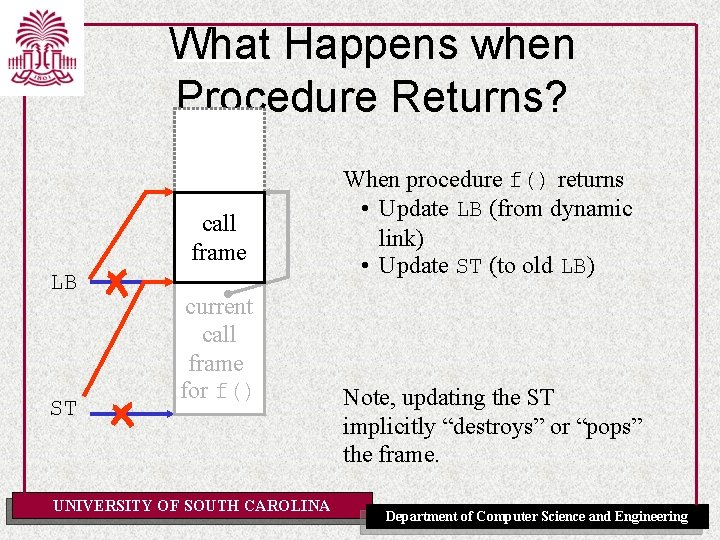

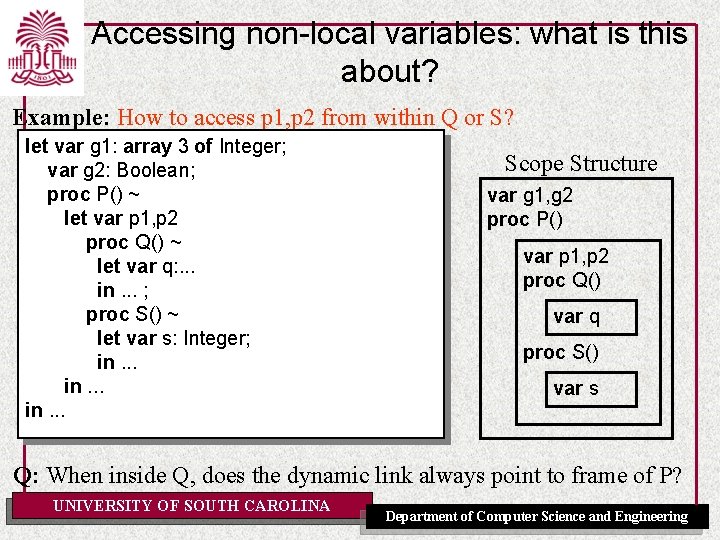

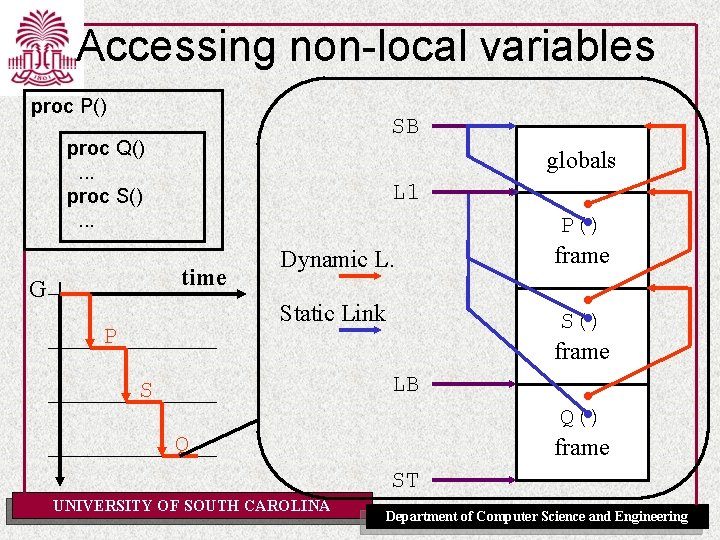

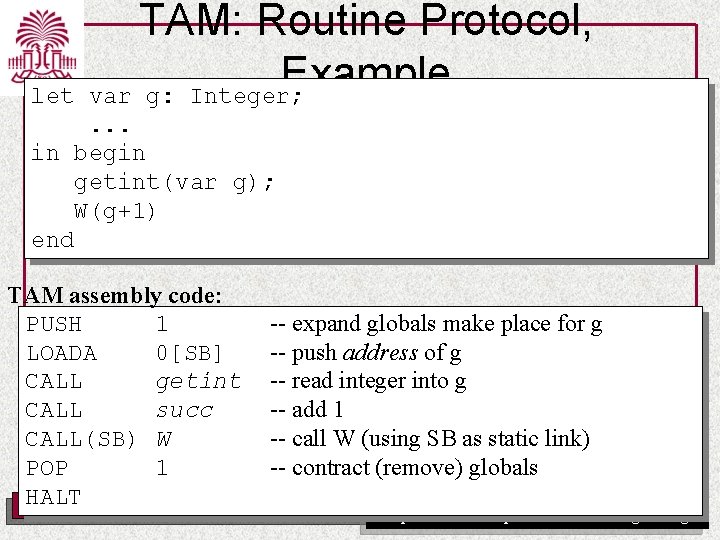

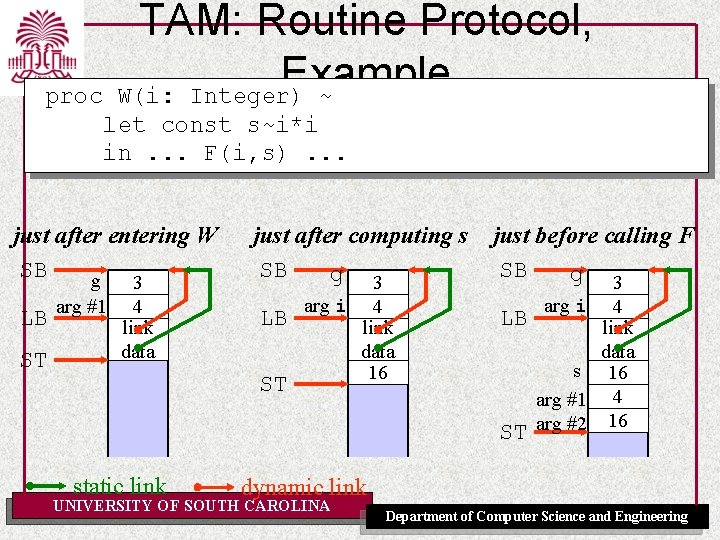

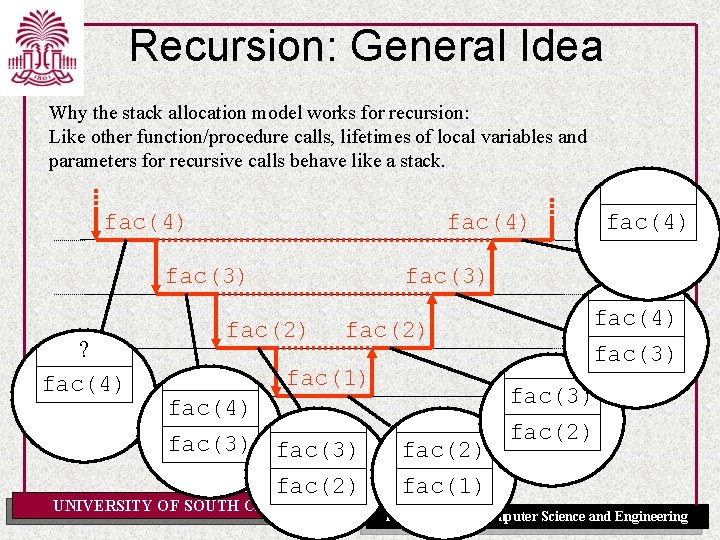

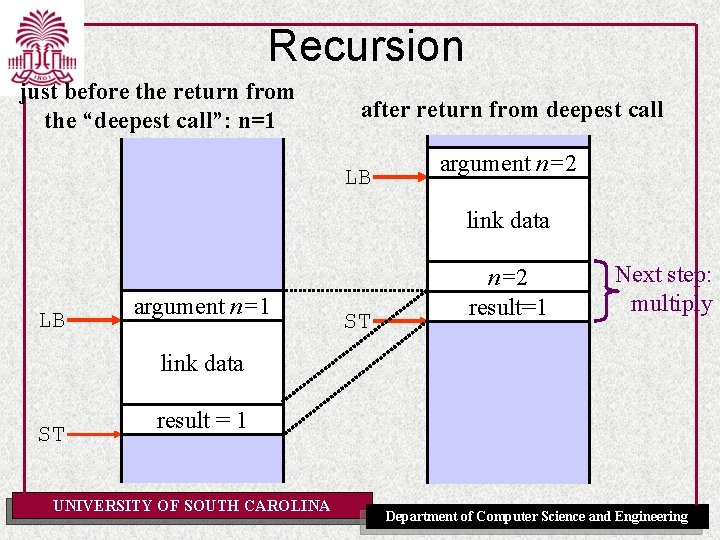

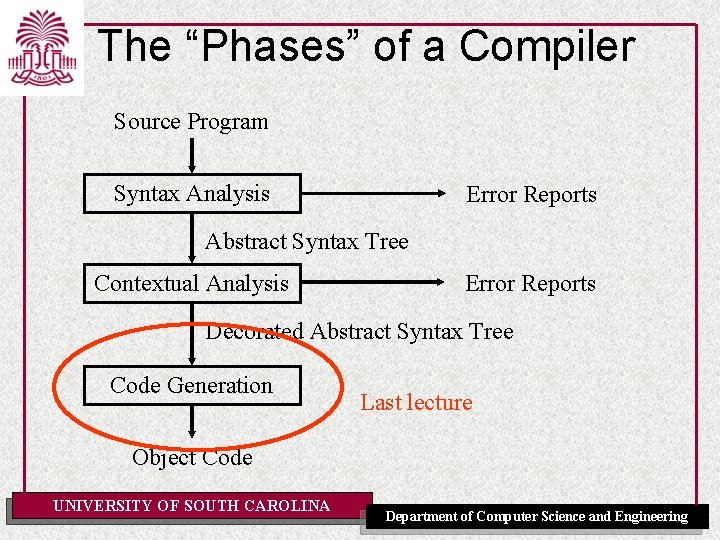

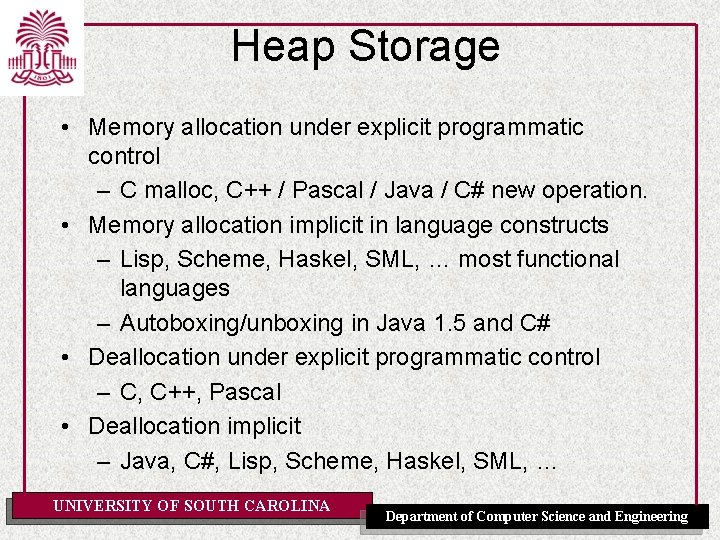

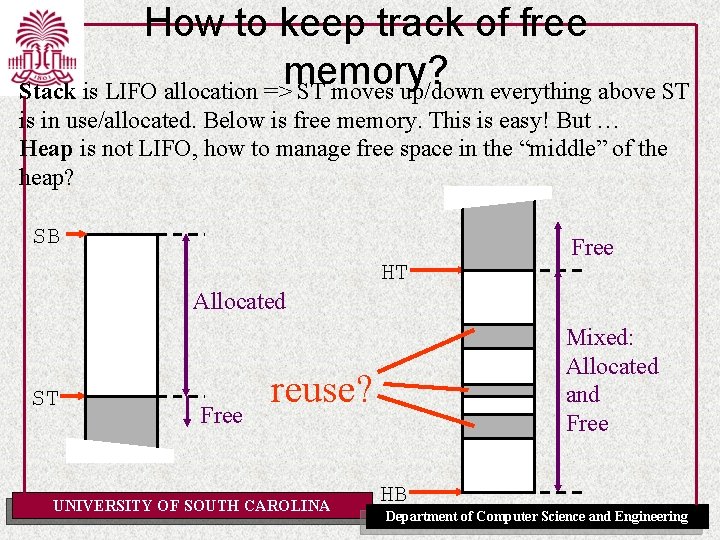

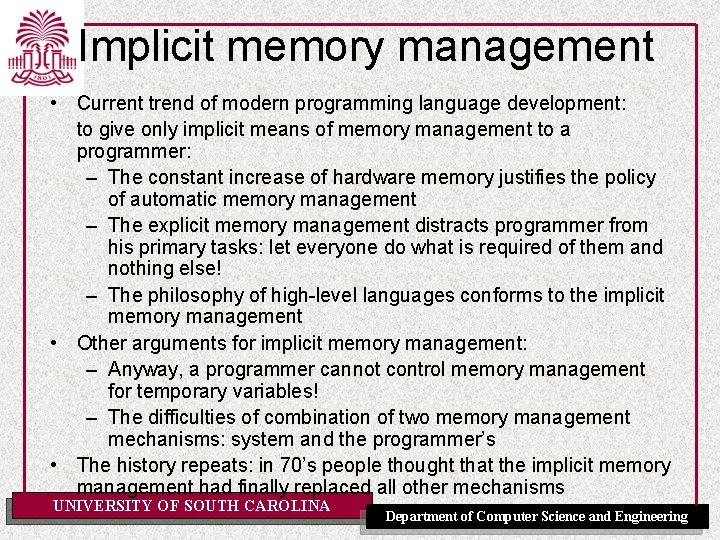

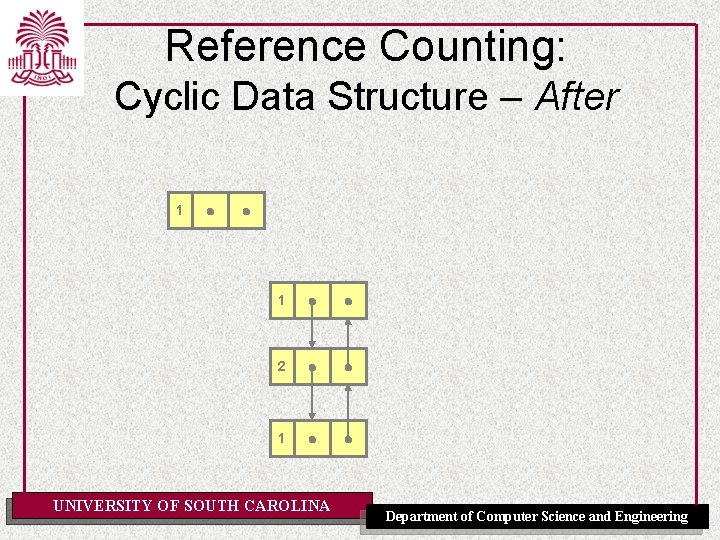

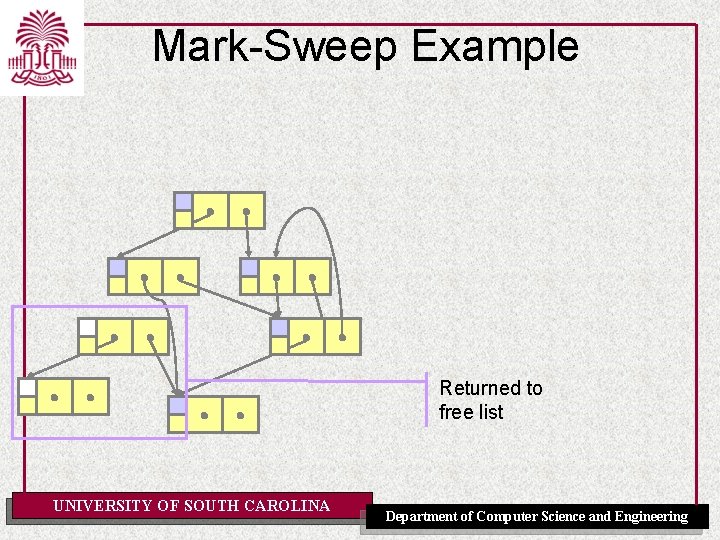

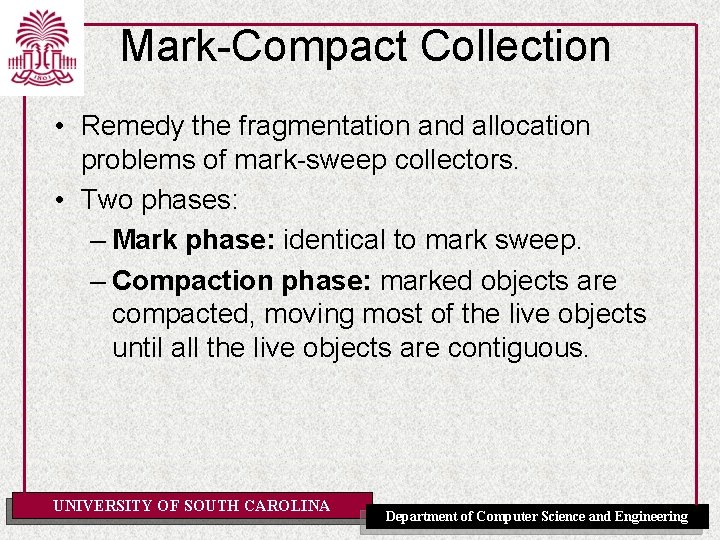

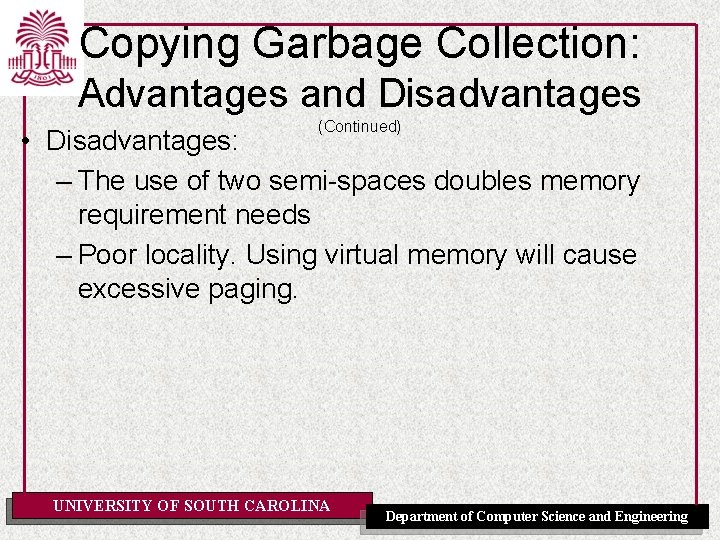

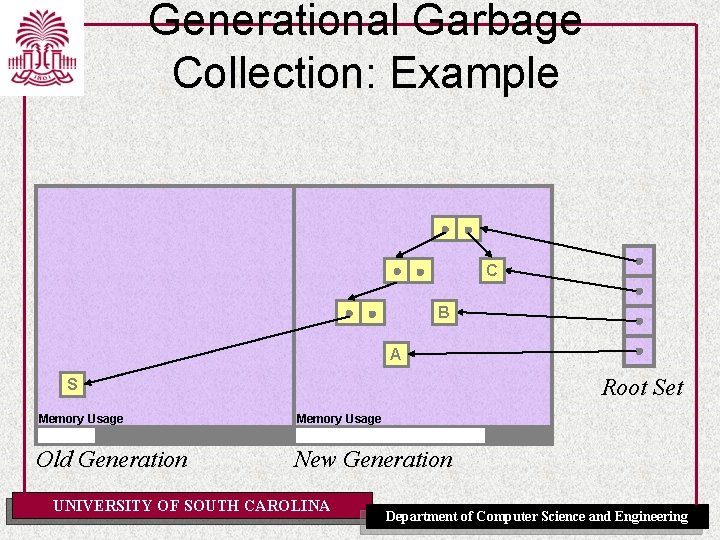

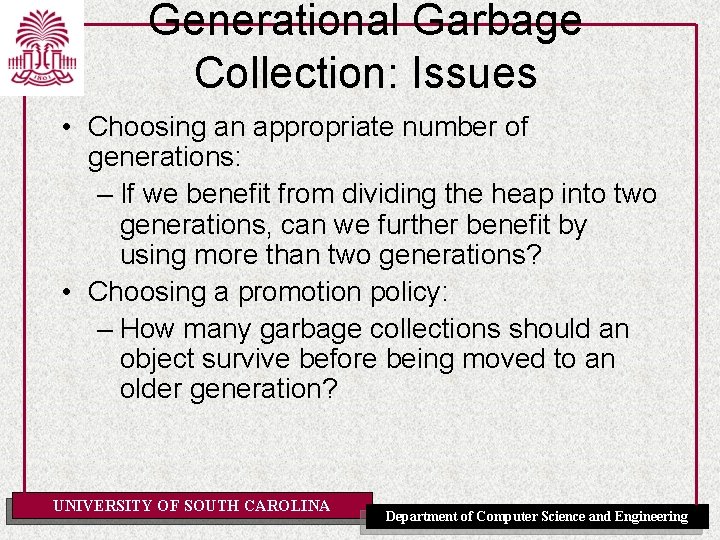

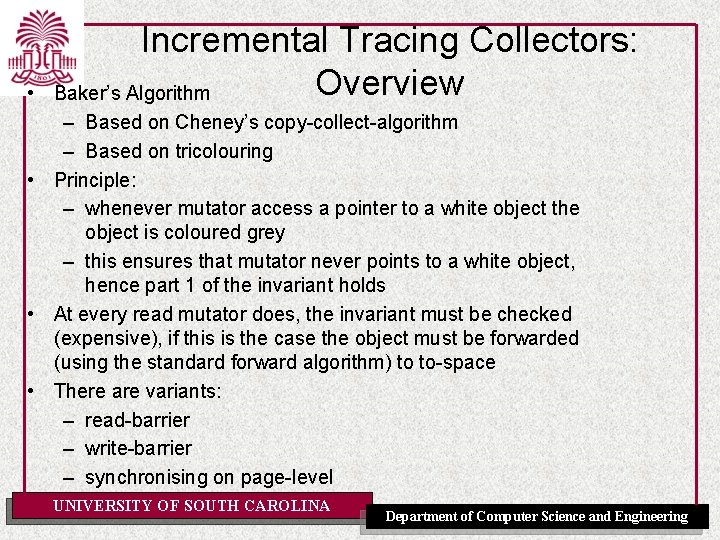

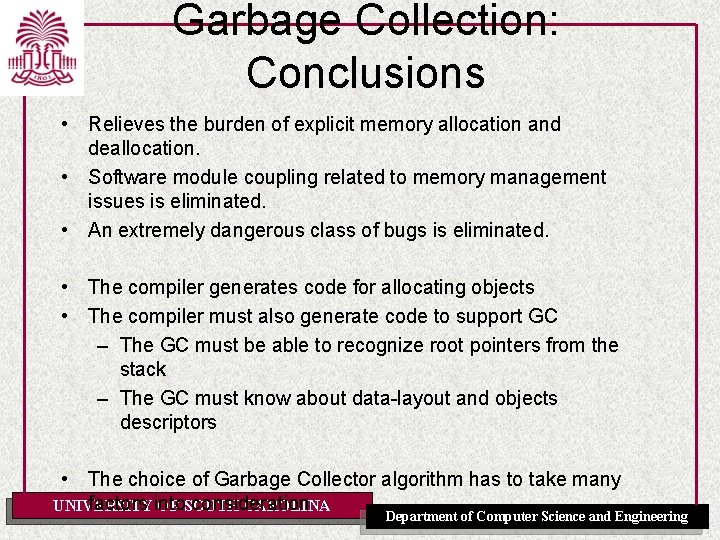

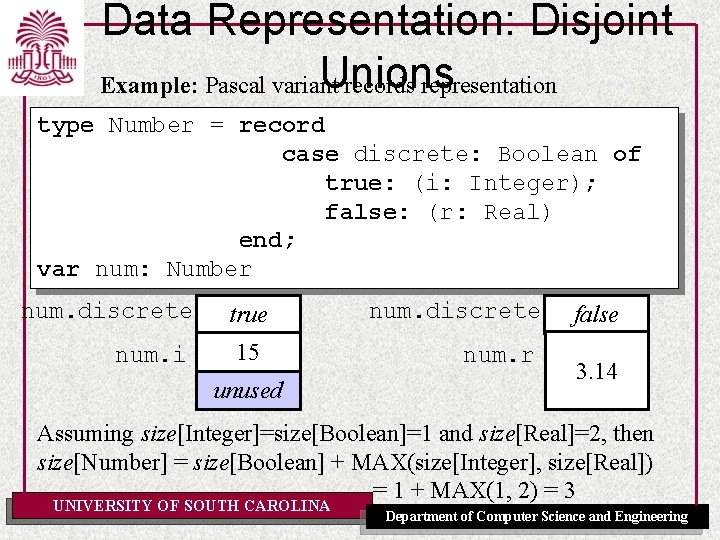

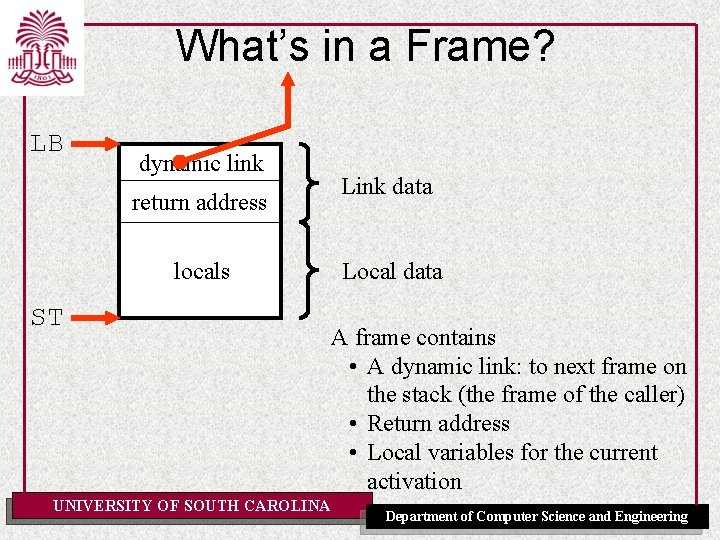

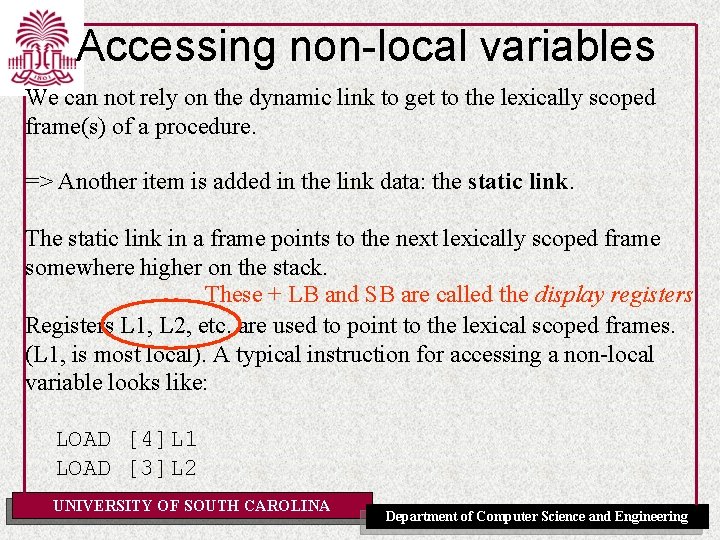

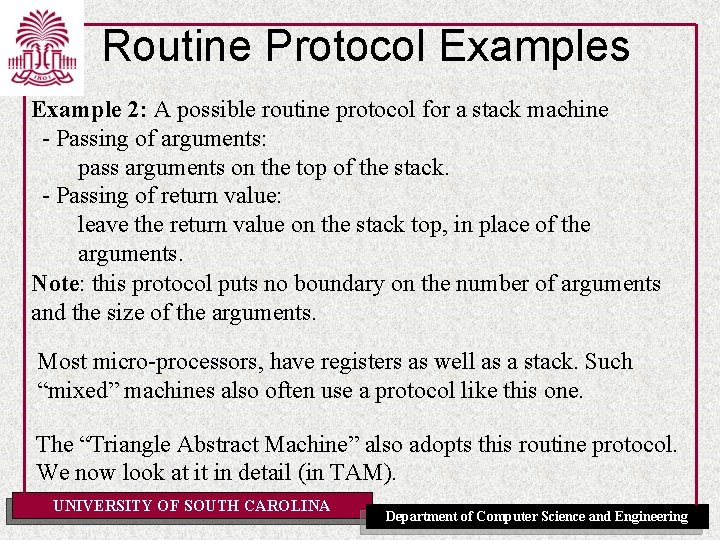

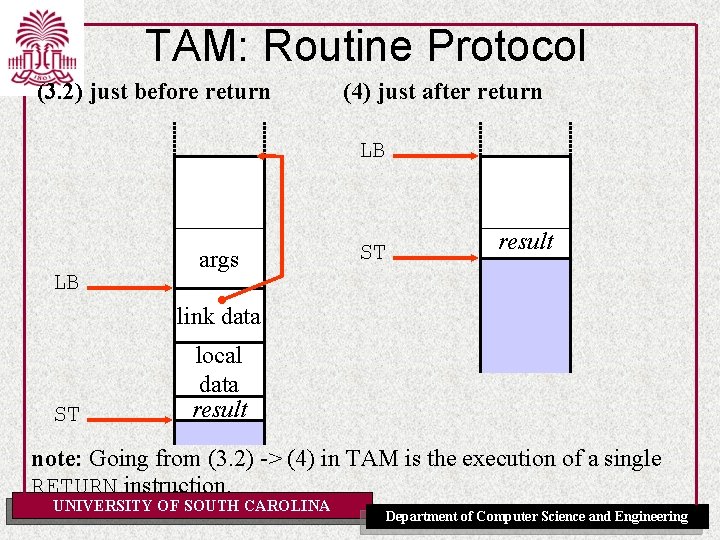

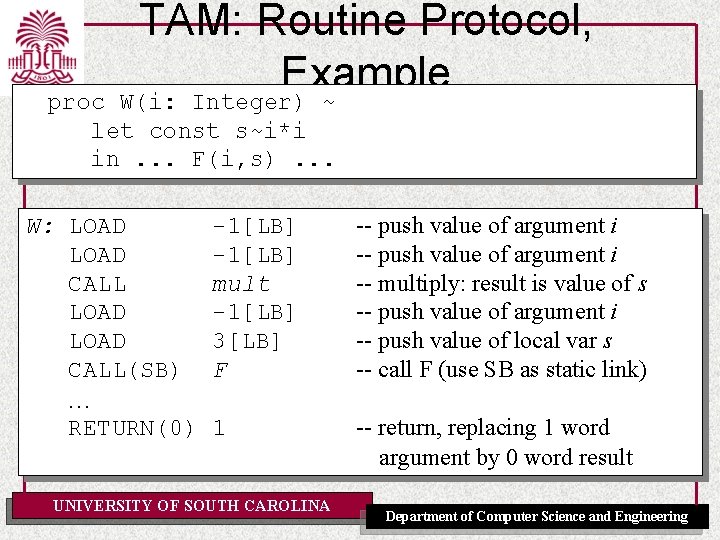

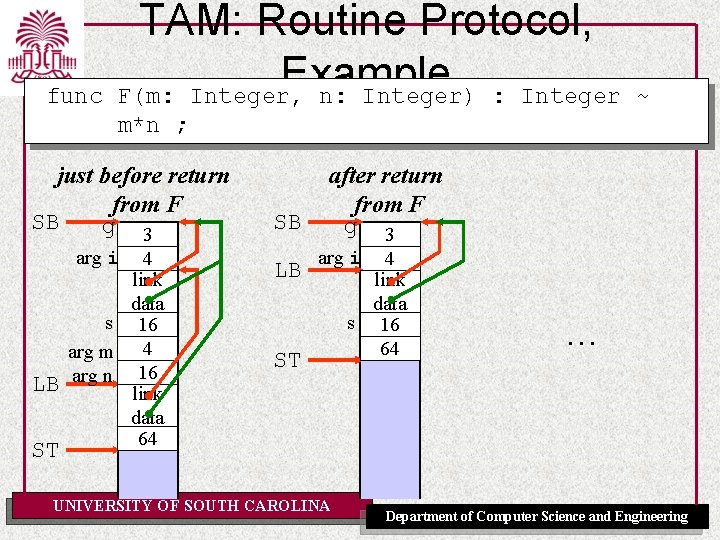

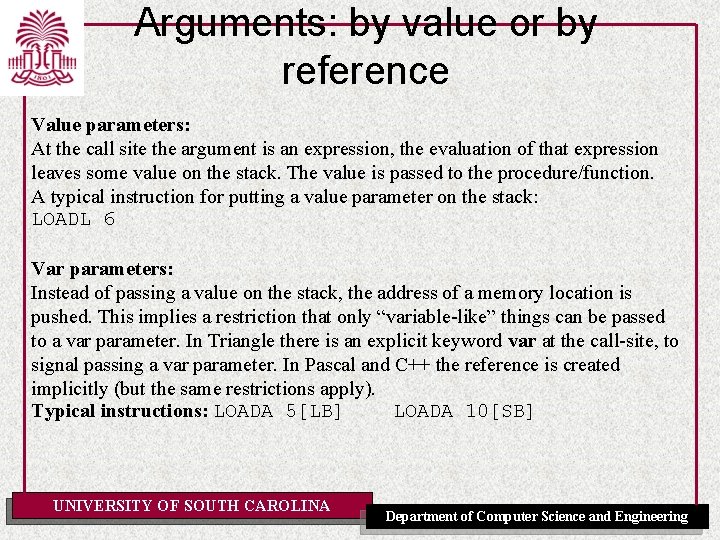

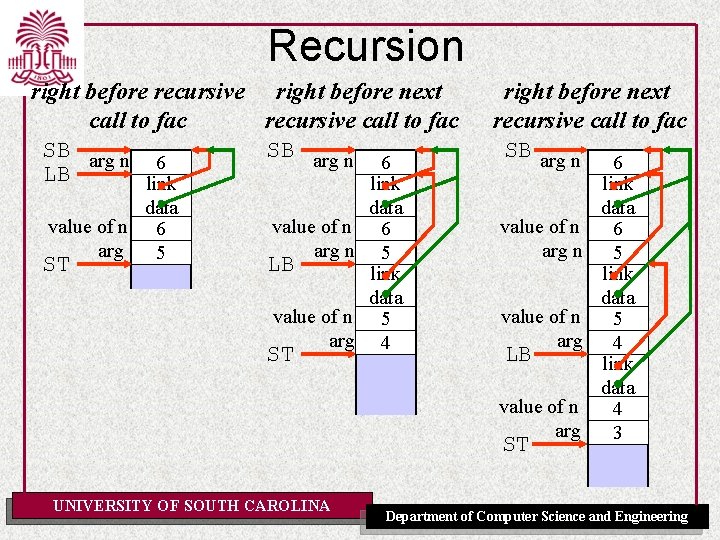

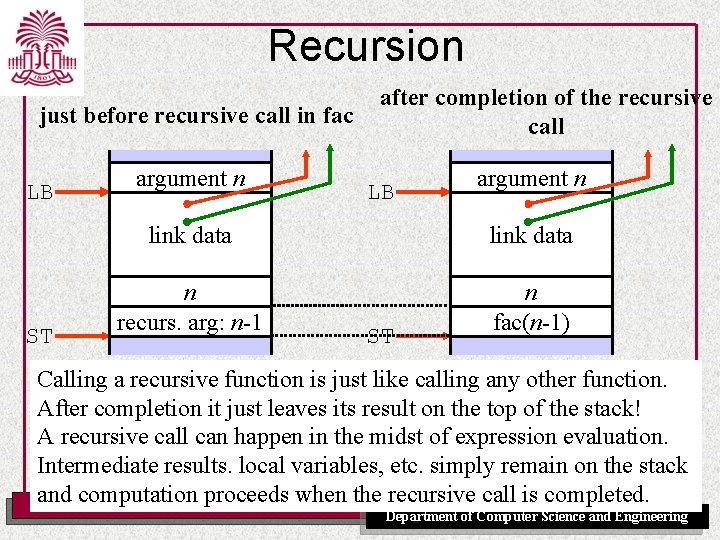

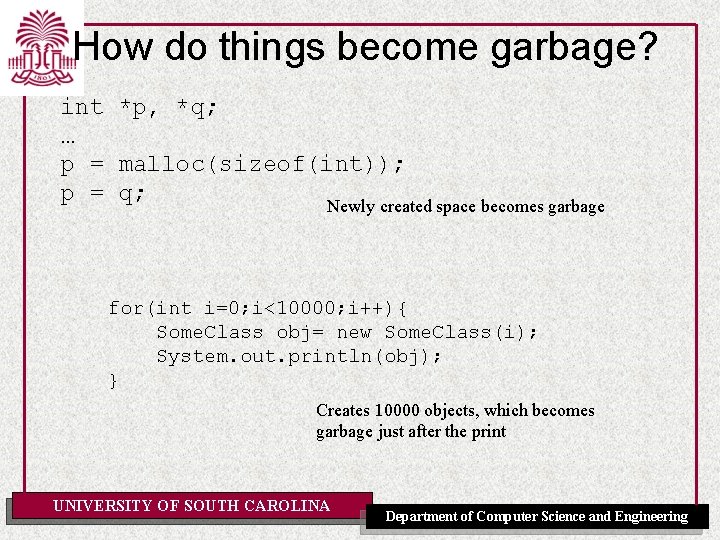

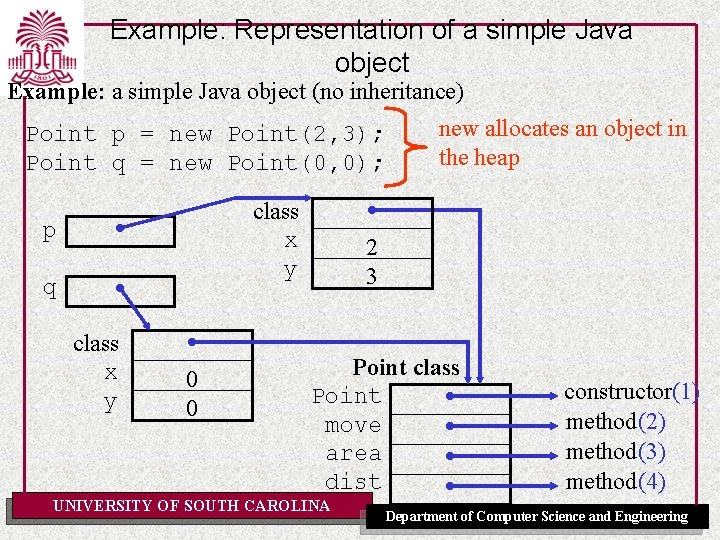

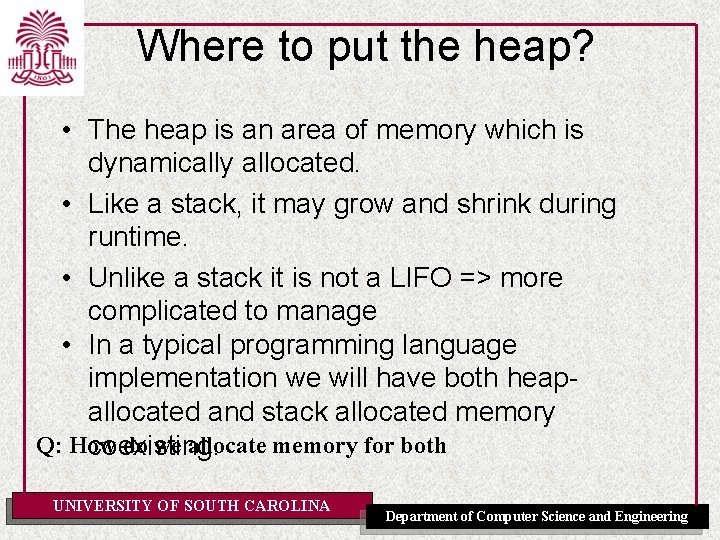

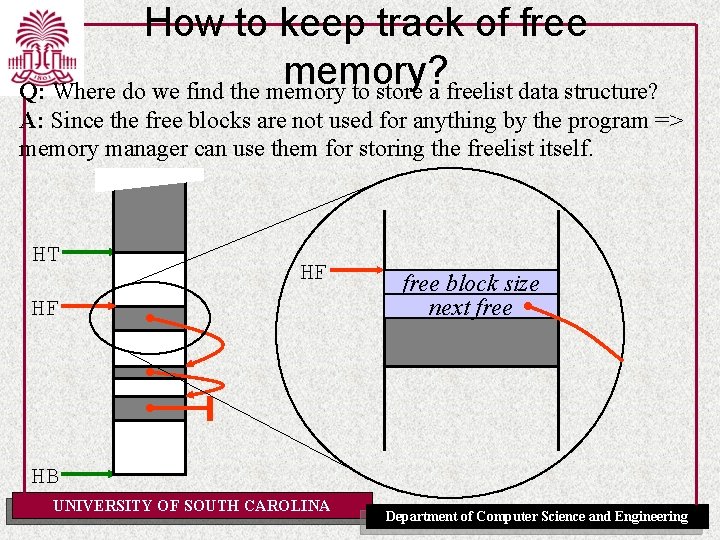

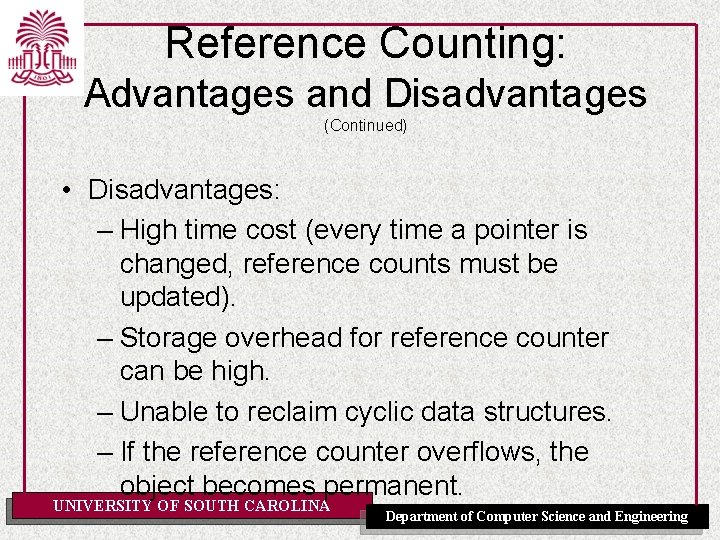

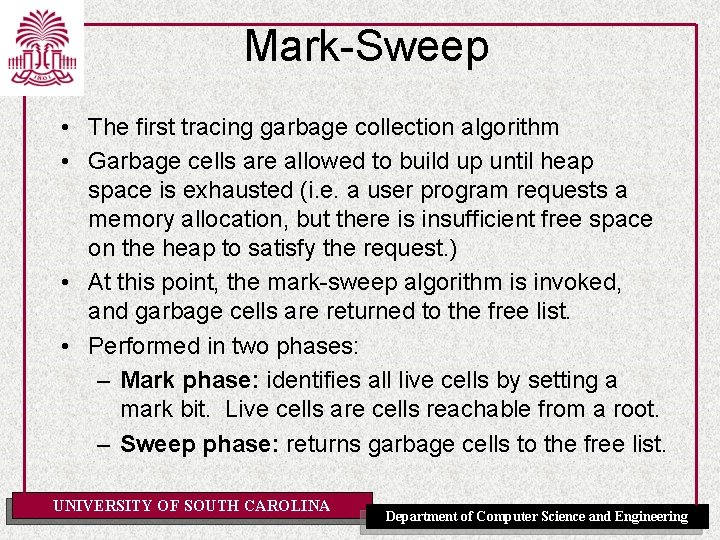

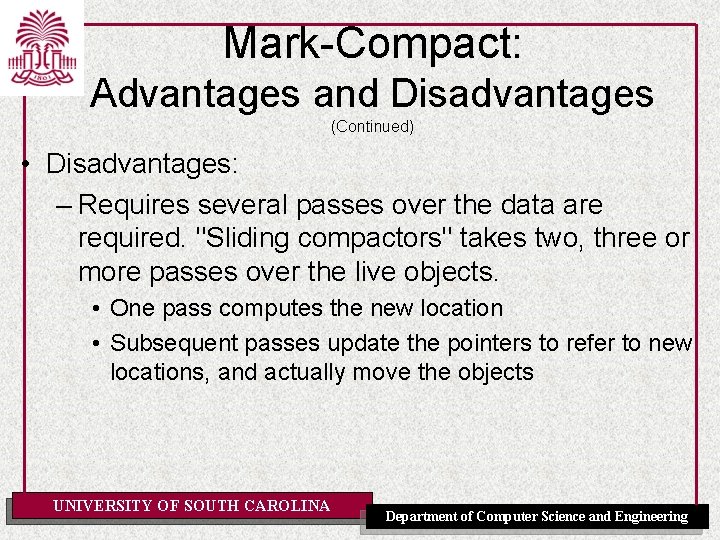

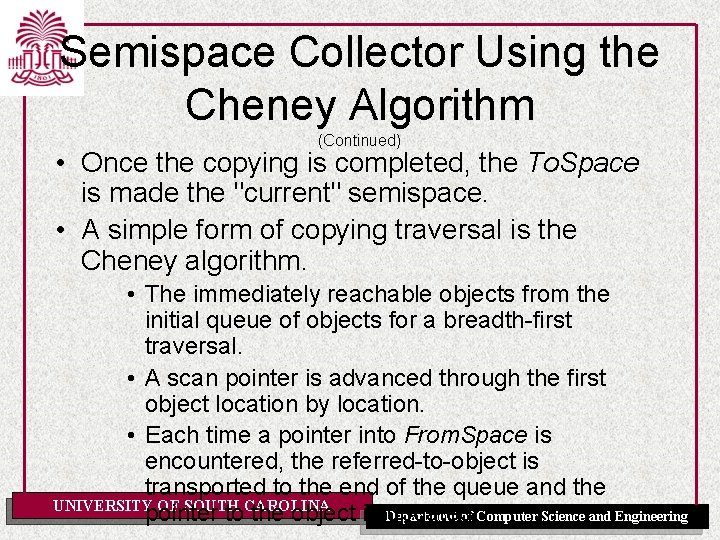

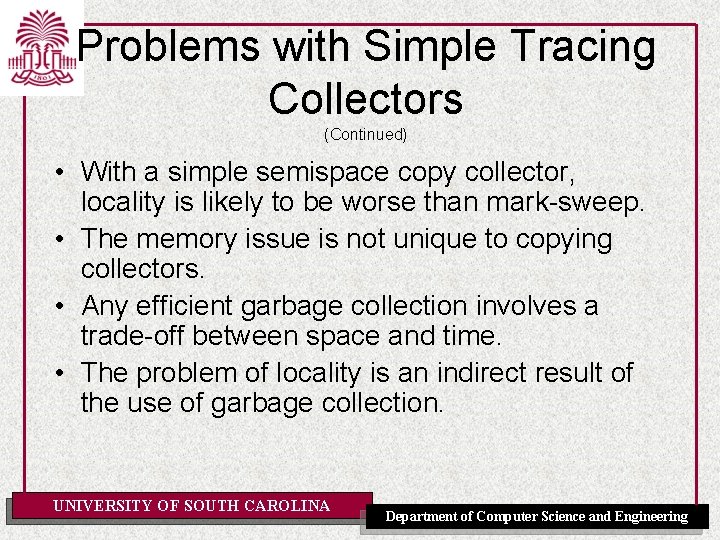

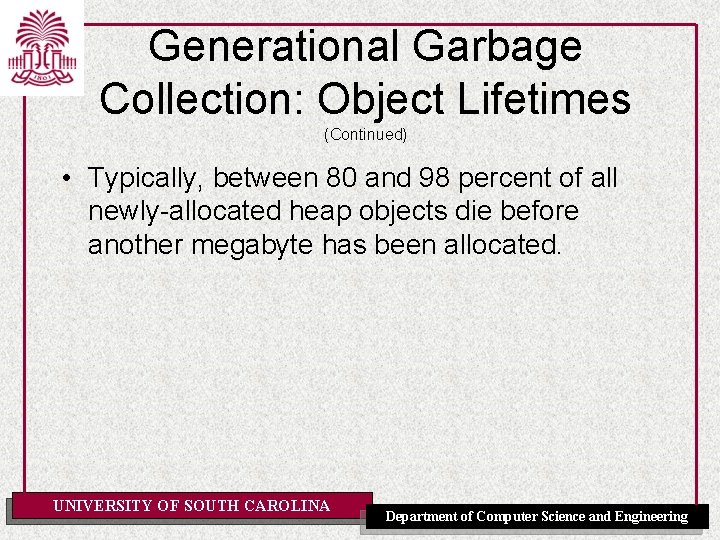

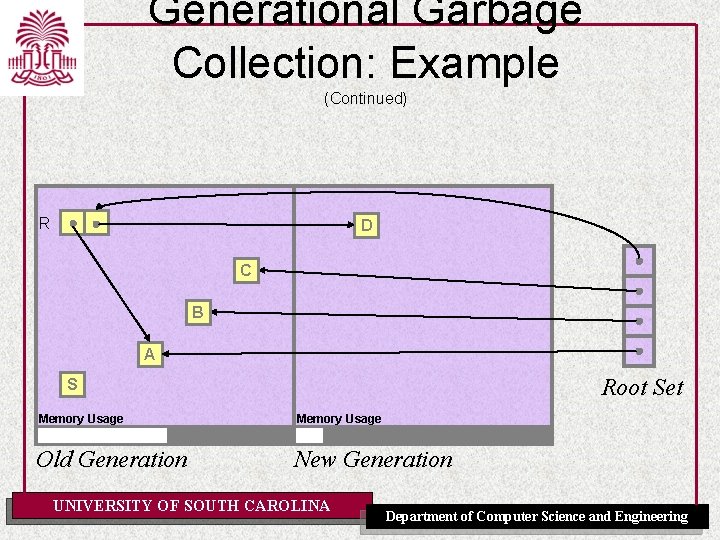

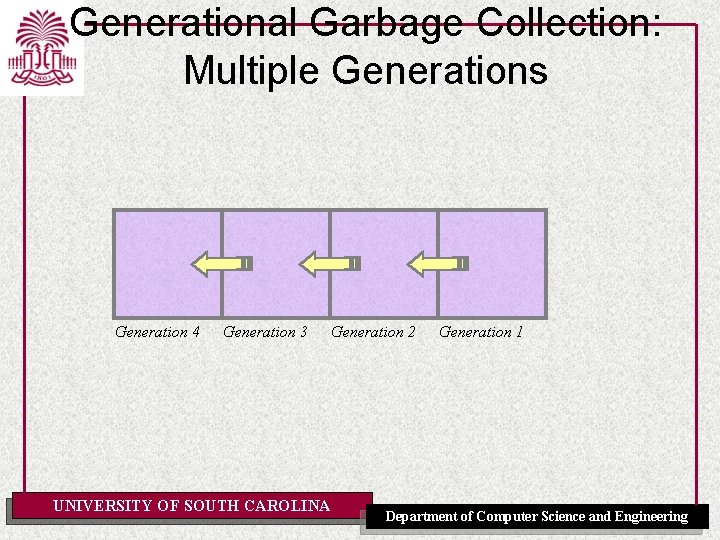

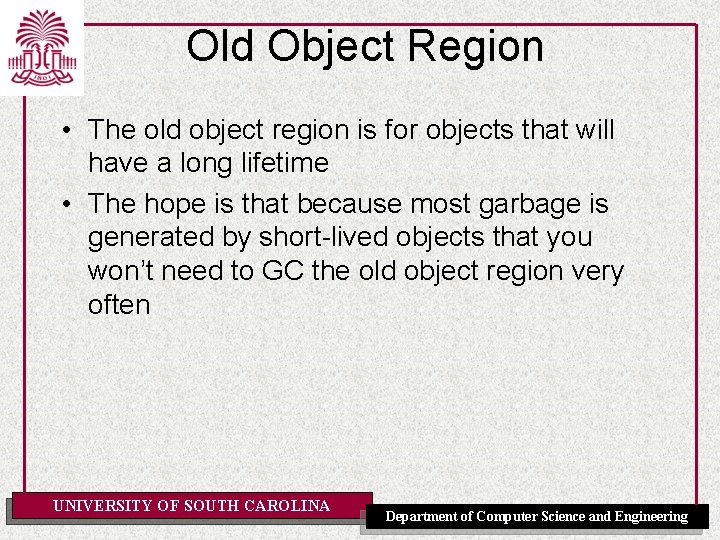

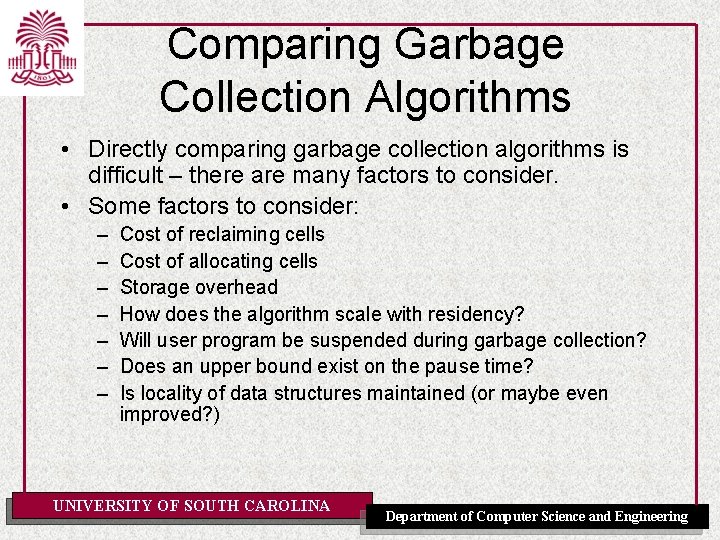

Arrays An array is a composite data type, an array value consists of multiple values of the same type. Arrays are in some sense like records, except that their elements all have the same type. The elements of arrays are typically indexed using an integer value (In some languages such as for example Pascal, also other “ordinal” types can be used for indexing arrays). Two kinds of arrays (with different runtime representation schemas): • static arrays: their size (number of elements) is known at compile time. • dynamic arrays: their size can not be known at compile time because the number of elements may vary at run-time. Q: Which are the “cheapest” arrays? Why? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

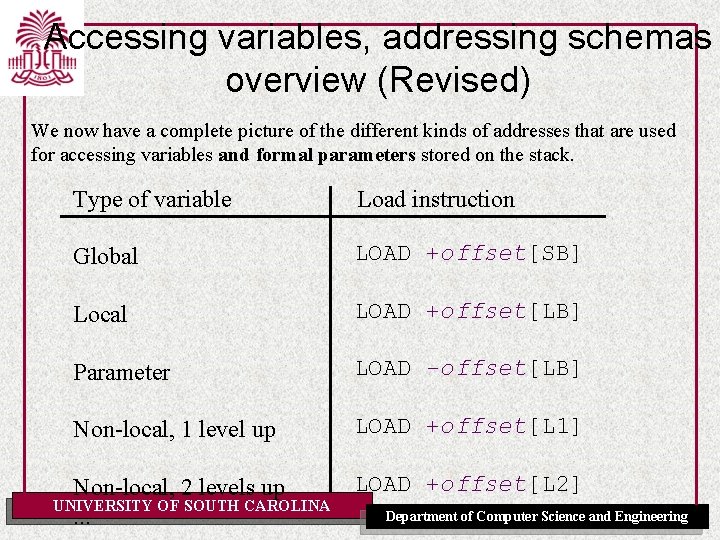

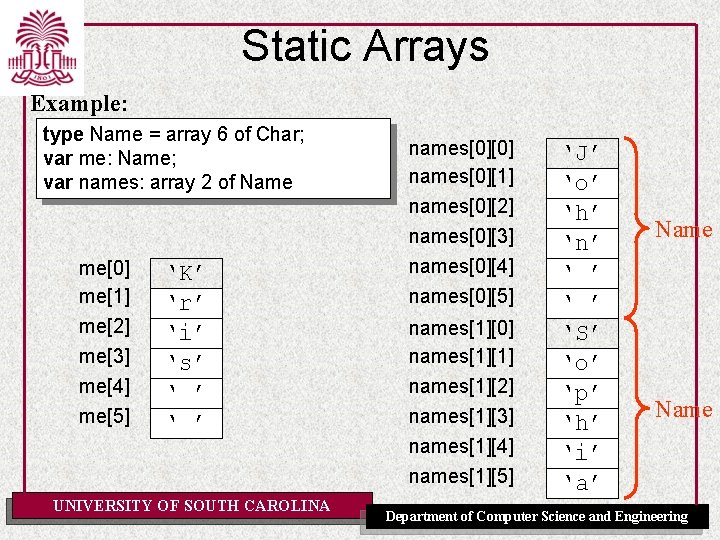

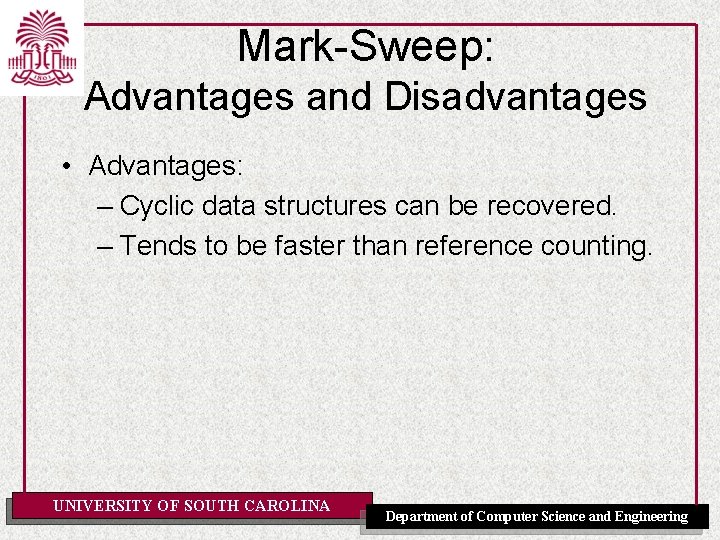

Static Arrays Example: type Name = array 6 of Char; var me: Name; var names: array 2 of Name me[0] me[1] me[2] me[3] me[4] me[5] ‘K’ ‘r’ ‘i’ ‘s’ ‘ ’ names[0][0] names[0][1] names[0][2] names[0][3] names[0][4] names[0][5] names[1][0] names[1][1] names[1][2] names[1][3] names[1][4] names[1][5] UNIVERSITY OF SOUTH CAROLINA ‘J’ ‘o’ ‘h’ ‘n’ ‘ ’ ‘S’ ‘o’ ‘p’ ‘h’ ‘i’ ‘a’ Name Department of Computer Science and Engineering

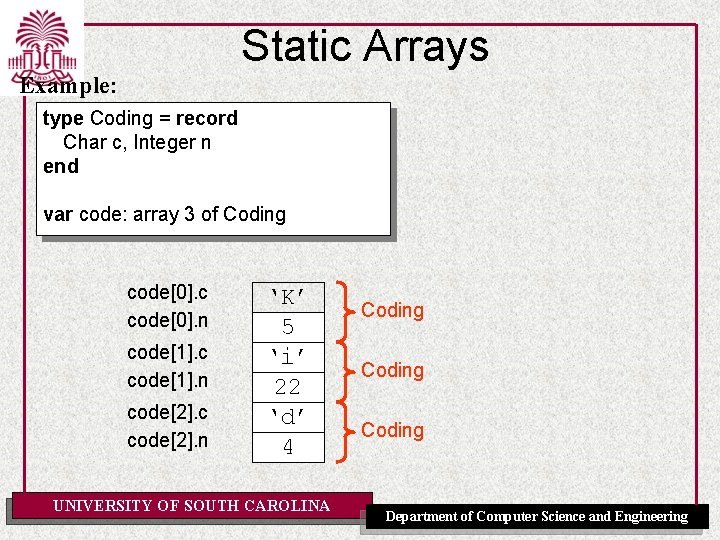

Static Arrays Example: type Coding = record Char c, Integer n end var code: array 3 of Coding code[0]. c code[0]. n code[1]. c code[1]. n code[2]. c code[2]. n ‘K’ 5 ‘i’ 22 ‘d’ 4 UNIVERSITY OF SOUTH CAROLINA Coding Department of Computer Science and Engineering

![Static Arrays type T array n of TE var a T a0 Static Arrays type T = array n of TE; var a : T; a[0]](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-38.jpg)

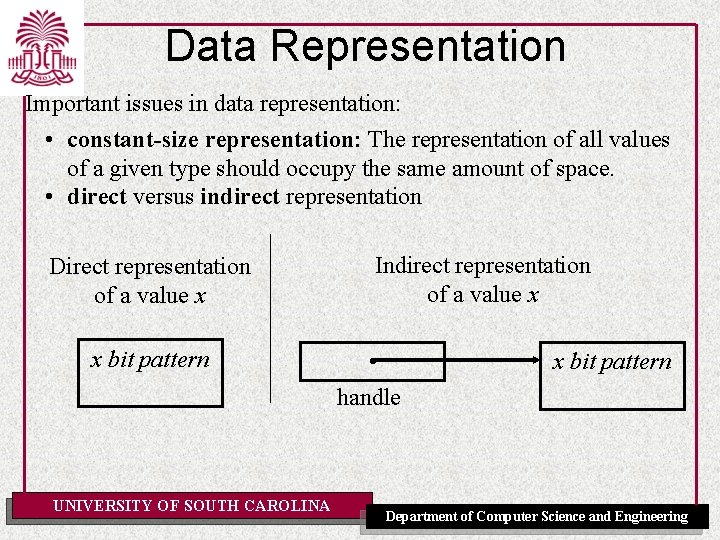

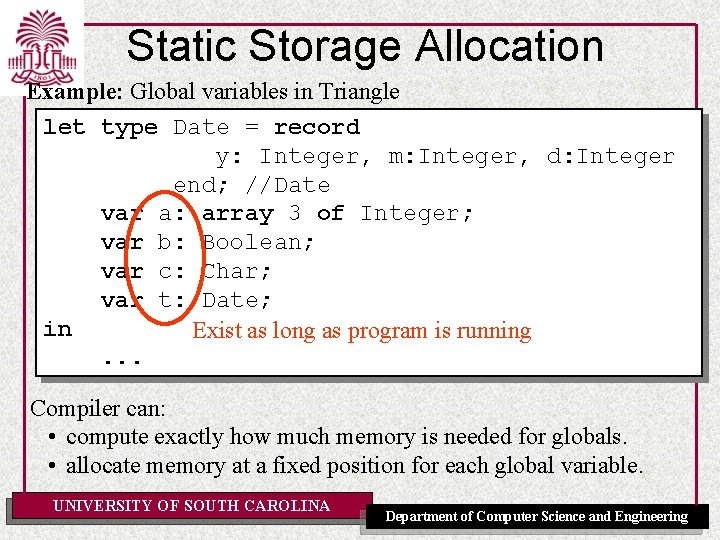

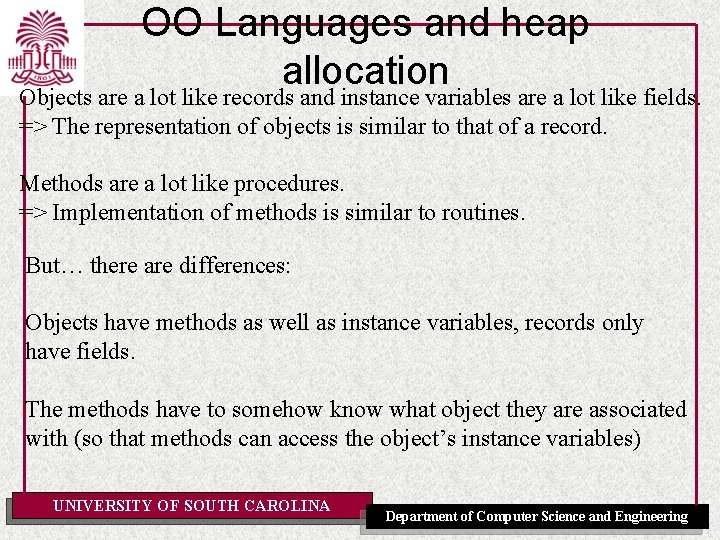

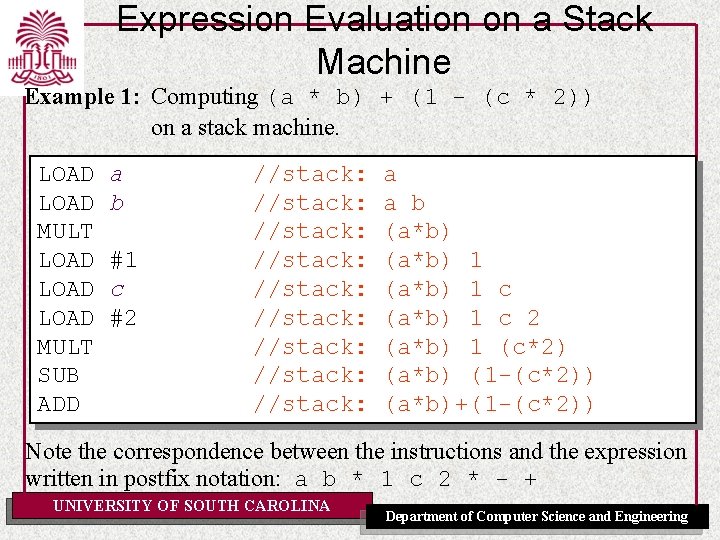

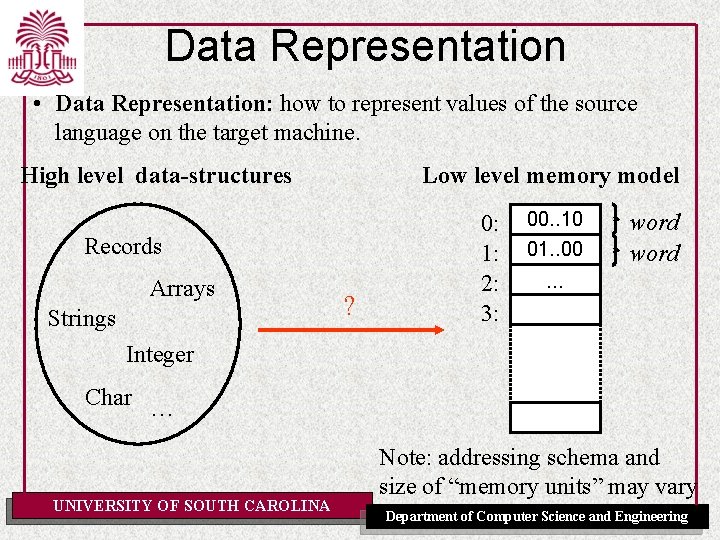

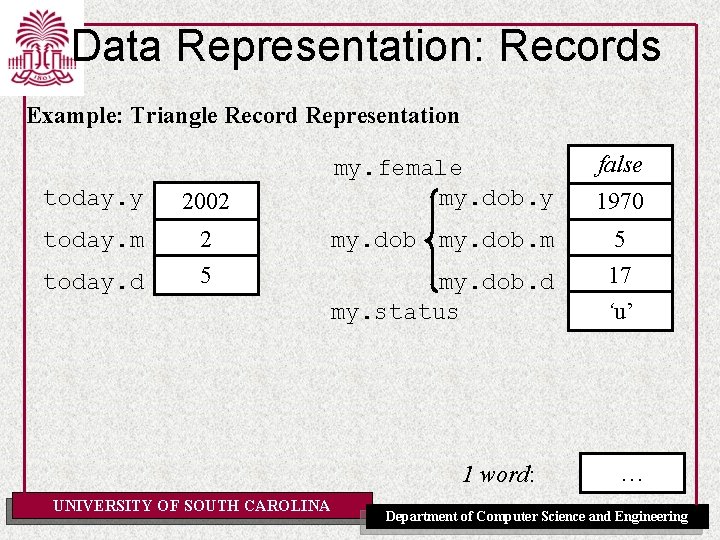

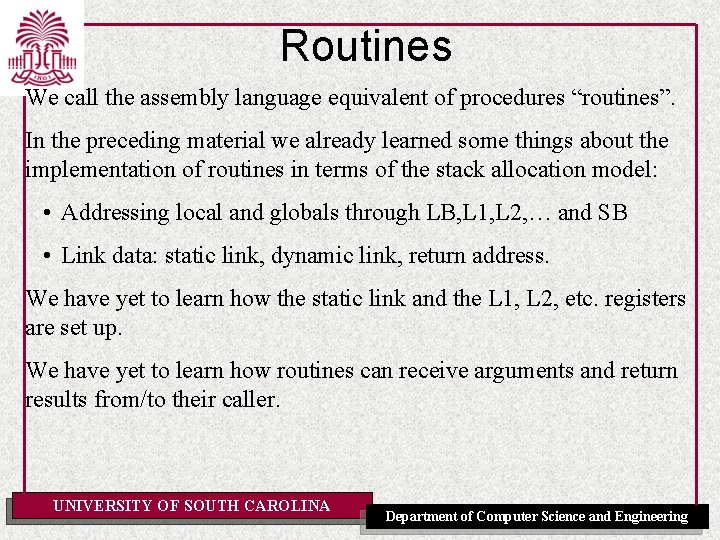

Static Arrays type T = array n of TE; var a : T; a[0] a[1] a[2] a[n-1] size[T] = n * size[TE] address[a[0]] = address[a] address[a[1]] = address[a]+size[TE] address[a[2]] = address[a]+2*size[TE] … address[a[i] ] = address[a]+i*size[TE] … UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

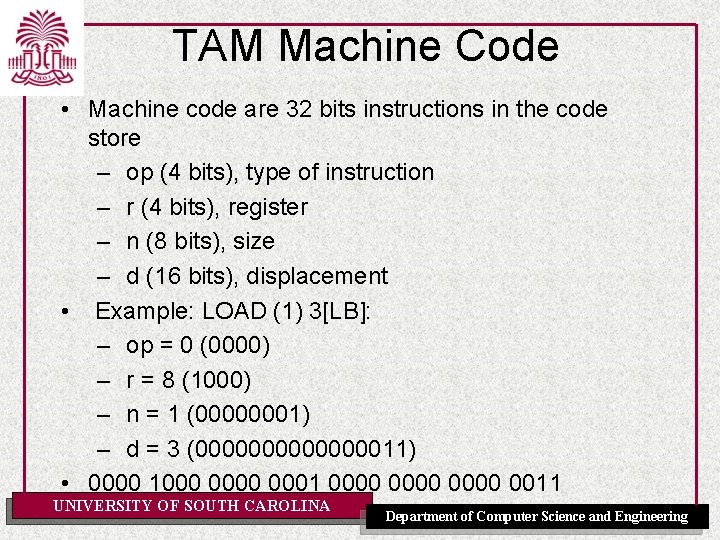

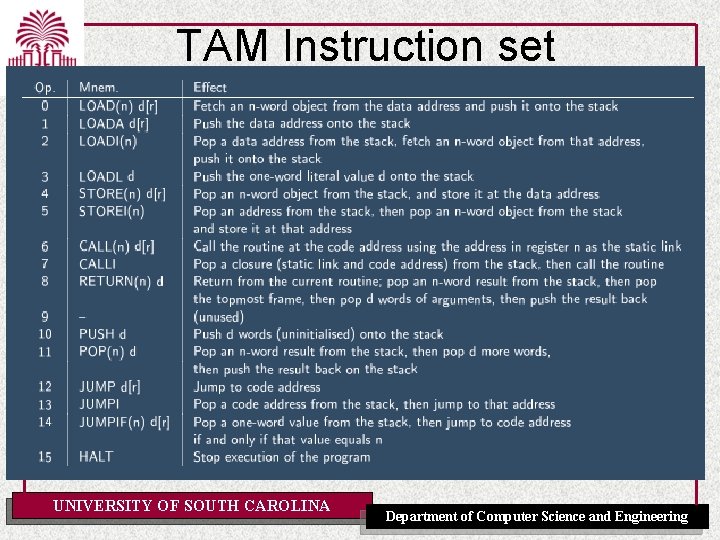

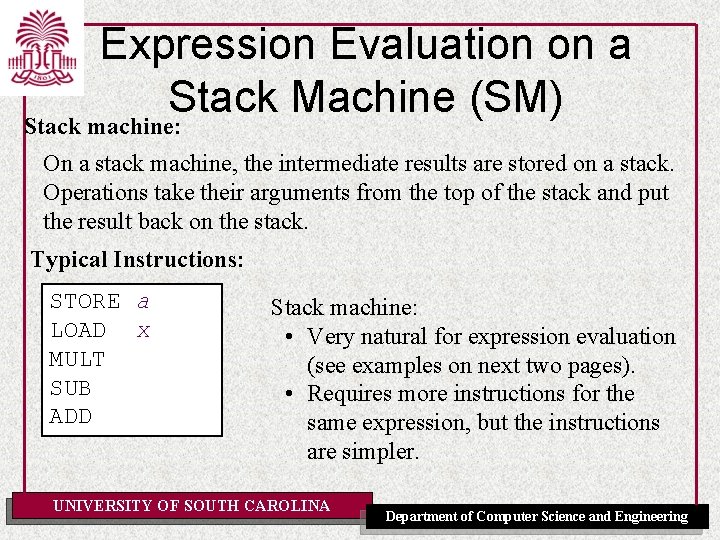

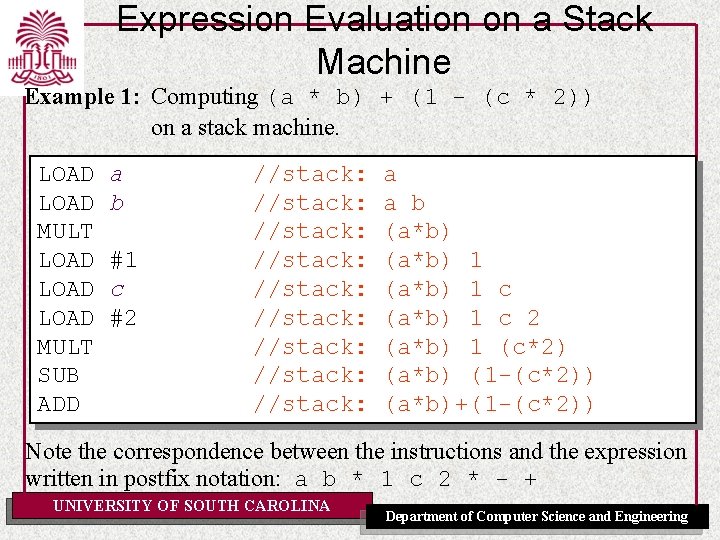

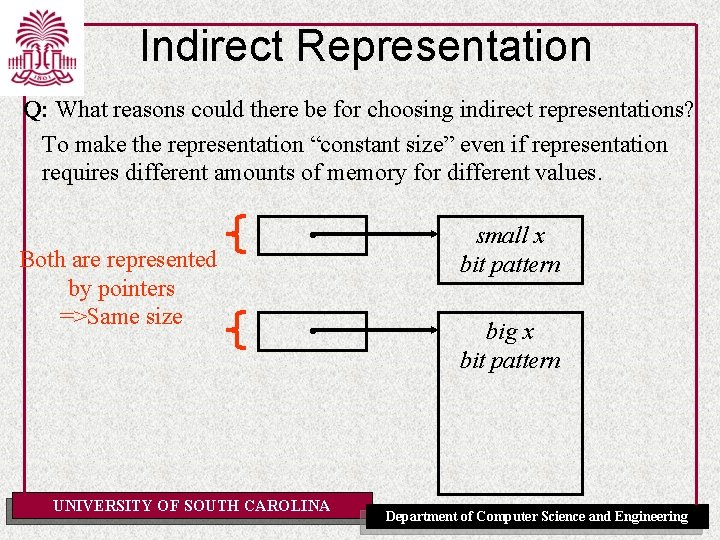

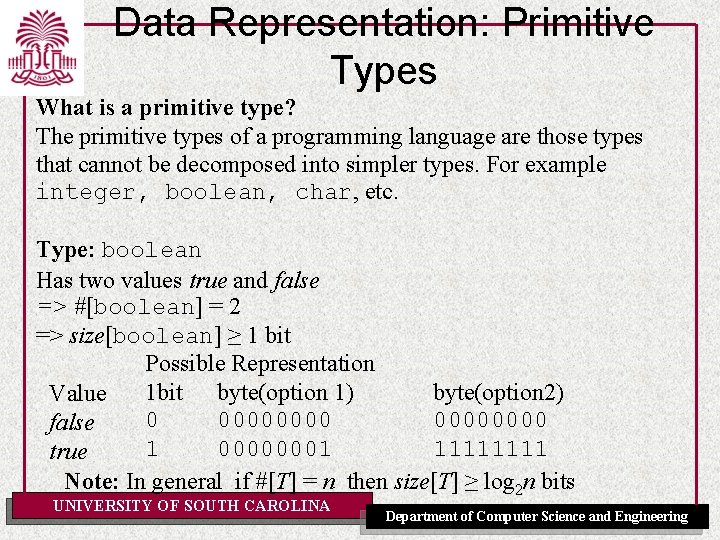

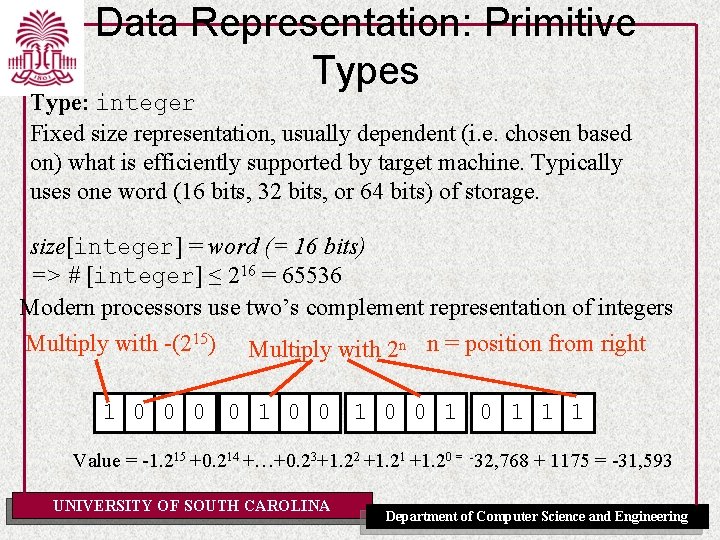

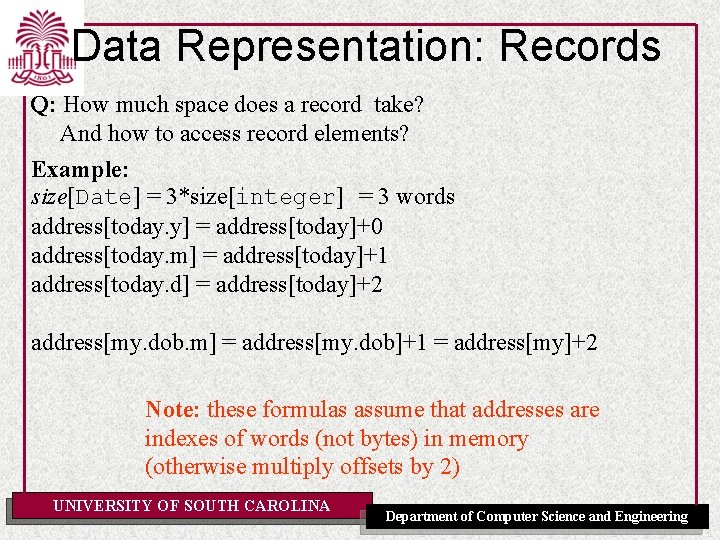

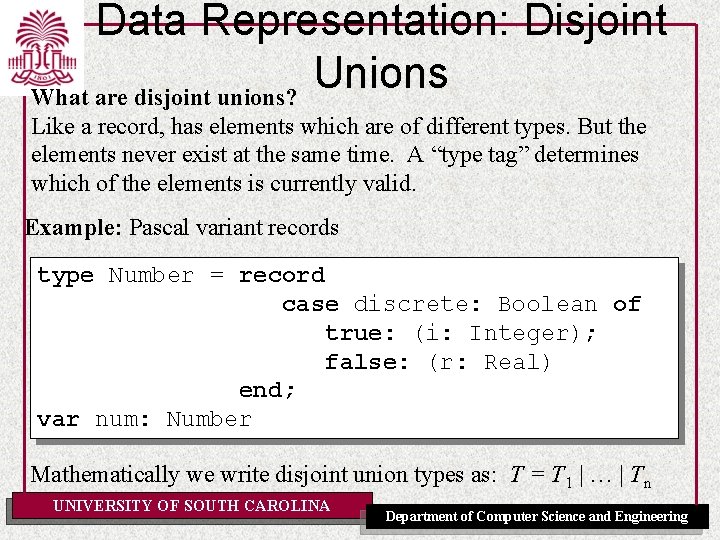

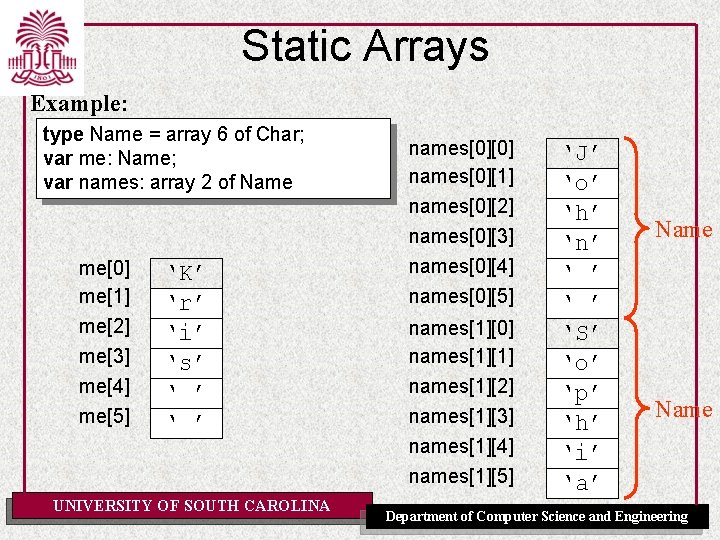

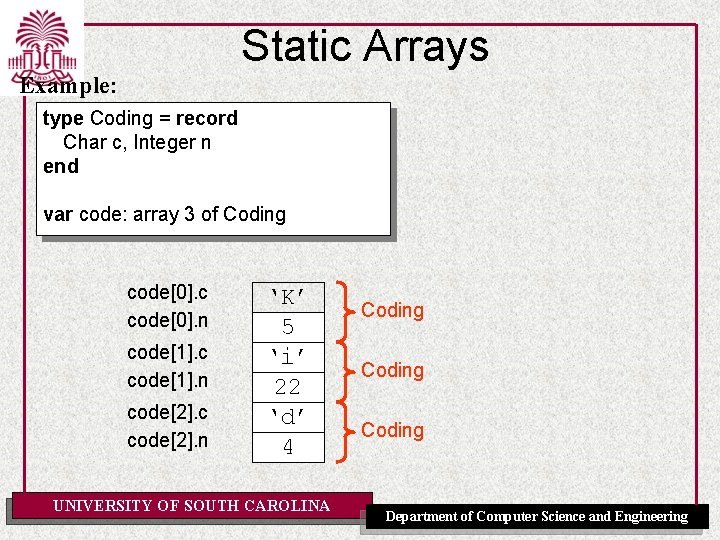

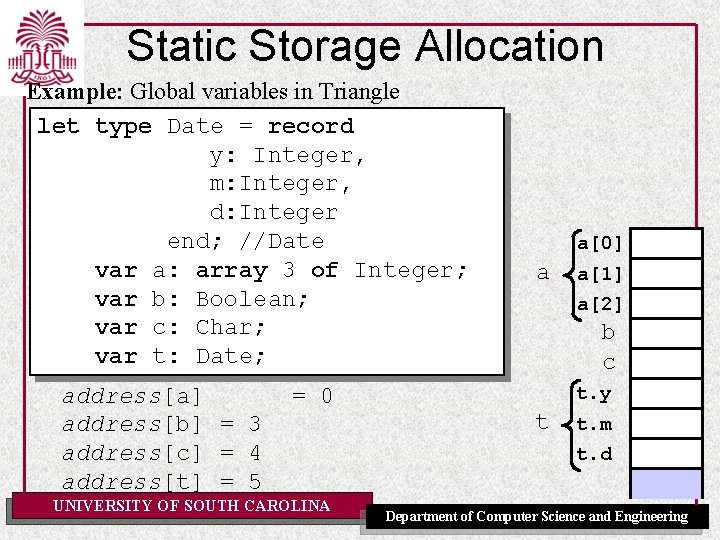

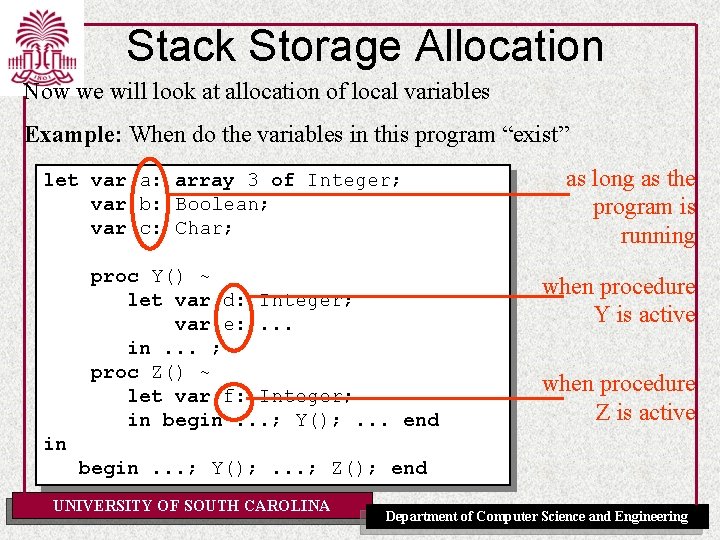

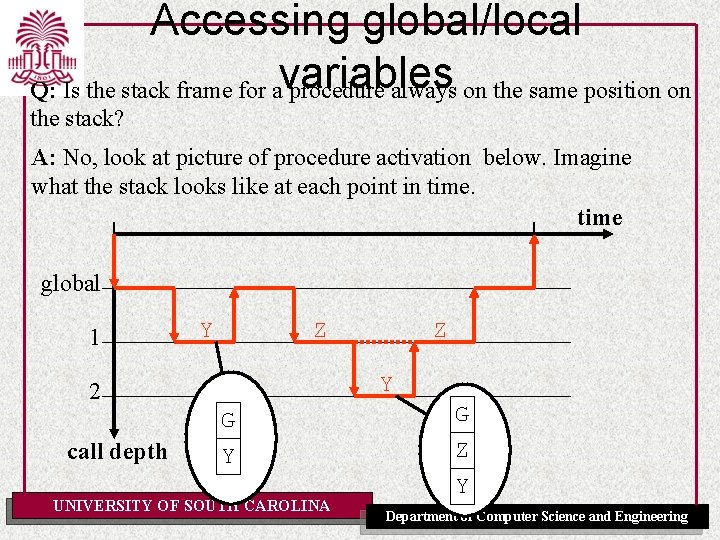

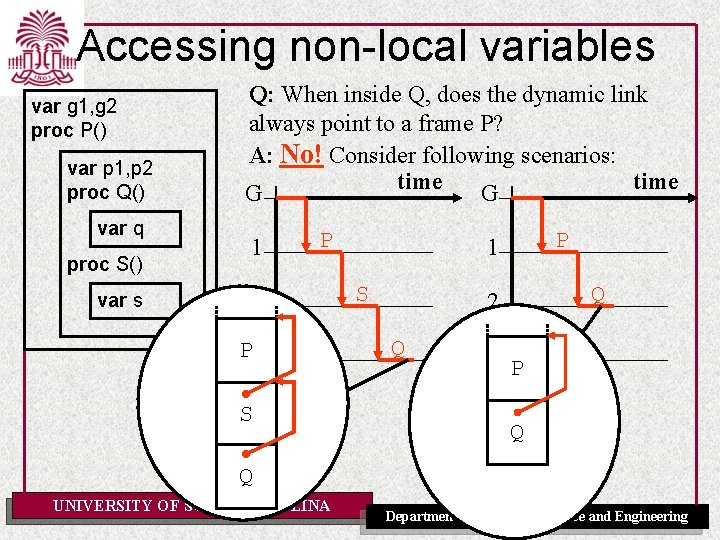

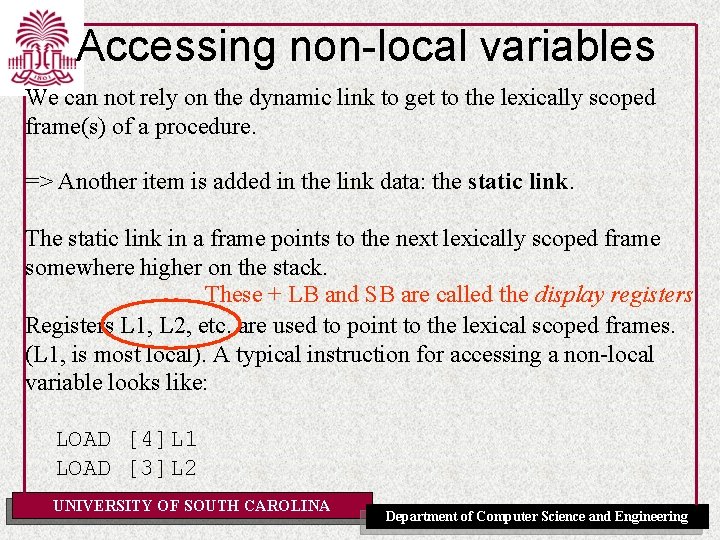

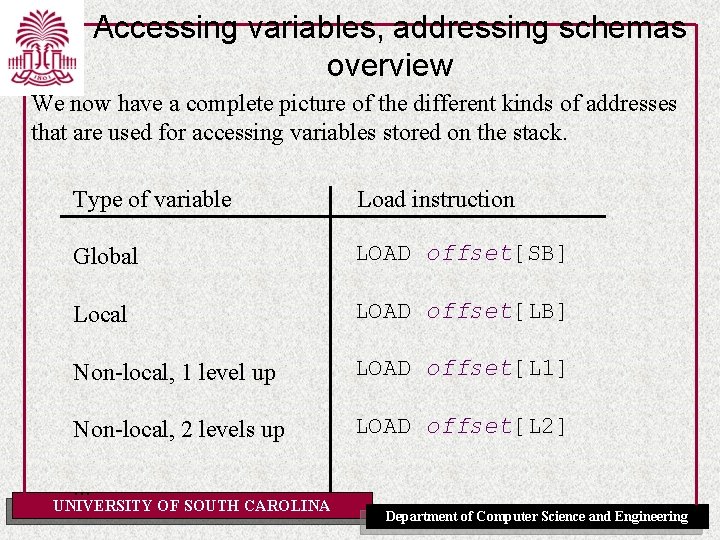

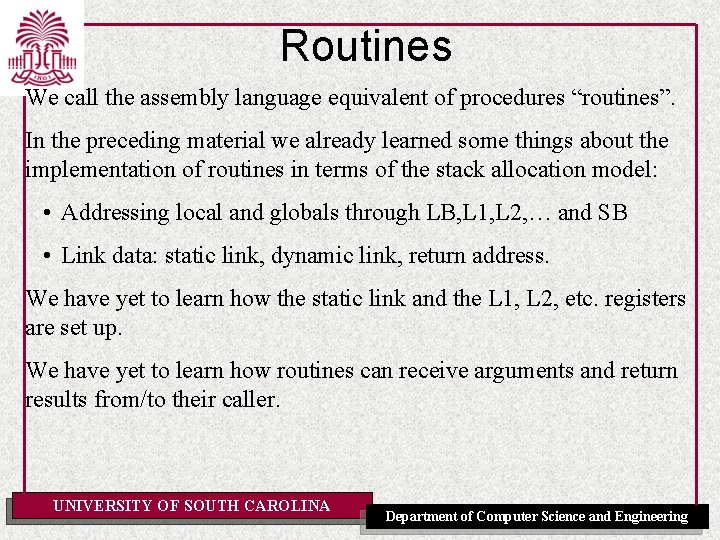

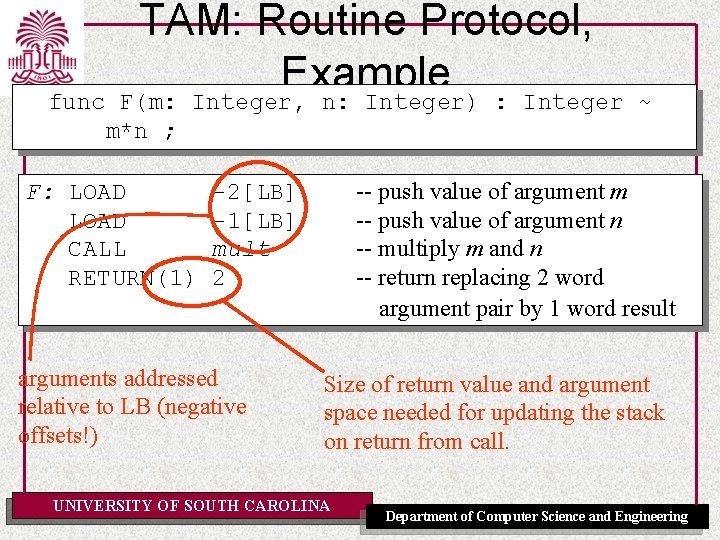

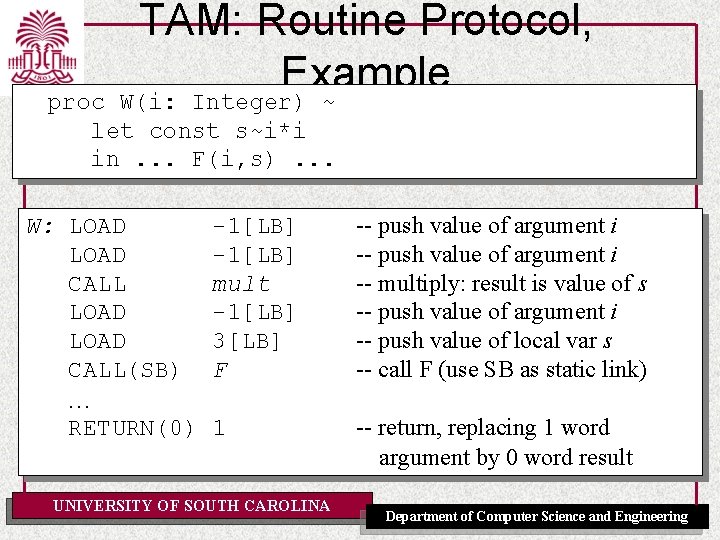

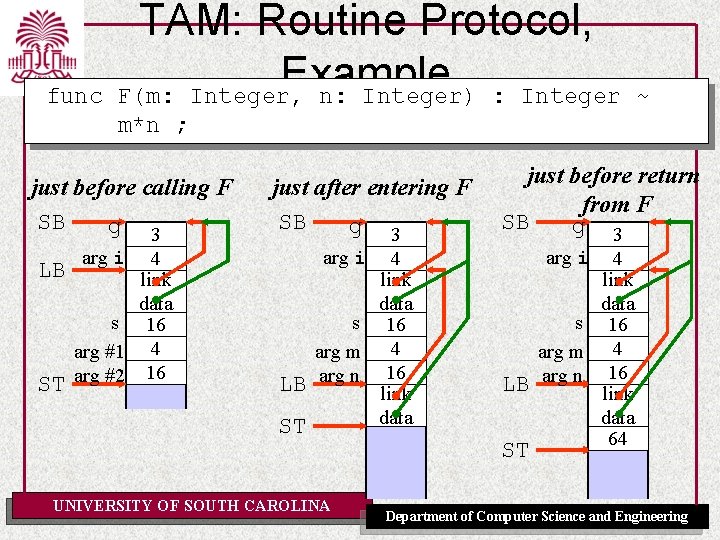

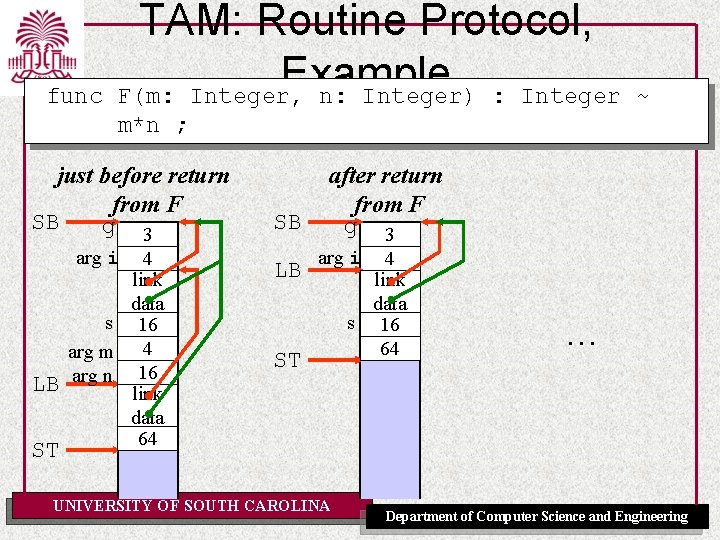

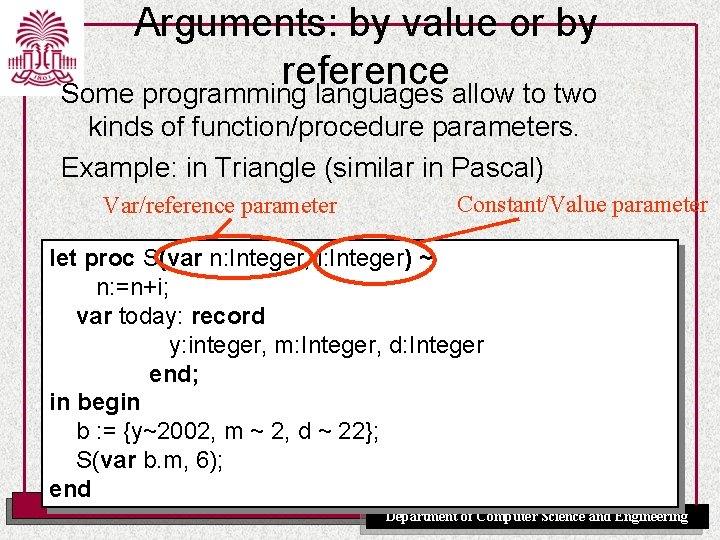

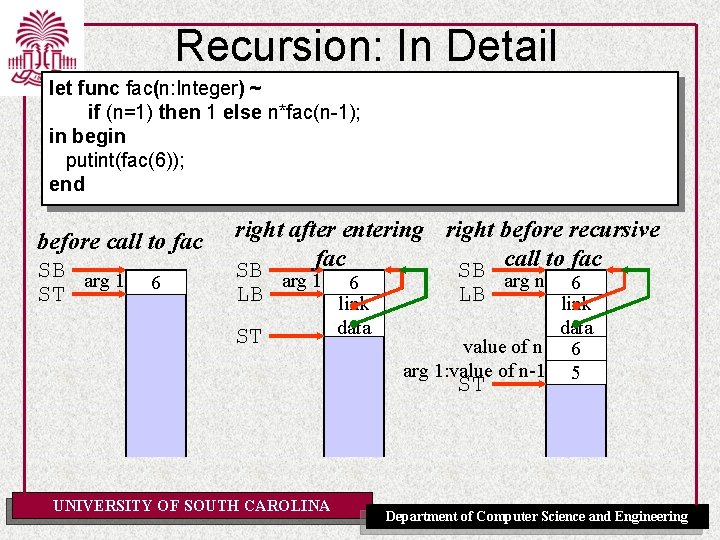

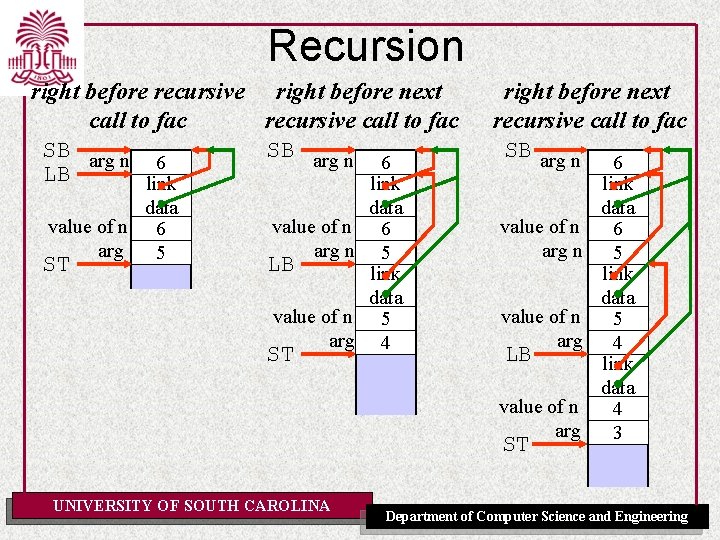

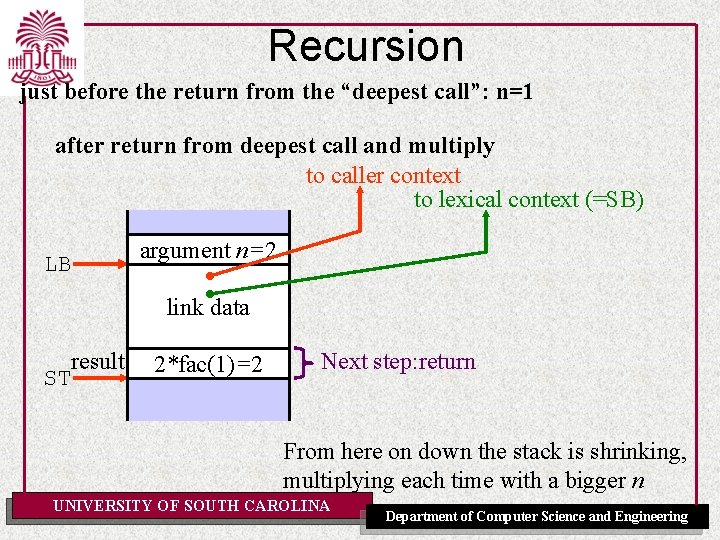

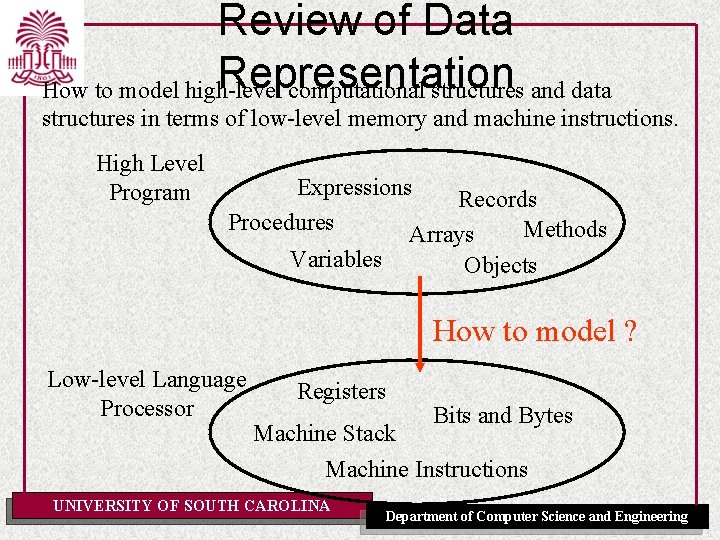

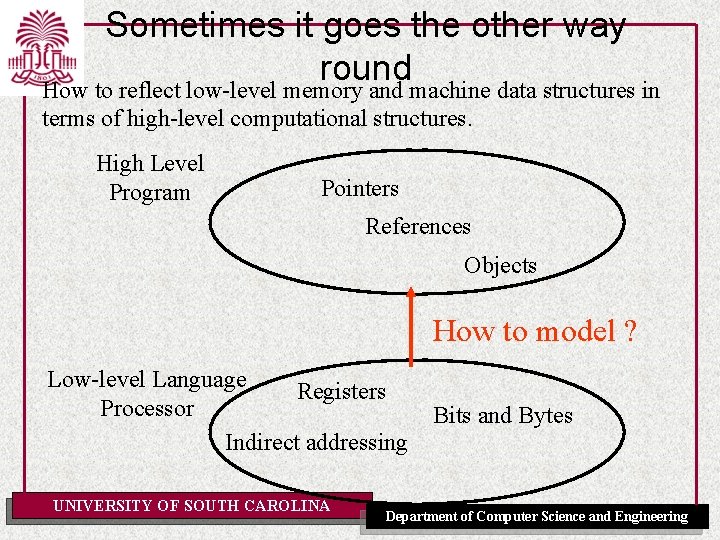

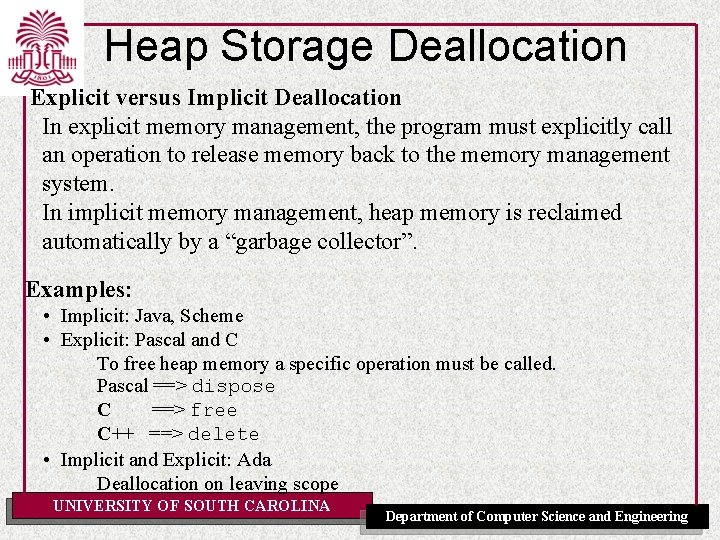

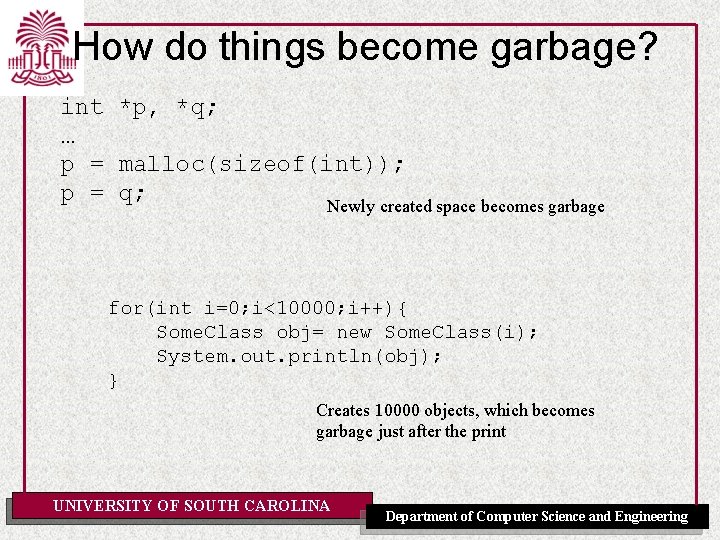

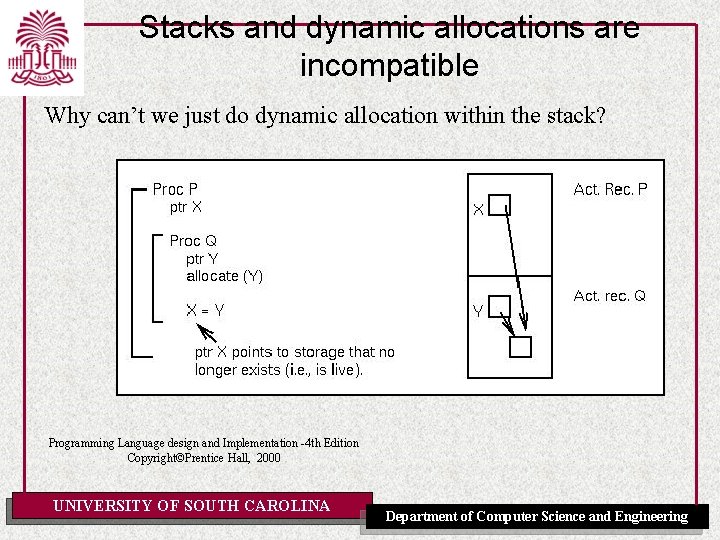

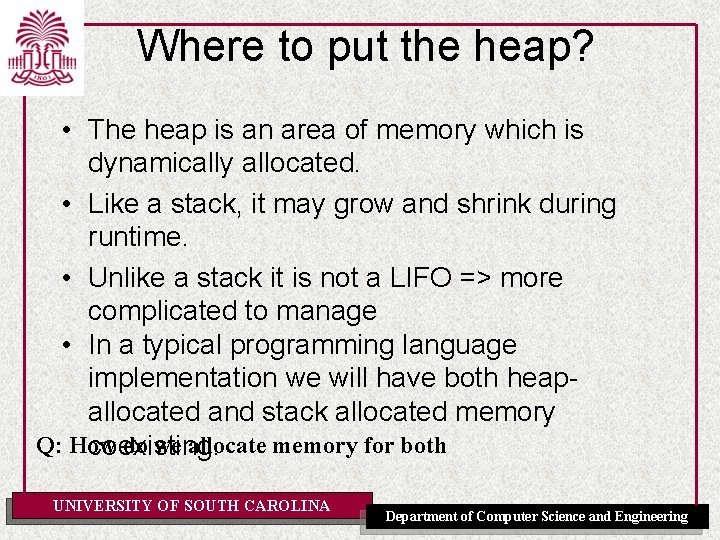

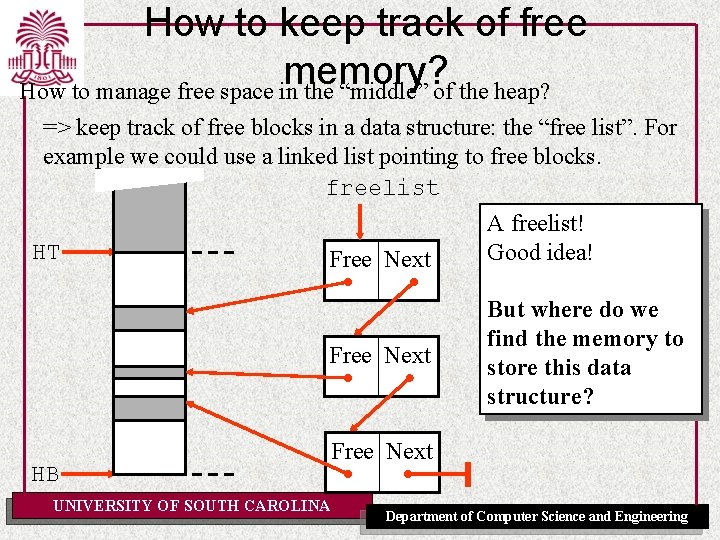

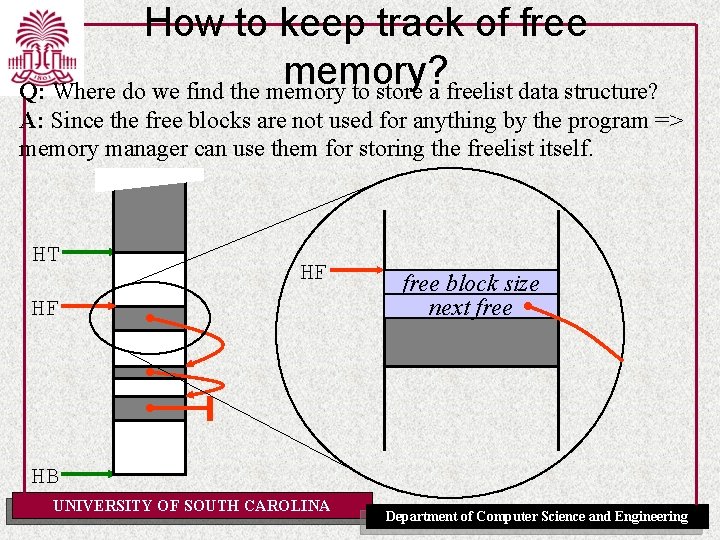

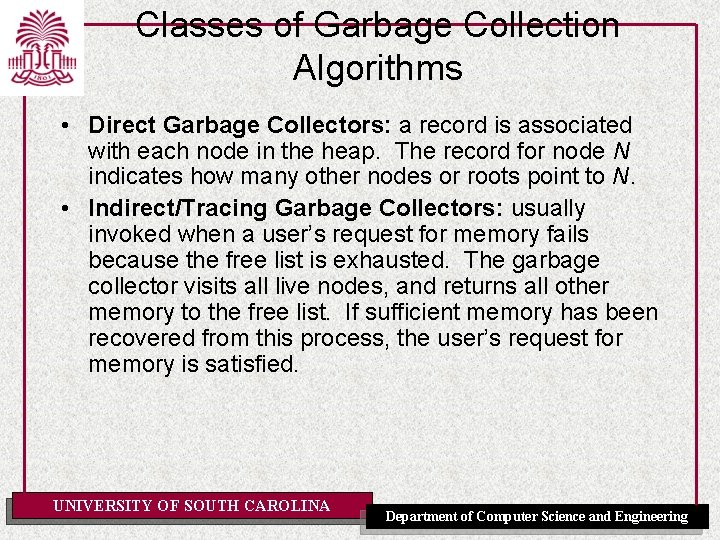

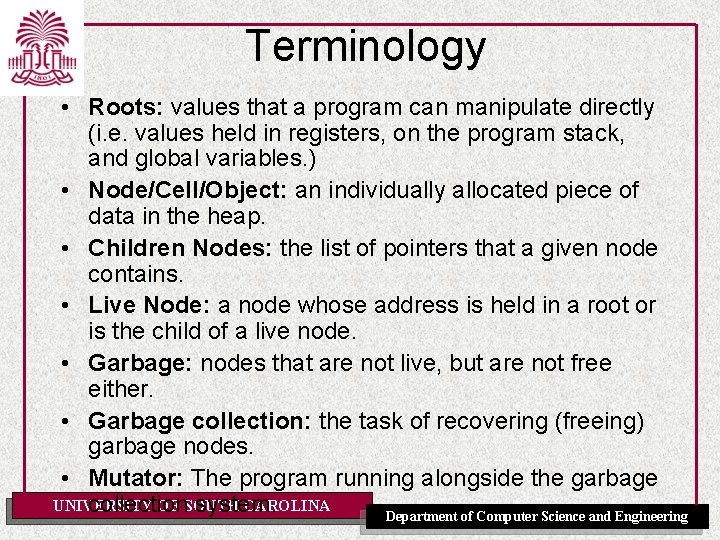

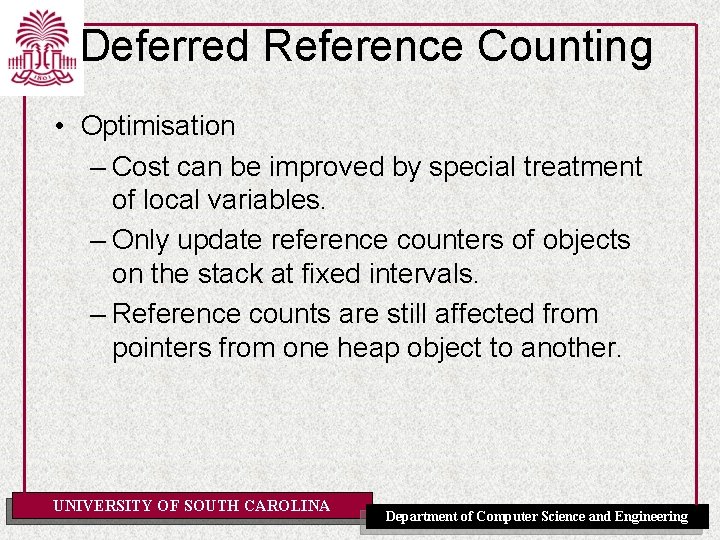

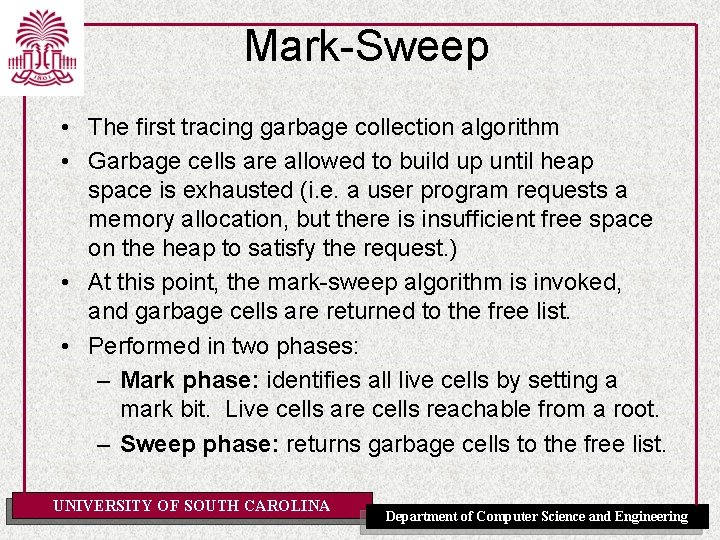

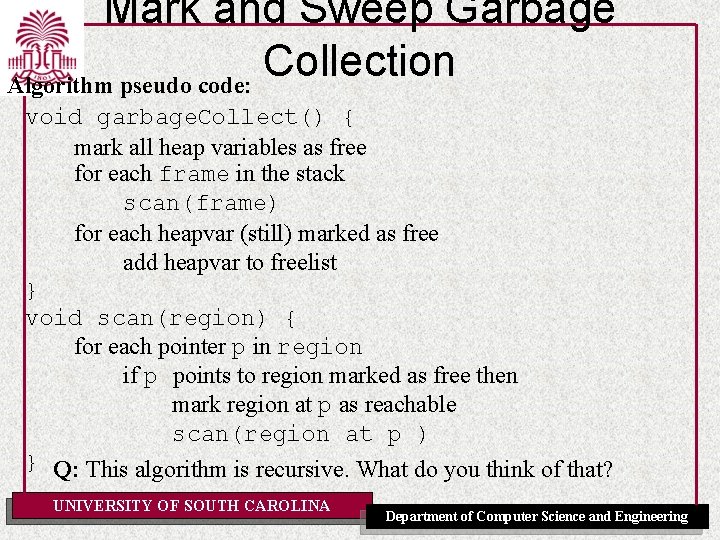

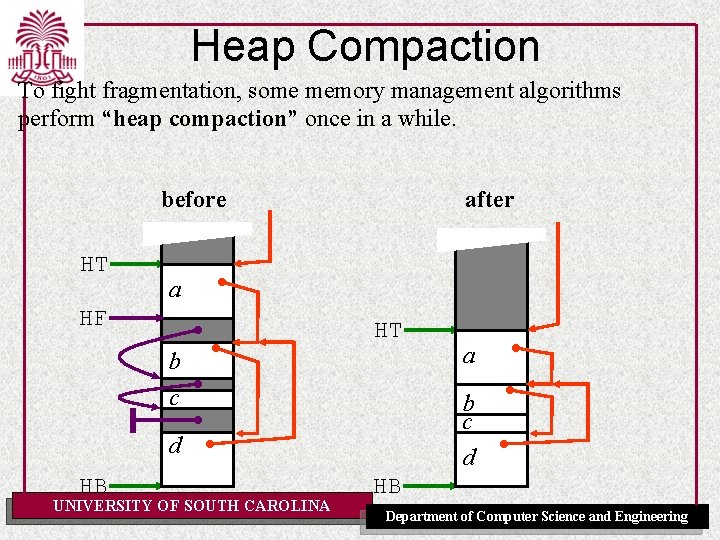

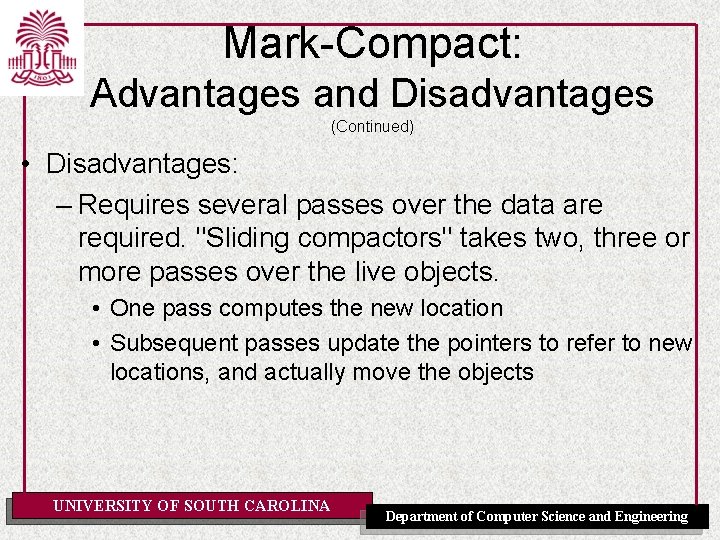

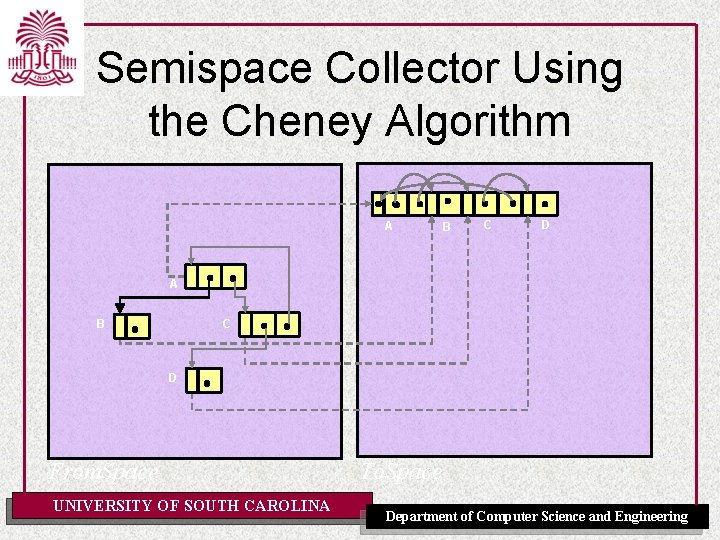

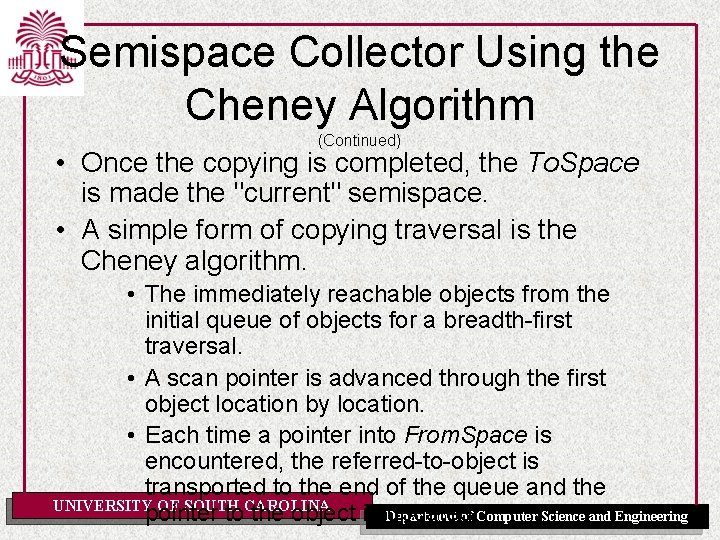

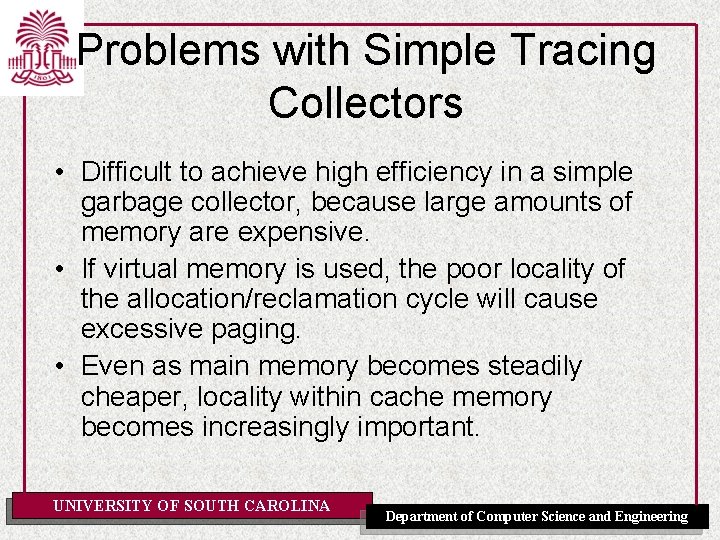

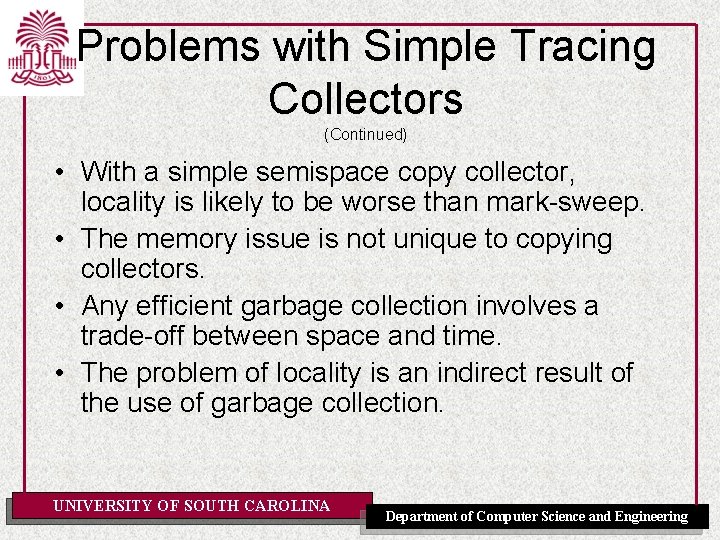

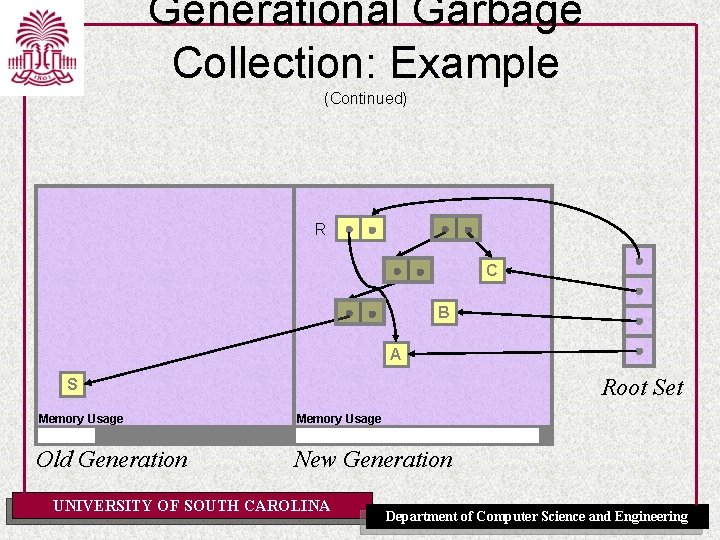

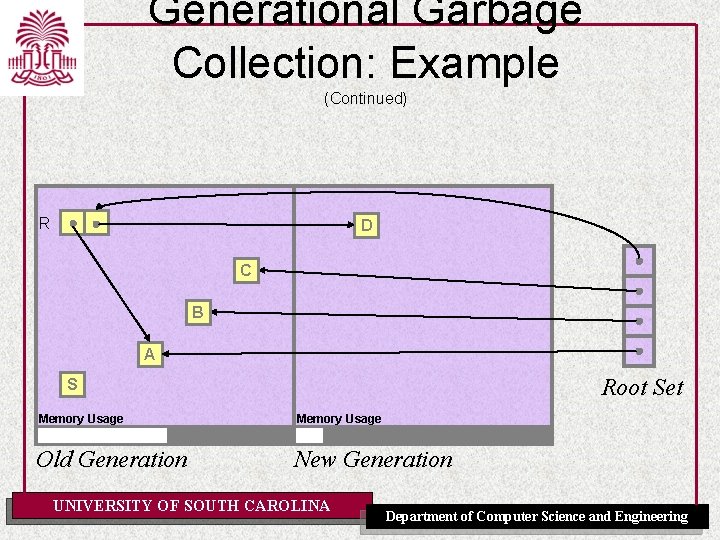

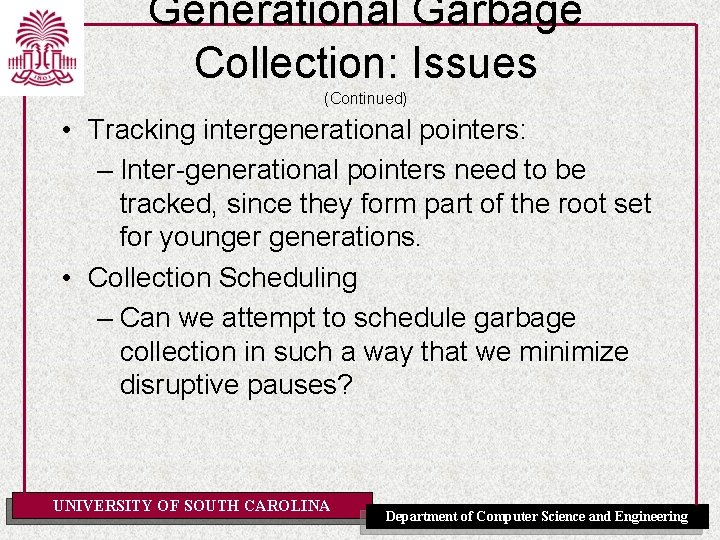

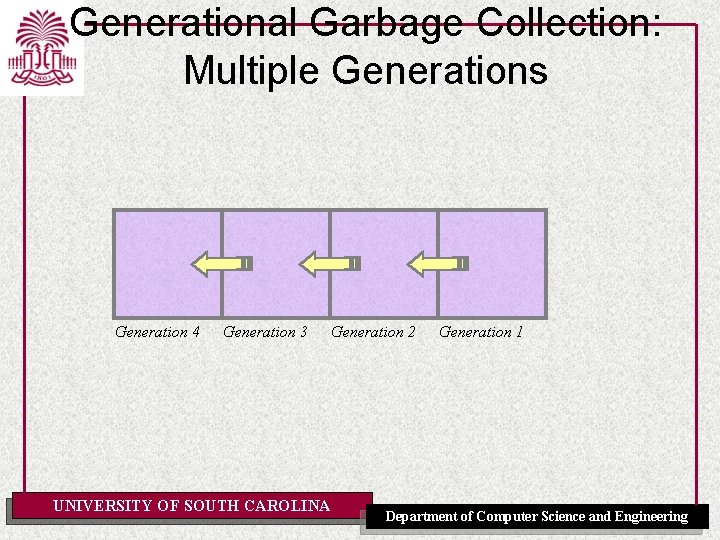

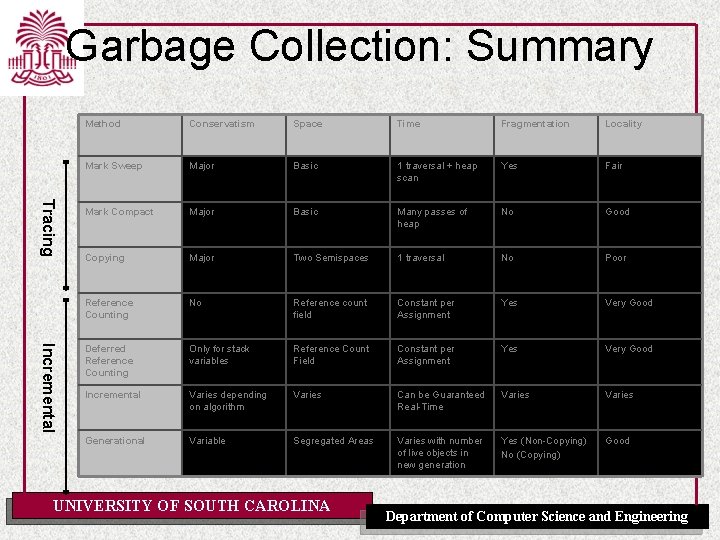

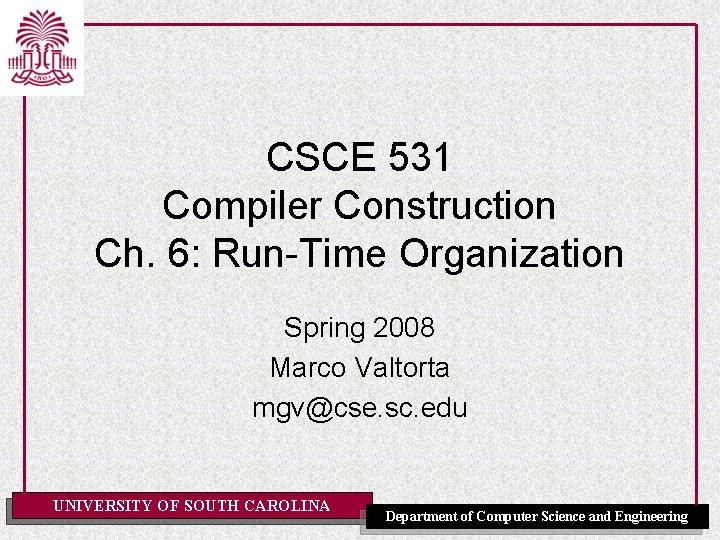

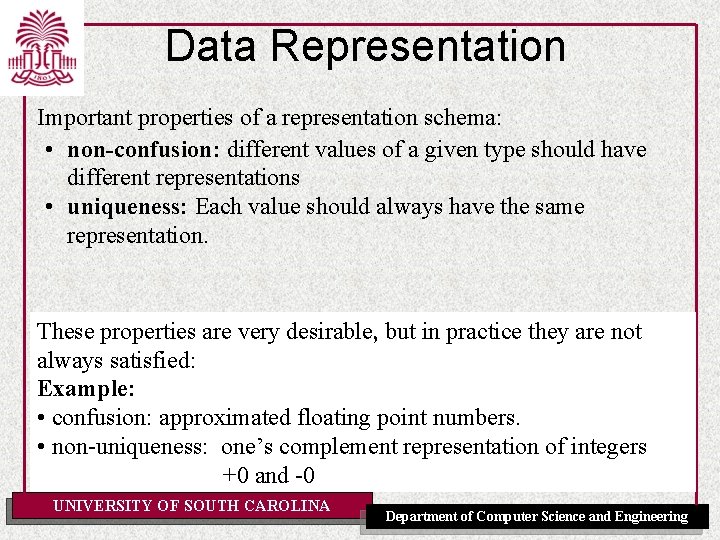

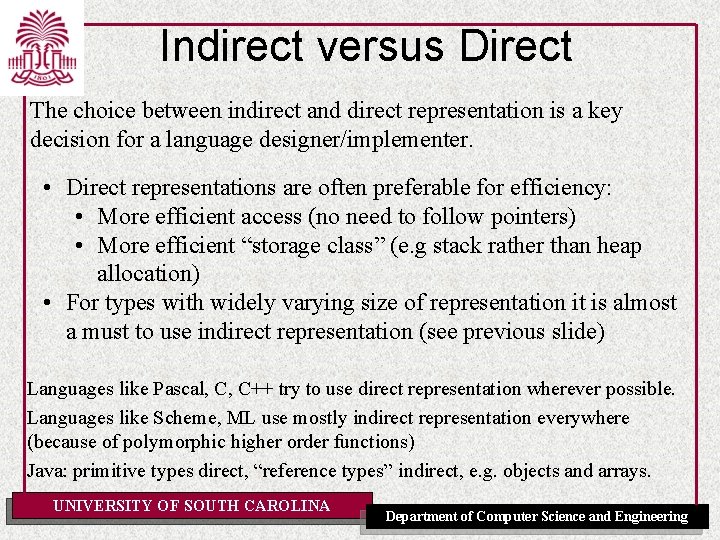

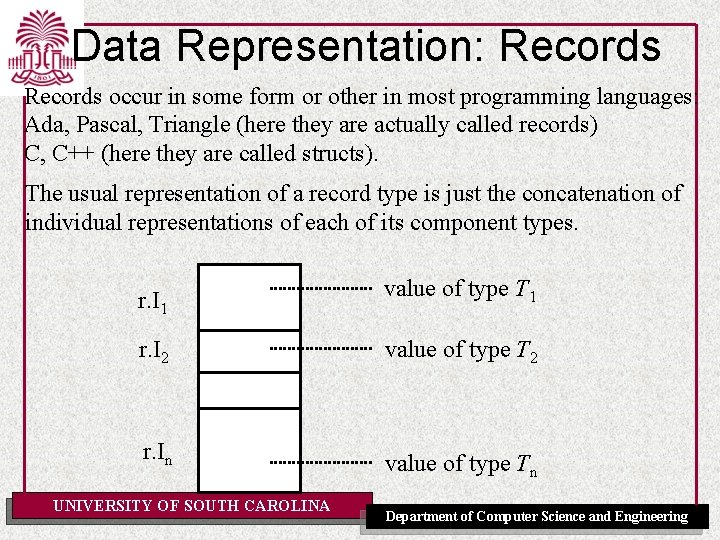

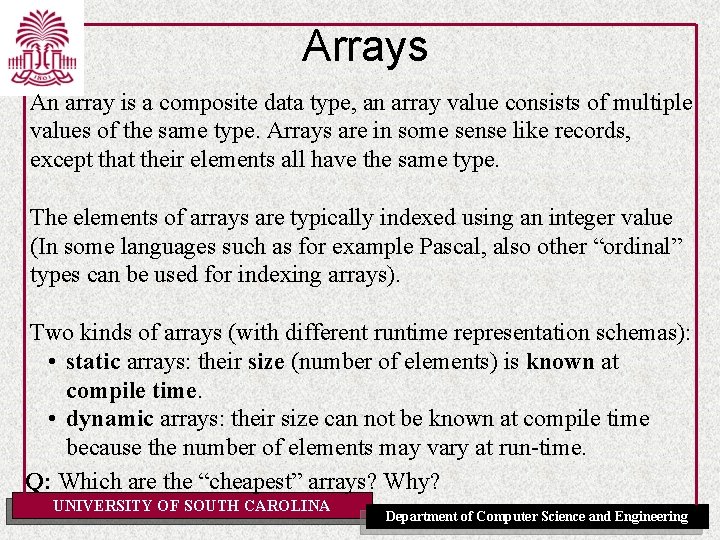

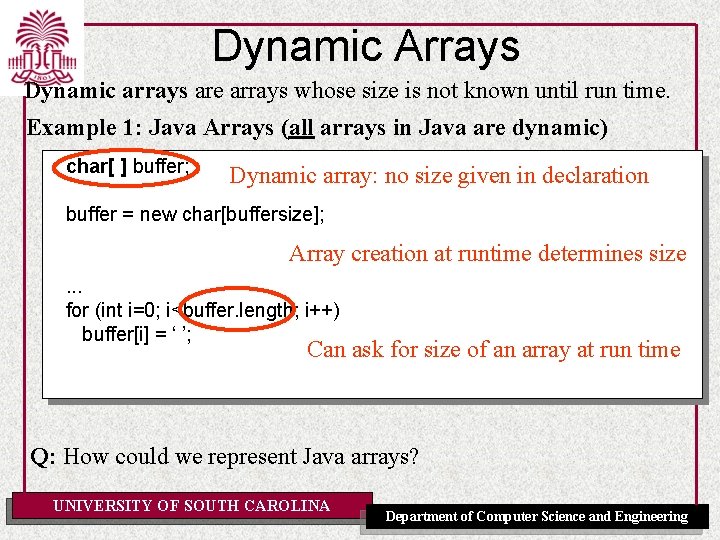

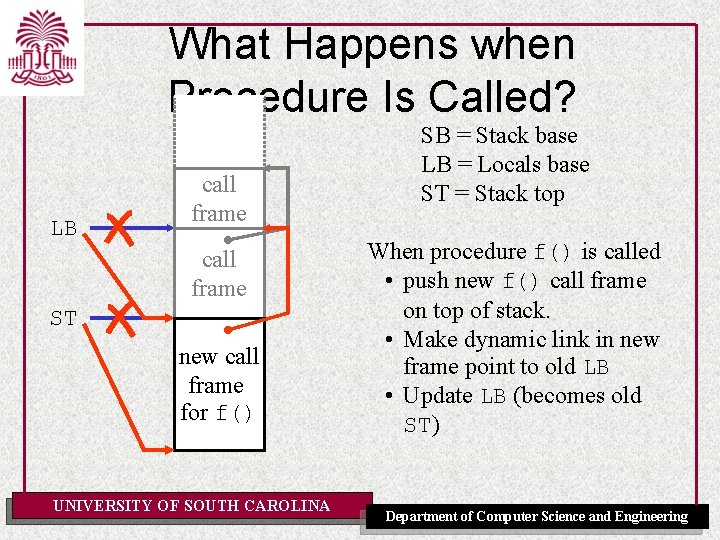

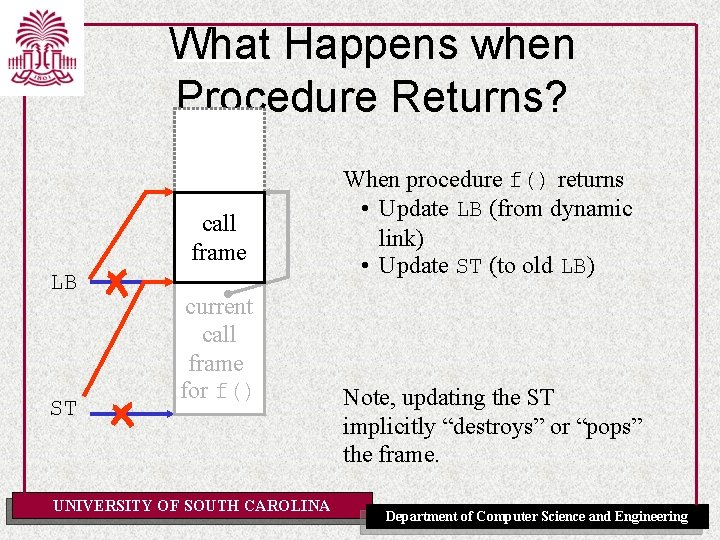

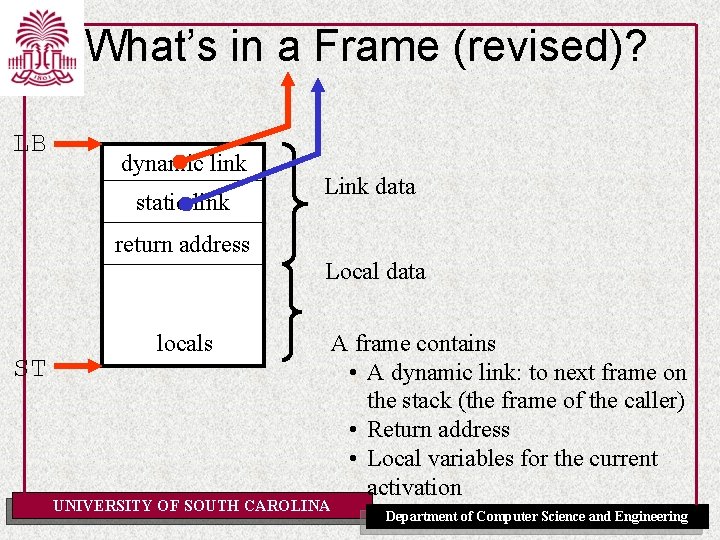

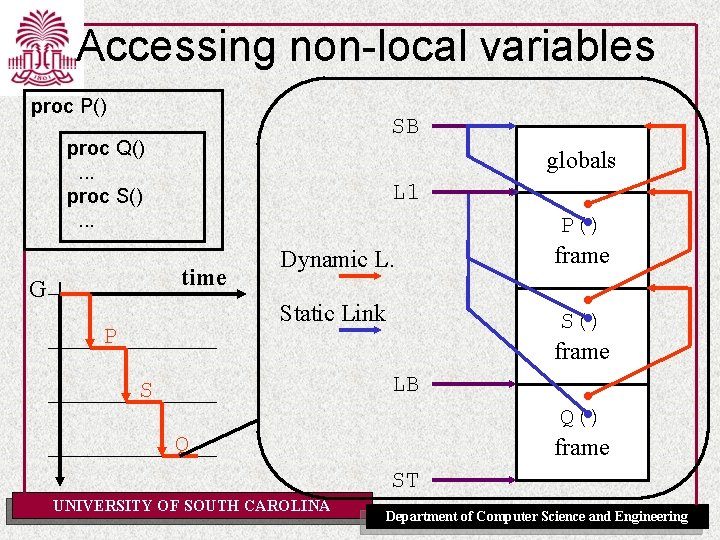

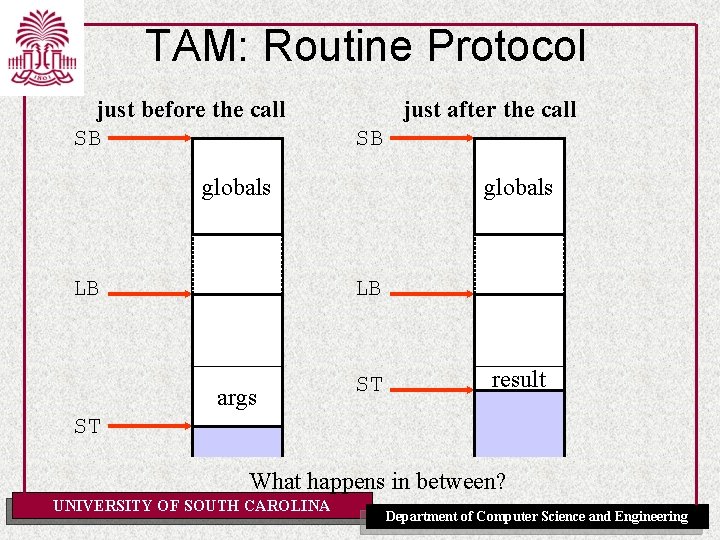

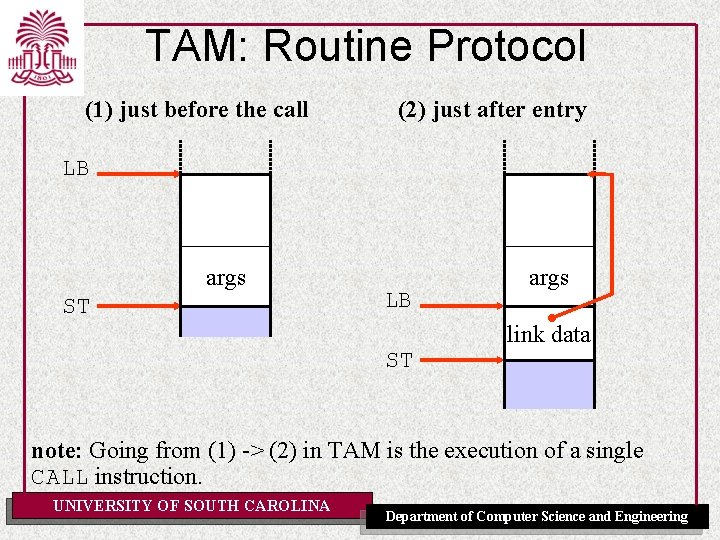

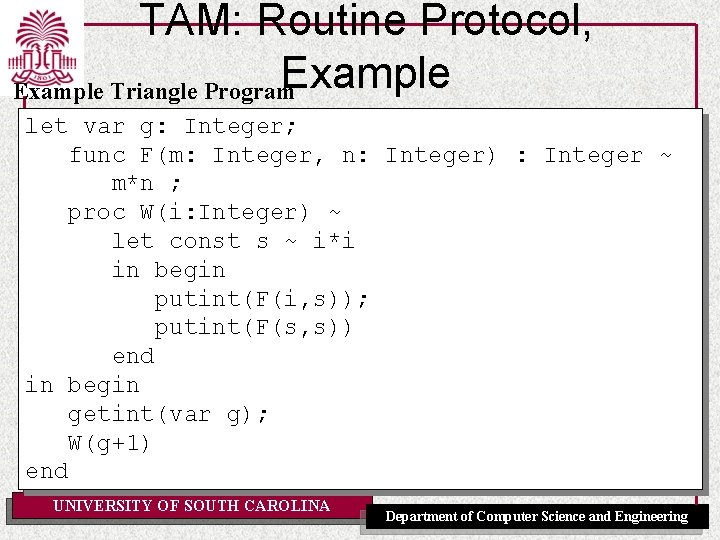

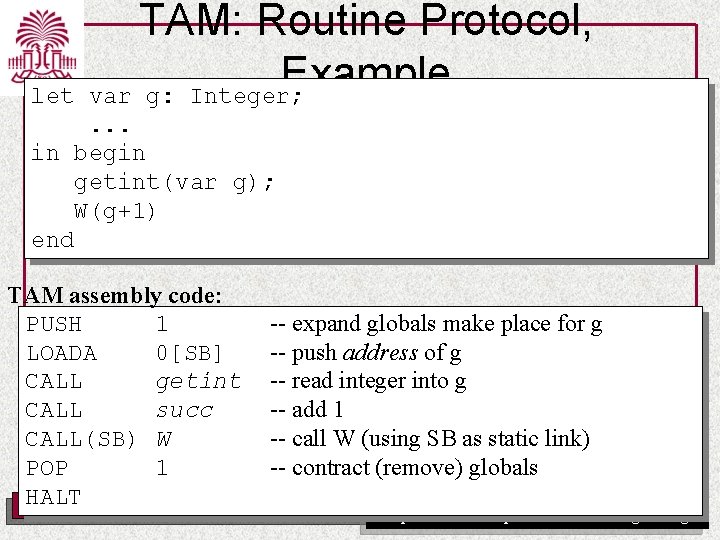

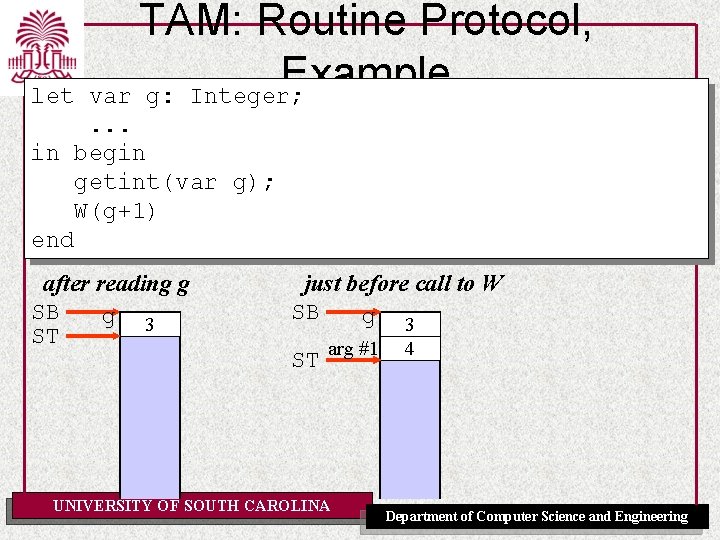

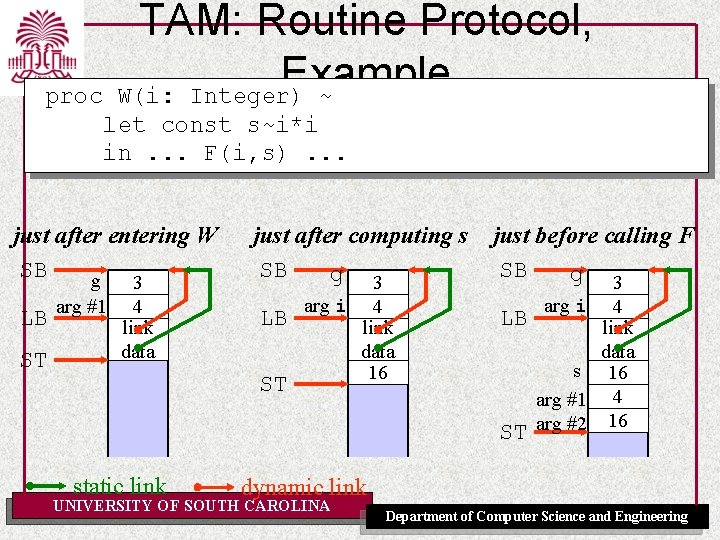

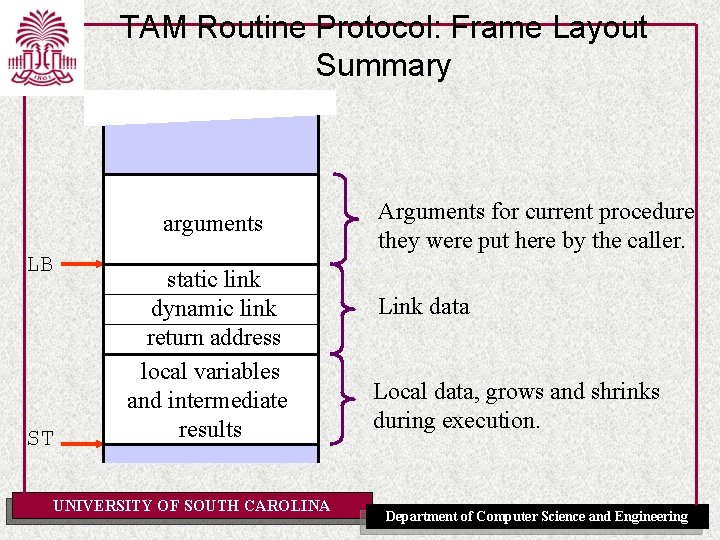

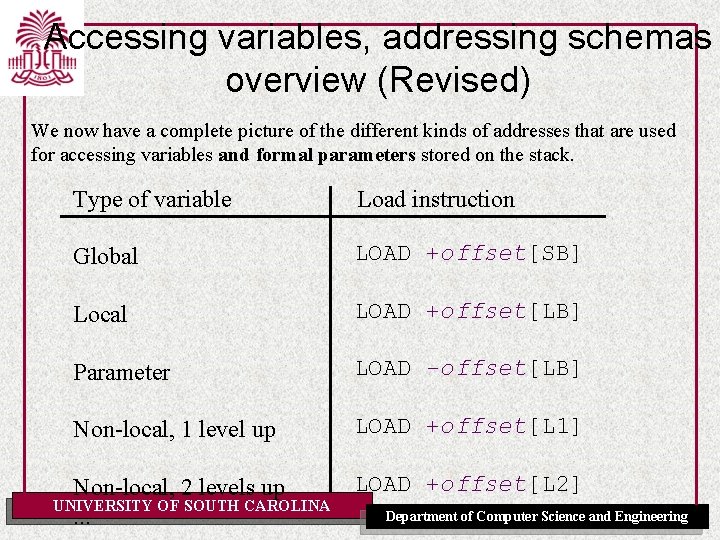

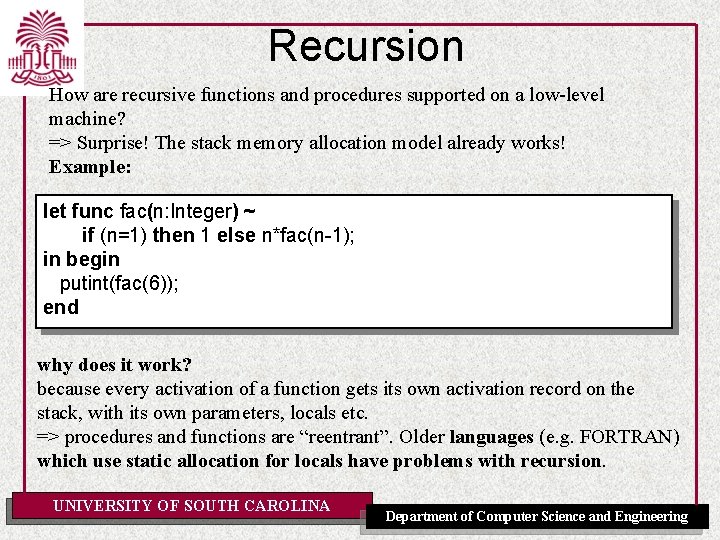

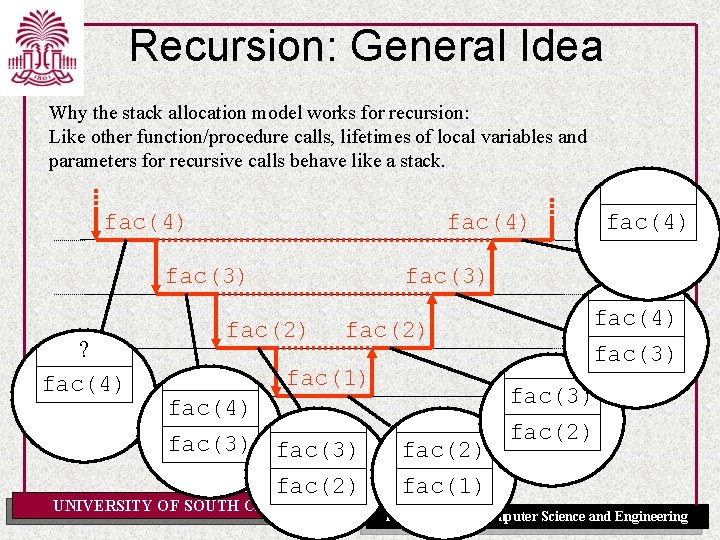

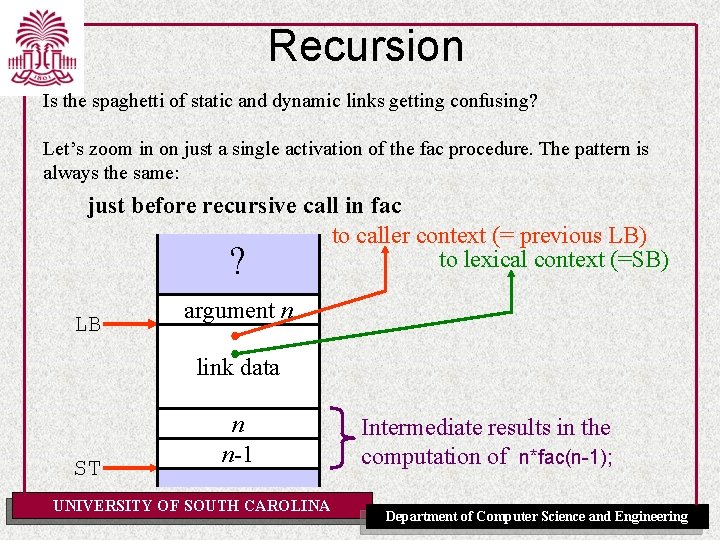

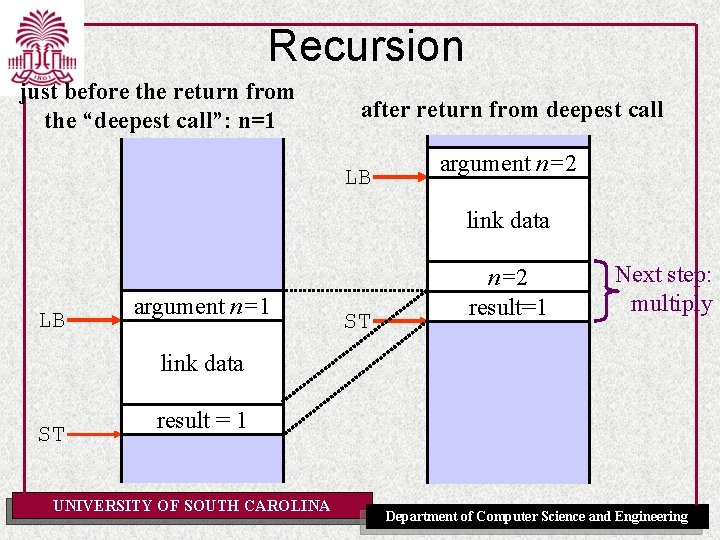

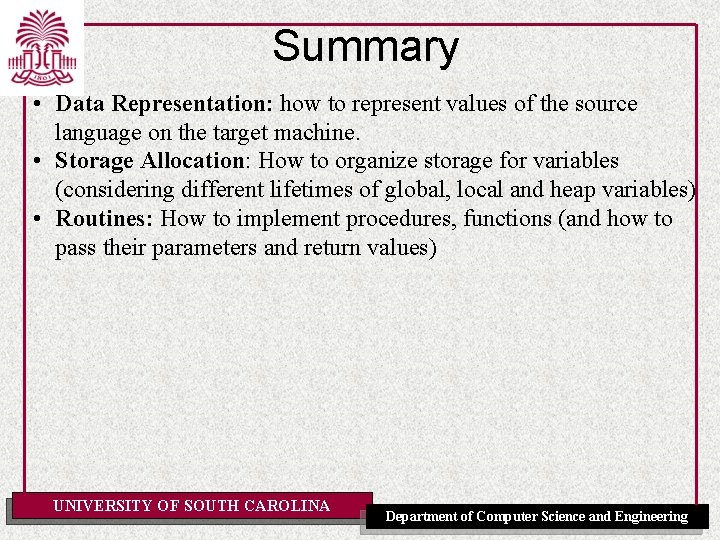

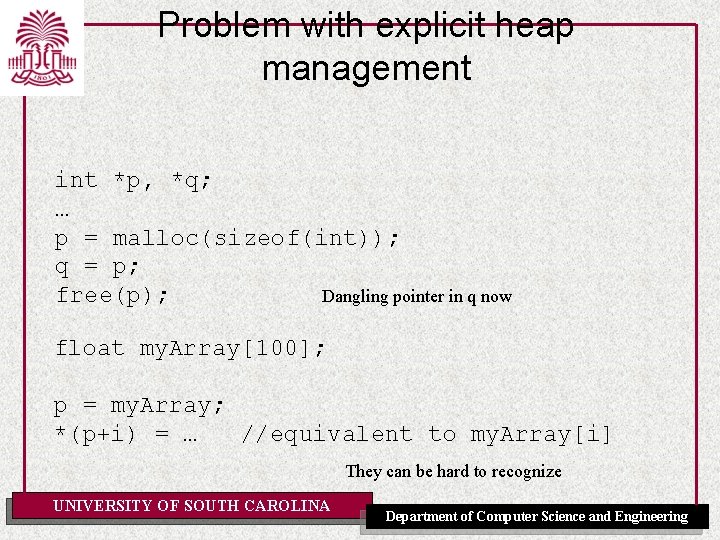

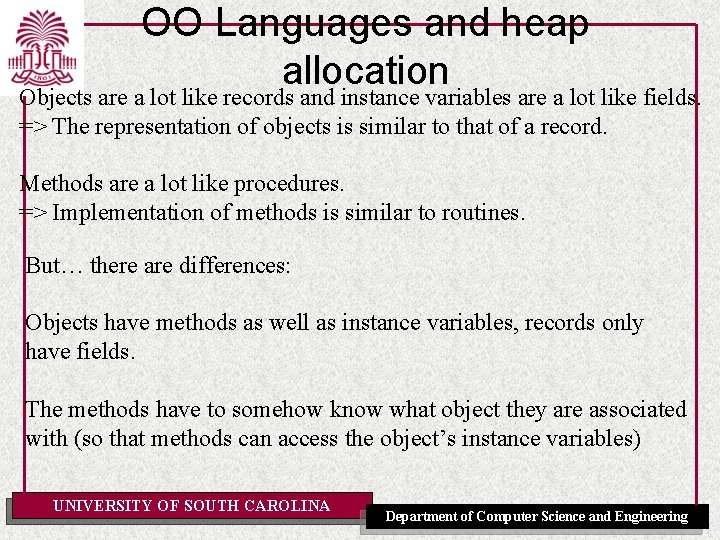

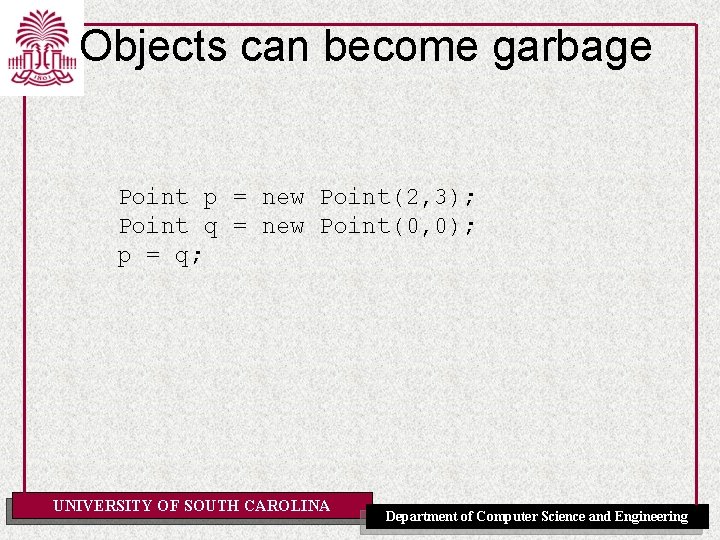

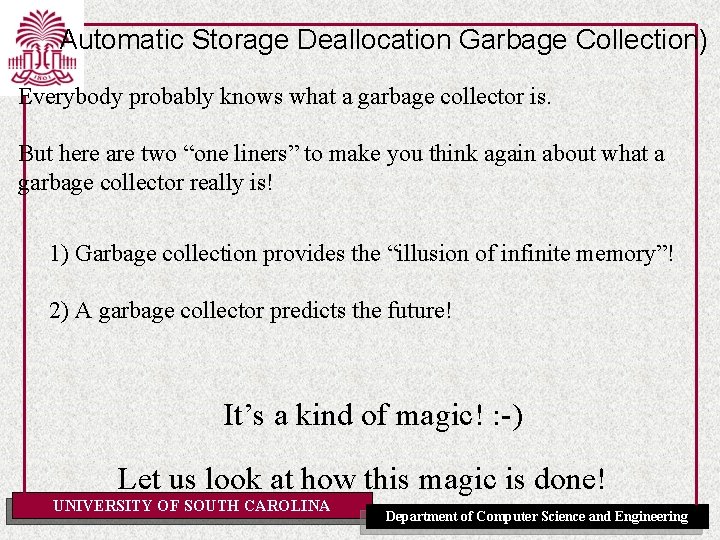

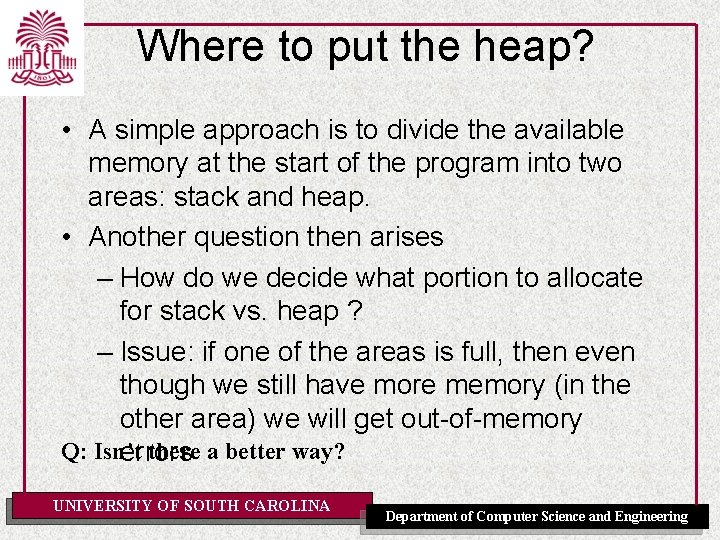

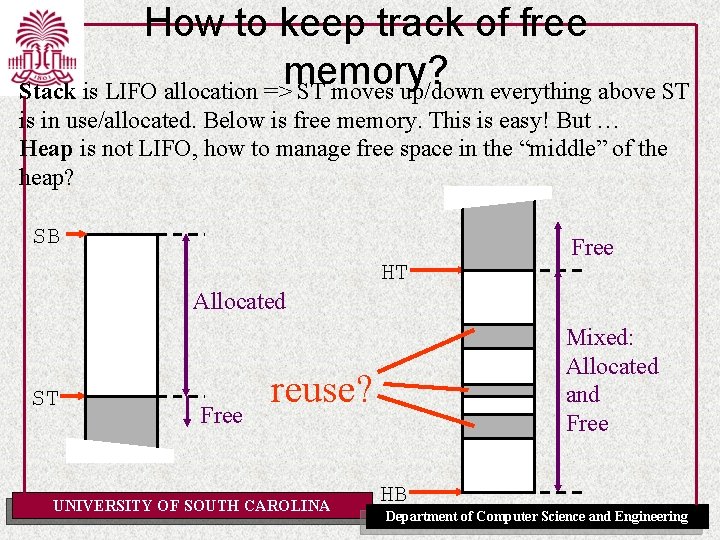

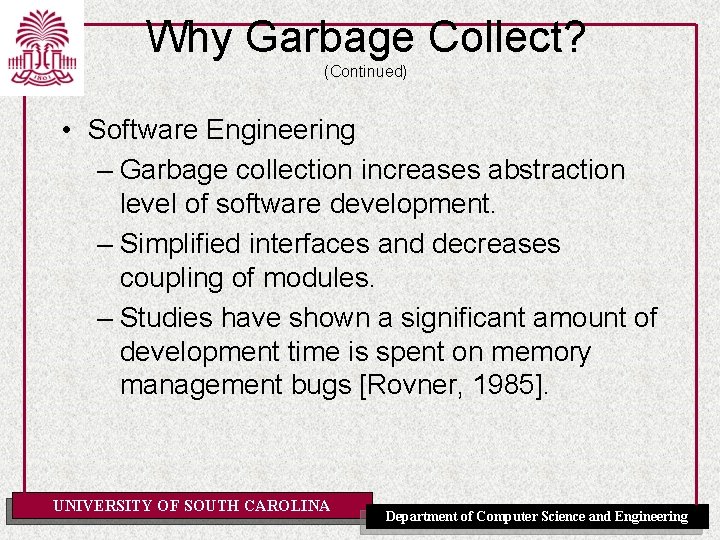

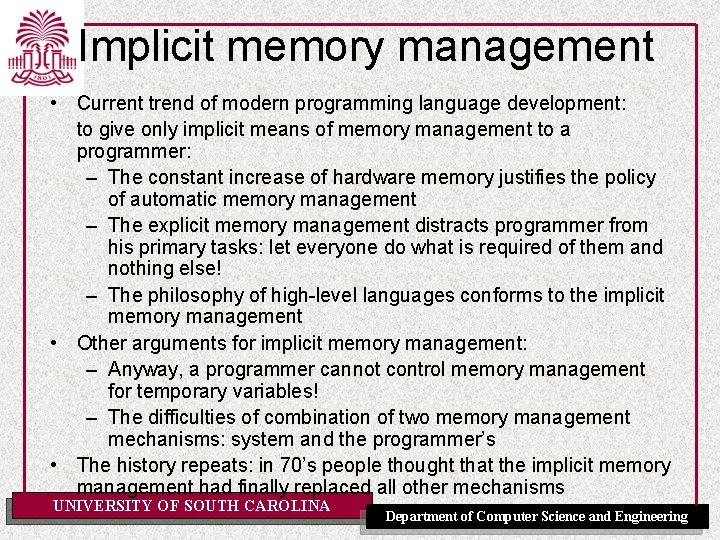

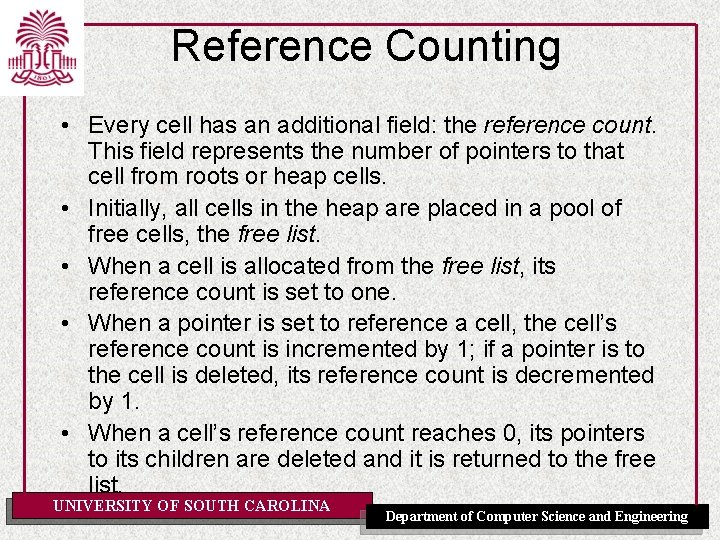

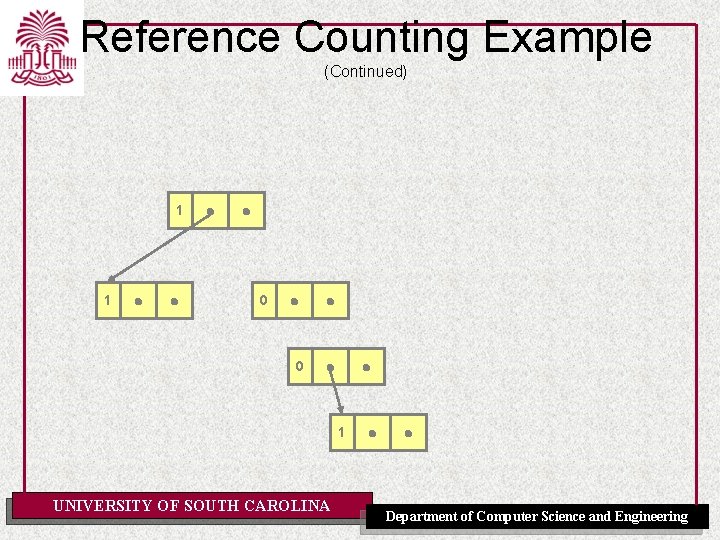

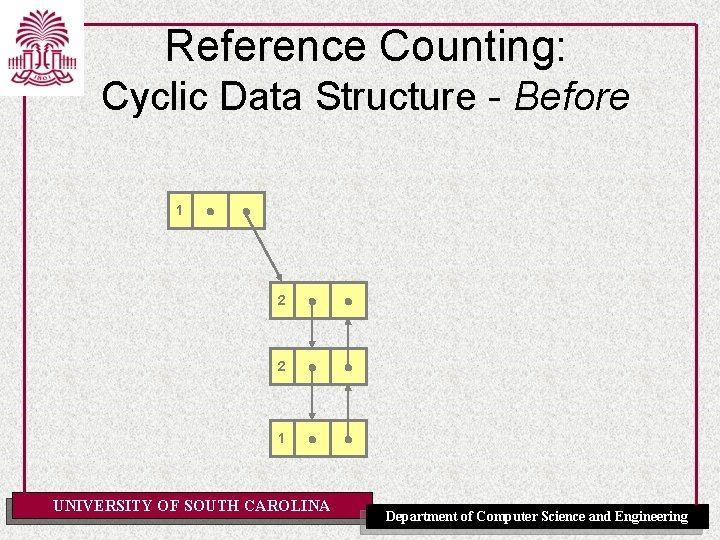

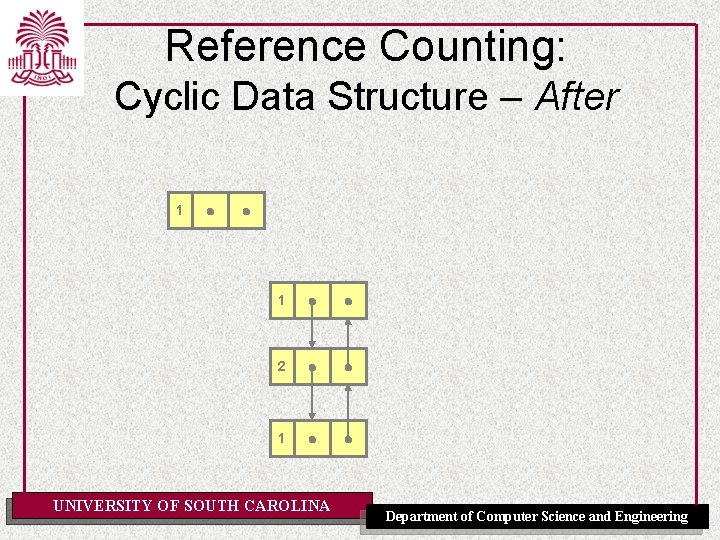

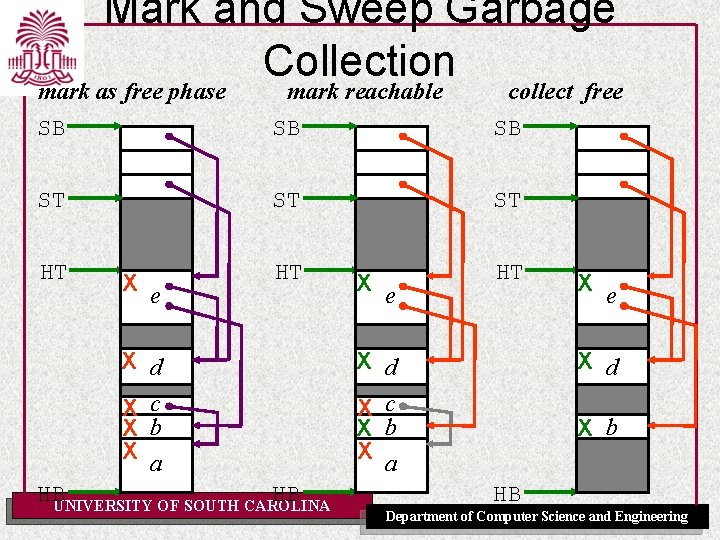

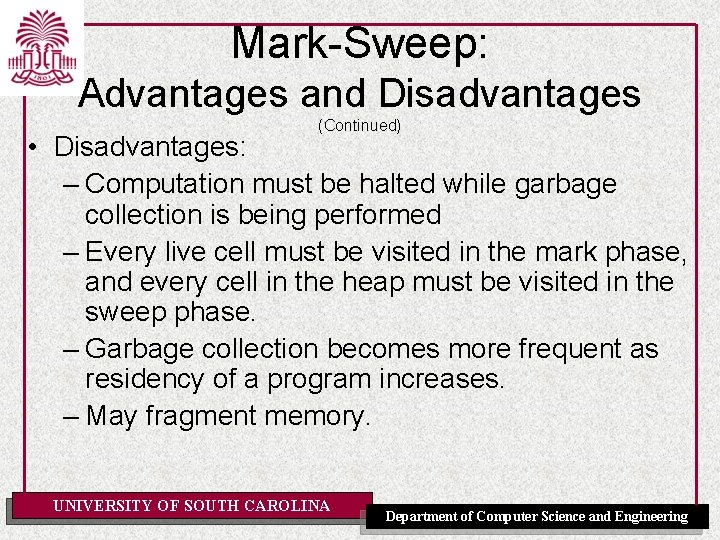

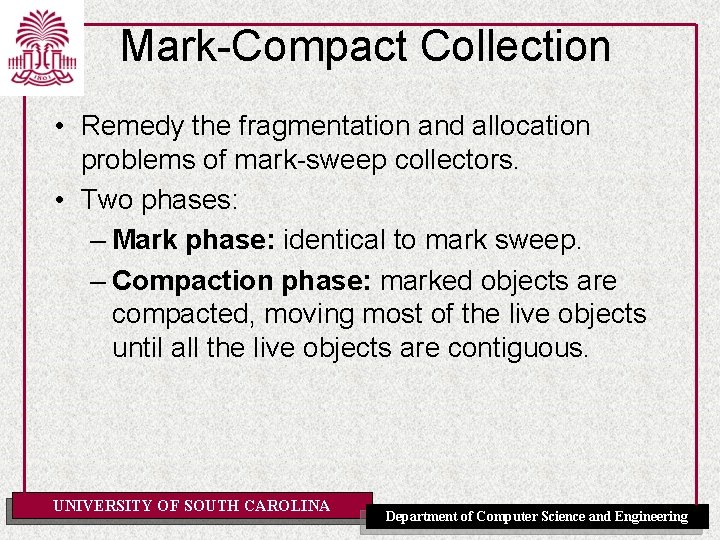

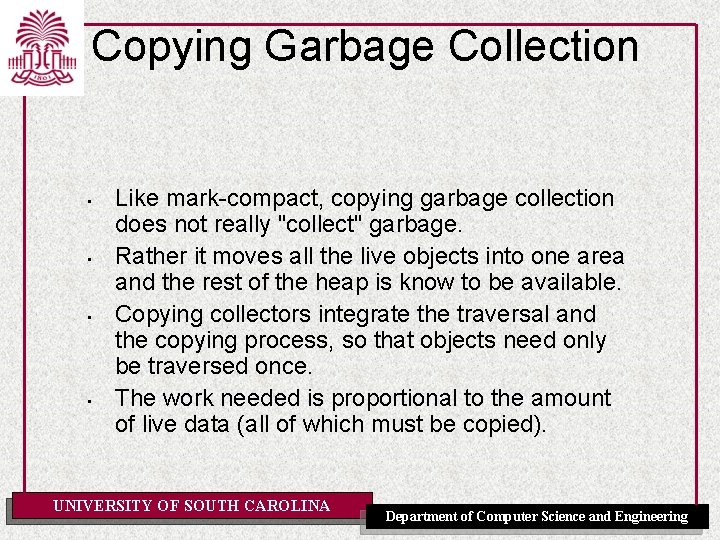

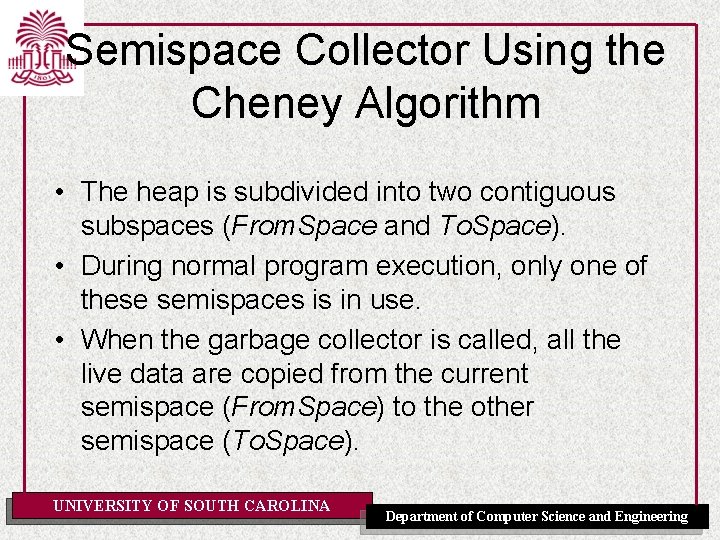

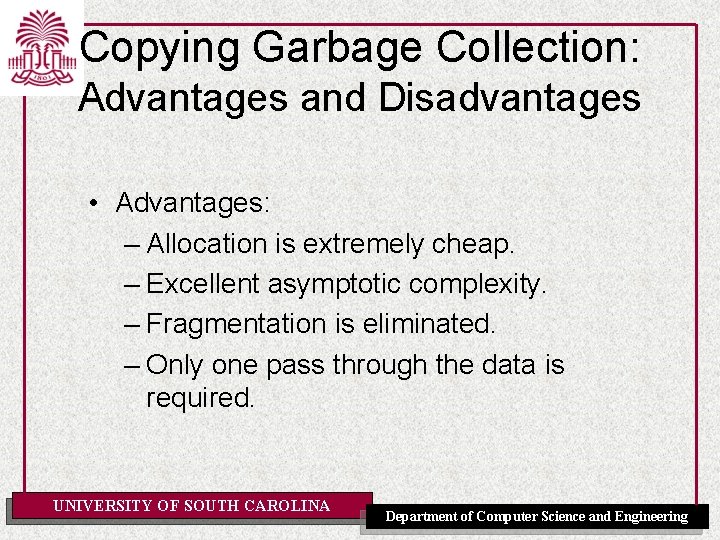

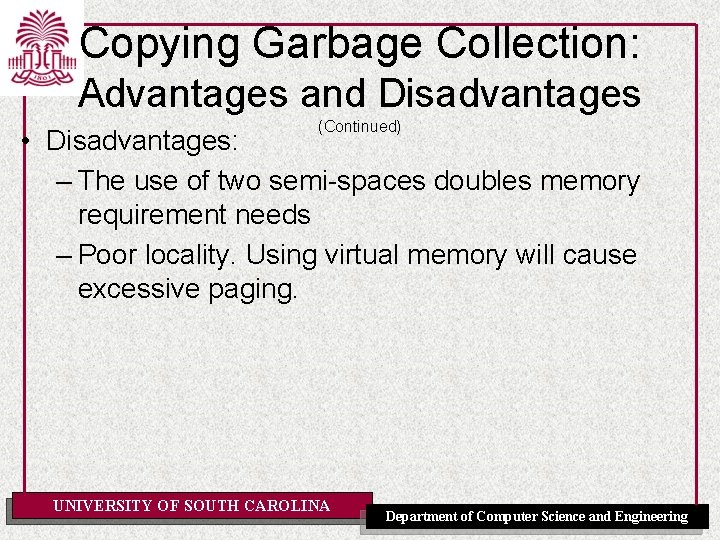

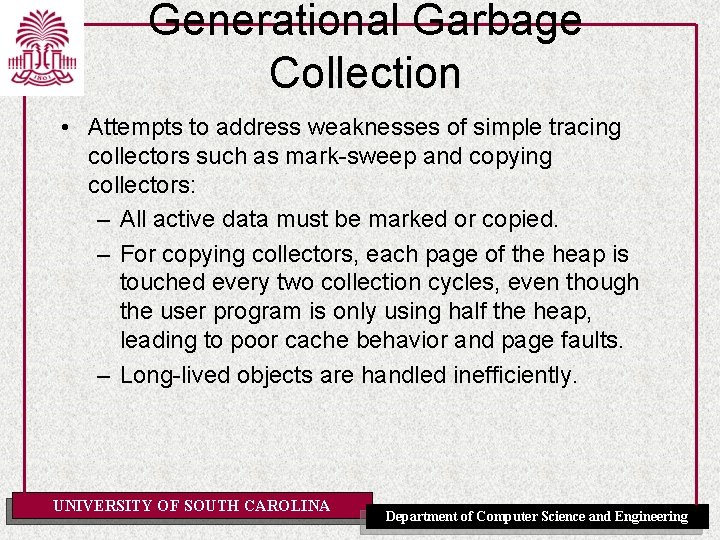

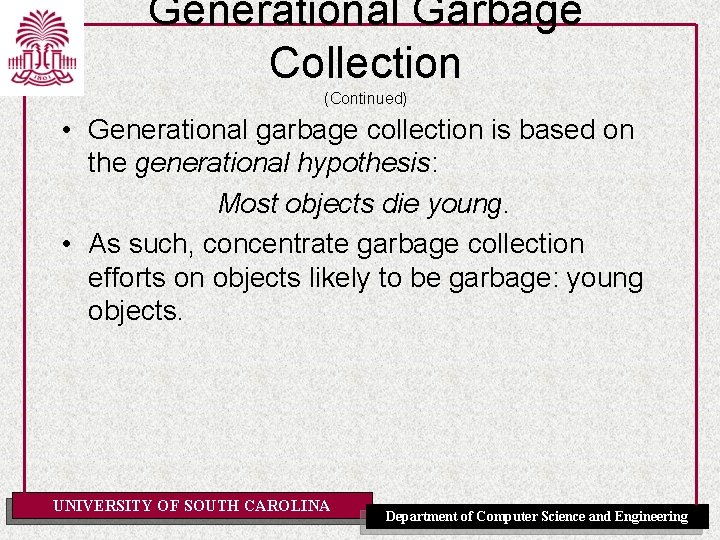

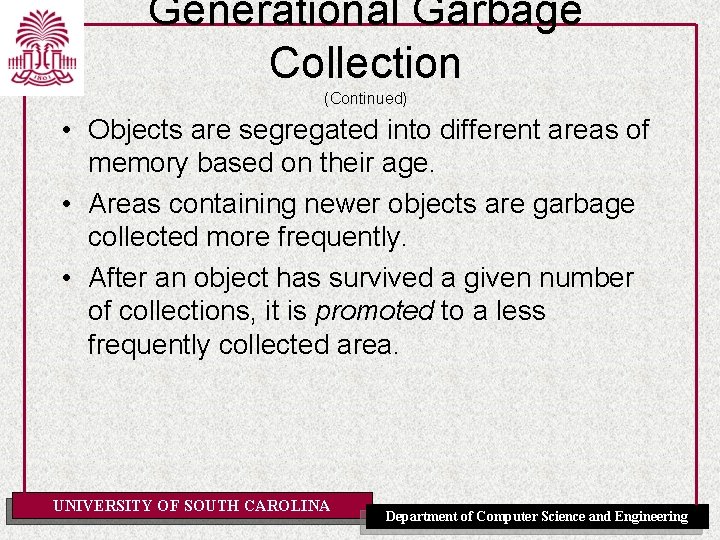

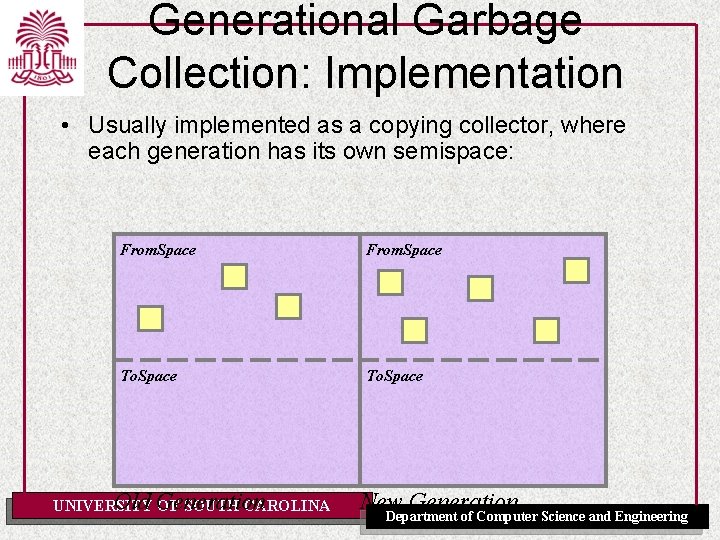

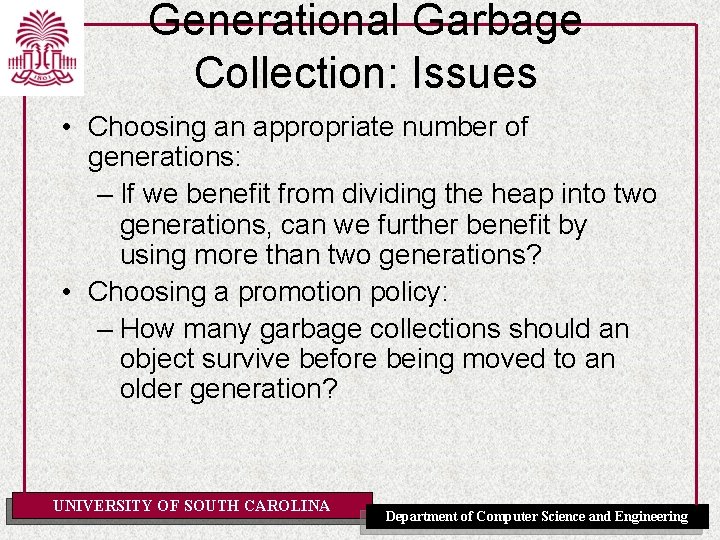

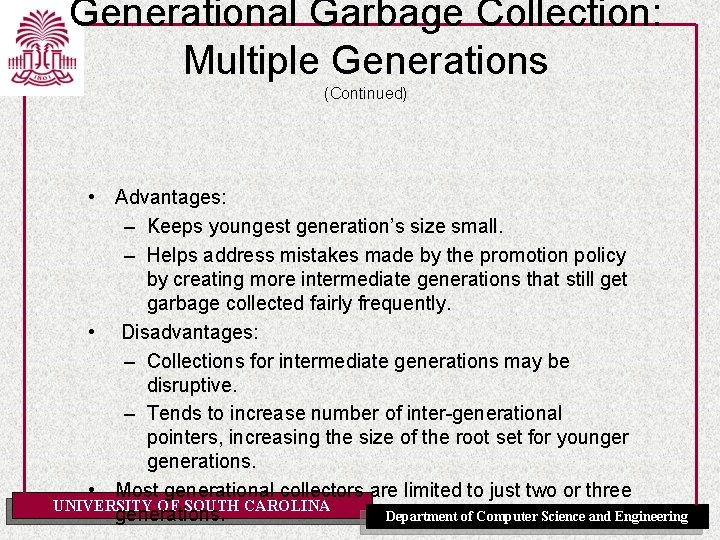

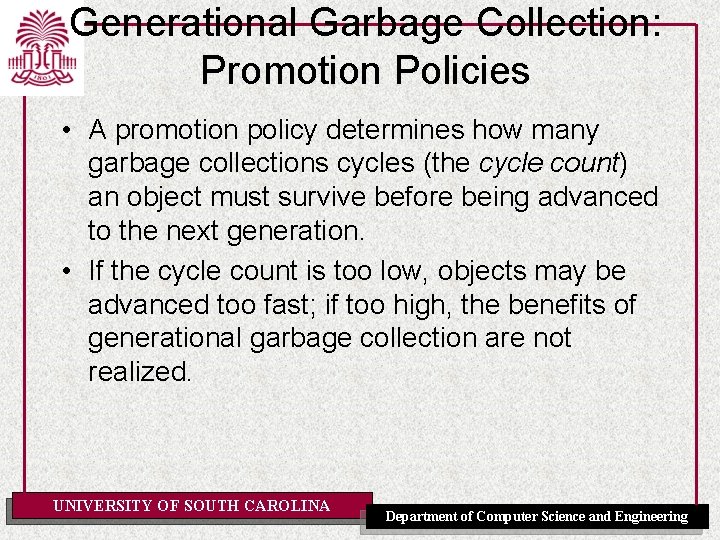

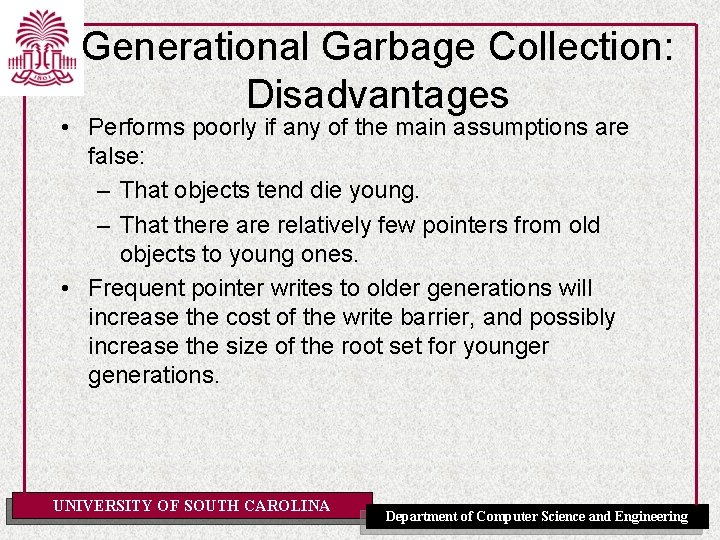

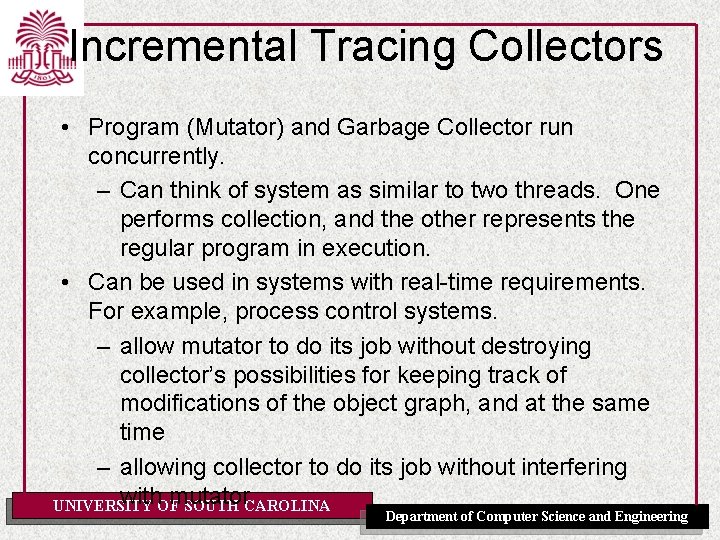

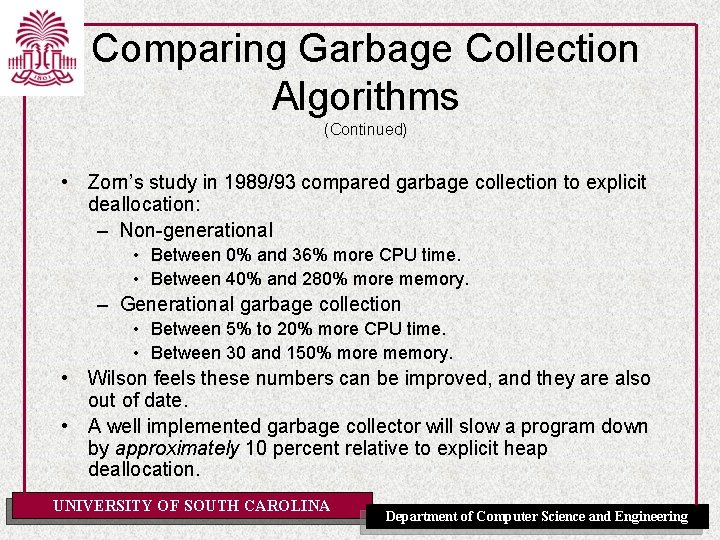

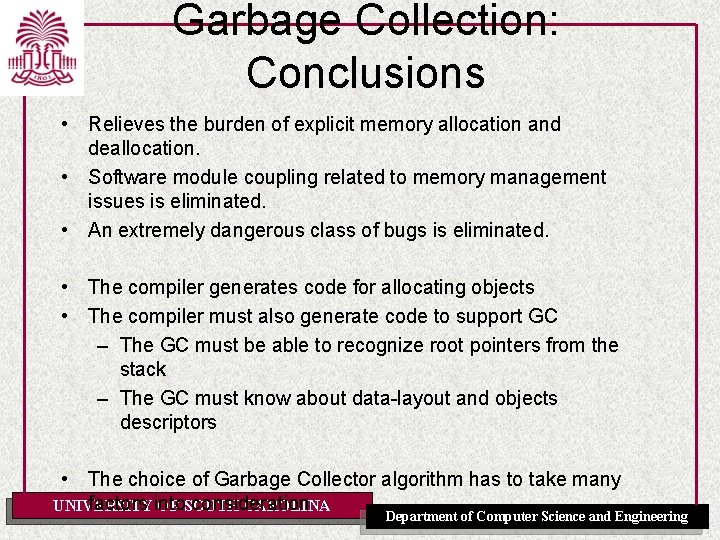

Dynamic Arrays Dynamic arrays are arrays whose size is not known until run time. Example 1: Java Arrays (all arrays in Java are dynamic) char[ ] buffer; Dynamic array: no size given in declaration buffer = new char[buffersize]; Array creation at runtime determines size. . . for (int i=0; i<buffer. length; i++) buffer[i] = ‘ ’; Can ask for size of an array at run time Q: How could we represent Java arrays? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

![Dynamic Arrays Java Arrays char buffer buffer new charlen A possible representation Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; A possible representation](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-40.jpg)

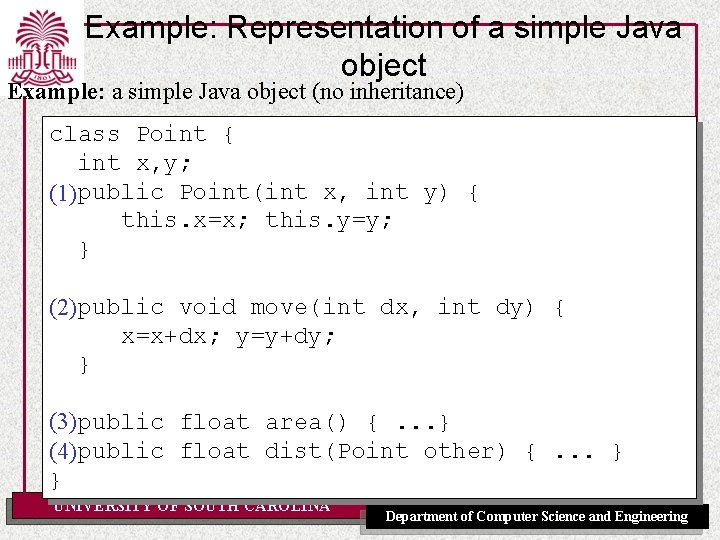

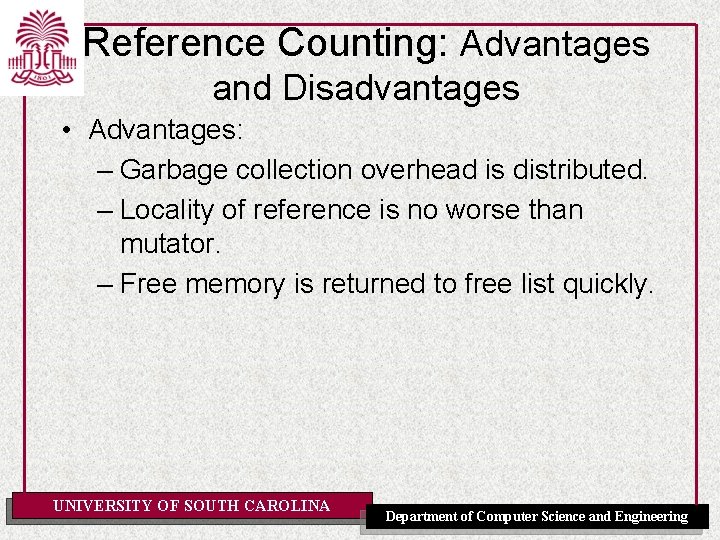

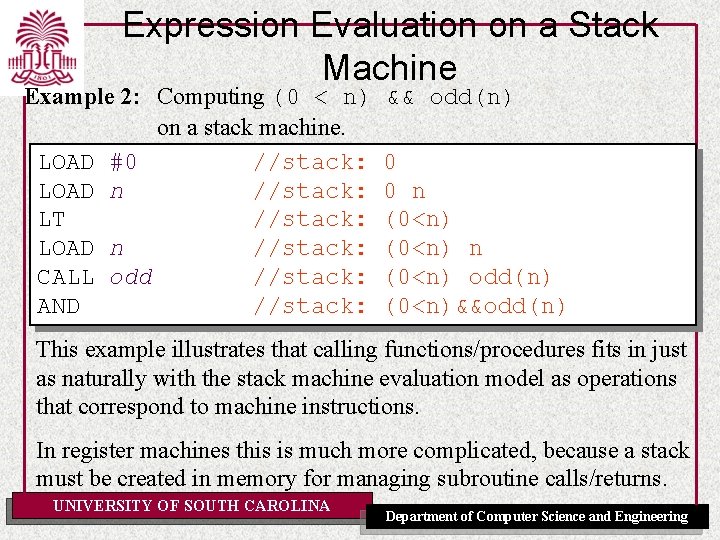

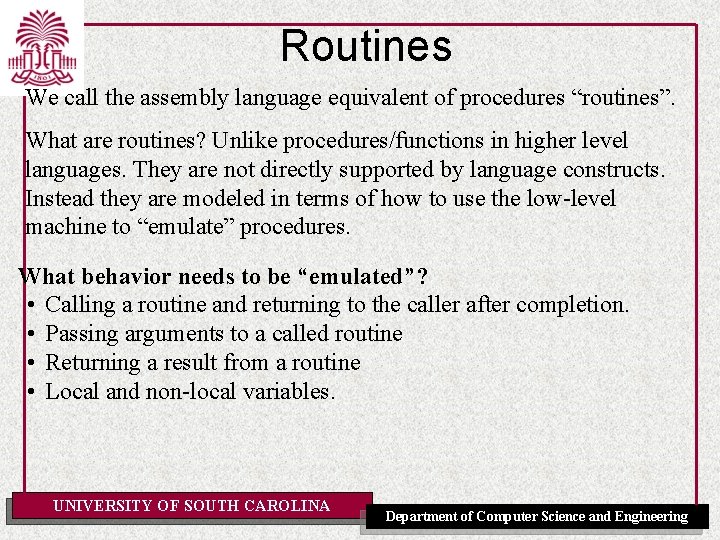

Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; A possible representation for Java arrays ‘C’ ‘o’ buffer. length 7 ‘m’ buffer. origin • ‘p’ ‘i’ ‘l’ ‘e’ UNIVERSITY OF SOUTH CAROLINA buffer[0] buffer[1] buffer[2] buffer[3] buffer[4] buffer[5] buffer[6] Department of Computer Science and Engineering

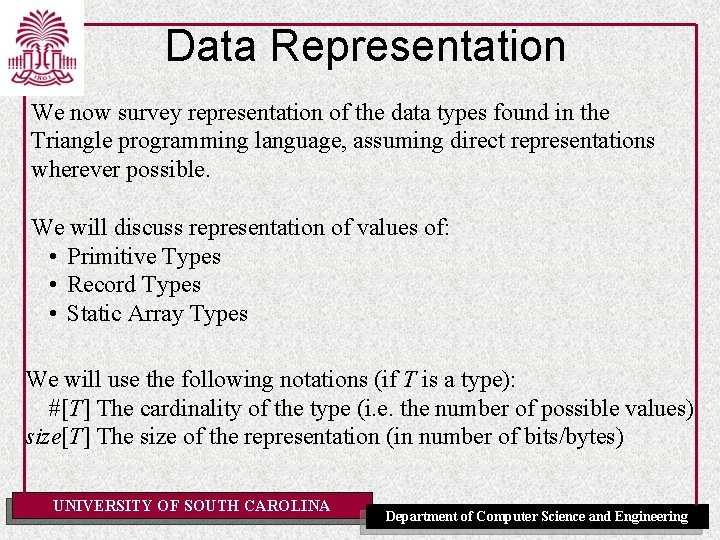

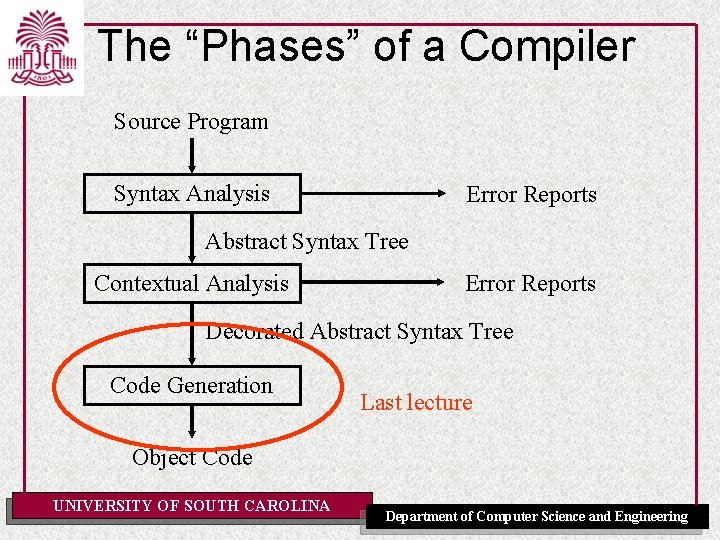

![Dynamic Arrays Java Arrays char buffer buffer new charlen Another possible representation Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; Another possible representation](https://slidetodoc.com/presentation_image/1786819c480edab3cd15e8b89c5d411c/image-41.jpg)

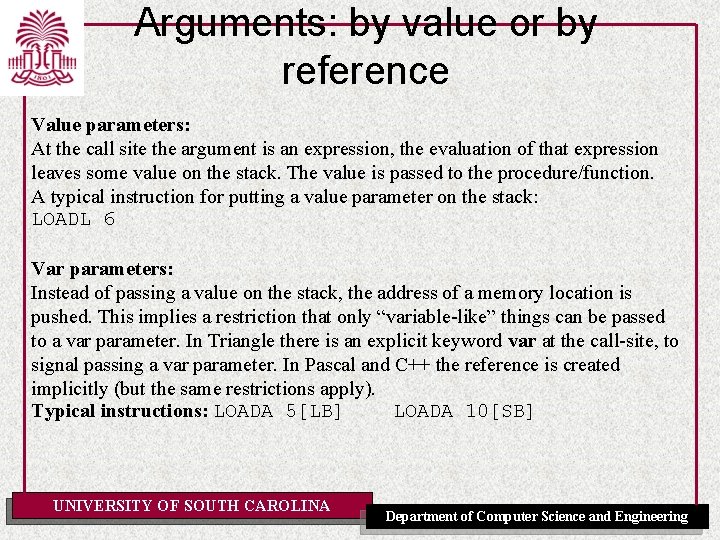

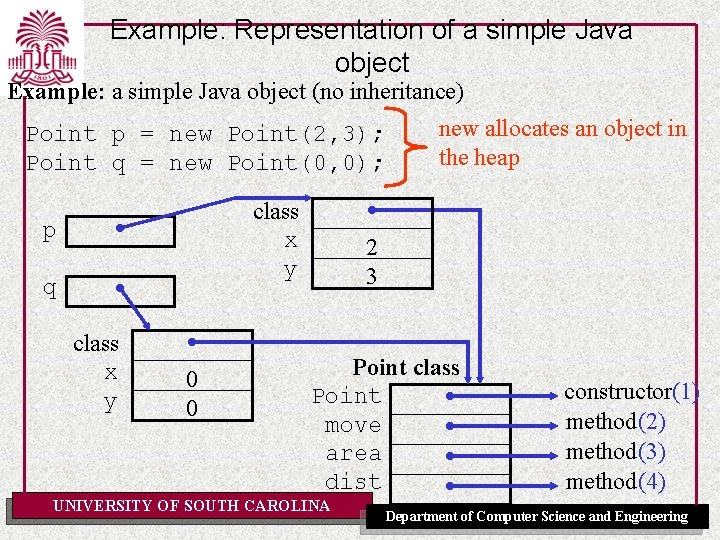

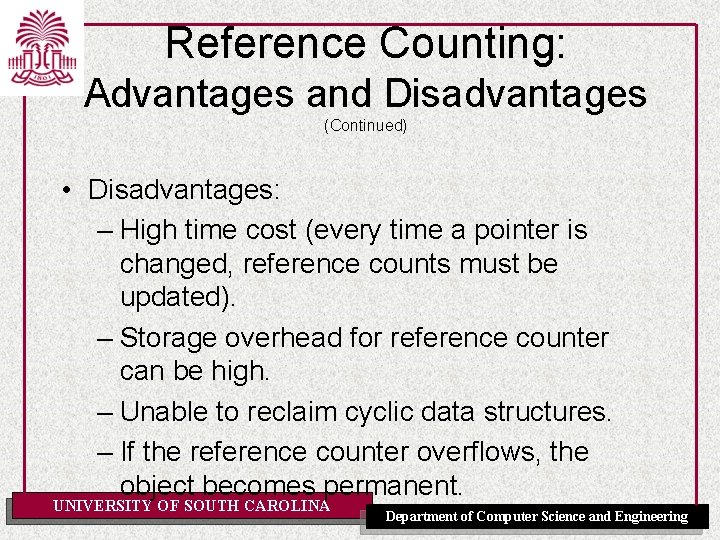

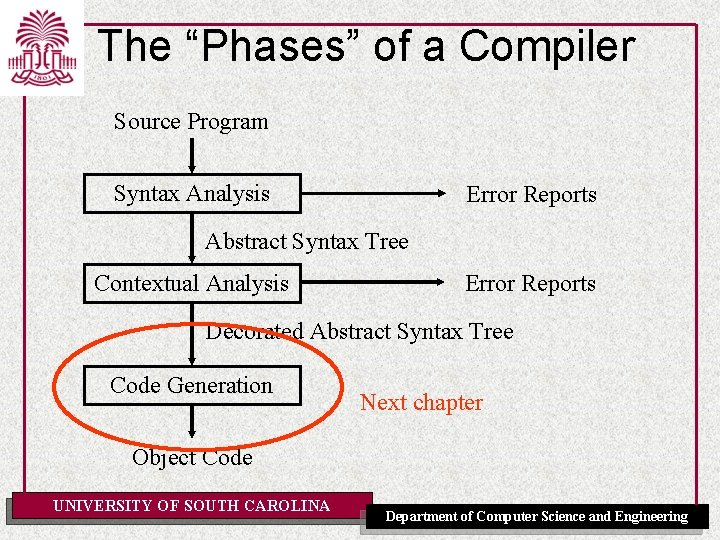

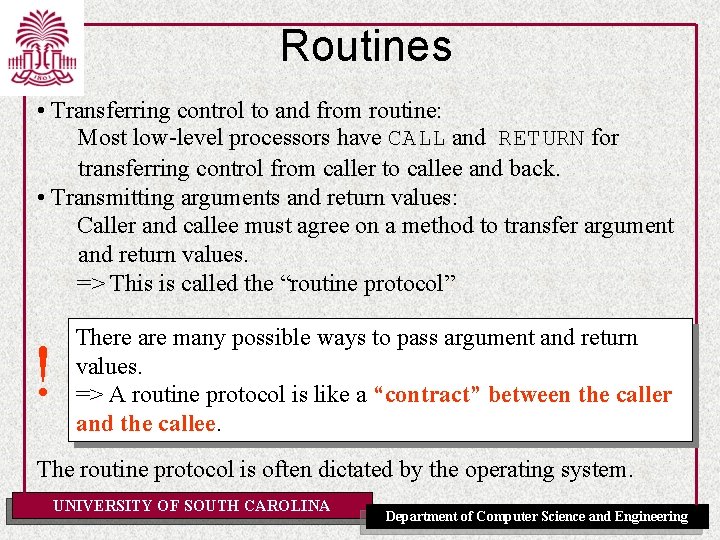

Dynamic Arrays Java Arrays char[ ] buffer; buffer = new char[len]; Another possible representation for Java arrays buffer • Note: In reality Java also stores a type in its representation for arrays, because Java arrays are objects (instances of classes). UNIVERSITY OF SOUTH CAROLINA 7 ‘C’ ‘o’ ‘m’ ‘p’ ‘i’ ‘l’ ‘e’ buffer. length buffer[0] buffer[1] buffer[2] buffer[3] buffer[4] buffer[5] buffer[6] Department of Computer Science and Engineering

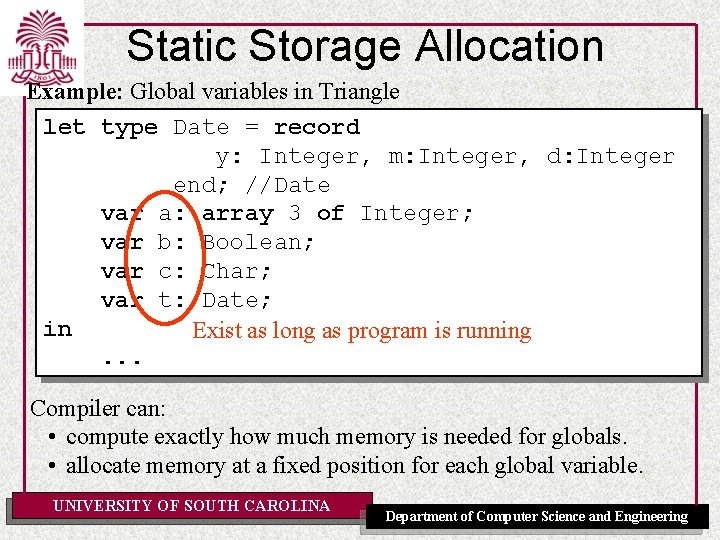

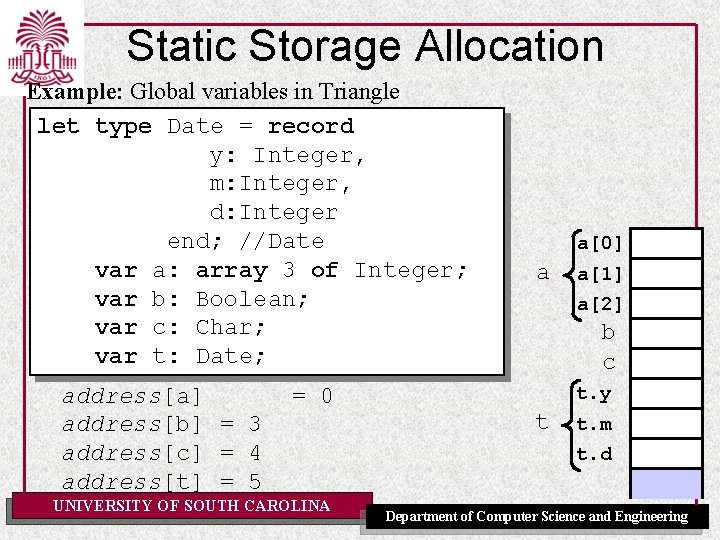

Static Storage Allocation Example: Global variables in Triangle let type Date = record y: Integer, m: Integer, d: Integer end; //Date var a: array 3 of Integer; var b: Boolean; var c: Char; var t: Date; in Exist as long as program is running. . . Compiler can: • compute exactly how much memory is needed for globals. • allocate memory at a fixed position for each global variable. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Static Storage Allocation Example: Global variables in Triangle let type Date = record y: Integer, m: Integer, d: Integer end; //Date var a: array 3 of Integer; var b: Boolean; var c: Char; var t: Date; address[a] address[b] = 3 address[c] = 4 address[t] = 5 = 0 UNIVERSITY OF SOUTH CAROLINA a[0] a a[1] a[2] b c t. y t t. m t. d Department of Computer Science and Engineering

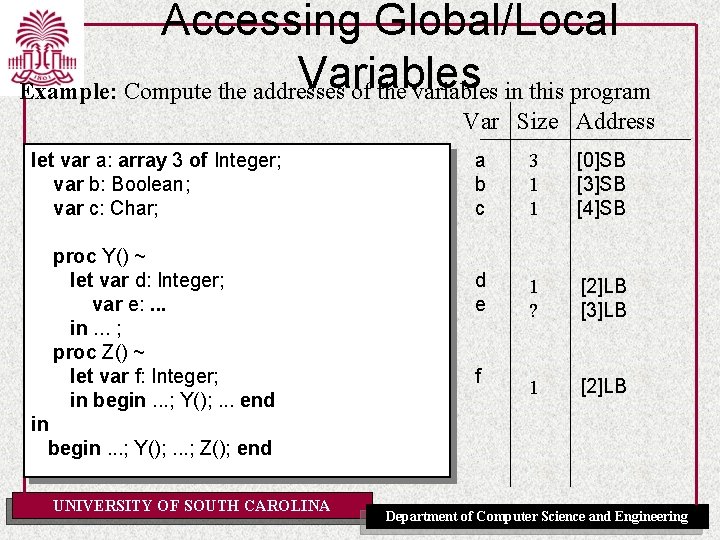

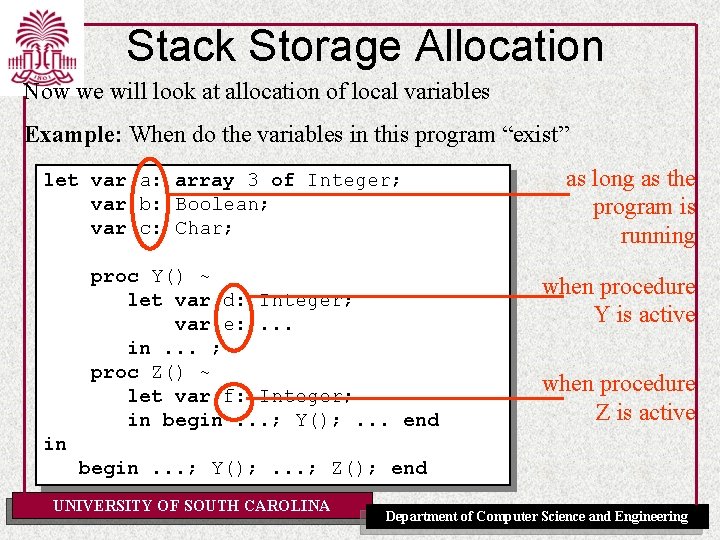

Stack Storage Allocation Now we will look at allocation of local variables Example: When do the variables in this program “exist” let var a: array 3 of Integer; var b: Boolean; var c: Char; proc Y() ~ let var d: Integer; var e: . . . in. . . ; proc Z() ~ let var f: Integer; in begin. . . ; Y(); . . . end as long as the program is running when procedure Y is active when procedure Z is active in begin. . . ; Y(); . . . ; Z(); end UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

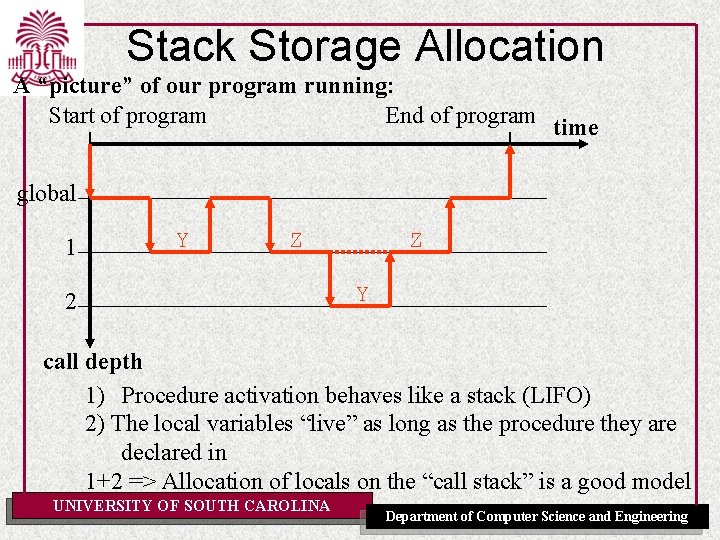

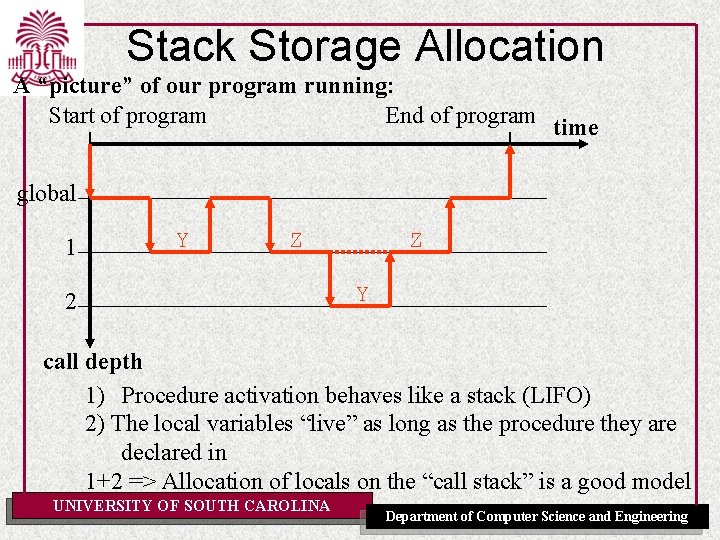

Stack Storage Allocation A “picture” of our program running: Start of program End of program time global 1 Y Z 2 Z Y call depth 1) Procedure activation behaves like a stack (LIFO) 2) The local variables “live” as long as the procedure they are declared in 1+2 => Allocation of locals on the “call stack” is a good model UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

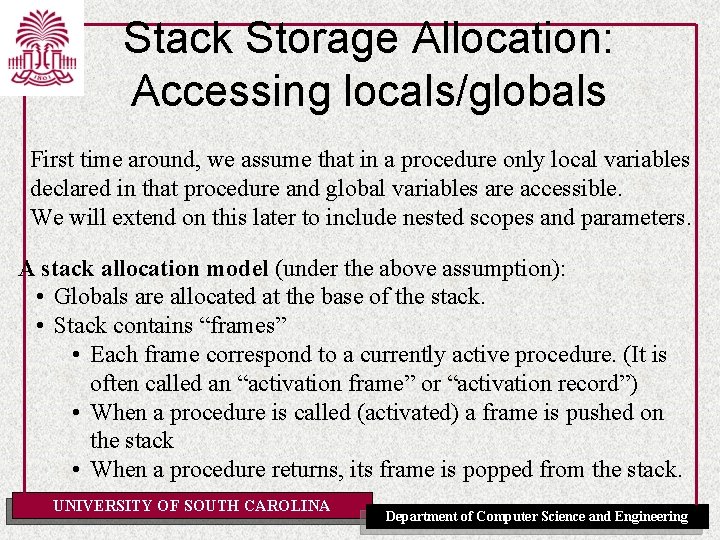

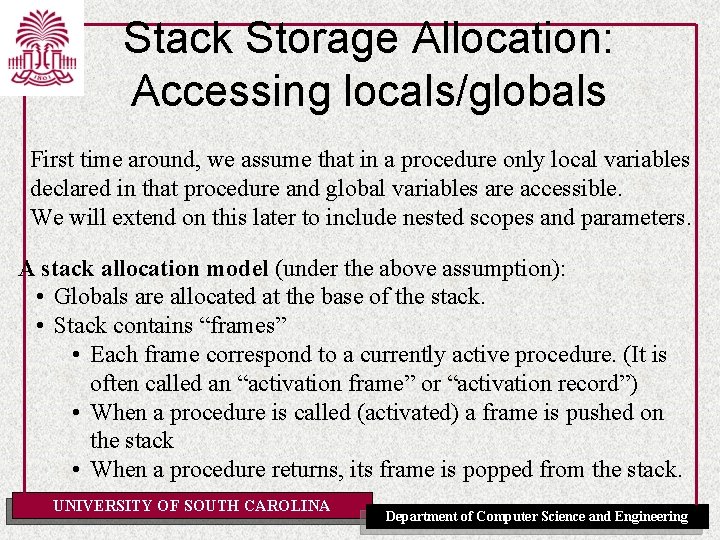

Stack Storage Allocation: Accessing locals/globals First time around, we assume that in a procedure only local variables declared in that procedure and global variables are accessible. We will extend on this later to include nested scopes and parameters. A stack allocation model (under the above assumption): • Globals are allocated at the base of the stack. • Stack contains “frames” • Each frame correspond to a currently active procedure. (It is often called an “activation frame” or “activation record”) • When a procedure is called (activated) a frame is pushed on the stack • When a procedure returns, its frame is popped from the stack. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Stack Storage Allocation: Accessing Locals/Globals SB SB = Stack base LB = Locals base ST = Stack top globals call frame LB ST Dynamic link call frame UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

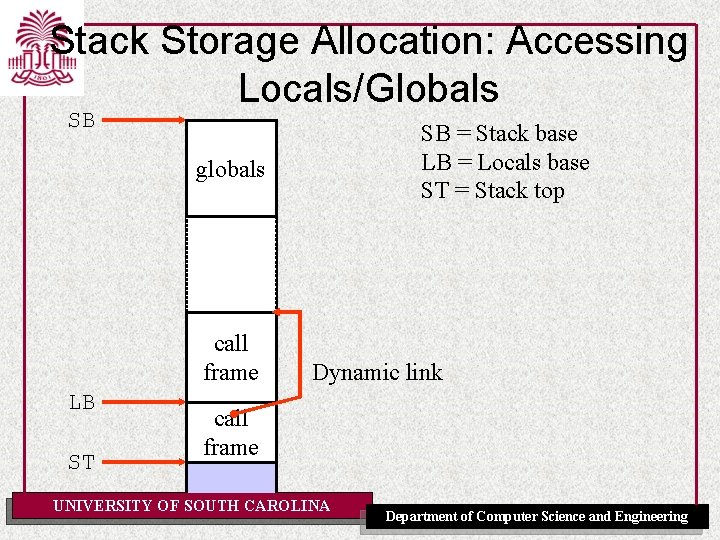

What’s in a Frame? LB dynamic link return address locals ST UNIVERSITY OF SOUTH CAROLINA Link data Local data A frame contains • A dynamic link: to next frame on the stack (the frame of the caller) • Return address • Local variables for the current activation Department of Computer Science and Engineering

What Happens when Procedure Is Called? LB call frame ST new call frame for f() UNIVERSITY OF SOUTH CAROLINA SB = Stack base LB = Locals base ST = Stack top When procedure f() is called • push new f() call frame on top of stack. • Make dynamic link in new frame point to old LB • Update LB (becomes old ST) Department of Computer Science and Engineering

What Happens when Procedure Returns? call frame LB ST current call frame for f() UNIVERSITY OF SOUTH CAROLINA When procedure f() returns • Update LB (from dynamic link) • Update ST (to old LB) Note, updating the ST implicitly “destroys” or “pops” the frame. Department of Computer Science and Engineering

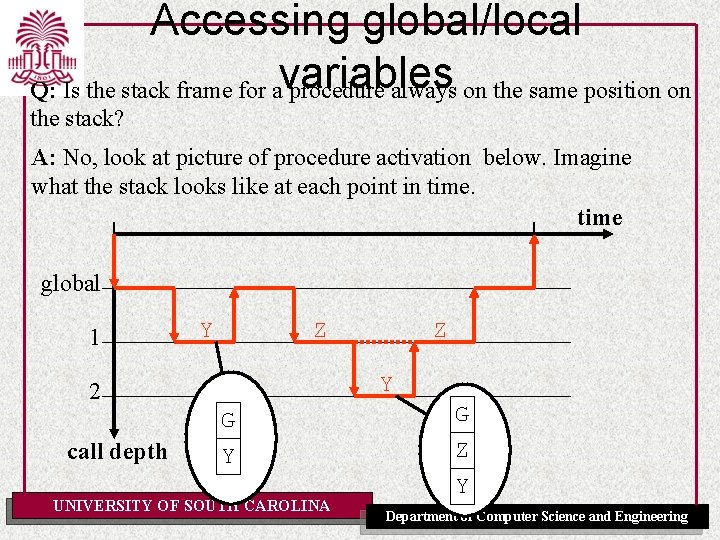

Accessing global/local Q: Is the stack frame for avariables procedure always on the same position on the stack? A: No, look at picture of procedure activation below. Imagine what the stack looks like at each point in time global 1 Y Z Y 2 call depth Z G G Y Z Y UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Accessing Global/Local Variables RECAP: We are still working under the assumption of a “flat” block structure How do we access global and local variables on the stack? The global frame is always at the same place in the stack. => Address global variables relative to SB A typical instruction to access a global variable: LOAD 4[SB] Frames are not always on the same position in the stack. Depends on the number of frames already on the stack. => Address local variables relative to LB A typical instruction to access a local variable: LOAD 3[LB] UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

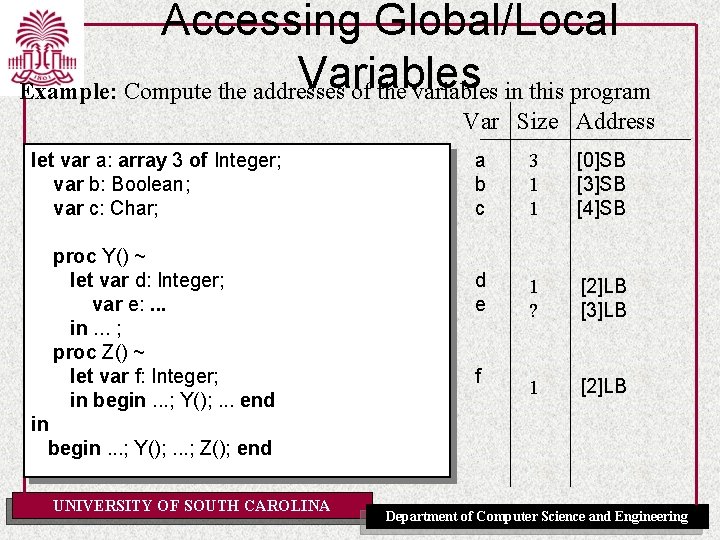

Accessing Global/Local Variables Example: Compute the addresses of the variables in this program Var Size Address let var a: array 3 of Integer; var b: Boolean; var c: Char; proc Y() ~ let var d: Integer; var e: . . . in. . . ; proc Z() ~ let var f: Integer; in begin. . . ; Y(); . . . end a b c 3 1 1 [0]SB [3]SB [4]SB d e 1 ? [2]LB [3]LB 1 [2]LB f in begin. . . ; Y(); . . . ; Z(); end UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

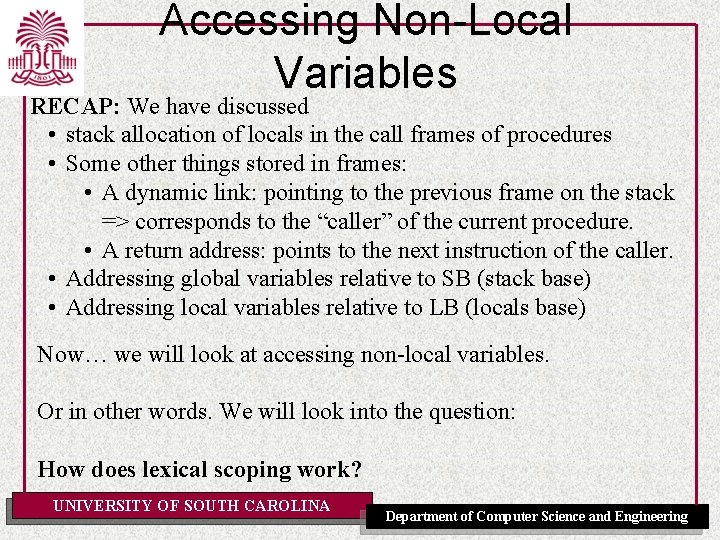

Accessing Non-Local Variables RECAP: We have discussed • stack allocation of locals in the call frames of procedures • Some other things stored in frames: • A dynamic link: pointing to the previous frame on the stack => corresponds to the “caller” of the current procedure. • A return address: points to the next instruction of the caller. • Addressing global variables relative to SB (stack base) • Addressing local variables relative to LB (locals base) Now… we will look at accessing non-local variables. Or in other words. We will look into the question: How does lexical scoping work? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

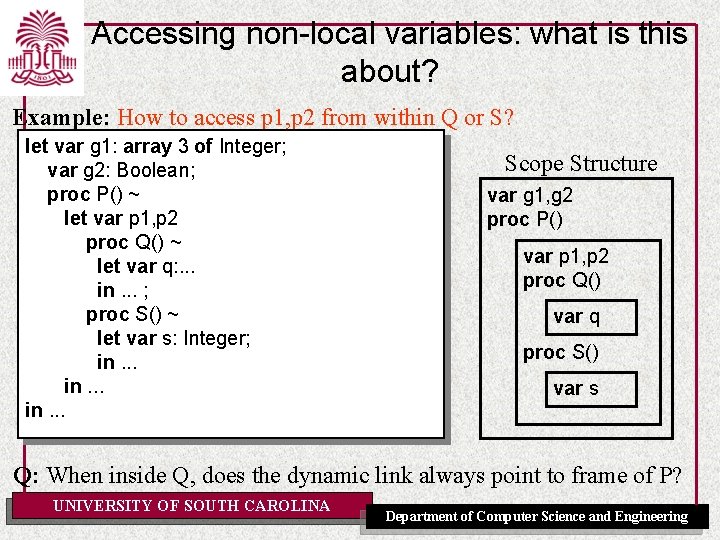

Accessing non-local variables: what is this about? Example: How to access p 1, p 2 from within Q or S? let var g 1: array 3 of Integer; var g 2: Boolean; proc P() ~ let var p 1, p 2 proc Q() ~ let var q: . . . in. . . ; proc S() ~ let var s: Integer; in. . . Scope Structure var g 1, g 2 proc P() var p 1, p 2 proc Q() var q proc S() var s Q: When inside Q, does the dynamic link always point to frame of P? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Accessing non-local variables var g 1, g 2 proc P() var p 1, p 2 proc Q() var q proc S() var s Q: When inside Q, does the dynamic link always point to a frame P? A: No! Consider following scenarios: time G G 1 P 2 P 3 S P 1 S Q 2 Q 3 P Q Q UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Accessing non-local variables We can not rely on the dynamic link to get to the lexically scoped frame(s) of a procedure. => Another item is added in the link data: the static link. The static link in a frame points to the next lexically scoped frame somewhere higher on the stack. These + LB and SB are called the display registers Registers L 1, L 2, etc. are used to point to the lexical scoped frames. (L 1, is most local). A typical instruction for accessing a non-local variable looks like: LOAD [4]L 1 LOAD [3]L 2 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

What’s in a Frame (revised)? LB dynamic link static link Link data return address Local data ST locals UNIVERSITY OF SOUTH CAROLINA A frame contains • A dynamic link: to next frame on the stack (the frame of the caller) • Return address • Local variables for the current activation Department of Computer Science and Engineering

Accessing non-local variables proc P() SB proc Q(). . . proc S(). . . globals L 1 time G Dynamic L. Static Link P P() frame S() frame LB S Q() frame Q ST UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Accessing variables, addressing schemas overview We now have a complete picture of the different kinds of addresses that are used for accessing variables stored on the stack. Type of variable Load instruction Global LOAD offset[SB] Local LOAD offset[LB] Non-local, 1 level up LOAD offset[L 1] Non-local, 2 levels up LOAD offset[L 2] . . . UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Routines We call the assembly language equivalent of procedures “routines”. In the preceding material we already learned some things about the implementation of routines in terms of the stack allocation model: • Addressing local and globals through LB, L 1, L 2, … and SB • Link data: static link, dynamic link, return address. We have yet to learn how the static link and the L 1, L 2, etc. registers are set up. We have yet to learn how routines can receive arguments and return results from/to their caller. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Routines We call the assembly language equivalent of procedures “routines”. What are routines? Unlike procedures/functions in higher level languages. They are not directly supported by language constructs. Instead they are modeled in terms of how to use the low-level machine to “emulate” procedures. What behavior needs to be “emulated”? • Calling a routine and returning to the caller after completion. • Passing arguments to a called routine • Returning a result from a routine • Local and non-local variables. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Routines • Transferring control to and from routine: Most low-level processors have CALL and RETURN for transferring control from caller to callee and back. • Transmitting arguments and return values: Caller and callee must agree on a method to transfer argument and return values. => This is called the “routine protocol” ! There are many possible ways to pass argument and return values. => A routine protocol is like a “contract” between the caller and the callee. The routine protocol is often dictated by the operating system. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Routine Protocol Examples The routine protocol depends on the machine architecture (e. g. stack machine versus register machine). Example 1: A possible routine protocol for a RM - Passing of arguments: first argument in R 1, second argument in R 2, etc. - Passing of return value: return the result (if any) in R 0 Note: this example is simplistic: - What if more arguments than registers? - What if the representation of an argument is larger than can be stored in a register. For RM protocols, the protocol usually also specifies who (caller or callee) is responsible for saving contents of registers. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Routine Protocol Examples Example 2: A possible routine protocol for a stack machine - Passing of arguments: pass arguments on the top of the stack. - Passing of return value: leave the return value on the stack top, in place of the arguments. Note: this protocol puts no boundary on the number of arguments and the size of the arguments. Most micro-processors, have registers as well as a stack. Such “mixed” machines also often use a protocol like this one. The “Triangle Abstract Machine” also adopts this routine protocol. We now look at it in detail (in TAM). UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

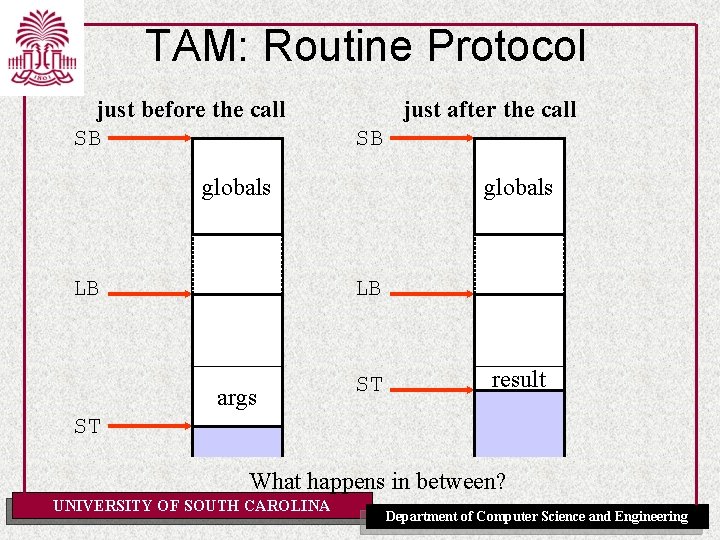

TAM: Routine Protocol just before the call SB just after the call SB globals LB args ST result ST What happens in between? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

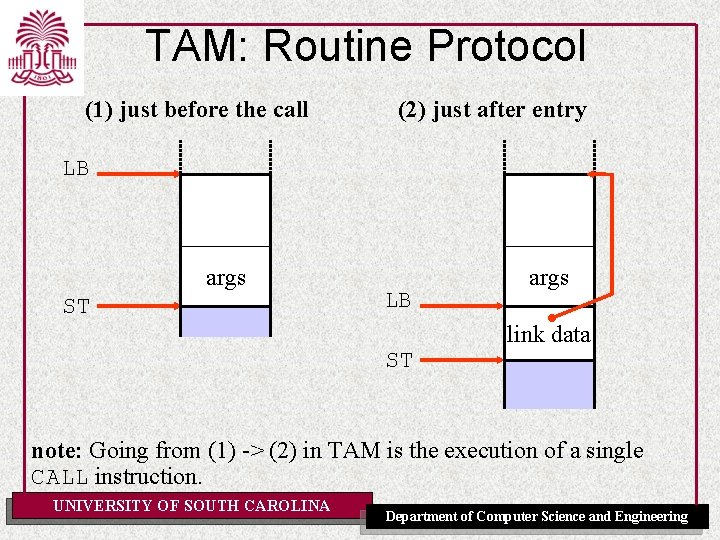

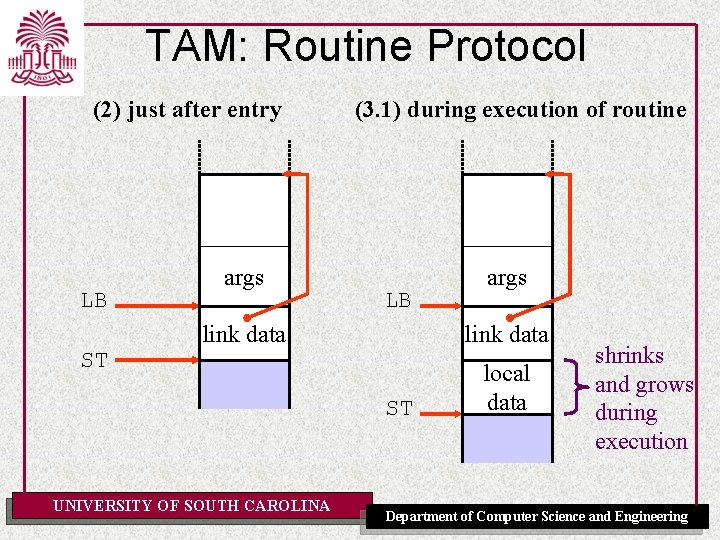

TAM: Routine Protocol (1) just before the call (2) just after entry LB args ST LB ST args link data note: Going from (1) -> (2) in TAM is the execution of a single CALL instruction. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

TAM: Routine Protocol (2) just after entry LB ST args (3. 1) during execution of routine LB link data ST UNIVERSITY OF SOUTH CAROLINA args local data shrinks and grows during execution Department of Computer Science and Engineering

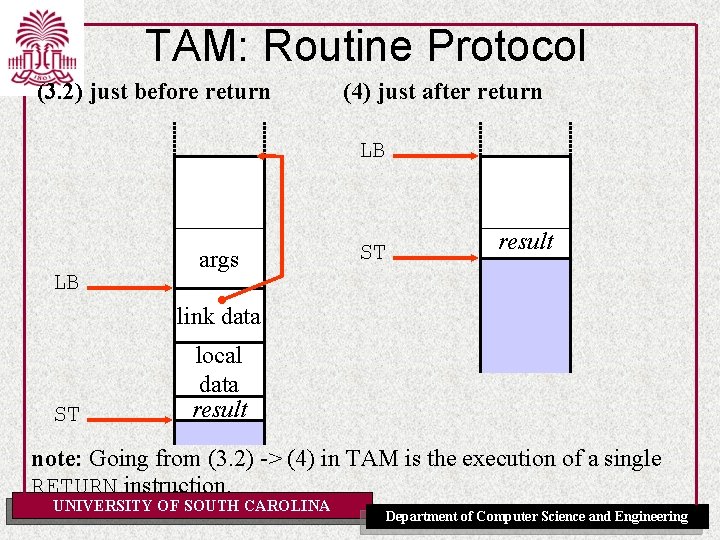

TAM: Routine Protocol (3. 2) just before return (4) just after return LB LB args ST result link data ST local data result note: Going from (3. 2) -> (4) in TAM is the execution of a single RETURN instruction. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

TAM: Routine Protocol, Example Triangle Program let var g: Integer; func F(m: Integer, n: Integer) : Integer ~ m*n ; proc W(i: Integer) ~ let const s ~ i*i in begin putint(F(i, s)); putint(F(s, s)) end in begin getint(var g); W(g+1) end UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

let TAM: Routine Protocol, Example var g: Integer; . . . in begin getint(var g); W(g+1) end TAM assembly code: PUSH 1 -- expand globals make place for g LOADA 0[SB] -- push address of g CALL getint -- read integer into g CALL succ -- add 1 CALL(SB) W -- call W (using SB as static link) POP 1 -- contract (remove) globals HALT UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

TAM: Routine Protocol, Example F(m: Integer, n: Integer) : Integer func m*n ; F: LOAD CALL RETURN(1) -- push value of argument m -- push value of argument n -- multiply m and n -- return replacing 2 word argument pair by 1 word result -2[LB] -1[LB] mult 2 arguments addressed relative to LB (negative offsets!) ~ Size of return value and argument space needed for updating the stack on return from call. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

TAM: Routine Protocol, Example W(i: Integer) ~ proc let const s~i*i in. . . F(i, s). . . W: LOAD CALL(SB) … RETURN(0) -1[LB] mult -1[LB] 3[LB] F -- push value of argument i -- multiply: result is value of s -- push value of argument i -- push value of local var s -- call F (use SB as static link) 1 -- return, replacing 1 word argument by 0 word result UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

let TAM: Routine Protocol, Example var g: Integer; . . . in begin getint(var g); W(g+1) end after reading g SB g 3 ST just before call to W SB g ST arg #1 UNIVERSITY OF SOUTH CAROLINA 3 4 Department of Computer Science and Engineering

proc TAM: Routine Protocol, Example W(i: Integer) ~ let const s~i*i in. . . F(i, s). . . just after entering W SB g just after computing s SB g just before calling F SB g LB LB LB ST 3 arg #1 4 link data ST 3 arg i 4 link data 16 ST static link 3 arg i 4 link data s 16 arg #1 4 arg #2 16 dynamic link UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

func TAM: Routine Protocol, Example F(m: Integer, n: Integer) : Integer ~ m*n ; just before calling F SB g LB ST 3 arg i 4 link data s 16 arg #1 4 arg #2 16 just after entering F SB g arg i LB s arg m arg n ST UNIVERSITY OF SOUTH CAROLINA 3 4 link data 16 4 16 link data just before return from F SB g 3 arg i LB ST 4 link data s 16 arg m 4 arg n 16 link data 64 Department of Computer Science and Engineering

func TAM: Routine Protocol, Example F(m: Integer, n: Integer) : Integer ~ m*n ; just before return from F SB g 3 arg i LB ST 4 link data s 16 arg m 4 arg n 16 link data 64 SB LB after return from F g 3 arg i ST UNIVERSITY OF SOUTH CAROLINA 4 link data s 16 64 … Department of Computer Science and Engineering

TAM Routine Protocol: Frame Layout Summary arguments LB ST static link dynamic link return address local variables and intermediate results UNIVERSITY OF SOUTH CAROLINA Arguments for current procedure they were put here by the caller. Link data Local data, grows and shrinks during execution. Department of Computer Science and Engineering

Accessing variables, addressing schemas overview (Revised) We now have a complete picture of the different kinds of addresses that are used for accessing variables and formal parameters stored on the stack. Type of variable Load instruction Global LOAD +offset[SB] Local LOAD +offset[LB] Parameter LOAD -offset[LB] Non-local, 1 level up LOAD +offset[L 1] LOAD +offset[L 2] Non-local, 2 levels up UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering. . .

Arguments: by value or by reference Some programming languages allow to two kinds of function/procedure parameters. Example: in Triangle (similar in Pascal) Var/reference parameter Constant/Value parameter let proc S(var n: Integer, i: Integer) ~ n: =n+i; var today: record y: integer, m: Integer, d: Integer end; in begin b : = {y~2002, m ~ 2, d ~ 22}; S(var b. m, 6); end UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Arguments: by value or by reference Value parameters: At the call site the argument is an expression, the evaluation of that expression leaves some value on the stack. The value is passed to the procedure/function. A typical instruction for putting a value parameter on the stack: LOADL 6 Var parameters: Instead of passing a value on the stack, the address of a memory location is pushed. This implies a restriction that only “variable-like” things can be passed to a var parameter. In Triangle there is an explicit keyword var at the call-site, to signal passing a var parameter. In Pascal and C++ the reference is created implicitly (but the same restrictions apply). Typical instructions: LOADA 5[LB] LOADA 10[SB] UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

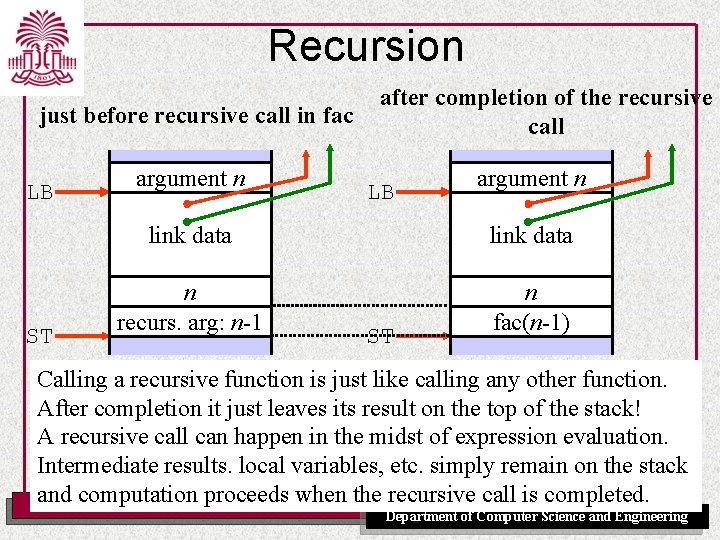

Recursion How are recursive functions and procedures supported on a low-level machine? => Surprise! The stack memory allocation model already works! Example: let func fac(n: Integer) ~ if (n=1) then 1 else n*fac(n-1); in begin putint(fac(6)); end why does it work? because every activation of a function gets its own activation record on the stack, with its own parameters, locals etc. => procedures and functions are “reentrant”. Older languages (e. g. FORTRAN) which use static allocation for locals have problems with recursion. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Recursion: General Idea Why the stack allocation model works for recursion: Like other function/procedure calls, lifetimes of local variables and parameters for recursive calls behave like a stack. fac(4) fac(3) ? fac(4) fac(3) fac(2) fac(1) fac(4) fac(3) fac(2) UNIVERSITY OF SOUTH CAROLINA fac(2) fac(1) fac(4) fac(3) fac(2) Department of Computer Science and Engineering

Recursion: In Detail let func fac(n: Integer) ~ if (n=1) then 1 else n*fac(n-1); in begin putint(fac(6)); end before call to fac SB arg 1 6 ST right after entering right before recursive to fac SB arg 1 fac SB call arg n 6 6 LB LB link data ST value of n arg 1: value of n-1 ST UNIVERSITY OF SOUTH CAROLINA 6 5 Department of Computer Science and Engineering

Recursion right before recursive right before next call to fac recursive call to fac SB arg n 6 6 LB link data value of n 6 arg 5 ST value of n arg n LB value of n arg ST data 6 5 link data 5 4 right before next recursive call to fac SB arg n 6 link data value of n 6 arg n 5 link data value of n 5 arg 4 LB link data value of n 4 arg 3 ST UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Recursion Is the spaghetti of static and dynamic links getting confusing? Let’s zoom in on just a single activation of the fac procedure. The pattern is always the same: just before recursive call in fac to caller context (= previous LB) to lexical context (=SB) ? LB argument n link data ST n n-1 UNIVERSITY OF SOUTH CAROLINA Intermediate results in the computation of n*fac(n-1); Department of Computer Science and Engineering

Recursion just before the return from the “deepest call”: n=1 after return from deepest call LB argument n=2 link frame data caller (what’s in here? ) n=2 result=1 ? LB argument n=1 ST Next step: multiply link data ST result = 1 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Recursion just before the return from the “deepest call”: n=1 after return from deepest call and multiply to caller context to lexical context (=SB) LB argument n=2 link frame data caller (what’s in here? ) result 2*fac(1)=2 ST ? Next step: return From here on down the stack is shrinking, multiplying each time with a bigger n UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Recursion just before recursive call in fac LB ST argument n after completion of the recursive call LB argument n link data n recurs. arg: n-1 n fac(n-1) ST Calling a recursive function is just like calling any other function. After completion it just leaves its result on the top of the stack! A recursive call can happen in the midst of expression evaluation. Intermediate results. local variables, etc. simply remain on the stack and computation proceeds when the recursive call is completed. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Summary • Data Representation: how to represent values of the source language on the target machine. • Storage Allocation: How to organize storage for variables (considering different lifetimes of global, local and heap variables) • Routines: How to implement procedures, functions (and how to pass their parameters and return values) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

The “Phases” of a Compiler Source Program Syntax Analysis Error Reports Abstract Syntax Tree Contextual Analysis Error Reports Decorated Abstract Syntax Tree Code Generation Last lecture Object Code UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Review of Data Representation How to model high-level computational structures and data structures in terms of low-level memory and machine instructions. High Level Program Expressions Records Procedures Methods Arrays Variables Objects How to model ? Low-level Language Processor Registers Bits and Bytes Machine Stack Machine Instructions UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

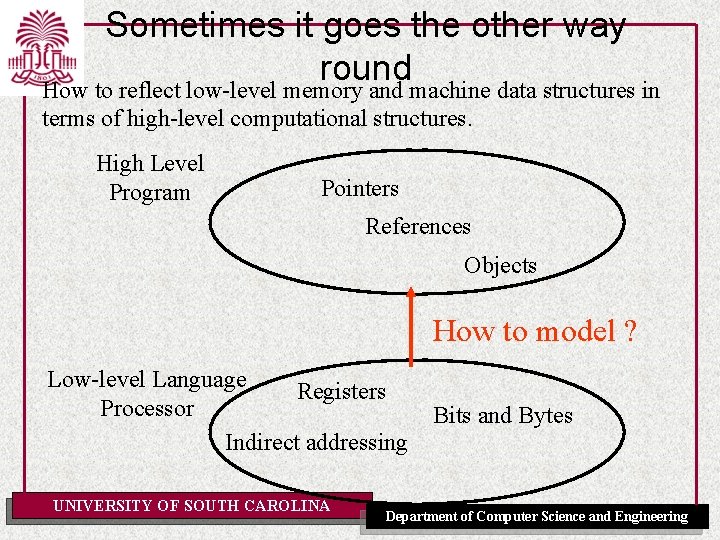

Sometimes it goes the other way round How to reflect low-level memory and machine data structures in terms of high-level computational structures. High Level Program Pointers References Objects How to model ? Low-level Language Registers Processor Indirect addressing UNIVERSITY OF SOUTH CAROLINA Bits and Bytes Department of Computer Science and Engineering

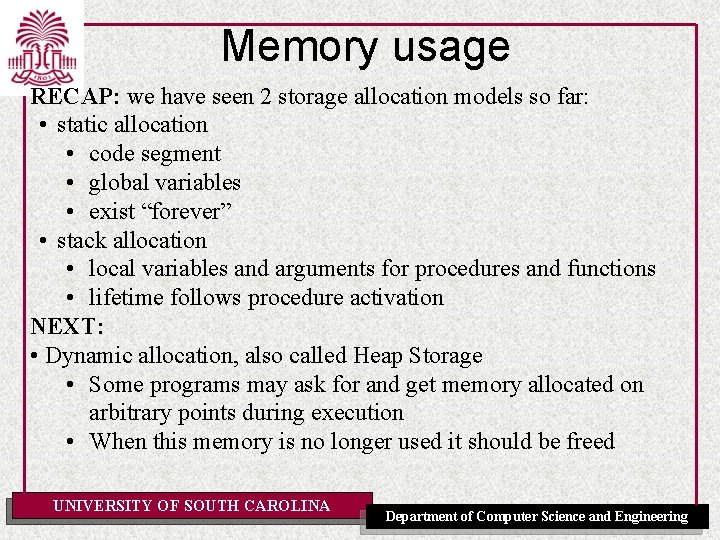

Memory usage RECAP: we have seen 2 storage allocation models so far: • static allocation • code segment • global variables • exist “forever” • stack allocation • local variables and arguments for procedures and functions • lifetime follows procedure activation NEXT: • Dynamic allocation, also called Heap Storage • Some programs may ask for and get memory allocated on arbitrary points during execution • When this memory is no longer used it should be freed UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

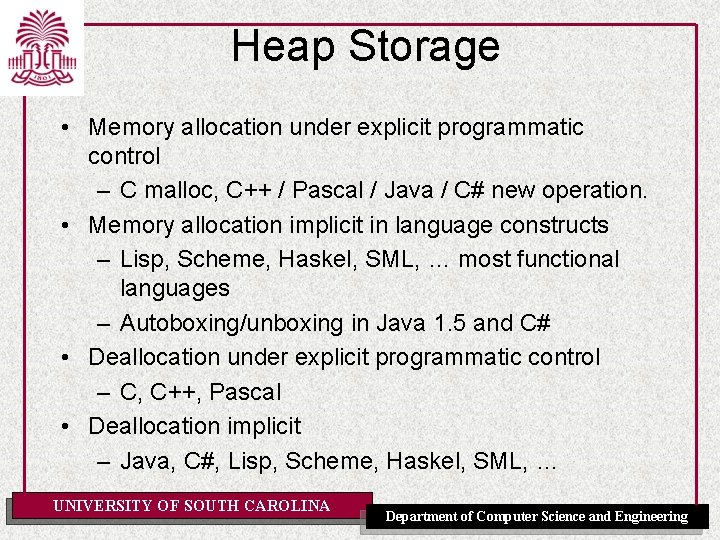

Heap Storage • Memory allocation under explicit programmatic control – C malloc, C++ / Pascal / Java / C# new operation. • Memory allocation implicit in language constructs – Lisp, Scheme, Haskel, SML, … most functional languages – Autoboxing/unboxing in Java 1. 5 and C# • Deallocation under explicit programmatic control – C, C++, Pascal • Deallocation implicit – Java, C#, Lisp, Scheme, Haskel, SML, … UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Heap Storage Deallocation Explicit versus Implicit Deallocation In explicit memory management, the program must explicitly call an operation to release memory back to the memory management system. In implicit memory management, heap memory is reclaimed automatically by a “garbage collector”. Examples: • Implicit: Java, Scheme • Explicit: Pascal and C To free heap memory a specific operation must be called. Pascal ==> dispose C ==> free C++ ==> delete • Implicit and Explicit: Ada Deallocation on leaving scope UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

How do things become garbage? int *p, *q; … p = malloc(sizeof(int)); p = q; Newly created space becomes garbage for(int i=0; i<10000; i++){ Some. Class obj= new Some. Class(i); System. out. println(obj); } Creates 10000 objects, which becomes garbage just after the print UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Problem with explicit heap management int *p, *q; … p = malloc(sizeof(int)); q = p; free(p); Dangling pointer in q now float my. Array[100]; p = my. Array; *(p+i) = … //equivalent to my. Array[i] They can be hard to recognize UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

OO Languages and heap allocation Objects are a lot like records and instance variables are a lot like fields. => The representation of objects is similar to that of a record. Methods are a lot like procedures. => Implementation of methods is similar to routines. But… there are differences: Objects have methods as well as instance variables, records only have fields. The methods have to somehow know what object they are associated with (so that methods can access the object’s instance variables) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Example: Representation of a simple Java object Example: a simple Java object (no inheritance) class Point { int x, y; (1) public Point(int x, int y) { this. x=x; this. y=y; } (2) public void move(int dx, int dy) { x=x+dx; y=y+dy; } (3) public float area() {. . . } (4) public float dist(Point other) {. . . } } UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Example: Representation of a simple Java object Example: a simple Java object (no inheritance) Point p = new Point(2, 3); Point q = new Point(0, 0); class x y p q class x y 0 0 new allocates an object in the heap 2 3 Point class Point move area dist UNIVERSITY OF SOUTH CAROLINA constructor(1) method(2) method(3) method(4) Department of Computer Science and Engineering

Objects can become garbage Point p = new Point(2, 3); Point q = new Point(0, 0); p = q; UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Automatic Storage Deallocation Garbage Collection) Everybody probably knows what a garbage collector is. But here are two “one liners” to make you think again about what a garbage collector really is! 1) Garbage collection provides the “illusion of infinite memory”! 2) A garbage collector predicts the future! It’s a kind of magic! : -) Let us look at how this magic is done! UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Stacks and dynamic allocations are incompatible Why can’t we just do dynamic allocation within the stack? Programming Language design and Implementation -4 th Edition Copyright©Prentice Hall, 2000 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Where to put the heap? • The heap is an area of memory which is dynamically allocated. • Like a stack, it may grow and shrink during runtime. • Unlike a stack it is not a LIFO => more complicated to manage • In a typical programming language implementation we will have both heapallocated and stack allocated memory Q: How do we allocate memory for both coexisting. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Where to put the heap? • A simple approach is to divide the available memory at the start of the program into two areas: stack and heap. • Another question then arises – How do we decide what portion to allocate for stack vs. heap ? – Issue: if one of the areas is full, then even though we still have more memory (in the other area) we will get out-of-memory Q: Isn’t there a better way? errors UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Where to put the heap? Q: Isn’t there a better way? A: Yes, there is an often used “trick”: let both stack and heap share the same memory area, but grow towards each other from opposite ends! SB Stack memory area ST HT Stack grows downward Heap can expand upward Heap memory area HB UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

How to keep track of free memory? Stack is LIFO allocation => ST moves up/down everything above ST is in use/allocated. Below is free memory. This is easy! But … Heap is not LIFO, how to manage free space in the “middle” of the heap? SB HT Free Allocated ST Free Mixed: Allocated and Free reuse? UNIVERSITY OF SOUTH CAROLINA HB Department of Computer Science and Engineering

How to keep track of free memory? How to manage free space in the “middle” of the heap? => keep track of free blocks in a data structure: the “free list”. For example we could use a linked list pointing to free blocks. freelist A freelist! HT Good idea! Free Next HB UNIVERSITY OF SOUTH CAROLINA But where do we find the memory to store this data structure? Free Next Department of Computer Science and Engineering

How to keep track of free memory? Q: Where do we find the memory to store a freelist data structure? A: Since the free blocks are not used for anything by the program => memory manager can use them for storing the freelist itself. HT HF HF free block size next free HB UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Why Garbage Collect? (Continued) • Software Engineering – Garbage collection increases abstraction level of software development. – Simplified interfaces and decreases coupling of modules. – Studies have shown a significant amount of development time is spent on memory management bugs [Rovner, 1985]. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Implicit memory management • Current trend of modern programming language development: to give only implicit means of memory management to a programmer: – The constant increase of hardware memory justifies the policy of automatic memory management – The explicit memory management distracts programmer from his primary tasks: let everyone do what is required of them and nothing else! – The philosophy of high-level languages conforms to the implicit memory management • Other arguments for implicit memory management: – Anyway, a programmer cannot control memory management for temporary variables! – The difficulties of combination of two memory management mechanisms: system and the programmer’s • The history repeats: in 70’s people thought that the implicit memory management had finally replaced all other mechanisms UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Classes of Garbage Collection Algorithms • Direct Garbage Collectors: a record is associated with each node in the heap. The record for node N indicates how many other nodes or roots point to N. • Indirect/Tracing Garbage Collectors: usually invoked when a user’s request for memory fails because the free list is exhausted. The garbage collector visits all live nodes, and returns all other memory to the free list. If sufficient memory has been recovered from this process, the user’s request for memory is satisfied. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Terminology • Roots: values that a program can manipulate directly (i. e. values held in registers, on the program stack, and global variables. ) • Node/Cell/Object: an individually allocated piece of data in the heap. • Children Nodes: the list of pointers that a given node contains. • Live Node: a node whose address is held in a root or is the child of a live node. • Garbage: nodes that are not live, but are not free either. • Garbage collection: the task of recovering (freeing) garbage nodes. • Mutator: The program running alongside the garbage UNIVERSITY OF SOUTH CAROLINA collection system. Department of Computer Science and Engineering

Types of garbage collectors • The “Classic” algorithms – Reference counting – Mark and sweep • Copying garbage collection • Generational garbage collection • Incremental Tracing garbage collection UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting • Every cell has an additional field: the reference count. This field represents the number of pointers to that cell from roots or heap cells. • Initially, all cells in the heap are placed in a pool of free cells, the free list. • When a cell is allocated from the free list, its reference count is set to one. • When a pointer is set to reference a cell, the cell’s reference count is incremented by 1; if a pointer is to the cell is deleted, its reference count is decremented by 1. • When a cell’s reference count reaches 0, its pointers to its children are deleted and it is returned to the free list. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting Example 1 0 2 1 0 0 1 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting Example (Continued) 1 2 1 0 1 1 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting Example (Continued) 1 2 1 1 0 0 1 1 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting Example (Continued) 1 2 1 1 0 Returned to free list 1 0 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting: Advantages and Disadvantages • Advantages: – Garbage collection overhead is distributed. – Locality of reference is no worse than mutator. – Free memory is returned to free list quickly. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting: Advantages and Disadvantages (Continued) • Disadvantages: – High time cost (every time a pointer is changed, reference counts must be updated). – Storage overhead for reference counter can be high. – Unable to reclaim cyclic data structures. – If the reference counter overflows, the object becomes permanent. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting: Cyclic Data Structure - Before 1 2 0 0 2 0 1 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Reference Counting: Cyclic Data Structure – After 1 1 0 0 2 0 1 UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Deferred Reference Counting • Optimisation – Cost can be improved by special treatment of local variables. – Only update reference counters of objects on the stack at fixed intervals. – Reference counts are still affected from pointers from one heap object to another. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

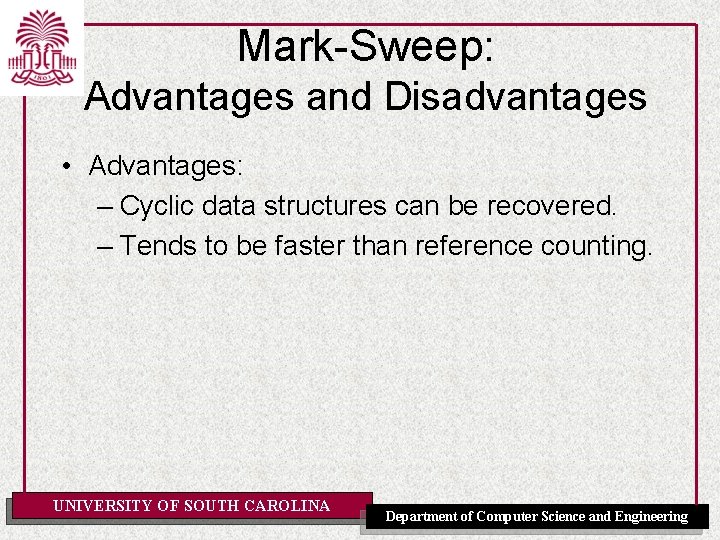

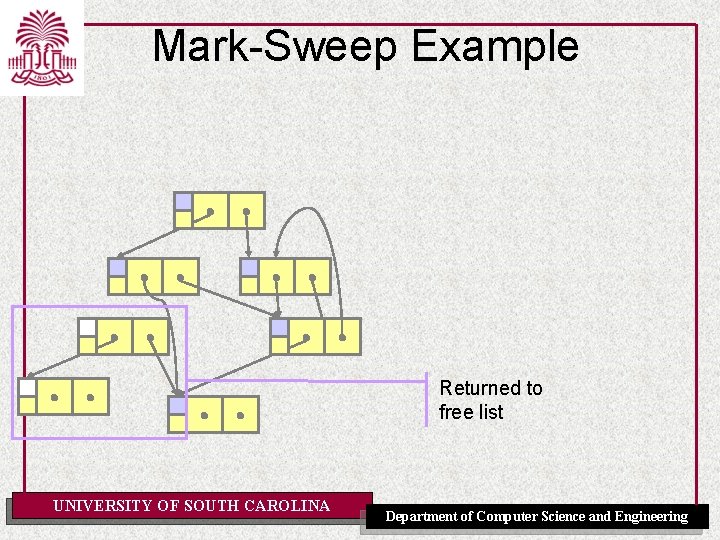

Mark-Sweep • The first tracing garbage collection algorithm • Garbage cells are allowed to build up until heap space is exhausted (i. e. a user program requests a memory allocation, but there is insufficient free space on the heap to satisfy the request. ) • At this point, the mark-sweep algorithm is invoked, and garbage cells are returned to the free list. • Performed in two phases: – Mark phase: identifies all live cells by setting a mark bit. Live cells are cells reachable from a root. – Sweep phase: returns garbage cells to the free list. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark and Sweep Garbage Collection before gc mark as free phase SB SB ST ST HT HT e X d X c X b X a d c b a HB UNIVERSITY OF SOUTH CAROLINA X e HB Department of Computer Science and Engineering

Mark and Sweep Garbage Collection mark as free phase mark reachable collect free SB SB SB ST ST ST HT X e HT X d X c X b X a HB HB UNIVERSITY OF SOUTH CAROLINA X e HT X d X c X b X a X e X d X b HB Department of Computer Science and Engineering

Mark-Sweep Example Returned to free list UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark and Sweep Garbage Collection Algorithm pseudo code: void garbage. Collect() { mark all heap variables as free for each frame in the stack scan(frame) for each heapvar (still) marked as free add heapvar to freelist } void scan(region) { for each pointer p in region if p points to region marked as free then mark region at p as reachable scan(region at p ) } Q: This algorithm is recursive. What do you think of that? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark-Sweep: Advantages and Disadvantages • Advantages: – Cyclic data structures can be recovered. – Tends to be faster than reference counting. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark-Sweep: Advantages and Disadvantages (Continued) • Disadvantages: – Computation must be halted while garbage collection is being performed – Every live cell must be visited in the mark phase, and every cell in the heap must be visited in the sweep phase. – Garbage collection becomes more frequent as residency of a program increases. – May fragment memory. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark-Sweep: Advantages and Disadvantages (Continued) • Disadvantages: – Has negative implications for locality of reference. Old objects get surrounded by new ones (not suited for virtual memory applications). • However, if objects tend to survive in clusters in memory, as they apparently often do, this can greatly reduce the cost of the sweep phase. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark-Compact Collection • Remedy the fragmentation and allocation problems of mark-sweep collectors. • Two phases: – Mark phase: identical to mark sweep. – Compaction phase: marked objects are compacted, moving most of the live objects until all the live objects are contiguous. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Heap Compaction To fight fragmentation, some memory management algorithms perform “heap compaction” once in a while. before HT after a HF HT b c d d HB UNIVERSITY OF SOUTH CAROLINA a HB Department of Computer Science and Engineering

Mark-Compact: Advantages and Disadvantages (Continued) • Advantages: – The contiguous free area eliminates fragmentation problem. Allocating objects of various sizes is simple. – The garbage space is "squeezed out", without disturbing the original ordering of objects. This ameliorates locality. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Mark-Compact: Advantages and Disadvantages (Continued) • Disadvantages: – Requires several passes over the data are required. "Sliding compactors" takes two, three or more passes over the live objects. • One pass computes the new location • Subsequent passes update the pointers to refer to new locations, and actually move the objects UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Copying Garbage Collection • • Like mark-compact, copying garbage collection does not really "collect" garbage. Rather it moves all the live objects into one area and the rest of the heap is know to be available. Copying collectors integrate the traversal and the copying process, so that objects need only be traversed once. The work needed is proportional to the amount of live data (all of which must be copied). UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Semispace Collector Using the Cheney Algorithm • The heap is subdivided into two contiguous subspaces (From. Space and To. Space). • During normal program execution, only one of these semispaces is in use. • When the garbage collector is called, all the live data are copied from the current semispace (From. Space) to the other semispace (To. Space). UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Semispace Collector Using the Cheney Algorithm A B C D From. Space UNIVERSITY OF SOUTH CAROLINA To. Space Department of Computer Science and Engineering

Semispace Collector Using the Cheney Algorithm A B C D From. Space UNIVERSITY OF SOUTH CAROLINA To. Space Department of Computer Science and Engineering

Semispace Collector Using the Cheney Algorithm (Continued) • Once the copying is completed, the To. Space is made the "current" semispace. • A simple form of copying traversal is the Cheney algorithm. • The immediately reachable objects from the initial queue of objects for a breadth-first traversal. • A scan pointer is advanced through the first object location by location. • Each time a pointer into From. Space is encountered, the referred-to-object is transported to the end of the queue and the UNIVERSITY OF SOUTH CAROLINA pointer to the object is Department updated. of Computer Science and Engineering

Semispace Collector Using the Cheney Algorithm (Continued) • Multiple paths must not be copied to tospace multiple times. • When an object is transported to tospace, a forwarding pointer is installed in the old version of the object. • The forwarding pointer signifies that the old object is obsolete and indicates where to find the new copy. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Copying Garbage Collection: Advantages and Disadvantages • Advantages: – Allocation is extremely cheap. – Excellent asymptotic complexity. – Fragmentation is eliminated. – Only one pass through the data is required. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Copying Garbage Collection: Advantages and Disadvantages (Continued) • Disadvantages: – The use of two semi-spaces doubles memory requirement needs – Poor locality. Using virtual memory will cause excessive paging. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Problems with Simple Tracing Collectors • Difficult to achieve high efficiency in a simple garbage collector, because large amounts of memory are expensive. • If virtual memory is used, the poor locality of the allocation/reclamation cycle will cause excessive paging. • Even as main memory becomes steadily cheaper, locality within cache memory becomes increasingly important. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Problems with Simple Tracing Collectors (Continued) • With a simple semispace copy collector, locality is likely to be worse than mark-sweep. • The memory issue is not unique to copying collectors. • Any efficient garbage collection involves a trade-off between space and time. • The problem of locality is an indirect result of the use of garbage collection. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection • Attempts to address weaknesses of simple tracing collectors such as mark-sweep and copying collectors: – All active data must be marked or copied. – For copying collectors, each page of the heap is touched every two collection cycles, even though the user program is only using half the heap, leading to poor cache behavior and page faults. – Long-lived objects are handled inefficiently. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection (Continued) • Generational garbage collection is based on the generational hypothesis: Most objects die young. • As such, concentrate garbage collection efforts on objects likely to be garbage: young objects. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

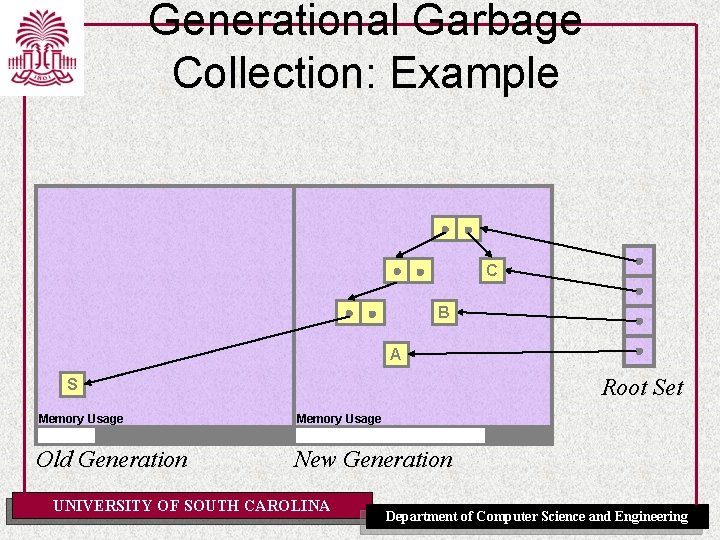

Generational Garbage Collection: Object Lifetimes • When we discuss object lifetimes, the amount of heap allocation that occurs between the object’s birth and death is used rather than the wall time. • For example, an object created when 1 Kb of heap was allocated and was no longer referenced when 4 Kb of heap data was allocated would have lived for 3 Kb. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Object Lifetimes (Continued) • Typically, between 80 and 98 percent of all newly-allocated heap objects die before another megabyte has been allocated. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection (Continued) • Objects are segregated into different areas of memory based on their age. • Areas containing newer objects are garbage collected more frequently. • After an object has survived a given number of collections, it is promoted to a less frequently collected area. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Example C B A Root Set S Memory Usage Old Generation New Generation UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Example (Continued) R C B A Root Set S Memory Usage Old Generation New Generation UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Example (Continued) R D C B A Root Set S Memory Usage Old Generation New Generation UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Example (Continued) • This example demonstrates several interesting characteristics of generational garbage collection: – The young generation can be collected independently of the older generations (resulting in shorter pause times). – An intergenerational pointer was created from R to D. These pointers must be treated as part of the root set of the New Generation. – Garbage collection in the new generation result in S becoming unreachable, and thus garbage. Garbage in older generations (sometimes called tenured garbage) can not be reclaimed via garbage collections in younger generations. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Implementation • Usually implemented as a copying collector, where each generation has its own semispace: From. Space To. Space Old Generation UNIVERSITY OF SOUTH CAROLINA New Generation Department of Computer Science and Engineering

Generational Garbage Collection: Issues • Choosing an appropriate number of generations: – If we benefit from dividing the heap into two generations, can we further benefit by using more than two generations? • Choosing a promotion policy: – How many garbage collections should an object survive before being moved to an older generation? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Issues (Continued) • Tracking intergenerational pointers: – Inter-generational pointers need to be tracked, since they form part of the root set for younger generations. • Collection Scheduling – Can we attempt to schedule garbage collection in such a way that we minimize disruptive pauses? UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Multiple Generations Generation 4 Generation 3 UNIVERSITY OF SOUTH CAROLINA Generation 2 Generation 1 Department of Computer Science and Engineering

Generational Garbage Collection: Multiple Generations (Continued) • Advantages: – Keeps youngest generation’s size small. – Helps address mistakes made by the promotion policy by creating more intermediate generations that still get garbage collected fairly frequently. • Disadvantages: – Collections for intermediate generations may be disruptive. – Tends to increase number of inter-generational pointers, increasing the size of the root set for younger generations. • Most generational collectors are limited to just two or three UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering generations.

Generational Garbage Collection: Promotion Policies • A promotion policy determines how many garbage collections cycles (the cycle count) an object must survive before being advanced to the next generation. • If the cycle count is too low, objects may be advanced too fast; if too high, the benefits of generational garbage collection are not realized. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Promotion Policies (Continued) • With a cycle count of just one, objects created just before the garbage collection will be advanced, even though the generational hypothesis states they are likely to die soon. • Increasing the cycle count to two denies advancement to recently created objects. • Under most conditions, it increasing the cycle count beyond two does not significantly reduce the amount of data advanced. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Inter-generational Pointers • Inter-generational pointers can be created in two ways: – When an object containing pointers is promoted to an older generation. – When a pointer to an object in a newer generation is stored in an object. • The garbage collector can easily detect promotioncaused inter-generational pointers, but handling pointer stores is a more complicated task. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Inter-generational Pointers • Pointer stores can be tracked via the use of a write barrier: – Pointer stores must be accompanied by extra bookkeeping instructions that let the garbage collector know of pointers that have been updated. • Often implemented at the compiler level. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Collection Scheduling • Generational garbage collection aims to reduce pause times. When should these (hopefully short) pause times occur? • Two strategies exist: – Hide collections when the user is least likely to notice a pause, or – Trigger efficient collections when there is likely to be lots of garbage to collect. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Advantages • In practice it has proven to be an effective garbage collection technique. • Minor garbage collections are performed quickly. • Good cache and virtual memory behavior. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Sun Hot. Spot • Sun JDK 1. 0 used mark-compact • Sun improved memory management in the Java 2 VMs (JDK 1. 2 and on) by switching to a generational garbage collection scheme • The heap is separated into two regions: – New Objects – Old Objects UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

New Object Region • The idea is to use a very fast allocation mechanism and hope that objects all become garbage before you have to garbage collect • The New Object Regions is subdivided into three smaller regions: – Eden, where objects are allocated – 2 “Survivor” semi-spaces: “From” and “To” UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

New Object Region • The Eden area is set up like a stack - an object allocation is implemented as a pointer increment • When the Eden area is full, the GC does a reachability test and then copies all the live objects from Eden to the “To” region • The labels on the regions are swapped – “To” becomes “From” - now the “From” area has objects UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

New Object Region • The next time Eden fills objects are copied from both the “From” region and Eden to the “To” area • There’s a “Tenuring Threshold” that determines how many times an object can be copied between survivor spaces before it’s moved to the Old Object region • Note that one side-effect is that one survivor space is always empty UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Old Object Region • The old object region is for objects that will have a long lifetime • The hope is that because most garbage is generated by short-lived objects that you won’t need to GC the old object region very often UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection New Object Region Old Object Region First GC Second GC Eden SS 1 SS 2 Old Eden SS 2 SS 1 Old UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Generational Garbage Collection: Disadvantages • Performs poorly if any of the main assumptions are false: – That objects tend die young. – That there are relatively few pointers from old objects to young ones. • Frequent pointer writes to older generations will increase the cost of the write barrier, and possibly increase the size of the root set for younger generations. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Incremental Tracing Collectors • Program (Mutator) and Garbage Collector run concurrently. – Can think of system as similar to two threads. One performs collection, and the other represents the regular program in execution. • Can be used in systems with real-time requirements. For example, process control systems. – allow mutator to do its job without destroying collector’s possibilities for keeping track of modifications of the object graph, and at the same time – allowing collector to do its job without interfering with. OFmutator UNIVERSITY SOUTH CAROLINA Department of Computer Science and Engineering

Coherence & Conservatism • Coherence: A proper state must be maintained between the mutator and the collector. • Conservatism: How aggressive the garbage collector is at finding objects to be deallocated. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

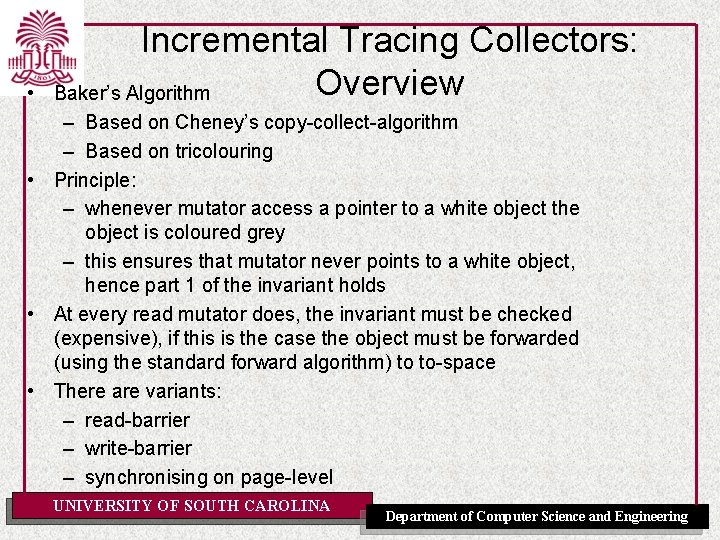

Tricoloring • White – Not yet traversed. A candidate for collection. • Black – Already traversed and found to be live. Will not be reclaimed. • Grey – In traversal process. Defining characteristic is that it’s children have not necessarily been explored. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

The Tricolor Abstraction UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

• Incremental Tracing Collectors: Overview Baker’s Algorithm – Based on Cheney’s copy-collect-algorithm – Based on tricolouring • Principle: – whenever mutator access a pointer to a white object the object is coloured grey – this ensures that mutator never points to a white object, hence part 1 of the invariant holds • At every read mutator does, the invariant must be checked (expensive), if this is the case the object must be forwarded (using the standard forward algorithm) to to-space • There are variants: – read-barrier – write-barrier – synchronising on page-level UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

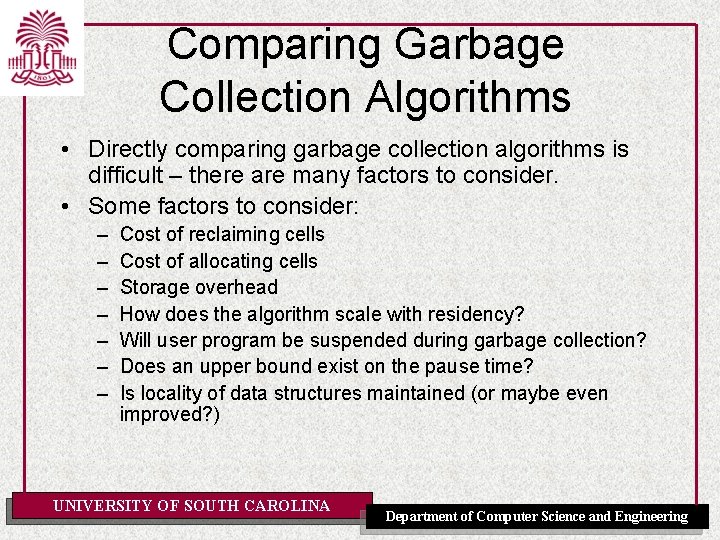

Garbage Collection: Summary Tracing Incremental Method Conservatism Space Time Fragmentation Locality Mark Sweep Major Basic 1 traversal + heap scan Yes Fair Mark Compact Major Basic Many passes of heap No Good Copying Major Two Semispaces 1 traversal No Poor Reference Counting No Reference count field Constant per Assignment Yes Very Good Deferred Reference Counting Only for stack variables Reference Count Field Constant per Assignment Yes Very Good Incremental Varies depending on algorithm Varies Can be Guaranteed Real-Time Varies Generational Variable Segregated Areas Varies with number of live objects in new generation Yes (Non-Copying) No (Copying) Good UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Comparing Garbage Collection Algorithms • Directly comparing garbage collection algorithms is difficult – there are many factors to consider. • Some factors to consider: – – – – Cost of reclaiming cells Cost of allocating cells Storage overhead How does the algorithm scale with residency? Will user program be suspended during garbage collection? Does an upper bound exist on the pause time? Is locality of data structures maintained (or maybe even improved? ) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Comparing Garbage Collection Algorithms (Continued) • Zorn’s study in 1989/93 compared garbage collection to explicit deallocation: – Non-generational • Between 0% and 36% more CPU time. • Between 40% and 280% more memory. – Generational garbage collection • Between 5% to 20% more CPU time. • Between 30 and 150% more memory. • Wilson feels these numbers can be improved, and they are also out of date. • A well implemented garbage collector will slow a program down by approximately 10 percent relative to explicit heap deallocation. UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Garbage Collection: Conclusions (Continued) • Despite this cost, garbage collection a feature in many widely used languages: – Lisp (1959) – SML, Haskel, Miranda (1980 -) – Perl (1987) – Java (1995) – C# (2001) – Microsoft’s Common Language Runtime (2002) UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Different choices for different reasons • JVM – Sun Classic: Mark, Sweep and Compact – SUN Hot. Spot: Generational (two generation + Eden) • -Xincgc an incremental collector that breaks that old-object region into smaller chunks and GCs them individually • -Xconcgc Concurrent GC allows other threads to keep running in parallel with the GC – BEA j. Rockit JVM: concurrent, even on another processor – IBM: Improved Concurrent Mark, Sweep and Compact with a notion of weak references – Real-Time Java • Scoped LTMemory, VTMemory, Raw. Memory • . Net CLR – Managed and unmanaged memory (memory blob) – PC version: Self-tuning Generation Garbage Collector –. Net CF: Mark, Sweep and Compact UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering

Garbage Collection: Conclusions • Relieves the burden of explicit memory allocation and deallocation. • Software module coupling related to memory management issues is eliminated. • An extremely dangerous class of bugs is eliminated. • The compiler generates code for allocating objects • The compiler must also generate code to support GC – The GC must be able to recognize root pointers from the stack – The GC must know about data-layout and objects descriptors • The choice of Garbage Collector algorithm has to take many factors into consideration UNIVERSITY OF SOUTH CAROLINA Department of Computer Science and Engineering