CSCE 492 Software Engineering Lecture 9 Testing Topics

- Slides: 30

CSCE 492 Software Engineering Lecture 9 Testing Topics n Testing Readings: Spring, 2008

Overview Last Time n Achieving Quality Attributes (Nonfunctional) requirements Today’s Lecture n Testing = Achieving Functional requirements References: n Chapter 8 - Testing Next Time: Requirements meetings with individual groups Start at 10: 15 Sample test – 2– CSCE 492 Spring 2008

Testing Why Test? The earlier an error is found the cheaper it is to fix. Errors/bugs terminology l A fault is a condition that causes the software to fail. l A failure is an inability of a piece of software to perform according to specifications – 3– CSCE 492 Spring 2008

Testing Approaches Development time techniques l Automated tools: compilers, lint, etc Offline techniques l Walkthroughs l Inspections Online Techniques l Black box testing (not looking at the code) l White box testing – 4– CSCE 492 Spring 2008

Testing Levels Unit level testing Integration testing System testing Test cases/test suites Regression tests – 5– CSCE 492 Spring 2008

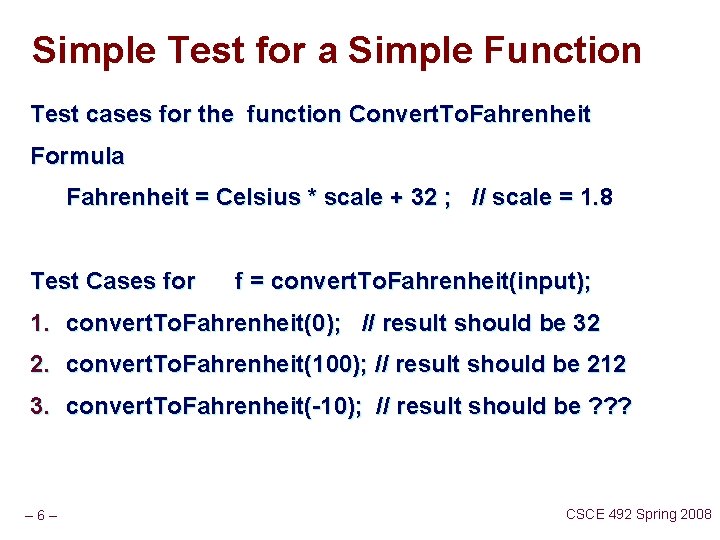

Simple Test for a Simple Function Test cases for the function Convert. To. Fahrenheit Formula Fahrenheit = Celsius * scale + 32 ; // scale = 1. 8 Test Cases for f = convert. To. Fahrenheit(input); 1. convert. To. Fahrenheit(0); // result should be 32 2. convert. To. Fahrenheit(100); // result should be 212 3. convert. To. Fahrenheit(-10); // result should be ? ? ? – 6– CSCE 492 Spring 2008

Principles of Object-Oriented Testing Object-oriented systems are built out of two or more interrelated objects Determining the correctness of O-O systems requires testing the methods that change or communicate the state of an object Testing methods in an object-oriented system is similar to testing subprograms in processoriented systems – 7– CSCE 492 Spring 2008

Testing Terminology Error - refers to any discrepancy between an actual, measured value and a theoretical, predicted value. Error also refers to some human action that results in some sort of failure or fault in the software Fault - is a condition that causes the software to malfunction or fail Failure - is the inability of a piece of software to perform according to its specifications. Failures are caused by faults, but not all faults cause failures. A piece of software has failed if its actual behaviour differs in any way from its expected behaviour – 8– CSCE 492 Spring 2008

Code Inspections l Formal procedure, where a team of programmers read through code, explaining what it does. l Inspectors play “devils advocate”, trying to find bugs. l Time consuming process! l Can be divisive/lead to interpersonal problems. l Often used only for safety/time critical systems. – 9– CSCE 492 Spring 2008

Walkthroughs l Similar to inspections, except that inspectors “mentally execute” the code using simple test data. l Expensive in terms of human resources. l Impossible for many systems. l Usually used as discussion aid. – 10 – CSCE 492 Spring 2008

Test Plan A test plan specifies how we will demonstrate that the software is free of faults and behaves according to the requirements specification A test plan breaks the testing process into specific tests, addressing specific data items and values Each test has a test specification that documents the purpose of the test – 11 – CSCE 492 Spring 2008

Test Plan If a test is to be accomplished by a series of smaller tests, the test specification describes the relationship between the smaller and the larger tests The test specification must describe the conditions that indicate when the test is complete and a means for evaluating the results – 12 – CSCE 492 Spring 2008

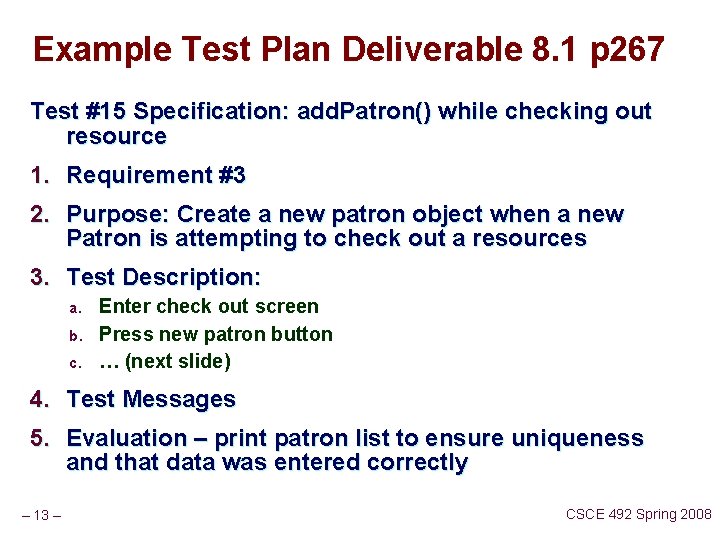

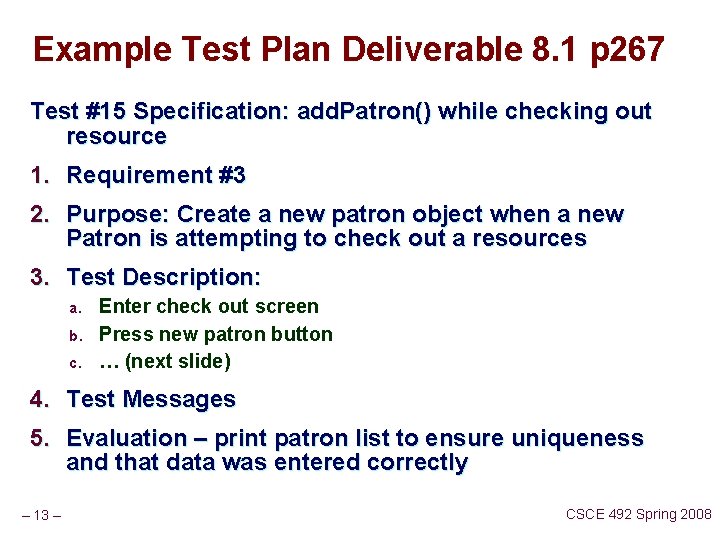

Example Test Plan Deliverable 8. 1 p 267 Test #15 Specification: add. Patron() while checking out resource 1. Requirement #3 2. Purpose: Create a new patron object when a new Patron is attempting to check out a resources 3. Test Description: a. b. c. Enter check out screen Press new patron button … (next slide) 4. Test Messages 5. Evaluation – print patron list to ensure uniqueness and that data was entered correctly – 13 – CSCE 492 Spring 2008

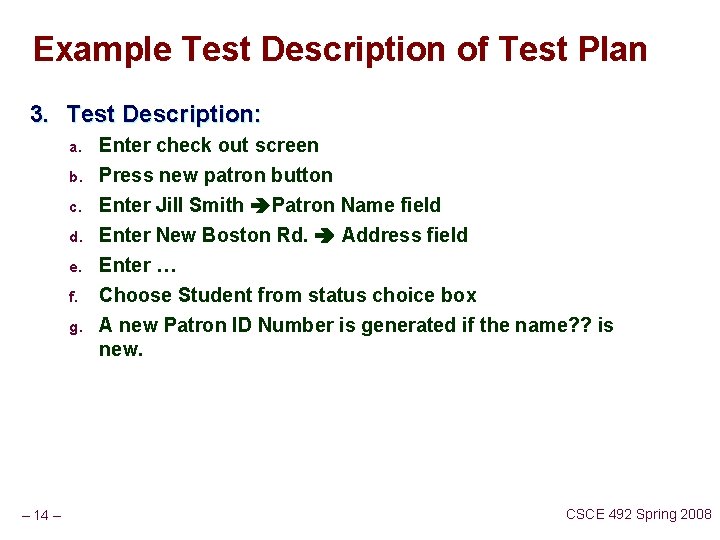

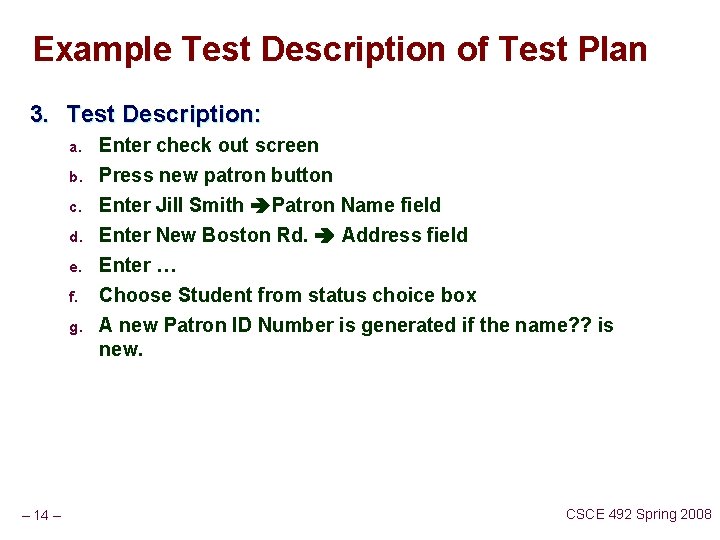

Example Test Description of Test Plan 3. Test Description: a. Enter check out screen b. Press new patron button Enter Jill Smith Patron Name field Enter New Boston Rd. Address field c. d. e. f. g. – 14 – Enter … Choose Student from status choice box A new Patron ID Number is generated if the name? ? is new. CSCE 492 Spring 2008

Test Oracle A test oracle is the set of predicted results for a set of tests, and is used to determine the success of testing Test oracles are extremely difficult to create and are ideally created from the requirements specification – 15 – CSCE 492 Spring 2008

Test Cases A test case is a set of inputs to the system Successfully testing a system hinges on selecting representative test cases Poorly chosen test cases may fail to illuminate the faults in a system In most systems exhaustive testing is impossible, so a white box or black box testing strategy is typically selected – 16 – CSCE 492 Spring 2008

Black Box Testing The tester knows nothing about the internal structure of the code Test cases are formulated based on expected output of methods Tester generates test cases to represent all possible situations in order to ensure that the observed and expected behaviour is the same – 17 – CSCE 492 Spring 2008

Black Box Testing l In black box testing, we ignore the internals of the system, and focus on relationship between inputs and outputs. l Exhaustive testing would mean examining output of system for every conceivable input. l Clearly not practical for any real system! l Instead, we use equivalence partitioning and boundary analysis to identify characteristic inputs. – 18 – CSCE 492 Spring 2008

Equivalence Partitioning l Suppose system asks for “a number between 100 and 999 inclusive”. l This gives three equivalence classes of input: n n n – less that 100 – 100 to 999 – greater than 999 l We thus test the system against characteristic values from each equivalence class. l Example: 50 (invalid), 500 (valid), 1500(invalid). – 19 – CSCE 492 Spring 2008

Boundary Values l Arises from the fact that most program fail at input boundaries. l Suppose system asks for “a number between 100 and 999 inclusive”. l The boundaries are 100 and 999. l We therefore test for values: n n – 20 – 99 100 101 the lower boundary 998 999 1000 the upper boundary CSCE 492 Spring 2008

White Box Testing The tester uses knowledge of the programming constructs to determine the test cases to use If one or more loops exist in a method, the tester would wish to test the execution of this loop for 0, 1, max, and max + 1, where max represents a possible maximum number of iterations Similarly, conditions would be tested for true and false – 21 – CSCE 492 Spring 2008

White Box Testing l In white box testing, we use knowledge of the internal structure of systems to guide development of tests. l The ideal: examine every possible run of a system. l Not possible in practice! l Instead: aim to test every statement at least once! EXAMPLE. if (x > 5) { System. out. println(‘‘hello’’); } else { System. out. println(‘‘bye’’); } There are two possible paths through this code, corresponding to x > 5 and x 5. – 22 – CSCE 492 Spring 2008

Unit Testing The units comprising a system are individually tested The code is examined for faults in algorithms, data and syntax A set of test cases is formulated and input and the results are evaluated The module being tested should be reviewed in context of the requirements specification – 23 – CSCE 492 Spring 2008

Integration Testing The goal is to ensure that groups of components work together as specified in the requirements document Four kinds of integration tests exist n n – 24 – Structure tests Functional tests Stress tests Performance tests CSCE 492 Spring 2008

System Testing The goal is to ensure that the system actually does what the customer expects it to do Testing is carried out by customers mimicking real world activities Customers should also intentionally enter erroneous values to determine the system behaviour in those instances – 25 – CSCE 492 Spring 2008

Testing Steps l Determine what the test is supposed to measure l Decide how to carry out the tests l Develop the test cases l Determine the expected results of each test (test oracle) l Execute the tests l Compare results to the test oracle – 26 – CSCE 492 Spring 2008

Analysis of Test Results The test analysis report documents testing and provides information that allows a failure to be duplicated, found, and fixed The test analysis report mentions the sections of the requirements specification, the implementation plan, the test plan, and connects these to each test – 27 – CSCE 492 Spring 2008

Special Issues for Testing Object -Oriented Systems Because object interaction is essential to O-O systems, integration testing must be more extensive Inheritance makes testing more difficult by requiring more contexts (all sub classes) for testing an inherited module – 28 – CSCE 492 Spring 2008

Configuration Management Software systems often have multiple versions or releases Configuration management is the process of controlling development that produces multiple software systems An evolutionary development approach often results in multiple versions of the system Regression testing is the process of retesting elements of the system that were tested in a previous version or release – 29 – CSCE 492 Spring 2008

Alpha/beta testing l In-house testing is usually called alpha testing. l For software products, there is usually an additional stage of testing, called beta testing. l Involves distributing tested code to “beta test sites” (usually prospective customers) for evaluation and use. l Typically involves a formal procedure for reporting bugs. l Delivering buggy beta test code is embarrassing! – 30 – CSCE 492 Spring 2008