CSC 4510 Support Vector Machines 2 SVMs CSC

- Slides: 28

CSC 4510 Support Vector Machines 2 (SVMs) CSC 4510, Spring 2012. © Paula Matuszek 2012. 1

So What’s an SVM? • A Support Vector Machine (SVM) is a classifier – It uses features of instances to decide which class each instance belongs to • It is a supervised machine-learning classifier – Training cases are used to calculate parameters for a model which can then be applied to new instances to make a decision • It is a binary classifier – it distinguishes between two classes • For the squirrel vs bird, Grandis used size, a histogram of pixels, and a measure of texture as the features CSC 4510, Spring 2012. © Paula Matuszek 2012. 2

Basic Idea Underlying SVMs • Find a line, or a plane, or a hyperplane, that separates our classes cleanly. – This is the same concept as we have seen in regression. • By finding the greatest margin separating them – This is not the same concept as we have seen in regression. What does it mean? CSC 4510, Spring 2012. © Paula Matuszek 2012. 3

Soft Margins • Intuitively, it still looks like we can make a decent separation here. – Can’t make a clean margin – But can almost do so, if we allow some errors • We introduce slack variables, which measure the degree of misclassification • A soft margin is one which lets us make some errors, in order to get a wider margin • Tradeoff between wide margin and classification errors CSC 4510, Spring 2012. © Paula Matuszek 2012. 4

Non-Linearly-Separable Data • Suppose we can’t do a good linear separation of our data? • As with regression, allowing non-linearity will give us much better modeling of many data sets. • In SVMs, we do this by using a kernel. • A kernel is a function which maps our data into a higher-order feature space where we can find a separating hyperplane CSC 4510, Spring 2012. © Paula Matuszek 2012. 5

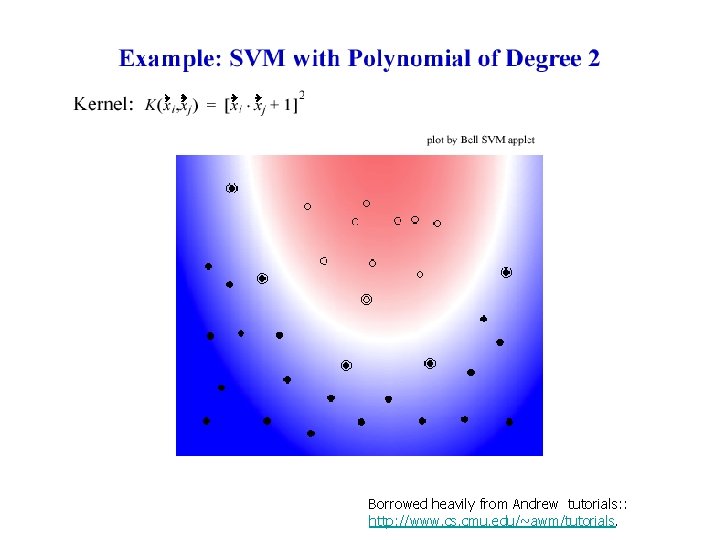

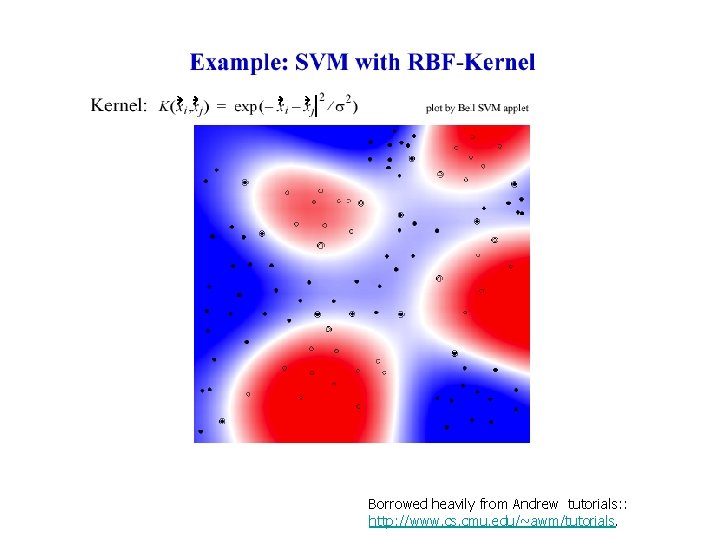

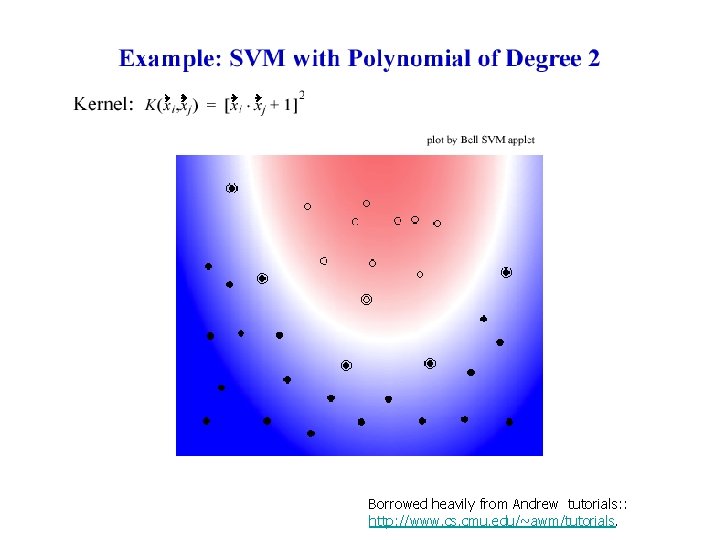

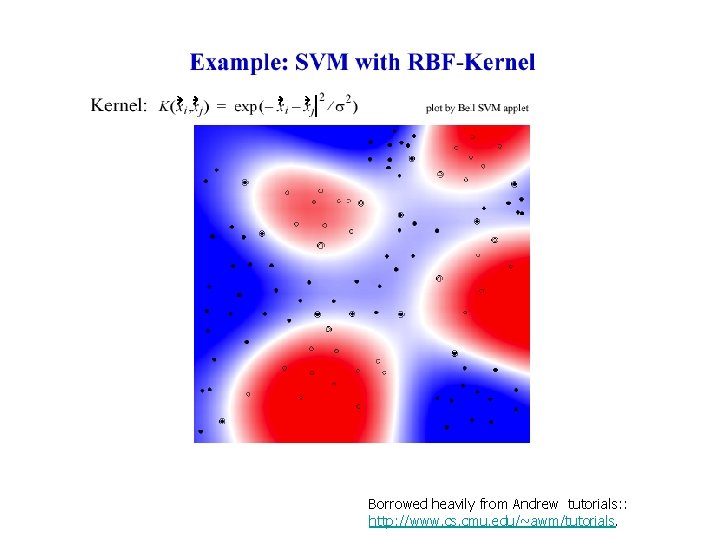

Kernels for SVMs • As we saw in Orange, we always specify a kernel for an SVM • Linear is simplest, but seldom a good match to the data • Other common ones are – polynomial – RBF (Gaussian Radial Basis Function) CSC 4510, Spring 2012. © Paula Matuszek 2012. 6

Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

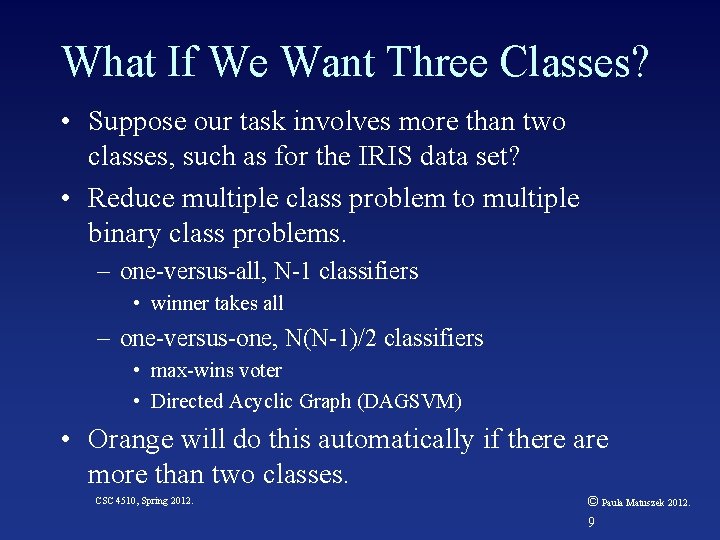

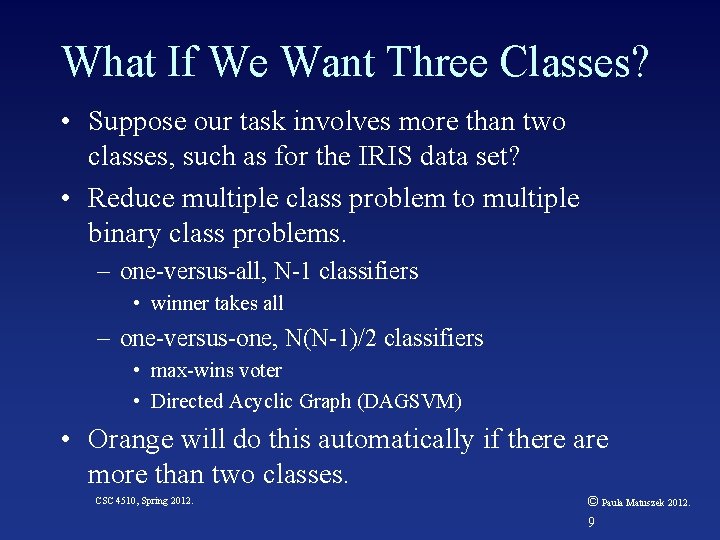

What If We Want Three Classes? • Suppose our task involves more than two classes, such as for the IRIS data set? • Reduce multiple class problem to multiple binary class problems. – one-versus-all, N-1 classifiers • winner takes all – one-versus-one, N(N-1)/2 classifiers • max-wins voter • Directed Acyclic Graph (DAGSVM) • Orange will do this automatically if there are more than two classes. CSC 4510, Spring 2012. © Paula Matuszek 2012. 9

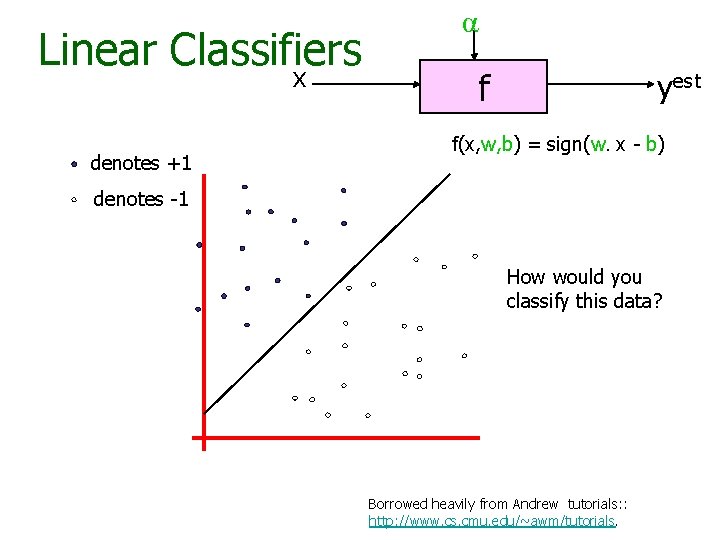

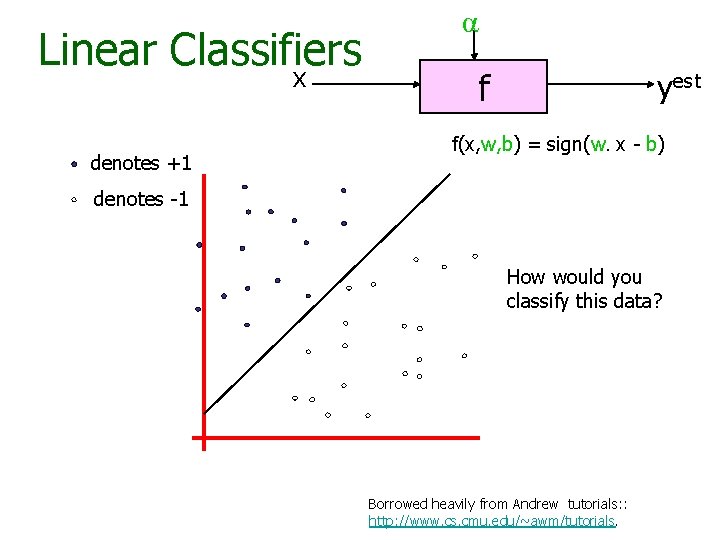

Linear Classifiers x denotes +1 α f yest f(x, w, b) = sign(w. x - b) denotes -1 How would you classify this data? Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

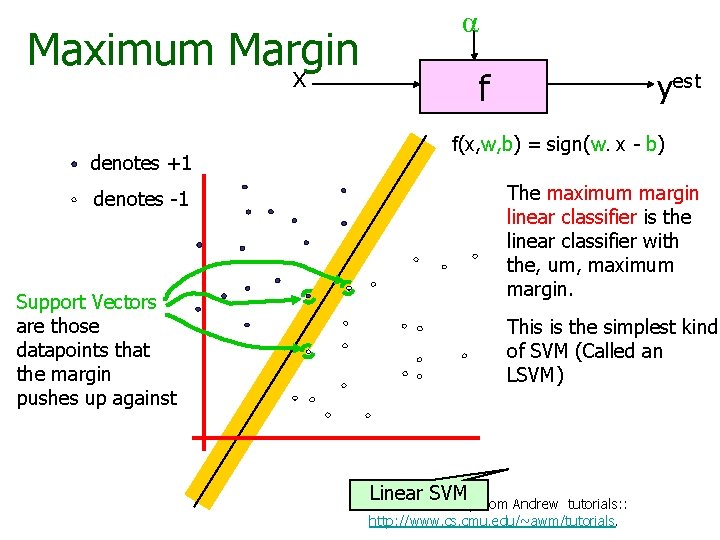

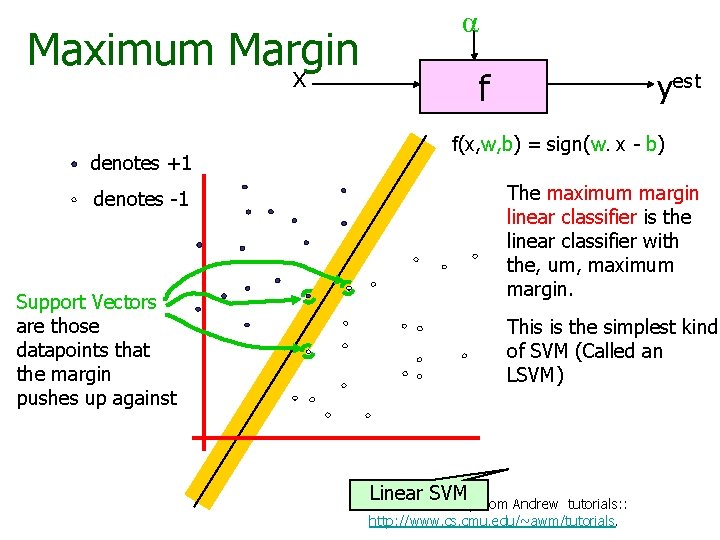

Maximum Margin α x denotes +1 f yest f(x, w, b) = sign(w. x - b) The maximum margin linear classifier is the linear classifier with the, um, maximum margin. denotes -1 Support Vectors are those datapoints that the margin pushes up against This is the simplest kind of SVM (Called an LSVM) Linear SVM Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

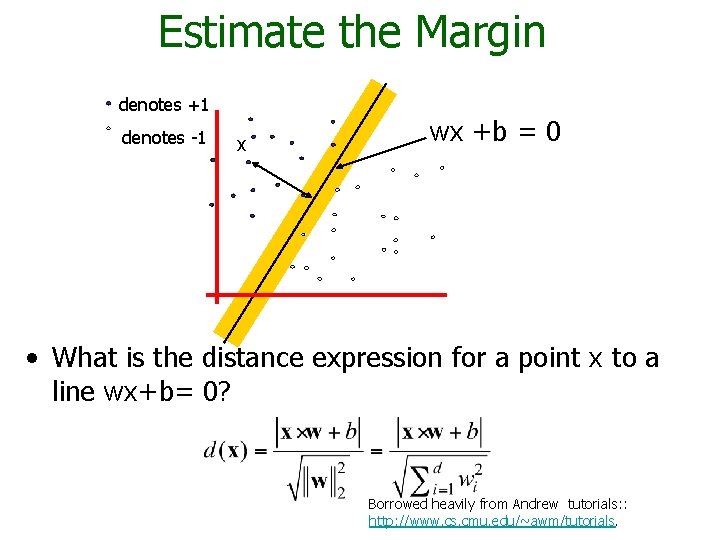

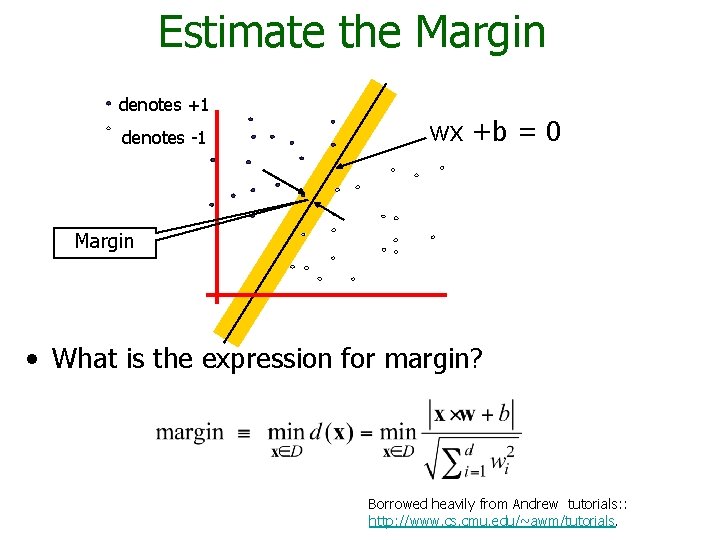

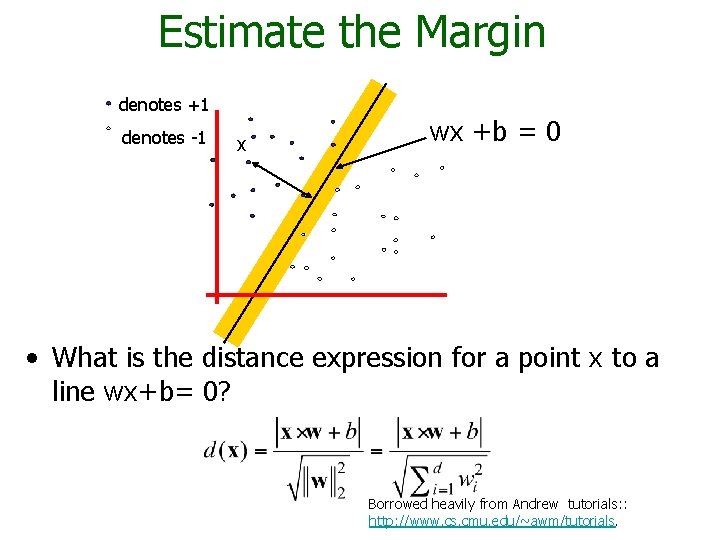

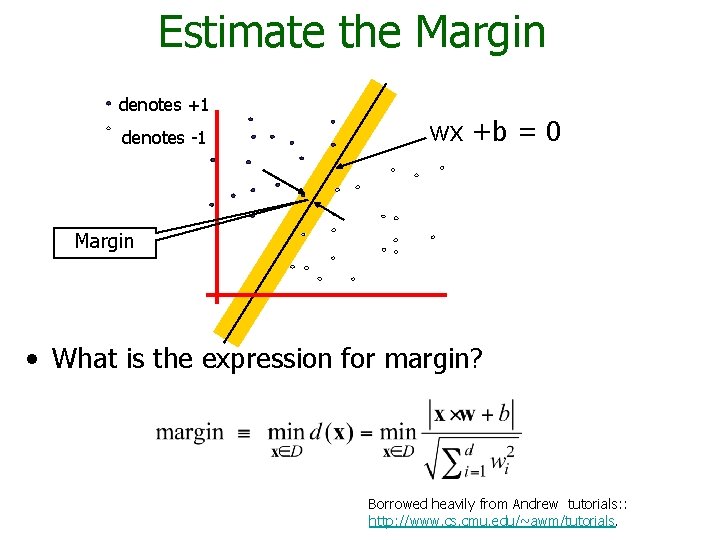

Estimate the Margin denotes +1 denotes -1 x wx +b = 0 • What is the distance expression for a point x to a line wx+b= 0? Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

Estimate the Margin denotes +1 denotes -1 wx +b = 0 Margin • What is the expression for margin? Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

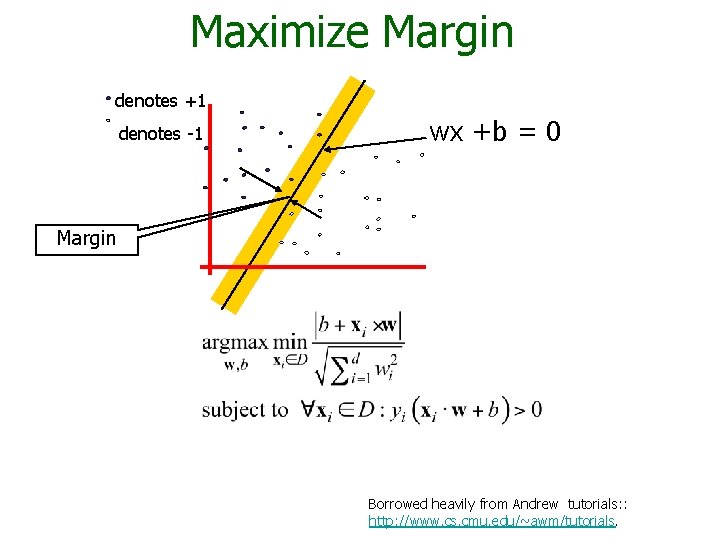

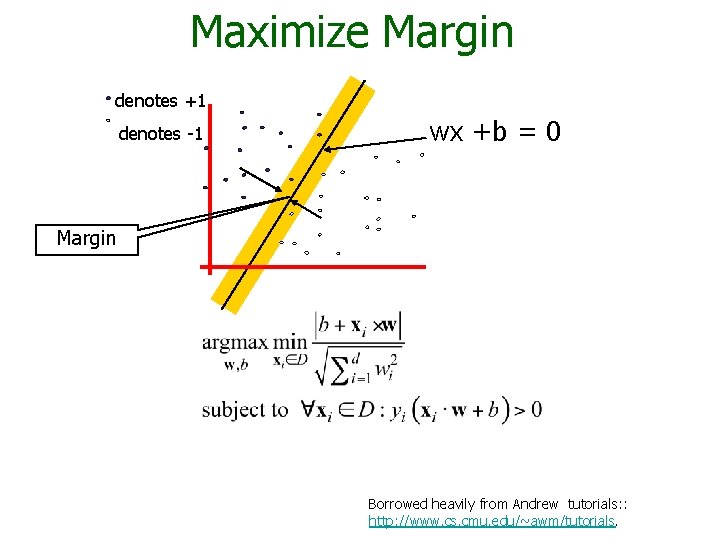

Maximize Margin denotes +1 denotes -1 wx +b = 0 Margin Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

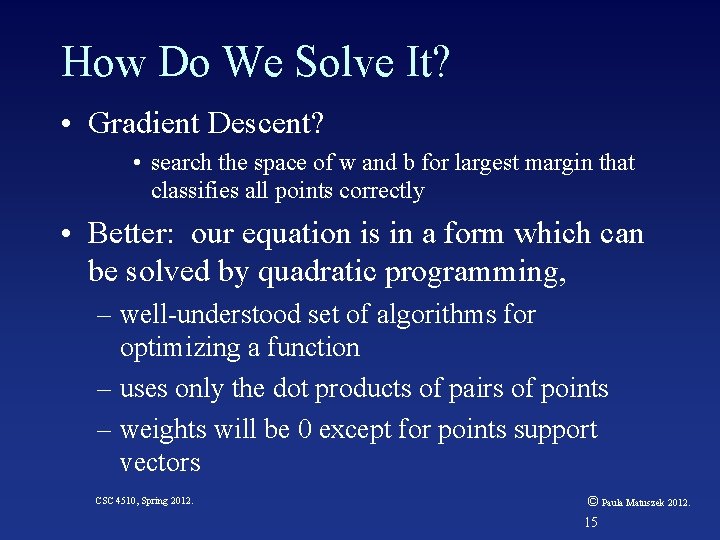

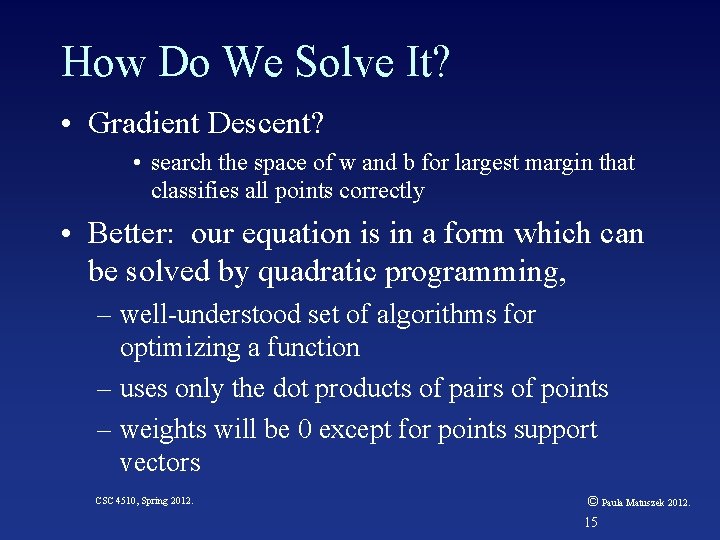

How Do We Solve It? • Gradient Descent? • search the space of w and b for largest margin that classifies all points correctly • Better: our equation is in a form which can be solved by quadratic programming, – well-understood set of algorithms for optimizing a function – uses only the dot products of pairs of points – weights will be 0 except for points support vectors CSC 4510, Spring 2012. © Paula Matuszek 2012. 15

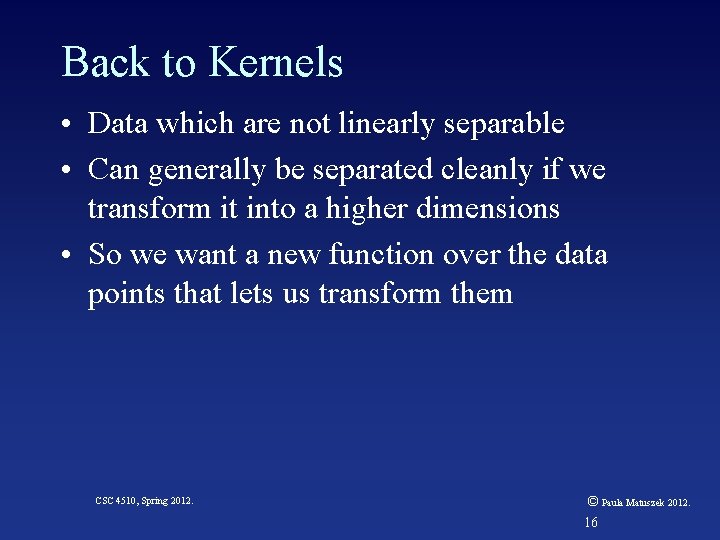

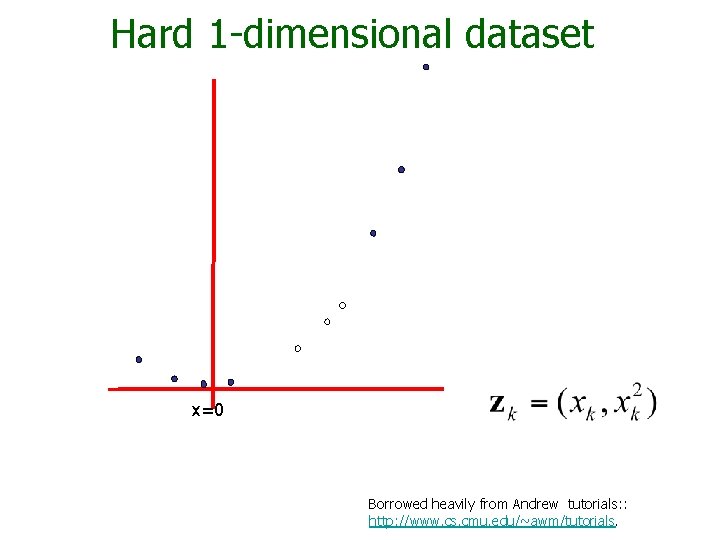

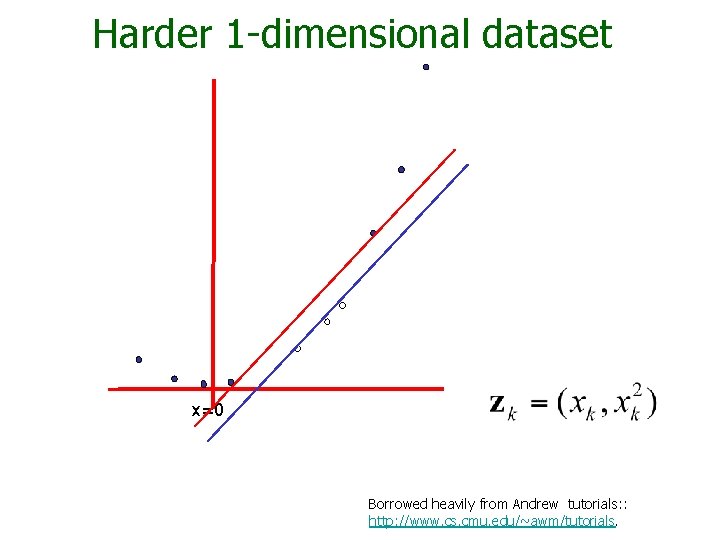

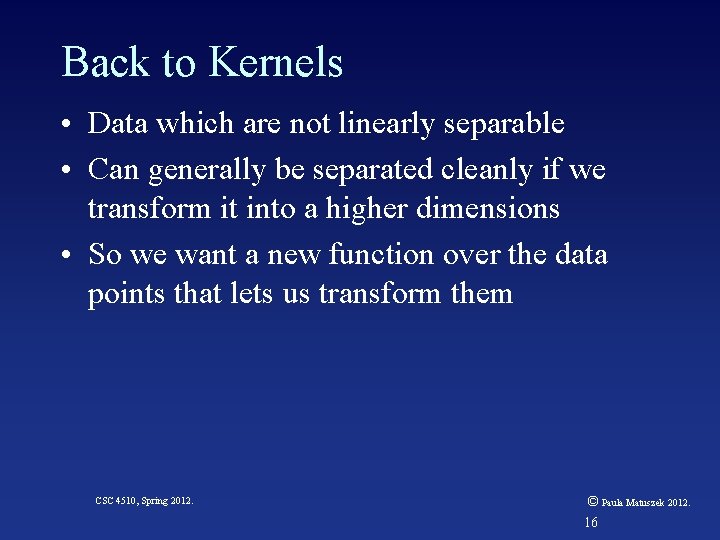

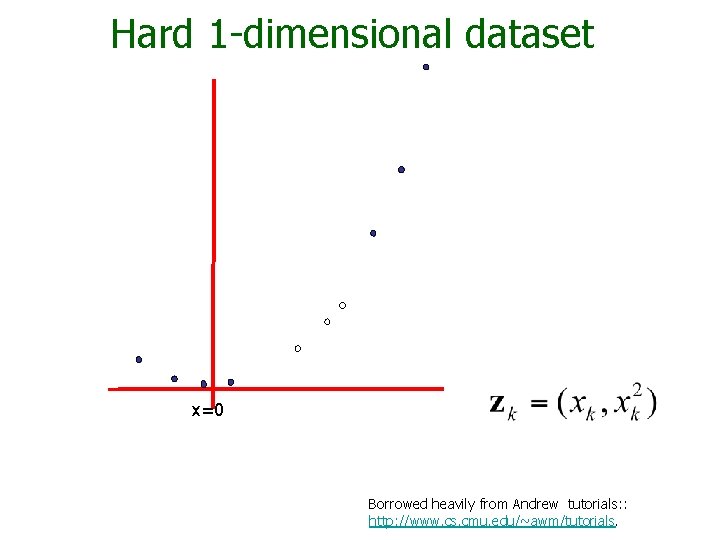

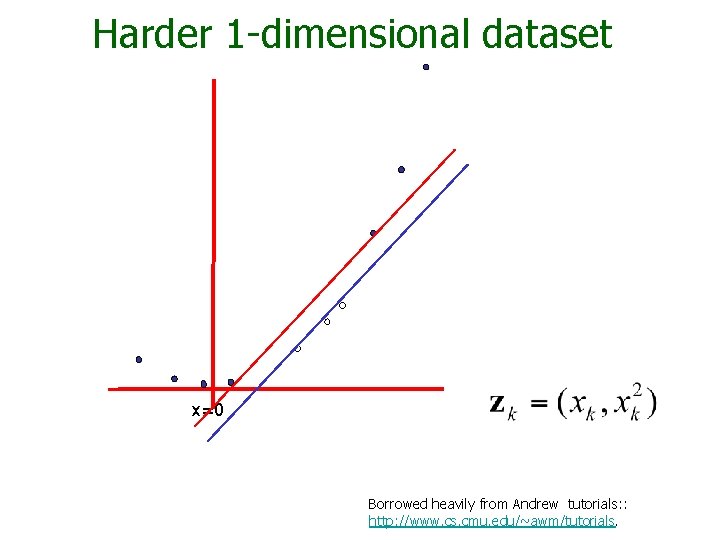

Back to Kernels • Data which are not linearly separable • Can generally be separated cleanly if we transform it into a higher dimensions • So we want a new function over the data points that lets us transform them CSC 4510, Spring 2012. © Paula Matuszek 2012. 16

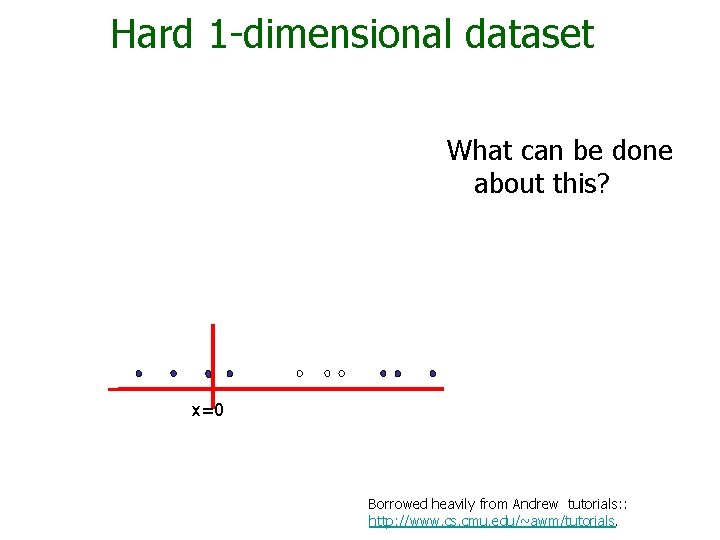

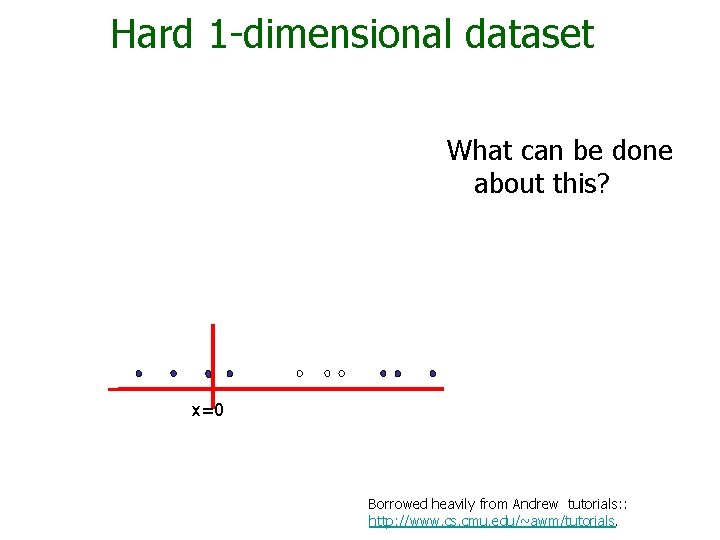

Hard 1 -dimensional dataset What can be done about this? x=0 Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

Hard 1 -dimensional dataset x=0 Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

Harder 1 -dimensional dataset x=0 Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

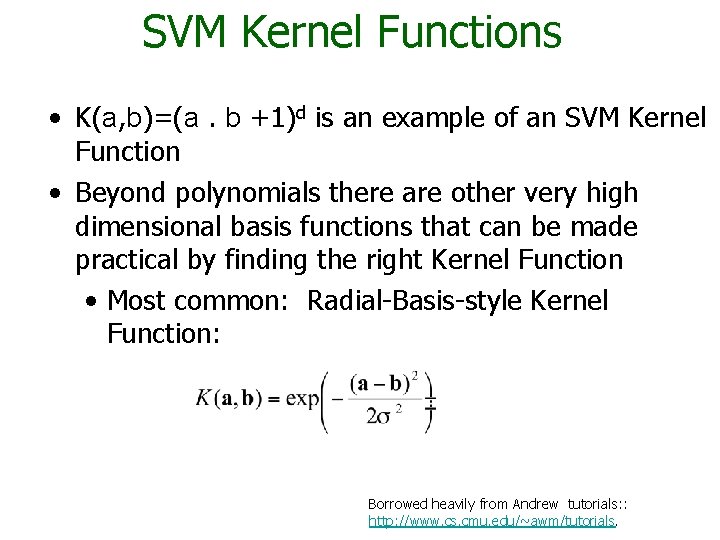

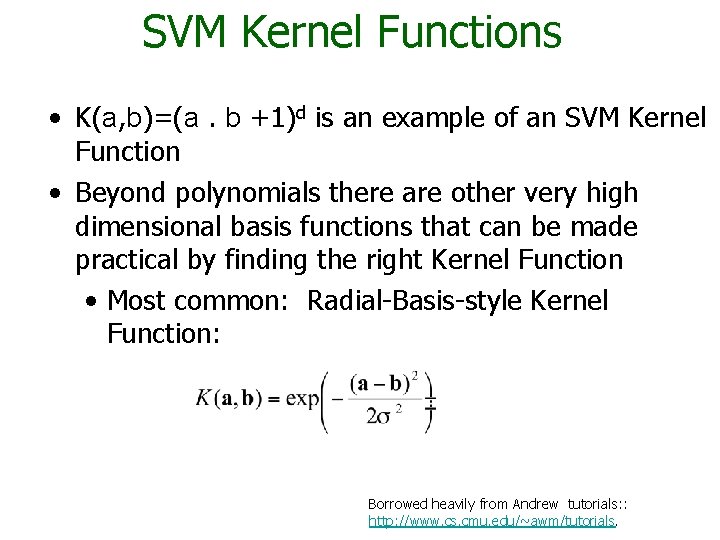

SVM Kernel Functions • K(a, b)=(a. b +1)d is an example of an SVM Kernel Function • Beyond polynomials there are other very high dimensional basis functions that can be made practical by finding the right Kernel Function • Most common: Radial-Basis-style Kernel Function: Borrowed heavily from Andrew tutorials: : http: //www. cs. cmu. edu/~awm/tutorials.

Kernel Trick • We don’t actually have to compute the complete higher-order function • In the QP equation we only use the dot product • So we replace it with a kernel function • This means we can work with much higher dimensions without getting hopeless performance • The kernel trick in SVMs refers to all of this: using a kernel function instead of the dot product to give us separation of non-linear data without impossible performance cost. CSC 4510, Spring 2012. © Paula Matuszek 2012. 21

Back to Orange CSC 4510, Spring 2012. © Paula Matuszek 2012. 22

SVM Type • C-SVM: This is the soft margin SVM we discussed, where C is the cost of an error. The higher the value of C the closer the fit to the data, and the narrower the margin • ν - SVM: An alternate approach to noisy data which measures how the proportion of support vectors which can be either in the margin or misclassified. The larger we make ν the more errors we can make and the larger the margin can be CSC 4510, Spring 2012. © Paula Matuszek 2012. 23

Kernel Parameters • For a linear “kernel” we specify cost or complexity • For more complex kernels there’s more. Some of them: – For polynomial, d is degree, and controls how complex we allow the match to be – For RBF, g is the width, which controls how steep the transformation curve is CSC 4510, Spring 2012. © Paula Matuszek 2012. 24

Setting the SVM Parameters • SVMs are quite sensitive to their parameters • There are not good a priori rules for setting them. The usual recommendation is “try some and see what works in your domain” • Widget has an “automatic parameter search” ; generally a good idea. • Generally, a C-SVM and RBF kernel, with data normalized and parameters set automatically, will give good results. CSC 4510, Spring 2012. © Paula Matuszek 2012. 25

How We Automate: Grid Search • To automate the parameter selection, we basically try sets of parameters, increasing the value exponentially – For instance, we might try C at 1, 2, 4, 8, 16, 32 – Calculate accuracy for each value and choose best • Can do a two-pass, coarse then fine: – C = 1, 8. 64 for first pass – Can do cross-validation, learning repeatedly on subsets of the data CSC 4510, Spring 2012. © Paula Matuszek 2012. 26

What Do We Do With SVMs • Popular because successful in a wide variety of domains. Some examples: – Medicine: Detecting breast cancer. (EARLY DETECTION OF BREAST CANCER USING SVM CLASSIFIER TECHNIQUE Y. Ireaneus Anna Rejani, Dr. S. Thamarai Selvi, International Journal on Computer Science and Engineering Vol. 1(3), 2009, 127 -130 ) – Natural Language Processing: Text classification. http: //www. cs. cornell. edu/People/tj/publications/joachims_98 a. pdf – Psychology: Decoding Cognitive States From MRI data. (Decoding Cognitive States from f. MRI Data Using Support Vector Regression, Maria Grazia Di Bono, Marco Zorzi. Psych. Nology Journal, 2008, Volume 6, Number 2, 189 – 201) CSC 4510, Spring 2012. © Paula Matuszek 2012. 27

Summary • SVMs are a form of supervised classifier • The basic SVM is binary and linear, but there are non-linear and multi-class extensions • “One key insight and one neat trick” 1 – key insight: maximum margin separator – neat trick: kernel trick • A good method to try first if you have no knowledge about the domain • Applicable in a wide variety of domains 1 Artificial Intelligence, a Modern Approach, third edition, Russell and Norvig, 2010, p, 744 CSC 4510, Spring 2012. © Paula Matuszek 2012. 28