CSC 3050 Computer Architecture Advanced Computer Architectures Prof

- Slides: 45

CSC 3050 – Computer Architecture Advanced Computer Architectures Prof. Yeh-Ching Chung School of Data Science Chinese University of Hong Kong, Shenzhen National Tsing Hua University ® copyright OIA 1

Outline l Introduction l Multi-Core Architectures l Multi-Threading l Parallel Computers National Tsing Hua University ® copyright OIA 2

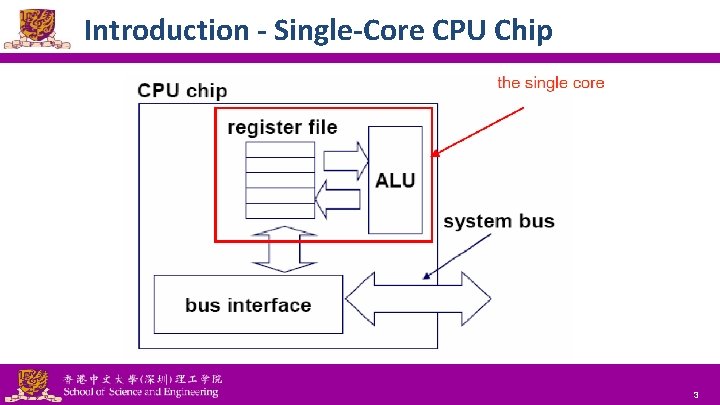

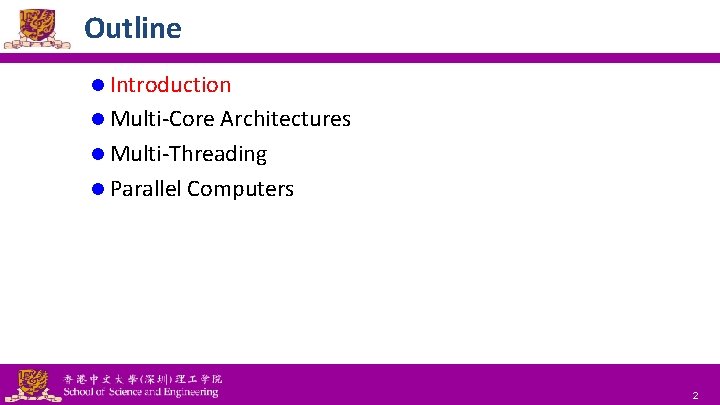

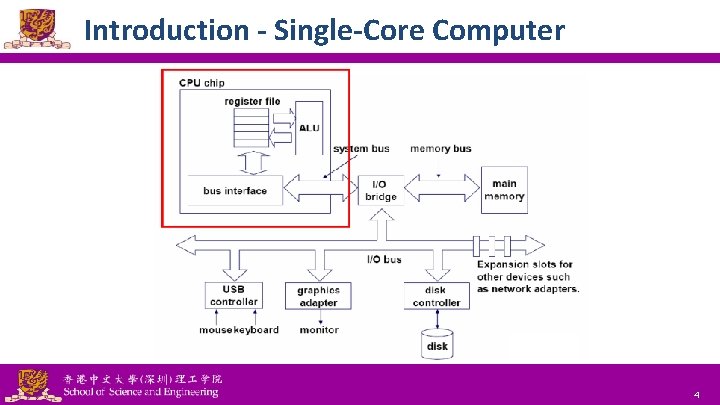

Introduction - Single-Core CPU Chip National Tsing Hua University ® copyright OIA 3

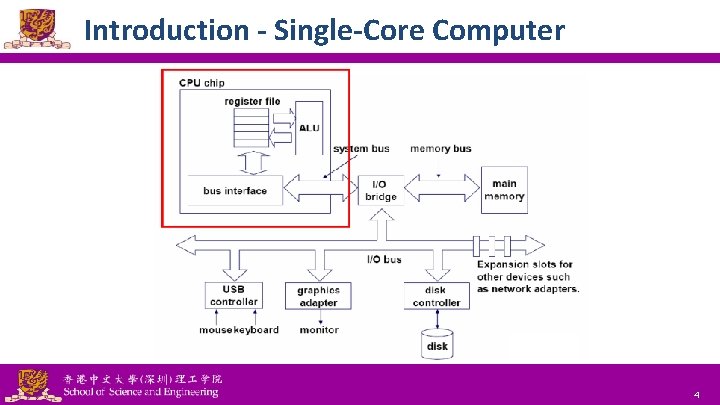

Introduction - Single-Core Computer National Tsing Hua University ® copyright OIA 4

Outline l Introduction l Multi-Core Architectures l Multi-Threading l Parallel Computers National Tsing Hua University ® copyright OIA 5

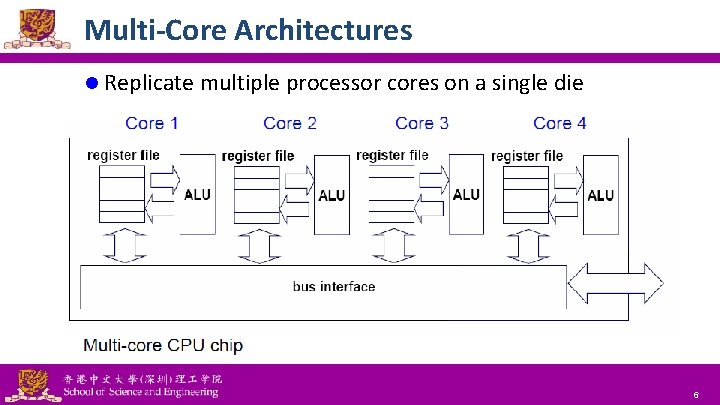

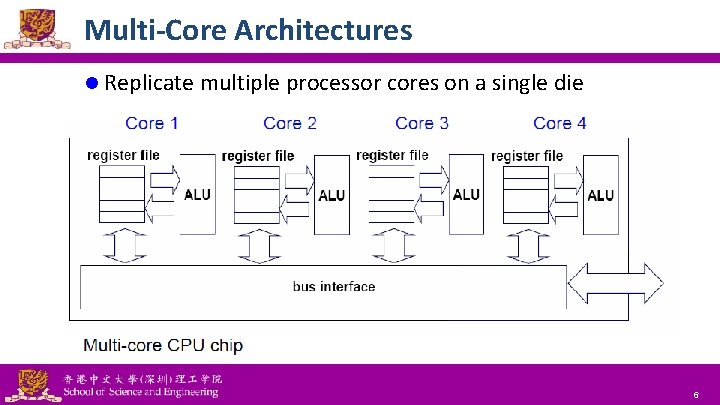

Multi-Core Architectures l Replicate multiple processor cores on a single die National Tsing Hua University ® copyright OIA 6

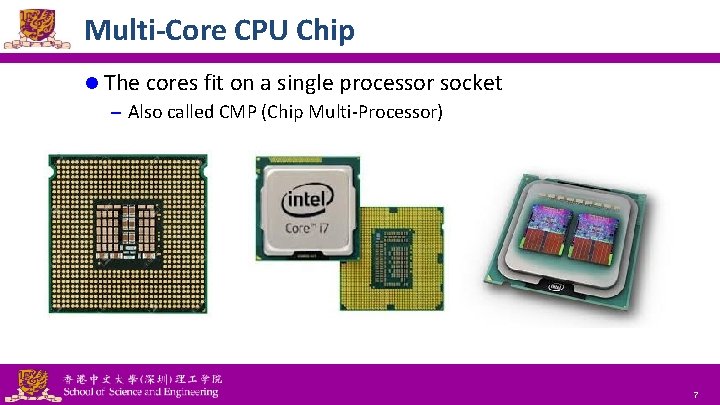

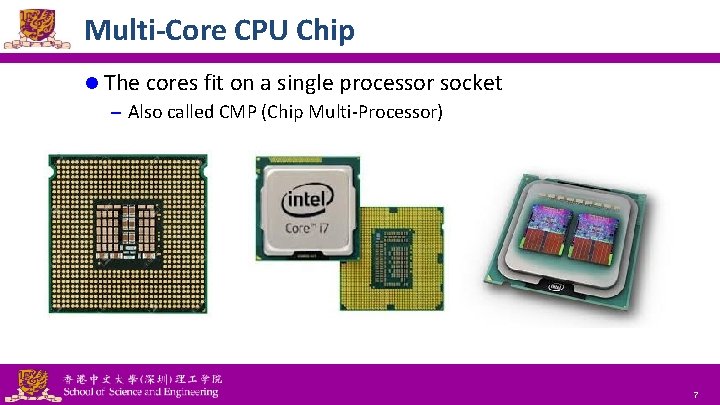

Multi-Core CPU Chip l The cores fit on a single processor socket – Also called CMP (Chip Multi-Processor) National Tsing Hua University ® copyright OIA 7

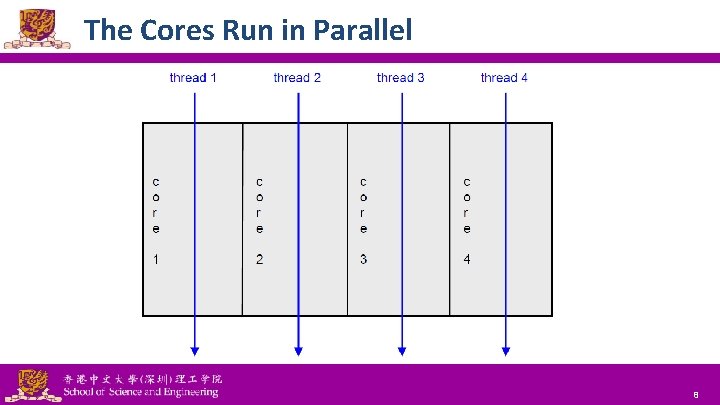

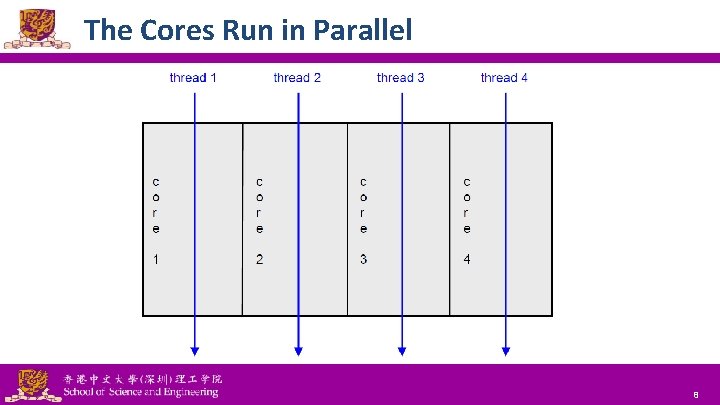

The Cores Run in Parallel National Tsing Hua University ® copyright OIA 8

Interaction with Operating System l OS perceives each core as a separate processor l OS scheduler maps threads/processes to different cores l Most major OS support multi-core today – Windows, Linux, Mac OS X, etc. National Tsing Hua University ® copyright OIA 9

Why Multicore Difficult to make single-core clock frequencies even higher l Deeply pipelined circuits l – – – Heat problems Speed of light problems Difficult design and verification Large design teams necessary Server farms are expensive Many new applications are multithreaded l General trend in computer architecture (shift towards more parallelism) l National Tsing Hua University ® copyright OIA 10

Instruction-Level Parallelism at the machine-instruction level l The processor can re-order, pipeline instructions, split them into microinstructions, do aggressive branch prediction, etc. l Instruction-level parallelism enabled rapid increases in processor speeds over the last 15 years National Tsing Hua University ® copyright OIA 11

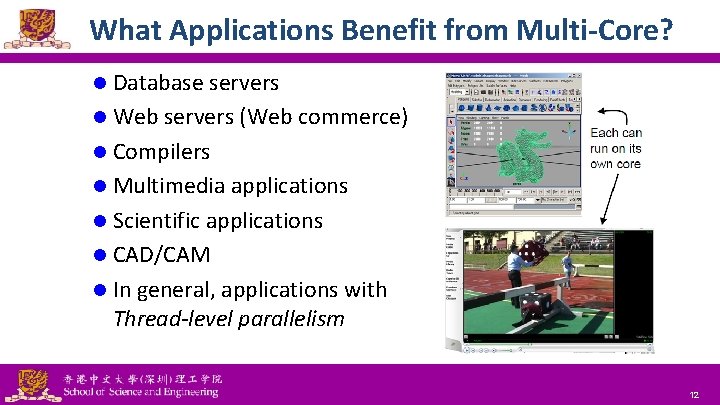

What Applications Benefit from Multi-Core? l Database servers l Web servers (Web commerce) l Compilers l Multimedia applications l Scientific applications l CAD/CAM l In general, applications with Thread-level parallelism National Tsing Hua University ® copyright OIA 12

More Examples l Editing a photo while recording a TV show through a digital video recorder l Downloading software while running an anti-virus program l “Anything that can be threaded today will map efficiently to multi-core” l BUT, some applications difficult to parallelize National Tsing Hua University ® copyright OIA 13

Outline l Introduction l Multi-Core Architectures l Multi-Threading l Parallel Computers National Tsing Hua University ® copyright OIA 14

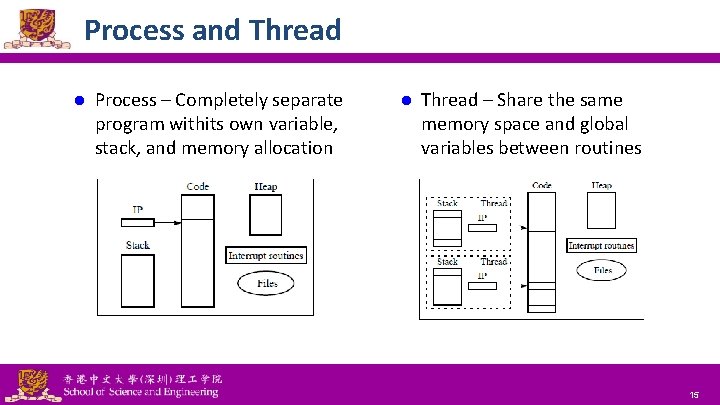

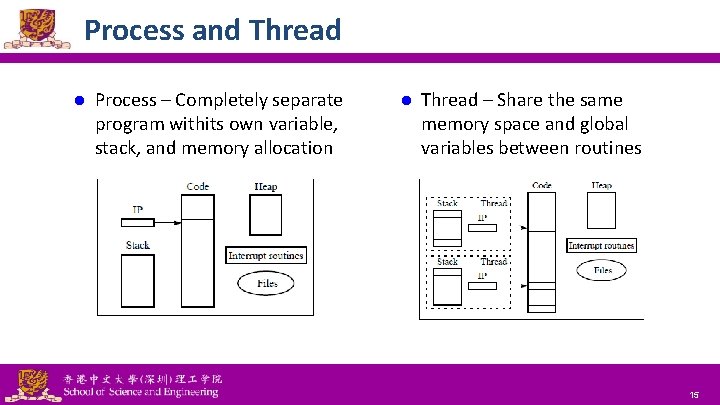

Process and Thread l Process – Completely separate program withits own variable, stack, and memory allocation National Tsing Hua University ® copyright OIA l Thread – Share the same memory space and global variables between routines 15

Thread-Level Parallelism l l l This parallelism is on a more coarser scale Server can serve each client in a separate thread (Web server, database server) A computer game can do AI, graphics, and physics in three separate threads Single-core superscalar processors cannot fully exploit TLP Multi-core architectures are the next step in processor evolution – Explicitly exploiting TLP National Tsing Hua University ® copyright OIA 16

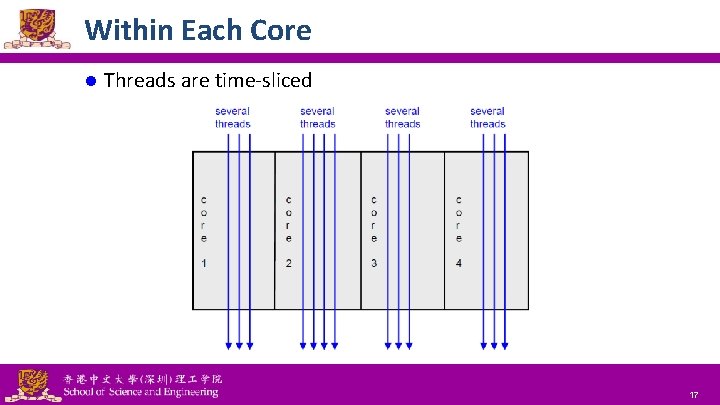

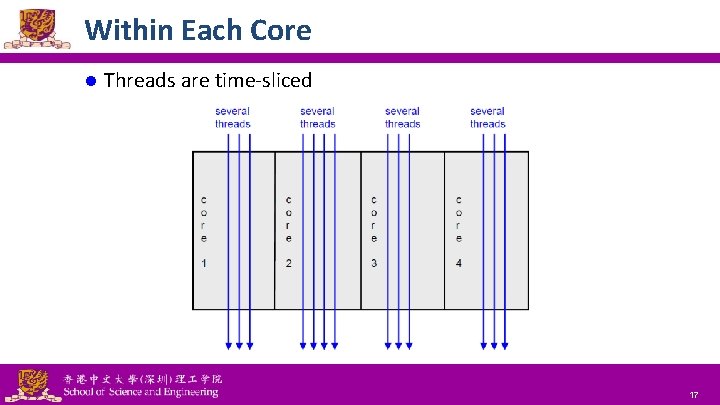

Within Each Core l Threads are time-sliced National Tsing Hua University ® copyright OIA 17

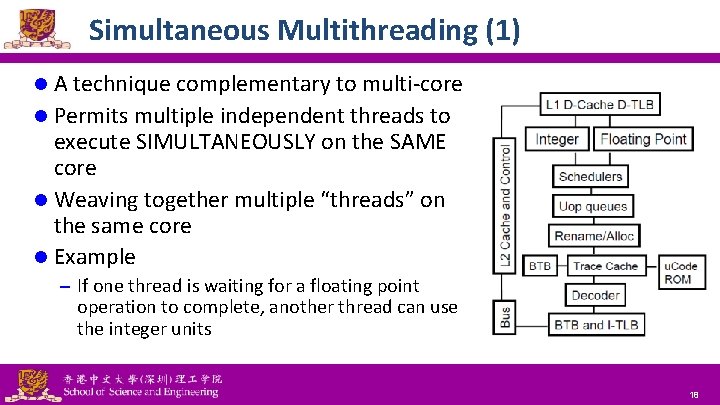

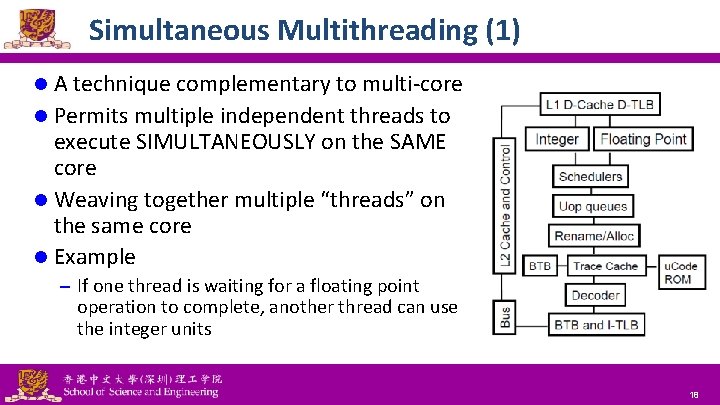

Simultaneous Multithreading (1) l A technique complementary to multi-core l Permits multiple independent threads to execute SIMULTANEOUSLY on the SAME core l Weaving together multiple “threads” on the same core l Example – If one thread is waiting for a floating point operation to complete, another thread can use the integer units National Tsing Hua University ® copyright OIA 18

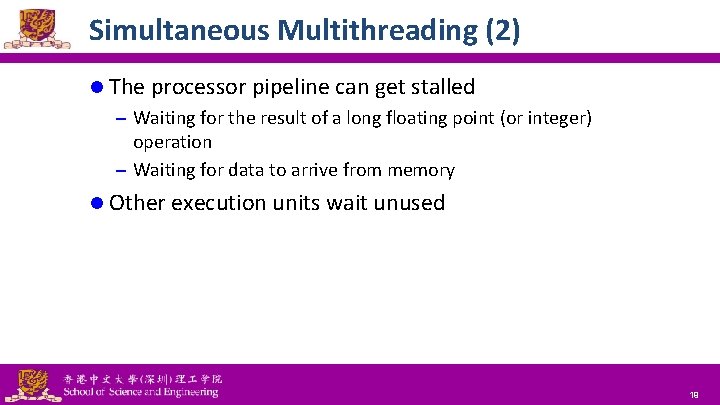

Simultaneous Multithreading (2) l The processor pipeline can get stalled – Waiting for the result of a long floating point (or integer) operation – Waiting for data to arrive from memory l Other execution units wait unused National Tsing Hua University ® copyright OIA 19

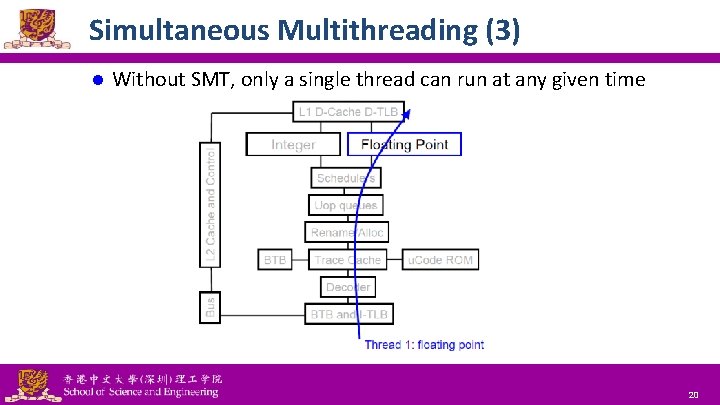

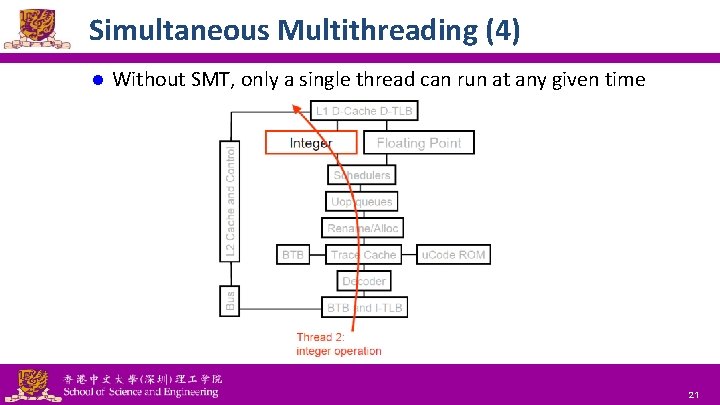

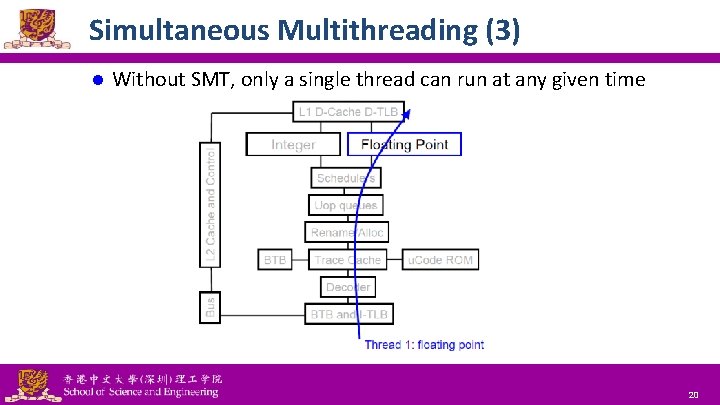

Simultaneous Multithreading (3) l Without SMT, only a single thread can run at any given time National Tsing Hua University ® copyright OIA 20

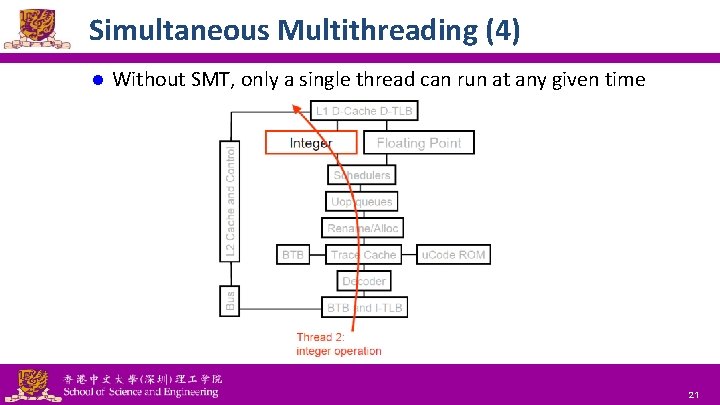

Simultaneous Multithreading (4) l Without SMT, only a single thread can run at any given time National Tsing Hua University ® copyright OIA 21

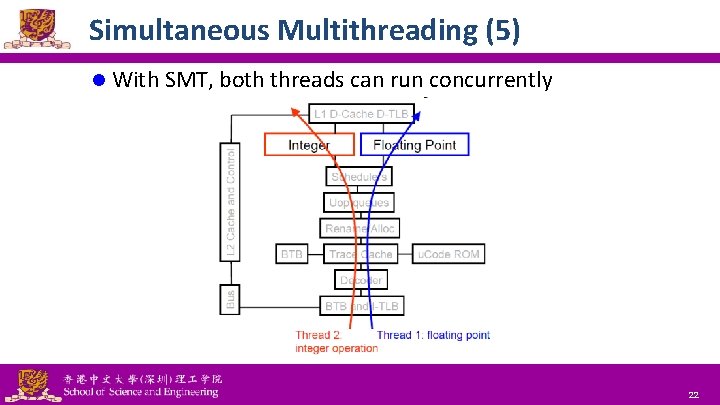

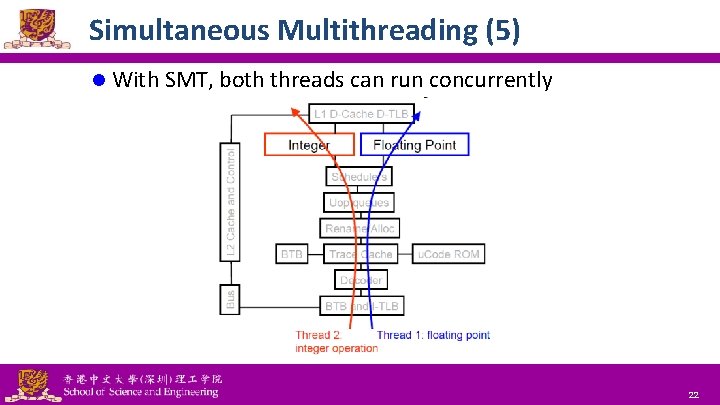

Simultaneous Multithreading (5) l With SMT, both threads can run concurrently National Tsing Hua University ® copyright OIA 22

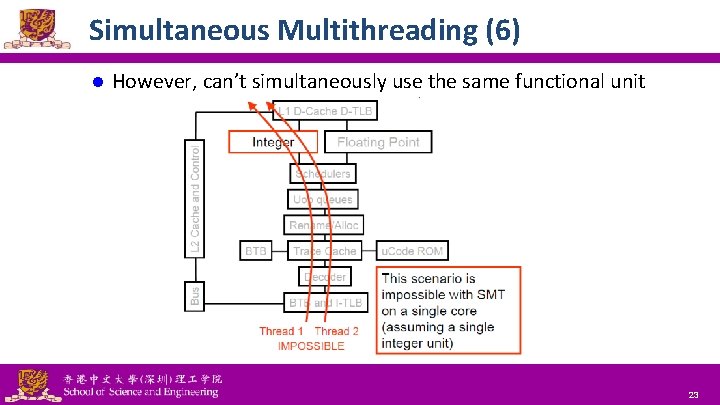

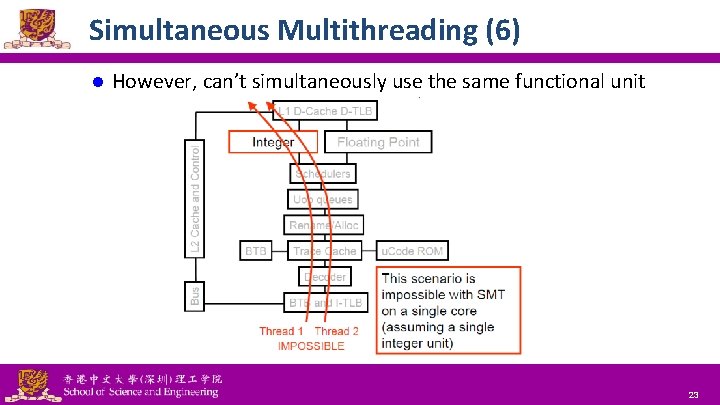

Simultaneous Multithreading (6) l However, can’t simultaneously use the same functional unit National Tsing Hua University ® copyright OIA 23

Simultaneous Multithreading (6) l SMT is not a “true” parallel processor – Enables better threading (e. g. up to 30%) – OS and applications perceive each simultaneous thread as a separate “virtual processor” – The chip has only a single copy of each resource National Tsing Hua University ® copyright OIA 24

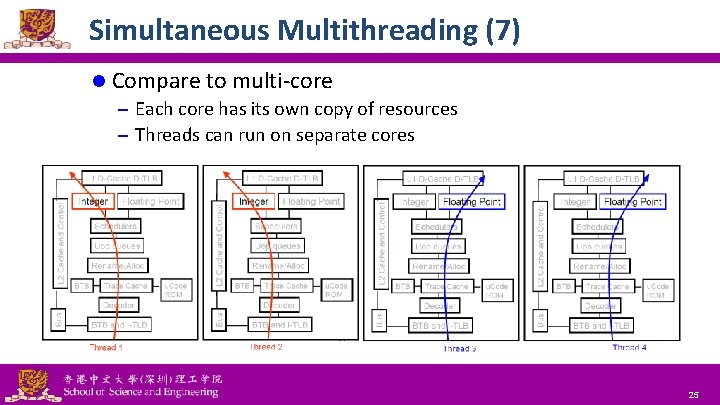

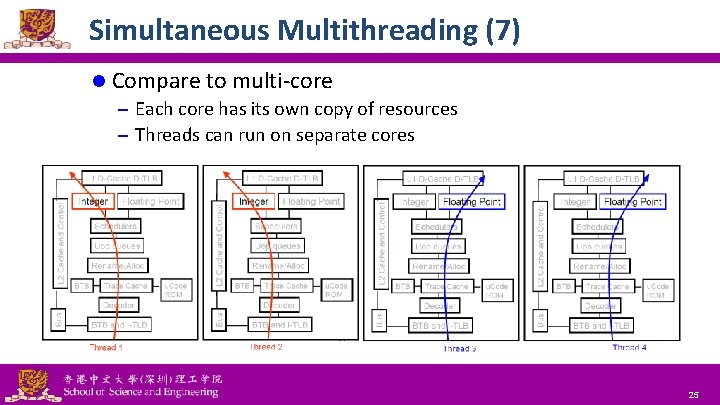

Simultaneous Multithreading (7) l Compare to multi-core – Each core has its own copy of resources – Threads can run on separate cores National Tsing Hua University ® copyright OIA 25

Combine Multi-Core and SMT (1) l Cores can be SMT-enabled (or not) l The different combinations – Single-core, non-SMT: standard uniprocessor – Single-core, with SMT – Multi-core, non-SMT – Multi-core, with SMT l The number of SMT threads – 2, 4, or sometimes 8 simultaneous threads l Intel calls them “hyper-threads” National Tsing Hua University ® copyright OIA 26

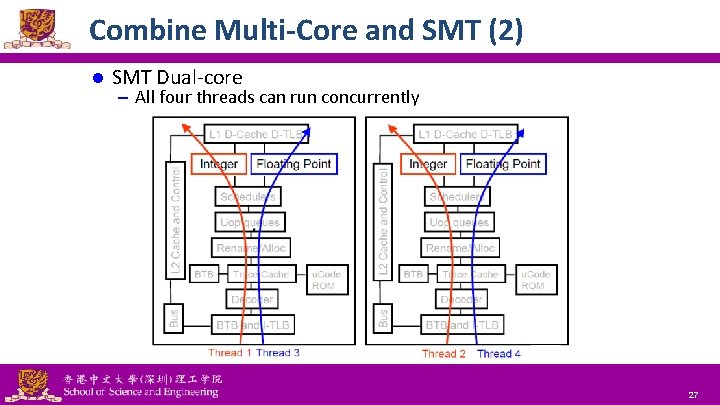

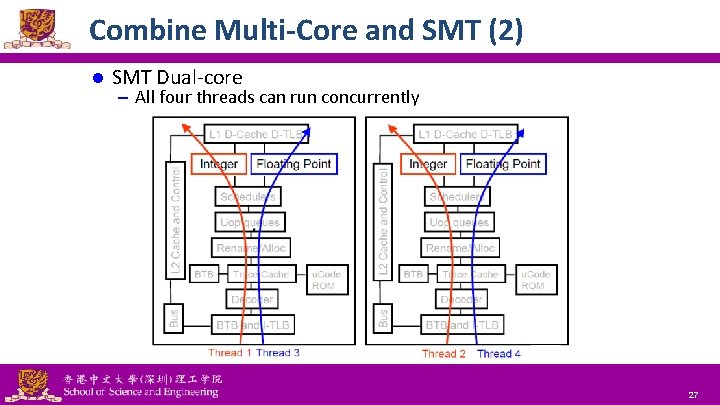

Combine Multi-Core and SMT (2) l SMT Dual-core – All four threads can run concurrently National Tsing Hua University ® copyright OIA 27

Comparisons of Multi-Core and SMT l Multi-Core – Since there are several cores, each is smaller and not as powerful (but also easier to design and manufacture) – However, great with thread-level parallelism l SMT – Can have one large and fast superscalar core – Great performance on a single thread – Mostly still only exploits instruction-level parallelism National Tsing Hua University ® copyright OIA 28

Comparisons of Multi-Core and SMT l Multi-Core – Since there are several cores, each is smaller and not as powerful (but also easier to design and manufacture) – However, great with thread-level parallelism l SMT – Can have one large and fast superscalar core – Great performance on a single thread – Mostly still only exploits instruction-level parallelism National Tsing Hua University ® copyright OIA 29

Outline l Introduction l Multi-Core Architectures l Parallel Computers National Tsing Hua University ® copyright OIA 30

Multiprocessors – Introduction (1) l Multiprocessor is any computer with several processors National Tsing Hua University ® copyright OIA 31

Multiprocessors – Introduction (2) l Flynn’s classifiction l SISD (Single Instruction Single Data) – Von Neuman machine l SIMD (Single Insruction Multiple Data) – CM 2, Modern graphics cards, etc. l MISD (Multiple Instruction Single Data) – Systolic array l MIMD (Multiple Instruction Multiple Data) – CM 5, cluster, etc. National Tsing Hua University ® copyright OIA 32

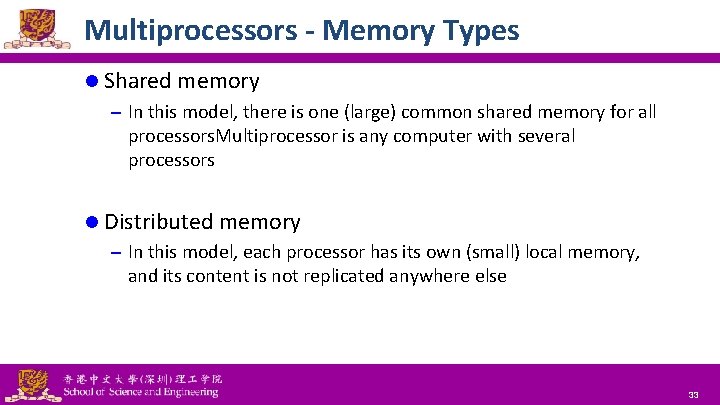

Multiprocessors - Memory Types l Shared memory – In this model, there is one (large) common shared memory for all processors. Multiprocessor is any computer with several processors l Distributed memory – In this model, each processor has its own (small) local memory, and its content is not replicated anywhere else National Tsing Hua University ® copyright OIA 33

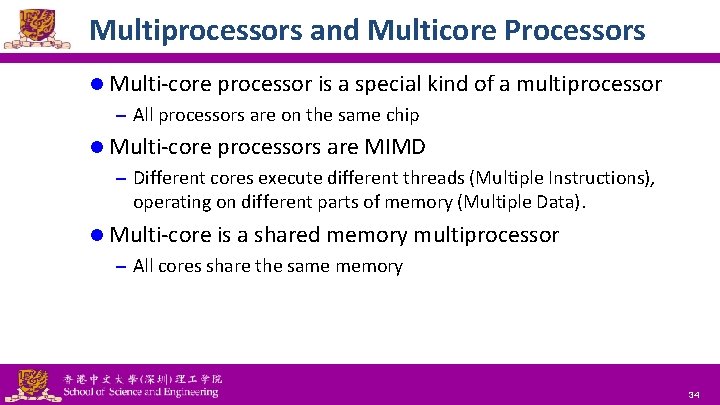

Multiprocessors and Multicore Processors l Multi-core processor is a special kind of a multiprocessor – All processors are on the same chip l Multi-core processors are MIMD – Different cores execute different threads (Multiple Instructions), operating on different parts of memory (Multiple Data). l Multi-core is a shared memory multiprocessor – All cores share the same memory National Tsing Hua University ® copyright OIA 34

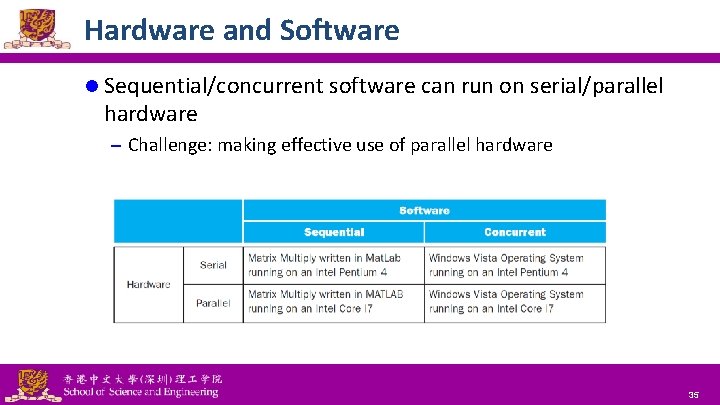

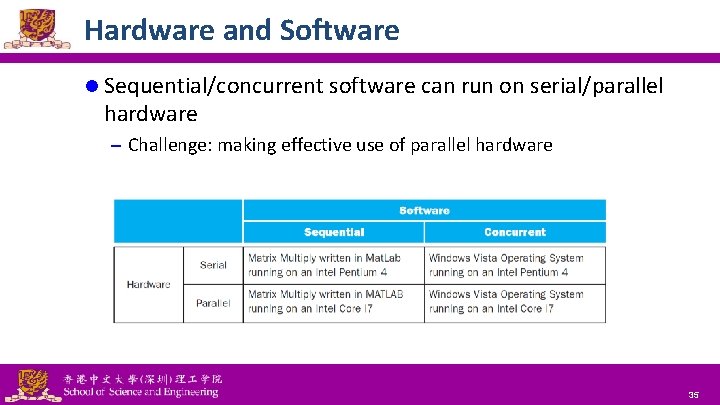

Hardware and Software l Sequential/concurrent software can run on serial/parallel hardware – Challenge: making effective use of parallel hardware National Tsing Hua University ® copyright OIA 35

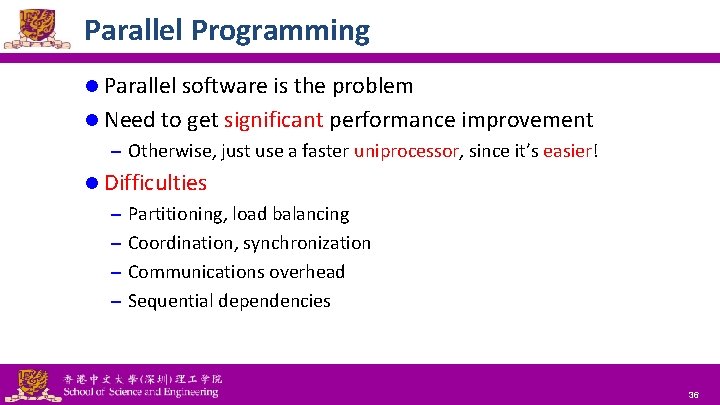

Parallel Programming l Parallel software is the problem l Need to get significant performance improvement – Otherwise, just use a faster uniprocessor, since it’s easier! l Difficulties – Partitioning, load balancing – Coordination, synchronization – Communications overhead – Sequential dependencies National Tsing Hua University ® copyright OIA 36

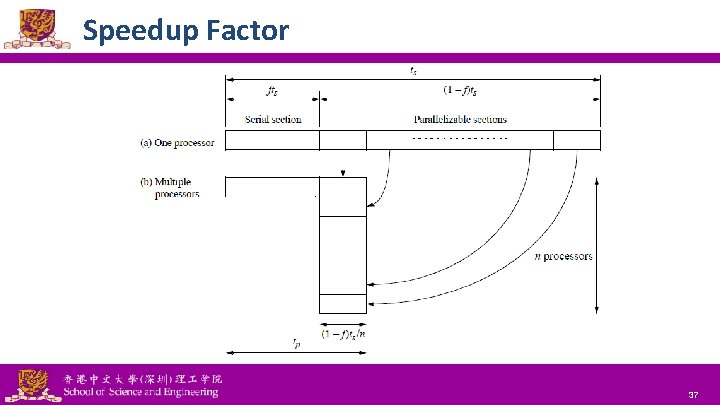

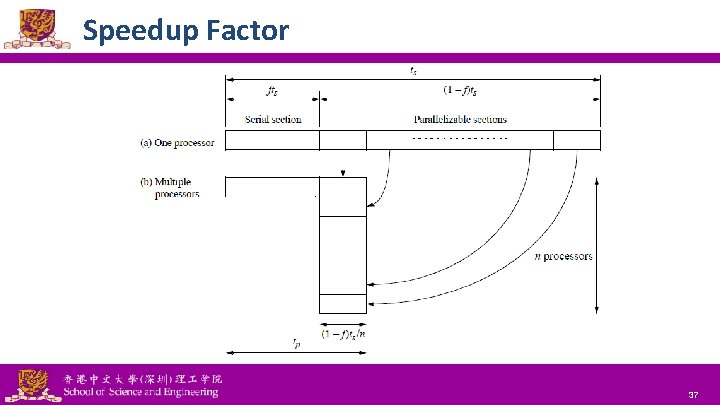

Speedup Factor National Tsing Hua University ® copyright OIA 37

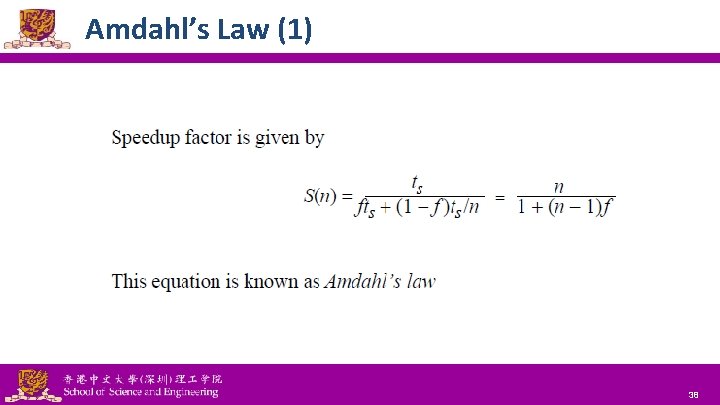

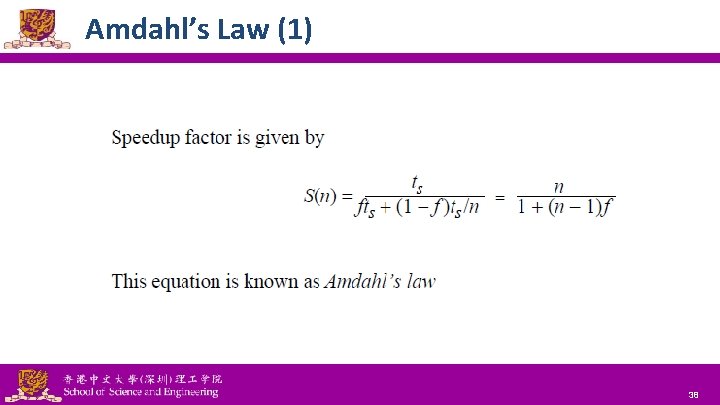

Amdahl’s Law (1) National Tsing Hua University ® copyright OIA 38

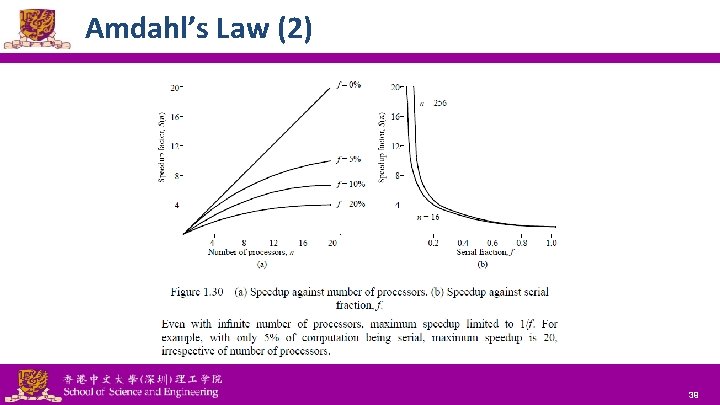

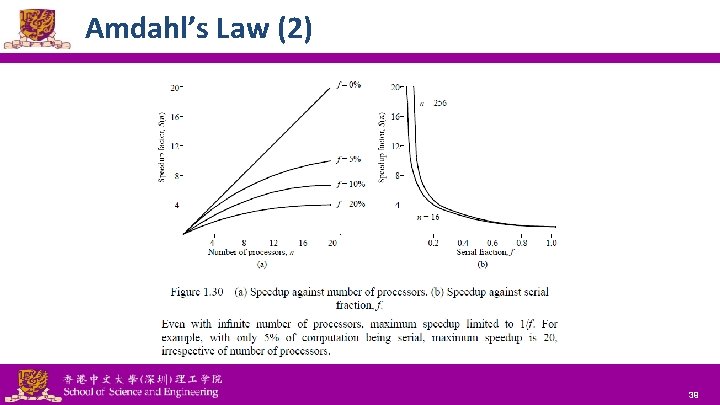

Amdahl’s Law (2) National Tsing Hua University ® copyright OIA 39

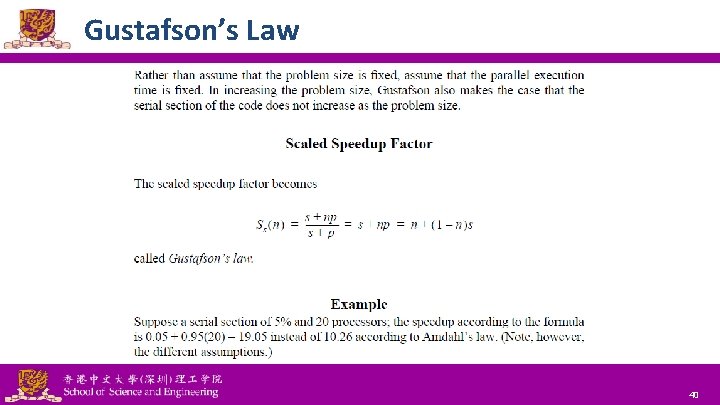

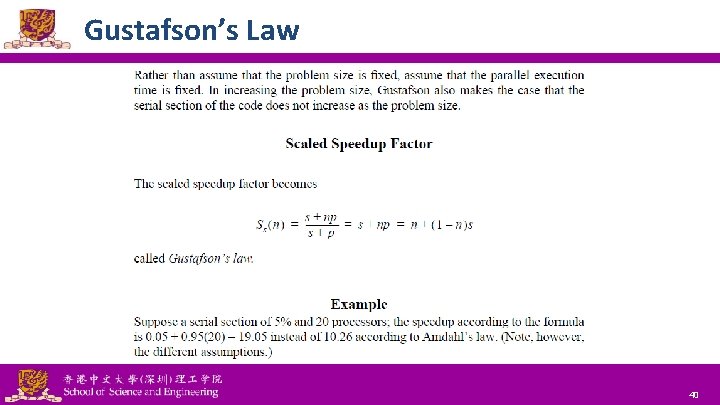

Gustafson’s Law National Tsing Hua University ® copyright OIA 40

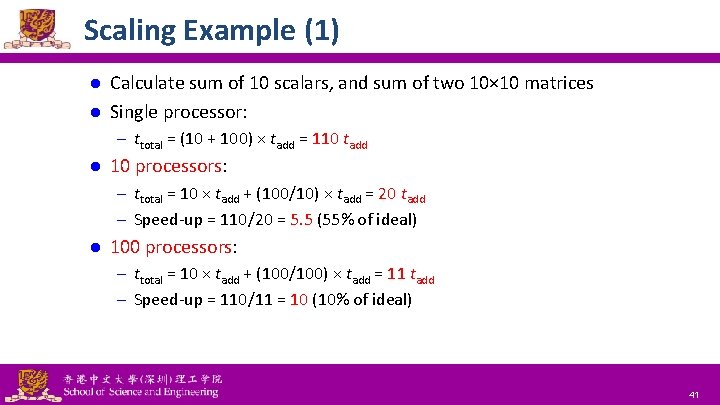

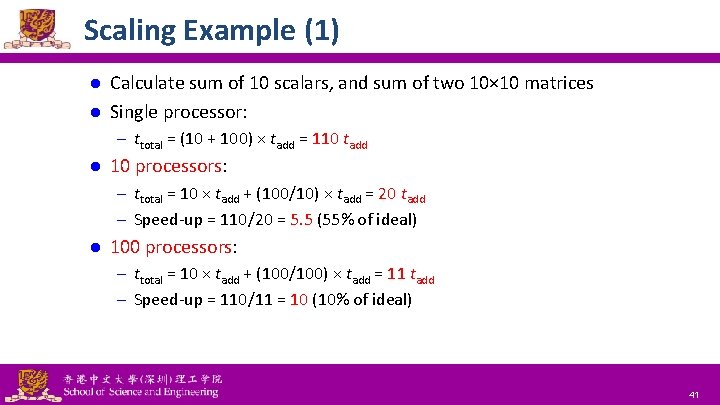

Scaling Example (1) Calculate sum of 10 scalars, and sum of two 10× 10 matrices l Single processor: l – ttotal = (10 + 100) × tadd = 110 tadd l 10 processors: – ttotal = 10 × tadd + (100/10) × tadd = 20 tadd – Speed-up = 110/20 = 5. 5 (55% of ideal) l 100 processors: – ttotal = 10 × tadd + (100/100) × tadd = 11 tadd – Speed-up = 110/11 = 10 (10% of ideal) National Tsing Hua University ® copyright OIA 41

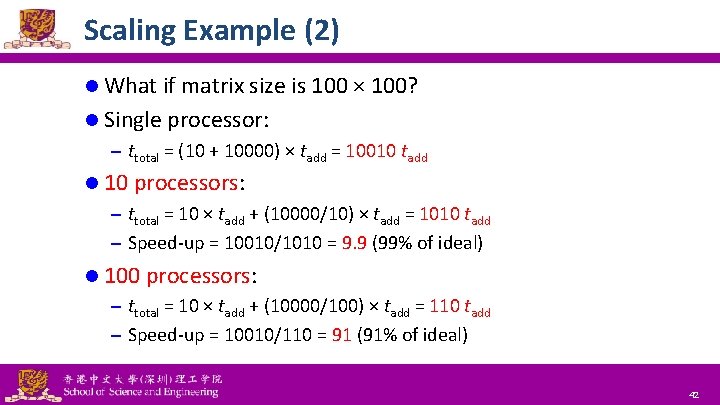

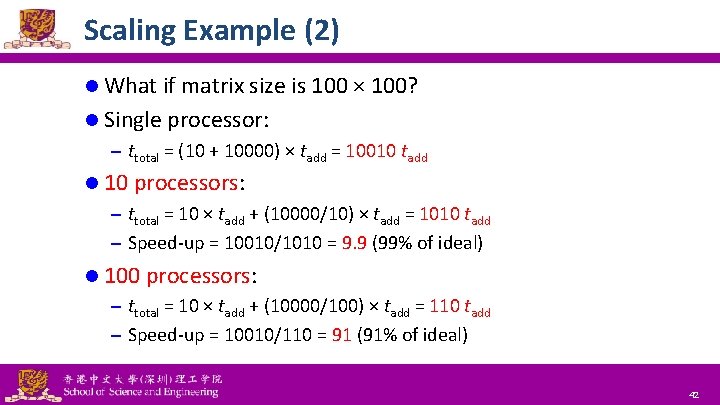

Scaling Example (2) l What if matrix size is 100 × 100? l Single processor: – ttotal = (10 + 10000) × tadd = 10010 tadd l 10 processors: – ttotal = 10 × tadd + (10000/10) × tadd = 1010 tadd – Speed-up = 10010/1010 = 9. 9 (99% of ideal) l 100 processors: – ttotal = 10 × tadd + (10000/100) × tadd = 110 tadd – Speed-up = 10010/110 = 91 (91% of ideal) National Tsing Hua University ® copyright OIA 42

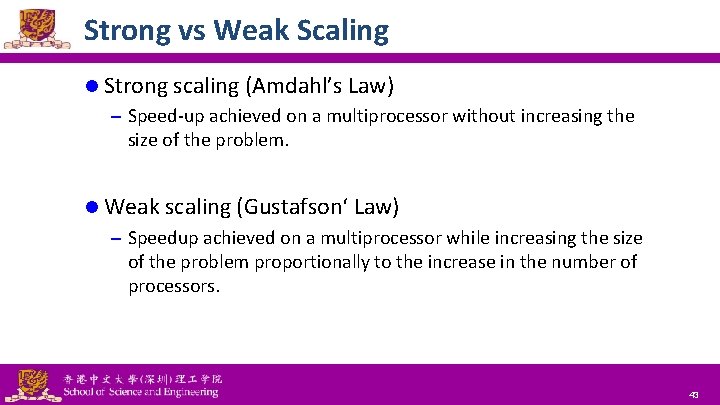

Strong vs Weak Scaling l Strong scaling (Amdahl’s Law) – Speed-up achieved on a multiprocessor without increasing the size of the problem. l Weak scaling (Gustafson‘ Law) – Speedup achieved on a multiprocessor while increasing the size of the problem proportionally to the increase in the number of processors. National Tsing Hua University ® copyright OIA 43

The Big Picture: Where are We Now? l Multiprocessor – A computer system with at least two processors – Can deliver high throughput for independent jobs via job-level parallelism or process-level parallelism – And improve the run time of a single program that has been specially crafted to run on a multiprocessor – a parallel processing program National Tsing Hua University ® copyright OIA 44

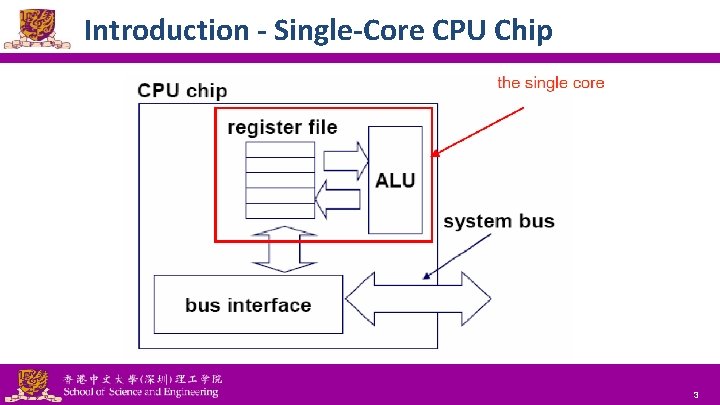

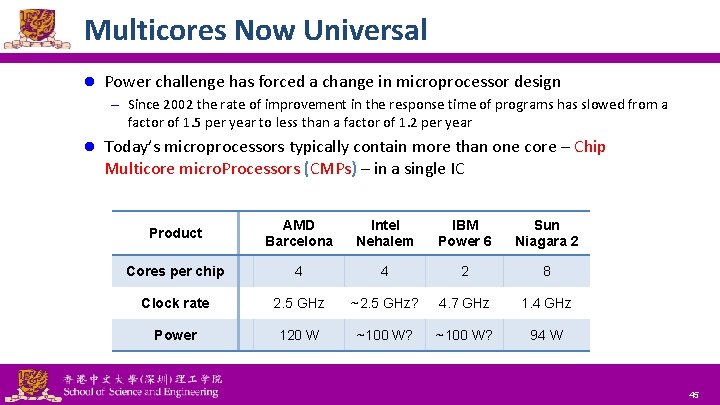

Multicores Now Universal l Power challenge has forced a change in microprocessor design – Since 2002 the rate of improvement in the response time of programs has slowed from a factor of 1. 5 per year to less than a factor of 1. 2 per year l Today’s microprocessors typically contain more than one core – Chip Multicore micro. Processors (CMPs) – in a single IC Product AMD Barcelona Intel Nehalem IBM Power 6 Sun Niagara 2 Cores per chip 4 4 2 8 Clock rate 2. 5 GHz ~2. 5 GHz? 4. 7 GHz 1. 4 GHz Power 120 W ~100 W? 94 W National Tsing Hua University ® copyright OIA 45