CS447 Computer Architecture Lecture 14 Pipelining 2 October

CS-447– Computer Architecture Lecture 14 Pipelining (2) October 8 th, 2008 Majd F. Sakr msakr@qatar. cmu. edu www. qatar. cmu. edu/~msakr/15447 -f 08/ 15 -447 Computer Architecture Fall 2008 ©

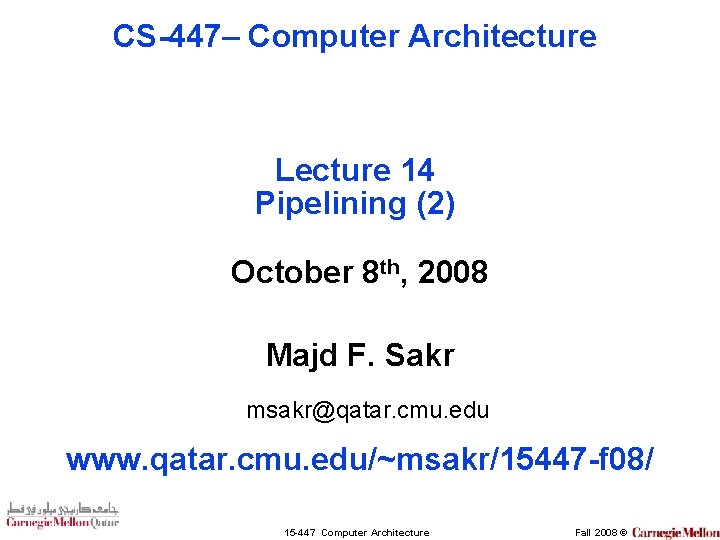

Sequential Laundry 6 PM 7 8 9 10 11 Midnight Time T a s k O r d e r 30 30 30 A B C D washing = drying = folding = 30 minutes 15 -447 Computer Architecture Fall 2008 ©

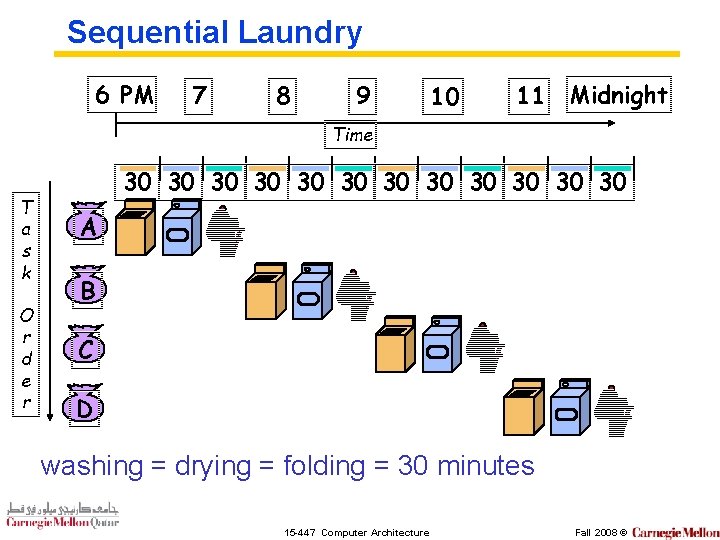

Sequential Laundry 6 PM 7 8 9 10 11 Midnight Time T a s k O r d e r 30 30 30 A B C D 15 -447 Computer Architecture Fall 2008 ©

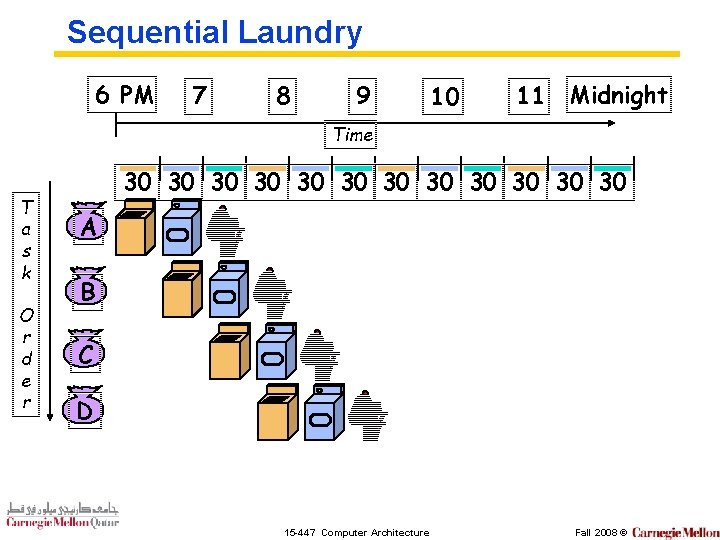

Sequential Laundry 6 PM 7 8 9 Midnight 11 10 Time T a s k O r d e r 30 30 30 A B C D 6 PM 7 8 9 11 10 Midnight Time 30 A B C D 30 30 30 Ideal Pipelining: • 3 -loads in parallel • No additional resources • Throughput increased by 3 • Latency per load is the same 15 -447 Computer Architecture Fall 2008 ©

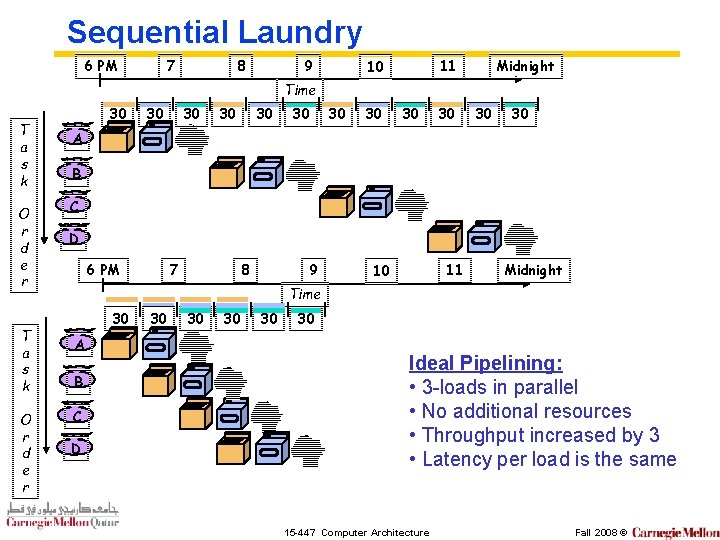

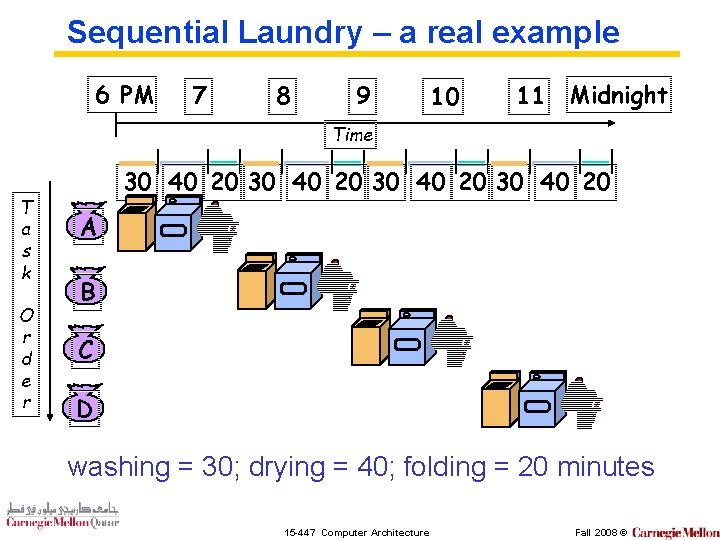

Sequential Laundry – a real example 6 PM 7 8 9 10 11 Midnight Time T a s k O r d e r 30 40 20 A B C D washing = 30; drying = 40; folding = 20 minutes 15 -447 Computer Architecture Fall 2008 ©

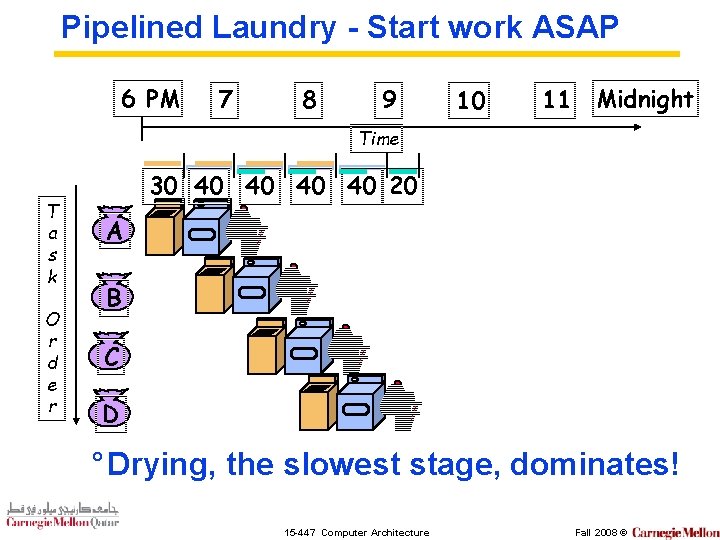

Pipelined Laundry - Start work ASAP 6 PM 7 8 9 10 11 Midnight Time T a s k O r d e r 30 40 40 20 A B C D ° Drying, the slowest stage, dominates! 15 -447 Computer Architecture Fall 2008 ©

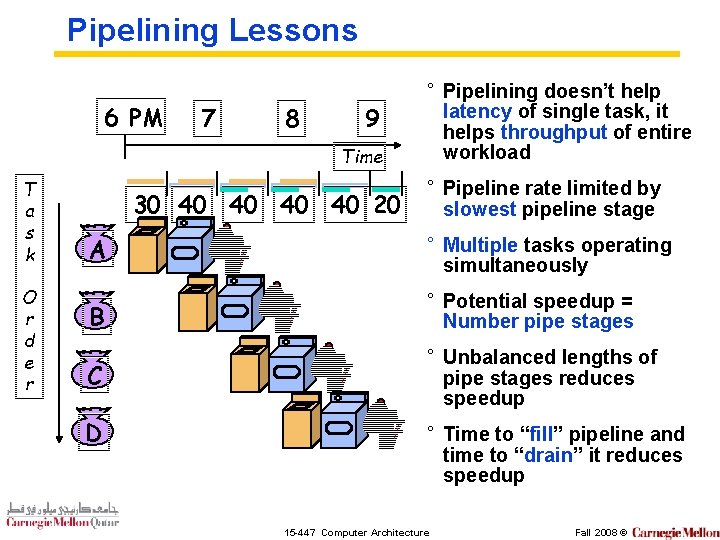

Pipelining Lessons 6 PM 7 8 9 Time T a s k O r d e r 30 40 40 20 ° Pipelining doesn’t help latency of single task, it helps throughput of entire workload ° Pipeline rate limited by slowest pipeline stage A ° Multiple tasks operating simultaneously B ° Potential speedup = Number pipe stages C ° Unbalanced lengths of pipe stages reduces speedup D ° Time to “fill” pipeline and time to “drain” it reduces speedup 15 -447 Computer Architecture Fall 2008 ©

Pipelining ° Doesn’t improve latency! ° Execute billions of instructions, so throughput is what matters! 15 -447 Computer Architecture Fall 2008 ©

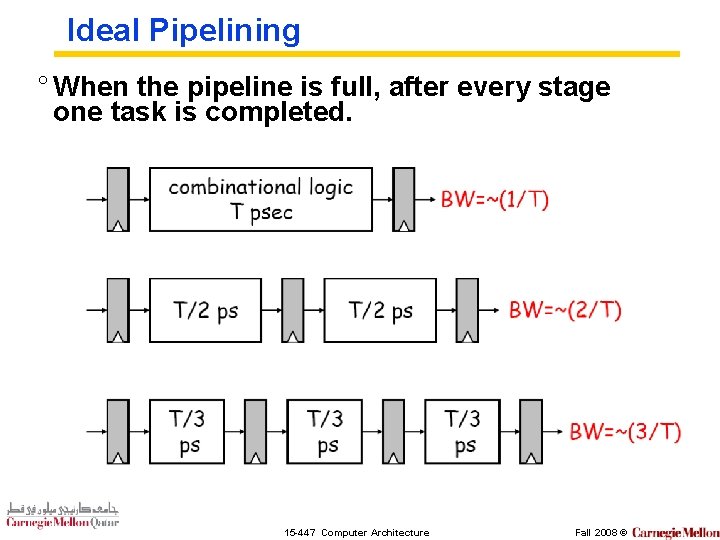

Ideal Pipelining ° When the pipeline is full, after every stage one task is completed. 15 -447 Computer Architecture Fall 2008 ©

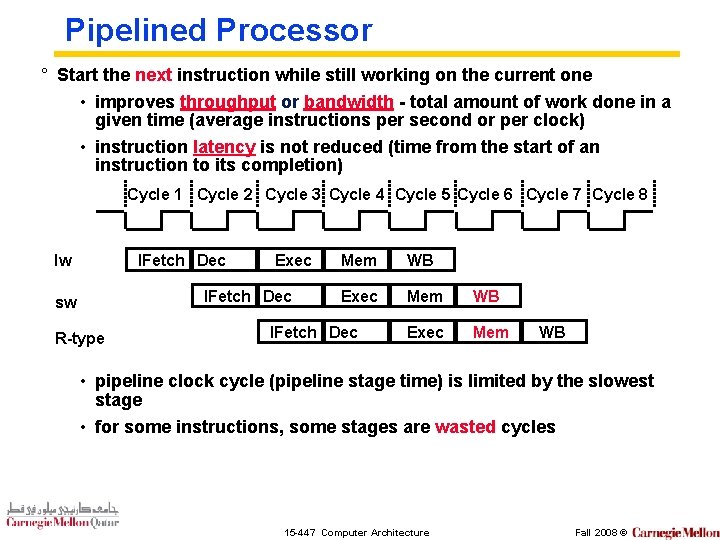

Pipelined Processor ° Start the next instruction while still working on the current one • improves throughput or bandwidth - total amount of work done in a given time (average instructions per second or per clock) • instruction latency is not reduced (time from the start of an instruction to its completion) Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 IFetch Dec lw Exec IFetch Dec sw R-type Mem WB Exec Mem IFetch Dec WB • pipeline clock cycle (pipeline stage time) is limited by the slowest stage • for some instructions, some stages are wasted cycles 15 -447 Computer Architecture Fall 2008 ©

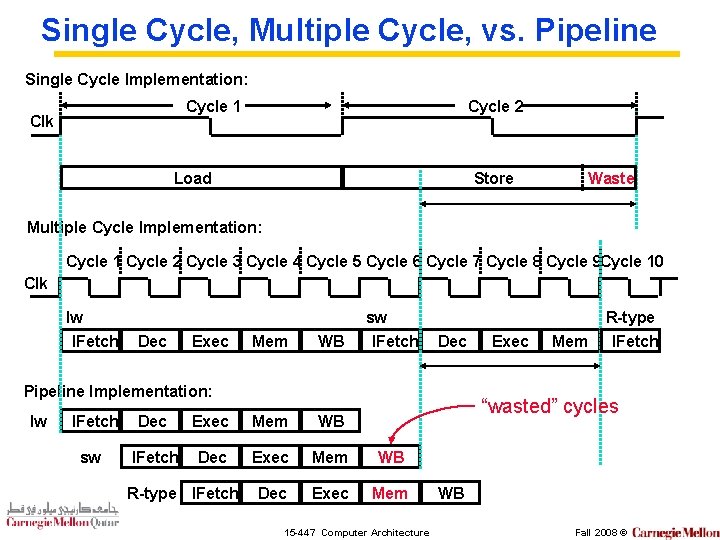

Single Cycle, Multiple Cycle, vs. Pipeline Single Cycle Implementation: Cycle 1 Clk Cycle 2 Load Store Waste Multiple Cycle Implementation: Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Cycle 9 Cycle 10 Clk lw IFetch Dec Exec Mem WB sw IFetch Dec Pipeline Implementation: lw IFetch sw Mem “wasted” cycles Dec Exec Mem WB IFetch Dec Exec Mem WB Dec Exec Mem R-type IFetch Exec R-type IFetch 15 -447 Computer Architecture WB Fall 2008 ©

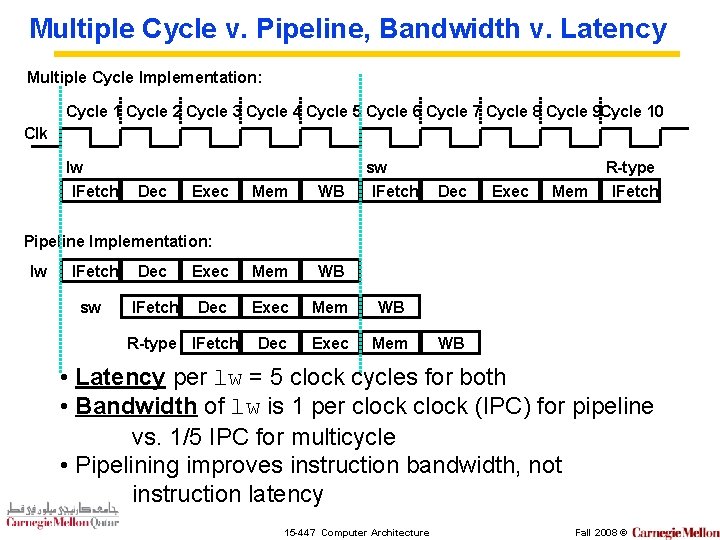

Multiple Cycle v. Pipeline, Bandwidth v. Latency Multiple Cycle Implementation: Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Cycle 9 Cycle 10 Clk lw IFetch Dec Exec Mem WB sw IFetch Dec Exec Mem R-type IFetch Pipeline Implementation: lw IFetch sw Dec Exec Mem WB IFetch Dec Exec Mem WB Dec Exec Mem R-type IFetch WB • Latency per lw = 5 clock cycles for both • Bandwidth of lw is 1 per clock (IPC) for pipeline vs. 1/5 IPC for multicycle • Pipelining improves instruction bandwidth, not instruction latency 15 -447 Computer Architecture Fall 2008 ©

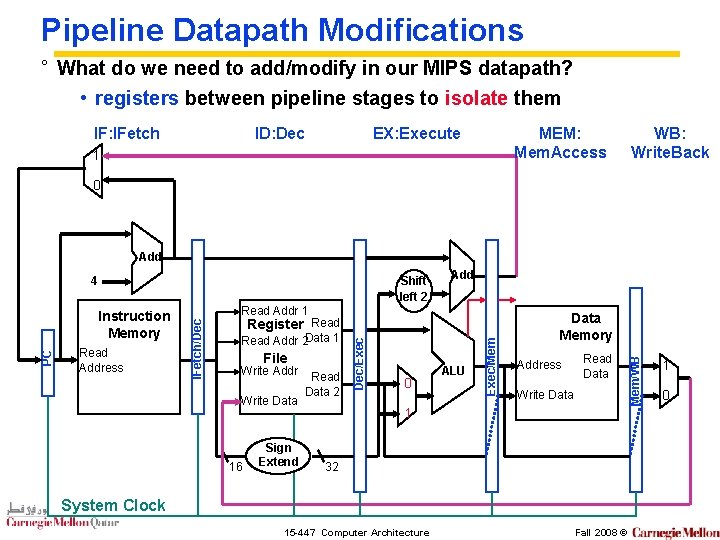

Pipeline Datapath Modifications ° What do we need to add/modify in our MIPS datapath? • registers between pipeline stages to isolate them IF: IFetch ID: Dec EX: Execute MEM: Mem. Access 1 WB: Write. Back 0 Add Read Addr 2 Data 1 File Write Addr Write Data 16 Sign Extend Read Data 2 0 ALU Exec/Mem Register Read Dec/Exec Read Address Read Addr 1 IFetch/Dec PC Instruction Memory Add Data Memory Address Read Data Write Data 1 32 System Clock 15 -447 Computer Architecture Fall 2008 © Mem/WB Shift left 2 4 1 0

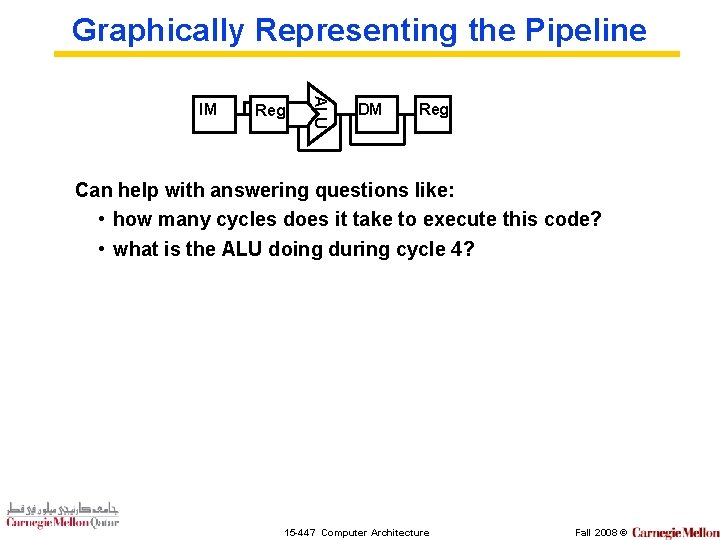

Graphically Representing the Pipeline Reg ALU IM DM Reg Can help with answering questions like: • how many cycles does it take to execute this code? • what is the ALU doing during cycle 4? 15 -447 Computer Architecture Fall 2008 ©

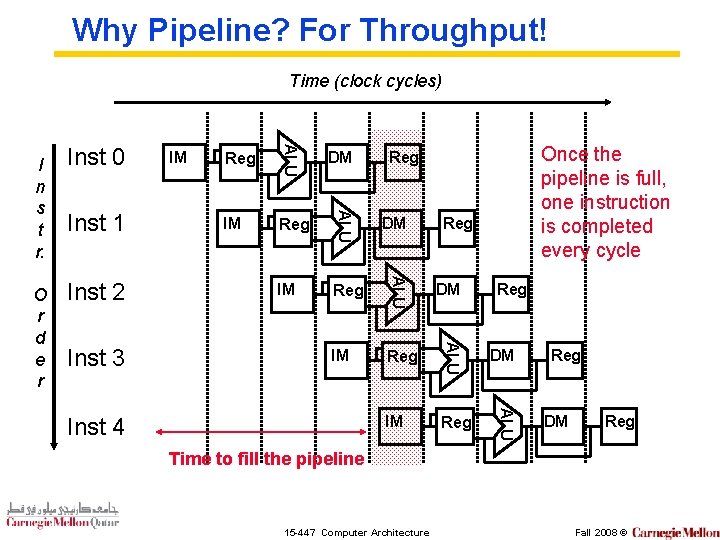

Why Pipeline? For Throughput! Time (clock cycles) IM Reg DM IM Reg ALU Inst 3 DM ALU Inst 2 Once the pipeline is full, one instruction is completed every cycle Reg ALU Inst 1 IM ALU O r d e r Inst 0 ALU I n s t r. Inst 4 Reg Reg DM Reg Time to fill the pipeline 15 -447 Computer Architecture Fall 2008 ©

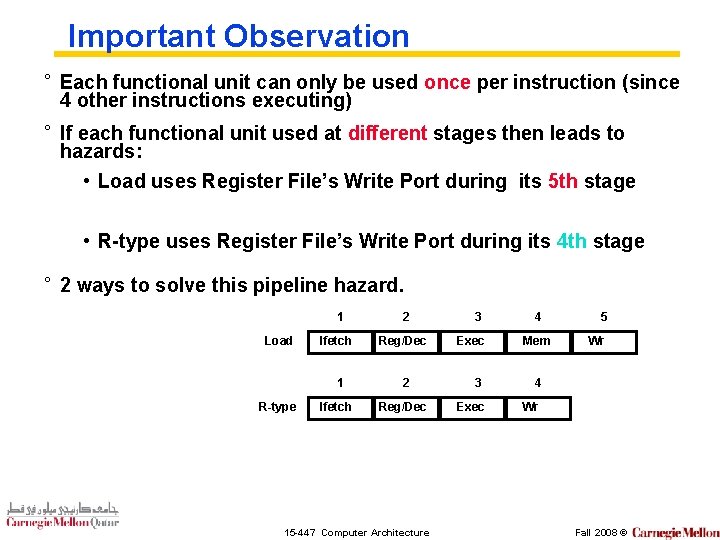

Important Observation ° Each functional unit can only be used once per instruction (since 4 other instructions executing) ° If each functional unit used at different stages then leads to hazards: • Load uses Register File’s Write Port during its 5 th stage • R-type uses Register File’s Write Port during its 4 th stage ° 2 ways to solve this pipeline hazard. 1 Load Ifetch 1 R-type Ifetch 2 Reg/Dec 15 -447 Computer Architecture 3 Exec 4 Mem 3 4 Exec Wr 5 Wr Fall 2008 ©

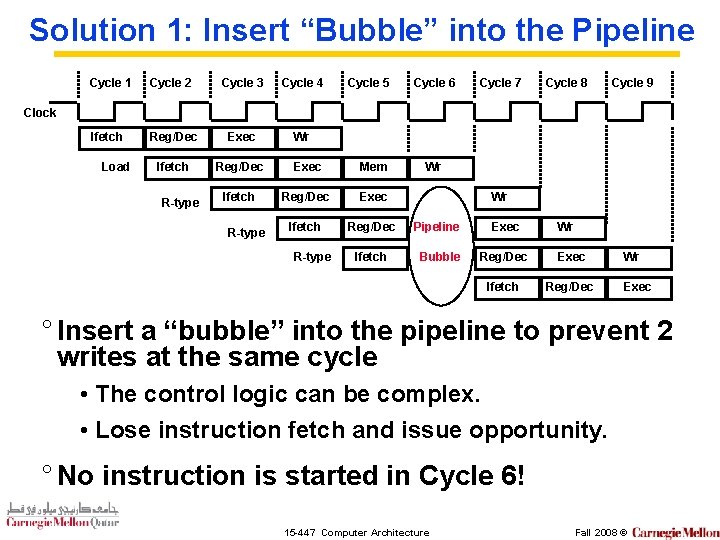

Solution 1: Insert “Bubble” into the Pipeline Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Ifetch Reg/Dec Exec Wr Ifetch Reg/Dec Exec Mem Ifetch Reg/Dec Exec Ifetch Reg/Dec Pipeline Exec Ifetch Bubble Reg/Dec Exec Ifetch Reg/Dec Cycle 9 Clock Load R-type Wr Wr Exec ° Insert a “bubble” into the pipeline to prevent 2 writes at the same cycle • The control logic can be complex. • Lose instruction fetch and issue opportunity. ° No instruction is started in Cycle 6! 15 -447 Computer Architecture Fall 2008 ©

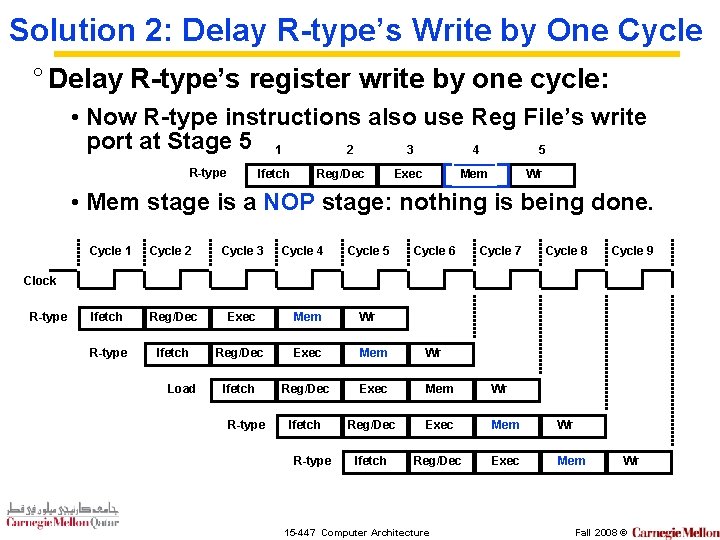

Solution 2: Delay R-type’s Write by One Cycle ° Delay R-type’s register write by one cycle: • Now R-type instructions also use Reg File’s write port at Stage 5 1 4 2 3 5 R-type Ifetch Reg/Dec Exec Mem Wr • Mem stage is a NOP stage: nothing is being done. Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Ifetch Reg/Dec Exec Mem Wr R-type Ifetch Reg/Dec Exec Mem Wr Ifetch Reg/Dec Exec Mem Cycle 9 Clock R-type Load R-type 15 -447 Computer Architecture Wr Fall 2008 ©

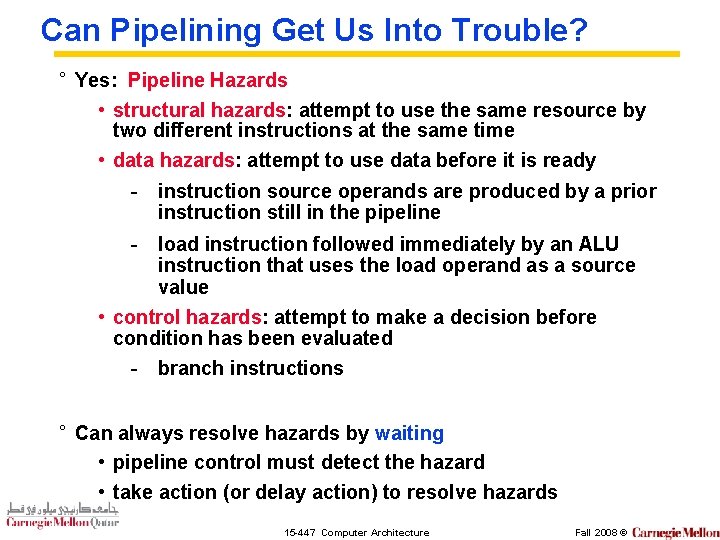

Can Pipelining Get Us Into Trouble? ° Yes: Pipeline Hazards • structural hazards: attempt to use the same resource by two different instructions at the same time • data hazards: attempt to use data before it is ready - instruction source operands are produced by a prior instruction still in the pipeline - load instruction followed immediately by an ALU instruction that uses the load operand as a source value • control hazards: attempt to make a decision before condition has been evaluated - branch instructions ° Can always resolve hazards by waiting • pipeline control must detect the hazard • take action (or delay action) to resolve hazards 15 -447 Computer Architecture Fall 2008 ©

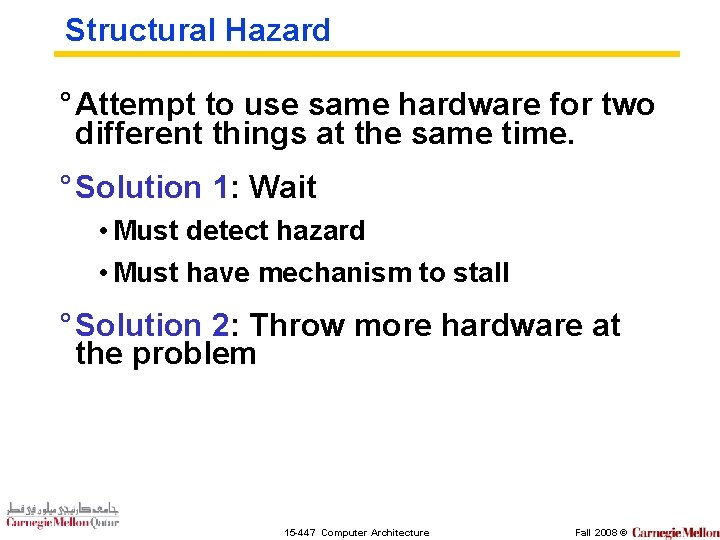

Structural Hazard ° Attempt to use same hardware for two different things at the same time. ° Solution 1: Wait • Must detect hazard • Must have mechanism to stall ° Solution 2: Throw more hardware at the problem 15 -447 Computer Architecture Fall 2008 ©

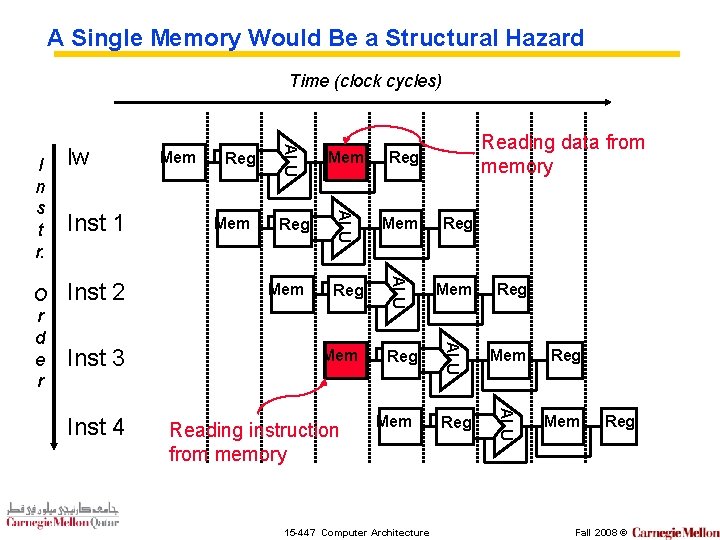

A Single Memory Would Be a Structural Hazard Time (clock cycles) Inst 4 Mem Reg Reg Mem Reg ALU Inst 3 Reg ALU Inst 2 Mem Reg ALU Inst 1 Reading data from memory Mem ALU O r d e r lw Reg ALU I n s t r. Mem Mem Reading instruction from memory Mem 15 -447 Computer Architecture Reg Fall 2008 ©

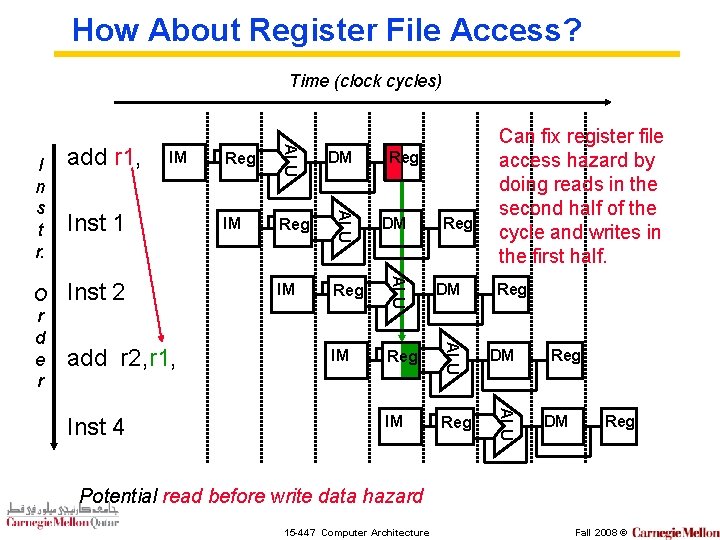

How About Register File Access? Time (clock cycles) add r 2, r 1, Inst 4 IM Reg DM IM Reg ALU Inst 2 DM ALU Inst 1 Reg ALU IM ALU O r d e r add r 1, ALU I n s t r. Can fix register file access hazard by doing reads in the second half of the cycle and writes in the first half. Reg Reg DM Reg Potential read before write data hazard 15 -447 Computer Architecture Fall 2008 ©

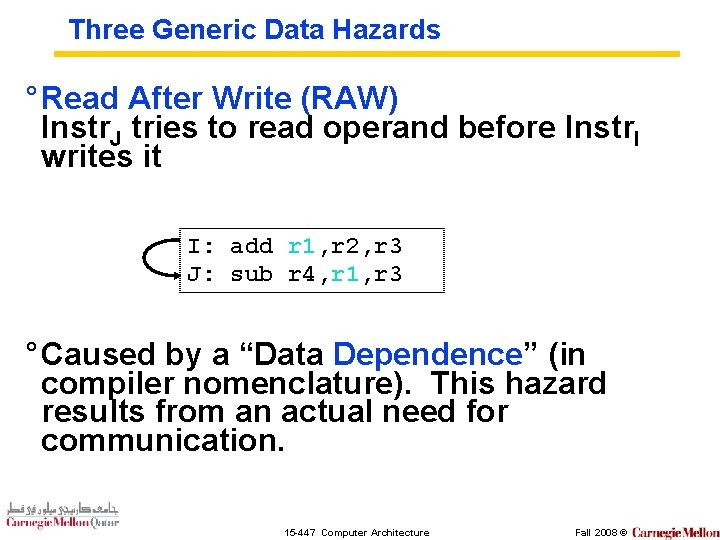

Three Generic Data Hazards ° Read After Write (RAW) Instr. J tries to read operand before Instr. I writes it I: add r 1, r 2, r 3 J: sub r 4, r 1, r 3 ° Caused by a “Data Dependence” (in compiler nomenclature). This hazard results from an actual need for communication. 15 -447 Computer Architecture Fall 2008 ©

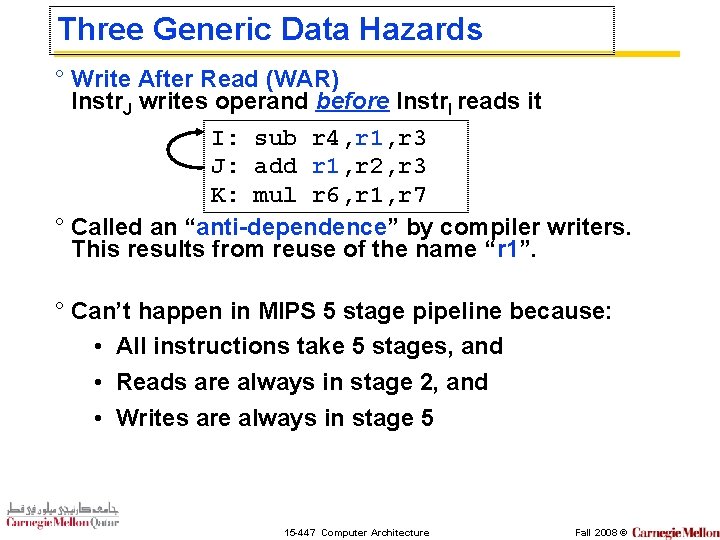

Three Generic Data Hazards ° Write After Read (WAR) Instr. J writes operand before Instr. I reads it I: sub r 4, r 1, r 3 J: add r 1, r 2, r 3 K: mul r 6, r 1, r 7 ° Called an “anti-dependence” by compiler writers. This results from reuse of the name “r 1”. ° Can’t happen in MIPS 5 stage pipeline because: • All instructions take 5 stages, and • Reads are always in stage 2, and • Writes are always in stage 5 15 -447 Computer Architecture Fall 2008 ©

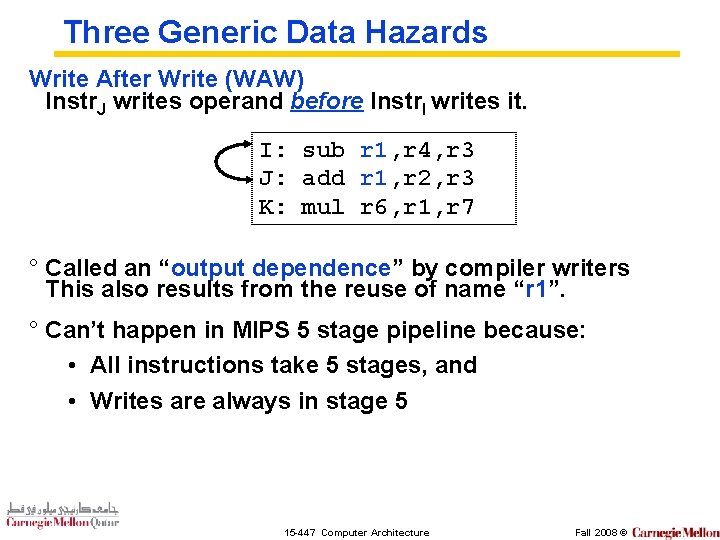

Three Generic Data Hazards Write After Write (WAW) Instr. J writes operand before Instr. I writes it. I: sub r 1, r 4, r 3 J: add r 1, r 2, r 3 K: mul r 6, r 1, r 7 ° Called an “output dependence” by compiler writers This also results from the reuse of name “r 1”. ° Can’t happen in MIPS 5 stage pipeline because: • All instructions take 5 stages, and • Writes are always in stage 5 15 -447 Computer Architecture Fall 2008 ©

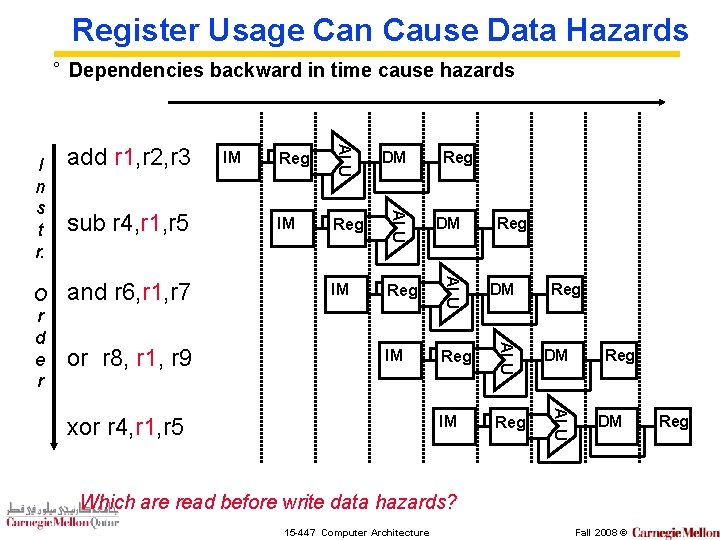

Register Usage Can Cause Data Hazards ° Dependencies backward in time cause hazards IM Reg DM IM Reg ALU or r 8, r 1, r 9 DM ALU and r 6, r 1, r 7 Reg ALU sub r 4, r 1, r 5 IM ALU O r d e r add r 1, r 2, r 3 ALU I n s t r. xor r 4, r 1, r 5 Reg Reg DM Which are read before write data hazards? 15 -447 Computer Architecture Fall 2008 © Reg

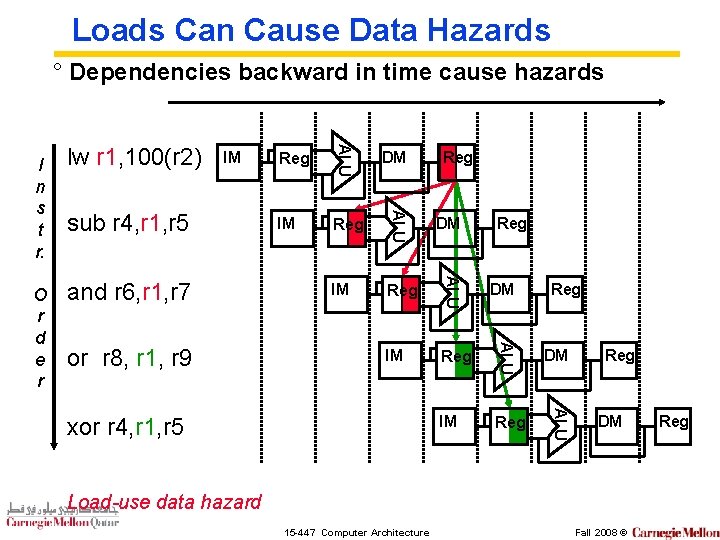

Loads Can Cause Data Hazards ° Dependencies backward in time cause hazards or r 8, r 1, r 9 IM Reg DM IM Reg ALU and r 6, r 1, r 7 DM ALU sub r 4, r 1, r 5 Reg ALU IM ALU O r d e r lw r 1, 100(r 2) ALU I n s t r. xor r 4, r 1, r 5 Reg Reg DM Load-use data hazard 15 -447 Computer Architecture Fall 2008 © Reg

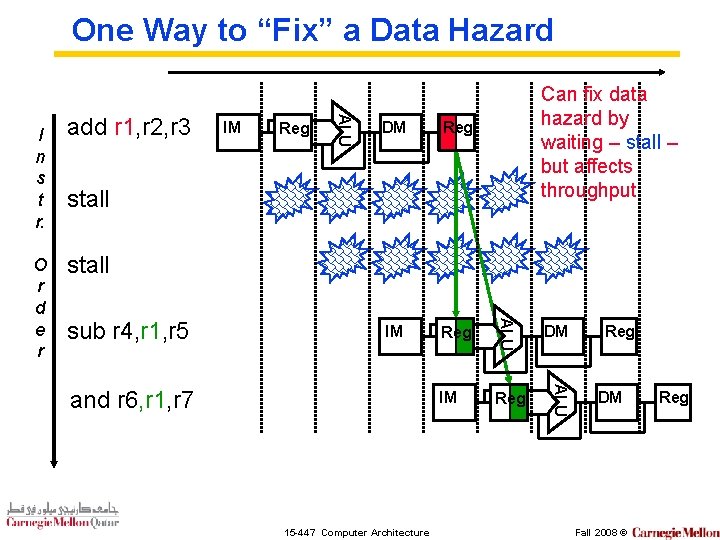

One Way to “Fix” a Data Hazard Reg DM Reg IM Reg DM IM Reg ALU IM ALU O r d e r add r 1, r 2, r 3 ALU I n s t r. Can fix data hazard by waiting – stall – but affects throughput stall sub r 4, r 1, r 5 and r 6, r 1, r 7 15 -447 Computer Architecture Reg DM Fall 2008 © Reg

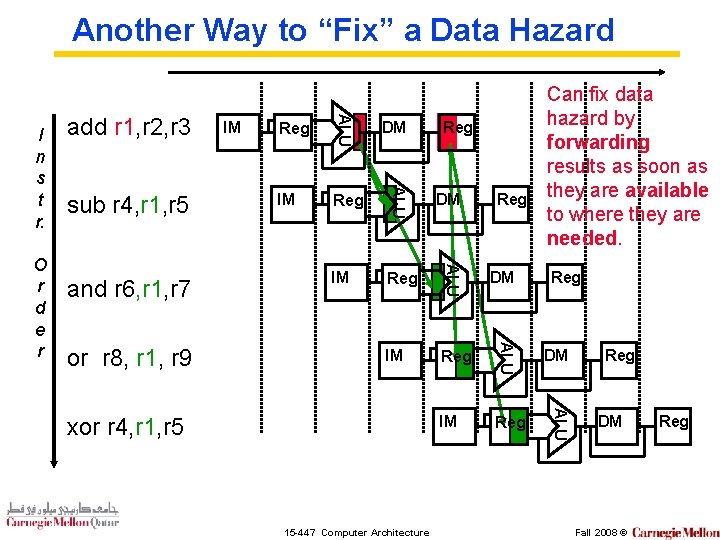

Another Way to “Fix” a Data Hazard IM Reg DM IM Reg ALU or r 8, r 1, r 9 DM ALU and r 6, r 1, r 7 Reg ALU sub r 4, r 1, r 5 IM ALU O r d e r add r 1, r 2, r 3 ALU I n s t r. Can fix data hazard by forwarding results as soon as they are available to where they are needed. xor r 4, r 1, r 5 15 -447 Computer Architecture Reg Reg DM Fall 2008 © Reg

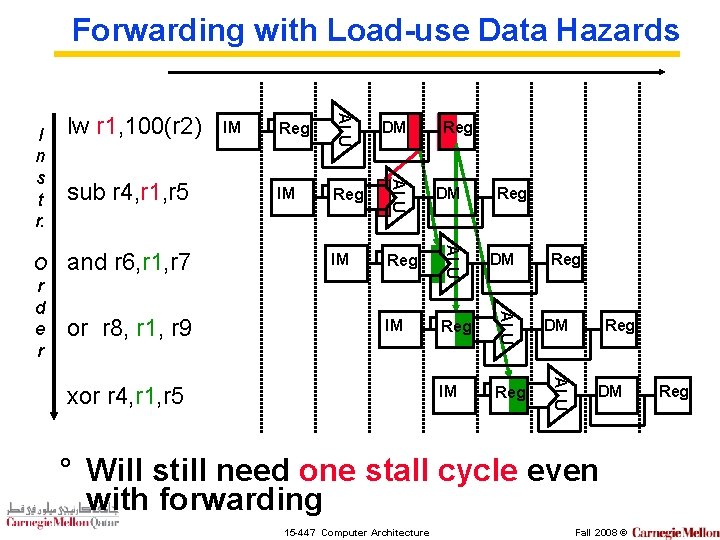

Forwarding with Load-use Data Hazards IM Reg DM IM Reg ALU or r 8, r 1, r 9 DM ALU and r 6, r 1, r 7 Reg ALU sub r 4, r 1, r 5 IM ALU O r d e r lw r 1, 100(r 2) ALU I n s t r. xor r 4, r 1, r 5 Reg Reg DM ° Will still need one stall cycle even with forwarding 15 -447 Computer Architecture Fall 2008 © Reg

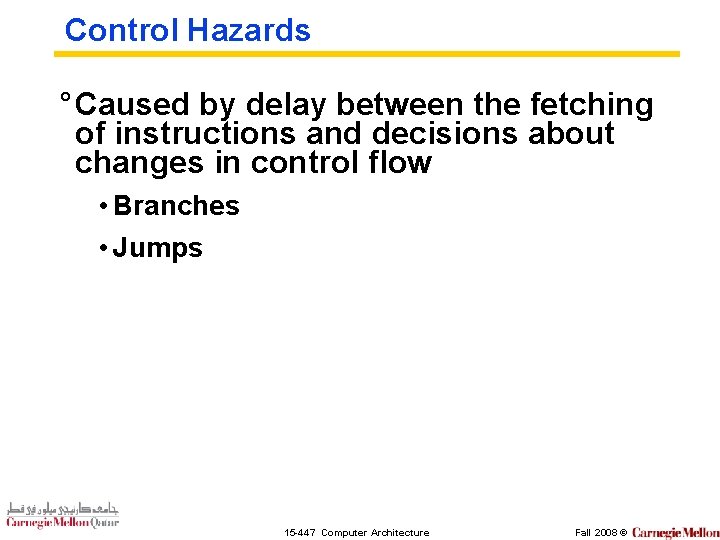

Control Hazards ° Caused by delay between the fetching of instructions and decisions about changes in control flow • Branches • Jumps 15 -447 Computer Architecture Fall 2008 ©

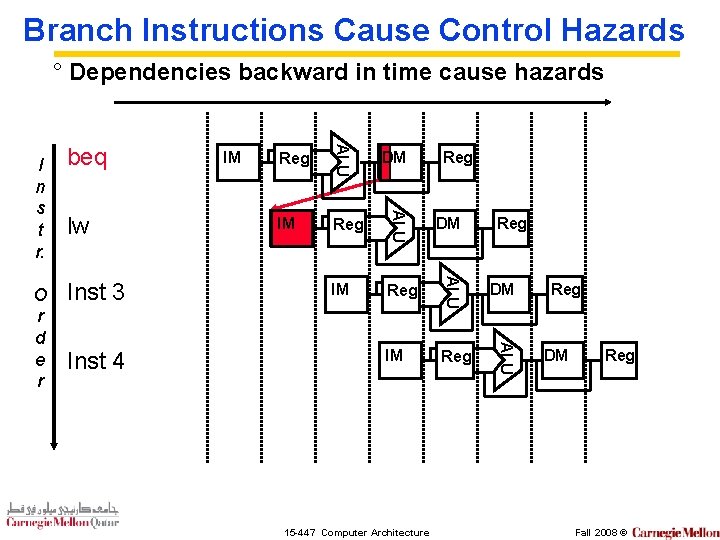

Branch Instructions Cause Control Hazards ° Dependencies backward in time cause hazards Inst 4 IM Reg DM IM Reg ALU Inst 3 Reg ALU lw IM ALU O r d e r beq ALU I n s t r. DM 15 -447 Computer Architecture Reg Reg DM Reg Fall 2008 ©

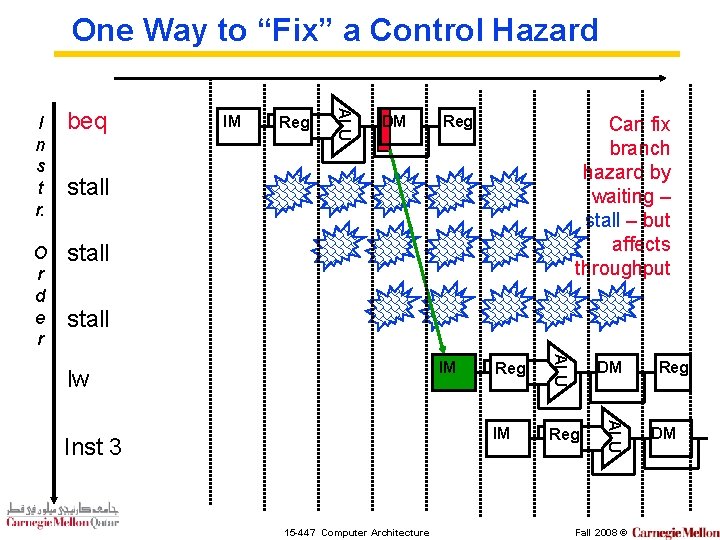

One Way to “Fix” a Control Hazard beq O r d e r stall IM Reg ALU I n s t r. DM Reg Can fix branch hazard by waiting – stall – but affects throughput stall 15 -447 Computer Architecture DM IM Reg ALU Inst 3 Reg ALU IM lw Fall 2008 © Reg DM

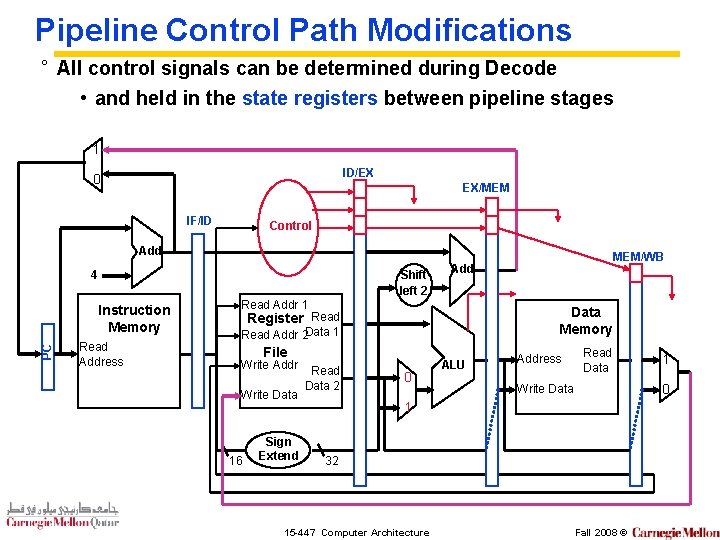

Pipeline Control Path Modifications ° All control signals can be determined during Decode • and held in the state registers between pipeline stages 1 ID/EX 0 EX/MEM IF/ID Control Add Shift left 2 4 PC Instruction Memory Read Address Read Addr 1 Data Memory Register Read Addr 2 Data 1 File Write Addr Write Data 16 Sign Extend Read Data 2 MEM/WB Add 0 ALU Address Read Data 0 Write Data 1 32 15 -447 Computer Architecture 1 Fall 2008 ©

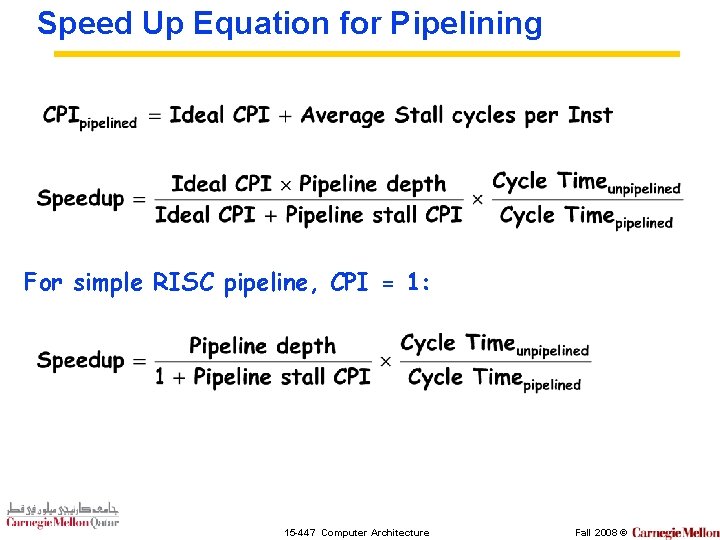

Speed Up Equation for Pipelining For simple RISC pipeline, CPI = 1: 15 -447 Computer Architecture Fall 2008 ©

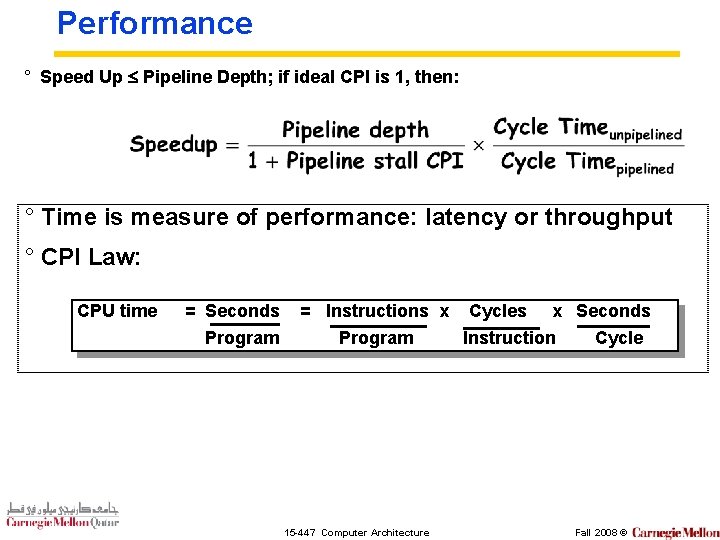

Performance ° Speed Up Pipeline Depth; if ideal CPI is 1, then: ° Time is measure of performance: latency or throughput ° CPI Law: CPU time = Seconds Program = Instructions x Cycles x Seconds Program Instruction Cycle 15 -447 Computer Architecture Fall 2008 ©

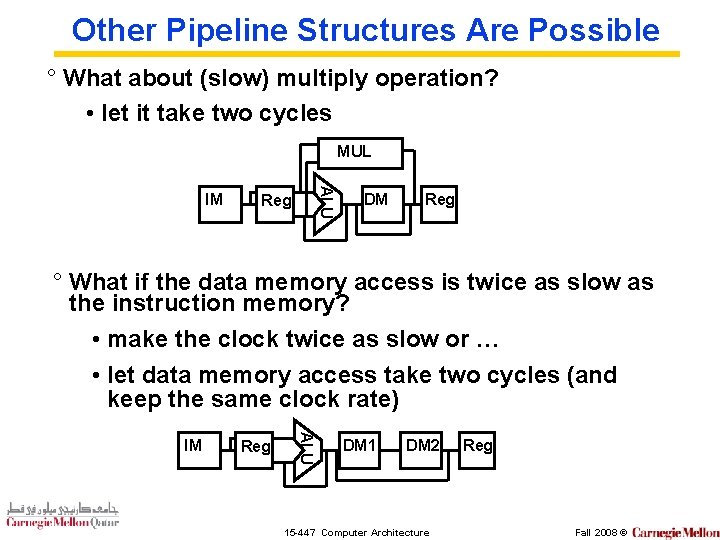

Other Pipeline Structures Are Possible ° What about (slow) multiply operation? • let it take two cycles MUL ALU IM Reg DM Reg ° What if the data memory access is twice as slow as the instruction memory? • make the clock twice as slow or … • let data memory access take two cycles (and keep the same clock rate) Reg ALU IM DM 1 DM 2 15 -447 Computer Architecture Reg Fall 2008 ©

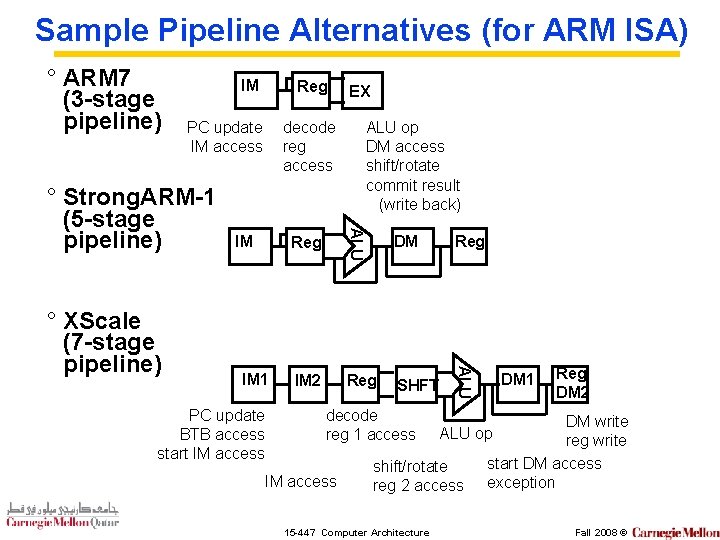

Sample Pipeline Alternatives (for ARM ISA) ° ARM 7 (3 -stage pipeline) IM PC update IM access decode reg access IM 1 Reg IM 2 ALU op DM access shift/rotate commit result (write back) DM Reg SHFT ALU IM PC update BTB access start IM access EX ALU ° Strong. ARM-1 (5 -stage pipeline) ° XScale (7 -stage pipeline) Reg decode reg 1 access IM access Reg DM 2 DM write reg write start DM access exception ALU op shift/rotate reg 2 access 15 -447 Computer Architecture DM 1 Fall 2008 ©

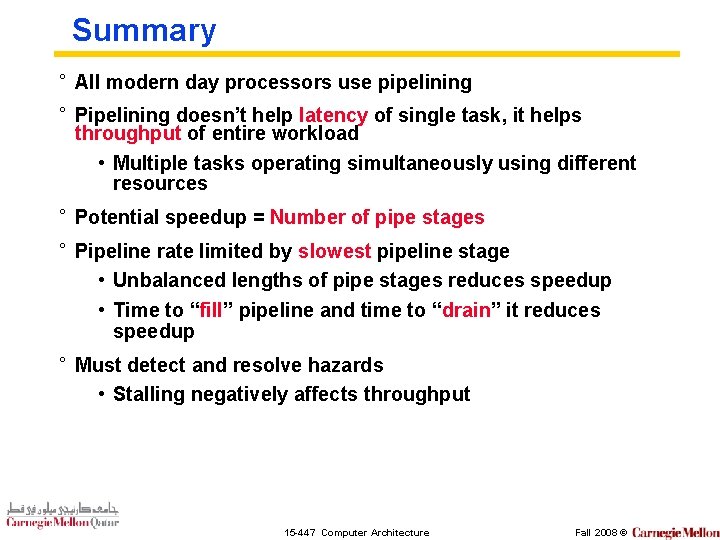

Summary ° All modern day processors use pipelining ° Pipelining doesn’t help latency of single task, it helps throughput of entire workload • Multiple tasks operating simultaneously using different resources ° Potential speedup = Number of pipe stages ° Pipeline rate limited by slowest pipeline stage • Unbalanced lengths of pipe stages reduces speedup • Time to “fill” pipeline and time to “drain” it reduces speedup ° Must detect and resolve hazards • Stalling negatively affects throughput 15 -447 Computer Architecture Fall 2008 ©

- Slides: 39