CS 798 Information Retrieval Charlie Clarke claclarkplg uwaterloo

- Slides: 30

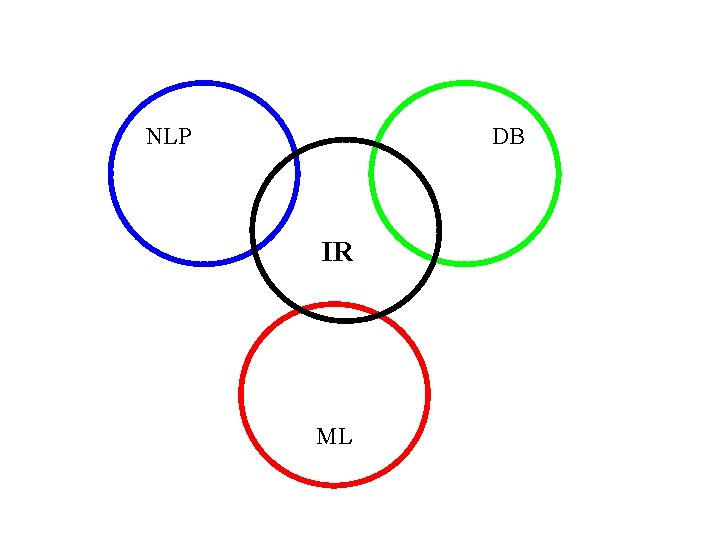

CS 798: Information Retrieval Charlie Clarke claclark@plg. uwaterloo. ca Information retrieval is concerned with representing, searching, and manipulating large collections of human-language data.

Housekeeping Web page: http: //plg. uwaterloo. ca/~claclark/cs 798 Area: “Applications/Databases” Meeting times: Mondays, 2: 00 -5: 00, MC 2036

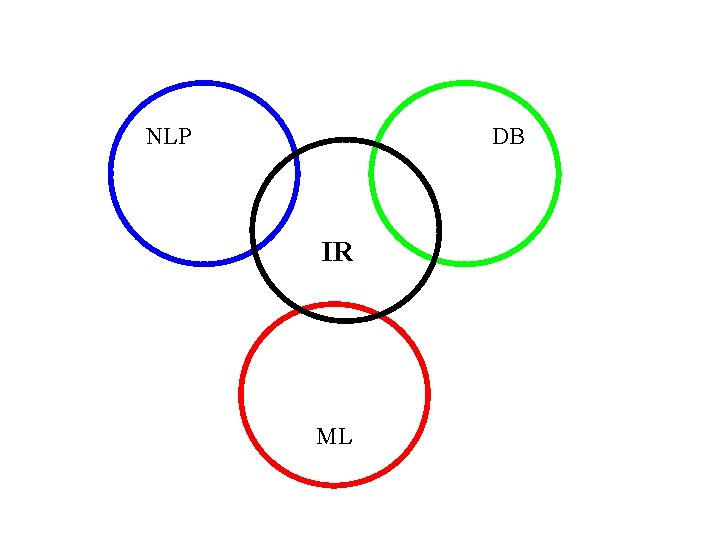

NLP DB IR ML

Topics 1. 2. 3. 4. 5. Basic techniques Searching, browsing, ranking, retrieval Indexing algorithms and data structures Evaluation Application areas

1. Basic Techniques • • Text representation & Tokenization Inverted indices Phrase searching example Vector space model Boolean retrieval Simple proximity ranking Test collections & Evaluation

2. Retrieval and Ranking • • Probabilistic retrieval and Okapi BM 25 F Language modeling Divergence from randomness Passage retrieval Classification Learning to rank Implicit user feedback

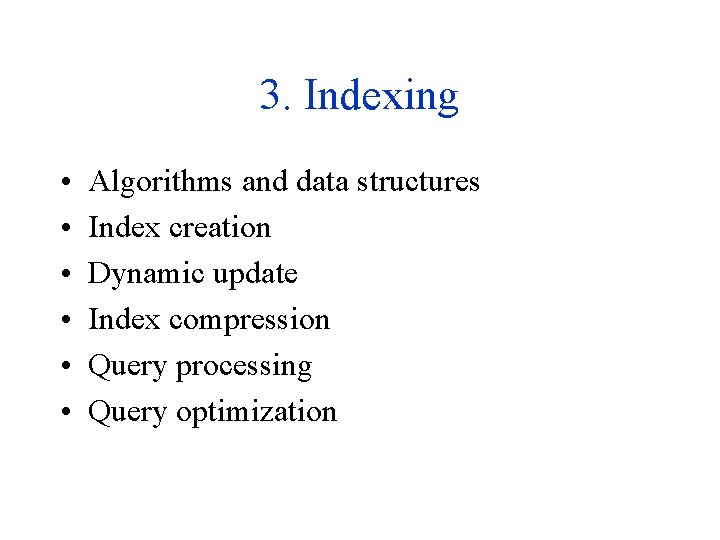

3. Indexing • • • Algorithms and data structures Index creation Dynamic update Index compression Query processing Query optimization

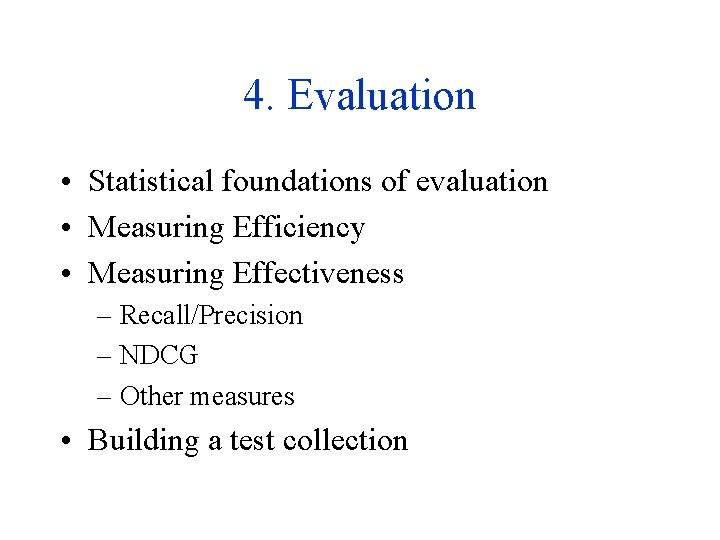

4. Evaluation • Statistical foundations of evaluation • Measuring Efficiency • Measuring Effectiveness – Recall/Precision – NDCG – Other measures • Building a test collection

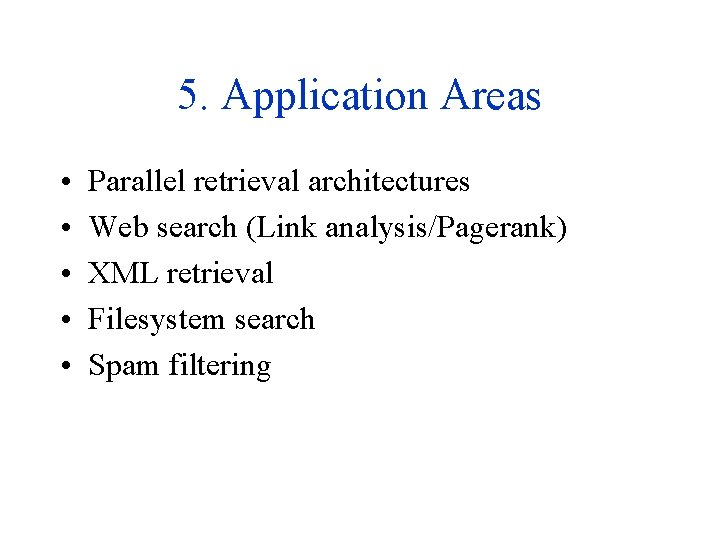

5. Application Areas • • • Parallel retrieval architectures Web search (Link analysis/Pagerank) XML retrieval Filesystem search Spam filtering

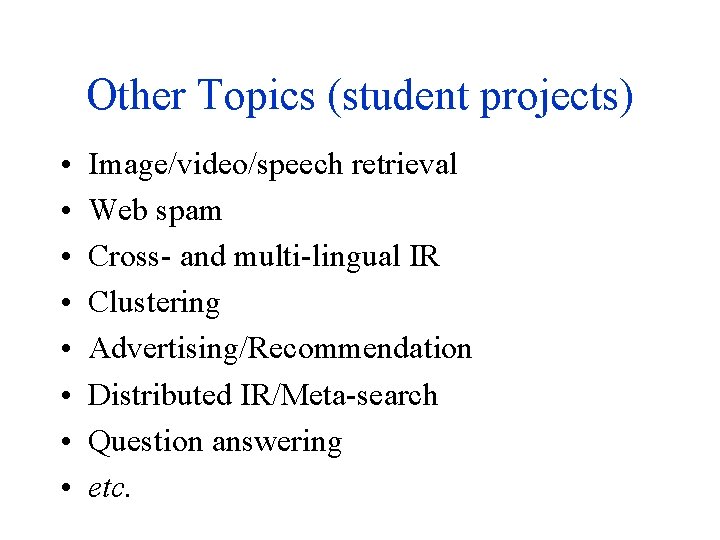

Other Topics (student projects) • • Image/video/speech retrieval Web spam Cross- and multi-lingual IR Clustering Advertising/Recommendation Distributed IR/Meta-search Question answering etc.

Resources Textbook (partial draft on Website): Büttcher, Clarke & Cormack. Information Retrieval: Data Structures, Algorithms and Evaluation. (start reading ch. 1 -3) Wumpus: www. wumpus-search. org

Grading • Short homework exercises from text (10%) • A literature review based on a topic area selected by the student with the agreement of the instructor (30%) • 30 -minute presentation on your selected topic (20%) • Class project (40%) – details coming up. .

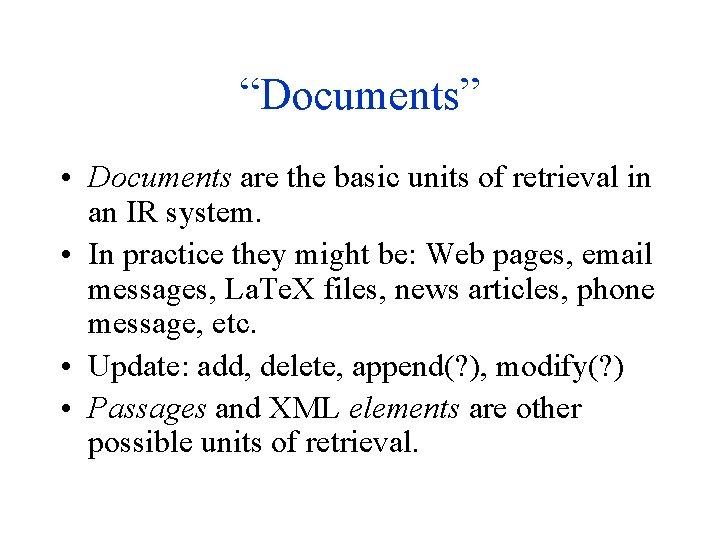

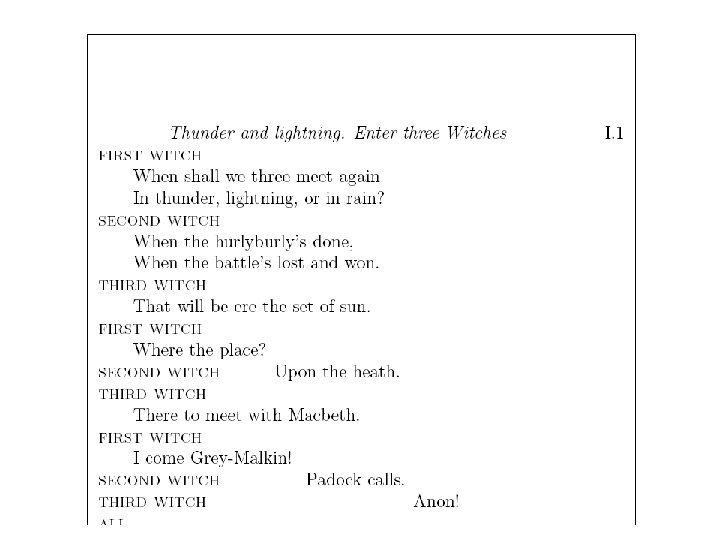

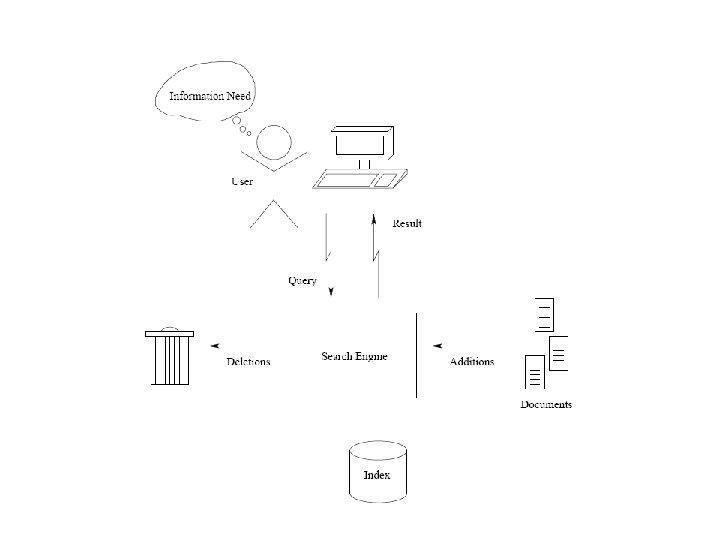

“Documents” • Documents are the basic units of retrieval in an IR system. • In practice they might be: Web pages, email messages, La. Te. X files, news articles, phone message, etc. • Update: add, delete, append(? ), modify(? ) • Passages and XML elements are other possible units of retrieval.

Probability Ranking Principle If an IR system’s response to a query is a ranking of the documents in the collection in order of decreasing probability of relevance, the overall effectiveness of the system to its users will be maximized.

Evaluating IR systems • Efficiency vs. effectiveness • Manual evaluation – Topic creation and judging – TREC (Text REtreival Conference) – Google Has 10, 000 Human Evaluators? • Evaluation through implicit user feedback • Specificity vs. exhaustivity

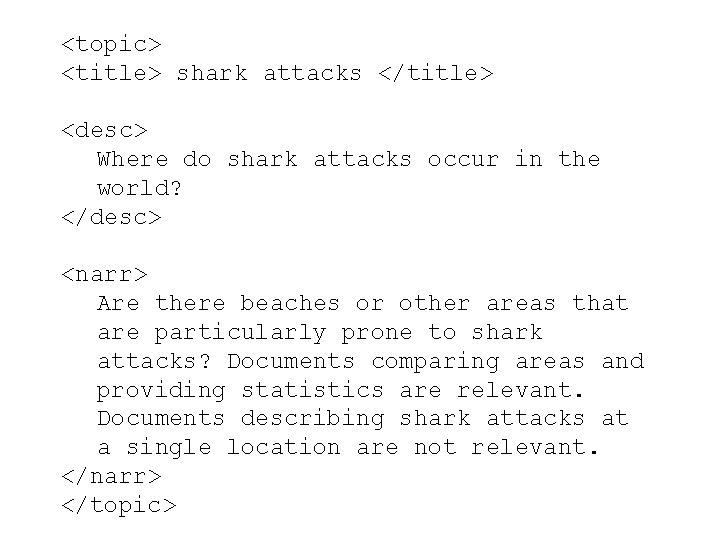

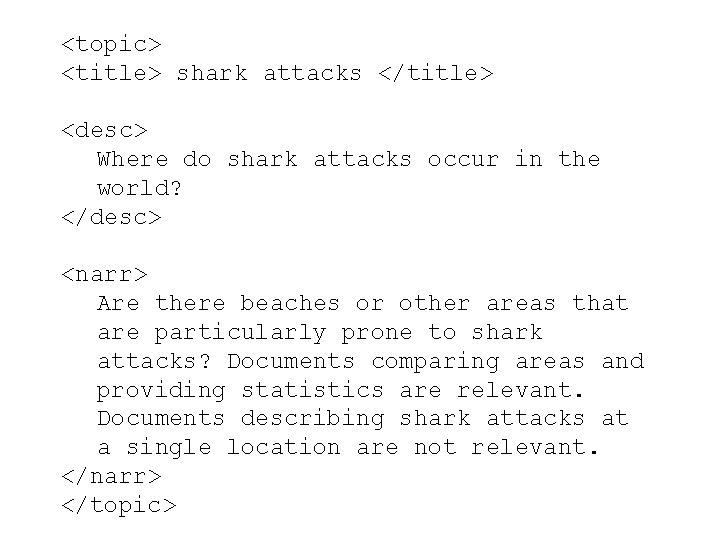

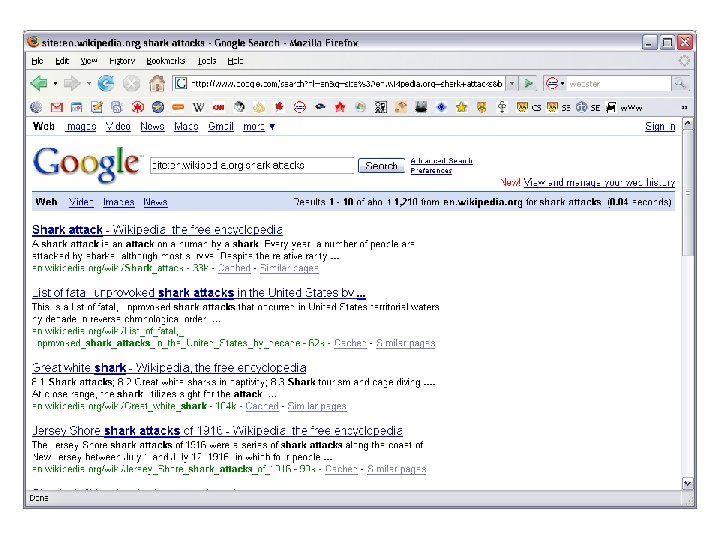

<topic> <title> shark attacks </title> <desc> Where do shark attacks occur in the world? </desc> <narr> Are there beaches or other areas that are particularly prone to shark attacks? Documents comparing areas and providing statistics are relevant. Documents describing shark attacks at a single location are not relevant. </narr> </topic>

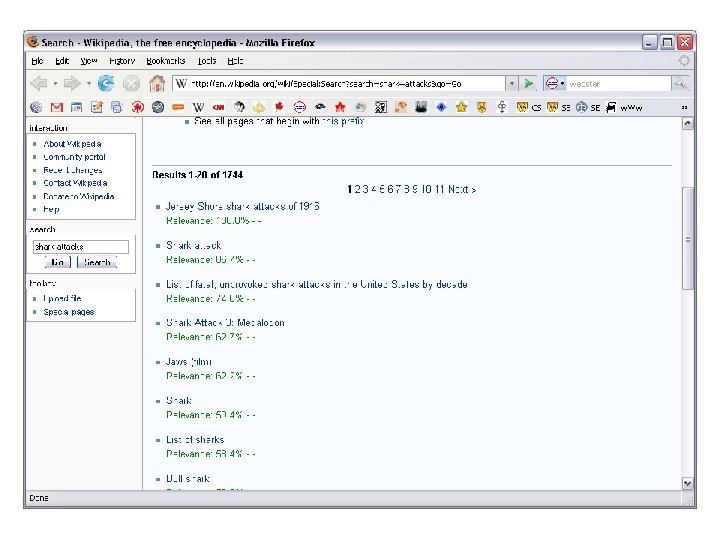

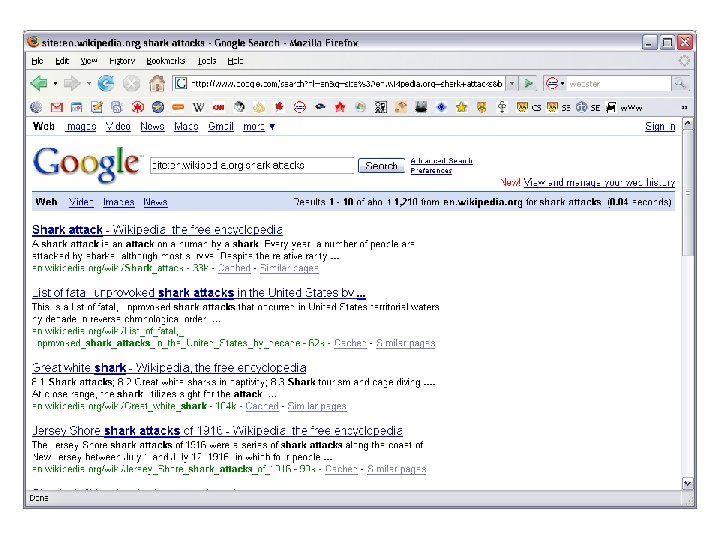

Class Project: Wikipedia Search • Can we outperform Google on the Wikipedia? • Basic project: Build a search engine for the Wikipedia (using any tools you can find). • Ideas: Pagerank, spelling, structure, element retrieval, summarization, external information, user interfaces

Class Project: Evaluation • Each student will create and judge n topics. • The value of n depends on the number of students. (But workload stays the same. ) • Quantitative measure of effectiveness. • Qualitative assessment of user interfaces. • Volunteer needed to operate the judging interface (for credit).

Class Project: Organization • You may work in groups (check with me). • You may work individually (check with me). • You may create and share tools with other students. You get the credit. (e. g. Volunteer needed to set up a class wiki. ) • Programming can’t be avoided, but can be minimized. ☺ • Programming can also be maximized.

Class Project: Grading • Topic creation and judging: 10% • Other project work: 30% – You are responsible for submitting one experimental run for evaluation. – Other activities are up to you.

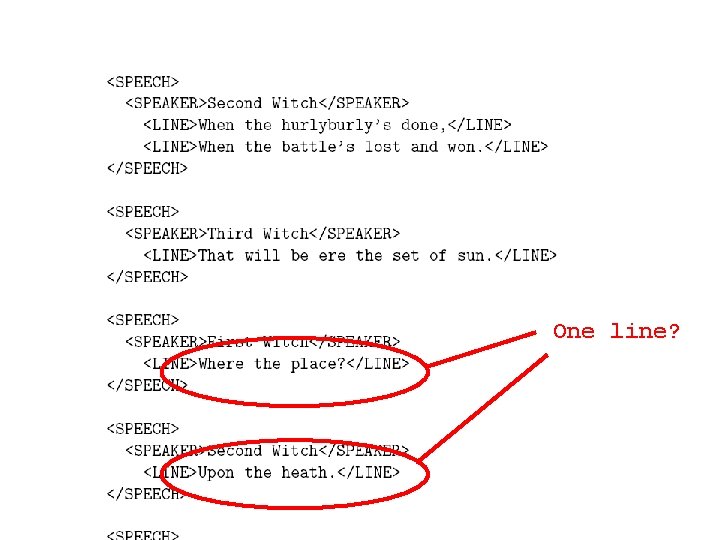

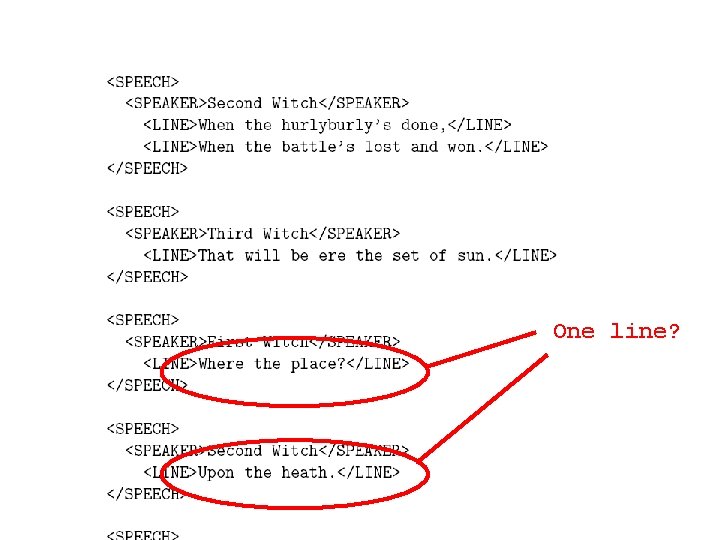

One line?

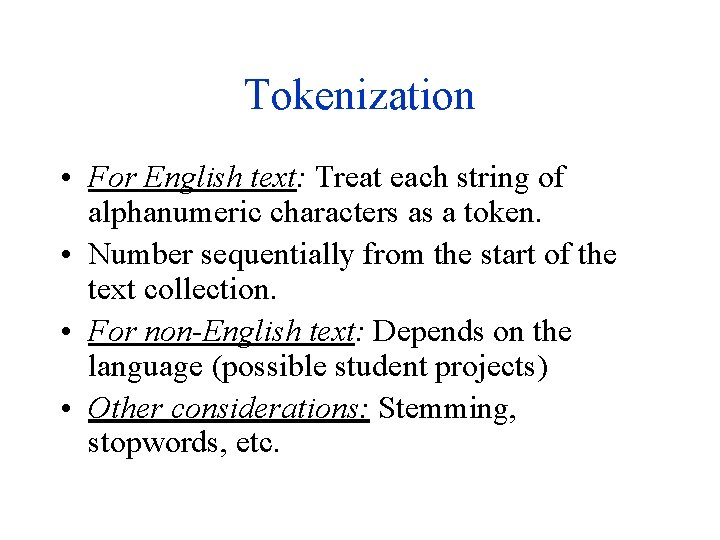

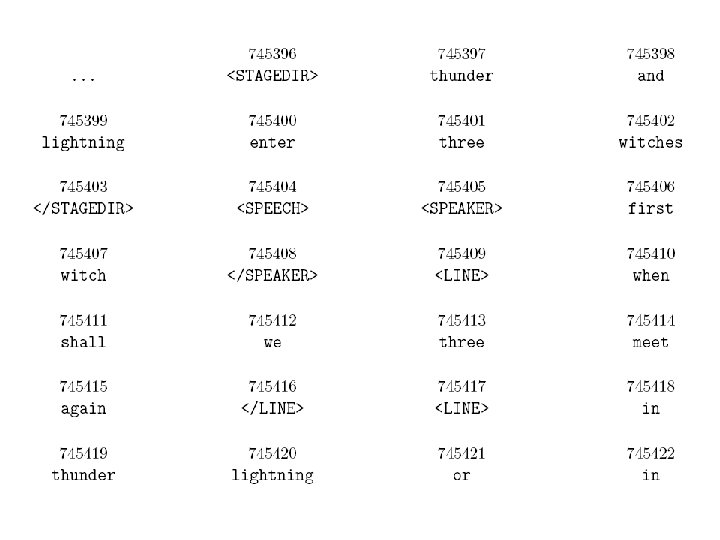

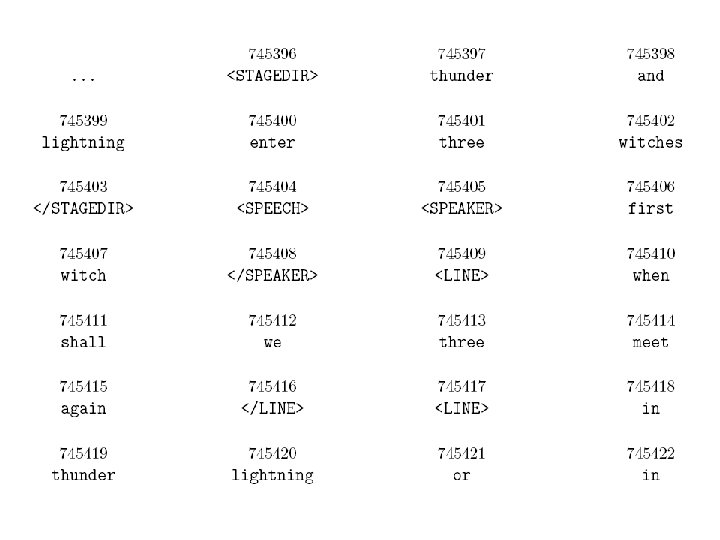

Tokenization • For English text: Treat each string of alphanumeric characters as a token. • Number sequentially from the start of the text collection. • For non-English text: Depends on the language (possible student projects) • Other considerations: Stemming, stopwords, etc.

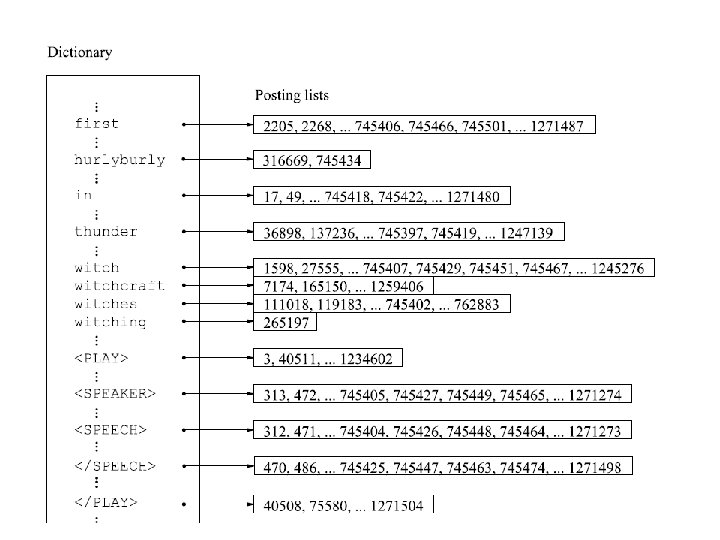

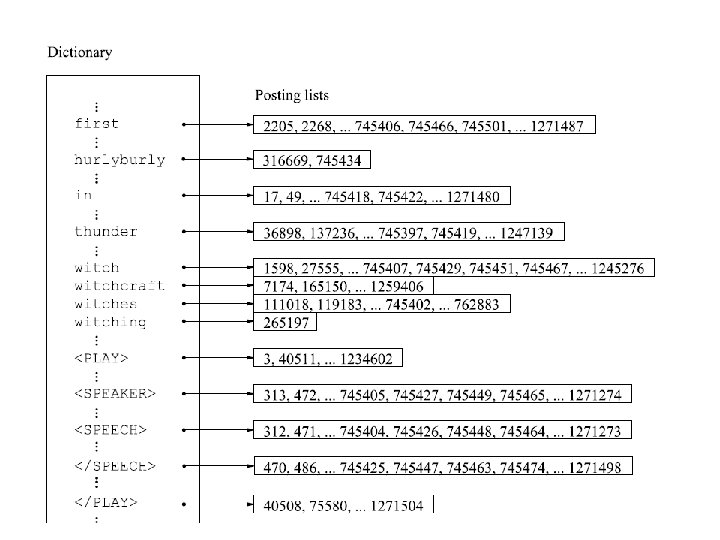

Inverted Indices • Basic data structure • More next day…

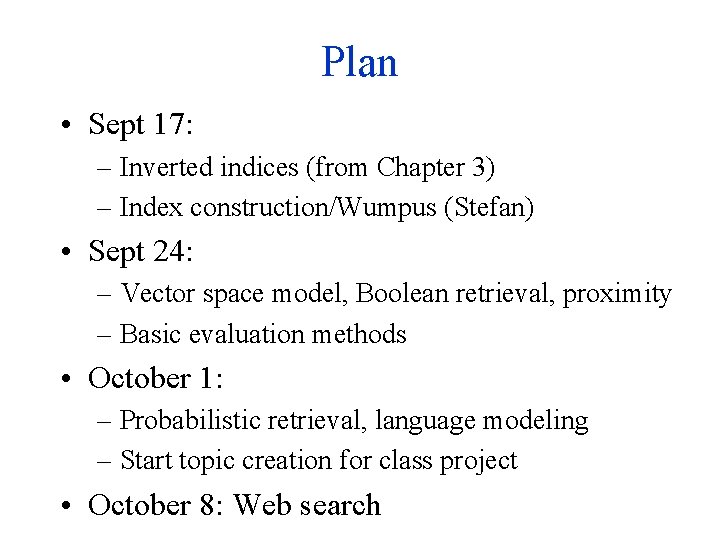

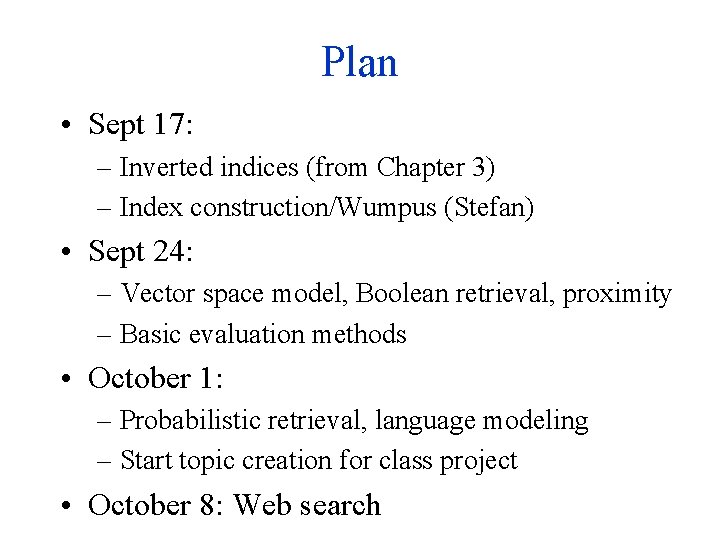

Plan • Sept 17: – Inverted indices (from Chapter 3) – Index construction/Wumpus (Stefan) • Sept 24: – Vector space model, Boolean retrieval, proximity – Basic evaluation methods • October 1: – Probabilistic retrieval, language modeling – Start topic creation for class project • October 8: Web search