CS 7810 Lecture 16 Simultaneous Multithreading Maximizing OnChip

- Slides: 29

CS 7810 Lecture 16 Simultaneous Multithreading: Maximizing On-Chip Parallelism D. M. Tullsen, S. J. Eggers, H. M. Levy Proceedings of ISCA-22 June 1995

Processor Under-Utilization • Wide gap between average processor utilization and peak processor utilization • Caused by dependences, long latency instrs, branch mispredicts • Results in many idle cycles for many structures

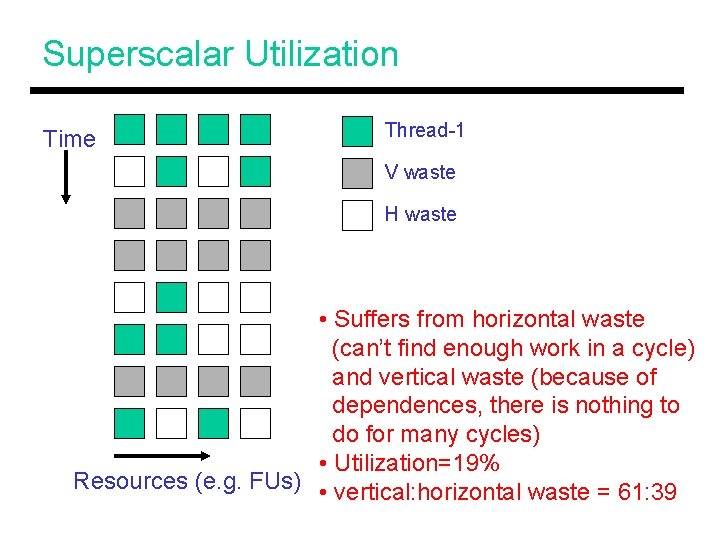

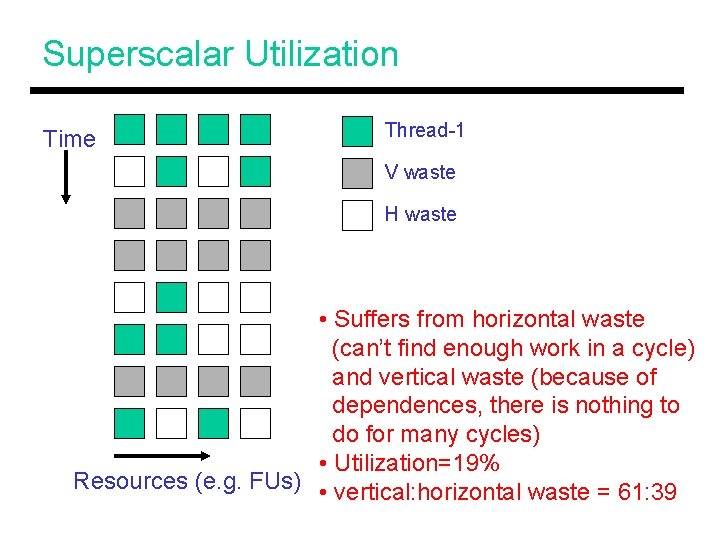

Superscalar Utilization Time Thread-1 V waste H waste • Suffers from horizontal waste (can’t find enough work in a cycle) and vertical waste (because of dependences, there is nothing to do for many cycles) • Utilization=19% Resources (e. g. FUs) • vertical: horizontal waste = 61: 39

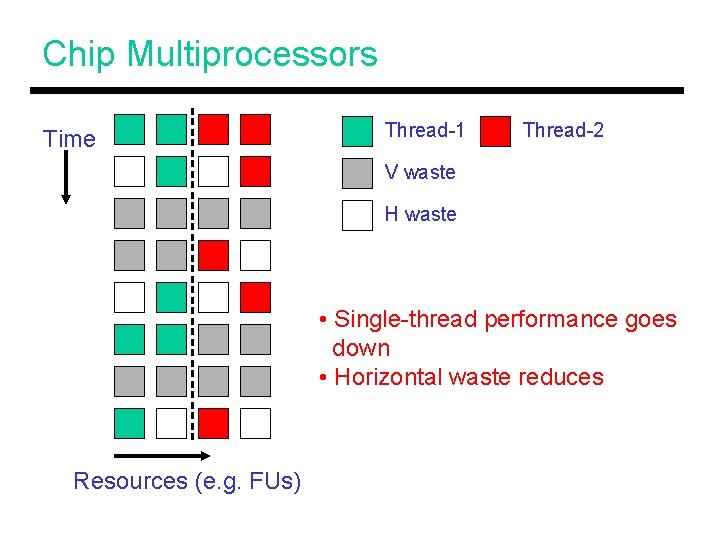

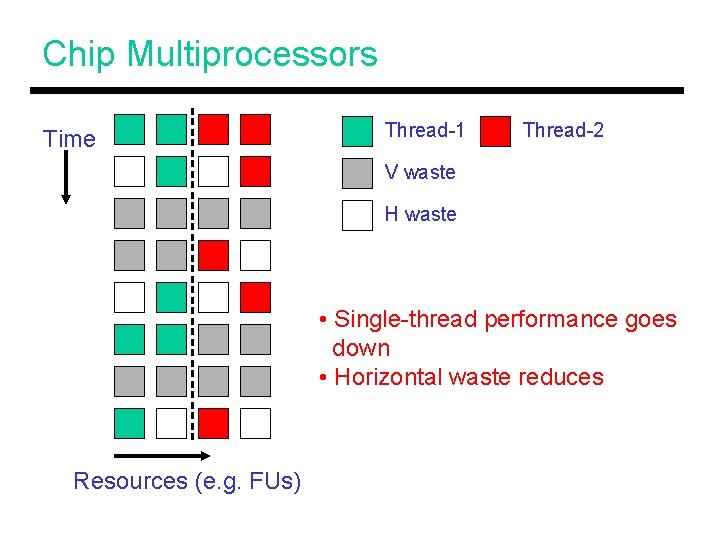

Chip Multiprocessors Time Thread-1 Thread-2 V waste H waste • Single-thread performance goes down • Horizontal waste reduces Resources (e. g. FUs)

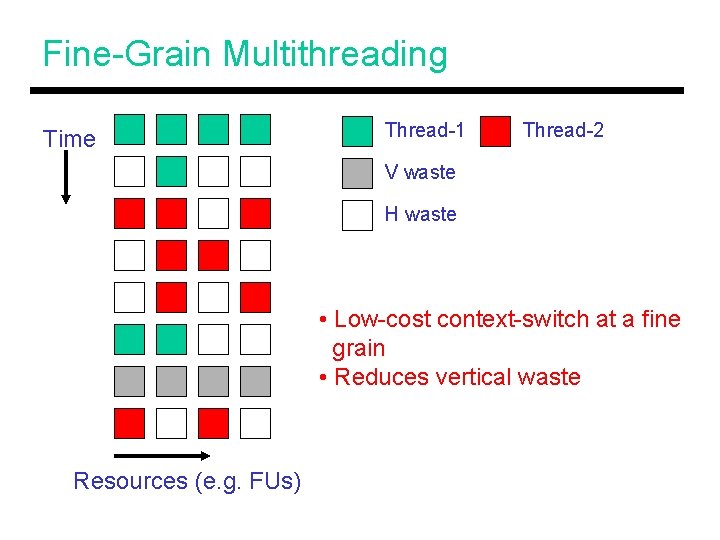

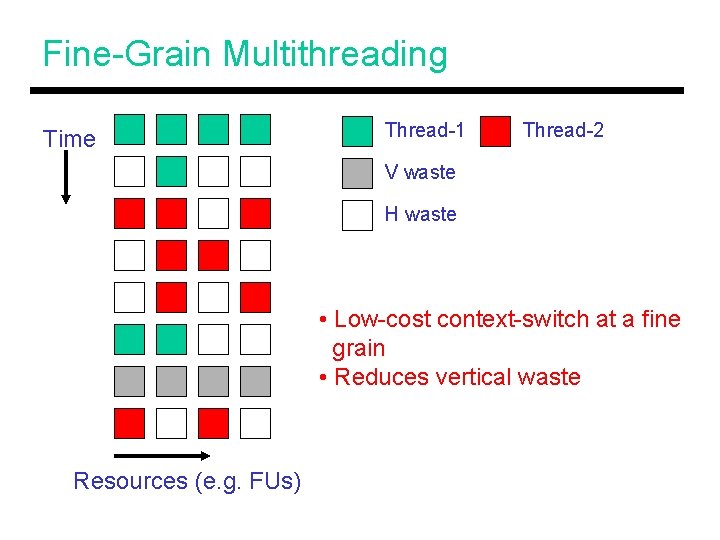

Fine-Grain Multithreading Time Thread-1 Thread-2 V waste H waste • Low-cost context-switch at a fine grain • Reduces vertical waste Resources (e. g. FUs)

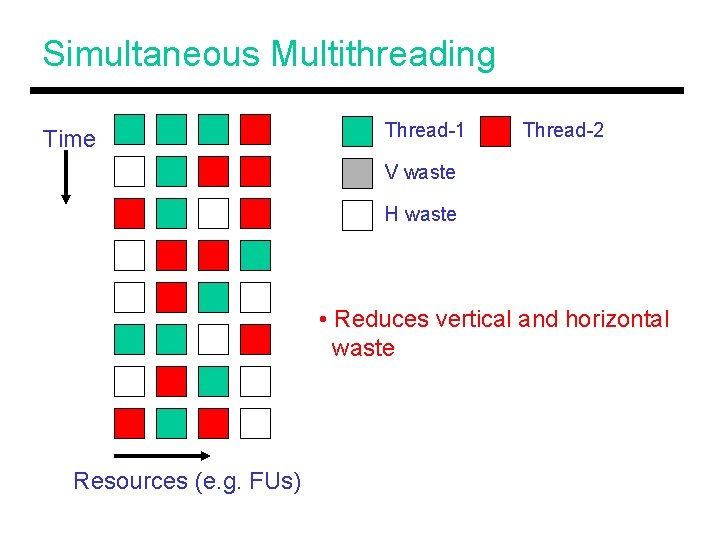

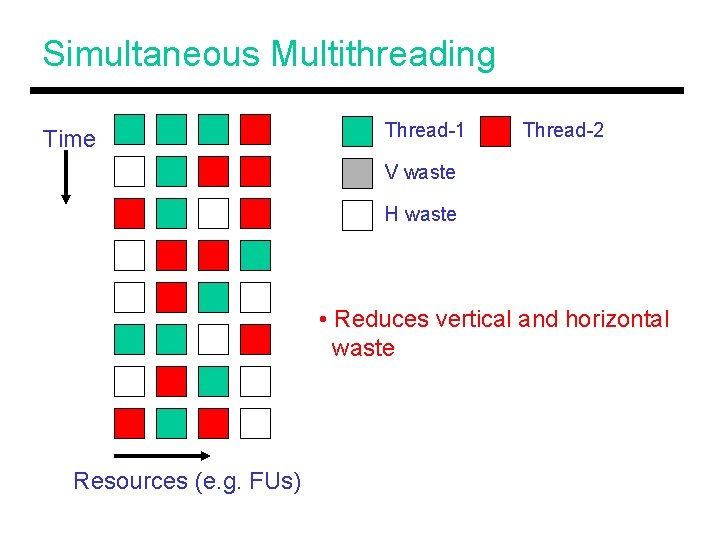

Simultaneous Multithreading Time Thread-1 Thread-2 V waste H waste • Reduces vertical and horizontal waste Resources (e. g. FUs)

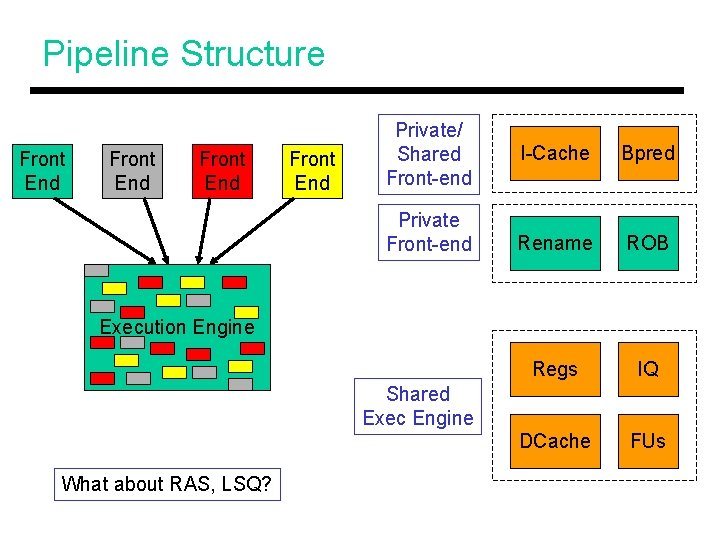

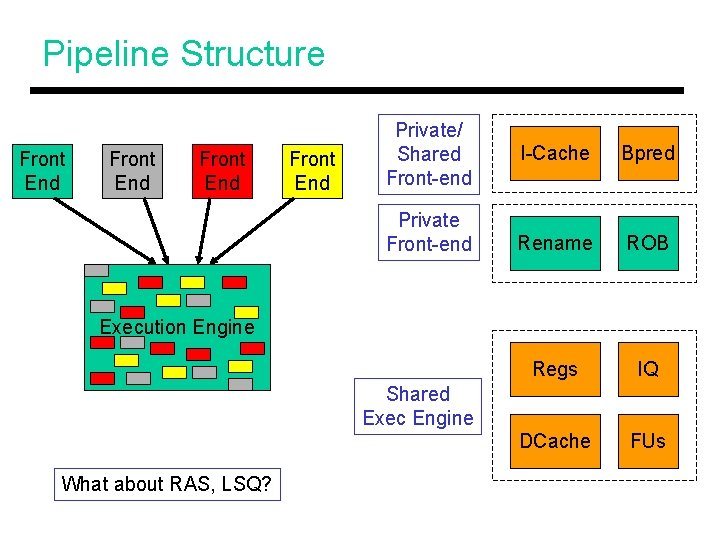

Pipeline Structure Front End Private/ Shared Front-end Private Front-end I-Cache Bpred Rename ROB Regs IQ DCache FUs Execution Engine Shared Exec Engine What about RAS, LSQ?

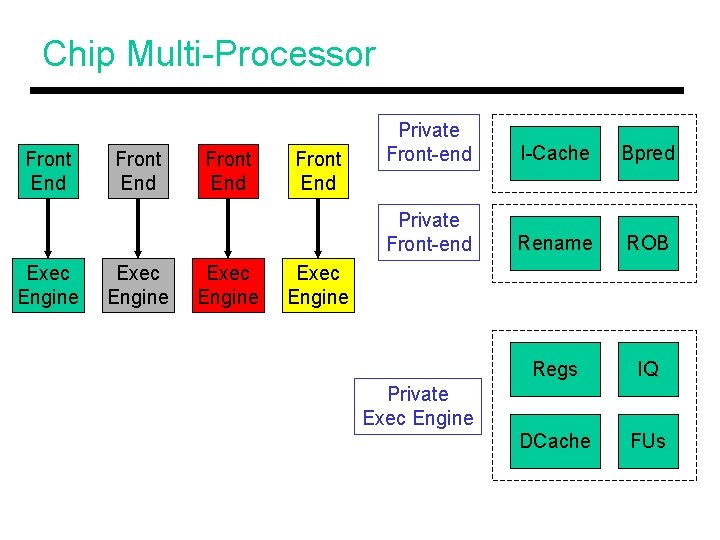

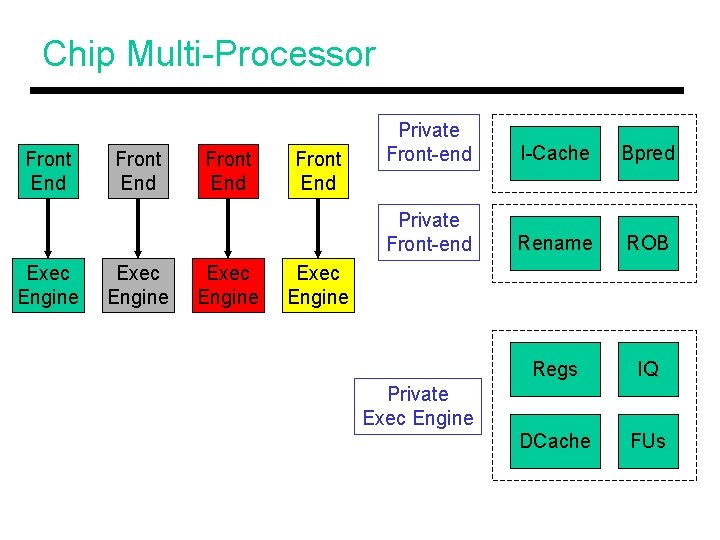

Chip Multi-Processor Front End Exec Engine Front End Private Front-end I-Cache Bpred Private Front-end Rename ROB Regs IQ DCache FUs Exec Engine Private Exec Engine

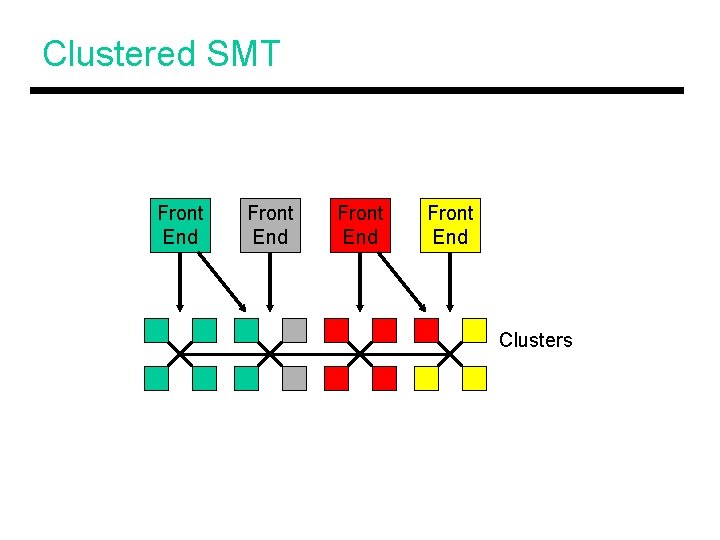

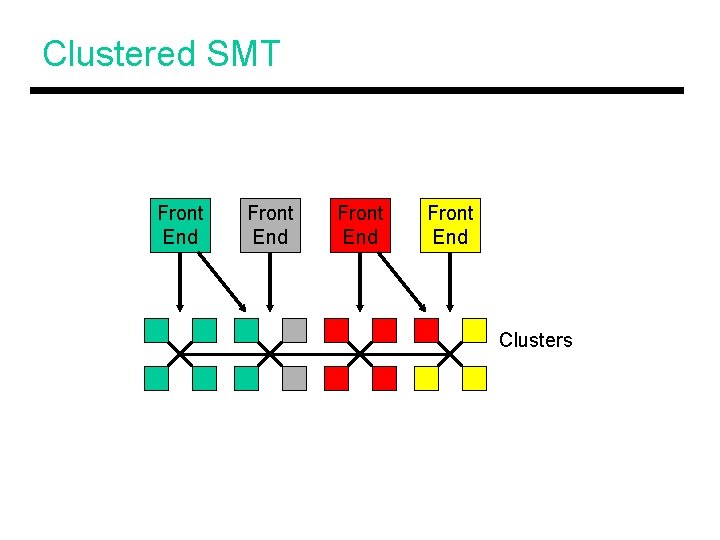

Clustered SMT Front End Clusters

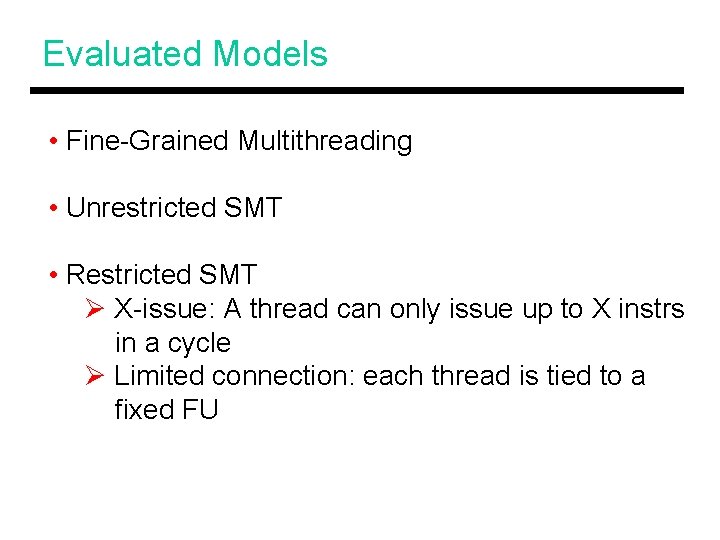

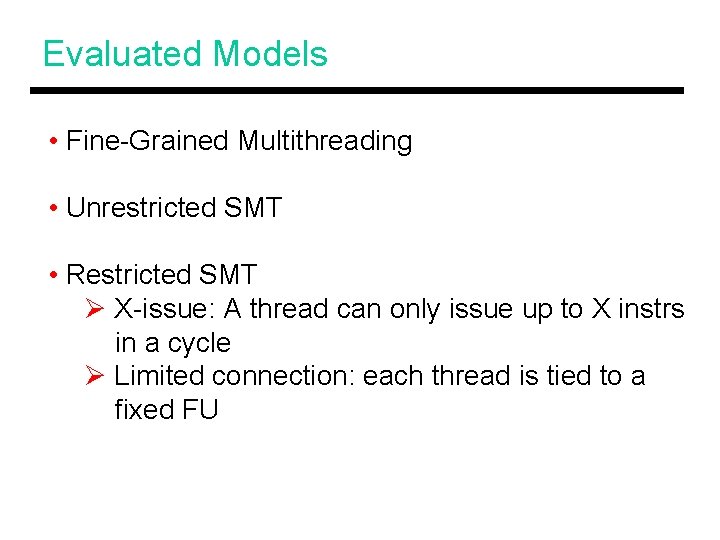

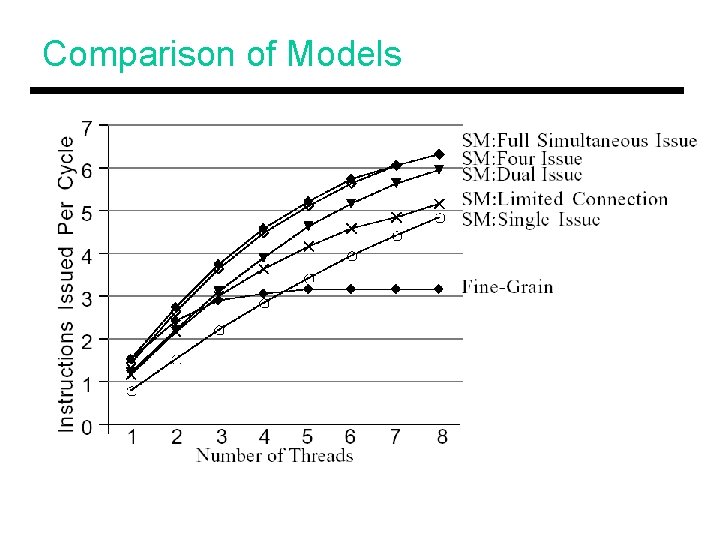

Evaluated Models • Fine-Grained Multithreading • Unrestricted SMT • Restricted SMT Ø X-issue: A thread can only issue up to X instrs in a cycle Ø Limited connection: each thread is tied to a fixed FU

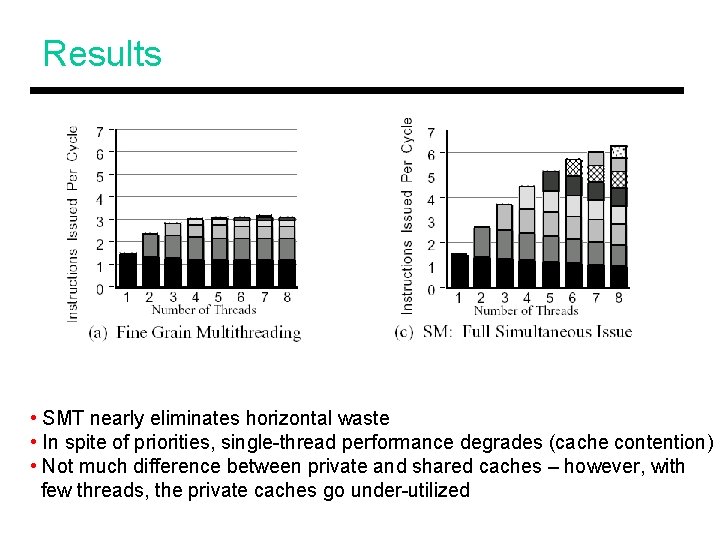

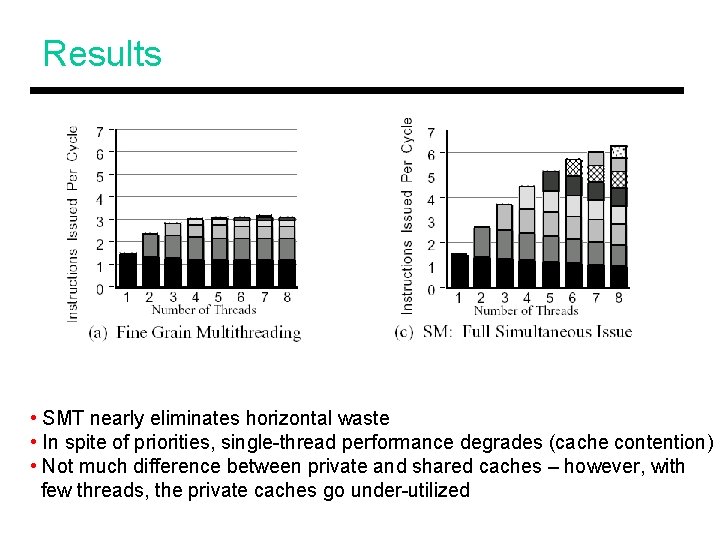

Results • SMT nearly eliminates horizontal waste • In spite of priorities, single-thread performance degrades (cache contention) • Not much difference between private and shared caches – however, with few threads, the private caches go under-utilized

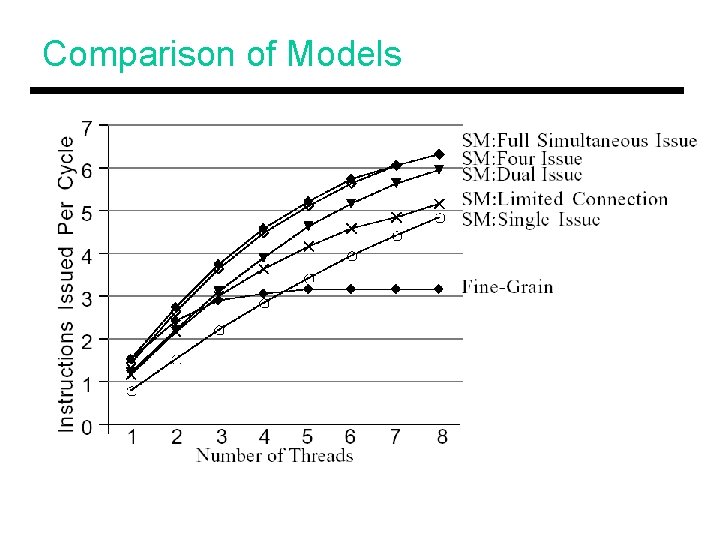

Comparison of Models • Bullet

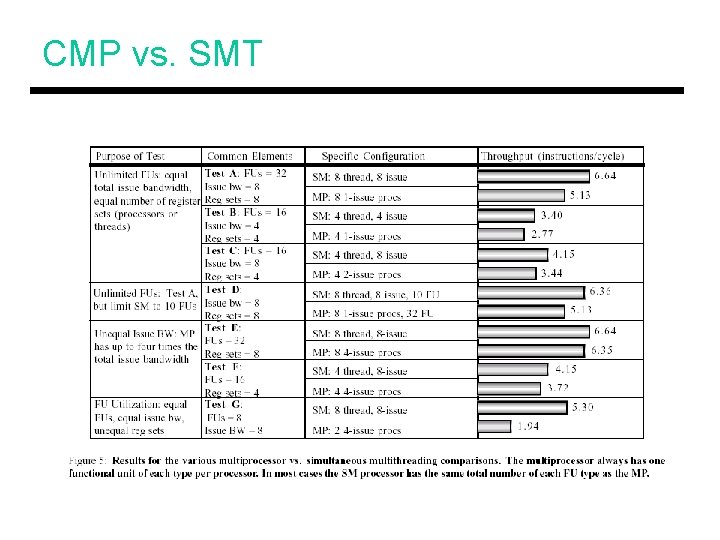

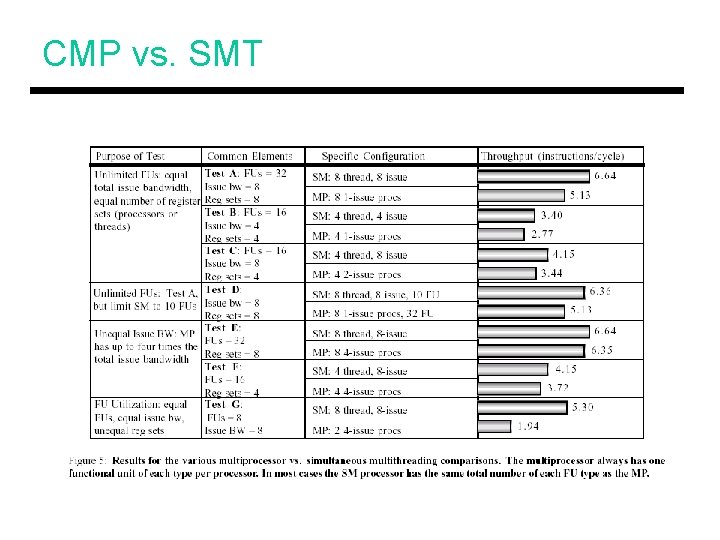

CMP vs. SMT

CS 7810 Lecture 16 Exploiting Choice: Instruction Fetch and Issue on an Implementable SMT Processor D. M. Tullsen, S. J. Eggers, J. S. Emer, H. M. Levy, J. L. Lo, R. L. Stamm Proceedings of ISCA-23 June 1996

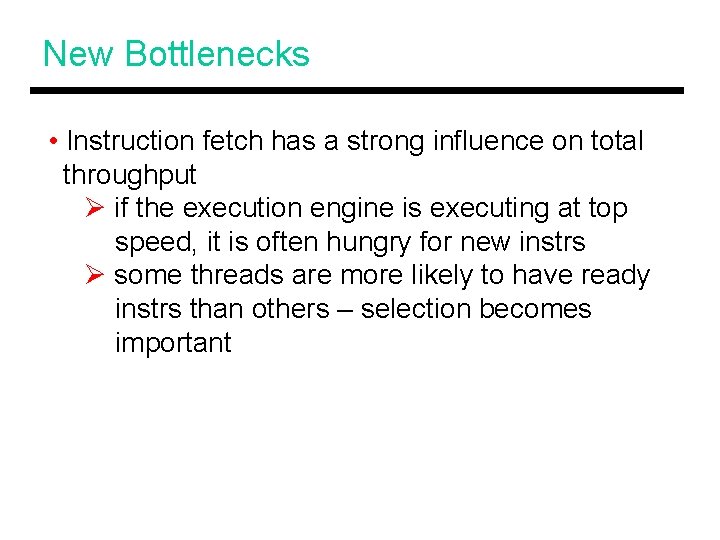

New Bottlenecks • Instruction fetch has a strong influence on total throughput Ø if the execution engine is executing at top speed, it is often hungry for new instrs Ø some threads are more likely to have ready instrs than others – selection becomes important

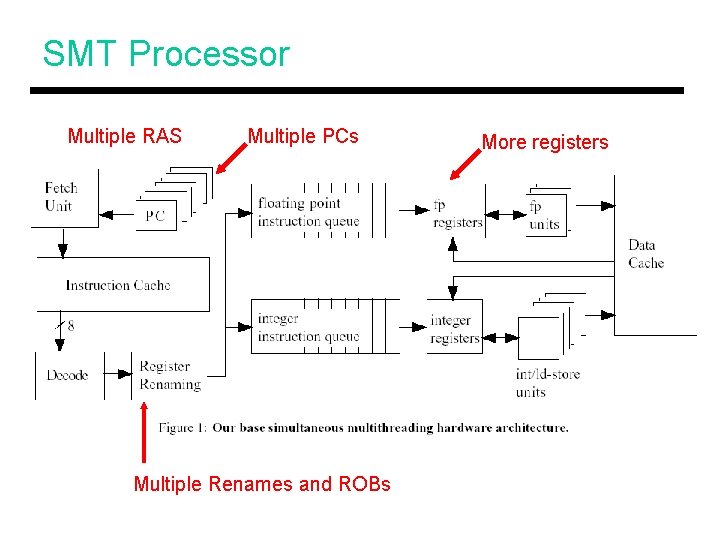

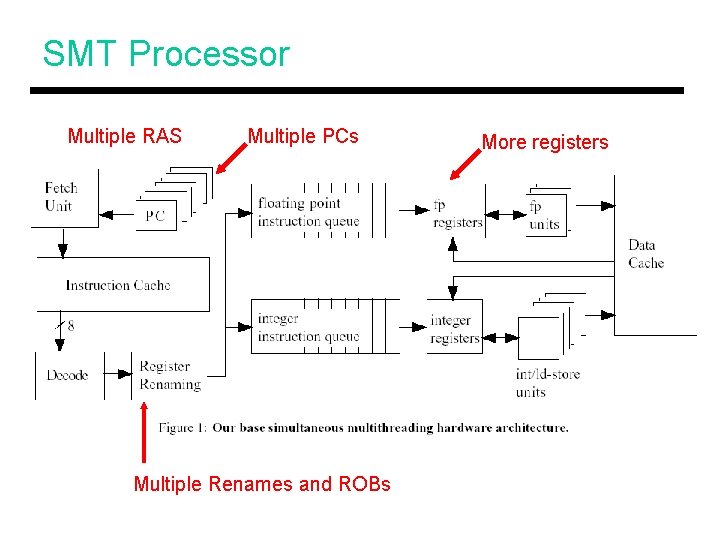

SMT Processor Multiple RAS Multiple PCs Multiple Renames and ROBs More registers

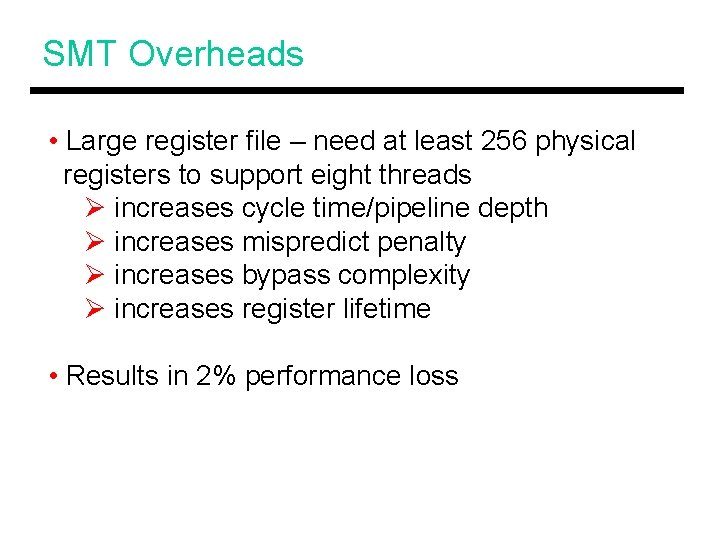

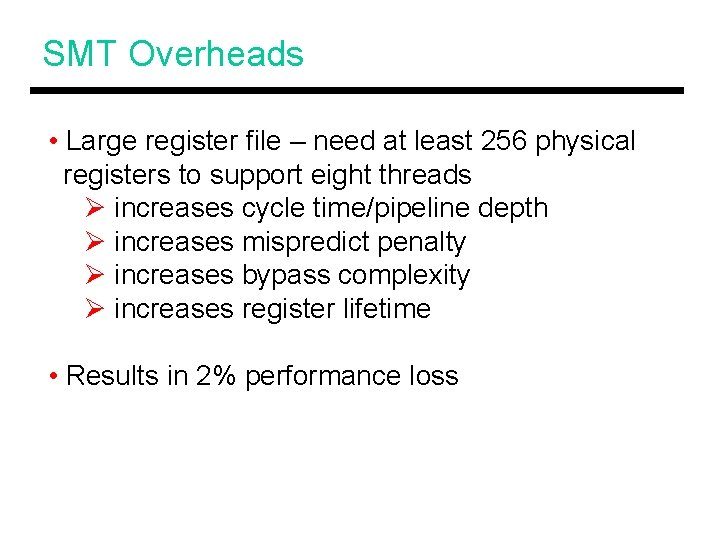

SMT Overheads • Large register file – need at least 256 physical registers to support eight threads Ø increases cycle time/pipeline depth Ø increases mispredict penalty Ø increases bypass complexity Ø increases register lifetime • Results in 2% performance loss

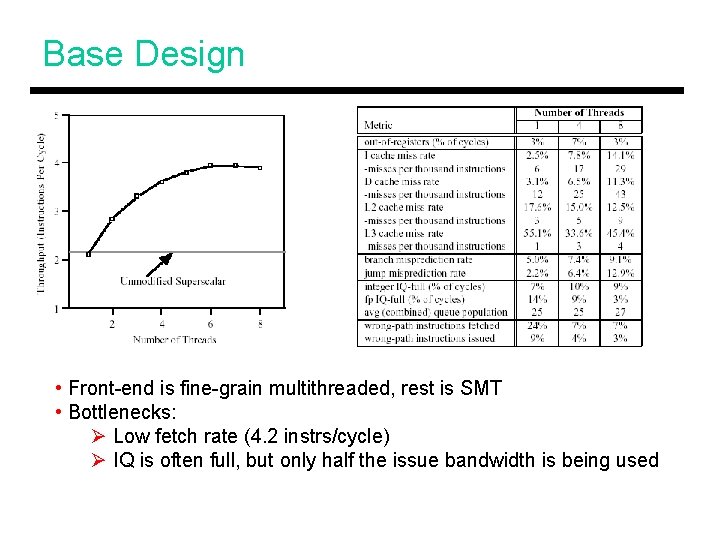

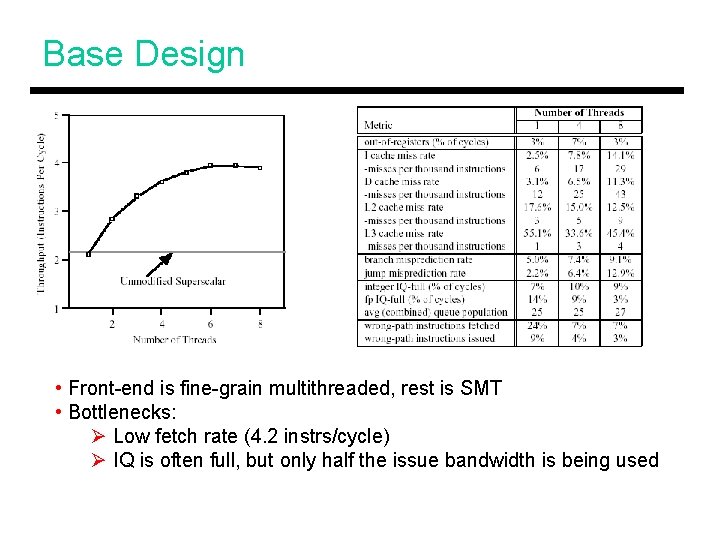

Base Design • Front-end is fine-grain multithreaded, rest is SMT • Bottlenecks: Ø Low fetch rate (4. 2 instrs/cycle) Ø IQ is often full, but only half the issue bandwidth is being used

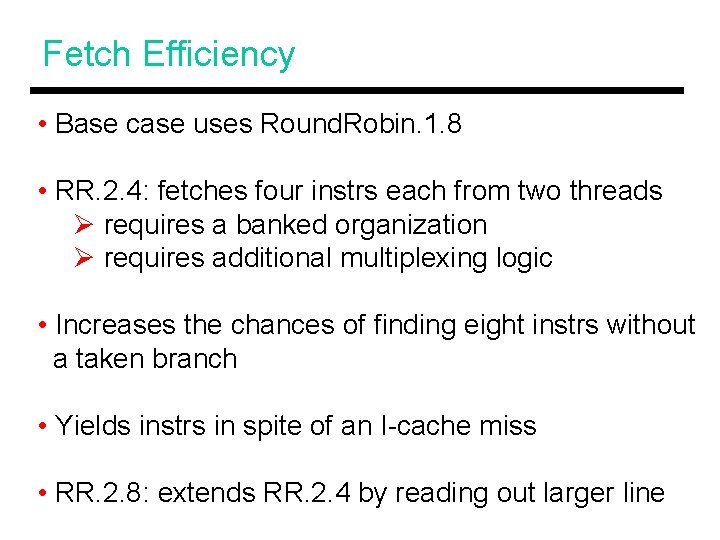

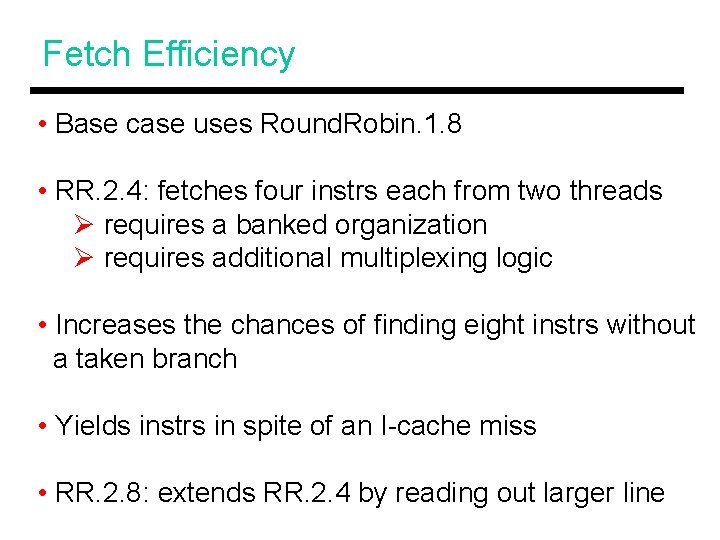

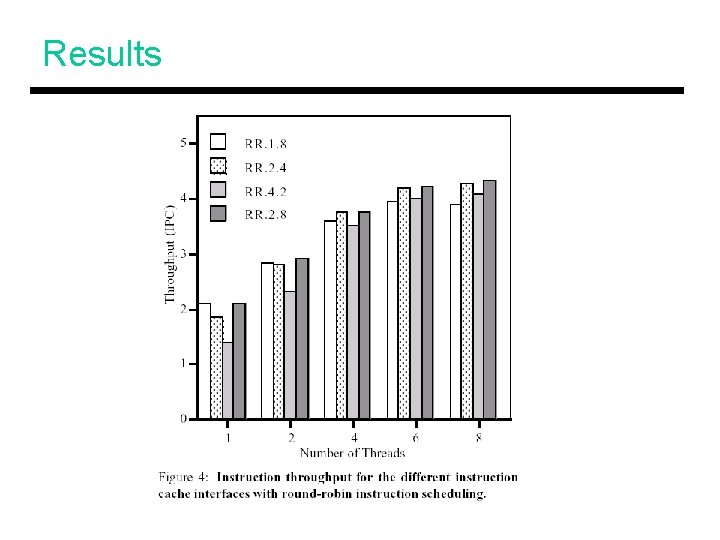

Fetch Efficiency • Base case uses Round. Robin. 1. 8 • RR. 2. 4: fetches four instrs each from two threads Ø requires a banked organization Ø requires additional multiplexing logic • Increases the chances of finding eight instrs without a taken branch • Yields instrs in spite of an I-cache miss • RR. 2. 8: extends RR. 2. 4 by reading out larger line

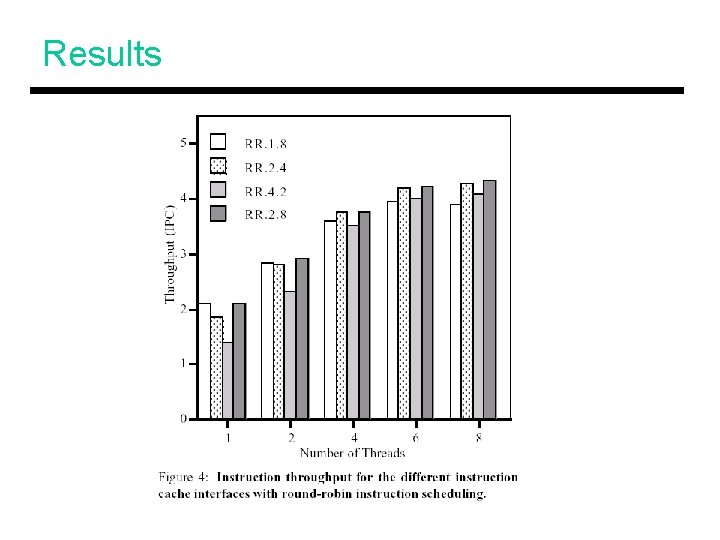

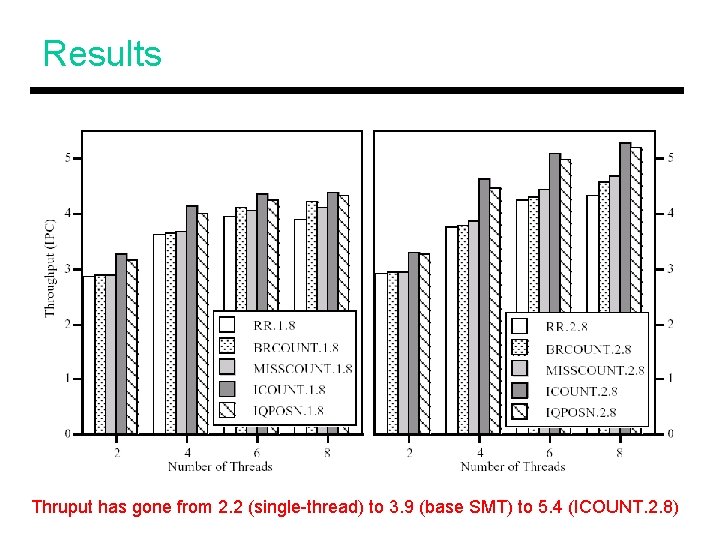

Results

Fetch Effectiveness • Are we picking the best instructions? • IQ-clog: instrs that sit in the issue queue for ages; does it make sense to fetch their dependents? • Wrong-path instructions waste issue slots • Ideally, we want useful instructions that have short issue queue lifetimes

Fetch Effectiveness • Useful instructions: throttle fetch if branch mpred probability is high confidence, num-branches (BRCOUNT), in-flight window size • Short lifetimes: throttle fetch if you encounter a cache miss (MISSCOUNT), give priority to threads that have young instrs (IQPOSN)

ICOUNT • ICOUNT: priority is based on number of unissued instrs everyone gets a share of the issueq • Long-latency instructions will not dominate the IQ • Threads that have high issue rate will also have high fetch rate • In-flight windows are short and wrong-path instrs are minimized • Increased fairness more ready instrs per cycle

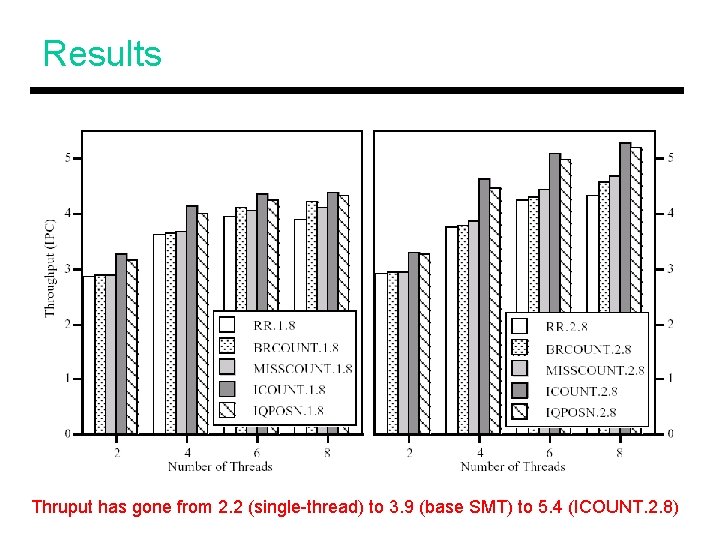

Results Thruput has gone from 2. 2 (single-thread) to 3. 9 (base SMT) to 5. 4 (ICOUNT. 2. 8)

Reducing IQ-clog • IQBUF: a buffer before the issue queue • ITAG: pre-examine the tags to detect I-cache misses and not waste fetch bandwidth • OPT_last and SPEC_last: lower issue priority for speculative instrs • These techniques entail overheads and result in minor improvements

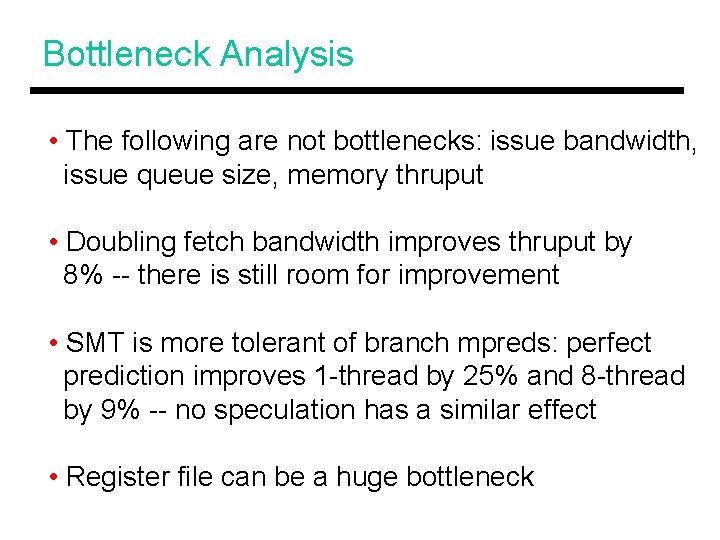

Bottleneck Analysis • The following are not bottlenecks: issue bandwidth, issue queue size, memory thruput • Doubling fetch bandwidth improves thruput by 8% -- there is still room for improvement • SMT is more tolerant of branch mpreds: perfect prediction improves 1 -thread by 25% and 8 -thread by 9% -- no speculation has a similar effect • Register file can be a huge bottleneck

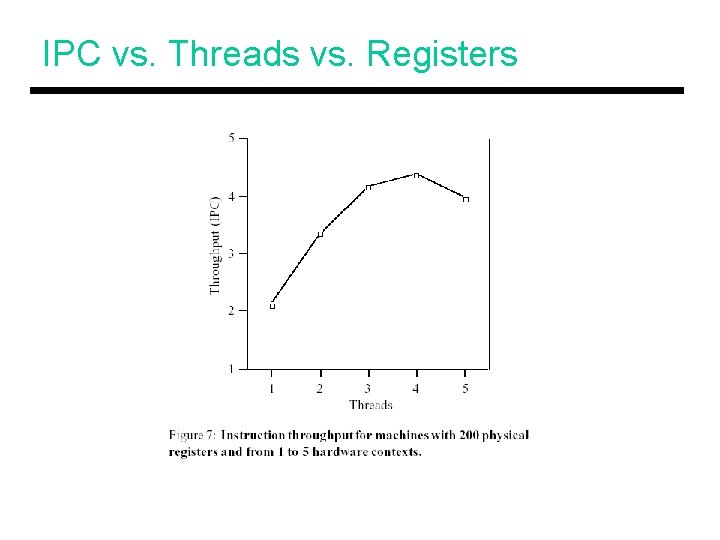

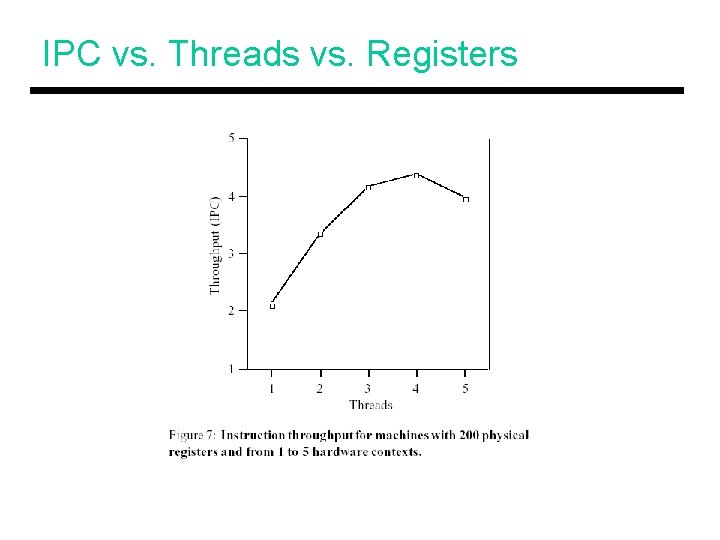

IPC vs. Threads vs. Registers

Power and Energy • Energy is heavily influenced by “work done” and by execution time compared to a single-thread machine, SMT does not reduce “work done”, but reduces execution time reduced energy • Same work, less time higher power!

Title • Bullet