CS 6501 Deep Learning for Visual Recognition Softmax

![Supervised Learning – Linear Softmax [1 0 0] 8 Supervised Learning – Linear Softmax [1 0 0] 8](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-8.jpg)

![How do we find a good w and b? [1 0 0] We need How do we find a good w and b? [1 0 0] We need](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-9.jpg)

![Remember this slide? [1 0 0] 34 Remember this slide? [1 0 0] 34](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-34.jpg)

![Supervised Learning – Linear Softmax [1 0 0] 43 Supervised Learning – Linear Softmax [1 0 0] 43](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-43.jpg)

![How do we find a good w and b? [1 0 0] We need How do we find a good w and b? [1 0 0] We need](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-44.jpg)

- Slides: 45

CS 6501: Deep Learning for Visual Recognition Softmax Classifier

Today’s Class Softmax Classifier • Inference / Making Predictions / Test Time • Training a Softmax Classifier • Stochastic Gradient Descent (SGD)

Supervised Learning - Classification Test Data Training Data cat dog cat . . . bear 3

Supervised Learning - Classification Training Data cat dog cat . . . bear 4

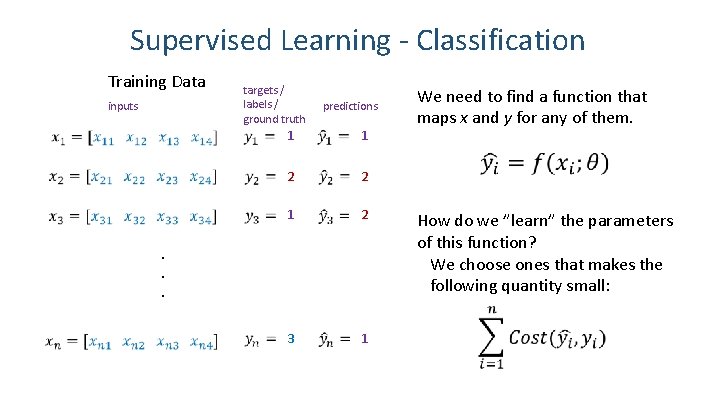

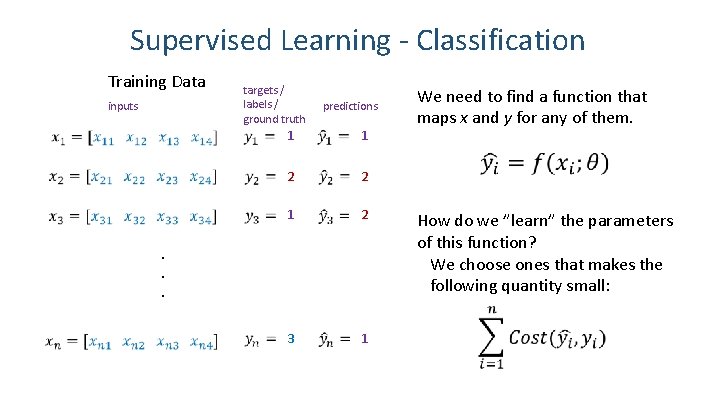

Supervised Learning - Classification Training Data inputs targets / labels / ground truth predictions 1 1 2 2 1 2 3 1 . . . We need to find a function that maps x and y for any of them. How do we ”learn” the parameters of this function? We choose ones that makes the following quantity small: 5

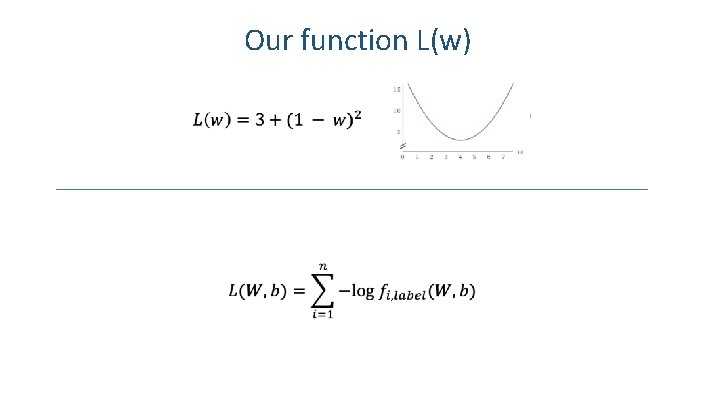

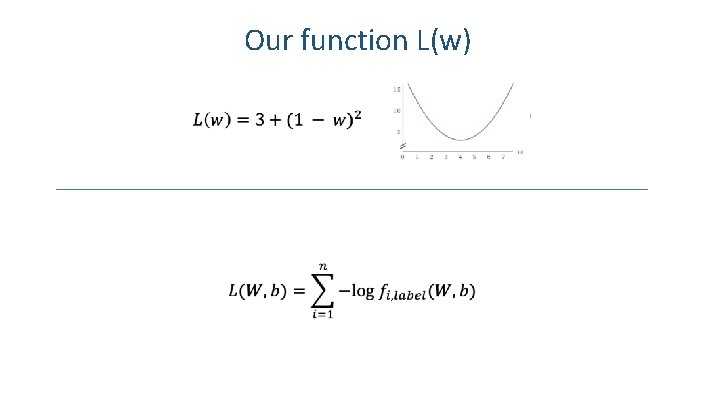

Supervised Learning – Linear Softmax Training Data inputs targets / labels / ground truth 1 2 1 . . . 3 6

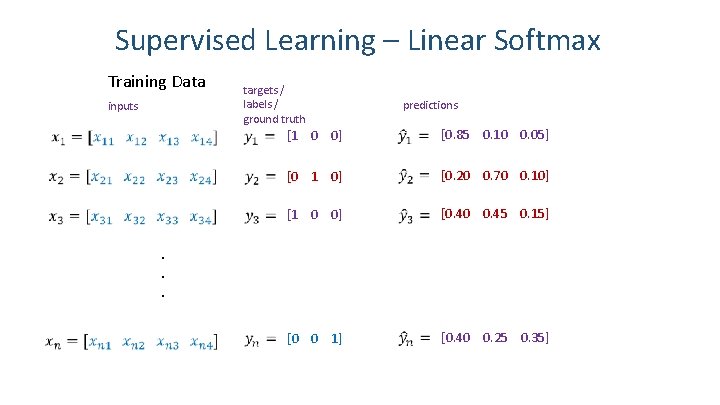

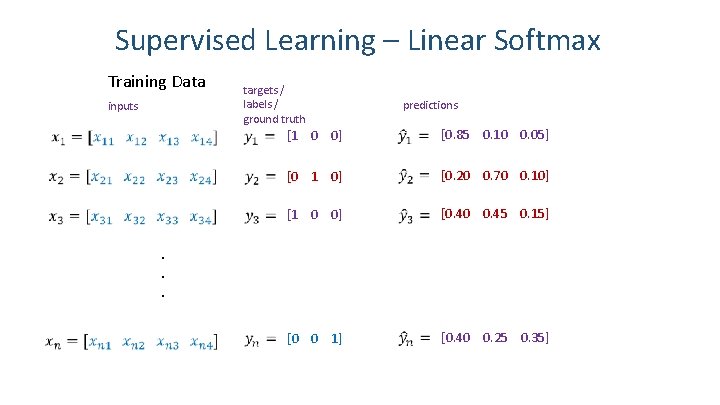

Supervised Learning – Linear Softmax Training Data inputs targets / labels / ground truth predictions [1 0 0] [0. 85 0. 10 0. 05] [0 1 0] [0. 20 0. 70 0. 10] [1 0 0] [0. 40 0. 45 0. 15] [0 0 1] [0. 40 0. 25 0. 35] . . . 7

![Supervised Learning Linear Softmax 1 0 0 8 Supervised Learning – Linear Softmax [1 0 0] 8](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-8.jpg)

Supervised Learning – Linear Softmax [1 0 0] 8

![How do we find a good w and b 1 0 0 We need How do we find a good w and b? [1 0 0] We need](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-9.jpg)

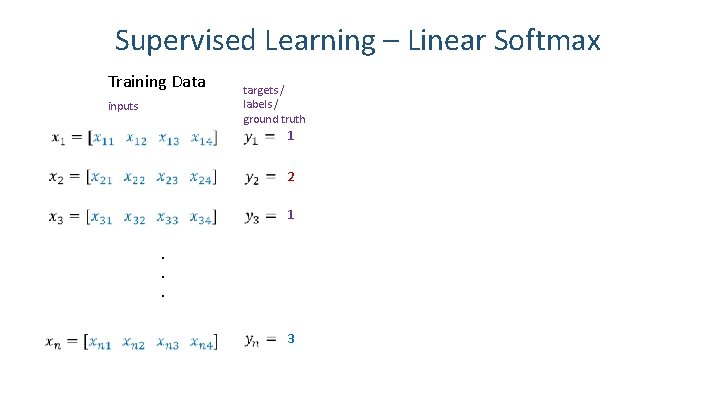

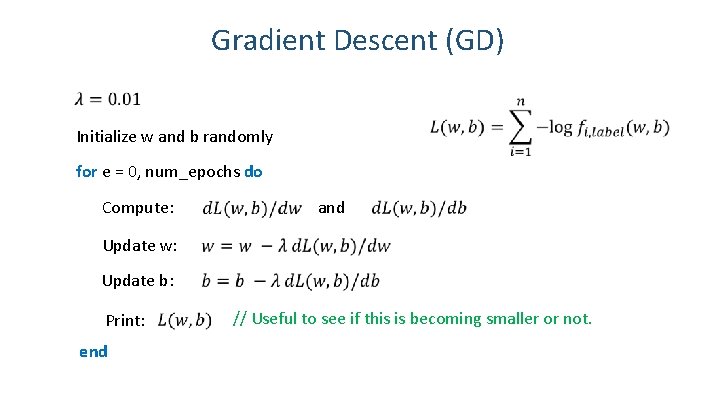

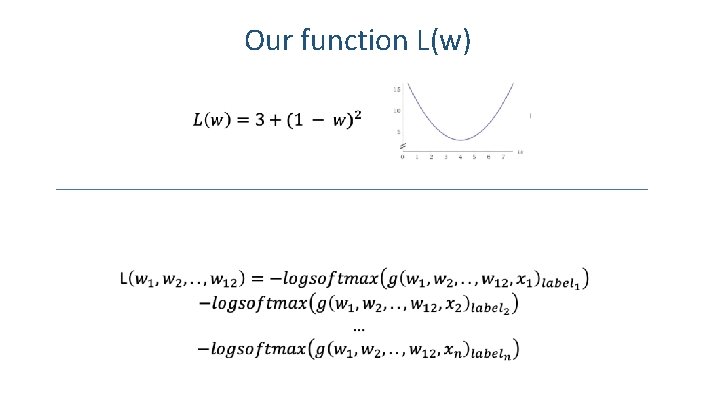

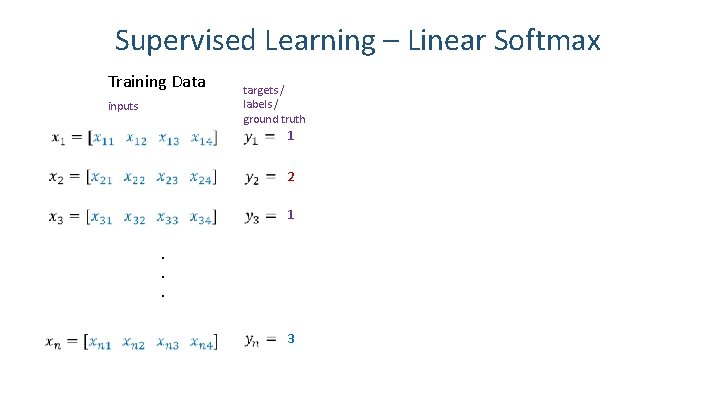

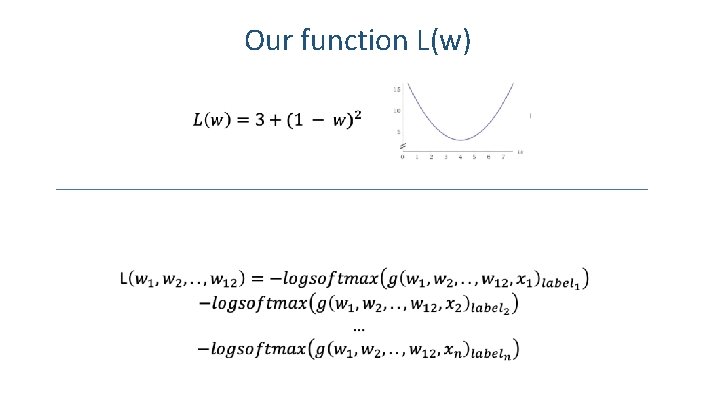

How do we find a good w and b? [1 0 0] We need to find w, and b that minimize the following: Why? 9

Gradient Descent (GD) Initialize w and b randomly for e = 0, num_epochs do Compute: and Update w: Update b: Print: // Useful to see if this is becoming smaller or not. end 10

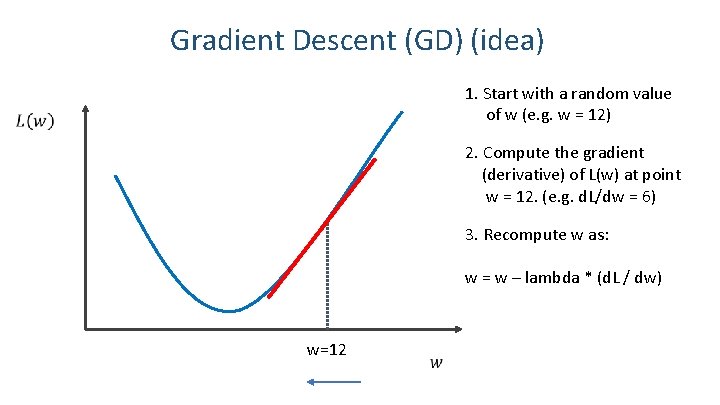

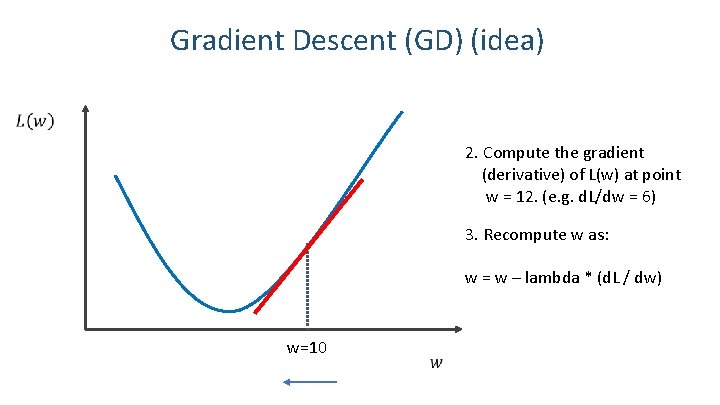

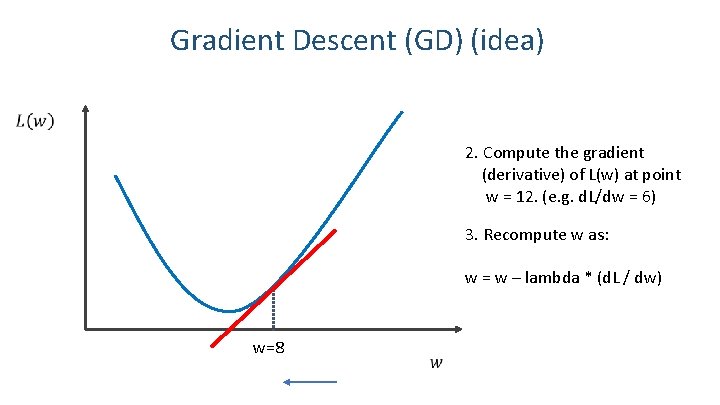

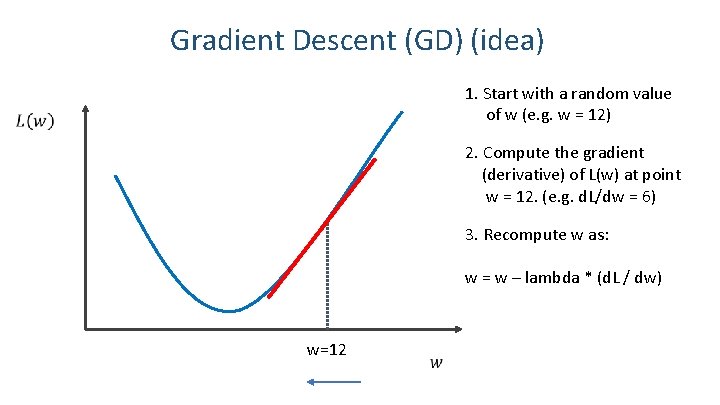

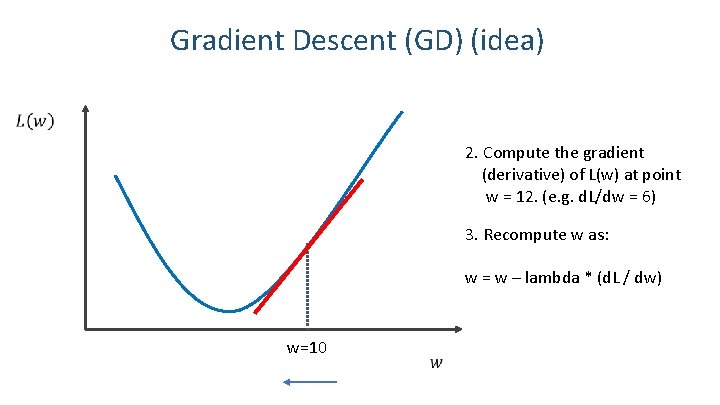

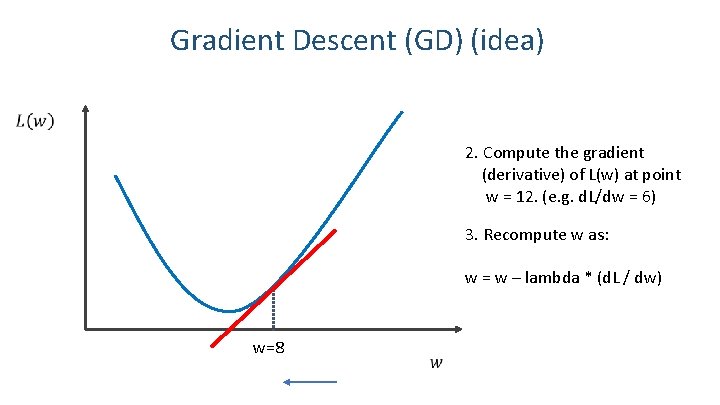

Gradient Descent (GD) (idea) 1. Start with a random value of w (e. g. w = 12) 2. Compute the gradient (derivative) of L(w) at point w = 12. (e. g. d. L/dw = 6) 3. Recompute w as: w = w – lambda * (d. L / dw) w=12 11

Gradient Descent (GD) (idea) 2. Compute the gradient (derivative) of L(w) at point w = 12. (e. g. d. L/dw = 6) 3. Recompute w as: w = w – lambda * (d. L / dw) w=10 12

Gradient Descent (GD) (idea) 2. Compute the gradient (derivative) of L(w) at point w = 12. (e. g. d. L/dw = 6) 3. Recompute w as: w = w – lambda * (d. L / dw) w=8 13

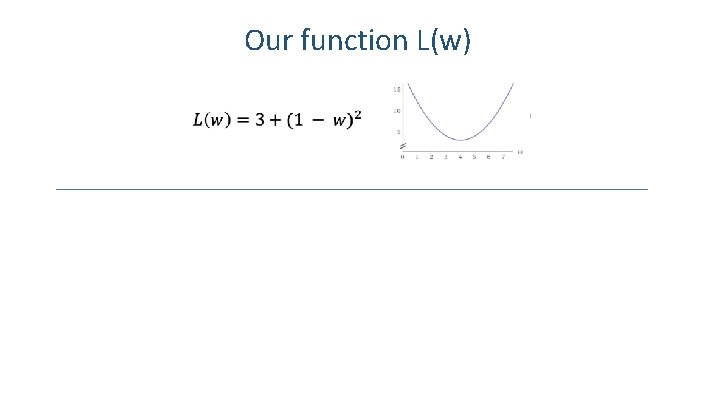

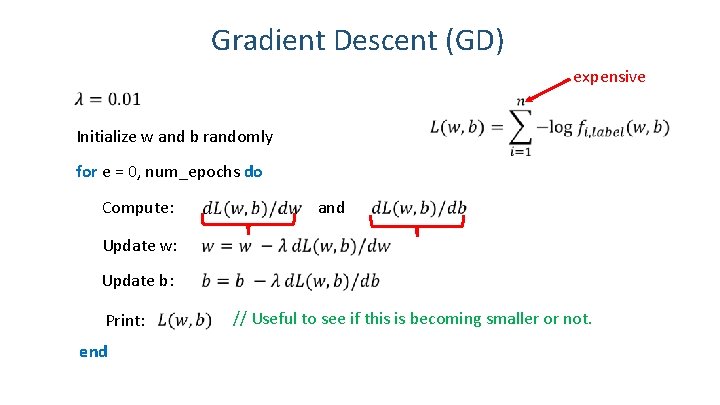

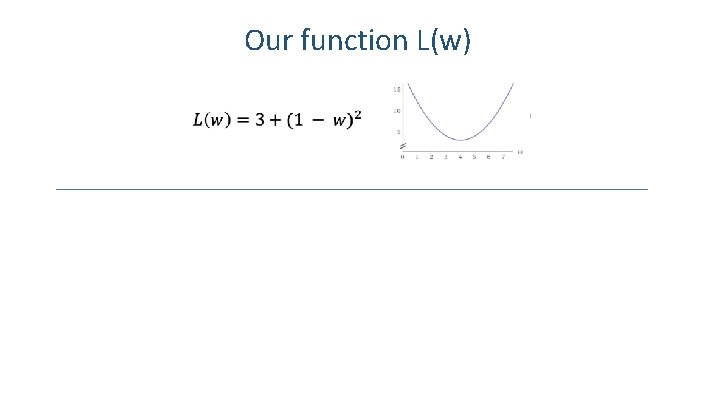

Our function L(w) 14

Our function L(w) 15

Our function L(w) 16

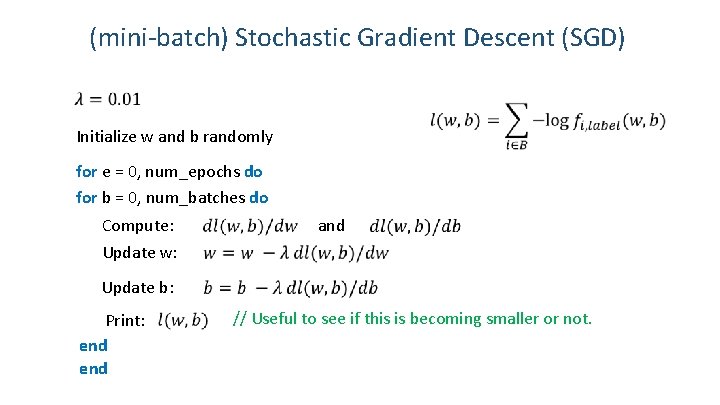

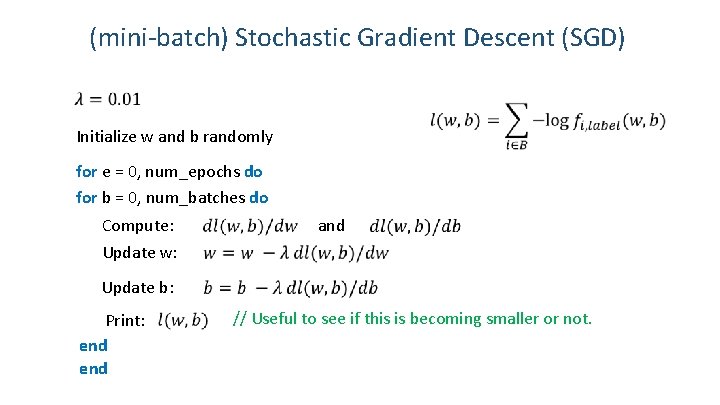

Gradient Descent (GD) expensive Initialize w and b randomly for e = 0, num_epochs do Compute: and Update w: Update b: Print: // Useful to see if this is becoming smaller or not. end 17

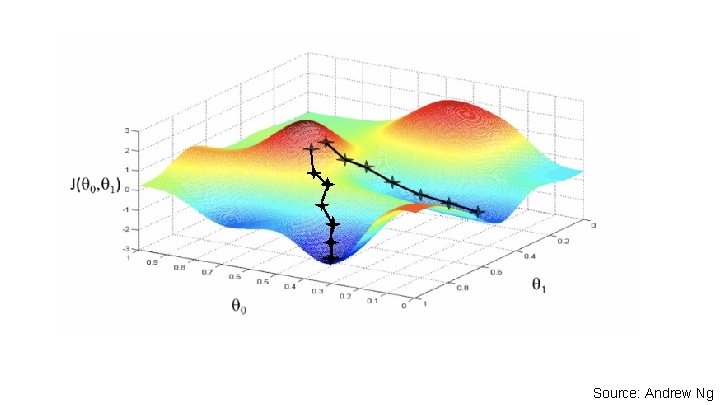

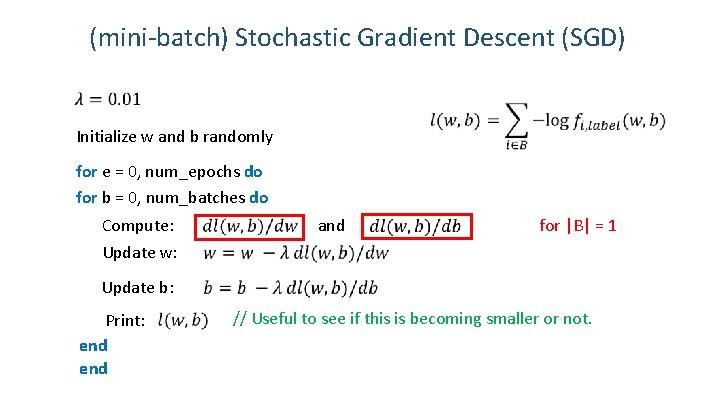

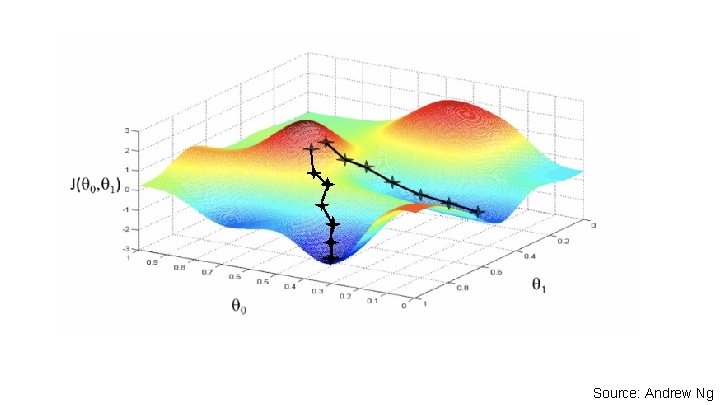

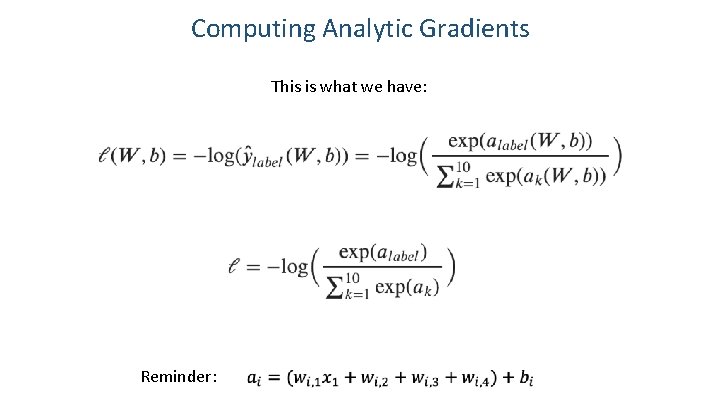

(mini-batch) Stochastic Gradient Descent (SGD) Initialize w and b randomly for e = 0, num_epochs do for b = 0, num_batches do Compute: Update w: and Update b: Print: end // Useful to see if this is becoming smaller or not. 18

Source: Andrew Ng

(mini-batch) Stochastic Gradient Descent (SGD) Initialize w and b randomly for e = 0, num_epochs do for b = 0, num_batches do Compute: Update w: and for |B| = 1 Update b: Print: end // Useful to see if this is becoming smaller or not. 20

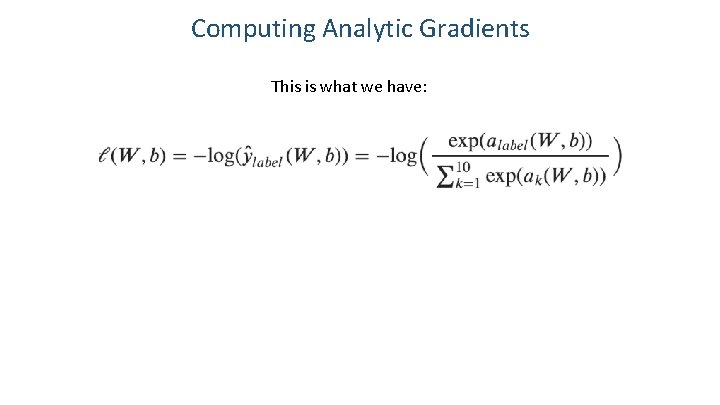

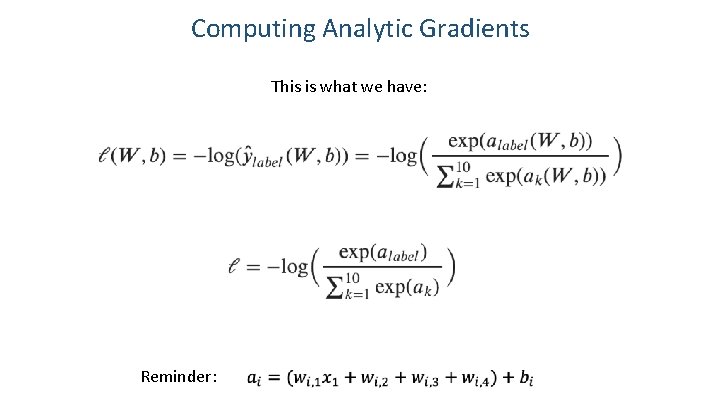

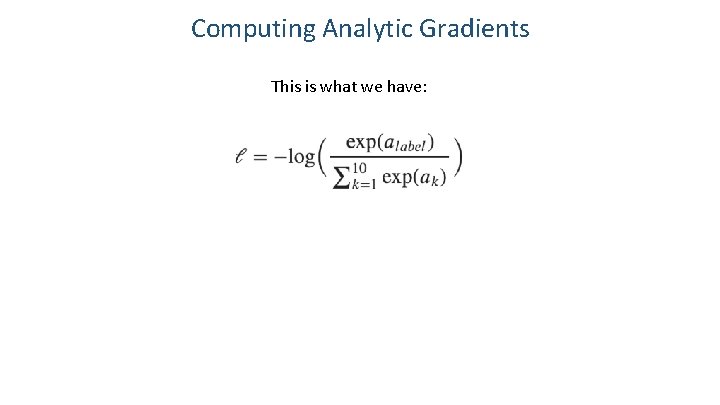

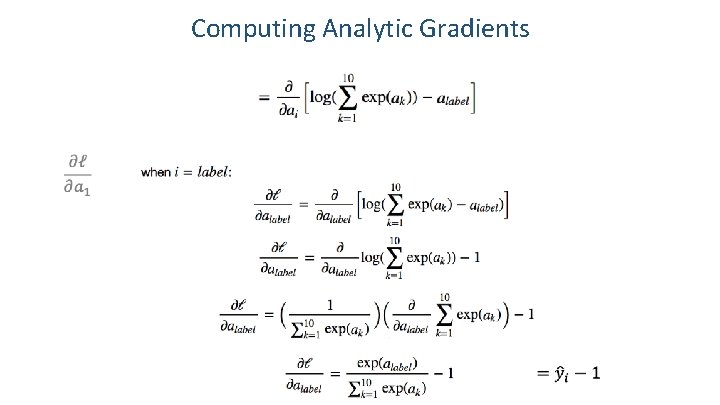

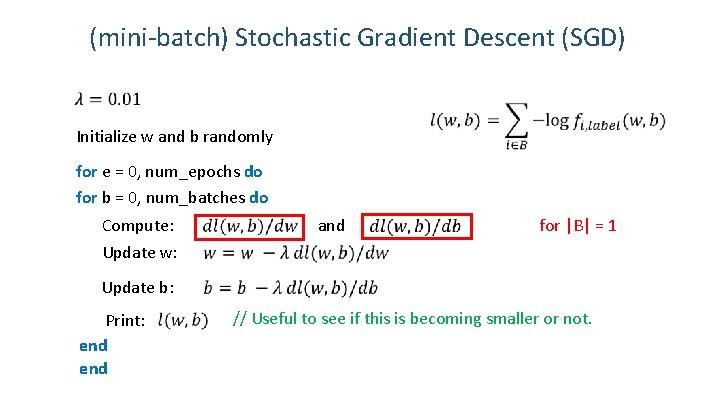

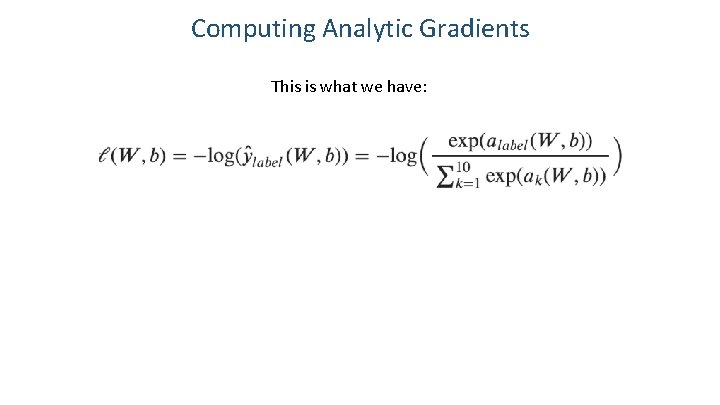

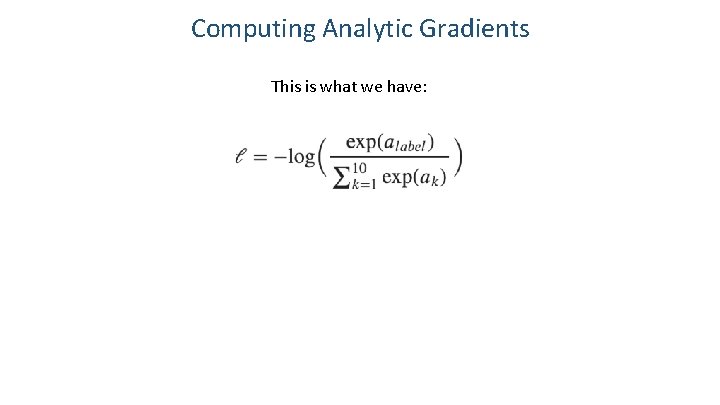

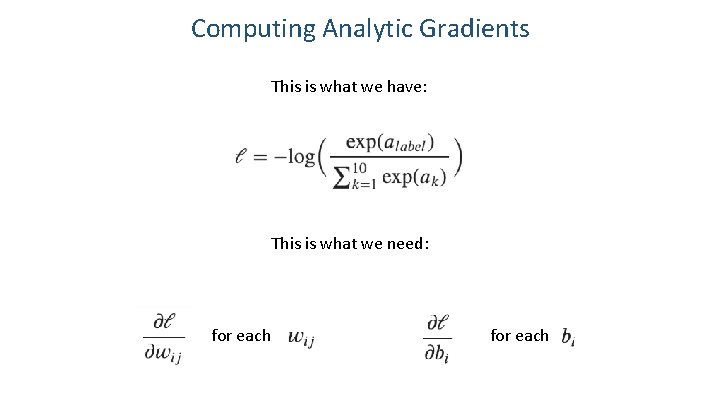

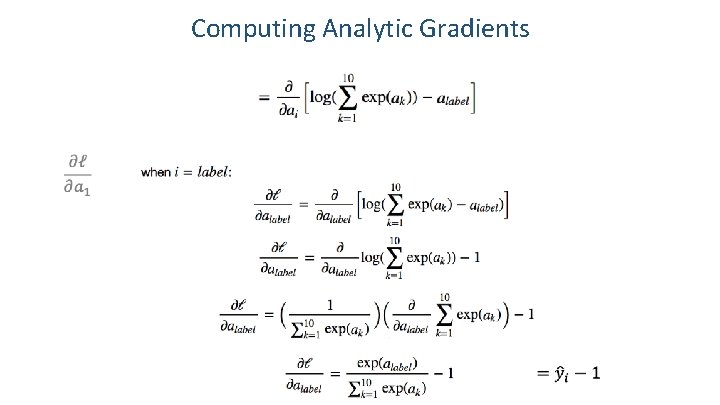

Computing Analytic Gradients This is what we have:

Computing Analytic Gradients This is what we have: Reminder:

Computing Analytic Gradients This is what we have:

Computing Analytic Gradients This is what we have: This is what we need: for each

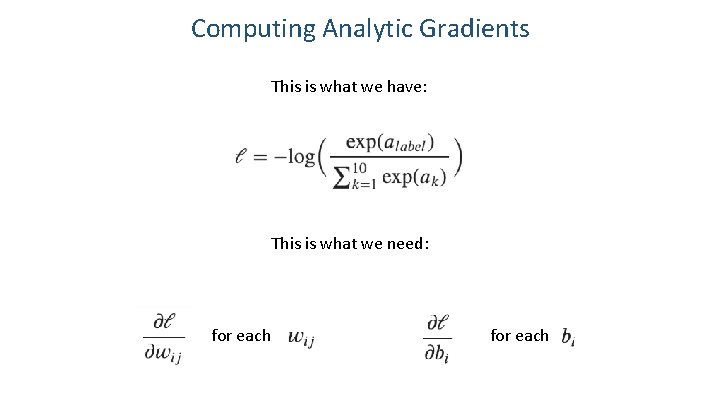

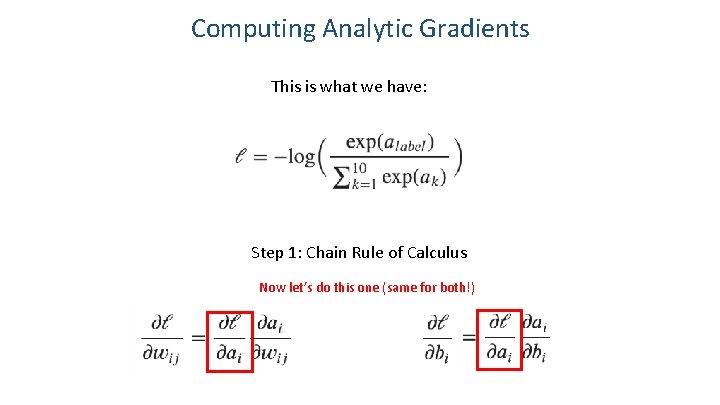

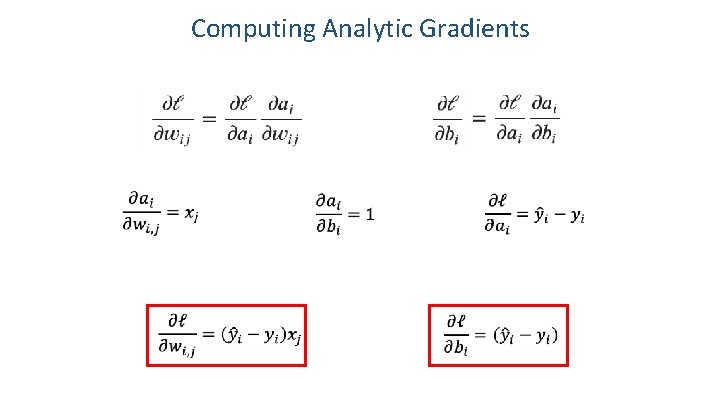

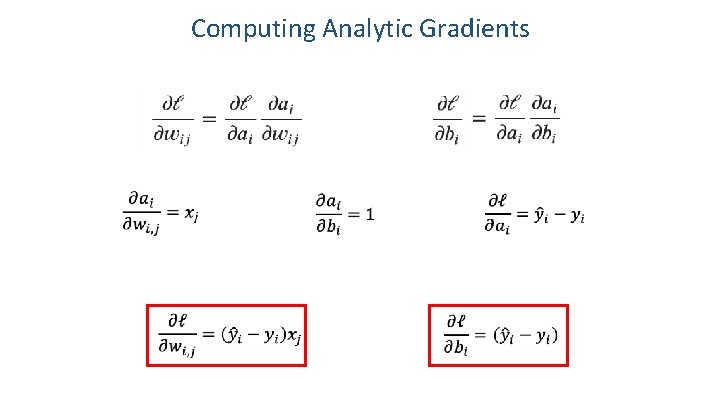

Computing Analytic Gradients This is what we have: Step 1: Chain Rule of Calculus

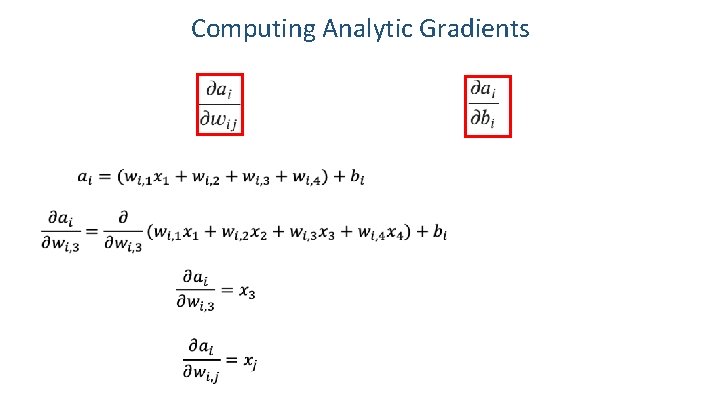

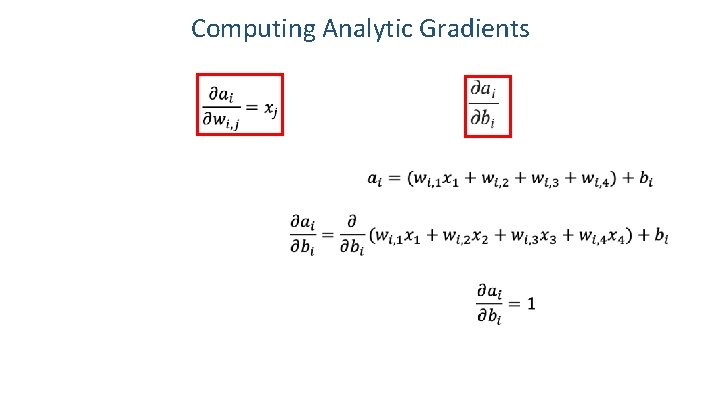

Computing Analytic Gradients This is what we have: Step 1: Chain Rule of Calculus Let’s do these first

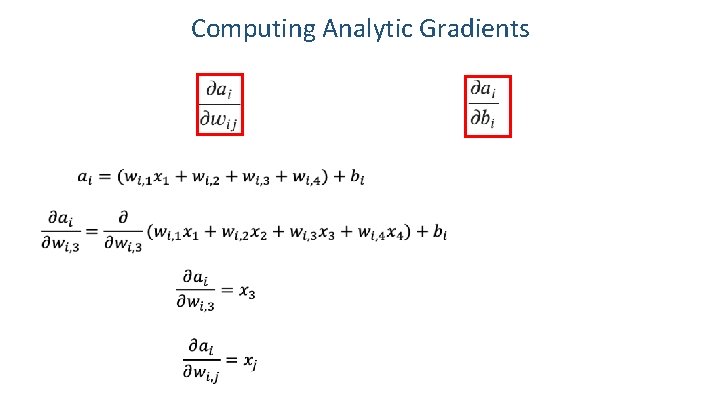

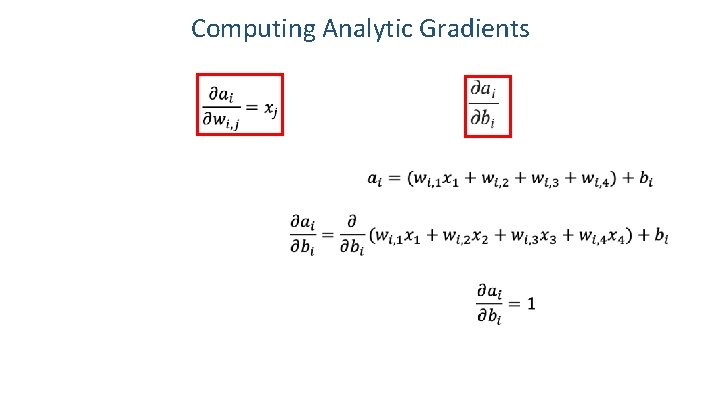

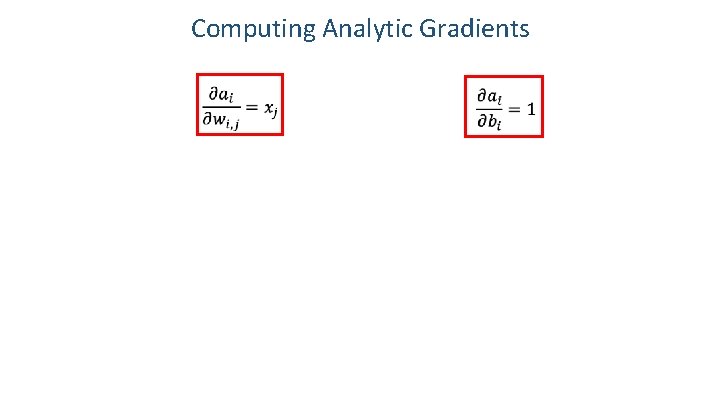

Computing Analytic Gradients

Computing Analytic Gradients

Computing Analytic Gradients

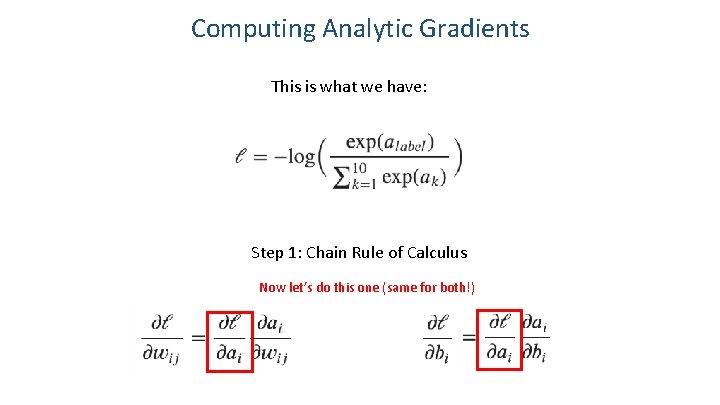

Computing Analytic Gradients This is what we have: Step 1: Chain Rule of Calculus Now let’s do this one (same for both!)

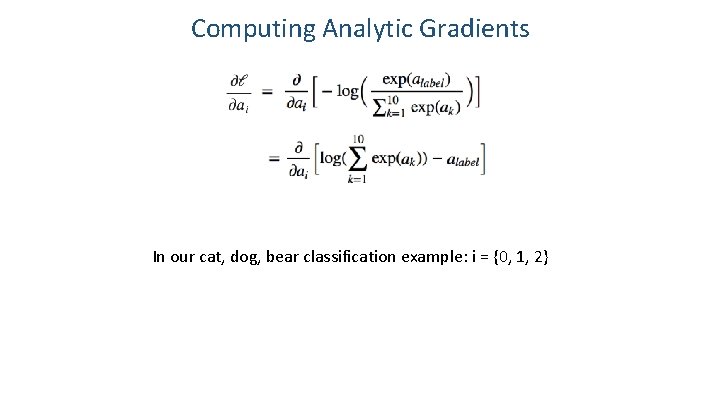

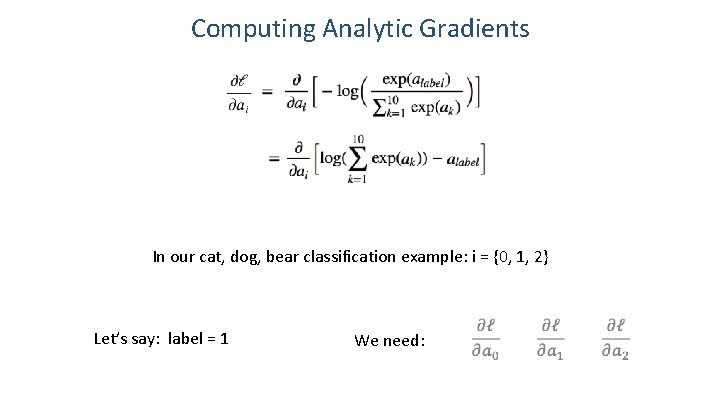

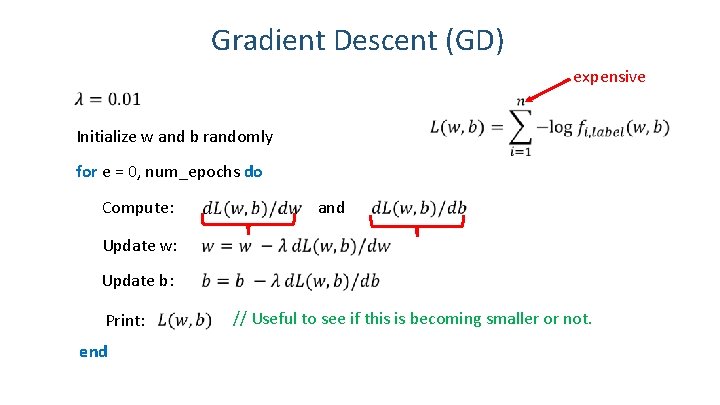

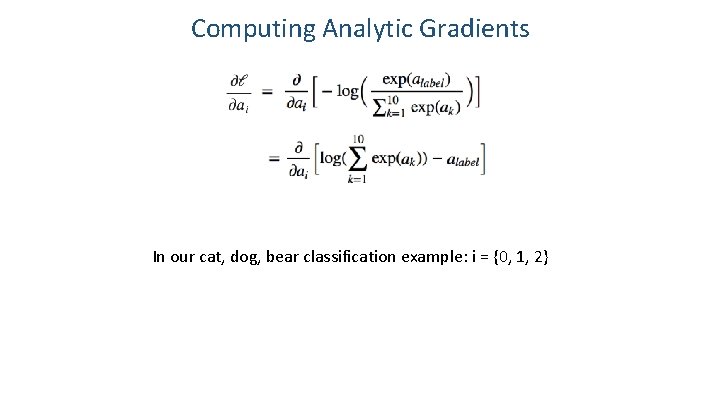

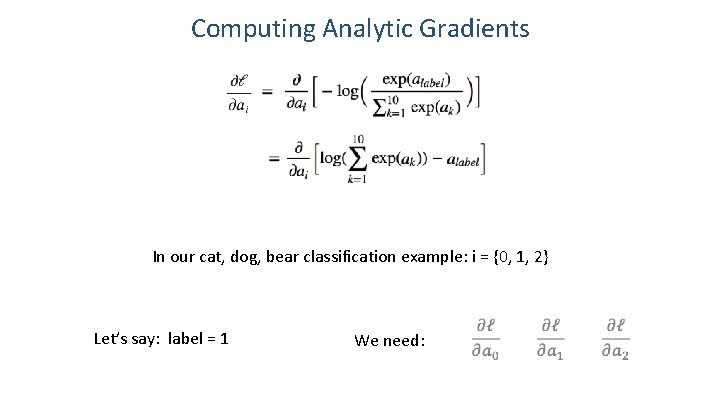

Computing Analytic Gradients In our cat, dog, bear classification example: i = {0, 1, 2}

Computing Analytic Gradients In our cat, dog, bear classification example: i = {0, 1, 2} Let’s say: label = 1 We need:

Computing Analytic Gradients

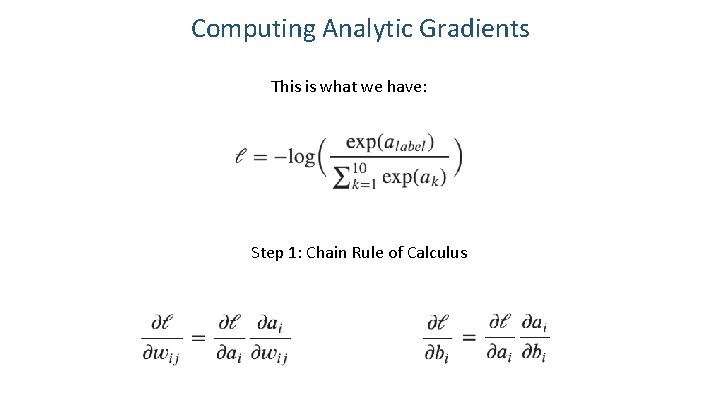

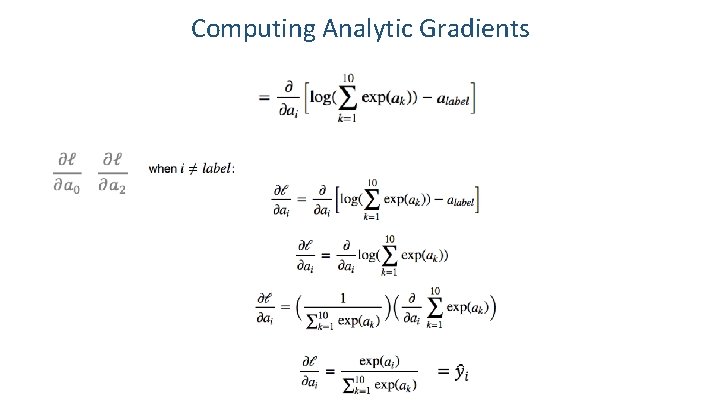

![Remember this slide 1 0 0 34 Remember this slide? [1 0 0] 34](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-34.jpg)

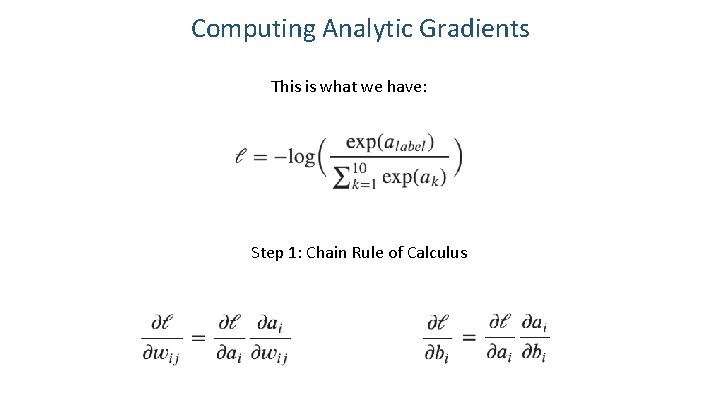

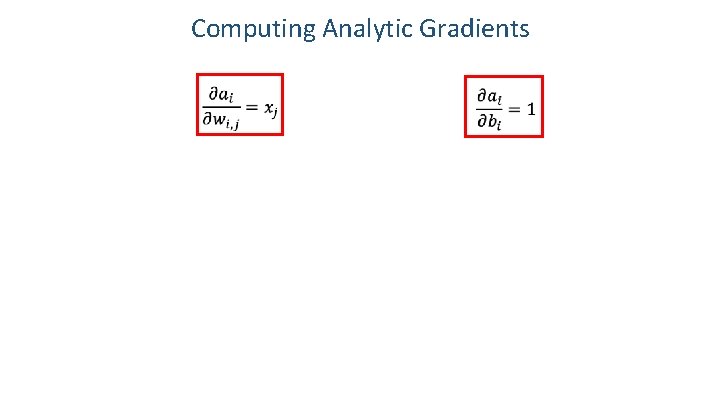

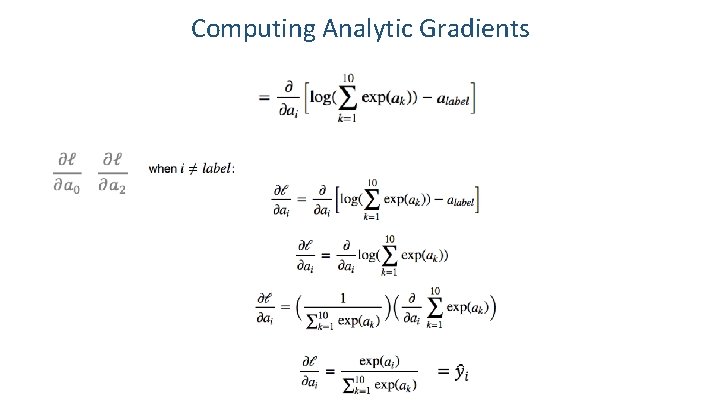

Remember this slide? [1 0 0] 34

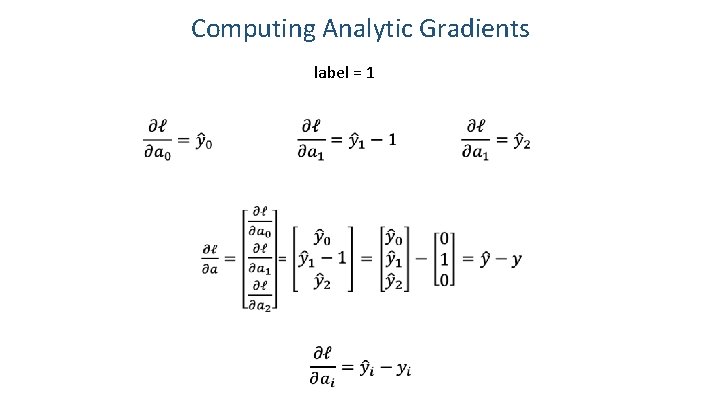

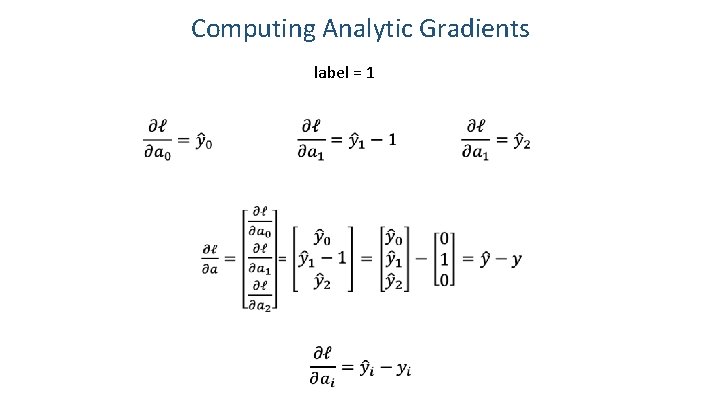

Computing Analytic Gradients

Computing Analytic Gradients

Computing Analytic Gradients label = 1

Computing Analytic Gradients

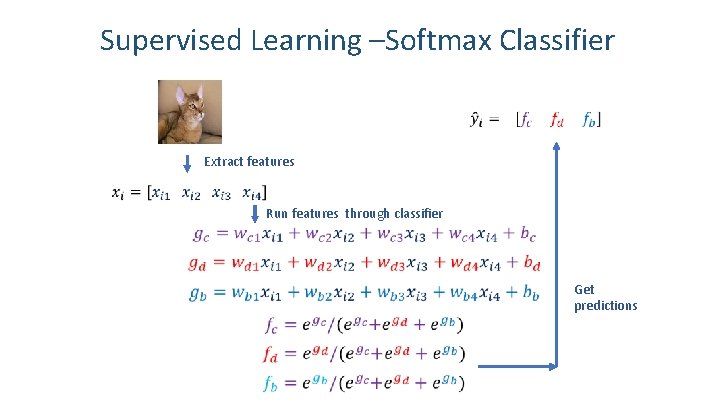

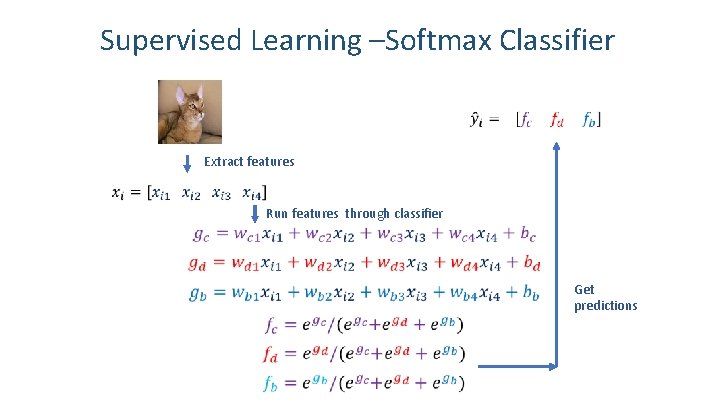

Supervised Learning –Softmax Classifier Extract features Run features through classifier Get predictions 39

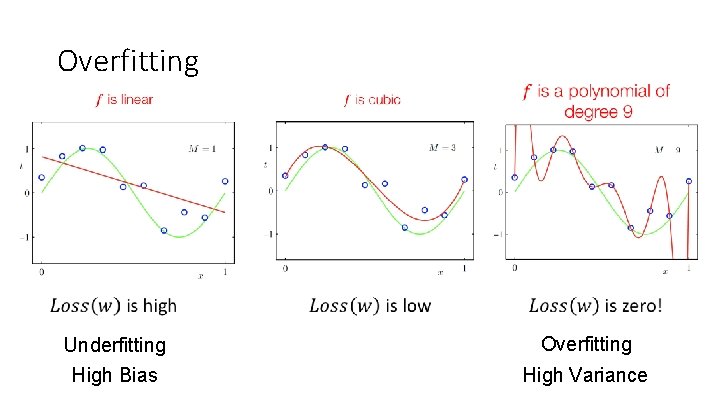

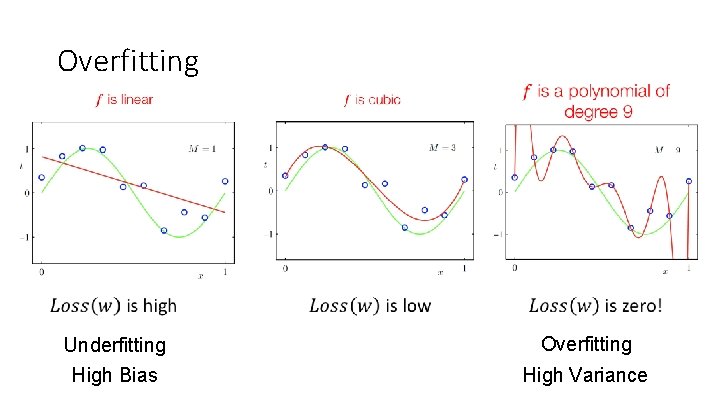

Overfitting Underfitting High Bias Overfitting High Variance

More … • Regularization • Momentum updates • Hinge Loss, Least Squares Loss, Logistic Regression Loss 41

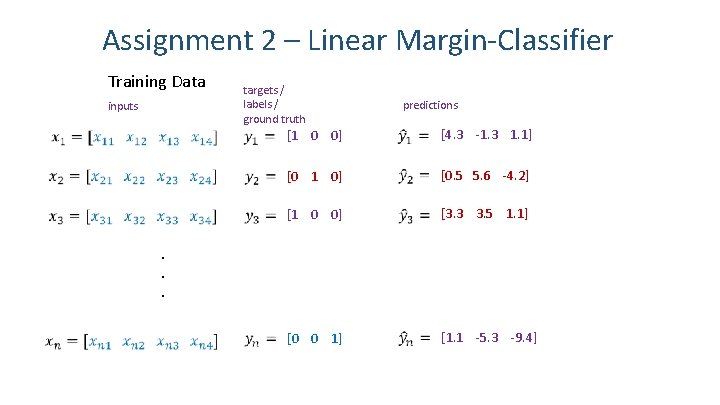

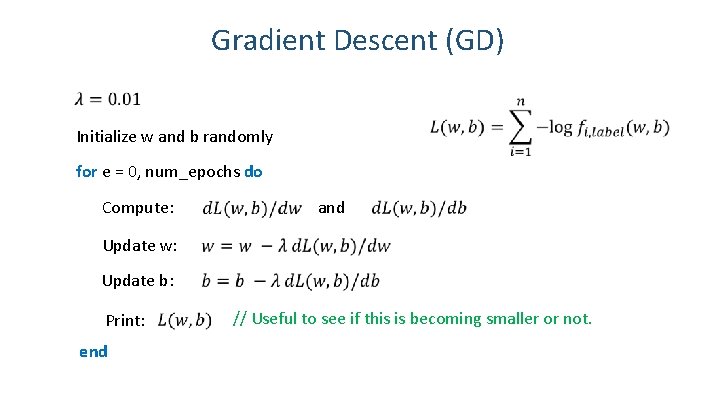

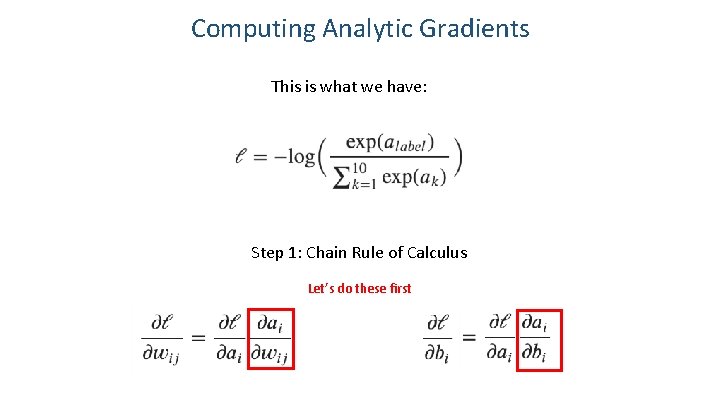

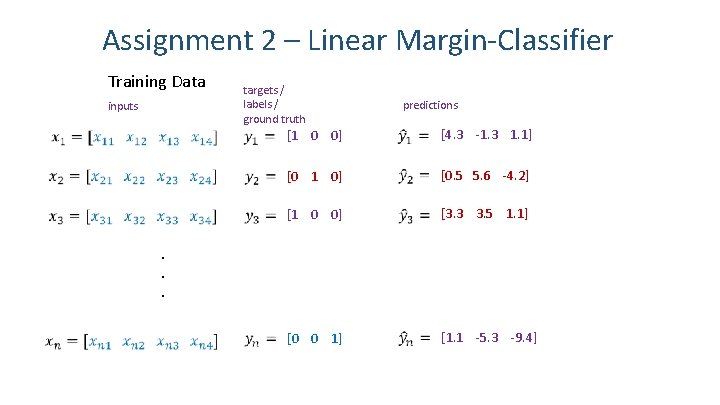

Assignment 2 – Linear Margin-Classifier Training Data inputs targets / labels / ground truth predictions [1 0 0] [4. 3 -1. 3 1. 1] [0 1 0] [0. 5 5. 6 -4. 2] [1 0 0] [3. 3 3. 5 1. 1] [0 0 1] [1. 1 -5. 3 -9. 4] . . . 42

![Supervised Learning Linear Softmax 1 0 0 43 Supervised Learning – Linear Softmax [1 0 0] 43](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-43.jpg)

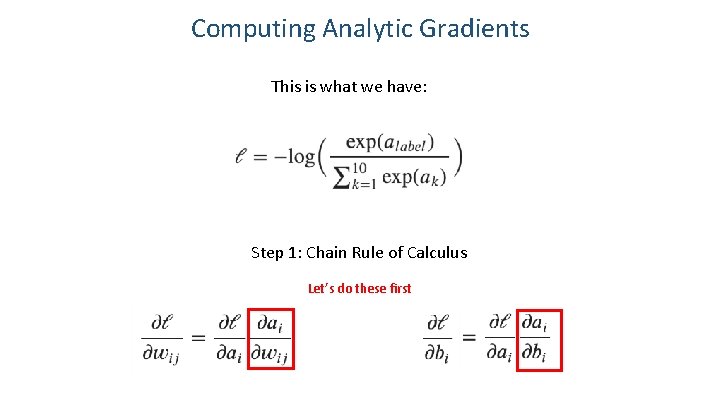

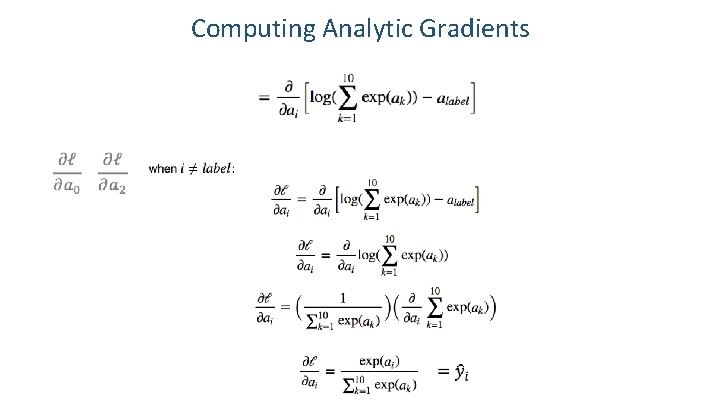

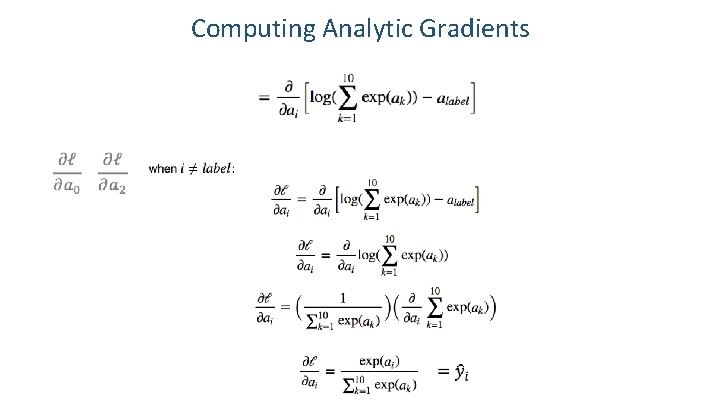

Supervised Learning – Linear Softmax [1 0 0] 43

![How do we find a good w and b 1 0 0 We need How do we find a good w and b? [1 0 0] We need](https://slidetodoc.com/presentation_image_h2/844853bca4618207d0bd8c83344d5321/image-44.jpg)

How do we find a good w and b? [1 0 0] We need to find w, and b that minimize the following: Why? 44

Questions? 45