CS 621 Artificial Intelligence Lecture 24 111005 Prof

- Slides: 26

CS 621 Artificial Intelligence Lecture 24 - 11/10/05 Prof. Pushpak Bhattacharyya Feedforward Nets 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 1

Perceptron • Cannot compute non-linearly separable data • Real life problems are typically non-linear 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 2

Basic Computing Paradigm • Setting up hyperplanes – Use higher power surfaces – Tolerate error – Use multiple perceptrons 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 3

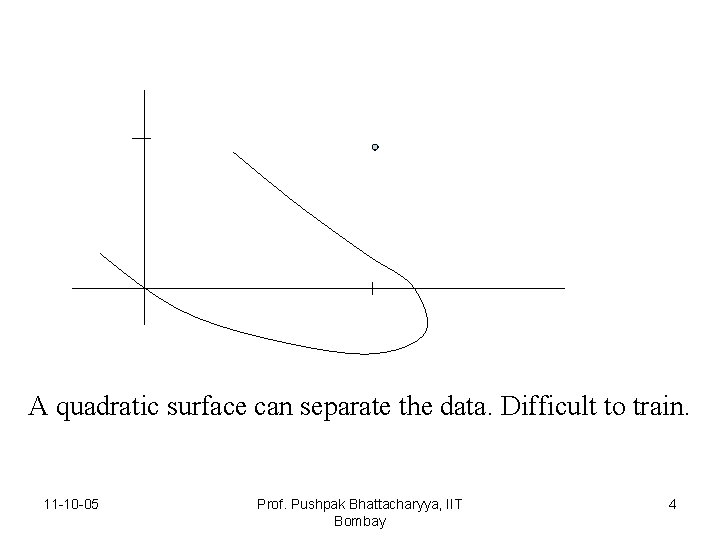

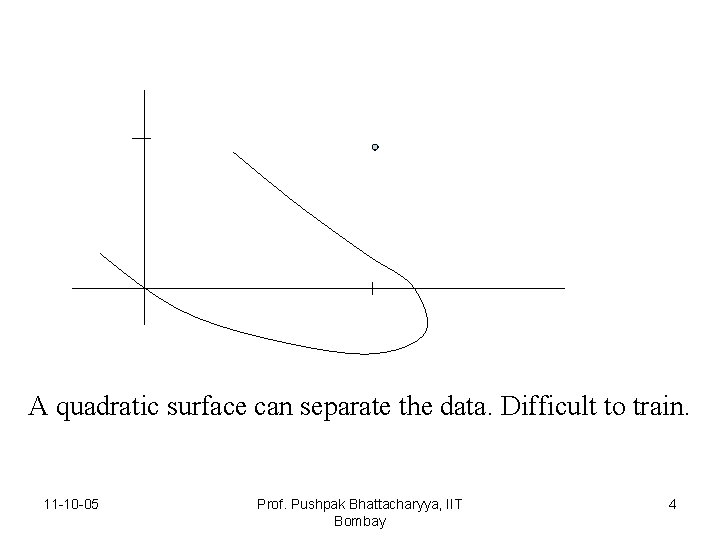

A quadratic surface can separate the data. Difficult to train. 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 4

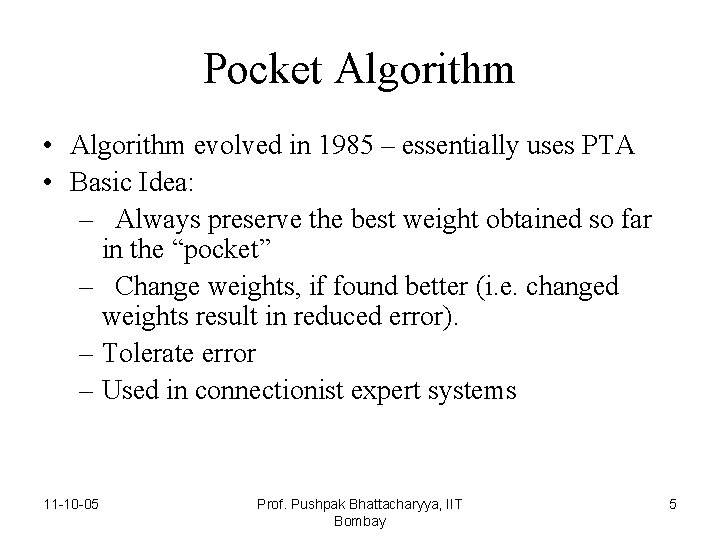

Pocket Algorithm • Algorithm evolved in 1985 – essentially uses PTA • Basic Idea: – Always preserve the best weight obtained so far in the “pocket” – Change weights, if found better (i. e. changed weights result in reduced error). – Tolerate error – Used in connectionist expert systems 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 5

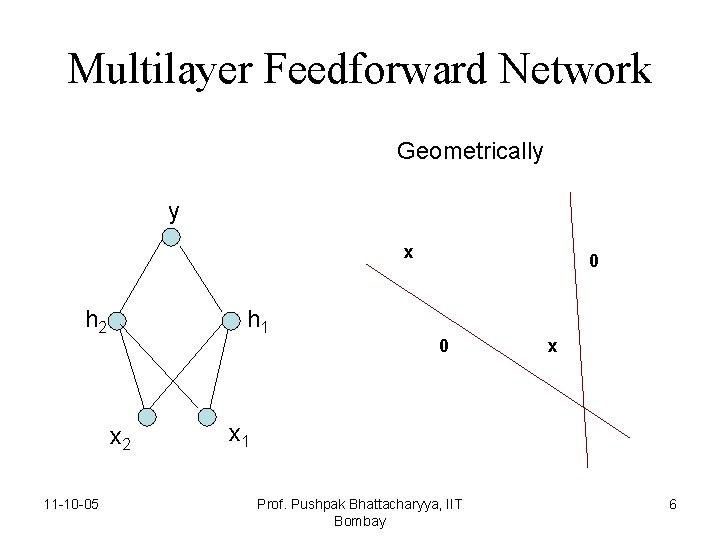

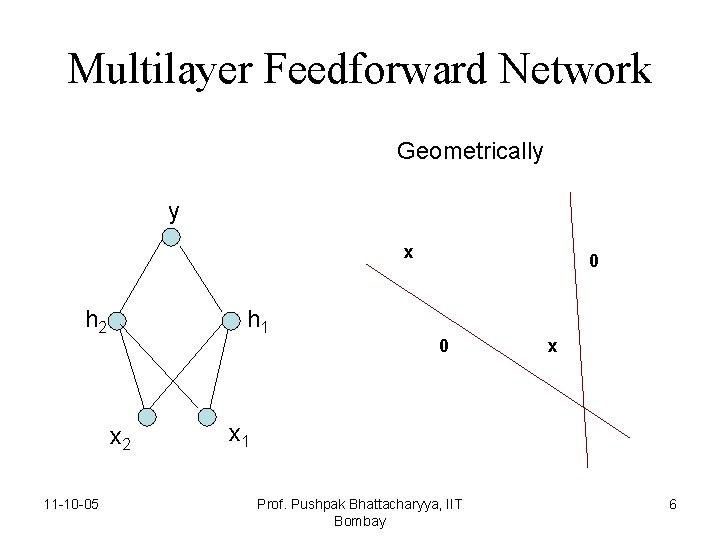

Multilayer Feedforward Network Geometrically y x h 2 h 1 x 2 11 -10 -05 0 0 x x 1 Prof. Pushpak Bhattacharyya, IIT Bombay 6

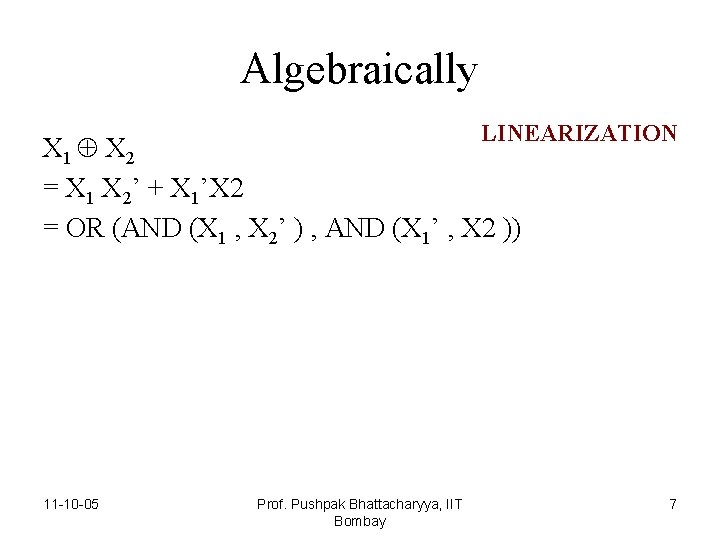

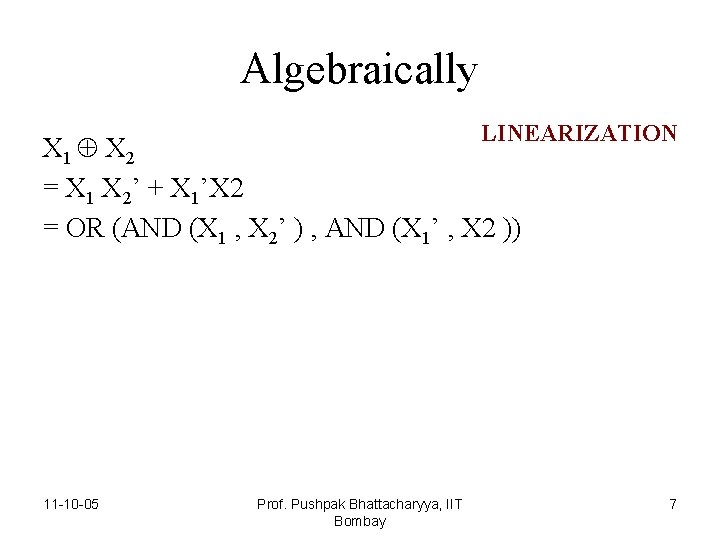

Algebraically LINEARIZATION X 1 X 2 = X 1 X 2’ + X 1’X 2 = OR (AND (X 1 , X 2’ ) , AND (X 1’ , X 2 )) 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 7

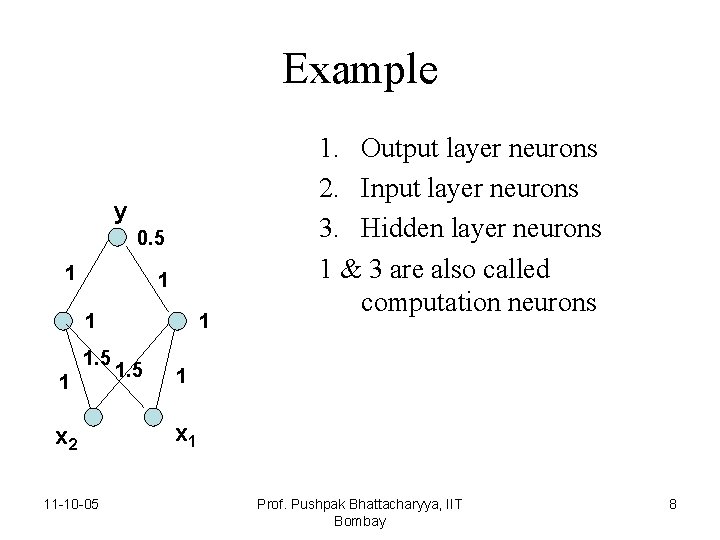

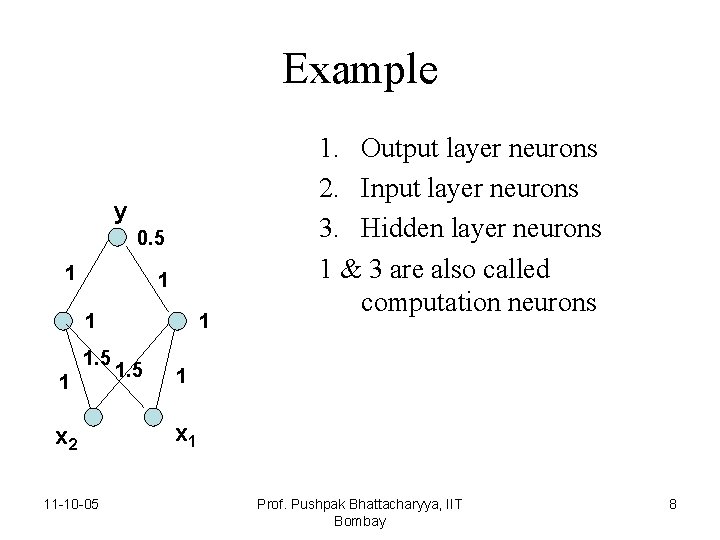

Example y 0. 5 1 1. 5 1 x 2 11 -10 -05 1 1. 5 1. Output layer neurons 2. Input layer neurons 3. Hidden layer neurons 1 & 3 are also called computation neurons 1 x 1 Prof. Pushpak Bhattacharyya, IIT Bombay 8

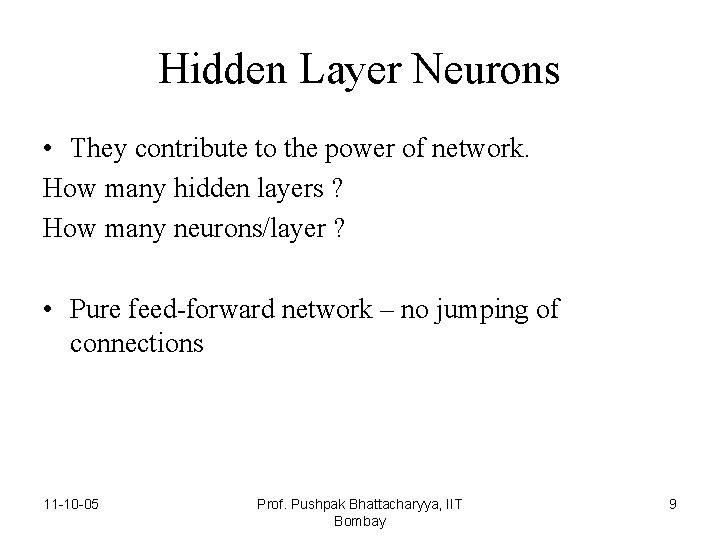

Hidden Layer Neurons • They contribute to the power of network. How many hidden layers ? How many neurons/layer ? • Pure feed-forward network – no jumping of connections 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 9

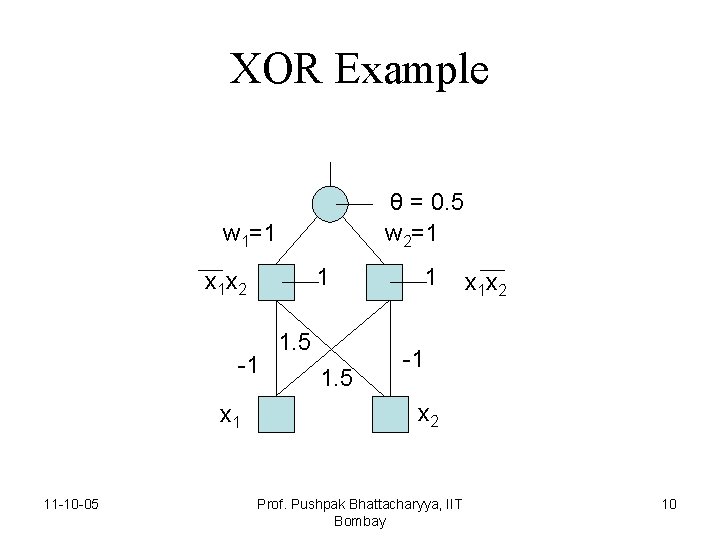

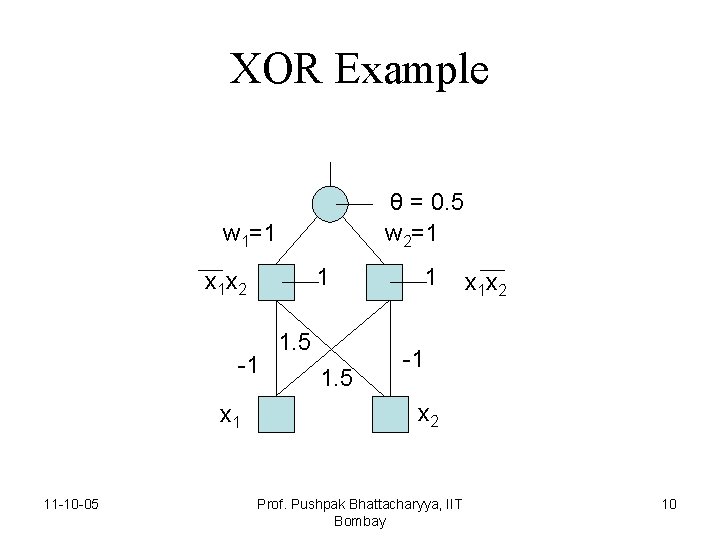

XOR Example θ = 0. 5 w 2=1 w 1=1 1 x 1 x 2 -1 x 1 11 -10 -05 1. 5 1 x 1 x 2 -1 x 2 Prof. Pushpak Bhattacharyya, IIT Bombay 10

Constraints on neurons in multi-layer perceptrons : The compute-neurons must be non-linear. Non linearity is the source of power. 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 11

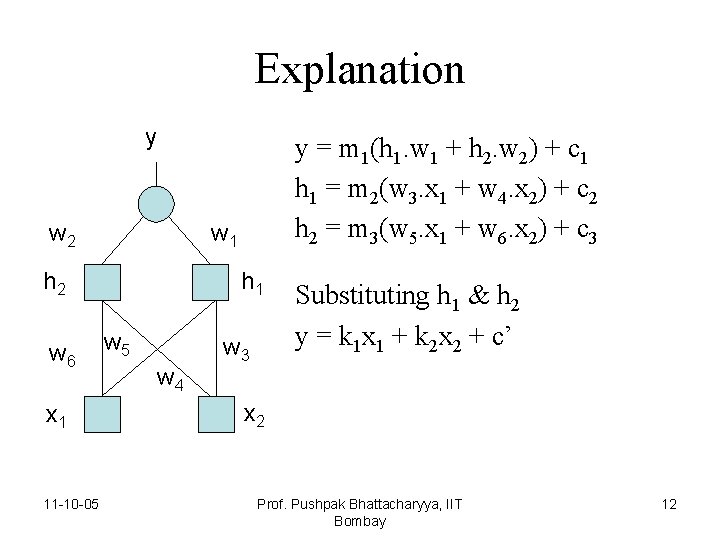

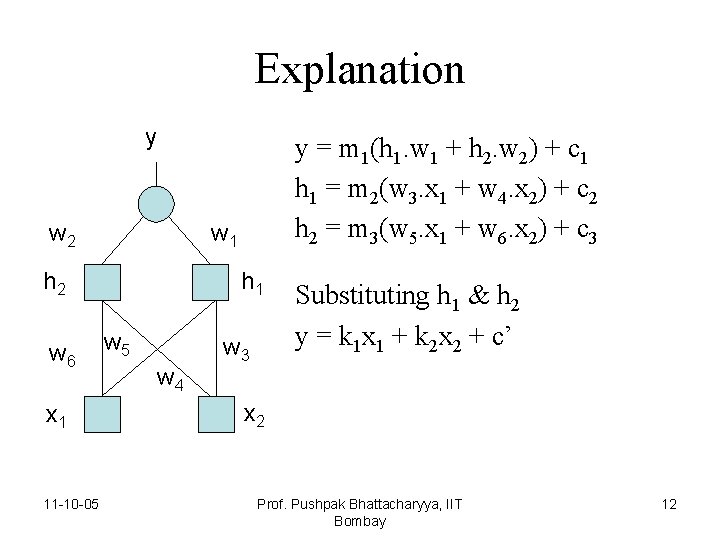

Explanation y w 2 w 1 h 2 w 6 x 1 11 -10 -05 y = m 1(h 1. w 1 + h 2. w 2) + c 1 h 1 = m 2(w 3. x 1 + w 4. x 2) + c 2 h 2 = m 3(w 5. x 1 + w 6. x 2) + c 3 h 1 w 5 w 4 w 3 Substituting h 1 & h 2 y = k 1 x 1 + k 2 x 2 + c’ x 2 Prof. Pushpak Bhattacharyya, IIT Bombay 12

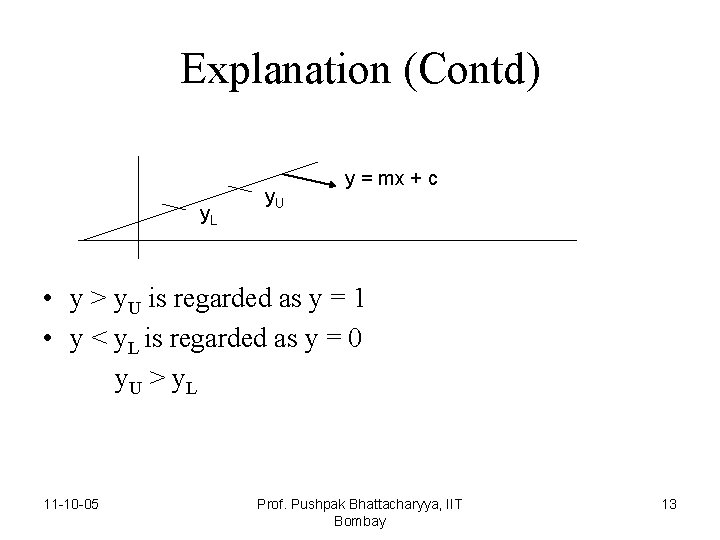

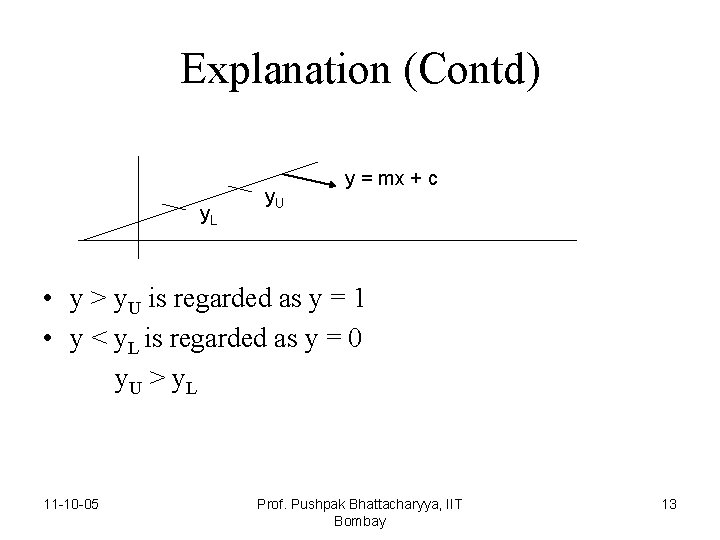

Explanation (Contd) y. L y. U y = mx + c • y > y. U is regarded as y = 1 • y < y. L is regarded as y = 0 y. U > y. L 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 13

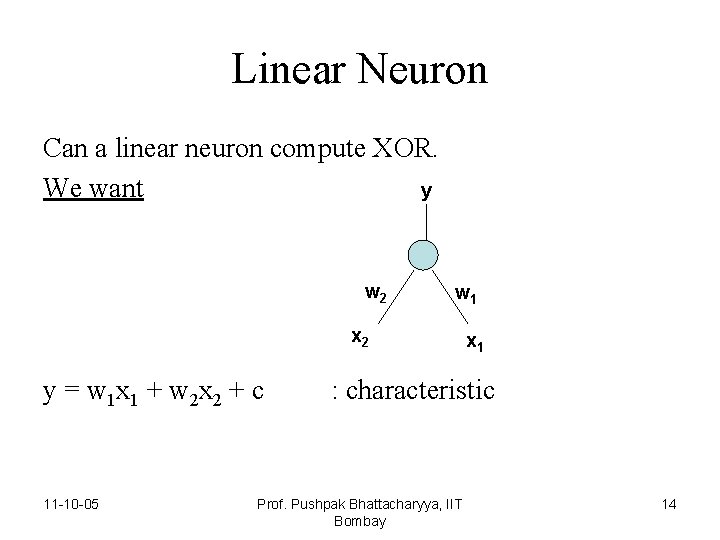

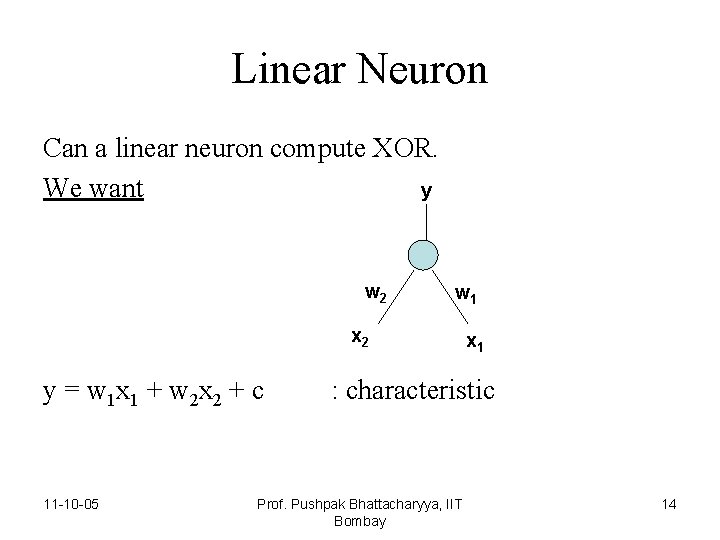

Linear Neuron Can a linear neuron compute XOR. We want y w 2 w 1 x 2 y = w 1 x 1 + w 2 x 2 + c 11 -10 -05 x 1 : characteristic Prof. Pushpak Bhattacharyya, IIT Bombay 14

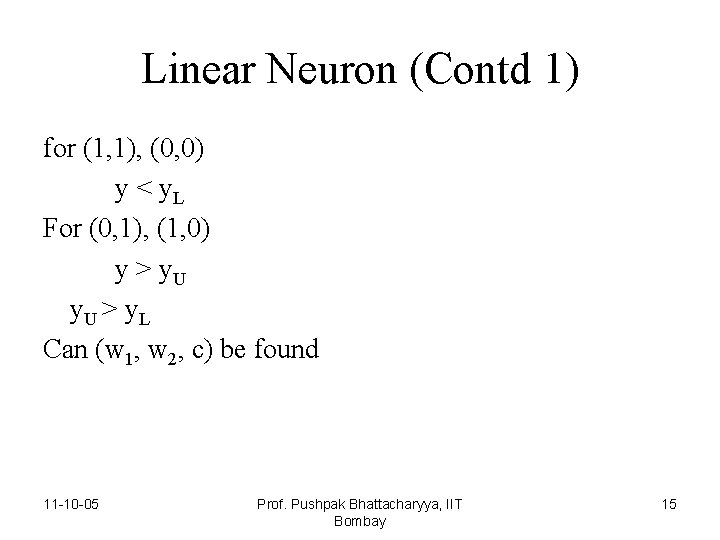

Linear Neuron (Contd 1) for (1, 1), (0, 0) y < y. L For (0, 1), (1, 0) y > y. U > y. L Can (w 1, w 2, c) be found 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 15

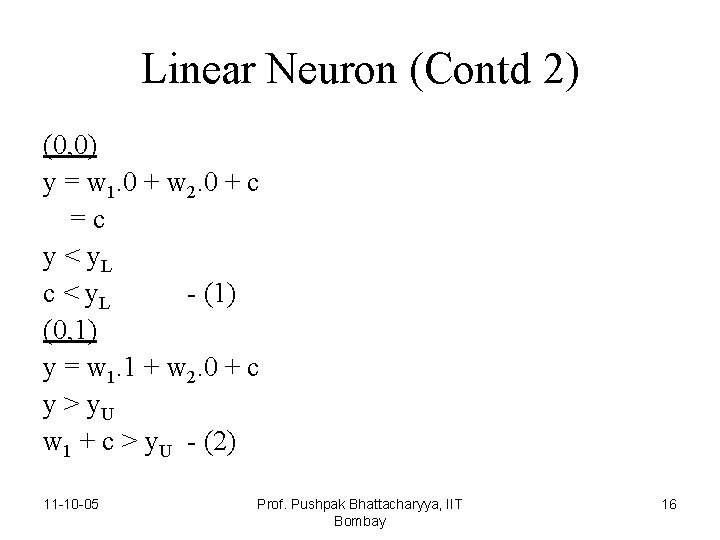

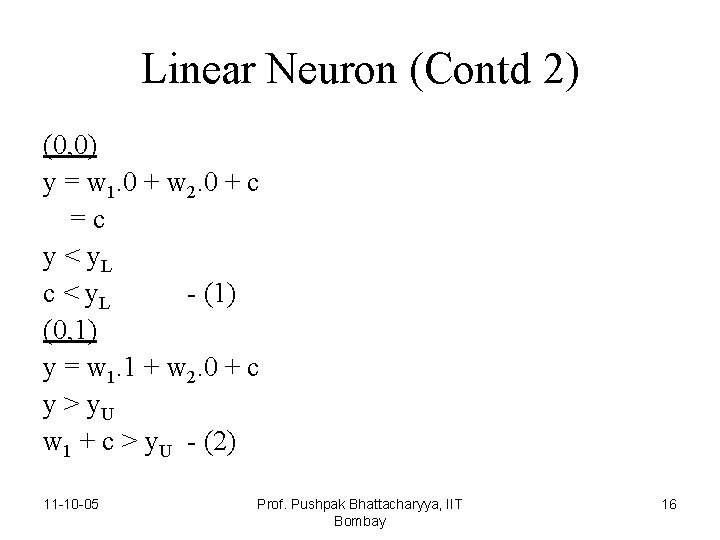

Linear Neuron (Contd 2) (0, 0) y = w 1. 0 + w 2. 0 + c =c y < y. L c < y. L - (1) (0, 1) y = w 1. 1 + w 2. 0 + c y > y. U w 1 + c > y. U - (2) 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 16

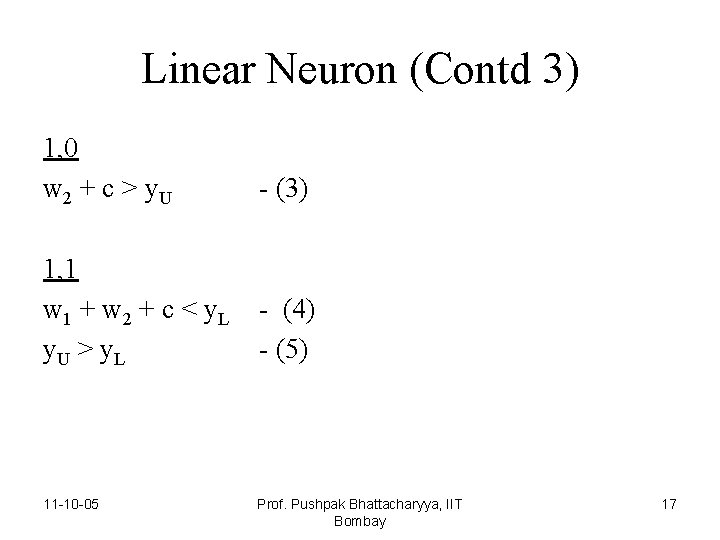

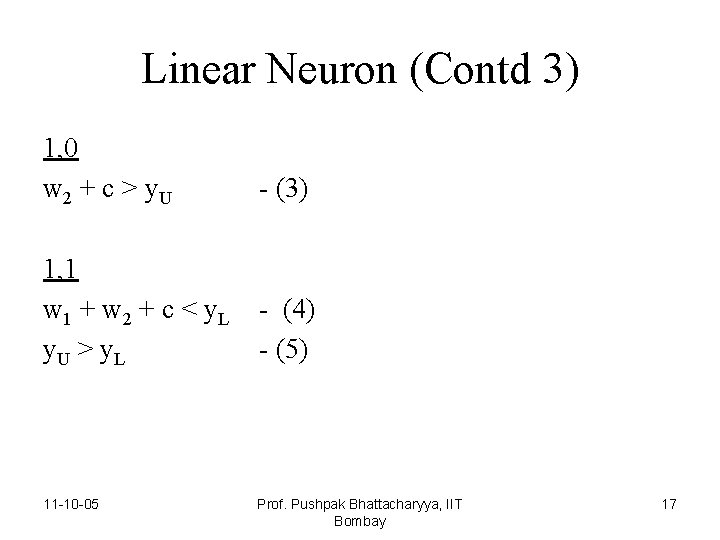

Linear Neuron (Contd 3) 1, 0 w 2 + c > y. U - (3) 1, 1 w 1 + w 2 + c < y. L y. U > y. L - (4) - (5) 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 17

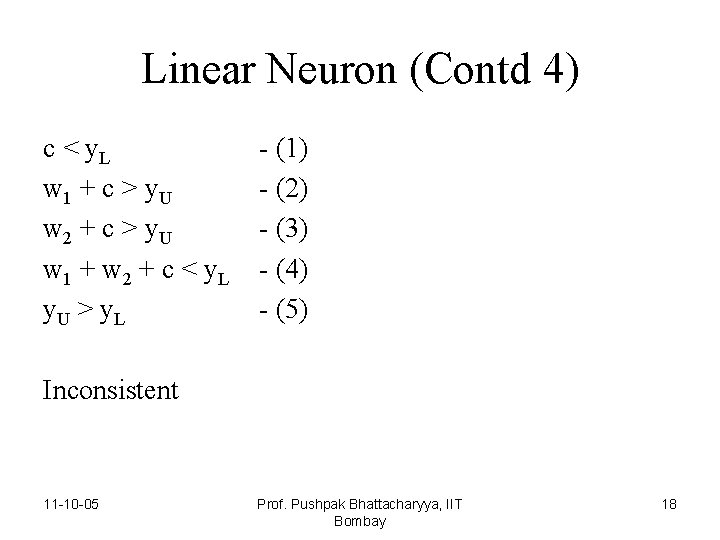

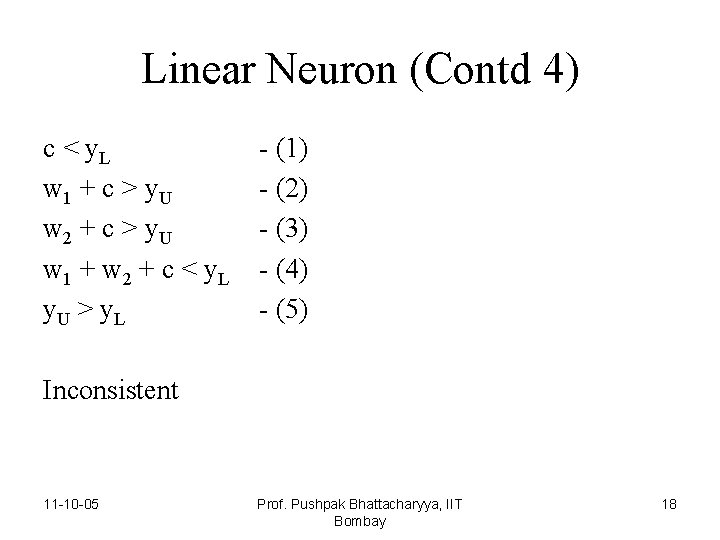

Linear Neuron (Contd 4) c < y. L w 1 + c > y. U w 2 + c > y. U w 1 + w 2 + c < y. L y. U > y. L - (1) - (2) - (3) - (4) - (5) Inconsistent 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 18

Observations • A linear neuron cannot compute XOR • A multilayer network with linear characteristic neurons is collapsible to a single linear neuron. • Therefore addition of layers does not contribute to computing power. • Neurons in feedforward network must be non-linear • Threshold elements will do iff we can linearize a nonlinearly function. 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 19

Linearity is not in general possible – • Need to know the function in closed form. • Very large space even for boolean data. 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 20

Training Algorithm • Looks at the pre-classified data • Arrives at weight values 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 21

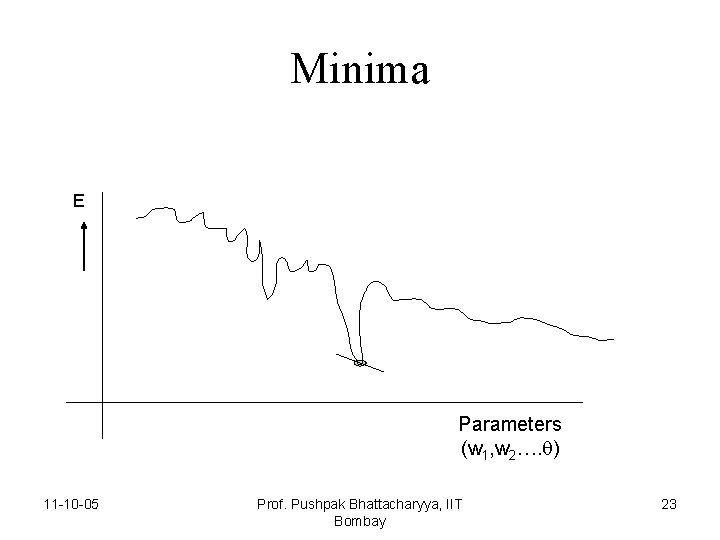

Why won’t PTA do? • Since we do not know desired outputs at hidden layer neurons, PTA cannot be applied. So apply a training method called GRADIENT DESCENT. 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 22

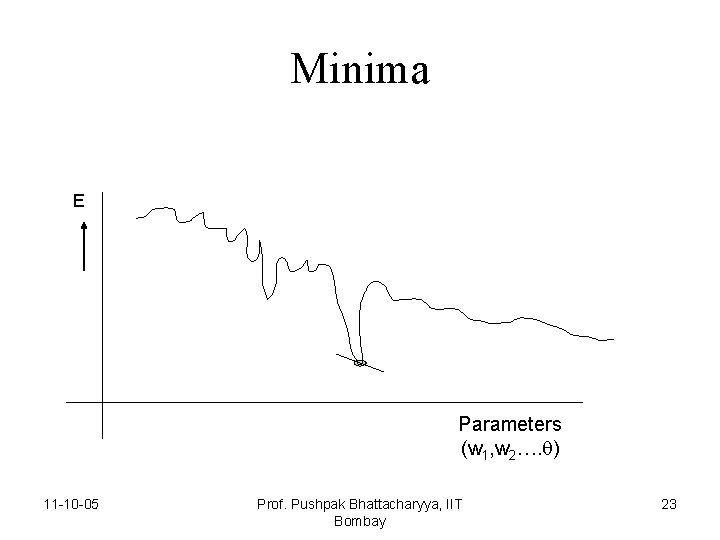

Minima E Parameters (w 1, w 2…. ) 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 23

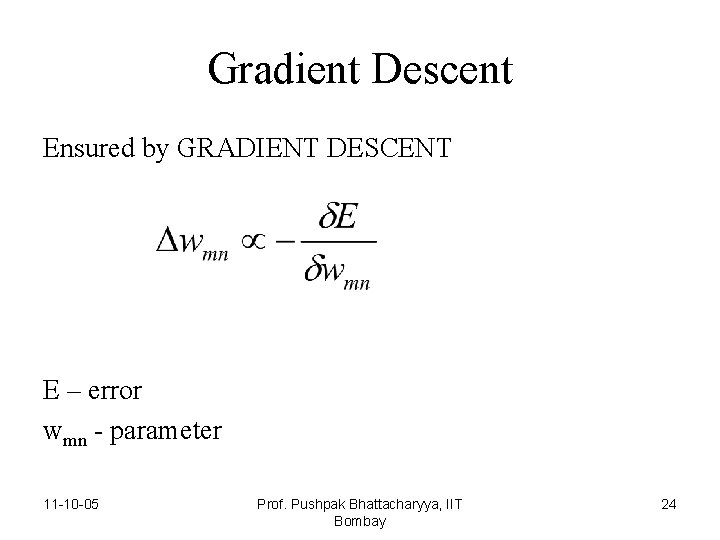

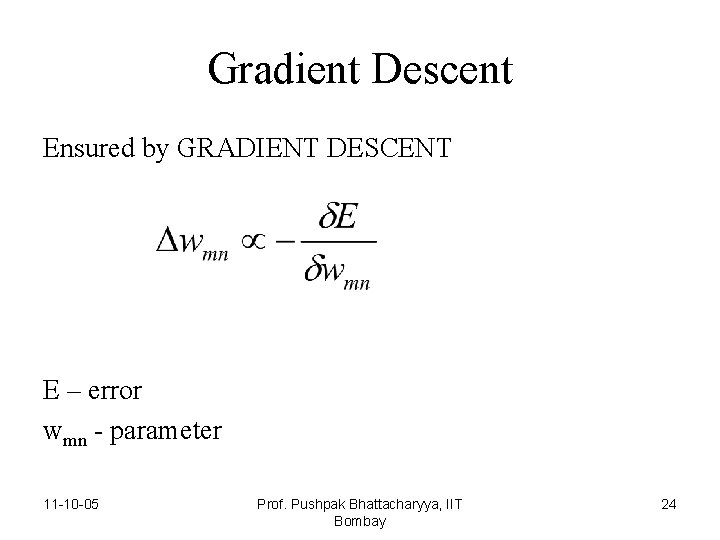

Gradient Descent Ensured by GRADIENT DESCENT E – error wmn - parameter 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 24

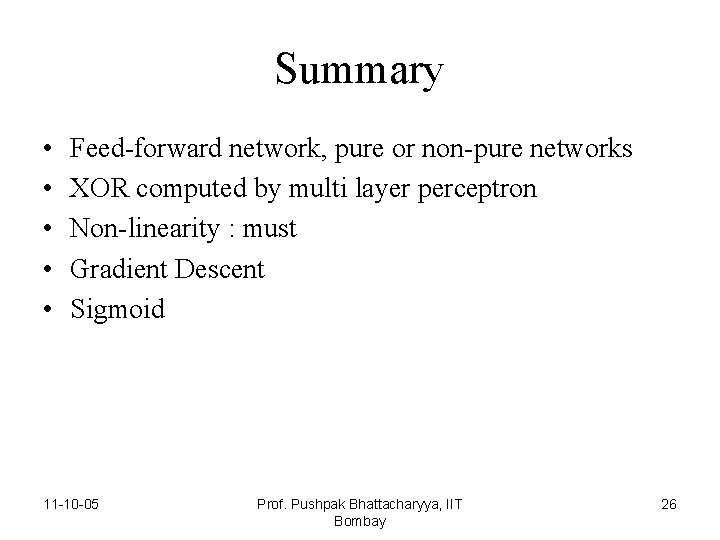

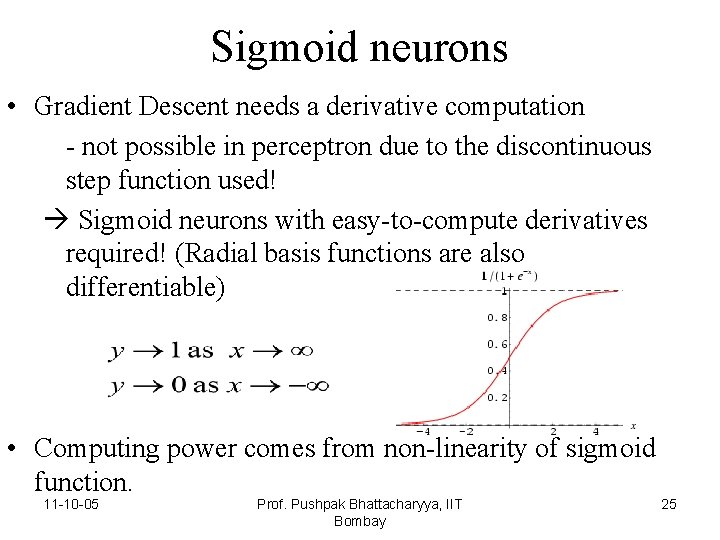

Sigmoid neurons • Gradient Descent needs a derivative computation - not possible in perceptron due to the discontinuous step function used! Sigmoid neurons with easy-to-compute derivatives required! (Radial basis functions are also differentiable) • Computing power comes from non-linearity of sigmoid function. 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 25

Summary • • • Feed-forward network, pure or non-pure networks XOR computed by multi layer perceptron Non-linearity : must Gradient Descent Sigmoid 11 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 26