CS 603 Failure Recovery April 19 2002 Failure

- Slides: 28

CS 603 Failure Recovery April 19, 2002

Failure Recovery • Assumption: system designed for normal operation – Failure is an exception • How to handle exception? – Must maintain correctness – Can compromise performance • Fault models provide mechanisms to describe failure and recovery – But how do implement?

Site Failure • Problem: complete failure at single site – Must have multiple sites – Thus a distributed problem • Two examples – Distributed Storage: Palladio • Think wide-area RAID – Distributed Transactions: Epoch algorithm

Recovery Example: Palladio Storage System • Work in HP Labs Storage Systems – Richard Golding – Elizabeth Borowsky (now at Boston College) Some slides taken from their talks • Goals – Disaster-resistant storage • Must store at multiple (widely distributed) sites – High availability • Can’t wait for restoration after disaster – High performance • Use the replication productively under normal operation

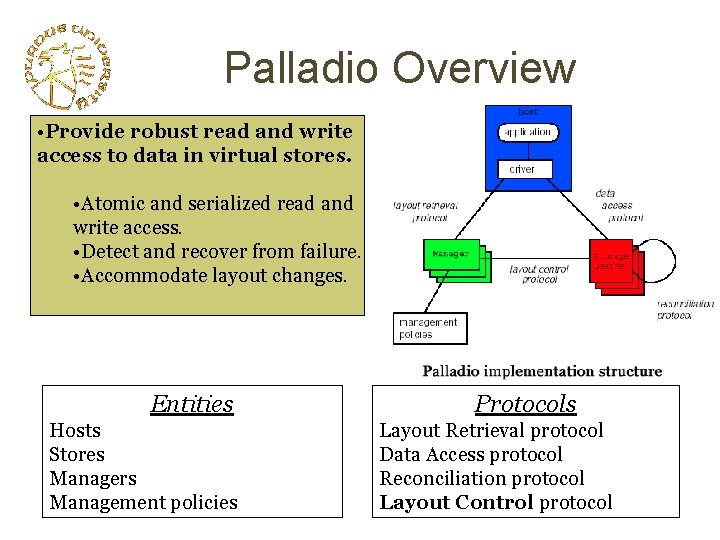

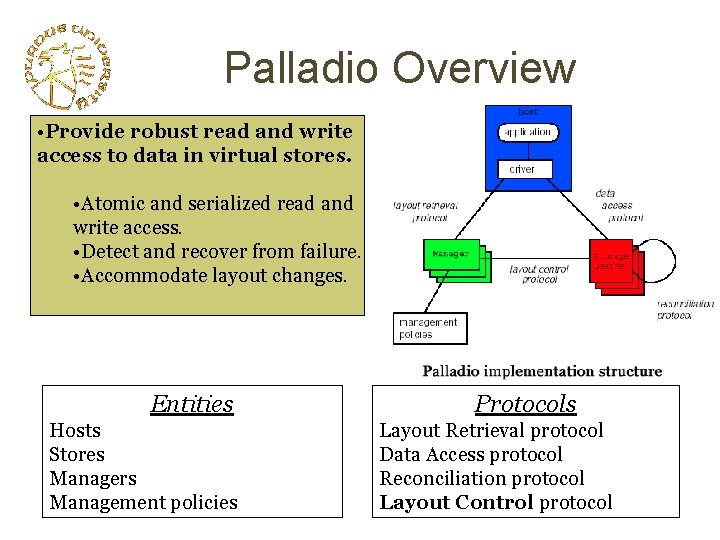

Palladio Overview • Provide robust read and write access to data in virtual stores. • Atomic and serialized read and write access. • Detect and recover from failure. • Accommodate layout changes. Entities Hosts Stores Managers Management policies Protocols Layout Retrieval protocol Data Access protocol Reconciliation protocol Layout Control protocol

Protocols • Access protocol allows hosts to read and write data on a storage device as long as there are no failures or layout changes for the virtual store. It must provide serialized, atomic writes that can span multiple devices. • Layout retrieval protocol allows hosts to obtain the current layout of a virtual store — the mapping from the virtual store’s address space onto the devices that store parts of it. • Reconciliation protocol runs between pairs of devices to bring them back to consistency after a failure. • Layout control protocol runs between managers and devices — maintains consensus about the layout and failure status of the devices, and in doing so coordinates the other three protocols.

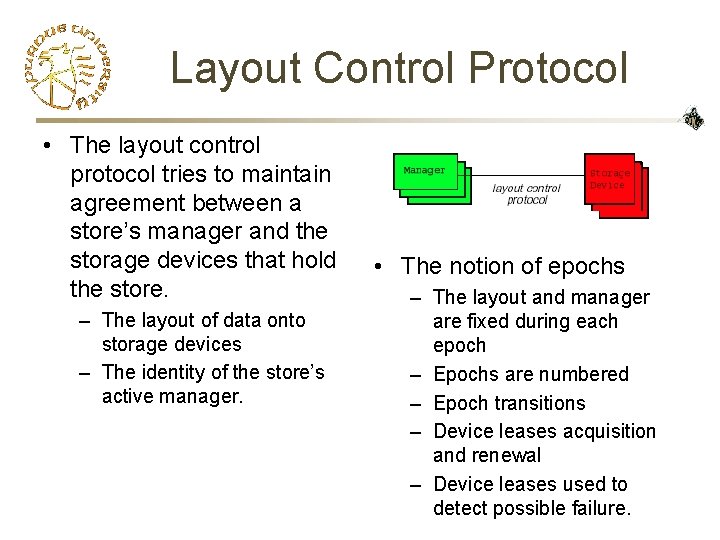

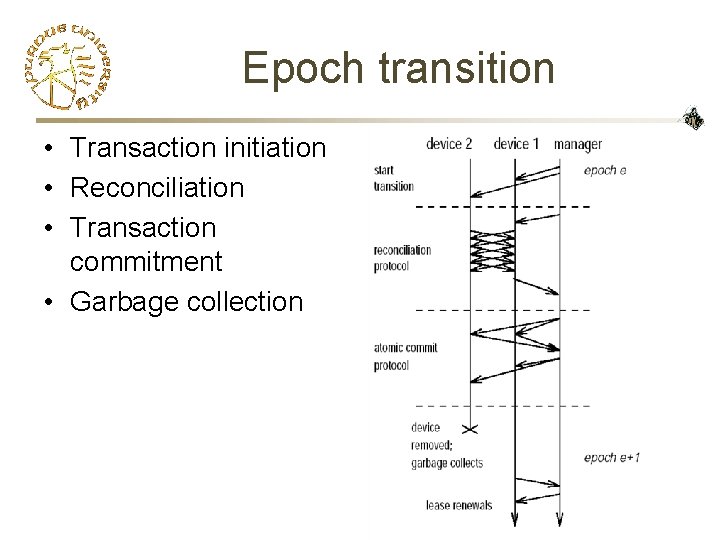

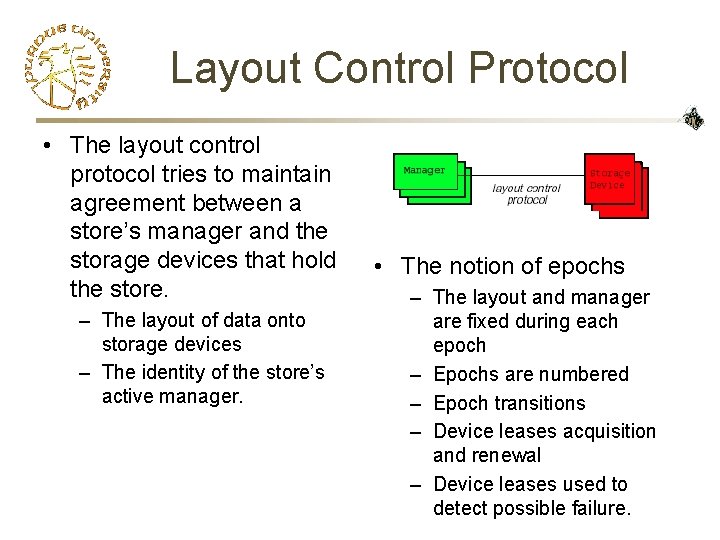

Layout Control Protocol • The layout control protocol tries to maintain agreement between a store’s manager and the storage devices that hold the store. – The layout of data onto storage devices – The identity of the store’s active manager. • The notion of epochs – The layout and manager are fixed during each epoch – Epochs are numbered – Epoch transitions – Device leases acquisition and renewal – Device leases used to detect possible failure.

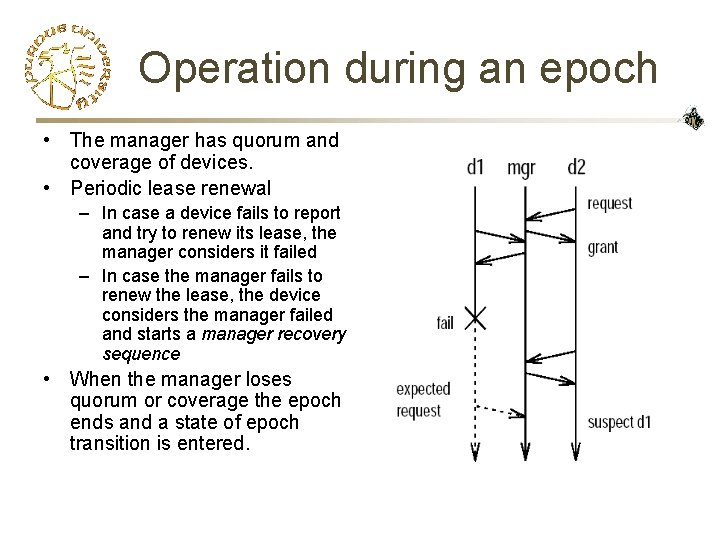

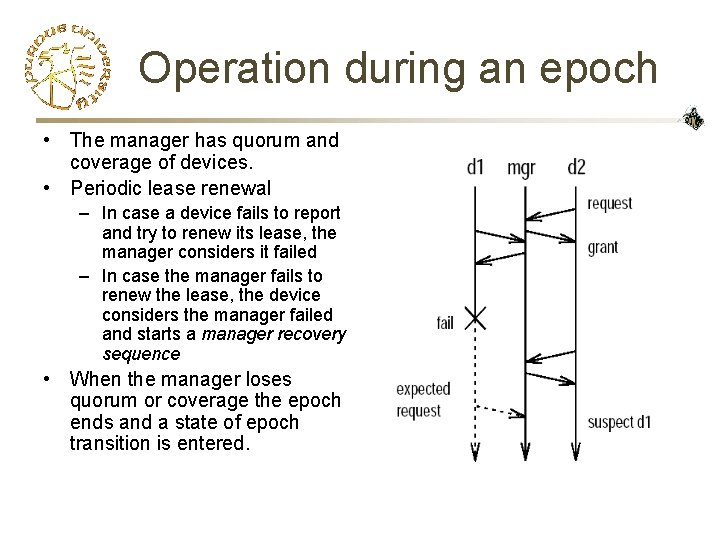

Operation during an epoch • The manager has quorum and coverage of devices. • Periodic lease renewal – In case a device fails to report and try to renew its lease, the manager considers it failed – In case the manager fails to renew the lease, the device considers the manager failed and starts a manager recovery sequence • When the manager loses quorum or coverage the epoch ends and a state of epoch transition is entered.

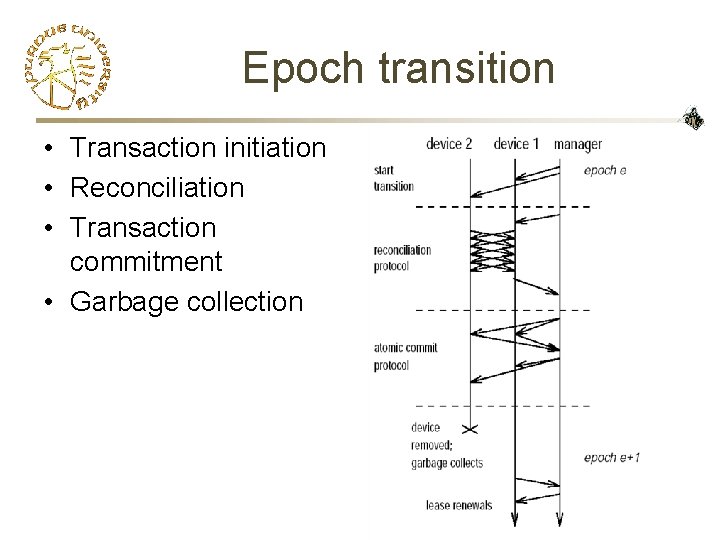

Epoch transition • Transaction initiation • Reconciliation • Transaction commitment • Garbage collection

The recovery sequence • Initiation - querying a recovery manager with the current layout and epoch number

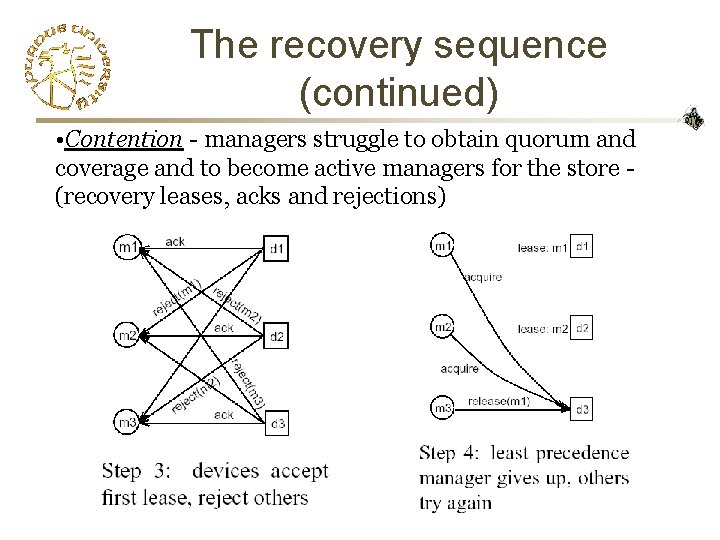

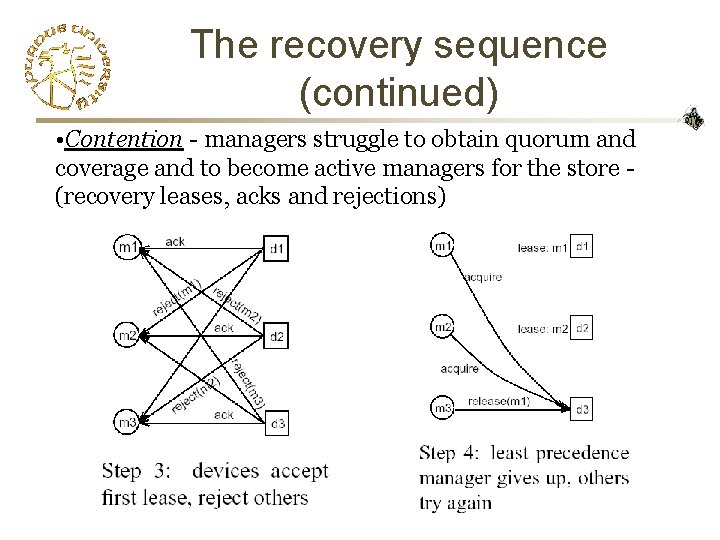

The recovery sequence (continued) • Contention - managers struggle to obtain quorum and coverage and to become active managers for the store (recovery leases, acks and rejections)

The recovery sequence (continued) • Completion - setting correct recovery leases & starting epoch transition • Failure - failure of devices and managers during recovery

Extensions • • • Single manager v. s. Multiple managers Whole devices v. s. Device parts (chunks) Reintegrating devices Synchrony model (future) Failure suspectors (future)

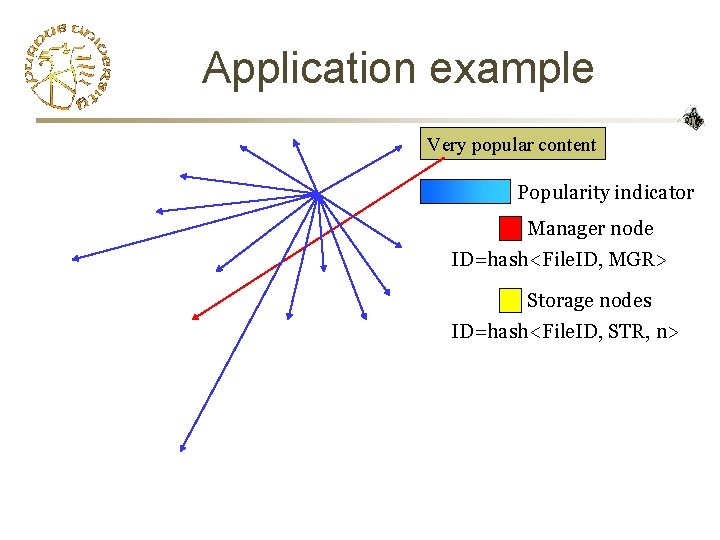

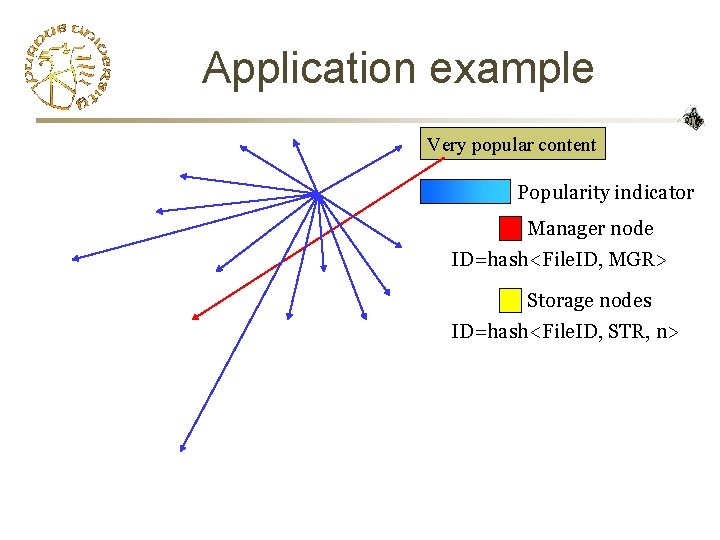

Application example Very popular content Popularity indicator Manager node ID=hash<File. ID, MGR> Storage nodes ID=hash<File. ID, STR, n>

Application example - benefits Stable manager node Stable storage nodes • Self-manageable storage • Increased availability • Popularity is hard to fake • Less per node load • Could be applied recursively (? )

Conclusions & recap • Palladio - Replication management system featuring – – Modular protocol design Active device participation Distributed management function Coverage and quorum condition

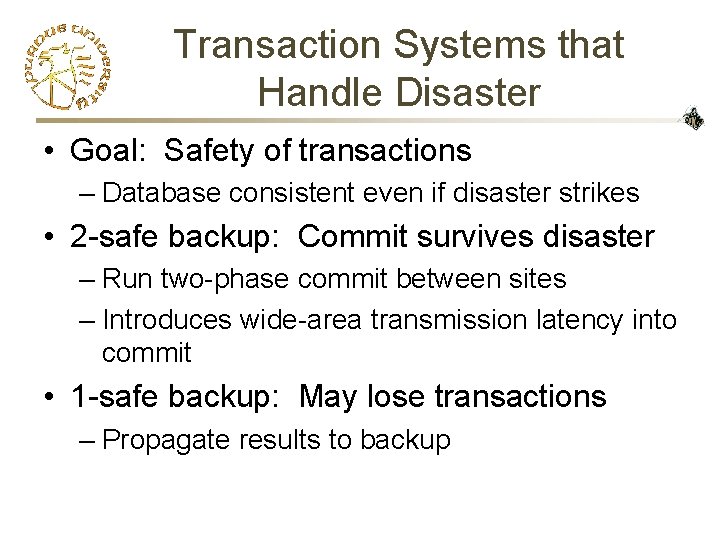

Transaction Systems that Handle Disaster • Goal: Safety of transactions – Database consistent even if disaster strikes • 2 -safe backup: Commit survives disaster – Run two-phase commit between sites – Introduces wide-area transmission latency into commit • 1 -safe backup: May lose transactions – Propagate results to backup

Epoch Algorithm (Garcia-Molina, Polyzois, and Hagmann 1990) • 1 -Safe backup – No performance penalty • Multiple transaction streams – Use distribution to improve performance • Multiple Logs – Avoid single bottleneck

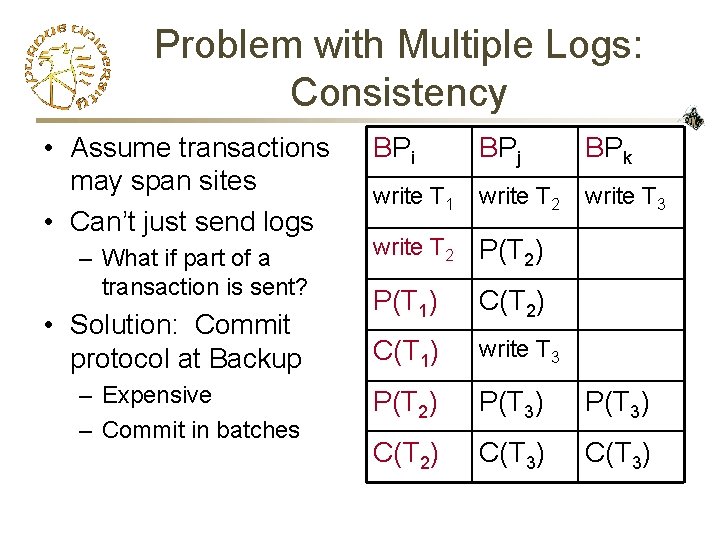

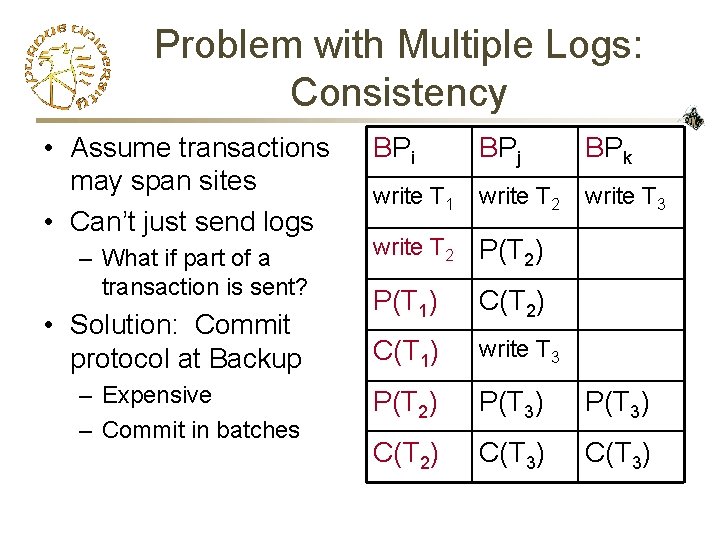

Problem with Multiple Logs: Consistency • Assume transactions may span sites • Can’t just send logs – What if part of a transaction is sent? • Solution: Commit protocol at Backup – Expensive – Commit in batches BPi BPj BPk write T 1 write T 2 write T 3 write T 2 P(T 2) P(T 1) C(T 2) C(T 1) write T 3 P(T 2) P(T 3) C(T 2) C(T 3)

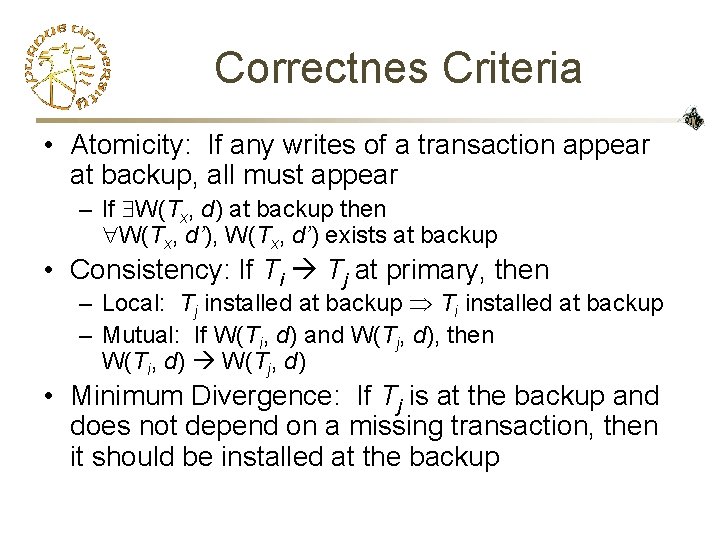

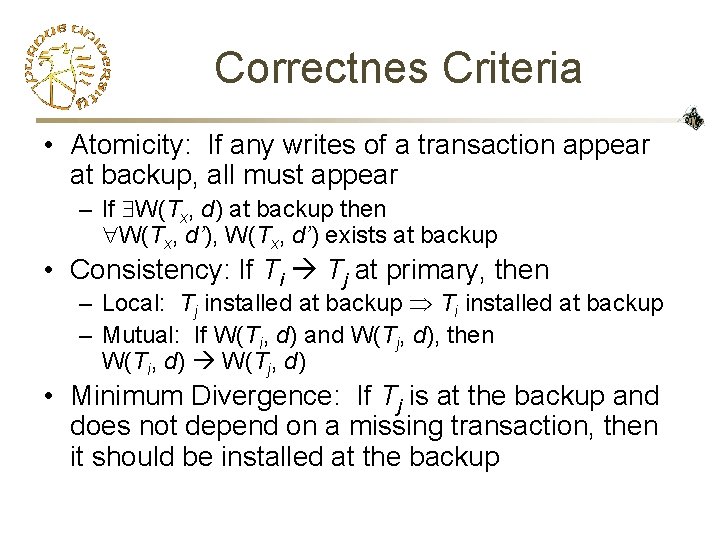

Correctnes Criteria • Atomicity: If any writes of a transaction appear at backup, all must appear – If W(Tx, d) at backup then W(Tx, d’), W(Tx, d’) exists at backup • Consistency: If Ti Tj at primary, then – Local: Tj installed at backup Ti installed at backup – Mutual: If W(Ti, d) and W(Tj, d), then W(Ti, d) W(Tj, d) • Minimum Divergence: If Tj is at the backup and does not depend on a missing transaction, then it should be installed at the backup

Algorithm Overview • Idea: Transactions that can be committed together grouped into epochs • Primaries write marker in log – Must agree when safe to write marker – Keep track of current epoch number – Master broadcasts when to end epoch • Backups commit epoch when all backups have received marker

CS 603 Failure Recovery April 22, 2002

Single-Mark Algorithm • Problem: Is it locally safe to mark when broadcast received? – Might be in the middle of a transaction • Solution: Share epoch at commit – Prepare to commit includes local epoch number – If received number greater than local, end epoch • At Backup: When all sites have epoch ○n, Commit transactions where – C(Ti) ○n – P(Ti) ○n, local site is not coordinator, and coordinator has C(Ti) ○n

Correctness: Atomicity • • Lemma 1: If C(T) ○n @ Pi, then CC(T) ○n @ coordinator Pc of T. – Proof. If Pi = Pc, trivial. Suppose Pi ≠ Pc, CP(T) ○n @ Pi, ○n CC(T) @ Pc. The commit message from Pc to Pi includes epoch Pc + 1 Pi will write ○n. Thus, ○n CP(T) is a contradiction. Lemma 2: If CC(T) ○n @ coordinator for T, then P(T) ○n @ participants. – Proof. Suppose ○n P(T) at some participant. When the coordinator received the acknowledgement (along with the epoch) from that participant, it bumped its epoch (if neces- sary) and then wrote the CC(T) entry. In either case, ○n CC(T) is a contradiction. • Atomicity: Suppose the changes T installed at BPi after ○n. If C(T) ○n @ Bpi and Pc was coordinator, by lemma 1 CC(T) ○n @ BPc. If B i does not encounter a C(T) entry before ○n, it must have committed because the coordinator told it to do so, which implies that in the log of the coordinator CC(T) ○n. Thus, in any case, in the coordinator’s log CC(T) ○n. According to lemma 2, in the logs of all participants P(T) ○n. The participants for which CP(T) ○n will commit T anyway. The rest of the participants will ask BP, and will be informed that T can commit.

Correctness: Consistency • if Tx Ty and Tx installed at the backup during epoch n, Ty is also installed • Suppose the dependency Tx Ty is induced by conflicting accesses to a data item d at a processor Pd. – By property 1: C(Tx, Pd) * P(Ty, Pd). Since Ty committed at the backup during epoch n, P(Tx, Pd) ○n(Pd), which implies C(Tx, Pd) ○n(Pd). – Thus, TX must commit during epoch n or earlier (see lemmas 1, 2) • Progress made: suppose Tx Ty, both write data item d. – if Tx Ty at the primary, Tx commits at the same epoch or before Ty – If TX is installed earlier, W(Tx, d) W(Ty, d) – If installed during the same epoch, the writes are executed in the order in which they appear in the log. Since Tx Ty at the primary, the order must be W(Tx, d) W(Ty, d).

Double-Mark Algorithm • Single mark algorithm requires modification to commit protocol – Hard to add to existing (closed) system • Solution: Two marks – First mark, as before • Quiesce commits – When all acknowledge having marked log, send second mark • After writing second mark, resume commits • At Backup: When all sites have epoch □n, Commit transactions where – C(Ti) ○n – P(Ti) □n, local site is not coordinator, and coordinator has C(Ti) ○n

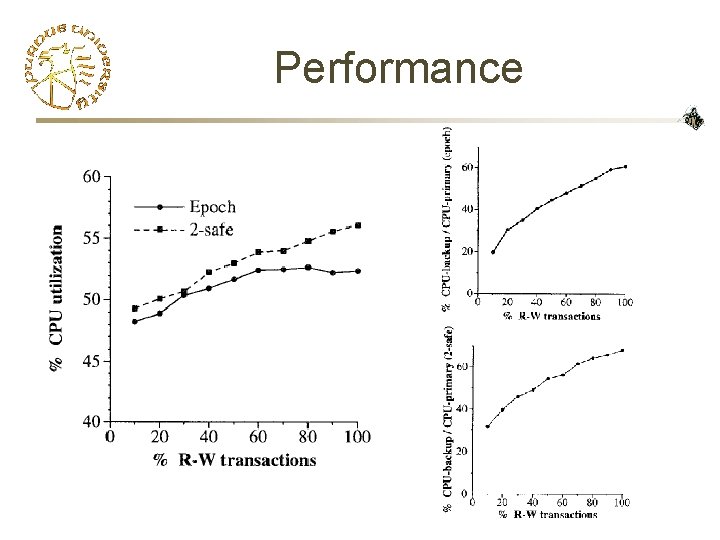

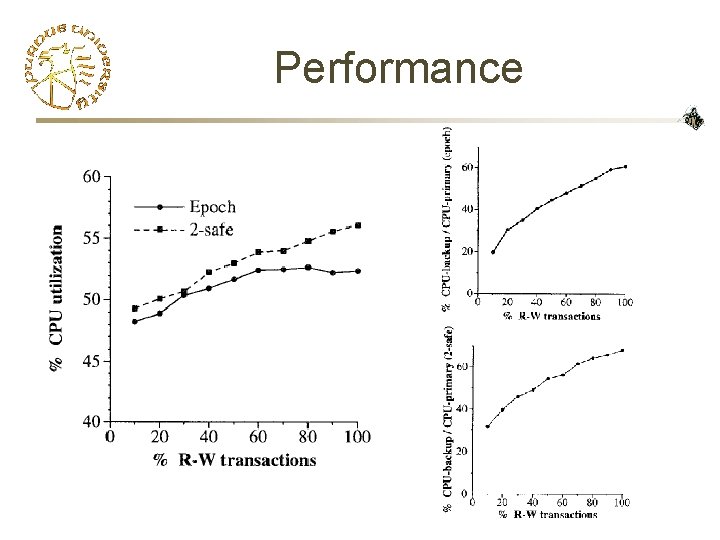

Performance

Communication