CS 533 Information Retrieval Dr Weiyi Meng Lecture

![Robot (8) Efficient Crawling through URL Ordering [Cho 98] z Default ordering is breadth-first Robot (8) Efficient Crawling through URL Ordering [Cho 98] z Default ordering is breadth-first](https://slidetodoc.com/presentation_image_h/093685740c28dbf8a44bccb1c598f5f2/image-16.jpg)

![Robot (11) page = fetch(url); if (page is hot) then hot[url] = true; enqueue(crawled_urls, Robot (11) page = fetch(url); if (page is hot) then hot[url] = true; enqueue(crawled_urls,](https://slidetodoc.com/presentation_image_h/093685740c28dbf8a44bccb1c598f5f2/image-19.jpg)

- Slides: 46

CS 533 Information Retrieval Dr. Weiyi Meng Lecture #15 March 28, 2000

Search Engines Two general paradigms for finding information on Web: z. Browsing: From a starting point, navigate through hyperlinks to find desired documents. y. Yahoo’s category hierarchy facilitates browsing.

Search Engines z. Searching: Submit a query to a search engine to find desired documents. y. Many well-known search engines on the Web: Alta. Vista, Excite, Hot. Bot, Infoseek, Lycos, Northern Light, etc.

Browsing Versus Searching z. Category hierarchy is built mostly manually and search engine databases can be created automatically. z. Search engines can index much more documents than a category hierarchy.

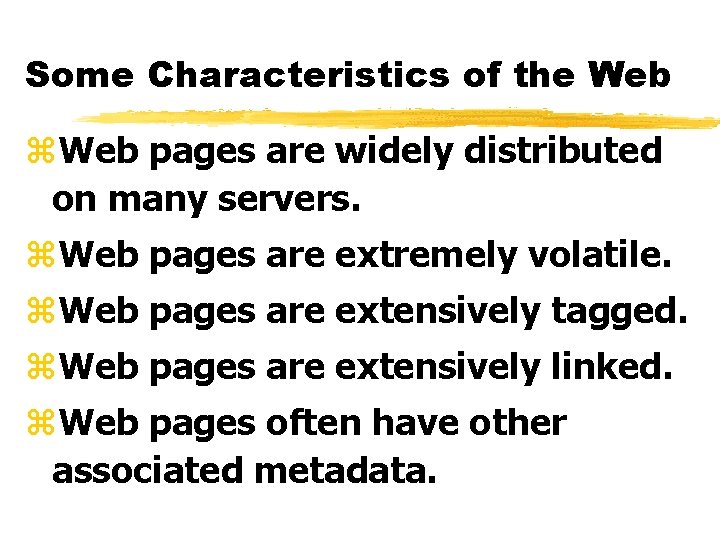

Browsing Versus Searching (cont. ) z. Browsing is good for finding some desired documents and searching is better for finding a lot of desired documents. z. Browsing is more accurate (less junk will be encountered) than searching.

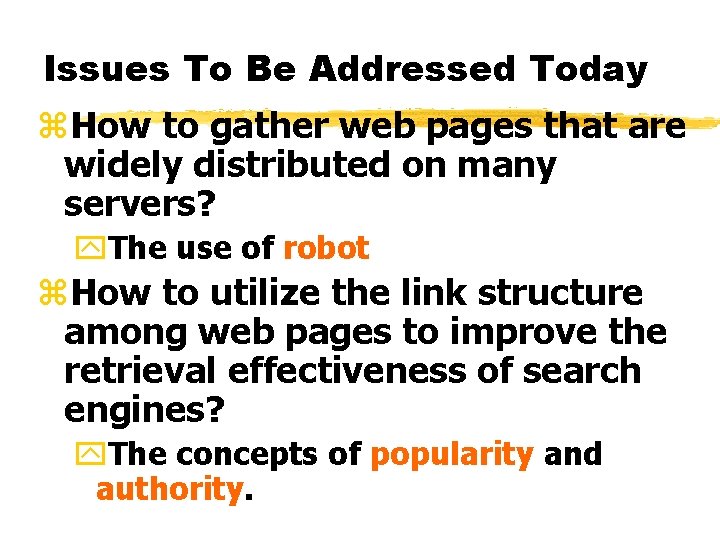

Search Engine A search engine is essentially an information retrieval system for web pages plus a Web interface. So what’s new? ? ?

Some Characteristics of the Web z. Web pages are widely distributed on many servers. z. Web pages are extremely volatile. z. Web pages are extensively tagged. z. Web pages are extensively linked. z. Web pages often have other associated metadata.

Issues To Be Addressed Today z. How to gather web pages that are widely distributed on many servers? y. The use of robot z. How to utilize the link structure among web pages to improve the retrieval effectiveness of search engines? y. The concepts of popularity and authority.

Robot (1) A robot (spider, crawler, wanderer) is a program for fetching web pages from the Web. Main idea: 1. Place some initial URLs into a URL queue. 2. Repeat until the queue is empty y Take the next URL from the queue and fetch the web page using HTTP. y Extract new URLs from the downloaded web page and add them to the queue.

Robot (2) Some specific issues: z What initial URLs to use? z For general-purpose search engines, use URLs that are likely to reach a large portion of the Web such as the Yahoo home page. z For local search engines covering one or several organizations, use URLs of the home pages of these organizations. In addition, use appropriate domain constraint.

Robot (3) Examples: To create a search engine for Binghamton University, use initial URL www. binghamton. edu and domain constraint “binghamton. edu”. z Only URLs having “binghamton. edu” will be used.

Robot (4) To create a search engine for Watson School, use initial URL “watson. binghamton. edu” and domain constraints “watson. binghamton. edu”, “cs. binghamton. edu”, “ee. binghamton. edu”, “me. binghamton. edu” and “ssie. binghamton. edu”.

Robot (5) 2. How to extract URLs from a web page? Identify tags and attributes that hold URLs. z Anchor tag: <a href=“URL” … > … </a> z Option tag: <option value=“URL”…> … z Map: <area href=“URL” …> z Frame: <frame src=“URL” …> z Link to an image: <img src=“URL” …> z Relative path vs. absolute path

Robot (6) 3. How fast should we download web pages from the same server? z Downloading web pages from a web server will consume local resources. z Be considerate to used web servers e. g. : one page per minute from the same server.

Robot (7) Several research issues: z Fetching more important pages first with limited resources. z Fetching web pages in a specified subject area for creating domainspecific search engines. z Efficient re-fetch of web pages to keep web page index up-to-date.

![Robot 8 Efficient Crawling through URL Ordering Cho 98 z Default ordering is breadthfirst Robot (8) Efficient Crawling through URL Ordering [Cho 98] z Default ordering is breadth-first](https://slidetodoc.com/presentation_image_h/093685740c28dbf8a44bccb1c598f5f2/image-16.jpg)

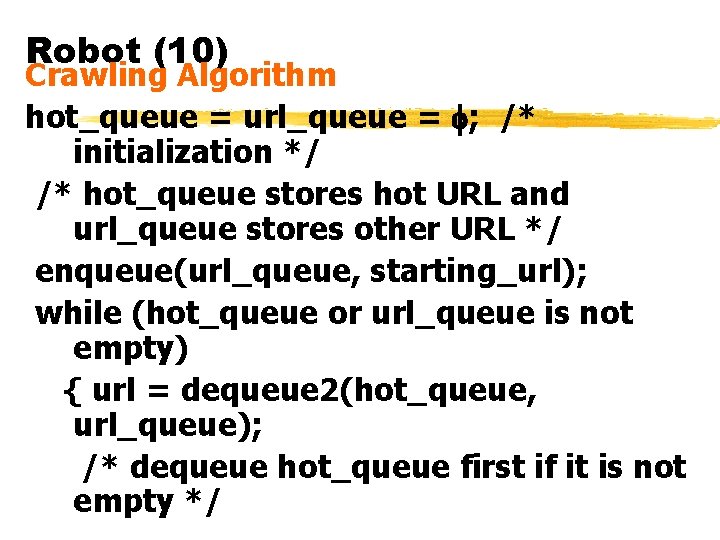

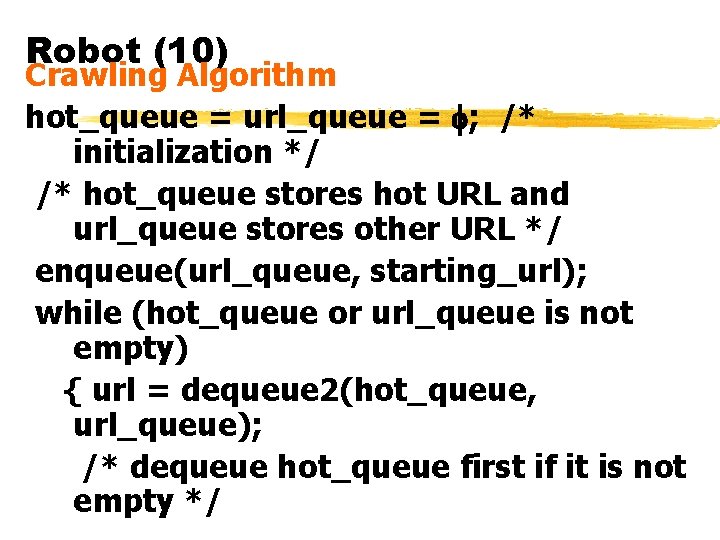

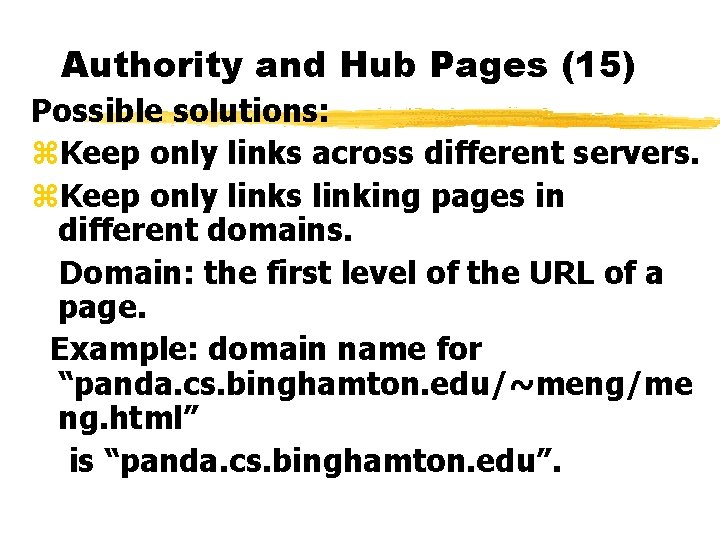

Robot (8) Efficient Crawling through URL Ordering [Cho 98] z Default ordering is breadth-first search. z Efficient crawling fetches important pages first. Example Importance Definitions z Similarity of a page to a driving query z Backlink count of a page (popularity metric)

Robot (9) z Suppose the driving query is “computer”. z A page is hot if “computer” appears in the title or appears 10 times in the body of the page. z Some heuristics for finding a hot page: y The anchor of its URL contains “computer”. y Its URL is within 3 links from a hot page. Call the above URL as a hot URL.

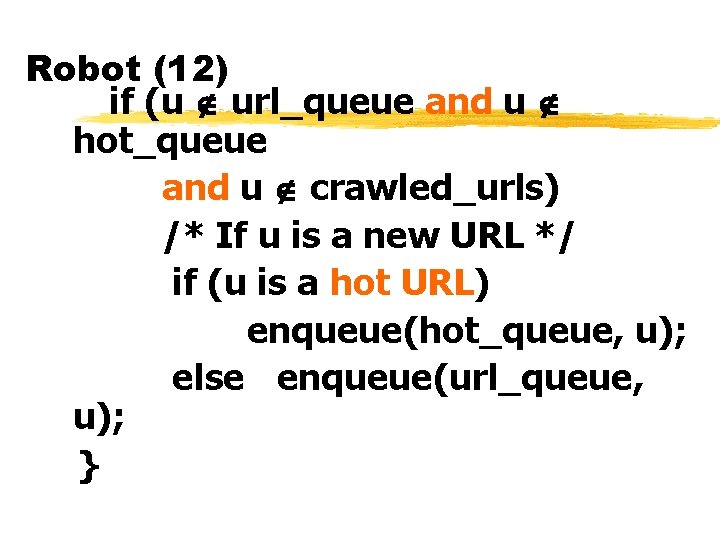

Robot (10) Crawling Algorithm hot_queue = url_queue = ; /* initialization */ /* hot_queue stores hot URL and url_queue stores other URL */ enqueue(url_queue, starting_url); while (hot_queue or url_queue is not empty) { url = dequeue 2(hot_queue, url_queue); /* dequeue hot_queue first if it is not empty */

![Robot 11 page fetchurl if page is hot then hoturl true enqueuecrawledurls Robot (11) page = fetch(url); if (page is hot) then hot[url] = true; enqueue(crawled_urls,](https://slidetodoc.com/presentation_image_h/093685740c28dbf8a44bccb1c598f5f2/image-19.jpg)

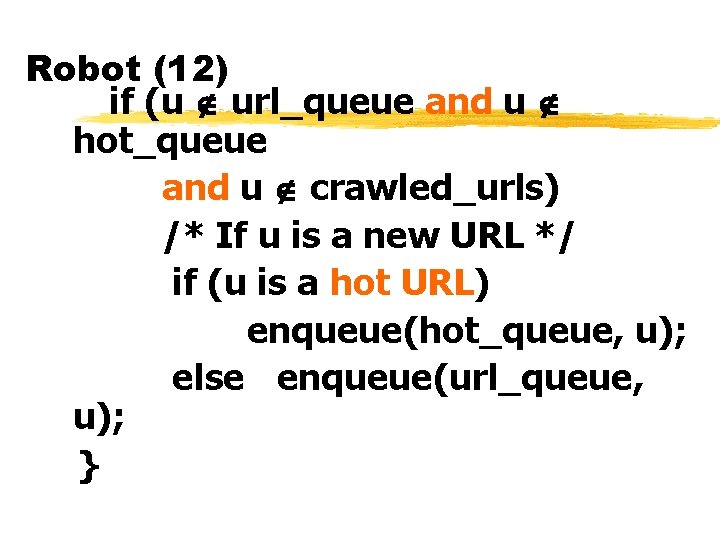

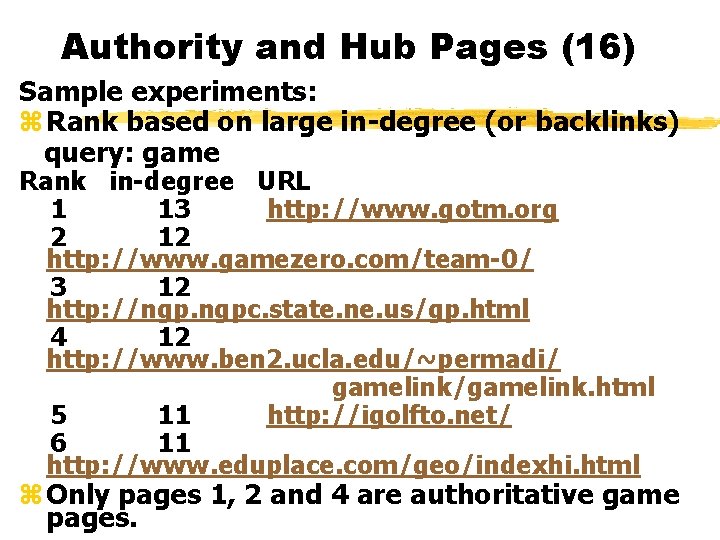

Robot (11) page = fetch(url); if (page is hot) then hot[url] = true; enqueue(crawled_urls, url); url_list = extract_urls(page); for each u in url_list

Robot (12) if (u url_queue and u hot_queue and u crawled_urls) /* If u is a new URL */ if (u is a hot URL) enqueue(hot_queue, u); else enqueue(url_queue, u); }

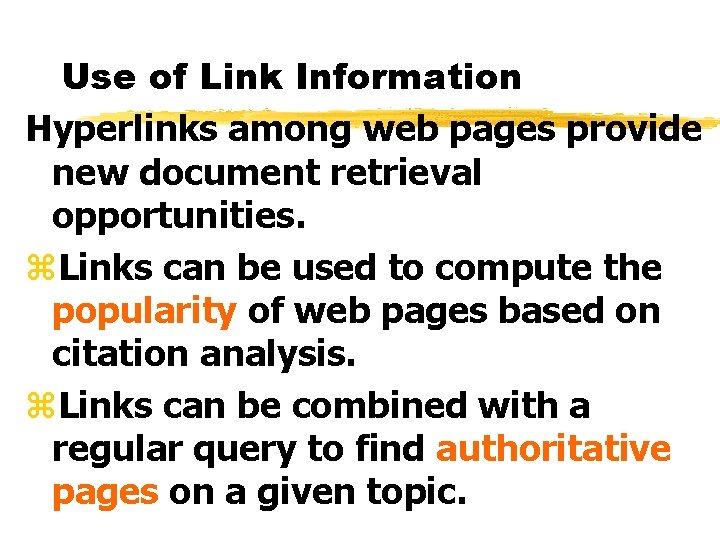

Use of Link Information Hyperlinks among web pages provide new document retrieval opportunities. z. Links can be used to compute the popularity of web pages based on citation analysis. z. Links can be combined with a regular query to find authoritative pages on a given topic.

Link-Based Popularity (1) Page. Rank citation ranking (Page 98). z. Each backlink of a page represents a citation to the page. z. Page. Rank is a measure of global web page popularity based on the backlinks of web pages.

Link-Based Popularity (2) Basic ideas: z. If a page is linked to by many pages, then the page is likely to be important. z. If a page is linked to by important pages, then the page is likely to be important even though there aren’t that many pages linking to it.

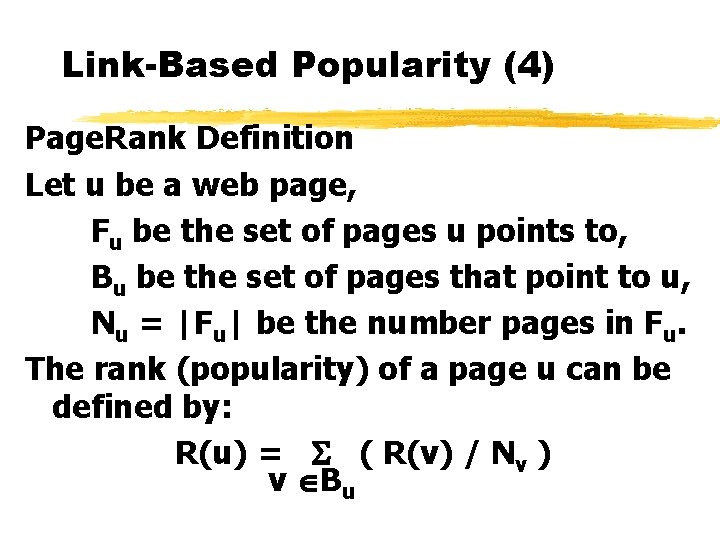

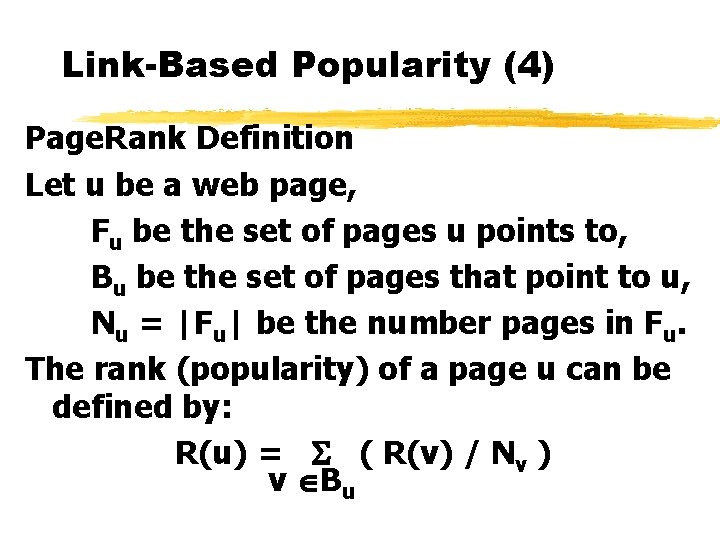

Link-Based Popularity (3) Popularity propagation: z. The importance of a page is divided evenly and propagated to the pages pointed to by it. 5 10 5

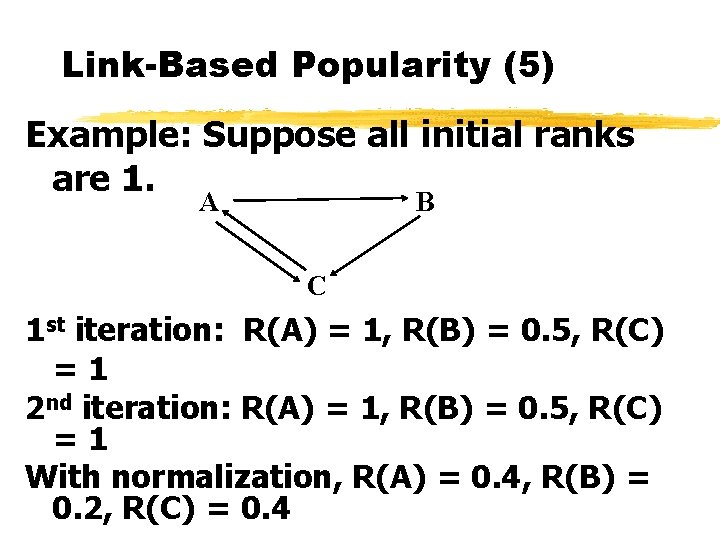

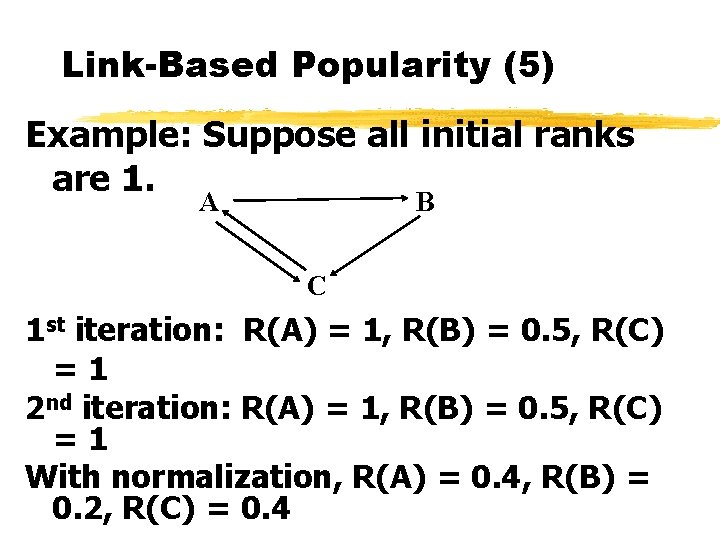

Link-Based Popularity (4) Page. Rank Definition Let u be a web page, Fu be the set of pages u points to, Bu be the set of pages that point to u, Nu = |Fu| be the number pages in Fu. The rank (popularity) of a page u can be defined by: R(u) = ( R(v) / Nv ) v Bu

Link-Based Popularity (5) Example: Suppose all initial ranks are 1. A B C 1 st iteration: R(A) = 1, R(B) = 0. 5, R(C) =1 2 nd iteration: R(A) = 1, R(B) = 0. 5, R(C) =1 With normalization, R(A) = 0. 4, R(B) = 0. 2, R(C) = 0. 4

Link-Based Popularity (6) Use page popularity in a search engine. z. Re-rank top k pages based on popularity. z. Rank web pages based on a combined score of regular similarity and popularity. score(q, d) = w 1 sim(q, d) + w 2 R(d) where 0 < w 1, w 2 < 1 and w 1 + w 2 = 1. y. Both sim(q, d) and R(d) need to be normalized to between [0, 1].

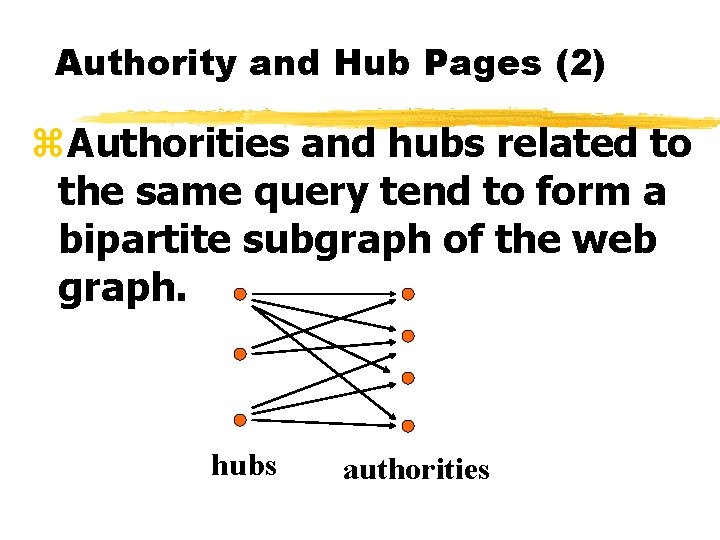

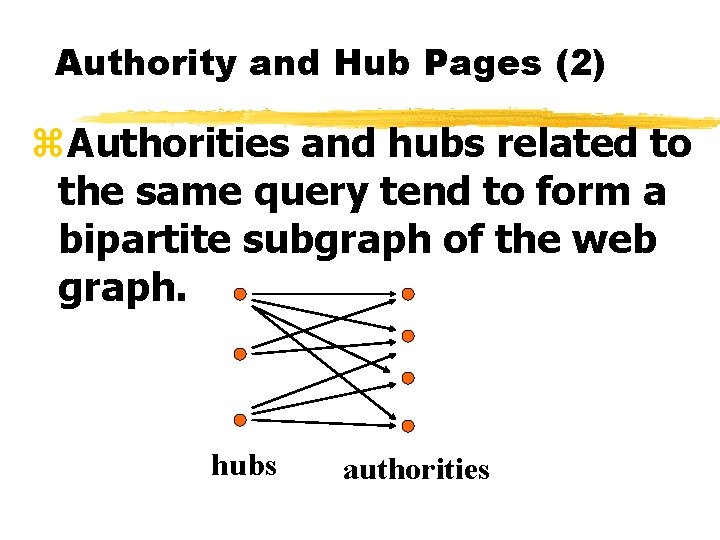

Link-Based Popularity (7) z. Page. Rank defines the global popularity of web pages. z. We often need to find authoritative pages which are relevant to a given query. z. Kleinberg (Kleinberg 98) proposed to use authority and hub scores to measure the importance of a web page with respect to a given query.

Authority and Hub Pages (1) The basic idea: z. A page is a good authoritative page with respect to a given query if it is referenced (i. e. , pointed to) by many (good hub) pages that are related to the query. z. A page is a good hub page with respect to a given query if it points to many good authoritative pages with respect to the query.

Authority and Hub Pages (2) z. Authorities and hubs related to the same query tend to form a bipartite subgraph of the web graph. hubs authorities

Authority and Hub Pages (3) Main steps of the algorithm for finding good authorities and hubs related to a query q. z Submit q to a regular similaritybased search engine. Let S be the set of top n pages returned by the search engine. • S is called the root set and n is often in the low hundreds.

Authority and Hub Pages (4) 2. Expand S into a larger set T (base set): • Add pages that are pointed to by any page in S. • Add pages that point to any page in S. If a page has too many parent pages, only the first k parent pages will be used for some k.

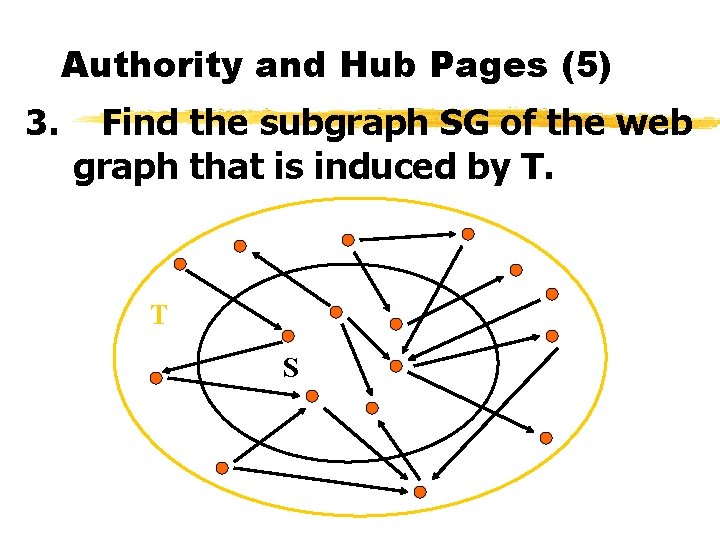

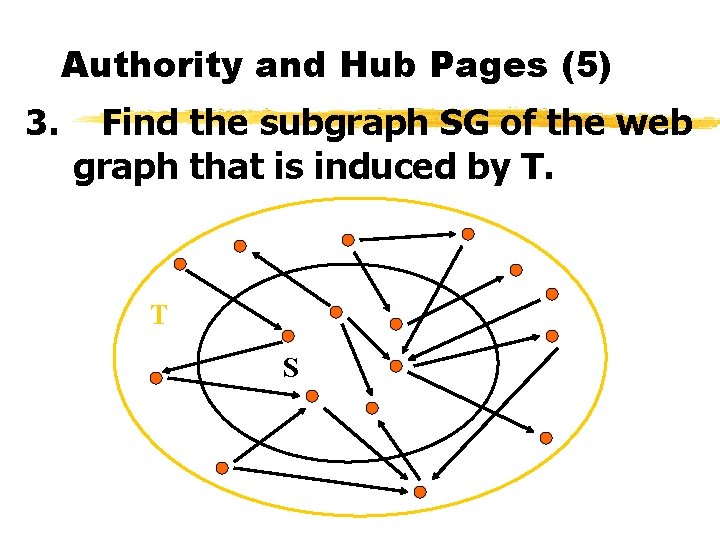

Authority and Hub Pages (5) 3. Find the subgraph SG of the web graph that is induced by T. T S

Authority and Hub Pages (6) z Compute the authority score and hub score of each web page in T based on the subgraph SG(V, E). Given a page p, let a(p) be the authority score of p h(p) be the hub score of p (p, q) be a directed edge in E from p to q.

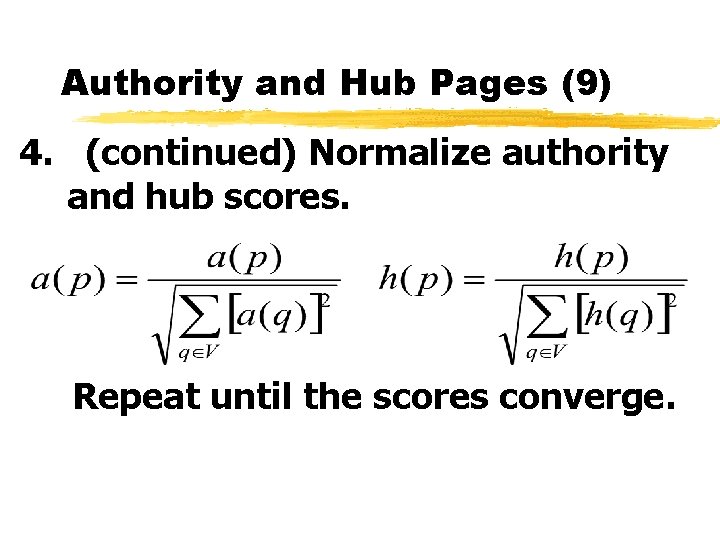

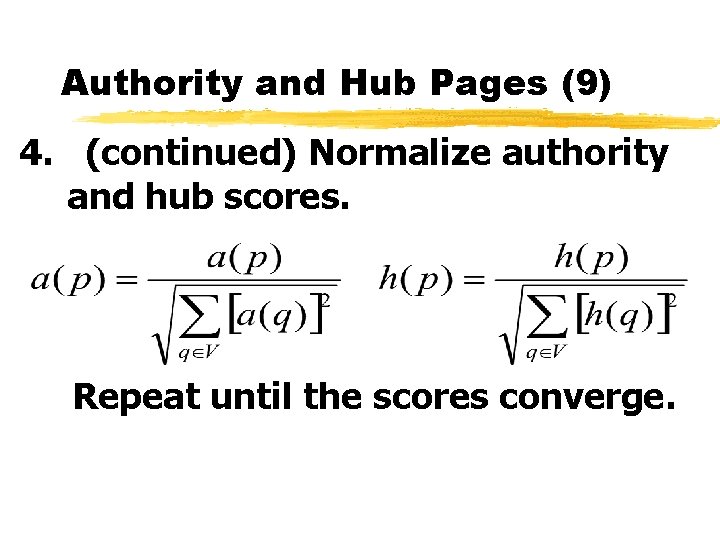

Authority and Hub Pages (7) z (continued) Two basic operations: z Operation I: Update each a(p) as the sum of all the hub scores of web pages that point to p. z Operation O: Update each h(p) as the sum of all the authority scores of web pages pointed to by p.

Authority and Hub Pages (8) Operation I: for each page p: q 1 a(p) = h(q) q 2 q: (q, p) E p q 3 Operation O: for each page p: h(p) = a(q) q: (p, q) E p q 1 q 2 q 3

Authority and Hub Pages (9) 4. (continued) Normalize authority and hub scores. Repeat until the scores converge.

Authority and Hub Pages (10) 5. Sort pages in descending authority scores and in descending hub scores. 6. Display the top authorities and the top hub pages.

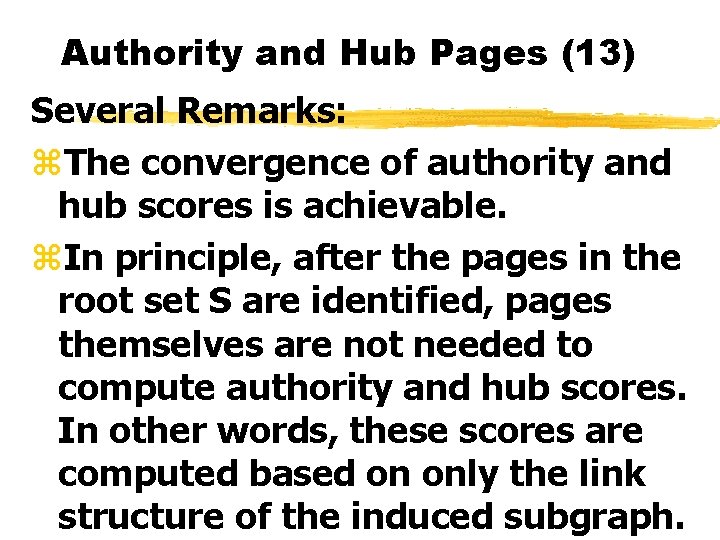

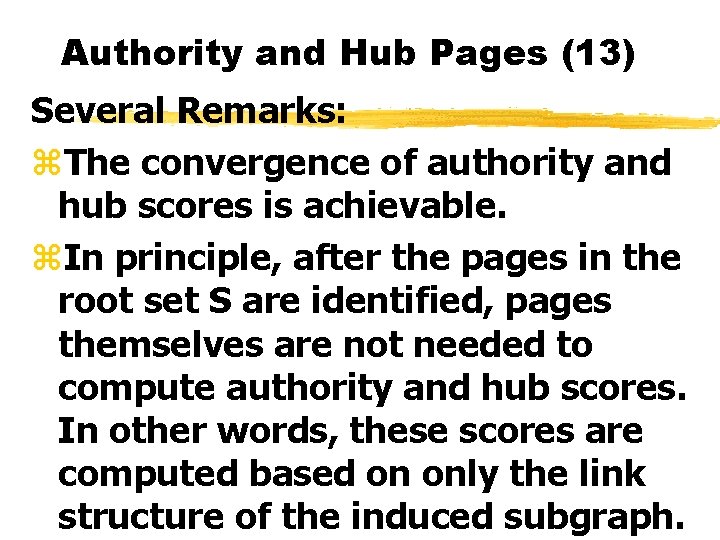

Authority and Hub Pages (11) Algorithm (summary) submit q to a search engine to obtain the root set S; expand S into the base set T; obtain the induced subgraph SG(V, E) using T; initialize a(p) = h(p) = 1 for all p in V; for each p in V until the scores converge { apply Operation I; apply Operation O; normalize a(p) and h(p); } return pages with top authority and hub scores;

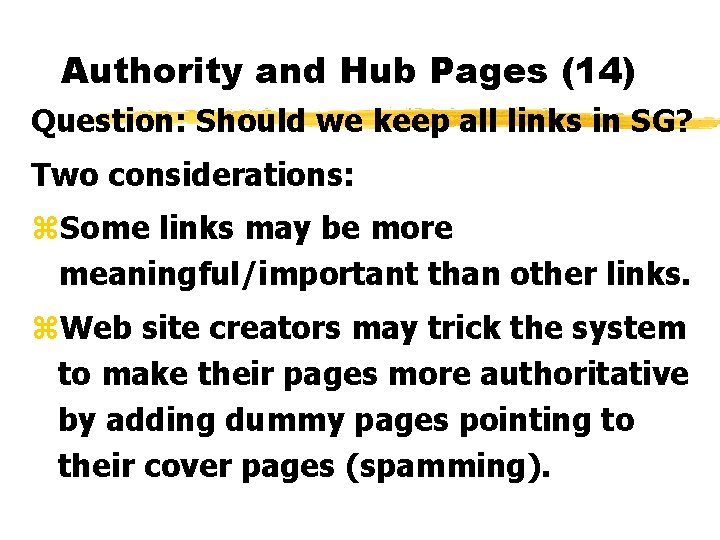

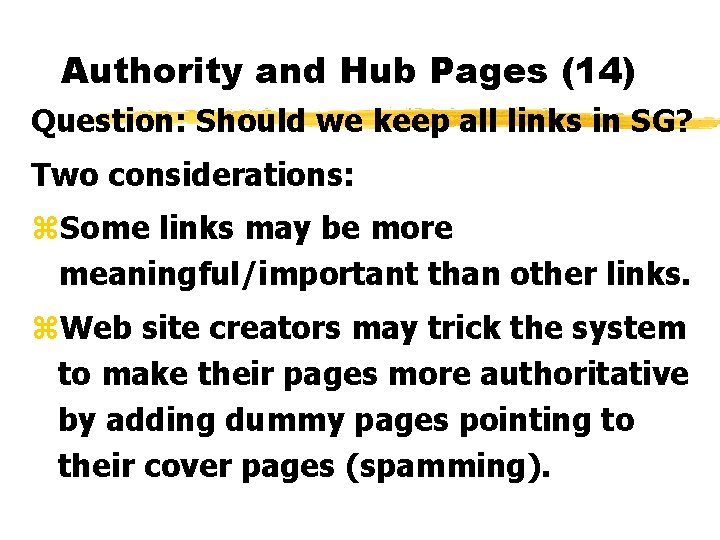

Authority and Hub Pages (12) Example: Initialize all scores to 1. 1 st Iteration: q 1 I operation: a(p 1) = 3, p 1 a(p 2) = 2 q 2 p 2 O operation: h(q 1) = 5, q 3 h(q 2) = 3, h(q 3) = 5 Normalization: a(p 1) = 0. 832, a(p 2) = 0. 555 h(q 1) = 0. 651, h(q 2) = 0. 391, h(q 3) = 0. 651

Authority and Hub Pages (13) Several Remarks: z. The convergence of authority and hub scores is achievable. z. In principle, after the pages in the root set S are identified, pages themselves are not needed to compute authority and hub scores. In other words, these scores are computed based on only the link structure of the induced subgraph.

Authority and Hub Pages (14) Question: Should we keep all links in SG? Two considerations: z. Some links may be more meaningful/important than other links. z. Web site creators may trick the system to make their pages more authoritative by adding dummy pages pointing to their cover pages (spamming).

Authority and Hub Pages (15) Possible solutions: z. Keep only links across different servers. z. Keep only links linking pages in different domains. Domain: the first level of the URL of a page. Example: domain name for “panda. cs. binghamton. edu/~meng/me ng. html” is “panda. cs. binghamton. edu”.

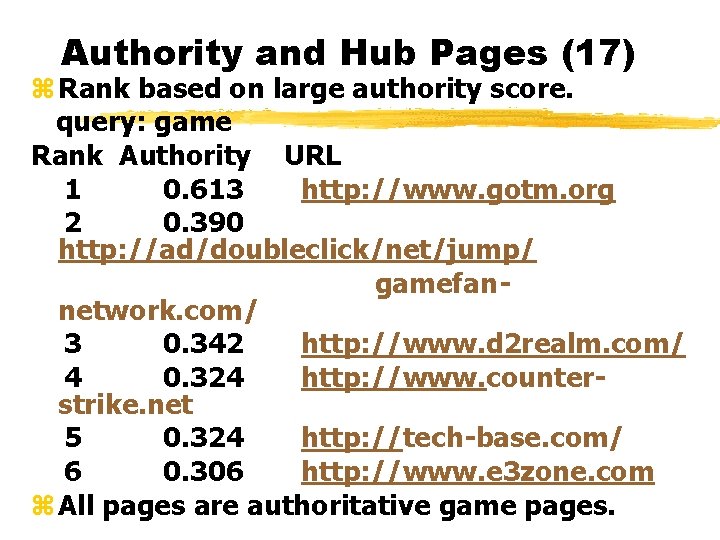

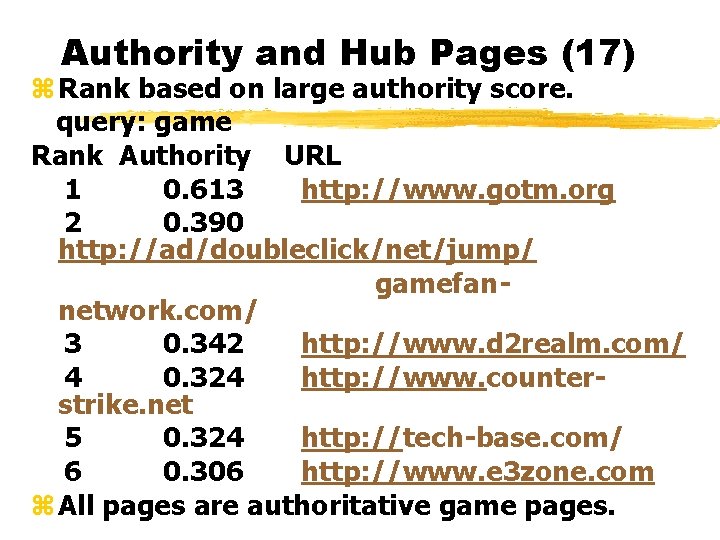

Authority and Hub Pages (16) Sample experiments: z Rank based on large in-degree (or backlinks) query: game Rank in-degree URL 1 13 http: //www. gotm. org 2 12 http: //www. gamezero. com/team-0/ 3 12 http: //ngp. ngpc. state. ne. us/gp. html 4 12 http: //www. ben 2. ucla. edu/~permadi/ gamelink/gamelink. html 5 11 http: //igolfto. net/ 6 11 http: //www. eduplace. com/geo/indexhi. html z Only pages 1, 2 and 4 are authoritative game pages.

Authority and Hub Pages (17) z Rank based on large authority score. query: game Rank Authority URL 1 0. 613 http: //www. gotm. org 2 0. 390 http: //ad/doubleclick/net/jump/ gamefannetwork. com/ 3 0. 342 http: //www. d 2 realm. com/ 4 0. 324 http: //www. counterstrike. net 5 0. 324 http: //tech-base. com/ 6 0. 306 http: //www. e 3 zone. com z All pages are authoritative game pages.

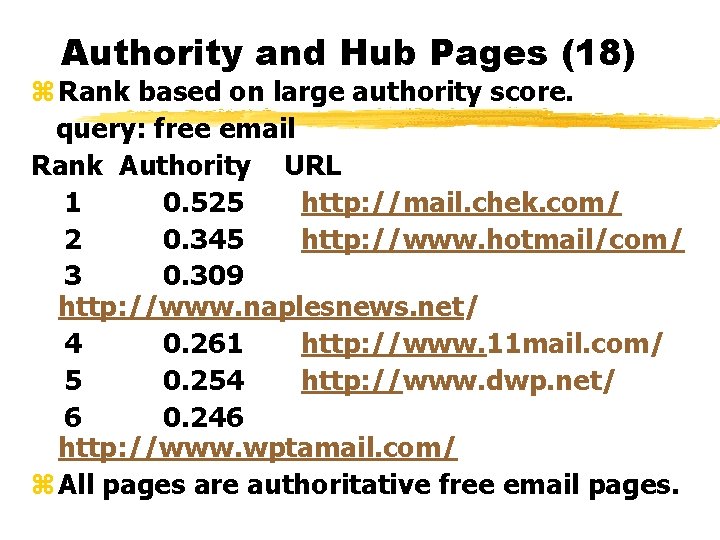

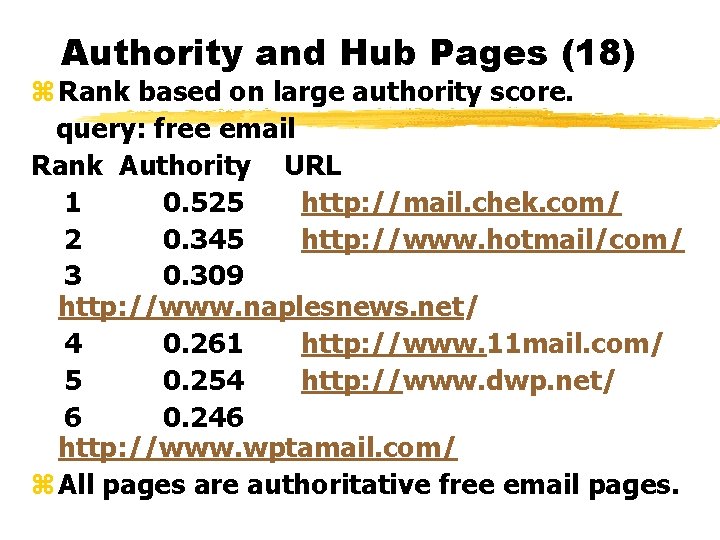

Authority and Hub Pages (18) z Rank based on large authority score. query: free email Rank Authority URL 1 0. 525 http: //mail. chek. com/ 2 0. 345 http: //www. hotmail/com/ 3 0. 309 http: //www. naplesnews. net/ 4 0. 261 http: //www. 11 mail. com/ 5 0. 254 http: //www. dwp. net/ 6 0. 246 http: //www. wptamail. com/ z All pages are authoritative free email pages.