CS 5321 Numerical Optimization 05 2192021 Conjugate Gradient

- Slides: 11

CS 5321 Numerical Optimization 05 2/19/2021 Conjugate Gradient Methods 1

Conjugate gradient methods l For convex quadratic problems, l l the steepest descent method is slow in convergence. the Newton’s method is expensive in solving Ax=b. the conjugate gradient method solves Ax=b iteratively. Outline l l l 2/19/2021 Conjugate directions Linear conjugate gradient method Nonlinear conjugate gradient method 2

Quadratic optimization problem l Consider the quadratic optimization problem min f (x) = 1 x T Ax ¡ b. T x 2 l l A is symmetric positive definite The optimal solution is at f (x) = 0 r ( 1 x T Ax ¡ b. T x) = Ax ¡ b = 0 2 l l Define r(x) = f (x) = Ax − b (the residual). Solve Ax=b without inverting A. (Iterative method) 2/19/2021 3

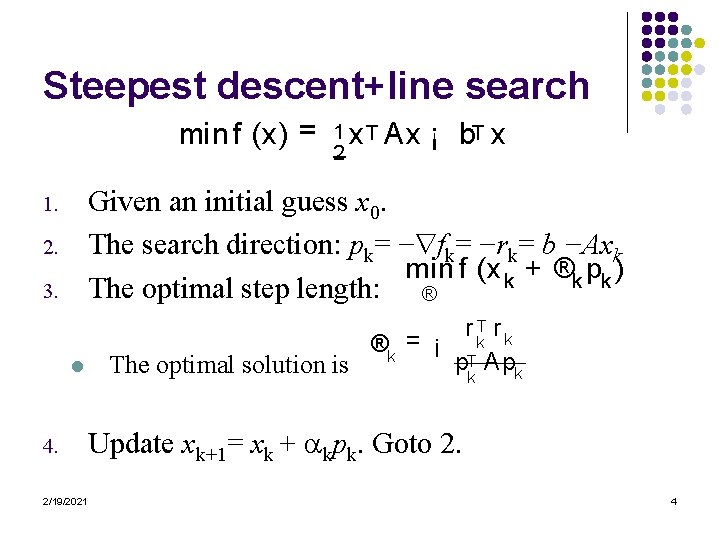

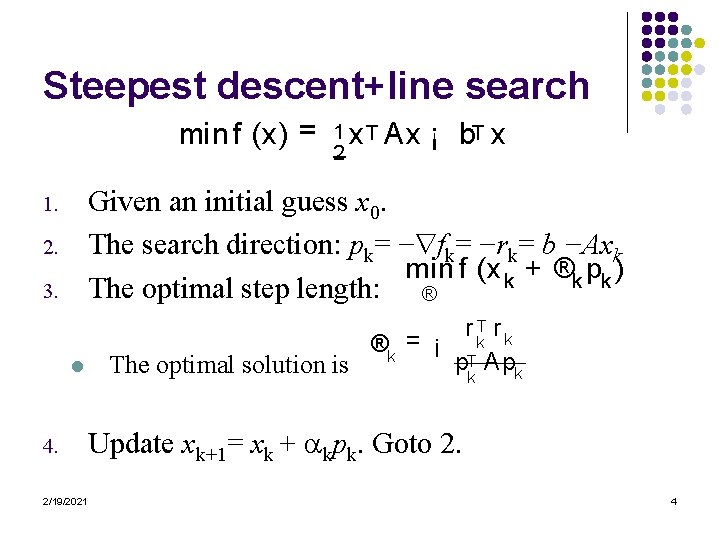

Steepest descent+line search min f (x) = 1 x T Ax 2 ¡ b. T x Given an initial guess x 0. The search direction: pk= − fk= −rk= b −Axk min f (x k + ®k pk ) The optimal step length: ® 1. 2. 3. l 4. 2/19/2021 r k. T r k ®k = ¡ p. T Apk The optimal solution is k Update xk+1= xk + kpk. Goto 2. 4

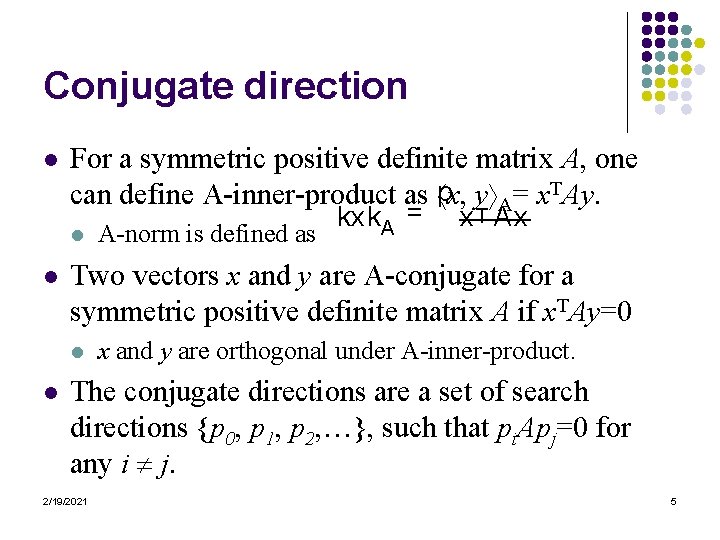

Conjugate direction l For a symmetric positive definite matrix A, one can define A-inner-product as p x, y A= x. TAy. l l x T Ax Two vectors x and y are A-conjugate for a symmetric positive definite matrix A if x. TAy=0 l l A-norm is defined as kxk. A = x and y are orthogonal under A-inner-product. The conjugate directions are a set of search directions {p 0, p 1, p 2, …}, such that pi. Apj=0 for any i j. 2/19/2021 5

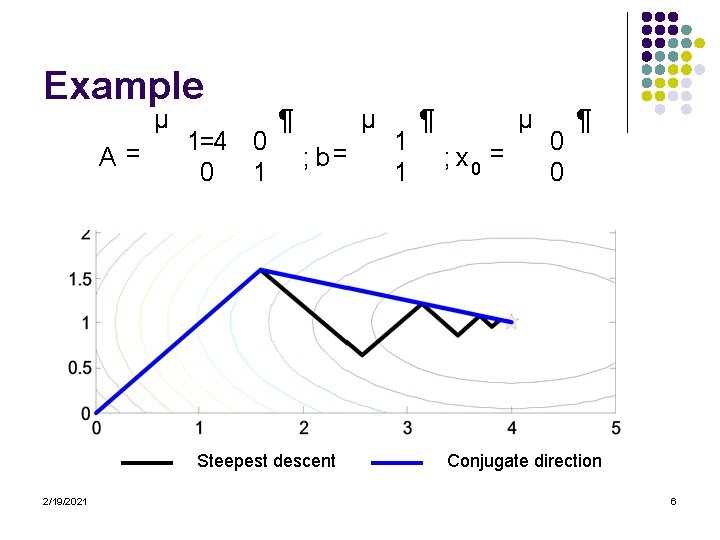

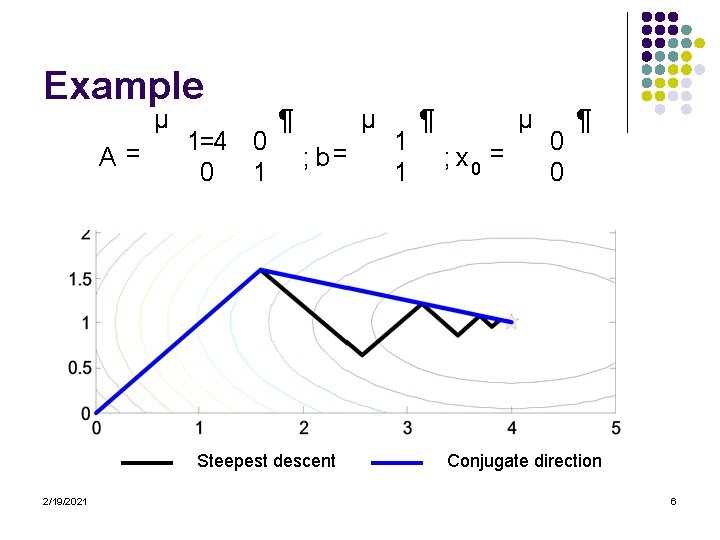

Example µ A= 1=4 0 0 1 ¶ µ ; b= Steepest descent 2/19/2021 1 1 ¶ µ ; x 0 = 0 0 ¶ Conjugate direction 6

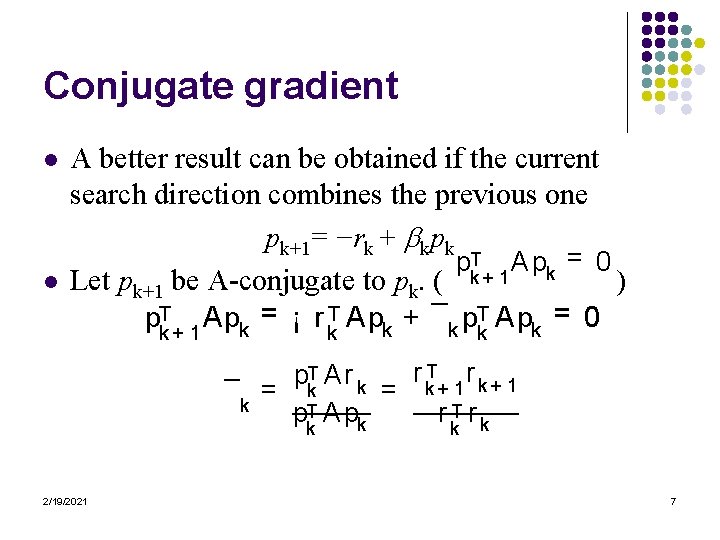

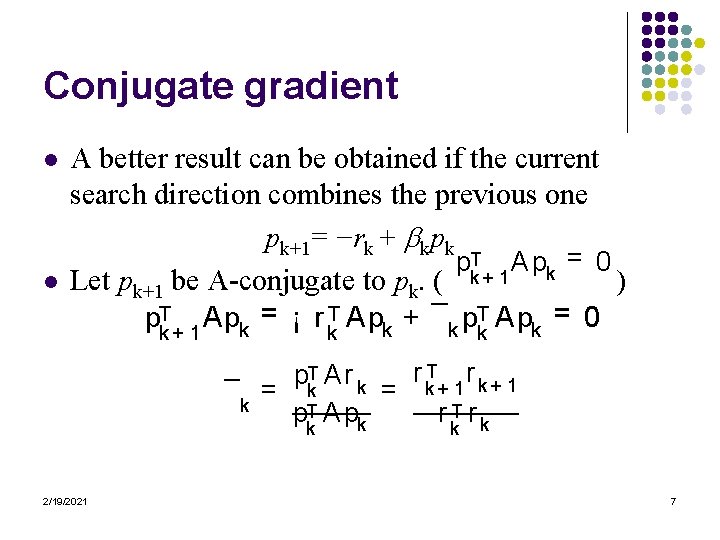

Conjugate gradient l l A better result can be obtained if the current search direction combines the previous one pk+1= −rk + kpk p. Tk+ 1 Apk = 0 Let pk+1 be A-conjugate to pk. ( ) p. Tk+ 1 Apk = ¡ r k. T Apk + ¯k pk. T Apk = 0 r k. T+ 1 r k + 1 p. Tk Ar k = ¯k = p. T Apk r. T rk k 2/19/2021 k 7

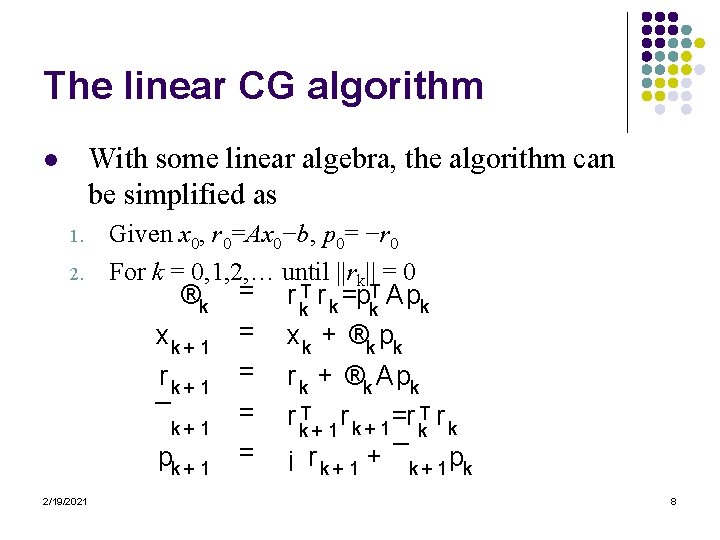

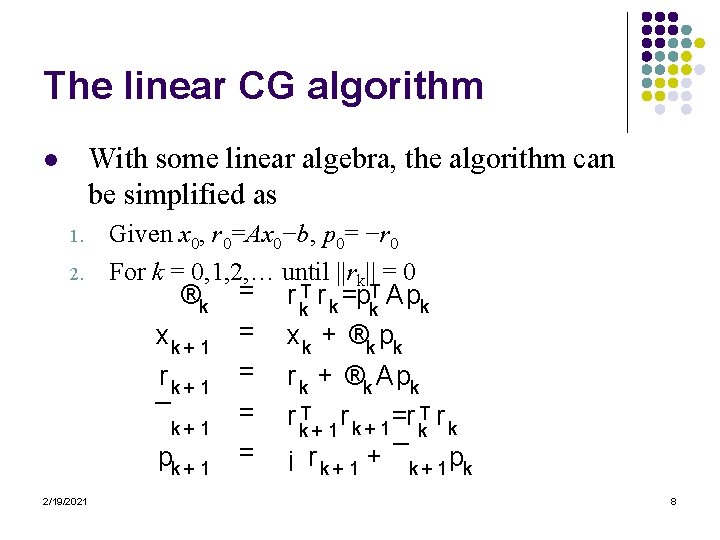

The linear CG algorithm With some linear algebra, the algorithm can be simplified as l 1. 2. Given x 0, r 0=Ax 0−b, p 0= −r 0 For k = 0, 1, 2, … until ||rk|| = 0 ®k = r k. T r k =p. Tk Apk x k + 1 = x k + ®k pk r k+ 1 ¯k + 1 pk + 1 2/19/2021 = = = r k + ®k Apk r k. T+ 1 r k + 1 =r k. T r k ¡ r k + 1 + ¯k + 1 pk 8

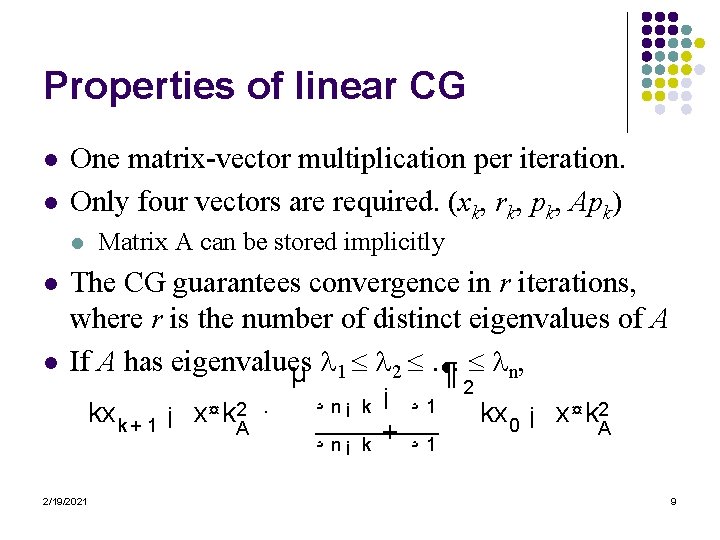

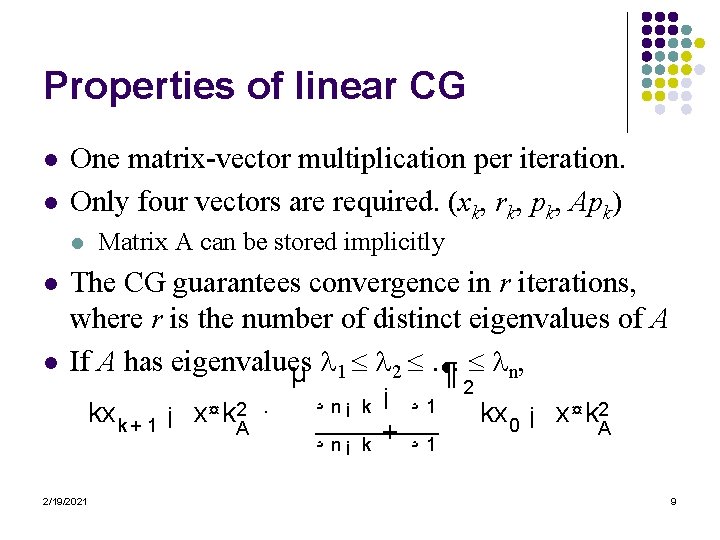

Properties of linear CG l l One matrix-vector multiplication per iteration. Only four vectors are required. (xk, rk, pk, Apk) l l l Matrix A can be stored implicitly The CG guarantees convergence in r iterations, where r is the number of distinct eigenvalues of A If A has eigenvalues µ 1 2 … ¶ n, kx k + 1 ¡ x ¤ k. A 2 · 2/19/2021 ¸ n¡ k k ¡ ¸ 1 + ¸ 1 2 kx 0 ¡ x ¤ k 2 A 9

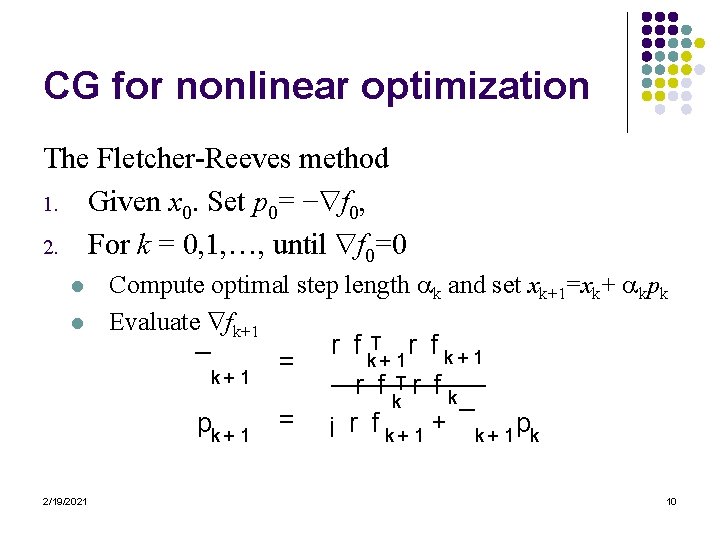

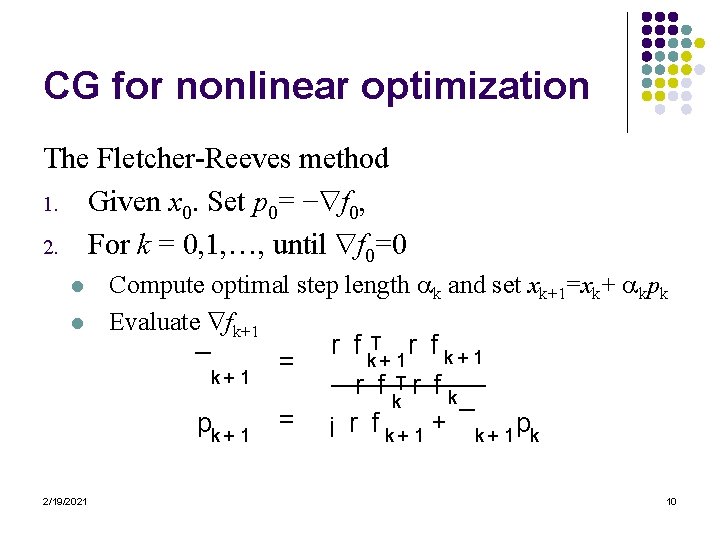

CG for nonlinear optimization The Fletcher-Reeves method 1. Given x 0. Set p 0= − f 0, 2. For k = 0, 1, …, until f 0=0 l l 2/19/2021 Compute optimal step length k and set xk+1=xk+ kpk Evaluate fk+1 r f k. T+ 1 r f k + 1 ¯k + 1 = r f Tr fk k pk + 1 = ¡ r f k + 1 + ¯k + 1 pk 10

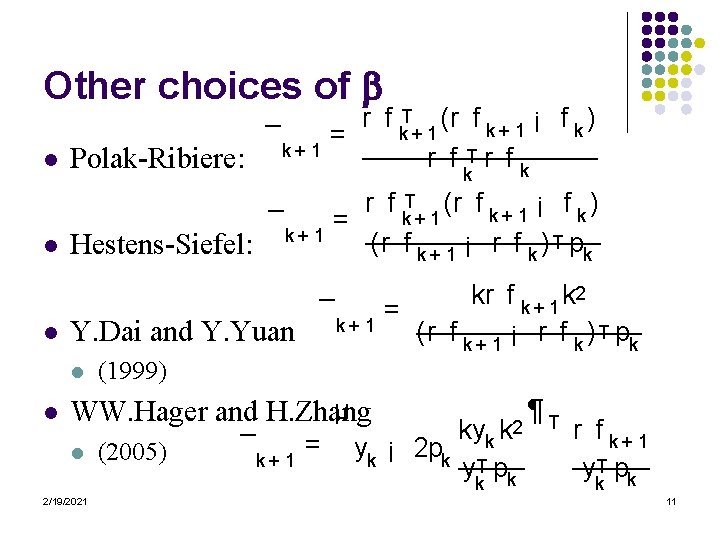

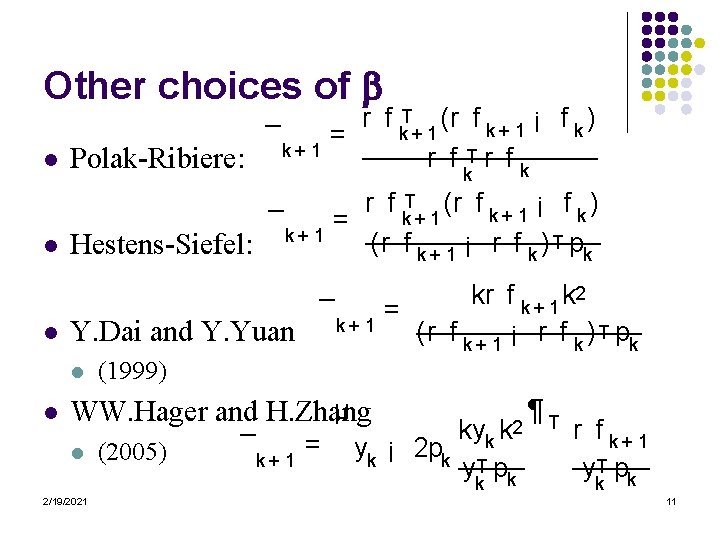

Other choices of l l l Polak-Ribiere: Hestens-Siefel: k ¯k + 1 Y. Dai and Y. Yuan l l ¯k + 1 r f k. T+ 1 (r f k + 1 ¡ f k ) = r f Tr fk r f k. T+ 1 (r f k + 1 ¡ f k ) = (r f k + 1 ¡ r f k ) T pk ¯k + 1 kr f k + 1 k 2 = (r f k + 1 ¡ r f k ) T pk (1999) µ WW. Hager and H. Zhang l (2005) ¯k + 1 = kyk k 2 yk ¡ 2 pk y T pk k 2/19/2021 ¶T r f k+ 1 y T pk k 11