CS 4803 7643 Deep Learning Topics Finish Computational

- Slides: 83

CS 4803 / 7643: Deep Learning Topics: – (Finish) Computational Graphs – Notation + example – (Finish) Computing Gradients – Forward mode vs Reverse mode AD – Patterns in backprop – Backprop in FC+Re. LU NNs Dhruv Batra Georgia Tech

Administrativia • HW 1 Reminder – Due: 10/02, 11: 55 pm • https: //www. cc. gatech. edu/classes/AY 2019/cs 7643_fall/assets/hw 1. pdf • https: //www. cc. gatech. edu/classes/AY 2019/cs 7643_fall/hw 1 -q 6/ (C) Dhruv Batra 2

Recap from last time (C) Dhruv Batra 3

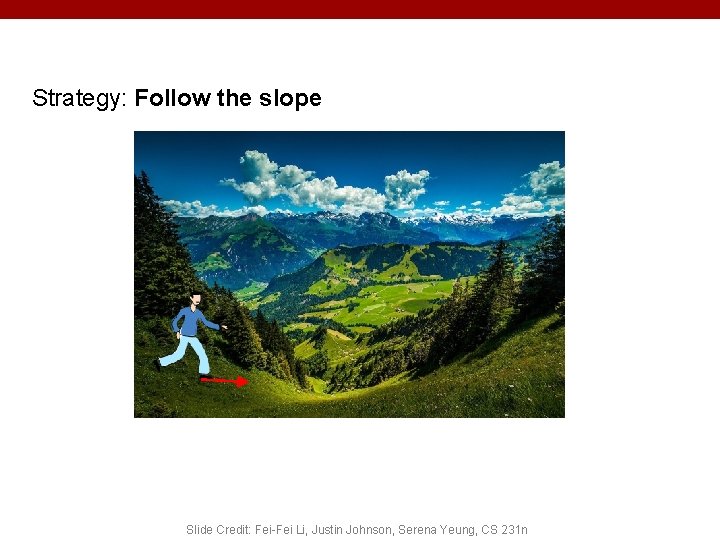

Strategy: Follow the slope Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

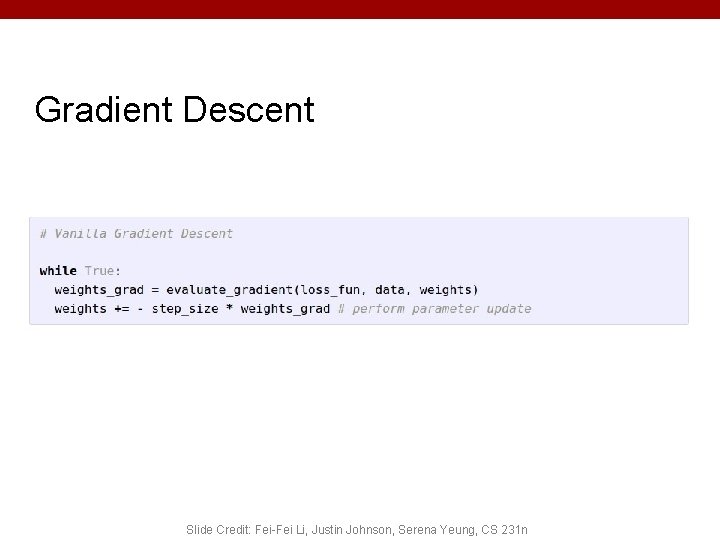

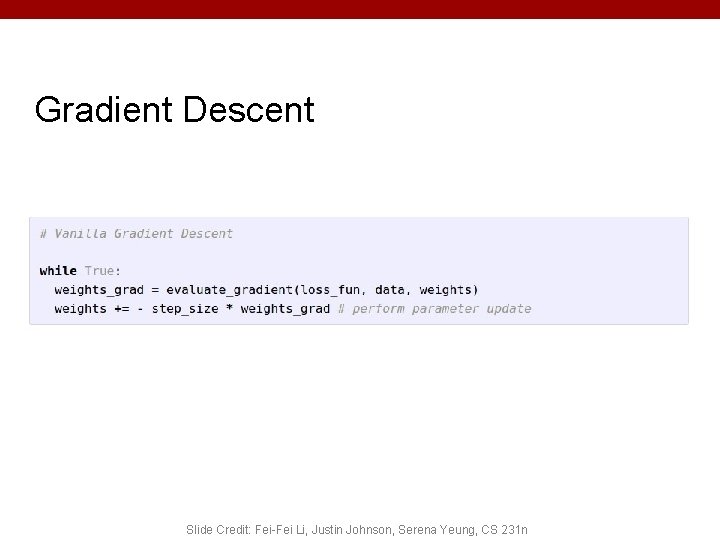

Gradient Descent Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

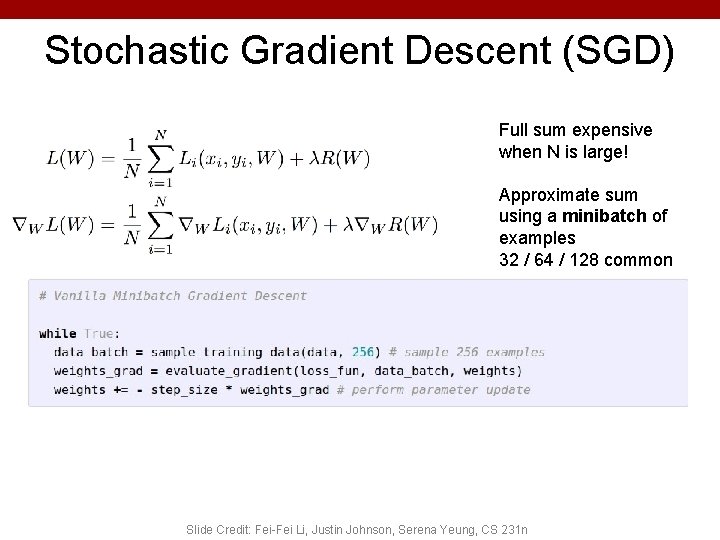

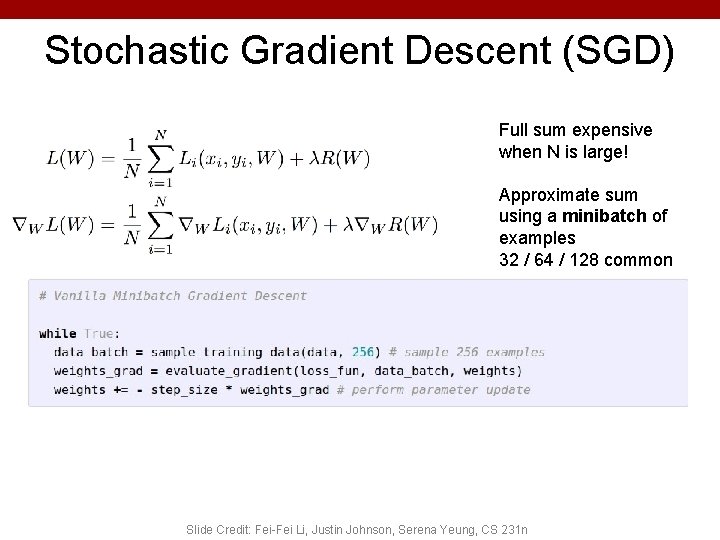

Stochastic Gradient Descent (SGD) Full sum expensive when N is large! Approximate sum using a minibatch of examples 32 / 64 / 128 common Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

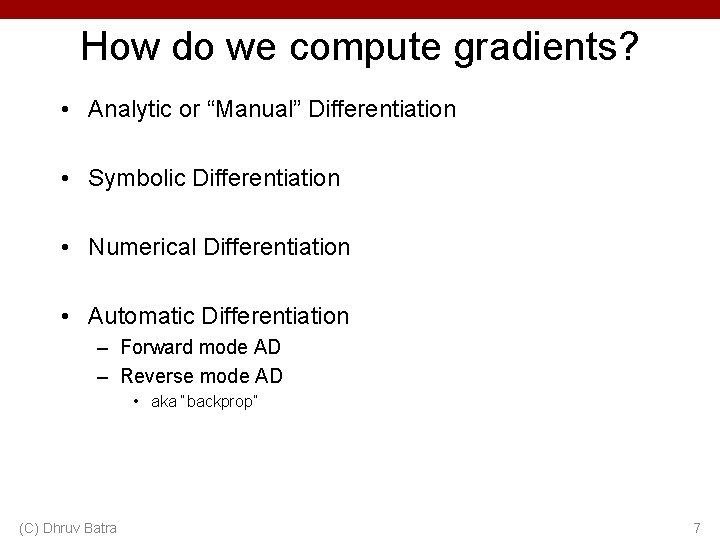

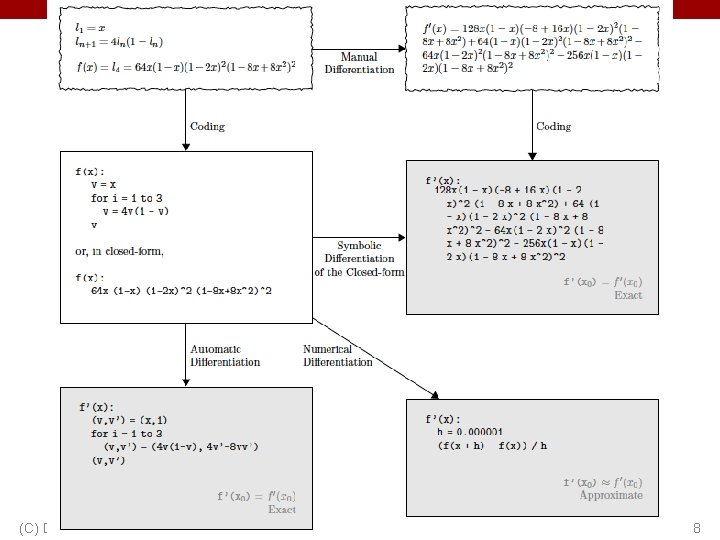

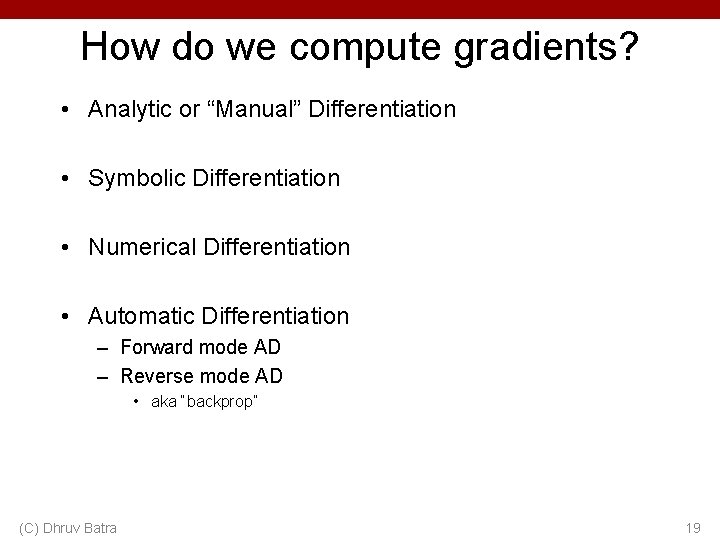

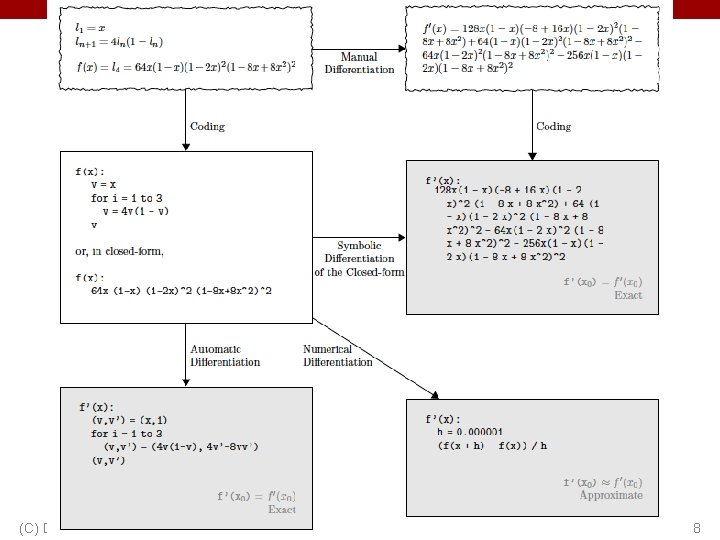

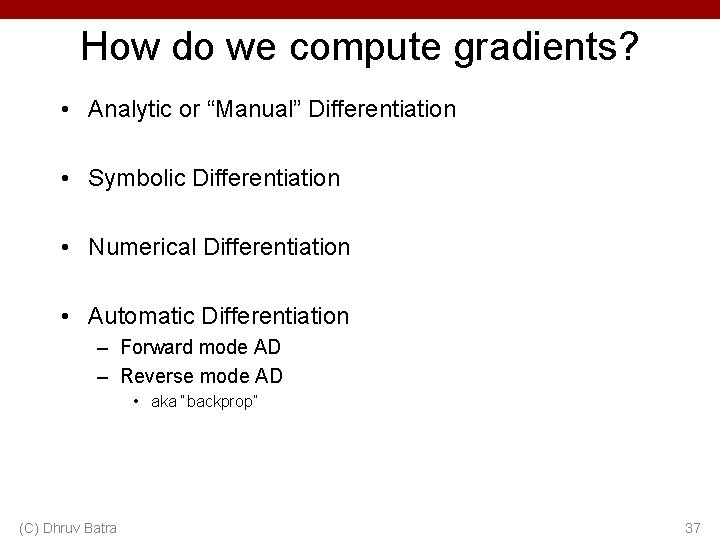

How do we compute gradients? • Analytic or “Manual” Differentiation • Symbolic Differentiation • Numerical Differentiation • Automatic Differentiation – Forward mode AD – Reverse mode AD • aka “backprop” (C) Dhruv Batra 7

(C) Dhruv Batra 8

How do we compute gradients? • Analytic or “Manual” Differentiation • Symbolic Differentiation • Numerical Differentiation • Automatic Differentiation – Forward mode AD – Reverse mode AD • aka “backprop” (C) Dhruv Batra 9

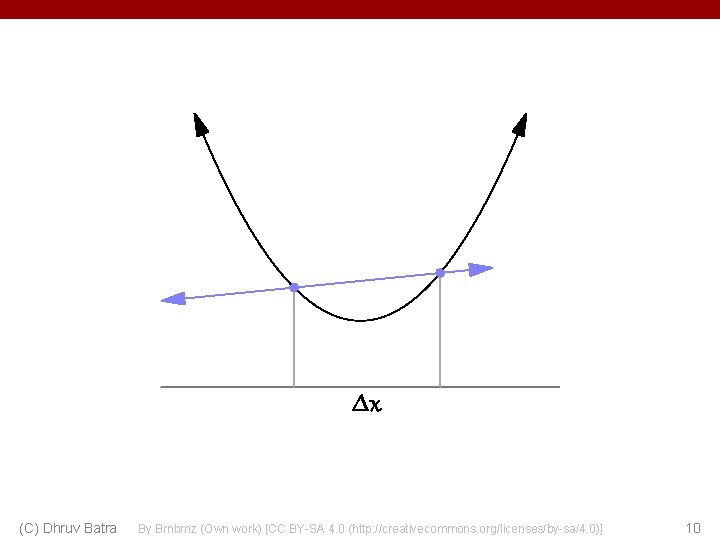

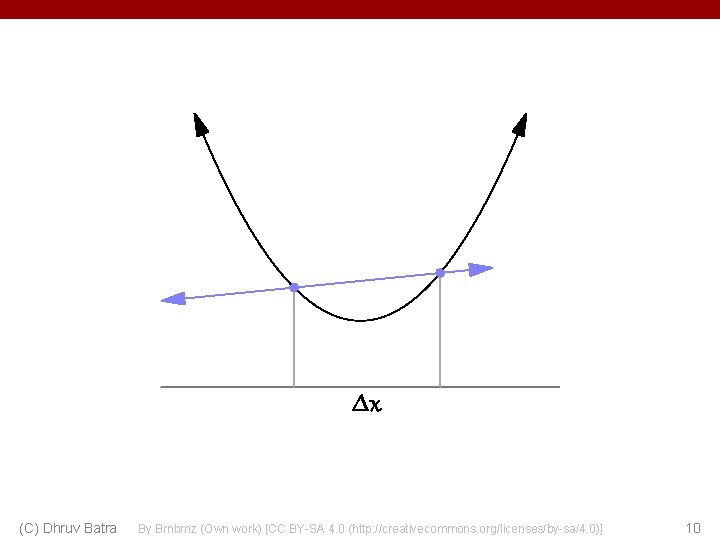

(C) Dhruv Batra By Brnbrnz (Own work) [CC BY-SA 4. 0 (http: //creativecommons. org/licenses/by-sa/4. 0)] 10

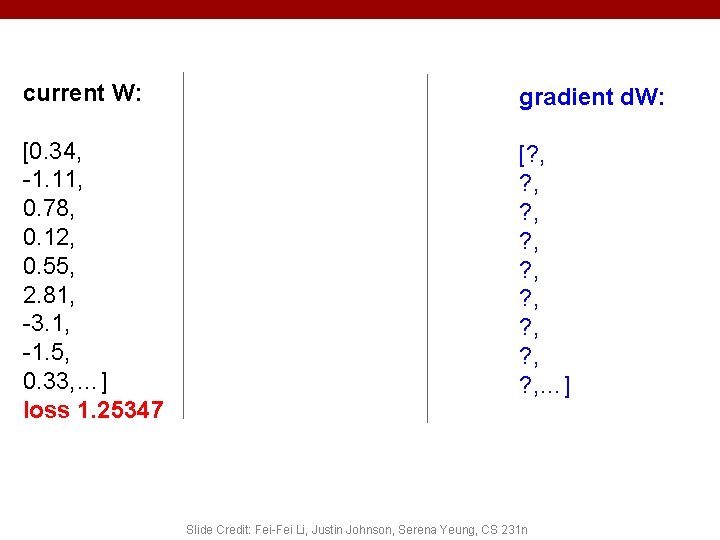

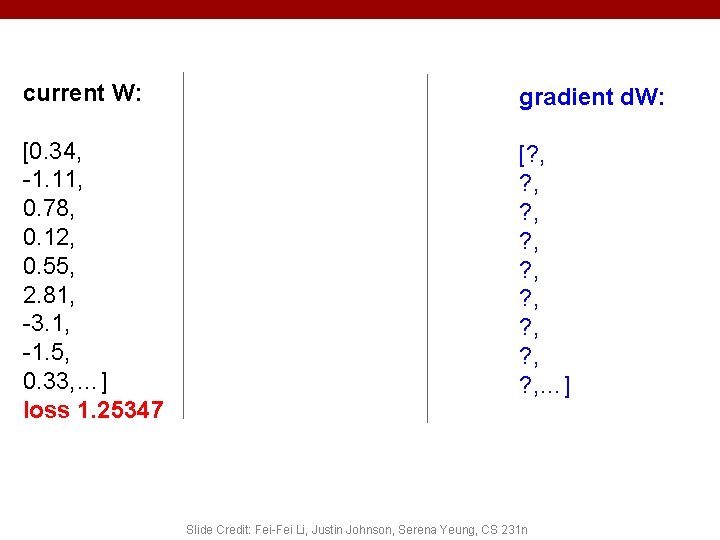

current W: gradient d. W: [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [? , ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

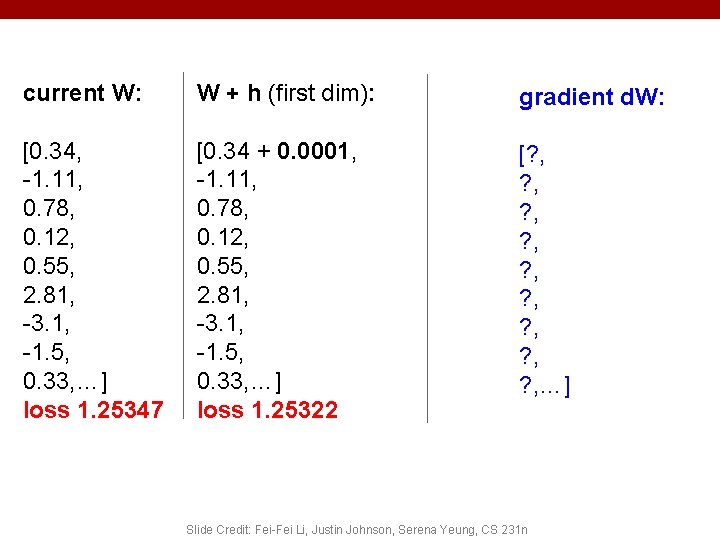

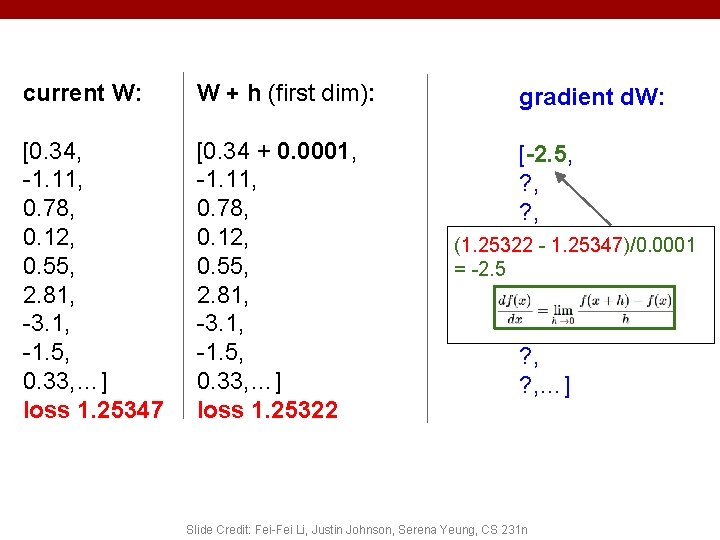

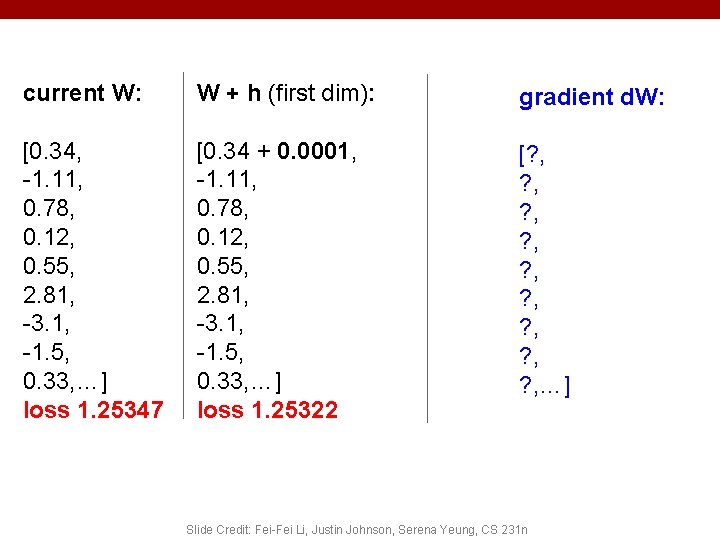

current W: W + h (first dim): gradient d. W: [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [0. 34 + 0. 0001, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25322 [? , ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

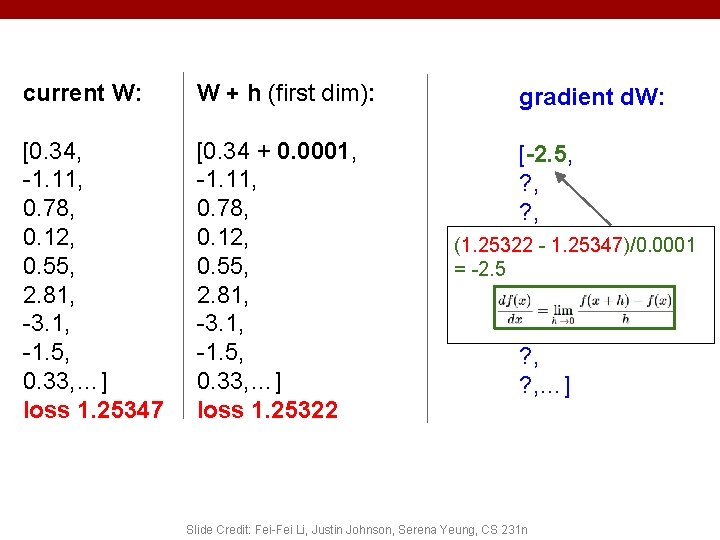

current W: W + h (first dim): [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [0. 34 + 0. 0001, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25322 gradient d. W: [-2. 5, ? , ? , - 1. 25347)/0. 0001 (1. 25322 = -2. 5 ? , ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

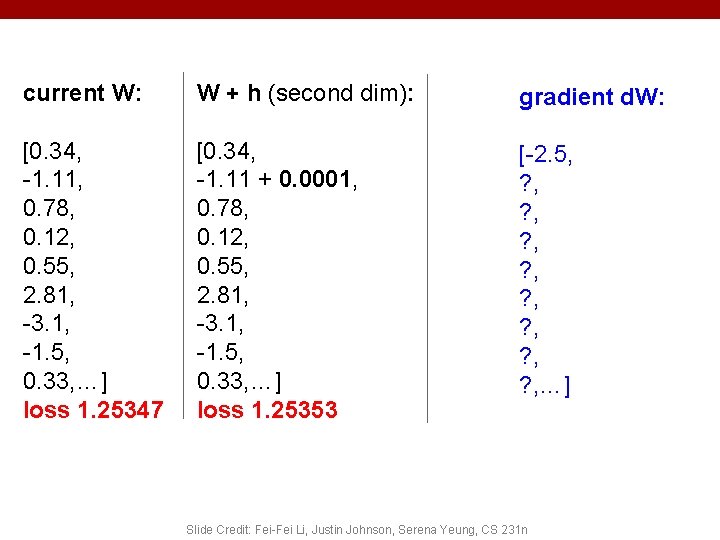

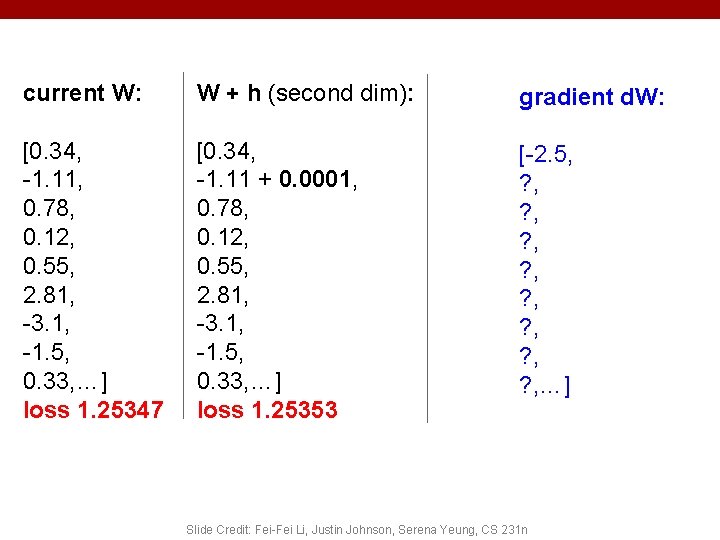

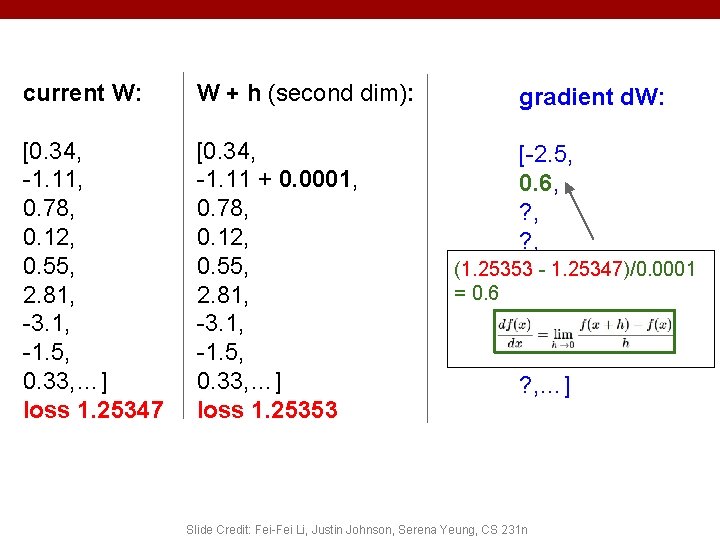

current W: W + h (second dim): gradient d. W: [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [0. 34, -1. 11 + 0. 0001, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25353 [-2. 5, ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

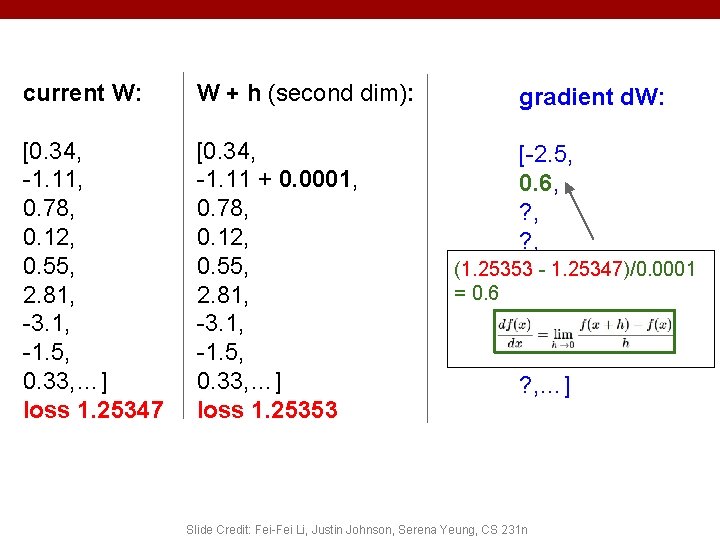

current W: W + h (second dim): [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [0. 34, -1. 11 + 0. 0001, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25353 gradient d. W: [-2. 5, 0. 6, ? , (1. 25353 ? , - 1. 25347)/0. 0001 = 0. 6 ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

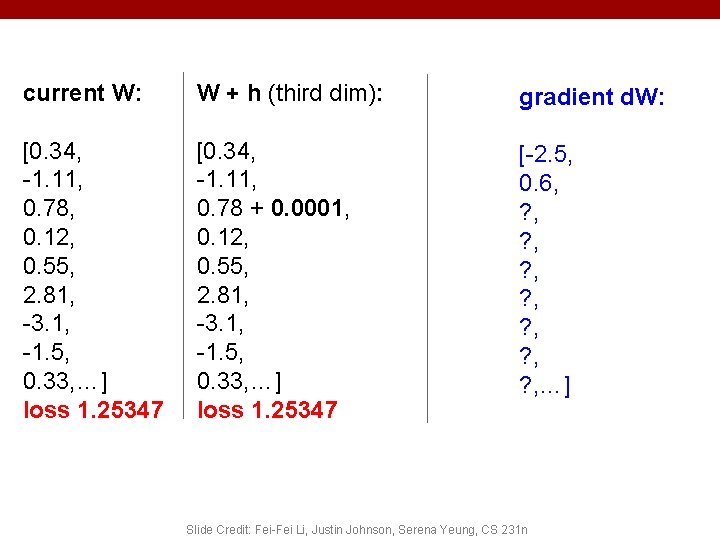

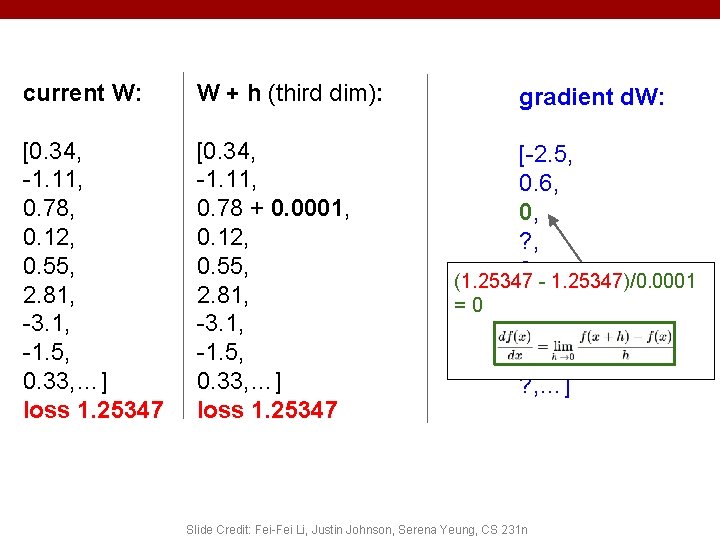

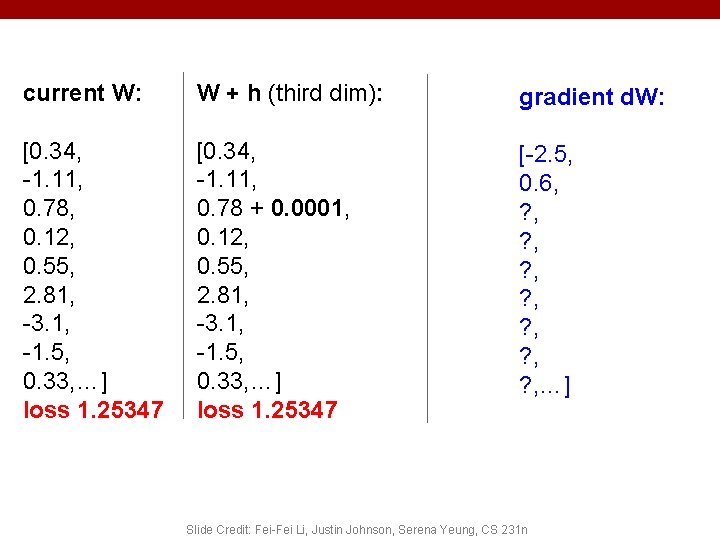

current W: W + h (third dim): gradient d. W: [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [0. 34, -1. 11, 0. 78 + 0. 0001, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [-2. 5, 0. 6, ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

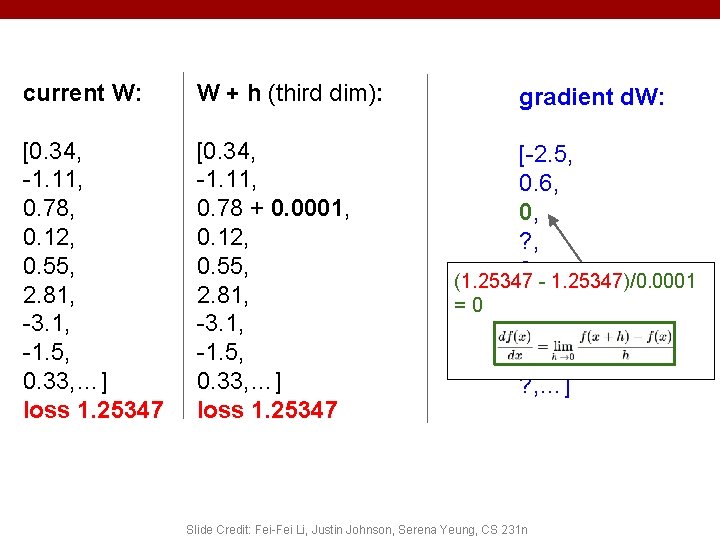

current W: W + h (third dim): [0. 34, -1. 11, 0. 78, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 [0. 34, -1. 11, 0. 78 + 0. 0001, 0. 12, 0. 55, 2. 81, -3. 1, -1. 5, 0. 33, …] loss 1. 25347 gradient d. W: [-2. 5, 0. 6, 0, ? , (1. 25347 - 1. 25347)/0. 0001 ? , =0 ? , ? , …] Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

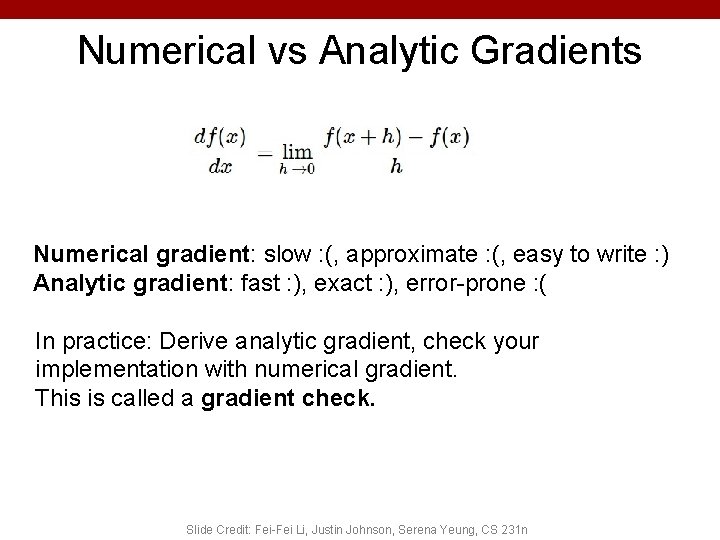

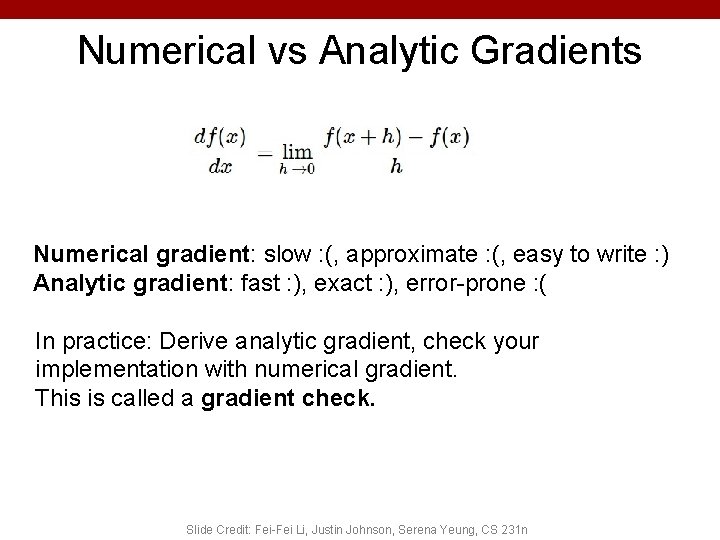

Numerical vs Analytic Gradients Numerical gradient: slow : (, approximate : (, easy to write : ) Analytic gradient: fast : ), exact : ), error-prone : ( In practice: Derive analytic gradient, check your implementation with numerical gradient. This is called a gradient check. Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

How do we compute gradients? • Analytic or “Manual” Differentiation • Symbolic Differentiation • Numerical Differentiation • Automatic Differentiation – Forward mode AD – Reverse mode AD • aka “backprop” (C) Dhruv Batra 19

Matrix/Vector Derivatives Notation (C) Dhruv Batra 20

Matrix/Vector Derivatives Notation (C) Dhruv Batra 21

Vector Derivative Example (C) Dhruv Batra 22

Extension to Tensors (C) Dhruv Batra 23

Chain Rule: Composite Functions (C) Dhruv Batra 24

Chain Rule: Scalar Case (C) Dhruv Batra 25

Chain Rule: Vector Case (C) Dhruv Batra 26

Chain Rule: Jacobian view (C) Dhruv Batra 27

Chain Rule: Graphical view (C) Dhruv Batra 28

Chain Rule: Cascaded (C) Dhruv Batra 29

Chain Rule: How should we multiply? (C) Dhruv Batra 30

Plan for Today • (Finish) Computational Graphs – Notation + example • (Finish) Computing Gradients – Forward mode vs Reverse mode AD – Patterns in backprop – Backprop in FC+Re. LU NNs (C) Dhruv Batra 31

(C) Dhruv Batra 33

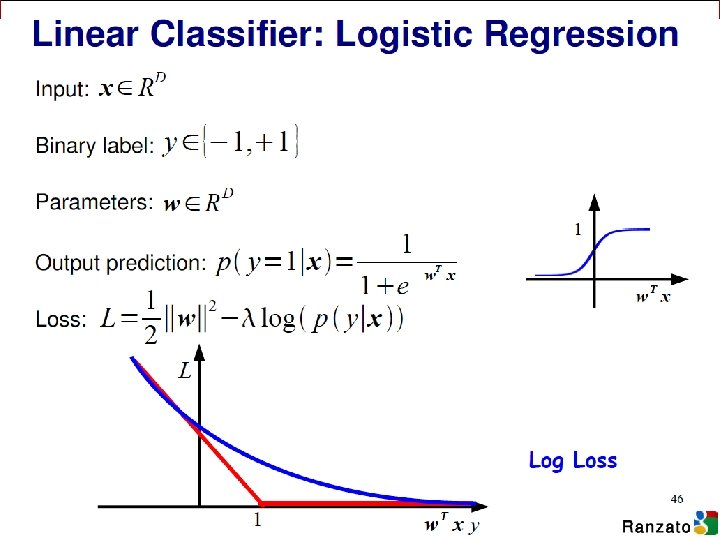

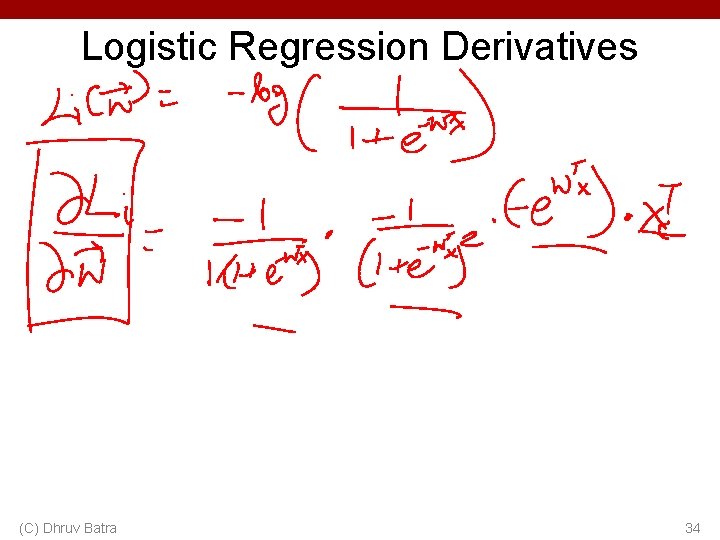

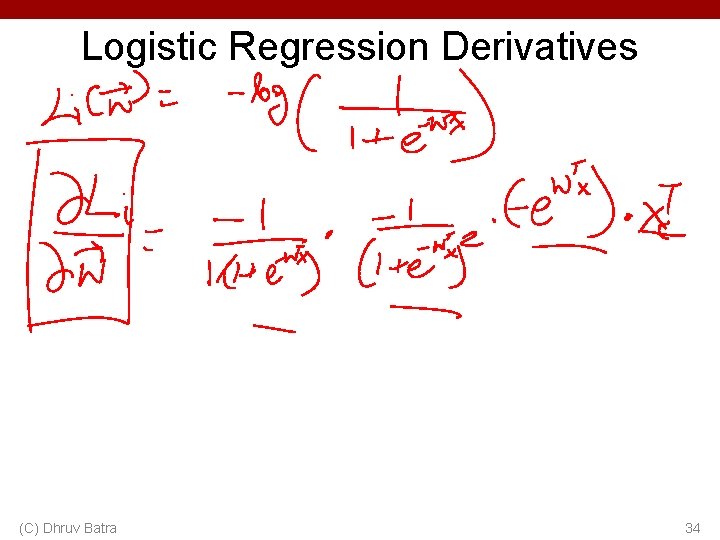

Logistic Regression Derivatives (C) Dhruv Batra 34

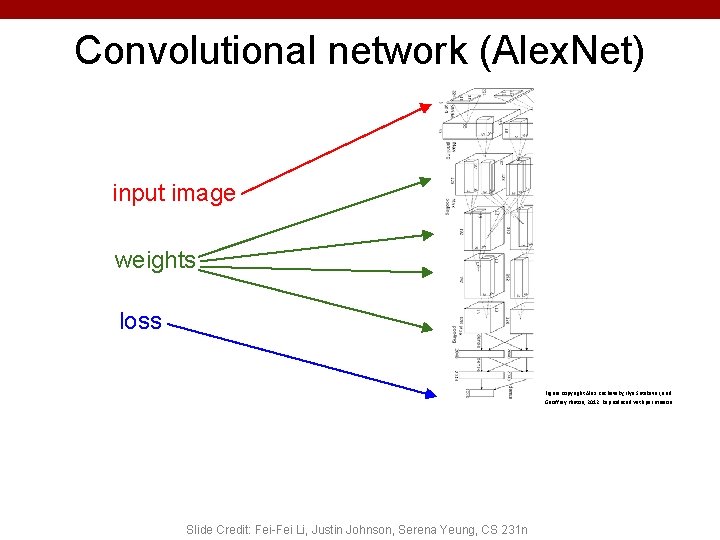

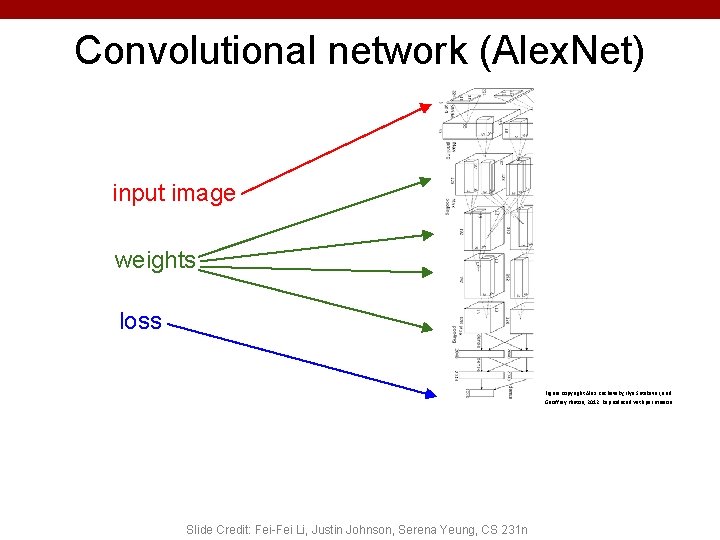

Convolutional network (Alex. Net) input image weights loss Figure copyright Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, 2012. Reproduced with permission. Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

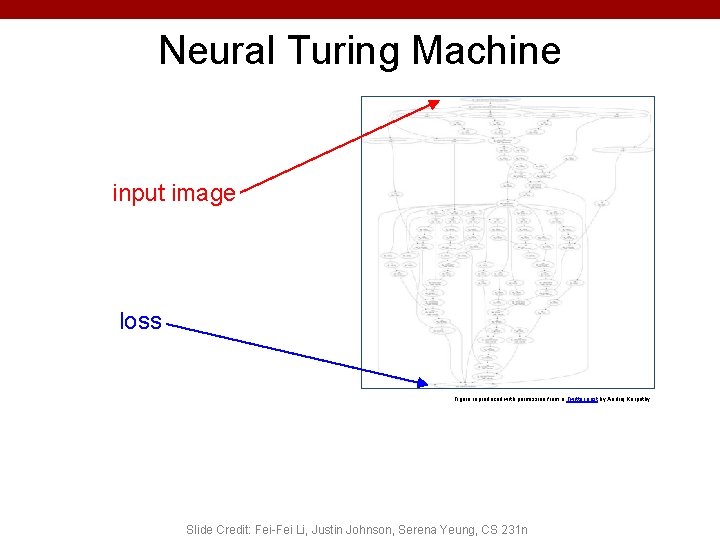

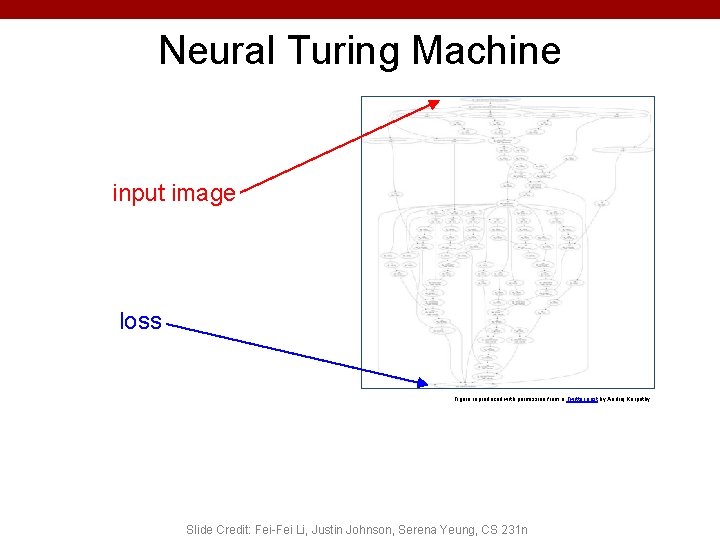

Neural Turing Machine input image loss Figure reproduced with permission from a Twitter post by Andrej Karpathy. Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

How do we compute gradients? • Analytic or “Manual” Differentiation • Symbolic Differentiation • Numerical Differentiation • Automatic Differentiation – Forward mode AD – Reverse mode AD • aka “backprop” (C) Dhruv Batra 37

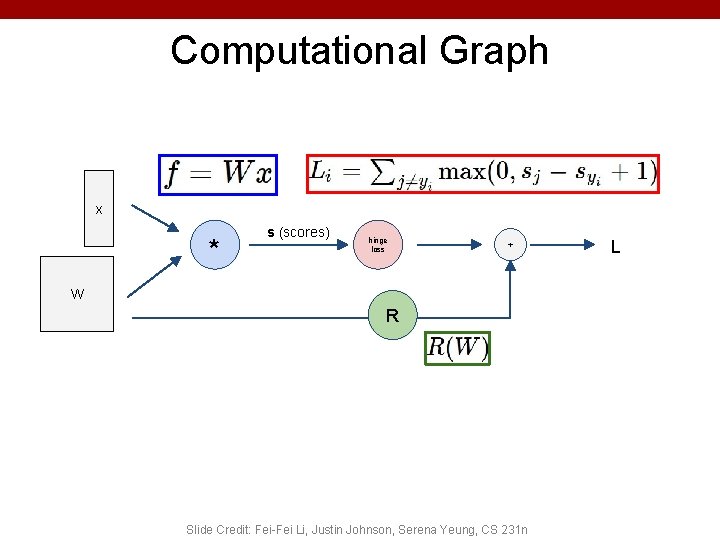

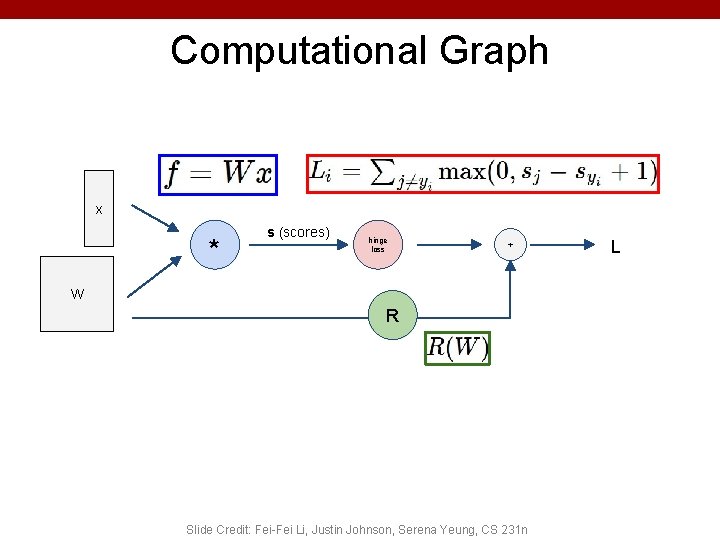

Computational Graph x * s (scores) hinge loss + W R Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n L

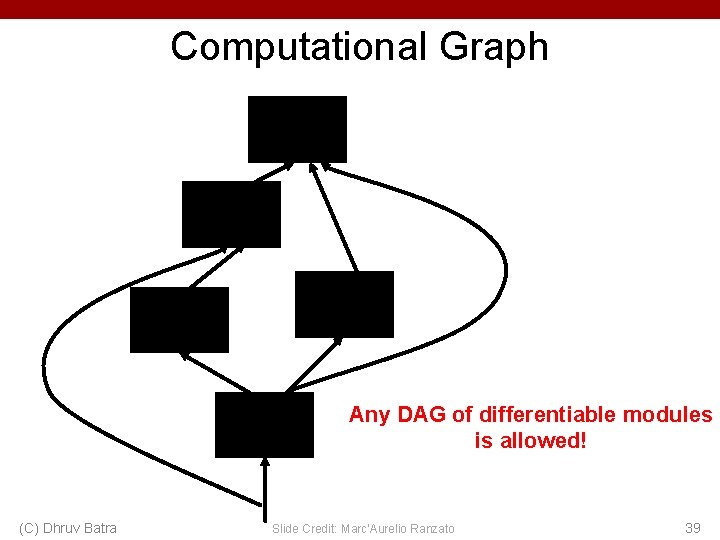

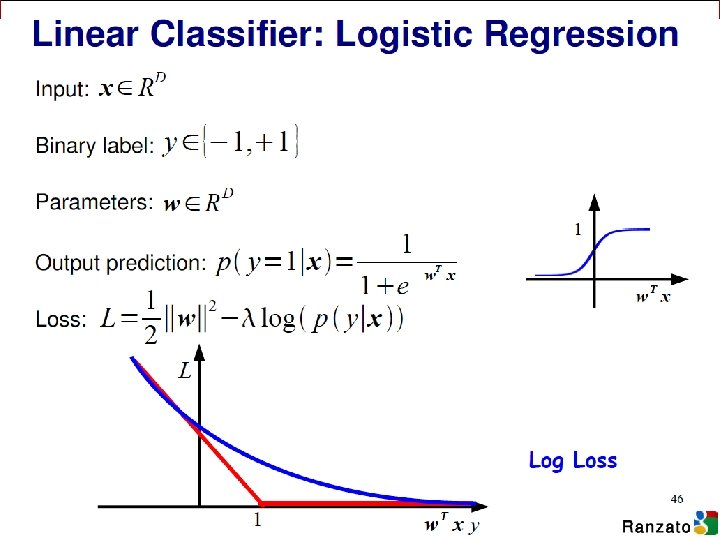

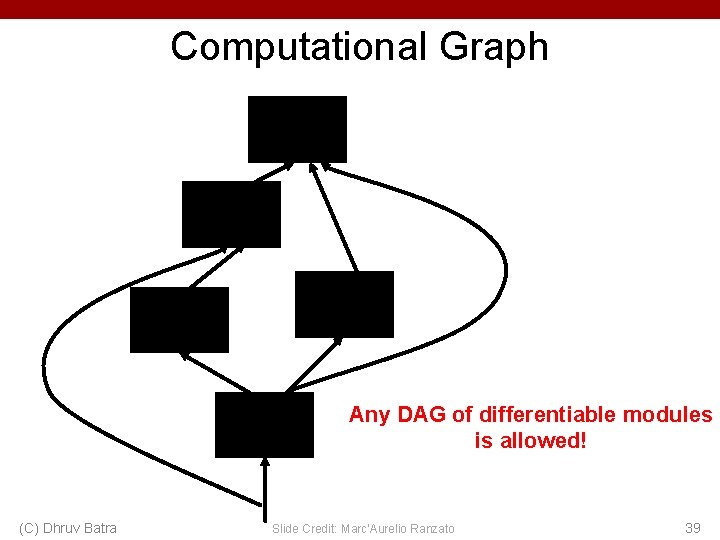

Computational Graph Any DAG of differentiable modules is allowed! (C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 39

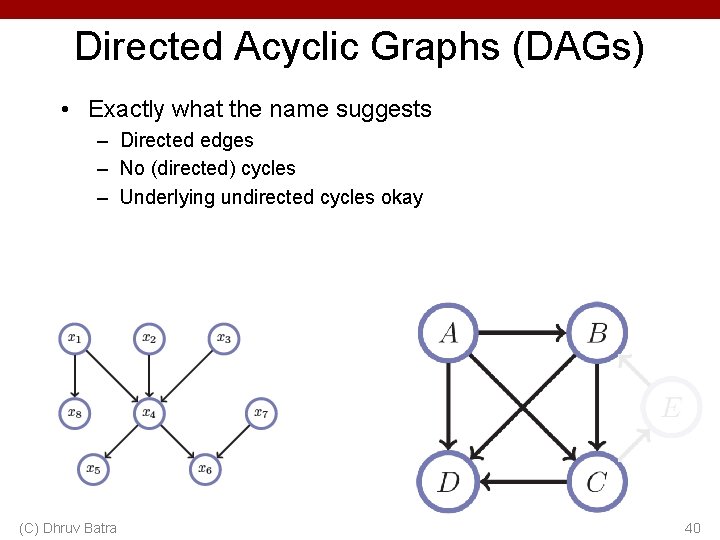

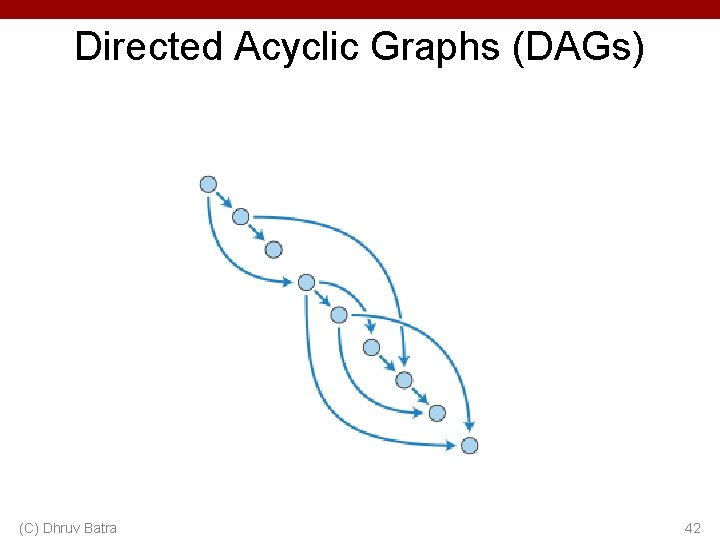

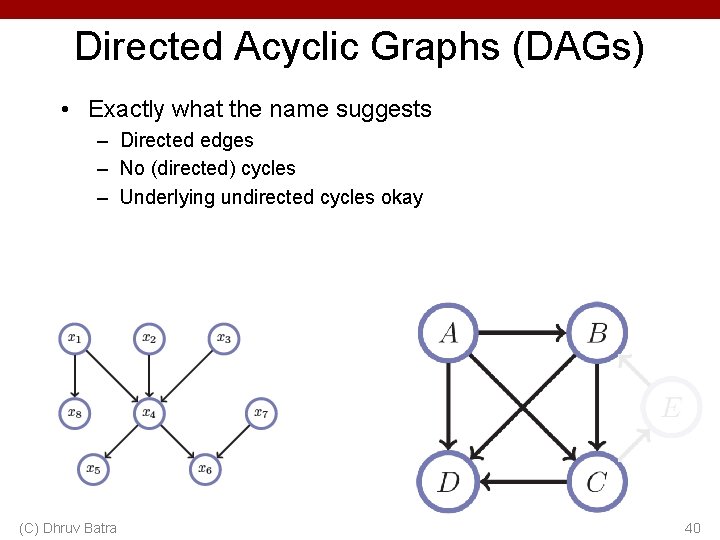

Directed Acyclic Graphs (DAGs) • Exactly what the name suggests – Directed edges – No (directed) cycles – Underlying undirected cycles okay (C) Dhruv Batra 40

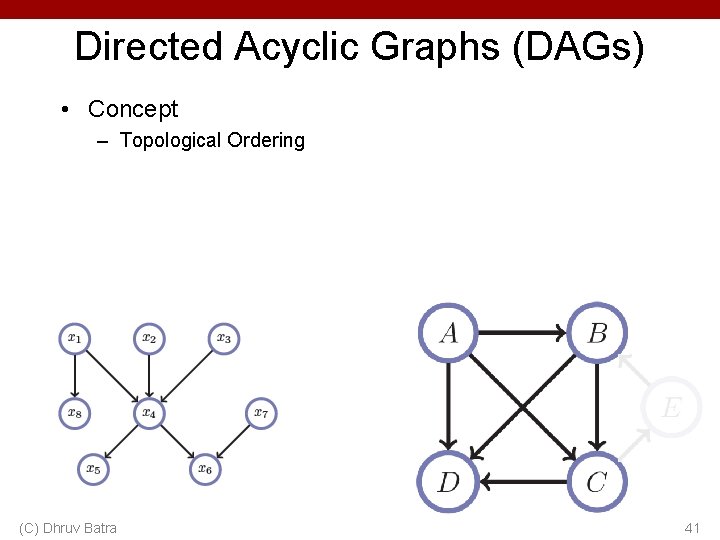

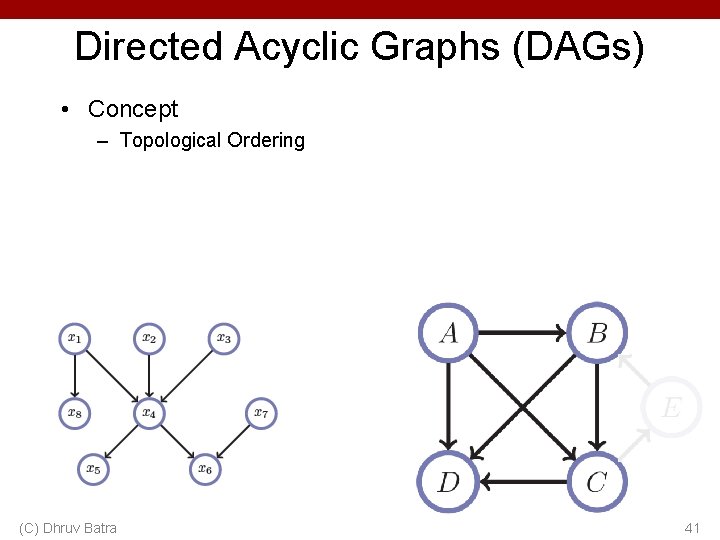

Directed Acyclic Graphs (DAGs) • Concept – Topological Ordering (C) Dhruv Batra 41

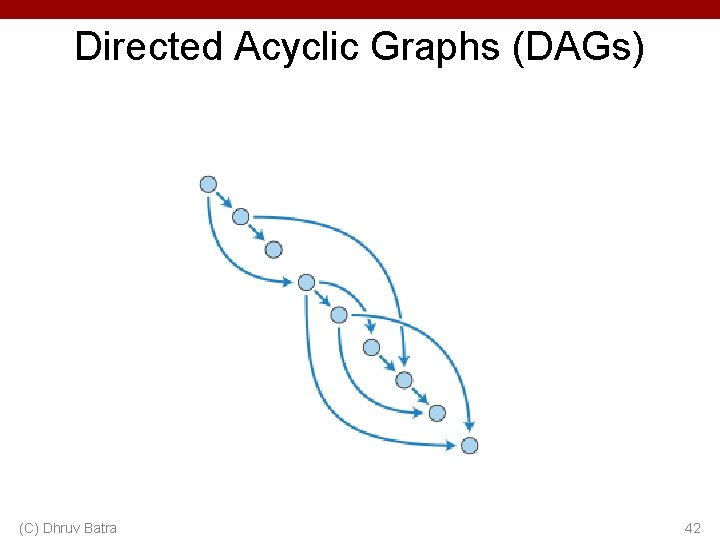

Directed Acyclic Graphs (DAGs) (C) Dhruv Batra 42

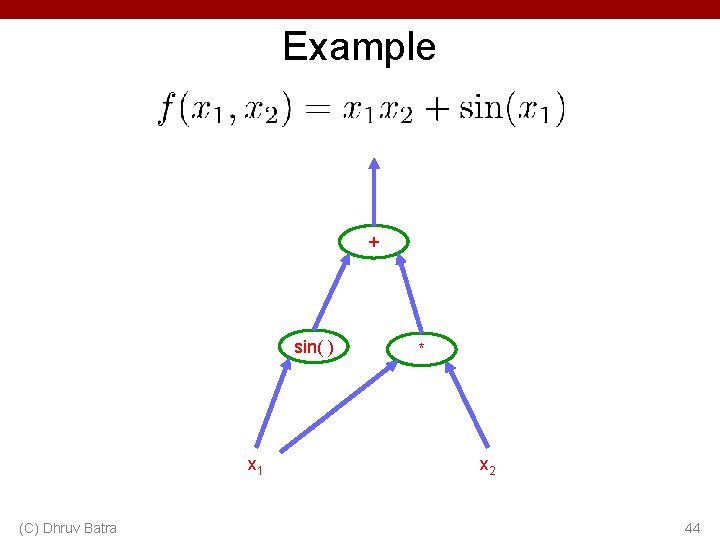

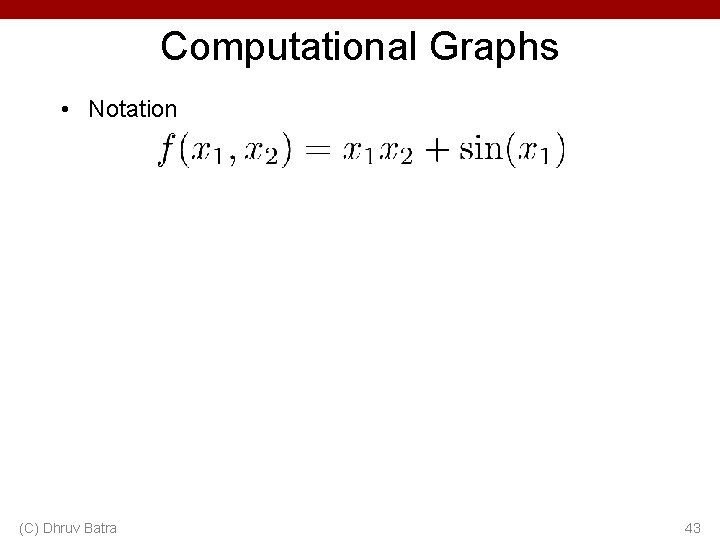

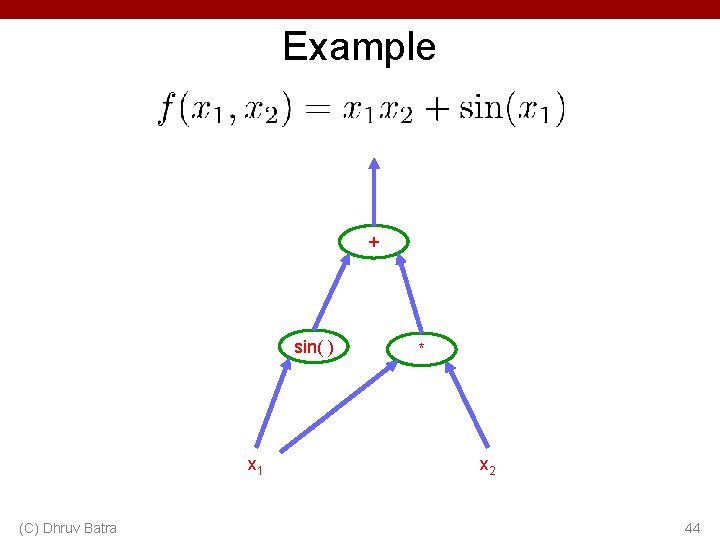

Computational Graphs • Notation (C) Dhruv Batra 43

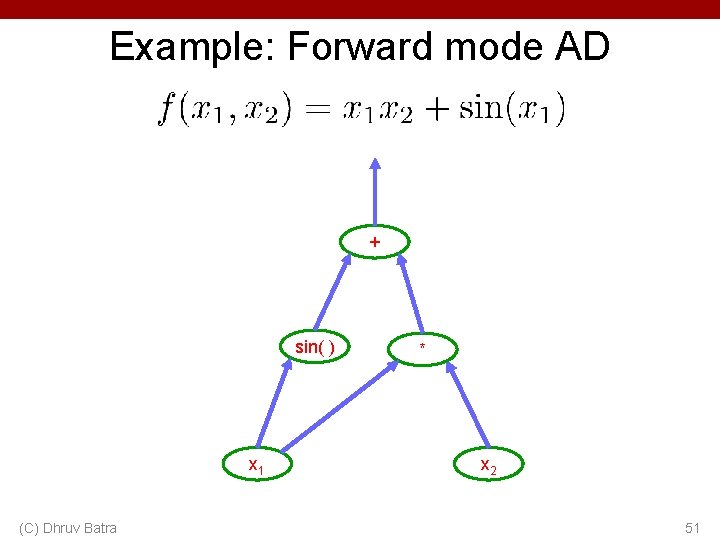

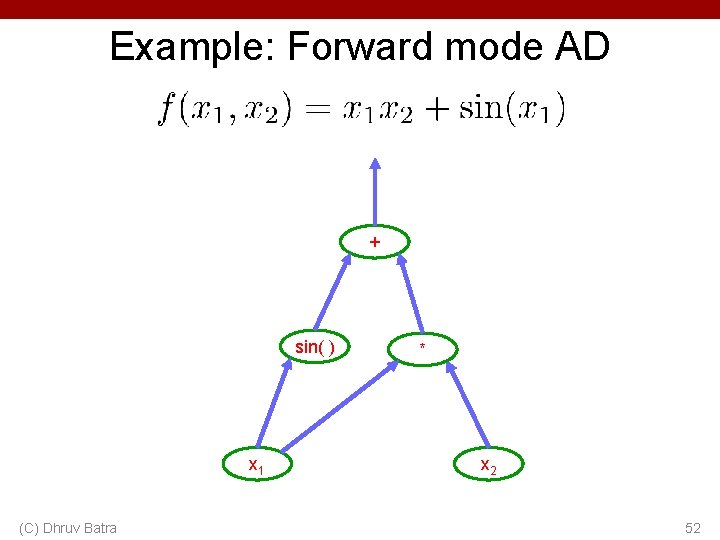

Example + sin( ) x 1 (C) Dhruv Batra * x 2 44

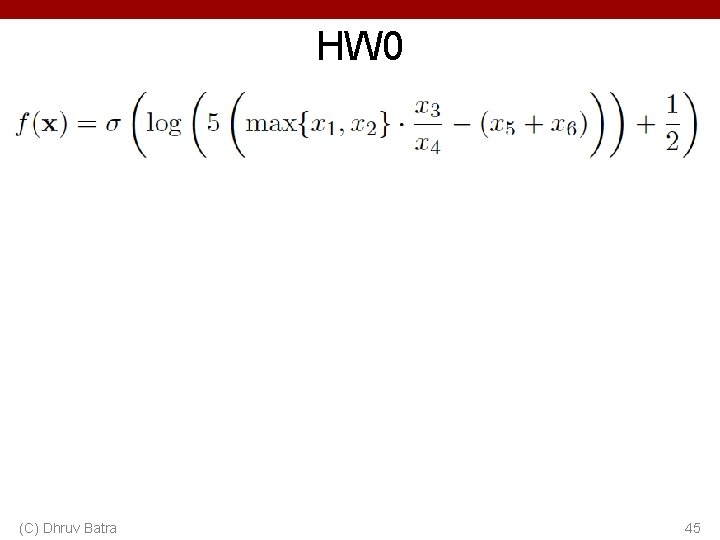

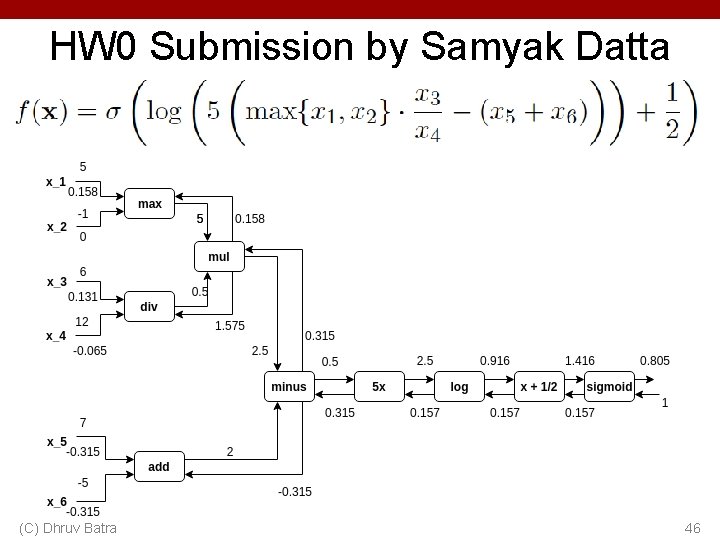

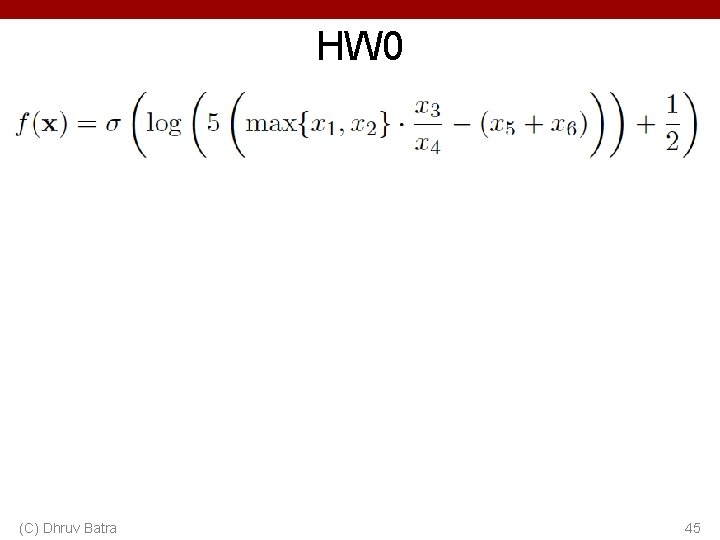

HW 0 (C) Dhruv Batra 45

HW 0 Submission by Samyak Datta (C) Dhruv Batra 46

Forward mode vs Reverse Mode • Key Computations (C) Dhruv Batra 48

Forward mode AD g 49

Reverse mode AD g 50

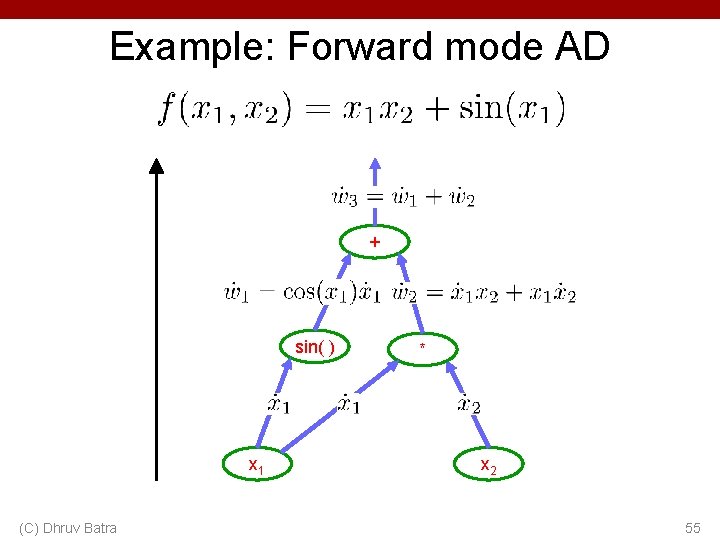

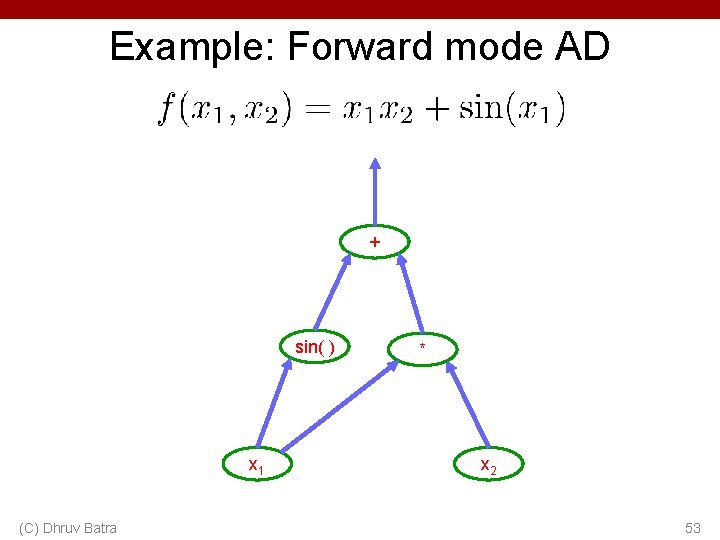

Example: Forward mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 51

Example: Forward mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 52

Example: Forward mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 53

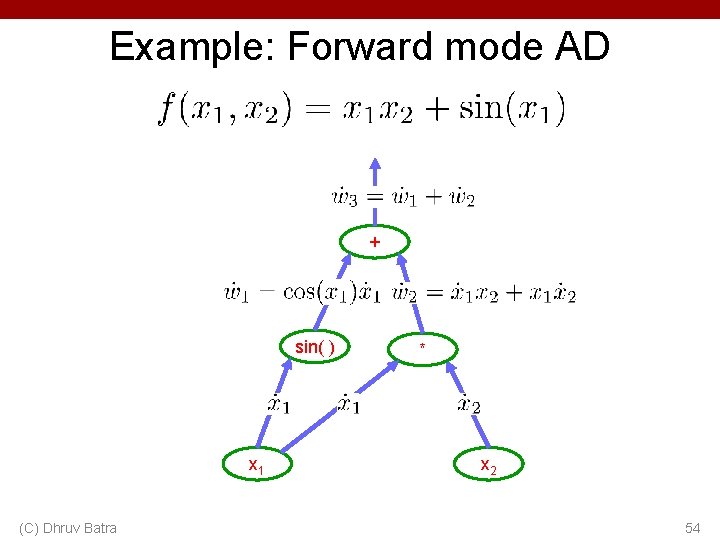

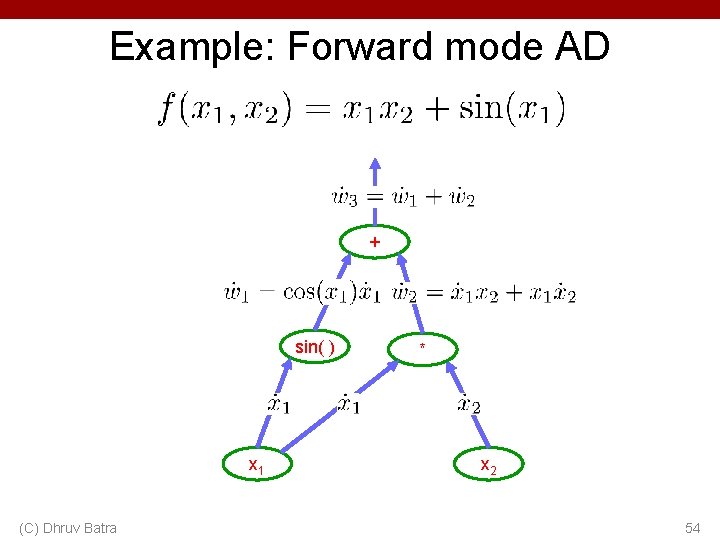

Example: Forward mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 54

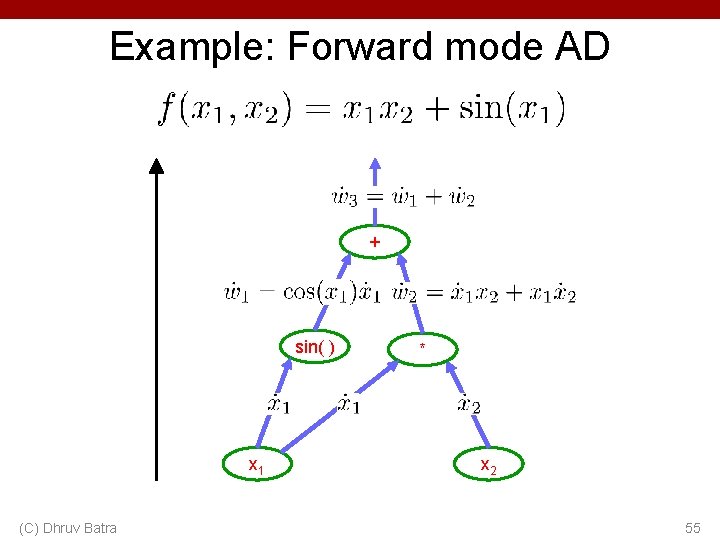

Example: Forward mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 55

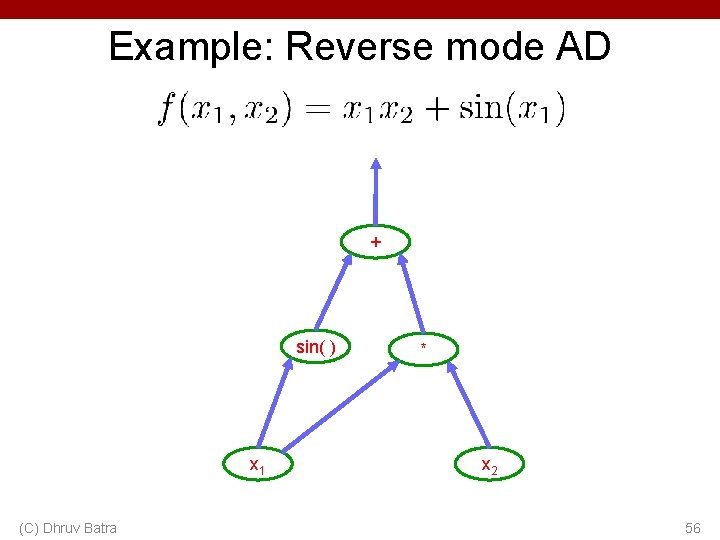

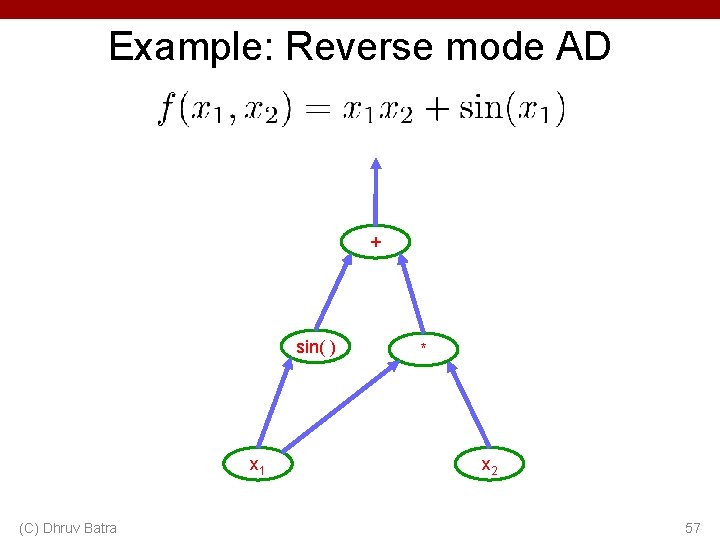

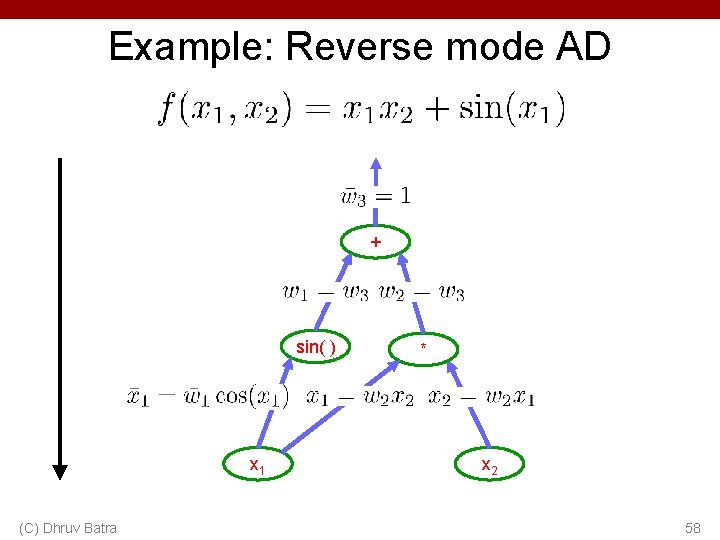

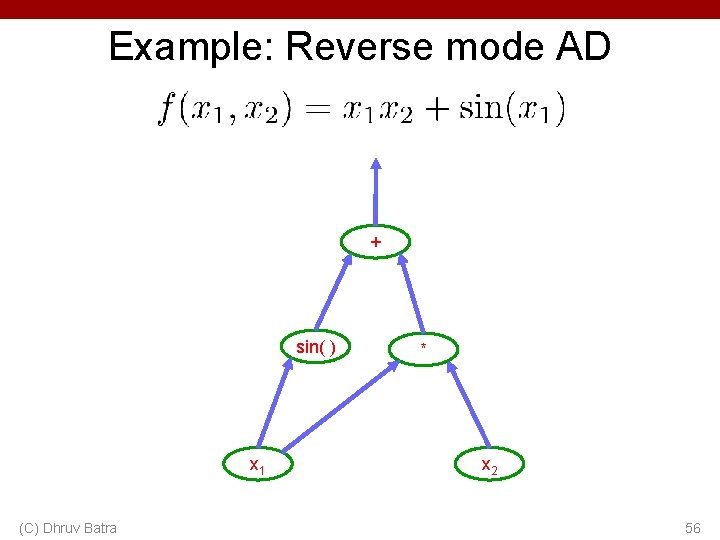

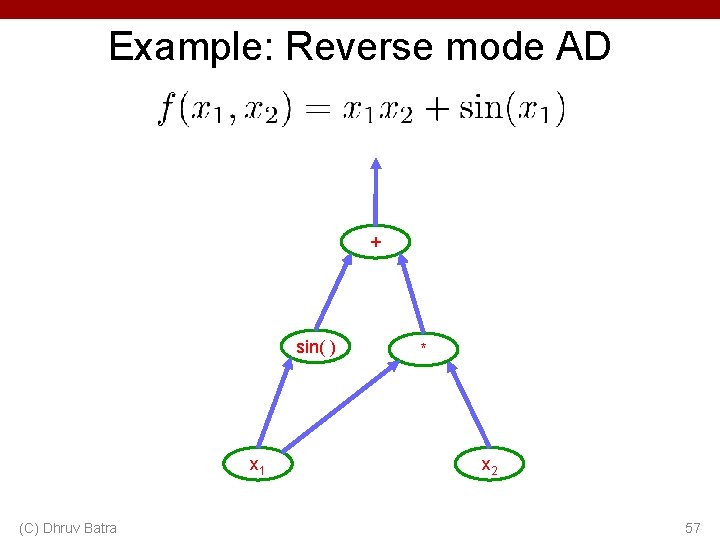

Example: Reverse mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 56

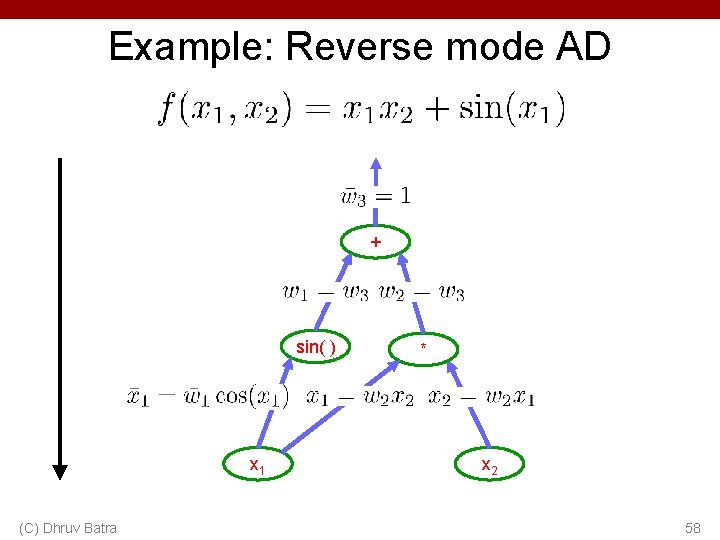

Example: Reverse mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 57

Example: Reverse mode AD + sin( ) x 1 (C) Dhruv Batra * x 2 58

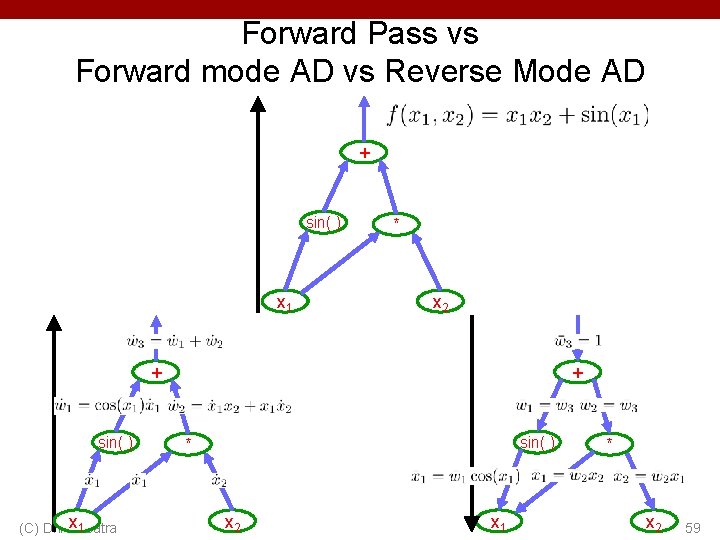

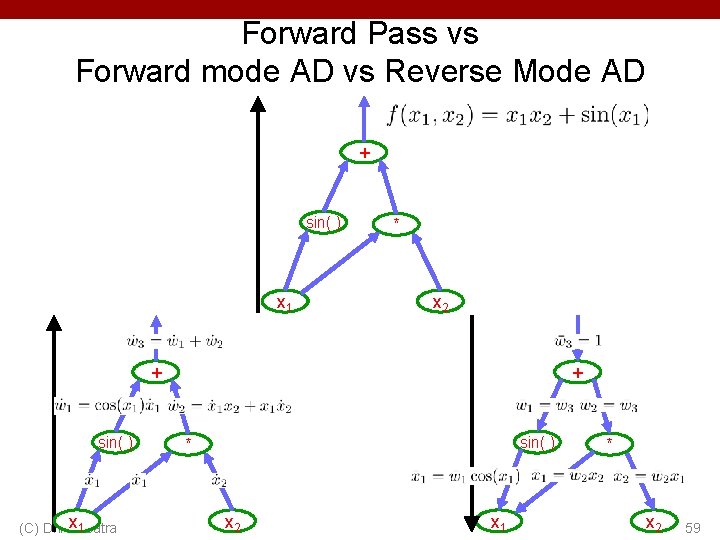

Forward Pass vs Forward mode AD vs Reverse Mode AD + sin( ) x 1 * x 2 + sin( ) x 1 Batra (C) Dhruv + sin( ) * x 2 x 1 * x 2 59

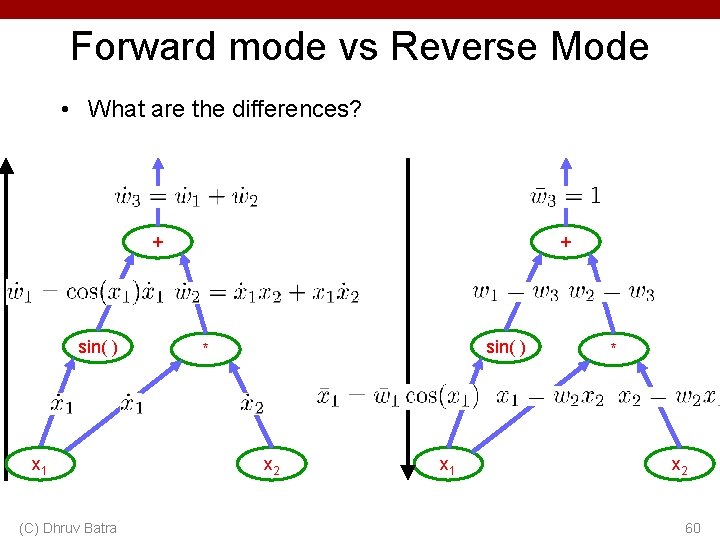

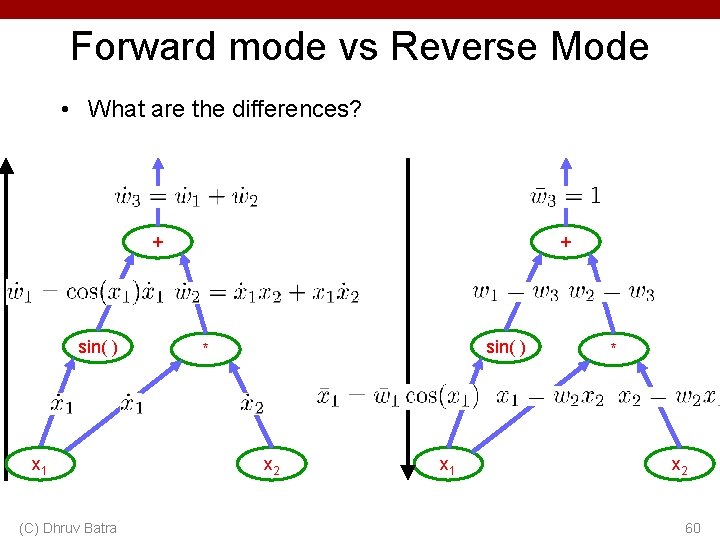

Forward mode vs Reverse Mode • What are the differences? + sin( ) x 1 (C) Dhruv Batra + sin( ) * x 2 x 1 * x 2 60

Forward mode vs Reverse Mode • What are the differences? • Which one is faster to compute? – Forward or backward? (C) Dhruv Batra 61

Forward mode vs Reverse Mode • What are the differences? • Which one is faster to compute? – Forward or backward? • Which one is more memory efficient (less storage)? – Forward or backward? (C) Dhruv Batra 62

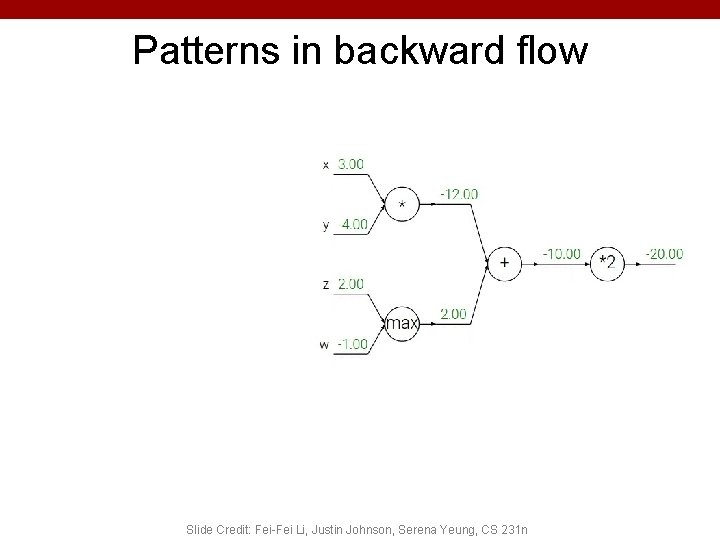

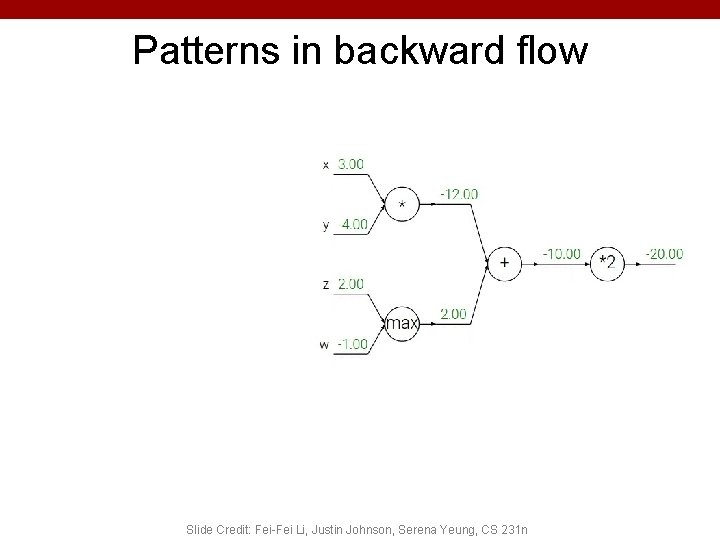

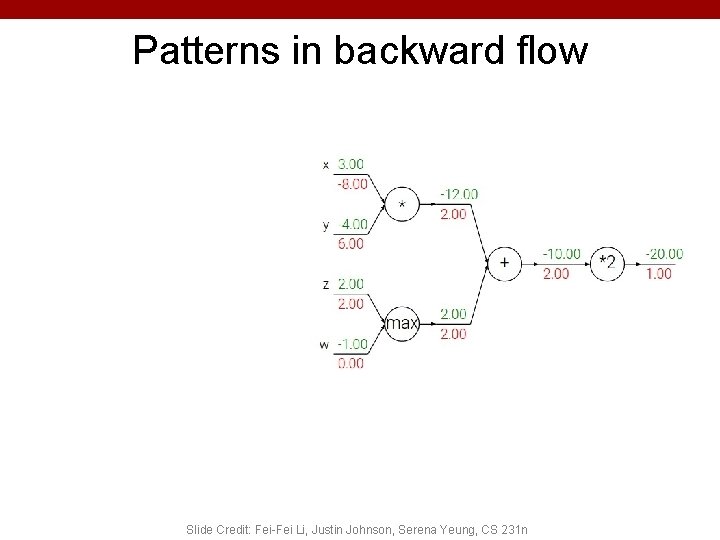

Patterns in backward flow Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

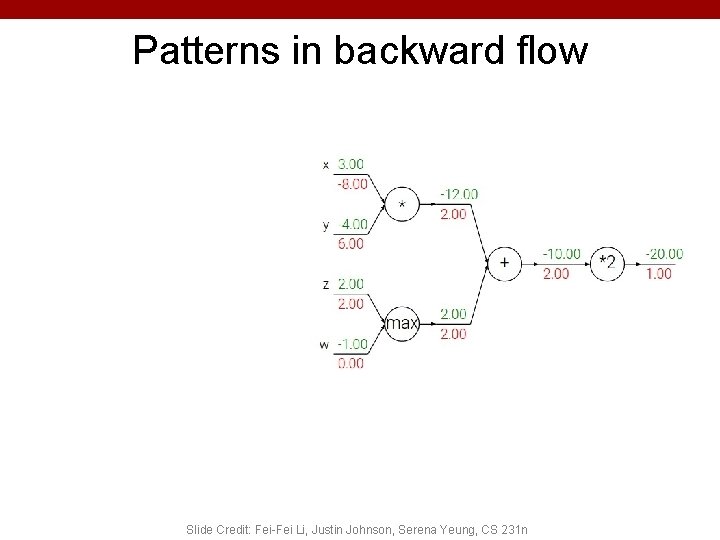

Patterns in backward flow Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

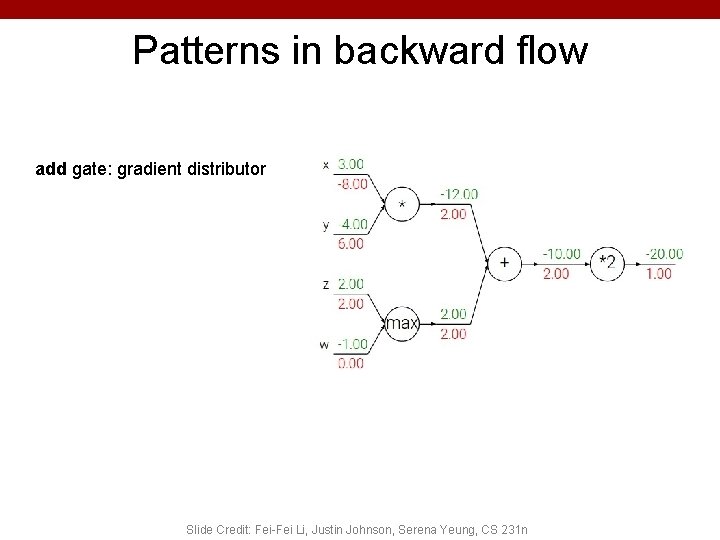

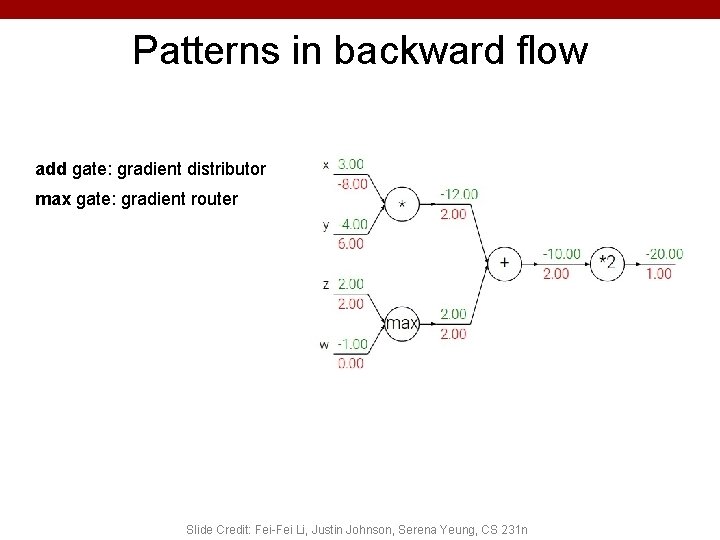

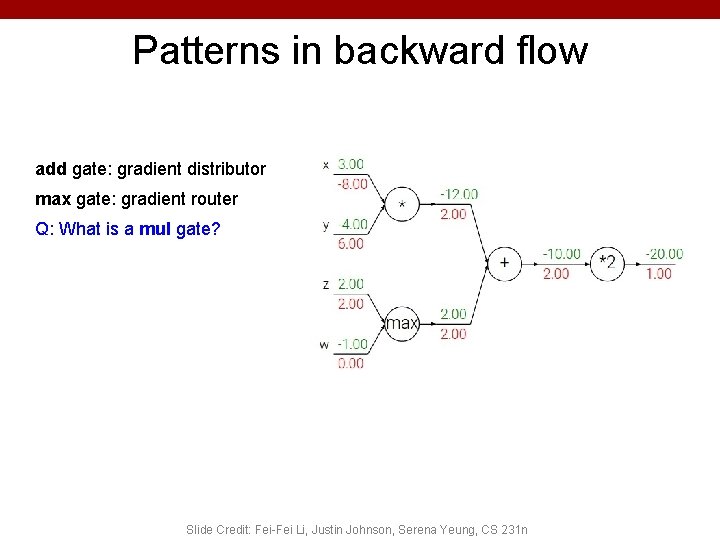

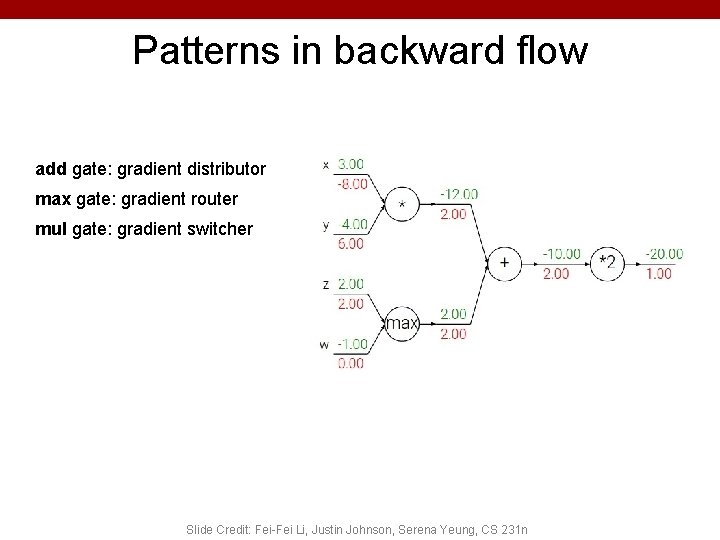

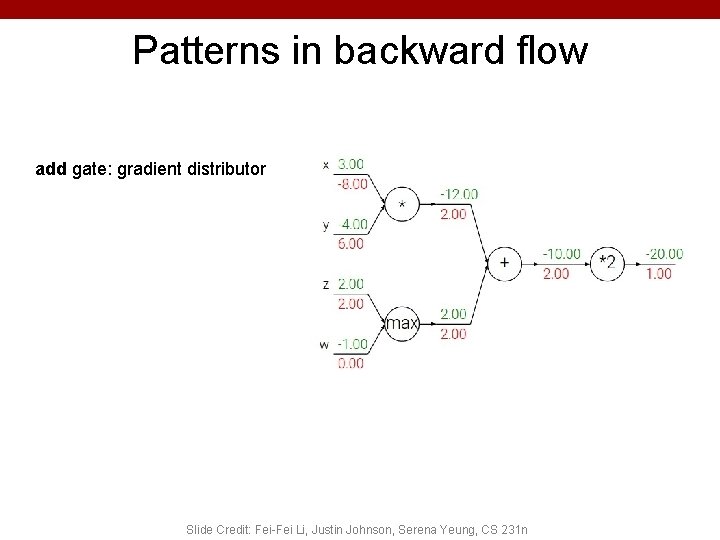

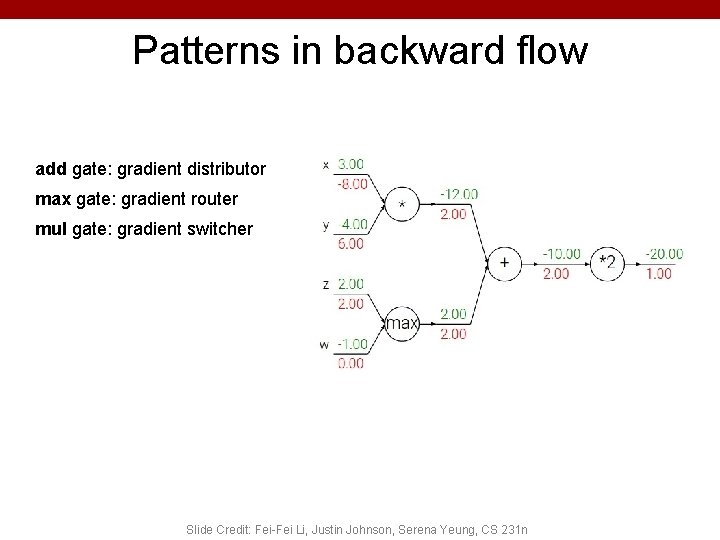

Patterns in backward flow add gate: gradient distributor Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

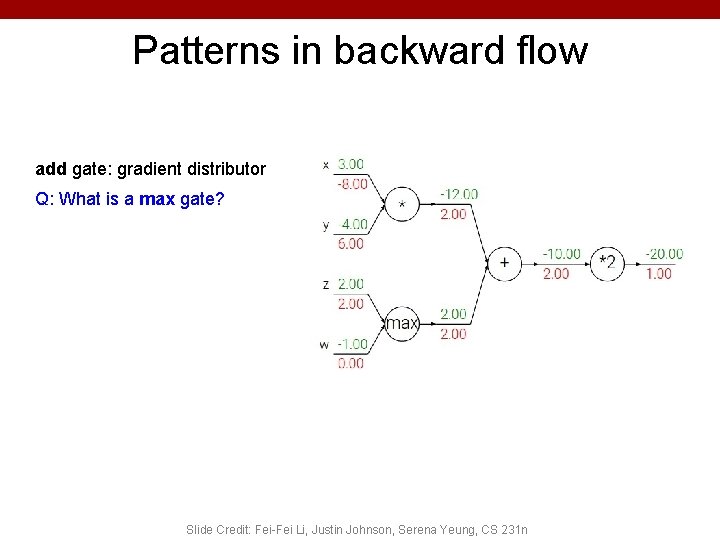

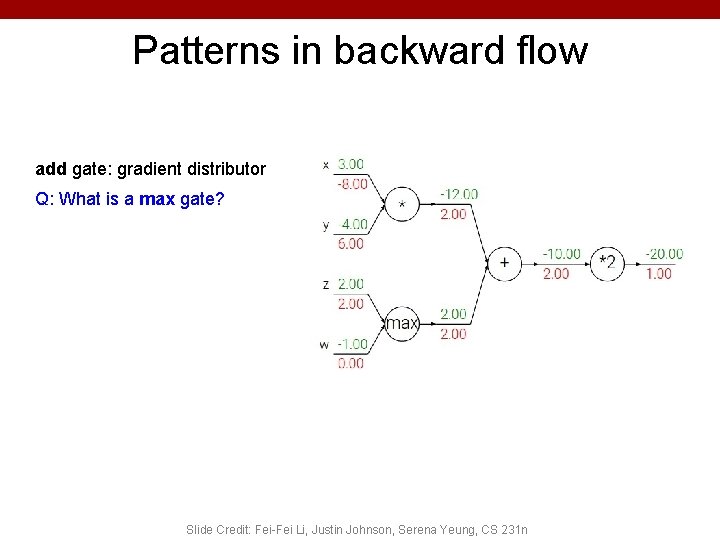

Patterns in backward flow add gate: gradient distributor Q: What is a max gate? Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

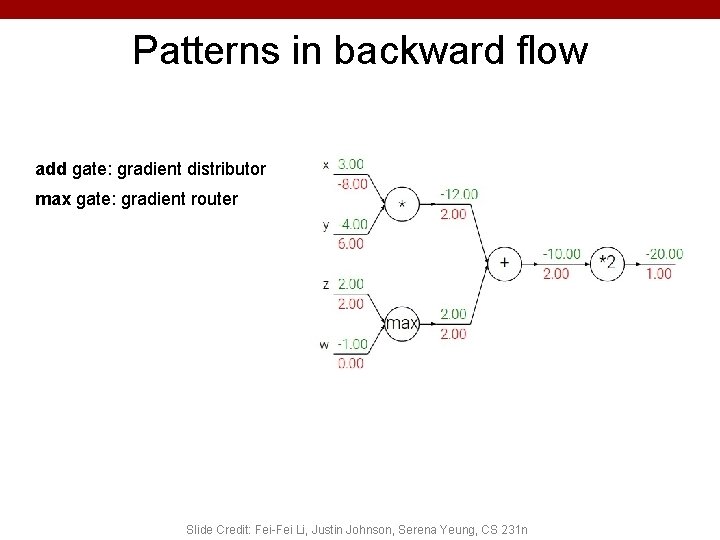

Patterns in backward flow add gate: gradient distributor max gate: gradient router Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

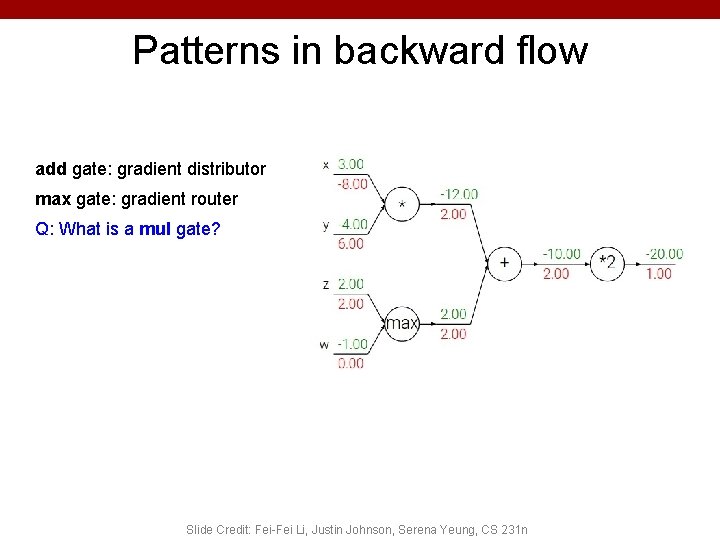

Patterns in backward flow add gate: gradient distributor max gate: gradient router Q: What is a mul gate? Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

Patterns in backward flow add gate: gradient distributor max gate: gradient router mul gate: gradient switcher Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

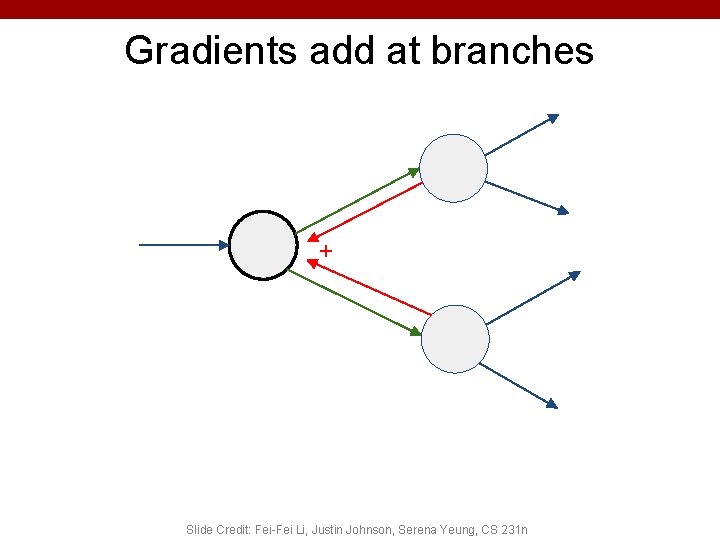

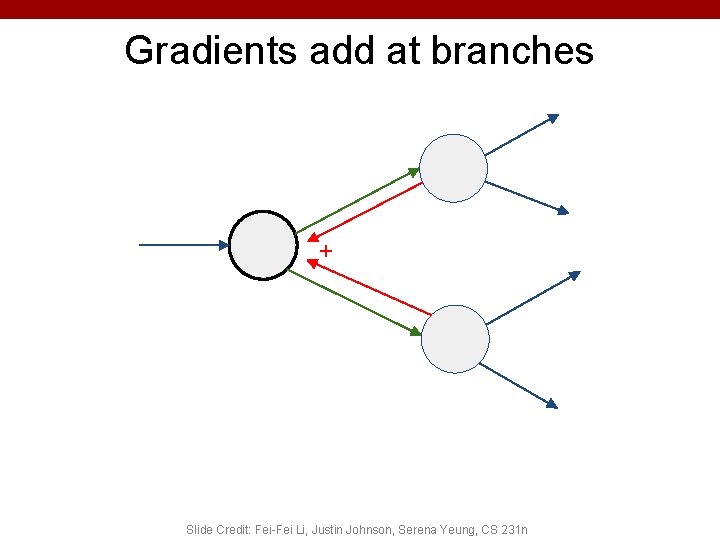

Gradients add at branches + Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

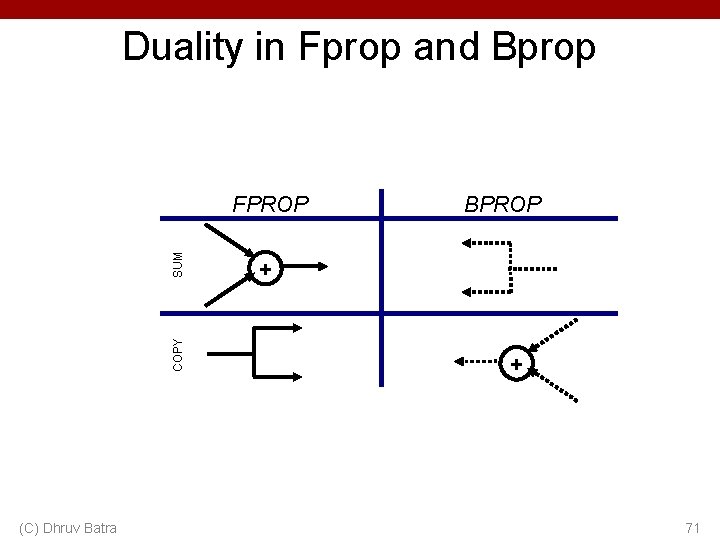

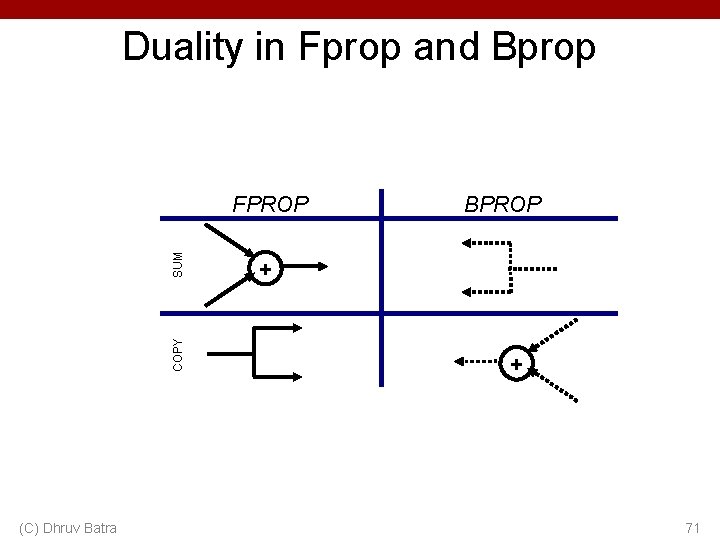

Duality in Fprop and Bprop COPY SUM FPROP (C) Dhruv Batra BPROP + + 71

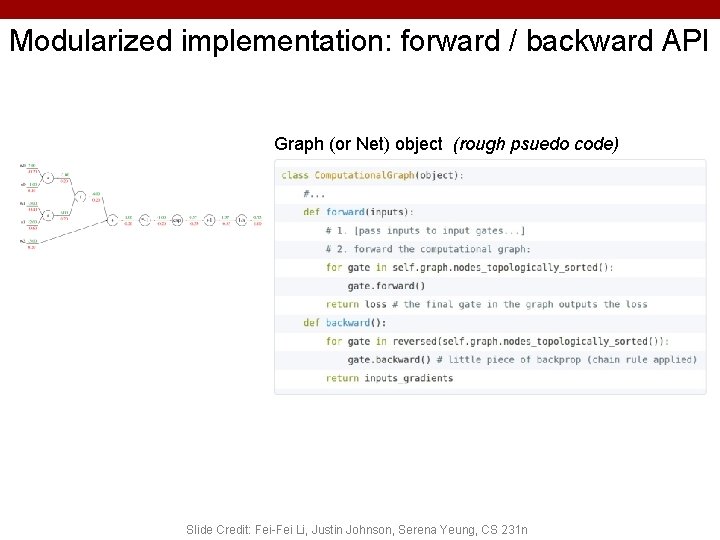

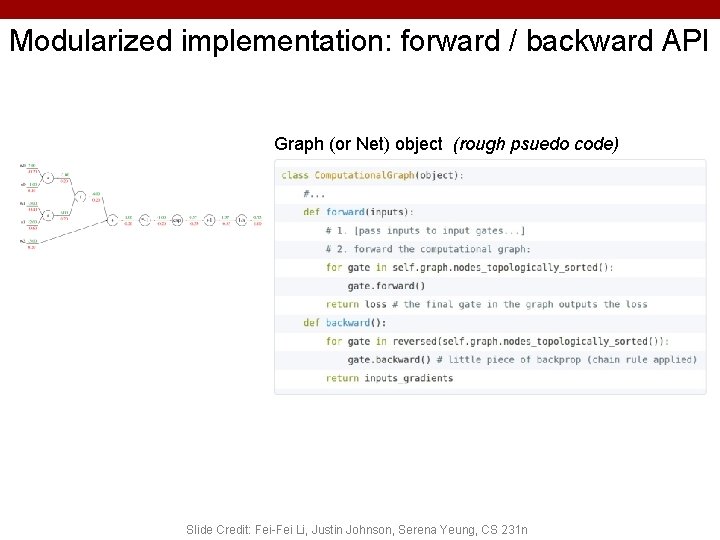

Modularized implementation: forward / backward API Graph (or Net) object (rough psuedo code) 72 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

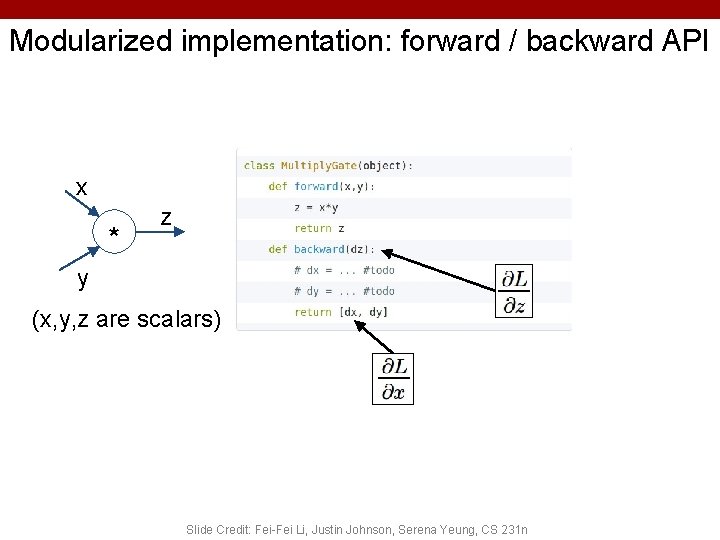

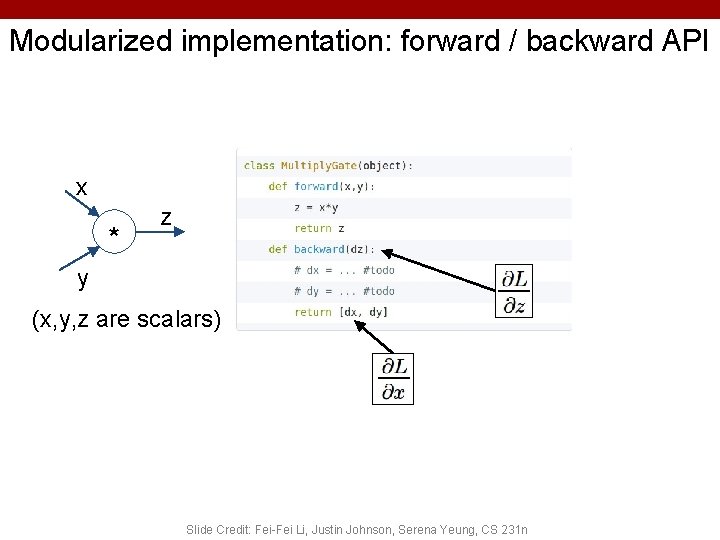

Modularized implementation: forward / backward API x * z y (x, y, z are scalars) 73 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

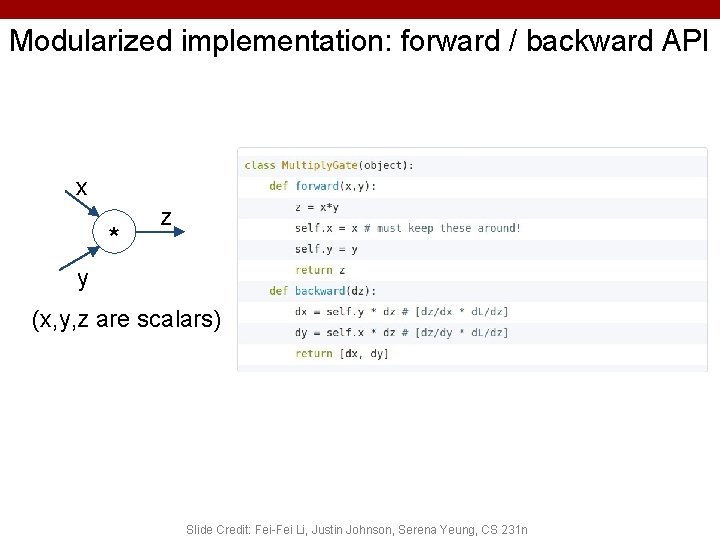

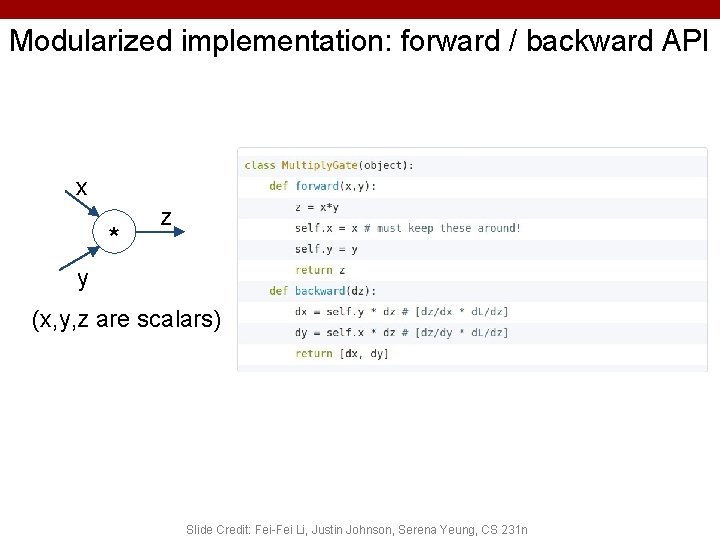

Modularized implementation: forward / backward API x * z y (x, y, z are scalars) 74 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

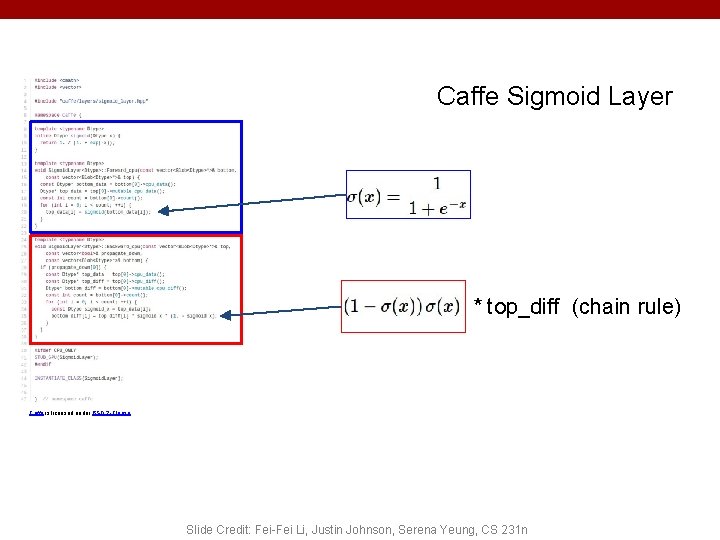

Example: Caffe layers Caffe is licensed under BSD 2 -Clause 75 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

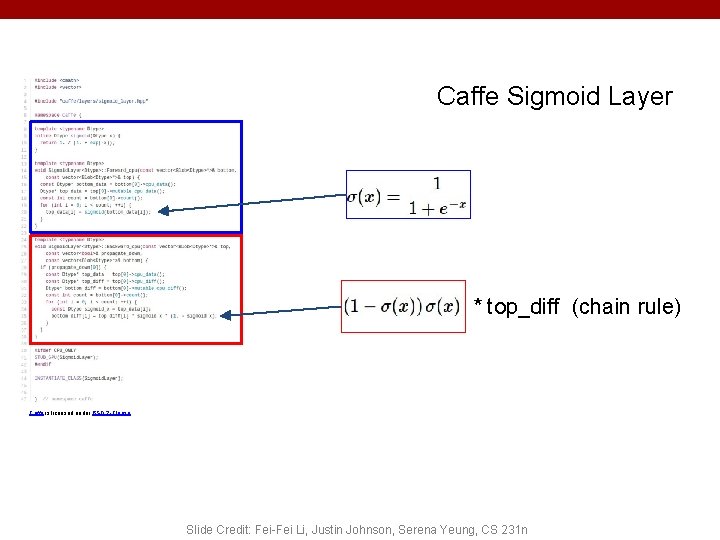

Caffe Sigmoid Layer * top_diff (chain rule) Caffe is licensed under BSD 2 -Clause 76 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

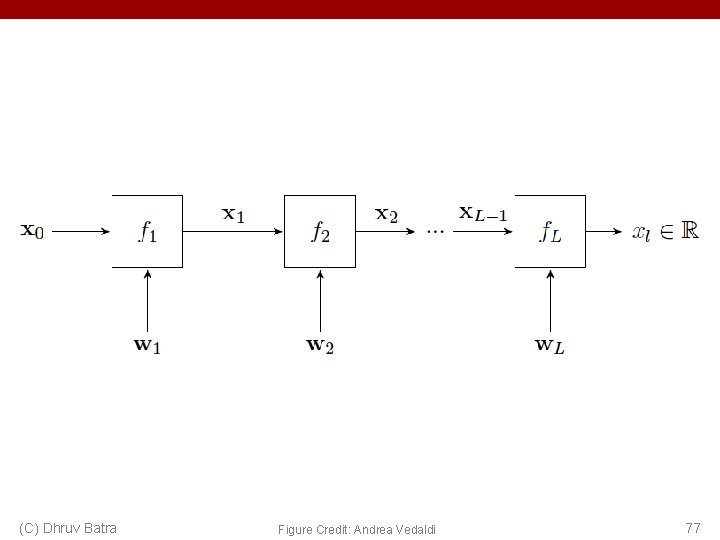

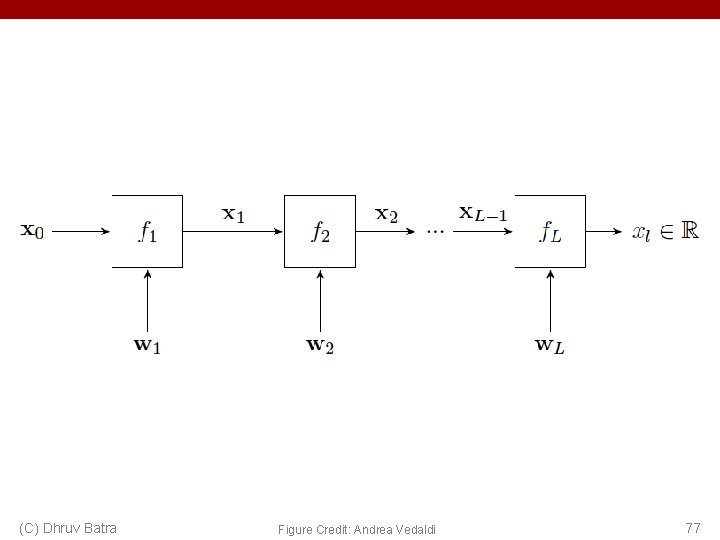

(C) Dhruv Batra Figure Credit: Andrea Vedaldi 77

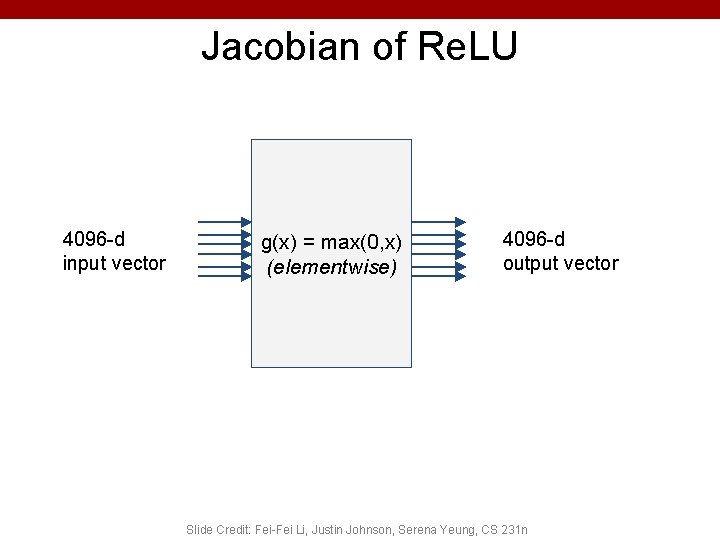

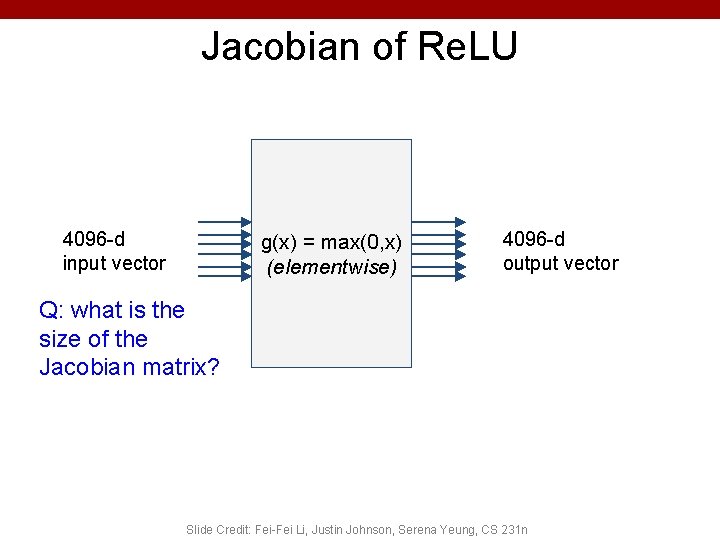

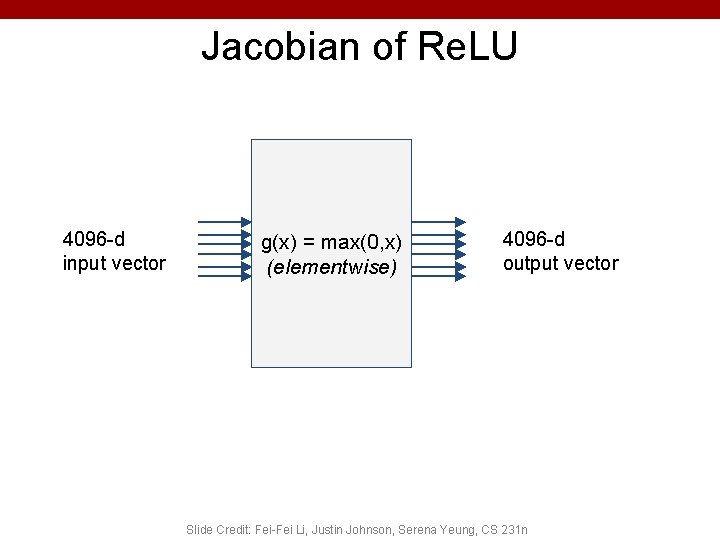

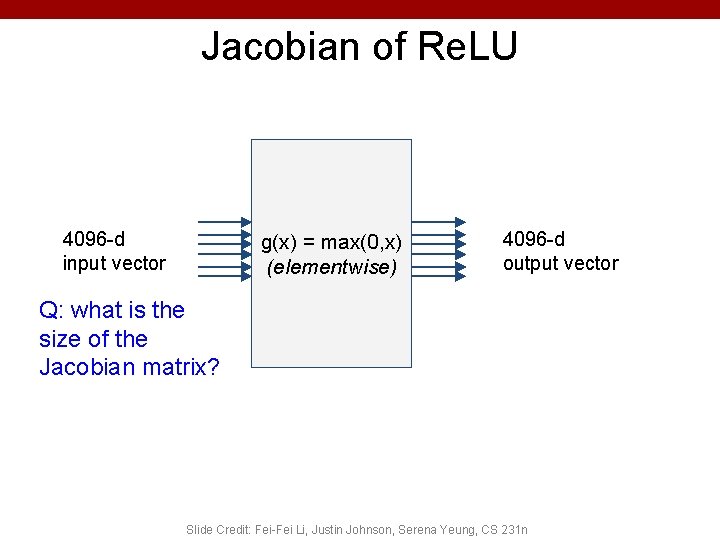

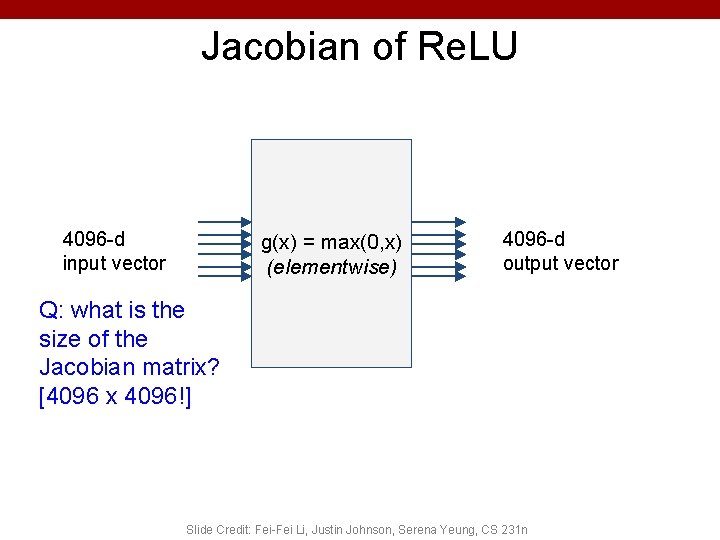

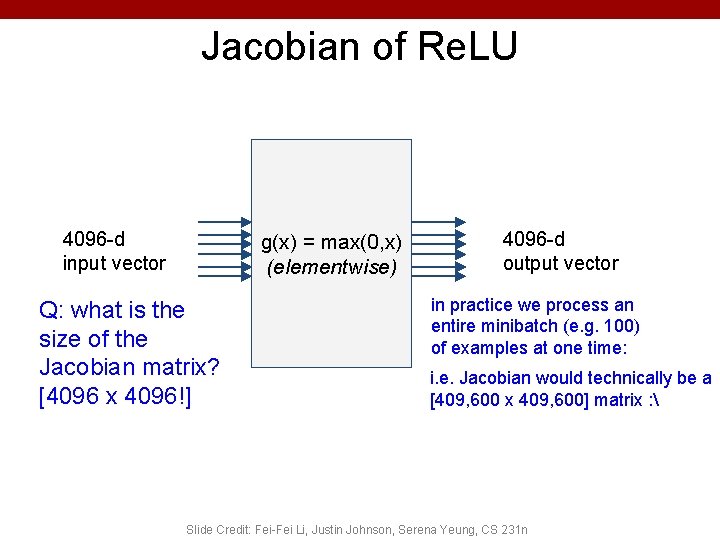

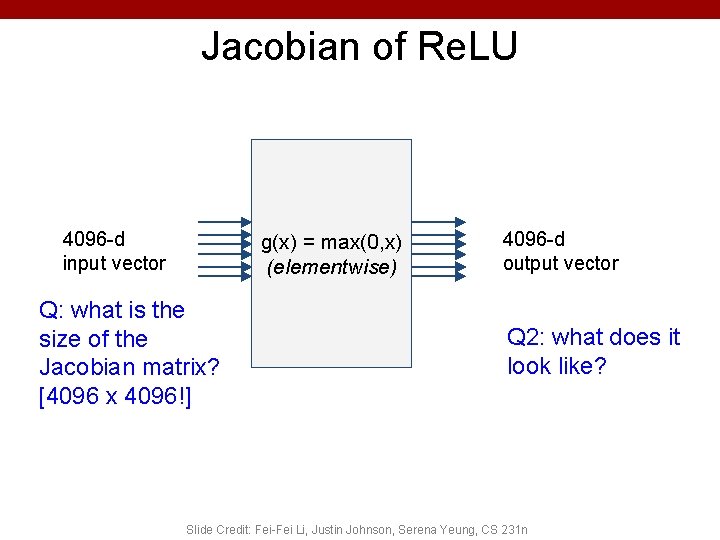

Jacobian of Re. LU 4096 -d input vector g(x) = max(0, x) (elementwise) 4096 -d output vector Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

Jacobian of Re. LU 4096 -d input vector g(x) = max(0, x) (elementwise) 4096 -d output vector Q: what is the size of the Jacobian matrix? 80 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

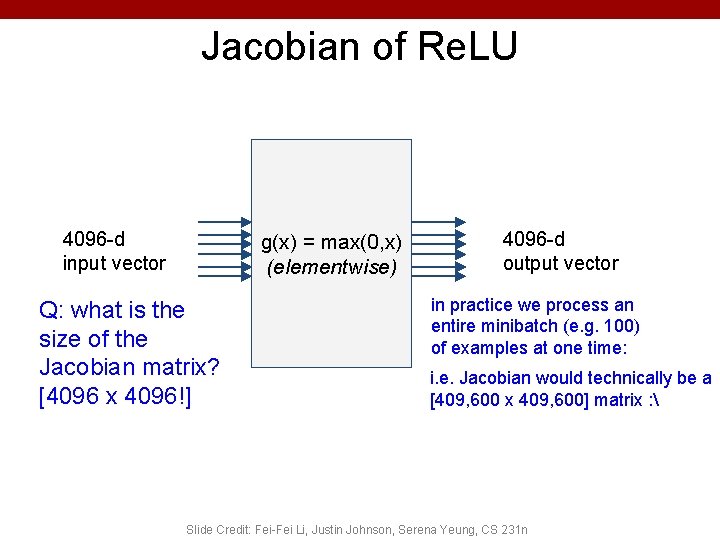

Jacobian of Re. LU 4096 -d input vector g(x) = max(0, x) (elementwise) 4096 -d output vector Q: what is the size of the Jacobian matrix? [4096 x 4096!] 81 Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

Jacobian of Re. LU 4096 -d input vector g(x) = max(0, x) (elementwise) Q: what is the size of the Jacobian matrix? [4096 x 4096!] 4096 -d output vector in practice we process an entire minibatch (e. g. 100) of examples at one time: i. e. Jacobian would technically be a [409, 600 x 409, 600] matrix : Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

Jacobian of Re. LU 4096 -d input vector g(x) = max(0, x) (elementwise) Q: what is the size of the Jacobian matrix? [4096 x 4096!] 4096 -d output vector Q 2: what does it look like? Slide Credit: Fei-Fei Li, Justin Johnson, Serena Yeung, CS 231 n

Jacobians of FC-Layer (C) Dhruv Batra 84

Jacobians of FC-Layer (C) Dhruv Batra 85

Jacobians of FC-Layer (C) Dhruv Batra 86