CS 434534 Topics in Networked Networking Systems Distributed

CS 434/534: Topics in Networked (Networking) Systems Distributed Network OS (cont. ); From Data to Function Store Yang (Richard) Yang Computer Science Department Yale University 208 A Watson Email: yry@cs. yale. edu http: //zoo. cs. yale. edu/classes/cs 434/

Outline r Admin and recap r High-level datapath programming m blackbox (trace-tree) m whitebox r Network OS supporting programmable networks m m overview Open. Daylight distributed network OS (Paxos, RAFT) from data store to function store 2

Admin r PS 1 small bug to be fixed 3

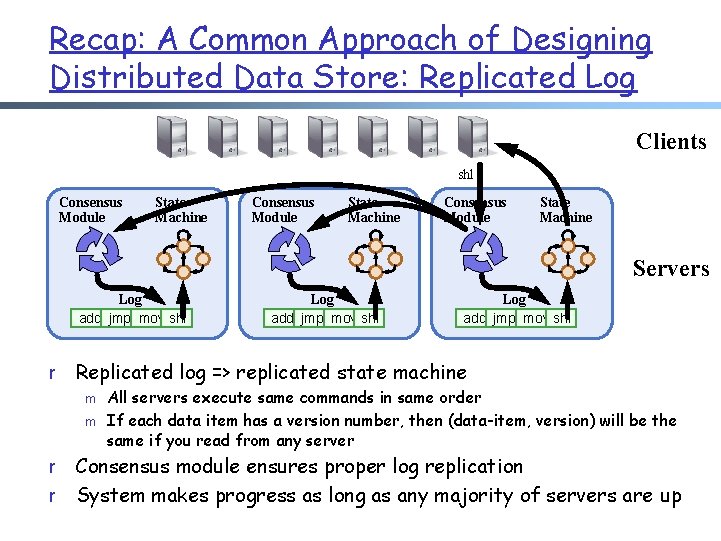

Recap: A Common Approach of Designing Distributed Data Store: Replicated Log Clients shl Consensus Module State Machine Servers Log add jmp mov shl r m r Log add jmp mov shl Replicated log => replicated state machine m r Log add jmp mov shl All servers execute same commands in same order If each data item has a version number, then (data-item, version) will be the same if you read from any server Consensus module ensures proper log replication System makes progress as long as any majority of servers are up

Recap: Approaches to Consensus Two general approaches to consensus: r Symmetric, leader-less: m m m All servers have equal roles Clients can contact any server Basic Paxos is an example r Asymmetric, leader-based: m At any given time, one server is in charge, others accept its decisions m Clients communicate with the leader m Raft is an example

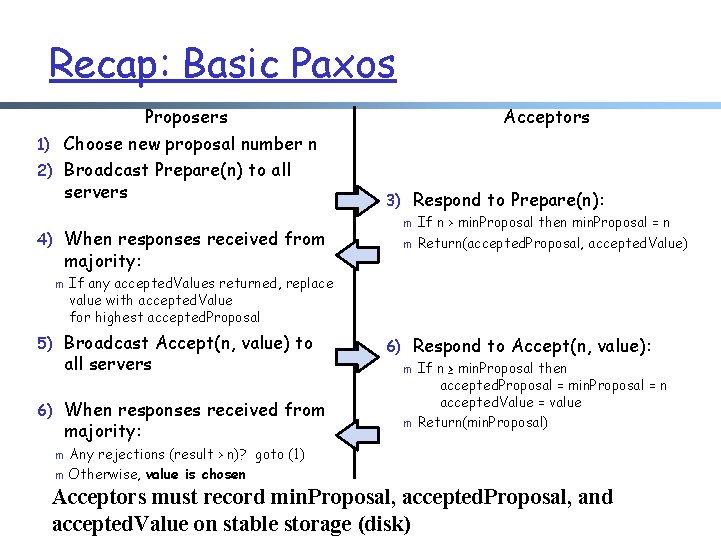

Recap: Basic Paxos Proposers Acceptors 1) Choose new proposal number n 2) Broadcast Prepare(n) to all servers 4) When responses received from majority: m all servers 6) When responses received from majority: m m m If n > min. Proposal then min. Proposal = n Return(accepted. Proposal, accepted. Value) If any accepted. Values returned, replace value with accepted. Value for highest accepted. Proposal 5) Broadcast Accept(n, value) to m 3) Respond to Prepare(n): 6) Respond to Accept(n, value): m m If n ≥ min. Proposal then accepted. Proposal = min. Proposal = n accepted. Value = value Return(min. Proposal) Any rejections (result > n)? goto (1) Otherwise, value is chosen Acceptors must record min. Proposal, accepted. Proposal, and accepted. Value on stable storage (disk)

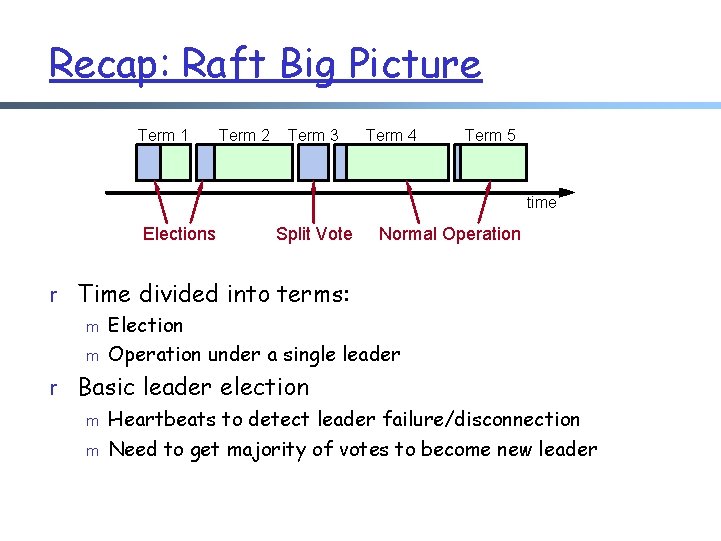

Recap: Raft Big Picture Term 1 Term 2 Term 3 Term 4 Term 5 time Elections Split Vote Normal Operation r Time divided into terms: m Election m Operation under a single leader r Basic leader election m Heartbeats to detect leader failure/disconnection m Need to get majority of votes to become new leader

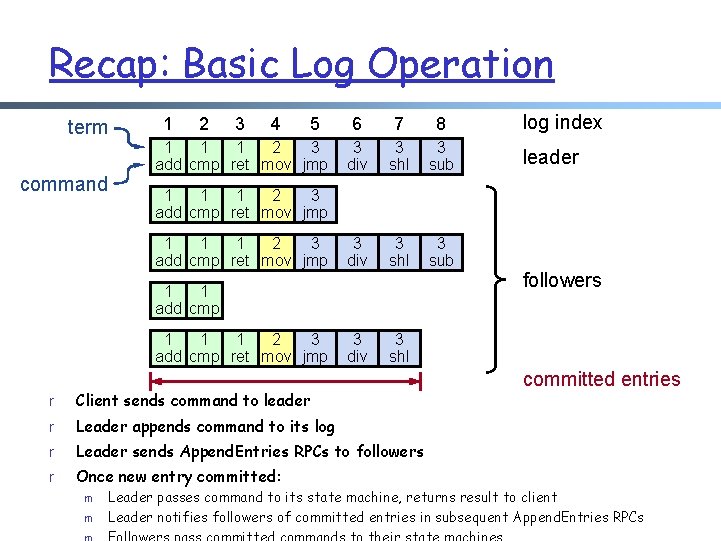

Recap: Basic Log Operation term command 1 2 3 4 5 1 1 1 2 3 add cmp ret mov jmp 6 7 8 3 div 3 shl 3 sub 3 div 3 shl 1 1 add cmp 1 1 1 2 3 add cmp ret mov jmp r Client sends command to leader r Leader appends command to its log r Leader sends Append. Entries RPCs to followers r Once new entry committed: m leader 1 1 1 2 3 add cmp ret mov jmp m log index followers committed entries Leader passes command to its state machine, returns result to client Leader notifies followers of committed entries in subsequent Append. Entries RPCs

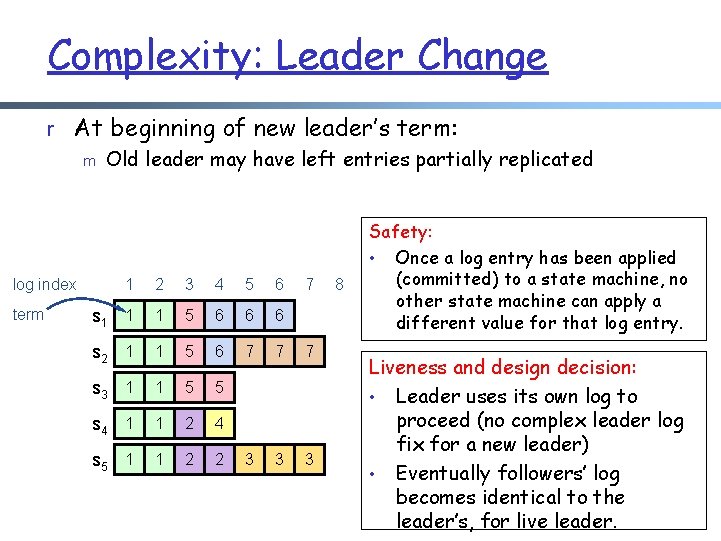

Complexity: Leader Change r At beginning of new leader’s term: m Old leader may have left entries partially replicated log index term 1 2 3 4 5 6 7 s 1 1 1 5 6 6 6 s 2 1 1 5 6 7 7 7 s 3 1 1 5 5 s 4 1 1 2 4 s 5 1 1 2 2 3 3 3 8 Safety: • Once a log entry has been applied (committed) to a state machine, no other state machine can apply a different value for that log entry. Liveness and design decision: • Leader uses its own log to proceed (no complex leader log fix for a new leader) • Eventually followers’ log becomes identical to the leader’s, for live leader.

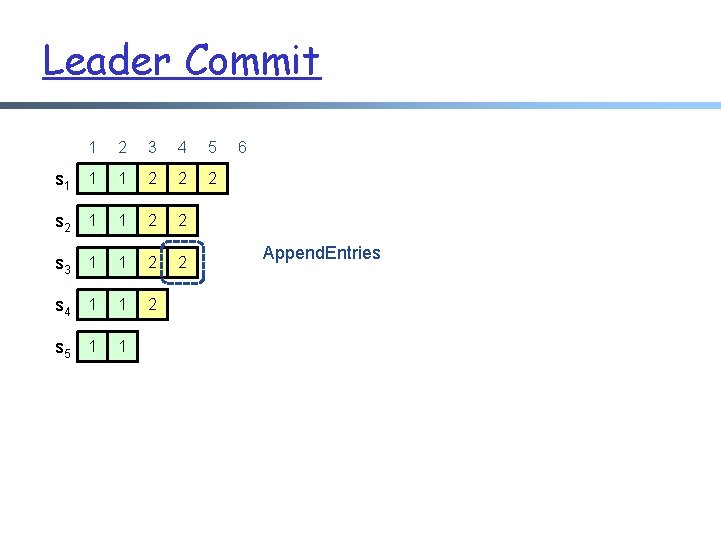

Leader Commit 1 2 3 4 5 s 1 1 1 2 2 2 s 2 1 1 2 2 s 3 1 1 2 2 s 4 1 1 2 s 5 1 1 6 Append. Entries

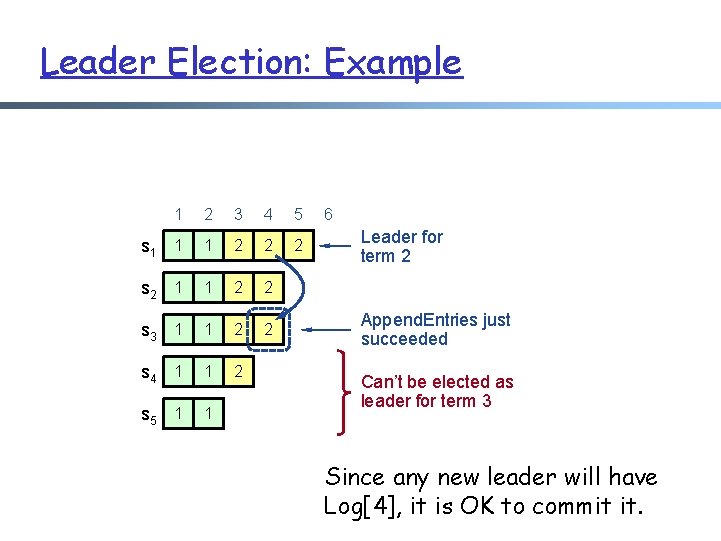

Leader Election Rule r During elections, choose candidate with log from newer terms m m m Candidates include log info in Request. Vote RPCs (index & term of last log entry) Voting server V denies vote if its log is “more complete”: (last. Term. V > last. Term. C) || (last. Term. V == last. Term. C) && (last. Index. V > last. Index. C) Leader will likely have “most complete” log among electing majority

Leader Election: Example 1 2 3 4 5 s 1 1 1 2 2 2 s 2 1 1 2 2 s 3 1 1 2 2 s 4 1 1 2 s 5 1 1 6 Leader for term 2 Append. Entries just succeeded Can’t be elected as leader for term 3 Since any new leader will have Log[4], it is OK to commit it.

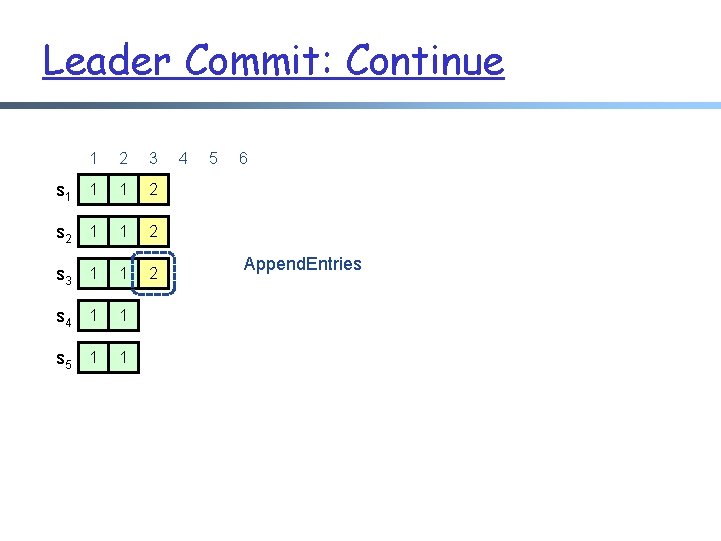

Leader Commit: Continue 1 2 3 s 1 1 1 2 s 2 1 1 2 s 3 1 1 2 s 4 1 1 s 5 1 1 4 5 6 Append. Entries

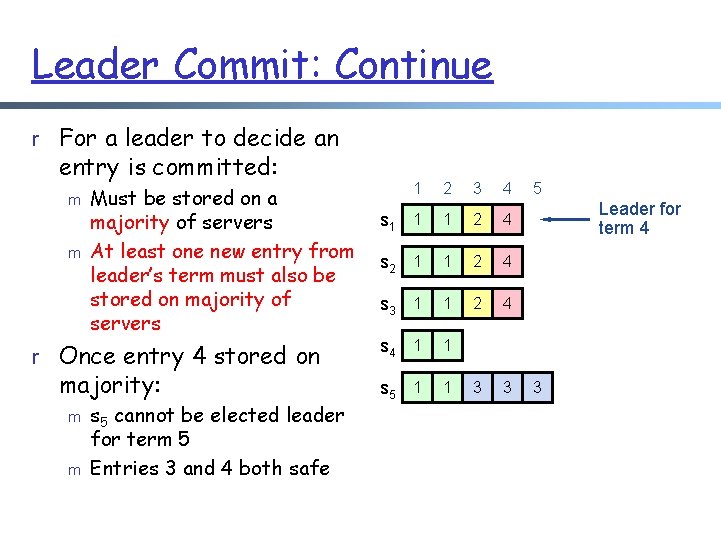

Leader Commit: Continue r For a leader to decide an entry is committed: m m Must be stored on a majority of servers At least one new entry from leader’s term must also be stored on majority of servers r Once entry 4 stored on majority: m m s 5 cannot be elected leader for term 5 Entries 3 and 4 both safe 1 2 3 4 s 1 1 1 2 4 s 2 1 1 2 4 s 3 1 1 2 4 s 4 1 1 s 5 1 1 3 3 5 Leader for term 4 3

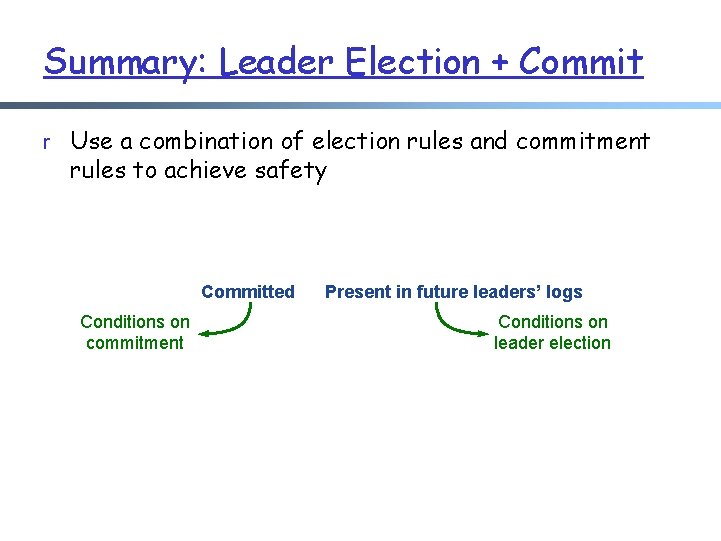

Summary: Leader Election + Commit r Use a combination of election rules and commitment rules to achieve safety Committed Conditions on commitment Present in future leaders’ logs Conditions on leader election

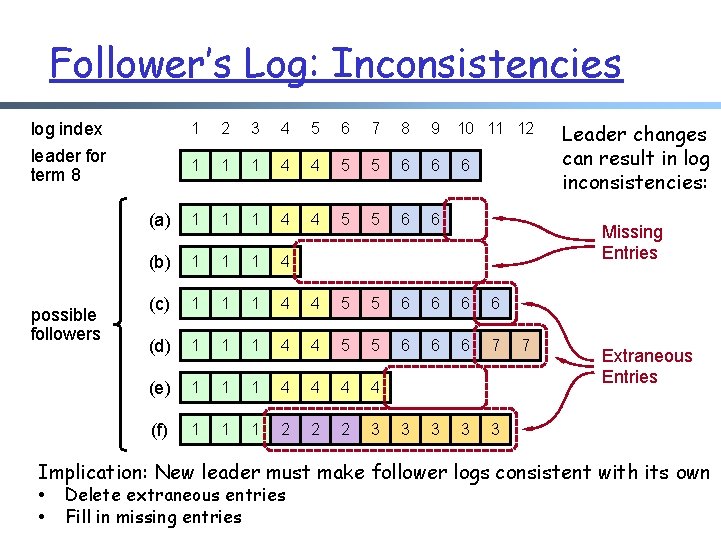

Follower’s Log: Inconsistencies log index leader for term 8 possible followers 1 2 3 4 5 6 7 8 9 10 11 12 1 1 1 4 4 5 5 6 6 6 (a) 1 1 1 4 4 5 5 6 6 (b) 1 1 1 4 (c) 1 1 1 4 4 5 5 6 6 (d) 1 1 1 4 4 5 5 6 6 6 7 (e) 1 1 1 4 4 (f) 1 1 1 2 2 2 3 3 3 Leader changes can result in log inconsistencies: Missing Entries 7 Extraneous Entries Implication: New leader must make follower logs consistent with its own • • Delete extraneous entries Fill in missing entries

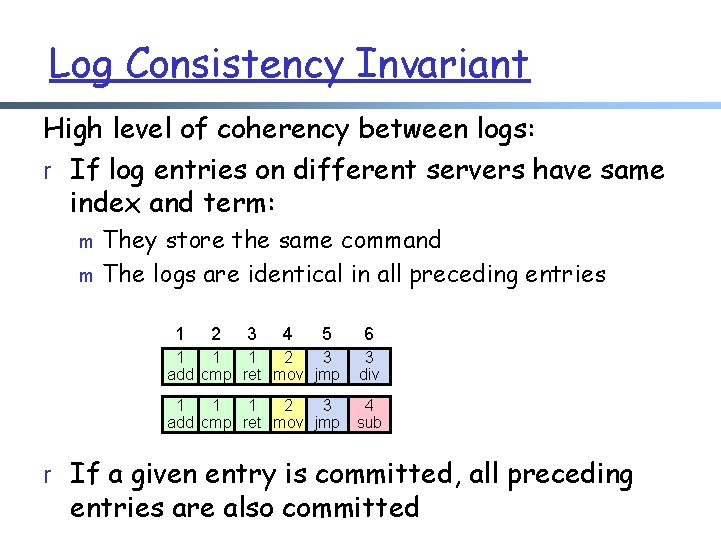

Log Consistency Invariant High level of coherency between logs: r If log entries on different servers have same index and term: m m They store the same command The logs are identical in all preceding entries 1 2 3 4 5 6 1 1 1 2 3 add cmp ret mov jmp 3 div 1 1 1 2 3 add cmp ret mov jmp 4 sub r If a given entry is committed, all preceding entries are also committed

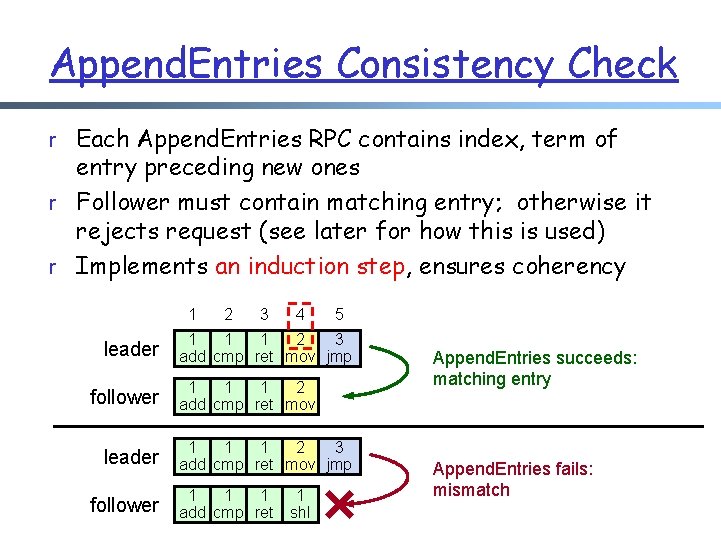

Append. Entries Consistency Check r Each Append. Entries RPC contains index, term of entry preceding new ones r Follower must contain matching entry; otherwise it rejects request (see later for how this is used) r Implements an induction step, ensures coherency 1 leader follower 2 3 4 5 1 1 1 2 3 add cmp ret mov jmp 1 1 1 2 add cmp ret mov 1 1 1 2 3 add cmp ret mov jmp 1 1 1 add cmp ret 1 shl Append. Entries succeeds: matching entry Append. Entries fails: mismatch

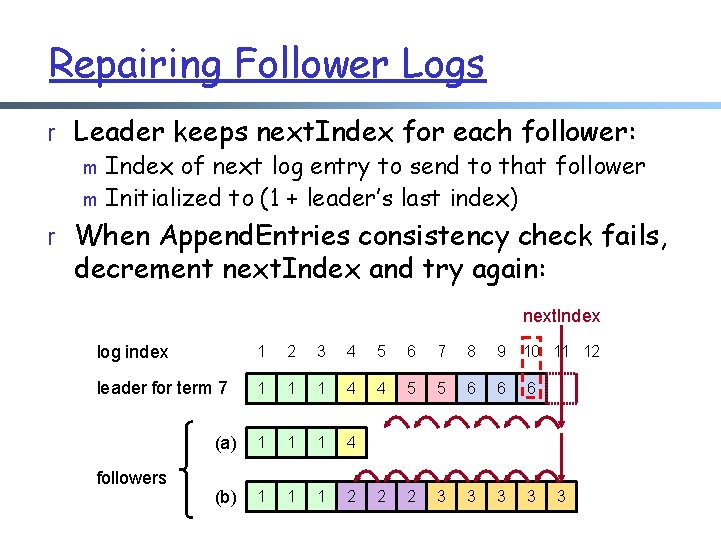

Repairing Follower Logs r Leader keeps next. Index for each follower: m Index of next log entry to send to that follower m Initialized to (1 + leader’s last index) r When Append. Entries consistency check fails, decrement next. Index and try again: next. Index log index 1 2 3 4 5 6 7 8 9 10 11 12 leader for term 7 1 1 1 4 4 5 5 6 6 6 (a) 1 1 1 4 (b) 1 1 1 2 2 2 3 3 followers 3

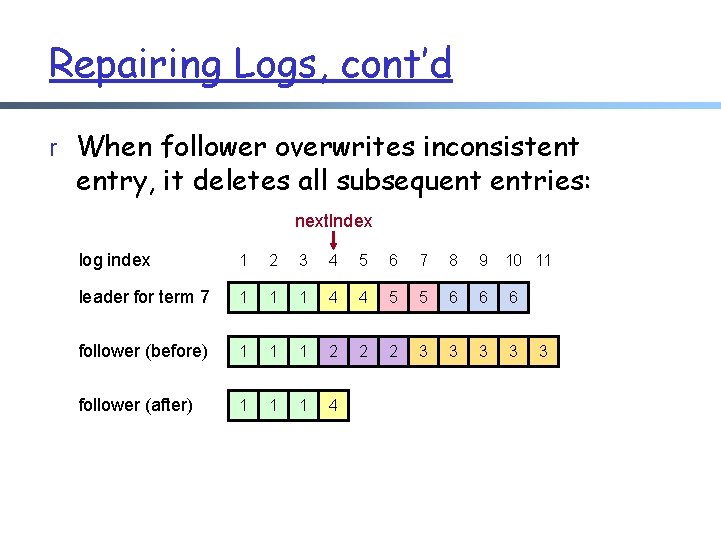

Repairing Logs, cont’d r When follower overwrites inconsistent entry, it deletes all subsequent entries: next. Index log index 1 2 3 4 5 6 7 8 9 10 11 leader for term 7 1 1 1 4 4 5 5 6 6 6 follower (before) 1 1 1 2 2 2 3 3 follower (after) 1 1 1 4 3

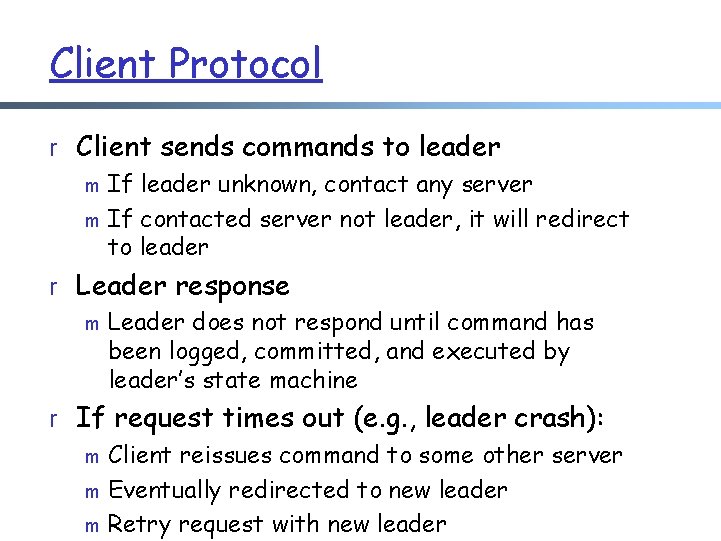

Client Protocol r Client sends commands to leader m If leader unknown, contact any server m If contacted server not leader, it will redirect to leader r Leader response m Leader does not respond until command has been logged, committed, and executed by leader’s state machine r If request times out (e. g. , leader crash): m Client reissues command to some other server m Eventually redirected to new leader m Retry request with new leader

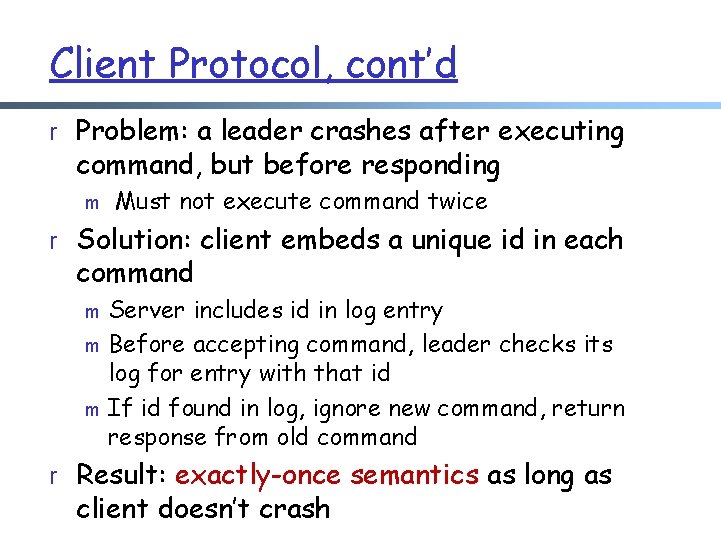

Client Protocol, cont’d r Problem: a leader crashes after executing command, but before responding m Must not execute command twice r Solution: client embeds a unique id in each command m m m Server includes id in log entry Before accepting command, leader checks its log for entry with that id If id found in log, ignore new command, return response from old command r Result: exactly-once semantics as long as client doesn’t crash

Next 4 Slides Not Covered in Class

Configuration Changes r System configuration: m ID, address for each server m Determines what constitutes a majority r Consensus mechanism must support changes in the configuration: m m Replace failed machine Change degree of replication

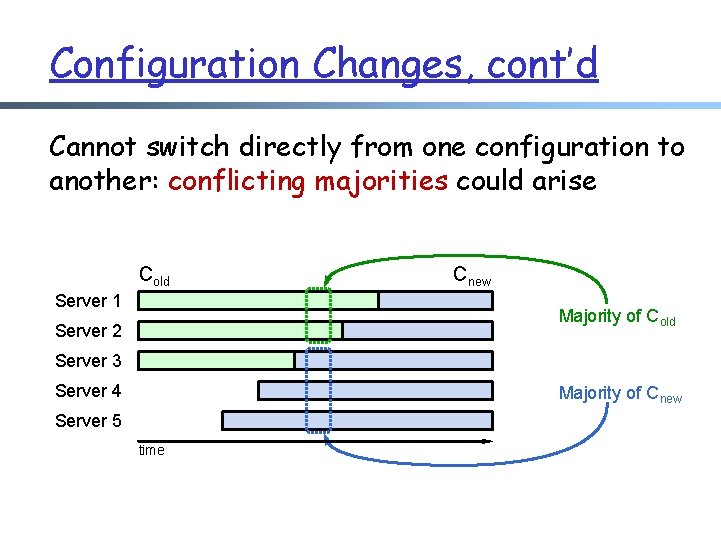

Configuration Changes, cont’d Cannot switch directly from one configuration to another: conflicting majorities could arise Cold Server 1 Cnew Majority of Cold Server 2 Server 3 Server 4 Majority of Cnew Server 5 time

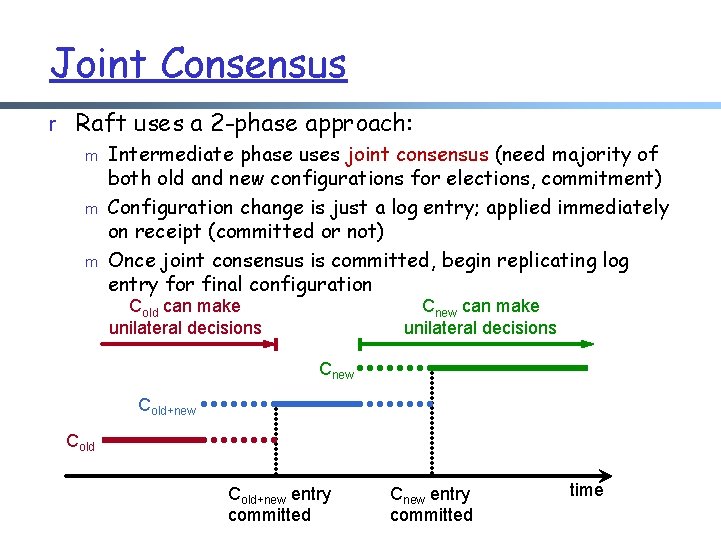

Joint Consensus r Raft uses a 2 -phase approach: m Intermediate phase uses joint consensus (need majority of both old and new configurations for elections, commitment) m Configuration change is just a log entry; applied immediately on receipt (committed or not) m Once joint consensus is committed, begin replicating log entry for final configuration Cold can make unilateral decisions Cnew Cold+new Cold March 3, 2013 Cold+new entry Cnew entry committed. Raft Consensus Algorithm committed time

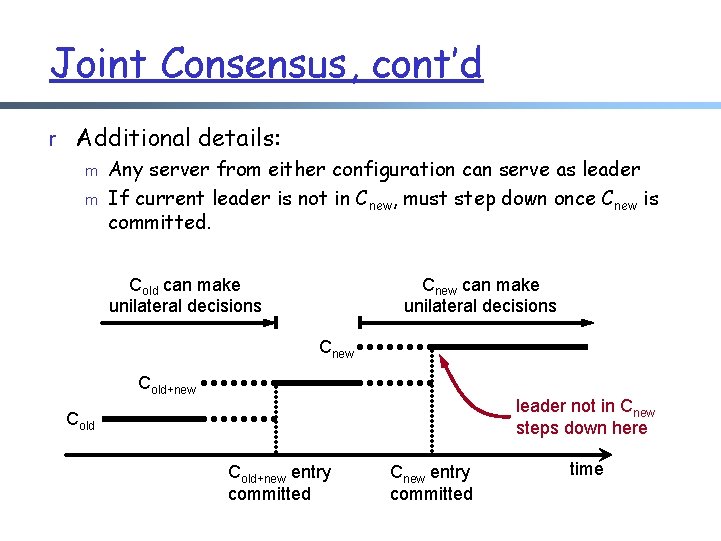

Joint Consensus, cont’d r Additional details: m Any server from either configuration can serve as leader m If current leader is not in Cnew, must step down once Cnew is committed. Cold can make unilateral decisions Cnew Cold+new leader not in Cnew steps down here Cold+new entry committed Cnew entry committed time

End of Slides Not Covered in Class

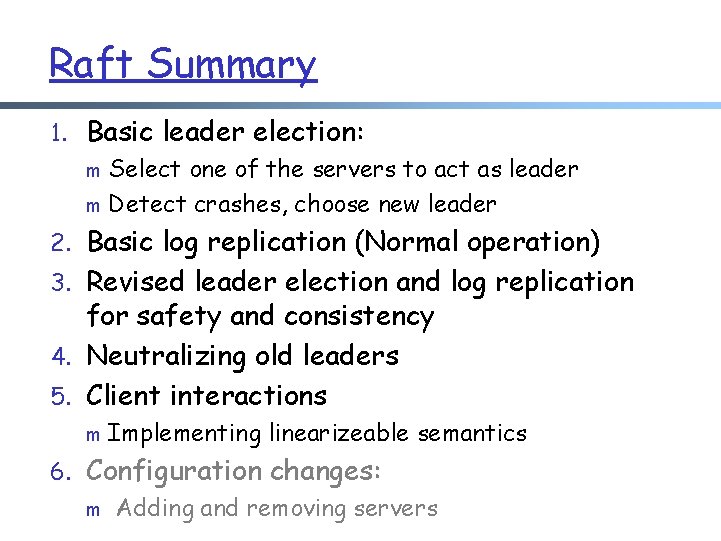

Raft Summary 1. Basic leader election: m Select one of the servers to act as leader m Detect crashes, choose new leader 2. Basic log replication (Normal operation) 3. Revised leader election and log replication for safety and consistency 4. Neutralizing old leaders 5. Client interactions m Implementing linearizeable semantics 6. Configuration changes: m Adding and removing servers

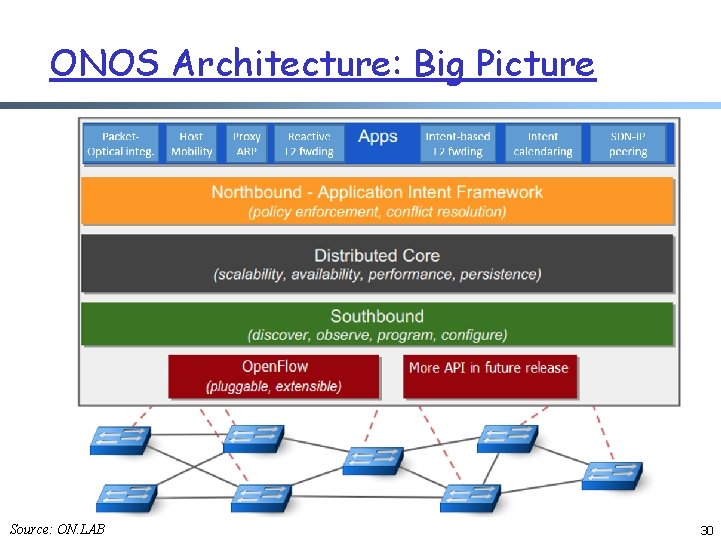

ONOS Architecture: Big Picture Source: ON. LAB 30

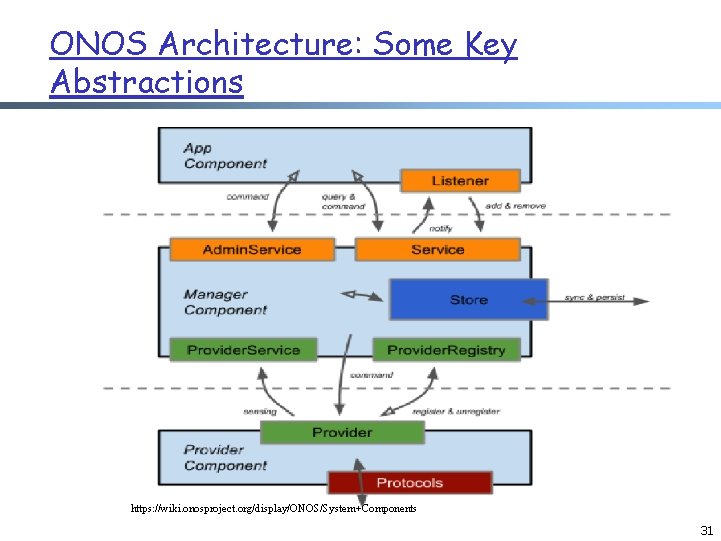

ONOS Architecture: Some Key Abstractions https: //wiki. onosproject. org/display/ONOS/System+Components 31

Discussions r Programming tasks of a data store based programming model 32

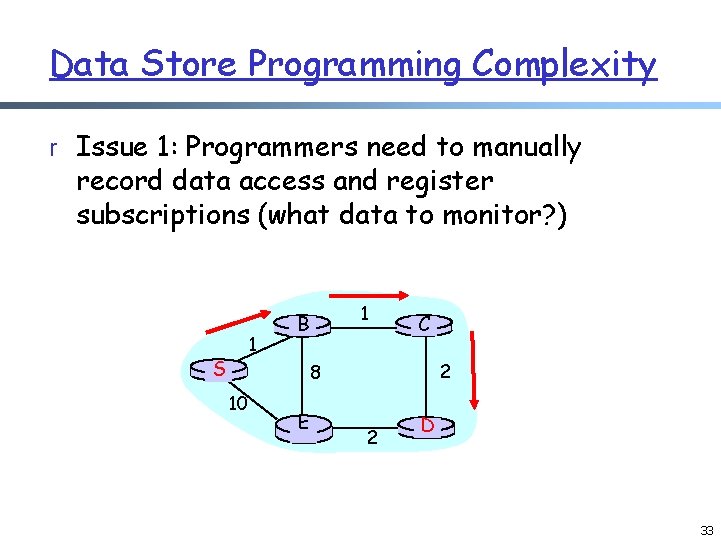

Data Store Programming Complexity r Issue 1: Programmers need to manually record data access and register subscriptions (what data to monitor? ) 1 S 1 B C 2 8 10 E 2 D 33

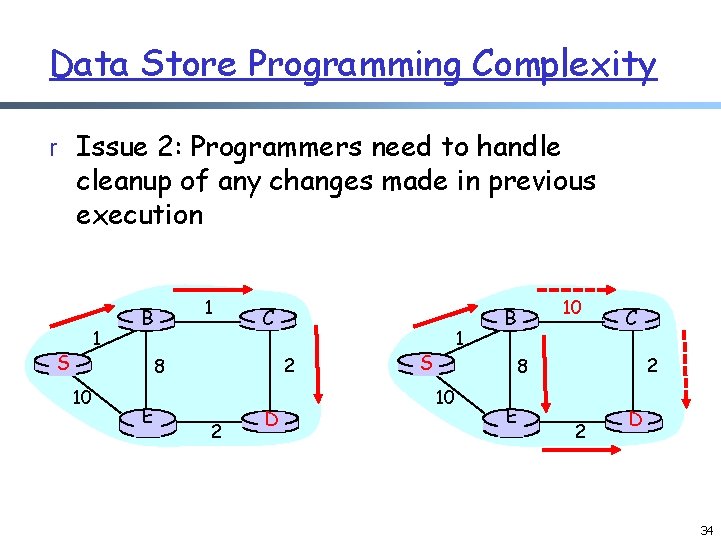

Data Store Programming Complexity r Issue 2: Programmers need to handle cleanup of any changes made in previous execution 1 S 1 B C 2 8 10 E 2 D 1 S 10 B C 2 8 10 E 2 D 34

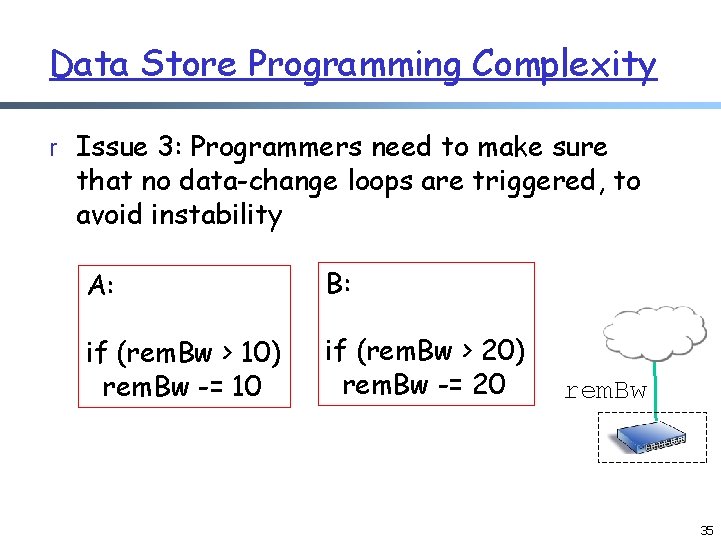

Data Store Programming Complexity r Issue 3: Programmers need to make sure that no data-change loops are triggered, to avoid instability A: B: if (rem. Bw > 10) rem. Bw -= 10 if (rem. Bw > 20) rem. Bw -= 20 rem. Bw 35

Outline r Admin and recap r High-level datapath programming m blackbox (trace-tree) m whitebox r Network OS supporting programmable networks m m overview Open. Daylight distributed network OS (Paxos, RAFT) from data store to function store 36

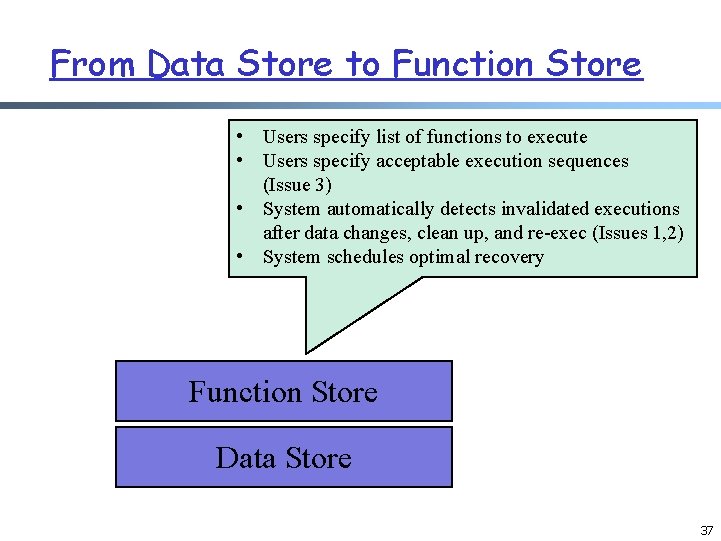

From Data Store to Function Store • Users specify list of functions to execute • Users specify acceptable execution sequences (Issue 3) • System automatically detects invalidated executions after data changes, clean up, and re-exec (Issues 1, 2) • System schedules optimal recovery Function Store Data Store 37

![APIs r Function store API m add(func, attr, [req]): returns a handler m remove(handler) APIs r Function store API m add(func, attr, [req]): returns a handler m remove(handler)](http://slidetodoc.com/presentation_image/951ba2467c81eaee41d78cc3c98a43f8/image-38.jpg)

APIs r Function store API m add(func, attr, [req]): returns a handler m remove(handler) m specify the relationship between two functions via precedence (e. g. , f 1 -> f 2 means f 1 exe before f 2) • A valid execution is one all precedence conditions are satisfied r Data access API m read(xpath): wrapper for NOS data store read m update(xpath, op, val): wrapper for NOS data store update m test(xpath, boolean expression)

Issue to Think About r Is the function store (λ-programming model) complete (i. e. , compared w/ pure data store)? 39

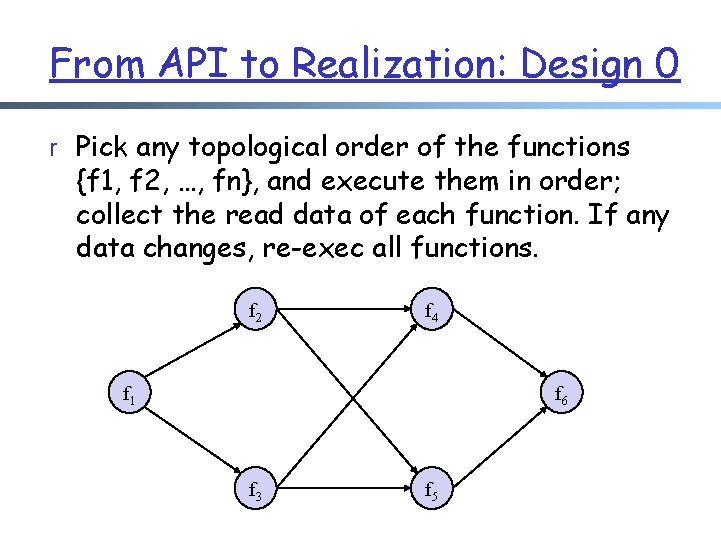

From API to Realization: Design 0 r Pick any topological order of the functions {f 1, f 2, …, fn}, and execute them in order; collect the read data of each function. If any data changes, re-exec all functions. f 2 f 4 f 1 f 6 f 3 f 5

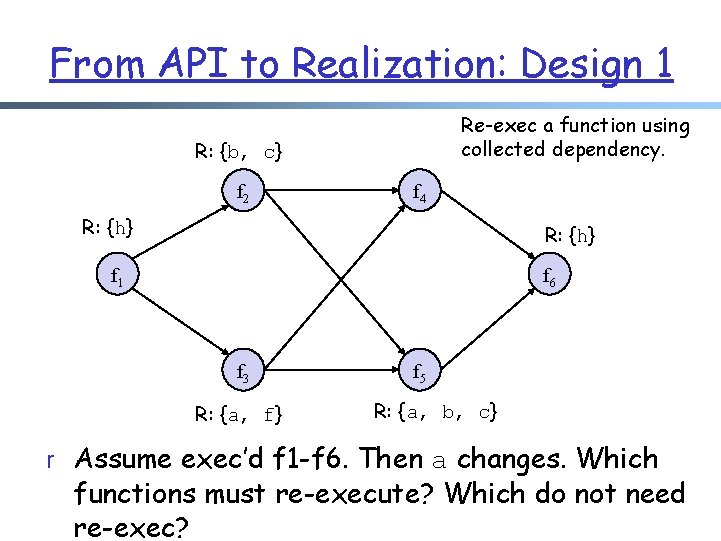

From API to Realization: Design 1 Re-exec a function using collected dependency. R: {b, c} f 2 f 4 R: {h} f 1 f 6 f 3 R: {a, f} f 5 R: {a, b, c} r Assume exec’d f 1 -f 6. Then a changes. Which functions must re-execute? Which do not need re-exec?

- Slides: 41