CS 430 INFO 430 Information Retrieval Lecture 2

- Slides: 26

CS 430 / INFO 430 Information Retrieval Lecture 2 Searching Full Text 2 1

Course Administration Web site: http: //www. cs. cornell. edu/courses/cs 430/2006 fa Notices: See the home page on the course Web site Sign-up sheet: If you did not sign up at the first class, please sign up now. Programming assignments: The web site require Java or C++. Other languages are under consideration. 2

Course Administration Please send all questions about the course to: cs 430 -l@lists. cornell. edu The message will be sent to William Arms Teaching Assistants 3

Course Administration Discussion class, Wednesday, August 30 Phillips Hall 203, 7: 30 to 8: 30 p. m. Prepare for the class as instructed on the course Web site. Participation in the discussion classes is one third of the grade, but tomorrow's class will not be included in the grade calculation. 4

Discussion Classes Format: Questions. Ask a member of the class to answer. Provide opportunity for others to comment. When answering: Stand up. Give your name. Make sure that the TA hears it. Speak clearly so that all the class can hear. Suggestions: Do not be shy at presenting partial answers. Differing viewpoints are welcome. 5

Discussion Class: Preparation You are given two problems to explore: • What is the medical evidence that red wine is good or bad for your health? • What in history led to the current turmoil in Palestine and the neighboring countries? In preparing for the class, focus on the question: What characteristics of the three search services are helpful or lead to difficulties in addressing these two problems? The aim of your preparation is to explore the search services, not to solve these two problems. Take care. Many of the documents that you might find are written from a one-sided viewpoint. 6

Discussion Class: Preparation In preparing for the discussion classes, you may find it useful to look at the slides from last year's class on the old Web site: http: //www. cs. cornell. edu/Courses/cs 430/2005 fa/ 7

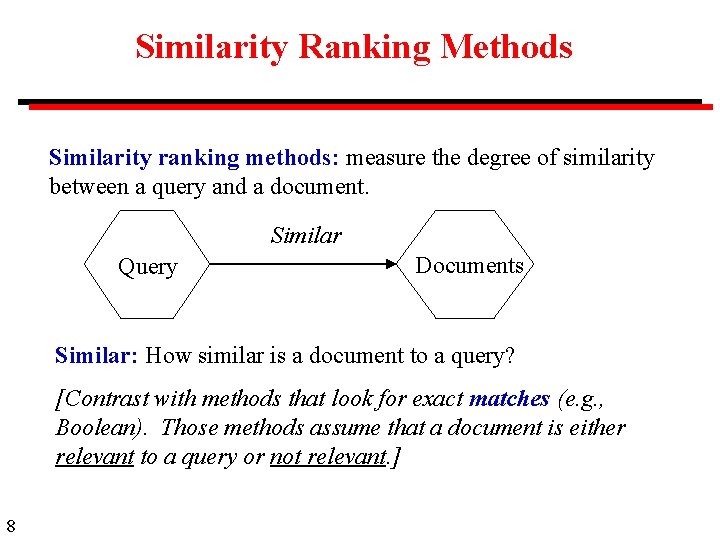

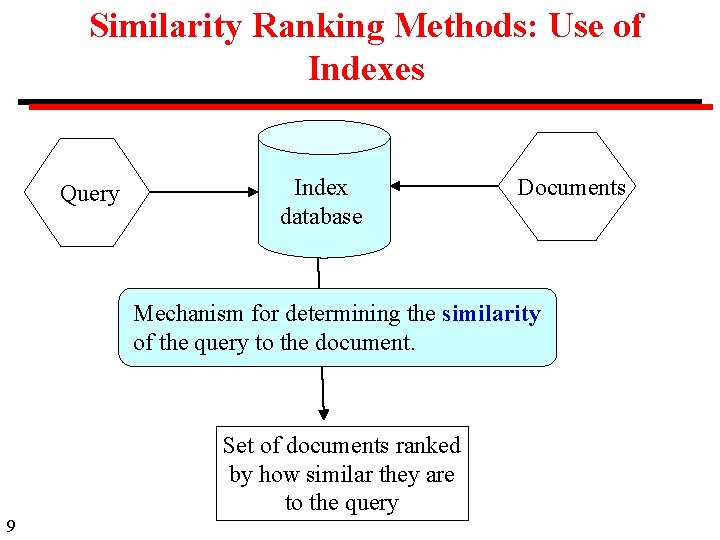

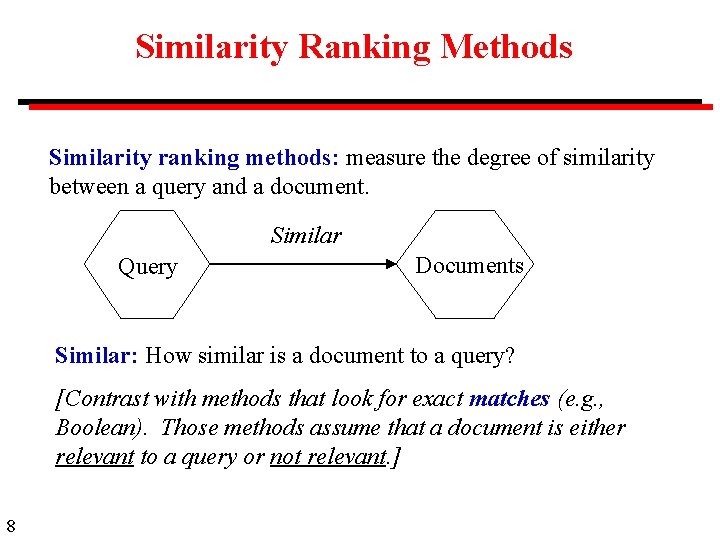

Similarity Ranking Methods Similarity ranking methods: measure the degree of similarity between a query and a document. Similar Query Documents Similar: How similar is a document to a query? [Contrast with methods that look for exact matches (e. g. , Boolean). Those methods assume that a document is either relevant to a query or not relevant. ] 8

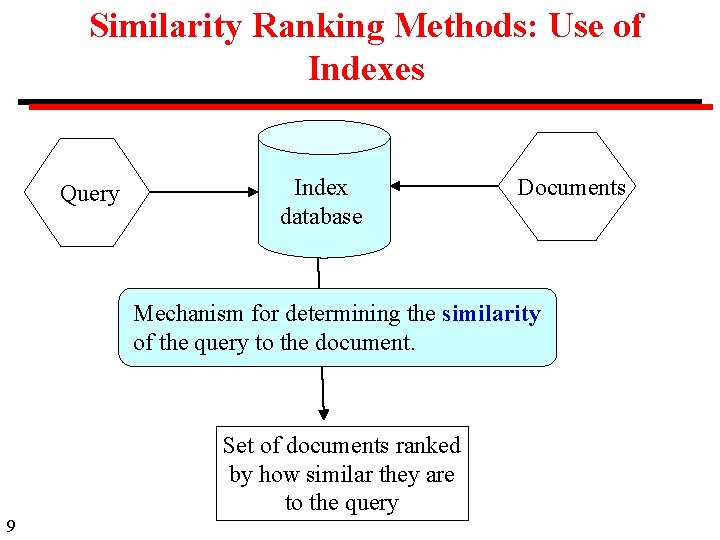

Similarity Ranking Methods: Use of Indexes Query Index database Documents Mechanism for determining the similarity of the query to the document. Set of documents ranked by how similar they are to the query 9

Term Similarity: Example Problem: Given two text documents, how similar are they? A documents can be any length from one word to thousands. The following examples use very short artificial documents. Example Here are three documents. How similar are they? d 1 d 2 d 3 10 ant bee dog hog dog ant dog cat gnu dog eel fox

Term Similarity: Basic Concept: Two documents are similar if they contain some of the same terms. 11

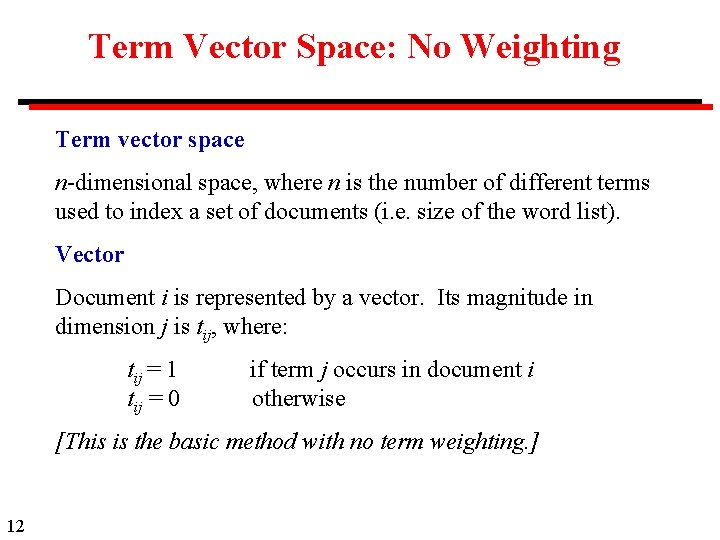

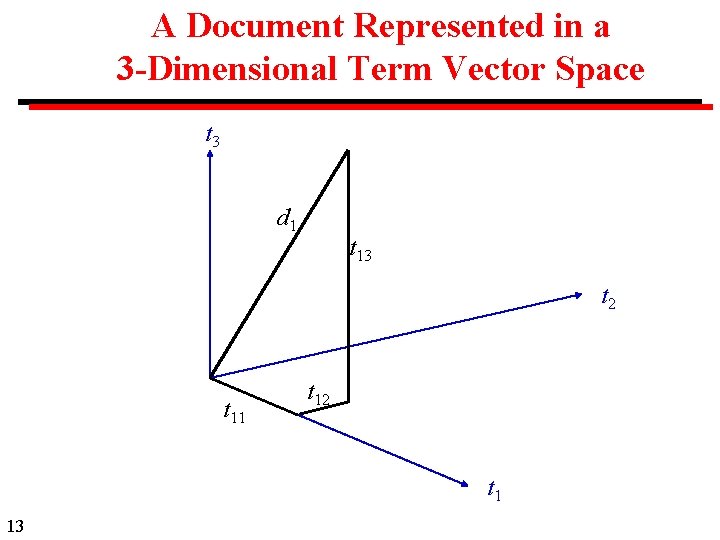

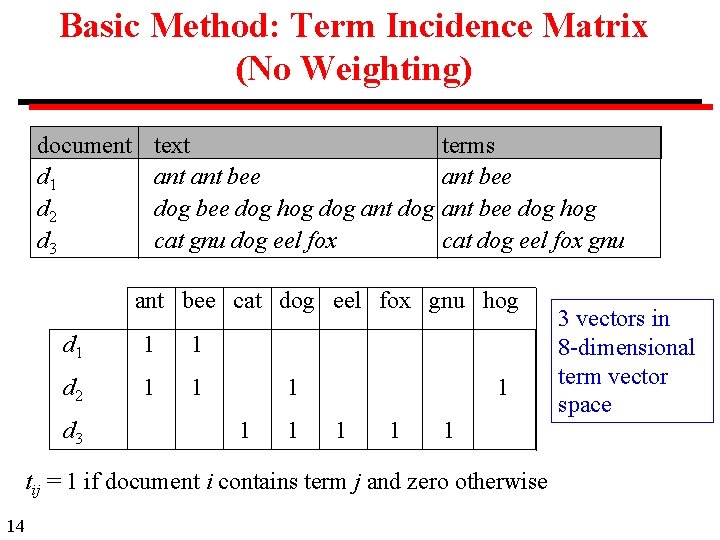

Term Vector Space: No Weighting Term vector space n-dimensional space, where n is the number of different terms used to index a set of documents (i. e. size of the word list). Vector Document i is represented by a vector. Its magnitude in dimension j is tij, where: tij = 1 tij = 0 if term j occurs in document i otherwise [This is the basic method with no term weighting. ] 12

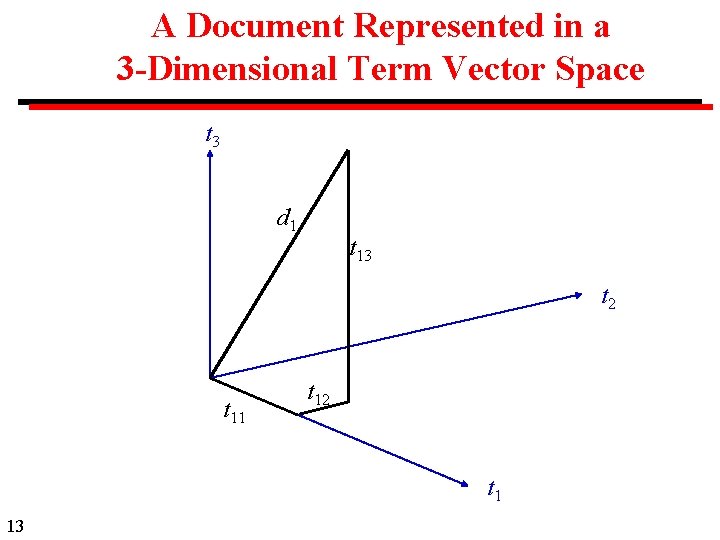

A Document Represented in a 3 -Dimensional Term Vector Space t 3 d 1 t 13 t 2 t 11 t 12 t 1 13

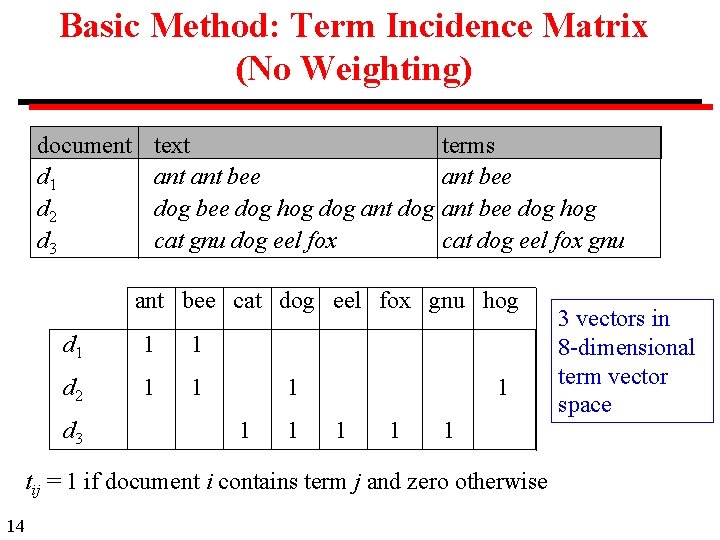

Basic Method: Term Incidence Matrix (No Weighting) document d 1 d 2 d 3 text ant bee dog hog dog ant dog cat gnu dog eel fox terms ant bee dog hog cat dog eel fox gnu ant bee cat dog eel fox gnu hog d 1 1 1 d 2 1 1 d 3 1 1 1 1 tij = 1 if document i contains term j and zero otherwise 14 3 vectors in 8 -dimensional term vector space

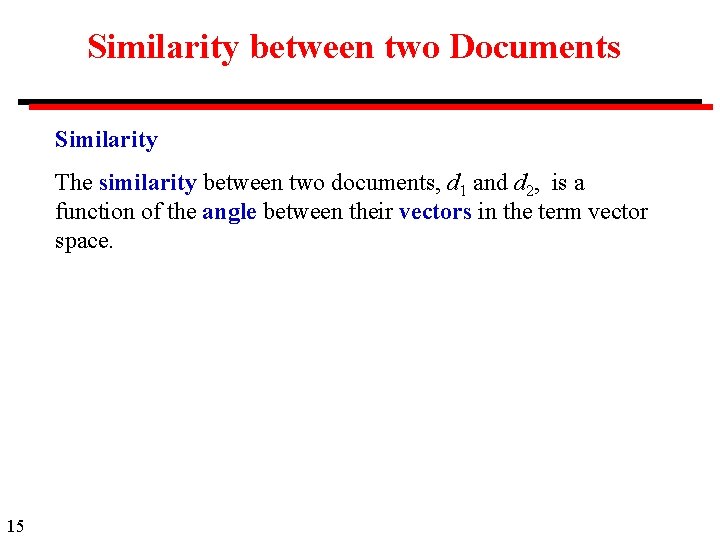

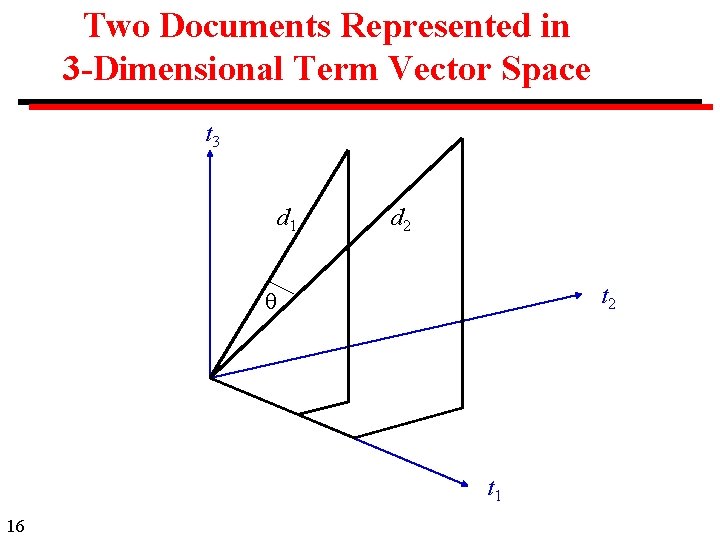

Similarity between two Documents Similarity The similarity between two documents, d 1 and d 2, is a function of the angle between their vectors in the term vector space. 15

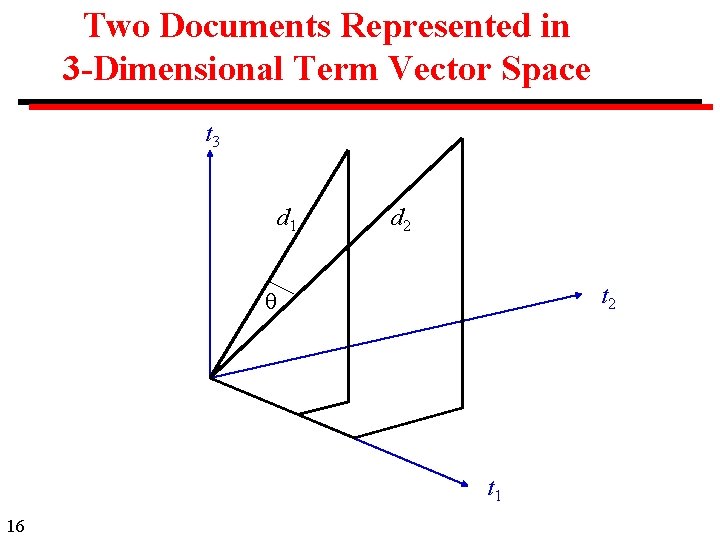

Two Documents Represented in 3 -Dimensional Term Vector Space t 3 d 1 d 2 t 1 16

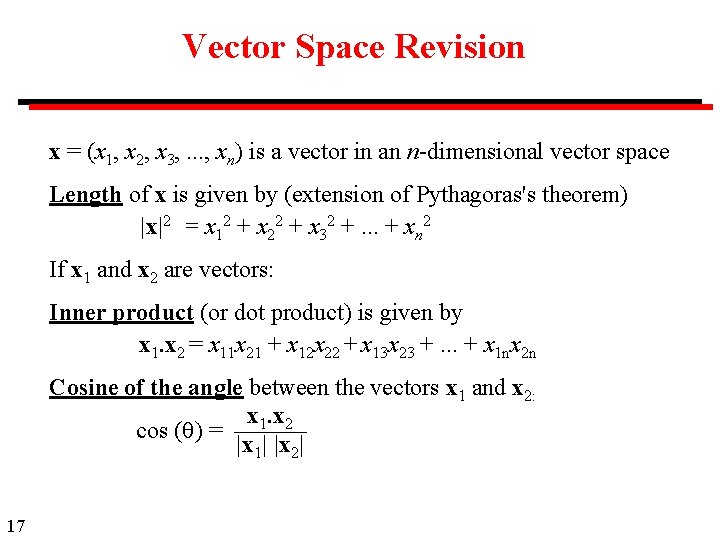

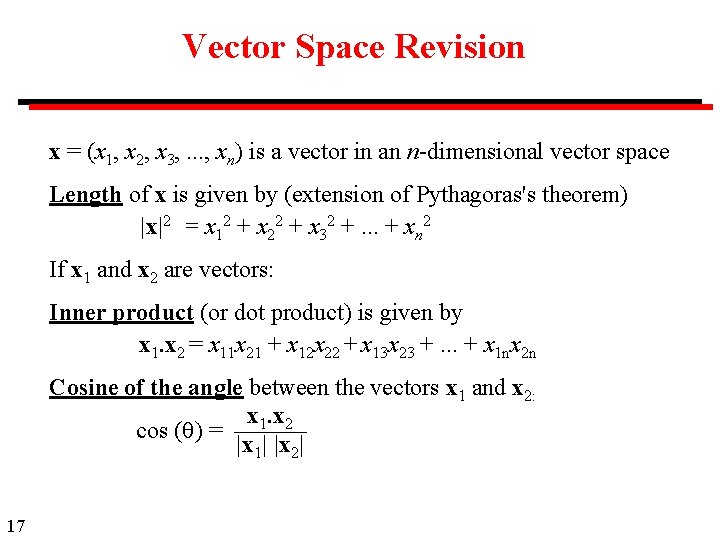

Vector Space Revision x = (x 1, x 2, x 3, . . . , xn) is a vector in an n-dimensional vector space Length of x is given by (extension of Pythagoras's theorem) |x|2 = x 12 + x 22 + x 32 +. . . + xn 2 If x 1 and x 2 are vectors: Inner product (or dot product) is given by x 1. x 2 = x 11 x 21 + x 12 x 22 + x 13 x 23 +. . . + x 1 nx 2 n Cosine of the angle between the vectors x 1 and x 2: x 1. x 2 cos ( ) = |x 1| |x 2| 17

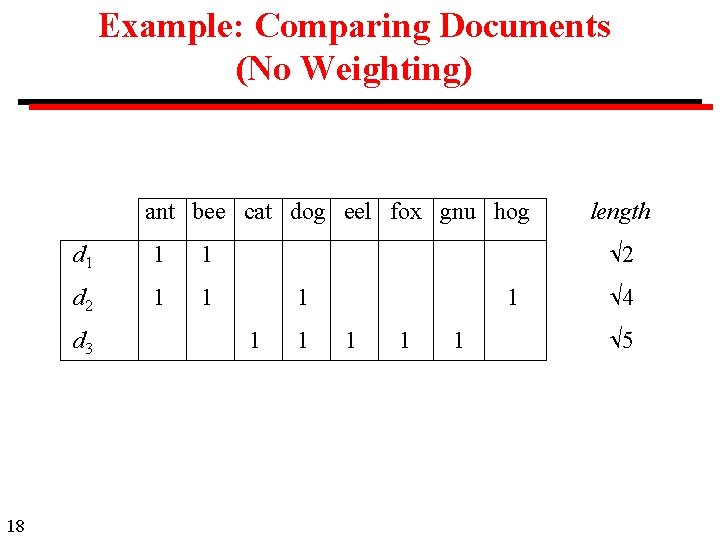

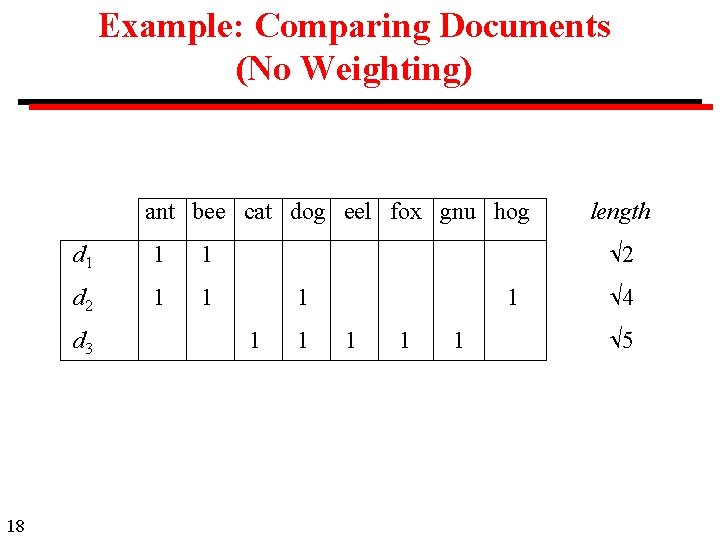

Example: Comparing Documents (No Weighting) ant bee cat dog eel fox gnu hog d 1 1 1 d 2 1 1 d 3 18 length 2 1 1 1 1 4 5

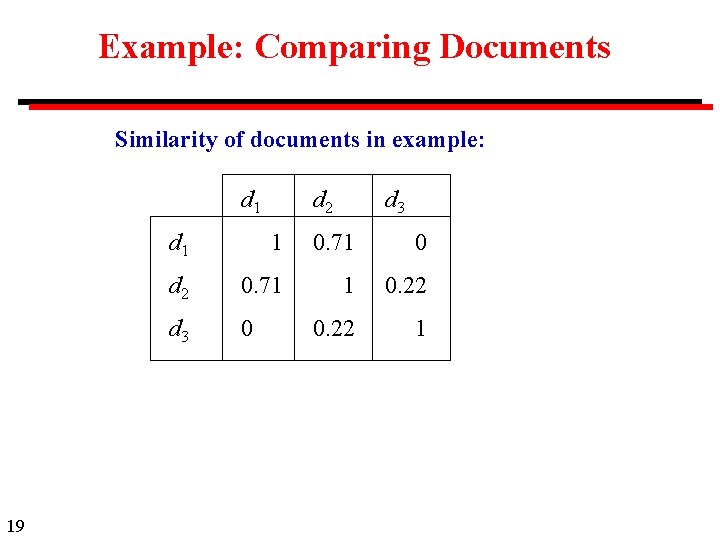

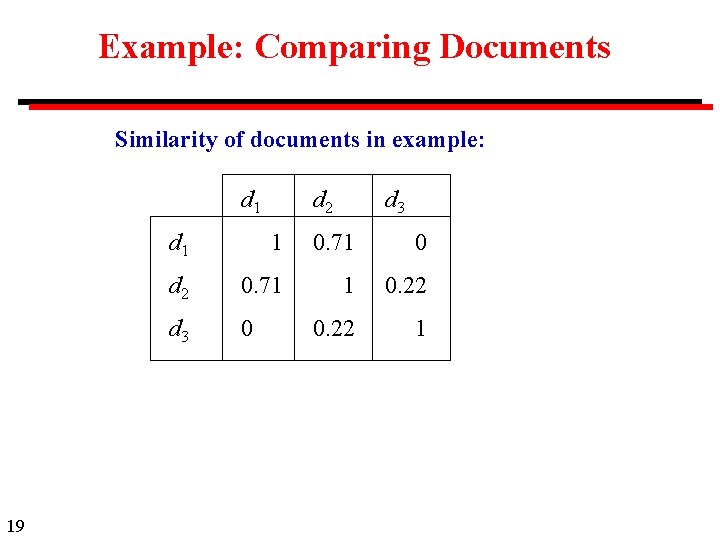

Example: Comparing Documents Similarity of documents in example: d 1 19 d 2 d 3 d 1 1 0. 71 0 d 2 0. 71 1 0. 22 d 3 0 0. 22 1

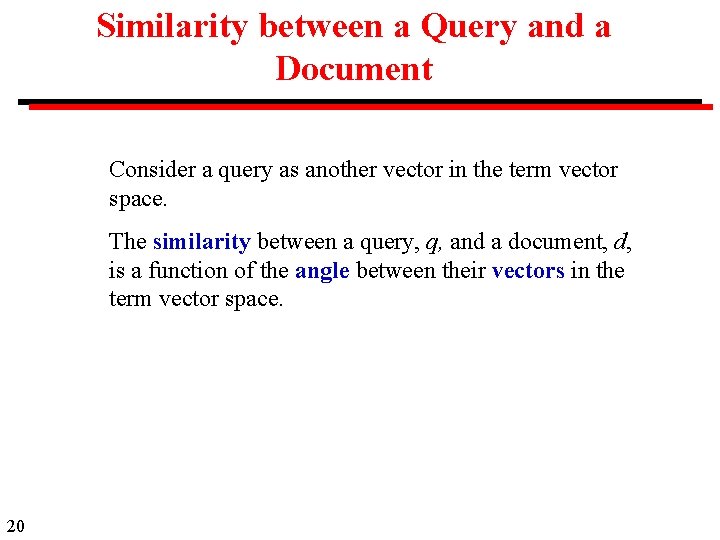

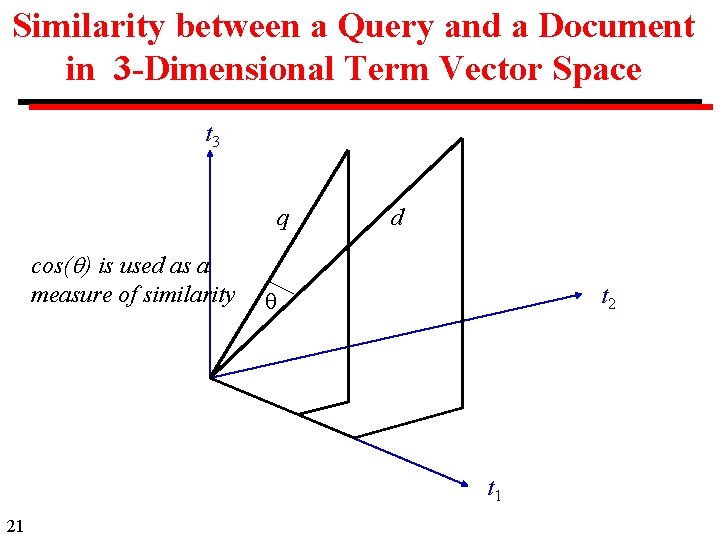

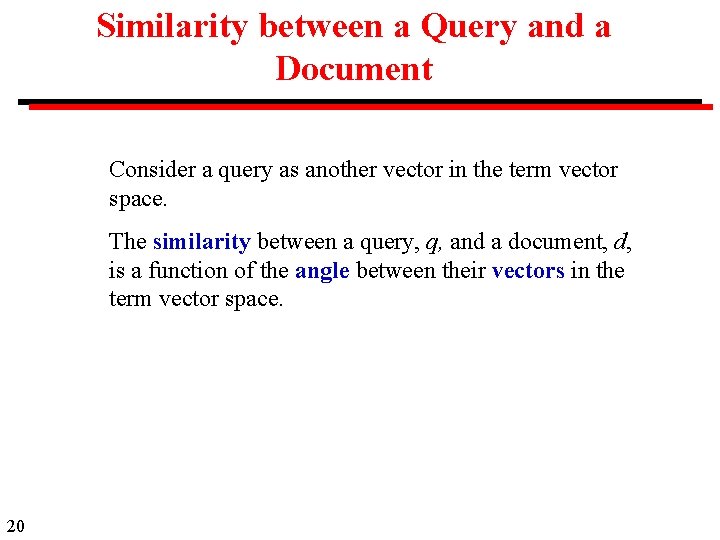

Similarity between a Query and a Document Consider a query as another vector in the term vector space. The similarity between a query, q, and a document, d, is a function of the angle between their vectors in the term vector space. 20

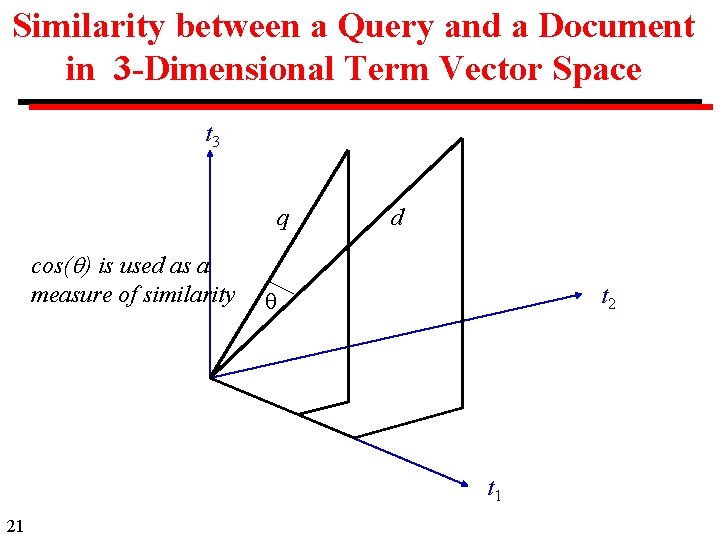

Similarity between a Query and a Document in 3 -Dimensional Term Vector Space t 3 q cos( ) is used as a measure of similarity d t 2 t 1 21

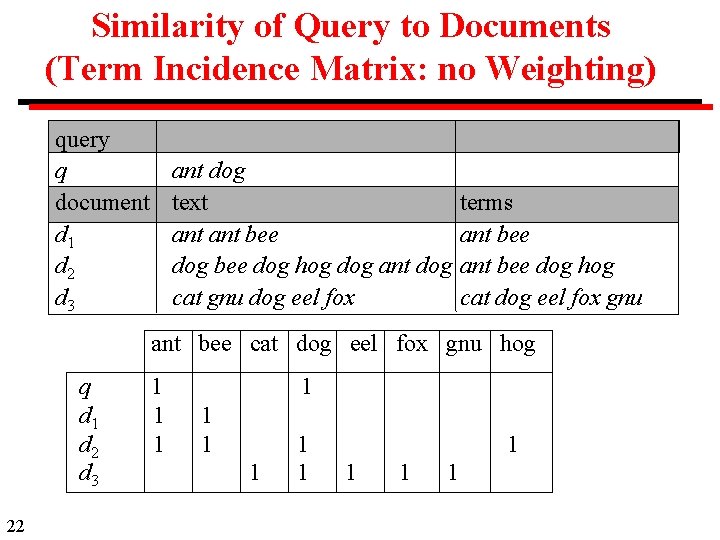

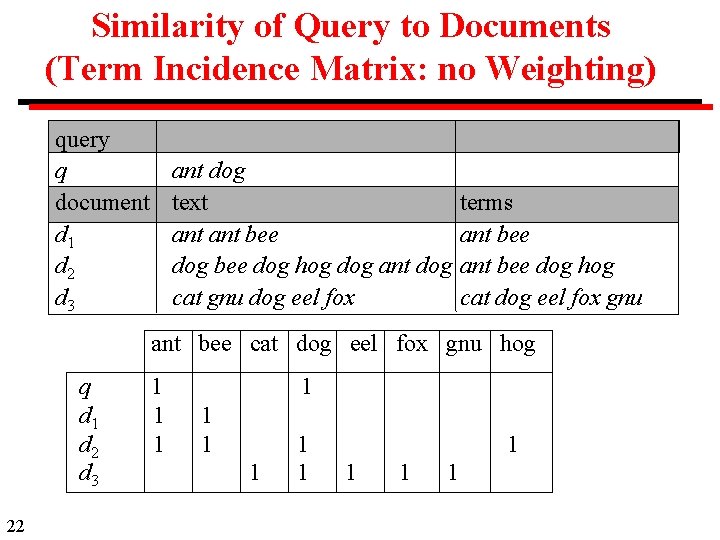

Similarity of Query to Documents (Term Incidence Matrix: no Weighting) query q document d 1 d 2 d 3 ant dog text ant bee dog hog dog ant dog cat gnu dog eel fox terms ant bee dog hog cat dog eel fox gnu ant bee cat dog eel fox gnu hog q d 1 d 2 d 3 22 1 1 1 1

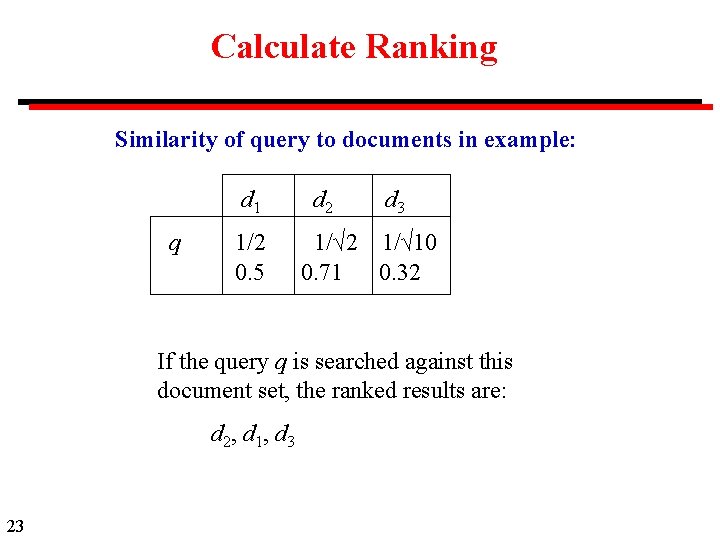

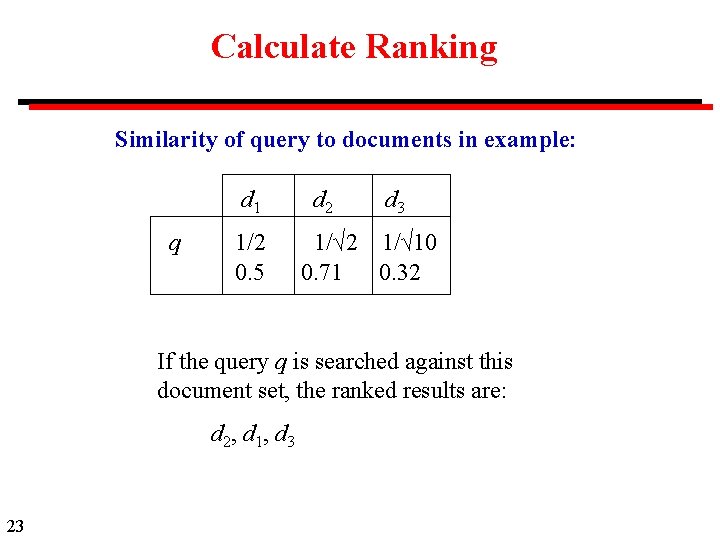

Calculate Ranking Similarity of query to documents in example: d 1 q 1/2 0. 5 d 2 d 3 1/√ 2 1/√ 10 0. 71 0. 32 If the query q is searched against this document set, the ranked results are: d 2, d 1, d 3 23

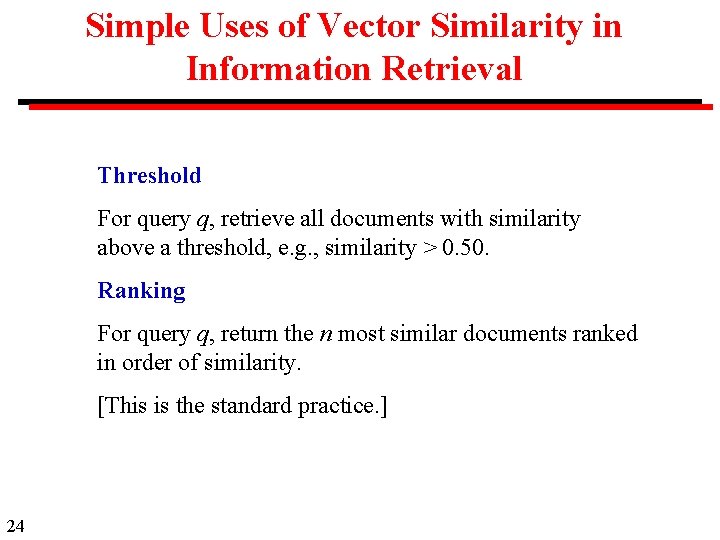

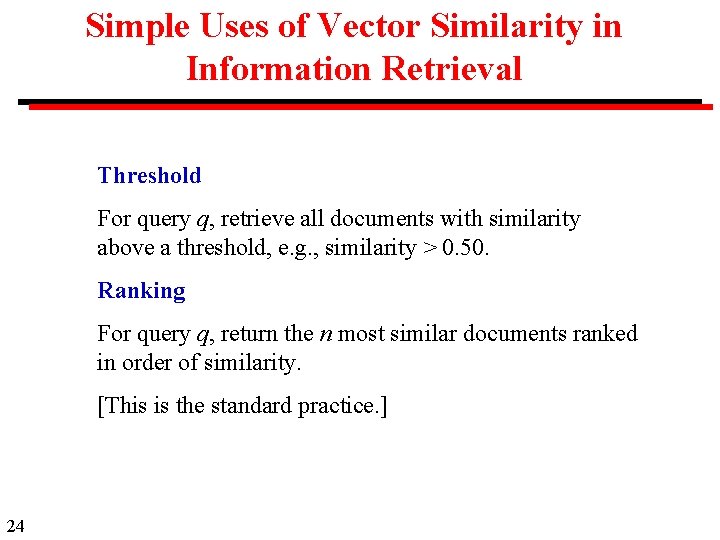

Simple Uses of Vector Similarity in Information Retrieval Threshold For query q, retrieve all documents with similarity above a threshold, e. g. , similarity > 0. 50. Ranking For query q, return the n most similar documents ranked in order of similarity. [This is the standard practice. ] 24

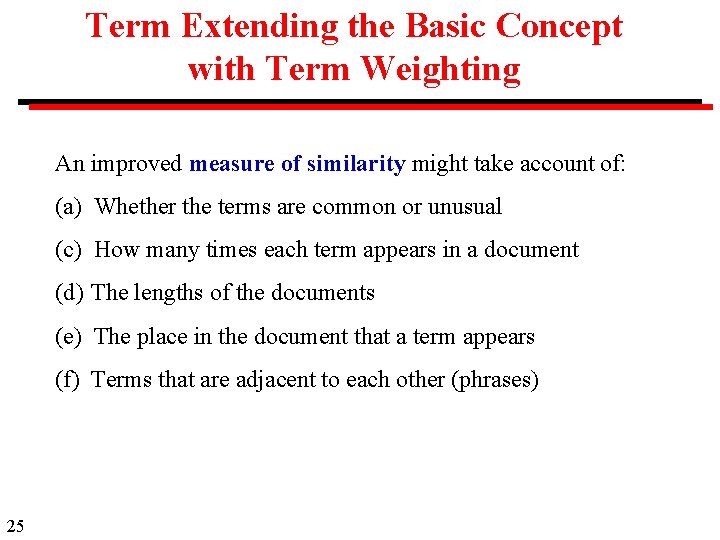

Term Extending the Basic Concept with Term Weighting An improved measure of similarity might take account of: (a) Whether the terms are common or unusual (c) How many times each term appears in a document (d) The lengths of the documents (e) The place in the document that a term appears (f) Terms that are adjacent to each other (phrases) 25

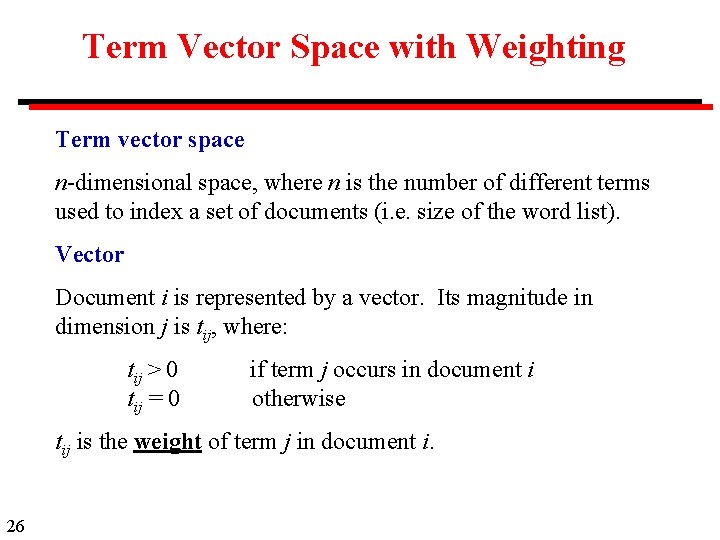

Term Vector Space with Weighting Term vector space n-dimensional space, where n is the number of different terms used to index a set of documents (i. e. size of the word list). Vector Document i is represented by a vector. Its magnitude in dimension j is tij, where: tij > 0 tij = 0 if term j occurs in document i otherwise tij is the weight of term j in document i. 26