CS 4100 Artificial Intelligence Prof C Hafner Class

- Slides: 48

CS 4100 Artificial Intelligence Prof. C. Hafner Class Notes March 20, 2012

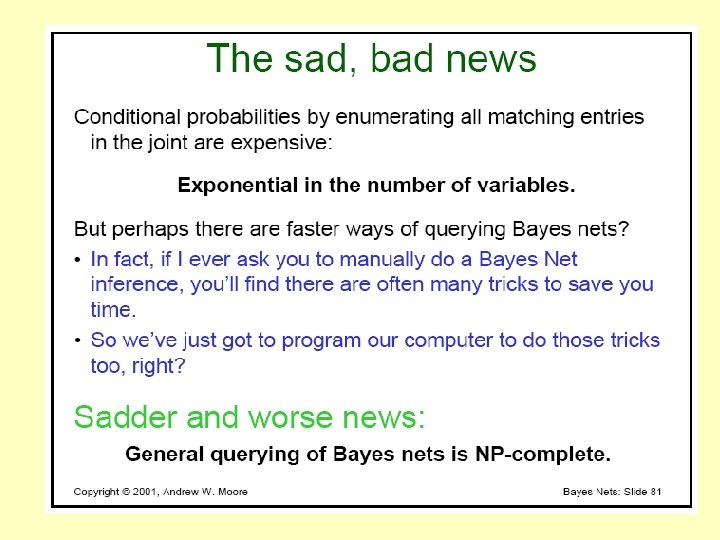

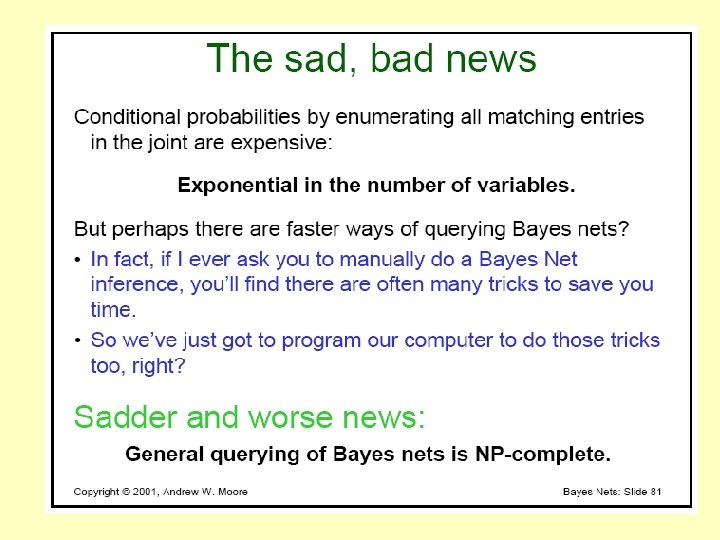

Outline • Midterm planning problem: solution http: //www. ccs. neu. edu/course/cs 4100 sp 12/classnotes/midterm-planning. doc • Discuss term projects • Continue uncertain reasoning in AI – Probability distribution (review) – Conditional Probability and the Chain Rule (cont. ) – Bayes’ Rule – Independence, “Expert” systems and the combinatorics of joint probabilities – Bayes networks – Assignment 6

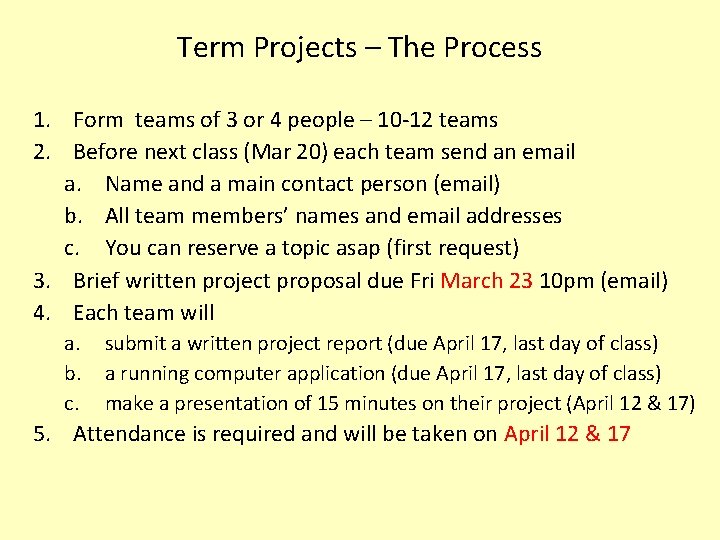

Term Projects – The Process 1. Form teams of 3 or 4 people – 10 -12 teams 2. Before next class (Mar 20) each team send an email a. Name and a main contact person (email) b. All team members’ names and email addresses c. You can reserve a topic asap (first request) 3. Brief written project proposal due Fri March 23 10 pm (email) 4. Each team will a. b. c. submit a written project report (due April 17, last day of class) a running computer application (due April 17, last day of class) make a presentation of 15 minutes on their project (April 12 & 17) 5. Attendance is required and will be taken on April 12 & 17

Term Projects – The Content 1. Select a domain 2. Model the domain a. b. c. “Logical/state model” : define an ontology w/ example world state Implementation in Protégé – demo with some queries “Dynamics model” (of how the world changes) Using Situation Calculus formalism or STRIPS-type operators 3. Define and solve example planning problems: initial state goal state a. b. Specify planning axioms or STRIPS-type operators Show (on paper) a proof or derivation of a trivial plan and then a more challenging one using resolution or the POP algorithm

Ontology Design Example: Protege • Simplest example: Dog project • Cooking ontology – Overall Design – Implement class taxonomy – Slots representing data types – Slots containing relationships

Cooking ontology (for meal or party planning) • Food. Item – taxonomy can include Dairy, Meat, Starch, Veg, Fruit, Sweets. A higher level can be Protein, Carbs. Should include nuts due to possible allergies • A Dish – taxonomy can be Appetizer, Main Course, Salad, Dessert. A Dish has Ingredients which are instances of Food. Item • A Recipe – Has Servings (a number) – Has steps • Each step includes a Food. Item, Amount, and Prep • An Amount is a number and units • Prep is a string • Relationships

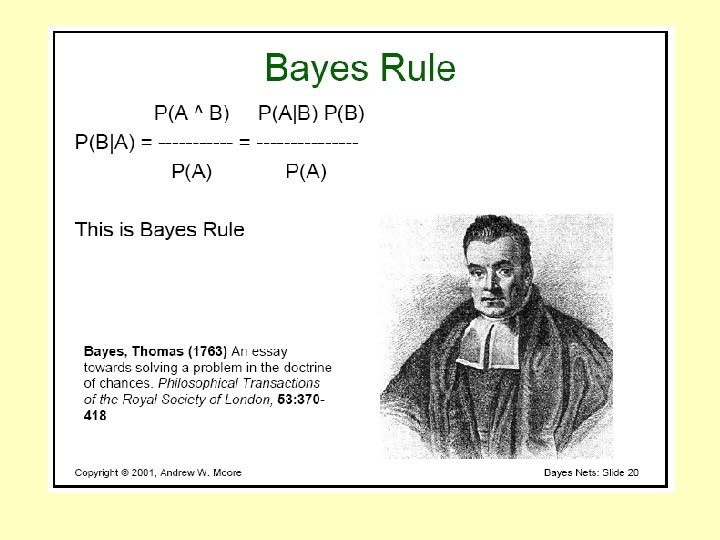

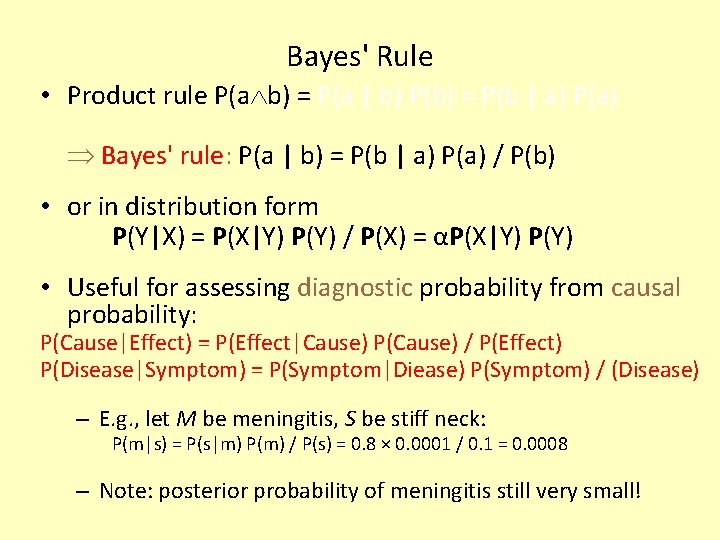

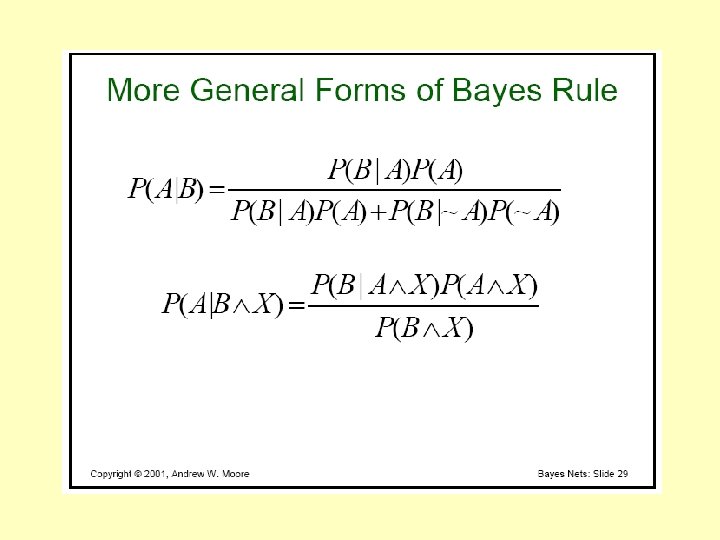

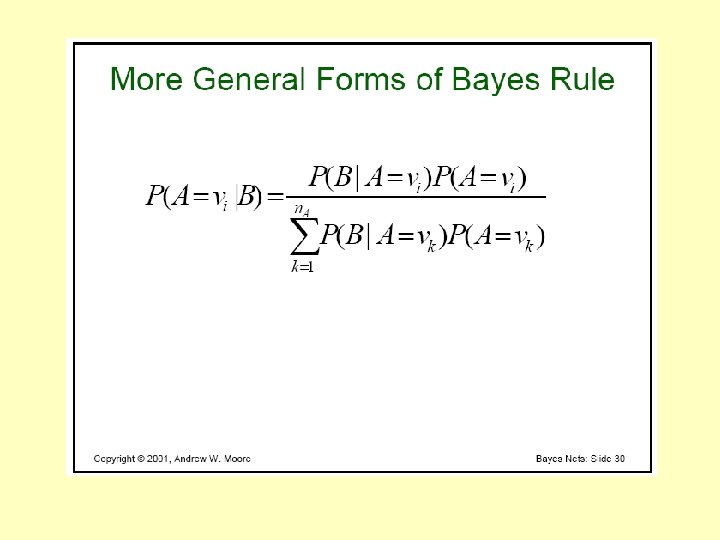

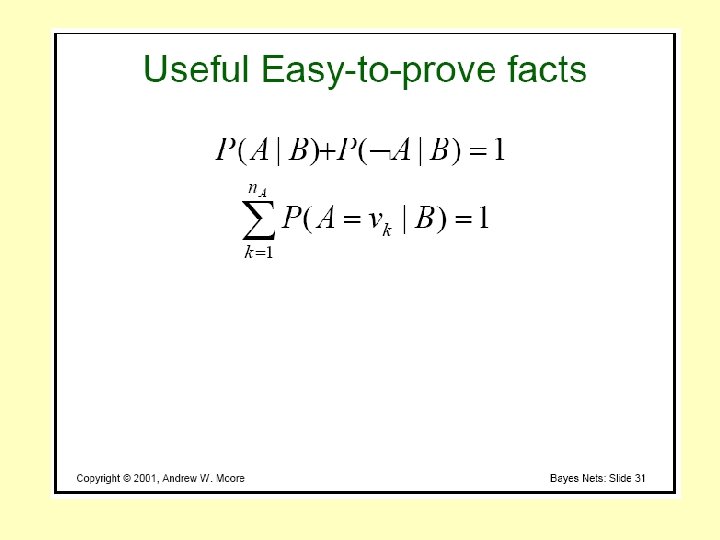

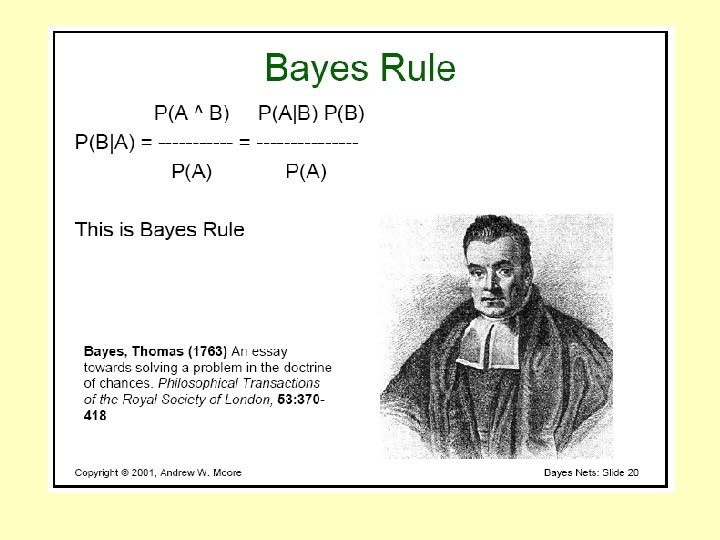

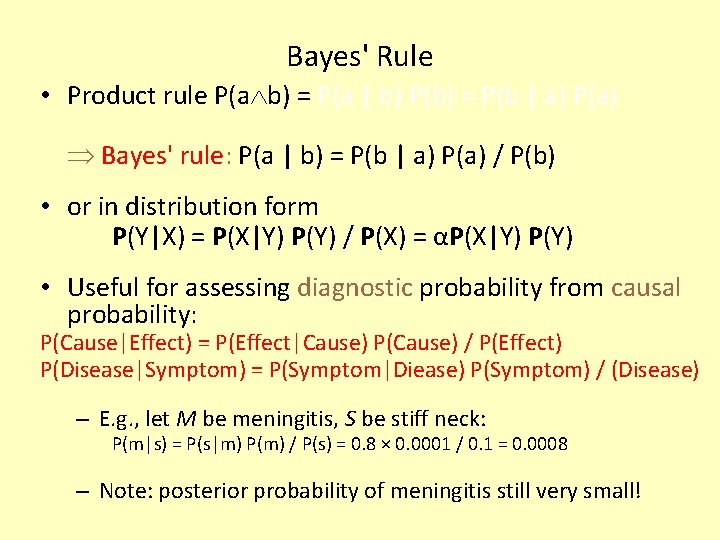

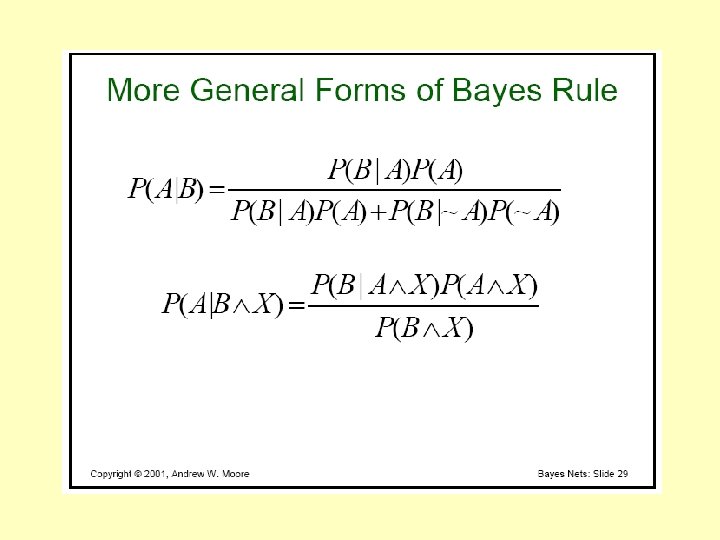

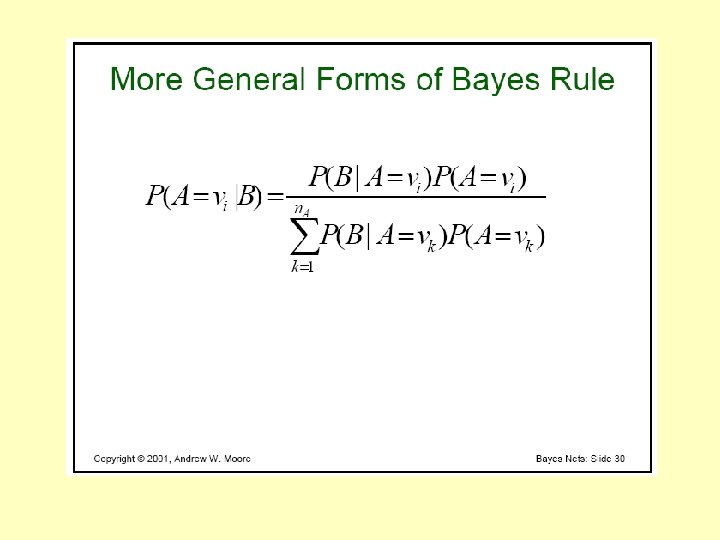

Bayes' Rule • Product rule P(a b) = P(a | b) P(b) = P(b | a) P(a) Bayes' rule: P(a | b) = P(b | a) P(a) / P(b) • or in distribution form P(Y|X) = P(X|Y) P(Y) / P(X) = αP(X|Y) P(Y) • Useful for assessing diagnostic probability from causal probability: P(Cause|Effect) = P(Effect|Cause) P(Cause) / P(Effect) P(Disease|Symptom) = P(Symptom|Diease) P(Symptom) / (Disease) – E. g. , let M be meningitis, S be stiff neck: P(m|s) = P(s|m) P(m) / P(s) = 0. 8 × 0. 0001 / 0. 1 = 0. 0008 – Note: posterior probability of meningitis still very small!

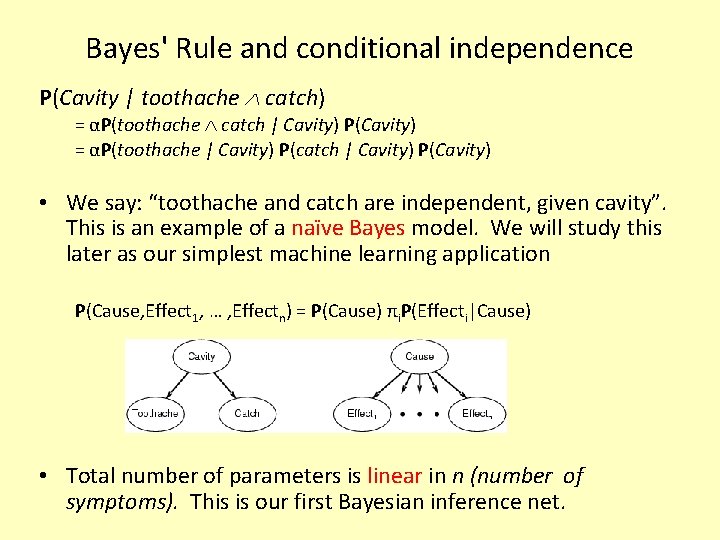

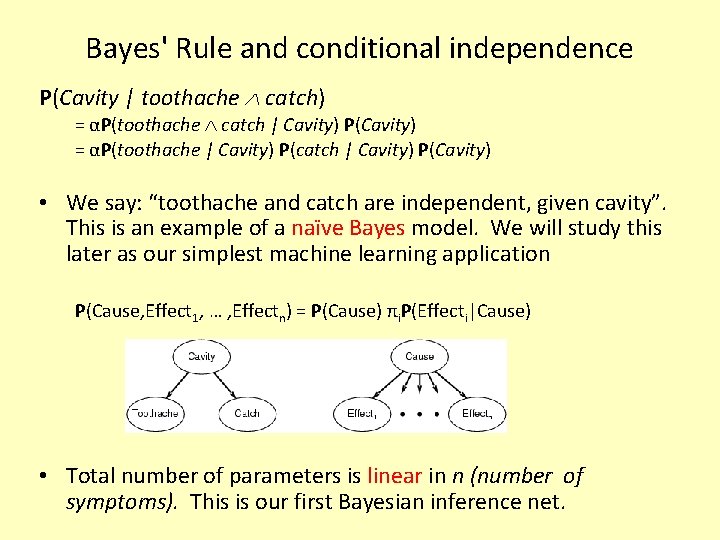

Bayes' Rule and conditional independence P(Cavity | toothache catch) = αP(toothache catch | Cavity) P(Cavity) = αP(toothache | Cavity) P(catch | Cavity) P(Cavity) • We say: “toothache and catch are independent, given cavity”. This is an example of a naïve Bayes model. We will study this later as our simplest machine learning application P(Cause, Effect 1, … , Effectn) = P(Cause) πi. P(Effecti|Cause) • Total number of parameters is linear in n (number of symptoms). This is our first Bayesian inference net.

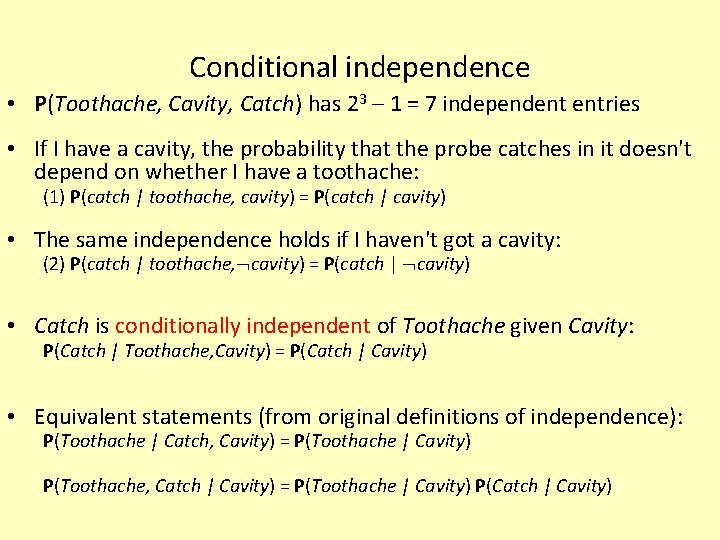

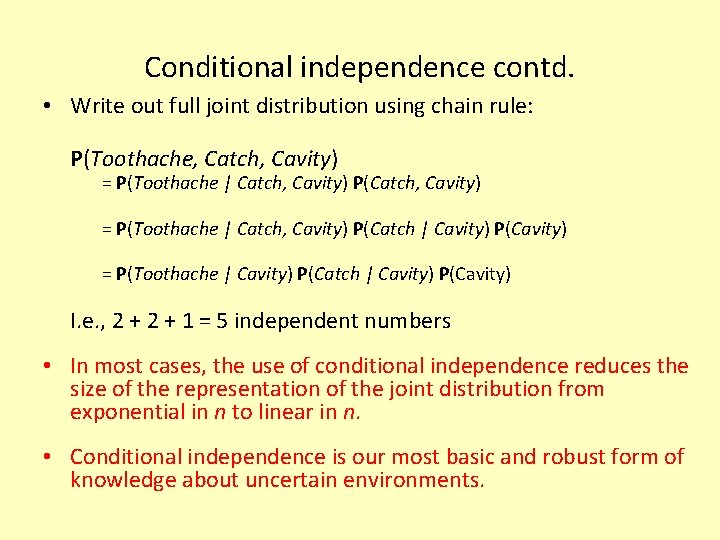

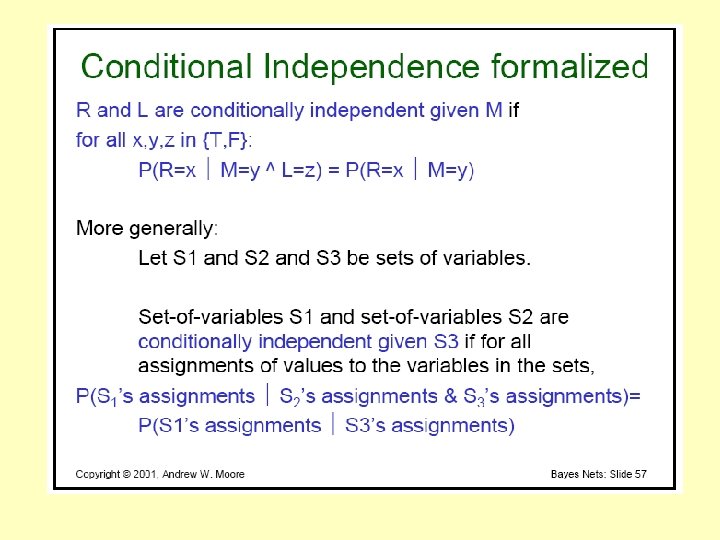

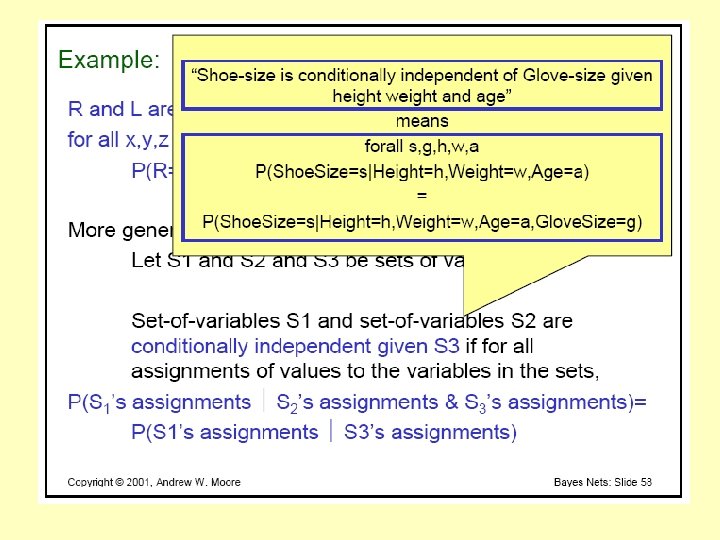

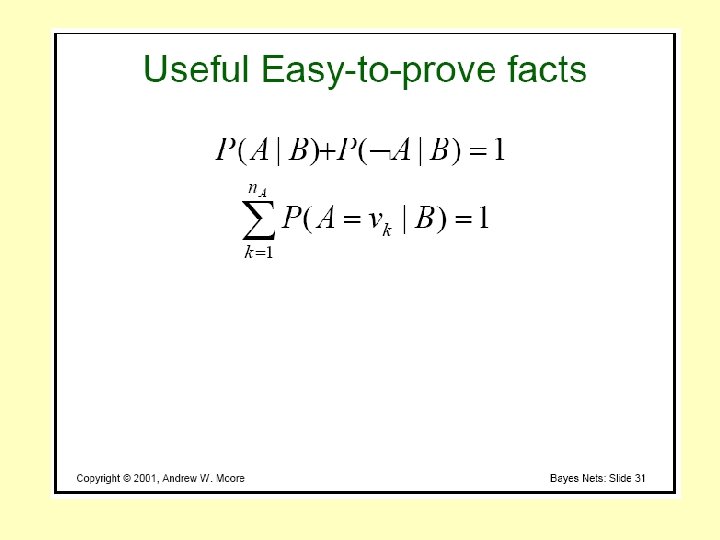

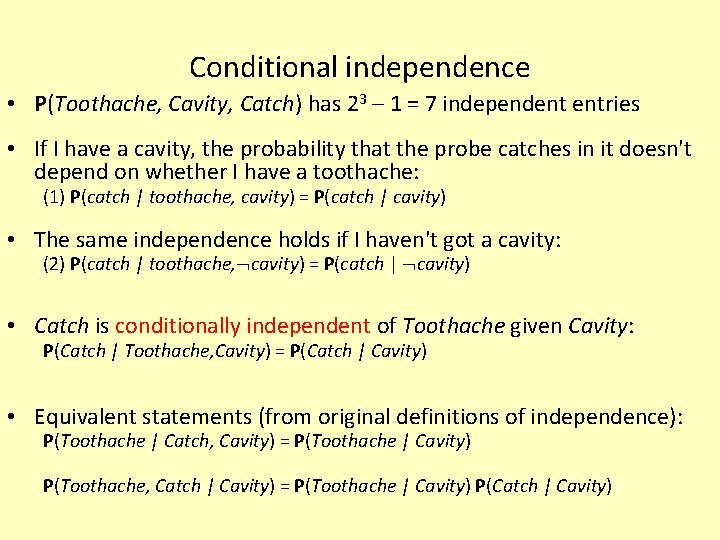

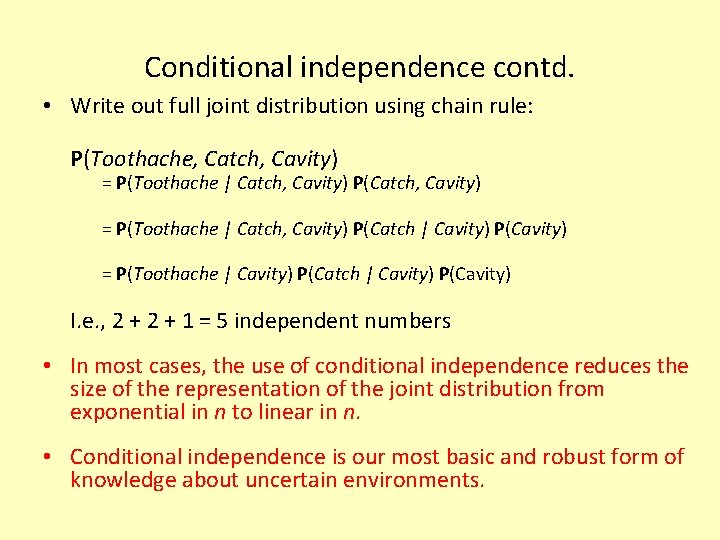

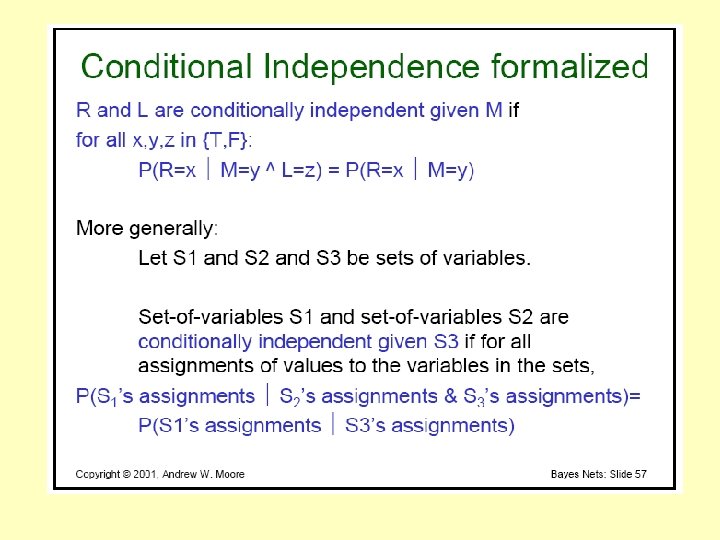

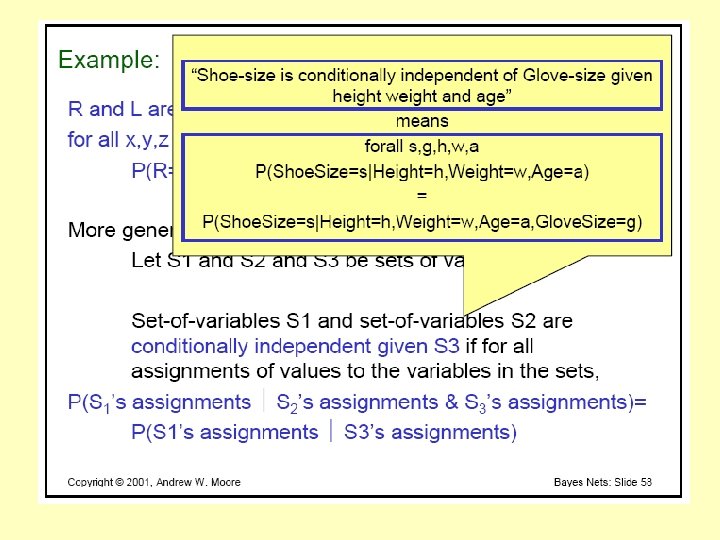

Conditional independence • P(Toothache, Cavity, Catch) has 23 – 1 = 7 independent entries • If I have a cavity, the probability that the probe catches in it doesn't depend on whether I have a toothache: (1) P(catch | toothache, cavity) = P(catch | cavity) • The same independence holds if I haven't got a cavity: (2) P(catch | toothache, cavity) = P(catch | cavity) • Catch is conditionally independent of Toothache given Cavity: P(Catch | Toothache, Cavity) = P(Catch | Cavity) • Equivalent statements (from original definitions of independence): P(Toothache | Catch, Cavity) = P(Toothache | Cavity) P(Toothache, Catch | Cavity) = P(Toothache | Cavity) P(Catch | Cavity)

Conditional independence contd. • Write out full joint distribution using chain rule: P(Toothache, Catch, Cavity) = P(Toothache | Catch, Cavity) P(Catch | Cavity) P(Cavity) = P(Toothache | Cavity) P(Catch | Cavity) P(Cavity) I. e. , 2 + 1 = 5 independent numbers • In most cases, the use of conditional independence reduces the size of the representation of the joint distribution from exponential in n to linear in n. • Conditional independence is our most basic and robust form of knowledge about uncertain environments.

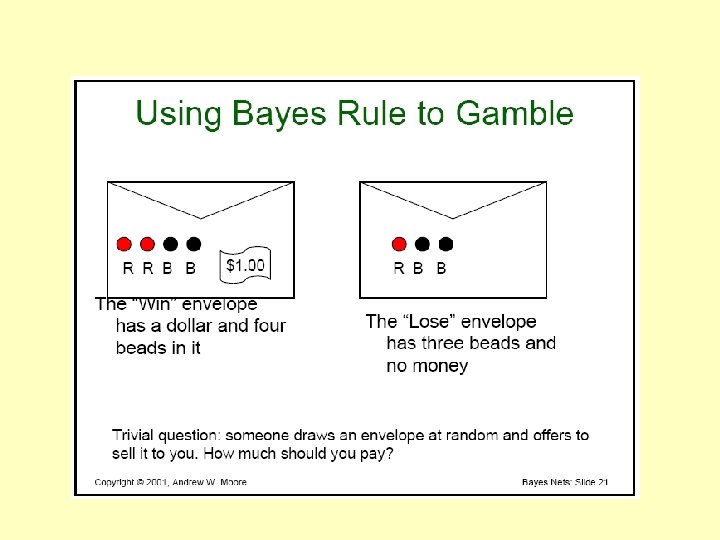

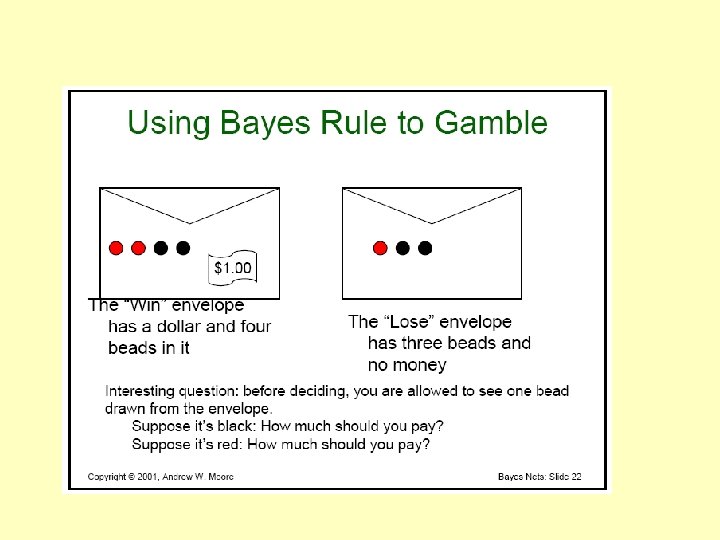

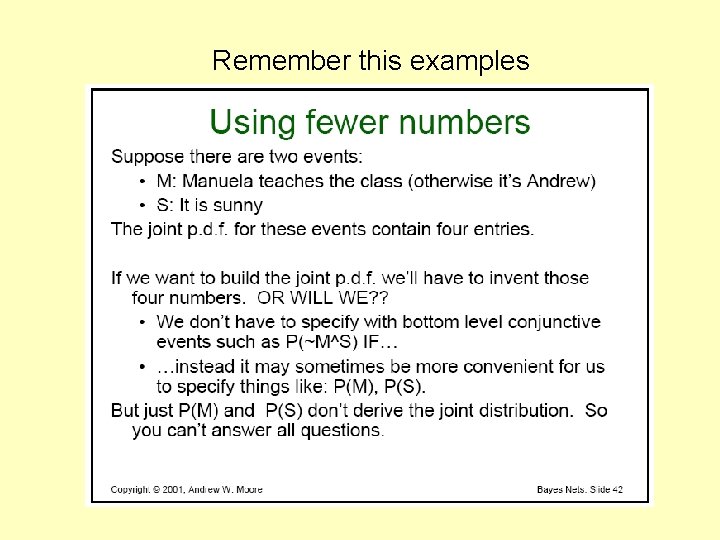

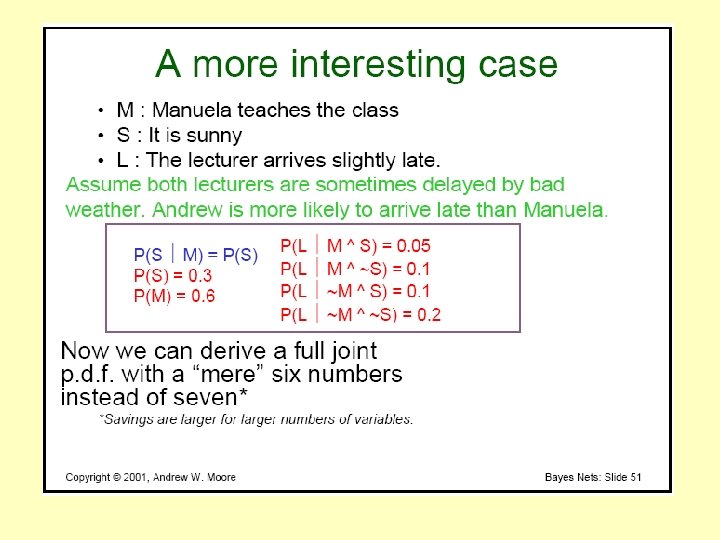

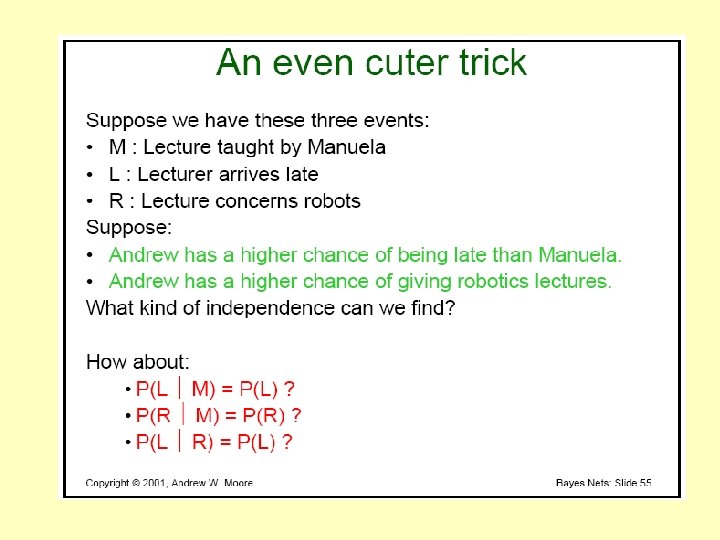

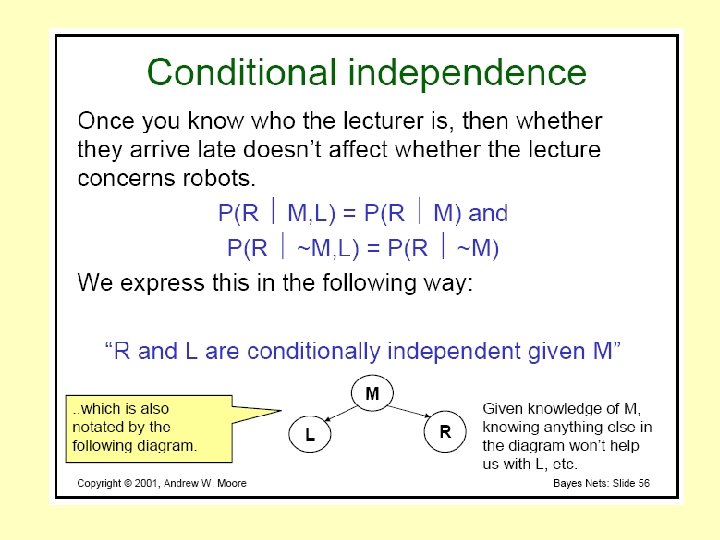

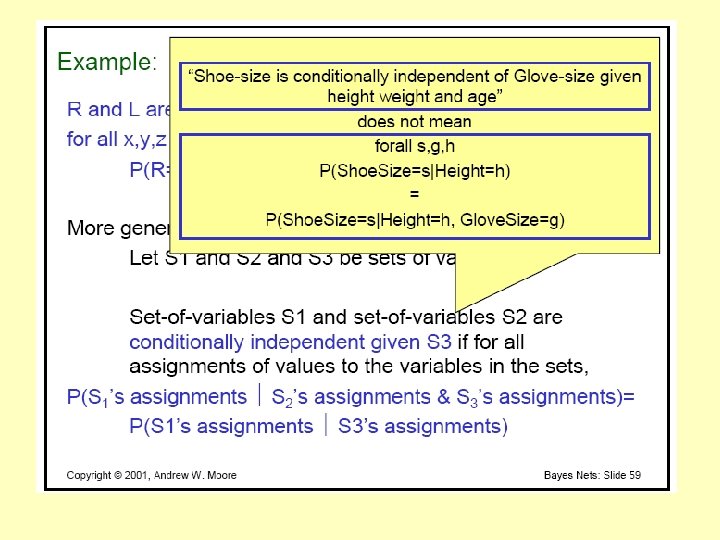

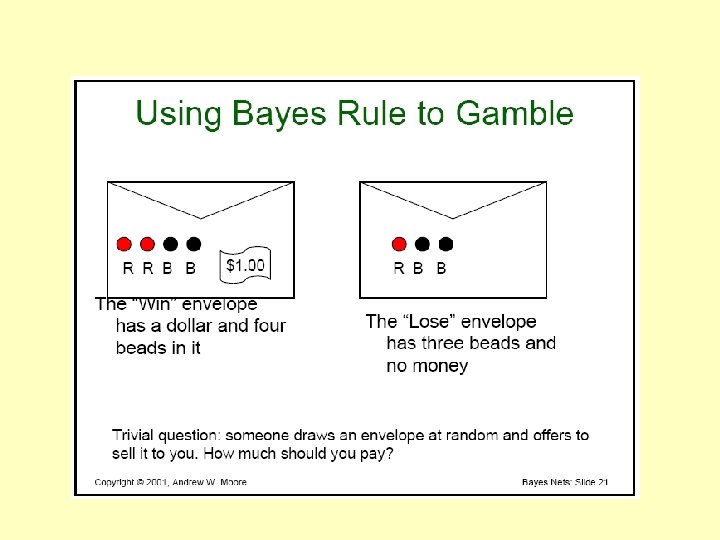

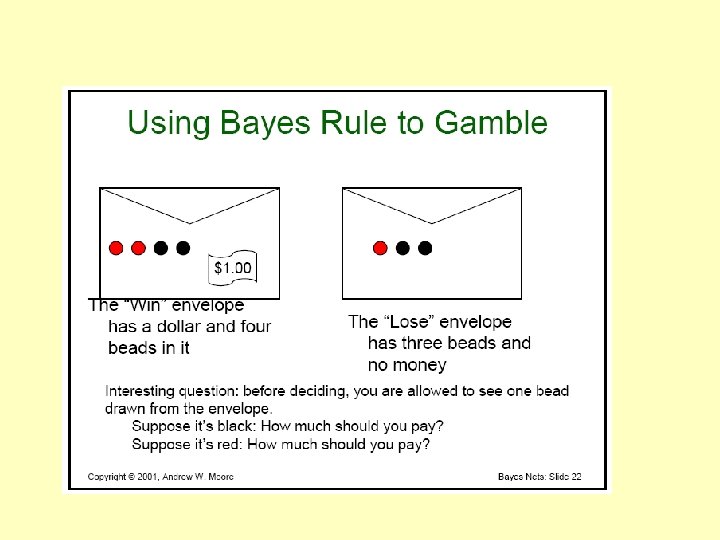

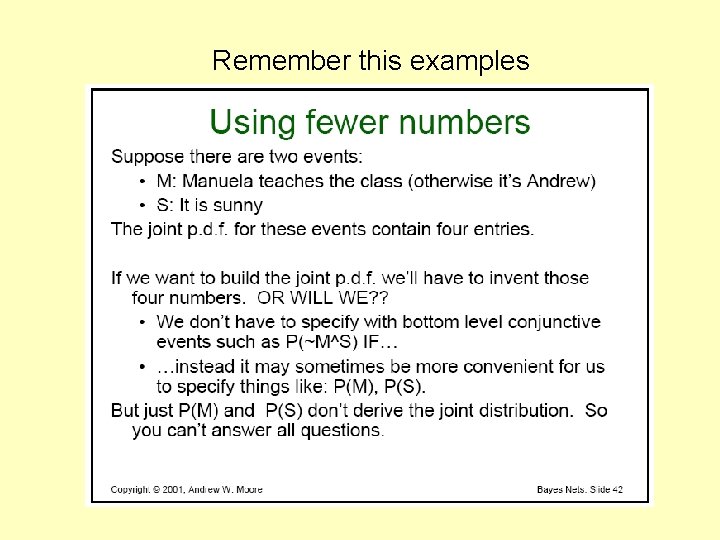

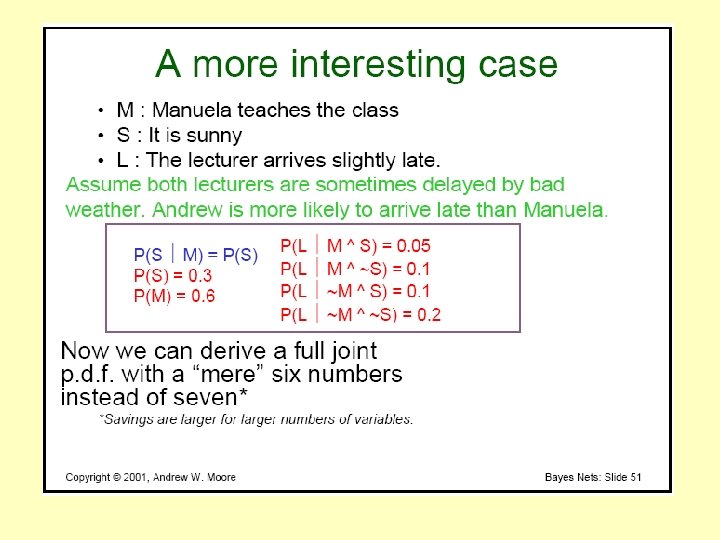

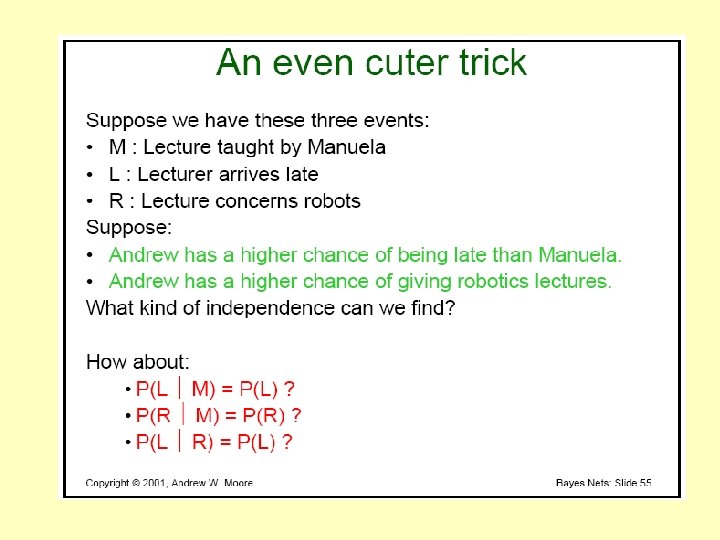

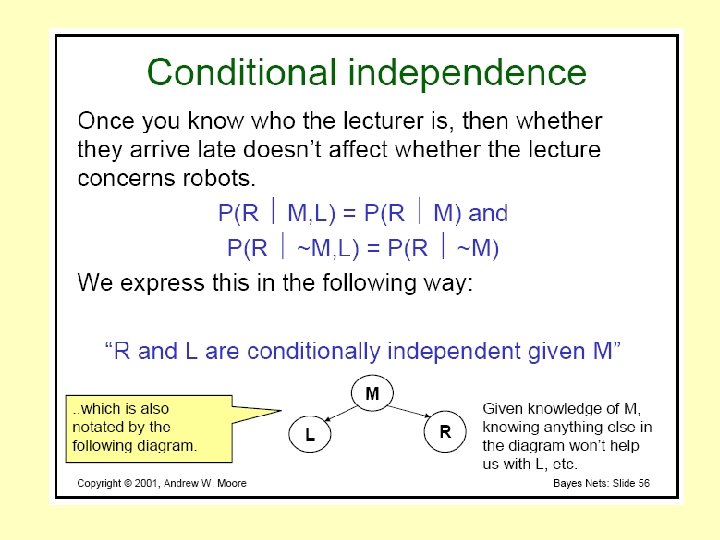

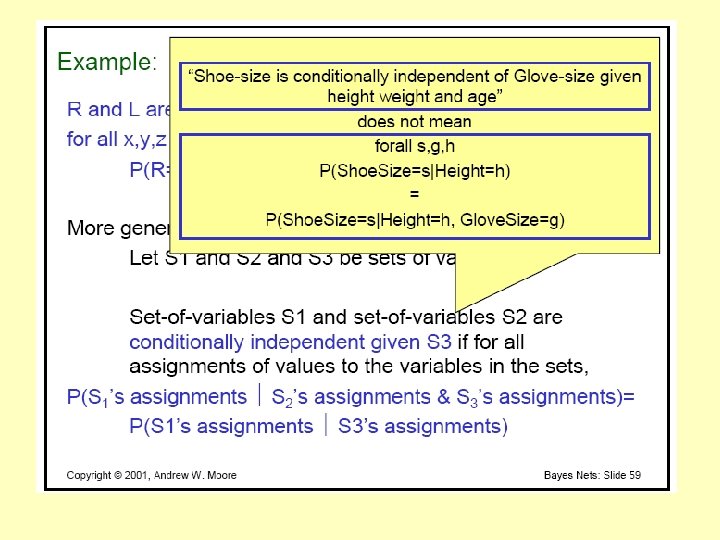

Remember this examples

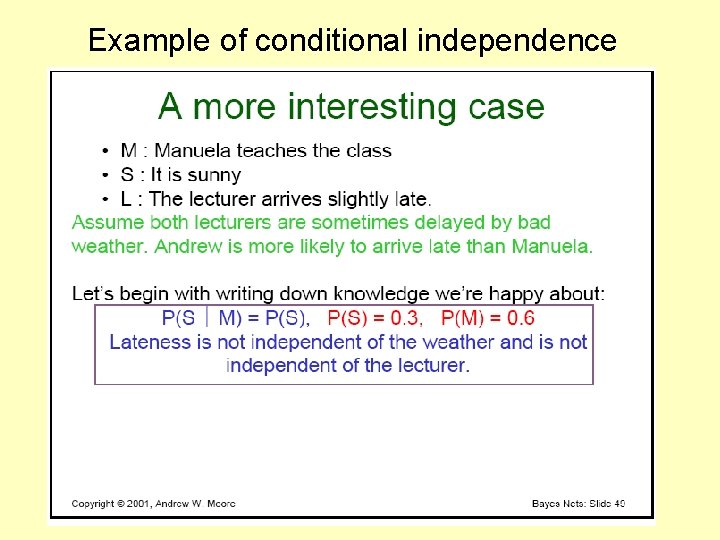

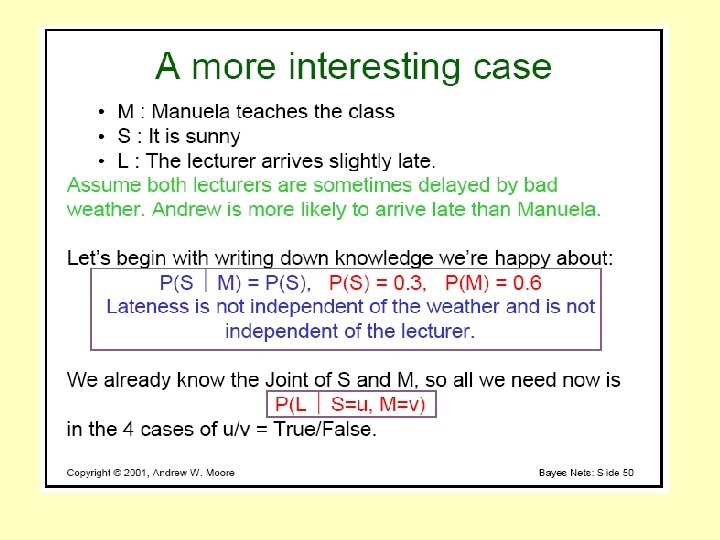

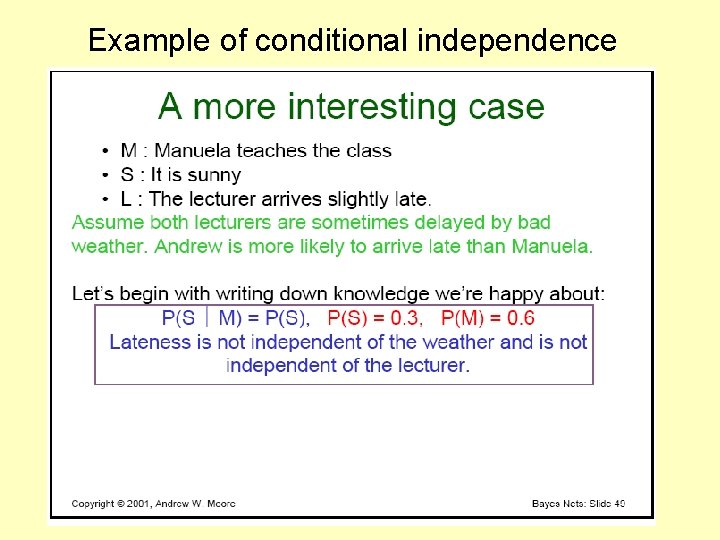

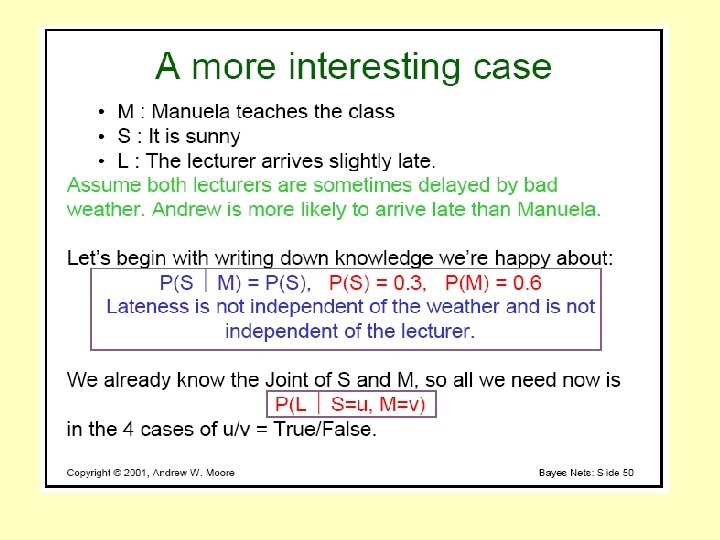

Example of conditional independence

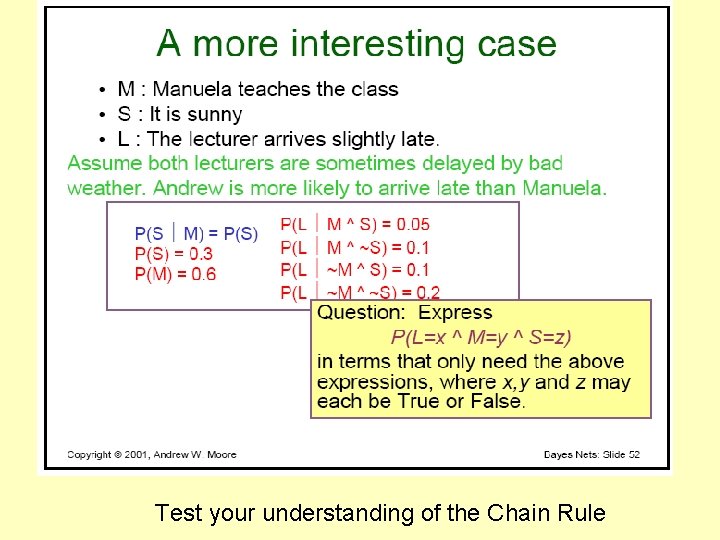

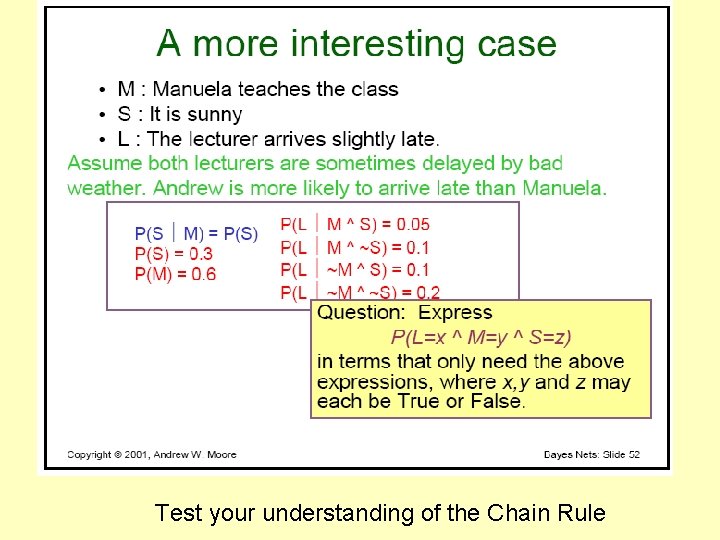

Test your understanding of the Chain Rule

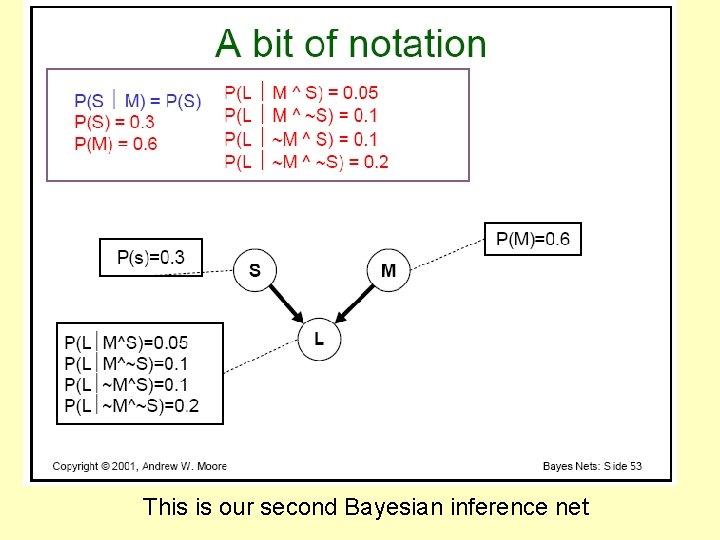

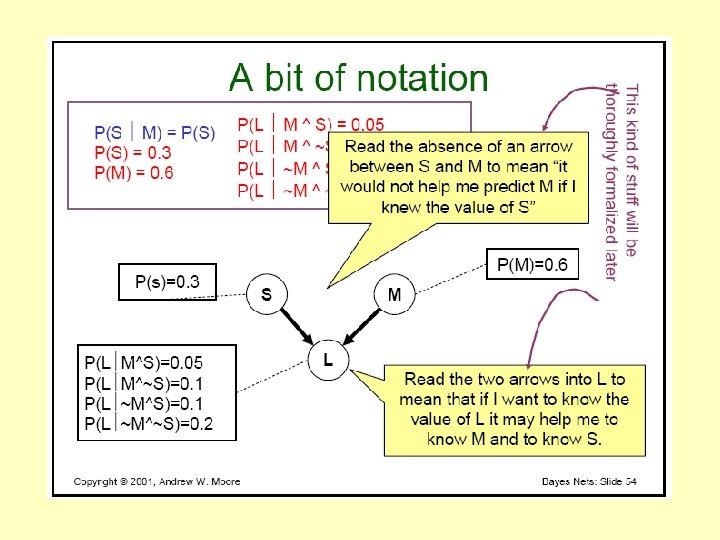

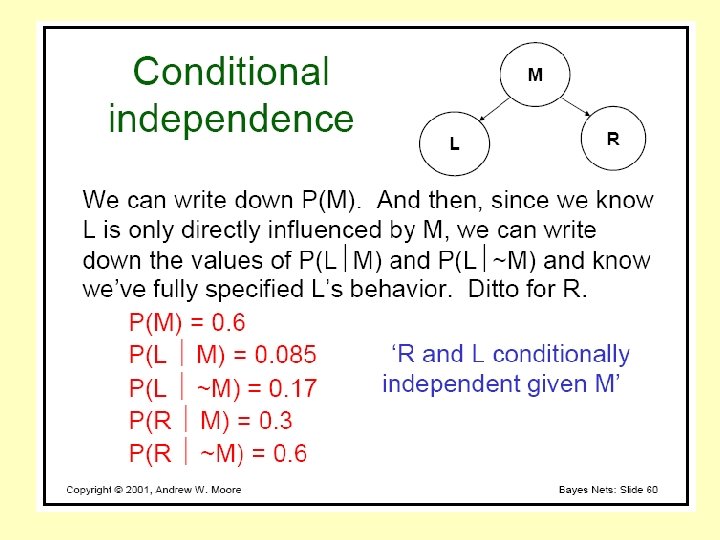

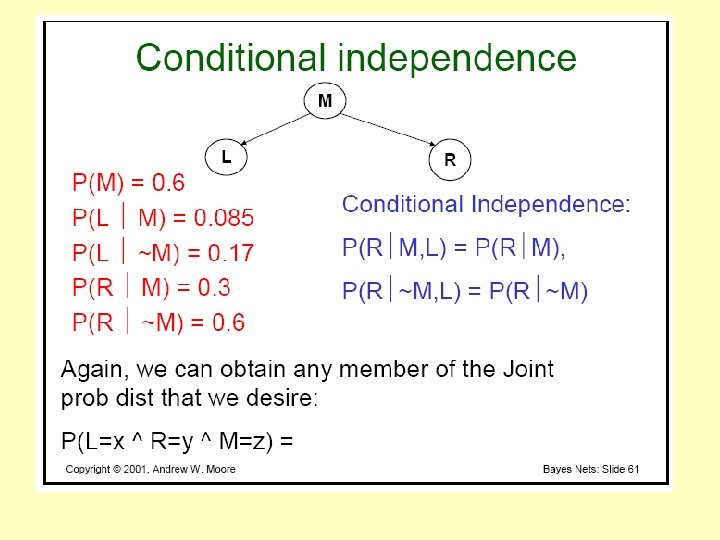

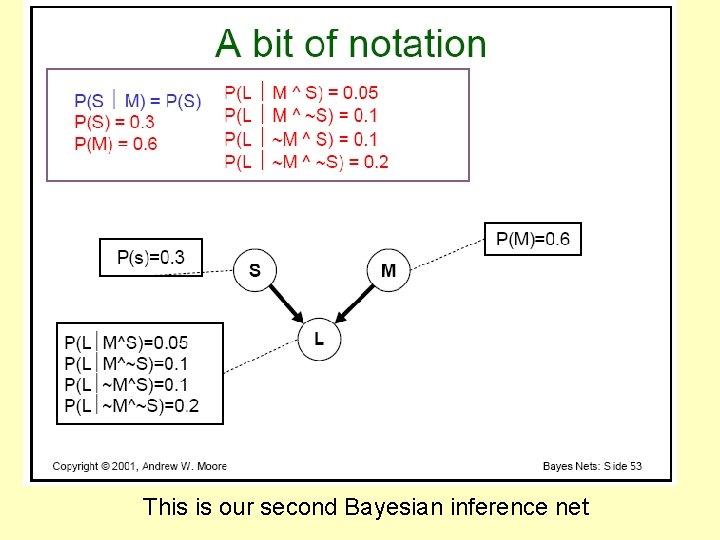

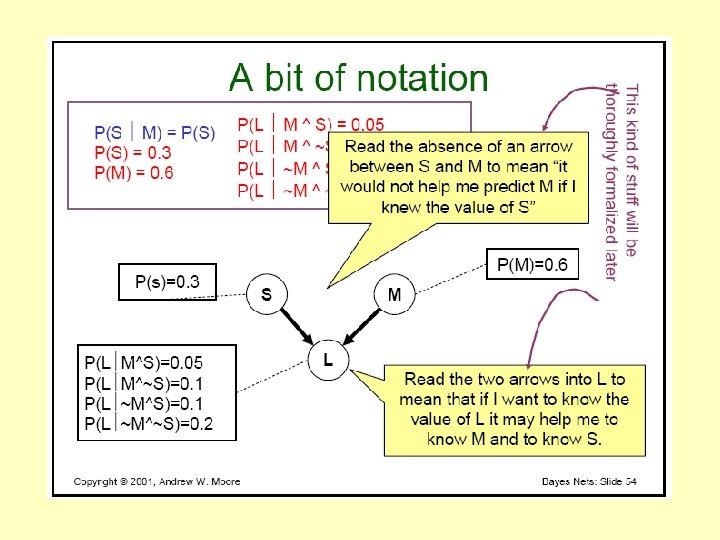

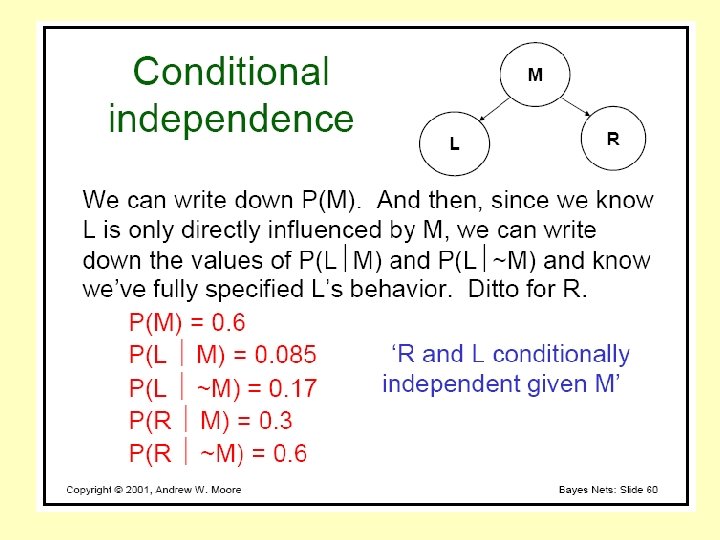

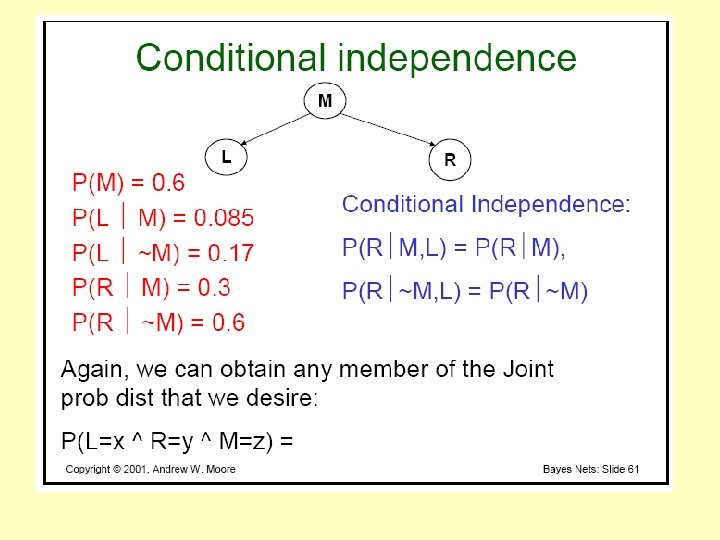

This is our second Bayesian inference net

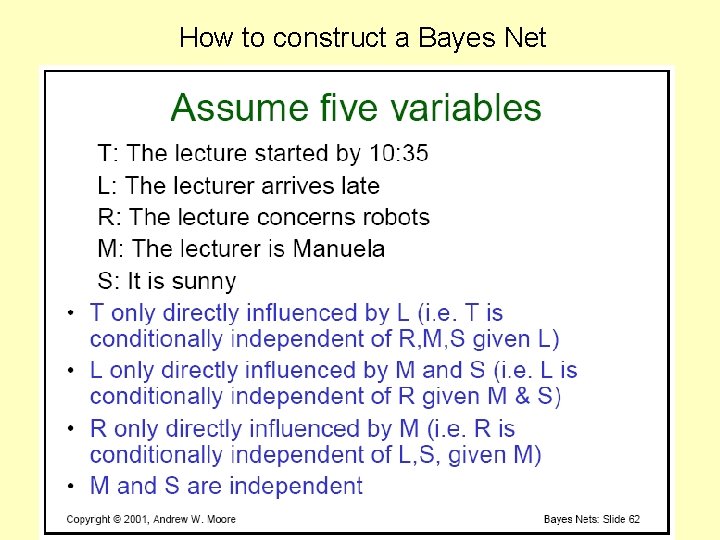

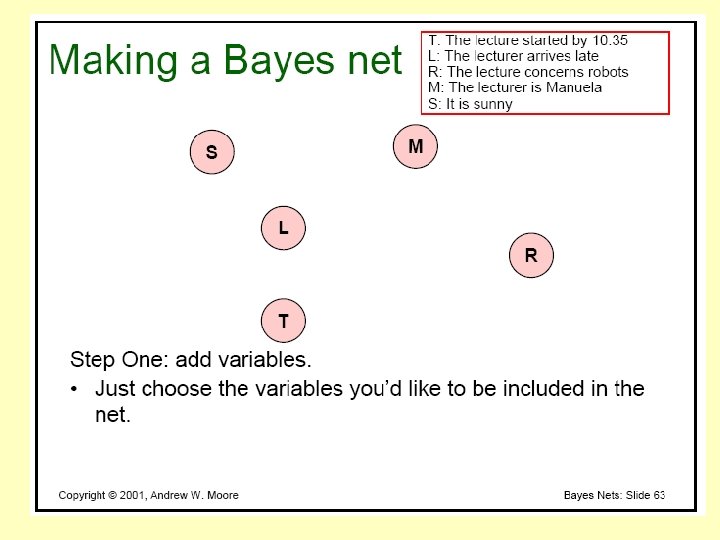

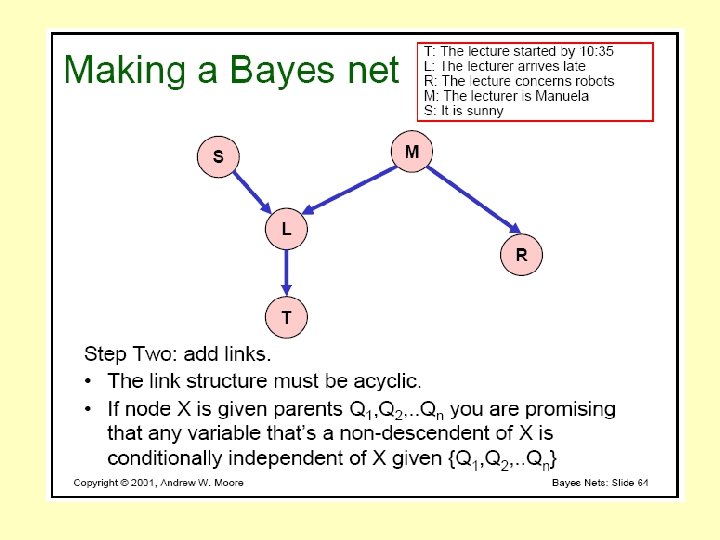

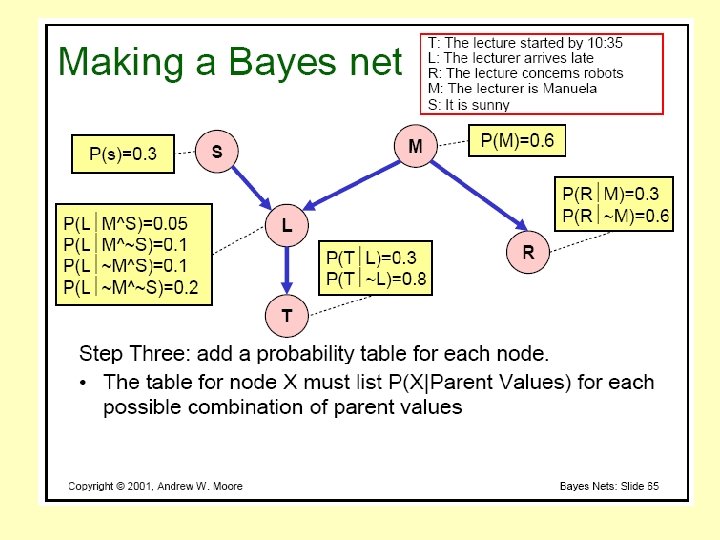

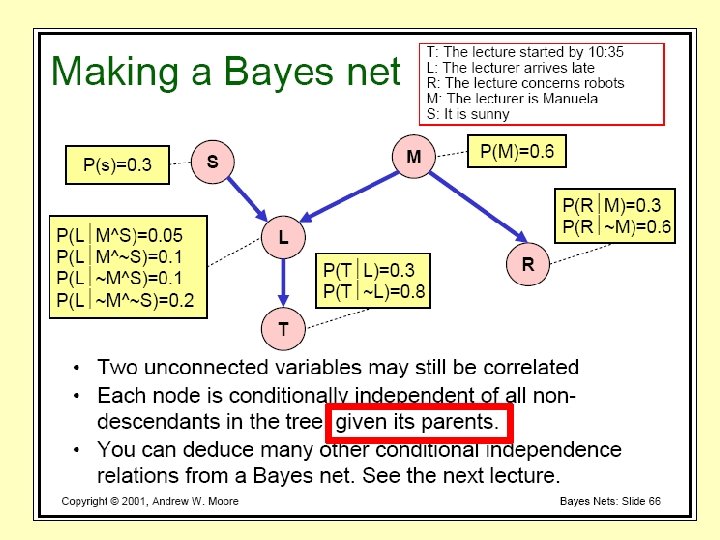

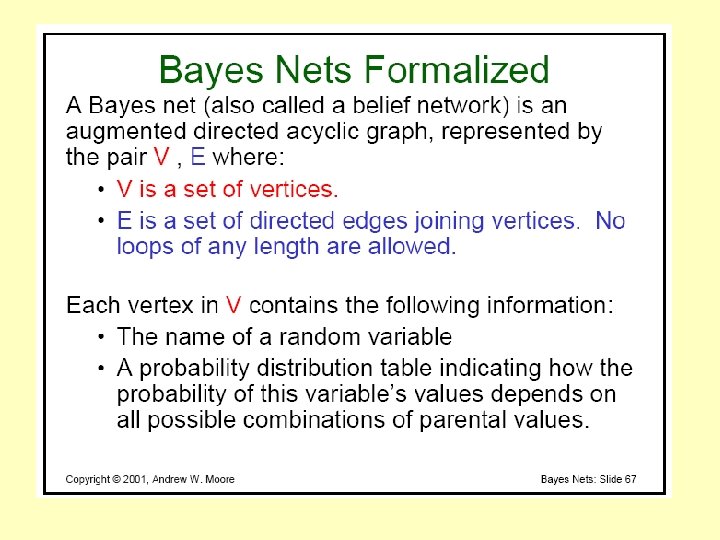

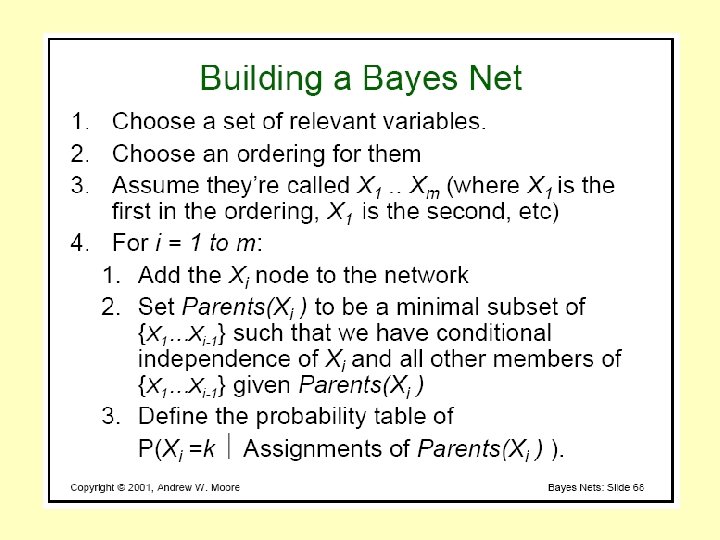

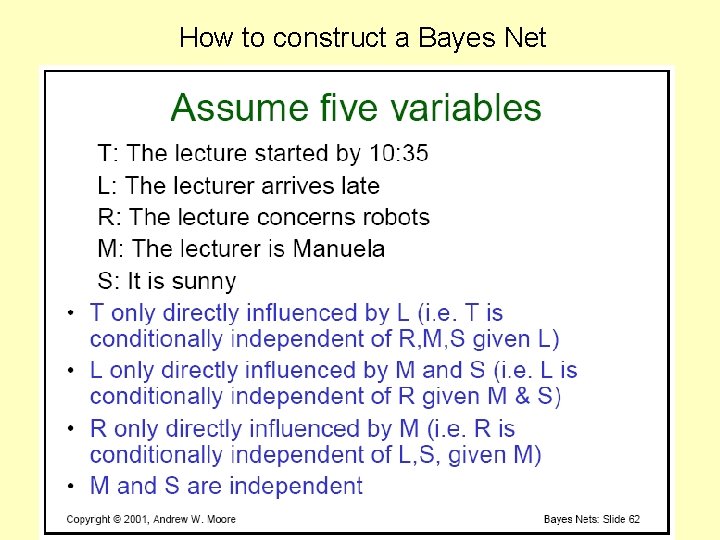

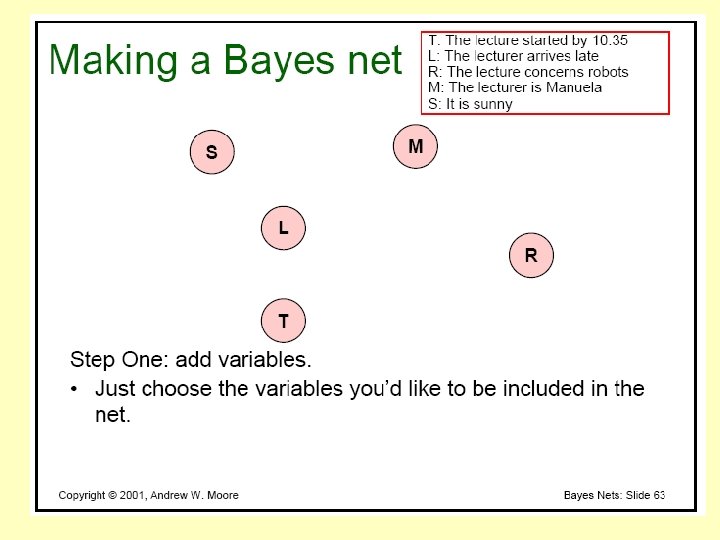

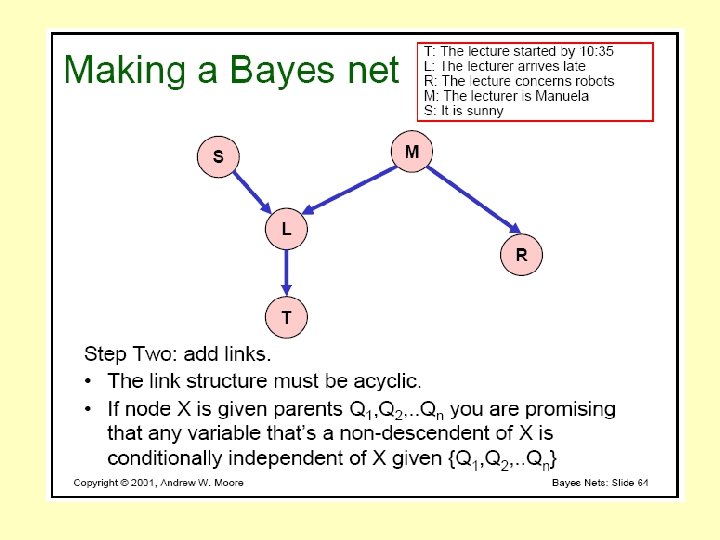

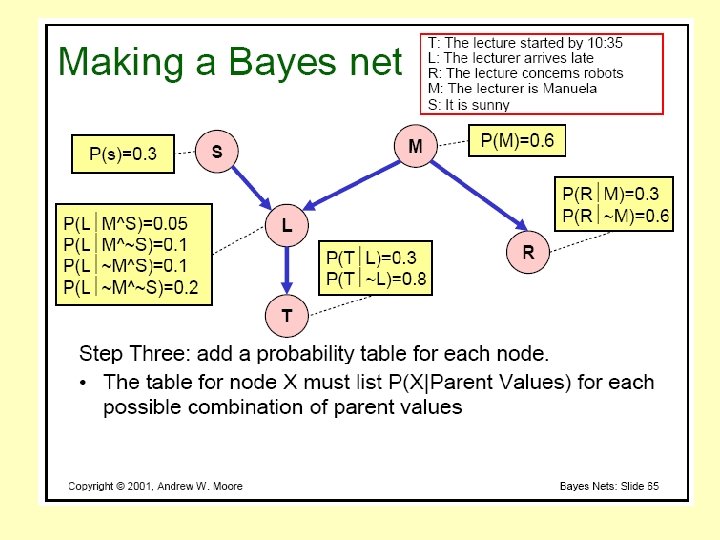

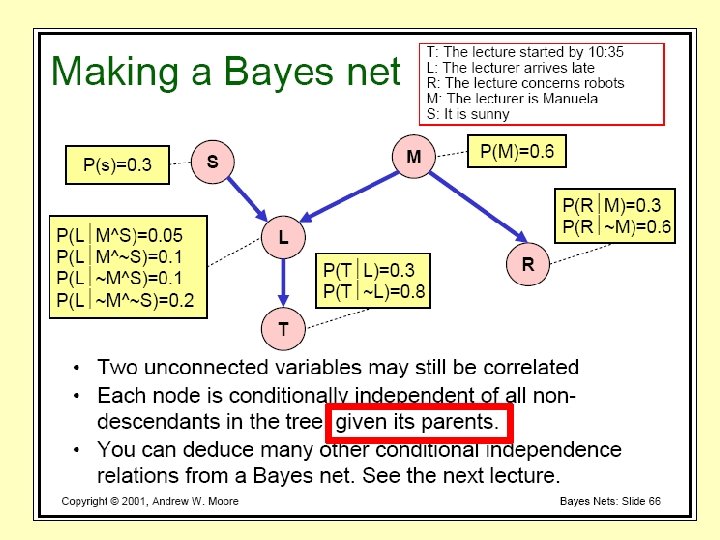

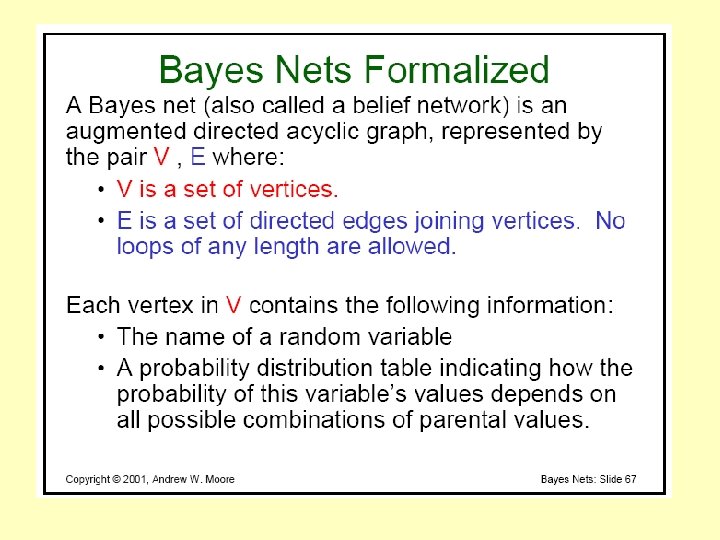

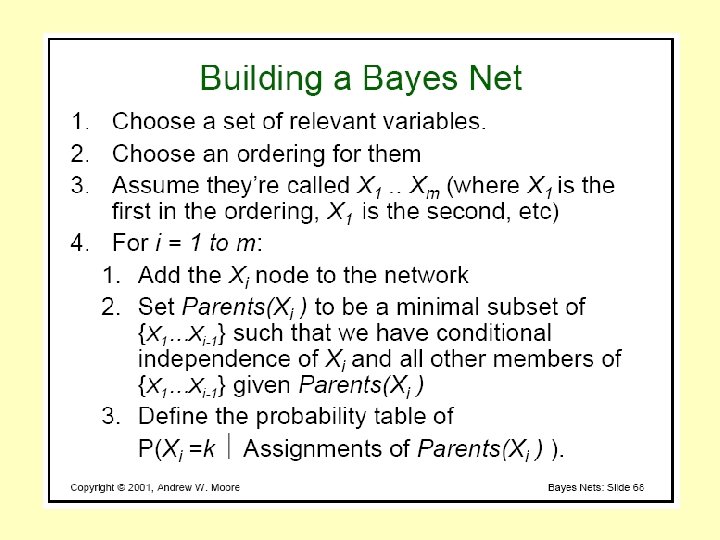

How to construct a Bayes Net

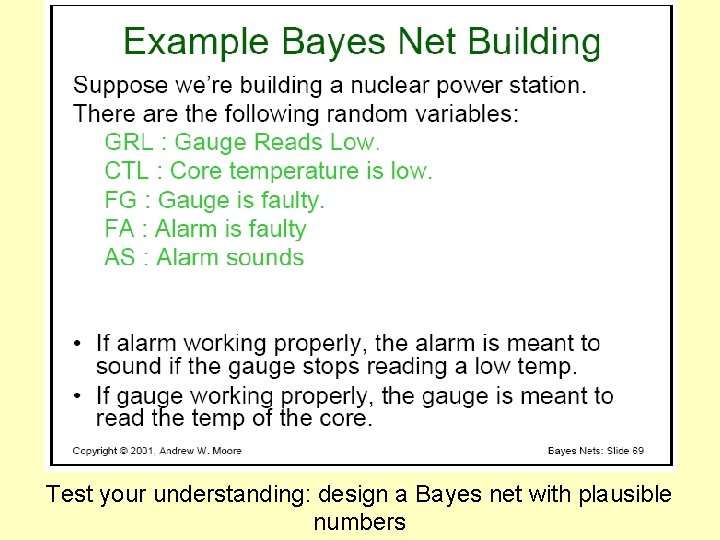

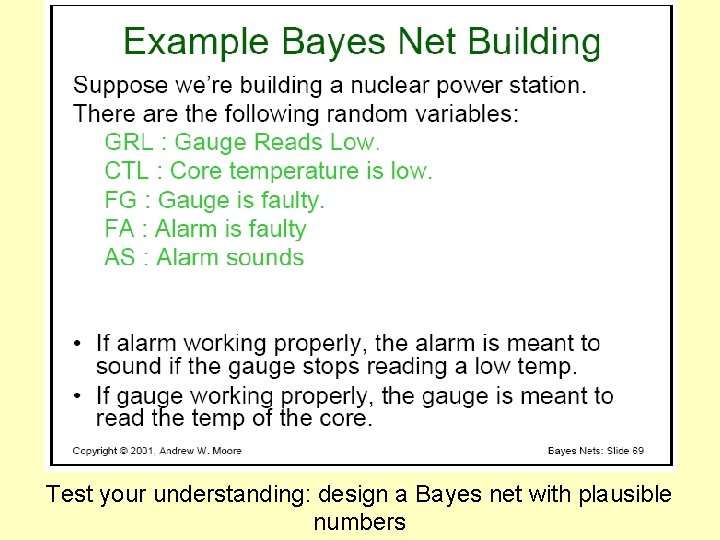

Test your understanding: design a Bayes net with plausible numbers

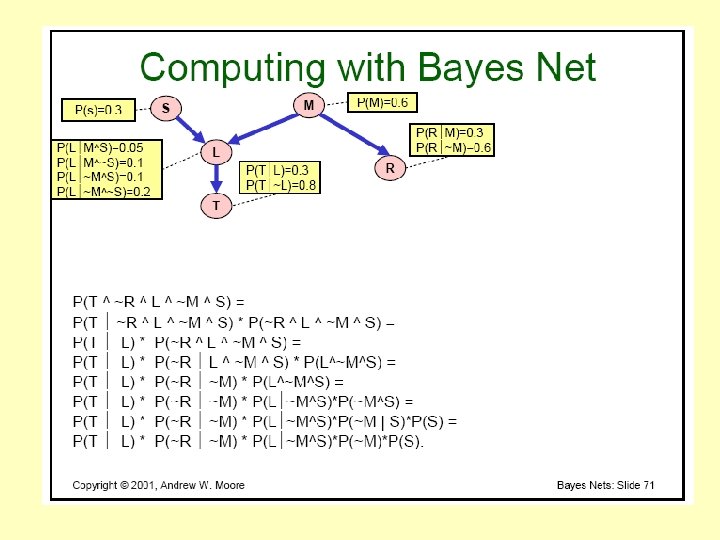

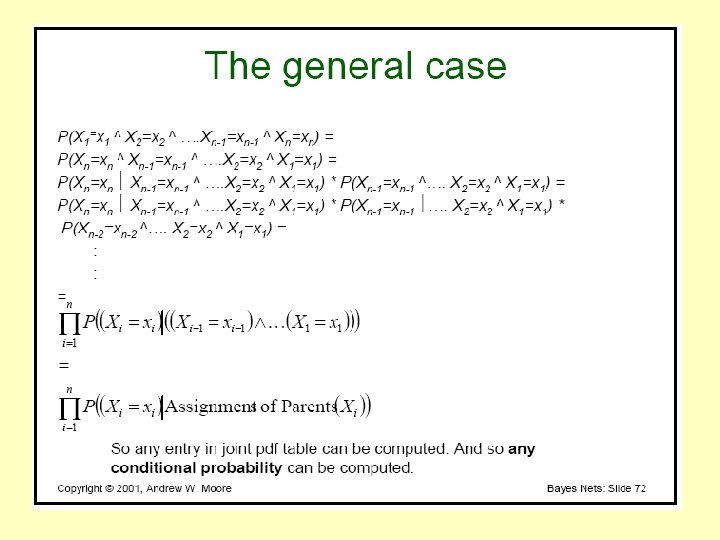

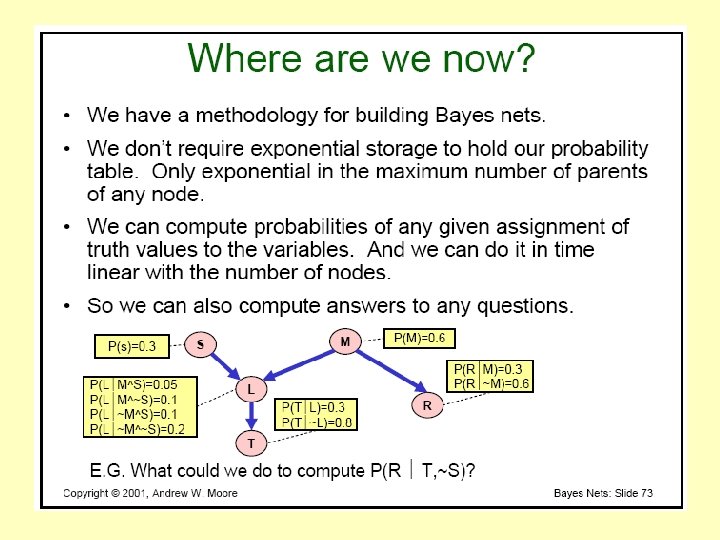

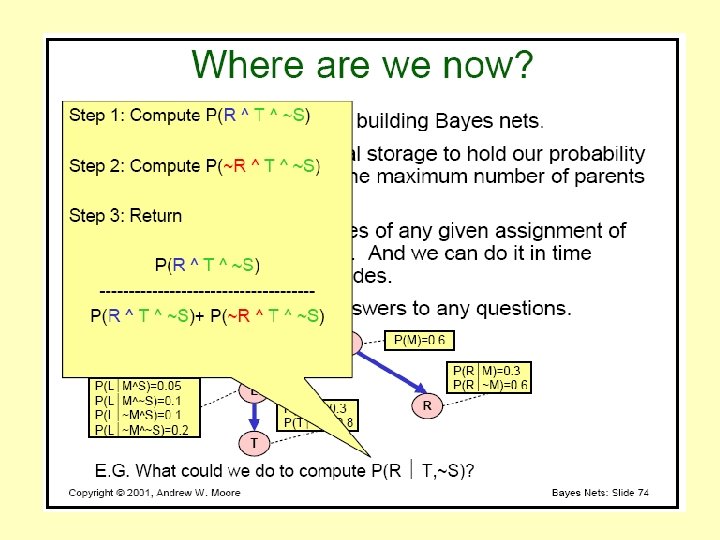

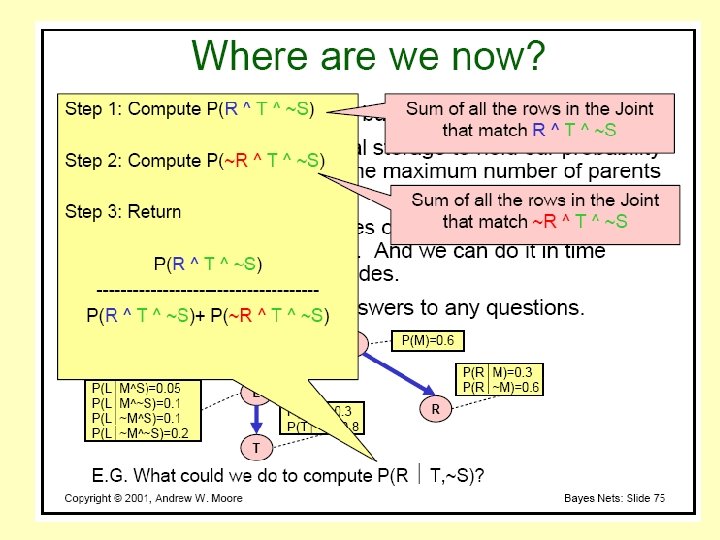

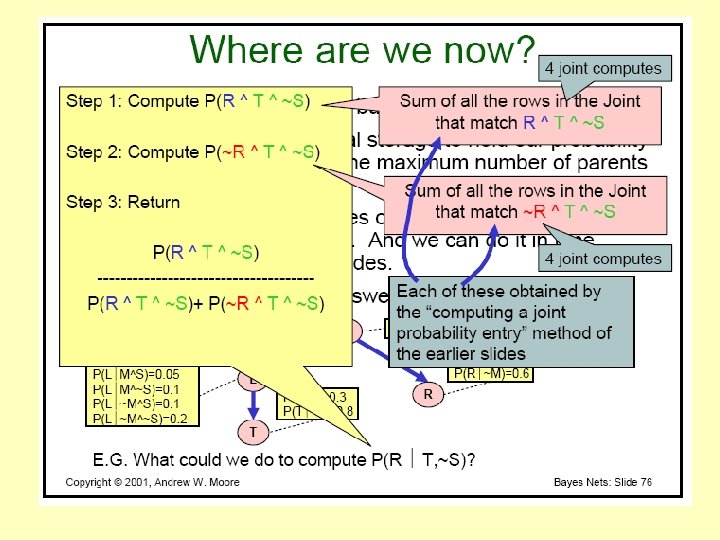

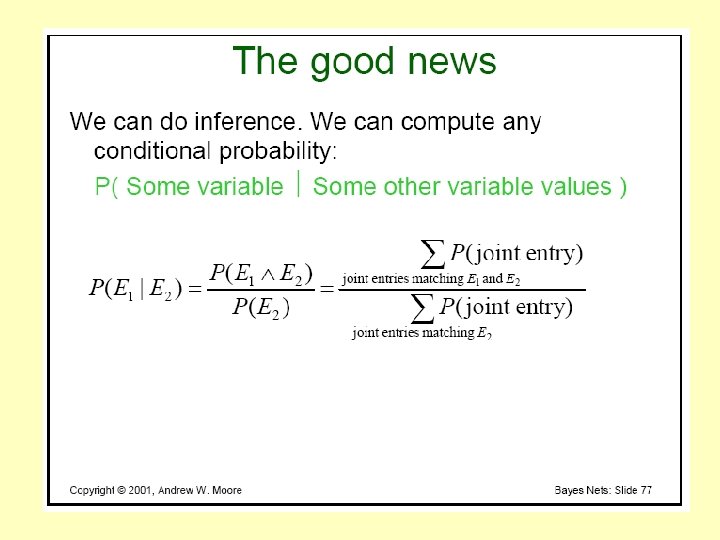

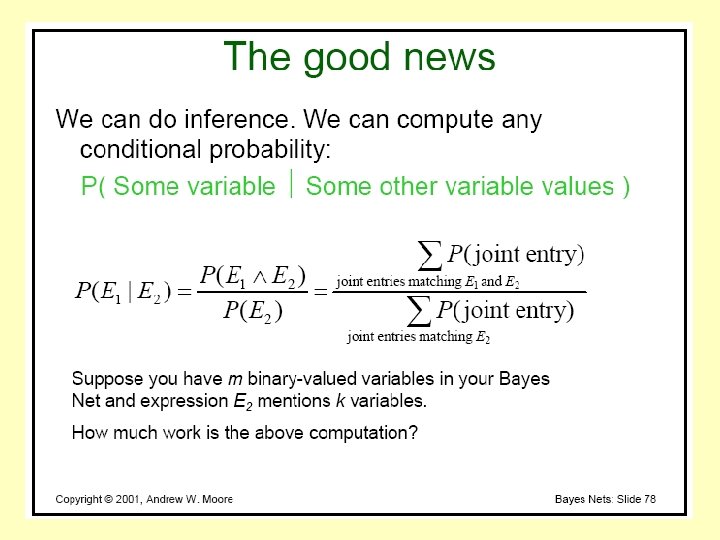

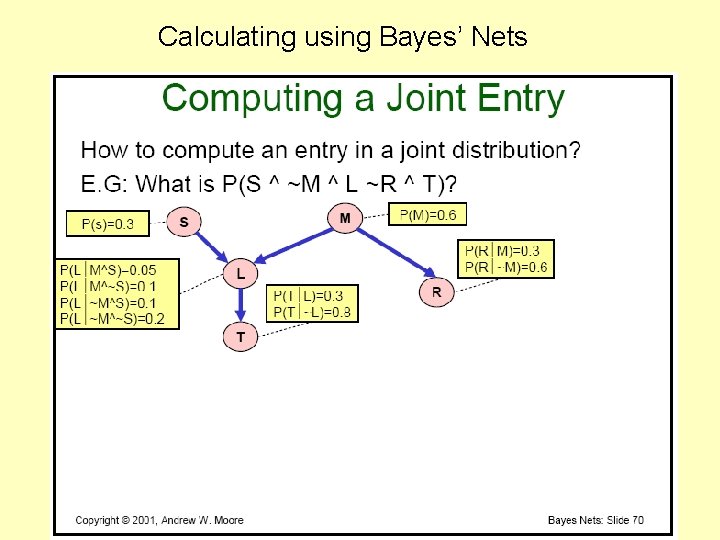

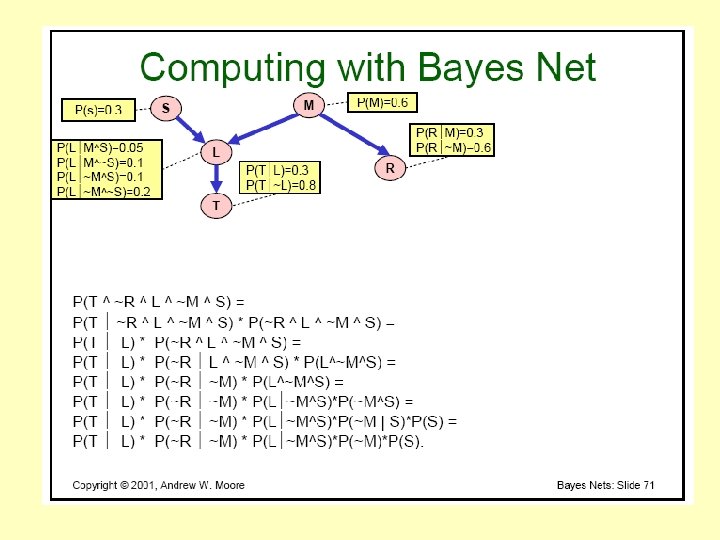

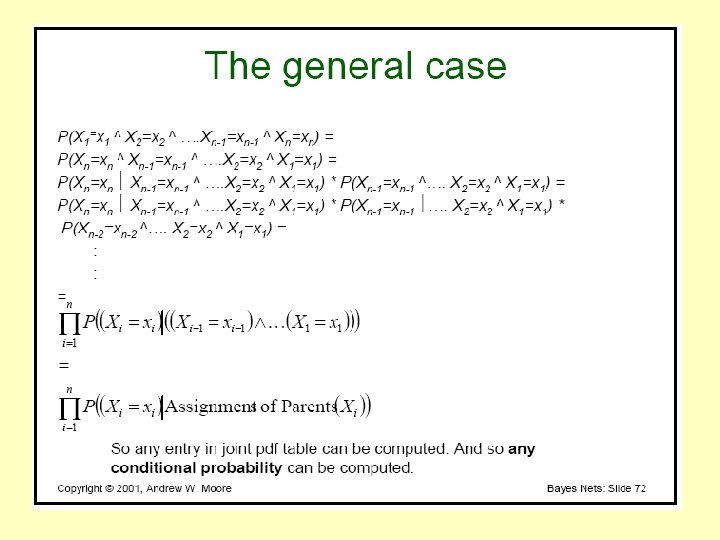

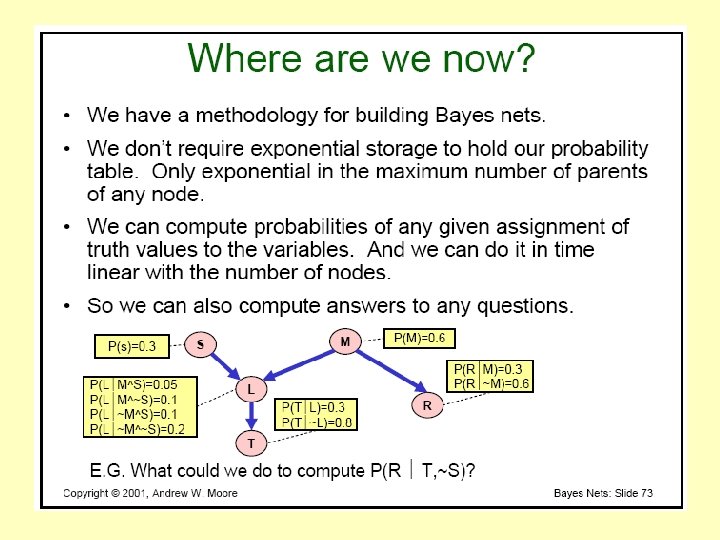

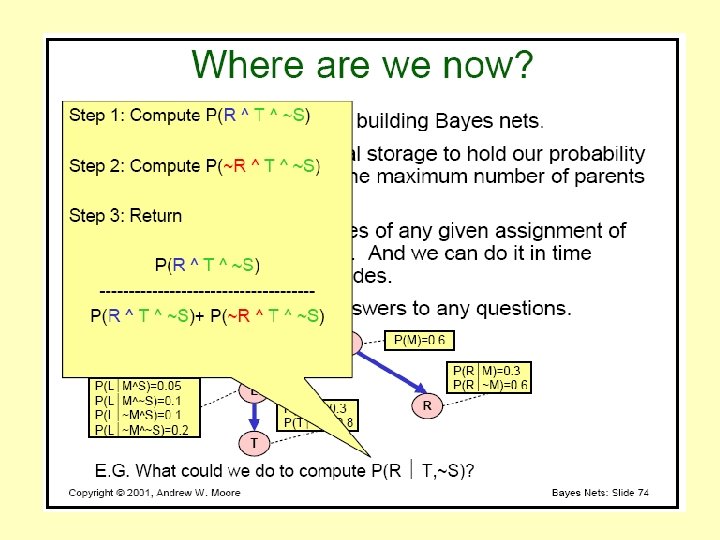

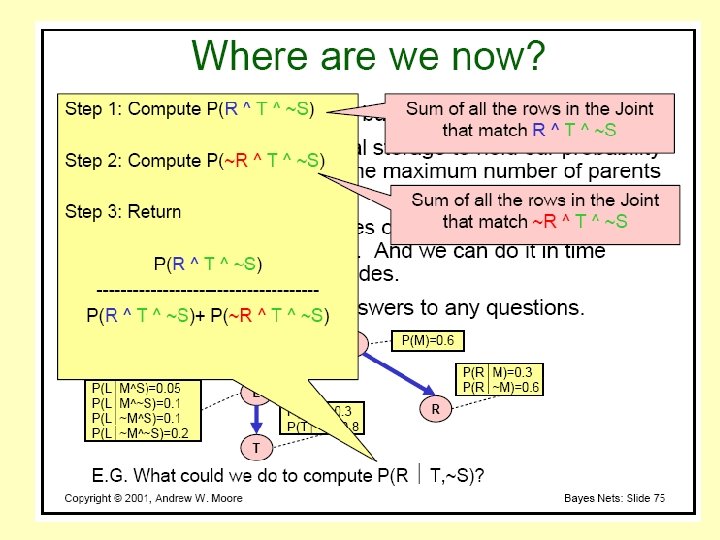

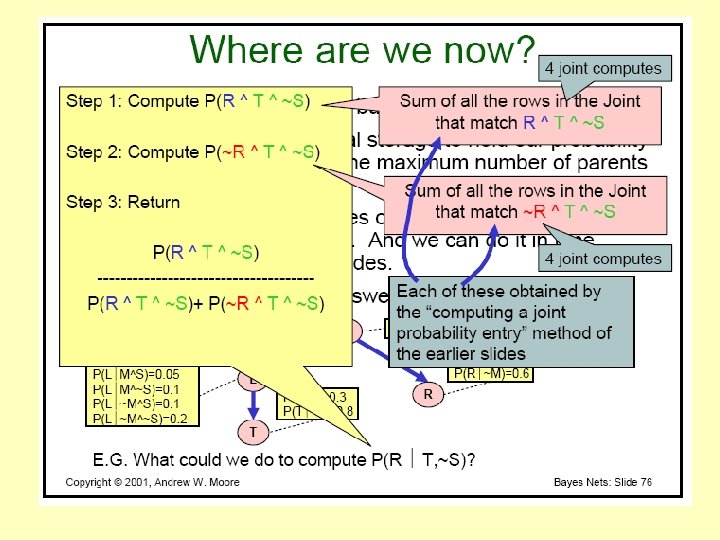

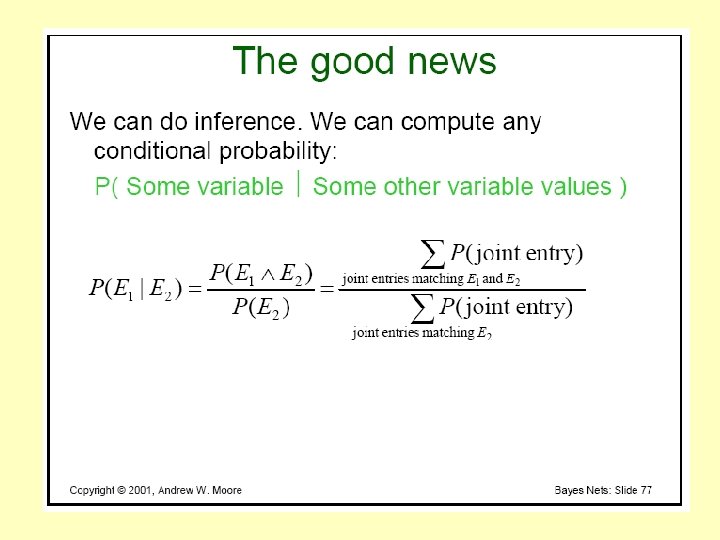

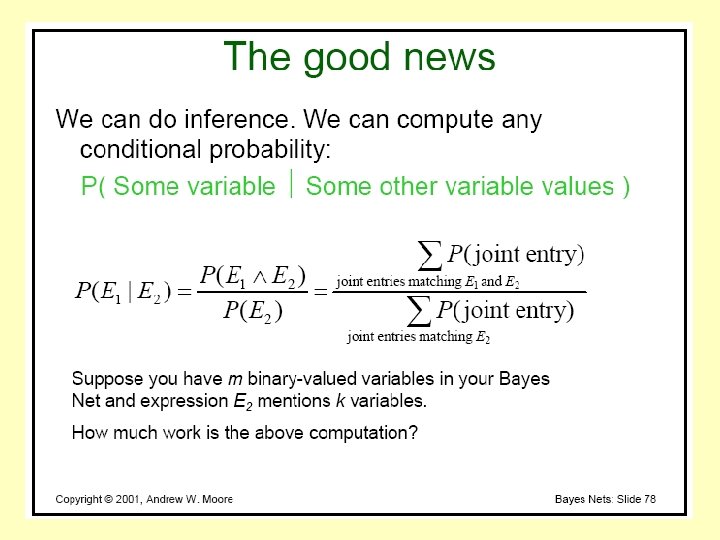

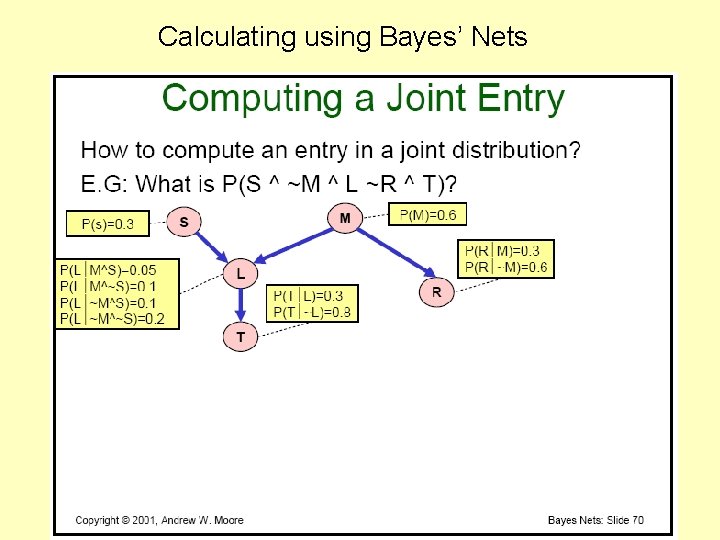

Calculating using Bayes’ Nets