CS 3610 Software Engineering Fall 2009 Dr Hisham

- Slides: 33

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Chapter 13 Software Testing Strategies Discussion of Software Testing Strategies

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Testing is the process of exercising a program with the intent of finding errors prior to delivery to the end user, and it requires a strategy.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. What Testing Shows errors requirements conformance Performance issue an indication of quality

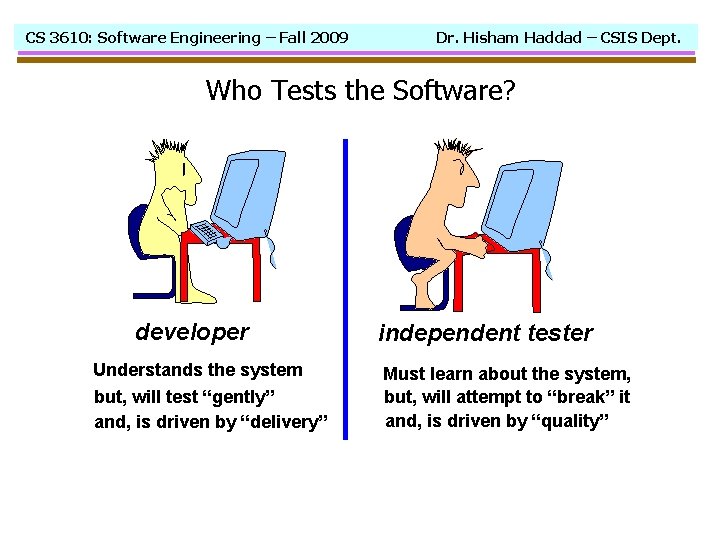

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Who Tests the Software? developer Understands the system but, will test “gently” and, is driven by “delivery” independent tester Must learn about the system, but, will attempt to “break” it and, is driven by “quality”

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. The Big Picture Testing is an element of the V&V process. Verification: The software implements the functional specifications. Validation: The software is traceable to customer requirements (functional, behavioural, performance). (mapping software components to requirements) V&V activities = SQA (see chapter 26 on Quality Management) The testing group works with the developers and reports to the SQA team. “You can’t test in quality. If it‘s not there before you begin testing, it won’t be there when you finished testing”

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Testing Strategy (1) A testing strategy is a plan (road map) that outlines the detailed testing activities (steps, test case design, test execution, effort, time, resources). It results in a Test Specification document. Many testing strategies are proposed. However, common characteristics of a testing strategies include: - Testing starts with effective FTR - Testing starts at the component level and work outward - Testing is conducted by developers (small projects) and testing groups (large projects) - Different techniques are relevant at different points in the development process - Debugging is an activity of any testing strategy

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Conventional vs. OO For conventional software, the module (component) is the initial focus, then integration of modules follows. For OO software, when “testing in the small”, the focus changes from an individual module (the conventional view) to an OO class (or package of classes) that encompasses attributes and operations and implies communication and collaboration.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Strategic Issues • State software requirements in quantifiable manner to test for quality characteristics • State testing objectives explicitly • Understand the users of the software and develop a profile for each user category (use-cases) • Develop a testing plan that emphasizes “rapid cycle testing” • Build “robust” software that tests itself – exception handling • Use effective FTRs to filter errors prior to testing • Apply FTRs to the test strategy and test cases themselves • Develop a continuous improvement approach for the testing process (collect data and develops metrics) See page 361

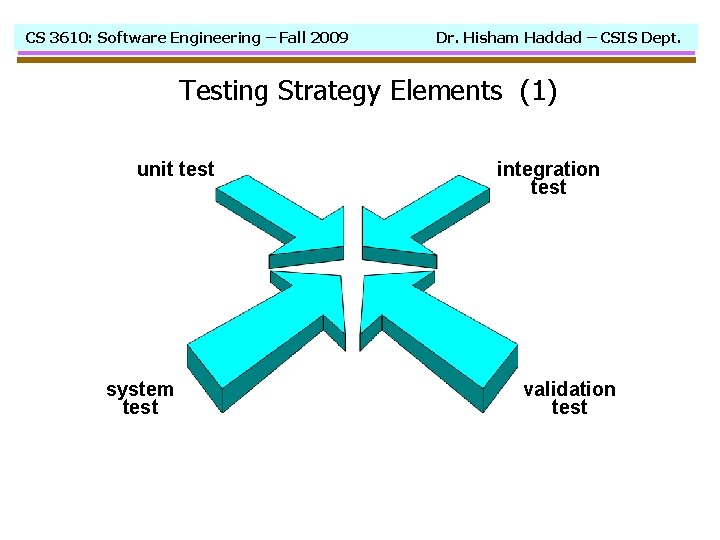

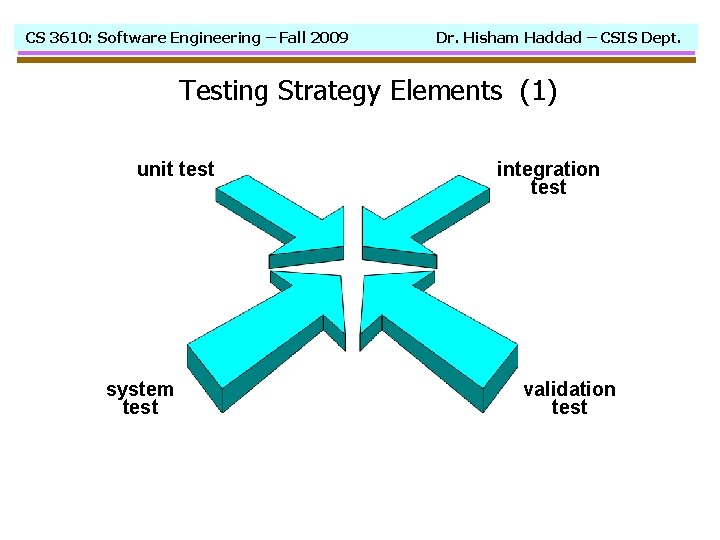

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Testing Strategy Elements (1) unit test system test integration test validation test

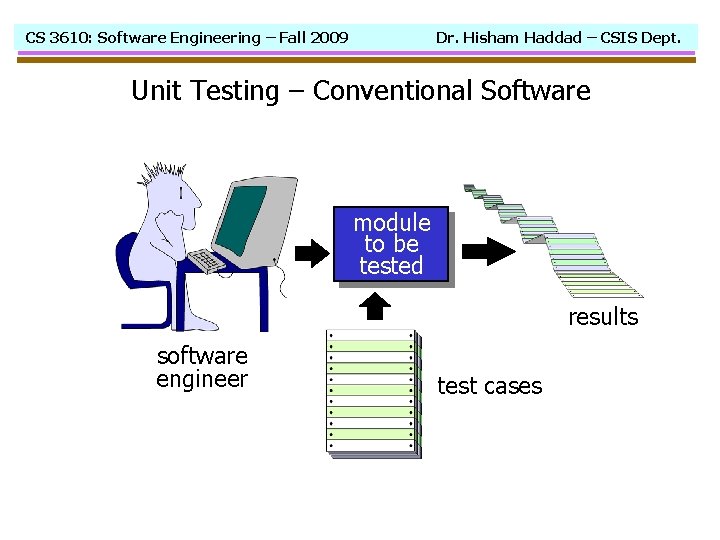

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Testing Strategy Elements (2) Unit Testing: testing functionality of individual modules (using white-box methods). Integration Testing: testing functionality of integrated modules (using both white-box and black-box methods). Validation Testing: testing the software for all established requirements (functional, behaviour, performance, reliability, …) (using black-box methods). System Testing: testing the software for compatibility with other system elements (HW, users, databases, other systems). See figure 13. 1, page 358. When does testing stop?

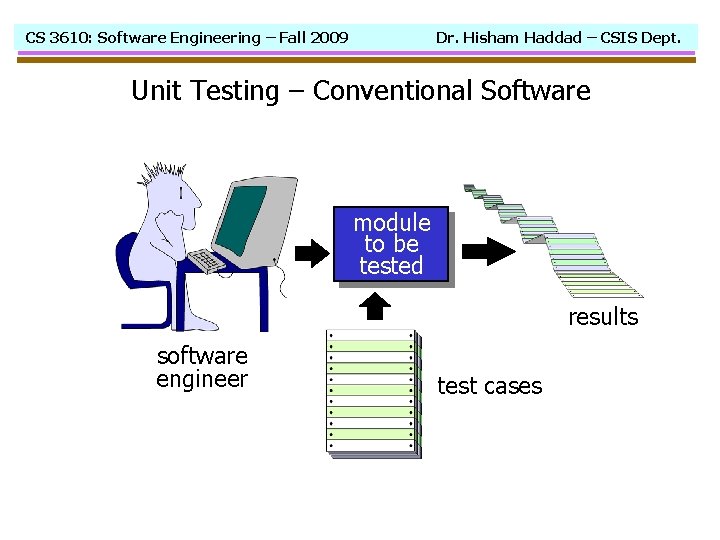

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Unit Testing – Conventional Software module to be tested results software engineer test cases

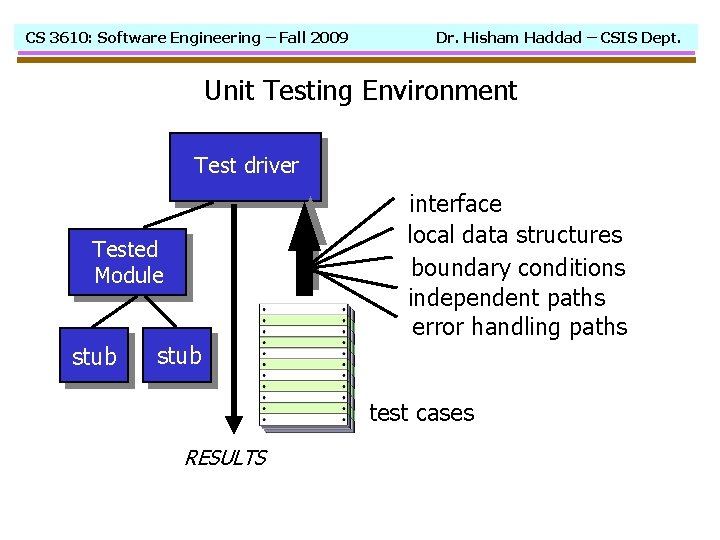

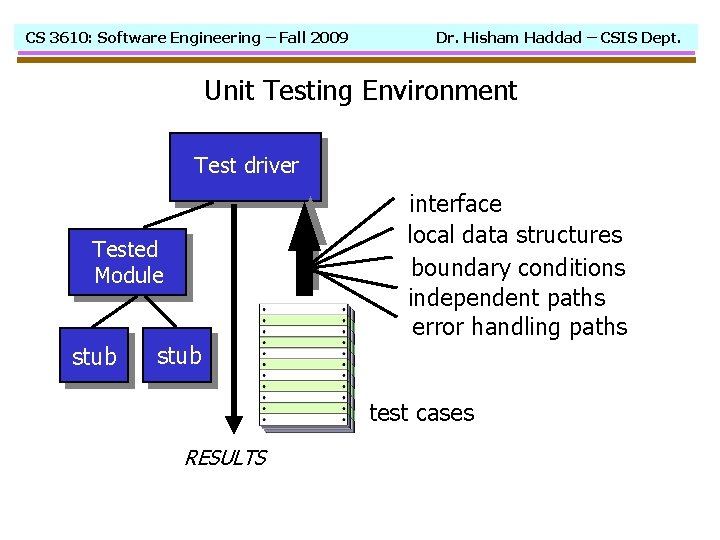

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Unit Testing Environment Test driver Tested Module stub interface local data structures boundary conditions independent paths error handling paths test cases RESULTS

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Unit Testing Errors Some common computational errors: - Incorrect arithmetic precedence - Incorrect logical operators or precedence - Incorrect initialization - Incorrect symbolic representation of an expression - Incorrect data type comparisons (different data types) - Incorrect comparison of variables - Improper or nonexistent loop termination - Improper modified loop variables - Improper boundary checks - Precision inaccuracy - Others… (see page 363)

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Integration Testing Options: - Non-incremental approach (all at once!) - Incremental construction strategy (one addition at a time) Incremental approach: - Top-Down Integration: start with main and work downward integrating subunits (either depth-first or breadth-first order) - Bottom-Up Integration: start with atomic unit (working modules) and work upward integrating other units (clusters).

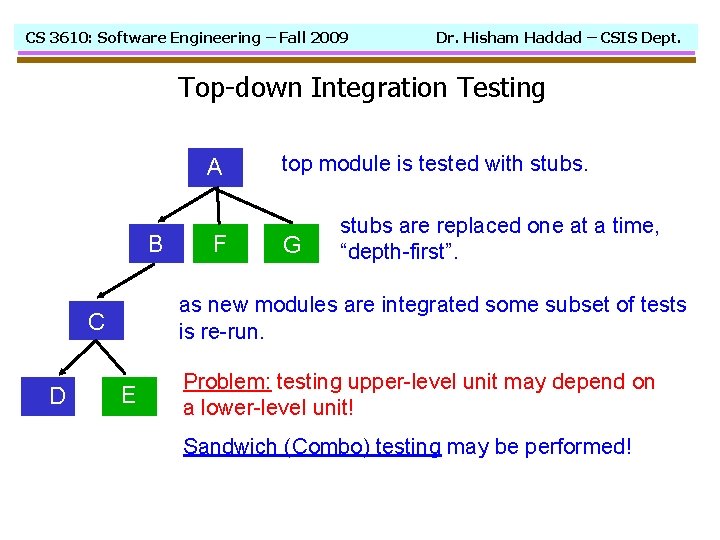

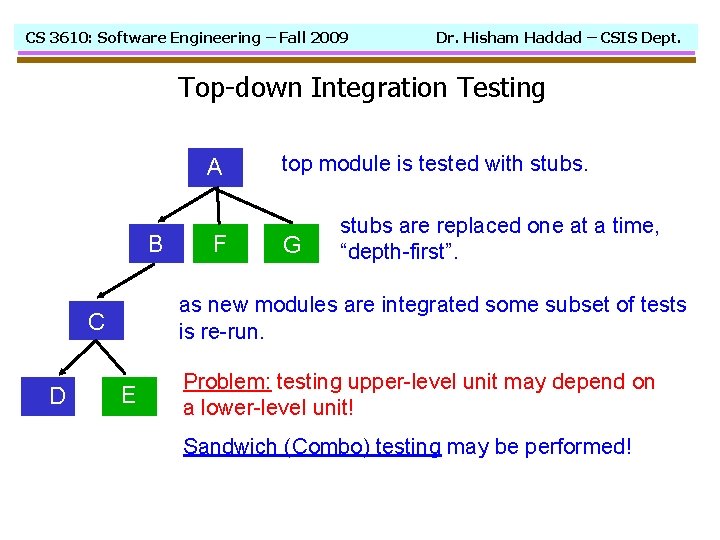

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Top-down Integration Testing A B G stubs are replaced one at a time, “depth-first”. as new modules are integrated some subset of tests is re-run. C D F top module is tested with stubs. E Problem: testing upper-level unit may depend on a lower-level unit! Sandwich (Combo) testing may be performed!

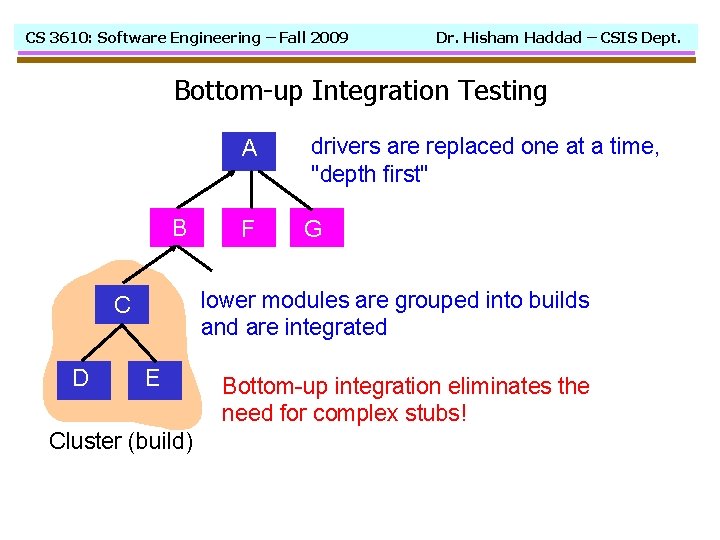

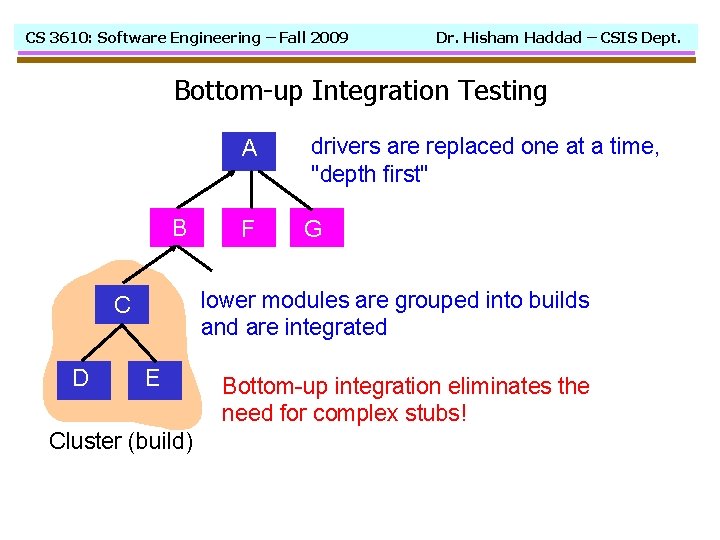

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Bottom-up Integration Testing A B G lower modules are grouped into builds and are integrated C D F drivers are replaced one at a time, "depth first" E Cluster (build) Bottom-up integration eliminates the need for complex stubs!

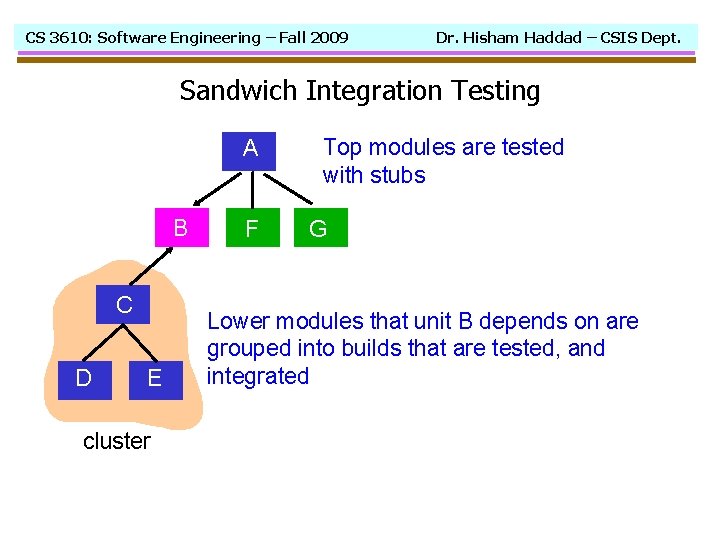

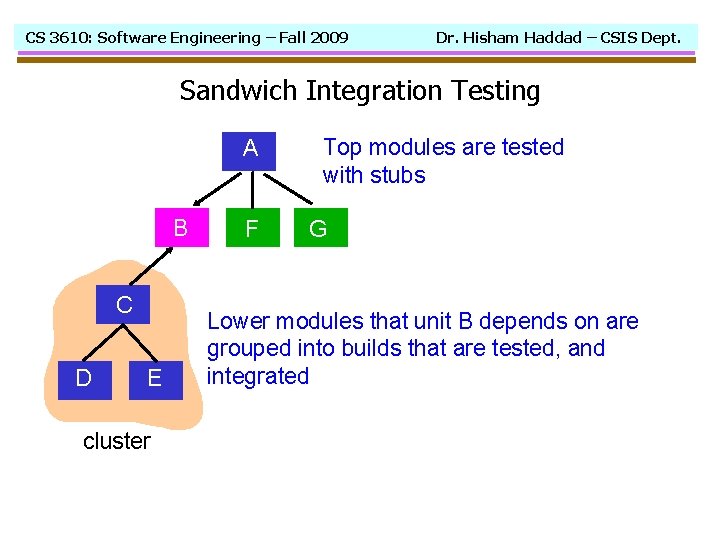

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Sandwich Integration Testing A B C D E cluster F Top modules are tested with stubs G Lower modules that unit B depends on are grouped into builds that are tested, and integrated

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Integration Testing - Comments - Possible difficulties writing stubs for top-down testing Top-down testing allows testing control module The entire program is not tested until the last module is added Bottom-up testing seems easier to conduct (no stubs) Sandwich testing is a compromise when selecting an integration testing strategy - Critical modules should be identified and tested as early as possible - “Test specifications” is a document that contains test plans, procedures, test cases, environment, resources etc… It becomes part of Software Configuration.

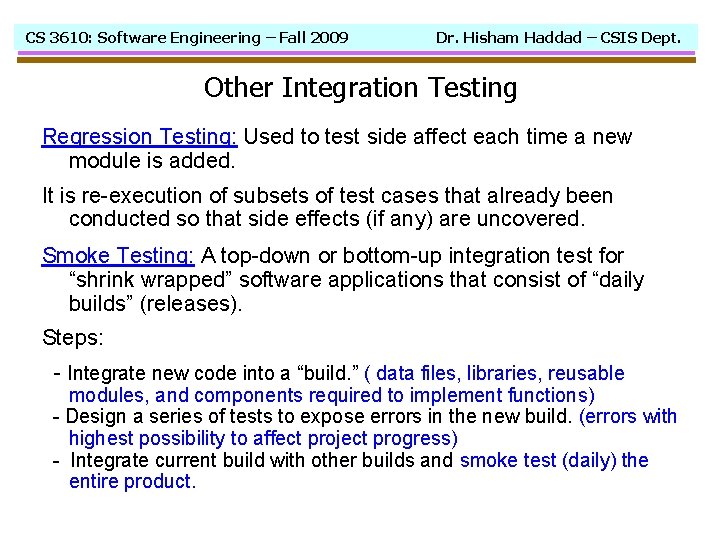

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Other Integration Testing Regression Testing: Used to test side affect each time a new module is added. It is re-execution of subsets of test cases that already been conducted so that side effects (if any) are uncovered. Smoke Testing: A top-down or bottom-up integration test for “shrink wrapped” software applications that consist of “daily builds” (releases). Steps: - Integrate new code into a “build. ” ( data files, libraries, reusable modules, and components required to implement functions) - Design a series of tests to expose errors in the new build. (errors with highest possibility to affect project progress) - Integrate current build with other builds and smoke test (daily) the entire product.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. OO Testing (1) - OO Testing begins by evaluating the correctness and consistency of the OOA and OOD models. - The nature of OO software changes testing strategies. - the concept of the ‘unit’ broadens due to encapsulation - cannot test class methods in isolation due to object collaborations and inheritance - class testing is driven by its methods and behavior (states)

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. OO Testing (2) Conventional integration testing (top-down and bottom-up) are not applicable to OO software. Class integration options: - thread-based integration (classes that respond to one event (input) to the system) - use-based integration (independent classes first, then dependent classes) - collaboration-based (cluster) integration (classes that collaborate together to complete one collaboration) Note: always apply regression testing for side effects. Test case design draws on conventional methods, but also encompasses special features.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. OO Testing Strategy Class testing is the equivalent of unit testing - operations within the class are tested - the state behavior of the class is examined Integration testing applies three different strategies: - thread-based testing: integrates the set of classes required to respond to one input or event - use-based testing: integrates the set of classes required to respond to one use-case (usage scenario) - cluster testing: integrates the set of classes required to demonstrate one collaboration (determined from the object relationship and CRC models)

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Validation Testing Validation test focus on software conformance with software requirements, and is based on the Validation Criteria, a section of the SRS document. behavioral characteristics, software configuration items, performance characteristics, documentation, error recovery, maintainability, and others… Mainly black-box based testing. Alpha test - acceptance test performed by the customer at the developer’s site to validate system requirements. Beta test - acceptance test performed by the customer at the customer's site to validate system requirements.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. System Testing A series of tests for system compatibility with HW, users, databases, and other systems. Example tests: Recovery testing: test the system’s ability to recover from a failure. Force the system to fails and see how it responds. Security testing: test built-in security methods. Try to gain access as an unauthorized user of the system. Stress testing: test the system for abnormal conditions (resource allocation). Try to overwhelm the system. Performance testing: test the system’s run-time performance. Try to cause system degradation and failure.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Testing vs. Debugging Testing uncovers errors; while debugging removes them. Testing is a process; while debugging is an art. Debugging outcome is either “error cause is found” or “error cause is not found!” Debugging is difficult!

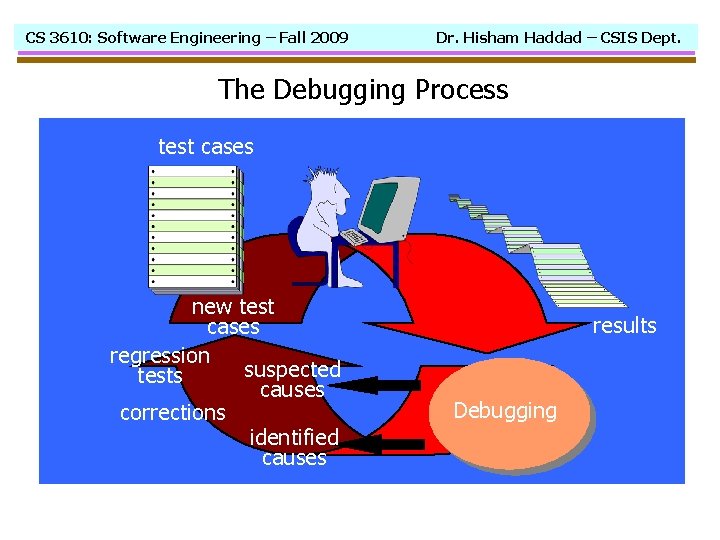

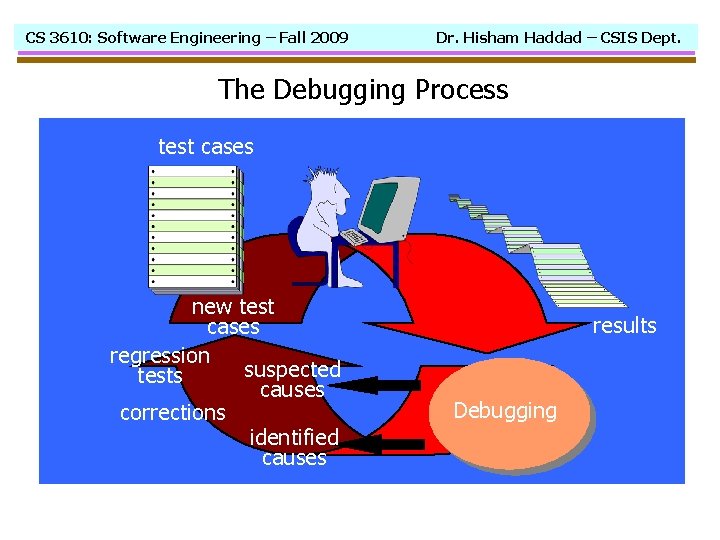

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. The Debugging Process test cases new test cases regression suspected tests causes corrections identified causes results Debugging

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Why Debugging is Difficult? - cause may be due to a combination of non-errors (rounding) - cause may be due to a system or compiler error - cause may be due to assumptions that everyone believes - causes may be distributed among processes/tasks - symptom may be irregular due to both HW and SW - symptom may disappear when another problem is fixed - symptom and cause may be geographically separated

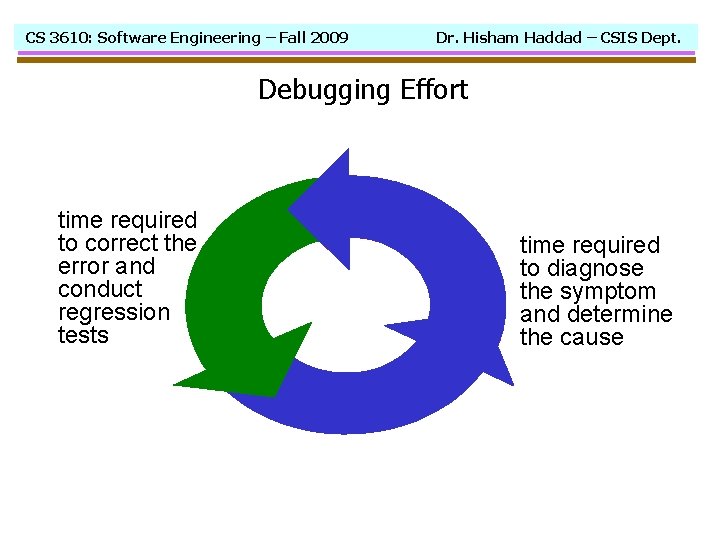

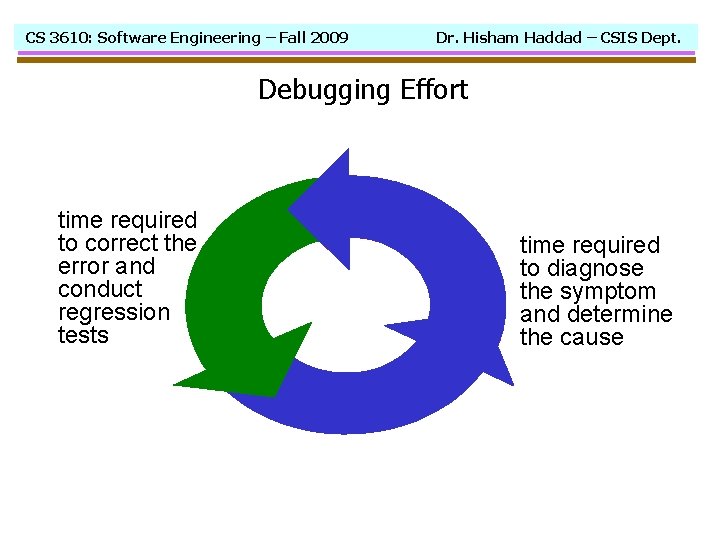

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Debugging Effort time required to correct the error and conduct regression tests time required to diagnose the symptom and determine the cause

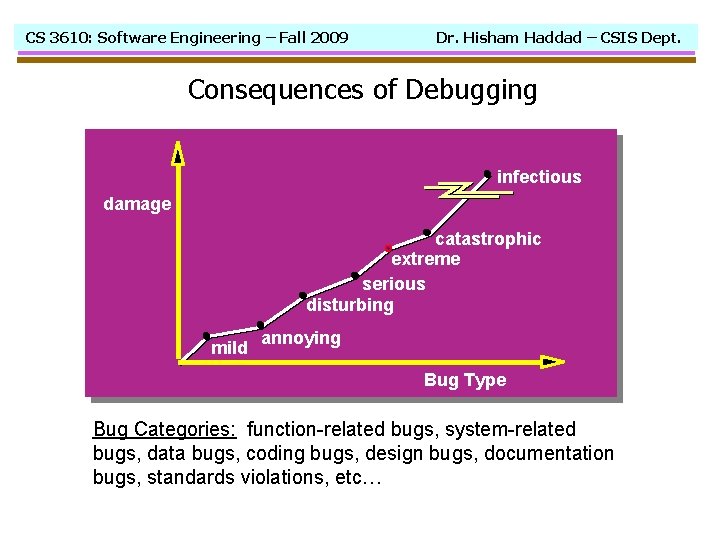

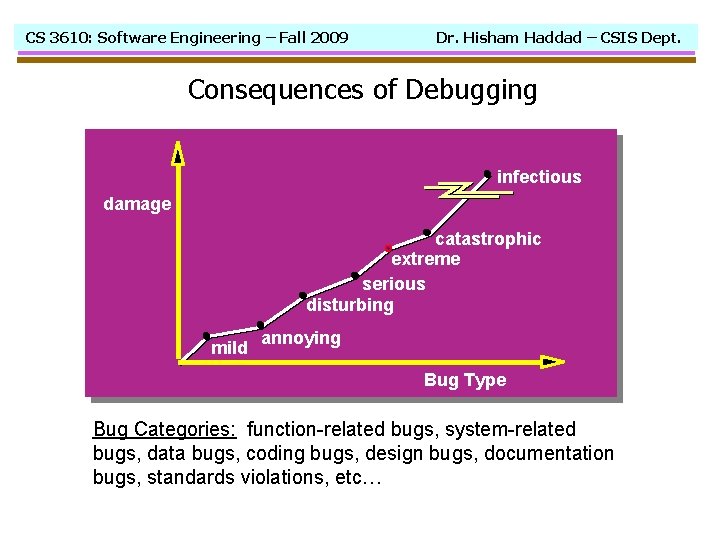

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Consequences of Debugging infectious damage catastrophic extreme serious disturbing mild annoying Bug Type Bug Categories: function-related bugs, system-related bugs, data bugs, coding bugs, design bugs, documentation bugs, standards violations, etc…

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Debugging Techniques - Brut Force debugging: “let the system find the error!” (memory dumps, run-time traces, and inserted output statements) - Backtracking: trace the code (manually) back to the source of the error. - Cause Elimination: by induction - make “cause induction hypothesis” and use test data to prove or disapprove the hypothesis. Or by deduction - list all possible cause and test them for elimination.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Debugging – Final Thoughts - Don't run off half-cocked, think about the symptom you're seeing. - Use tools (e. g. , dynamic debugger) to gain more insight. - If at an impasse, get help from someone else. - Be absolutely sure to conduct regression tests when you do "fix" the bug.

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Suggested Problems Consider working the following problems from chapter 13, page 385: 1, 2, 3, 4, 7, and 8. No submission is required for practice assignments. Work it for yourself!

CS 3610: Software Engineering – Fall 2009 Dr. Hisham Haddad – CSIS Dept. Last Slide End of chapter 13