CS 265 Dynamic Data Race Detection Koushik Sen

![Dynamic Race Detection • Happens Before [Dinning and Schonberg 1991] • Lockset: – Eraser Dynamic Race Detection • Happens Before [Dinning and Schonberg 1991] • Lockset: – Eraser](https://slidetodoc.com/presentation_image_h2/74c04c90aab606bb87f8147c12cb4a45/image-9.jpg)

![Happens-before relation • [Dinning and Schonberg 1991] • Idea: Infer a happens-before relation Á Happens-before relation • [Dinning and Schonberg 1991] • Idea: Infer a happens-before relation Á](https://slidetodoc.com/presentation_image_h2/74c04c90aab606bb87f8147c12cb4a45/image-16.jpg)

![Related work • Stoller et al. and Edelstein et al. [Con. Test] – Inserts Related work • Stoller et al. and Edelstein et al. [Con. Test] – Inserts](https://slidetodoc.com/presentation_image_h2/74c04c90aab606bb87f8147c12cb4a45/image-104.jpg)

- Slides: 104

CS 265: Dynamic Data Race Detection Koushik Sen UC Berkeley

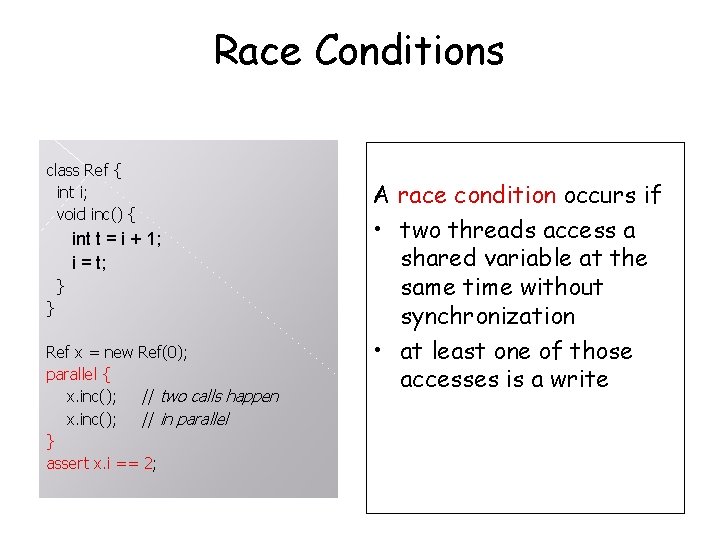

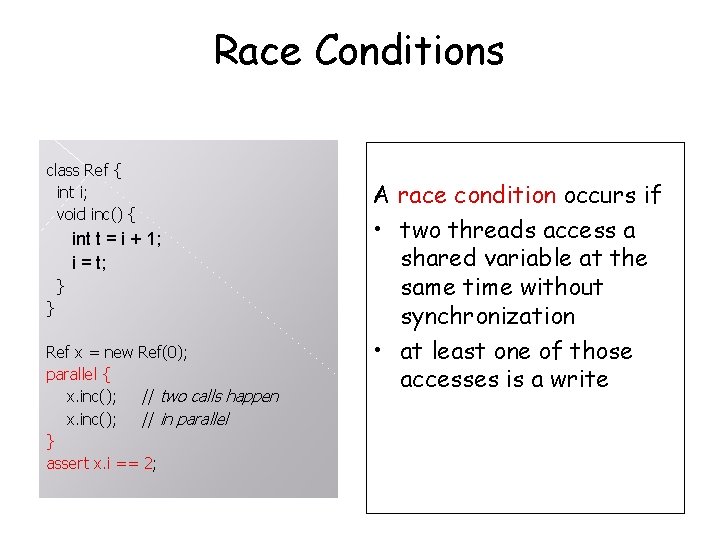

Race Conditions class Ref { int i; void inc() { int t = i + 1; i = t; } } Courtesy Cormac Flanagan

Race Conditions class Ref { int i; void inc() { int t = i + 1; i = t; } } Ref x = new Ref(0); parallel { x. inc(); // two calls happen x. inc(); // in parallel } assert x. i == 2; A race condition occurs if • two threads access a shared variable at the same time without synchronization • at least one of those accesses is a write

Race Conditions class Ref { int i; void inc() { t 1 t 2 RD(i) int t = i + 1; i = t; } RD(i) WR(i) } Ref x = new Ref(0); parallel { x. inc(); // two calls happen x. inc(); // in parallel } assert x. i == 2; WR(i)

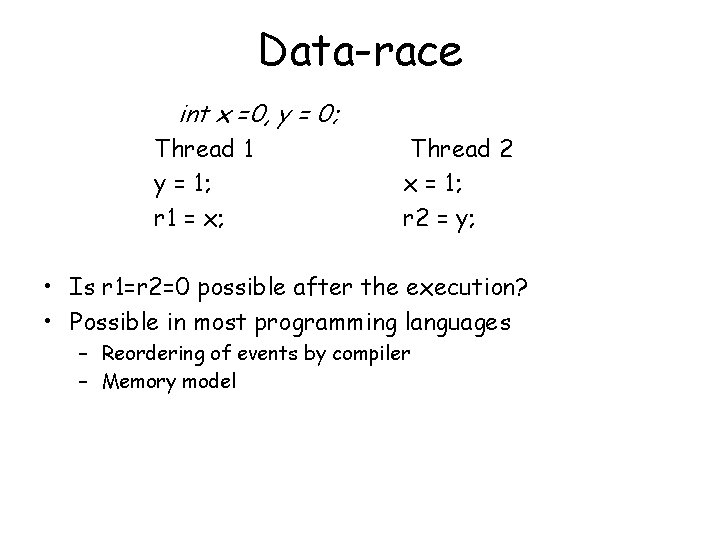

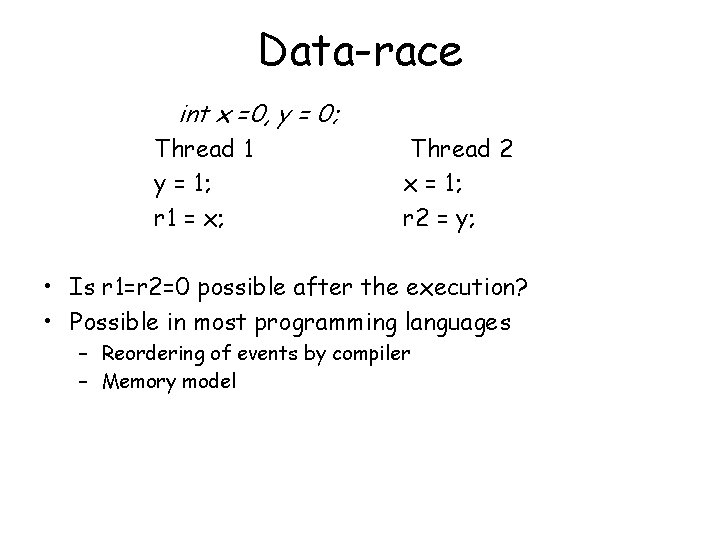

Data-race int x =0, y = 0; Thread 1 y = 1; r 1 = x; Thread 2 x = 1; r 2 = y; • Is r 1=r 2=0 possible after the execution?

Data-race int x =0, y = 0; Thread 1 y = 1; r 1 = x; Thread 2 x = 1; r 2 = y; • Is r 1=r 2=0 possible after the execution? • Possible in most programming languages – Reordering of events by compiler – Memory model

Data race free -> Seq consistency • Memory models for programming languages – Specifies what exactly the compiler will ensure – Consensus: Data-race-free pgms -> seq consistent – Java memory model [Manson et al. , POPL 05] • A complex model for programs with races • Bugs/unclear implementations – C++ (new version) [Boehm, Adve, PLDI 08] • No semantics for programs with races!

Lock-Based Synchronization class Ref { int i; // guarded by this void inc() { synchronized (this) { int t = i + 1; i = t; } } } Ref x = new Ref(0); parallel { x. inc(); // two calls happen x. inc(); // in parallel } assert x. i == 2; • Field guarded by a lock • Lock acquired before accessing field • Ensures race freedom

![Dynamic Race Detection Happens Before Dinning and Schonberg 1991 Lockset Eraser Dynamic Race Detection • Happens Before [Dinning and Schonberg 1991] • Lockset: – Eraser](https://slidetodoc.com/presentation_image_h2/74c04c90aab606bb87f8147c12cb4a45/image-9.jpg)

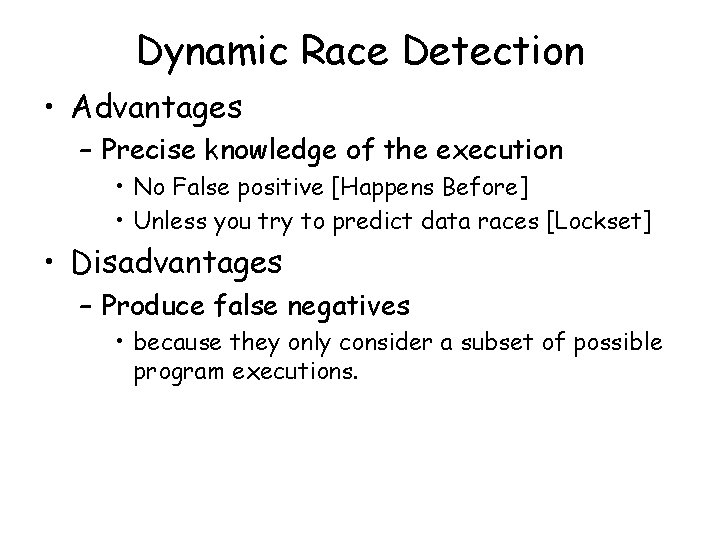

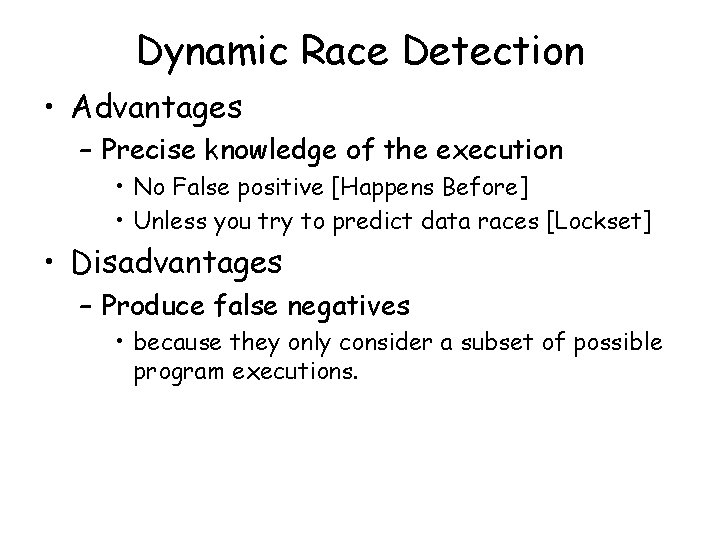

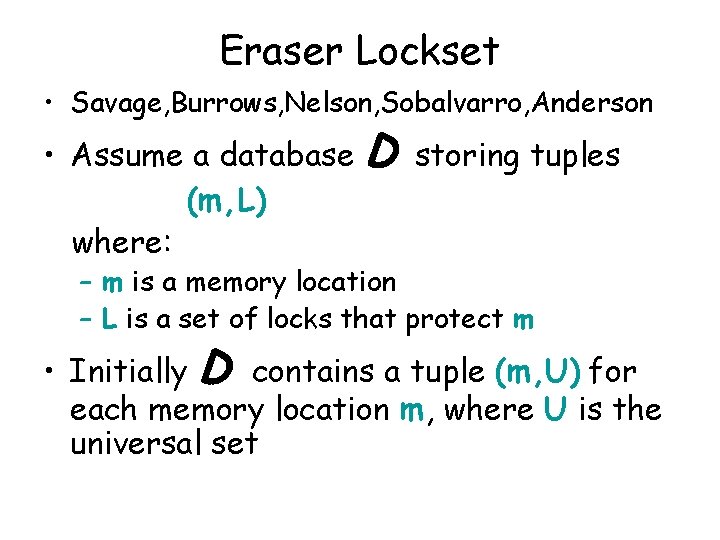

Dynamic Race Detection • Happens Before [Dinning and Schonberg 1991] • Lockset: – Eraser [Savage et al. 1997] – Precise Lockset [Choi et al. 2002] • Hybrid [O'Callahan and Choi 2003]

Dynamic Race Detection • Advantages – Precise knowledge of the execution • No False positive [Happens Before] • Unless you try to predict data races [Lockset] • Disadvantages – Produce false negatives • because they only consider a subset of possible program executions.

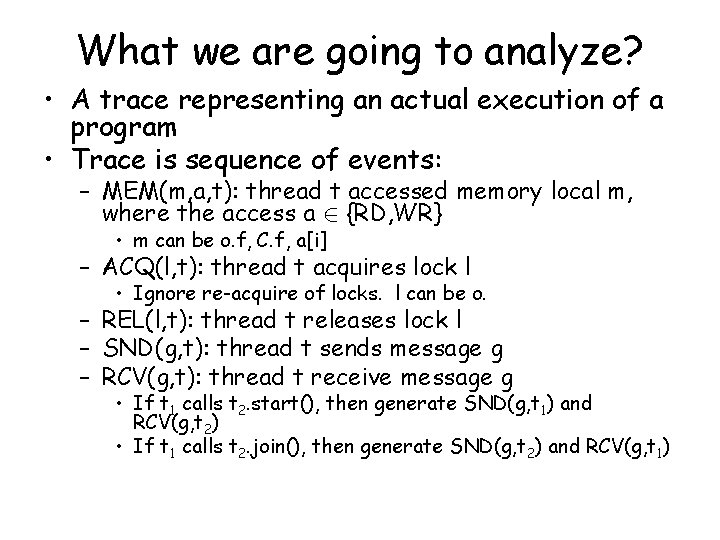

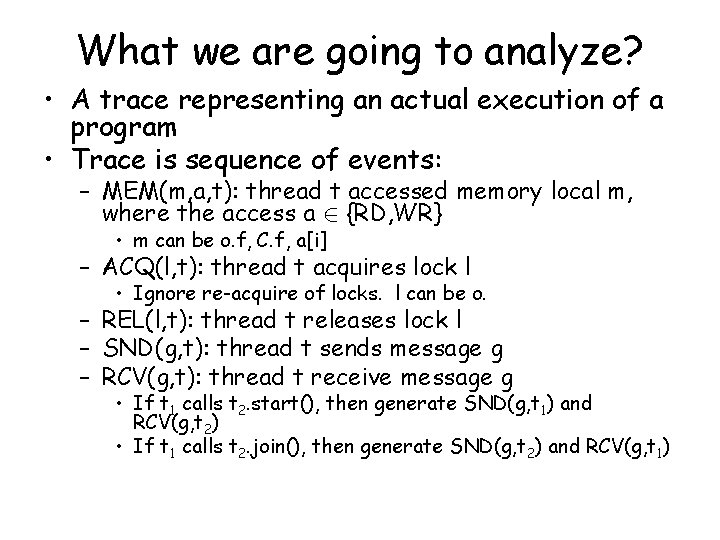

What we are going to analyze? • A trace representing an actual execution of a program • Trace is sequence of events: – MEM(m, a, t): thread t accessed memory local m, where the access a 2 {RD, WR} • m can be o. f, C. f, a[i] – ACQ(l, t): thread t acquires lock l • Ignore re-acquire of locks. l can be o. – REL(l, t): thread t releases lock l – SND(g, t): thread t sends message g – RCV(g, t): thread t receive message g • If t 1 calls t 2. start(), then generate SND(g, t 1) and RCV(g, t 2) • If t 1 calls t 2. join(), then generate SND(g, t 2) and RCV(g, t 1)

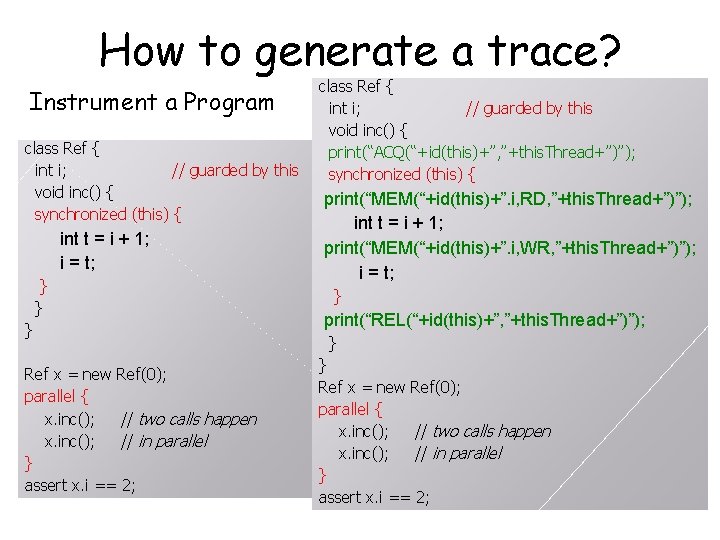

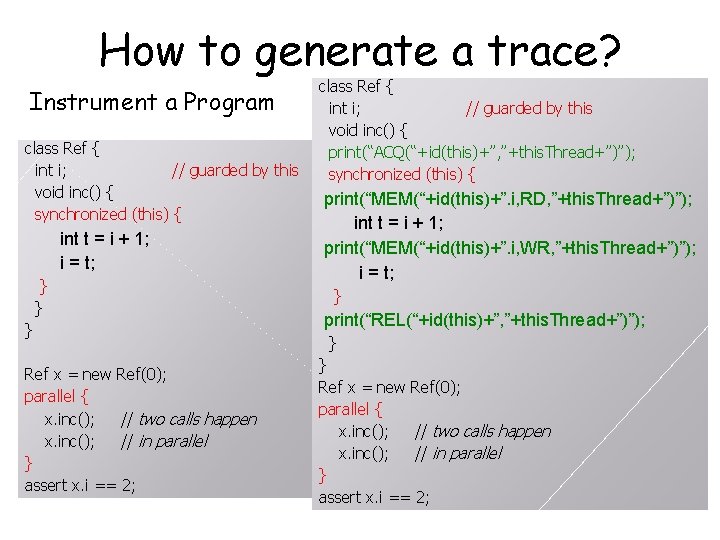

How to generate a trace? Instrument a Program class Ref { int i; // guarded by this void inc() { synchronized (this) { int t = i + 1; i = t; } } } Ref x = new Ref(0); parallel { x. inc(); // two calls happen x. inc(); // in parallel } assert x. i == 2; class Ref { int i; // guarded by this void inc() { print(“ACQ(“+id(this)+”, ”+this. Thread+”)”); synchronized (this) { print(“MEM(“+id(this)+”. i, RD, ”+this. Thread+”)”); int t = i + 1; print(“MEM(“+id(this)+”. i, WR, ”+this. Thread+”)”); i = t; } print(“REL(“+id(this)+”, ”+this. Thread+”)”); } } Ref x = new Ref(0); parallel { x. inc(); // two calls happen x. inc(); // in parallel } assert x. i == 2;

Sample Trace ACQ(4365, t 1); MEM(4365. i, RD, t 1) MEM(4365. i, WR, t 1) REL(4365, t 1); ACQ(4365, t 2); MEM(4365. i, RD, t 2) MEM(4365. i, WR, t 2) REL(4365, t 2); class Ref { int i; // guarded by this void inc() { print(“ACQ(“+id(this)+”, ”+this. Thread+”)”); synchronized (this) { print(“MEM(“+id(this)+”. i, RD, ”+this. Thread+”)”); print(“MEM(“+id(this)+”. i, WR, ”+this. Thread+”)”); i = i + 1; } print(“REL(“+id(this)+”, ”+this. Thread+”)”); } } Ref x = new Ref(0); parallel { x. inc(); // two calls happen x. inc(); // in parallel } assert x. i == 2;

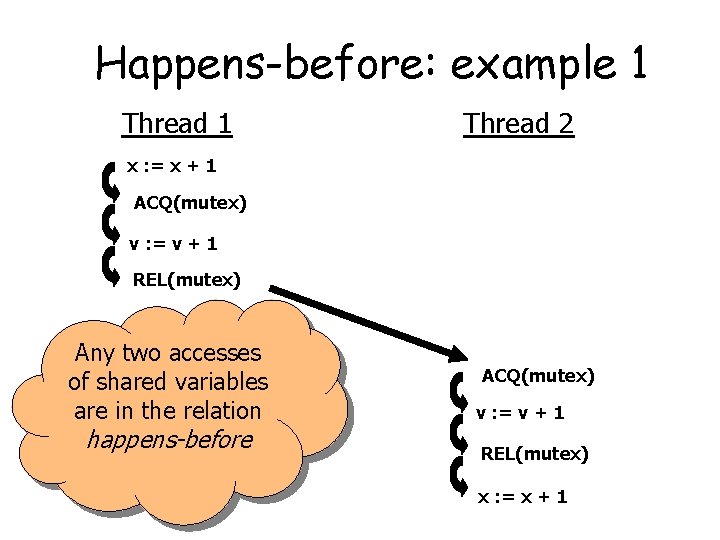

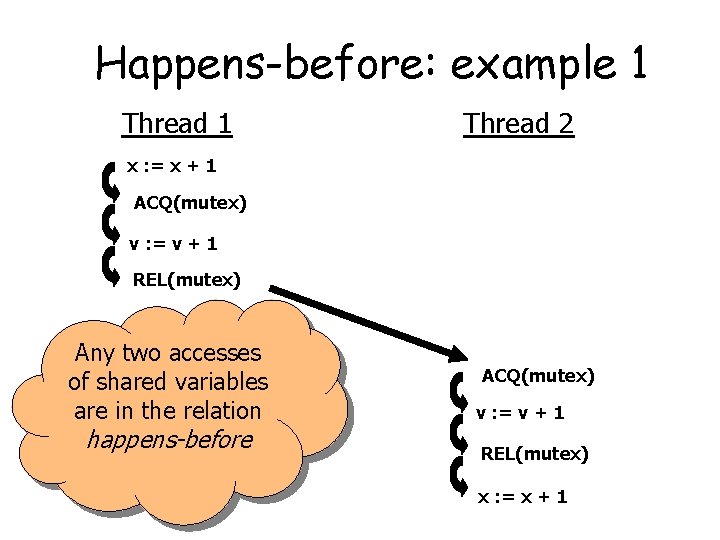

Compute Locks Held by a Thread L(t) = locks held by thread t. How do we compute L(t)? Locks Held Sample Trace L(t 1)={}, L(t 2)={} ACQ(4365, t 1); L(t 1)={4365}, L(t 2)={} MEM(4365. i, RD, t 1) L(t 1)={4365}, L(t 2)={} MEM(4365. i, WR, t 1) L(t 1)={4365}, L(t 2)={} REL(4365, t 1); L(t 1)={}, L(t 2)={} ACQ(4365, t 2); L(t 1)={}, L(t 2)={4365} MEM(4365. i, RD, t 2) L(t 1)={}, L(t 2)={4365} MEM(4365. i, WR, t 2) L(t 1)={}, L(t 2)={4365} REL(4365, t 2); L(t 1)={}, L(t 2)={}

Let us now analyze a trace • Instrument Program • Run Program => A Trace File • Analyze Trace File

![Happensbefore relation Dinning and Schonberg 1991 Idea Infer a happensbefore relation Á Happens-before relation • [Dinning and Schonberg 1991] • Idea: Infer a happens-before relation Á](https://slidetodoc.com/presentation_image_h2/74c04c90aab606bb87f8147c12cb4a45/image-16.jpg)

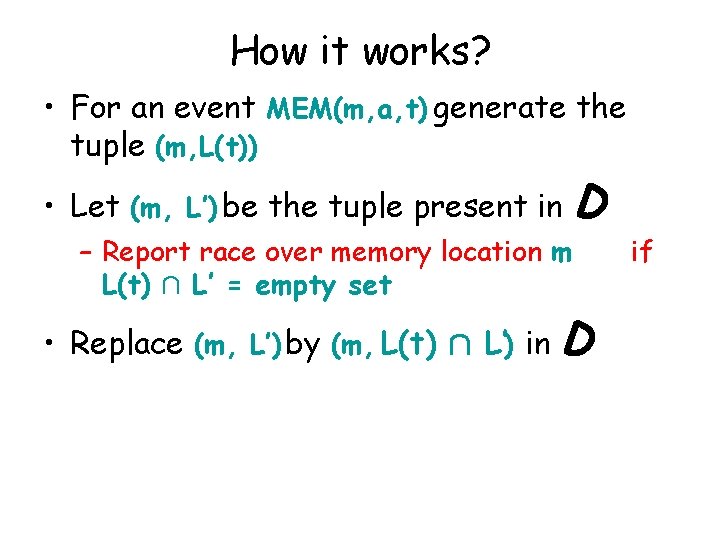

Happens-before relation • [Dinning and Schonberg 1991] • Idea: Infer a happens-before relation Á between events in a trace • We say e 1 Á e 2 – If e 1 and e 2 are events from the same thread and e 1 appears before e 2 in the trace – If e 1 = SND(g, t) and e 2 = RCV(g, t’) – If there is a e’ such that e 1 Á e’ and e’ Á e 2 • REL(l, t) and ACQ(l, t’) generates SND(g, t) and RCV(g, t’) • We say e 1 and e 2 are in race, if – e 1 and e 2 are not related by Á, – e 1 and e 2 are from different threads – e 1 and e 2 access the same memory location and one of the accesses is a write

Happens-before: example 1 Thread 2 x : = x + 1 ACQ(mutex) v : = v + 1 REL(mutex) Any two accesses of shared variables are in the relation happens-before ACQ(mutex) v : = v + 1 REL(mutex) x : = x + 1

Happens-before: example 2 Thread 1 Thread 2 ACQ(mutex) v : = v + 1 REL(mutex) x : = x + 1 ACQ(mutex) v : = v + 1 REL(mutex) Therefore, only this second execution reveals the existing datarace!!

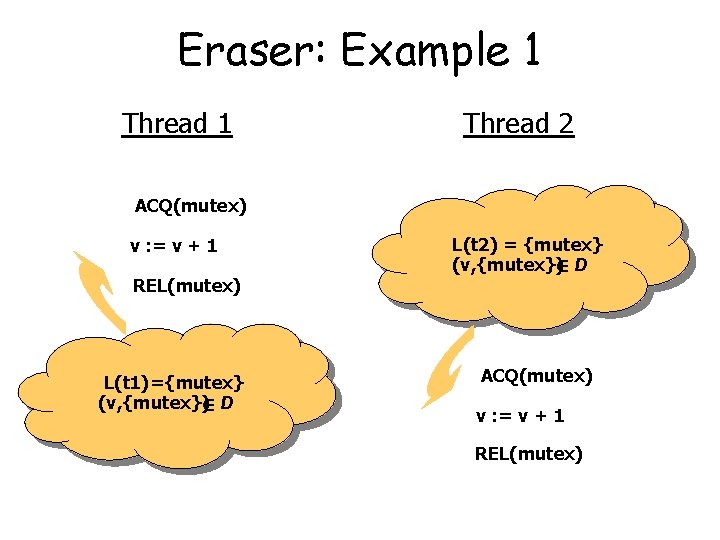

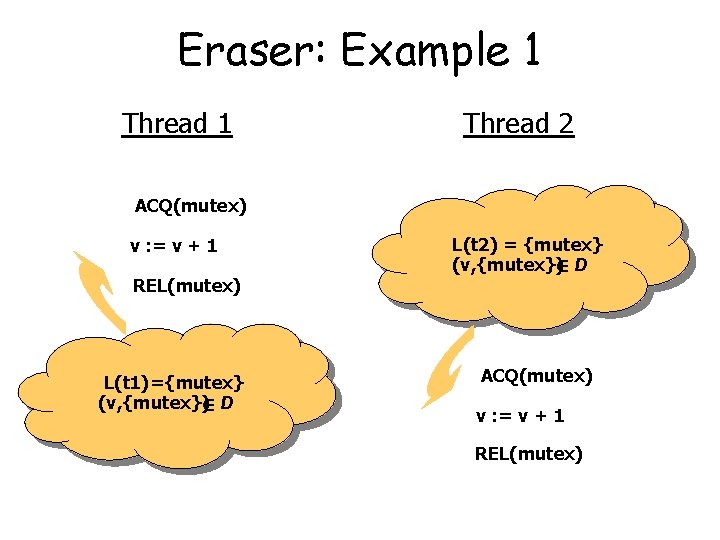

Eraser Lockset • Savage, Burrows, Nelson, Sobalvarro, Anderson • Assume a database (m, L) where: D storing tuples – m is a memory location – L is a set of locks that protect m • Initially D contains a tuple (m, U) for each memory location m, where U is the universal set

How it works? • For an event MEM(m, a, t) generate the tuple (m, L(t)) • Let (m, L’) be the tuple present in – Report race over memory location m L(t) Å L’ = empty set D • Replace (m, L’) by (m, L(t) Å L’) in D if

Eraser: Example 1 Thread 2 ACQ(mutex) v : = v + 1 REL(mutex) L(t 1)={mutex} (v, {mutex})2 D L(t 2) = {mutex} (v, {mutex})2 D ACQ(mutex) v : = v + 1 REL(mutex)

Eraser: Example 2 Thread 1 Thread 2 ACQ(mutex 1) v : = v + 1 REL(mutex 1) L(t 2) = {mutex 2} (v, {})2 D ACQ(mutex 2) L(t 1) = {mutex 1} (v, {mutex 1}) 2 D v : = v + 1 REL(mutex 2) Warning!!

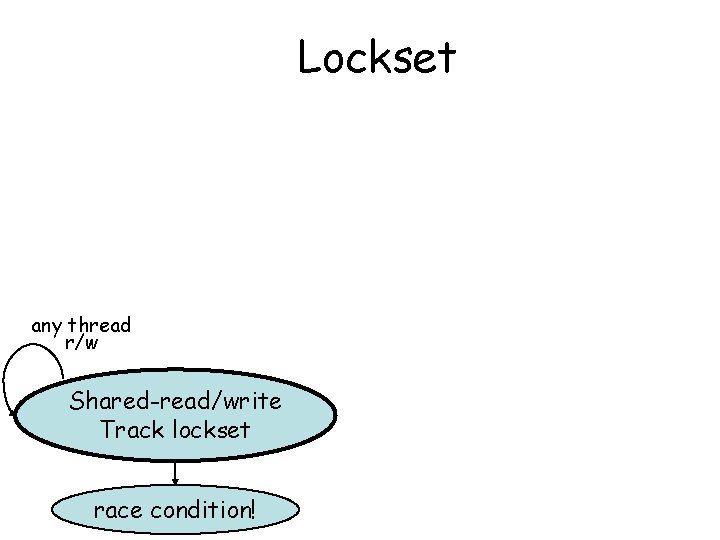

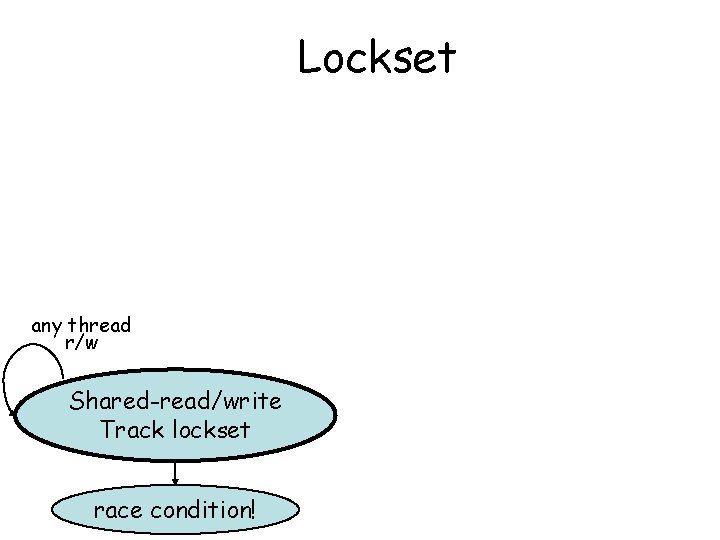

Lockset any thread r/w Shared-exclusive Shared-read/write Track lockset race condition!

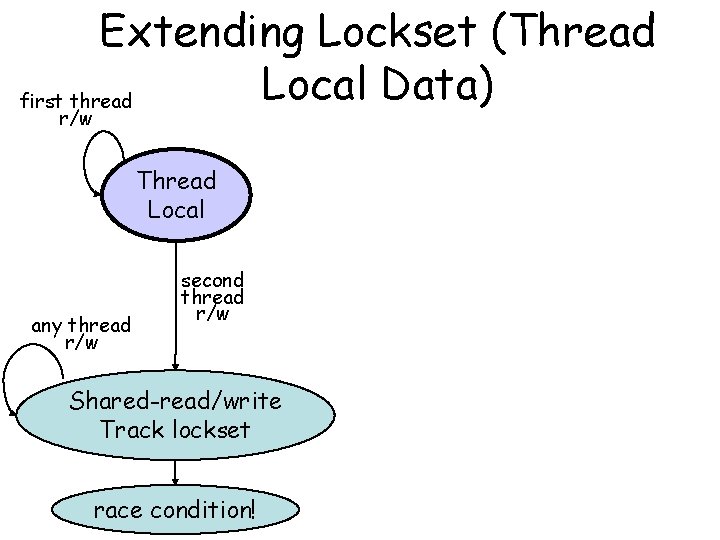

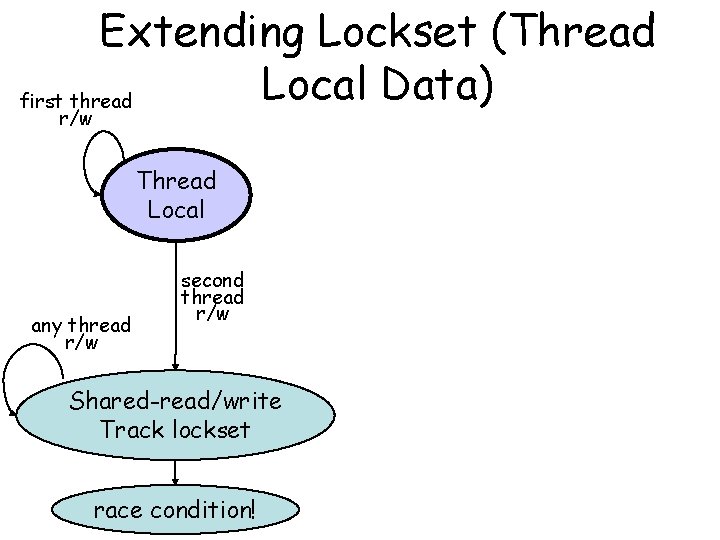

Extending Lockset (Thread Local Data) first thread r/w Thread Local any thread r/w second thread r/w Shared-read/write Shared-exclusive Track lockset race condition!

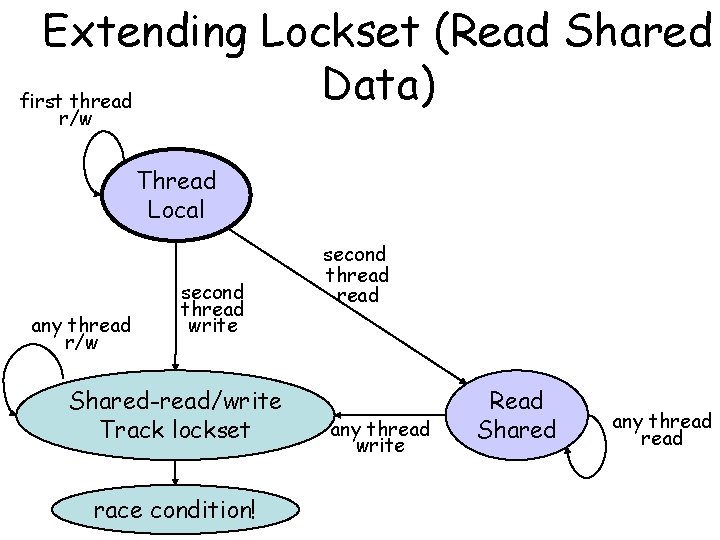

Extending Lockset (Read Shared Data) first thread r/w Thread Local any thread r/w second thread write Shared-read/write Shared-exclusive Track lockset race condition! second thread any thread write Read Shared any thread

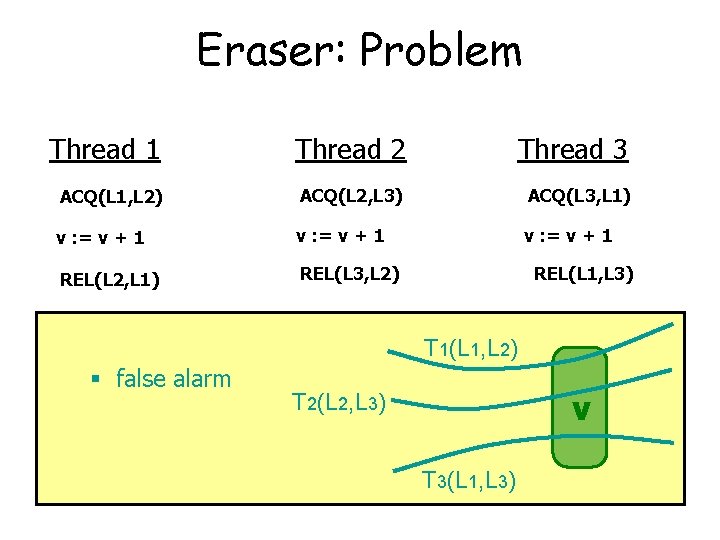

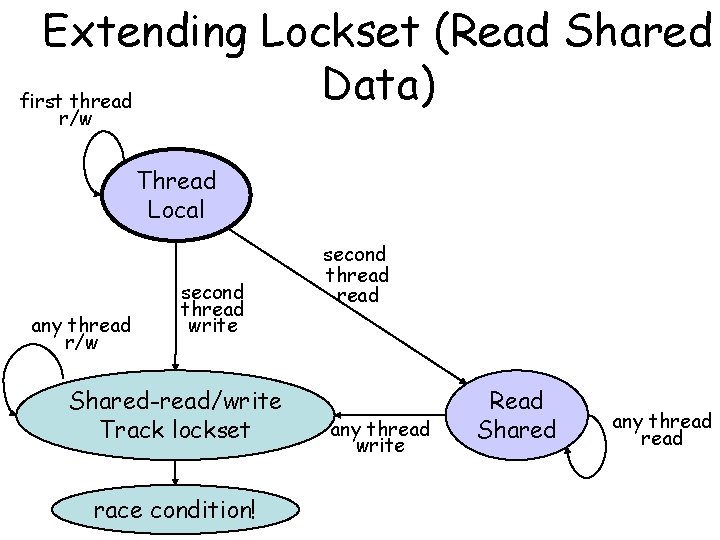

Eraser: Problem Thread 1 Thread 2 Thread 3 ACQ(L 1, L 2) ACQ(L 2, L 3) ACQ(L 3, L 1) v : = v + 1 REL(L 2, L 1) REL(L 3, L 2) REL(L 1, L 3) T 1(L 1, L 2) § false alarm v T 2(L 2, L 3) T 3(L 1, L 3)

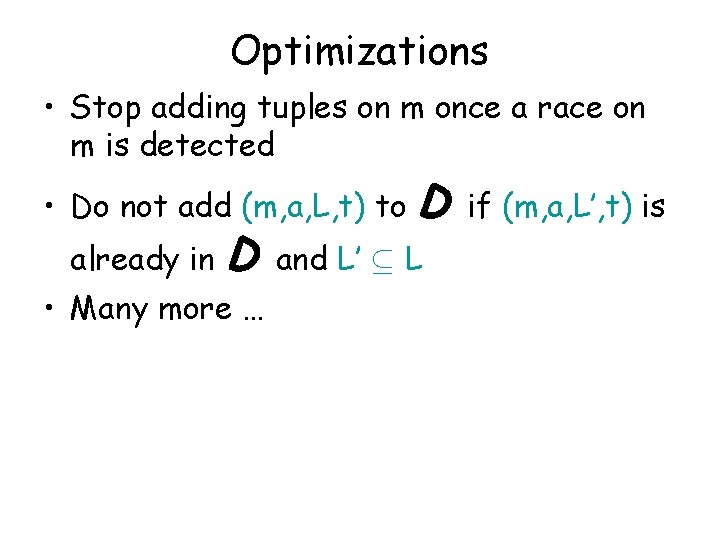

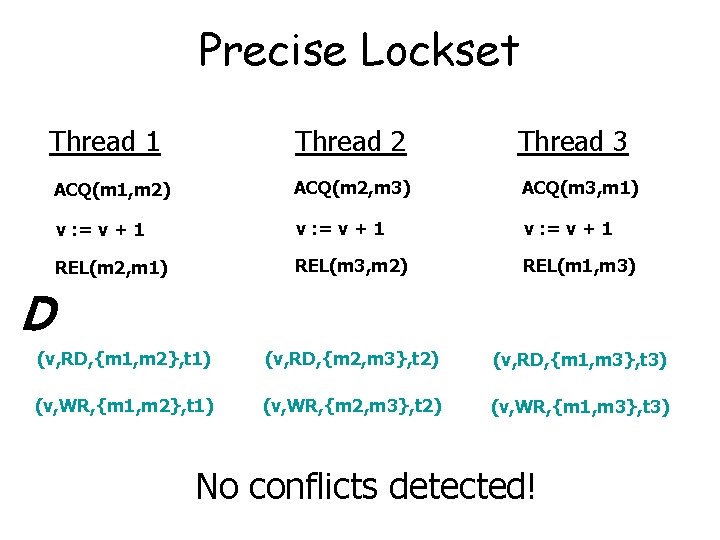

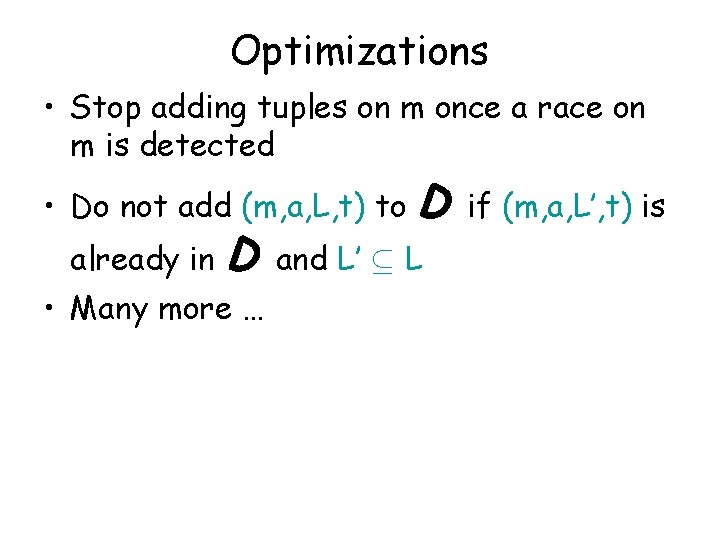

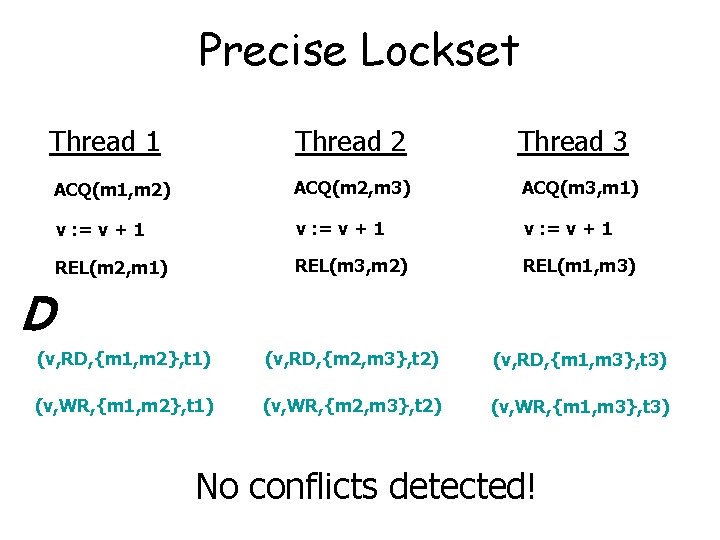

Precise Lockset • Choi, Lee, Loginov, O'Callahan, Sarkar, Sridharan • Assume a database D storing tuples (m, t, L, a) where: – – m is a memory location t is a thread accessing m L is a set of locks held by t while accessing m a is the type of access (read or write) • Initially D is empty

How it works? • For an event MEM(m, a, t) generate the tuple (m, a, L(t), t) • If there is a tuple (m’, a’, L’, t’)in D such that – – – m = m’, (a = WR)Ç (a’=WR) L(t) Å L’ = empty set t t’ Report race over memory location m • Add (m, a, L(t), t) in D

Optimizations • Stop adding tuples on m once a race on m is detected • Do not add (m, a, L, t) to D if (m, a, L’, t) is already in D • Many more … and L’ µ L

Precise Lockset Thread 1 Thread 2 x : = x + 1 D ACQ(mutex) v : = v + 1 REL(mutex) x : = x + 1 (x, RD, {}, t 1) (v, RD, {mutex}, t 2) (x, WR, {}, t 1) (v, WR, {mutex}, t 2) (v, RD, {mutex}, t 1) (v, WR, {mutex}, t 1) Conflict detected! (x, RD, {}, t 2) (x, WR, {}, t 2)

Precise Lockset Thread 1 Thread 2 Thread 3 ACQ(m 1, m 2) ACQ(m 2, m 3) ACQ(m 3, m 1) v : = v + 1 REL(m 2, m 1) REL(m 3, m 2) REL(m 1, m 3) (v, RD, {m 1, m 2}, t 1) (v, RD, {m 2, m 3}, t 2) (v, RD, {m 1, m 3}, t 3) (v, WR, {m 1, m 2}, t 1) (v, WR, {m 2, m 3}, t 2) (v, WR, {m 1, m 3}, t 3) D No conflicts detected!

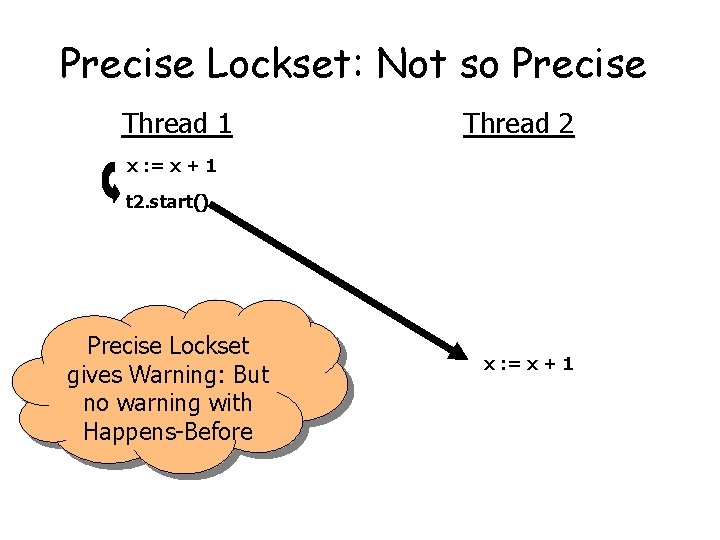

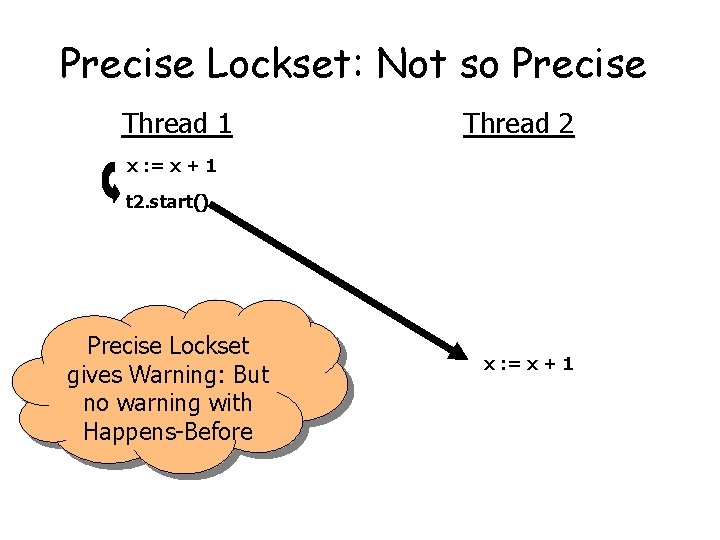

Precise Lockset: Not so Precise Thread 1 Thread 2 x : = x + 1 t 2. start() Precise Lockset gives Warning: But no warning with Happens-Before x : = x + 1

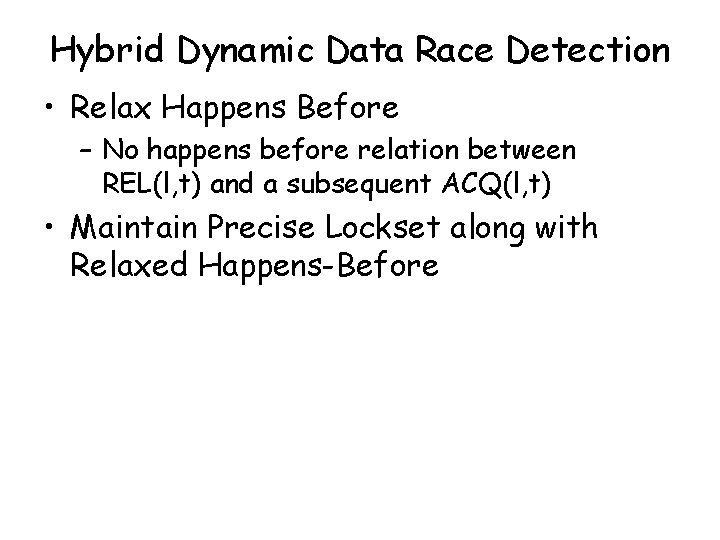

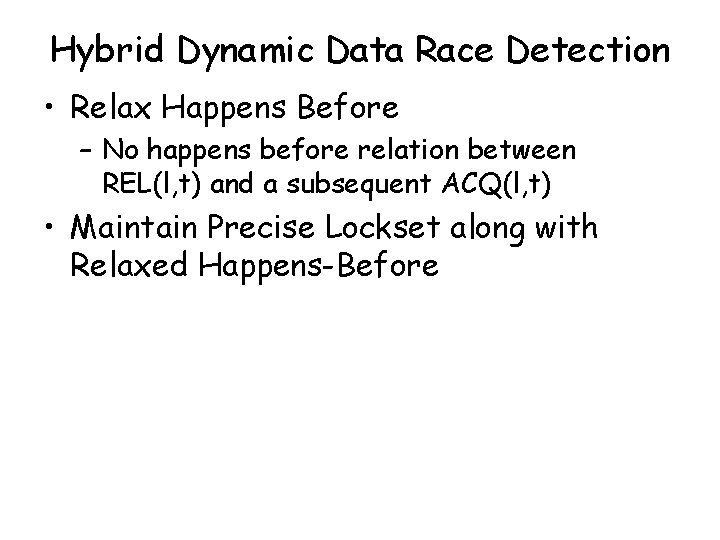

Hybrid Dynamic Data Race Detection • Relax Happens Before – No happens before relation between REL(l, t) and a subsequent ACQ(l, t) • Maintain Precise Lockset along with Relaxed Happens-Before

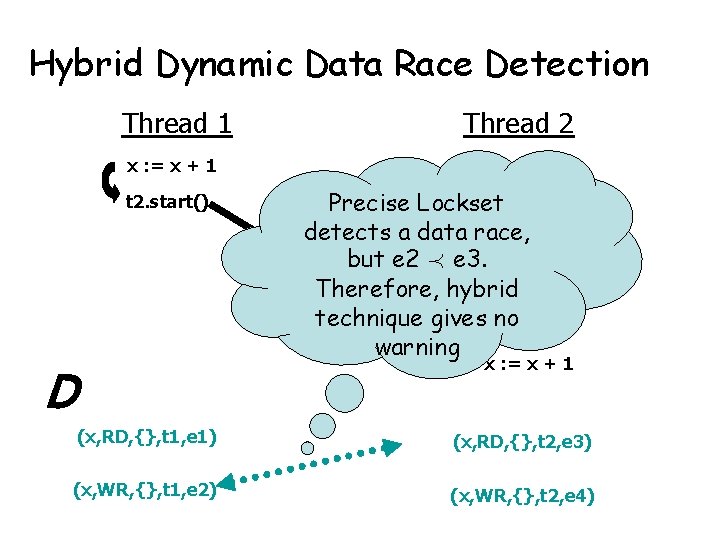

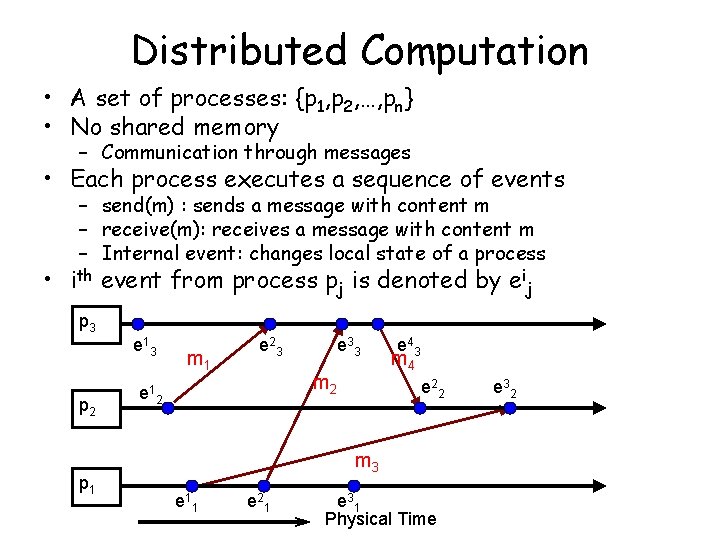

Hybrid • O'Callahan and Choi • Assume a database (m, t, L, a, e) where: – – – D storing tuples m is a memory location t is a thread accessing m L is a set of locks held by t while accessing m a is the type of access (read or write) e is the event associated with the access • Initially D is empty

How it works? • For an event e = MEM(m, a, t)generate the tuple (m, a, L(t), t, e) • If there is a tuple (m’, a’, L’, t’, e’)in D such that – – – m = m’, (a = WR)Ç (a’=WR) L(t) Å L’ = empty set t t’ e and e’ are not related by the happens before relation, i. e. , : (e Á e’) Æ : (e’ Á e) – Report race over memory location m • Add (m, a, L(t), t, e)in D

Hybrid Dynamic Data Race Detection Thread 1 Thread 2 x : = x + 1 t 2. start() Precise Lockset detects a data race, but e 2 Á e 3. Therefore, hybrid technique gives no warning x : = x + 1 D (x, RD, {}, t 1, e 1) (x, RD, {}, t 2, e 3) (x, WR, {}, t 1, e 2) (x, WR, {}, t 2, e 4)

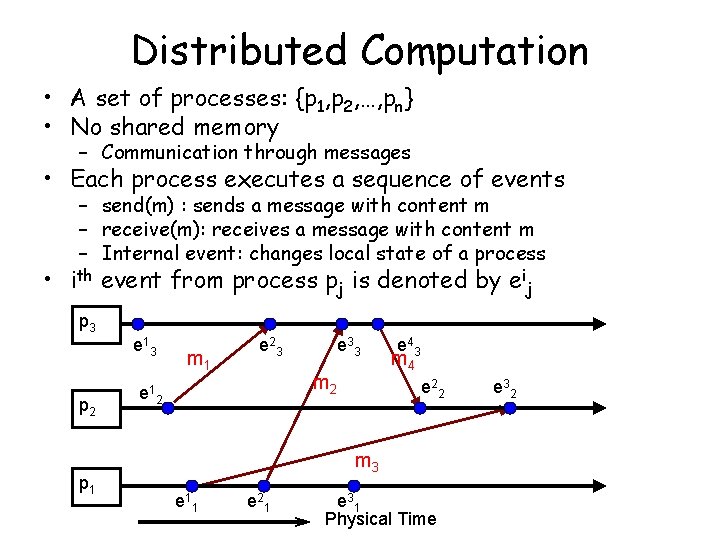

Distributed Computation • A set of processes: {p 1, p 2, …, pn} • No shared memory – Communication through messages • Each process executes a sequence of events – send(m) : sends a message with content m – receive(m): receives a message with content m – Internal event: changes local state of a process • ith event from process pj is denoted by eij p 3 e 1 3 p 2 p 1 m 1 e 2 3 e 3 3 m 2 e 1 2 e 4 3 m 4 e 2 2 m 3 e 1 1 e 2 1 e 3 1 Physical Time e 3 2

Distributed Computation as a Partial Order • Distributed Computation defines a partial order on the events – e ! e’ • e and e’ are events from the same process and e executes before e’ • e is the send of a message and e’ is the receive of the same message • there is a e’’ such that e ! e’’ and e’’ ! e’ p 3 e 1 3 p 2 p 1 m 1 e 2 3 e 3 3 m 2 e 1 2 e 4 3 m 4 e 2 2 m 3 e 1 1 e 2 1 e 3 1 Physical Time e 3 2

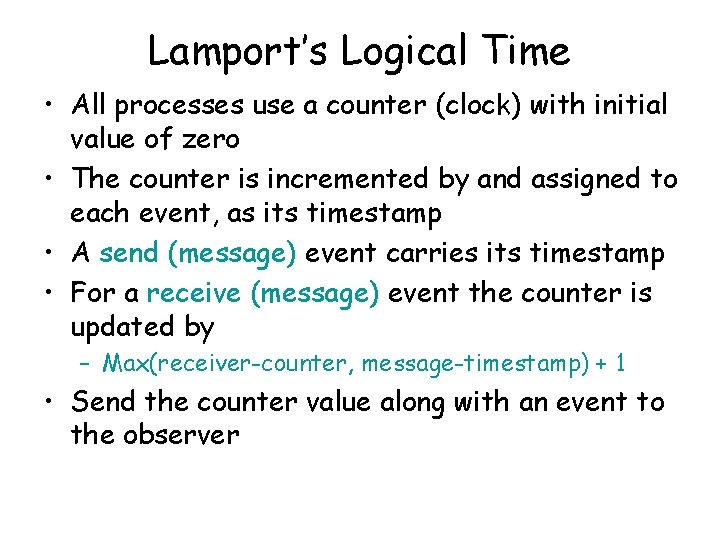

Distributed Computation as a Partial Order • Problem: An external process or observer wants to infer the partial order or the computation for debugging – No global clock – At each event a process can send a message to the observer to inform about the event – Message delay is unbounded Observer p 3 e 1 3 p 2 p 1 m 1 e 2 3 e 3 3 m 2 e 1 2 e 4 3 m 4 e 2 2 m 3 e 1 1 e 2 1 e 3 1 Physical Time e 3 2

Can we infer the partial order? • From the observation: e 12 e 13 e 11 e 23 e 43 e 31 e 32 e 22 • Can we associate a suitable value with every event such that – V(e) < V(e’) , e ! e’ • We need the notion of clock (logical)

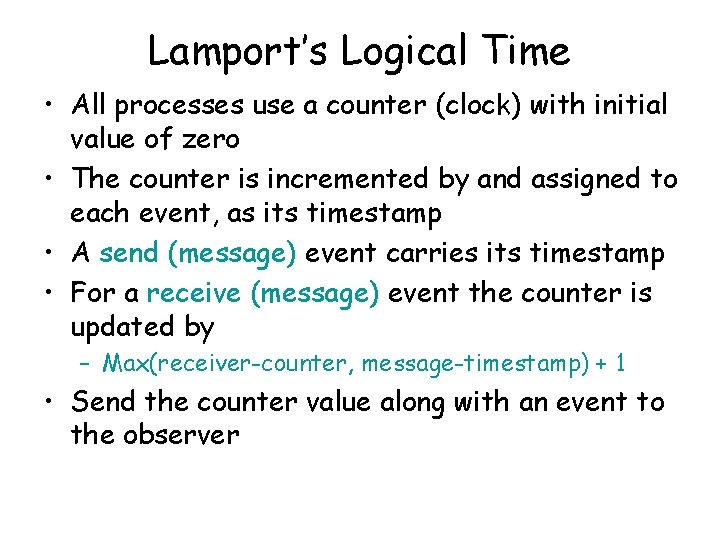

Lamport’s Logical Time • All processes use a counter (clock) with initial value of zero • The counter is incremented by and assigned to each event, as its timestamp • A send (message) event carries its timestamp • For a receive (message) event the counter is updated by – Max(receiver-counter, message-timestamp) + 1 • Send the counter value along with an event to the observer

Example p 3 1 2 e 1 3 p 2 3 e 2 3 m 1 4 e 3 3 m 2 e 1 2 m 4 e 2 2 5 1 p 1 e 4 3 2 1 e 1 1 e 2 1 m 3 e 3 1 3 Physical Time e 3 2 6

Example • Problem with Lamport’s logical clock: – e ! e’ ) C(e) < C(e’) – C(e) < C(e’) ) X e ! e’ p 3 1 2 e 1 3 p 2 3 e 2 3 m 1 4 e 3 3 m 2 e 1 2 m 4 e 2 2 5 1 p 1 e 4 3 2 1 e 1 1 e 2 1 m 3 e 3 1 3 Physical Time e 3 2 6

Example • Problem with Lamport’s logical clock: – e ! e’ ) C(e) < C(e’) – C(e) < C(e’) ) X e ! e’ p 3 1 2 e 1 3 p 2 3 e 2 3 m 1 4 e 3 3 m 2 e 1 2 m 4 e 2 2 5 1 p 1 e 4 3 2 1 e 1 1 e 2 1 m 3 e 3 1 3 Physical Time e 3 2 6

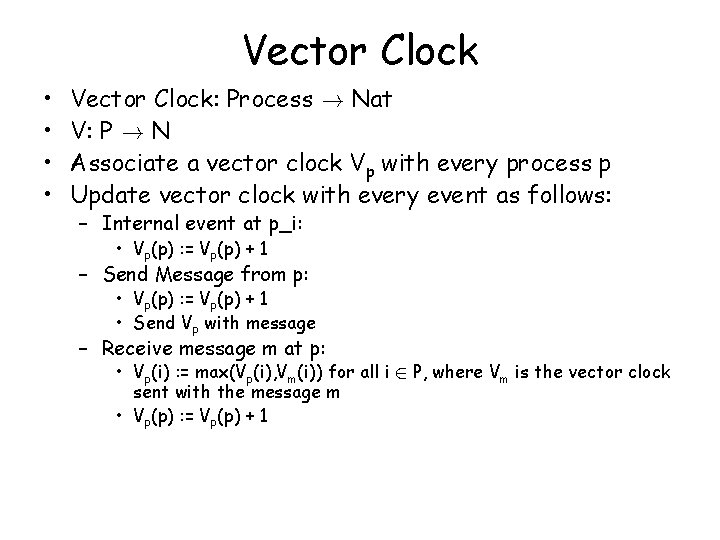

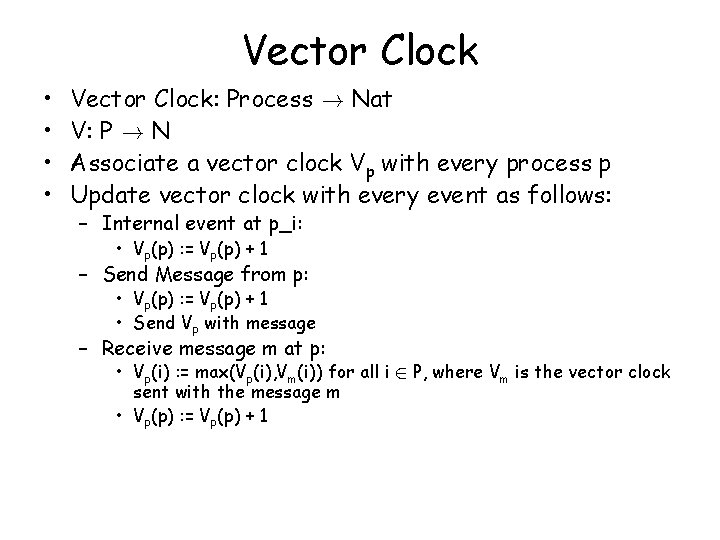

Vector Clock • • Vector Clock: Process ! Nat V: P ! N Associate a vector clock Vp with every process p Update vector clock with every event as follows: – Internal event at p_i: • Vp(p) : = Vp(p) + 1 – Send Message from p: • Vp(p) : = Vp(p) + 1 • Send Vp with message – Receive message m at p: • Vp(i) : = max(Vp(i), Vm(i)) for all i 2 P, where Vm is the vector clock sent with the message m • Vp(p) : = Vp(p) + 1

Example V = (a, b, c) means V(p 1)=a, V(p 2)=b, and V(p 3)=c p 3 p 2 (0, 0, 1) (0, 1, 2) e 1 3 e 2 3 m 1 (2, 1, 3) (2, 1, 4) e 3 3 m 2 e 1 2 m 4 e 2 2 (2, 2, 4) (0, 1, 0) p 1 e 4 3 (1, 0, 0) e 1 1 (2, 0, 0) e 2 1 m 3 e 31(3, 0, 0) Physical Time e 3 2 (2, 3, 4)

Intuitive Meaning of a Vector Clock • If Vp = (a, b, c) after some event then – p is affected by the ath event from p 1 – p is affected by the bth event from p 2 – p is affected by the cth event from p 3

Comparing Vector Clocks • V · V’ iff for all p 2 P, V(p) · V’(p) • V = V’ iff for all p 2 P, V(p) = V’(p) • V < V’ iff V · V’ and V V’ • Theorem: Ve < Ve’ iff e ! e’ • Send an event along with its vector clock to the observer

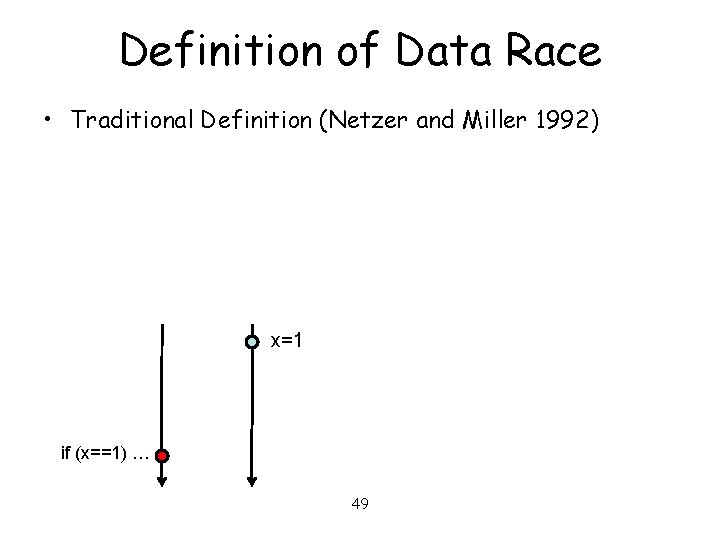

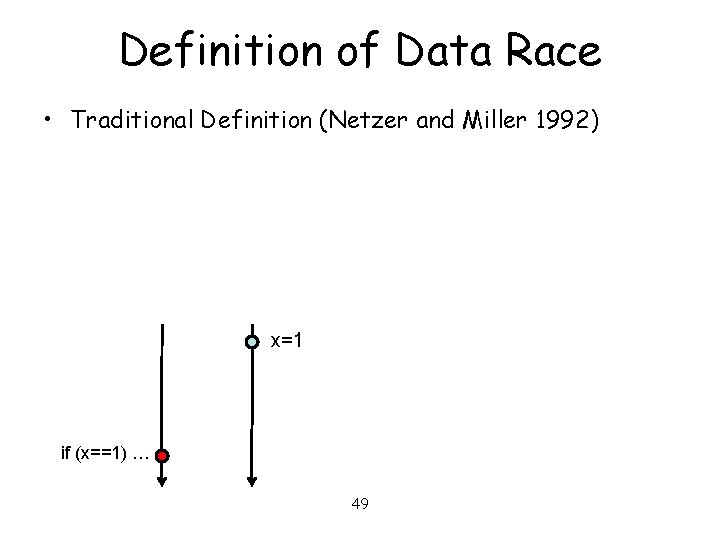

Definition of Data Race • Traditional Definition (Netzer and Miller 1992) x=1 if (x==1) … 49

Definition of Data Race • Traditional Definition (Netzer and Miller 1992) x=1 receive(m) X send(m) if (x==1) … 50

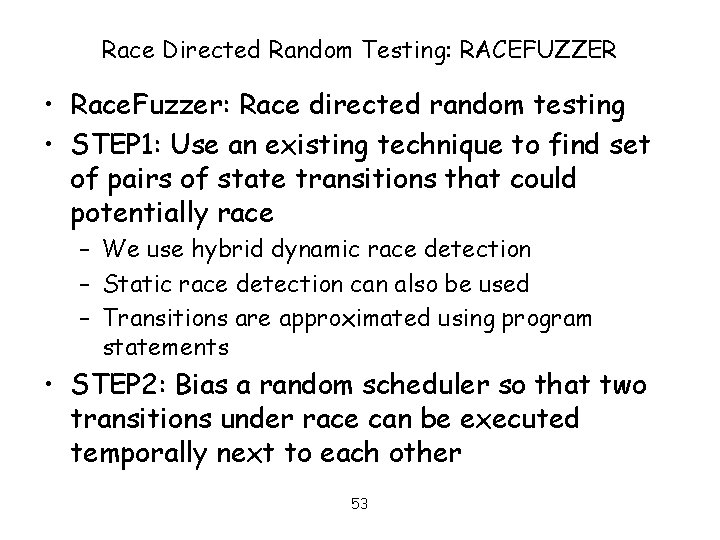

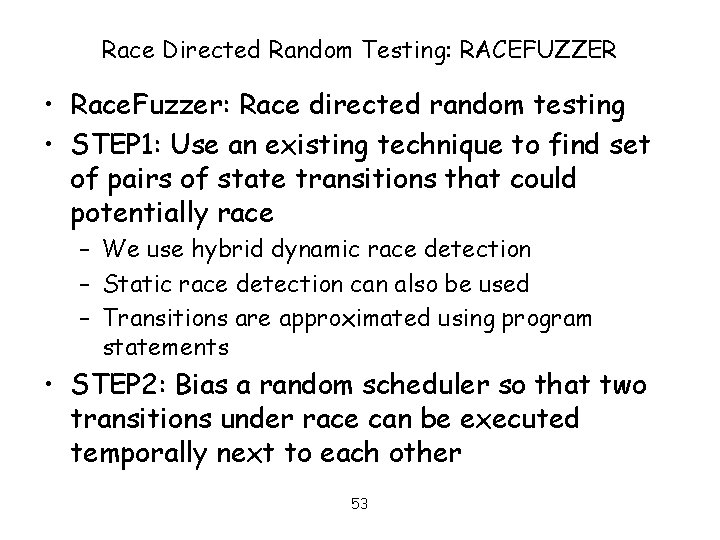

Operational Definition of Data Race • We say that the execution of two statements are in race if they could be executed by different threads temporally next to each other and both access the same memory location and at least one of the accesses is a write x=1 receive(m) X send(m) if (x==1) … Temporally next to each 51 other x=1

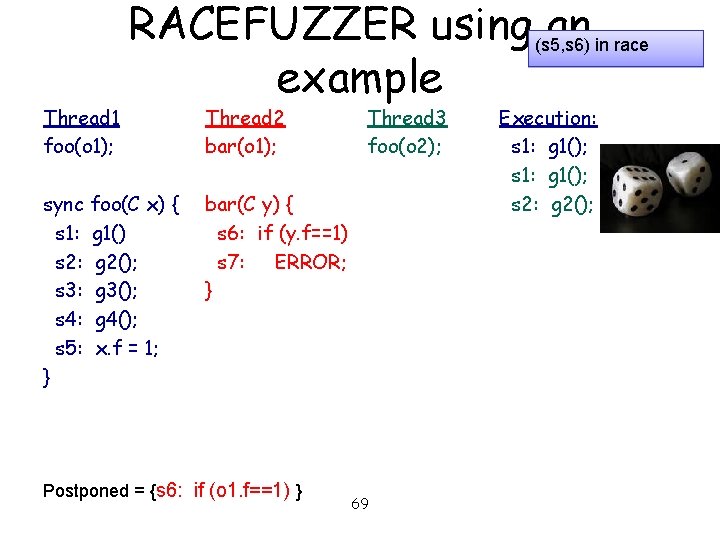

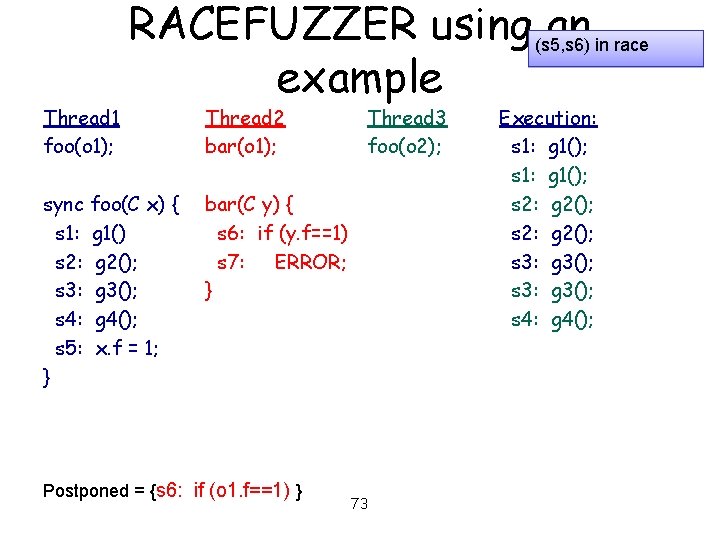

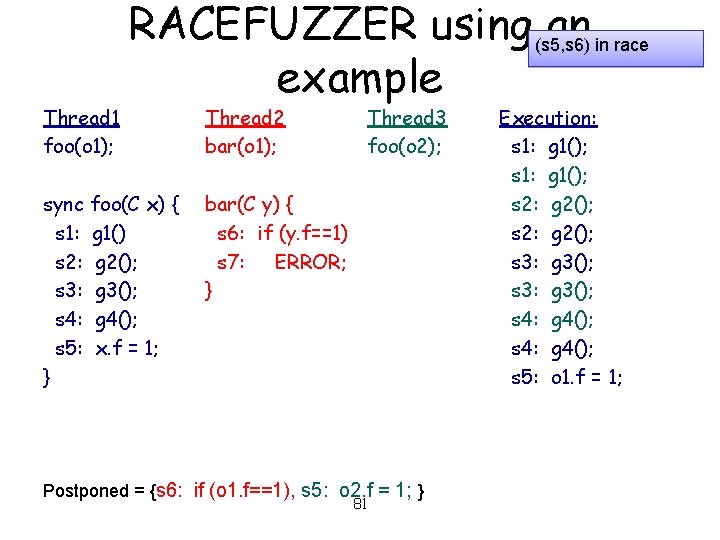

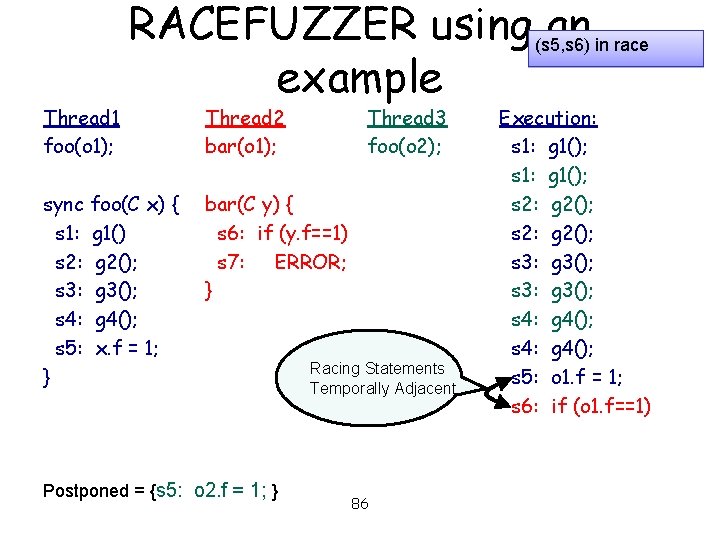

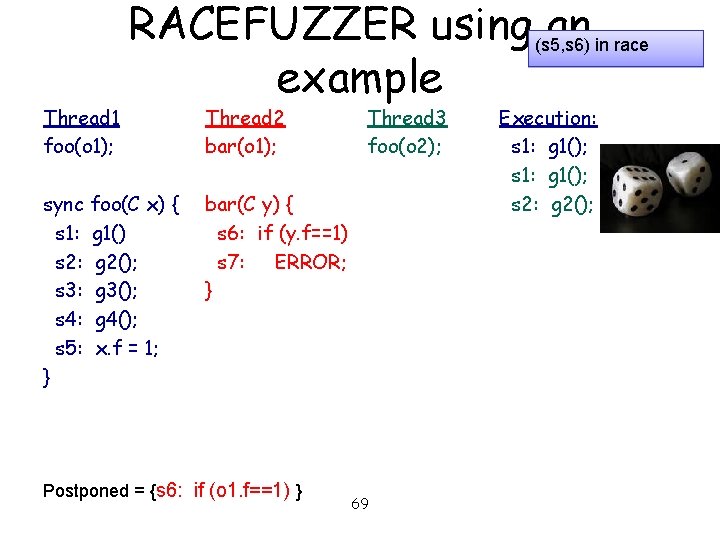

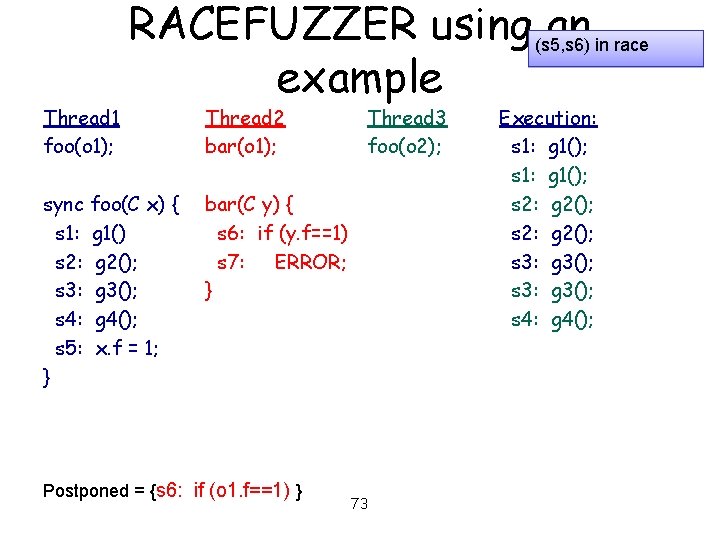

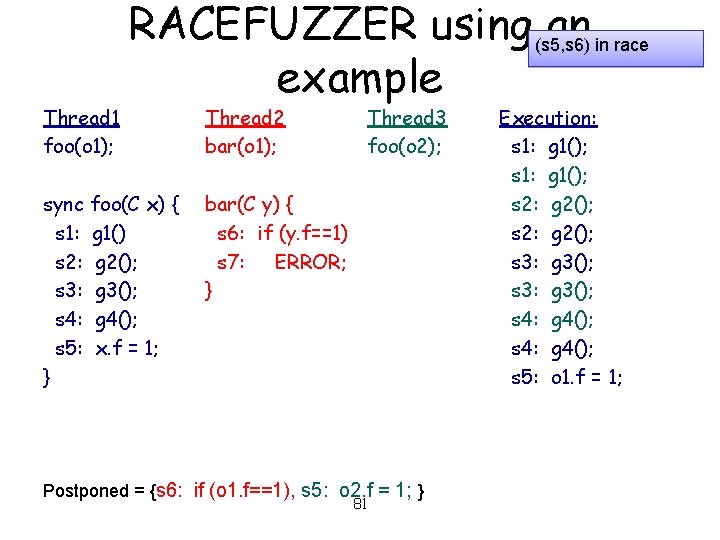

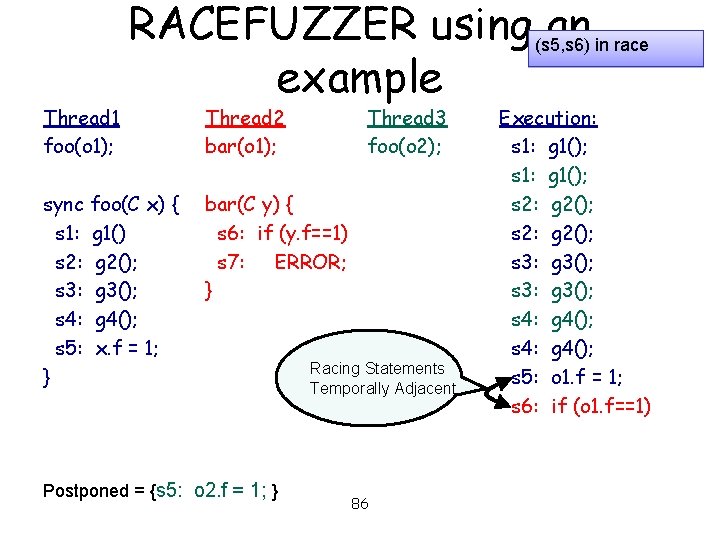

Race Directed Random Testing: RACEFUZZER • Race. Fuzzer: Race directed random testing • STEP 1: Use an existing technique to find set of pairs of state transitions that could potentially race – We use hybrid dynamic race detection – Static race detection can also be used – Transitions are approximated using program statements 52

Race Directed Random Testing: RACEFUZZER • Race. Fuzzer: Race directed random testing • STEP 1: Use an existing technique to find set of pairs of state transitions that could potentially race – We use hybrid dynamic race detection – Static race detection can also be used – Transitions are approximated using program statements • STEP 2: Bias a random scheduler so that two transitions under race can be executed temporally next to each other 53

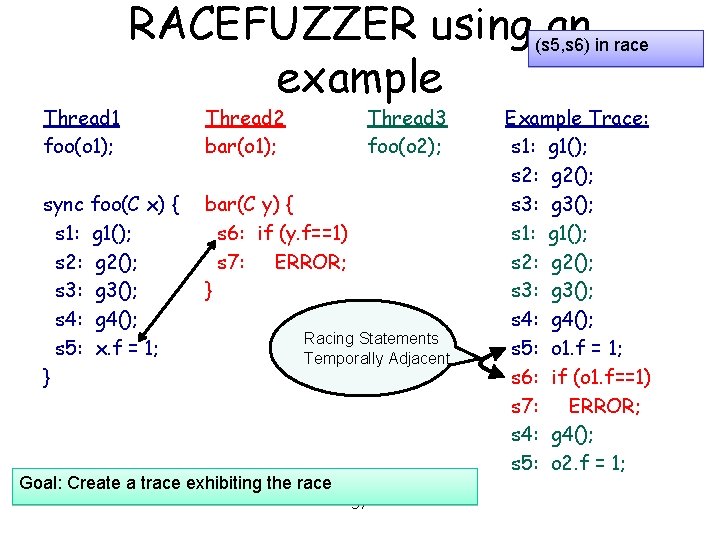

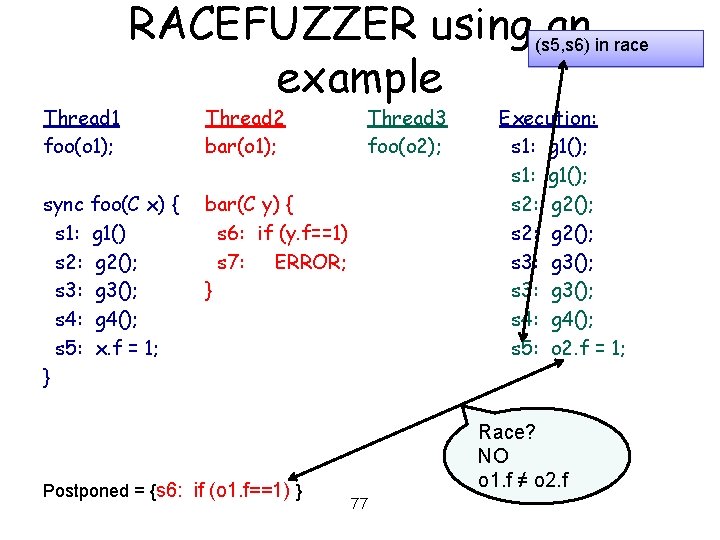

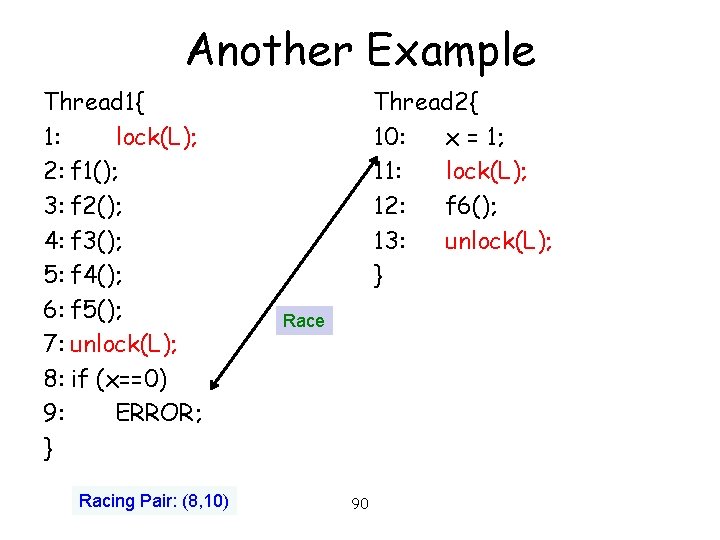

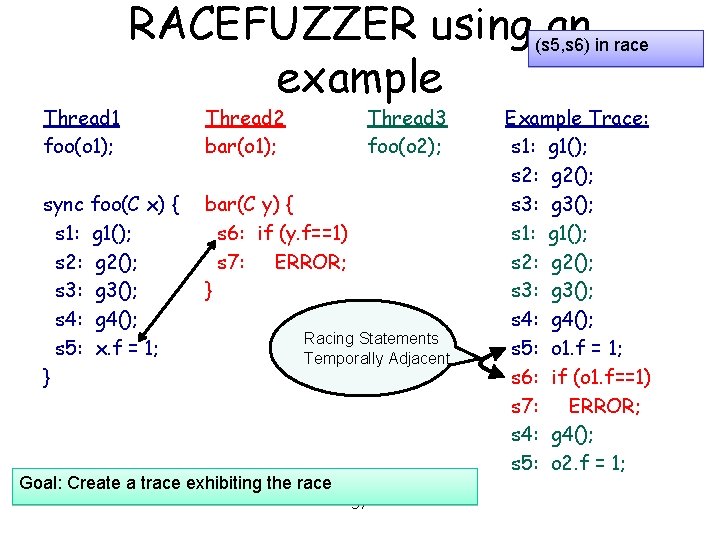

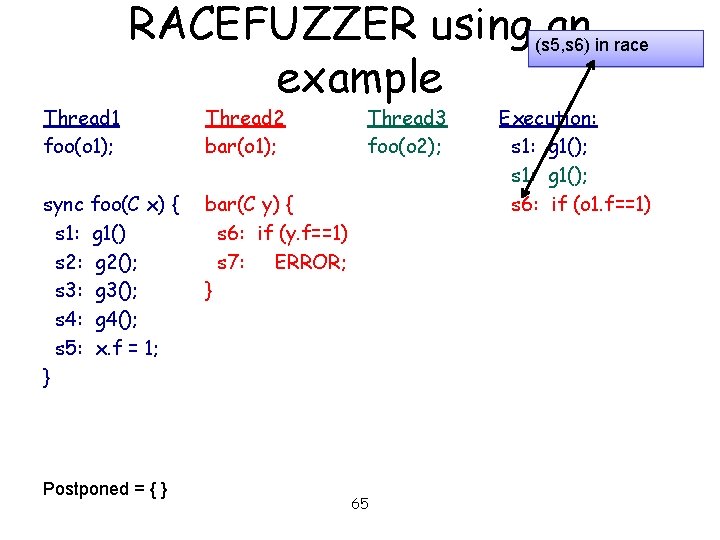

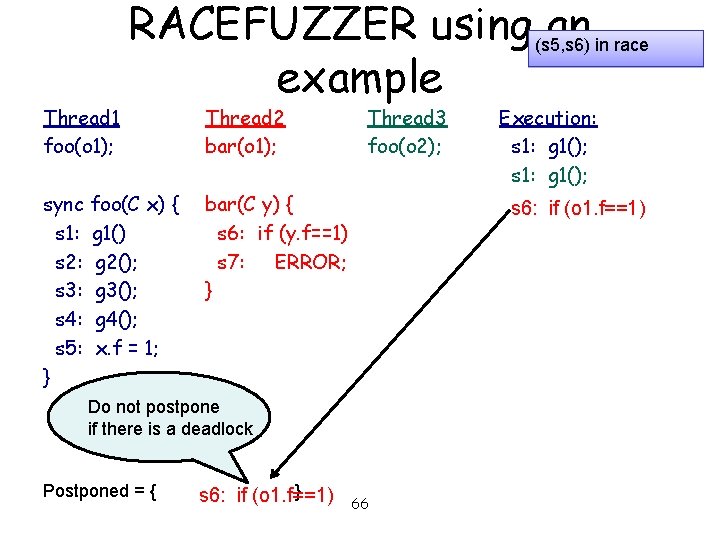

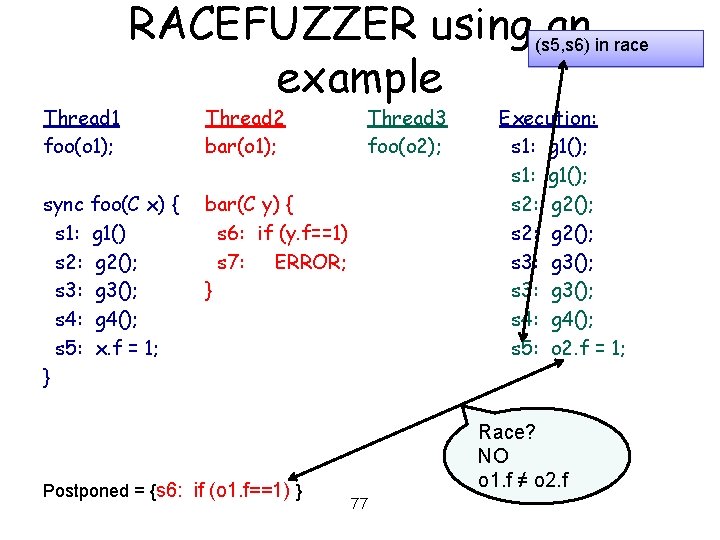

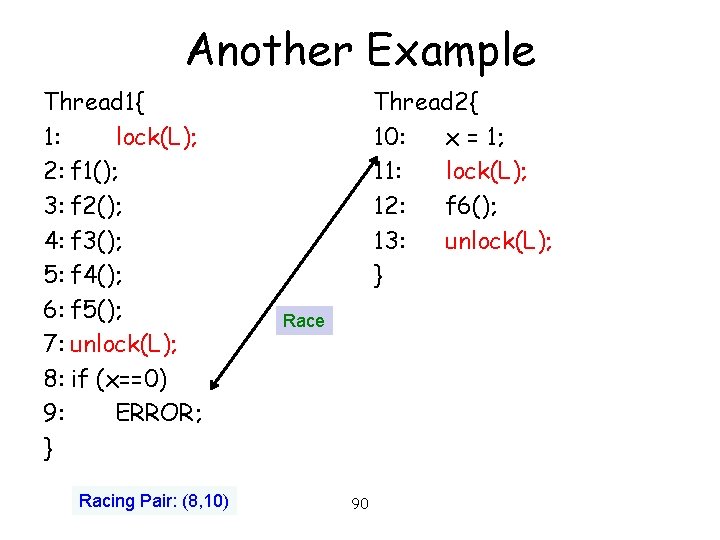

Thread 1 foo(o 1); RACEFUZZER using an example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Run ERASER: Statement pair (s 5, s 6) are in race 54

Thread 1 foo(o 1); RACEFUZZER using an example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Run ERASER: Statement pair (s 5, s 6) are in race 55

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Goal: Create a trace exhibiting the race 56

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Racing Statements Temporally Adjacent Goal: Create a trace exhibiting the race 57 Example Trace: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1; s 6: if (o 1. f==1) s 7: ERROR; s 4: g 4(); s 5: o 2. f = 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 58 Execution:

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 59 Execution: s 1: g 1();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 60 Execution: s 1: g 1();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 61 Execution: s 1: g 1();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 62 Execution: s 1: g 1();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 63 Execution: s 1: g 1(); s 6: if (o 1. f==1)

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 64 Execution: s 1: g 1(); s 6: if (o 1. f==1)

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Postponed = { } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 65 Execution: s 1: g 1(); s 6: if (o 1. f==1)

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } s 6: if (o 1. f==1) Do not postpone if there is a deadlock Postponed = { } s 6: if (o 1. f==1) Execution: s 1: g 1(); 66

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } 67 Execution: s 1: g 1();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 68 Execution: s 1: g 1(); s 2: g 2();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 69 Execution: s 1: g 1(); s 2: g 2();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 70 Execution: s 1: g 1(); s 2: g 2();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 71 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 72 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 73 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 74 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 2. f = 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } 75 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 2. f = 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 2. f = 1; Race? Postponed = {s 6: if (o 1. f==1) } 76

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1) } Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 2. f = 1; Race? NO o 1. f ≠ o 2. f 77

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1), Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 2. f = 1; s 5: 78 o 2. f }= 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1), s 5: o 2. f = 1; } 79 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1), s 5: o 2. f = 1; } 80 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4();

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1), s 5: o 2. f = 1; } 81 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1), s 5: o 2. f = 1; } 82 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 6: if (o 1. f==1), s 5: o 2. f = 1; } 83 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1; Race? YES o 1. f = o 1. f

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 5: o 2. f = 1; } Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 6: if (o 1. f==1) 84 s 5: o 1. f = 1;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 5: o 2. f = 1; } 85 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1; s 6: if (o 1. f==1)

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 5: o 2. f = 1; } Racing Statements Temporally Adjacent 86 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1; s 6: if (o 1. f==1)

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Postponed = {s 5: o 2. f = 1; } Racing Statements Temporally Adjacent 87 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1; s 6: if (o 1. f==1) s 7: ERROR;

Thread 1 foo(o 1); RACEFUZZER using (s 5, s 6) an in race example sync foo(C x) { s 1: g 1() s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: x. f = 1; } Postponed = { } Thread 2 bar(o 1); Thread 3 foo(o 2); bar(C y) { s 6: if (y. f==1) s 7: ERROR; } Racing Statements Temporally Adjacent 88 Execution: s 1: g 1(); s 2: g 2(); s 3: g 3(); s 4: g 4(); s 5: o 1. f = 1; s 6: if (o 1. f==1) s 7: ERROR; s 5: o 2. f = 1;

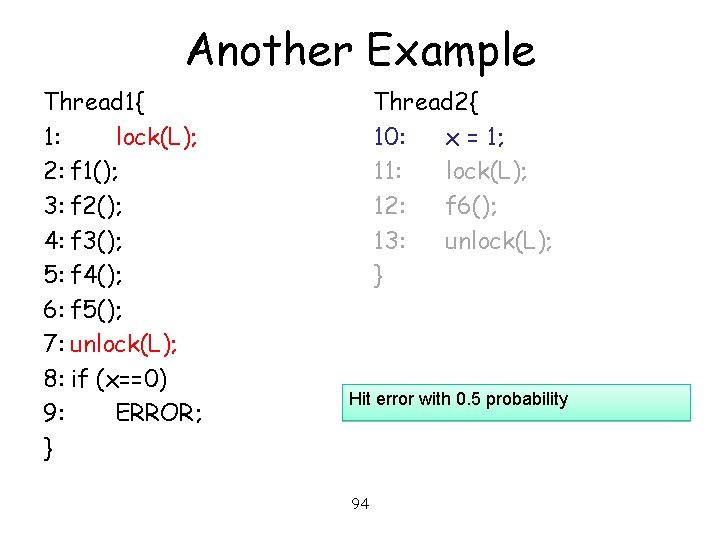

Another Example Thread 1{ 1: lock(L); 2: f 1(); 3: f 2(); 4: f 3(); 5: f 4(); 6: f 5(); 7: unlock(L); 8: if (x==0) 9: ERROR; } Thread 2{ 10: x = 1; 11: lock(L); 12: f 6(); 13: unlock(L); } 89

Another Example Thread 1{ 1: lock(L); 2: f 1(); 3: f 2(); 4: f 3(); 5: f 4(); 6: f 5(); 7: unlock(L); 8: if (x==0) 9: ERROR; } Racing Pair: (8, 10) Thread 2{ 10: x = 1; 11: lock(L); 12: f 6(); 13: unlock(L); } Race 90

Another Example Thread 1{ 1: lock(L); 2: f 1(); 3: f 2(); 4: f 3(); 5: f 4(); 6: f 5(); 7: unlock(L); 8: if (x==0) 9: ERROR; } Racing Pair: (8, 10) Thread 2{ 10: x = 1; 11: lock(L); 12: f 6(); 13: unlock(L); } Postponed Set 91 = {Thread 2}

Another Example Thread 1{ 1: lock(L); 2: f 1(); 3: f 2(); 4: f 3(); 5: f 4(); 6: f 5(); 7: unlock(L); 8: if (x==0) 9: ERROR; } Thread 2{ 10: x = 1; 11: lock(L); 12: f 6(); 13: unlock(L); } 92

Another Example Thread 1{ 1: lock(L); 2: f 1(); 3: f 2(); 4: f 3(); 5: f 4(); 6: f 5(); 7: unlock(L); 8: if (x==0) 9: ERROR; } Thread 2{ 10: x = 1; 11: lock(L); 12: f 6(); 13: unlock(L); } 93

Another Example Thread 1{ 1: lock(L); 2: f 1(); 3: f 2(); 4: f 3(); 5: f 4(); 6: f 5(); 7: unlock(L); 8: if (x==0) 9: ERROR; } Thread 2{ 10: x = 1; 11: lock(L); 12: f 6(); 13: unlock(L); } Hit error with 0. 5 probability 94

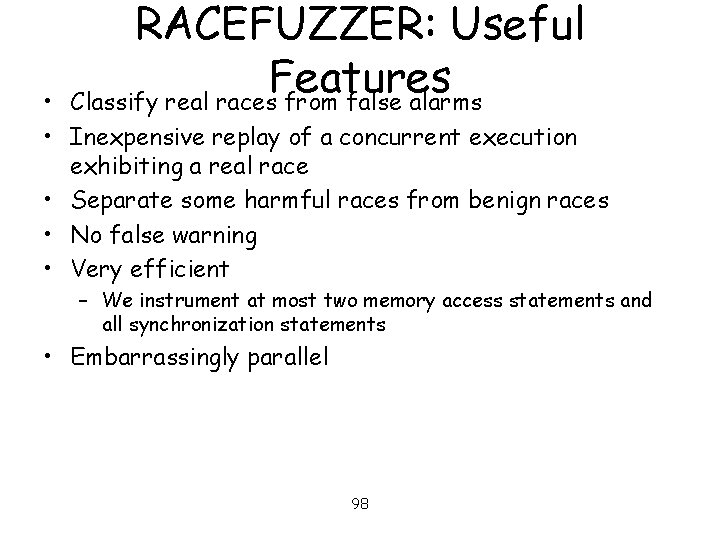

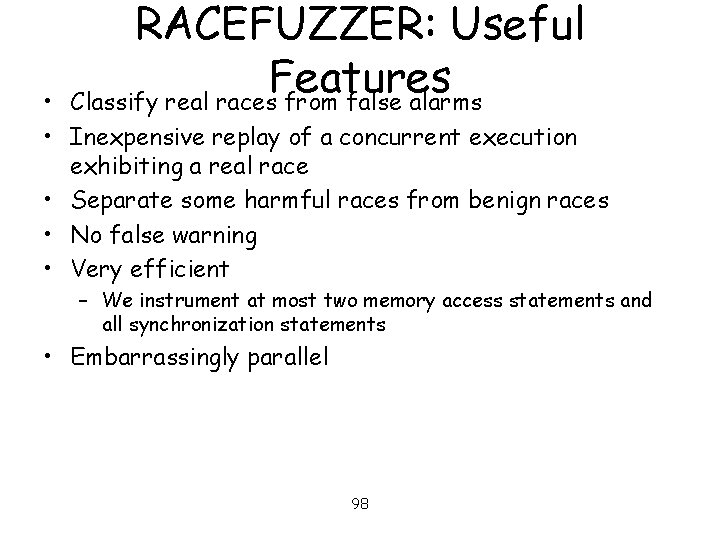

Implementation • Race. Fuzzer: Part of Cal. Fuzzer tool suite • Instrument using SOOT compiler framework • Instrumentations are used to “hijack” the scheduler lock(L 1); X=1; unlock(L 1); – Implement a custom scheduler – Run one thread at a time – Use semaphores to control threads lock(L 2); Y=2; unlock(L 2); • Deadlock detector – Because we cannot instrument native method calls 95

Implementation • Race. Fuzzer: Part of Cal. Fuzzer tool suite • Instrument using SOOT compiler framework • Instrumentations are used to “hijack” the scheduler ins_lock(L 1); ins_write(&X); X=1; unlock(L 1); ins_unlock(L 1); – Implement a custom scheduler – Run one thread at a time – Use semaphores to control threads • Deadlock detector – Because we cannot instrument native method calls 96 ins_lock(L 1); lock(L 2); Y=2; unlock(L 2); ins_unlock(L 1); Custom Scheduler

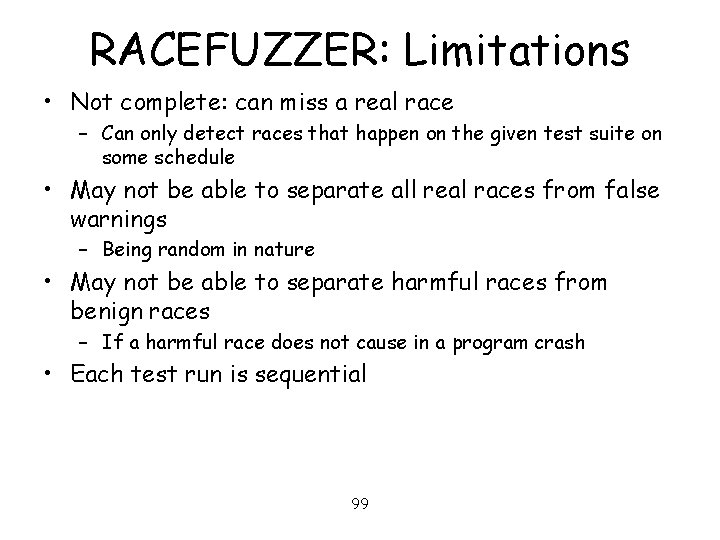

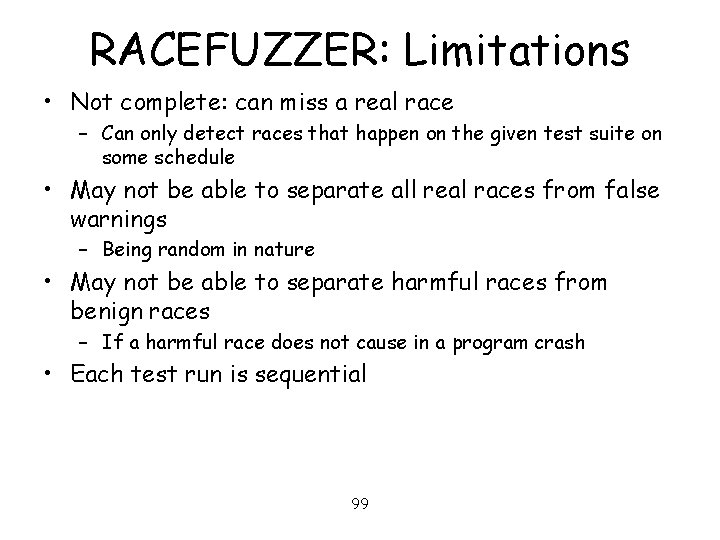

Experimental Results 97

RACEFUZZER: Useful Features Classify real races from false alarms • • Inexpensive replay of a concurrent execution exhibiting a real race • Separate some harmful races from benign races • No false warning • Very efficient – We instrument at most two memory access statements and all synchronization statements • Embarrassingly parallel 98

RACEFUZZER: Limitations • Not complete: can miss a real race – Can only detect races that happen on the given test suite on some schedule • May not be able to separate all real races from false warnings – Being random in nature • May not be able to separate harmful races from benign races – If a harmful race does not cause in a program crash • Each test run is sequential 99

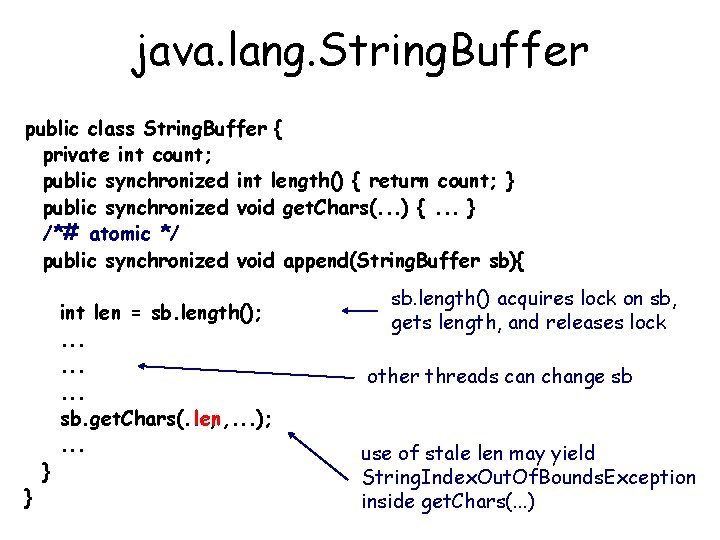

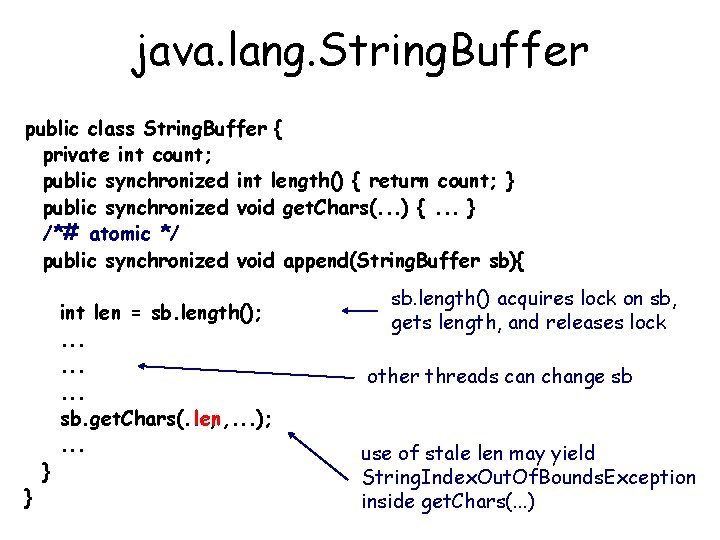

Summary • Claim: testing (a. k. a verification in industry) is the most practical way to find software bugs – We need to make software testing systematic and rigorous • Random testing works amazingly well in practice – Randomizing a scheduler is more effective than randomizing inputs – We need to make random testing smarter and closer to verification • Bias random testing – Prioritize random testing • Find interesting preemption points in the programs • Randomly preempt threads at these interesting points 100

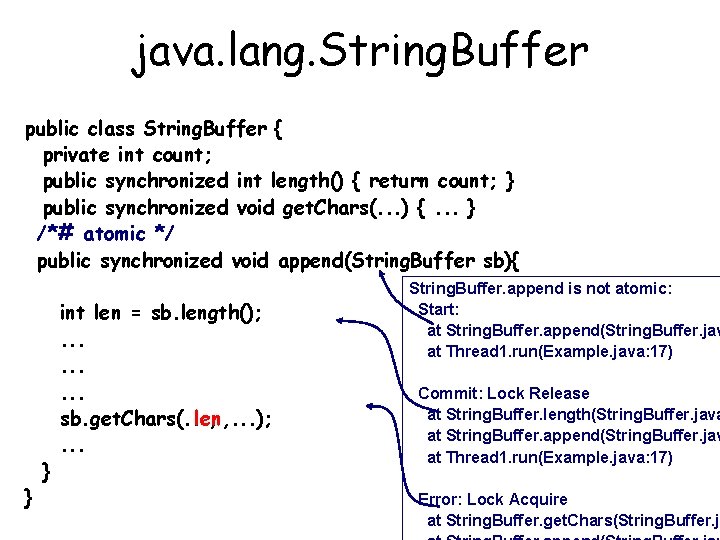

java. lang. String. Buffer /**. . . used by the compiler to implement the binary string concatenation operator. . . String buffers are safe for use by multiple threads. The methods are synchronized so that all the operations on any particular instance behave as if they occur in some serial order that is consistent with the order of the method calls made by each of the individual threads involved. */ /*# atomic */ public class String. Buffer {. . . }

java. lang. String. Buffer public class String. Buffer { private int count; public synchronized int length() { return count; } public synchronized void get. Chars(. . . ) {. . . } /*# atomic */ public synchronized void append(String. Buffer sb){ } } int len = sb. length(); . . sb. get. Chars(. . . , len, . . . ); . . . sb. length() acquires lock on sb, gets length, and releases lock other threads can change sb use of stale len may yield String. Index. Out. Of. Bounds. Exception inside get. Chars(. . . )

java. lang. String. Buffer public class String. Buffer { private int count; public synchronized int length() { return count; } public synchronized void get. Chars(. . . ) {. . . } /*# atomic */ public synchronized void append(String. Buffer sb){ } } int len = sb. length(); . . sb. get. Chars(. . . , len, . . . ); . . . String. Buffer. append is not atomic: Start: at String. Buffer. append(String. Buffer. jav at Thread 1. run(Example. java: 17) Commit: Lock Release at String. Buffer. length(String. Buffer. java at String. Buffer. append(String. Buffer. jav at Thread 1. run(Example. java: 17) Error: Lock Acquire at String. Buffer. get. Chars(String. Buffer. j

![Related work Stoller et al and Edelstein et al Con Test Inserts Related work • Stoller et al. and Edelstein et al. [Con. Test] – Inserts](https://slidetodoc.com/presentation_image_h2/74c04c90aab606bb87f8147c12cb4a45/image-104.jpg)

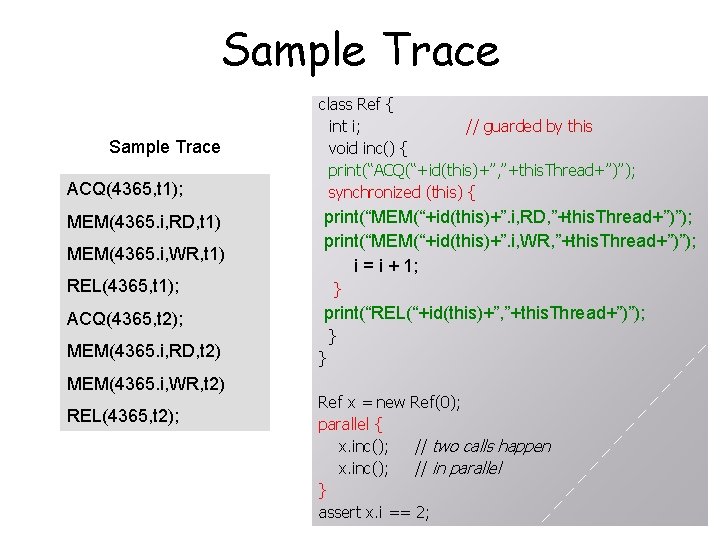

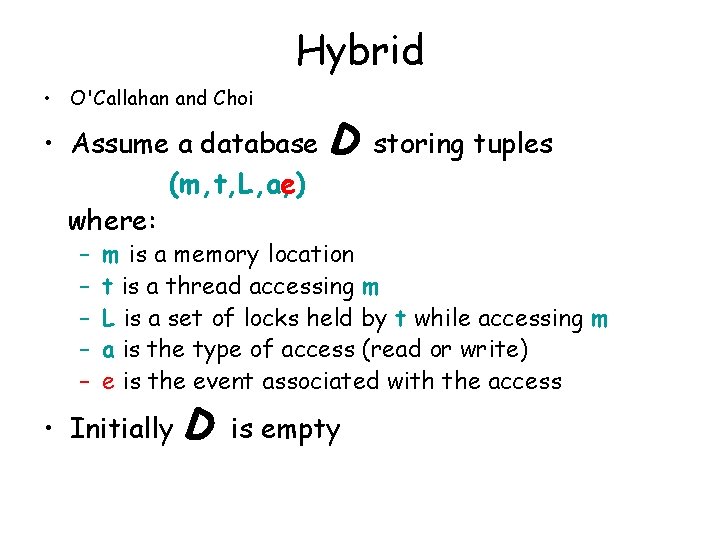

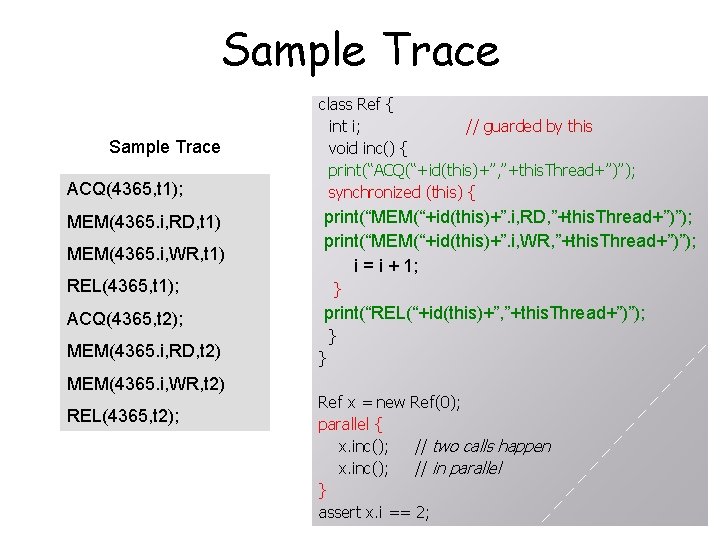

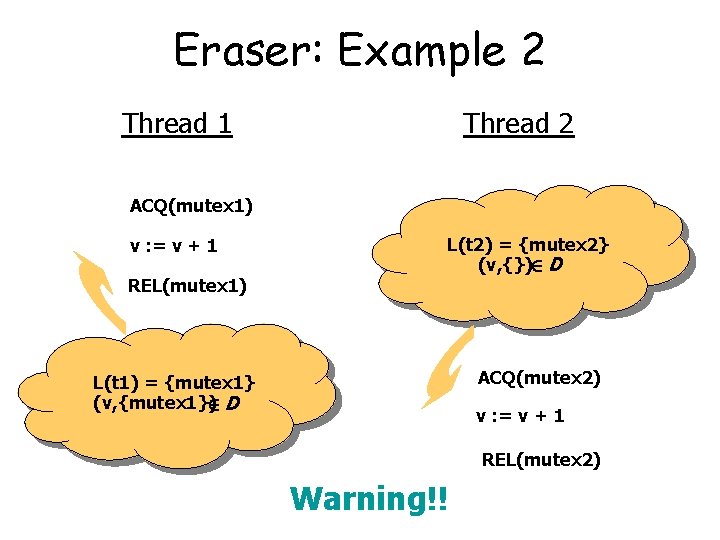

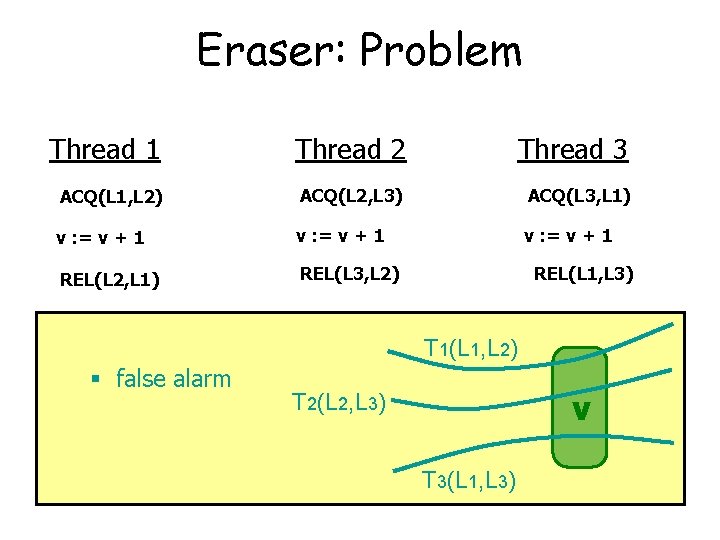

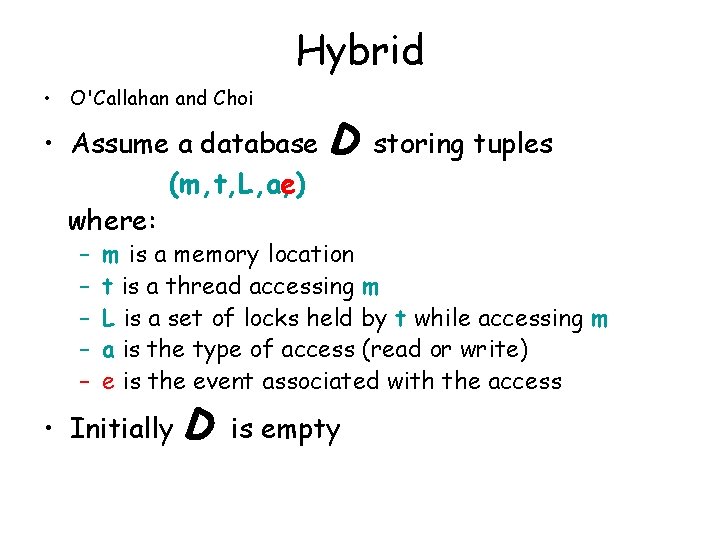

Related work • Stoller et al. and Edelstein et al. [Con. Test] – Inserts yield() and sleep() randomly in Java code • Parallel randomized depth-first search by Dwyer et al. – Modifies search strategy in Java Pathfinder by Visser et al. • Iterative context bounding (Musuvathi and Qadeer) – Systematic testing with bounded context switches • Satish Narayanasamy, Zhenghao Wang, Jordan Tigani, Andrew Edwards and Brad Calder “Automatically Classifying Benign and Harmful Data Races Using Replay Analysis”, PLDI 07 104