CS 252 Graduate Computer Architecture Lecture 8 ILP

![Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer -- M[10] F 0 F Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer -- M[10] F 0 F](https://slidetodoc.com/presentation_image_h2/7e9956ad609a6d317d57f7cd875cbfe3/image-22.jpg)

![Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer Done? -- M[10] ST 0(R Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer Done? -- M[10] ST 0(R](https://slidetodoc.com/presentation_image_h2/7e9956ad609a6d317d57f7cd875cbfe3/image-23.jpg)

- Slides: 50

CS 252 Graduate Computer Architecture Lecture 8 ILP 2: Precise Interrupts and Getting the CPI < 1 John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http: //www. eecs. berkeley. edu/~kubitron/cs 252 http: //www-inst. eecs. berkeley. edu/~cs 252

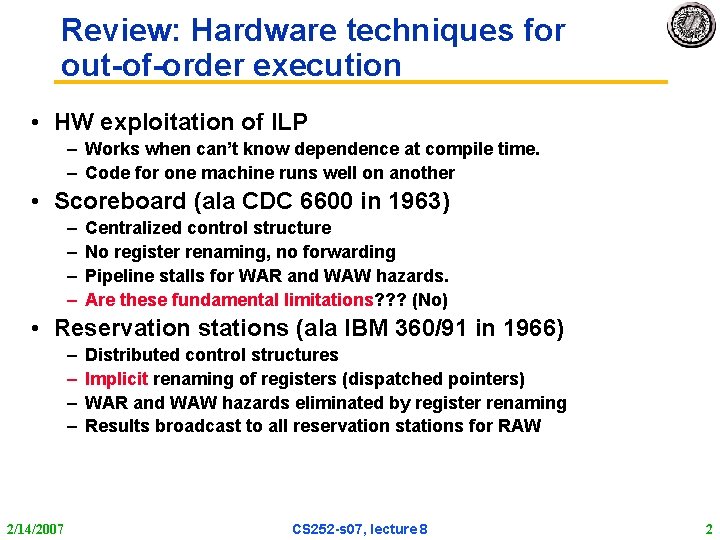

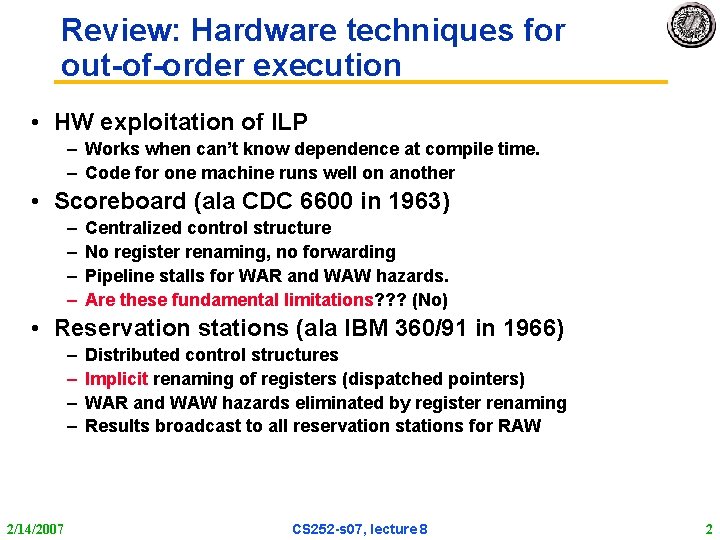

Review: Hardware techniques for out-of-order execution • HW exploitation of ILP – Works when can’t know dependence at compile time. – Code for one machine runs well on another • Scoreboard (ala CDC 6600 in 1963) – – Centralized control structure No register renaming, no forwarding Pipeline stalls for WAR and WAW hazards. Are these fundamental limitations? ? ? (No) • Reservation stations (ala IBM 360/91 in 1966) – – 2/14/2007 Distributed control structures Implicit renaming of registers (dispatched pointers) WAR and WAW hazards eliminated by register renaming Results broadcast to all reservation stations for RAW CS 252 -s 07, lecture 8 2

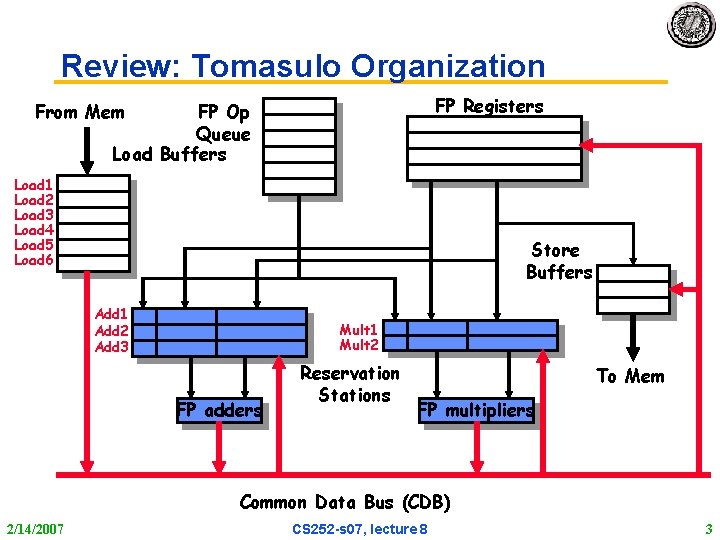

Review: Tomasulo Organization FP Registers From Mem FP Op Queue Load Buffers Load 1 Load 2 Load 3 Load 4 Load 5 Load 6 Store Buffers Add 1 Add 2 Add 3 Mult 1 Mult 2 FP adders Reservation Stations To Mem FP multipliers Common Data Bus (CDB) 2/14/2007 CS 252 -s 07, lecture 8 3

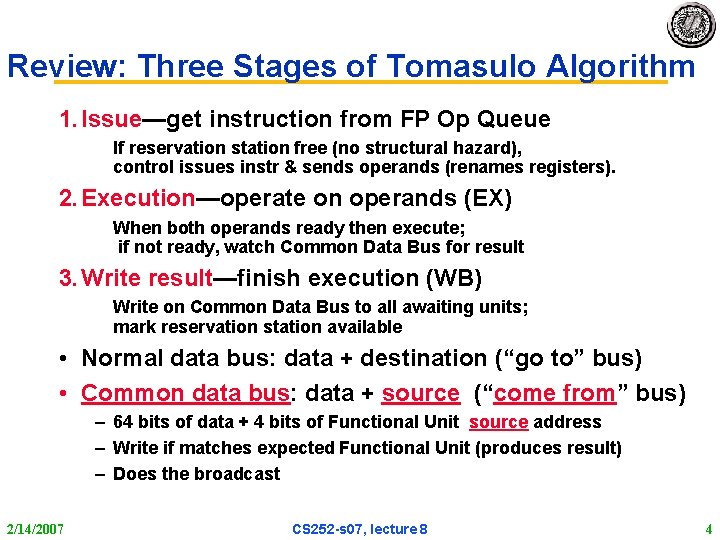

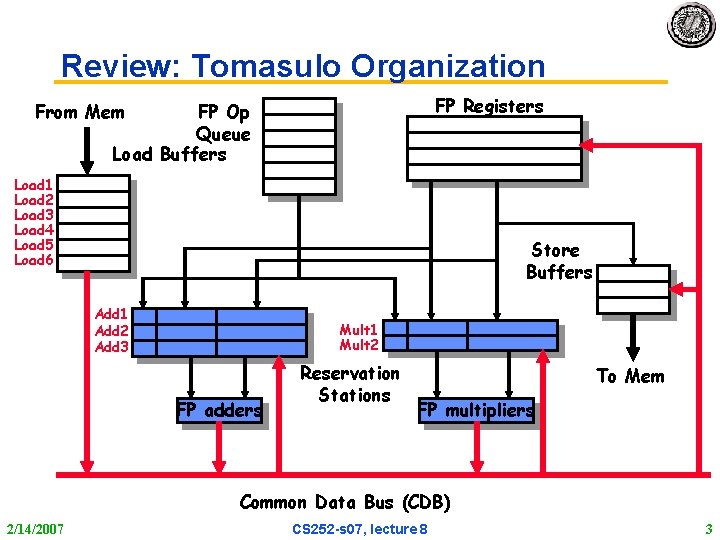

Review: Three Stages of Tomasulo Algorithm 1. Issue—get instruction from FP Op Queue If reservation station free (no structural hazard), control issues instr & sends operands (renames registers). 2. Execution—operate on operands (EX) When both operands ready then execute; if not ready, watch Common Data Bus for result 3. Write result—finish execution (WB) Write on Common Data Bus to all awaiting units; mark reservation station available • Normal data bus: data + destination (“go to” bus) • Common data bus: data + source (“come from” bus) – 64 bits of data + 4 bits of Functional Unit source address – Write if matches expected Functional Unit (produces result) – Does the broadcast 2/14/2007 CS 252 -s 07, lecture 8 4

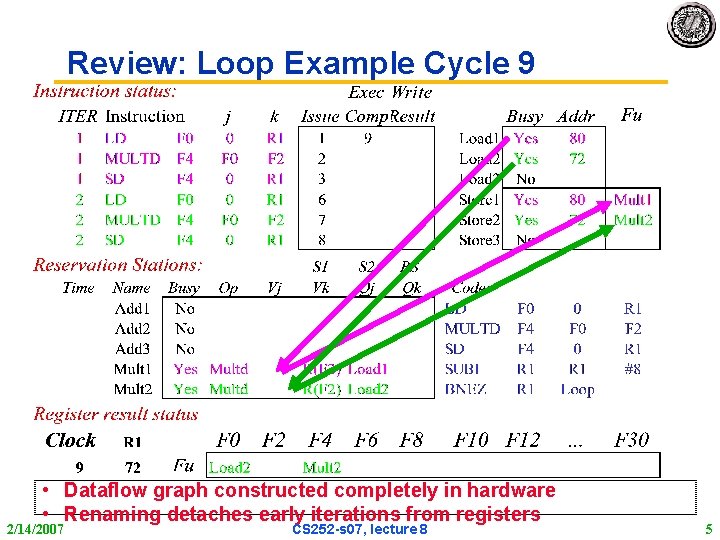

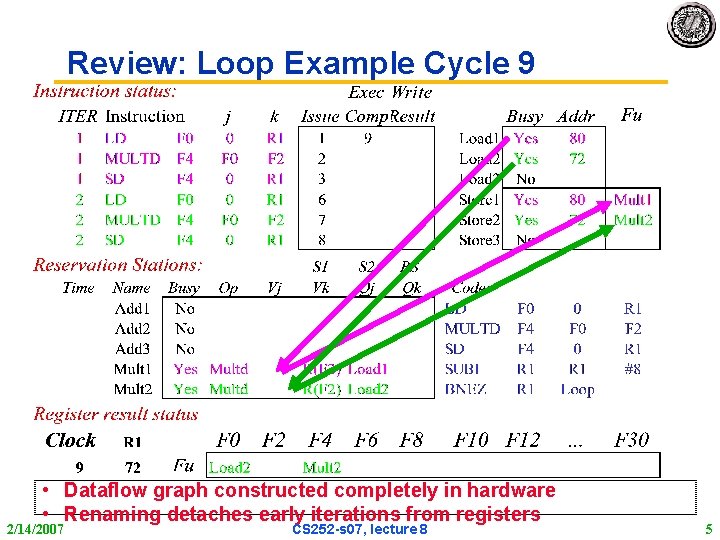

Review: Loop Example Cycle 9 • Dataflow graph constructed completely in hardware • Renaming detaches early iterations from registers 2/14/2007 CS 252 -s 07, lecture 8 5

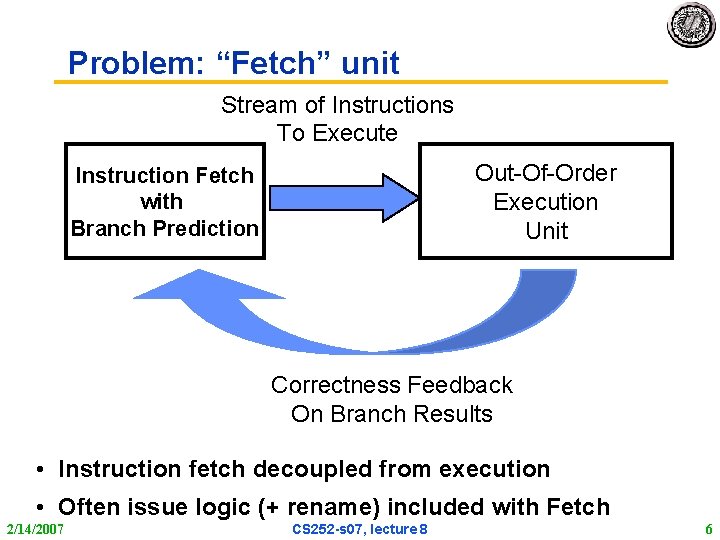

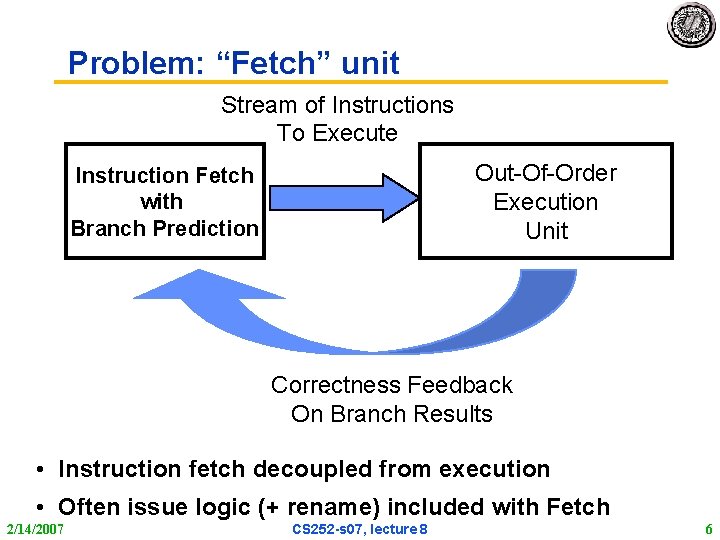

Problem: “Fetch” unit Stream of Instructions To Execute Out-Of-Order Execution Unit Instruction Fetch with Branch Prediction Correctness Feedback On Branch Results • Instruction fetch decoupled from execution • Often issue logic (+ rename) included with Fetch 2/14/2007 CS 252 -s 07, lecture 8 6

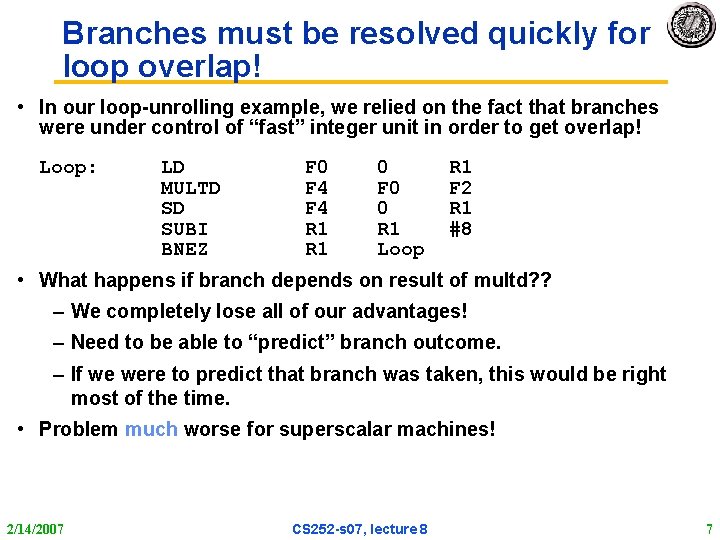

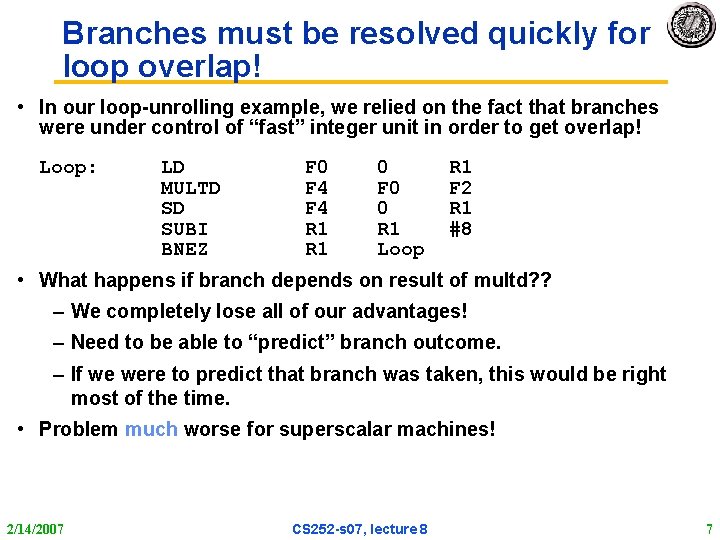

Branches must be resolved quickly for loop overlap! • In our loop-unrolling example, we relied on the fact that branches were under control of “fast” integer unit in order to get overlap! Loop: LD MULTD SD SUBI BNEZ F 0 F 4 R 1 0 F 0 0 R 1 Loop R 1 F 2 R 1 #8 • What happens if branch depends on result of multd? ? – We completely lose all of our advantages! – Need to be able to “predict” branch outcome. – If we were to predict that branch was taken, this would be right most of the time. • Problem much worse for superscalar machines! 2/14/2007 CS 252 -s 07, lecture 8 7

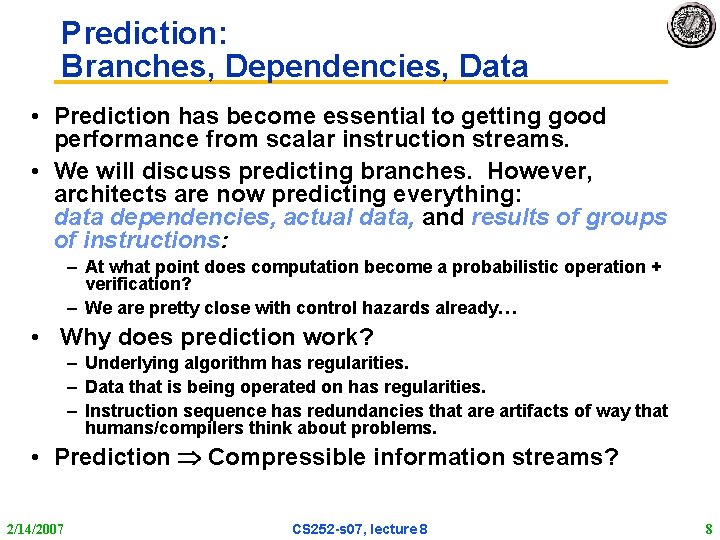

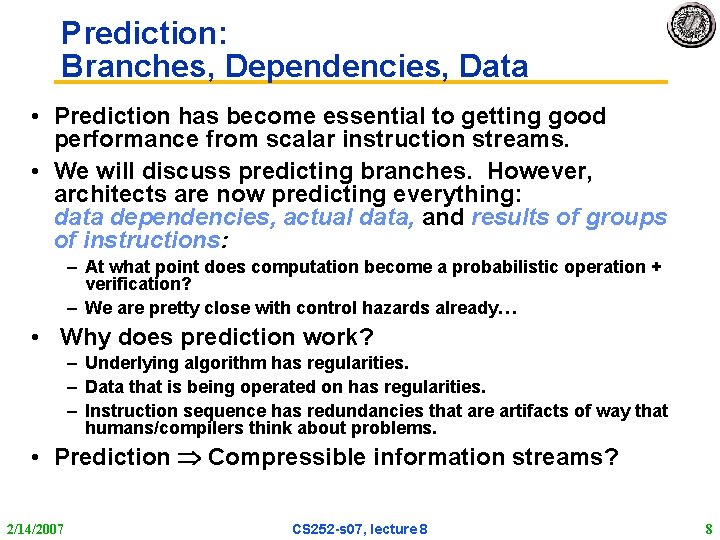

Prediction: Branches, Dependencies, Data • Prediction has become essential to getting good performance from scalar instruction streams. • We will discuss predicting branches. However, architects are now predicting everything: data dependencies, actual data, and results of groups of instructions: – At what point does computation become a probabilistic operation + verification? – We are pretty close with control hazards already… • Why does prediction work? – Underlying algorithm has regularities. – Data that is being operated on has regularities. – Instruction sequence has redundancies that are artifacts of way that humans/compilers think about problems. • Prediction Compressible information streams? 2/14/2007 CS 252 -s 07, lecture 8 8

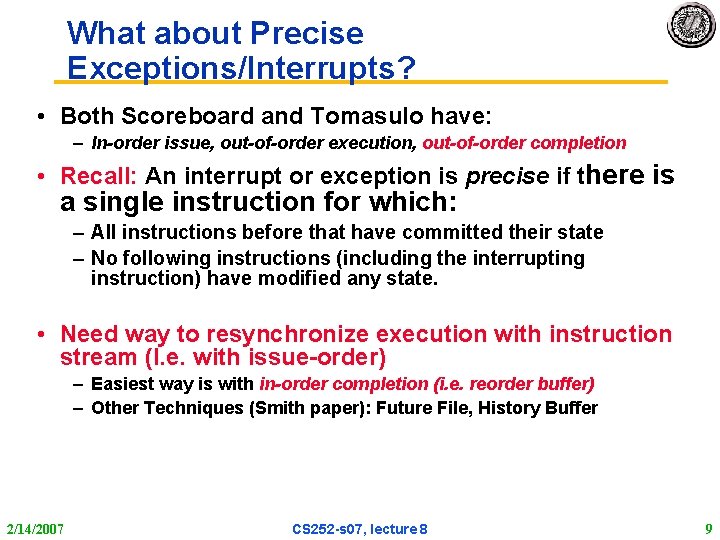

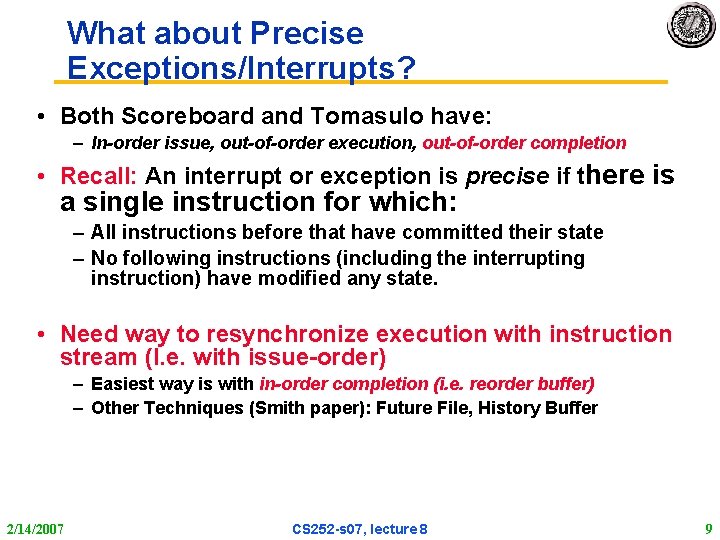

What about Precise Exceptions/Interrupts? • Both Scoreboard and Tomasulo have: – In-order issue, out-of-order execution, out-of-order completion • Recall: An interrupt or exception is precise if there is a single instruction for which: – All instructions before that have committed their state – No following instructions (including the interrupting instruction) have modified any state. • Need way to resynchronize execution with instruction stream (I. e. with issue-order) – Easiest way is with in-order completion (i. e. reorder buffer) – Other Techniques (Smith paper): Future File, History Buffer 2/14/2007 CS 252 -s 07, lecture 8 9

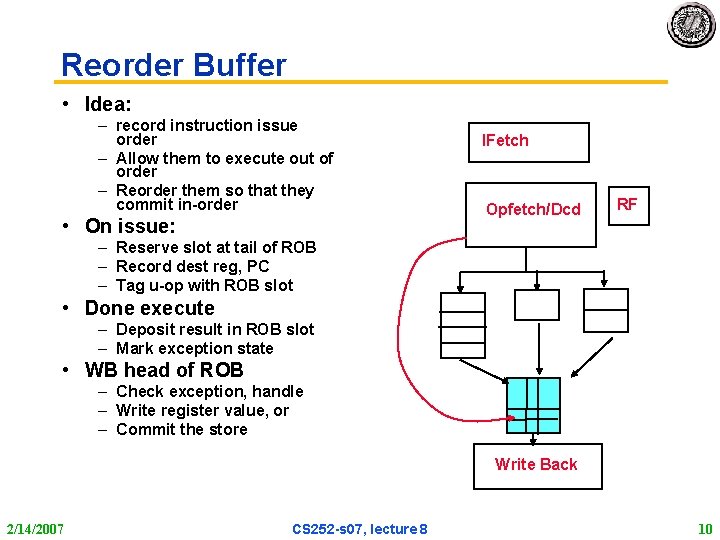

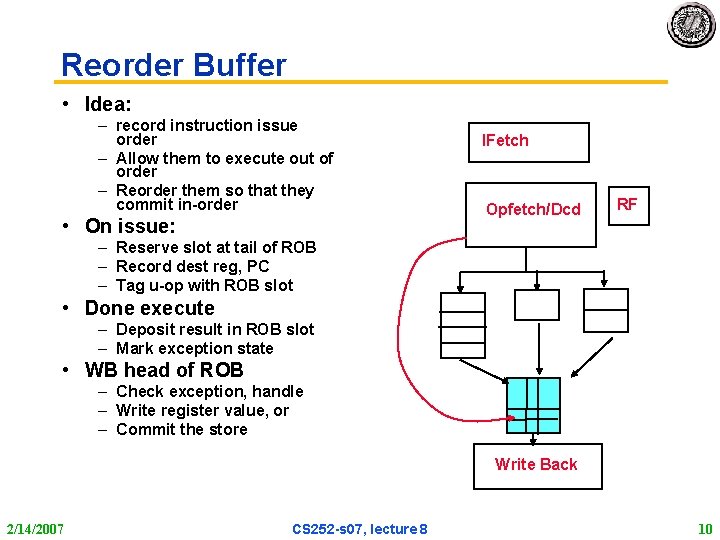

Reorder Buffer • Idea: – record instruction issue order – Allow them to execute out of order – Reorder them so that they commit in-order • On issue: IFetch Opfetch/Dcd RF – Reserve slot at tail of ROB – Record dest reg, PC – Tag u-op with ROB slot • Done execute – Deposit result in ROB slot – Mark exception state • WB head of ROB – Check exception, handle – Write register value, or – Commit the store Write Back 2/14/2007 CS 252 -s 07, lecture 8 10

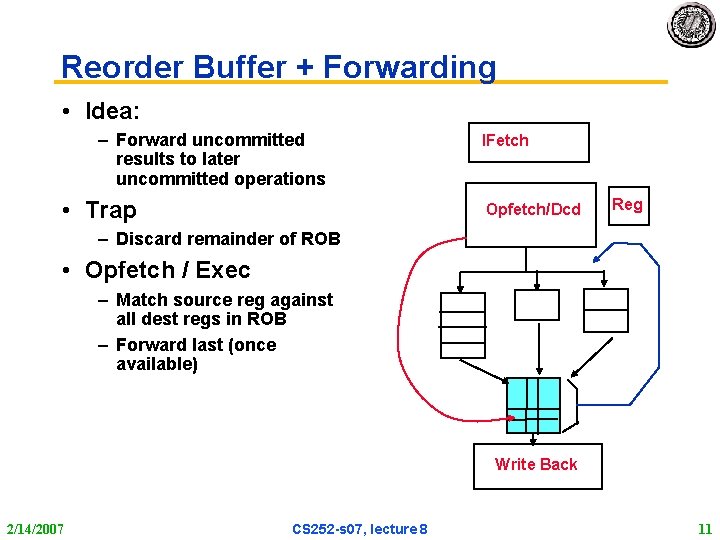

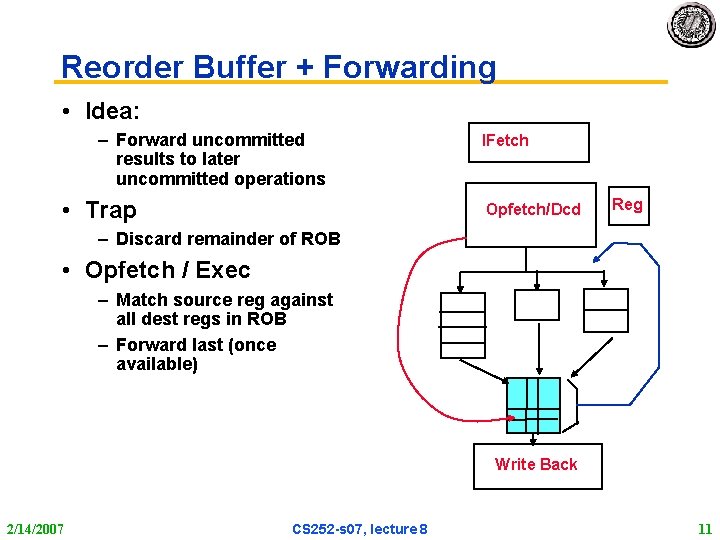

Reorder Buffer + Forwarding • Idea: – Forward uncommitted results to later uncommitted operations • Trap IFetch Opfetch/Dcd Reg – Discard remainder of ROB • Opfetch / Exec – Match source reg against all dest regs in ROB – Forward last (once available) Write Back 2/14/2007 CS 252 -s 07, lecture 8 11

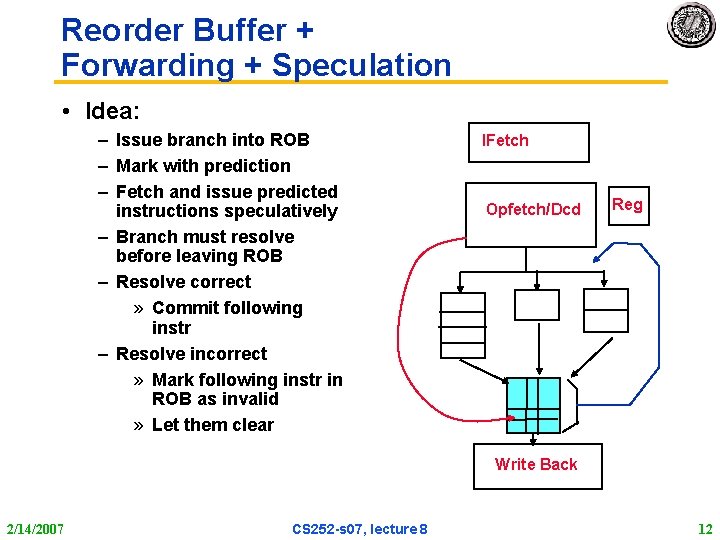

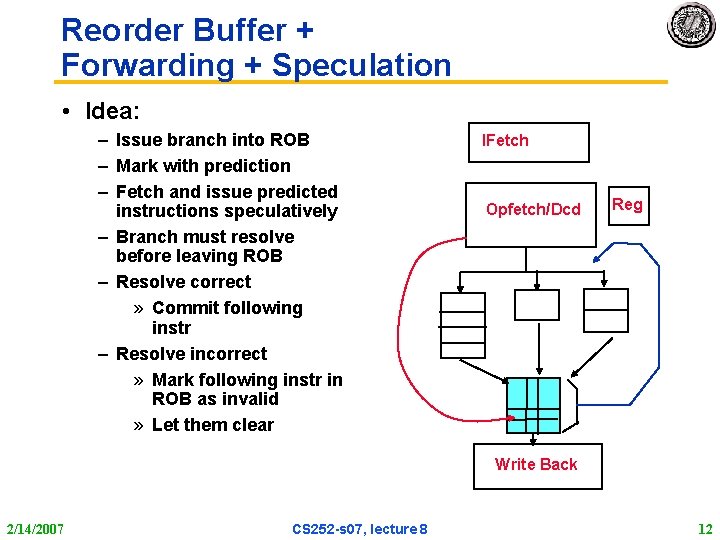

Reorder Buffer + Forwarding + Speculation • Idea: – Issue branch into ROB – Mark with prediction – Fetch and issue predicted instructions speculatively – Branch must resolve before leaving ROB – Resolve correct » Commit following instr – Resolve incorrect » Mark following instr in ROB as invalid » Let them clear IFetch Opfetch/Dcd Reg Write Back 2/14/2007 CS 252 -s 07, lecture 8 12

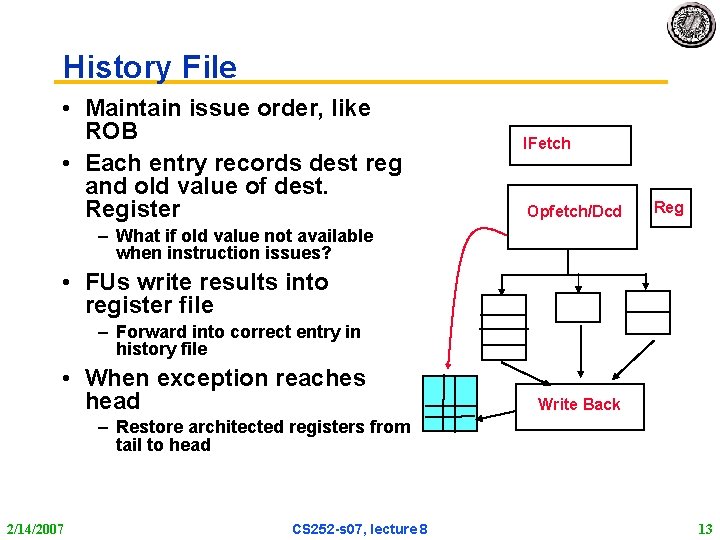

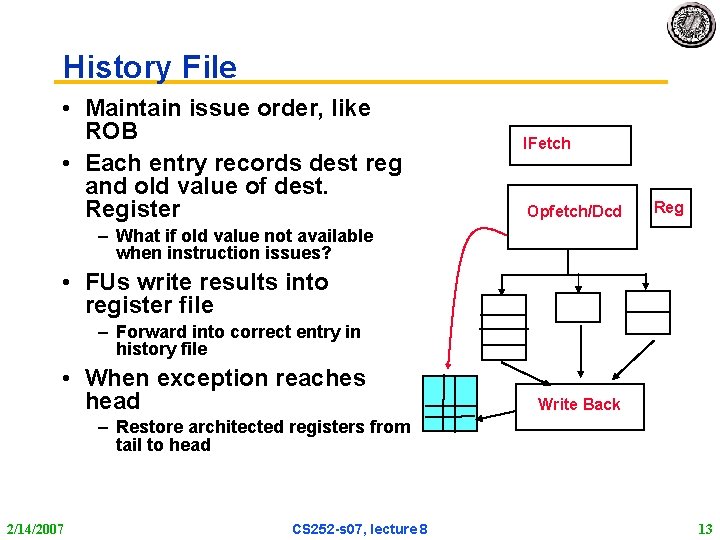

History File • Maintain issue order, like ROB • Each entry records dest reg and old value of dest. Register IFetch Opfetch/Dcd Reg – What if old value not available when instruction issues? • FUs write results into register file – Forward into correct entry in history file • When exception reaches head Write Back – Restore architected registers from tail to head 2/14/2007 CS 252 -s 07, lecture 8 13

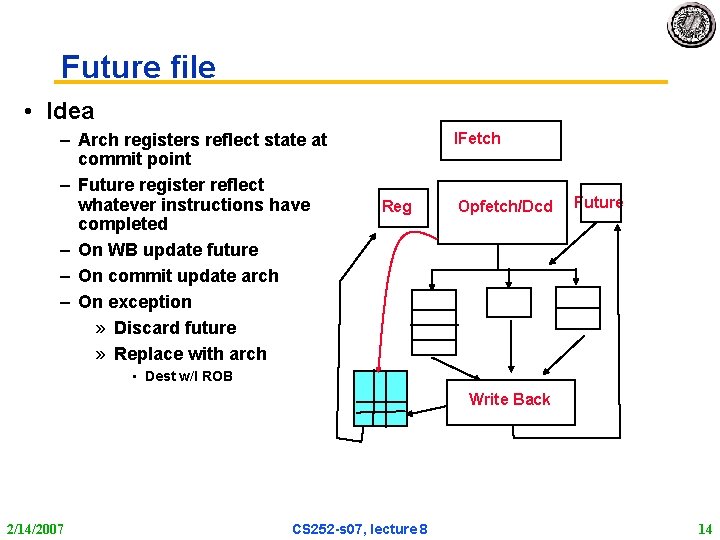

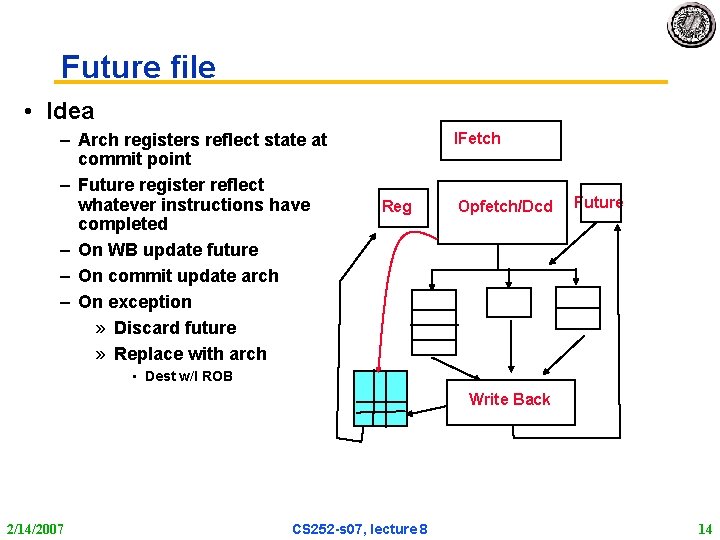

Future file • Idea – Arch registers reflect state at commit point – Future register reflect whatever instructions have completed – On WB update future – On commit update arch – On exception » Discard future » Replace with arch IFetch Reg Opfetch/Dcd Future • Dest w/I ROB Write Back 2/14/2007 CS 252 -s 07, lecture 8 14

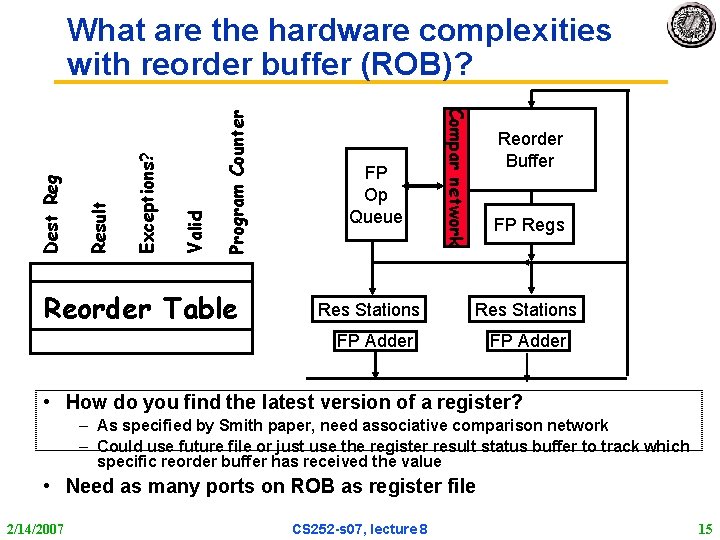

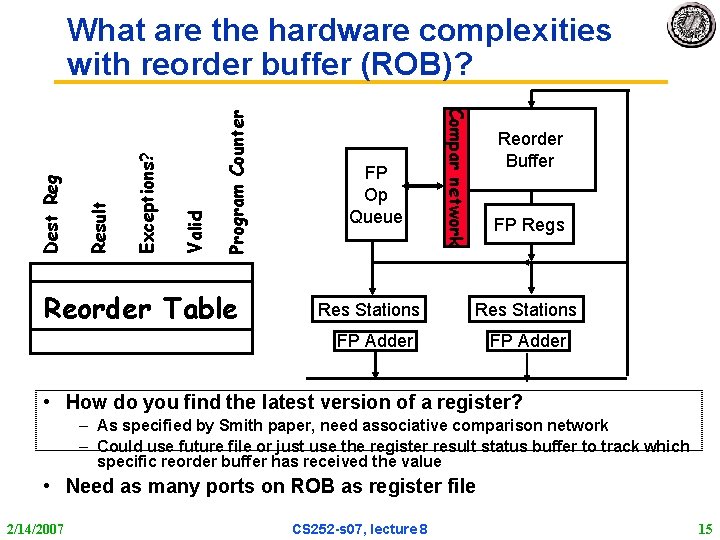

Program Counter Valid Exceptions? Result Reorder Table FP Op Queue Res Stations Compar network Dest Reg What are the hardware complexities with reorder buffer (ROB)? Reorder Buffer FP Regs Res Stations FP Adder • How do you find the latest version of a register? – As specified by Smith paper, need associative comparison network – Could use future file or just use the register result status buffer to track which specific reorder buffer has received the value • Need as many ports on ROB as register file 2/14/2007 CS 252 -s 07, lecture 8 15

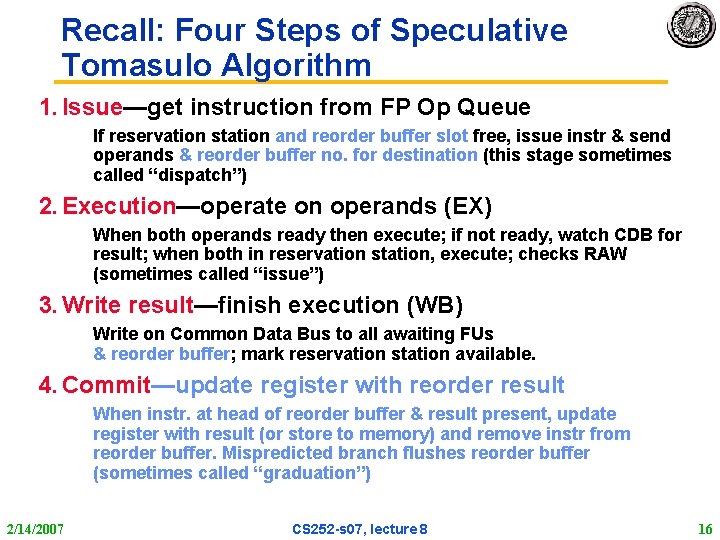

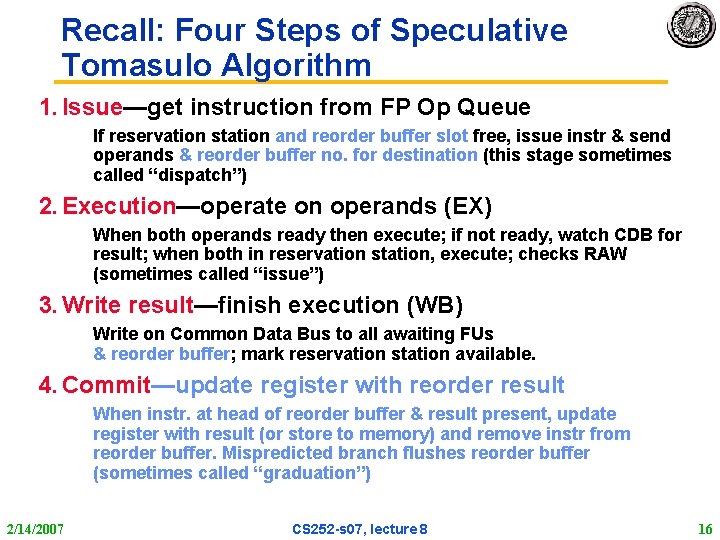

Recall: Four Steps of Speculative Tomasulo Algorithm 1. Issue—get instruction from FP Op Queue If reservation station and reorder buffer slot free, issue instr & send operands & reorder buffer no. for destination (this stage sometimes called “dispatch”) 2. Execution—operate on operands (EX) When both operands ready then execute; if not ready, watch CDB for result; when both in reservation station, execute; checks RAW (sometimes called “issue”) 3. Write result—finish execution (WB) Write on Common Data Bus to all awaiting FUs & reorder buffer; mark reservation station available. 4. Commit—update register with reorder result When instr. at head of reorder buffer & result present, update register with result (or store to memory) and remove instr from reorder buffer. Mispredicted branch flushes reorder buffer (sometimes called “graduation”) 2/14/2007 CS 252 -s 07, lecture 8 16

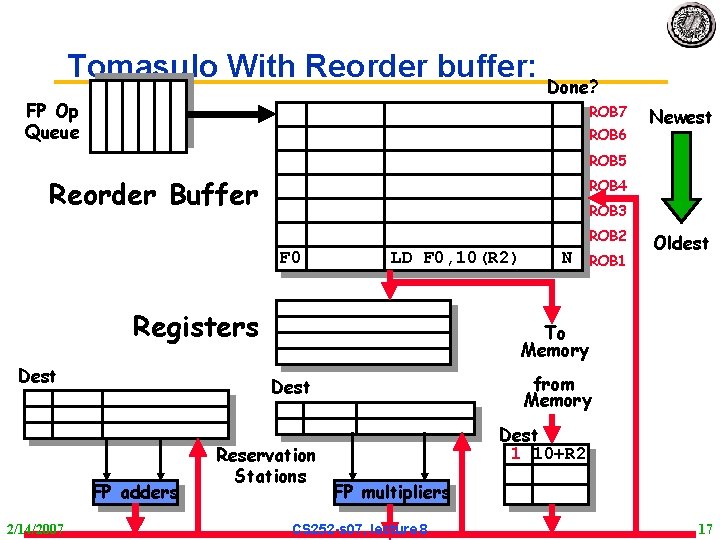

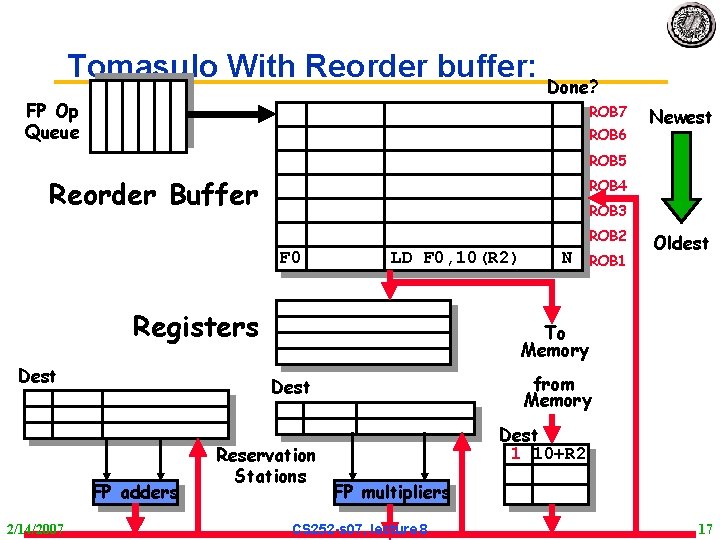

Tomasulo With Reorder buffer: Done? FP Op Queue ROB 7 ROB 6 Newest ROB 5 Reorder Buffer ROB 4 ROB 3 ROB 2 F 0 LD F 0, 10(R 2) Registers Dest 2/14/2007 ROB 1 Oldest To Memory from Memory Dest FP adders N Reservation Stations Dest 1 10+R 2 FP multipliers CS 252 -s 07, lecture 8 17

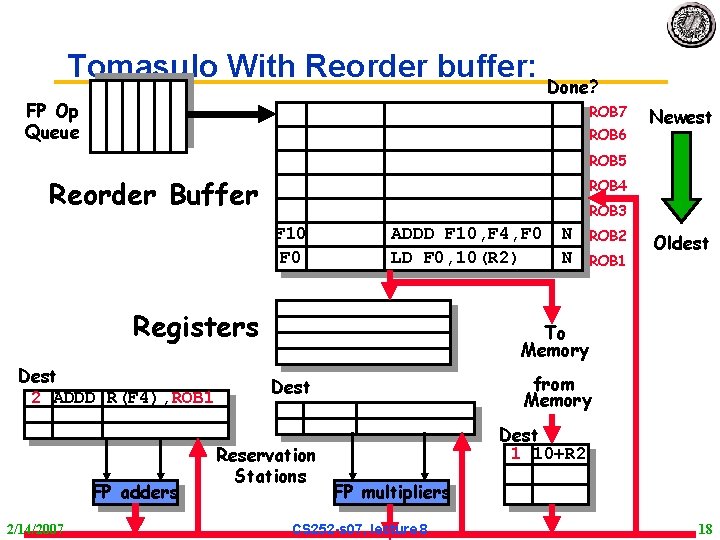

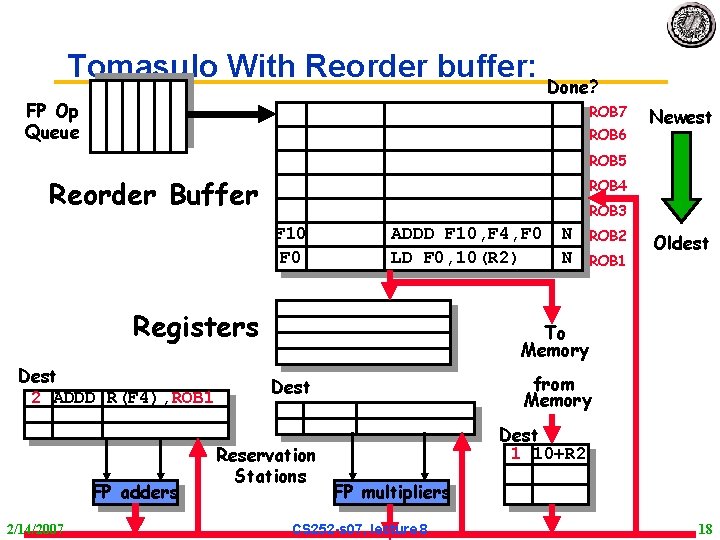

Tomasulo With Reorder buffer: Done? FP Op Queue ROB 7 ROB 6 Newest ROB 5 Reorder Buffer ROB 4 ROB 3 F 10 F 0 ADDD F 10, F 4, F 0 LD F 0, 10(R 2) Registers Dest 2 ADDD R(F 4), ROB 1 FP adders 2/14/2007 N N ROB 2 ROB 1 Oldest To Memory from Memory Dest Reservation Stations Dest 1 10+R 2 FP multipliers CS 252 -s 07, lecture 8 18

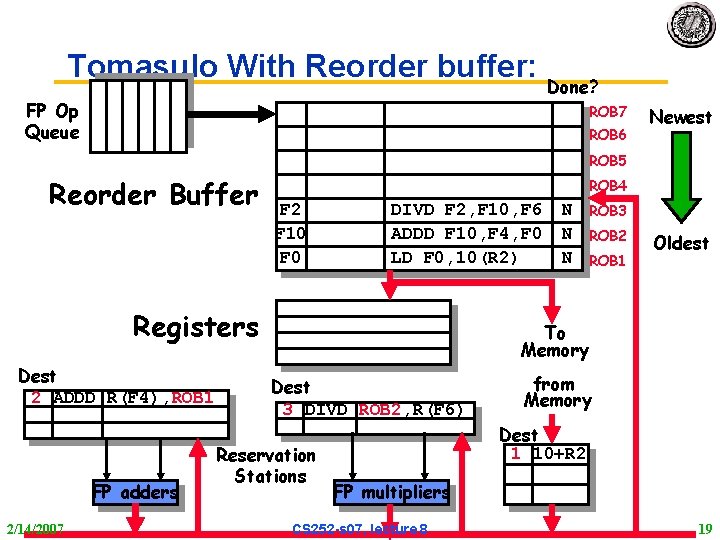

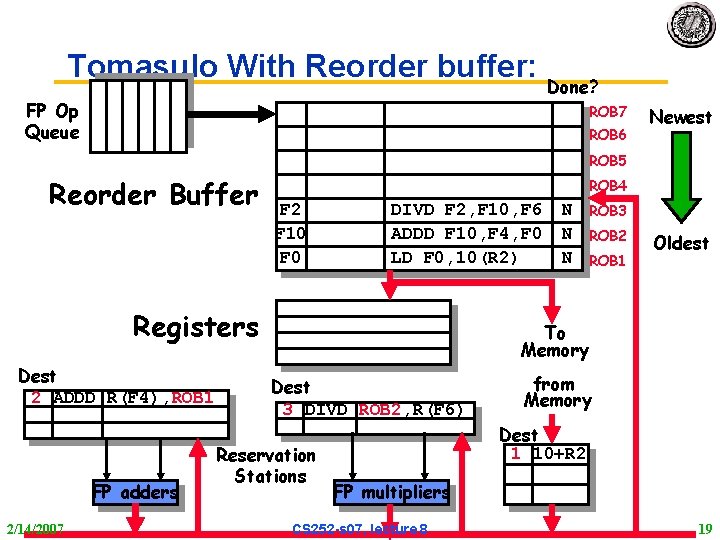

Tomasulo With Reorder buffer: Done? FP Op Queue ROB 7 ROB 6 Newest ROB 5 Reorder Buffer ROB 4 F 2 F 10 F 0 DIVD F 2, F 10, F 6 ADDD F 10, F 4, F 0 LD F 0, 10(R 2) Registers Dest 2 ADDD R(F 4), ROB 1 FP adders 2/14/2007 N N N ROB 3 ROB 2 ROB 1 Oldest To Memory Dest 3 DIVD ROB 2, R(F 6) Reservation Stations from Memory Dest 1 10+R 2 FP multipliers CS 252 -s 07, lecture 8 19

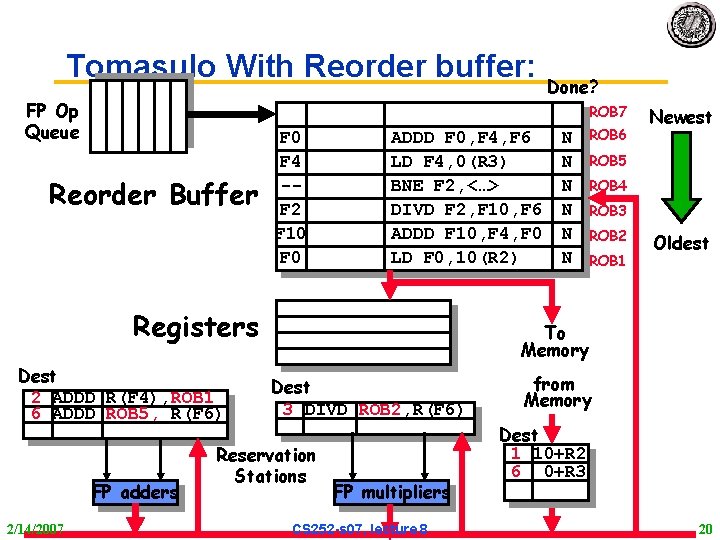

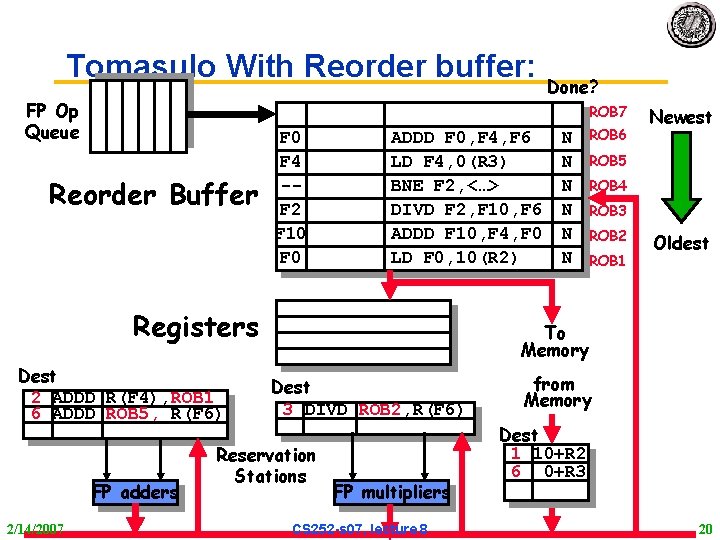

Tomasulo With Reorder buffer: FP Op Queue ROB 7 Reorder Buffer F 0 F 4 -F 2 F 10 F 0 ADDD F 0, F 4, F 6 LD F 4, 0(R 3) BNE F 2, <…> DIVD F 2, F 10, F 6 ADDD F 10, F 4, F 0 LD F 0, 10(R 2) Registers Dest 2 ADDD R(F 4), ROB 1 6 ADDD ROB 5, R(F 6) FP adders 2/14/2007 Done? N N N ROB 6 Newest ROB 5 ROB 4 ROB 3 ROB 2 ROB 1 Oldest To Memory Dest 3 DIVD ROB 2, R(F 6) Reservation Stations FP multipliers CS 252 -s 07, lecture 8 from Memory Dest 1 10+R 2 6 0+R 3 20

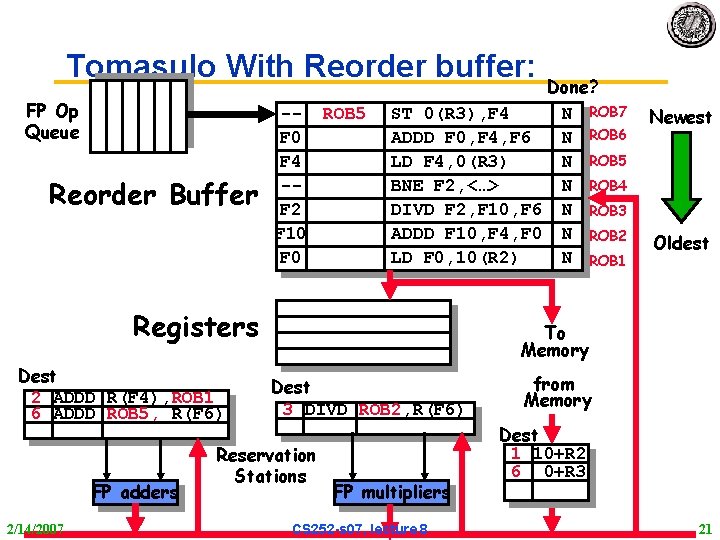

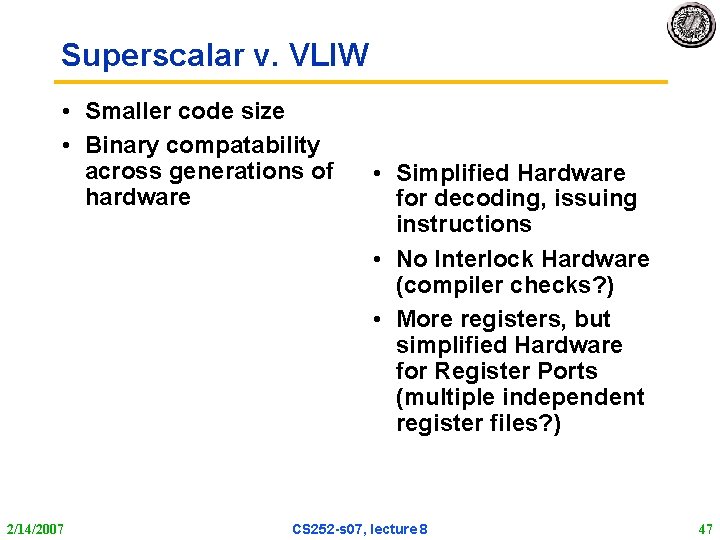

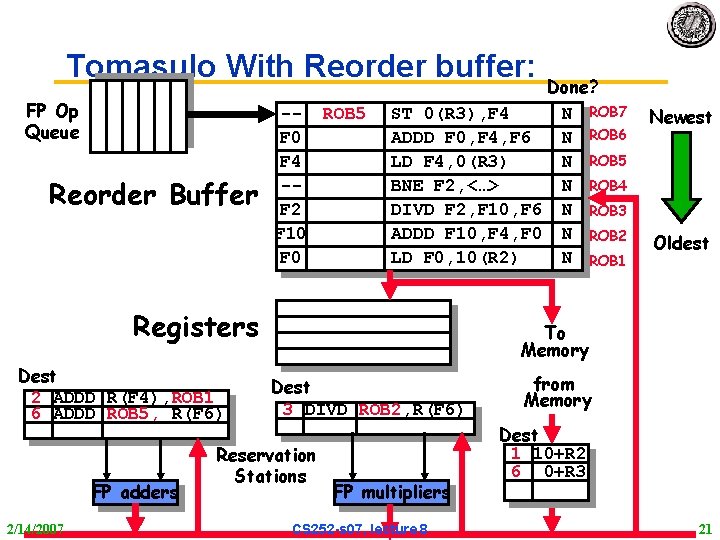

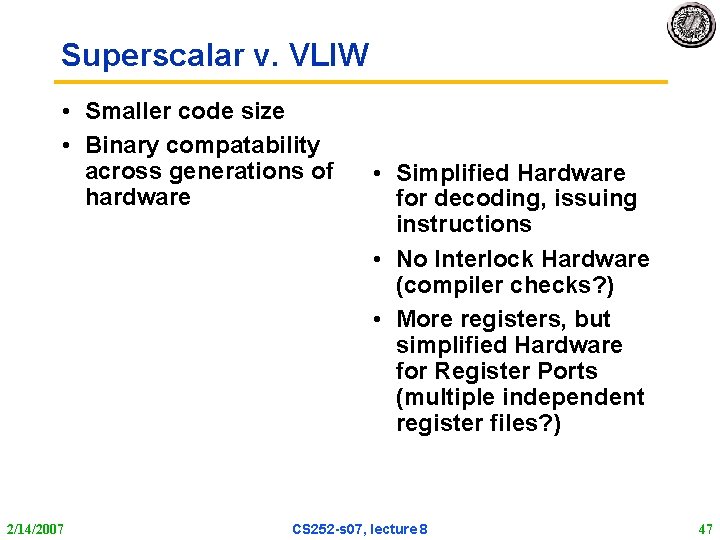

Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer -- ROB 5 F 0 F 4 -F 2 F 10 F 0 Done? ST 0(R 3), F 4 N ROB 7 ADDD F 0, F 4, F 6 N ROB 6 LD F 4, 0(R 3) N ROB 5 BNE F 2, <…> N ROB 4 DIVD F 2, F 10, F 6 N ROB 3 ADDD F 10, F 4, F 0 N ROB 2 LD F 0, 10(R 2) N ROB 1 Registers Dest 2 ADDD R(F 4), ROB 1 6 ADDD ROB 5, R(F 6) FP adders 2/14/2007 Newest Oldest To Memory Dest 3 DIVD ROB 2, R(F 6) Reservation Stations FP multipliers CS 252 -s 07, lecture 8 from Memory Dest 1 10+R 2 6 0+R 3 21

![Tomasulo With Reorder buffer FP Op Queue Reorder Buffer M10 F 0 F Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer -- M[10] F 0 F](https://slidetodoc.com/presentation_image_h2/7e9956ad609a6d317d57f7cd875cbfe3/image-22.jpg)

Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer -- M[10] F 0 F 4 M[10] -F 2 F 10 F 0 Done? ST 0(R 3), F 4 Y ROB 7 ADDD F 0, F 4, F 6 N ROB 6 LD F 4, 0(R 3) Y ROB 5 BNE F 2, <…> N ROB 4 DIVD F 2, F 10, F 6 N ROB 3 ADDD F 10, F 4, F 0 N ROB 2 LD F 0, 10(R 2) N ROB 1 Registers Dest 2 ADDD R(F 4), ROB 1 6 ADDD M[10], R(F 6) FP adders 2/14/2007 Newest Oldest To Memory Dest 3 DIVD ROB 2, R(F 6) Reservation Stations from Memory Dest 1 10+R 2 FP multipliers CS 252 -s 07, lecture 8 22

![Tomasulo With Reorder buffer FP Op Queue Reorder Buffer Done M10 ST 0R Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer Done? -- M[10] ST 0(R](https://slidetodoc.com/presentation_image_h2/7e9956ad609a6d317d57f7cd875cbfe3/image-23.jpg)

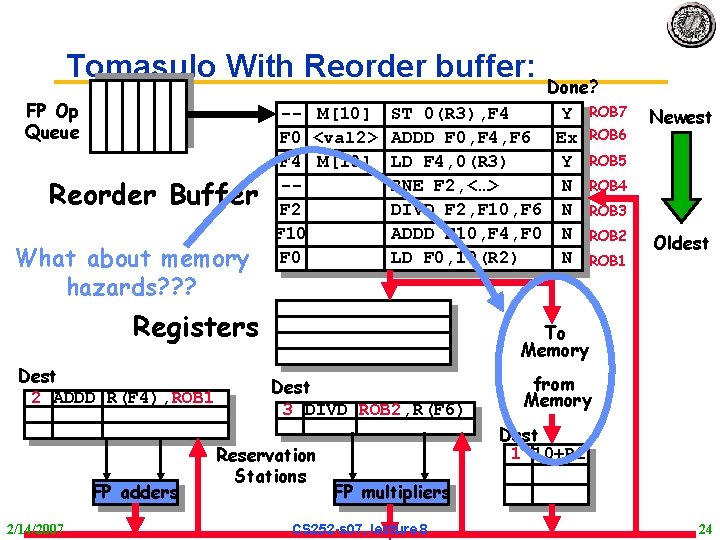

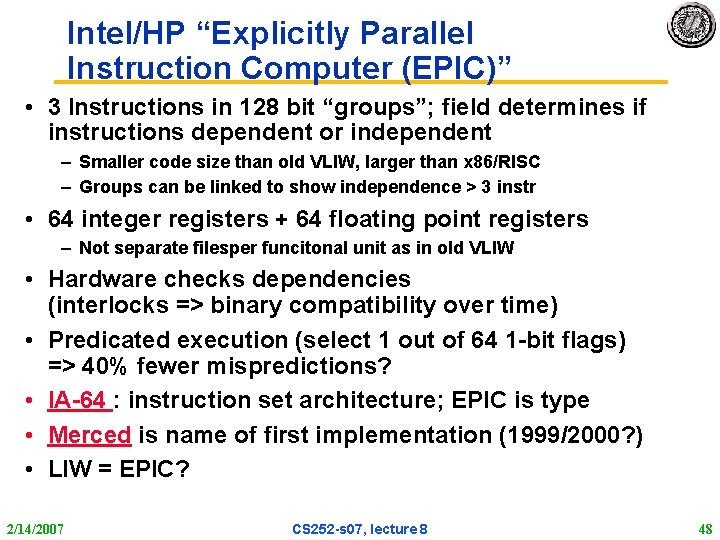

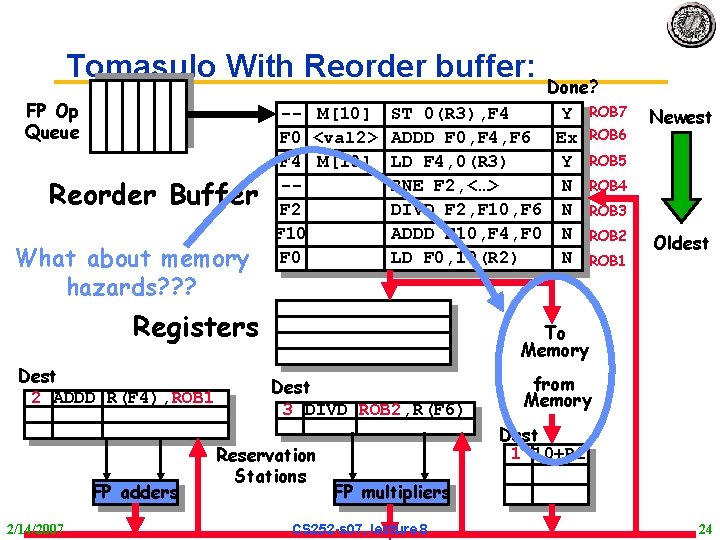

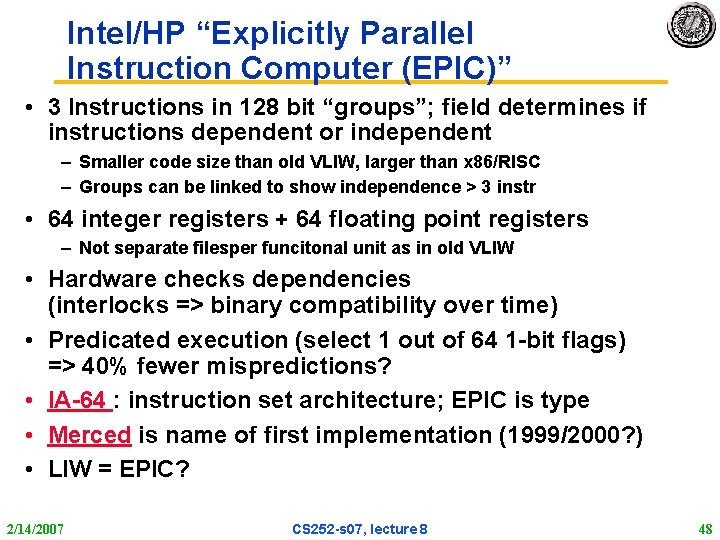

Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer Done? -- M[10] ST 0(R 3), F 4 Y ROB 7 F 0 <val 2> ADDD F 0, F 4, F 6 Ex ROB 6 F 4 M[10] LD F 4, 0(R 3) Y ROB 5 -BNE F 2, <…> N ROB 4 F 2 DIVD F 2, F 10, F 6 N ROB 3 F 10 ADDD F 10, F 4, F 0 N ROB 2 F 0 LD F 0, 10(R 2) N ROB 1 Registers Dest 2 ADDD R(F 4), ROB 1 FP adders 2/14/2007 Newest Oldest To Memory Dest 3 DIVD ROB 2, R(F 6) Reservation Stations from Memory Dest 1 10+R 2 FP multipliers CS 252 -s 07, lecture 8 23

Tomasulo With Reorder buffer: FP Op Queue Reorder Buffer What about memory hazards? ? ? Done? -- M[10] ST 0(R 3), F 4 Y ROB 7 F 0 <val 2> ADDD F 0, F 4, F 6 Ex ROB 6 F 4 M[10] LD F 4, 0(R 3) Y ROB 5 -BNE F 2, <…> N ROB 4 F 2 DIVD F 2, F 10, F 6 N ROB 3 F 10 ADDD F 10, F 4, F 0 N ROB 2 F 0 LD F 0, 10(R 2) N ROB 1 Registers Dest 2 ADDD R(F 4), ROB 1 FP adders 2/14/2007 Newest Oldest To Memory Dest 3 DIVD ROB 2, R(F 6) Reservation Stations from Memory Dest 1 10+R 2 FP multipliers CS 252 -s 07, lecture 8 24

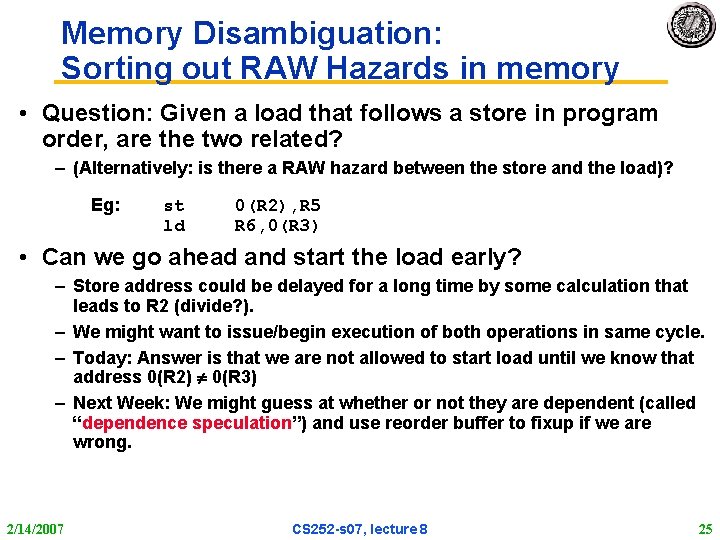

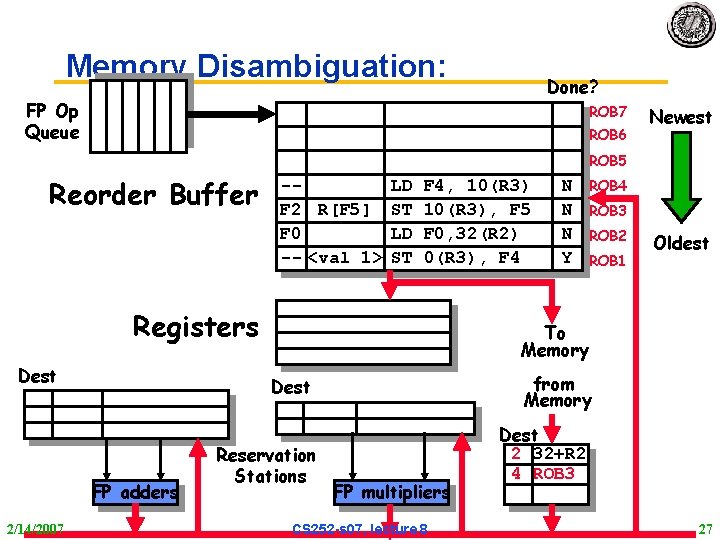

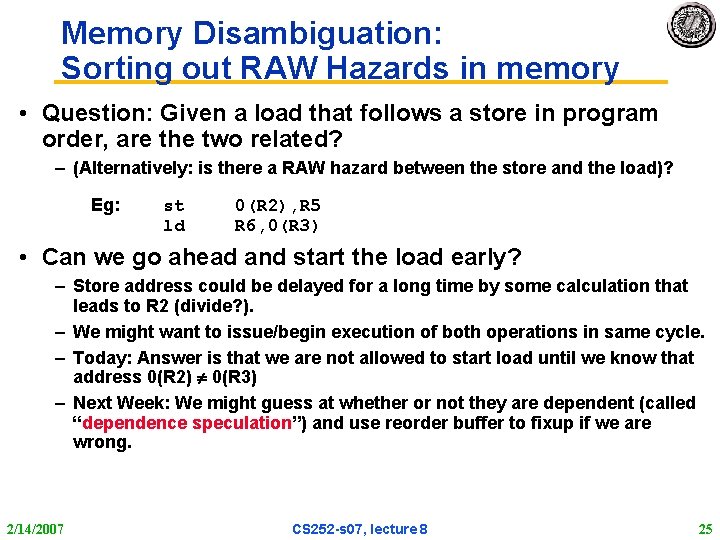

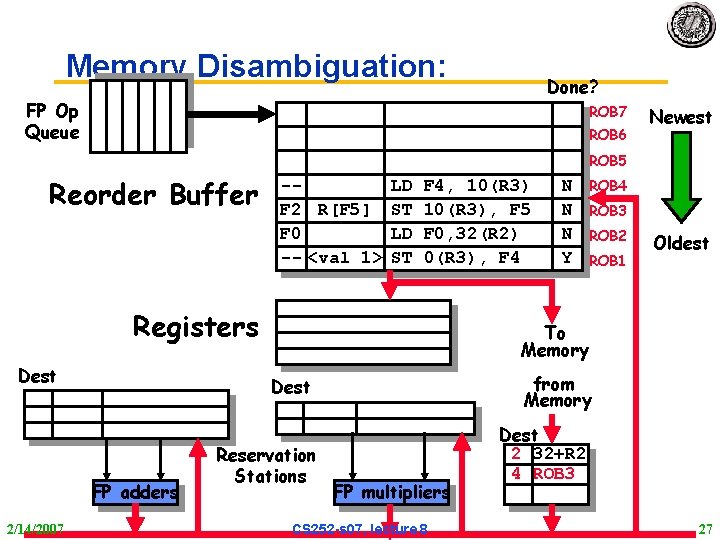

Memory Disambiguation: Sorting out RAW Hazards in memory • Question: Given a load that follows a store in program order, are the two related? – (Alternatively: is there a RAW hazard between the store and the load)? Eg: st ld 0(R 2), R 5 R 6, 0(R 3) • Can we go ahead and start the load early? – Store address could be delayed for a long time by some calculation that leads to R 2 (divide? ). – We might want to issue/begin execution of both operations in same cycle. – Today: Answer is that we are not allowed to start load until we know that address 0(R 2) 0(R 3) – Next Week: We might guess at whether or not they are dependent (called “dependence speculation”) and use reorder buffer to fixup if we are wrong. 2/14/2007 CS 252 -s 07, lecture 8 25

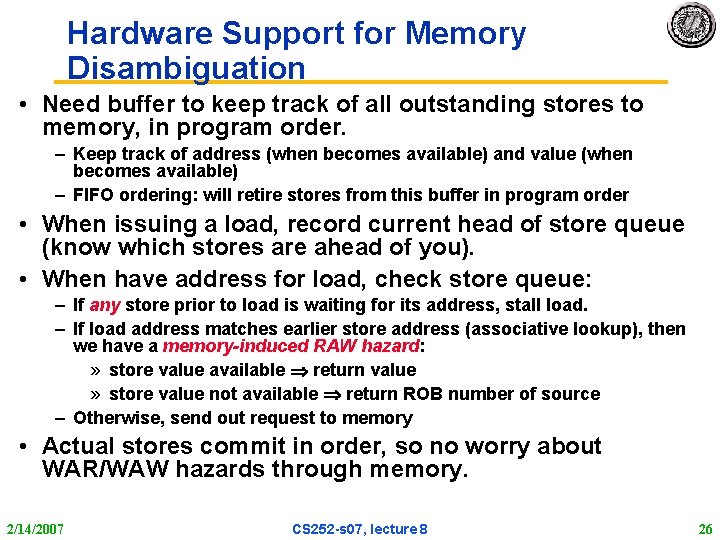

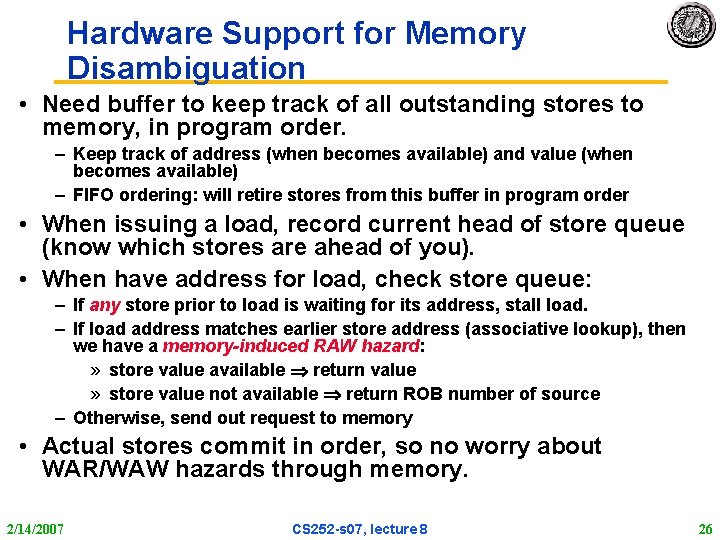

Hardware Support for Memory Disambiguation • Need buffer to keep track of all outstanding stores to memory, in program order. – Keep track of address (when becomes available) and value (when becomes available) – FIFO ordering: will retire stores from this buffer in program order • When issuing a load, record current head of store queue (know which stores are ahead of you). • When have address for load, check store queue: – If any store prior to load is waiting for its address, stall load. – If load address matches earlier store address (associative lookup), then we have a memory-induced RAW hazard: » store value available return value » store value not available return ROB number of source – Otherwise, send out request to memory • Actual stores commit in order, so no worry about WAR/WAW hazards through memory. 2/14/2007 CS 252 -s 07, lecture 8 26

Memory Disambiguation: Done? FP Op Queue ROB 7 ROB 6 Newest ROB 5 Reorder Buffer -F 2 R[F 5] F 0 -- <val 1> LD ST F 4, 10(R 3), F 5 F 0, 32(R 2) 0(R 3), F 4 Registers Dest 2/14/2007 ROB 4 ROB 3 ROB 2 ROB 1 Oldest To Memory from Memory Dest FP adders N N N Y Reservation Stations FP multipliers CS 252 -s 07, lecture 8 Dest 2 32+R 2 4 ROB 3 27

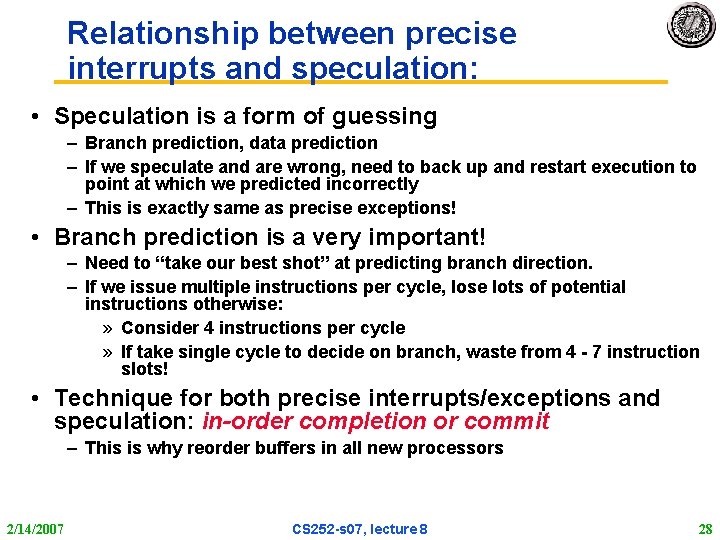

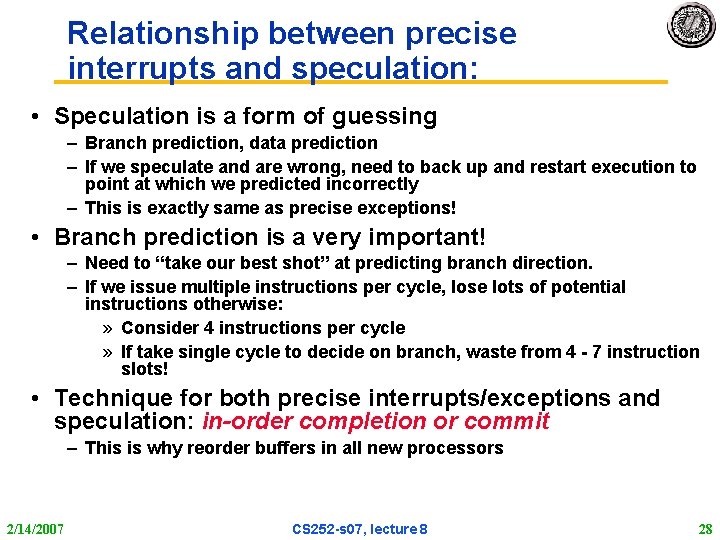

Relationship between precise interrupts and speculation: • Speculation is a form of guessing – Branch prediction, data prediction – If we speculate and are wrong, need to back up and restart execution to point at which we predicted incorrectly – This is exactly same as precise exceptions! • Branch prediction is a very important! – Need to “take our best shot” at predicting branch direction. – If we issue multiple instructions per cycle, lose lots of potential instructions otherwise: » Consider 4 instructions per cycle » If take single cycle to decide on branch, waste from 4 - 7 instruction slots! • Technique for both precise interrupts/exceptions and speculation: in-order completion or commit – This is why reorder buffers in all new processors 2/14/2007 CS 252 -s 07, lecture 8 28

Administrative • Midterm I: Wednesday 3/14 Location: 306 Soda Hall TIME: 5: 30 - 8: 30 – Can have 1 sheet of 8½x 11 handwritten notes – both sides – No microfiche of the book! • This info is on the Lecture page (has been) • Meet at La. Val’s afterwards for Pizza and Beverages – Great way for me to get to know you better – I’ll Buy! 2/14/2007 CS 252 -s 07, lecture 8 29

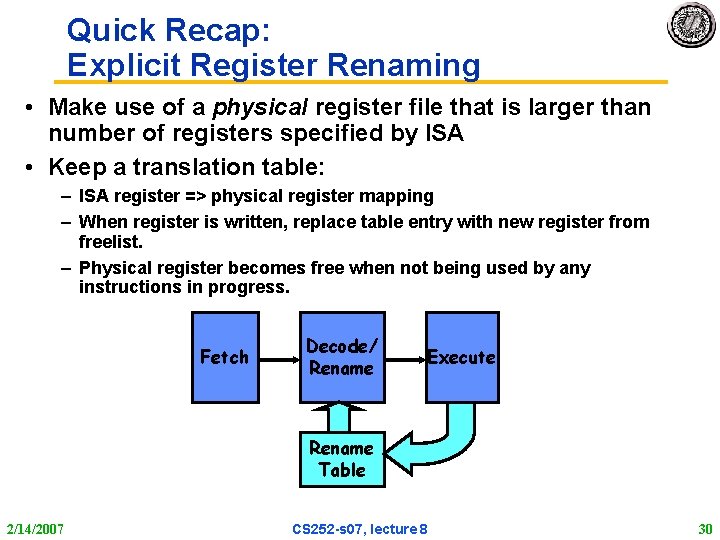

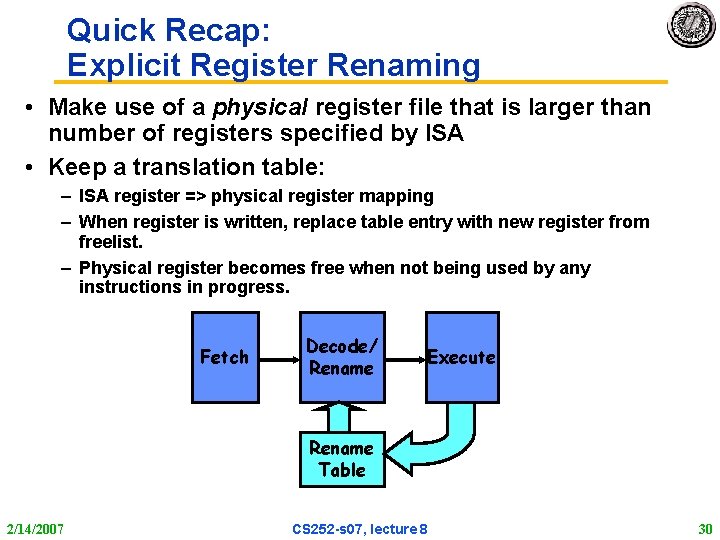

Quick Recap: Explicit Register Renaming • Make use of a physical register file that is larger than number of registers specified by ISA • Keep a translation table: – ISA register => physical register mapping – When register is written, replace table entry with new register from freelist. – Physical register becomes free when not being used by any instructions in progress. Fetch Decode/ Rename Execute Rename Table 2/14/2007 CS 252 -s 07, lecture 8 30

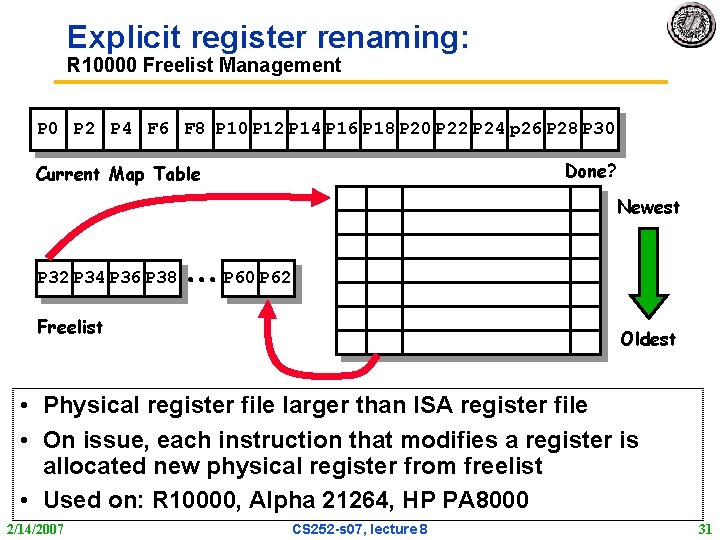

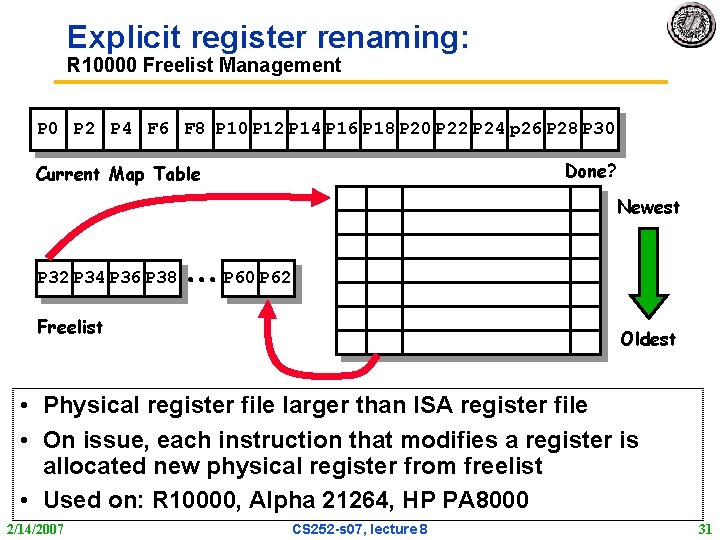

Explicit register renaming: R 10000 Freelist Management P 0 P 2 P 4 F 6 F 8 P 10 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 Done? Current Map Table Newest P 32 P 34 P 36 P 38 P 60 P 62 Freelist Oldest • Physical register file larger than ISA register file • On issue, each instruction that modifies a register is allocated new physical register from freelist • Used on: R 10000, Alpha 21264, HP PA 8000 2/14/2007 CS 252 -s 07, lecture 8 31

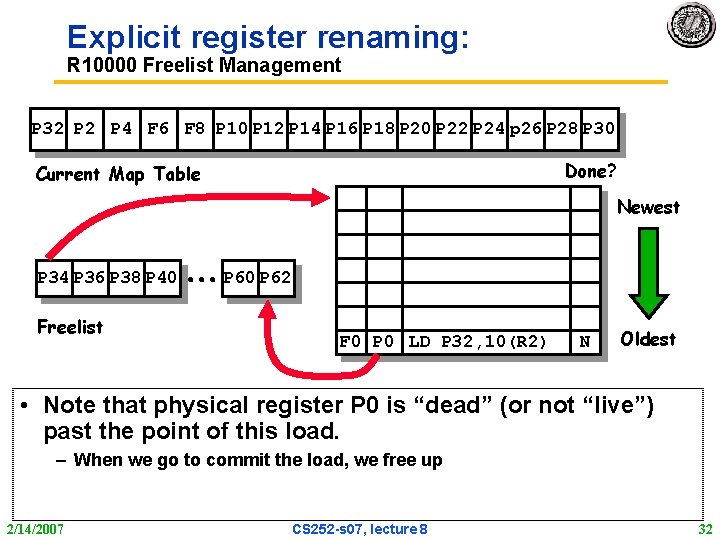

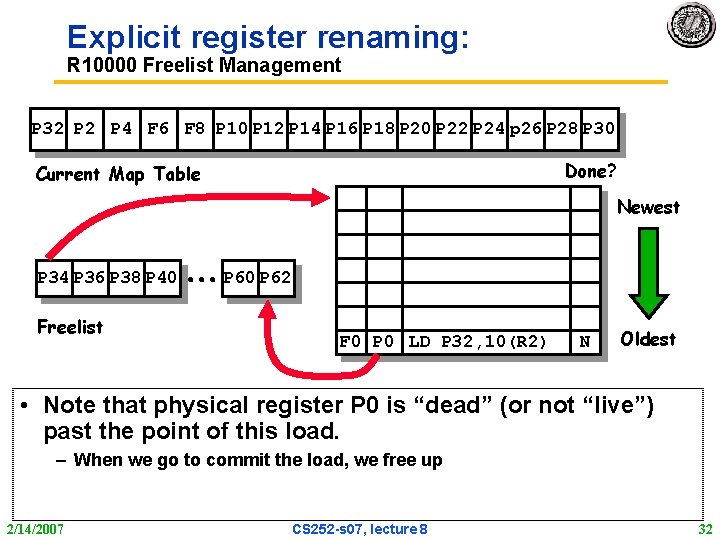

Explicit register renaming: R 10000 Freelist Management P 32 P 4 F 6 F 8 P 10 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 Done? Current Map Table Newest P 34 P 36 P 38 P 40 Freelist P 60 P 62 F 0 P 0 LD P 32, 10(R 2) N Oldest • Note that physical register P 0 is “dead” (or not “live”) past the point of this load. – When we go to commit the load, we free up 2/14/2007 CS 252 -s 07, lecture 8 32

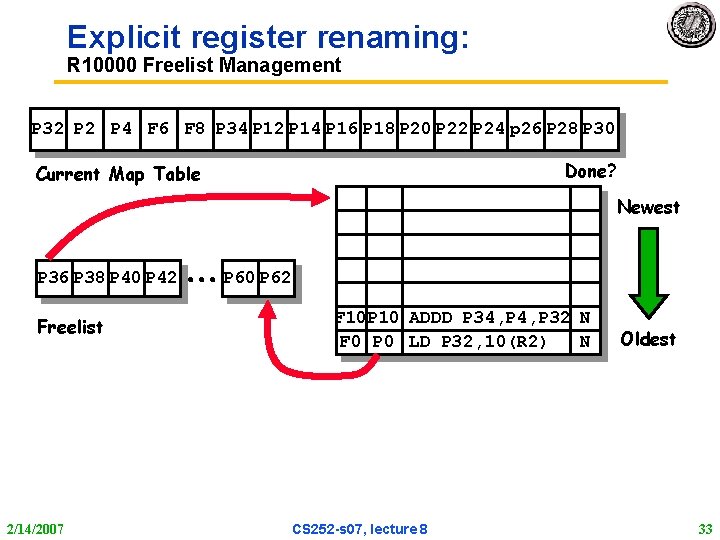

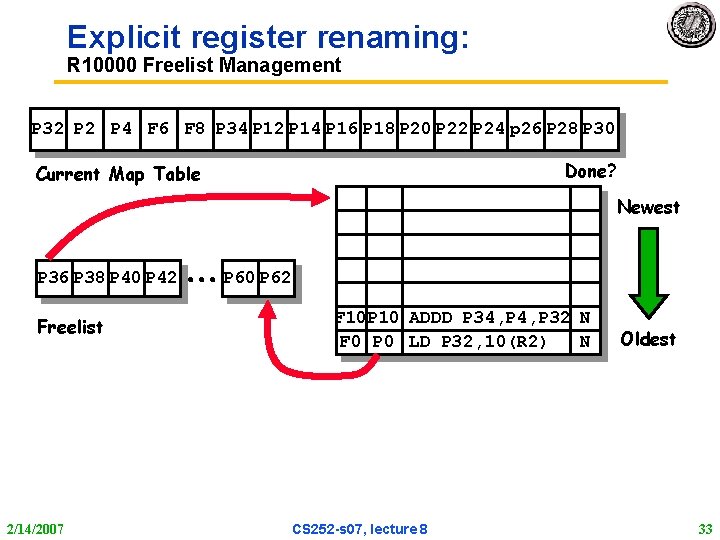

Explicit register renaming: R 10000 Freelist Management P 32 P 4 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 Done? Current Map Table Newest P 36 P 38 P 40 P 42 Freelist 2/14/2007 P 60 P 62 F 10 P 10 ADDD P 34, P 32 N F 0 P 0 LD P 32, 10(R 2) N CS 252 -s 07, lecture 8 Oldest 33

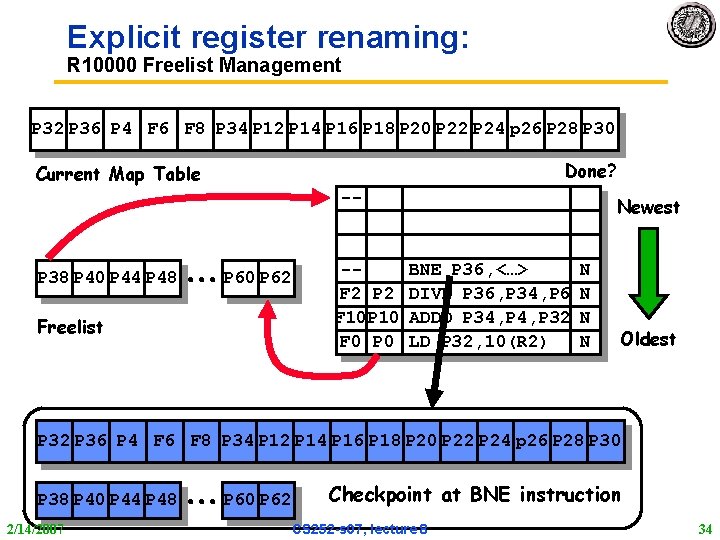

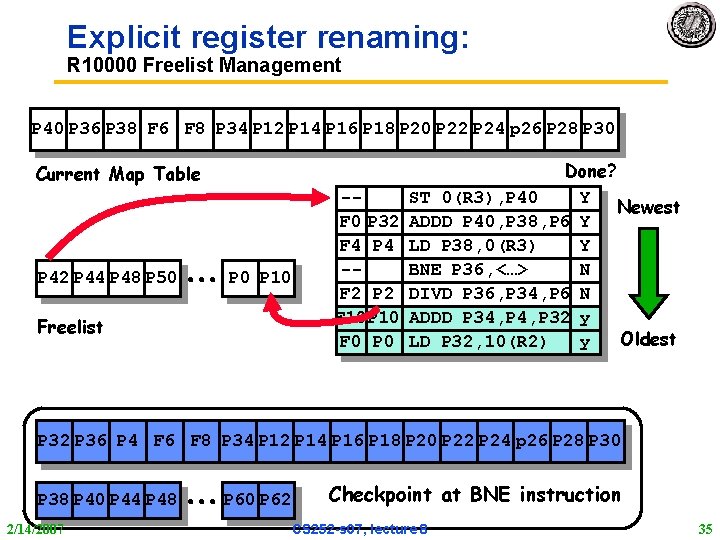

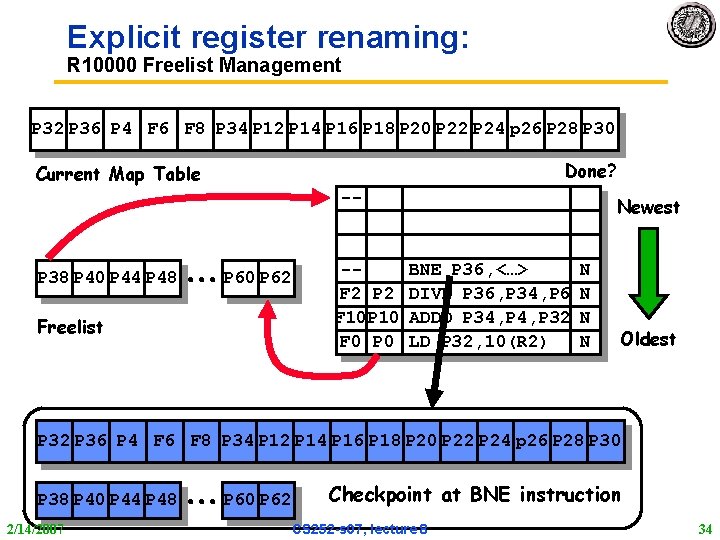

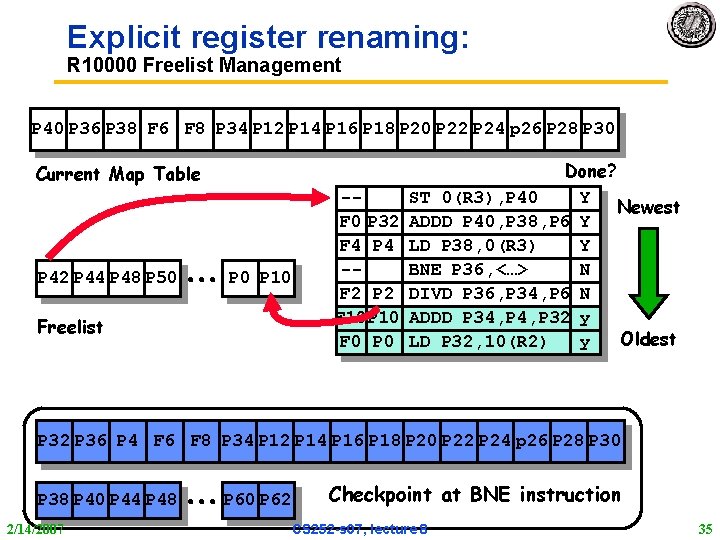

Explicit register renaming: R 10000 Freelist Management P 32 P 36 P 4 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 Done? Current Map Table -- P 38 P 40 P 44 P 48 P 60 P 62 Freelist -F 2 P 2 F 10 P 10 F 0 P 0 Newest BNE P 36, <…> DIVD P 36, P 34, P 6 ADDD P 34, P 32 LD P 32, 10(R 2) N N Oldest P 32 P 36 P 4 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 P 38 P 40 P 44 P 48 2/14/2007 P 60 P 62 Checkpoint at BNE instruction CS 252 -s 07, lecture 8 34

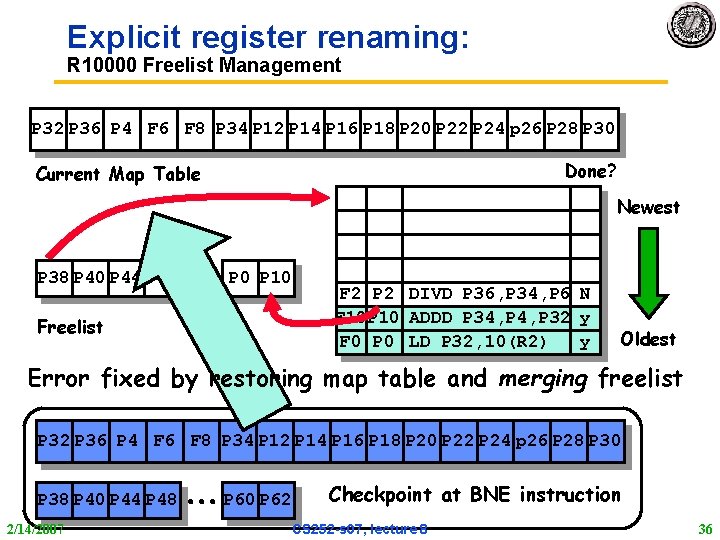

Explicit register renaming: R 10000 Freelist Management P 40 P 36 P 38 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 Current Map Table P 42 P 44 P 48 P 50 P 10 Freelist -F 0 P 32 F 4 P 4 -F 2 P 2 F 10 P 10 F 0 P 0 Done? ST 0(R 3), P 40 Y Newest ADDD P 40, P 38, P 6 Y LD P 38, 0(R 3) Y BNE P 36, <…> N DIVD P 36, P 34, P 6 N ADDD P 34, P 32 y Oldest LD P 32, 10(R 2) y P 32 P 36 P 4 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 P 38 P 40 P 44 P 48 2/14/2007 P 60 P 62 Checkpoint at BNE instruction CS 252 -s 07, lecture 8 35

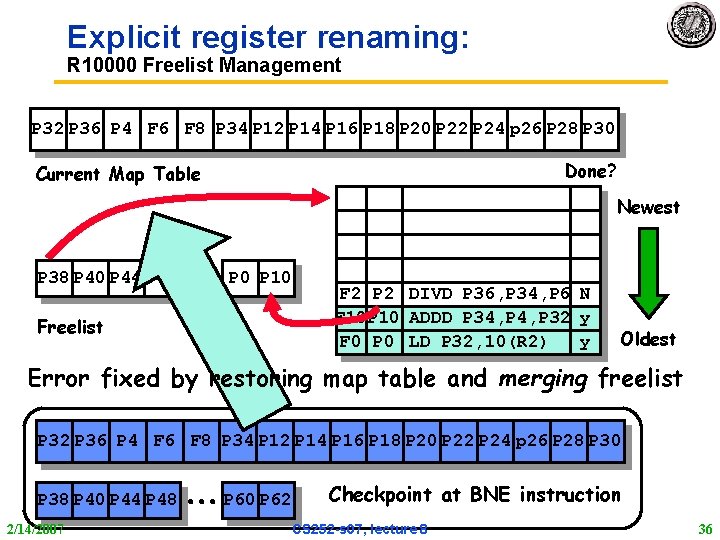

Explicit register renaming: R 10000 Freelist Management P 32 P 36 P 4 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 Done? Current Map Table Newest P 38 P 40 P 44 P 48 P 0 P 10 Freelist F 2 P 2 DIVD P 36, P 34, P 6 N F 10 P 10 ADDD P 34, P 32 y F 0 P 0 LD P 32, 10(R 2) y Oldest Error fixed by restoring map table and merging freelist P 32 P 36 P 4 F 6 F 8 P 34 P 12 P 14 P 16 P 18 P 20 P 22 P 24 p 26 P 28 P 30 P 38 P 40 P 44 P 48 2/14/2007 P 60 P 62 Checkpoint at BNE instruction CS 252 -s 07, lecture 8 36

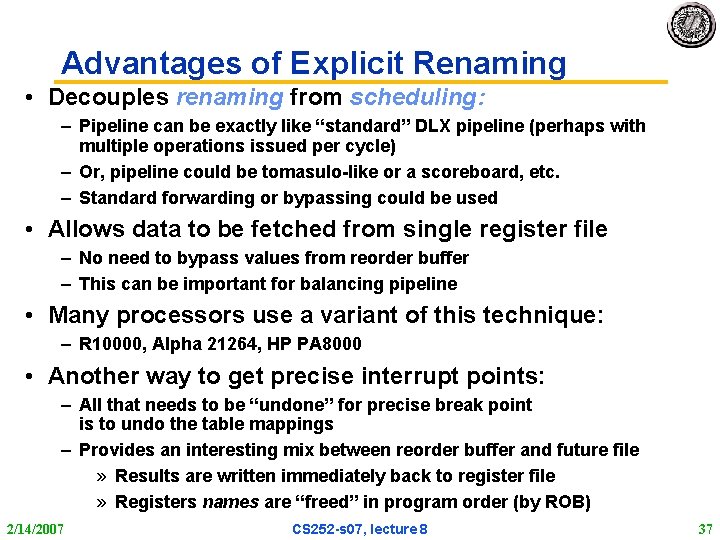

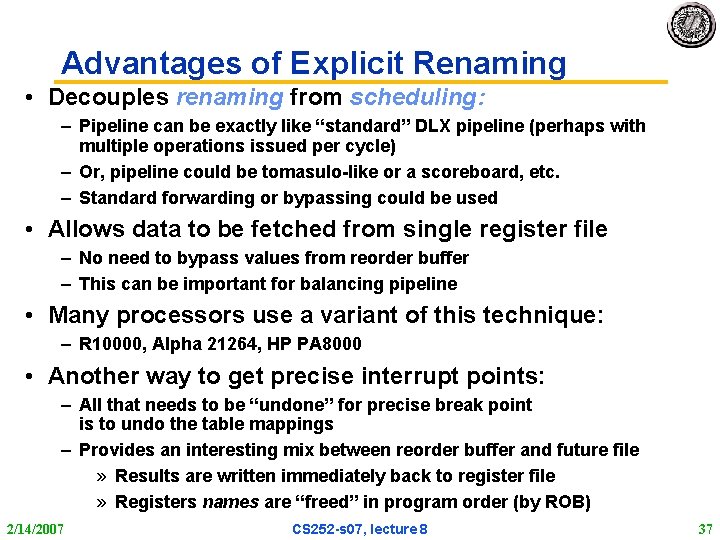

Advantages of Explicit Renaming • Decouples renaming from scheduling: – Pipeline can be exactly like “standard” DLX pipeline (perhaps with multiple operations issued per cycle) – Or, pipeline could be tomasulo-like or a scoreboard, etc. – Standard forwarding or bypassing could be used • Allows data to be fetched from single register file – No need to bypass values from reorder buffer – This can be important for balancing pipeline • Many processors use a variant of this technique: – R 10000, Alpha 21264, HP PA 8000 • Another way to get precise interrupt points: – All that needs to be “undone” for precise break point is to undo the table mappings – Provides an interesting mix between reorder buffer and future file » Results are written immediately back to register file » Registers names are “freed” in program order (by ROB) 2/14/2007 CS 252 -s 07, lecture 8 37

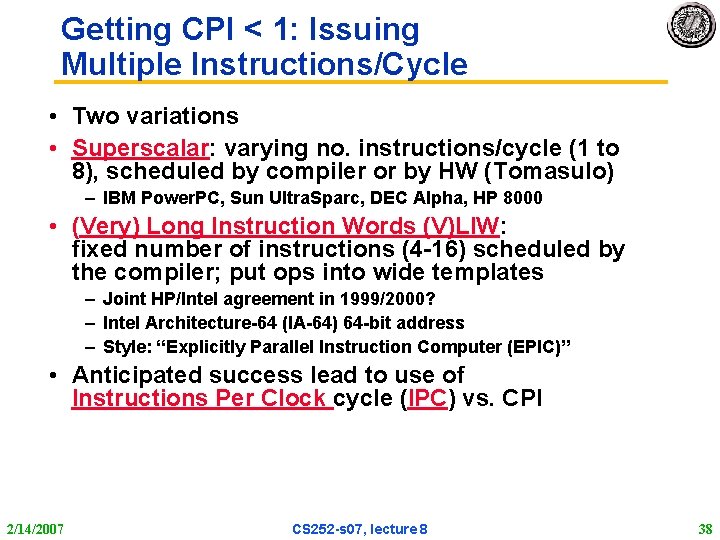

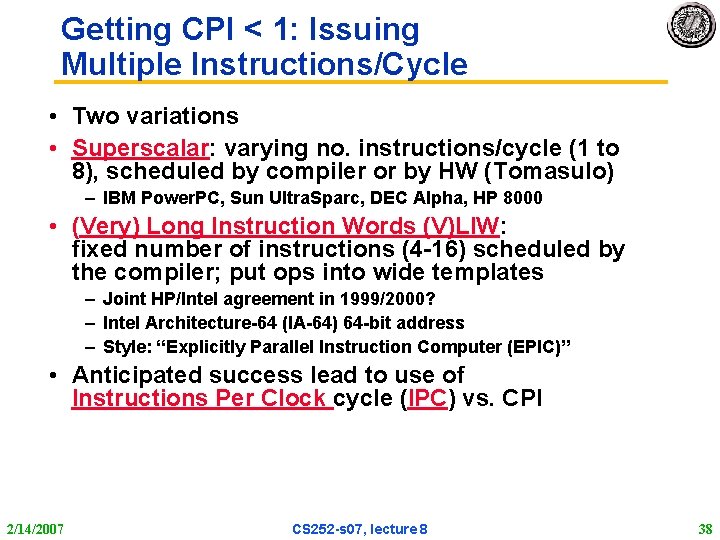

Getting CPI < 1: Issuing Multiple Instructions/Cycle • Two variations • Superscalar: varying no. instructions/cycle (1 to 8), scheduled by compiler or by HW (Tomasulo) – IBM Power. PC, Sun Ultra. Sparc, DEC Alpha, HP 8000 • (Very) Long Instruction Words (V)LIW: fixed number of instructions (4 -16) scheduled by the compiler; put ops into wide templates – Joint HP/Intel agreement in 1999/2000? – Intel Architecture-64 (IA-64) 64 -bit address – Style: “Explicitly Parallel Instruction Computer (EPIC)” • Anticipated success lead to use of Instructions Per Clock cycle (IPC) vs. CPI 2/14/2007 CS 252 -s 07, lecture 8 38

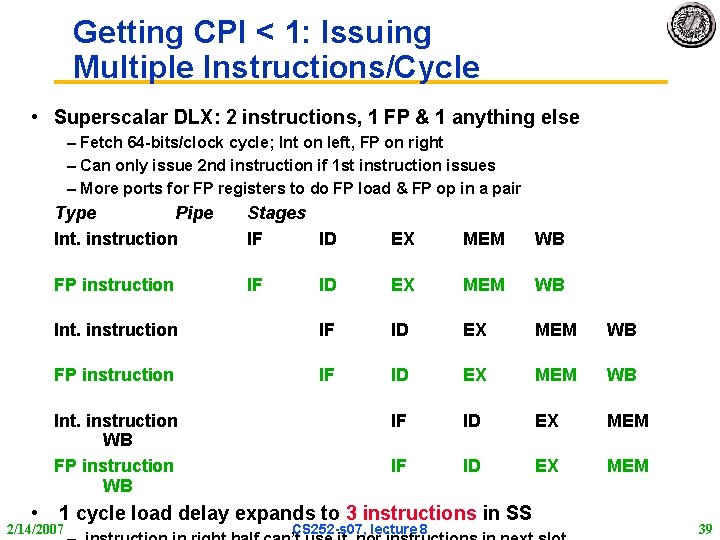

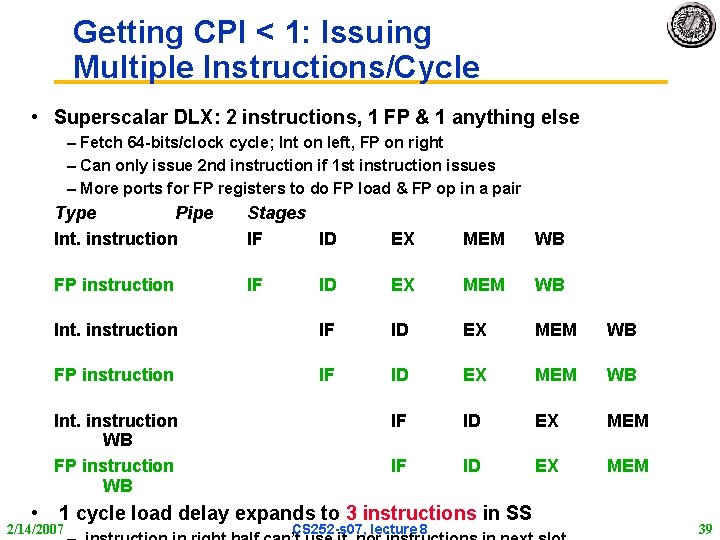

Getting CPI < 1: Issuing Multiple Instructions/Cycle • Superscalar DLX: 2 instructions, 1 FP & 1 anything else – Fetch 64 -bits/clock cycle; Int on left, FP on right – Can only issue 2 nd instruction if 1 st instruction issues – More ports for FP registers to do FP load & FP op in a pair Type Pipe Int. instruction Stages IF ID EX MEM WB FP instruction IF ID EX MEM WB Int. instruction IF ID EX MEM WB FP instruction IF ID EX MEM WB IF ID EX MEM Int. instruction WB FP instruction WB • 1 cycle load delay expands to 3 instructions in SS 2/14/2007 CS 252 -s 07, lecture 8 39

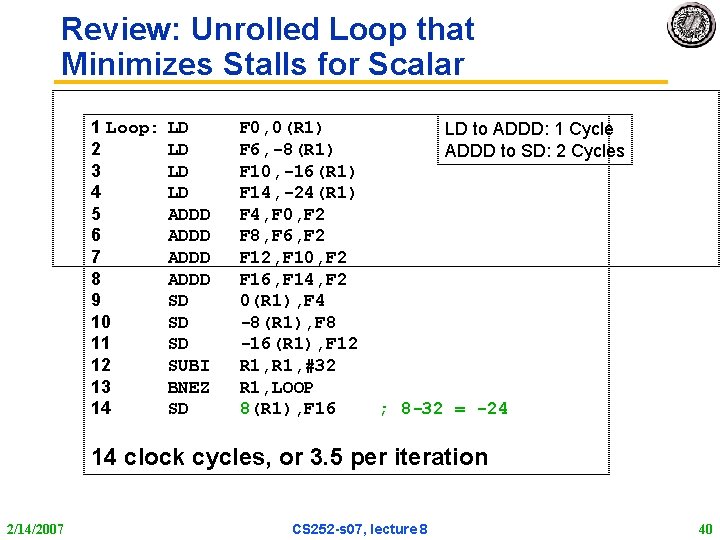

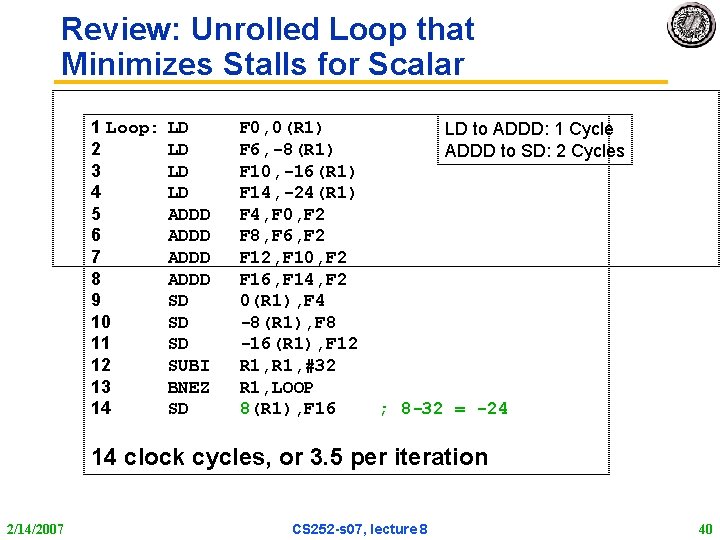

Review: Unrolled Loop that Minimizes Stalls for Scalar 1 Loop: 2 3 4 5 6 7 8 9 10 11 12 13 14 LD LD ADDD SD SD SD SUBI BNEZ SD F 0, 0(R 1) F 6, -8(R 1) F 10, -16(R 1) F 14, -24(R 1) F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 0(R 1), F 4 -8(R 1), F 8 -16(R 1), F 12 R 1, #32 R 1, LOOP 8(R 1), F 16 LD to ADDD: 1 Cycle ADDD to SD: 2 Cycles ; 8 -32 = -24 14 clock cycles, or 3. 5 per iteration 2/14/2007 CS 252 -s 07, lecture 8 40

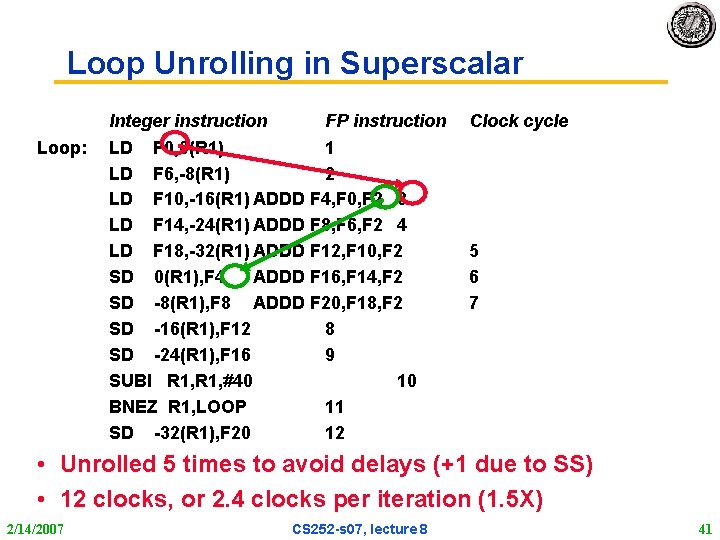

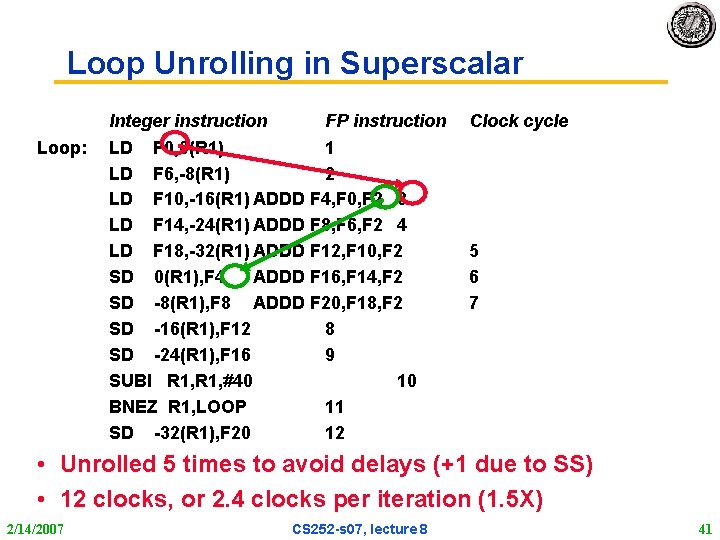

Loop Unrolling in Superscalar Integer instruction Loop: FP instruction LD F 0, 0(R 1) 1 LD F 6, -8(R 1) 2 LD F 10, -16(R 1) ADDD F 4, F 0, F 2 3 LD F 14, -24(R 1) ADDD F 8, F 6, F 2 4 LD F 18, -32(R 1) ADDD F 12, F 10, F 2 SD 0(R 1), F 4 ADDD F 16, F 14, F 2 SD -8(R 1), F 8 ADDD F 20, F 18, F 2 SD -16(R 1), F 12 8 SD -24(R 1), F 16 9 SUBI R 1, #40 10 BNEZ R 1, LOOP 11 SD -32(R 1), F 20 12 Clock cycle 5 6 7 • Unrolled 5 times to avoid delays (+1 due to SS) • 12 clocks, or 2. 4 clocks per iteration (1. 5 X) 2/14/2007 CS 252 -s 07, lecture 8 41

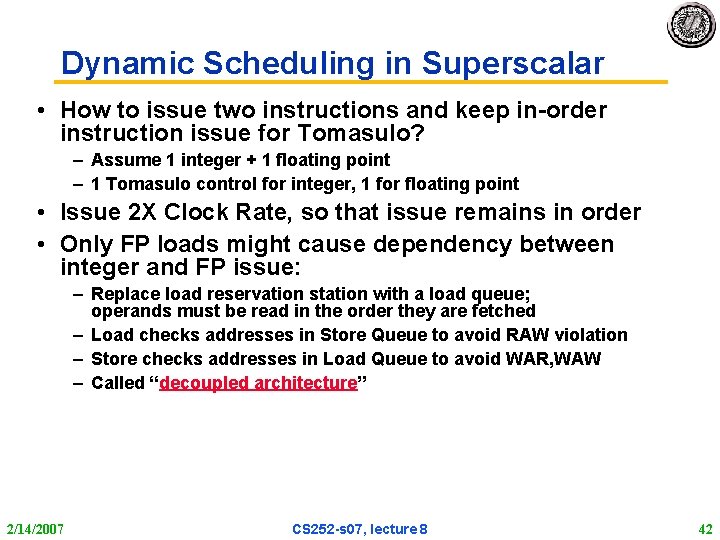

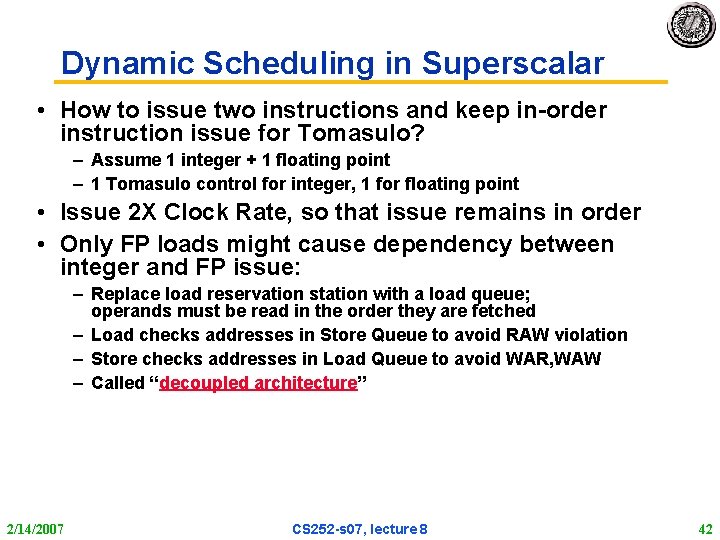

Dynamic Scheduling in Superscalar • How to issue two instructions and keep in-order instruction issue for Tomasulo? – Assume 1 integer + 1 floating point – 1 Tomasulo control for integer, 1 for floating point • Issue 2 X Clock Rate, so that issue remains in order • Only FP loads might cause dependency between integer and FP issue: – Replace load reservation station with a load queue; operands must be read in the order they are fetched – Load checks addresses in Store Queue to avoid RAW violation – Store checks addresses in Load Queue to avoid WAR, WAW – Called “decoupled architecture” 2/14/2007 CS 252 -s 07, lecture 8 42

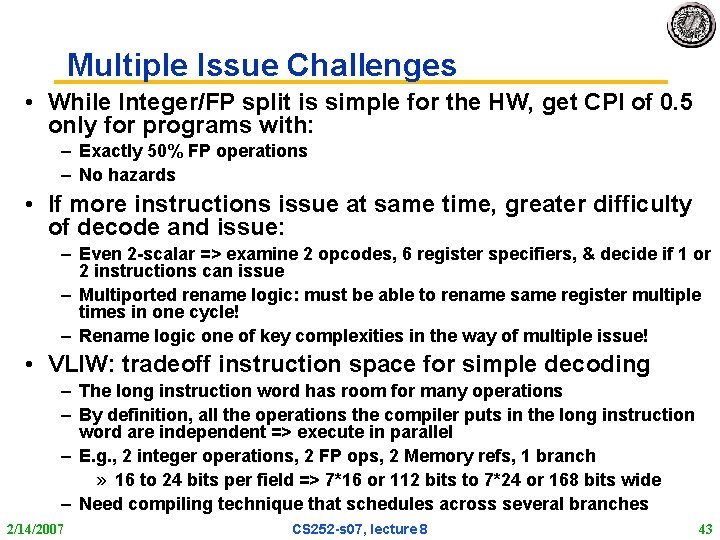

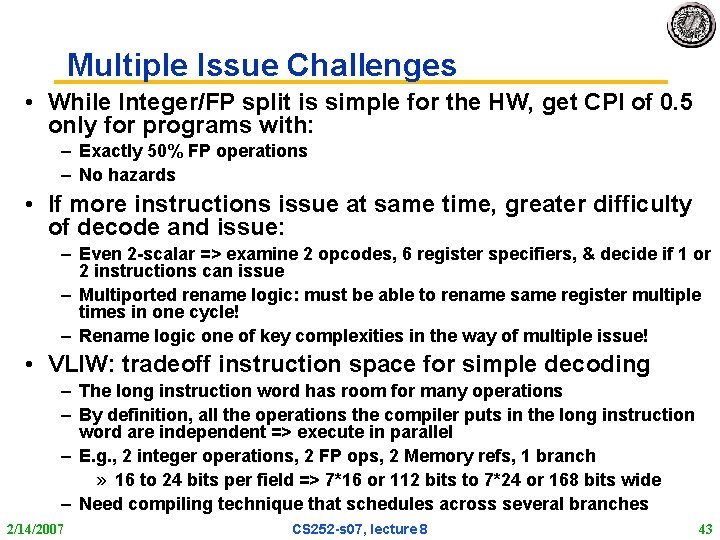

Multiple Issue Challenges • While Integer/FP split is simple for the HW, get CPI of 0. 5 only for programs with: – Exactly 50% FP operations – No hazards • If more instructions issue at same time, greater difficulty of decode and issue: – Even 2 -scalar => examine 2 opcodes, 6 register specifiers, & decide if 1 or 2 instructions can issue – Multiported rename logic: must be able to rename same register multiple times in one cycle! – Rename logic one of key complexities in the way of multiple issue! • VLIW: tradeoff instruction space for simple decoding – The long instruction word has room for many operations – By definition, all the operations the compiler puts in the long instruction word are independent => execute in parallel – E. g. , 2 integer operations, 2 FP ops, 2 Memory refs, 1 branch » 16 to 24 bits per field => 7*16 or 112 bits to 7*24 or 168 bits wide – Need compiling technique that schedules across several branches 2/14/2007 CS 252 -s 07, lecture 8 43

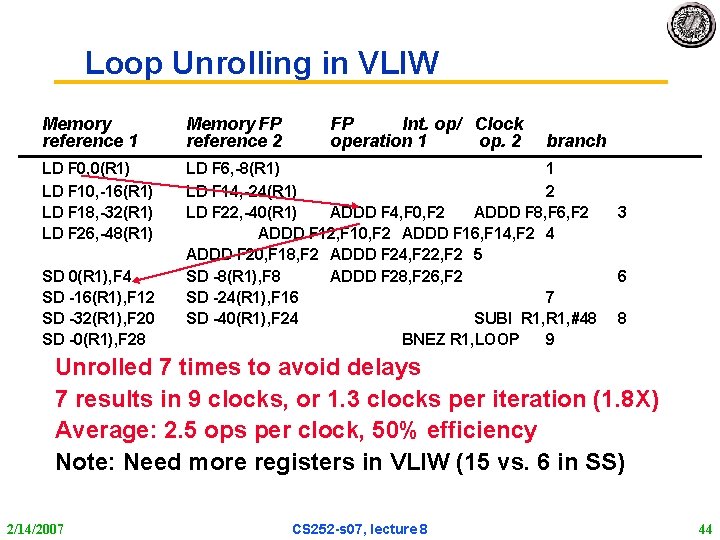

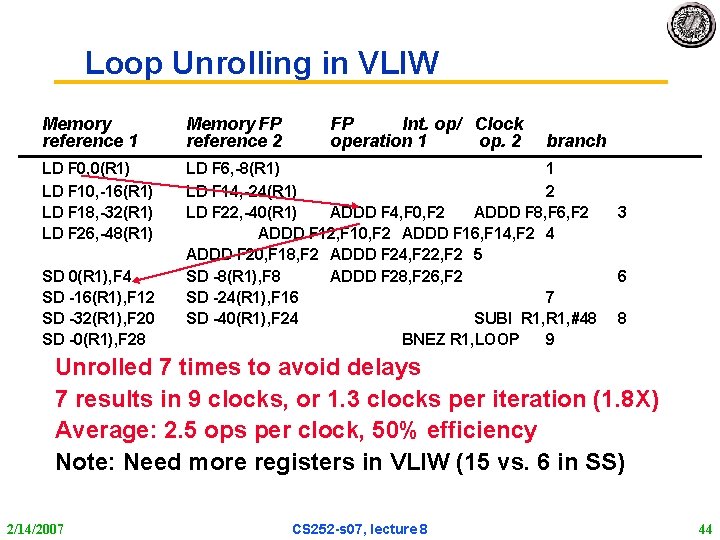

Loop Unrolling in VLIW Memory reference 1 Memory FP reference 2 LD F 0, 0(R 1) LD F 10, -16(R 1) LD F 18, -32(R 1) LD F 26, -48(R 1) LD F 6, -8(R 1) 1 LD F 14, -24(R 1) 2 LD F 22, -40(R 1) ADDD F 4, F 0, F 2 ADDD F 8, F 6, F 2 ADDD F 12, F 10, F 2 ADDD F 16, F 14, F 2 4 ADDD F 20, F 18, F 2 ADDD F 24, F 22, F 2 5 SD -8(R 1), F 8 ADDD F 28, F 26, F 2 SD -24(R 1), F 16 7 SD -40(R 1), F 24 SUBI R 1, #48 BNEZ R 1, LOOP 9 SD 0(R 1), F 4 SD -16(R 1), F 12 SD -32(R 1), F 20 SD -0(R 1), F 28 FP Int. op/ Clock operation 1 op. 2 branch 3 6 8 Unrolled 7 times to avoid delays 7 results in 9 clocks, or 1. 3 clocks per iteration (1. 8 X) Average: 2. 5 ops per clock, 50% efficiency Note: Need more registers in VLIW (15 vs. 6 in SS) 2/14/2007 CS 252 -s 07, lecture 8 44

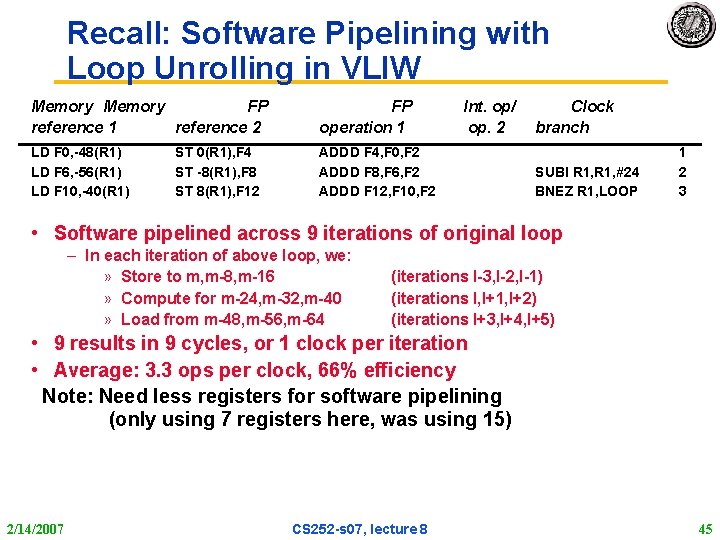

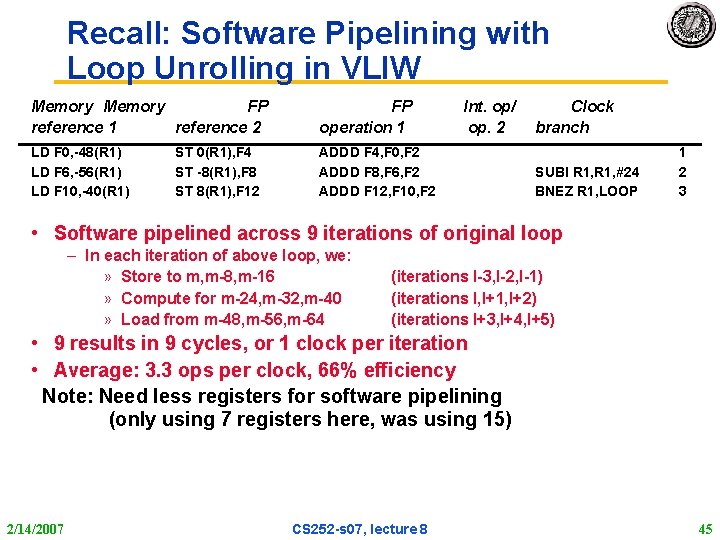

Recall: Software Pipelining with Loop Unrolling in VLIW Memory FP reference 1 reference 2 FP operation 1 LD F 0, -48(R 1) LD F 6, -56(R 1) LD F 10, -40(R 1) ADDD F 4, F 0, F 2 ADDD F 8, F 6, F 2 ADDD F 12, F 10, F 2 ST 0(R 1), F 4 ST -8(R 1), F 8 ST 8(R 1), F 12 Int. op/ op. 2 Clock branch SUBI R 1, #24 BNEZ R 1, LOOP 1 2 3 • Software pipelined across 9 iterations of original loop – In each iteration of above loop, we: » Store to m, m-8, m-16 » Compute for m-24, m-32, m-40 » Load from m-48, m-56, m-64 (iterations I-3, I-2, I-1) (iterations I, I+1, I+2) (iterations I+3, I+4, I+5) • 9 results in 9 cycles, or 1 clock per iteration • Average: 3. 3 ops per clock, 66% efficiency Note: Need less registers for software pipelining (only using 7 registers here, was using 15) 2/14/2007 CS 252 -s 07, lecture 8 45

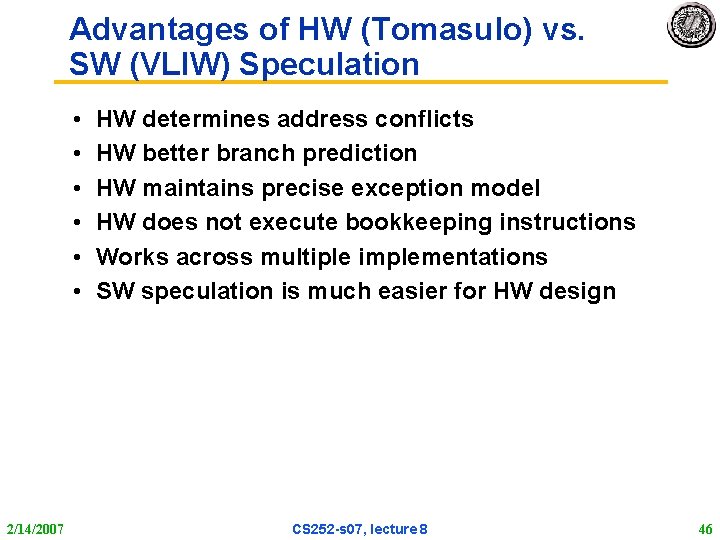

Advantages of HW (Tomasulo) vs. SW (VLIW) Speculation • • • 2/14/2007 HW determines address conflicts HW better branch prediction HW maintains precise exception model HW does not execute bookkeeping instructions Works across multiple implementations SW speculation is much easier for HW design CS 252 -s 07, lecture 8 46

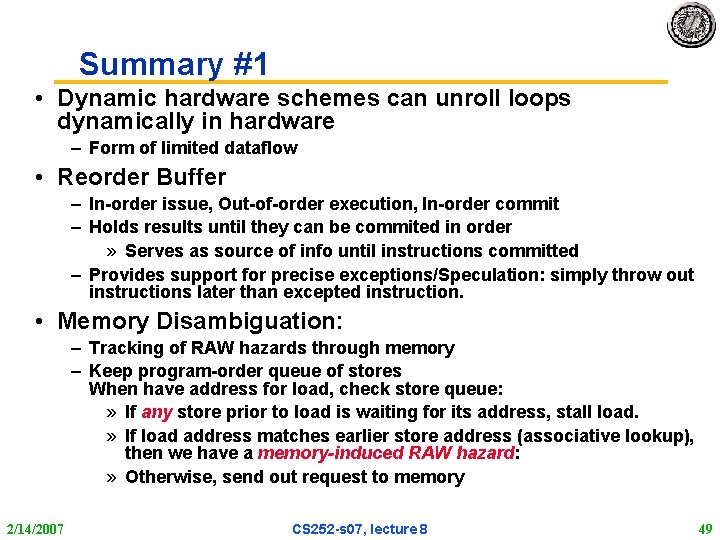

Superscalar v. VLIW • Smaller code size • Binary compatability across generations of hardware 2/14/2007 • Simplified Hardware for decoding, issuing instructions • No Interlock Hardware (compiler checks? ) • More registers, but simplified Hardware for Register Ports (multiple independent register files? ) CS 252 -s 07, lecture 8 47

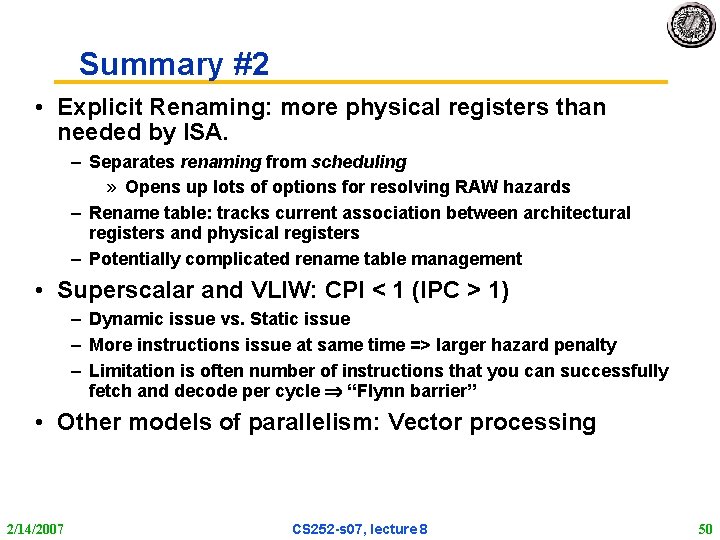

Intel/HP “Explicitly Parallel Instruction Computer (EPIC)” • 3 Instructions in 128 bit “groups”; field determines if instructions dependent or independent – Smaller code size than old VLIW, larger than x 86/RISC – Groups can be linked to show independence > 3 instr • 64 integer registers + 64 floating point registers – Not separate filesper funcitonal unit as in old VLIW • Hardware checks dependencies (interlocks => binary compatibility over time) • Predicated execution (select 1 out of 64 1 -bit flags) => 40% fewer mispredictions? • IA-64 : instruction set architecture; EPIC is type • Merced is name of first implementation (1999/2000? ) • LIW = EPIC? 2/14/2007 CS 252 -s 07, lecture 8 48

Summary #1 • Dynamic hardware schemes can unroll loops dynamically in hardware – Form of limited dataflow • Reorder Buffer – In-order issue, Out-of-order execution, In-order commit – Holds results until they can be commited in order » Serves as source of info until instructions committed – Provides support for precise exceptions/Speculation: simply throw out instructions later than excepted instruction. • Memory Disambiguation: – Tracking of RAW hazards through memory – Keep program-order queue of stores When have address for load, check store queue: » If any store prior to load is waiting for its address, stall load. » If load address matches earlier store address (associative lookup), then we have a memory-induced RAW hazard: » Otherwise, send out request to memory 2/14/2007 CS 252 -s 07, lecture 8 49

Summary #2 • Explicit Renaming: more physical registers than needed by ISA. – Separates renaming from scheduling » Opens up lots of options for resolving RAW hazards – Rename table: tracks current association between architectural registers and physical registers – Potentially complicated rename table management • Superscalar and VLIW: CPI < 1 (IPC > 1) – Dynamic issue vs. Static issue – More instructions issue at same time => larger hazard penalty – Limitation is often number of instructions that you can successfully fetch and decode per cycle “Flynn barrier” • Other models of parallelism: Vector processing 2/14/2007 CS 252 -s 07, lecture 8 50