CS 1699 Intro to Computer Vision Detection III

- Slides: 63

CS 1699: Intro to Computer Vision Detection III: Analyzing and Debugging Detection Methods Prof. Adriana Kovashka University of Pittsburgh November 17, 2015

Today • • Review: Deformable part models How can we speed up detection? In what ways does detection fail? How can we visualize features and models?

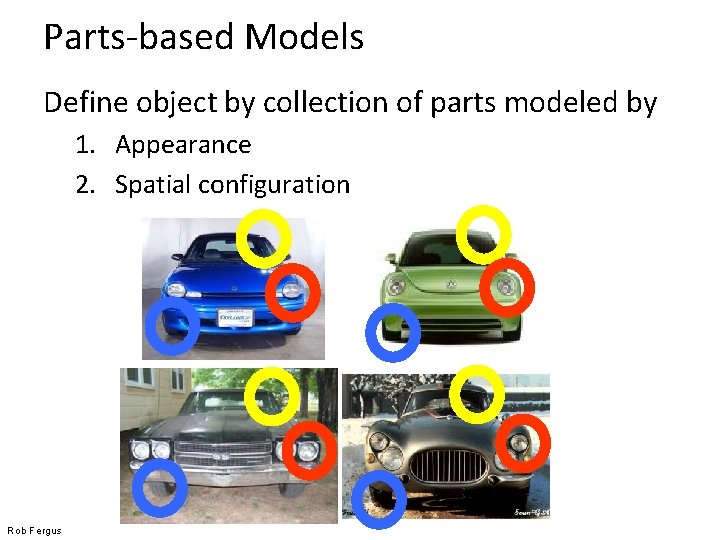

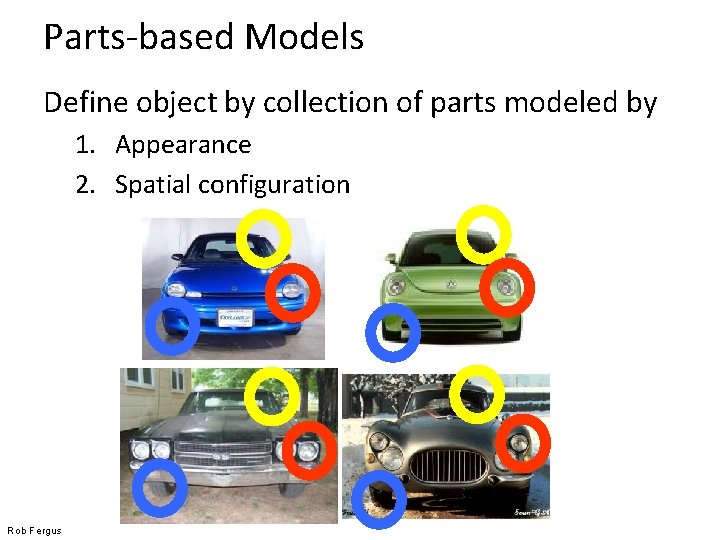

Parts-based Models Define object by collection of parts modeled by 1. Appearance 2. Spatial configuration Rob Fergus

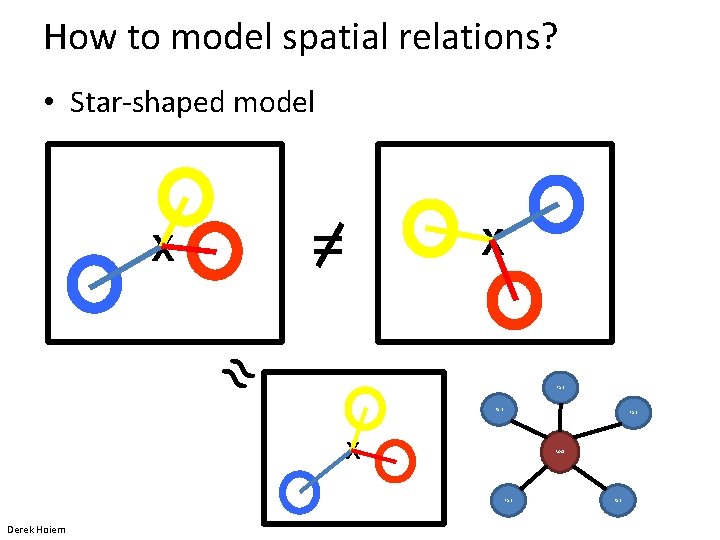

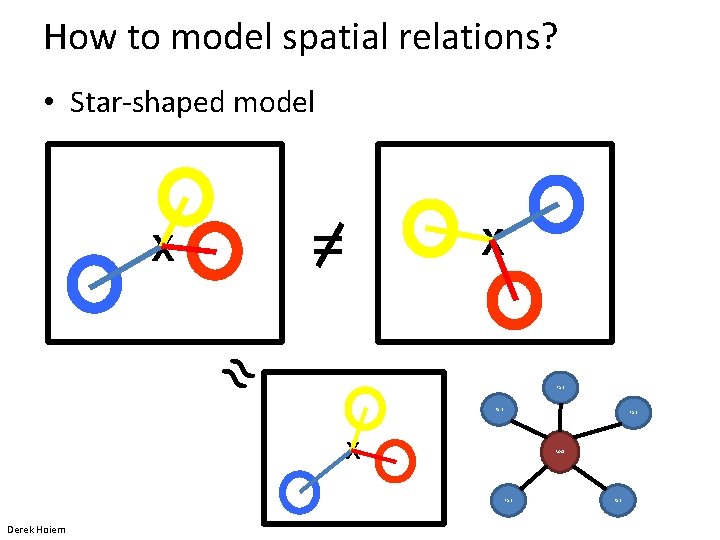

How to model spatial relations? • Star-shaped model = X X ≈ Part X Root Part Derek Hoiem Part

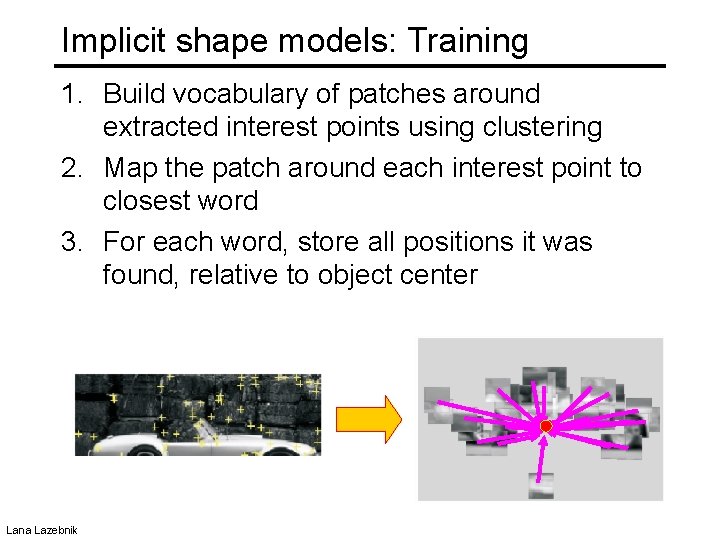

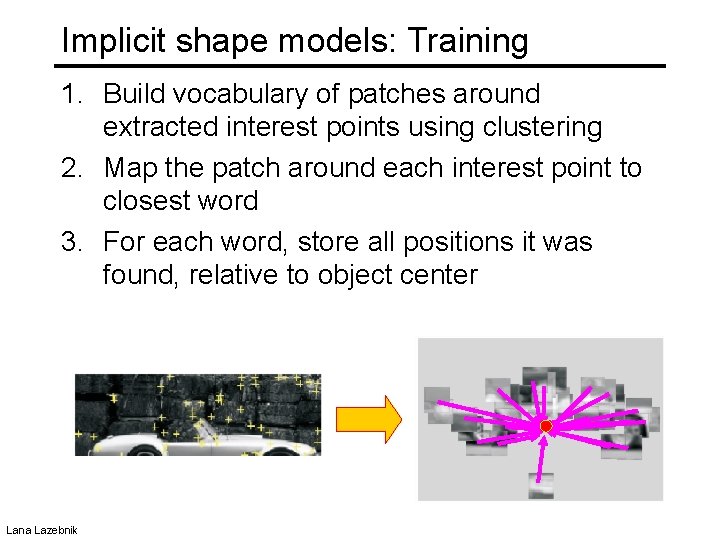

Implicit shape models: Training 1. Build vocabulary of patches around extracted interest points using clustering 2. Map the patch around each interest point to closest word 3. For each word, store all positions it was found, relative to object center Lana Lazebnik

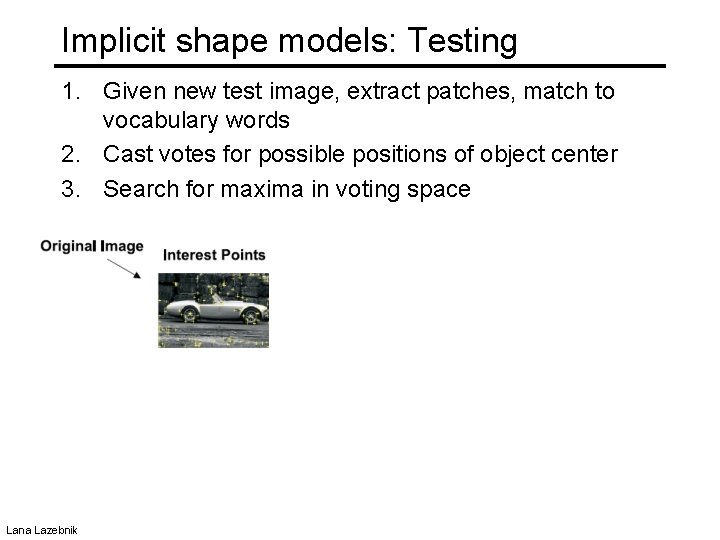

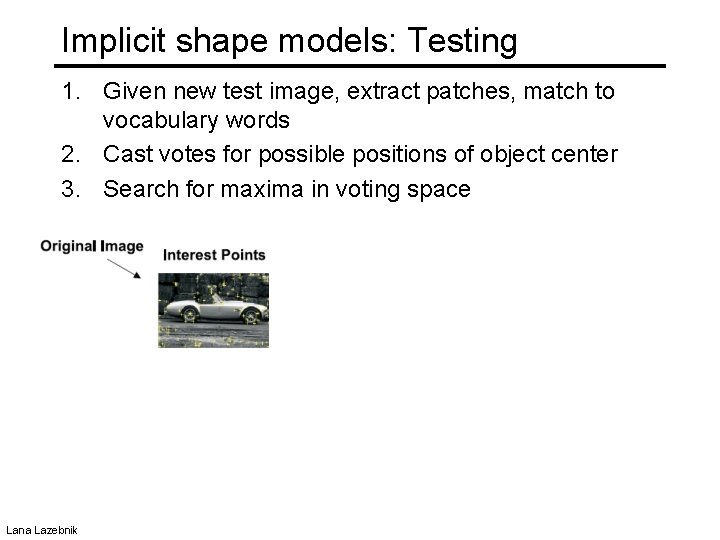

Implicit shape models: Testing 1. Given new test image, extract patches, match to vocabulary words 2. Cast votes for possible positions of object center 3. Search for maxima in voting space Lana Lazebnik

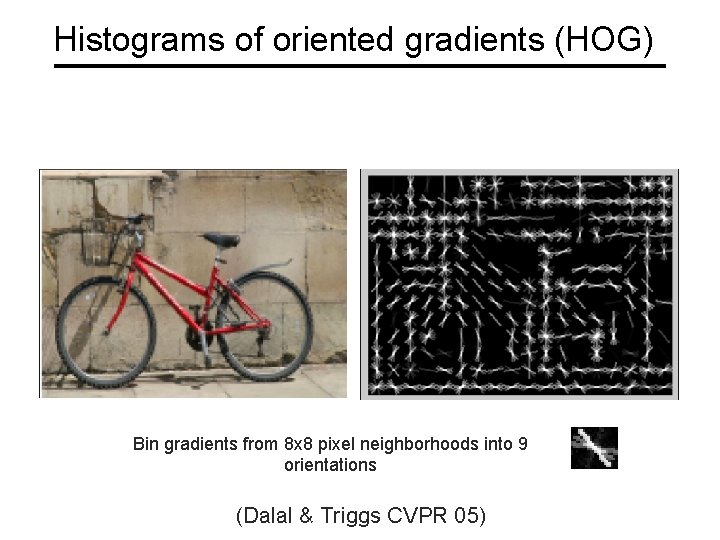

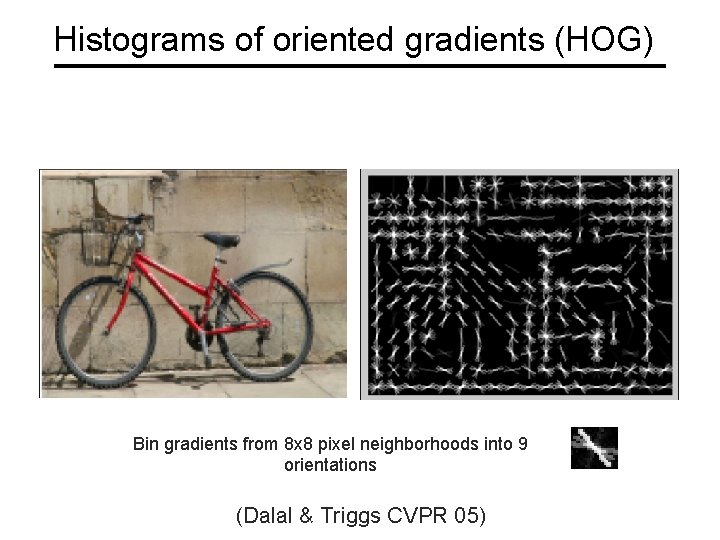

Histograms of oriented gradients (HOG) Bin gradients from 8 x 8 pixel neighborhoods into 9 orientations (Dalal & Triggs CVPR 05)

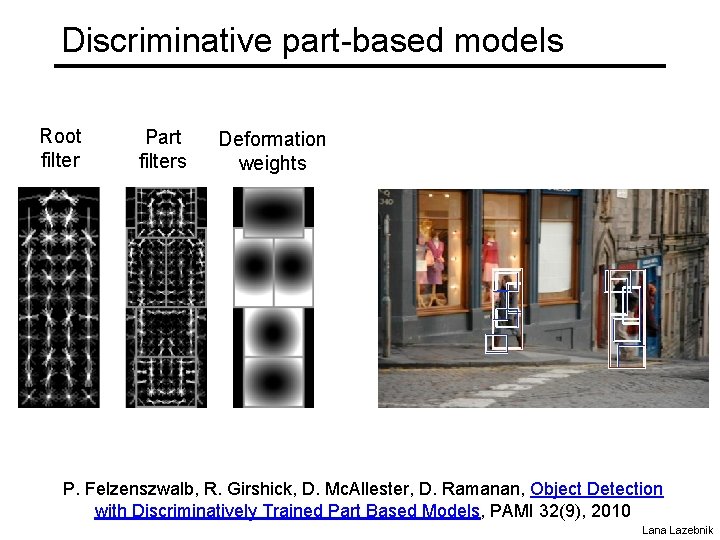

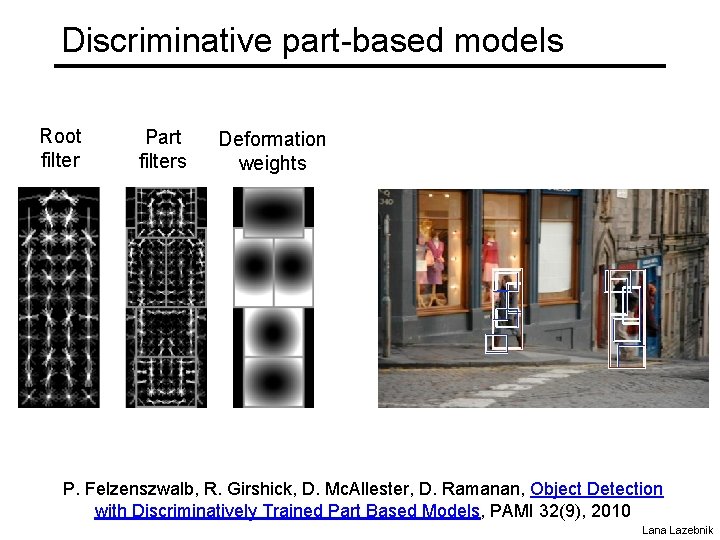

Discriminative part-based models Root filter Part filters Deformation weights P. Felzenszwalb, R. Girshick, D. Mc. Allester, D. Ramanan, Object Detection with Discriminatively Trained Part Based Models, PAMI 32(9), 2010 Lana Lazebnik

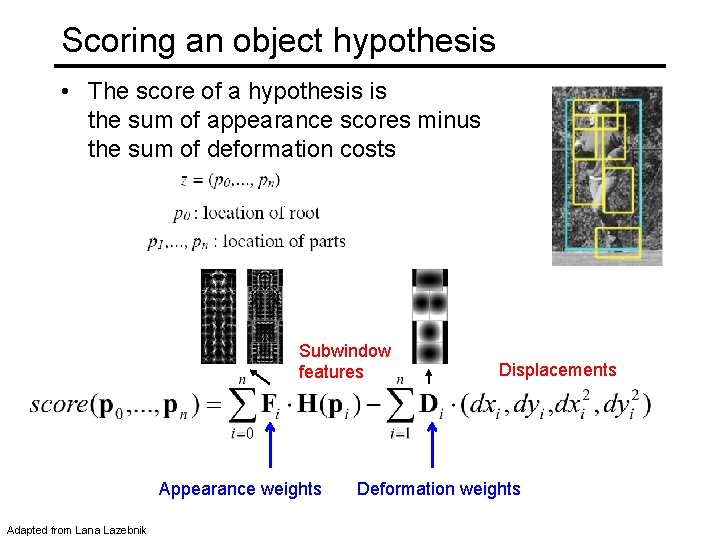

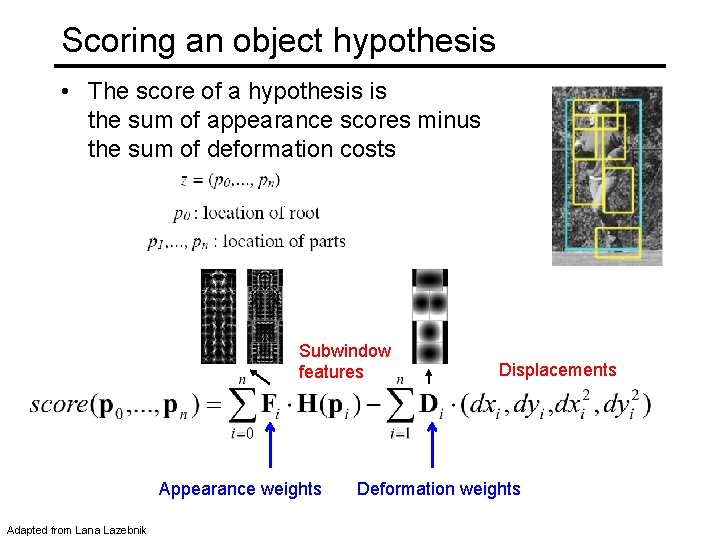

Scoring an object hypothesis • The score of a hypothesis is the sum of appearance scores minus the sum of deformation costs Subwindow features Appearance weights Adapted from Lana Lazebnik Displacements Deformation weights

What is an Object? B. Alexe, T. Deselaers, and V. Ferrari Computer Vision and Pattern Recognition (CVPR) 2010

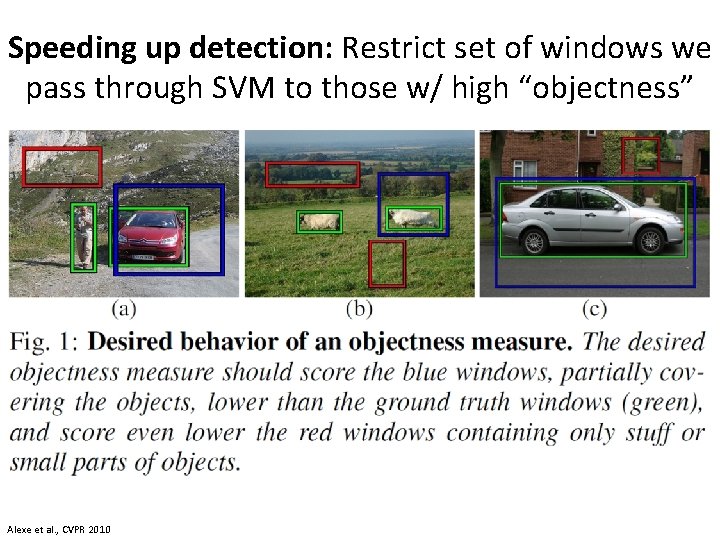

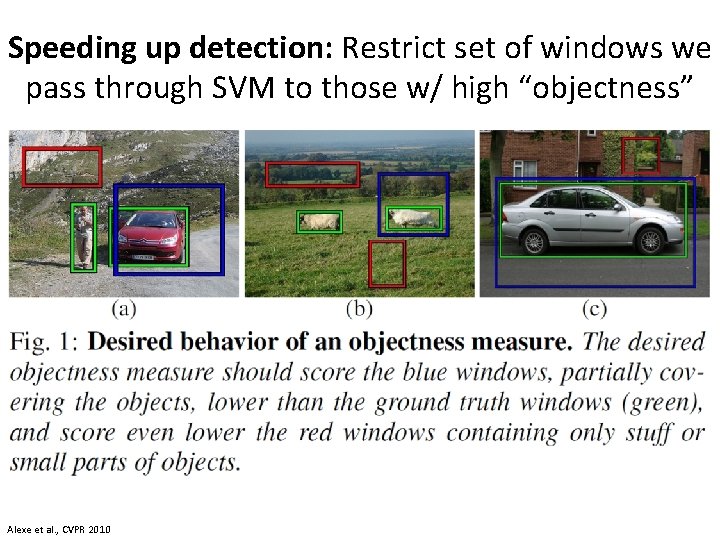

Speeding up detection: Restrict set of windows we pass through SVM to those w/ high “objectness” Alexe et al. , CVPR 2010

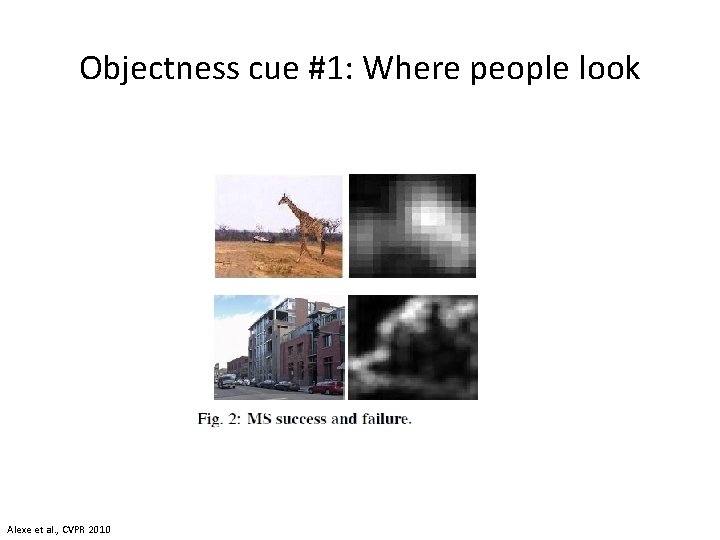

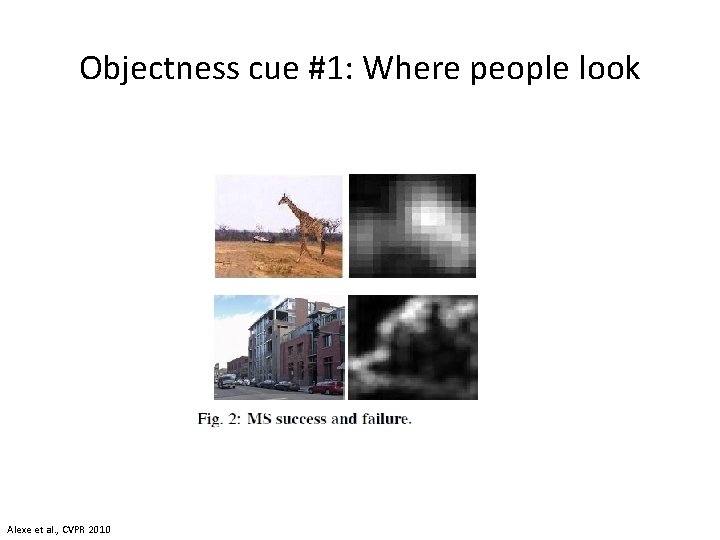

Objectness cue #1: Where people look Alexe et al. , CVPR 2010

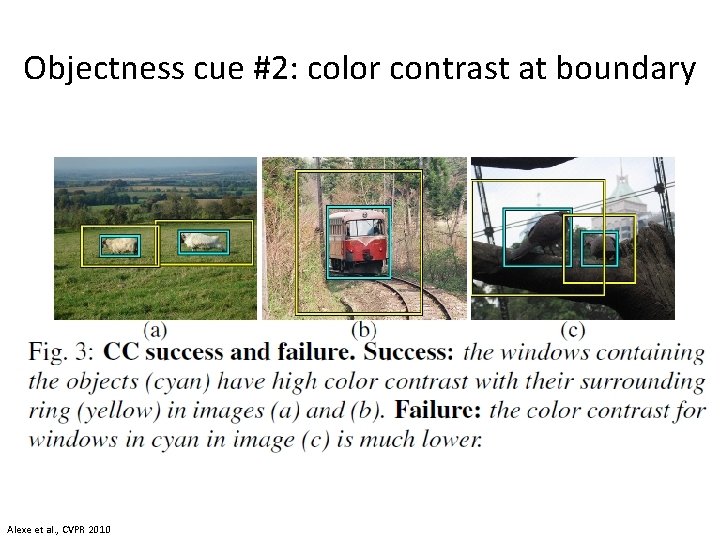

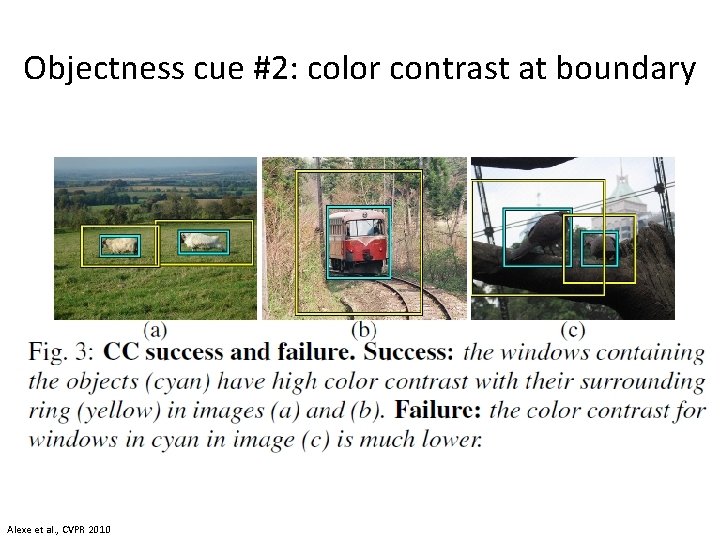

Objectness cue #2: color contrast at boundary Alexe et al. , CVPR 2010

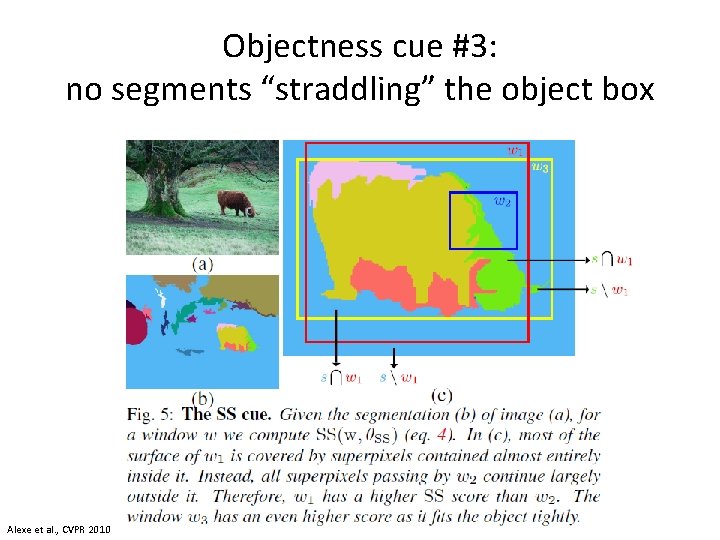

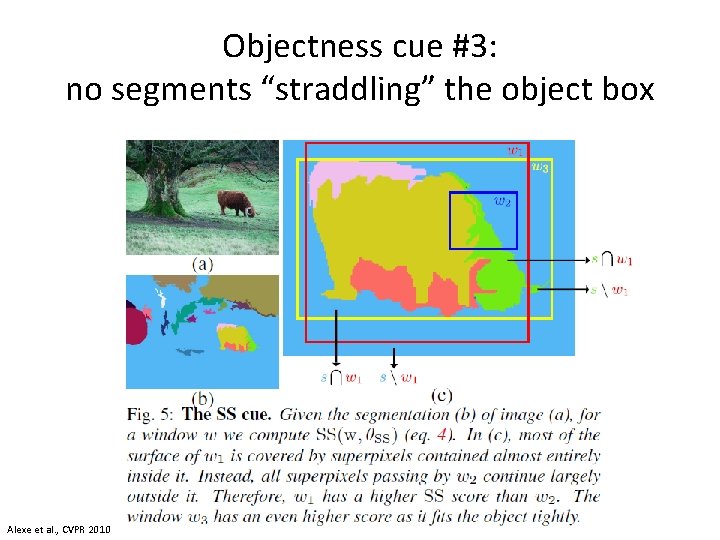

Objectness cue #3: no segments “straddling” the object box Alexe et al. , CVPR 2010

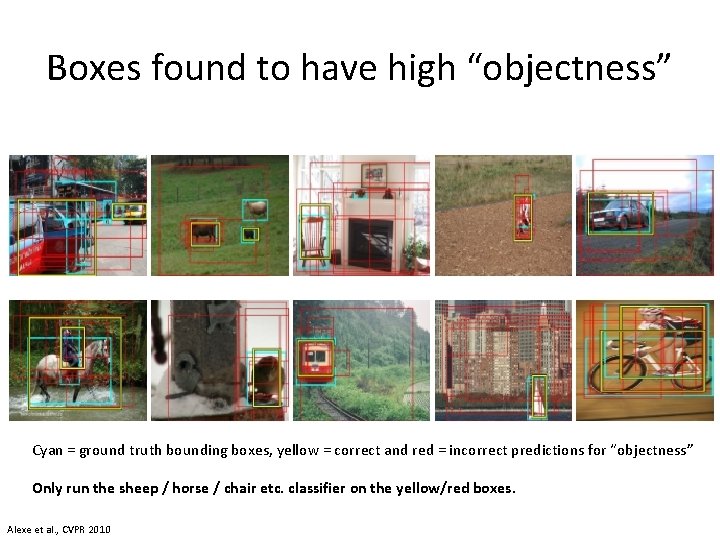

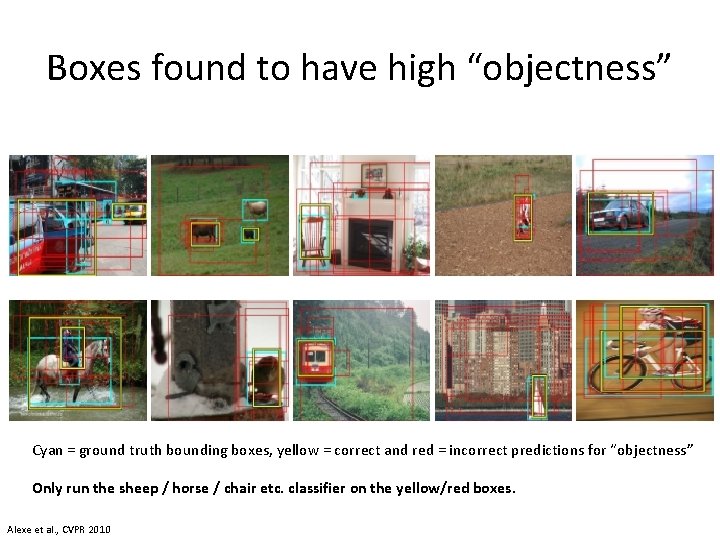

Boxes found to have high “objectness” Cyan = ground truth bounding boxes, yellow = correct and red = incorrect predictions for “objectness” Only run the sheep / horse / chair etc. classifier on the yellow/red boxes. Alexe et al. , CVPR 2010

Today • • Review: Deformable part models How can we speed up detection? In what ways does detection fail? How can we visualize features and detections?

Diagnosing Error in Object Detectors D. Hoiem, Y. Chodpathumwan and Q. Dai European Conference on Computer Vision (ECCV) 2012

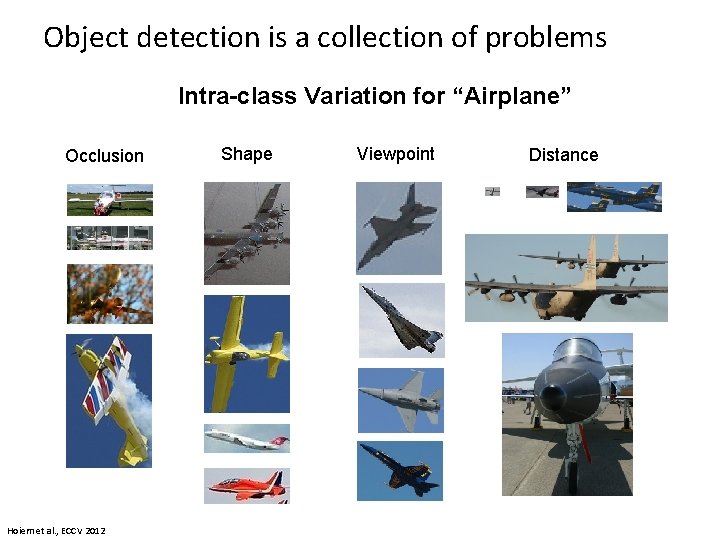

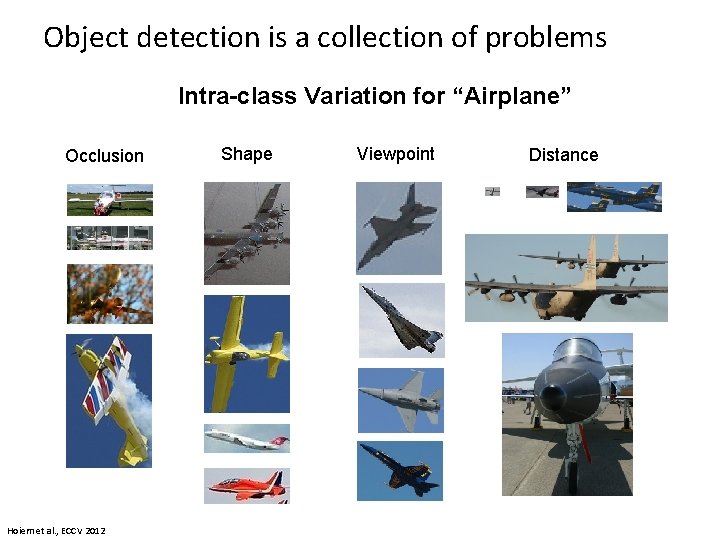

Object detection is a collection of problems Intra-class Variation for “Airplane” Occlusion Hoiem et al. , ECCV 2012 Shape Viewpoint Distance

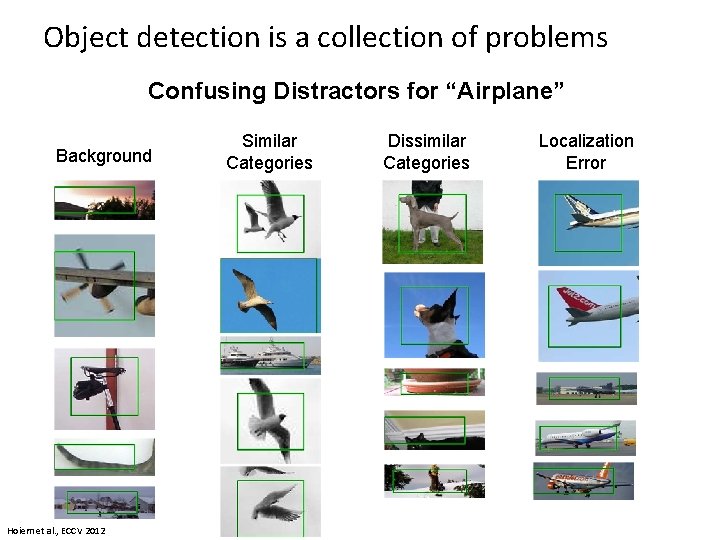

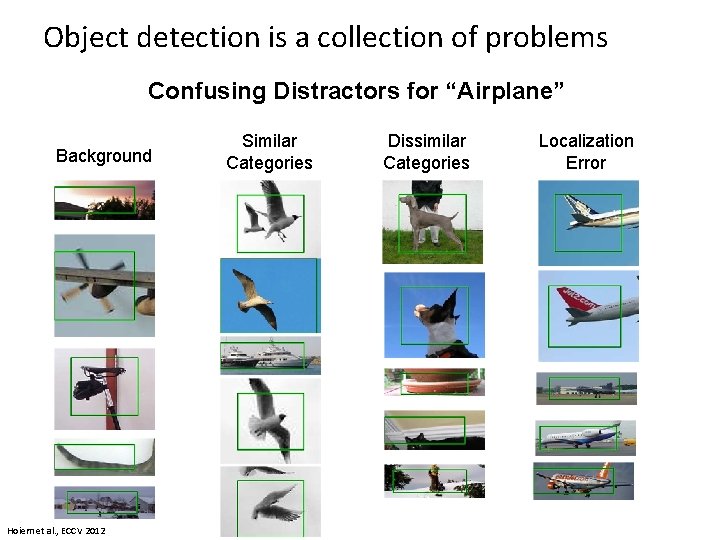

Object detection is a collection of problems Confusing Distractors for “Airplane” Background Hoiem et al. , ECCV 2012 Similar Categories Dissimilar Categories Localization Error

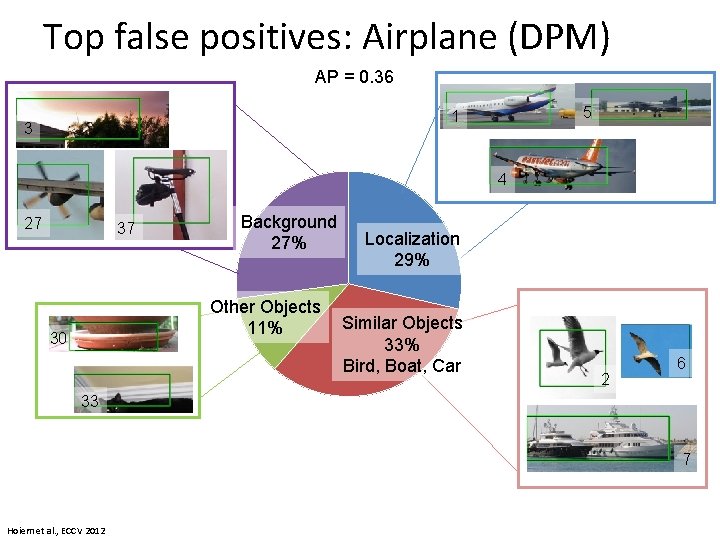

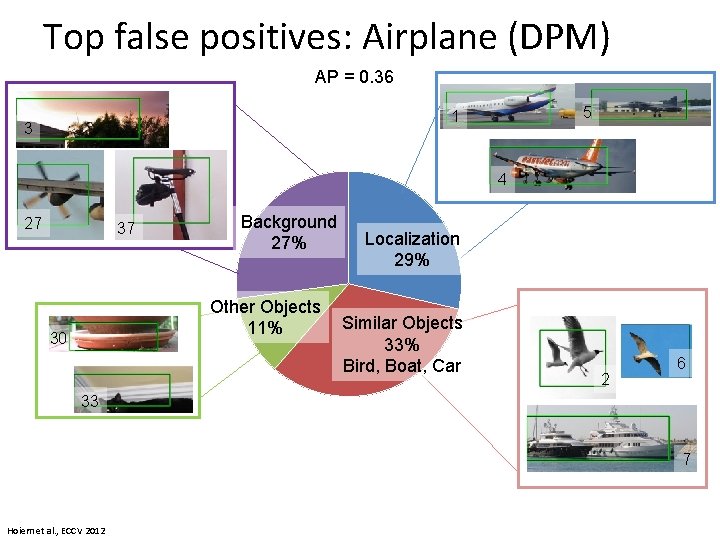

Top false positives: Airplane (DPM) AP = 0. 36 5 1 3 4 27 37 Background 27% Other Objects 11% 30 Localization 29% Similar Objects 33% Bird, Boat, Car 2 6 33 7 Hoiem et al. , ECCV 2012

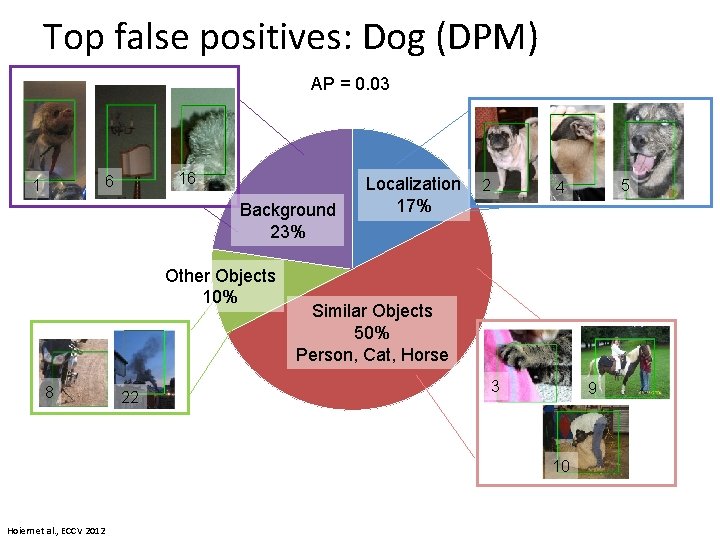

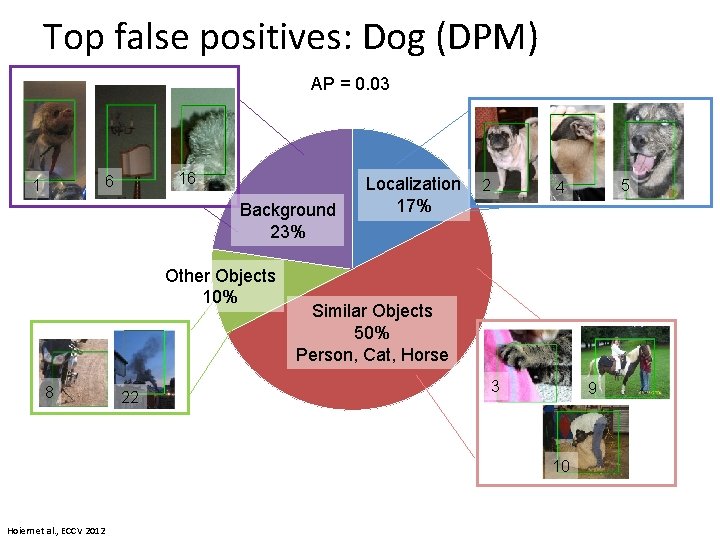

Top false positives: Dog (DPM) AP = 0. 03 16 6 1 Background 23% Other Objects 10% 8 22 Localization 17% 2 Similar Objects 50% Person, Cat, Horse 3 9 10 Hoiem et al. , ECCV 2012 5 4

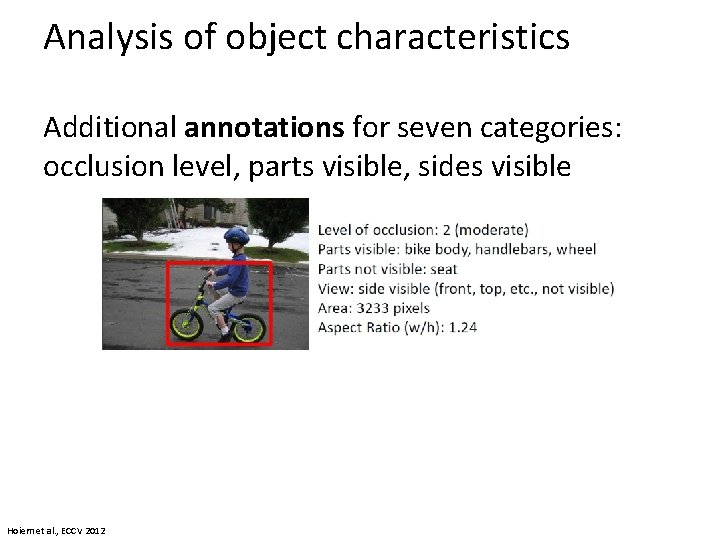

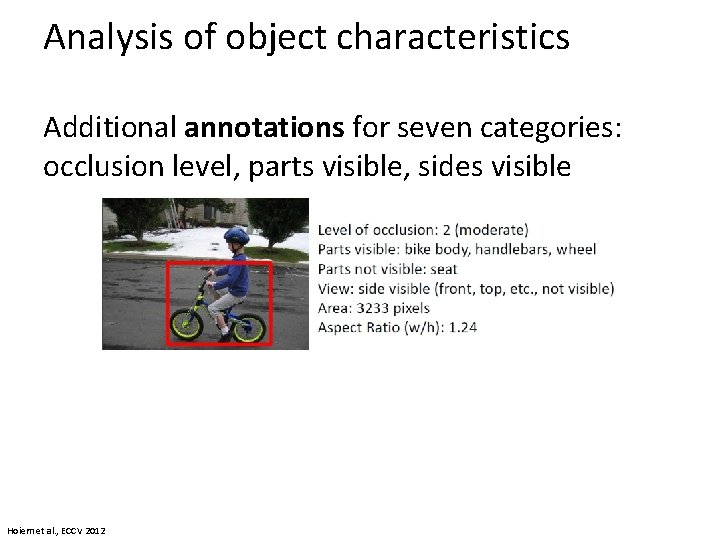

Analysis of object characteristics Additional annotations for seven categories: occlusion level, parts visible, sides visible Hoiem et al. , ECCV 2012

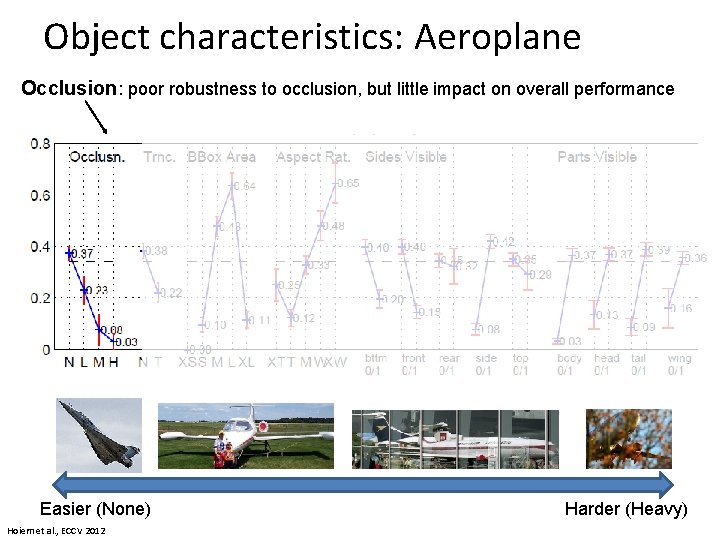

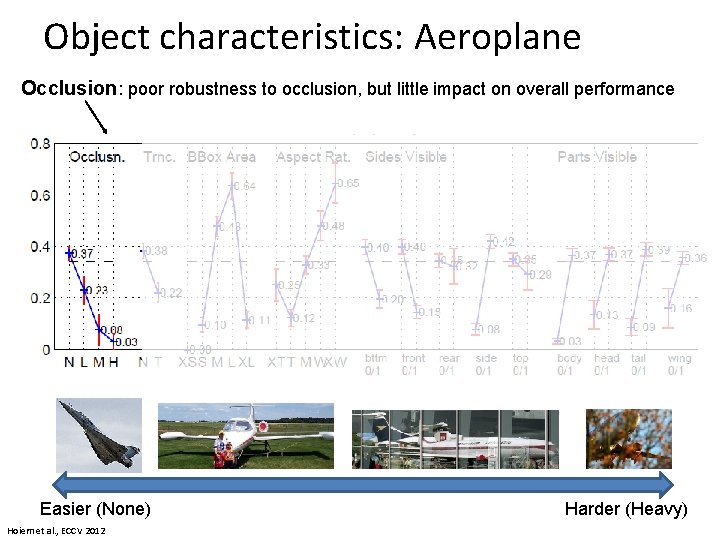

Object characteristics: Aeroplane Occlusion: poor robustness to occlusion, but little impact on overall performance Easier (None) Hoiem et al. , ECCV 2012 Harder (Heavy)

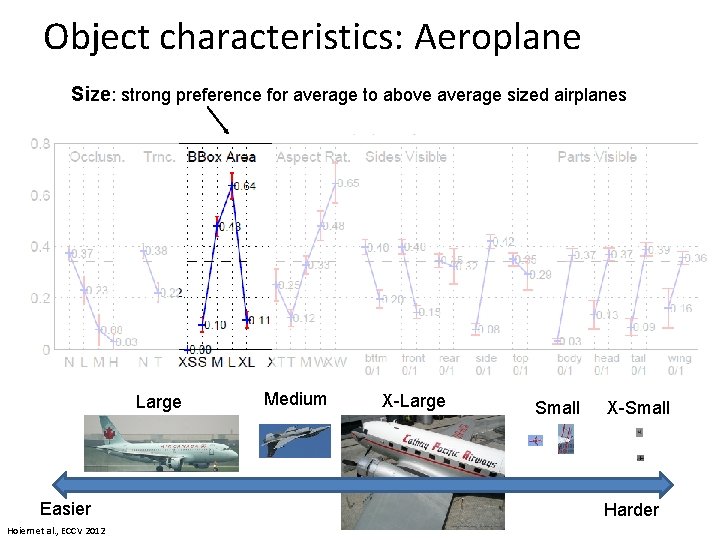

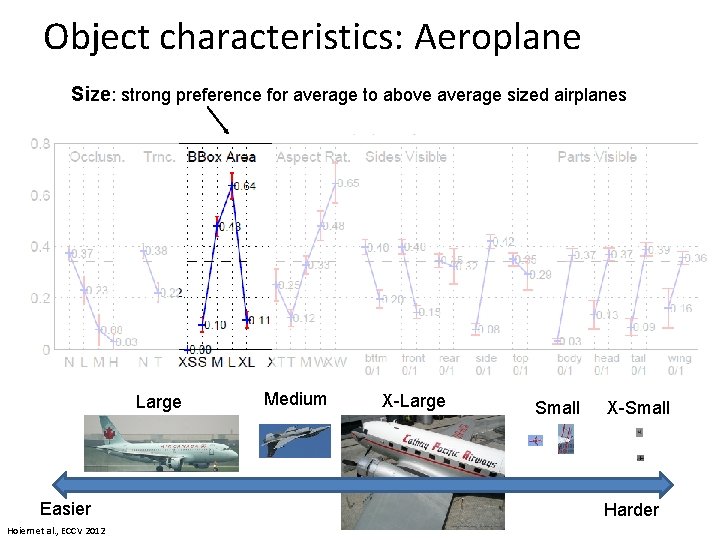

Object characteristics: Aeroplane Size: strong preference for average to above average sized airplanes Large Easier Hoiem et al. , ECCV 2012 Medium X-Large Small X-Small Harder

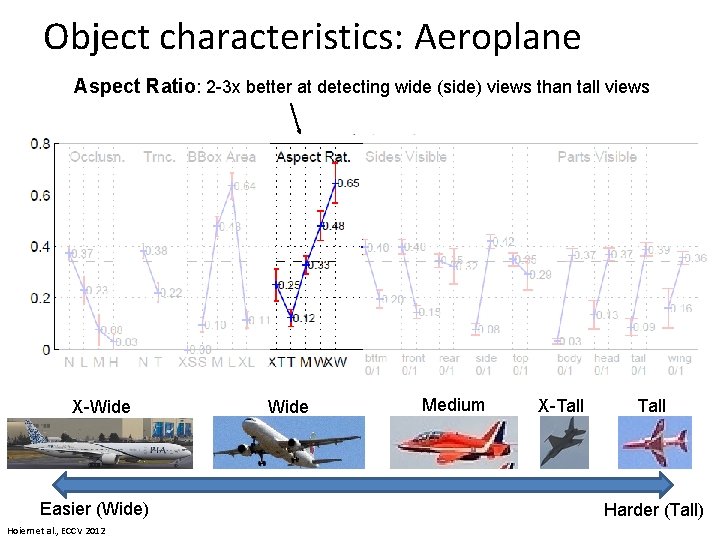

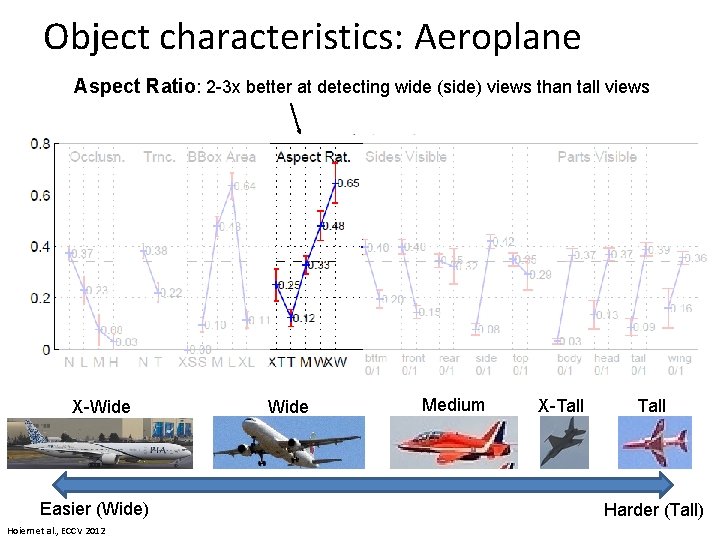

Object characteristics: Aeroplane Aspect Ratio: 2 -3 x better at detecting wide (side) views than tall views X-Wide Easier (Wide) Hoiem et al. , ECCV 2012 Wide Medium X-Tall Harder (Tall)

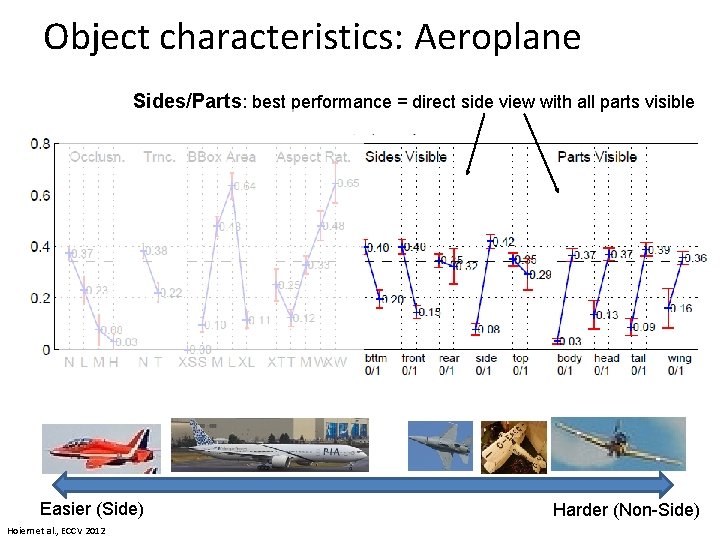

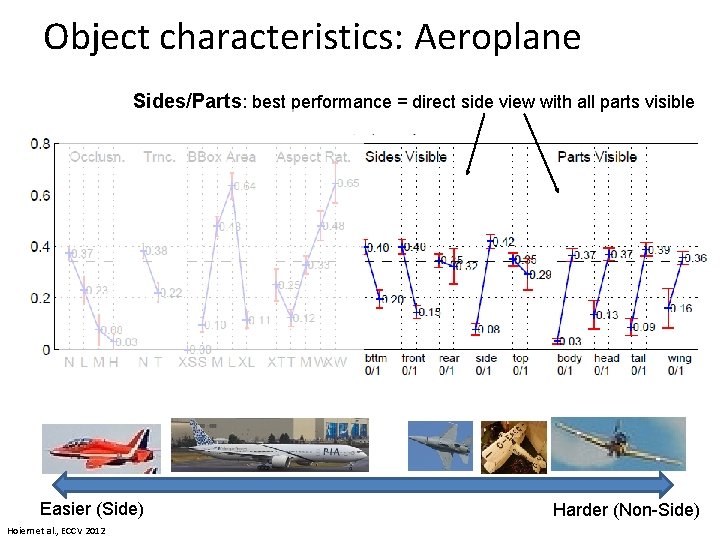

Object characteristics: Aeroplane Sides/Parts: best performance = direct side view with all parts visible Easier (Side) Hoiem et al. , ECCV 2012 Harder (Non-Side)

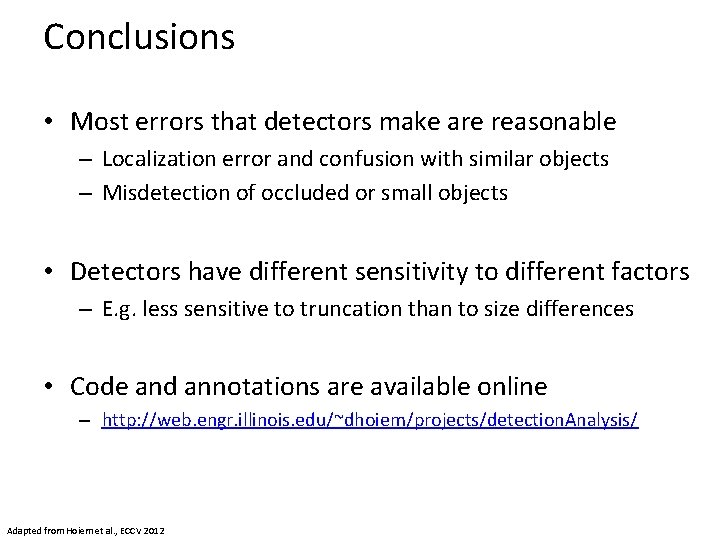

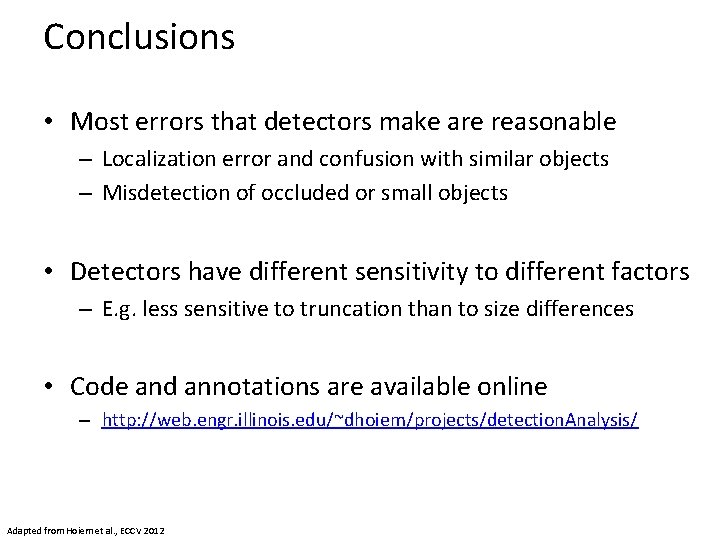

Conclusions • Most errors that detectors make are reasonable – Localization error and confusion with similar objects – Misdetection of occluded or small objects • Detectors have different sensitivity to different factors – E. g. less sensitive to truncation than to size differences • Code and annotations are available online – http: //web. engr. illinois. edu/~dhoiem/projects/detection. Analysis/ Adapted from Hoiem et al. , ECCV 2012

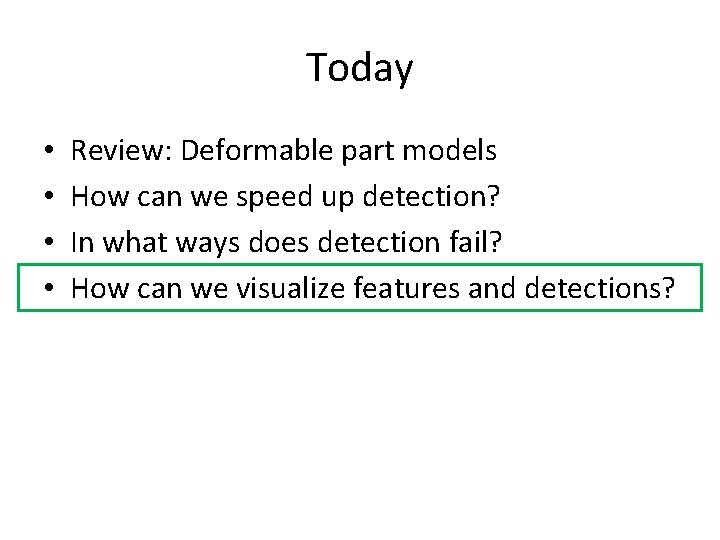

Today • • Review: Deformable part models How can we speed up detection? In what ways does detection fail? How can we visualize features and detections?

HOGgles: Visualizing Object Detection Features C. Vondrick, A. Khosla, T. Malisiewicz, and A. Torralba International Conference on Computer Vision (ICCV) 2013

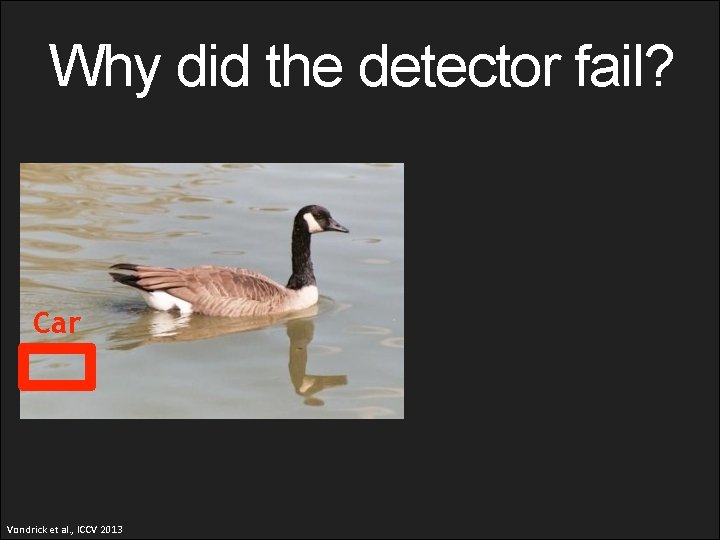

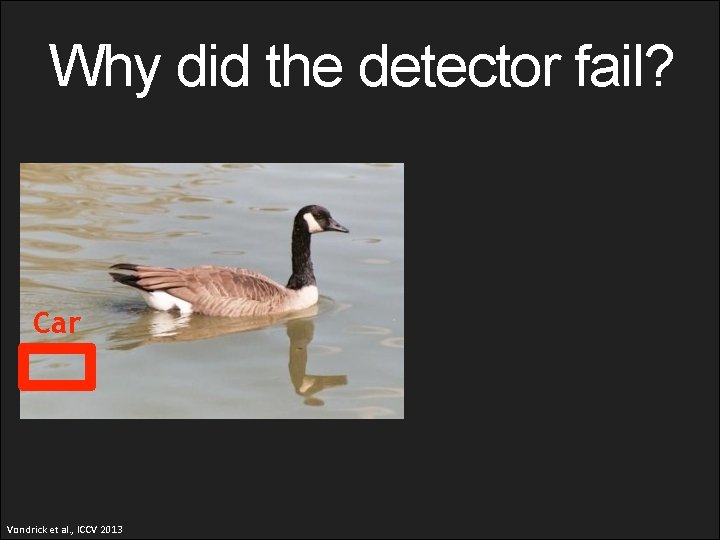

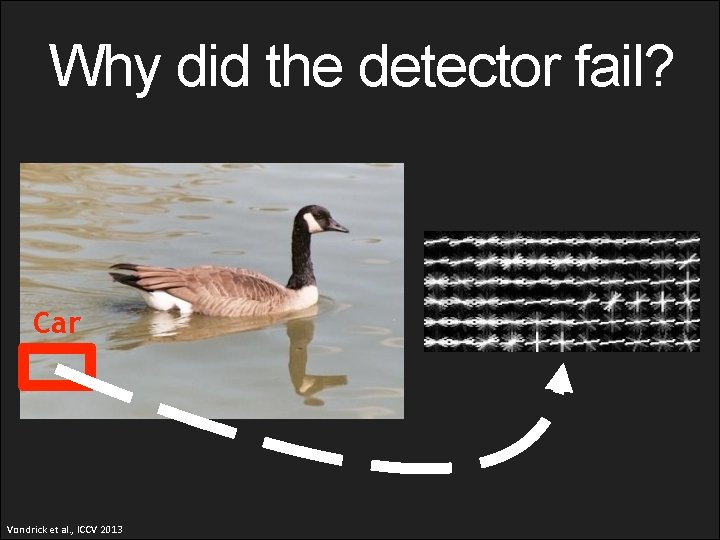

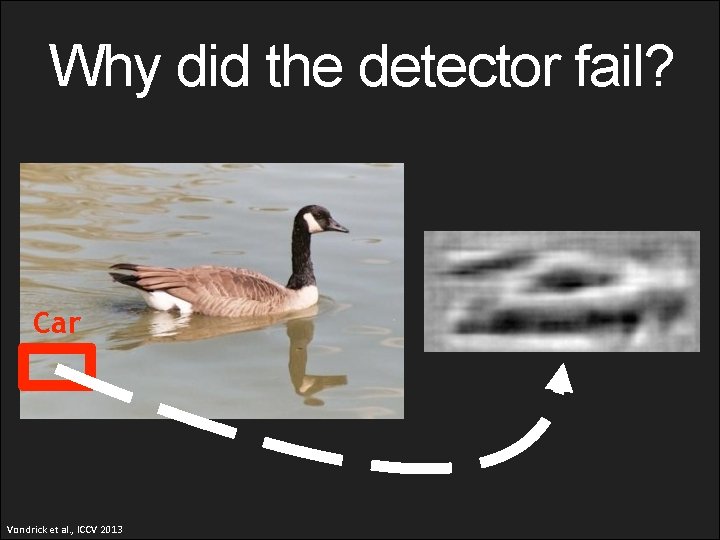

Why did the detector fail? Car Vondrick et al. , ICCV 2013

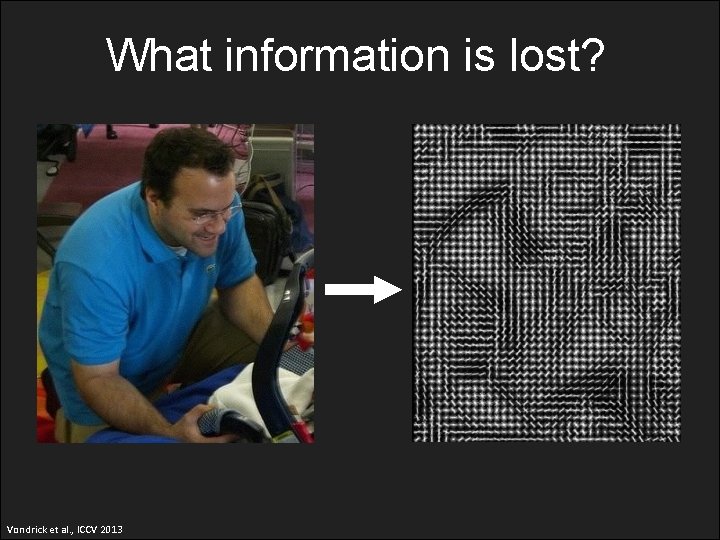

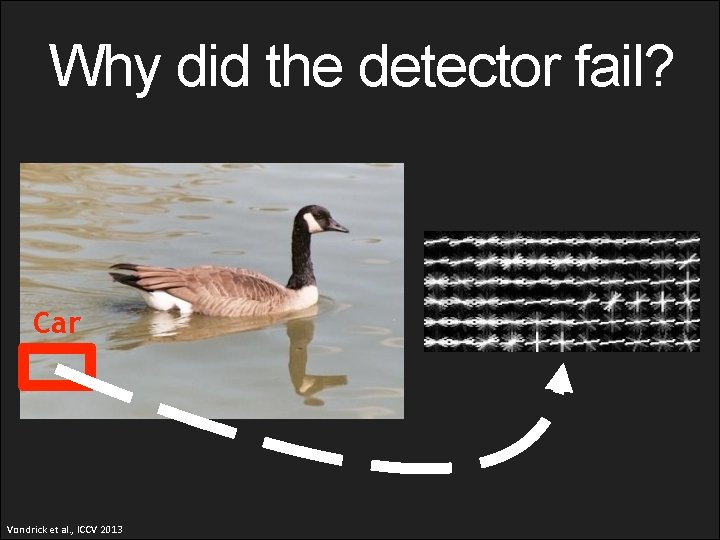

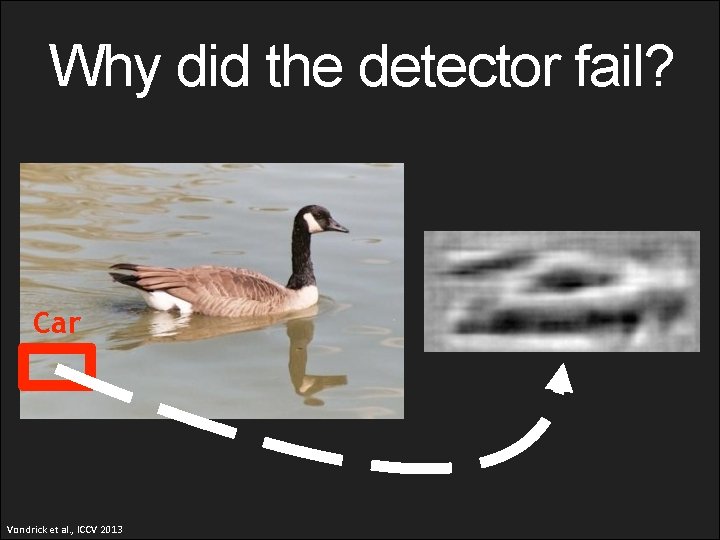

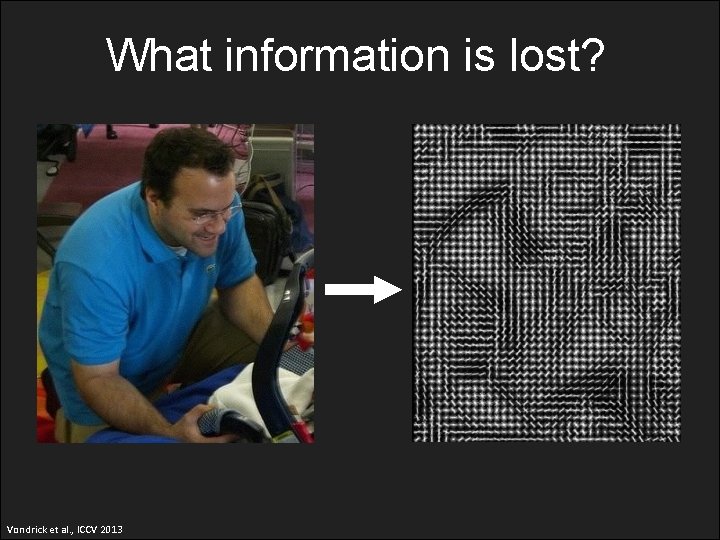

What information is lost? Vondrick et al. , ICCV 2013

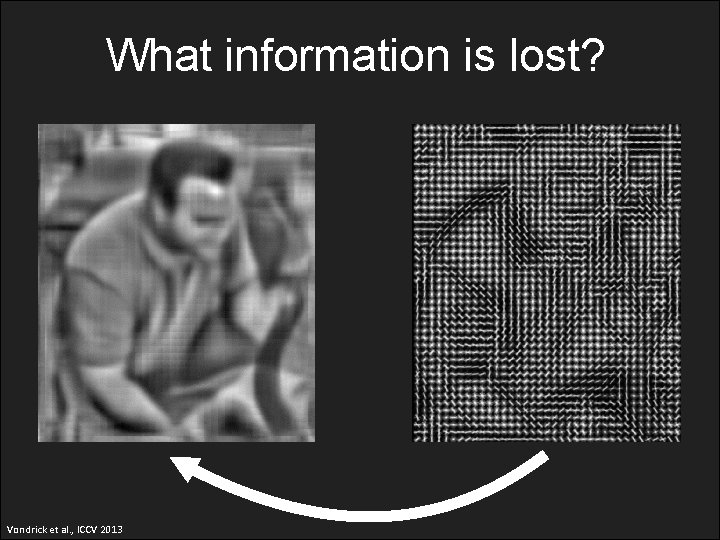

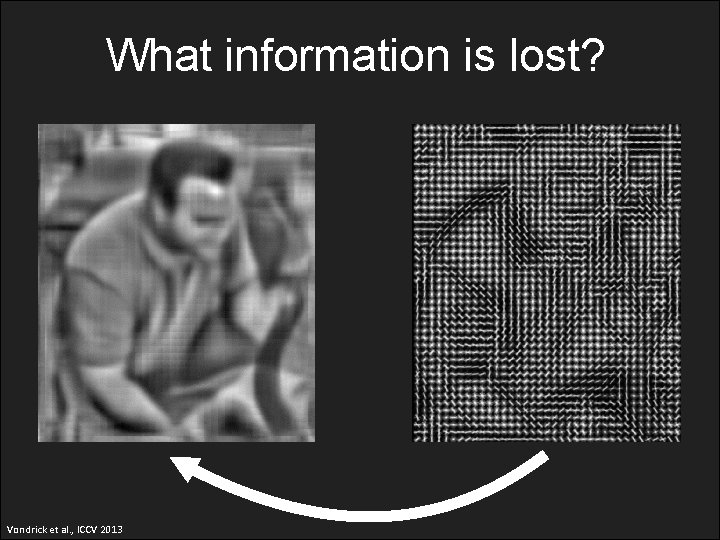

What information is lost? Vondrick et al. , ICCV 2013

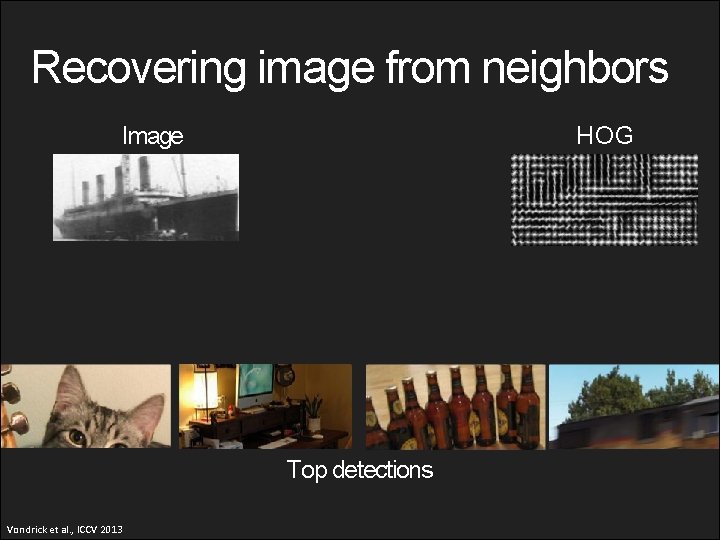

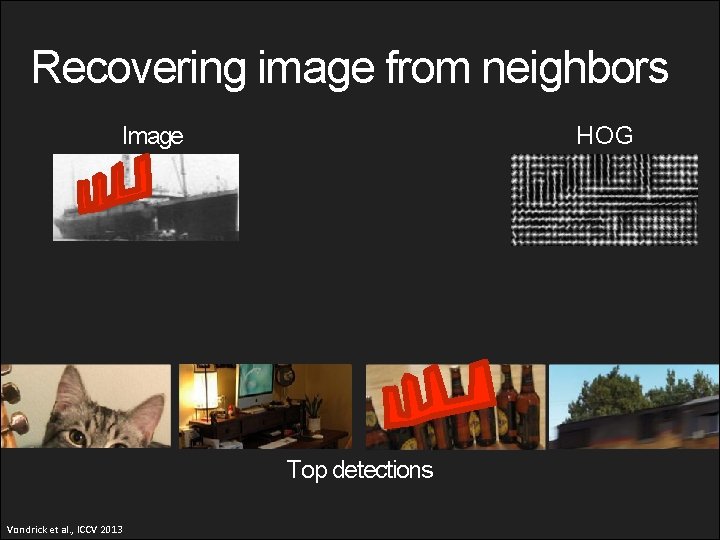

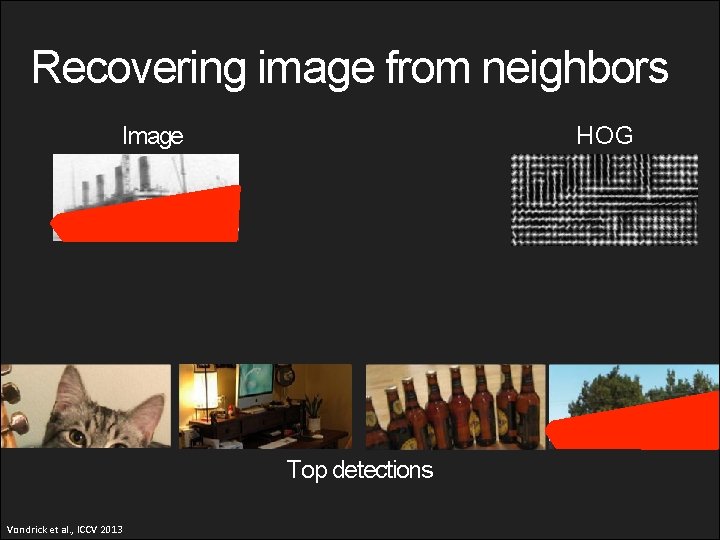

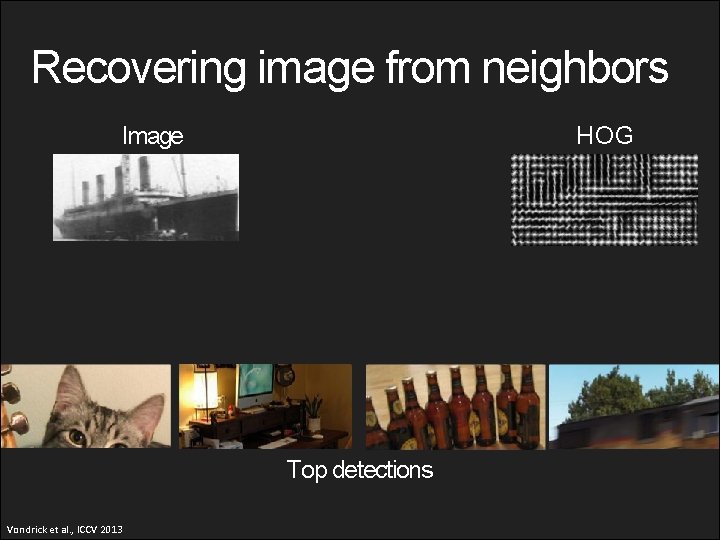

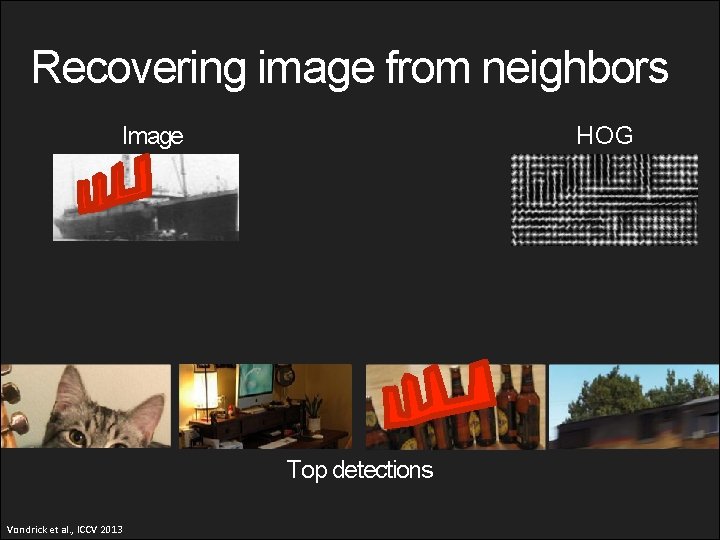

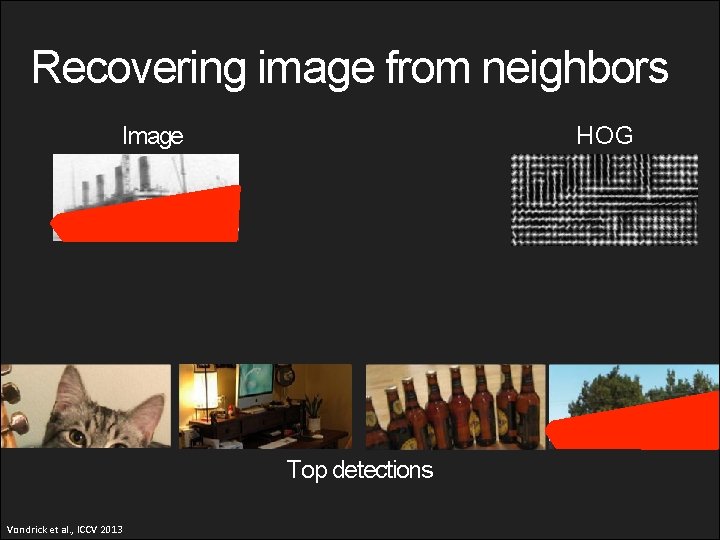

Recovering image from neighbors Image HOG Top detections Vondrick et al. , ICCV 2013

Recovering image from neighbors Image HOG Top detections Vondrick et al. , ICCV 2013

Recovering image from neighbors Image HOG Top detections Vondrick et al. , ICCV 2013

Recovering image from neighbors Image HOG Top detections Vondrick et al. , ICCV 2013

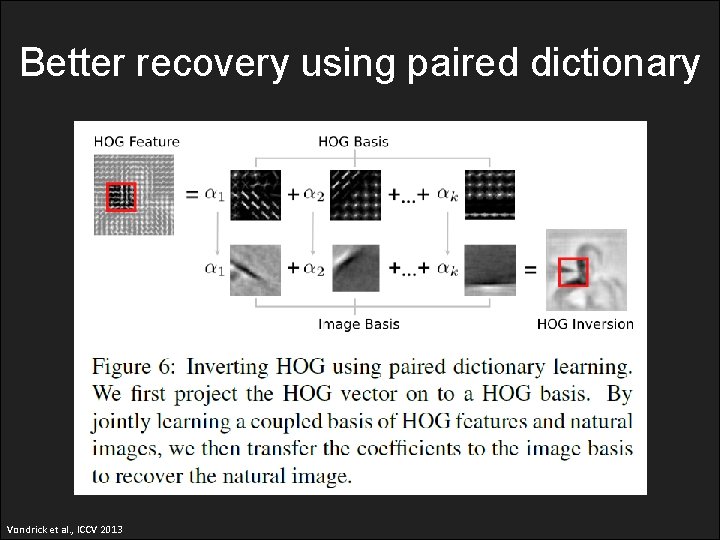

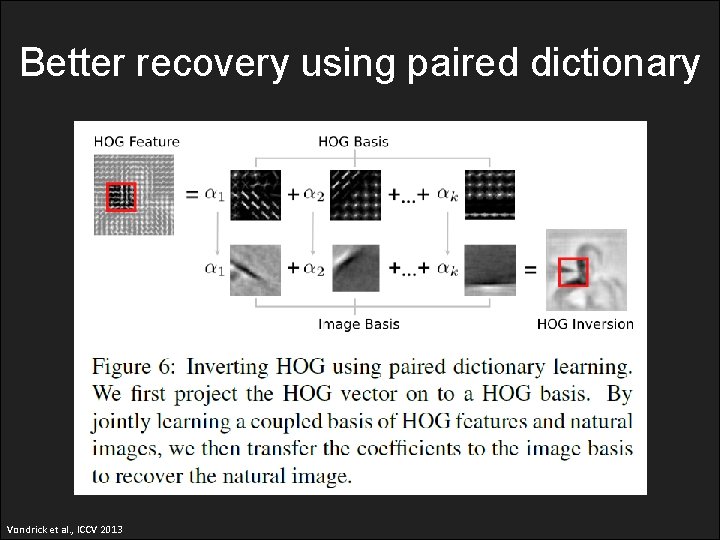

Better recovery using paired dictionary Vondrick et al. , ICCV 2013

A microscope to view HOG 2 x more intuitive Vondrick et al. , ICCV 2013

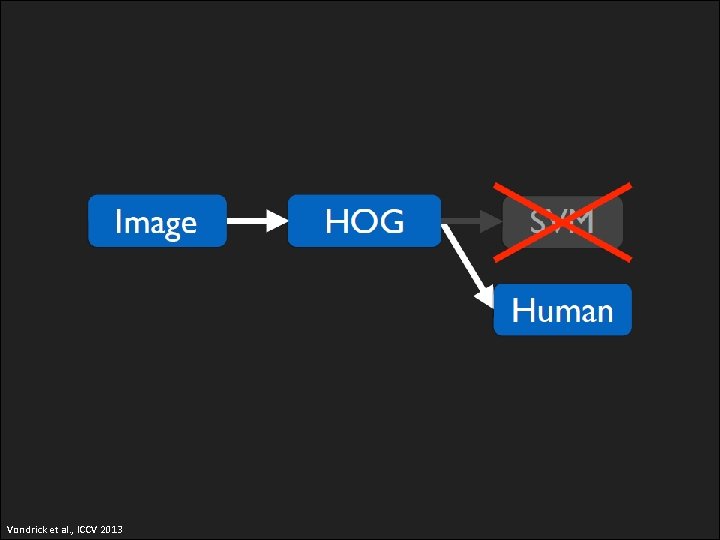

vs Human Vision Vondrick et al. , ICCV 2013 HOG Vision

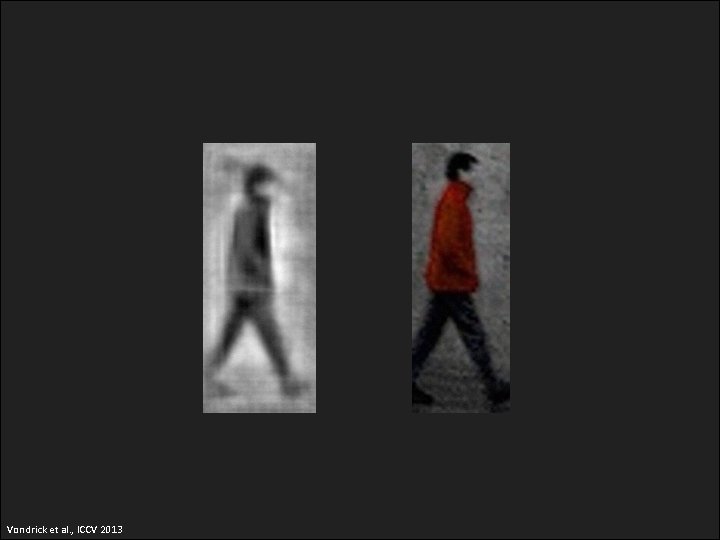

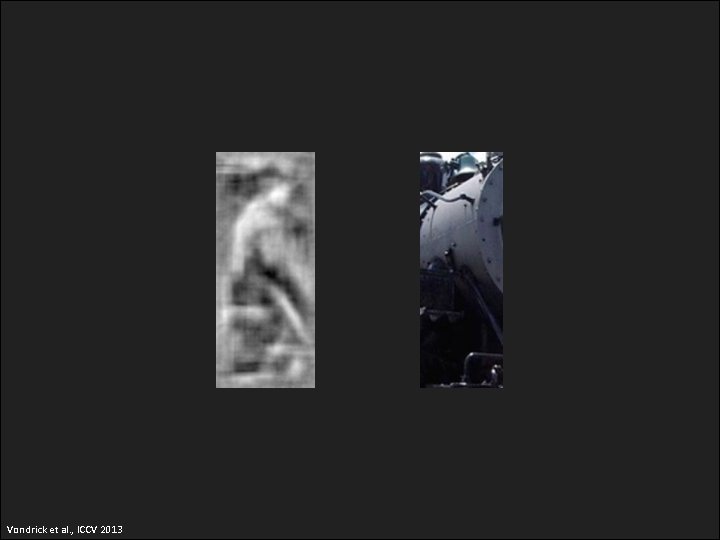

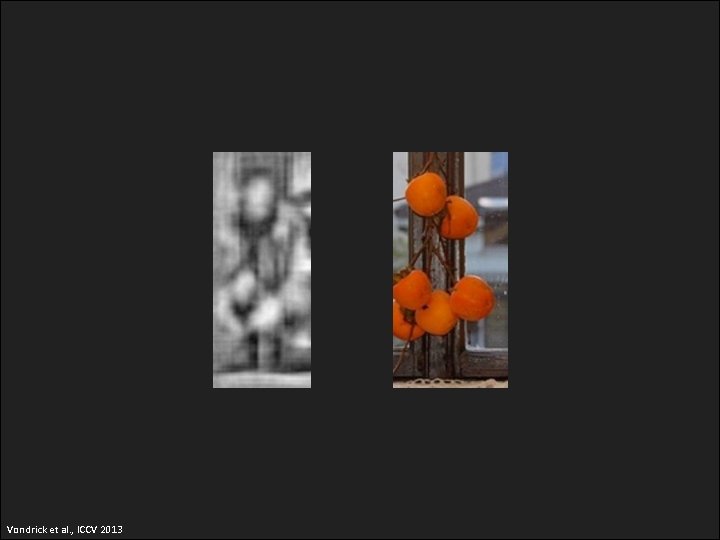

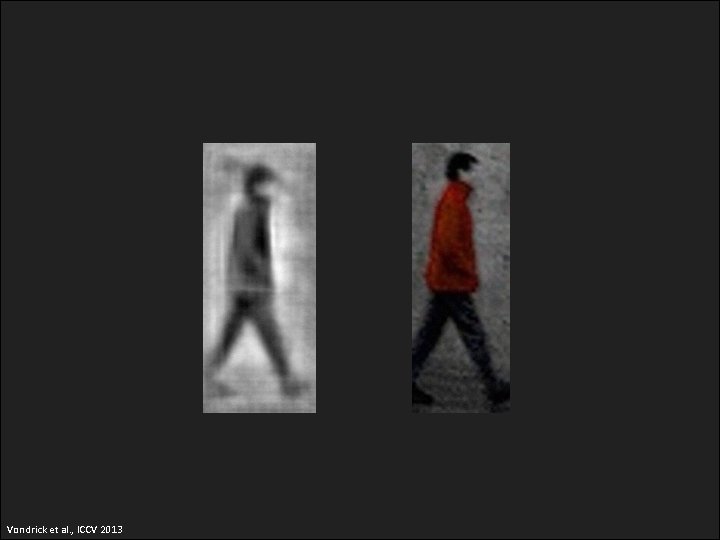

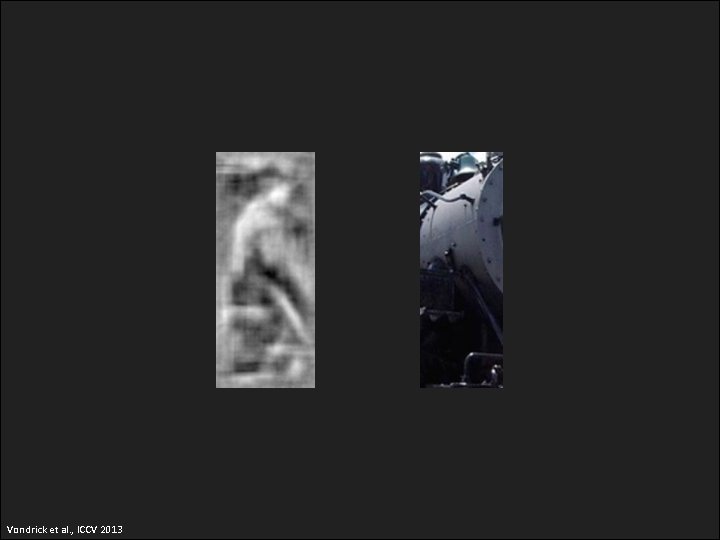

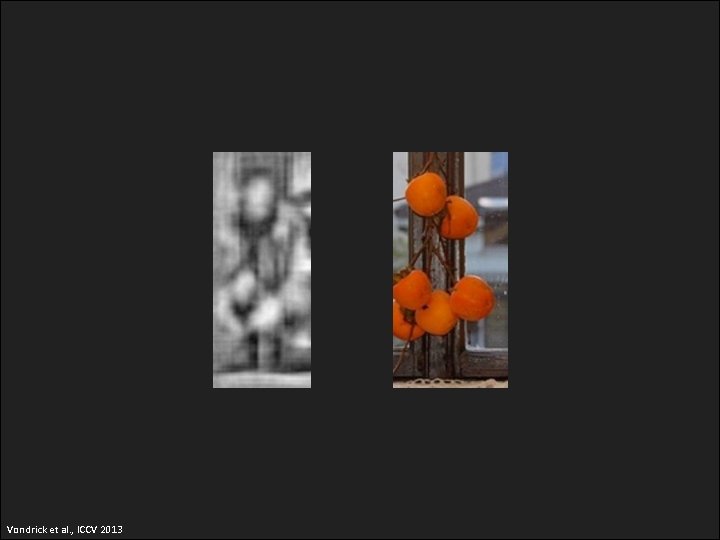

Vondrick et al. , ICCV 2013

Vondrick et al. , ICCV 2013

Vondrick et al. , ICCV 2013

Vondrick et al. , ICCV 2013

Vondrick et al. , ICCV 2013

Vondrick et al. , ICCV 2013

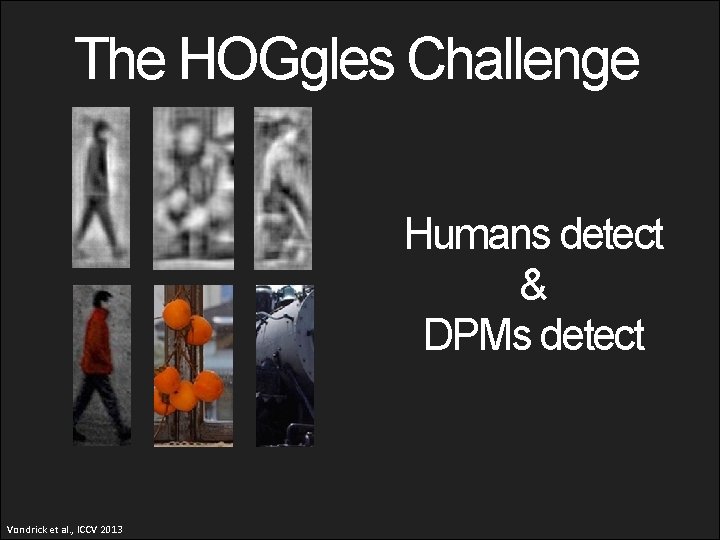

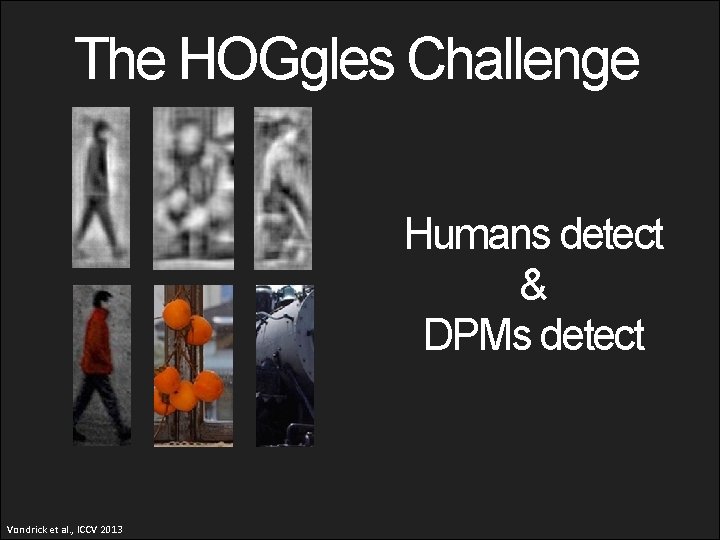

The HOGgles Challenge Humans detect & DPMs detect Vondrick et al. , ICCV 2013

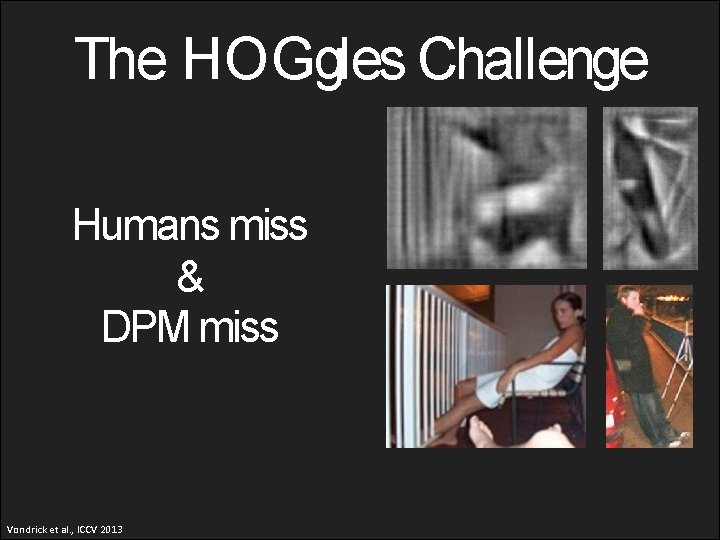

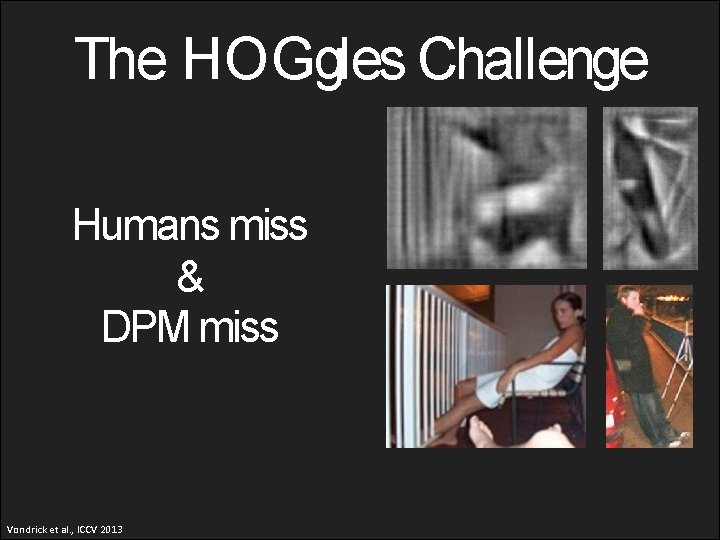

The HOGgles Challenge Humans miss & DPM miss Vondrick et al. , ICCV 2013

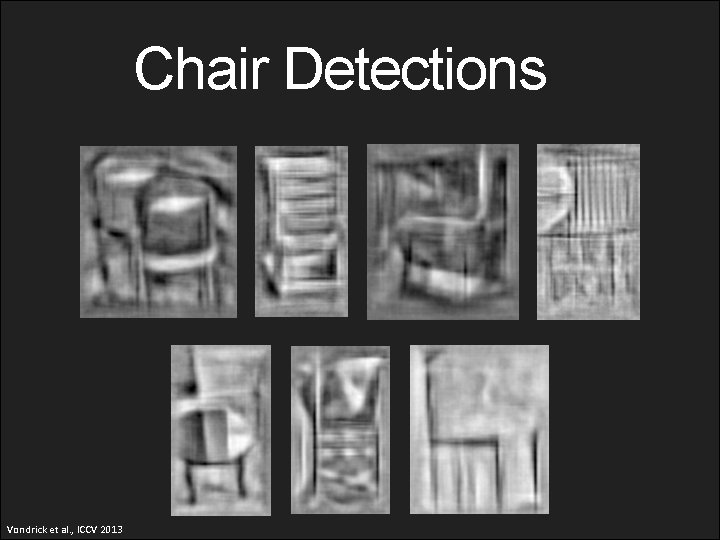

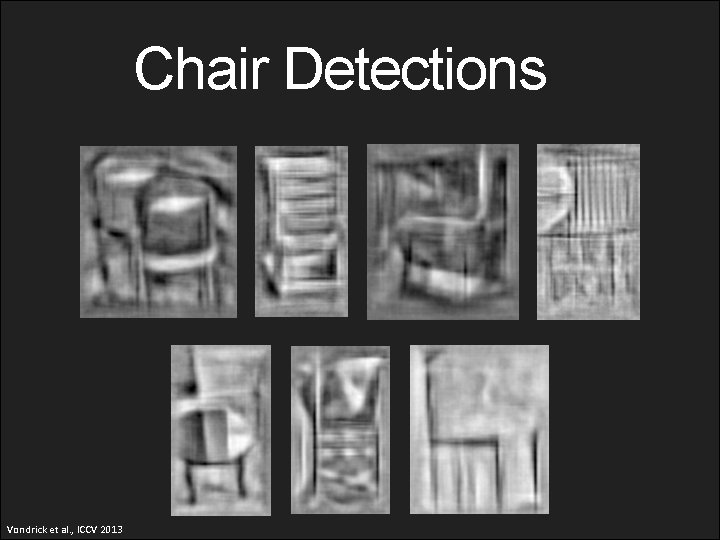

Chair Detections Vondrick et al. , ICCV 2013

Chair Detections Vondrick et al. , ICCV 2013

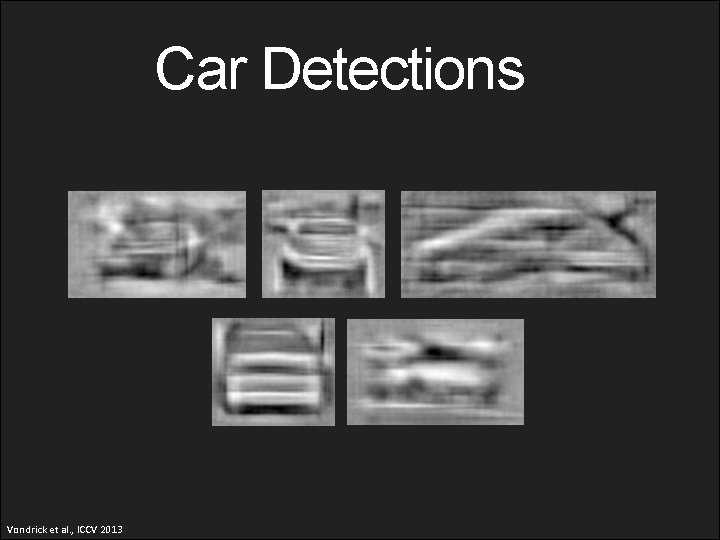

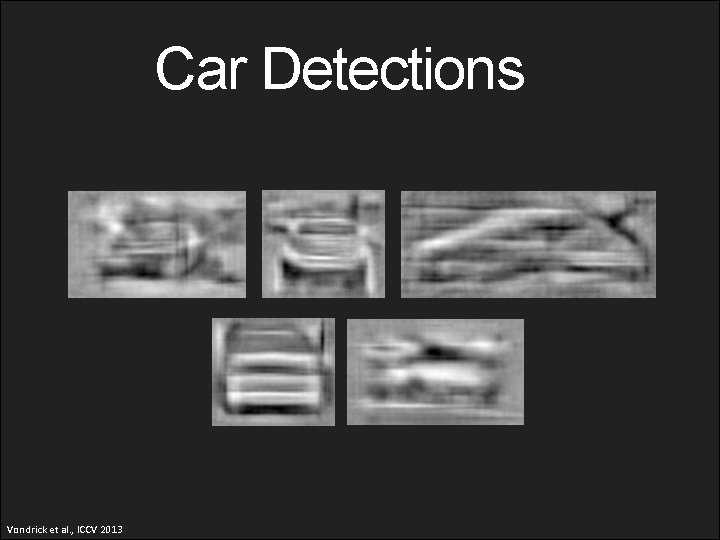

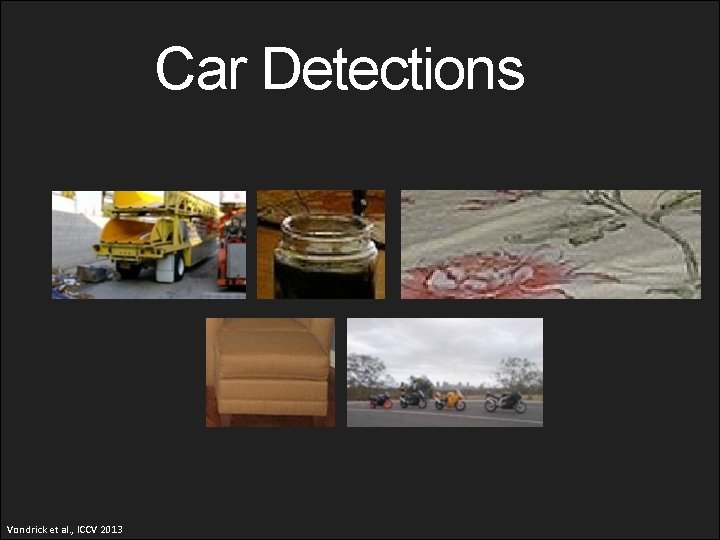

Car Detections Vondrick et al. , ICCV 2013

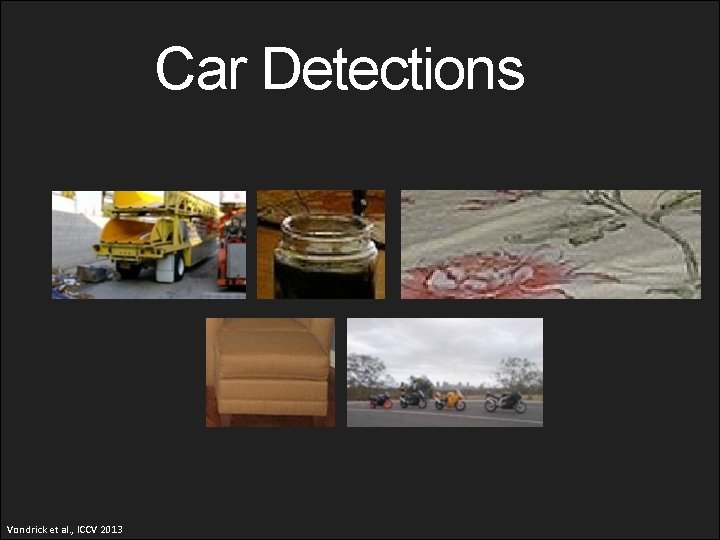

Car Detections Vondrick et al. , ICCV 2013

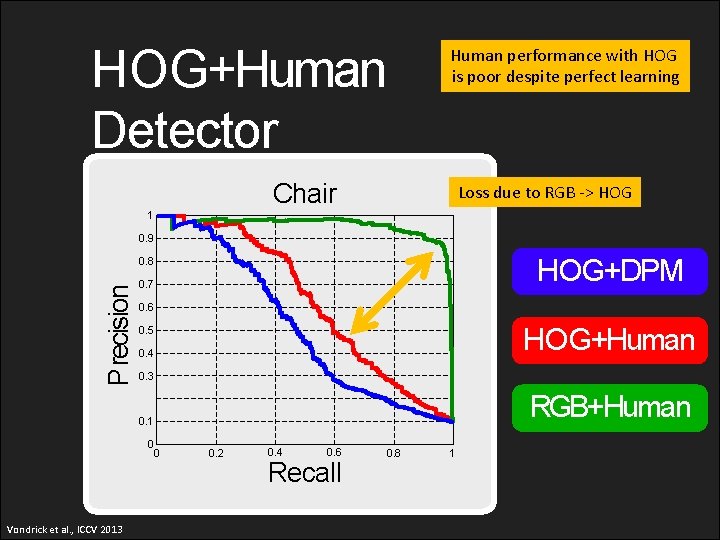

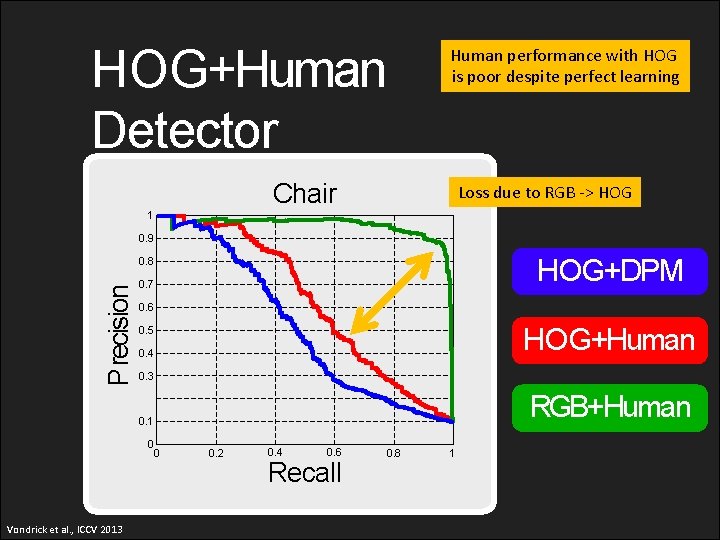

HOG+Human Detector Human performance with HOG is poor despite perfect learning Chair Loss due to RGB -> HOG 1 0. 9 HOG+DPM Precision 0. 8 0. 7 0. 6 HOG+Human 0. 5 0. 4 0. 3 RGB+Human 0. 1 0 Vondrick et al. , ICCV 2013 0 0. 2 0. 4 0. 6 Recall 0. 8 1

Why did the detector fail? Car Vondrick et al. , ICCV 2013

Why did the detector fail? Car Vondrick et al. , ICCV 2013

Why did the detector fail? Car Vondrick et al. , ICCV 2013

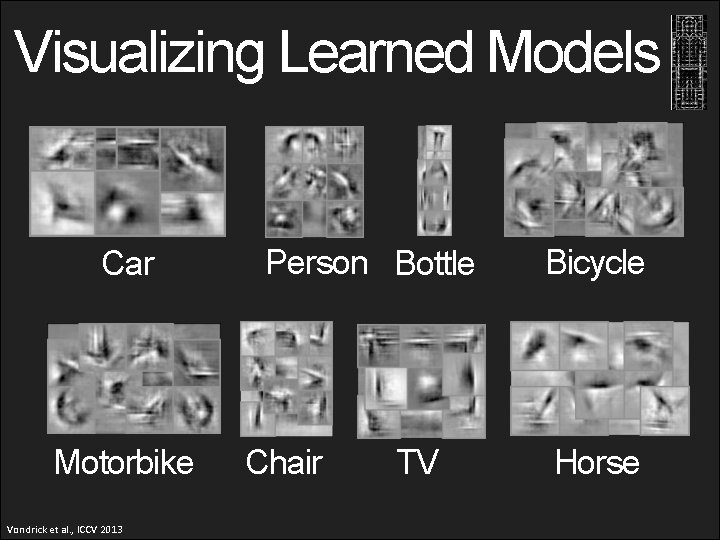

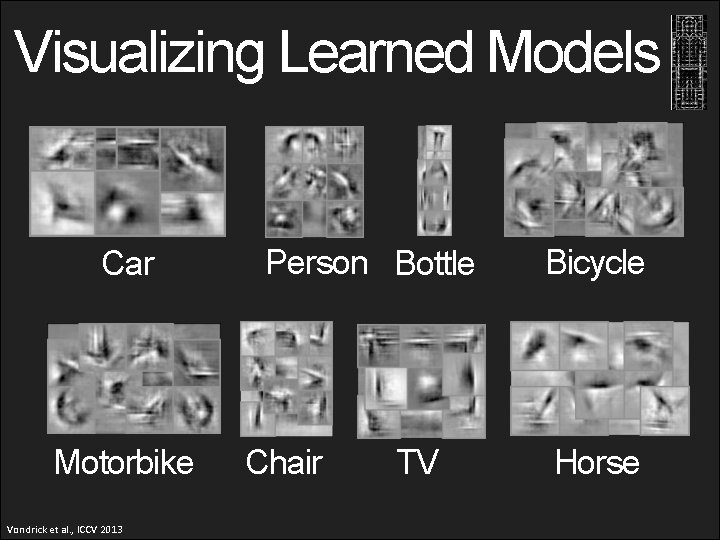

Visualizing Learned Models Car Motorbike Vondrick et al. , ICCV 2013 Person Bottle Chair TV Bicycle Horse

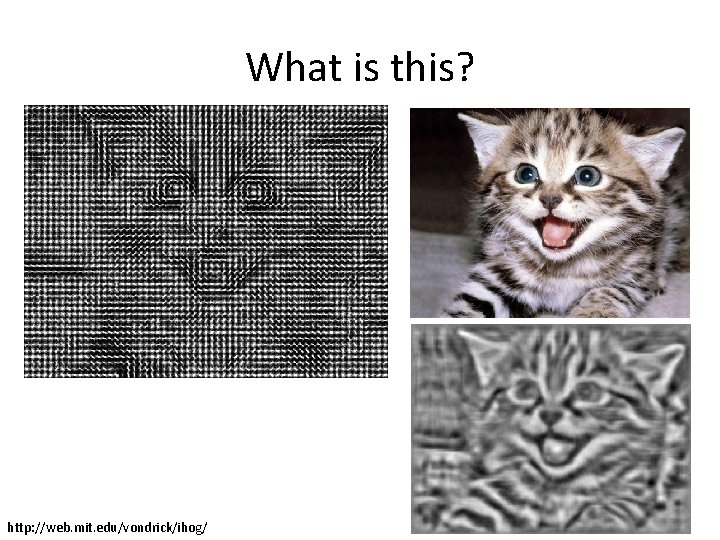

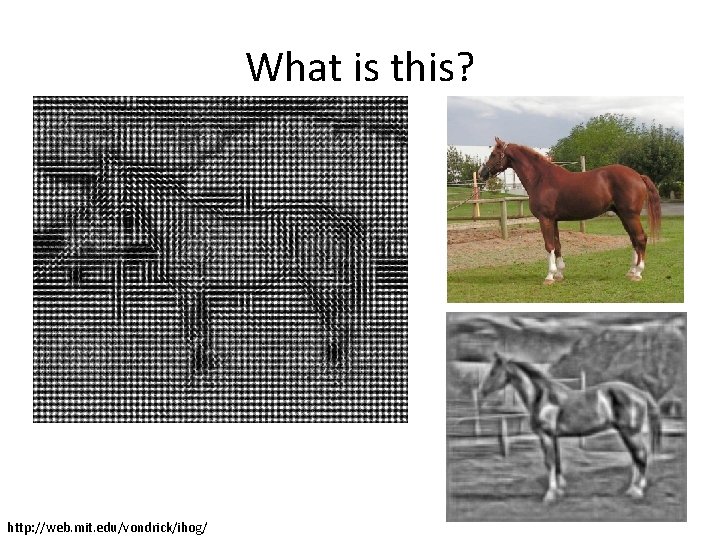

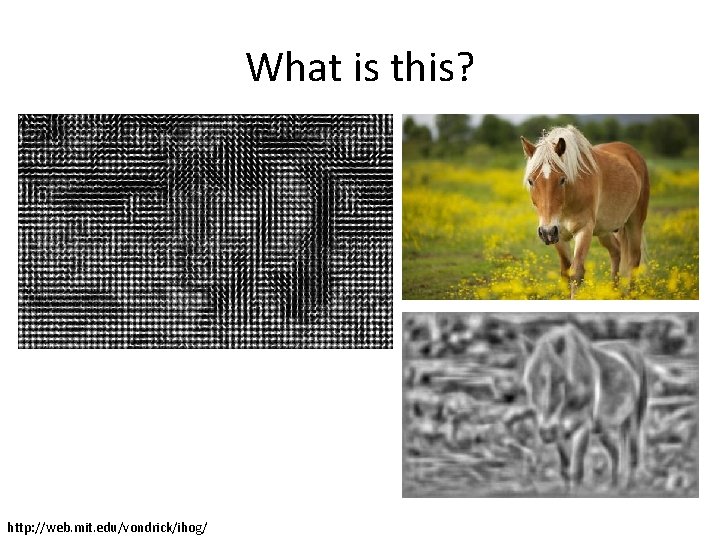

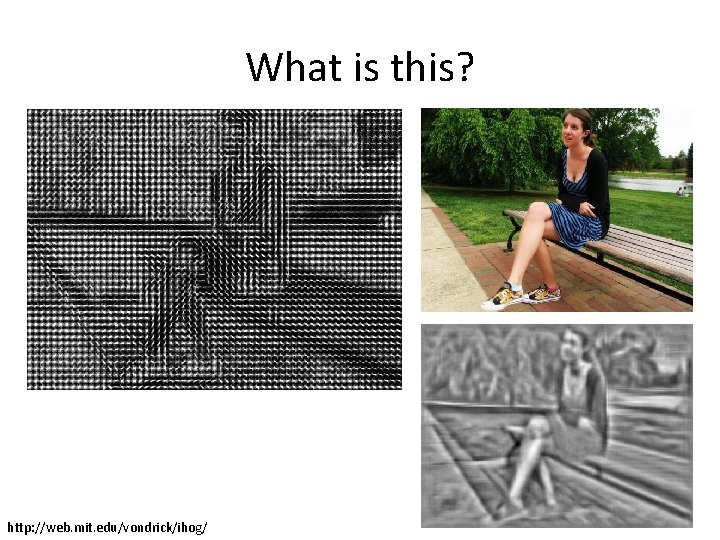

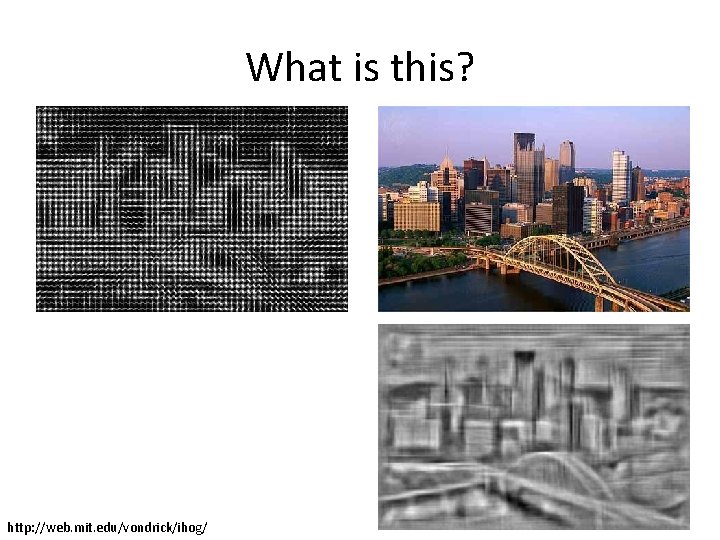

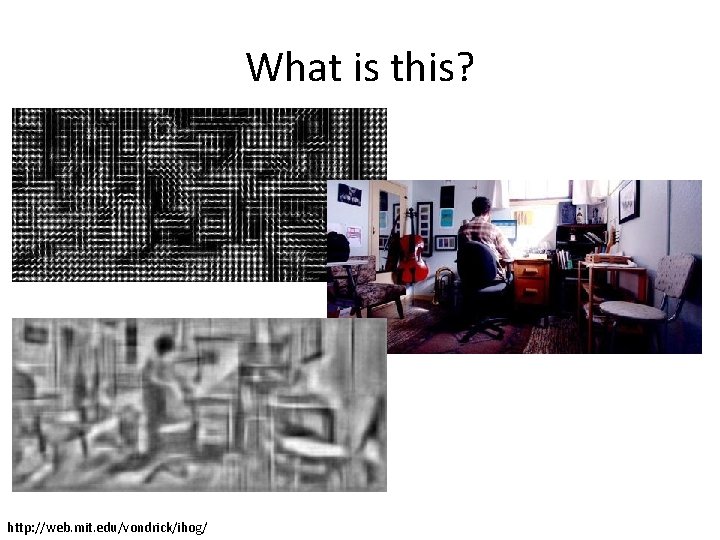

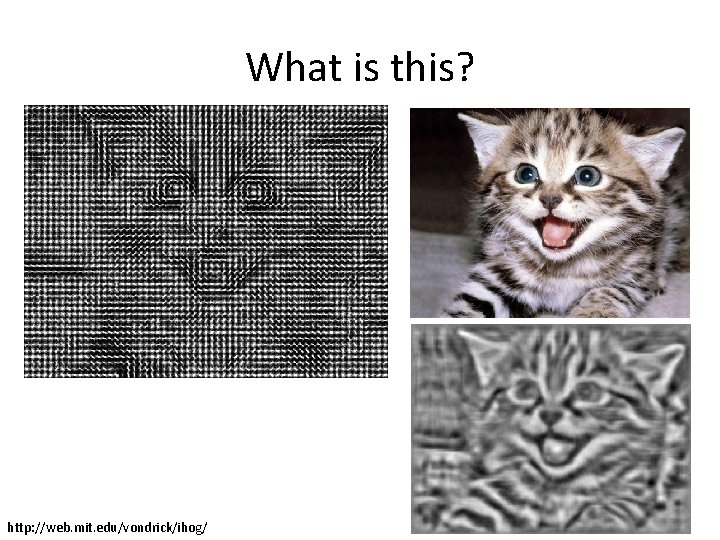

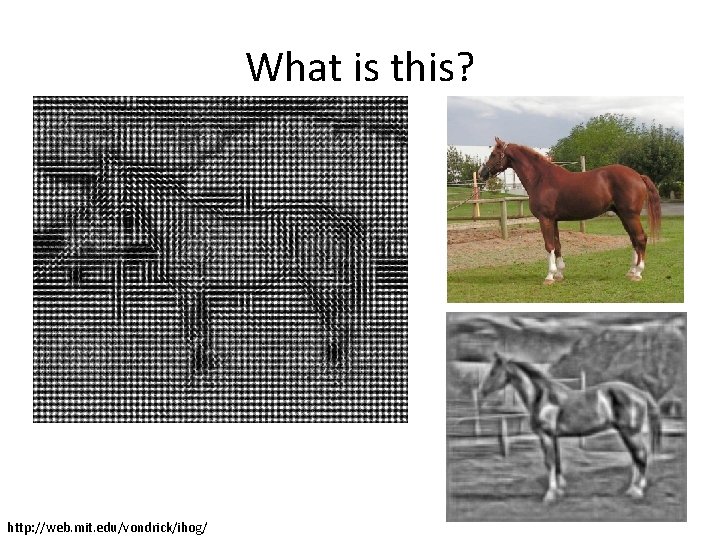

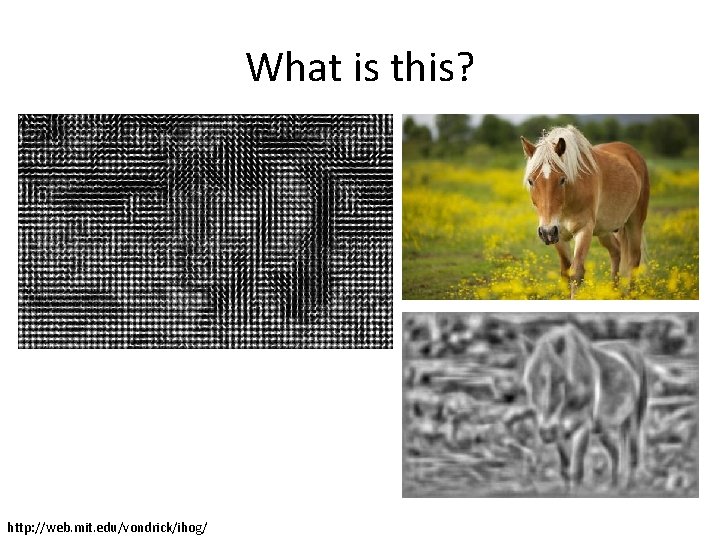

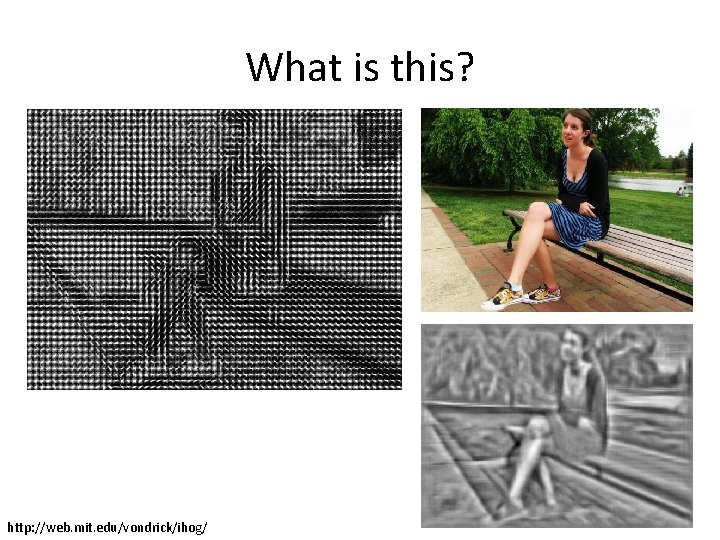

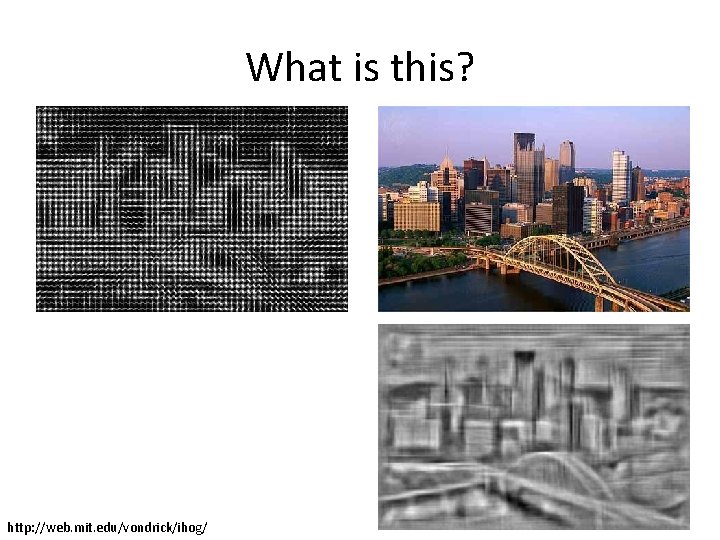

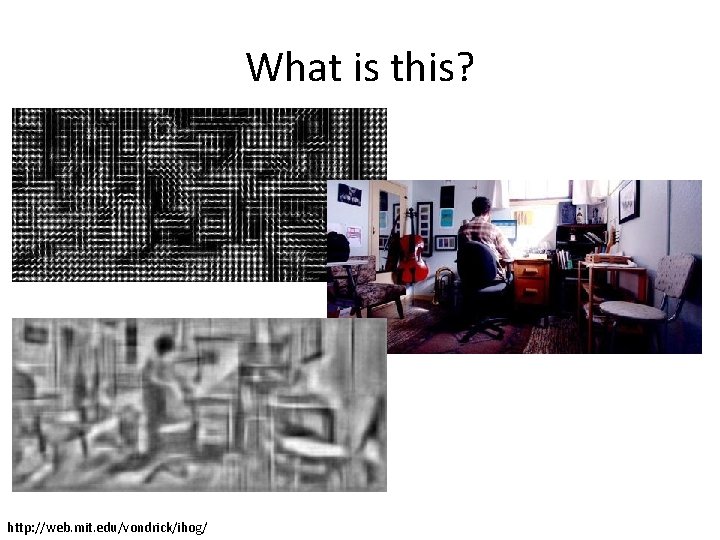

What is this? http: //web. mit. edu/vondrick/ihog/

What is this? http: //web. mit. edu/vondrick/ihog/

What is this? http: //web. mit. edu/vondrick/ihog/

What is this? http: //web. mit. edu/vondrick/ihog/

What is this? http: //web. mit. edu/vondrick/ihog/

What is this? http: //web. mit. edu/vondrick/ihog/

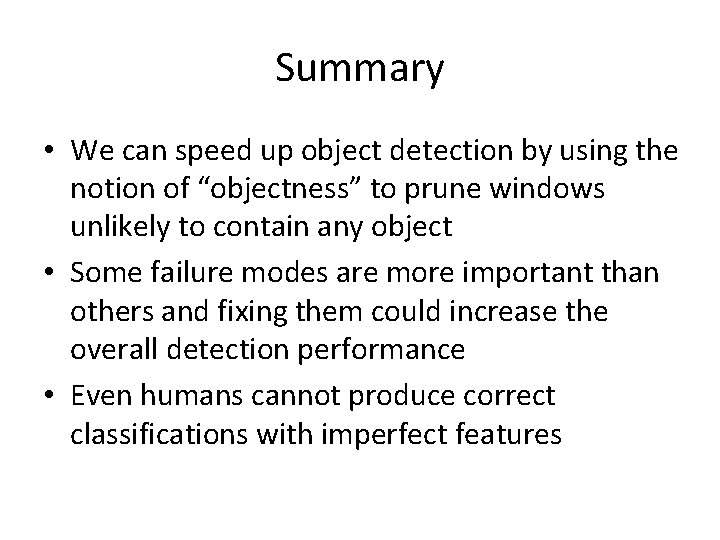

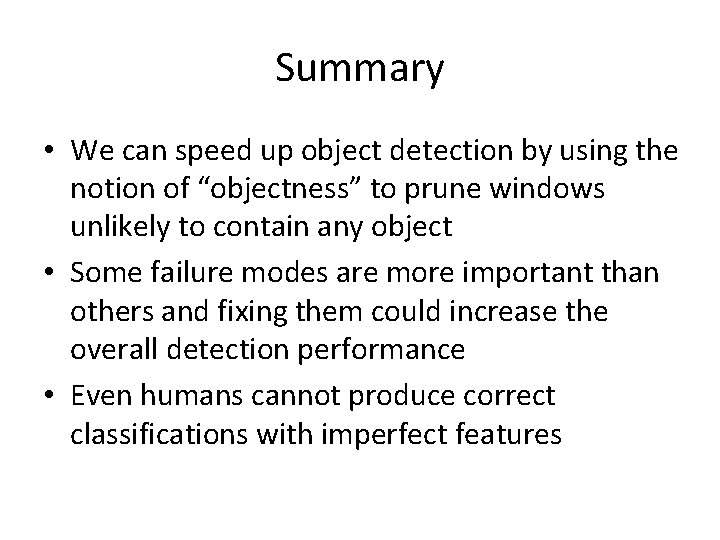

Summary • We can speed up object detection by using the notion of “objectness” to prune windows unlikely to contain any object • Some failure modes are more important than others and fixing them could increase the overall detection performance • Even humans cannot produce correct classifications with imperfect features