CS 1674 Intro to Computer Vision Recurrent Neural

![Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-24.jpg)

![Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-25.jpg)

![Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-26.jpg)

![Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-27.jpg)

![Image Sentence Datasets Microsoft COCO [Tsung-Yi Lin et al. 2014] mscoco. org currently: ~120 Image Sentence Datasets Microsoft COCO [Tsung-Yi Lin et al. 2014] mscoco. org currently: ~120](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-40.jpg)

- Slides: 50

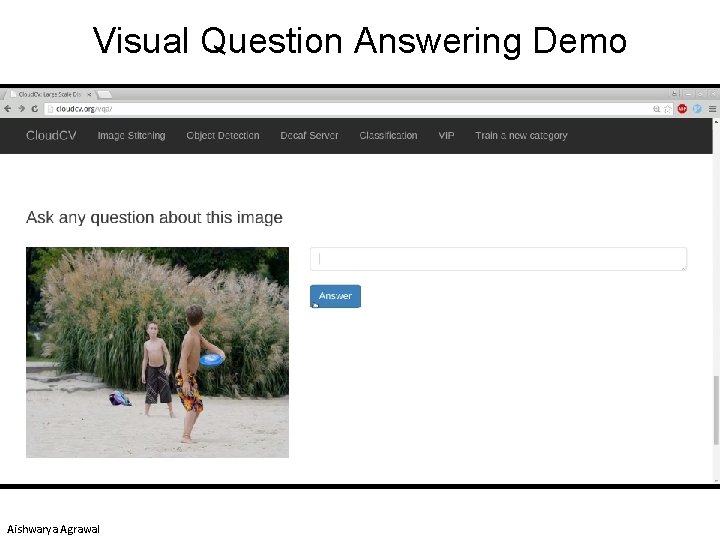

CS 1674: Intro to Computer Vision Recurrent Neural Networks Prof. Adriana Kovashka University of Pittsburgh December 5, 2016

Announcements • Next time: Review for the final exam • By Tuesday at noon, send me three topics you want me to review (for participation credit!) • Please do OMETs! (Thanks!) • Grades before final: See Course. Web, “Overall” column (I won’t need to curve)

Plan for today • Motivation/history – Vision and language, image captioning • Tools – Recurrent neural networks • Recent problem: Visual question answering – Some approaches

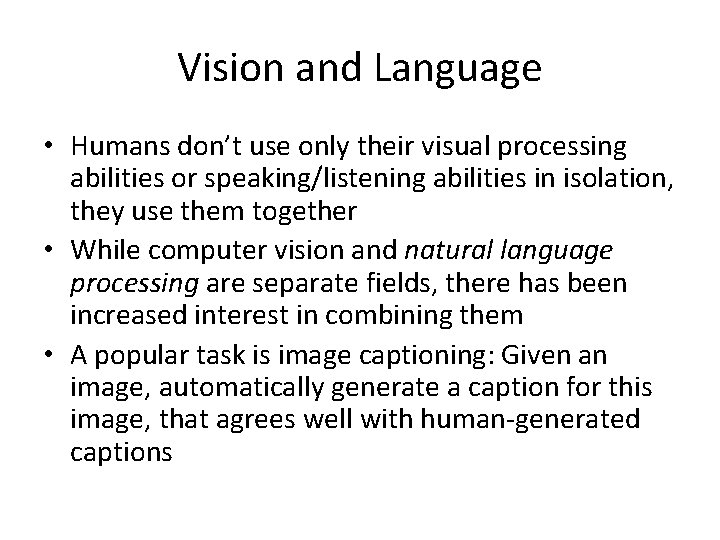

Vision and Language • Humans don’t use only their visual processing abilities or speaking/listening abilities in isolation, they use them together • While computer vision and natural language processing are separate fields, there has been increased interest in combining them • A popular task is image captioning: Given an image, automatically generate a caption for this image, that agrees well with human-generated captions

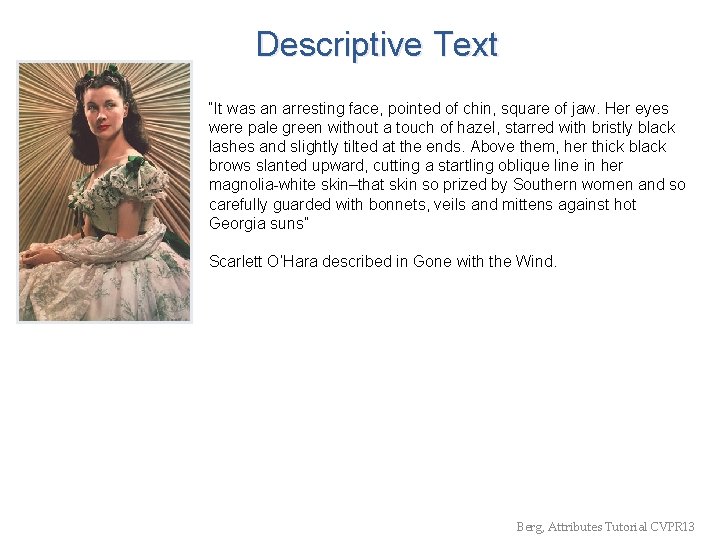

Descriptive Text “It was an arresting face, pointed of chin, square of jaw. Her eyes were pale green without a touch of hazel, starred with bristly black lashes and slightly tilted at the ends. Above them, her thick black brows slanted upward, cutting a startling oblique line in her magnolia-white skin–that skin so prized by Southern women and so carefully guarded with bonnets, veils and mittens against hot Georgia suns” Scarlett O’Hara described in Gone with the Wind. Berg, Attributes Tutorial CVPR 13

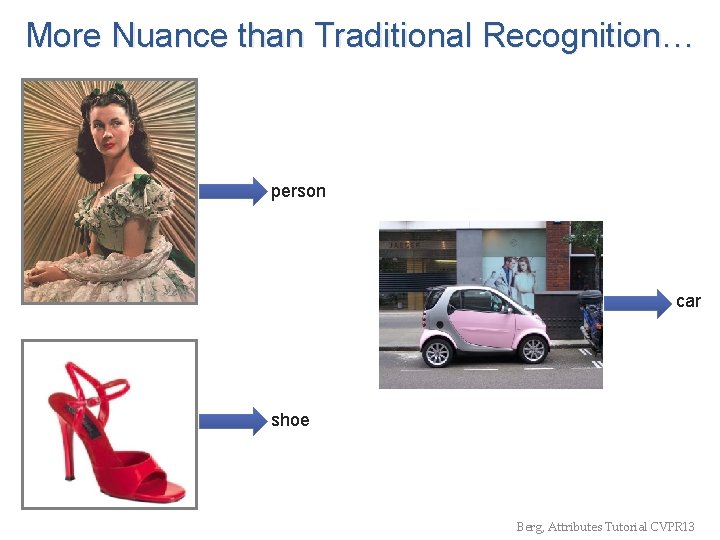

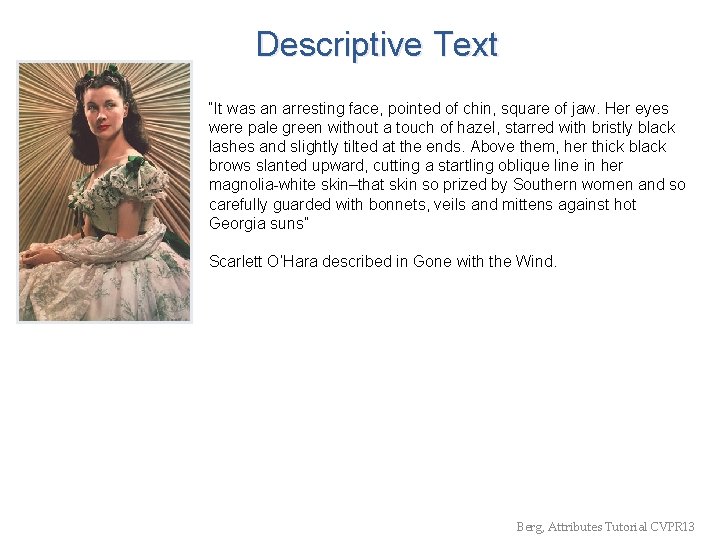

More Nuance than Traditional Recognition… person car shoe Berg, Attributes Tutorial CVPR 13

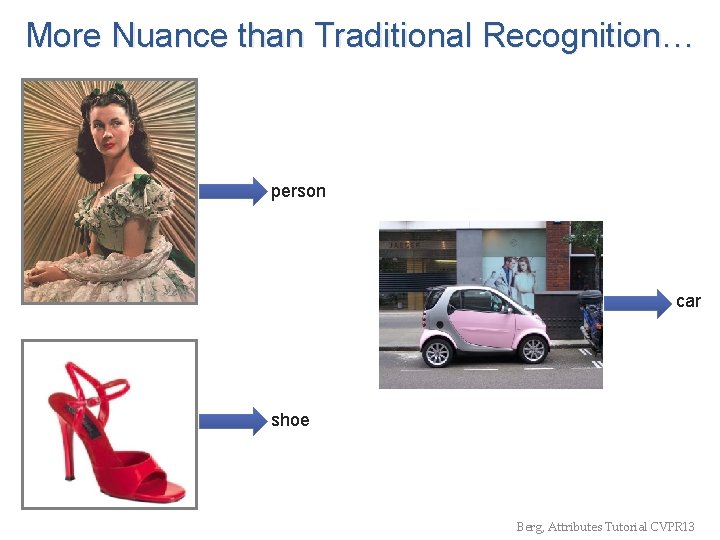

Toward Complex Structured Outputs car Berg, Attributes Tutorial CVPR 13

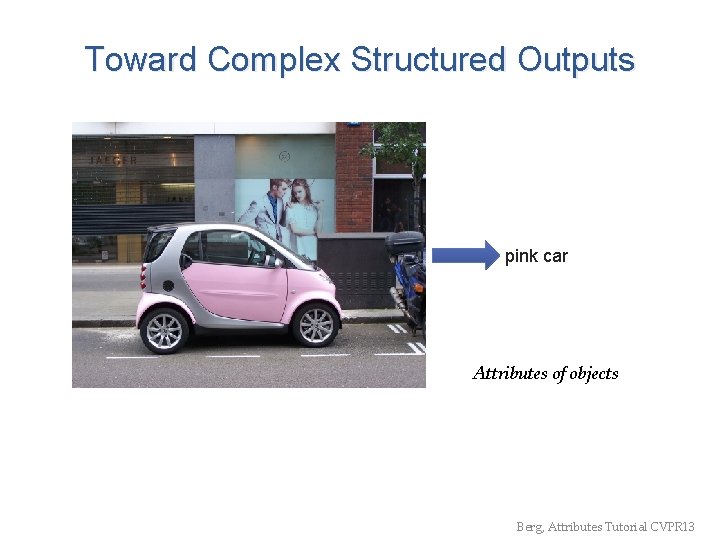

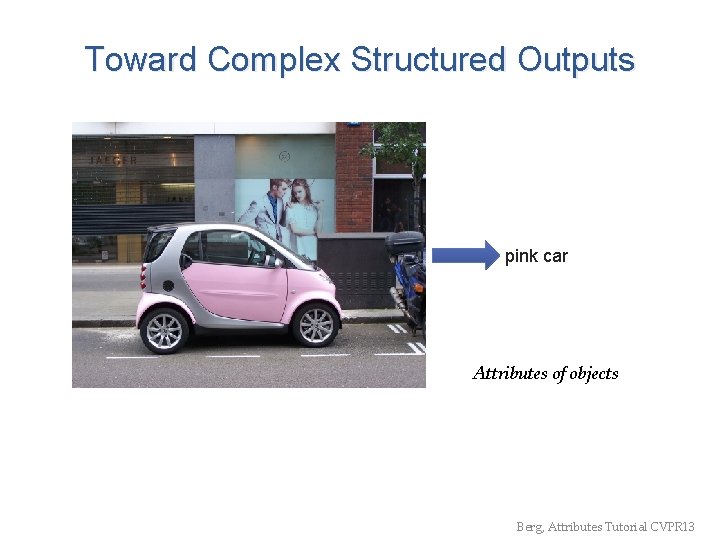

Toward Complex Structured Outputs pink car Attributes of objects Berg, Attributes Tutorial CVPR 13

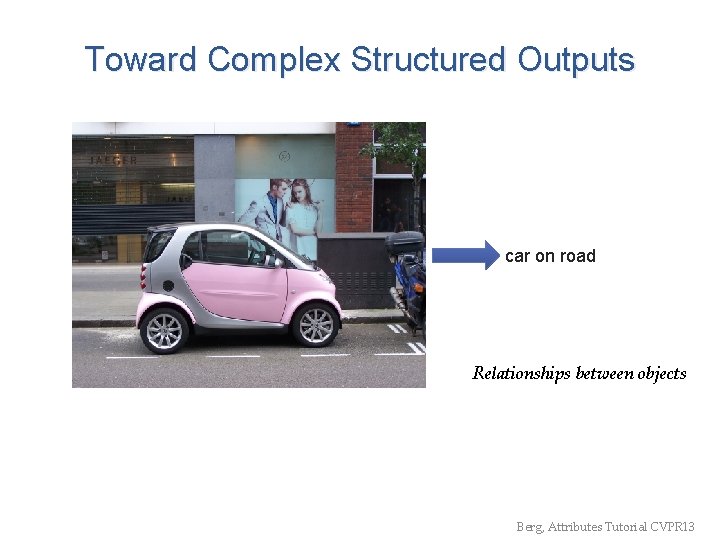

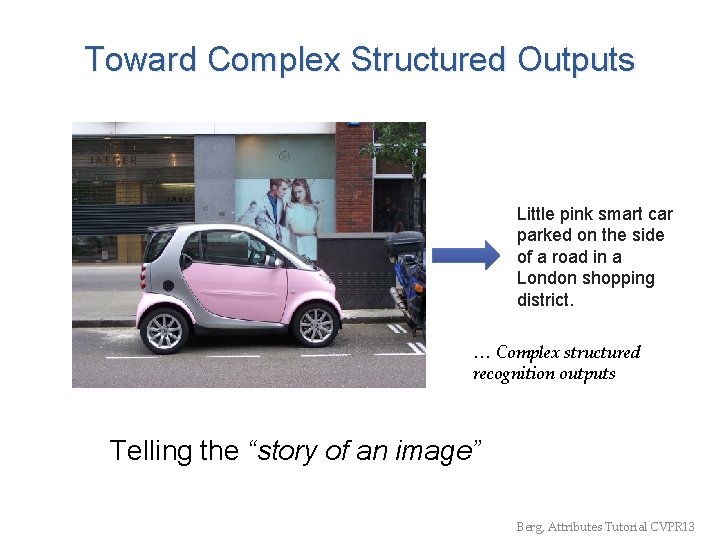

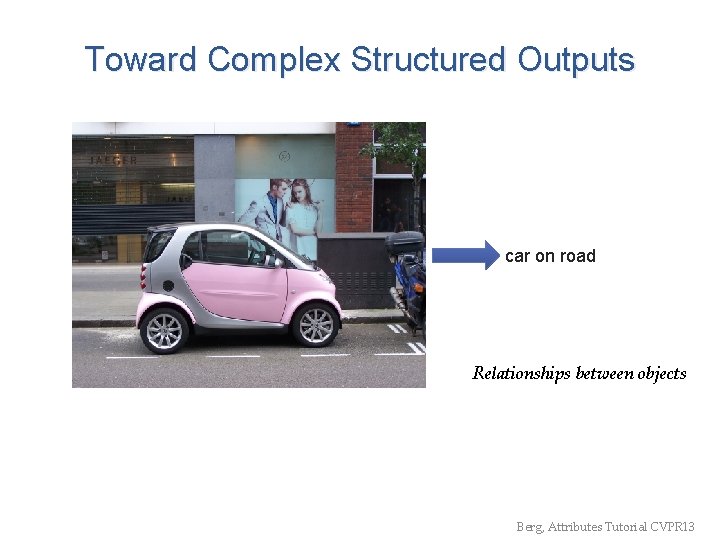

Toward Complex Structured Outputs car on road Relationships between objects Berg, Attributes Tutorial CVPR 13

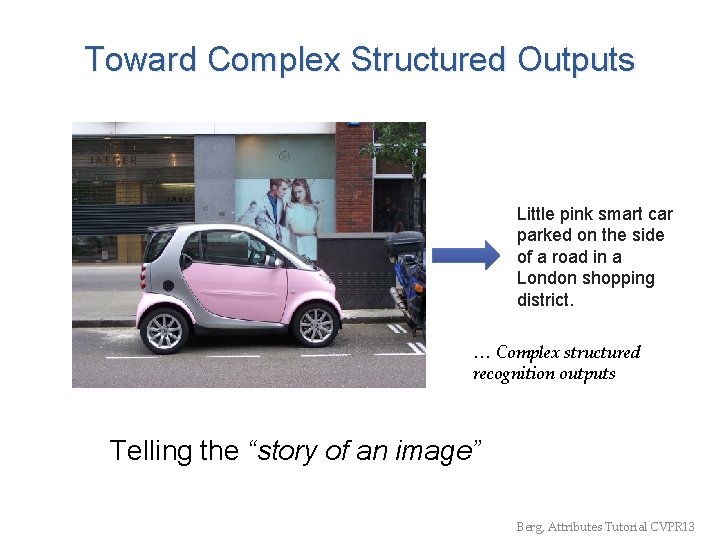

Toward Complex Structured Outputs Little pink smart car parked on the side of a road in a London shopping district. … Complex structured recognition outputs Telling the “story of an image” Berg, Attributes Tutorial CVPR 13

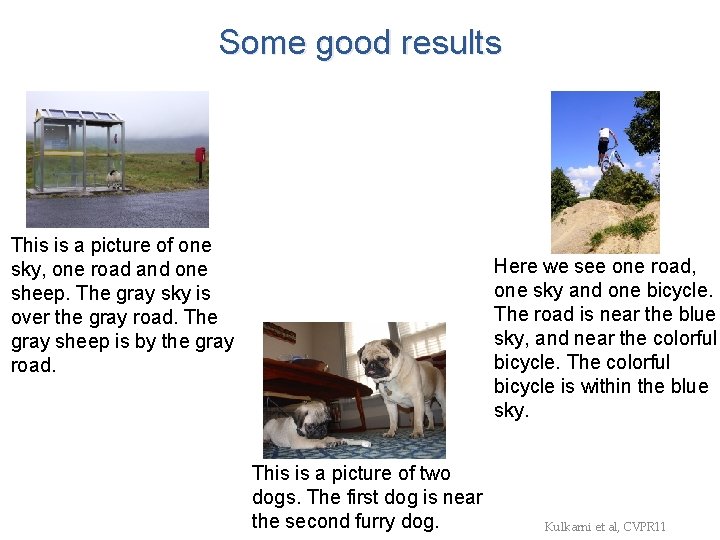

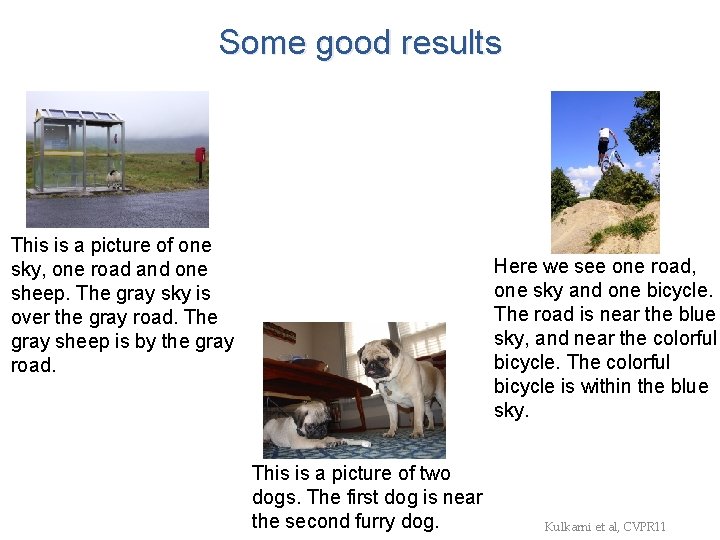

Some good results This is a picture of one sky, one road and one sheep. The gray sky is over the gray road. The gray sheep is by the gray road. Here we see one road, one sky and one bicycle. The road is near the blue sky, and near the colorful bicycle. The colorful bicycle is within the blue sky. This is a picture of two dogs. The first dog is near the second furry dog. Kulkarni et al, CVPR 11

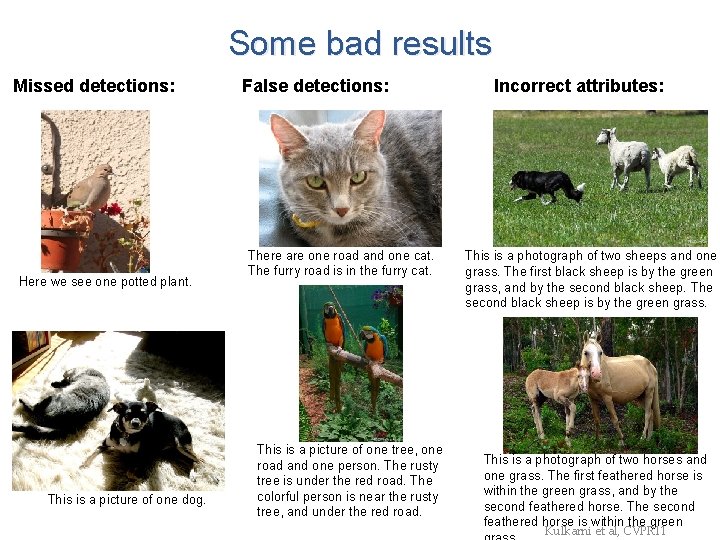

Some bad results Missed detections: Here we see one potted plant. This is a picture of one dog. False detections: There are one road and one cat. The furry road is in the furry cat. This is a picture of one tree, one road and one person. The rusty tree is under the red road. The colorful person is near the rusty tree, and under the red road. Incorrect attributes: This is a photograph of two sheeps and one grass. The first black sheep is by the green grass, and by the second black sheep. The second black sheep is by the green grass. This is a photograph of two horses and one grass. The first feathered horse is within the green grass, and by the second feathered horse. The second feathered horse is within the green Kulkarni et al, CVPR 11

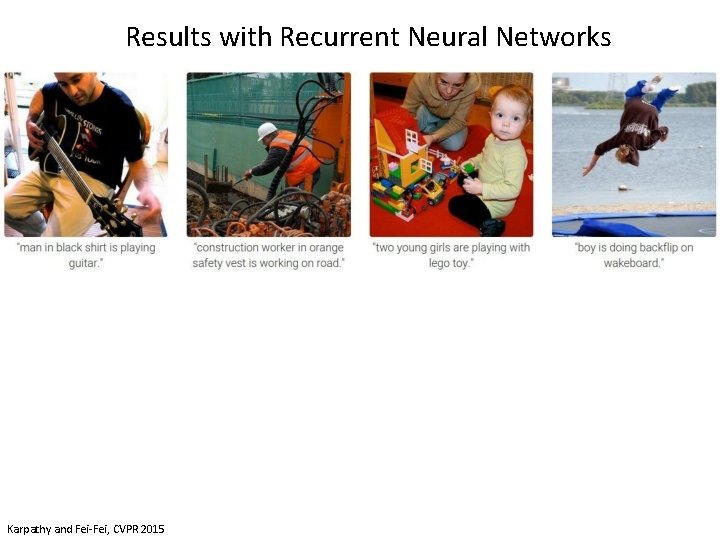

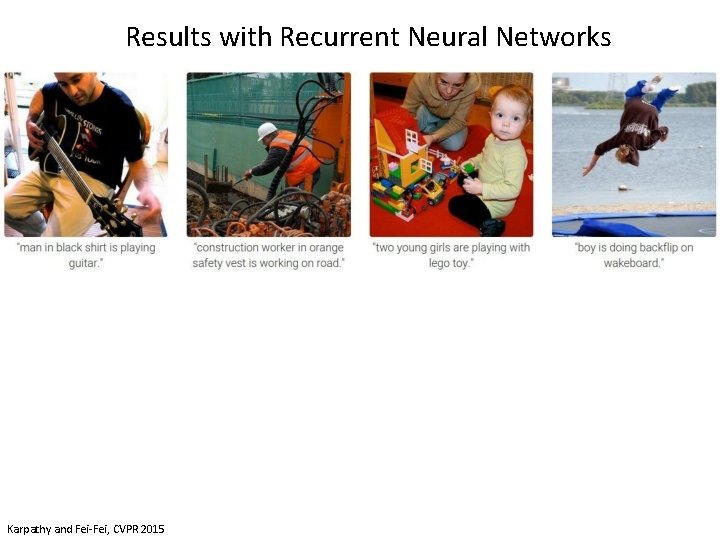

Results with Recurrent Neural Networks Karpathy and Fei-Fei, CVPR 2015

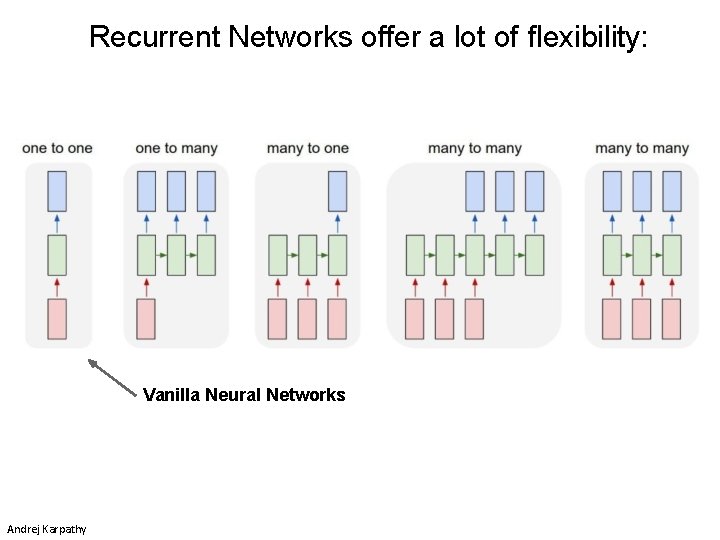

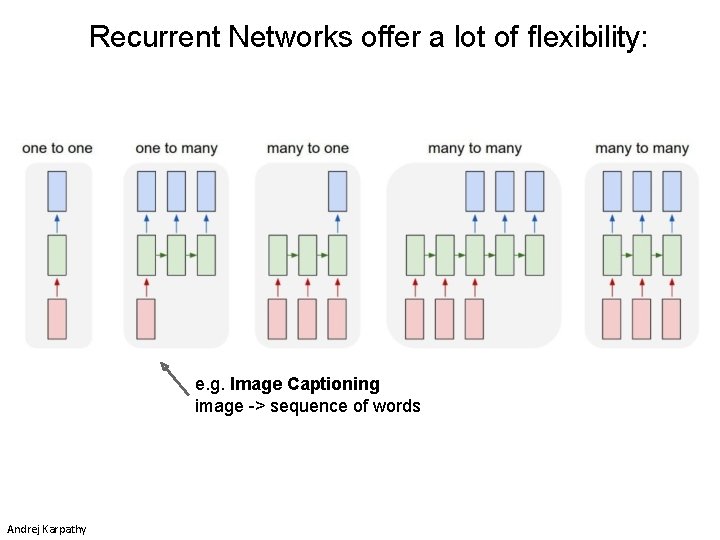

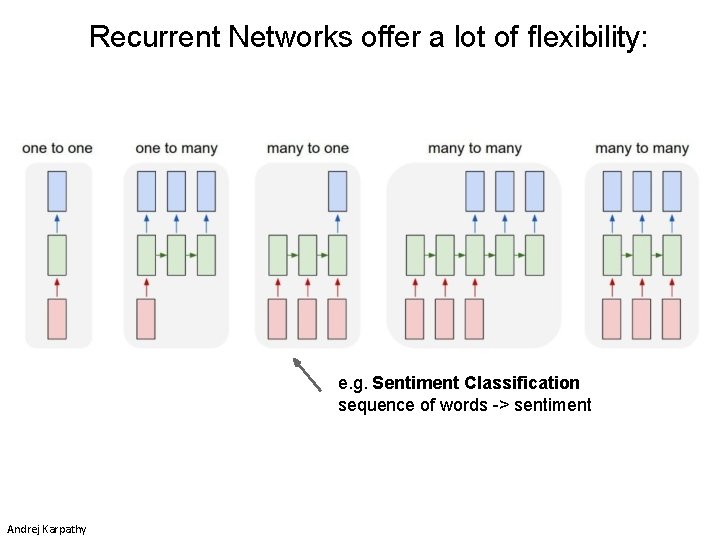

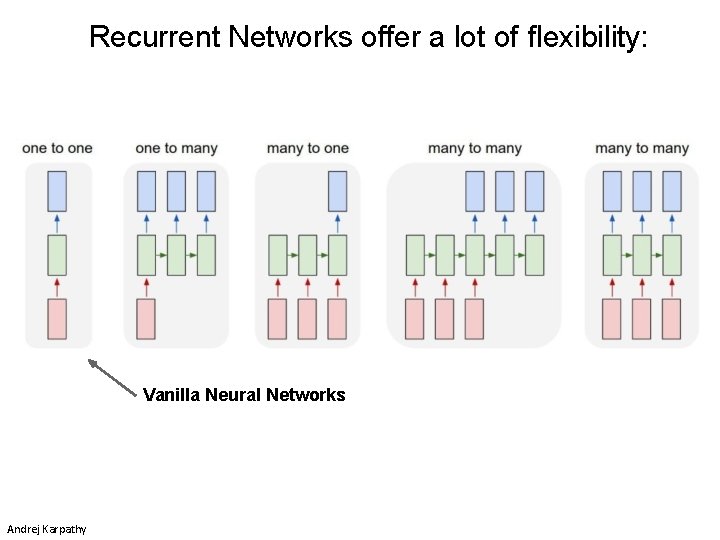

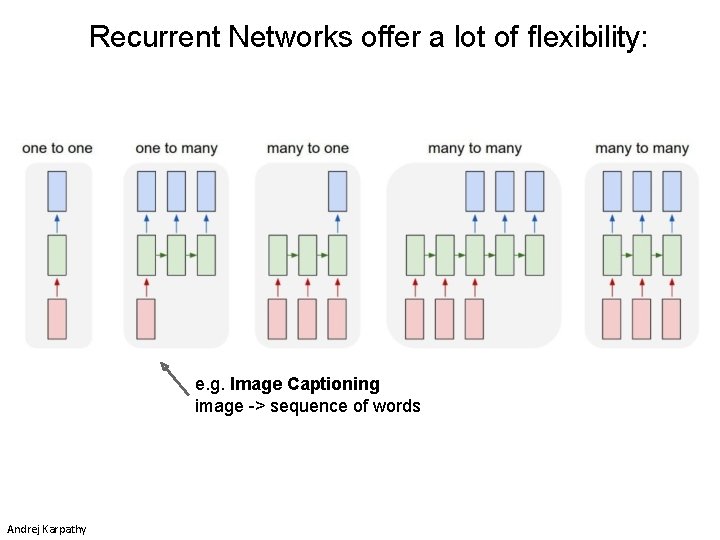

Recurrent Networks offer a lot of flexibility: Vanilla Neural Networks Andrej Karpathy

Recurrent Networks offer a lot of flexibility: e. g. Image Captioning image -> sequence of words Andrej Karpathy

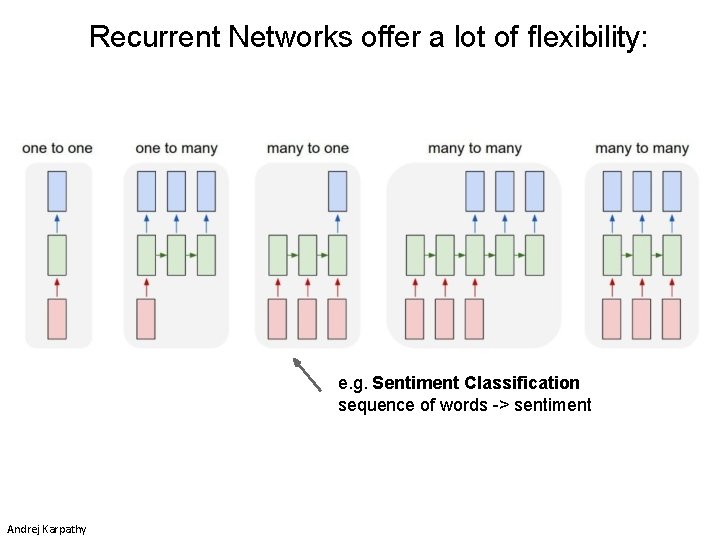

Recurrent Networks offer a lot of flexibility: e. g. Sentiment Classification sequence of words -> sentiment Andrej Karpathy

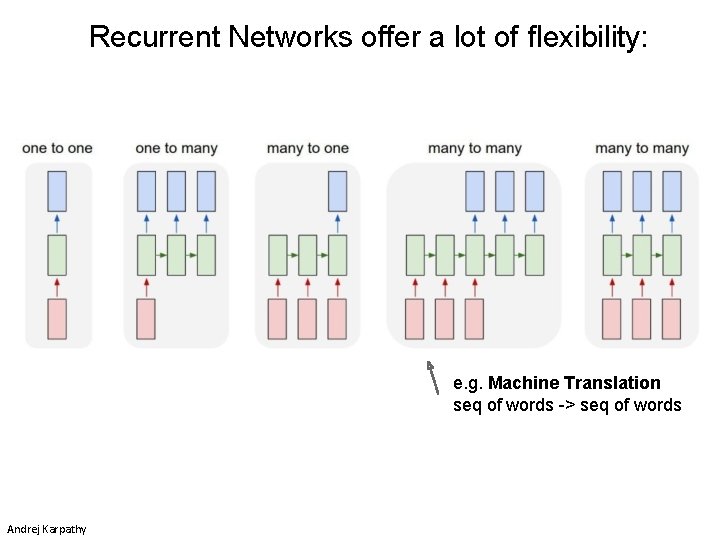

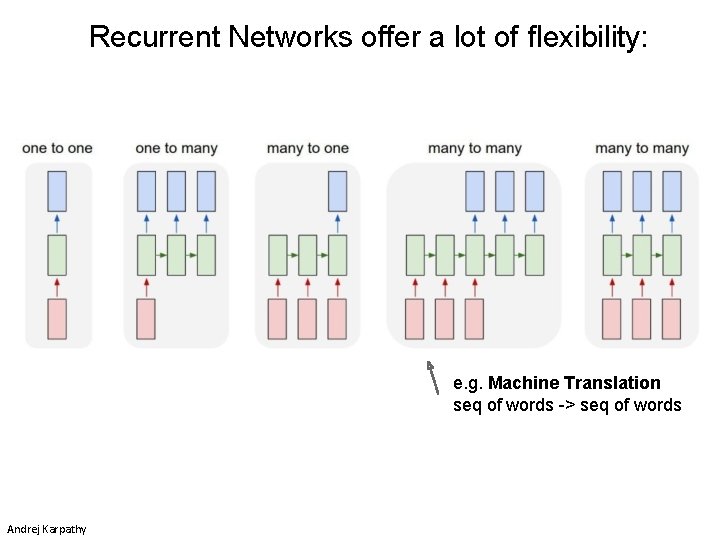

Recurrent Networks offer a lot of flexibility: e. g. Machine Translation seq of words -> seq of words Andrej Karpathy

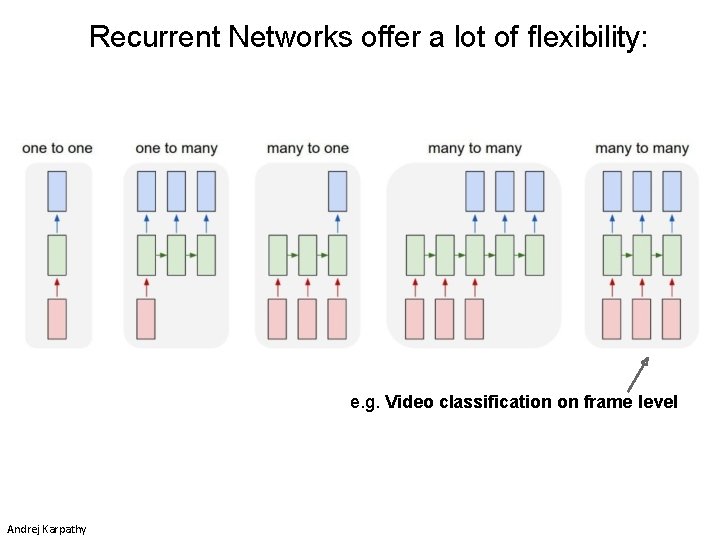

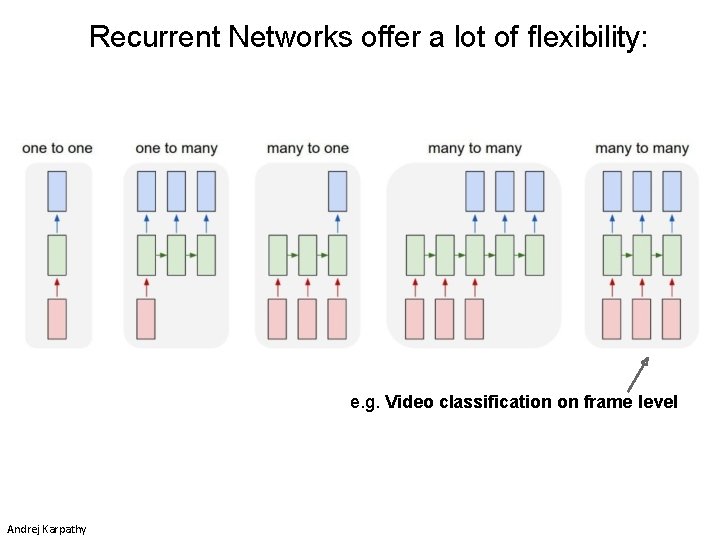

Recurrent Networks offer a lot of flexibility: e. g. Video classification on frame level Andrej Karpathy

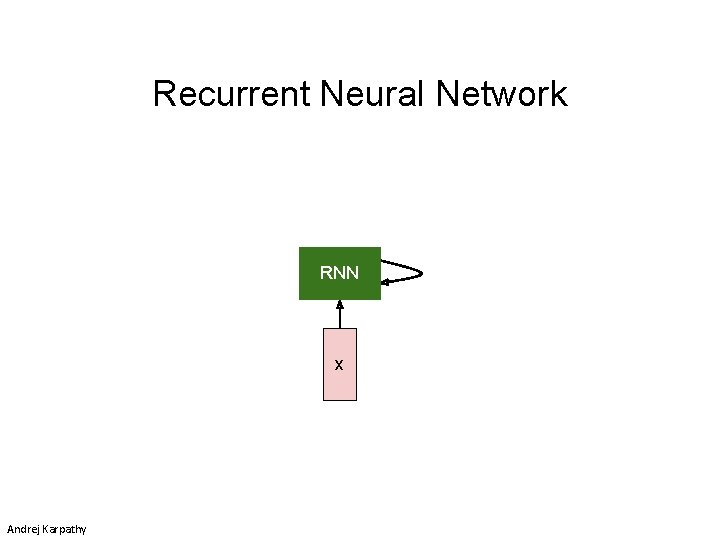

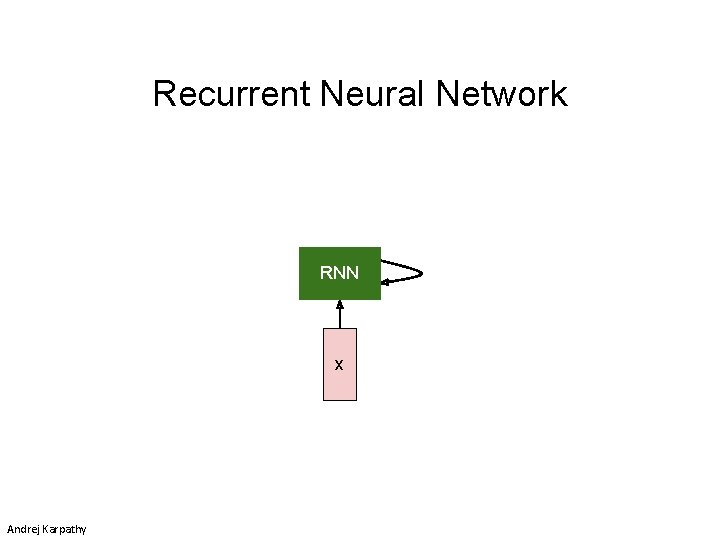

Recurrent Neural Network RNN x Andrej Karpathy

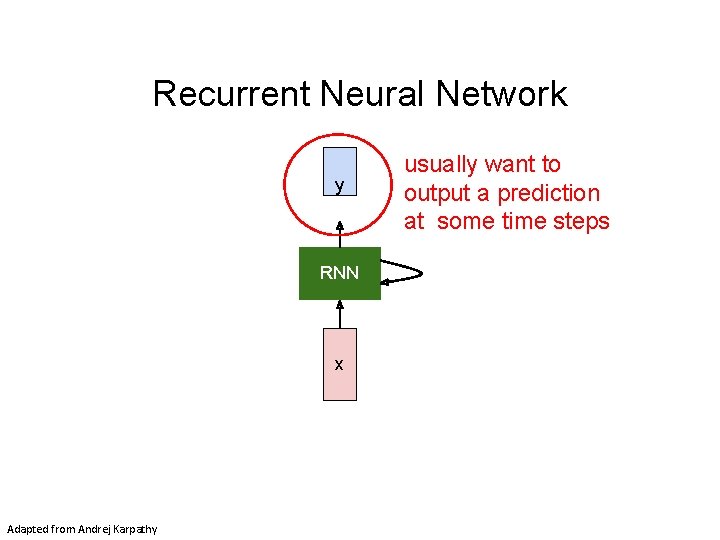

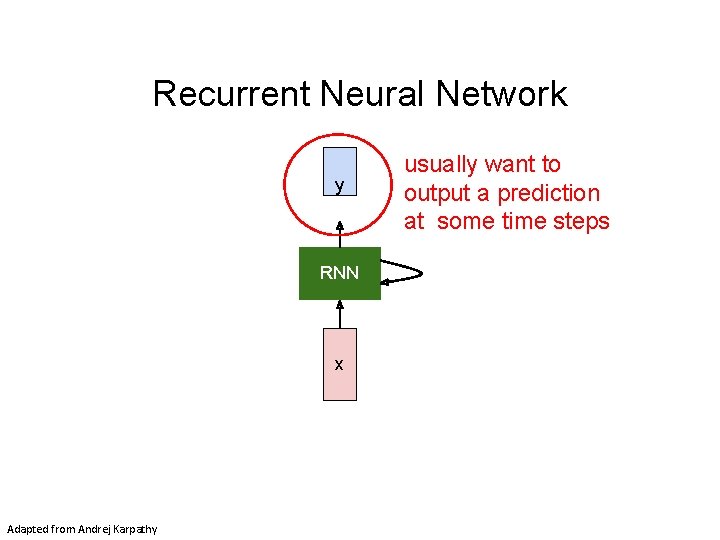

Recurrent Neural Network y RNN x Adapted from Andrej Karpathy usually want to output a prediction at some time steps

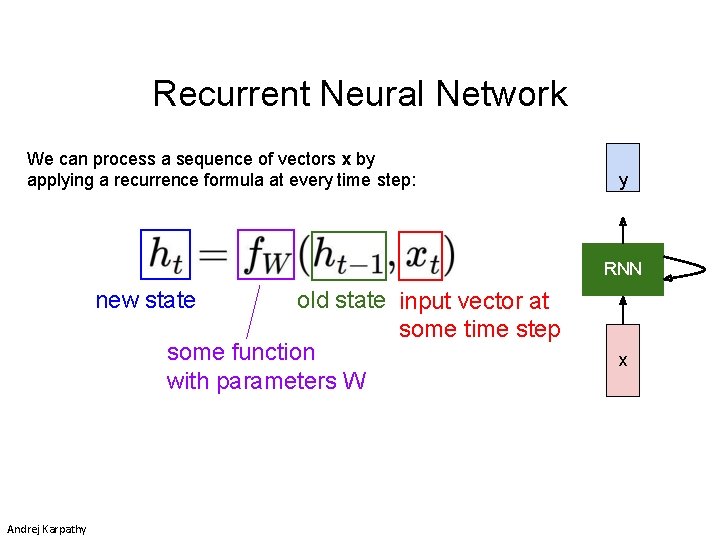

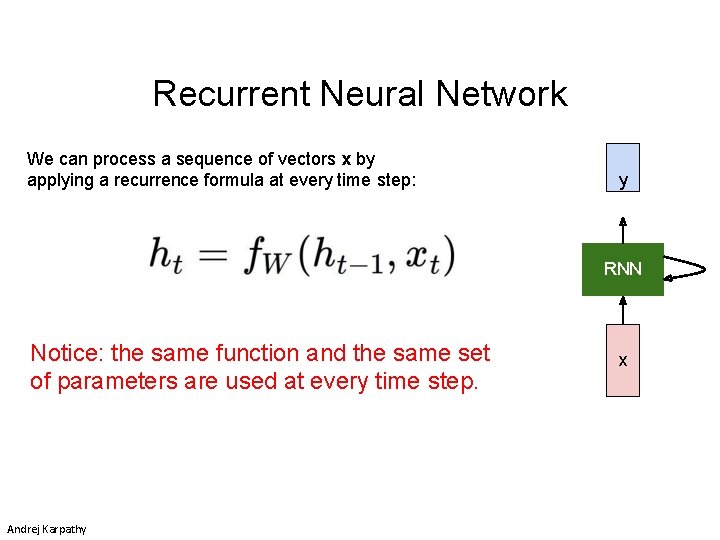

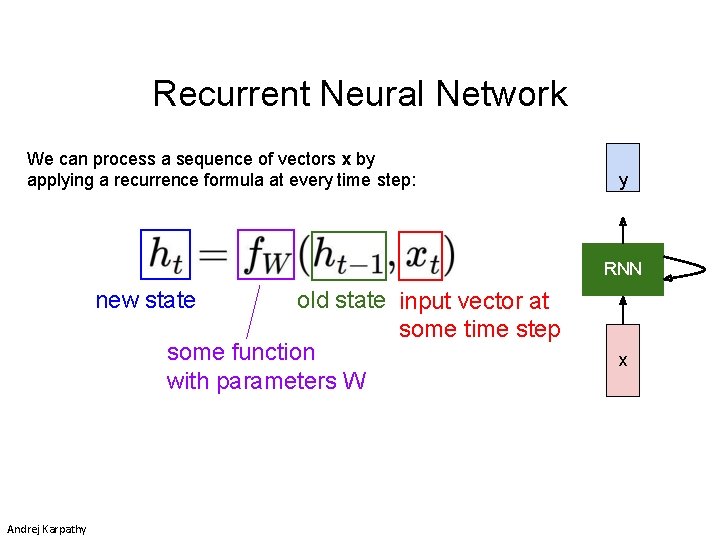

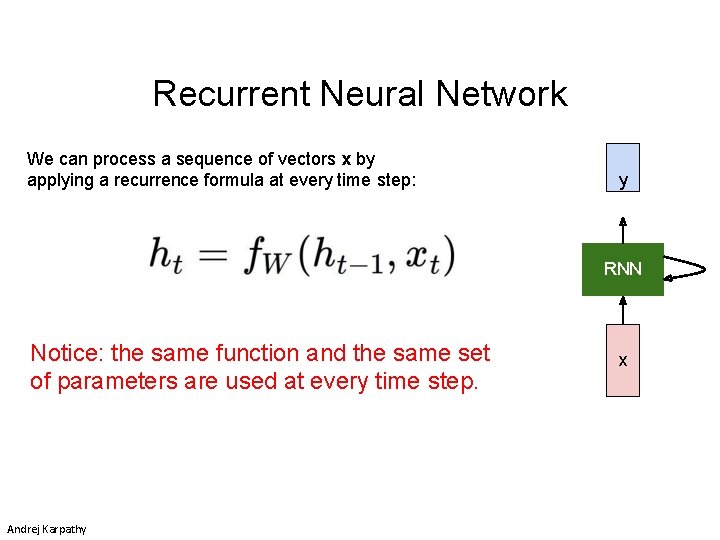

Recurrent Neural Network We can process a sequence of vectors x by applying a recurrence formula at every time step: y RNN new state old state input vector at some time step some function with parameters W Andrej Karpathy x

Recurrent Neural Network We can process a sequence of vectors x by applying a recurrence formula at every time step: y RNN Notice: the same function and the same set of parameters are used at every time step. Andrej Karpathy x

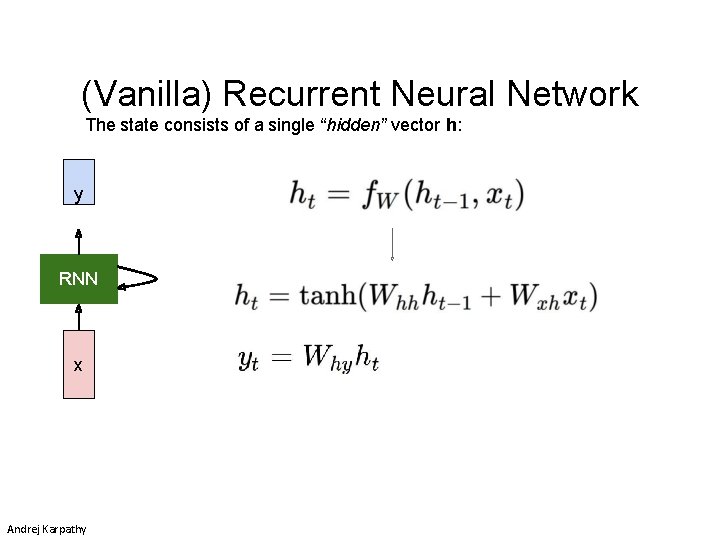

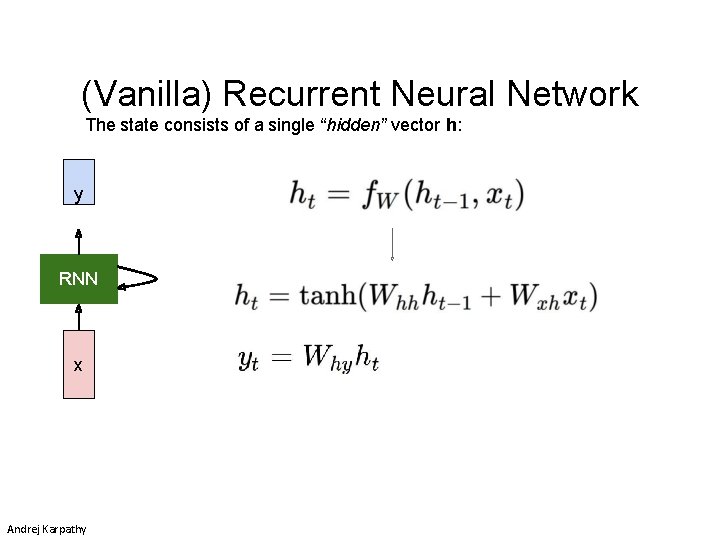

(Vanilla) Recurrent Neural Network The state consists of a single “hidden” vector h: y RNN x Andrej Karpathy

![Example Characterlevel language model example Vocabulary h e l o Example training sequence hello Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-24.jpg)

Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Andrej Karpathy y RNN x

![Example Characterlevel language model example Vocabulary h e l o Example training sequence hello Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-25.jpg)

Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Andrej Karpathy

![Example Characterlevel language model example Vocabulary h e l o Example training sequence hello Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-26.jpg)

Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Andrej Karpathy

![Example Characterlevel language model example Vocabulary h e l o Example training sequence hello Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello”](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-27.jpg)

Example Character-level language model example Vocabulary: [h, e, l, o] Example training sequence: “hello” Andrej Karpathy

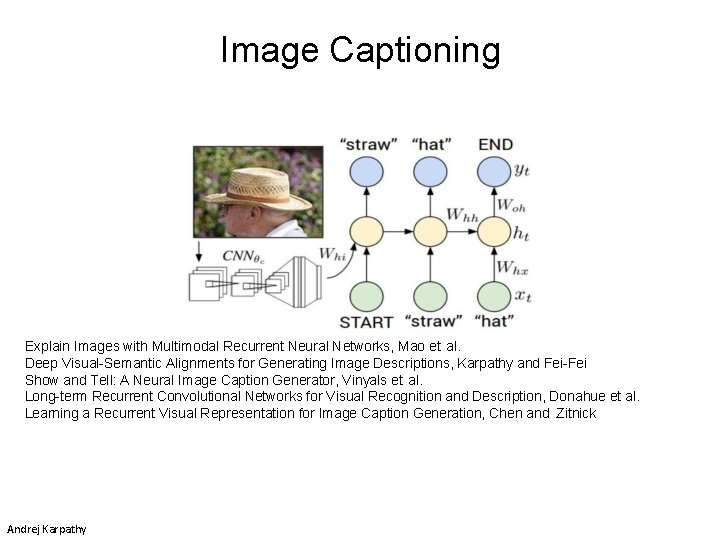

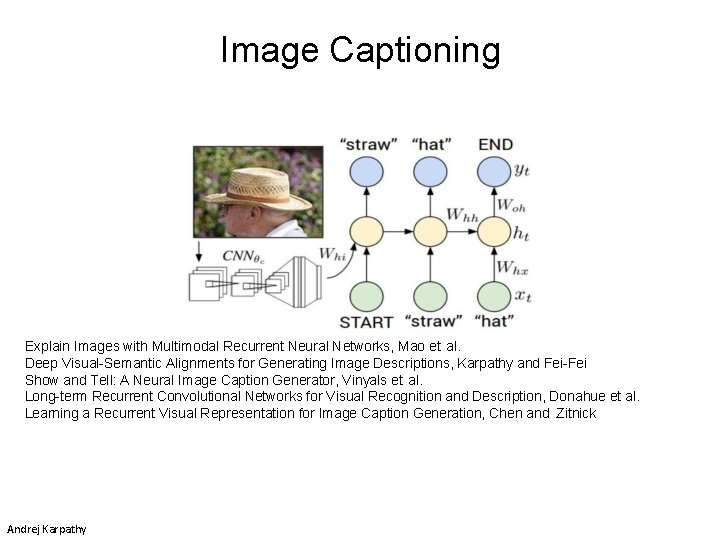

Image Captioning Explain Images with Multimodal Recurrent Neural Networks, Mao et al. Deep Visual-Semantic Alignments for Generating Image Descriptions, Karpathy and Fei-Fei Show and Tell: A Neural Image Caption Generator, Vinyals et al. Long-term Recurrent Convolutional Networks for Visual Recognition and Description, Donahue et al. Learning a Recurrent Visual Representation for Image Caption Generation, Chen and Zitnick Andrej Karpathy

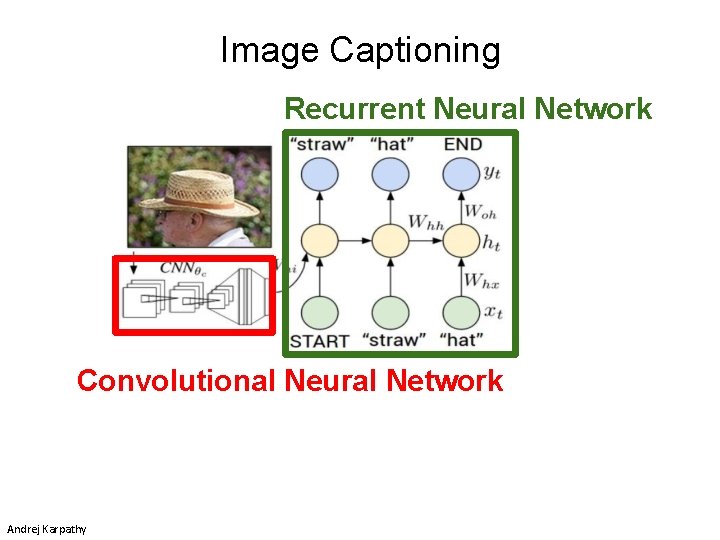

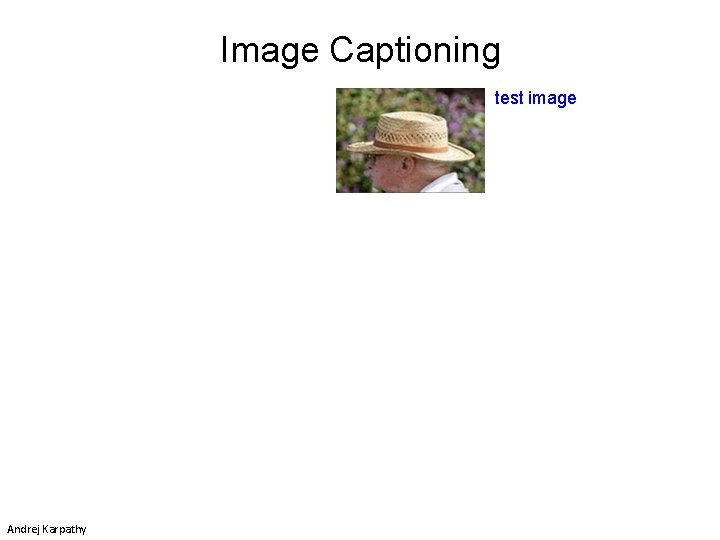

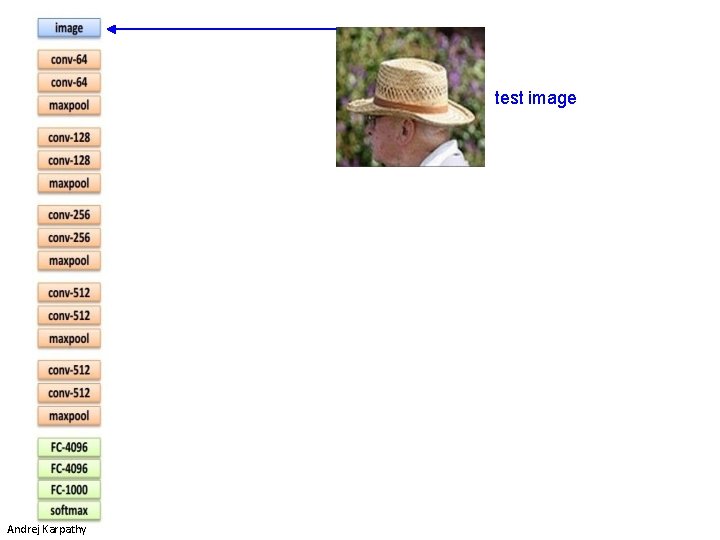

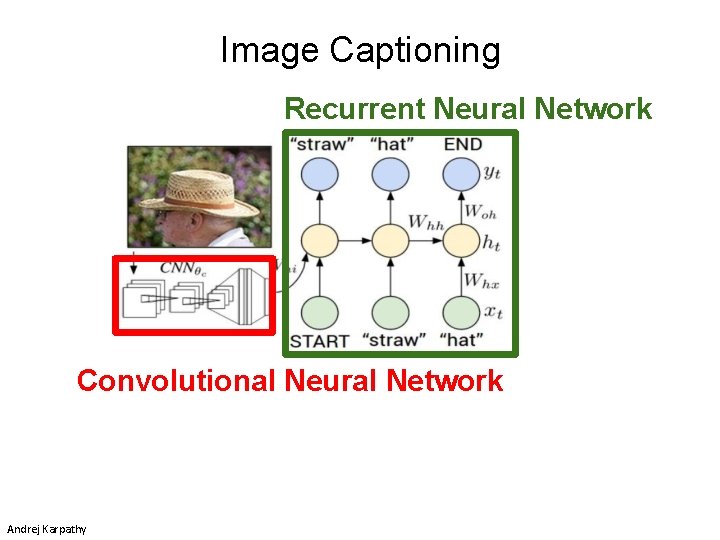

Image Captioning Recurrent Neural Network Convolutional Neural Network Andrej Karpathy

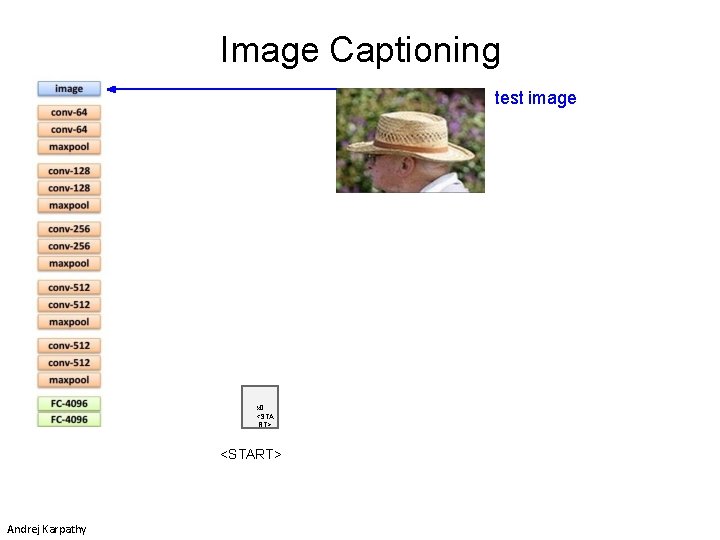

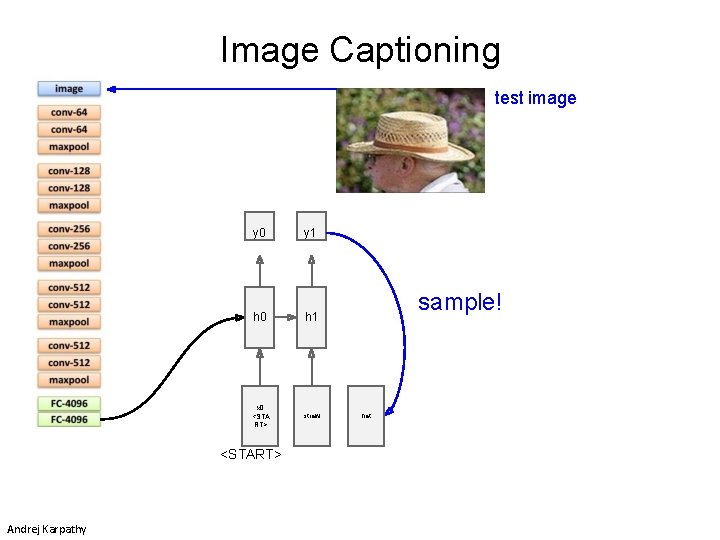

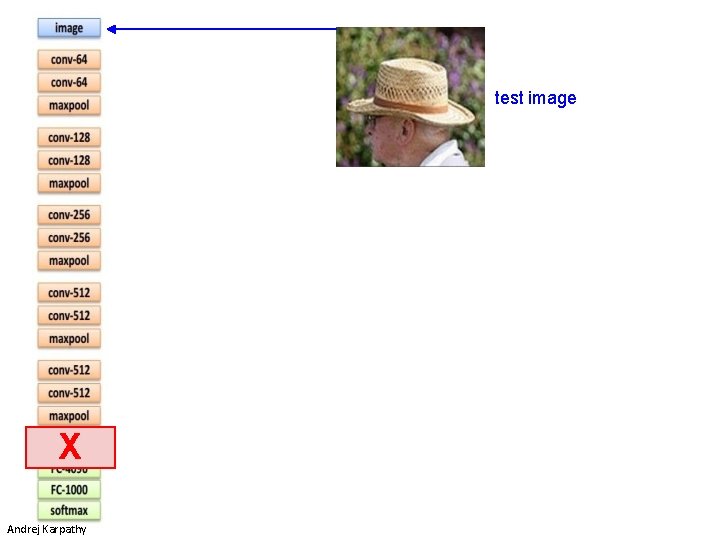

Image Captioning test image Andrej Karpathy

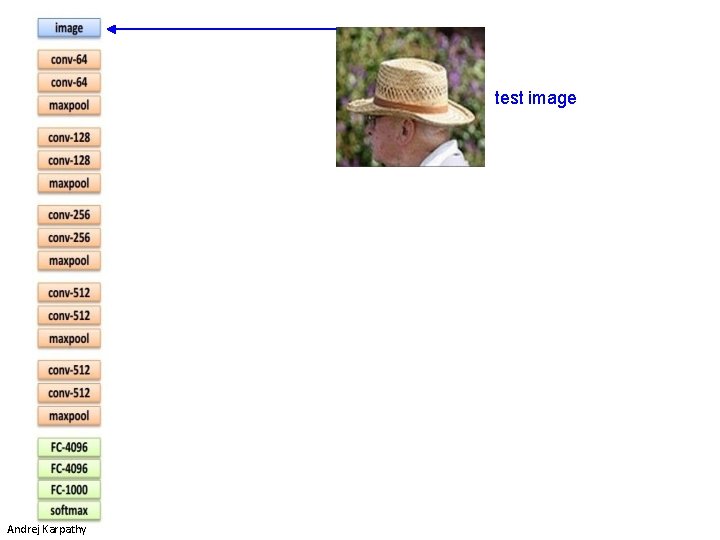

test image Andrej Karpathy

test image X Andrej Karpathy

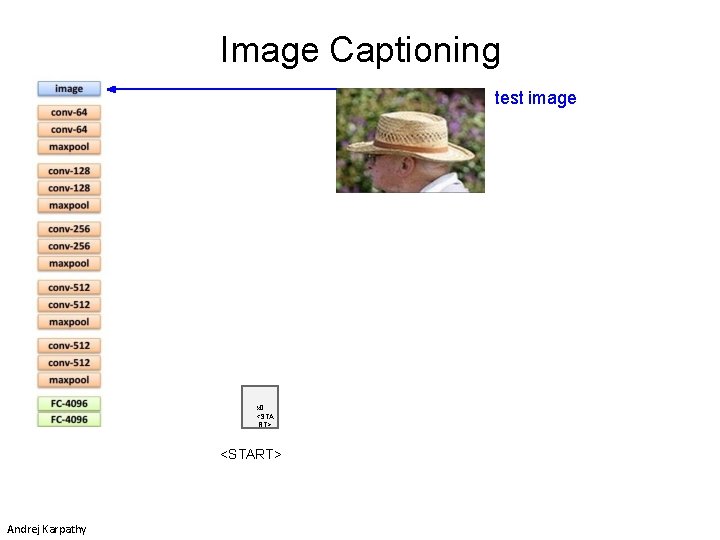

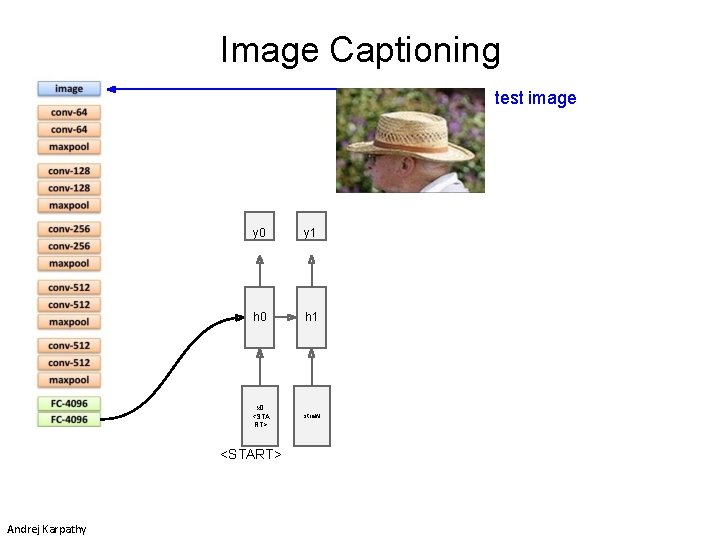

Image Captioning test image x 0 <STA RT> <START> Andrej Karpathy

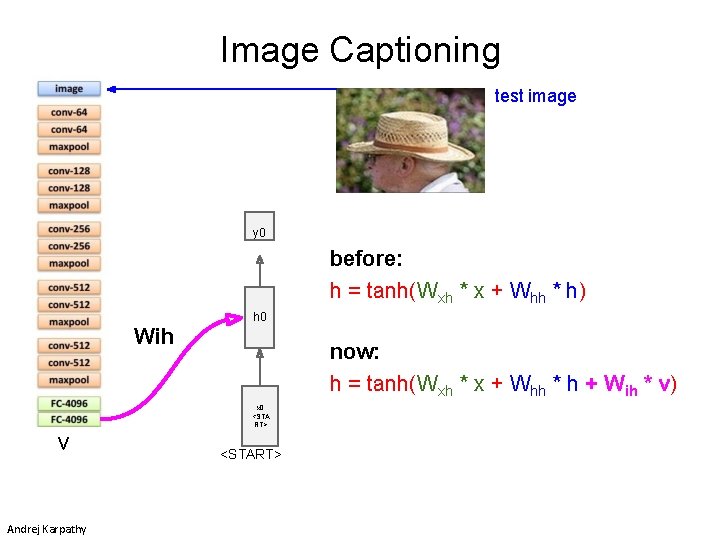

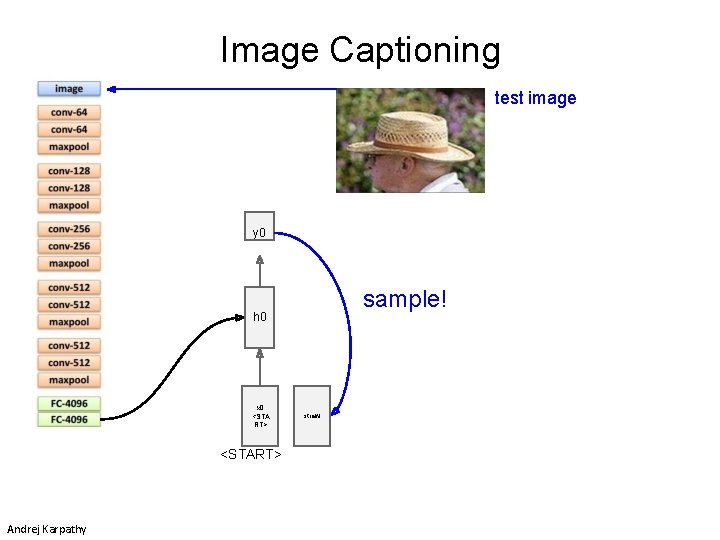

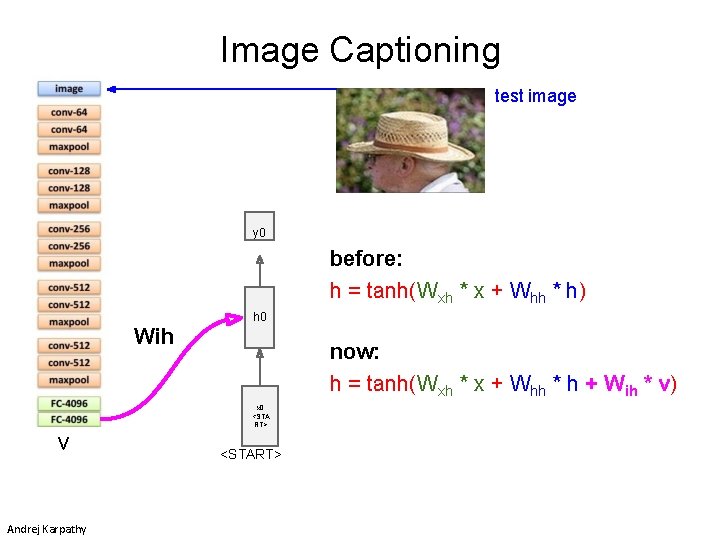

Image Captioning test image y 0 before: h = tanh(Wxh * x + Whh * h) h 0 Wih now: h = tanh(Wxh * x + Whh * h + Wih * v) x 0 <STA RT> v Andrej Karpathy <START>

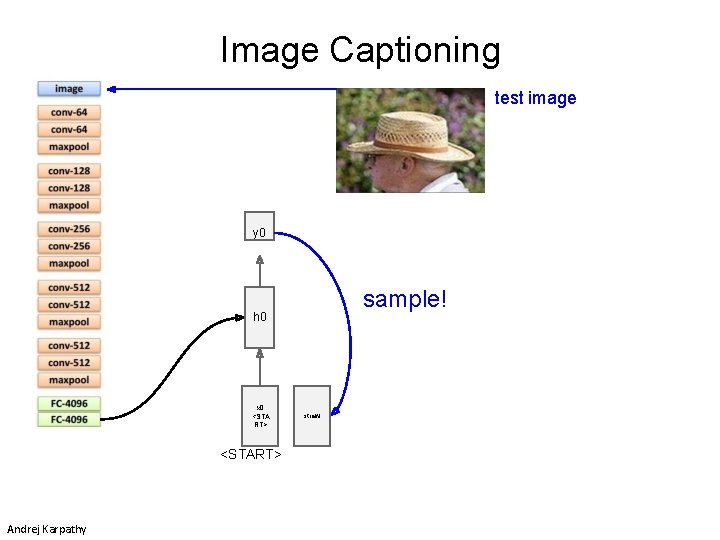

Image Captioning test image y 0 sample! h 0 x 0 <STA RT> <START> Andrej Karpathy straw

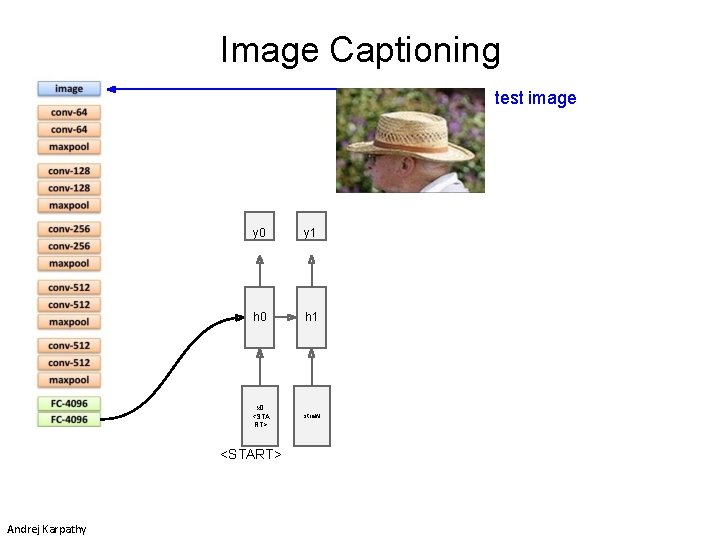

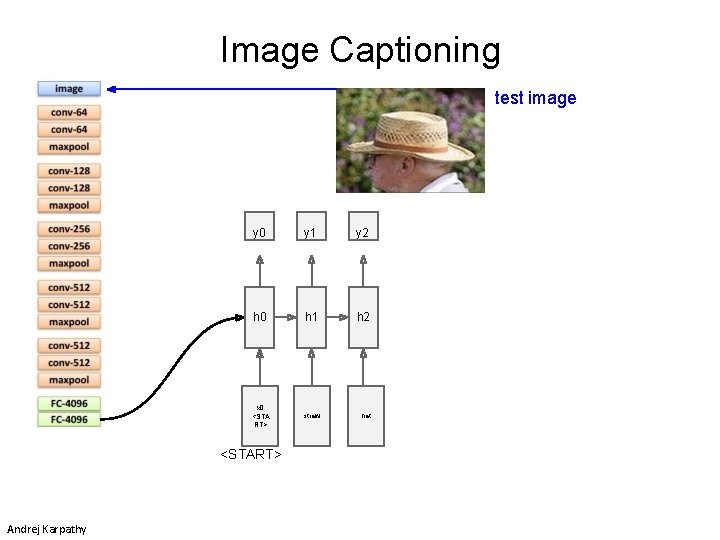

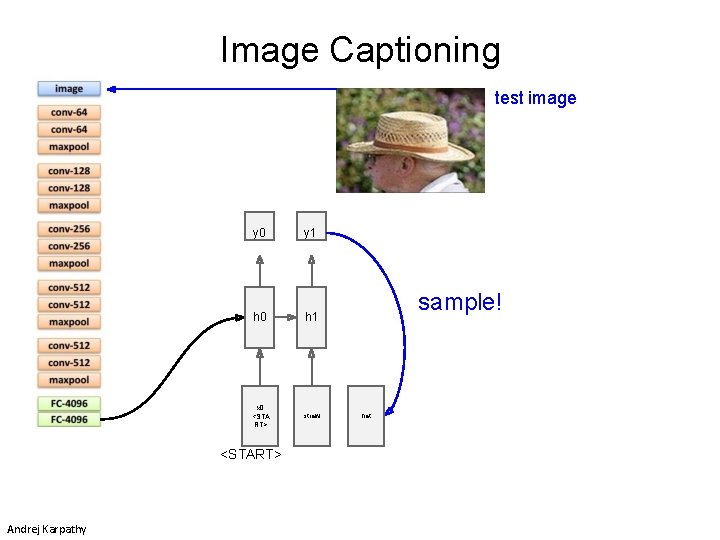

Image Captioning test image y 0 y 1 h 0 h 1 x 0 <STA RT> straw <START> Andrej Karpathy

Image Captioning test image y 0 y 1 h 0 h 1 x 0 <STA RT> straw <START> Andrej Karpathy sample! hat

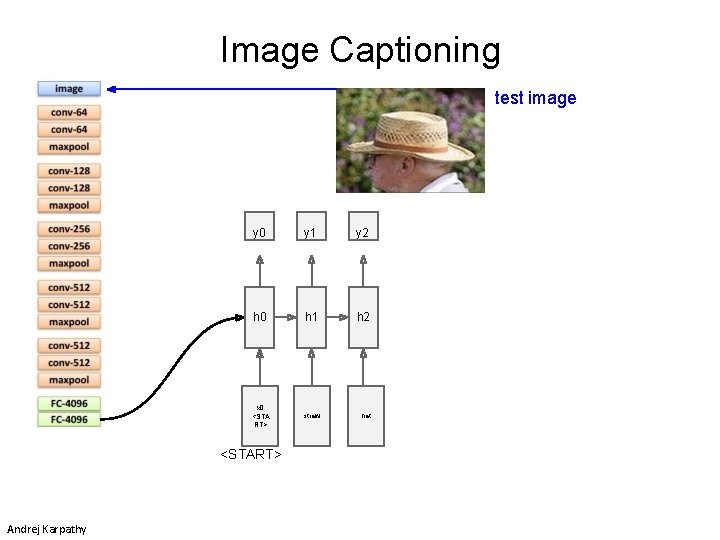

Image Captioning test image y 0 y 1 y 2 h 0 h 1 h 2 x 0 <STA RT> straw hat <START> Andrej Karpathy

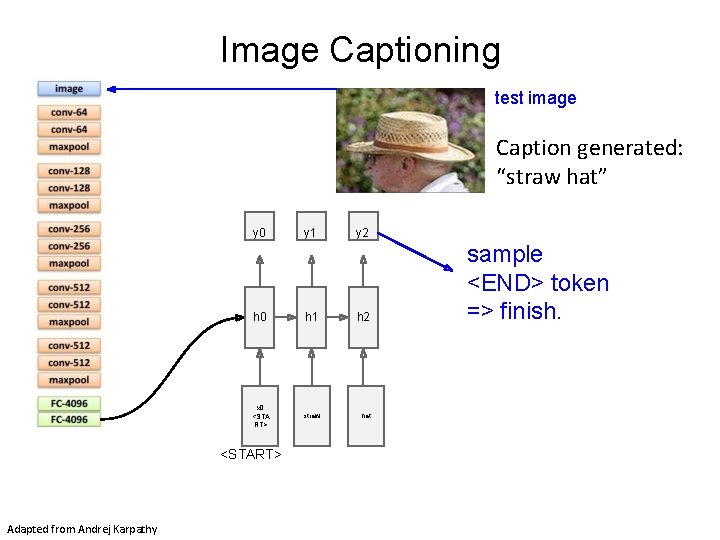

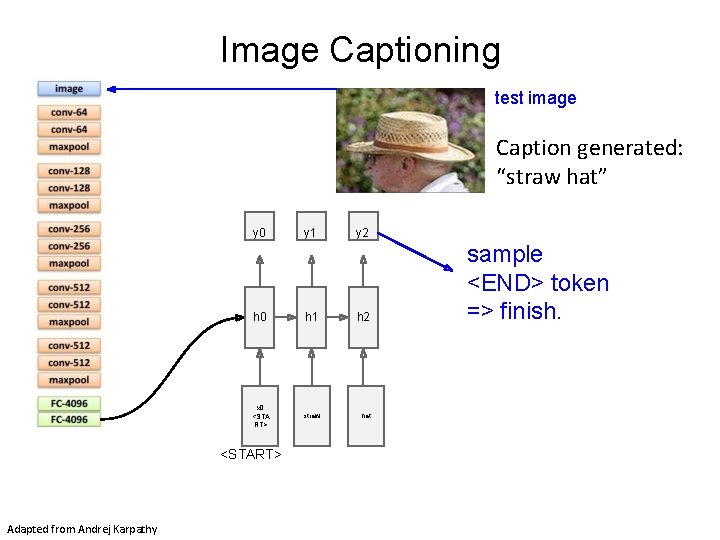

Image Captioning test image Caption generated: “straw hat” y 0 y 2 h 0 h 1 h 2 x 0 <STA RT> straw hat <START> Adapted from Andrej Karpathy y 1 sample <END> token => finish.

![Image Sentence Datasets Microsoft COCO TsungYi Lin et al 2014 mscoco org currently 120 Image Sentence Datasets Microsoft COCO [Tsung-Yi Lin et al. 2014] mscoco. org currently: ~120](https://slidetodoc.com/presentation_image/1d4640596eb575c13ec2fe82cbaa4f1c/image-40.jpg)

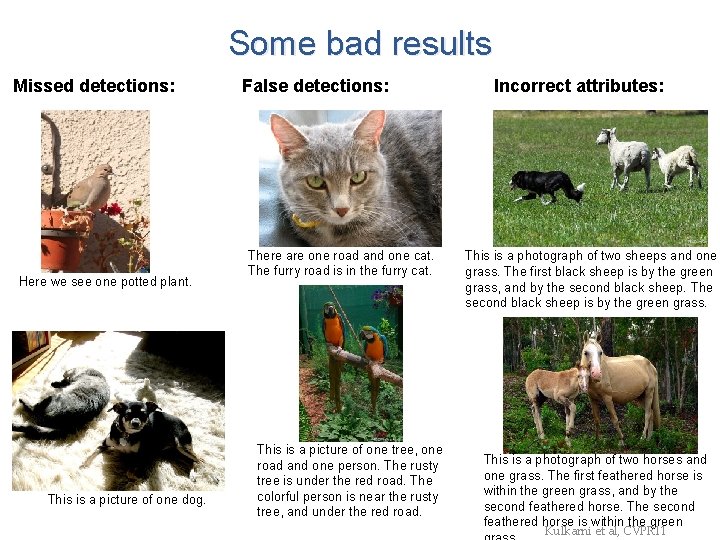

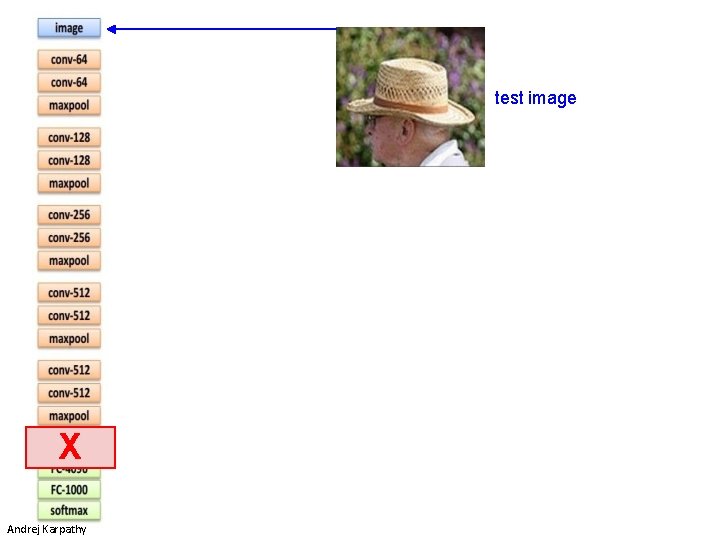

Image Sentence Datasets Microsoft COCO [Tsung-Yi Lin et al. 2014] mscoco. org currently: ~120 K images ~5 sentences each Andrej Karpathy

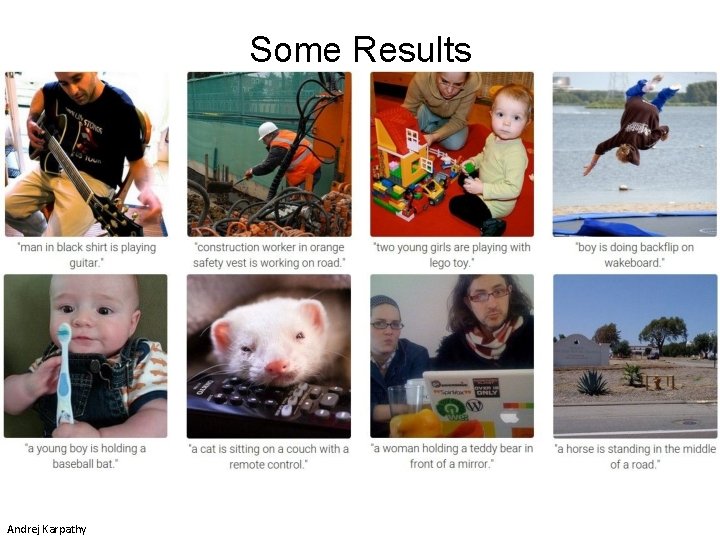

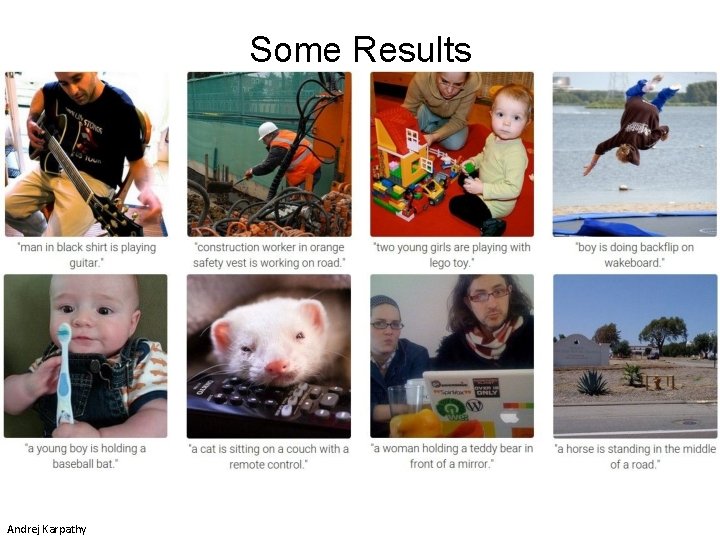

Some Results Andrej Karpathy

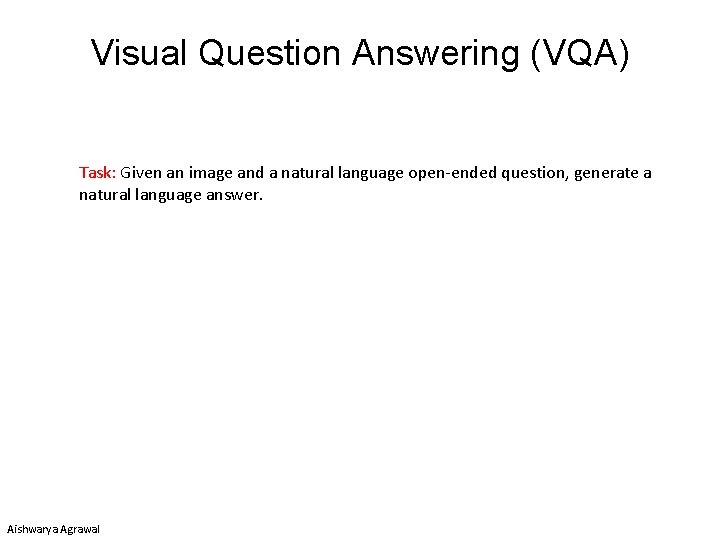

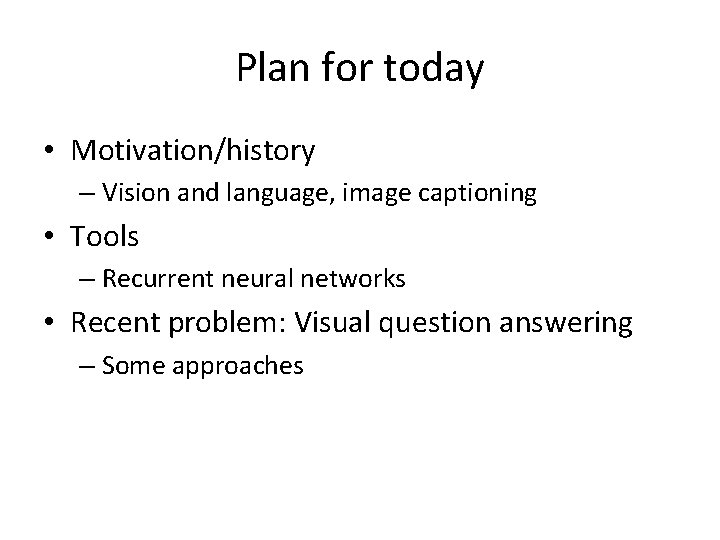

Visual Question Answering (VQA) Task: Given an image and a natural language open-ended question, generate a natural language answer. Aishwarya Agrawal

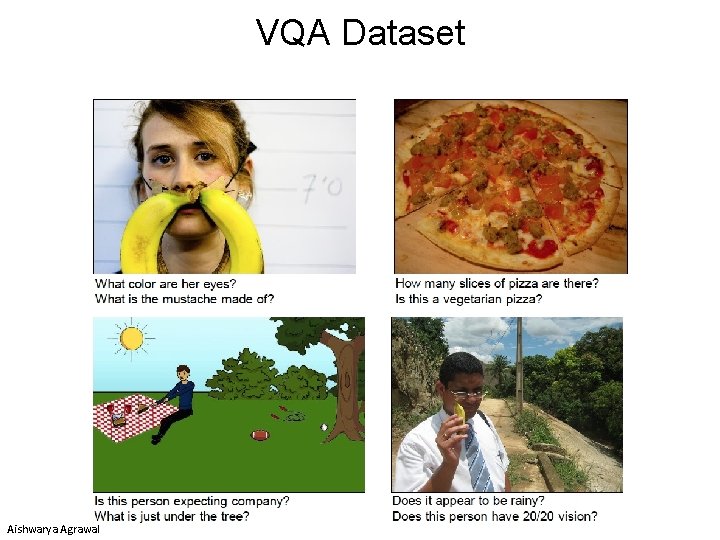

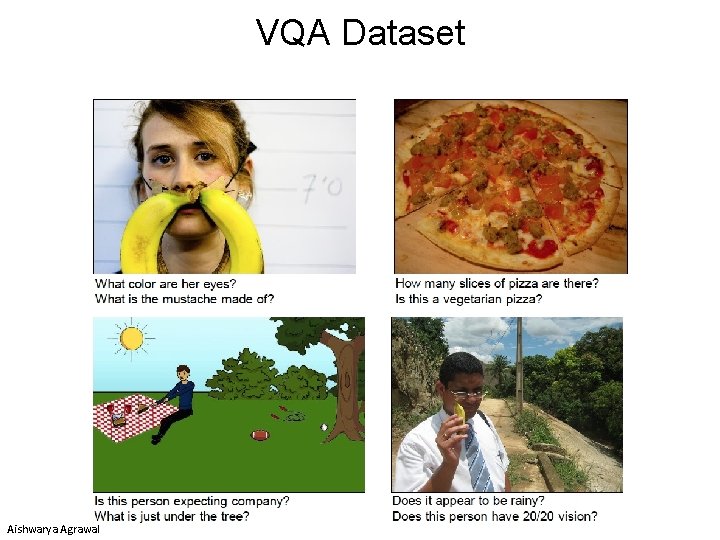

VQA Dataset Aishwarya Agrawal

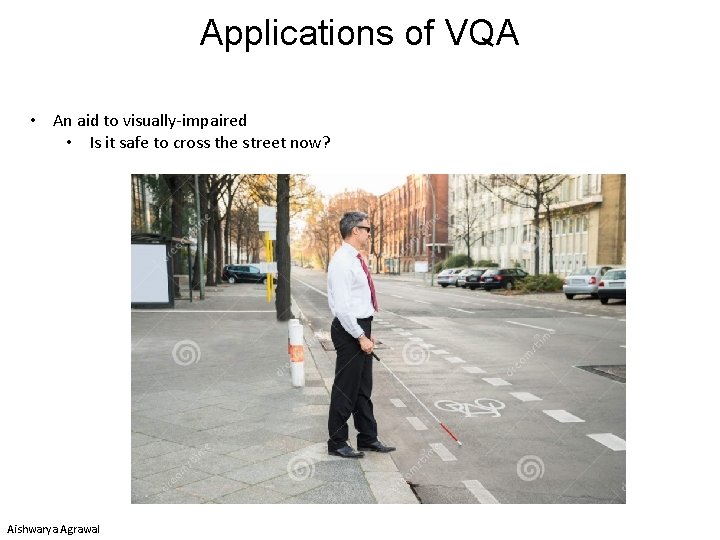

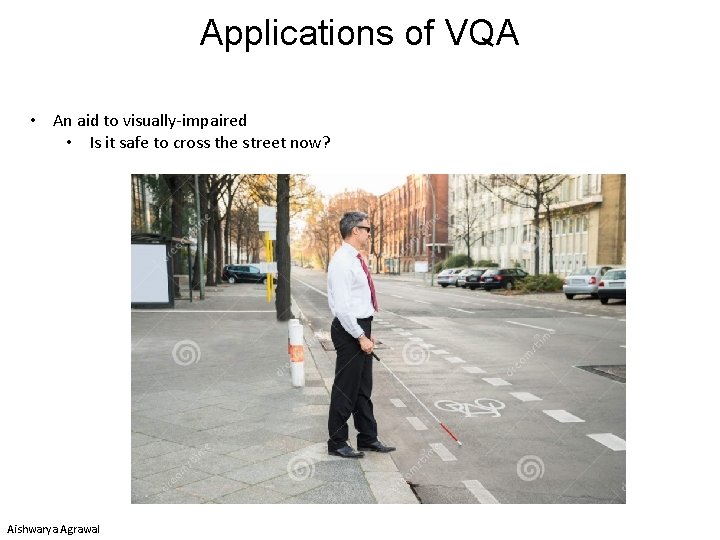

Applications of VQA • An aid to visually-impaired • Is it safe to cross the street now? Aishwarya Agrawal

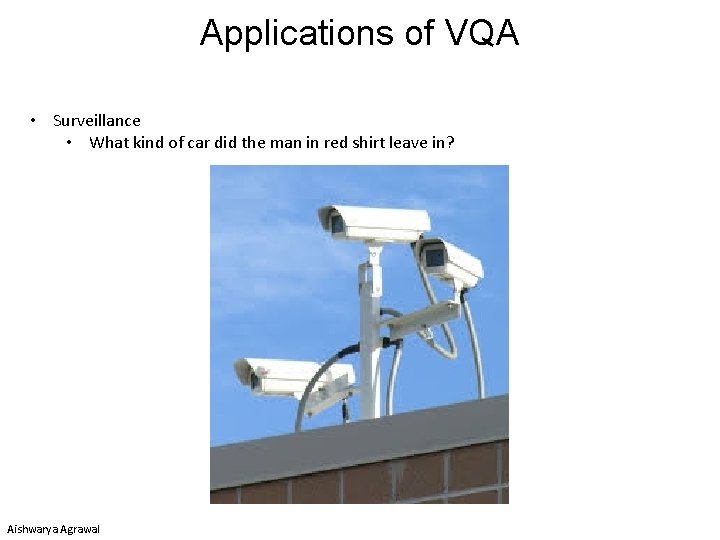

Applications of VQA • Surveillance • What kind of car did the man in red shirt leave in? Aishwarya Agrawal

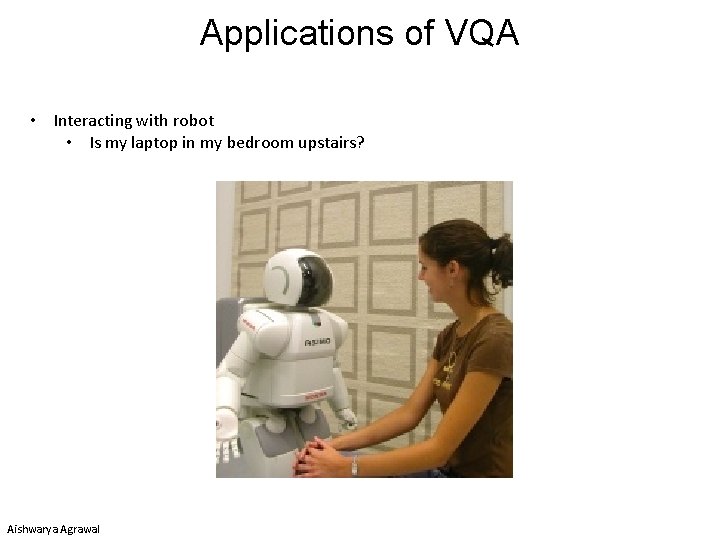

Applications of VQA • Interacting with robot • Is my laptop in my bedroom upstairs? Aishwarya Agrawal

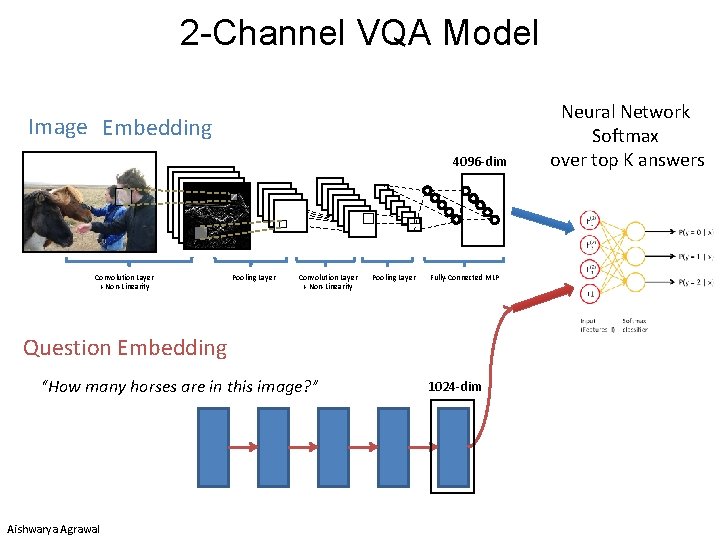

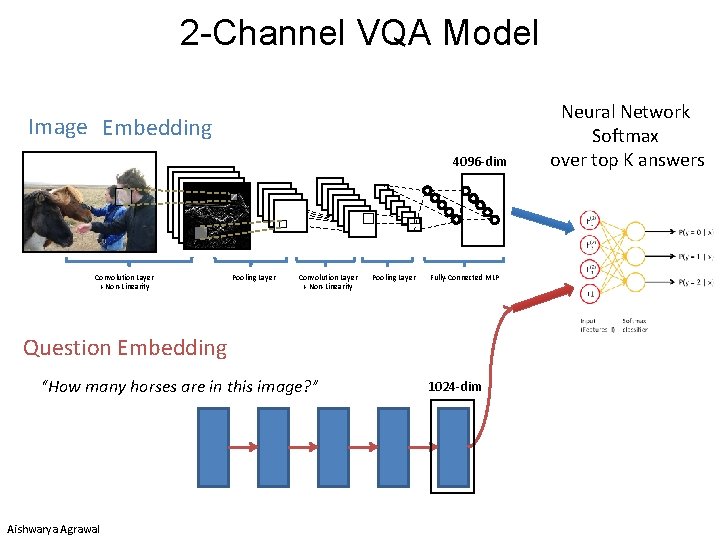

2 -Channel VQA Model Image Embedding 4096 -dim Convolution Layer + Non-Linearity Pooling Layer Fully-Connected MLP Question Embedding “How many horses are in this image? ” Aishwarya Agrawal 1024 -dim Neural Network Softmax over top K answers

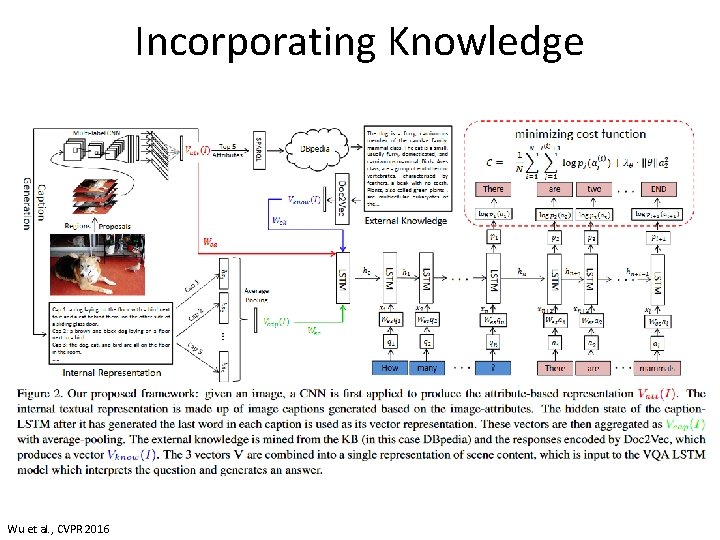

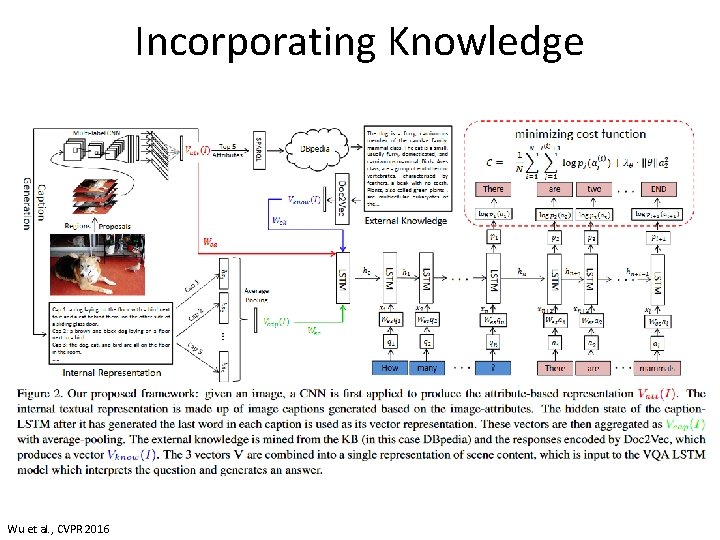

Incorporating Knowledge Wu et al. , CVPR 2016

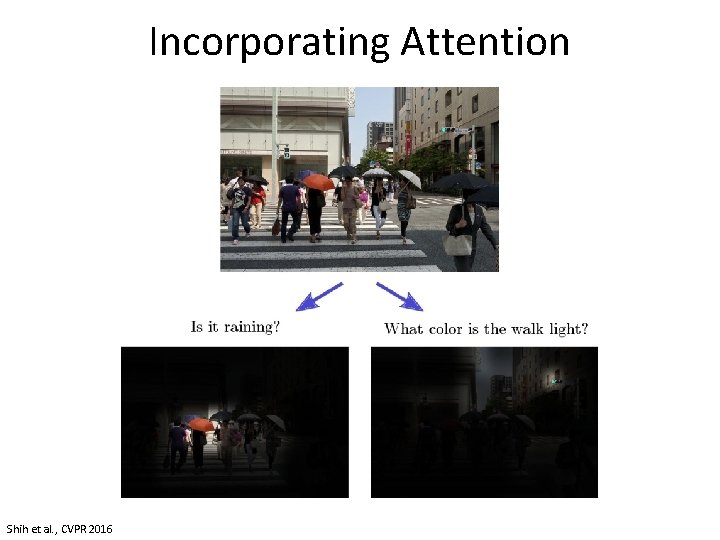

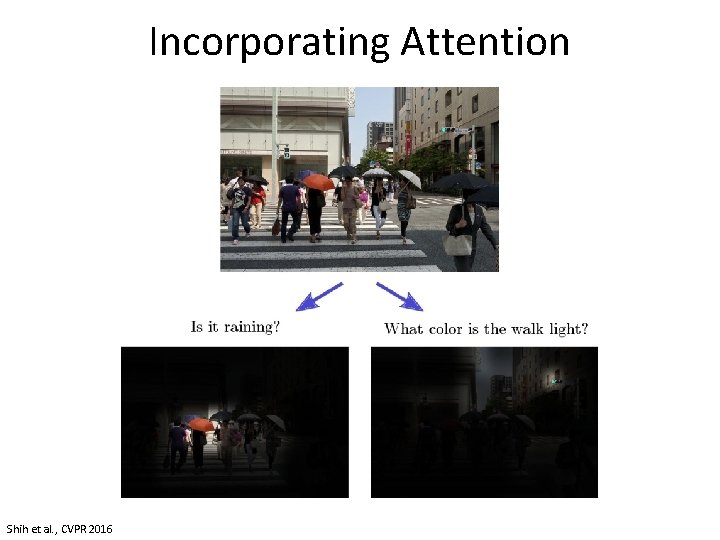

Incorporating Attention Shih et al. , CVPR 2016

Visual Question Answering Demo Aishwarya Agrawal