CS 160 Lecture 24 Professor John Canny Fall

- Slides: 32

CS 160: Lecture 24 Professor John Canny Fall 2004 3/7/2021 1

Speech: the Ultimate Interface? 4 In the early days of HCI, people assumed that speech/natural language would be the ultimate UI (Licklider’s OLIVER). 4 There have been sophisticated attempts to duplicate such behavior (e. g. Extempo systems, Verbot) – But text seems to be the preferred communication medium. 4 MS Agents are an open architecture (you can write new ones). They can do speech I/O. 3/7/2021 2

Speech: the Ultimate Interface? 4 In the early days of HCI, people assumed that speech/natural language would be the ultimate UI (Licklider’s OLIVER). 4 Critique that assertion… 3/7/2021 3

Advantages of GUIs 4 Support menus (recognition over recall). 4 Support scanning for keyword/icon. 4 Faster information acquisition (cursory readings). 4 Fewer affective cues. 4 Quiet! 3/7/2021 4

Advantages of speech? 3/7/2021 5

Advantages of speech? 4 Less effort and faster for output (vs. writing). 4 Allows a natural repair process for error recovery (if computer’s knew how to deal with that. . ) 4 Richer channel - speaker’s disposition and emotional state (if computer’s knew how to deal with that. . ) 3/7/2021 6

Multimodal Interfaces 4 Multi-modal refers to interfaces that support non-GUI interaction. 4 Speech and pen input are two common examples - and are complementary. 3/7/2021 7

Speech+pen Interfaces 4 Speech is the preferred medium for subject, verb, object expression. 4 Writing or gesture provide locative information (pointing etc). 3/7/2021 8

Speech+pen Interfaces 4 Speech+pen for visual-spatial tasks (compared to speech only) * * 10% faster. 36% fewer task-critical errors. Shorter and simpler linguistic constructions. 90 -100% user preference to interact this way. 3/7/2021 9

Put-That-There 4 User points at object, and says “put that” (grab), then points to destination and says “there” (drop). * Very good for deictic actions, (speak and point), but these are only 20% of actions. For the rest, need complex gestures. 3/7/2021 10

Multimodal advantages 4 Advantages for error recovery: * Users intuitively pick the mode that is less error-prone. * Language is often simplified. * Users intuitively switch modes after an error, so the same problem is not repeated. 3/7/2021 11

Multimodal advantages 4 Other situations where mode choice helps: * Users with disability. * People with a strong accent or a cold. * People with RSI. * Young children or non-literate users. 3/7/2021 12

Multimodal advantages 4 For collaborative work, multimodal interfaces can communicate a lot more than text: * Speech contains prosodic information. * Gesture communicates emotion. * Writing has several expressive dimensions. 3/7/2021 13

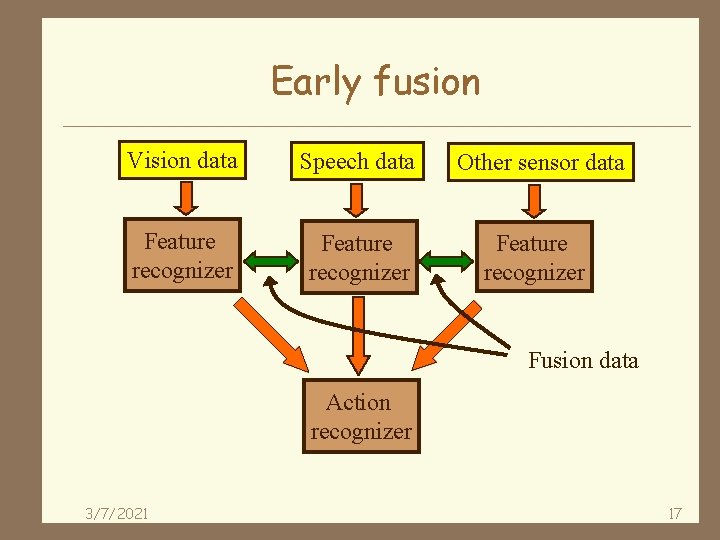

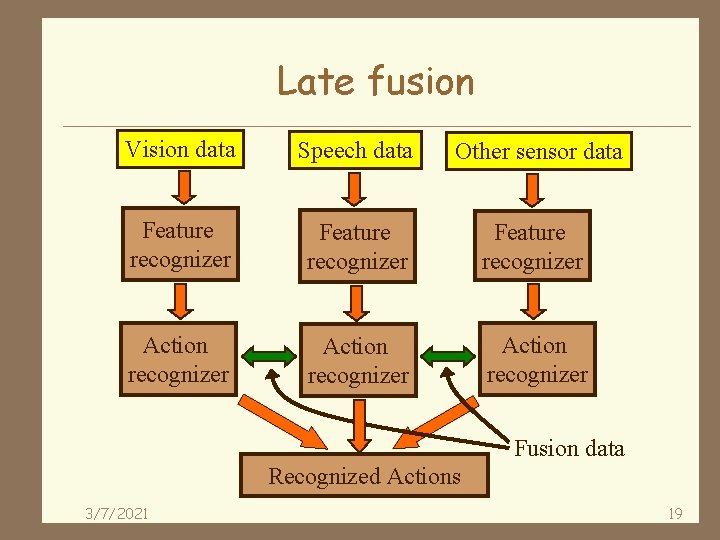

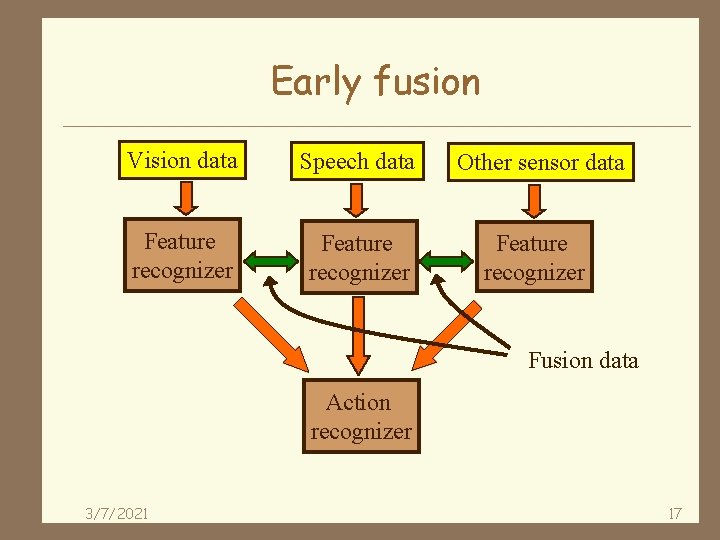

Multimodal challenges 4 Using multimodal input generally requires advanced recognition methods: * For each mode. * For combining redundant information. * For combining non-redundant information: “open this file (pointing)” 4 Information is combined at two levels: * Feature level (early fusion). * Semantic level (late fusion). 3/7/2021 14

Break 3/7/2021 15

Adminstrative 4 Final project presentations on Dec 6 and 8. 4 Presentations go by group number. Groups 6 - 10 on Monday 6, groups 1 -5 on Friday 8. 4 Presentations are due on the Swiki on Weds Dec 8. Final reports due Friday Dec 3 rd. Posters are due Mon Dec 13. 3/7/2021 16

Early fusion Vision data Speech data Other sensor data Feature recognizer Fusion data Action recognizer 3/7/2021 17

Early fusion 4 Early fusion applies to combinations like speech+lip movement. It is difficult because: * Of the need for MM training data. * Because data need to be closely synchronized. * Computational and training costs. 3/7/2021 18

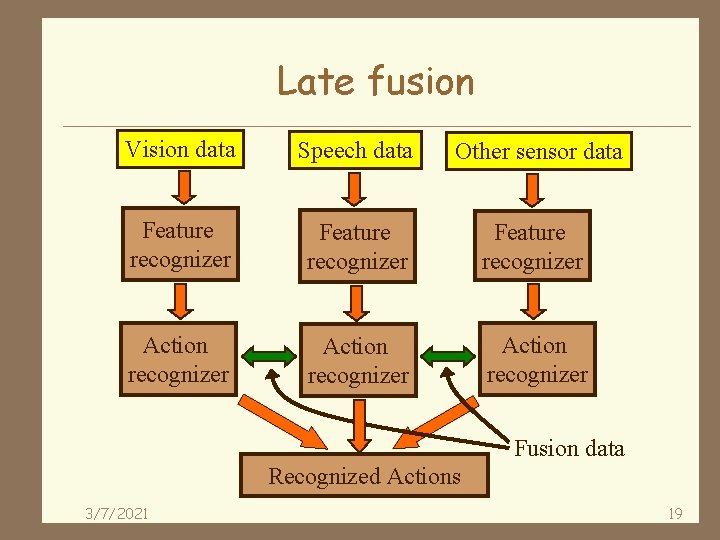

Late fusion Vision data Speech data Other sensor data Feature recognizer Action recognizer Fusion data Recognized Actions 3/7/2021 19

Late fusion 4 Late fusion is appropriate for combinations of complementary information, like pen+speech. * Recognizers are trained and used separately. * Unimodal recognizers are available off-the-shelf. * Its still important to accurately time-stamp all inputs: typical delays are known between e. g. gesture and speech. 3/7/2021 20

Examples 4 Speech understanding: * Feature recognizers = Phoneme, “Moveme, ” * Action recognizer = word recognizer 4 Gesture recognition: * Feature recognizers = Movemes (from different cameras) * Action recognizers = gesture (like stop, start, raise, lower) 3/7/2021 21

Exercise 4 What method would be more appropriate for: * Pen gesture recognition using a combination of pen motion and pen tip pressure? * Destination selection from a map, where the user points at the map and says to the name of the destination? 3/7/2021 22

Contrast between MM and GUIs 4 GUI interfaces often restrict input to single non-overlapping events, while MM interfaces handle all inputs at once. 4 GUI events are unambiguous, MM inputs are (usually) based on recognition and require a probabilistic approach 4 MM interfaces are often distributed on a network. 3/7/2021 23

Agent architectures 4 Allow parts of an MM system to be written separately, in the most appropriate language, and integrated easily. 4 OAA: Open-Agent Architecture (Cohen et al) supports MM interfaces. 4 Blackboards and message queues are often used to simplify inter-agent communication. * Jini, Javaspaces, Tspaces, JXTA, JMS, MSMQ. . . 3/7/2021 24

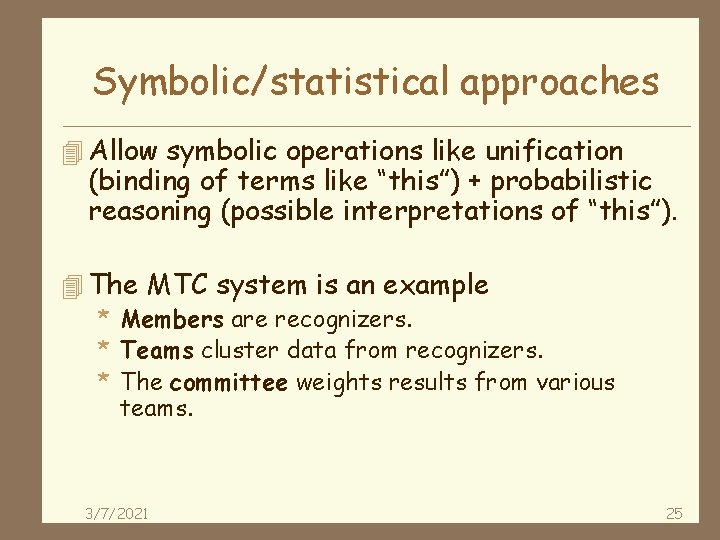

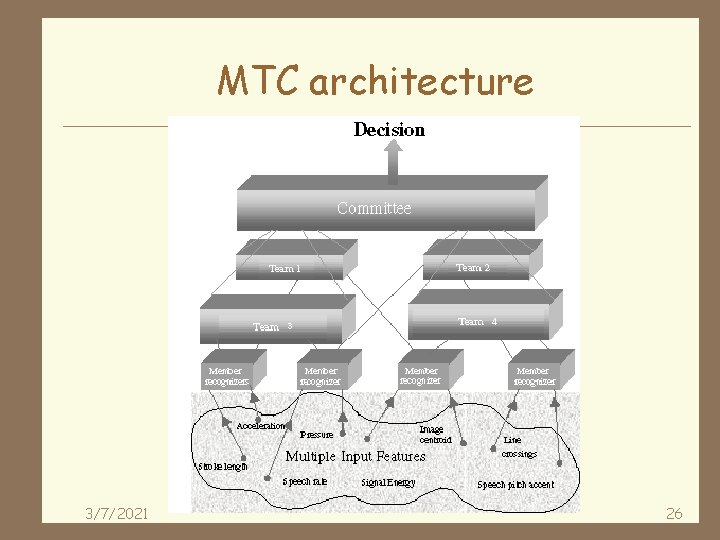

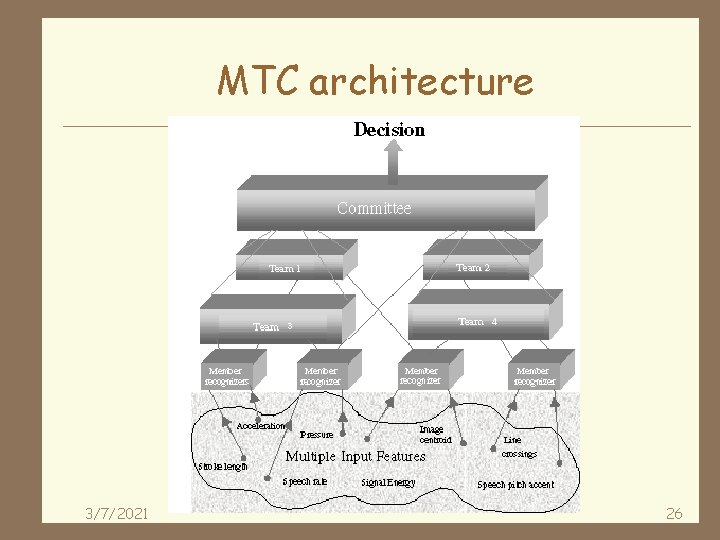

Symbolic/statistical approaches 4 Allow symbolic operations like unification (binding of terms like “this”) + probabilistic reasoning (possible interpretations of “this”). 4 The MTC system is an example * Members are recognizers. * Teams cluster data from recognizers. * The committee weights results from various teams. 3/7/2021 25

MTC architecture 3/7/2021 26

Probabilistic Toolkits 4 The “graphical models toolkit” U. Washington (Bilmes and Zweig). * Good for speech and time-series data. 4 MSBNx Bayes Net toolkit from Microsoft (Kadie et al. ) 4 UCLA MUSE: middleware for sensor fusion (also using Bayes nets). 3/7/2021 27

MM systems 4 Designers Outpost (Berkeley) 3/7/2021 28

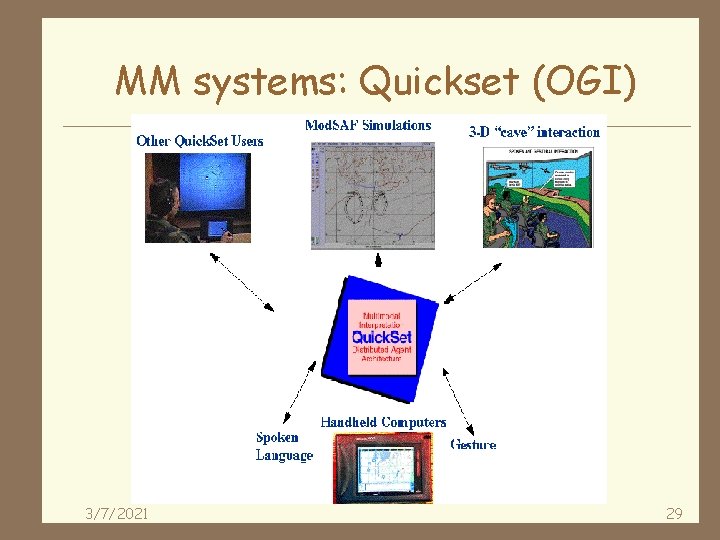

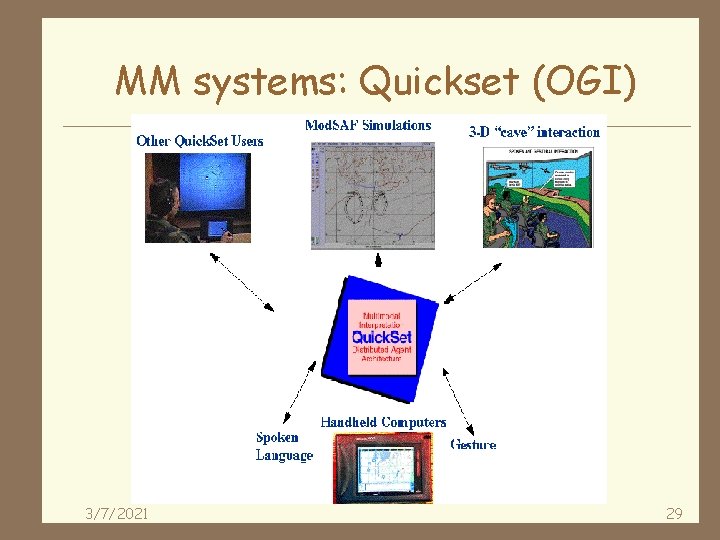

MM systems: Quickset (OGI) 3/7/2021 29

Crossweaver (Berkeley) 3/7/2021 30

Crossweaver (Berkeley) 4 Crossweaver is a prototyping system for multi-modal (primarily pen and speech) UIs. 4 Also allows cross-platform development (for PDAs, Tablet-PCs, desktops. 3/7/2021 31

Summary 4 Multi-modal systems provide several advantages. 4 Speech and pointing are complementary. 4 Challenges for multi-modal. 4 Early vs. late fusion. 4 MM architectures, fusion approaches. 4 Examples of MM systems. 3/7/2021 32