CS 152 Computer Architecture and Engineering Lecture 23

- Slides: 26

CS 152 Computer Architecture and Engineering Lecture 23 – Synchronization 2005 -11 -17 John Lazzaro (www. cs. berkeley. edu/~lazzaro) TAs: David Marquardt and Udam Saini www-inst. eecs. berkeley. edu/~cs 152/ CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

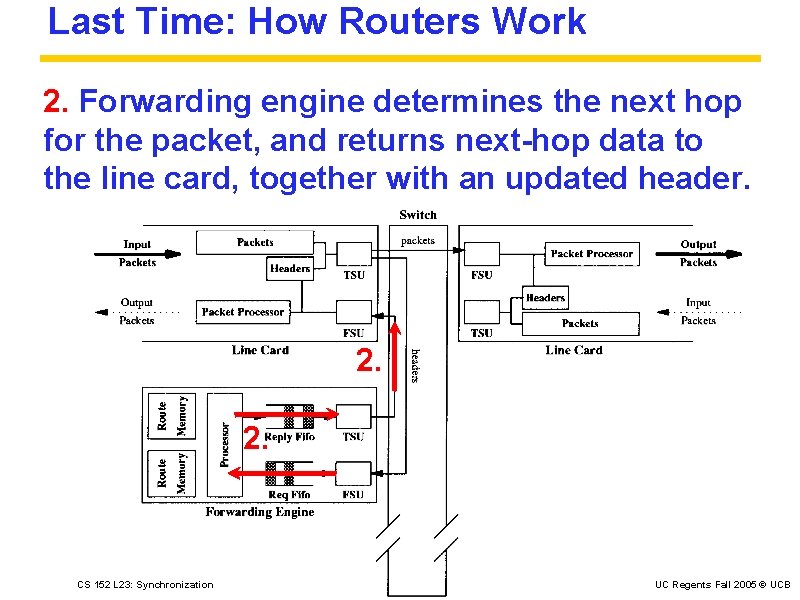

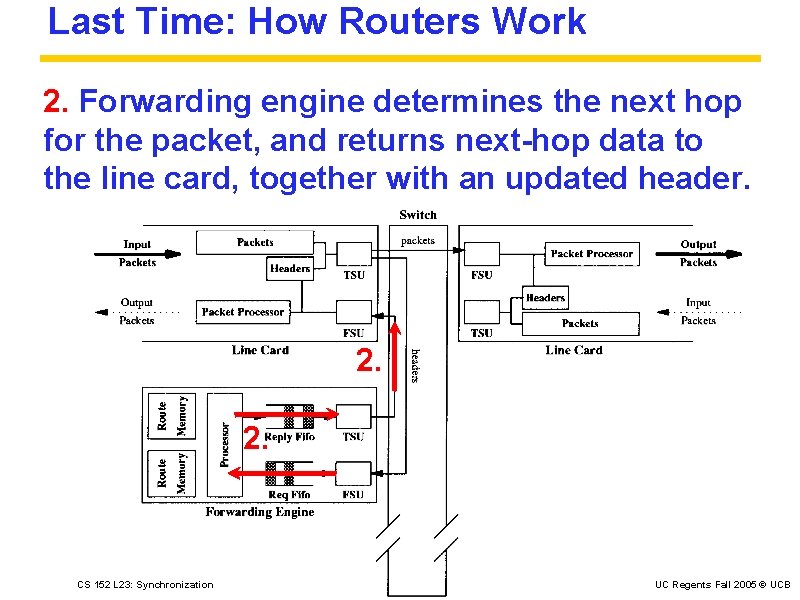

Last Time: How Routers Work 2. Forwarding engine determines the next hop for the packet, and returns next-hop data to the line card, together with an updated header. 2. 2. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

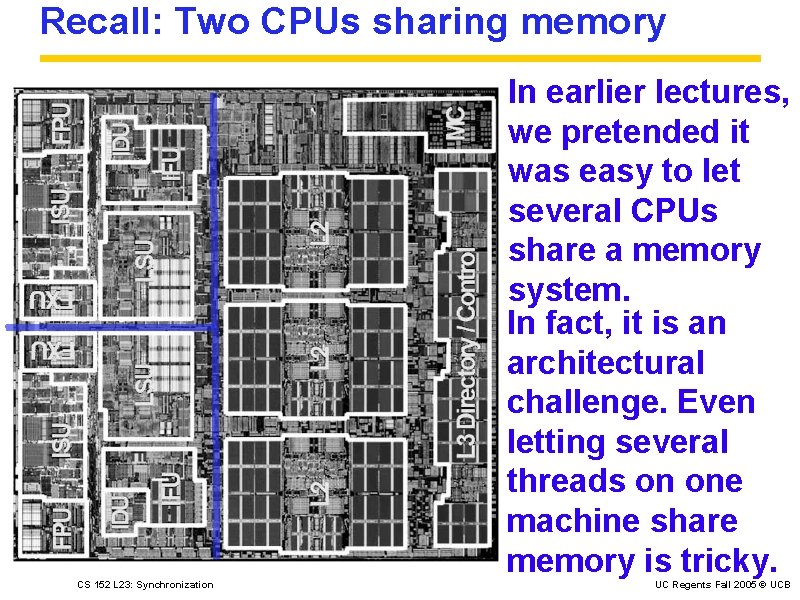

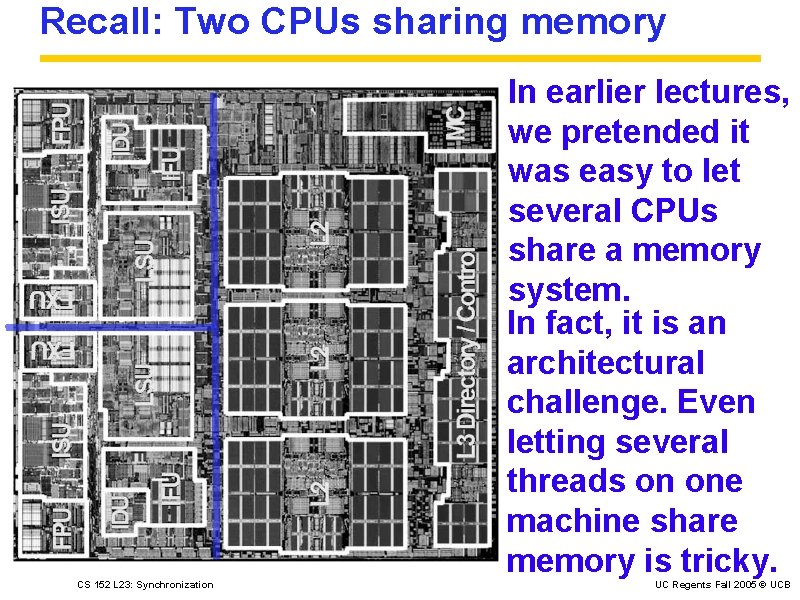

Recall: Two CPUs sharing memory In earlier lectures, we pretended it was easy to let several CPUs share a memory system. In fact, it is an architectural challenge. Even letting several threads on one machine share memory is tricky. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

Today: Hardware Thread Support Producer/Consumer: One thread writes A, one threads A. Locks: Two threads share write access to A. On Tuesday: Multiprocessor memory system design and synchronization issues. Tuesday is a simplified overview -- graduate-level architecture courses spend weeks on this topic. . . CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

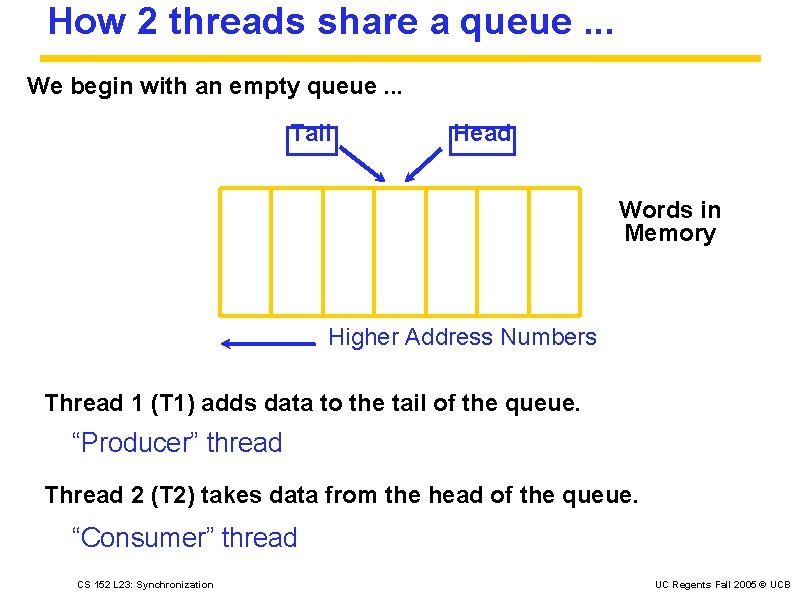

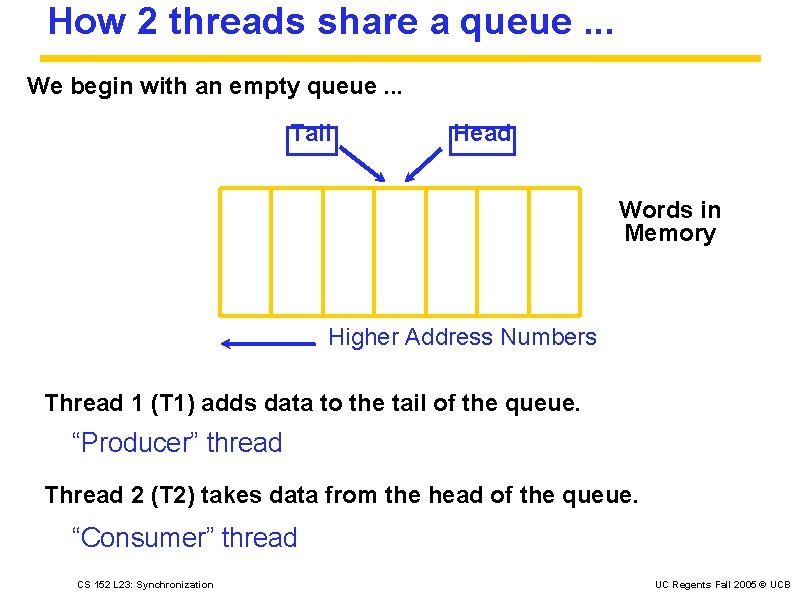

How 2 threads share a queue. . . We begin with an empty queue. . . Tail Head Words in Memory Higher Address Numbers Thread 1 (T 1) adds data to the tail of the queue. “Producer” thread Thread 2 (T 2) takes data from the head of the queue. “Consumer” thread CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

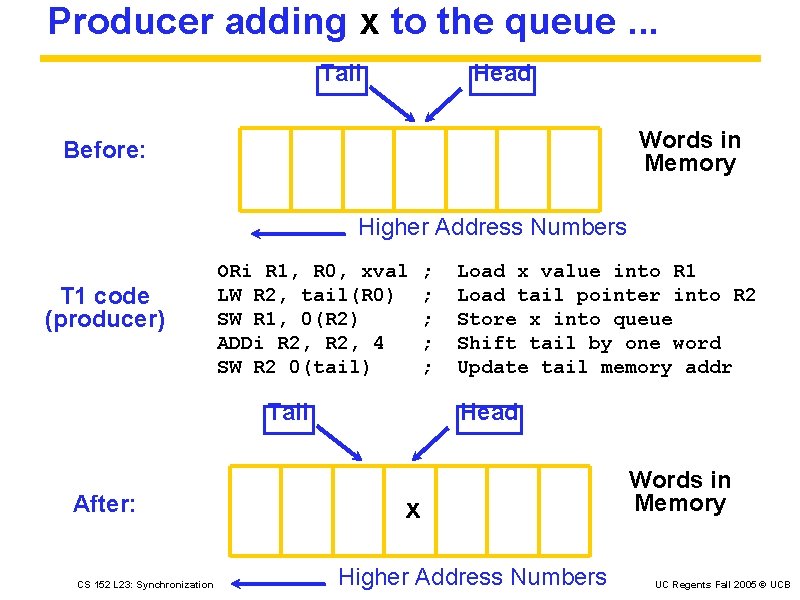

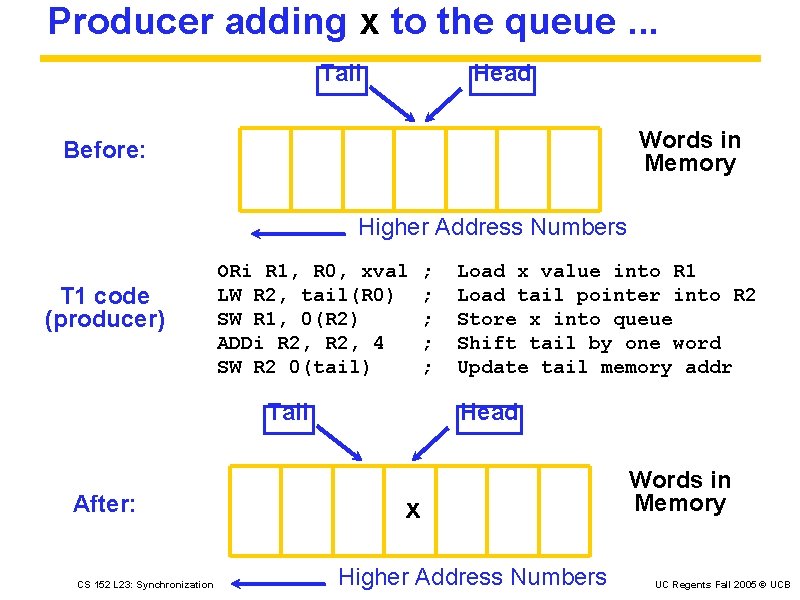

Producer adding x to the queue. . . Tail Head Words in Memory Before: Higher Address Numbers T 1 code (producer) ORi R 1, R 0, xval LW R 2, tail(R 0) SW R 1, 0(R 2) ADDi R 2, 4 SW R 2 0(tail) Tail After: CS 152 L 23: Synchronization ; ; ; Load x value into R 1 Load tail pointer into R 2 Store x into queue Shift tail by one word Update tail memory addr Head x Higher Address Numbers Words in Memory UC Regents Fall 2005 © UCB

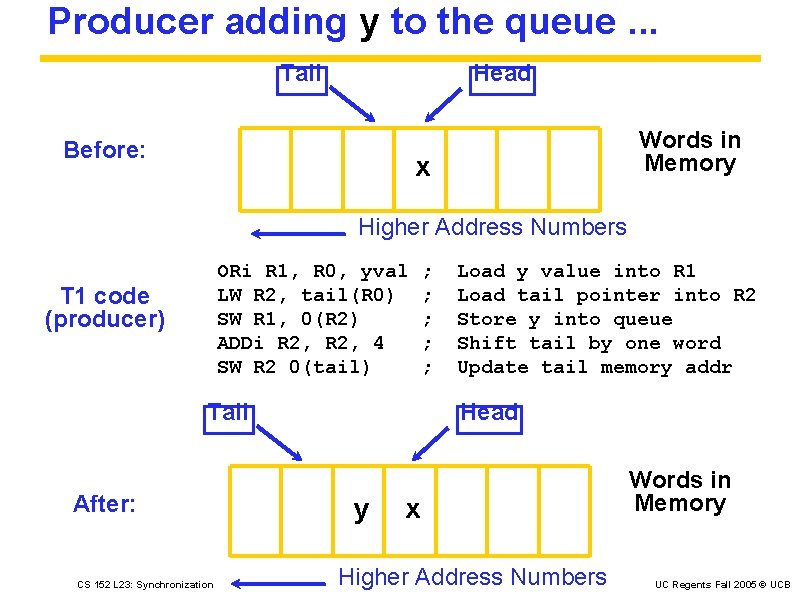

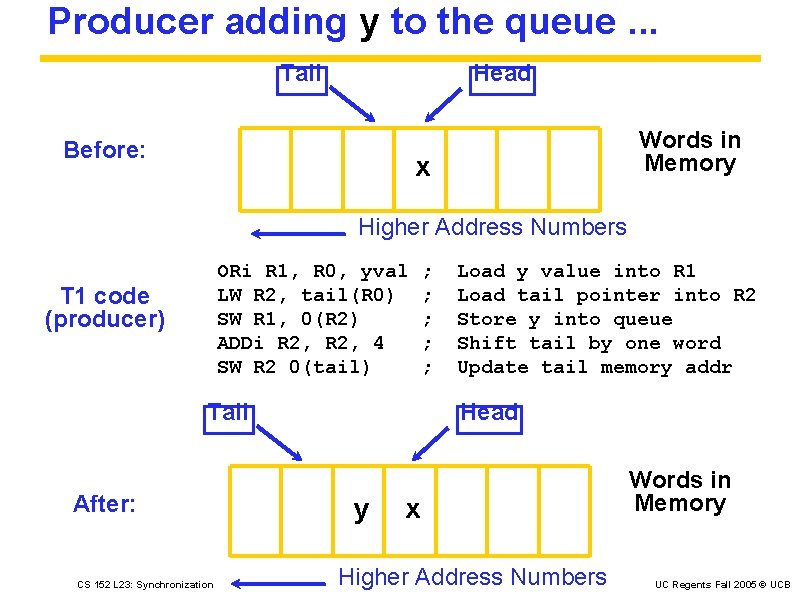

Producer adding y to the queue. . . Tail Head Before: Words in Memory x Higher Address Numbers ORi R 1, R 0, yval LW R 2, tail(R 0) SW R 1, 0(R 2) ADDi R 2, 4 SW R 2 0(tail) T 1 code (producer) Tail After: CS 152 L 23: Synchronization ; ; ; Load y value into R 1 Load tail pointer into R 2 Store y into queue Shift tail by one word Update tail memory addr Head y x Higher Address Numbers Words in Memory UC Regents Fall 2005 © UCB

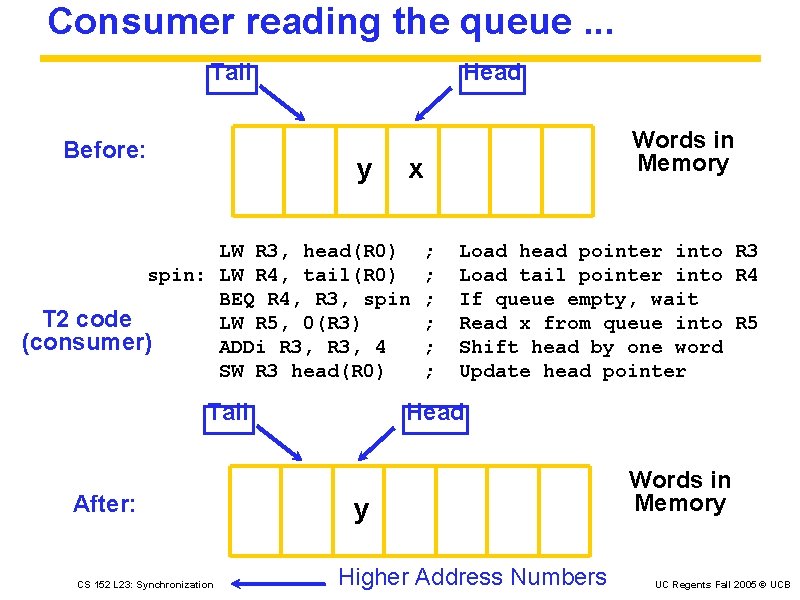

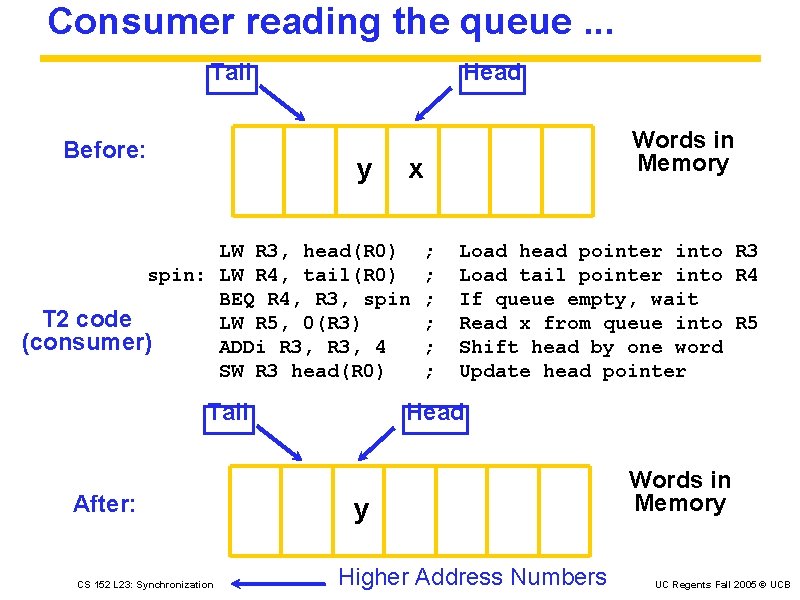

Consumer reading the queue. . . Tail Before: Head y x LW R 3, head(R 0) spin: LW R 4, tail(R 0) BEQ R 4, R 3, spin T 2 code LW R 5, 0(R 3) (consumer) ADDi R 3, 4 SW R 3 head(R 0) Tail After: CS 152 L 23: Synchronization Words in Memory ; ; ; Load head pointer into R 3 Load tail pointer into R 4 If queue empty, wait Read x from queue into R 5 Shift head by one word Update head pointer Head y Higher Address Numbers Words in Memory UC Regents Fall 2005 © UCB

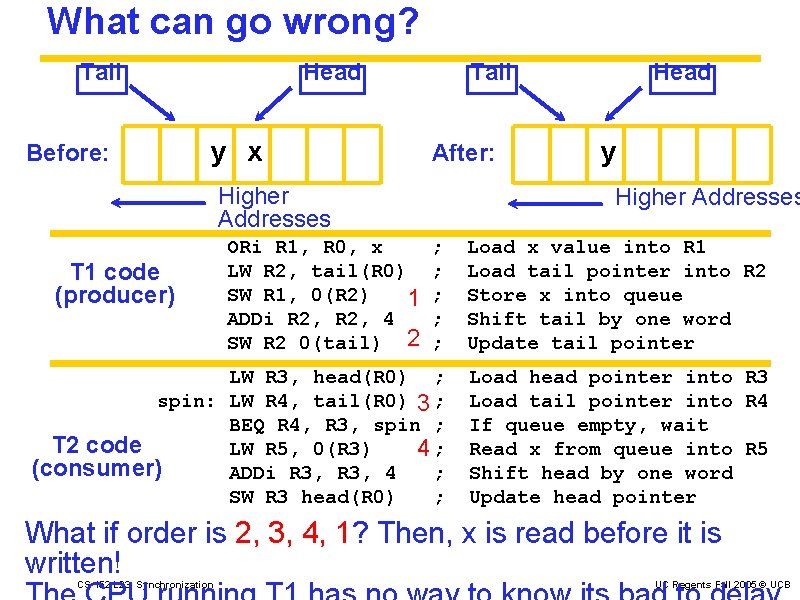

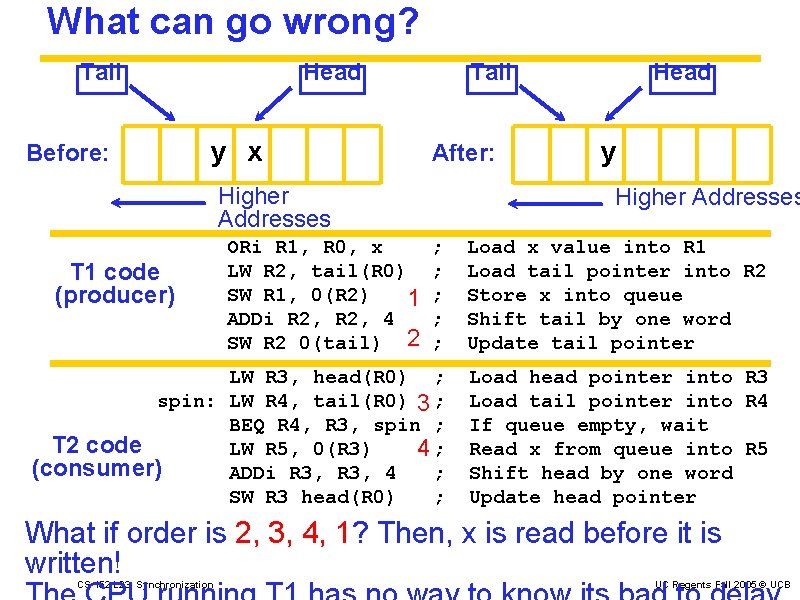

What can go wrong? Tail Before: Head y x Tail After: Higher Addresses y Higher Addresses ; ; ; Load x value into R 1 Load tail pointer into R 2 Store x into queue Shift tail by one word Update tail pointer LW R 3, head(R 0) ; spin: LW R 4, tail(R 0) 3 ; BEQ R 4, R 3, spin ; T 2 code LW R 5, 0(R 3) 4; (consumer) ADDi R 3, 4 ; SW R 3 head(R 0) ; Load head pointer into R 3 Load tail pointer into R 4 If queue empty, wait Read x from queue into R 5 Shift head by one word Update head pointer T 1 code (producer) ORi R 1, R 0, x LW R 2, tail(R 0) SW R 1, 0(R 2) 1 ADDi R 2, 4 SW R 2 0(tail) 2 Head What if order is 2, 3, 4, 1? Then, x is read before it is written! CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

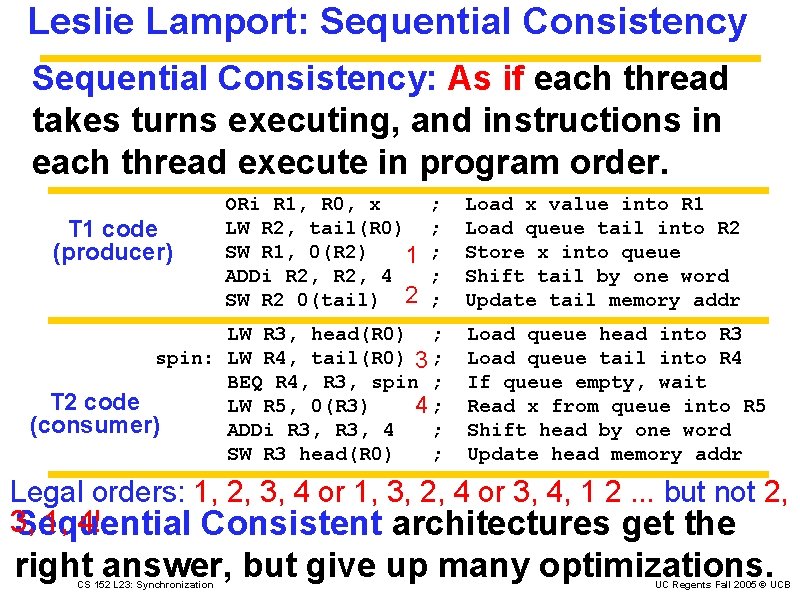

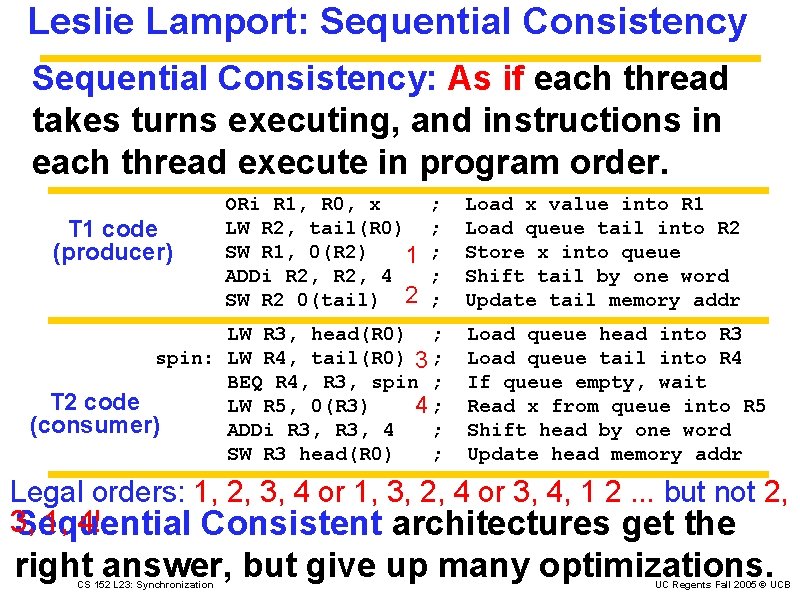

Leslie Lamport: Sequential Consistency: As if each thread takes turns executing, and instructions in each thread execute in program order. T 1 code (producer) ORi R 1, R 0, x LW R 2, tail(R 0) SW R 1, 0(R 2) 1 ADDi R 2, 4 SW R 2 0(tail) 2 ; ; ; LW R 3, head(R 0) ; spin: LW R 4, tail(R 0) 3 ; BEQ R 4, R 3, spin ; T 2 code LW R 5, 0(R 3) 4; (consumer) ADDi R 3, 4 ; SW R 3 head(R 0) ; Load x value into R 1 Load queue tail into R 2 Store x into queue Shift tail by one word Update tail memory addr Load queue head into R 3 Load queue tail into R 4 If queue empty, wait Read x from queue into R 5 Shift head by one word Update head memory addr Legal orders: 1, 2, 3, 4 or 1, 3, 2, 4 or 3, 4, 1 2. . . but not 2, 3, 1, 4! Sequential Consistent architectures get the right answer, but give up many optimizations. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

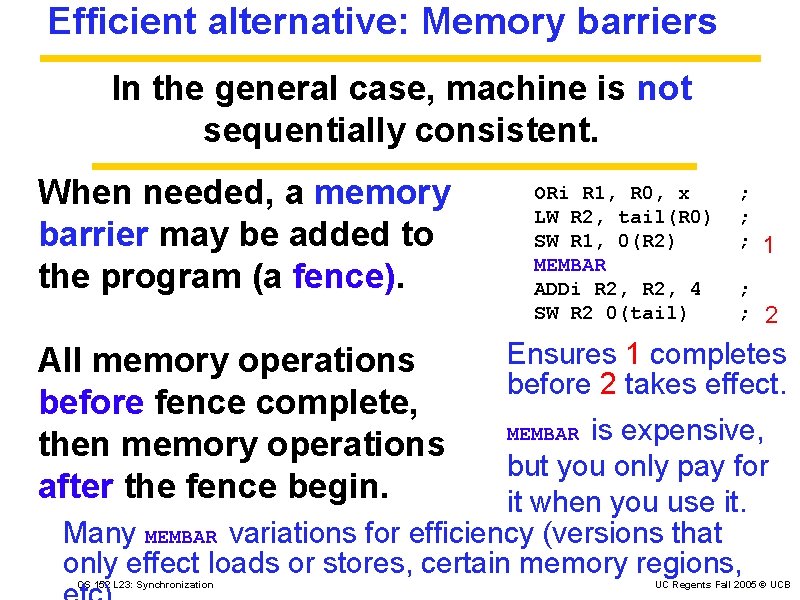

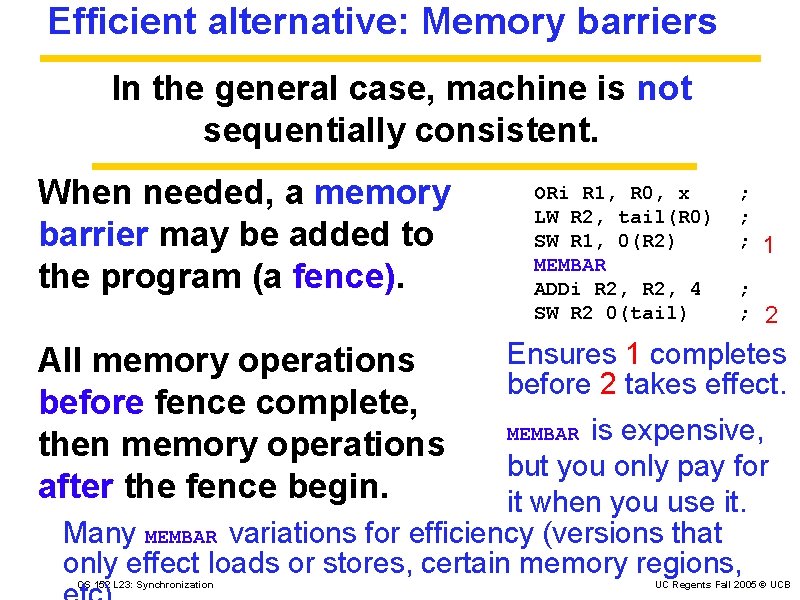

Efficient alternative: Memory barriers In the general case, machine is not sequentially consistent. When needed, a memory barrier may be added to the program (a fence). All memory operations before fence complete, then memory operations after the fence begin. ORi R 1, R 0, x LW R 2, tail(R 0) SW R 1, 0(R 2) MEMBAR ADDi R 2, 4 SW R 2 0(tail) ; ; ; 1 ; ; 2 Ensures 1 completes before 2 takes effect. is expensive, but you only pay for it when you use it. Many MEMBAR variations for efficiency (versions that only effect loads or stores, certain memory regions, CS 152 L 23: Synchronization MEMBAR UC Regents Fall 2005 © UCB

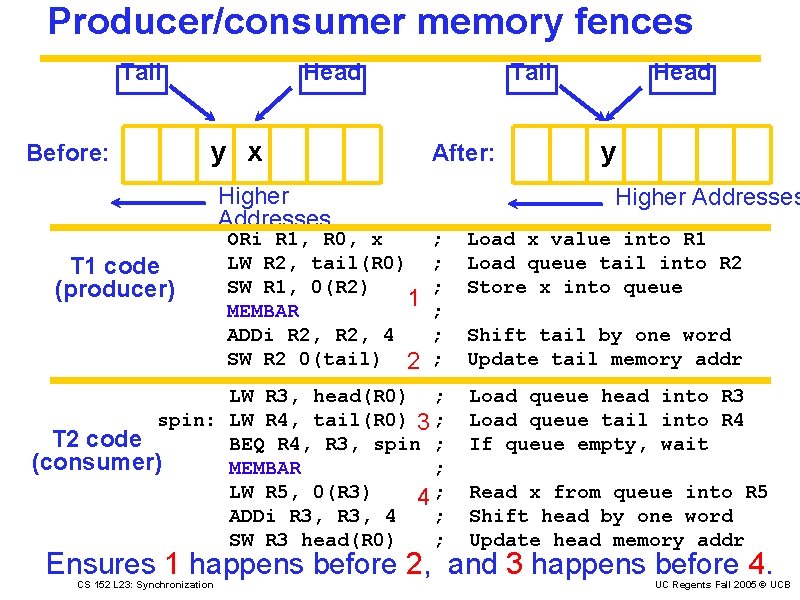

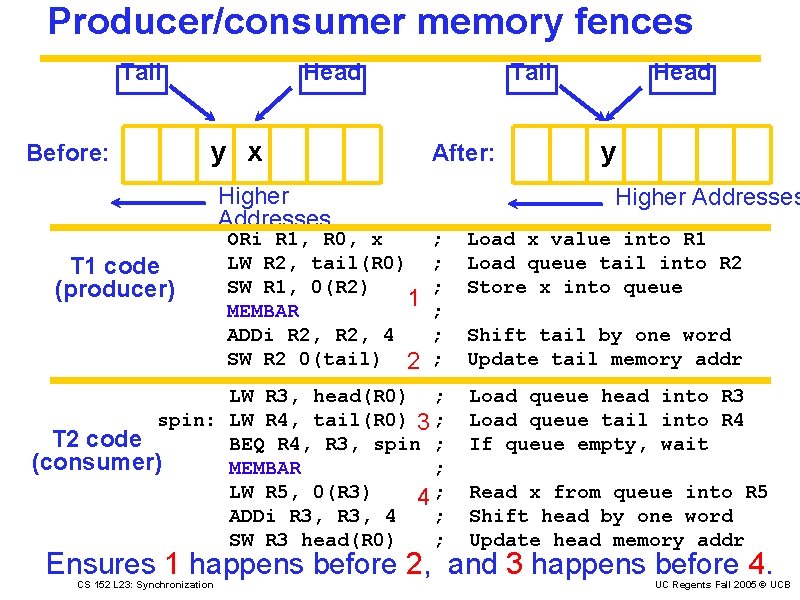

Producer/consumer memory fences Tail Before: Head y x Higher Addresses After: Head y Higher Addresses ; ; ; Load x value into R 1 Load queue tail into R 2 Store x into queue LW R 3, head(R 0) ; spin: LW R 4, tail(R 0) 3 ; T 2 code BEQ R 4, R 3, spin ; (consumer) MEMBAR ; LW R 5, 0(R 3) 4; ADDi R 3, 4 ; SW R 3 head(R 0) ; Load queue head into R 3 Load queue tail into R 4 If queue empty, wait T 1 code (producer) ORi R 1, R 0, x LW R 2, tail(R 0) SW R 1, 0(R 2) 1 MEMBAR ADDi R 2, 4 SW R 2 0(tail) 2 Tail Shift tail by one word Update tail memory addr Read x from queue into R 5 Shift head by one word Update head memory addr Ensures 1 happens before 2, and 3 happens before 4. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

Reminder: Final Checkoff this Friday! Final report due following Monday, 11: 59 PM TAs will provide “secret” MIPS machine code tests. Bonus points if these tests run by end of section. If not, TAs give you test code to use over weekend Mid-term, project presentations after Thanksgiving. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

CS 152: What’s left. . . Monday 11/21: Final report due, 11: 59 PM Class as normal on Tuesday, then Thanksgiving Tuesday 11/29: Architecture @ Cal. Team evaluations due 11: 59 PM Tuesday. . . Thursday 12/1: Mid-term review in-class Tuesday 12/6: Mid-term II, 6: 00 -9: 00 PM. No class 11 -12: 30 that day. Thursday 12/8: Final presentations. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

If you get done early on Monday. . . Sun’s chief architect, key player in Niagara. . . Sun announced T 1 (Niagara) last week officially. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

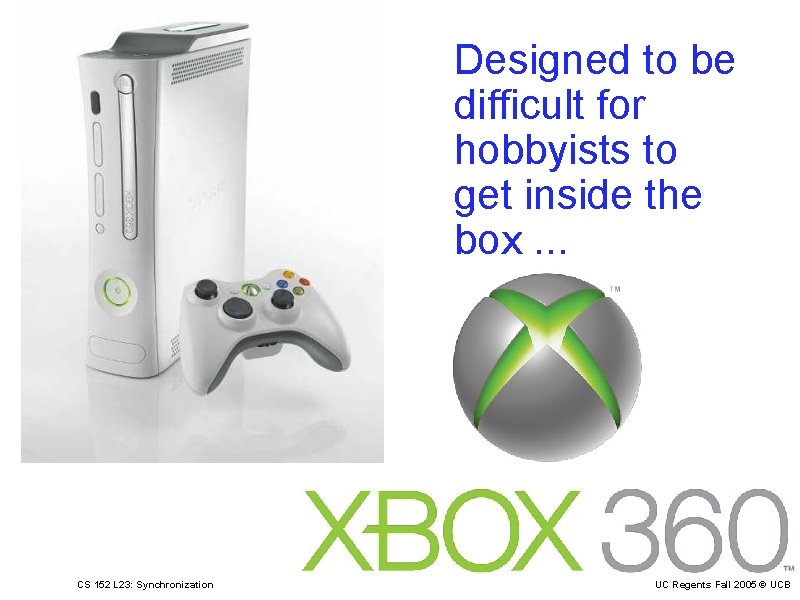

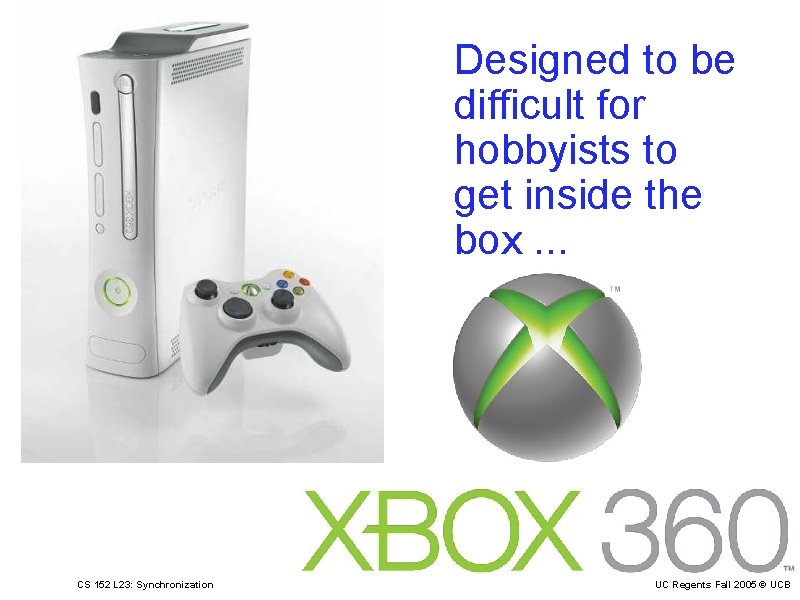

Designed to be difficult for hobbyists to get inside the box. . . CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

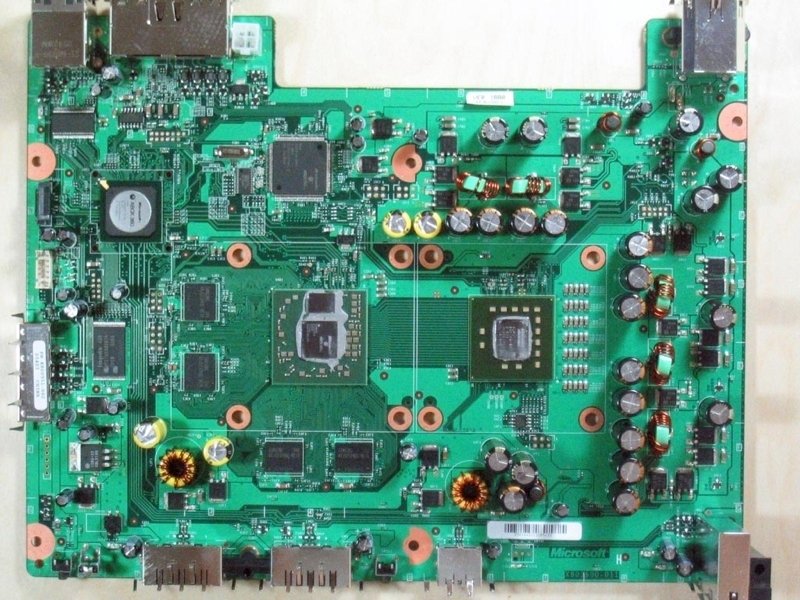

CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

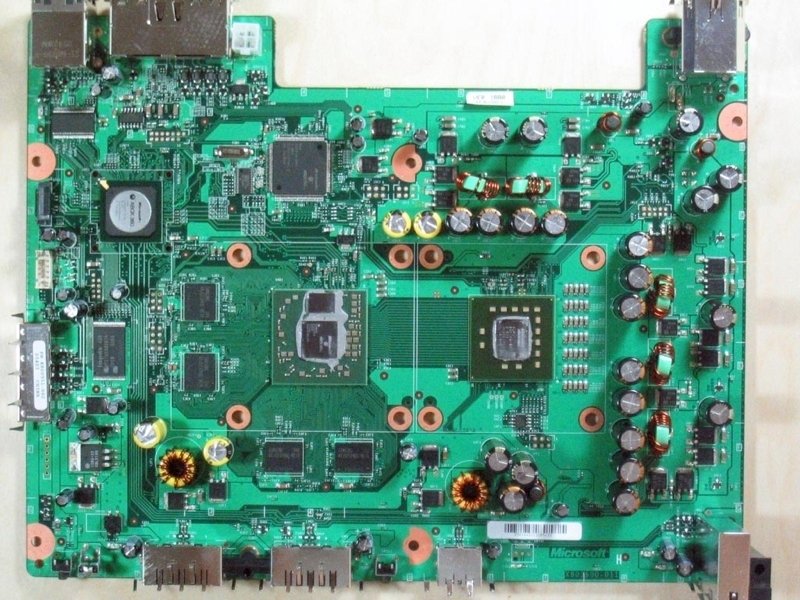

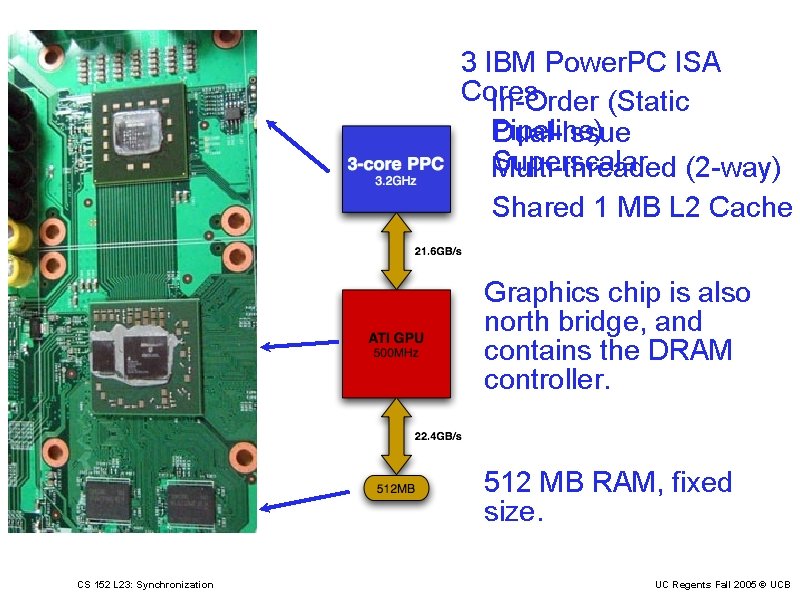

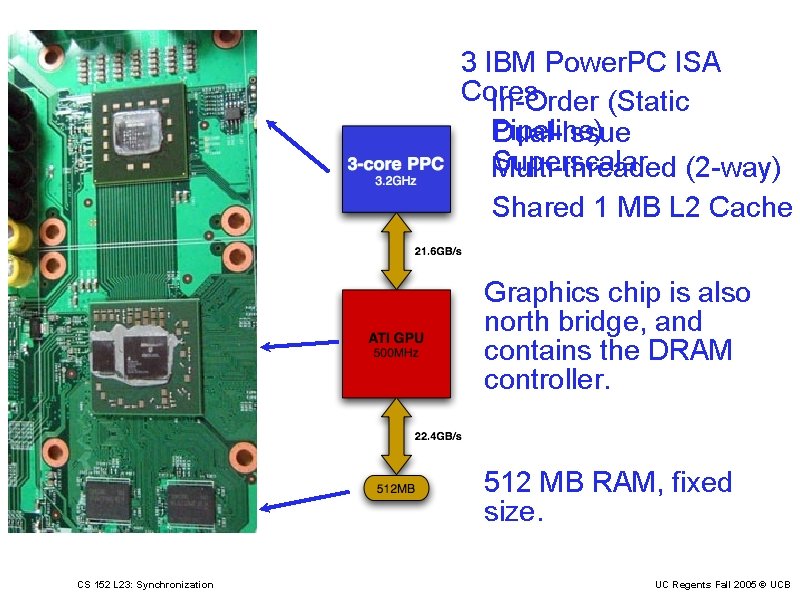

3 IBM Power. PC ISA Cores In-Order (Static Pipeline) Dual-Issue Superscalar (2 -way) Multi-threaded Shared 1 MB L 2 Cache Graphics chip is also north bridge, and contains the DRAM controller. 512 MB RAM, fixed size. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

With heat sinks attached. . . CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

Sharing Write Access CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

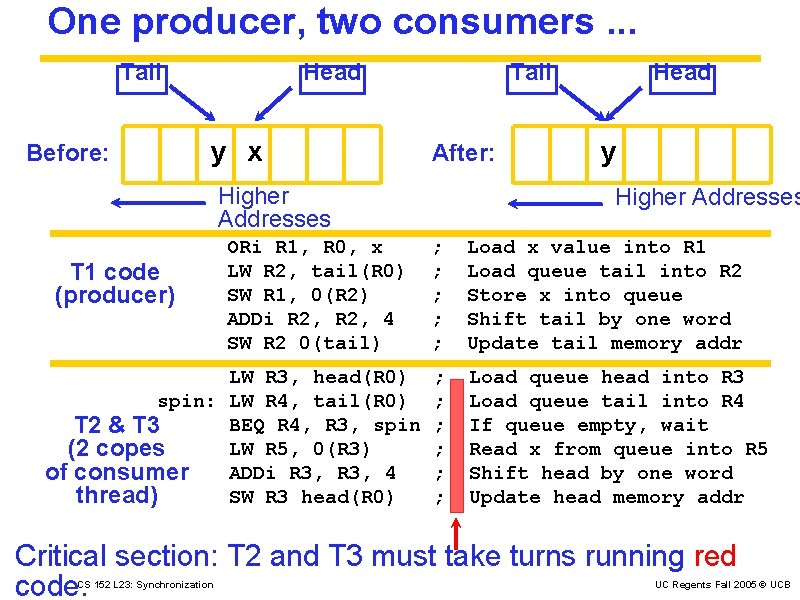

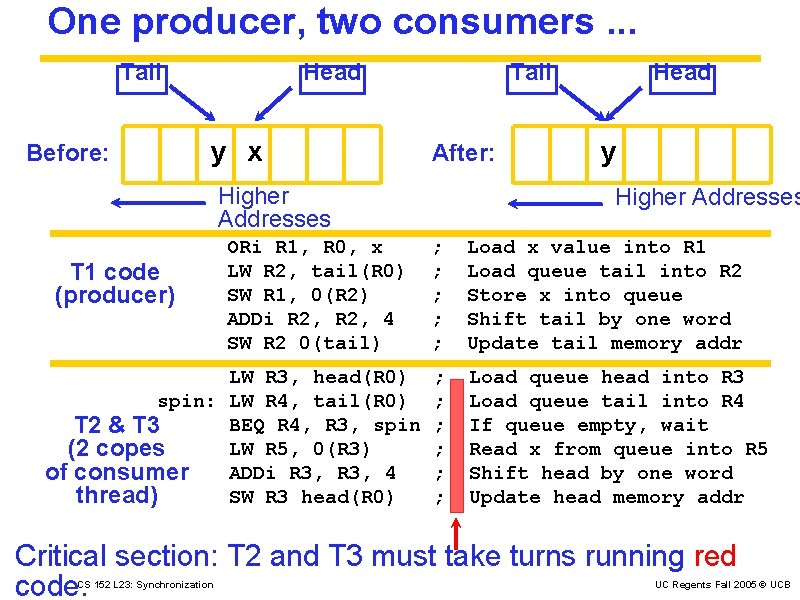

One producer, two consumers. . . Tail Before: Head y x Tail After: Higher Addresses T 1 code (producer) ORi R 1, R 0, x LW R 2, tail(R 0) SW R 1, 0(R 2) ADDi R 2, 4 SW R 2 0(tail) LW R 3, head(R 0) spin: LW R 4, tail(R 0) BEQ R 4, R 3, spin T 2 & T 3 LW R 5, 0(R 3) (2 copes ADDi R 3, 4 of consumer SW R 3 head(R 0) thread) Head y Higher Addresses ; ; ; Load x value into R 1 Load queue tail into R 2 Store x into queue Shift tail by one word Update tail memory addr ; ; ; Load queue head into R 3 Load queue tail into R 4 If queue empty, wait Read x from queue into R 5 Shift head by one word Update head memory addr Critical section: T 2 and T 3 must take turns running red code. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

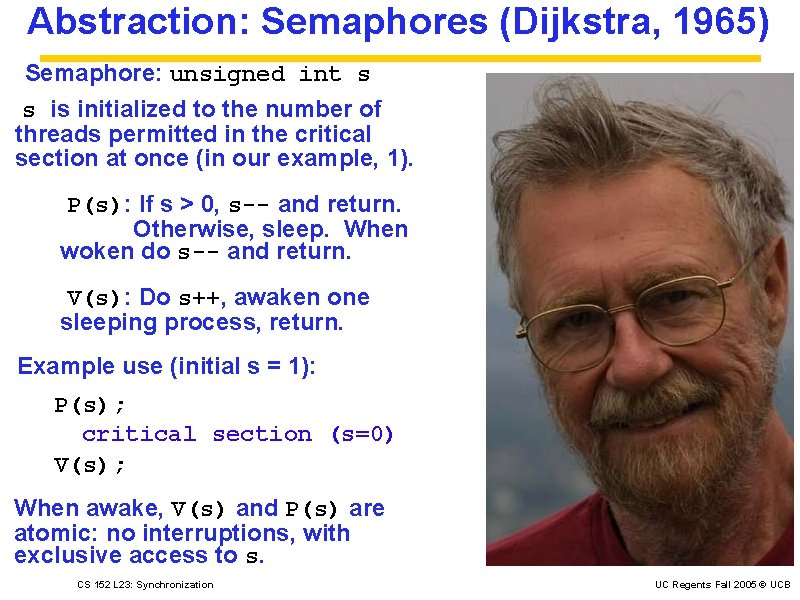

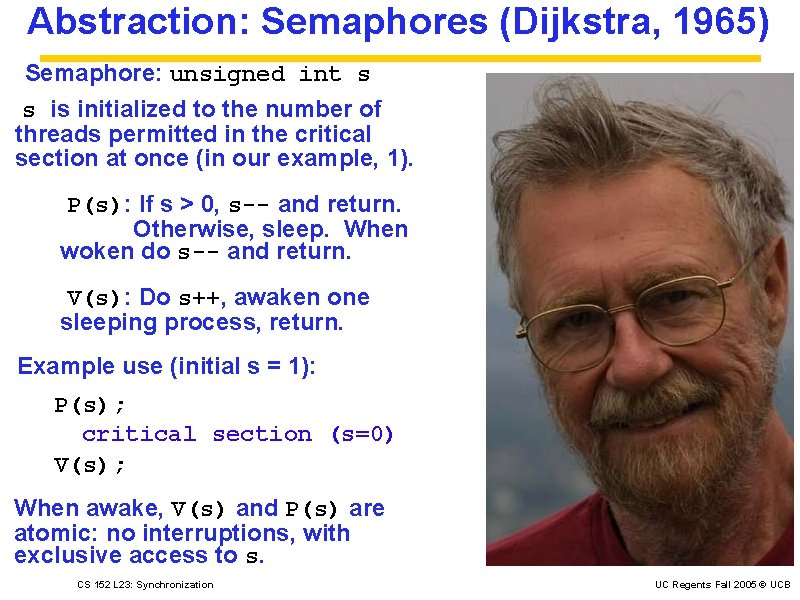

Abstraction: Semaphores (Dijkstra, 1965) Semaphore: unsigned int s s is initialized to the number of threads permitted in the critical section at once (in our example, 1). P(s): If s > 0, s-- and return. Otherwise, sleep. When woken do s-- and return. V(s): Do s++, awaken one sleeping process, return. Example use (initial s = 1): P(s); critical section (s=0) V(s); When awake, V(s) and P(s) are atomic: no interruptions, with exclusive access to s. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

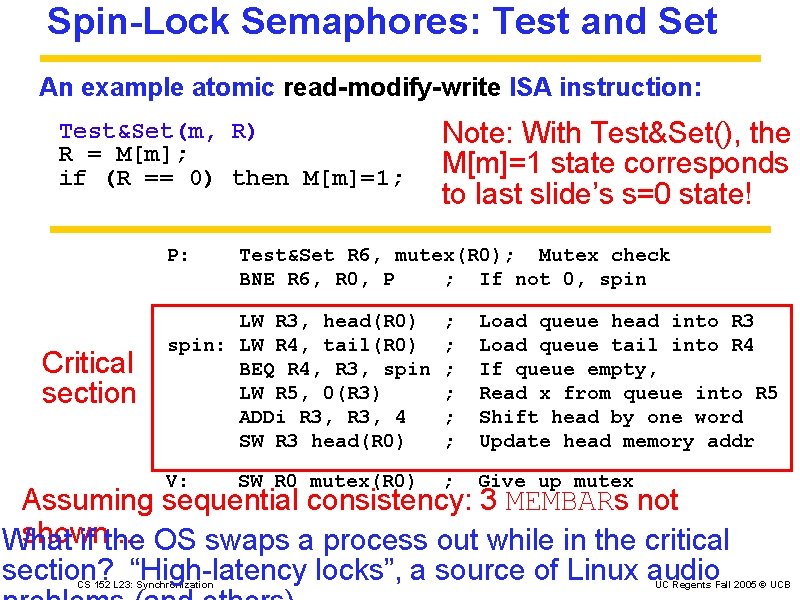

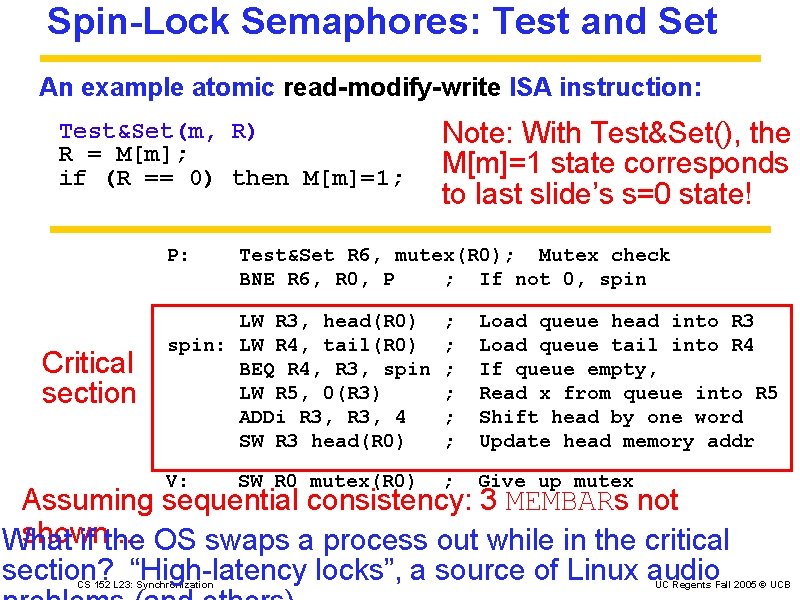

Spin-Lock Semaphores: Test and Set An example atomic read-modify-write ISA instruction: Test&Set(m, R) R = M[m]; if (R == 0) then M[m]=1; P: Critical section Note: With Test&Set(), the M[m]=1 state corresponds to last slide’s s=0 state! Test&Set R 6, mutex(R 0); Mutex check BNE R 6, R 0, P ; If not 0, spin LW R 3, head(R 0) spin: LW R 4, tail(R 0) BEQ R 4, R 3, spin LW R 5, 0(R 3) ADDi R 3, 4 SW R 3 head(R 0) ; ; ; Load queue head into R 3 Load queue tail into R 4 If queue empty, Read x from queue into R 5 Shift head by one word Update head memory addr V: ; Give up mutex SW R 0 mutex(R 0) Assuming sequential consistency: 3 MEMBARs not shown. . . OS swaps a process out while in the critical What if the section? “High-latency locks”, a source of Linux audio CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

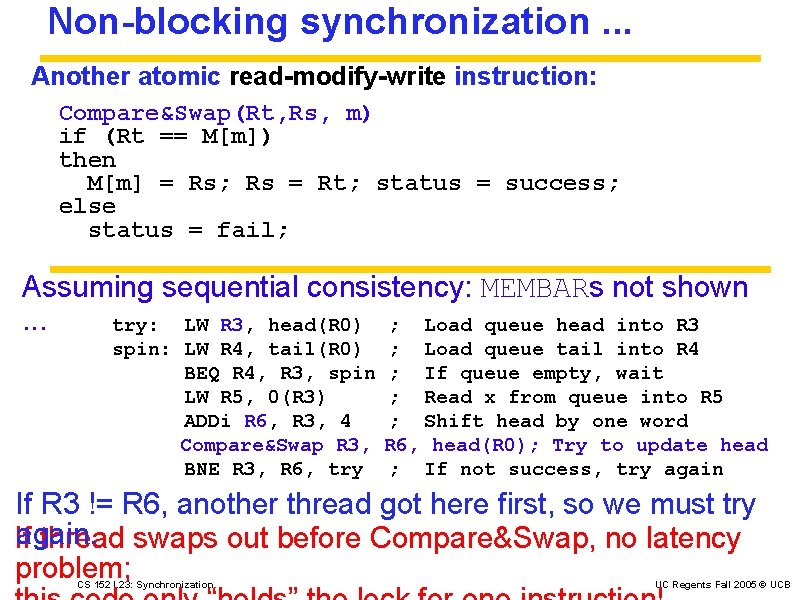

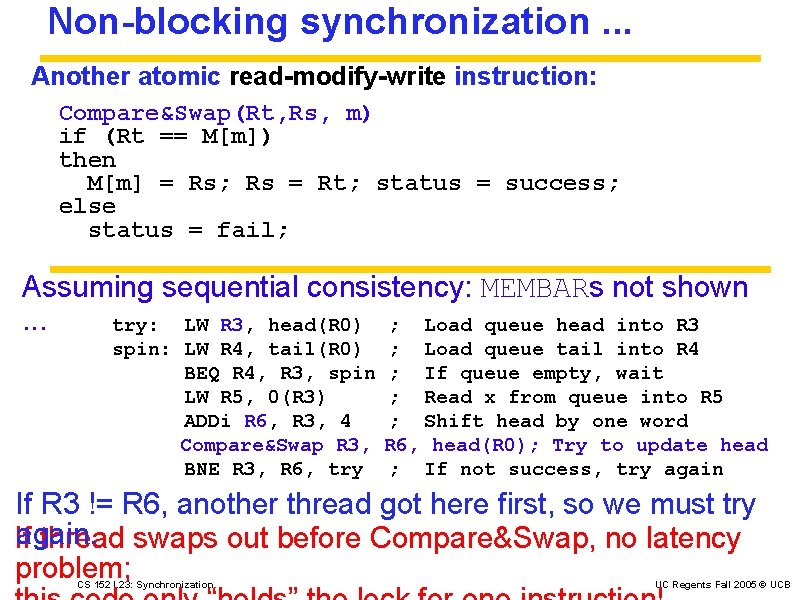

Non-blocking synchronization. . . Another atomic read-modify-write instruction: Compare&Swap(Rt, Rs, m) if (Rt == M[m]) then M[m] = Rs; Rs = Rt; status = success; else status = fail; Assuming sequential consistency: MEMBARs not shown. . . try: LW R 3, head(R 0) ; Load queue head into R 3 spin: LW R 4, tail(R 0) BEQ R 4, R 3, spin LW R 5, 0(R 3) ADDi R 6, R 3, 4 Compare&Swap R 3, BNE R 3, R 6, try ; Load queue tail into R 4 ; If queue empty, wait ; Read x from queue into R 5 ; Shift head by one word R 6, head(R 0); Try to update head ; If not success, try again If R 3 != R 6, another thread got here first, so we must try again. If thread swaps out before Compare&Swap, no latency problem; CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

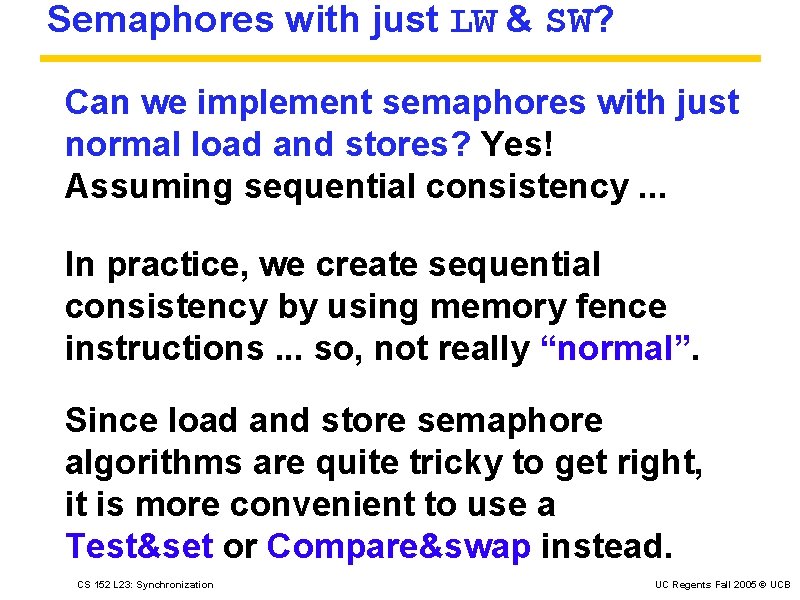

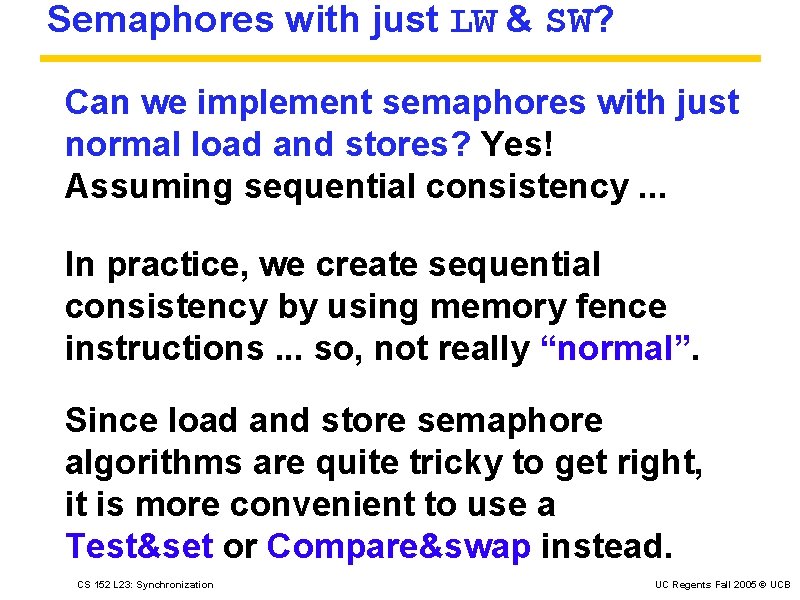

Semaphores with just LW & SW? Can we implement semaphores with just normal load and stores? Yes! Assuming sequential consistency. . . In practice, we create sequential consistency by using memory fence instructions. . . so, not really “normal”. Since load and store semaphore algorithms are quite tricky to get right, it is more convenient to use a Test&set or Compare&swap instead. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB

Conclusions: Synchronization Memset: Memory fences, in lieu of full sequential consistency. Test&Set: A spin-lock instruction for sharing write access. Compare&Swap: A non-blocking alternative to share write access. CS 152 L 23: Synchronization UC Regents Fall 2005 © UCB