CS 152 Computer Architecture and Engineering Lecture 22

- Slides: 34

CS 152 Computer Architecture and Engineering Lecture 22 -- GPU + SIMD + Vectors I 2014 -4 -15 John Lazzaro (not a prof - “John” is always OK) TA: Eric Love www-inst. eecs. berkeley. edu/~cs 152/ Play: CS 152 L 22: GPU + SIMD + Vectors UC Regents Spring 2014 © UCB

Today: Architecture for data parallelism The Landscape: Three chips that deliver Tera. Ops/s in 2014, and how they differ. E 5 -2600 v 2: Stretching the Xeon server approach for compute-intensive apps. Short Break GK 110: n. Vidia’s flagship Kepler GPU, customized for compute applications. CS 152 L 22: GPU + SIMD + Vectors UC Regents Fall 2006 © UCB

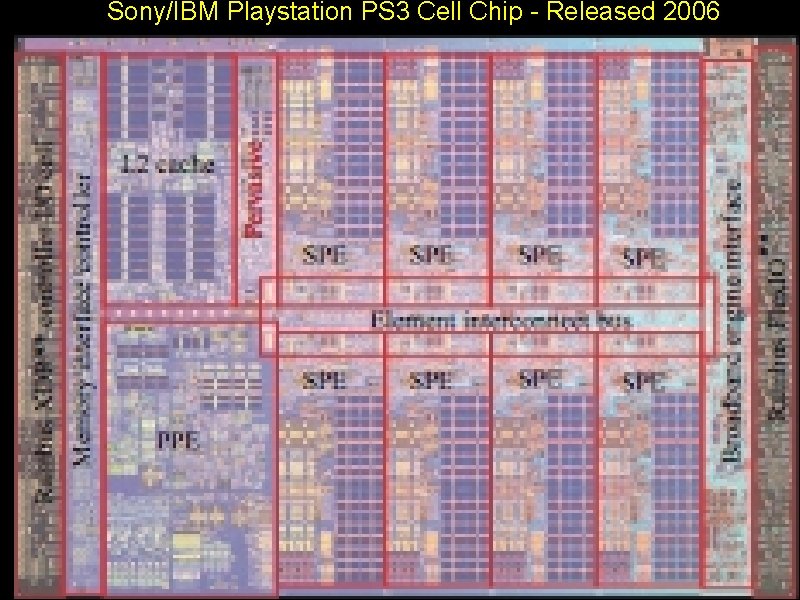

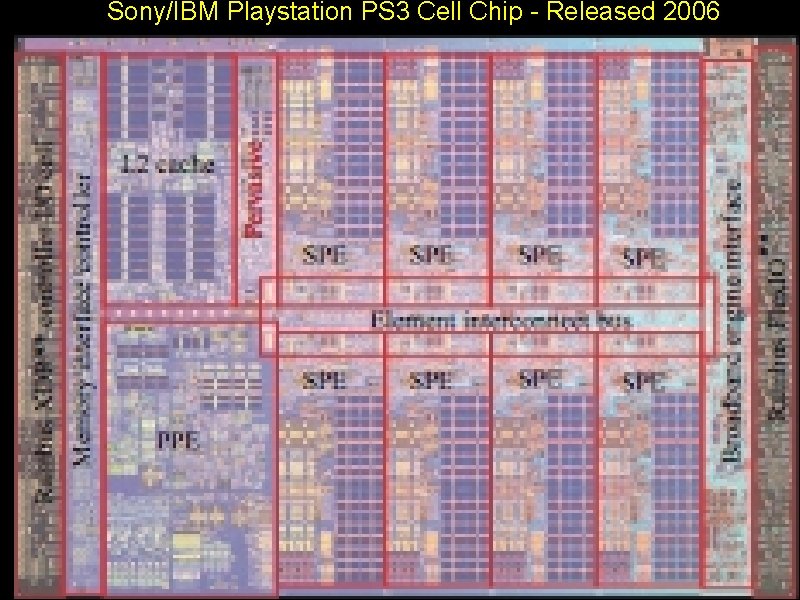

Sony/IBM Playstation PS 3 Cell Chip - Released 2006

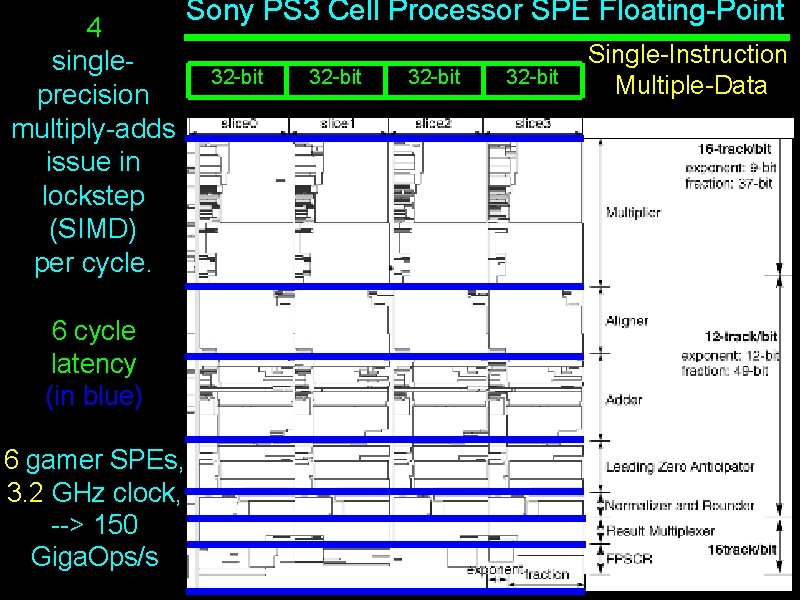

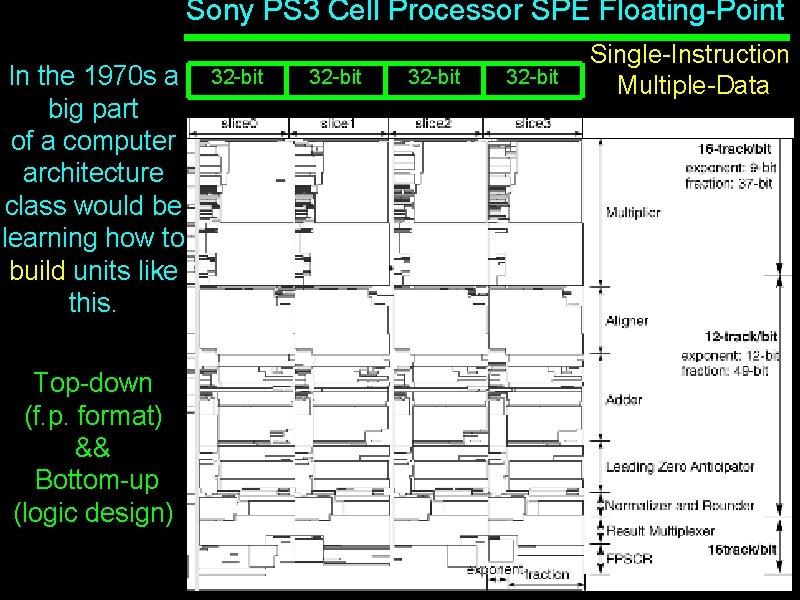

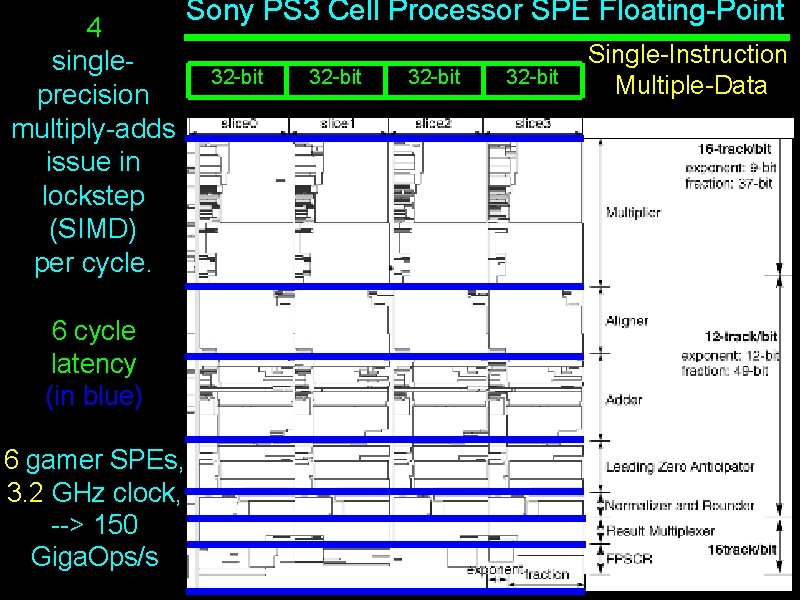

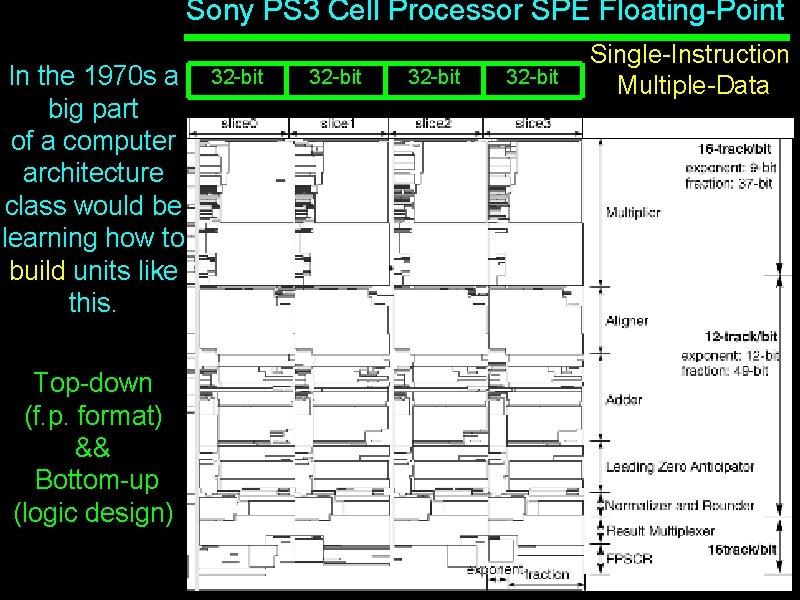

4 singleprecision multiply-adds issue in lockstep (SIMD) per cycle. 6 cycle latency (in blue) 6 gamer SPEs, 3. 2 GHz clock, --> 150 Giga. Ops/s Sony PS 3 Cell Processor SPE Floating-Point 32 -bit Single-Instruction Multiple-Data

Sony PS 3 Cell Processor SPE Floating-Point In the 1970 s a big part of a computer architecture class would be learning how to build units like this. Top-down (f. p. format) && Bottom-up (logic design) 32 -bit Single-Instruction Multiple-Data

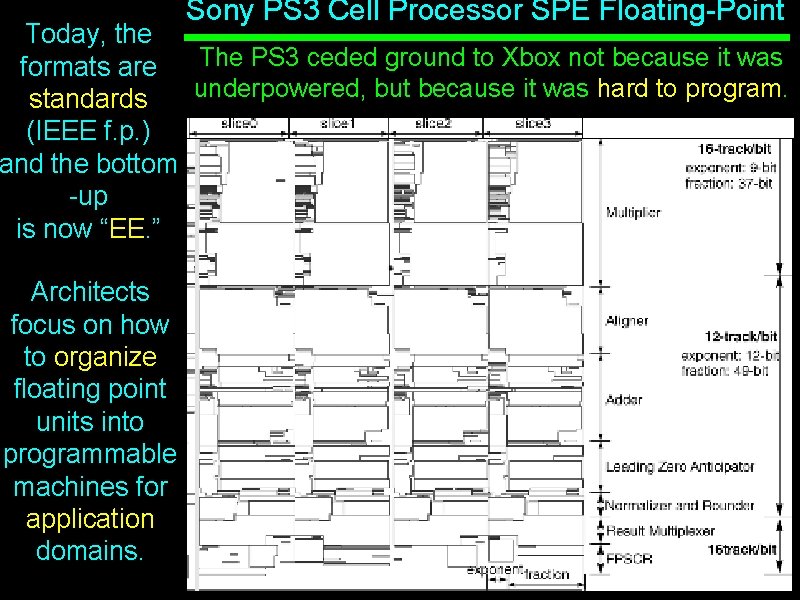

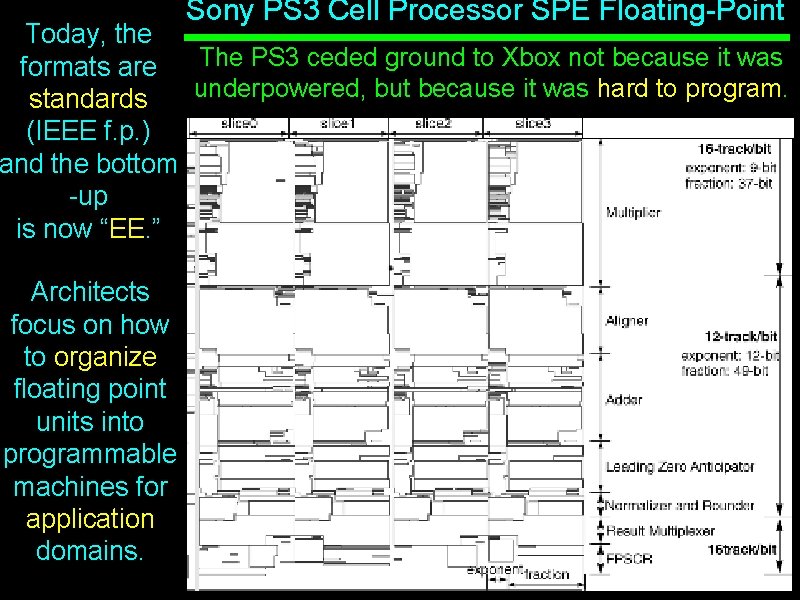

Sony PS 3 Cell Processor SPE Floating-Point Today, the The PS 3 ceded ground to Xbox not because it was formats are underpowered, but because it was hard to program. standards (IEEE f. p. ) and the bottom -up is now “EE. ” Architects focus on how to organize floating point units into programmable machines for application domains.

2014: Tera. Ops/Sec Chips CS 152 L 22: GPU + SIMD + Vectors UC Regents Spring 2014 © UCB

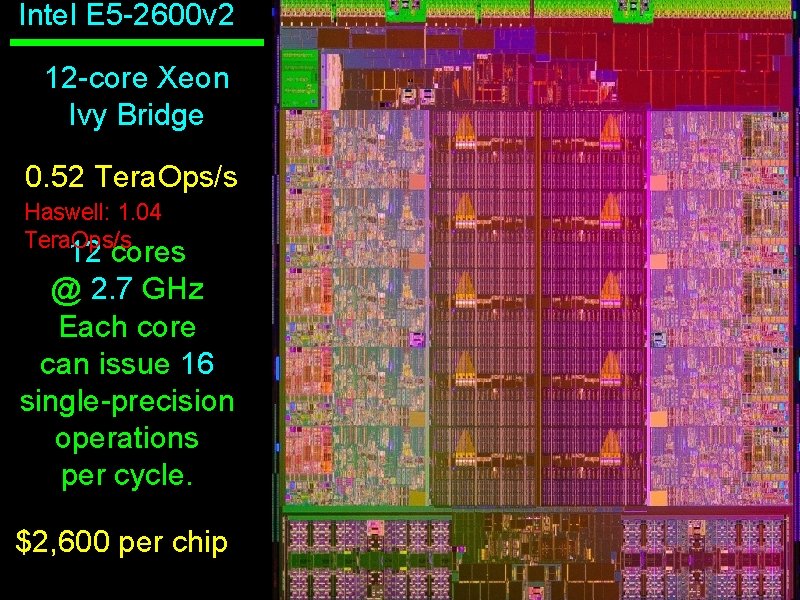

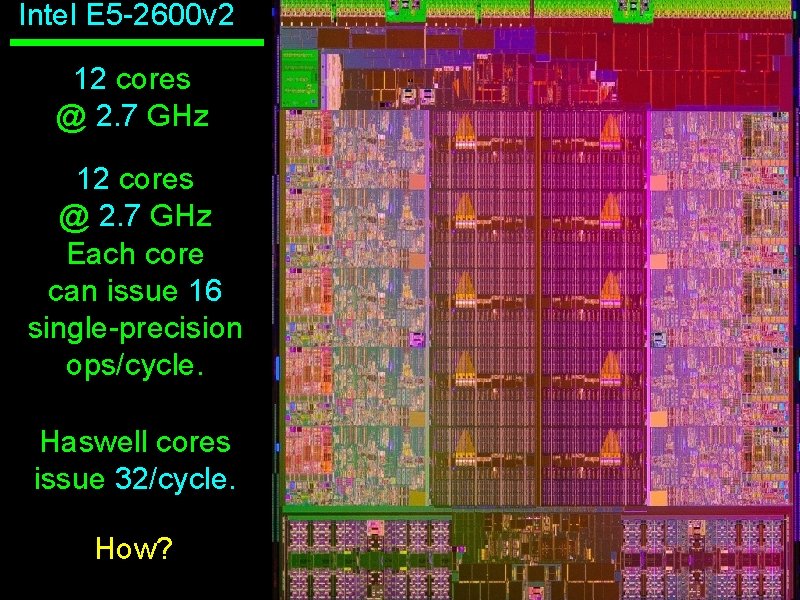

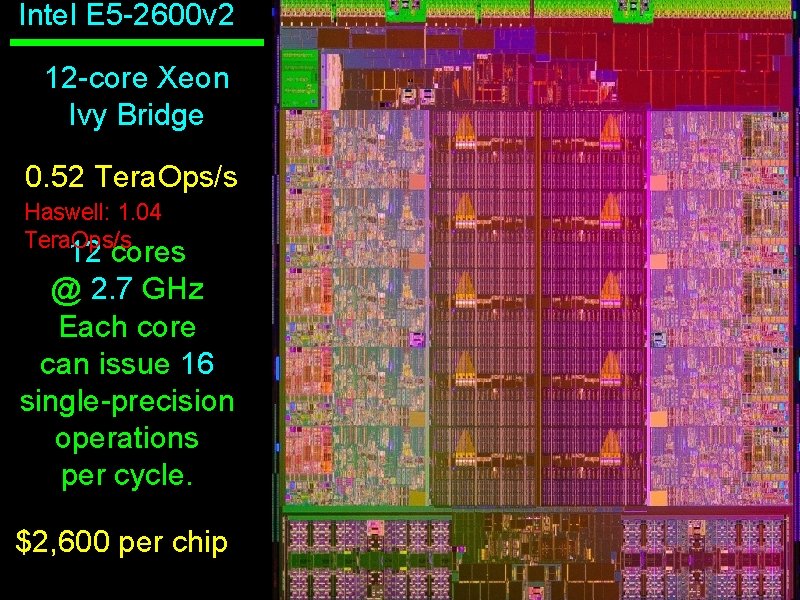

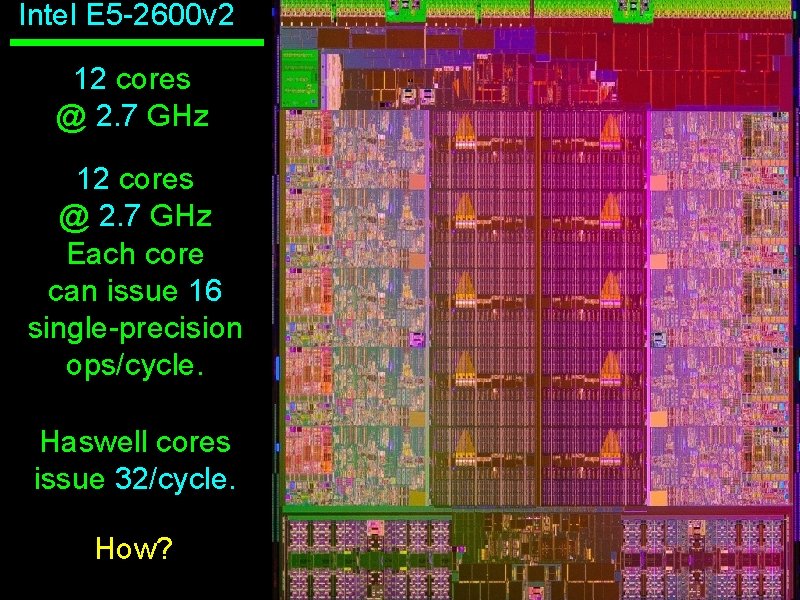

Intel E 5 -2600 v 2 12 -core Xeon Ivy Bridge 0. 52 Tera. Ops/s Haswell: 1. 04 Tera. Ops/s 12 cores @ 2. 7 GHz Each core can issue 16 single-precision operations per cycle. $2, 600 per chip

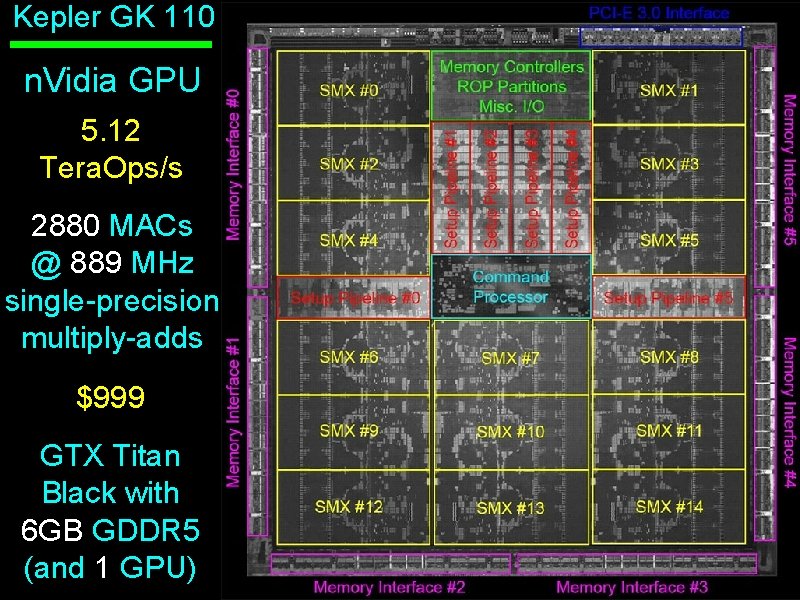

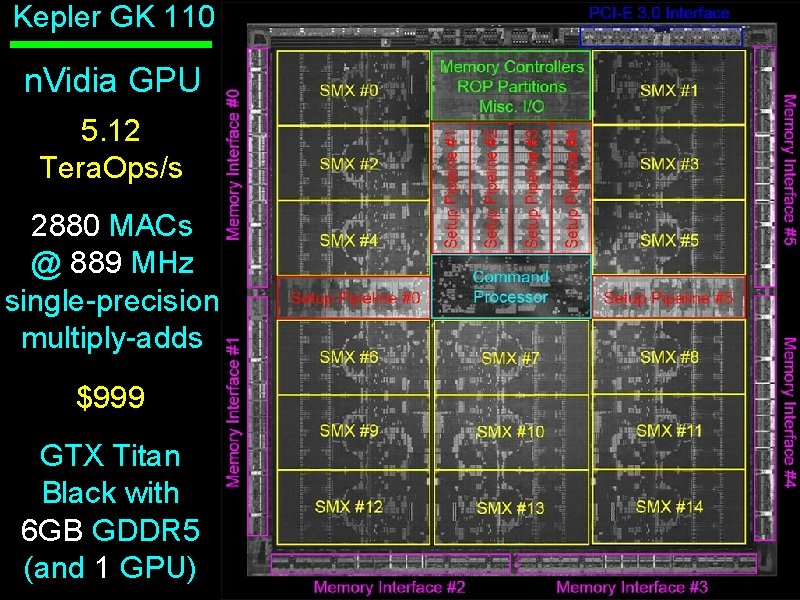

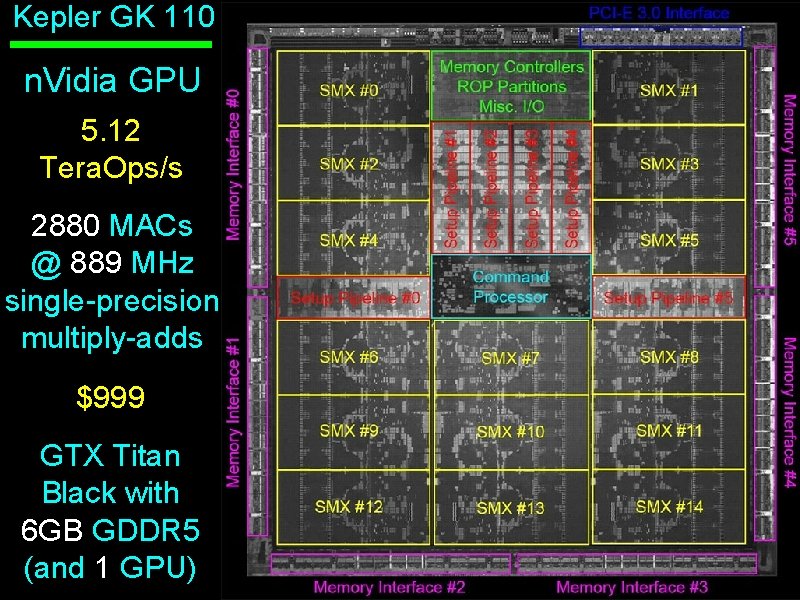

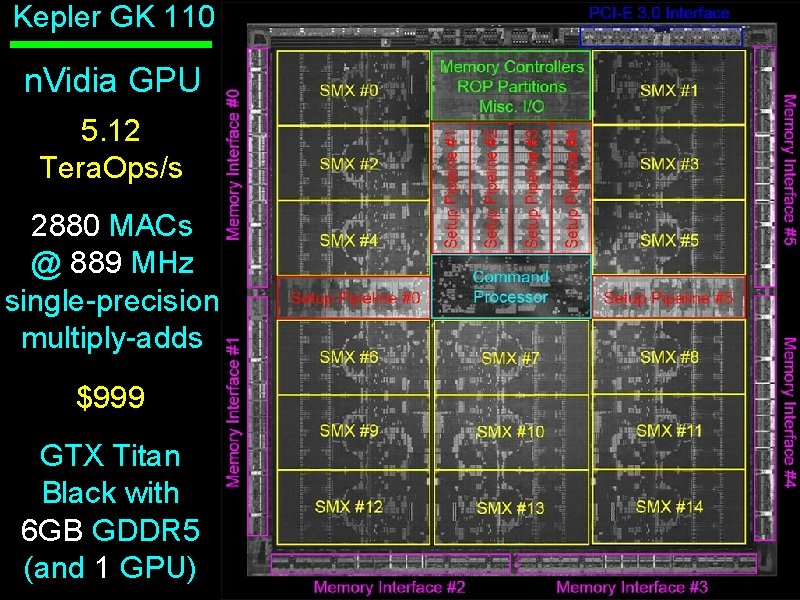

Kepler GK 110 n. Vidia GPU 5. 12 Tera. Ops/s 2880 MACs @ 889 MHz single-precision multiply-adds $999 GTX Titan Black with 6 GB GDDR 5 (and 1 GPU) EECS 150: Graphics Processors UC Regents Fall 2013 © UCB

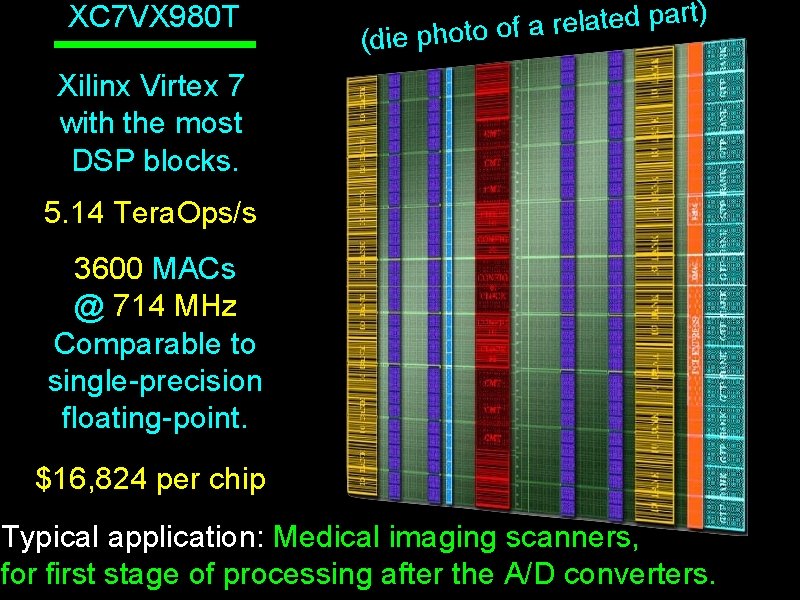

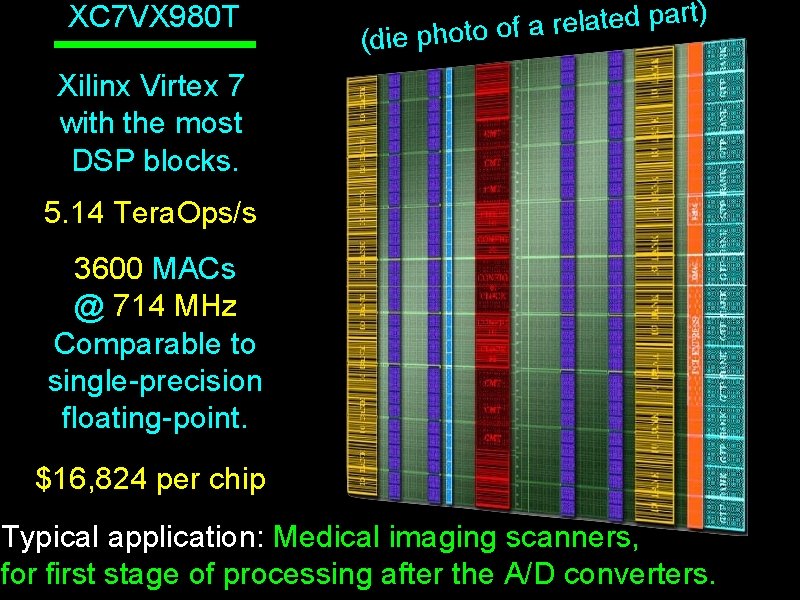

XC 7 VX 980 T ) ( rt a p d e t a l e r a die photo of Xilinx Virtex 7 with the most DSP blocks. 5. 14 Tera. Ops/s 3600 MACs @ 714 MHz Comparable to single-precision floating-point. $16, 824 per chip Typical application: Medical imaging scanners, for first stage of processing after the A/D converters.

Intel E 5 -2600 v 2 12 cores @ 2. 7 GHz Each core can issue 16 single-precision ops/cycle. Haswell cores issue 32/cycle. How?

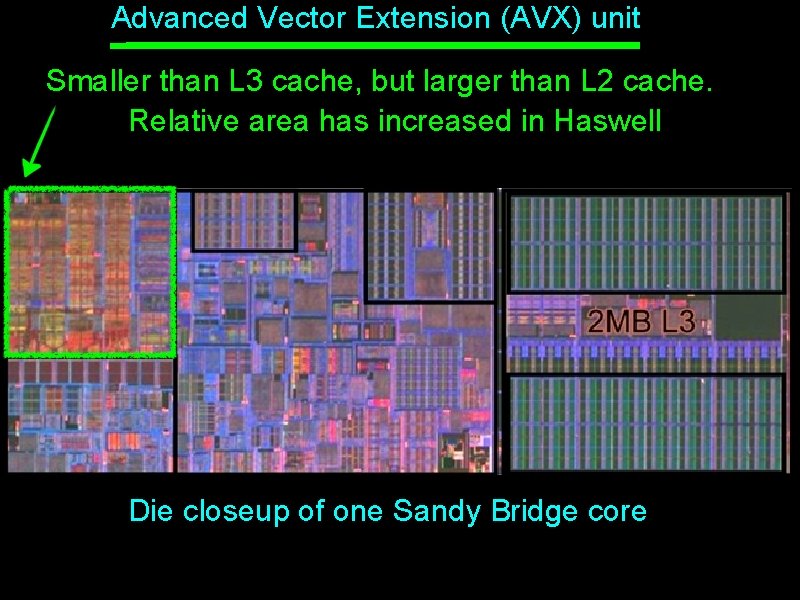

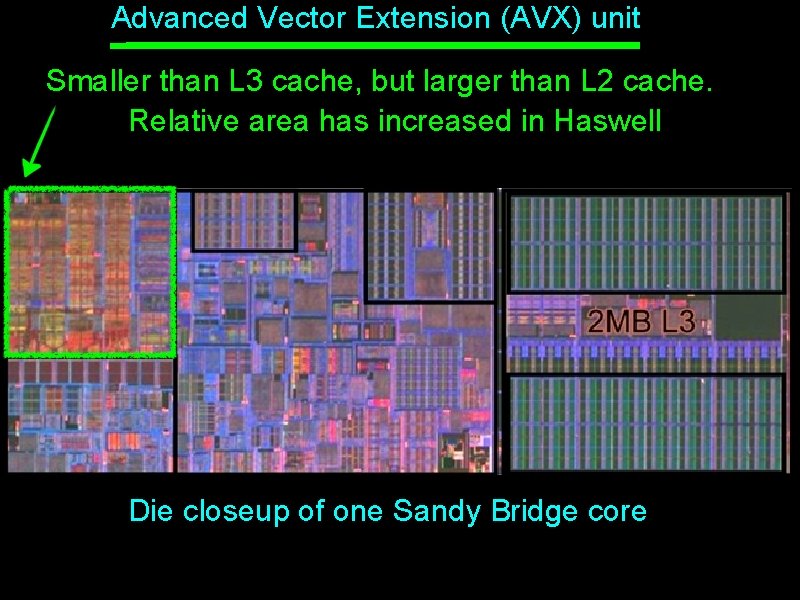

Advanced Vector Extension (AVX) unit Smaller than L 3 cache, but larger than L 2 cache. Relative area has increased in Haswell Die closeup of one Sandy Bridge core

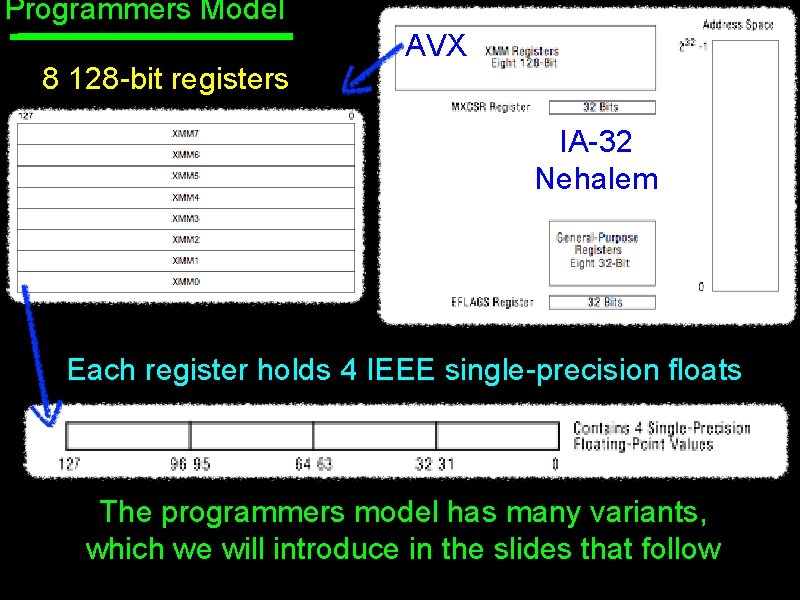

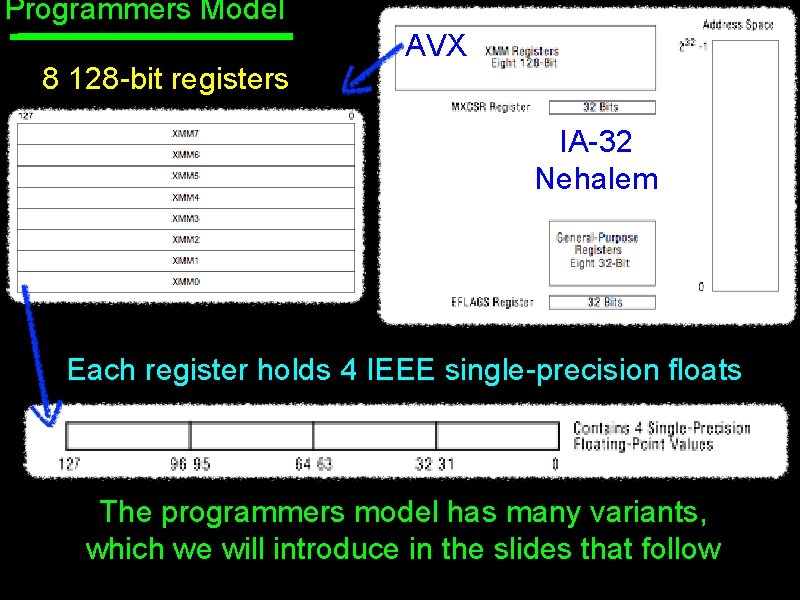

Programmers Model 8 128 -bit registers AVX IA-32 Nehalem Each register holds 4 IEEE single-precision floats The programmers model has many variants, which we will introduce in the slides that follow

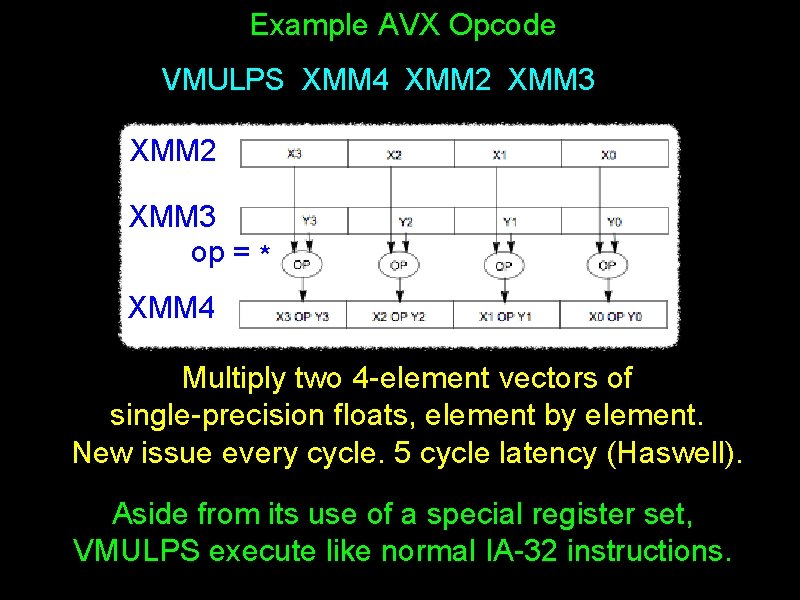

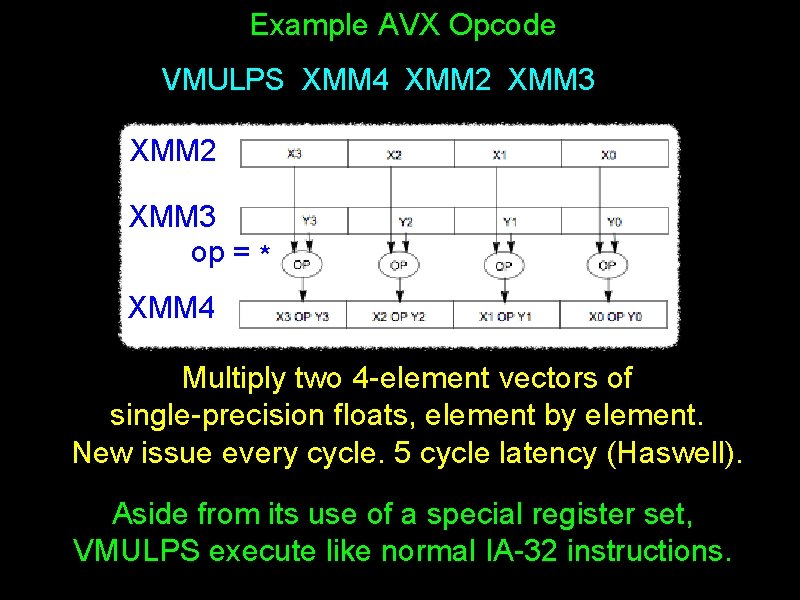

Example AVX Opcode VMULPS XMM 4 XMM 2 XMM 3 op = * XMM 4 Multiply two 4 -element vectors of single-precision floats, element by element. New issue every cycle. 5 cycle latency (Haswell). Aside from its use of a special register set, VMULPS execute like normal IA-32 instructions.

Sandy Bridge, Haswell Sandy Bridge extends register set to 256 bits: vectors are twice the size. IA-64 AVX/AVX 2 has 16 registers (IA -32: 8) Haswell adds 3 -operand instructions a*b + c Fused multiply-add (FMA) 2 EX units with FMA --> 2 X increase in ops/cycle

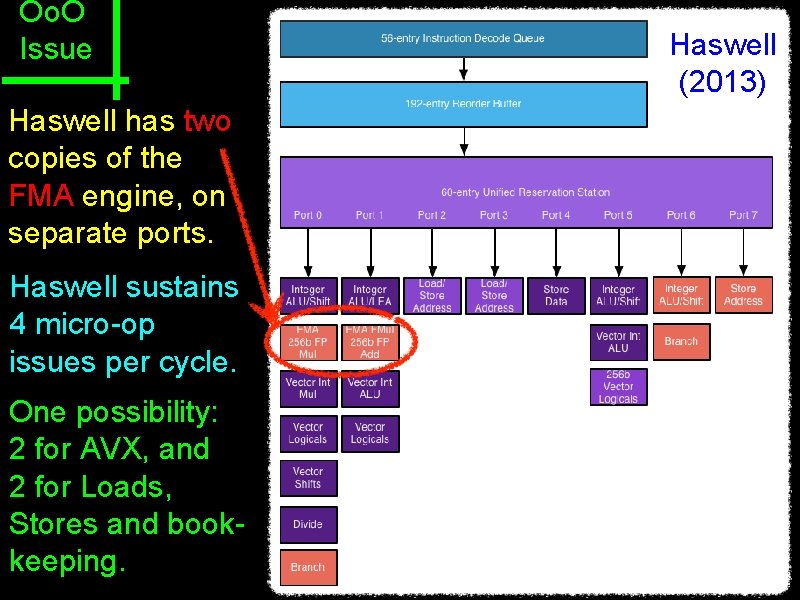

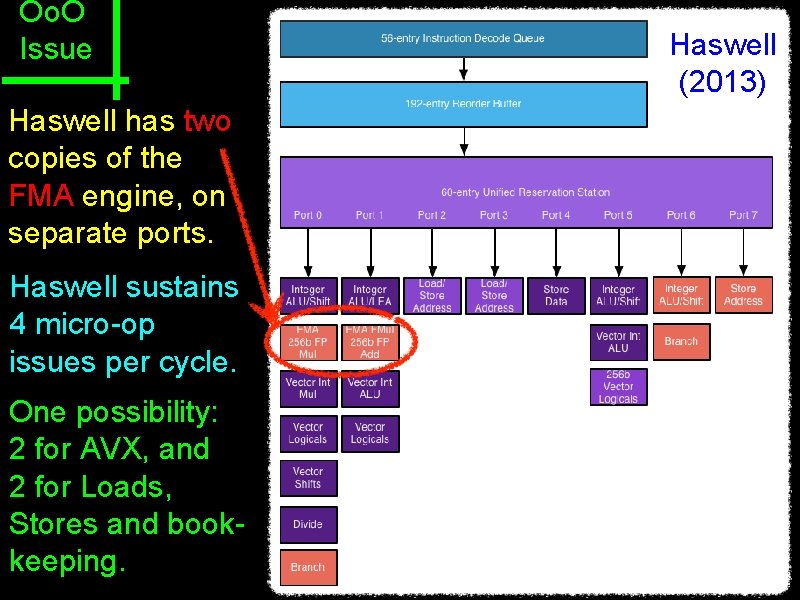

Oo. O Issue Haswell has two copies of the FMA engine, on separate ports. Haswell sustains 4 micro-op issues per cycle. One possibility: 2 for AVX, and 2 for Loads, Stores and bookkeeping. Haswell (2013)

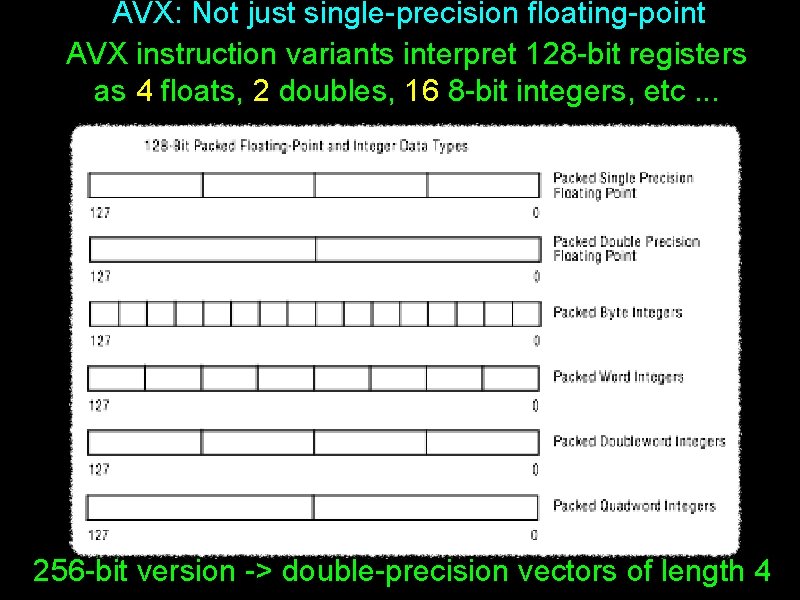

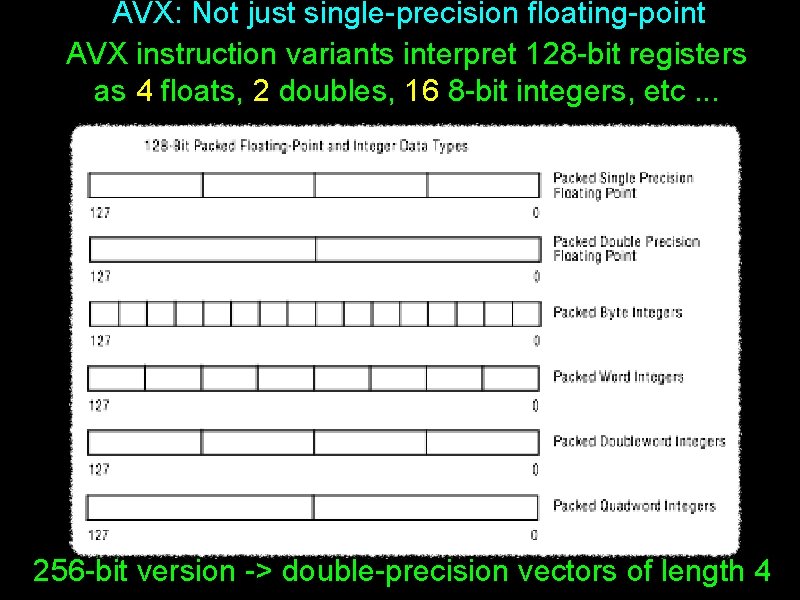

AVX: Not just single-precision floating-point AVX instruction variants interpret 128 -bit registers as 4 floats, 2 doubles, 16 8 -bit integers, etc. . . 256 -bit version -> double-precision vectors of length 4

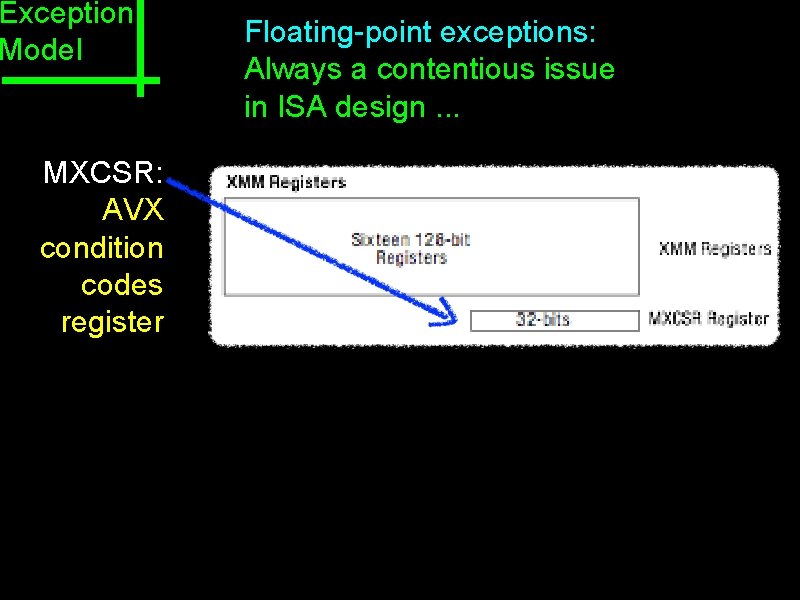

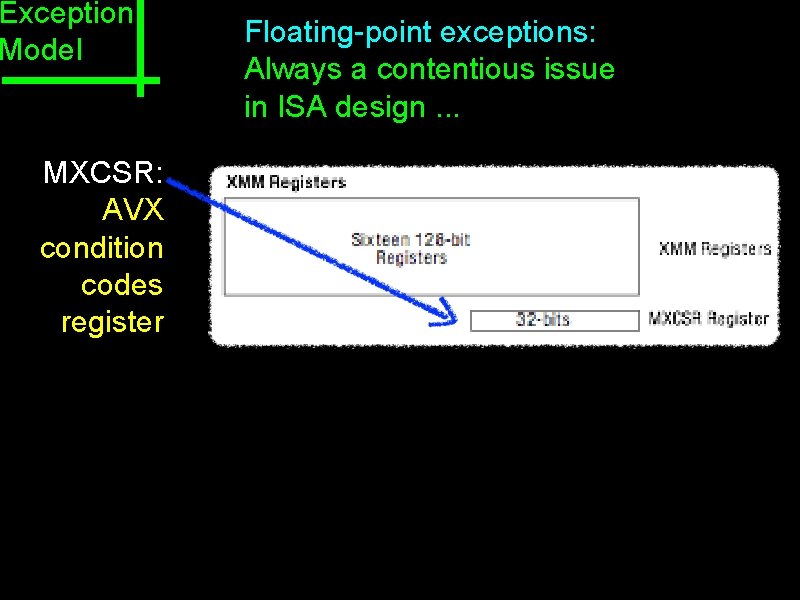

Exception Model MXCSR: AVX condition codes register Floating-point exceptions: Always a contentious issue in ISA design. . .

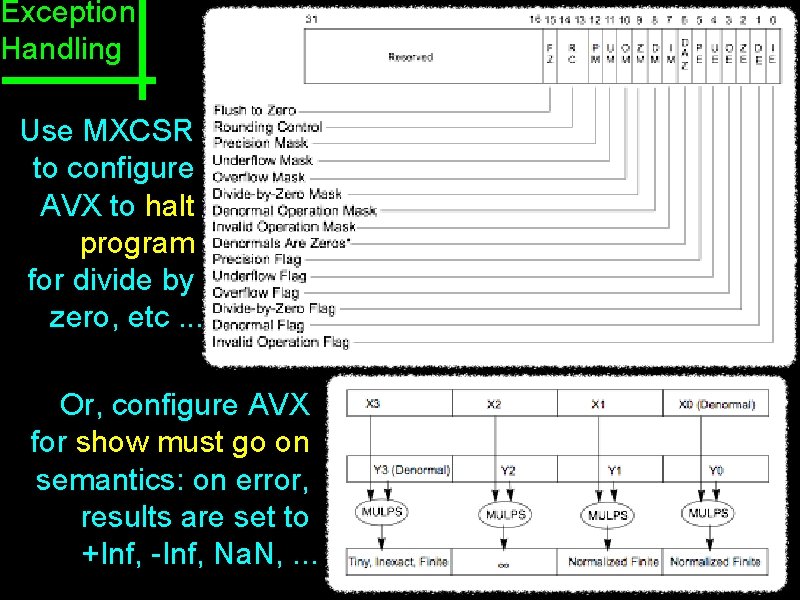

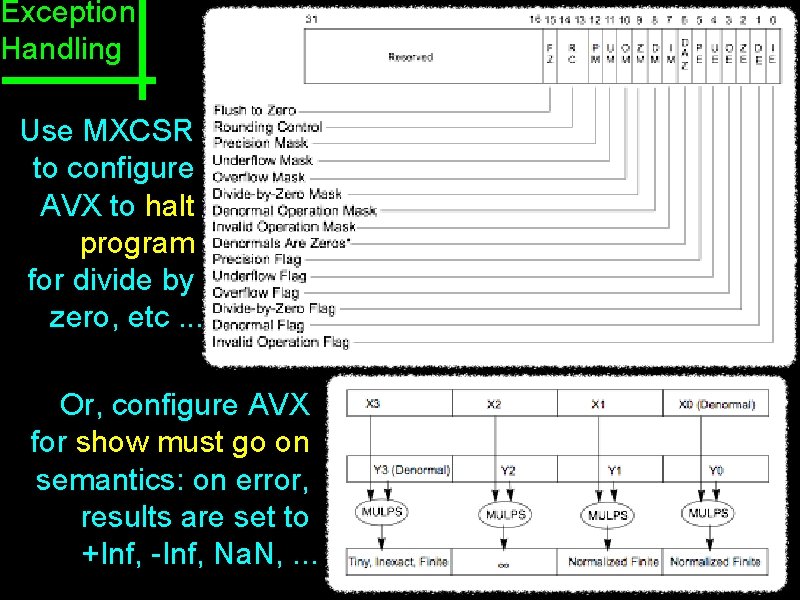

Exception Handling Use MXCSR to configure AVX to halt program for divide by zero, etc. . . Or, configure AVX for show must go on semantics: on error, results are set to +Inf, -Inf, Na. N, . . .

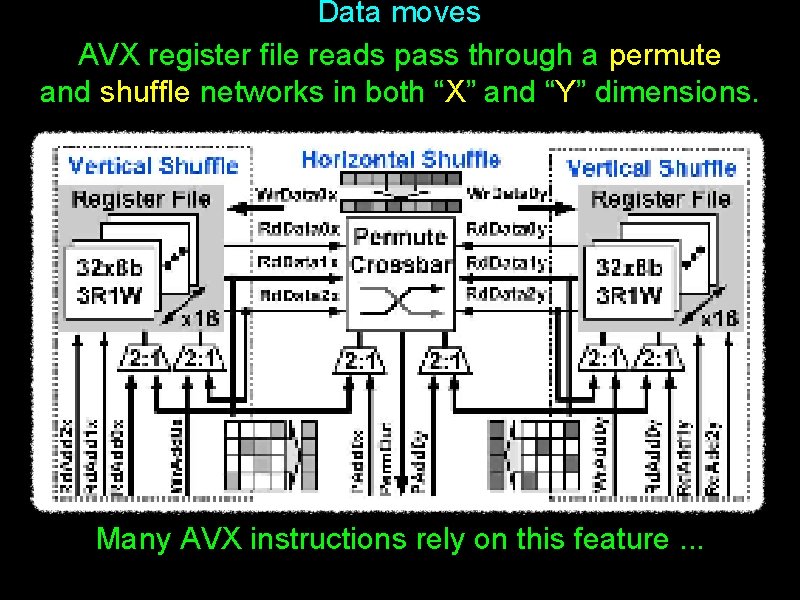

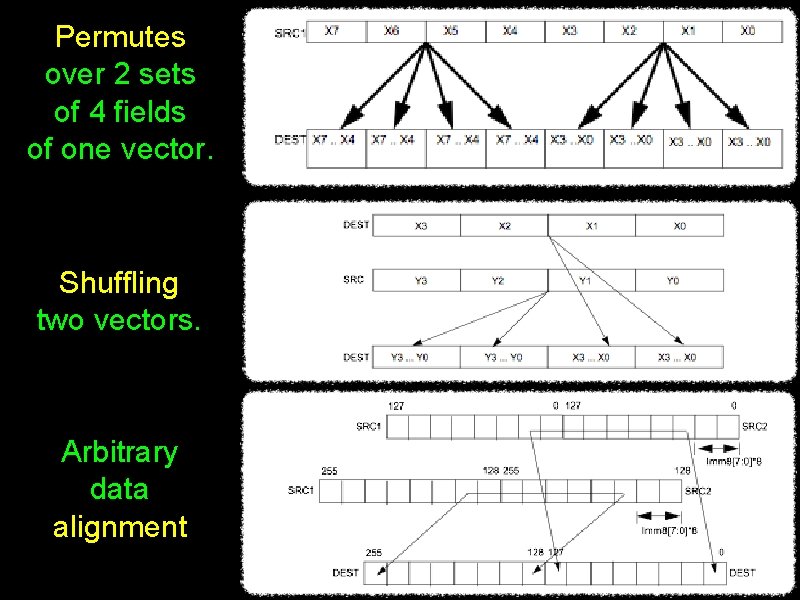

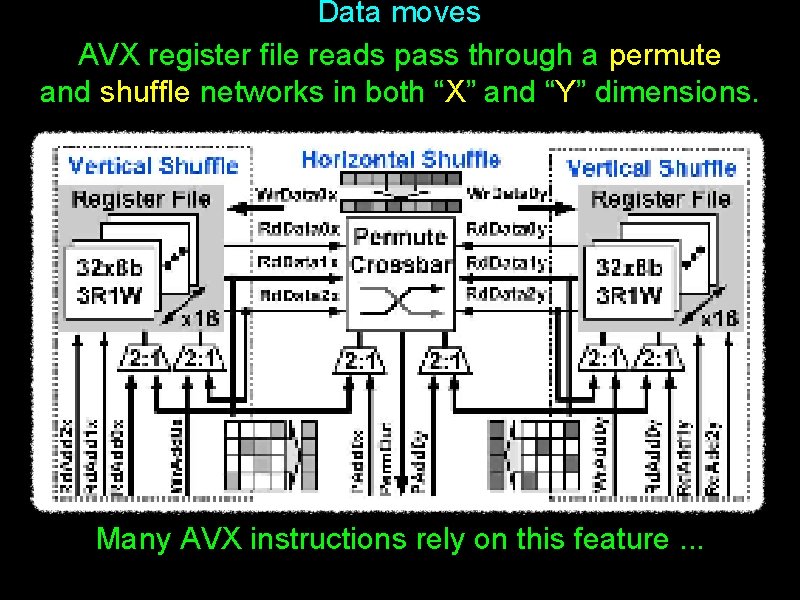

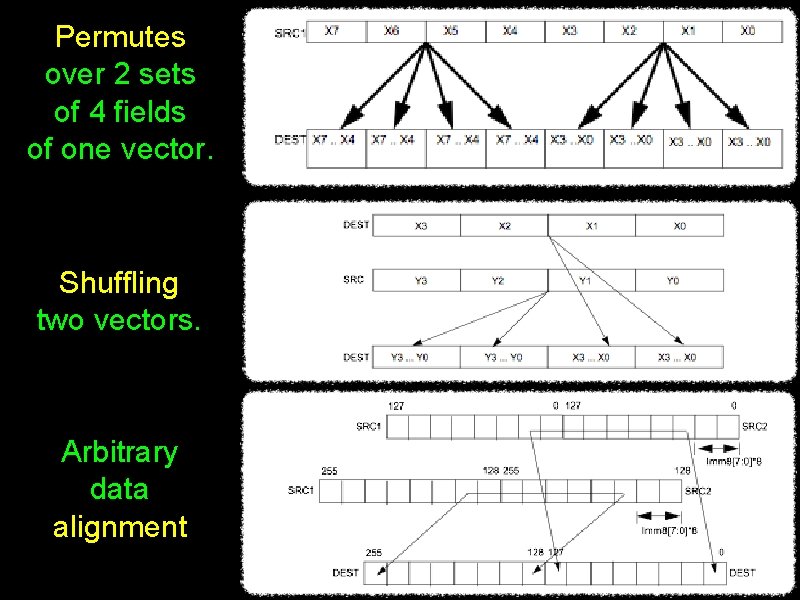

Data moves AVX register file reads pass through a permute and shuffle networks in both “X” and “Y” dimensions. Many AVX instructions rely on this feature. . .

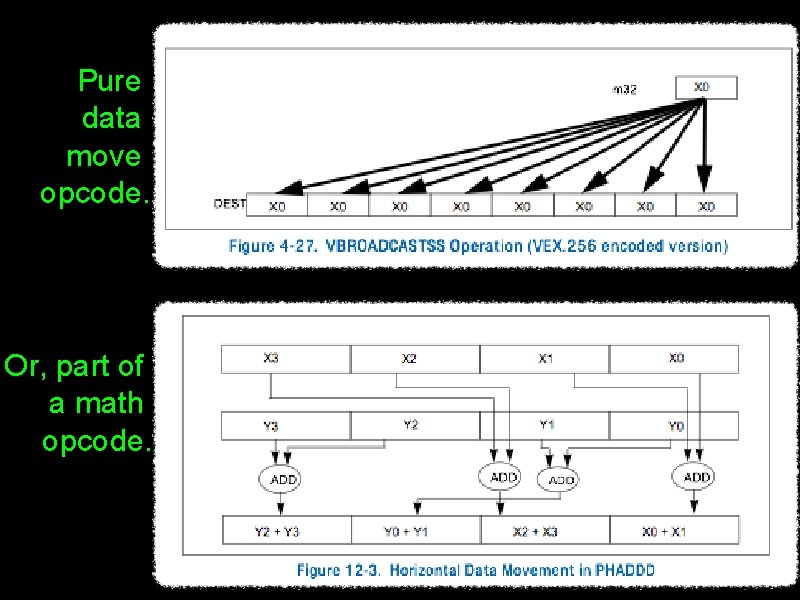

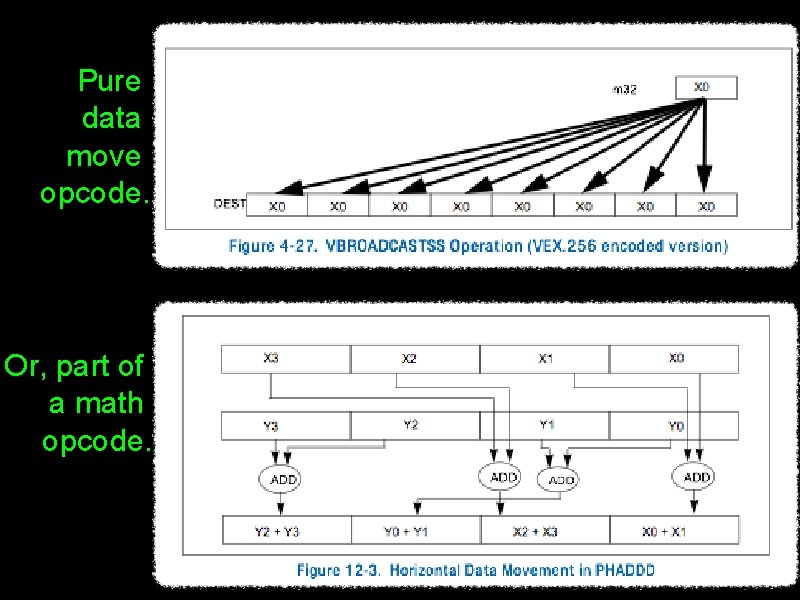

Pure data move opcode. Or, part of a math opcode.

Permutes over 2 sets of 4 fields of one vector. Shuffling two vectors. Arbitrary data alignment

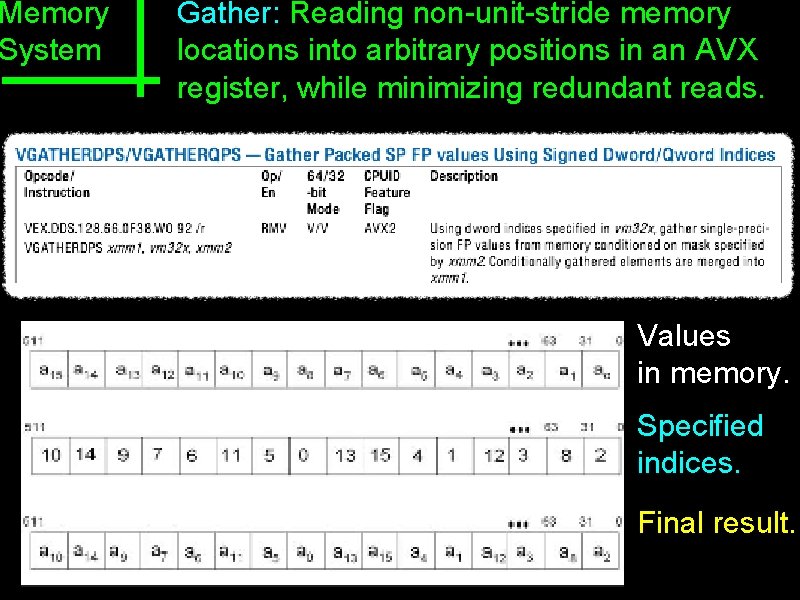

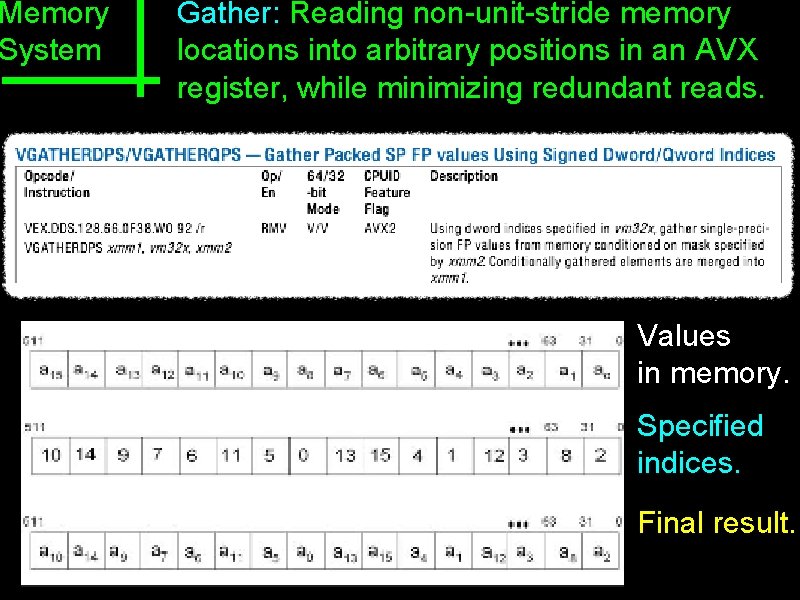

Memory System Gather: Reading non-unit-stride memory locations into arbitrary positions in an AVX register, while minimizing redundant reads. Values in memory. Specified indices. Final result.

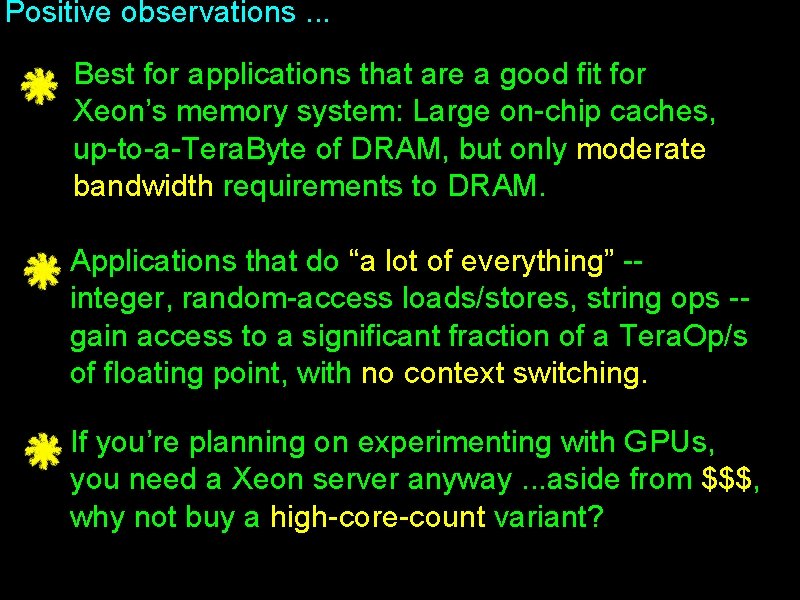

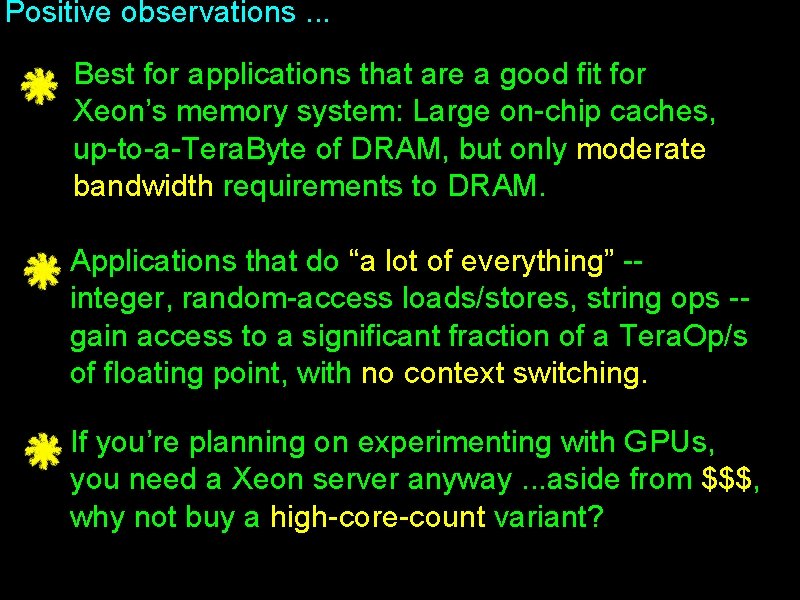

Positive observations. . . Best for applications that are a good fit for Xeon’s memory system: Large on-chip caches, up-to-a-Tera. Byte of DRAM, but only moderate bandwidth requirements to DRAM. Applications that do “a lot of everything” -integer, random-access loads/stores, string ops -gain access to a significant fraction of a Tera. Op/s of floating point, with no context switching. If you’re planning on experimenting with GPUs, you need a Xeon server anyway. . . aside from $$$, why not buy a high-core-count variant?

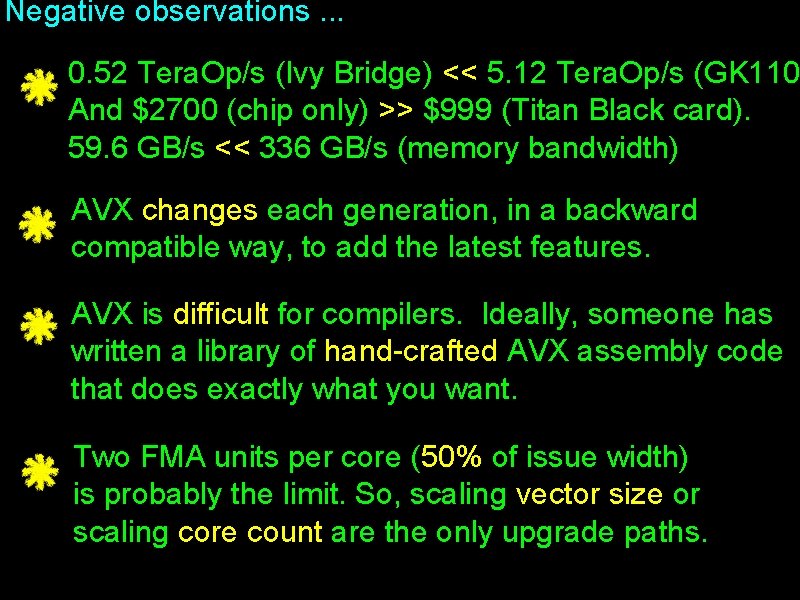

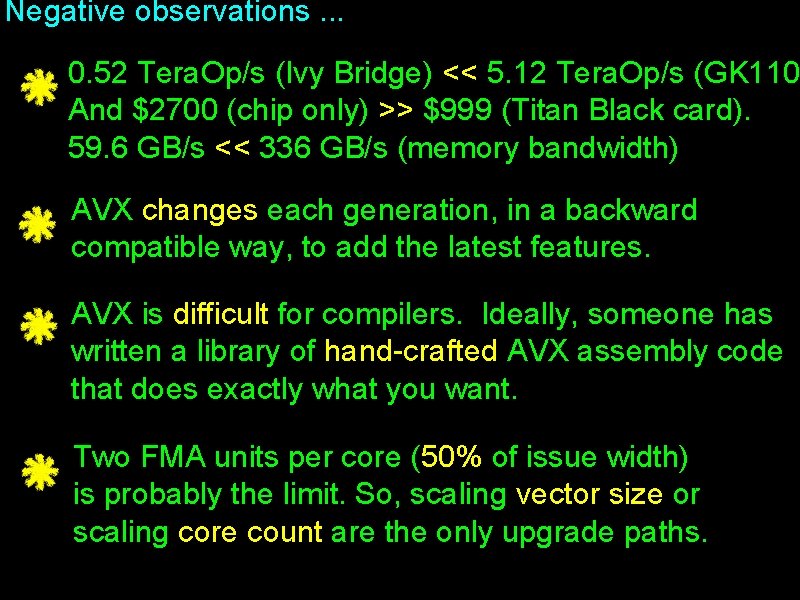

Negative observations. . . 0. 52 Tera. Op/s (Ivy Bridge) << 5. 12 Tera. Op/s (GK 110 And $2700 (chip only) >> $999 (Titan Black card). 59. 6 GB/s << 336 GB/s (memory bandwidth) AVX changes each generation, in a backward compatible way, to add the latest features. AVX is difficult for compilers. Ideally, someone has written a library of hand-crafted AVX assembly code that does exactly what you want. Two FMA units per core (50% of issue width) is probably the limit. So, scaling vector size or scaling core count are the only upgrade paths.

Break Play: CS 152 L 22: GPU + SIMD + Vectors UC Regents Spring 2014 © UCB

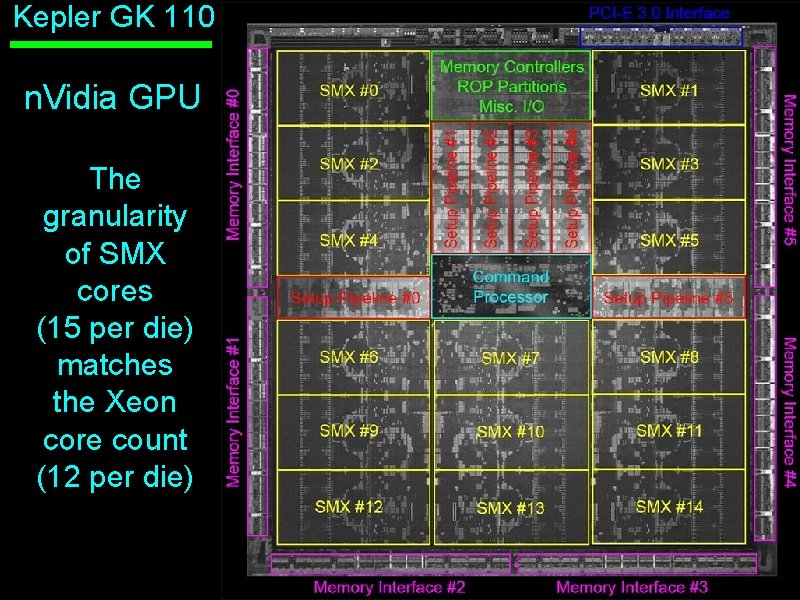

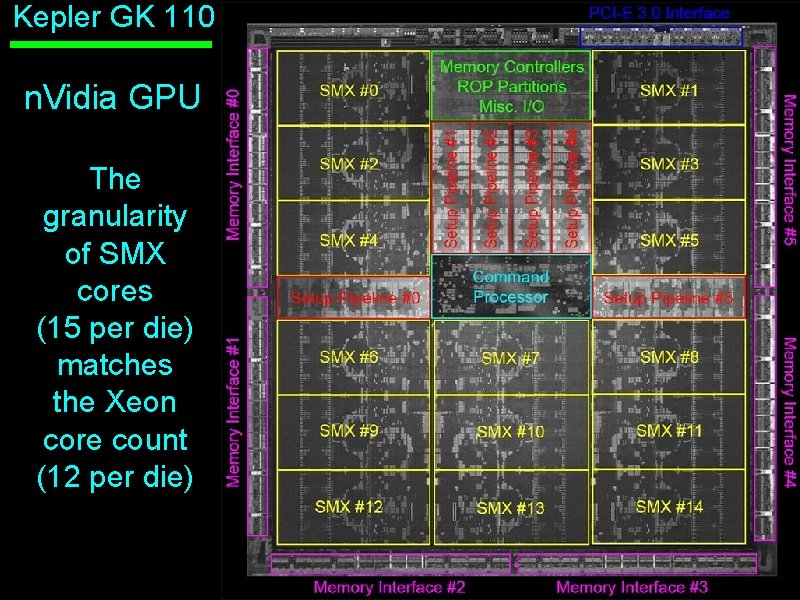

Kepler GK 110 n. Vidia GPU The granularity of SMX cores (15 per die) matches the Xeon core count (12 per die) EECS 150: Graphics Processors UC Regents Fall 2013 © UCB

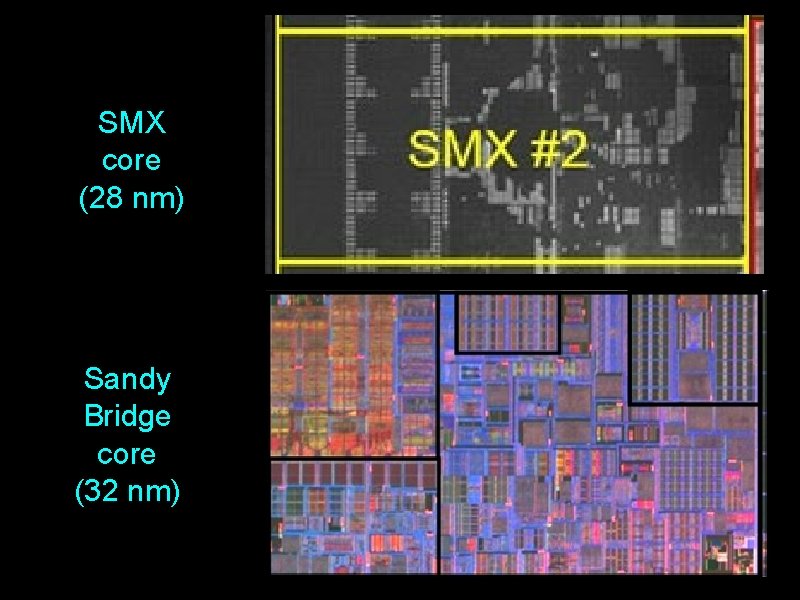

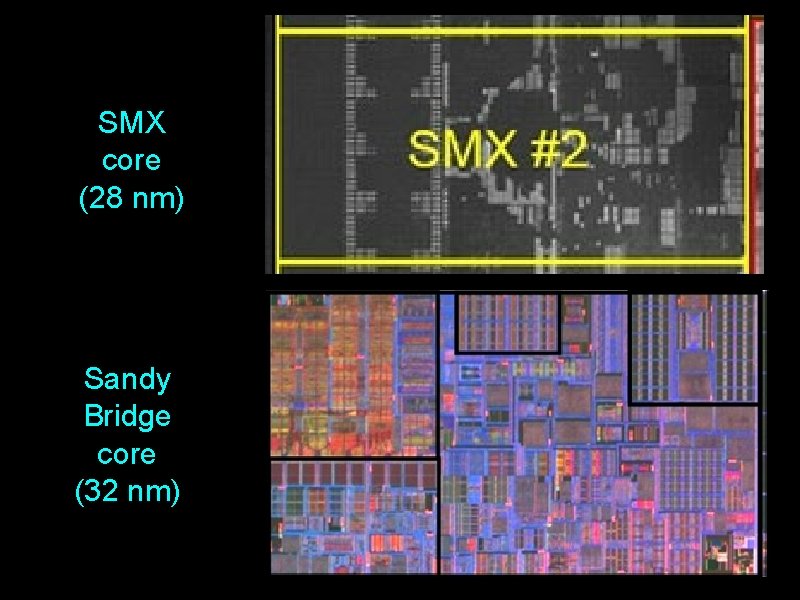

SMX core (28 nm) Sandy Bridge core (32 nm)

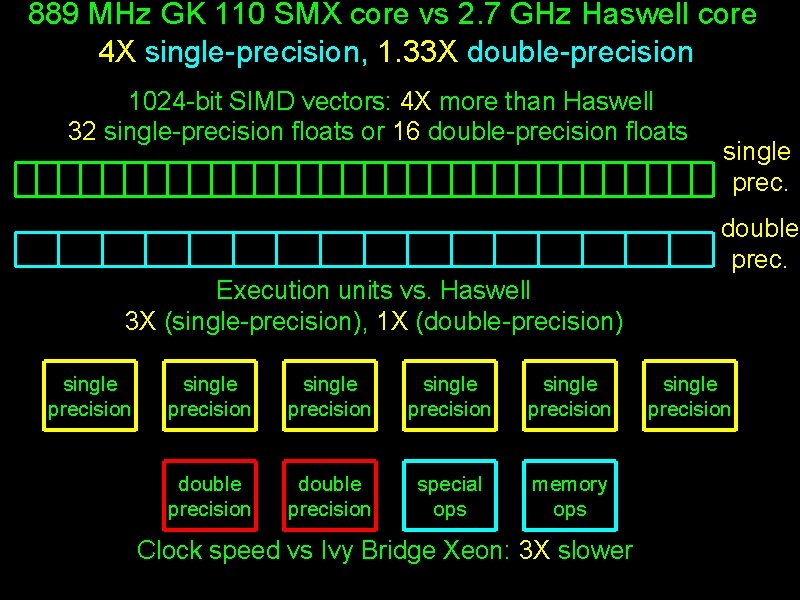

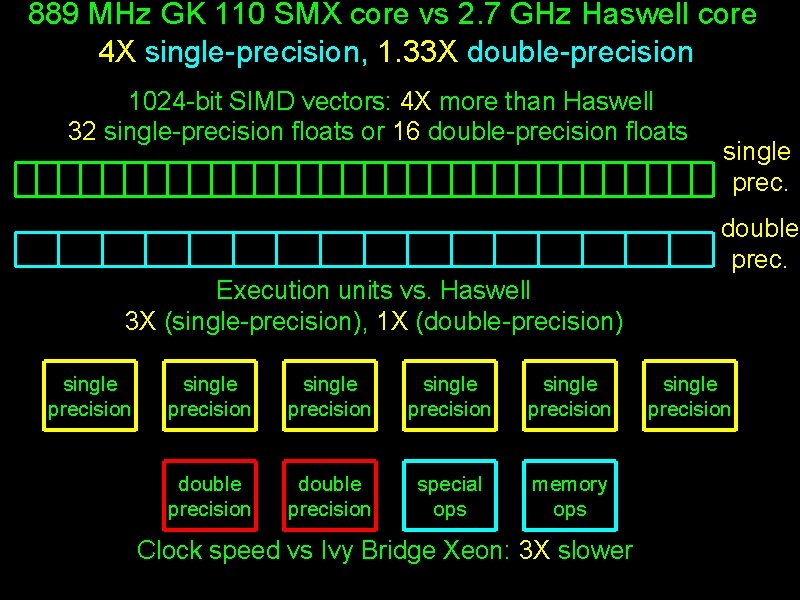

889 MHz GK 110 SMX core vs 2. 7 GHz Haswell core 4 X single-precision, 1. 33 X double-precision 1024 -bit SIMD vectors: 4 X more than Haswell 32 single-precision floats or 16 double-precision floats single prec. double prec. Execution units vs. Haswell 3 X (single-precision), 1 X (double-precision) single precision single precision double precision special ops memory ops Clock speed vs Ivy Bridge Xeon: 3 X slower single precision

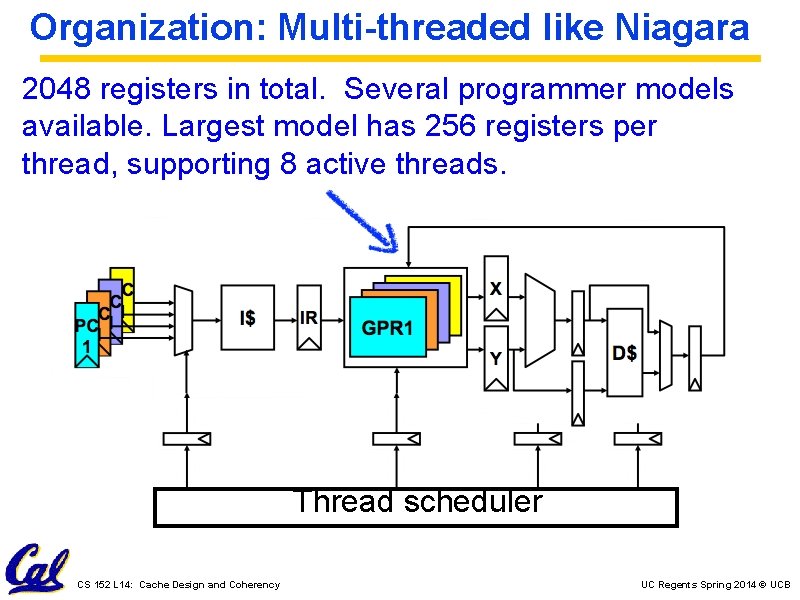

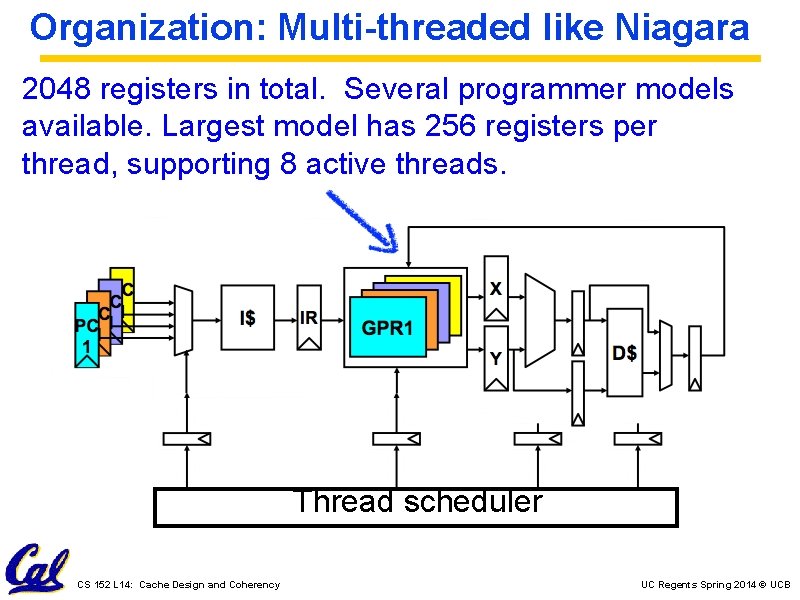

Organization: Multi-threaded like Niagara 2048 registers in total. Several programmer models available. Largest model has 256 registers per thread, supporting 8 active threads. Thread scheduler CS 152 L 14: Cache Design and Coherency UC Regents Spring 2014 © UCB

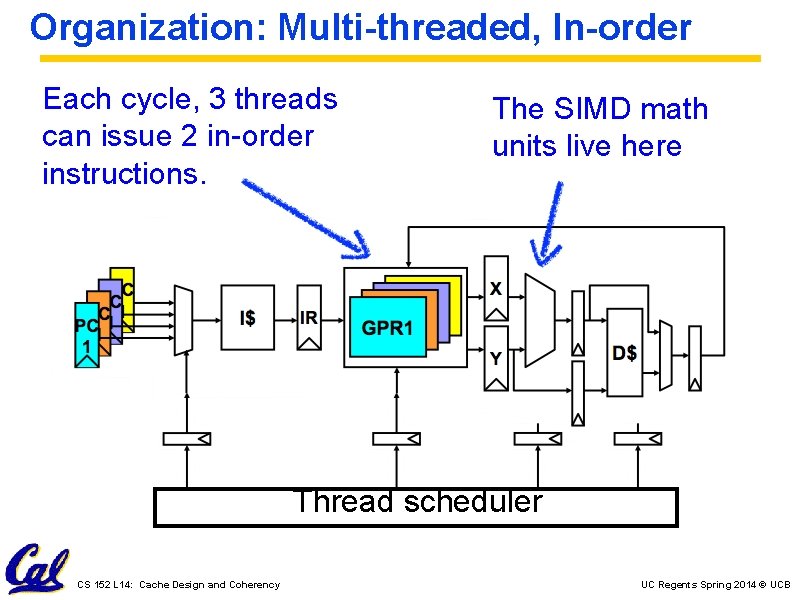

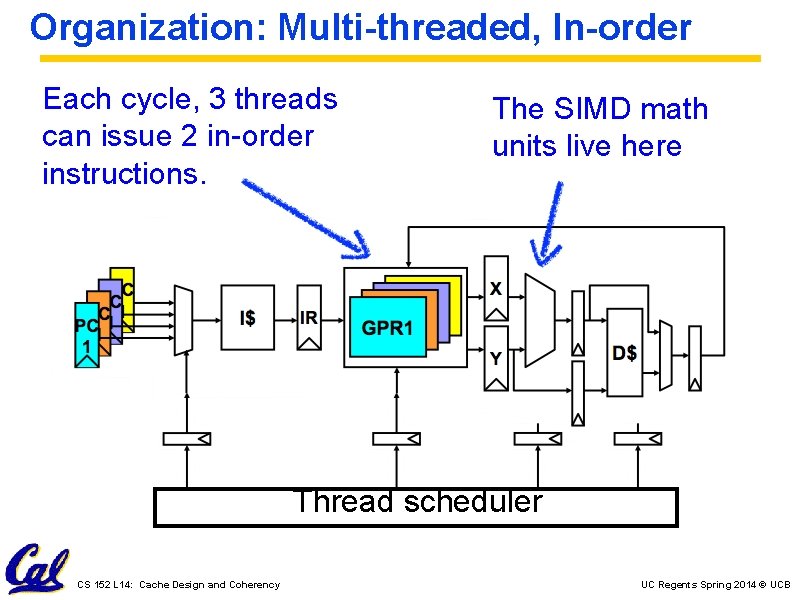

Organization: Multi-threaded, In-order Each cycle, 3 threads can issue 2 in-order instructions. The SIMD math units live here Thread scheduler CS 152 L 14: Cache Design and Coherency UC Regents Spring 2014 © UCB

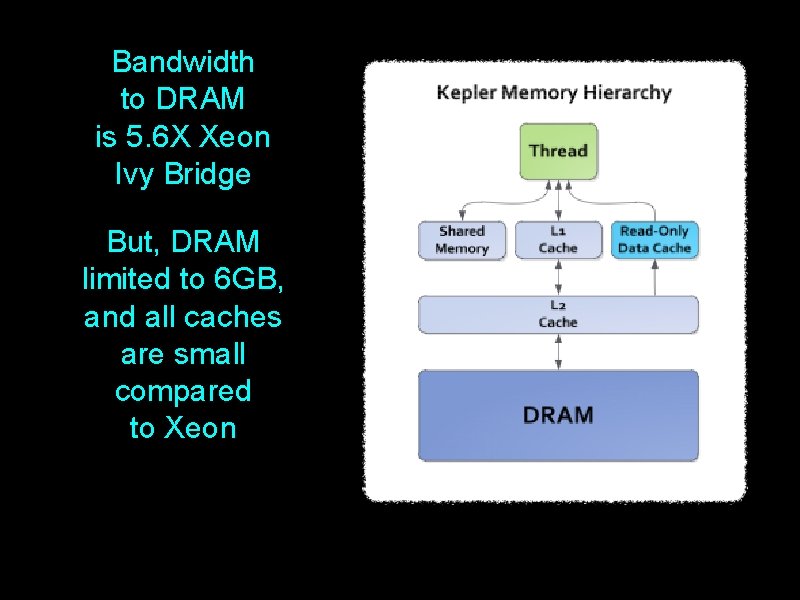

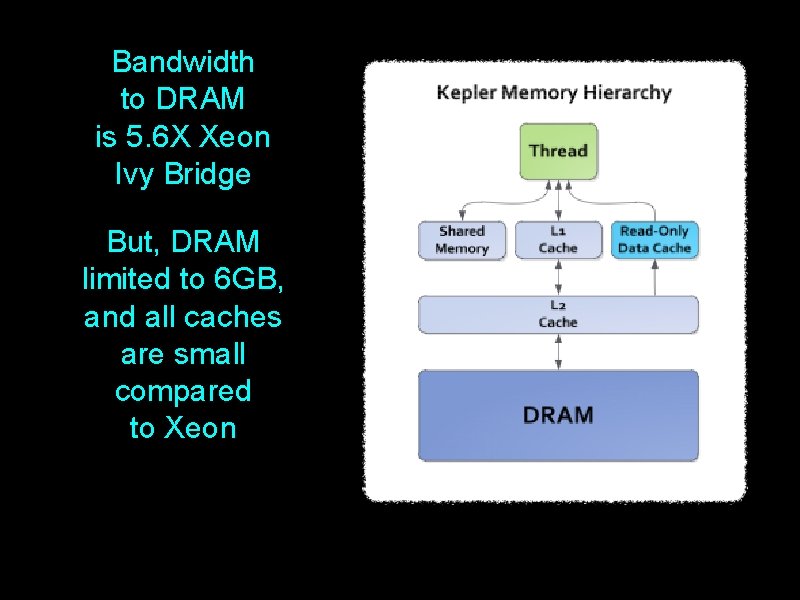

Bandwidth to DRAM is 5. 6 X Xeon Ivy Bridge But, DRAM limited to 6 GB, and all caches are small compared to Xeon

Kepler GK 110 n. Vidia GPU 5. 12 Tera. Ops/s 2880 MACs @ 889 MHz single-precision multiply-adds $999 GTX Titan Black with 6 GB GDDR 5 (and 1 GPU) EECS 150: Graphics Processors UC Regents Fall 2013 © UCB

On Thursday To be continued. . . Have fun in section !