CS 152 Computer Architecture and Engineering Lecture 19

- Slides: 40

CS 152 Computer Architecture and Engineering Lecture 19 – Advanced Processors III 2006 -11 -2 John Lazzaro (www. cs. berkeley. edu/~lazzaro) TAs: Udam Saini and Jue Sun www-inst. eecs. berkeley. edu/~cs 152/ CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

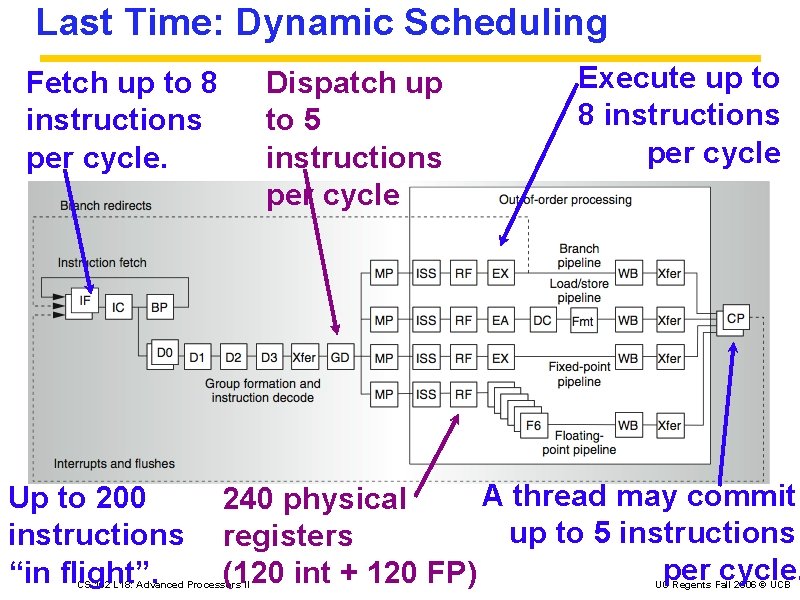

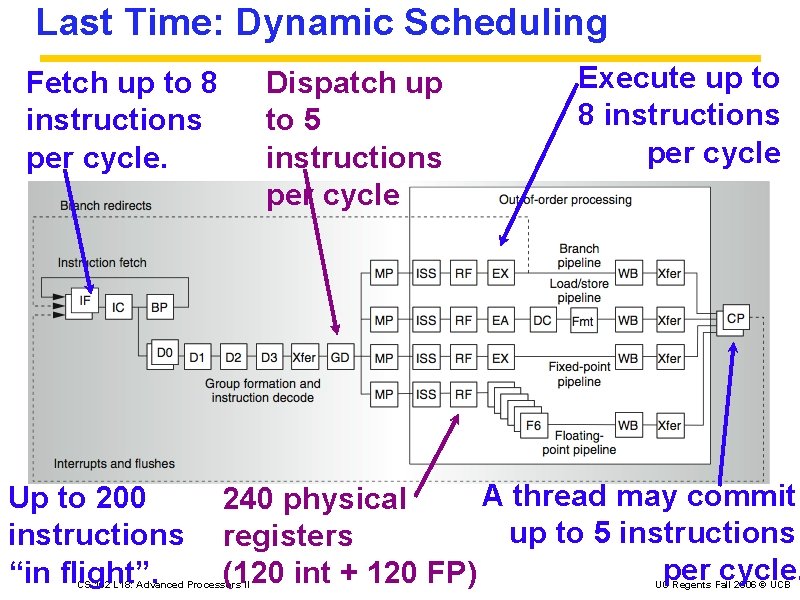

Last Time: Dynamic Scheduling Fetch up to 8 instructions per cycle. Up to 200 instructions “in flight”. Dispatch up to 5 instructions per cycle Execute up to 8 instructions per cycle A thread may commit 240 physical up to 5 instructions registers per cycle. (120 int + 120 FP) CS 152 L 18: Advanced Processors II UC Regents Fall 2006 © UCB

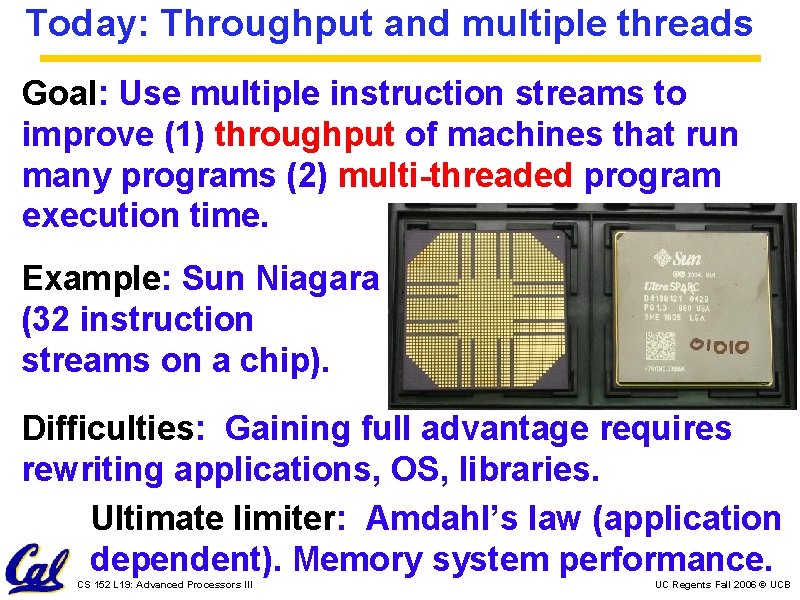

Today: Throughput and multiple threads Goal: Use multiple instruction streams to improve (1) throughput of machines that run many programs (2) multi-threaded program execution time. Example: Sun Niagara (32 instruction streams on a chip). Difficulties: Gaining full advantage requires rewriting applications, OS, libraries. Ultimate limiter: Amdahl’s law (application dependent). Memory system performance. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Throughput Computing Multithreading: Interleave instructions from separate threads on the same hardware. Seen by OS as several CPUs. Multi-core: Integrating several processors that (partially) share a memory system on the same chip CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Multi-Threading (Static Pipelines) CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

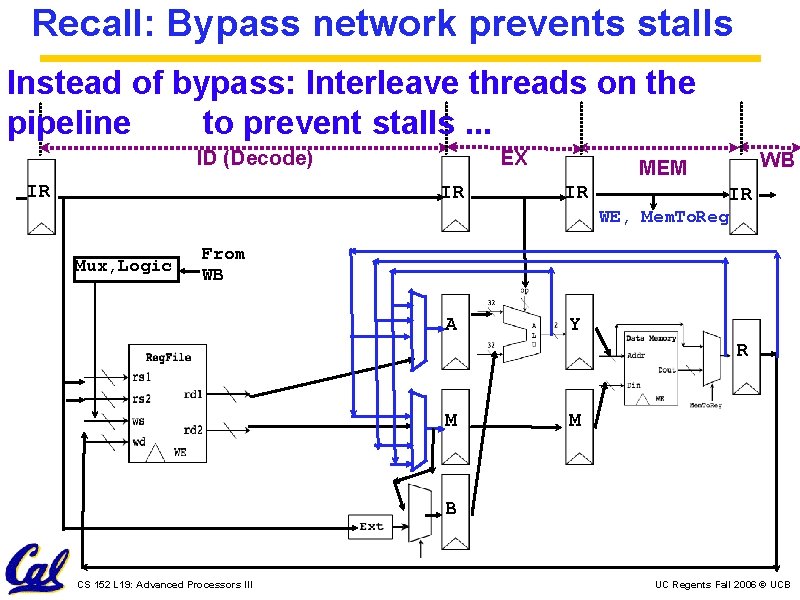

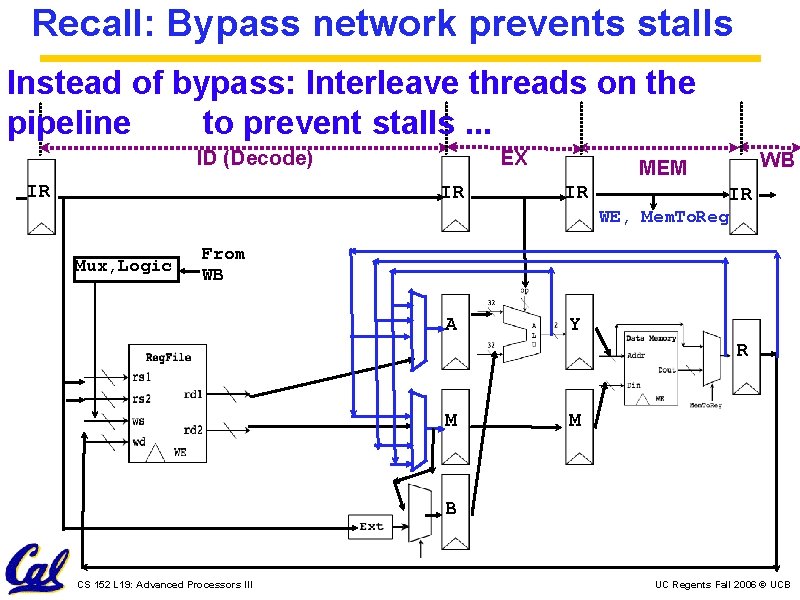

Recall: Bypass network prevents stalls Instead of bypass: Interleave threads on the pipeline to prevent stalls. . . ID (Decode) IR EX IR WB MEM IR IR WE, Mem. To. Reg Mux, Logic From WB A Y R M M B CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

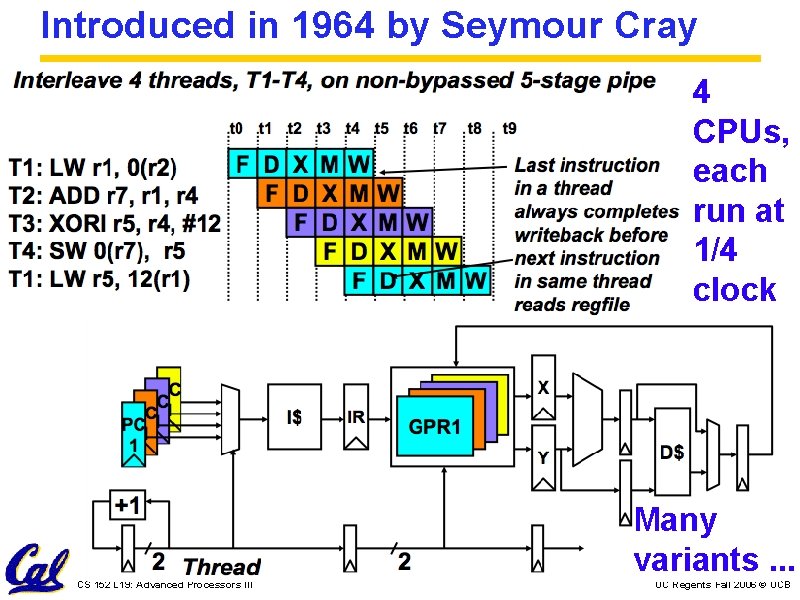

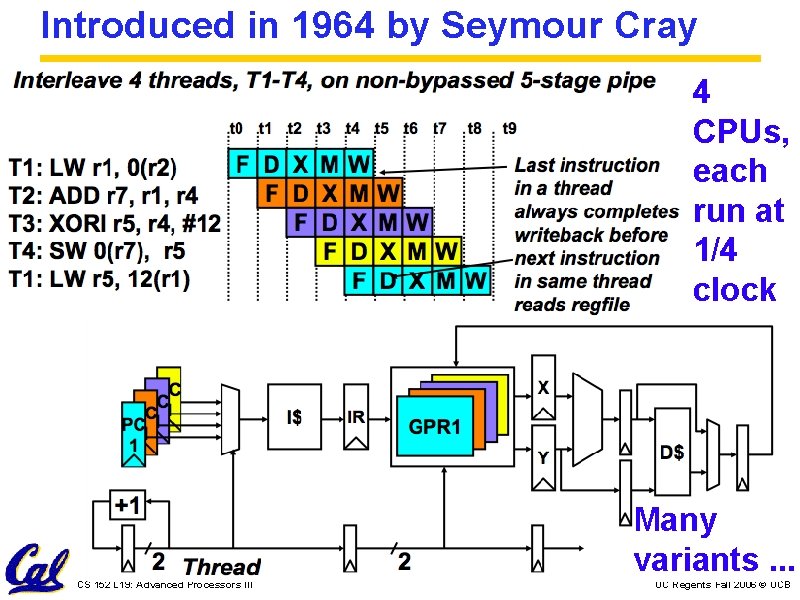

Introduced in 1964 by Seymour Cray 4 CPUs, each run at 1/4 clock Many variants. . . CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Multi-Threading (Dynamic Scheduling) CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

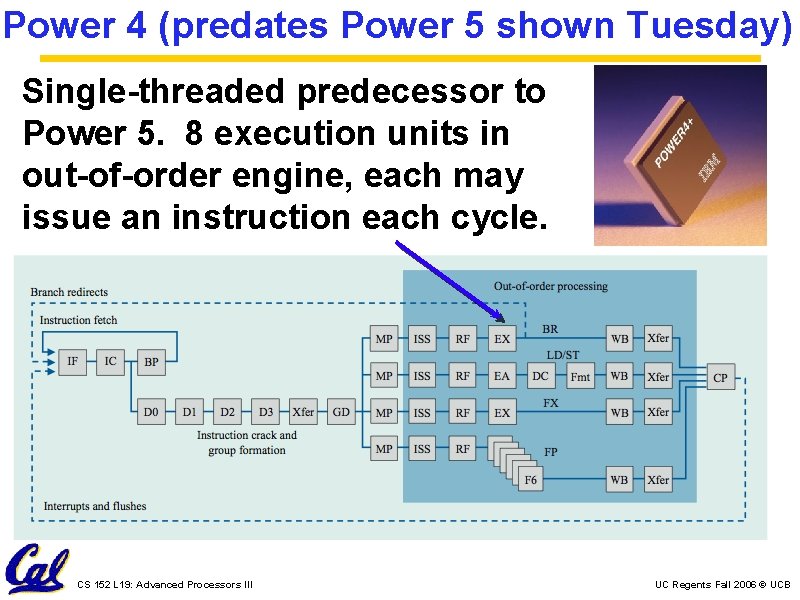

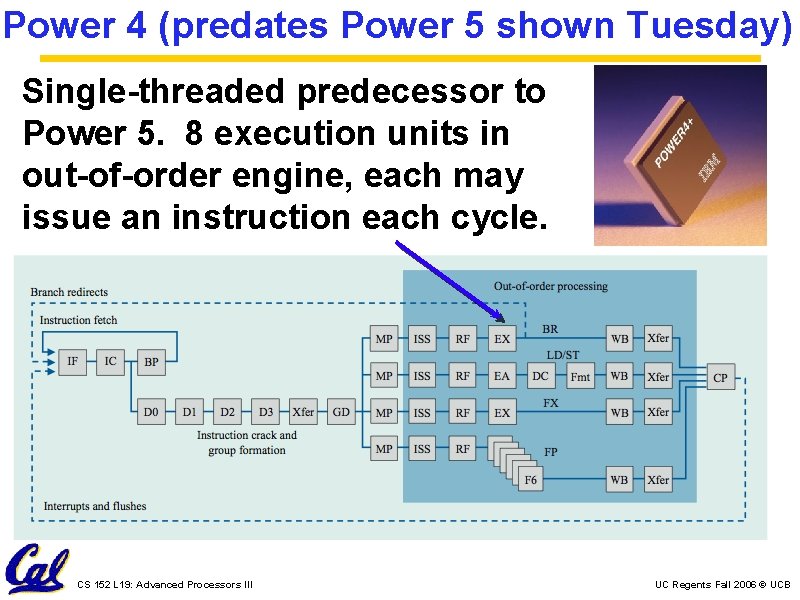

Power 4 (predates Power 5 shown Tuesday) Single-threaded predecessor to Power 5. 8 execution units in out-of-order engine, each may issue an instruction each cycle. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

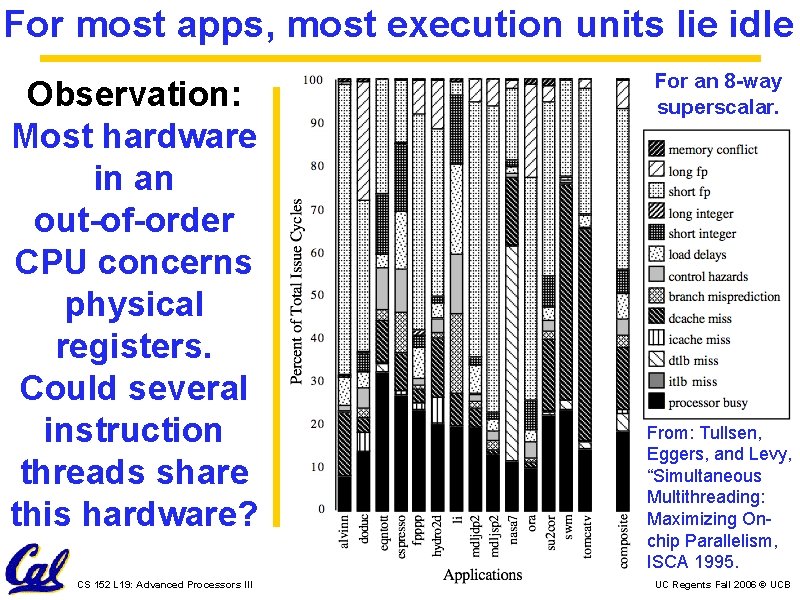

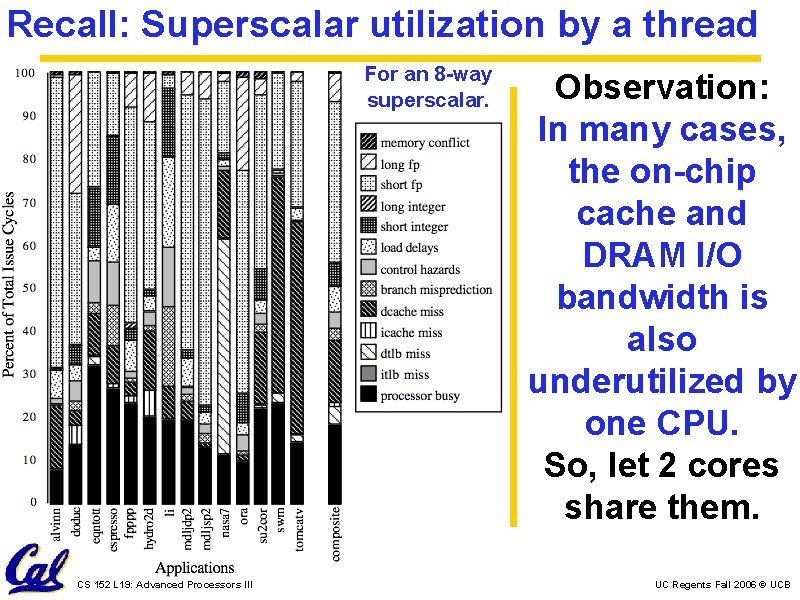

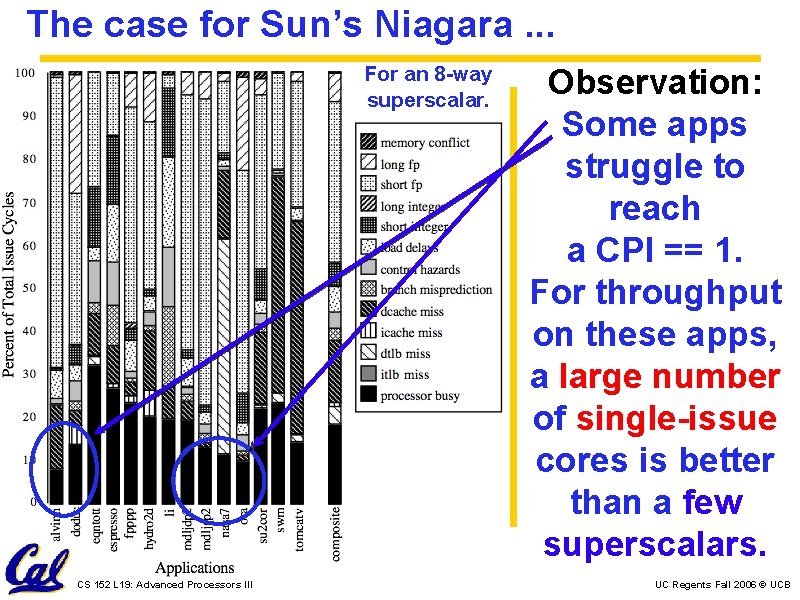

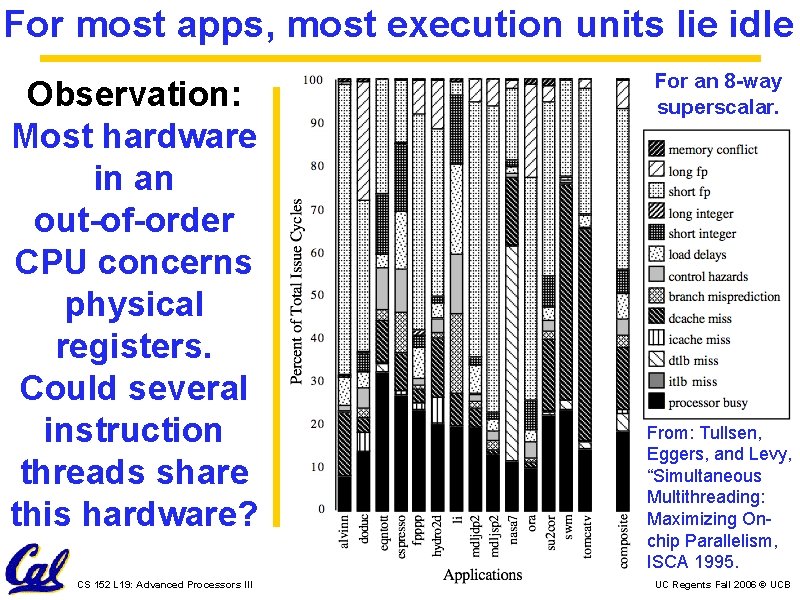

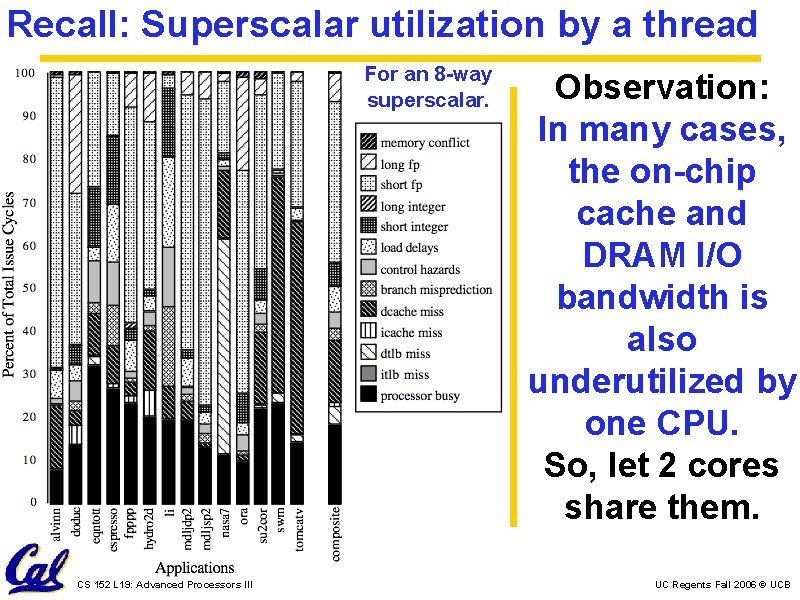

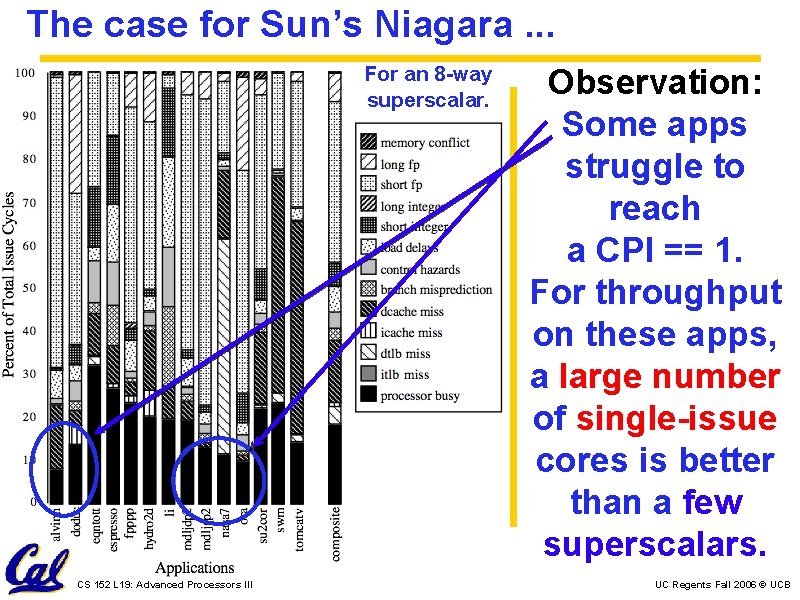

For most apps, most execution units lie idle Observation: Most hardware in an out-of-order CPU concerns physical registers. Could several instruction threads share this hardware? CS 152 L 19: Advanced Processors III For an 8 -way superscalar. From: Tullsen, Eggers, and Levy, “Simultaneous Multithreading: Maximizing Onchip Parallelism, ISCA 1995. UC Regents Fall 2006 © UCB

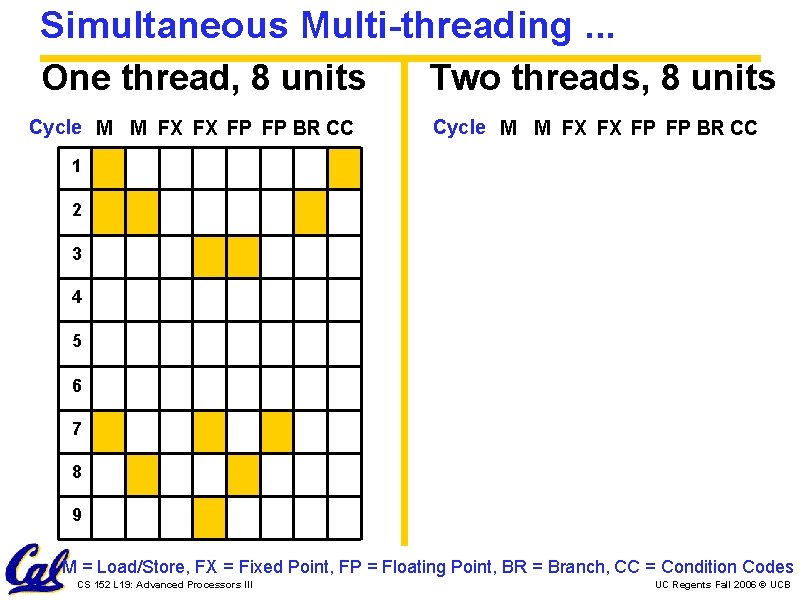

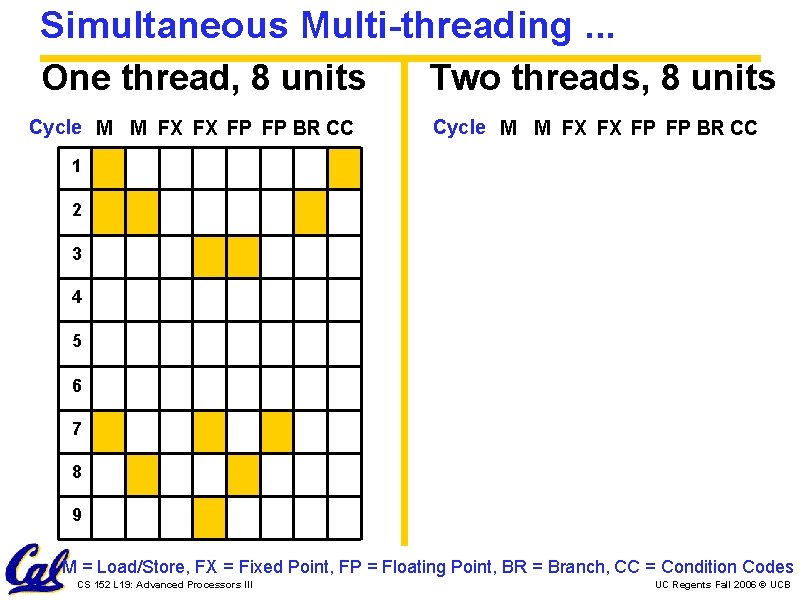

Simultaneous Multi-threading. . . One thread, 8 units Cycle M M FX FX FP FP BR CC Two threads, 8 units Cycle M M FX FX FP FP BR CC 1 2 3 4 5 6 7 8 9 M = Load/Store, FX = Fixed Point, FP = Floating Point, BR = Branch, CC = Condition Codes CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

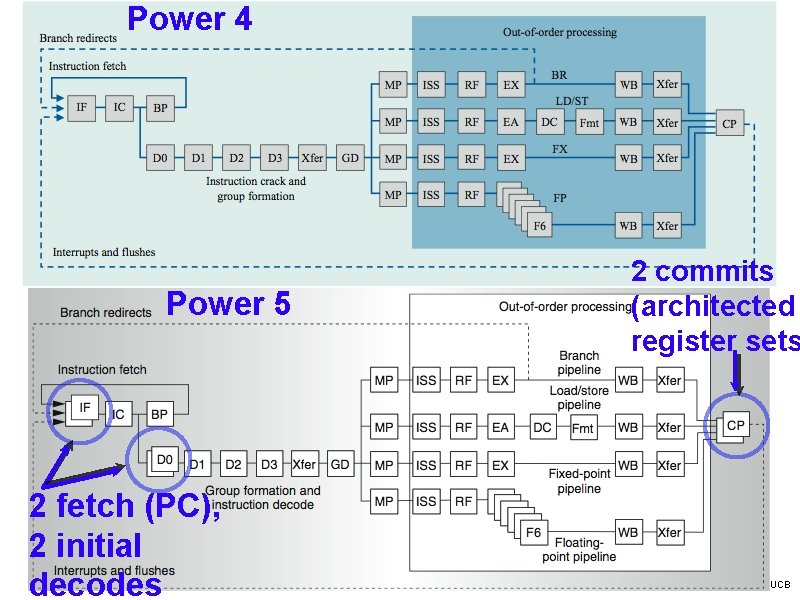

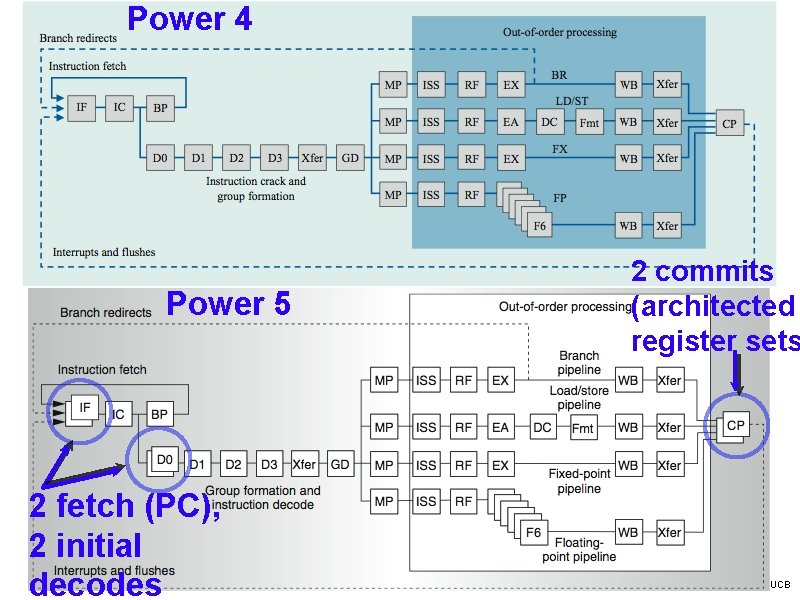

Power 4 Power 5 2 fetch (PC), 2 initial decodes CS 152 L 19: Advanced Processors III 2 commits (architected register sets UC Regents Fall 2006 © UCB

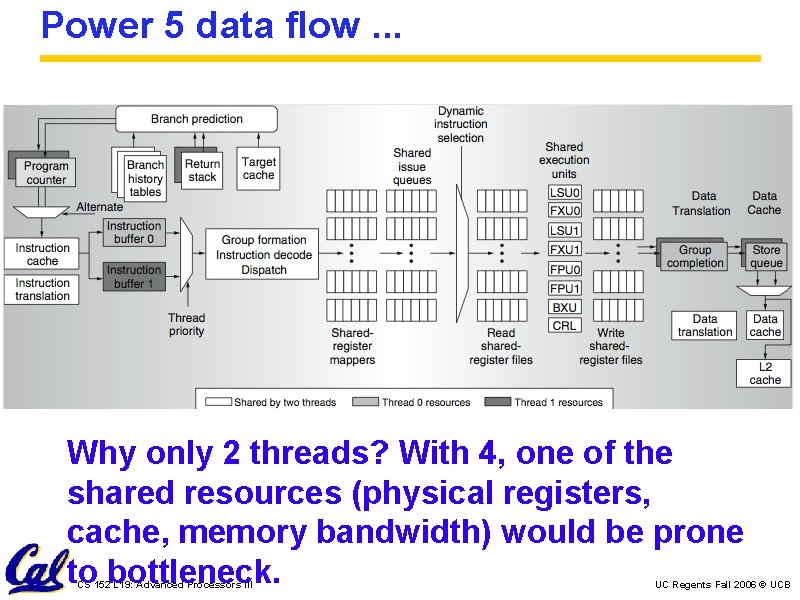

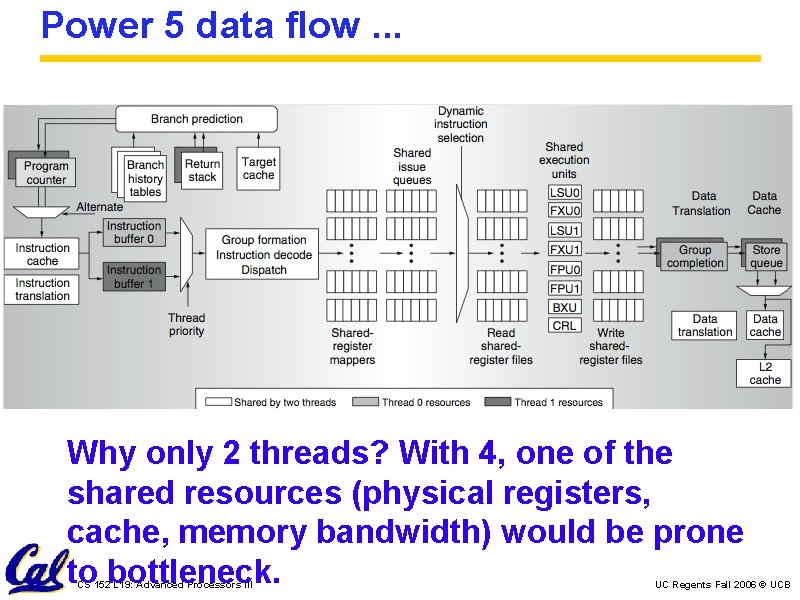

Power 5 data flow. . . Why only 2 threads? With 4, one of the shared resources (physical registers, cache, memory bandwidth) would be prone to bottleneck. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

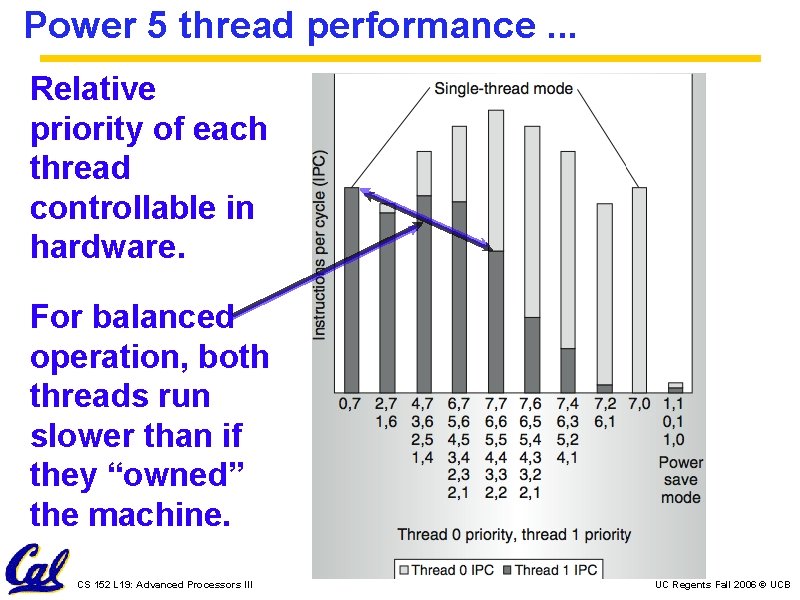

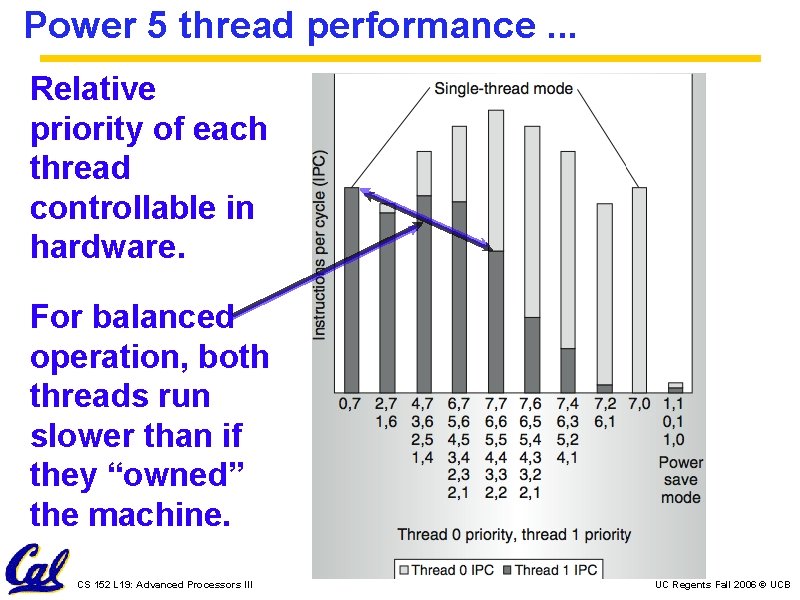

Power 5 thread performance. . . Relative priority of each thread controllable in hardware. For balanced operation, both threads run slower than if they “owned” the machine. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

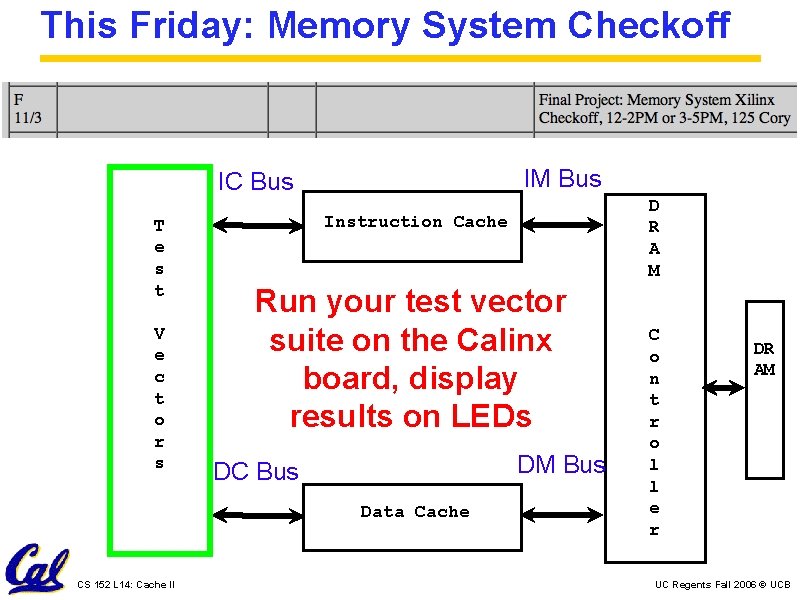

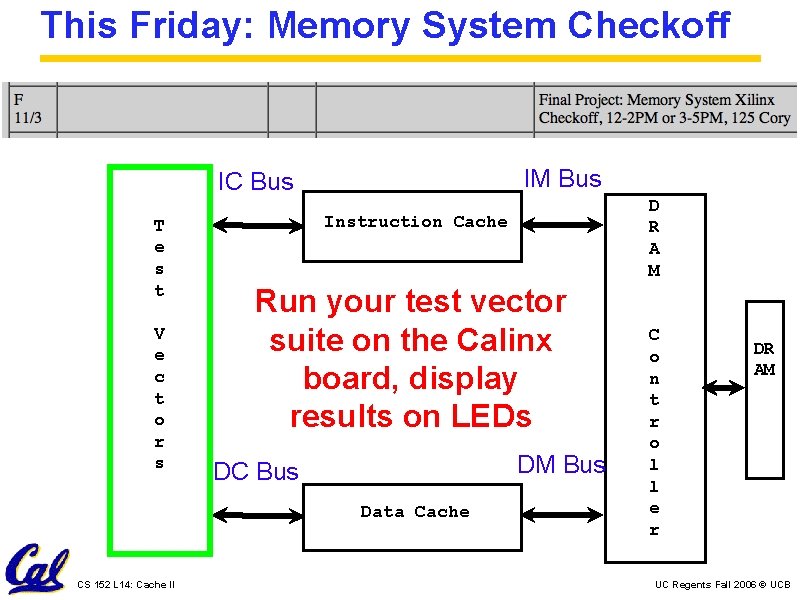

This Friday: Memory System Checkoff IM Bus IC Bus T e s t V e c t o r s Instruction Cache Run your test vector suite on the Calinx board, display results on LEDs DM Bus DC Bus Data Cache CS 152 L 14: Cache II D R A M C o n t r o l l e r DR AM UC Regents Fall 2006 © UCB

Multi-Core CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Recall: Superscalar utilization by a thread For an 8 -way superscalar. CS 152 L 19: Advanced Processors III Observation: In many cases, the on-chip cache and DRAM I/O bandwidth is also underutilized by one CPU. So, let 2 cores share them. UC Regents Fall 2006 © UCB

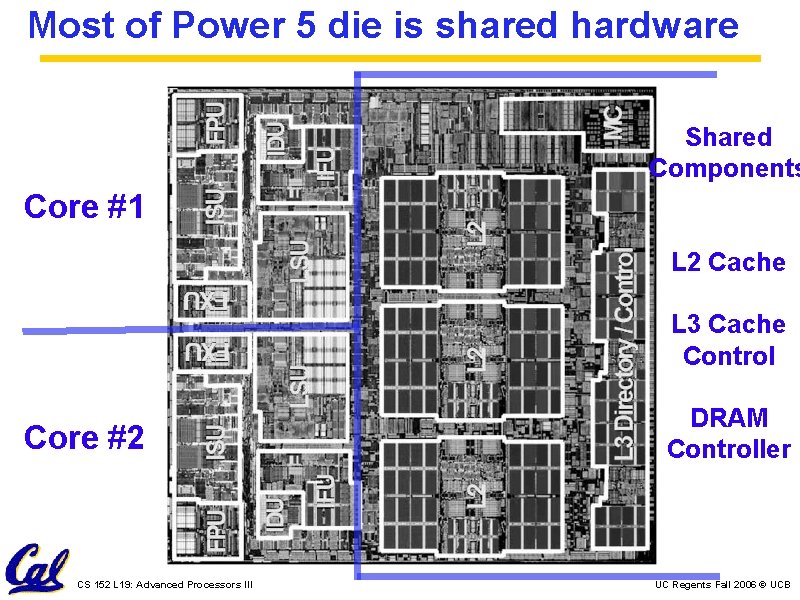

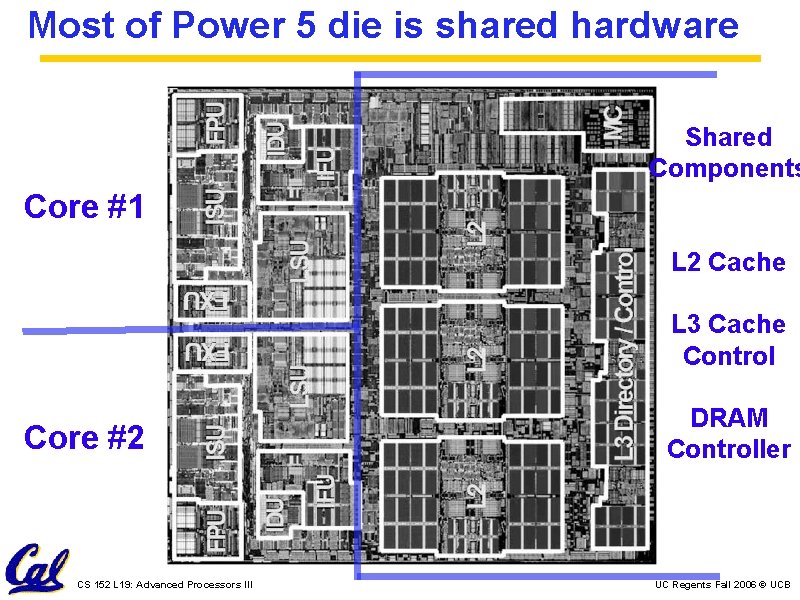

Most of Power 5 die is shared hardware Shared Components Core #1 L 2 Cache L 3 Cache Control Core #2 CS 152 L 19: Advanced Processors III DRAM Controller UC Regents Fall 2006 © UCB

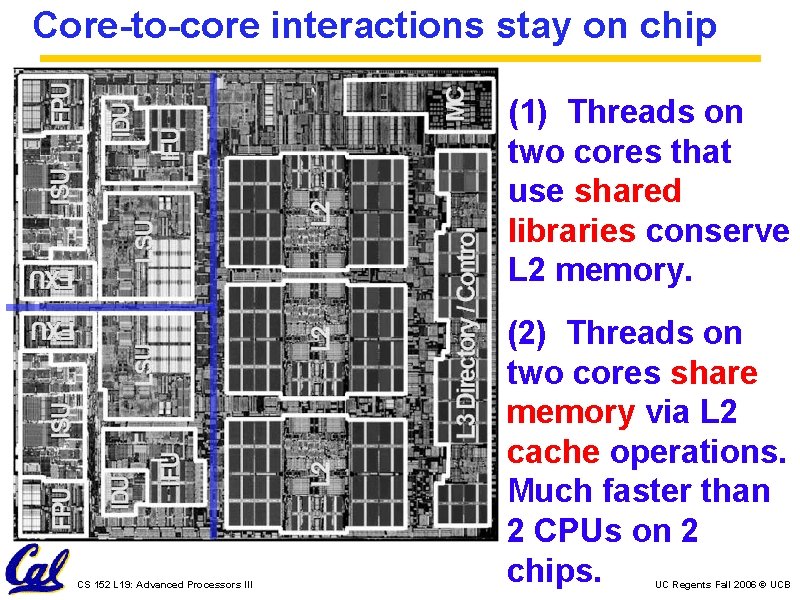

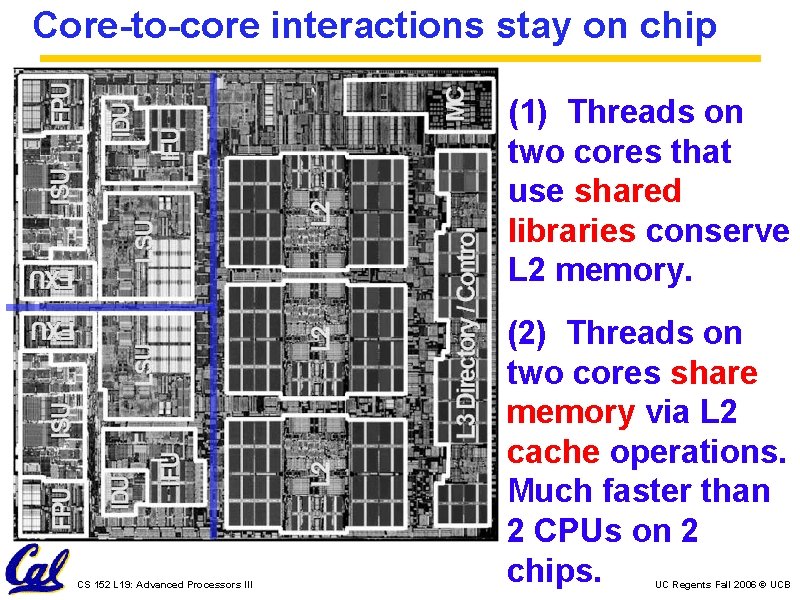

Core-to-core interactions stay on chip (1) Threads on two cores that use shared libraries conserve L 2 memory. CS 152 L 19: Advanced Processors III (2) Threads on two cores share memory via L 2 cache operations. Much faster than 2 CPUs on 2 chips. UC Regents Fall 2006 © UCB

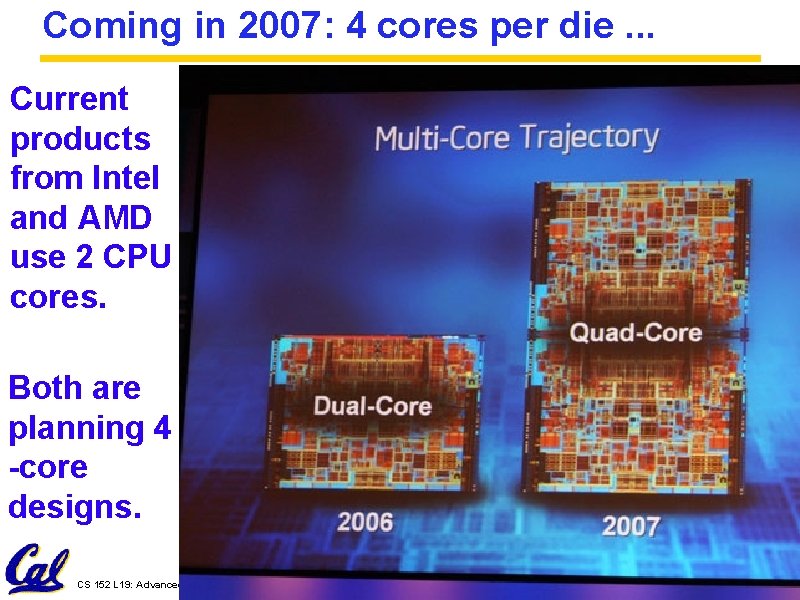

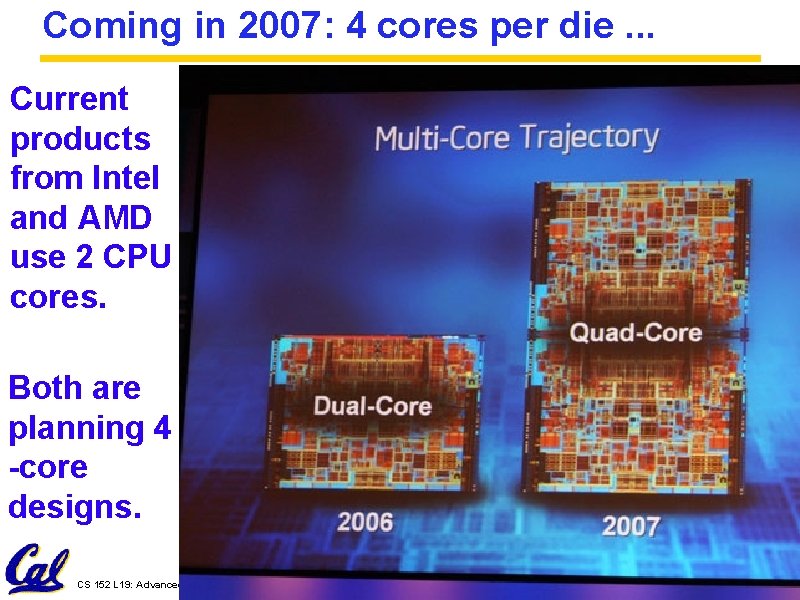

Coming in 2007: 4 cores per die. . . Current products from Intel and AMD use 2 CPU cores. Both are planning 4 -core designs. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Sun Niagara CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

The case for Sun’s Niagara. . . For an 8 -way superscalar. CS 152 L 19: Advanced Processors III Observation: Some apps struggle to reach a CPI == 1. For throughput on these apps, a large number of single-issue cores is better than a few superscalars. UC Regents Fall 2006 © UCB

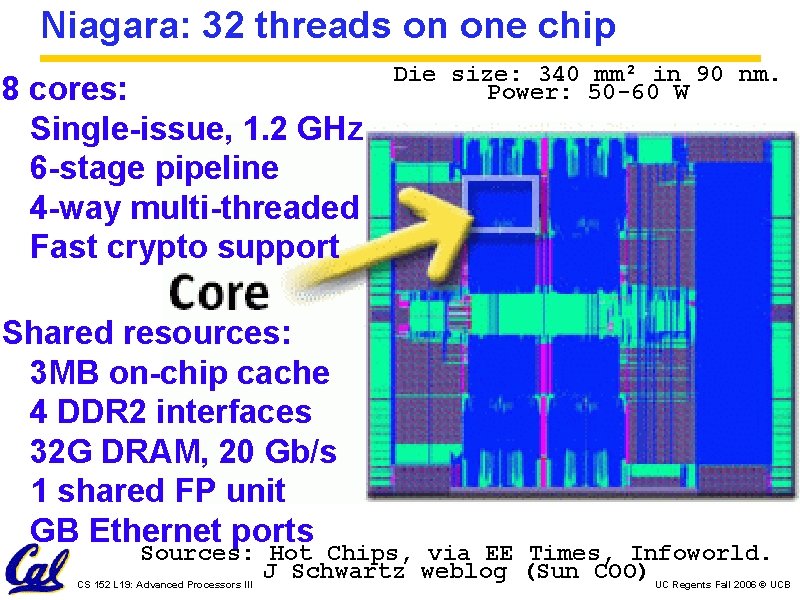

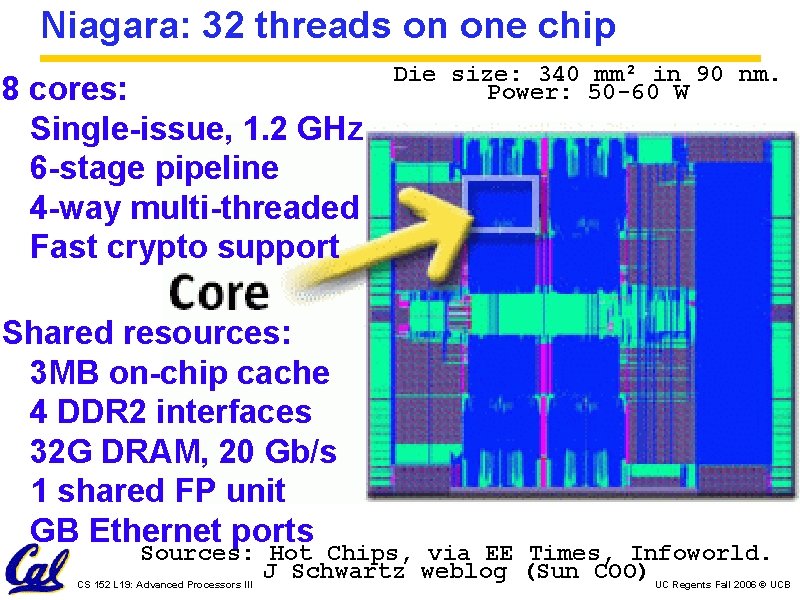

Niagara: 32 threads on one chip 8 cores: Single-issue, 1. 2 GHz 6 -stage pipeline 4 -way multi-threaded Fast crypto support Shared resources: 3 MB on-chip cache 4 DDR 2 interfaces 32 G DRAM, 20 Gb/s 1 shared FP unit GB Ethernet ports Die size: 340 mm² in 90 nm. Power: 50 -60 W Sources: Hot Chips, via EE Times, Infoworld. J Schwartz weblog (Sun COO) UC Regents Fall 2006 © UCB CS 152 L 19: Advanced Processors III

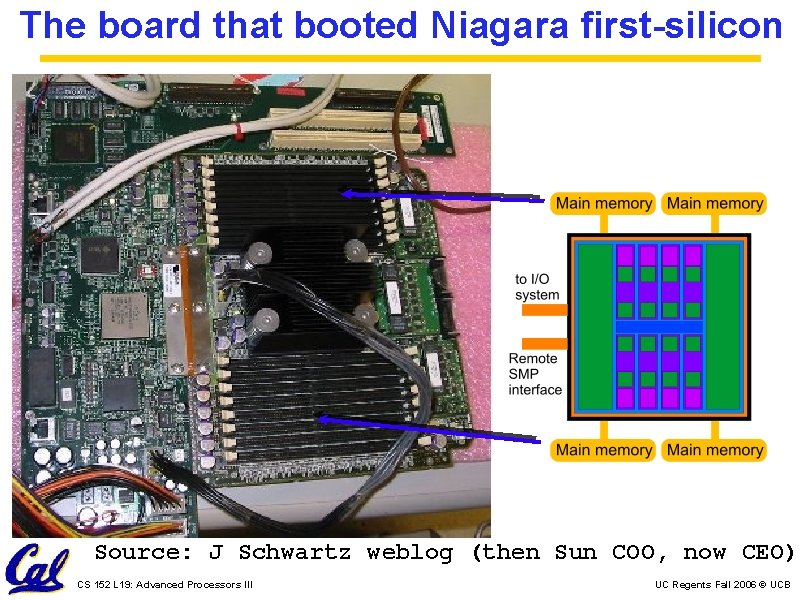

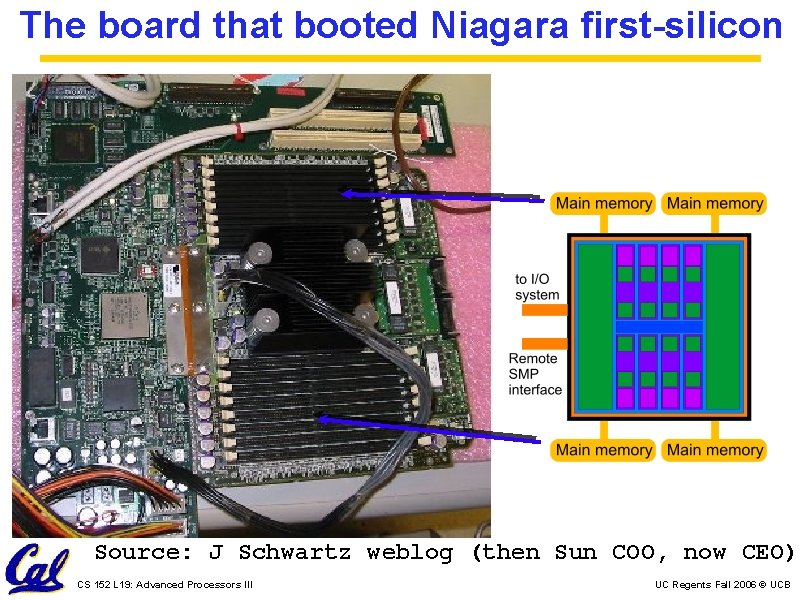

The board that booted Niagara first-silicon Source: J Schwartz weblog (then Sun COO, now CEO) CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

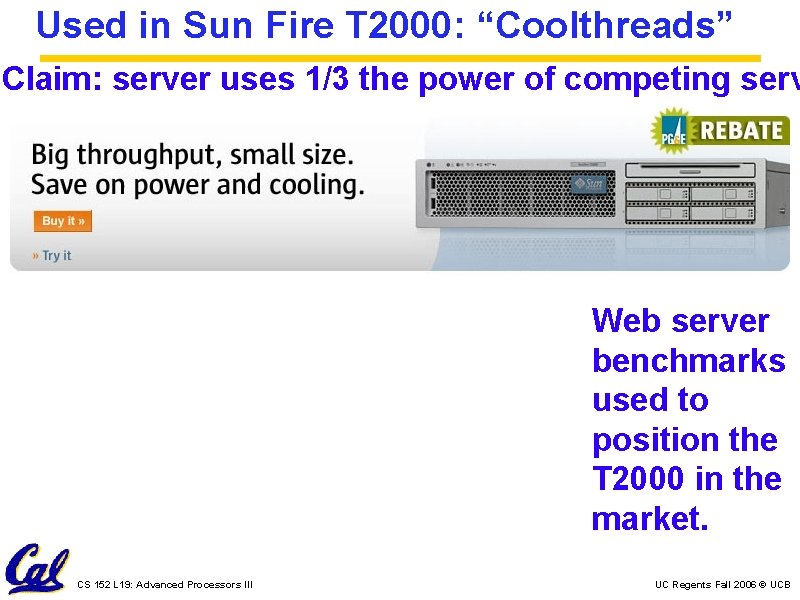

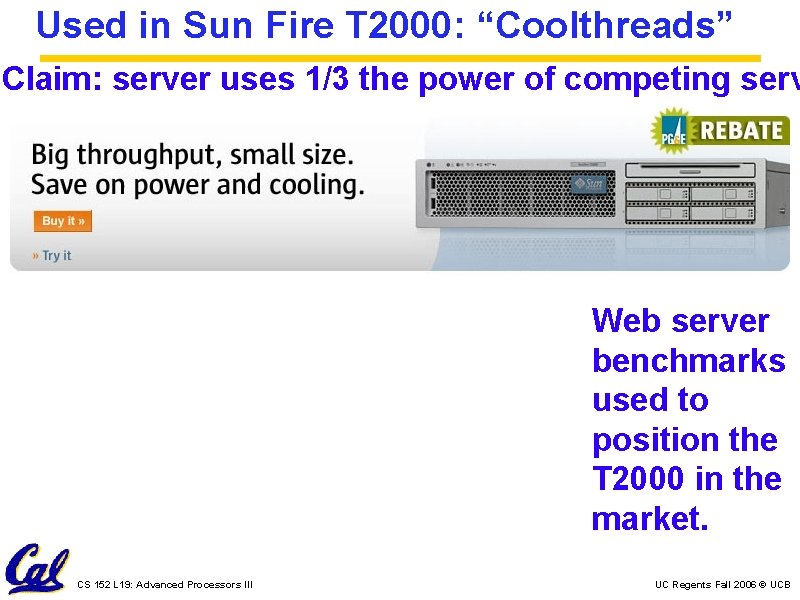

Used in Sun Fire T 2000: “Coolthreads” Claim: server uses 1/3 the power of competing serv Web server benchmarks used to position the T 2000 in the market. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

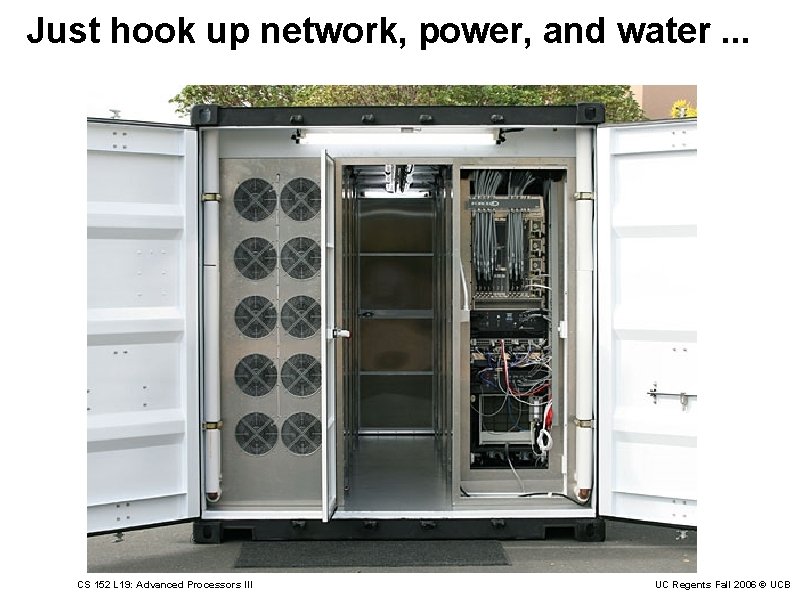

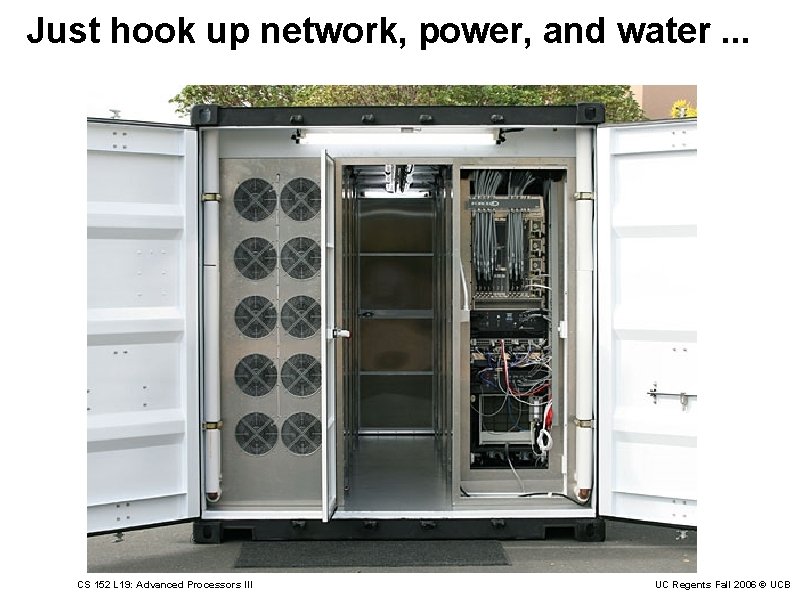

Project Blackbox A data center in a 20 -ft shipping container. Servers, air-conditioners, power distribution. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Just hook up network, power, and water. . . CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Holds 250 T 1000 servers. 2000 CPU cores, 8000 threads. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Cell: The PS 3 chip CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

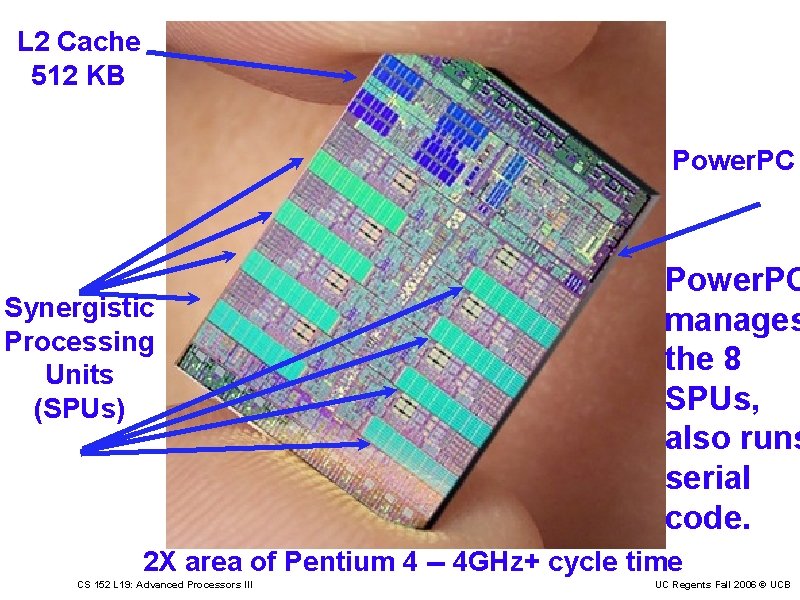

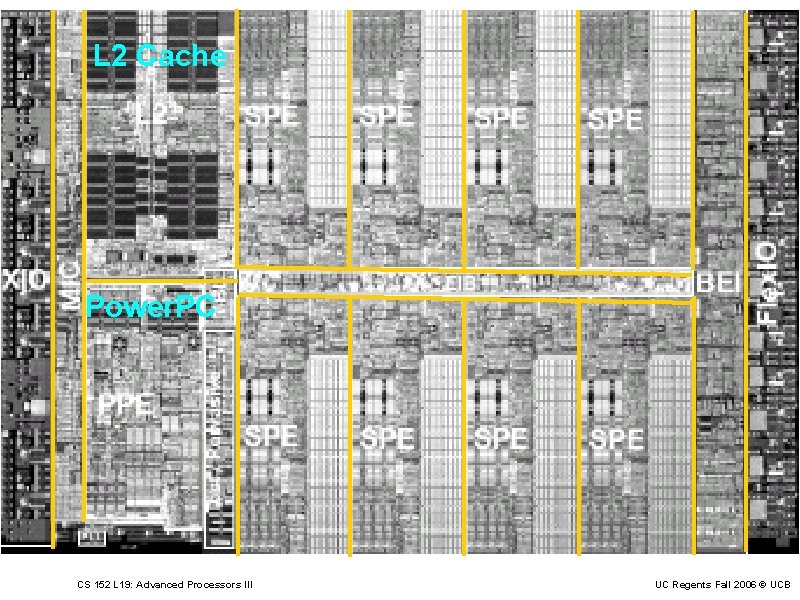

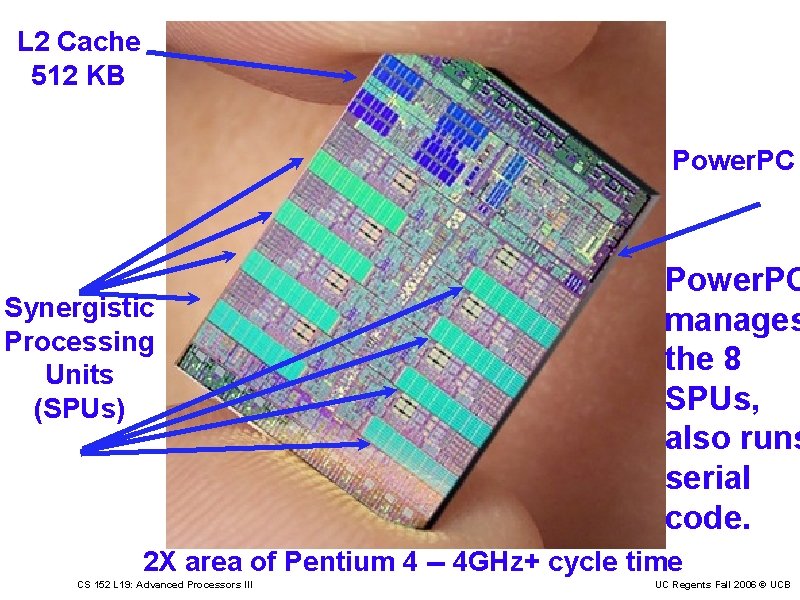

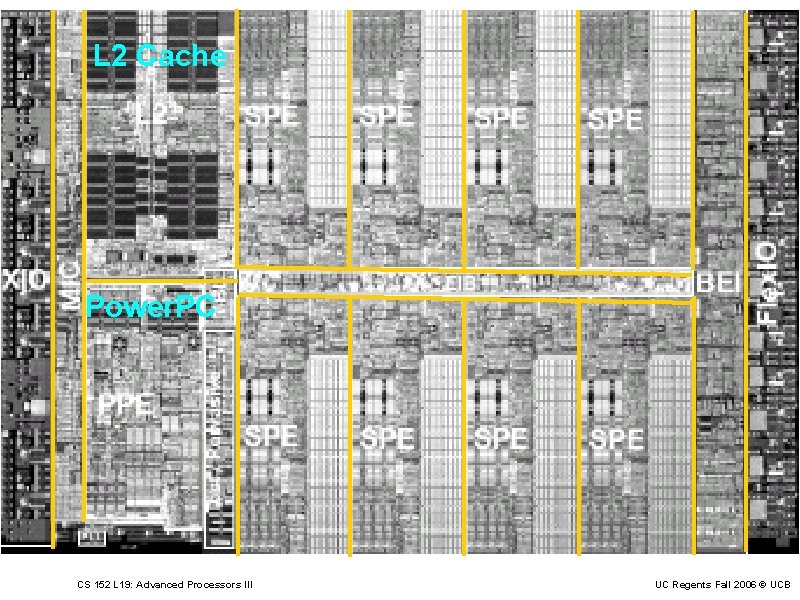

L 2 Cache 512 KB Power. PC Synergistic Processing Units (SPUs) Power. PC manages the 8 SPUs, also runs serial code. 2 X area of Pentium 4 -- 4 GHz+ cycle time CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

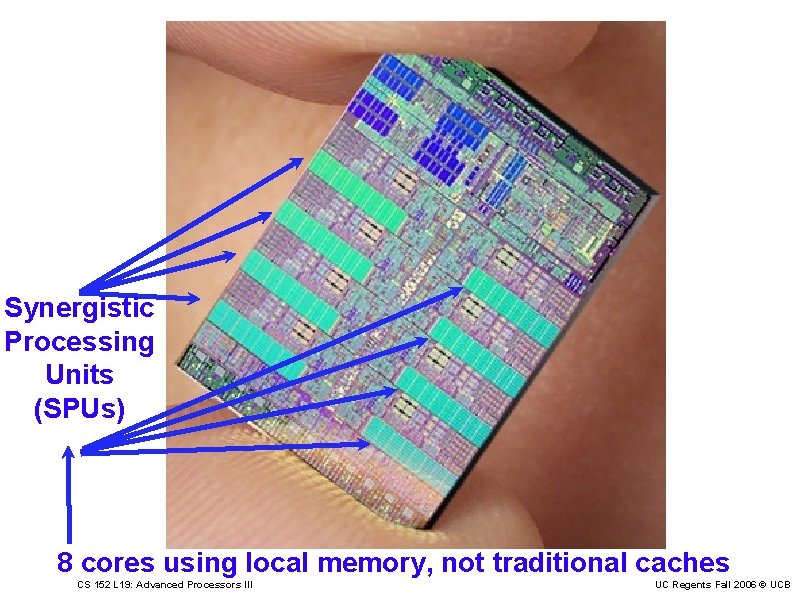

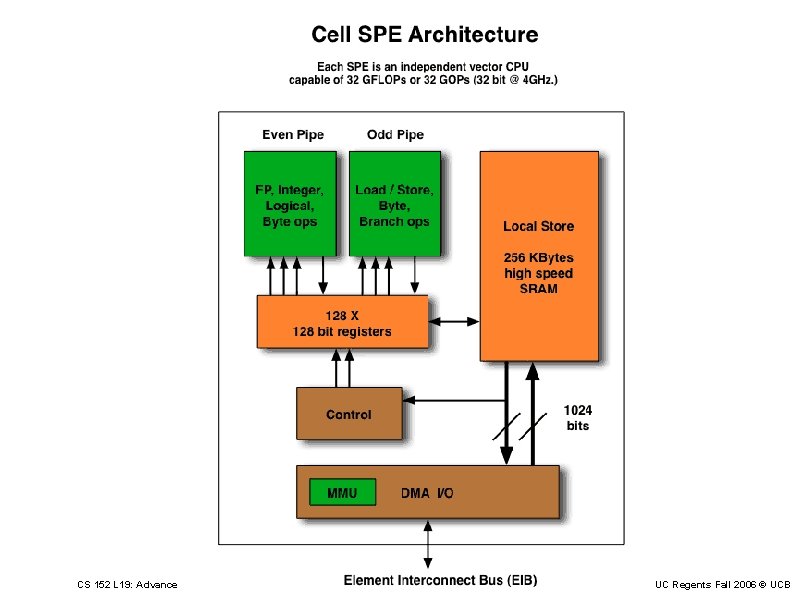

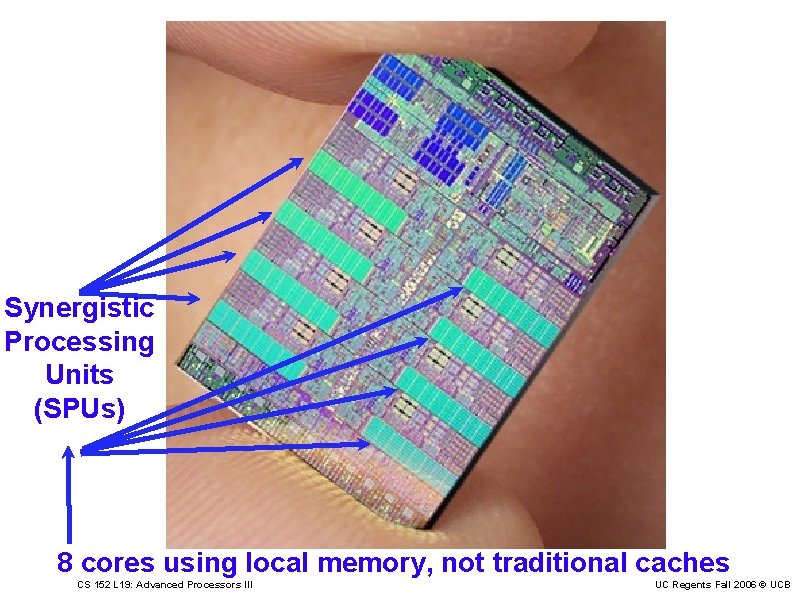

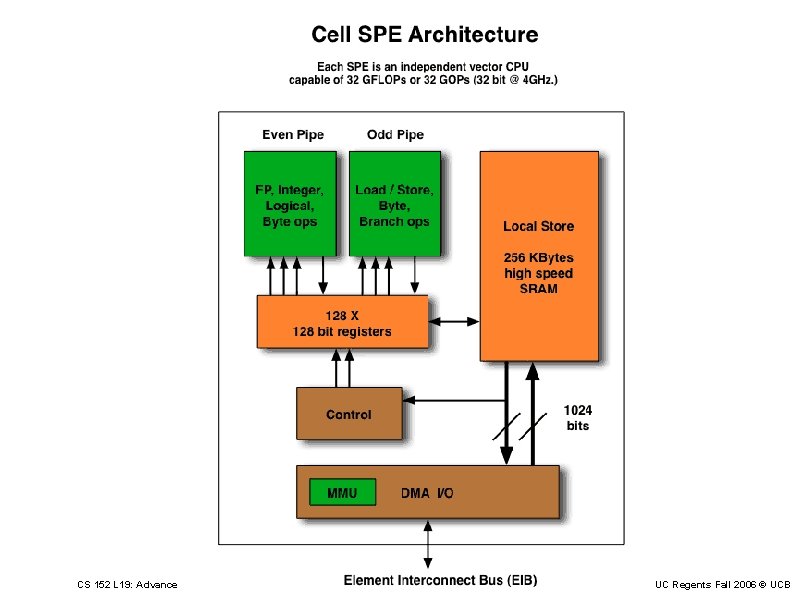

Synergistic Processing Units (SPUs) 8 cores using local memory, not traditional caches CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

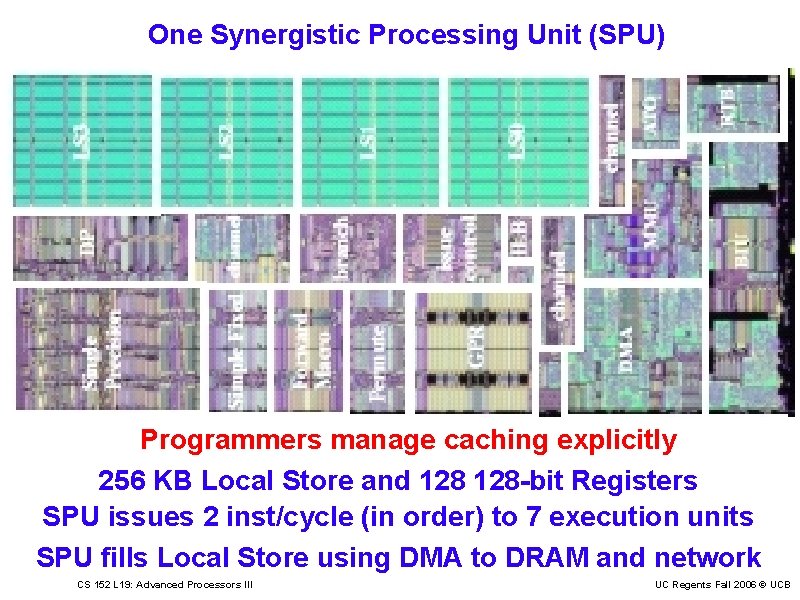

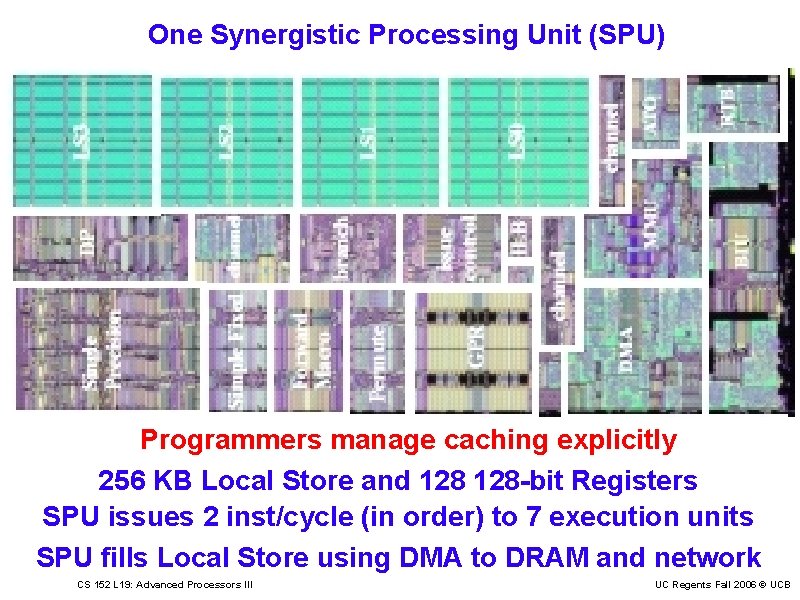

One Synergistic Processing Unit (SPU) Programmers manage caching explicitly 256 KB Local Store and 128 -bit Registers SPU issues 2 inst/cycle (in order) to 7 execution units SPU fills Local Store using DMA to DRAM and network CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

L 2 Cache Power. PC CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

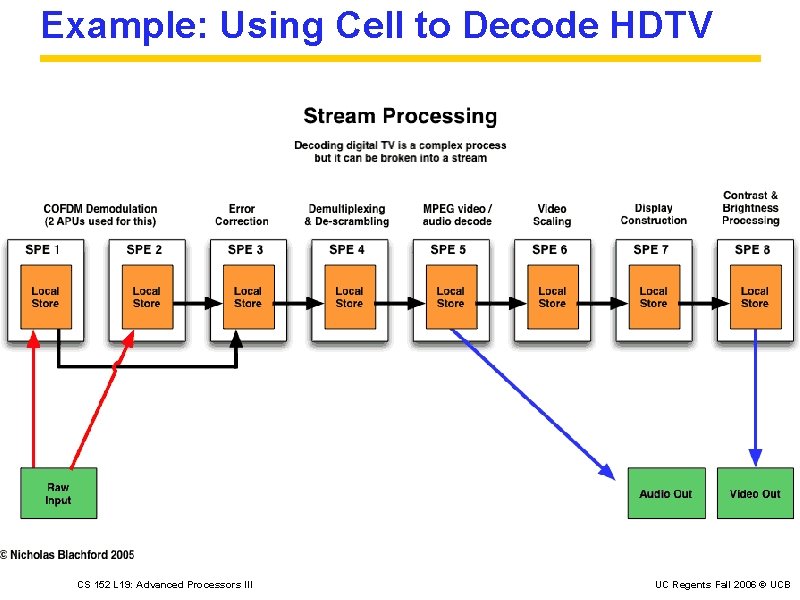

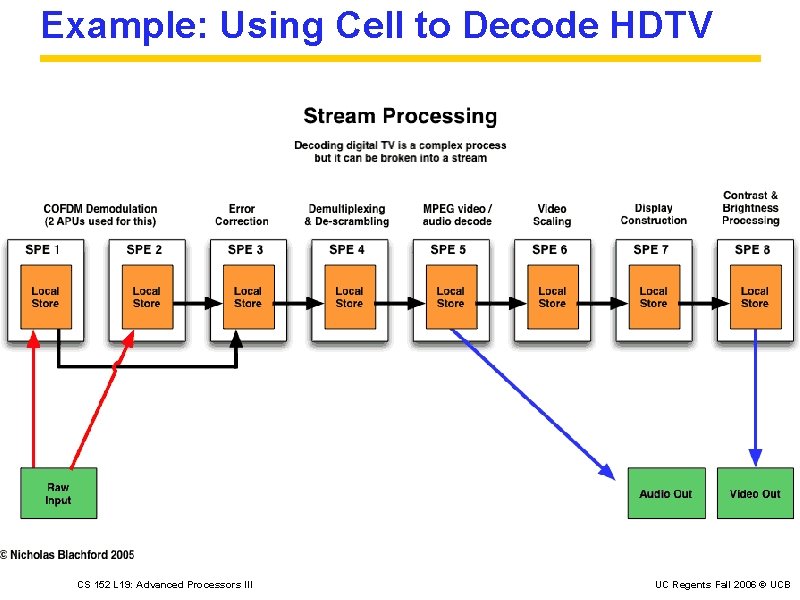

Example: Using Cell to Decode HDTV CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB

Conclusions: Throughput processing Simultaneous Multithreading: Instructions streams can share an out-of-order engine economically. Multi-core: Once instruction-level parallelism run dry, thread-level parallelism is a good use of die area. CS 152 L 19: Advanced Processors III UC Regents Fall 2006 © UCB