CS 152 Computer Architecture and Engineering Lecture 12

![Example: Can easily use with for non ideal memory “instruction fetch” IR <= MEM[PC] Example: Can easily use with for non ideal memory “instruction fetch” IR <= MEM[PC]](https://slidetodoc.com/presentation_image/102c92ec4a7ed4f6b2e54c55b69bce13/image-15.jpg)

![Fetch Note: These 5 stages were there all along! IR <= MEM[PC] PC <= Fetch Note: These 5 stages were there all along! IR <= MEM[PC] PC <=](https://slidetodoc.com/presentation_image/102c92ec4a7ed4f6b2e54c55b69bce13/image-26.jpg)

![Control and Datapath: Split state diag into 5 pieces IR < Mem[PC]; PC <– Control and Datapath: Split state diag into 5 pieces IR < Mem[PC]; PC <–](https://slidetodoc.com/presentation_image/102c92ec4a7ed4f6b2e54c55b69bce13/image-46.jpg)

- Slides: 48

CS 152 Computer Architecture and Engineering Lecture 12 Introduction to Pipelining February 27, 2001 John Kubiatowicz (http. cs. berkeley. edu/~kubitron) lecture slides: http: //www inst. eecs. berkeley. edu/~cs 152/ 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 1

Recap: Microprogramming ° Microprogramming is a convenient method for implementing structured control state diagrams: • Random logic replaced by micro. PC sequencer and ROM • Each line of ROM called a instruction: contains sequencer control + values for control points • limited state transitions: branch to zero, next sequential, branch to instruction address from displatch ROM ° Horizontal Code: one control bit in Instruction for every control line in datapath ° Vertical Code: groups of control lines coded together in Instruction (e. g. possible ALU dest) ° Control design reduces to Microprogramming • Part of the design process is to develop a “language” that describes control and is easy for humans to understand 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 2

Recap: Microprogramming sequencer datapath control microinstruction ( ) micro-PC Opcode -sequencer: fetch, dispatch, sequential Dispatch ROM -Code ROM Decoders implement our code language: For instance: rt ALU Decode rd ALU mem ALU To Data. Path ° Microprogramming is a fundamental concept • implement an instruction set by building a very simple processor and interpreting the instructions • essential for very complex instructions and when few register transfers are possible • overkill when ISA matches datapath 1 1 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 3

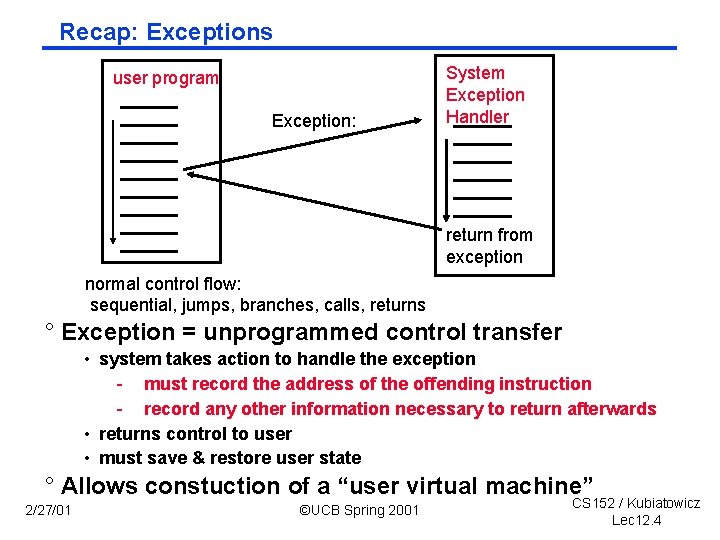

Recap: Exceptions user program Exception: System Exception Handler return from exception normal control flow: sequential, jumps, branches, calls, returns ° Exception = unprogrammed control transfer • system takes action to handle the exception - must record the address of the offending instruction - record any other information necessary to return afterwards • returns control to user • must save & restore user state ° Allows constuction of a “user virtual machine” 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 4

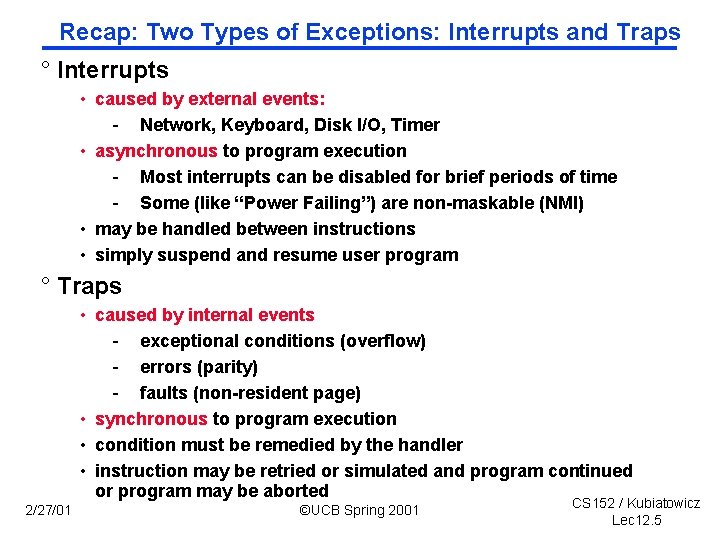

Recap: Two Types of Exceptions: Interrupts and Traps ° Interrupts • caused by external events: - Network, Keyboard, Disk I/O, Timer • asynchronous to program execution - Most interrupts can be disabled for brief periods of time - Some (like “Power Failing”) are non maskable (NMI) • may be handled between instructions • simply suspend and resume user program ° Traps • caused by internal events - exceptional conditions (overflow) - errors (parity) - faults (non resident page) • synchronous to program execution • condition must be remedied by the handler • instruction may be retried or simulated and program continued or program may be aborted 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 5

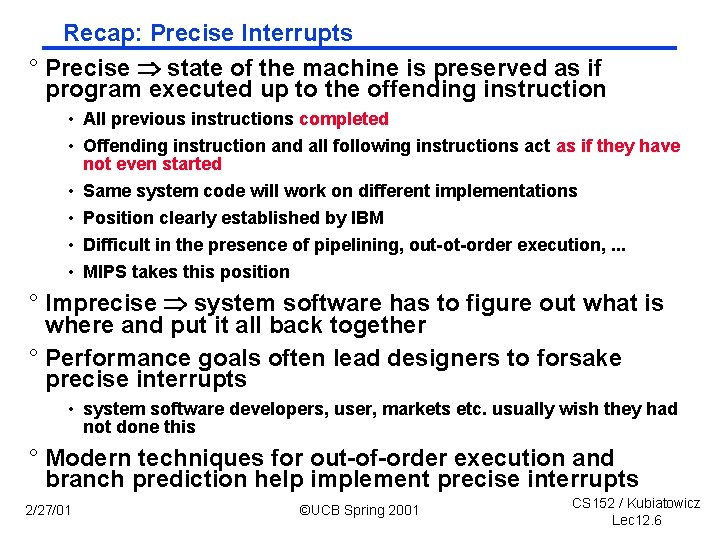

Recap: Precise Interrupts ° Precise state of the machine is preserved as if program executed up to the offending instruction • All previous instructions completed • Offending instruction and all following instructions act as if they have not even started • Same system code will work on different implementations • Position clearly established by IBM • Difficult in the presence of pipelining, out ot order execution, . . . • MIPS takes this position ° Imprecise system software has to figure out what is where and put it all back together ° Performance goals often lead designers to forsake precise interrupts • system software developers, user, markets etc. usually wish they had not done this ° Modern techniques for out of order execution and branch prediction help implement precise interrupts 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 6

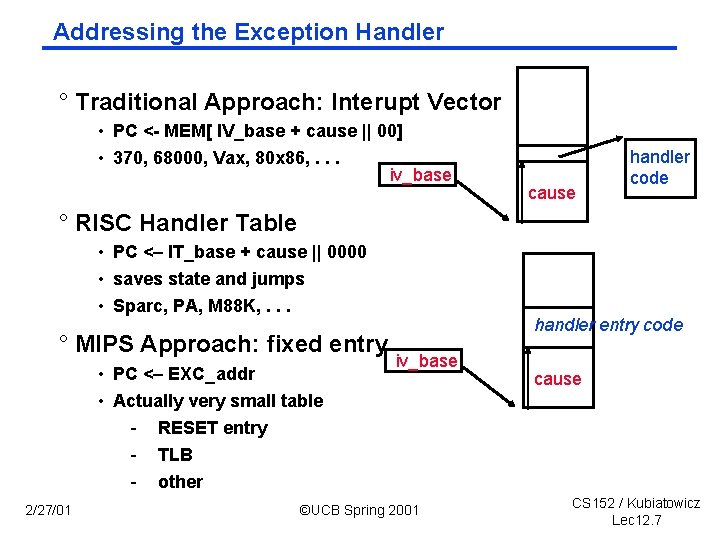

Addressing the Exception Handler ° Traditional Approach: Interupt Vector • PC < MEM[ IV_base + cause || 00] • 370, 68000, Vax, 80 x 86, . . . iv_base cause handler code ° RISC Handler Table • PC <– IT_base + cause || 0000 • saves state and jumps • Sparc, PA, M 88 K, . . . ° MIPS Approach: fixed entry • PC <– EXC_addr • Actually very small table - RESET entry - TLB - other 2/27/01 handler entry code iv_base ©UCB Spring 2001 cause CS 152 / Kubiatowicz Lec 12. 7

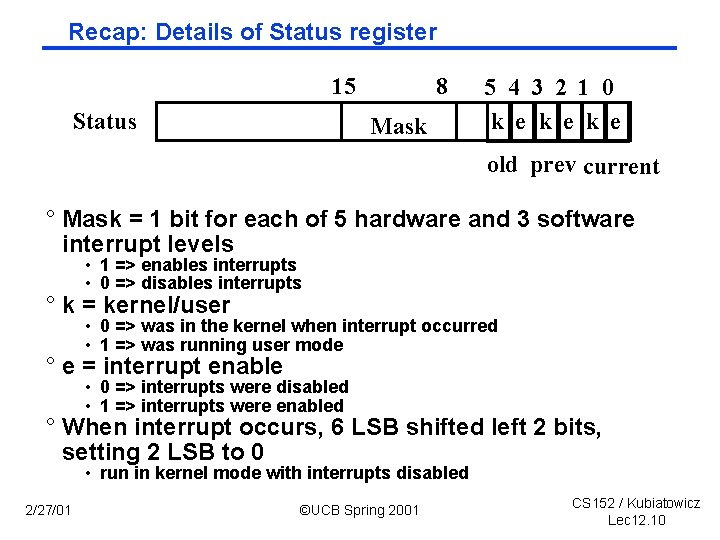

Saving State ° Push it onto the stack • Vax, 68 k, 80 x 86 ° Save it in special registers • MIPS EPC, Bad. Vaddr, Status, Cause ° Shadow Registers • M 88 k • Save state in a shadow of the internal pipeline registers 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 8

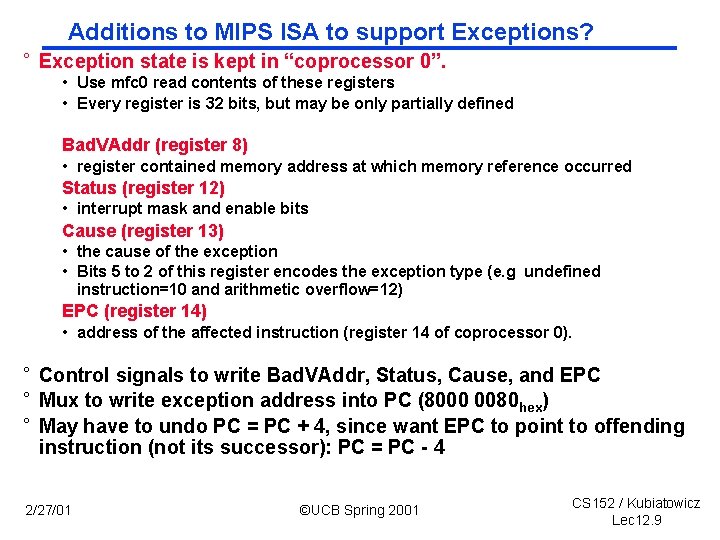

Additions to MIPS ISA to support Exceptions? ° Exception state is kept in “coprocessor 0”. • Use mfc 0 read contents of these registers • Every register is 32 bits, but may be only partially defined Bad. VAddr (register 8) • register contained memory address at which memory reference occurred Status (register 12) • interrupt mask and enable bits Cause (register 13) • the cause of the exception • Bits 5 to 2 of this register encodes the exception type (e. g undefined instruction=10 and arithmetic overflow=12) EPC (register 14) • address of the affected instruction (register 14 of coprocessor 0). ° Control signals to write Bad. VAddr, Status, Cause, and EPC ° Mux to write exception address into PC (8000 0080 hex) ° May have to undo PC = PC + 4, since want EPC to point to offending instruction (not its successor): PC = PC 4 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 9

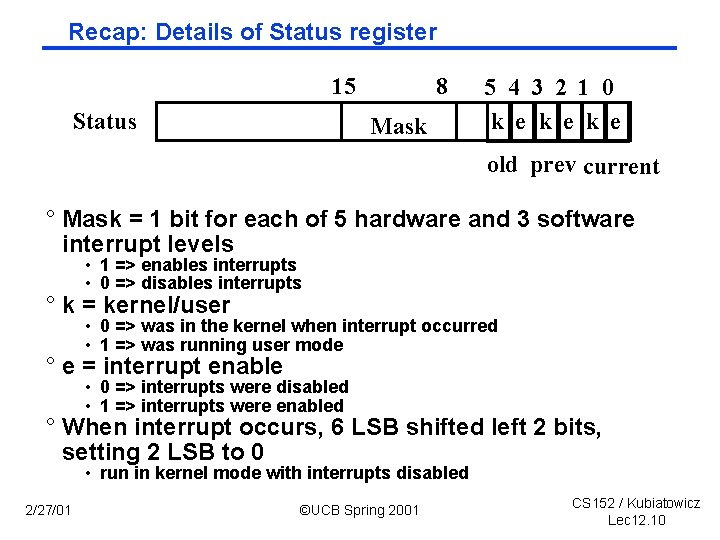

Recap: Details of Status register 15 Status 8 Mask 5 4 3 2 1 0 k e k e old prev current ° Mask = 1 bit for each of 5 hardware and 3 software interrupt levels • 1 => enables interrupts • 0 => disables interrupts ° k = kernel/user • 0 => was in the kernel when interrupt occurred • 1 => was running user mode ° e = interrupt enable • 0 => interrupts were disabled • 1 => interrupts were enabled ° When interrupt occurs, 6 LSB shifted left 2 bits, setting 2 LSB to 0 • run in kernel mode with interrupts disabled 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 10

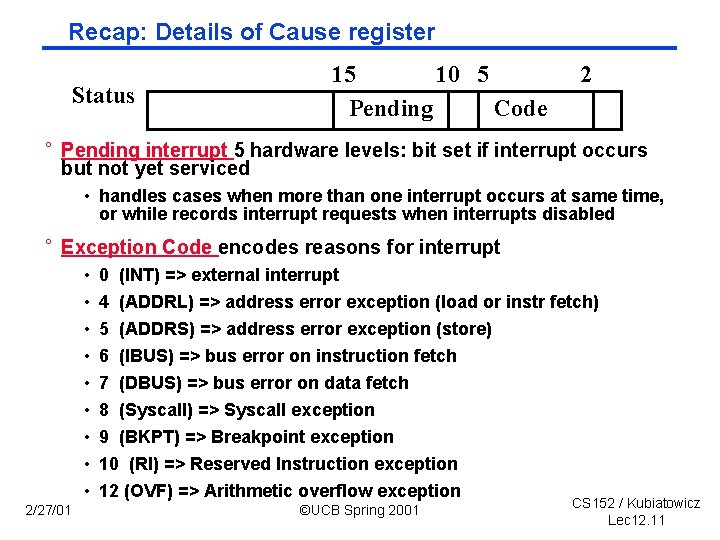

Recap: Details of Cause register Status 15 10 5 Pending Code 2 ° Pending interrupt 5 hardware levels: bit set if interrupt occurs but not yet serviced • handles cases when more than one interrupt occurs at same time, or while records interrupt requests when interrupts disabled ° Exception Code encodes reasons for interrupt • • • 2/27/01 0 4 5 6 7 (INT) => external interrupt (ADDRL) => address error exception (load or instr fetch) (ADDRS) => address error exception (store) (IBUS) => bus error on instruction fetch (DBUS) => bus error on data fetch 8 (Syscall) => Syscall exception 9 (BKPT) => Breakpoint exception 10 (RI) => Reserved Instruction exception 12 (OVF) => Arithmetic overflow exception ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 11

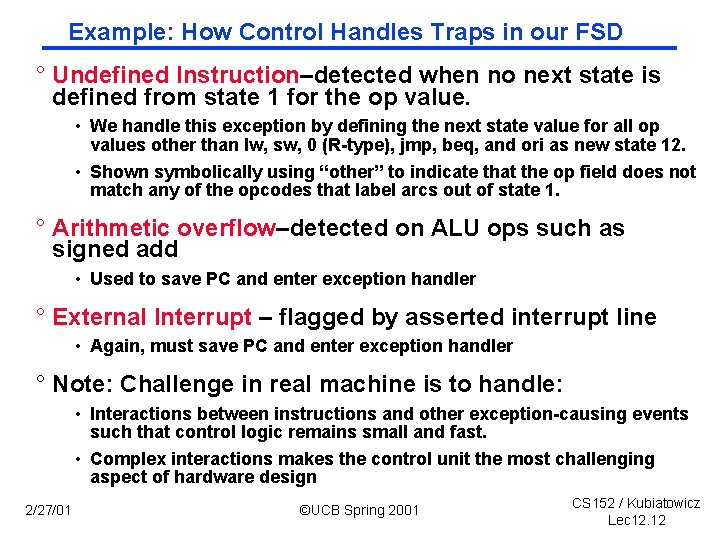

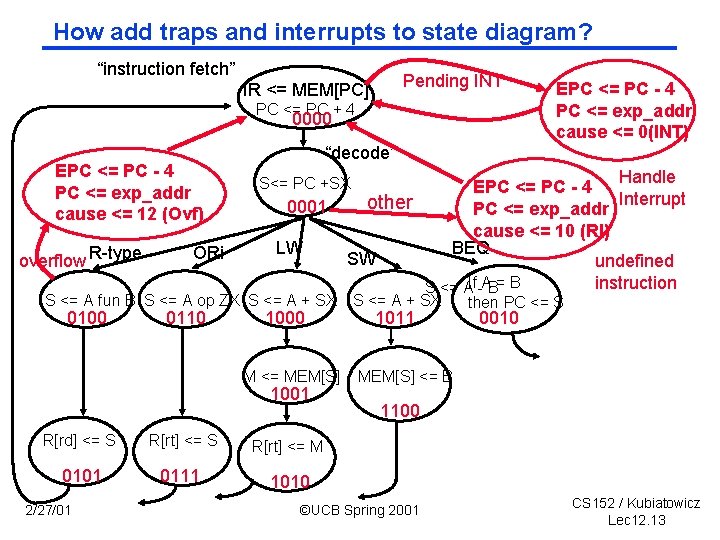

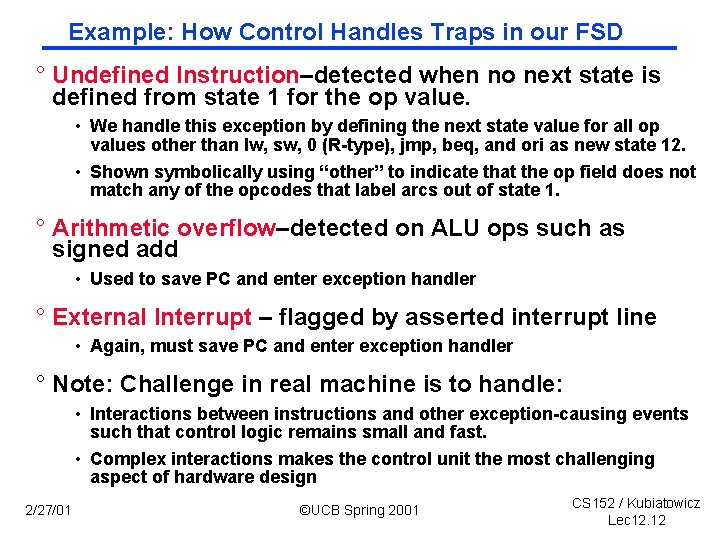

Example: How Control Handles Traps in our FSD ° Undefined Instruction–detected when no next state is defined from state 1 for the op value. • We handle this exception by defining the next state value for all op values other than lw, sw, 0 (R type), jmp, beq, and ori as new state 12. • Shown symbolically using “other” to indicate that the op field does not match any of the opcodes that label arcs out of state 1. ° Arithmetic overflow–detected on ALU ops such as signed add • Used to save PC and enter exception handler ° External Interrupt – flagged by asserted interrupt line • Again, must save PC and enter exception handler ° Note: Challenge in real machine is to handle: • Interactions between instructions and other exception causing events such that control logic remains small and fast. • Complex interactions makes the control unit the most challenging aspect of hardware design 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 12

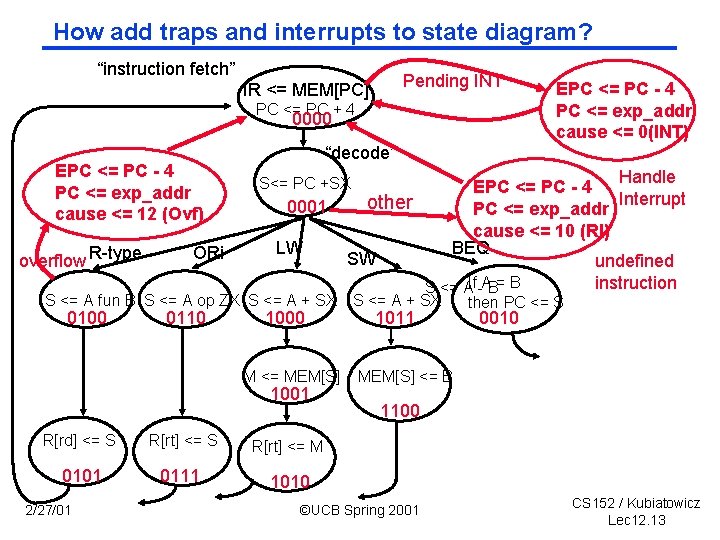

How add traps and interrupts to state diagram? “instruction fetch” Pending INT IR <= MEM[PC] PC <= PC + 4 0000 EPC <= PC 4 PC <= exp_addr cause <= 12 (Ovf) overflow R-type ORi “decode” 0110 0001 LW 1000 M <= MEM[S] 1001 R[rd] <= S R[rt] <= M 0101 0111 1010 2/27/01 Handle EPC <= PC 4 Interrupt other PC <= exp_addr cause <= 10 (RI) BEQ SW undefined instruction S <= AIf -AB= B S<= PC +SX S <= A fun B S <= A op ZX S <= A + SX 0100 EPC <= PC 4 PC <= exp_addr cause <= 0(INT) S <= A + SX 1011 then PC <= S 0010 MEM[S] <= B 1100 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 13

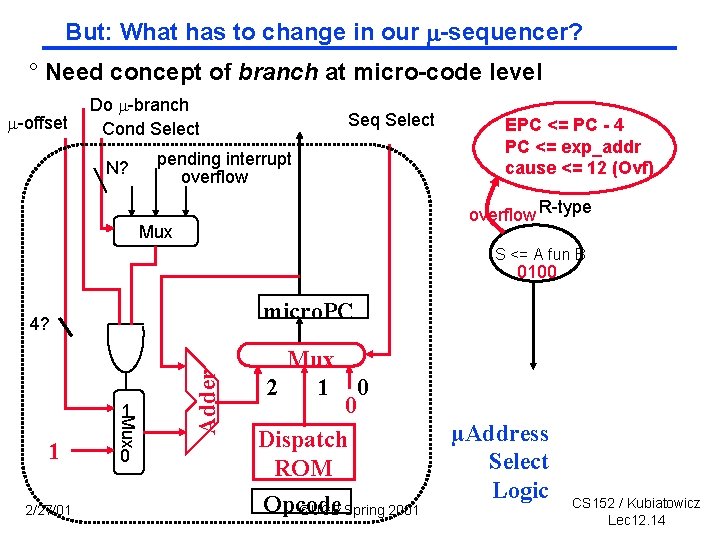

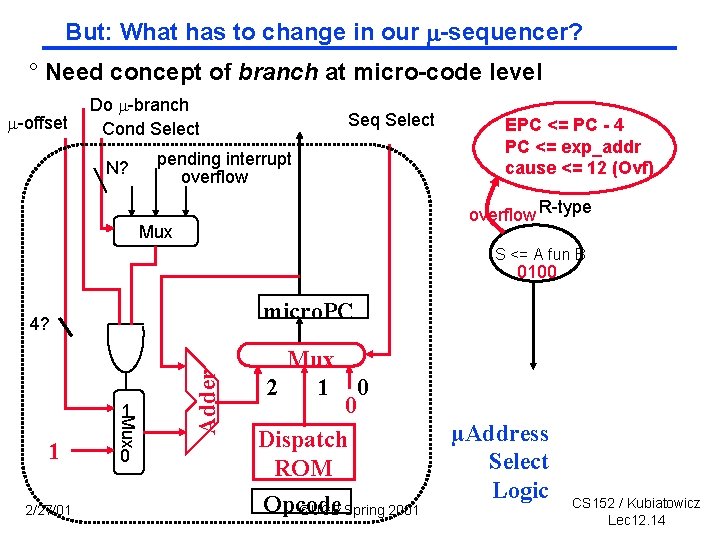

But: What has to change in our sequencer? ° Need concept of branch at micro code level -offset Do -branch Cond Select N? Seq Select pending interrupt overflow EPC <= PC 4 PC <= exp_addr cause <= 12 (Ovf) overflow R-type Mux S <= A fun B 0100 micro. PC 1 2/27/01 Mux 1 0 Adder 4? Mux 2 1 0 0 Dispatch ROM Opcode ©UCB Spring 2001 µAddress Select Logic CS 152 / Kubiatowicz Lec 12. 14

![Example Can easily use with for non ideal memory instruction fetch IR MEMPC Example: Can easily use with for non ideal memory “instruction fetch” IR <= MEM[PC]](https://slidetodoc.com/presentation_image/102c92ec4a7ed4f6b2e54c55b69bce13/image-15.jpg)

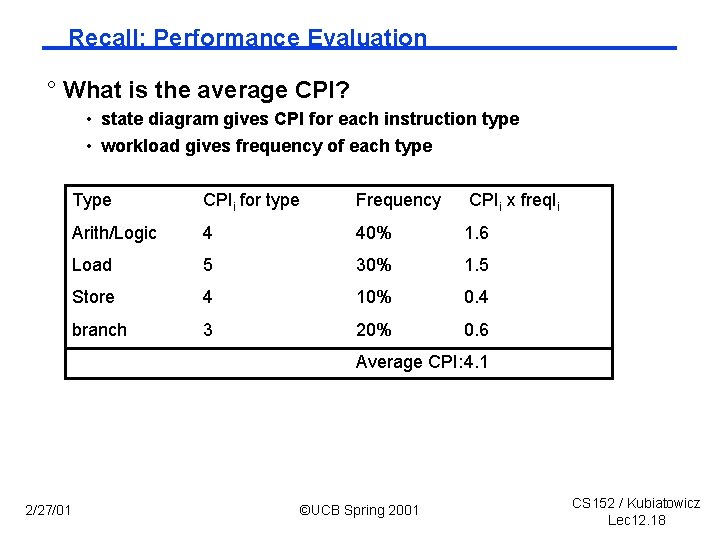

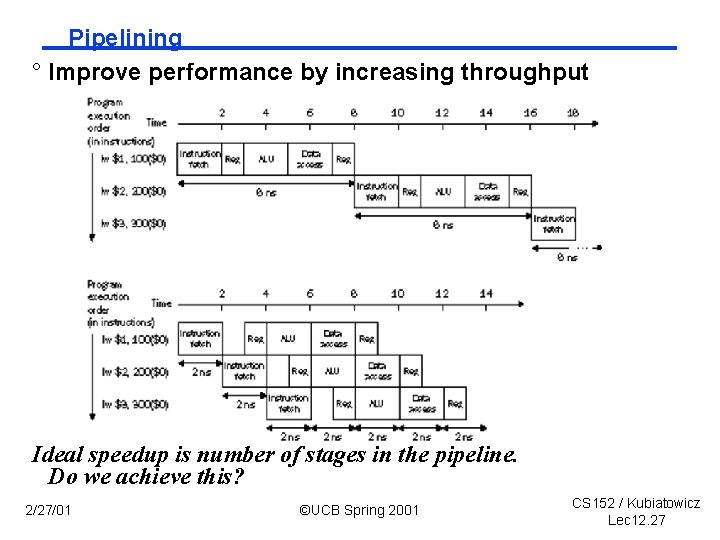

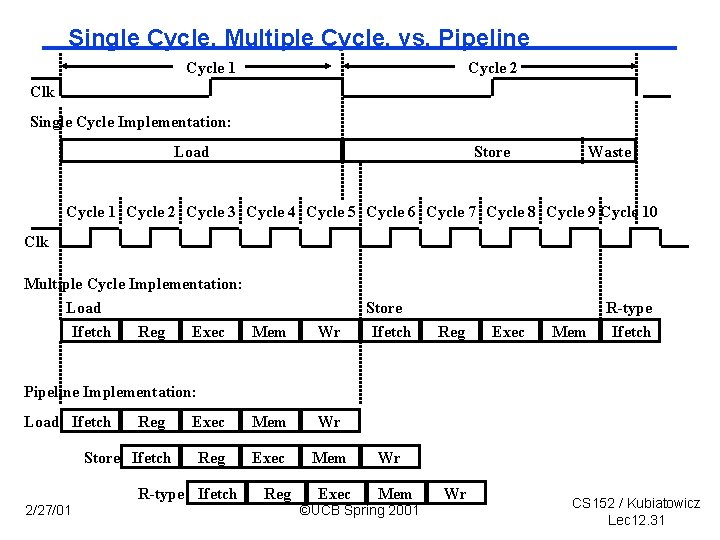

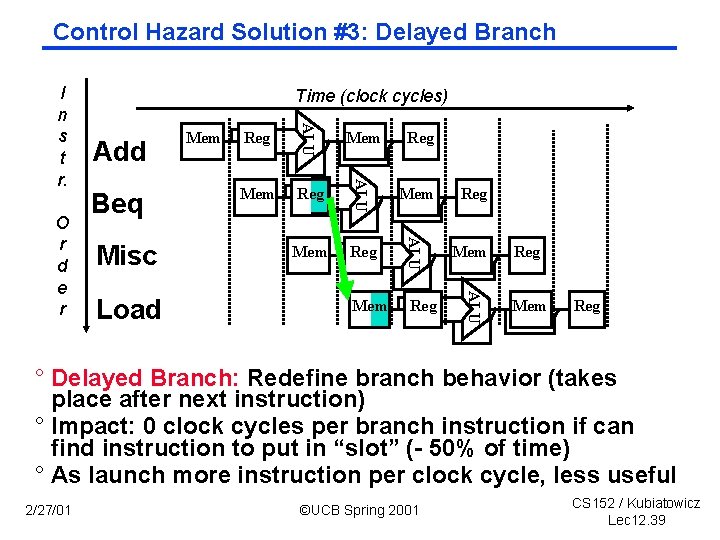

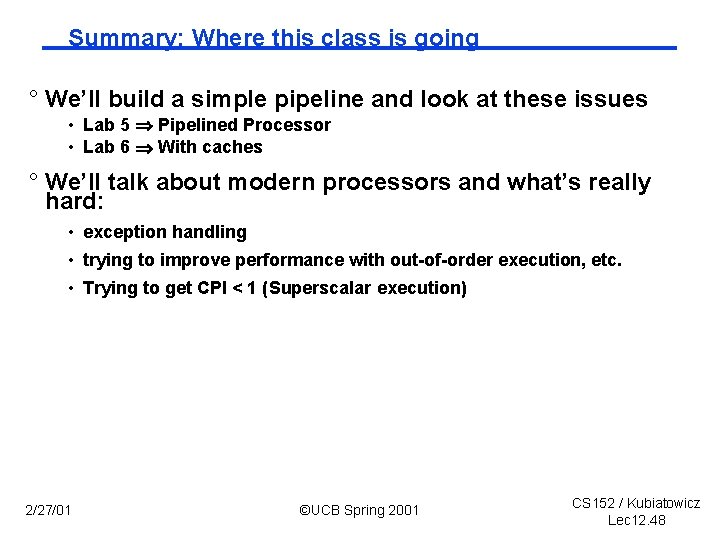

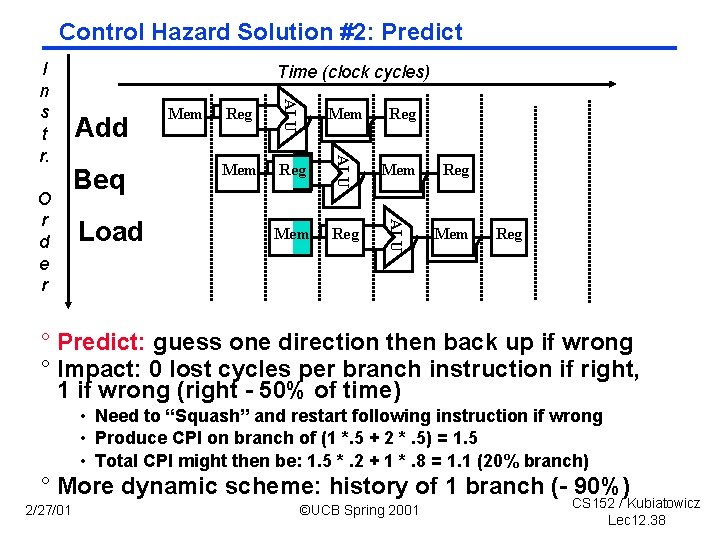

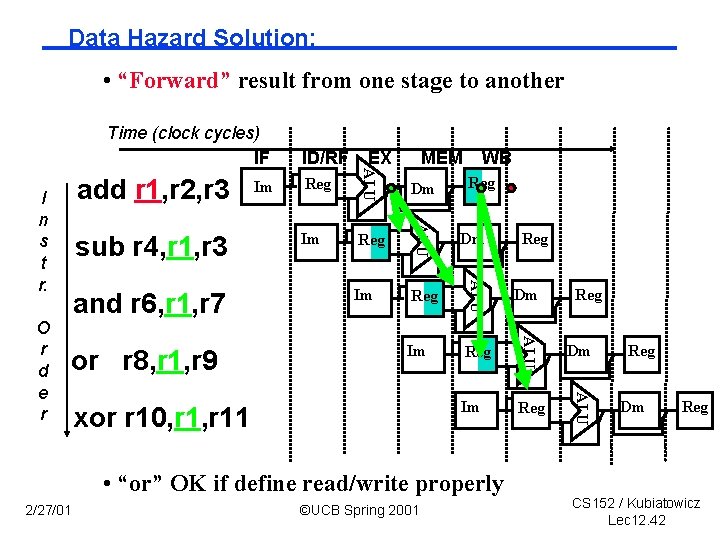

Example: Can easily use with for non ideal memory “instruction fetch” IR <= MEM[PC] wait ~wait R type S <= A fun B LW ORi S <= A or ZX SW S <= A + SX M <= MEM[S] ~wait R[rd] <= S R[rt] <= M PC <= PC + 4 2/27/01 BEQ S <= A + SX PC <= Next(PC) MEM[S] <= B ~wait PC <= PC + 4 ©UCB Spring 2001 wait Write-back Memory Execute “decode / operand fetch” A <= R[rs] B <= R[rt] CS 152 / Kubiatowicz Lec 12. 15

Administrative Issues ° Midterm I: Thursday 3/1 (day after tomorrow) • • 5: 30 – 8: 30 in 277 Cory Closed book, but can have one 8 ½ 11 sheet of handwritten notes Covers: Chapters 1 – 5, Appendices A – C Make sure to check out the sample quizzes on the Web! ° Get started reading Chapter 6! • Complete chapter on Pipelining. . . ° Sections: • This week sections 433 Latimer (as usual) • Next week sections Cory 119. - You will demonstrate your processors on a mystery program - Report still due at midnight ° Lab Reports: • Up to you to do a good job of summarizing your work • Part of grade will be on quality of your writing - Put code and schematics in appendices appropriately referenced - Use actual wordprocessor (Microsoft Word online) 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 16

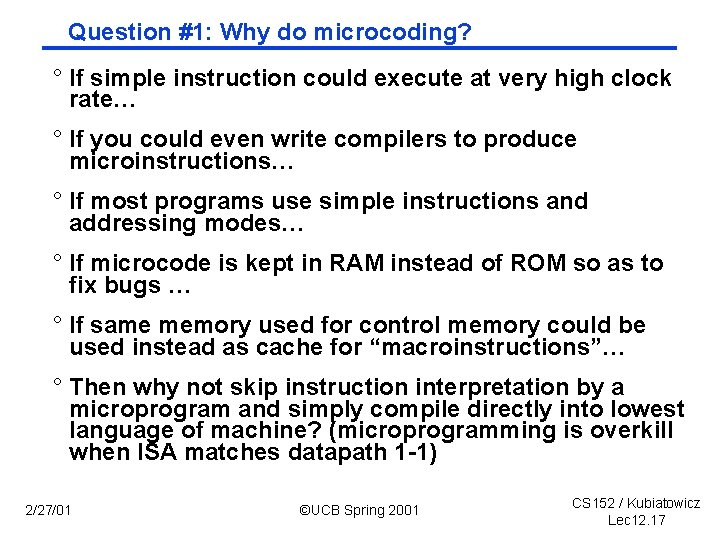

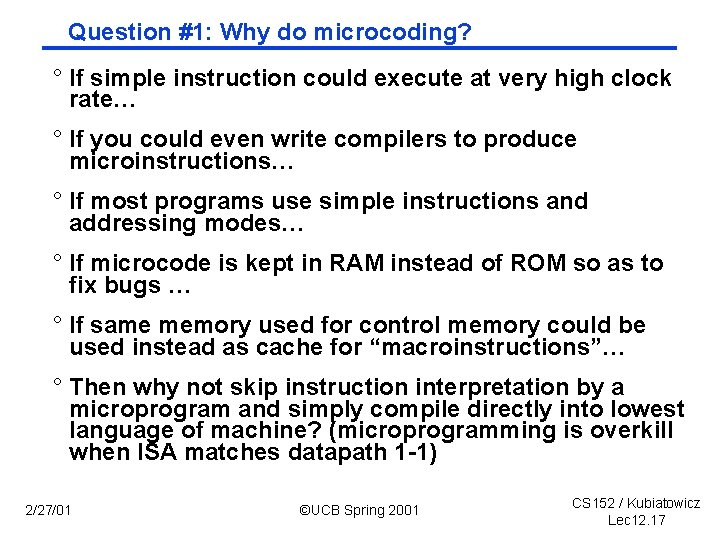

Question #1: Why do microcoding? ° If simple instruction could execute at very high clock rate… ° If you could even write compilers to produce microinstructions… ° If most programs use simple instructions and addressing modes… ° If microcode is kept in RAM instead of ROM so as to fix bugs … ° If same memory used for control memory could be used instead as cache for “macroinstructions”… ° Then why not skip instruction interpretation by a microprogram and simply compile directly into lowest language of machine? (microprogramming is overkill when ISA matches datapath 1 1) 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 17

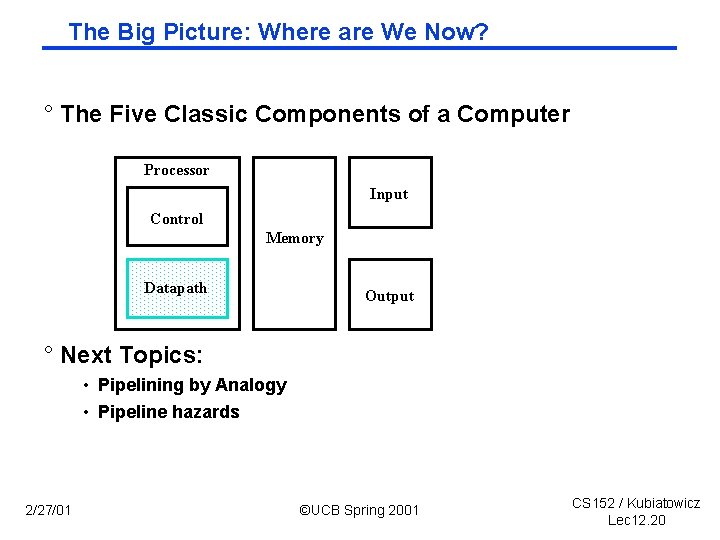

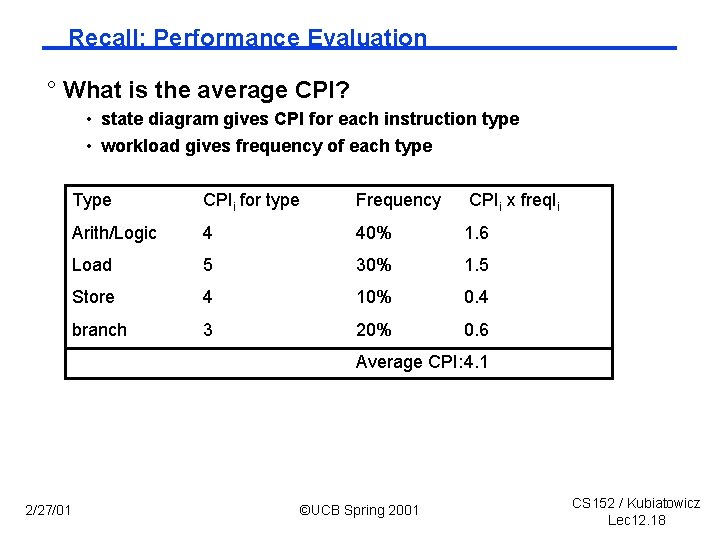

Recall: Performance Evaluation ° What is the average CPI? • state diagram gives CPI for each instruction type • workload gives frequency of each type Type CPIi for type Frequency CPIi x freq. Ii Arith/Logic 4 40% 1. 6 Load 5 30% 1. 5 Store 4 10% 0. 4 branch 3 20% 0. 6 Average CPI: 4. 1 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 18

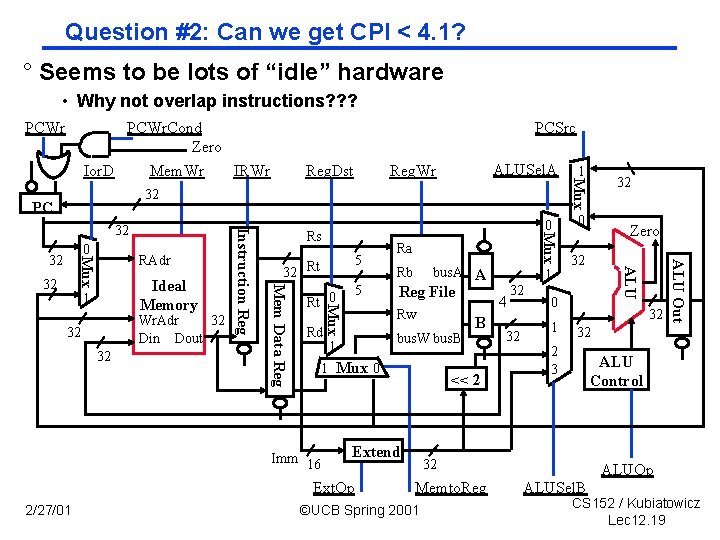

Question #2: Can we get CPI < 4. 1? ° Seems to be lots of “idle” hardware • Why not overlap instructions? ? ? PCWr PCSrc Reg. Dst ALUSel. A Reg. Wr 32 PC 32 32 5 Rt 0 Rd Rb bus. A A Reg File Rw bus. W bus. B 1 1 Mux 0 Imm 16 1 4 B << 2 Extend Ext. Op 2/27/01 Ra 32 32 0 1 32 32 2 3 ALU Control 32 Memto. Reg ©UCB Spring 2001 Zero ALU Out Wr. Adr 32 Din Dout 32 Rt Mux Ideal Memory 1 5 32 ALU 32 Rs Mem Data Reg Mux RAdr 0 Mux 0 32 Instruction Reg 32 1 Mux PCWr. Cond Zero Ior. D Mem. Wr IRWr ALUOp ALUSel. B CS 152 / Kubiatowicz Lec 12. 19

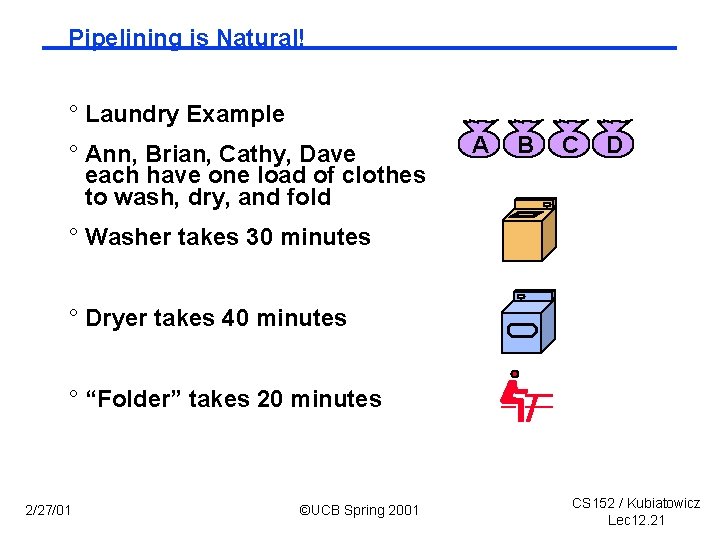

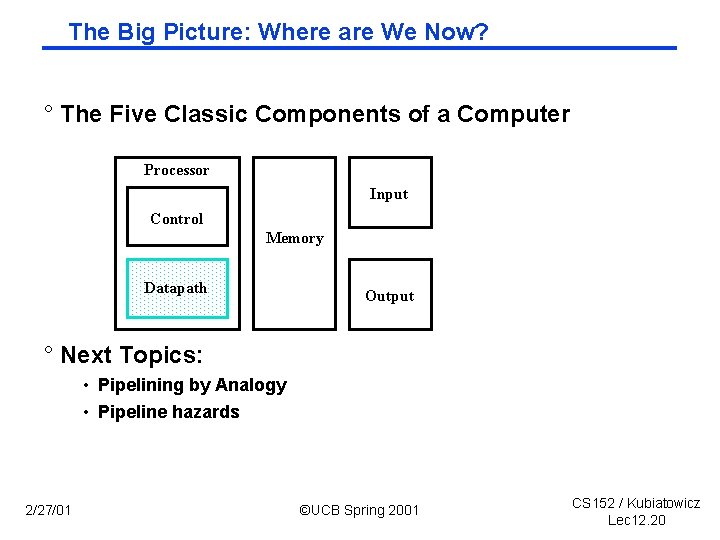

The Big Picture: Where are We Now? ° The Five Classic Components of a Computer Processor Input Control Memory Datapath Output ° Next Topics: • Pipelining by Analogy • Pipeline hazards 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 20

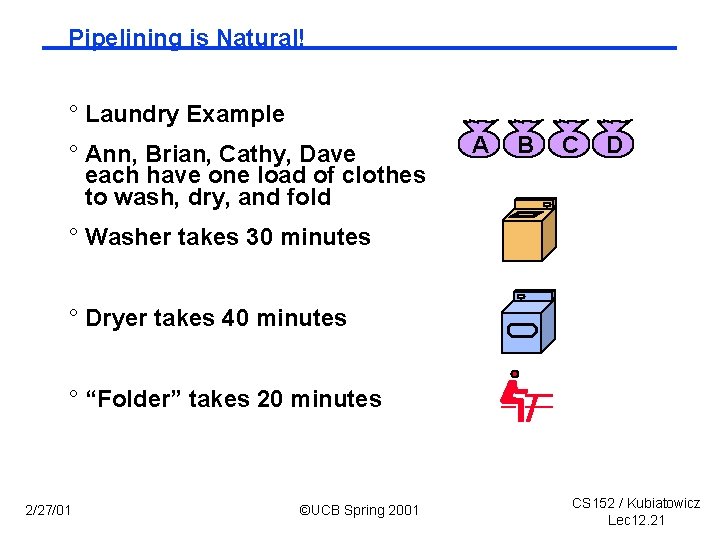

Pipelining is Natural! ° Laundry Example ° Ann, Brian, Cathy, Dave each have one load of clothes to wash, dry, and fold A B C D ° Washer takes 30 minutes ° Dryer takes 40 minutes ° “Folder” takes 20 minutes 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 21

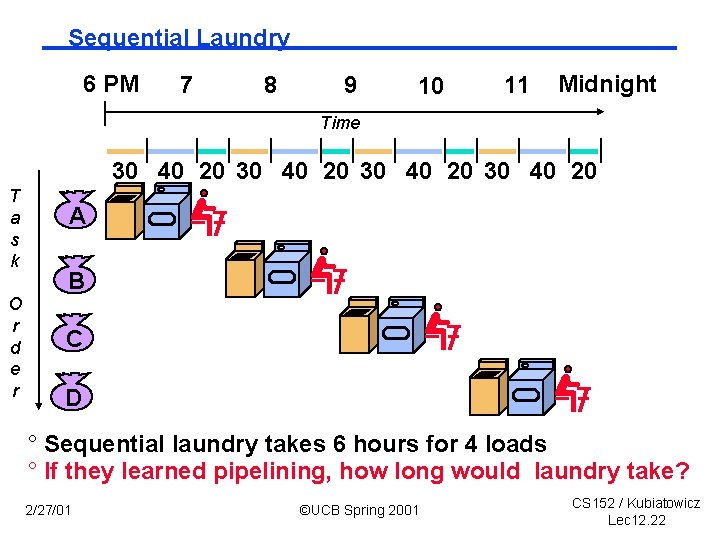

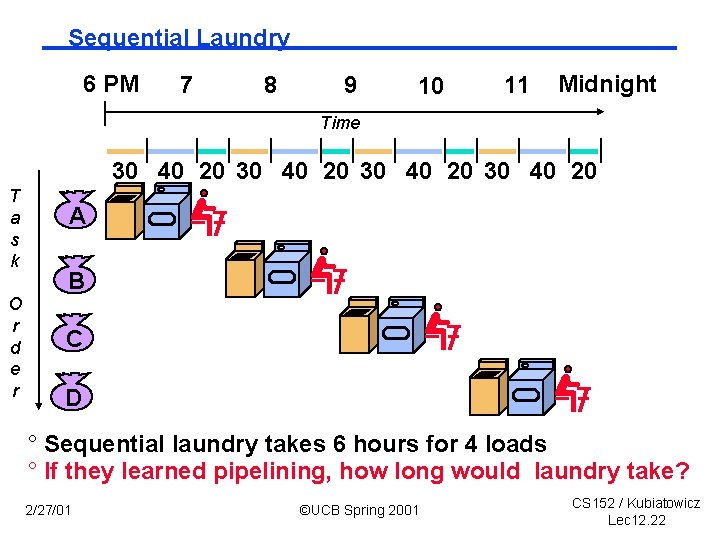

Sequential Laundry 6 PM 7 8 9 10 11 Midnight Time 30 40 20 T a s k O r d e r A B C D ° Sequential laundry takes 6 hours for 4 loads ° If they learned pipelining, how long would laundry take? 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 22

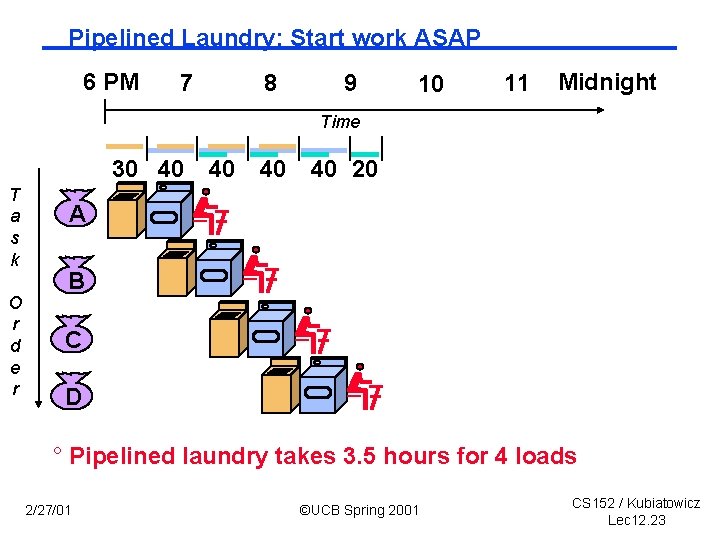

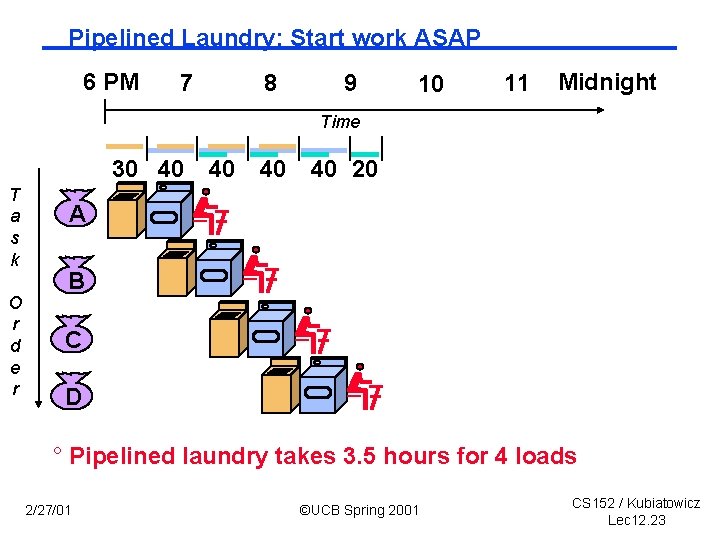

Pipelined Laundry: Start work ASAP 6 PM 7 8 9 10 11 Midnight Time 30 40 T a s k O r d e r 40 40 40 20 A B C D ° Pipelined laundry takes 3. 5 hours for 4 loads 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 23

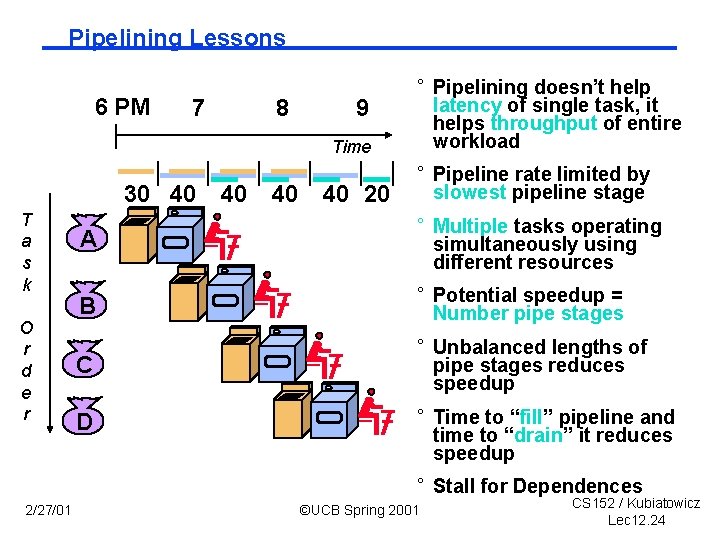

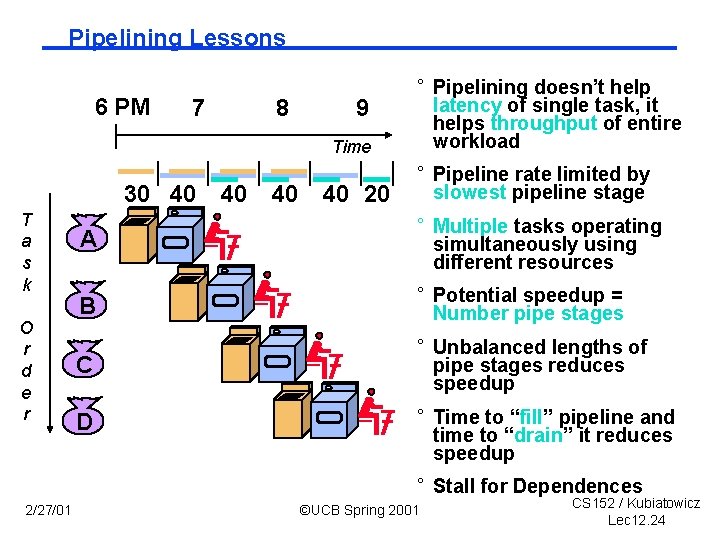

Pipelining Lessons 6 PM 7 8 9 Time 30 40 T a s k O r d e r 40 40 40 20 ° Pipelining doesn’t help latency of single task, it helps throughput of entire workload ° Pipeline rate limited by slowest pipeline stage A ° Multiple tasks operating simultaneously using different resources B ° Potential speedup = Number pipe stages C ° Unbalanced lengths of pipe stages reduces speedup D ° Time to “fill” pipeline and time to “drain” it reduces speedup ° Stall for Dependences 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 24

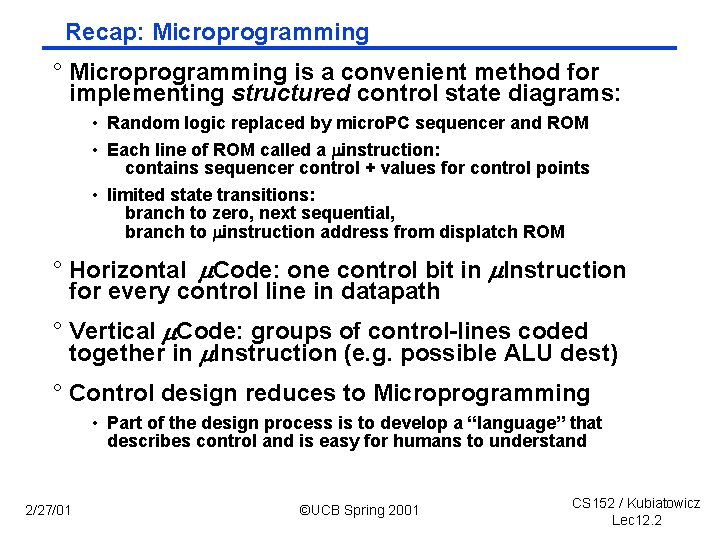

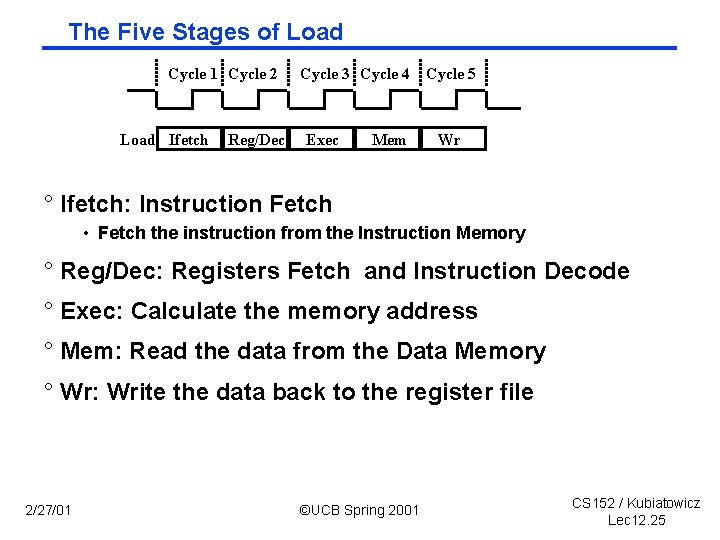

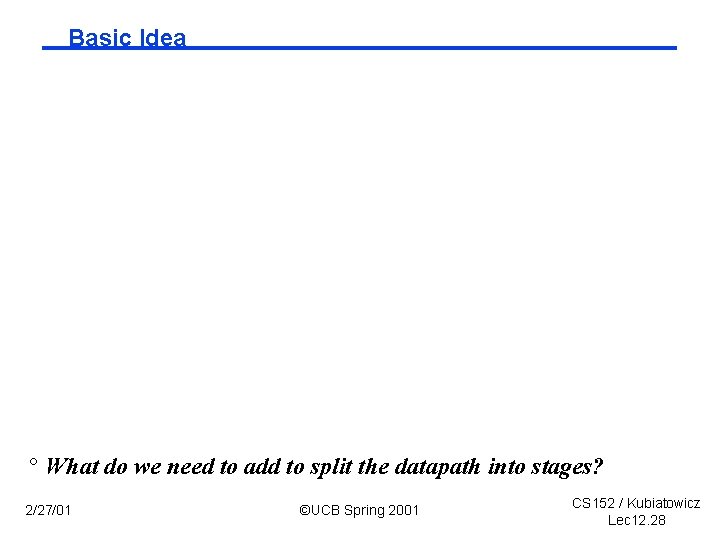

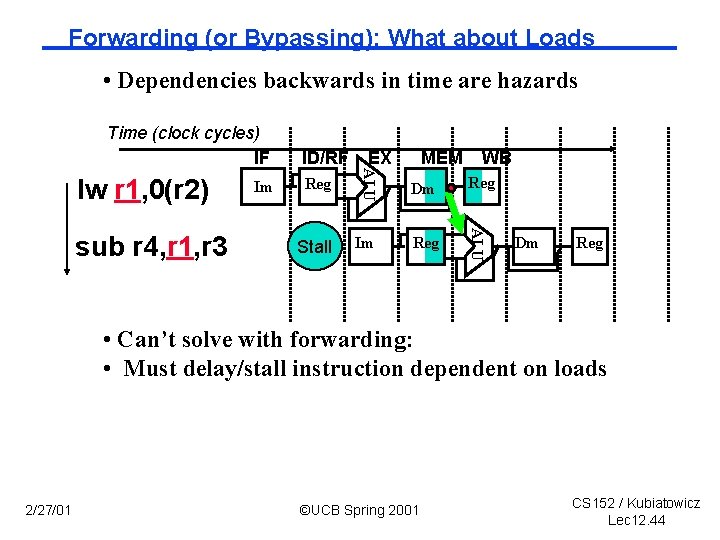

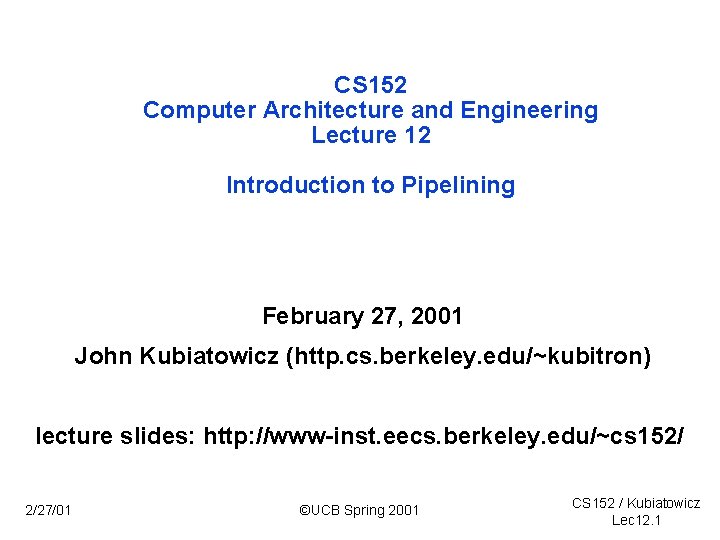

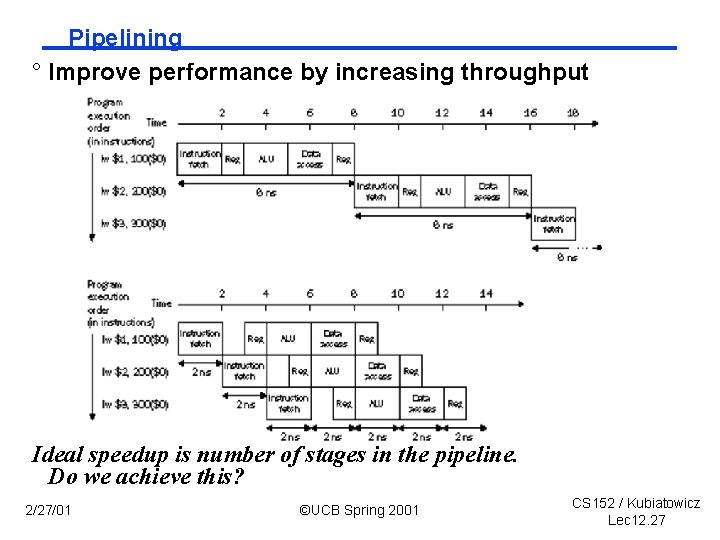

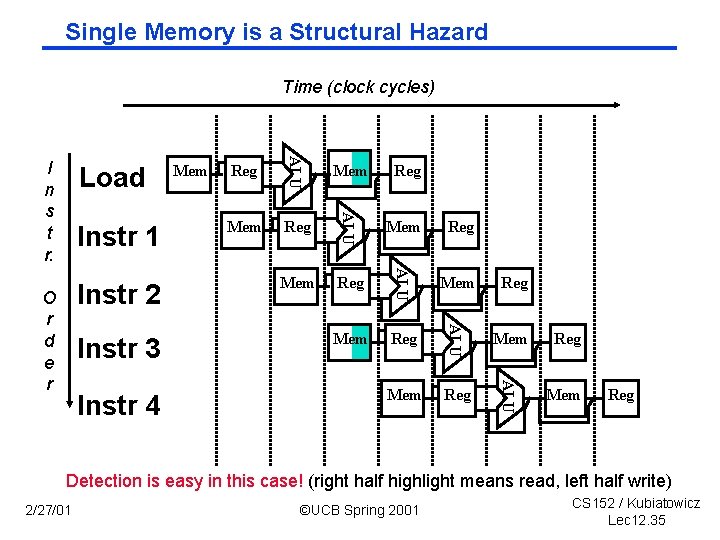

The Five Stages of Load Cycle 1 Cycle 2 Load Ifetch Reg/Dec Cycle 3 Cycle 4 Cycle 5 Exec Mem Wr ° Ifetch: Instruction Fetch • Fetch the instruction from the Instruction Memory ° Reg/Dec: Registers Fetch and Instruction Decode ° Exec: Calculate the memory address ° Mem: Read the data from the Data Memory ° Wr: Write the data back to the register file 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 25

![Fetch Note These 5 stages were there all along IR MEMPC PC Fetch Note: These 5 stages were there all along! IR <= MEM[PC] PC <=](https://slidetodoc.com/presentation_image/102c92ec4a7ed4f6b2e54c55b69bce13/image-26.jpg)

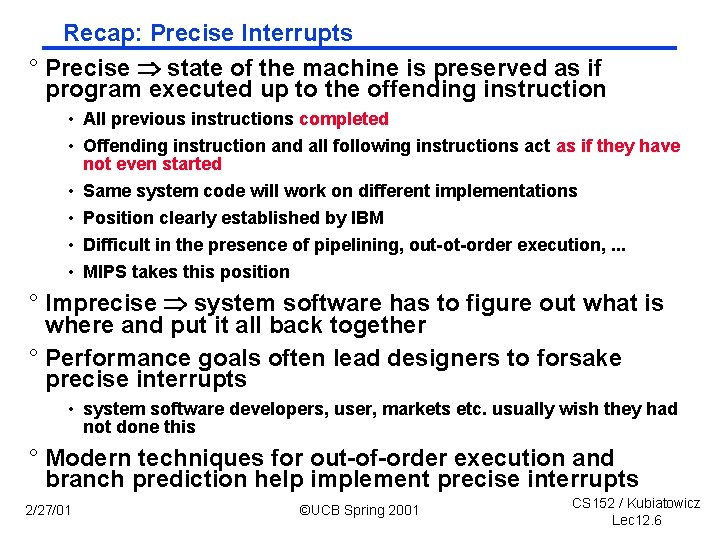

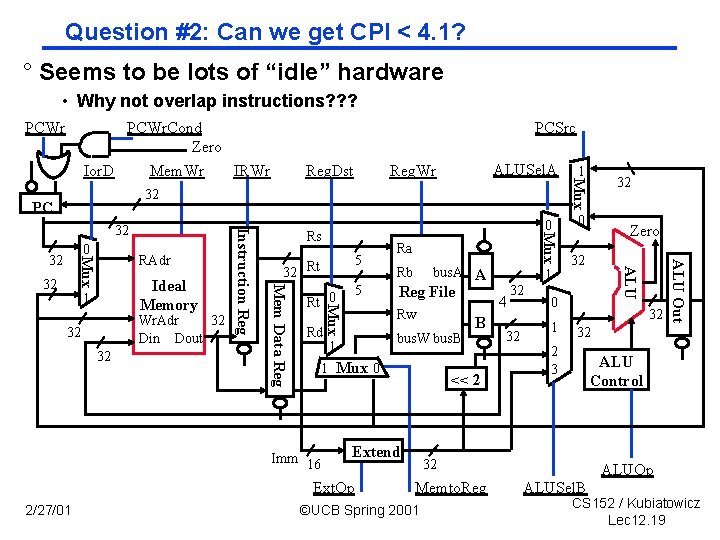

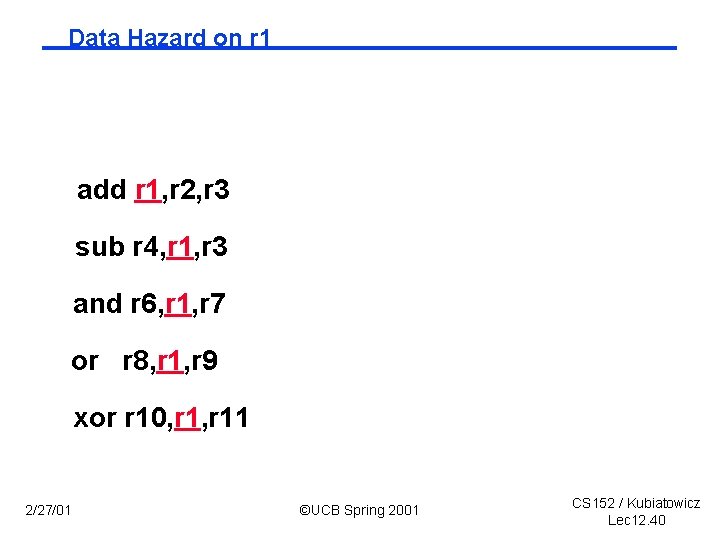

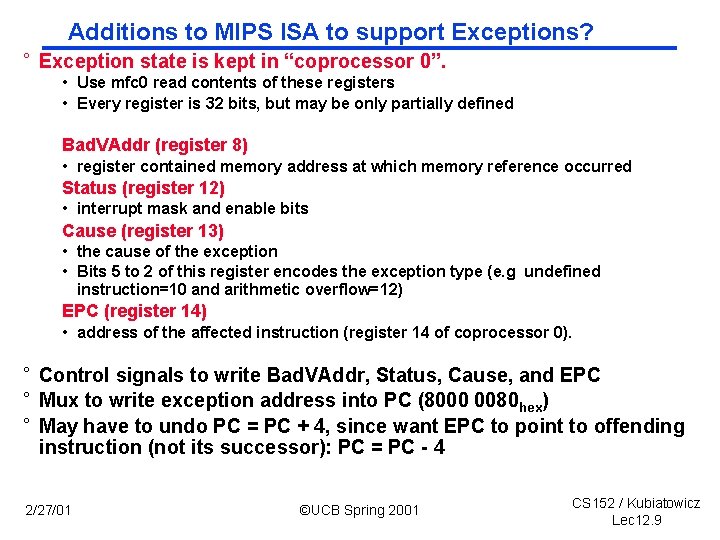

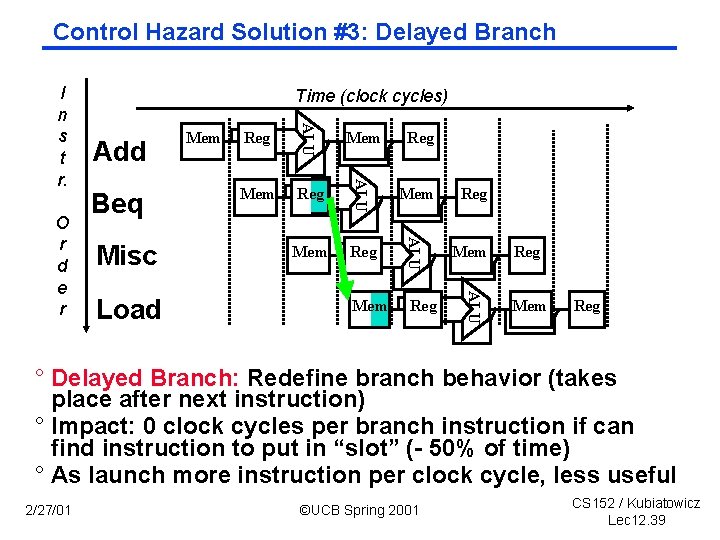

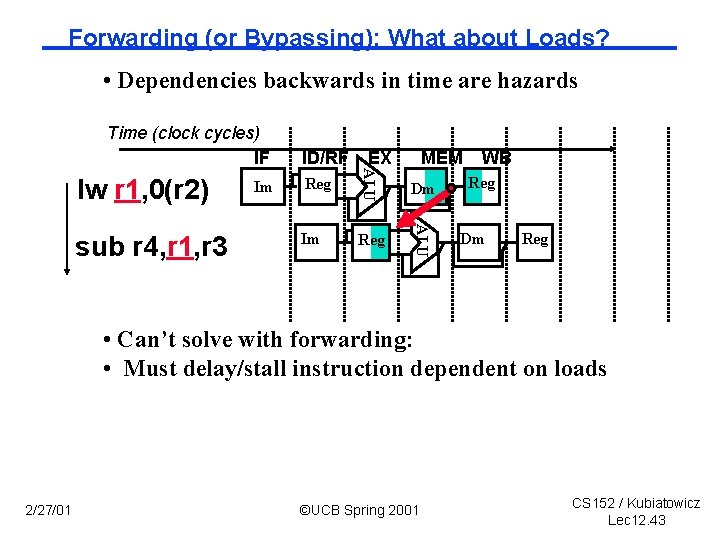

Fetch Note: These 5 stages were there all along! IR <= MEM[PC] PC <= PC + 4 Memory Write-back Execute Decode 0000 2/27/01 ALUout <= PC +SX 0001 R-type ALUout <= A fun B 0100 ORi ALUout <= A op ZX 0110 LW ALUout <= A + SX 1000 M <= MEM[ALUout] 1001 R[rd] <= ALUout 0101 R[rt] <= ALUout 0111 BEQ SW ALUout <= A + SX 1011 If A = B then PC <= ALUout 0010 MEM[ALUout] <= B 1100 R[rt] <= M 1010 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 26

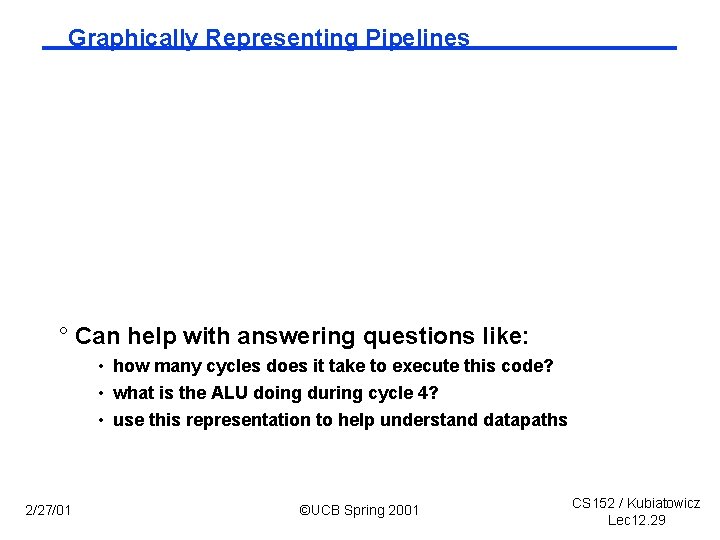

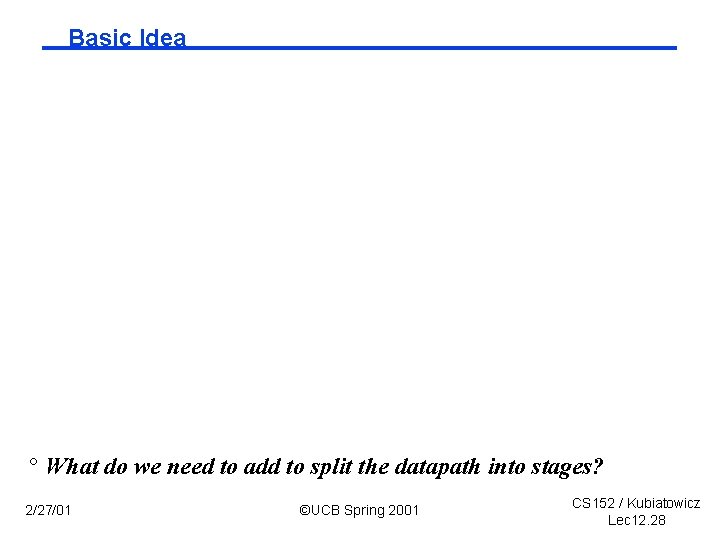

Pipelining ° Improve performance by increasing throughput Ideal speedup is number of stages in the pipeline. Do we achieve this? 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 27

Basic Idea ° What do we need to add to split the datapath into stages? 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 28

Graphically Representing Pipelines ° Can help with answering questions like: • how many cycles does it take to execute this code? • what is the ALU doing during cycle 4? • use this representation to help understand datapaths 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 29

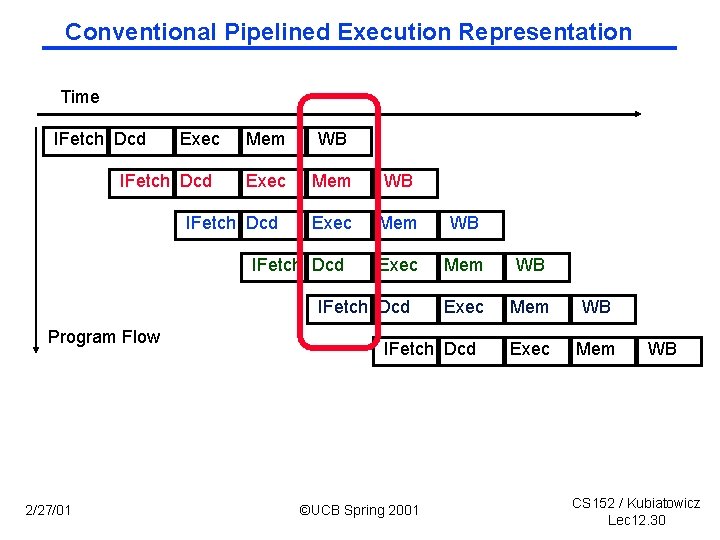

Conventional Pipelined Execution Representation Time IFetch Dcd Exec IFetch Dcd Mem WB Exec Mem WB Exec Mem IFetch Dcd Program Flow 2/27/01 IFetch Dcd ©UCB Spring 2001 WB CS 152 / Kubiatowicz Lec 12. 30

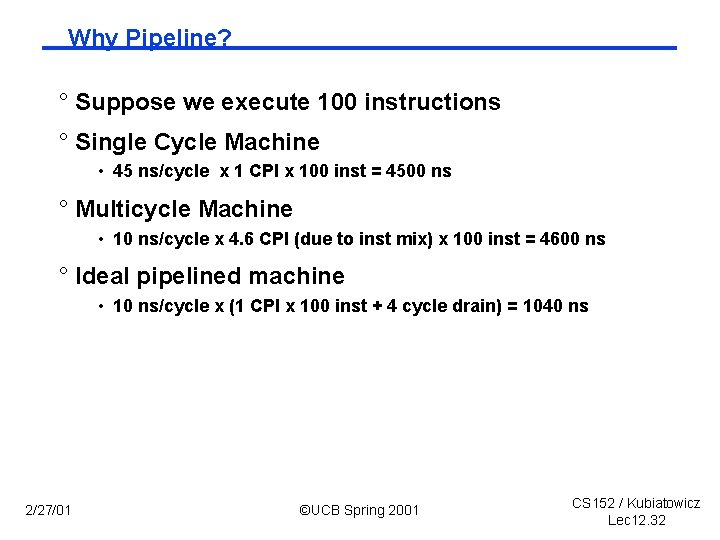

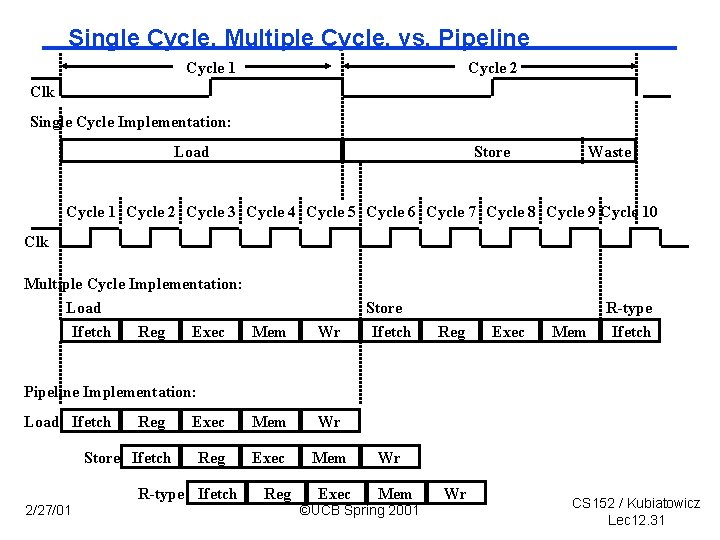

Single Cycle, Multiple Cycle, vs. Pipeline Cycle 1 Cycle 2 Clk Single Cycle Implementation: Load Store Waste Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Cycle 9 Cycle 10 Clk Multiple Cycle Implementation: Load Ifetch Reg Exec Mem Wr Store Ifetch Reg Exec Mem R-type Ifetch Pipeline Implementation: Load Ifetch Reg Store Ifetch Exec Mem Wr Reg Exec Mem R-type Ifetch 2/27/01 Reg Exec Wr Mem ©UCB Spring 2001 Wr CS 152 / Kubiatowicz Lec 12. 31

Why Pipeline? ° Suppose we execute 100 instructions ° Single Cycle Machine • 45 ns/cycle x 1 CPI x 100 inst = 4500 ns ° Multicycle Machine • 10 ns/cycle x 4. 6 CPI (due to inst mix) x 100 inst = 4600 ns ° Ideal pipelined machine • 10 ns/cycle x (1 CPI x 100 inst + 4 cycle drain) = 1040 ns 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 32

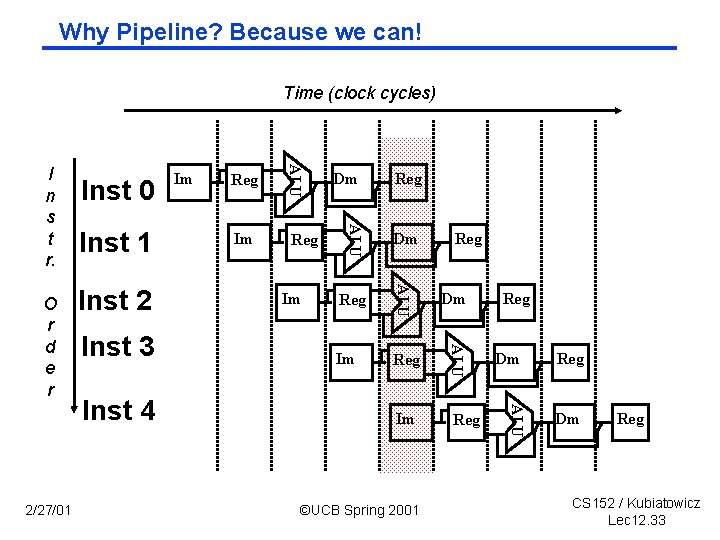

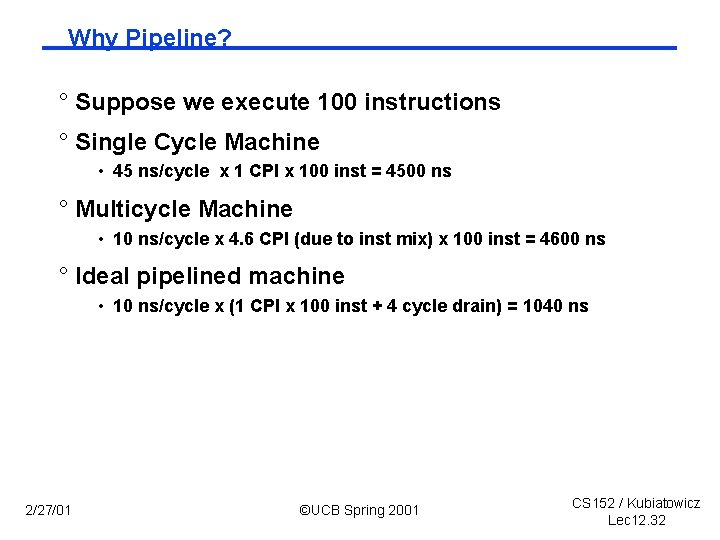

Why Pipeline? Because we can! Time (clock cycles) Inst 3 Reg Im Reg Dm Im Reg ©UCB Spring 2001 Reg Dm ALU Inst 4 Im Dm ALU Inst 2 Reg ALU 2/27/01 Inst 1 Im ALU O r d e r Inst 0 ALU I n s t r. Reg Dm Reg CS 152 / Kubiatowicz Lec 12. 33

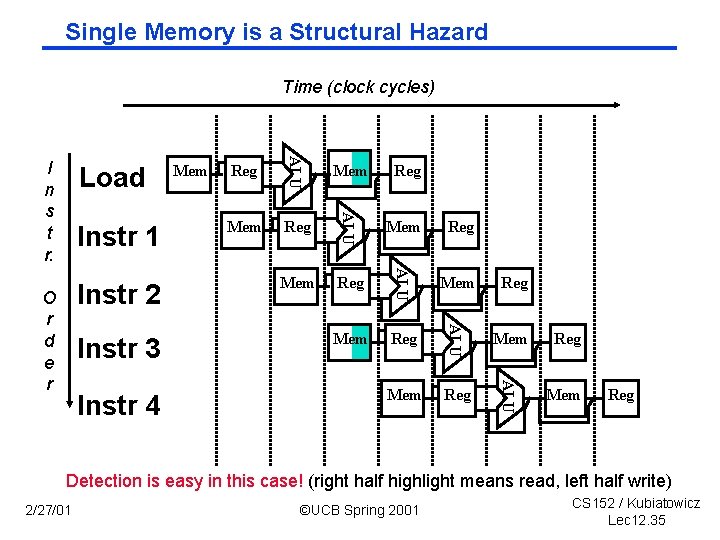

Can pipelining get us into trouble? ° Yes: Pipeline Hazards • structural hazards: attempt to use the same resource two different ways at the same time - E. g. , combined washer/dryer would be a structural hazard or folder busy doing something else (watching TV) • control hazards: attempt to make a decision before condition is evaluated - E. g. , washing football uniforms and need to get proper detergent level; need to see after dryer before next load in - branch instructions • data hazards: attempt to use item before it is ready - E. g. , one sock of pair in dryer and one in washer; can’t fold until get sock from washer through dryer - instruction depends on result of prior instruction still in the pipeline ° Can always resolve hazards by waiting • pipeline control must detect the hazard • take action (or delay action) to resolve hazards 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 34

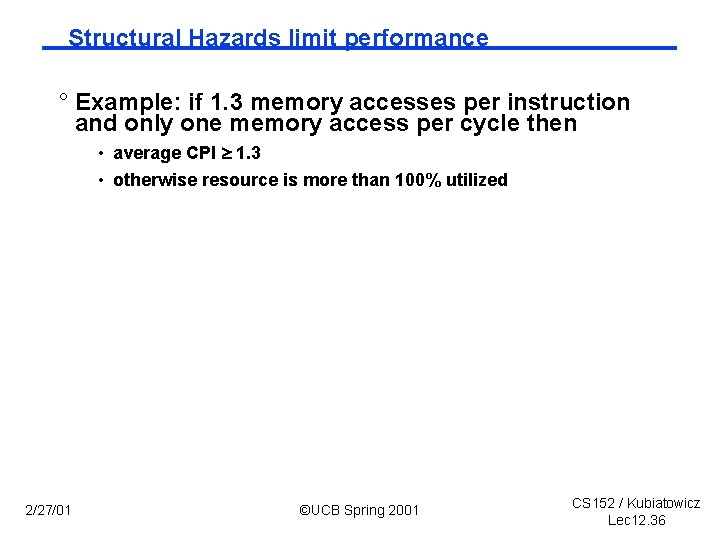

Single Memory is a Structural Hazard Time (clock cycles) Instr 4 Reg Mem Reg Mem Reg ALU Instr 3 Mem ALU Instr 2 O r d e r Reg ALU Instr 1 Mem ALU Load ALU I n s t r. Mem Reg Detection is easy in this case! (right half highlight means read, left half write) 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 35

Structural Hazards limit performance ° Example: if 1. 3 memory accesses per instruction and only one memory access per cycle then • average CPI 1. 3 • otherwise resource is more than 100% utilized 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 36

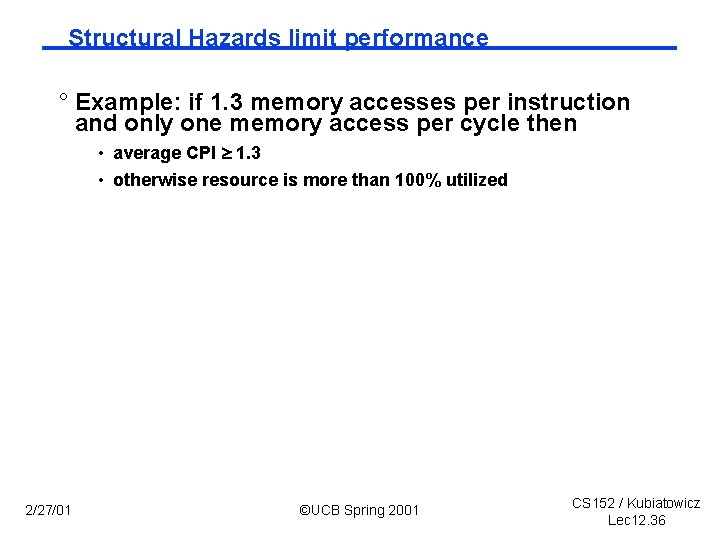

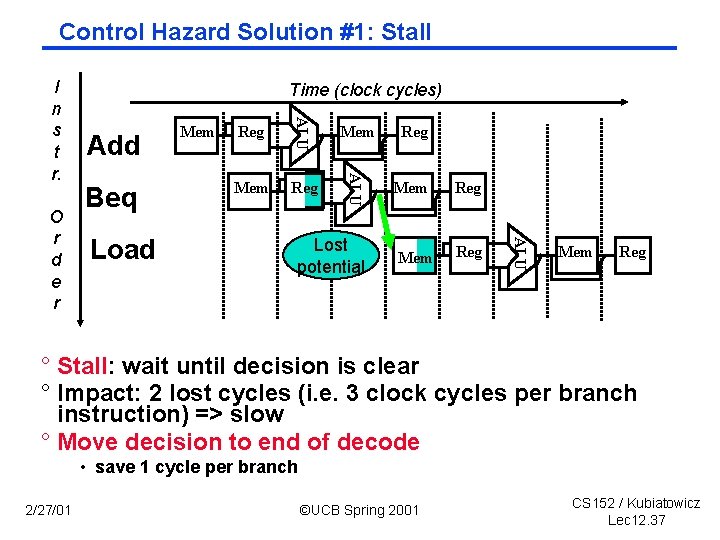

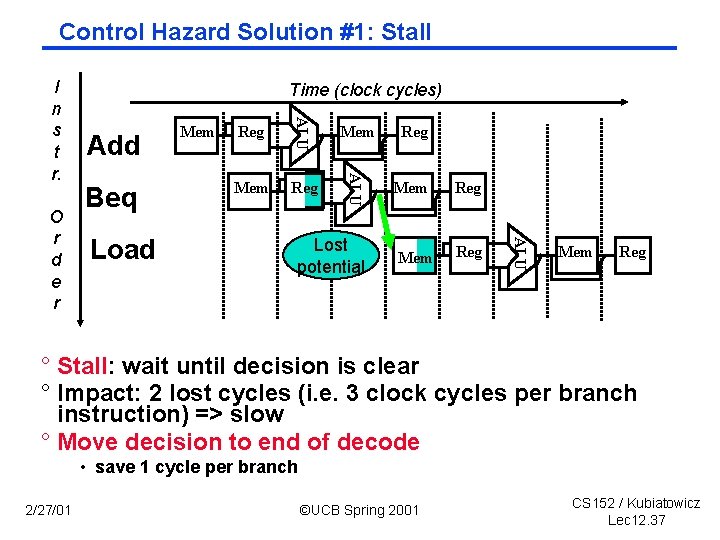

Control Hazard Solution #1: Stall Add Beq Reg Mem Lost potential Mem Reg ALU Load Mem ALU O r d e r Time (clock cycles) ALU I n s t r. Mem Reg ° Stall: wait until decision is clear ° Impact: 2 lost cycles (i. e. 3 clock cycles per branch instruction) => slow ° Move decision to end of decode • save 1 cycle per branch 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 37

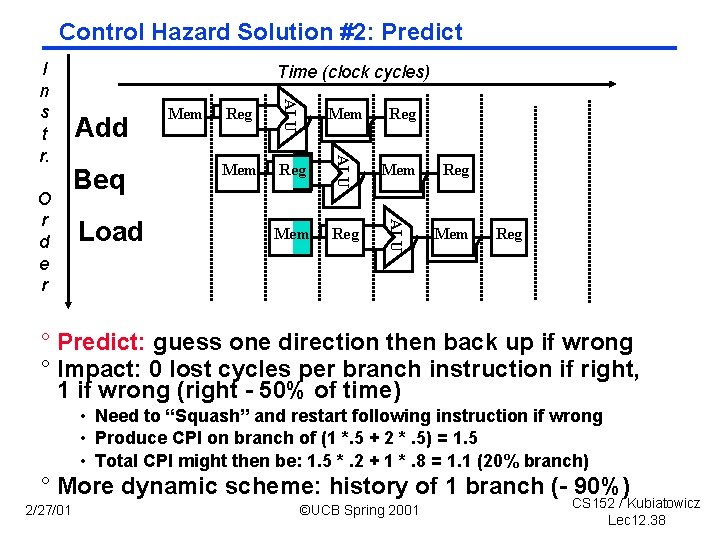

Control Hazard Solution #2: Predict Beq Load Reg Mem Reg ALU Add Mem ALU O r d e r Time (clock cycles) ALU I n s t r. Mem Reg ° Predict: guess one direction then back up if wrong ° Impact: 0 lost cycles per branch instruction if right, 1 if wrong (right 50% of time) • Need to “Squash” and restart following instruction if wrong • Produce CPI on branch of (1 *. 5 + 2 *. 5) = 1. 5 • Total CPI might then be: 1. 5 *. 2 + 1 *. 8 = 1. 1 (20% branch) ° More dynamic scheme: history of 1 branch ( 90%) 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 38

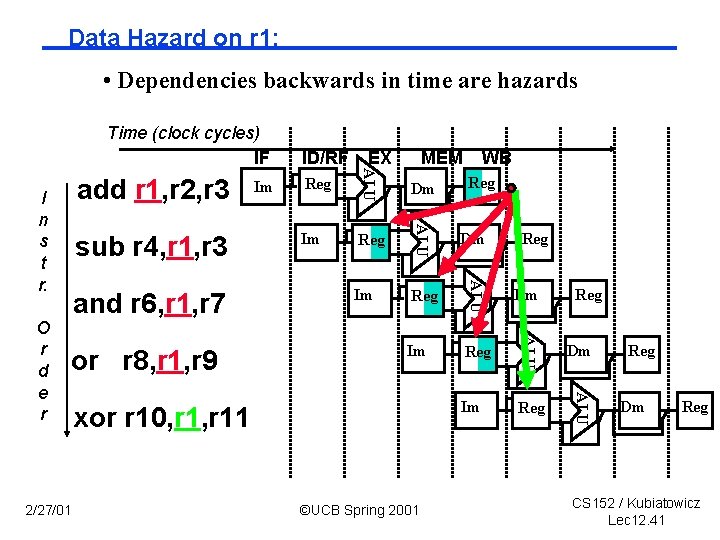

Control Hazard Solution #3: Delayed Branch Misc Load Mem Reg Mem Reg ALU Beq Reg ALU Add Mem ALU O r d e r Time (clock cycles) ALU I n s t r. Mem Reg ° Delayed Branch: Redefine branch behavior (takes place after next instruction) ° Impact: 0 clock cycles per branch instruction if can find instruction to put in “slot” ( 50% of time) ° As launch more instruction per clock cycle, less useful 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 39

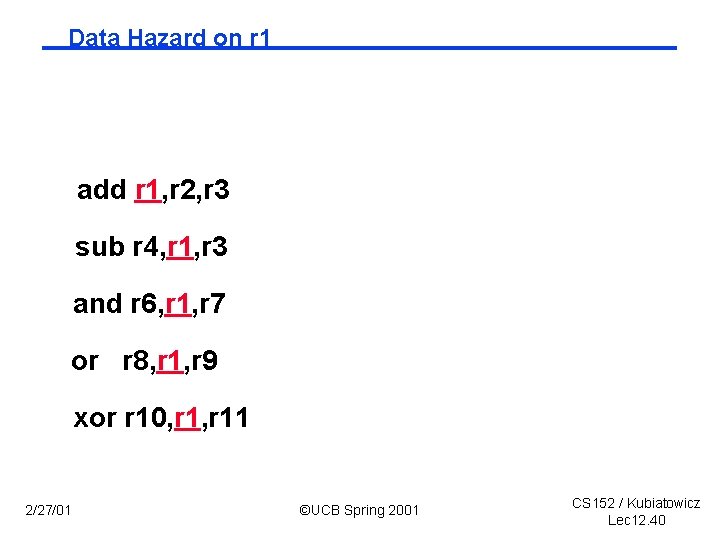

Data Hazard on r 1 add r 1, r 2, r 3 sub r 4, r 1, r 3 and r 6, r 1, r 7 or r 8, r 1, r 9 xor r 10, r 11 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 40

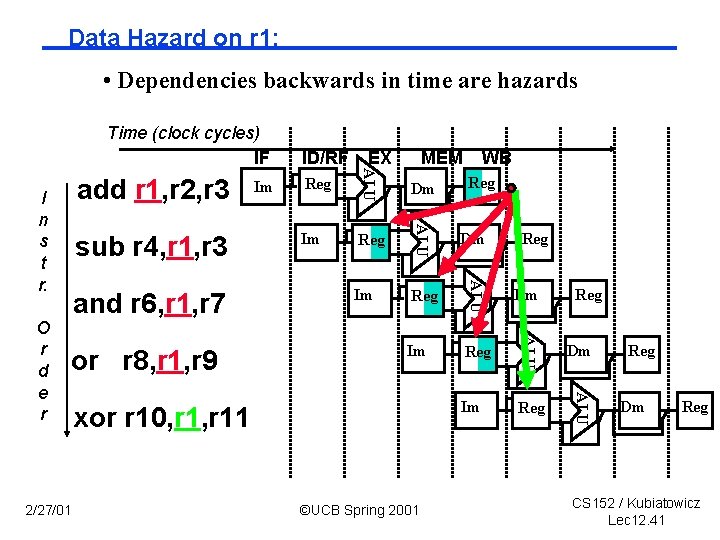

Data Hazard on r 1: • Dependencies backwards in time are hazards Time (clock cycles) IF 2/27/01 Dm Im Reg ALU or r 8, r 1, r 9 Reg ALU and r 6, r 1, r 7 WB ALU O r d e r sub r 4, r 1, r 3 MEM ALU I n s t r. Im EX ALU add r 1, r 2, r 3 ID/RF xor r 10, r 11 ©UCB Spring 2001 Reg Reg Dm Reg CS 152 / Kubiatowicz Lec 12. 41

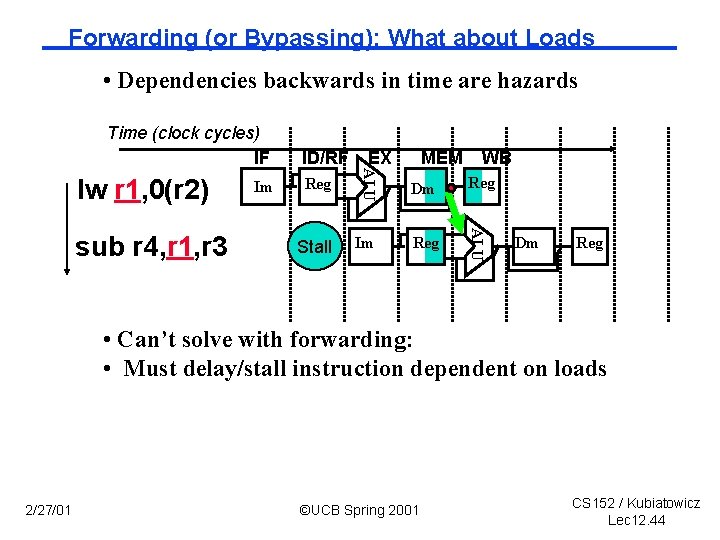

Data Hazard Solution: • “Forward” result from one stage to another Time (clock cycles) IF Dm Im Reg ALU or r 8, r 1, r 9 Reg ALU and r 6, r 1, r 7 WB ALU O r d e r sub r 4, r 1, r 3 MEM ALU I n s t r. Im EX ALU add r 1, r 2, r 3 ID/RF xor r 10, r 11 Reg • “or” OK if define read/write properly 2/27/01 ©UCB Spring 2001 Reg Reg Dm Reg CS 152 / Kubiatowicz Lec 12. 42

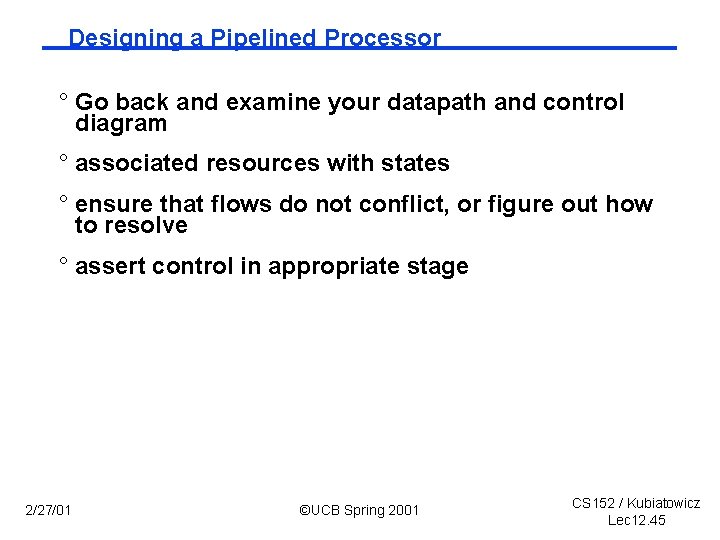

Forwarding (or Bypassing): What about Loads? • Dependencies backwards in time are hazards Time (clock cycles) IF MEM Reg Dm Im Reg ALU sub r 4, r 1, r 3 Im EX ALU lw r 1, 0(r 2) ID/RF WB Reg Dm Reg • Can’t solve with forwarding: • Must delay/stall instruction dependent on loads 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 43

Forwarding (or Bypassing): What about Loads • Dependencies backwards in time are hazards Time (clock cycles) IF Reg Stall MEM WB Dm Reg Im Reg ALU sub r 4, r 1, r 3 Im EX ALU lw r 1, 0(r 2) ID/RF Dm Reg • Can’t solve with forwarding: • Must delay/stall instruction dependent on loads 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 44

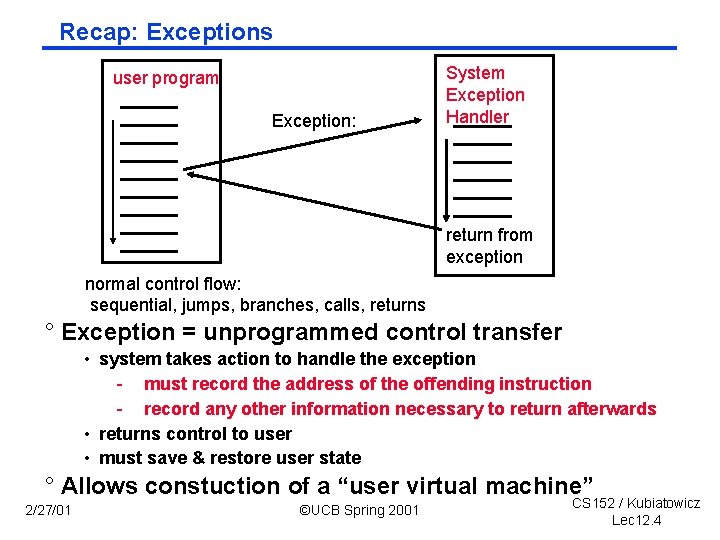

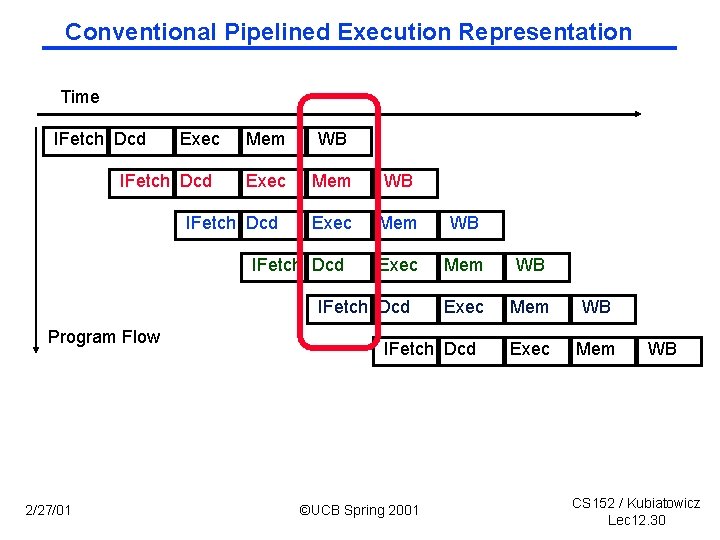

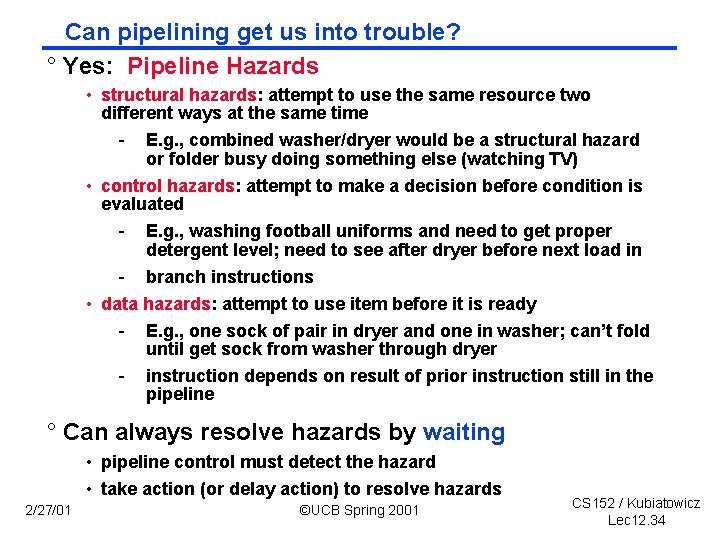

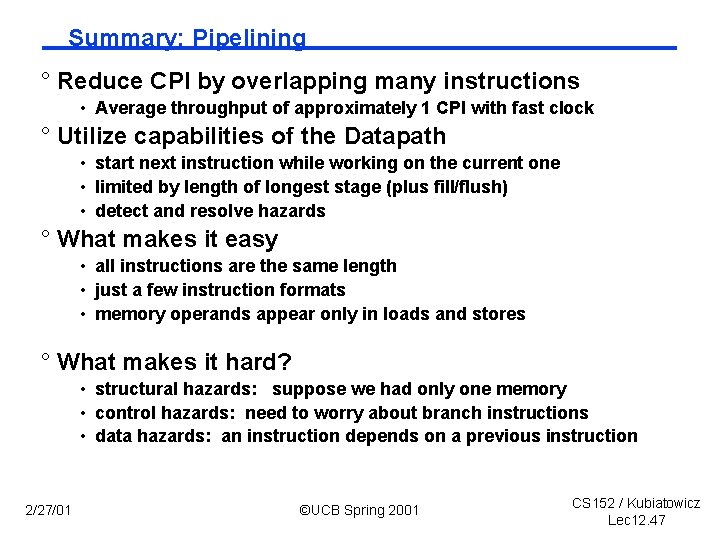

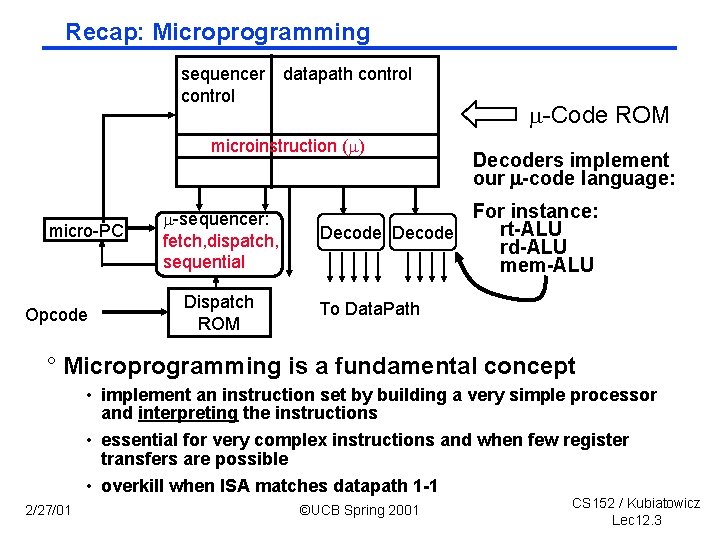

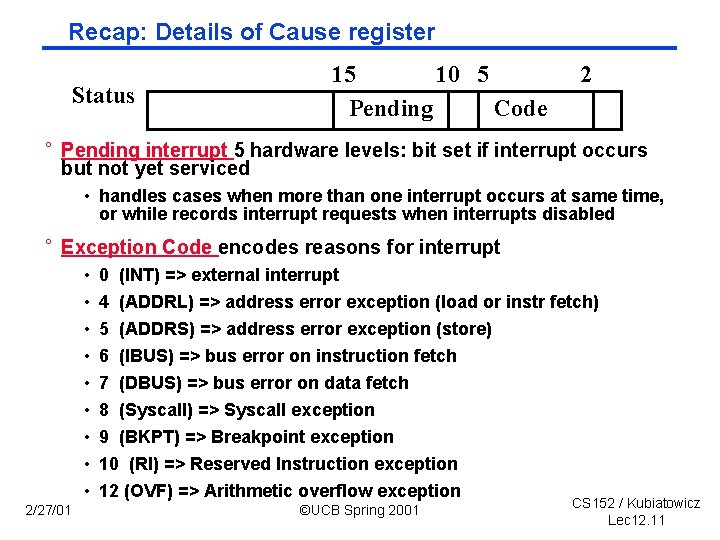

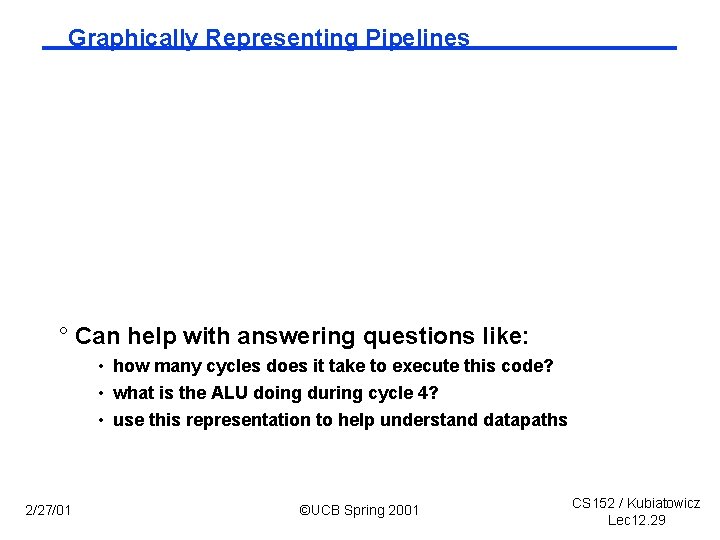

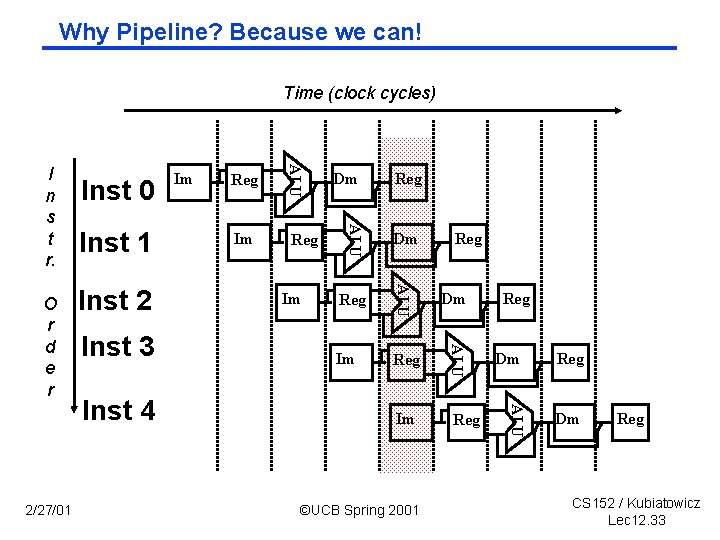

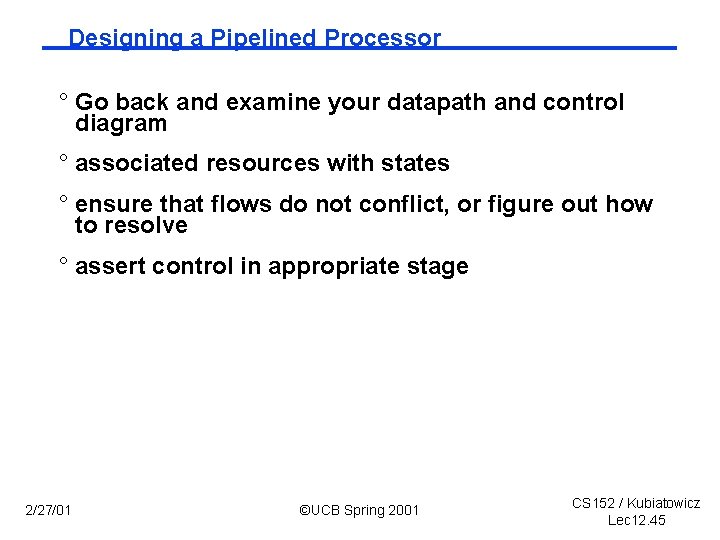

Designing a Pipelined Processor ° Go back and examine your datapath and control diagram ° associated resources with states ° ensure that flows do not conflict, or figure out how to resolve ° assert control in appropriate stage 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 45

![Control and Datapath Split state diag into 5 pieces IR MemPC PC Control and Datapath: Split state diag into 5 pieces IR < Mem[PC]; PC <–](https://slidetodoc.com/presentation_image/102c92ec4a7ed4f6b2e54c55b69bce13/image-46.jpg)

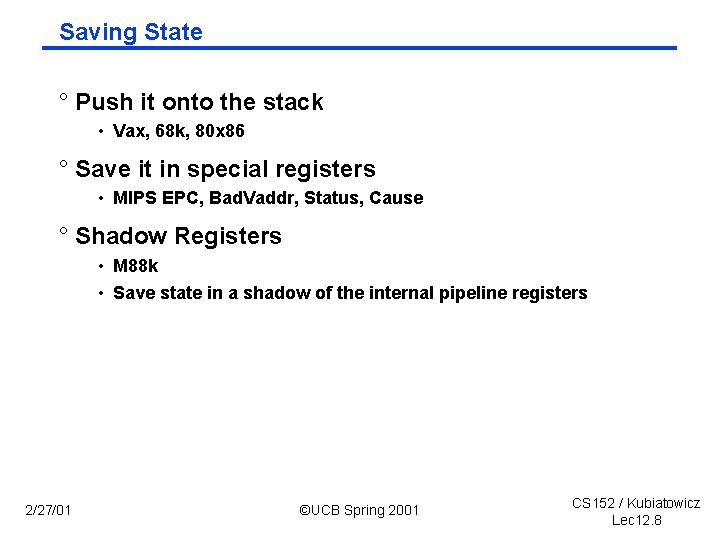

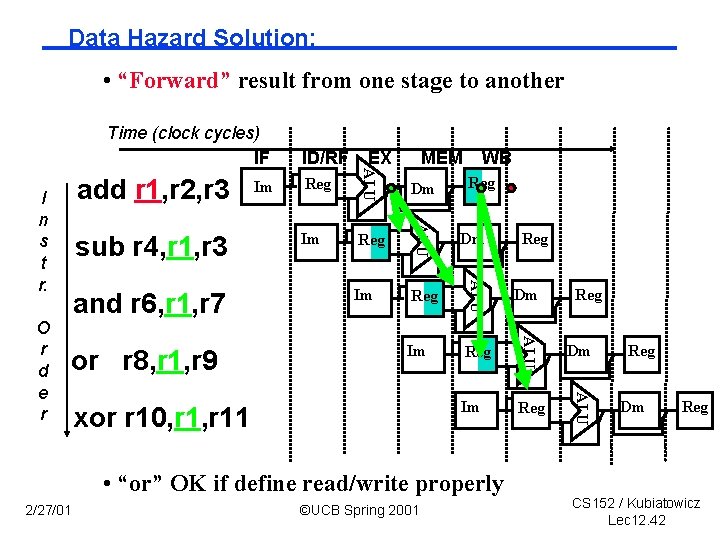

Control and Datapath: Split state diag into 5 pieces IR < Mem[PC]; PC <– PC+4; A < R[rs]; B<– R[rt] S <– A + SX; M <– Mem[S] < B M Data Mem B ©UCB Spring 2001 Reg. File S D 2/27/01 If Cond PC < PC+SX; Mem Access A Exec R[rd] <– M; IR Inst. Mem R[rt] <– S; PC Next PC R[rd] <– S; S <– A + SX; Equal S <– A or ZX; Reg File S <– A + B; CS 152 / Kubiatowicz Lec 12. 46

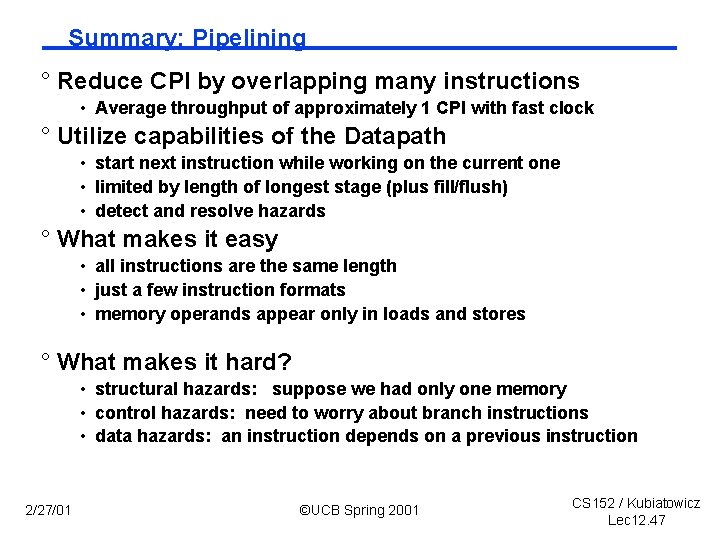

Summary: Pipelining ° Reduce CPI by overlapping many instructions • Average throughput of approximately 1 CPI with fast clock ° Utilize capabilities of the Datapath • start next instruction while working on the current one • limited by length of longest stage (plus fill/flush) • detect and resolve hazards ° What makes it easy • all instructions are the same length • just a few instruction formats • memory operands appear only in loads and stores ° What makes it hard? • structural hazards: suppose we had only one memory • control hazards: need to worry about branch instructions • data hazards: an instruction depends on a previous instruction 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 47

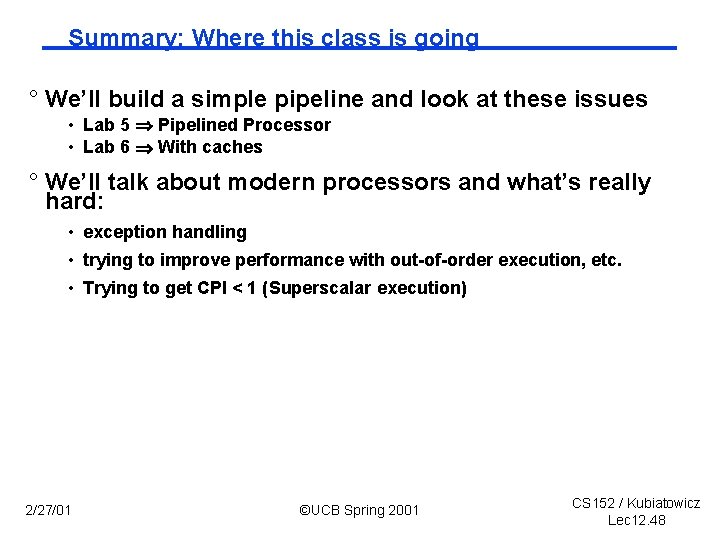

Summary: Where this class is going ° We’ll build a simple pipeline and look at these issues • Lab 5 Pipelined Processor • Lab 6 With caches ° We’ll talk about modern processors and what’s really hard: • exception handling • trying to improve performance with out of order execution, etc. • Trying to get CPI < 1 (Superscalar execution) 2/27/01 ©UCB Spring 2001 CS 152 / Kubiatowicz Lec 12. 48