Cryptography Lecture 4 Arpita Patra Arpita Patra Recall

- Slides: 25

Cryptography Lecture 4 Arpita Patra © Arpita Patra

Recall >> Perfect Security o Various Definitions and their equivalence (Shannon’s Theorem) o Inherent Drawbacks o Cannot afford perfect security >> Relaxing Perfect Security o Make the adversary bounded/efficient/polynomial time o Allow the break with some small/negligible probability o Are they necessary? Computational Security

Today’s Goal - Both relaxations are necessary. - Computational/Cryptographic Security Impossible to break Infeasible to break with high prob. o Will make ‘polynomially bounded/efficient’ and ‘small/negligible prob. ’ precise o Paradigm I- Semantic Security for SKE- computational analogue of Shannon’s perfect security o Paradigm II- Indistinguishability-based Security for SKE – computational analogue of game/experiment based security definition of perfect security o Look for assumptions needed for construction and construct a scheme

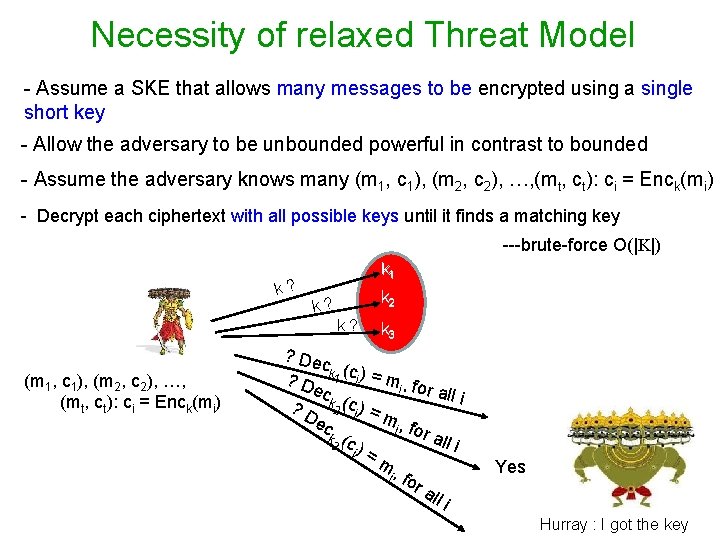

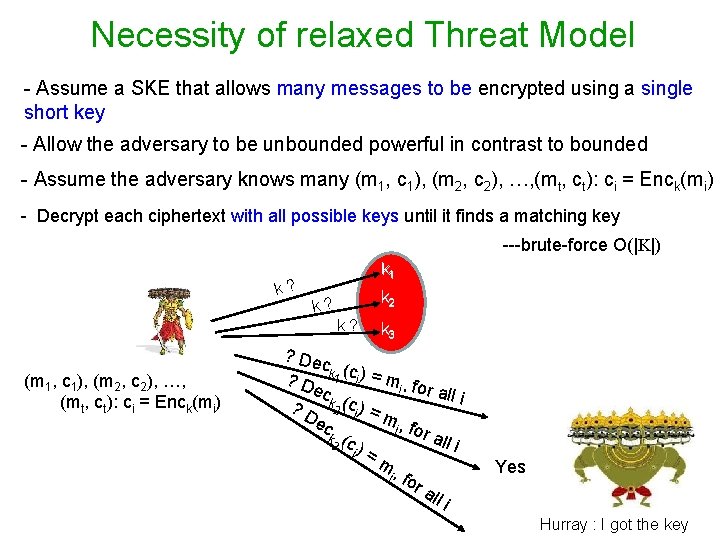

Necessity of relaxed Threat Model - Assume a SKE that allows many messages to be encrypted using a single short key - Allow the adversary to be unbounded powerful in contrast to bounded - Assume the adversary knows many (m 1, c 1), (m 2, c 2), …, (mt, ct): ci = Enck(mi) - Decrypt each ciphertext with all possible keys until it finds a matching key ---brute-force O(|K|) k? k 1 k 2 k? k? (m 1, c 1), (m 2, c 2), …, (mt, ct): ci = Enck(mi) k 3 ? Dec ( ? D k 1 ci ) = mi , fo ec r all i k ( ? D 2 ci ) = m, ec i fo r al k ( 3 c) li i = m i , fo ra ll i Yes Hurray : I got the key

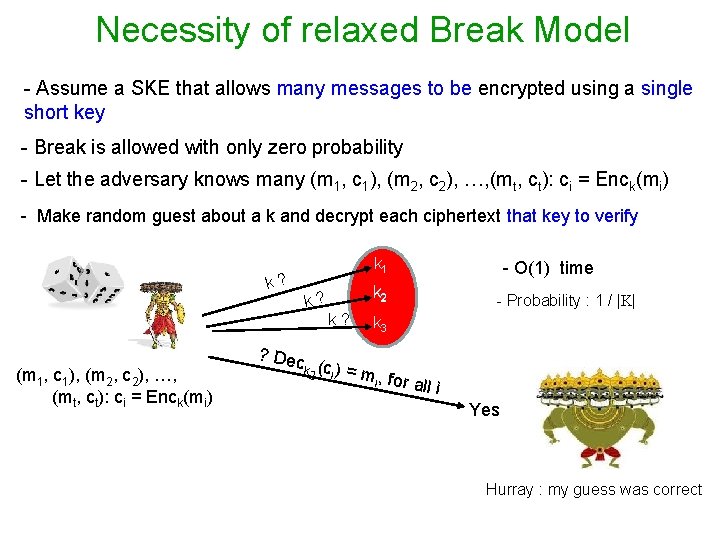

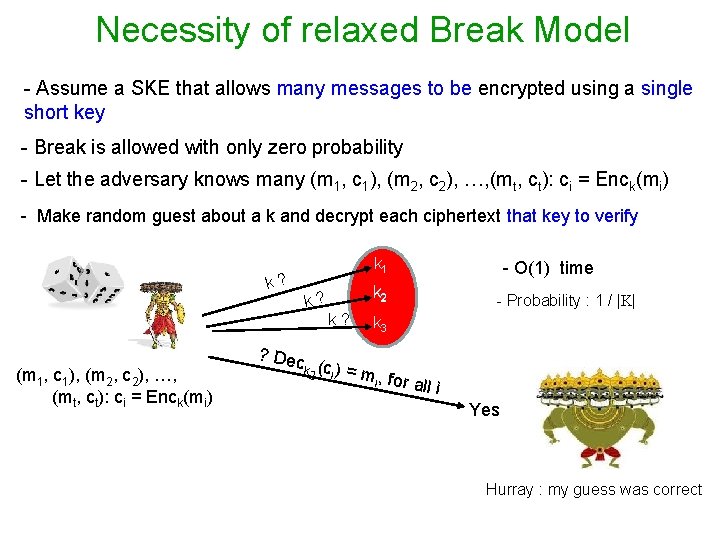

Necessity of relaxed Break Model - Assume a SKE that allows many messages to be encrypted using a single short key - Break is allowed with only zero probability - Let the adversary knows many (m 1, c 1), (m 2, c 2), …, (mt, ct): ci = Enck(mi) - Make random guest about a k and decrypt each ciphertext that key to verify k 1 k? k 2 k? k? (m 1, c 1), (m 2, c 2), …, (mt, ct): ci = Enck(mi) ? Dec - O(1) time k 2 (ci ) - Probability : 1 / |K| k 3 = m, f i or all i Yes Hurray : my guess was correct

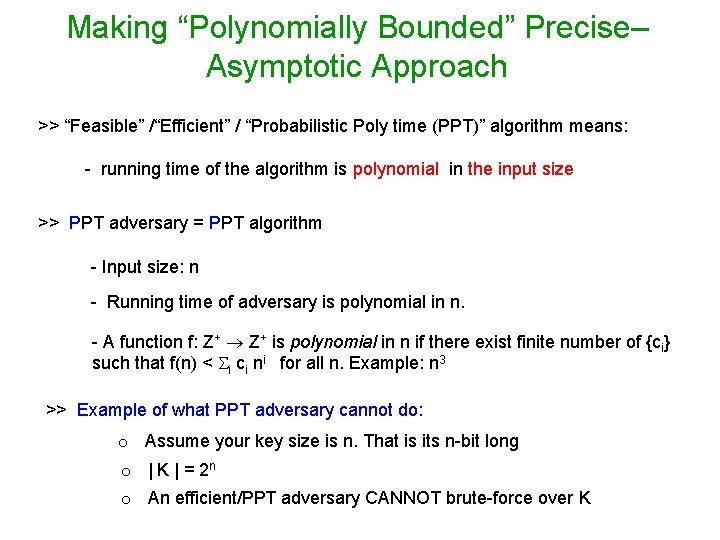

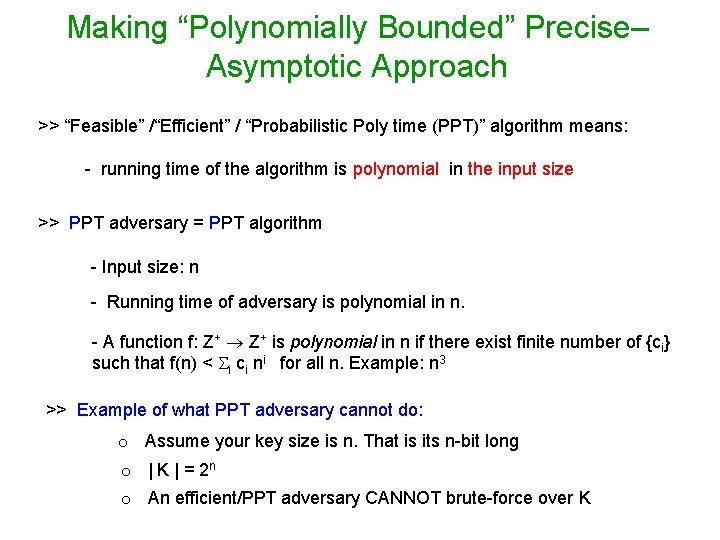

Making “Polynomially Bounded” Precise– Asymptotic Approach >> “Feasible” /“Efficient” / “Probabilistic Poly time (PPT)” algorithm means: - running time of the algorithm is polynomial in the input size >> PPT adversary = PPT algorithm - Input size: n - Running time of adversary is polynomial in n. - A function f: Z+ is polynomial in n if there exist finite number of {ci} such that f(n) < i ci ni for all n. Example: n 3 >> Example of what PPT adversary cannot do: o Assume your key size is n. That is its n-bit long o | K | = 2 n o An efficient/PPT adversary CANNOT brute-force over K

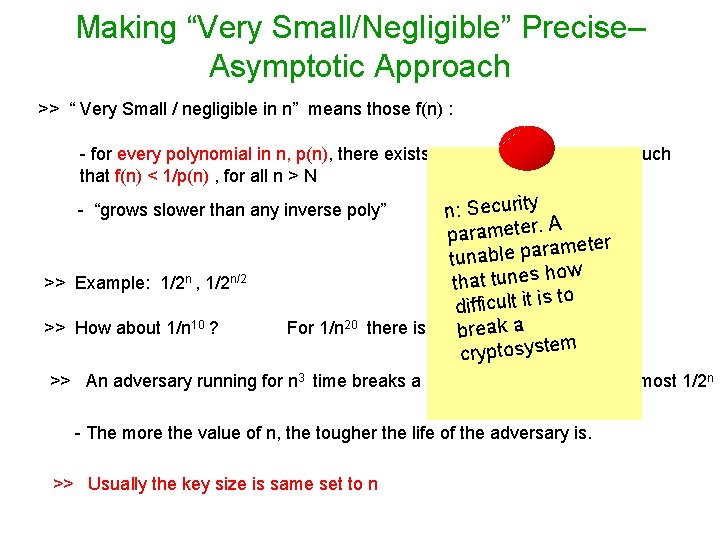

Making “Very Small/Negligible” Precise– Asymptotic Approach >> “ Very Small / negligible in n” means those f(n) : - for every polynomial in n, p(n), there exists some positive integer N, such that f(n) < 1/p(n) , for all n > N n: Security A parameter a p le b a tun how that tunes to difficult it is a 10 < 1/n 20 there is no N eak 1/n brs. t. m cryptosyste - “grows slower than any inverse poly” >> Example: 1/2 n , 1/2 n/2 >> How about 1/n 10 ? For 1/n 20 >> An adversary running for n 3 time breaks a scheme with probability at most 1/2 n - The more the value of n, the tougher the life of the adversary is. >> Usually the key size is same set to n

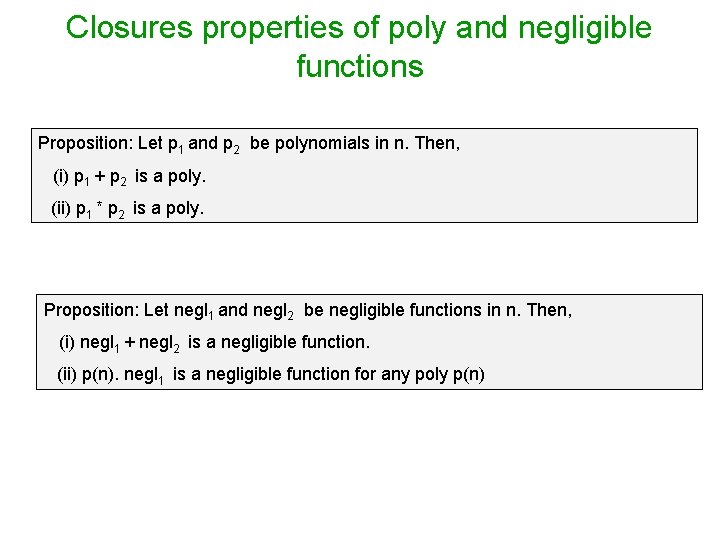

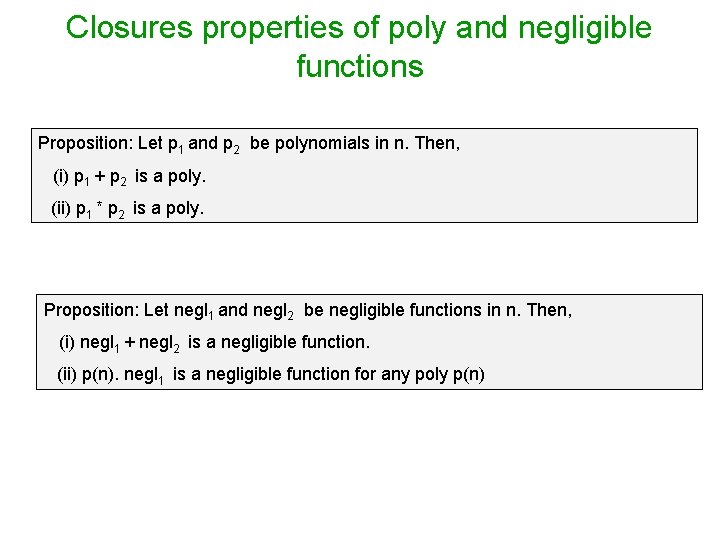

Closures properties of poly and negligible functions Proposition: Let p 1 and p 2 be polynomials in n. Then, (i) p 1 + p 2 is a poly. (ii) p 1 * p 2 is a poly. Proposition: Let negl 1 and negl 2 be negligible functions in n. Then, (i) negl 1 + negl 2 is a negligible function. (ii) p(n). negl 1 is a negligible function for any poly p(n)

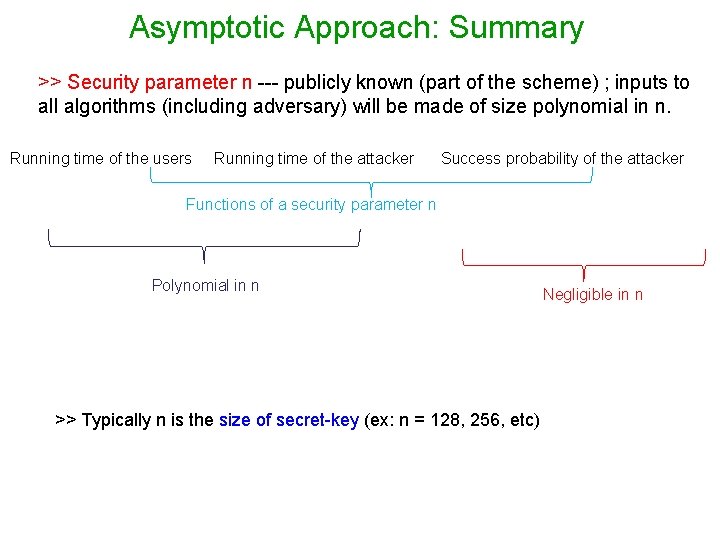

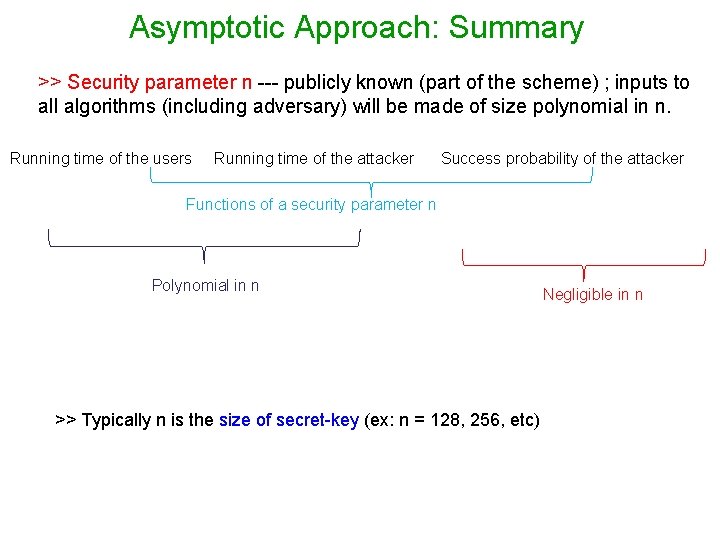

Asymptotic Approach: Summary >> Security parameter n --- publicly known (part of the scheme) ; inputs to all algorithms (including adversary) will be made of size polynomial in n. Running time of the users Running time of the attacker Success probability of the attacker Functions of a security parameter n Polynomial in n >> Typically n is the size of secret-key (ex: n = 128, 256, etc) Negligible in n

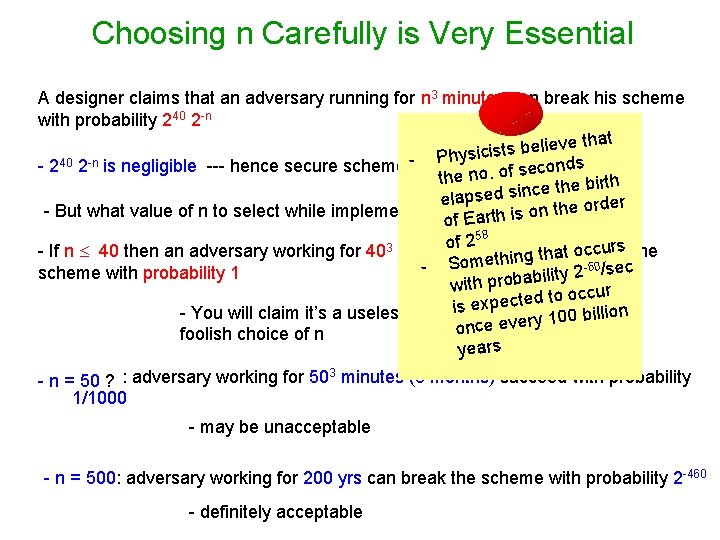

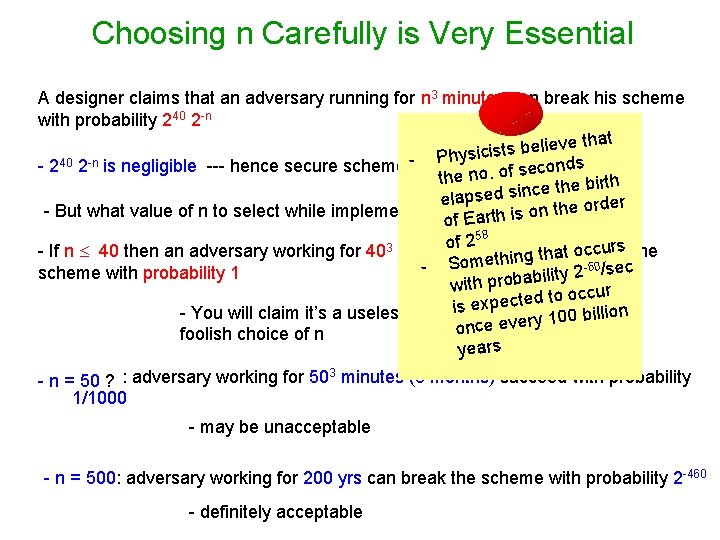

Choosing n Carefully is Very Essential A designer claims that an adversary running for n 3 minutes can break his scheme with probability 240 2 -n lieve that e b s t is ic s Phy - 240 2 -n is negligible --- hence secure scheme econds s f o. o n e h t birth e h t e c in s elapsed r - But what value of n to select while implementing ? f Earth is on the orde o f 258 o 3 ccurs the o - If n 40 then an adversary working for 40 minutes (6 weeks) can break t a h t g in -60 /sec - Someth 2 scheme with probability 1 y it il b a with prob to occur d e t c e p x e is but you just b - You will claim it’s a useless scheme, illion a 00 made 1 y r e v e e onc foolish choice of n years 3 - n = 50 ? : adversary working for 50 minutes (3 months) succeed with probability 1/1000 - may be unacceptable - n = 500: adversary working for 200 yrs can break the scheme with probability 2 -460 - definitely acceptable

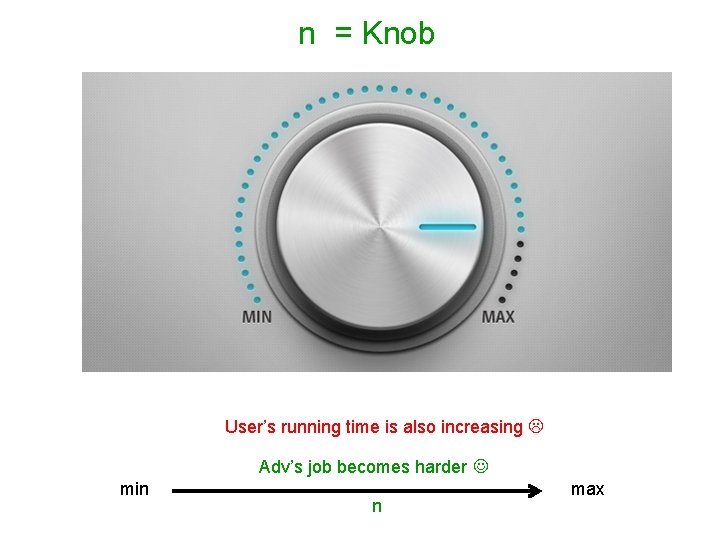

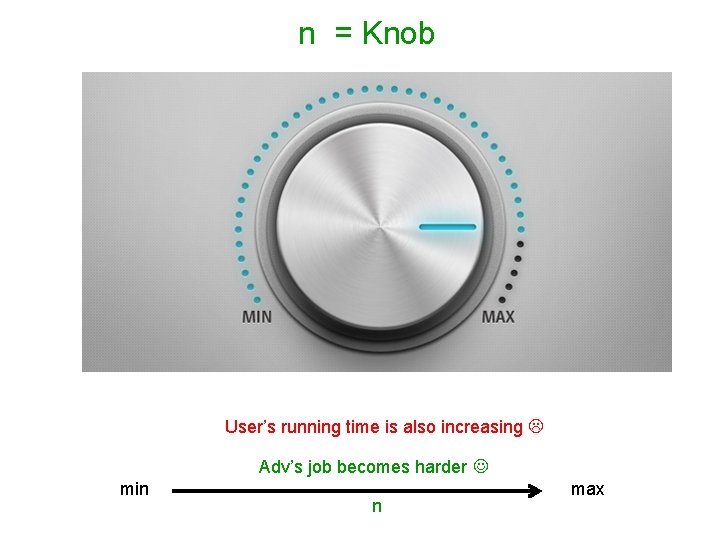

n = Knob User’s running time is also increasing Adv’s job becomes harder min n max

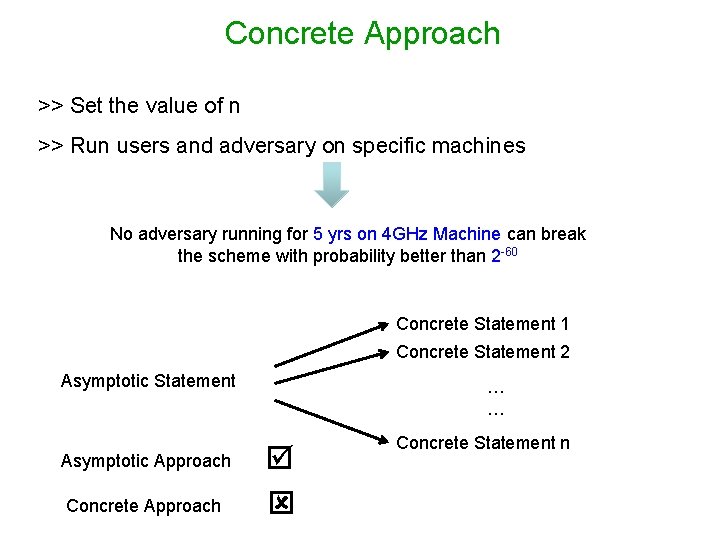

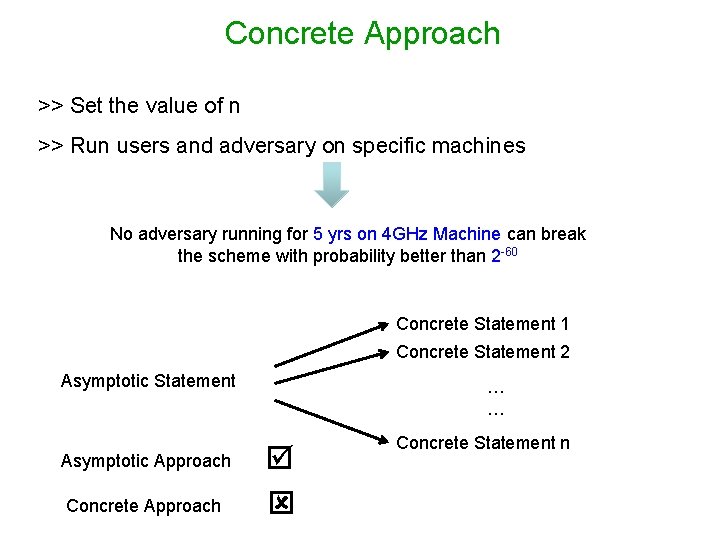

Concrete Approach >> Set the value of n >> Run users and adversary on specific machines No adversary running for 5 yrs on 4 GHz Machine can break the scheme with probability better than 2 -60 Concrete Statement 1 Concrete Statement 2 Asymptotic Statement Asymptotic Approach Concrete Approach … … Concrete Statement n

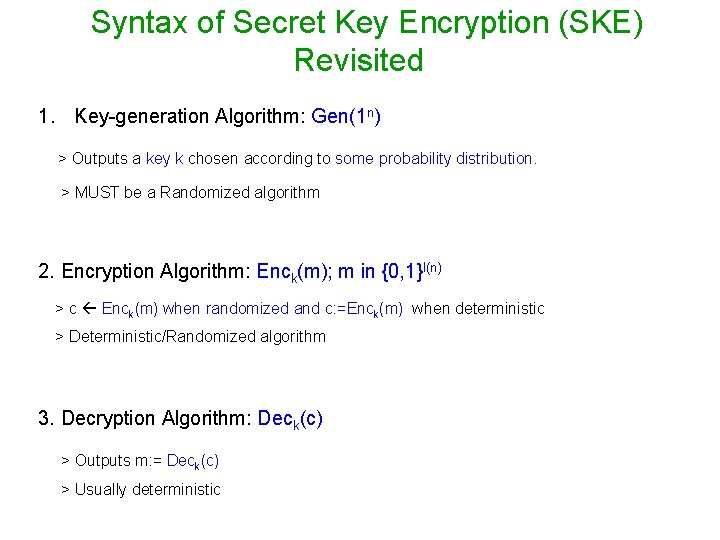

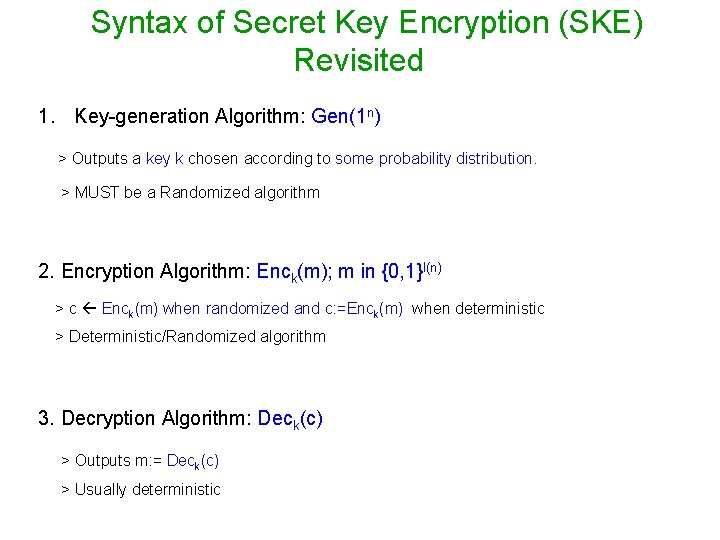

Syntax of Secret Key Encryption (SKE) Revisited 1. Key-generation Algorithm: Gen(1 n) > Outputs a key k chosen according to some probability distribution. > MUST be a Randomized algorithm 2. Encryption Algorithm: Enck(m); m in {0, 1}l(n) > c Enck(m) when randomized and c: =Enck(m) when deterministic > Deterministic/Randomized algorithm 3. Decryption Algorithm: Deck(c) > Outputs m: = Deck(c) > Usually deterministic

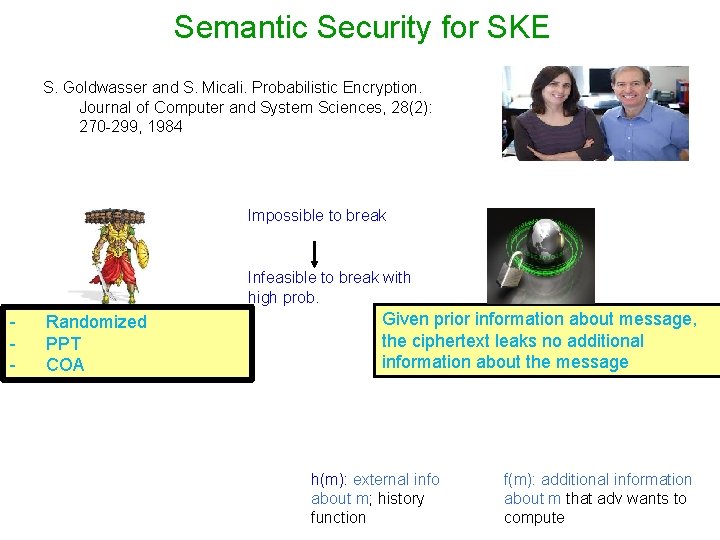

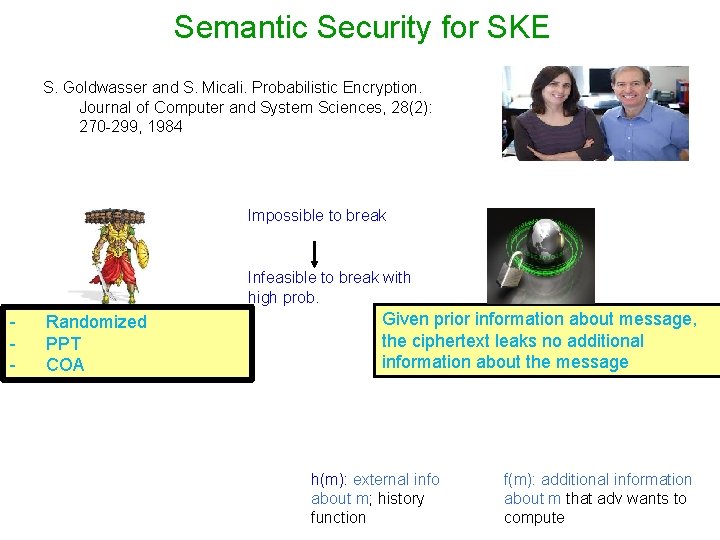

Semantic Security for SKE S. Goldwasser and S. Micali. Probabilistic Encryption. Journal of Computer and System Sciences, 28(2): 270 -299, 1984 Impossible to break Infeasible to break with high prob. - Randomized PPT COA Given prior information about message, the ciphertext leaks no additional information about the message h(m): external info about m; history function f(m): additional information about m that adv wants to compute

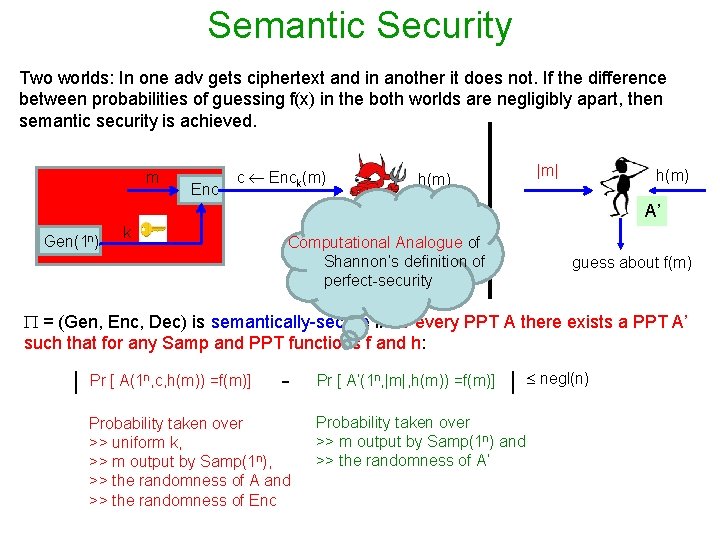

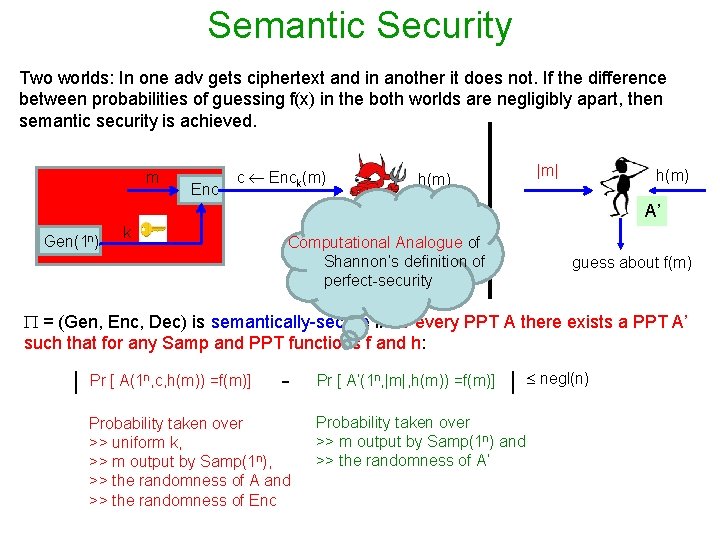

Semantic Security Two worlds: In one adv gets ciphertext and in another it does not. If the difference between probabilities of guessing f(x) in the both worlds are negligibly apart, then semantic security is achieved. m Gen(1 n) Enc c Enck(m) k |m| h(m) A’ A Computational Analogue of guess about definition f(m) Shannon’s of perfect-security guess about f(m) = (Gen, Enc, Dec) is semantically-secure if for every PPT A there exists a PPT A’ such that for any Samp and PPT functions f and h: | Pr [ A(1 n, c, h(m)) =f(m)] - Probability taken over >> uniform k, >> m output by Samp(1 n), >> the randomness of A and >> the randomness of Enc Pr [ A’(1 n, |m|, h(m)) =f(m)] | Probability taken over >> m output by Samp(1 n) and >> the randomness of A’ negl(n)

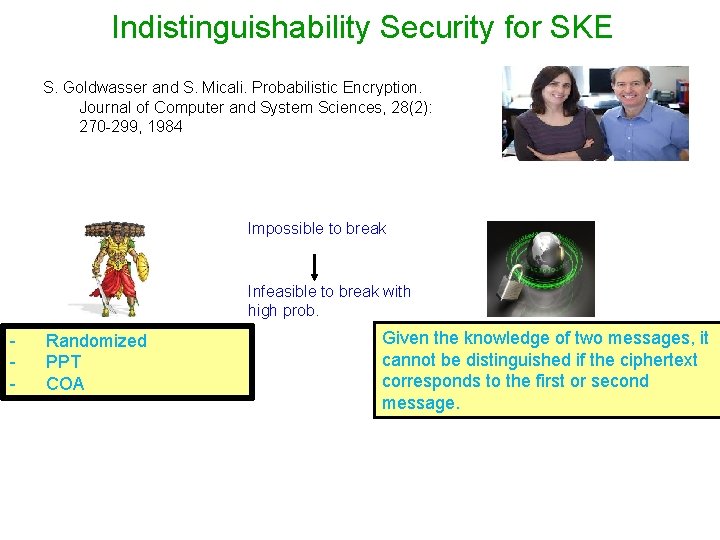

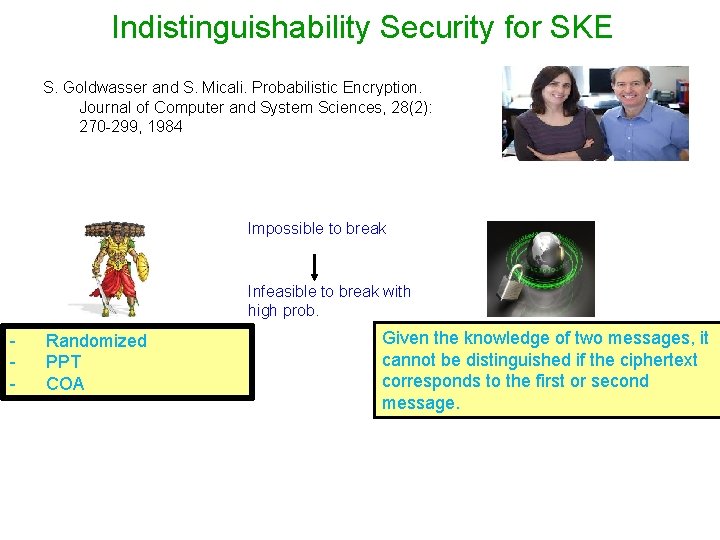

Indistinguishability Security for SKE S. Goldwasser and S. Micali. Probabilistic Encryption. Journal of Computer and System Sciences, 28(2): 270 -299, 1984 Impossible to break Infeasible to break with high prob. - Randomized PPT COA Given the knowledge of two messages, it cannot be distinguished if the ciphertext corresponds to the first or second message.

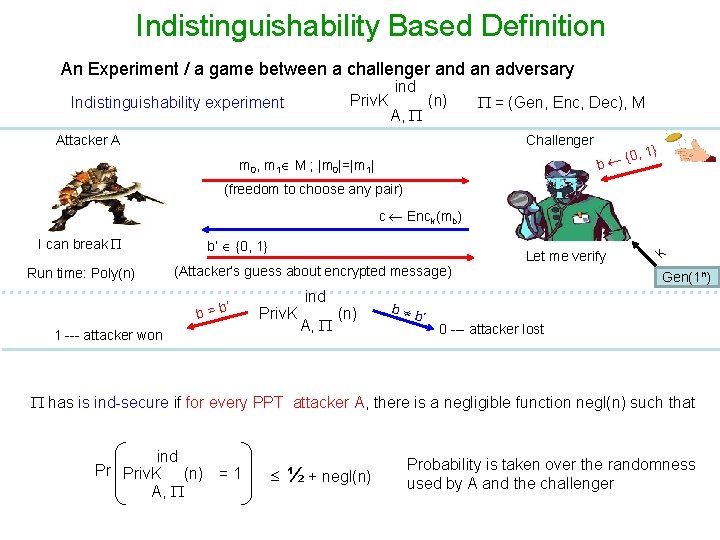

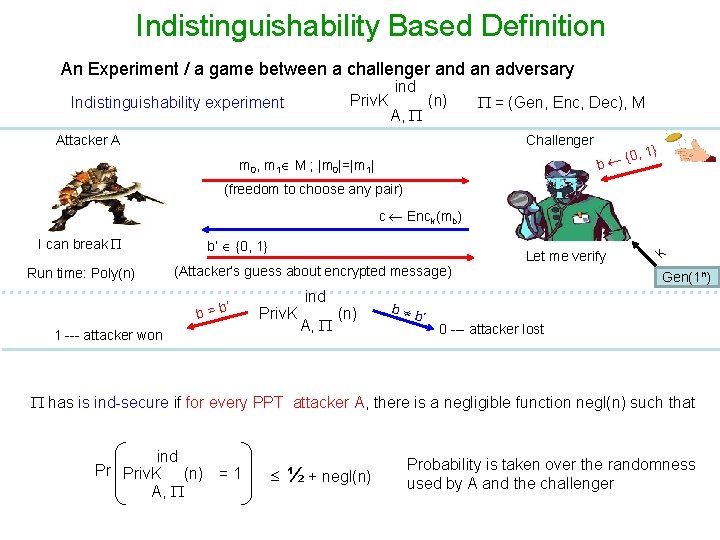

Indistinguishability Based Definition An Experiment / a game between a challenger and an adversary Priv. K Indistinguishability experiment ind A, (n) Attacker A = (Gen, Enc, Dec), M Challenger , 1} {0 b m 0, m 1 M ; |m 0|=|m 1| (freedom to choose any pair) I can break Run time: Poly(n) b’ {0, 1} (Attacker’s guess about encrypted message) ’ b=b Priv. K 1 --- attacker won ind A, (n) b b ’ Let me verify k c Enck(mb) Gen(1 n) 0 --- attacker lost has is ind-secure if for every PPT attacker A, there is a negligible function negl(n) such that ind Pr Priv. K (n) = 1 A, ½ + negl(n) Probability is taken over the randomness used by A and the challenger

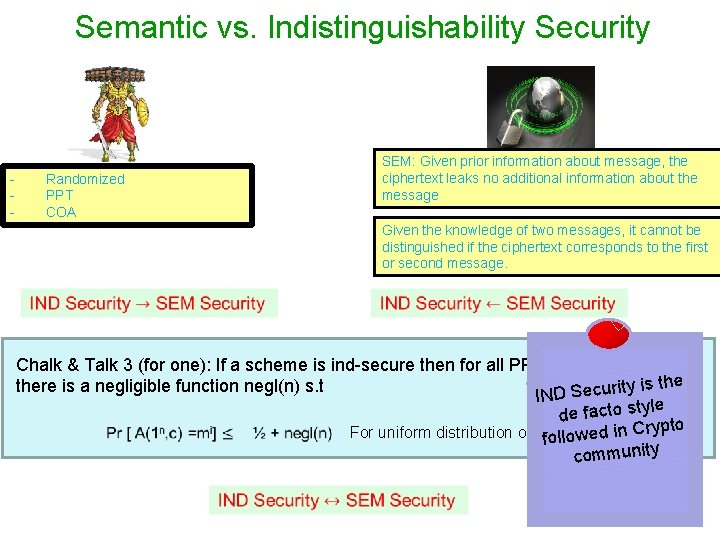

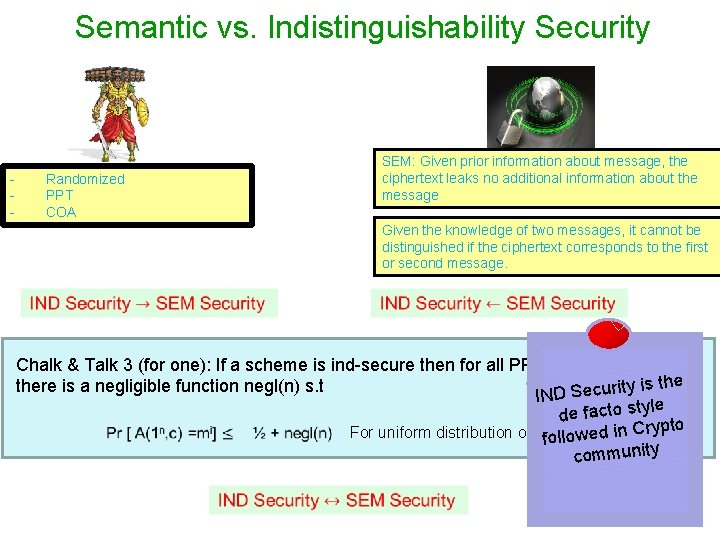

Semantic vs. Indistinguishability Security - SEM: Given prior information about message, the ciphertext leaks no additional information about the message Randomized PPT COA Given the knowledge of two messages, it cannot be distinguished if the ciphertext corresponds to the first or second message. Chalk & Talk 3 (for one): If a scheme is ind-secure then for all PPT A and any index i, is there is a negligible function negl(n) s. t y it r u c e S IND yle de facto st Crypto in For uniform distribution of kfand m. d e w o ll o community

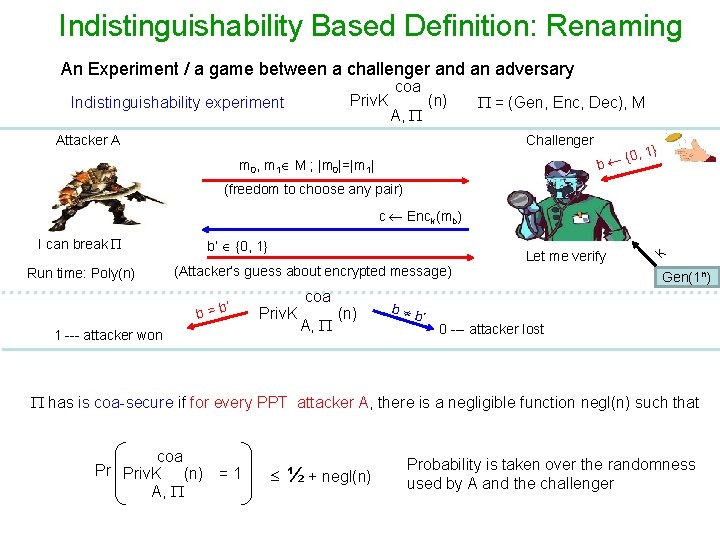

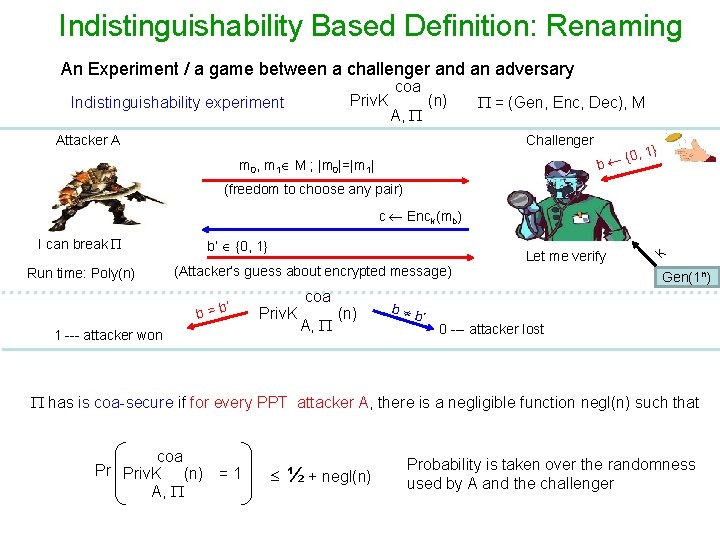

Indistinguishability Based Definition: Renaming An Experiment / a game between a challenger and an adversary Priv. K Indistinguishability experiment coa A, (n) Attacker A = (Gen, Enc, Dec), M Challenger , 1} {0 b m 0, m 1 M ; |m 0|=|m 1| (freedom to choose any pair) I can break Run time: Poly(n) b’ {0, 1} (Attacker’s guess about encrypted message) ’ b=b Priv. K 1 --- attacker won coa A, (n) b b ’ Let me verify k c Enck(mb) Gen(1 n) 0 --- attacker lost has is coa-secure if for every PPT attacker A, there is a negligible function negl(n) such that coa Pr Priv. K (n) = 1 A, ½ + negl(n) Probability is taken over the randomness used by A and the challenger

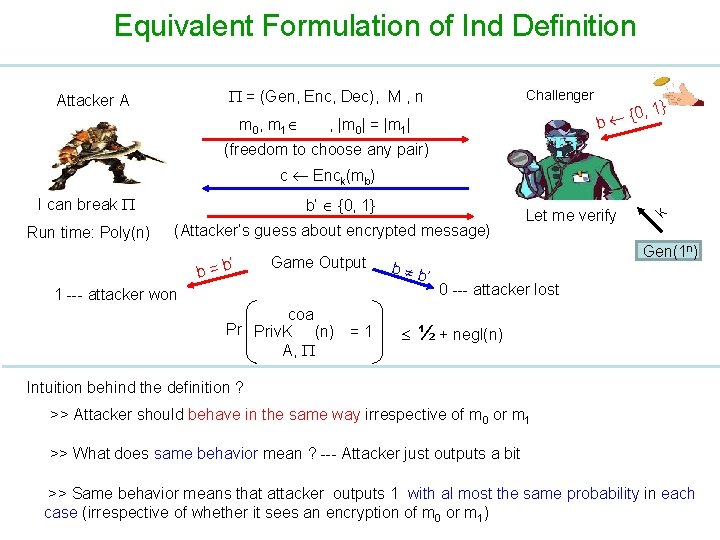

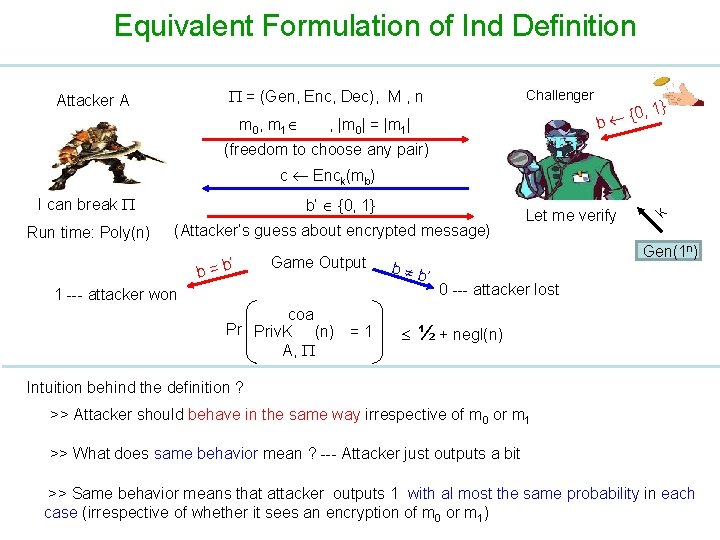

Equivalent Formulation of Ind Definition = (Gen, Enc, Dec), M , n Attacker A m 0 , m 1 Challenger {0 b , |m 0| = |m 1| , 1} (freedom to choose any pair) I can break Run time: Poly(n) b’ {0, 1} (Attacker’s guess about encrypted message) ’ b=b Game Output coa Pr Priv. K (n) = 1 A, Gen(1 n) b b ’ 1 --- attacker won Let me verify k c Enck(mb) 0 --- attacker lost ½ + negl(n) Intuition behind the definition ? >> Attacker should behave in the same way irrespective of m 0 or m 1 >> What does same behavior mean ? --- Attacker just outputs a bit >> Same behavior means that attacker outputs 1 with al most the same probability in each case (irrespective of whether it sees an encryption of m 0 or m 1)

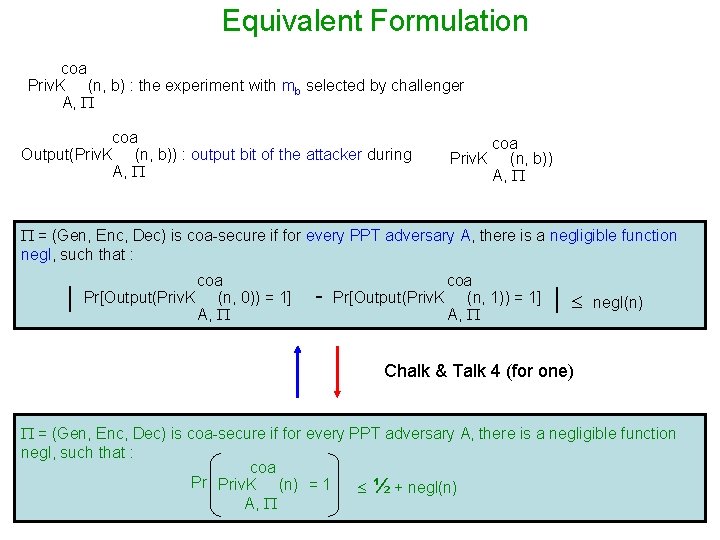

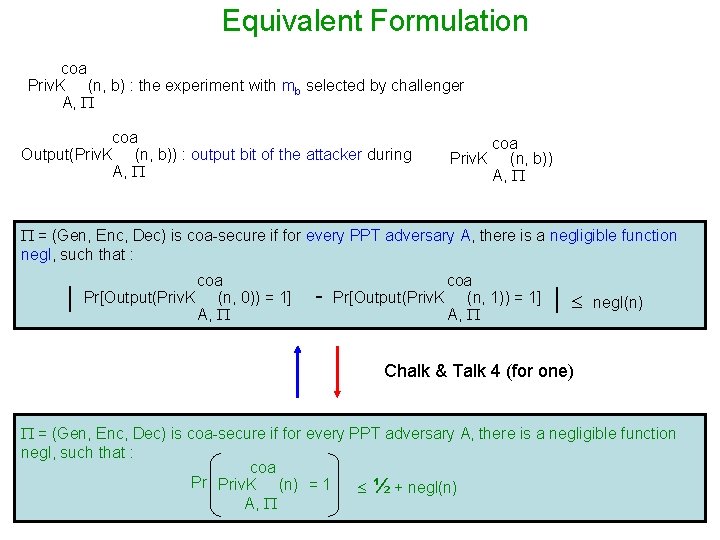

Equivalent Formulation coa Priv. K (n, b) : the experiment with mb selected by challenger A, coa Output(Priv. K (n, b)) : output bit of the attacker during A, coa Priv. K (n, b)) A, = (Gen, Enc, Dec) is coa-secure if for every PPT adversary A, there is a negligible function negl, such that : | coa Pr[Output(Priv. K (n, 0)) = 1] A, - coa Pr[Output(Priv. K (n, 1)) = 1] A, | negl(n) Chalk & Talk 4 (for one) = (Gen, Enc, Dec) is coa-secure if for every PPT adversary A, there is a negligible function negl, such that : coa Pr Priv. K (n) = 1 ½ + negl(n) A,

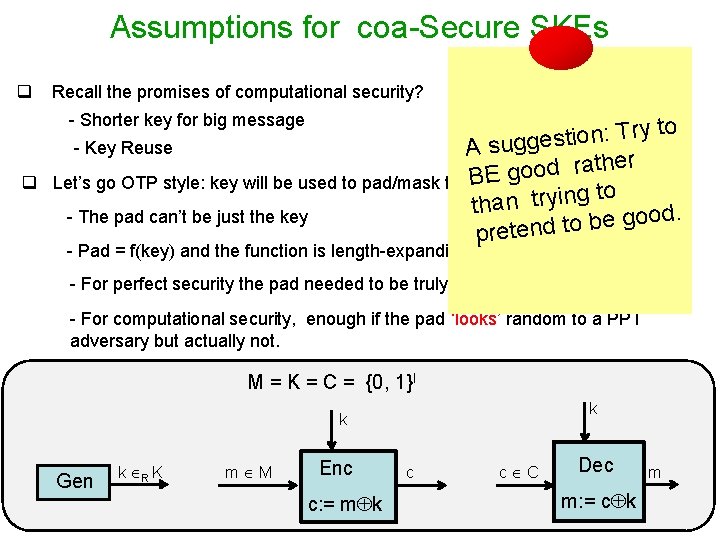

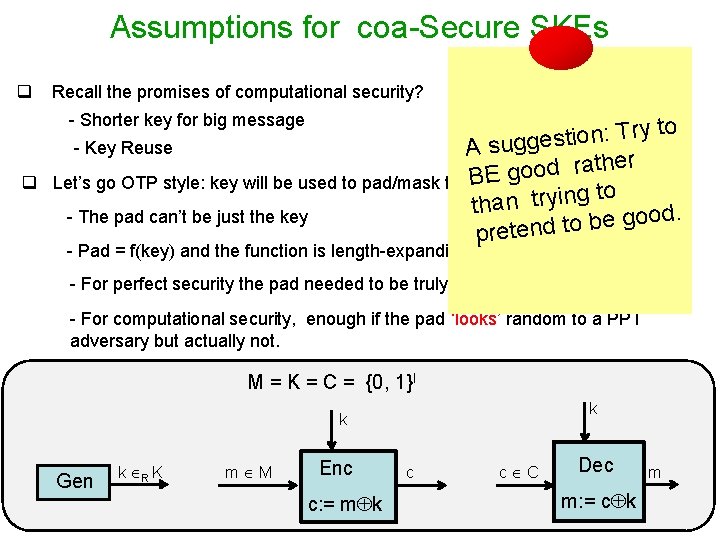

Assumptions for coa-Secure SKEs q Recall the promises of computational security? - Shorter key for big message q to y r T : n o i t s - Key Reuse A sugge r e h t a r d o o Eg Let’s go OTP style: key will be used to pad/mask the. B message to g n i y r t n a th - The pad can’t be just the key od. o g e b o t d preten - Pad = f(key) and the function is length-expanding ? ? - For perfect security the pad needed to be truly random - For computational security, enough if the pad ‘looks’ random to a PPT adversary but actually not. M = K = C = {0, 1}l k k Gen k R K m M Enc c: = m k c c C Dec m: = c k m

Assumptions for coa-Secure SKEs M. Blum, S. Micali. How to Generate Cryptographically strong sequences of pseudo-random bits. SIAM Journal of Computing, 13(4), 850 -864, 1984 A. C. -C. Yao. Theory and Applications of Trapdoor Functions. FOCS, 80 -91, 1982. Pseudorandom Generators (PRGs): Tool to cheat the PPT adversaries

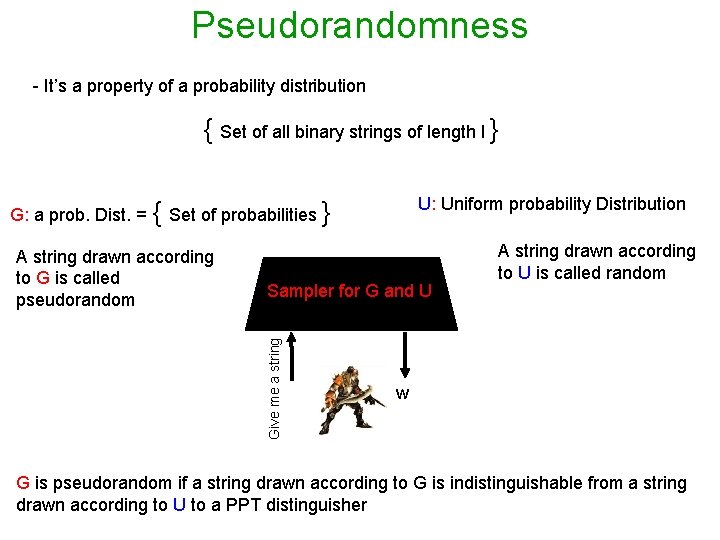

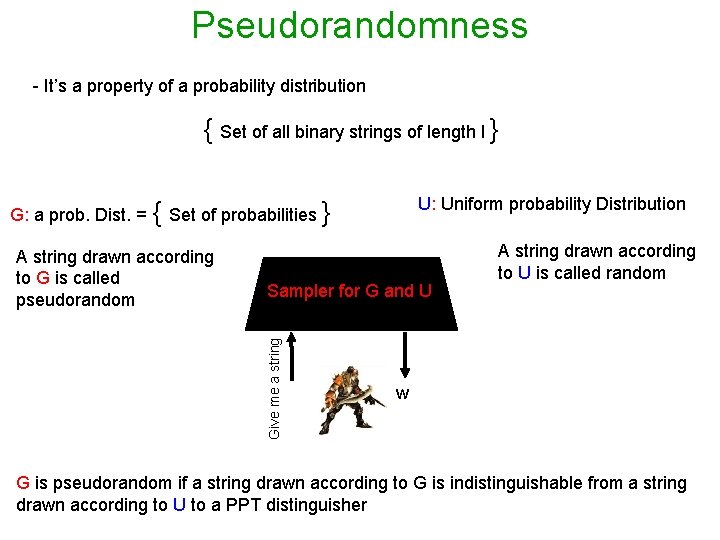

Pseudorandomness - It’s a property of a probability distribution { Set of all binary strings of length l } U: Uniform probability Distribution G: a prob. Dist. = { Set of probabilities } Sampler for G and U Give me a string A string drawn according to G is called pseudorandom A string drawn according to U is called random w G is pseudorandom if a string drawn according to G is indistinguishable from a string drawn according to U to a PPT distinguisher