Crowdsourcing Opportunity or New Threat Major Area Exam

![Image Search Crowd. Search [Yan 2010] • Accurate image searching for mobile devices by Image Search Crowd. Search [Yan 2010] • Accurate image searching for mobile devices by](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-15.jpg)

![Question and Answer Viz. Wiz [Bigham 2010] • Help blind people • Answer questions Question and Answer Viz. Wiz [Bigham 2010] • Help blind people • Answer questions](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-16.jpg)

![Quality Control • Fundamental problem: the crowd is not reliable – [Oleson 2011] [Snow Quality Control • Fundamental problem: the crowd is not reliable – [Oleson 2011] [Snow](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-19.jpg)

![Detecting Fake Reviews • Detecting review spam [Jindal 2008] – Duplicated/Near-duplicated reviews Dataset Reviewer Detecting Fake Reviews • Detecting review spam [Jindal 2008] – Duplicated/Near-duplicated reviews Dataset Reviewer](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-29.jpg)

- Slides: 36

Crowdsourcing: Opportunity or New Threat? Major Area Exam: June 12 th Gang Wang Committee: Prof. Ben Y. Zhao (Co-chair) Prof. Heather Zheng (Co-chair) Prof. Christopher Kruegel

Why Crowdsourcing • Software automation replaces the role of human in many areas – Store and retrieve large volumes of information – Perform calculation • Human still outperform computer in many ways 1

Searching for Jim Gray (2007) • Jim Gray, Turing Award winner • Missing with his sailboat outside San Francisco Bay, Jan 2007 • No result from searches of coastguard and private • Use satelliteplanes image to search for Jim Gray’s sailboat • Problem: the search cannot be automated by computer • Solution – Split the satellite image into many small images – Volunteers look for his boat in each image 100, 000 tasks completed in 2 days 2

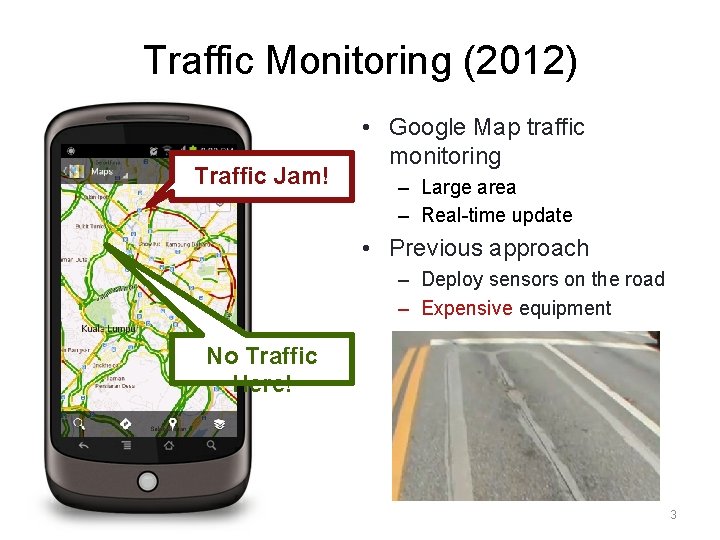

Traffic Monitoring (2012) Traffic Jam! • Google Map traffic monitoring – Large area – Real-time update • Previous approach – Deploy sensors on the road – Expensive equipment No Traffic Here! 3

User-driven traffic monitoring – 200 million Google Map users (mobile) 1 – Report location while driving – Integrate the traffic map in real-time Newsflash: Apple also builds crowdsourced map system in i. OS 6 this fall 1. http: //techcrunch. com/2011/05/25/google-maps-for-mobile-stats 4

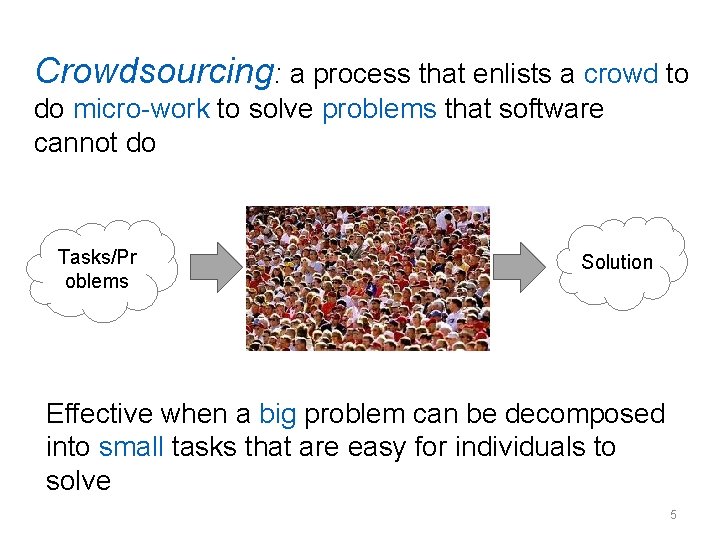

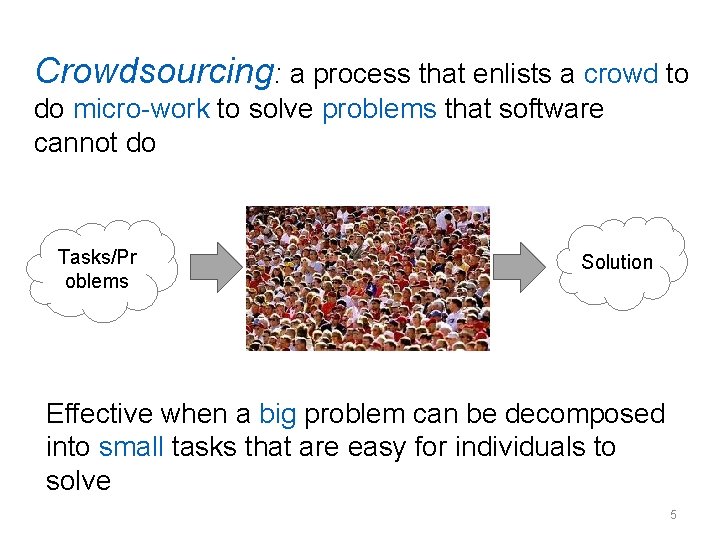

Crowdsourcing: a process that enlists a crowd to do micro-work to solve problems that software cannot do Tasks/Pr oblems Solution Effective when a big problem can be decomposed into small tasks that are easy for individuals to solve 5

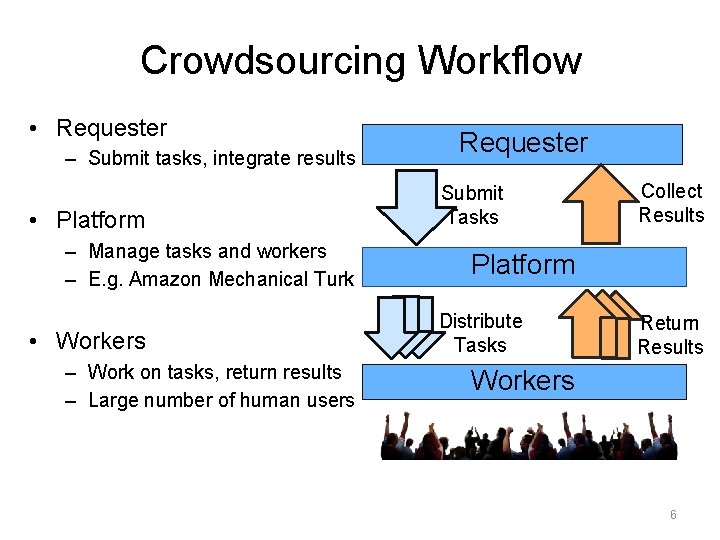

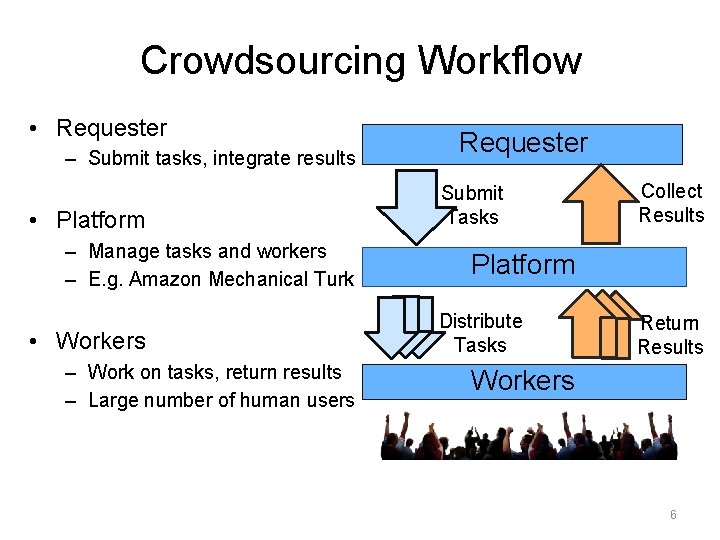

Crowdsourcing Workflow • Requester – Submit tasks, integrate results • Platform – Manage tasks and workers – E. g. Amazon Mechanical Turk • Workers – Work on tasks, return results – Large number of human users Requester Submit Tasks Collect Results Platform Distribute Tasks Return Results Workers 6

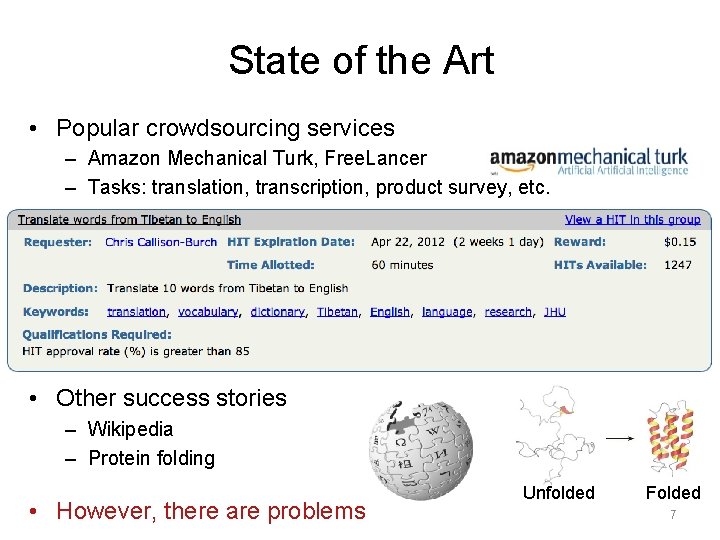

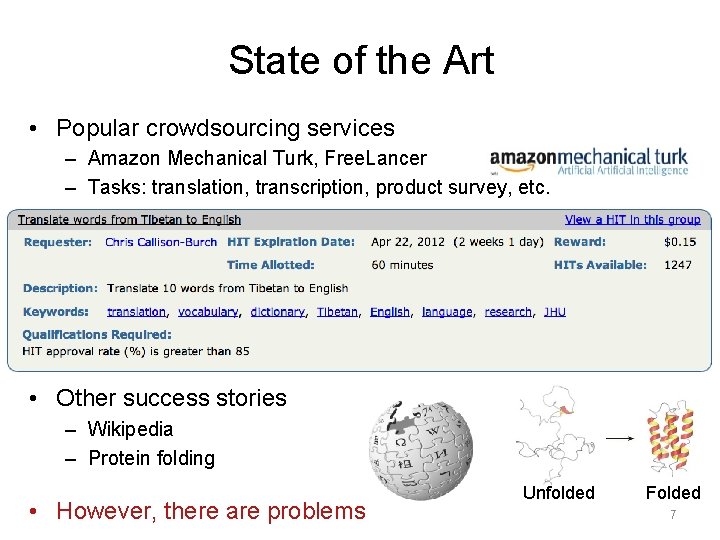

State of the Art • Popular crowdsourcing services – Amazon Mechanical Turk, Free. Lancer – Tasks: translation, transcription, product survey, etc. • Other success stories – Wikipedia – Protein folding • However, there are problems Unfolded Folded 7

Misuse of Crowdsourcing “Over 40% of New Mechanical Turk Jobs Involve Spam” “In a Race to Out-Rave, 5 -Star Web Reviews Go for $5” “Dairy Giant Mengniu in Smear Scandal” “Hacked Emails Reveal Russian Astroturfing Program” • Difficult to detect – High quality spam – Deceptive product reviews – Realistic fake accounts • Emerging threat to online communities 8

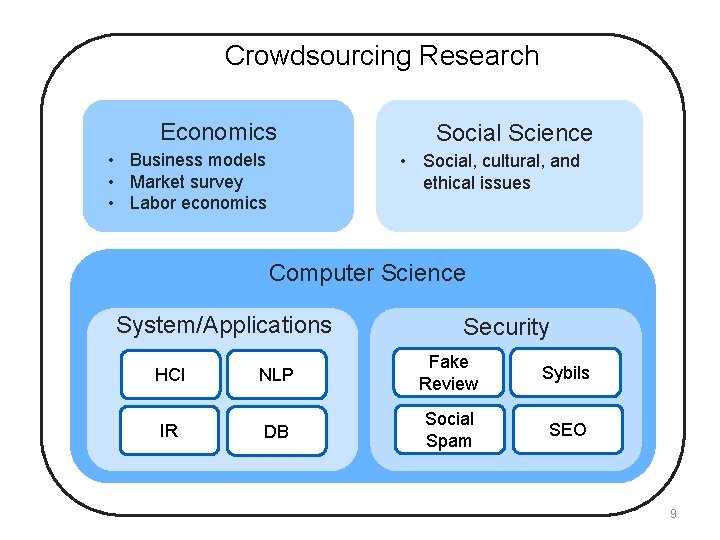

Crowdsourcing Research Economics • Business models • Market survey • Labor economics Social Science • Social, cultural, and ethical issues Computer Science System/Applications Security HCI NLP Fake Review Sybils IR DB Social Spam SEO 9

Outline • • Introduction Overview of Crowdsourcing Applications Research Challenges Security and Crowdsourcing 10

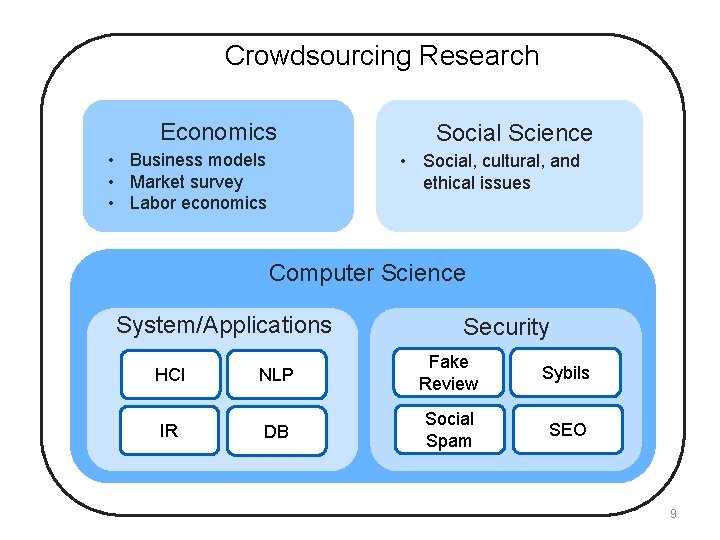

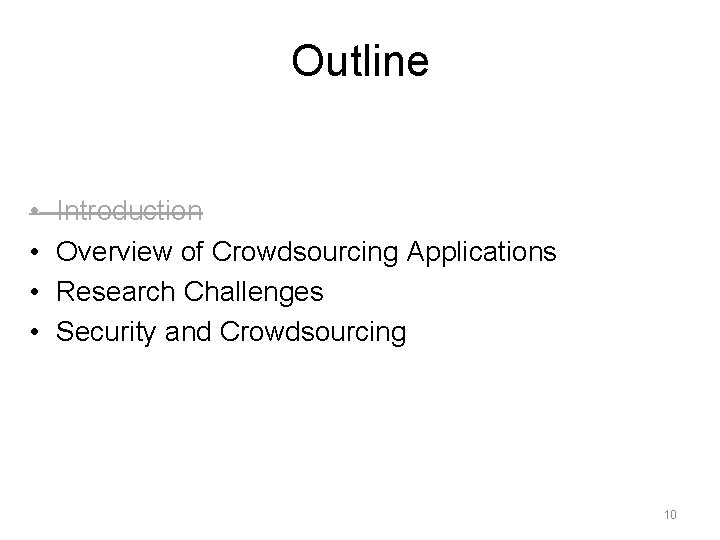

Crowdsourcing Applications Categorize applications based on human intelligence • Natural language processing (NLP) – Data labeling [Snow 2008] [Callison-Burch 2009] – Searching results validation [Alonso 2008] – Database query: Crowd. DB [Franklin 2011], Qurk [Marcus 2011] • Image processing – Image annotation [Ahn 2004] [Chen 2009] – Image search [Yan 2010] • Content generation/knowledge sharing – Wikipedia, Quora, Yahoo! Answers, Stack. Overflow – Real-time Q&A: Vizwiz [Bigham 2010], Mimir [Hsieh 2009] • Human sensor – Google Map traffic monitoring – Twitter earthquake report [Sakaki 2010] 11

Crowdsourcing Applications Categorize applications based on human intelligence • Natural language processing (NLP) – Data labeling [Snow 2008] [Callison-Burch 2009] – Searching results validation [Alonso 2008] – Database query: Crowd. DB [Franklin 2011], Qurk [Marcus 2011] • Image processing – Image annotation [Ahn 2004] [Chen 2009] – Image search [Yan 2010] • Content generation/knowledge sharing – Wikipedia, Quora, Yahoo! Answers, Stack. Overflow – Real-time Q&A: Vizwiz [Bigham 2010], Mimir [Hsieh 2009] • Human sensor – Google Map traffic monitoring – Twitter earthquake report [Sakaki 2010] 12

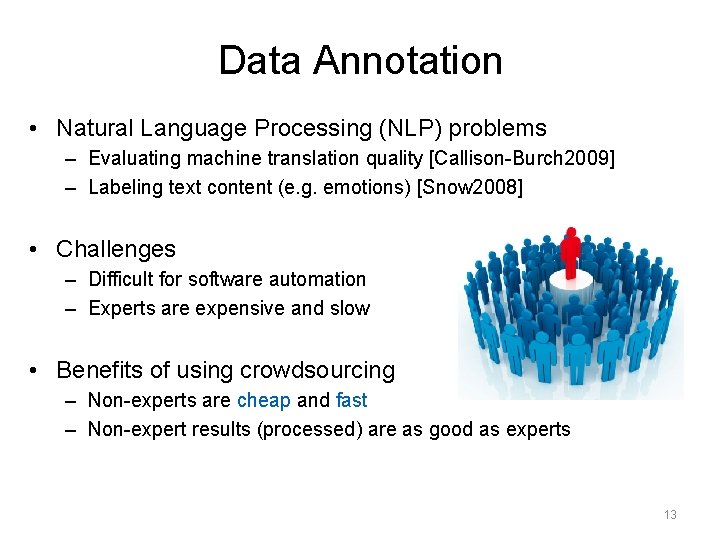

Data Annotation • Natural Language Processing (NLP) problems – Evaluating machine translation quality [Callison-Burch 2009] – Labeling text content (e. g. emotions) [Snow 2008] • Challenges – Difficult for software automation – Experts are expensive and slow • Benefits of using crowdsourcing – Non-experts are cheap and fast – Non-expert results (processed) are as good as experts 13

![Image Search Crowd Search Yan 2010 Accurate image searching for mobile devices by Image Search Crowd. Search [Yan 2010] • Accurate image searching for mobile devices by](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-15.jpg)

Image Search Crowd. Search [Yan 2010] • Accurate image searching for mobile devices by combining – Automated image searching – Human validation of searching results via crowdsourcing Automated Image Search Query Image Crowd Validation Candidate Images Only 25% accuracy Res ult Accuracy > 95% Use human intelligence to improve automation 14

![Question and Answer Viz Wiz Bigham 2010 Help blind people Answer questions Question and Answer Viz. Wiz [Bigham 2010] • Help blind people • Answer questions](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-16.jpg)

Question and Answer Viz. Wiz [Bigham 2010] • Help blind people • Answer questions • Near real-time “Take a photo” “Record the question” “Right side” “Which item is corn? ” • Example – Shopping scenario Server • Use the crowd to help people in need • Replace an “expensive” personal assistant 15

Outline • • Introduction Overview of Crowdsourcing Applications Research Challenges Security and Crowdsourcing 16

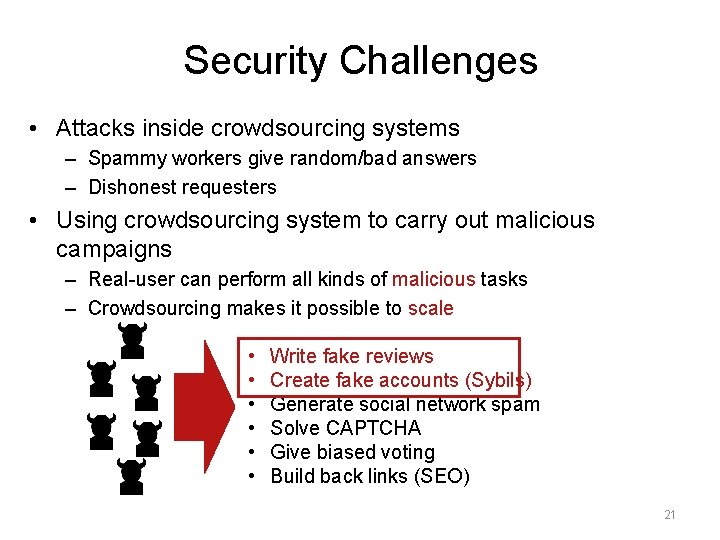

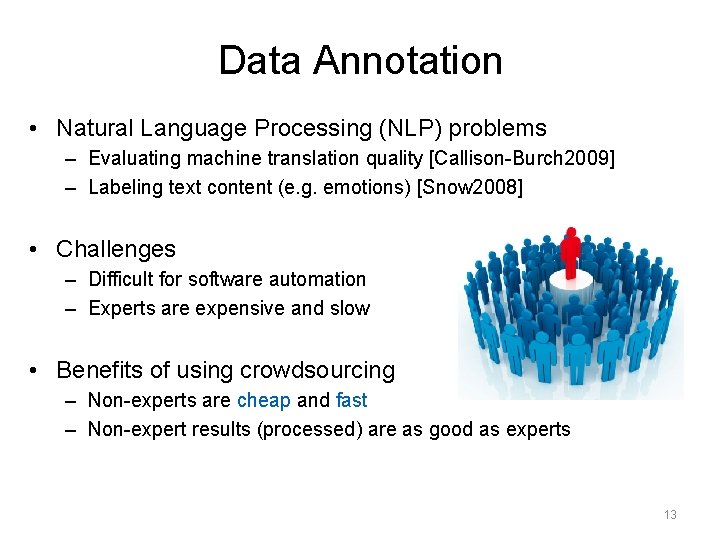

Challenges in Crowdsourcing • Quality control – High diversity in worker background and expertise • Incentives – Encourage participation – Improve work quality • Task management – Perform complex/real-time tasks – Coordinate workers and requesters • Security – Spammy/cheating workers, fraud requesters – Using crowdsourcing systems for malicious attacks 17

![Quality Control Fundamental problem the crowd is not reliable Oleson 2011 Snow Quality Control • Fundamental problem: the crowd is not reliable – [Oleson 2011] [Snow](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-19.jpg)

Quality Control • Fundamental problem: the crowd is not reliable – [Oleson 2011] [Snow 2008] [Callison-Burch 2009] [Yan 2010] [Franklin 2011] – Workers make mistakes – Workers spam the system • Existing strategies – Majority voting – Pre-screening to test workers – Statistic models to clear data bias Yes Yes No Ground Truth Screenin g Test 18

Incentives • Basic questions: how to set the right price of the tasks? – Can you improve work quality by raising payment? – Can you attract more workers by raising payment? • Empirical study on worker incentives [Mason 2010] [Hsieh 2010] – High payment helps to recruit workers faster and increase participation – Money does not improve quality – Punishment/bonus based quality control • Pay the minimum $0. 01 for all workers and $0. 01 for bonus • Common problem for all applications 19

Task Management Example: Writing a travel book for New York City • Crowdsource complex tasks [Kittur 2011] Example: use bubble sort algorithm to sort pictures – Partition tasks Partition the complex … Brief History Attractions (outline)execute each work flow – Parallel – Integrate results … Task 2 Task 1 Task 3 • Map (gather facts) Implement algorithms • Reduce (collect text) Open problems … … on the crowd Task 1 Task 2 [Little 2010] one is better? unit – Regard the. Which crowd as computation – Design/organize the tasks in a way to run algorithms Paragraph – Real-time crowdsourcing, parallel tasks execution, synchronization 20

Security Challenges • Attacks inside crowdsourcing systems – Spammy workers give random/bad answers – Dishonest requesters • Using crowdsourcing system to carry out malicious campaigns – Real-user can perform all kinds of malicious tasks – Crowdsourcing makes it possible to scale • • • Write fake reviews Create fake accounts (Sybils) Generate social network spam Solve CAPTCHA Give biased voting Build back links (SEO) 21

Outline • • Introduction Overview of Crowdsourcing Applications Research Challenges Security and Crowdsourcing – Malicious Crowdsourcing Systems – Fake Reviews Generation by the Crowd – Detecting Sybils in Online Social Networks 22

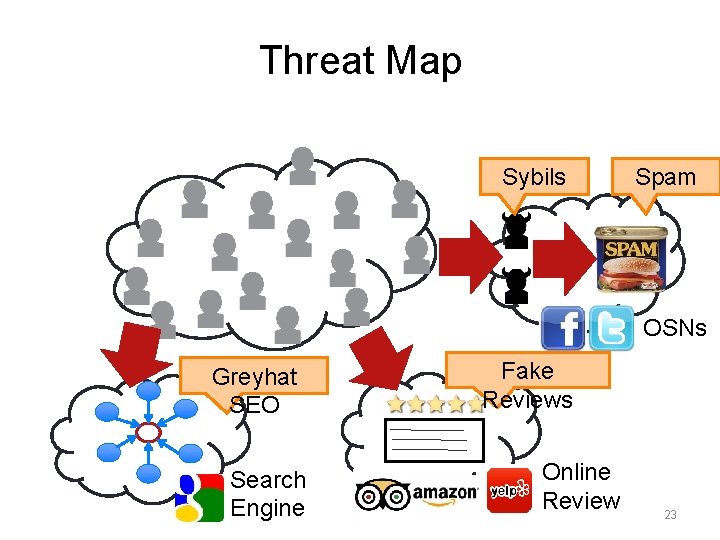

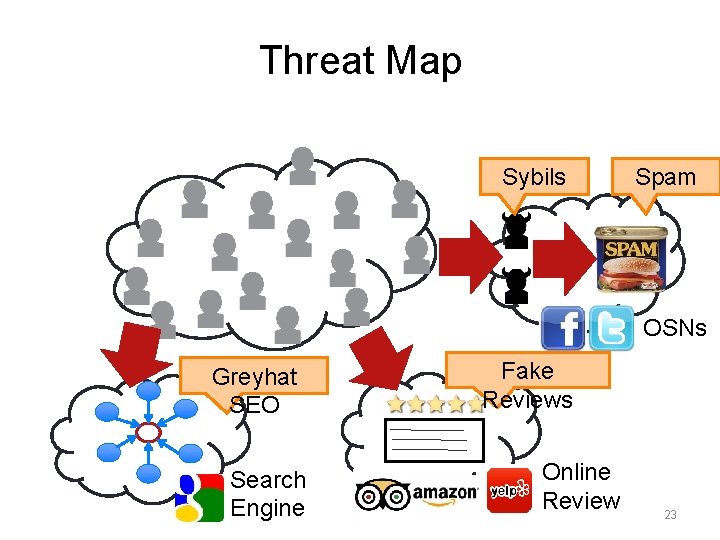

Threat Map Sybils Spam OSNs Greyhat SEO Search Engine Fake Reviews Online Review 23

Dark Side of Crowdsourcing • Crowdsourcing – Large number of workers – Easy, cheap, fast – Real users can do bad jobs 5 star rating and positive review Use different IP addresses Bypass existing spam filter 24

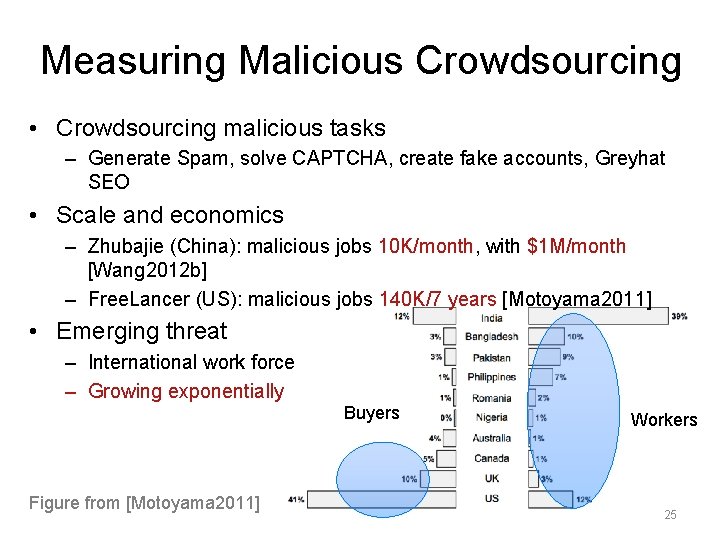

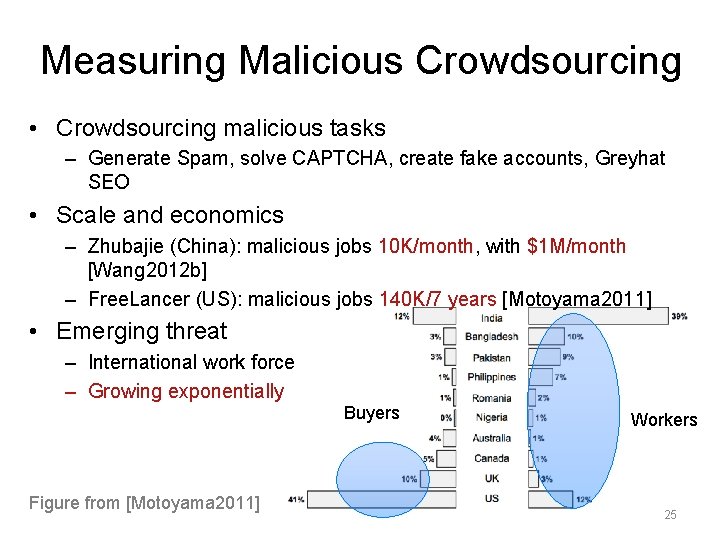

Measuring Malicious Crowdsourcing • Crowdsourcing malicious tasks – Generate Spam, solve CAPTCHA, create fake accounts, Greyhat SEO • Scale and economics – Zhubajie (China): malicious jobs 10 K/month, with $1 M/month [Wang 2012 b] – Free. Lancer (US): malicious jobs 140 K/7 years [Motoyama 2011] • Emerging threat – International work force – Growing exponentially Buyers Figure from [Motoyama 2011] Workers 25

Outline • • Introduction Overview of Crowdsourcing Applications Research Challenges Security and Crowdsourcing – Malicious Crowdsourcing Systems – Fake Reviews Generation by the Crowd – Detecting Sybils in Online Social Networks 26

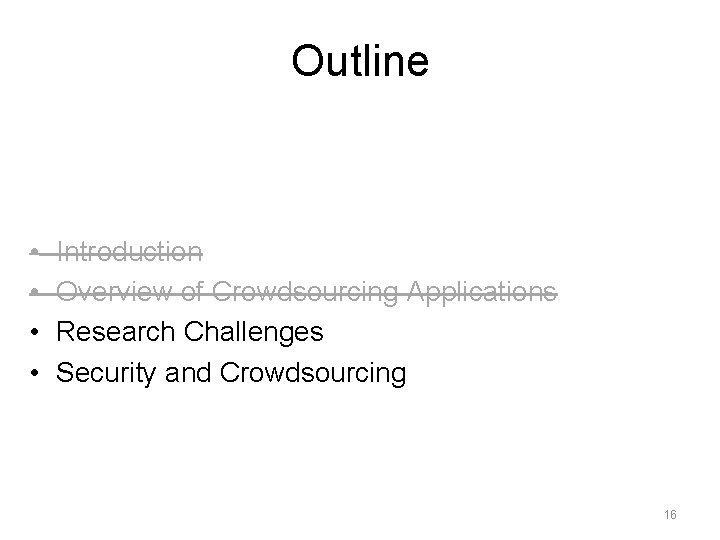

Online Reviews: Why Important? • 80% of people will check online reviews before purchasing products/travel online. 1 • Independent restaurants: a one-star increase in Yelp rating leads to a 9% increase in revenue. 2 1 2 http: //www. coneinc. com/negative-reviews-online-reverse-purchase-decisions Michael Luca. Reviews, reputation, and revenue: The Case of Yelp. com. Harvard Business School Working Paper, 2011 27

![Detecting Fake Reviews Detecting review spam Jindal 2008 DuplicatedNearduplicated reviews Dataset Reviewer Detecting Fake Reviews • Detecting review spam [Jindal 2008] – Duplicated/Near-duplicated reviews Dataset Reviewer](https://slidetodoc.com/presentation_image_h/3626010ee89b4d65c46dd8da20a4af25/image-29.jpg)

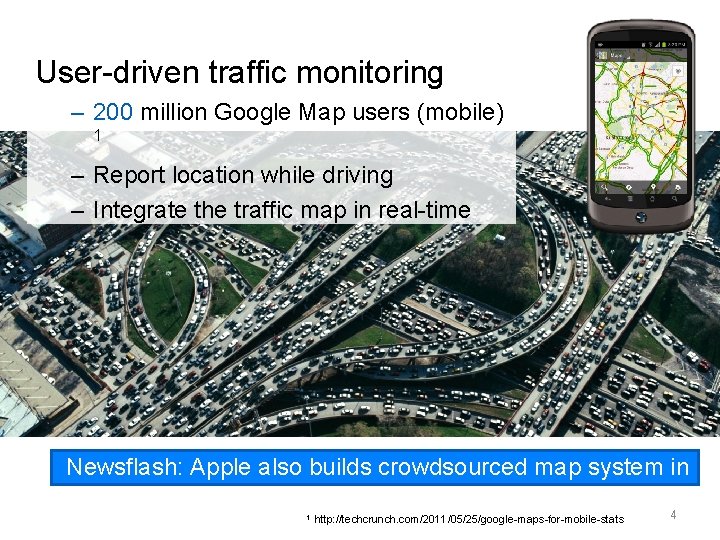

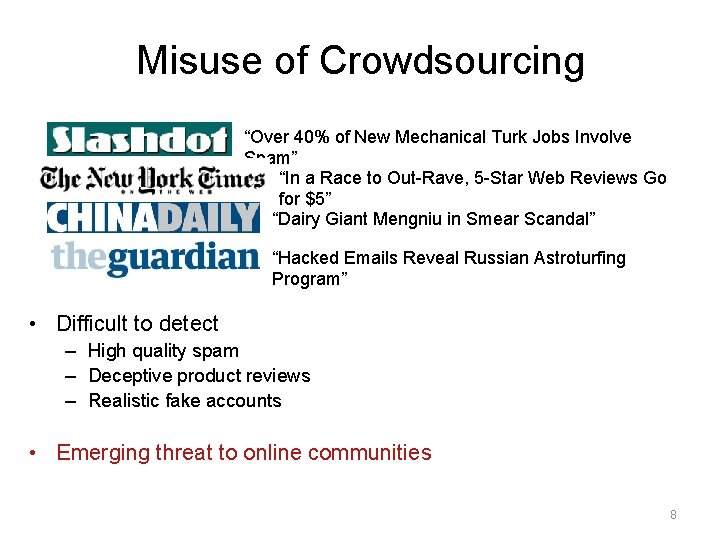

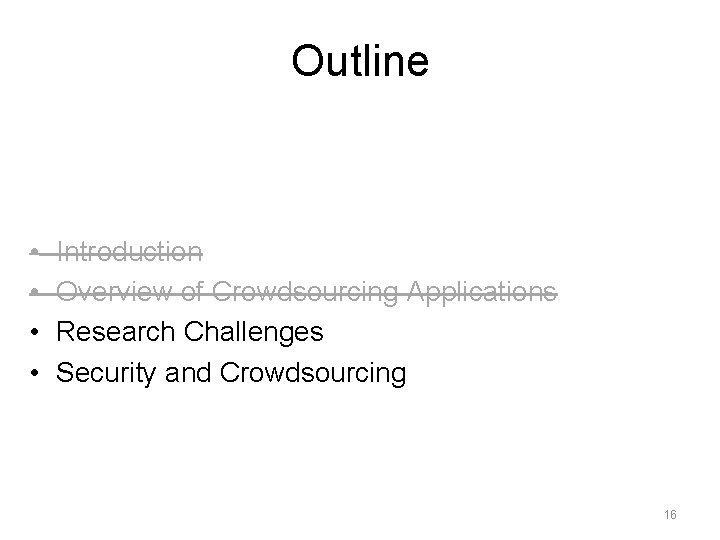

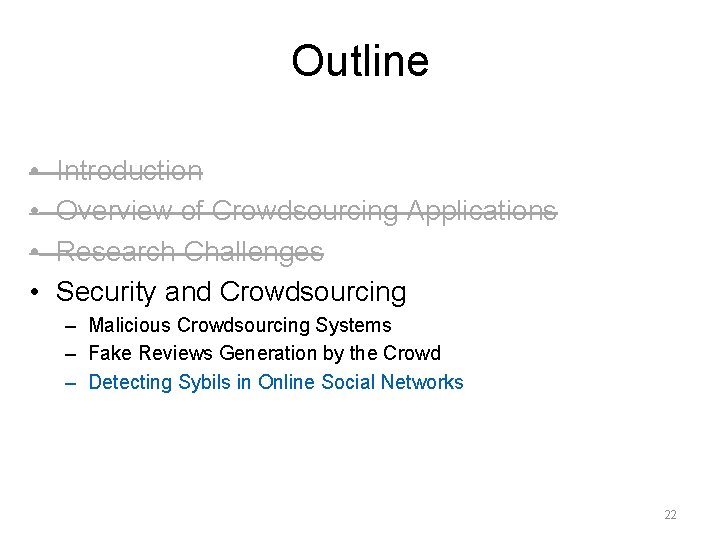

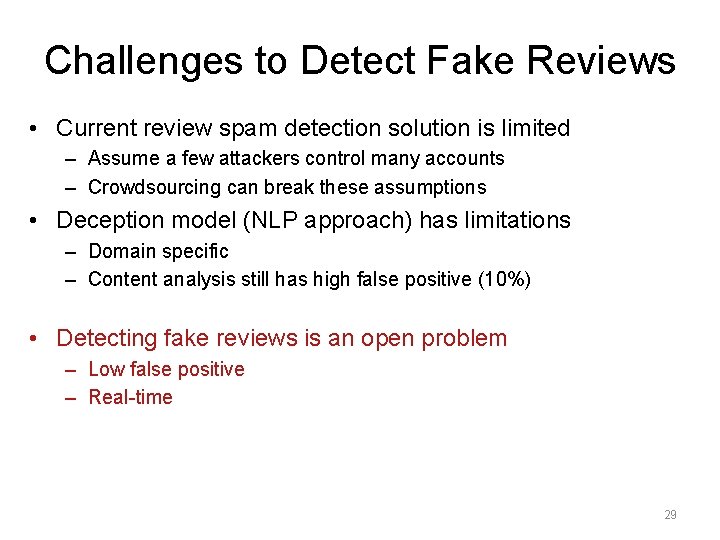

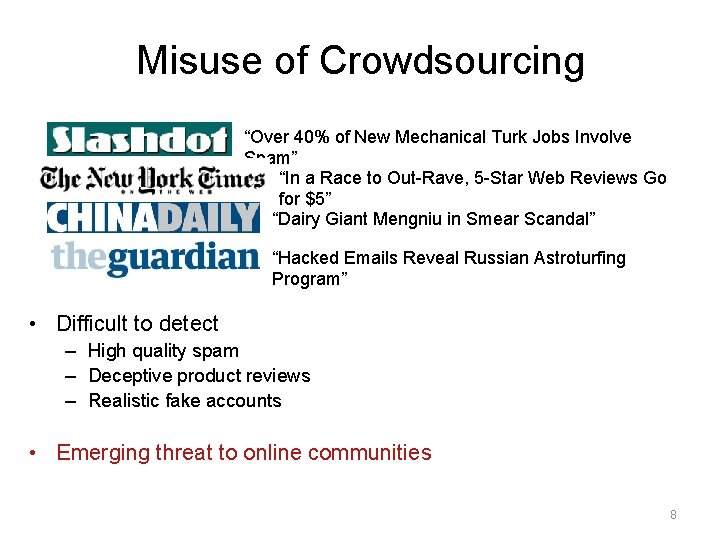

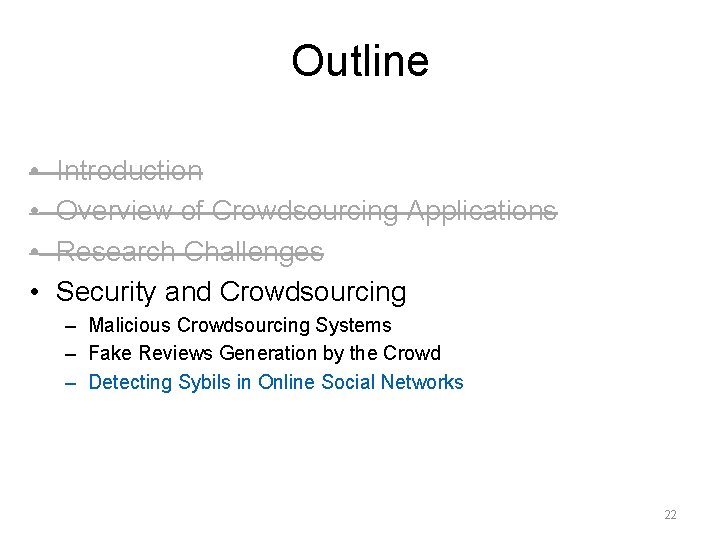

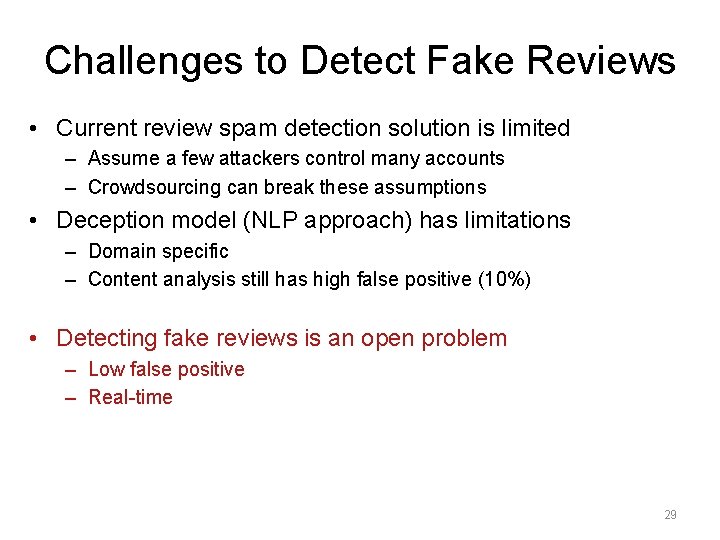

Detecting Fake Reviews • Detecting review spam [Jindal 2008] – Duplicated/Near-duplicated reviews Dataset Reviewer Products Reviews Spam Reviews Amazon 2, 146, 048 1, 195, 133 5, 838, 032 55, 319 • Detecting review spammers Lower bound of spam reviews – Classify rating/review behaviors [Lim 2010] – Detect synchronized reviews in groups [Mukherjee 2012] • Deception models [Ott 2011] – Content classification using trained data – Psycholinguistic deception detection Human accuracy 60% Classifier accuracy 90% 28

Challenges to Detect Fake Reviews • Current review spam detection solution is limited – Assume a few attackers control many accounts – Crowdsourcing can break these assumptions • Deception model (NLP approach) has limitations – Domain specific – Content analysis still has high false positive (10%) • Detecting fake reviews is an open problem – Low false positive – Real-time 29

Outline • • Introduction Overview of Crowdsourcing Applications Research Challenges Security and Crowdsourcing – Malicious Crowdsourcing Systems – Fake Reviews Generation by the Crowd – Detecting Sybils in Online Social Networks 30

Social Network Sybils • Sybils in Online Social Networks (OSNs) – Cheating in social games – Spreading spam/malware – [Thomas 2011], [Gao 2010], [Nazir 2010] • Challenges to detect Sybils in the wild – Various/adaptive Sybil behavior patterns/attack strategies – Increasingly sophisticated/realistic Sybil account profiles – Automated mechanisms losing effectiveness • Use crowdsourcing for Sybil detection 31

Crowdsourced Sybil Detection • Basic idea: build a crowdsourced Sybil detector – Resilient to changing attacker strategies • Question: can human identify Sybil profiles? (answer: user study) – Ground truth datasets of full user profiles • 200 real + 180 fake accounts (Renren, Facebook-India) – Segmented user groups • Renren users (Chinese), Facebook (US), Facebook (Indian) • Experts (conscientious, motivated), Turkers (paid per profile, $driven) • High level results – Experts are accurate; both experts and turkers have near-zero false positives – Quality control can improve turker accuracy ~ experts – Accurate, scalable, cost-effective 32

Conclusion • An alternative solution to various problems – Difficult to be automated by software – Can be decomposed into small tasks • Many challenges in crowdsourcing system – Quality control against unreliable workers – Task management for complex tasks – Incentive models to reduce cost and optimize performance • Malicious crowdsourcing and related attacks – Serious threat to existing security mechanisms – Measurement study to understand the problem – Defense is still an open problem 33

Possible Research Areas • Defend against malicious crowdsourcing systems – Attacking malicious crowdsourcing systems – Detecting crowdsourcing campaigns in real-time • Spot fake reviews/reviewers – Resilient to changing behaviors – Real-time – Scalability • Using crowdsourcing to solve security problems – Crowdsourcing to detect social Sybils 34

Thank you! Questions? 35

Strengths opportunities threats weaknesses

Strengths opportunities threats weaknesses Opportunities and threats of a person

Opportunities and threats of a person What is opportunity assessment plan

What is opportunity assessment plan Contoh crowdsourcing

Contoh crowdsourcing Crowdsourcing

Crowdsourcing Crowdsourcing in journalism

Crowdsourcing in journalism Prolific vs mturk

Prolific vs mturk Captcha crowdsourcing

Captcha crowdsourcing Facebook porter 5 forces

Facebook porter 5 forces Dr helen kay

Dr helen kay New entry in entrepreneurship

New entry in entrepreneurship Strategy

Strategy Ramapo eof program

Ramapo eof program Area and perimeter gcse exam questions higher

Area and perimeter gcse exam questions higher Maritime front

Maritime front Which major type of air mass is moving into the area?

Which major type of air mass is moving into the area? New jersey board of examiners

New jersey board of examiners A major drug store chain wishes to build a new warehouse

A major drug store chain wishes to build a new warehouse Surface area of prism formula

Surface area of prism formula Cobol area a and area b

Cobol area a and area b Ibm system/390

Ibm system/390 Surface area of a cone

Surface area of a cone What is the area of the base

What is the area of the base Cobol cheat sheet

Cobol cheat sheet Cleavage in birds

Cleavage in birds Area radicularis

Area radicularis Surfce area

Surfce area Landmarks in prosthodontics

Landmarks in prosthodontics Lateral edge

Lateral edge What is the area

What is the area Area radicularis

Area radicularis Segmentová inervace těla

Segmentová inervace těla Lateral area meaning

Lateral area meaning Find the lateral area of the prism

Find the lateral area of the prism What is 506 area code

What is 506 area code Sql server roadmap

Sql server roadmap Sd3 security framework

Sd3 security framework