CROWD CENTRALITY David Karger Sewoong Oh MIT and

![WHY ALGORITHM WORKS? Random graph + probabilistic model E[Aij] = (ti pj - (1 WHY ALGORITHM WORKS? Random graph + probabilistic model E[Aij] = (ti pj - (1](https://slidetodoc.com/presentation_image/172c5949c5a67355825db6114a1b64a0/image-27.jpg)

- Slides: 29

CROWD CENTRALITY David Karger Sewoong Oh MIT and UIUC Devavrat Shah

CROWD SOURCING

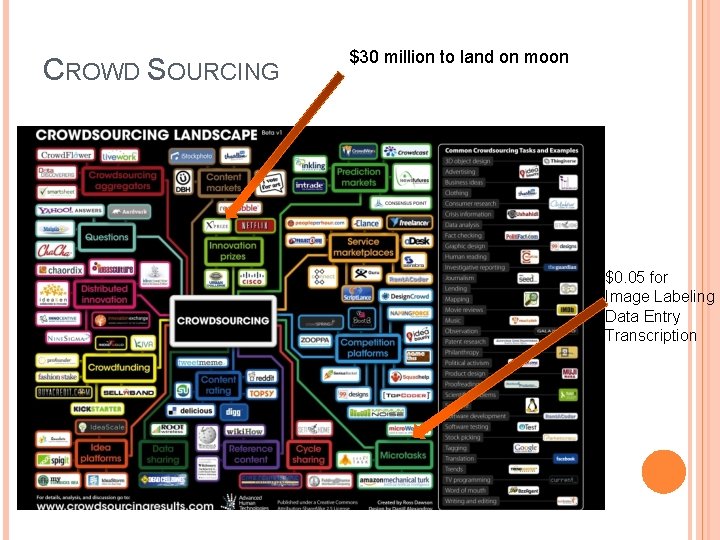

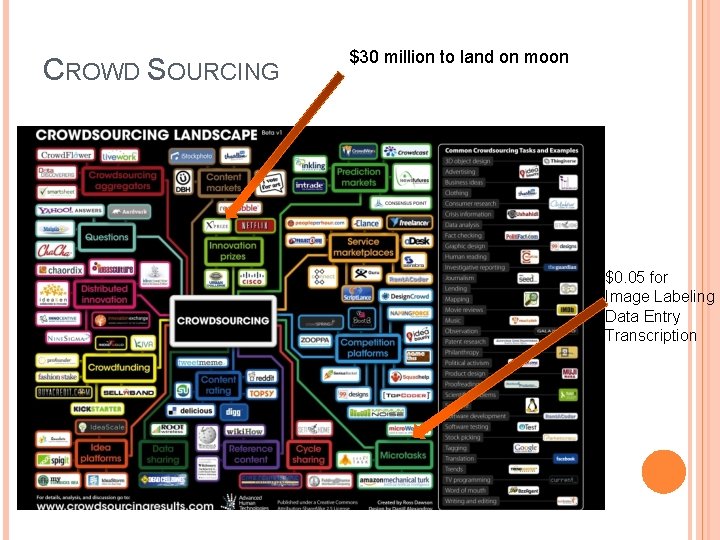

CROWD SOURCING $30 million to land on moon $0. 05 for Image Labeling Data Entry Transcription

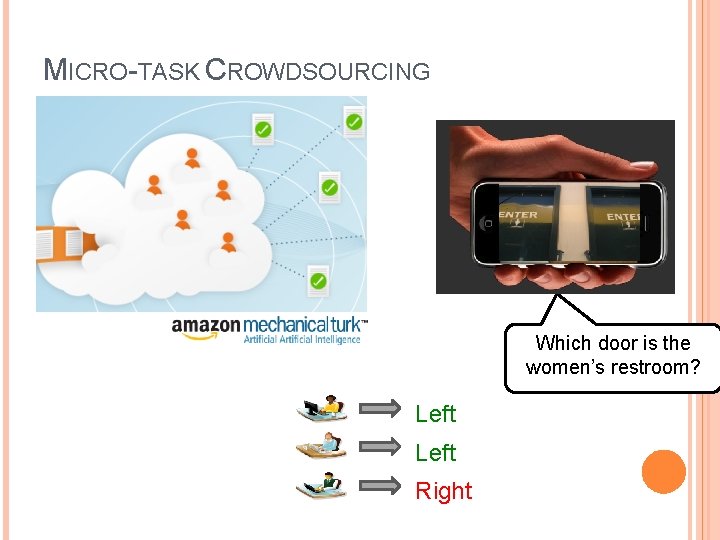

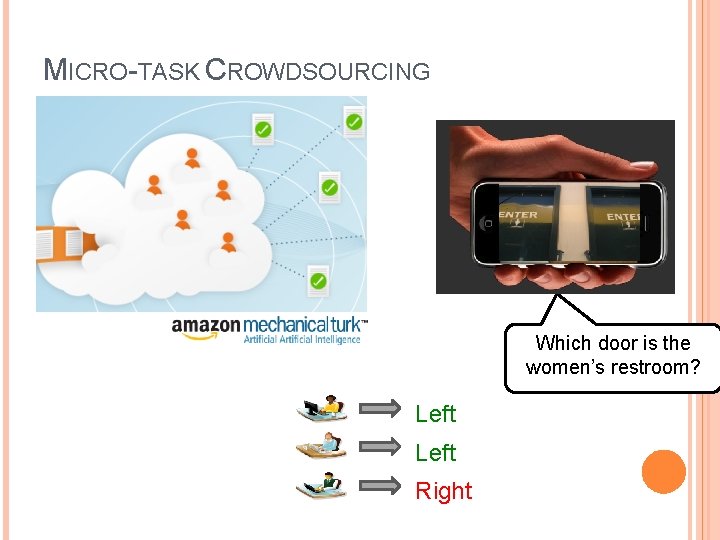

MICRO-TASK CROWDSOURCING

MICRO-TASK CROWDSOURCING Which door is the women’s restroom? Left Right

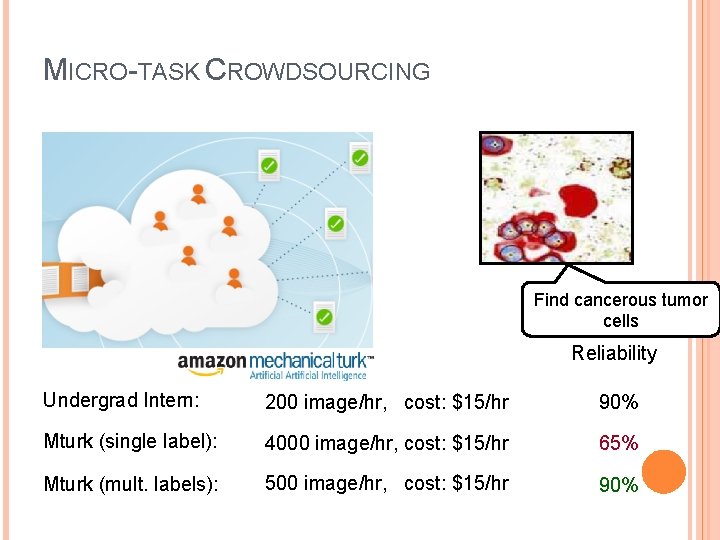

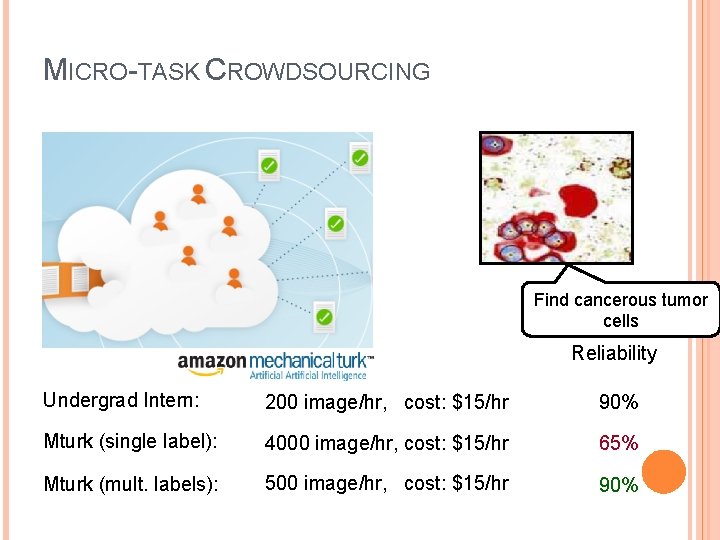

MICRO-TASK CROWDSOURCING Find cancerous tumor cells Reliability Undergrad Intern: 200 image/hr, cost: $15/hr 90% Mturk (single label): 4000 image/hr, cost: $15/hr 65% Mturk (mult. labels): 500 image/hr, cost: $15/hr 90%

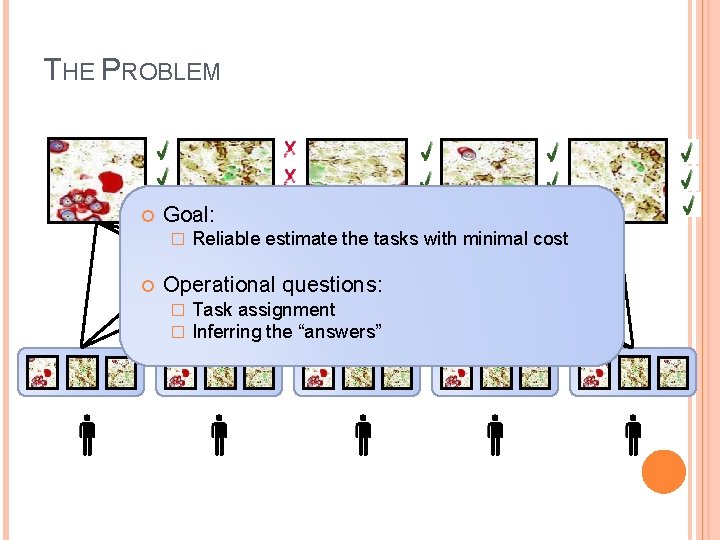

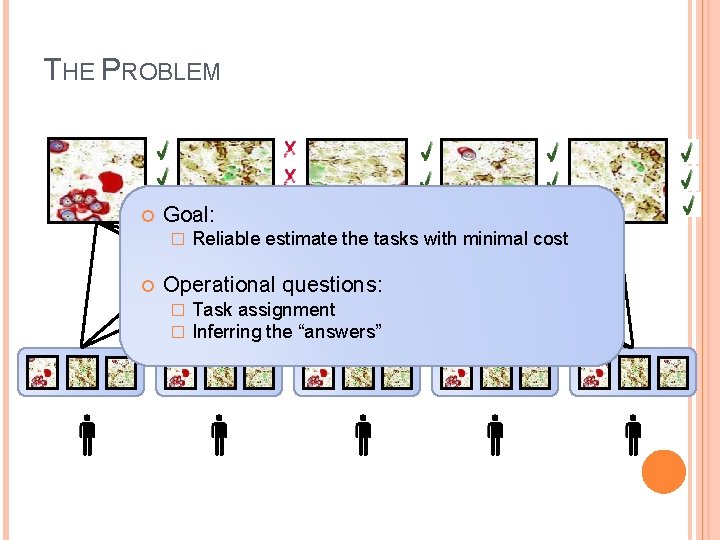

THE PROBLEM Goal: � Reliable estimate the tasks with minimal cost Operational questions: � � Task assignment Inferring the “answers”

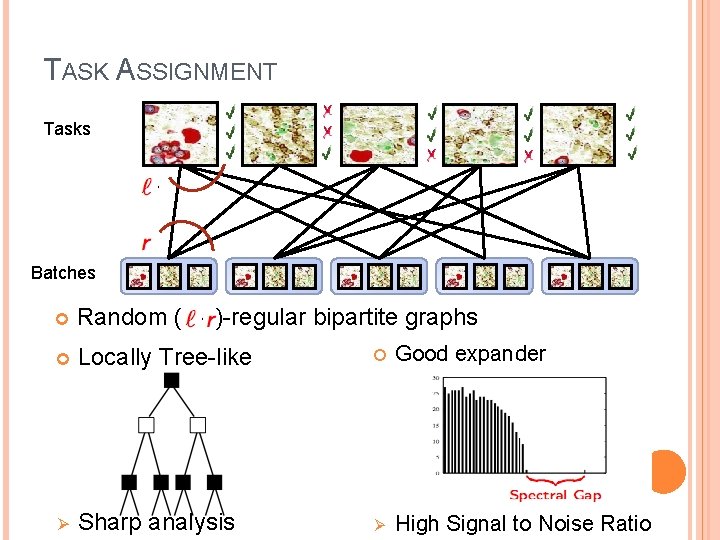

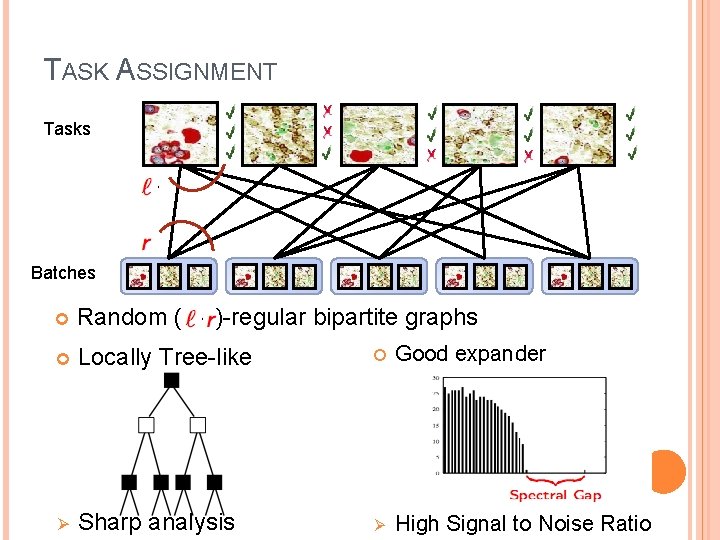

TASK ASSIGNMENT Tasks Batches Random ( , )-regular bipartite graphs Locally Tree-like Good expander Ø Sharp analysis Ø High Signal to Noise Ratio

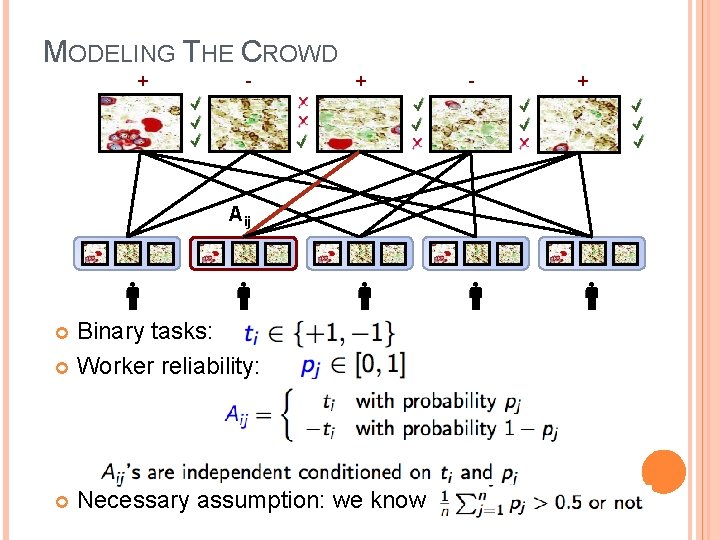

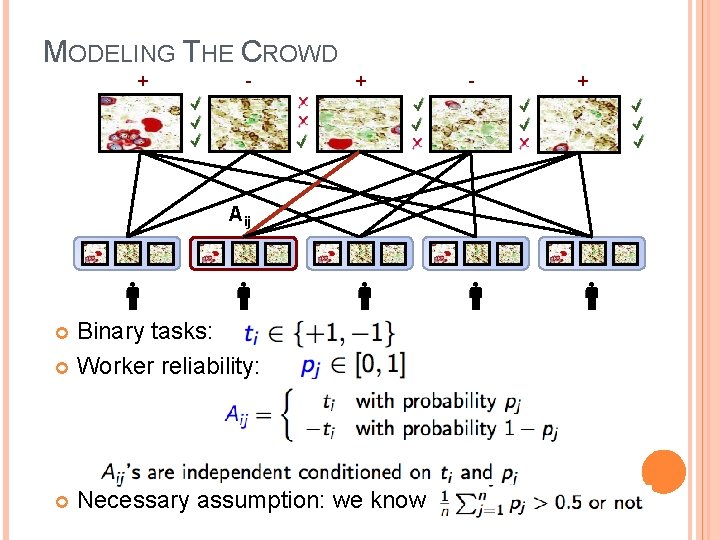

MODELING THE CROWD + - + Aij Binary tasks: Worker reliability: Necessary assumption: we know - +

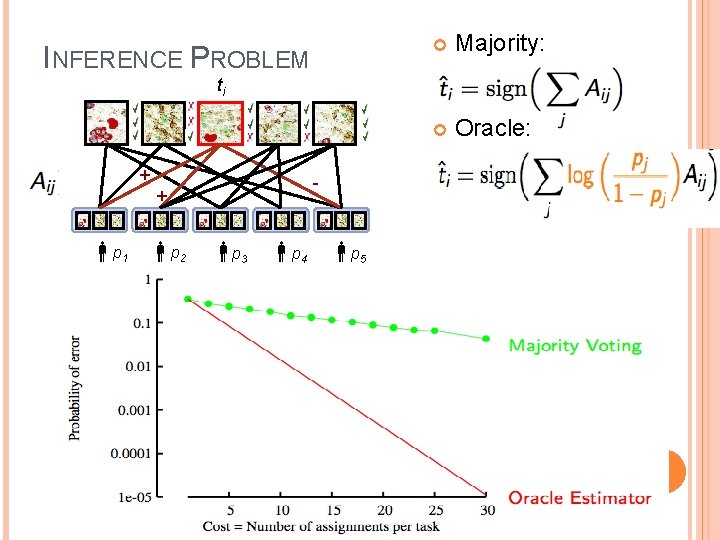

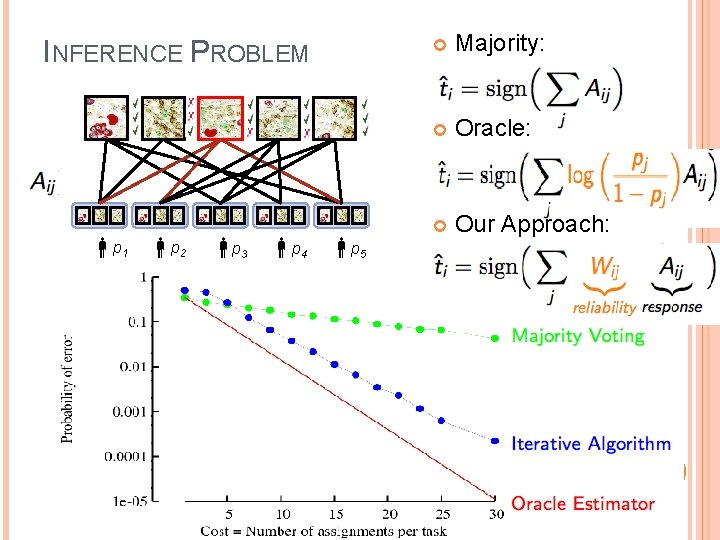

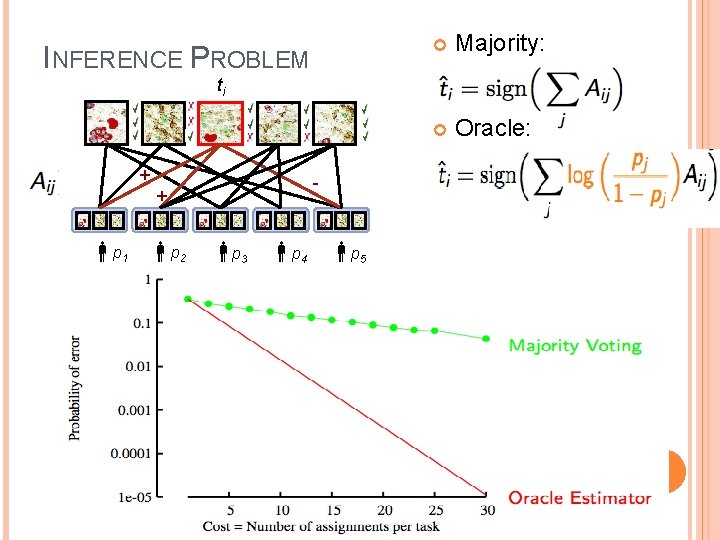

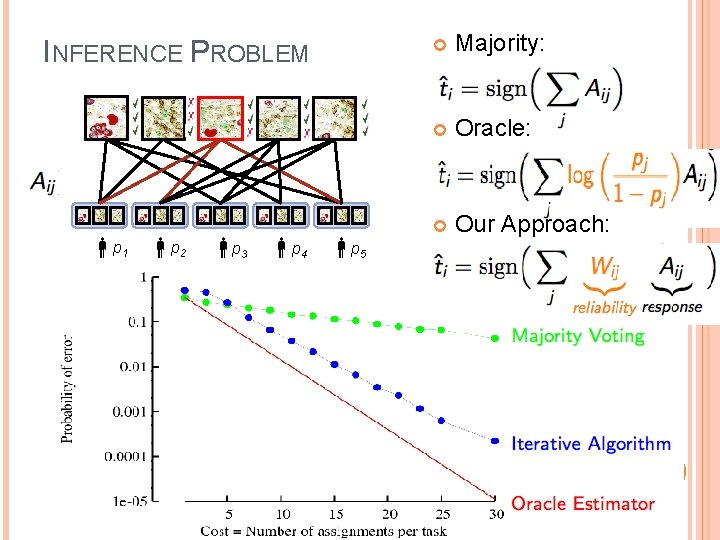

INFERENCE PROBLEM Majority: Oracle: ti + - + p 1 p 2 p 3 p 4 p 5

INFERENCE PROBLEM p 1 p 2 p 3 p 4 p 5 Majority: Oracle: Our Approach:

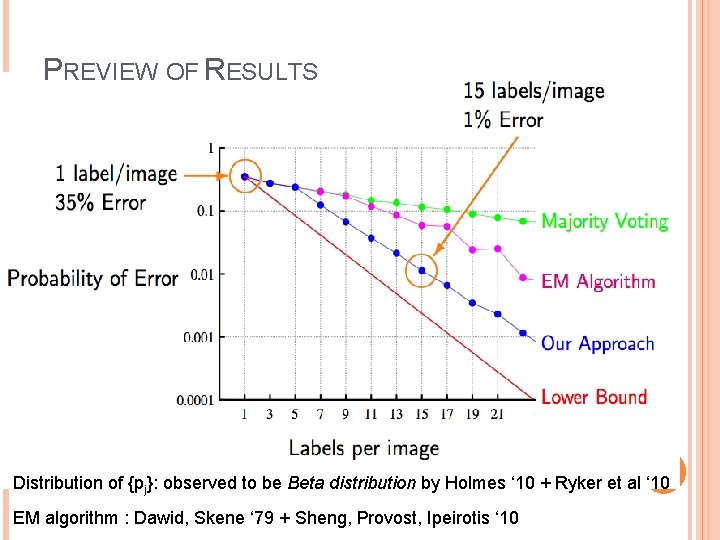

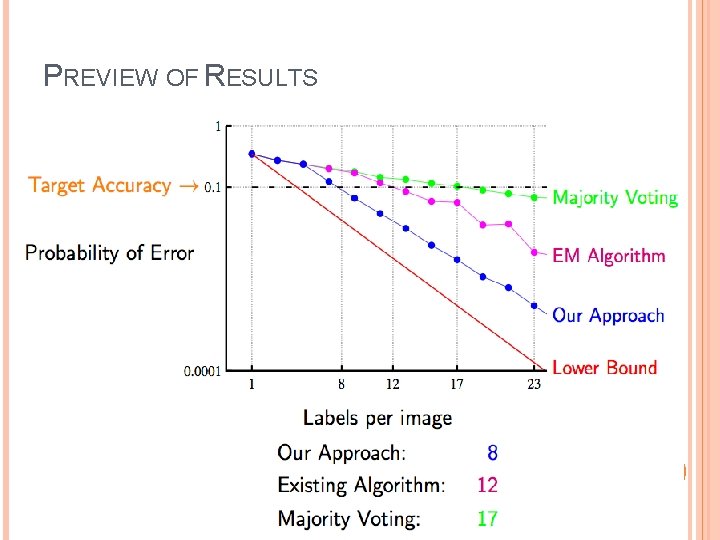

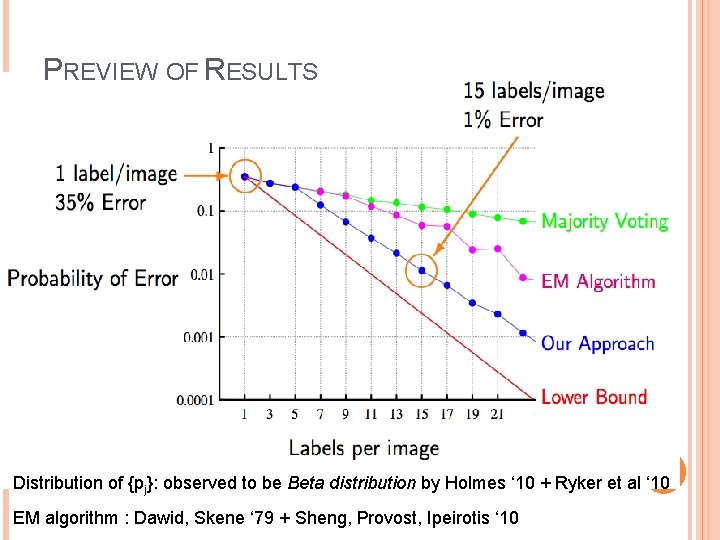

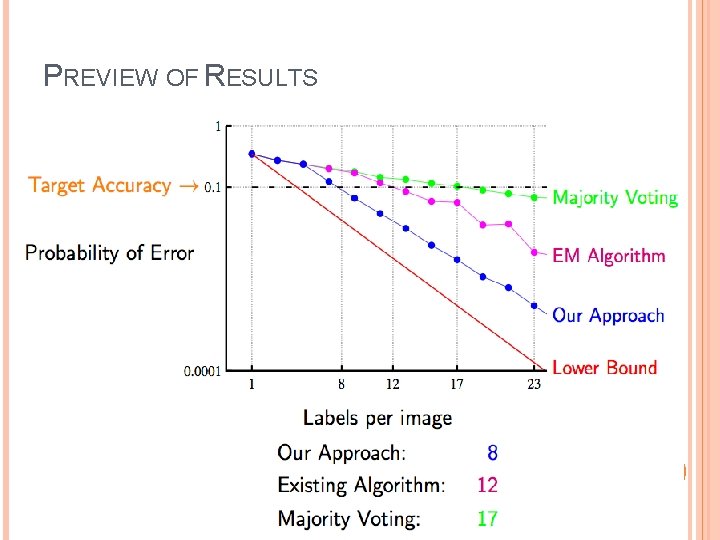

PREVIEW OF RESULTS Distribution of {pj}: observed to be Beta distribution by Holmes ‘ 10 + Ryker et al ‘ 10 EM algorithm : Dawid, Skene ‘ 79 + Sheng, Provost, Ipeirotis ‘ 10

PREVIEW OF RESULTS

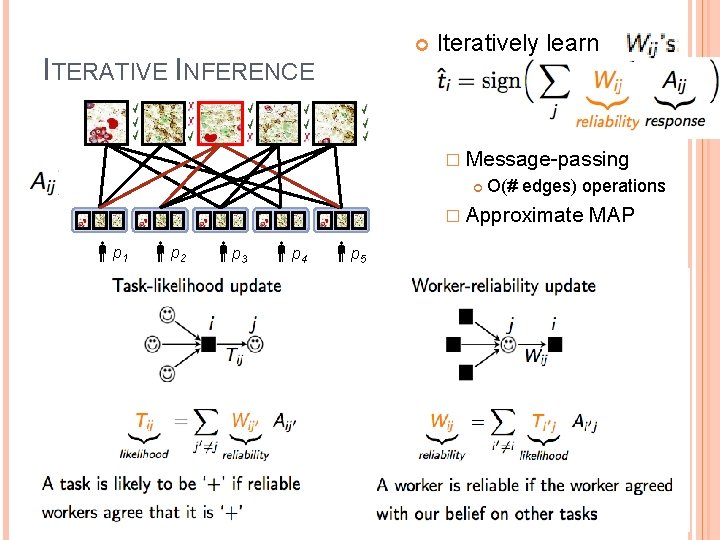

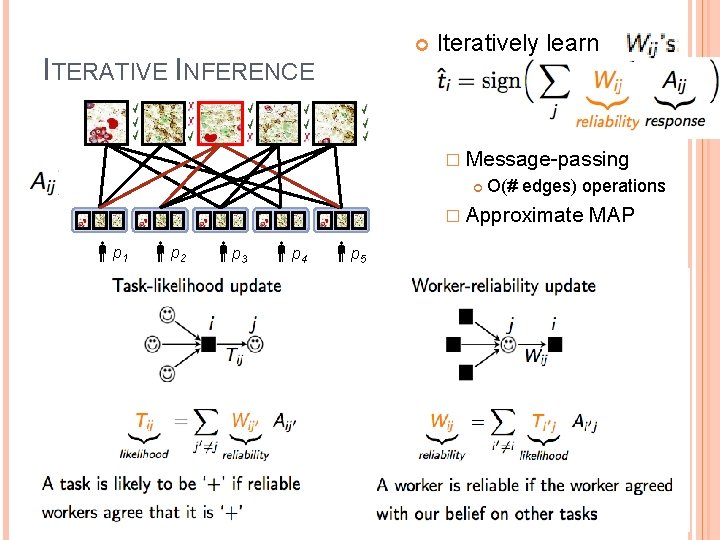

ITERATIVE INFERENCE Iteratively learn � Message-passing O(# edges) operations � Approximate p 1 p 2 p 3 p 4 p 5 MAP

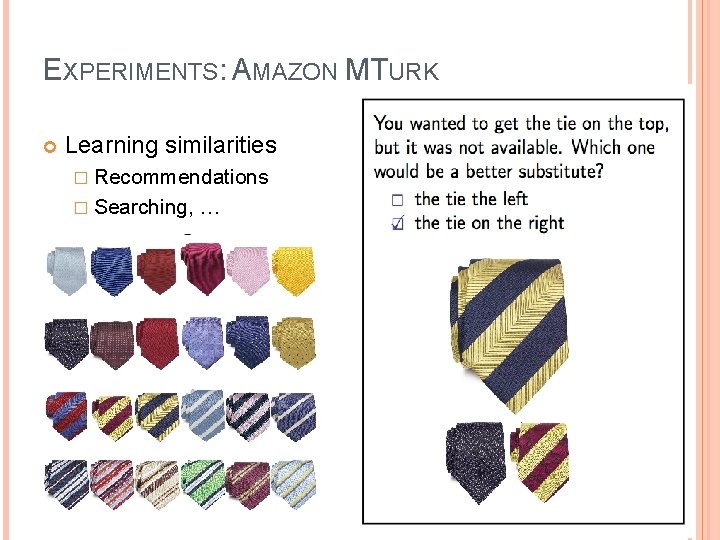

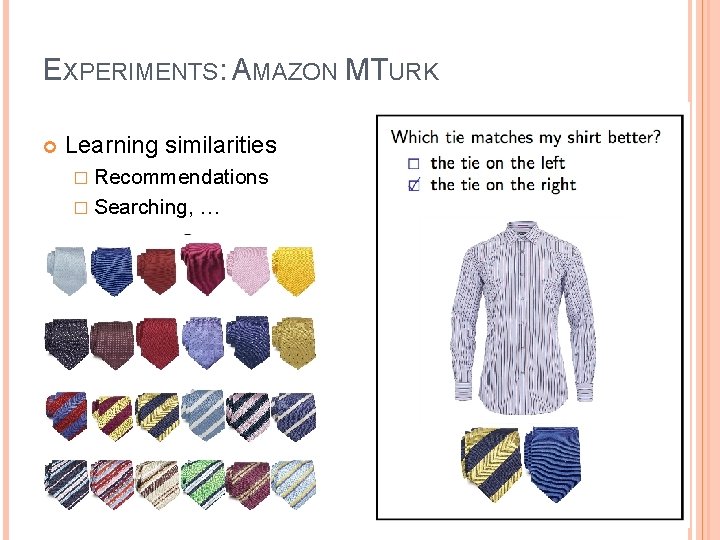

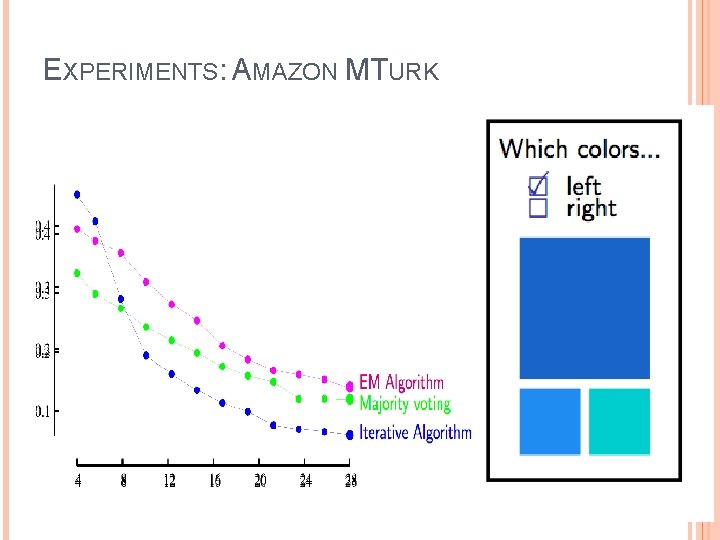

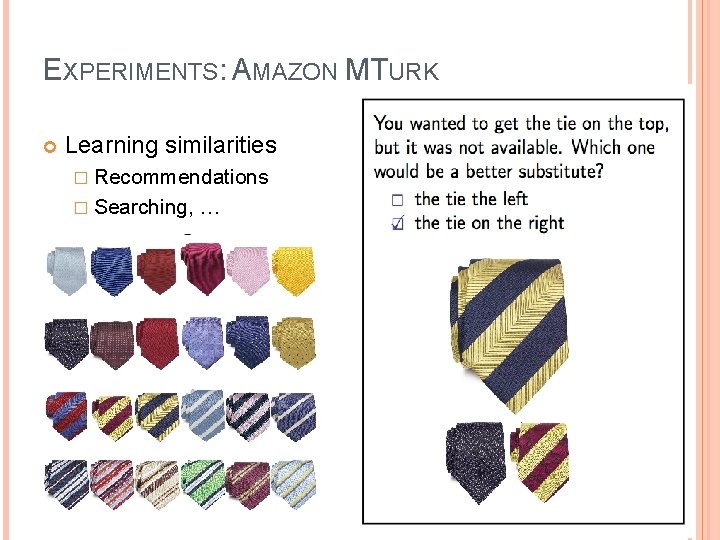

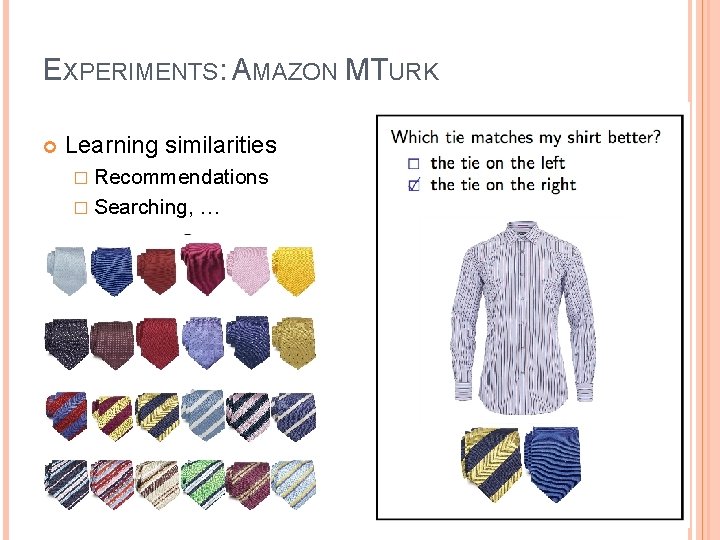

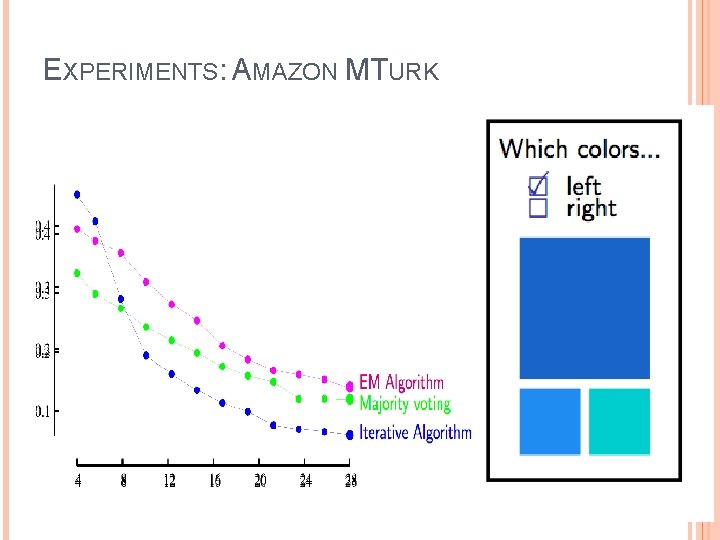

EXPERIMENTS: AMAZON MTURK Learning similarities � Recommendations � Searching, …

EXPERIMENTS: AMAZON MTURK Learning similarities � Recommendations � Searching, …

EXPERIMENTS: AMAZON MTURK

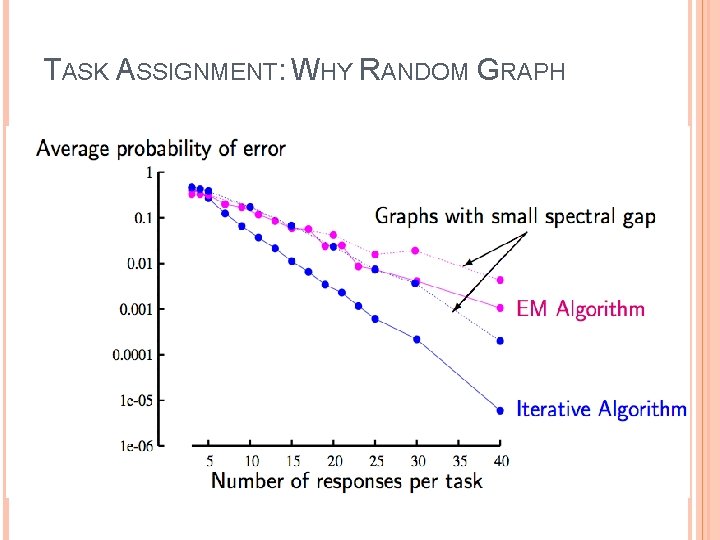

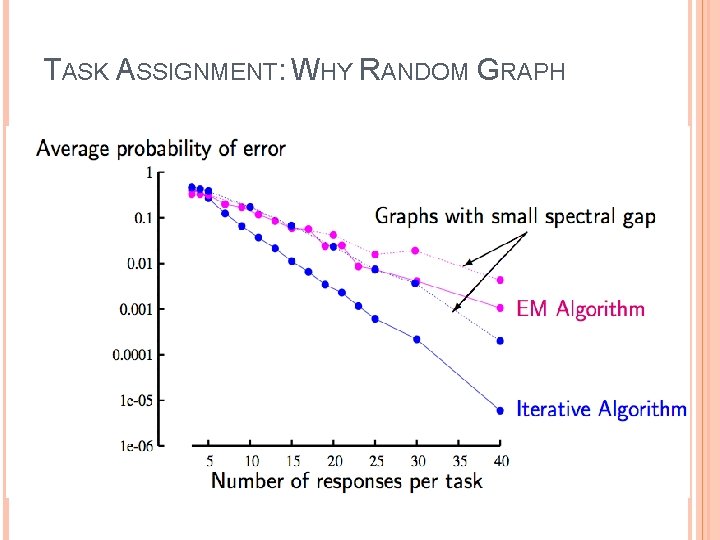

TASK ASSIGNMENT: WHY RANDOM GRAPH

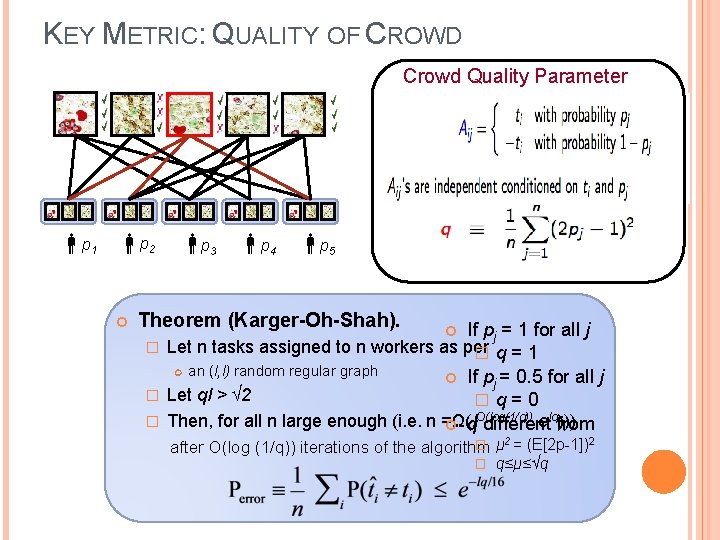

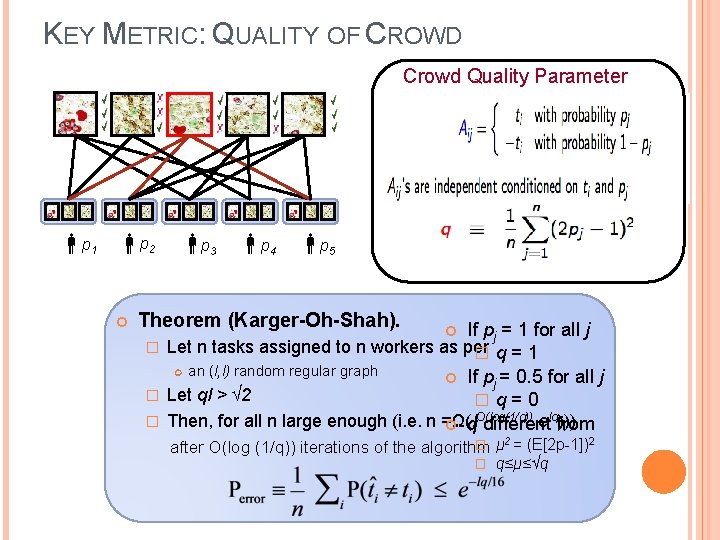

KEY METRIC: QUALITY OF CROWD Crowd Quality Parameter p 2 p 1 p 3 p 4 p 5 Theorem (Karger-Oh-Shah). � � � If pj = 1 for all j Let n tasks assigned to n workers as per �q=1 an (l, l) random regular graph If pj = 0. 5 for all j Let ql > √ 2 �q=0 O(log(1/q)) elq))) Then, for all n large enough (i. e. n =Ω(l q different from � μ 2 = (E[2 p-1])2 after O(log (1/q)) iterations of the algorithm � q≤μ≤√q

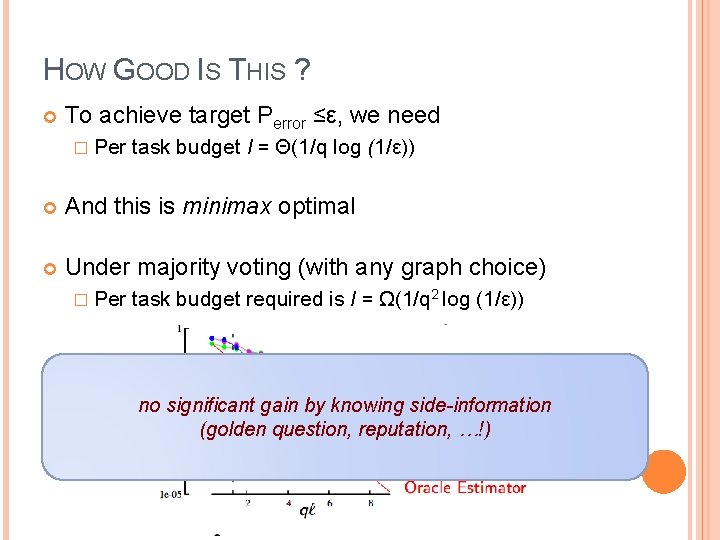

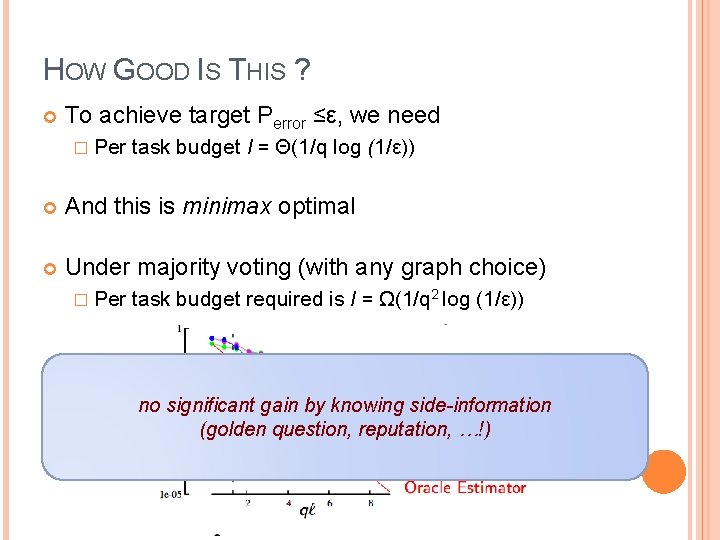

HOW GOOD IS THIS ? To achieve target Perror ≤ε, we need � Per task budget l = Θ(1/q log (1/ε)) And this is minimax optimal Under majority voting (with any graph choice) � Per task budget required is l = Ω(1/q 2 log (1/ε)) no significant gain by knowing side-information (golden question, reputation, …!)

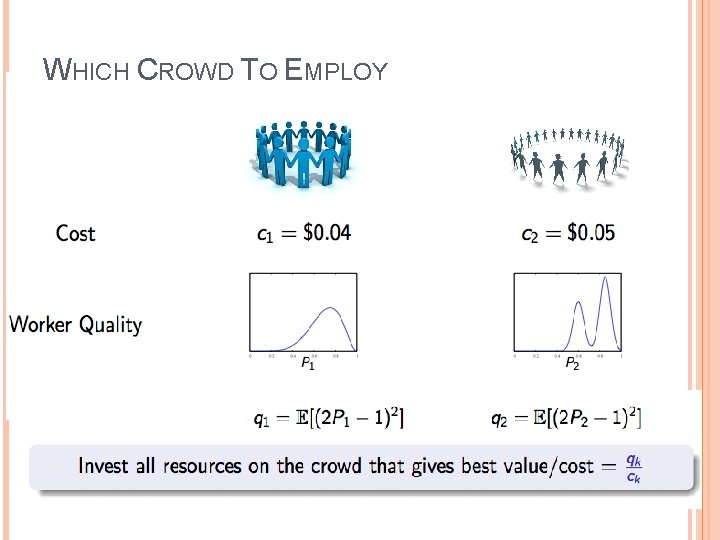

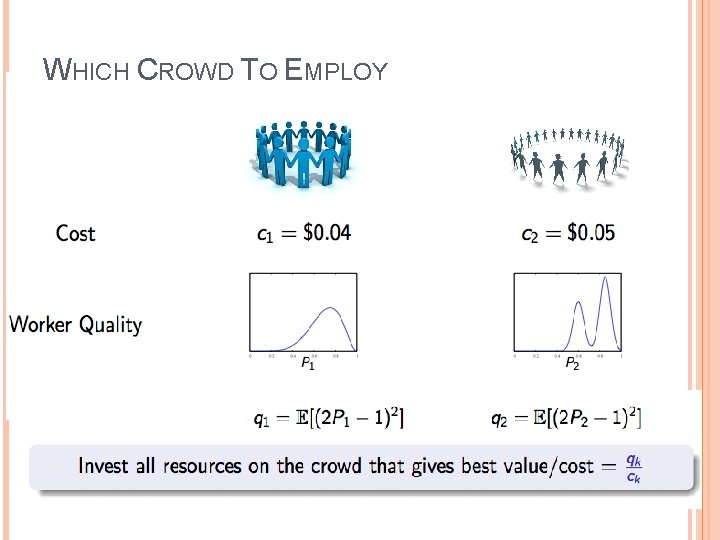

ADAPTIVE TASK ASSIGNMENT: DOES IT HELP ? Theorem (Karger-Oh-Shah). � Given any adaptive algorithm, � let Δbe the average number of workers required per task to achieve desired Perror ≤ε Then there exists {pj} with quality q so that gain through adaptivity is limited

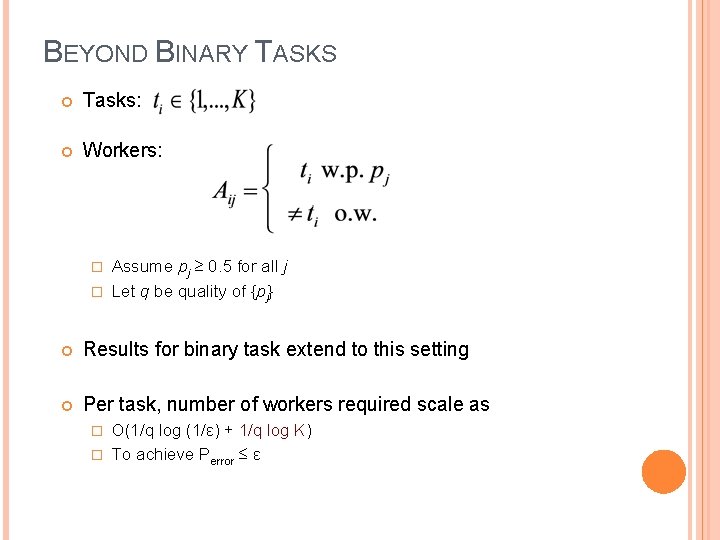

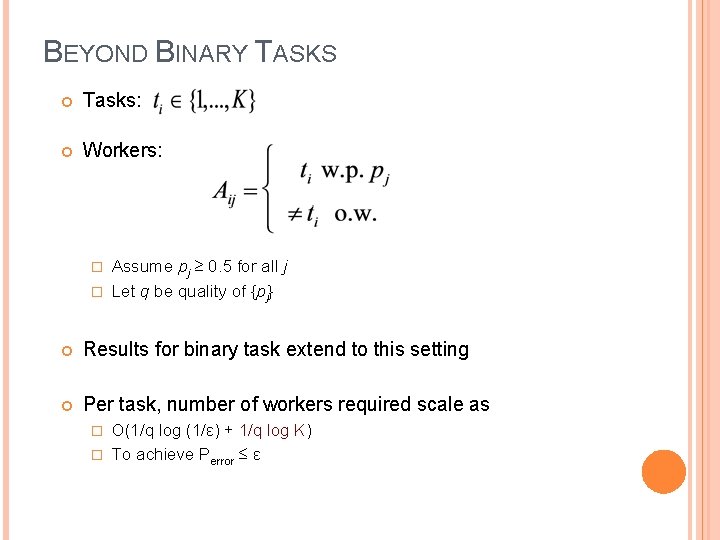

WHICH CROWD TO EMPLOY

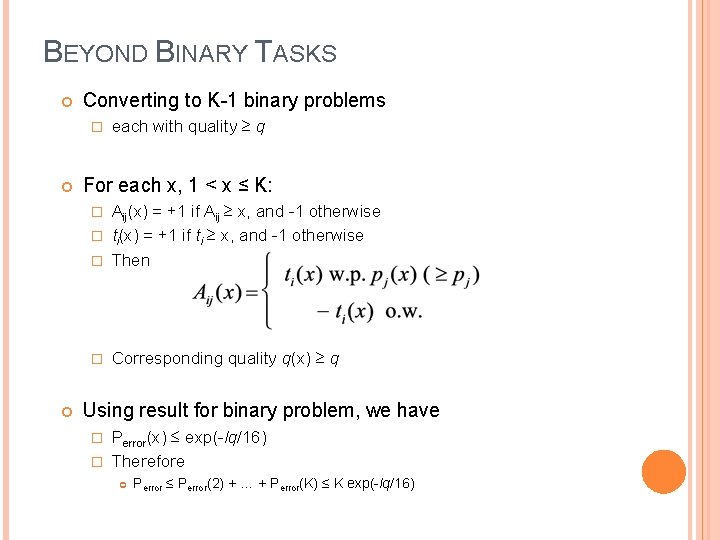

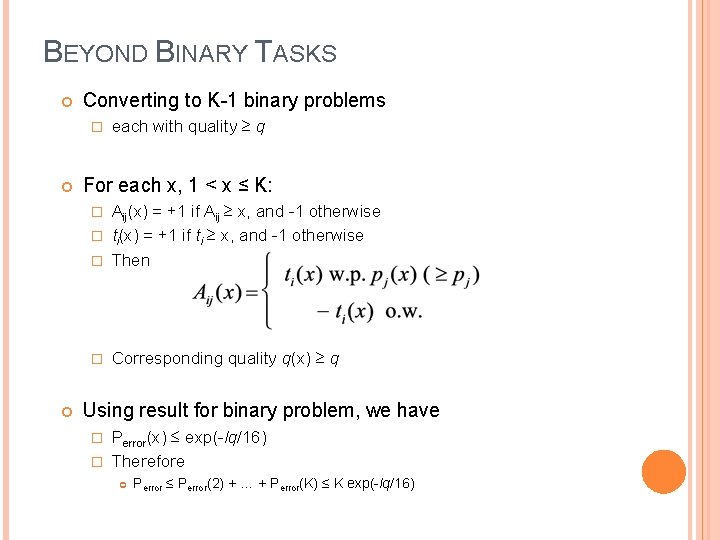

BEYOND BINARY TASKS Tasks: Workers: Assume pj ≥ 0. 5 for all j � Let q be quality of {pj} � Results for binary task extend to this setting Per task, number of workers required scale as O(1/q log (1/ε) + 1/q log K) � To achieve Perror ≤ ε �

BEYOND BINARY TASKS Converting to K-1 binary problems � each with quality ≥ q For each x, 1 < x ≤ K: Aij(x) = +1 if Aij ≥ x, and -1 otherwise � ti(x) = +1 if ti ≥ x, and -1 otherwise � Then � � Corresponding quality q(x) ≥ q Using result for binary problem, we have Perror(x) ≤ exp(-lq/16) � Therefore � Perror ≤ Perror(2) + … + Perror(K) ≤ K exp(-lq/16)

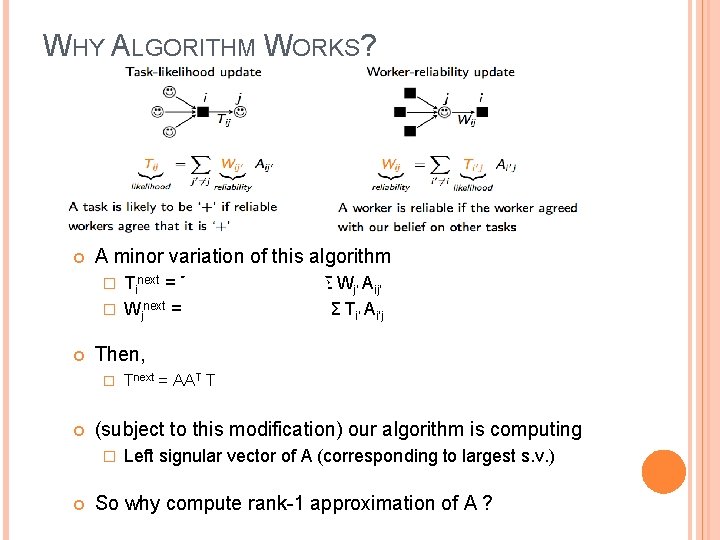

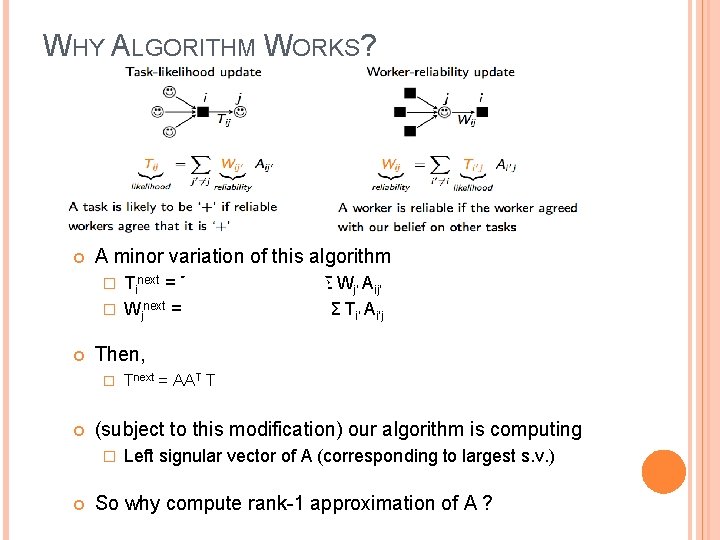

WHY ALGORITHM WORKS? MAP estimation � Prior on probability {pj} Let f(p) be density over [0, 1] Answers A=[Aij] � Then, � Belief propagation (max-product) algorithm for MAP With Haldane prior: pj is 0 or 1 with equal probability � Iteration k+1: for all task-worker pairs (i, j) � Xi/Yjrepresent log likelihood ratio for ti/pj= +1 vs -1 This is exactly the same as our algorithm! � And our random task assignment graph is tree-like � That is, our algorithm is effectively MAP for Haldane prior

WHY ALGORITHM WORKS? A minor variation of this algorithm Tinext = Tijnext = Σ Wij’ Aij’ = Σ Wj’ Aij’ � Wjnext = Wijnext = Σ Ti’j Ai’j = Σ Ti’ Ai’j � Then, � (subject to this modification) our algorithm is computing � Tnext = AAT T Left signular vector of A (corresponding to largest s. v. ) So why compute rank-1 approximation of A ?

![WHY ALGORITHM WORKS Random graph probabilistic model EAij ti pj 1 WHY ALGORITHM WORKS? Random graph + probabilistic model E[Aij] = (ti pj - (1](https://slidetodoc.com/presentation_image/172c5949c5a67355825db6114a1b64a0/image-27.jpg)

WHY ALGORITHM WORKS? Random graph + probabilistic model E[Aij] = (ti pj - (1 -pj)ti) l/n = ti (2 pj-1)l/n � E[A] = t (2 p-1)T l/n � That is, � E[A] is rank-1 matrix And, t is the left singular vector of E[A] If A ≈ E[A] � Then computing left singular vector of A makes sense Building upon Friedman-Kahn-Szemeredi ‘ 89 � Singular vector of A provides reasonable approximation Perror = O(1/lq) Ghosh, Kale, Mcafee ’ 12 � For sharper result we use belief propagation

CONCLUDING REMARKS Budget optimal micro-task crowd sourcing via � Random regular task allocation graph � Belief propagation Key messages � All that matters is quality of crowd � Worker reputation is not useful for non-adaptive tasks � Adaptation does not help due to fleeting nature of workers Reputation + worker id needed for adaptation to be effective Inference algorithm can be useful for assigning reputation � Model of binary task is equivalent to K-ary tasks

ON THAT NOTE…