Creating The Basics of Evaluating Models Evaluation is

Creating The Basics of Evaluating Models Evaluation is Creation Geoff Hulten

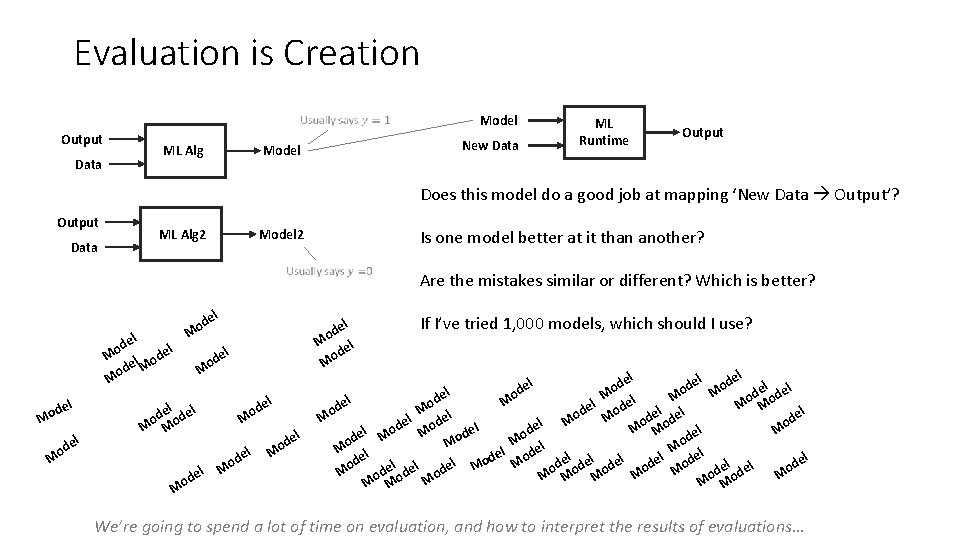

Evaluation is Creation Model Output Data New Data Model ML Alg ML Runtime Output Does this model do a good job at mapping ‘New Data Output’? Output Data Model 2 ML Alg 2 Is one model better at it than another? Are the mistakes similar or different? Which is better? l de l o M l ode de M o M l de Mo l de o M Mo l de odel o M M Mo el d Mo l e od M l de d Mo el l el el e d d d o o el del o l M d o M e M o l l d M M Mo el ode de l l Mo l d o l e o M M l e d ode de M e d l o o d l M M M de Mo odel el Mo o de d o M l o M M l el ode el l e de d o e e l l el de l el d o M d od od o e M e e o o o d M d d o M M Mo Mo M l de el d Mo If I’ve tried 1, 000 models, which should I use? el We’re going to spend a lot of time on evaluation, and how to interpret the results of evaluations…

![Common Pattern: best. Hyperparameters = accuracies(d, key=lambda key: accuracies[key]) Validation Set final. Model. fit(train. Common Pattern: best. Hyperparameters = accuracies(d, key=lambda key: accuracies[key]) Validation Set final. Model. fit(train.](http://slidetodoc.com/presentation_image_h2/7a0321bb6547251baaec79c0348c136d/image-3.jpg)

Common Pattern: best. Hyperparameters = accuracies(d, key=lambda key: accuracies[key]) Validation Set final. Model. fit(train. X+validation. X, train. Y+validation. Y, best. Hyperparameters) Test Set for p in hyperparameters. To. Try: model. fit(train. X, train. Y, p) accuracies[p] = evaluate(validation. Y, model. predict(validation. X)) estimate. Of. Generalization. Performance = evaluate(test. Y, final. Model. predict(test. X)) Full Data Set 1) Training set: to build the model 2) Validation set: tune the hyperparameters 3) Test set: to estimate how well the model works Training Set Getting Data for Evaluation

Risks with Evaluation Failure to Generalize: 1) If you test on the same data you train on, you’ll be too optimistic 2) If you evaluate on test data a lot as you’re debugging, you’ll be too optimistic Failure to learn the best model you can: 3) If you reserve too much data for testing you might not learn as good a model We’ll get into more detail on how to make the tradeoff For now: 1) if very little data (100 s), maybe up to 50% for validate + test 2) if tons of data (millions+), maybe ten thousand for validate + test 3) for assignments in Module 01 (1000 s), we’ll use 20% for validate + test

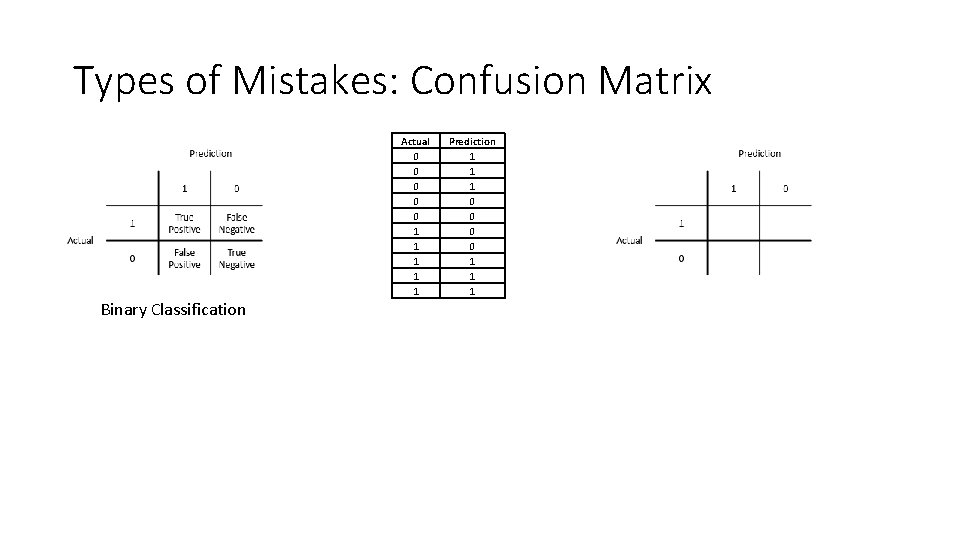

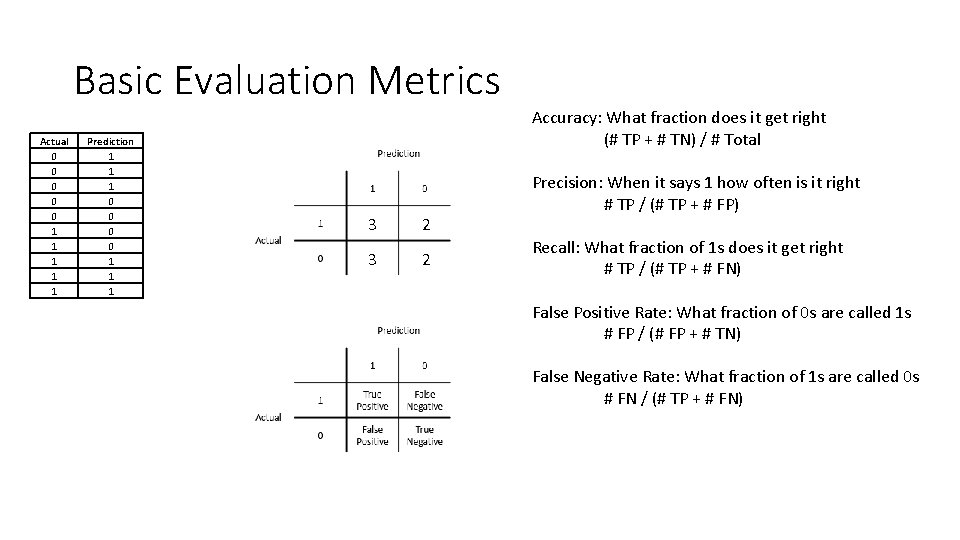

Types of Mistakes: Confusion Matrix Binary Classification Actual 0 0 0 1 1 1 Prediction 1 1 1 0 0 1 1 1

Basic Evaluation Metrics Actual 0 0 0 1 1 1 Prediction 1 1 1 0 0 1 1 1 Accuracy: What fraction does it get right (# TP + # TN) / # Total 3 2 Precision: When it says 1 how often is it right # TP / (# TP + # FP) Recall: What fraction of 1 s does it get right # TP / (# TP + # FN) False Positive Rate: What fraction of 0 s are called 1 s # FP / (# FP + # TN) False Negative Rate: What fraction of 1 s are called 0 s # FN / (# TP + # FN)

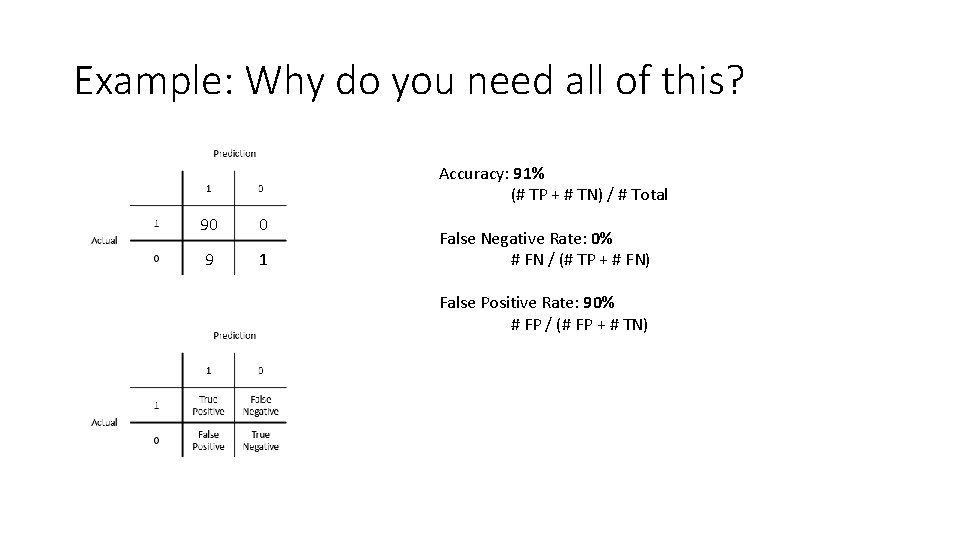

Example: Why do you need all of this? Accuracy: 91% (# TP + # TN) / # Total 90 0 9 1 False Negative Rate: 0% # FN / (# TP + # FN) False Positive Rate: 90% # FP / (# FP + # TN)

Summary • Evaluation is creation • Training data, validation data, test data • Learn the reasons & common pattern for using them • There are many types of mistakes • False positive, false negative, precision, recall, etc.

- Slides: 8