Creating Assessments AKA how to write a test

- Slides: 17

Creating Assessments AKA how to write a test

Creating Assessments n All good assessments have three key features: – Validity – Reliability – Usability

Reliability Next to validity, reliability is the most important characteristic of assessment results. Why? 1. It provides the consistency to make validity possible. 2. It indicates the degree to which various kinds of generalizations are justifiable.

Reliability n re·li·a·ble adj. Capable of being relied on; dependable. re·li”a·bil“i·ty or re·li“a·ble·ness n. --re·li“a·bly adv. (American Heritage Dictionary)

Reliability: the consistency of measurement, i. e. how consistent test scores or other assessment results are from one measurement to another.

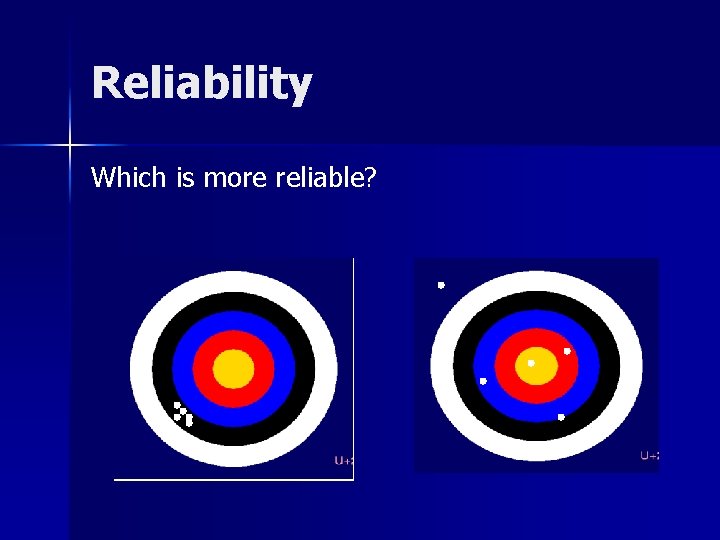

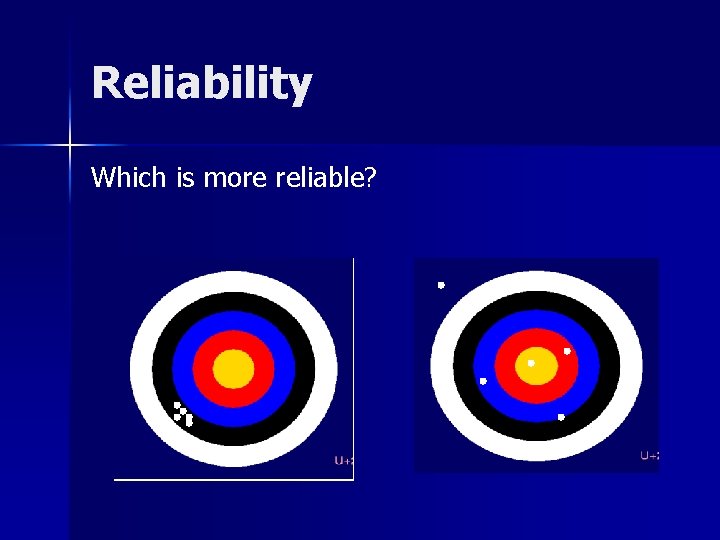

Reliability Which is more reliable?

Reliability Classical Test Theory: X=T+e Where: X = observed score T = “true score” e = error

Reliability x = observed score: The score the student receives on the exam. T = “true score”: What the student “really” knows.

Reliability e = error Error variance is the variability that exists in a set of scores and is due to factors other than the one being assessed. – Systematic: errors that are consistent. – Random: errors that have no pattern.

Reliability e = error Positive error (i. e. raises score): – Lucky guesses. – Items that give clues to the answer. – Cheating (students, aides, teachers).

Reliability e = error score Negative error (i. e. lowers score): – – – Not following directions. Miss-marking items. Room climate/atmosphere. Hunger, fatigue, illness, “need to go potty”. Assemblies, ball games, fire drills, etc. Break-up of a relationship.

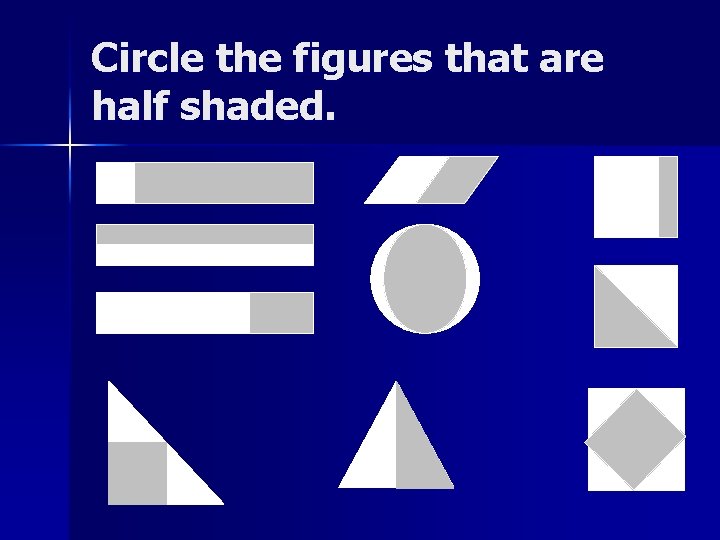

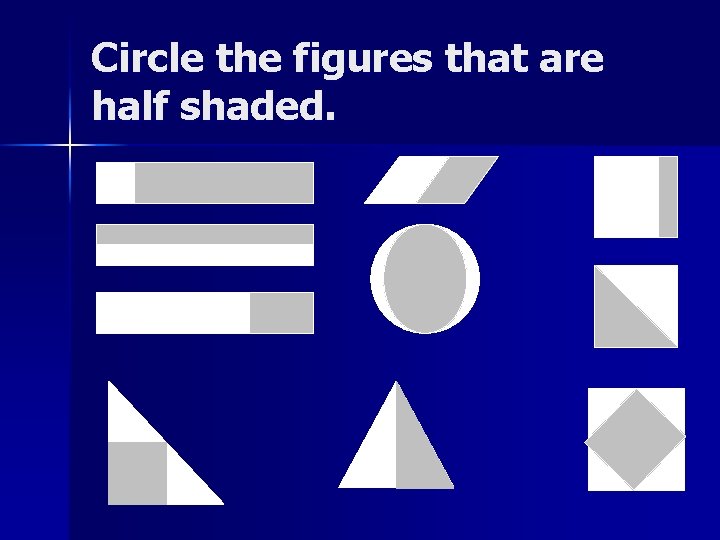

Circle the figures that are half shaded.

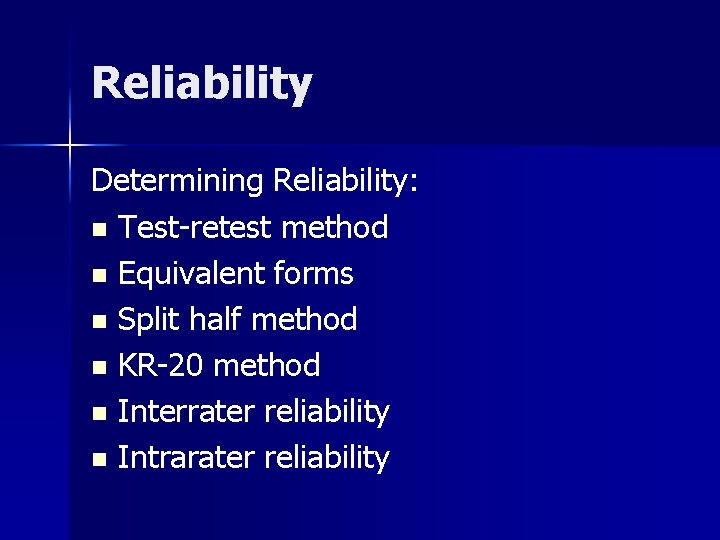

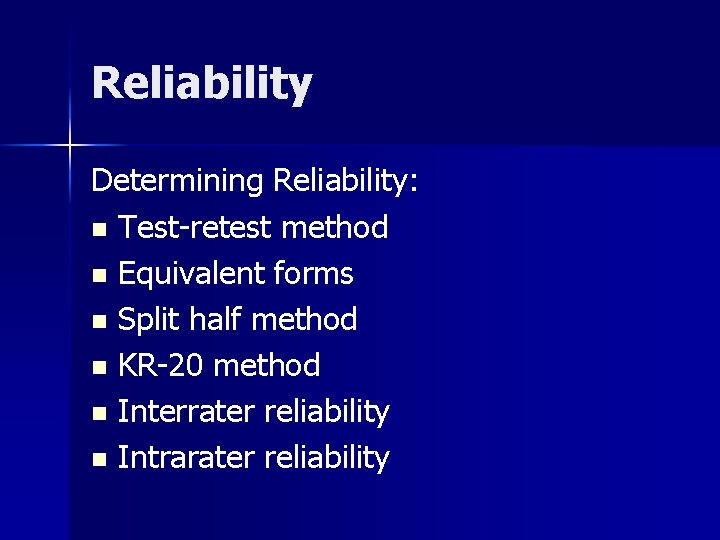

Reliability Determining Reliability: n Test-retest method n Equivalent forms n Split half method n KR-20 method n Interrater reliability n Intrarater reliability

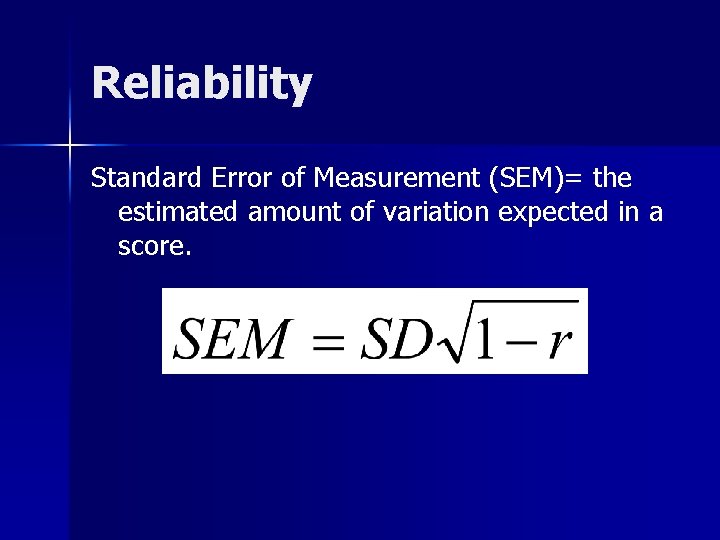

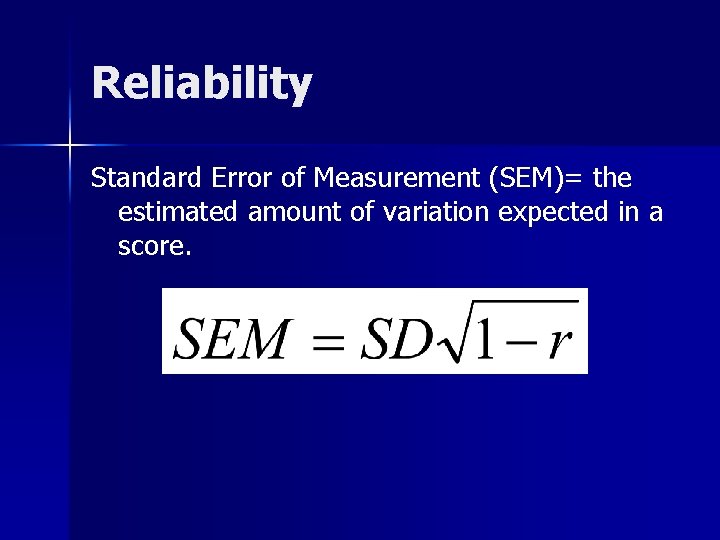

Reliability Standard Error of Measurement (SEM)= the estimated amount of variation expected in a score.

Reliability Example: If Sara scored 78 on a standardized test with a SEM of 6 we can be: n n n 68% certain her true score is between 72 and 84 95% certain her true score is between 66 and 90 99% certain her true score is between 60 and 96

Reliability Summation of Reliability: 1. Reliability refers to the results and not to the instrument itself. 2. Reliability is a necessary but not sufficient condition for validity. 3. The more reliable the assessment, the better.

Usability The practical aspects of a test cannot be neglected: – Ease of administration – Time n n – – – Administration Scoring Ease of Interpretation Availability of equivalent forms Cost