Crawler AKA Spider AKA Robot AKA Bot 1

Crawler (AKA Spider) (AKA Robot) (AKA Bot) 1

What is a Web Crawler? • A system for bulk downloading of Web pages • Used for: – Creating corpus of search engine – Web archiving – Data mining of Web for statistical properties (e. g. , Attributor monitors for copyright infringement) 2

Push and Pull Models • Push Model: – Web content providers push content of interest to aggregators • Pull Model: – Aggregators scour Web for new / updated information • Crawlers use the pull model – Low overhead for content providers – Easier for new aggregators 3

Basic Crawler • Start with a seed set of URLs • Download all pages with these URLs • Extract URLs from downloaded pages • Repeat 4

Basic Crawler Frontier Will this terminate? remove. URL( ) get. Page( ) get. Links. From. Page( ) 5

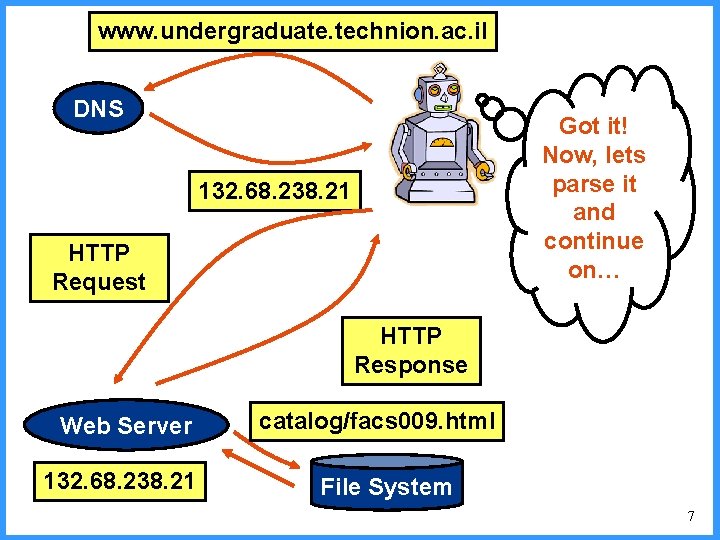

Reminder: Downloading Web Pages Suppose our robot wants to download: http: //www. undergraduate. technion. ac. il/ catalog/facs 009. html 6

www. undergraduate. technion. ac. il DNS Got it! Now, lets parse it and continue on… 132. 68. 238. 21 HTTP Request HTTP Response Web Server 132. 68. 238. 21 catalog/facs 009. html File System 7

Challenges • Scale – huge Web, changing constantly • Content selection tradeoffs – Cannot download entire Web and be constantly up-to-date – Balance coverage, freshness with per-site limitations 8

Challenges • Social obligations – Do not over burden web sites being crawled – (Avoid mistakenly performing denial of service attacks) • Adversaries – Content provides way try to misrepresent their content (e. g. , cloaking) 9

Goals of a Crawler • Download a large set of pages • Refresh downloaded pages • Find new pages • Make sure to have as many “good” pages as possible 10

Structure of the Web What does this mean for seed choice? • http: //www 9. org/w 9 cdrom/160. html 11

Topics • Crawler architecture • Traversal order • Duplicate Detection 12

Crawler Architecture and Issues 13

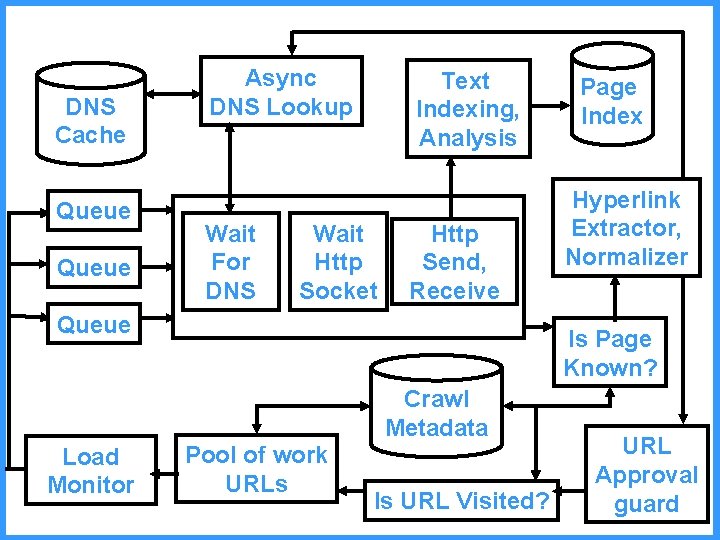

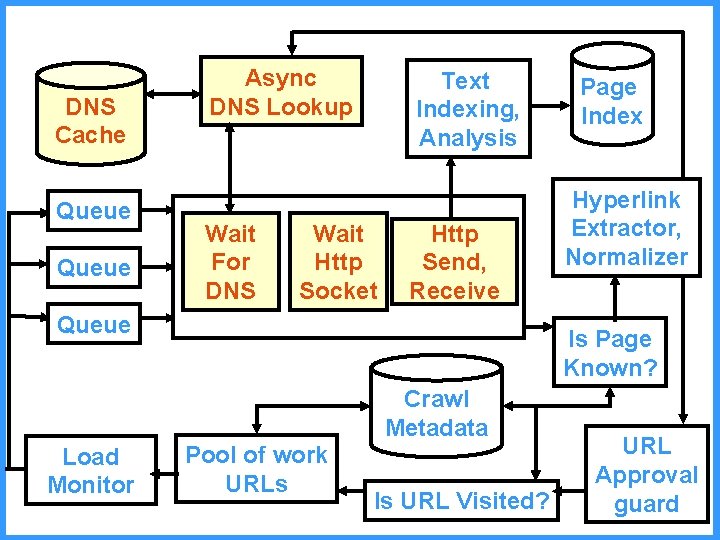

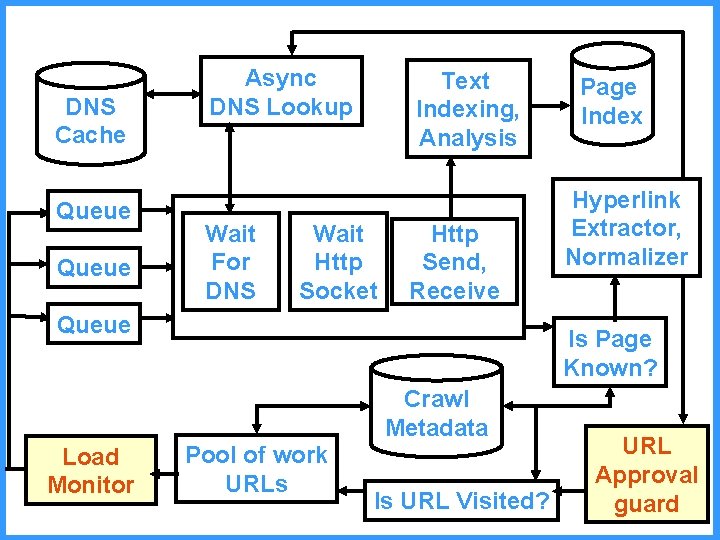

DNS Cache Queue Async DNS Lookup Wait For DNS Text Indexing, Analysis Wait Http Socket Http Send, Receive Queue Hyperlink Extractor, Normalizer Is Page Known? Crawl Metadata Load Monitor Page Index Pool of work URLs Is URL Visited? URL Approval guard 14

Delays • Crawler should download fetch many pages quickly. Delays follow from: – Resolving IP address from URL using a DNS – Connecting to the server and sending request – Waiting for response 15

Reducing Time for DNS lookup • Cache DNS addresses • Perform pre-fetching – Look up address immediately when URL is inserted into pool, so it will be ready when URL will be downloaded 16

DNS Cache Queue Async DNS Lookup Wait For DNS Text Indexing, Analysis Wait Http Socket Http Send, Receive Queue Hyperlink Extractor, Normalizer Is Page Known? Crawl Metadata Load Monitor Page Index Pool of work URLs Is URL Visited? URL Approval guard 17

How and Where should the Crawler Crawl? • When a crawler crawls a site, it uses the site’s resources: – The web server needs to find the file in file system – The web server needs to send the file in the network • If a crawler asks for many of the pages and at a high speed it may – crash the sites web server or – be banned from the site (how? ) • Do not ask for too many pages from the same site without waiting enough time in between! 18

Directing Crawlers • Sometimes people want to direct automatic crawling over their resources “Do not visit my files!” “Do not index my files!” “Only my crawler may visit my files!” “Please, follow my useful links…” “Please update your data after X time…” • Solution: publish instructions in some known format • Crawlers are expected to follow these instructions 19

Robots Exclusion Protocol • A method that allows Web servers to indicate which of their resources should not be visited by crawlers • Put the file robots. txt at the root directory of the server – http: //www. cnn. com/robots. txt – http: //www. w 3. org/robots. txt – http: //www. ynet. co. il/robots. txt – http: //www. google. com/robots. txt 20

robots. txt Format • A robots. txt file consists of several records • Each record consists of a set of some crawler id’s and a set of URLs these crawlers are not allowed to visit – User-agent lines: Names of crawlers – Disallowed lines: Which URLs are not to be visited by these crawlers (agents)? 21

Examples User-agent: * Disallow: / User-agent: * Disallow: User-agent: Google Disallow: User-agent: * Disallow: /cgi-bin/ Disallow: /tmp/ Disallow: /junk/ User-agent: Bad. Bot Disallow: / User-agent: * Disallow: / 22

Robots Meta Tag • A Web-page author can also publish directions for crawlers • These are expressed by the meta tag with name robots, inside the HTML file • Format: <meta name="robots" content="options"/> • Options: – index or noindex: index or do not index this file – follow or nofollow: follow or do not follow the links of this file 23

Robots Meta Tag An Example: <html> <head> <meta name="robots" content="noindex, follow"> <title>. . . </title> </head> <body> … How should a crawler act when it visits this page? 24

Revisit Meta Tag • Web page authors may want Web applications to have an up-to-date copy of their page • Using the revisit meta tag, page authors can give crawlers some idea of how often the page is being updated • For example: <meta name="revisit-after" content="10 days" /> 25

Stronger Restrictions • It is possible for a (non-polite) crawler to ignore the restrictions imposed by robots. txt and robots meta directions • Therefore, if one wants to ensure that automatic robots do not visit her resources, she has to use other mechanisms – For example, password protections 26

Interesting Questions • Can you be sued for copyright infringement if you follow the robots. txt protocol? • Can you be sued for copyright infringement if you don’t follow the robots. txt protocol? • Can the site owner determine who is actually crawling their site? • Can the site owner protect against unauthorized access by specific robots? 27

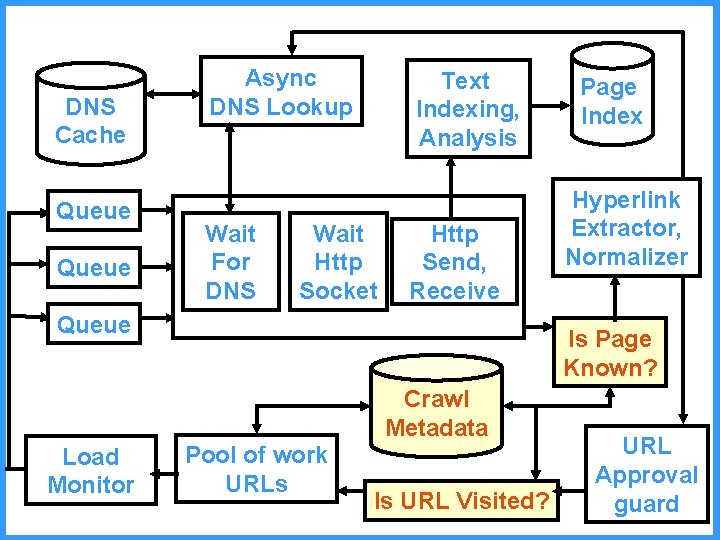

DNS Cache Queue Async DNS Lookup Wait For DNS Text Indexing, Analysis Wait Http Socket Http Send, Receive Queue Hyperlink Extractor, Normalizer Is Page Known? Crawl Metadata Load Monitor Page Index Pool of work URLs Is URL Visited? URL Approval guard 28

Duplication • There are many “near duplicate” pages on the web, e. g. , mirror sites • Different host names can resolve to the same IP address • Different URLs can resolve to the same physical address • Challenge: Avoid repeat downloads 29

DNS Cache Queue Async DNS Lookup Wait For DNS Text Indexing, Analysis Wait Http Socket Http Send, Receive Queue Hyperlink Extractor, Normalizer Is Page Known? Crawl Metadata Load Monitor Page Index Pool of work URLs Is URL Visited? URL Approval guard 30

Multiple Crawlers • Normally, multiple crawlers must work together to download pages • URLs are divided among crawlers – if there are k crawlers, compute a hash of host name (with value 1, …, k) and assign pages appropriately 31

Ordering the URL Queues • Politeness: do not hit a web server too frequently • Freshness: crawl some pages more often than others – E. g. , pages (such as News sites) whose content changes often • These goals may conflict each other. 32

DNS Cache Queue Async DNS Lookup Wait For DNS Text Indexing, Analysis Wait Http Socket Http Send, Receive Queue Hyperlink Extractor, Normalizer Is Page Known? Crawl Metadata Load Monitor Page Index Pool of work URLs Is URL Visited? URL Approval guard 33

Traversal Order 34

Choosing Traversal Order • We are constantly inserting links into the list (frontier) and removing links from the list • In which order should this be done? • Why does it matter? 35

Importance Metrics Efficient Crawling through Url Ordering, Cho, Garcia-Molina, Page, 1998. • Similarity to driving query • Backlink count • Pagerank • Location metric • Each of these imply an importance Imp(p) for pages, and we want to crawl best pages 36

Measuring Success: Crawler Models (1) • Crawl and Stop: Starts at page P 0, crawls k pages p 1, …, pk and stops. • Suppose the “best” k pages on the Web are r 1, …, rk • Suppose that | {pi s. t. Imp(pi) >= Imp(rk) } | = M • Performance: M / k • What is ideal performance? • How would a random crawler perform? 37

Measuring Success: Crawler Models (2) • Crawl and Stop with Threshold: Crawler visits k pages p 1, …, pk and stops. • We are given an importance target G • Suppose that total number of pages in Web with threshold >= G is H • Suppose that | {pi s. t. Imp(pi) >= G } | = M • Performance: M / H • What is ideal performance? • How would a random crawler perform? 38

Measuring Success: Crawler Models (3) • Limited Buffer Crawl: Crawler can keep B pages in its buffer. After buffer fills up, must flush some out. – Can visit T pages, where T is the number of pages on the web • Performance: Measured by percentage of pages in the buffer at the end which are at least as good as G 39

Ordering Metrics • The crawler stores a queue of URLs, and chooses the best from this queue to download and traverse • An ordering metric O(p) is used to define the ordering: we will traverse page with highest O(p) • Should we choose O(p) = Imp(p)? • Can we choose O(p) = Imp(p)? 40

Example Ordering Metrics: BFS, DFS • DFS (Depth First Search = )חיפוש לעומק • BFS (Breadth First Search = )חיפוש לרוחב • Advantages? Disadvantages? 41

BFS versus DFS • Which is better? • What advantages do each have? – DFS: locality of requests (save time on DNS) – BFS: get shallow pages first (usually more important), pages are distributed among many sites, load distribution 42

Example Ordering Metrics: Estimated Importance • Each URL has a ranking that determines how important it is • Always remove the highest ranking URL first • How is the ranking defined? – By estimating importance measures. How? 43

Similarity to Driving Query • Compile a list of words Q that describes a topic of interest • Define the importance of a page P by its textual similarity to Q by using a formula that combines – The number of appearances of words from Q in P – For each word of Q, its frequency throughout the web (why is this important? ) • Problem: We must decide if a link is important without (1) seeing the page and (2) knowing how rare a word is • Estimated Importance: Use an estimate, e. g. , context of the link, or entire page containing the link 44

Backlink Count, Page. Rank • The importance of a page P is proportional to the number of pages with a link to P or to its Page. Rank • As before, need to estimate this amount • Estimated importance computed by evaluating these values for the portion of the Web already downloaded 45

Location Metric • The importance of P is a function of its URL • Example: – Words appearing on URL (e. g. , edu or ac) – Number of “/” on the URL • We can compute this precisely and use it as an ordering metric, if desired (no need for estimation) 46

Bottom Line: What Ordering Metric to Use? • In general, they have shown that Page. Rank outperforms BFS and estimated backlinks if our importance metric is Page. Rank or Backlinks • Estimated similarity to query (combined with Page. Rank for cases in which the estimated similarity is 0) works well for finding pages that are similar to a query • For more details, see the paper 47

Duplicate Detection 48

How to Avoid Duplicates • Store normalized versions of URLs • Store hash values of the pages. Compare each new page to hashes of previous pages – Example: use MD 5 hashing function – Does not detect near duplicates 49

Detecting approximate duplicates • Can compare the pages using known distance metrics e. g. , edit distance – Takes far too long when we have millions of pages! • One solution: create a sketch for each page that is much smaller than the page • Assume: we have converted page into a sequence of tokens – Eliminate punctuation, HTML markup, etc 50

Shingling • Given document D, a w-shingle is a contiguous subsequence of w tokens • The w-shingling S(D, w) of D, is the set of all wshingles in D • Example: D=(a rose is a rose) – a rose is a – rose is a rose – is a rose is – – a rose is a rose – S(D, 4) = {(a, rose, is, a), (rose, is, a, rose), (is, a, rose, is)} 51

Resemblance Remember this? • The resemblance of docs A and B is defined as: • In general, 0 rw(A, B) 1 – Note rw(A, A) = 1 – But rw(A, B)=1 does not mean A and B are identical! • What is a good value for w? 52

Sketches • Set of all shingles is large – Bigger than the original document • We create a document sketch by sampling only a few shingles • Requirement – Sketch resemblance should be a good estimate of document resemblance • Notation: From now on, w will be a fixed value, so we omit w from rw and S(A, w) – r(A, B)=rw(A, B) and S(A) = S(A, w) 53

Choosing a sketch • Random sampling does not work! – Suppose we have identical documents A, B each with n shingles – Let m. A be a shingle from A, chosen uniformly at random; similarly m. B • For k=1: E[{m. A} {m. B}|] = 1/n – But r(A, B) = 1 – So the sketch overlap is an underestimate 54

Choosing a sketch • Assume the set of all possible shingles is totally ordered • Define: – m. A is the “smallest” shingle in A – m. B is the “smallest” shingle in B – r’(A, B) = | {m. A} {m. B} | • For identical documents A & B – r’(A, B) = 1 = r(A, B) 55

Problems • With a sketch of size 1, there is still a high probability of error – Especially if we choose minimum shingle, e. g. , in alphabetical order • Size of each shingle is still large – e. g. , if w = 7, about 40 -50 bytes – 100 shingles = 4 K-5 K 56

Solution • We can improve the estimate by picking more than one shingle • Idea: – Choose many different random orderings on the set of all shingles. – For each ordering, take minimal shingles, as explained before • How do we choose random orderings of shingles? 57

Solution (cont) • Compute K fingerprint(s) for each shingle – For example, use MD 5 hashing function with different parameters • For each choices of parameters to hashing function, choose lexicographically first shingle in each document • Near duplication likelihood is computed by checking how many of the K smallest shingles are common to both documents 58

Solution (cont) • Observe that this gives us: – An efficient way to compute random orders of shingles – Significant reduction in size of shingles stored (40 bits is usually enough to keep estimates reasonably accurate) 59

- Slides: 59