CPU Scheduling CS 519 Operating System Theory Computer

![Lottery Scheduling After n rounds, your expected number of wins is E[win] = n. Lottery Scheduling After n rounds, your expected number of wins is E[win] = n.](https://slidetodoc.com/presentation_image_h2/bd987e9c1b22be1a05f60fe0c4cd5608/image-26.jpg)

- Slides: 54

CPU Scheduling CS 519: Operating System Theory Computer Science, Rutgers University Instructor: Thu D. Nguyen TA: Xiaoyan Li Spring 2002

What and Why? What is processor scheduling? Why? At first to share an expensive resource – multiprogramming Now to perform concurrent tasks because processor is so powerful Future looks like past + now Rent-a-computer approach – large data/processing centers use multiprogramming to maximize resource utilization Systems still powerful enough for each user to run multiple concurrent tasks Computer Science, Rutgers 2 CS 519: Operating System Theory

Assumptions Pool of jobs contending for the CPU is a scarce resource Jobs are independent and compete for resources (this assumption is not always used) Scheduler mediates between jobs to optimize some performance criteria Computer Science, Rutgers 3 CS 519: Operating System Theory

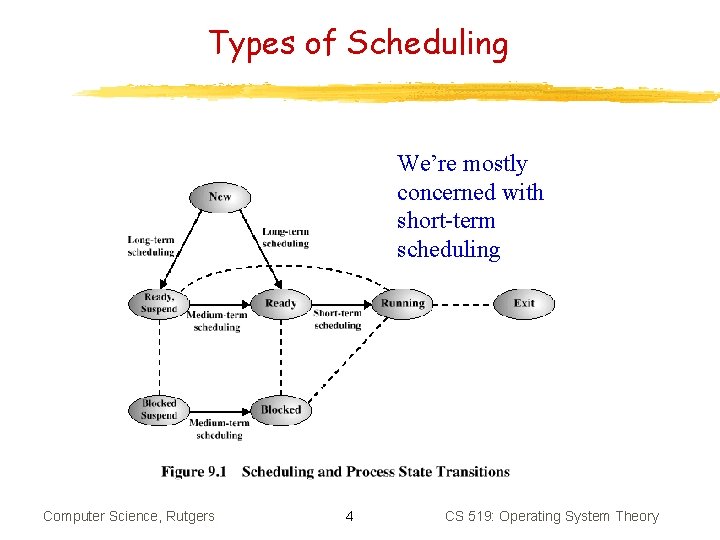

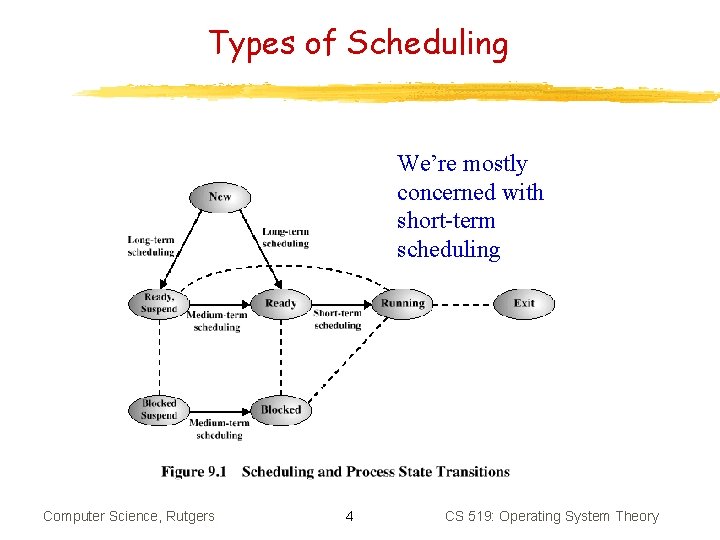

Types of Scheduling We’re mostly concerned with short-term scheduling Computer Science, Rutgers 4 CS 519: Operating System Theory

What Do We Optimize? System-oriented metrics: Processor utilization: percentage of time the processor is busy Throughput: number of processes completed per unit of time User-oriented metrics: Turnaround time: interval of time between submission and termination (including any waiting time). Appropriate for batch jobs Response time: for interactive jobs, time from the submission of a request until the response begins to be received Deadlines: when process completion deadlines are specified, the percentage of deadlines met must be promoted Computer Science, Rutgers 5 CS 519: Operating System Theory

Design Space Two dimensions Selection function Which of the ready jobs should be run next? Preemption Preemptive: currently running job may be interrupted and moved to Ready state Non-preemptive: once a process is in Running state, it continues to execute until it terminates or it blocks for I/O or system service Computer Science, Rutgers 6 CS 519: Operating System Theory

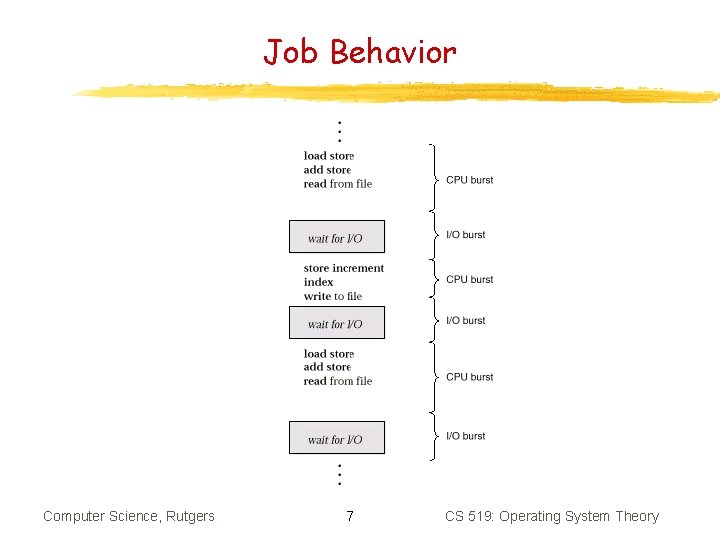

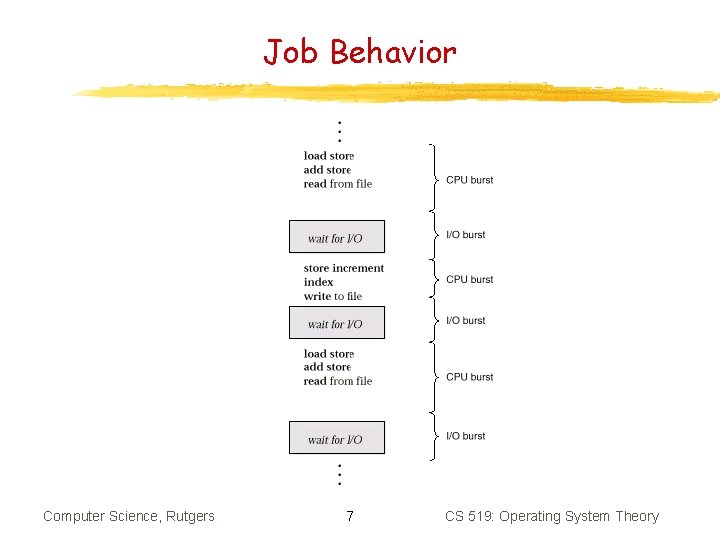

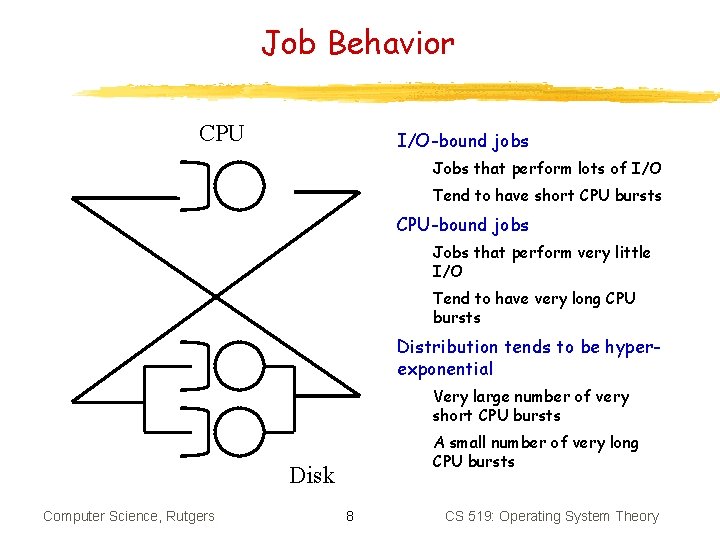

Job Behavior Computer Science, Rutgers 7 CS 519: Operating System Theory

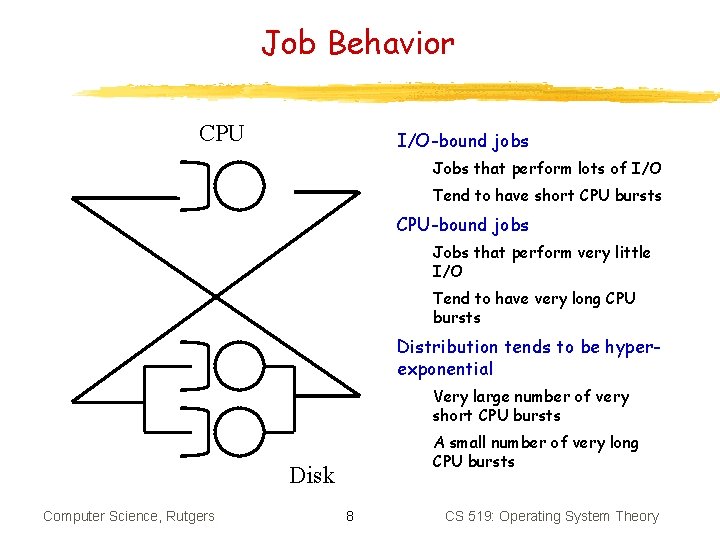

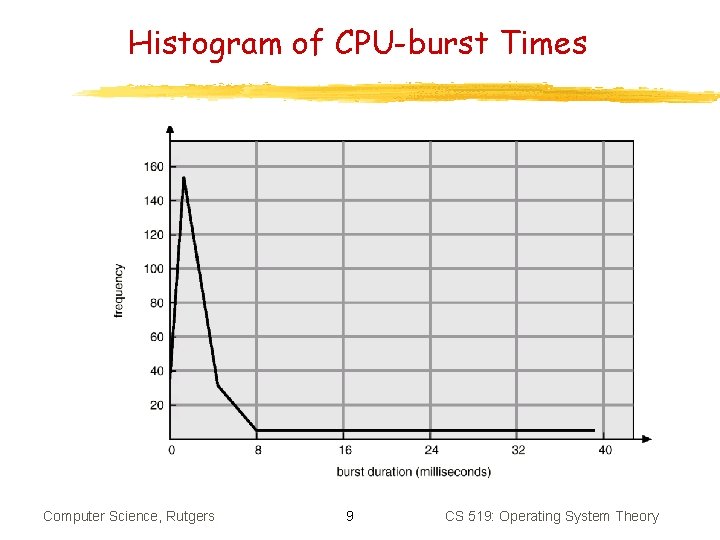

Job Behavior CPU I/O-bound jobs Jobs that perform lots of I/O Tend to have short CPU bursts CPU-bound jobs Jobs that perform very little I/O Tend to have very long CPU bursts Distribution tends to be hyperexponential Very large number of very short CPU bursts A small number of very long CPU bursts Disk Computer Science, Rutgers 8 CS 519: Operating System Theory

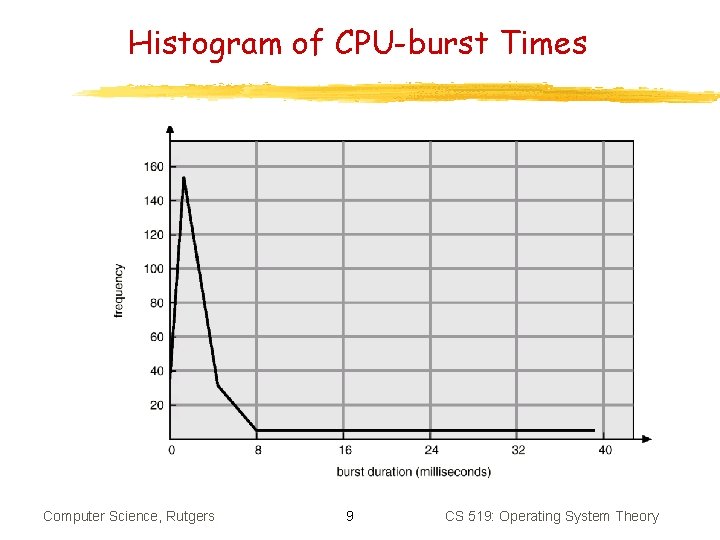

Histogram of CPU-burst Times Computer Science, Rutgers 9 CS 519: Operating System Theory

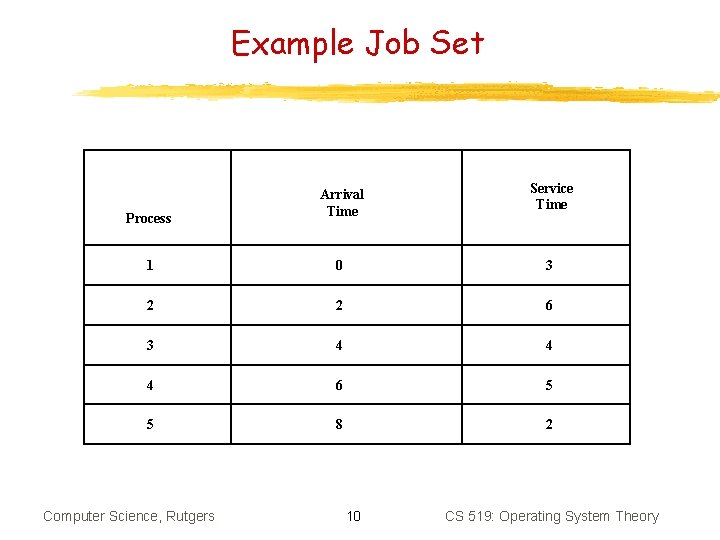

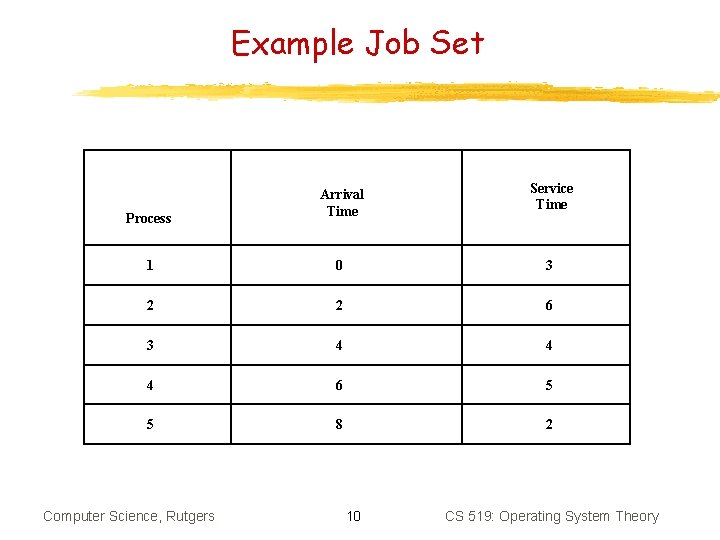

Example Job Set Arrival Time Service Time 1 0 3 2 2 6 3 4 4 4 6 5 5 8 2 Process Computer Science, Rutgers 10 CS 519: Operating System Theory

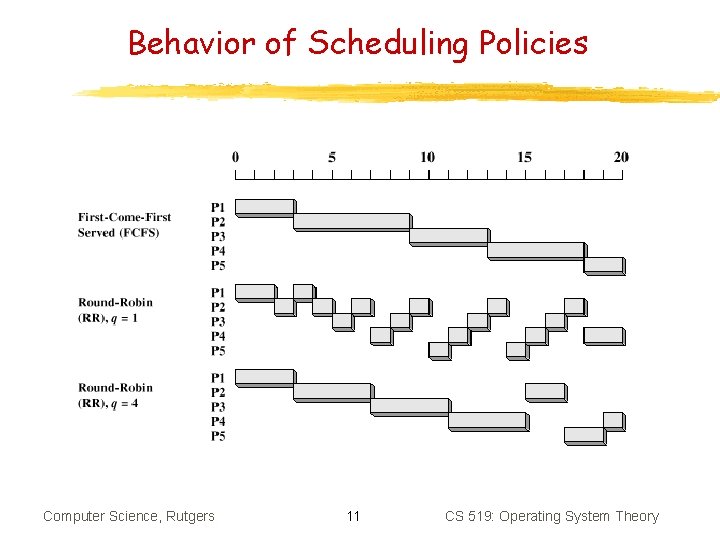

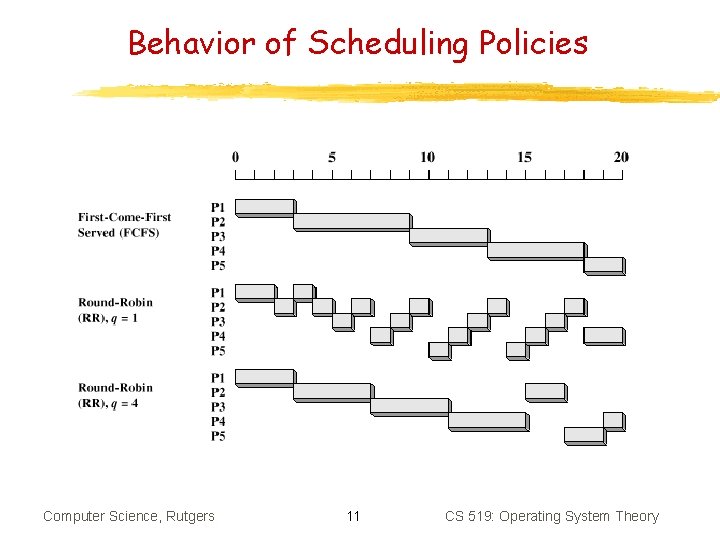

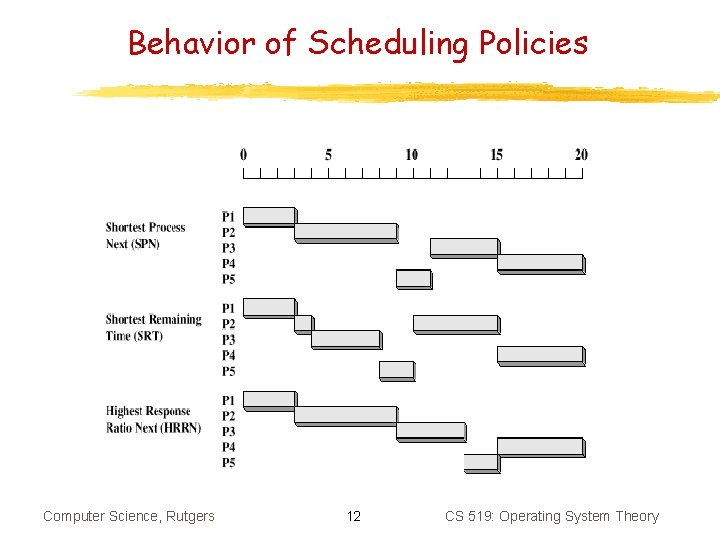

Behavior of Scheduling Policies Computer Science, Rutgers 11 CS 519: Operating System Theory

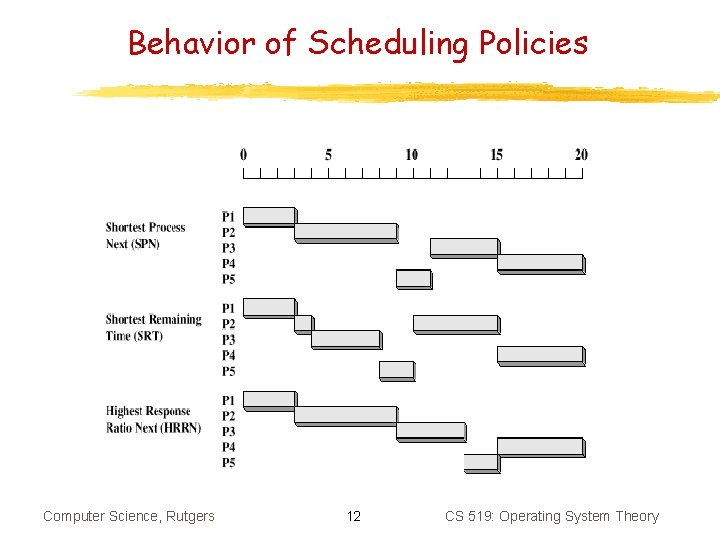

Behavior of Scheduling Policies Computer Science, Rutgers 12 CS 519: Operating System Theory

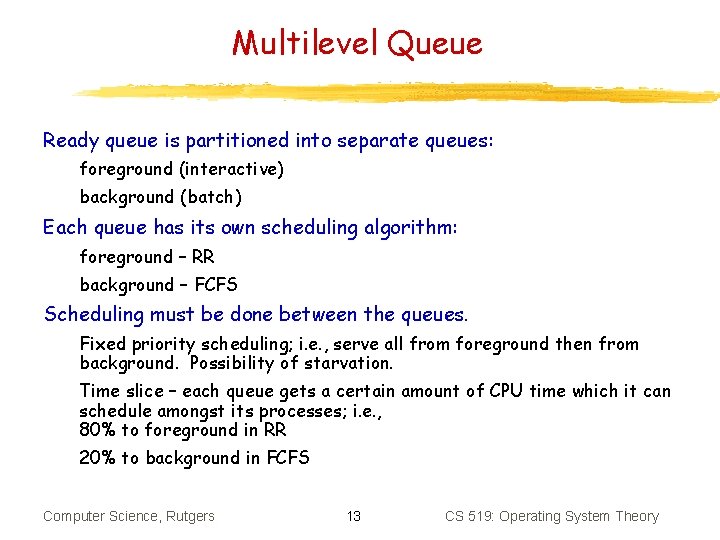

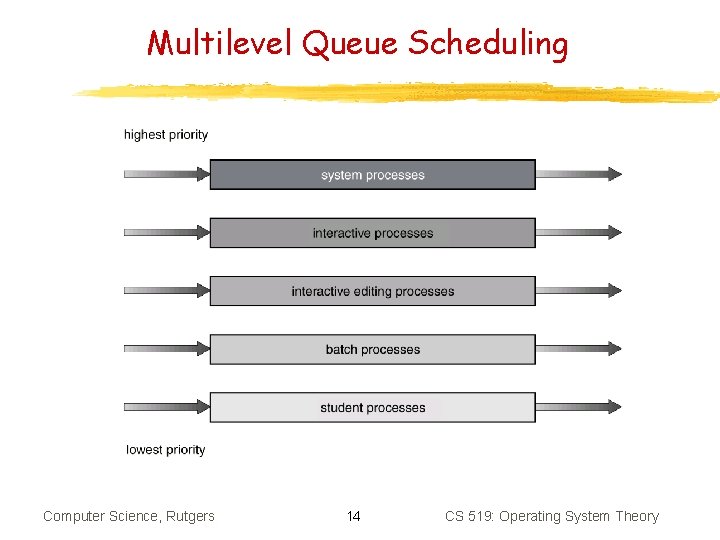

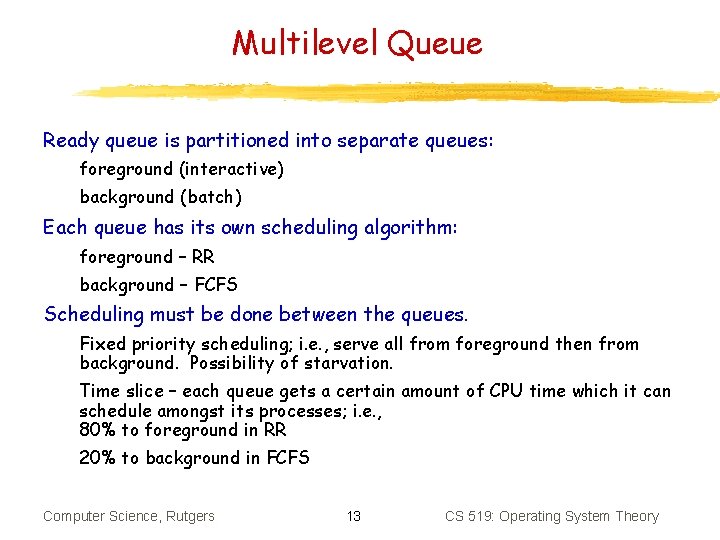

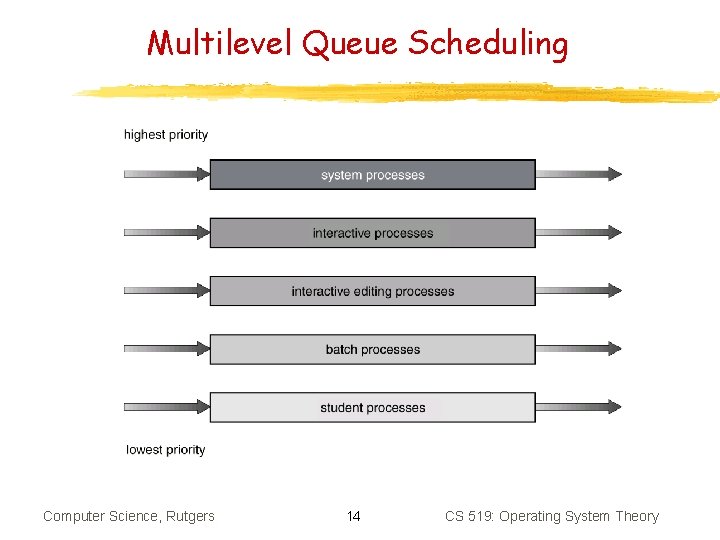

Multilevel Queue Ready queue is partitioned into separate queues: foreground (interactive) background (batch) Each queue has its own scheduling algorithm: foreground – RR background – FCFS Scheduling must be done between the queues. Fixed priority scheduling; i. e. , serve all from foreground then from background. Possibility of starvation. Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i. e. , 80% to foreground in RR 20% to background in FCFS Computer Science, Rutgers 13 CS 519: Operating System Theory

Multilevel Queue Scheduling Computer Science, Rutgers 14 CS 519: Operating System Theory

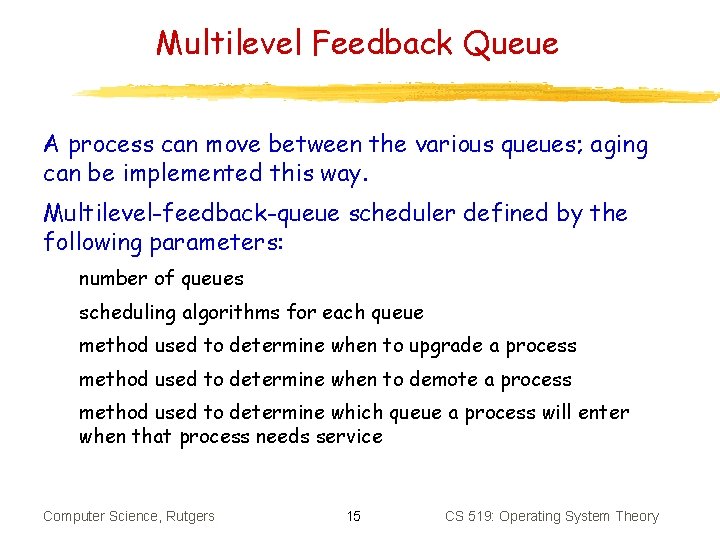

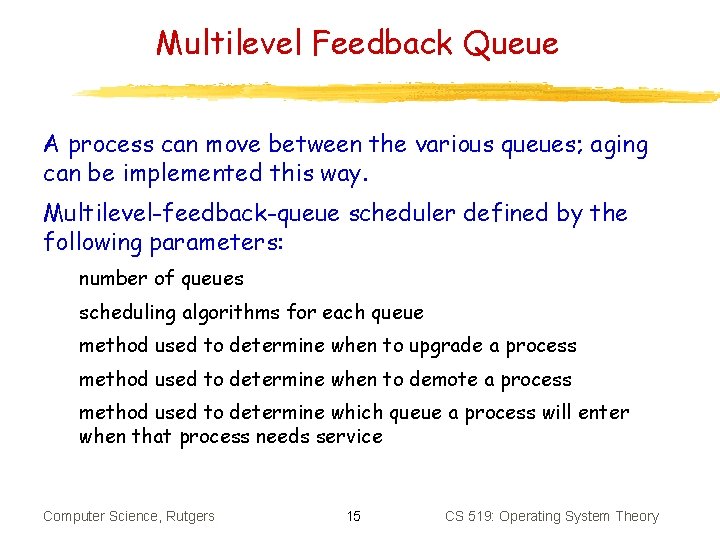

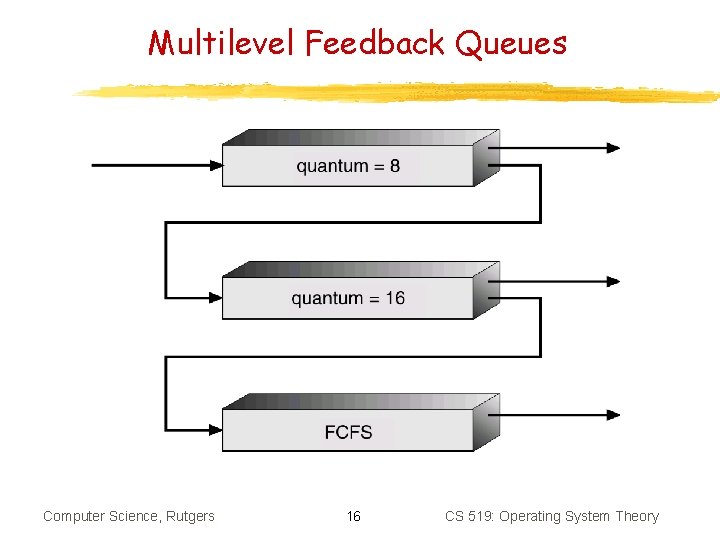

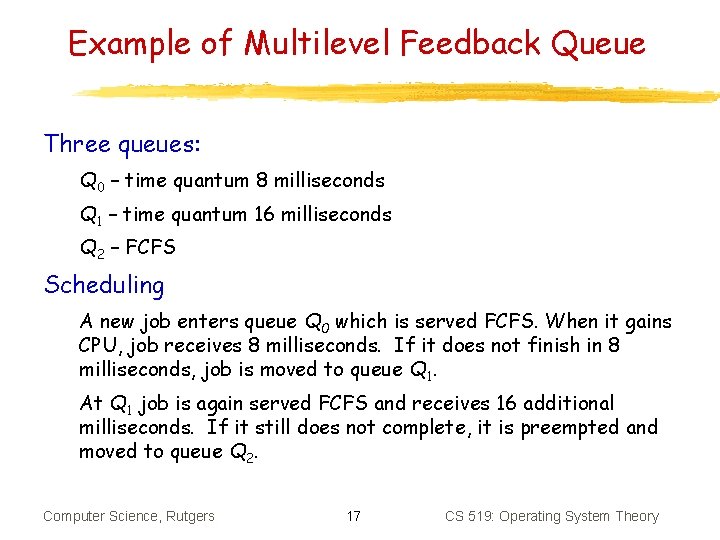

Multilevel Feedback Queue A process can move between the various queues; aging can be implemented this way. Multilevel-feedback-queue scheduler defined by the following parameters: number of queues scheduling algorithms for each queue method used to determine when to upgrade a process method used to determine when to demote a process method used to determine which queue a process will enter when that process needs service Computer Science, Rutgers 15 CS 519: Operating System Theory

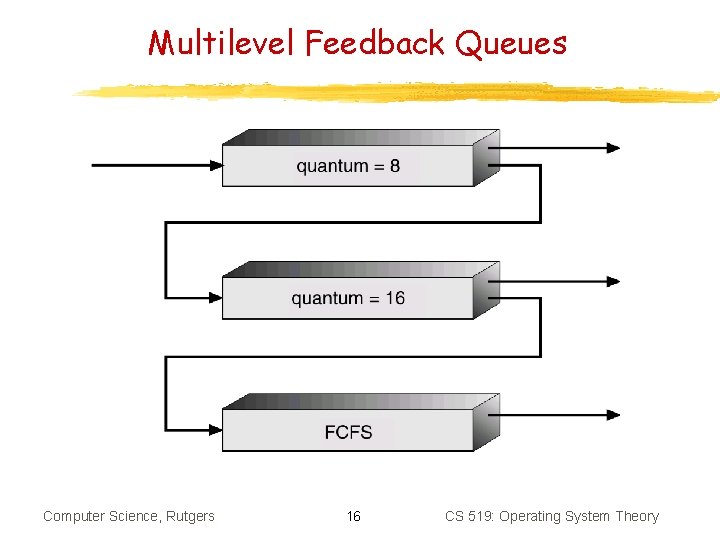

Multilevel Feedback Queues Computer Science, Rutgers 16 CS 519: Operating System Theory

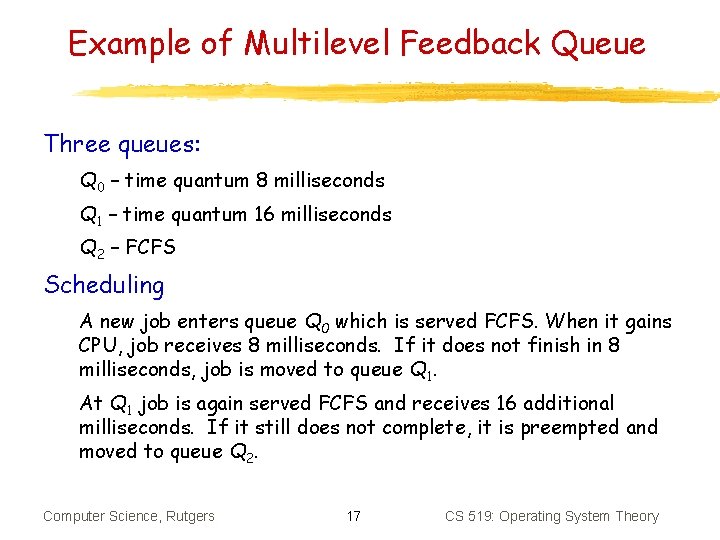

Example of Multilevel Feedback Queue Three queues: Q 0 – time quantum 8 milliseconds Q 1 – time quantum 16 milliseconds Q 2 – FCFS Scheduling A new job enters queue Q 0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q 1. At Q 1 job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q 2. Computer Science, Rutgers 17 CS 519: Operating System Theory

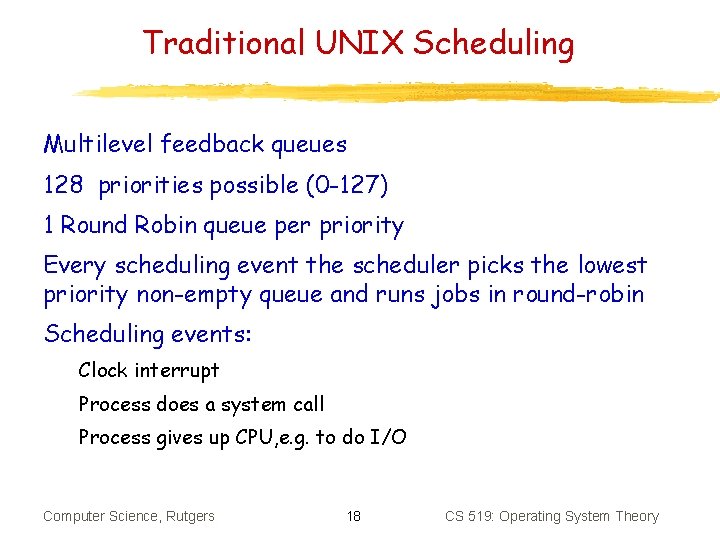

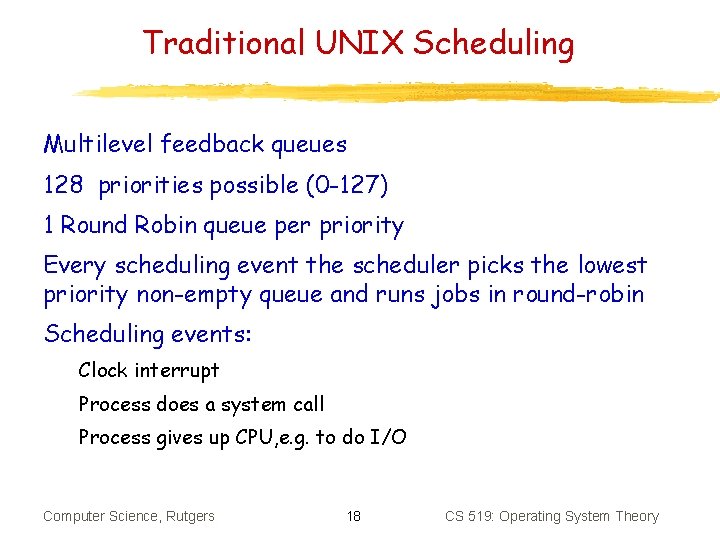

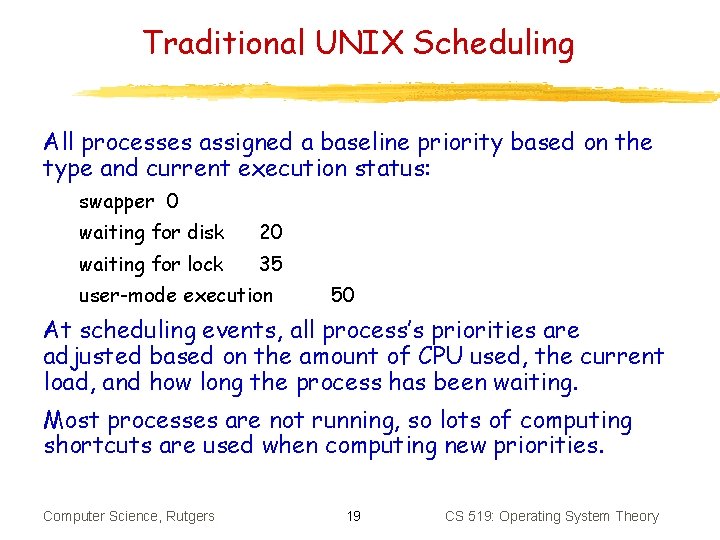

Traditional UNIX Scheduling Multilevel feedback queues 128 priorities possible (0 -127) 1 Round Robin queue per priority Every scheduling event the scheduler picks the lowest priority non-empty queue and runs jobs in round-robin Scheduling events: Clock interrupt Process does a system call Process gives up CPU, e. g. to do I/O Computer Science, Rutgers 18 CS 519: Operating System Theory

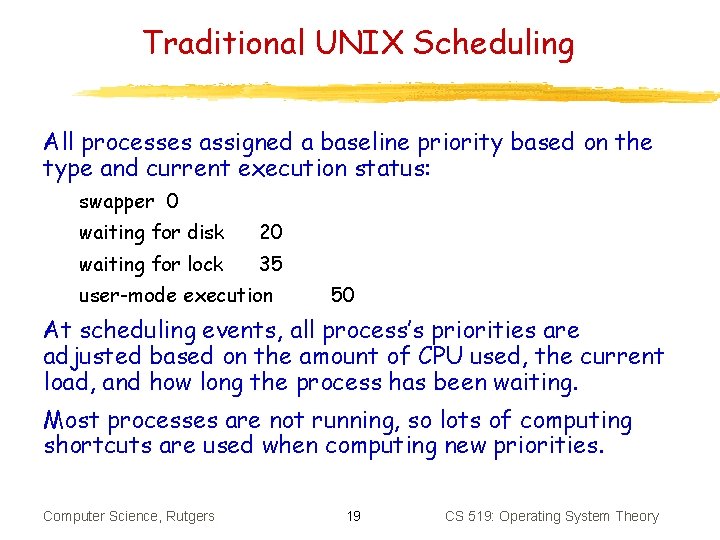

Traditional UNIX Scheduling All processes assigned a baseline priority based on the type and current execution status: swapper 0 waiting for disk 20 waiting for lock 35 user-mode execution 50 At scheduling events, all process’s priorities are adjusted based on the amount of CPU used, the current load, and how long the process has been waiting. Most processes are not running, so lots of computing shortcuts are used when computing new priorities. Computer Science, Rutgers 19 CS 519: Operating System Theory

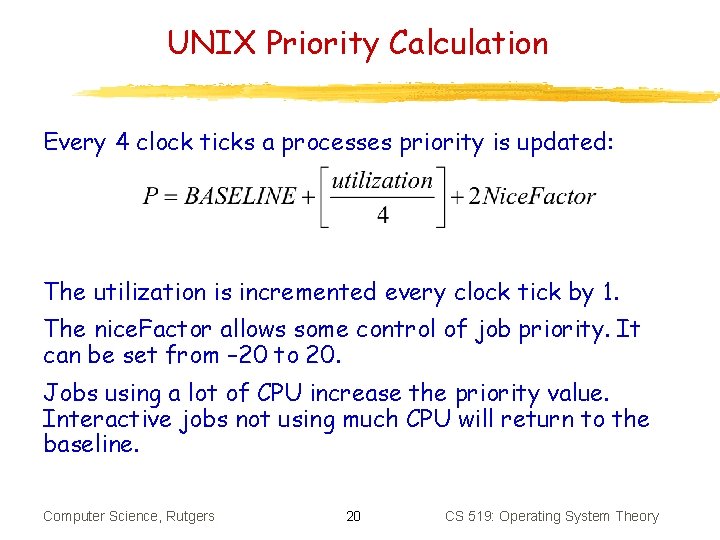

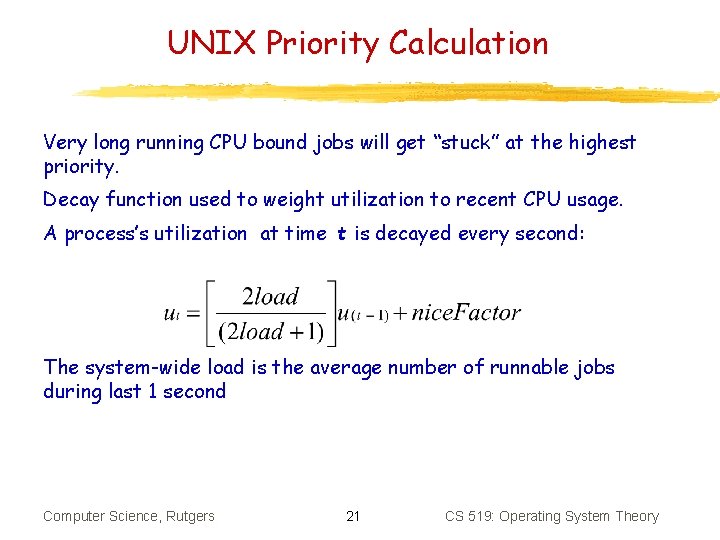

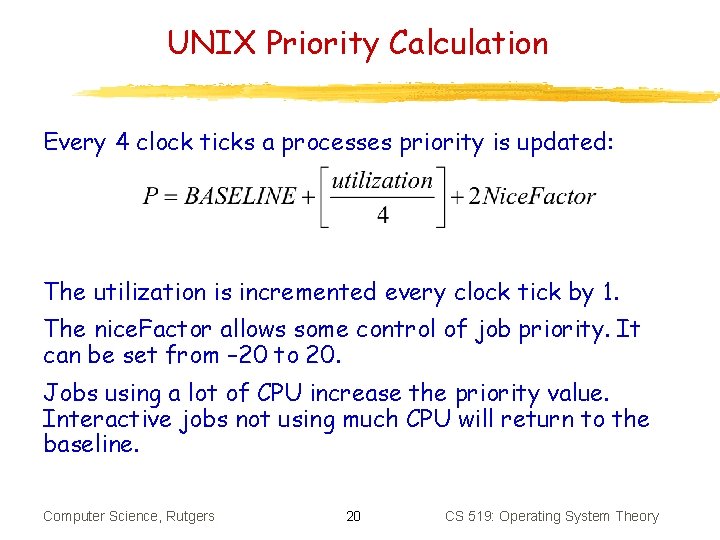

UNIX Priority Calculation Every 4 clock ticks a processes priority is updated: The utilization is incremented every clock tick by 1. The nice. Factor allows some control of job priority. It can be set from – 20 to 20. Jobs using a lot of CPU increase the priority value. Interactive jobs not using much CPU will return to the baseline. Computer Science, Rutgers 20 CS 519: Operating System Theory

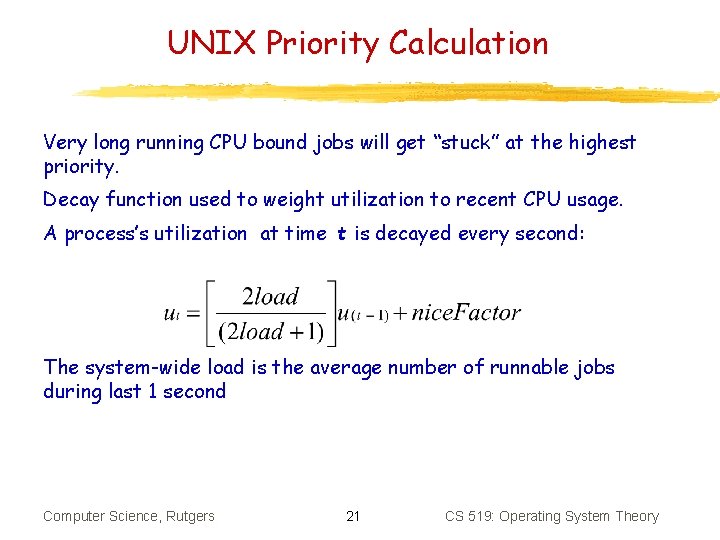

UNIX Priority Calculation Very long running CPU bound jobs will get “stuck” at the highest priority. Decay function used to weight utilization to recent CPU usage. A process’s utilization at time t is decayed every second: The system-wide load is the average number of runnable jobs during last 1 second Computer Science, Rutgers 21 CS 519: Operating System Theory

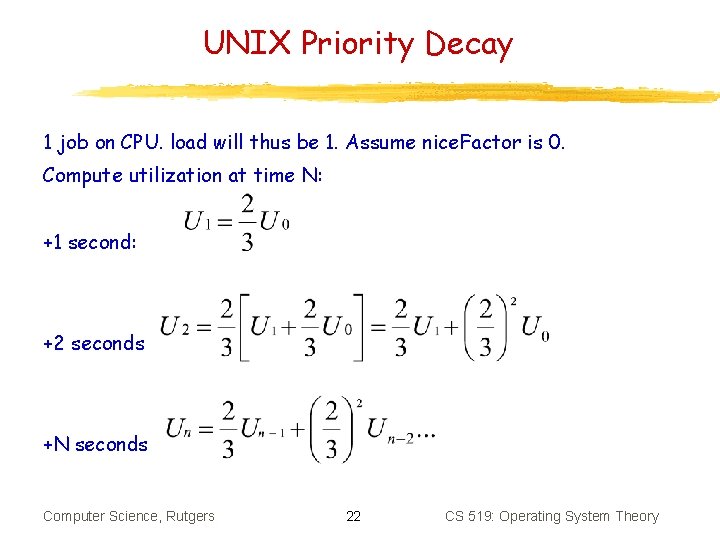

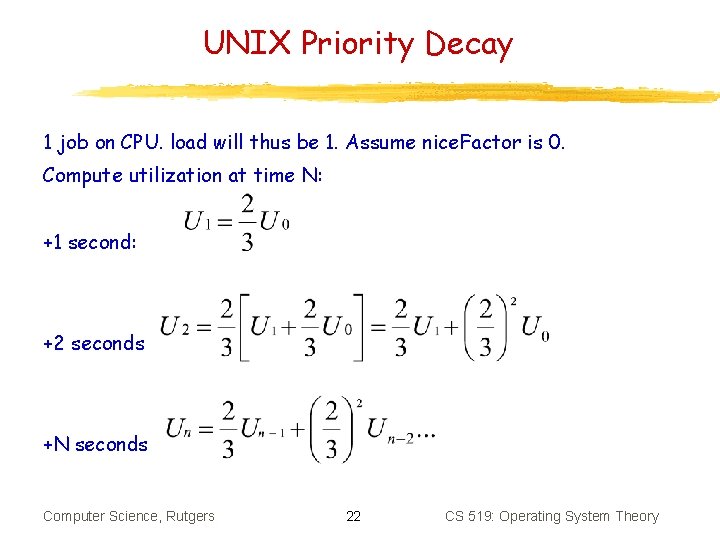

UNIX Priority Decay 1 job on CPU. load will thus be 1. Assume nice. Factor is 0. Compute utilization at time N: +1 second: +2 seconds +N seconds Computer Science, Rutgers 22 CS 519: Operating System Theory

Scheduling Algorithms FIFO is simple but leads to poor average response times. Short processes are delayed by long processes that arrive before them RR eliminate this problem, but favors CPU-bound jobs, which have longer CPU bursts than I/O-bound jobs SJN, SRT, and HRRN alleviate the problem with FIFO, but require information on the length of each process. This information is not always available (although it can sometimes be approximated based on past history or user input) Feedback is a way of alleviating the problem with FIFO without information on process length Computer Science, Rutgers 23 CS 519: Operating System Theory

It’s a Changing World Assumption about bi-modal workload no longer holds Interactive continuous media applications are sometimes processor-bound but require good response times New computing model requires more flexibility How to match priorities of cooperative jobs, such as client/server jobs? How to balance execution between multiple threads of a single process? Computer Science, Rutgers 24 CS 519: Operating System Theory

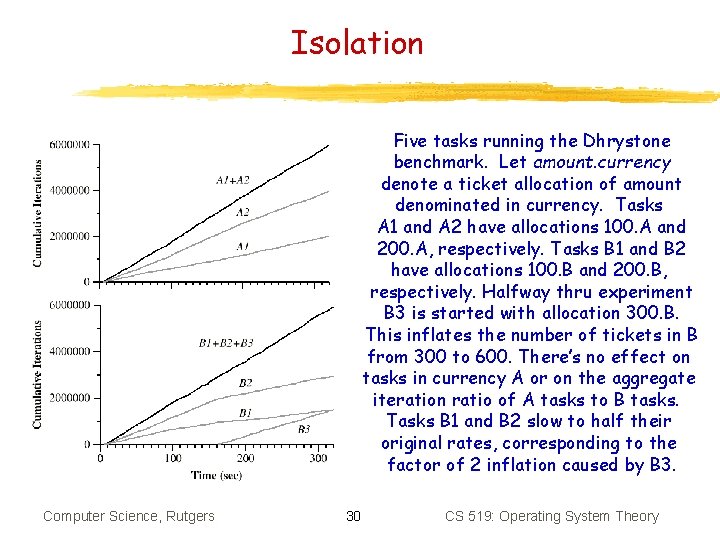

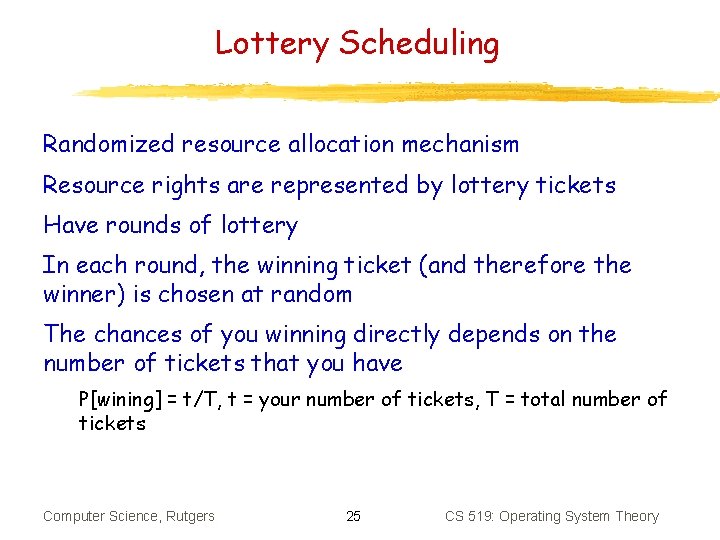

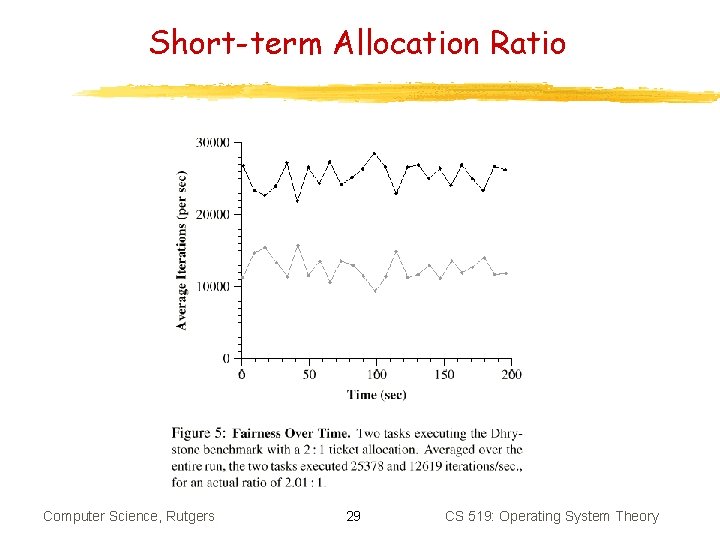

Lottery Scheduling Randomized resource allocation mechanism Resource rights are represented by lottery tickets Have rounds of lottery In each round, the winning ticket (and therefore the winner) is chosen at random The chances of you winning directly depends on the number of tickets that you have P[wining] = t/T, t = your number of tickets, T = total number of tickets Computer Science, Rutgers 25 CS 519: Operating System Theory

![Lottery Scheduling After n rounds your expected number of wins is Ewin n Lottery Scheduling After n rounds, your expected number of wins is E[win] = n.](https://slidetodoc.com/presentation_image_h2/bd987e9c1b22be1a05f60fe0c4cd5608/image-26.jpg)

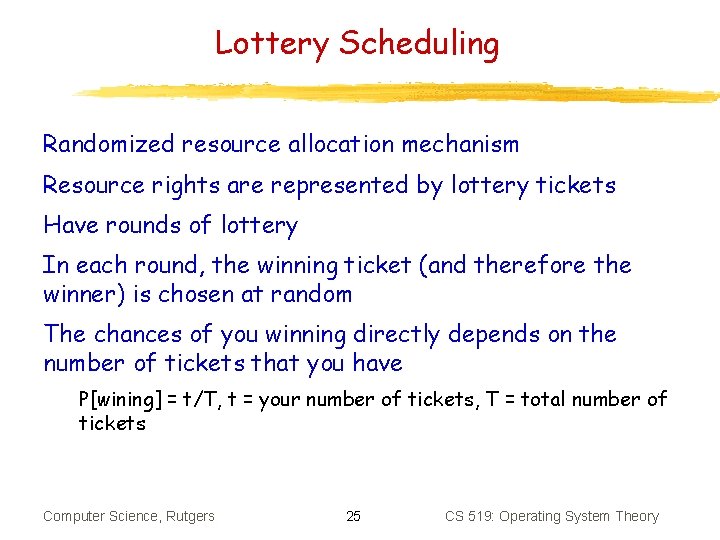

Lottery Scheduling After n rounds, your expected number of wins is E[win] = n. P[wining] The expected number of lotteries that a client must wait before its first win E[wait] = 1/P[wining] Lottery scheduling implements proportional-share resource management Ticket currencies allow isolation between users, processes, and threads OK, so how do we actually schedule the processor using lottery scheduling? Computer Science, Rutgers 26 CS 519: Operating System Theory

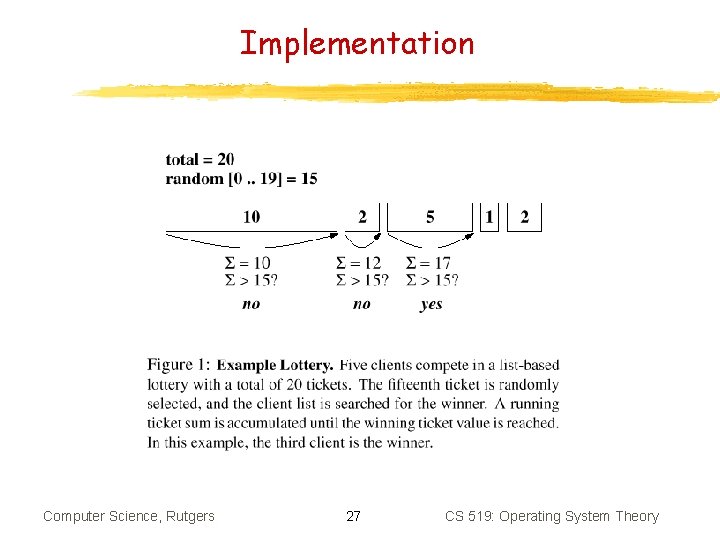

Implementation Computer Science, Rutgers 27 CS 519: Operating System Theory

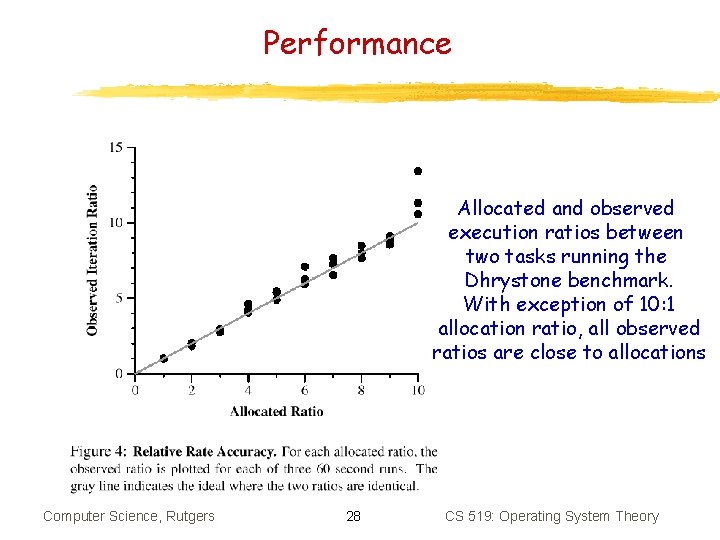

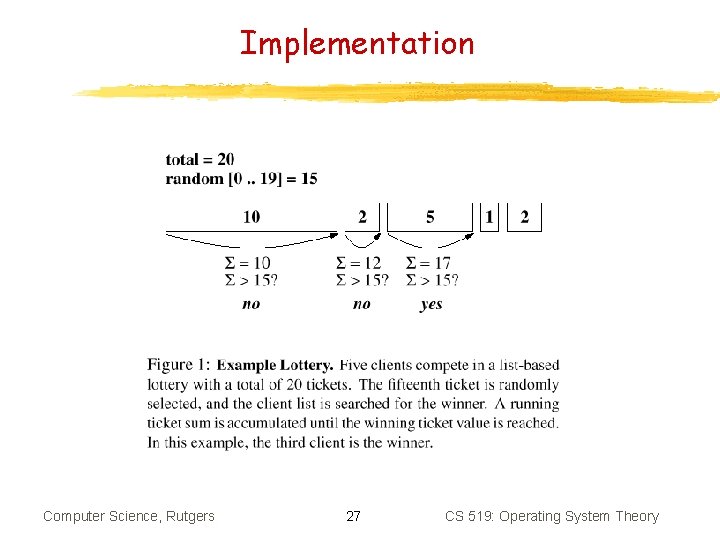

Performance Allocated and observed execution ratios between two tasks running the Dhrystone benchmark. With exception of 10: 1 allocation ratio, all observed ratios are close to allocations Computer Science, Rutgers 28 CS 519: Operating System Theory

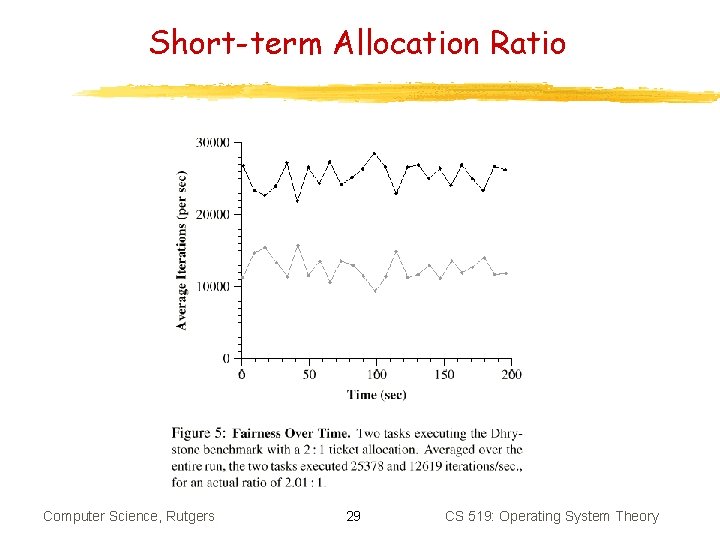

Short-term Allocation Ratio Computer Science, Rutgers 29 CS 519: Operating System Theory

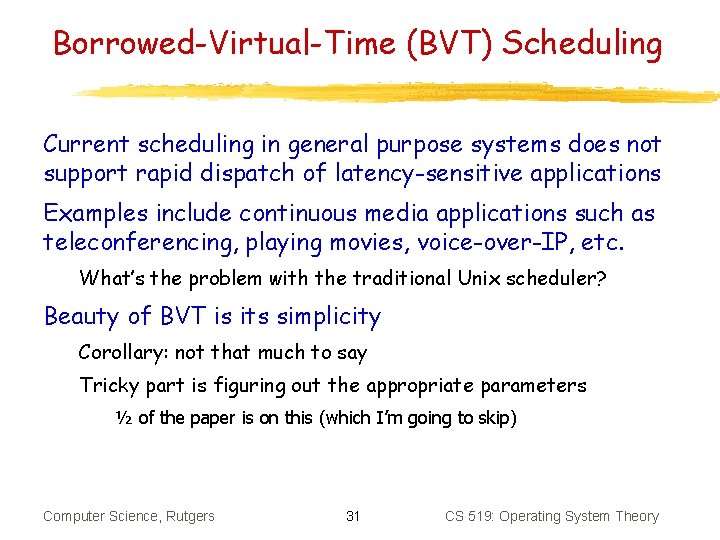

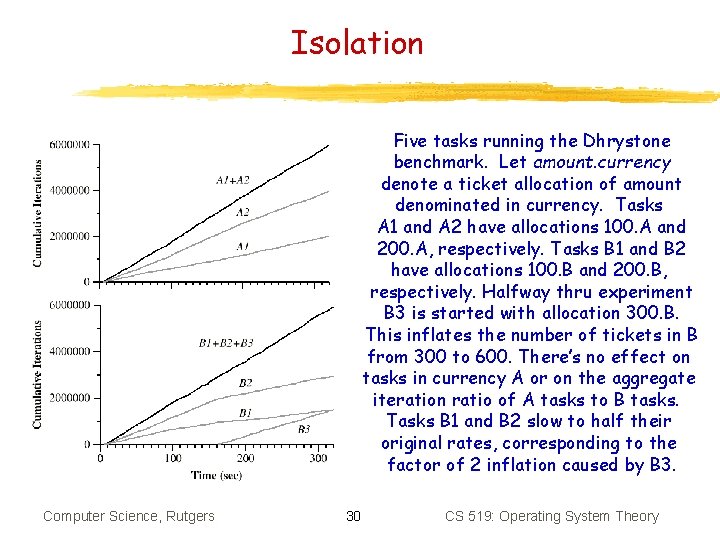

Isolation Five tasks running the Dhrystone benchmark. Let amount. currency denote a ticket allocation of amount denominated in currency. Tasks A 1 and A 2 have allocations 100. A and 200. A, respectively. Tasks B 1 and B 2 have allocations 100. B and 200. B, respectively. Halfway thru experiment B 3 is started with allocation 300. B. This inflates the number of tickets in B from 300 to 600. There’s no effect on tasks in currency A or on the aggregate iteration ratio of A tasks to B tasks. Tasks B 1 and B 2 slow to half their original rates, corresponding to the factor of 2 inflation caused by B 3. Computer Science, Rutgers 30 CS 519: Operating System Theory

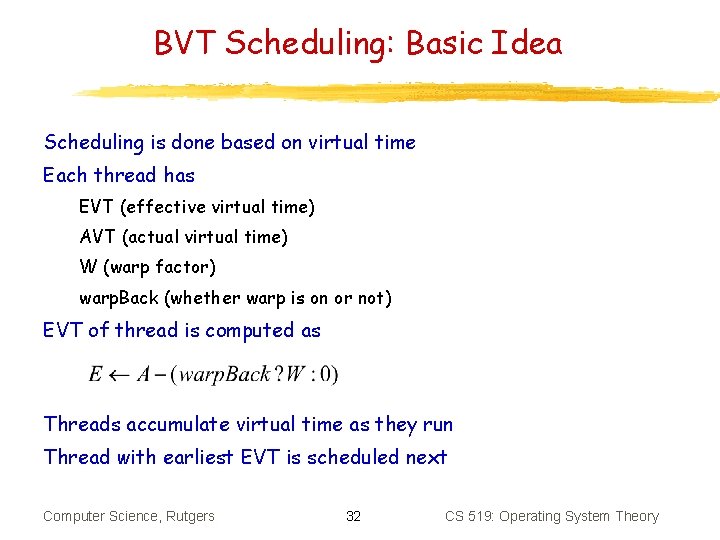

Borrowed-Virtual-Time (BVT) Scheduling Current scheduling in general purpose systems does not support rapid dispatch of latency-sensitive applications Examples include continuous media applications such as teleconferencing, playing movies, voice-over-IP, etc. What’s the problem with the traditional Unix scheduler? Beauty of BVT is its simplicity Corollary: not that much to say Tricky part is figuring out the appropriate parameters ½ of the paper is on this (which I’m going to skip) Computer Science, Rutgers 31 CS 519: Operating System Theory

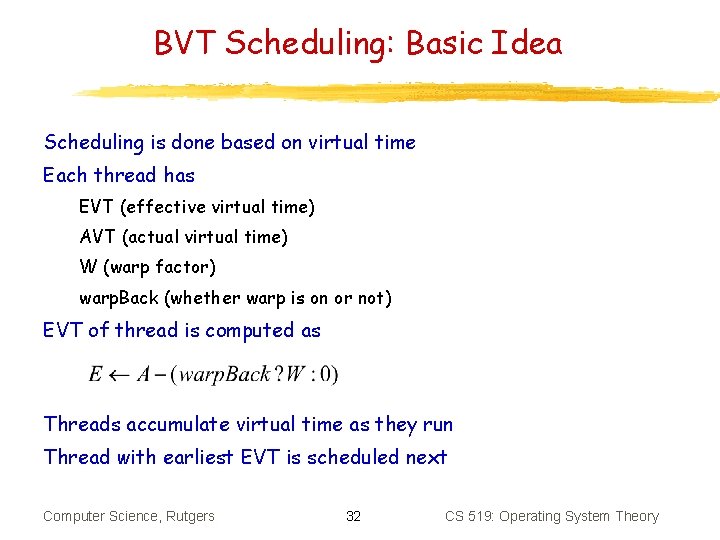

BVT Scheduling: Basic Idea Scheduling is done based on virtual time Each thread has EVT (effective virtual time) AVT (actual virtual time) W (warp factor) warp. Back (whether warp is on or not) EVT of thread is computed as Threads accumulate virtual time as they run Thread with earliest EVT is scheduled next Computer Science, Rutgers 32 CS 519: Operating System Theory

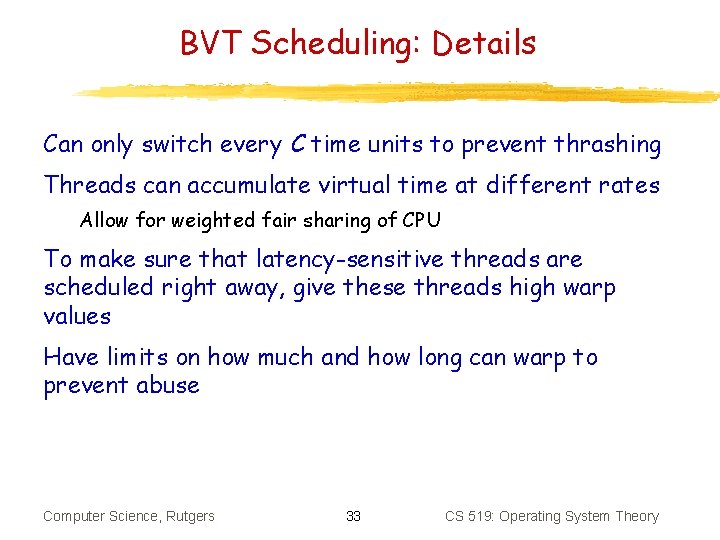

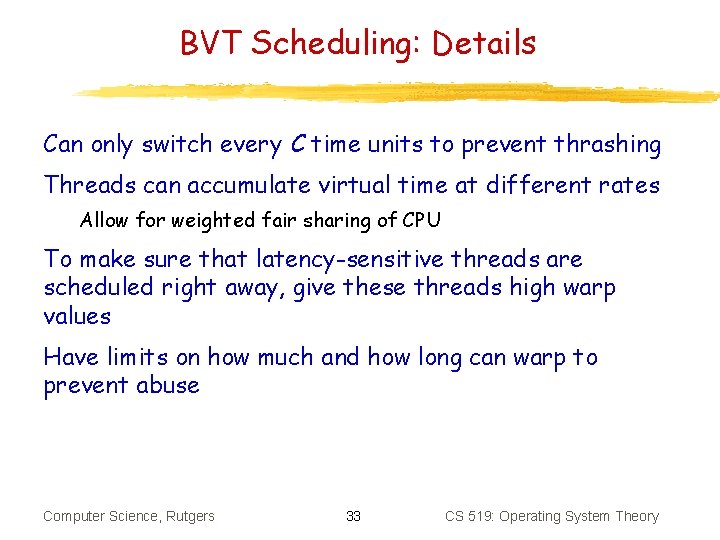

BVT Scheduling: Details Can only switch every C time units to prevent thrashing Threads can accumulate virtual time at different rates Allow for weighted fair sharing of CPU To make sure that latency-sensitive threads are scheduled right away, give these threads high warp values Have limits on how much and how long can warp to prevent abuse Computer Science, Rutgers 33 CS 519: Operating System Theory

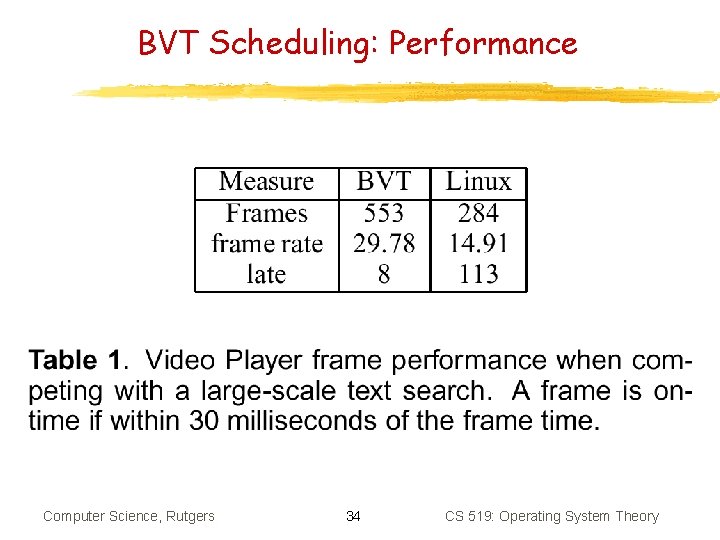

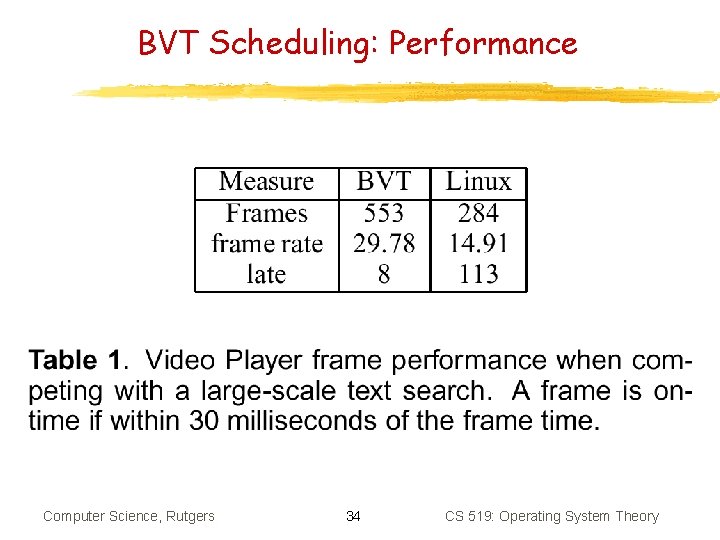

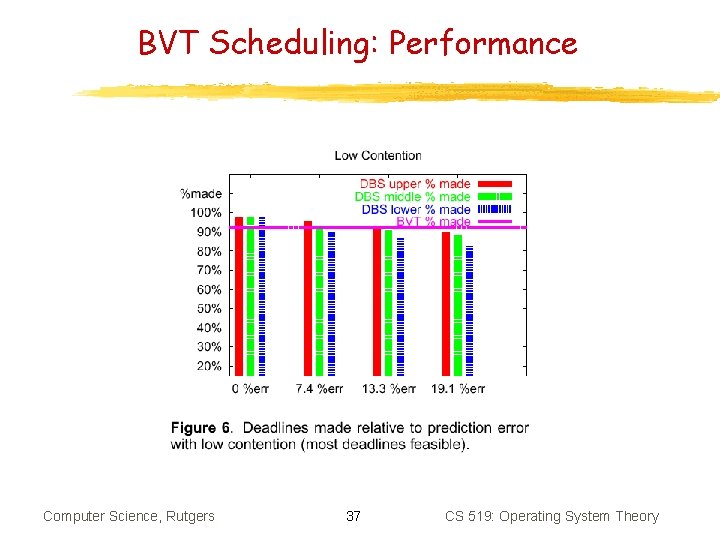

BVT Scheduling: Performance Computer Science, Rutgers 34 CS 519: Operating System Theory

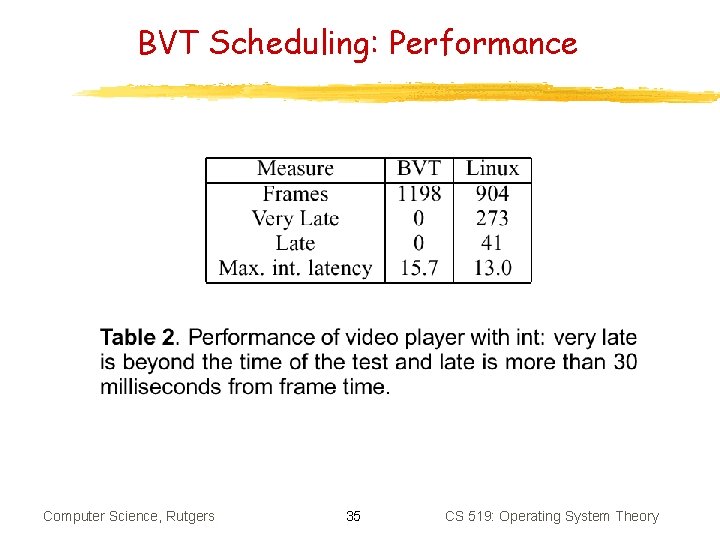

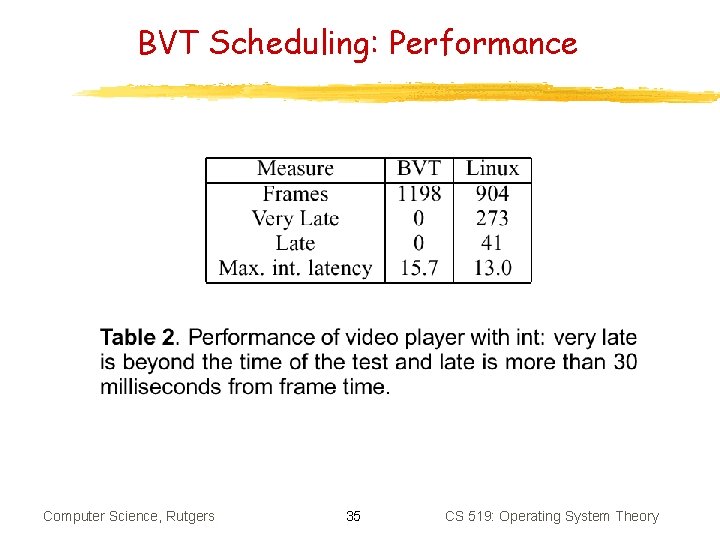

BVT Scheduling: Performance Computer Science, Rutgers 35 CS 519: Operating System Theory

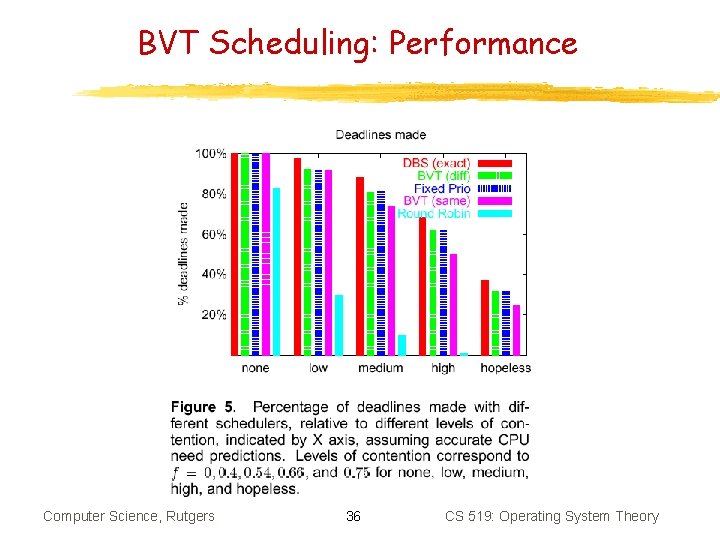

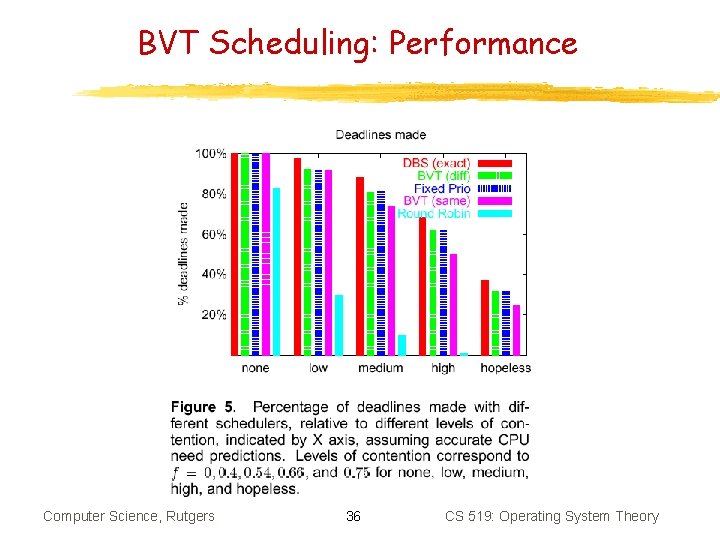

BVT Scheduling: Performance Computer Science, Rutgers 36 CS 519: Operating System Theory

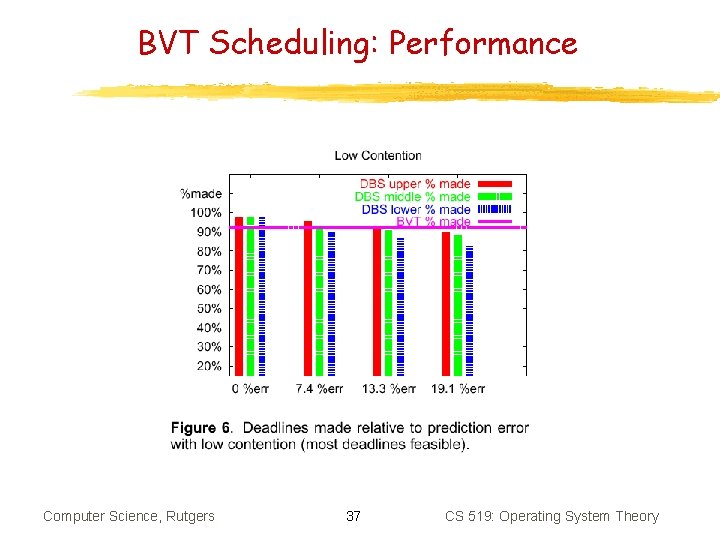

BVT Scheduling: Performance Computer Science, Rutgers 37 CS 519: Operating System Theory

BVT vs. Lottery How do the two compare? Computer Science, Rutgers 38 CS 519: Operating System Theory

Parallel Processor Scheduling

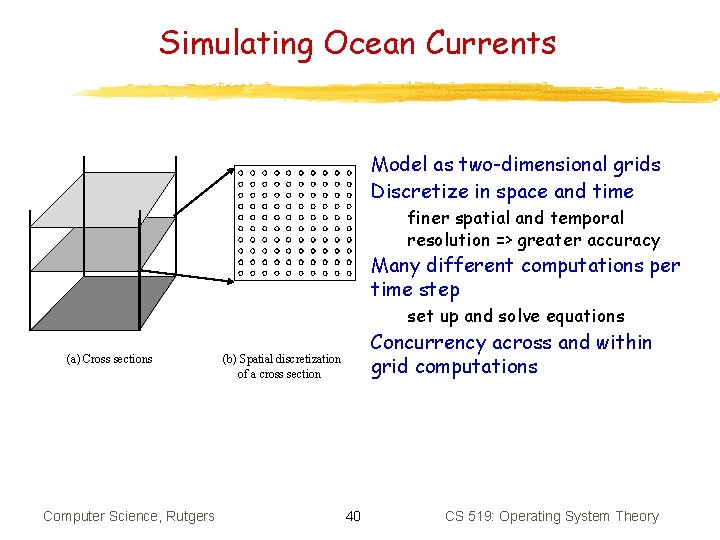

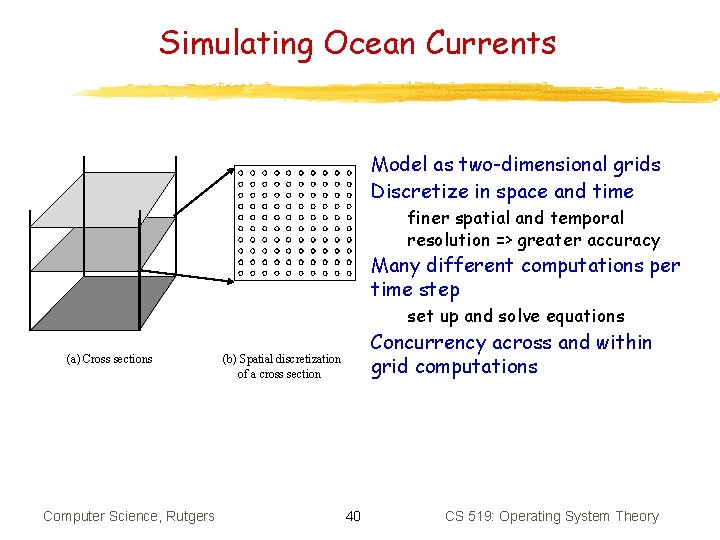

Simulating Ocean Currents Model as two-dimensional grids Discretize in space and time finer spatial and temporal resolution => greater accuracy Many different computations per time step set up and solve equations (a) Cross sections Computer Science, Rutgers Concurrency across and within grid computations (b) Spatial discretization of a cross section 40 CS 519: Operating System Theory

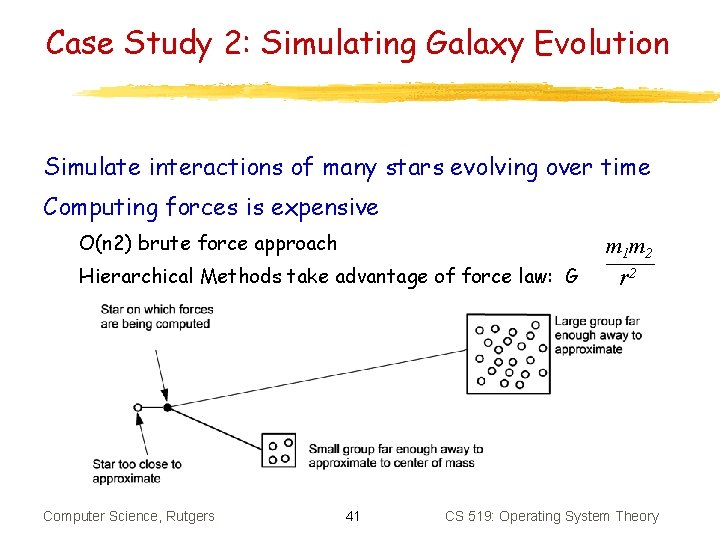

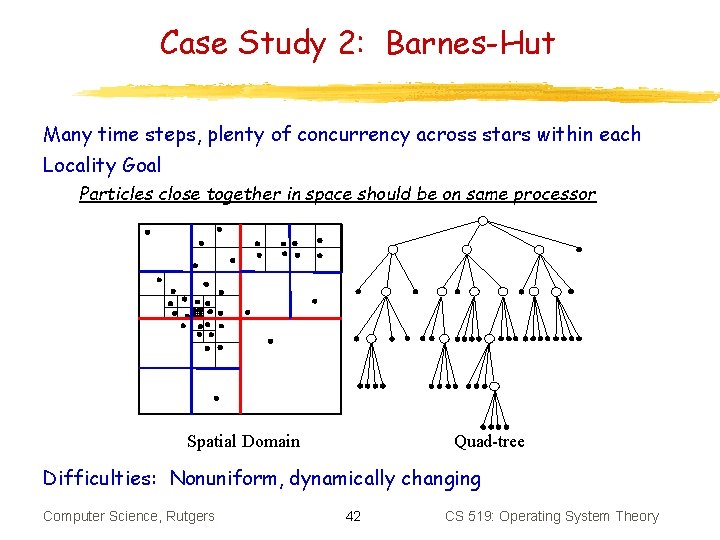

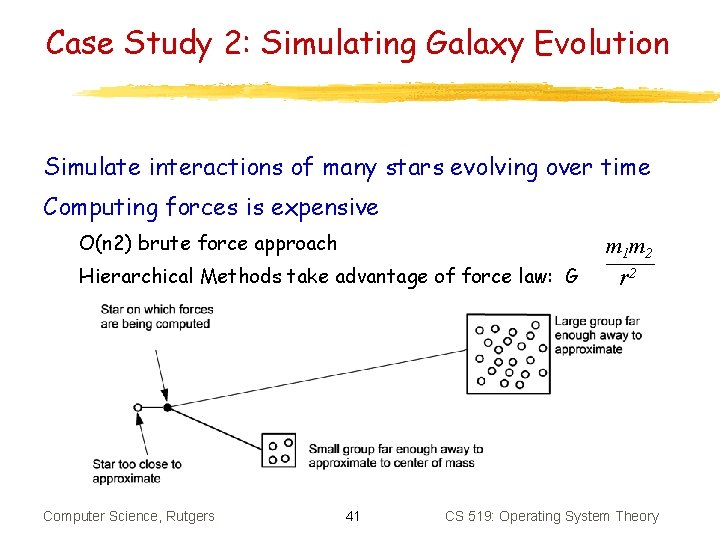

Case Study 2: Simulating Galaxy Evolution Simulate interactions of many stars evolving over time Computing forces is expensive O(n 2) brute force approach Hierarchical Methods take advantage of force law: G Computer Science, Rutgers 41 m 1 m 2 r 2 CS 519: Operating System Theory

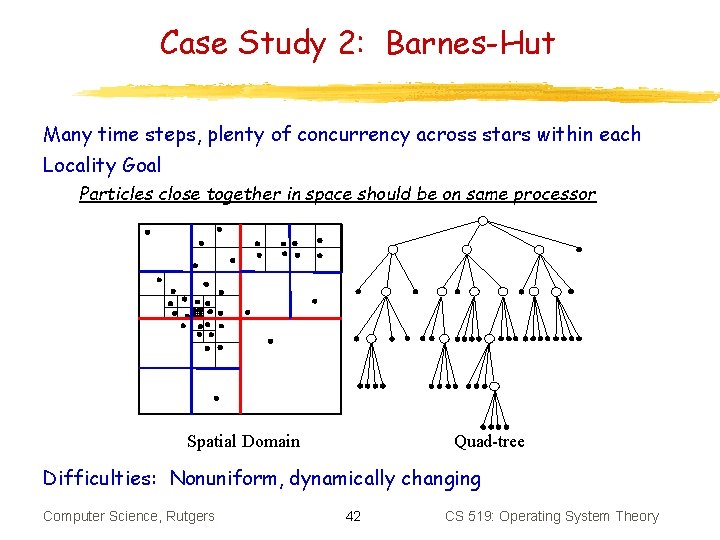

Case Study 2: Barnes-Hut Many time steps, plenty of concurrency across stars within each Locality Goal Particles close together in space should be on same processor Spatial Domain Quad-tree Difficulties: Nonuniform, dynamically changing Computer Science, Rutgers 42 CS 519: Operating System Theory

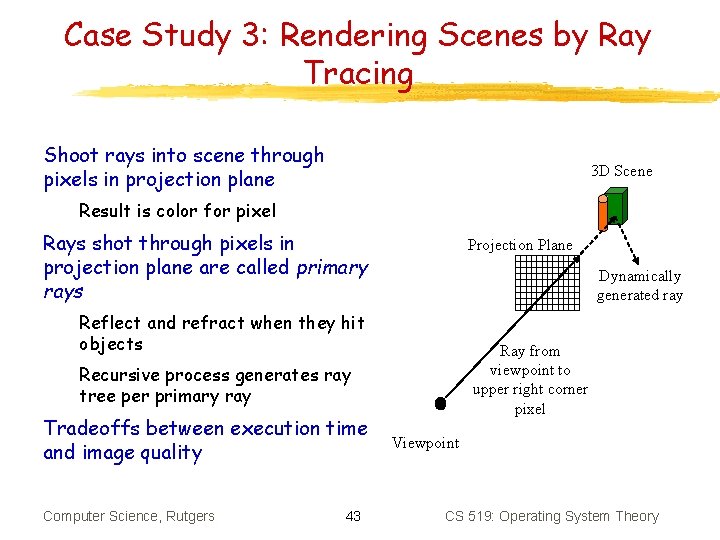

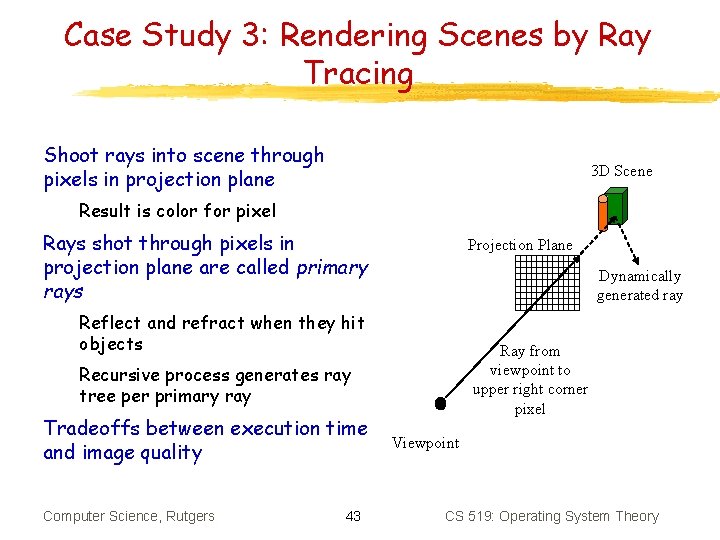

Case Study 3: Rendering Scenes by Ray Tracing Shoot rays into scene through pixels in projection plane 3 D Scene Result is color for pixel Rays shot through pixels in projection plane are called primary rays Projection Plane Dynamically generated ray Reflect and refract when they hit objects Ray from viewpoint to upper right corner pixel Recursive process generates ray tree per primary ray Tradeoffs between execution time and image quality Computer Science, Rutgers 43 Viewpoint CS 519: Operating System Theory

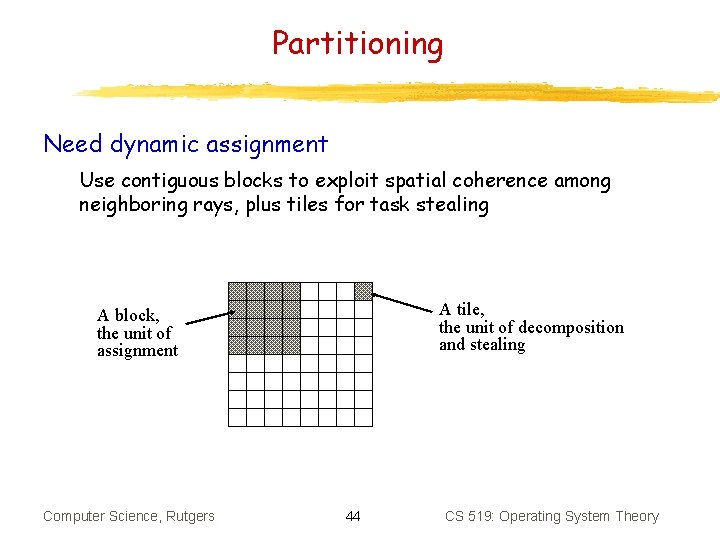

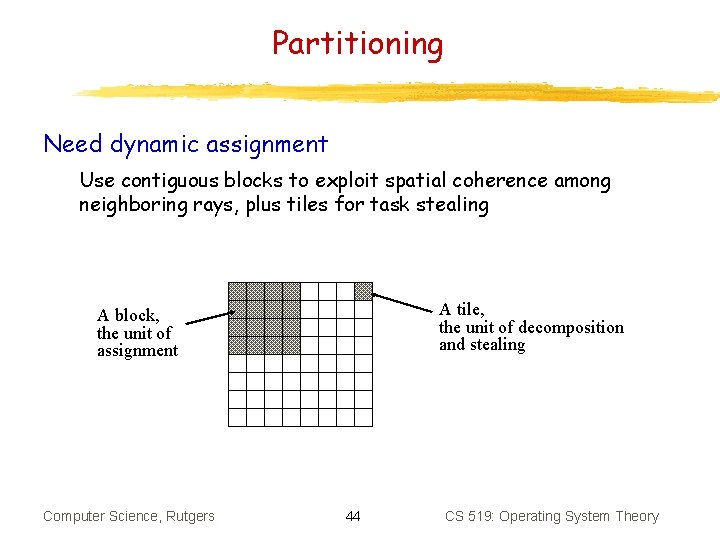

Partitioning Need dynamic assignment Use contiguous blocks to exploit spatial coherence among neighboring rays, plus tiles for task stealing A tile, the unit of decomposition and stealing A block, the unit of assignment Computer Science, Rutgers 44 CS 519: Operating System Theory

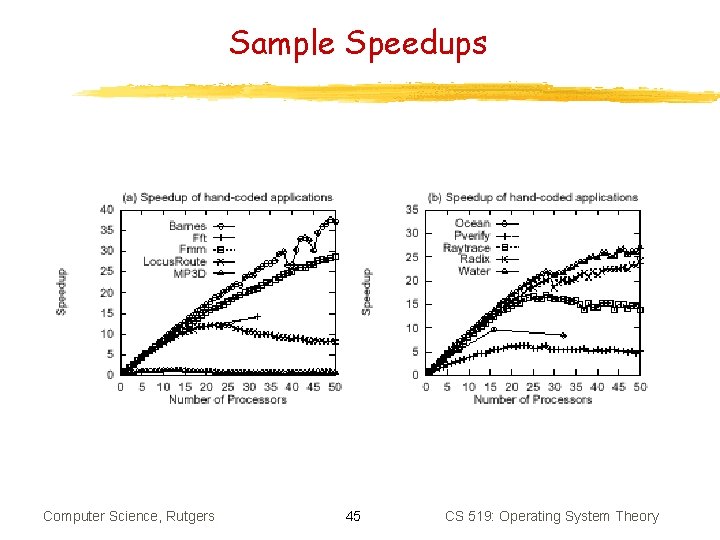

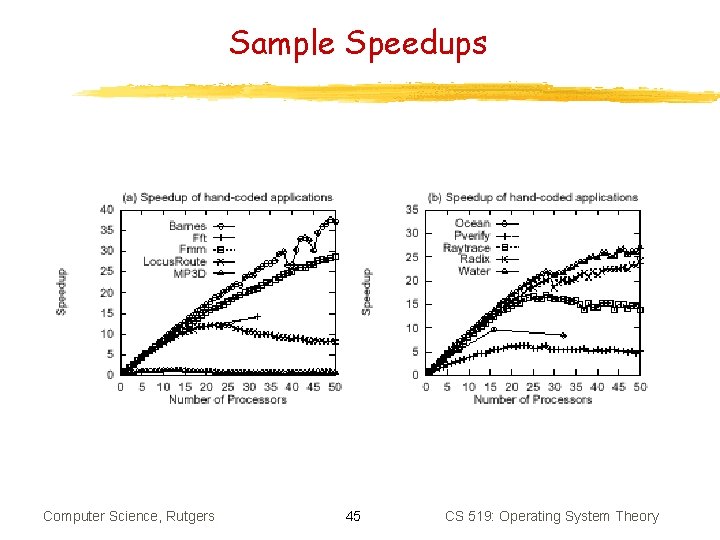

Sample Speedups Computer Science, Rutgers 45 CS 519: Operating System Theory

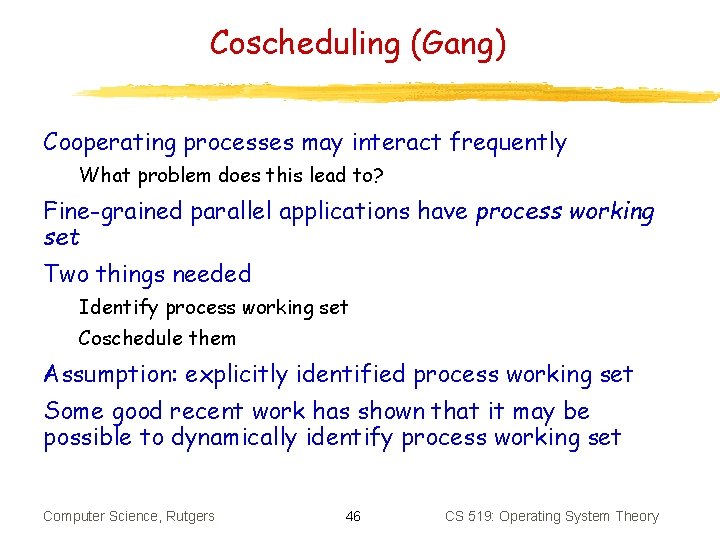

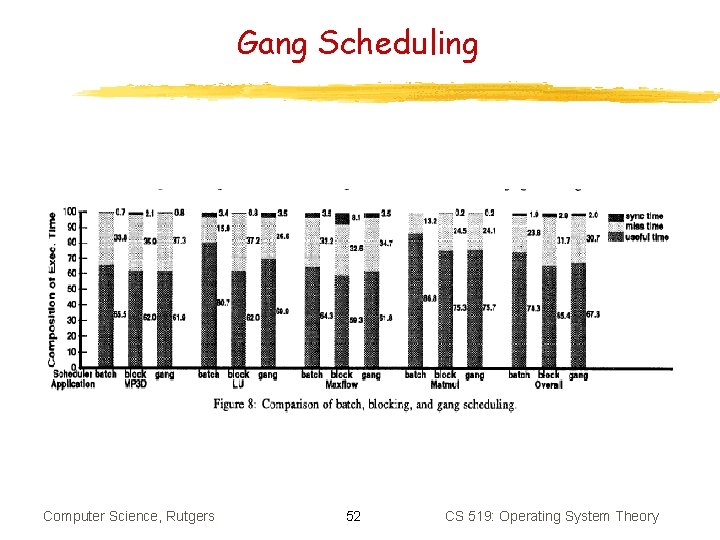

Coscheduling (Gang) Cooperating processes may interact frequently What problem does this lead to? Fine-grained parallel applications have process working set Two things needed Identify process working set Coschedule them Assumption: explicitly identified process working set Some good recent work has shown that it may be possible to dynamically identify process working set Computer Science, Rutgers 46 CS 519: Operating System Theory

Coscheduling What is coscheduling? Coordinating across nodes to make sure that processes belonging to the same process working set are scheduled simultaneously How might we do this? Computer Science, Rutgers 47 CS 519: Operating System Theory

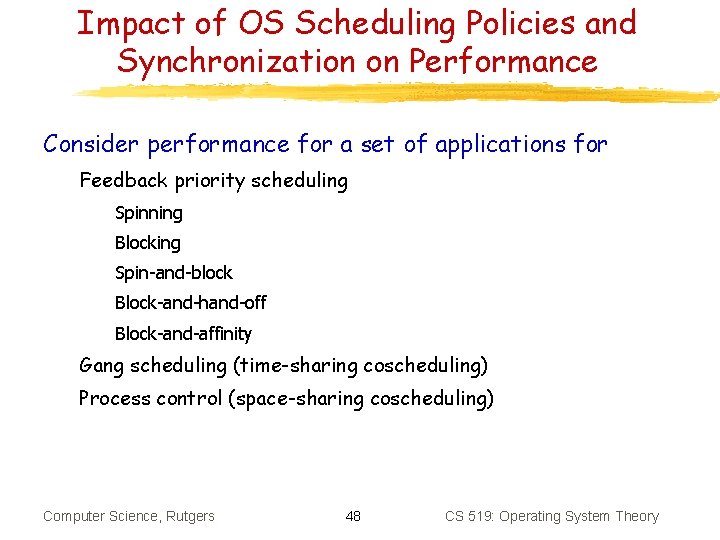

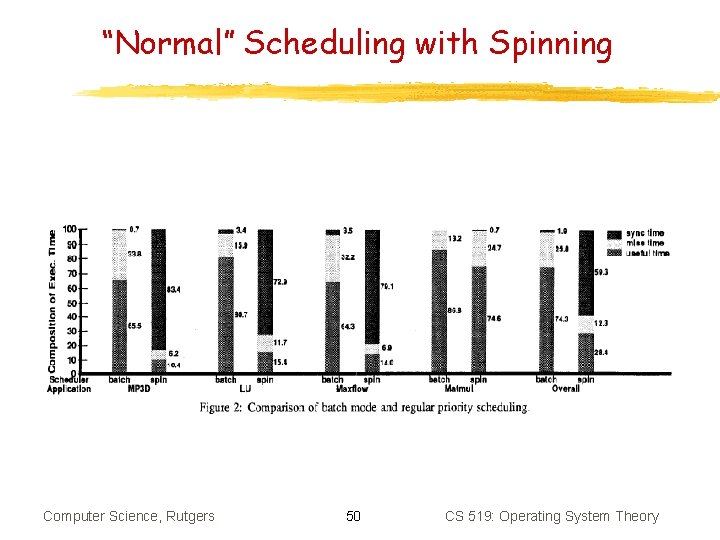

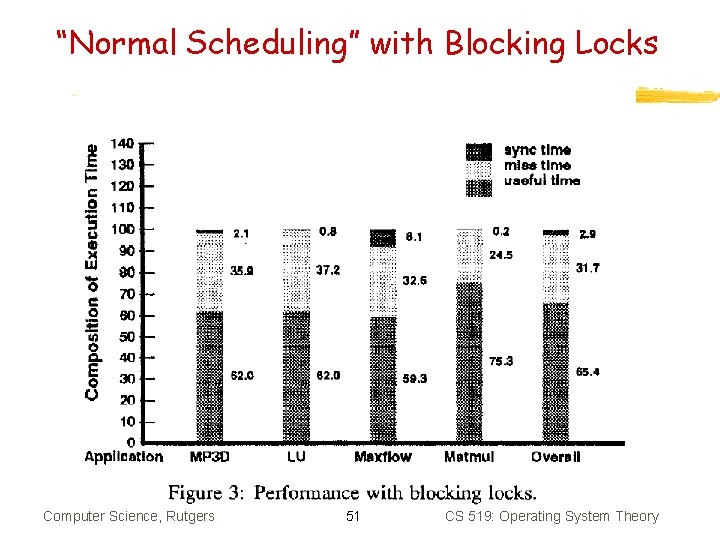

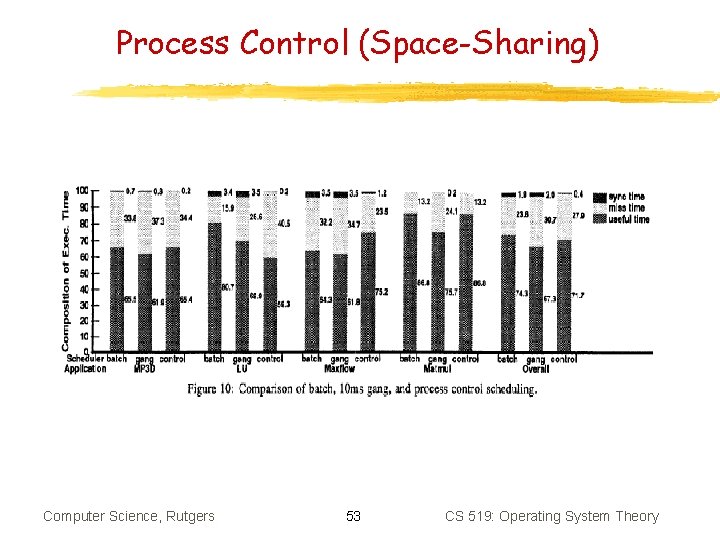

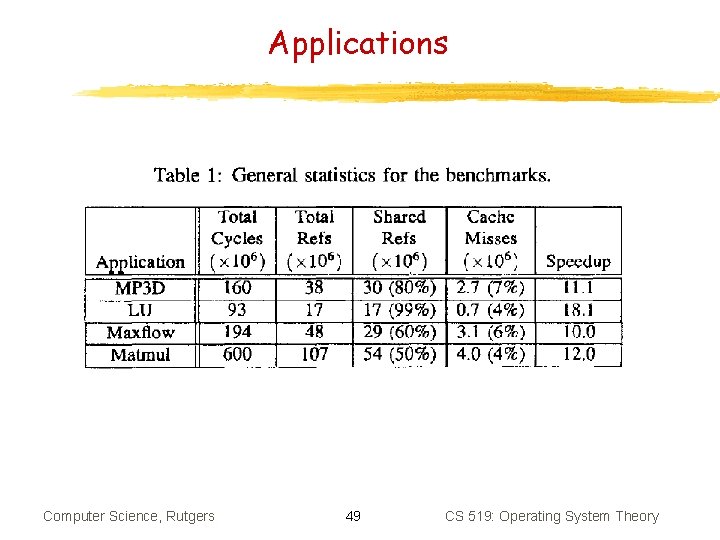

Impact of OS Scheduling Policies and Synchronization on Performance Consider performance for a set of applications for Feedback priority scheduling Spinning Blocking Spin-and-block Block-and-hand-off Block-and-affinity Gang scheduling (time-sharing coscheduling) Process control (space-sharing coscheduling) Computer Science, Rutgers 48 CS 519: Operating System Theory

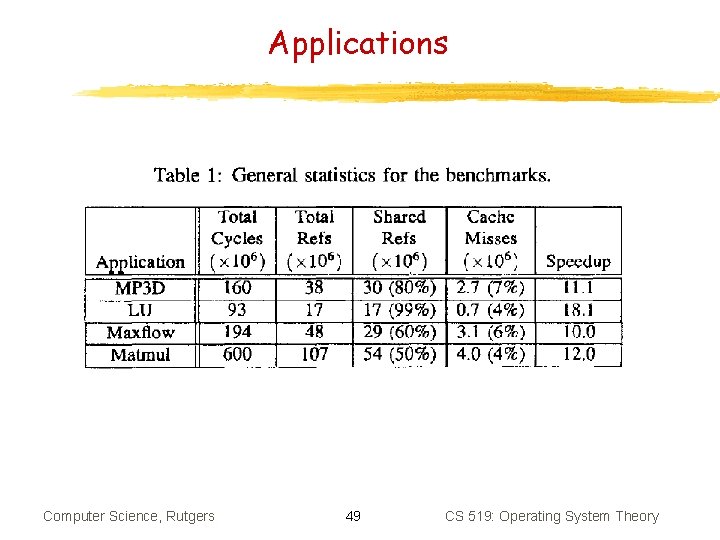

Applications Computer Science, Rutgers 49 CS 519: Operating System Theory

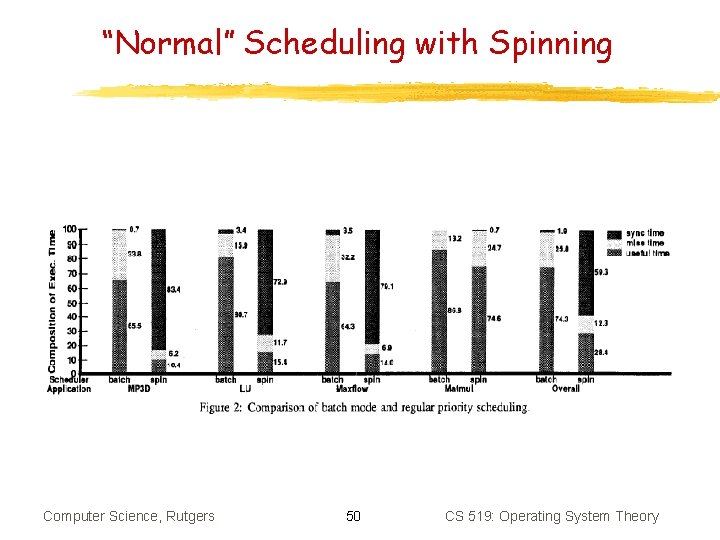

“Normal” Scheduling with Spinning Computer Science, Rutgers 50 CS 519: Operating System Theory

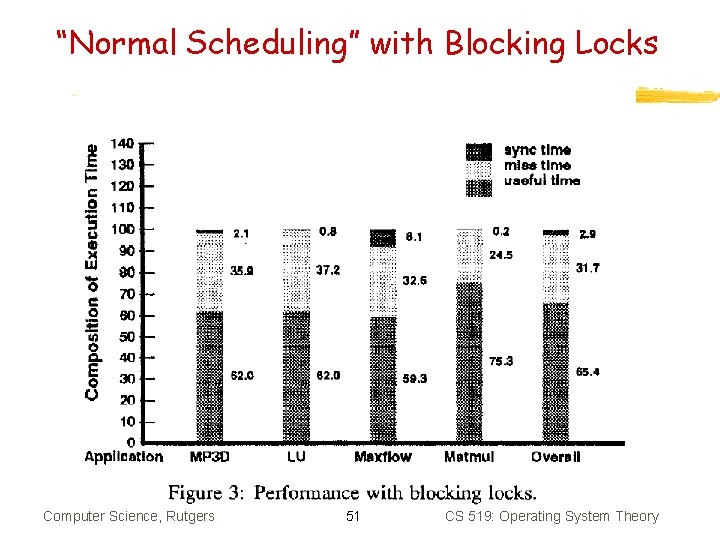

“Normal Scheduling” with Blocking Locks Computer Science, Rutgers 51 CS 519: Operating System Theory

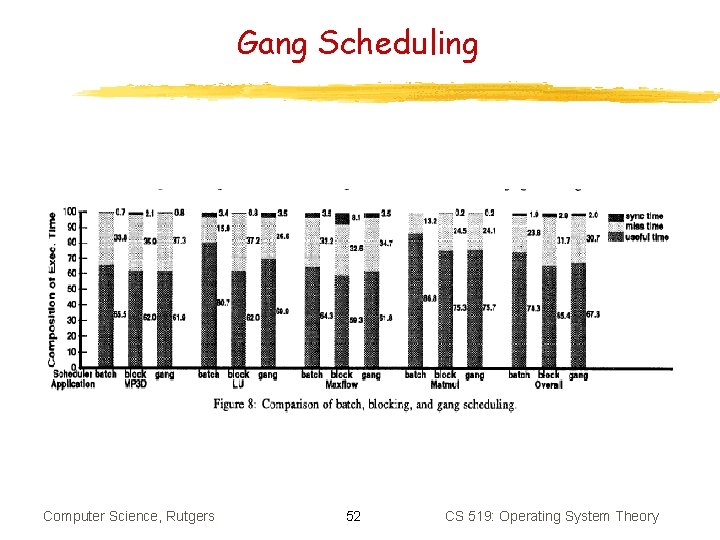

Gang Scheduling Computer Science, Rutgers 52 CS 519: Operating System Theory

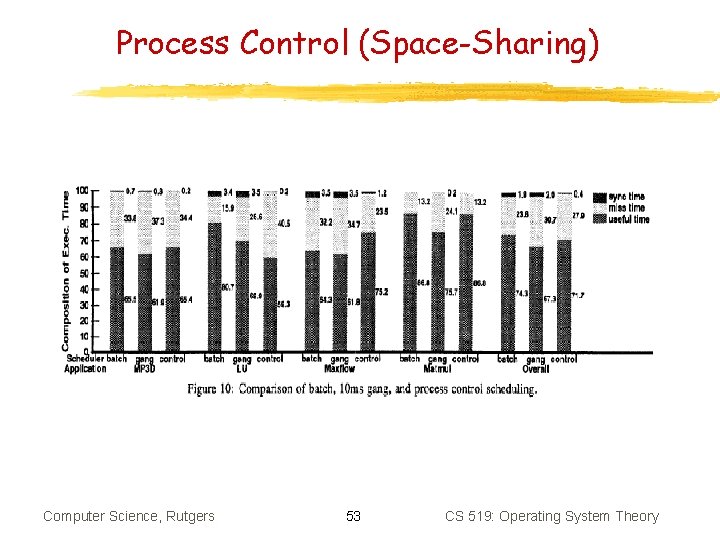

Process Control (Space-Sharing) Computer Science, Rutgers 53 CS 519: Operating System Theory

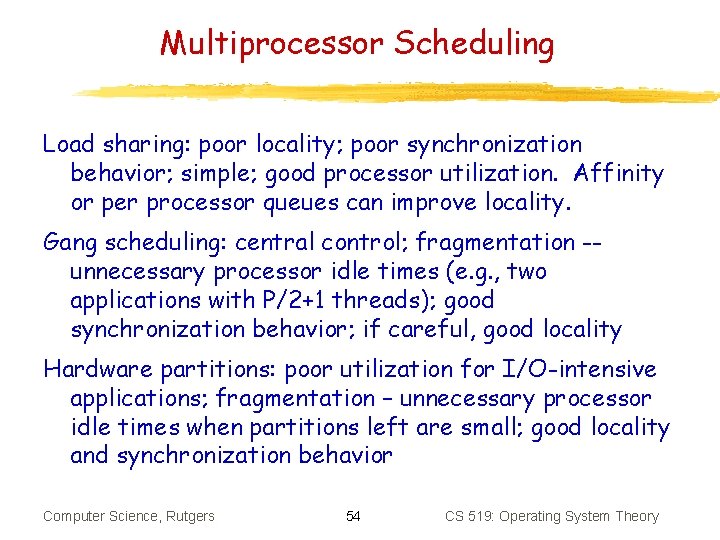

Multiprocessor Scheduling Load sharing: poor locality; poor synchronization behavior; simple; good processor utilization. Affinity or per processor queues can improve locality. Gang scheduling: central control; fragmentation -unnecessary processor idle times (e. g. , two applications with P/2+1 threads); good synchronization behavior; if careful, good locality Hardware partitions: poor utilization for I/O-intensive applications; fragmentation – unnecessary processor idle times when partitions left are small; good locality and synchronization behavior Computer Science, Rutgers 54 CS 519: Operating System Theory