CPSC 6730 Big Data Analytics Lecture 3 Map

CPSC 6730 Big Data Analytics Lecture # 3

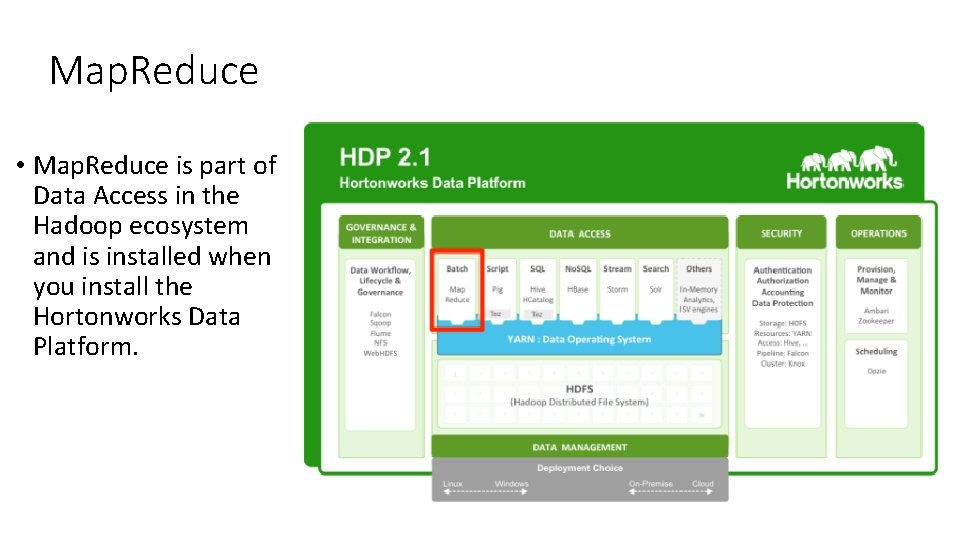

Map. Reduce • Map. Reduce is part of Data Access in the Hadoop ecosystem and is installed when you install the Hortonworks Data Platform.

The Problem of Processing Big Data • In the past, processing increasingly larger datasets was a problem. All your data and computation had to fit on a single machine. To work on more data you had to buy a bigger, more expensive machine. Bigger machines are always more expensive and the expense does not end with the purchase price. Big machines typically come with expensive support contracts too. Using increasingly larger machines to process ever growing data is called scaling up. Another term for it is vertical scaling. Even if you can afford big machines, big machines only scale up so far. There are technical limitations to scaling up a single machine. So what is the solution to processing a large volume of data when it is no longer technically or financially feasible to do it on a single machine?

A Cluster of Machines • Using a cluster of smaller machines is called scaling out. It can also be called horizontal scaling. Using a cluster of smaller, commodity machines is often the only solution to process a large volume of data. However, using a cluster of machines creates a problem of its own. The problem is that you must create new programs to support distributed data processing.

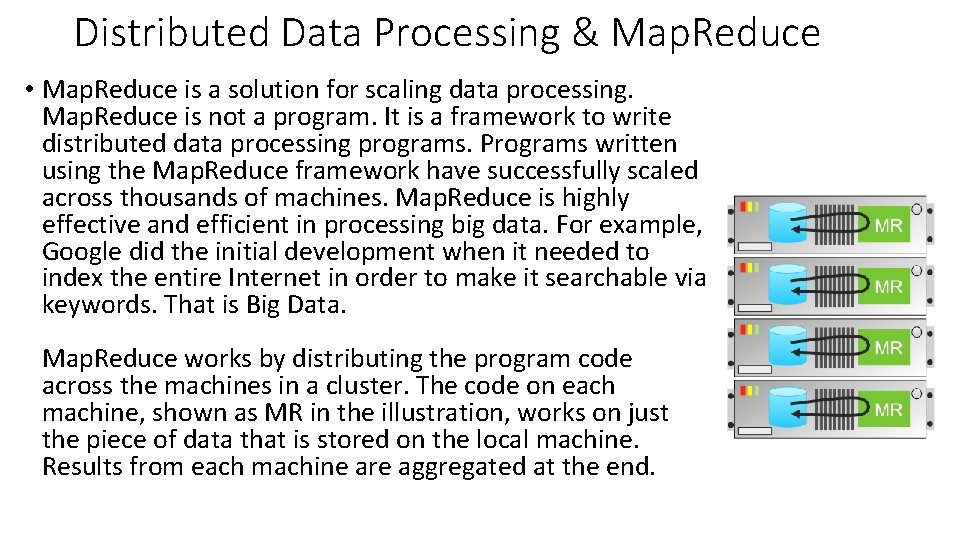

Distributed Data Processing & Map. Reduce • Map. Reduce is a solution for scaling data processing. Map. Reduce is not a program. It is a framework to write distributed data processing programs. Programs written using the Map. Reduce framework have successfully scaled across thousands of machines. Map. Reduce is highly effective and efficient in processing big data. For example, Google did the initial development when it needed to index the entire Internet in order to make it searchable via keywords. That is Big Data. Map. Reduce works by distributing the program code across the machines in a cluster. The code on each machine, shown as MR in the illustration, works on just the piece of data that is stored on the local machine. Results from each machine are aggregated at the end.

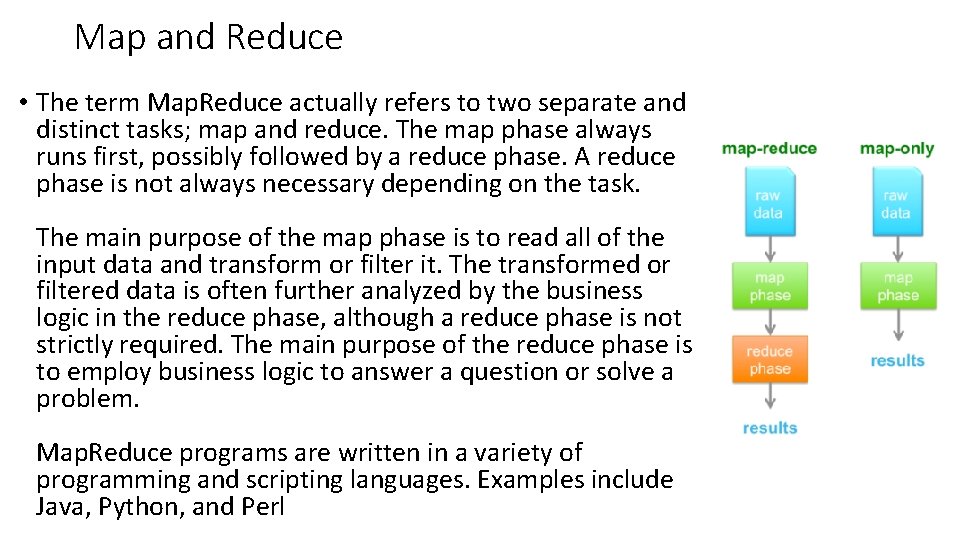

Map and Reduce • The term Map. Reduce actually refers to two separate and distinct tasks; map and reduce. The map phase always runs first, possibly followed by a reduce phase. A reduce phase is not always necessary depending on the task. The main purpose of the map phase is to read all of the input data and transform or filter it. The transformed or filtered data is often further analyzed by the business logic in the reduce phase, although a reduce phase is not strictly required. The main purpose of the reduce phase is to employ business logic to answer a question or solve a problem. Map. Reduce programs are written in a variety of programming and scripting languages. Examples include Java, Python, and Perl

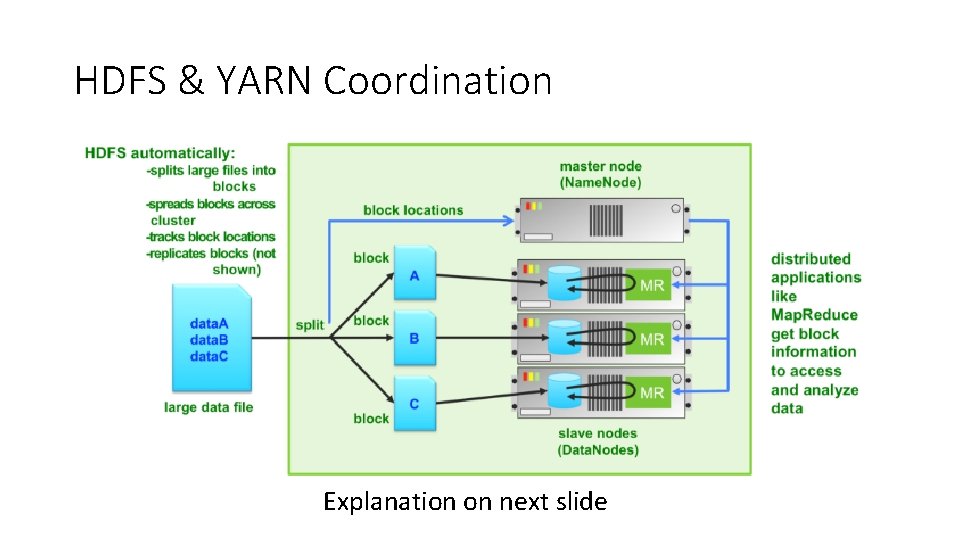

HDFS & YARN Coordination Explanation on next slide

HDFS & YARN Coordination. . continued The Hadoop distributed file system, or HDFS, automatically splits large data files into smaller chunks of data called blocks and distributes them across the slave nodes in a cluster. Distributing file data across slave nodes provides a crucial foundation for Map. Reduce performance and scalability. The location of the blocks is tracked by the master node in HDFS. The master HDFS node runs the Name. Node process. Whenever an application like Map. Reduce needs to read a block, it gets the block location information from the Name. Node.

HDFS & YARN Coordination. . continued YARN, the Hadoop scheduler and resource manager, coordinates with HDFS to make every attempt to run the mapper as close to the data as possible. As a result, when the Map. Reduce job’s Application. Master requests containers from YARN, YARN tries to provide those containers on the Data. Nodes that have the data blocks. Moving the application code to the data rather than the data to the application code enables higher performance and greater scalability. A Map. Reduce job is massively scalable. By default, Map. Reduce performs one map job for each 128 megabyte block. For example using the default settings, if a 512 gigabyte dataset is split into blocks of 128 megabytes each, then it is possible for 4096 mappers to run in parallel assuming that the cluster has 4096 machines. Multiple map and reduce tasks can run on the same machine, so 4096 machines are not strictly necessary to complete this compute task.

Map. Reduce Operation Explanation on next slide

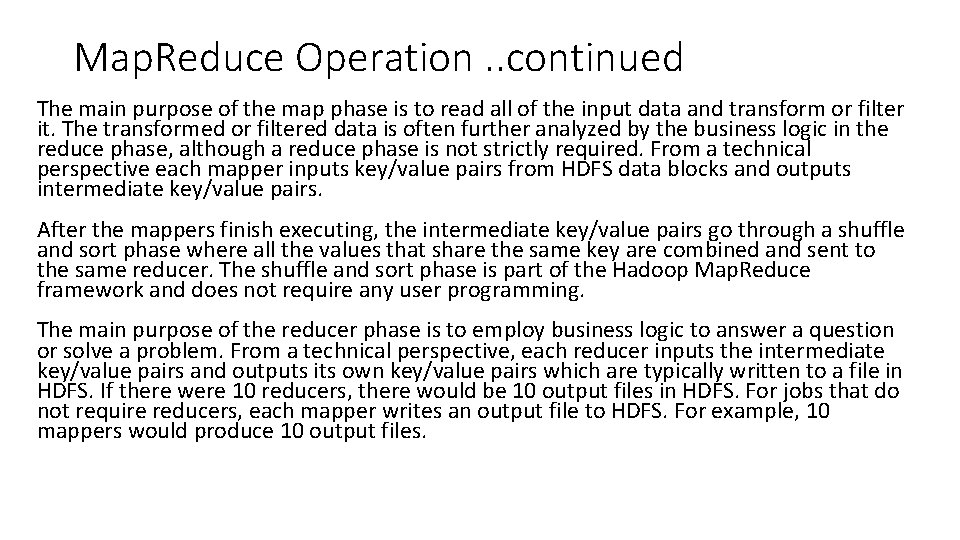

Map. Reduce Operation. . continued The main purpose of the map phase is to read all of the input data and transform or filter it. The transformed or filtered data is often further analyzed by the business logic in the reduce phase, although a reduce phase is not strictly required. From a technical perspective each mapper inputs key/value pairs from HDFS data blocks and outputs intermediate key/value pairs. After the mappers finish executing, the intermediate key/value pairs go through a shuffle and sort phase where all the values that share the same key are combined and sent to the same reducer. The shuffle and sort phase is part of the Hadoop Map. Reduce framework and does not require any user programming. The main purpose of the reducer phase is to employ business logic to answer a question or solve a problem. From a technical perspective, each reducer inputs the intermediate key/value pairs and outputs its own key/value pairs which are typically written to a file in HDFS. If there were 10 reducers, there would be 10 output files in HDFS. For jobs that do not require reducers, each mapper writes an output file to HDFS. For example, 10 mappers would produce 10 output files.

Map. Reduce Operation. . continued The output data in the output files can be aggregated and processed by a program in Hadoop or on external system. While not shown in the illustration, the intermediate key/value pairs output by the mappers, called map output, are temporarily written to the local disk of each slave node. This data is eventually sorted and shuffled across the network and used as input for the reducers. The additional writing to and reading from local disk adds overhead to Map. Reduce. In the illustration here, the input data is read into each mapper. Each mapper found two keys in the data; k 1 and k 2. However, each mapper found different values associated with those keys. The top mapper found the values v 1 and v 4. The middle mapper found the values v 2 and v 5. The bottom mapper found the values v 3 and v 6. The shuffle and sort phase sent k 1 and all its values to the top reducer. It also sent k 2 and all its values to the bottom reducer. In this illustration, each reducer simply counted the number of values associated with each key. The counts are represented by the values v 7 and v 8. Each reducer produced an output file with its results. The results in the output files were aggregated by another program

Map. Reduce Use-Cases Map. Reduce is best suited for batch processing. There are time delays associated with Map. Reduce operation. For example, there is overhead when setting up the mappers and reducers. There is also overhead when writing and reading the intermediate key/value pairs to the slave nodes’ local disks. HDFS is not used for intermediate data because HDFS automatically replicates data. To avoid the automatic and unnecessary replication of the intermediate data, Map. Reduce uses local disk space instead. When multiple Map. Reduce jobs are chained together, they use HDFS to pass data from the first job to the next job. Lastly, there is also some time delay associated with sorting and shuffling data over the network.

Map. Reduce Use-Cases • Even though Map. Reduce is batch-oriented, there are still many ways to use Map. Reduce is used for data analytics. For example, you could calculate the most common reasons for product failure or determine the most visited Web pages. Map. Reduce supports data mining. For example, finding correlations between data in the same or different datasets. Map. Reduce is used to create the indexes necessary to support full-text search. Full-text indexes permits full-text searches based on keywords

A Map. Reduce Example Sometimes it is easier to understand the power of Map. Reduce using an example. Consider the following scenario. As a company you want to know what people are saying about your company and its products. As a first step, you want to know how often your products are mentioned in social media. The problem is the massive size of social media data. The good news is that Hadoop and Map. Reduce can analyze the raw data and provide an answer.

Define Your Search Words First, you need to define the keywords that you want to search for in social media data. The keywords in this example would be your product names. You currently sell four products; Widget, Widget. Plus, Widgety, and Widgeter

Write the Map & Reduce Programs Now you need to write your map and reduce programs. Write a map function that searches for a product name in each line of data. Each time it finds a product name it outputs a key/value pair in the format (product_name, 1). The number 1 indicates that one occurrence of the product name has been found. Then write a reduce function that counts the number of occurrences of each product name using the key/value pairs output from the map function.

Capture Social Media Data • With your map and reduce programs ready to go, you need to capture the social media data. We will use Twitter tweets as an example. Notice that some of the tweets have your product names in them. • • • I love my Widget. My parents don’t understand me. Widget. Plus is a minus. I am going to the Eagles concert. Widgety support is great. Widgeter works well on my tablet What did you think about the new British prime minister? How did I ever live without Widget? Widget. Plus 6 more months of dev work would have made it better. • While the captured social media data is large, it is automatically split and spread across multiple slave nodes in your cluster.

Processing by Map. Reduce Now the data can be processed by Map. Reduce. The data on each slave node is processed locally by your mapper program, which has been automatically distributed to the slave nodes containing portions of the Twitter data. The key/value pairs output from each mapper are automatically shuffled and sorted across the network by the Map. Reduce framework. All key/value pairs with the same key, which is a product name, are sent to the same reducer. The reducers count the number of times each key, which is a product name, occurs in a key/value pair and calculates a total.

Getting Your Answer • The results from each reducer are collected into separate output files in HDFS. To finish, aggregate the information in each output file to calculate the total number of times each product name has appeared in social media. While you could manually aggregate this information, it is far more likely that you would write a program to automate the task. This was a simple example of using Map. Reduce to count words. More sophisticated map and reduce logic could analyze the data for other types of information about your products

Summary • Map. Reduce is a framework, and not a program, used to process data in parallel across many machines. • It works in cooperation with HDFS to achieve scalability and performance. • Map. Reduce is so named because there is a map phase and a reduce phase. • The main purpose of the map phase is to read all of the input data and transform or filter it • The main purpose of the reduce phase is to employ business logic to answer a question or solve a problem • Use cases for Map. Reduce include data analytics, data mining, and full-text indexing

- Slides: 21