CPS 110 Implementing threads on a uniprocessor Landon

- Slides: 30

CPS 110: Implementing threads on a uni-processor Landon Cox January 29, 2008

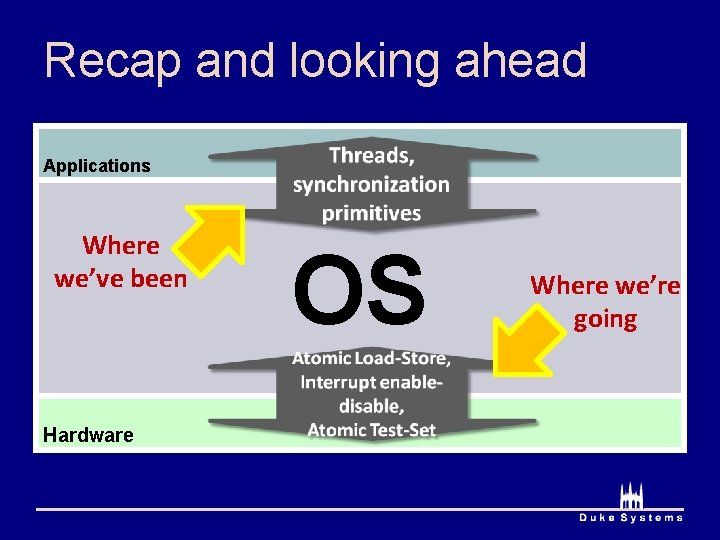

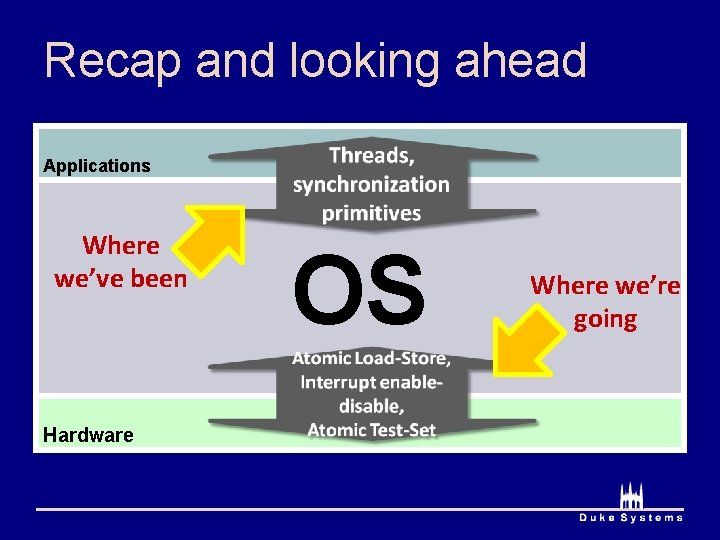

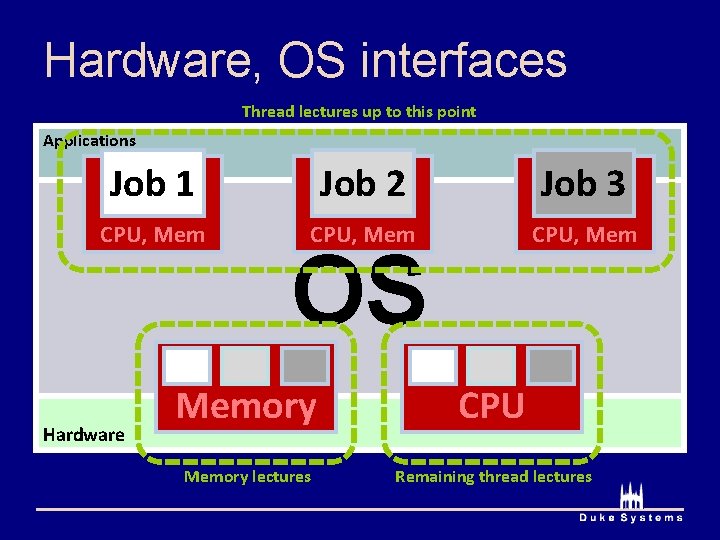

Recap and looking ahead Applications Where we’ve been Hardware OS Where we’re going

Recall, thread interactions 1. Threads can access shared data ê Use locks, monitors, and semaphores ê What we’ve done so far 2. Threads also share hardware ê CPU (uni-processor) ê Memory

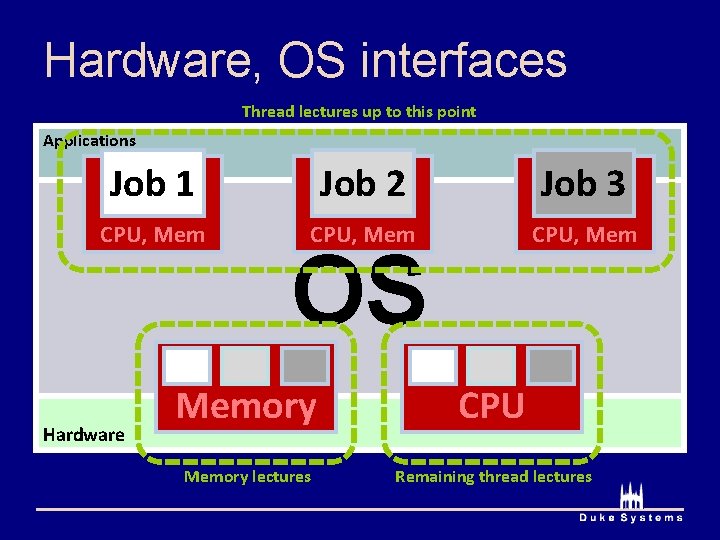

Hardware, OS interfaces Thread lectures up to this point Applications Job 1 Job 2 Job 3 CPU, Mem Hardware OS Memory CPU Memory lectures Remaining thread lectures

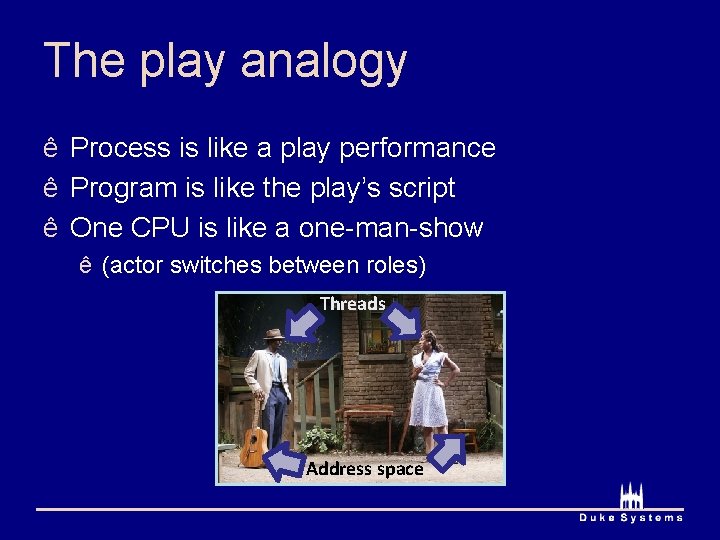

The play analogy ê Process is like a play performance ê Program is like the play’s script ê One CPU is like a one-man-show ê (actor switches between roles) Threads Address space

Threads that aren’t running ê What is a non-running thread? ê thread=“sequence of executing instructions” ê non-running thread=“paused execution” ê Must save thread’s private state ê To re-run, re-load private state ê Want thread to start where it left off

Private vs global thread state ê What state is private to each thread? ê Code (like lines of a play) ê PC (where actor is in his/her script) ê Stack, SP (actor’s mindset) ê What state is shared? ê Global variables, heap ê (props on set)

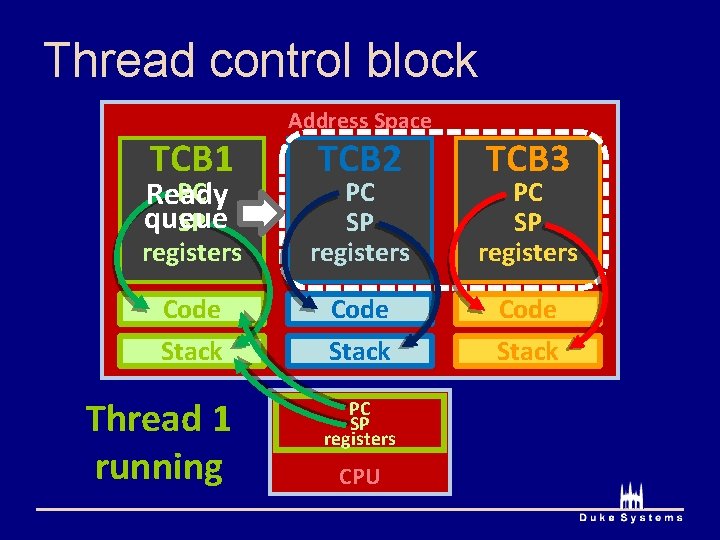

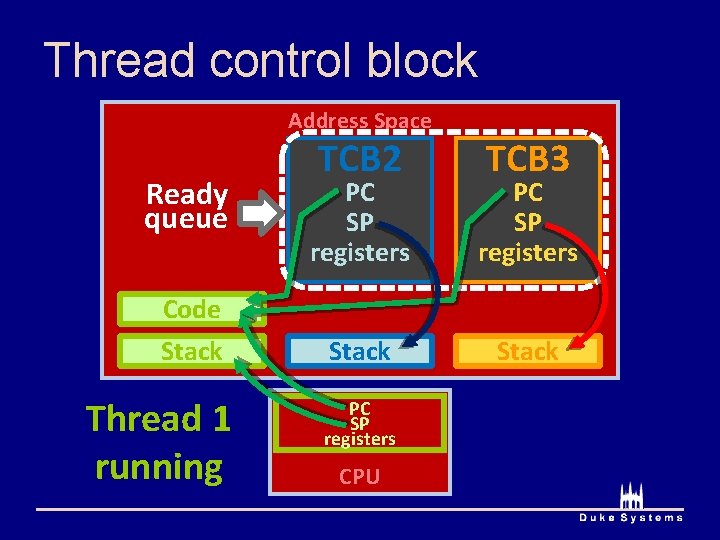

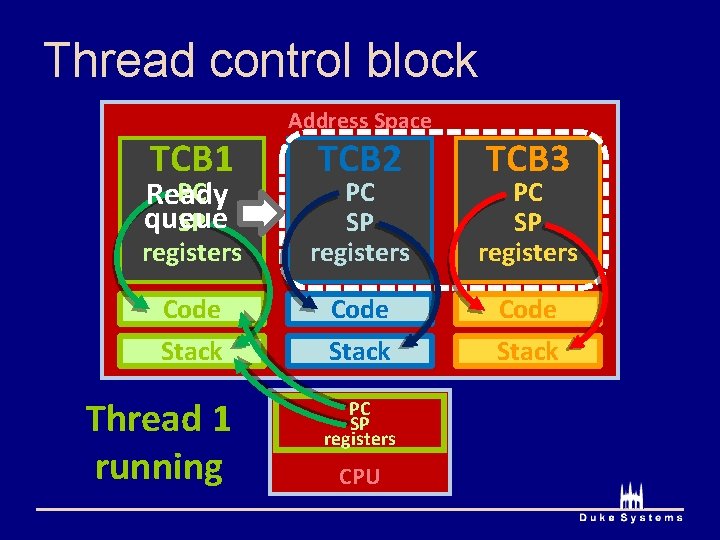

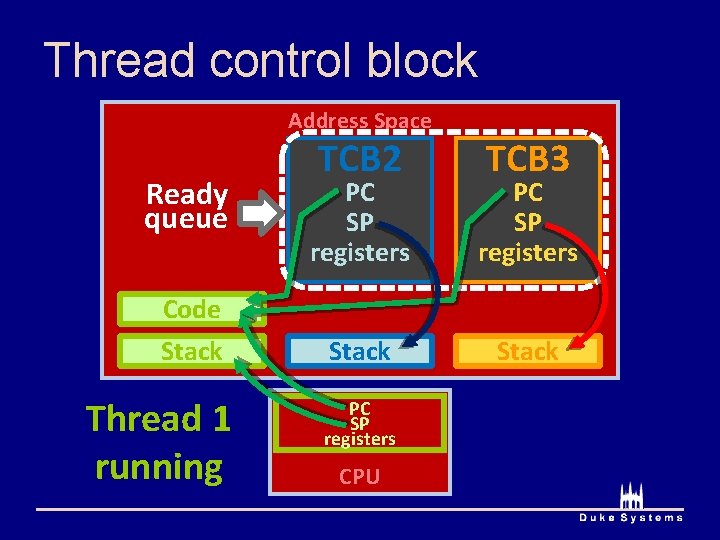

Thread control block (TCB) ê What needs to access threads’ private data? ê The CPU ê This info is stored in the PC, SP, other registers ê The OS needs pointers to non-running threads’ data ê Thread control block (TCB) ê Container for non-running thread’s private data ê Values of PC, code, SP, stack, registers

Thread control block TCB 1 Address Space TCB 2 TCB 3 PC Ready queue SP registers PC SP registers Code Stack Thread 1 running PC SP registers CPU

Thread control block Address Space Ready queue Code Stack Thread 1 running TCB 2 TCB 3 PC SP registers Stack PC SP registers CPU

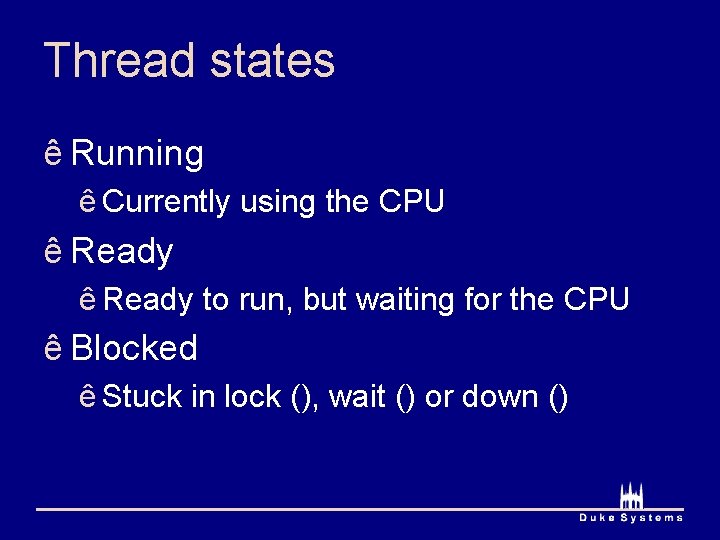

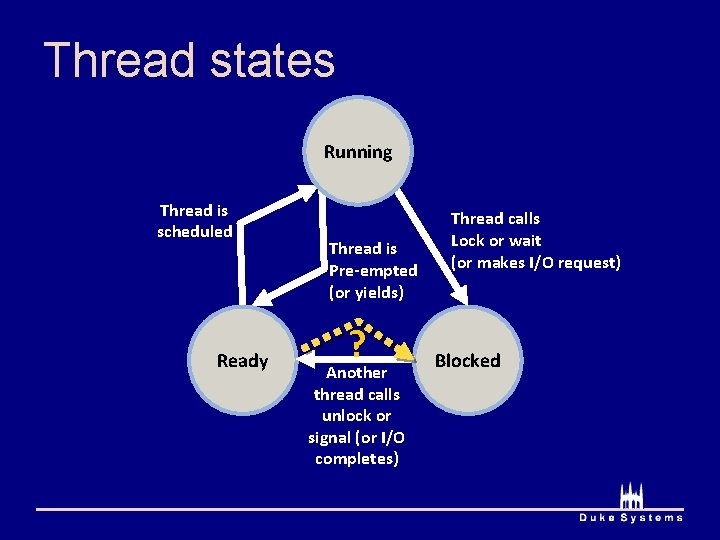

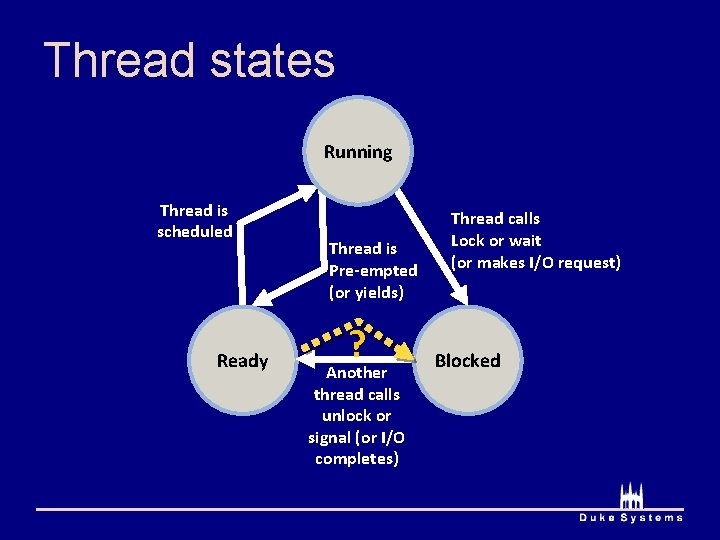

Thread states ê Running ê Currently using the CPU ê Ready to run, but waiting for the CPU ê Blocked ê Stuck in lock (), wait () or down ()

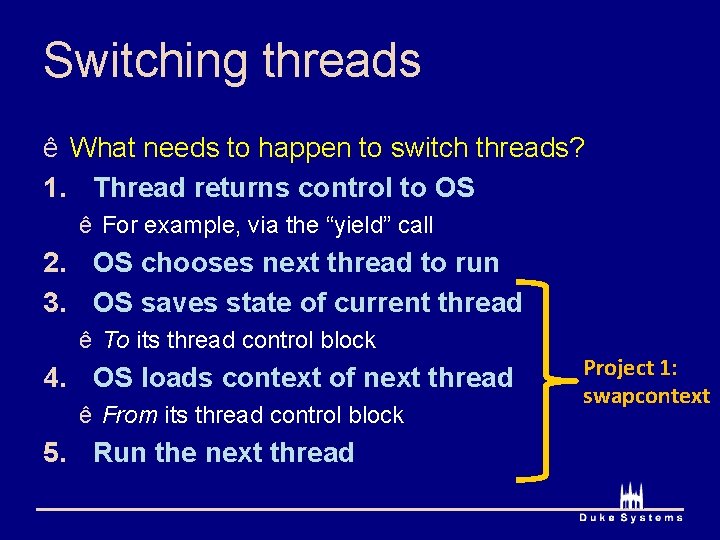

Switching threads ê What needs to happen to switch threads? 1. Thread returns control to OS ê For example, via the “yield” call 2. OS chooses next thread to run 3. OS saves state of current thread ê To its thread control block 4. OS loads context of next thread ê From its thread control block 5. Run the next thread Project 1: swapcontext

1. Thread returns control to OS ê How does the thread system get control? ê Voluntary internal events ê Thread might block inside lock or wait ê Thread might call into kernel for service ê (system call) ê Thread might call yield ê Are internal events enough?

1. Thread returns control to OS ê Involuntary external events ê (events not initiated by the thread) ê Hardware interrupts ê Transfer control directly to OS interrupt handlers ê From 104 ê CPU checks for interrupts while executing ê Jumps to OS code with interrupt mask set ê Interrupts lead to pre-emption (a forced yield) ê Common interrupt: timer interrupt

2. Choosing the next thread ê If no ready threads, just spin ê Modern CPUs: execute a “halt” instruction ê Project 1: exit if no ready threads ê Loop switches to thread if one is ready ê Many ways to prioritize ready threads ê Will discuss a little later in the semester

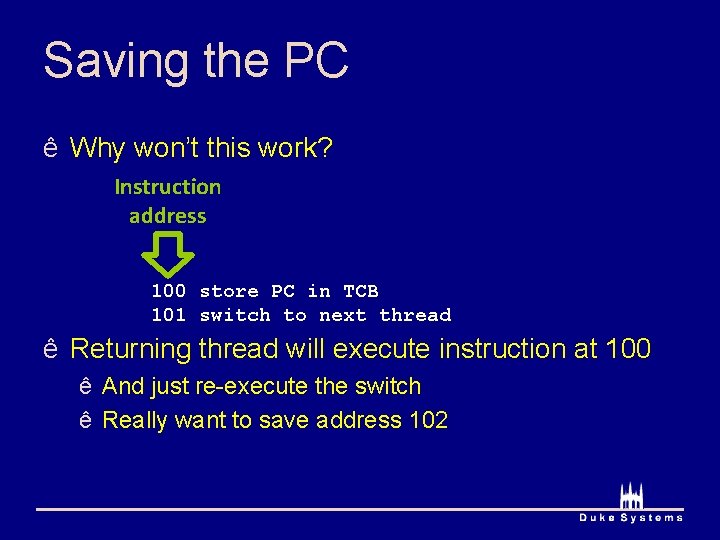

3. Saving state of current thread ê What needs to be saved? ê Registers, PC, SP ê What makes this tricky? ê Self-referential sequence of actions ê Need registers to save state ê But you’re trying to save all the registers ê Saving the PC is particularly tricky

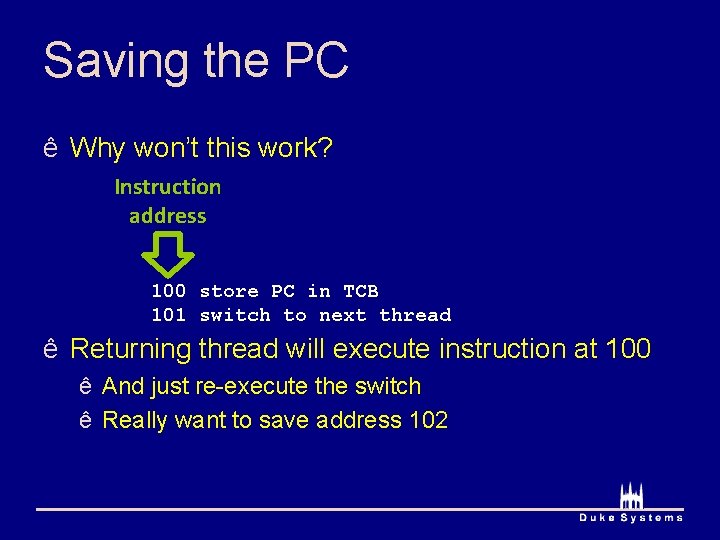

Saving the PC ê Why won’t this work? Instruction address 100 store PC in TCB 101 switch to next thread ê Returning thread will execute instruction at 100 ê And just re-execute the switch ê Really want to save address 102

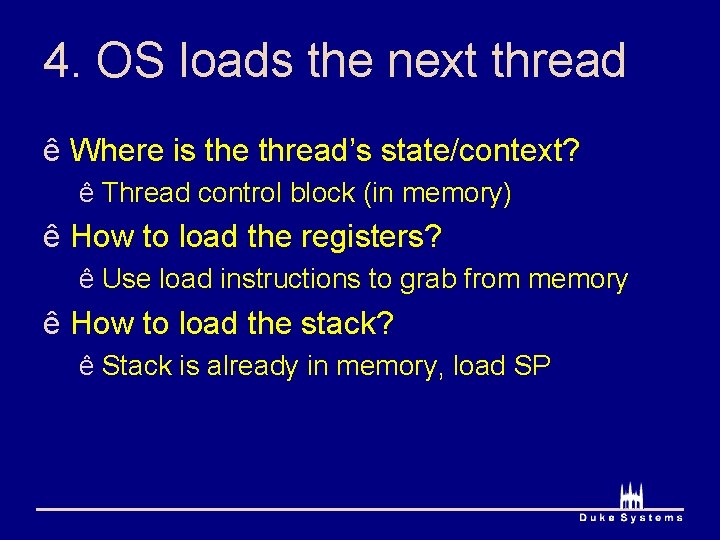

4. OS loads the next thread ê Where is the thread’s state/context? ê Thread control block (in memory) ê How to load the registers? ê Use load instructions to grab from memory ê How to load the stack? ê Stack is already in memory, load SP

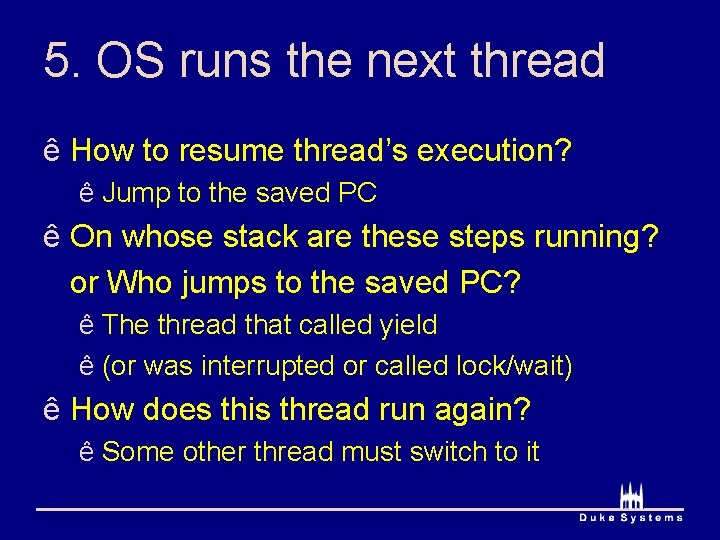

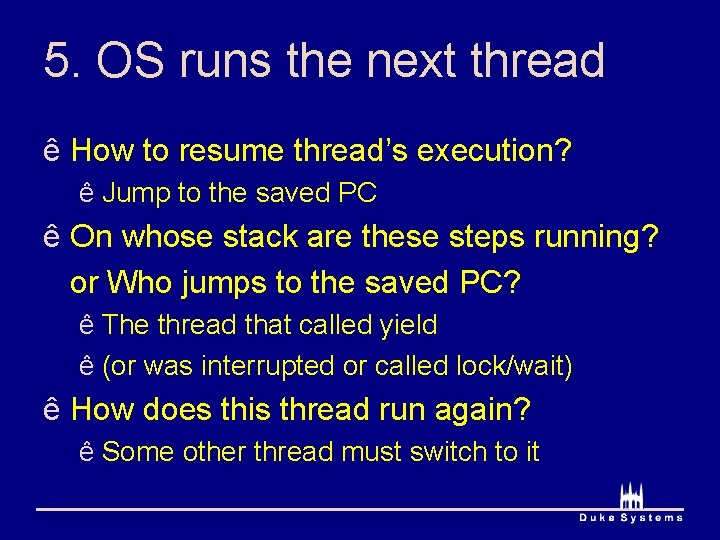

5. OS runs the next thread ê How to resume thread’s execution? ê Jump to the saved PC ê On whose stack are these steps running? or Who jumps to the saved PC? ê The thread that called yield ê (or was interrupted or called lock/wait) ê How does this thread run again? ê Some other thread must switch to it

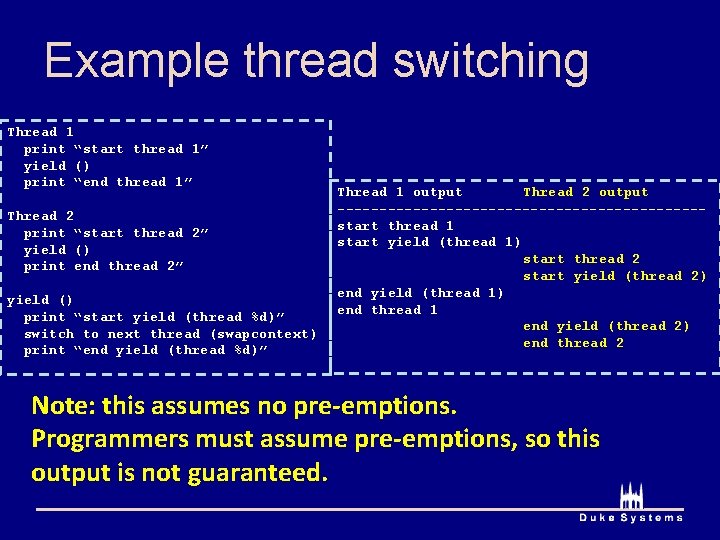

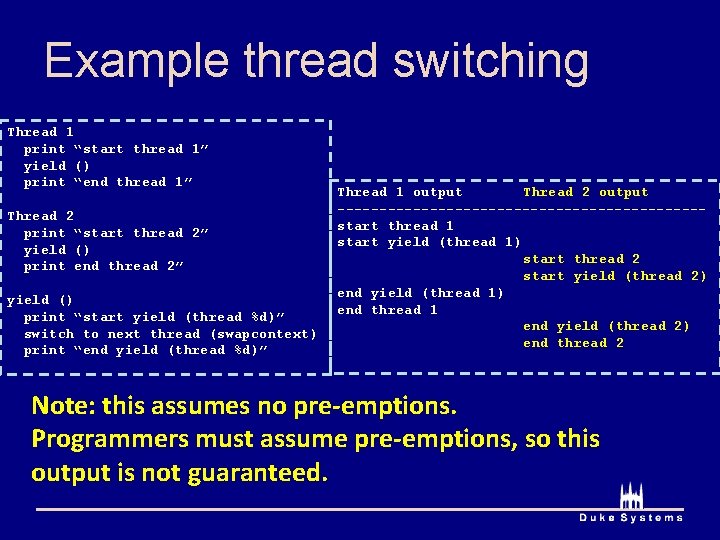

Example thread switching Thread 1 print “start thread 1” yield () print “end thread 1” Thread 2 print “start thread 2” yield () print end thread 2” yield () print “start yield (thread %d)” switch to next thread (swapcontext) print “end yield (thread %d)” Thread 1 output Thread 2 output ----------------------start thread 1 start yield (thread 1) start thread 2 start yield (thread 2) end yield (thread 1) end thread 1 end yield (thread 2) end thread 2 Note: this assumes no pre-emptions. Programmers must assume pre-emptions, so this output is not guaranteed.

Thread states Running Thread is scheduled Ready Thread is Pre-empted (or yields) ? Another thread calls unlock or signal (or I/O completes) Thread calls Lock or wait (or makes I/O request) Blocked

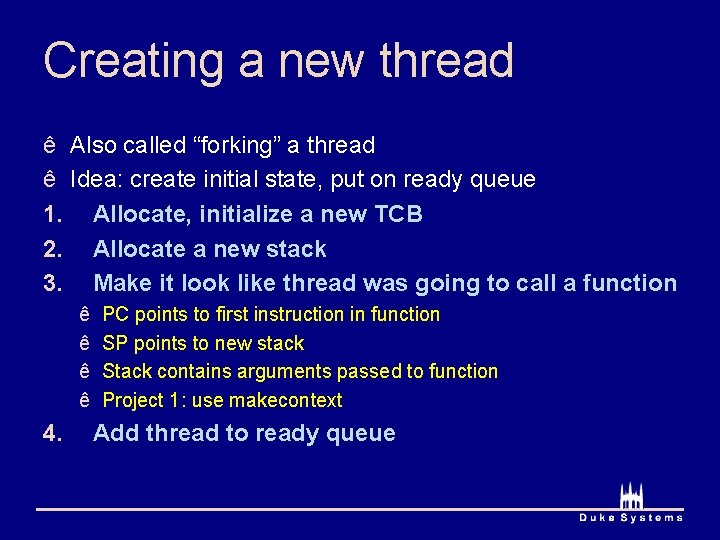

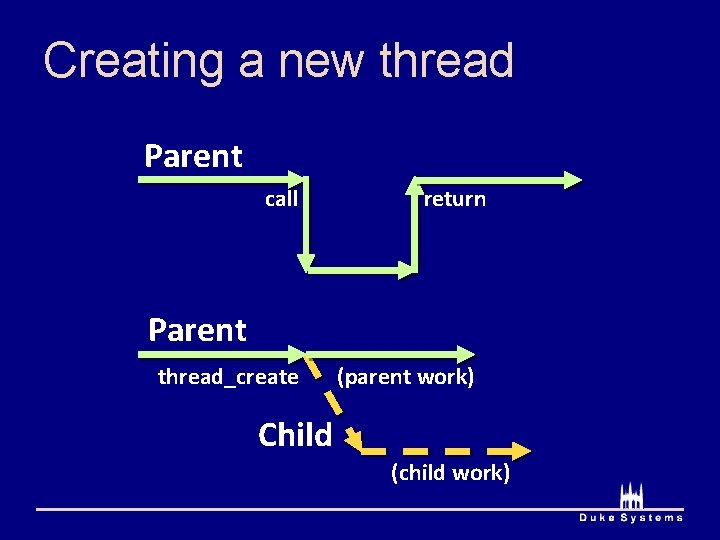

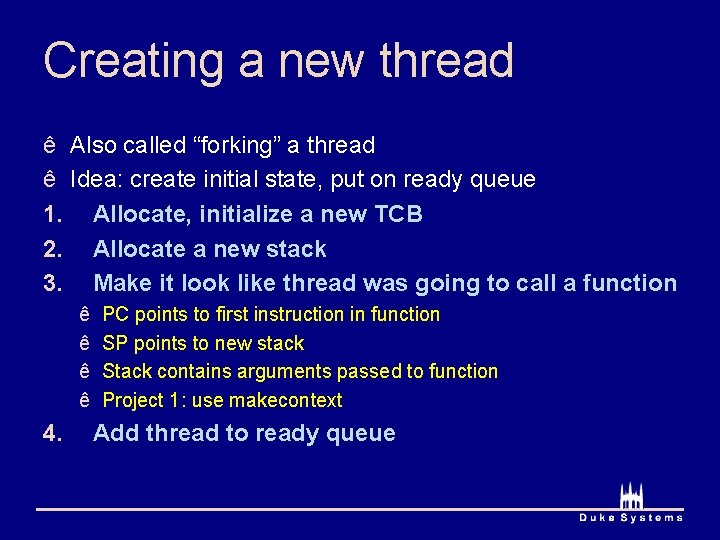

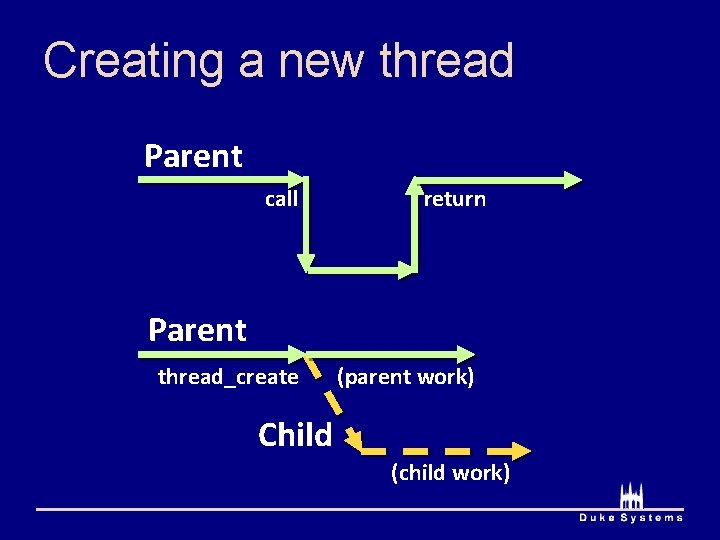

Creating a new thread ê Also called “forking” a thread ê Idea: create initial state, put on ready queue 1. Allocate, initialize a new TCB 2. Allocate a new stack 3. Make it look like thread was going to call a function ê ê 4. PC points to first instruction in function SP points to new stack Stack contains arguments passed to function Project 1: use makecontext Add thread to ready queue

Course administration ê Second UTA ê Jason Lee ê Office hours: Thursday, 4: 30 -6: 30, Teer lab ê Lots of questions on newsgroup ê Great resource

Course administration ê Project 1 disk scheduler (1 d) ê How to use concurrency primitives ê Disk scheduler should be done or almost done ê Two/seven groups are done with 1 d ê Project 1 thread library (1 t) ê ê How concurrency primitives actually work Best way to learn something is to build it! (no such thing as magic) Should be able to start after class Time management is really, really important (start soon!!) ê Happy answer any Project 1 questions

Creating a new thread Parent call return Parent thread_create (parent work) Child (child work)

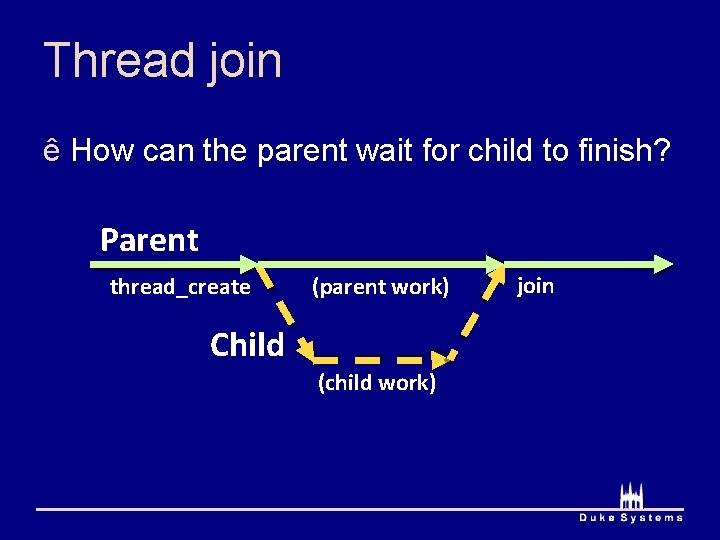

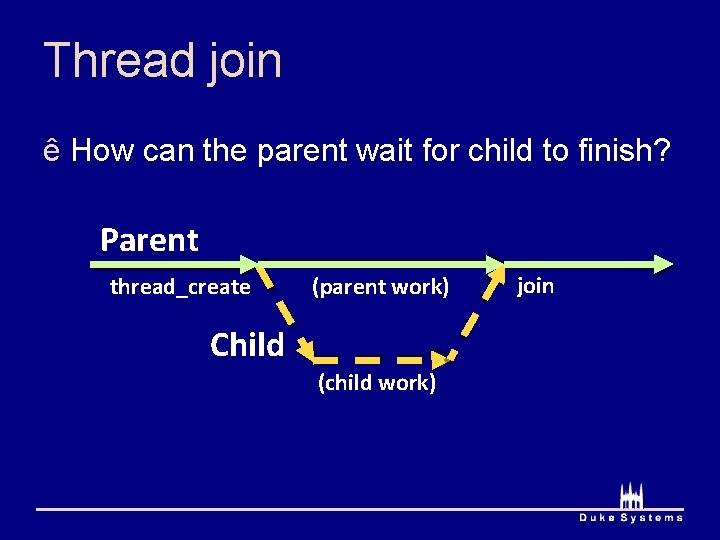

Thread join ê How can the parent wait for child to finish? Parent thread_create (parent work) Child (child work) join

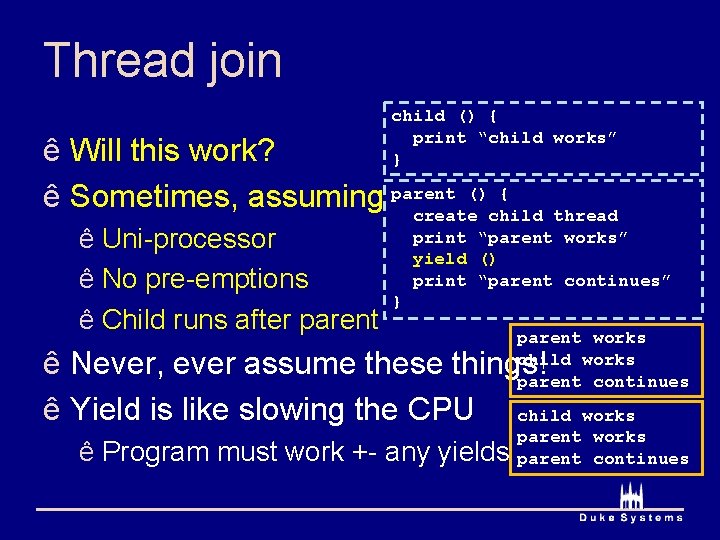

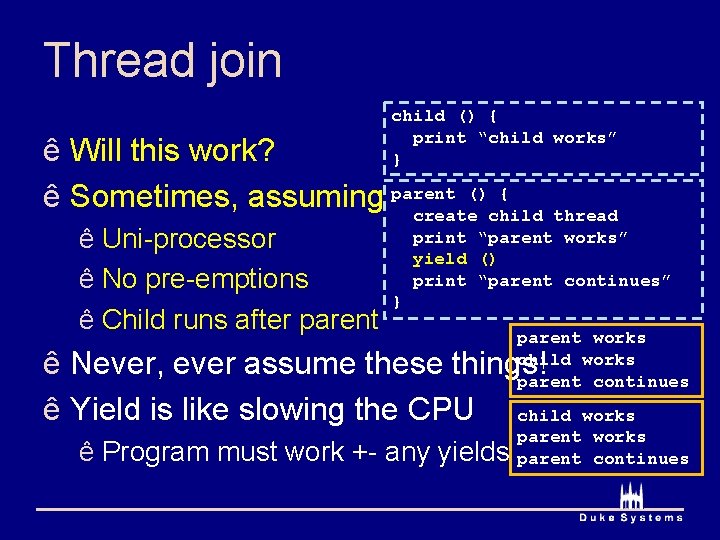

Thread join child () { print “child works” } ê Will this work? () { ê Sometimes, assuming parent create child ê Uni-processor ê No pre-emptions ê Child runs after parent thread print “parent works” yield () print “parent continues” } parent works child works parent continues ê Never, ever assume these things! ê Yield is like slowing the CPU child ê Program must work +- any yields works parent continues

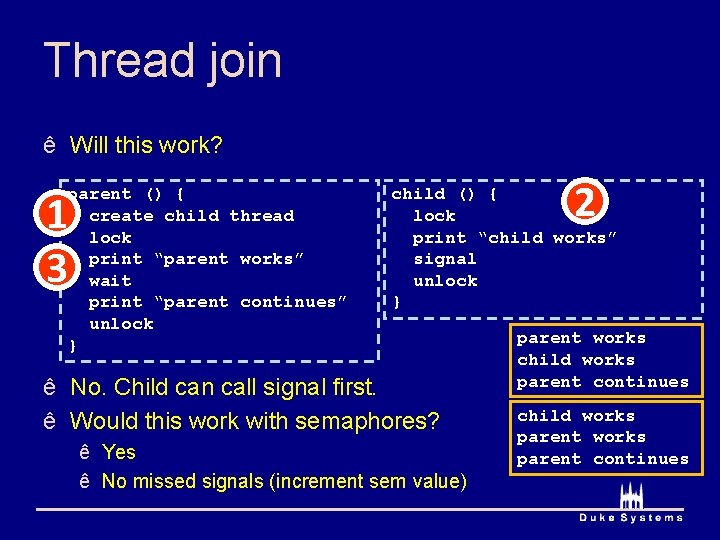

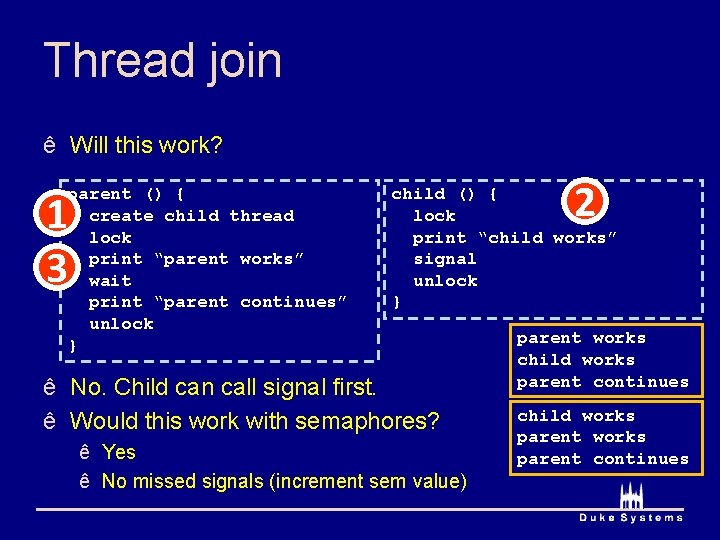

Thread join ê Will this work? parent () { create child thread lock print “parent works” wait print “parent continues” unlock } 1 3 2 child () { lock print “child works” signal unlock } ê No. Child can call signal first. ê Would this work with semaphores? ê Yes ê No missed signals (increment sem value) parent works child works parent continues

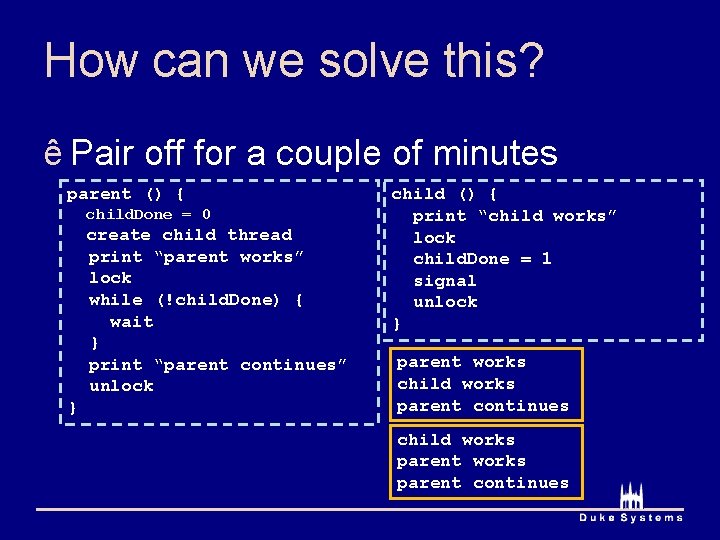

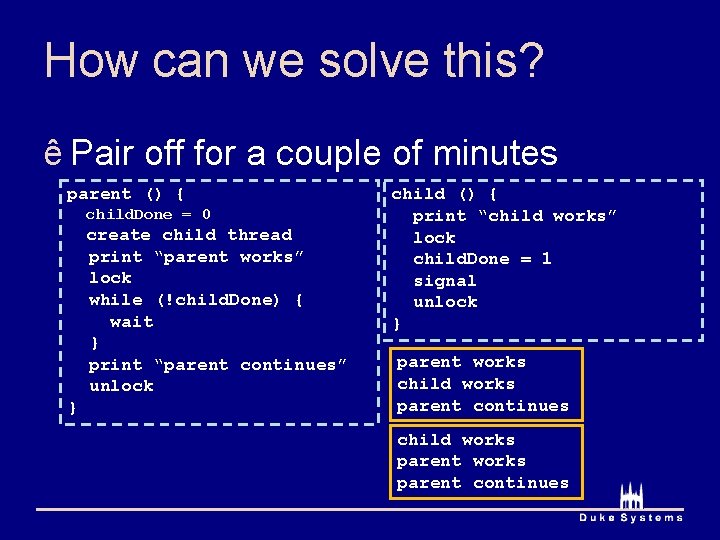

How can we solve this? ê Pair off for a couple of minutes parent () { child. Done = 0 create child thread print “parent works” lock while (!child. Done) { wait } print “parent continues” unlock } child () { print “child works” lock child. Done = 1 signal unlock } parent works child works parent continues

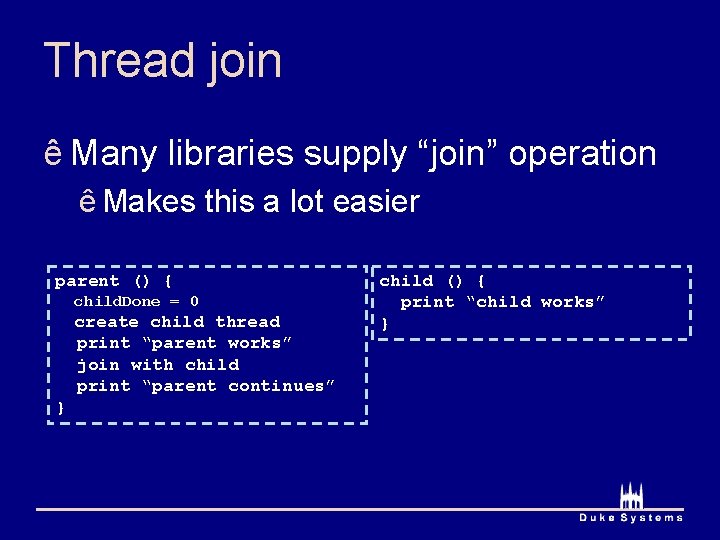

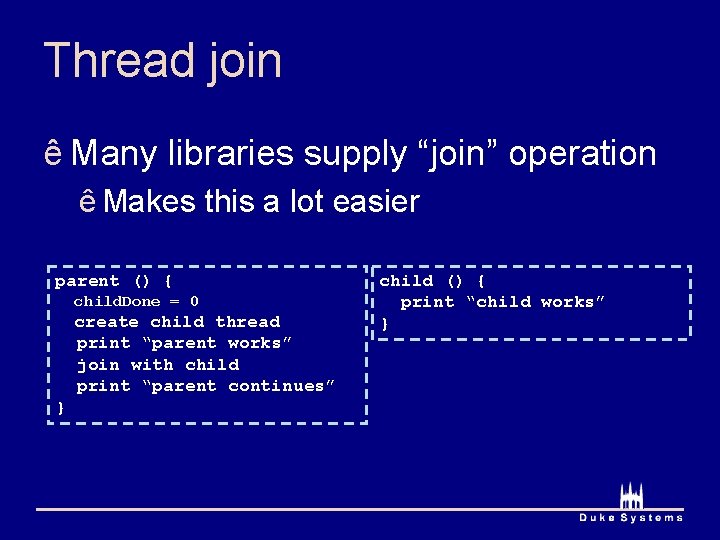

Thread join ê Many libraries supply “join” operation ê Makes this a lot easier parent () { child. Done = 0 create child thread print “parent works” join with child print “parent continues” } child () { print “child works” }