CPE 626 CPU Resources ARM Cache Memories Aleksandar

- Slides: 35

CPE 626 CPU Resources: ARM Cache Memories Aleksandar Milenkovic E-mail: Web: milenka@ece. uah. edu http: //www. ece. uah. edu/~milenka

On-chip RAM Ø On-chip memory is essential if a processor is to deliver its best performance § zero wait state access speed § power efficiency § reduced electromagnetic interference Ø In many embedded systems simple on-chip RAM is preferred to a cache § Advantages o simpler, cheaper, less power o more deterministic behavior § Disadvantages o require explicit control by the programmer 2

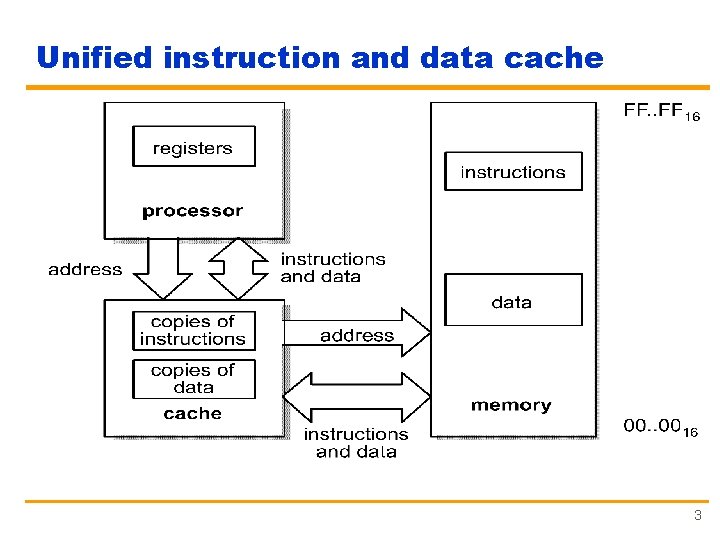

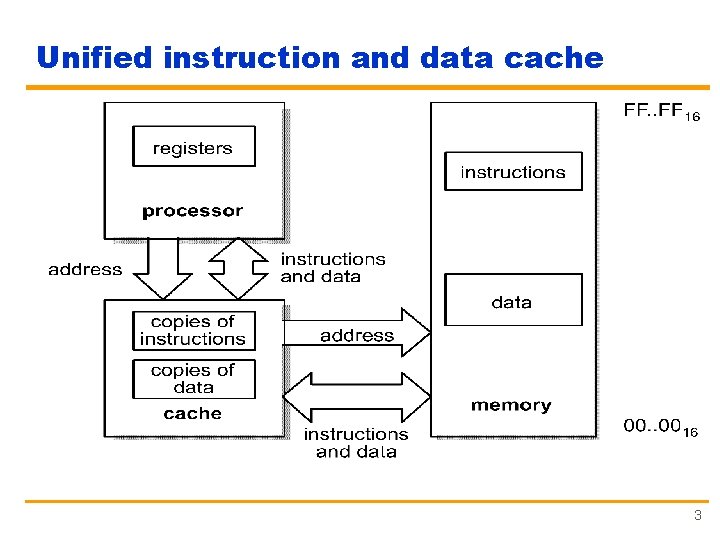

Unified instruction and data cache 3

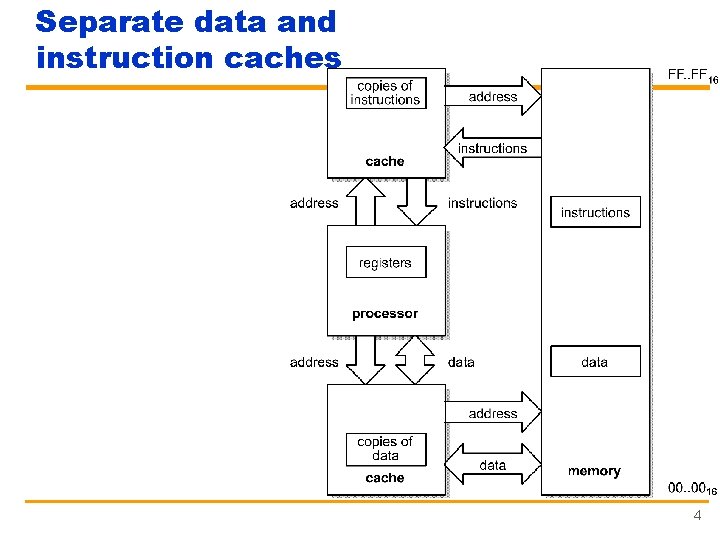

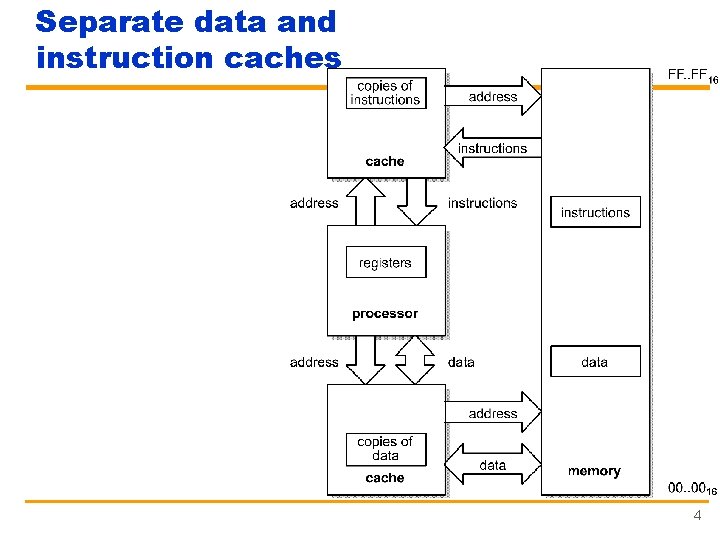

Separate data and instruction caches 4

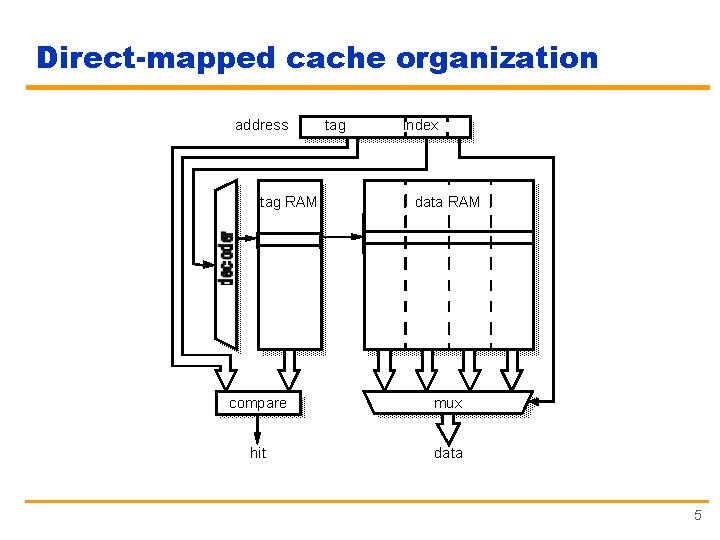

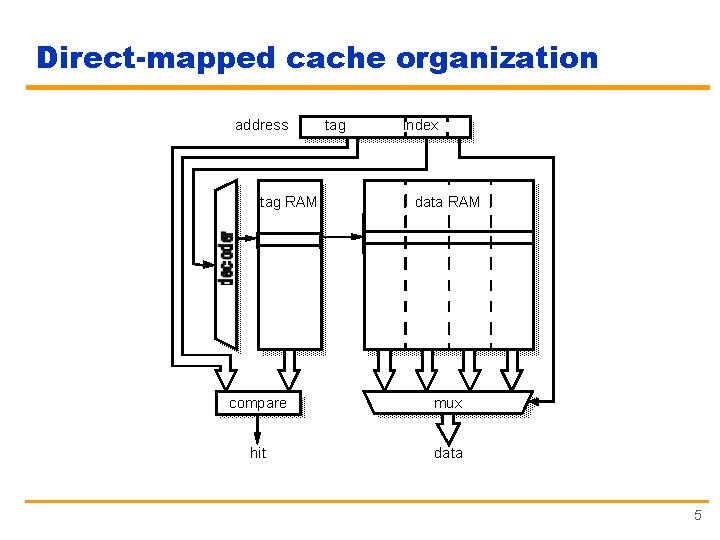

Direct-mapped cache organization address tag RAM tag index data RAM compare mux hit data 5

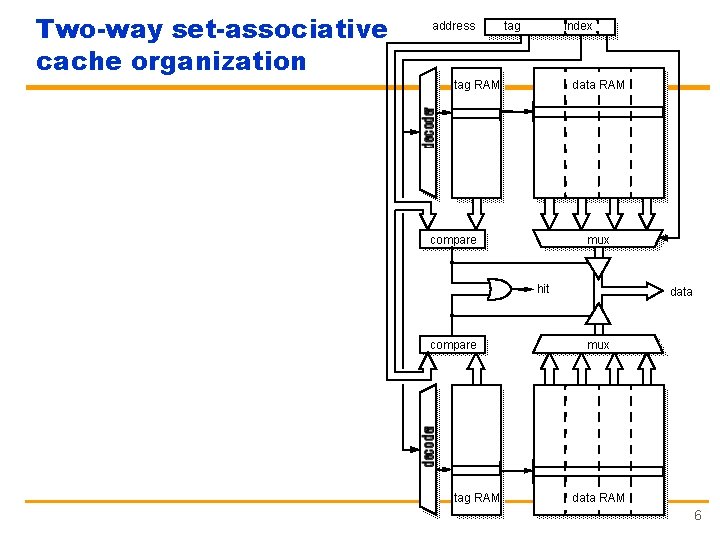

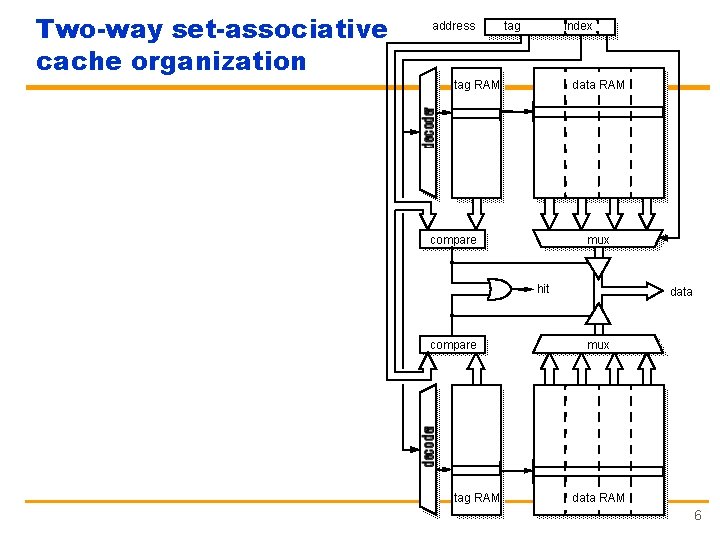

Two-way set-associative cache organization address tag index tag RAM data RAM mux compare hit compare tag RAM data mux data RAM 6

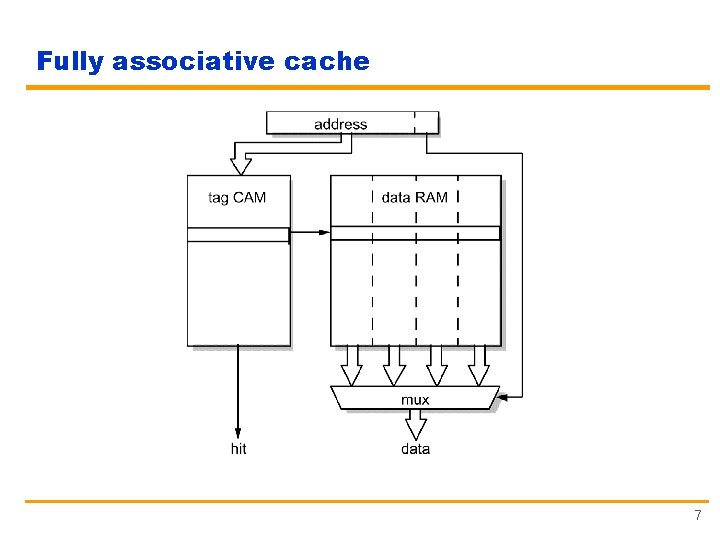

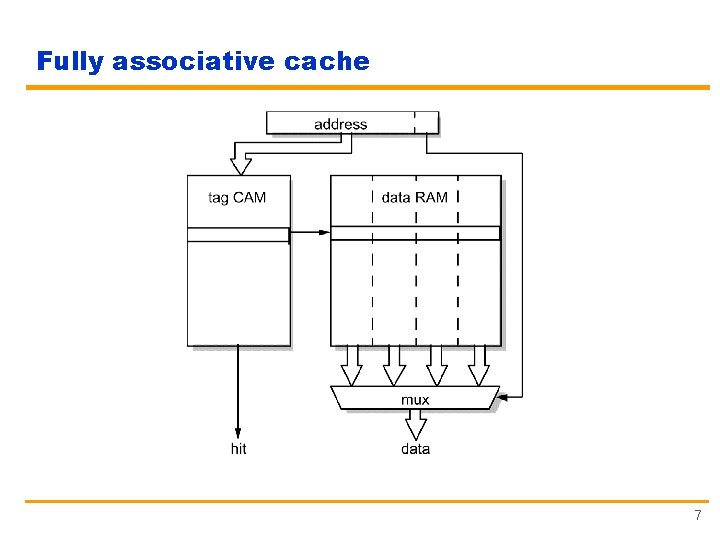

Fully associative cache 7

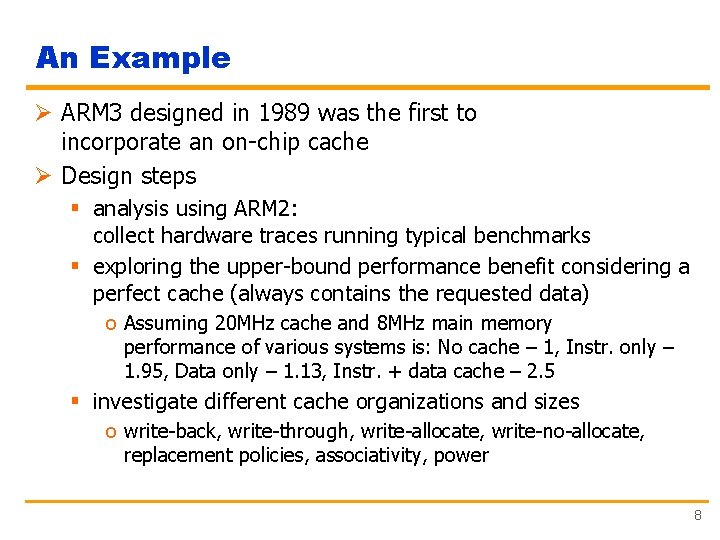

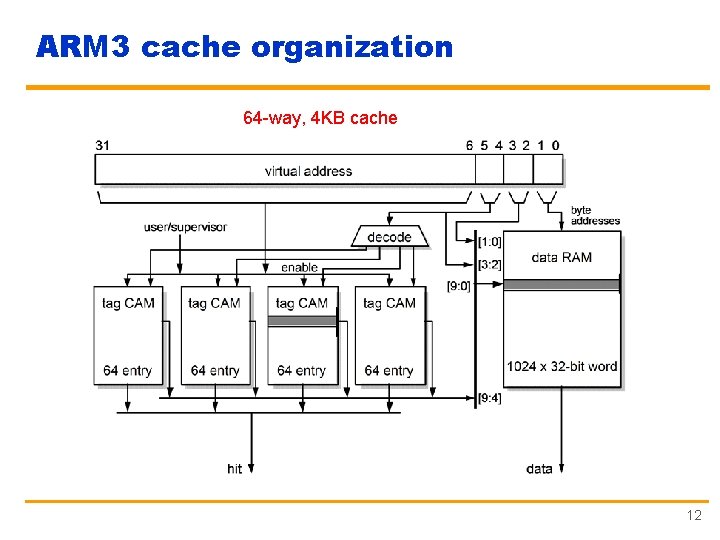

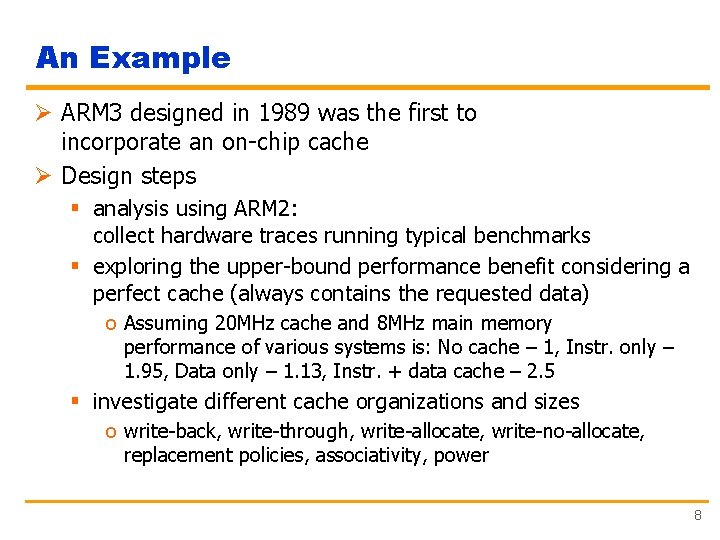

An Example Ø ARM 3 designed in 1989 was the first to incorporate an on-chip cache Ø Design steps § analysis using ARM 2: collect hardware traces running typical benchmarks § exploring the upper-bound performance benefit considering a perfect cache (always contains the requested data) o Assuming 20 MHz cache and 8 MHz main memory performance of various systems is: No cache – 1, Instr. only – 1. 95, Data only – 1. 13, Instr. + data cache – 2. 5 § investigate different cache organizations and sizes o write-back, write-through, write-allocate, write-no-allocate, replacement policies, associativity, power 8

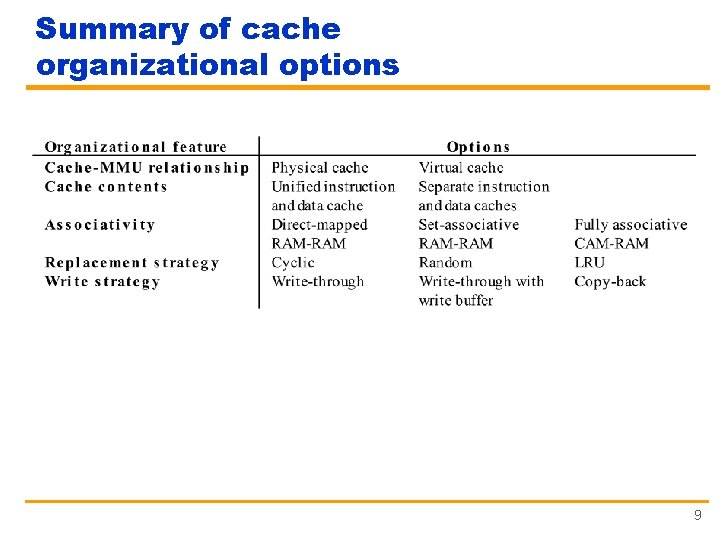

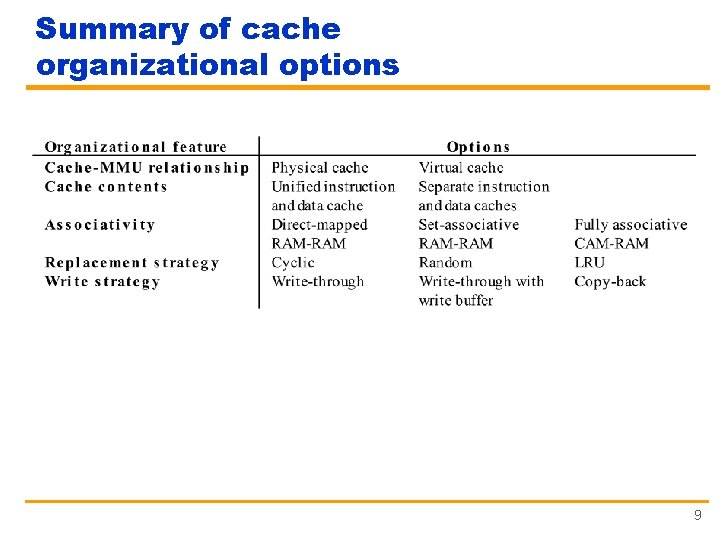

Summary of cache organizational options 9

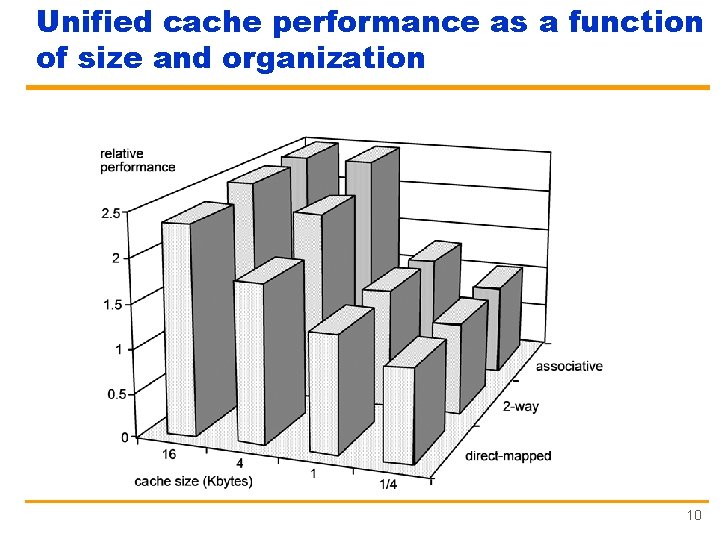

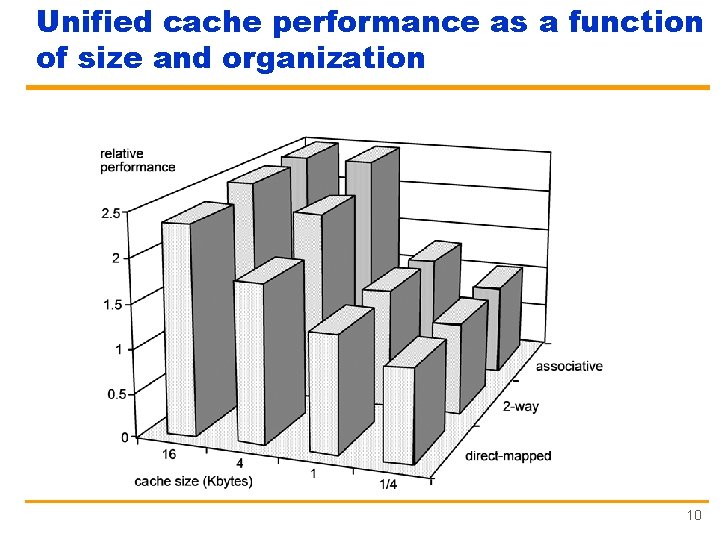

Unified cache performance as a function of size and organization 10

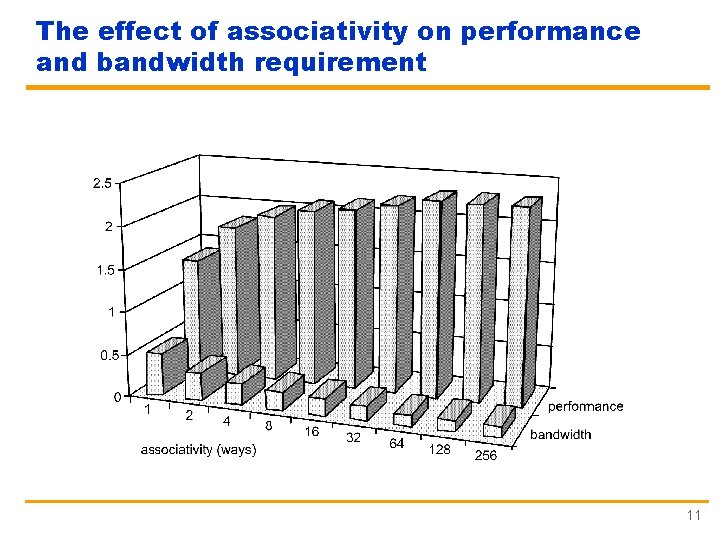

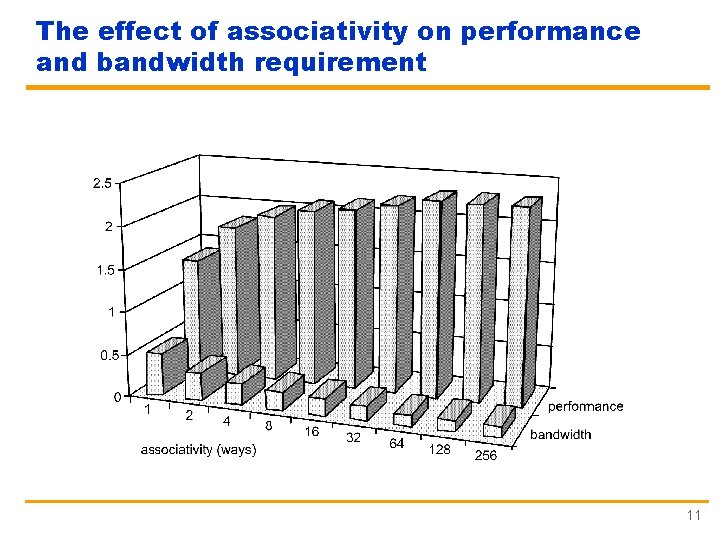

The effect of associativity on performance and bandwidth requirement 11

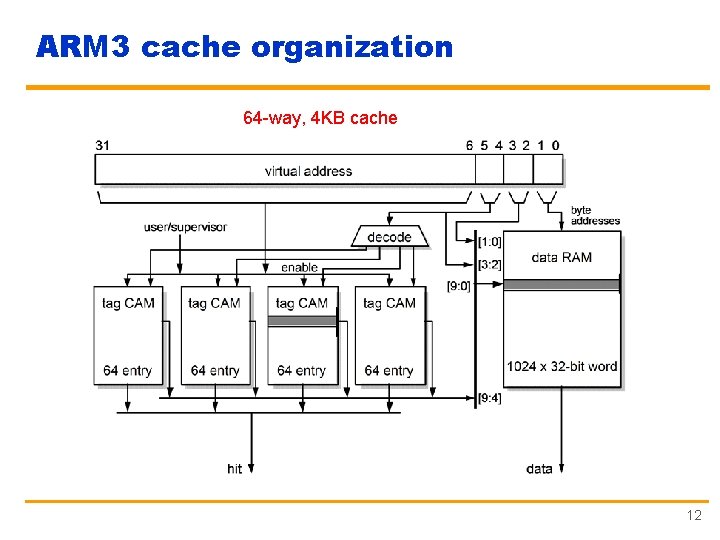

ARM 3 cache organization 64 -way, 4 KB cache 12

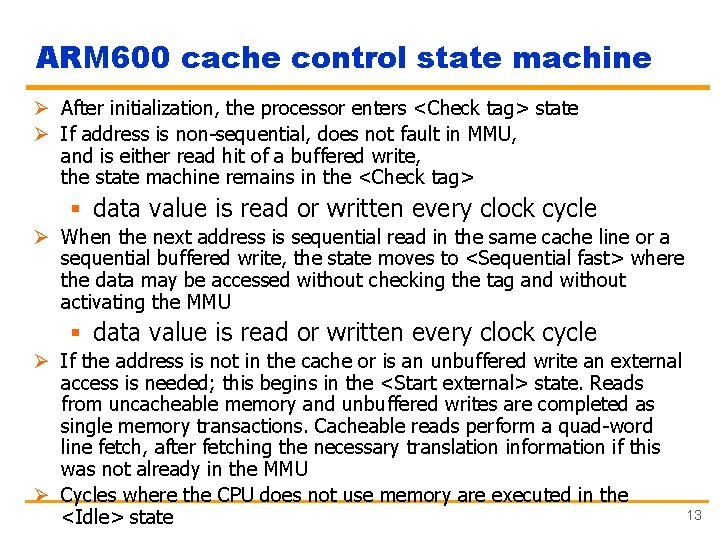

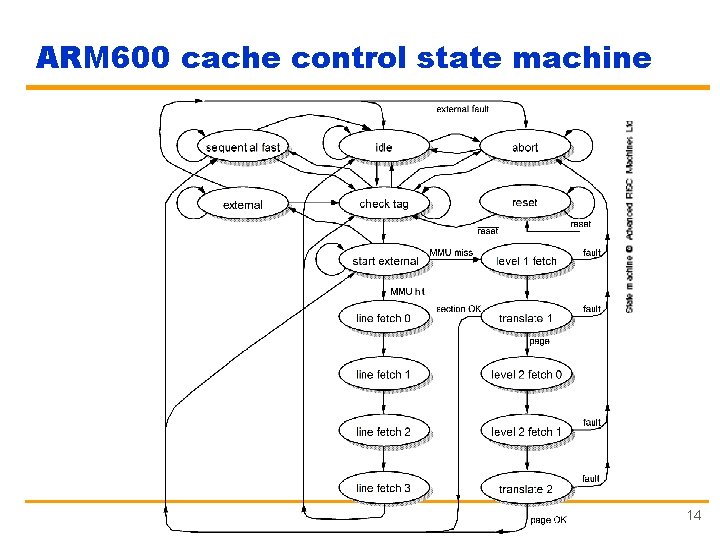

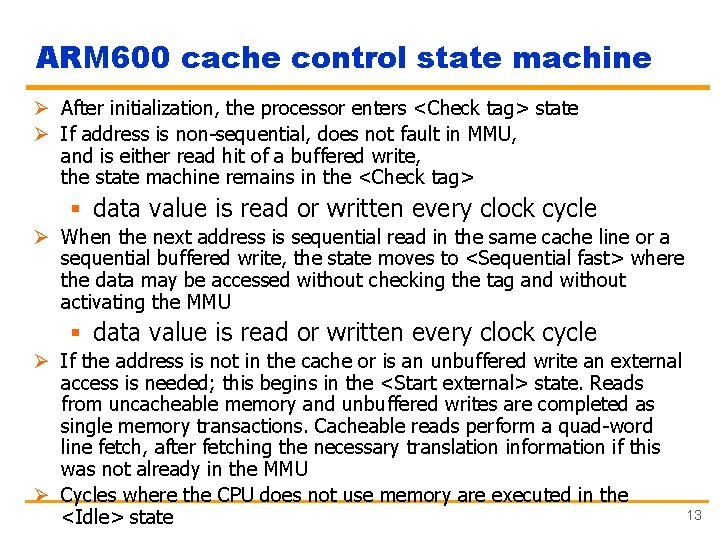

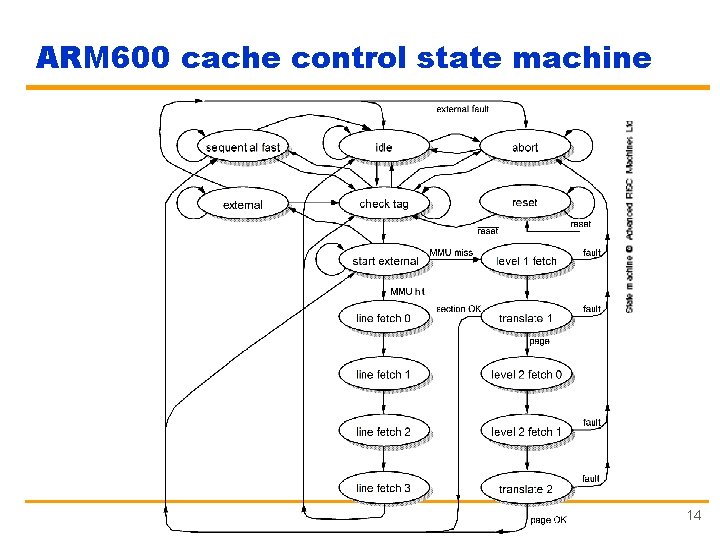

ARM 600 cache control state machine Ø After initialization, the processor enters <Check tag> state Ø If address is non-sequential, does not fault in MMU, and is either read hit of a buffered write, the state machine remains in the <Check tag> § data value is read or written every clock cycle Ø When the next address is sequential read in the same cache line or a sequential buffered write, the state moves to <Sequential fast> where the data may be accessed without checking the tag and without activating the MMU § data value is read or written every clock cycle Ø If the address is not in the cache or is an unbuffered write an external access is needed; this begins in the <Start external> state. Reads from uncacheable memory and unbuffered writes are completed as single memory transactions. Cacheable reads perform a quad-word line fetch, after fetching the necessary translation information if this was not already in the MMU Ø Cycles where the CPU does not use memory are executed in the 13 <Idle> state

ARM 600 cache control state machine 14

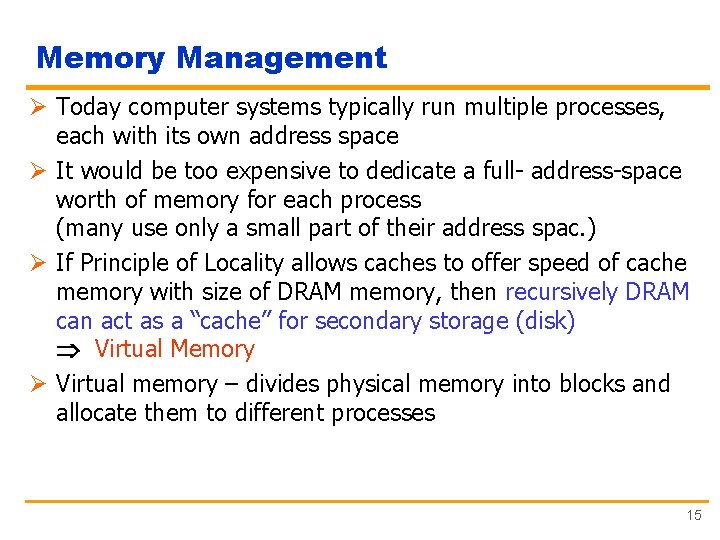

Memory Management Ø Today computer systems typically run multiple processes, each with its own address space Ø It would be too expensive to dedicate a full- address-space worth of memory for each process (many use only a small part of their address spac. ) Ø If Principle of Locality allows caches to offer speed of cache memory with size of DRAM memory, then recursively DRAM can act as a “cache” for secondary storage (disk) Virtual Memory Ø Virtual memory – divides physical memory into blocks and allocate them to different processes 15

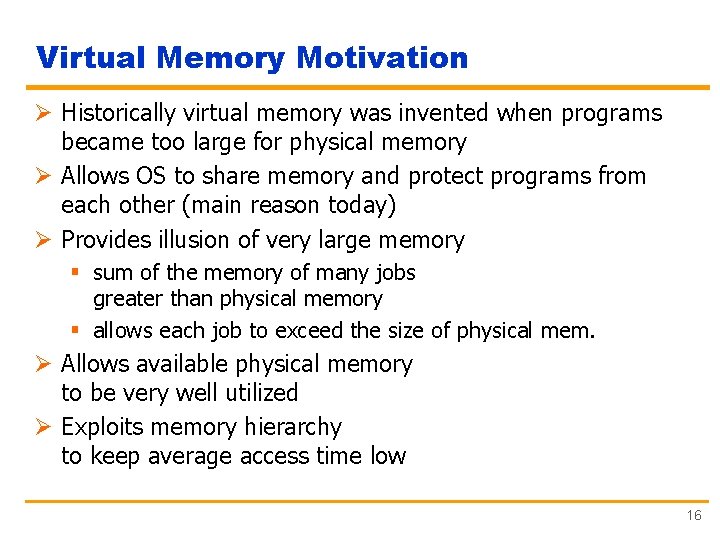

Virtual Memory Motivation Ø Historically virtual memory was invented when programs became too large for physical memory Ø Allows OS to share memory and protect programs from each other (main reason today) Ø Provides illusion of very large memory § sum of the memory of many jobs greater than physical memory § allows each job to exceed the size of physical mem. Ø Allows available physical memory to be very well utilized Ø Exploits memory hierarchy to keep average access time low 16

Virtual Memory Terminology Ø Virtual Address § address used by the programmer; CPU produces virtual addresses Ø Virtual Address Space § collection of such addresses Ø Memory (Physical or Real) Address § address of word in physical memory Ø Memory mapping or address translation § process of virtual to physical address translation 17

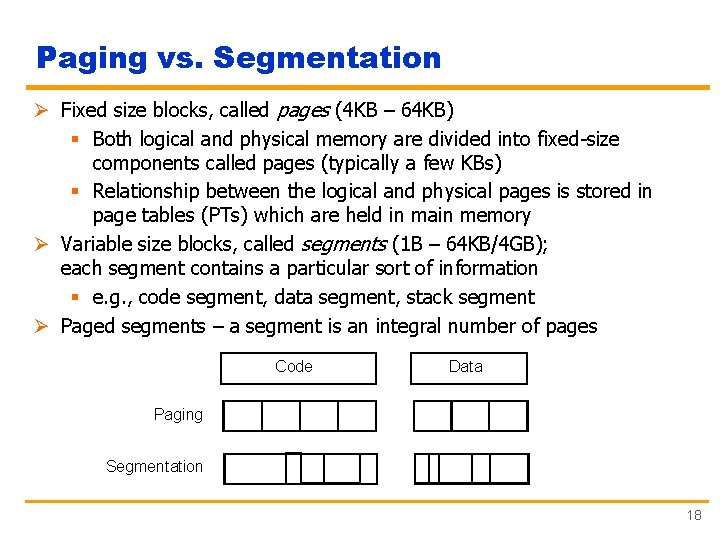

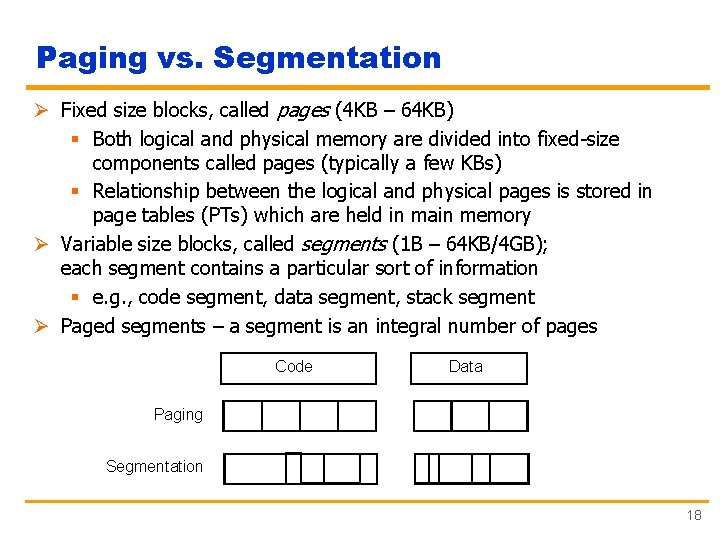

Paging vs. Segmentation Ø Fixed size blocks, called pages (4 KB – 64 KB) § Both logical and physical memory are divided into fixed-size components called pages (typically a few KBs) § Relationship between the logical and physical pages is stored in page tables (PTs) which are held in main memory Ø Variable size blocks, called segments (1 B – 64 KB/4 GB); each segment contains a particular sort of information § e. g. , code segment, data segment, stack segment Ø Paged segments – a segment is an integral number of pages Code Data Paging Segmentation 18

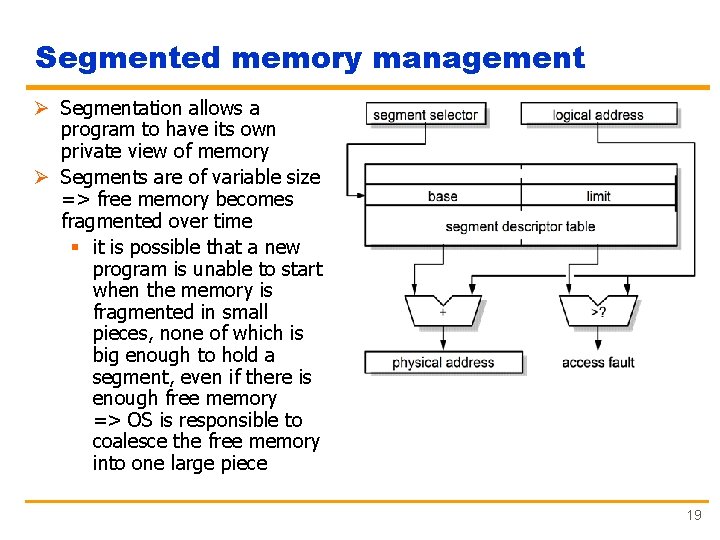

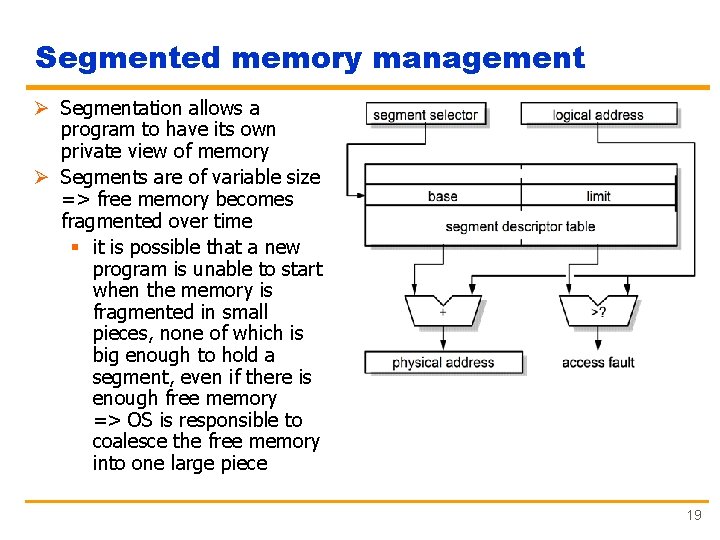

Segmented memory management Ø Segmentation allows a program to have its own private view of memory Ø Segments are of variable size => free memory becomes fragmented over time § it is possible that a new program is unable to start when the memory is fragmented in small pieces, none of which is big enough to hold a segment, even if there is enough free memory => OS is responsible to coalesce the free memory into one large piece 19

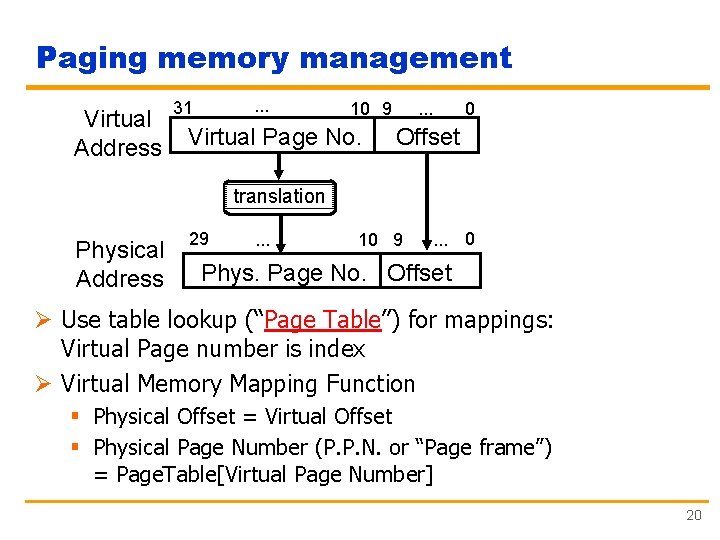

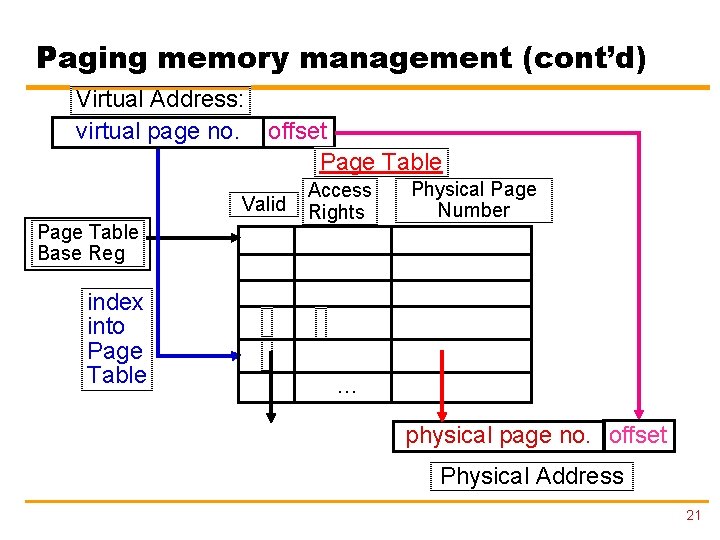

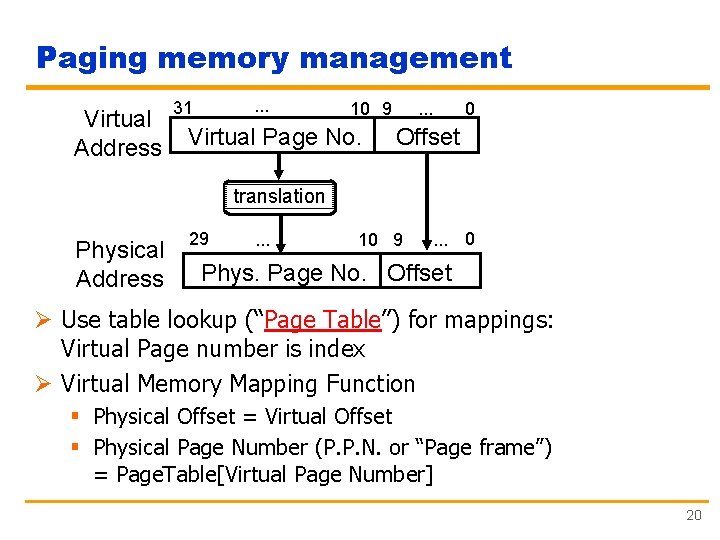

Paging memory management Virtual Address . . . 31 10 9 Virtual Page No. 0 . . . Offset translation Physical Address 29 . . . 10 9 . . . 0 Phys. Page No. Offset Ø Use table lookup (“Page Table”) for mappings: Virtual Page number is index Ø Virtual Memory Mapping Function § Physical Offset = Virtual Offset § Physical Page Number (P. P. N. or “Page frame”) = Page. Table[Virtual Page Number] 20

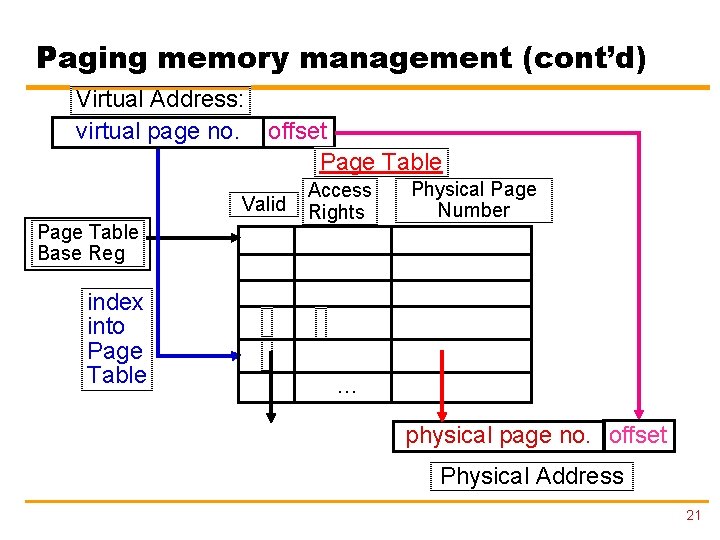

Paging memory management (cont’d) Virtual Address: virtual page no. offset Page Table Valid Page Table Base Reg index into Page Table Access Rights Physical Page Number . . . physical page no. offset Physical Address 21

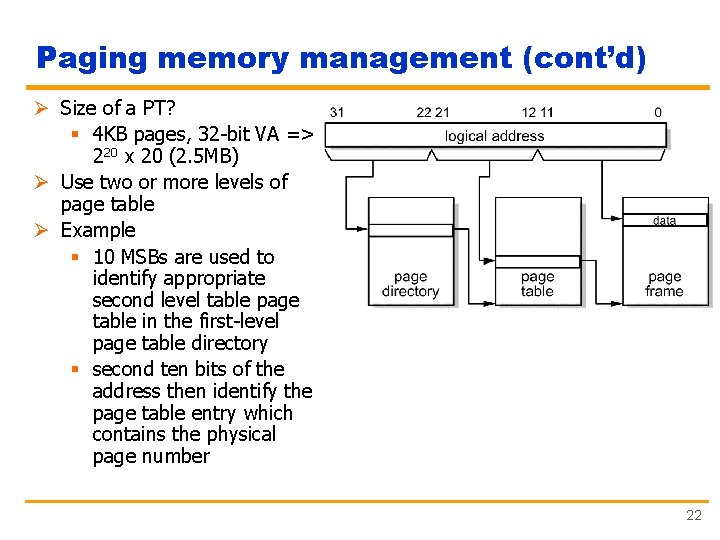

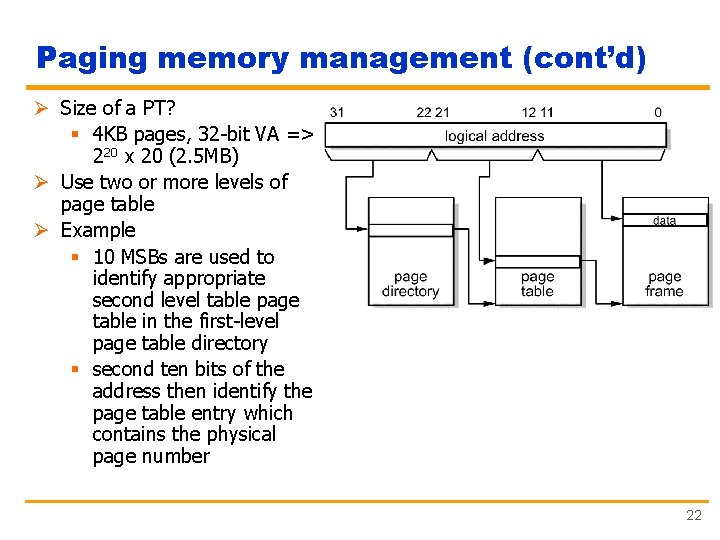

Paging memory management (cont’d) Ø Size of a PT? § 4 KB pages, 32 -bit VA => 220 x 20 (2. 5 MB) Ø Use two or more levels of page table Ø Example § 10 MSBs are used to identify appropriate second level table page table in the first-level page table directory § second ten bits of the address then identify the page table entry which contains the physical page number 22

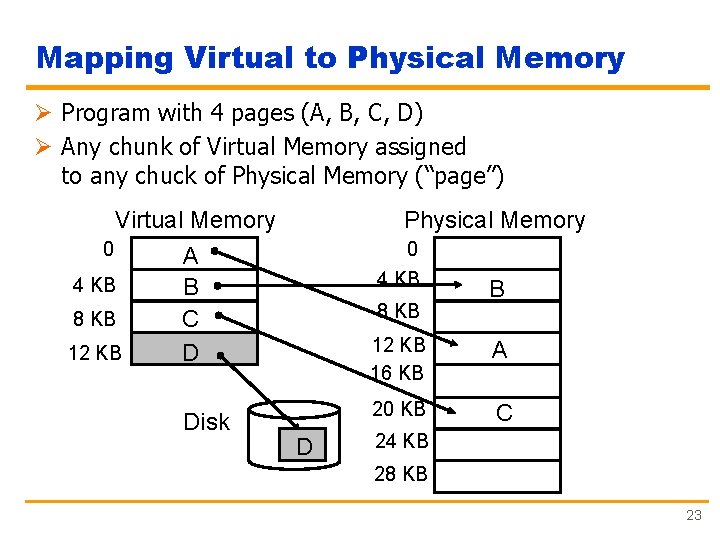

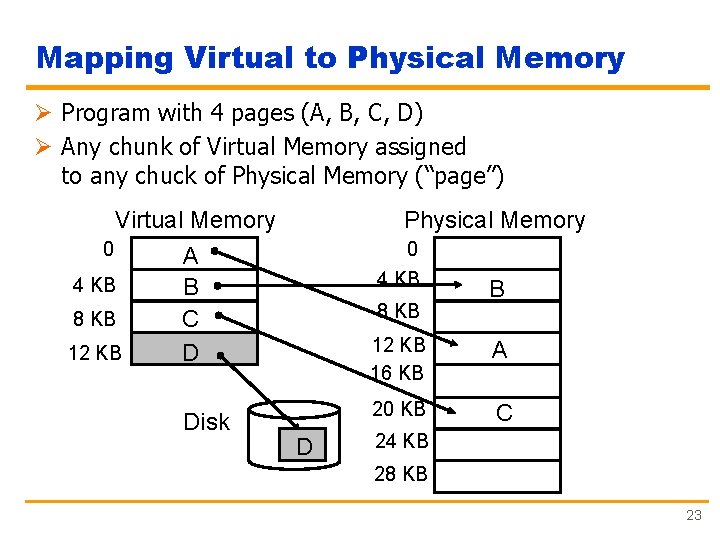

Mapping Virtual to Physical Memory Ø Program with 4 pages (A, B, C, D) Ø Any chunk of Virtual Memory assigned to any chuck of Physical Memory (“page”) Virtual Memory 0 A 4 KB B 8 KB C 12 KB D Disk Physical Memory 0 4 KB 8 KB D B 12 KB 16 KB A 20 KB C 24 KB 28 KB 23

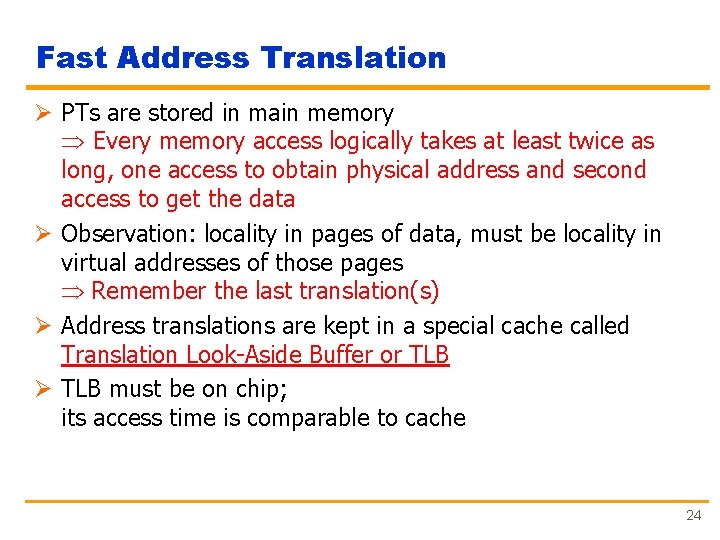

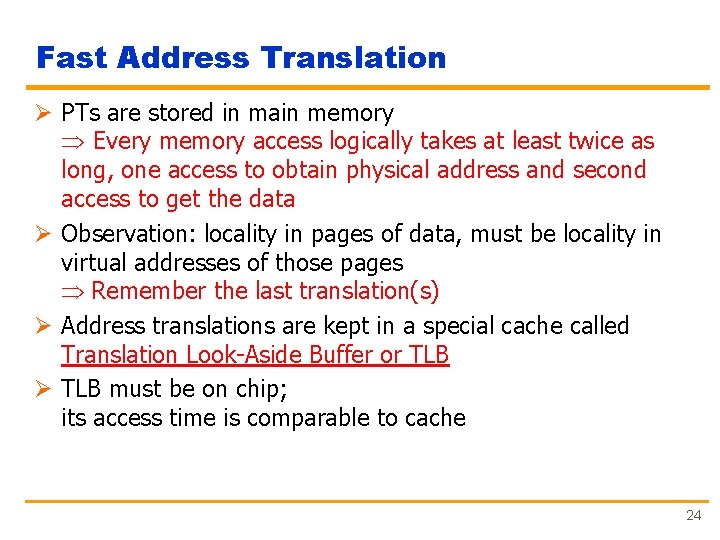

Fast Address Translation Ø PTs are stored in main memory Every memory access logically takes at least twice as long, one access to obtain physical address and second access to get the data Ø Observation: locality in pages of data, must be locality in virtual addresses of those pages Remember the last translation(s) Ø Address translations are kept in a special cache called Translation Look-Aside Buffer or TLB Ø TLB must be on chip; its access time is comparable to cache 24

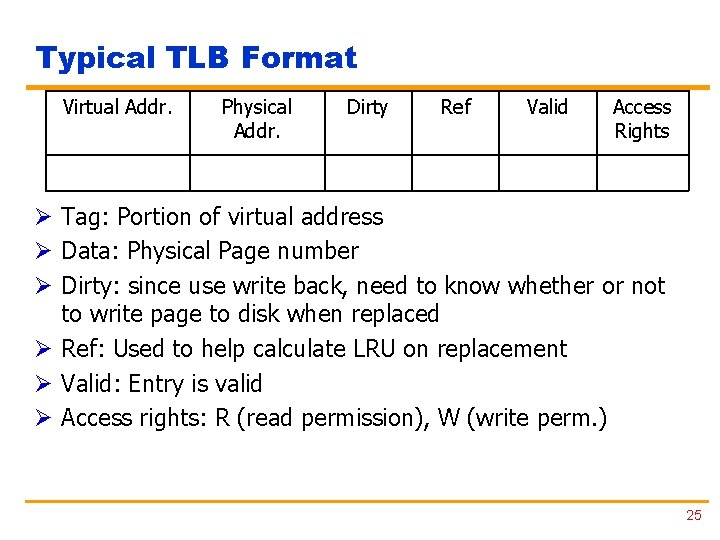

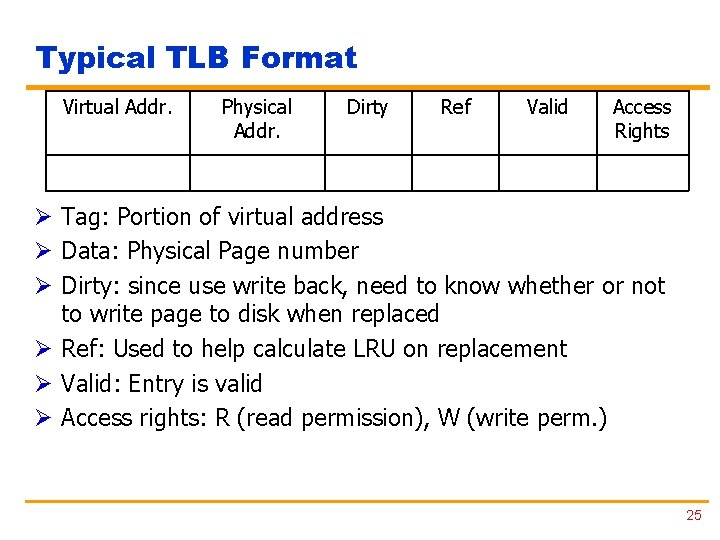

Typical TLB Format Virtual Addr. Physical Addr. Dirty Ref Valid Access Rights Ø Tag: Portion of virtual address Ø Data: Physical Page number Ø Dirty: since use write back, need to know whether or not to write page to disk when replaced Ø Ref: Used to help calculate LRU on replacement Ø Valid: Entry is valid Ø Access rights: R (read permission), W (write perm. ) 25

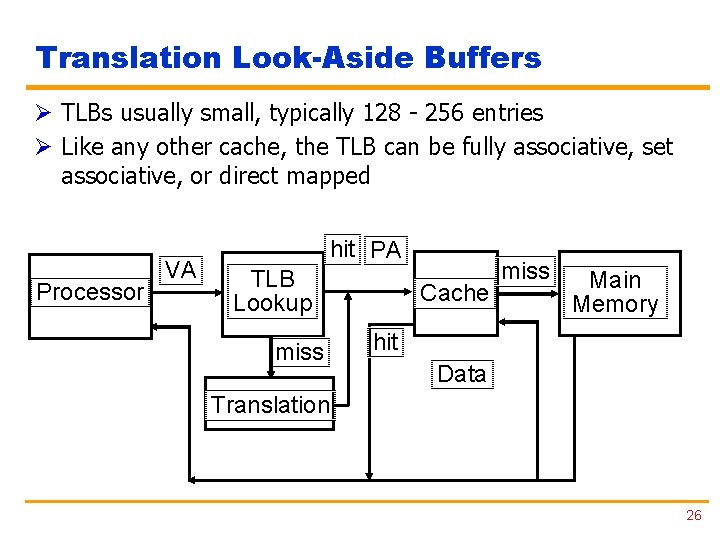

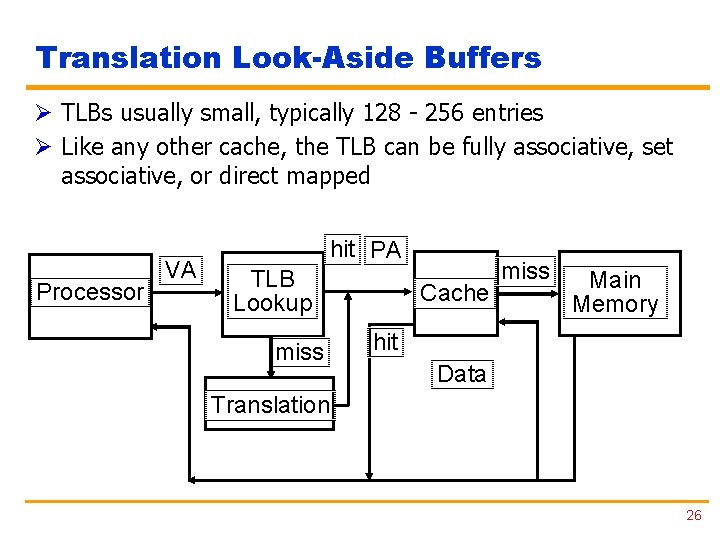

Translation Look-Aside Buffers Ø TLBs usually small, typically 128 - 256 entries Ø Like any other cache, the TLB can be fully associative, set associative, or direct mapped Processor VA hit PA TLB Lookup miss Cache miss Main Memory hit Data Translation 26

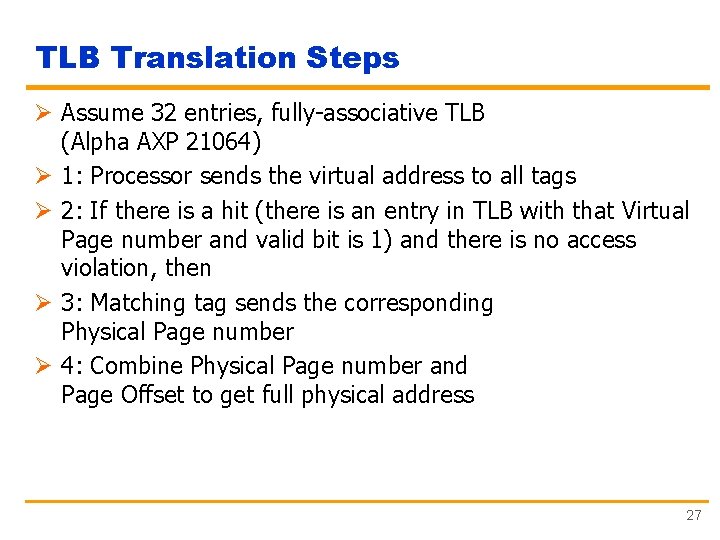

TLB Translation Steps Ø Assume 32 entries, fully-associative TLB (Alpha AXP 21064) Ø 1: Processor sends the virtual address to all tags Ø 2: If there is a hit (there is an entry in TLB with that Virtual Page number and valid bit is 1) and there is no access violation, then Ø 3: Matching tag sends the corresponding Physical Page number Ø 4: Combine Physical Page number and Page Offset to get full physical address 27

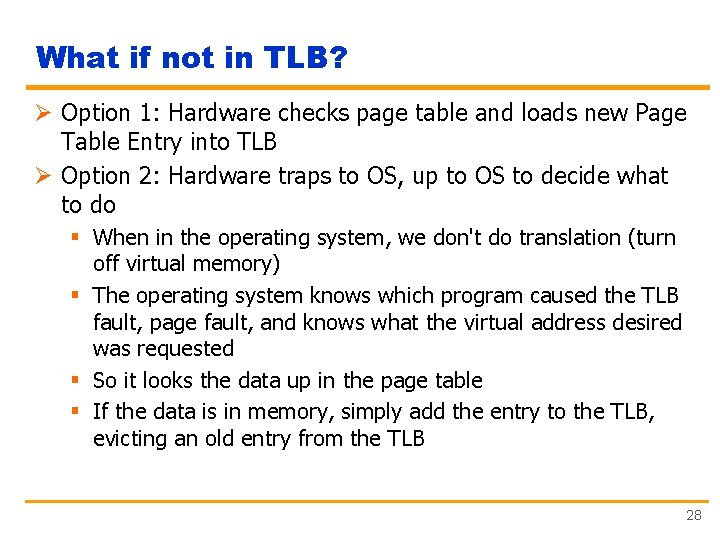

What if not in TLB? Ø Option 1: Hardware checks page table and loads new Page Table Entry into TLB Ø Option 2: Hardware traps to OS, up to OS to decide what to do § When in the operating system, we don't do translation (turn off virtual memory) § The operating system knows which program caused the TLB fault, page fault, and knows what the virtual address desired was requested § So it looks the data up in the page table § If the data is in memory, simply add the entry to the TLB, evicting an old entry from the TLB 28

What if the data is on disk? Ø We load the page off the disk into a free block of memory, using a DMA transfer § Meantime we switch to some other process waiting to be run Ø When the DMA is complete, we get an interrupt and update the process's page table § So when we switch back to the task, the desired data will be in memory 29

What if we don't have enough memory? Ø We chose some other page belonging to a program and transfer it onto the disk if it is dirty § If clean (other copy is up-to-date), just overwrite that data in memory § We chose the page to evict based on replacement policy (e. g. , LRU) Ø And update that program's page table to reflect the fact that its memory moved somewhere else 30

Page Replacement Algorithms Ø First-In/First Out § in response to page fault, replace the page that has been in memory for the longest period of time § does not make use of the principle of locality: an old but frequently used page could be replaced § easy to implement (OS maintains history thread through page table entries) § usually exhibits the worst behavior Ø Least Recently Used § selects the least recently used page for replacement § requires knowledge of past references § more difficult to implement, good performance 31

Page Replacement Algorithms Ø Not Recently Used (an estimation of LRU) § A reference bit flag is associated to each page table entry such that Ref flag = 1 - if page has been referenced in recent past Ref flag = 0 - otherwise § If replacement is necessary, choose any page frame such that its reference bit is 0 § OS periodically clears the reference bits § Reference bit is set whenever a page is accessed 32

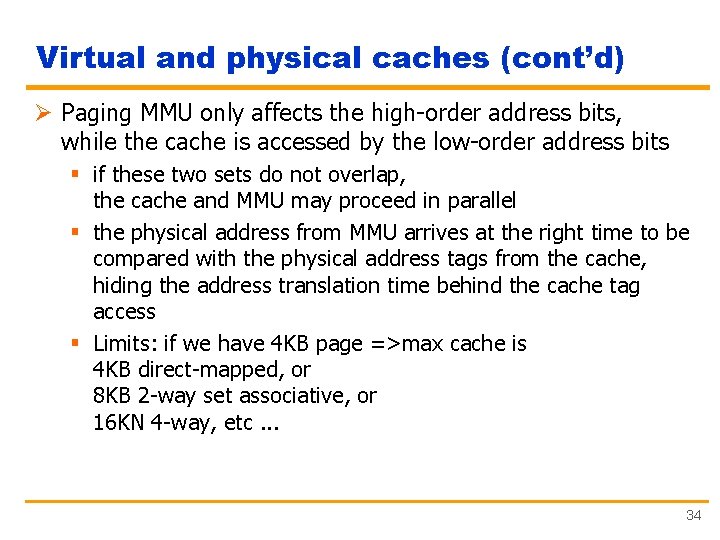

Virtual and physical caches Ø When system incorporates both MMU and a cache. the cache may operate either with virtual or physical address Ø Virtual cache § + : cache access may start immediately; there is no need to activate the MMU if the data is found in the cache => save the power, eliminates address translation from a cache hit § - : drawbacks o every time a process is switched VAs refer to different physical addresses => cache to flushed on each process switch • increase the width of the cache address tag with a PID o OS and user programs may use two different VAs for the same physical location (synonyms, aliases) => may result in two copies of the same data, • if we modify one, the other will have wrong value 33

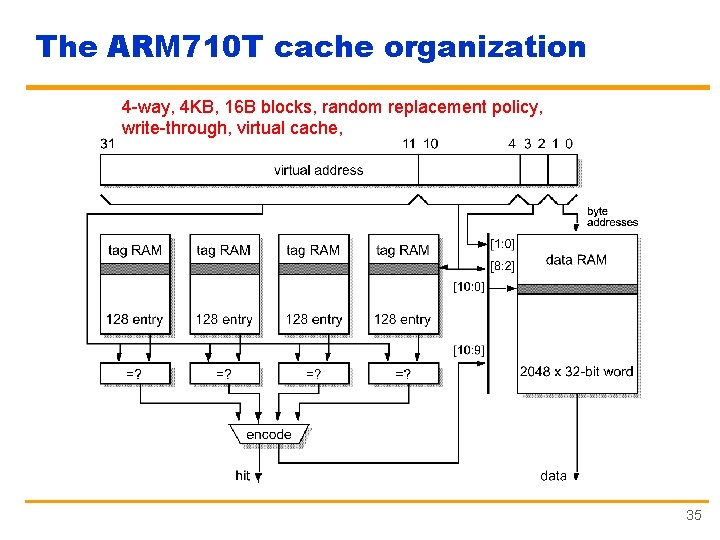

Virtual and physical caches (cont’d) Ø Paging MMU only affects the high-order address bits, while the cache is accessed by the low-order address bits § if these two sets do not overlap, the cache and MMU may proceed in parallel § the physical address from MMU arrives at the right time to be compared with the physical address tags from the cache, hiding the address translation time behind the cache tag access § Limits: if we have 4 KB page =>max cache is 4 KB direct-mapped, or 8 KB 2 -way set associative, or 16 KN 4 -way, etc. . . 34

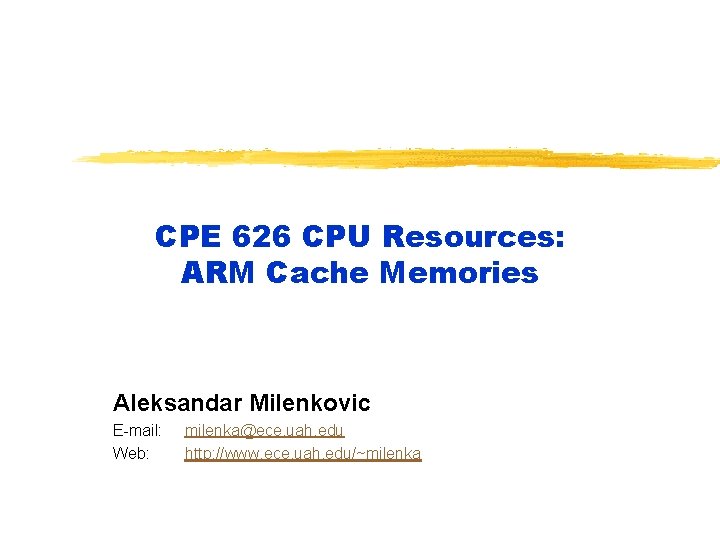

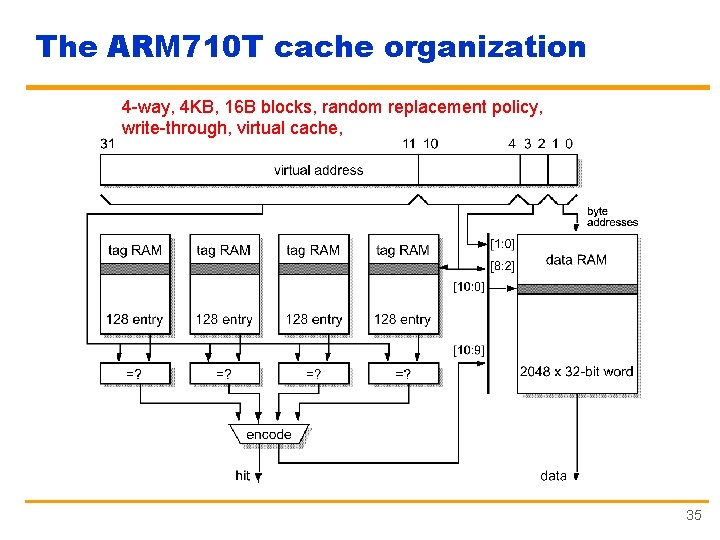

The ARM 710 T cache organization 4 -way, 4 KB, 16 B blocks, random replacement policy, write-through, virtual cache, 35