Course Roadmap Introduction to Data mining Introduction Preprocessing

![Format of Rules • Examples. Rule form: “Body ® Head [support, confidence]”. – buys(x, Format of Rules • Examples. Rule form: “Body ® Head [support, confidence]”. – buys(x,](https://slidetodoc.com/presentation_image_h2/92dae45c60d832313a4f32c6017951cb/image-6.jpg)

- Slides: 30

Course Roadmap • Introduction to Data mining – Introduction, Preprocessing, Clustering, Association Rules, Classification, … • Web structure mining – Authoritative Sources in a Hyperlinked environment, by John. M. Kleonberg – The anatomy large scale Hyper-textual Web Search engine, Sergey Brin and Lawrance Page – Efficient Crawling through URL ordering, J. Cho, H. Garcia Molina and L. Page – Focused Crawling: A new approach to topic-specific web resource discovery, by Souman Chakravarthi et al. – Trawling the web for emerging cyber communities, Ravi Kumar… – Building a cyber-community hierarchy based on link analysis, P. Krishna Reddy and Masaru Kitsuregawa – Efficient identification of web communities, GW Flake, S. . Lawrence, et al. – Finding related pages in WWW, J Dean and MR Herizinger • • Web content mining/Information Retrieval Web log mining/Recommendation systems/E-commerce

Association Rules • Reference: • Chapter 6: Data mining: Concepts and Techniques: Jiawei Han and Micheline Kamber, Morgan Kaufmann

Mining Association Rules in Large Databases • Association rule mining • Mining single-dimensional Boolean association rules from transactional databases • Multilevel association rules Summary

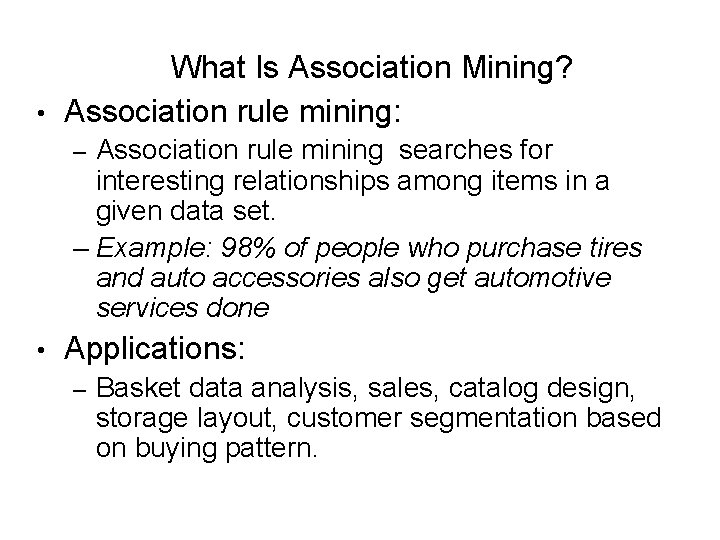

What Is Association Mining? • Association rule mining: Association rule mining searches for interesting relationships among items in a given data set. – Example: 98% of people who purchase tires and auto accessories also get automotive services done – • Applications: – Basket data analysis, sales, catalog design, storage layout, customer segmentation based on buying pattern.

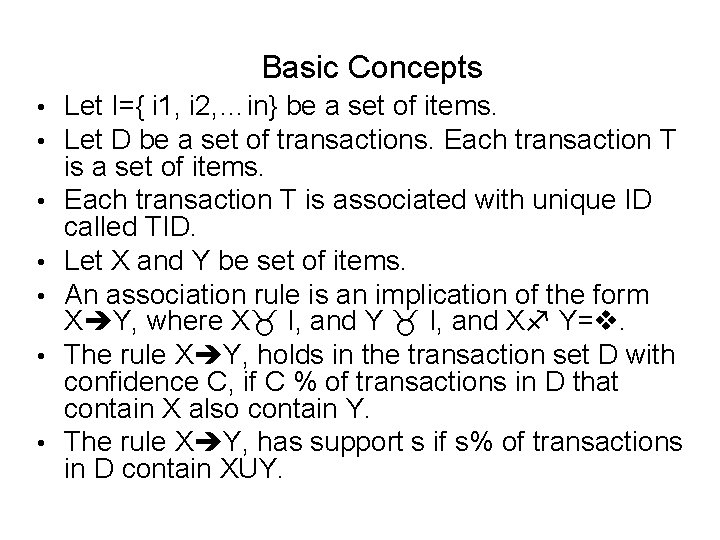

Association Rule: Basic Concepts • Given: (1) database of transactions, (2) each transaction is a list of items (purchased by a customer in a visit) • Find: all rules that correlate the presence of one set of items with that of another set of items – E. g. , 98% of people who purchase tires and auto accessories also get automotive services done • Applications – * Maintenance Agreement (What the store should do to boost Maintenance Agreement sales) – Home Electronics * (What other products should the store stocks up? ) – Attached mailing in direct marketing

![Format of Rules Examples Rule form Body Head support confidence buysx Format of Rules • Examples. Rule form: “Body ® Head [support, confidence]”. – buys(x,](https://slidetodoc.com/presentation_image_h2/92dae45c60d832313a4f32c6017951cb/image-6.jpg)

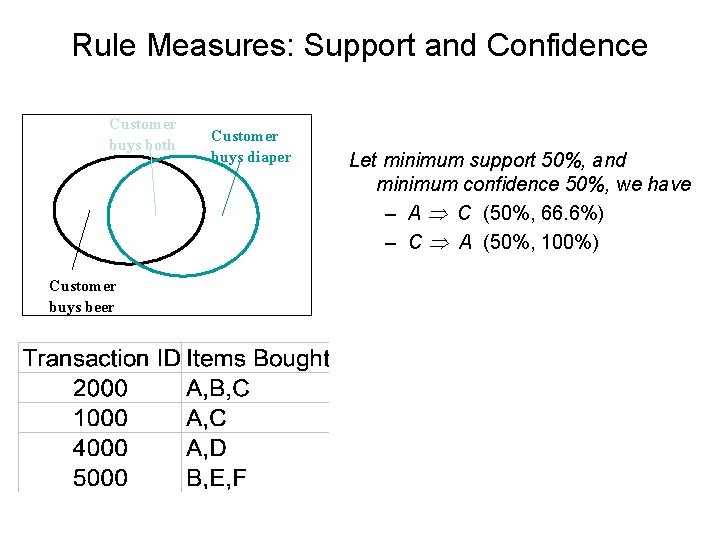

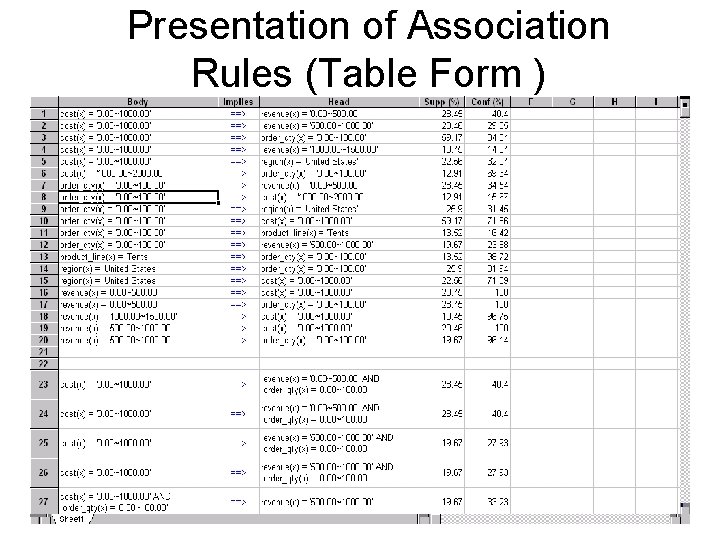

Format of Rules • Examples. Rule form: “Body ® Head [support, confidence]”. – buys(x, “diapers”) ® buys(x, “beers”) [0. 5%, 60%] – major(x, “CS”) ^ takes(x, “DB”) ® grade(x, “A”) [1%, 75%] –

Basic Concepts • • Let I={ i 1, i 2, …in} be a set of items. Let D be a set of transactions. Each transaction T is a set of items. Each transaction T is associated with unique ID called TID. Let X and Y be set of items. An association rule is an implication of the form X Y, where X I, and Y I, and X Y=. The rule X Y, holds in the transaction set D with confidence C, if C % of transactions in D that contain X also contain Y. The rule X Y, has support s if s% of transactions in D contain XUY.

Basic Concepts • Problem: Given a set of transactions D, the problem of mining association rules is to generate all association rules that have support and confidence greater than the user-specified minimum support called (minsup) and minimum confidence (called minconf) respectively. • Find all the rules X & Y Z with minimum confidence and support – support, s, probability that a transaction contains {X Y Z}= % of transactions that contain {X Y Z}. – confidence, c, conditional probability that a transaction having {X Y} also contains Z – =Support {X Y Z} / Support {X Y}

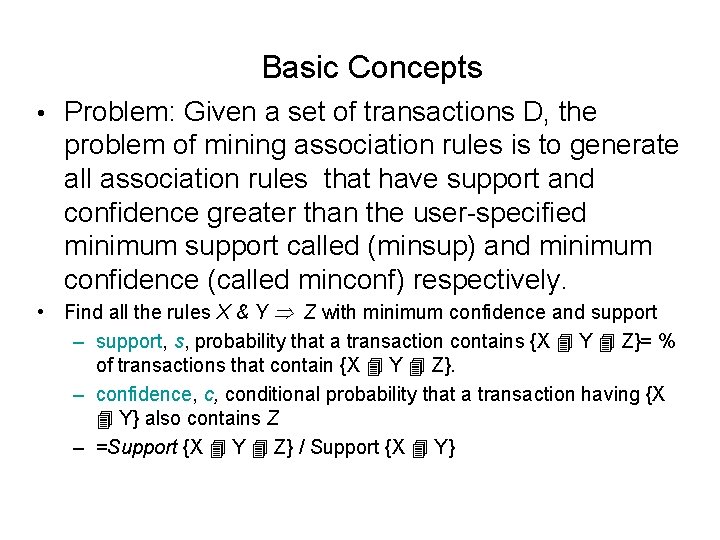

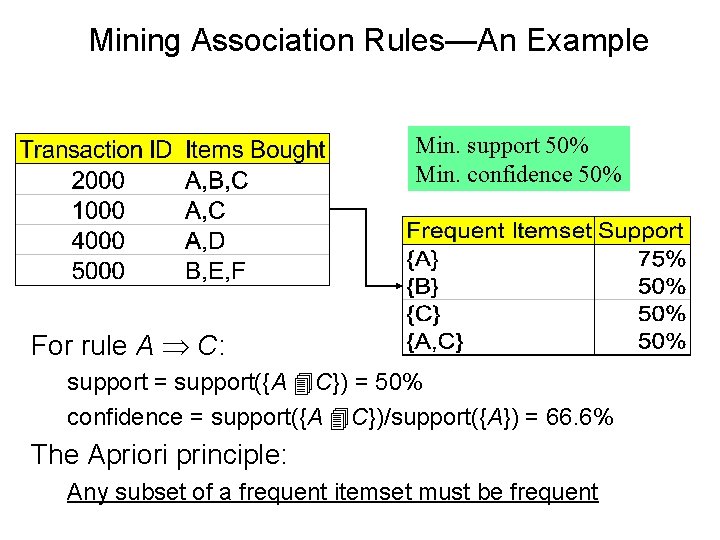

Rule Measures: Support and Confidence Customer buys both Customer buys beer Customer buys diaper Let minimum support 50%, and minimum confidence 50%, we have – A C (50%, 66. 6%) – C A (50%, 100%)

Confidence of a rule • The confidence of a rule provides an accurate prediction on the association of the items in the rule. • The support of a rule indicates how frequent the rule is in the transactions. • Rules that have small support are uninteresting, since they do not describe large populations.

Association Rule Mining • • Boolean vs. quantitative associations (Based on the types of values handled) – buys(x, “SQLServer”) ^ buys(x, “DMBook”) ® buys(x, “DBMiner”) [0. 2%, 60%] – age(x, “ 30. . 39”) ^ income(x, “ 42. . 48 K”) ® buys(x, “PC”) [1%, 75%] Single level vs. multiple-level analysis – What brands of beers are associated with what brands of diapers?

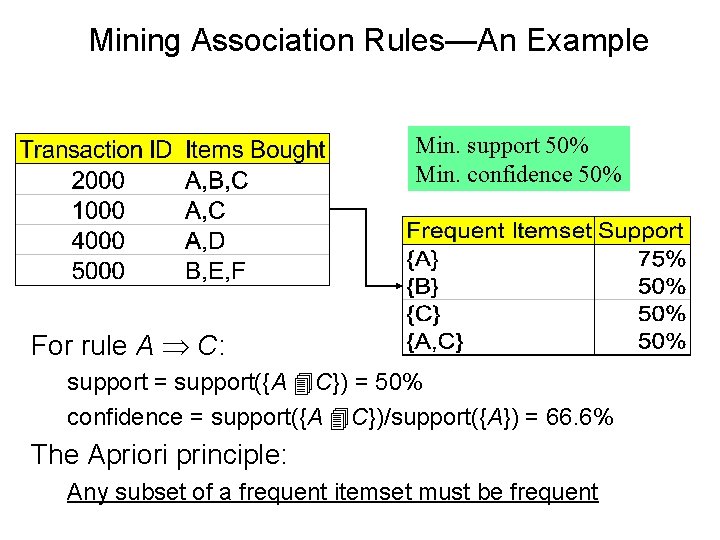

Mining Association Rules—An Example Min. support 50% Min. confidence 50% For rule A C: support = support({A C}) = 50% confidence = support({A C})/support({A}) = 66. 6% The Apriori principle: Any subset of a frequent itemset must be frequent

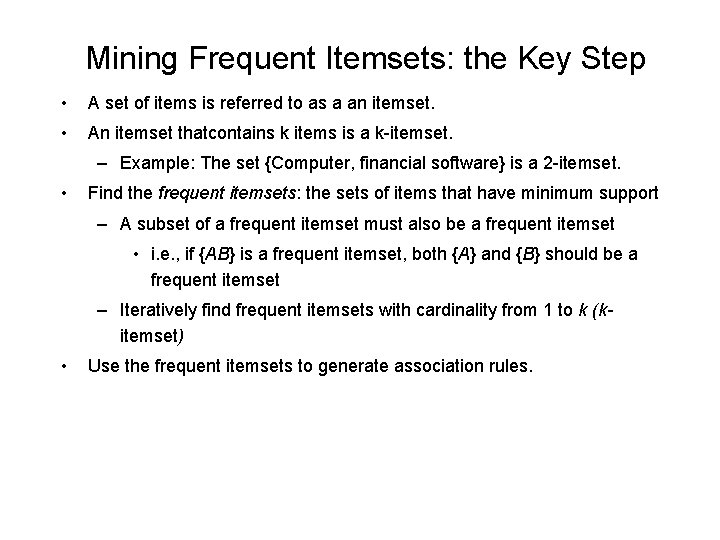

Mining Frequent Itemsets: the Key Step • A set of items is referred to as a an itemset. • An itemset thatcontains k items is a k-itemset. – Example: The set {Computer, financial software} is a 2 -itemset. • Find the frequent itemsets: the sets of items that have minimum support – A subset of a frequent itemset must also be a frequent itemset • i. e. , if {AB} is a frequent itemset, both {A} and {B} should be a frequent itemset – Iteratively find frequent itemsets with cardinality from 1 to k (kitemset) • Use the frequent itemsets to generate association rules.

Association rule mining • It is a two step process: • Step 1: Find all frequent item sets – Each of item set will occur at least as frequently as a predetermined minimum support count. • Step 2: Generate association rules from the frequent item sets. • The second step is easier than the first step.

The algorithm • The algorithm uses a priori knowledge of frequent item sets properties. • A priori employs level wise approach – k-items sets are used to explore (k+1)-item sets. – First set of 1 -itemsets is found (L 1) – L 1 is used to find L 2 – L 2 is used to find L 3. • The finding of each Lk requires one full scan of the database.

A priori property • All nonempty subsets of a frequent item set most also be frequent. – An item set I does not satisfy the minimum support threshold, minsup, then I is not frequent, i. e. , support(I) < min-sup – If an item A is added to the item set I then the resulting item set (I U A) can not occur more frequently than I. • Monotonic functions are functions that move in only one direction. • This property is called anti-monotonic. • If a set can not pass a test, all its supersets will fail the same test as well. • This property is monotonic in failing the test.

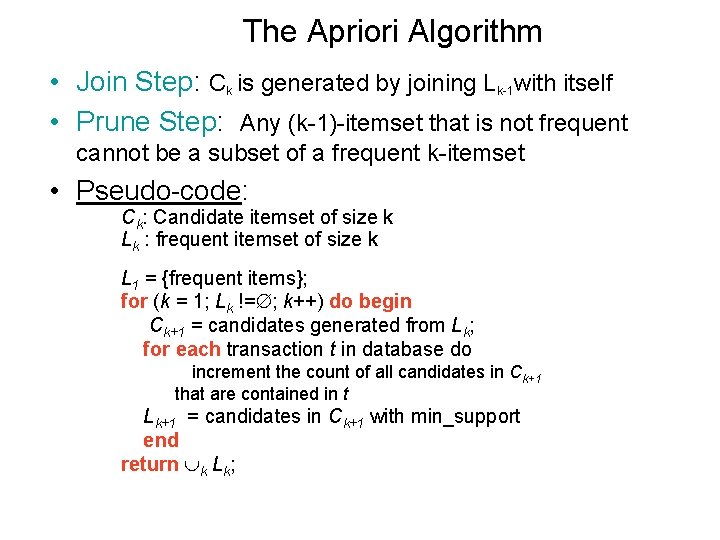

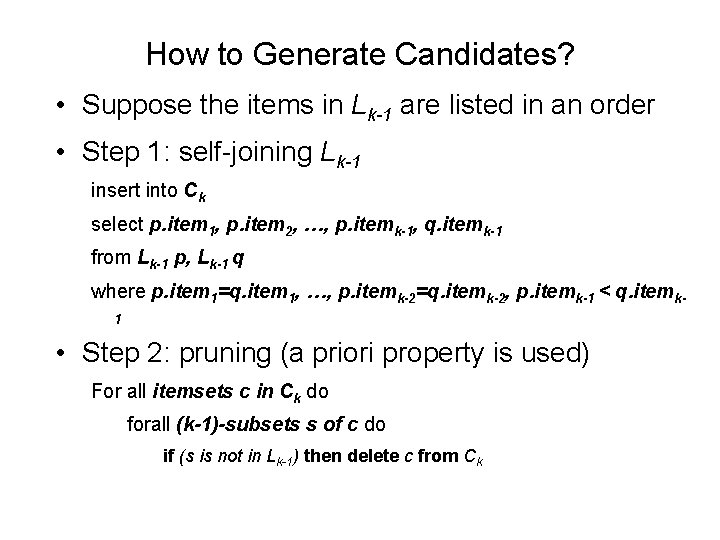

The Apriori Algorithm • Join Step: Ck is generated by joining Lk-1 with itself • Prune Step: Any (k-1)-itemset that is not frequent cannot be a subset of a frequent k-itemset • Pseudo-code: Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support end return k Lk;

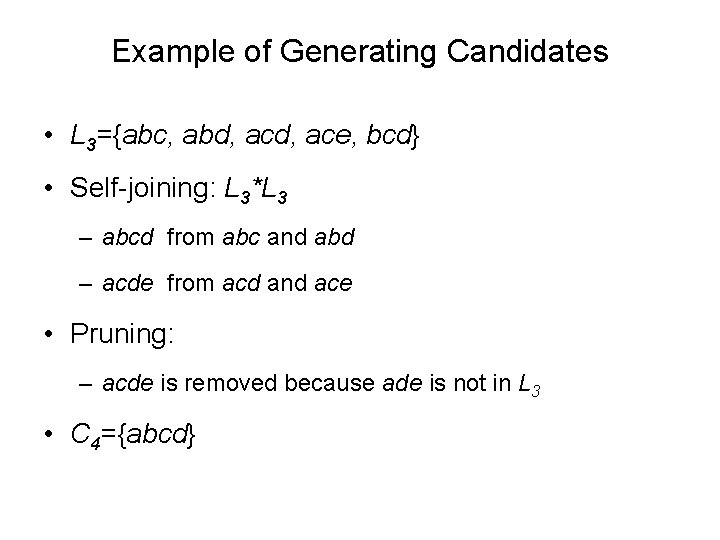

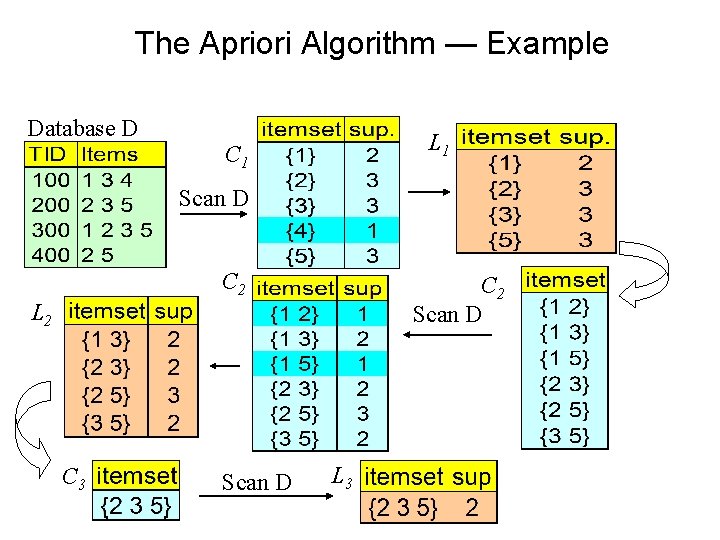

How to Generate Candidates? • Suppose the items in Lk-1 are listed in an order • Step 1: self-joining Lk-1 insert into Ck select p. item 1, p. item 2, …, p. itemk-1, q. itemk-1 from Lk-1 p, Lk-1 q where p. item 1=q. item 1, …, p. itemk-2=q. itemk-2, p. itemk-1 < q. itemk 1 • Step 2: pruning (a priori property is used) For all itemsets c in Ck do forall (k-1)-subsets s of c do if (s is not in Lk-1) then delete c from Ck

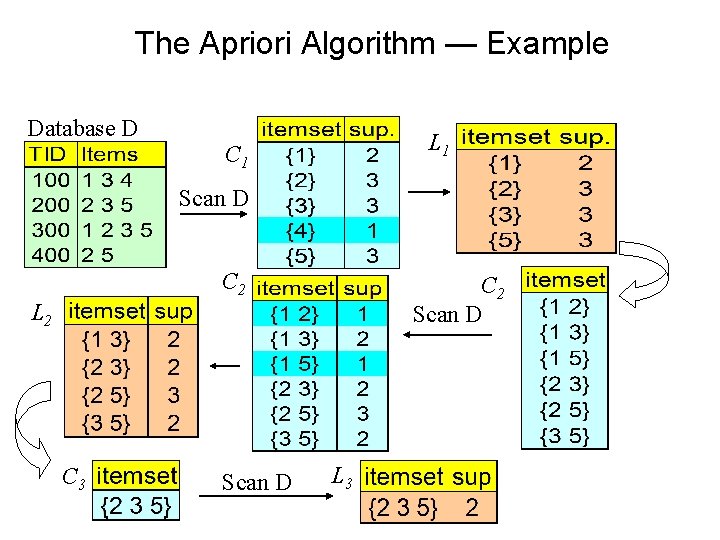

The Apriori Algorithm — Example Database D L 1 C 1 Scan D C 2 Scan D L 2 C 3 Scan D L 3

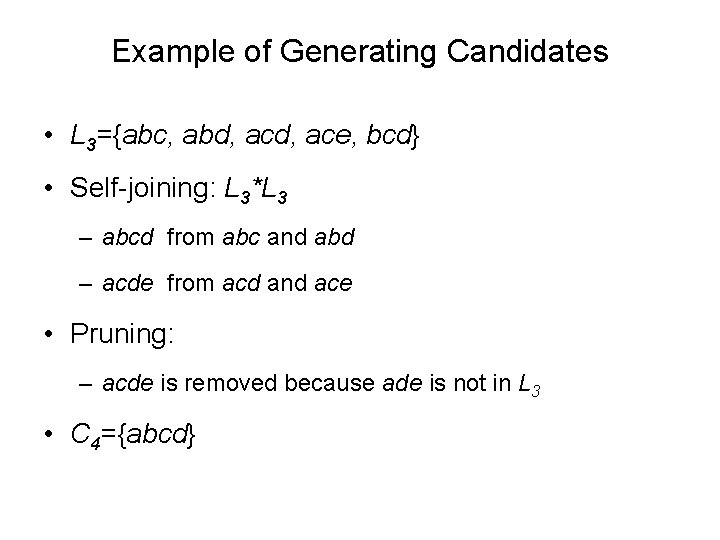

Example of Generating Candidates • L 3={abc, abd, ace, bcd} • Self-joining: L 3*L 3 – abcd from abc and abd – acde from acd and ace • Pruning: – acde is removed because ade is not in L 3 • C 4={abcd}

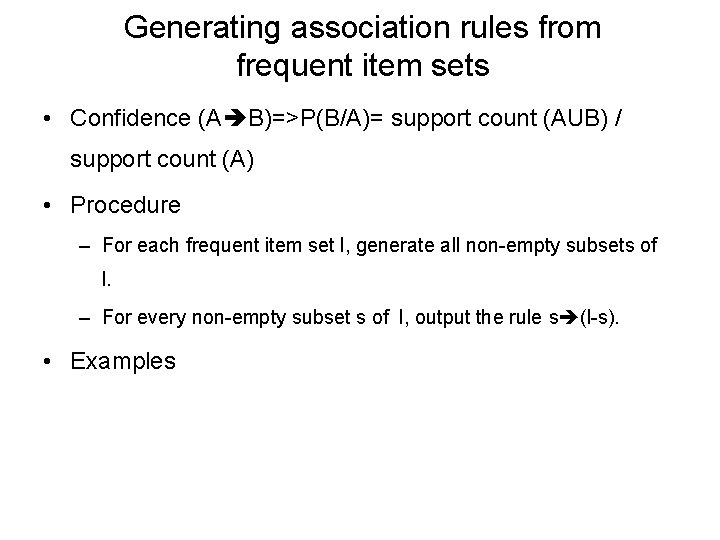

Generating association rules from frequent item sets • Confidence (A B)=>P(B/A)= support count (AUB) / support count (A) • Procedure – For each frequent item set l, generate all non-empty subsets of l. – For every non-empty subset s of l, output the rule s (l-s). • Examples

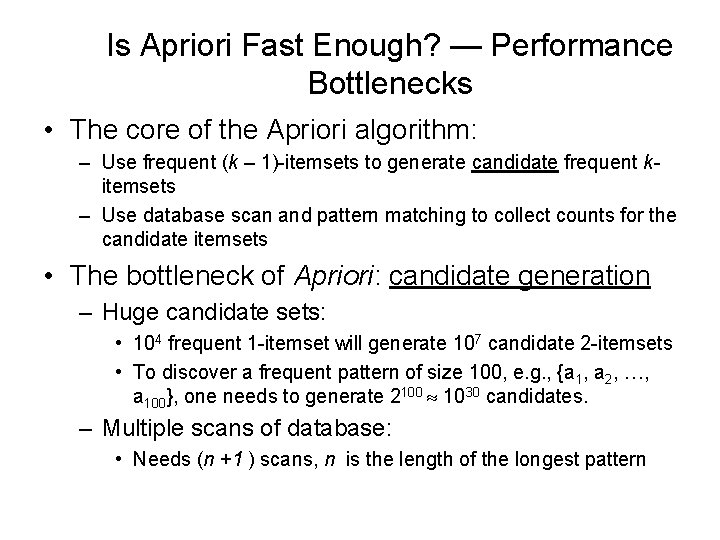

Methods to Improve Apriori’s Efficiency • Hash-based itemset counting: A k-itemset whose corresponding hashing bucket count is below the threshold cannot be frequent • Transaction reduction: A transaction that does not contain any frequent k-itemset is useless in subsequent scans • Partitioning: Any itemset that is potentially frequent in DB must be frequent in at least one of the partitions of DB • Sampling: mining on a subset of given data, lower support threshold + a method to determine the completeness • Dynamic itemset counting: add new candidate itemsets only when all of their subsets are estimated to be frequent

Is Apriori Fast Enough? — Performance Bottlenecks • The core of the Apriori algorithm: – Use frequent (k – 1)-itemsets to generate candidate frequent kitemsets – Use database scan and pattern matching to collect counts for the candidate itemsets • The bottleneck of Apriori: candidate generation – Huge candidate sets: • 104 frequent 1 -itemset will generate 107 candidate 2 -itemsets • To discover a frequent pattern of size 100, e. g. , {a 1, a 2, …, a 100}, one needs to generate 2100 1030 candidates. – Multiple scans of database: • Needs (n +1 ) scans, n is the length of the longest pattern

FP-tree algorithm • FP-tree algorithm generates association rules without generating candidate sets.

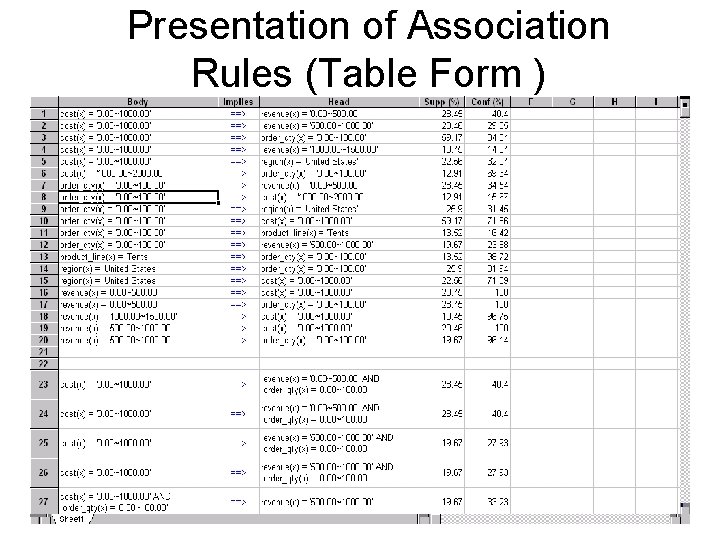

Presentation of Association Rules (Table Form )

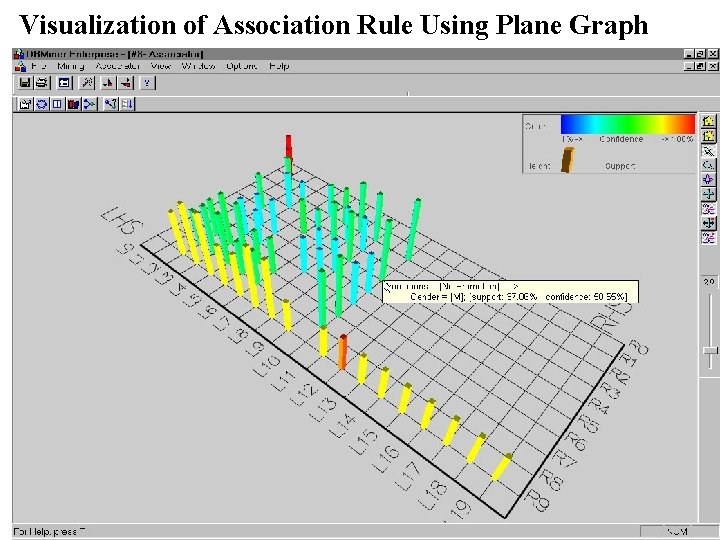

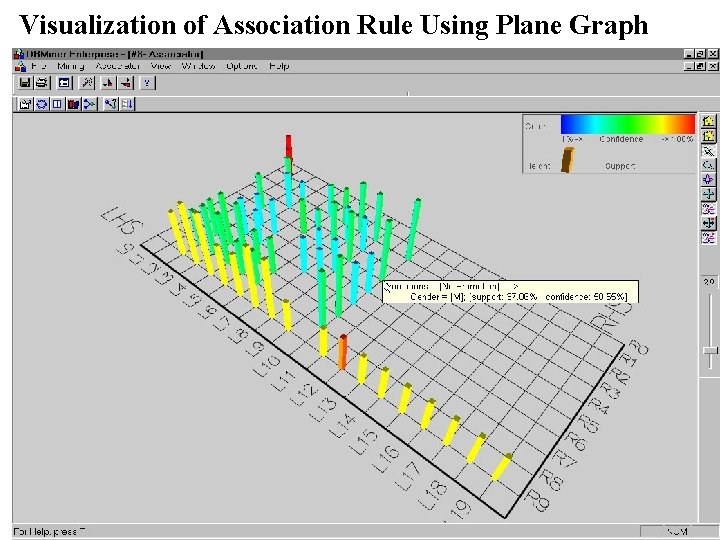

Visualization of Association Rule Using Plane Graph

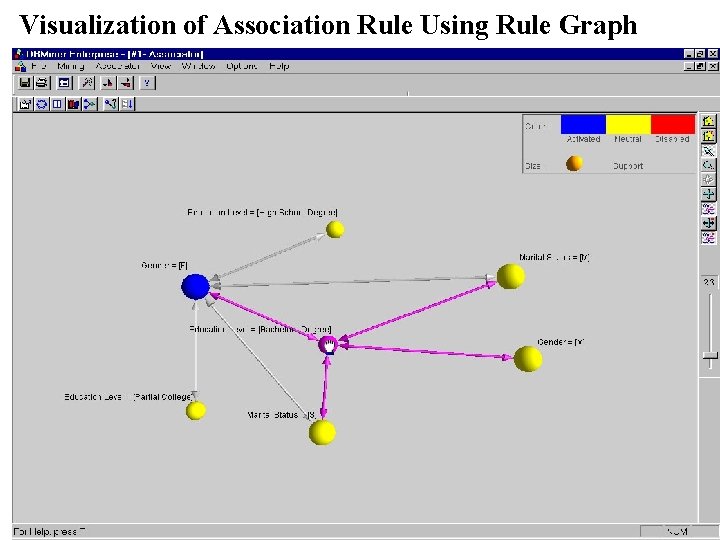

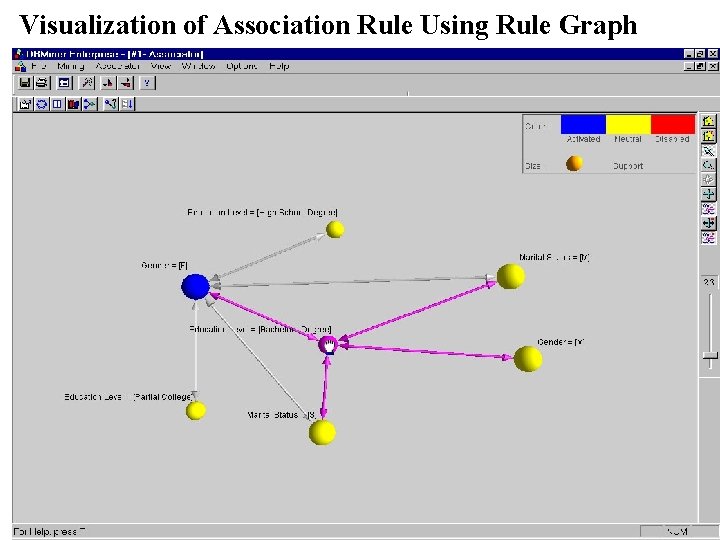

Visualization of Association Rule Using Rule Graph

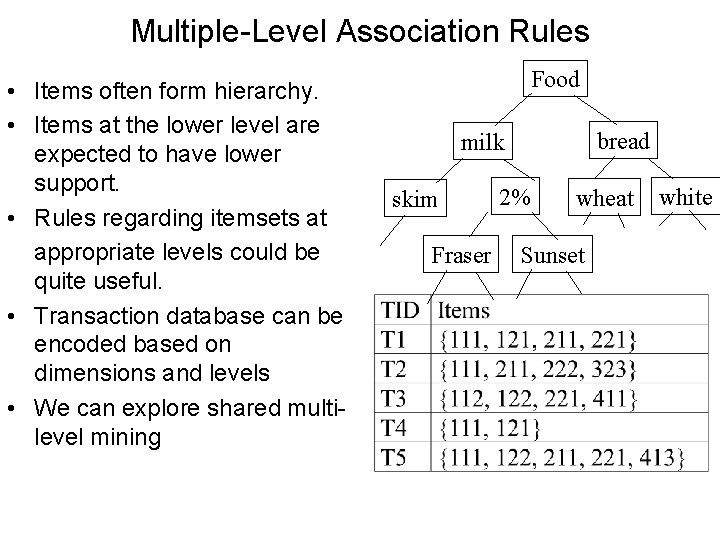

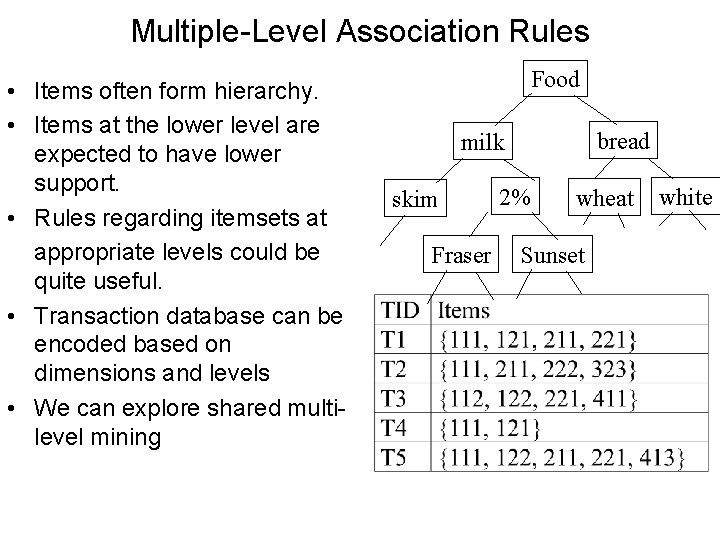

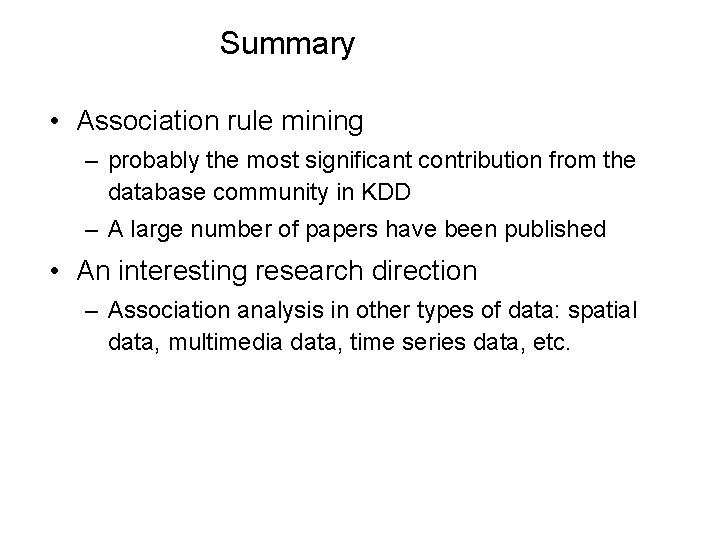

Multiple-Level Association Rules • Items often form hierarchy. • Items at the lower level are expected to have lower support. • Rules regarding itemsets at appropriate levels could be quite useful. • Transaction database can be encoded based on dimensions and levels • We can explore shared multilevel mining Food bread milk skim Fraser 2% wheat Sunset white

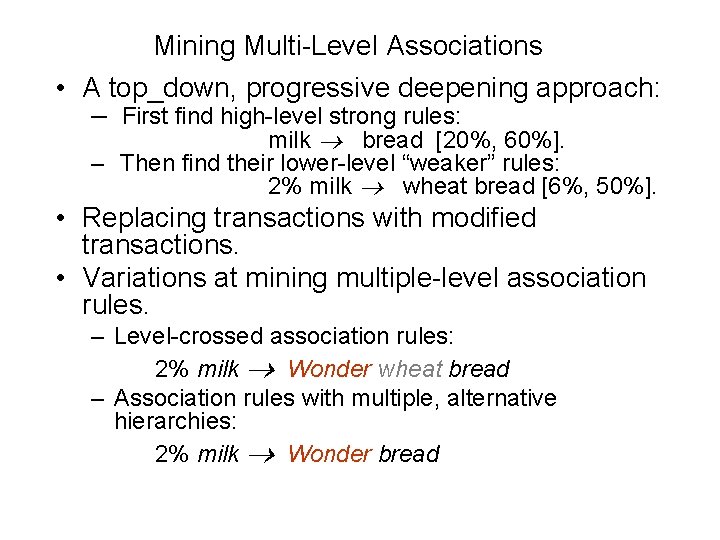

Mining Multi-Level Associations • A top_down, progressive deepening approach: – First find high-level strong rules: milk ® bread [20%, 60%]. – Then find their lower-level “weaker” rules: 2% milk ® wheat bread [6%, 50%]. • Replacing transactions with modified transactions. • Variations at mining multiple-level association rules. – Level-crossed association rules: 2% milk ® Wonder wheat bread – Association rules with multiple, alternative hierarchies: 2% milk ® Wonder bread

Summary • Association rule mining – probably the most significant contribution from the database community in KDD – A large number of papers have been published • An interesting research direction – Association analysis in other types of data: spatial data, multimedia data, time series data, etc.