Counterfactual impact evaluation What is it why do

- Slides: 20

Counterfactual impact evaluation: What is it, why do it? Daniel Mouqué Evaluation Unit DG REGIO 1

An example: support to enterprise & innovation • Some € 79 billion of cohesion in 2007 -13: the largest broad category of expenditure • Key instrument: investment/research grant • But also significant spending on loans/venture capital, advice, networking, incubators • With all this at stake, we should know exactly what we’re doing, right? 2

What should we know about enterprise support? We’re managing a programme – what should we know? • The context and needs (productive base, sectors, weaknesses etc…) • What we plan to change (Investment? Productivity? Employment? ) • How we will change it: instruments, delivery, financial allocations • Activity/outputs (number of enterprises assisted etc) Question: is this enough? 3

In the long term, we want to know about impacts • Do the instruments work? In terms of increasing long run investment, productivity, employment, etc? • What is the optimum level of support? • Different effects of different tools? Better single instrument or mixed? • SMEs only or include larger enterprises? 4

In other words, we want to know. . . • What works? • How much impact does it have? • How to change/finetune it to get more impact? These questions apply to all cohesion policy fields: human resources, infrastructure, environment 5

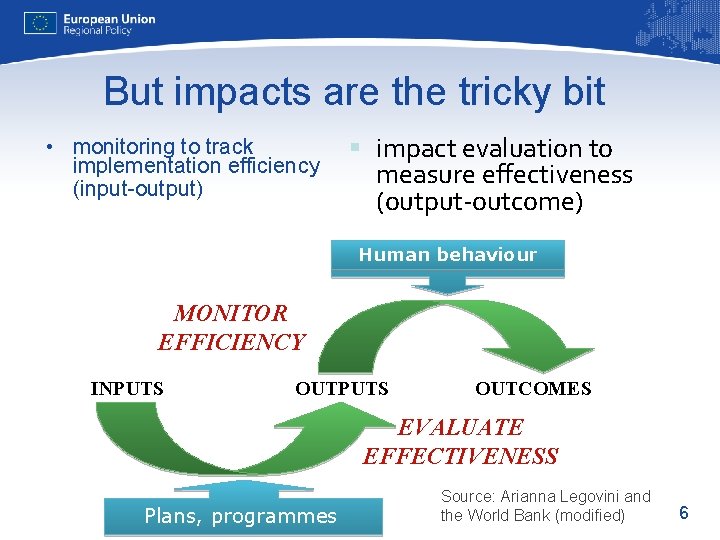

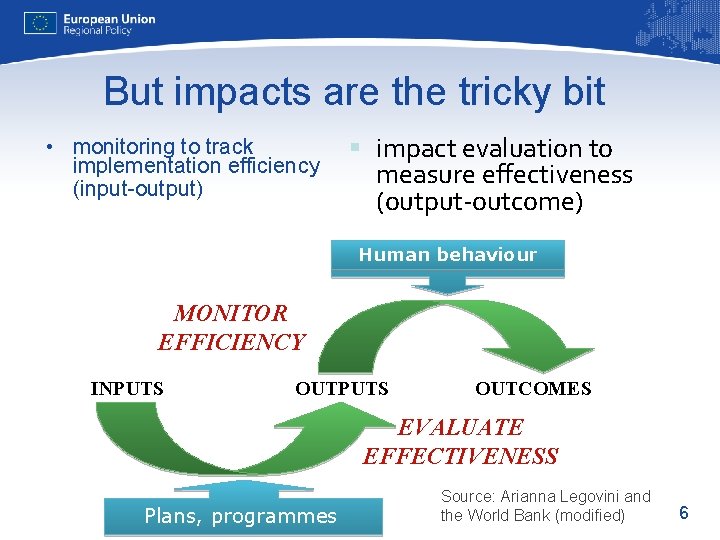

But impacts are the tricky bit • monitoring to track implementation efficiency (input-output) § impact evaluation to measure effectiveness (output-outcome) Human behaviour MONITOR EFFICIENCY INPUTS OUTCOMES EVALUATE EFFECTIVENESS Plans, programmes Source: Arianna Legovini and the World Bank (modified) 6

How do we assess impacts? Traditionally in enterprise support: • Monitoring (but: « before/after » problem) • Beneficiary surveys • Opinion And enterprise support is one of the « good » areas – situation no better in training, infrastructure, environment 7

To truly know impacts, you must know… … What would have happened without the intervention Or in other words: The counterfactual 8

• How do we find this mysterious counterfactual? • Can it be observed? 9

A time machine? 10

Sadly, only in Hollywood… Maybe someday? 11

What do scientists do? 12

One thing scientists do to find counterfactuals: Compare twins 13

Source: www. webmd. com – smoking and sun are responsible here 14

Twins in cohesion policy? • Does this mean we can only provide training to twins? And only one of the two? • And what about enterprises? Or urban neighbourhoods in crisis? 15

Solution 1: large « n » - mobilising the power of statistics The « law of large numbers » As n increases, random differences tend to average out NB: « large » varies. But 20 or 50 may be enough 16

Solution 2: clever statistical matching techniques • Sometimes solution 1 is enough • But sometimes we need to use statistical techniques to find matches between the treated and non-treated populations We’ll come back to how this is done tomorrow… 17

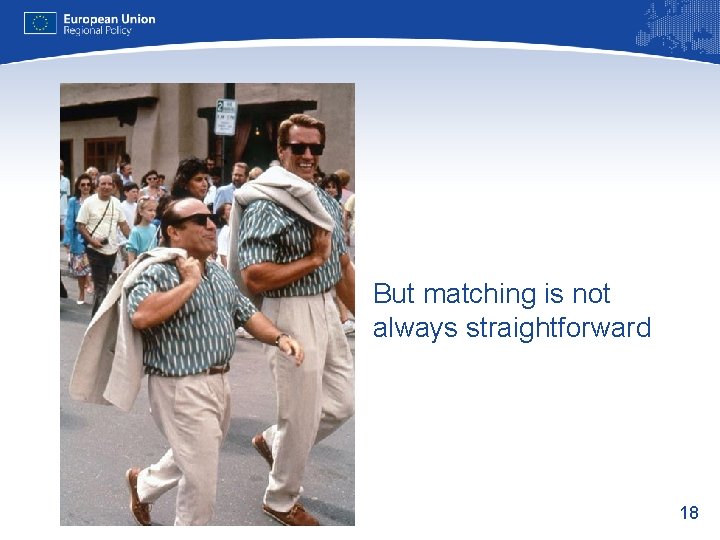

But matching is not always straightforward 18

Examples of counterfactuals in practice • 100 innovation vouchers are randomly distributed between ~900 applicant firms, performance tracked • 500 long term unemployed in poor mental health – 250 receive standard support, 250 receive extra counselling • 70 deprived urban areas assisted. Performance on unemployment etc compared to neighbouring areas 19

To recap • It is crucial to know about impacts • But measuring impacts is far from straightforward, depends on human behaviour • « Traditional » techniques do not measure impact • We need a counterfactual, comparing performance of treated and non-treated • But counterfactuals are not the only useful technique… 20