Counter Braids A novel counter architecture for network

Counter Braids A novel counter architecture for network measurement 1

Data Measurement: Background Accurate data measurement is needed in network and database systems. § Internet Backbones – – Accounting/Billing by ISPs: Usage-based pricing Traffic engineering Network diagnostics and forensics: Intrusion detection, denial-of-service attacks Products: Net. Flow (Cisco), cflowd (Juniper), Net. Stream (Huawei) – Ref. Lin, Xu, Kumar, Sung, Ramabhadran, Varghese, Estan, Varghese, Shah, Iyer, Prabhakar, Mc. Keown, etc. § Database Systems – Sketches, synopses of data streams – Usage and access logs – Ref. Cormode, Muthukrishnan, Babcock, Babu, Motwani, Widom, Charikar, Chen, Farach. Colton, Alon, etc § Data Centers, Cloud Computing – Monitoring network usage, diagnostics, real-time load balancing, network planning – E. g. Amazon, Facebook, Google, Microsoft, Yahoo! 2

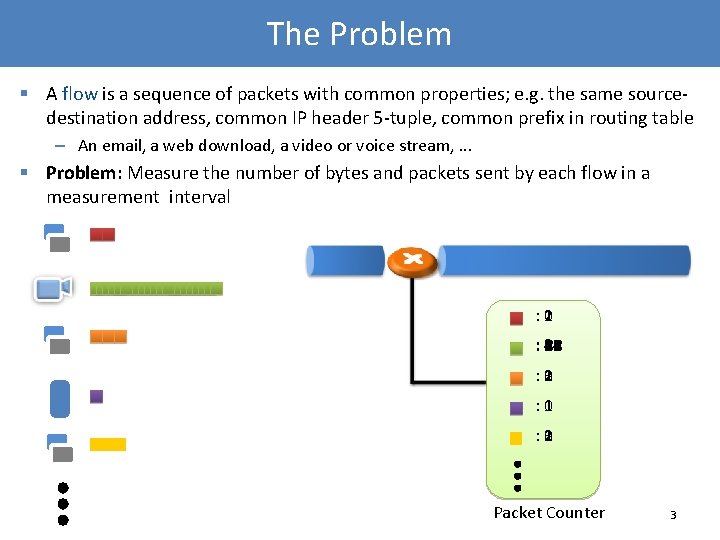

The Problem § A flow is a sequence of packets with common properties; e. g. the same sourcedestination address, common IP header 5 -tuple, common prefix in routing table – An email, a web download, a video or voice stream, . . . § Problem: Measure the number of bytes and packets sent by each flow in a measurement interval : 2 0 1 3 : 24 0 1 2 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 : 3 0 1 2 : 1 0 : 3 0 1 2 Packet Counter 3

The Constraints § Large number of active flows in a measurement interval – Several millions over 5 -min interval on backbone links (CAIDA) – Problem: need lots of counters, large memory § Very high link speeds, need fast memory updates – E. g. per-packet update time on 40 Gbps links are roughly 15 nanoseconds – Problem: need memory with fast access times § Memories are either large (DRAM) or fast (SRAM); can’t get both – DRAMs have access times of 50 - 60 ns – SRAMs have access times of 4. 5 -7 ns, but around 50 - 60 Mb (Micron Tech. ) 4

Building Counter Arrays: A naïve approach § Allocate one counter per flow – Packet arrives, looks up flow-id in memory, update corresponding counter Flow 1: Flow 2: : 2 1 Flow 3: 23 : 24 Flow 4: : 3 : 1 Flow 5: : 3 -- 0 -- 1 § Problems – Too much space taken up by counters, especially because not all flows are large – Need to perform flow-to-counter association for every packet § I. e. need perfect hash function 5

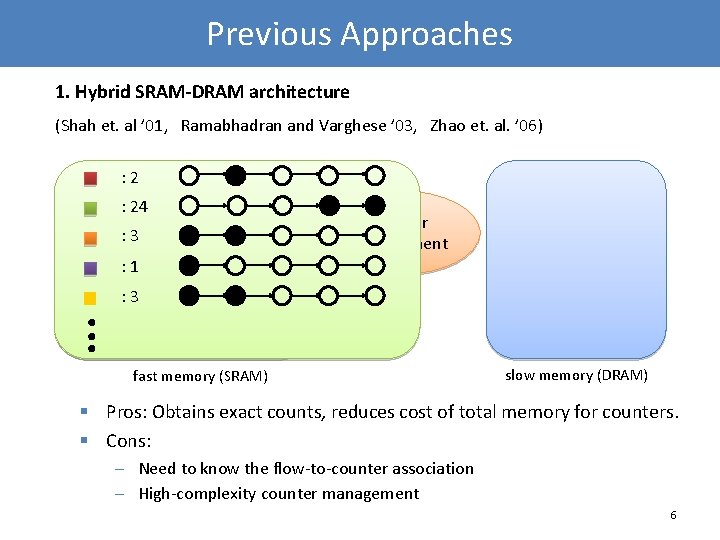

Previous Approaches 1. Hybrid SRAM-DRAM architecture (Shah et. al ’ 01, Ramabhadran and Varghese ’ 03, Zhao et. al. ’ 06) : 24 : 3 Counter management : 1 : 3 fast memory (SRAM) slow memory (DRAM) § Pros: Obtains exact counts, reduces cost of total memory for counters. § Cons: – Need to know the flow-to-counter association – High-complexity counter management 6

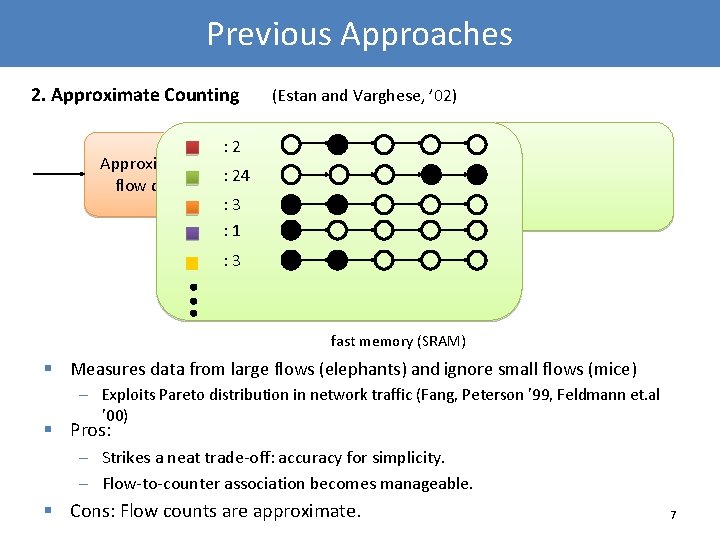

Previous Approaches 2. Approximate Counting (Estan and Varghese, ’ 02) : 2 Approximate large : 24 flow detection : 3 : 1 : 3 fast memory (SRAM) § Measures data from large flows (elephants) and ignore small flows (mice) – Exploits Pareto distribution in network traffic (Fang, Peterson ’ 99, Feldmann et. al ’ 00) § Pros: – Strikes a neat trade-off: accuracy for simplicity. – Flow-to-counter association becomes manageable. § Cons: Flow counts are approximate. 7

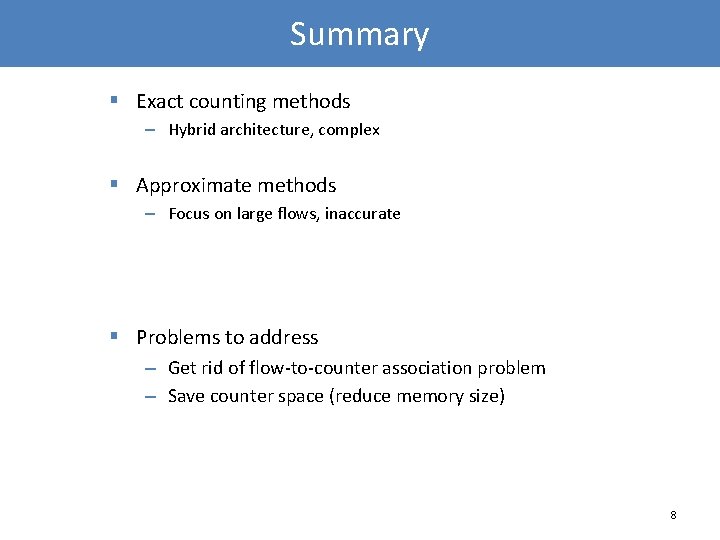

Summary § Exact counting methods – Hybrid architecture, complex § Approximate methods – Focus on large flows, inaccurate § Problems to address – Get rid of flow-to-counter association problem – Save counter space (reduce memory size) 8

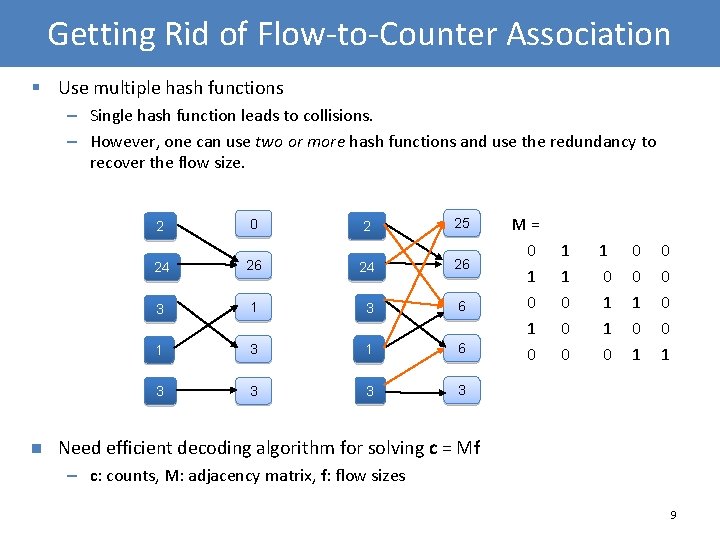

Getting Rid of Flow-to-Counter Association § Use multiple hash functions – Single hash function leads to collisions. – However, one can use two or more hash functions and use the redundancy to recover the flow size. n 2 0 2 25 24 26 3 1 3 6 1 3 1 6 3 3 M= 0 1 0 1 1 0 0 0 1 0 1 0 0 1 Need efficient decoding algorithm for solving c = Mf – c: counts, M: adjacency matrix, f: flow sizes 9

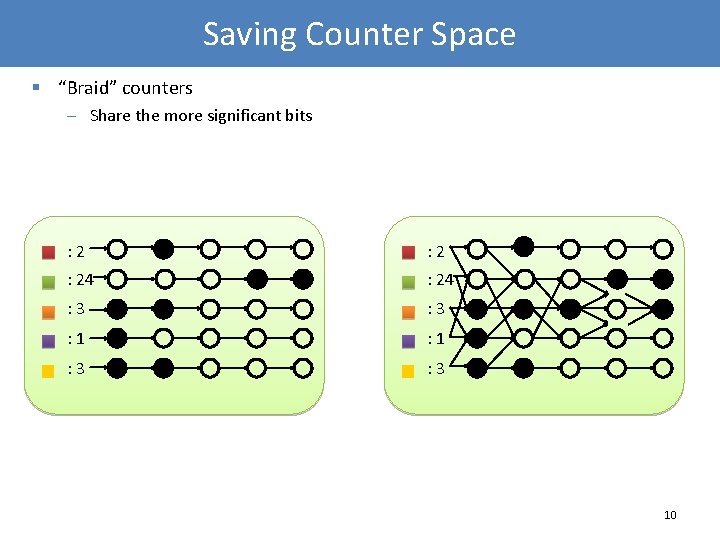

Saving Counter Space § “Braid” counters – Share the more significant bits : 2 : 24 : 3 : 1 : 3 10

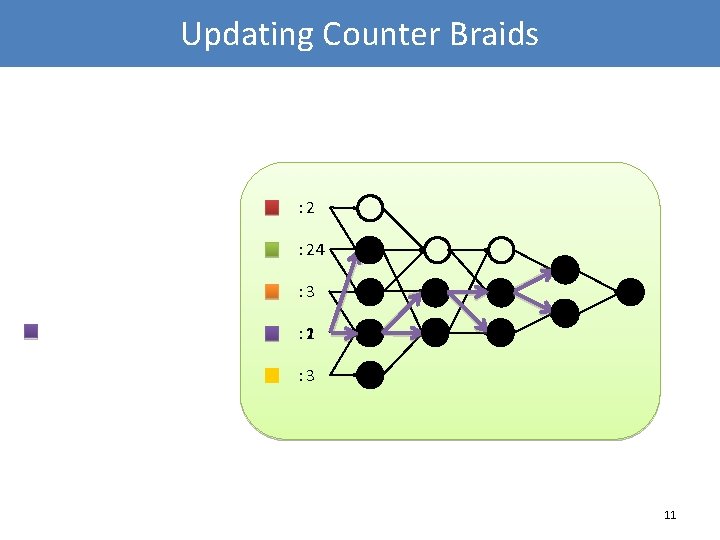

Updating Counter Braids : 24 : 3 1 : 2 : 3 11

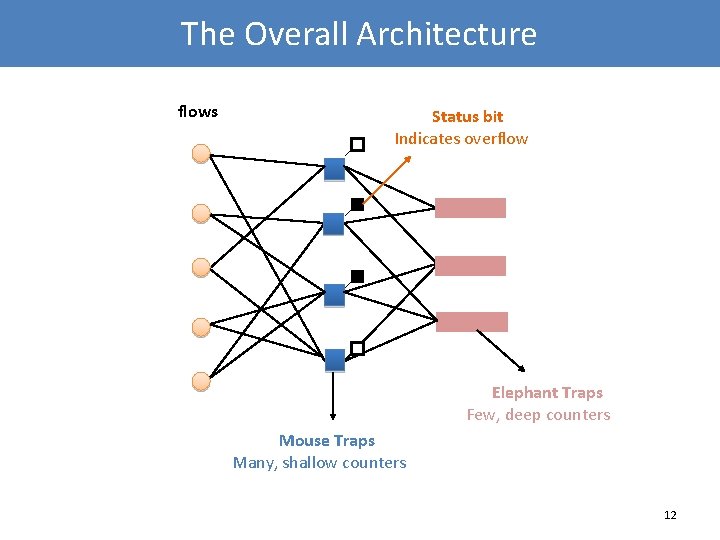

The Overall Architecture flows Status bit Indicates overflow Elephant Traps Few, deep counters Mouse Traps Many, shallow counters 12

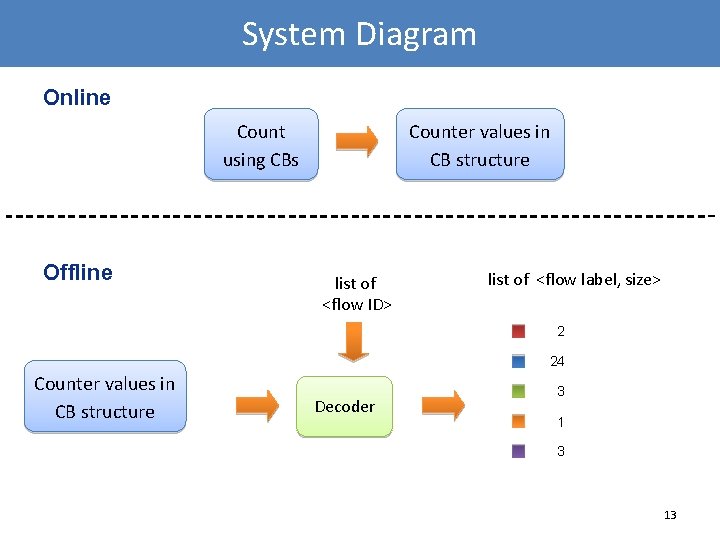

System Diagram Online Count using CBs Offline Counter values in CB structure list of <flow ID> list of <flow label, size> 2 24 Counter values in CB structure Decoder 3 13

Will Describe § Decoding algorithms § Sizing of Counter Braids – Comparison with Linear Programming Decoder 14

Decoding Algorithms 1. Typical set decoder 2. Message-passing decoder 15

Typical set decoder § Assumptions on flow size distribution, P: 1. At most power-law tails: 2. Decreasing digit entropy: write §Satisfied by real traffic distributions , entropy of fi(l) decreasing in l § Typical set decoder Let f’ = (f 1’, . . . fn’) and. If f’ is the unique vector that solves c = Mf and D(P’ | P) < , then output f’; otherwise, output error. Theorem (Lu, Montanari, Prabhakar ’ 07) Asymptotic Optimality For any rate r > H(P) there exists a sequence of reliable sparse Counter Braids with asymptotic rate r. Hence, with the typical set decoder, Counter Braids is optimal. 1. However, too complex to implement 16

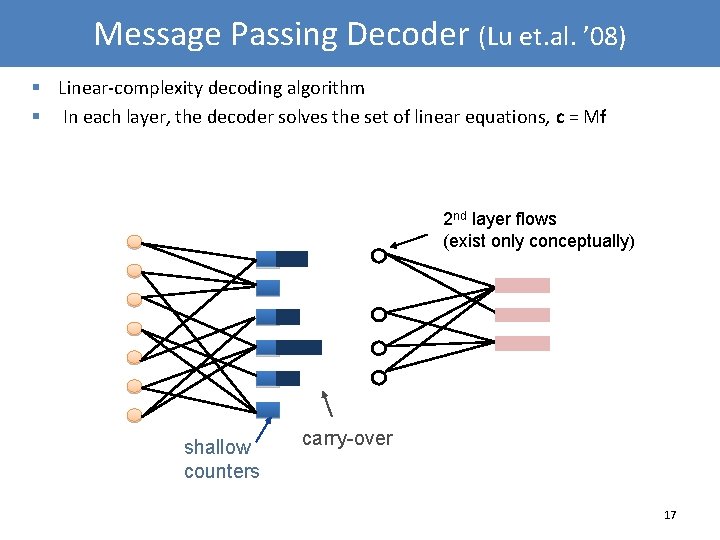

Message Passing Decoder (Lu et. al. ’ 08) § Linear-complexity decoding algorithm § In each layer, the decoder solves the set of linear equations, c = Mf 2 nd layer flows (exist only conceptually) shallow counters carry-over 17

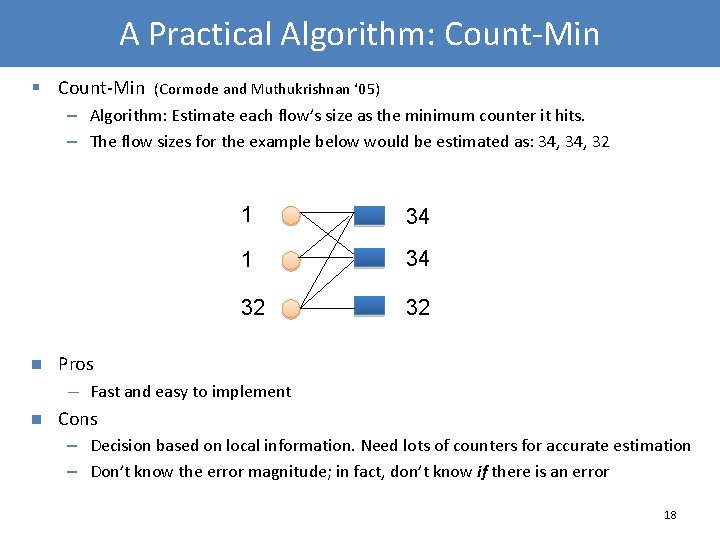

A Practical Algorithm: Count-Min § Count-Min (Cormode and Muthukrishnan ‘ 05) – Algorithm: Estimate each flow’s size as the minimum counter it hits. – The flow sizes for the example below would be estimated as: 34, 32 n 34 1 34 32 32 Pros — n 1 Fast and easy to implement Cons – Decision based on local information. Need lots of counters for accurate estimation – Don’t know the error magnitude; in fact, don’t know if there is an error 18

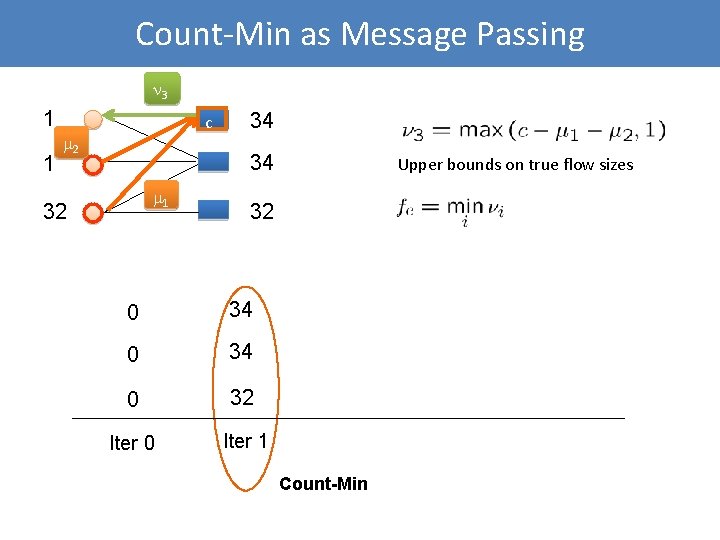

Count-Min as Message Passing 3 1 1 c 2 34 34 1 32 Upper bounds on true flow sizes 32 0 34 0 32 Iter 0 Iter 1 Count-Min

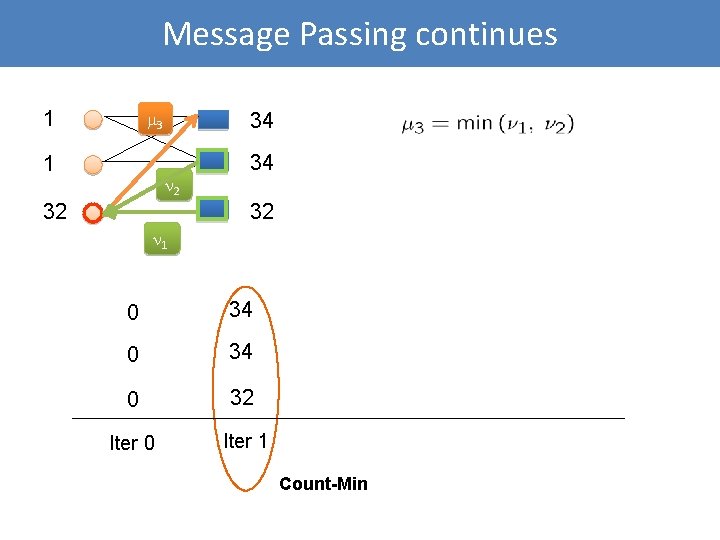

Message Passing continues 1 3 1 34 2 32 34 32 1 0 34 0 32 Iter 0 Iter 1 Count-Min

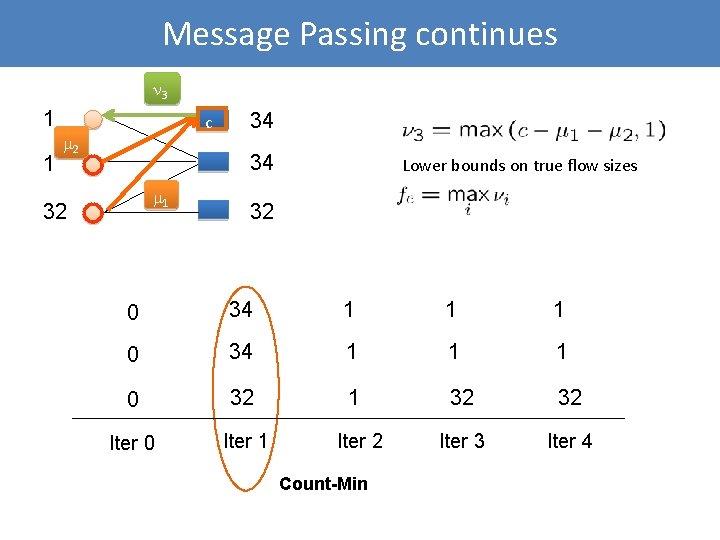

Message Passing continues 3 1 1 c 2 34 34 1 32 Lower bounds on true flow sizes 32 0 34 1 1 1 0 32 1 32 32 Iter 0 Iter 1 Iter 3 Iter 4 Iter 2 Count-Min

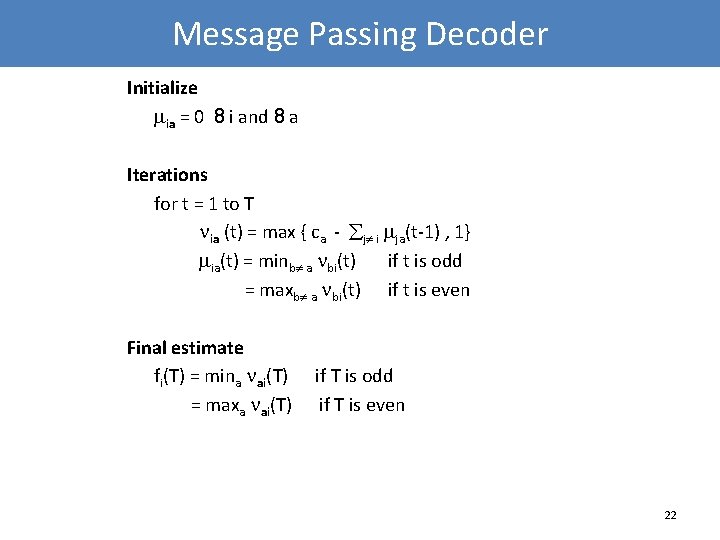

Message Passing Decoder Initialize ia = 0 8 i and 8 a Iterations for t = 1 to T ia (t) = max { ca - j i ja(t-1) , 1} ia(t) = minb a bi(t) if t is odd = maxb a bi(t) if t is even Final estimate fi(T) = mina ai(T) = maxa ai(T) if T is odd if T is even 22

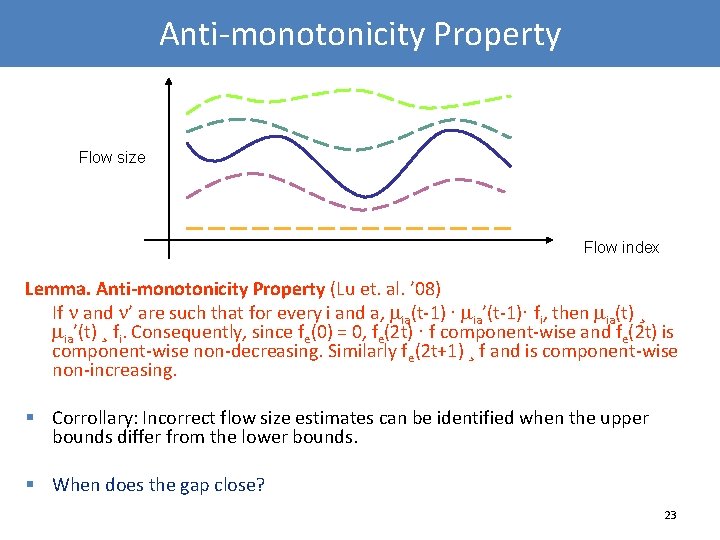

Anti-monotonicity Property Flow size Flow index Lemma. Anti-monotonicity Property (Lu et. al. ’ 08) If and ’ are such that for every i and a, ia(t-1) · ia’(t-1)· fi, then ia(t) ¸ ia’(t) ¸ fi. Consequently, since fe(0) = 0, fe(2 t) · f component-wise and fe(2 t) is component-wise non-decreasing. Similarly fe(2 t+1) ¸ f and is component-wise non-increasing. § Corrollary: Incorrect flow size estimates can be identified when the upper bounds differ from the lower bounds. § When does the gap close? 23

Convergence: Sizing of Counter Braids 24

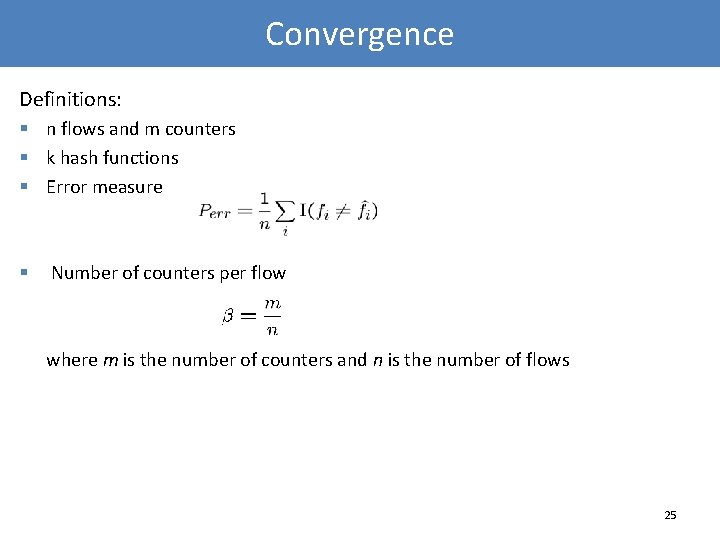

Convergence Definitions: § n flows and m counters § k hash functions § Error measure § Number of counters per flow where m is the number of counters and n is the number of flows 25

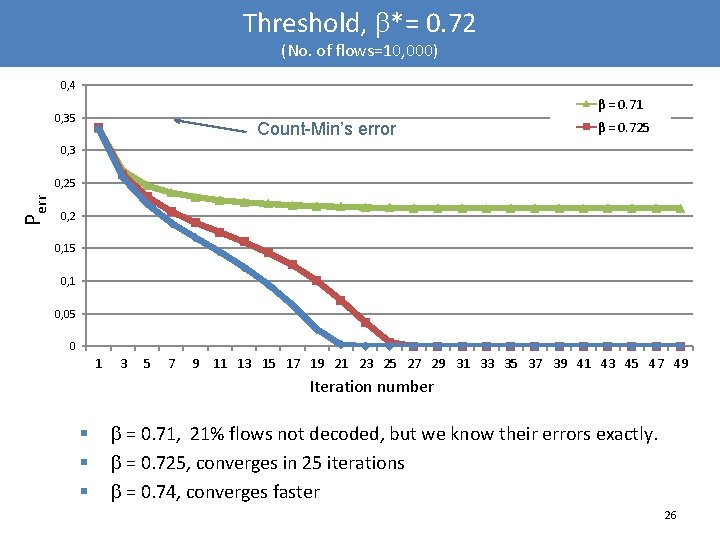

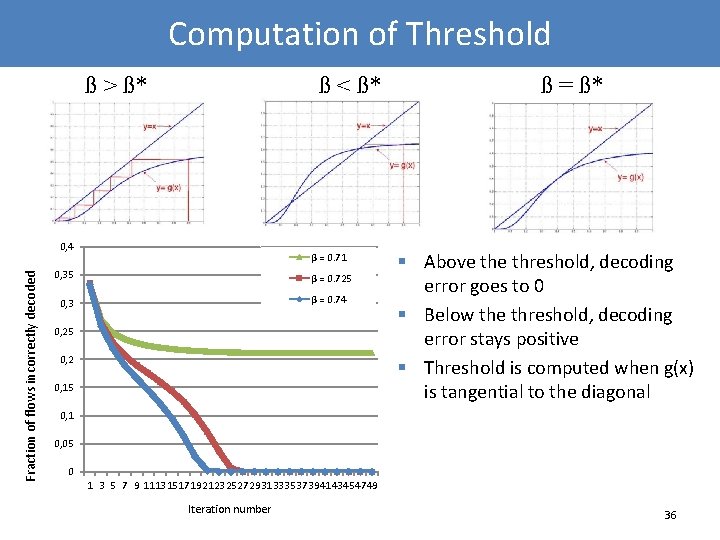

Threshold, *= 0. 72 (No. of flows=10, 000) 0, 4 β = 0. 71 0, 35 Count-Min’s error β = 0. 725 0, 3 Perr 0, 25 0, 2 0, 15 0, 1 0, 05 0 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 37 39 41 43 45 47 49 Iteration number § § § = 0. 71, 21% flows not decoded, but we know their errors exactly. = 0. 725, converges in 25 iterations = 0. 74, converges faster 26

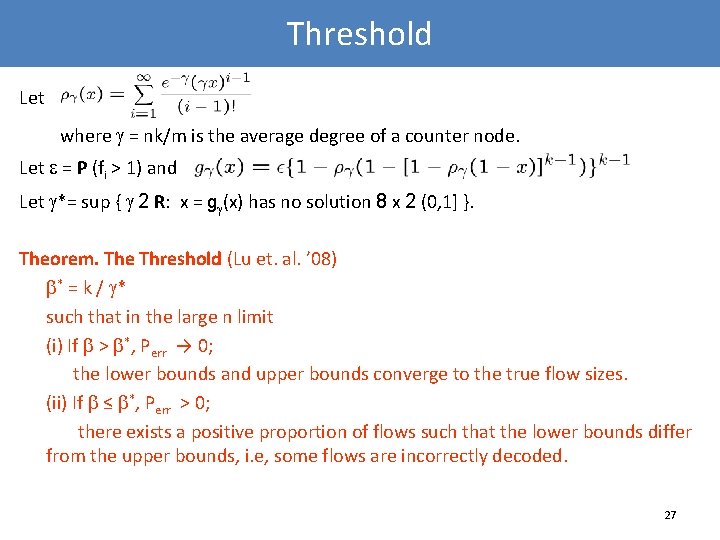

Threshold Let where = nk/m is the average degree of a counter node. Let = P (fi > 1) and Let *= sup { 2 R: x = g (x) has no solution 8 x 2 (0, 1] }. Theorem. The Threshold (Lu et. al. ’ 08) * = k / * such that in the large n limit (i) If > *, Perr → 0; the lower bounds and upper bounds converge to the true flow sizes. (ii) If ≤ *, Perr > 0; there exists a positive proportion of flows such that the lower bounds differ from the upper bounds, i. e, some flows are incorrectly decoded. 27

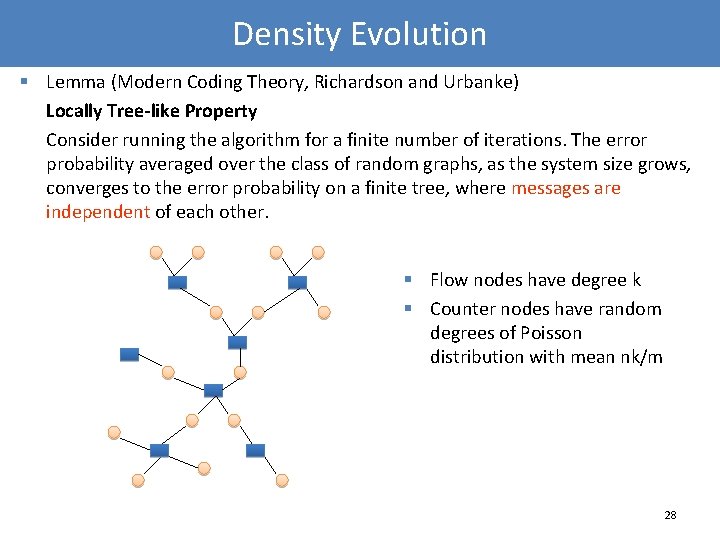

Density Evolution § Lemma (Modern Coding Theory, Richardson and Urbanke) Locally Tree-like Property Consider running the algorithm for a finite number of iterations. The error probability averaged over the class of random graphs, as the system size grows, converges to the error probability on a finite tree, where messages are independent of each other. § Flow nodes have degree k § Counter nodes have random degrees of Poisson distribution with mean nk/m 28

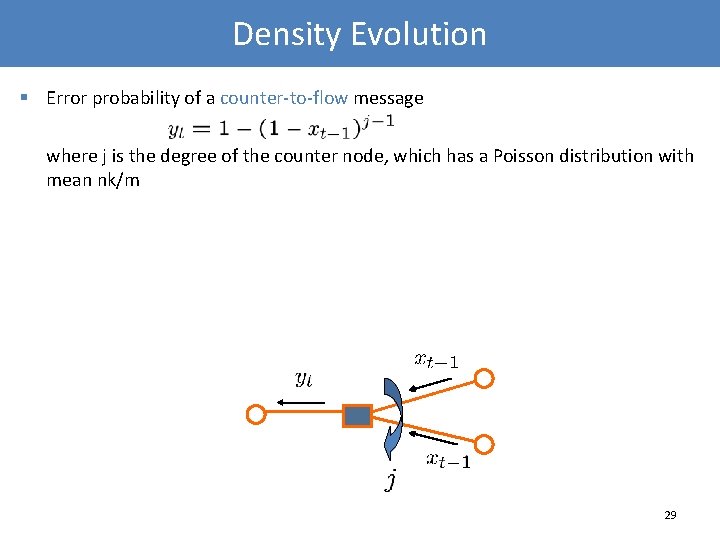

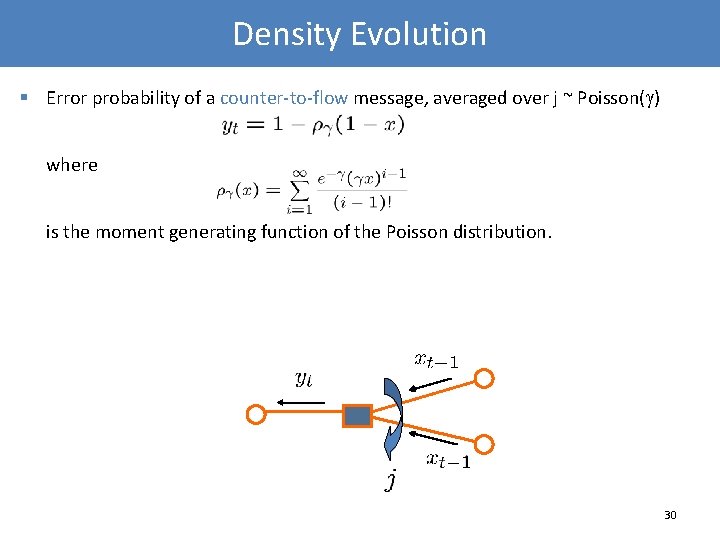

Density Evolution § Error probability of a counter-to-flow message where j is the degree of the counter node, which has a Poisson distribution with mean nk/m 29

Density Evolution § Error probability of a counter-to-flow message, averaged over j ~ Poisson( ) where is the moment generating function of the Poisson distribution. 30

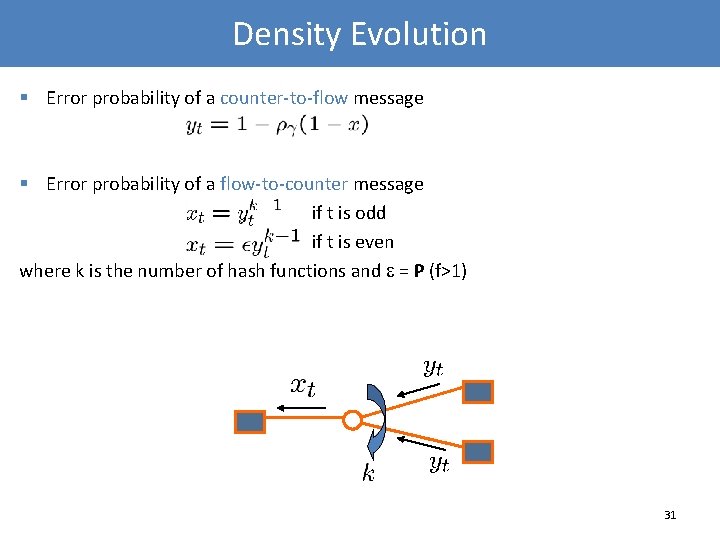

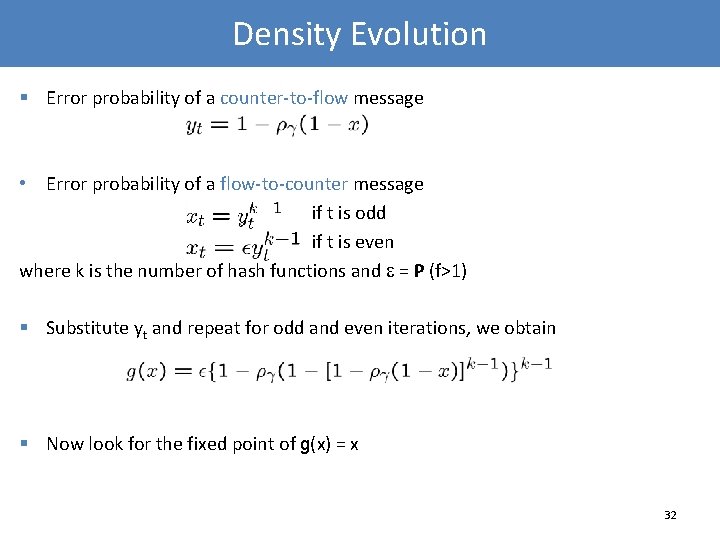

Density Evolution § Error probability of a counter-to-flow message § Error probability of a flow-to-counter message if t is odd if t is even where k is the number of hash functions and = P (f>1) 31

Density Evolution § Error probability of a counter-to-flow message • Error probability of a flow-to-counter message if t is odd if t is even where k is the number of hash functions and = P (f>1) § Substitute yt and repeat for odd and even iterations, we obtain § Now look for the fixed point of g(x) = x 32

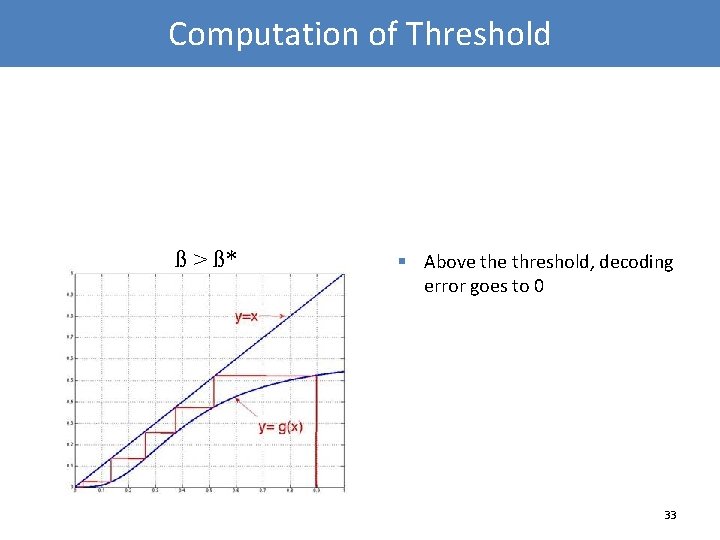

Computation of Threshold ß > ß* § Above threshold, decoding error goes to 0 33

Computation of Threshold ß > ß* ß < ß* § Above threshold, decoding error goes to 0 § Below the threshold, decoding error stays positive 34

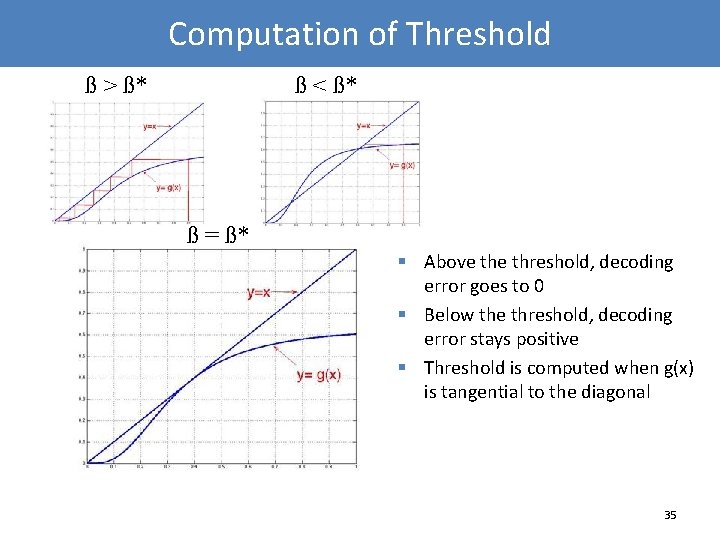

Computation of Threshold ß > ß* ß < ß* ß = ß* § Above threshold, decoding error goes to 0 § Below the threshold, decoding error stays positive § Threshold is computed when g(x) is tangential to the diagonal 35

Computation of Threshold ß > ß* ß < ß* Fraction of flows incorrectly decoded 0, 4 β = 0. 71 0, 35 β = 0. 725 0, 3 β = 0. 74 0, 25 0, 2 0, 15 ß = ß* § Above threshold, decoding error goes to 0 § Below the threshold, decoding error stays positive § Threshold is computed when g(x) is tangential to the diagonal 0, 1 0, 05 0 1 3 5 7 9 1113151719212325272931333537394143454749 Iteration number 36

Comparison with Linear Programming Decoder 37

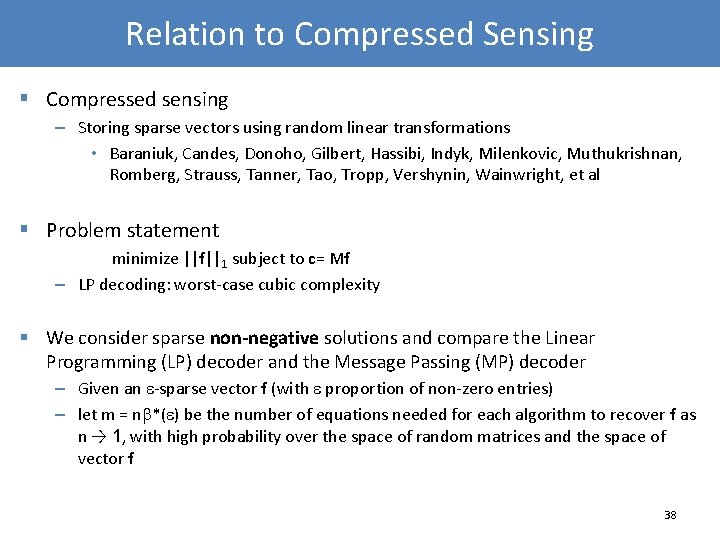

Relation to Compressed Sensing § Compressed sensing – Storing sparse vectors using random linear transformations • Baraniuk, Candes, Donoho, Gilbert, Hassibi, Indyk, Milenkovic, Muthukrishnan, Romberg, Strauss, Tanner, Tao, Tropp, Vershynin, Wainwright, et al § Problem statement minimize ||f||1 subject to c= Mf – LP decoding: worst-case cubic complexity § We consider sparse non-negative solutions and compare the Linear Programming (LP) decoder and the Message Passing (MP) decoder – Given an -sparse vector f (with proportion of non-zero entries) – let m = n *( ) be the number of equations needed for each algorithm to recover f as n → 1, with high probability over the space of random matrices and the space of vector f 38

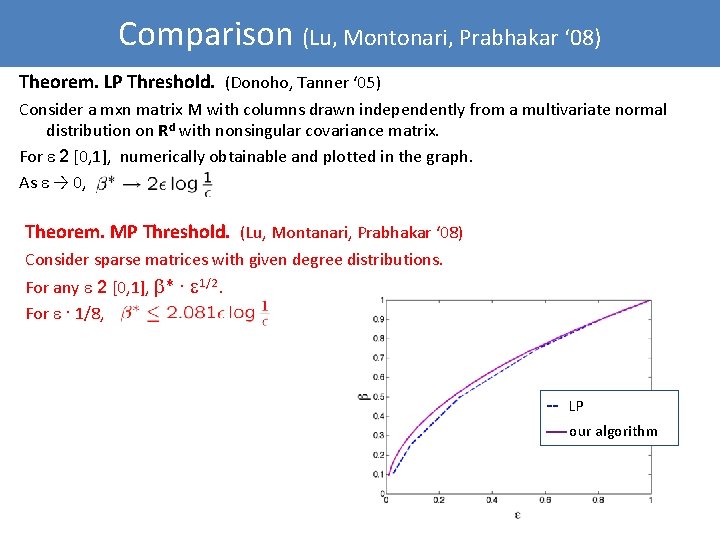

Comparison (Lu, Montonari, Prabhakar ‘ 08) Theorem. LP Threshold. (Donoho, Tanner ‘ 05) Consider a mxn matrix M with columns drawn independently from a multivariate normal distribution on Rd with nonsingular covariance matrix. For 2 [0, 1], numerically obtainable and plotted in the graph. As → 0, Theorem. MP Threshold. (Lu, Montanari, Prabhakar ‘ 08) Consider sparse matrices with given degree distributions. For any 2 [0, 1], * · 1/2. For · 1/8, -- LP our algorithm 39

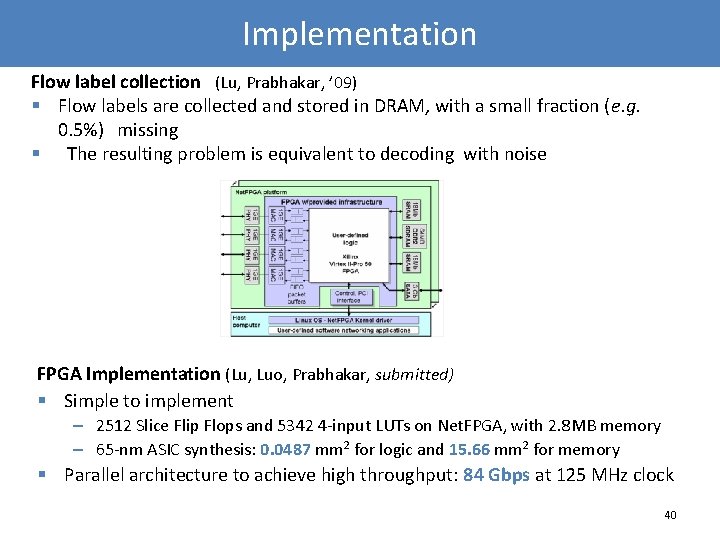

Implementation Flow label collection (Lu, Prabhakar, ’ 09) § Flow labels are collected and stored in DRAM, with a small fraction (e. g. 0. 5%) missing § The resulting problem is equivalent to decoding with noise FPGA Implementation (Lu, Luo, Prabhakar, submitted) § Simple to implement – 2512 Slice Flip Flops and 5342 4 -input LUTs on Net. FPGA, with 2. 8 MB memory – 65 -nm ASIC synthesis: 0. 0487 mm 2 for logic and 15. 66 mm 2 for memory § Parallel architecture to achieve high throughput: 84 Gbps at 125 MHz clock 40

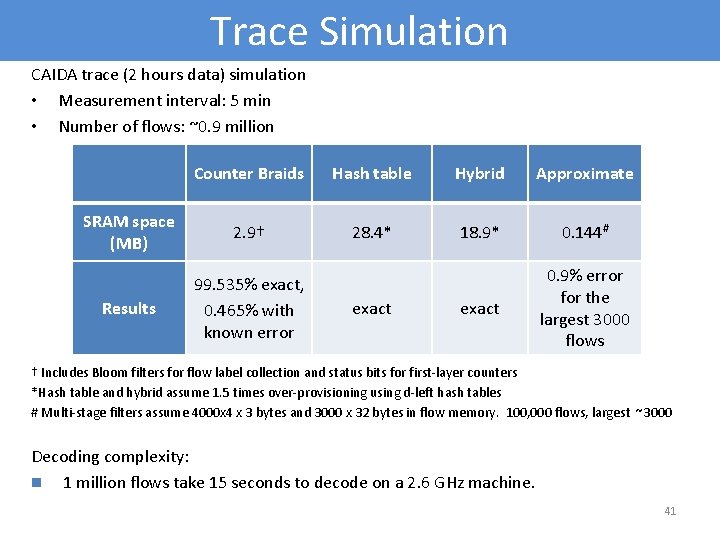

Trace Simulation CAIDA trace (2 hours data) simulation • Measurement interval: 5 min • Number of flows: ~0. 9 million Counter Braids Hash table Hybrid Approximate SRAM space (MB) 2. 9† 28. 4* 18. 9* 0. 144# Results 99. 535% exact, 0. 465% with known error exact 0. 9% error for the largest 3000 flows exact † Includes Bloom filters for flow label collection and status bits for first-layer counters *Hash table and hybrid assume 1. 5 times over-provisioning using d-left hash tables # Multi-stage filters assume 4000 x 4 x 3 bytes and 3000 x 32 bytes in flow memory. 100, 000 flows, largest ~3000 Decoding complexity: n 1 million flows take 15 seconds to decode on a 2. 6 GHz machine. 41

- Slides: 41