COTS Testing Diff With inhouse components w Interface

- Slides: 77

COTS Testing

Diff. With in-house components w Interface (pre and post conditions) are not clearly specified. w No Arch. and code. w Black boxes to component user. Why use COTS

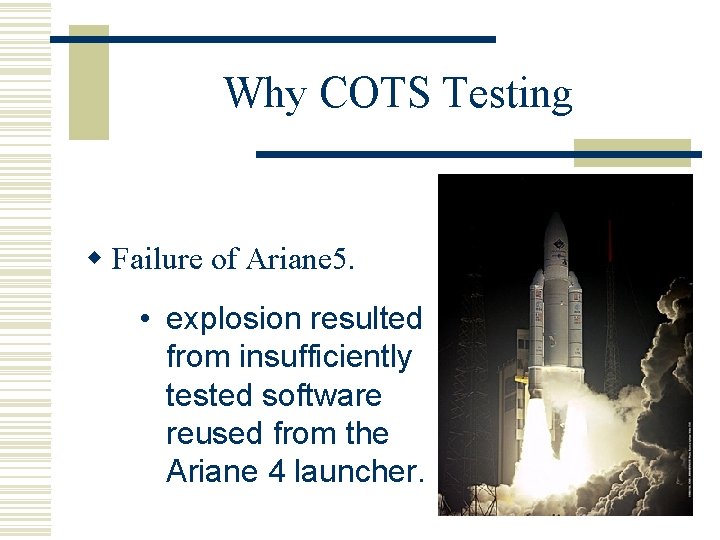

Why COTS Testing w Failure of Ariane 5. • explosion resulted from insufficiently tested software reused from the Ariane 4 launcher.

COTS Evaluation and selection

Why rigorous evaluation of COTS? w Large number of alternative products. w Multiple stakeholders. w Large number of Quality criteria. w Compatibility with other products.

Why evaluation difficult w Large number of evaluation criteria. w Different opinions are usually encountered among different stakeholders. w Evaluation criteria are not easily measurable at evaluation time. w Gathering relevant info. is prohibitively expensive. w COTS market is changing fast, evaluation must be performed several times during lifecycle. w Evaluation deals with uncertainty info.

AHP Technique w Originally designed for economic and political science domains. w Requires a pair wise comparison of alternatives and pair wise weighting of selection criteria. w Enables consistency analysis of comparisons and weights, making possible to assess quality of gathered info.

AHP Technique (contd. ) w Allows alternatives to be measured on a ratio scale, we can determine how much better an alternative compared to other. w Practically usable if number of alternatives and criteria are sufficiently low, because comparisons are made by experts.

Selection in practice Follows three stages w Informal screening for a set of requirements using selection thresholds. w More systematic evaluation using AHP process. w Detailed Information gathering involves testing, prototyping and reading technical documents.

State of the art in COTS testing

How to provide information to user w Component meta-data approach. w Retro-components approach. w Component test bench approach. w Built-in test approach. w Component+ approach. w STECC strategy.

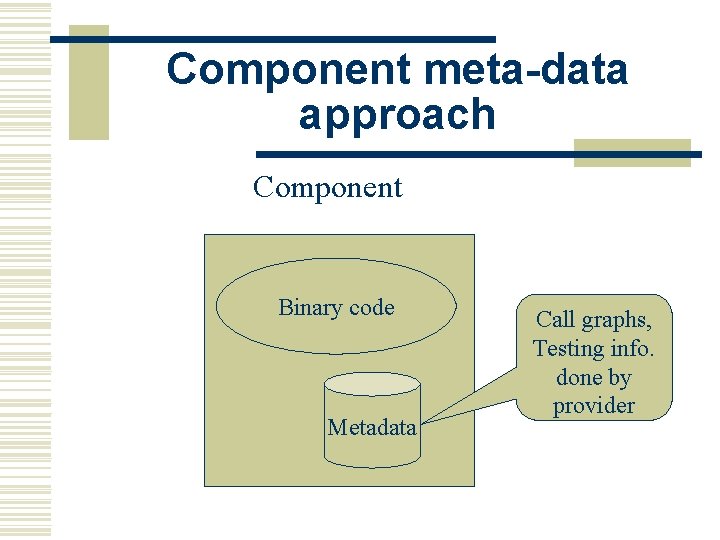

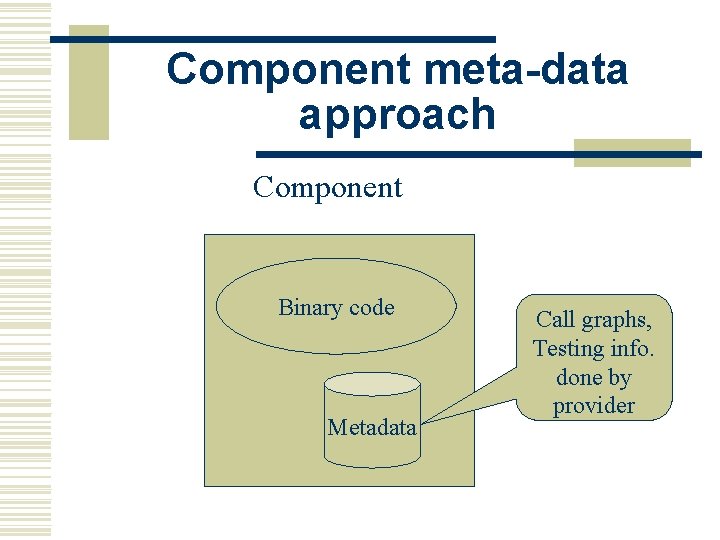

Component meta-data approach Component Binary code Metadata Call graphs, Testing info. done by provider

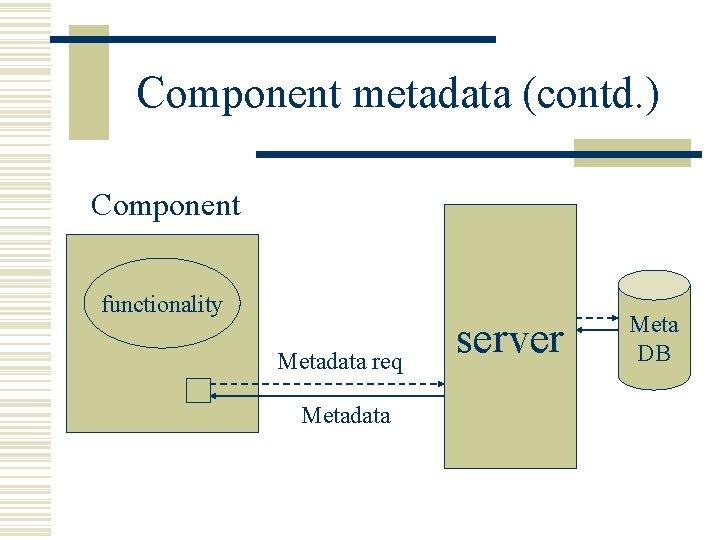

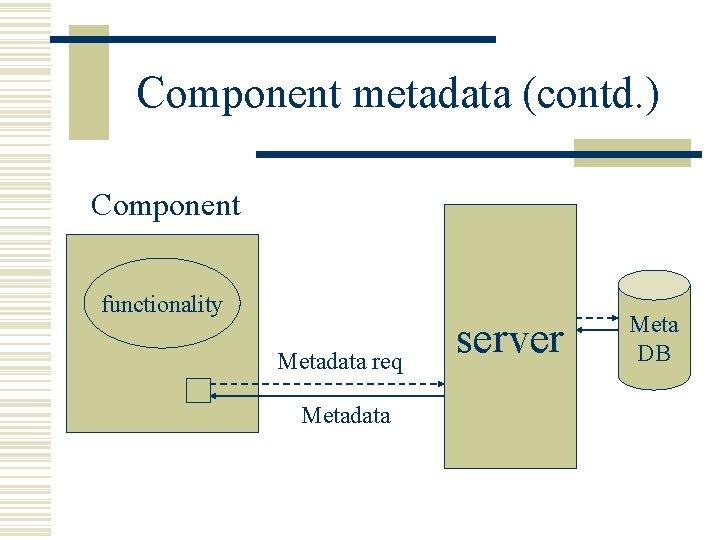

Component metadata (contd. ) Component functionality Metadata req Metadata server Meta DB

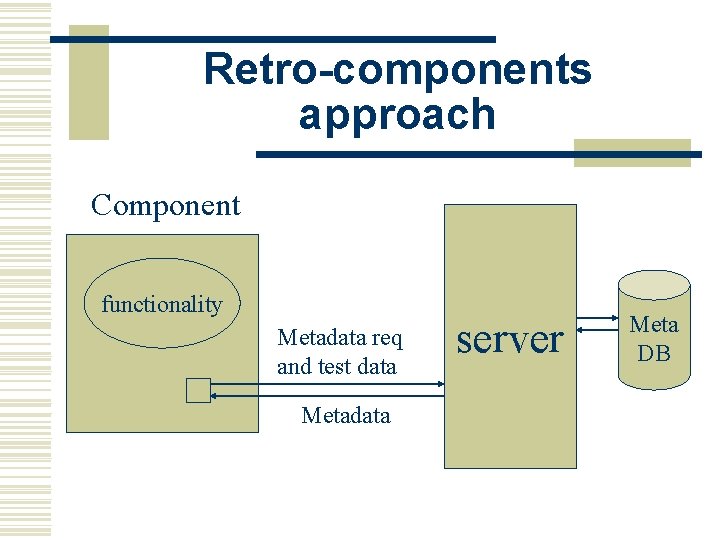

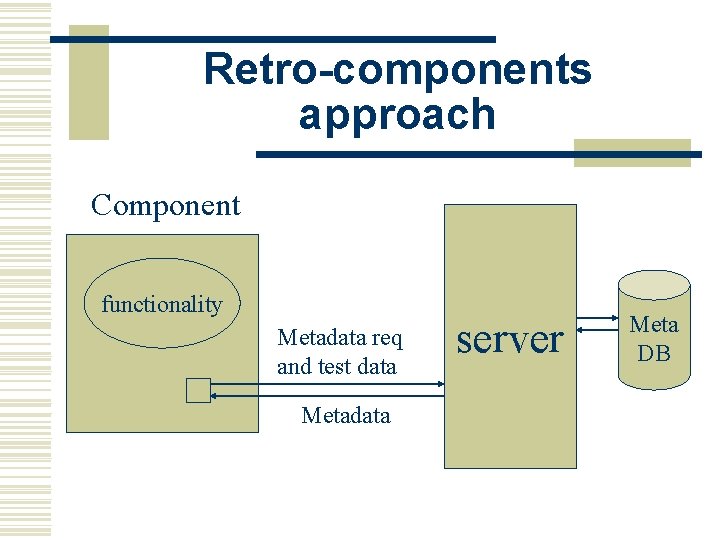

Retro-components approach Component functionality Metadata req and test data Metadata server Meta DB

Component test bench approach w A set of test cases called test operation is associated with each interface of a component. w A test operation defines the necessary steps for testing a specific method. w The concrete test inputs and expected test output packaged in a test operation.

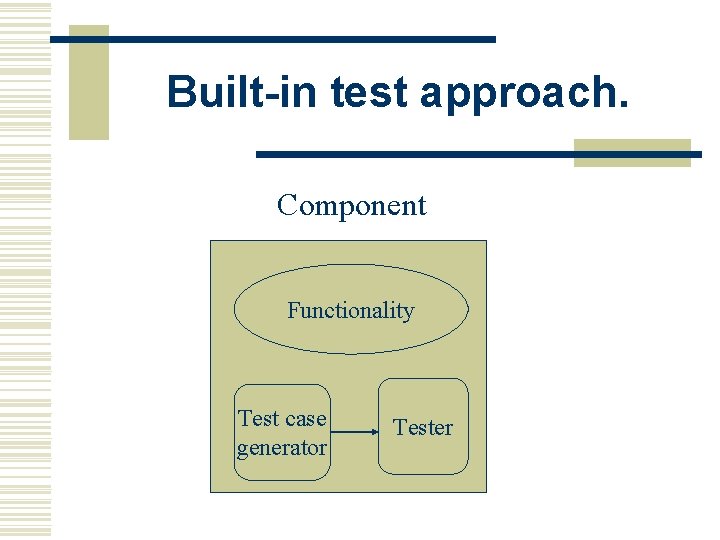

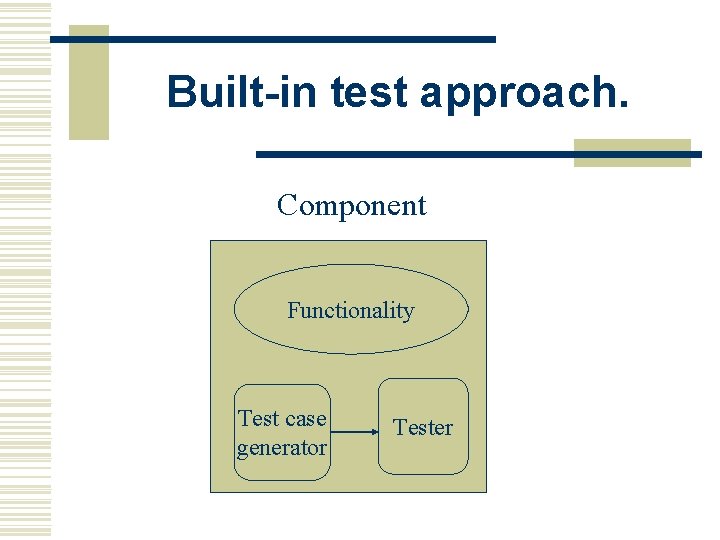

Built-in test approach. Component Functionality Test case generator Tester

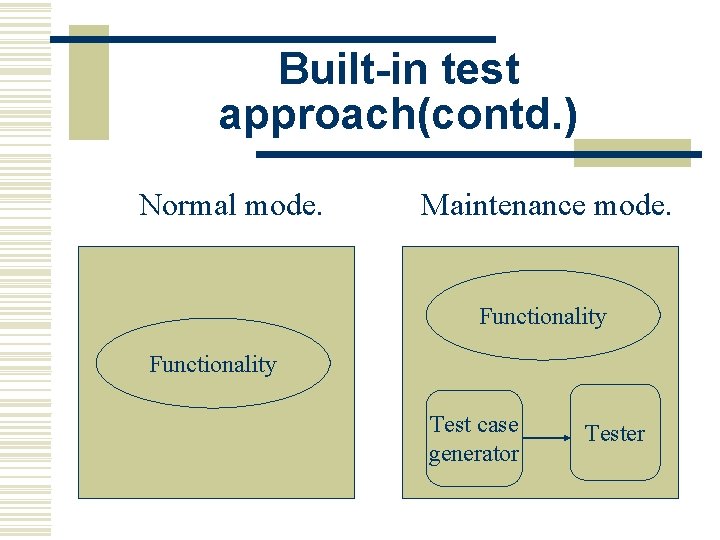

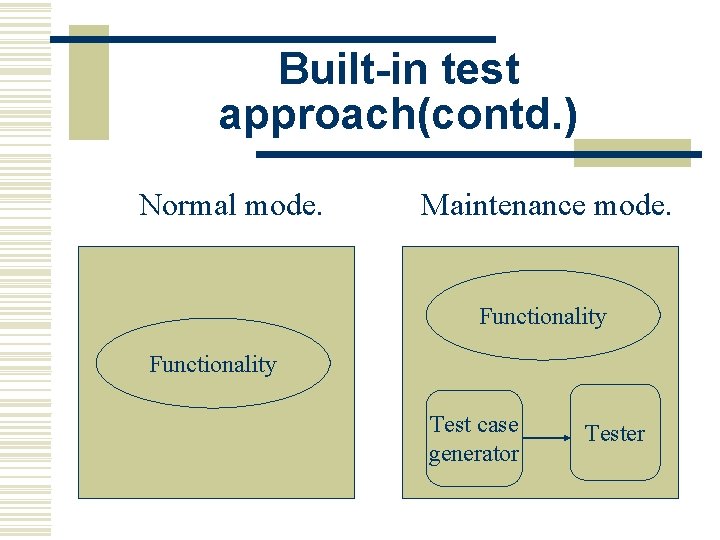

Built-in test approach(contd. ) Normal mode. Maintenance mode. Functionality Test case generator Tester

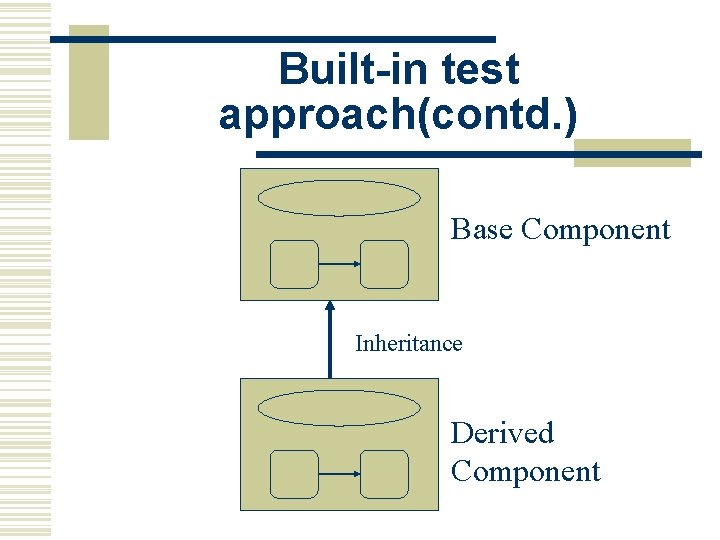

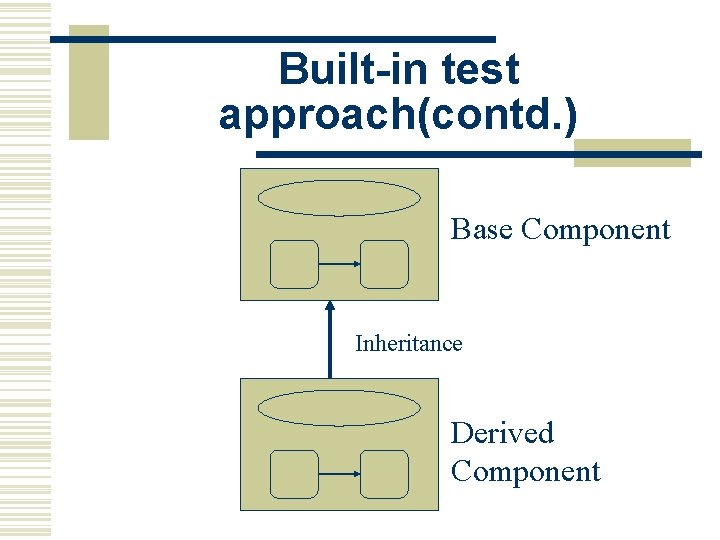

Built-in test approach(contd. ) Base Component Inheritance Derived Component

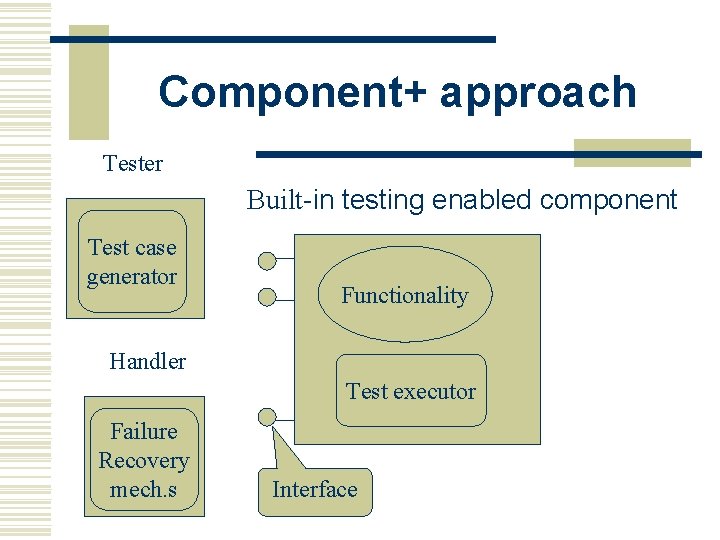

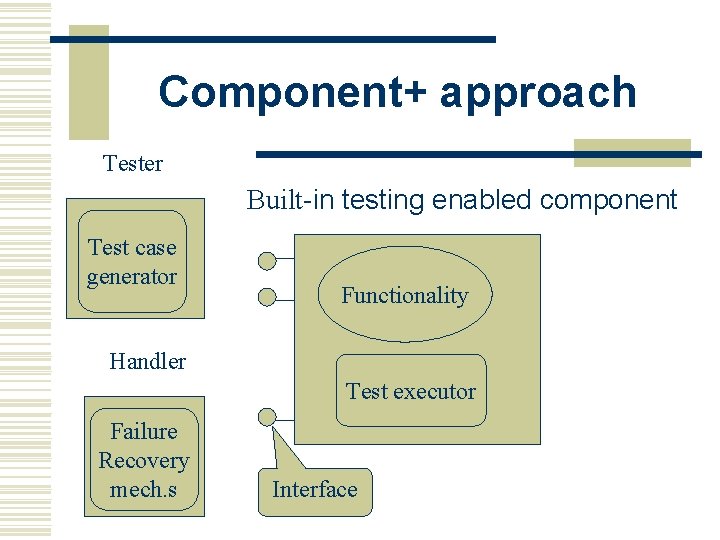

Component+ approach Tester Built-in testing enabled component Test case generator Functionality Handler Test executor Failure Recovery mech. s Interface

Disadv. of BIT and component+ w Static nature. w Generally do not ensure that tests are conducted as required by the component user w The component provider makes some assumptions concerning the requirements of the component user, which again might be wrong or inaccurate.

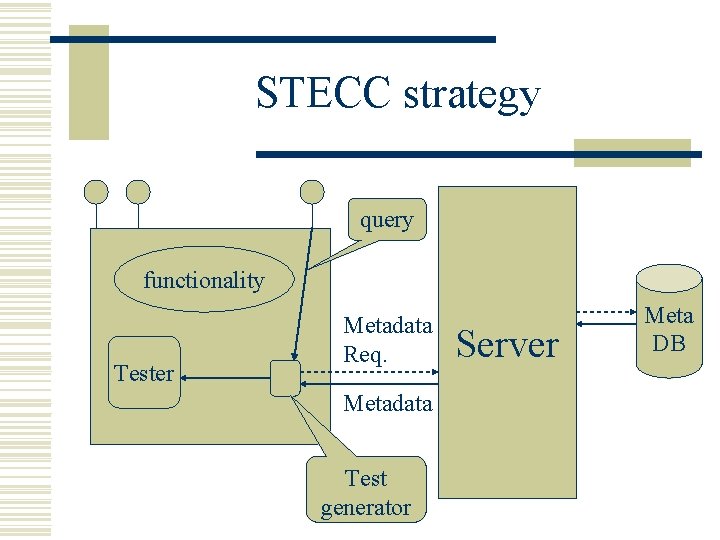

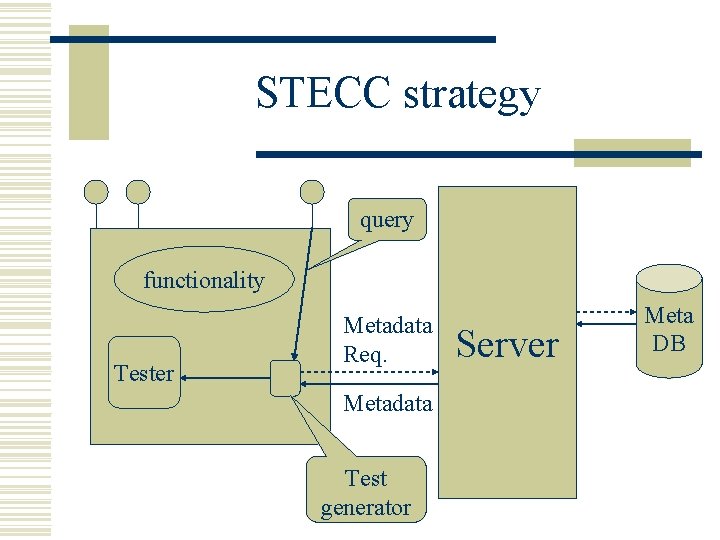

STECC strategy query functionality Tester Metadata Req. Metadata Test generator Server Meta DB

Levels of Testing w Unit Testing. w Integration Testing. w System Testing

Types of testing w Functionality Testing. w Reliability Testing. w Robustness Testing. w Performance Testing. w Load Testing. w Stress Testing. w Stability Testing. w Security Testing.

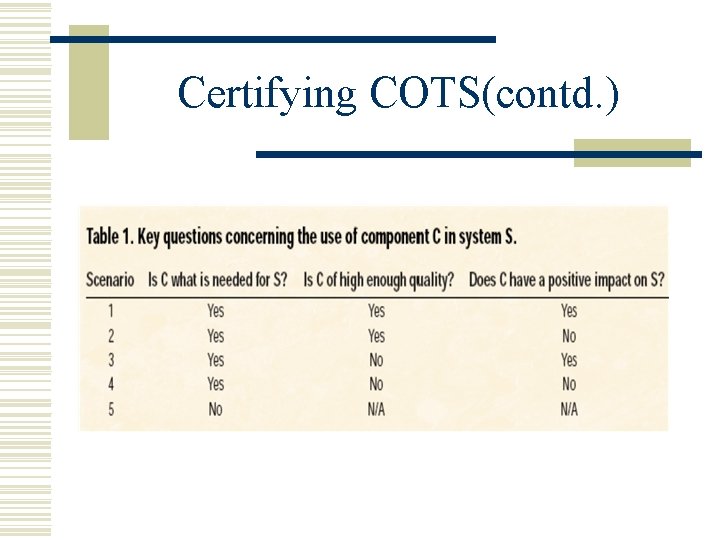

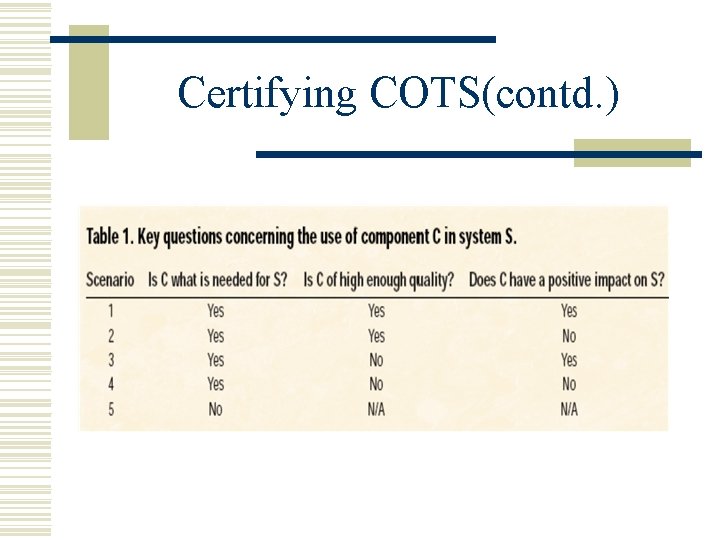

Certifying COTS When considering a candidate component, developers need to ask three key questions: w Does component C fill the developer’s needs? w Is the quality of component C high enough? w What impact will component C have on system S?

Certifying COTS(contd. )

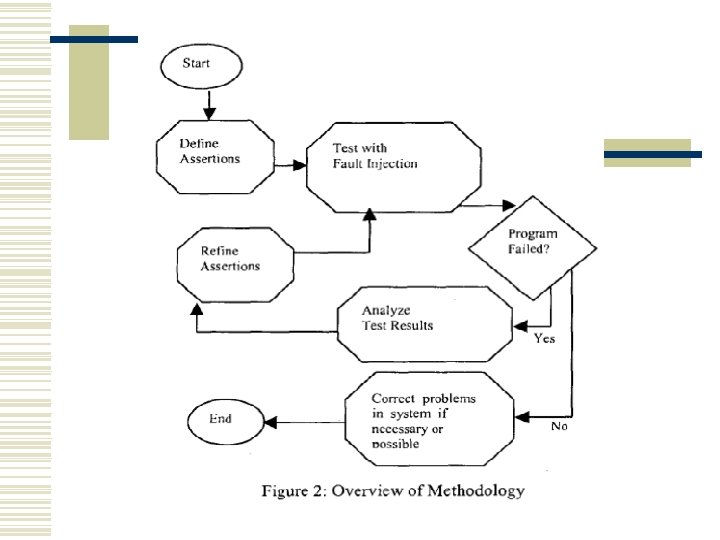

CERTIFICATION TECHNIQUES w Black-box component testing. w System-level fault injection. w Operational system testing. w Software Wrapping. w Interface propagation Analysis.

Black box Testing w To understand the behavior of a component, various inputs are executed and outputs are analyzed. w To catch all types of errors all possible combinations of input values should be executed. w To make testing feasible, test cases are selected randomly from test case space.

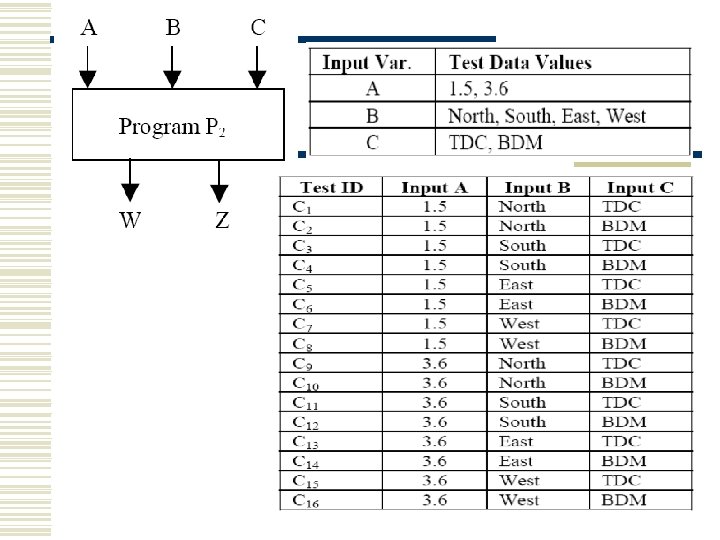

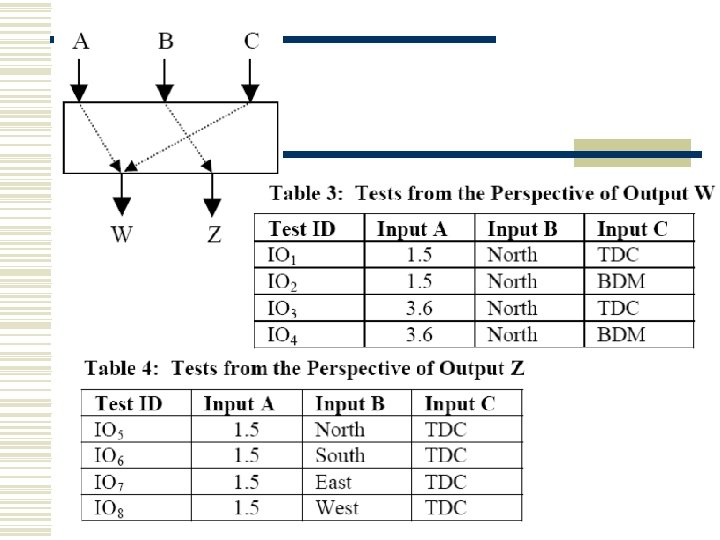

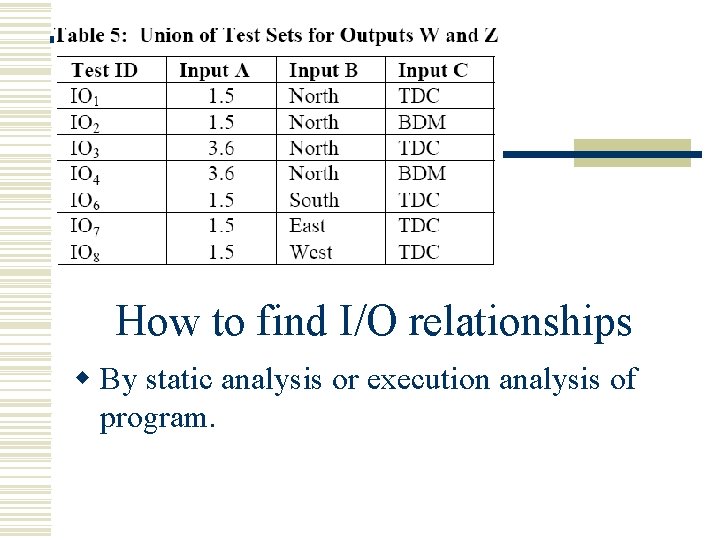

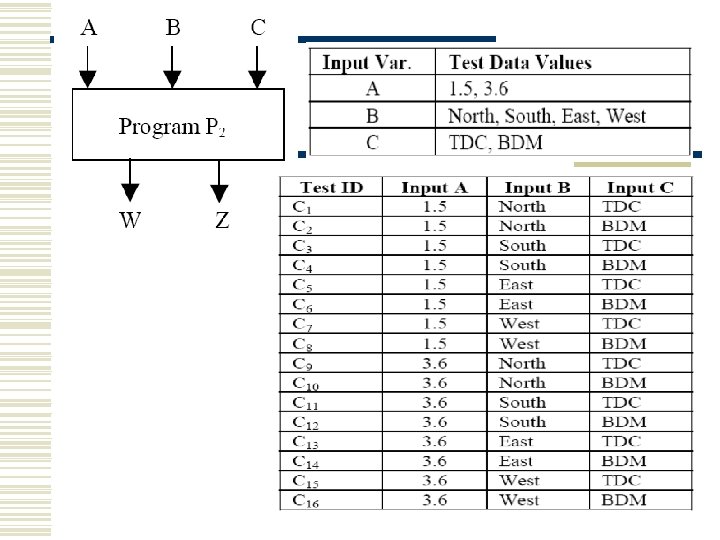

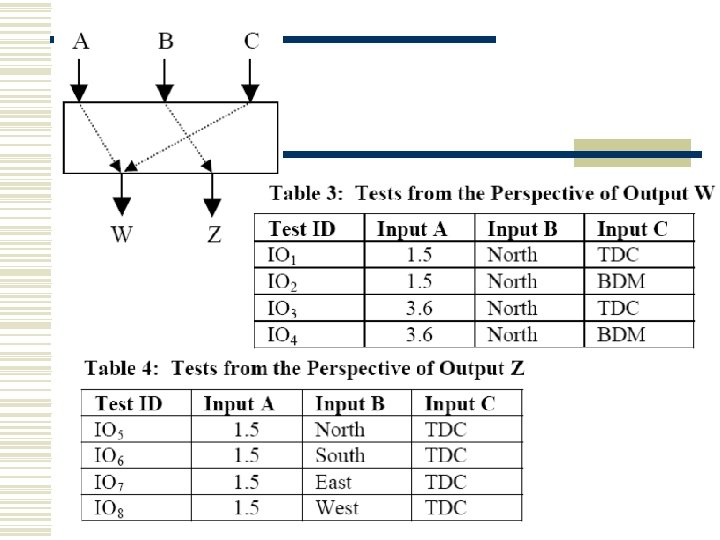

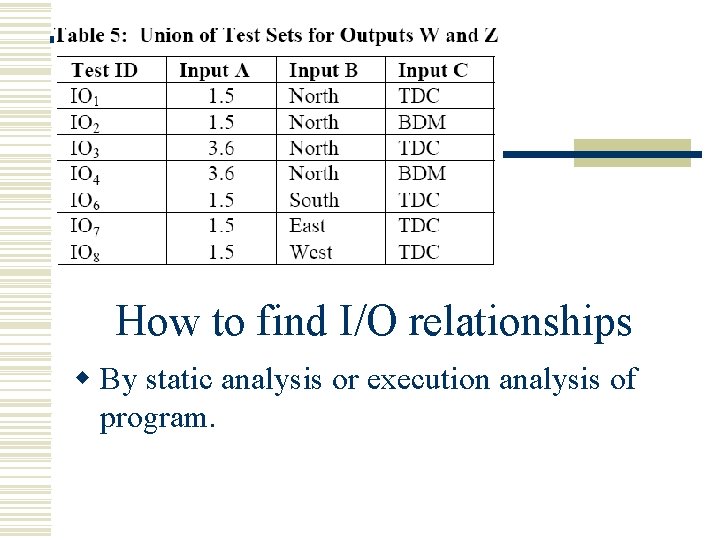

Black box test reduction using Input-output Analysis w Random Testing is not complete. w To perform complete functional testing, number of test cases can be reduced by Input-output Analysis.

How to find I/O relationships w By static analysis or execution analysis of program.

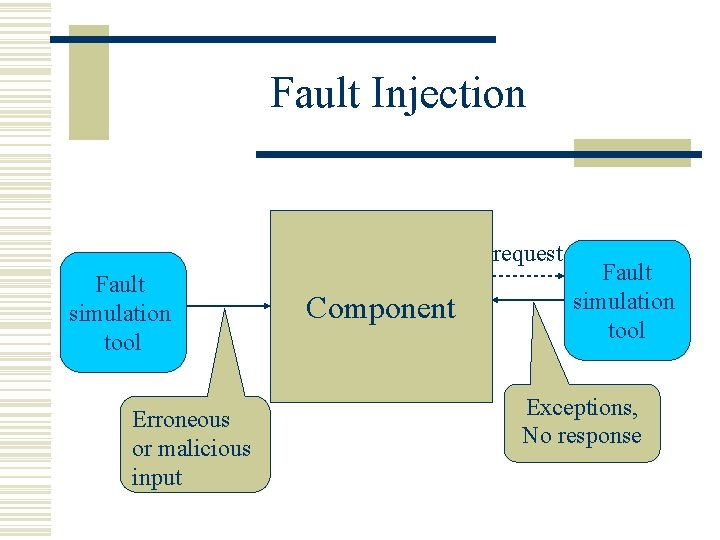

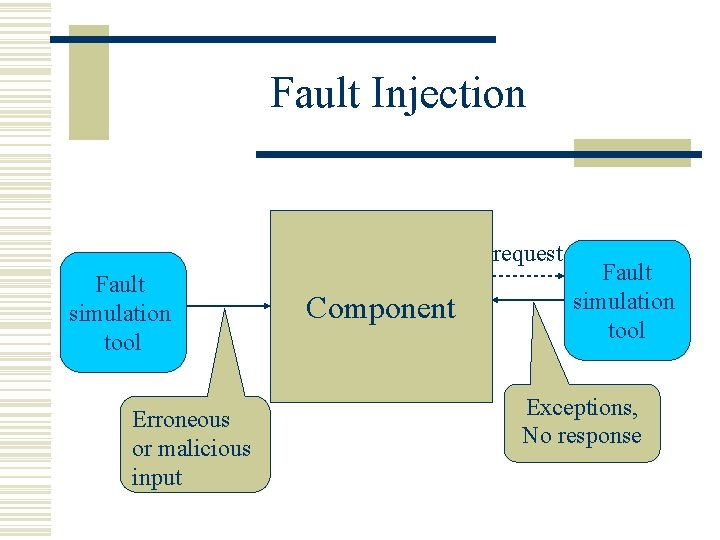

Fault Injection request Fault simulation tool Erroneous or malicious input Component Fault simulation tool Exceptions, No response

Operational System Testing w complements system-level fault injection. w System is operated with random inputs (valid and invalid inputs) w Provides more accurate assessment of COTS quality. w To ensure that a component is a good match for the system.

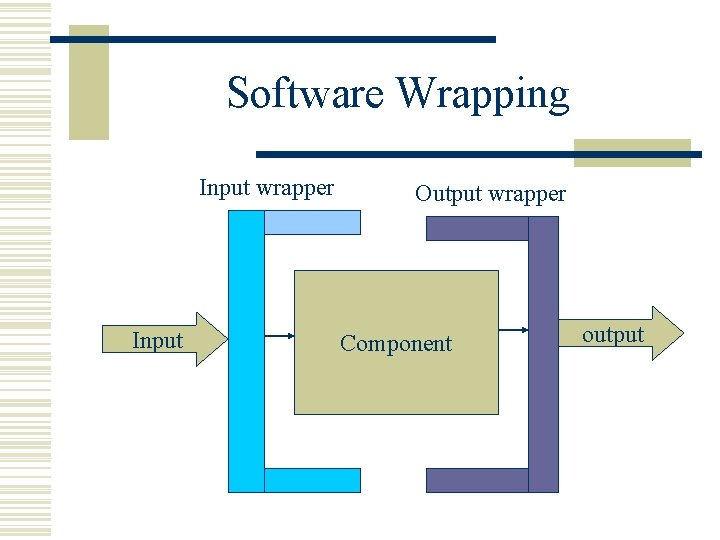

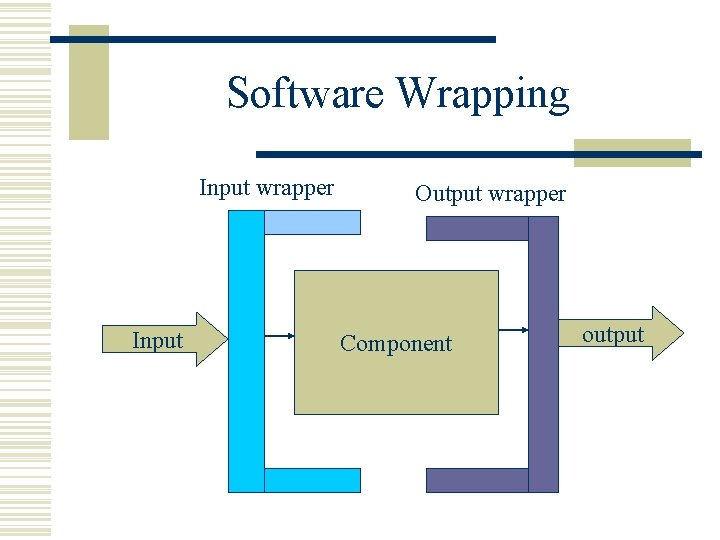

Software Wrapping Input wrapper Input Output wrapper Component output

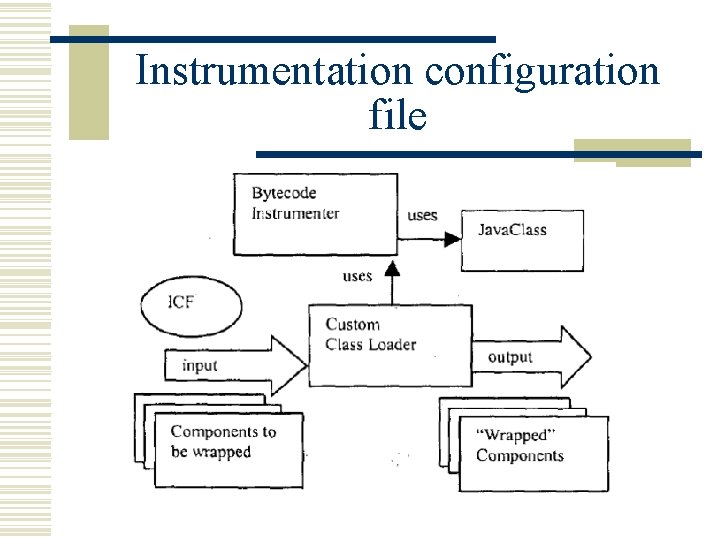

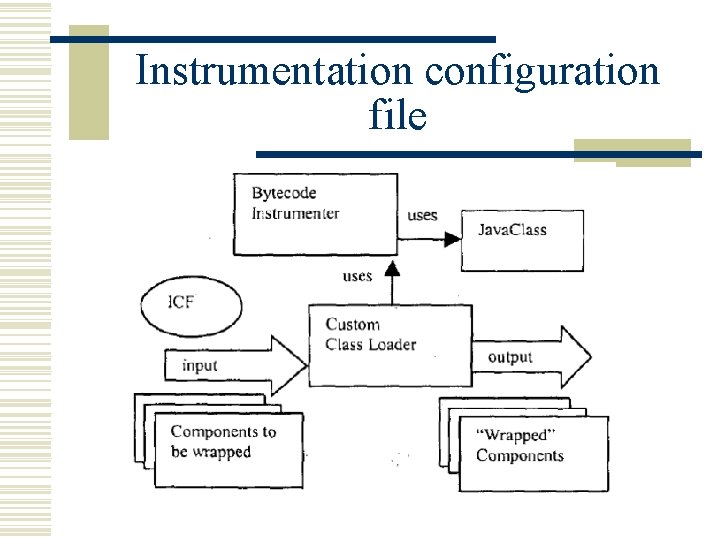

Instrumentation configuration file

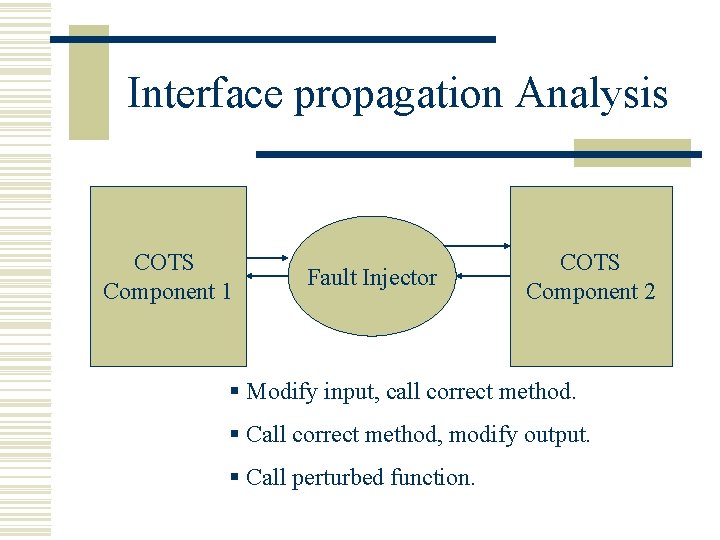

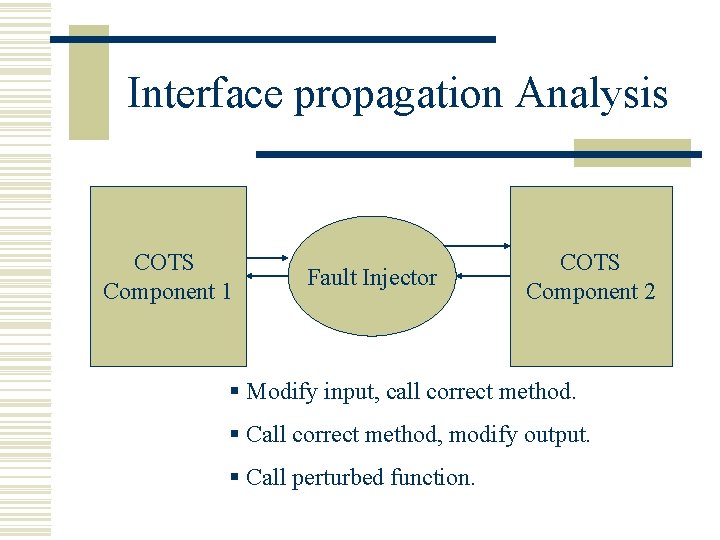

Interface propagation Analysis COTS Component 1 Fault Injector COTS Component 2 § Modify input, call correct method. § Call correct method, modify output. § Call perturbed function.

Fault Injection used for w Robustness Testing. w Error propagation Analysis. w Reliability Testing. w Security Testing.

Robustness Testing

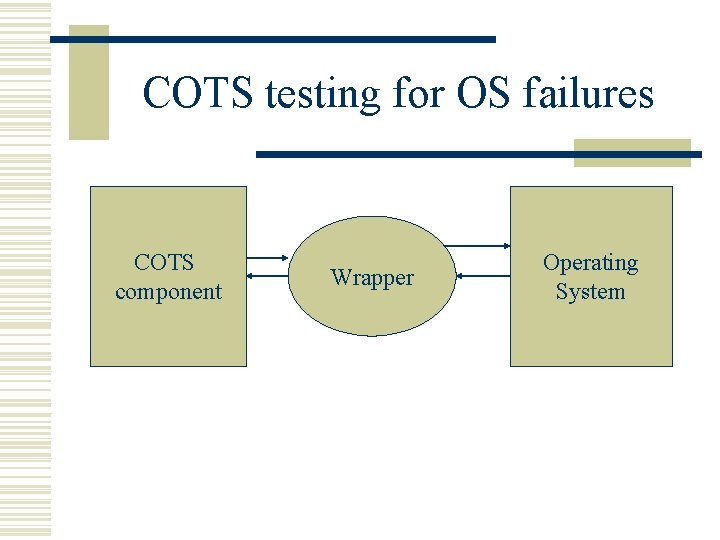

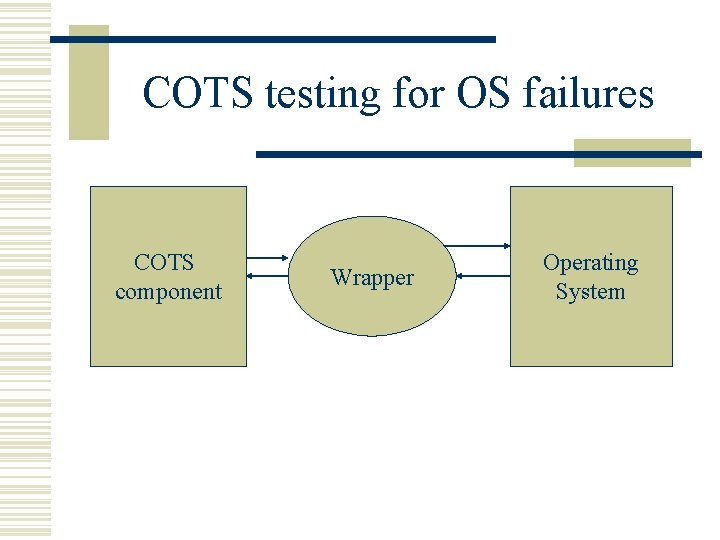

COTS testing for OS failures COTS component Wrapper Operating System

Ballista approach w Based on fault injection technique. w Test cases are generated using parameter types of an interface. w Independent of internal functionality. w Testing is not complete.

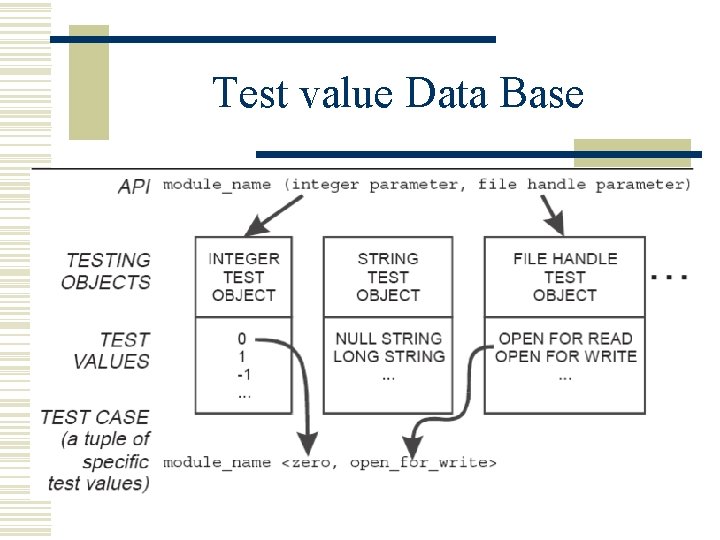

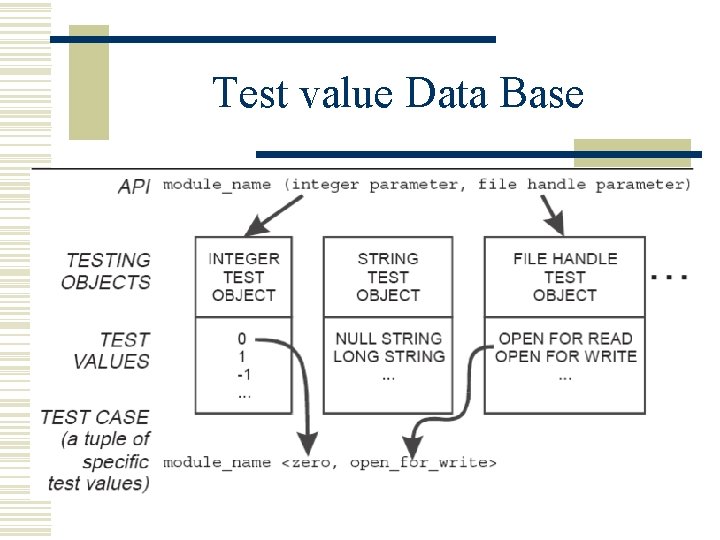

Test value Data Base

Test value Data Base(contd. ) w Integer data type: 0, 1, -1, MAXINT, -MAXINT, selected powers of two, powers of two minus one, and powers of two plus one. w Float data type: 0, 1, -1, +/-DBL_MIN, +/DBL_MAX, pi, and e. w Pointer data type: NULL, -1 (cast to a pointer), pointer to free’d memory, and pointers to malloc’ed buffers of various powers of two in size.

Test value Data Base(contd. ) w String data type (based on the pointer base type): includes NULL, -1 (cast to a pointer), pointer to an empty string, a string as large as a virtual memory page, a string 64 K bytes in length. w File descriptor (based on integer base type): includes 1; MAXINT; and various descriptors: to a file open for reading, to a file open for writing, to a file whose offset is set to end of file, to an empty file, and to a file deleted after the file descriptor was assigned.

Test case generation w All combinations of values for the parameter types are generated. w Number of test cases generated are product of number of parameters and test base for that type.

Error propagation analysis w Interface Propagation Analysis is used by injecting faults at one component. w This is done at component integration level. w A known faulty input is injected using fault injector into the system. w Components effected by this input are observed (how they handle the faulty input).

Performance Testing

Middleware w Application’s execution and Middleware cannot be divorced in any meaningful way. w In order to predict the performance of application component, performance of its middleware should be analyzed.

Performance prediction Methodology Application’s performance prediction is three step process. w Obtaining Technology performance. w Analyzing Architecture specific behavioral characteristics. w Analyzing Application specific behavioral characteristics.

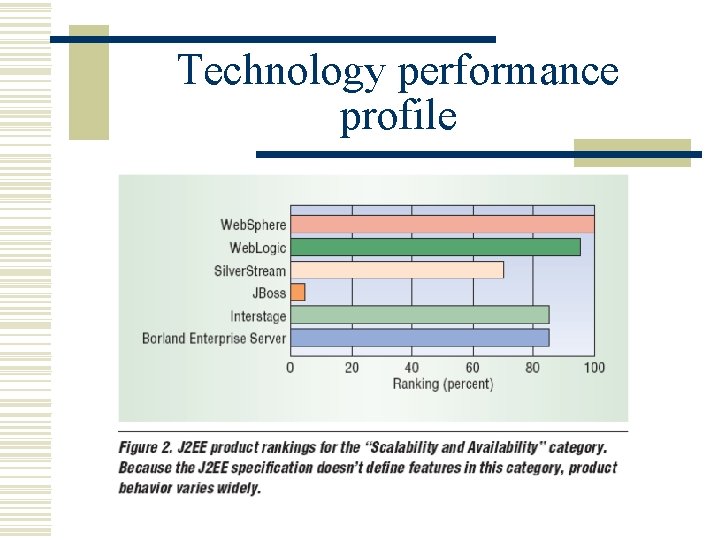

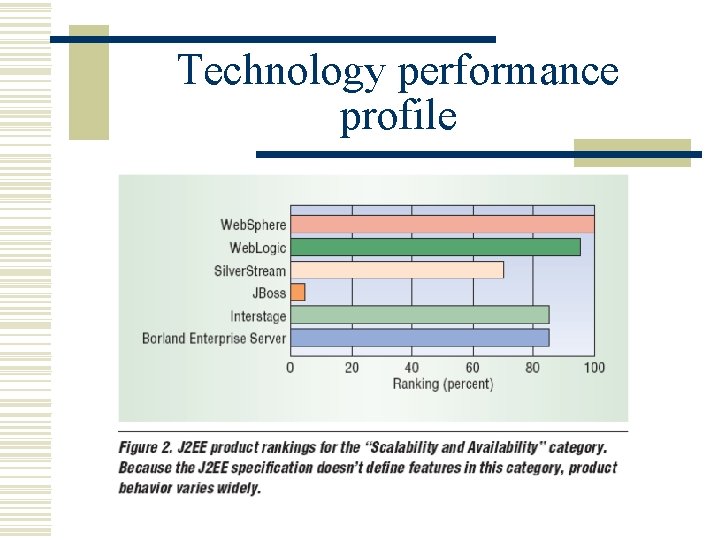

Technology performance profile

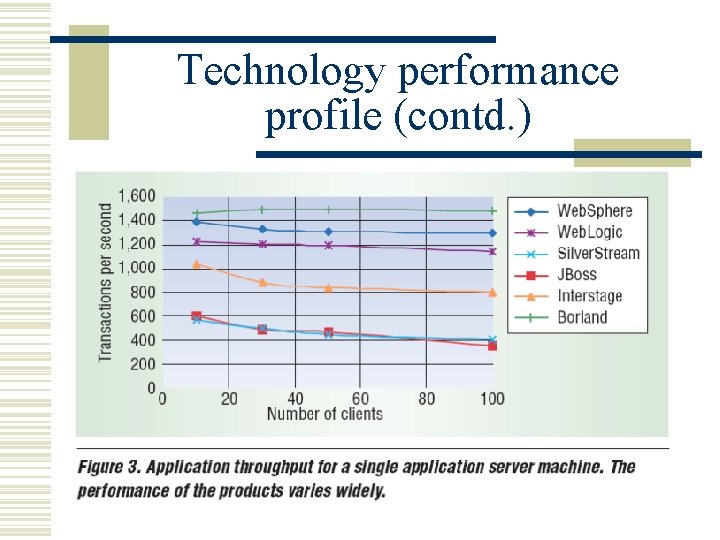

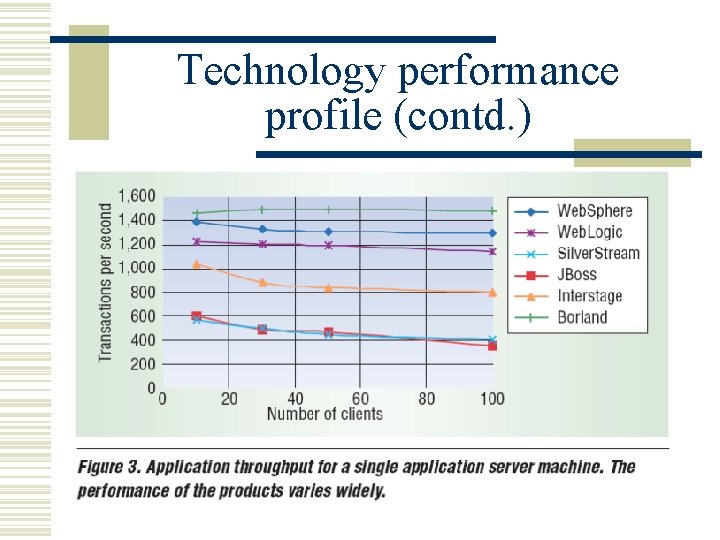

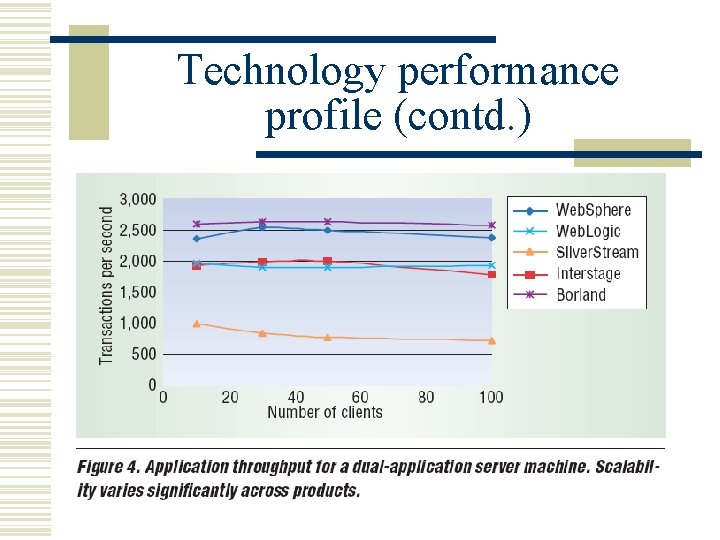

Technology performance profile (contd. )

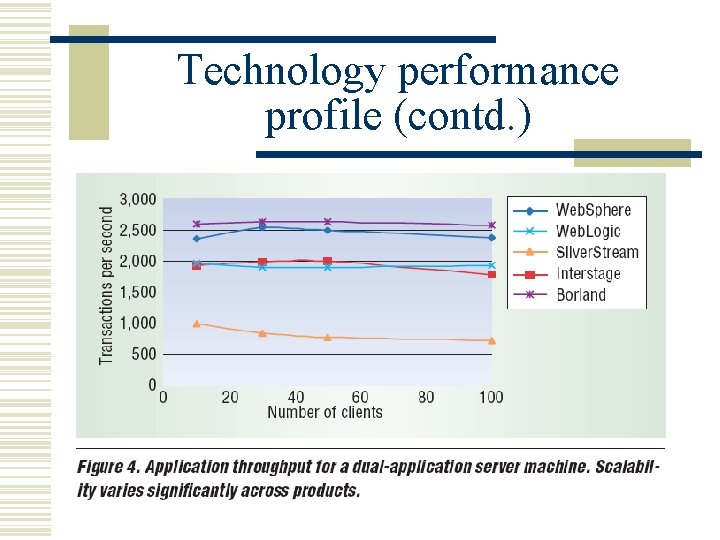

Technology performance profile (contd. )

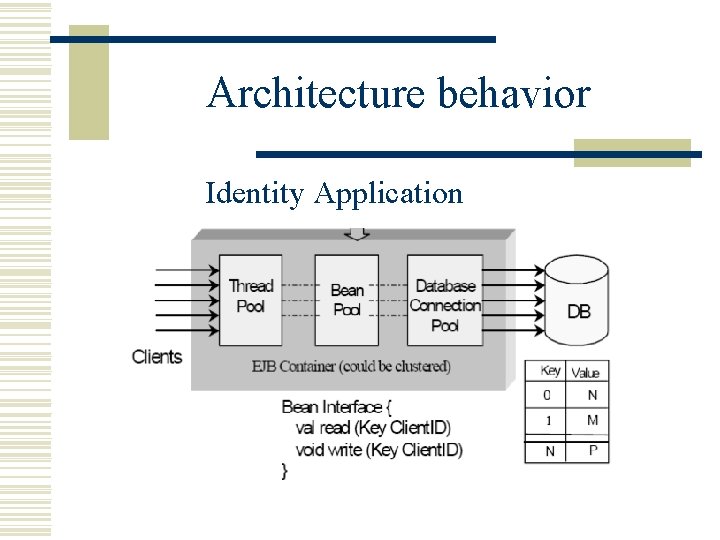

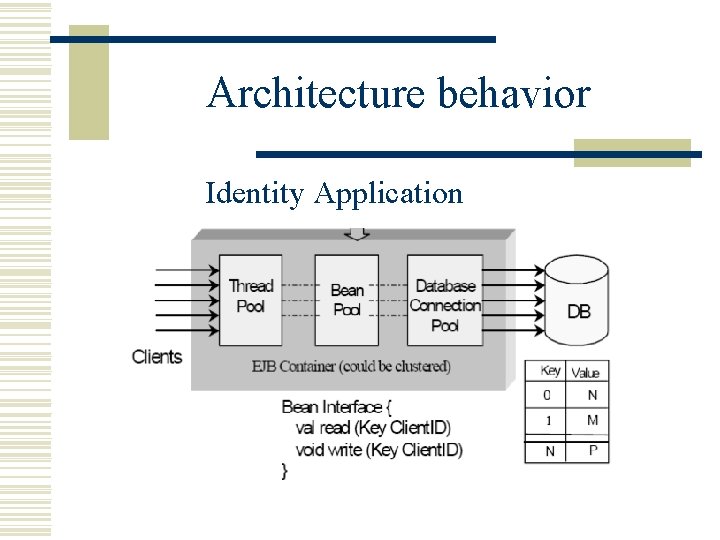

Architecture behavior Identity Application

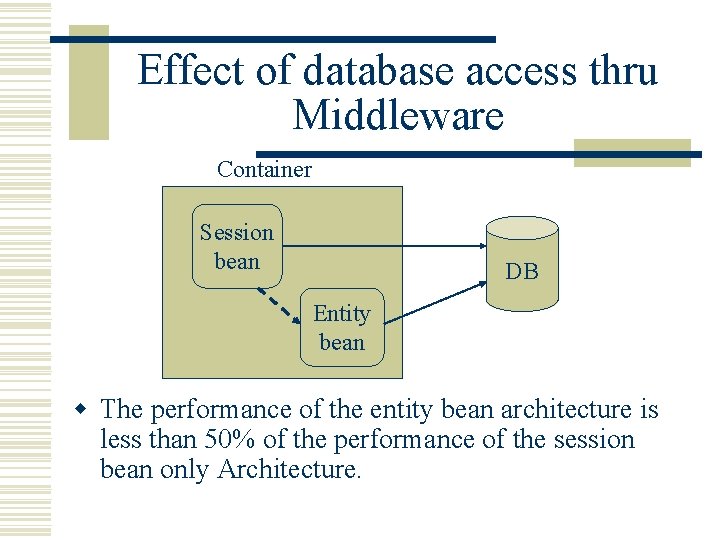

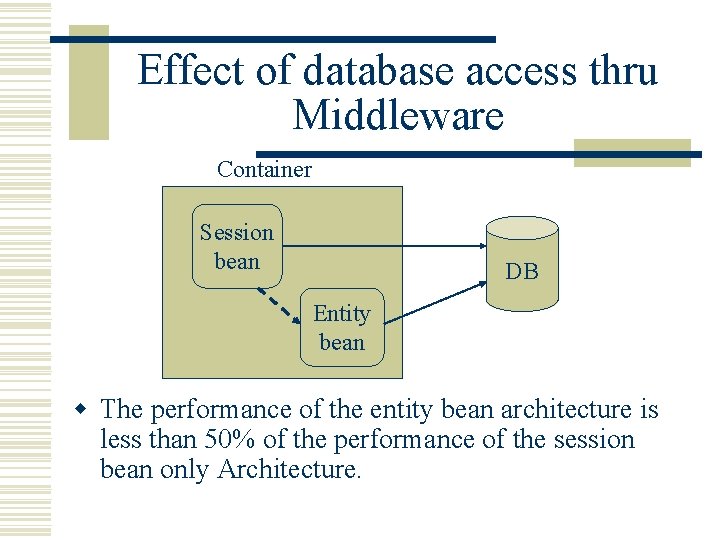

Effect of database access thru Middleware Container Session bean DB Entity bean w The performance of the entity bean architecture is less than 50% of the performance of the session bean only Architecture.

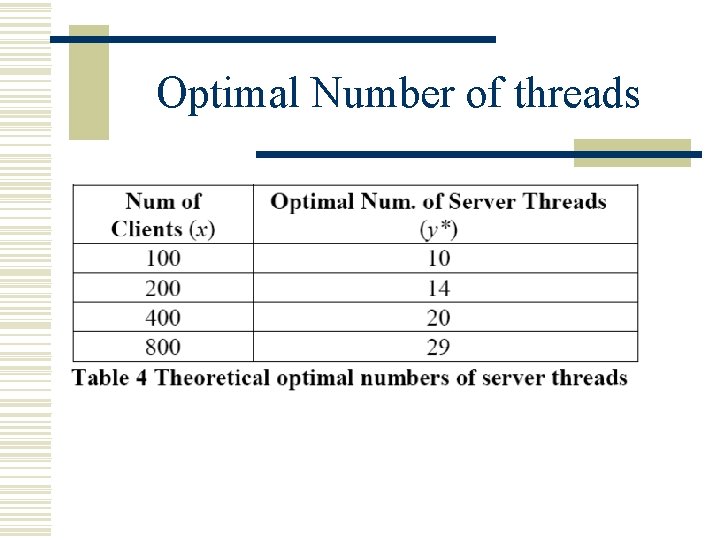

Effect of Server Thread w The performance increases from 2 threads to 32 threads, stabilizes around 32 to 64 threads, and gradually decreases as more threads are added due to contention.

The Effect of Client Request Load. w Client response time increases with concurrent client request rate due to contention for server threads.

Effect of Database Contention w Effect of database contention leads to performance that is between 20% and 49%.

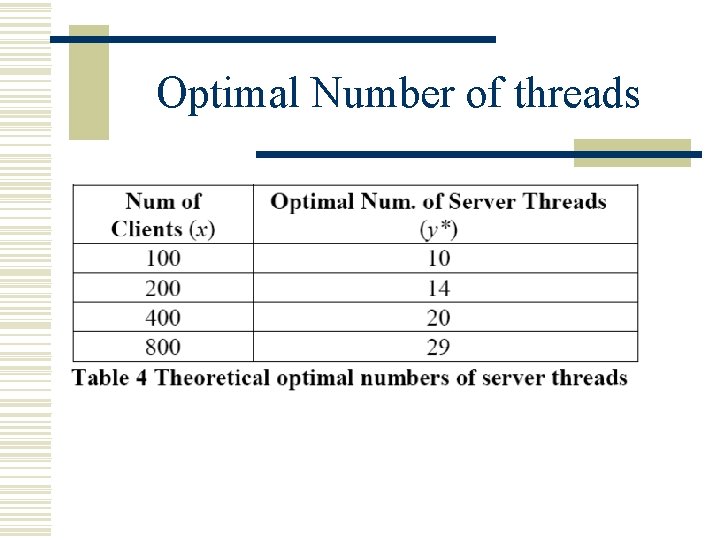

Optimal Number of threads

Load Testing

Load Testing w It is just Performance testing under various loads. w Performance is measured as Connections per second (CPS), throughput in bytes per second, and round trip time (RTT).

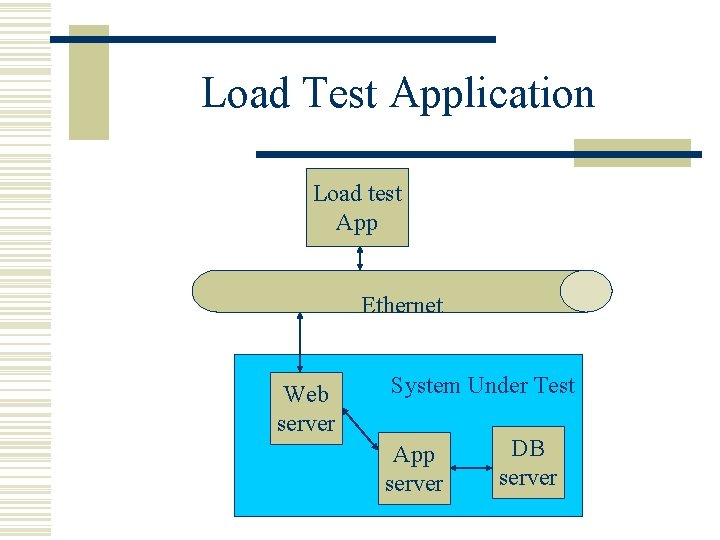

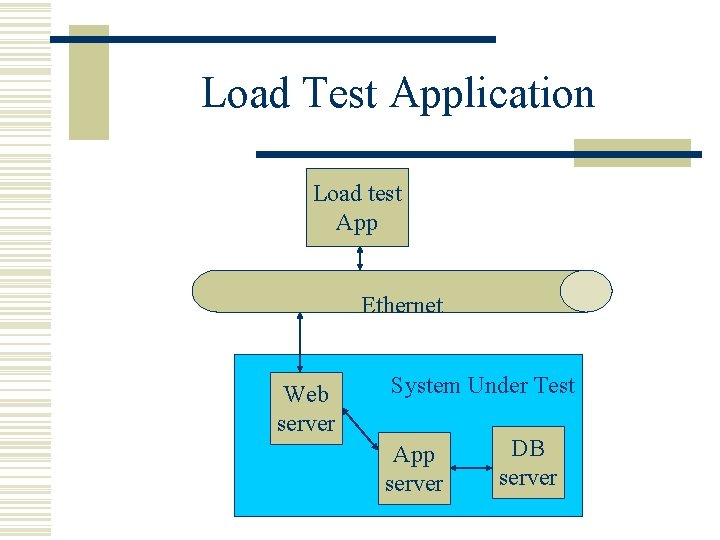

Load Test Application Load test App Ethernet Web server System Under Test App server DB server

Testing strategy Load tests will be conducted in three phases. 1. Consumption of server resources as a function of the volume of incoming requests will be measured. 2. Response time for sequential requests will be measured. 3. Response time for concurrent client request load will be measured.

Security Testing

Security Risks with COTS w Component design. w Component procurement. w Component integration. w System maintenance.

Component Design w Inadvertently flawed component design. w Intentionally flawed component design. w Excessive component functionality. w Open or widely spread component design. w Insufficient or incorrect documentation.

Component integration Mismatch between product security levels. Ex. UNIX and CORBA security integration. System maintenance w Insecure updating. w Unexpected side effects. w Maintenance backdoors.

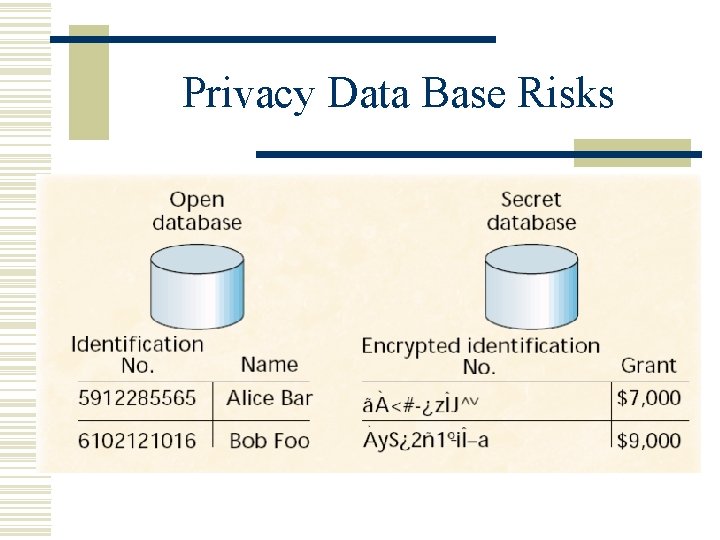

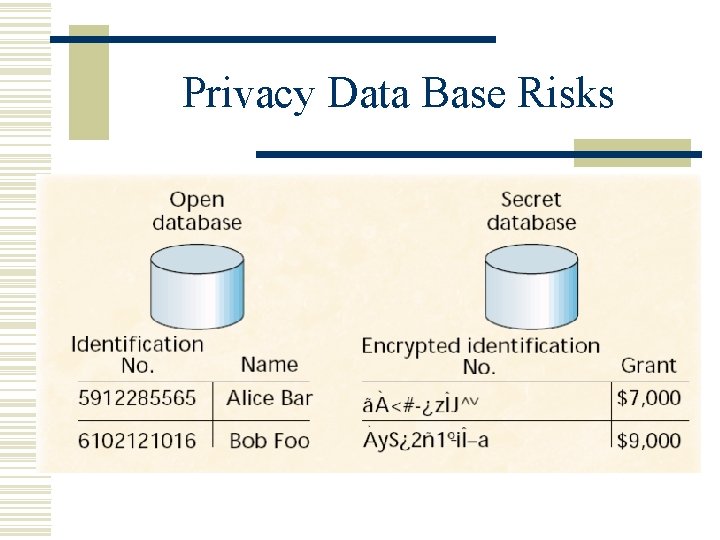

Privacy Data Base Risks

Risks revealed w Trojan horse in client. w Information leaking to swap file. w DBMS log files. w DBMS ordering of records.

Piracy avoidance techniques w Hardware and software tokens. w Dynamic Decryption of Code. w Watermarking. w Code Partitioning.

Regression testing for COTS

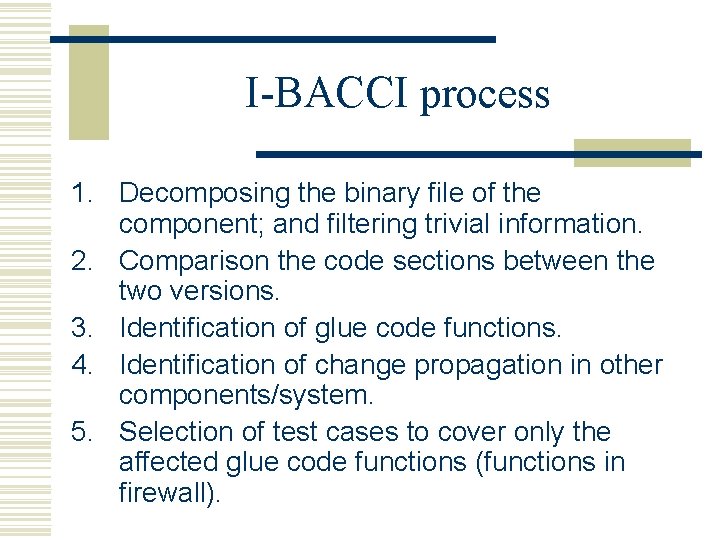

I-BACCI process 1. Decomposing the binary file of the component; and filtering trivial information. 2. Comparison the code sections between the two versions. 3. Identification of glue code functions. 4. Identification of change propagation in other components/system. 5. Selection of test cases to cover only the affected glue code functions (functions in firewall).

Black box understanding of COTS

Methods for understanding w Binary reverse Engg. w Interface probing. w Partial automation of interface probing.

Binary reverse Engg. w Derives the design structure (call graph, control graph) from binary code. w Source code can also be partially extracted using decompilation. w Decompiled source code will have no comments and variable names will not be meaningful. w Licenses forbid decompilation back to source code.

Interface probing w System Developer designs a set of test cases, executes, and analyzes outputs. w Done in an iterative manner.

Disadvantages w A large number of test cases have to be generated analyzed. w Some properties may require significant probing which may be tedious, labor intensive, expensive. w Developers miss certain limitations and make incorrect assumptions.

Partial Automation of interface probing w Based on interface probing. w Test cases are generated based on scenarios. w Testing is done in three phases 1. Scenario description phase. 2. Search space specification phase. 3. Test case generation phase.