CostSensitive Bayesian Network algorithm Eman Nashnush E Nashnush

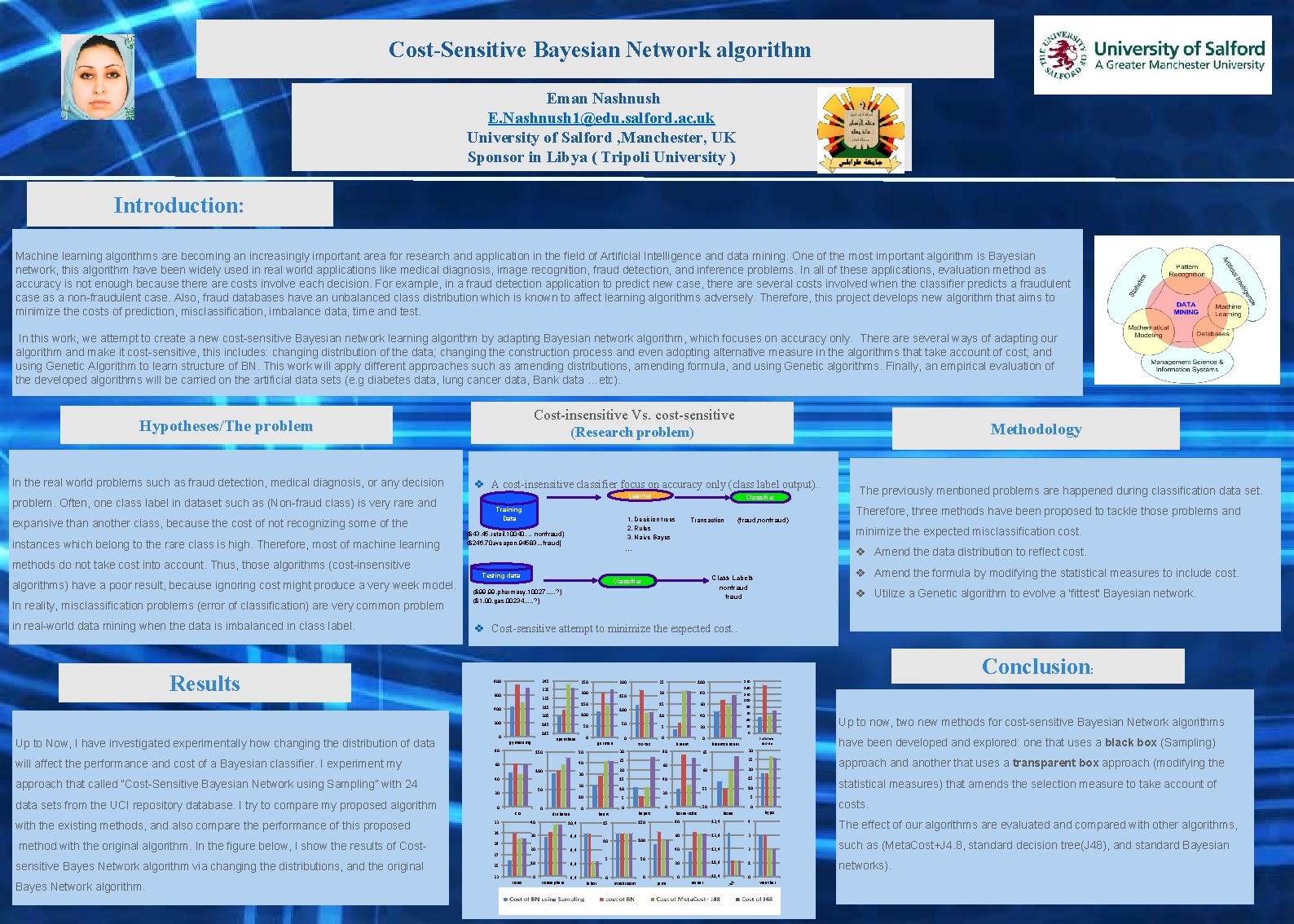

Cost-Sensitive Bayesian Network algorithm Eman Nashnush E. Nashnush 1@edu. salford. ac. uk University of Salford , Manchester, UK Sponsor in Libya ( Tripoli University ) Introduction: Machine learning algorithms are becoming an increasingly important area for research and application in the field of Artificial Intelligence and data mining. One of the most important algorithm is Bayesian network, this algorithm have been widely used in real world applications like medical diagnosis, image recognition, fraud detection, and inference problems. In all of these applications, evaluation method as accuracy is not enough because there are costs involve each decision. For example, in a fraud detection application to predict new case, there are several costs involved when the classifier predicts a fraudulent case as a non-fraudulent case. Also, fraud databases have an unbalanced class distribution which is known to affect learning algorithms adversely. Therefore, this project develops new algorithm that aims to minimize the costs of prediction, misclassification, imbalance data, time and test. In this work, we attempt to create a new cost-sensitive Bayesian network learning algorithm by adapting Bayesian network algorithm, which focuses on accuracy only. There are several ways of adapting our algorithm and make it cost-sensitive, this includes: changing distribution of the data; changing the construction process and even adopting alternative measure in the algorithms that take account of cost; and using Genetic Algorithm to learn structure of BN. This work will apply different approaches such as amending distributions, amending formula, and using Genetic algorithms. Finally, an empirical evaluation of the developed algorithms will be carried on the artificial data sets (e. g diabetes data, lung cancer data, Bank data …etc). Cost-insensitive Vs. cost-sensitive (Research problem) Hypotheses/The problem In the real world problems such as fraud detection, medical diagnosis, or any decision problem. Often, one class label in dataset such as (Non-fraud class) is very rare and expansive than another class, because the cost of not recognizing some of the instances which belong to the rare class is high. Therefore, most of machine learning Methodology v A cost-insensitive classifier focus on accuracy only (class label output). . Learner Training Data Classifier 1. Decision trees 2. Rules 3. Naive Bayes ($43. 45, retail, 10040, . . nonfraud) ($246, 70, weapon, 94583, . , fraud) Transaction {fraud, nonfraud} v Amend the data distribution to reflect cost. methods do not take cost into account. Thus, those algorithms (cost-insensitive 245 250 235 600 225 Up to Now, I have investigated experimentally how changing the distribution of data will affect the performance and cost of a Bayesian classifier. I experiment my approach that called “Cost-Sensitive Bayesian Network using Sampling” with 24 data sets from the UCI repository database. I try to compare my proposed algorithm with the existing methods, and also compare the performance of this proposed method with the original algorithm. In the figure below, I show the results of Costsensitive Bayes Network algorithm via changing the distributions, and the original 195 80 150 german 40 20 0 0 crx 33 31 29 27 25 iono 10 5 0 0 heart 40 10, 4 30 9, 9 20 9, 4 10 8, 9 0 8, 4 ionosphere 15 labor 15 60 10 40 60 5 20 20 100 80 Up to now, two new methods for cost-sensitive Bayesian Network algorithms 40 0 breastcancear 65 bupa liver diorder 30 25 15 5 0 50 0 hypo horse hepati horse-colic 150 80 13, 4 4 60 12, 9 3 40 12, 4 2 20 11, 9 1 0 11, 4 0 100 5 50 0 0 pima statistical measures) that amends the selection measure to take account of 10 55 sonar have been developed and explored: one that uses a black box (Sampling) approach and another that uses a transparent box approach (modifying the 20 60 20 10 mushroom 140 0 breast Conclusion: 160 40 10 diabetes 80 60 15 20 50 20 80 20 30 100 tic-tac 25 40 100 25 0 0 30 50 60 23 Bayes Network algorithm. 0 spambase gymexamg 50 50 185 0 100 205 200 150 215 400 200 ed 800 nc Results v Utilize a Genetic algorithm to evolve a 'fittest' Bayesian network. v Cost-sensitive attempt to minimize the expected cost. . la in real-world data mining when the data is imbalanced in class label. nonfraud ($99. 99, pharmacy, 10027, . . . , ? ) ($1. 00, gas, 00234, . . . , ? ) ba In reality, misclassification problems (error of classification) are very common problem v Amend the formula by modifying the statistical measures to include cost. Class Labels Classifier un algorithms) have a poor result, because ignoring cost might produce a very week model. Therefore, three methods have been proposed to tackle those problems and minimize the expected misclassification cost. . Testing data The previously mentioned problems are happened during classification data set. costs. The effect of our algorithms are evaluated and compared with other algorithms, such as (Meta. Cost+J 4. 8, standard decision tree(J 48), and standard Bayesian networks). weather

- Slides: 1