COSC 121 Computer Systems ISA and Performance Jeremy

- Slides: 114

COSC 121: Computer Systems. ISA and Performance Jeremy Bolton, Ph. D Assistant Teaching Professor Constructed using materials: - Patt and Patel Introduction to Computing Systems (2 nd) - Patterson and Hennessy Computer Organization and Design (4 th) **A special thanks to Eric Roberts and Mary Jane Irwin

Notes • Project 3 Due soon. • Next HW posted soon. • Read PH. 1 and PH. 4

Outline • ISA and performance • CISC • RISC • Details of Pipelining – Avoiding Hazards (and avoiding stalls) • Data – Stalls and no-ops – Forwarding • Branch – Branch Delay Scheduling – Prediction » Predictions schemes – Unrolling loops

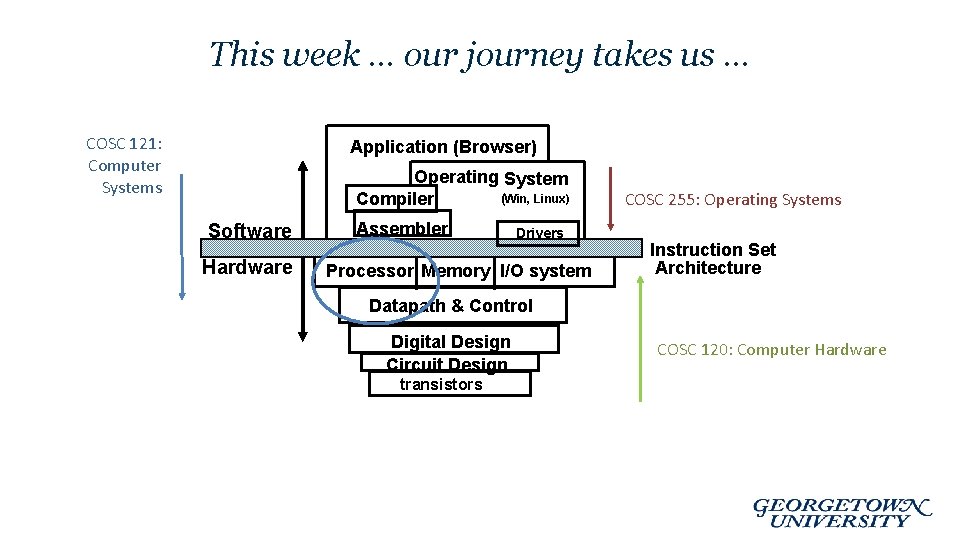

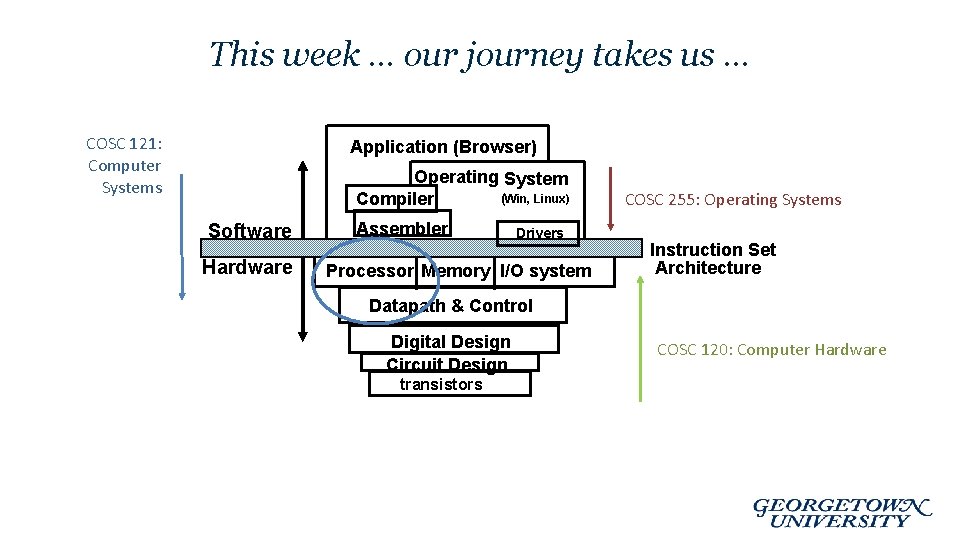

This week … our journey takes us … COSC 121: Computer Systems Application (Browser) Operating System (Win, Linux) Compiler Software Hardware Assembler Drivers Processor Memory I/O system COSC 255: Operating Systems Instruction Set Architecture Datapath & Control Digital Design Circuit Design transistors COSC 120: Computer Hardware

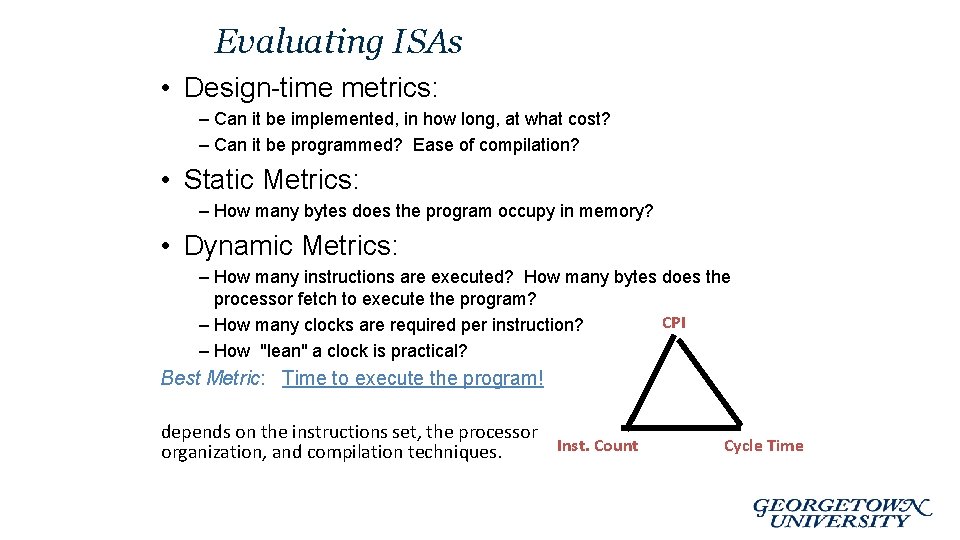

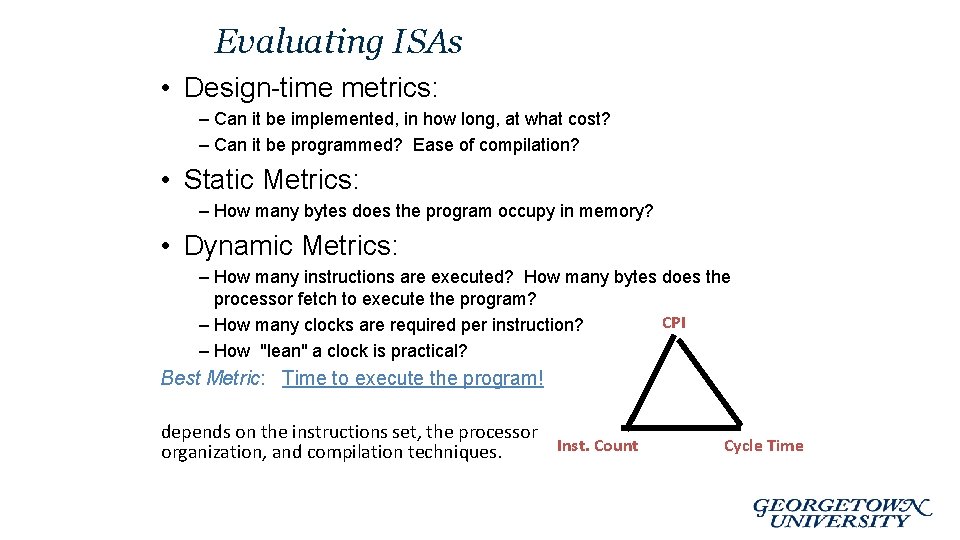

Evaluating ISAs • Design-time metrics: – Can it be implemented, in how long, at what cost? – Can it be programmed? Ease of compilation? • Static Metrics: – How many bytes does the program occupy in memory? • Dynamic Metrics: – How many instructions are executed? How many bytes does the processor fetch to execute the program? CPI – How many clocks are required per instruction? – How "lean" a clock is practical? Best Metric: Time to execute the program! depends on the instructions set, the processor Inst. Count organization, and compilation techniques. Cycle Time

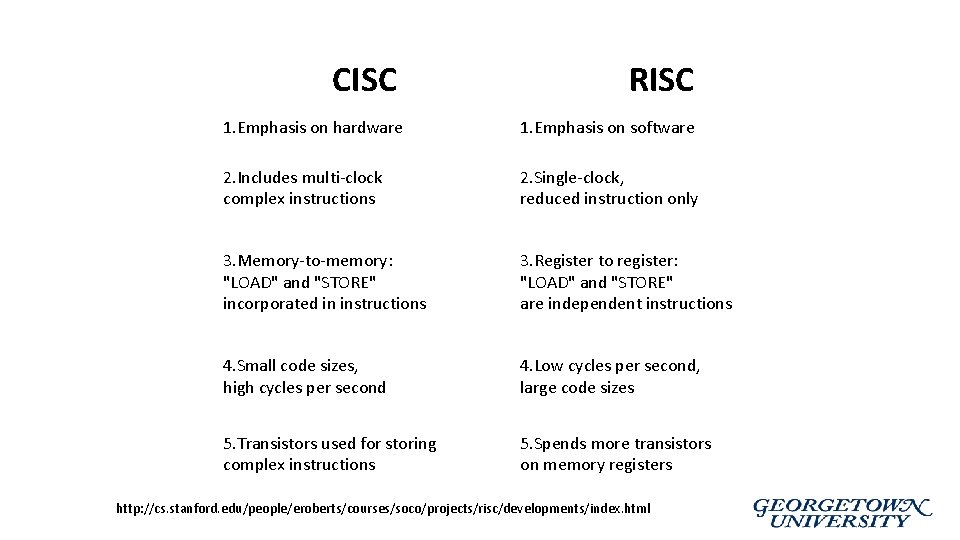

RISC vs CISC • Ideologies for ISA design • Two extremes: – Build very complex instructions that can execute multiple or complex operations as 1 instruction (CISC) – Build very simple instructions that execute quickly (RISC)

CISC Architecture • The simplest way to examine the advantages and disadvantages of RISC architecture is by contrasting it with it's predecessor: CISC (Complex Instruction Set Computers) architecture.

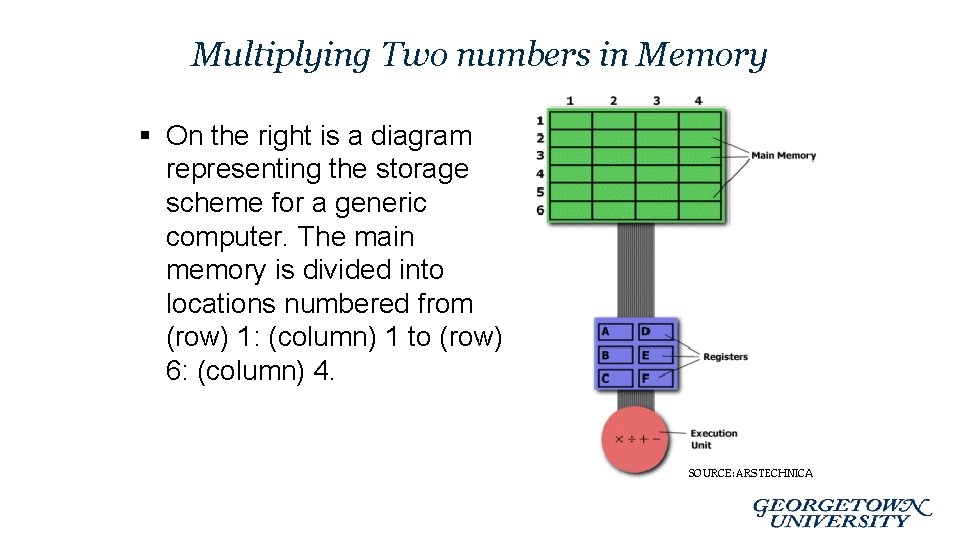

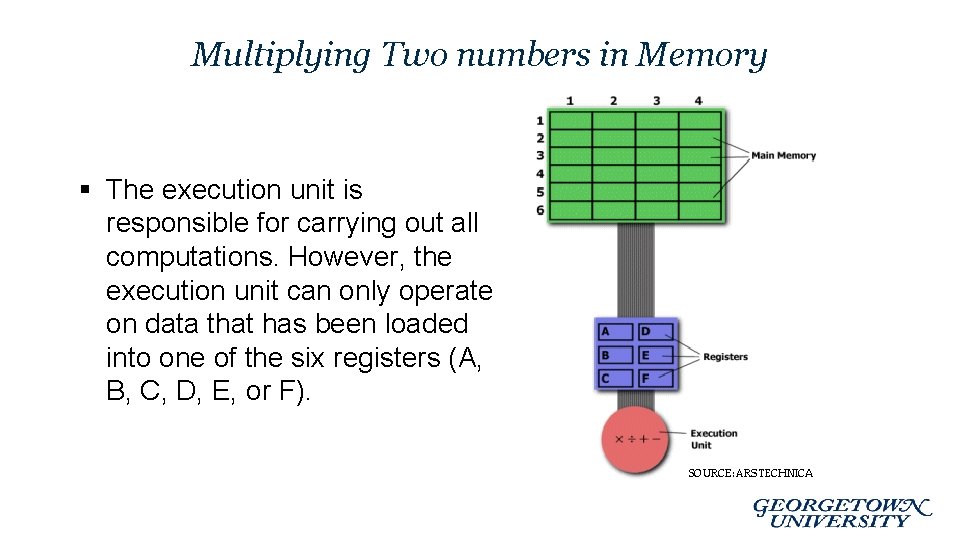

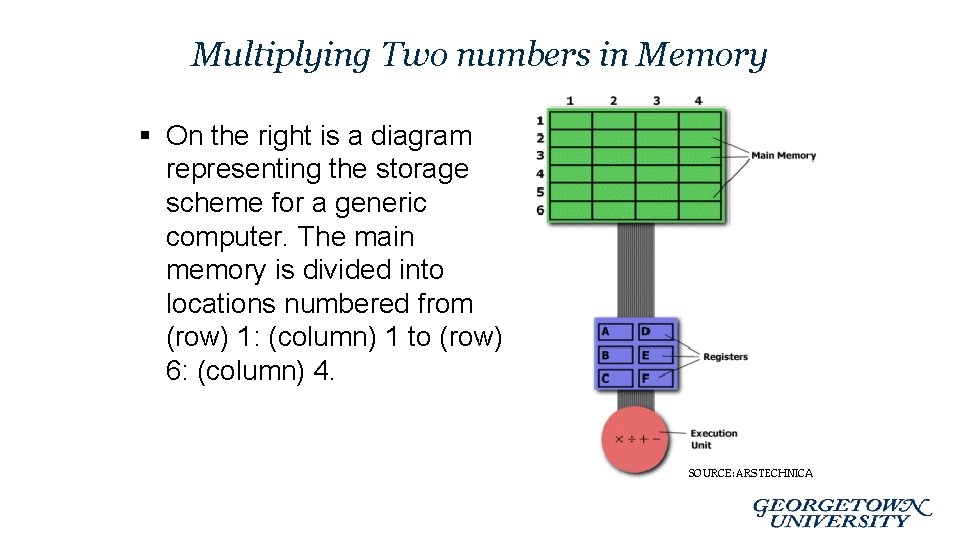

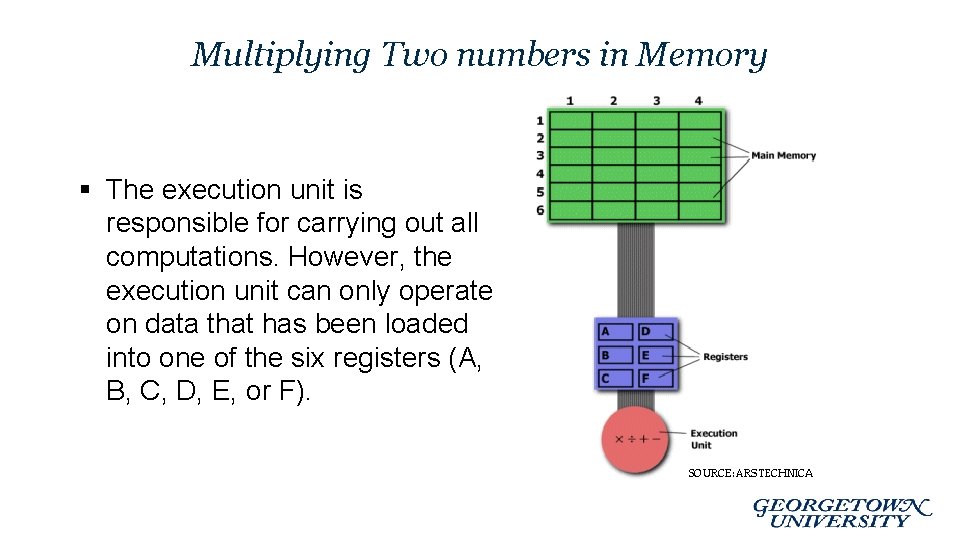

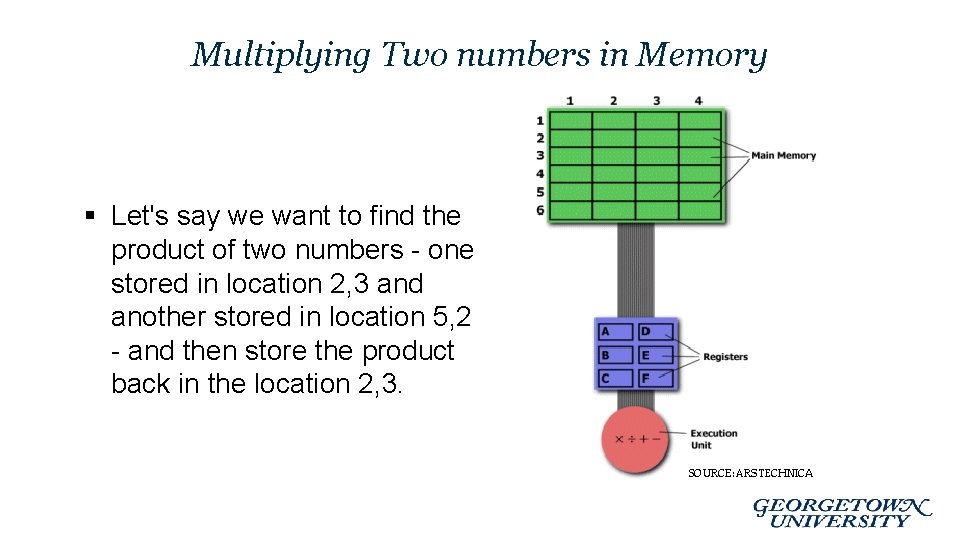

Multiplying Two numbers in Memory § On the right is a diagram representing the storage scheme for a generic computer. The main memory is divided into locations numbered from (row) 1: (column) 1 to (row) 6: (column) 4.

Multiplying Two numbers in Memory § The execution unit is responsible for carrying out all computations. However, the execution unit can only operate on data that has been loaded into one of the six registers (A, B, C, D, E, or F).

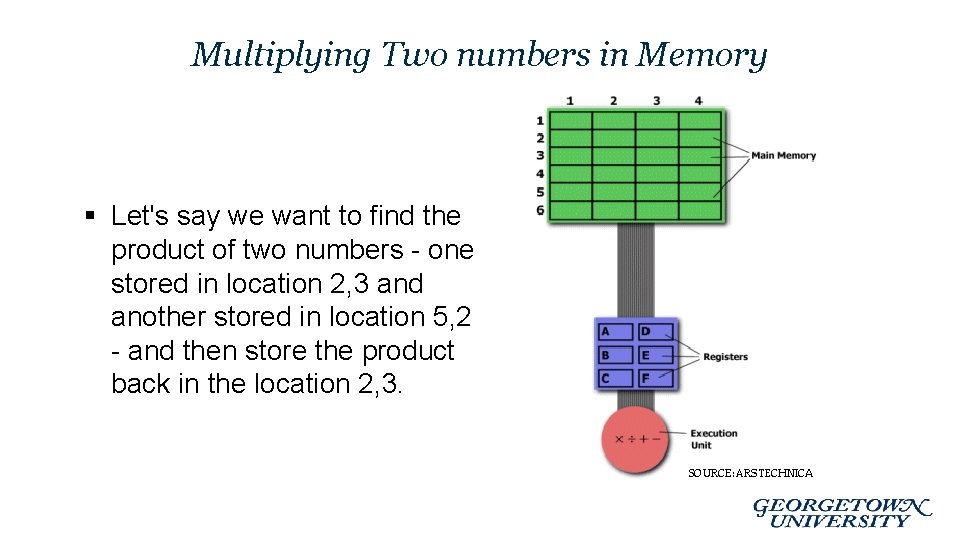

Multiplying Two numbers in Memory § Let's say we want to find the product of two numbers - one stored in location 2, 3 and another stored in location 5, 2 - and then store the product back in the location 2, 3.

The CISC Approach • The primary goal of CISC architecture is to complete a task in as few lines of assembly as possible. • This is achieved by building processor hardware that is capable of understanding and executing a series of operations.

The CISC Approach • For this particular task, a CISC processor would come prepared with a specific instruction (we'll call it "MULT"). • When executed, this instruction loads the two values into separate registers, multiplies the operands in the execution unit, and then stores the product in the appropriate register. • Thus, the entire task of multiplying two numbers can be completed with one instruction: MULT 2: 3, 5: 2

The CISC Approach • MULT is what is known as a "complex instruction. " • It operates directly on the computer's memory banks and does not require the programmer to explicitly call any loading or storing functions. • It closely resembles a command in a higher level language. • For instance, if we let "a" represent the value of 2: 3 and "b" represent the value of 5: 2, then this command is identical to the C statement "a = a * b. "

The CISC Approach • One of the primary advantages of this system is that the compiler has to do very little work to translate a high-level language statement into assembly. • Because the length of the code is relatively short, very little RAM is required to store instructions. • The emphasis is put on building complex instructions directly into the hardware.

The RISC Approach • RISC processors only use simple instructions that can be executed within one clock cycle. (amortized via pipeline) • Thus, the "MULT" command described above could be divided into three separate commands: "LOAD, " which moves data from the memory bank to a register, "PROD, " which finds the product of two operands located within the registers, and "STORE, " which moves data from a register to the memory banks.

The RISC Approach • In order to perform the exact series of steps described in the CISC approach, a programmer would need to code four lines of assembly: LOAD A, 2: 3 LOAD B, 5: 2 PROD A, B STORE 2: 3, A

The RISC Approach • At first, this may seem like a much less efficient way of completing the operation. • Because there are more lines of code, more RAM is needed to store the assembly level instructions. • The compiler must also perform more work to convert a high-level language statement into code of this form.

The RISC Approach • …However, the RISC strategy also brings some very important advantages. • Because each instruction requires only one clock cycle to execute, the entire program will execute in approximately the same amount of time as the multi-cycle "MULT" command. • These RISC "reduced instructions" require less transistors of hardware space than the complex instructions, leaving more room for general purpose registers. • Because all of the instructions execute in a uniform amount of time (i. e. one clock), pipelining is possible and effective.

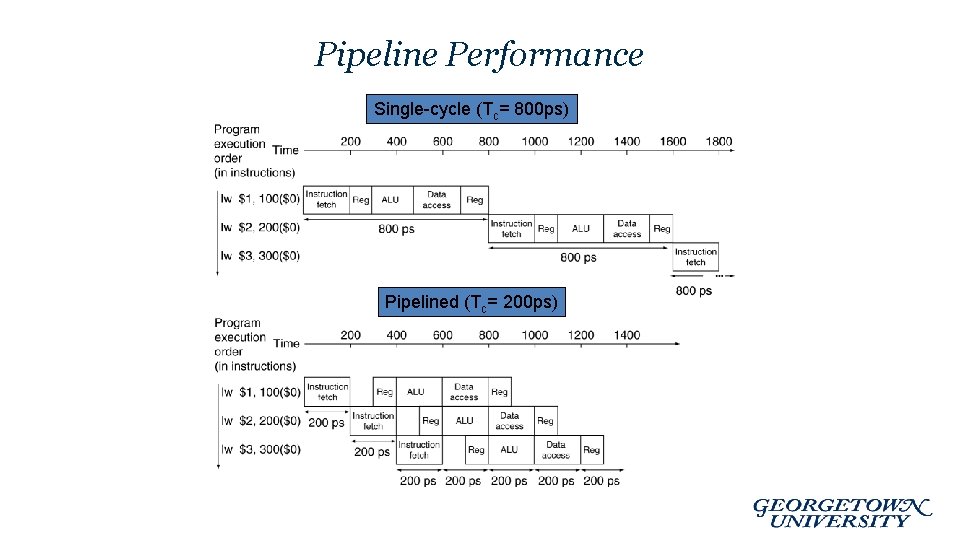

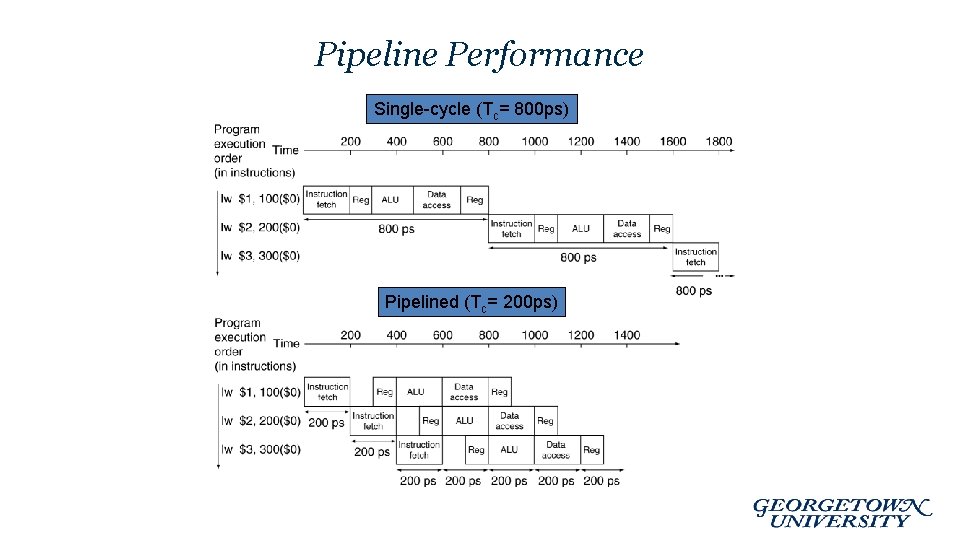

Pipeline Performance Single-cycle (Tc= 800 ps) Pipelined (Tc= 200 ps)

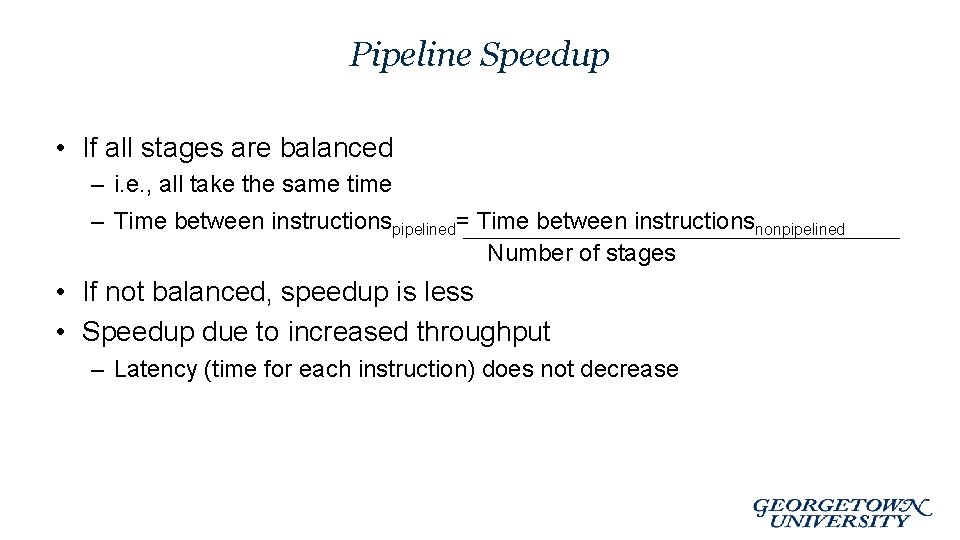

Pipeline Speedup • If all stages are balanced – i. e. , all take the same time – Time between instructionspipelined= Time between instructionsnonpipelined Number of stages • If not balanced, speedup is less • Speedup due to increased throughput – Latency (time for each instruction) does not decrease

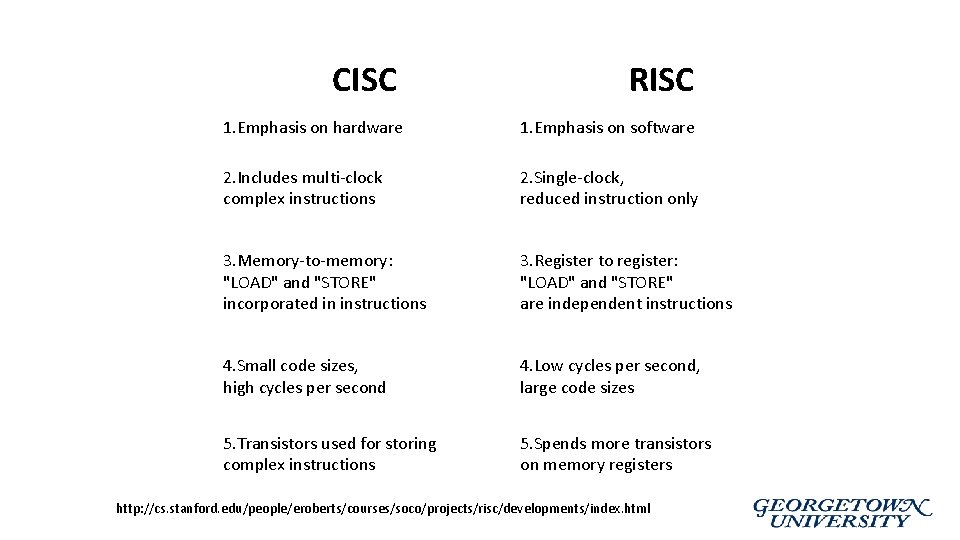

CISC RISC 1. Emphasis on hardware 1. Emphasis on software 2. Includes multi-clock complex instructions 2. Single-clock, reduced instruction only 3. Memory-to-memory: "LOAD" and "STORE" incorporated in instructions 3. Register to register: "LOAD" and "STORE" are independent instructions 4. Small code sizes, high cycles per second 4. Low cycles per second, large code sizes 5. Transistors used for storing complex instructions 5. Spends more transistors on memory registers http: //cs. stanford. edu/people/eroberts/courses/soco/projects/risc/developments/index. html

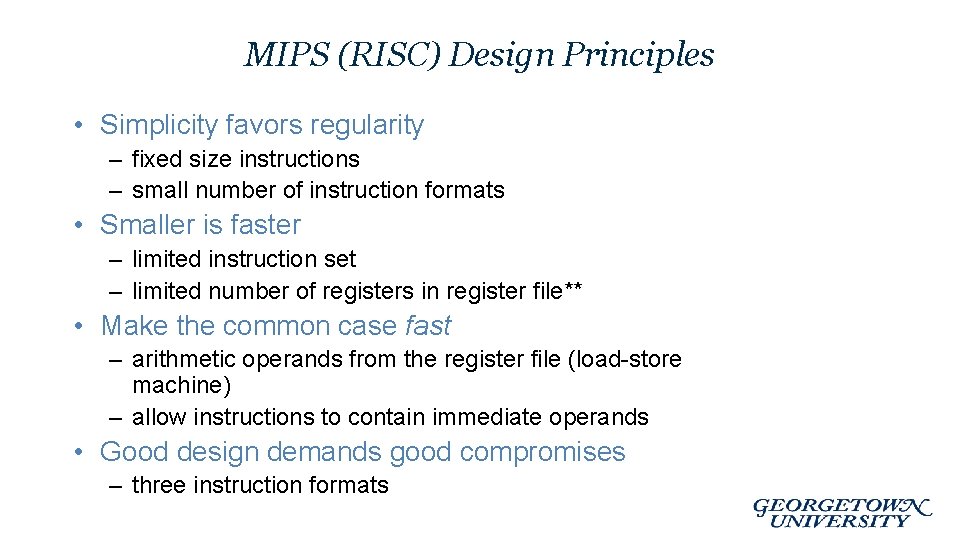

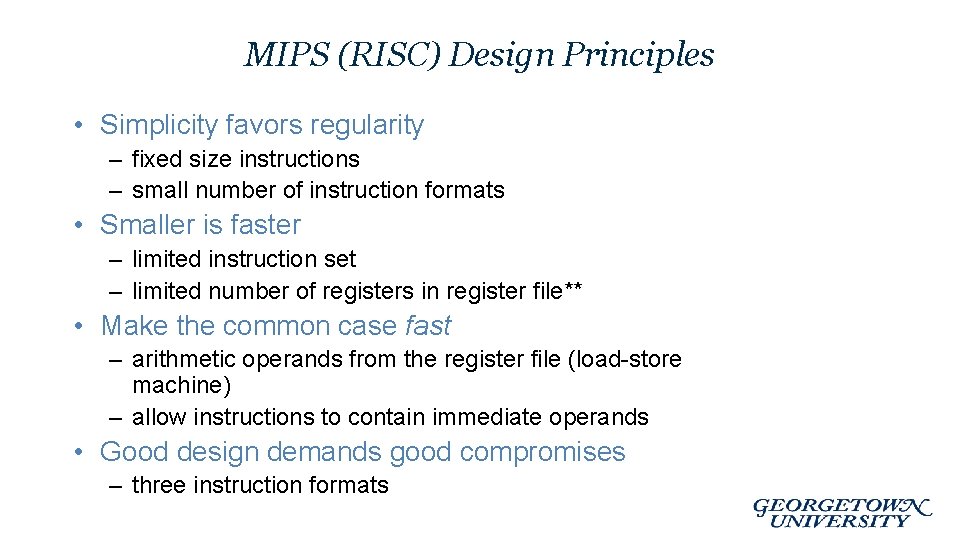

MIPS (RISC) Design Principles • Simplicity favors regularity – fixed size instructions – small number of instruction formats • Smaller is faster – limited instruction set – limited number of registers in register file** • Make the common case fast – arithmetic operands from the register file (load-store machine) – allow instructions to contain immediate operands • Good design demands good compromises – three instruction formats

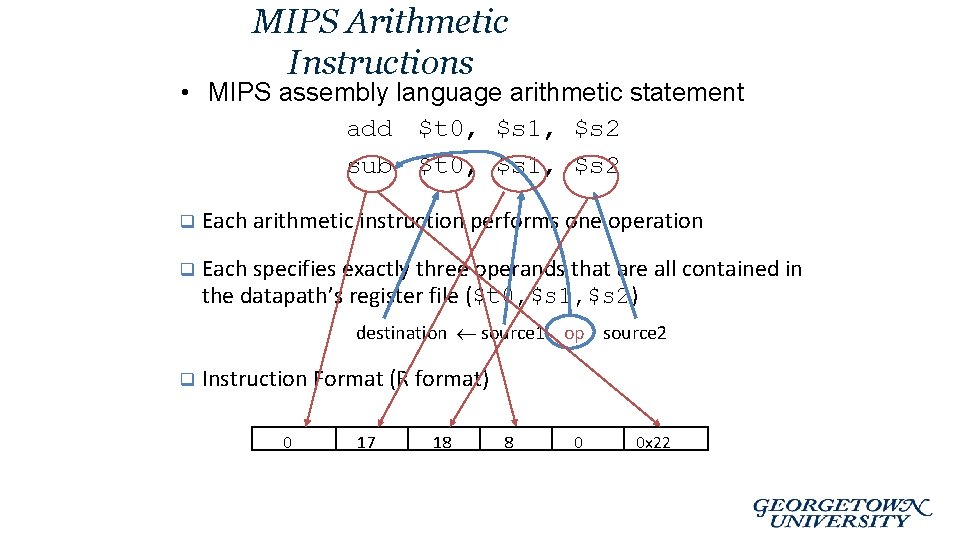

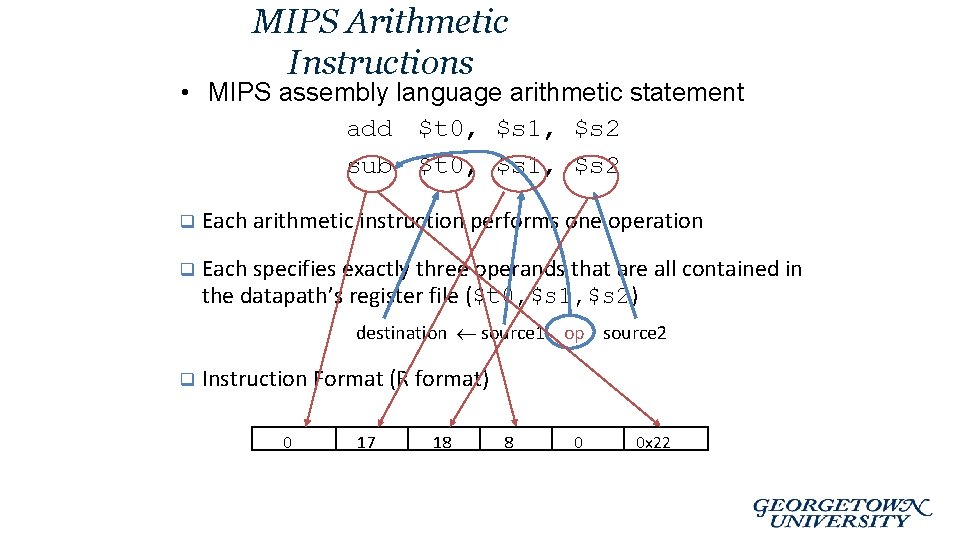

MIPS Arithmetic Instructions • MIPS assembly language arithmetic statement add $t 0, $s 1, $s 2 sub $t 0, $s 1, $s 2 q Each arithmetic instruction performs one operation q Each specifies exactly three operands that are all contained in the datapath’s register file ($t 0, $s 1, $s 2) destination source 1 op source 2 q Instruction Format (R format) 0 17 18 8 0 0 x 22

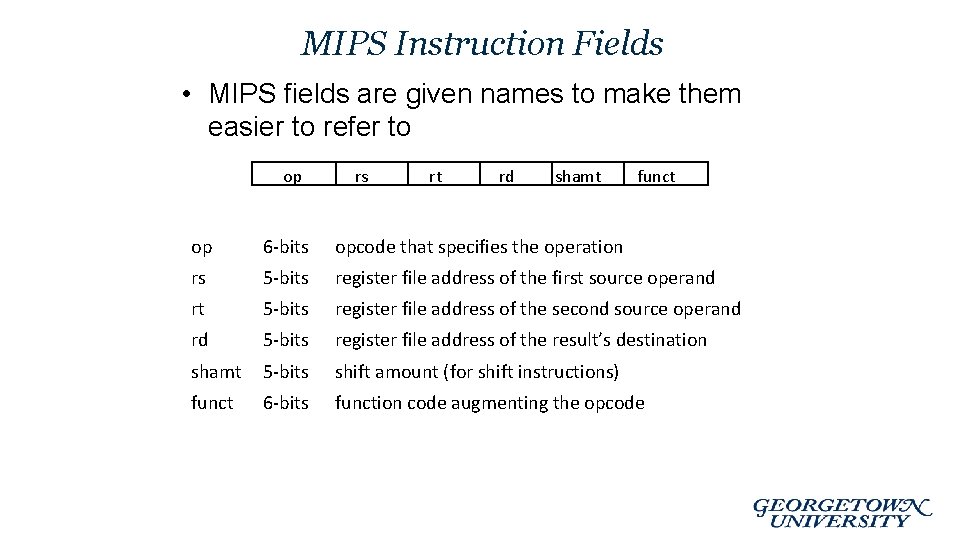

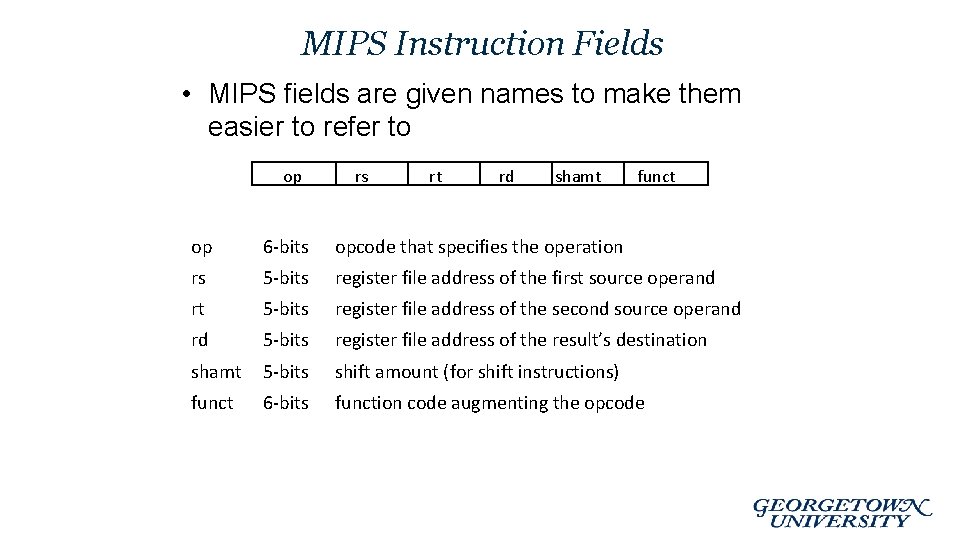

MIPS Instruction Fields • MIPS fields are given names to make them easier to refer to op rs rt rd shamt funct op 6 -bits opcode that specifies the operation rs 5 -bits register file address of the first source operand rt 5 -bits register file address of the second source operand rd 5 -bits register file address of the result’s destination shamt 5 -bits shift amount (for shift instructions) funct 6 -bits function code augmenting the opcode

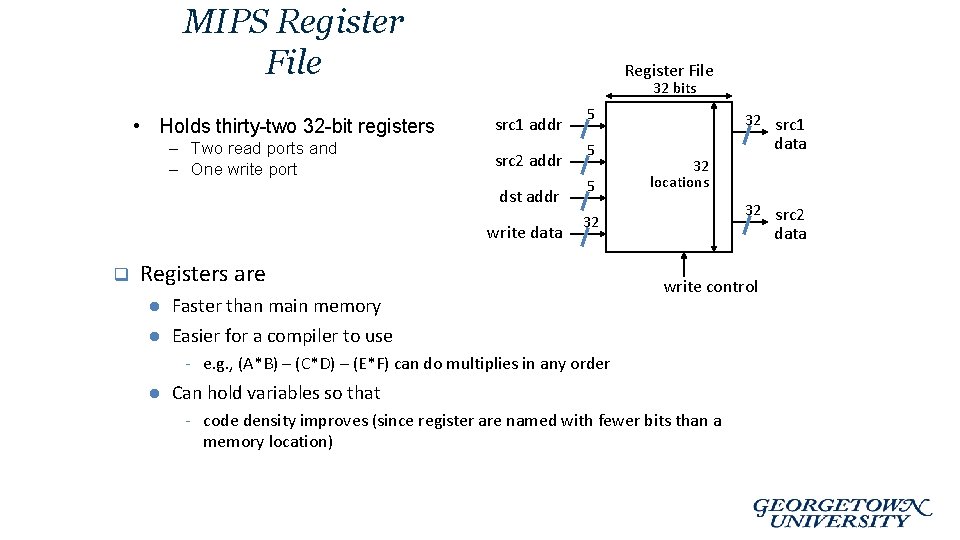

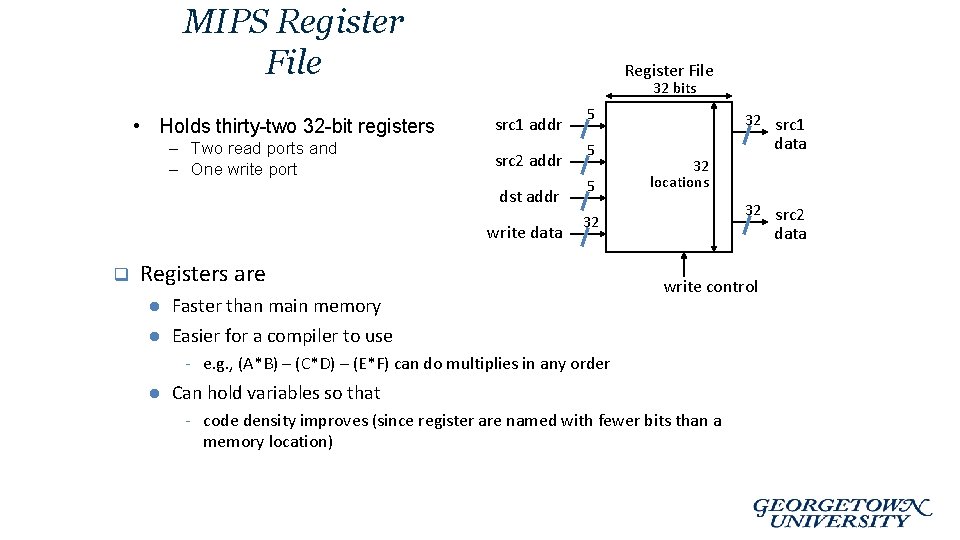

MIPS Register File • Holds thirty-two 32 -bit registers – Two read ports and – One write port Register File 32 bits src 1 addr src 2 addr dst addr write data q 5 5 5 l data 32 locations 32 src 2 32 Registers are l 32 src 1 Faster than main memory Easier for a compiler to use data write control - e. g. , (A*B) – (C*D) – (E*F) can do multiplies in any order l Can hold variables so that - code density improves (since register are named with fewer bits than a memory location)

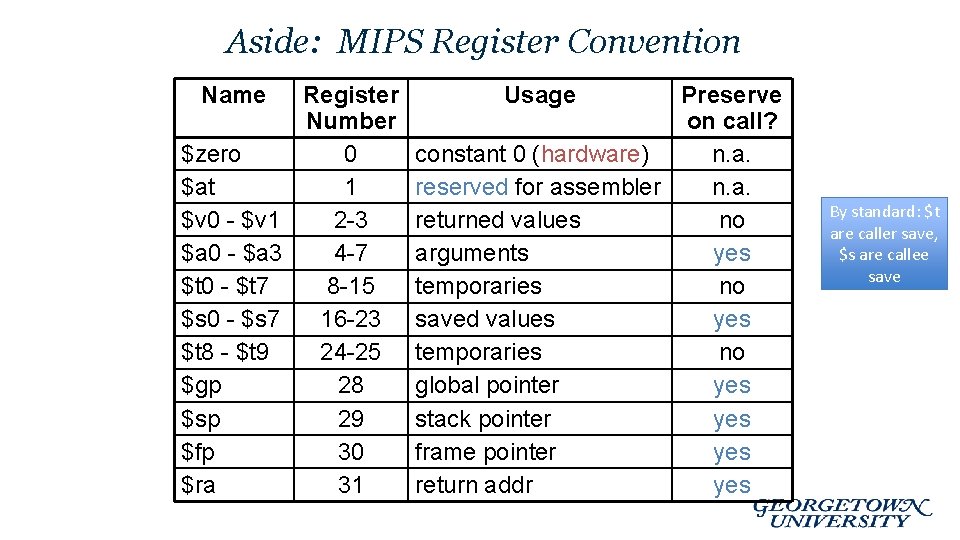

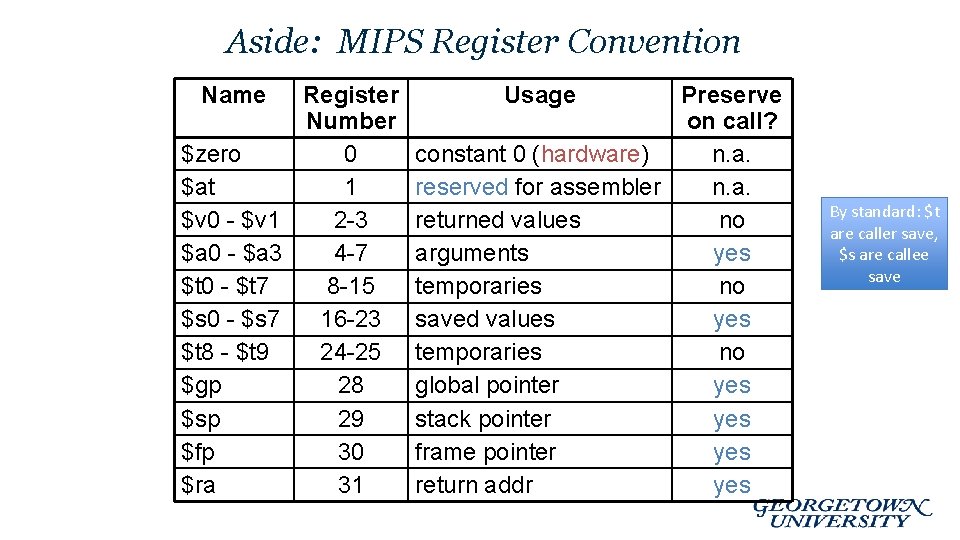

Aside: MIPS Register Convention Name Register Number $zero 0 $at 1 $v 0 - $v 1 2 -3 $a 0 - $a 3 4 -7 $t 0 - $t 7 8 -15 $s 0 - $s 7 16 -23 $t 8 - $t 9 24 -25 $gp 28 $sp 29 $fp 30 $ra 31 Usage Preserve on call? constant 0 (hardware) n. a. reserved for assembler n. a. returned values no arguments yes temporaries no saved values yes temporaries no global pointer yes stack pointer yes frame pointer yes return addr yes By standard: $t are caller save, $s are callee save

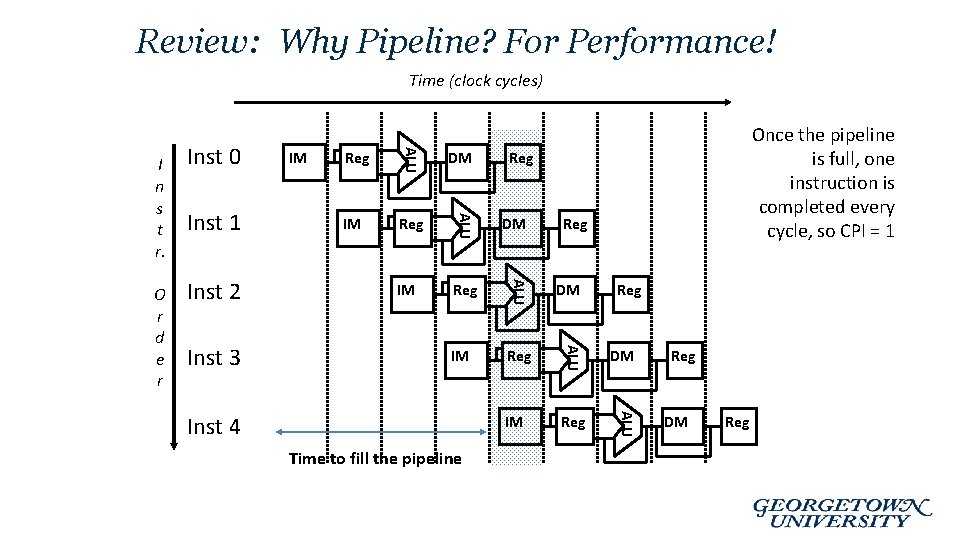

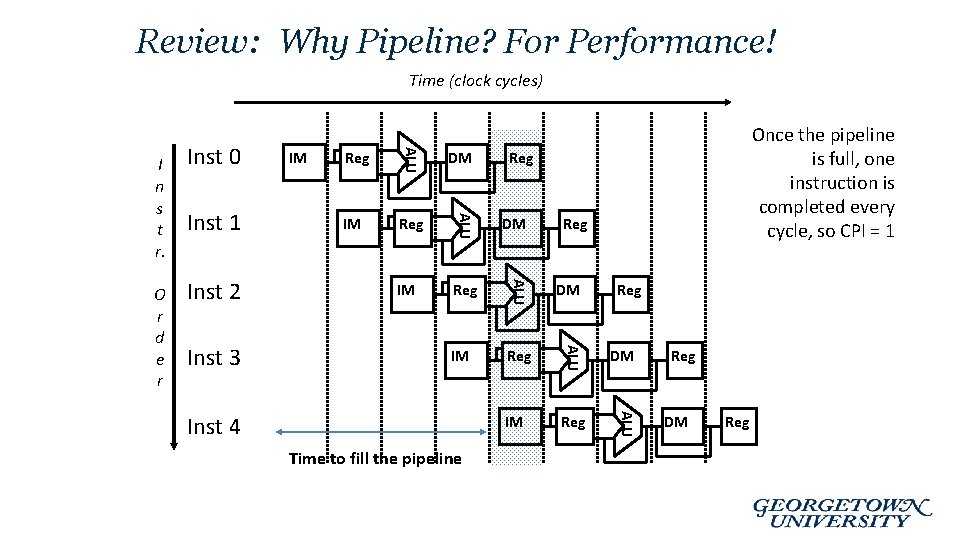

Review: Why Pipeline? For Performance! Time (clock cycles) IM Reg DM IM Reg ALU Inst 3 DM ALU Inst 2 Reg ALU Inst 1 IM ALU O r d e r Inst 0 ALU I n s t r. Once the pipeline is full, one instruction is completed every cycle, so CPI = 1 Inst 4 Time to fill the pipeline Reg Reg DM Reg

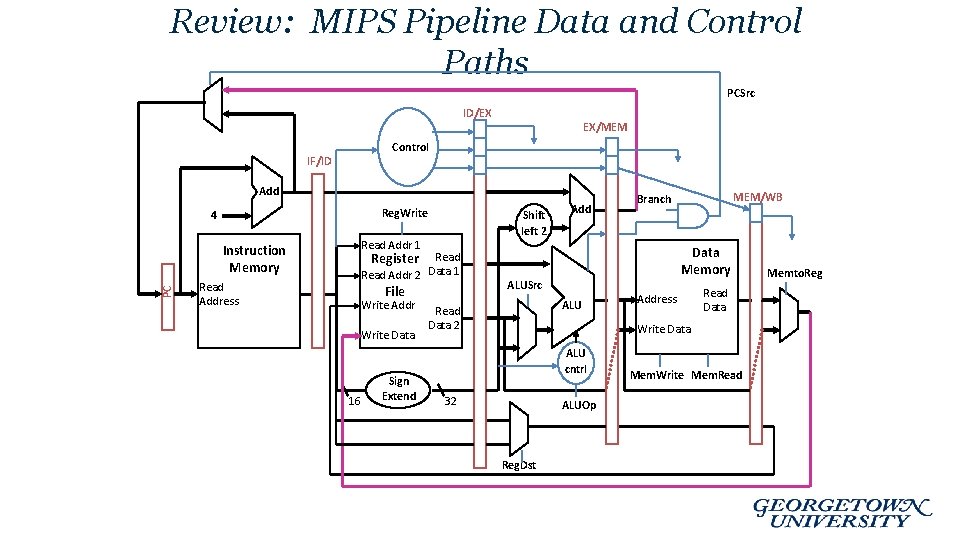

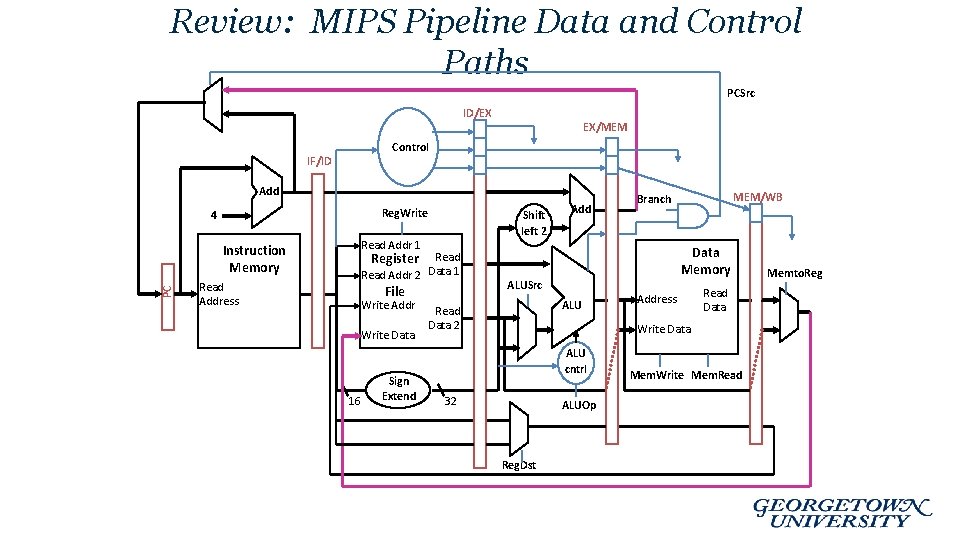

Review: MIPS Pipeline Data and Control Paths PCSrc ID/EX EX/MEM Control IF/ID Add Reg. Write 4 PC Instruction Memory Read Address Shift left 2 Read Addr 1 Add Data Memory Register Read Data 1 Read Addr 2 File Write Addr Write Data 16 Sign Extend MEM/WB Branch ALUSrc ALU Read Data 2 Address Read Data Write Data ALU cntrl 32 ALUOp Reg. Dst Mem. Write Mem. Read Memto. Reg

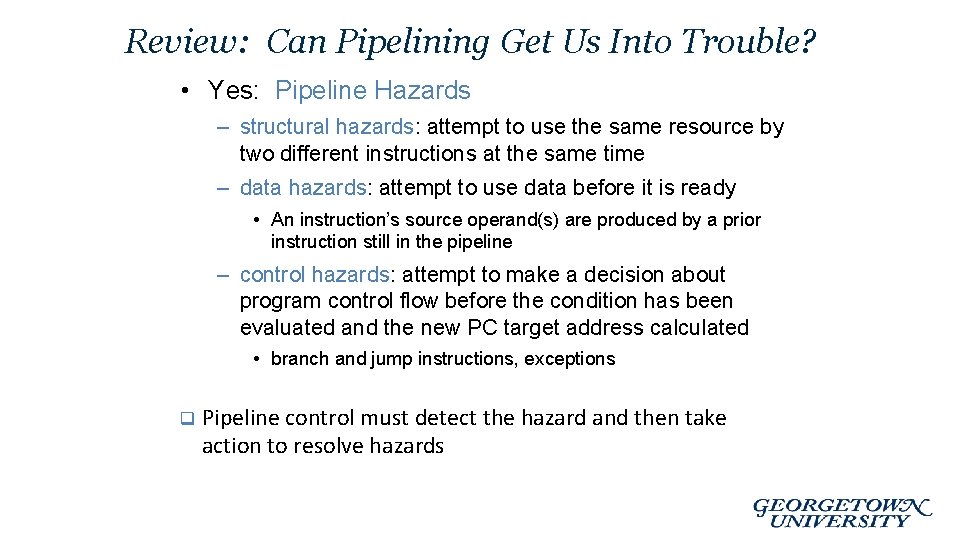

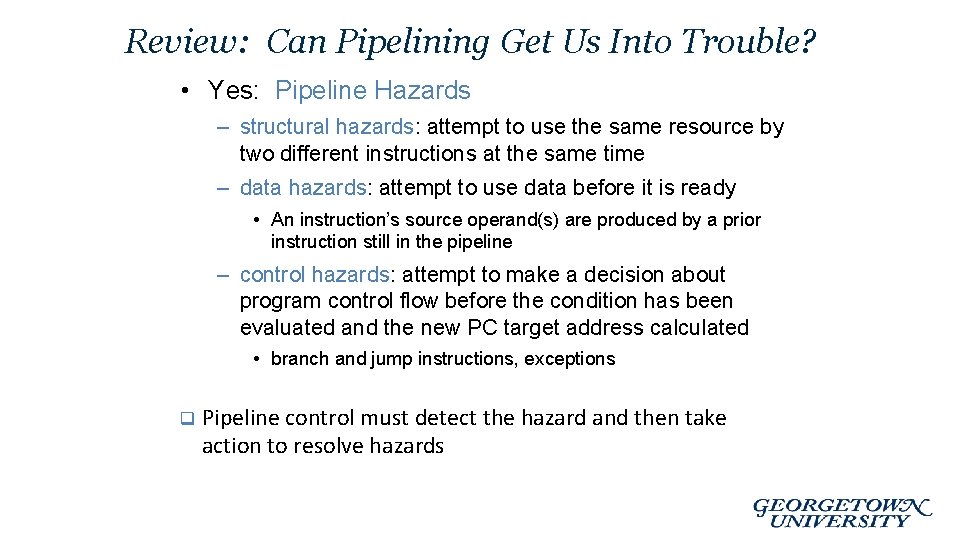

Review: Can Pipelining Get Us Into Trouble? • Yes: Pipeline Hazards – structural hazards: attempt to use the same resource by two different instructions at the same time – data hazards: attempt to use data before it is ready • An instruction’s source operand(s) are produced by a prior instruction still in the pipeline – control hazards: attempt to make a decision about program control flow before the condition has been evaluated and the new PC target address calculated • branch and jump instructions, exceptions q Pipeline control must detect the hazard and then take action to resolve hazards

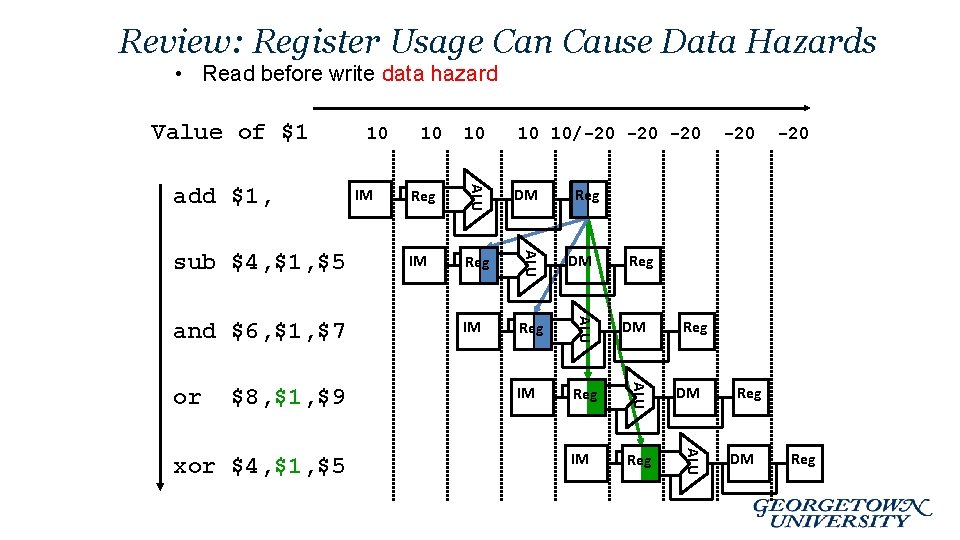

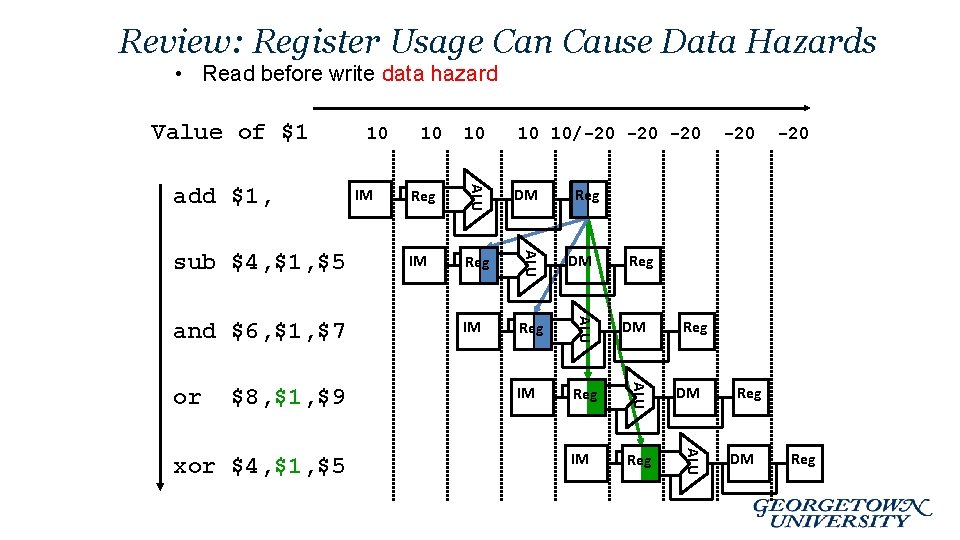

Review: Register Usage Can Cause Data Hazards • Read before write data hazard Value of $1 xor $4, $1, $5 DM IM Reg ALU $8, $1, $9 Reg ALU or 10 10/-20 -20 ALU and $6, $1, $7 10 ALU sub $4, $1, $5 IM 10 ALU add $1, 10 -20 Reg Reg DM Reg

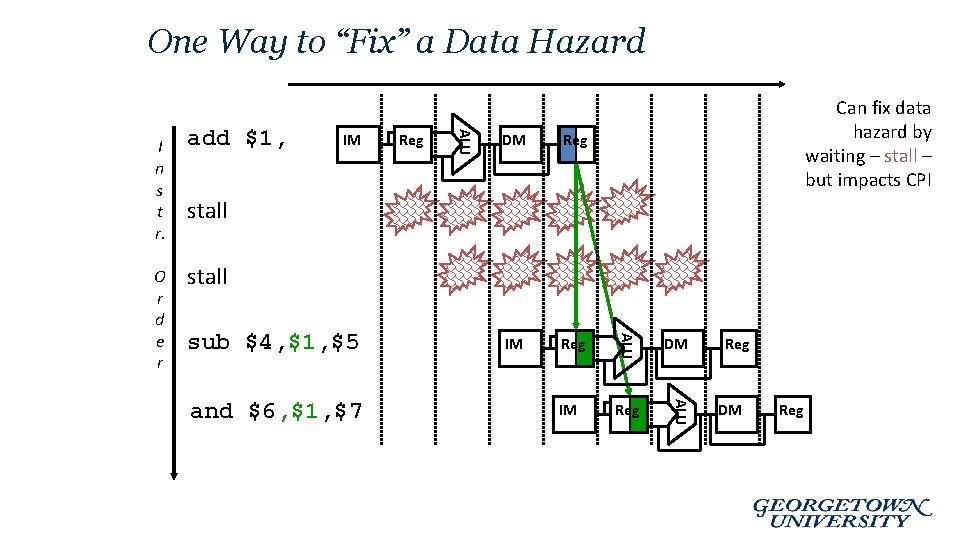

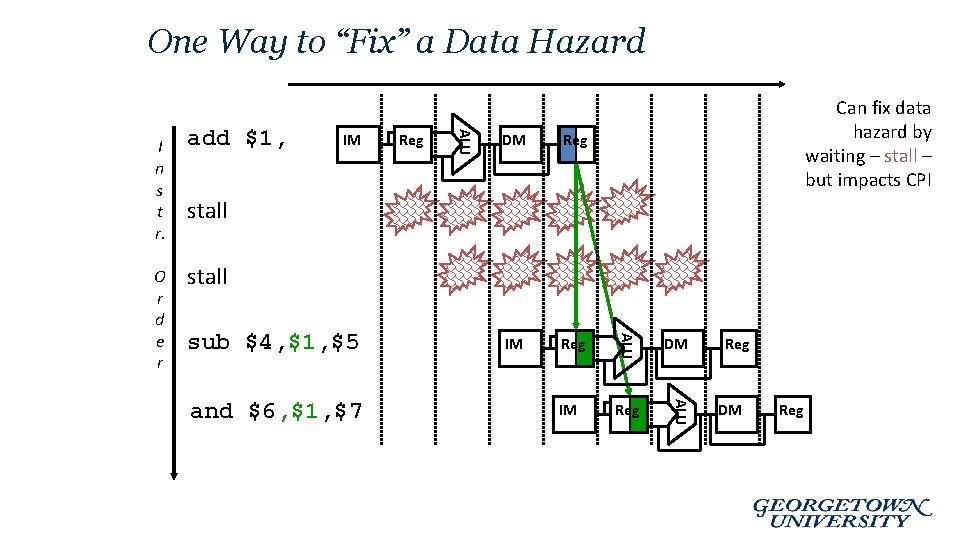

One Way to “Fix” a Data Hazard Reg DM Reg IM Reg DM IM Reg ALU IM ALU O r d e r add $1, ALU I n s t r. Can fix data hazard by waiting – stall – but impacts CPI stall sub $4, $1, $5 and $6, $1, $7 Reg DM Reg

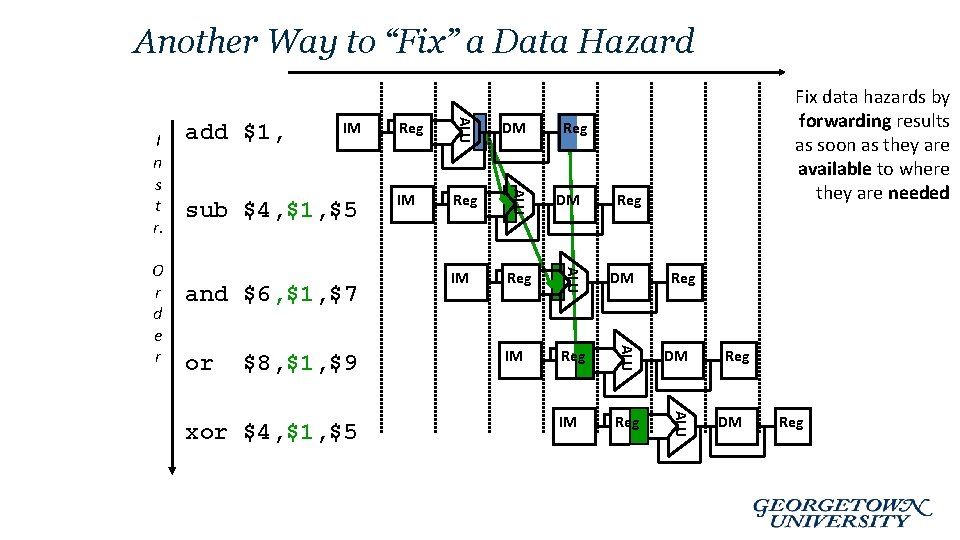

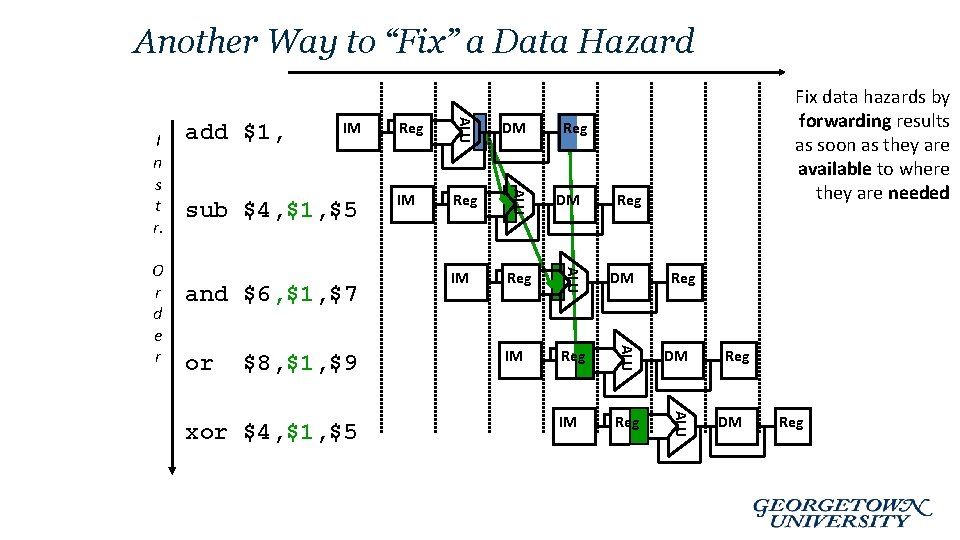

Another Way to “Fix” a Data Hazard or $8, $1, $9 xor $4, $1, $5 IM Reg DM IM Reg ALU and $6, $1, $7 DM ALU sub $4, $1, $5 Reg ALU IM ALU O r d e r add $1, ALU I n s t r. Fix data hazards by forwarding results as soon as they are available to where they are needed Reg Reg DM Reg

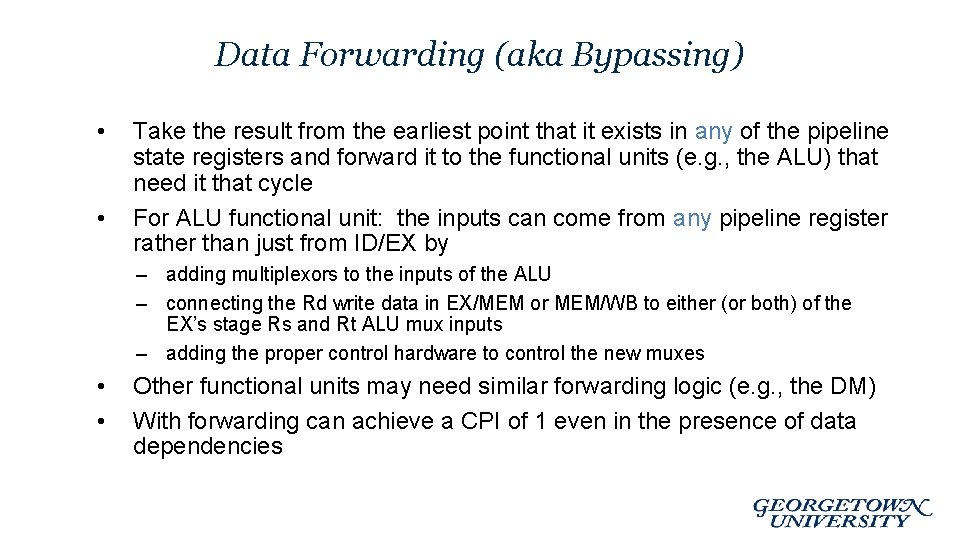

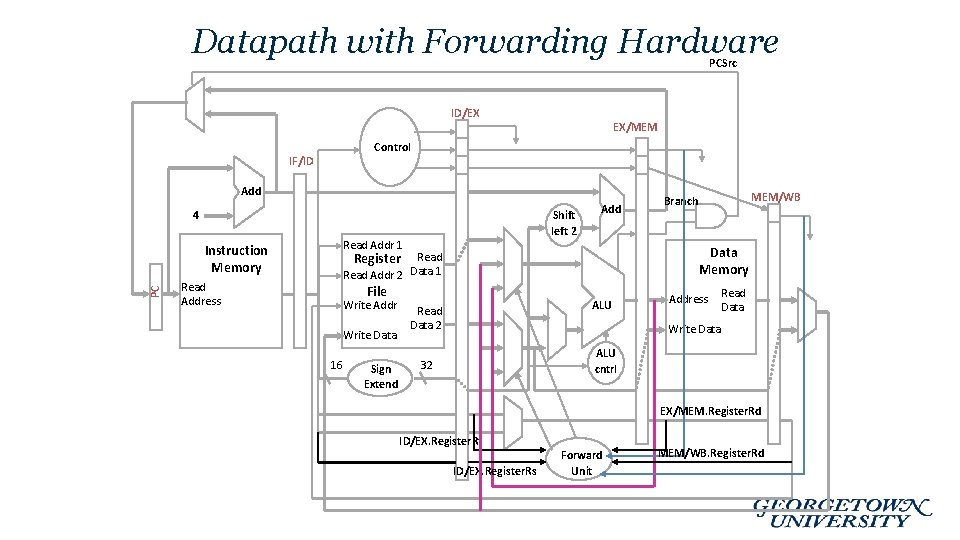

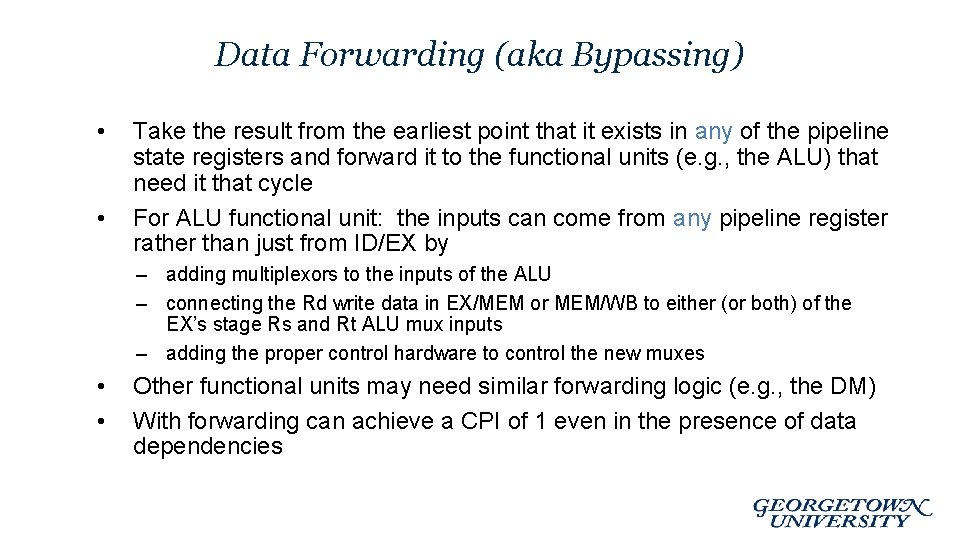

Data Forwarding (aka Bypassing) • • Take the result from the earliest point that it exists in any of the pipeline state registers and forward it to the functional units (e. g. , the ALU) that need it that cycle For ALU functional unit: the inputs can come from any pipeline register rather than just from ID/EX by – adding multiplexors to the inputs of the ALU – connecting the Rd write data in EX/MEM or MEM/WB to either (or both) of the EX’s stage Rs and Rt ALU mux inputs – adding the proper control hardware to control the new muxes • • Other functional units may need similar forwarding logic (e. g. , the DM) With forwarding can achieve a CPI of 1 even in the presence of data dependencies

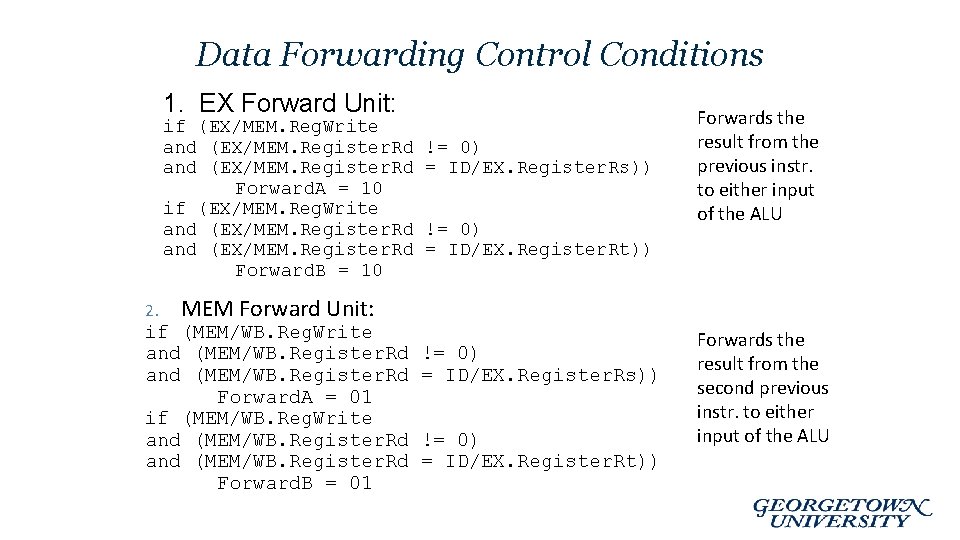

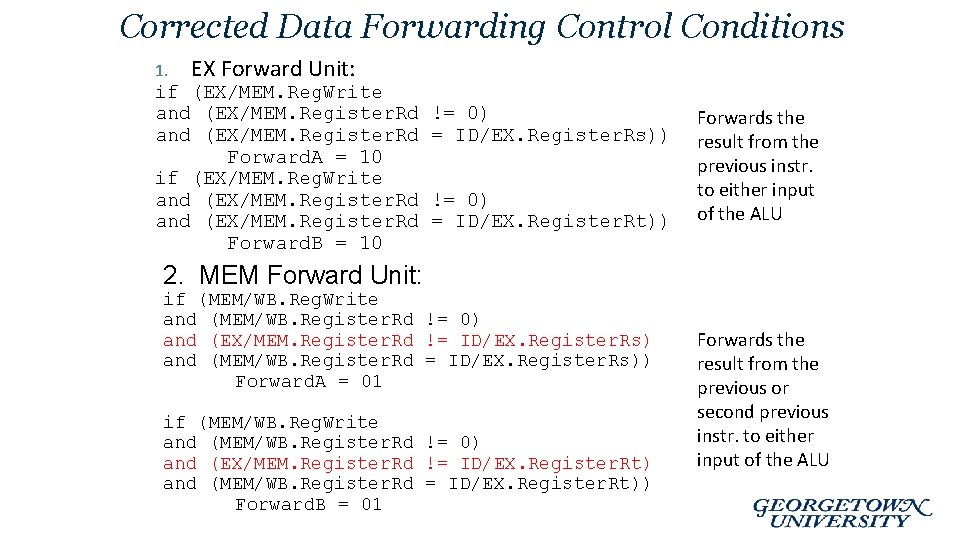

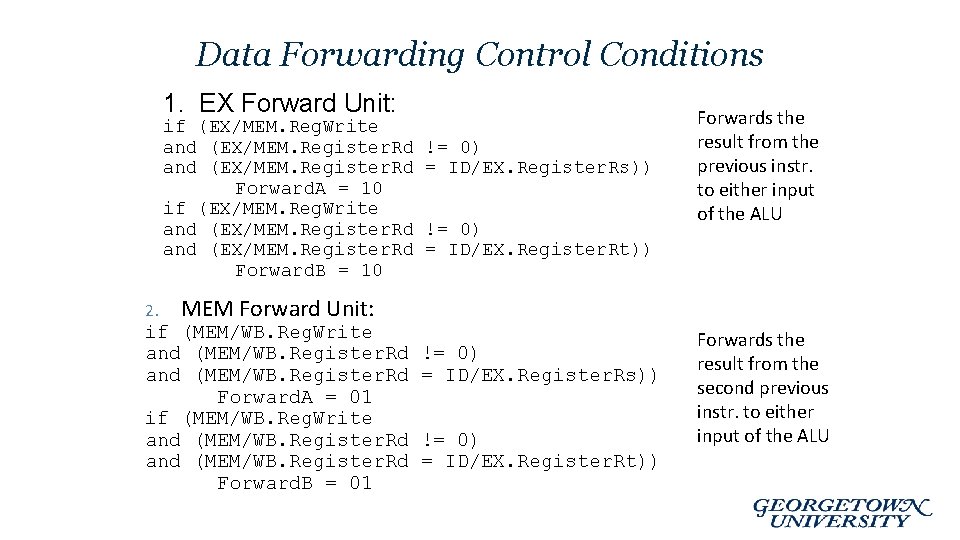

Data Forwarding Control Conditions 1. EX Forward Unit: if (EX/MEM. Reg. Write and (EX/MEM. Register. Rd Forward. A = 10 if (EX/MEM. Reg. Write and (EX/MEM. Register. Rd Forward. B = 10 2. != 0) = ID/EX. Register. Rs)) != 0) = ID/EX. Register. Rt)) Forwards the result from the previous instr. to either input of the ALU MEM Forward Unit: if (MEM/WB. Reg. Write and (MEM/WB. Register. Rd Forward. A = 01 if (MEM/WB. Reg. Write and (MEM/WB. Register. Rd Forward. B = 01 != 0) = ID/EX. Register. Rs)) != 0) = ID/EX. Register. Rt)) Forwards the result from the second previous instr. to either input of the ALU

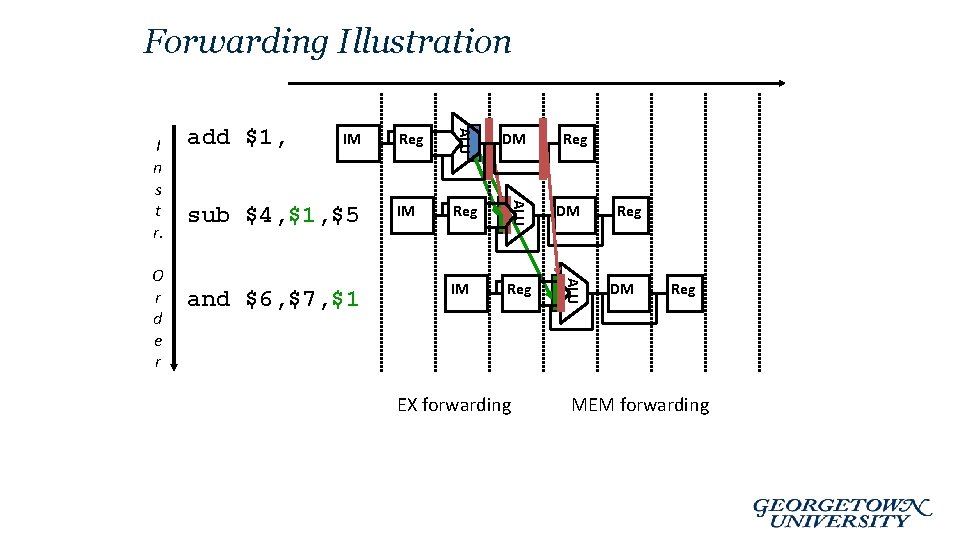

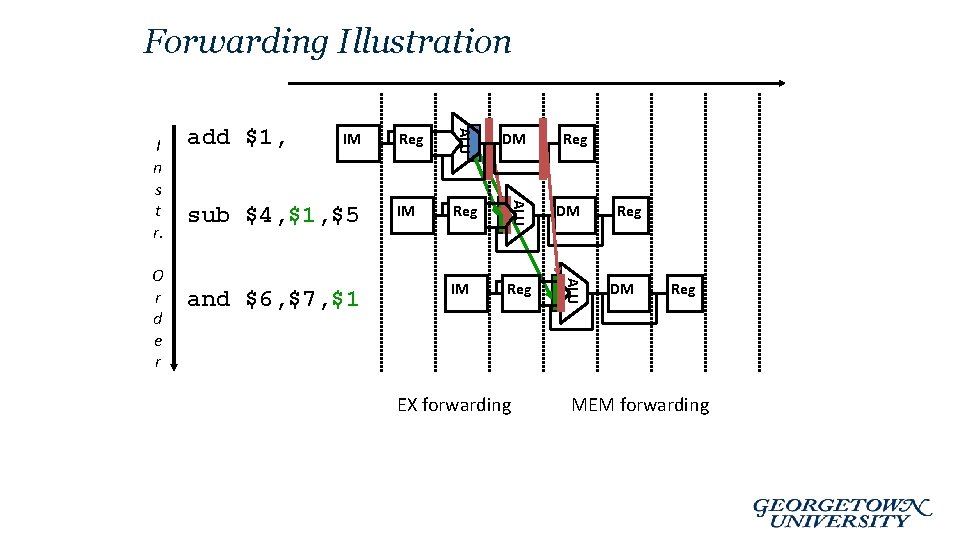

Forwarding Illustration sub $4, $1, $5 and $6, $7, $1 Reg DM IM Reg ALU IM ALU O r d e r add $1, ALU I n s t r. EX forwarding Reg DM Reg MEM forwarding

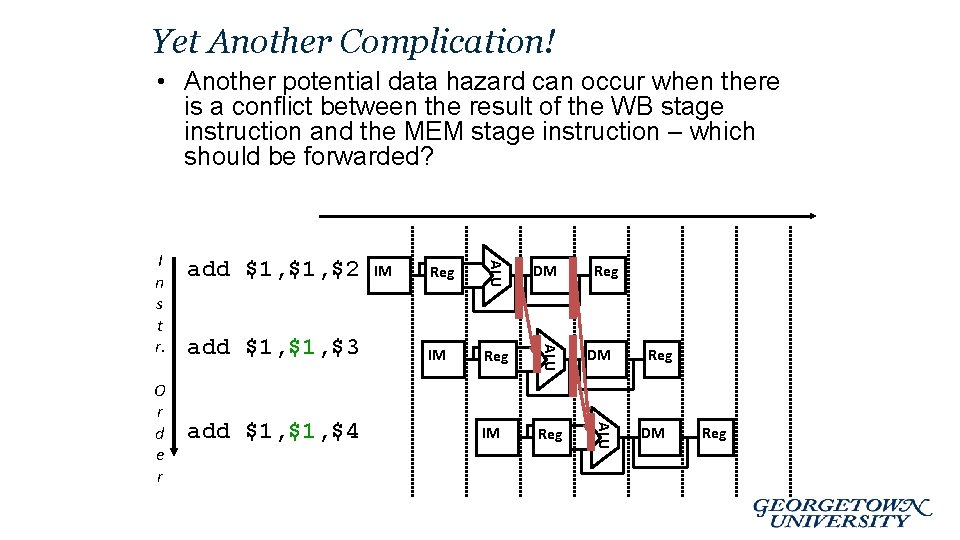

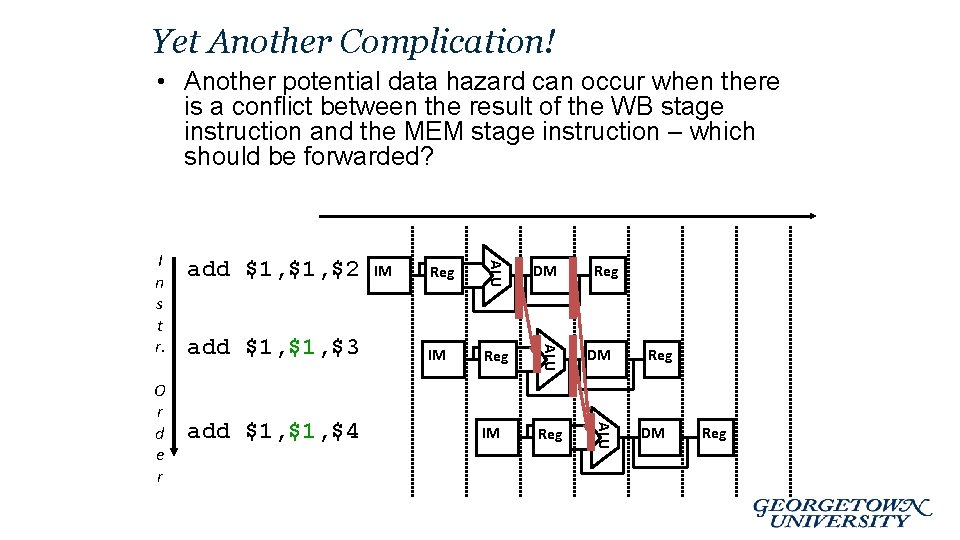

Yet Another Complication! • Another potential data hazard can occur when there is a conflict between the result of the WB stage instruction and the MEM stage instruction – which should be forwarded? add $1, $4 Reg DM IM Reg ALU add $1, $3 IM ALU O r d e r add $1, $2 ALU I n s t r. Reg DM Reg

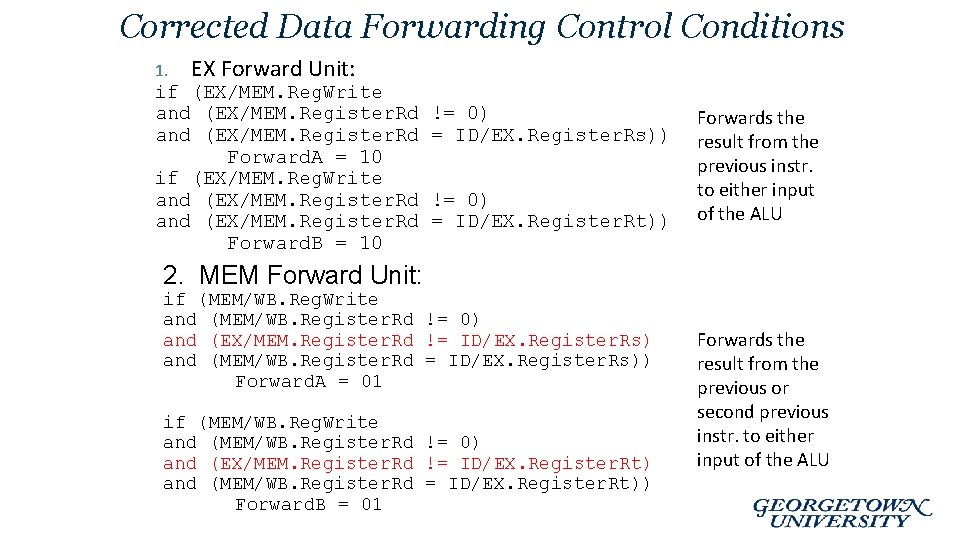

Corrected Data Forwarding Control Conditions 1. EX Forward Unit: if (EX/MEM. Reg. Write and (EX/MEM. Register. Rd Forward. A = 10 if (EX/MEM. Reg. Write and (EX/MEM. Register. Rd Forward. B = 10 != 0) = ID/EX. Register. Rs)) != 0) = ID/EX. Register. Rt)) Forwards the result from the previous instr. to either input of the ALU 2. MEM Forward Unit: if (MEM/WB. Reg. Write and (MEM/WB. Register. Rd != 0) and (EX/MEM. Register. Rd != ID/EX. Register. Rs) and (MEM/WB. Register. Rd = ID/EX. Register. Rs)) Forward. A = 01 if (MEM/WB. Reg. Write and (MEM/WB. Register. Rd != 0) and (EX/MEM. Register. Rd != ID/EX. Register. Rt) and (MEM/WB. Register. Rd = ID/EX. Register. Rt)) Forward. B = 01 Forwards the result from the previous or second previous instr. to either input of the ALU

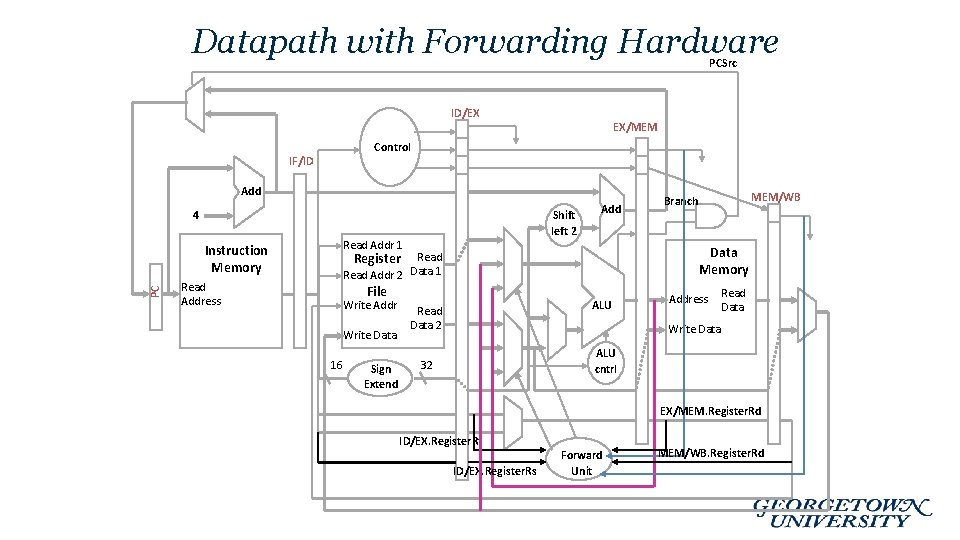

Datapath with Forwarding Hardware PCSrc ID/EX EX/MEM Control IF/ID Add Shift left 2 4 PC Instruction Memory Read Address Read Addr 1 Add Data Memory Register Read Data 1 Read Addr 2 File Write Addr Write Data 16 Sign Extend MEM/WB Branch ALU Read Data 2 Address Read Data Write Data ALU cntrl 32 EX/MEM. Register. Rd ID/EX. Register. Rt ID/EX. Register. Rs Forward Unit MEM/WB. Register. Rd

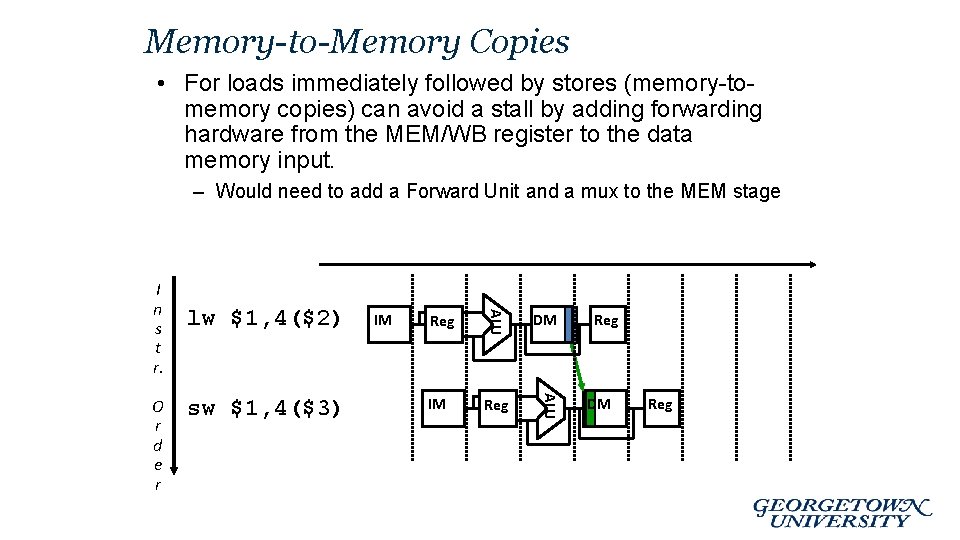

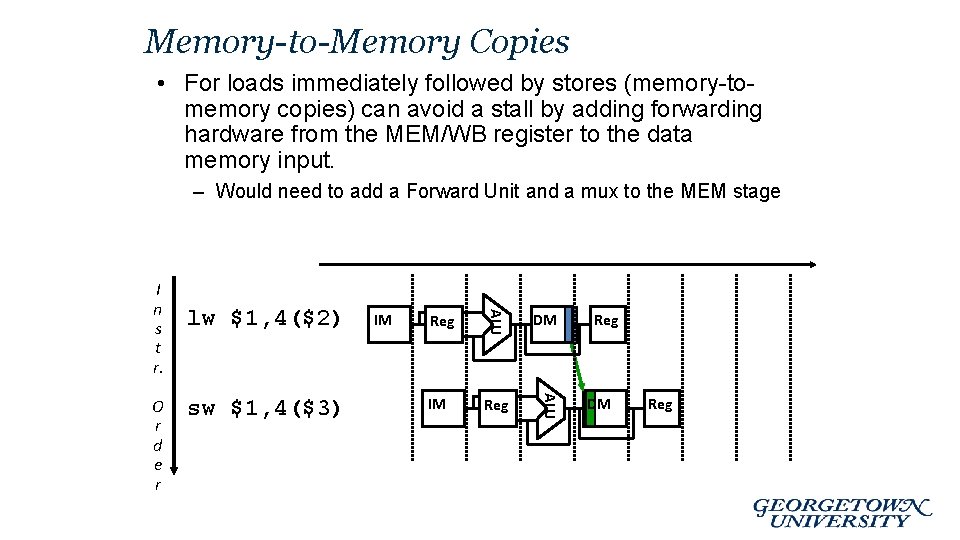

Memory-to-Memory Copies • For loads immediately followed by stores (memory-tomemory copies) can avoid a stall by adding forwarding hardware from the MEM/WB register to the data memory input. – Would need to add a Forward Unit and a mux to the MEM stage sw $1, 4($3) IM Reg DM IM Reg ALU O r d e r lw $1, 4($2) ALU I n s t r. Reg DM Reg

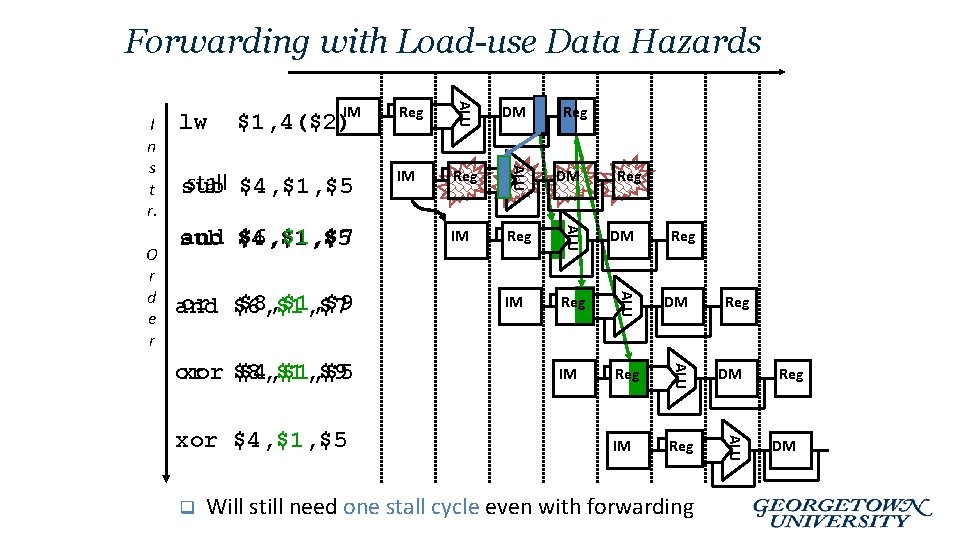

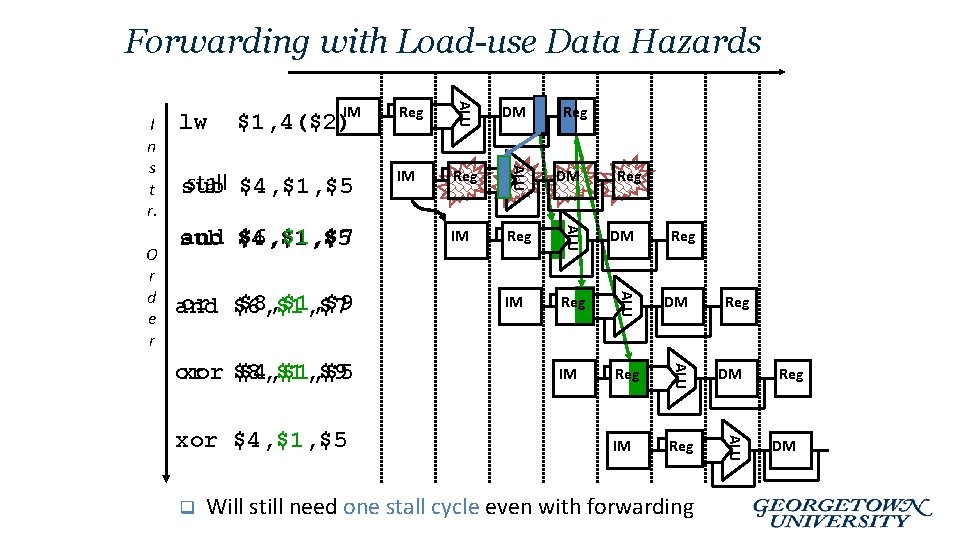

Forwarding with Load-use Data Hazards or xor $8, $1, $9 $4, $1, $5 xor $4, $1, $5 q Reg DM IM Reg ALU or $6, $1, $7 $8, $1, $9 and IM ALU and $4, $1, $5 $6, $1, $7 sub DM ALU stall $4, $1, $5 sub Reg ALU $1, 4($2)IM ALU O r d e r lw ALU I n s t r. Reg Reg Will still need one stall cycle even with forwarding Reg DM

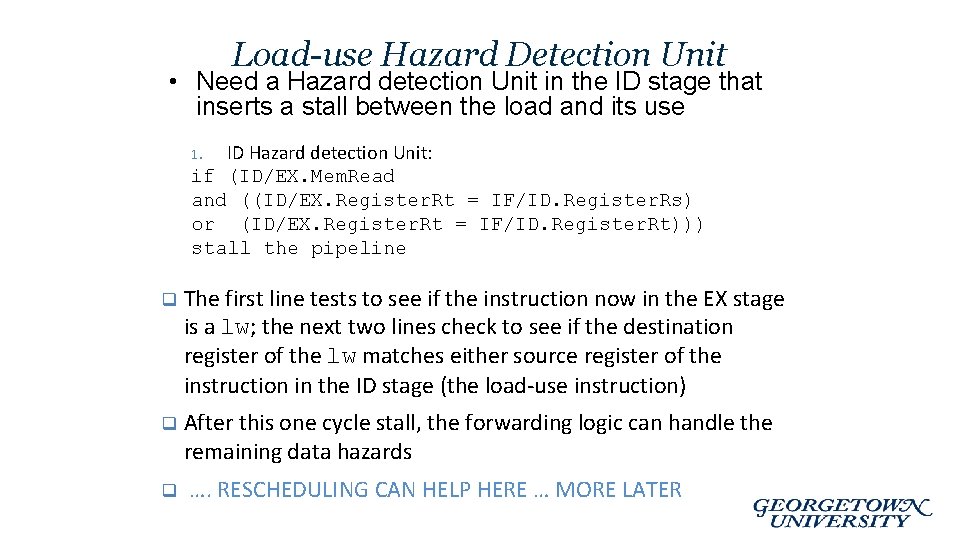

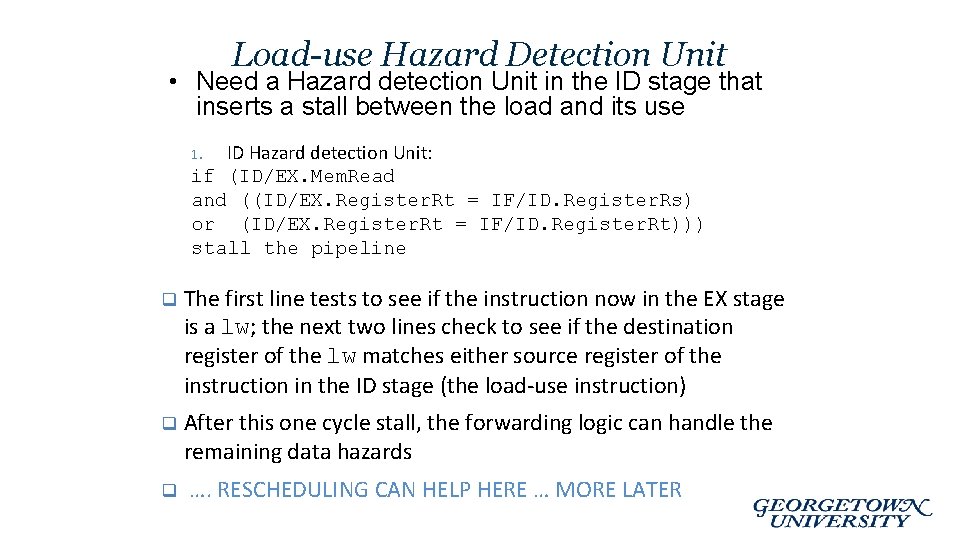

Load-use Hazard Detection Unit • Need a Hazard detection Unit in the ID stage that inserts a stall between the load and its use ID Hazard detection Unit: if (ID/EX. Mem. Read and ((ID/EX. Register. Rt = IF/ID. Register. Rs) or (ID/EX. Register. Rt = IF/ID. Register. Rt))) stall the pipeline 1. q The first line tests to see if the instruction now in the EX stage is a lw; the next two lines check to see if the destination register of the lw matches either source register of the instruction in the ID stage (the load-use instruction) q After this one cycle stall, the forwarding logic can handle the remaining data hazards q …. RESCHEDULING CAN HELP HERE … MORE LATER

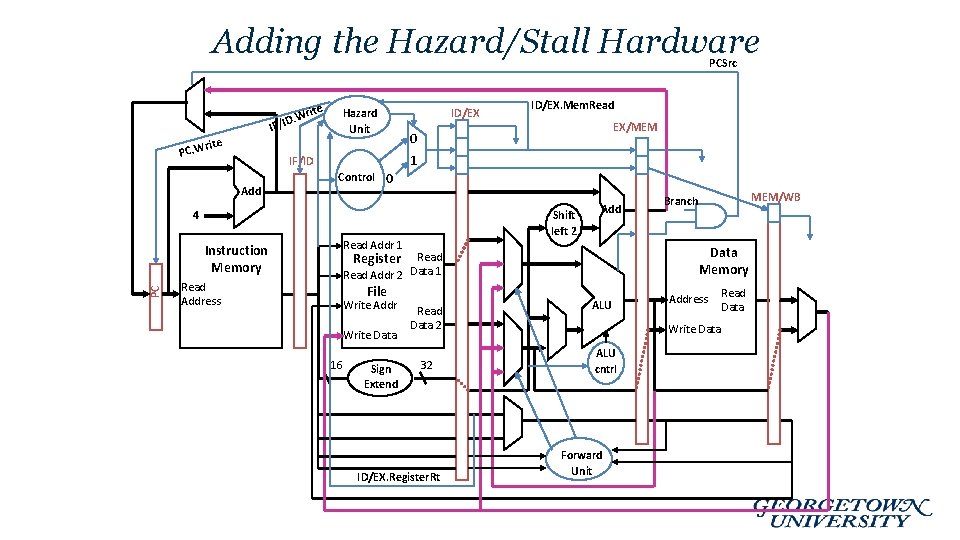

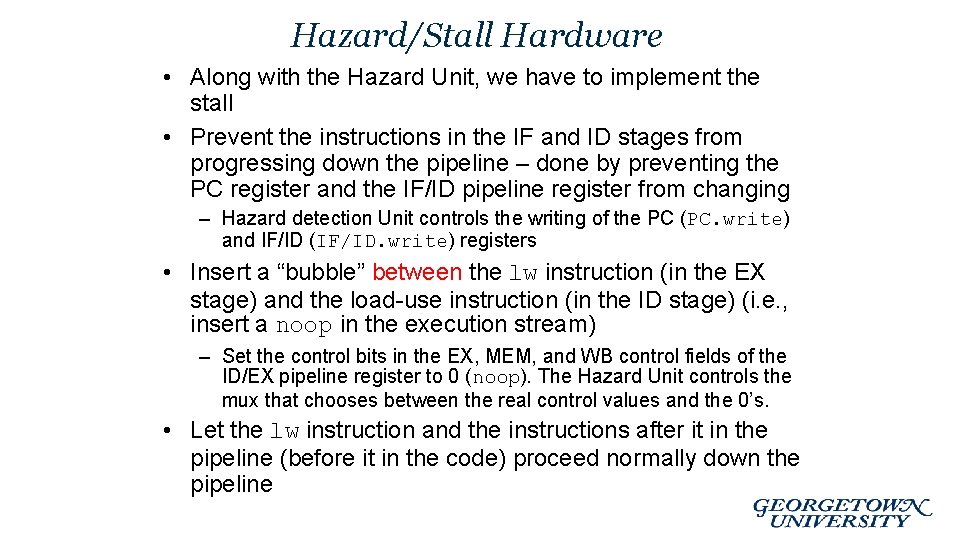

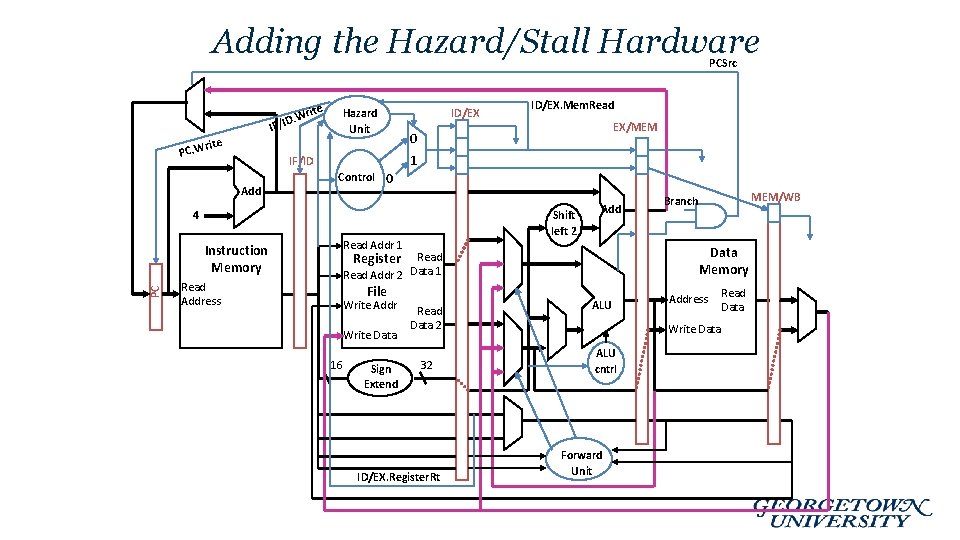

Hazard/Stall Hardware • Along with the Hazard Unit, we have to implement the stall • Prevent the instructions in the IF and ID stages from progressing down the pipeline – done by preventing the PC register and the IF/ID pipeline register from changing – Hazard detection Unit controls the writing of the PC (PC. write) and IF/ID (IF/ID. write) registers • Insert a “bubble” between the lw instruction (in the EX stage) and the load-use instruction (in the ID stage) (i. e. , insert a noop in the execution stream) – Set the control bits in the EX, MEM, and WB control fields of the ID/EX pipeline register to 0 (noop). The Hazard Unit controls the mux that chooses between the real control values and the 0’s. • Let the lw instruction and the instructions after it in the pipeline (before it in the code) proceed normally down the pipeline

Adding the Hazard/Stall Hardware PCSrc ite r D. W IF/I Hazard Unit rite PC. W PC Read Address EX/MEM 0 Control 0 Shift left 2 4 Instruction Memory ID/EX. Mem. Read 1 IF/ID Add ID/EX Read Addr 1 Add Data Memory Register Read Data 1 Read Addr 2 File Write Addr Write Data 16 Sign Extend Read Data 2 32 ID/EX. Register. Rt MEM/WB Branch ALU Address Read Data Write Data ALU cntrl Forward Unit

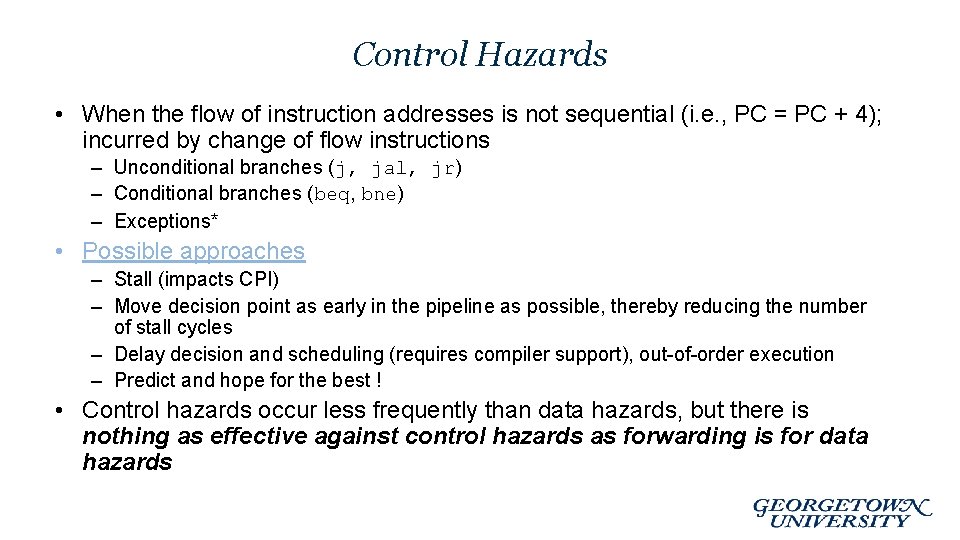

Control Hazards • When the flow of instruction addresses is not sequential (i. e. , PC = PC + 4); incurred by change of flow instructions – Unconditional branches (j, jal, jr) – Conditional branches (beq, bne) – Exceptions* • Possible approaches – Stall (impacts CPI) – Move decision point as early in the pipeline as possible, thereby reducing the number of stall cycles – Delay decision and scheduling (requires compiler support), out-of-order execution – Predict and hope for the best ! • Control hazards occur less frequently than data hazards, but there is nothing as effective against control hazards as forwarding is for data hazards

Control Hazards • Stall / Flush • Add hardware / optimize ISA – Determine branch condition and target as early as possible – VLIW • Scheduling / Out-of-Order Execution – Static (by compiler) – Dynamic (need to implement hardware) • Register renaming • Predict branch – Static or dynamic – Don’t commit until branch outcome determined • Loop Unrolling – Done by compiler

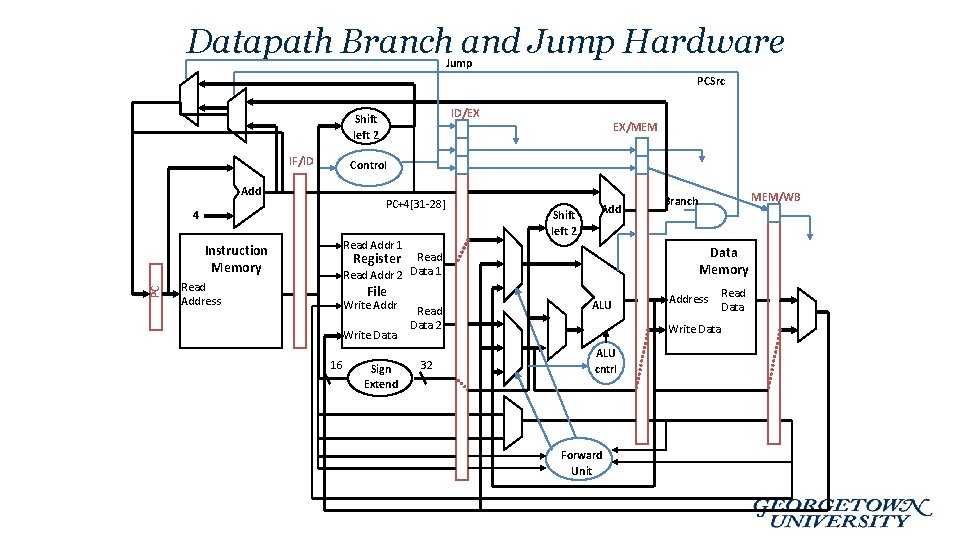

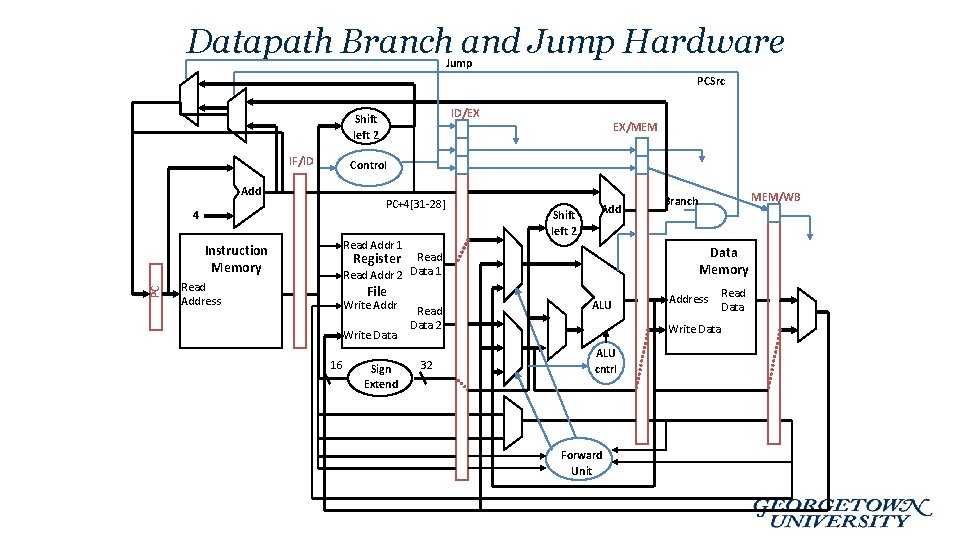

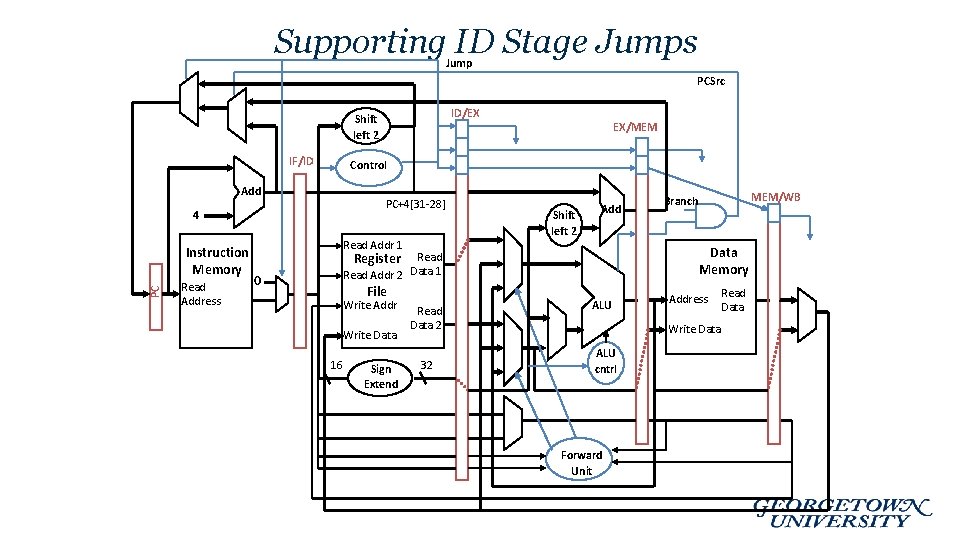

Datapath Branch and Jump Hardware Jump PCSrc ID/EX Shift left 2 IF/ID Control Add PC+4[31 -28] 4 PC Instruction Memory Read Address EX/MEM Read Addr 1 Shift left 2 Add Data Memory Register Read Data 1 Read Addr 2 File Write Addr Write Data 16 Sign Extend Read Data 2 32 MEM/WB Branch ALU Address Read Data Write Data ALU cntrl Forward Unit

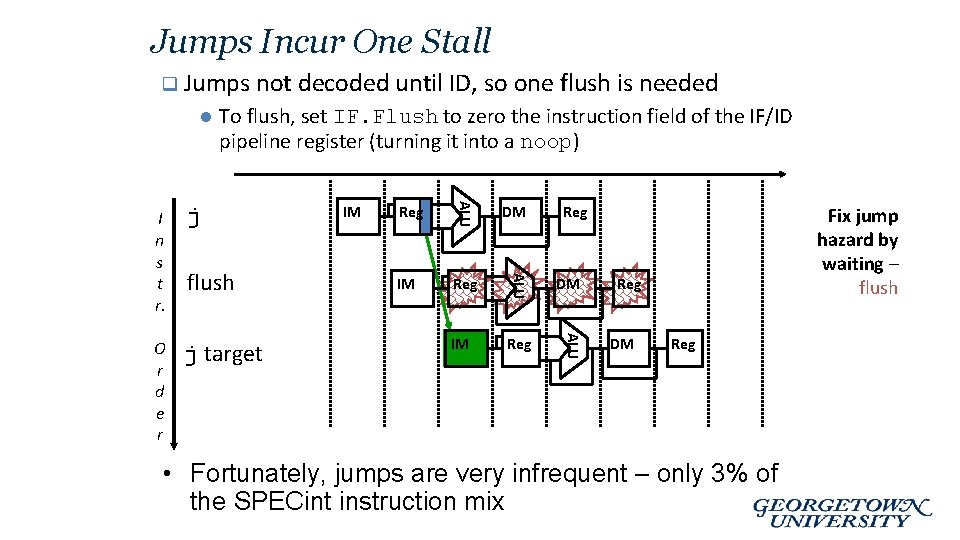

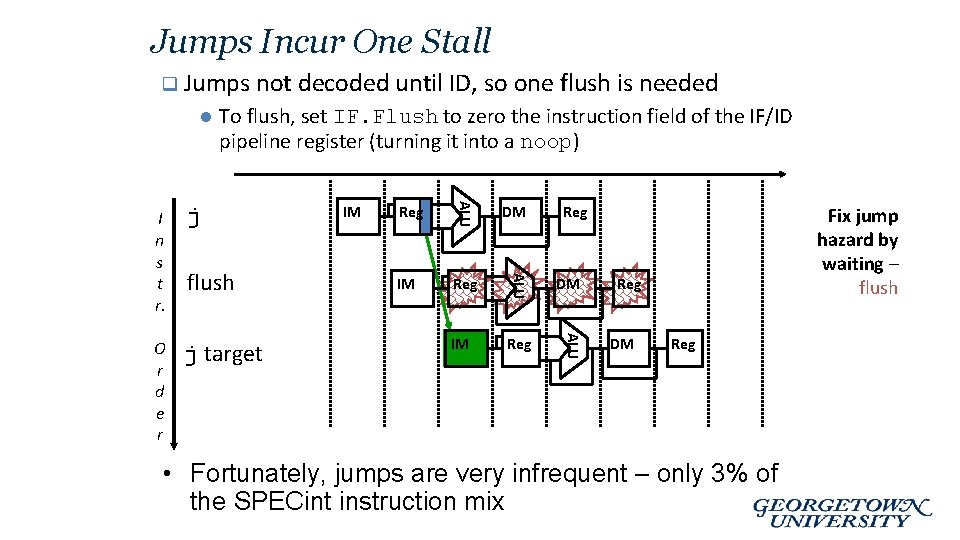

Jumps Incur One Stall q Jumps not decoded until ID, so one flush is needed l j target Reg DM IM Reg ALU flush IM ALU O r d e r j ALU I n s t r. To flush, set IF. Flush to zero the instruction field of the IF/ID pipeline register (turning it into a noop) Reg Fix jump hazard by waiting – flush Reg DM Reg • Fortunately, jumps are very infrequent – only 3% of the SPECint instruction mix

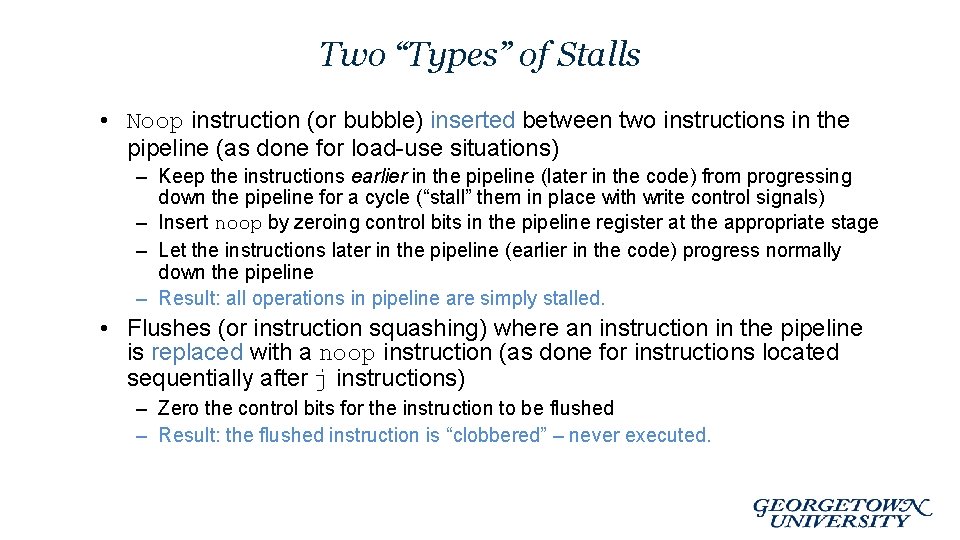

Two “Types” of Stalls • Noop instruction (or bubble) inserted between two instructions in the pipeline (as done for load-use situations) – Keep the instructions earlier in the pipeline (later in the code) from progressing down the pipeline for a cycle (“stall” them in place with write control signals) – Insert noop by zeroing control bits in the pipeline register at the appropriate stage – Let the instructions later in the pipeline (earlier in the code) progress normally down the pipeline – Result: all operations in pipeline are simply stalled. • Flushes (or instruction squashing) where an instruction in the pipeline is replaced with a noop instruction (as done for instructions located sequentially after j instructions) – Zero the control bits for the instruction to be flushed – Result: the flushed instruction is “clobbered” – never executed.

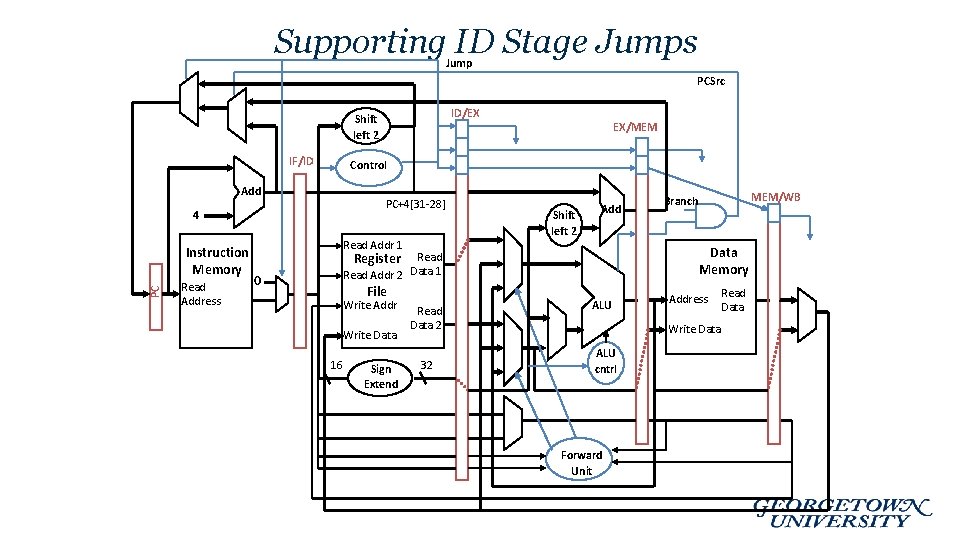

Supporting ID Stage Jumps Jump PCSrc ID/EX Shift left 2 IF/ID Control Add PC+4[31 -28] 4 PC Instruction Memory Read Address EX/MEM Read Addr 1 Shift left 2 Add Data Memory Register 0 Read Data 1 Read Addr 2 File Write Addr Write Data 16 Sign Extend Read Data 2 32 MEM/WB Branch ALU Address Read Data Write Data ALU cntrl Forward Unit

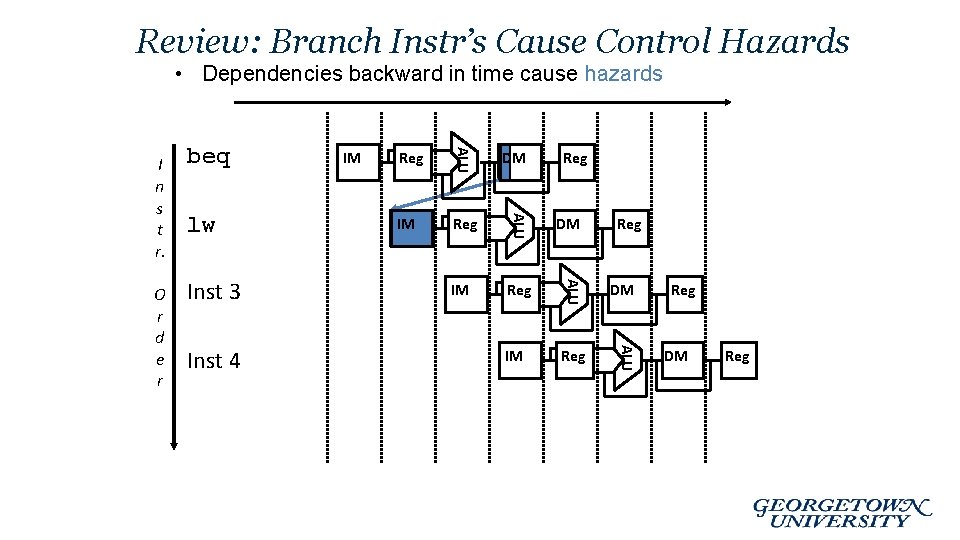

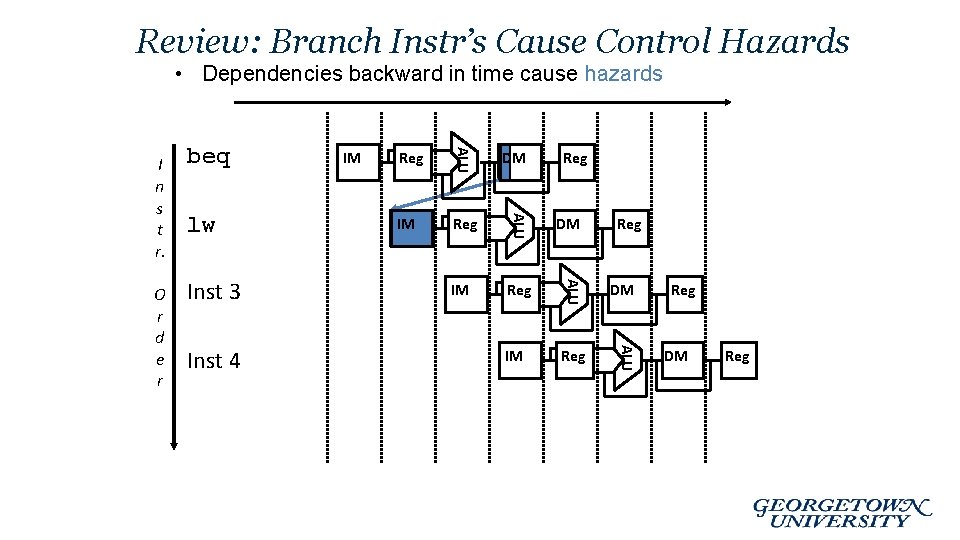

Review: Branch Instr’s Cause Control Hazards • Dependencies backward in time cause hazards Inst 4 IM Reg DM IM Reg ALU Inst 3 Reg ALU lw IM ALU O r d e r beq ALU I n s t r. DM Reg Reg DM Reg

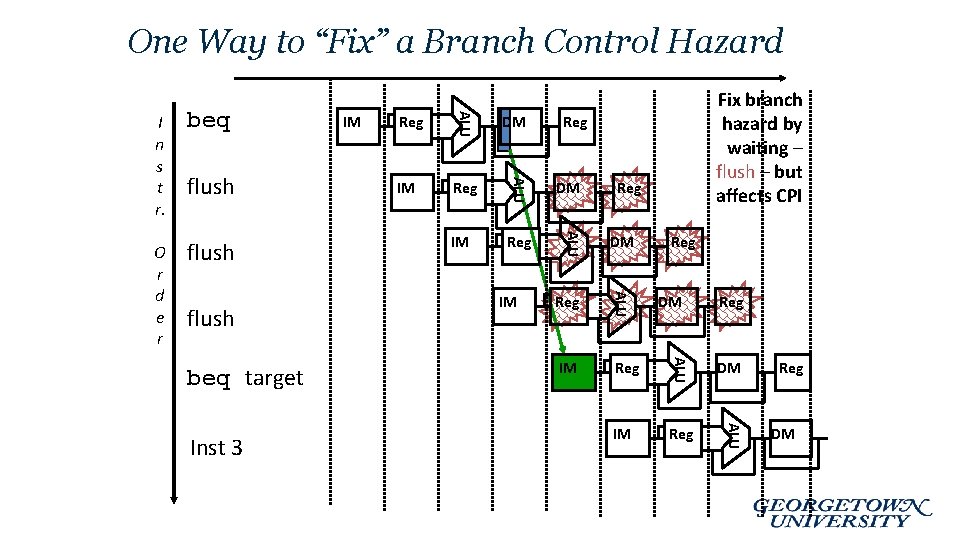

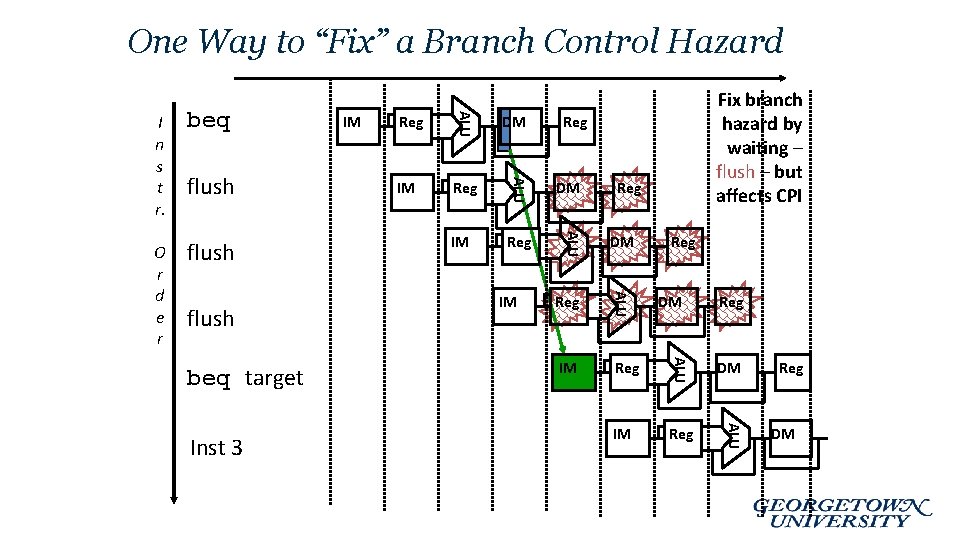

One Way to “Fix” a Branch Control Hazard Inst 3 Reg DM IM Reg DM Reg IM Reg DM IM Reg ALU beq target IM Reg ALU flush DM ALU flush Reg ALU O r d e r flush IM ALU beq ALU I n s t r. Fix branch hazard by waiting – flush – but affects CPI Reg DM

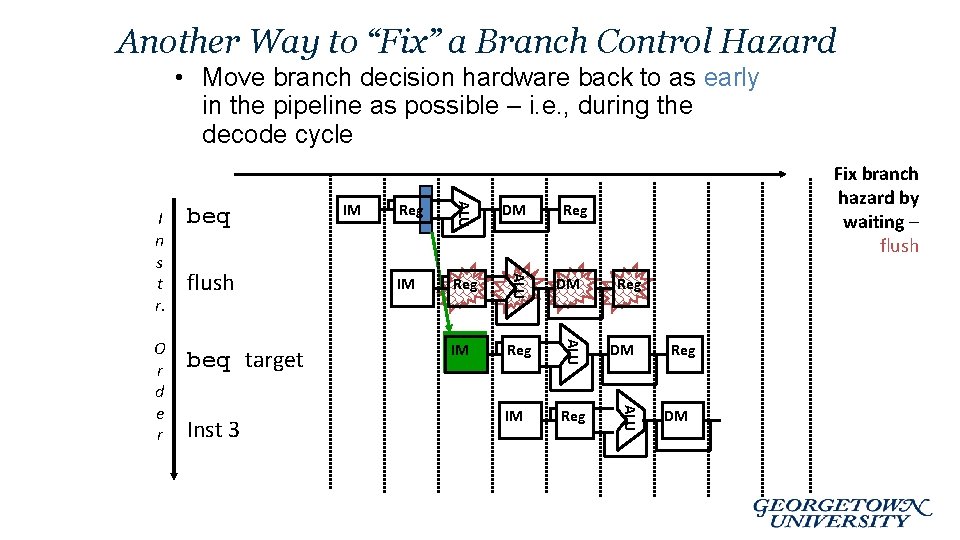

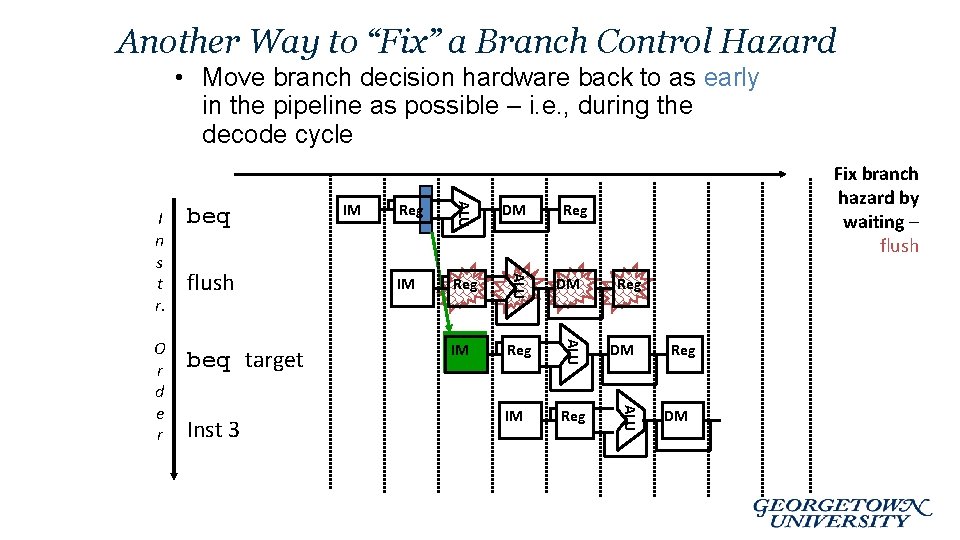

Another Way to “Fix” a Branch Control Hazard • Move branch decision hardware back to as early in the pipeline as possible – i. e. , during the decode cycle Inst 3 DM Reg IM Reg DM IM Reg Reg DM ALU beq target Reg ALU O r d e r flush IM ALU beq ALU I n s t r. Fix branch hazard by waiting – flush Reg DM

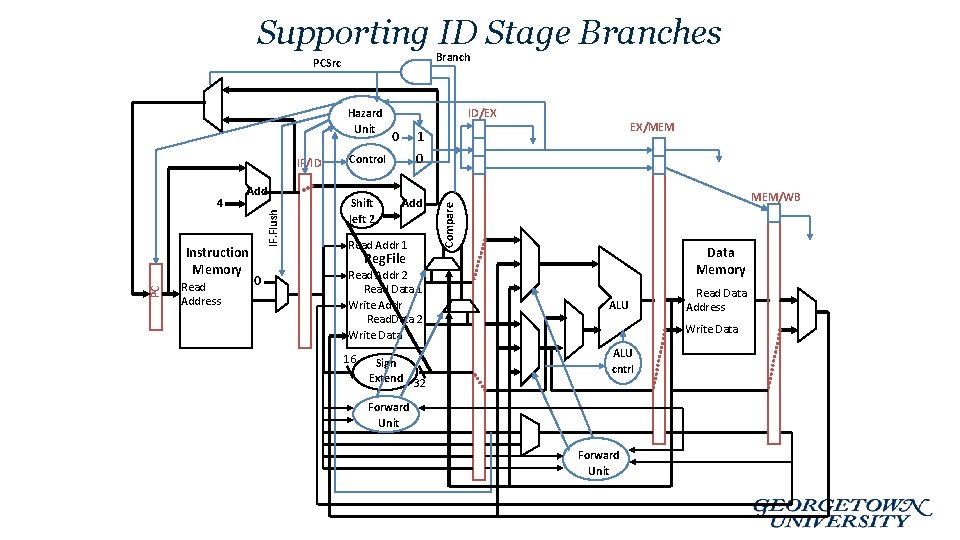

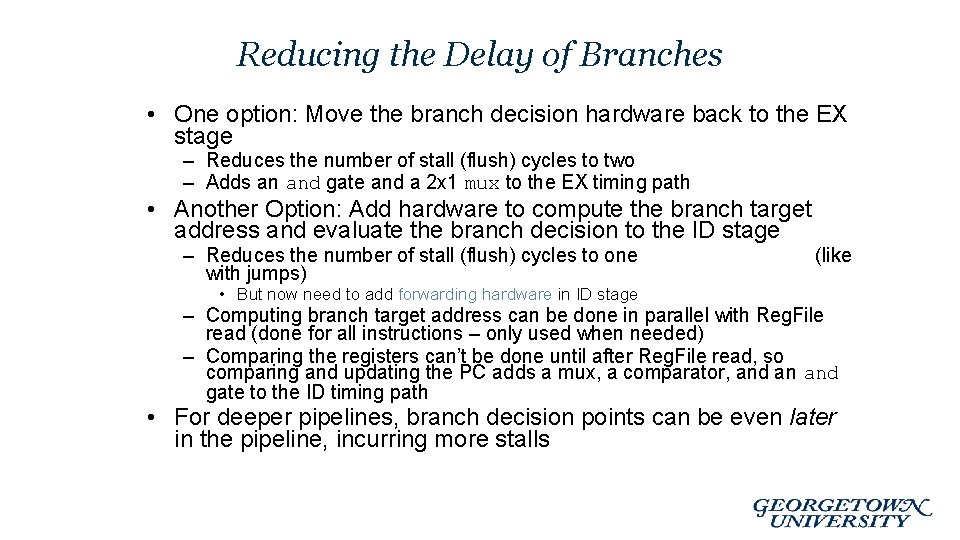

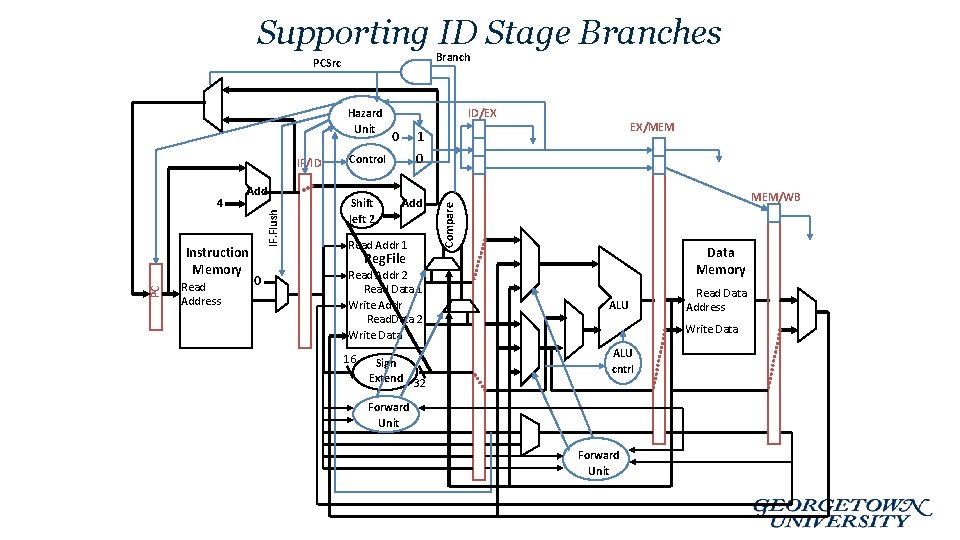

Reducing the Delay of Branches • One option: Move the branch decision hardware back to the EX stage – Reduces the number of stall (flush) cycles to two – Adds an and gate and a 2 x 1 mux to the EX timing path • Another Option: Add hardware to compute the branch target address and evaluate the branch decision to the ID stage – Reduces the number of stall (flush) cycles to one with jumps) (like • But now need to add forwarding hardware in ID stage – Computing branch target address can be done in parallel with Reg. File read (done for all instructions – only used when needed) – Comparing the registers can’t be done until after Reg. File read, so comparing and updating the PC adds a mux, a comparator, and an and gate to the ID timing path • For deeper pipelines, branch decision points can be even later in the pipeline, incurring more stalls

Supporting ID Stage Branches Branch PCSrc IF/ID Add PC Instruction Memory Read Address IF. Flush 4 ID/EX 0 0 Control Shift left 2 EX/MEM 1 Add Read Addr 1 MEM/WB Compare Hazard Unit Data Memory Reg. File 0 Read Addr 2 Read Data 1 Write Addr Read. Data 2 Write Data 16 Sign Extend 32 ALU Read Data Address Write Data ALU cntrl Forward Unit

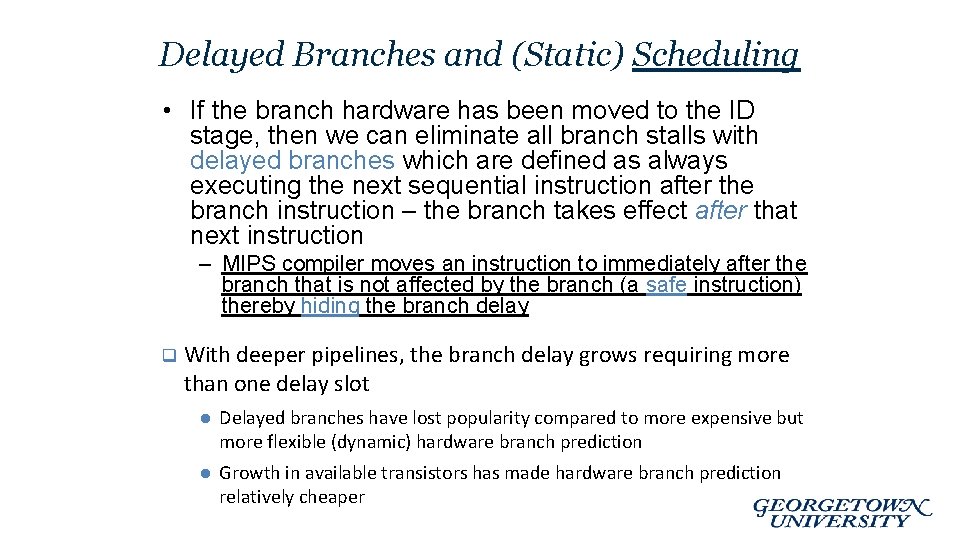

Delayed Branches and (Static) Scheduling • If the branch hardware has been moved to the ID stage, then we can eliminate all branch stalls with delayed branches which are defined as always executing the next sequential instruction after the branch instruction – the branch takes effect after that next instruction – MIPS compiler moves an instruction to immediately after the branch that is not affected by the branch (a safe instruction) thereby hiding the branch delay q With deeper pipelines, the branch delay grows requiring more than one delay slot l Delayed branches have lost popularity compared to more expensive but more flexible (dynamic) hardware branch prediction l Growth in available transistors has made hardware branch prediction relatively cheaper

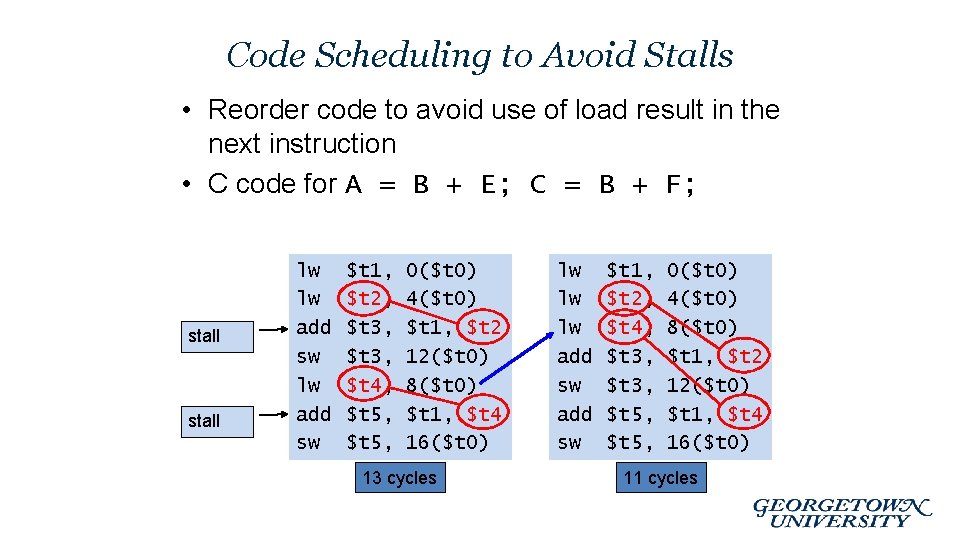

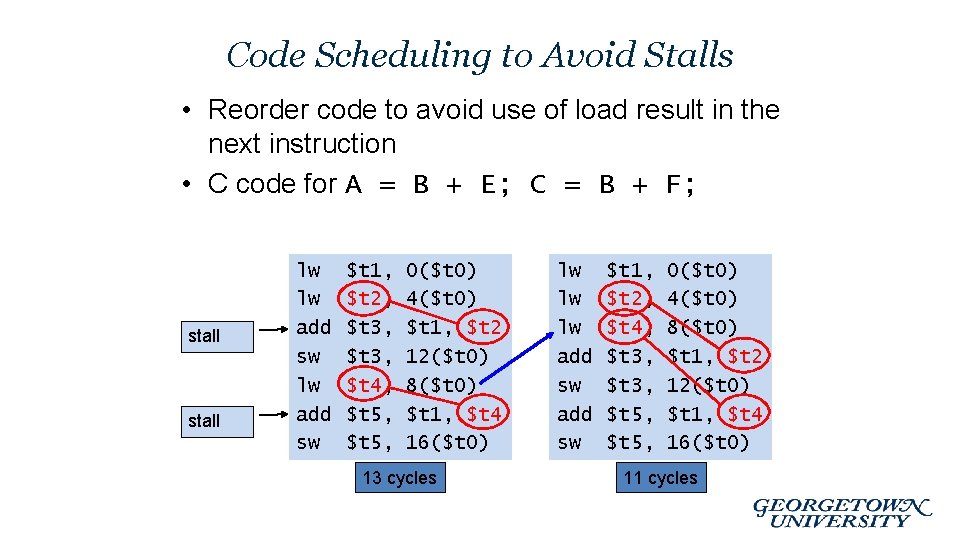

Code Scheduling to Avoid Stalls • Reorder code to avoid use of load result in the next instruction • C code for A = B + E; C = B + F; stall lw lw add sw $t 1, $t 2, $t 3, $t 4, $t 5, 0($t 0) 4($t 0) $t 1, $t 2 12($t 0) 8($t 0) $t 1, $t 4 16($t 0) 13 cycles lw lw lw add sw $t 1, $t 2, $t 4, $t 3, $t 5, 0($t 0) 4($t 0) 8($t 0) $t 1, $t 2 12($t 0) $t 1, $t 4 16($t 0) 11 cycles

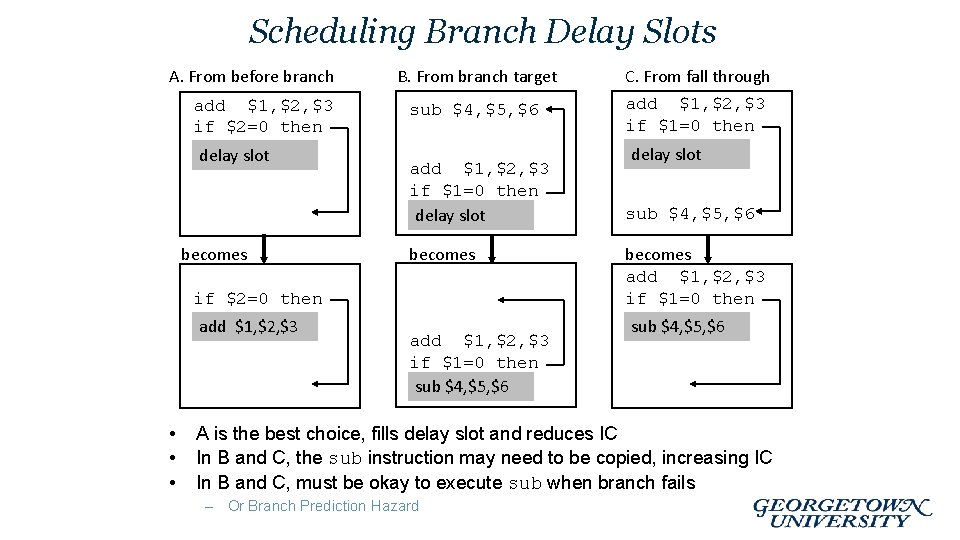

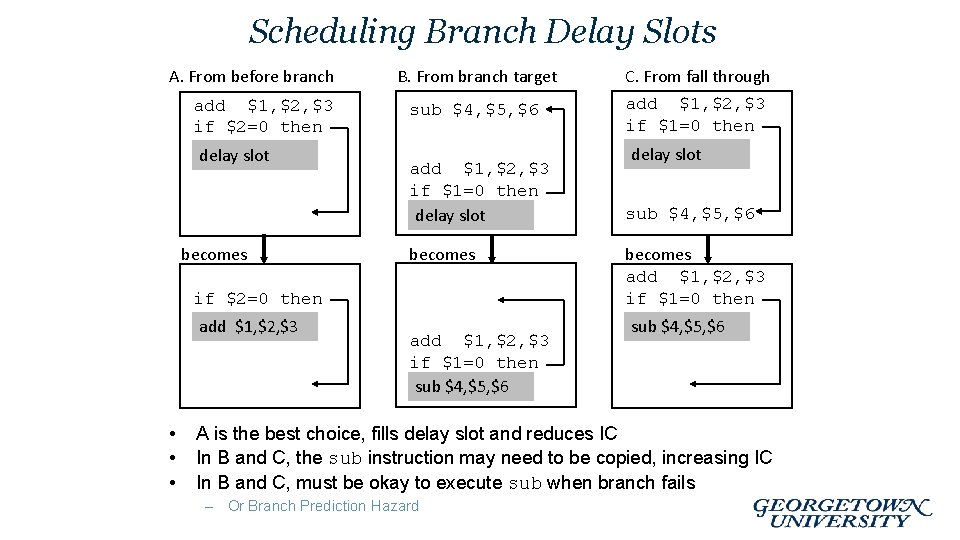

Scheduling Branch Delay Slots A. From before branch add $1, $2, $3 if $2=0 then delay slot becomes B. From branch target C. From fall through sub $4, $5, $6 add $1, $2, $3 if $1=0 then delay slot becomes if $2=0 then add $1, $2, $3 • • • add $1, $2, $3 if $1=0 then sub $4, $5, $6 delay slot sub $4, $5, $6 becomes add $1, $2, $3 if $1=0 then sub $4, $5, $6 A is the best choice, fills delay slot and reduces IC In B and C, the sub instruction may need to be copied, increasing IC In B and C, must be okay to execute sub when branch fails – Or Branch Prediction Hazard

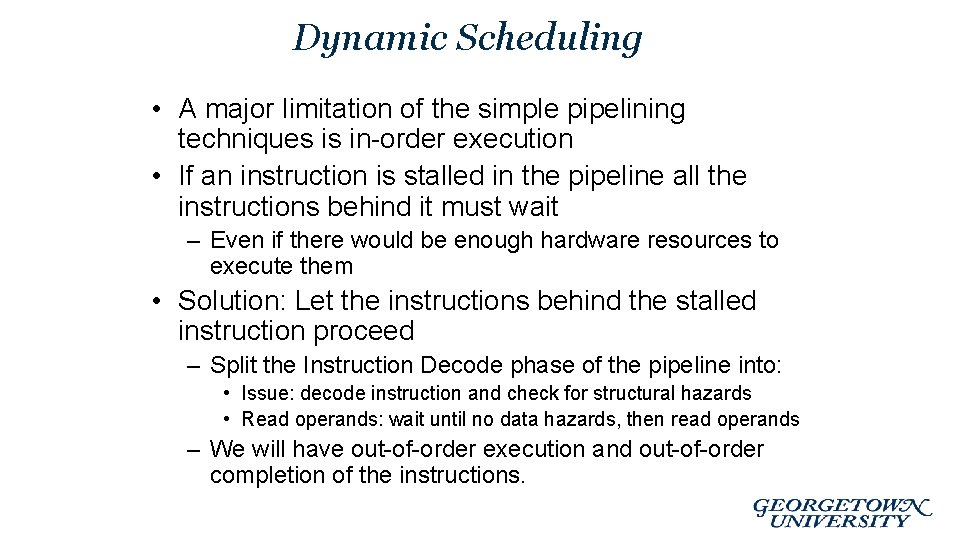

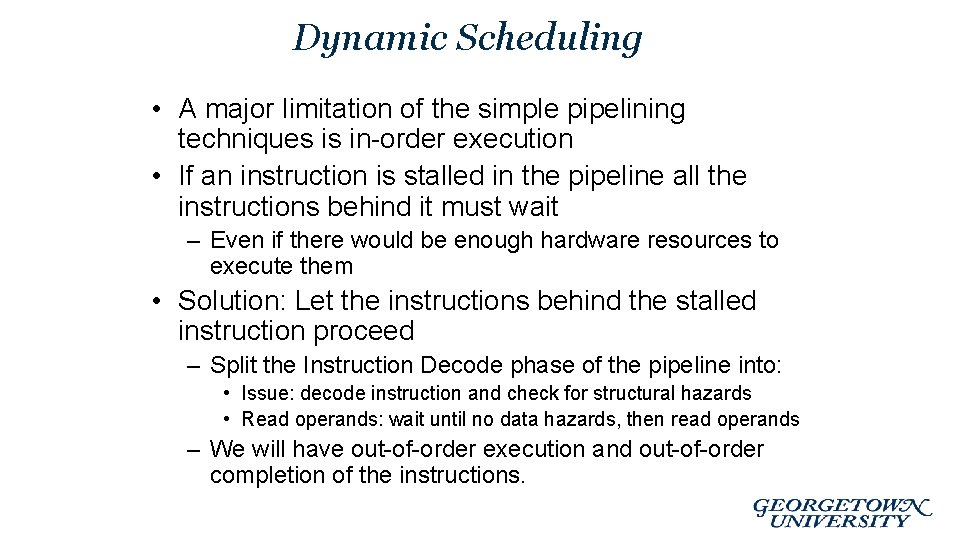

Dynamic Scheduling • A major limitation of the simple pipelining techniques is in-order execution • If an instruction is stalled in the pipeline all the instructions behind it must wait – Even if there would be enough hardware resources to execute them • Solution: Let the instructions behind the stalled instruction proceed – Split the Instruction Decode phase of the pipeline into: • Issue: decode instruction and check for structural hazards • Read operands: wait until no data hazards, then read operands – We will have out-of-order execution and out-of-order completion of the instructions.

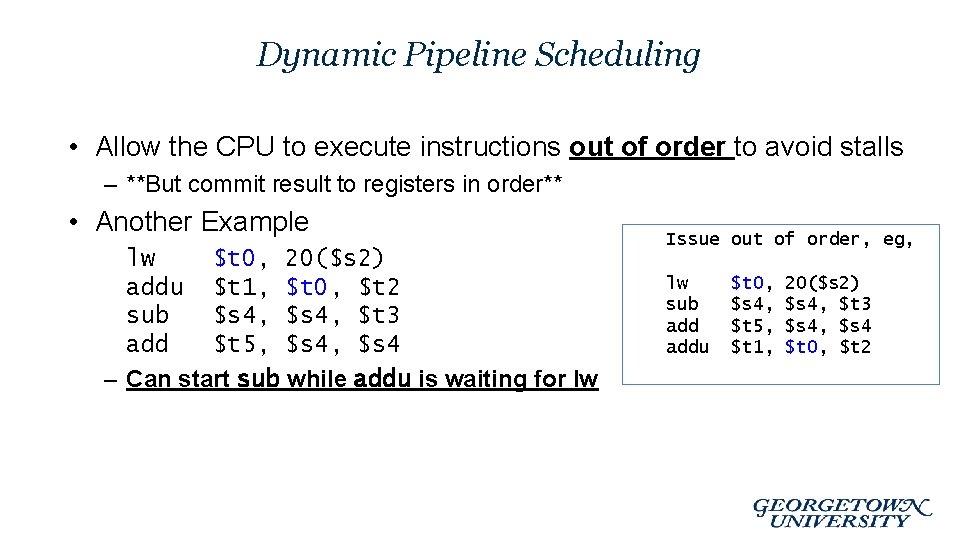

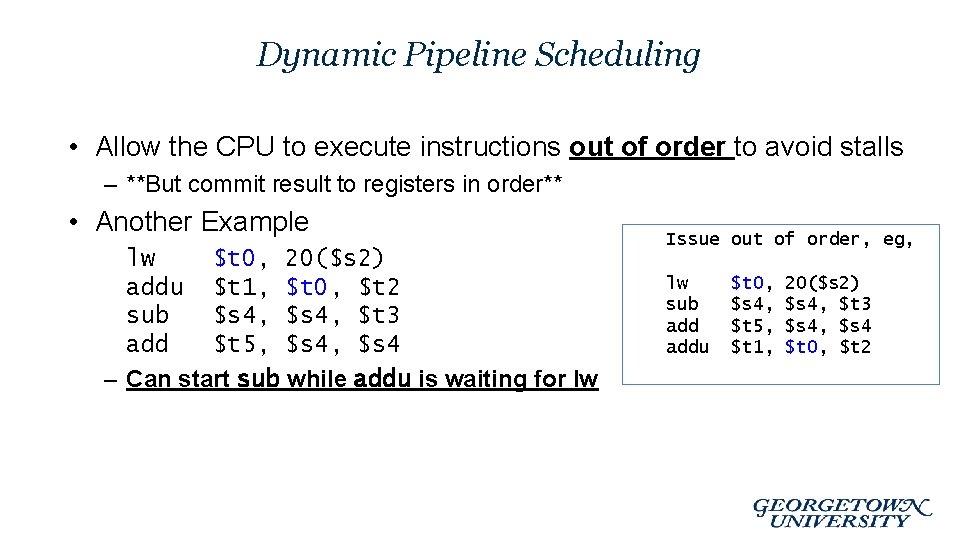

Dynamic Pipeline Scheduling • Allow the CPU to execute instructions out of order to avoid stalls – **But commit result to registers in order** • Another Example lw $t 0, 20($s 2) addu $t 1, $t 0, $t 2 sub $s 4, $t 3 add $t 5, $s 4 – Can start sub while addu is waiting for lw Issue out of order, eg, lw sub addu $t 0, $s 4, $t 5, $t 1, 20($s 2) $s 4, $t 3 $s 4, $s 4 $t 0, $t 2

Why Do Dynamic Scheduling? • Why not just let the compiler schedule code? • Not all stalls are predicable • Can’t always schedule around branches – Branch outcome is dynamically determined • Different implementations of an ISA have different latencies and hazards • EG: Scorecard and Tomasolos Algorithm** – An example upcoming if time (See appendix)

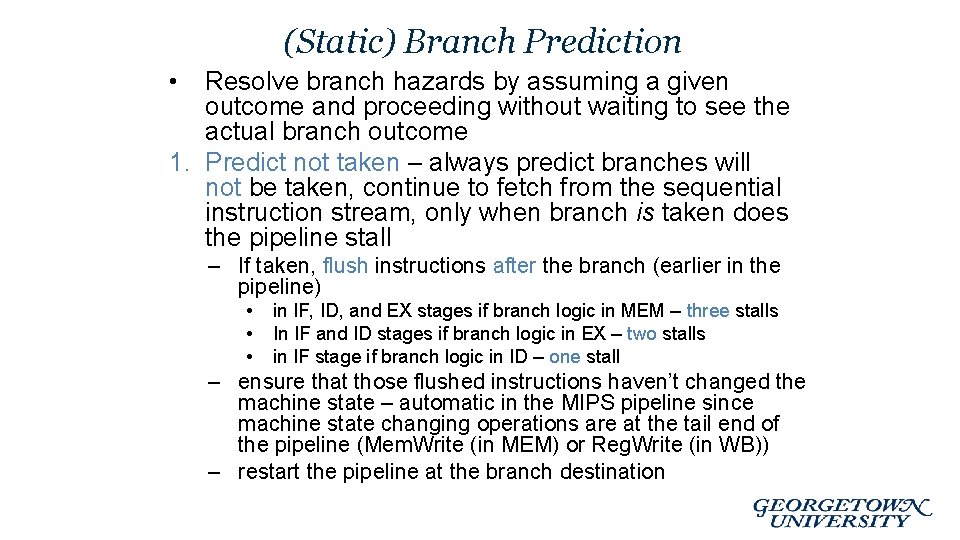

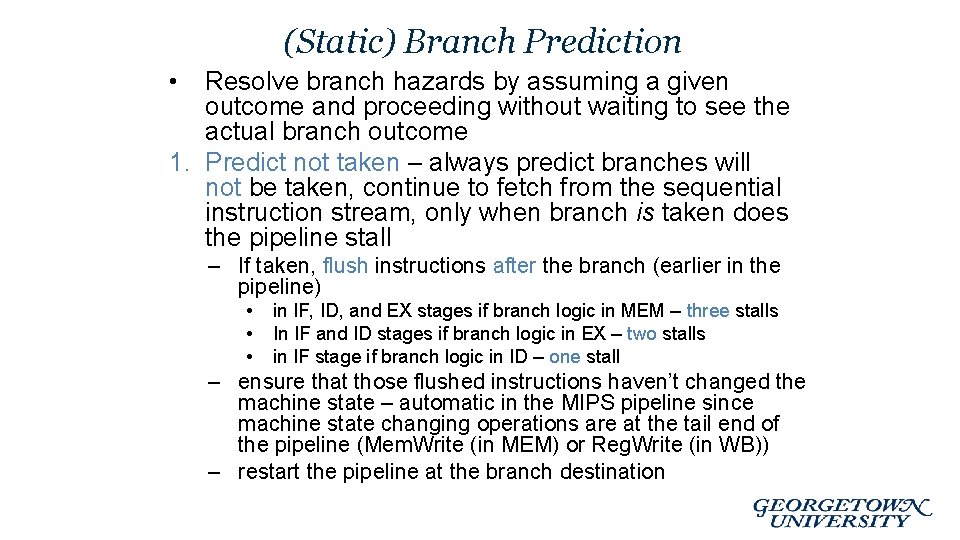

(Static) Branch Prediction • Resolve branch hazards by assuming a given outcome and proceeding without waiting to see the actual branch outcome 1. Predict not taken – always predict branches will not be taken, continue to fetch from the sequential instruction stream, only when branch is taken does the pipeline stall – If taken, flush instructions after the branch (earlier in the pipeline) • • • in IF, ID, and EX stages if branch logic in MEM – three stalls In IF and ID stages if branch logic in EX – two stalls in IF stage if branch logic in ID – one stall – ensure that those flushed instructions haven’t changed the machine state – automatic in the MIPS pipeline since machine state changing operations are at the tail end of the pipeline (Mem. Write (in MEM) or Reg. Write (in WB)) – restart the pipeline at the branch destination

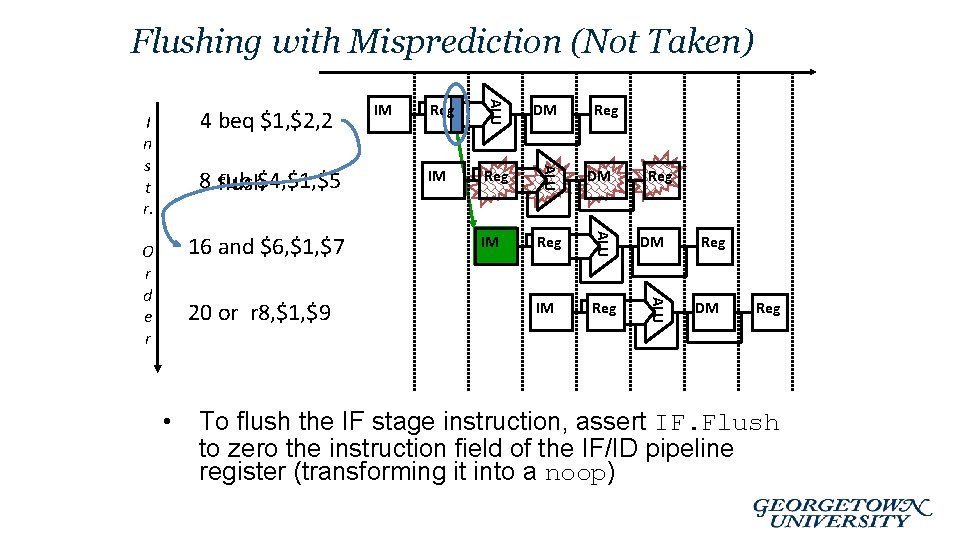

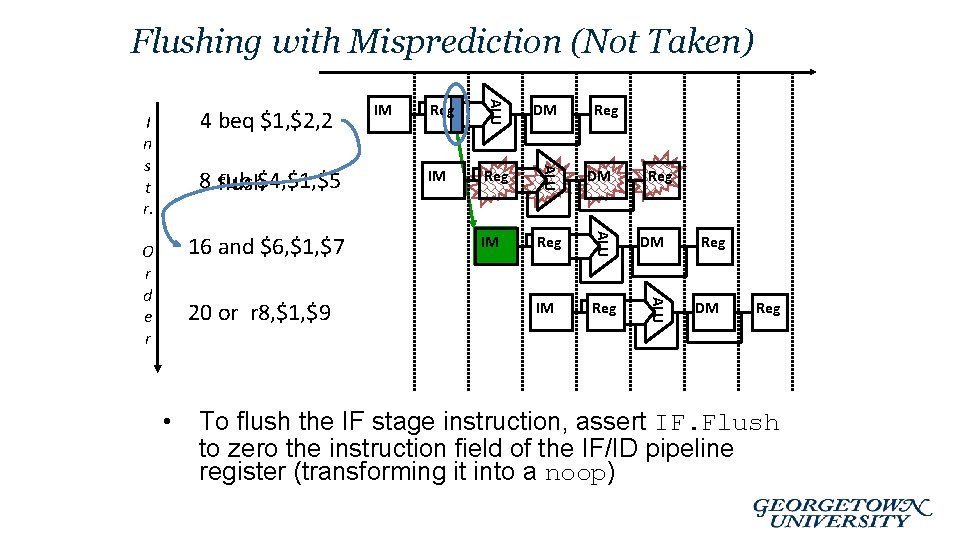

Flushing with Misprediction (Not Taken) • IM Reg DM IM Reg ALU 20 or r 8, $1, $9 DM ALU 16 and $6, $1, $7 O r d e r Reg ALU 8 sub flush$4, $1, $5 IM ALU 4 beq $1, $2, 2 I n s t r. Reg Reg DM Reg To flush the IF stage instruction, assert IF. Flush to zero the instruction field of the IF/ID pipeline register (transforming it into a noop)

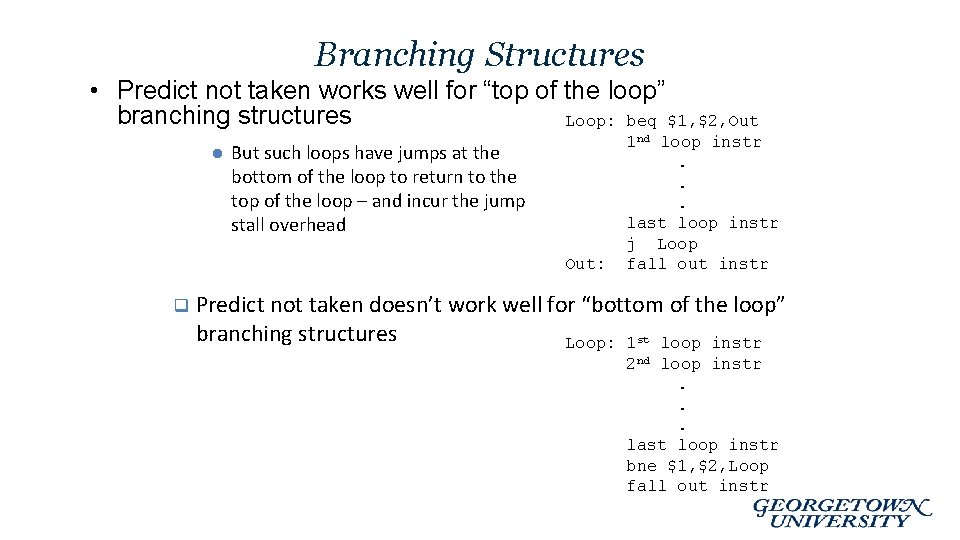

Branching Structures • Predict not taken works well for “top of the loop” branching structures Loop: beq $1, $2, Out l But such loops have jumps at the bottom of the loop to return to the top of the loop – and incur the jump stall overhead Out: q 1 nd loop instr. . . last loop instr j Loop fall out instr Predict not taken doesn’t work well for “bottom of the loop” branching structures Loop: 1 st loop instr 2 nd loop instr. . . last loop instr bne $1, $2, Loop fall out instr

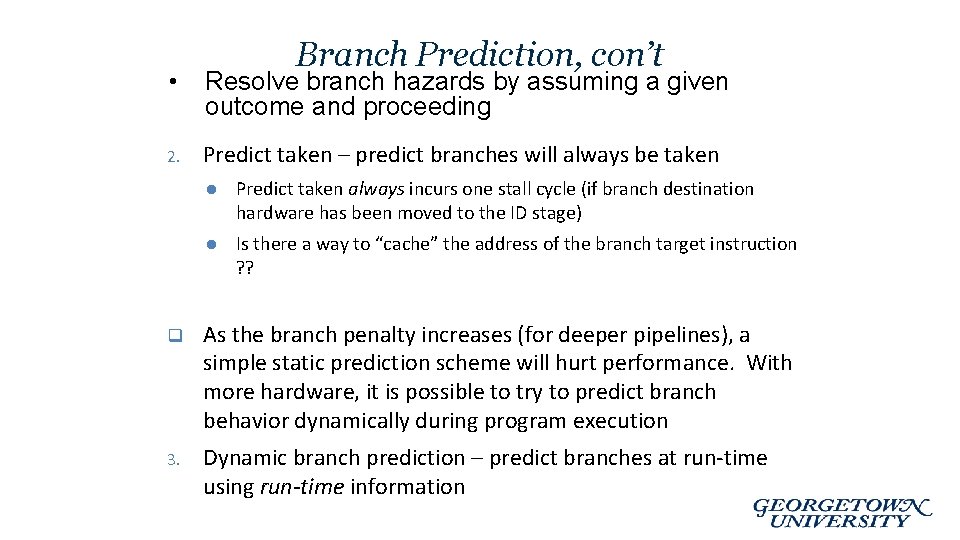

Branch Prediction, con’t • Resolve branch hazards by assuming a given outcome and proceeding 2. Predict taken – predict branches will always be taken l Predict taken always incurs one stall cycle (if branch destination hardware has been moved to the ID stage) l Is there a way to “cache” the address of the branch target instruction ? ? q As the branch penalty increases (for deeper pipelines), a simple static prediction scheme will hurt performance. With more hardware, it is possible to try to predict branch behavior dynamically during program execution 3. Dynamic branch prediction – predict branches at run-time using run-time information

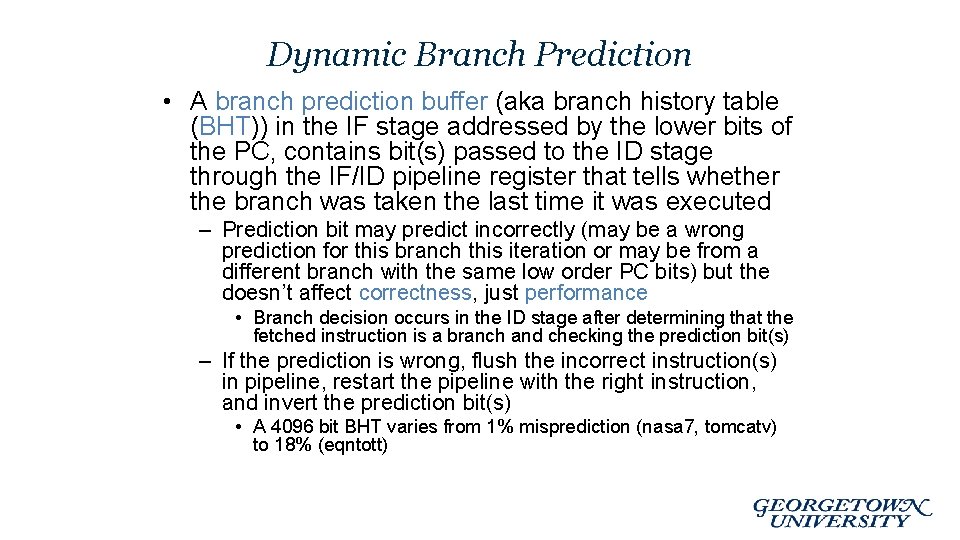

Dynamic Branch Prediction • A branch prediction buffer (aka branch history table (BHT)) in the IF stage addressed by the lower bits of the PC, contains bit(s) passed to the ID stage through the IF/ID pipeline register that tells whether the branch was taken the last time it was executed – Prediction bit may predict incorrectly (may be a wrong prediction for this branch this iteration or may be from a different branch with the same low order PC bits) but the doesn’t affect correctness, just performance • Branch decision occurs in the ID stage after determining that the fetched instruction is a branch and checking the prediction bit(s) – If the prediction is wrong, flush the incorrect instruction(s) in pipeline, restart the pipeline with the right instruction, and invert the prediction bit(s) • A 4096 bit BHT varies from 1% misprediction (nasa 7, tomcatv) to 18% (eqntott)

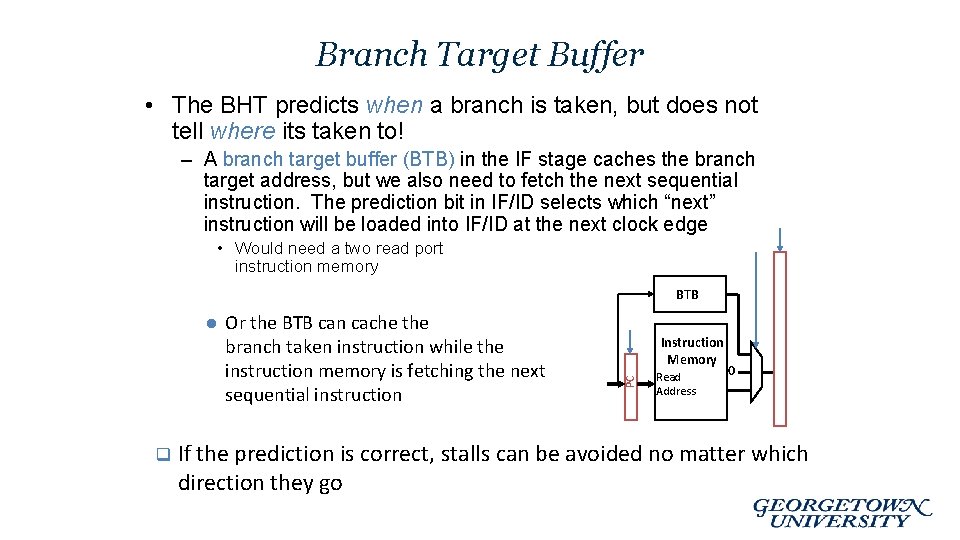

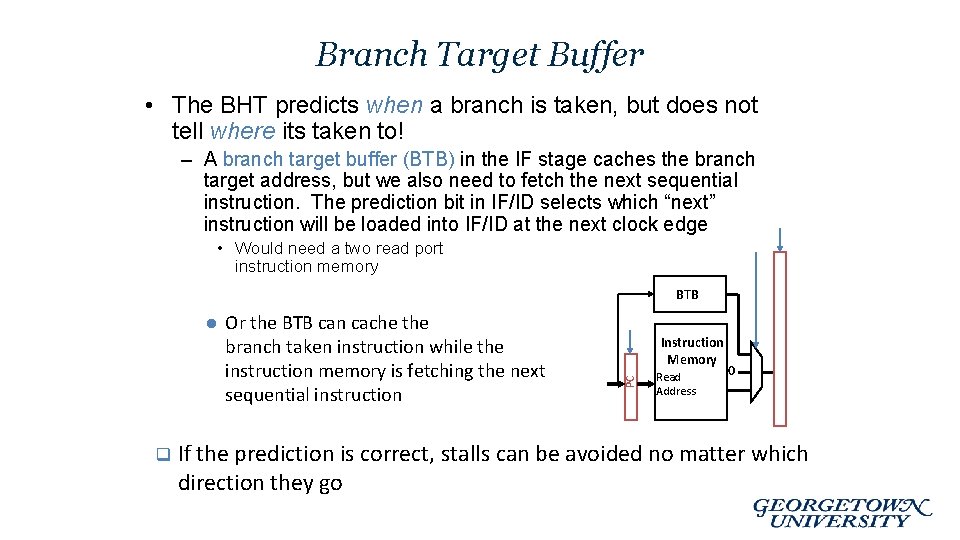

Branch Target Buffer • The BHT predicts when a branch is taken, but does not tell where its taken to! – A branch target buffer (BTB) in the IF stage caches the branch target address, but we also need to fetch the next sequential instruction. The prediction bit in IF/ID selects which “next” instruction will be loaded into IF/ID at the next clock edge • Would need a two read port instruction memory BTB q Or the BTB can cache the branch taken instruction while the instruction memory is fetching the next sequential instruction Instruction Memory PC l Read Address 0 If the prediction is correct, stalls can be avoided no matter which direction they go

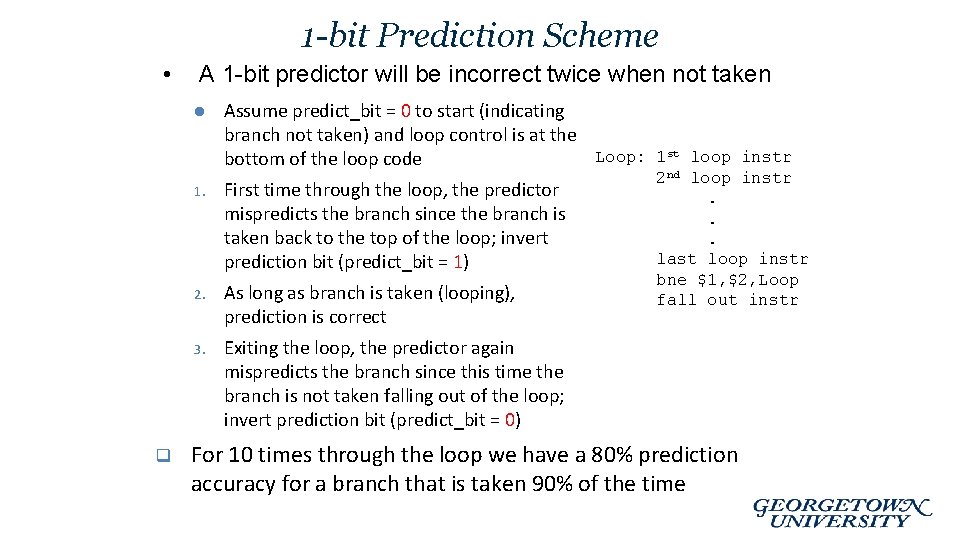

1 -bit Prediction Scheme • A 1 -bit predictor will be incorrect twice when not taken l q Assume predict_bit = 0 to start (indicating branch not taken) and loop control is at the Loop: 1 st loop instr bottom of the loop code 1. First time through the loop, the predictor mispredicts the branch since the branch is taken back to the top of the loop; invert prediction bit (predict_bit = 1) 2. As long as branch is taken (looping), prediction is correct 3. Exiting the loop, the predictor again mispredicts the branch since this time the branch is not taken falling out of the loop; invert prediction bit (predict_bit = 0) 2 nd loop instr. . . last loop instr bne $1, $2, Loop fall out instr For 10 times through the loop we have a 80% prediction accuracy for a branch that is taken 90% of the time

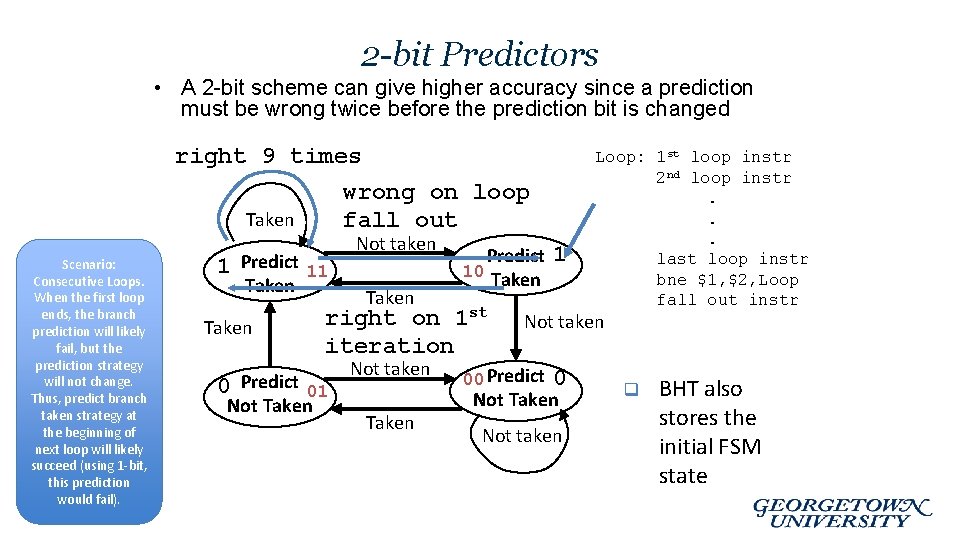

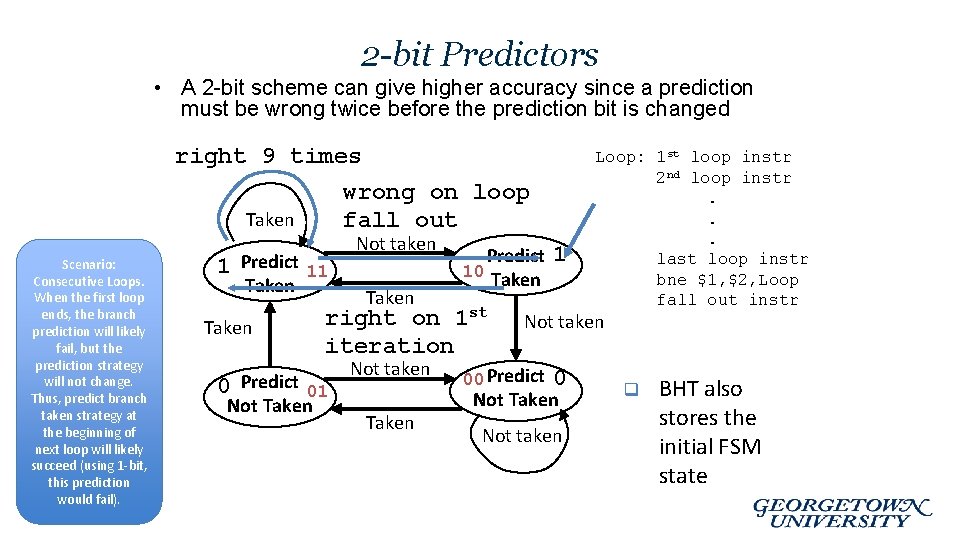

2 -bit Predictors • A 2 -bit scheme can give higher accuracy since a prediction must be wrong twice before the prediction bit is changed right 9 times wrong on loop Taken fall out Scenario: Consecutive Loops. When the first loop ends, the branch prediction will likely fail, but the prediction strategy will not change. Thus, predict branch taken strategy at the beginning of next loop will likely succeed (using 1 -bit, this prediction would fail). 1 Predict Taken Not taken 11 Taken Predict 10 Taken right on 1 st iteration 0 Predict 01 Not Taken Not taken Taken 1 Loop: 1 st loop instr 2 nd loop instr. . . last loop instr bne $1, $2, Loop fall out instr Not taken 00 Predict 0 Not Taken Not taken q BHT also stores the initial FSM state

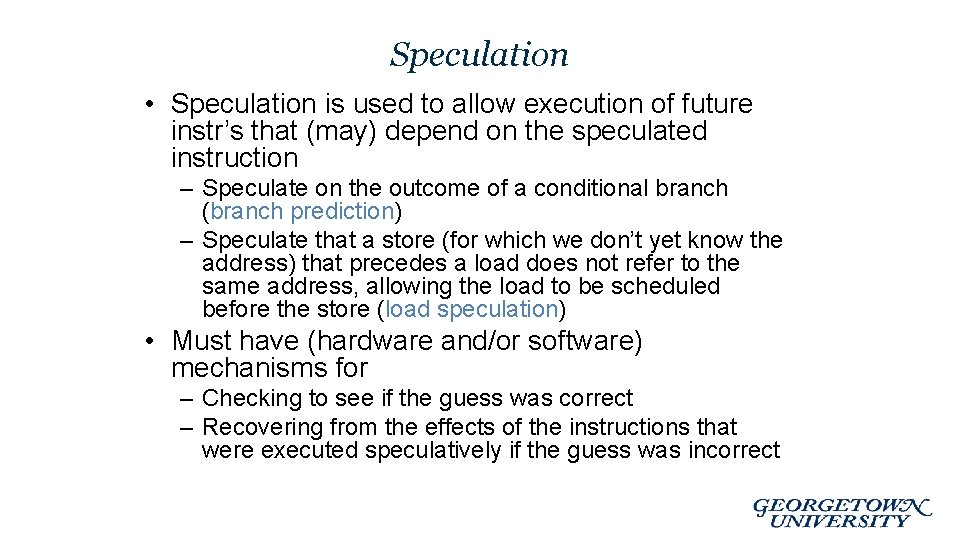

Speculation • Speculation is used to allow execution of future instr’s that (may) depend on the speculated instruction – Speculate on the outcome of a conditional branch (branch prediction) – Speculate that a store (for which we don’t yet know the address) that precedes a load does not refer to the same address, allowing the load to be scheduled before the store (load speculation) • Must have (hardware and/or software) mechanisms for – Checking to see if the guess was correct – Recovering from the effects of the instructions that were executed speculatively if the guess was incorrect

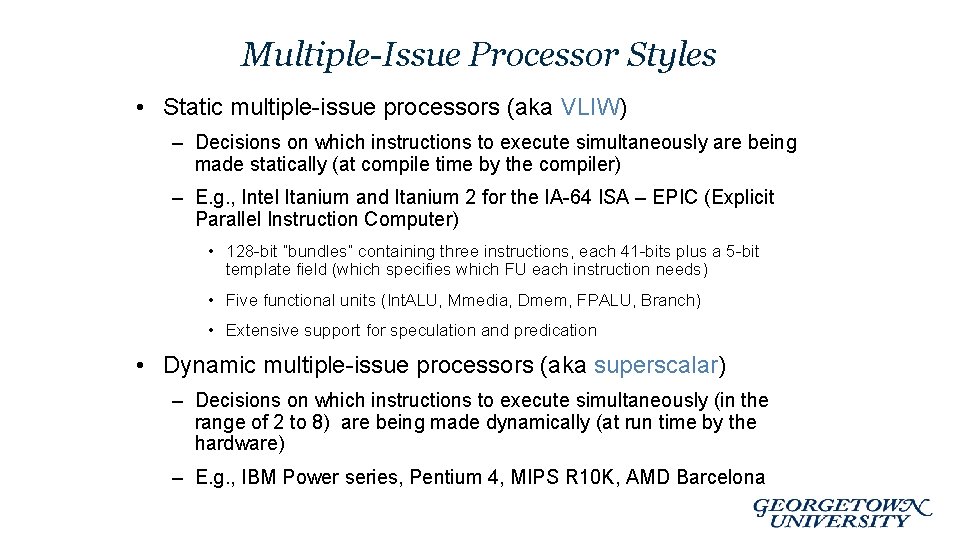

Multiple-Issue Processor Styles • Static multiple-issue processors (aka VLIW) – Decisions on which instructions to execute simultaneously are being made statically (at compile time by the compiler) – E. g. , Intel Itanium and Itanium 2 for the IA-64 ISA – EPIC (Explicit Parallel Instruction Computer) • 128 -bit “bundles” containing three instructions, each 41 -bits plus a 5 -bit template field (which specifies which FU each instruction needs) • Five functional units (Int. ALU, Mmedia, Dmem, FPALU, Branch) • Extensive support for speculation and predication • Dynamic multiple-issue processors (aka superscalar) – Decisions on which instructions to execute simultaneously (in the range of 2 to 8) are being made dynamically (at run time by the hardware) – E. g. , IBM Power series, Pentium 4, MIPS R 10 K, AMD Barcelona

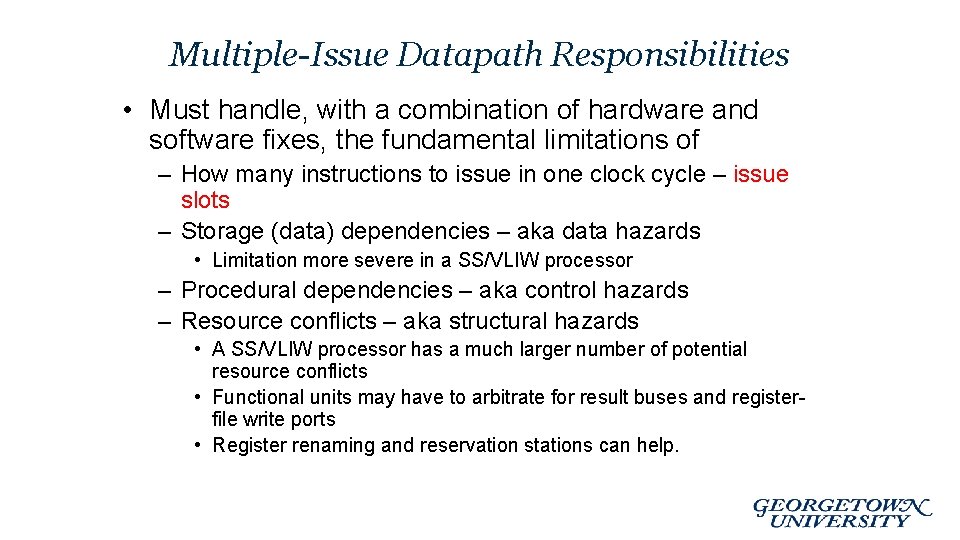

Multiple-Issue Datapath Responsibilities • Must handle, with a combination of hardware and software fixes, the fundamental limitations of – How many instructions to issue in one clock cycle – issue slots – Storage (data) dependencies – aka data hazards • Limitation more severe in a SS/VLIW processor – Procedural dependencies – aka control hazards – Resource conflicts – aka structural hazards • A SS/VLIW processor has a much larger number of potential resource conflicts • Functional units may have to arbitrate for result buses and registerfile write ports • Register renaming and reservation stations can help.

Static Multiple Issue Machines (VLIW) • Static multiple-issue processors (aka VLIW) use the compiler (at compile-time) to statically decide which instructions to issue and execute simultaneously – Issue packet – the set of instructions that are bundled together and issued in one clock cycle – think of it as one large instruction with multiple operations – The mix of instructions in the packet (bundle) is usually restricted – a single “instruction” with several predefined fields – The compiler does static branch prediction and code scheduling to reduce (control) or eliminate (data) hazards • VLIW’s have – Multiple functional units – Multi-ported register files – Wide program bus

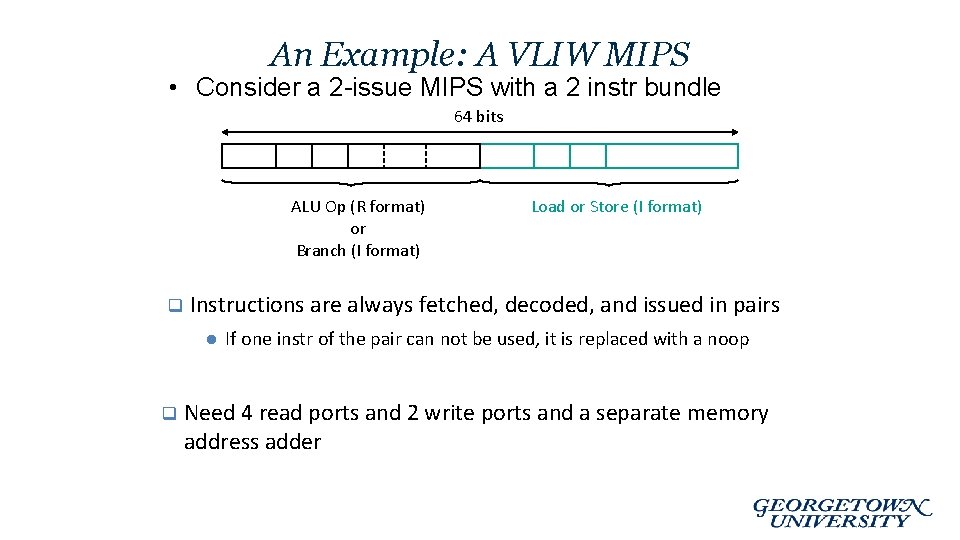

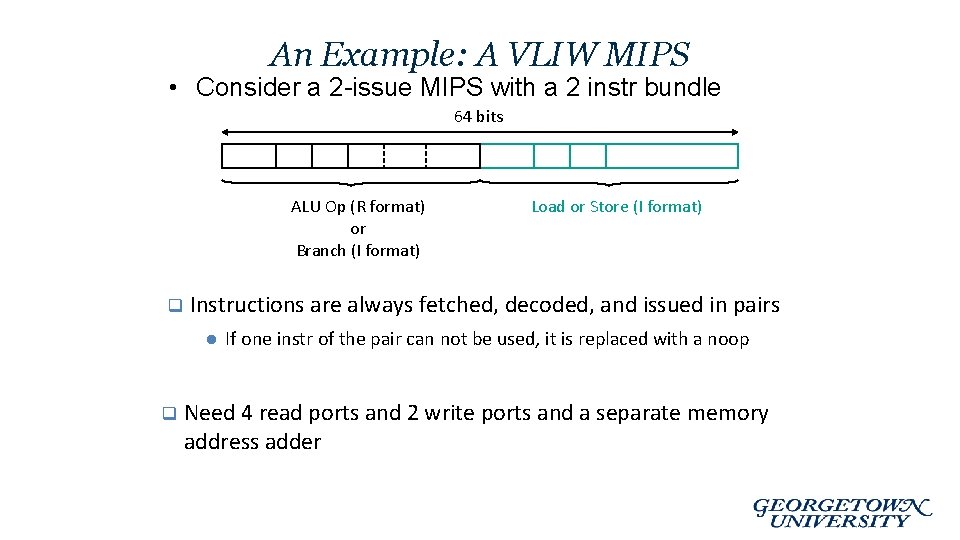

An Example: A VLIW MIPS • Consider a 2 -issue MIPS with a 2 instr bundle 64 bits ALU Op (R format) or Branch (I format) q Instructions are always fetched, decoded, and issued in pairs l q Load or Store (I format) If one instr of the pair can not be used, it is replaced with a noop Need 4 read ports and 2 write ports and a separate memory address adder

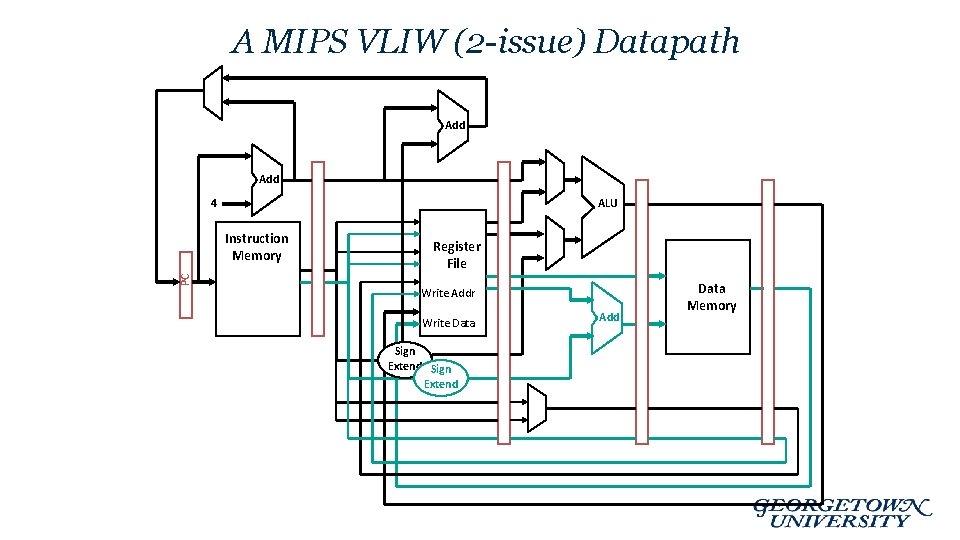

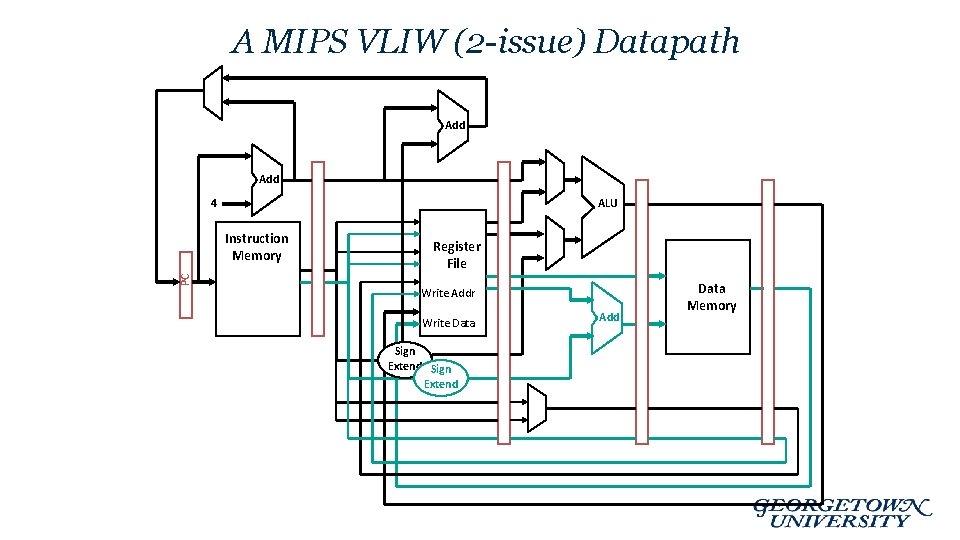

A MIPS VLIW (2 -issue) Datapath Add ALU 4 PC Instruction Memory Register File Write Addr Write Data Sign Extend Add Data Memory

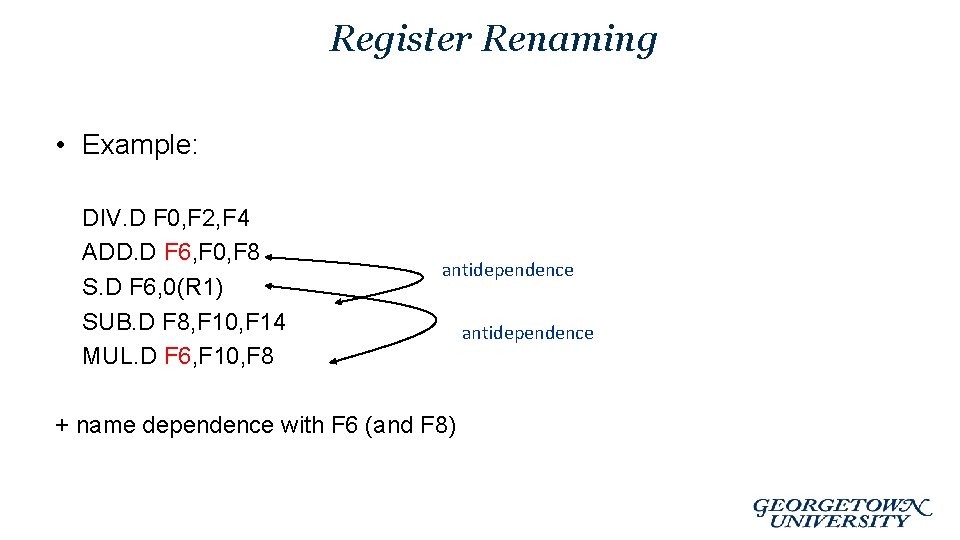

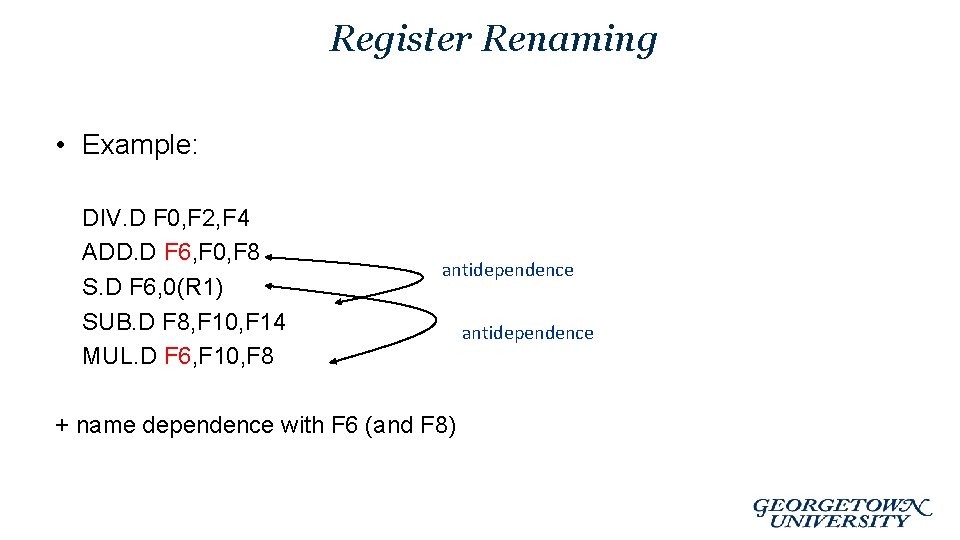

Register Renaming • Example: DIV. D F 0, F 2, F 4 ADD. D F 6, F 0, F 8 S. D F 6, 0(R 1) SUB. D F 8, F 10, F 14 MUL. D F 6, F 10, F 8 antidependence + name dependence with F 6 (and F 8) antidependence

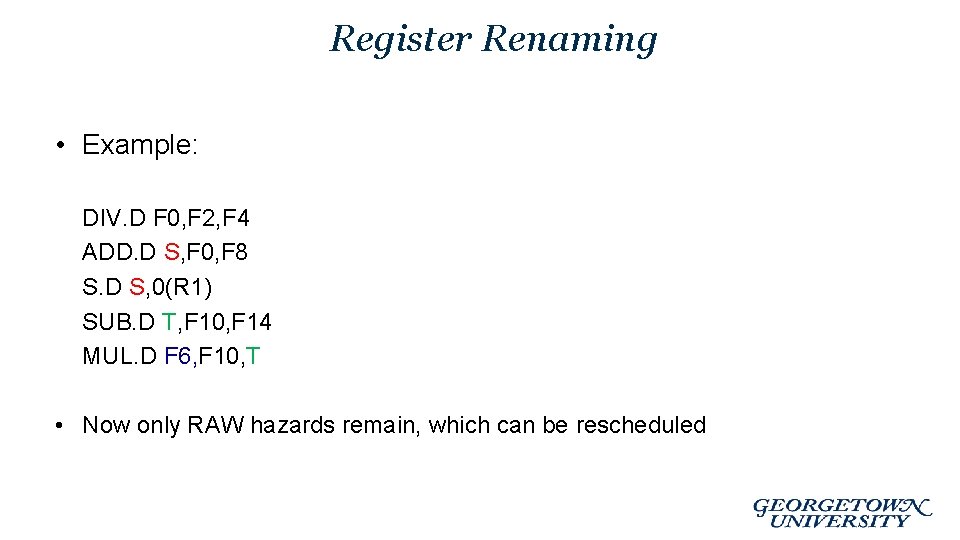

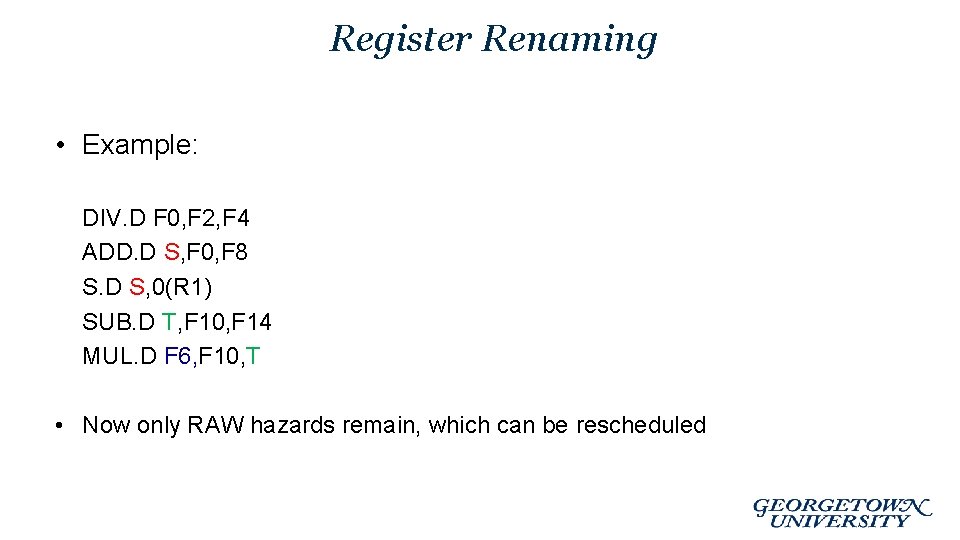

Register Renaming • Example: DIV. D F 0, F 2, F 4 ADD. D S, F 0, F 8 S. D S, 0(R 1) SUB. D T, F 10, F 14 MUL. D F 6, F 10, T • Now only RAW hazards remain, which can be rescheduled

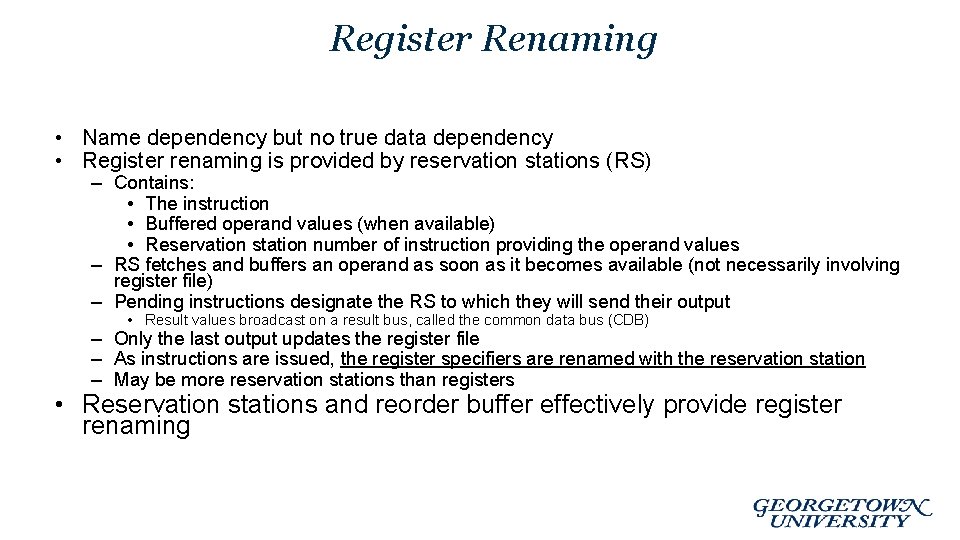

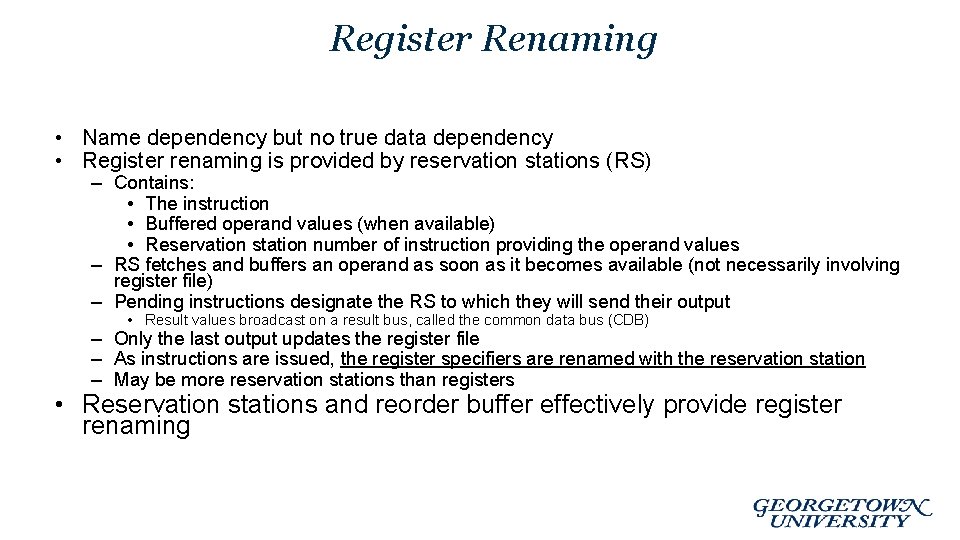

Register Renaming • Name dependency but no true data dependency • Register renaming is provided by reservation stations (RS) – Contains: • The instruction • Buffered operand values (when available) • Reservation station number of instruction providing the operand values – RS fetches and buffers an operand as soon as it becomes available (not necessarily involving register file) – Pending instructions designate the RS to which they will send their output • Result values broadcast on a result bus, called the common data bus (CDB) – Only the last output updates the register file – As instructions are issued, the register specifiers are renamed with the reservation station – May be more reservation stations than registers • Reservation stations and reorder buffer effectively provide register renaming

Loop Unrolling • Replicate loop body to expose more parallelism – Reduces loop-control overhead • Use different registers per replication – Called “register renaming” – Avoid loop-carried “anti-dependencies” • Store followed by a load of the same register • Aka “name dependence” – Reuse of a register name

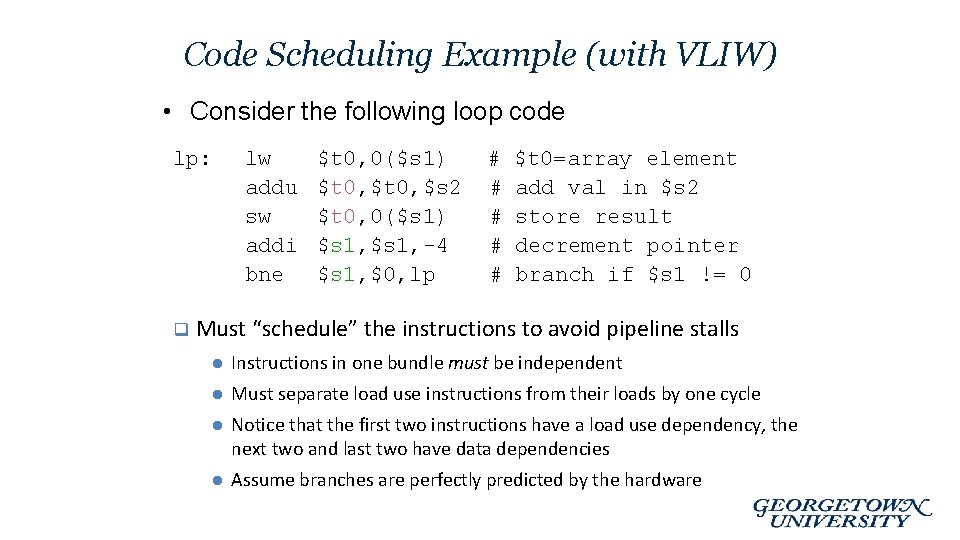

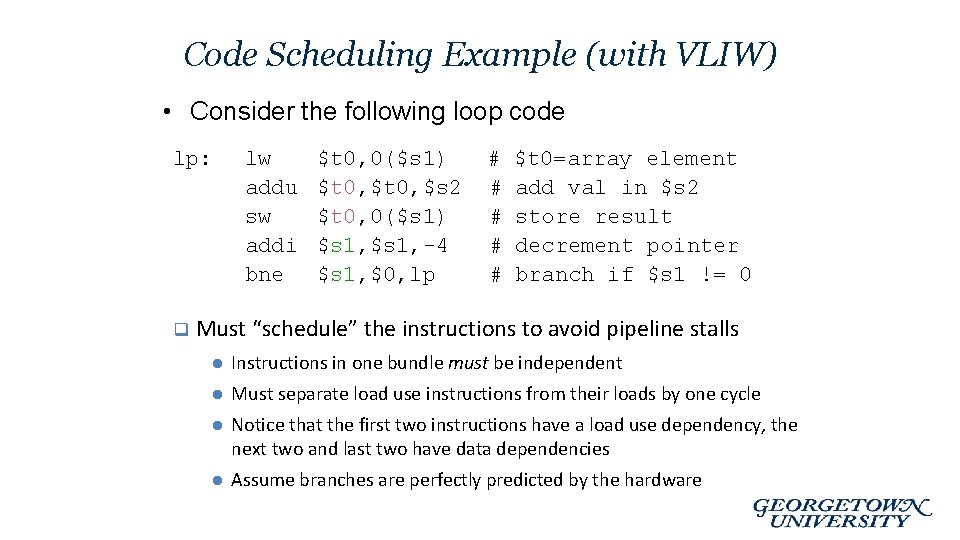

Code Scheduling Example (with VLIW) • Consider the following loop code lp: q lw addu sw addi bne $t 0, 0($s 1) $t 0, $s 2 $t 0, 0($s 1) $s 1, -4 $s 1, $0, lp # # # $t 0=array element add val in $s 2 store result decrement pointer branch if $s 1 != 0 Must “schedule” the instructions to avoid pipeline stalls l Instructions in one bundle must be independent l Must separate load use instructions from their loads by one cycle l Notice that the first two instructions have a load use dependency, the next two and last two have data dependencies l Assume branches are perfectly predicted by the hardware

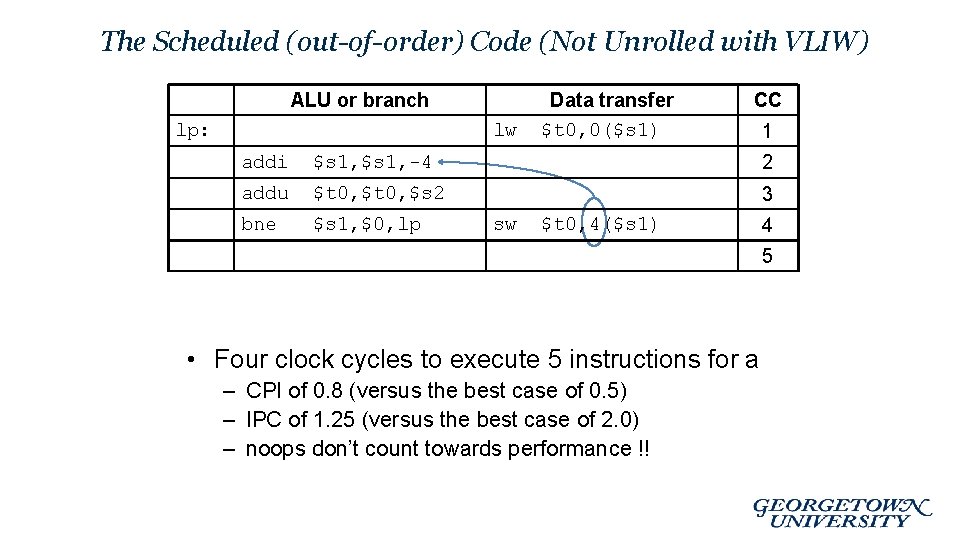

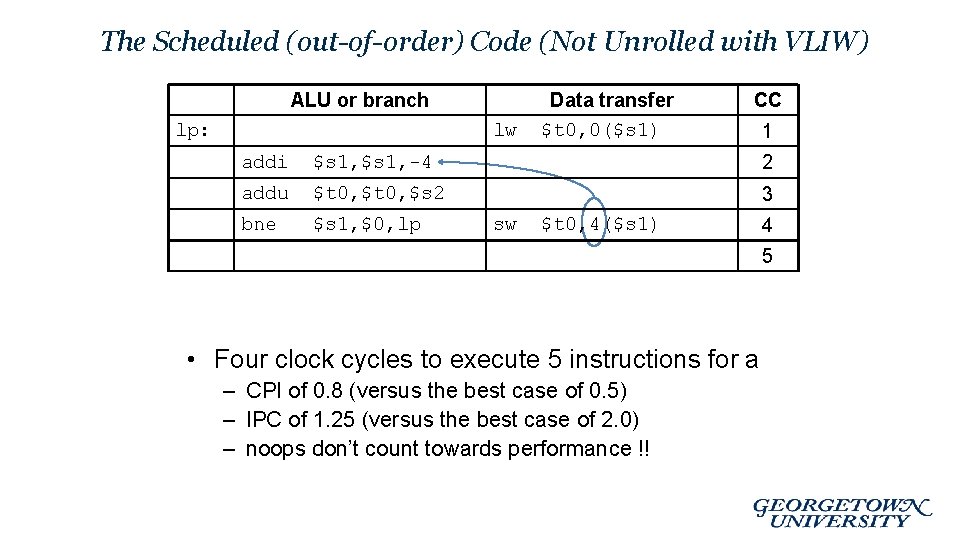

The Scheduled (out-of-order) Code (Not Unrolled with VLIW) ALU or branch lp: lw Data transfer $t 0, 0($s 1) CC 1 addi $s 1, -4 2 addu $t 0, $s 2 3 bne $s 1, $0, lp sw $t 0, 4($s 1) 4 5 • Four clock cycles to execute 5 instructions for a – CPI of 0. 8 (versus the best case of 0. 5) – IPC of 1. 25 (versus the best case of 2. 0) – noops don’t count towards performance !!

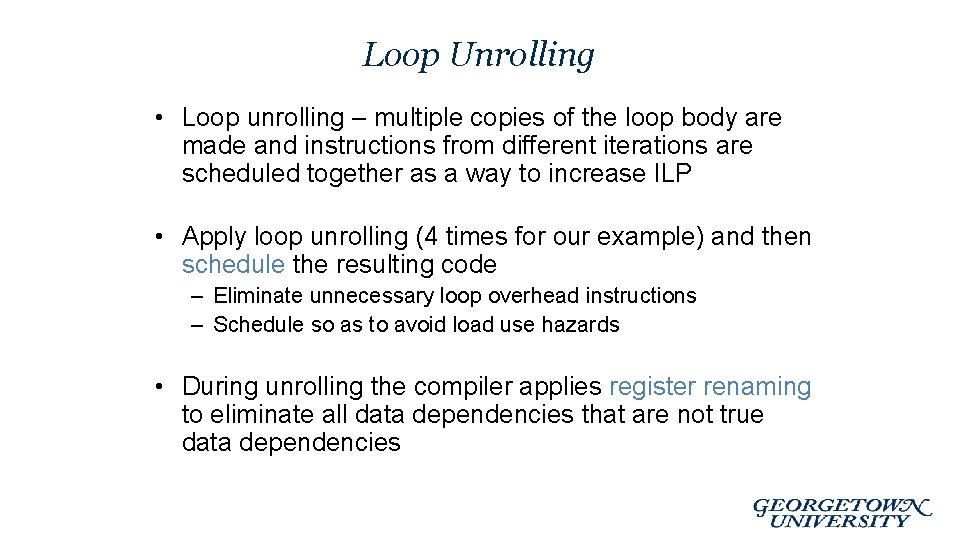

Loop Unrolling • Loop unrolling – multiple copies of the loop body are made and instructions from different iterations are scheduled together as a way to increase ILP • Apply loop unrolling (4 times for our example) and then schedule the resulting code – Eliminate unnecessary loop overhead instructions – Schedule so as to avoid load use hazards • During unrolling the compiler applies register renaming to eliminate all data dependencies that are not true data dependencies

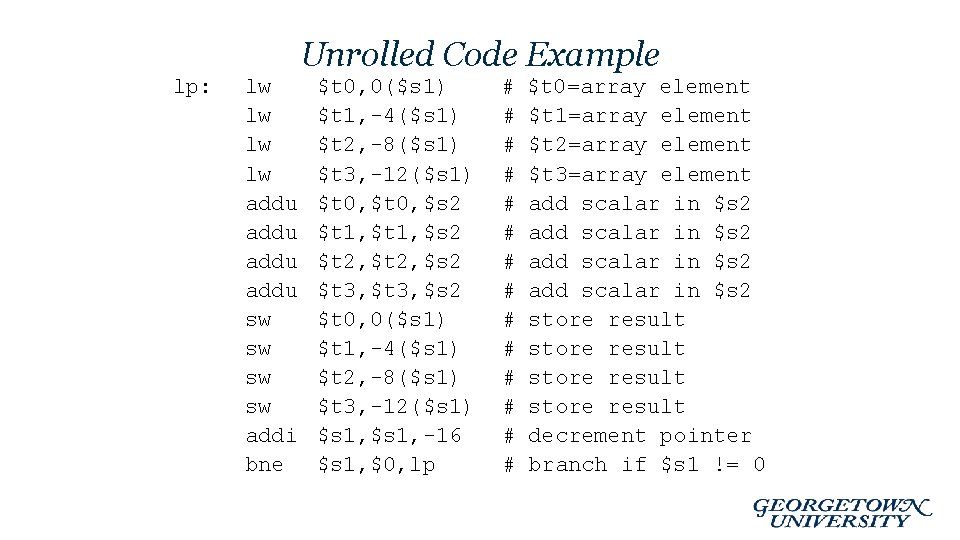

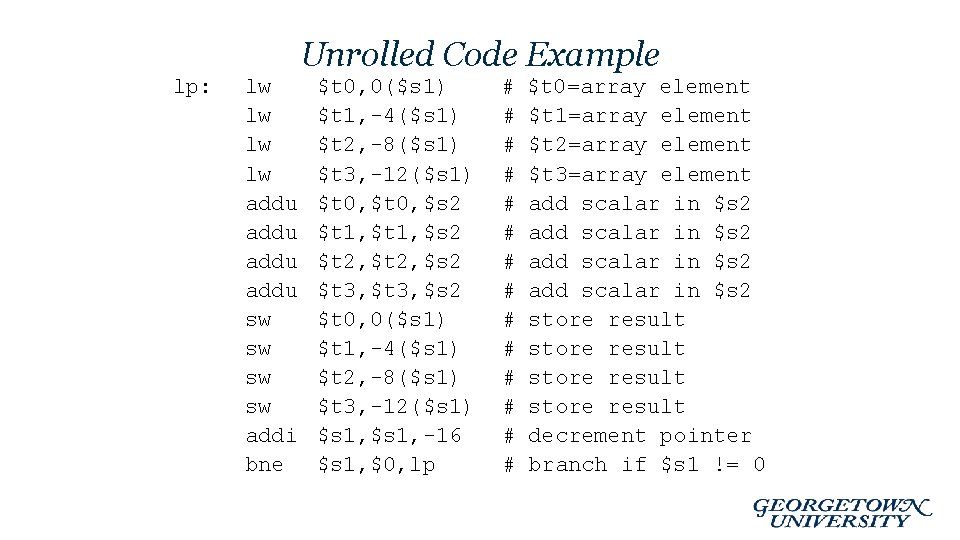

Unrolled Code Example lp: lw lw addu sw sw addi bne $t 0, 0($s 1) $t 1, -4($s 1) $t 2, -8($s 1) $t 3, -12($s 1) $t 0, $s 2 $t 1, $s 2 $t 2, $s 2 $t 3, $s 2 $t 0, 0($s 1) $t 1, -4($s 1) $t 2, -8($s 1) $t 3, -12($s 1) $s 1, -16 $s 1, $0, lp # # # # $t 0=array element $t 1=array element $t 2=array element $t 3=array element add scalar in $s 2 store result decrement pointer branch if $s 1 != 0

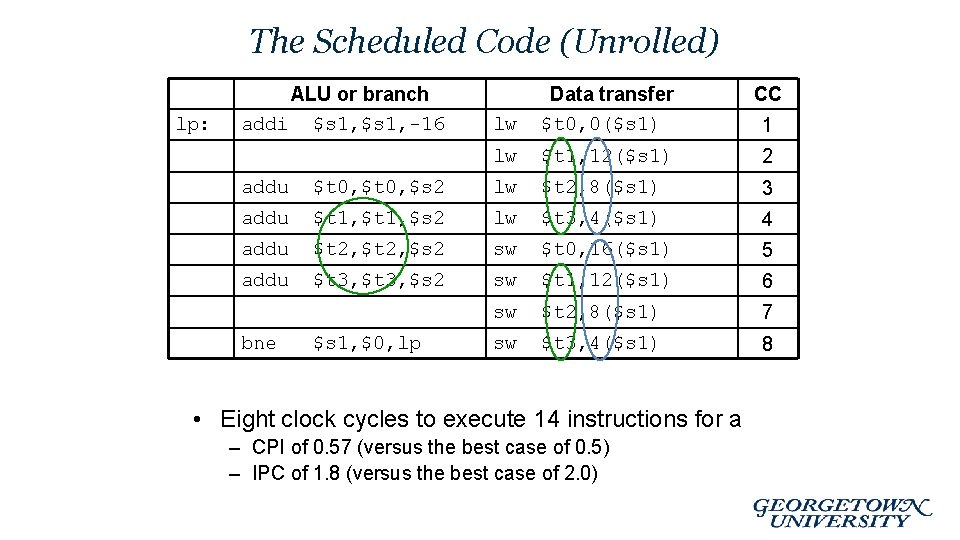

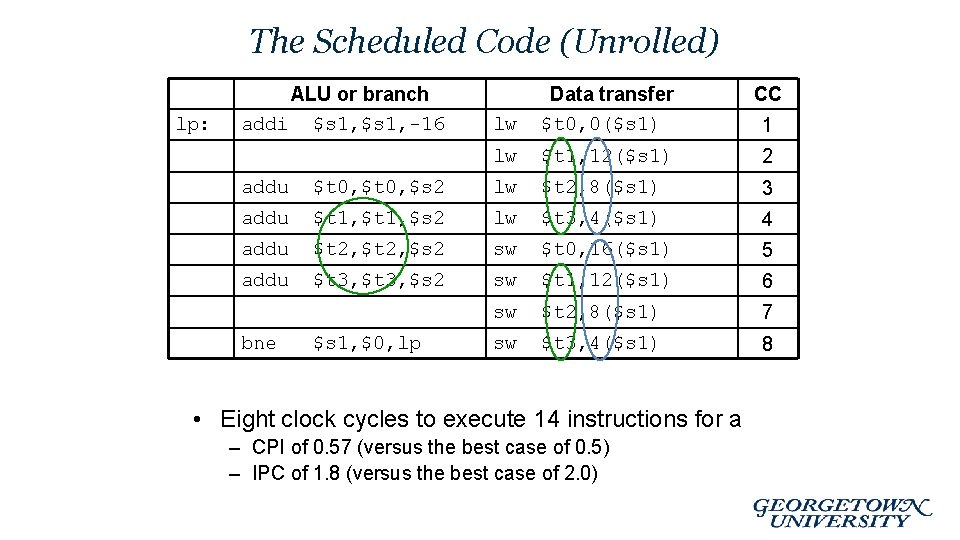

The Scheduled Code (Unrolled) lp: ALU or branch addi $s 1, -16 CC lw Data transfer $t 0, 0($s 1) lw $t 1, 12($s 1) 2 1 addu $t 0, $s 2 lw $t 2, 8($s 1) 3 addu $t 1, $s 2 lw $t 3, 4($s 1) 4 addu $t 2, $s 2 sw $t 0, 16($s 1) 5 addu $t 3, $s 2 sw $t 1, 12($s 1) 6 sw $t 2, 8($s 1) 7 sw $t 3, 4($s 1) 8 bne $s 1, $0, lp • Eight clock cycles to execute 14 instructions for a – CPI of 0. 57 (versus the best case of 0. 5) – IPC of 1. 8 (versus the best case of 2. 0)

Summary • All modern day processors use pipelining for performance (a CPI of 1 and fast a CC) • Pipeline clock rate limited by slowest pipeline stage – so designing a balanced pipeline is important • Must detect and resolve hazards – Structural hazards – resolved by designing the pipeline correctly – Data hazards • Stall (impacts CPI) • Forward (requires hardware support) – Control hazards – put the branch decision hardware in as early a stage in the pipeline as possible • Stall (impacts CPI) • Delay decision (requires compiler support) • Static and dynamic prediction (requires hardware support) • Scheduling and Speculation can reduce stalls • Multiple-issue, and VLIW can improve ILP

Appendix Jeremy Bolton, Ph. D Assistant Teaching Professor Constructed using materials: - Patt and Patel Introduction to Computing Systems (2 nd) - Patterson and Hennessy Computer Organization and Design (4 th) **A special thanks to Eric Roberts and Mary Jane Irwin

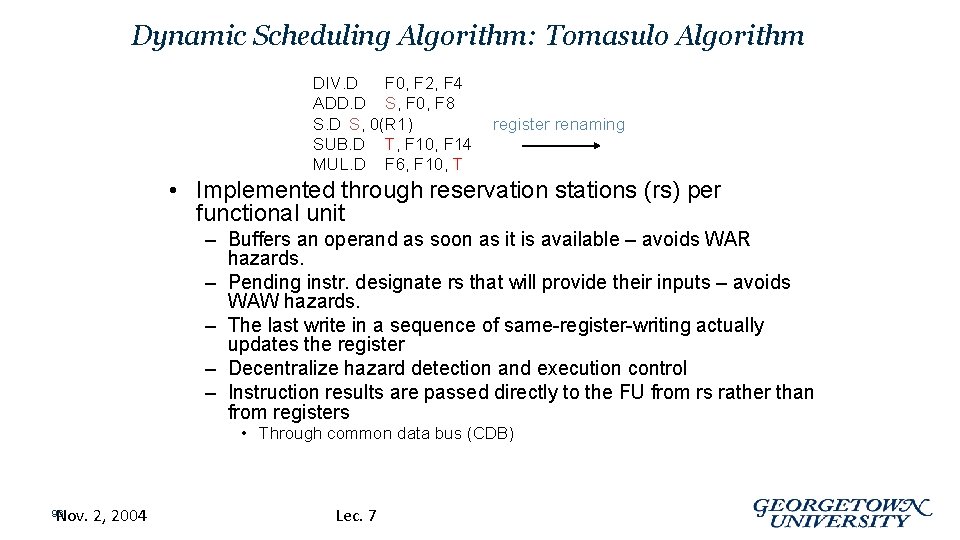

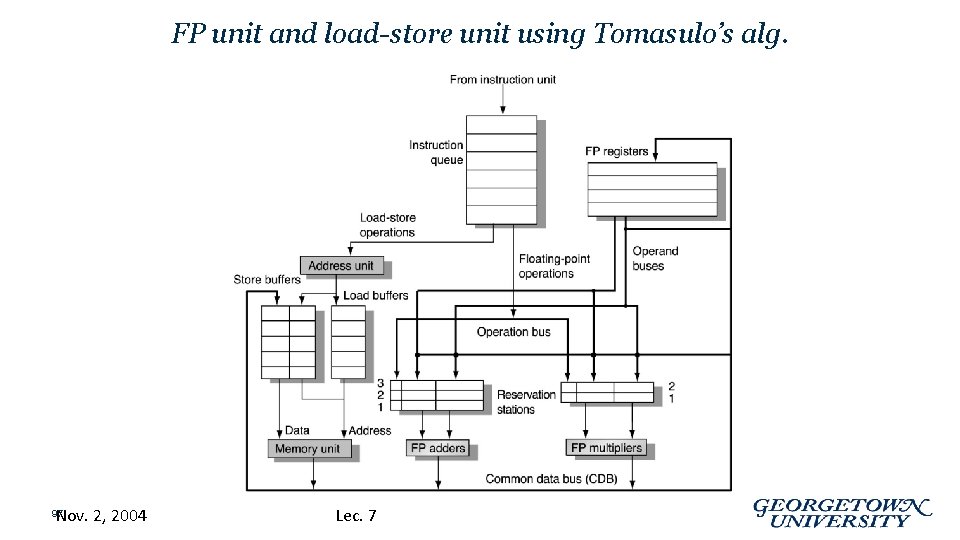

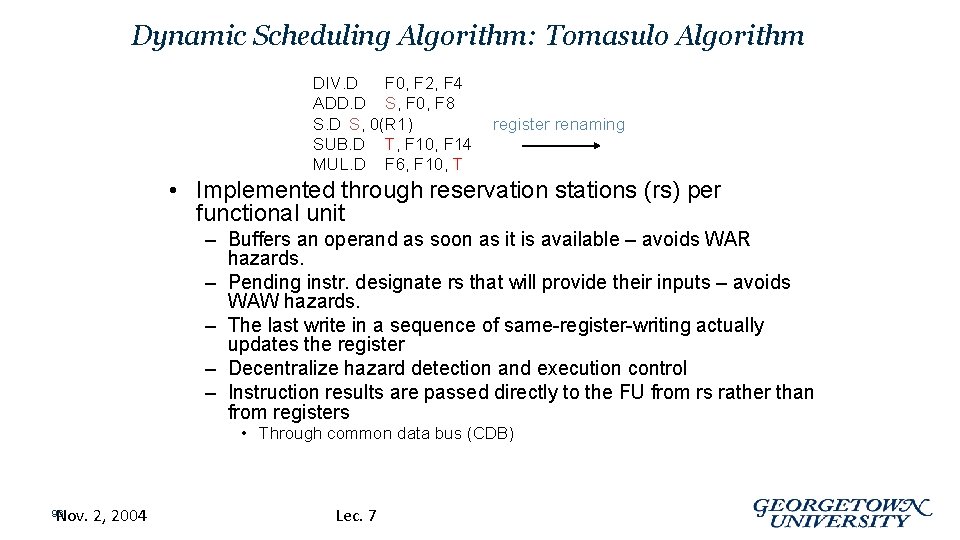

Dynamic Scheduling Algorithm: Tomasulo Algorithm DIV. D F 0, F 2, F 4 ADD. D S, F 0, F 8 S. D S, 0(R 1) SUB. D T, F 10, F 14 MUL. D F 6, F 10, T register renaming • Implemented through reservation stations (rs) per functional unit – Buffers an operand as soon as it is available – avoids WAR hazards. – Pending instr. designate rs that will provide their inputs – avoids WAW hazards. – The last write in a sequence of same-register-writing actually updates the register – Decentralize hazard detection and execution control – Instruction results are passed directly to the FU from rs rather than from registers • Through common data bus (CDB) Nov. 2, 2004 96 Lec. 7

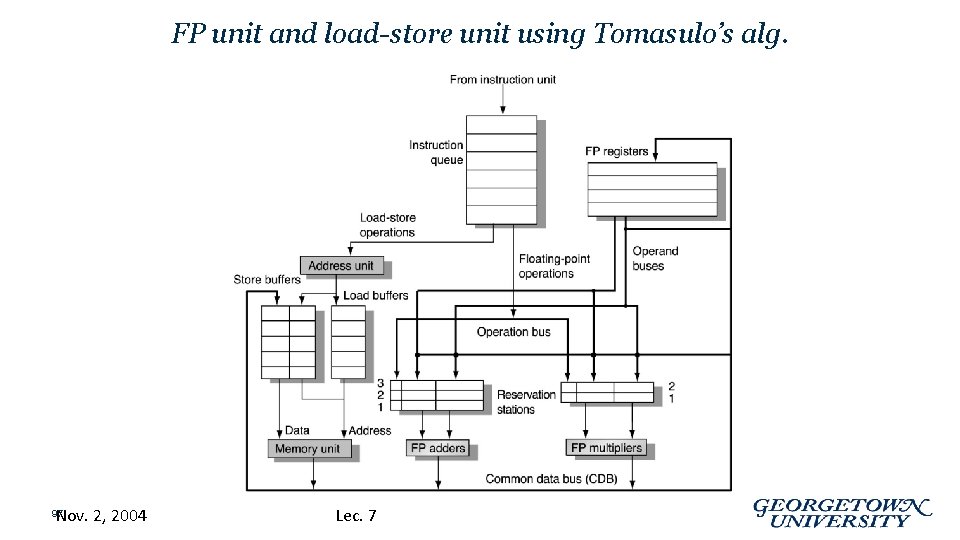

FP unit and load-store unit using Tomasulo’s alg. Nov. 2, 2004 97 Lec. 7

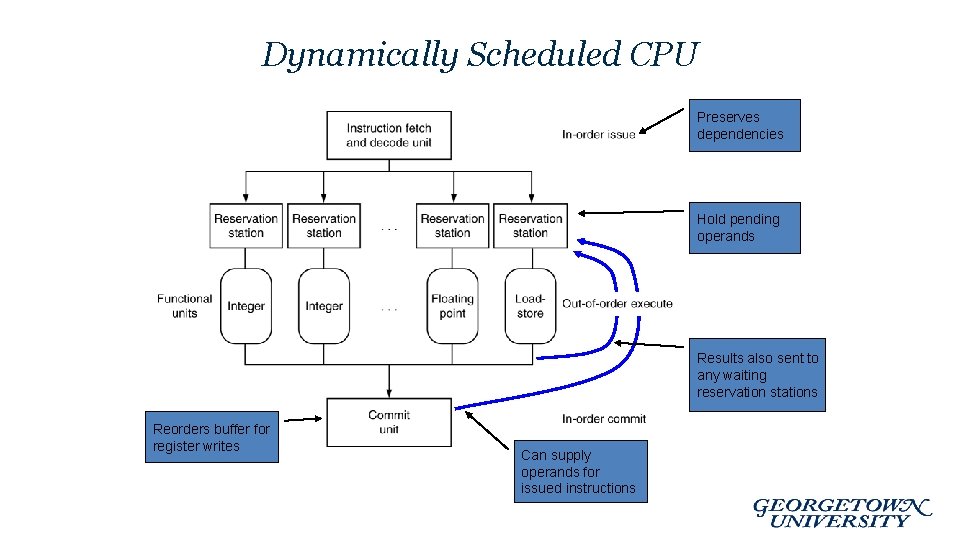

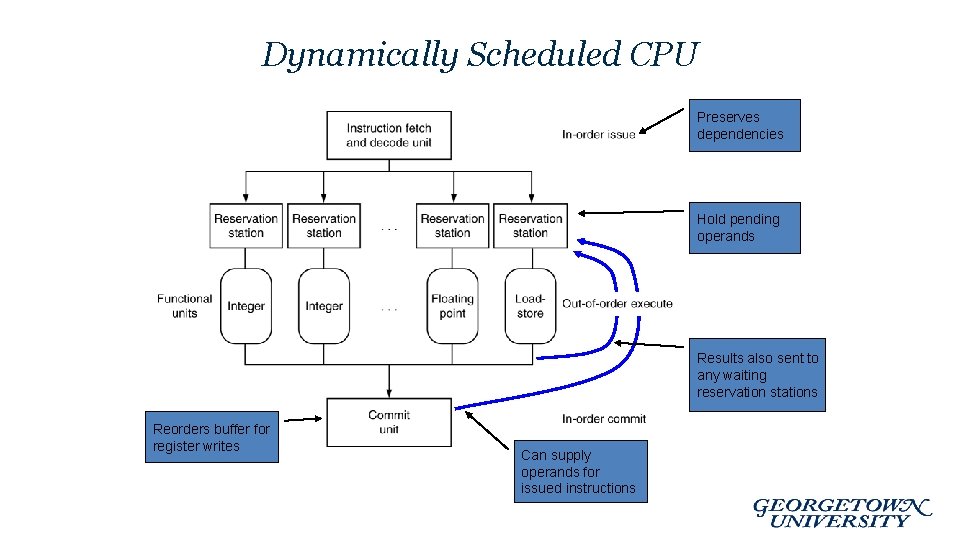

Dynamically Scheduled CPU Preserves dependencies Hold pending operands Results also sent to any waiting reservation stations Reorders buffer for register writes Can supply operands for issued instructions

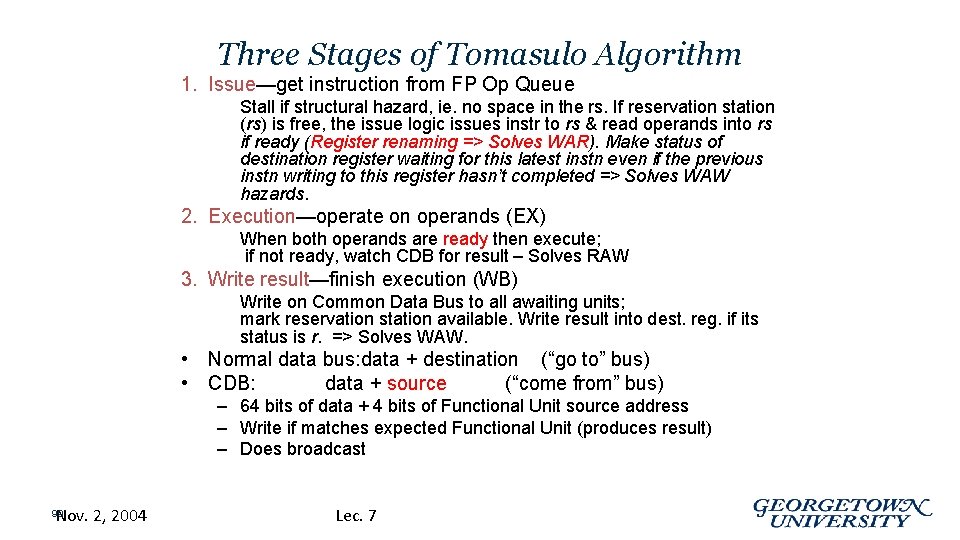

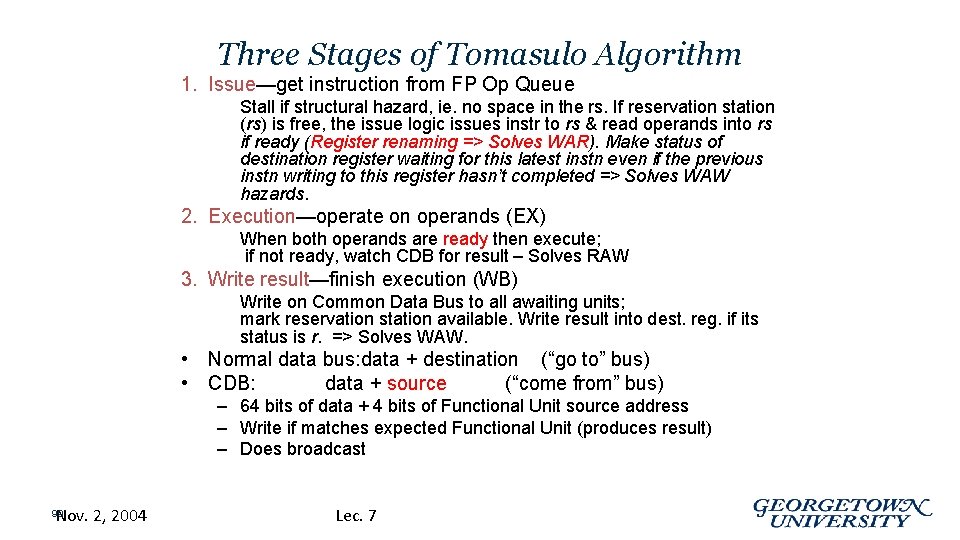

Three Stages of Tomasulo Algorithm 1. Issue—get instruction from FP Op Queue Stall if structural hazard, ie. no space in the rs. If reservation station (rs) is free, the issue logic issues instr to rs & read operands into rs if ready (Register renaming => Solves WAR). Make status of destination register waiting for this latest instn even if the previous instn writing to this register hasn’t completed => Solves WAW hazards. 2. Execution—operate on operands (EX) When both operands are ready then execute; if not ready, watch CDB for result – Solves RAW 3. Write result—finish execution (WB) Write on Common Data Bus to all awaiting units; mark reservation station available. Write result into dest. reg. if its status is r. => Solves WAW. • Normal data bus: data + destination (“go to” bus) • CDB: data + source (“come from” bus) – 64 bits of data + 4 bits of Functional Unit source address – Write if matches expected Functional Unit (produces result) – Does broadcast Nov. 2, 2004 99 Lec. 7

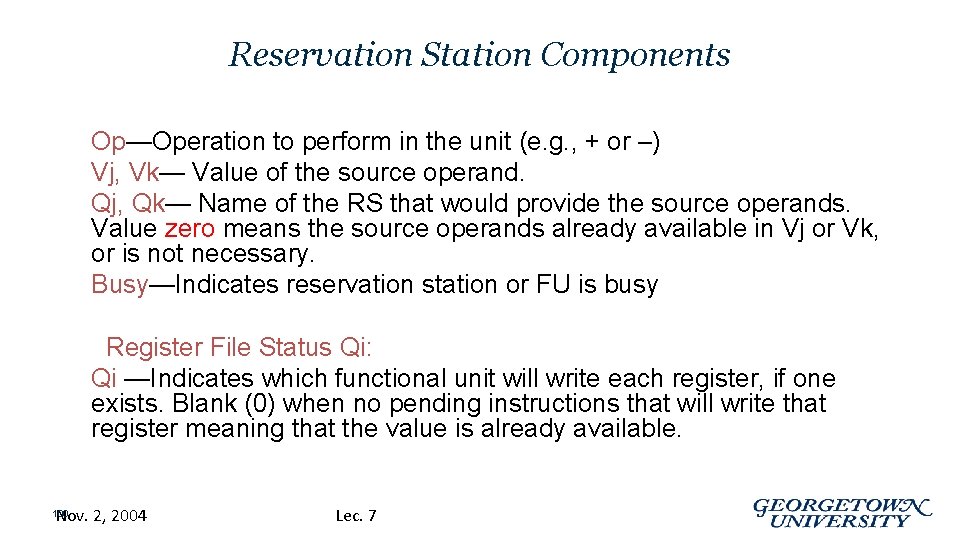

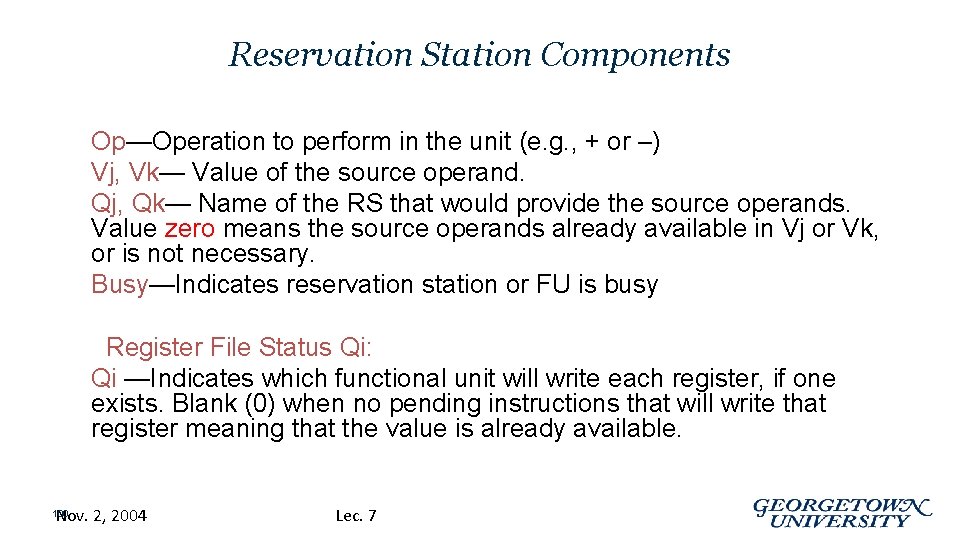

Reservation Station Components Op—Operation to perform in the unit (e. g. , + or –) Vj, Vk— Value of the source operand. Qj, Qk— Name of the RS that would provide the source operands. Value zero means the source operands already available in Vj or Vk, or is not necessary. Busy—Indicates reservation station or FU is busy Register File Status Qi: Qi —Indicates which functional unit will write each register, if one exists. Blank (0) when no pending instructions that will write that register meaning that the value is already available. Nov. 2, 2004 100 Lec. 7

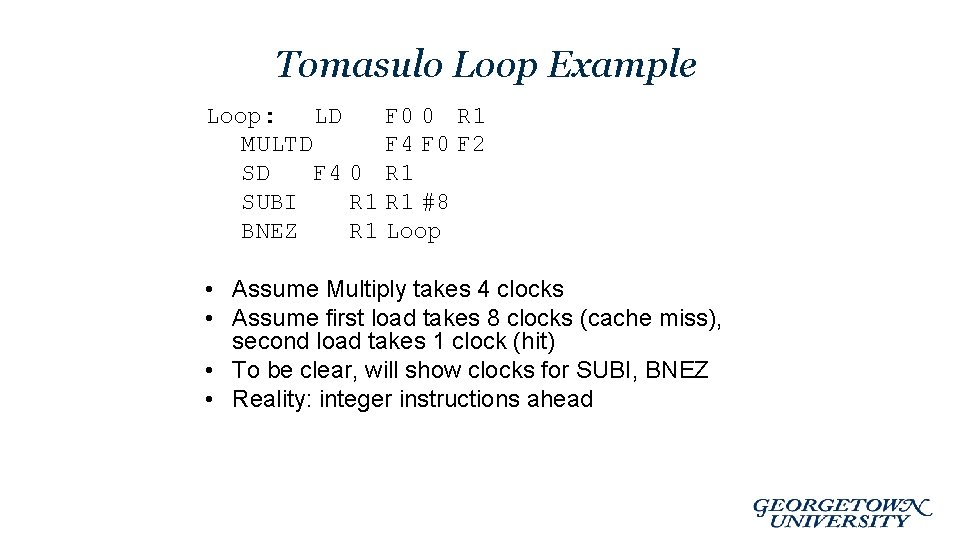

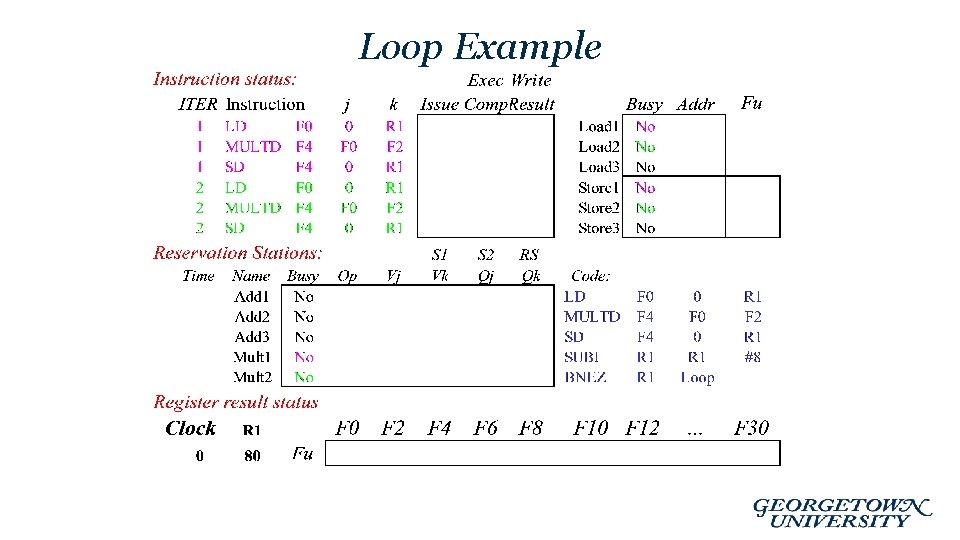

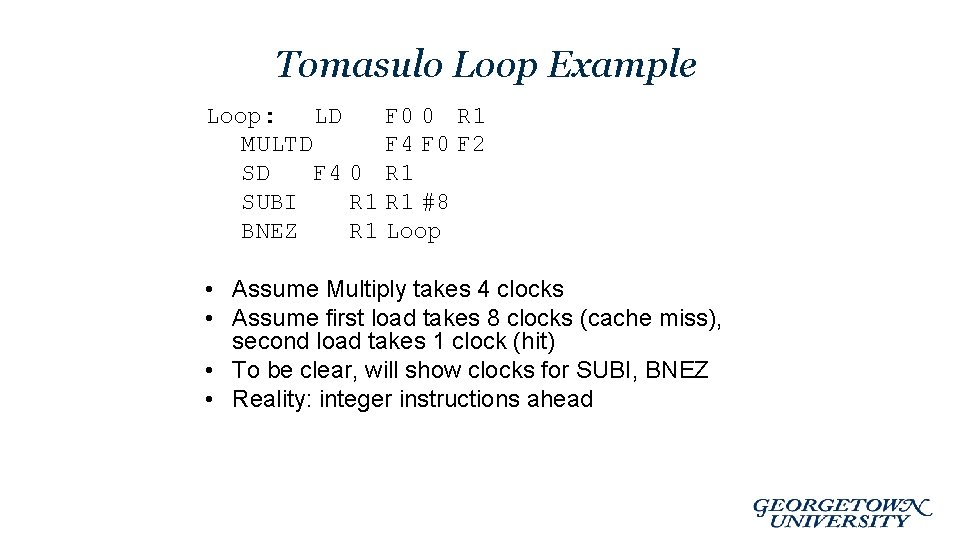

Tomasulo Loop Example Loop: LD F 0 0 R 1 MULTD F 4 F 0 F 2 SD F 4 0 R 1 SUBI R 1 #8 BNEZ R 1 Loop • Assume Multiply takes 4 clocks • Assume first load takes 8 clocks (cache miss), second load takes 1 clock (hit) • To be clear, will show clocks for SUBI, BNEZ • Reality: integer instructions ahead

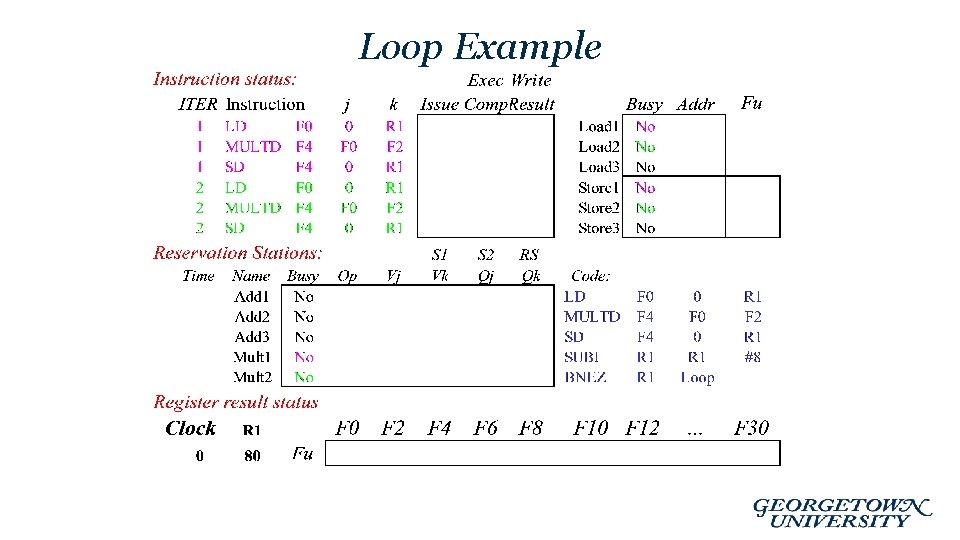

Loop Example

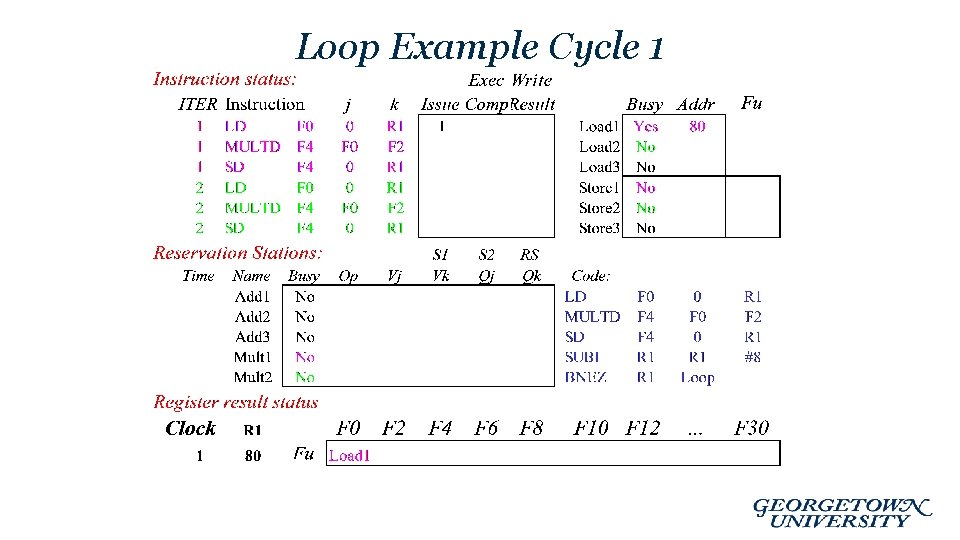

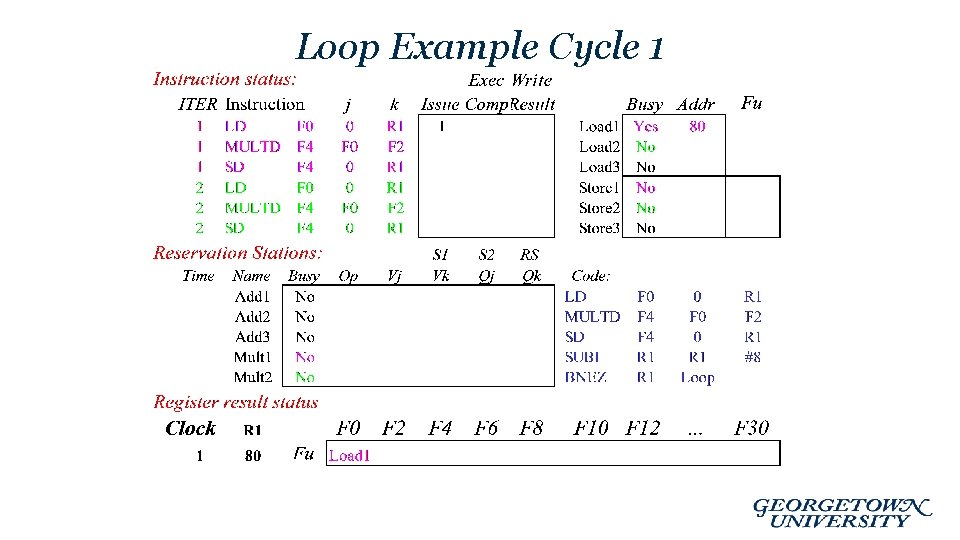

Loop Example Cycle 1

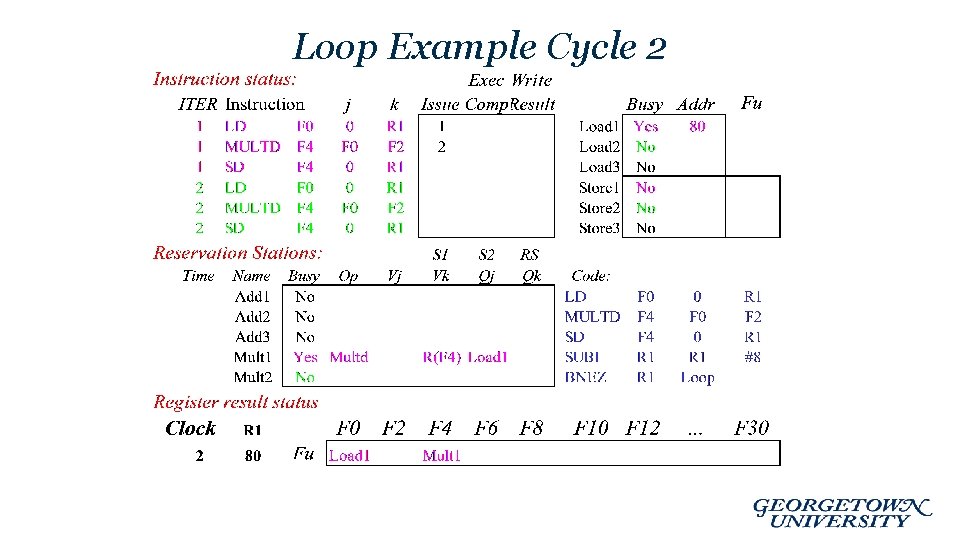

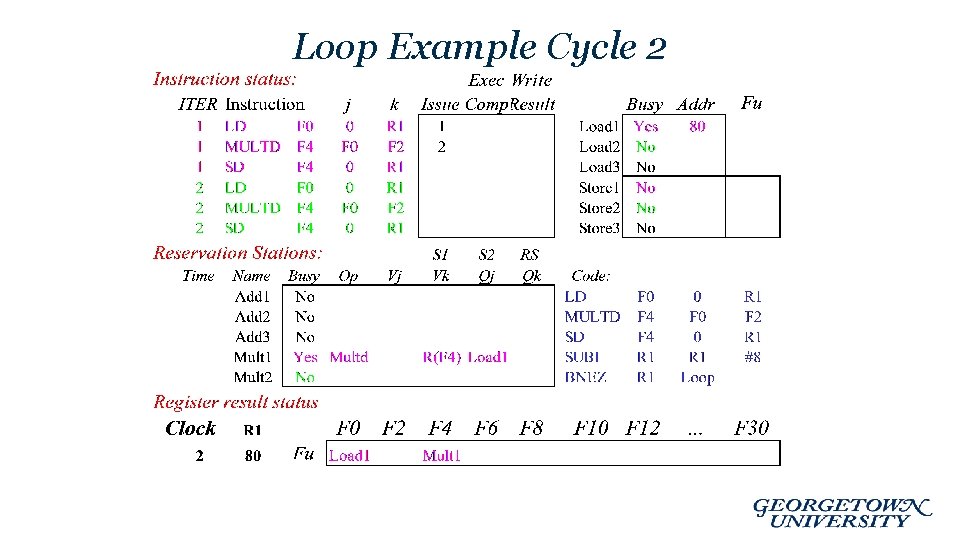

Loop Example Cycle 2

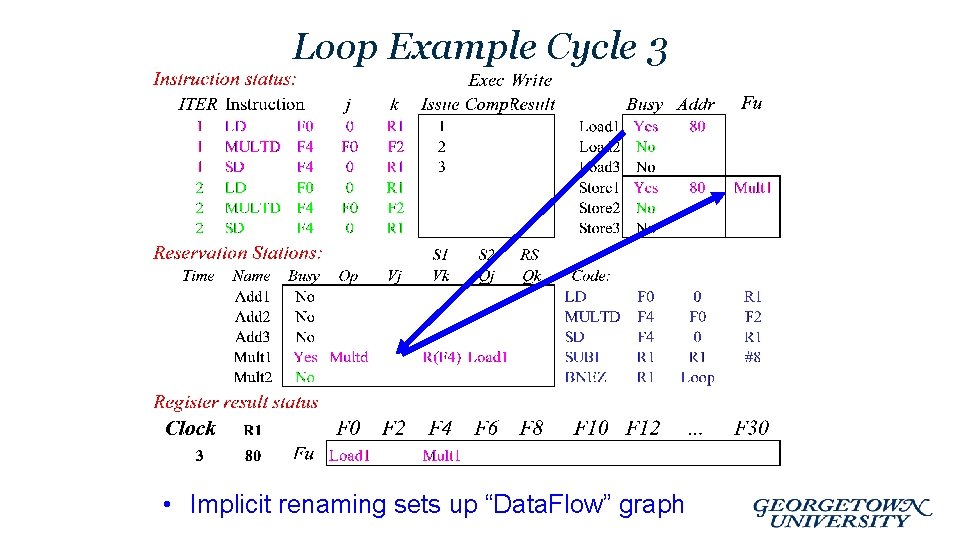

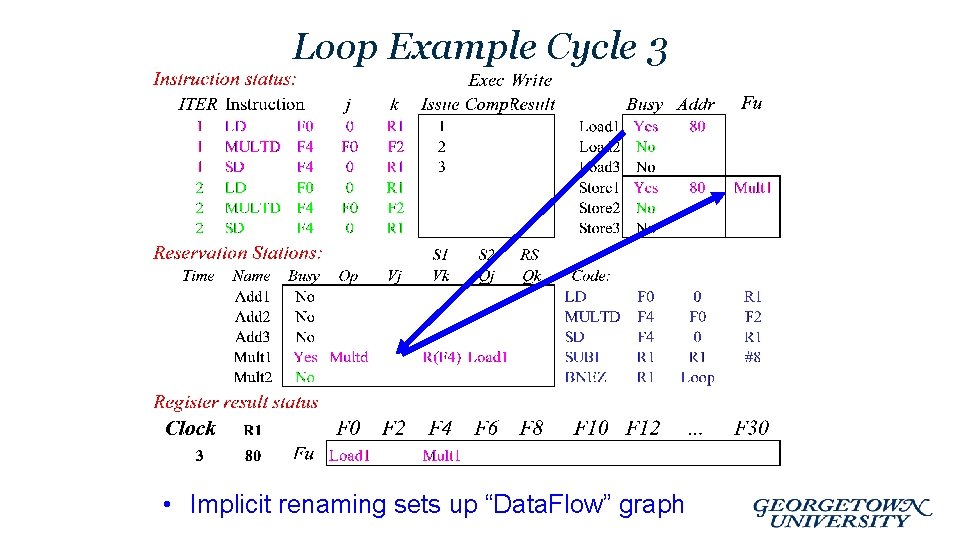

Loop Example Cycle 3 • Implicit renaming sets up “Data. Flow” graph

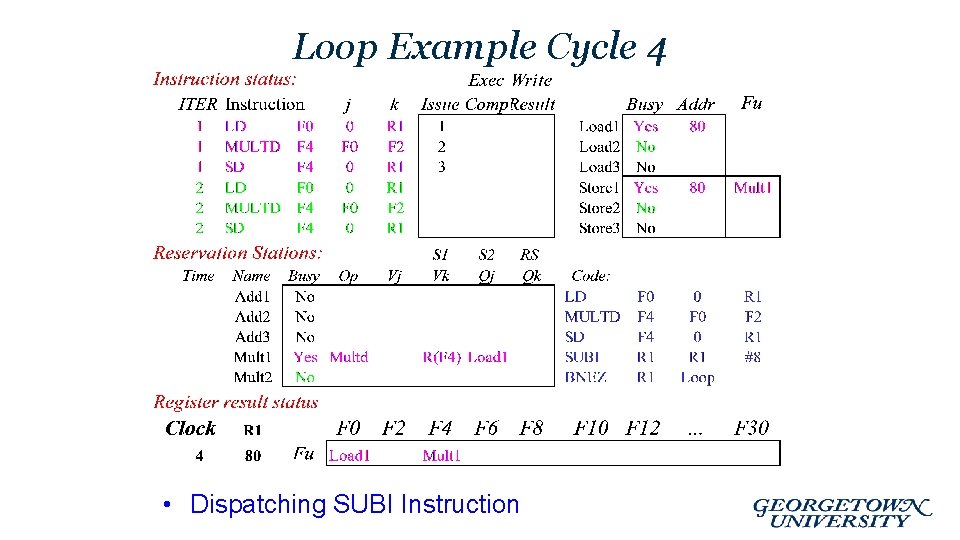

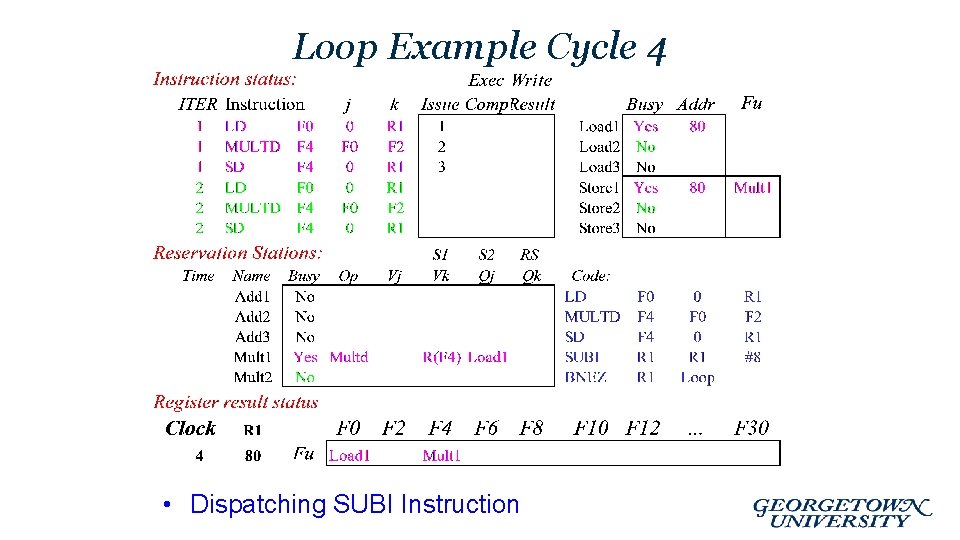

Loop Example Cycle 4 • Dispatching SUBI Instruction

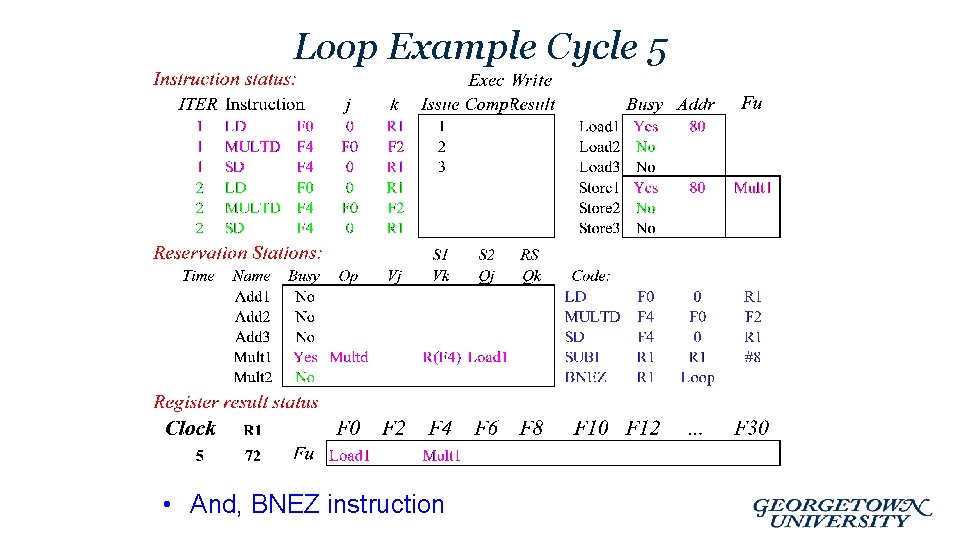

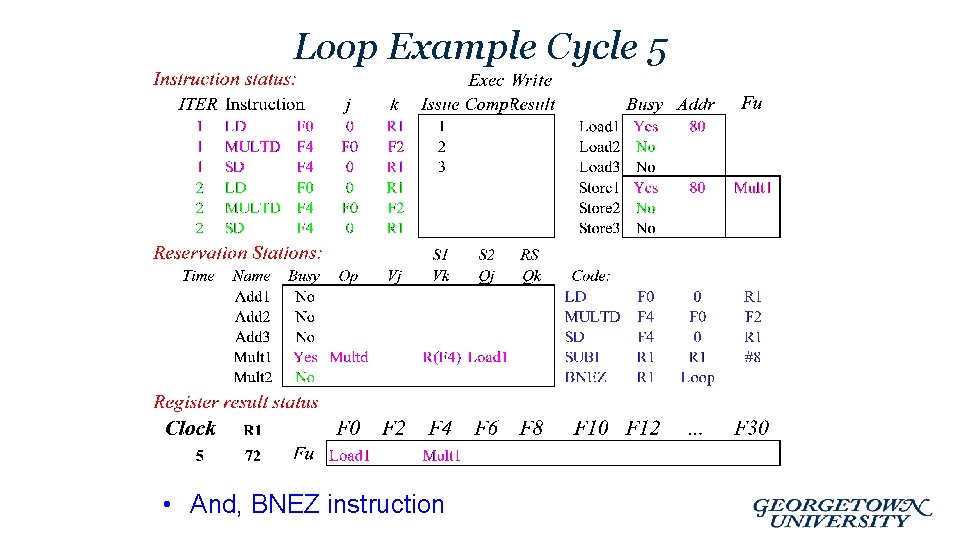

Loop Example Cycle 5 • And, BNEZ instruction

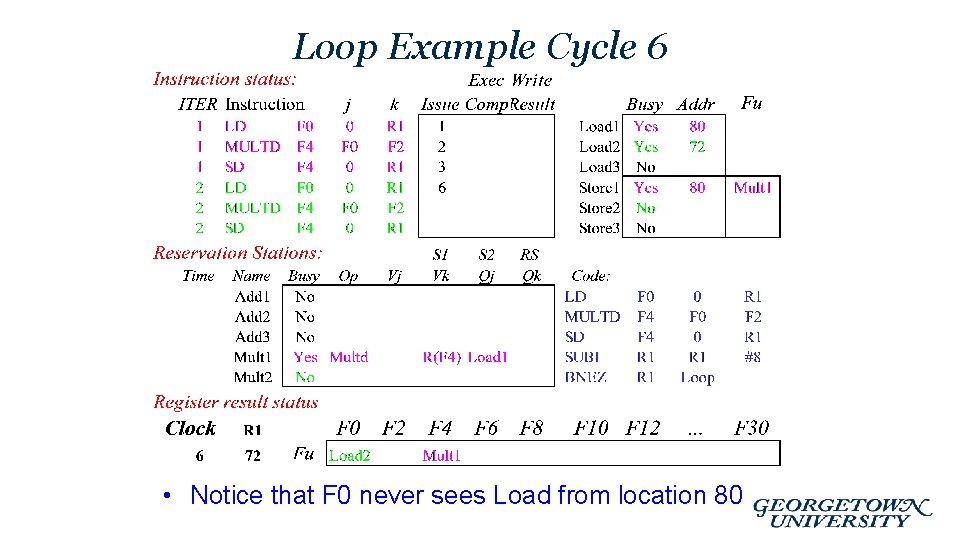

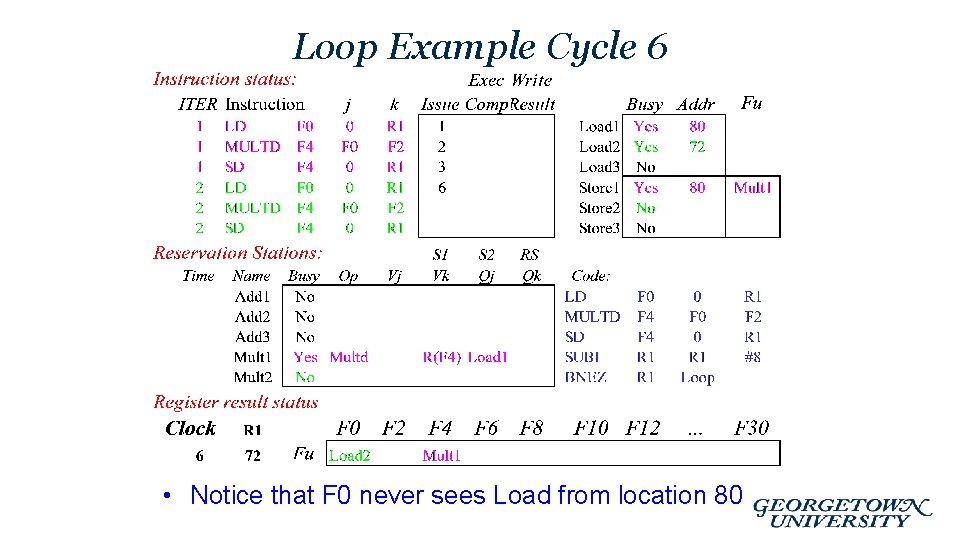

Loop Example Cycle 6 • Notice that F 0 never sees Load from location 80

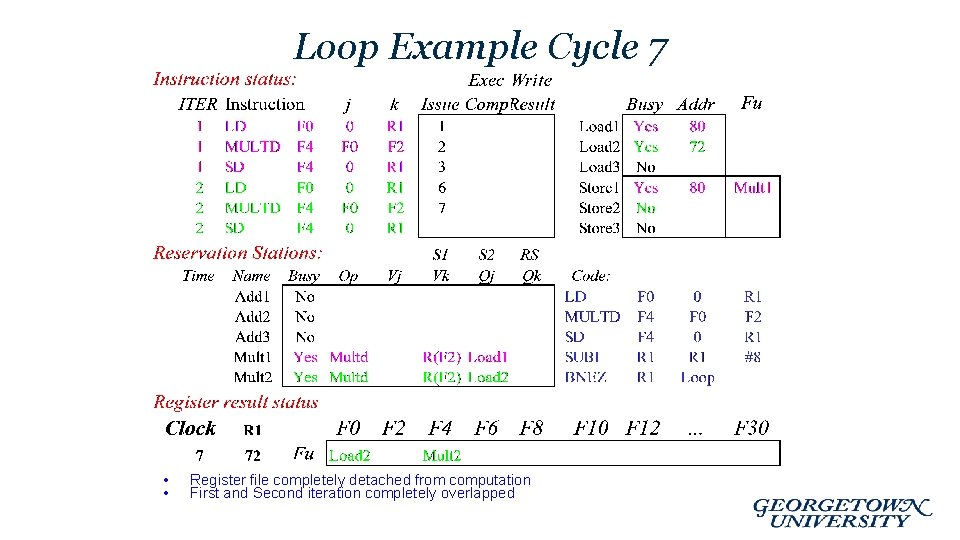

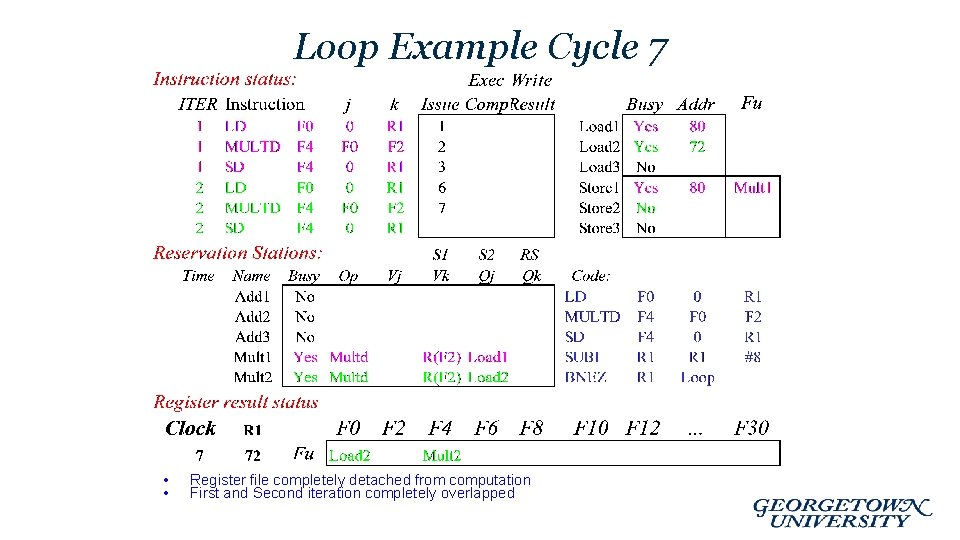

Loop Example Cycle 7 • • Register file completely detached from computation First and Second iteration completely overlapped

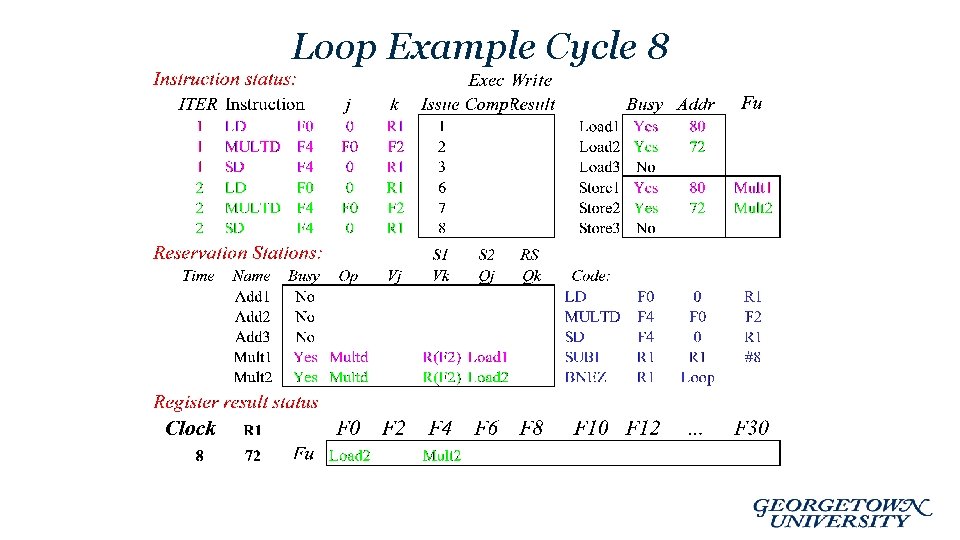

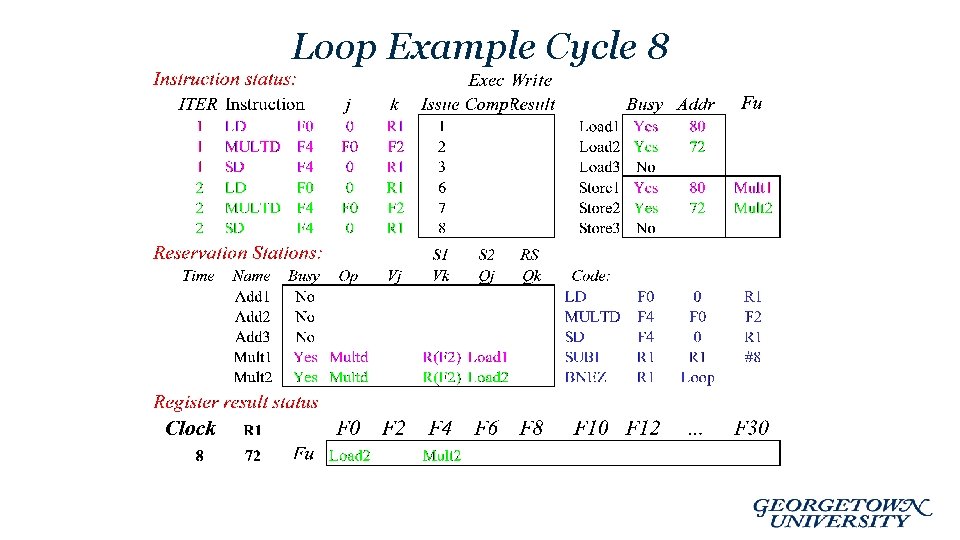

Loop Example Cycle 8

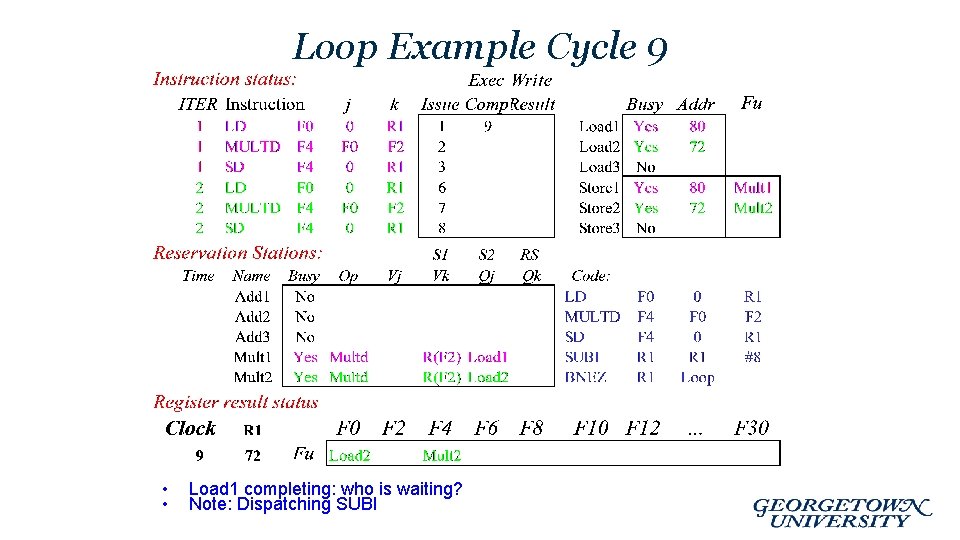

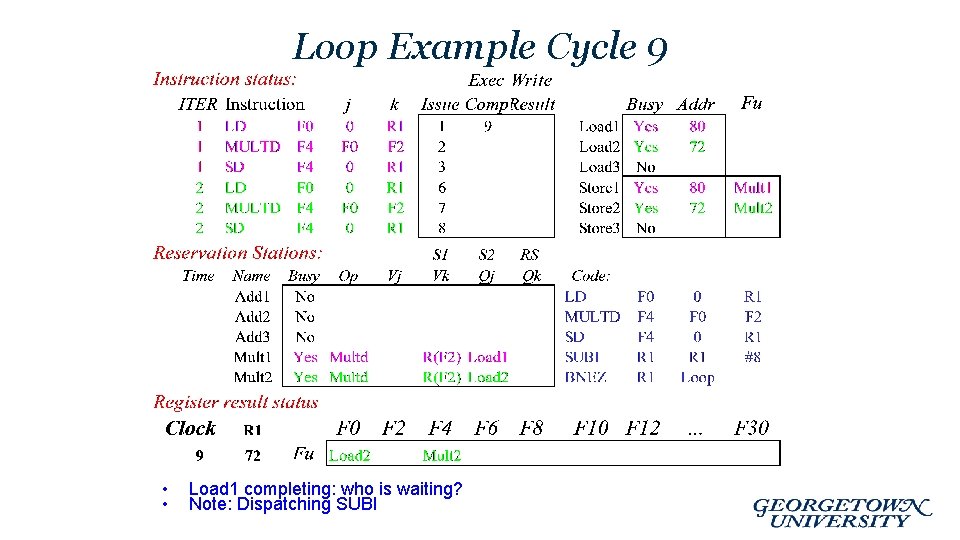

Loop Example Cycle 9 • • Load 1 completing: who is waiting? Note: Dispatching SUBI

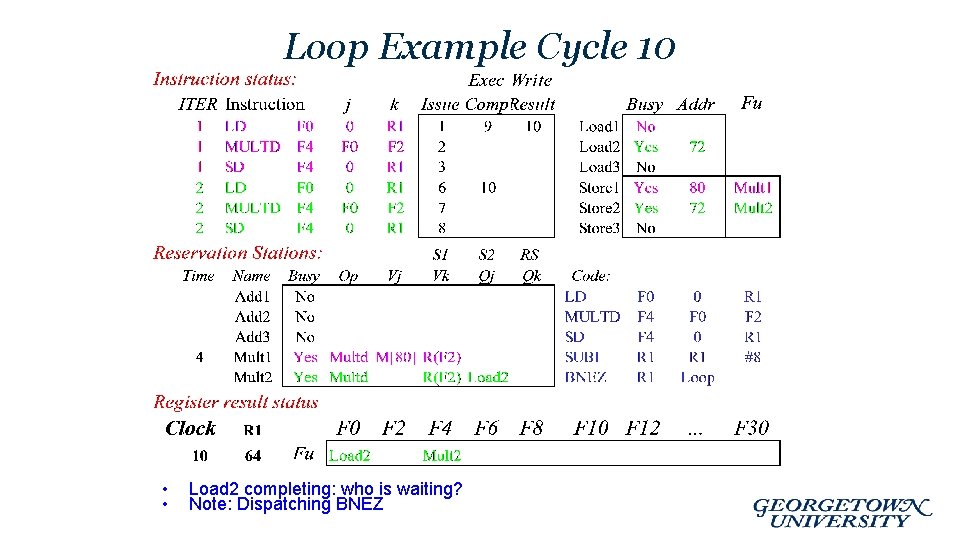

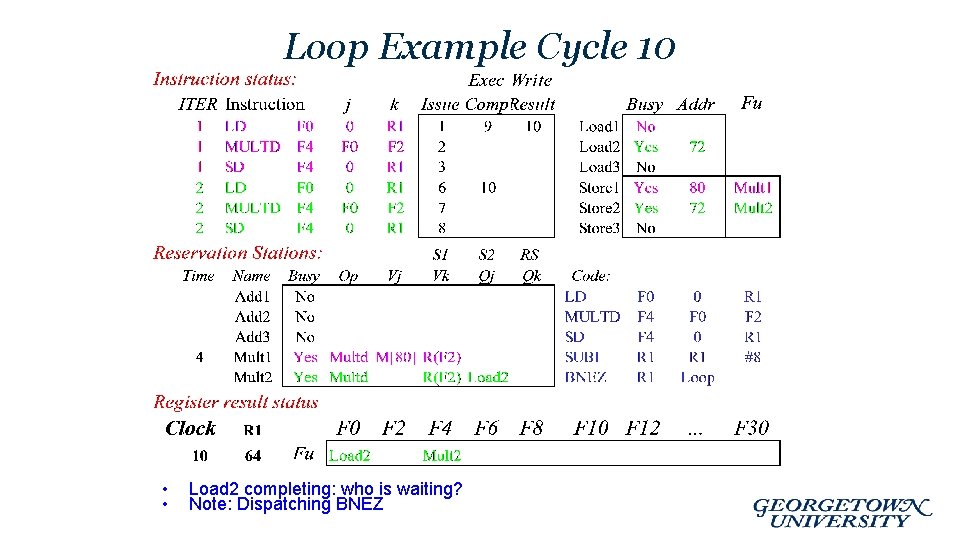

Loop Example Cycle 10 • • Load 2 completing: who is waiting? Note: Dispatching BNEZ

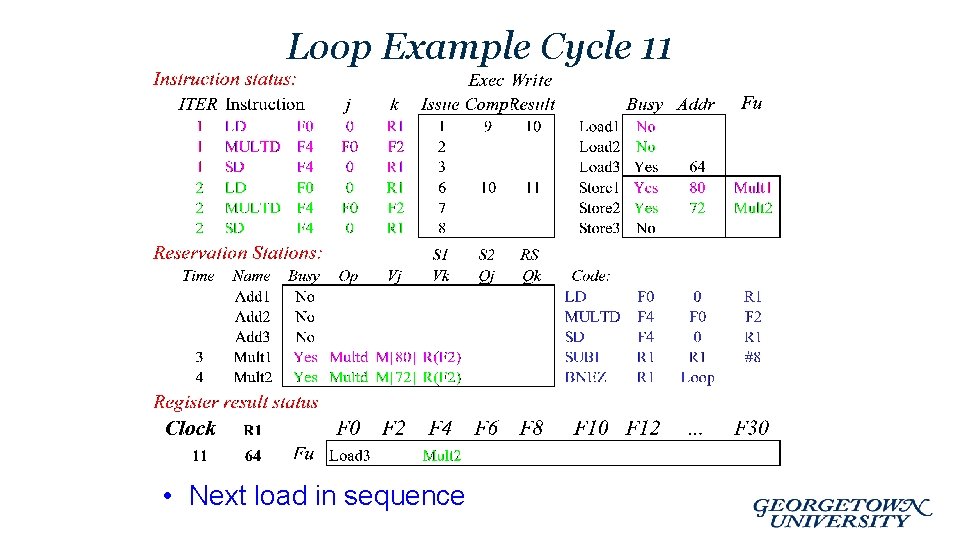

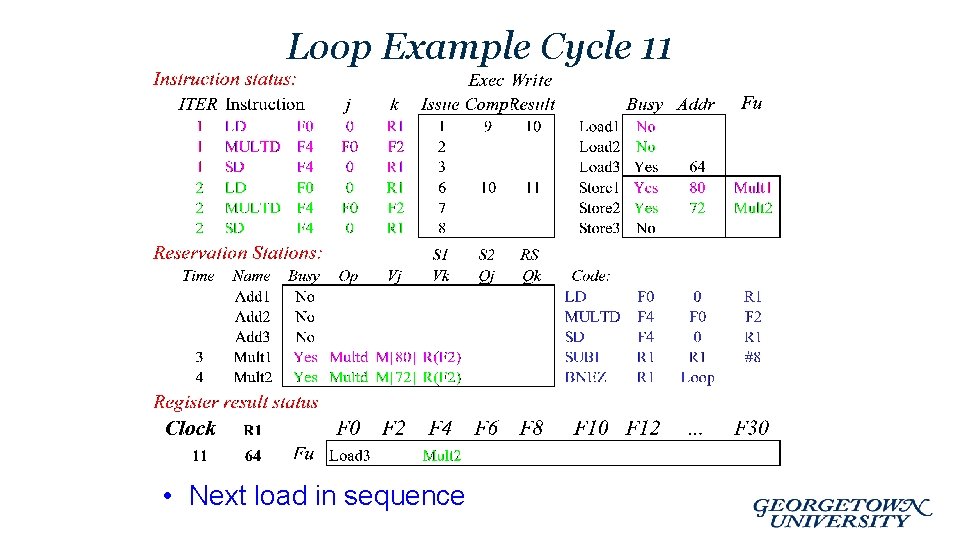

Loop Example Cycle 11 • Next load in sequence

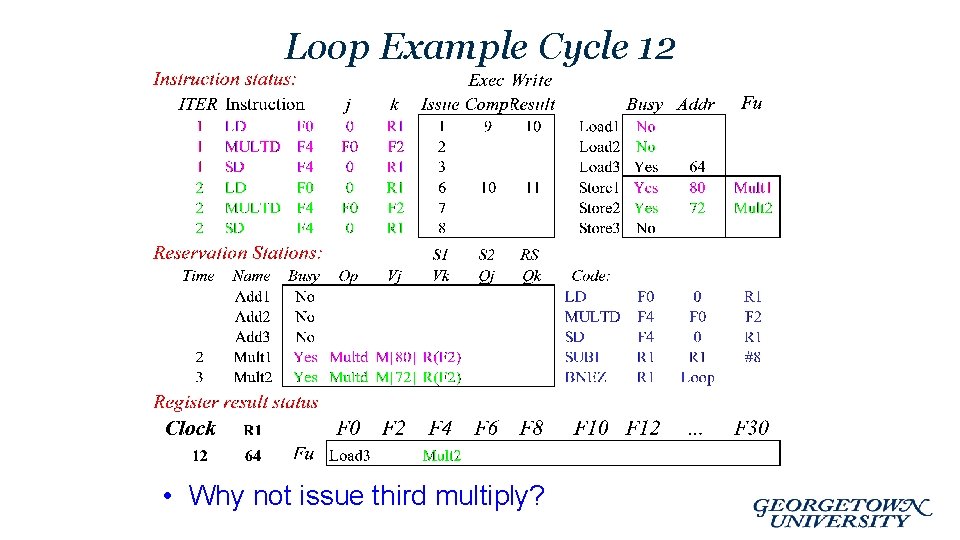

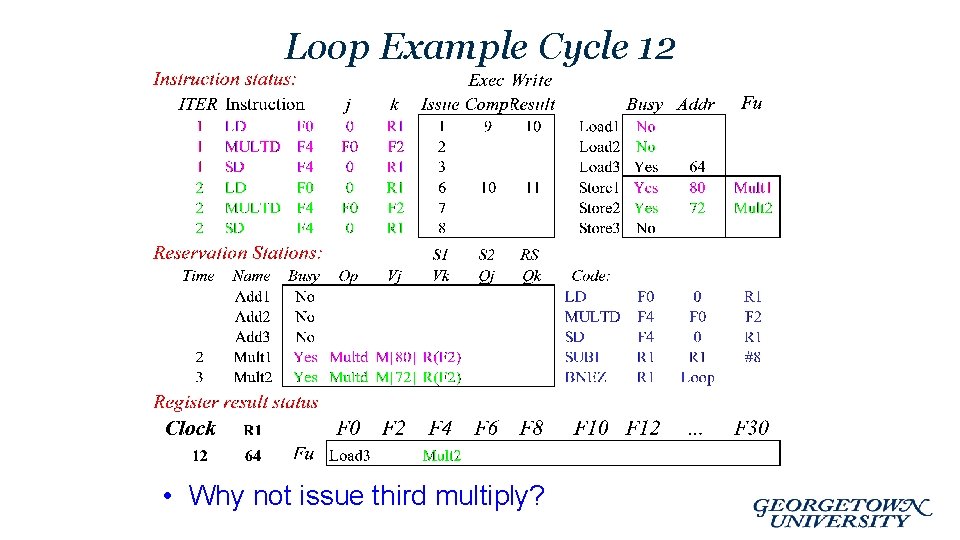

Loop Example Cycle 12 • Why not issue third multiply?

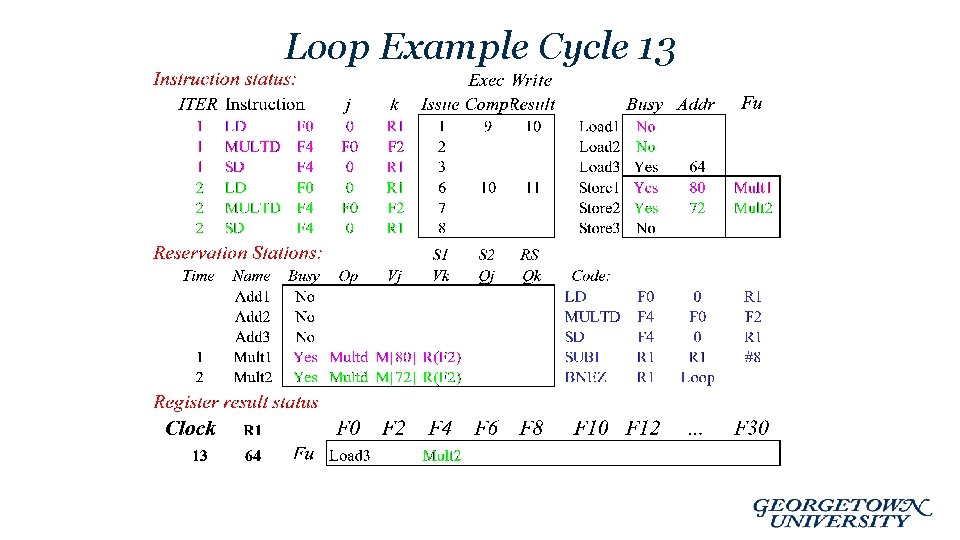

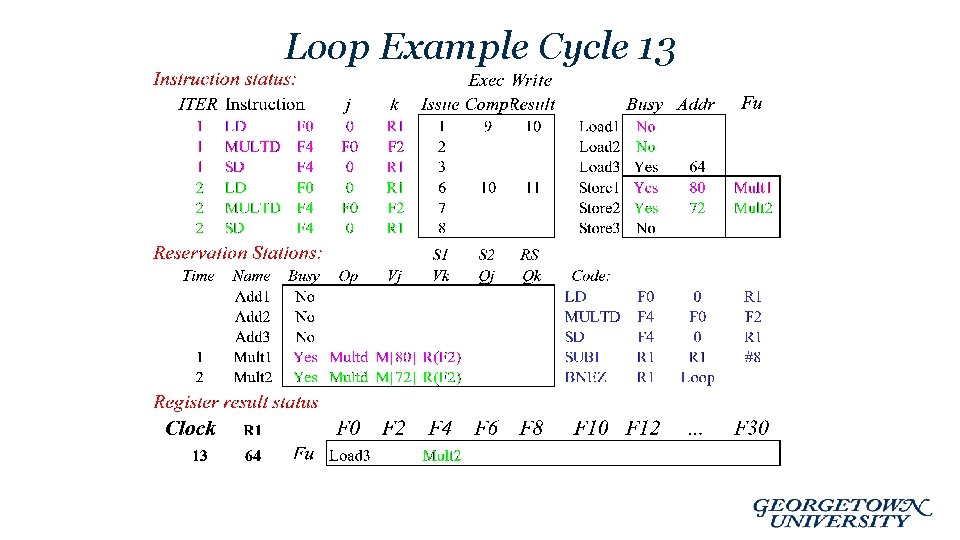

Loop Example Cycle 13

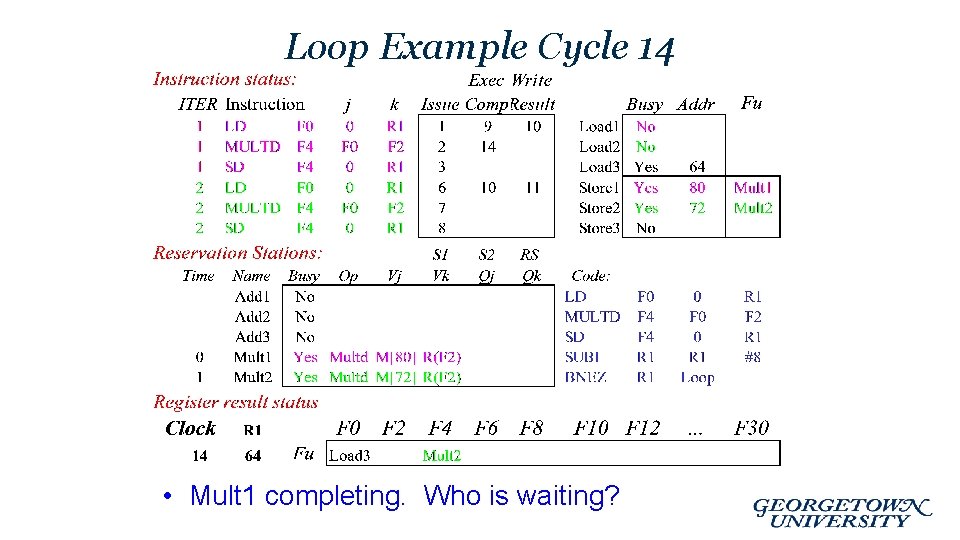

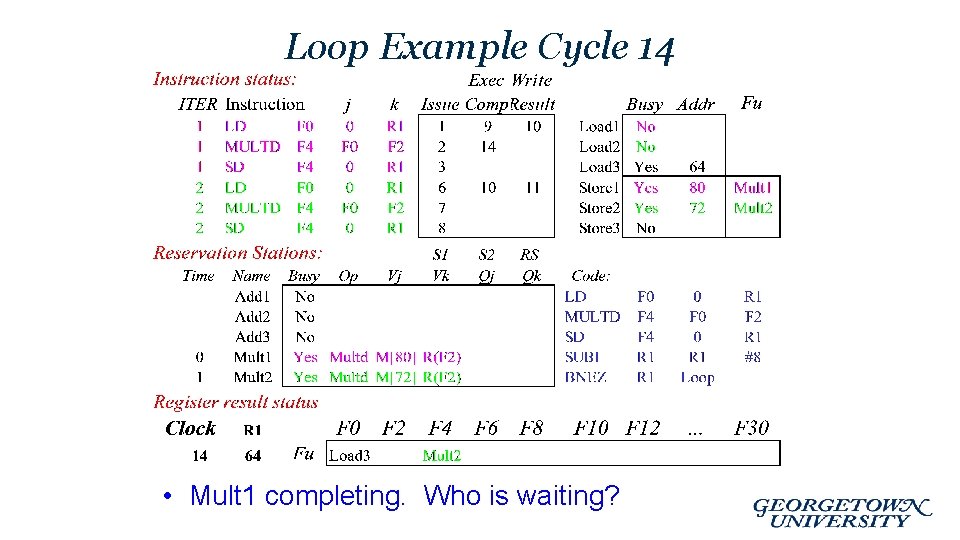

Loop Example Cycle 14 • Mult 1 completing. Who is waiting?

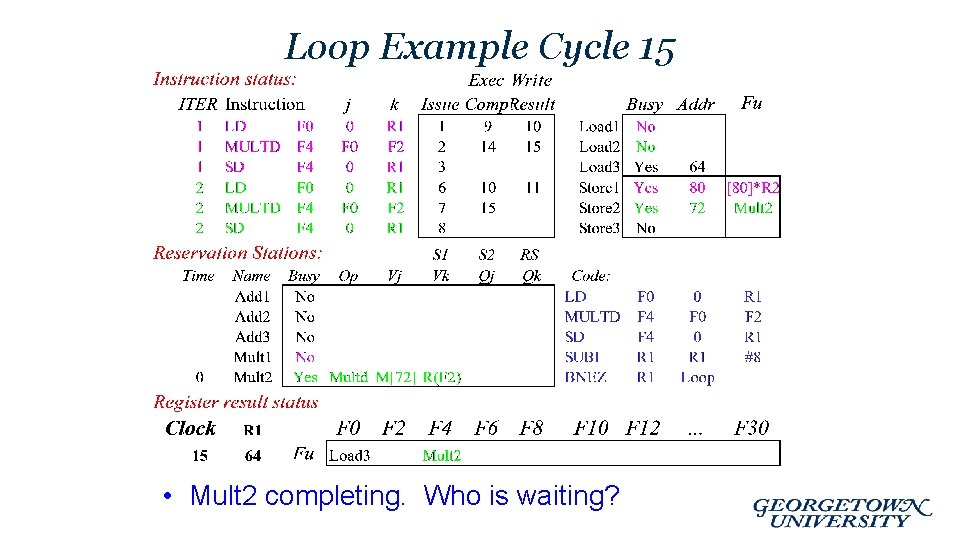

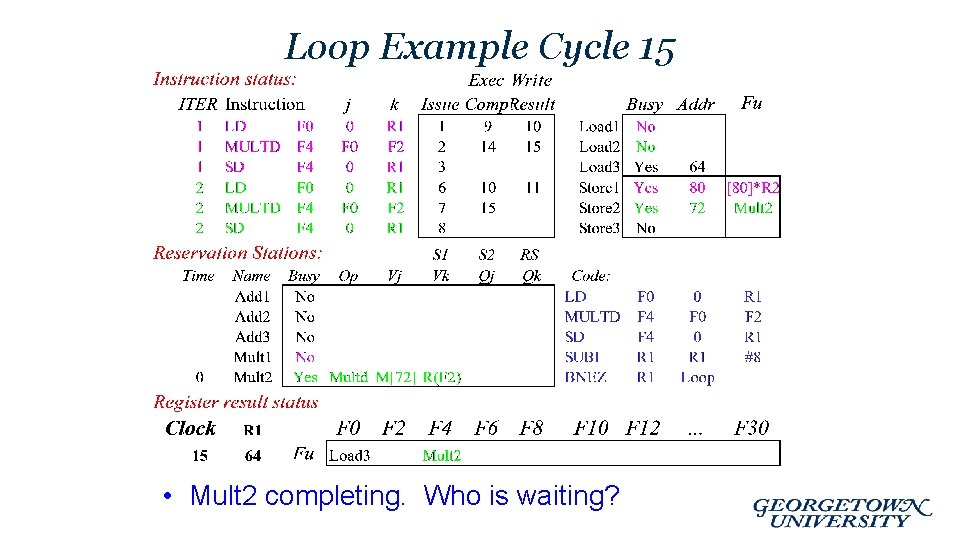

Loop Example Cycle 15 • Mult 2 completing. Who is waiting?

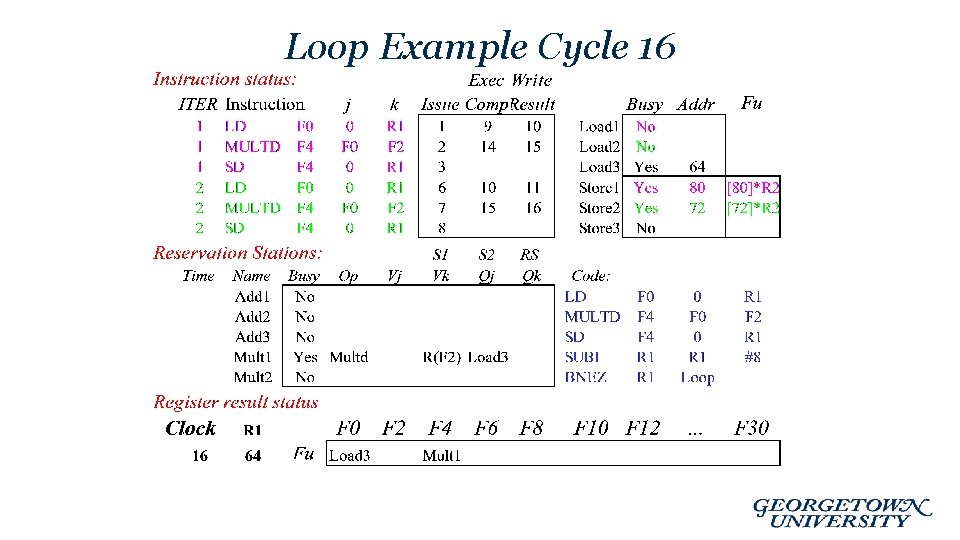

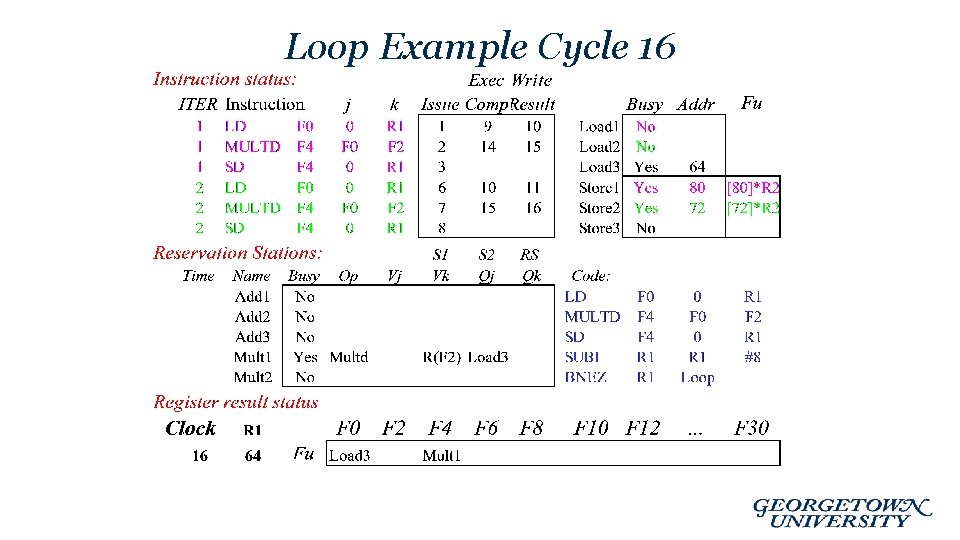

Loop Example Cycle 16

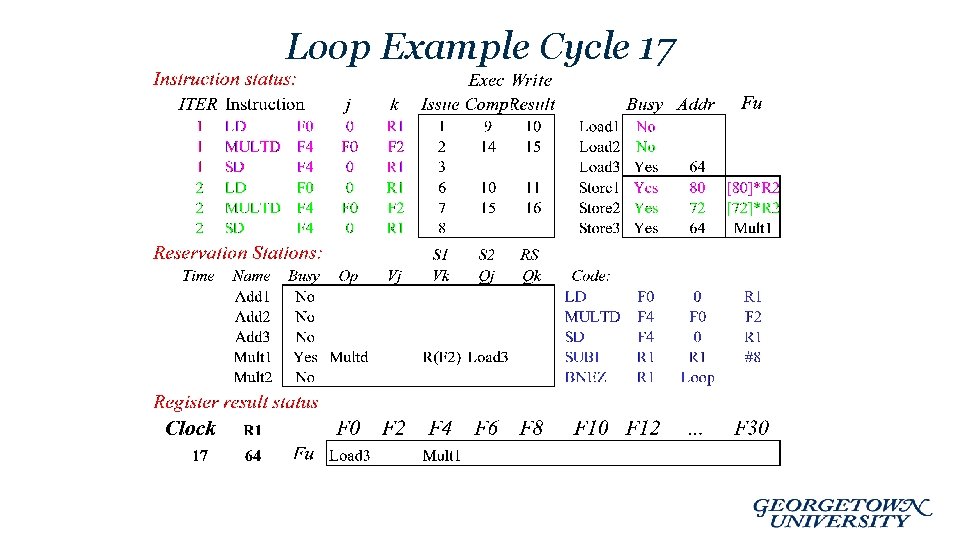

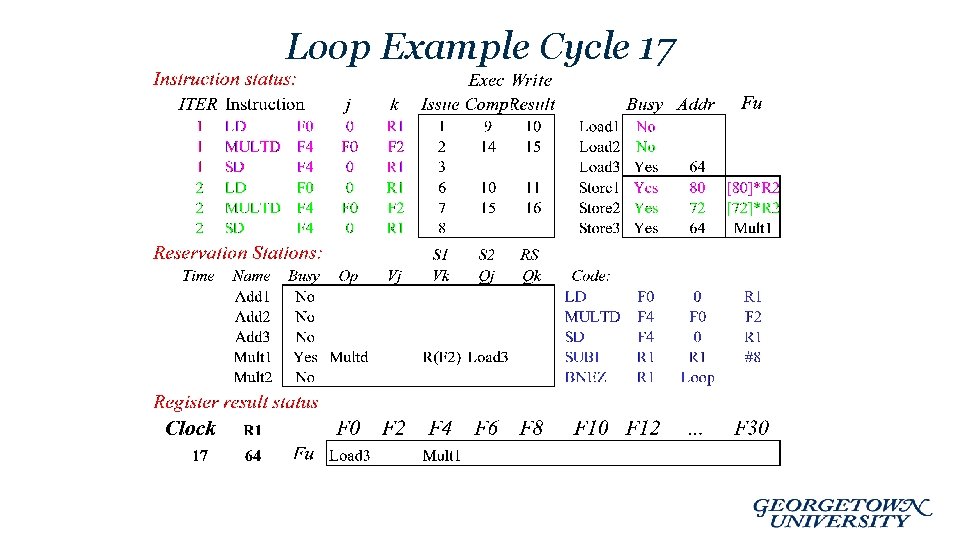

Loop Example Cycle 17

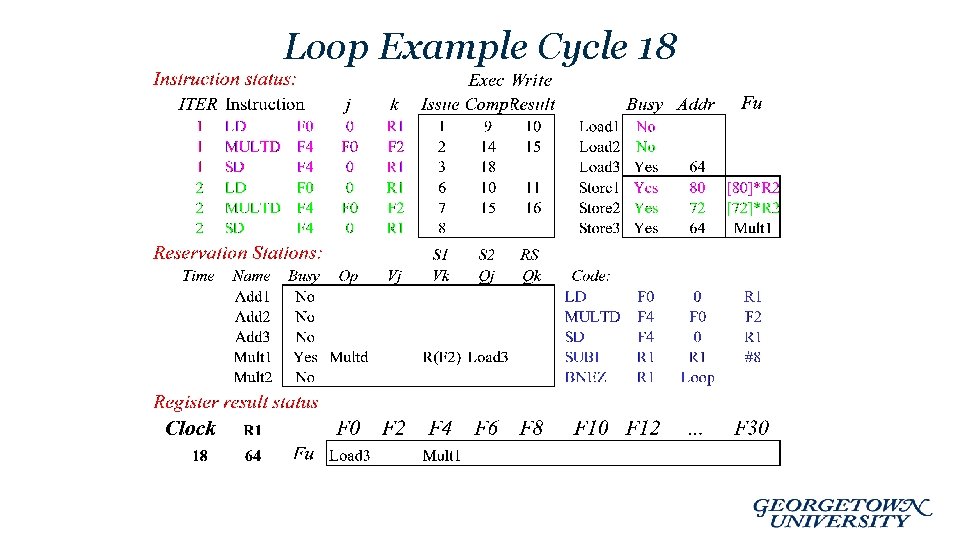

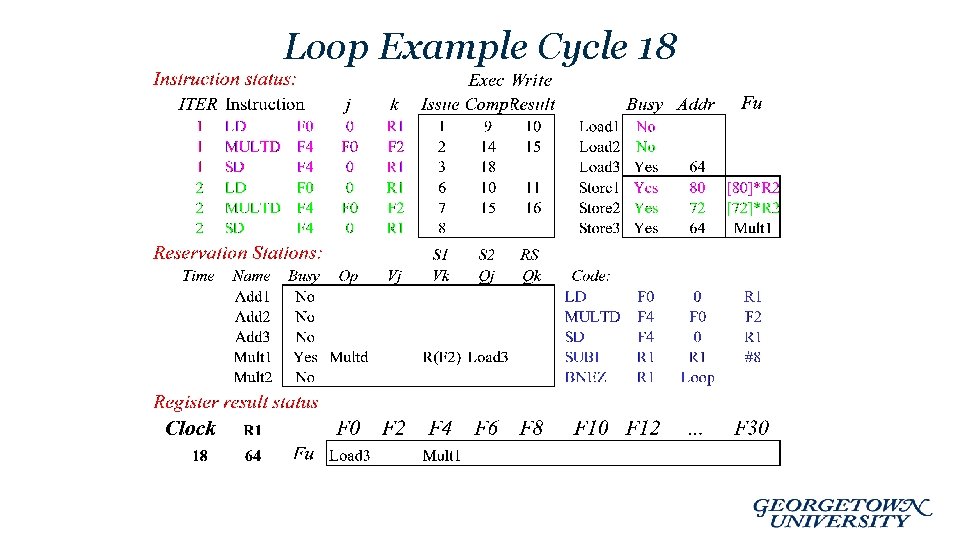

Loop Example Cycle 18

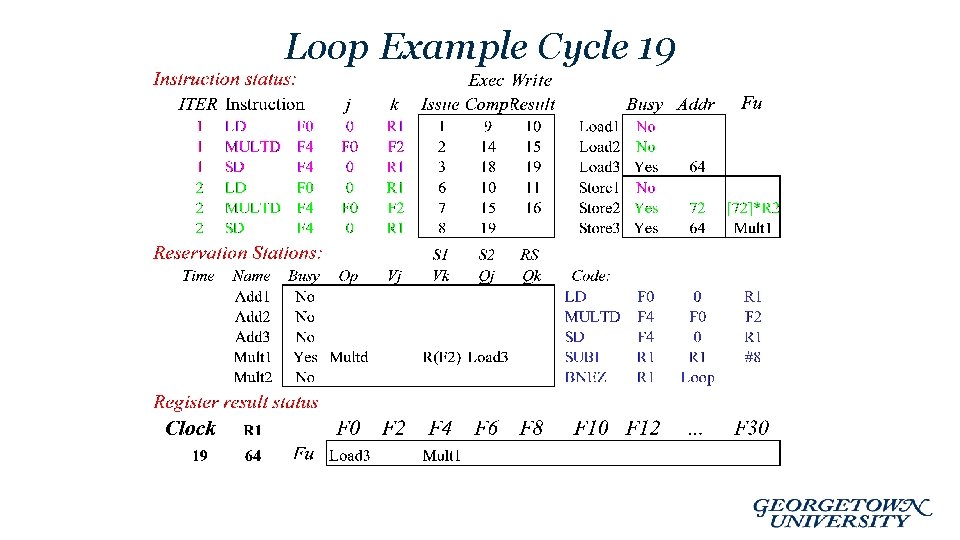

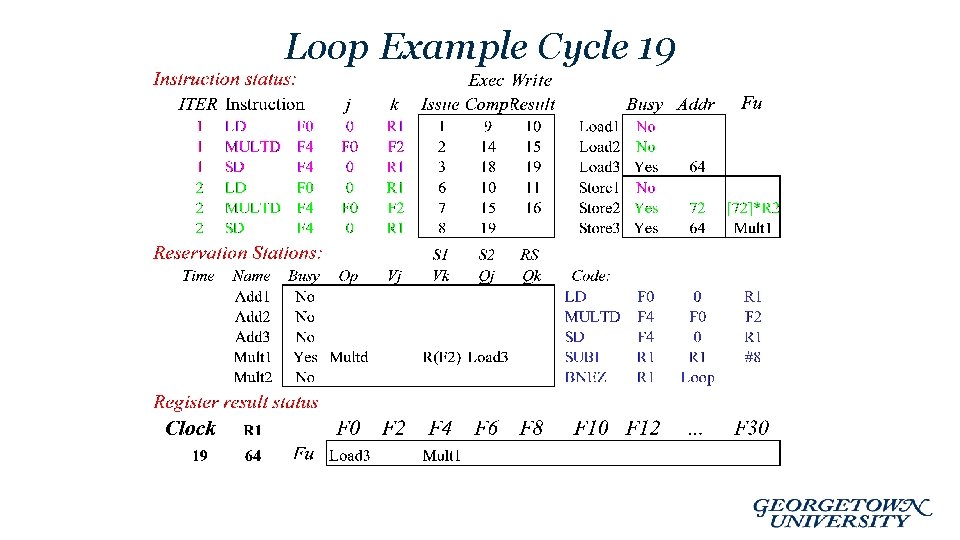

Loop Example Cycle 19

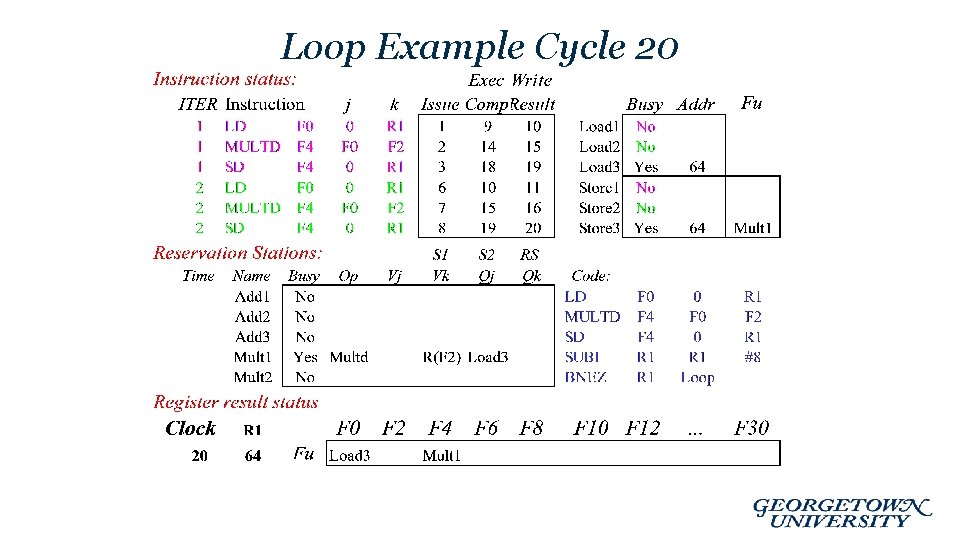

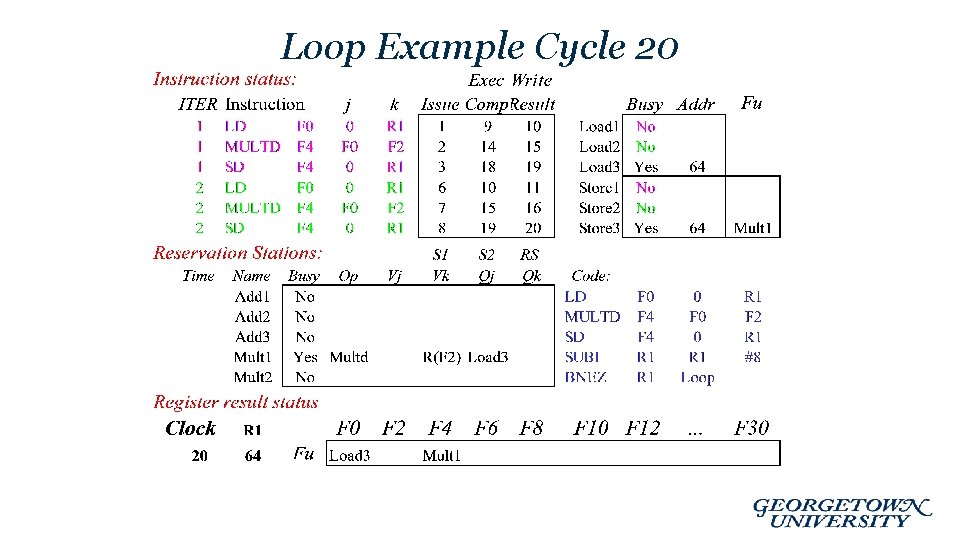

Loop Example Cycle 20

Why can Tomasulo overlap iterations of loops? • Register renaming – Multiple iterations use different physical destinations for registers (dynamic loop unrolling). • Reservation stations – Permit instruction issue to advance past integer control flow operations – Also buffer old values of registers - totally avoiding the WAR stall that we saw in the scoreboard. • Other idea: Tomasulo building “Data. Flow” graph on the fly.

Register Renaming • Reservation stations and reorder buffer effectively provide register renaming • On instruction issue to reservation station – If operand is available in register file or reorder buffer • Copied to reservation station • No longer required in the register; can be overwritten – If operand is not yet available • It will be provided to the reservation station by a function unit • Register update may not be required