Correlation Clustering Shuchi Chawla Carnegie Mellon University Joint

![Minimizing Disagreements [Bansal, Blum, C, FOCS’ 02] w Goal: approximately minimize number of “mistakes” Minimizing Disagreements [Bansal, Blum, C, FOCS’ 02] w Goal: approximately minimize number of “mistakes”](https://slidetodoc.com/presentation_image_h2/ae82213b6c751d3d5bfa8383d12cb935/image-16.jpg)

![Experimental Results [Wellner Mc. Callum’ 03] Dataset 1 Dataset 2 Dataset 3 Best-previous-match 90. Experimental Results [Wellner Mc. Callum’ 03] Dataset 1 Dataset 2 Dataset 3 Best-previous-match 90.](https://slidetodoc.com/presentation_image_h2/ae82213b6c751d3d5bfa8383d12cb935/image-24.jpg)

- Slides: 26

Correlation Clustering Shuchi Chawla Carnegie Mellon University Joint work with Nikhil Bansal and Avrim Blum Shuchi Chawla, Carnegie Mellon University

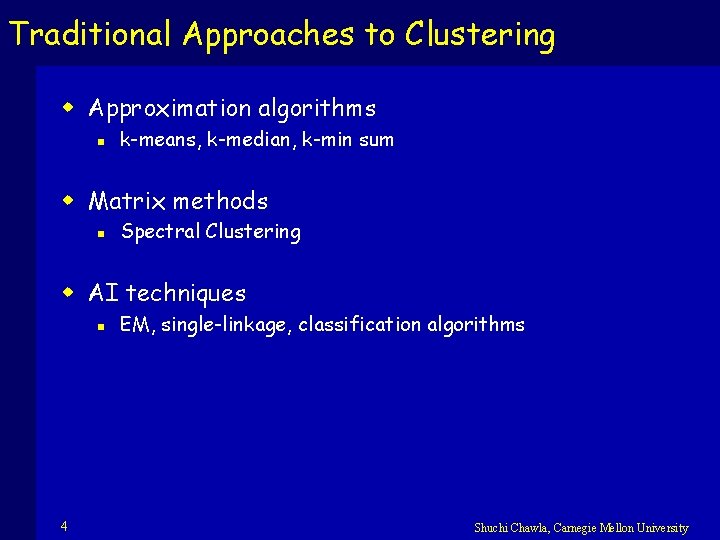

Natural Language Processing Co-reference Analysis w In order to understand the article automatically, need to figure out which entities are one and the same w Is “his” in the second line the same person as “The secretary” in the first line? 2 Shuchi Chawla, Carnegie Mellon University

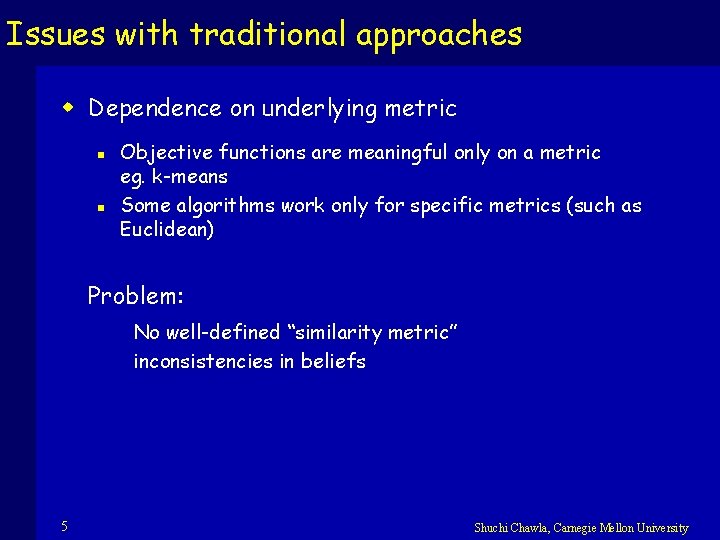

Other real-world clustering problems w Web Document Clustering Given a bunch of documents, classify them into salient topics w Computer Vision Distinguish boundaries between different objects and the background in a picture w Research Communities Given data on research papers, divide researchers into communities by co-authorship w Authorship (Citeseer/DBLP) Given authors of documents, figure out which authors are really the same person 3 Shuchi Chawla, Carnegie Mellon University

Traditional Approaches to Clustering w Approximation algorithms n k-means, k-median, k-min sum w Matrix methods n Spectral Clustering w AI techniques n 4 EM, single-linkage, classification algorithms Shuchi Chawla, Carnegie Mellon University

Issues with traditional approaches w Dependence on underlying metric n n Objective functions are meaningful only on a metric eg. k-means Some algorithms work only for specific metrics (such as Euclidean) Problem: No well-defined “similarity metric” inconsistencies in beliefs 5 Shuchi Chawla, Carnegie Mellon University

Issues with traditional approaches w Fixed number of clusters/known topics n Meaningless without prespecified number of clusters eg. for k-means or k-median, if k is unspecified, it is best to put everything in their own cluster Problem: Number of clusters is usually unknown No predefined topics – desirable to figure them out as part of the algorithm 6 Shuchi Chawla, Carnegie Mellon University

Issues with traditional approaches w No clean notion of “quality” of clustering n Approximations do not directly translate to how many items have been grouped wrongly w Reliance on generative model n n eg. Data arising from a mixture of Gaussians Typically don’t work well in the case of fuzzy boundaries Problem: Fuzzy boundaries – how to cluster may depends on the given set of objects 7 Shuchi Chawla, Carnegie Mellon University

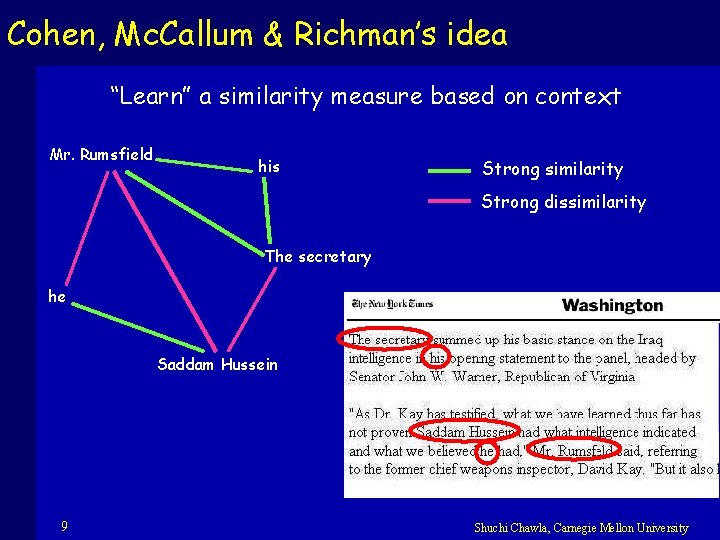

Cohen, Mc. Callum & Richman’s idea w “Learn” a similarity function based on context w f(x, y) = amount of similarity between x and y Not necessarily a metric! 1. Use labeled data to train up this function 2. Classify all pairs with the learned function 3. Find the clustering that agrees most with the function w Problem divided into two separate phases w We deal with the second phase 8 Shuchi Chawla, Carnegie Mellon University

Cohen, Mc. Callum & Richman’s idea “Learn” a similarity measure based on context Mr. Rumsfield his Strong similarity Strong dissimilarity The secretary he Saddam Hussein 9 Shuchi Chawla, Carnegie Mellon University

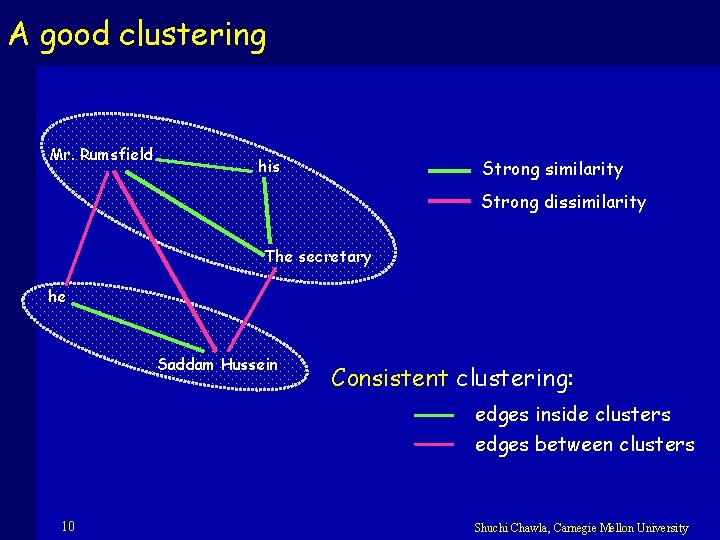

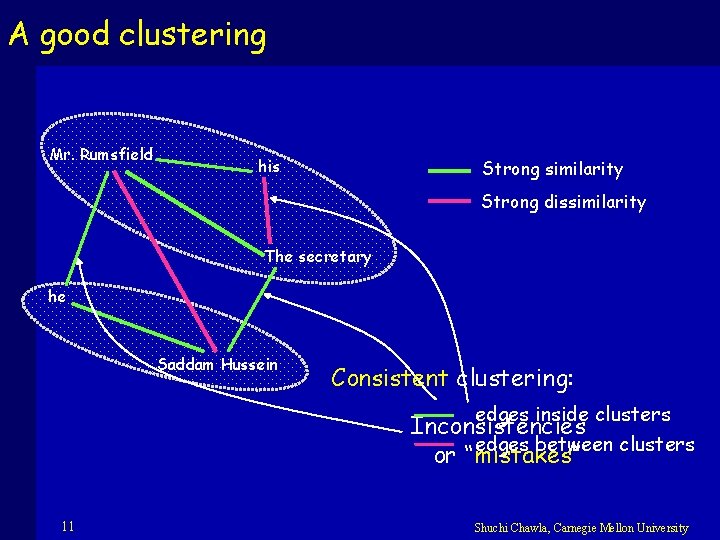

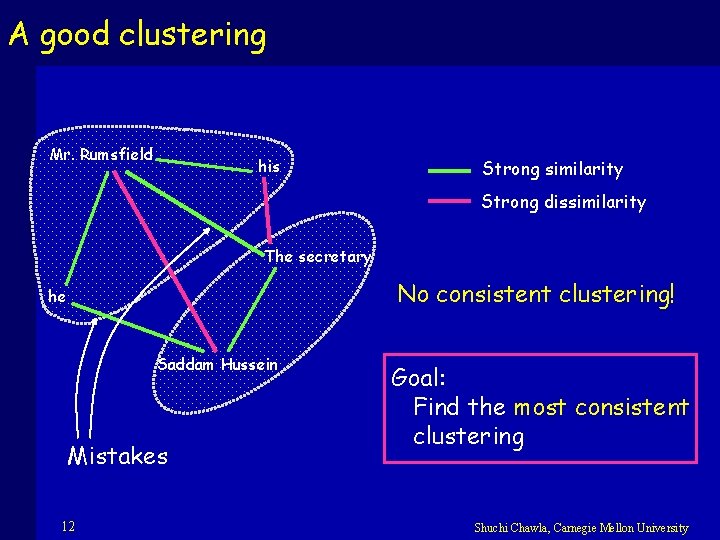

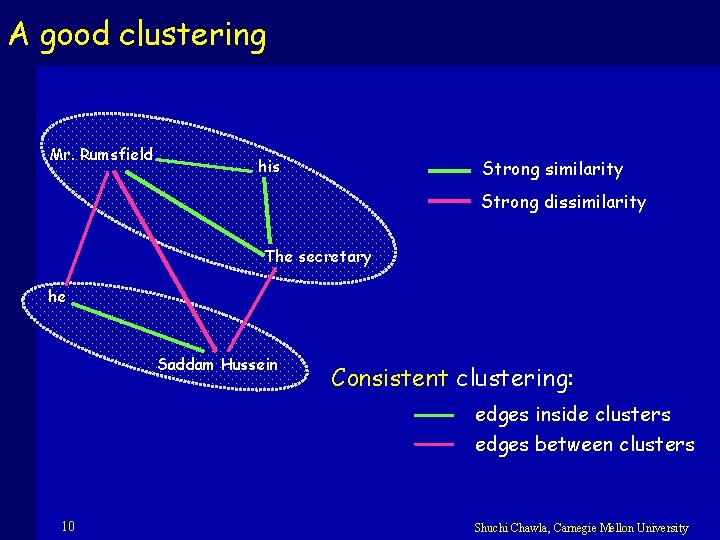

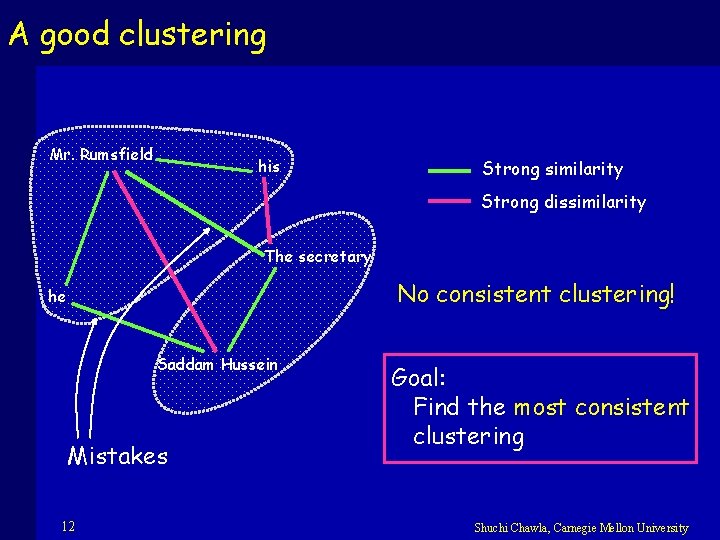

A good clustering Mr. Rumsfield his Strong similarity Strong dissimilarity The secretary he Saddam Hussein Consistent clustering: edges inside clusters edges between clusters 10 Shuchi Chawla, Carnegie Mellon University

A good clustering Mr. Rumsfield his Strong similarity Strong dissimilarity The secretary he Saddam Hussein Consistent clustering: edges inside clusters Inconsistencies edges between clusters or “mistakes” 11 Shuchi Chawla, Carnegie Mellon University

A good clustering Mr. Rumsfield his Strong similarity Strong dissimilarity The secretary No consistent clustering! he Saddam Hussein Mistakes 12 Goal: Find the most consistent clustering Shuchi Chawla, Carnegie Mellon University

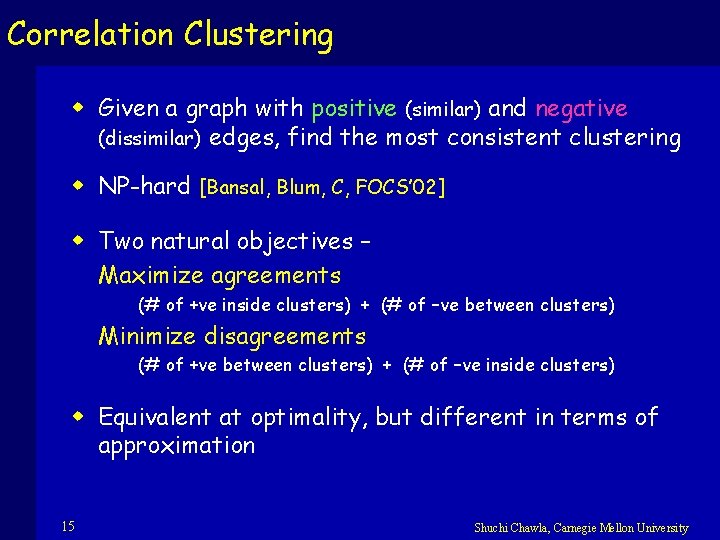

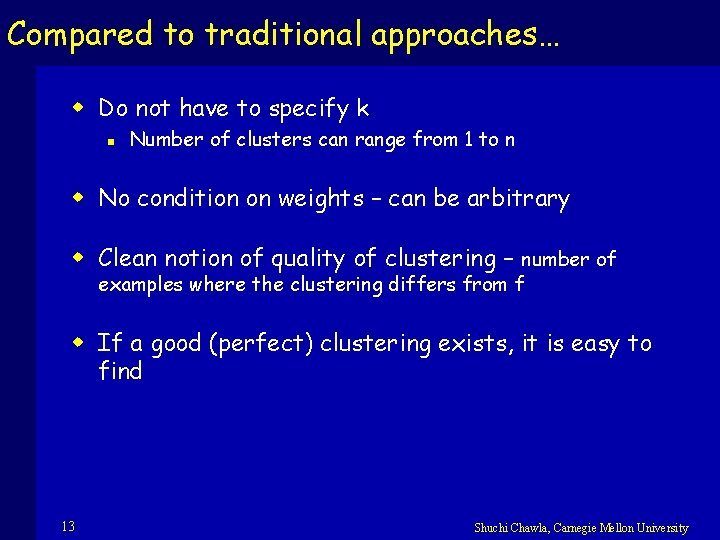

Compared to traditional approaches… w Do not have to specify k n Number of clusters can range from 1 to n w No condition on weights – can be arbitrary w Clean notion of quality of clustering – number of examples where the clustering differs from f w If a good (perfect) clustering exists, it is easy to find 13 Shuchi Chawla, Carnegie Mellon University

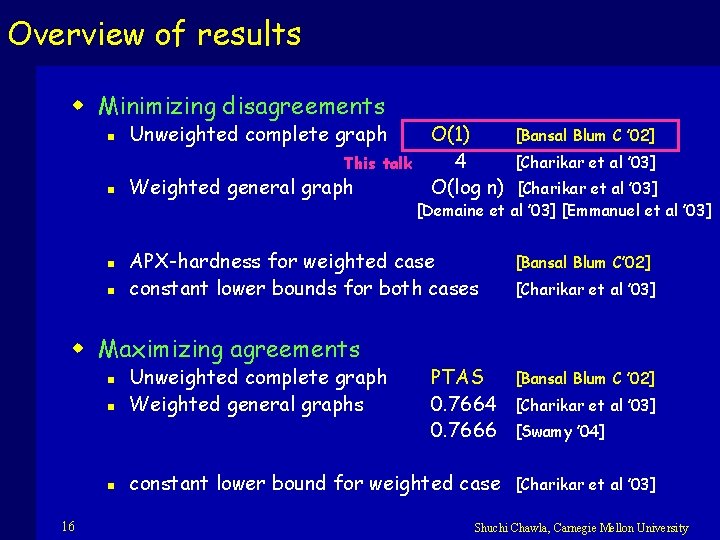

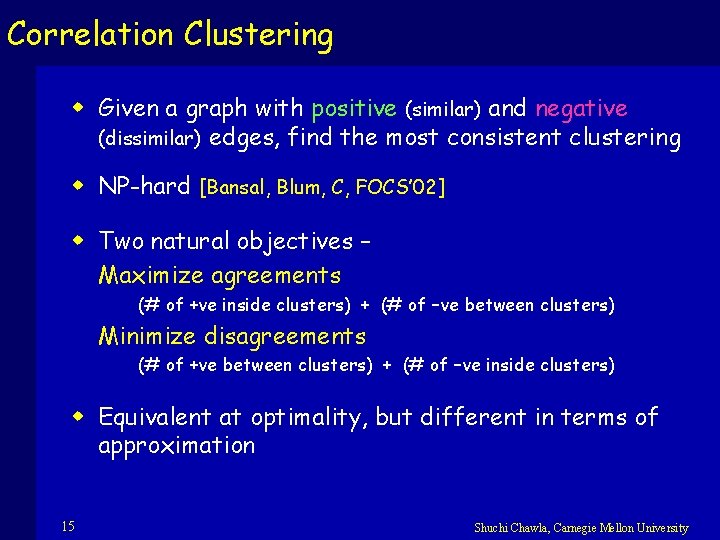

Correlation Clustering w Given a graph with positive (similar) and negative (dissimilar) edges, find the most consistent clustering w NP-hard [Bansal, Blum, C, FOCS’ 02] w Two natural objectives – Maximize agreements (# of +ve inside clusters) + (# of –ve between clusters) Minimize disagreements (# of +ve between clusters) + (# of –ve inside clusters) w Equivalent at optimality, but different in terms of approximation 15 Shuchi Chawla, Carnegie Mellon University

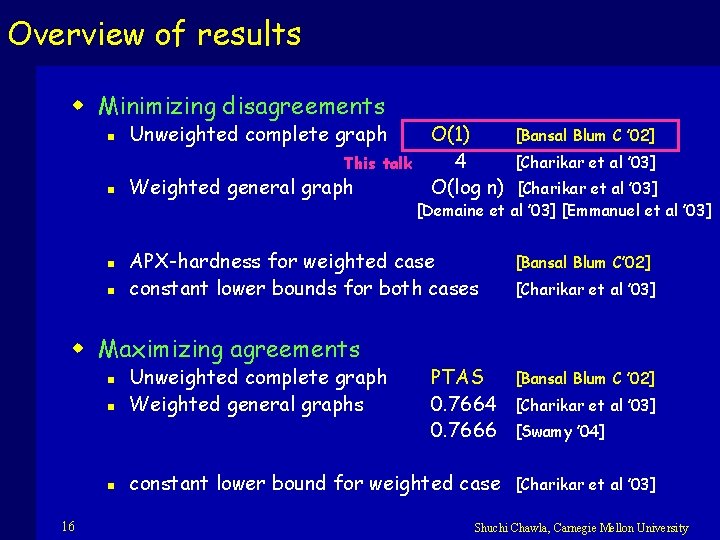

Overview of results w Minimizing disagreements n Unweighted complete graph This talk n n n Weighted general graph n n 16 Unweighted complete graph Weighted general graphs [Bansal Blum C ’ 02] [Charikar et al ’ 03] [Demaine et al ’ 03] [Emmanuel et al ’ 03] APX-hardness for weighted case constant lower bounds for both cases w Maximizing agreements n O(1) 4 O(log n) PTAS 0. 7664 0. 7666 constant lower bound for weighted case [Bansal Blum C’ 02] [Charikar et al ’ 03] [Bansal Blum C ’ 02] [Charikar et al ’ 03] [Swamy ’ 04] [Charikar et al ’ 03] Shuchi Chawla, Carnegie Mellon University

![Minimizing Disagreements Bansal Blum C FOCS 02 w Goal approximately minimize number of mistakes Minimizing Disagreements [Bansal, Blum, C, FOCS’ 02] w Goal: approximately minimize number of “mistakes”](https://slidetodoc.com/presentation_image_h2/ae82213b6c751d3d5bfa8383d12cb935/image-16.jpg)

Minimizing Disagreements [Bansal, Blum, C, FOCS’ 02] w Goal: approximately minimize number of “mistakes” w Assumption: The graph is unweighted and complete w A lower bound on OPT : Erroneous Triangles Consider + - “Erroneous Triangle” + Any clustering disagrees with at least one of these edges If several edge-disjoint erroneous ∆s, then Dopt Maximum fractional of erroneous triangles any clustering makespacking a mistake on each one 17 Shuchi Chawla, Carnegie Mellon University

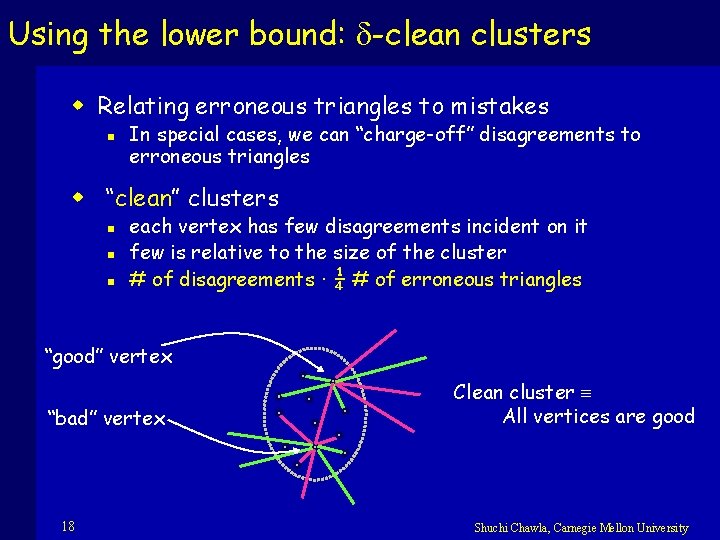

Using the lower bound: -clean clusters w Relating erroneous triangles to mistakes n In special cases, we can “charge-off” disagreements to erroneous triangles w “clean” clusters n n n each vertex has few disagreements incident on it few is relative to the size of the cluster # of disagreements · ¼ # of erroneous triangles “good” vertex “bad” vertex 18 Clean cluster All vertices are good Shuchi Chawla, Carnegie Mellon University

Using the lower bound: -clean clusters w Relating erroneous triangles to mistakes n In special cases, we can “charge-off” disagreements to erroneous triangles w -clean clusters n n each vertex in cluster C has fewer than |C| positive and |C| negative mistakes ¼ # of disagreements · ¼ # of erroneous triangles A high density of positive edges We can easily spot them in the graph w Possible solution: Find a -clean clustering, and charge disagreements to erroneous triangles w Caveat: It may not exist 19 Shuchi Chawla, Carnegie Mellon University

Using the lower bound: -clean clusters w Caveat: A -clean clustering may not exist w An almost- -clean clustering: All clusters are either -clean or contain a single node w An almost- -clean clustering always exists – trivially w We show: an almost- -clean clustering that is almost as good as OPT( ) Nice structure helps us find it easily. 20 Shuchi Chawla, Carnegie Mellon University

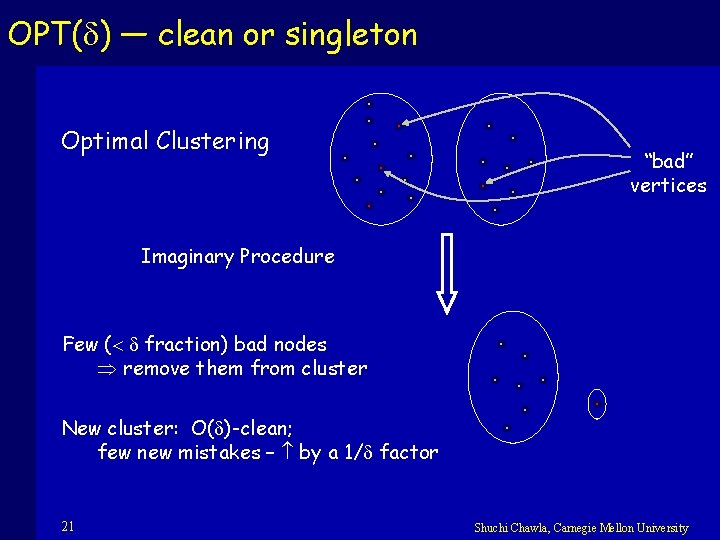

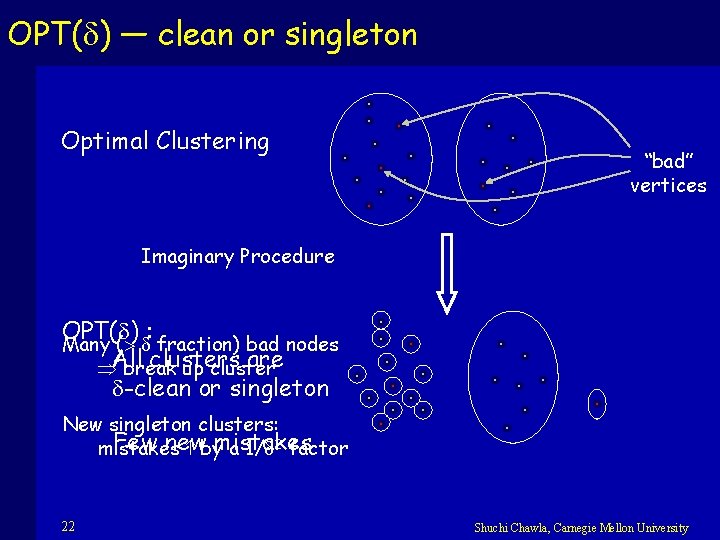

OPT( ) — clean or singleton Optimal Clustering “bad” vertices Imaginary Procedure Few ( fraction) bad nodes remove them from cluster New cluster: O( )-clean; few new mistakes – by a 1/ factor 21 Shuchi Chawla, Carnegie Mellon University

OPT( ) — clean or singleton Optimal Clustering “bad” vertices Imaginary Procedure OPT( ) : Many ( fraction) bad nodes clusters are All break up cluster -clean or singleton New singleton clusters: Few new mistakes by a 1/ 2 factor 22 Shuchi Chawla, Carnegie Mellon University

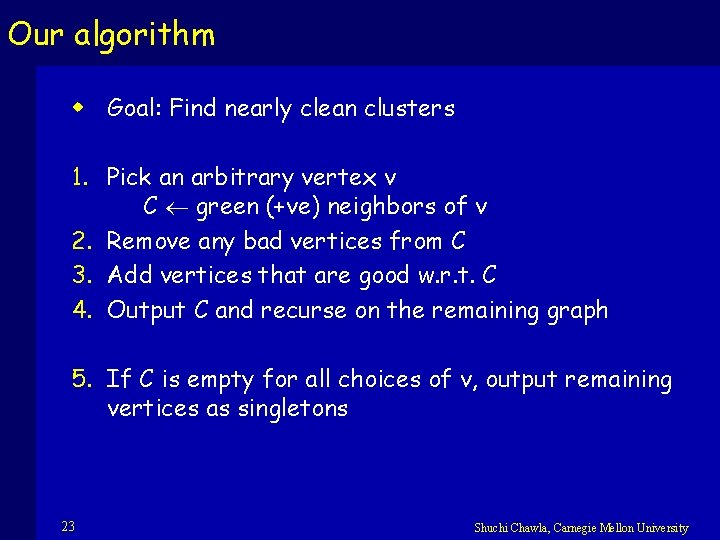

Our algorithm w Goal: Find nearly clean clusters 1. Pick an arbitrary vertex v C green (+ve) neighbors of v 2. Remove any bad vertices from C 3. Add vertices that are good w. r. t. C 4. Output C and recurse on the remaining graph 5. If C is empty for all choices of v, output remaining vertices as singletons 23 Shuchi Chawla, Carnegie Mellon University

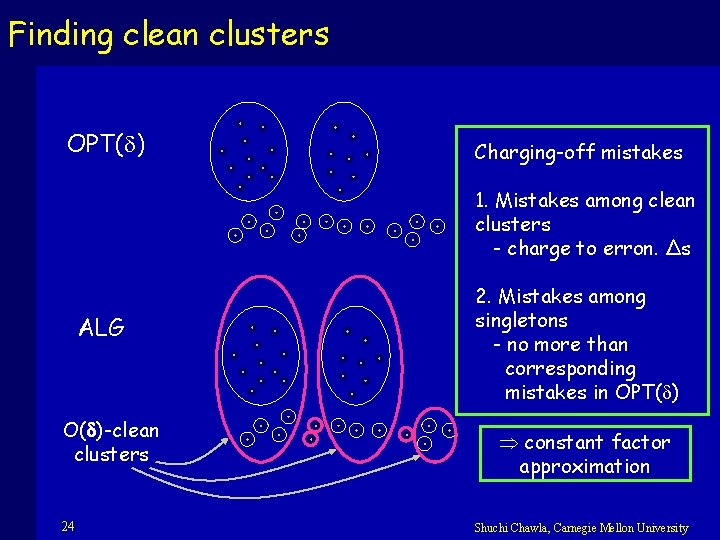

Finding clean clusters OPT( ) Charging-off mistakes 1. Mistakes among clean clusters - charge to erron. ∆s ALG O( )-clean clusters 24 2. Mistakes among singletons - no more than corresponding mistakes in OPT( ) constant factor approximation Shuchi Chawla, Carnegie Mellon University

![Experimental Results Wellner Mc Callum 03 Dataset 1 Dataset 2 Dataset 3 Bestpreviousmatch 90 Experimental Results [Wellner Mc. Callum’ 03] Dataset 1 Dataset 2 Dataset 3 Best-previous-match 90.](https://slidetodoc.com/presentation_image_h2/ae82213b6c751d3d5bfa8383d12cb935/image-24.jpg)

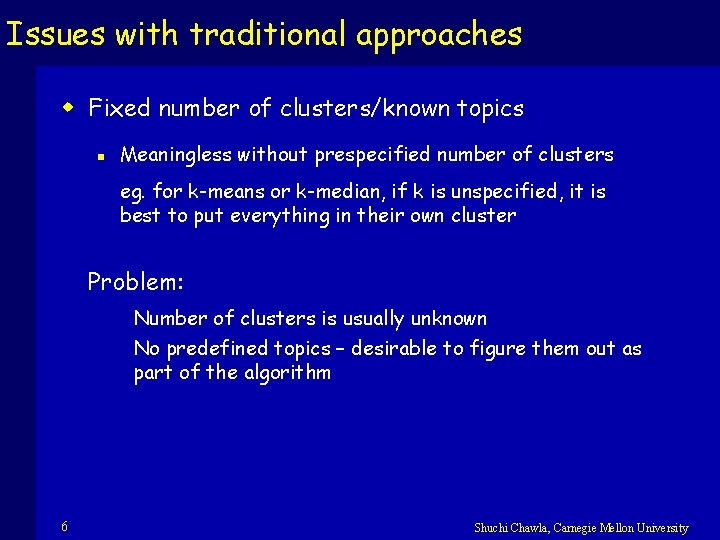

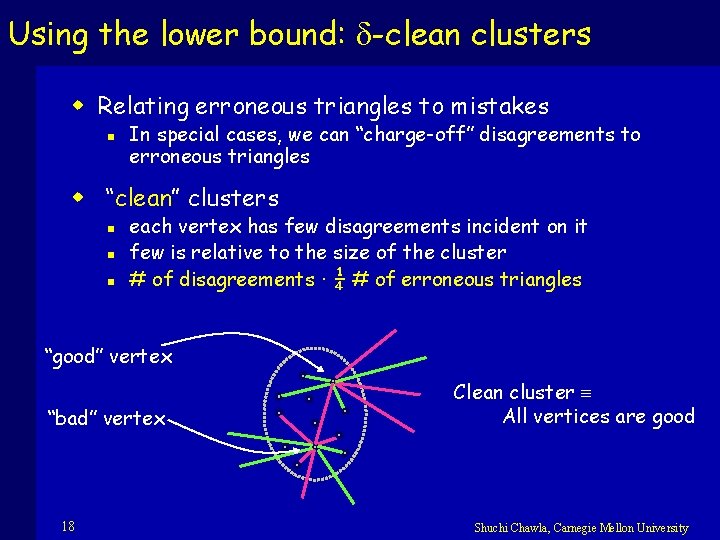

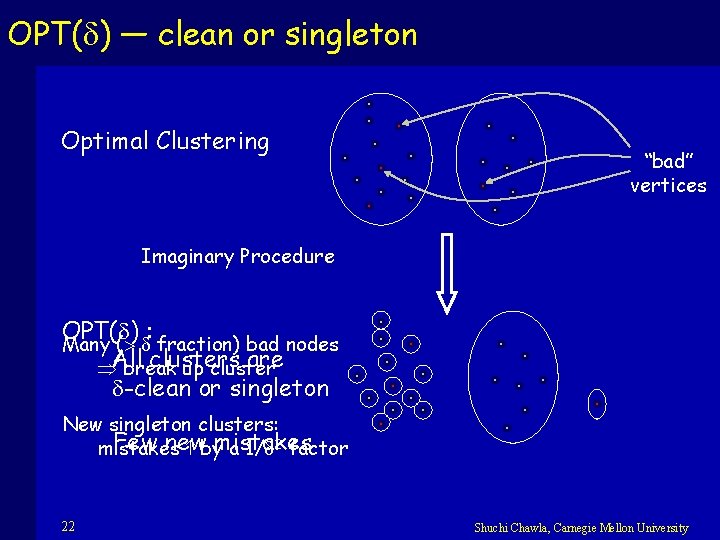

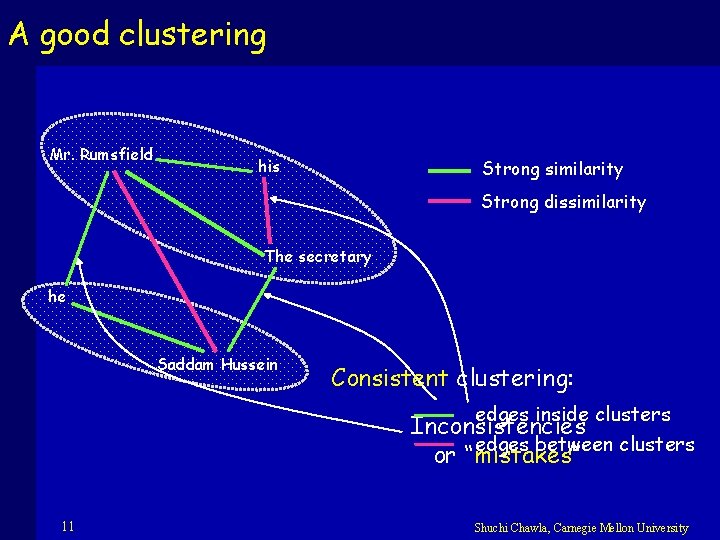

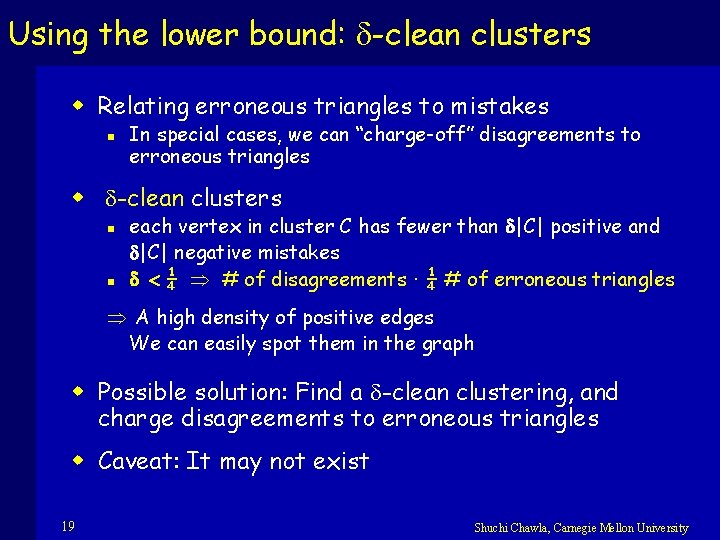

Experimental Results [Wellner Mc. Callum’ 03] Dataset 1 Dataset 2 Dataset 3 Best-previous-match 90. 98 88. 83 70. 41 Single-link-threshold 91. 65 88. 90 60. 83 Correlation clustering 93. 96 91. 59 73. 42 %age error reduction over previous best 28 24 10 (%age Accuracy of classification) 27 Shuchi Chawla, Carnegie Mellon University

Future Directions w Better combinatorial approximation w A good “iterative” approximation n on few changes to the graph, quickly recompute a good clustering w Minimizing Correlation n n 28 number of agreements – number of disagreements log-approx known; can we get a constant factor approx? Shuchi Chawla, Carnegie Mellon University

Questions? Shuchi Chawla, Carnegie Mellon University