CONVOLUTIONAL NEURAL NETWORKS FOR SENTENCE CLASSIFICATION EMNLP 2014

- Slides: 13

CONVOLUTIONAL NEURAL NETWORKS FOR SENTENCE CLASSIFICATION EMNLP 2014 YOON KIM NEW YORK UNIVERSITY 단국대학교 EDUAI 센터 발표자 : 김태경

LIST • Abstract • Introduction • CNN(convolutional neural network) • Collobert-Weston style CNN • Neural Word Embeddings • Model • Regularization • Dataset and experimental • Result • Conclusion

ABSTRACT • Convolutional neural networks (CNN) trained on top of pre-trained word vectors for sentence-level classification tasks. • Simple CNN Architecture • Static & Nonstatic • State of the art on 4 out of 7

INTRODUCTION • A simple CNNs in NLP • Collobert-Weston style CNN • Pretrained model + Fine tuning model -> Multiple channels • Fine tuning model results in further improvements

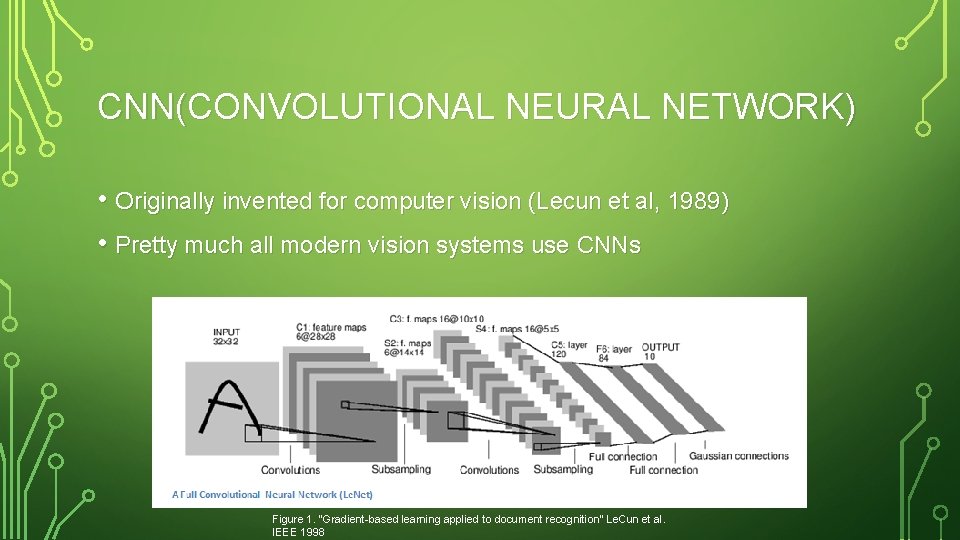

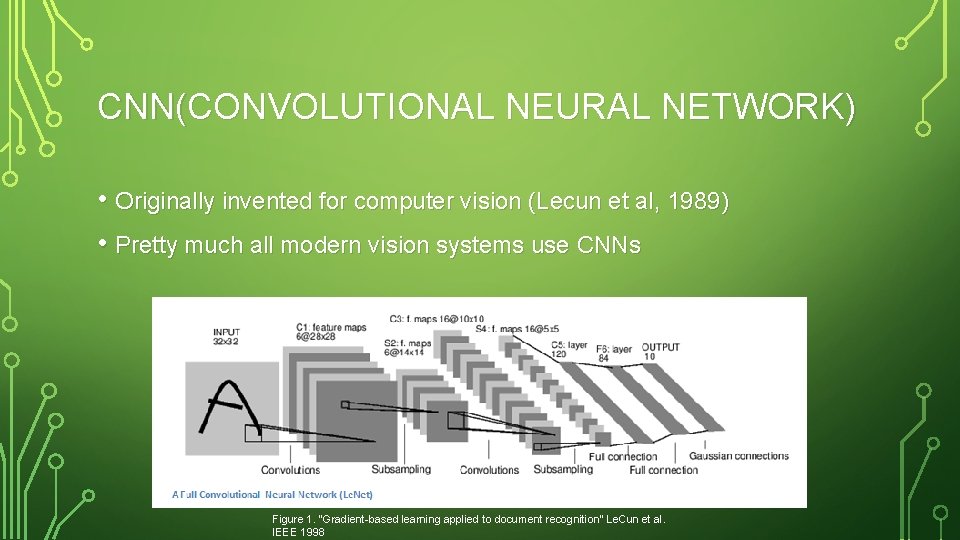

CNN(CONVOLUTIONAL NEURAL NETWORK) • Originally invented for computer vision (Lecun et al, 1989) • Pretty much all modern vision systems use CNNs Figure 1. “Gradient-based learning applied to document recognition” Le. Cun et al. IEEE 1998

COLLOBERT-WESTON STYLE CNN • CNN at the bottom + CRF on top • A unified neural network architecture and learning algorithm • Most of these networks are quite complex, with multiple convolutional layers. • The system learns internal representations on the basis of vast amounts of mostly unlabeled training data

NEURAL WORD EMBEDDINGS • Word Vector • A continuous vector space where semantically similar words are mapped to nearby points • A particularly computationally-efficient predictive model for learning word embeddings from raw text • • CBOW(Continuous Bag-Of-Words) model Skip-Gram model

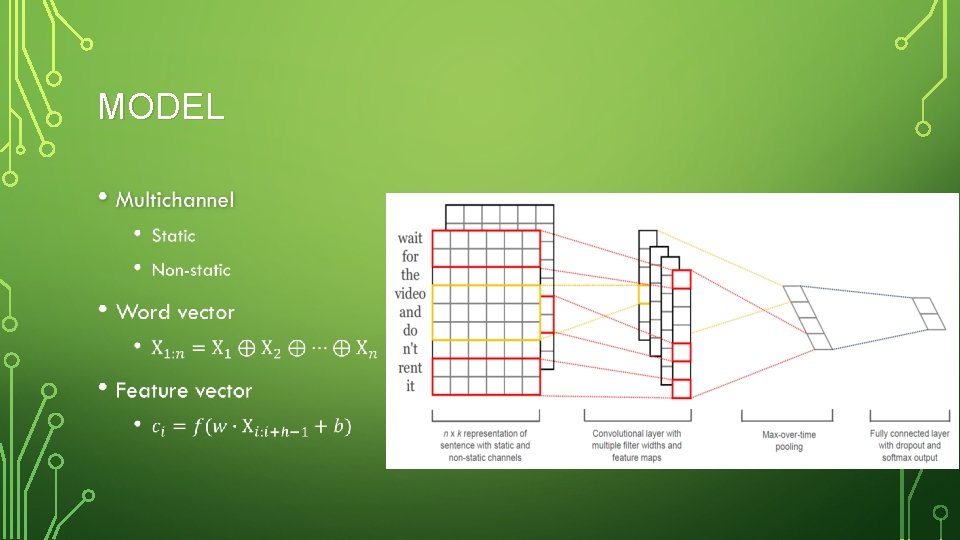

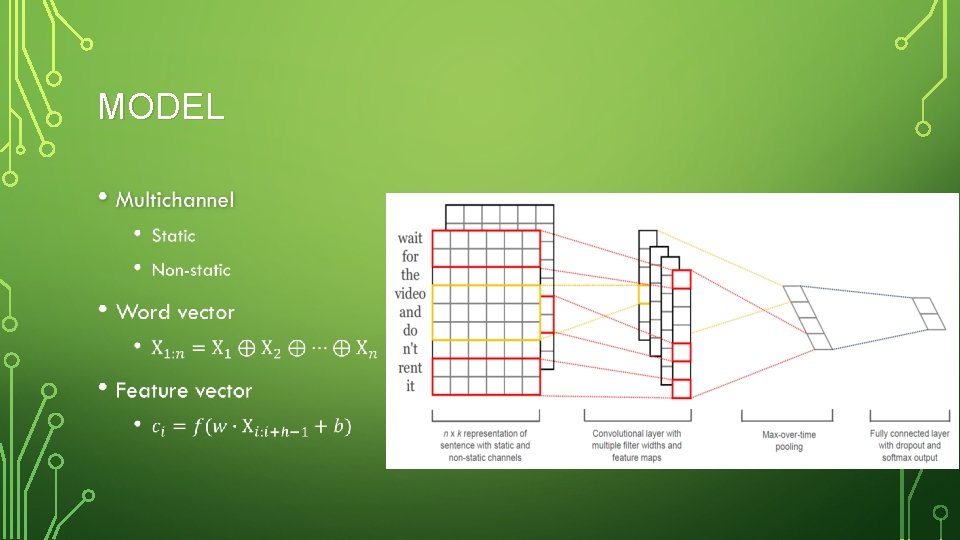

MODEL

REGULARIZATION •

DATASET AND EXPRIMENTAL

RESULT • CNN-rand : baseline model • CNN-static : simple model • CNN-nonstatic : fine-tuning model • CNN-multichannel : Proposed model

STATIC VS NON-STATIC • Good is arguably closer to nice than it is to great for expressing sentiment • Fine-tuning allows them to learn more meaningful representations • The network learns that exclamation marks are associated with effusive expressions and that commas are conjunctive.

CONCLUSION • Convolutional neural networks built on top of word 2 vec • A simple CNN with one layer of convolution performs remarkably well • Good result for unsupervised pre-training of word vectors is an important ingredient in deep learning for NLP