Contrastive Estimation Efficiently Training LogLinear Models of Sequences

- Slides: 35

Contrastive Estimation : (Efficiently) Training Log-Linear Models (of Sequences) on Unlabeled Data Noah A. Smith and Jason Eisner Department of Computer Science / Center for Language and Speech Processing Johns Hopkins University {nasmith, jason}@cs. jhu. edu ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

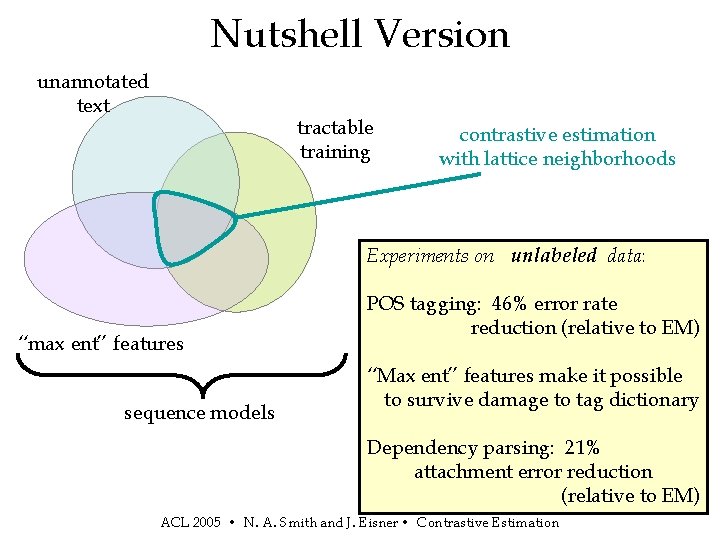

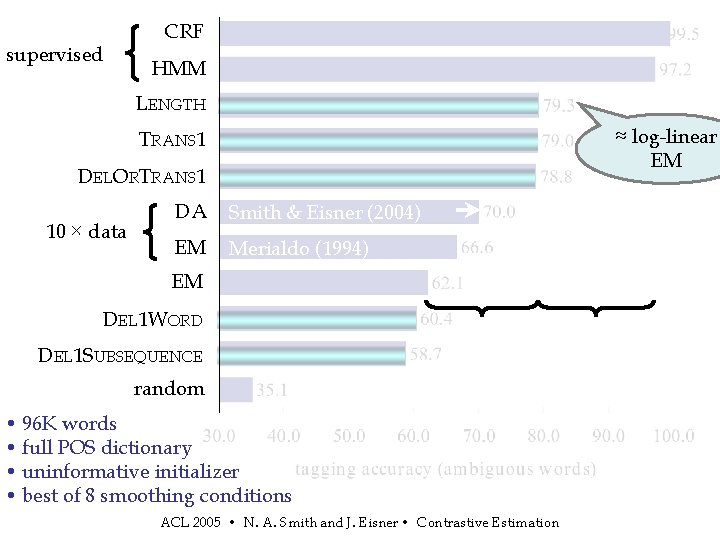

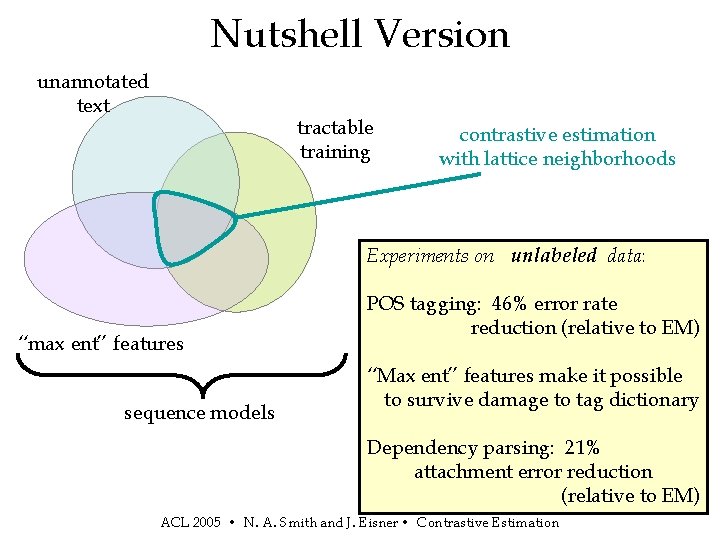

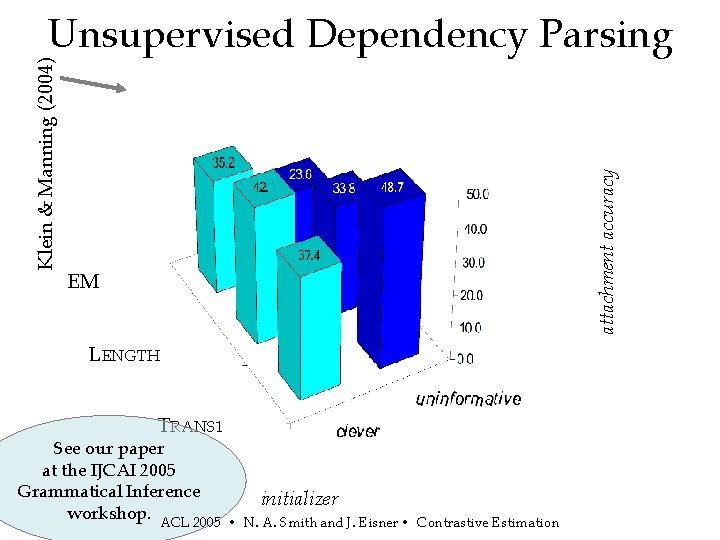

Nutshell Version unannotated text tractable training contrastive estimation with lattice neighborhoods Experiments on unlabeled data: “max ent” features sequence models POS tagging: 46% error rate reduction (relative to EM) “Max ent” features make it possible to survive damage to tag dictionary Dependency parsing: 21% attachment error reduction (relative to EM) ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

“Red leaves don’t hide blue jays. ” ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

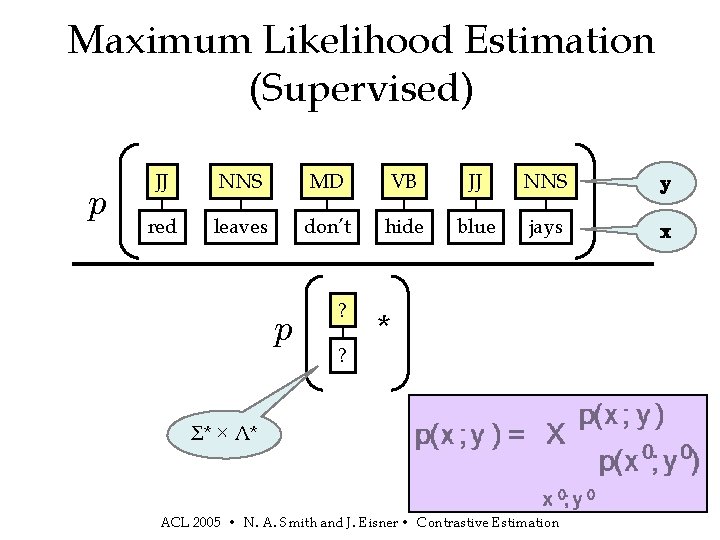

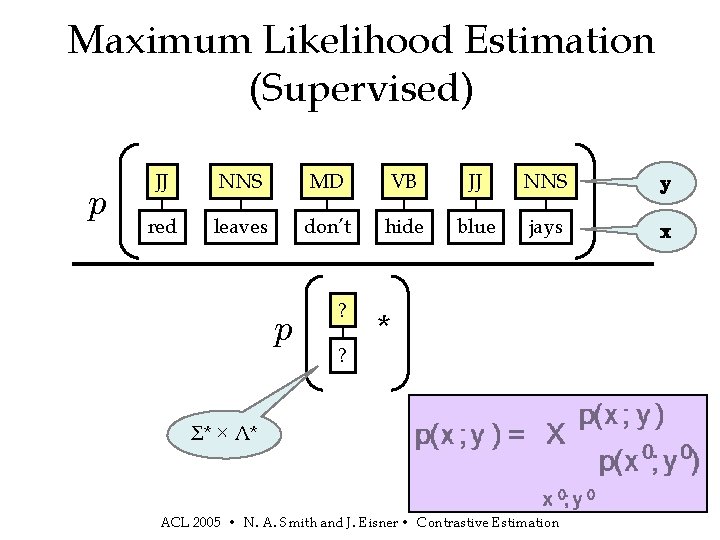

Maximum Likelihood Estimation (Supervised) p JJ NNS MD VB JJ NNS y red leaves don’t hide blue jays x p ? ? * Σ* × Λ* ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

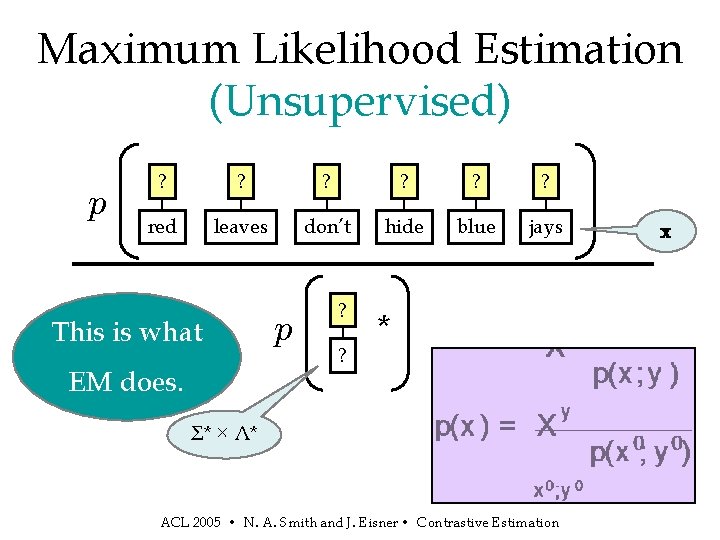

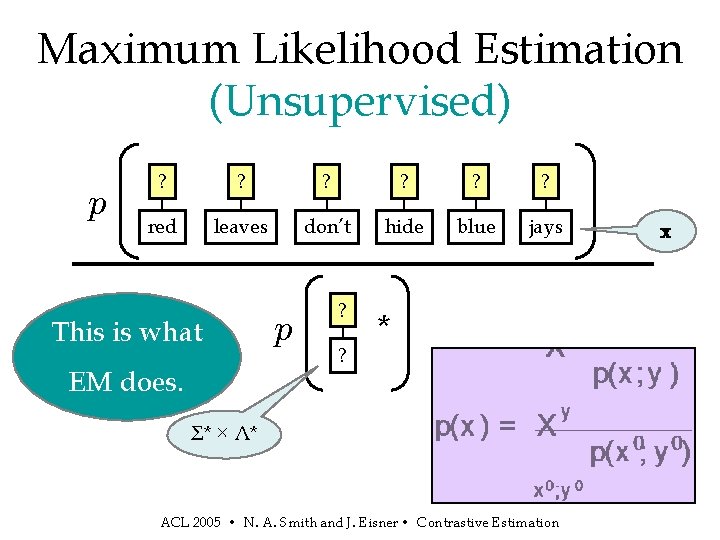

Maximum Likelihood Estimation (Unsupervised) p ? ? ? red leaves don’t hide blue jays This is what EM does. p ? ? * Σ* × Λ* ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation x

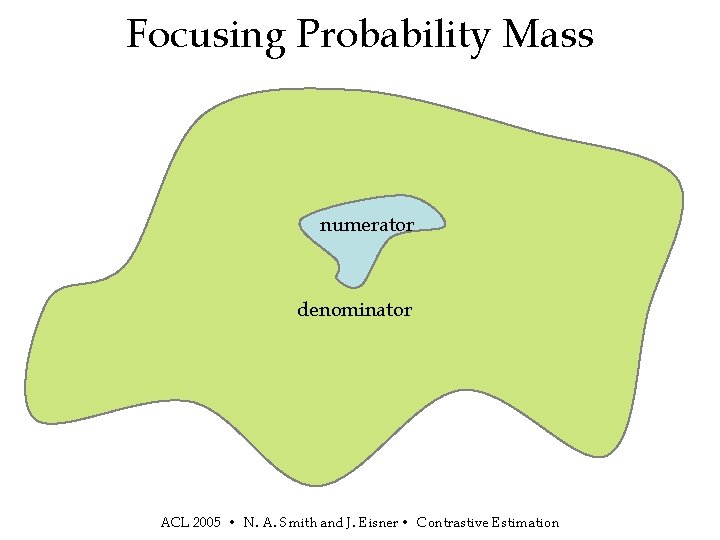

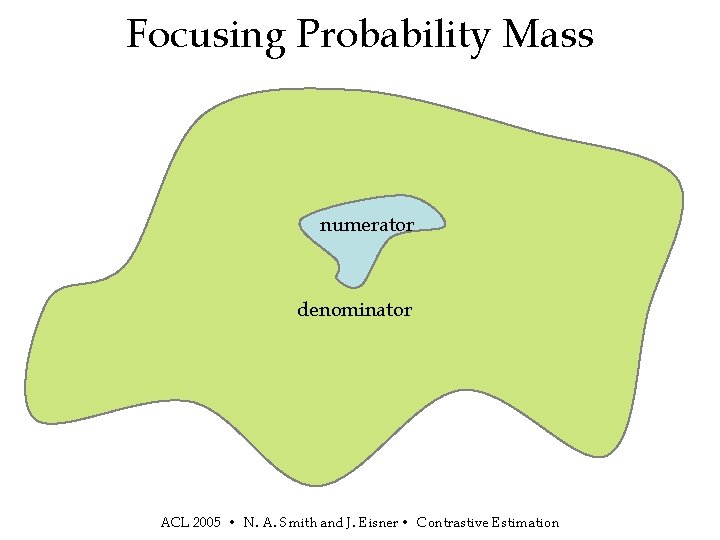

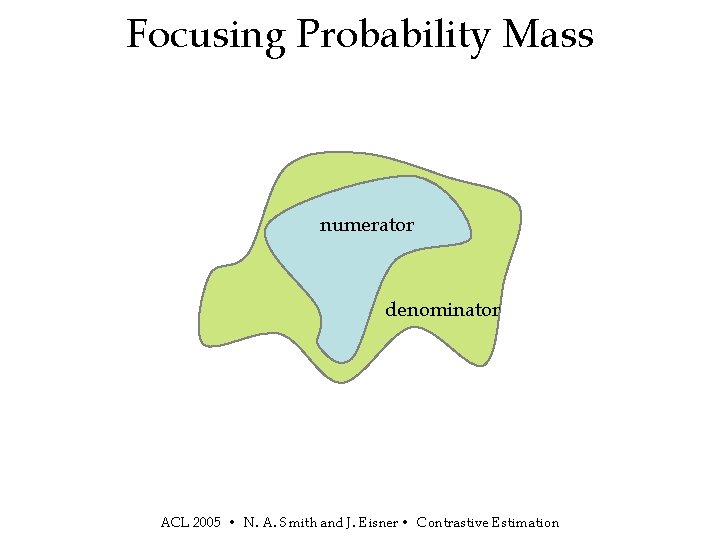

Focusing Probability Mass numerator denominator ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

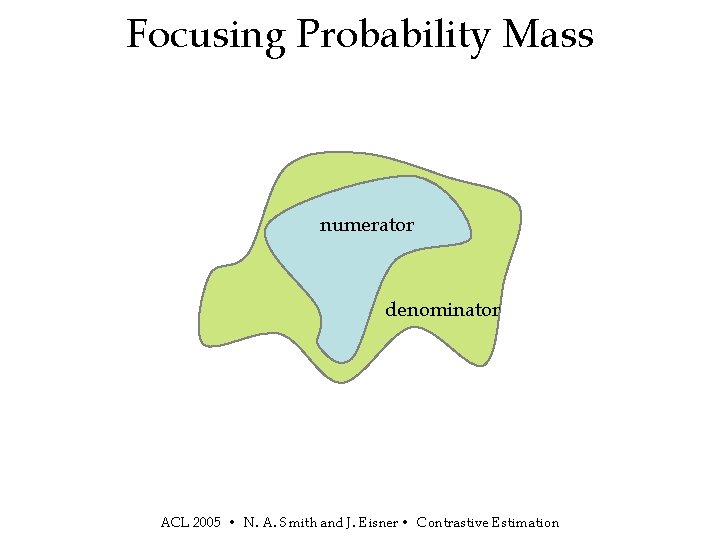

Focusing Probability Mass numerator denominator ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

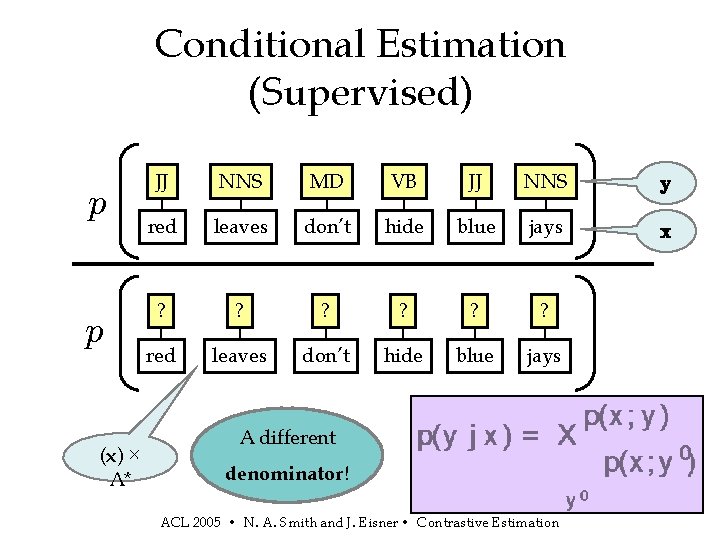

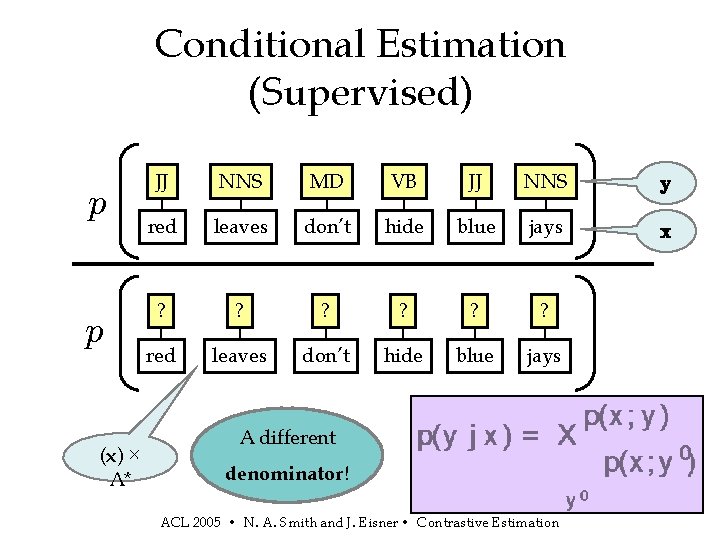

Conditional Estimation (Supervised) p p (x) × Λ* JJ NNS MD VB JJ NNS y red leaves don’t hide blue jays x ? ? ? red leaves don’t hide blue jays A different denominator! ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

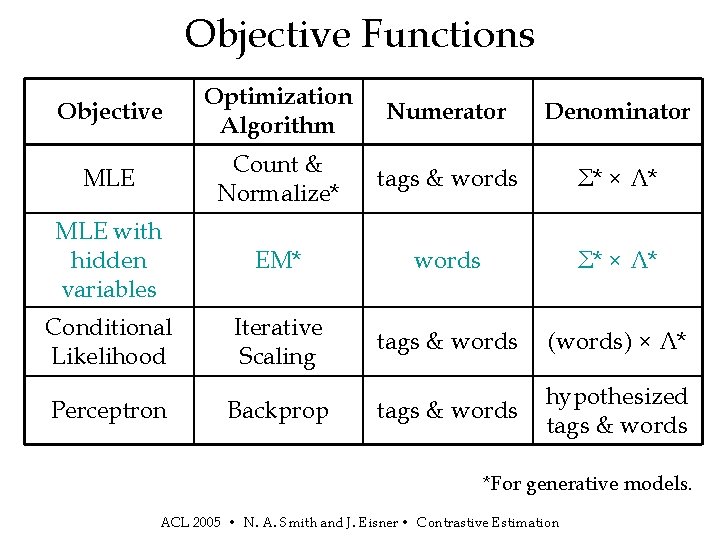

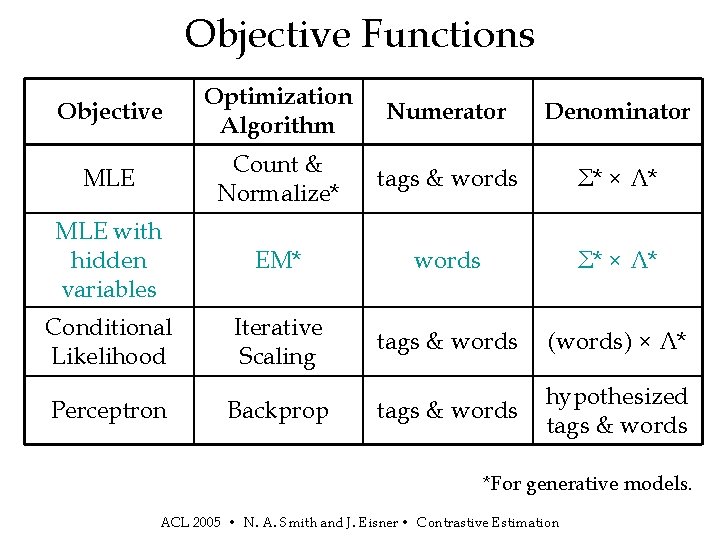

Objective Functions Objective Optimization Algorithm Numerator Denominator MLE Count & Normalize* tags & words Σ* × Λ* MLE with hidden variables EM* words Σ* × Λ* Conditional Likelihood Iterative Scaling tags & words (words) × Λ* tags & words hypothesized tags & words Perceptron Backprop *For generative models. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

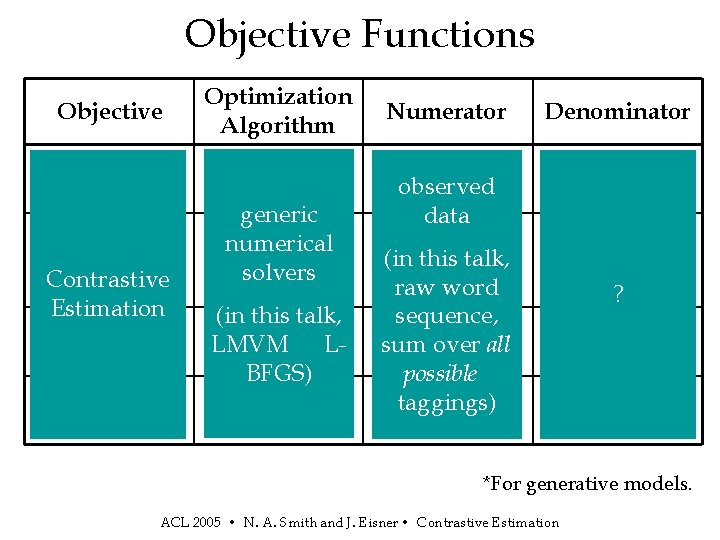

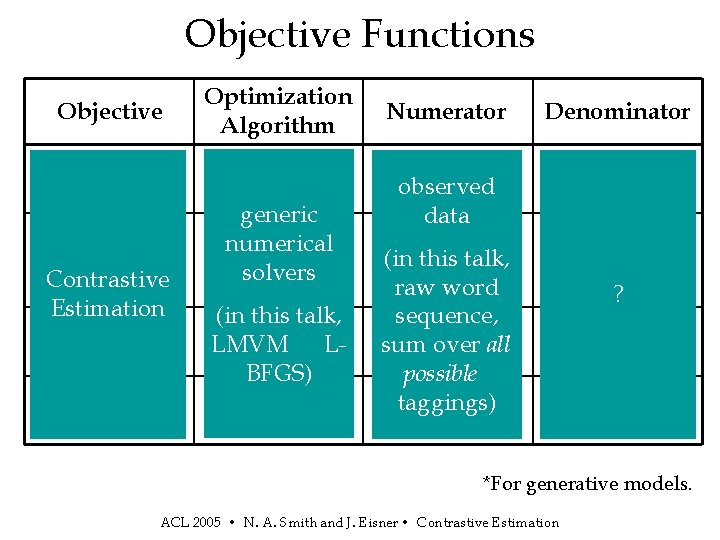

Objective Functions Objective MLE with hidden Contrastive variables Estimation Conditional Likelihood Perceptron Optimization Algorithm Count & Normalize* generic numerical EM* solvers (in. Iterative this talk, LMVM Scaling LBFGS) Backprop Numerator Denominator tags & words observed data Σ* × Λ* (in words this talk, raw word sequence, tags words sum&over all possible taggings) tags & words Σ* × Λ* ? (words) × Λ* hypothesized tags & words *For generative models. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

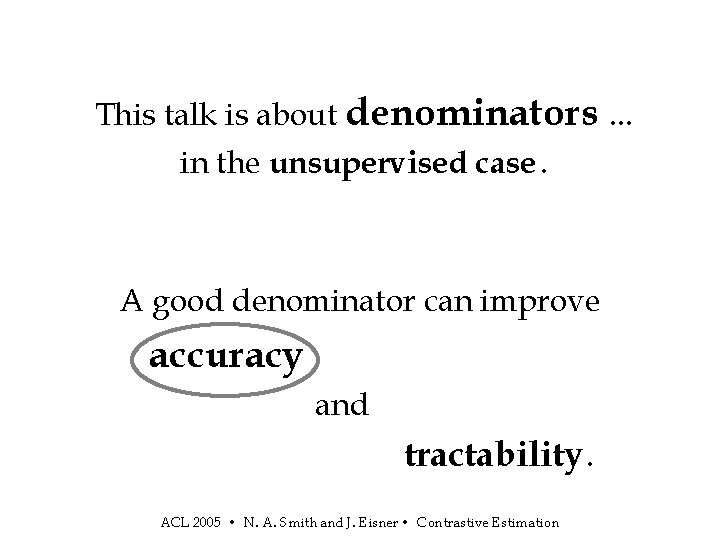

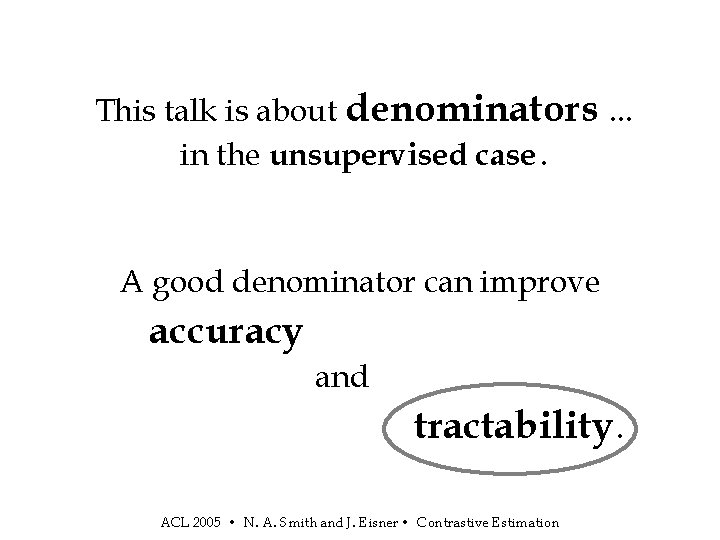

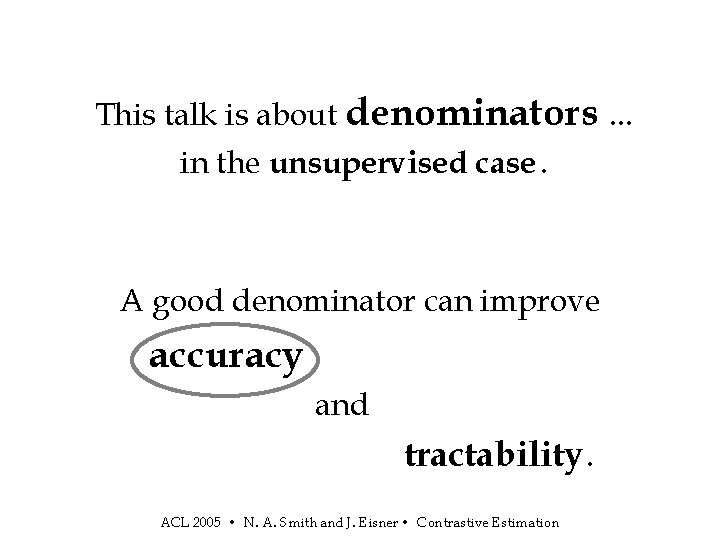

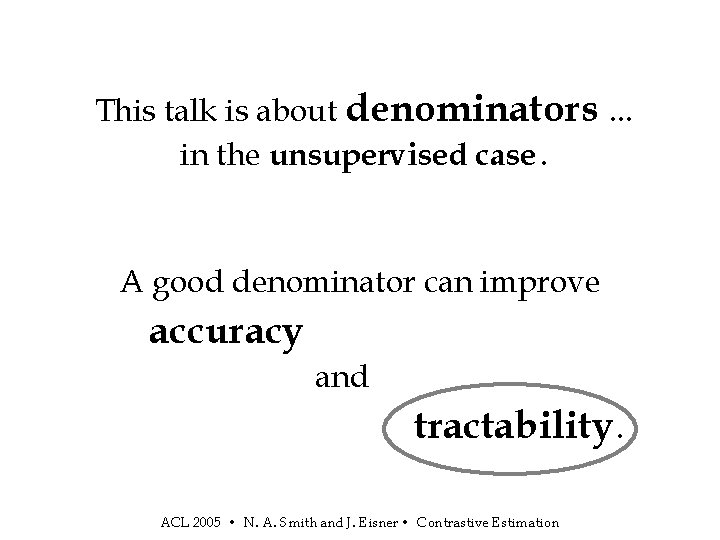

This talk is about denominators. . . in the unsupervised case. A good denominator can improve accuracy and tractability. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

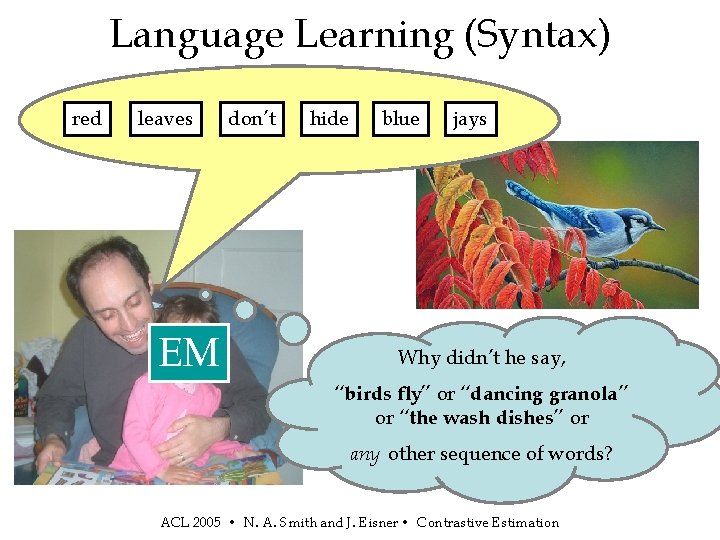

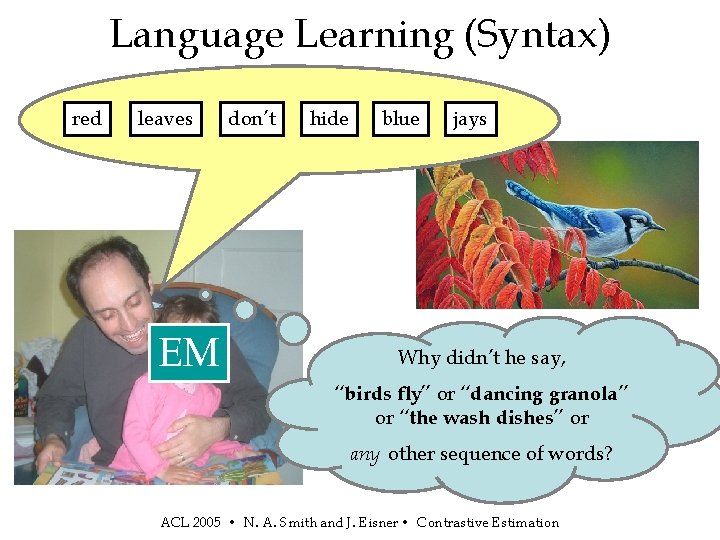

Language Learning (Syntax) red leaves EM don’t hide blue jays Why didn’t he say, “birds fly” or “dancing granola ” or “the wash dishes” or any other sequence of words? ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

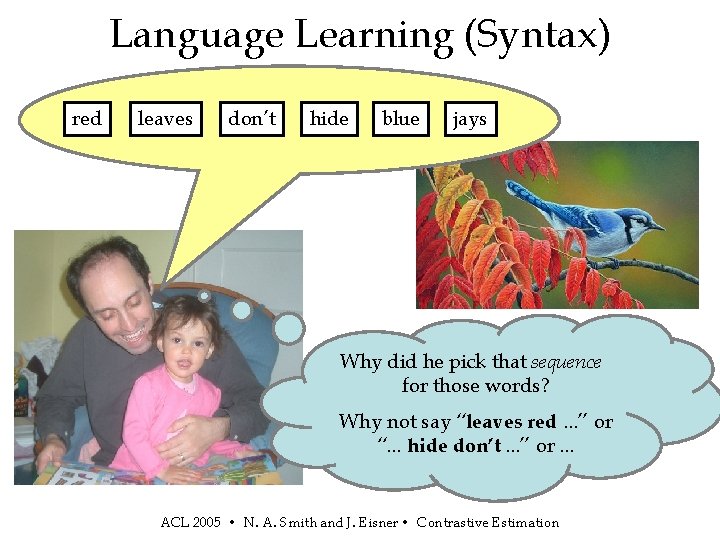

Language Learning (Syntax) red leaves don’t hide blue jays Why did he pick that sequence for those words? Why not say “leaves red. . . ” or “. . . hide don’t. . . ” or. . . ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

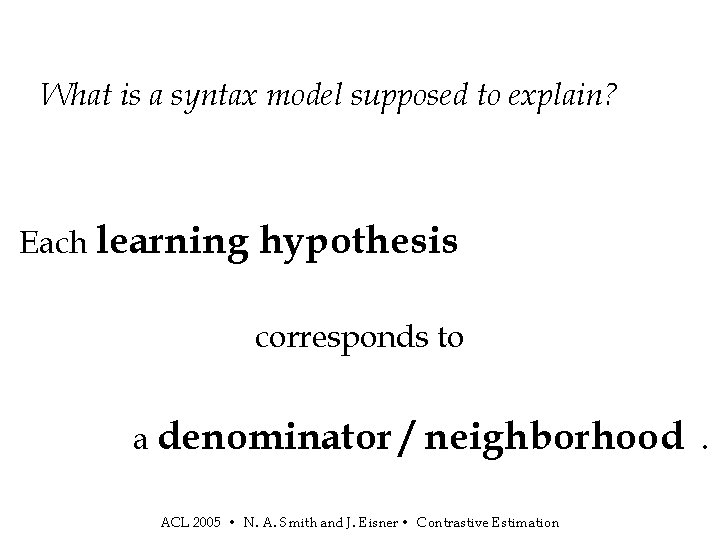

What is a syntax model supposed to explain? Each learning hypothesis corresponds to a denominator / neighborhood ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation .

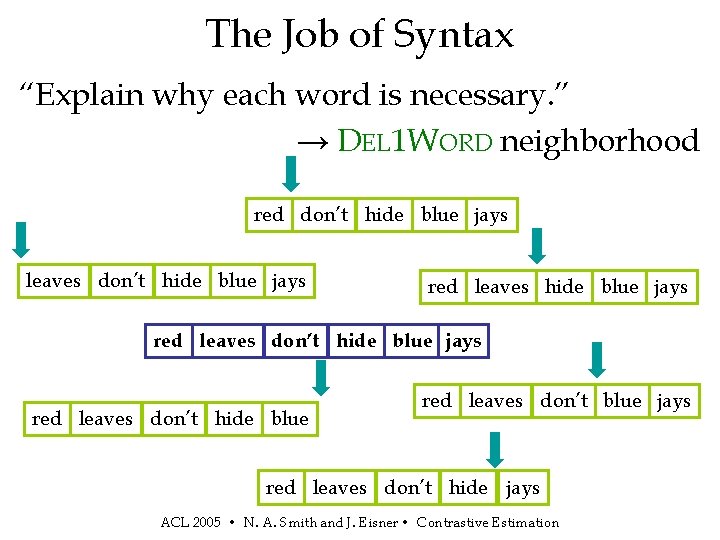

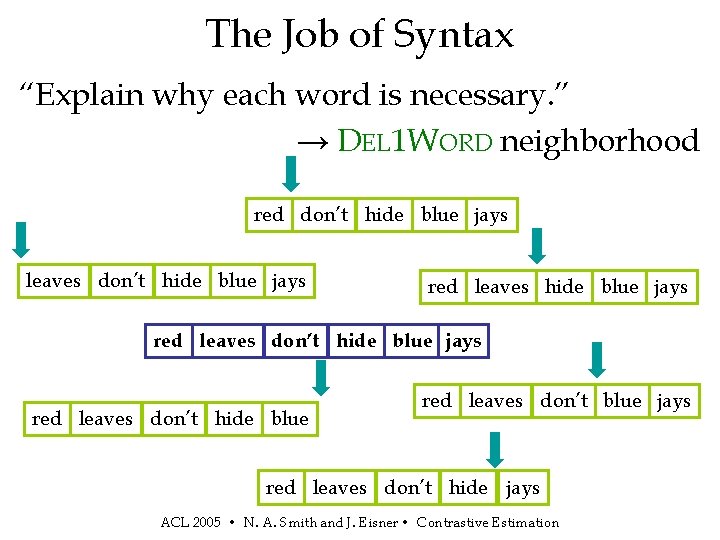

The Job of Syntax “Explain why each word is necessary. ” → DEL 1 WORD neighborhood red don’t hide blue jays leaves don’t hide blue jays red leaves don’t hide blue red leaves don’t blue jays red leaves don’t hide jays ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

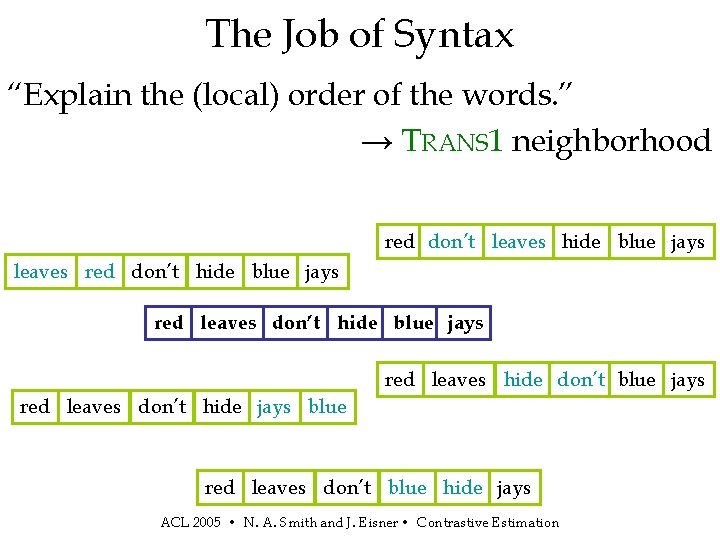

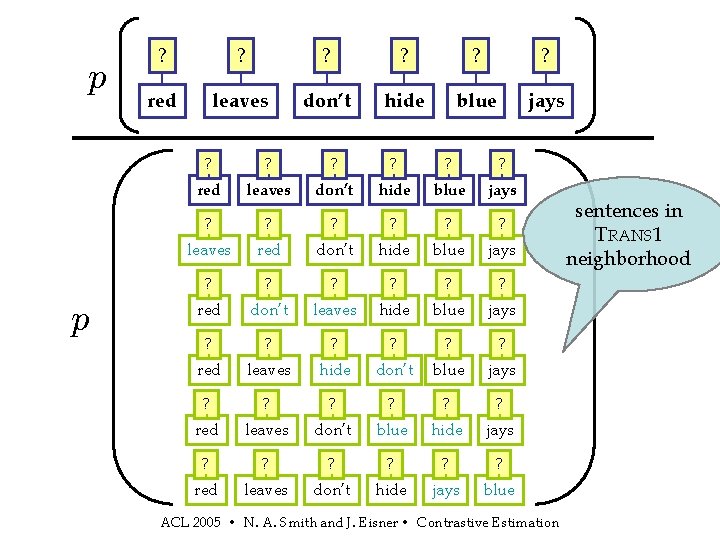

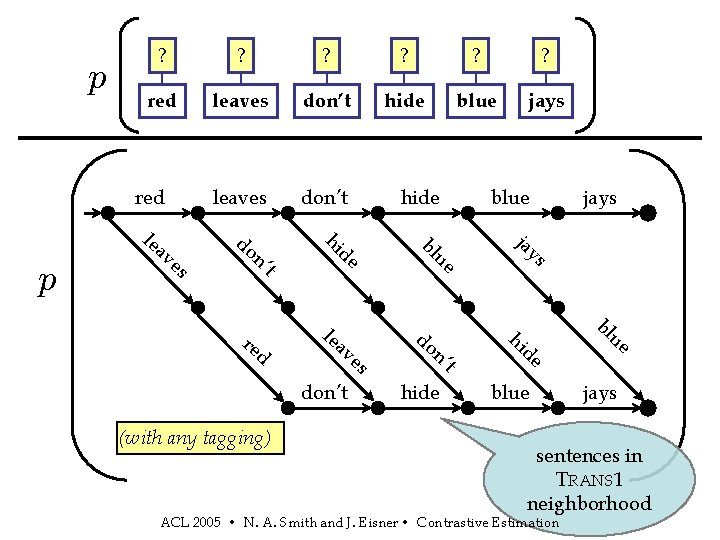

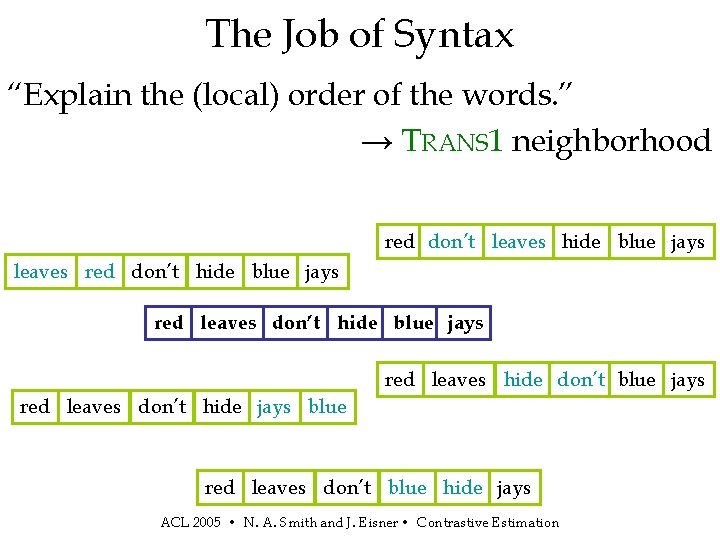

The Job of Syntax “Explain the (local) order of the words. ” → TRANS 1 neighborhood red don’t leaves hide blue jays leaves red don’t hide blue jays red leaves hide don’t blue jays red leaves don’t hide jays blue red leaves don’t blue hide jays ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

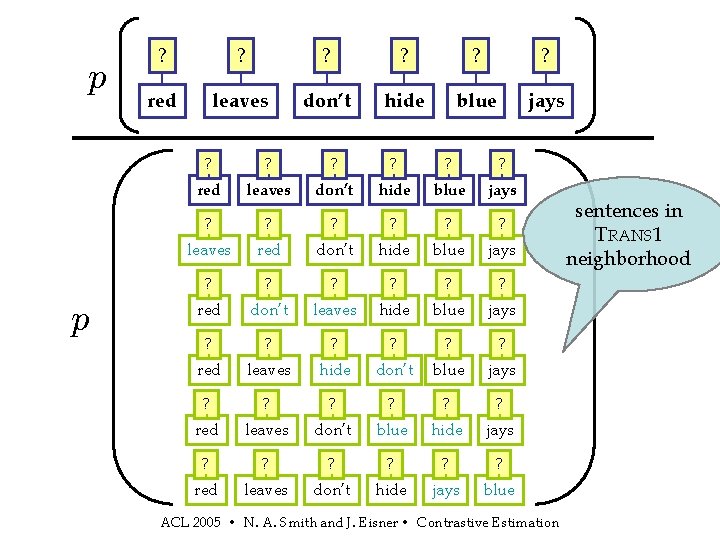

p p ? ? ? red leaves don’t hide blue jays ? ? ? leaves red don’t hide blue jays ? ? ? red don’t leaves hide blue jays ? ? ? red leaves hide don’t blue jays ? ? ? red leaves don’t blue hide jays ? ? ? red leaves don’t hide jays blue ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation sentences in TRANS 1 neighborhood

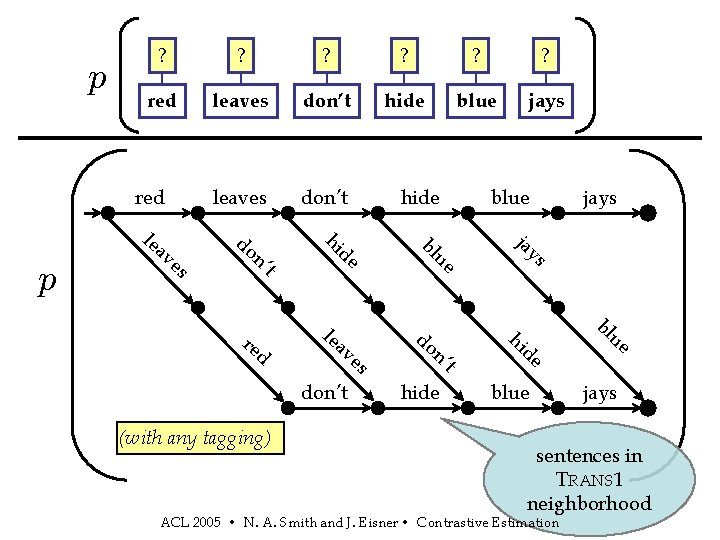

p ? ? ? red leaves don’t hide blue jays leaves don’t red le p av es do n’ t hi re d le de av don’t (with any tagging) hide es bl ue do n’ t hide blue jays ja y s hi de blue bl ue jays sentences in TRANS 1 neighborhood ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

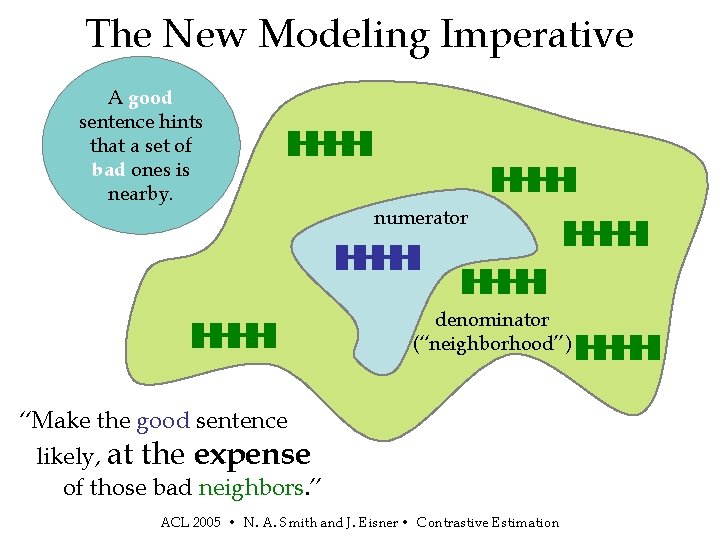

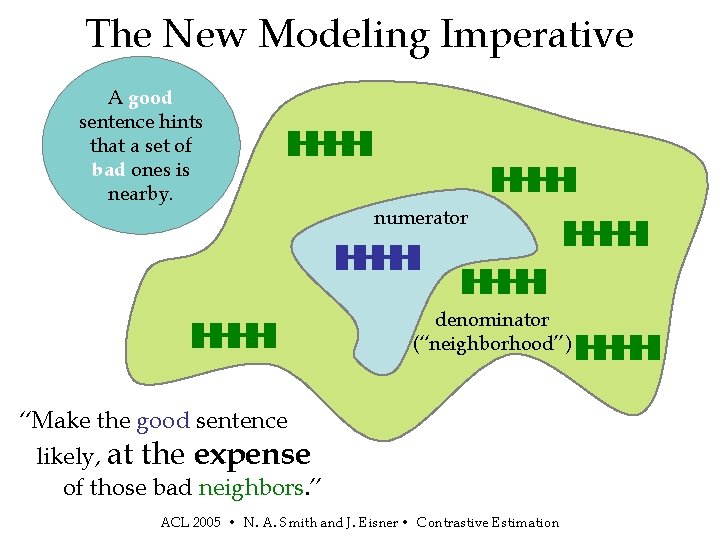

The New Modeling Imperative A good sentence hints that a set of bad ones is nearby. numerator denominator (“neighborhood”) “Make the good sentence likely, at the expense of those bad neighbors. ” ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

This talk is about denominators. . . in the unsupervised case. A good denominator can improve accuracy and tractability. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

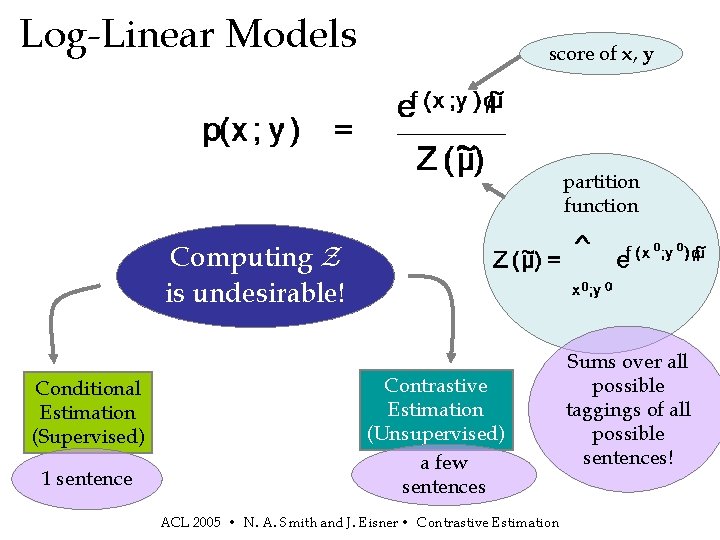

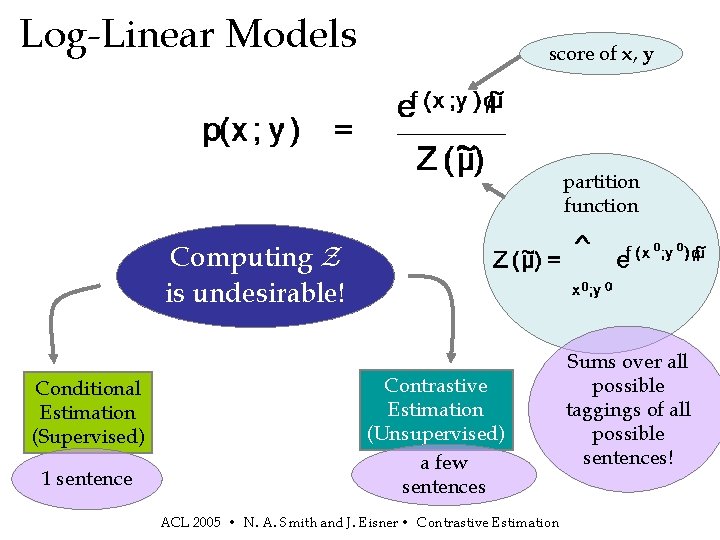

Log-Linear Models score of x, y partition function Computing Z is undesirable! Conditional Estimation (Supervised) 1 sentence Contrastive Estimation (Unsupervised) a few sentences ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation Sums over all possible taggings of all possible sentences!

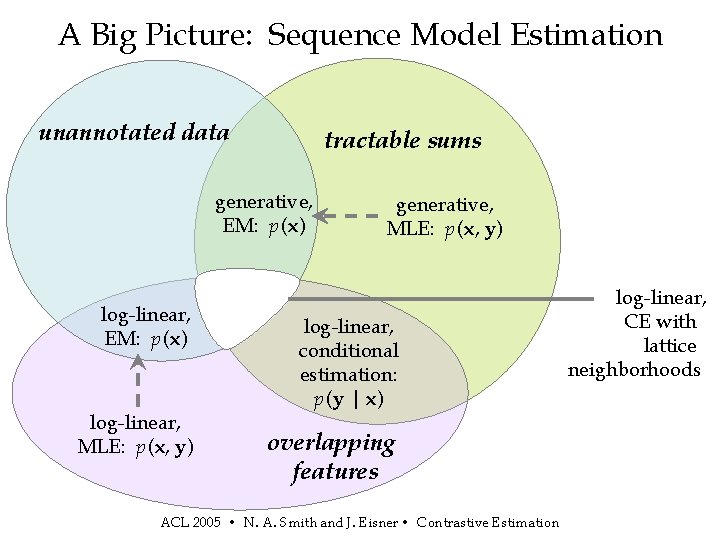

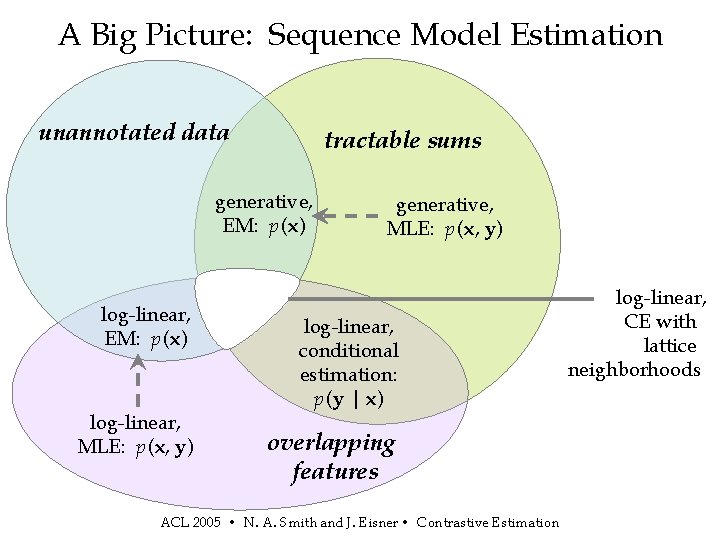

A Big Picture: Sequence Model Estimation unannotated data tractable sums generative, EM: p (x) log-linear, MLE: p (x, y) generative, MLE: p (x, y) log-linear, conditional estimation: p (y | x) overlapping features ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation log-linear, CE with lattice neighborhoods

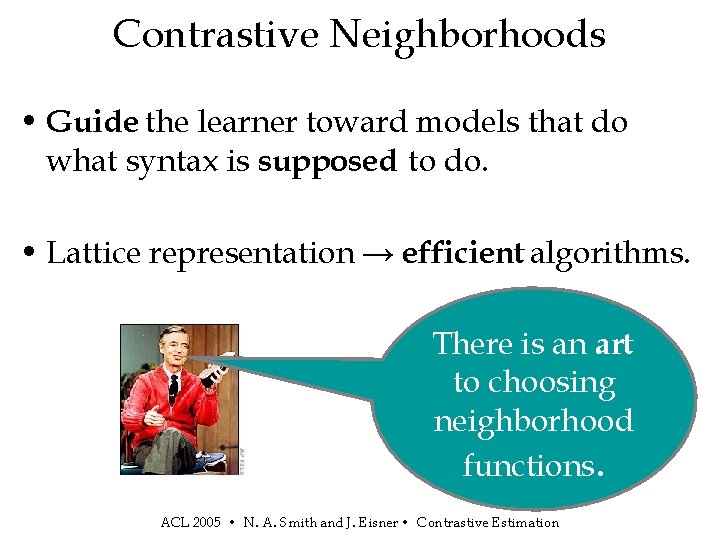

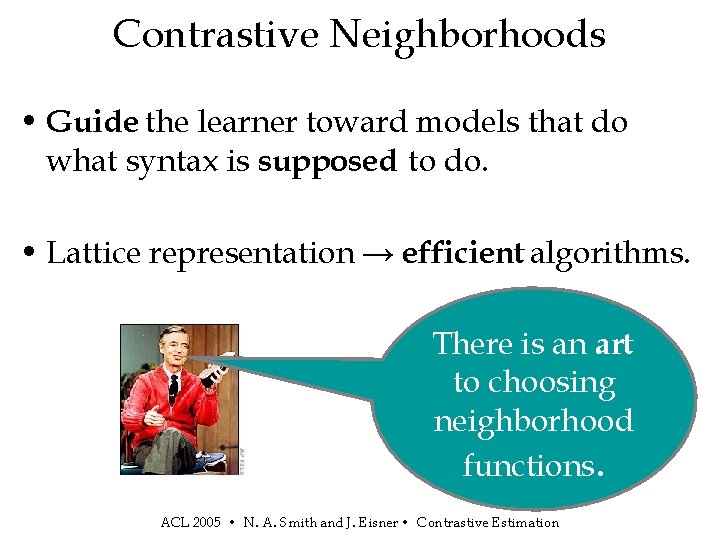

Contrastive Neighborhoods • Guide the learner toward models that do what syntax is supposed to do. • Lattice representation → efficient algorithms. There is an art to choosing neighborhood functions. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

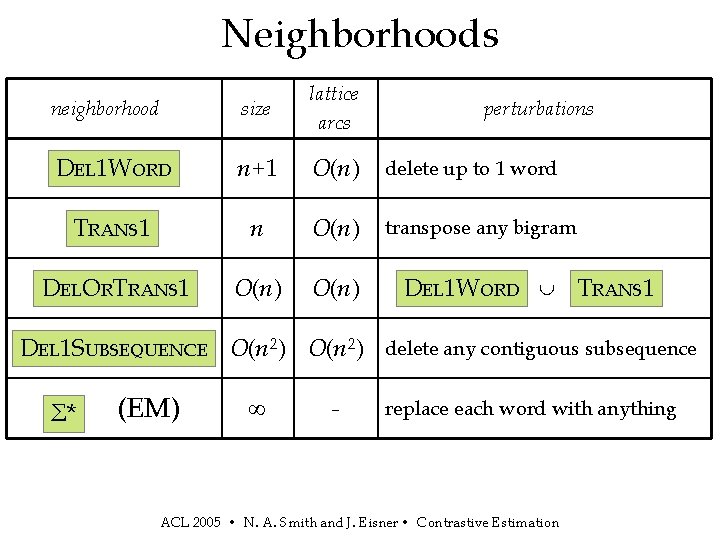

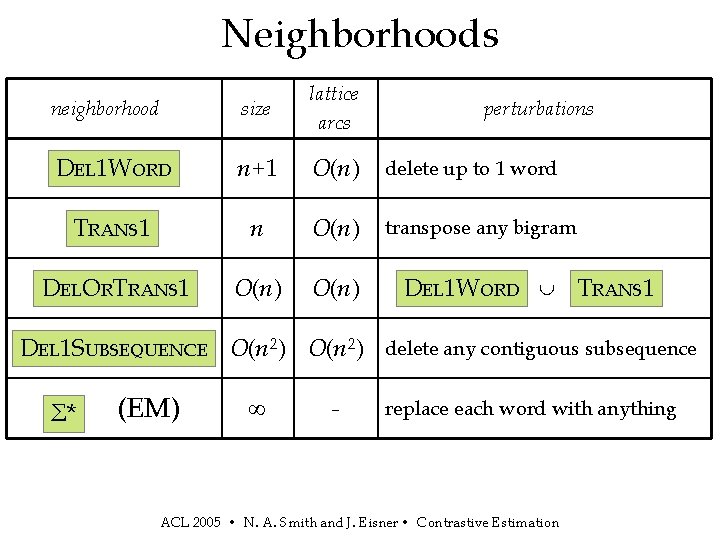

Neighborhoods neighborhood size lattice arcs DEL 1 WORD n+1 O(n) delete up to 1 word TRANS 1 n O(n) transpose any bigram DELORTRANS 1 O(n) perturbations DEL 1 WORD TRANS 1 DEL 1 SUBSEQUENCE O(n 2) delete any contiguous subsequence Σ* (EM) ∞ - replace each word with anything ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

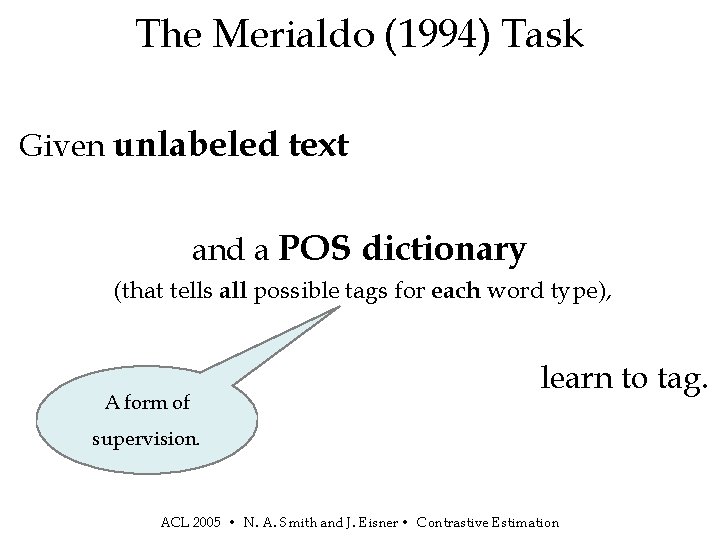

The Merialdo (1994) Task Given unlabeled text and a POS dictionary (that tells all possible tags for each word type), A form of learn to tag. supervision. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

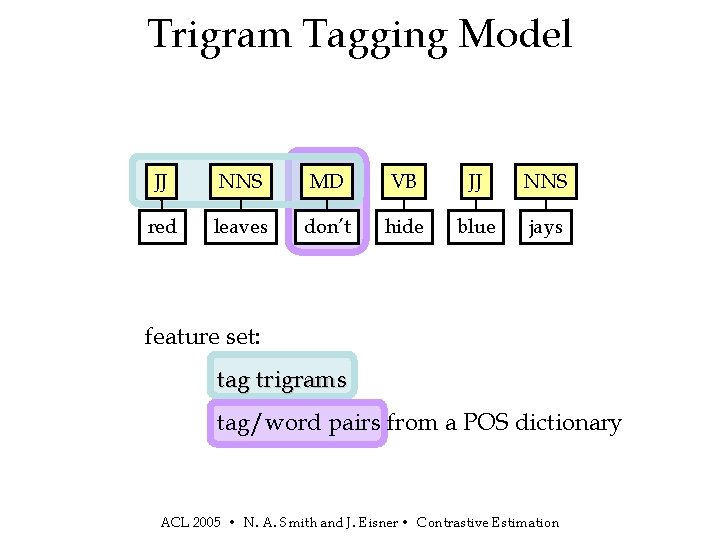

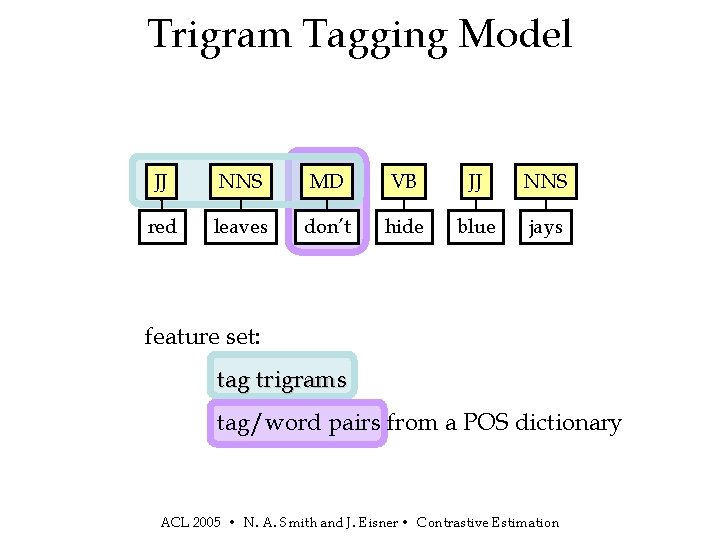

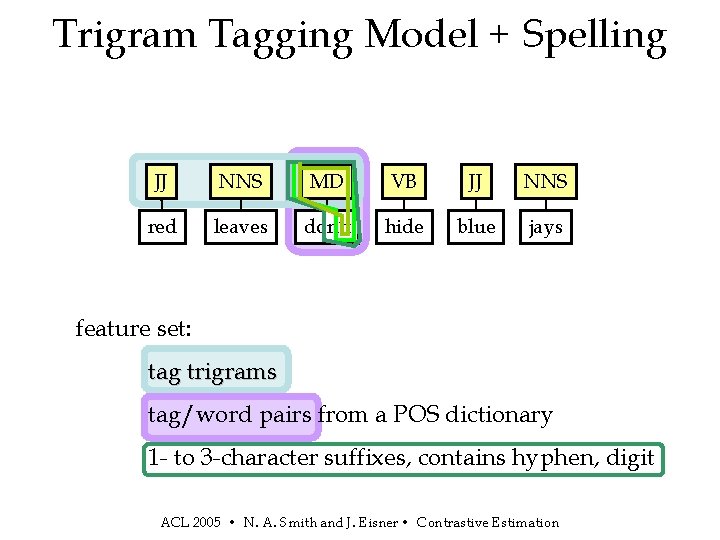

Trigram Tagging Model JJ NNS MD VB JJ NNS red leaves don’t hide blue jays feature set: tag trigrams tag/word pairs from a POS dictionary ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

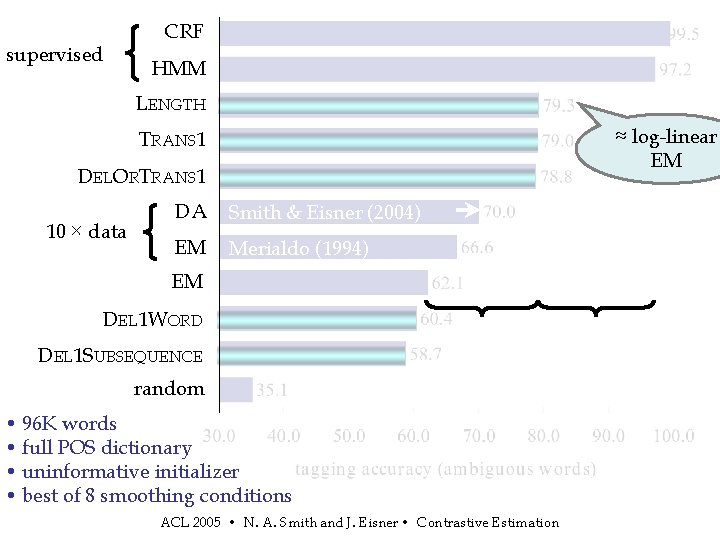

supervised CRF HMM LENGTH ≈ log-linear EM TRANS 1 DELORTRANS 1 10 × data DA Smith & Eisner (2004) EM Merialdo (1994) EM DEL 1 WORD DEL 1 SUBSEQUENCE random • 96 K words • full POS dictionary • uninformative initializer • best of 8 smoothing conditions ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

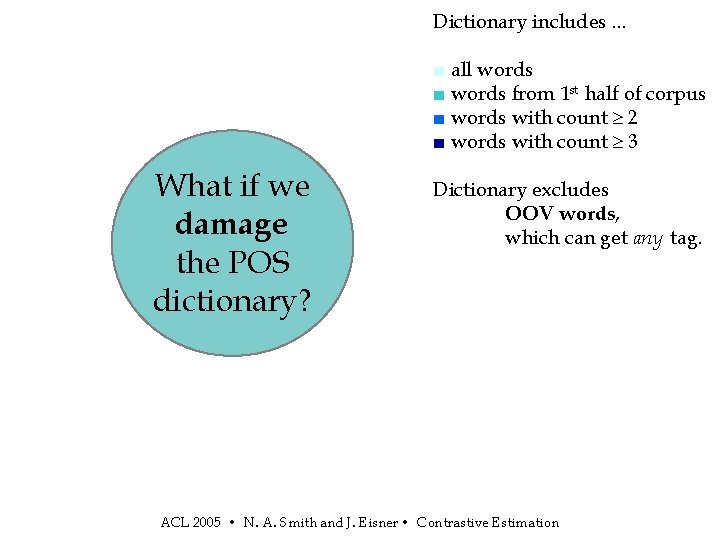

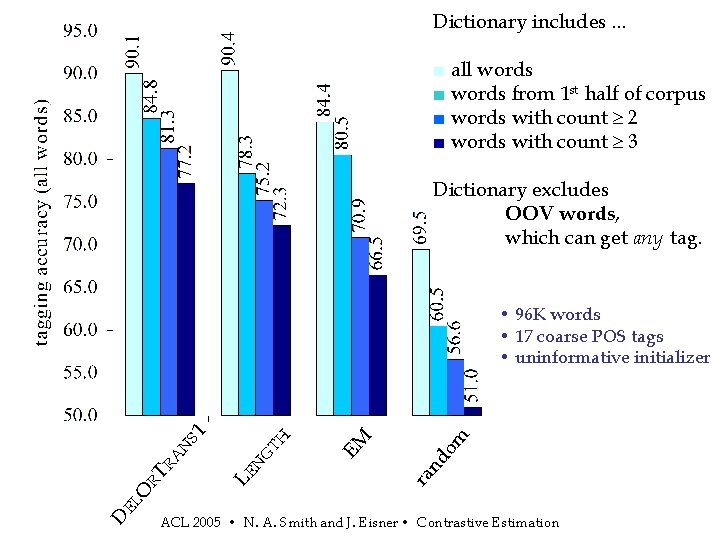

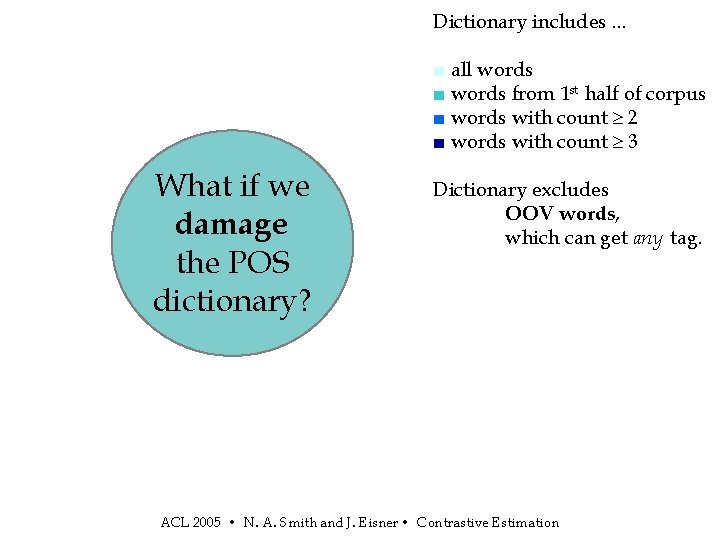

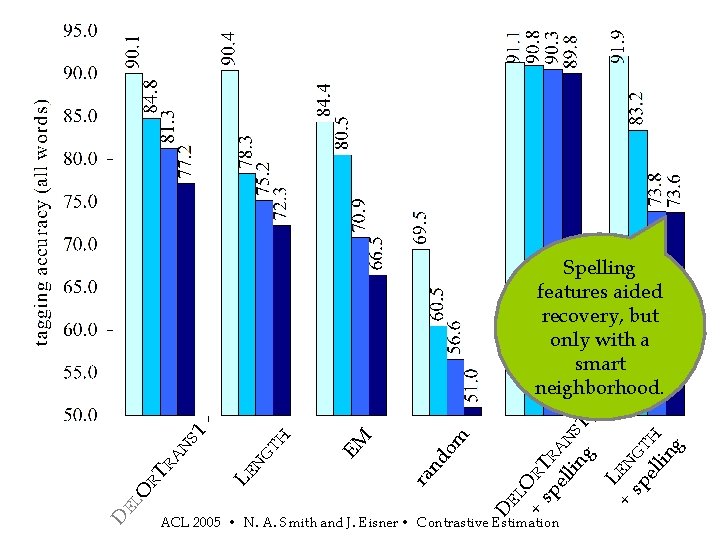

Dictionary includes. . . ■ all words ■ words from 1 st half of corpus ■ words with count 2 ■ words with count 3 What if we damage the POS dictionary? Dictionary excludes OOV words, which can get any tag. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

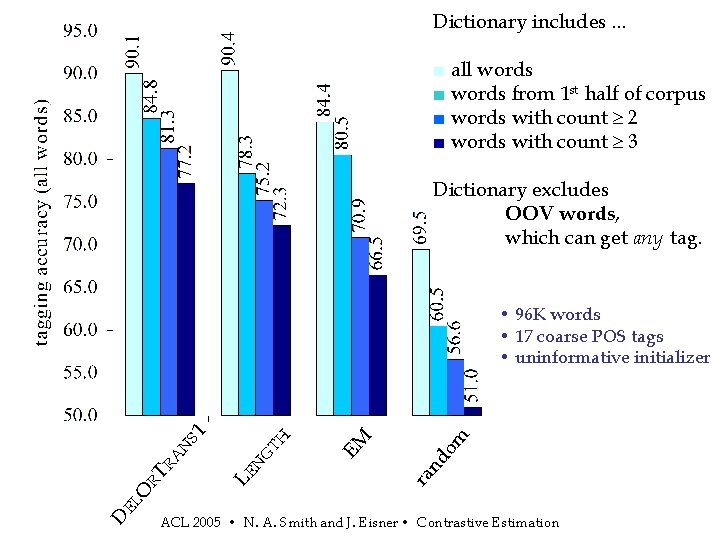

Dictionary includes. . . ■ all words ■ words from 1 st half of corpus ■ words with count 2 ■ words with count 3 Dictionary excludes OOV words, which can get any tag. D EL nd om ra EM TH G N LE O RT RA N S 1 • 96 K words • 17 coarse POS tags • uninformative initializer ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

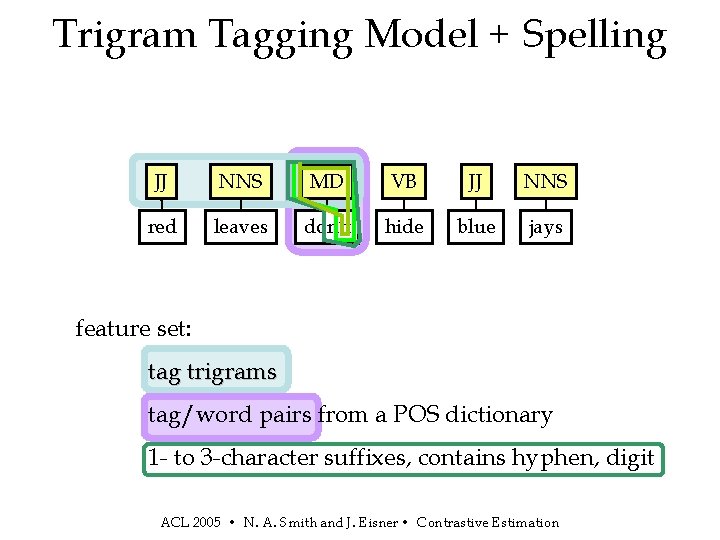

Trigram Tagging Model + Spelling JJ NNS MD VB JJ NNS red leaves don’t hide blue jays feature set: tag trigrams tag/word pairs from a POS dictionary 1 - to 3 -character suffixes, contains hyphen, digit ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

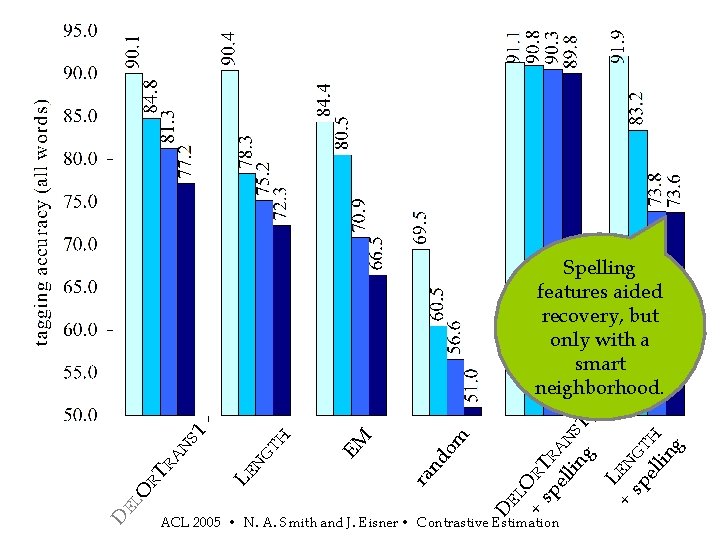

+ OR T sp R el AN lin S g 1 L + EN sp GT el H lin g nd om ra EM TH G N LE EL D D EL O RT RA N S 1 Spelling features aided recovery, but only with a smart neighborhood. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

The model need not be finite-state. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

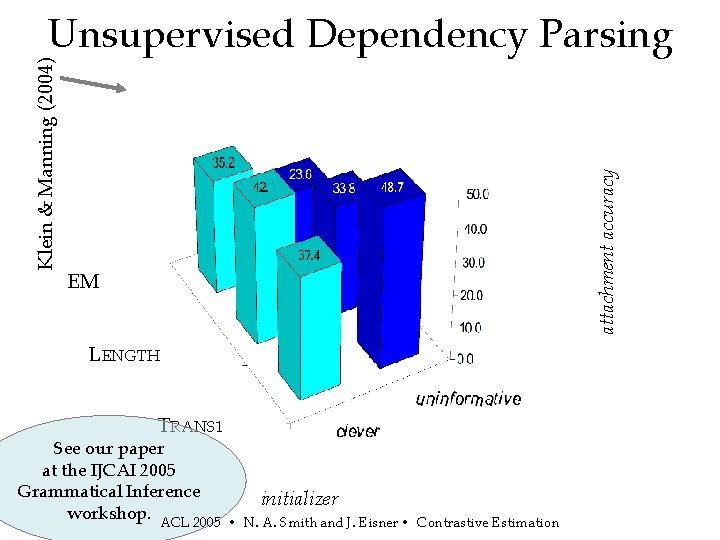

attachment accuracy Klein & Manning (2004) Unsupervised Dependency Parsing EM LENGTH TRANS 1 See our paper at the IJCAI 2005 Grammatical Inference workshop. ACL 2005 initializer • N. A. Smith and J. Eisner • Contrastive Estimation

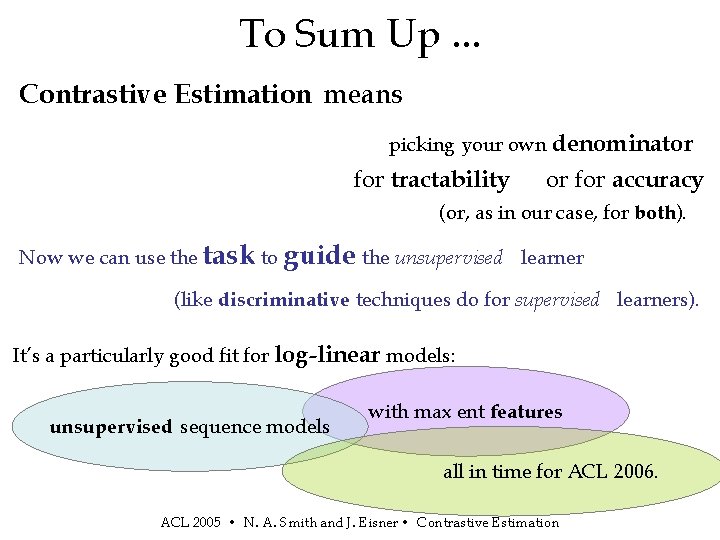

To Sum Up. . . Contrastive Estimation means picking your own denominator for tractability or for accuracy (or, as in our case, for both). Now we can use the task to guide the unsupervised learner (like discriminative techniques do for supervised learners). It’s a particularly good fit for log-linear models: unsupervised sequence models with max ent features all in time for ACL 2006. ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation

ACL 2005 • N. A. Smith and J. Eisner • Contrastive Estimation